Patents

Literature

2094 results about "Binocular distance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

This distance varies from about 0.5 m to 30 m, depending upon the design of the binoculars. If the close focus distance is short respect to the magnification, the binocular can be used also to see particulars not visible to the naked eye.

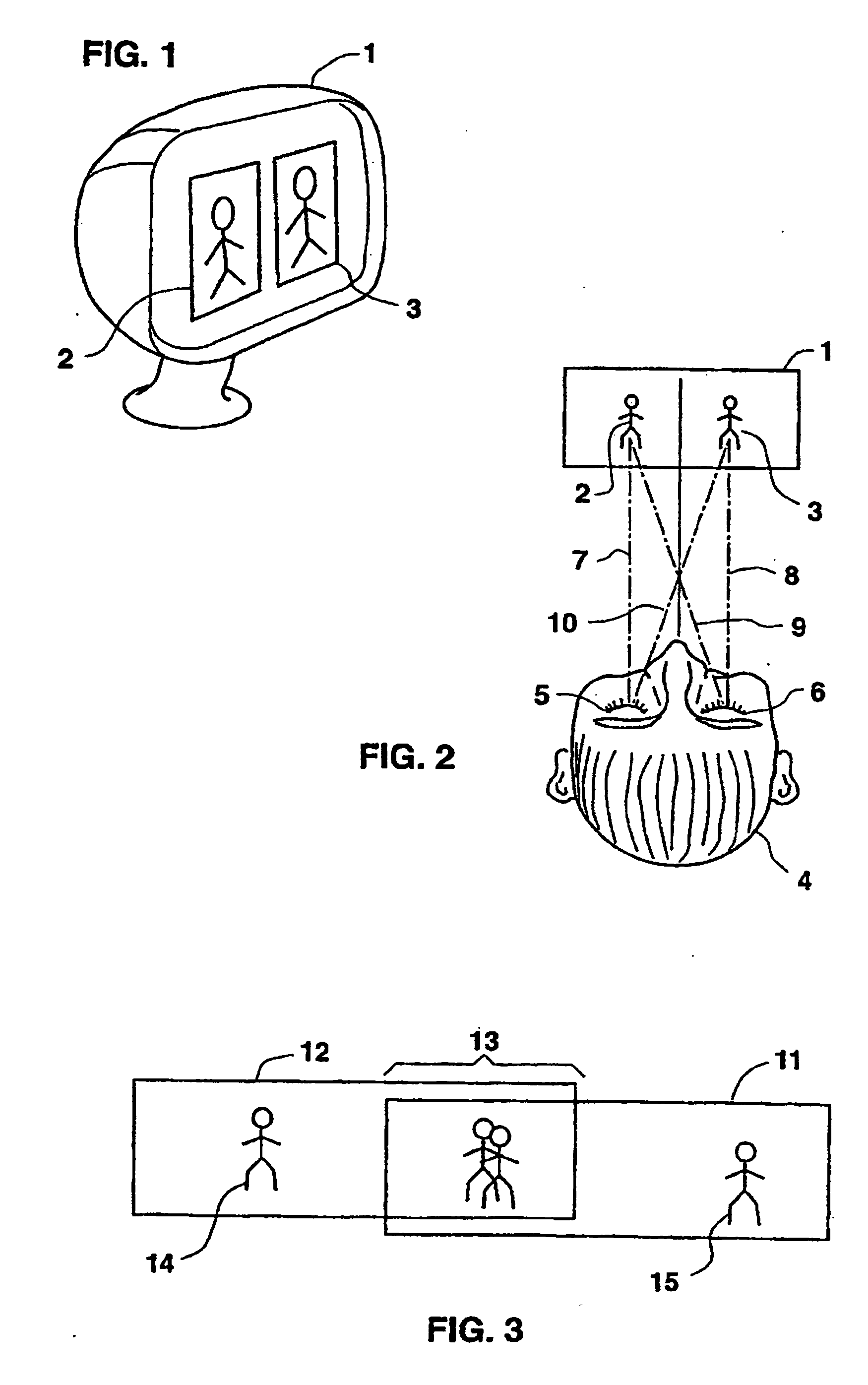

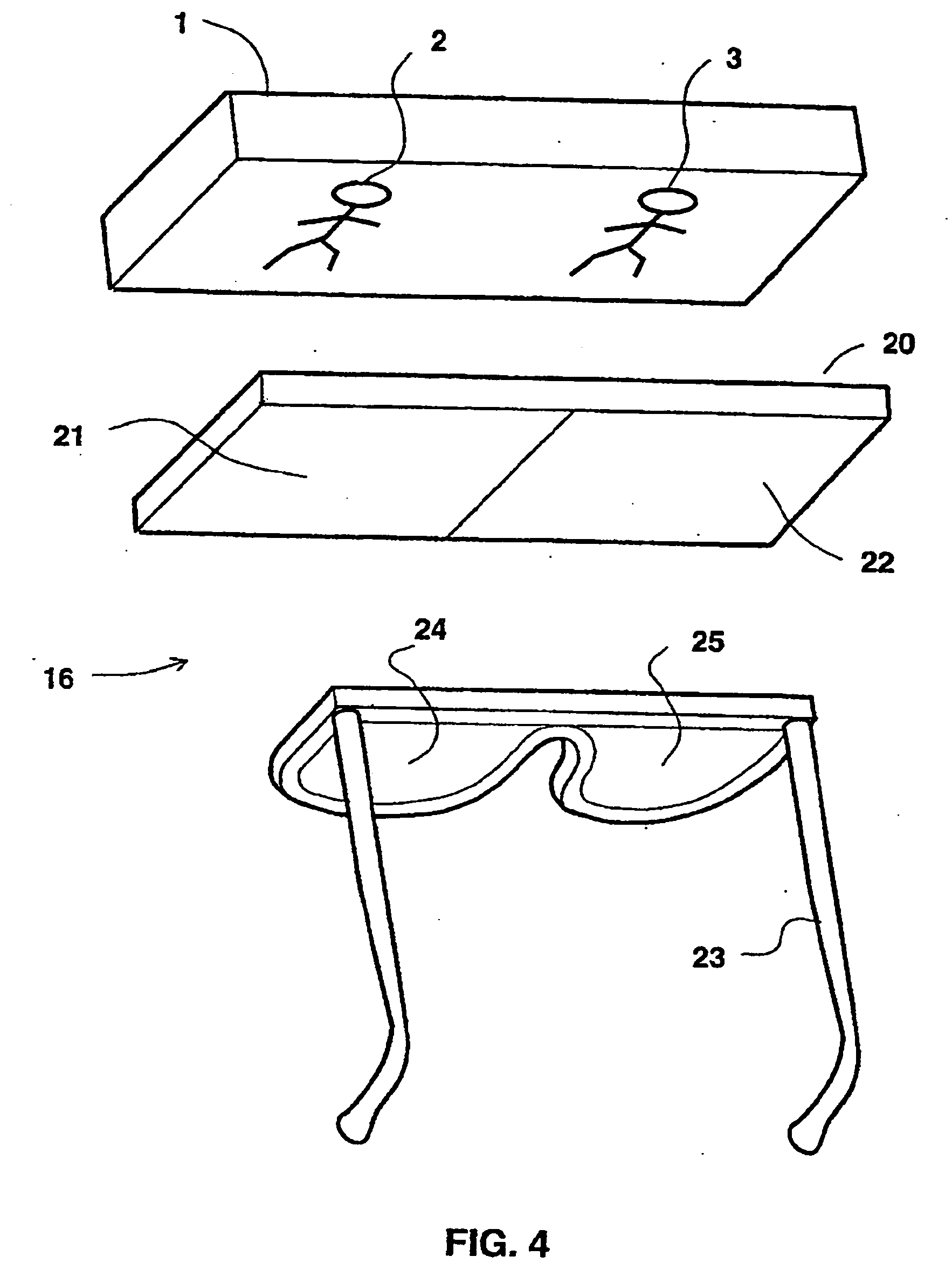

Vision Measurement and Training System and Method of Operation Thereof

ActiveUS20070200927A1Low costHighly effectiveVibration massageEye exercisersControl signalComputer science

A binocular viewer, a method of measuring and training vision that uses a binocular viewer and a vision measurement and training system that employs a computer to control the binocular viewer. In one embodiment, the binocular viewer has left and right display elements and comprises: (1) a variable focal depth optical subsystem located in an optical path between the display elements and a user when the user uses the binocular viewer and (2) a control input coupled to the left and right display elements and the variable focal depth optical subsystem and configured to receive control signals operable to place images on the left and right display elements and vary a focal depth of the variable focal depth optical subsystem. In another embodiment, the binocular viewer lacks the variable focal depth optical subsystem, but the images include at least one feature unique to one of the left and right display elements.

Owner:GENENTECH INC

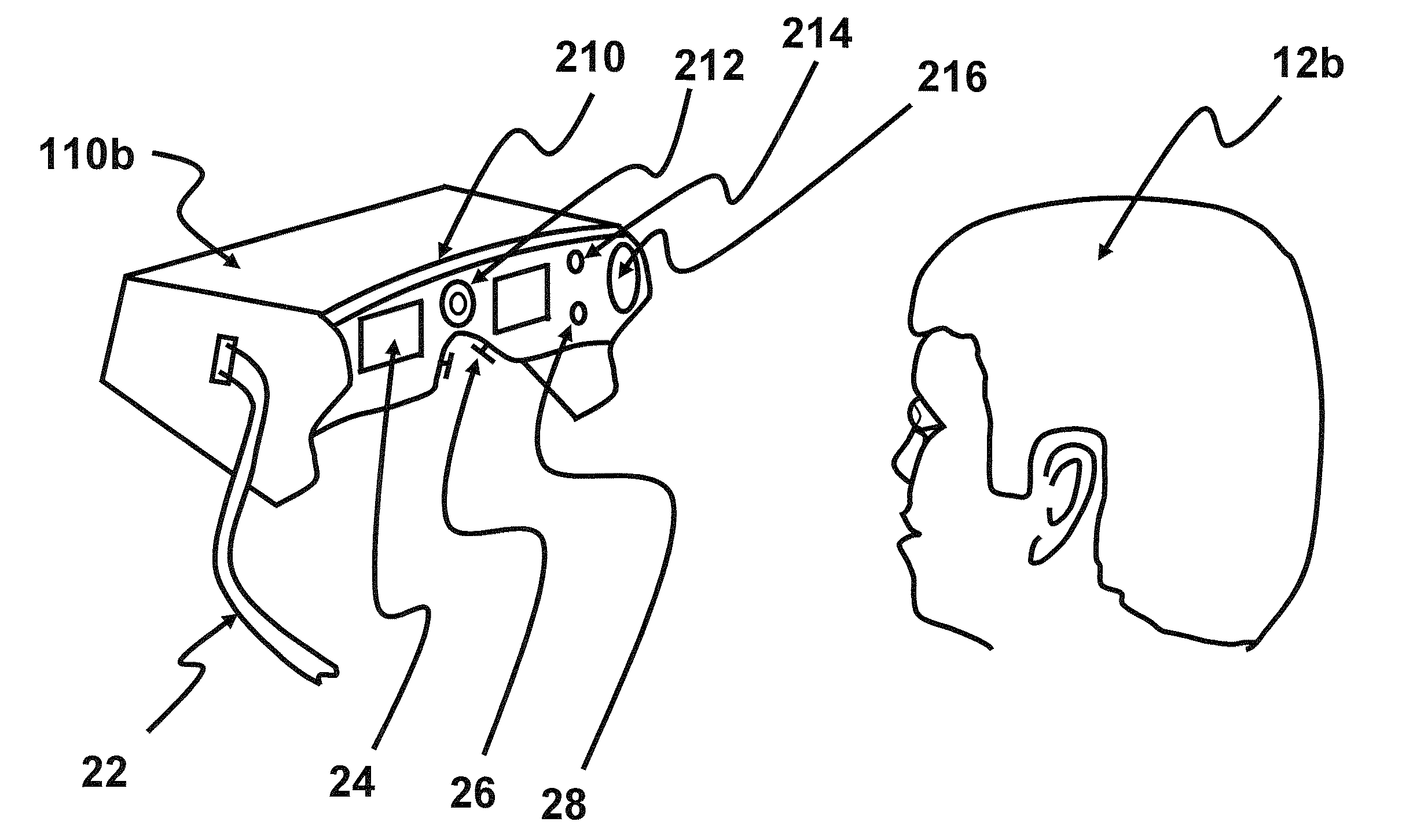

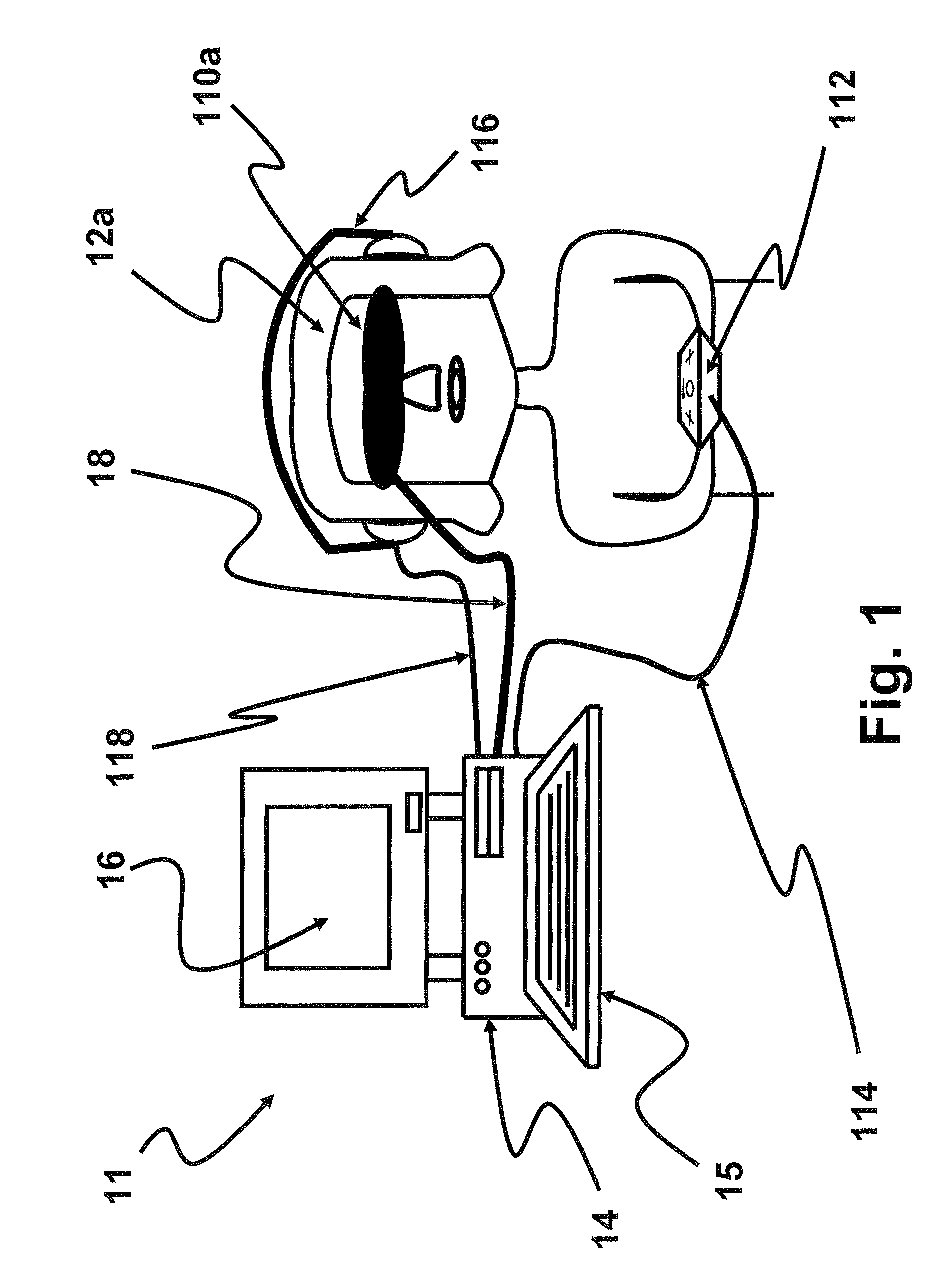

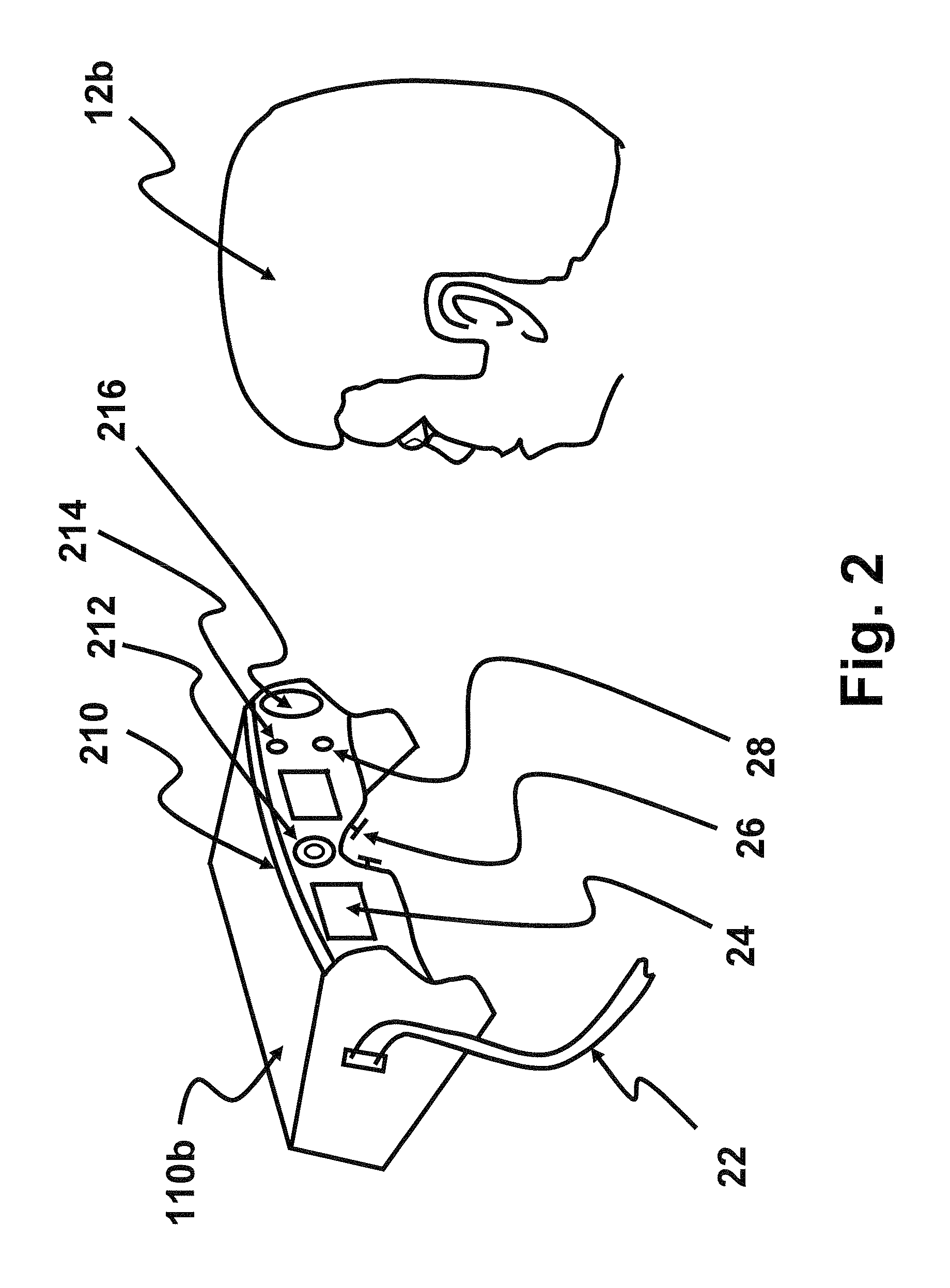

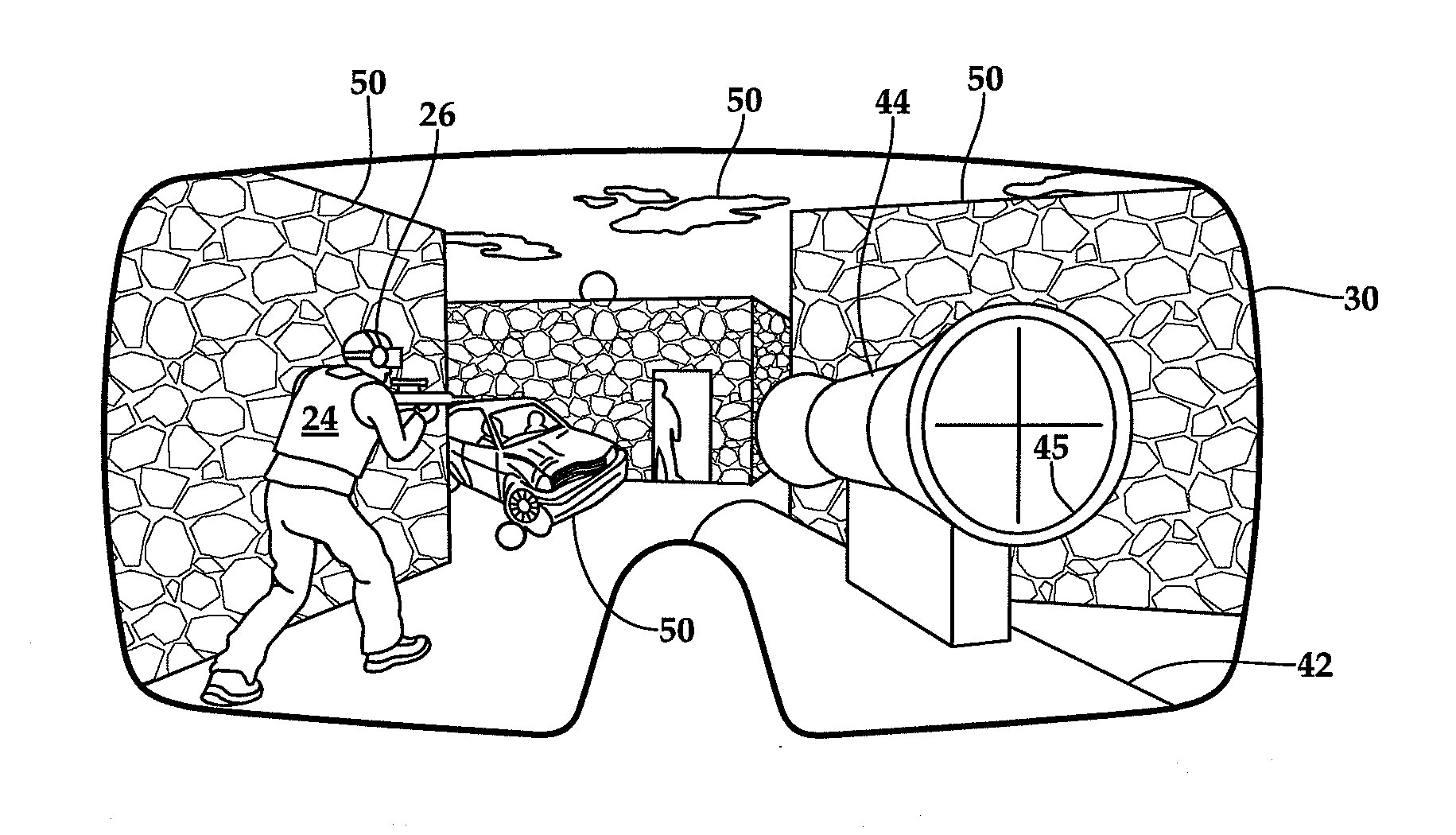

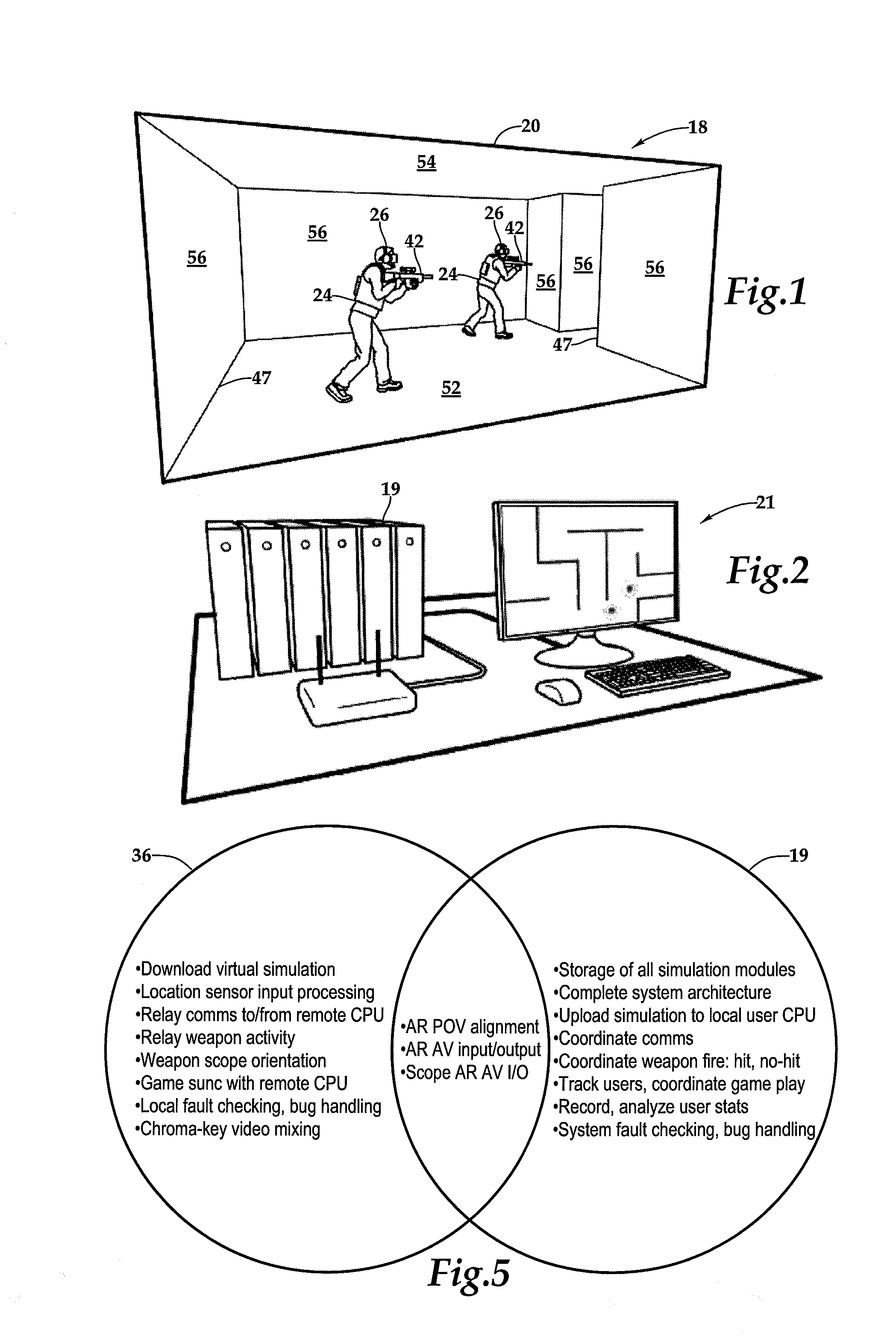

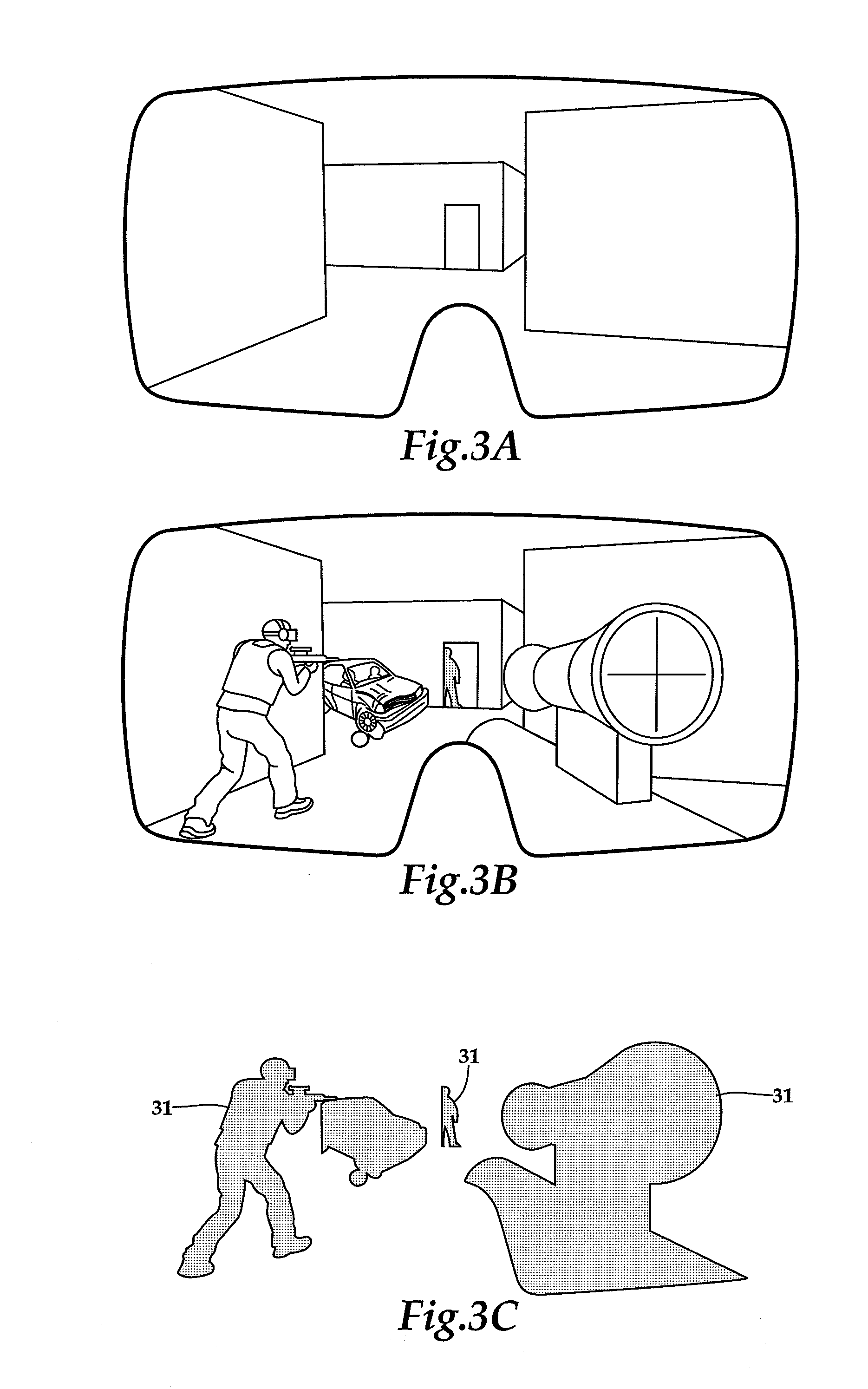

Augmented Reality Simulator

ActiveUS20150260474A1Lower latencyImprove securityInput/output for user-computer interactionCosmonautic condition simulationsDisplay deviceVideo image

An augmented reality system in which video imagery of a physical environment is combined with video images output by a game engine by the use of a traveling matte which identifies portions of the visible physical environment by techniques such as Computer vision or chroma keying and replaces them with the video images output by the video game engine. The composited imagery of the physical environment and the video game imagery is supplied to a trainee through a headmounted display screen. Additionally, peripheral vision is preserved either by providing complete binocular display to the limits of peripheral vision, or by providing a visual path to the peripheral vision which is matched in luminance to higher resolution augmented reality images provided by the binocular displays. A software / hardware element comprised of a server control station and a controller onboard the trainee performs the modeling, scenario generation, communications, tracking, and metric generation.

Owner:LINEWEIGHT

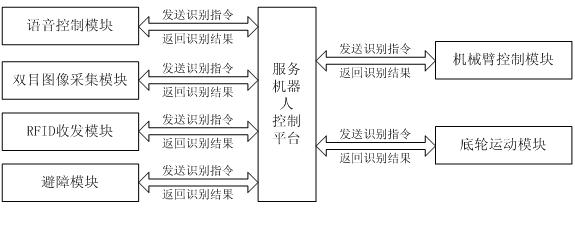

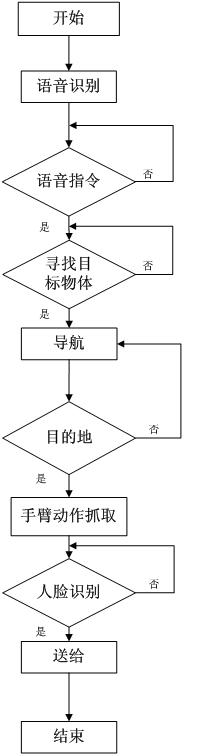

Service robot control platform system and multimode intelligent interaction and intelligent behavior realizing method thereof

InactiveCN102323817AEnhance the ability of intelligent grabbingIntelligent service modeVehicle position/course/altitude controlPosition/direction controlFace detectionEngineering

The invention discloses a service robot control platform system and a multimode intelligent interaction and intelligent behavior realizing method thereof. The service robot control platform system comprises a voice control module, a binocular image acquisition module, an RFID (Radio Frequency Identification Device) receiving and transmitting module, a bottom wheel movement module, an obstacle avoidance module and a mechanical arm control module. The multimode intelligent interaction and intelligent behavior realizing method comprises the following steps of: 1, voice interaction; 2, independent navigation and location; 3, mechanical arm control; and face detection and recognition. According to the invention, a robot carries out intelligent interaction and intelligent behaviors, and the capacity of intelligent grabbing of the robot is enhanced. Except for special person recognition, distinguish of a picture face and an actual face is added, thus the influence to a program by the picture face is effectively eliminated. According to the invention, anthropomorphic mechanical arm grabbing is controlled in a specific anthropomorphic path grabbing manner, so that a service mode of the robot is more intelligent and humanized.

Owner:SHANGHAI UNIV

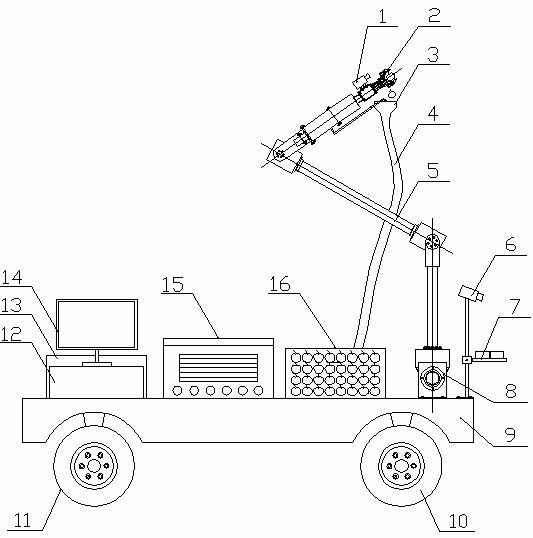

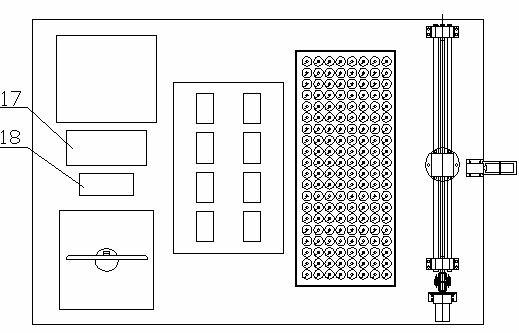

Wheel type mobile fruit picking robot and fruit picking method

InactiveCN102124866AReduce energy consumptionShorten speedProgramme-controlled manipulatorPicking devicesUltrasonic sensorData acquisition

Owner:NANJING AGRICULTURAL UNIVERSITY

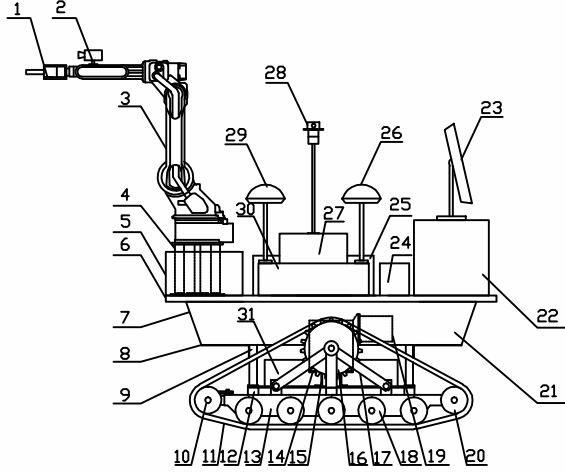

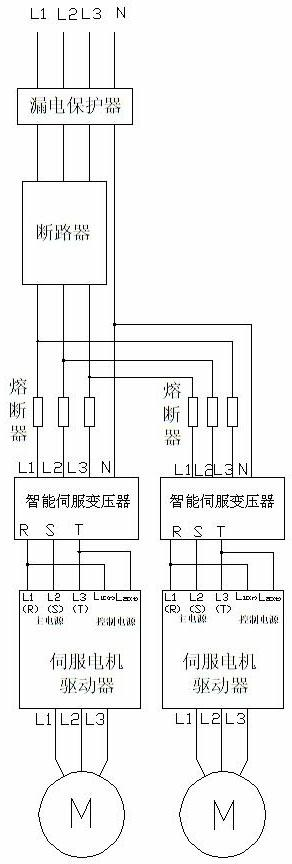

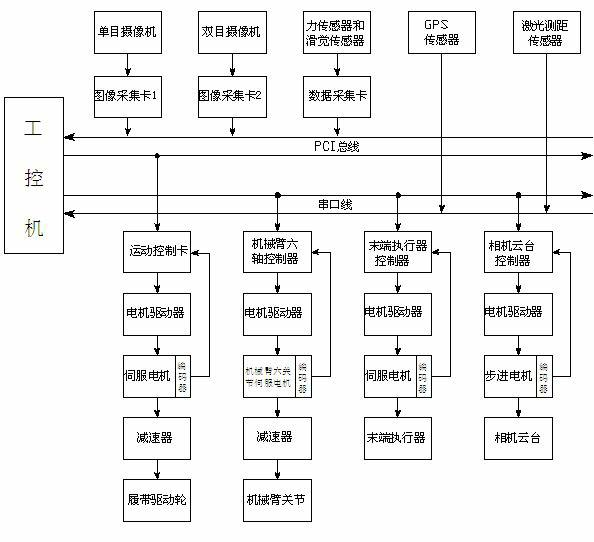

Automatic-navigation crawler-type mobile fruit picking robot and fruit picking method

InactiveCN102165880AFully automated pickingSimple structureProgramme-controlled manipulatorPicking devicesSimulationActuator

The invention discloses an automatic-navigation crawler-type mobile fruit picking robot which comprises a mechanical execution system and a control system and is characterized in that the mechanical execution system comprises an intelligent movable platform, a fruit picking mechanical arm and a two-finger type manipulator, wherein the intelligent movable platform comprises two crawler assemblies, an experimental facility fixing rack, a supporting stand column, a cross beam, a speed reducer and the like; and the control system comprises an industrial personal computer, a motion control card, a data collecting card, an image collecting card, an encoder, a GPS (global position system), a monocular zooming camera assembly, a binocular camera, a laser ranging sensor, a control circuit and the like. The automatic-navigation crawler-type mobile fruit picking robot integrates the fruit picking mechanical arm, the two-finger type manipulator, the intelligent movable platform and the sensor system, integrates multiple key technologies such as fruit identification, motion of the picking mechanical arm, grabbing of a tail-end executer, automatic navigation and obstacle avoidance of the movable platform, and the like, and really realizes automatic and humanized fruit picking.

Owner:NANJING AGRICULTURAL UNIVERSITY

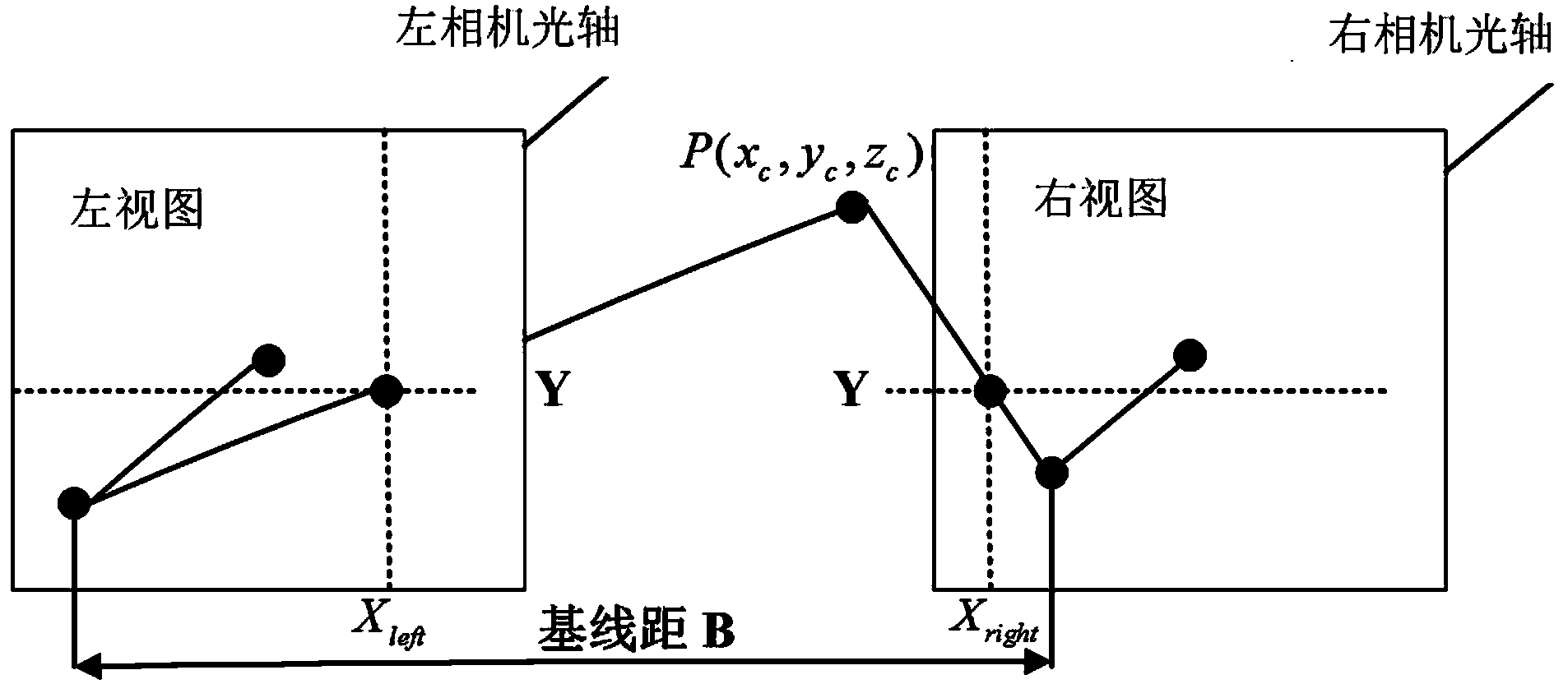

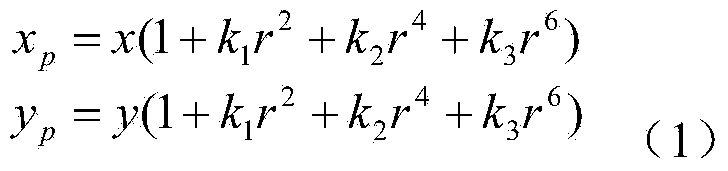

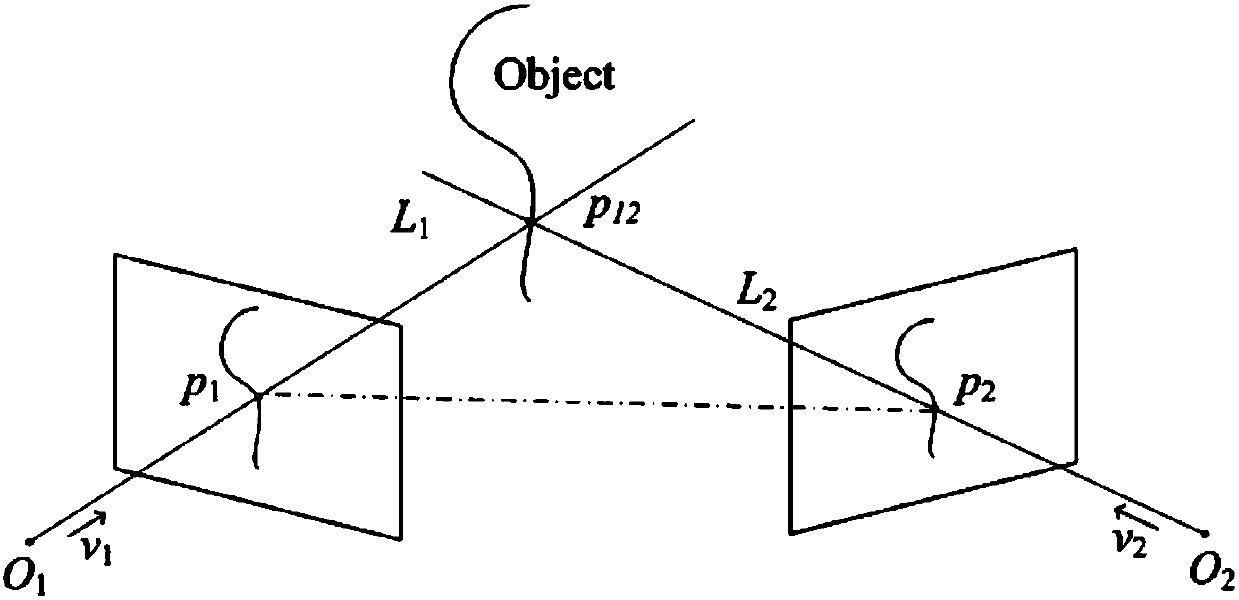

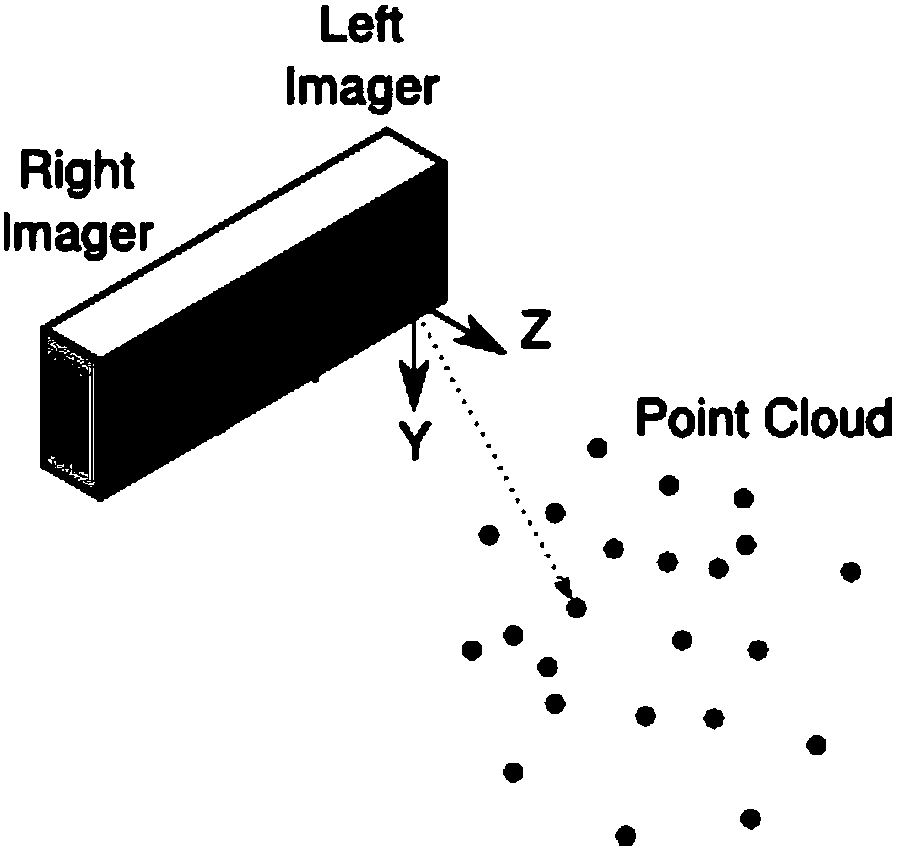

Parallax optimization algorithm-based binocular stereo vision automatic measurement method

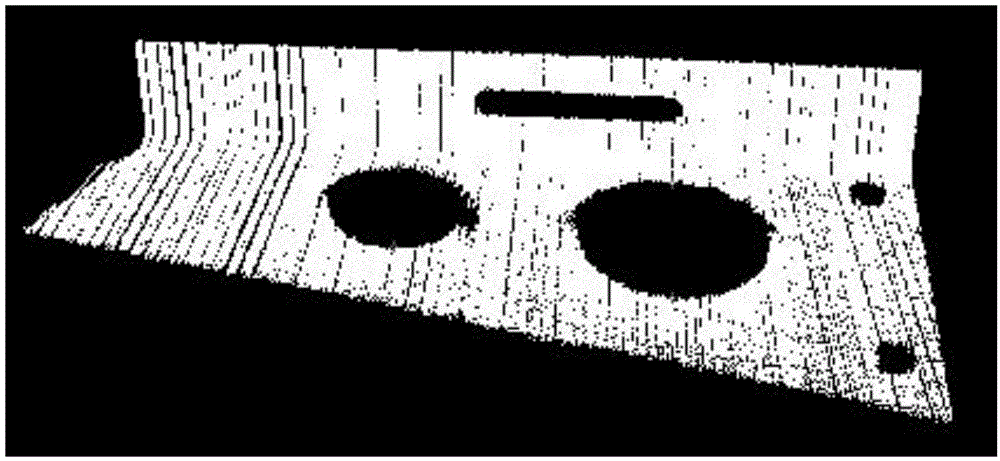

InactiveCN103868460AAccurate and automatic acquisitionComplete 3D point cloud informationImage analysisUsing optical meansBinocular stereoNon targeted

The invention discloses a parallax optimization algorithm-based binocular stereo vision automatic measurement method. The method comprises the steps of 1, obtaining a corrected binocular view; 2, matching by using a stereo matching algorithm and taking a left view as a base map to obtain a preliminary disparity map; 3, for the corrected left view, enabling a target object area to be a colorized master map and other non-target areas to be wholly black; 4, acquiring a complete disparity map of the target object area according to the target object area; 5, for the complete disparity map, obtaining a three-dimensional point cloud according to a projection model; 6, performing coordinate reprojection on the three-dimensional point cloud to compound a coordinate related pixel map; 7, using a morphology method to automatically measure the length and width of a target object. By adopting the method, a binocular measuring operation process is simplified, the influence of specular reflection, foreshortening, perspective distortion, low textures and repeated textures on a smooth surface is reduced, automatic and intelligent measuring is realized, the application range of binocular measuring is widened, and technical support is provided for subsequent robot binocular vision.

Owner:GUILIN UNIV OF ELECTRONIC TECH

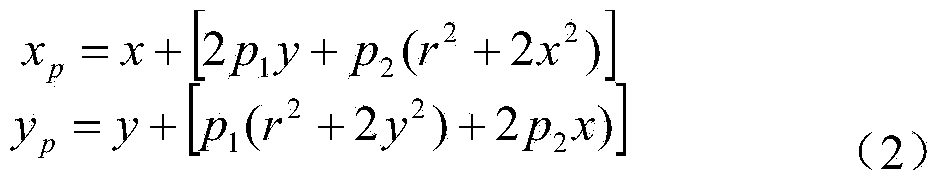

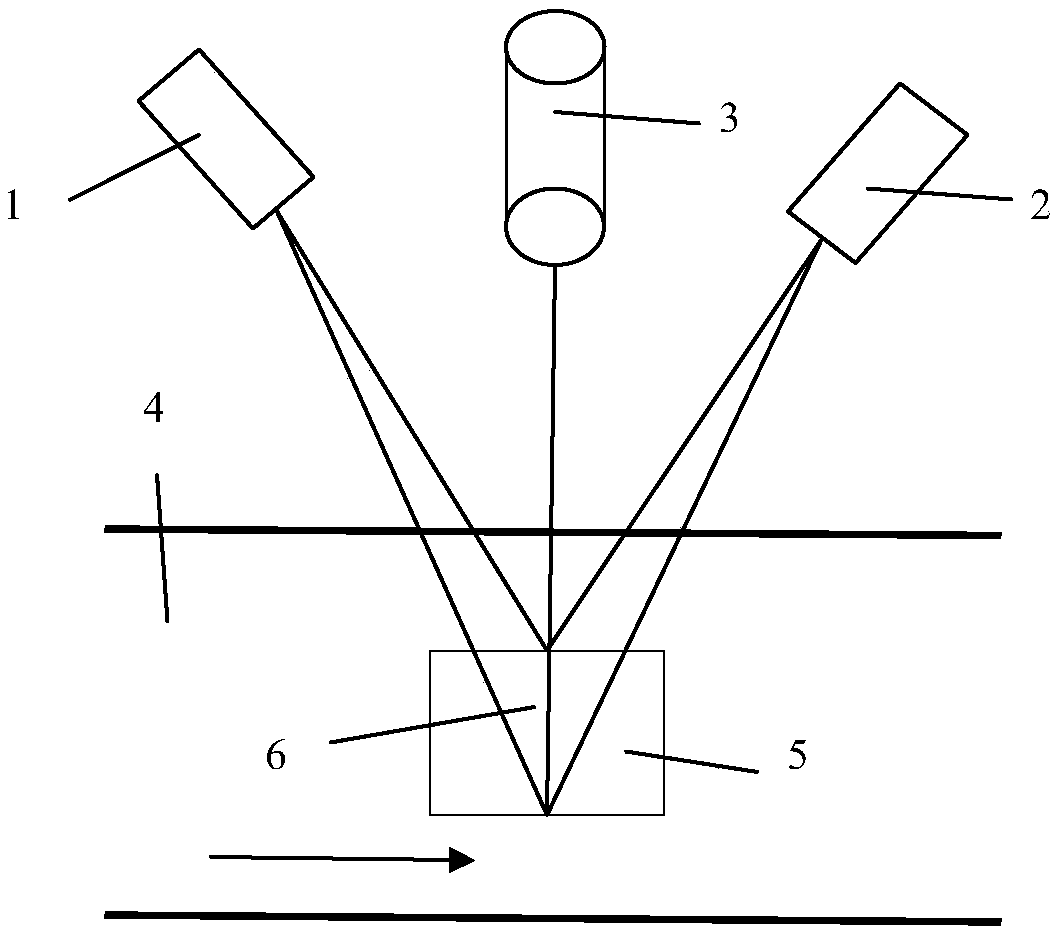

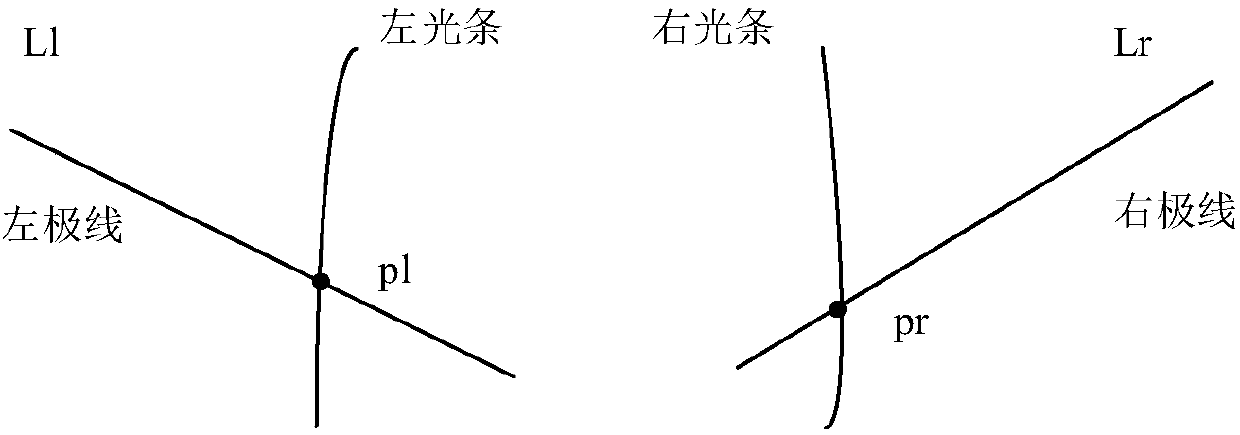

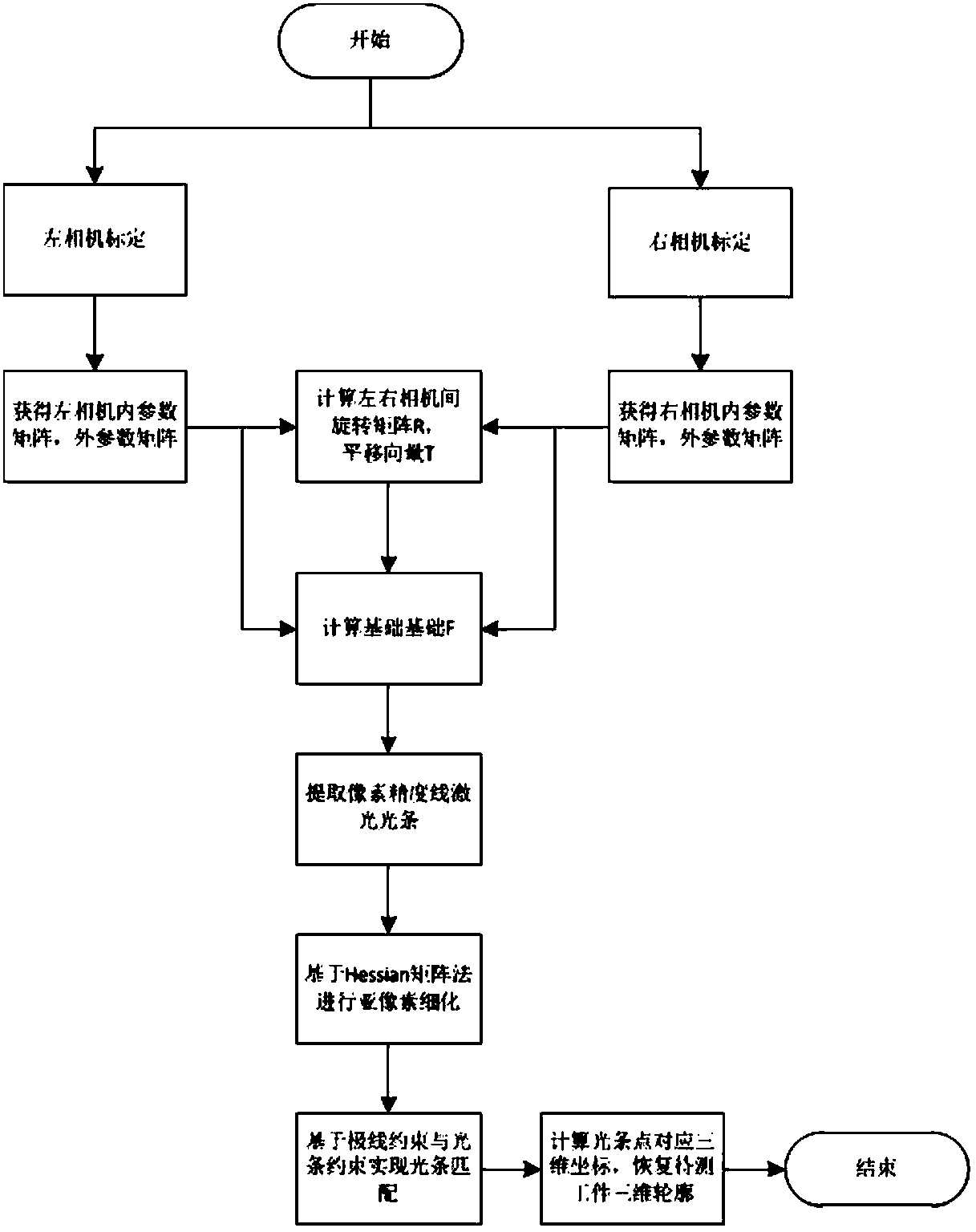

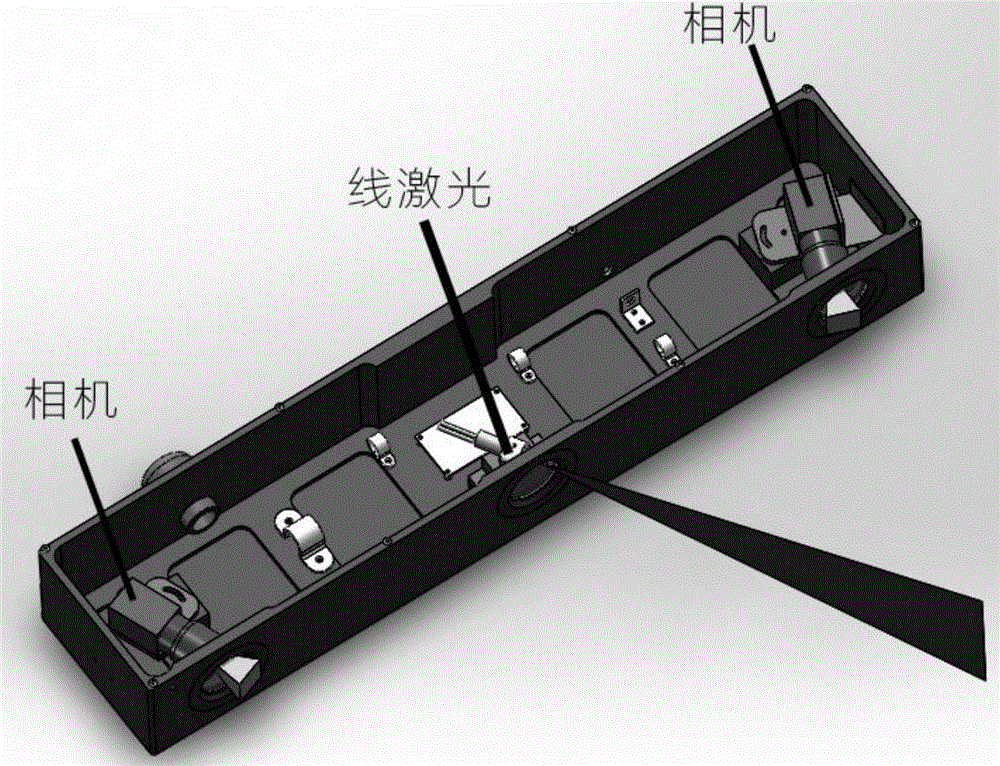

Binocular stereo vision three-dimensional measurement method based on line structured light scanning

InactiveCN107907048AReduce the difficulty of matchingImprove robustnessUsing optical meansThree dimensional measurementLaser scanning

The invention discloses a binocular stereo vision three-dimensional measurement method based on line structured light scanning, which comprises the steps of performing stereo calibration on binocularindustrial cameras, projecting laser light bars by using a line laser, respectively acquiring left and right laser light bar images, extracting light bar center coordinates with sub-pixel accuracy based on a Hessian matrix method, performing light bar matching according to an epipolar constraint principle, and calculating a laser plane equation; secondly, acquiring a line laser scanning image of aworkpiece to be measured, extracting coordinates of the image of the workpiece to be measured, calculating world coordinates of the workpiece to be measured by combining binocular camera calibrationparameters and the laser plane equation, and recovering the three-dimensional surface topography of the workpiece to be measured. Compared with a common three-dimensional measurement system combininga monocular camera and line structured light, the binocular stereo vision three-dimensional measurement method avoids complicated laser plane calibration. Compared with the traditional stereo vision method, the binocular stereo vision three-dimensional measurement method reduces the difficulty of stereo matching in binocular stereo vision while ensuring the measurement accuracy, and improves the robustness and the usability of a visual three-dimensional measurement system.

Owner:CHANGSHA XIANGJI HAIDUN TECH CO LTD

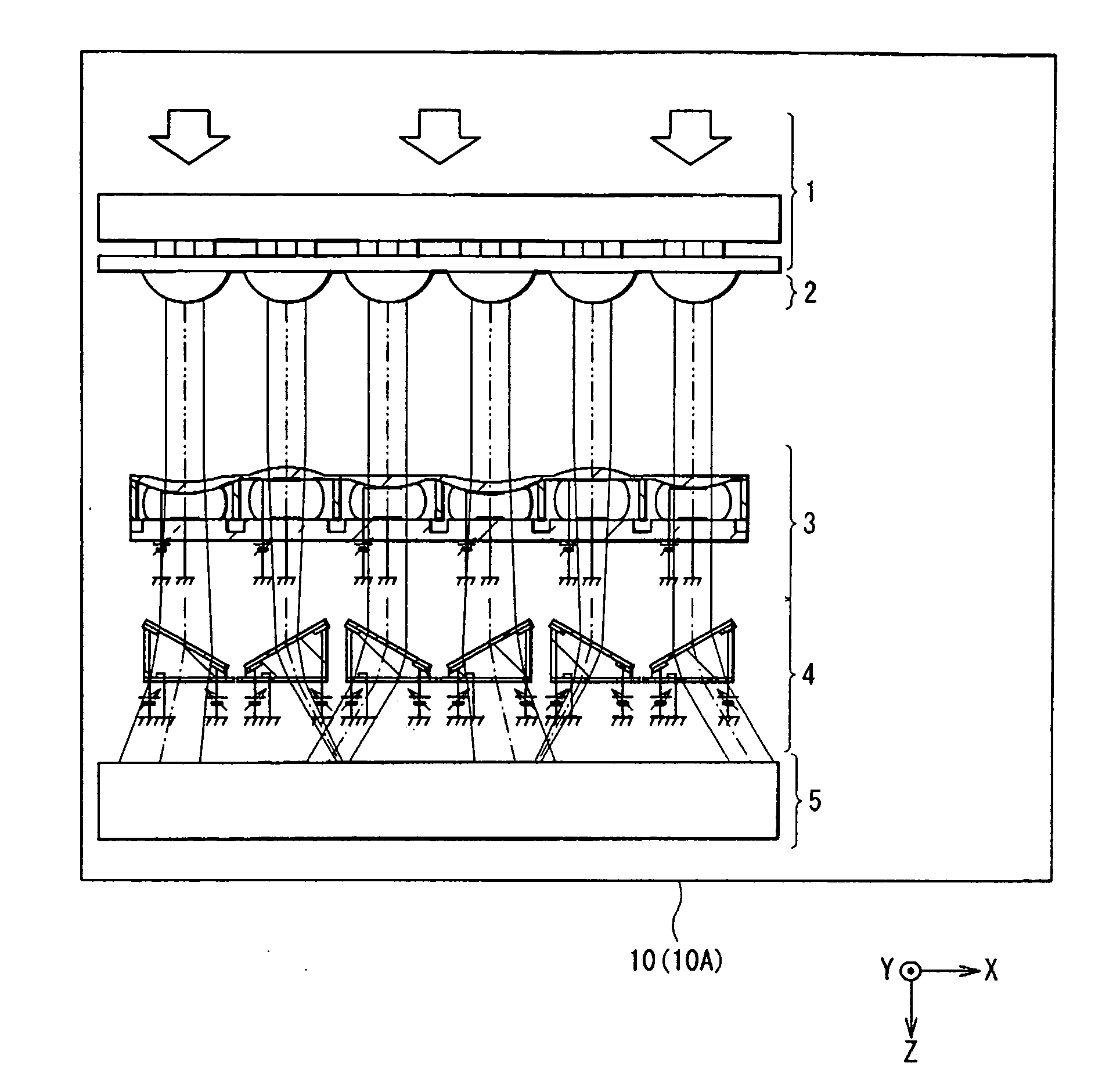

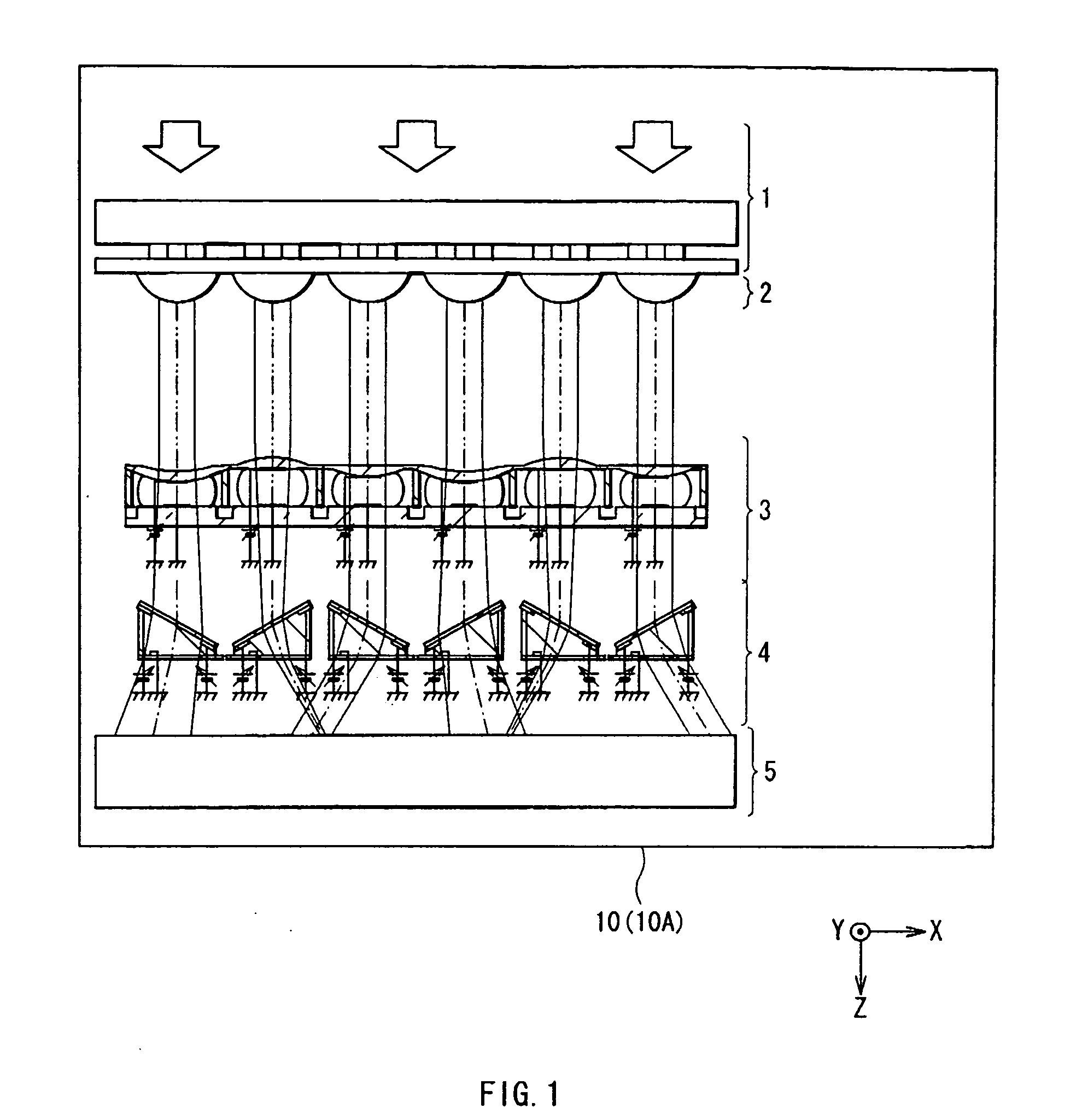

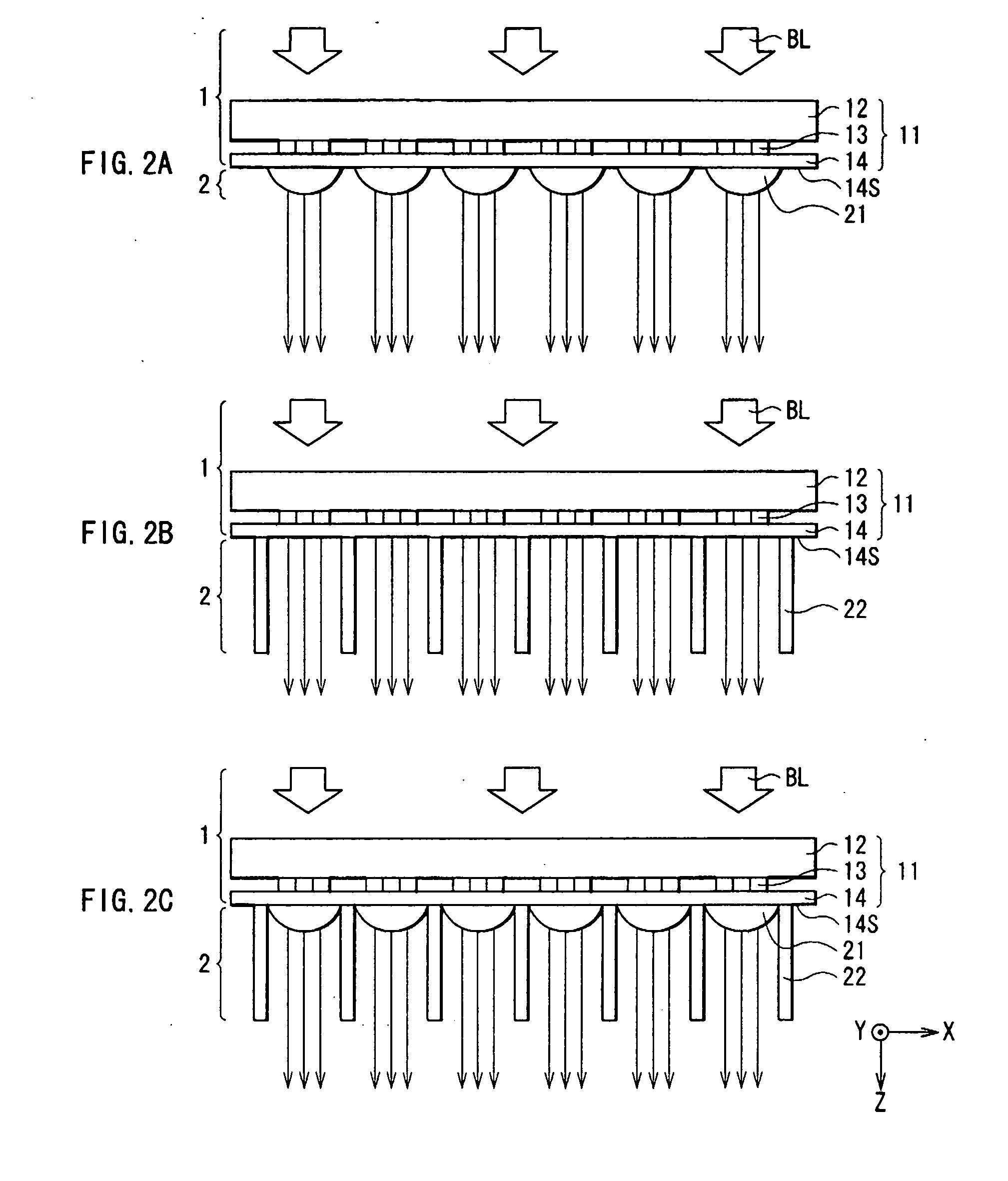

Three-dimensional display

In the three-dimensional display, a two-dimensional display section generates a two-dimensional display image based on an image signal, and a lens array converts the wavefront of the display image light from the two-dimensional display section into a wavefront having a curvature which allows the display image light to focus upon a focal point where an optical path length from an observation point to the focal point is equal to an optical path length from the observation point to a virtual object point, so a viewer can obtain information about an appropriate focal length in addition to information about binocular parallax and a convergence angle. Therefore, consistency between the information about binocular parallax and a convergence angle and the information about an appropriate focal length can be ensured, and a desired stereoscopic image can be perceived without physiological discomfort.

Owner:SONY CORP

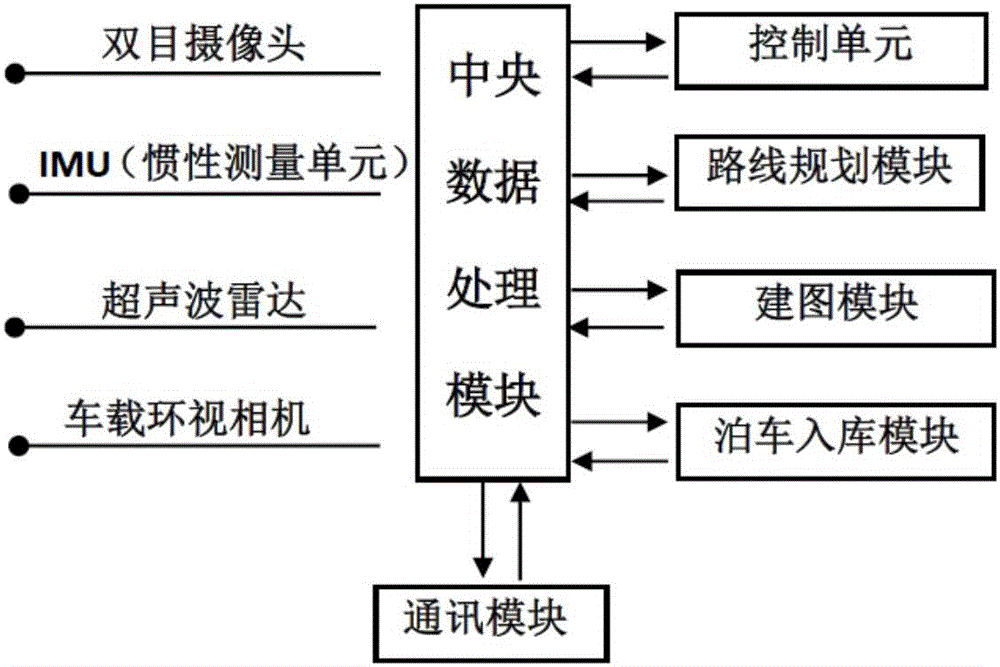

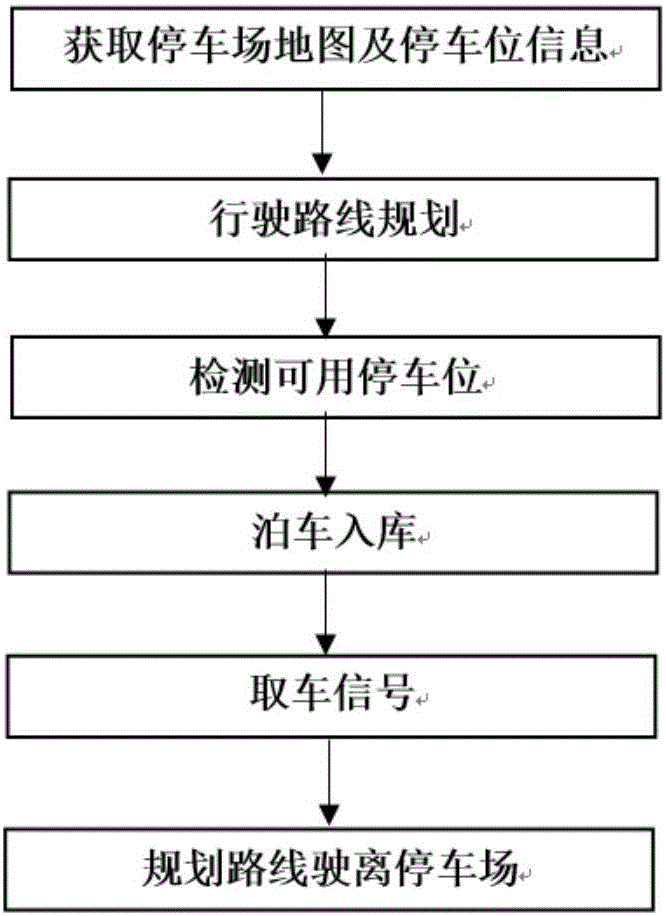

Long-distance automatic parking system and method based on multi-sensor fusion

ActiveCN105946853ALow costScene recognitionExternal condition input parametersComputer scienceWave radar

The invention discloses a long-distance automatic parking system and method based on multi-sensor fusion. The system comprises a central data processing module, a path planning module, a mapping module, a parking module, a communication module and a control unit. The sensor comprises but is not limited to an IMU, a vehicle-mounted all-round looking camera, an ultrasonic wave radar and a binocular camera. According to the embodiment of the invention, through planning paths for the obtained parking lot map, obstacle information, parking place information and road information within a vehicle running range are judged according to a disparity map obtained by the binocular camera and are fed back to the central data processing module in real time; and after calculation, the central data processing module gives an instruction to the control unit so as to realize long-distance automatic parking of vehicles from entering the parking lot to parking in the parking place.

Owner:SUN YAT SEN UNIV

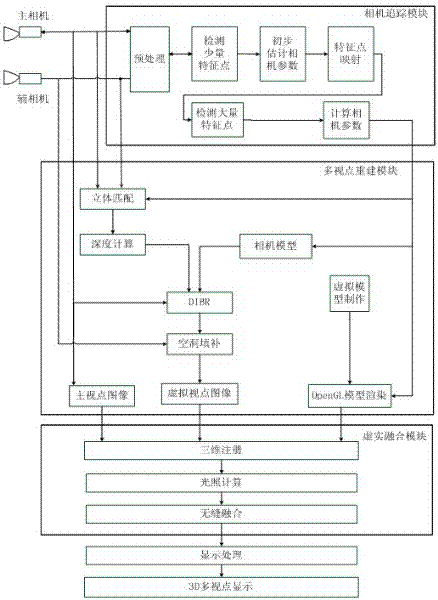

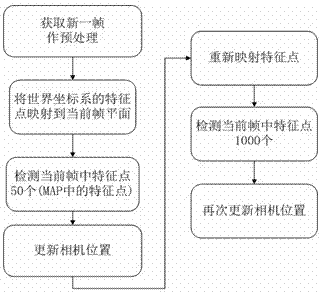

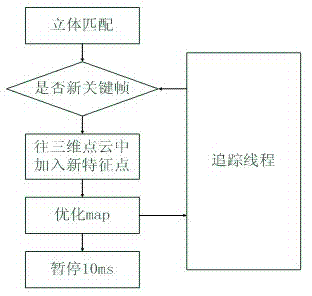

Three-dimensional enhancing realizing method for multi-viewpoint free stereo display

ActiveCN102568026ARealisticAchieve consistencySteroscopic systems3D-image renderingViewpointsDisplay device

The invention discloses a three-dimensional enhancing realizing method for multi-viewpoint free stereo display, which comprises the following steps: 1) stereoscopically shooting a natural scene by using a binocular camera; 2) extracting and matching a characteristic point of an image of a main camera, generating a three-dimensional point cloud picture of the natural scene in real time, and calculating a camera parameter; 3) calculating a depth image corresponding to the image of the main camera, drawing a virtual viewpoint image and a depth image thereof, and performing hollow repairing; 4) utilizing three-dimensional making software to draw a three-dimensional virtual model and utilizing a false-true fusing module to realize the false-true fusing of the multi-viewpoint image; 5) suitably combining multiple paths of false-true fused images; and 6) providing multi-viewpoint stereo display by a 3D display device. According to the method provided by the invention, the binocular camera is used for stereoscopically shooting and the characteristic extracting and matching technique with better instantaneity is adopted, so that no mark is required in the natural scene; the false-true fusing module is used for realizing the illumination consistency and seamless fusing of the false-true scenes; and the multi-user multi-angle naked-eye multi-viewpoint stereo display effect is supplied by the 3D display device.

Owner:万维显示科技(深圳)有限公司

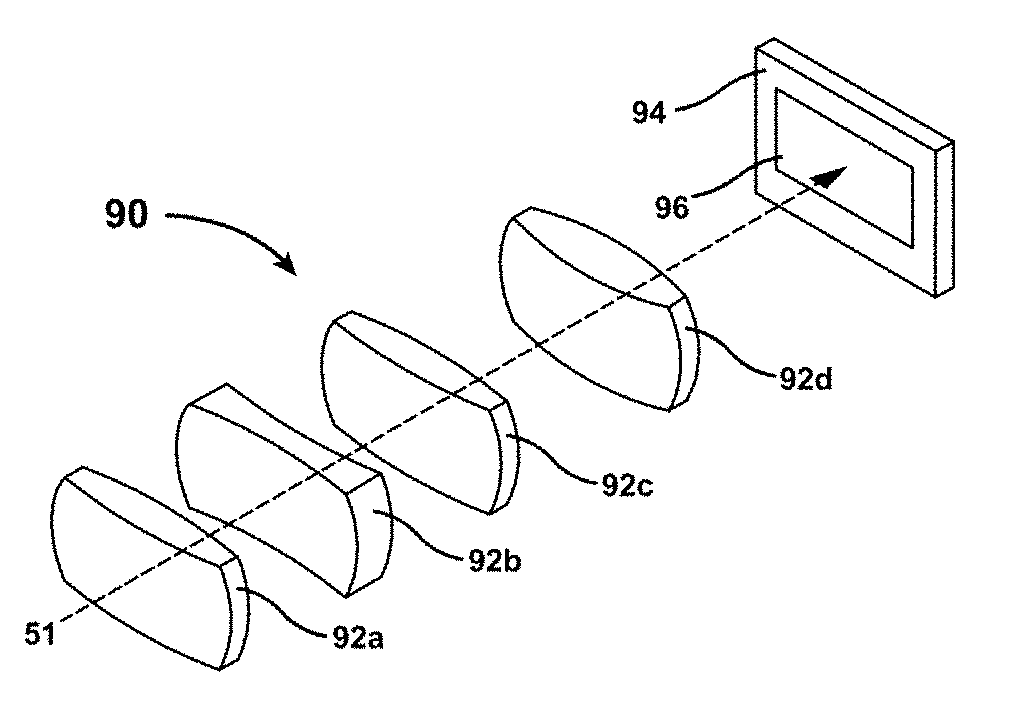

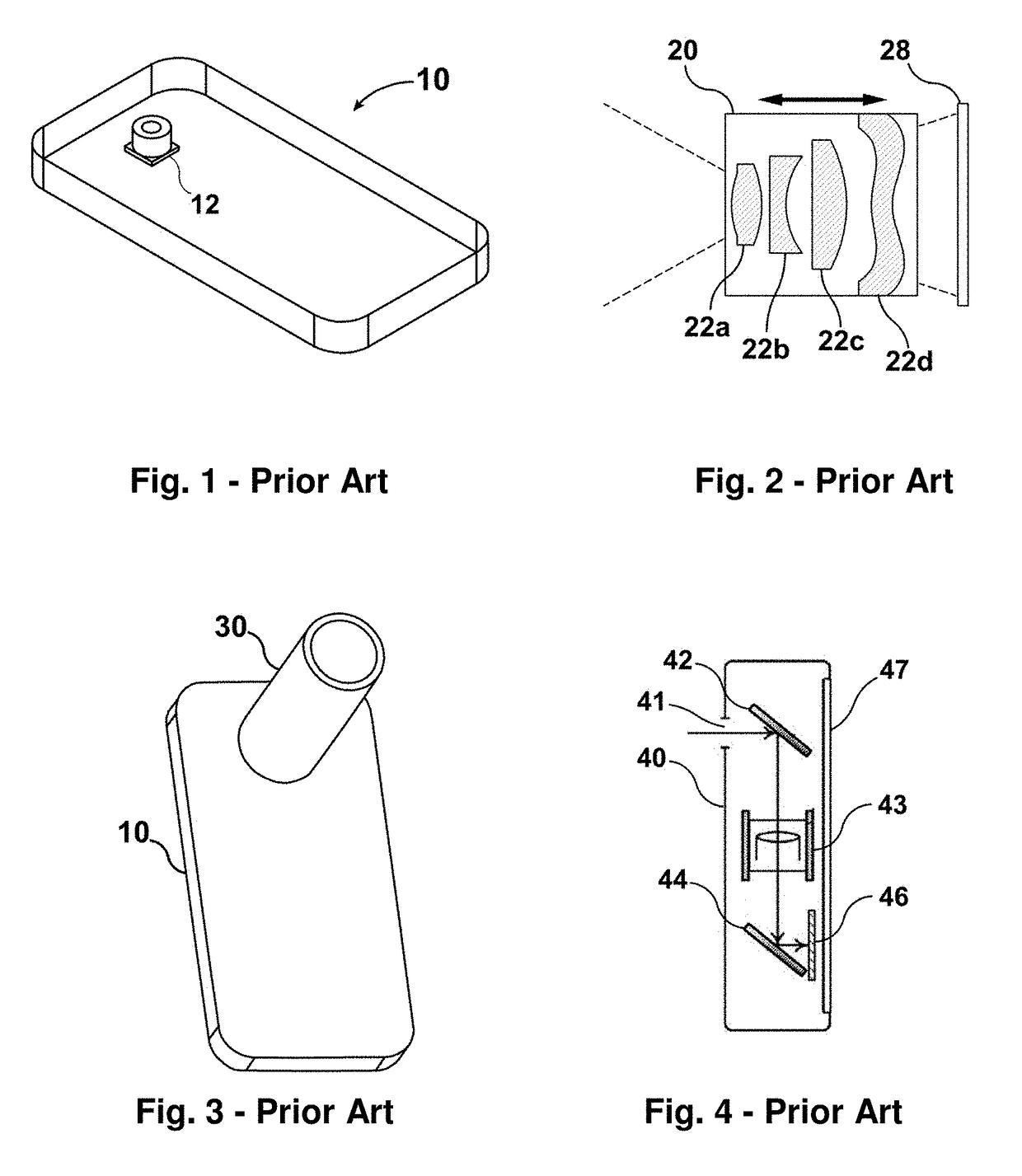

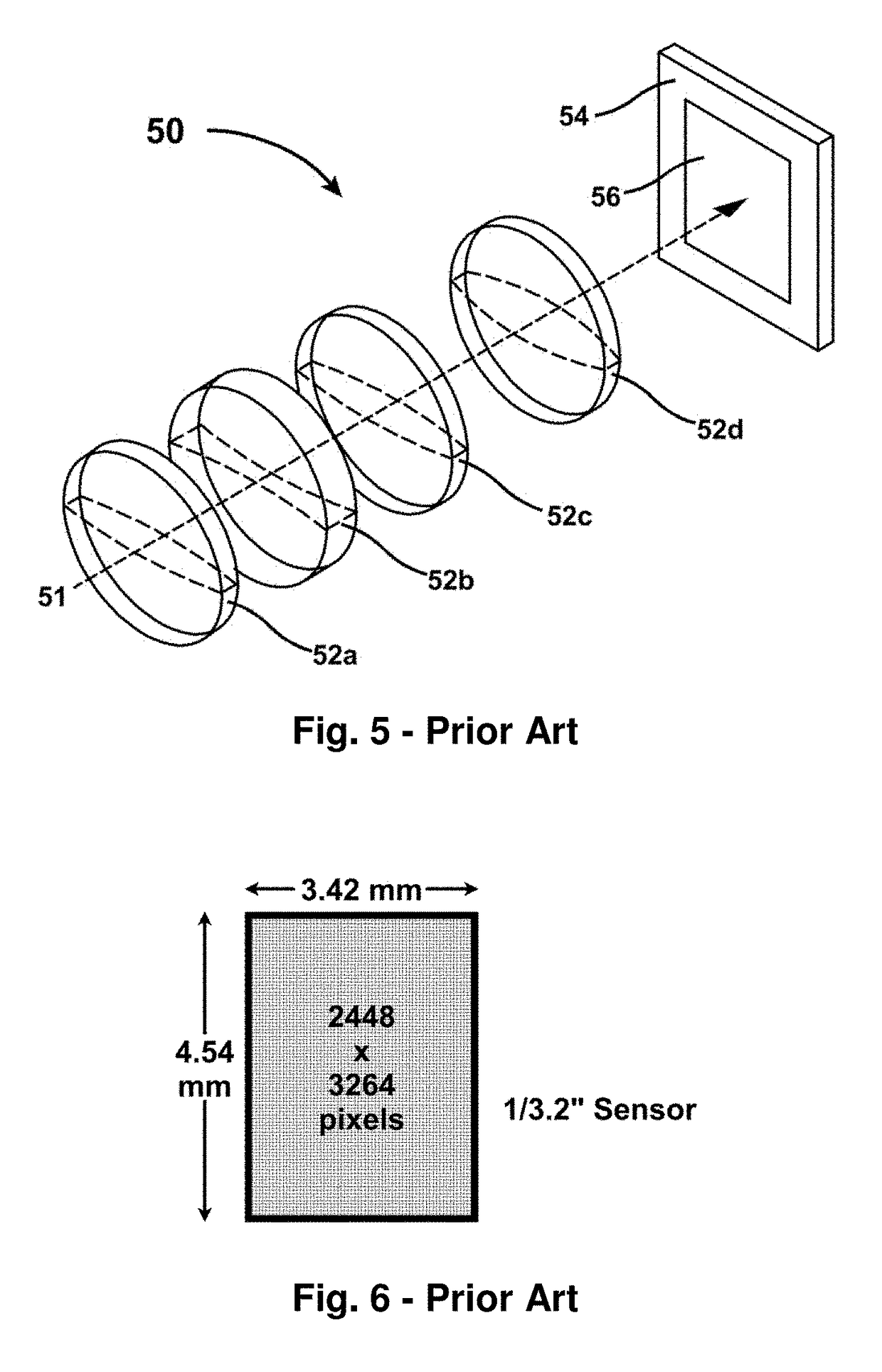

Thin optical system and camera

InactiveUS20170242225A1Improve scanning rateReduced Power RequirementsMountingsCamera lensScanning mirror

A camera module (170) includes a miniature scanning mirror (120), lens elements (163a to 163d) corresponding to thin lateral lens slices, and a short, wide imaging sensor (165). As the scanning mirror (120) pivots to scan a scene, the imaging sensor (165) captures successive image segments. Multiple image segments are stitched together by software running on a digital processor to provide a complete image. The assembly of lens elements (163a to 163d) may include moveable elements to allow variable focus, variable magnification and image stabilization, and may utilize refraction, reflection, diffraction and / or planar optical elements. The camera module (170) may be less than 5 millimeters thick while allowing long focal length lenses and increased light collecting area. Other embodiments include a switchable scan mirror with two apertures and a dual-camera system that provides binocular images and video.

Owner:FISKE ORLO JAMES

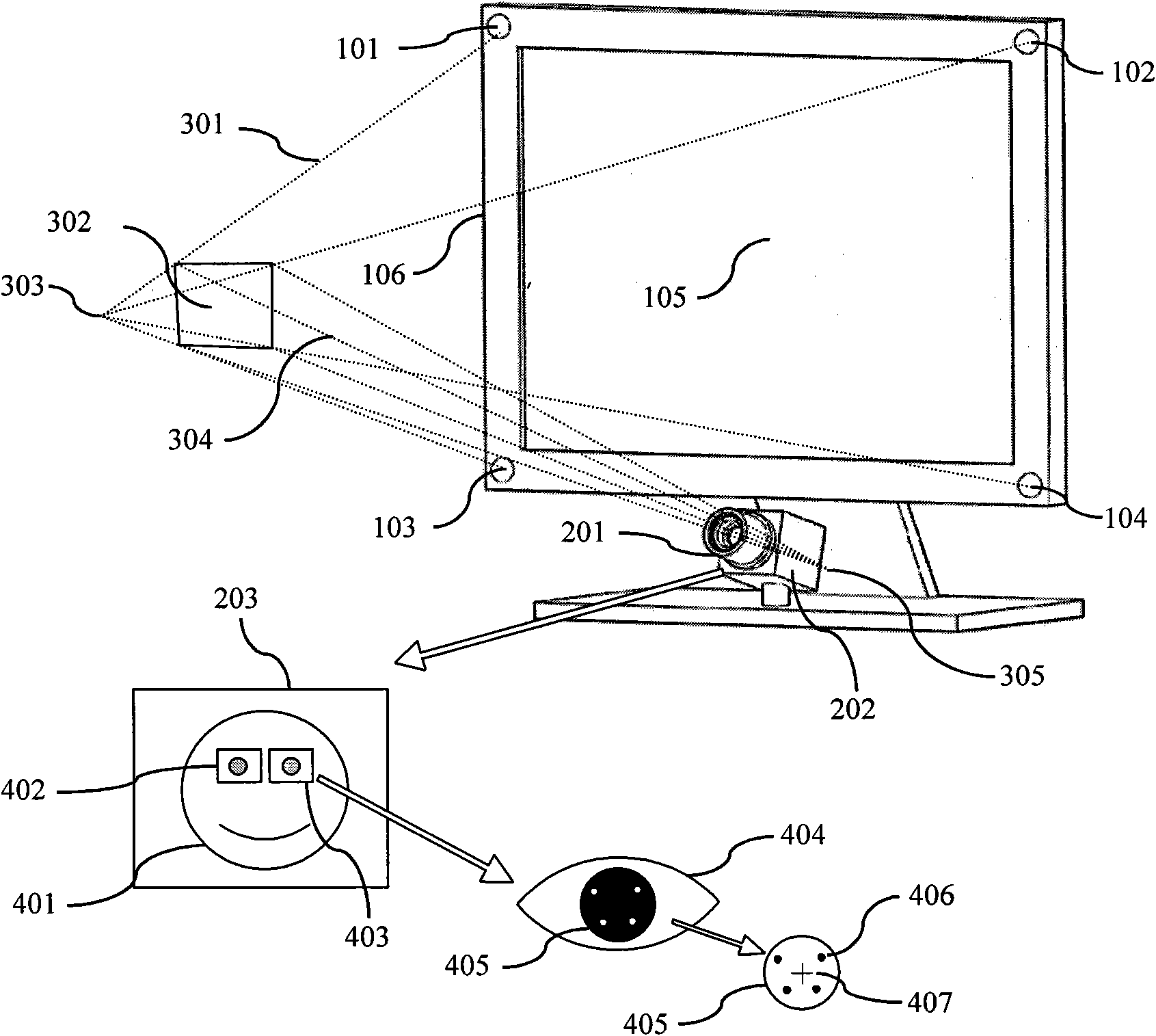

Human-computer interaction device and method adopting eye tracking in video monitoring

ActiveCN101866215AReduce the impactEnhanced interactionInput/output for user-computer interactionTelevision system detailsVideo monitoringHuman–machine interface

The invention belongs to the technical field of video monitoring and in particular relates to a human-computer interaction device and a human-computer interaction method adopting human-eye tracking in the video monitoring. The device comprises a non-invasive facial eye image video acquisition unit, a monitoring screen, an eye tracking image processing module and a human-computer interaction interface control module, wherein the monitoring screen is provided with infrared reference light sources around; and the eye tracking image processing module separates out binocular sub-images of a left eye and a right eye from a captured facial image, identifies the two sub-images respectively and estimates the position of a human eye staring position corresponding to the monitoring screen. The invention also provides an efficient human-computer interaction way according to eye tracking characteristics. The unified human-computer interaction way disclosed by the invention can be used for selecting a function menu by using eyes, switching monitoring video contents, regulating the focus shooting vision angle of a remote monitoring camera and the like to improve the efficiency of operating videomonitoring equipment and a video monitoring system.

Owner:FUDAN UNIV

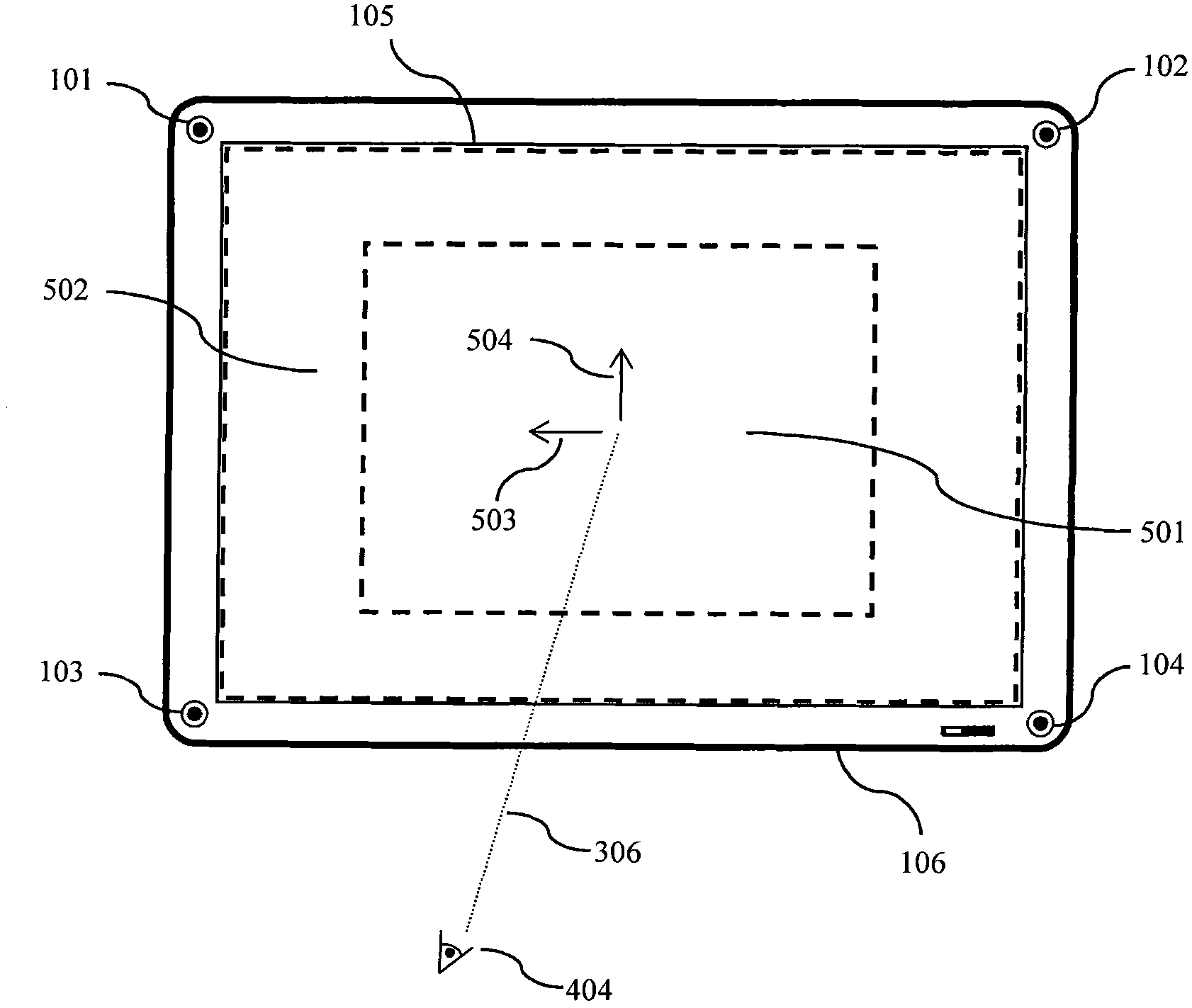

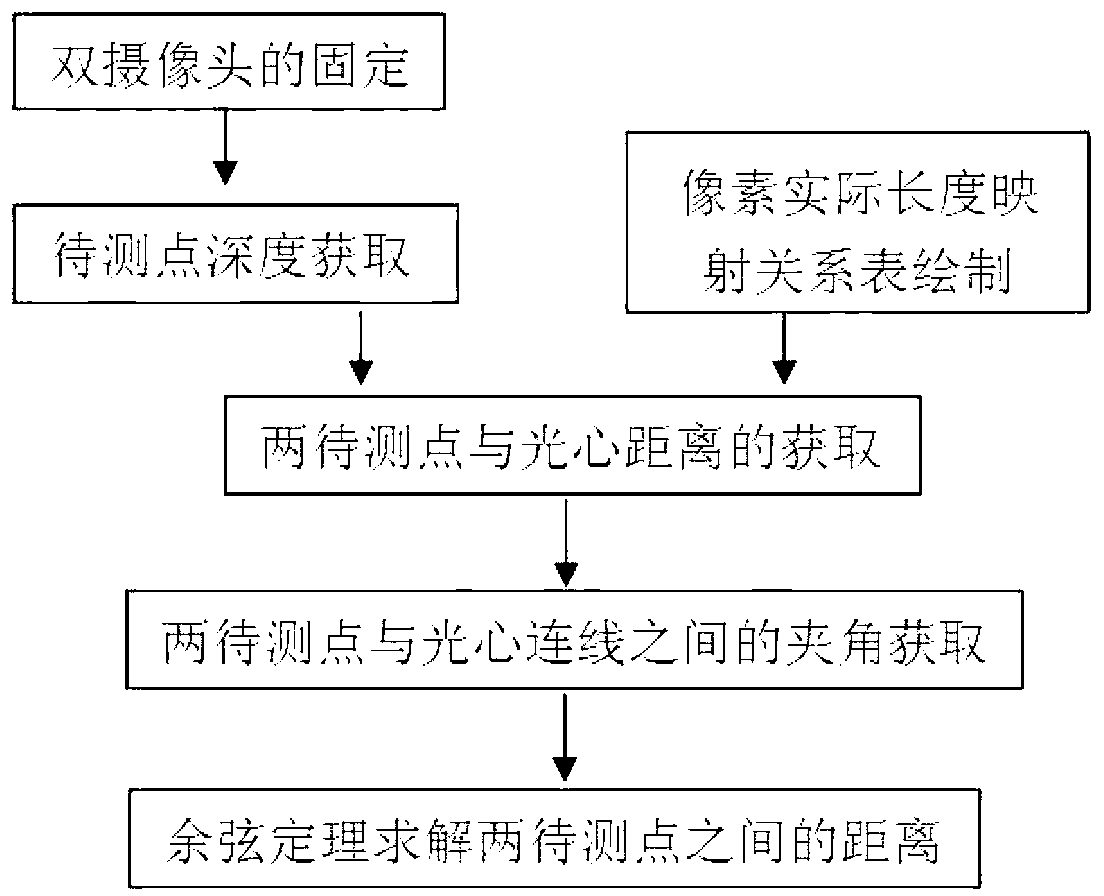

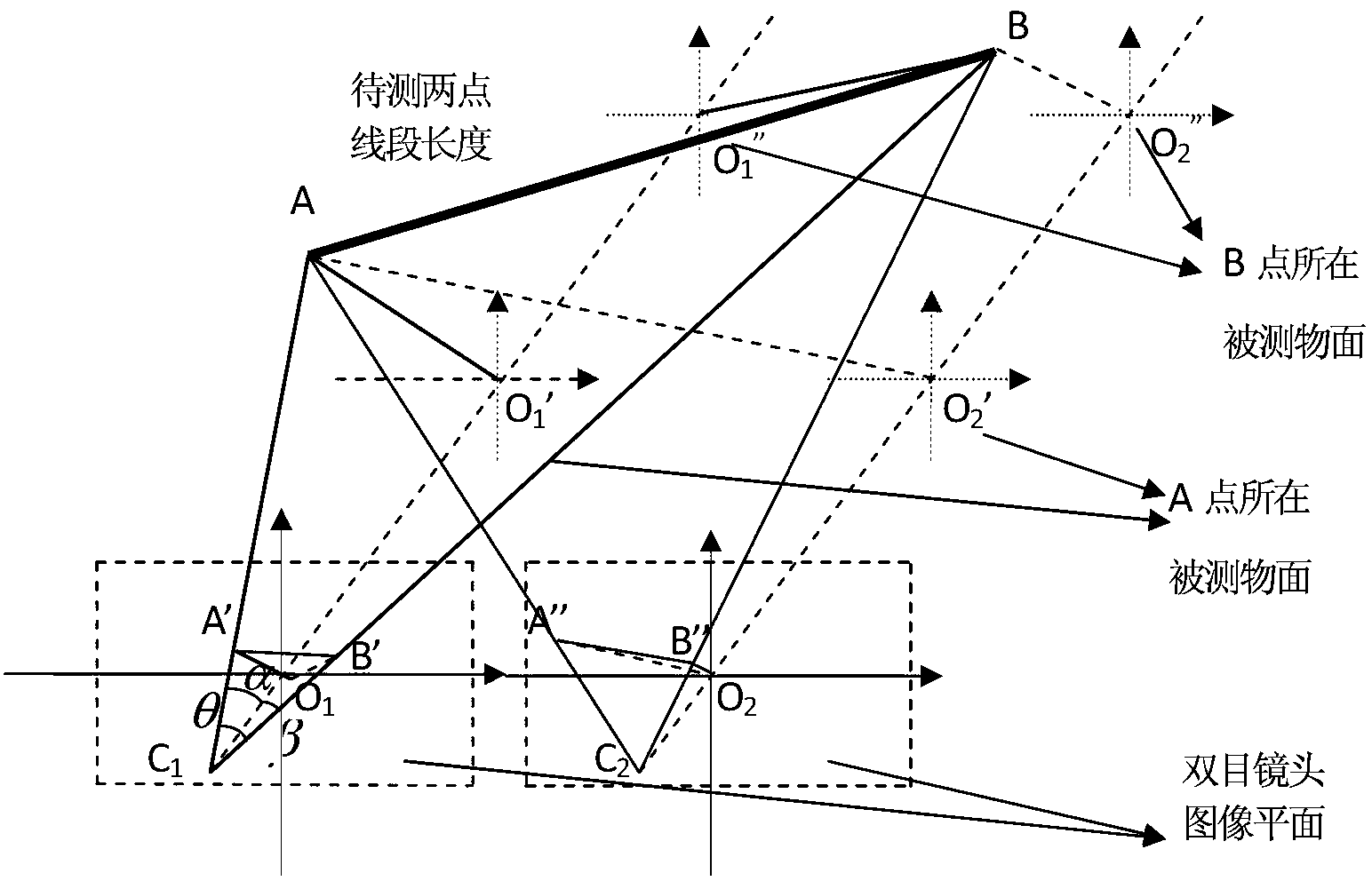

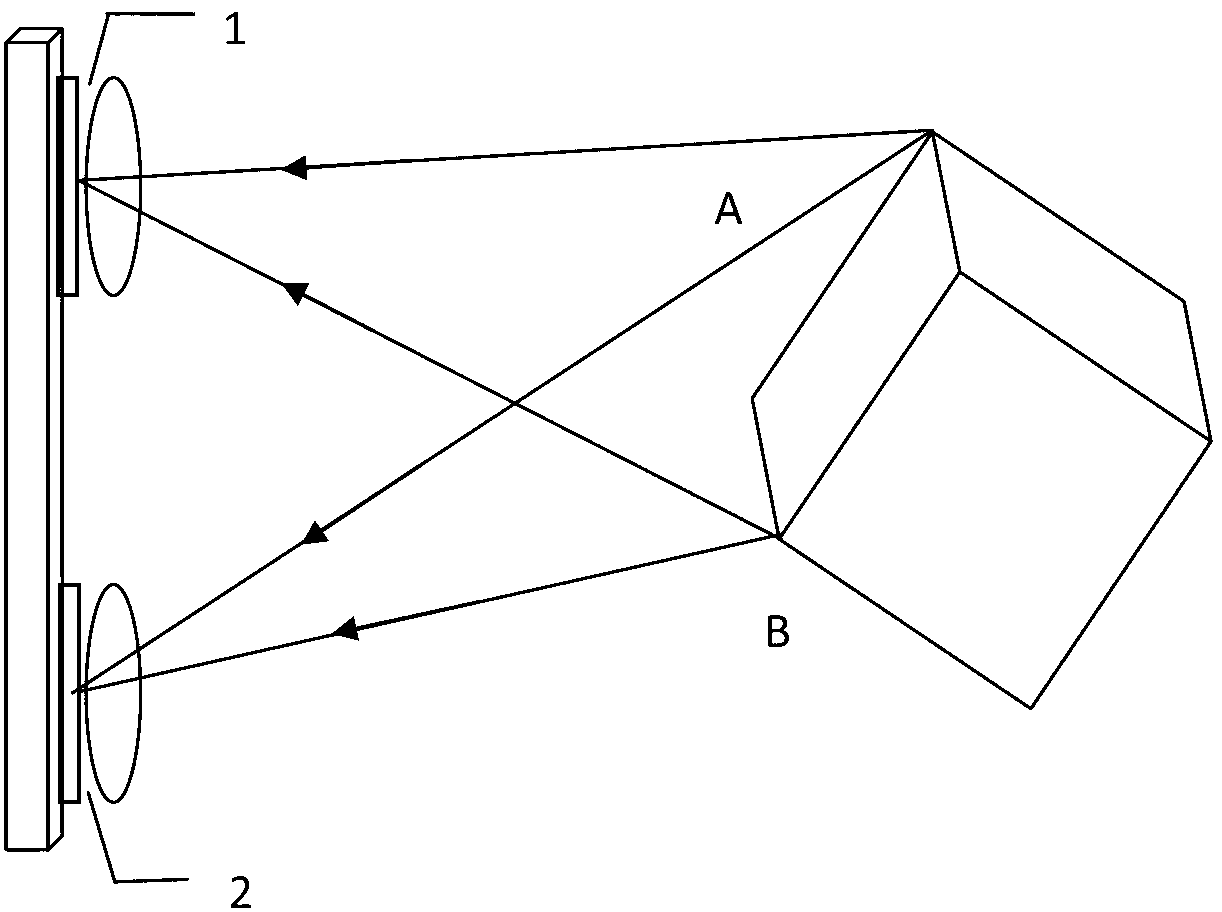

Distance measuring method applying binocular visual parallax error distance-measuring principle

InactiveCN103292710ASimple processEasy to operateUsing optical meansShortest distanceVisual perception

The invention discloses a distance measuring method applying a binocular visual parallax error distance-measuring principle. By means of adopting different base length to adapt to requirements on measurement accuracy of different distance measuring ranges, the distance measuring method is applicable to precise size measurement for short distance and small parts. On the basis of a conventional binocular measuring method, a pair of cameras which are provided with parallel optical axes and positioned side by side are adopted to make two random to-be-measured visualized points in two images into a plane vertical to the two optical axes, depth values of the two to-be-measured points are obtained via a inversely proportional relationship between binocular visual parallax error and depth, distances from the two to-be-measured points to an optic center and angles among the two to-be-measured points and an optical center connection line can be determined according to the depth value of the to-be-measured points and focal length of the cameras, and then the actual distance between the two points can be determined via the cosine law. Compared with conventional methods, the distance measuring method applying the binocular visual parallax error distance-measuring principle has the advantages of simplicity in operation, strong practicability, high precision and easiness in popularization and use.

Owner:SOUTH CHINA UNIV OF TECH

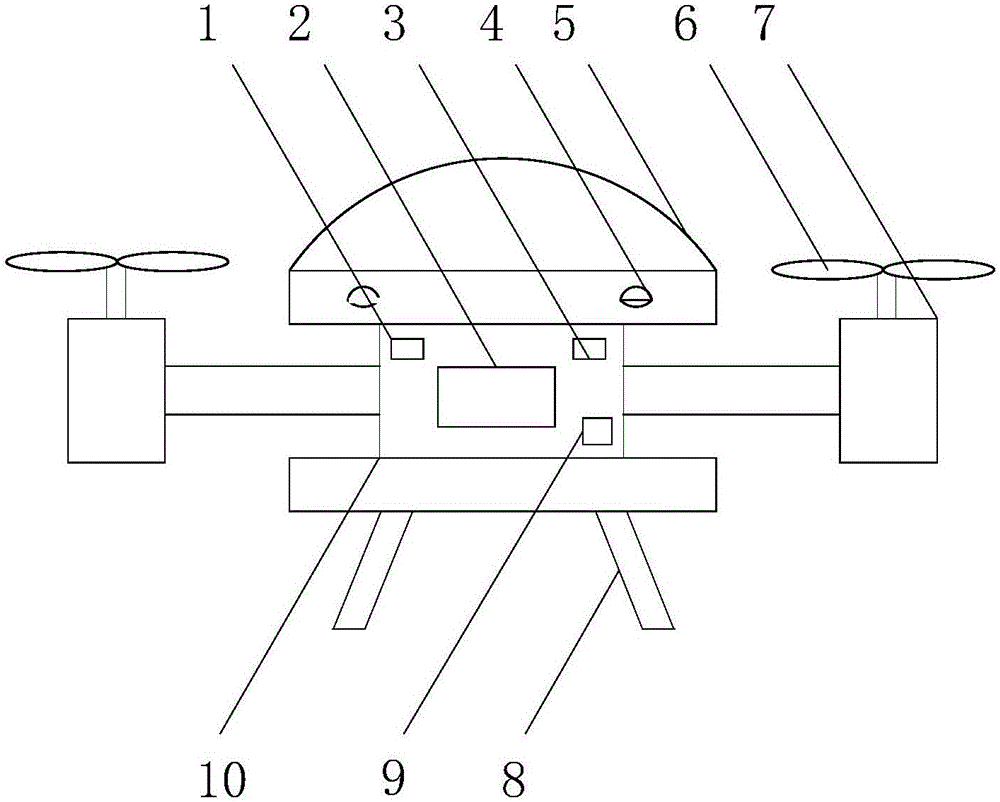

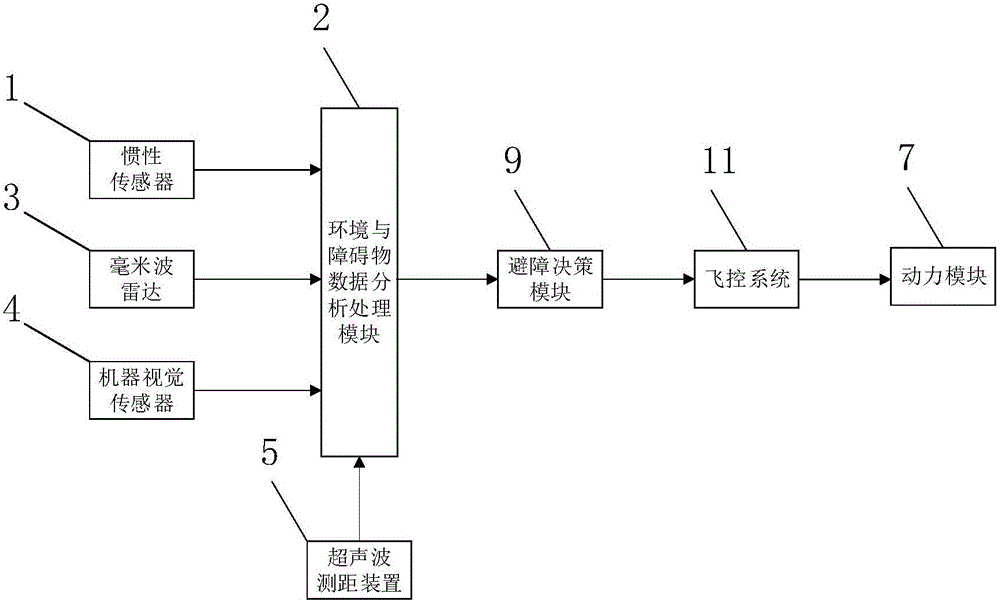

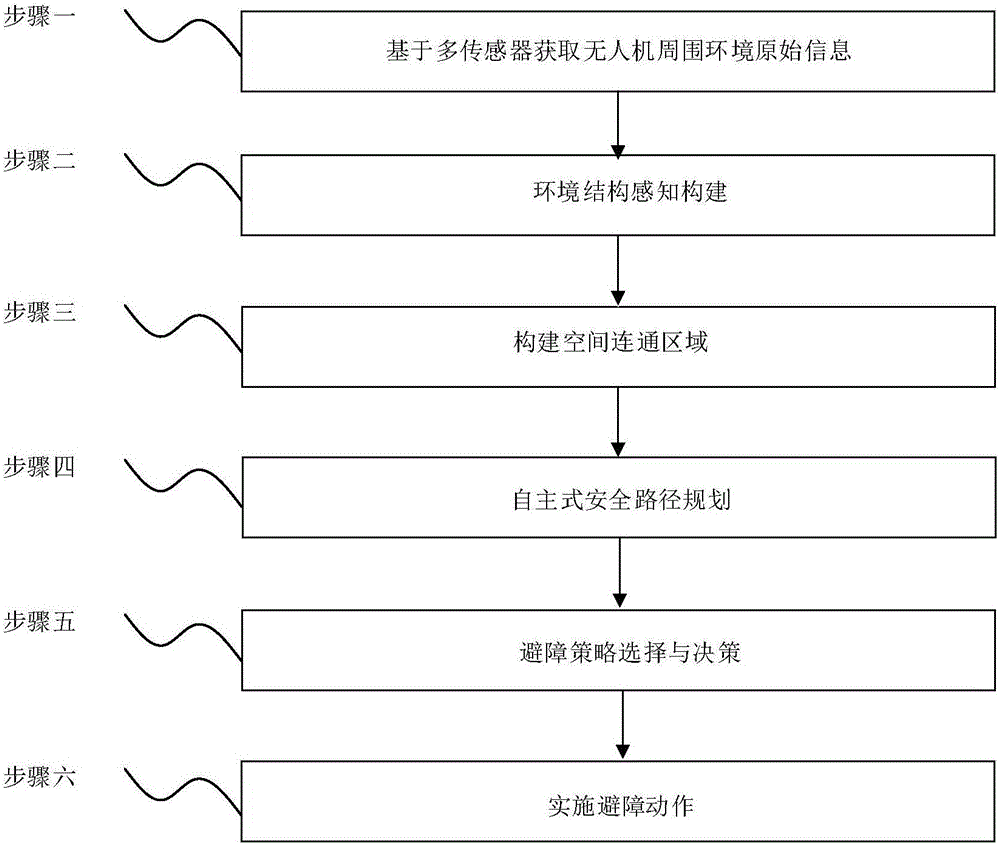

Multi-sensor fusion-based autonomous obstacle avoidance unmanned aerial vehicle system and control method

ActiveCN105892489AAvoid collisionImprove real-time detectionPosition/course control in three dimensionsMulti sensorBinocular distance

The invention discloses a multi-sensor fusion-based autonomous obstacle avoidance unmanned aerial vehicle system and a control method. The system includes an environment information real-time detection module which carries out real-time detection on surrounding environment through adopting a multi-sensor fusion technology and transmits detected information to an obstacle data analysis processing module, the obstacle data analysis processing module which carries out environment structure sensing construction on the received information of the surrounding environment so as to determine an obstacle, and an obstacle avoidance decision-making module which determines an obstacle avoidance decision according to the output result of the obstacle data analysis processing module, so as to achieve obstacle avoidance of an unmanned aerial vehicle through the driving of power modules which is performed by a flight control system. According to the multi-sensor fusion-based autonomous obstacle avoidance unmanned aerial vehicle system and the control method of the invention, binocular machine vision systems are arranged around the body of the unmanned aerial vehicle, so that 3D space reconstruction can be realized; and an ultrasonic device and a millimeter wave radar in an advancing direction are used in cooperation, so that an obstacle avoidance method is more comprehensive. The system has the advantages of high real-time performance of obstacle detection, long visual detection distance and high resolution.

Owner:STATE GRID INTELLIGENCE TECH CO LTD

Human-machine interaction method and system based on binocular stereoscopic vision

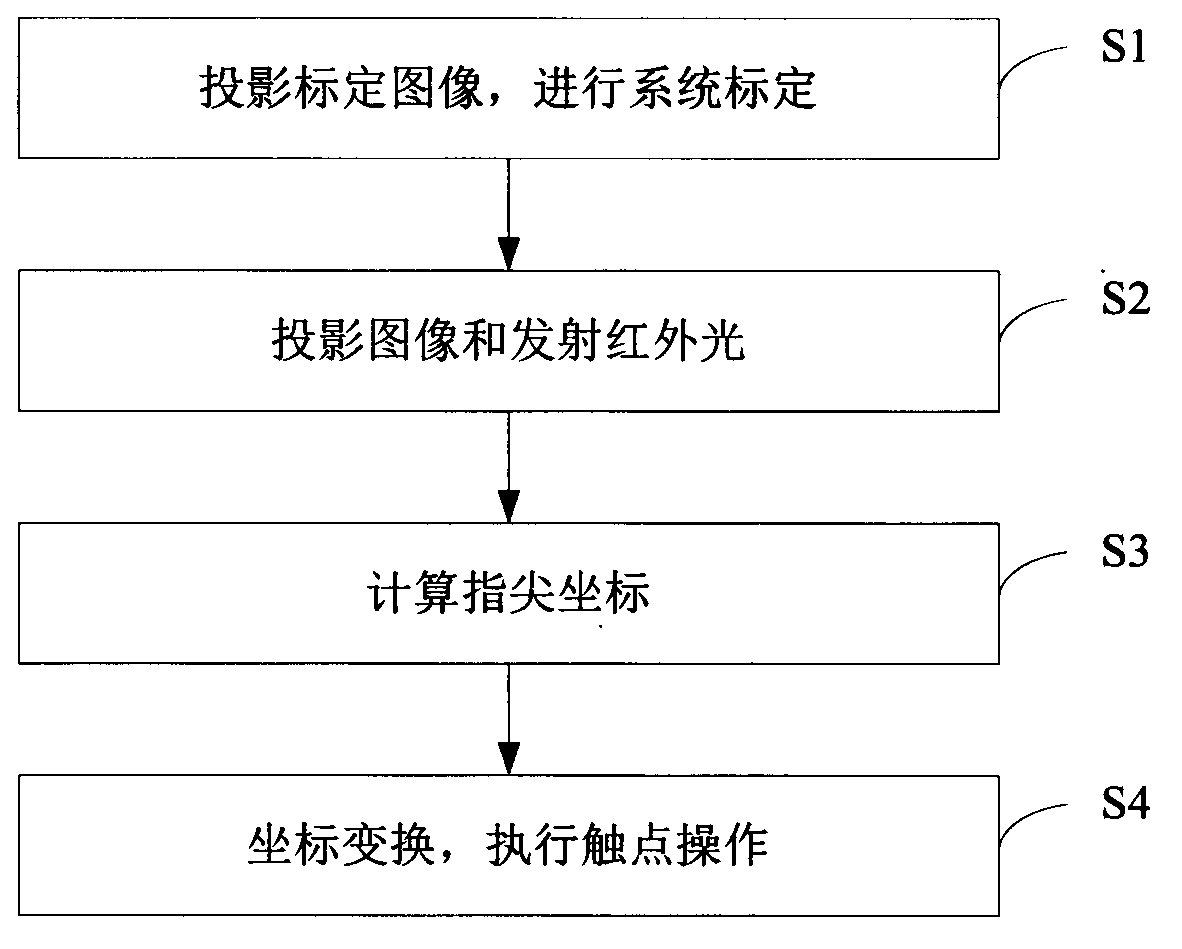

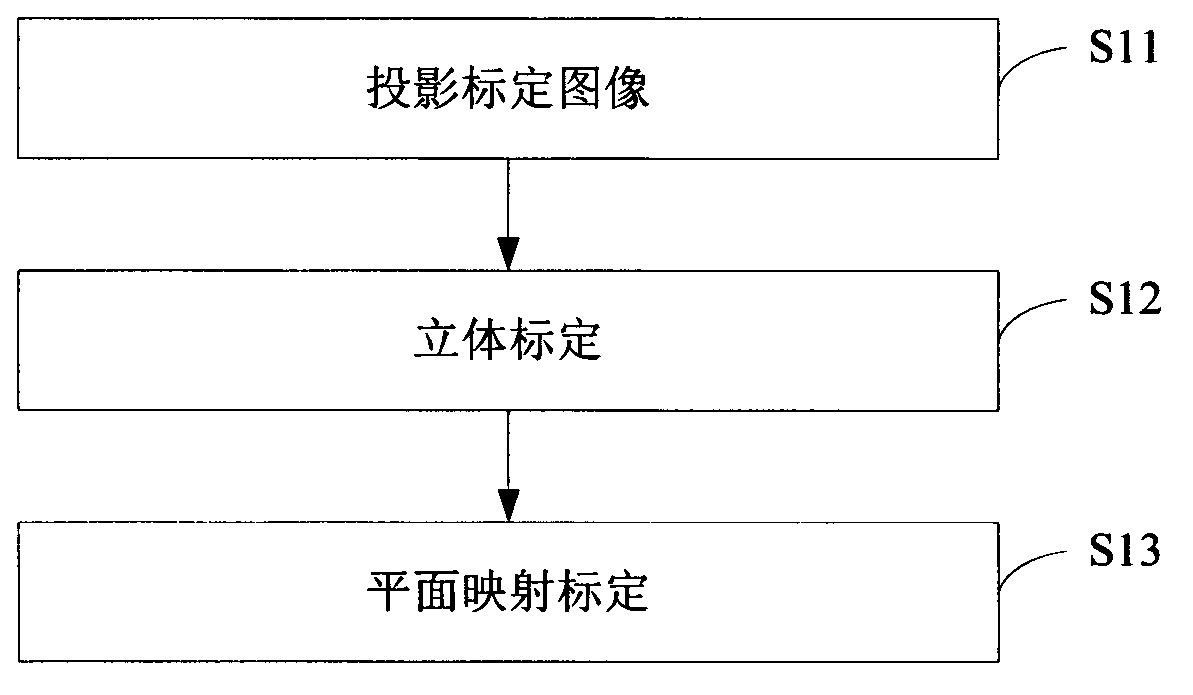

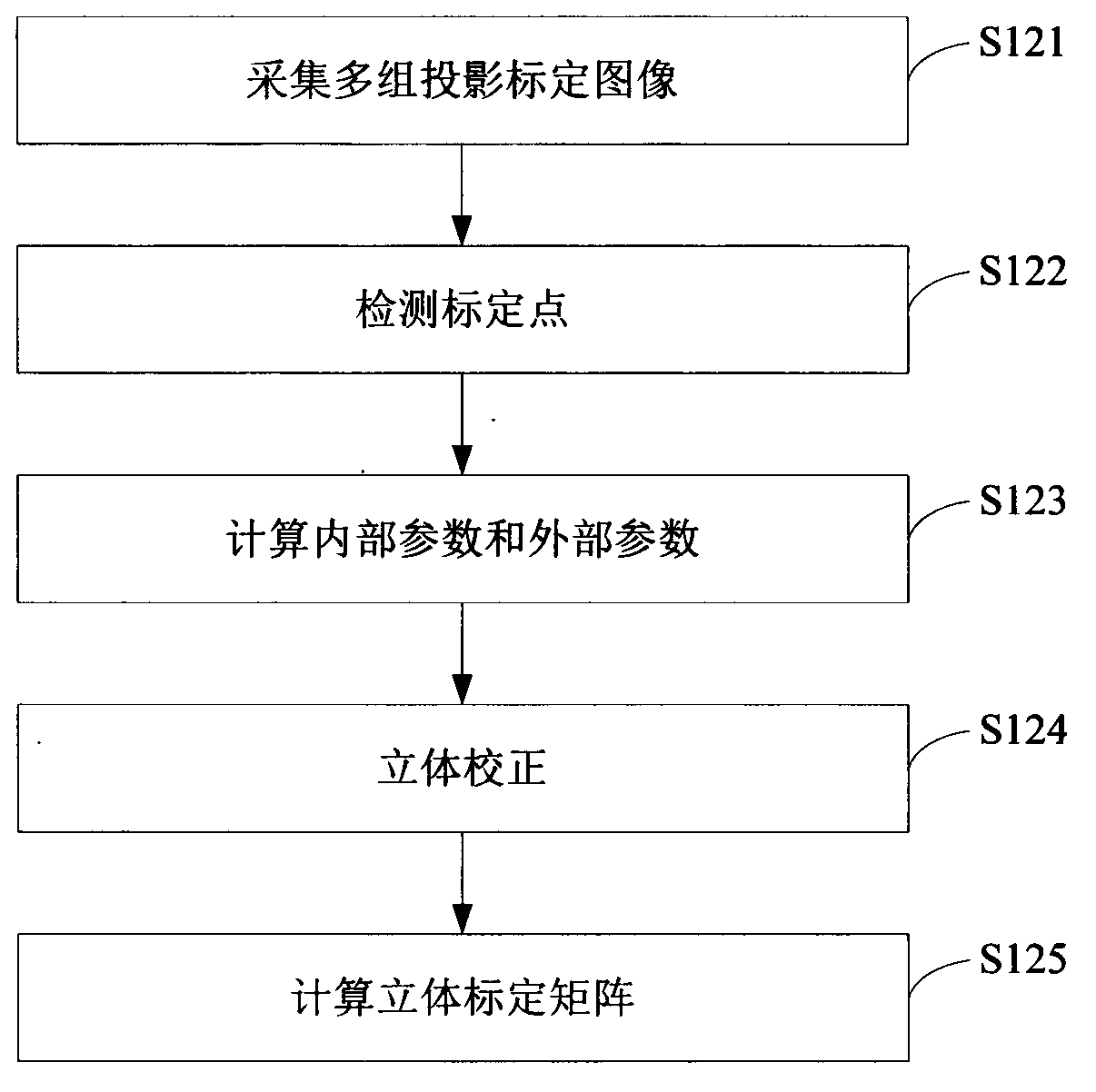

ActiveCN102799318AImprove human-computer interactionEnhanced interactionInput/output processes for data processingHuman–robot interactionProjection plane

The invention relates to the technical field of human-machine interaction and provides a human-machine interaction method and a human-machine interaction system based on binocular stereoscopic vision. The human-machine interaction method comprises the following steps: projecting a screen calibration image to a projection plane and acquiring the calibration image on the projection surface for system calibration; projecting an image and transmitting infrared light to the projection plane, wherein the infrared light forms a human hand outline infrared spot after meeting a human hand; acquiring an image with the human hand outline infrared spot on the projection plane and calculating a fingertip coordinate of the human hand according to the system calibration; and converting the fingertip coordinate into a screen coordinate according to the system calibration and executing the operation of a contact corresponding to the screen coordinate. According to the invention, the position and the coordinate of the fingertip are obtained by the system calibration and infrared detection; a user can carry out human-machine interaction more conveniently and quickly on the basis of touch operation of the finger on a general projection plane; no special panels and auxiliary positioning devices are needed on the projection plane; and the human-machine interaction device is simple in and convenient for mounting and using and lower in cost.

Owner:SHENZHEN INST OF ADVANCED TECH

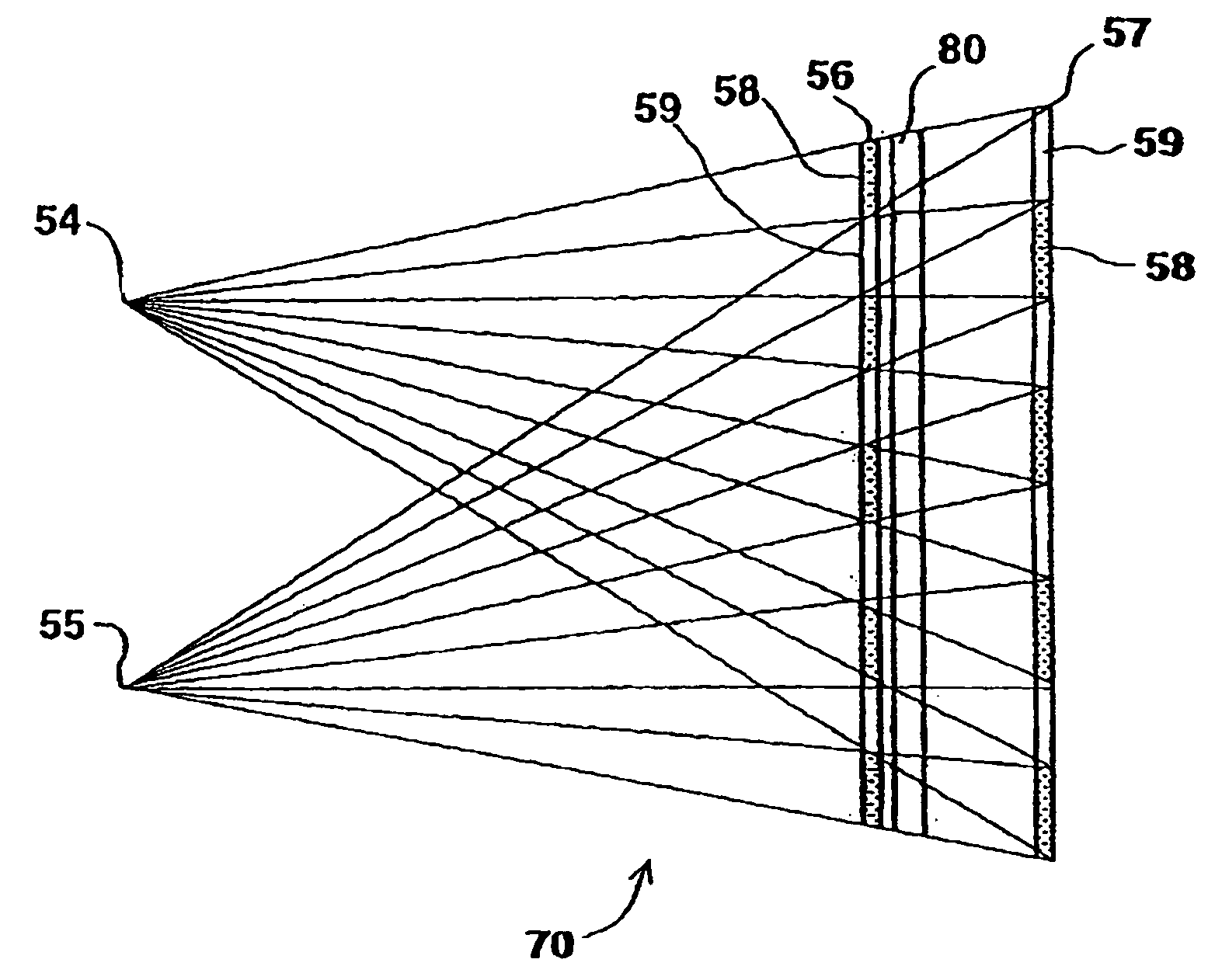

Systems for three-dimensional viewing and projection

Systems for three-dimensional viewing and projection aimed at full-color flat-screen binocular stereoscopic viewing without the use of eyeglasses for the viewer. According to the invention, light emanating from a display or projected thereat presenting a left image and a right image is directed only to the appropriate left or right eyes of at least one viewer using various combinations of light polarizing layers and layers of light rotating means.

Owner:TAMIRAS PER PTE LTD LLC

Autonomous obstacle avoidance and navigation method for unmanned aerial vehicle-based field search and rescue

InactiveCN107656545AGuaranteed accuracySatellite radio beaconingTarget-seeking controlUltrasonic sensorDetect and avoid

An autonomous obstacle avoidance and navigation method for unmanned aerial vehicle (UAV)-based field search and rescue provided by the invention includes global navigation based on GPS signals and anautonomous obstacle avoidance and navigation algorithm based on binocular vision and ultrasound. First, a UAV carries out search under the navigation of GPS signals according to a planned path. Aftera target is found, the autonomous obstacle avoidance and navigation algorithm based on binocular vision and ultrasound is adopted. A binocular camera acquires a disparity map containing depth information. The disparity map is re-projected to a 3D space to get a point cloud. A cost map is established according to the point cloud. The path is re-planned using the cost map to avoid obstacles. When there is no GPS signal, a visual inertial odometry (VIO) is used to ensure that the UAV flies according to the planned path, and an ultrasonic sensor is used to detect and avoid obstacles below the UAVto approach the rescued target. UAV autonomous obstacle avoidance and navigation in a field search and rescue scene is realized.

Owner:WUHAN UNIV

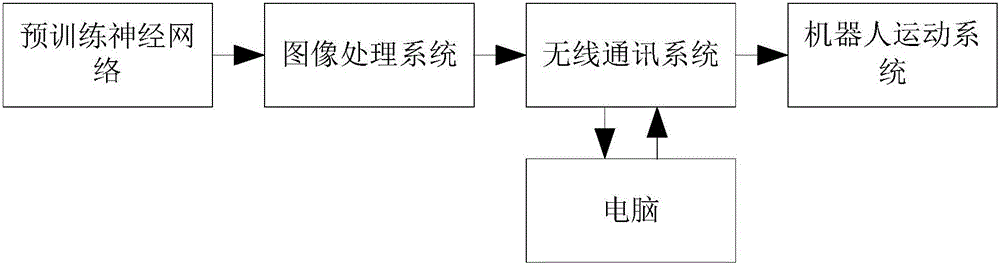

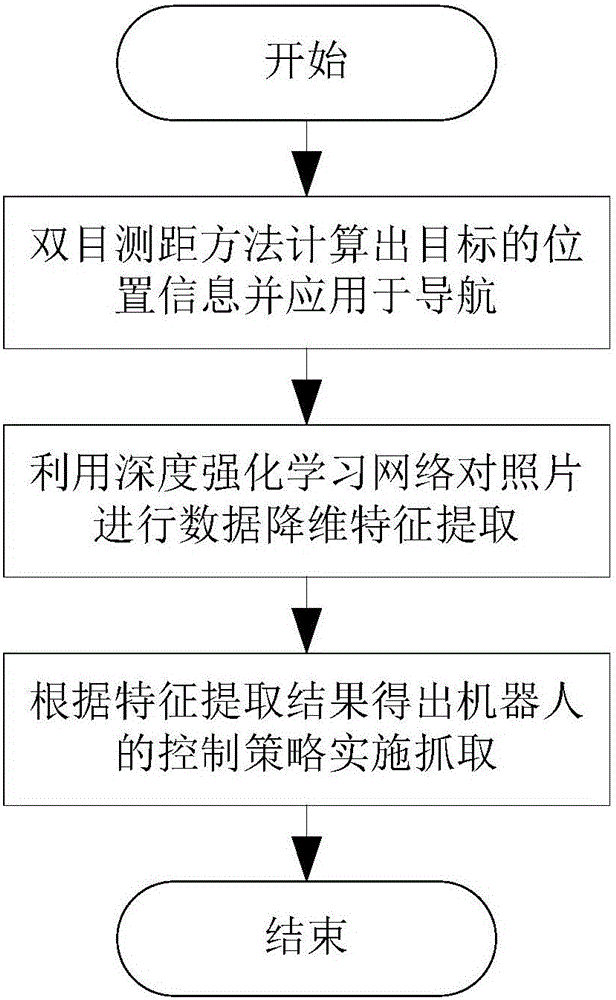

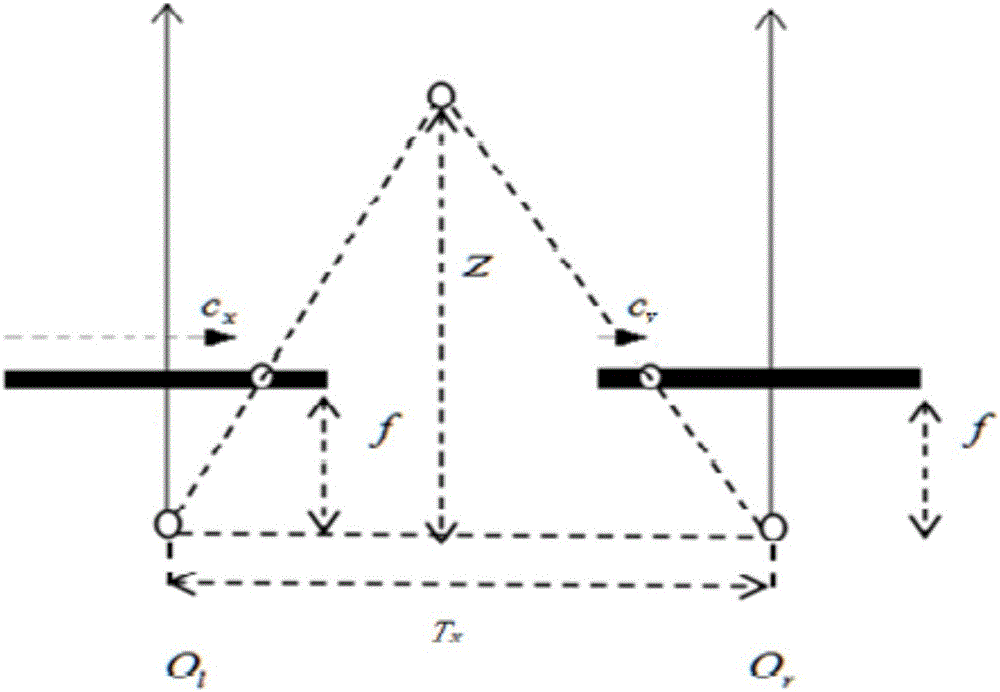

Robot adaptive grabbing method based on deep reinforcement learning

InactiveCN106094516AGuaranteed convergenceSolve problems that require each other independentlyAdaptive controlFeature extractionRobotic arm

The invention provides a robot adaptive grabbing method based on deep reinforcement learning. The method comprises the following steps: when distanced a certain distance away from an object to be grabbed, a robot obtaining a picture of a target through a pick-up head in the front, then according to the picture, calculating position information of the target by use of a binocular distance measurement method, and applying the calculated position information to robot navigation; when the target goes into the grabbing scope of a manipulator, taking a picture of the target through the pick-up head in the front again, and by use of a DDPG-based deep reinforcement learning network trained in advance, performing data dimension reduction feature extraction on the picture; and according to a feature extraction result, obtaining a control strategy of the robot, and the robot controlling a movement path and the posture of the manipulator by use of a control strategy so as to realize adaptive grabbing of the target. The grabbing method can realize adaptive grabbing of objects which are in different sizes and shapes and are not fixedly positioned and has quite good market application prospect.

Owner:NANJING UNIV

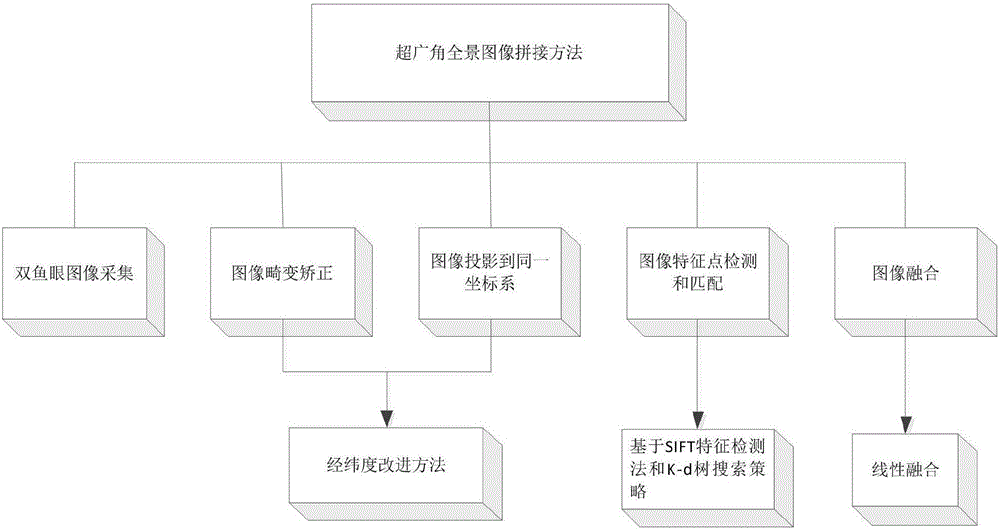

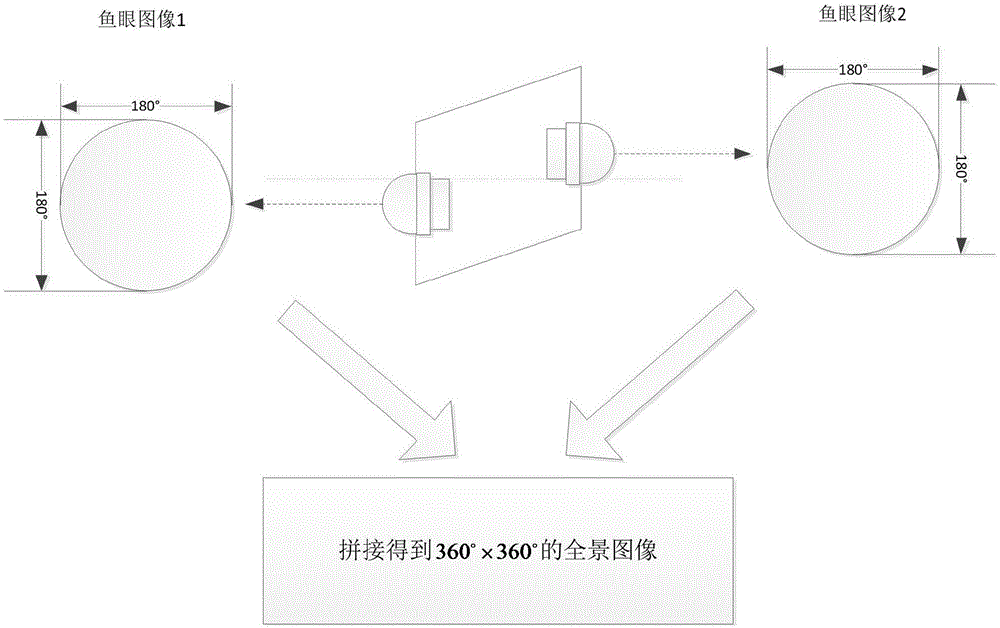

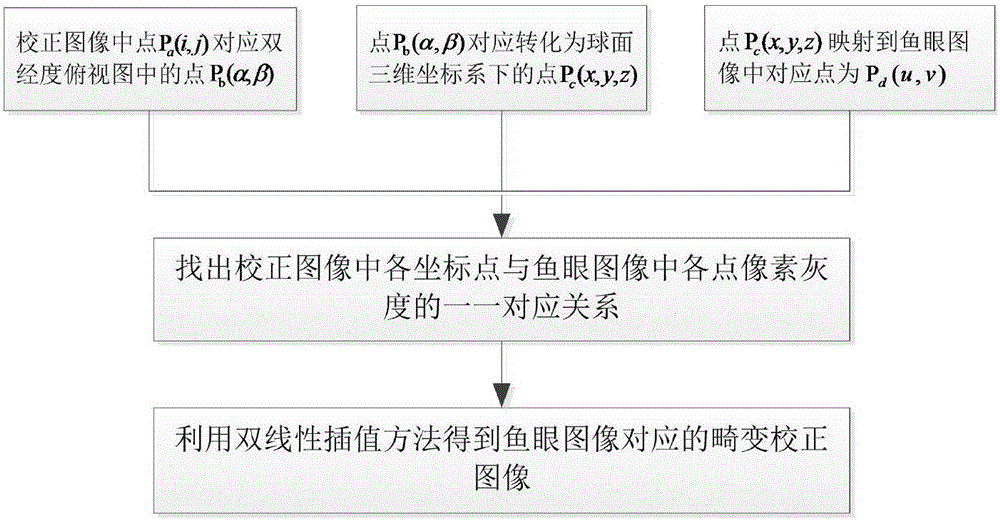

Binocular camera-based panoramic image splicing method

InactiveCN106683045AImprove operational efficiencyAchieve a natural transition effectImage enhancementImage analysisNear neighborLongitude

The invention provides a binocular camera-based panoramic image splicing method. According to the method, a binocular camera is arranged at a certain point of view in the space, the binocular camera completes photographing for once and obtains two fisheye images; a traditional algorithm is improved according to the defect of insufficient distortion correction capacity of a latitude-longitude correction method in a horizontal direction; corrected images are projected into the same coordinate system through using a spherical surface orthographic projection method, so that the fast correction of the fisheye images can be realized; feature points in an overlapping area of the two projected images are extracted based on an SIFT feature point detection method; the search strategy of a K-D tree is adopted to search Euclidean nearest neighbor distances of the feature points, so that feature point matching can be performed; an RANSAC (random sample consensus) algorithm is used to perform de-noising on the feature points and eliminate mismatching points, so that image splicing can be completed; and a linear fusion method is adopted to fuse spliced images, and therefore, color and scene change bluntness in an image transition area can be avoided.

Owner:深圳市优象计算技术有限公司

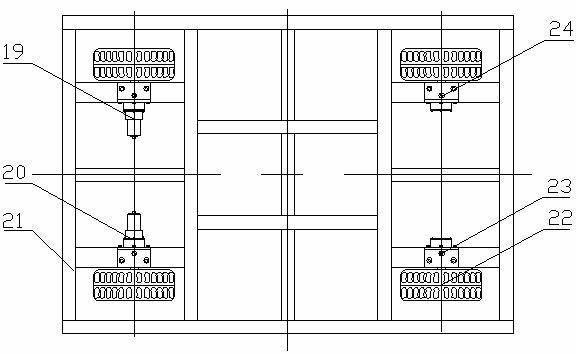

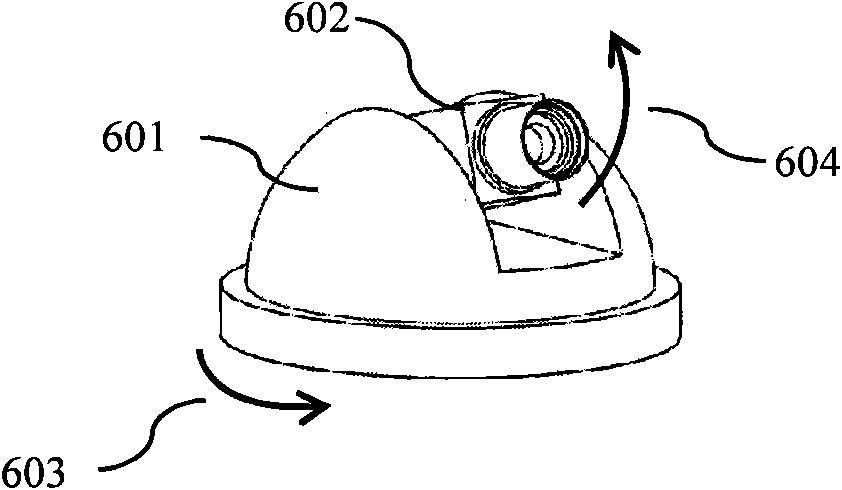

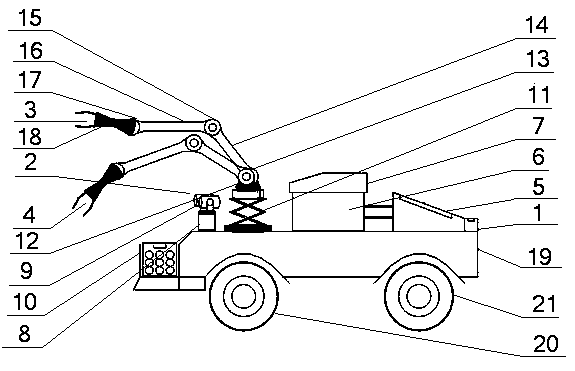

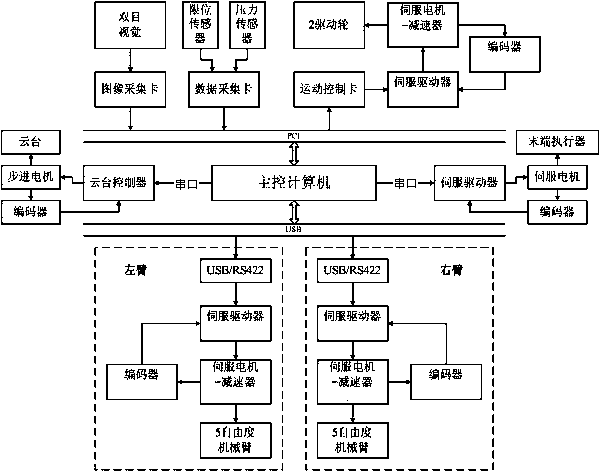

Double-manipulator fruit and vegetable harvesting robot system and fruit and vegetable harvesting method thereof

ActiveCN103503639ARealize automatic harvestingImprove work efficiencyProgramme-controlled manipulatorPicking devicesSimulationActuator

The invention discloses a double-manipulator fruit and vegetable harvesting robot system and a fruit and vegetable harvesting robot method of the double-manipulator fruit and vegetable harvesting robot system. According to the system, a binocular stereoscopic vision system is used for visual navigation of walking motion of a robot and obtaining of position information of harvested targets and barriers; a manipulator device is used for grasping and separating according to the positions of the harvested targets and the barriers; a robot movement platform is used for autonomous moving under the operation environment; a main control computer is a control center, integrates a control interface and all software modules, and controls the whole system. The binocular stereoscopic vision system comprises two color vidicons, an image collection card and an intelligent control cloud deck; the manipulator device comprises two five degree-of-freedom manipulator bodies, a joint servo driver, an actuator motor and the like; the robot movement platform comprises a wheel-type body, a power source and power control device and a fruit and vegetable harvesting device. Binocular vision and the double-manipulator bionic personification are used for building the fruit and vegetable harvesting robot, and autonomous navigation walking and automatic harvesting of the fruit and vegetable targets are achieved.

Owner:溧阳常大技术转移中心有限公司

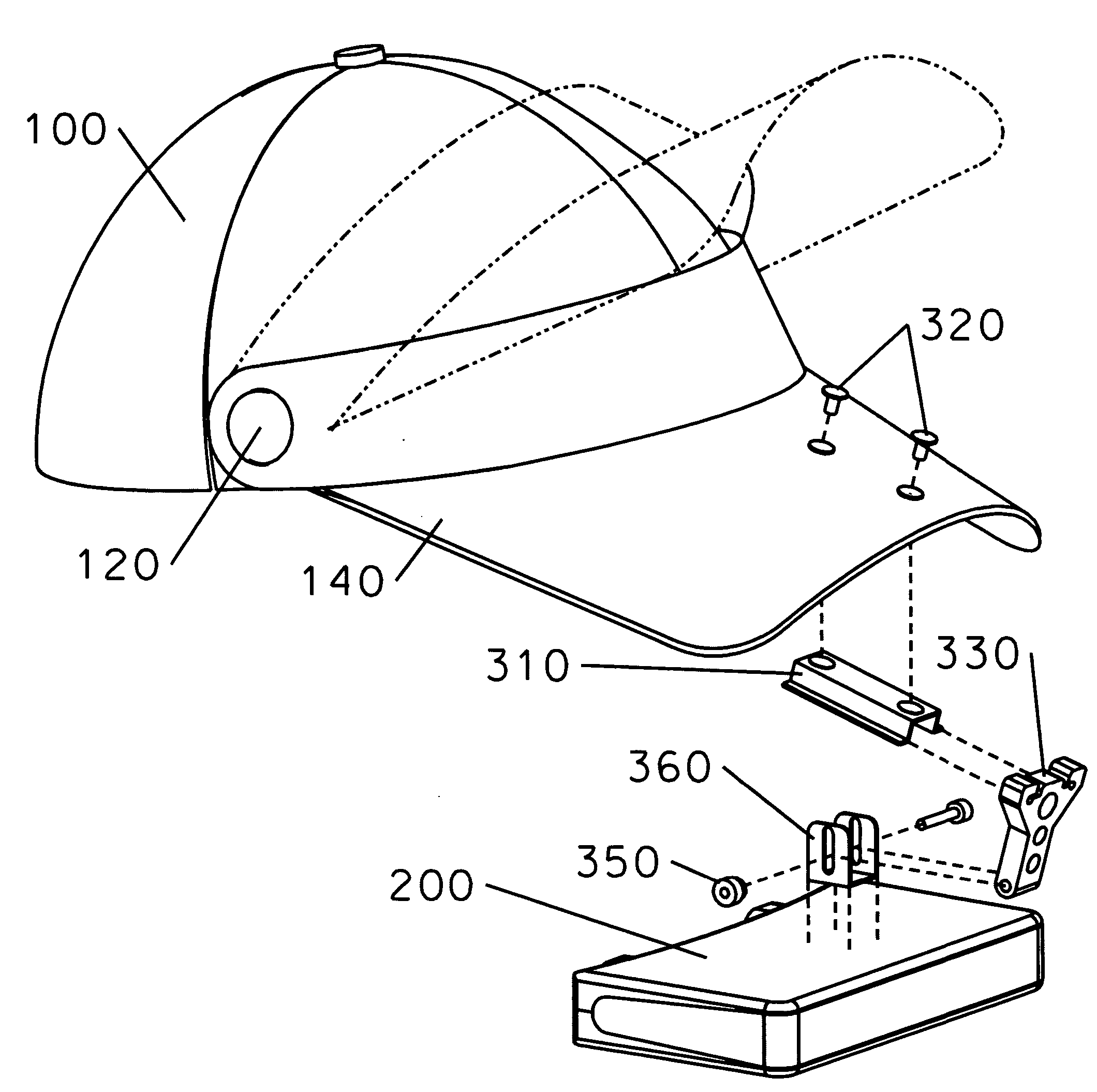

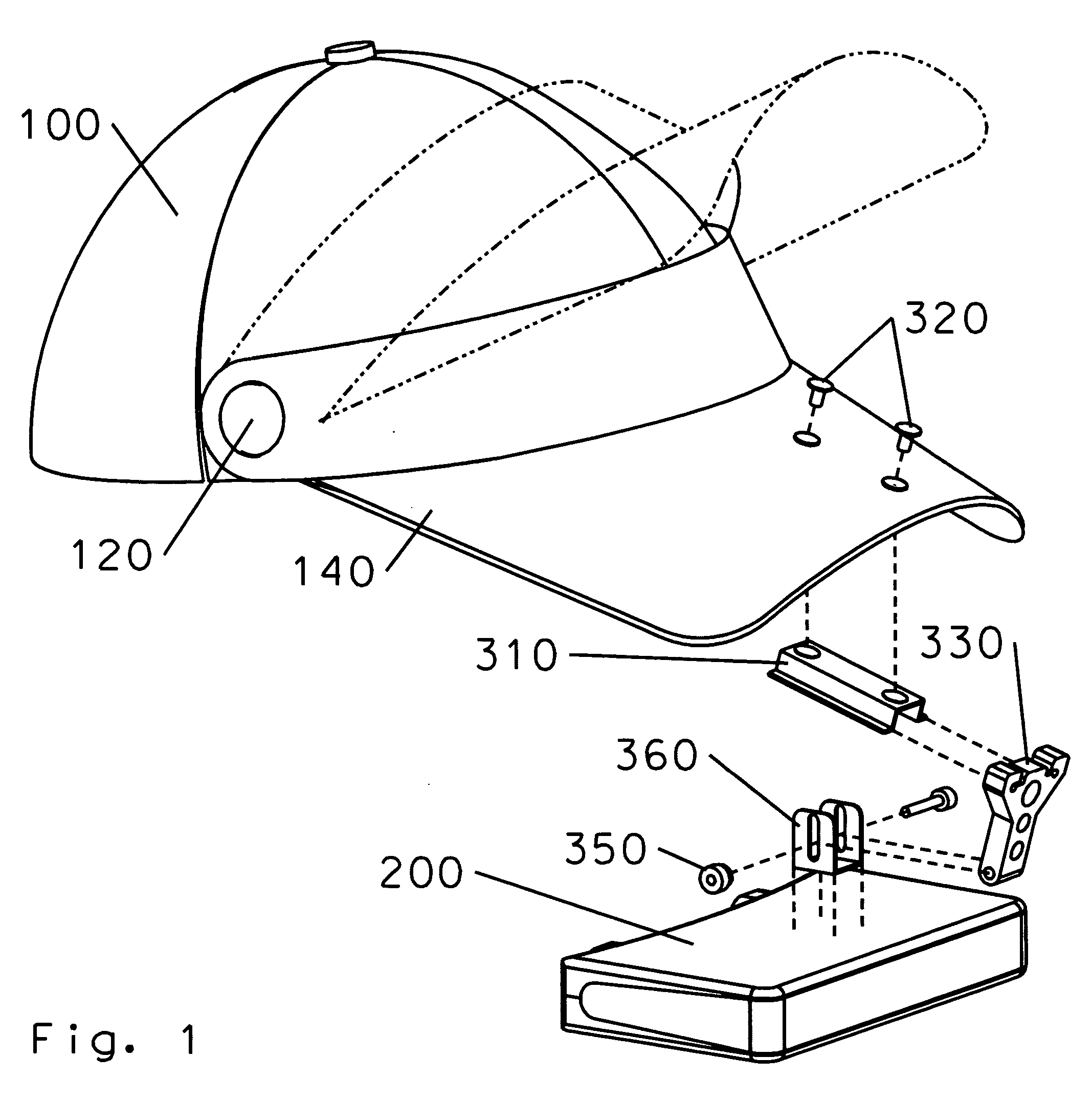

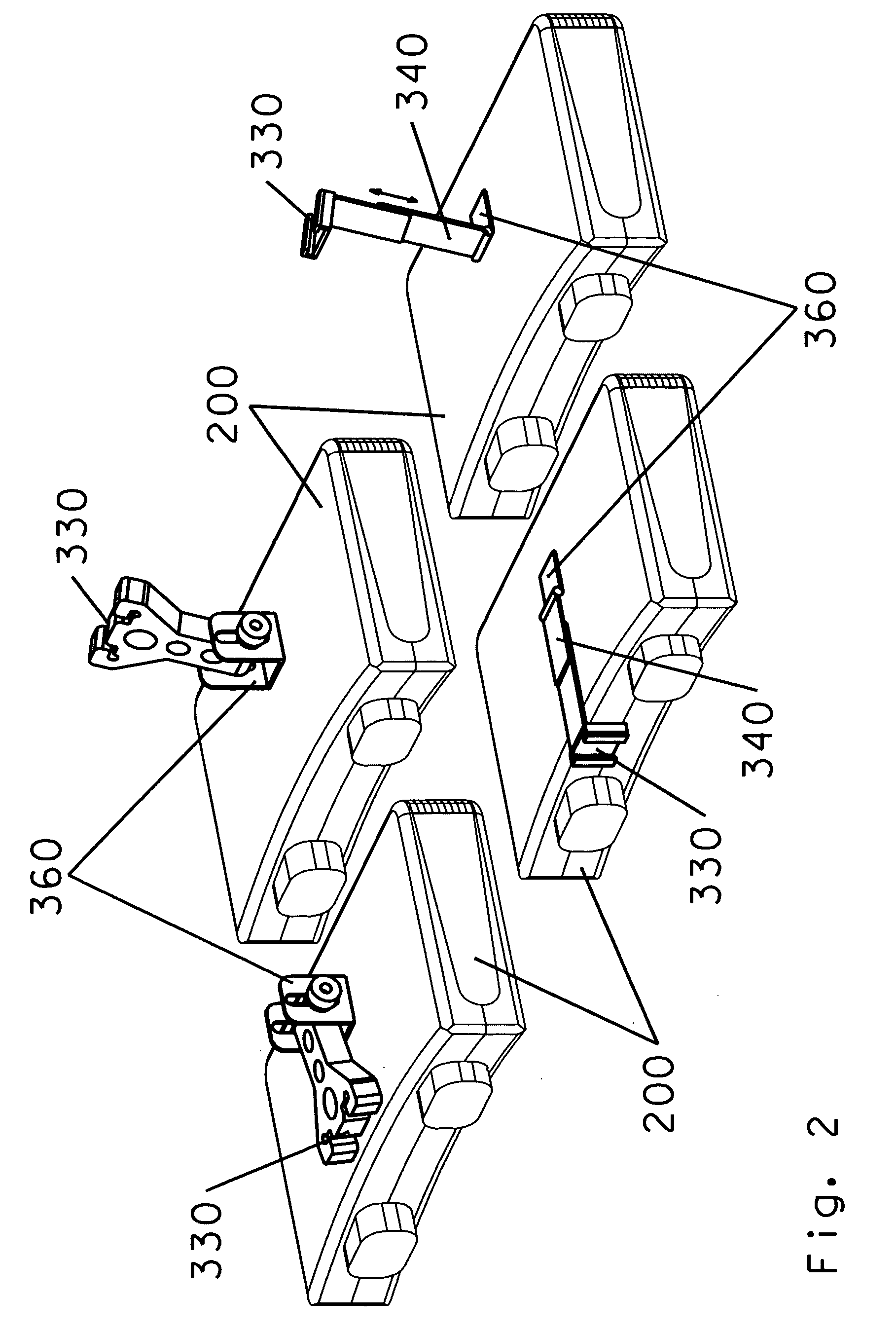

Binocular to hat attachment

InactiveUS20060037125A1Removing objectionable limitationQuick removalHatsHeadwear capsContinuous useEngineering

A Quick and easy attachment system for linking a light weight headgear and a binocular for steady hands free viewing without fatigue. The headgear functions as an attractive hat or cap with visor and is comfortable for continuous use with or without the binocular attached. The attaching system offers multiple embodiments, functions and adjustments for differing conditions and wearers. Options include, flip up or fixed visor.

Owner:MCDOWELL ANTHONY

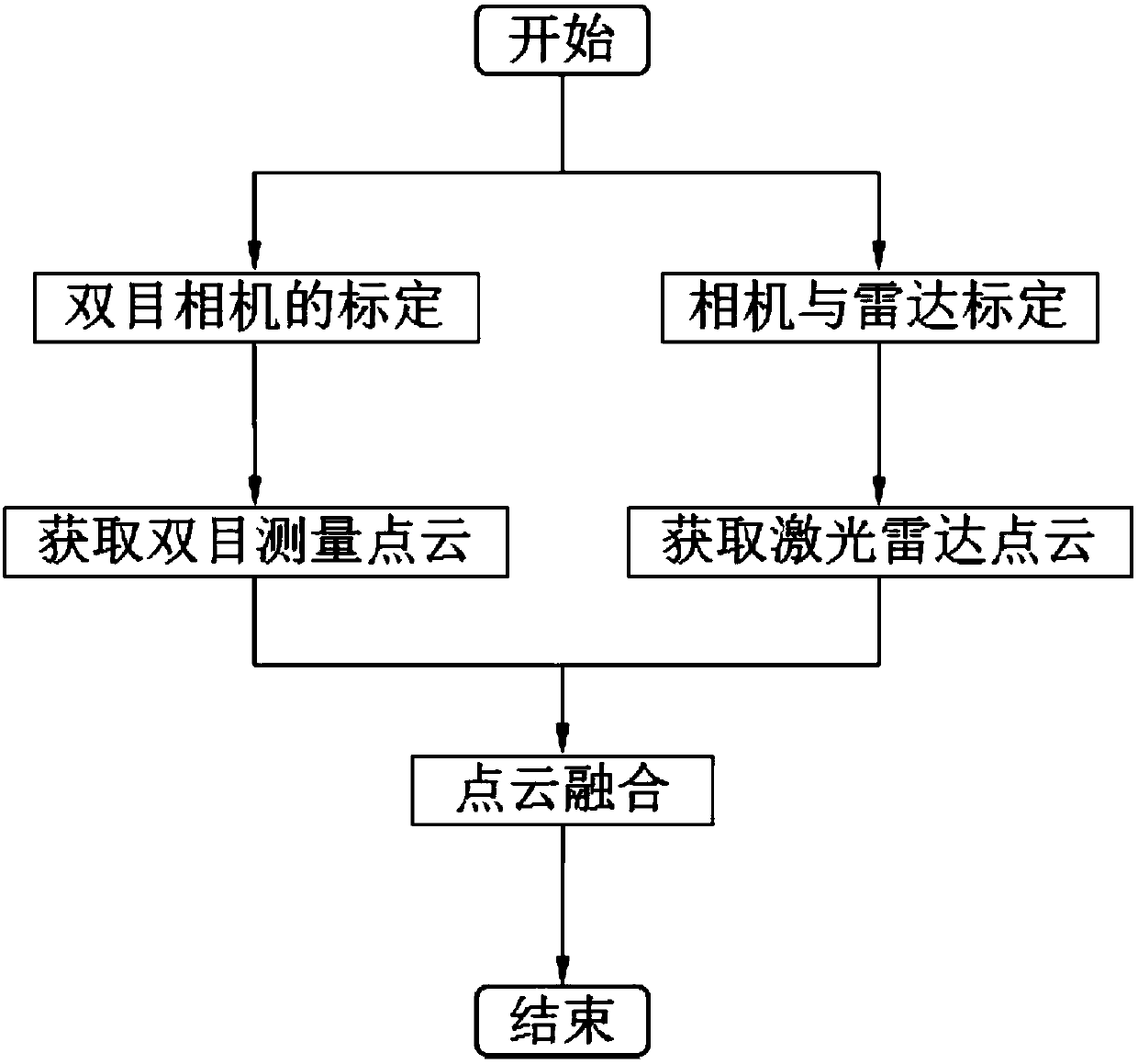

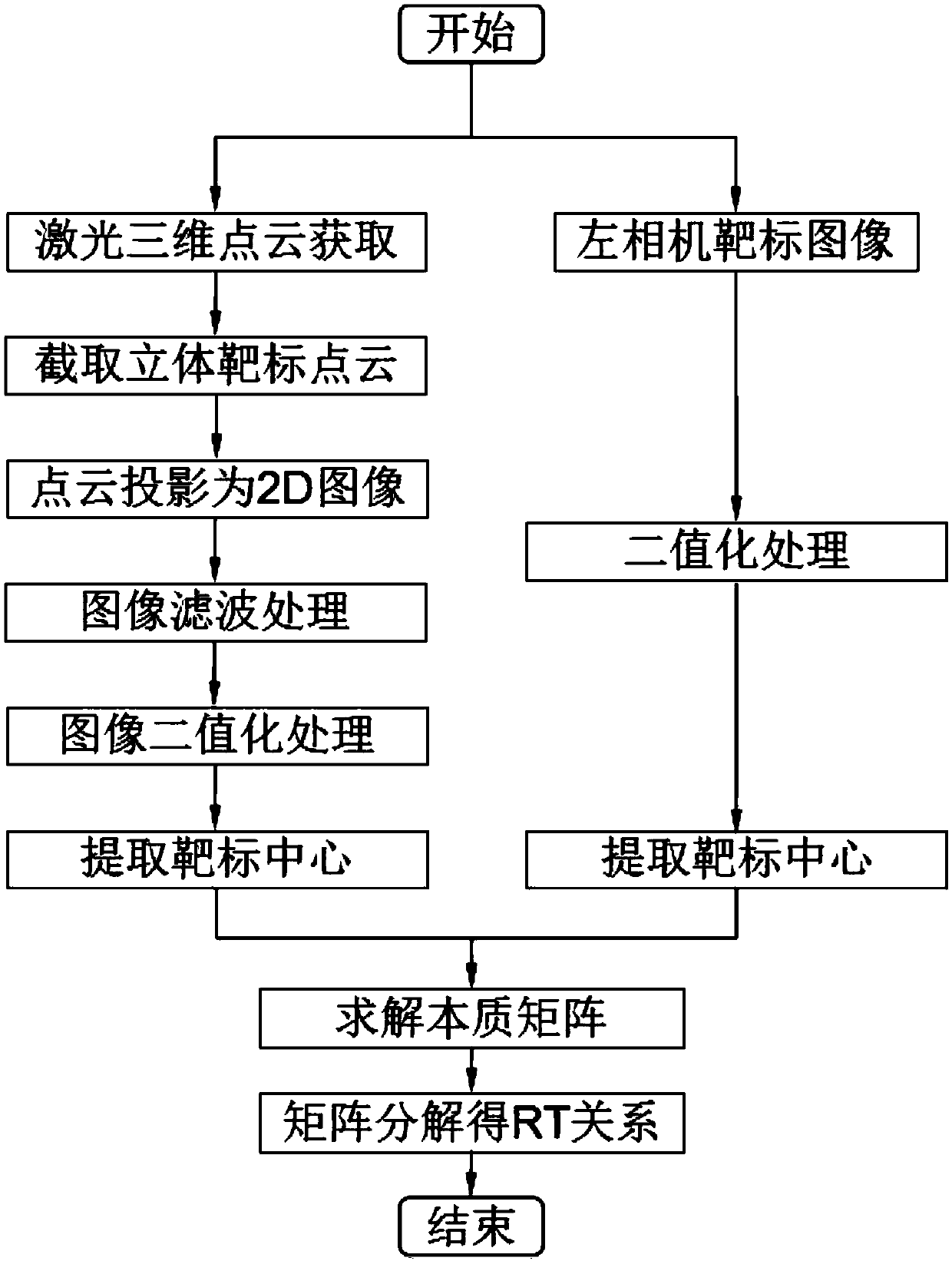

Joint measurement method based on laser radar and binocular visible light camera

ActiveCN108828606AAccurate locationIncrease workloadPhotogrammetry/videogrammetryElectromagnetic wave reradiationBinocular stereoVisual perception

The invention provides a joint measurement method based on a laser radar and a binocular visible light camera to obtain accurate and dense three-dimensional information simply and efficiently. The joint measurement method comprises assuming that the laser radar is a camera device with a fixed internal reference; directly projecting three-dimensional point cloud data into a two-dimensional image, calculating rotation and translation relationships between the laser radar device and the binocular camera by using an image processing method and the matching between the two-dimensional images; creatively introducing an idea for obtaining a matrix norm and a matrix trace to solve a rotation matrix; finally fusing laser radar and binocular stereo vision point cloud data. The method not only can obtain an accurate position and attitude information, but also can reconstruct the special texture and feature information of a target surface, which has a high application value for spacecraft dockingand hostile satellite capture in the military field and workpiece measurement and unmanned driving in the civilian field.

Owner:XI'AN INST OF OPTICS & FINE MECHANICS - CHINESE ACAD OF SCI

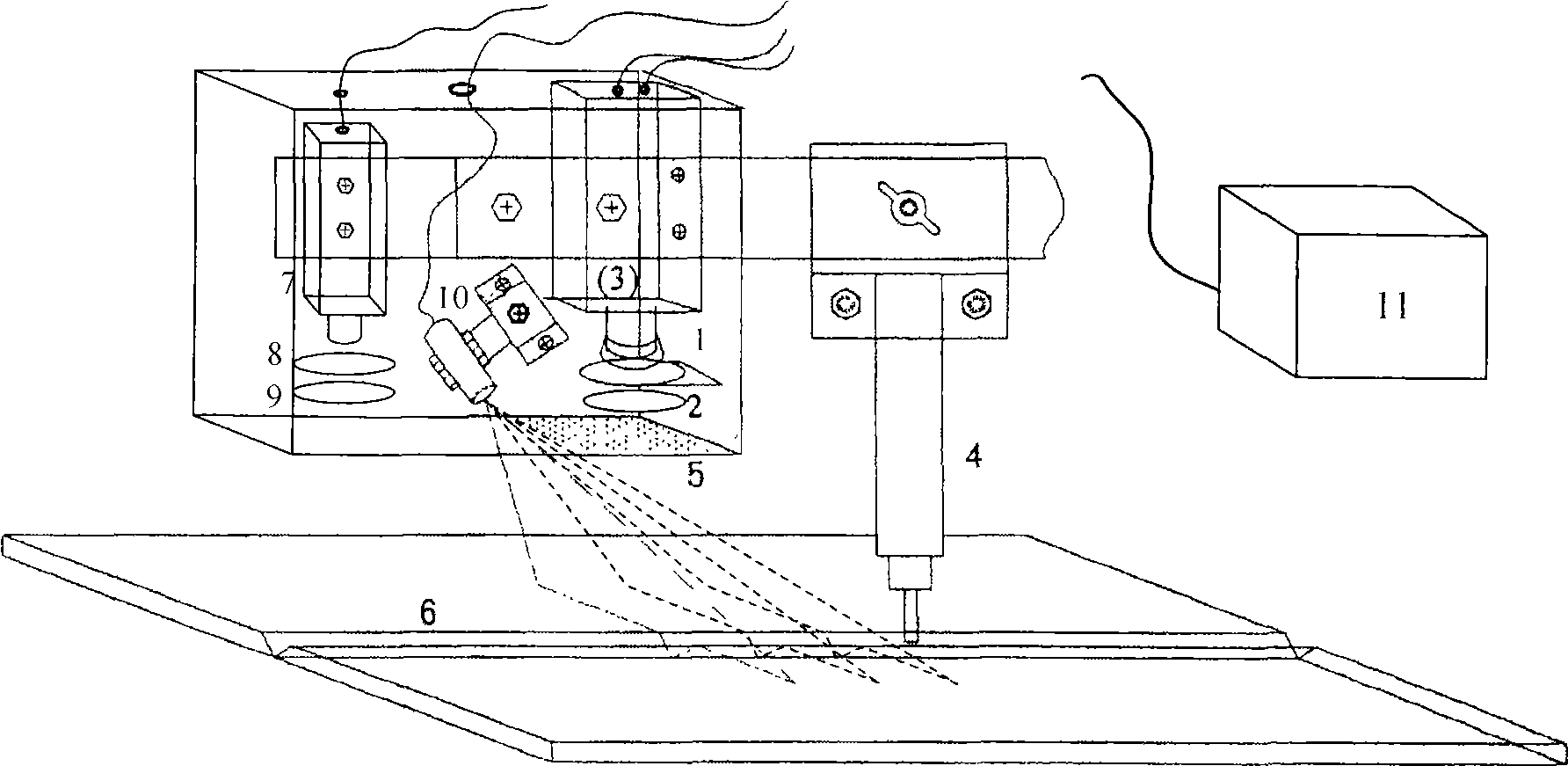

Multi-structured light binocular composite vision weld joint tracking method and device

InactiveCN101486124AEnable seam trackingImprove anti-interference abilityWelding/cutting auxillary devicesArc welding apparatusBandpass filteringEngineering

The invention relates to a method and a device for tracking a multi-structure optical binocular composite vision weld joint, and relates to the technical field of automatic welding, in particular to the technical field of vision sensing of a welding robot. The device comprises a multi-line laser generator (10) for projecting a structured light stripe to a weld joint region, a vidicon A(3) which is provided with a narrow bandpass filter (1) and a neutral light reducing plate A(2) and is used for collecting a weld joint character laser stripe image, a vidicon B(7) which is provided with a bandpass filter (8) and a neutral light reducing plate B(9) and is used for collecting an image not including laser stripe, and a controller (11) connected with the vidicon A(3) and the vidicon B(7). The method integrates image information of two vidicons, and calculates the current perfect position of a welding gun so as to obtain differential value between the perfect position and the actual position; the controller converts the differential value into an analog signal or wireless communication to output; and the welding gun is controlled to correct deflection of an electromotor, so the aim of automatic tracking of the weld joint is realized.

Owner:NANJING INST OF TECH

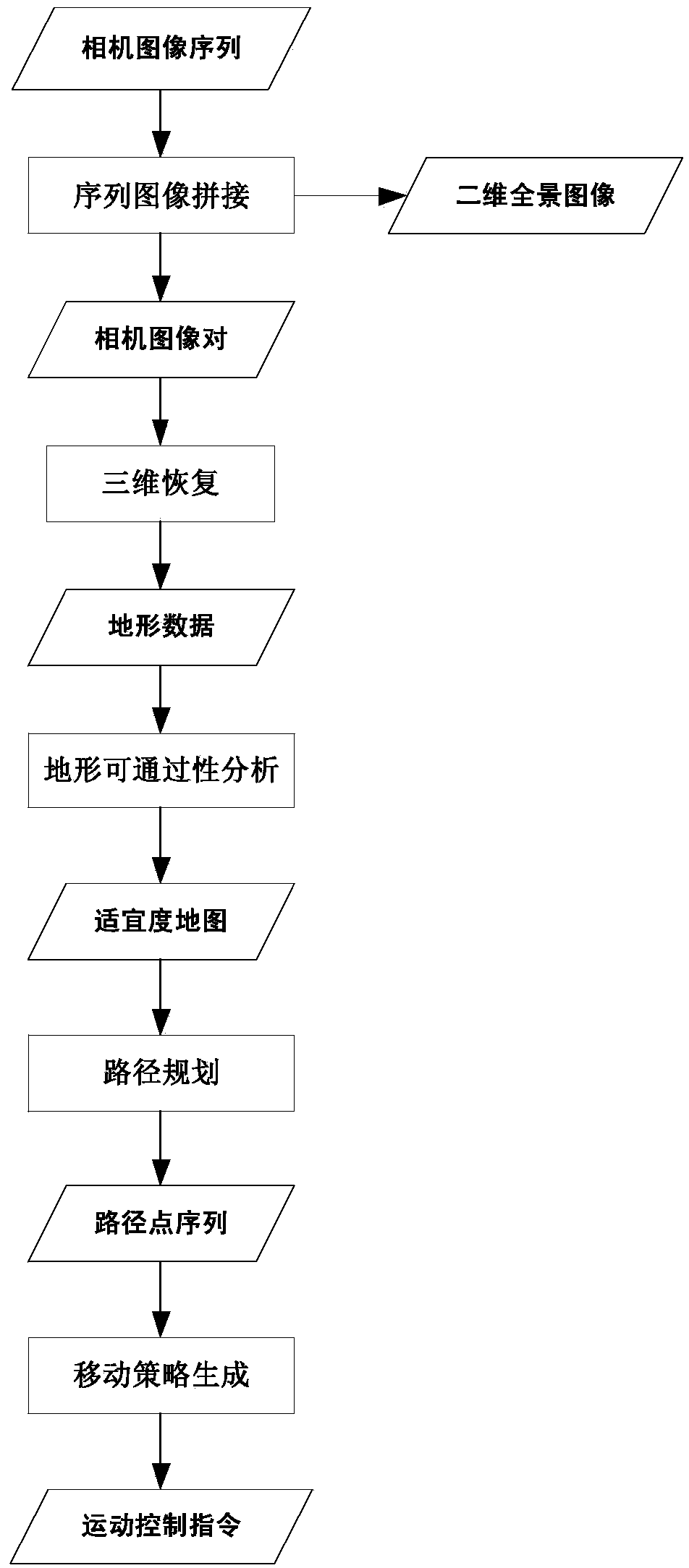

Ground navigation planning control method of rover

ActiveCN103869820AReduce calculationImprove planning efficiencyPosition/course control in three dimensionsTerrainEnvironmental perception

A ground navigation planning control method of a rover comprises the steps of sequential image splicing, three-dimensional terrain recovery, terrain traversability analysis, path planning and moving strategy generating. By means of the ground navigation planning control method of the rover, ground navigation planning control over the rover is conducted on the basis of a binocular stereoscopic vision system, a plurality of images comprising detection targets can be selected to be processed according to the positions of the detection targets of the rover with combination of practical situations, unnecessary calculation is reduced, and planning efficiency is high; an automatic noise reduction and manual denoising mode is provided so as to process three-dimensional terrain data, environmental perception accuracy is improved, and high-precision navigation planning control over the rover is facilitated; a data fusion mode is provided so as to splice multiple sets of terrain data, the environmental perception range is enlarged, and safety of navigation planning is improved; navigation planning control schemes of terrain traversability analysis, path planning and moving strategy generating provided in the ground navigation planning control method are still suitable for rovers provided with other sensors for environmental perception.

Owner:BEIJING INST OF CONTROL ENG

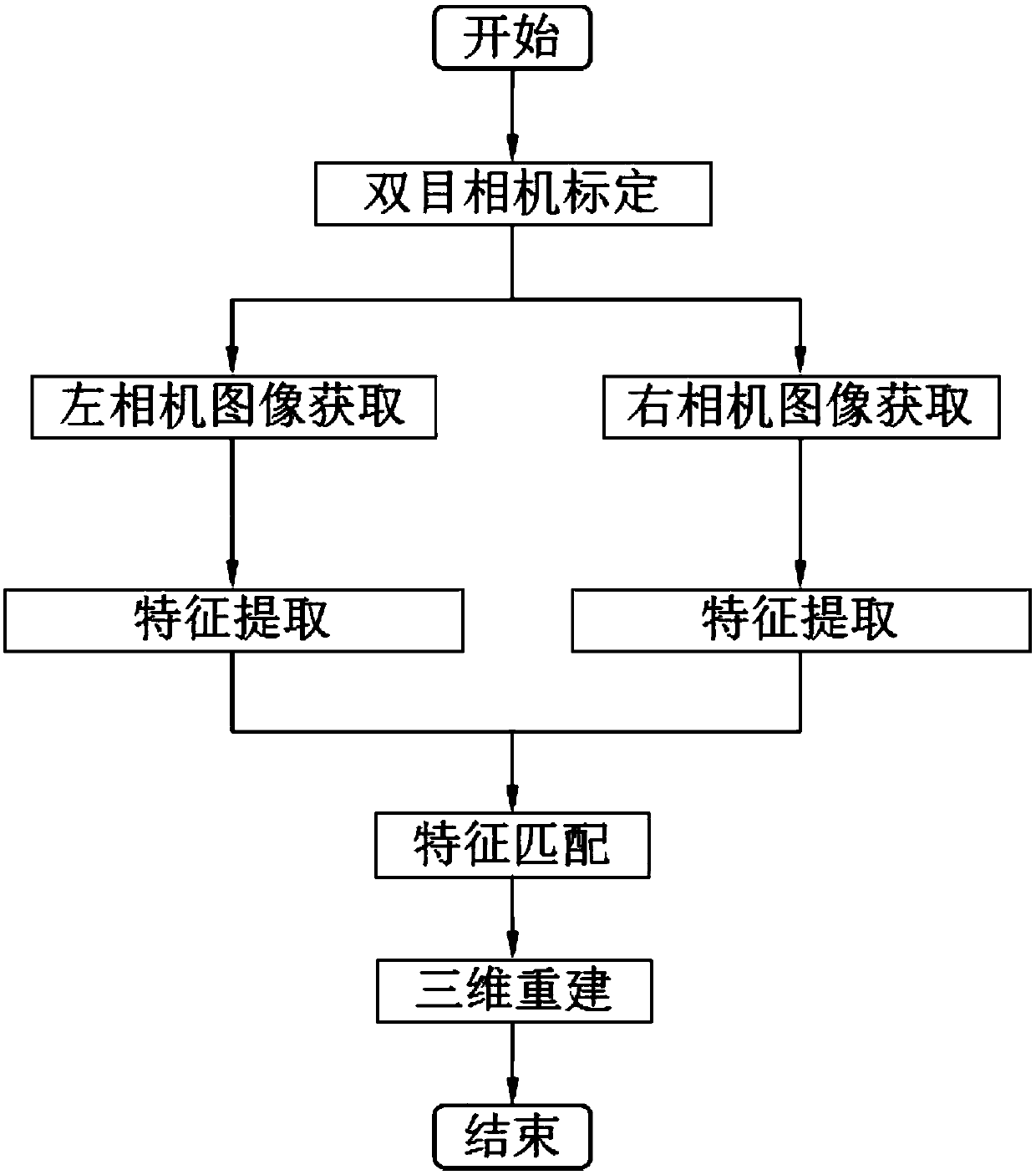

Control method of manipulator grabbing control system based on binocular stereoscopic vision

ActiveCN106041937AAchieve placementRealize the assemblyProgramme-controlled manipulatorPoint cloudTriangulation

The invention discloses a control method of a manipulator grabbing control system based on binocular stereoscopic vision. The control method comprises the following steps: camera parameters are calibrated, and a teaching manipulator is used for realizing conversion of a camera coordinate system and a manipulator coordinate system; line laser performs an arc surface scanning cloud motion, and a camera acquires images; laser lines of the images shot by the camera are respectively extracted; the positions of the laser lines are precisely determined by using a sub pixel algorithm; the homonymy point matching is performed for the laser lines extracted in the images; three-dimensional space coordinates of all points on the laser lines are calculated through a triangular measuring principle to finish scanning of workpieces in a scene so as to obtain point cloud data in the viewing field of the camera; a workpiece point cloud template is built, a manipulator grabbing point position is selected, and the obtained point cloud data is matched with the workpiece point cloud template to calculate a conversion relation of the two; the manipulator grabbing point position in the point cloud template is converted to a present coordinate, is converted to a coordinate under the manipulator coordinate system, and is transferred to the manipulator; and the manipulator finishes actuation actions.

Owner:HENAN ALSONTECH INTELLIGENT TECH CO LTD

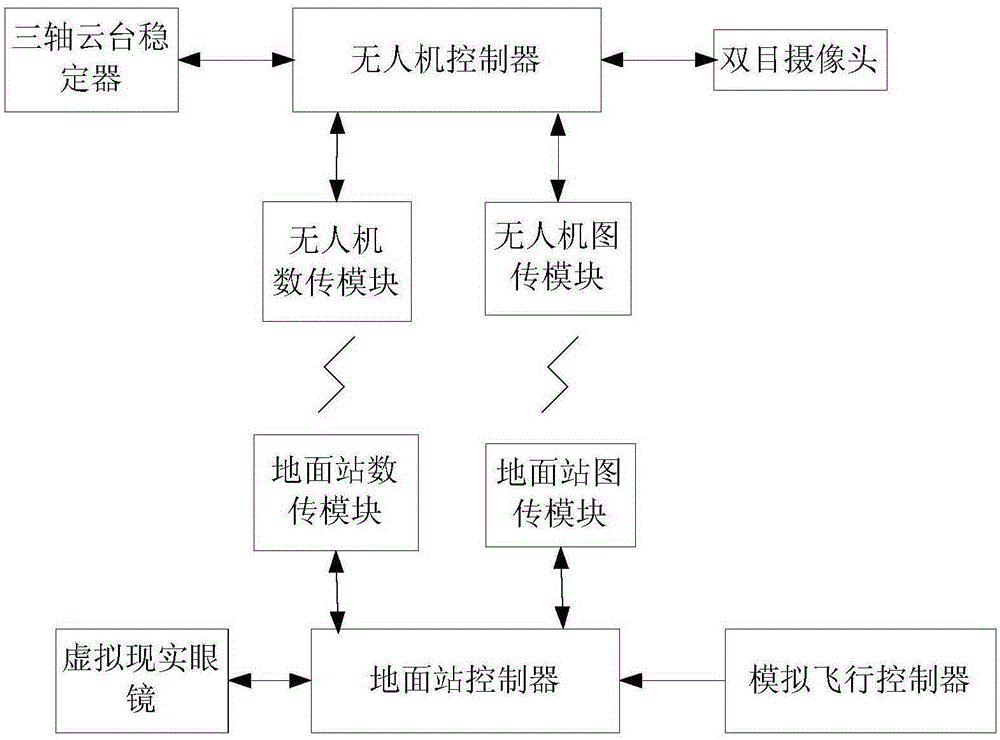

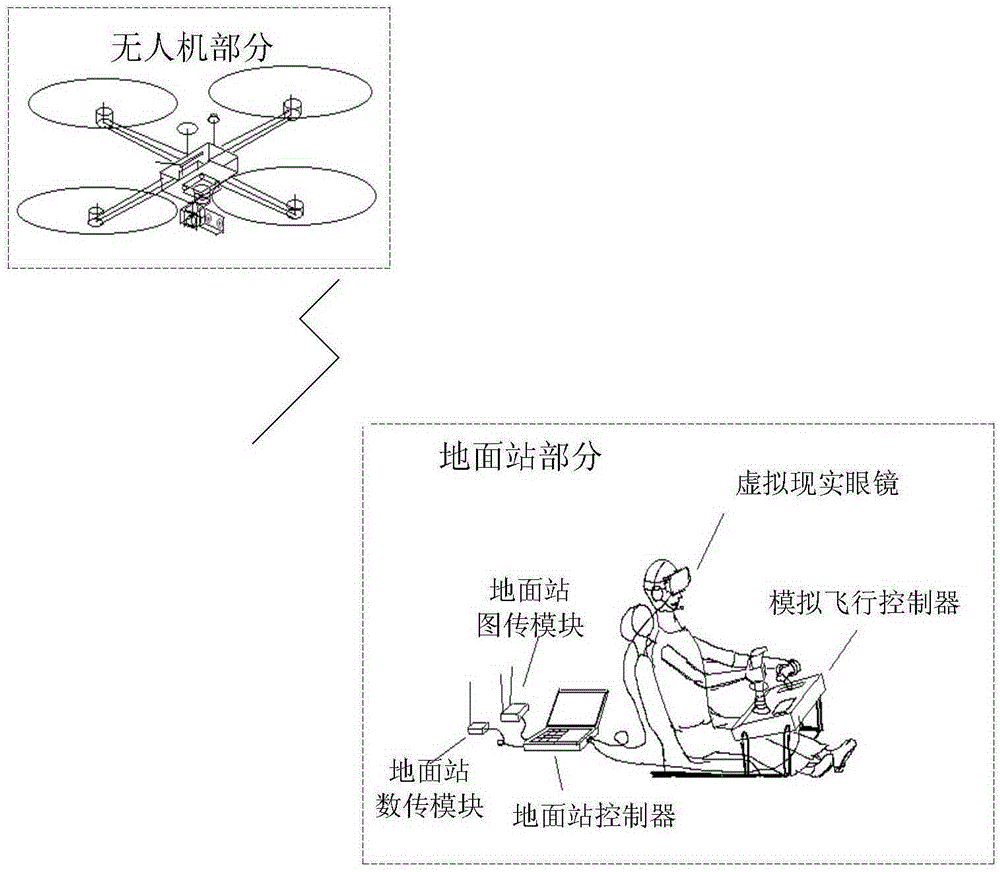

First-person immersive unmanned aerial vehicle driving system realized by virtue of virtual reality and binocular vision technology and driving method

InactiveCN105222761ARealize Virtual MigrationTrack syncSimulator controlPicture taking arrangementsUncrewed vehicleEyewear

A first-person immersive unmanned aerial vehicle driving system realized by virtue of virtual reality and a binocular vision technology and a driving method belong to the technical field of unmanned aerial vehicles and aim at solving the problems that a real-time flying state cannot be mastered through an existing unmanned aerial vehicle driving system, and errors are liable to occur. The driving system and the driving method are characterized in that an unmanned aerial vehicle body carries a binocular camera through an existing tri-axial cradle head stabilizer, an unmanned aerial vehicle image transmission module is equipped for image back transmission, and the tri-axial cradle head stabilizer and the actions of a driver on the ground are synchronized by virtue of a data transmission module and a pair of virtual reality goggles; the driver of the unmanned aerial vehicle on the ground wears the virtual reality goggles and sits in a simulating cabin approximate to a real helicopter driving cabin to feel pictures took by the binocular camera and aircraft flying information, and the flying of the unmanned aerial vehicle is controlled by adopting the data transmission module. The driving system and the driving method are suitable for manipulation of the unmanned aerial vehicle.

Owner:HARBIN INST OF TECH

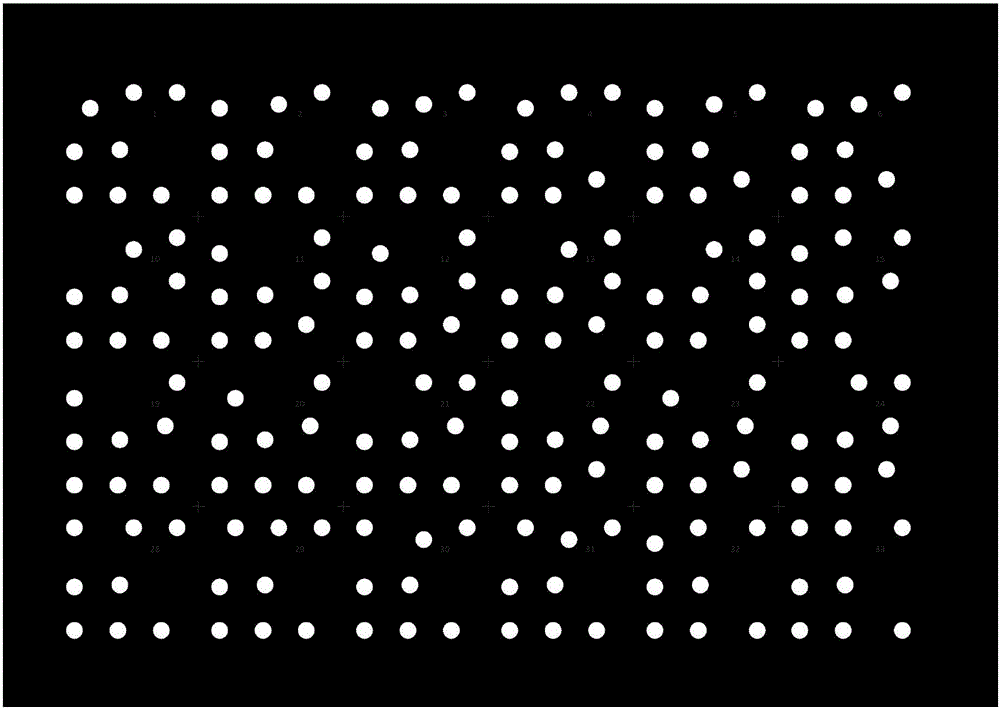

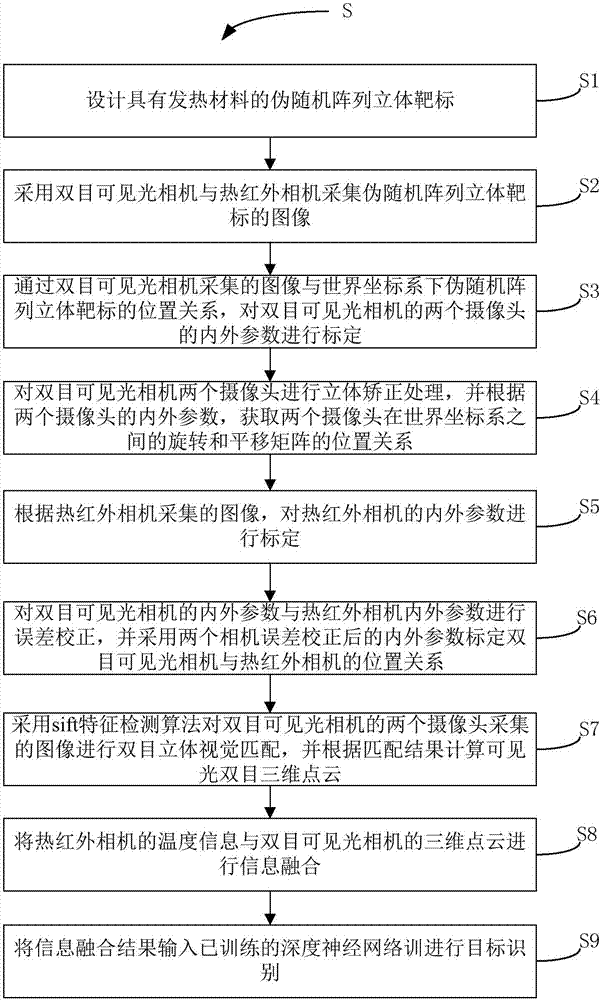

Binocular visible light camera and thermal infrared camera-based target identification method

ActiveCN108010085AImprove recognition rateDetect fasterImage enhancementImage analysisVisual matchingPoint cloud

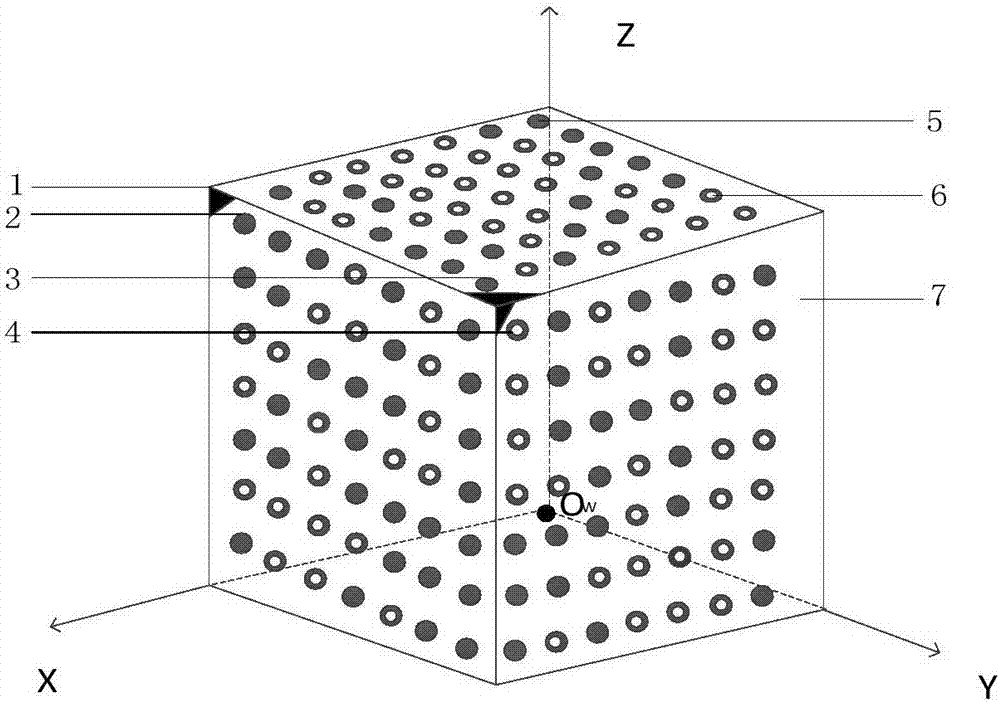

The invention discloses a binocular visible light camera and thermal infrared camera-based target identification method. The method comprises the steps of calibrating internal and external parametersof two cameras of a binocular visible light camera through a position relationship between an image collected by the binocular visible light camera and a pseudo-random array stereoscopic target in a world coordinate system, and obtaining a rotation and translation matrix position relationship, between world coordinate systems, of the two cameras; according to an image collected by a thermal infrared camera, calibrating internal and external parameters of the thermal infrared camera; calibrating a position relationship between the binocular visible light camera and the thermal infrared camera;performing binocular stereoscopic visual matching on the images collected by the two cameras of the binocular visible light camera by adopting a sift feature detection algorithm, and calculating a visible light binocular three-dimensional point cloud according to a matching result; performing information fusion on temperature information of the thermal infrared camera and the three-dimensional point cloud of the binocular visible light camera; and inputting an information fusion result to a trained deep neural network for performing target identification.

Owner:SOUTHWEAT UNIV OF SCI & TECH

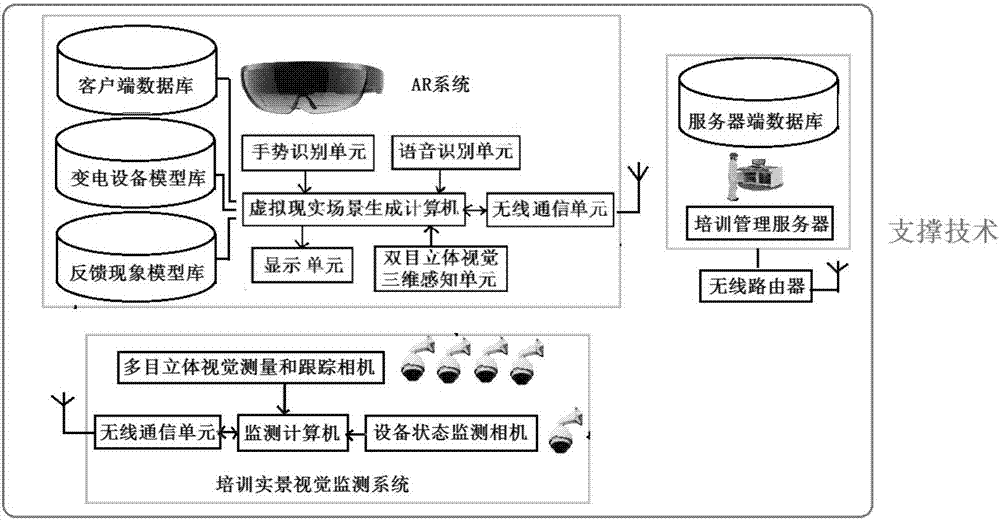

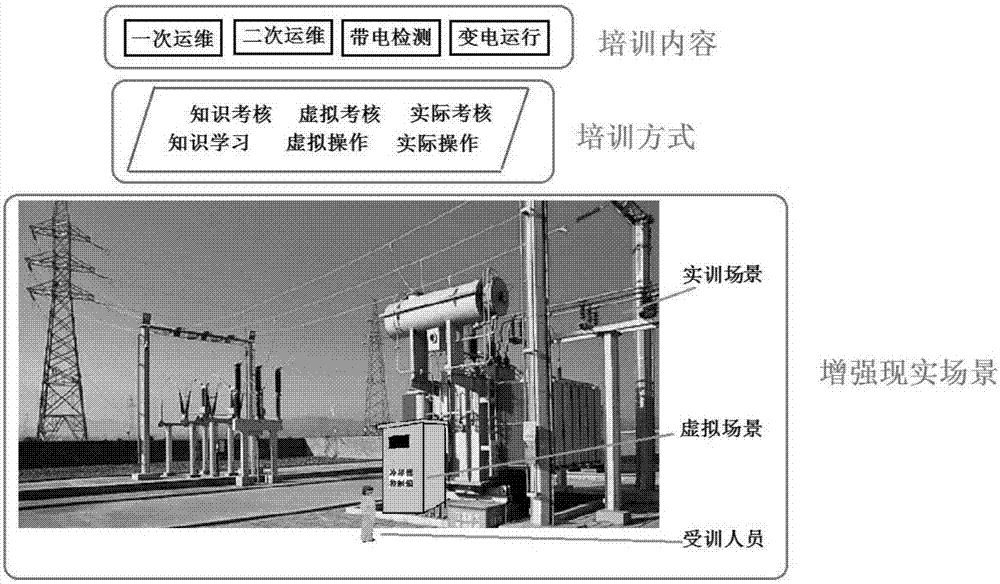

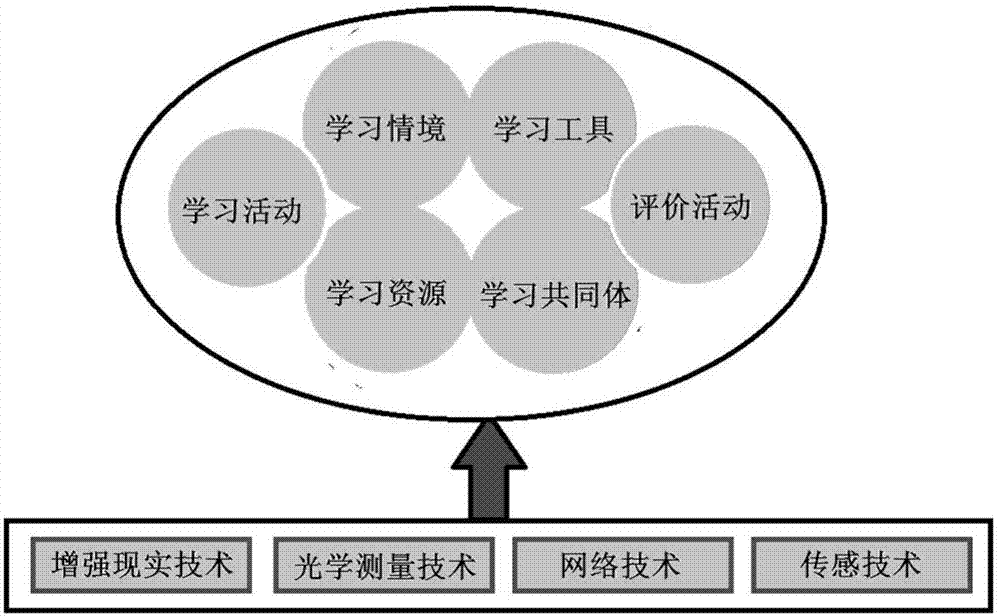

Substation equipment operation and maintenance simulation training system and method based on augmented reality

InactiveCN107331220ACosmonautic condition simulationsElectrical appliancesLive actionPattern perception

The invention provides a substation equipment operation and maintenance simulation training system and method based on augmented reality. The system comprises a training management server, an AR subsystem and a training live-action visual monitoring subsystem; the AR subsystem comprises a binocular stereoscopic vision three-dimensional perception unit used for identifying, tracking and registering target equipment, a display unit making a displayed virtual scene overlaid on an actual scene, a gesture recognition unit used for recognizing actions of a user and a voice recognition unit used for recognizing voice of the user; the training live-action visual monitoring subsystem comprises a multi-view stereoscopic vision measurement and tracking camera and a equipment state monitoring video camera which are arranged in the actual scene; the equipment state monitoring video camera is used for recognizing the working condition of substation equipment, and the multi-view stereoscopic vision measurement and tracking camera is used for obtaining three-dimensional space coordinates of the substation equipment in the actual scene, measuring three-dimensional space coordinates of trainees on the training site and tracking and measuring walking trajectories of the trainees.

Owner:JINZHOU ELECTRIC POWER SUPPLY COMPANY OF STATE GRID LIAONING ELECTRIC POWER SUPPLY +3

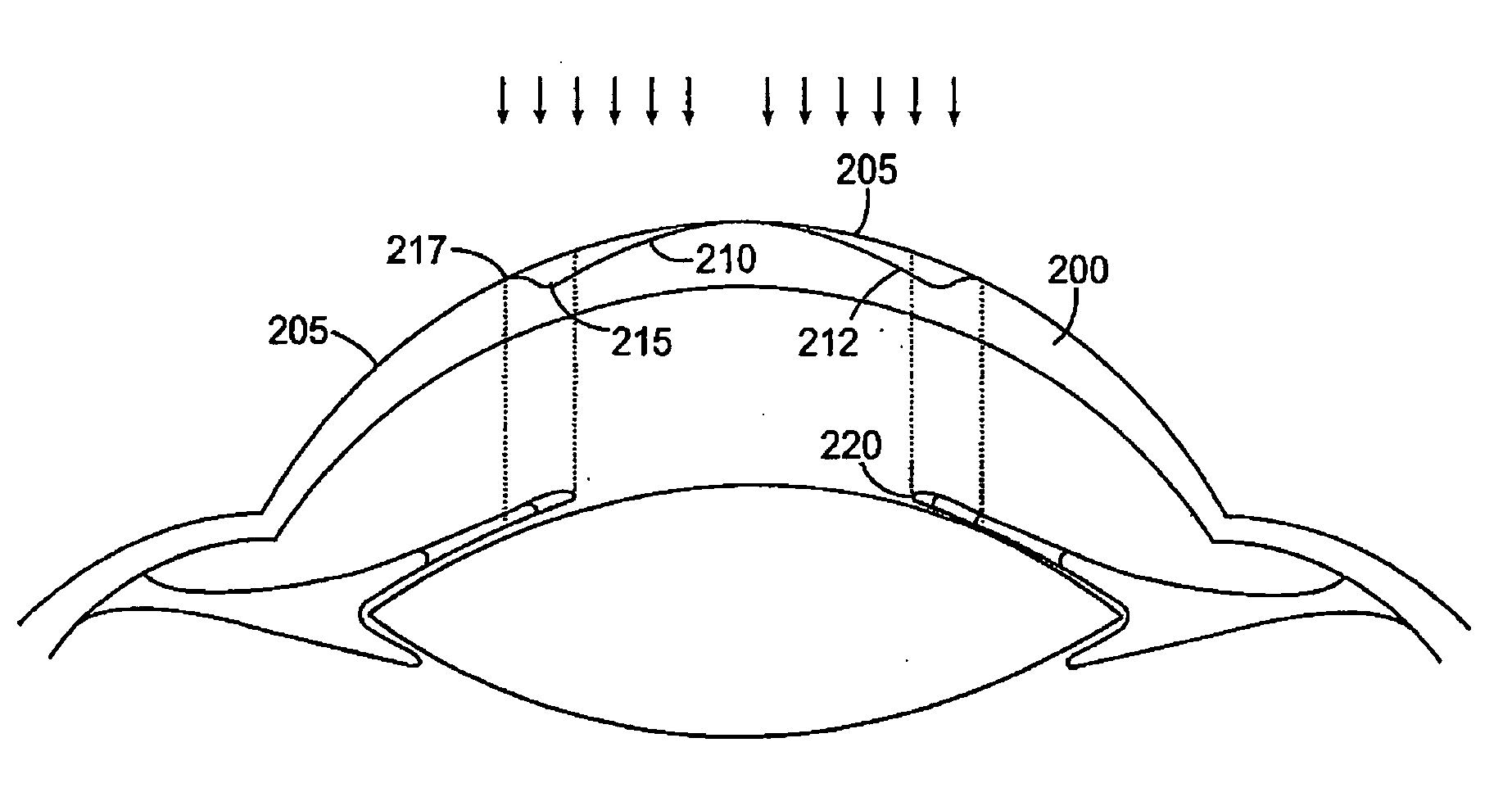

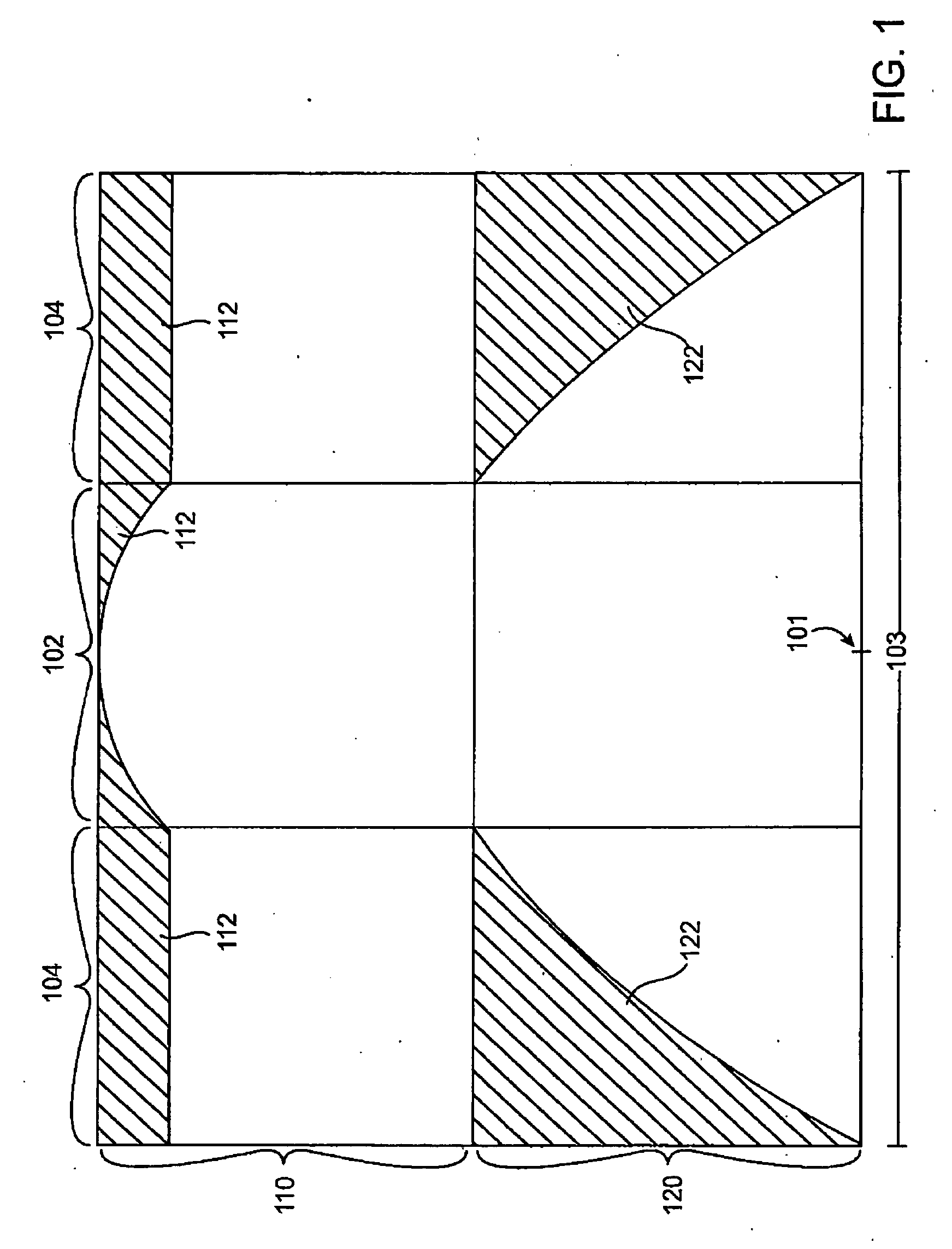

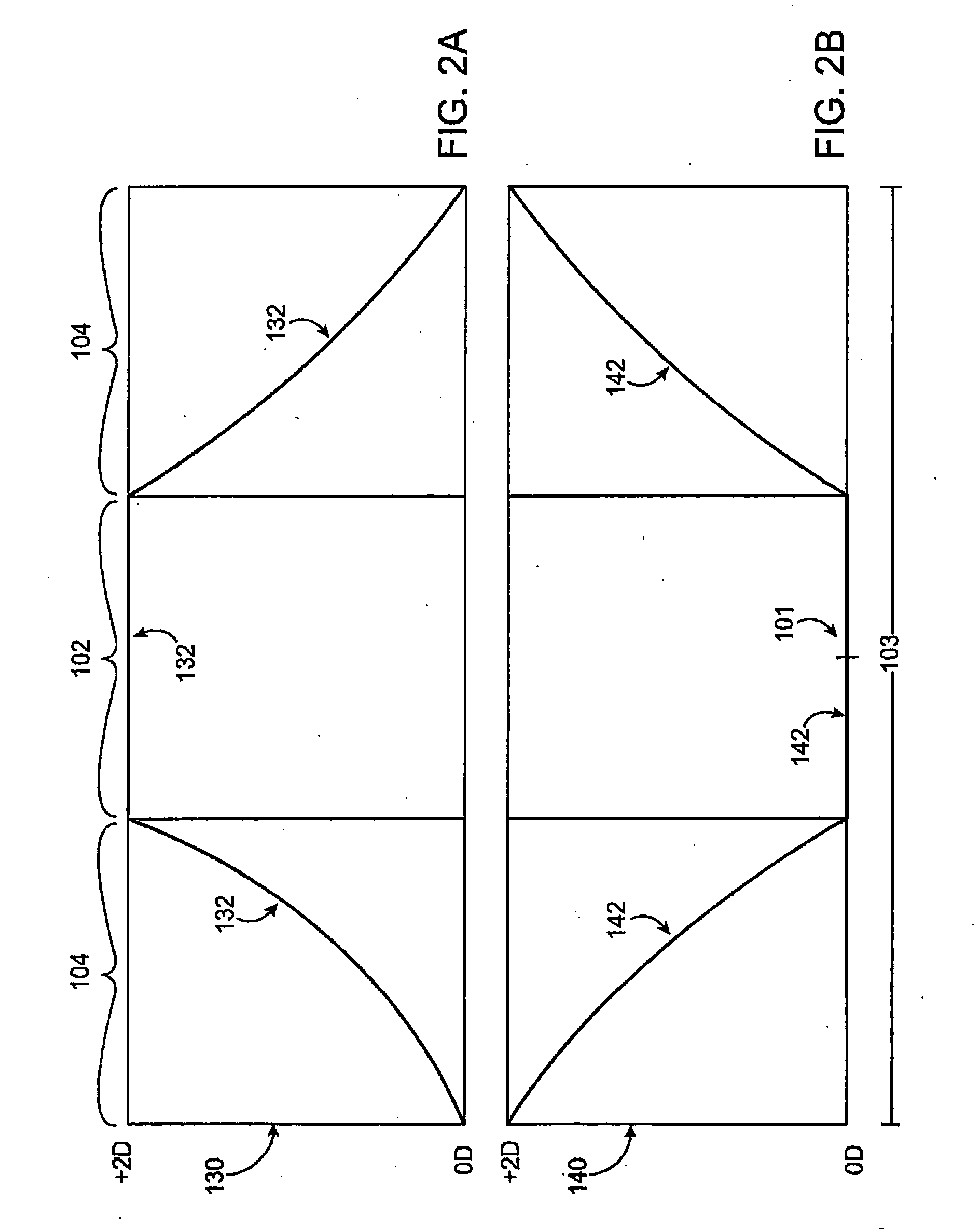

Binocular optical treatment for presbyopia

InactiveUS20050261752A1Improve abilitiesAmelioration of presbyopiaLaser surgeryTherapeutic coolingCorneal surfaceOptical power

Methods and systems for treating presbyopia involve ablating a corneal surface of a first eye of a patient to enhance vision of near objects through a central zone of the first eye and ablating a second eye of the patient to enhance vision of near objects through a peripheral zone of the second eye. The optical power of the first eye is increased in the central zone, while the optical power of the second eye is increased in the peripheral zone. In the first eye, a peripheral zone is used primarily for distance vision. In the second eye, a central zone is used primarily for distance vision. Systems include a laser device and a processor for directing the laser device to ablate the two eyes of the patient.

Owner:AMO MFG USA INC

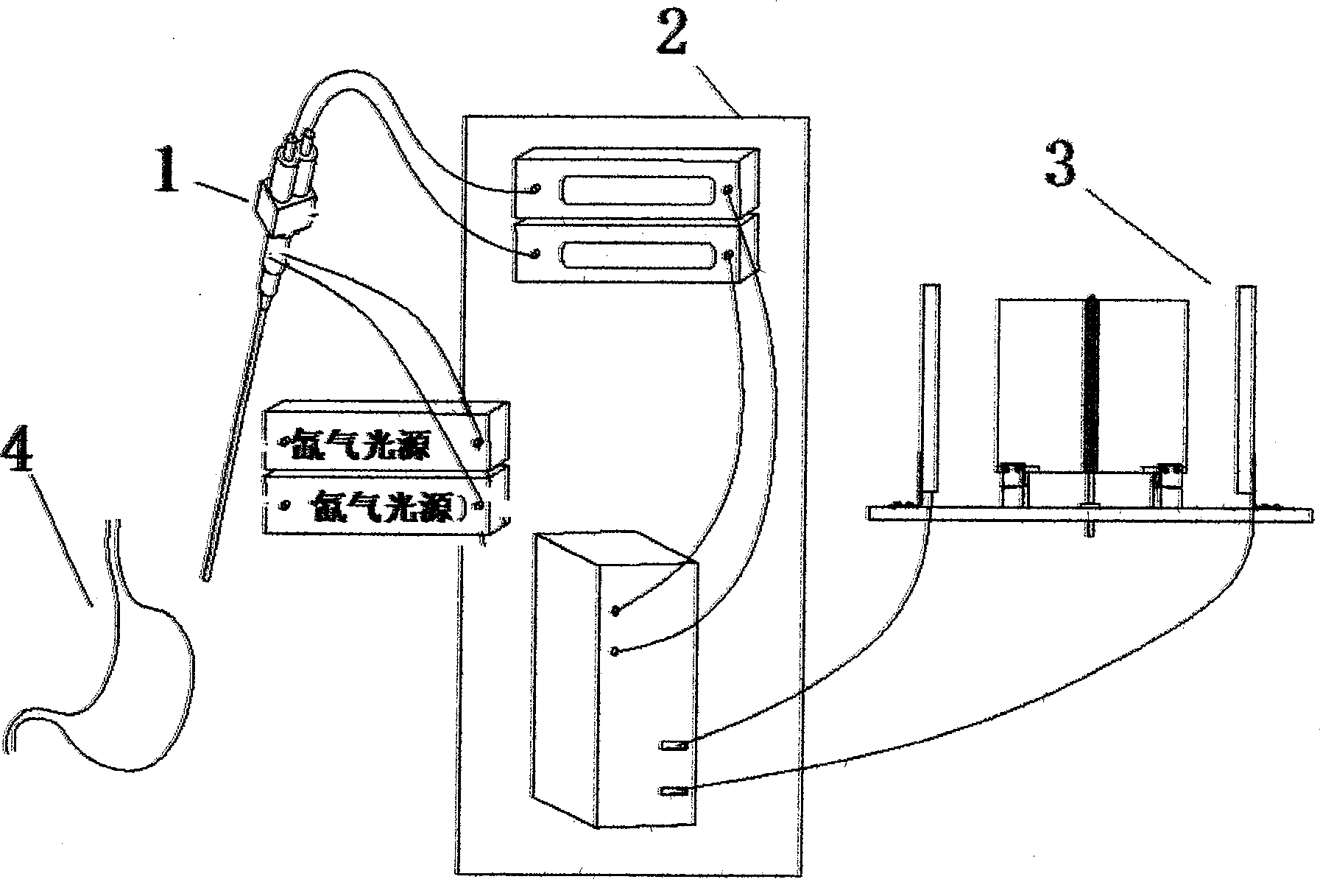

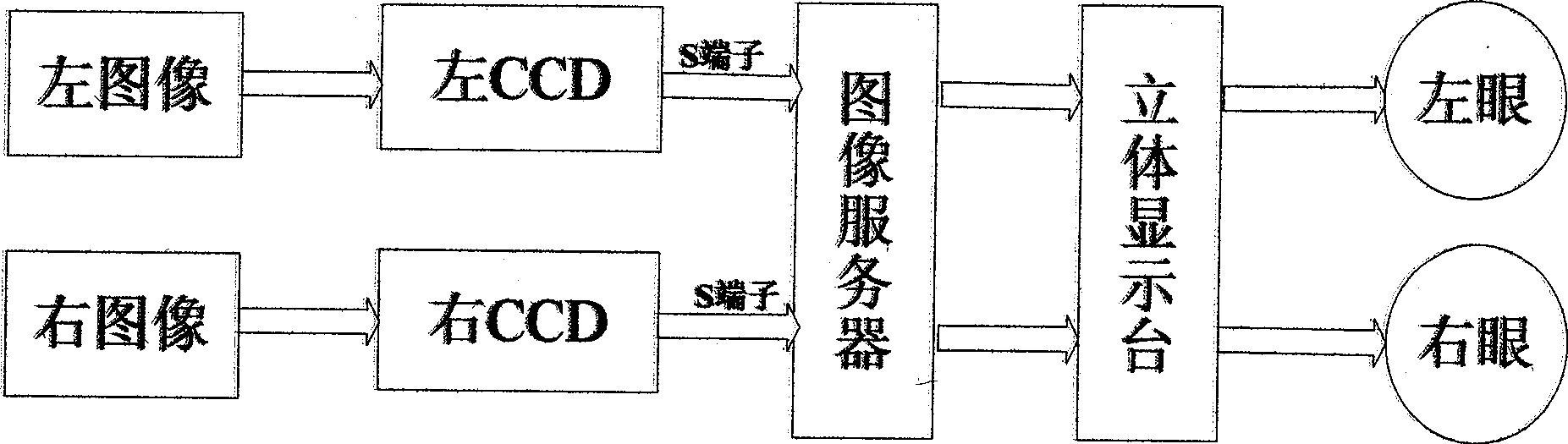

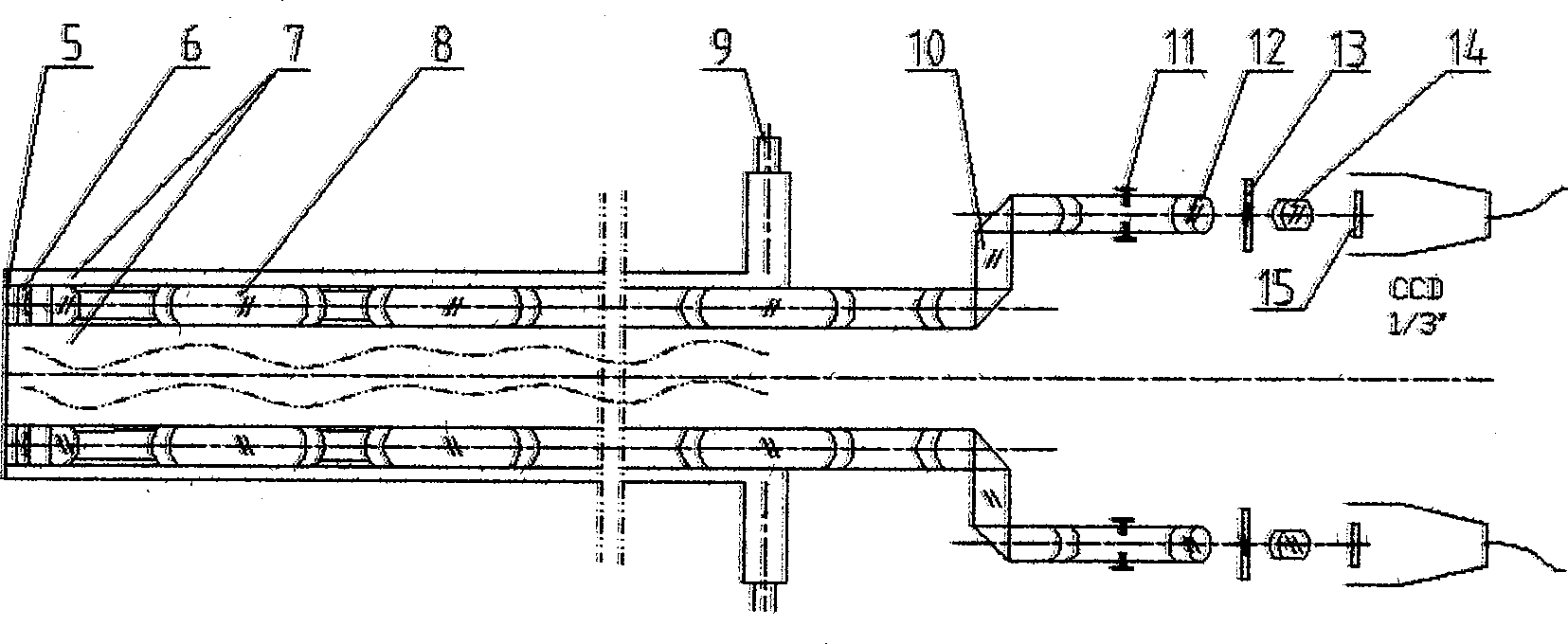

Binocular endoscope operation visual system

InactiveCN101518438AMeet the needs of 3D stereo visionStereoscopic effect is goodLaproscopesEndoscopesVideo memoryLaparoscopy

A binocular endoscope operation visual system mainly comprises a binocular endoscope, an image server and an endoscope stereo display unit. Two ways of clear abdominal cavity image video signals are obtained by the binocular endoscope, two ways of video streams enter a memory of an image processing server or a video memory by a two-way image capture card, and a left-way image and a right-way image which are processed are respectively displayed in a left displayer and a right displayer. By a flat mirror, the contents of the left displayer and the right displayer respectively enter the left eye and the right eye of an observer, thereby the stereo effect is realized. The binocular endoscope operation visual system is suitable for the circumstance of a medical surgical endoscope operation, can completely satisfy the stereoscopic vision requirement of a laparoscope operation of a minimally invasive operation robot, realizes the binocular stereoscopic vision real-time display in the laparoscope operation, has reliable performance, simple structure and high commercialization level, and adapts the germfree circumstances of the operation.

Owner:NANKAI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com