Human-machine interaction method and system based on binocular stereoscopic vision

A technology for binocular stereo vision and human-computer interaction, applied in the field of human-computer interaction methods and systems based on binocular stereo vision, can solve the problems of inconvenient installation and use, high hardware cost, inconvenient installation and use, etc. Simple and convenient installation and use, low cost, convenient and fast human-computer interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

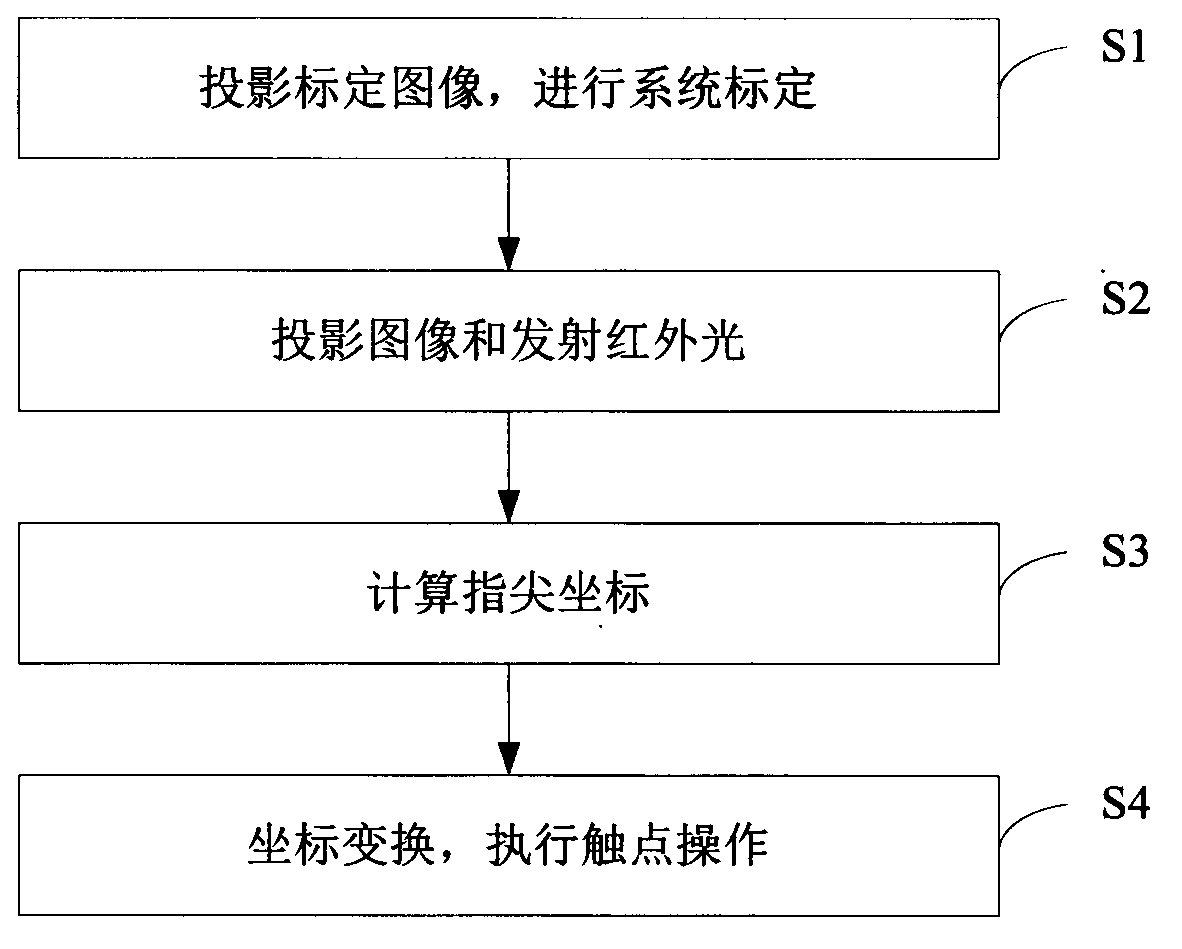

[0068] see figure 1 As shown, it is a flow chart of the human-computer interaction method based on binocular stereo vision in Embodiment 1 of the present invention. The method comprises the steps of:

[0069] Step S1: Projecting a calibration image to perform system calibration.

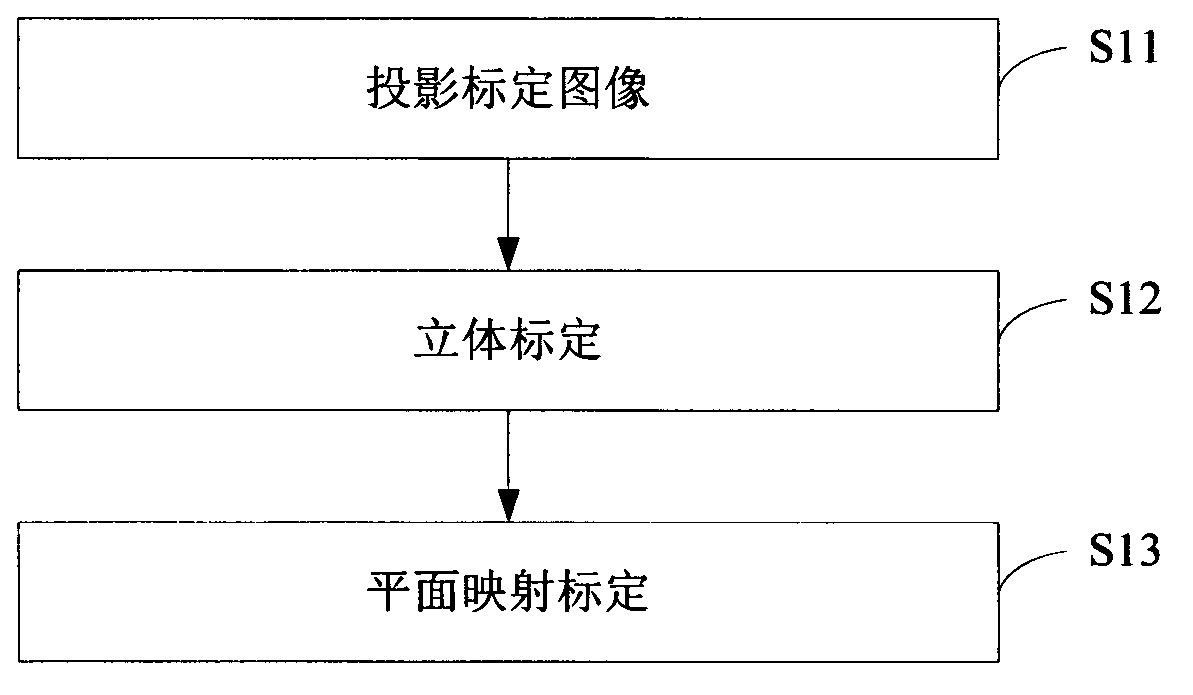

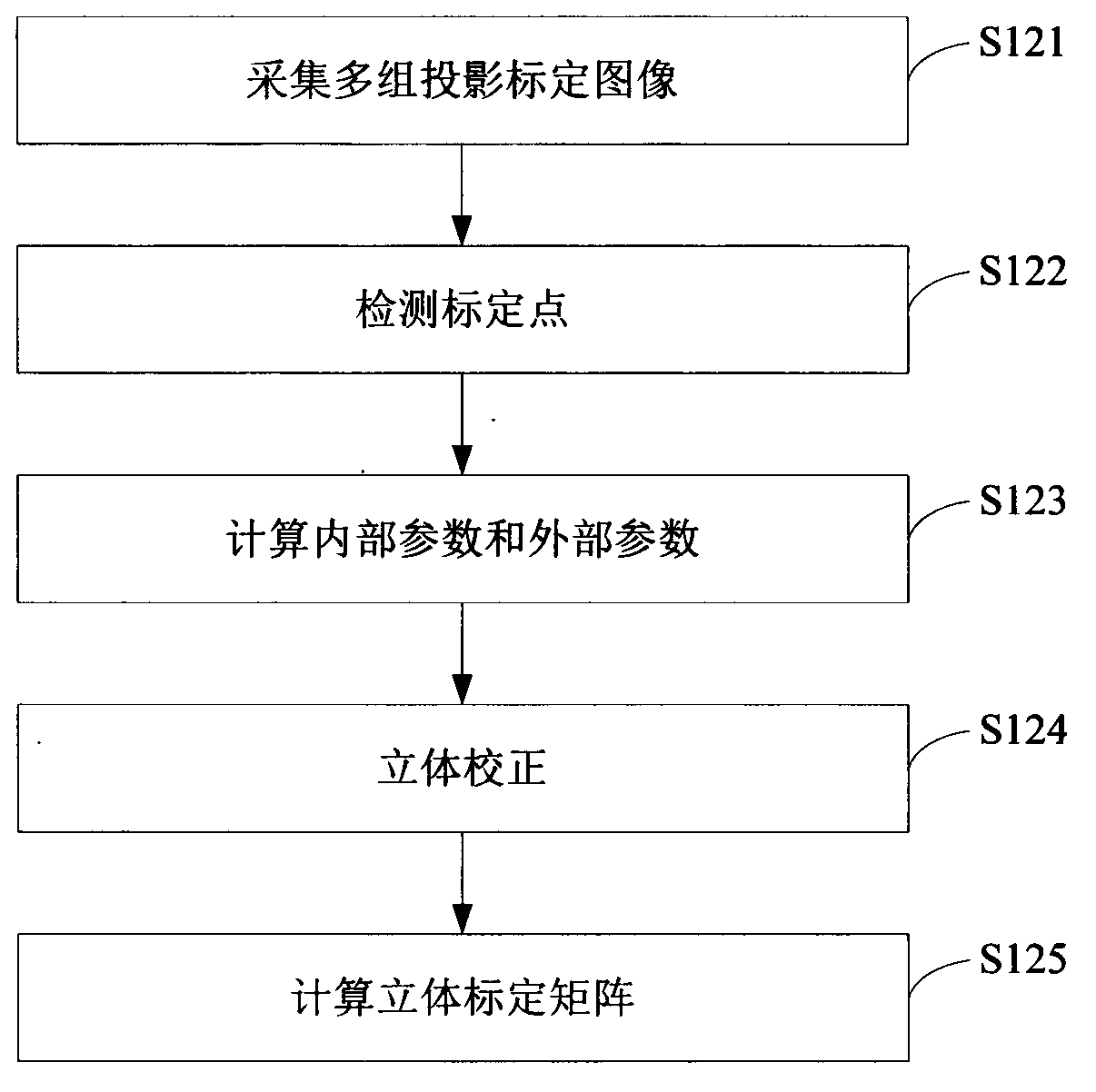

[0070] In this step, the calibration image is projected onto the projection surface, and the calibration image on the projection surface is collected for system calibration. Using the method of this embodiment for human-computer interaction has no special requirements on the material of the projection surface, and various ordinary desktops and walls can be used as the projection surface. The calibration image can be projected by a projector, and the calibration image can be a checkerboard image. The final result of system calibration is a stereo calibration matrix that can calculate three-dimensional coordinates and a projection transformation matrix that can convert fingertip coordinates (ie, the...

Embodiment 2

[0138] The difference between this embodiment and Embodiment 1 is that in this embodiment, after calculating the three-dimensional coordinates of the fingertip, before converting the coordinates of the fingertip into screen coordinates, it first judges whether the fingertip is located in the preset projection plane. When it is within the preset projection plane, it is considered that the touch command is issued by the human hand. At this time, the fingertip coordinates are converted into screen coordinates and mouse motion information through the projection transformation matrix H. see Figure 8 As shown, it is a flow chart of the human-computer interaction method based on binocular stereo vision in Embodiment 2 of the present invention. For the convenience of description, only the steps starting from acquiring frame images are listed in the figure, and the steps before that are similar to those in Embodiment 1, and will not be described in detail here. The method mainly incl...

Embodiment 3

[0154] see Figure 9 As shown, it is a flow chart of the human-computer interaction system based on binocular stereo vision in Embodiment 3 of the present invention. The system includes electronic equipment, a projection device, an image acquisition device, an infrared light source and a projection surface. In this embodiment, the electronic equipment adopts a computer 1. The projection device adopts a micro-projector 2 , the image acquisition device adopts two cameras 3 , the infrared light source adopts two LED infrared lamps 4 , and the projection surface 5 is generated on an ordinary desktop 6 . The projection mode is vertical projection, and the micro-projector 2 , two cameras 3 and two LED infrared lamps 4 are vertically installed on the top of the projection surface 5 . Computer 1 is connected with micro-projector 2 and two video cameras 3 respectively; Two video cameras 3 are common video cameras and get final product, but all need band infrared filter (not shown in th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com