Patents

Literature

587 results about "Interaction technique" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

An interaction technique, user interface technique or input technique is a combination of hardware and software elements that provides a way for computer users to accomplish a single task. For example, one can go back to the previously visited page on a Web browser by either clicking a button, pressing a key, performing a mouse gesture or uttering a speech command. It is a widely used term in human-computer interaction. In particular, the term "new interaction technique" is frequently used to introduce a novel user interface design idea.

Method, system, and computer program product for visualizing a data structure

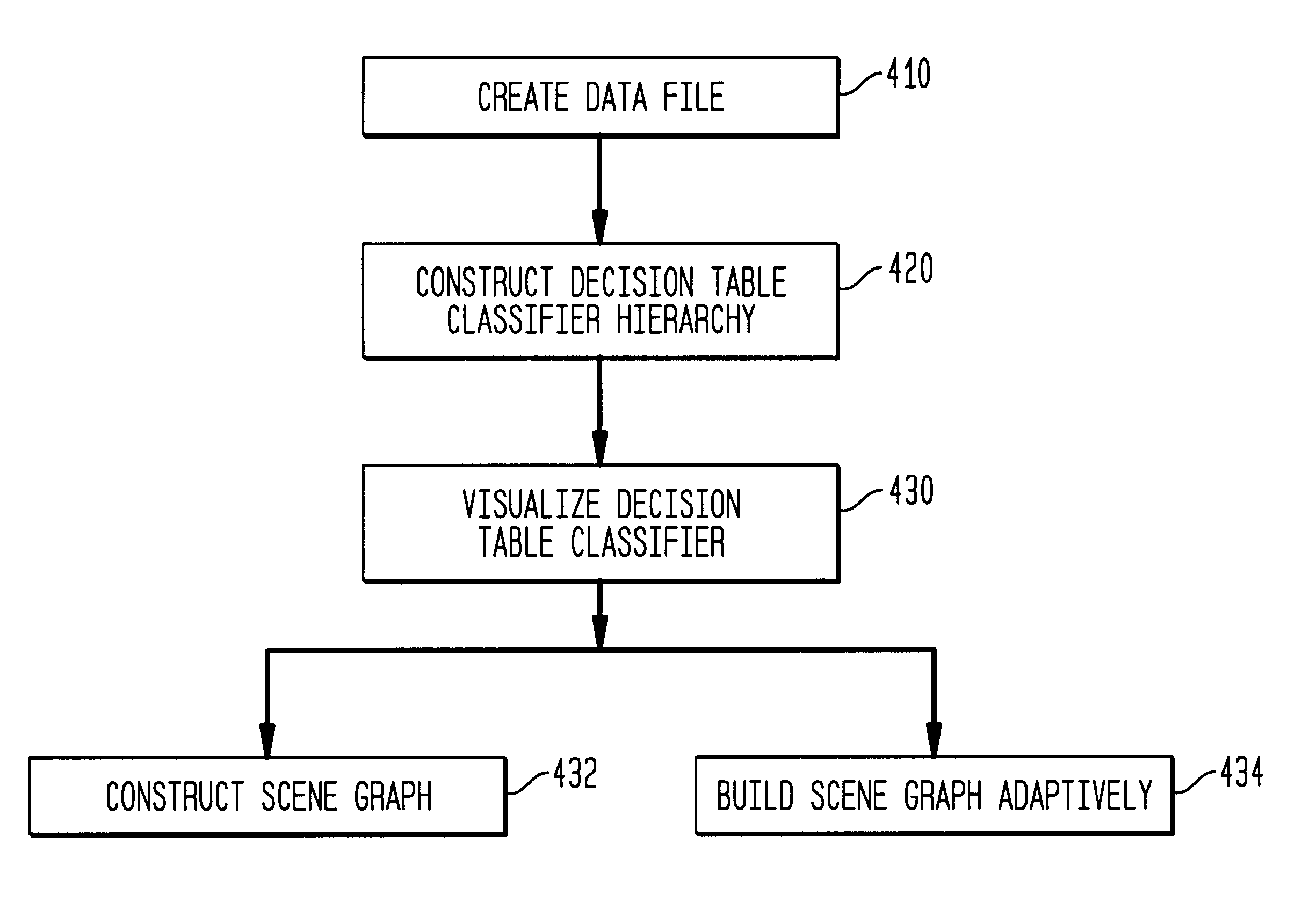

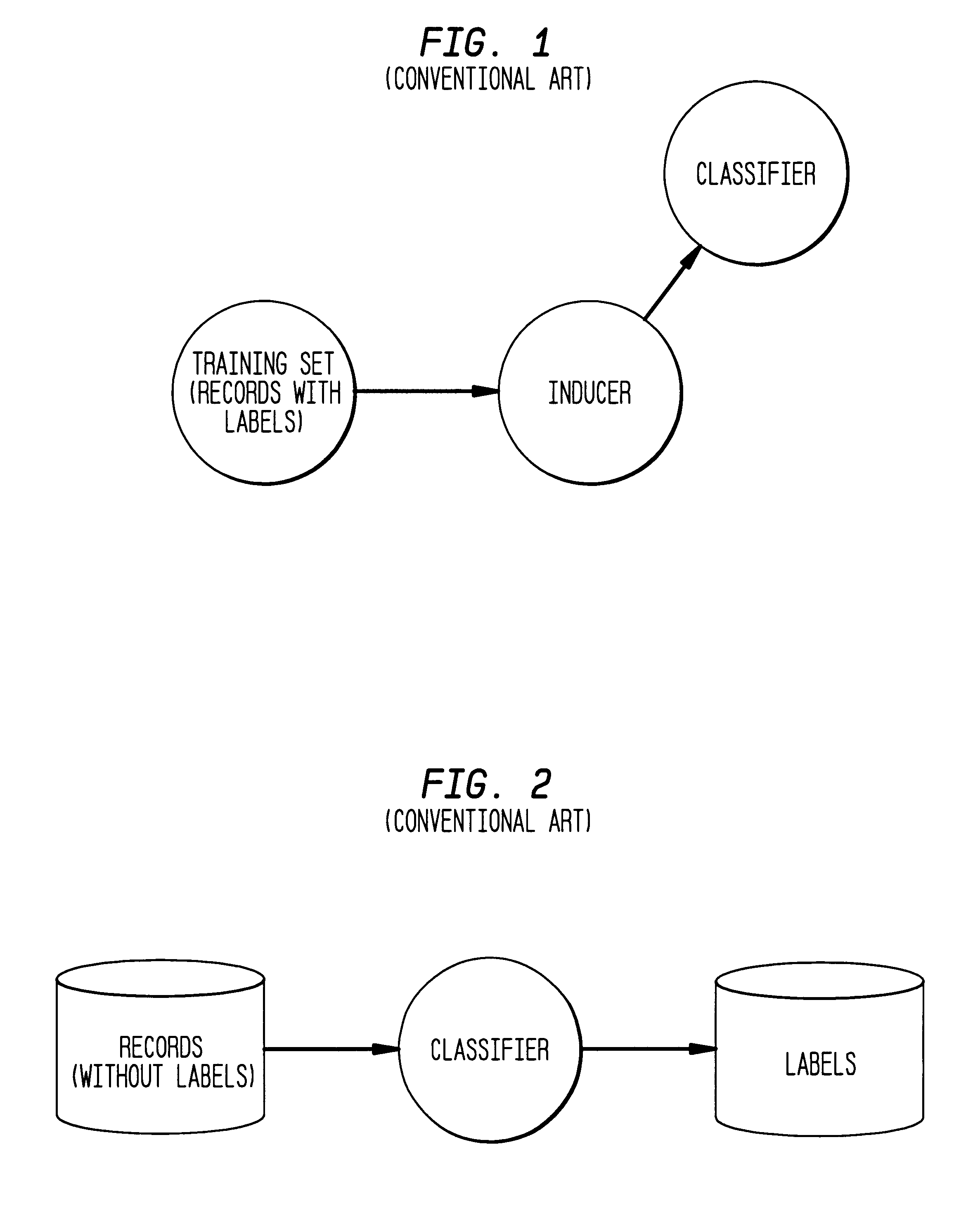

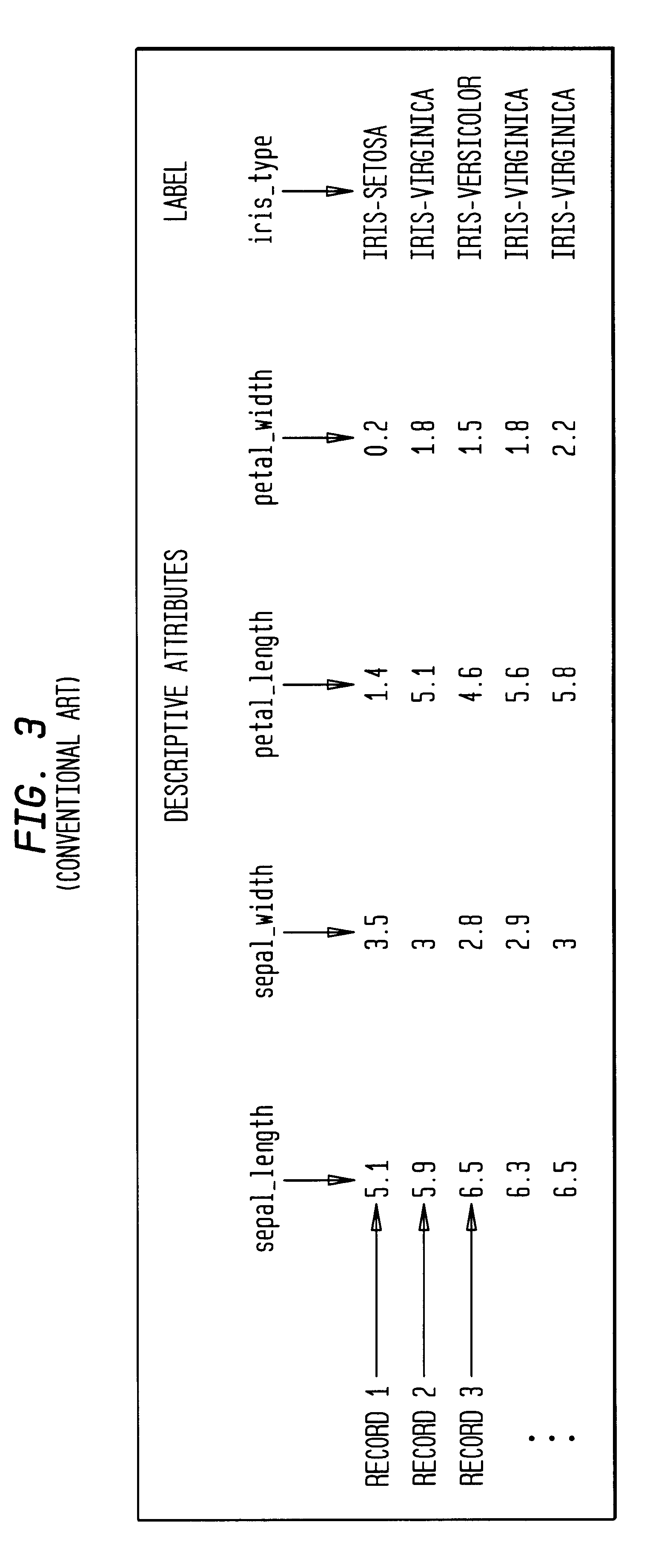

A data structure visualization tool visualizes a data structure such as a decision table classifier. A data file based on a data set of relational data is stored as a relational table, where each row represents an aggregate of all the records for each combination of values of the attributes used. Once loaded into memory, an inducer is used to construct a hierarchy of levels, called a decision table classifier, where each successive level in the hierarchy has two fewer attributes. Besides a column for each attribute, there is a column for the record count (or more generally, sum of record weights), and a column containing a vector of probabilities (each probability gives the proportion of records in each class). Finally, at the top-most level, a single row represents all the data. The decision table classifier is then passed to the visualization tool for display and the decision table classifier is visualized. By building a representative scene graph adaptively, the visualization application never loads the whole data set into memory. Interactive techniques, such as drill-down and drill-through are used view further levels of detail or to retrieve some subset of the original data. The decision table visualizer helps a user understand the importance of specific attribute values for classification.

Owner:RPX CORP +1

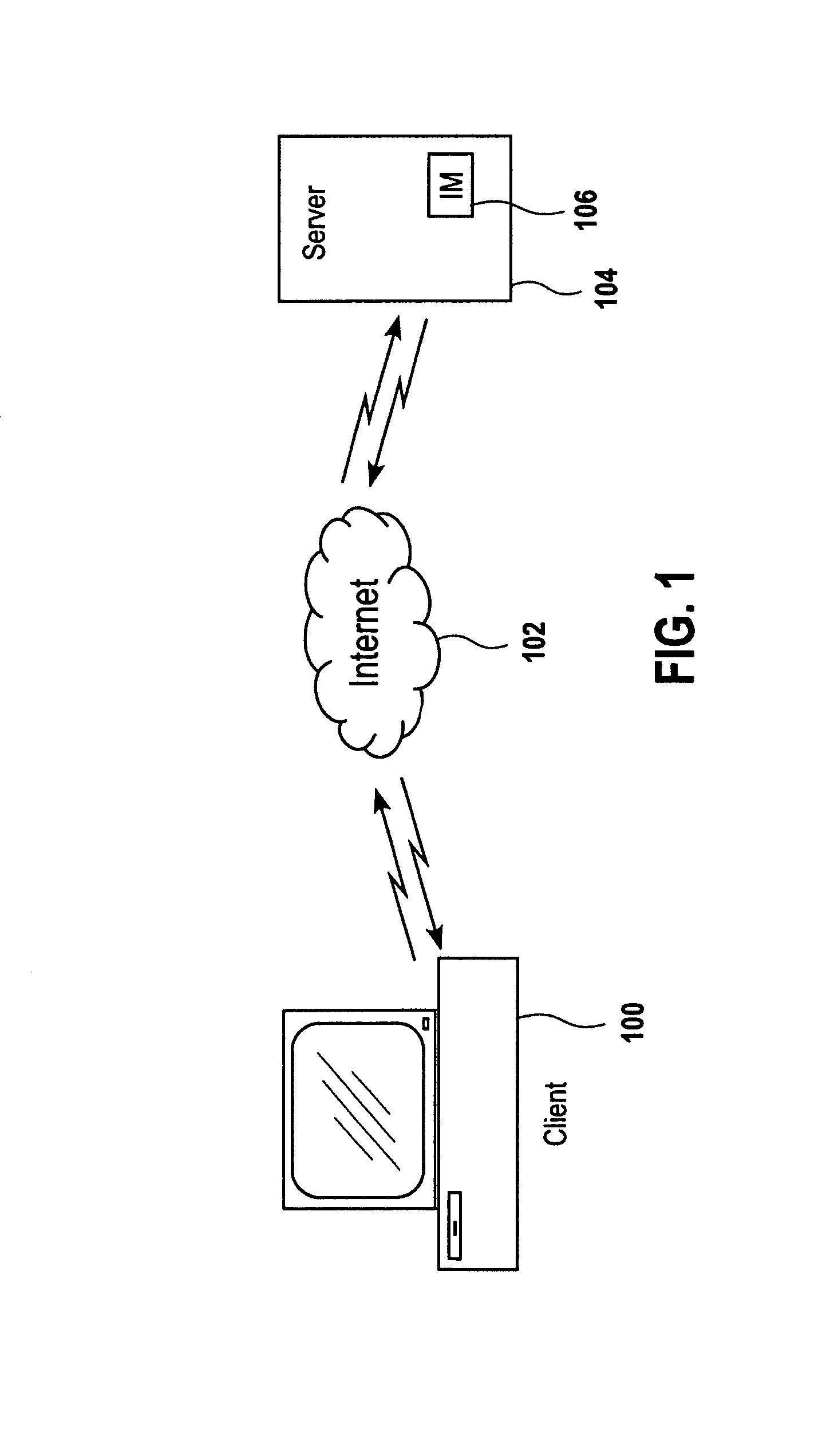

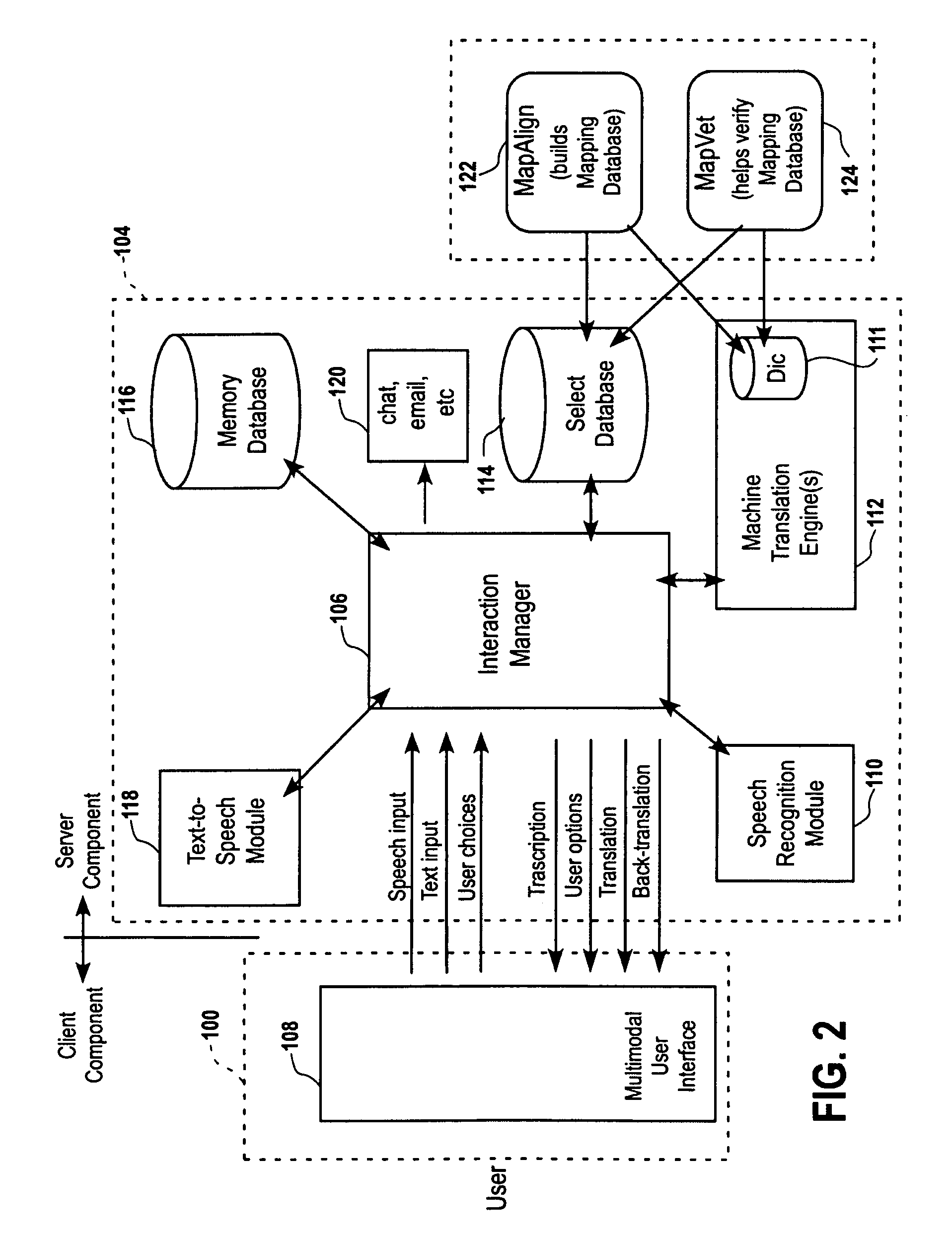

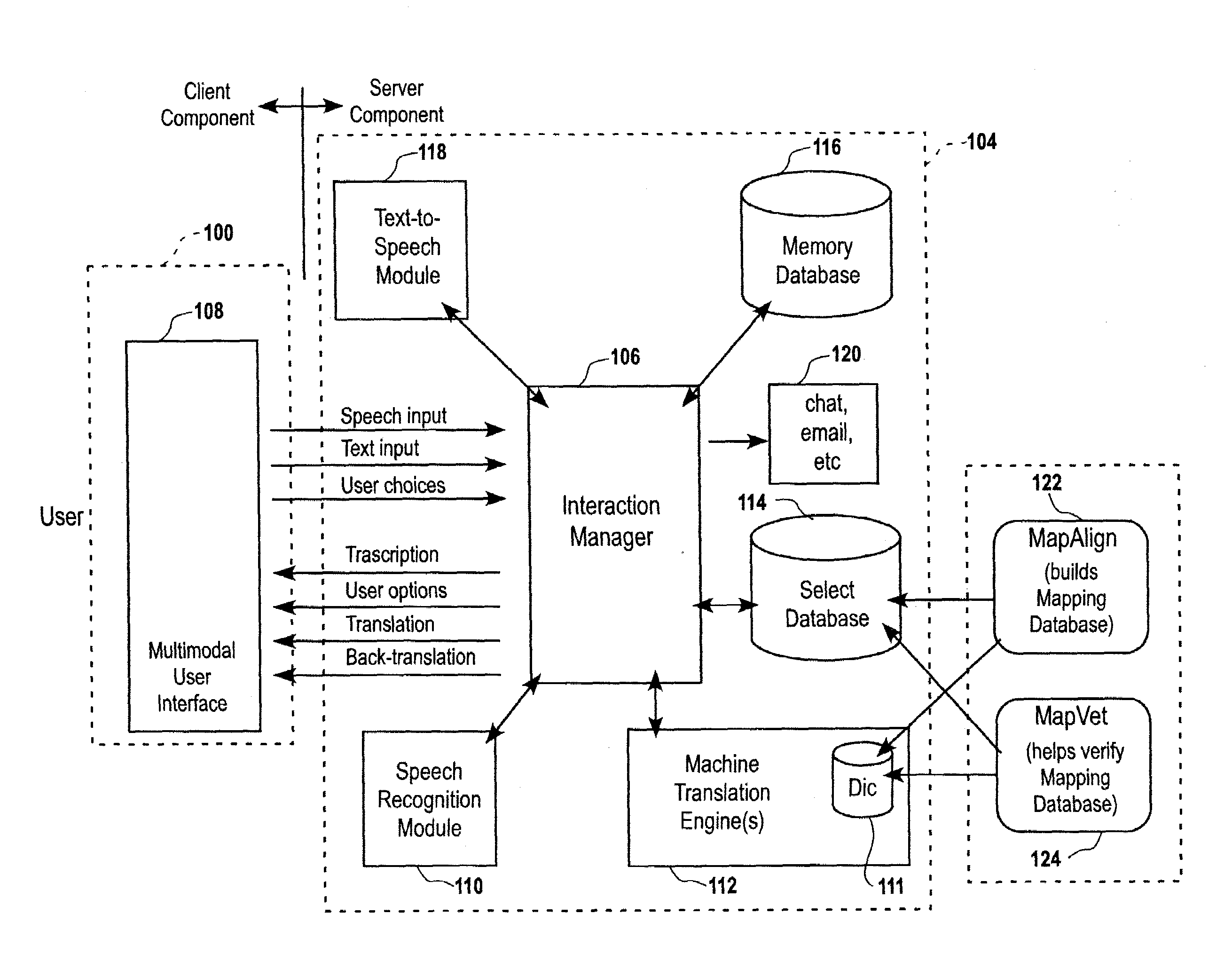

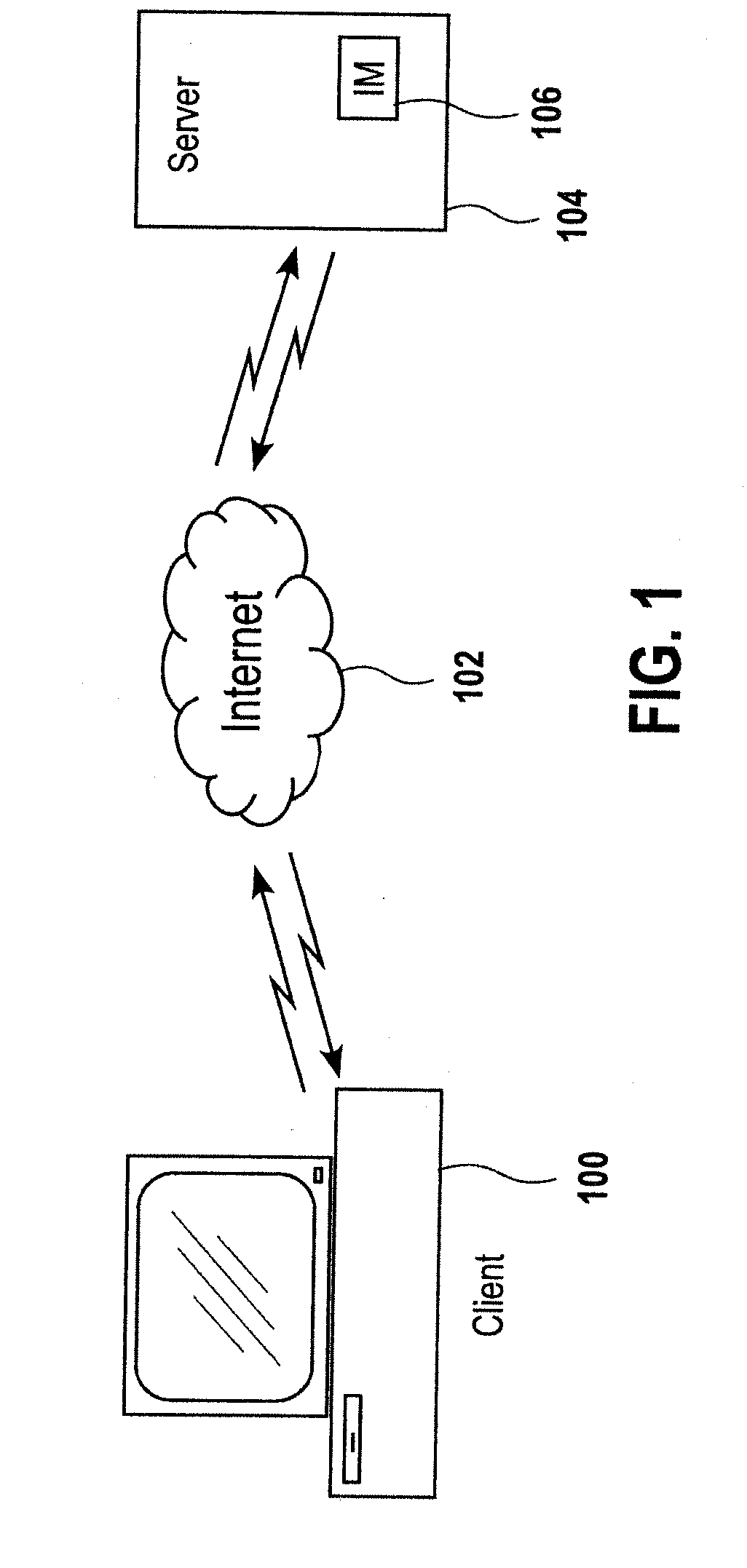

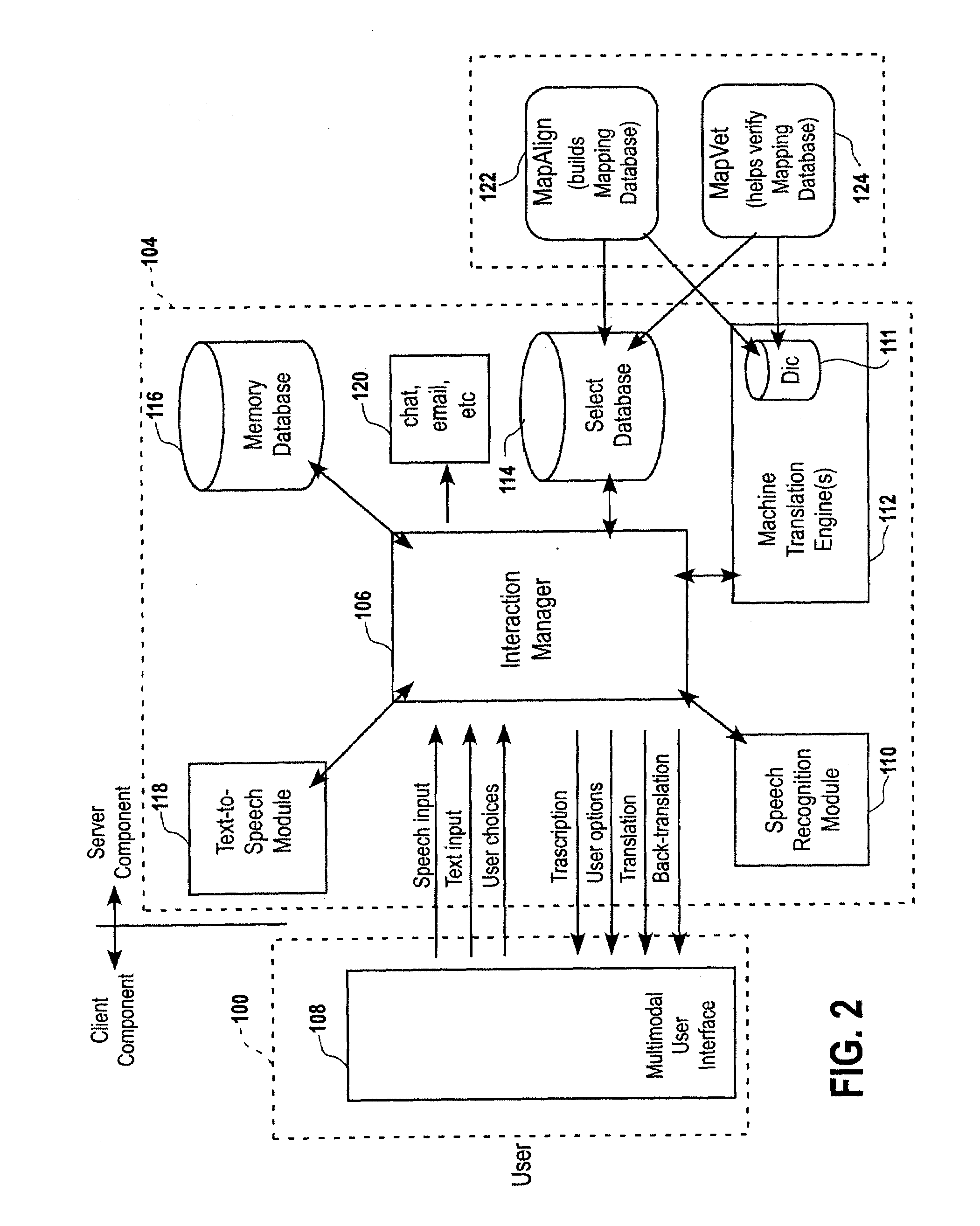

Speech-enabled language translation system and method enabling interactive user supervision of translation and speech recognition accuracy

ActiveUS7539619B1Natural language translationSpeech recognitionSpeech to speech translationAmbiguity

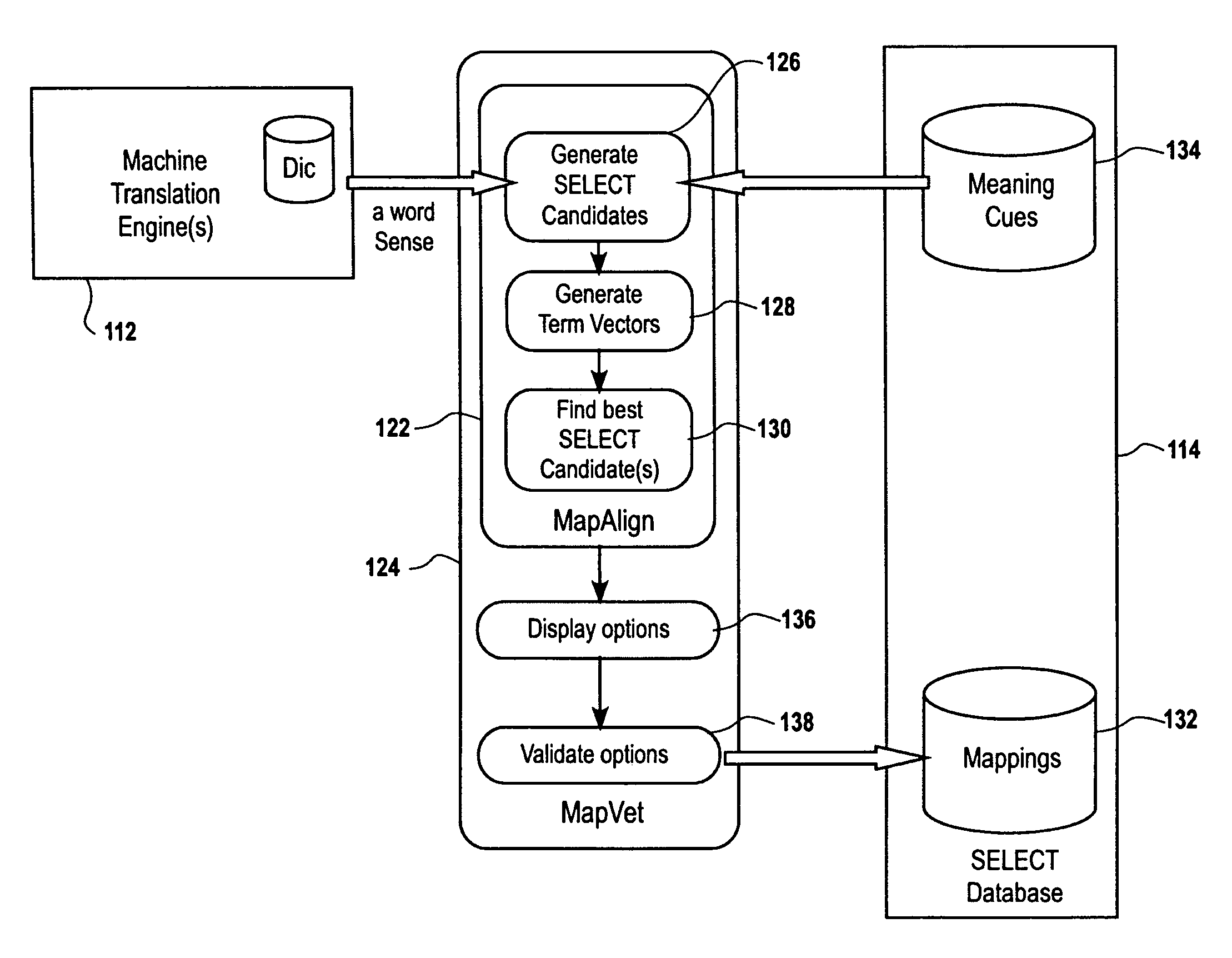

A system and method for a highly interactive style of speech-to-speech translation is provided. The interactive procedures enable a user to recognize, and if necessary correct, errors in both speech recognition and translation, thus providing robust translation output than would otherwise be possible. The interactive techniques for monitoring and correcting word ambiguity errors during automatic translation, search, or other natural language processing tasks depend upon the correlation of Meaning Cues and their alignment with, or mapping into, the word senses of third party lexical resources, such as those of a machine translation or search lexicon. This correlation and mapping can be carried out through the creation and use of a database of Meaning Cues, i.e., SELECT. Embodiments described above permit the intelligent building and application of this database, which can be viewed as an interlingua, or language-neutral set of meaning symbols, applicable for many purposes. Innovative techniques for interactive correction of server-based speech recognition are also described.

Owner:ZAMA INNOVATIONS LLC

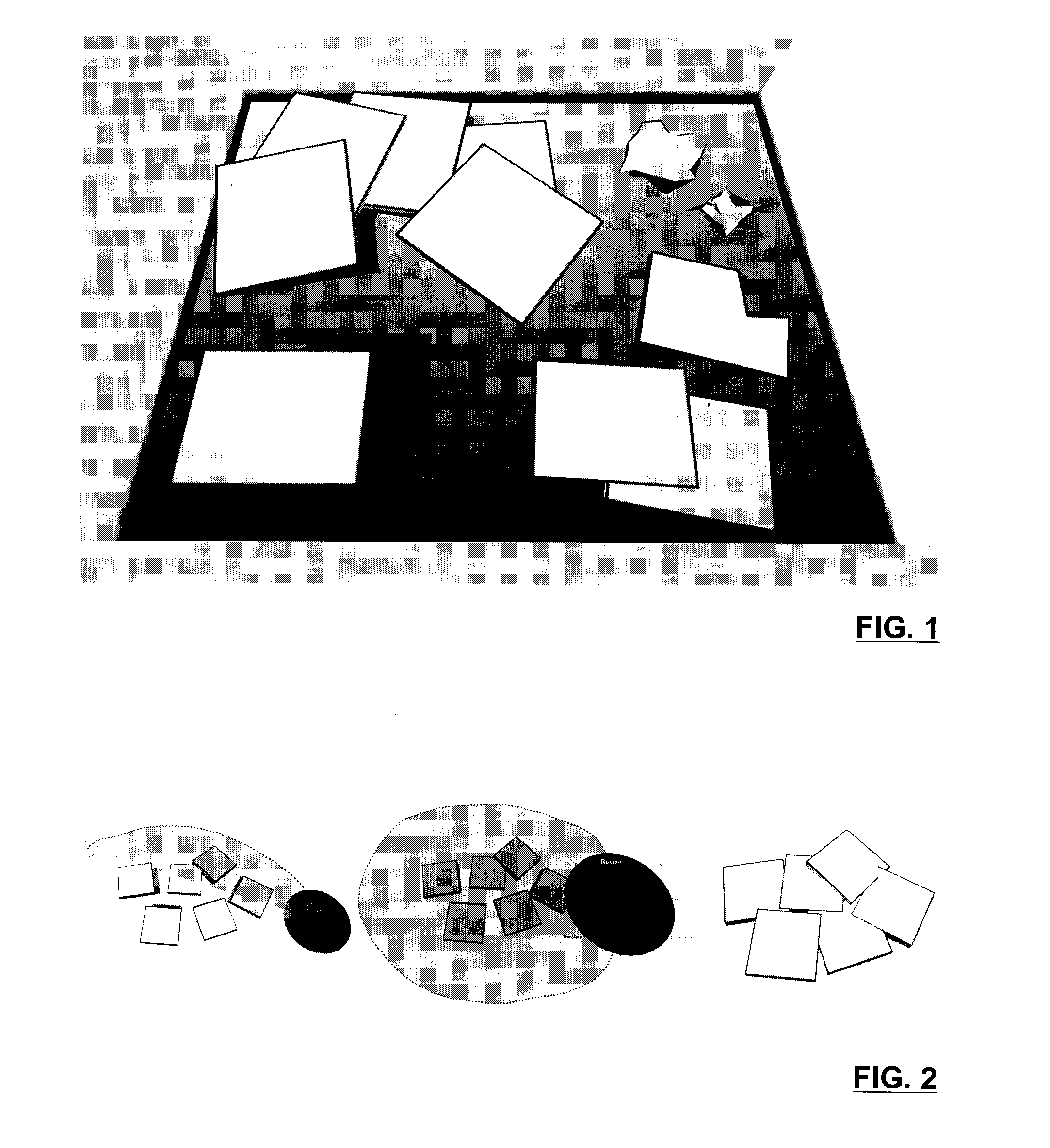

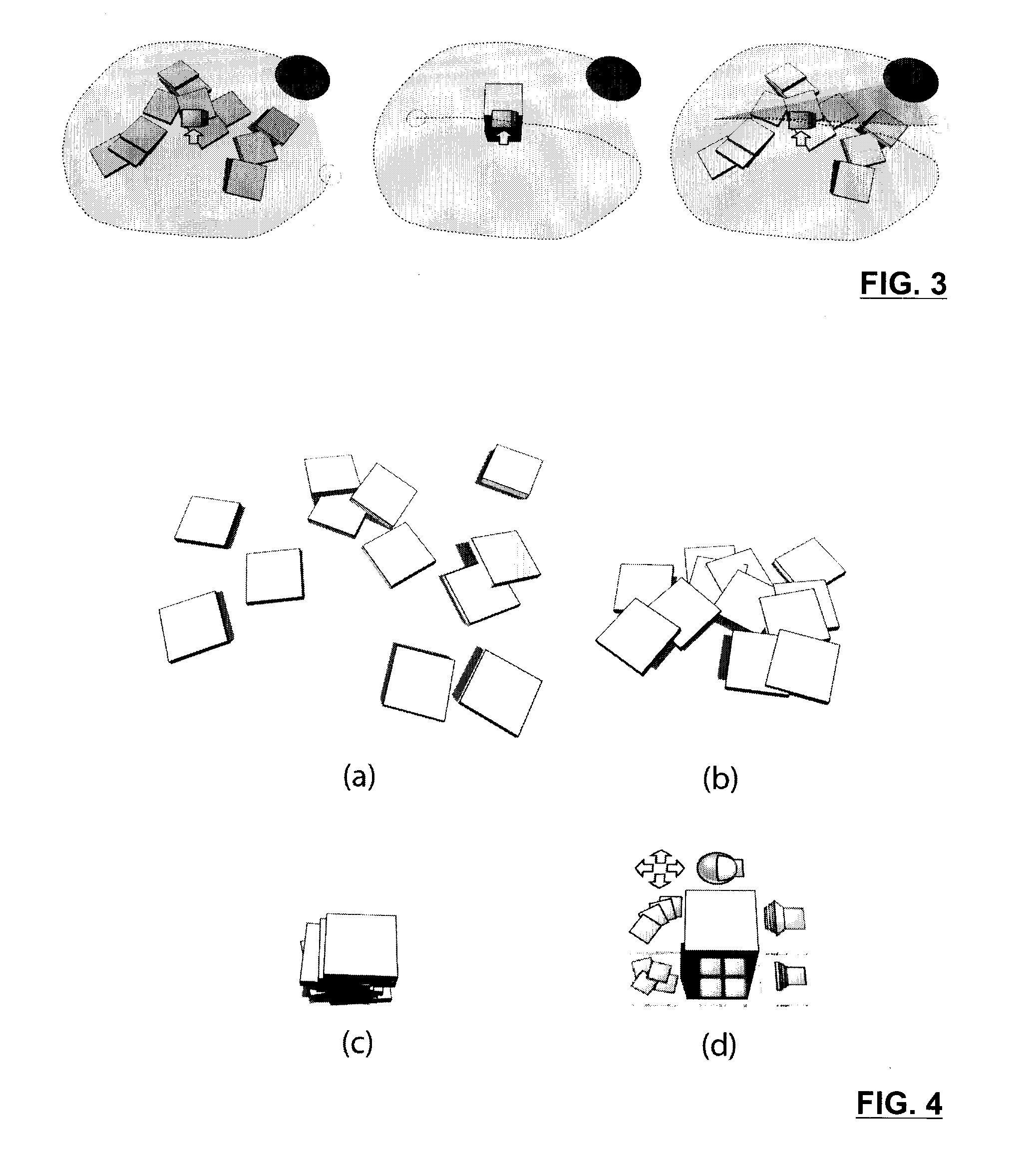

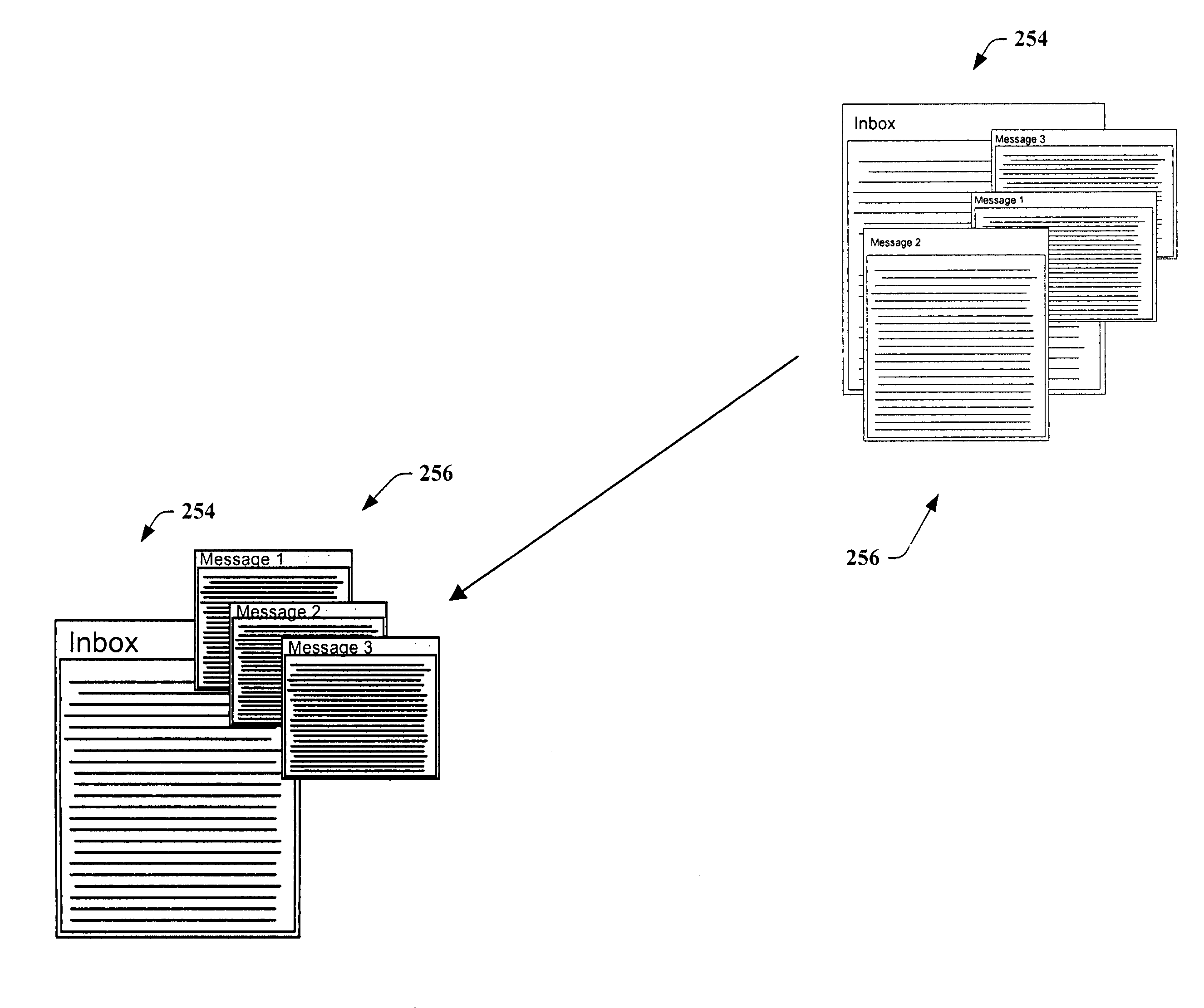

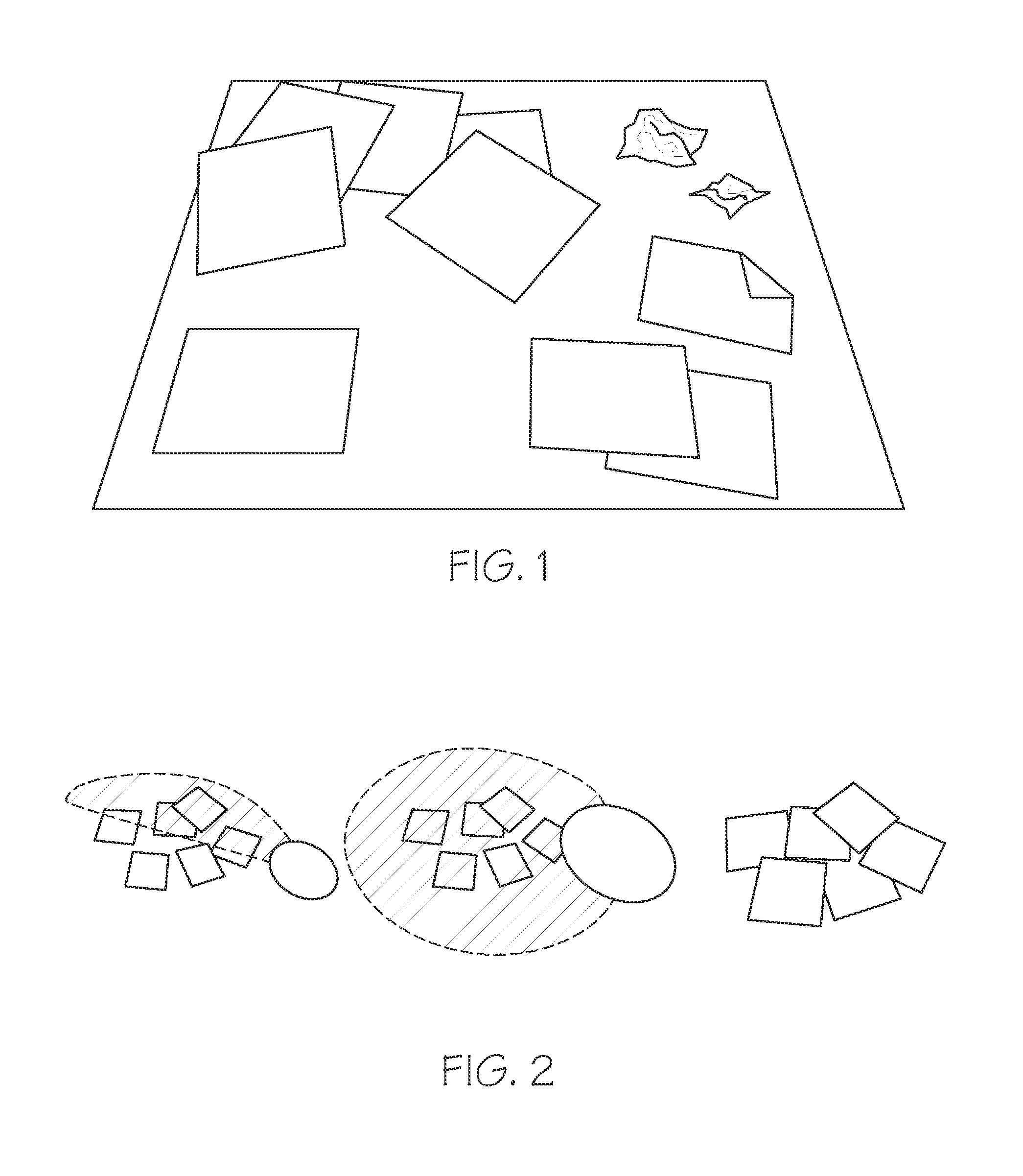

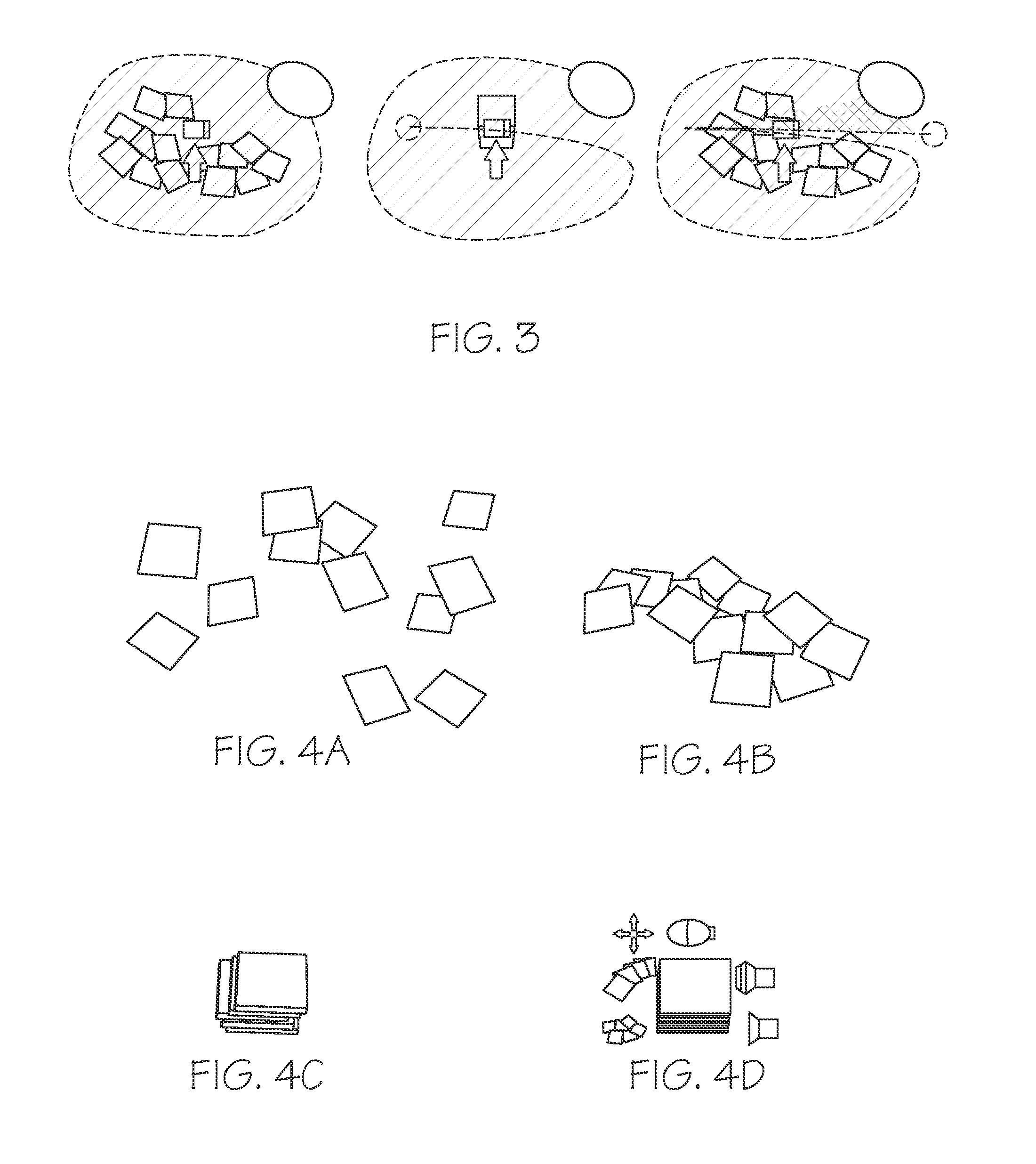

System for organizing and visualizing display objects

InactiveUS20090307623A1Enhanced interactionSimple technologyInput/output processes for data processingHuman–computer interactionComputer program

A method, system and computer program for organizing and visualizing display objects within a virtual environment is provided. In one aspect, attributes of display objects define the interaction between display objects according to pre-determined rules, including rules simulating real world mechanics, thereby enabling enriched user interaction. The present invention further provides for the use of piles as an organizational entity for desktop objects. The present invention further provides for fluid interaction techniques for committing actions on display objects in a virtual interface. A number of other interaction and visualization techniques are disclosed.

Owner:GOOGLE LLC

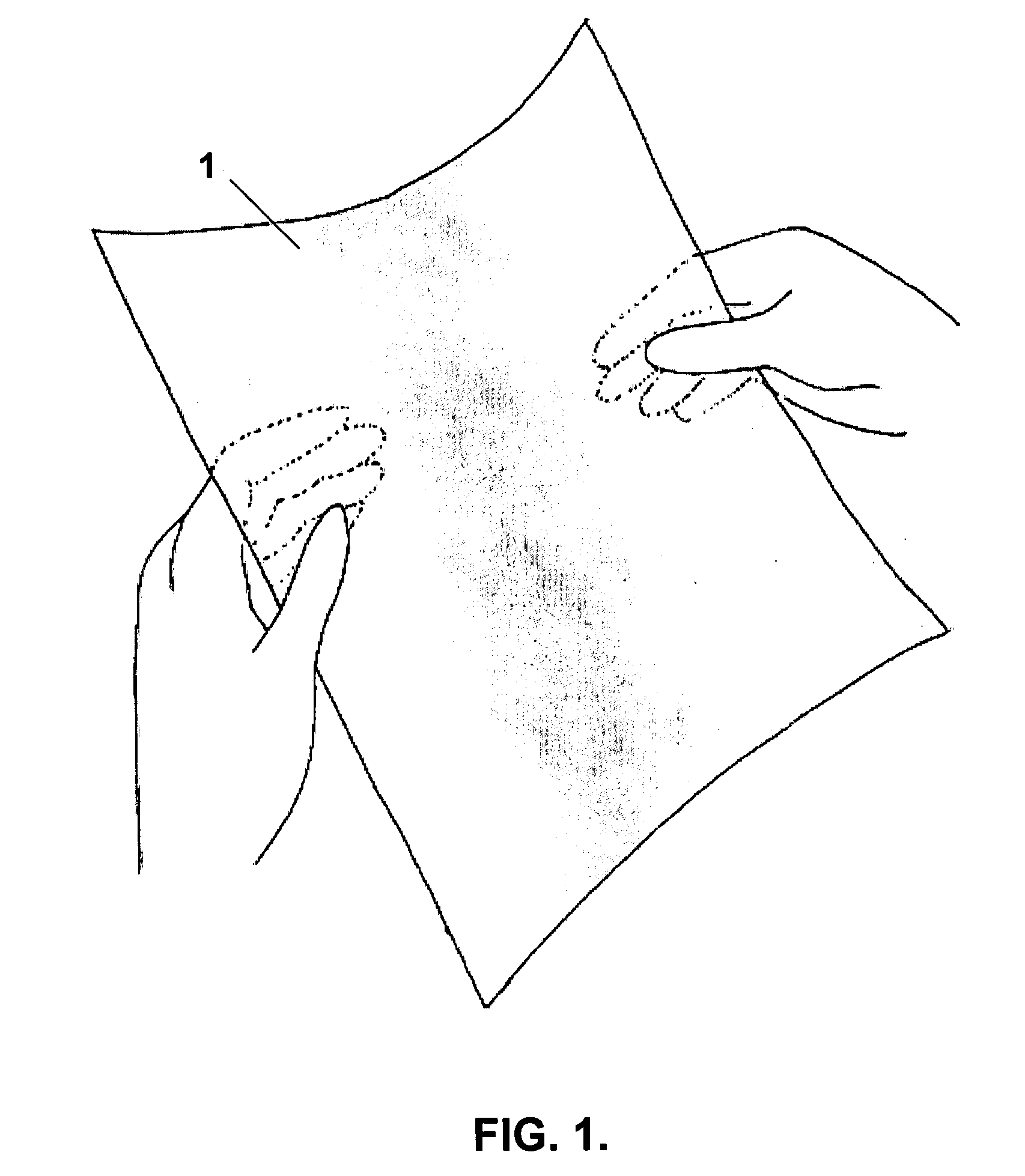

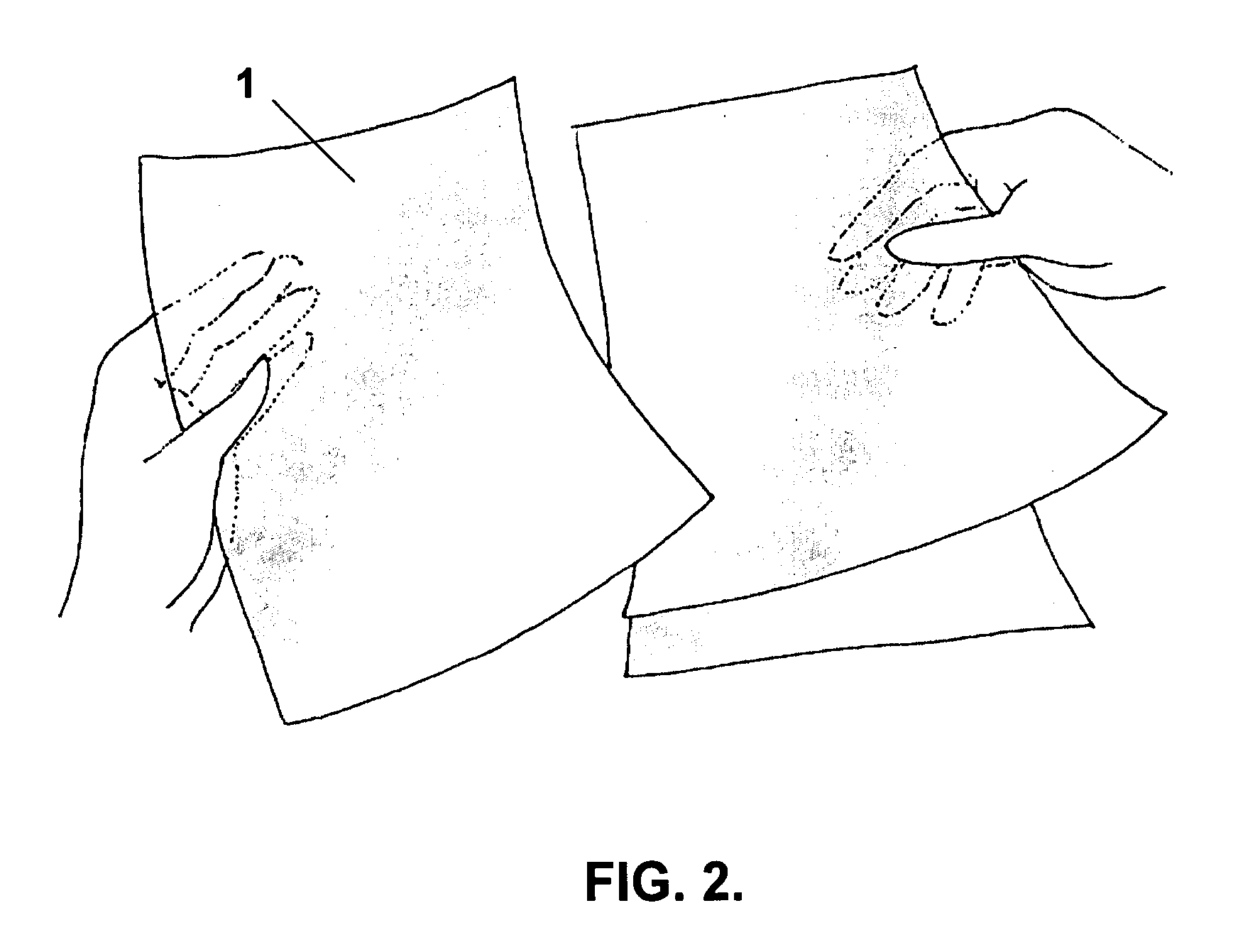

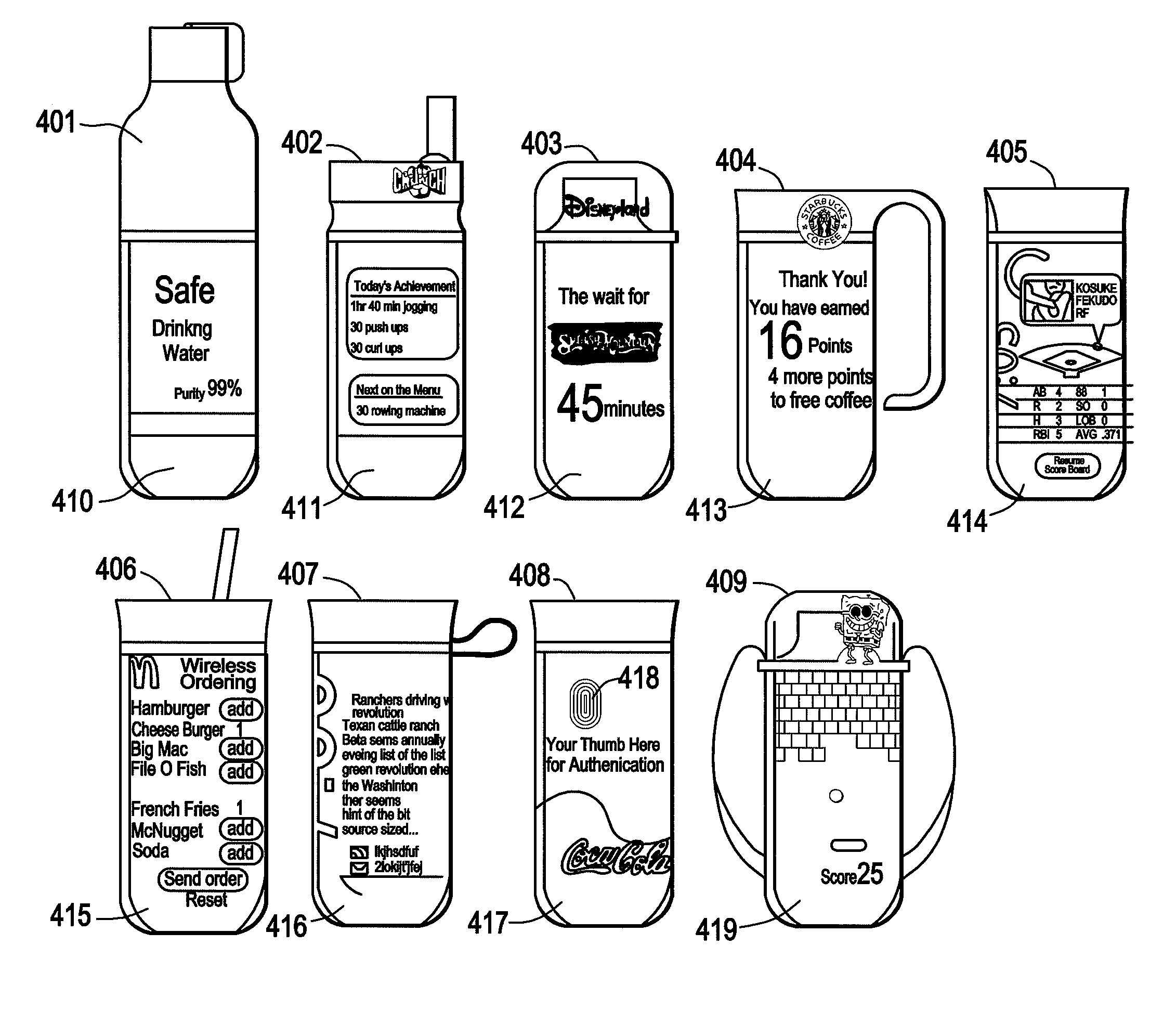

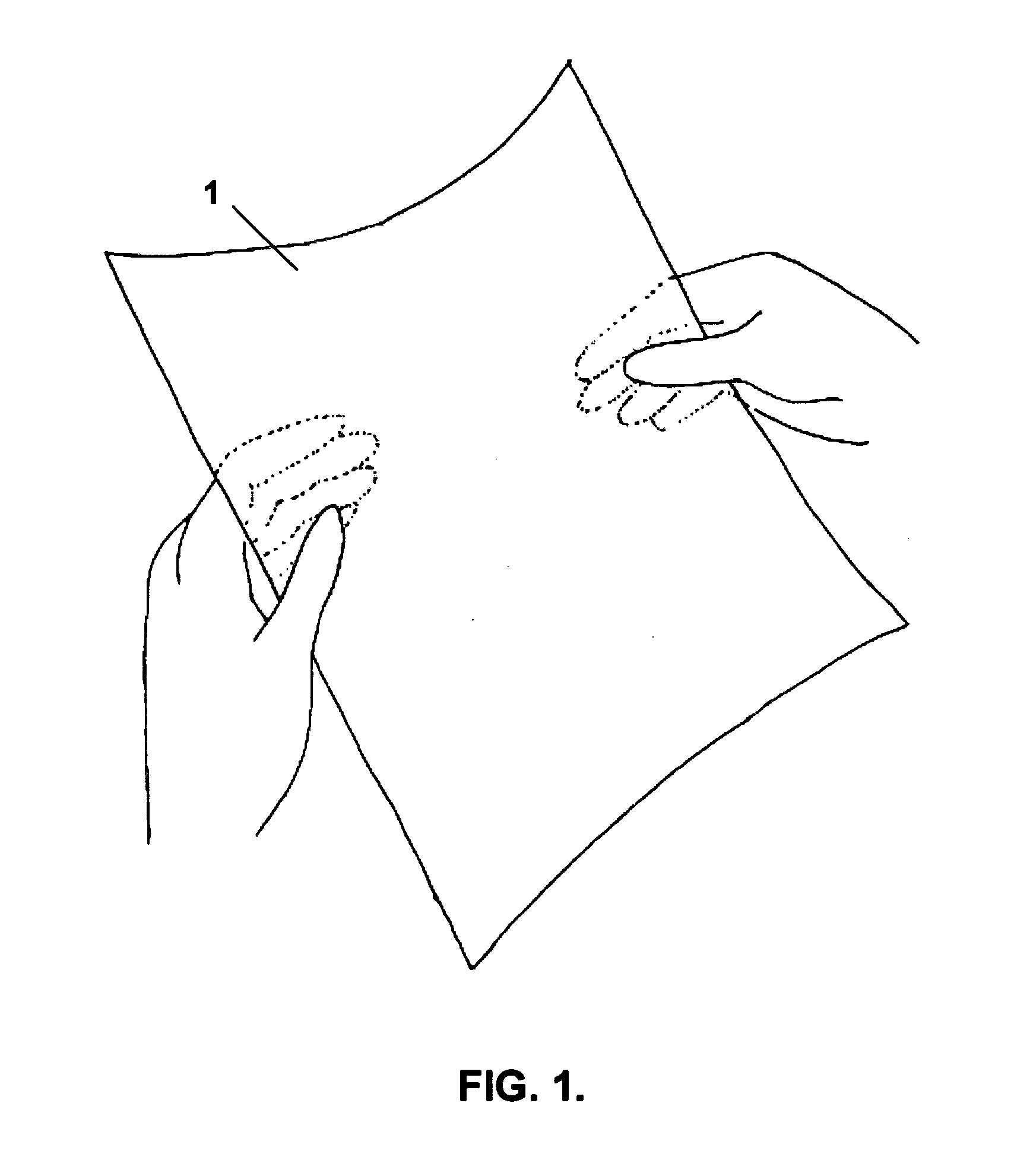

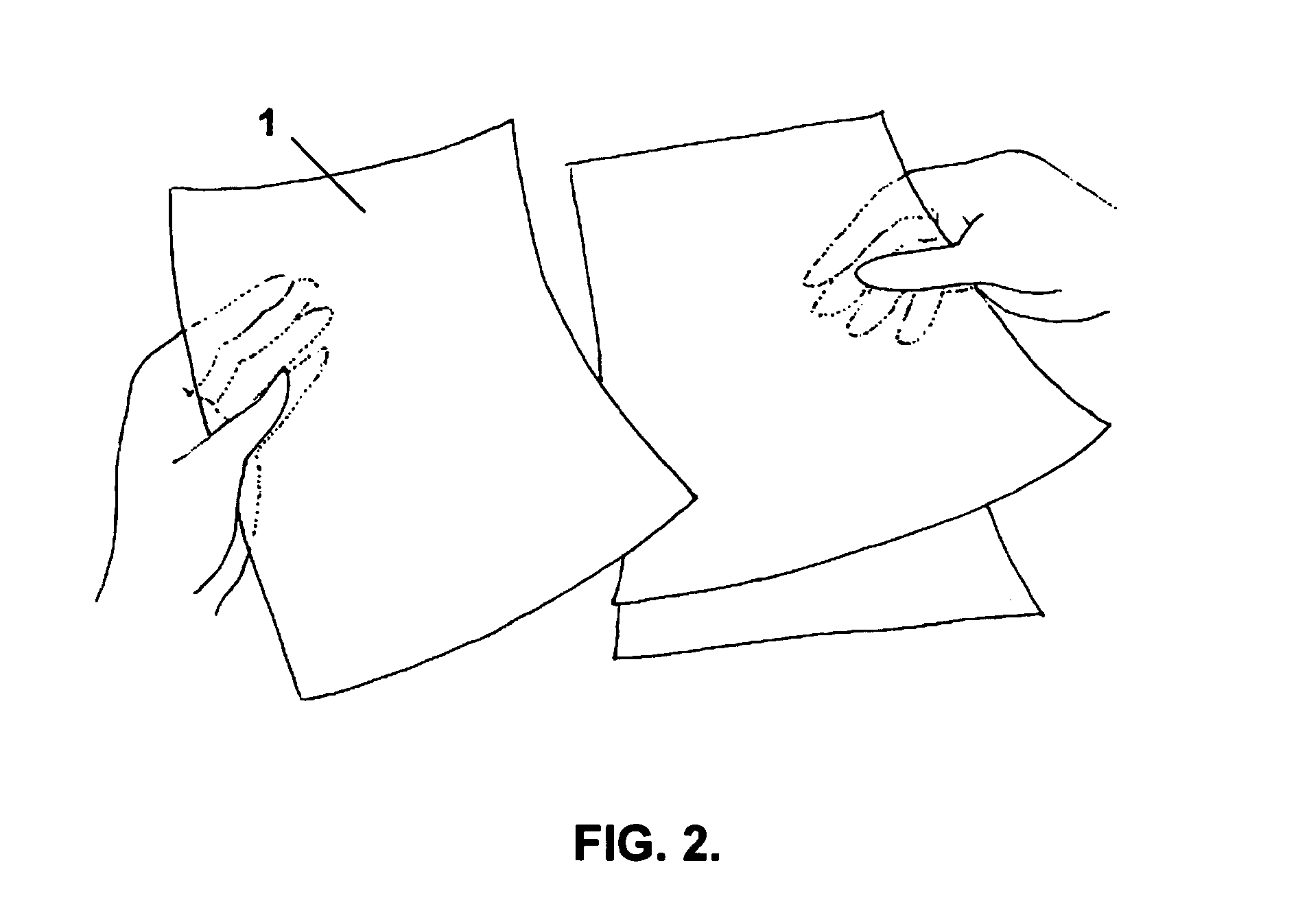

Interaction techniques for flexible displays

InactiveUS20070247422A1Input/output for user-computer interactionDigital data processing detailsGraphicsGraphical content

The invention relates to a set of interaction techniques for obtaining input to a computer system based on methods and apparatus for detecting properties of the shape, location and orientation of flexible display surfaces, as determined through manual or gestural interactions of a user with said display surfaces. Such input may be used to alter graphical content and functionality displayed on said surfaces or some other display or computing system.

Owner:XUUK

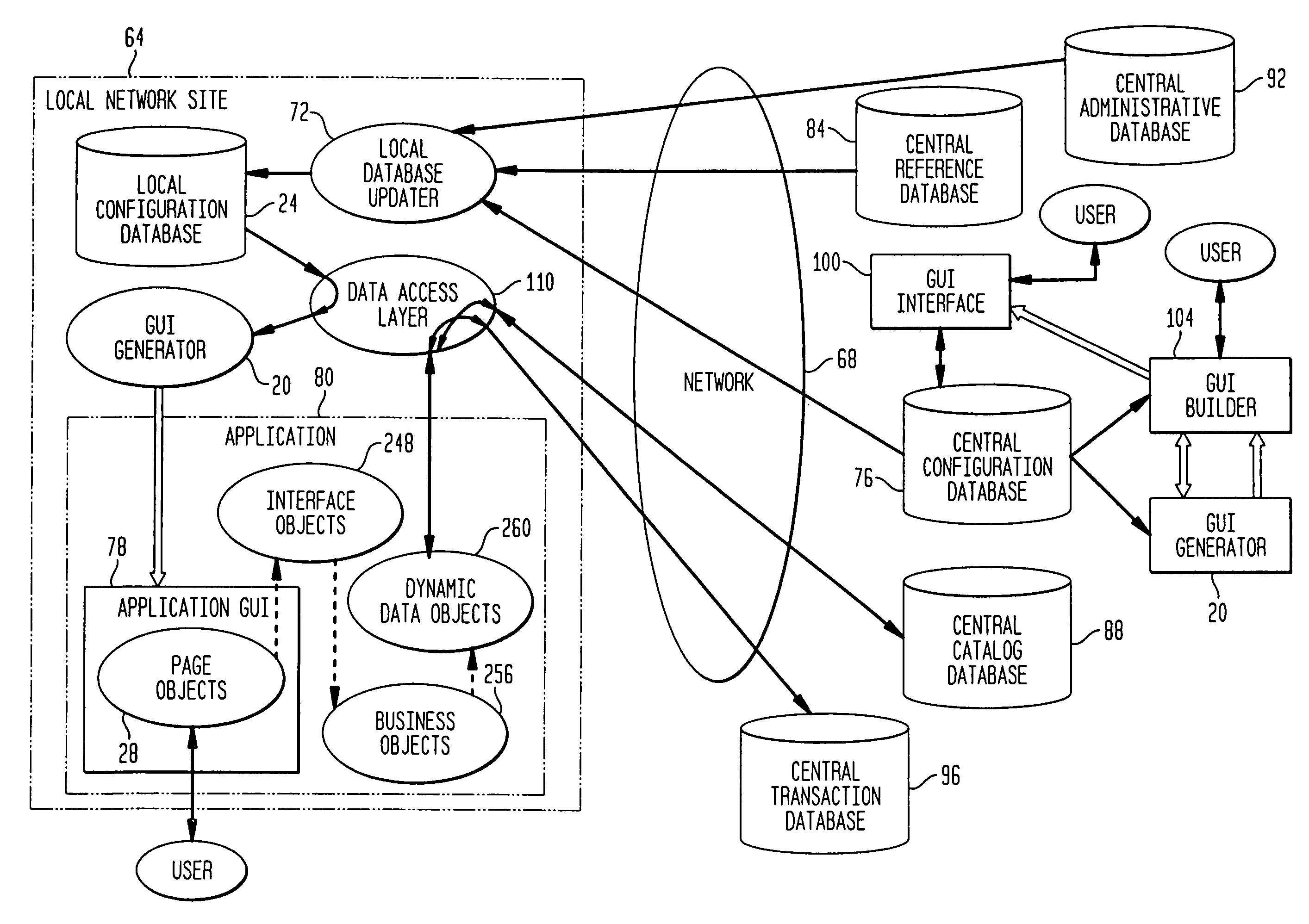

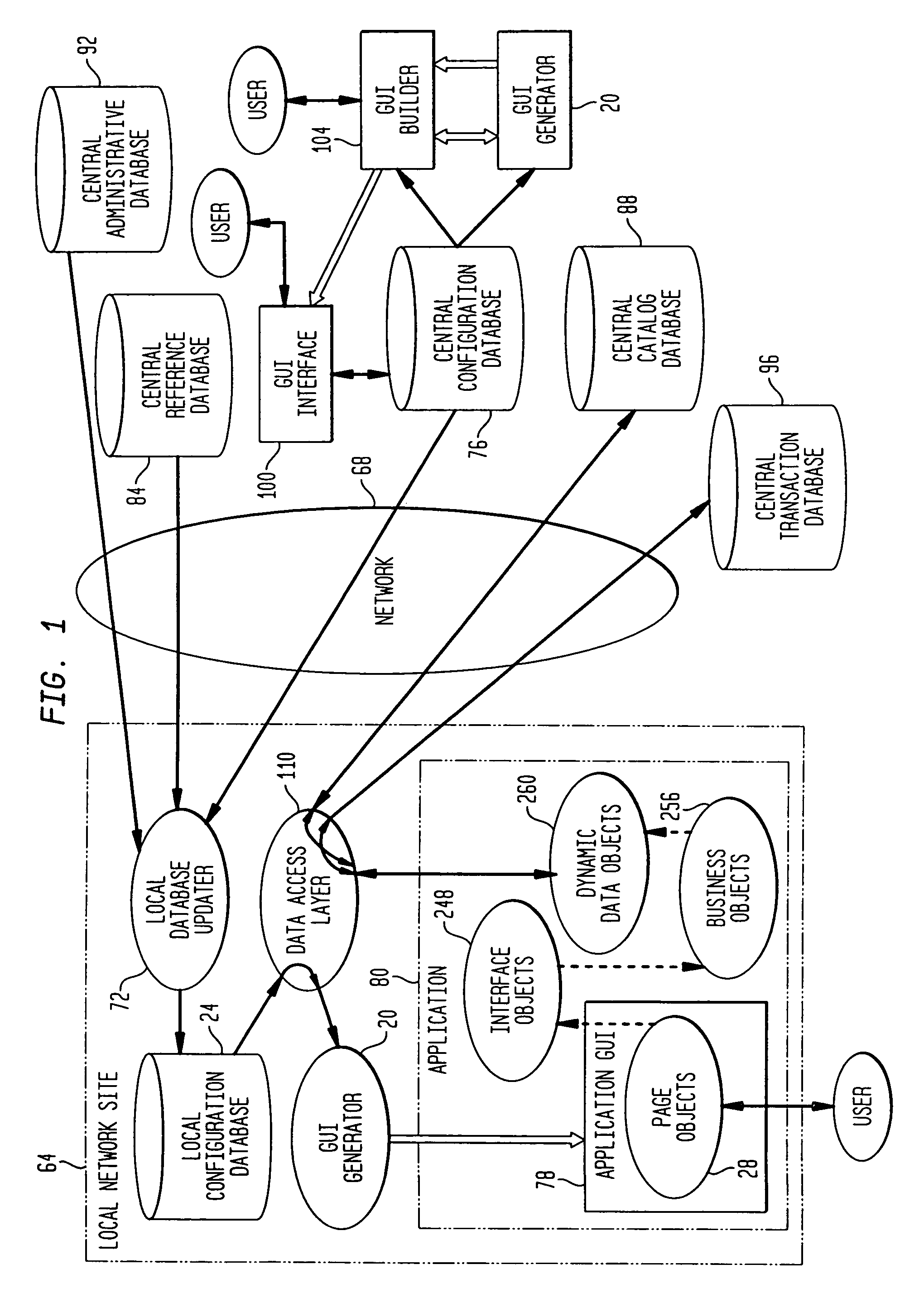

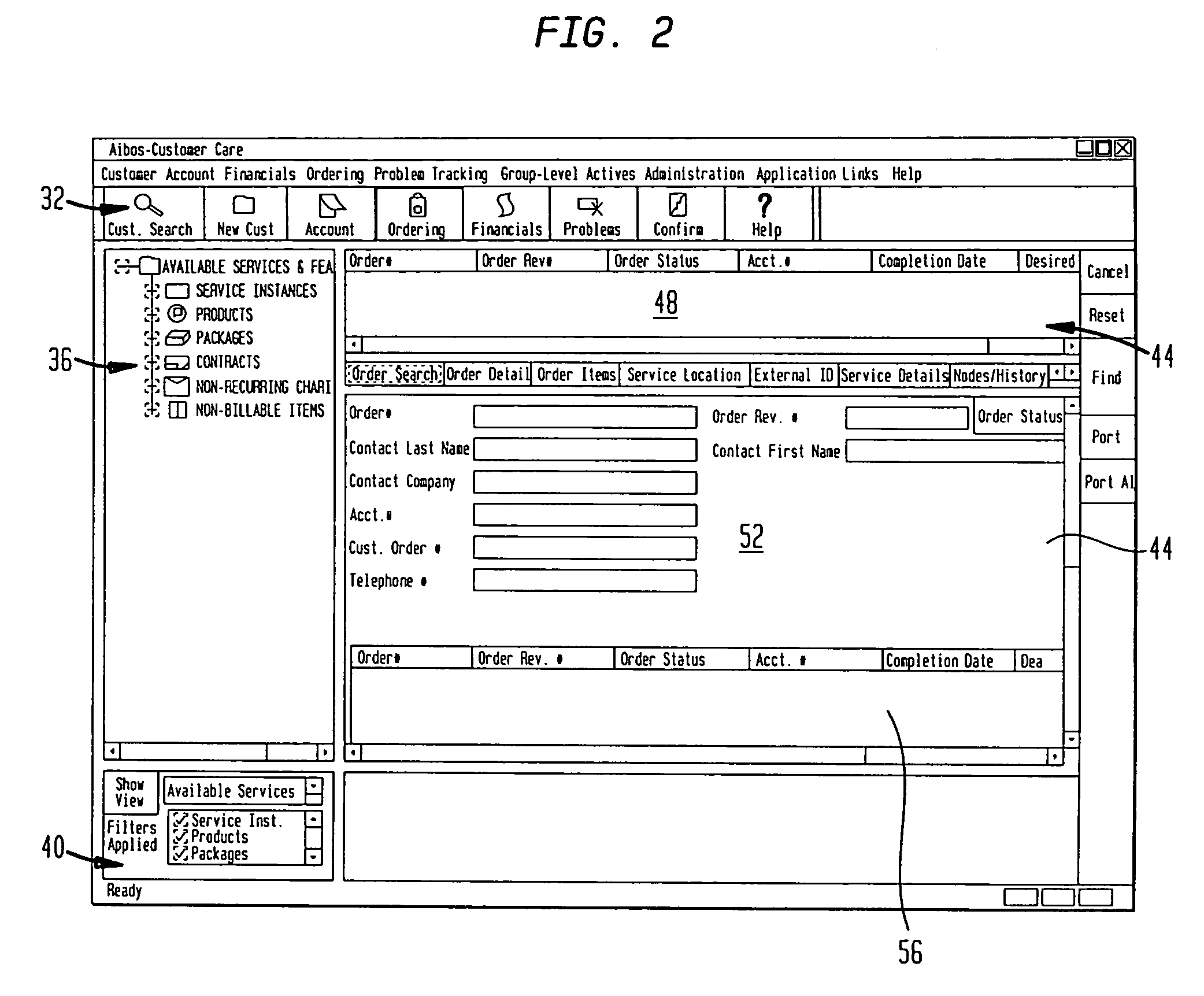

Computer user interfaces that are generated as needed

InactiveUS7039875B2Maximal functionalitySpecific program execution arrangementsInput/output processes for data processingGraphicsComputer users

A computer user interface generation system and method is disclosed, wherein computer user interfaces can be generated dynamically during activation of the computer application for which the generated user interface provides user access to the functional features of the application. The generated user interface may be a graphical user interface (GUI) that uses instances of various user interaction techniques. A user interface specification is provided in a configuration database for generating the user interface, and by changing the user interface specification in the configuration database, the user interface for the computer application can be changed during activation of the application.

Owner:ALCATEL-LUCENT USA INC

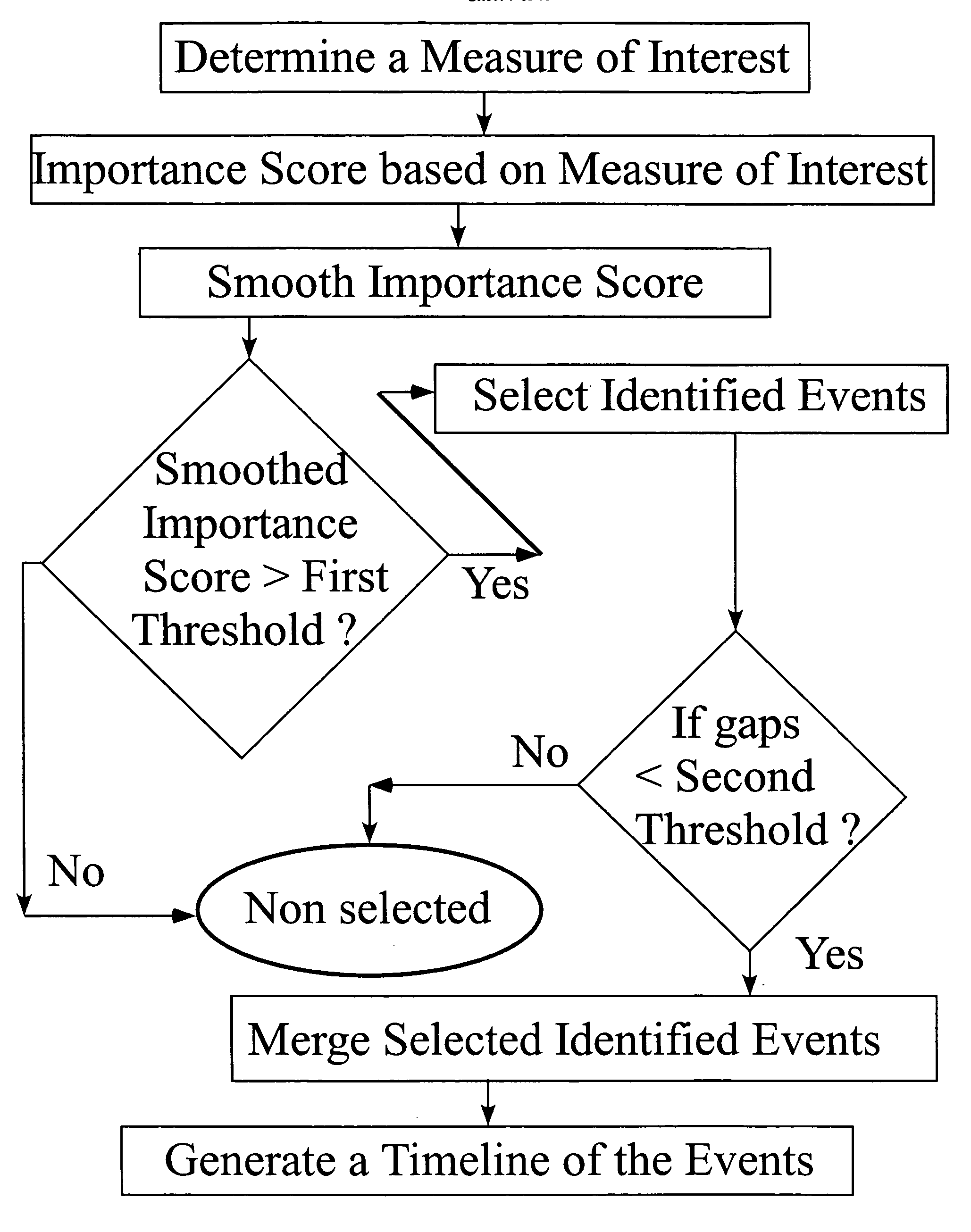

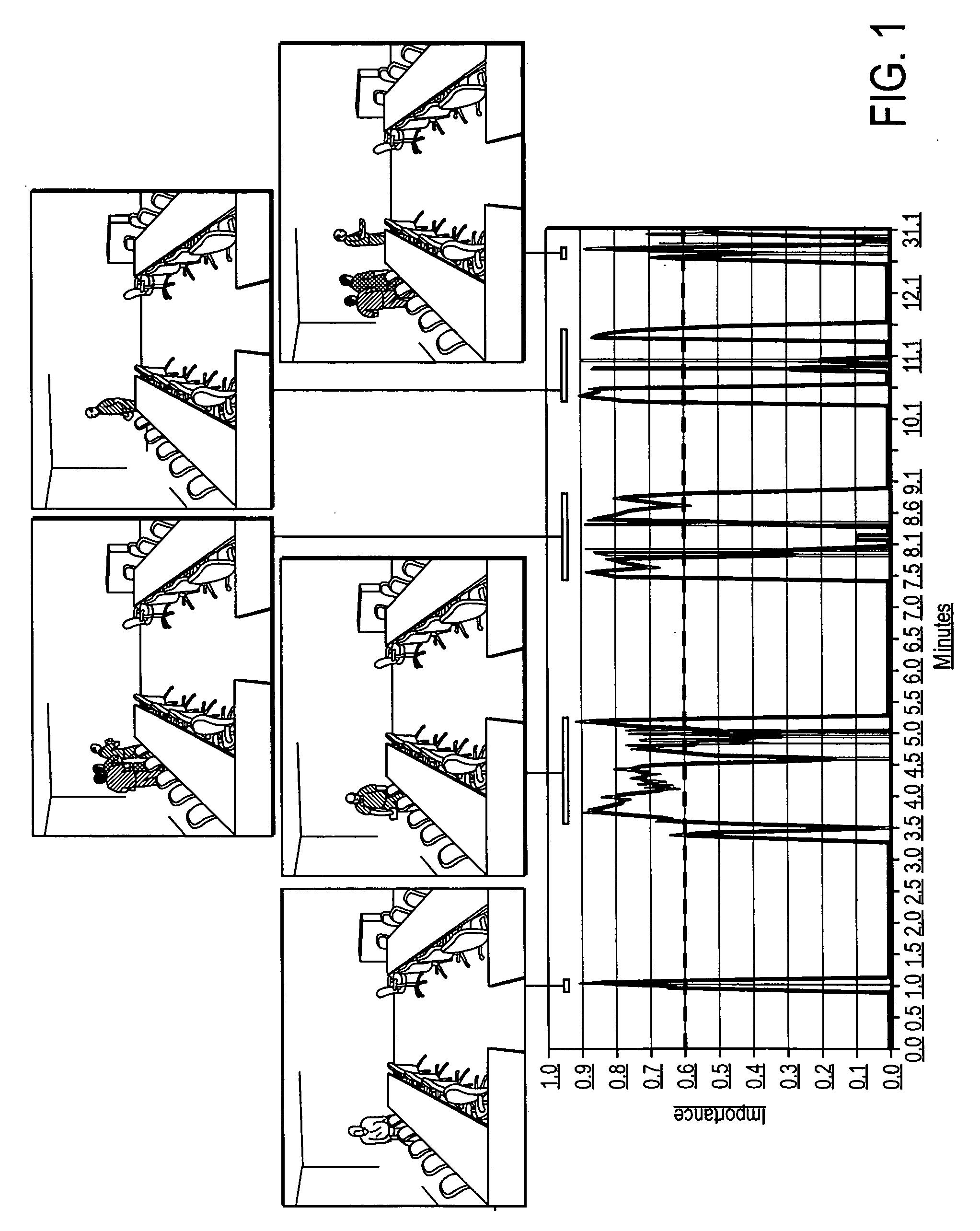

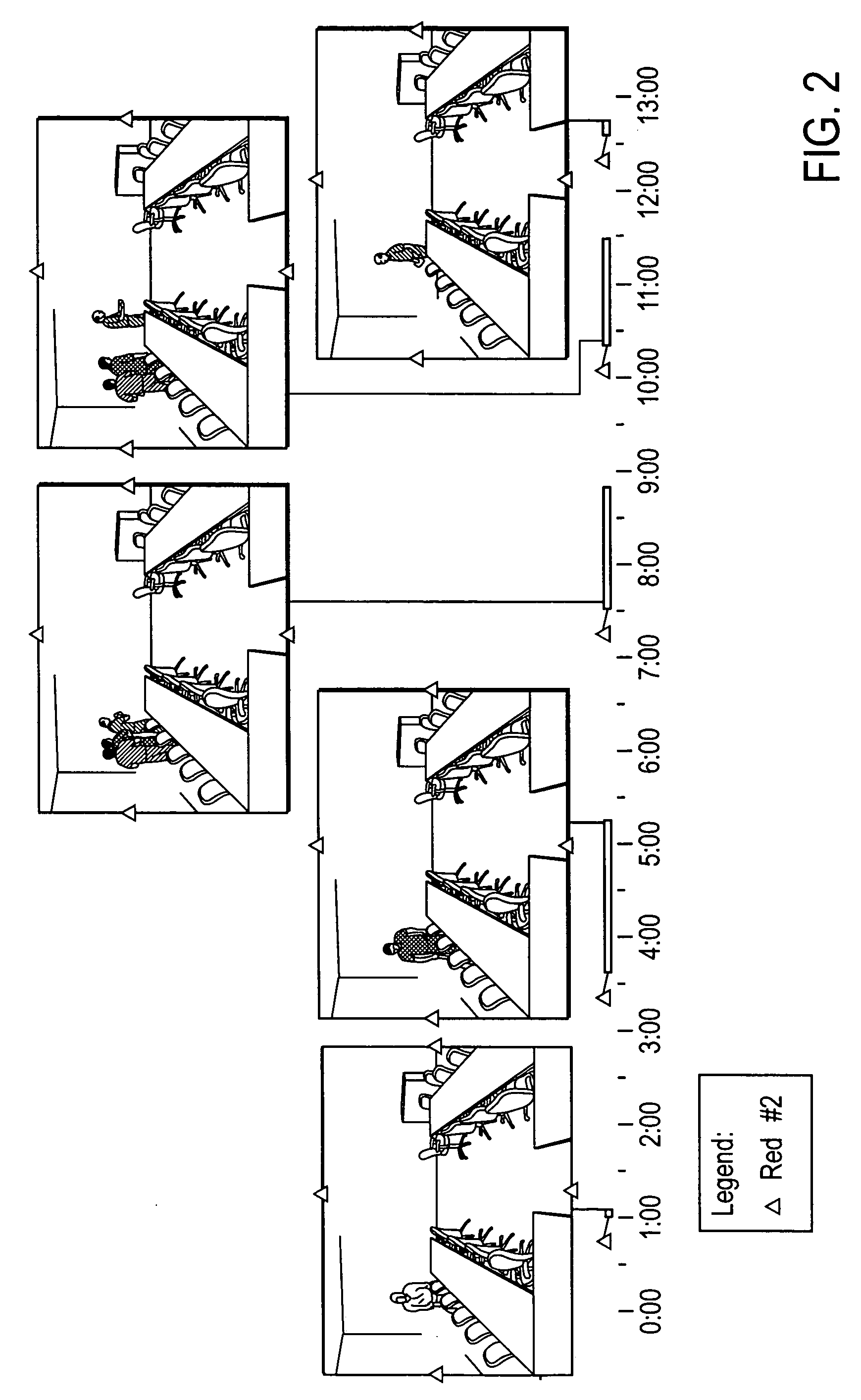

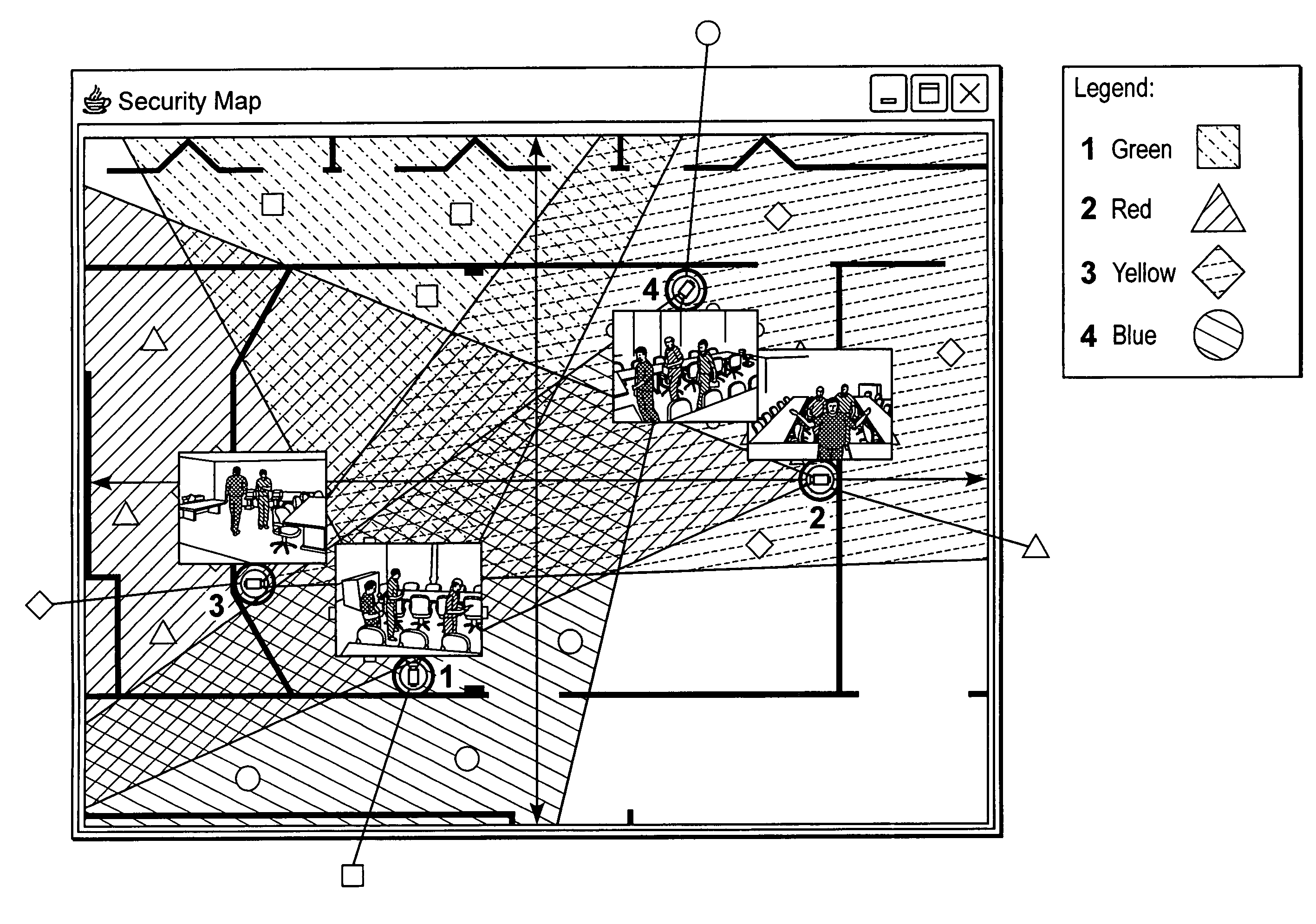

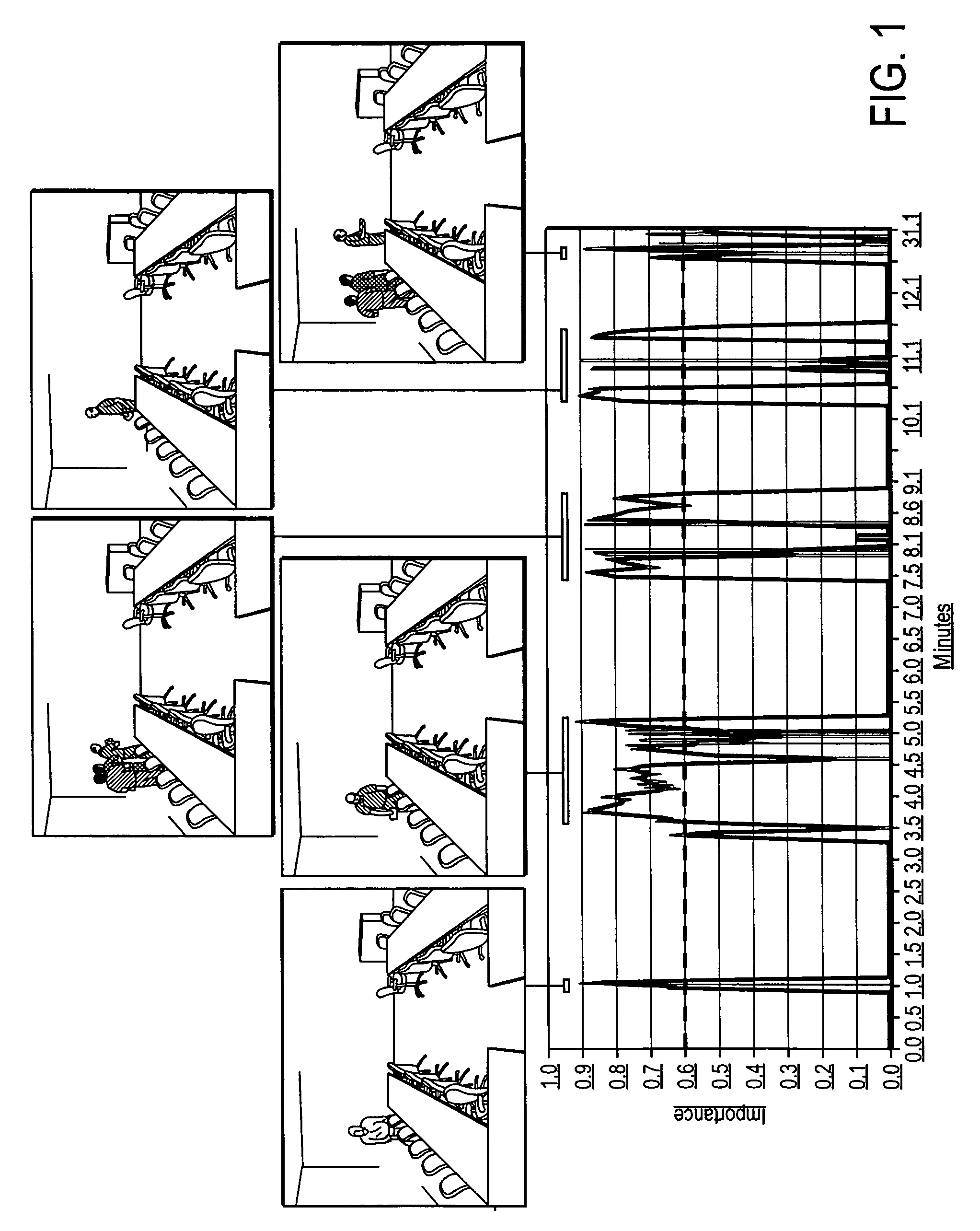

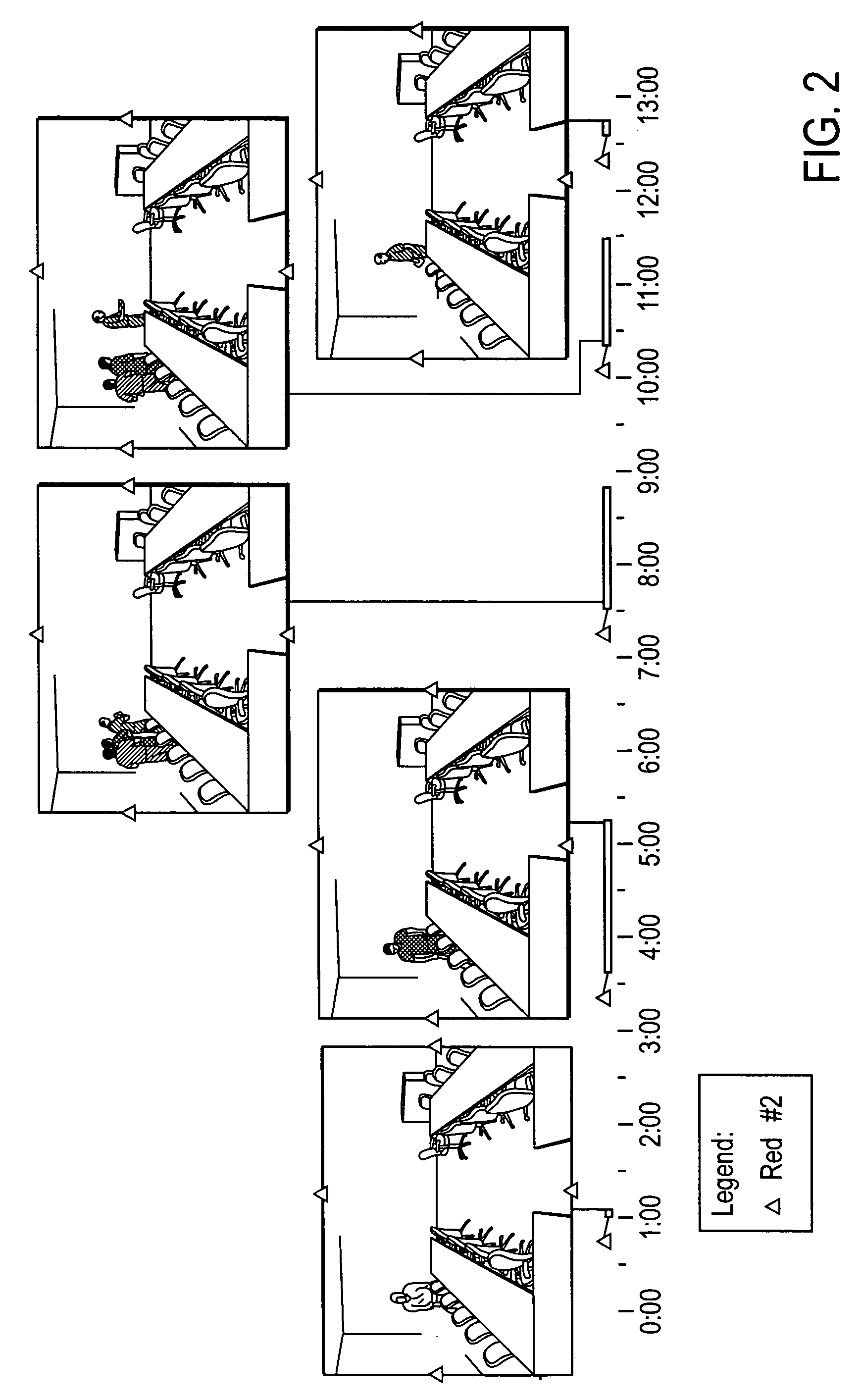

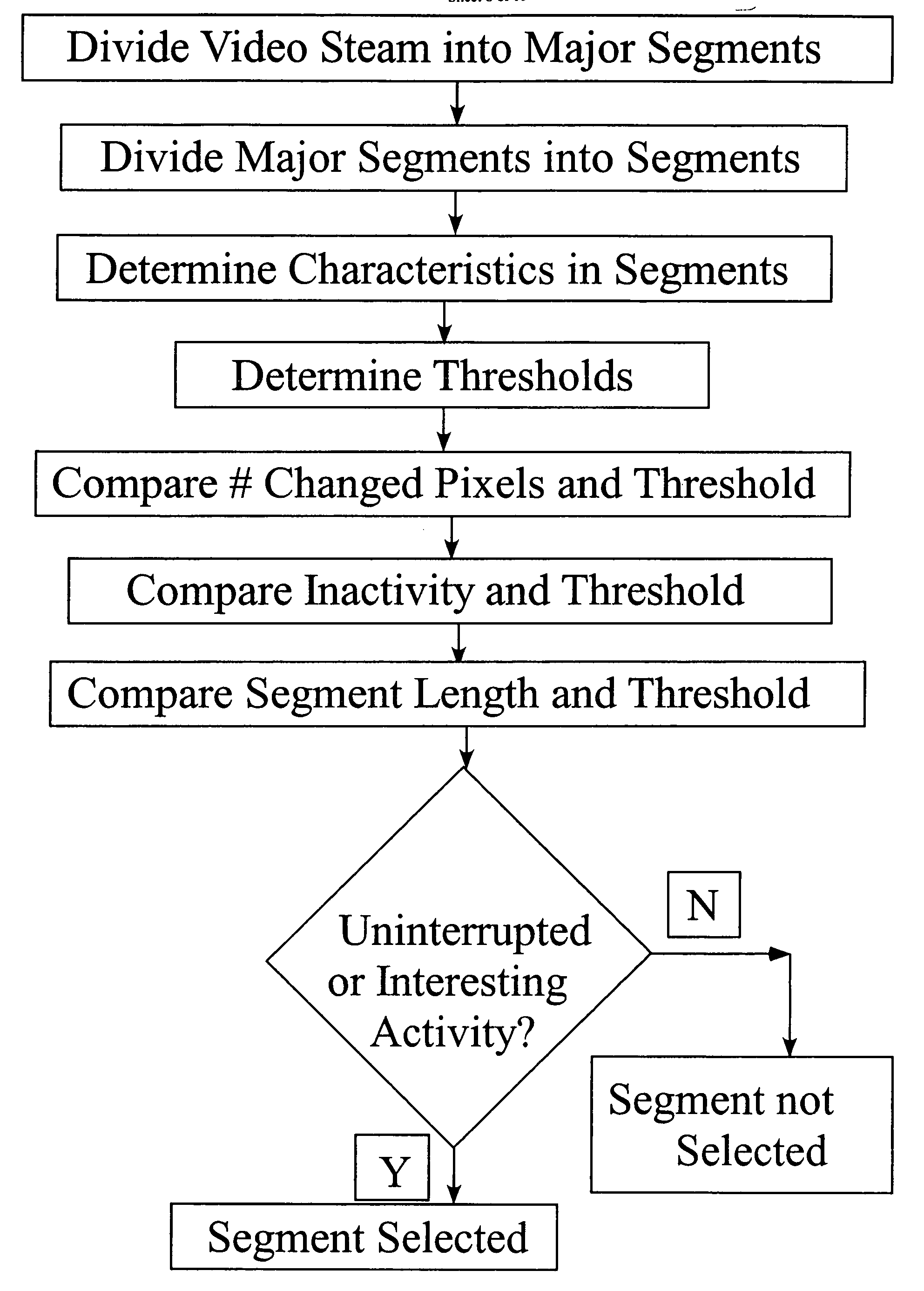

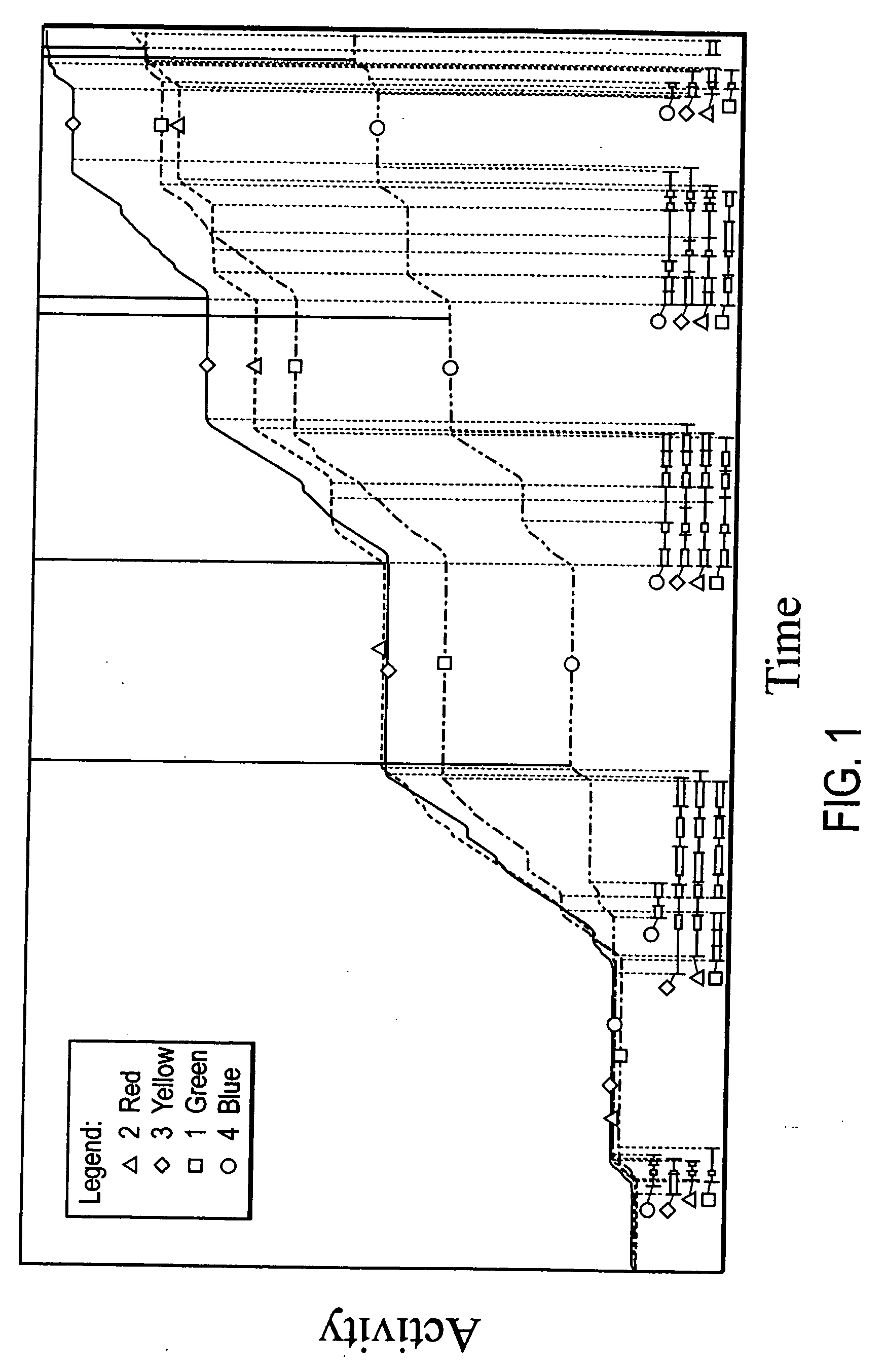

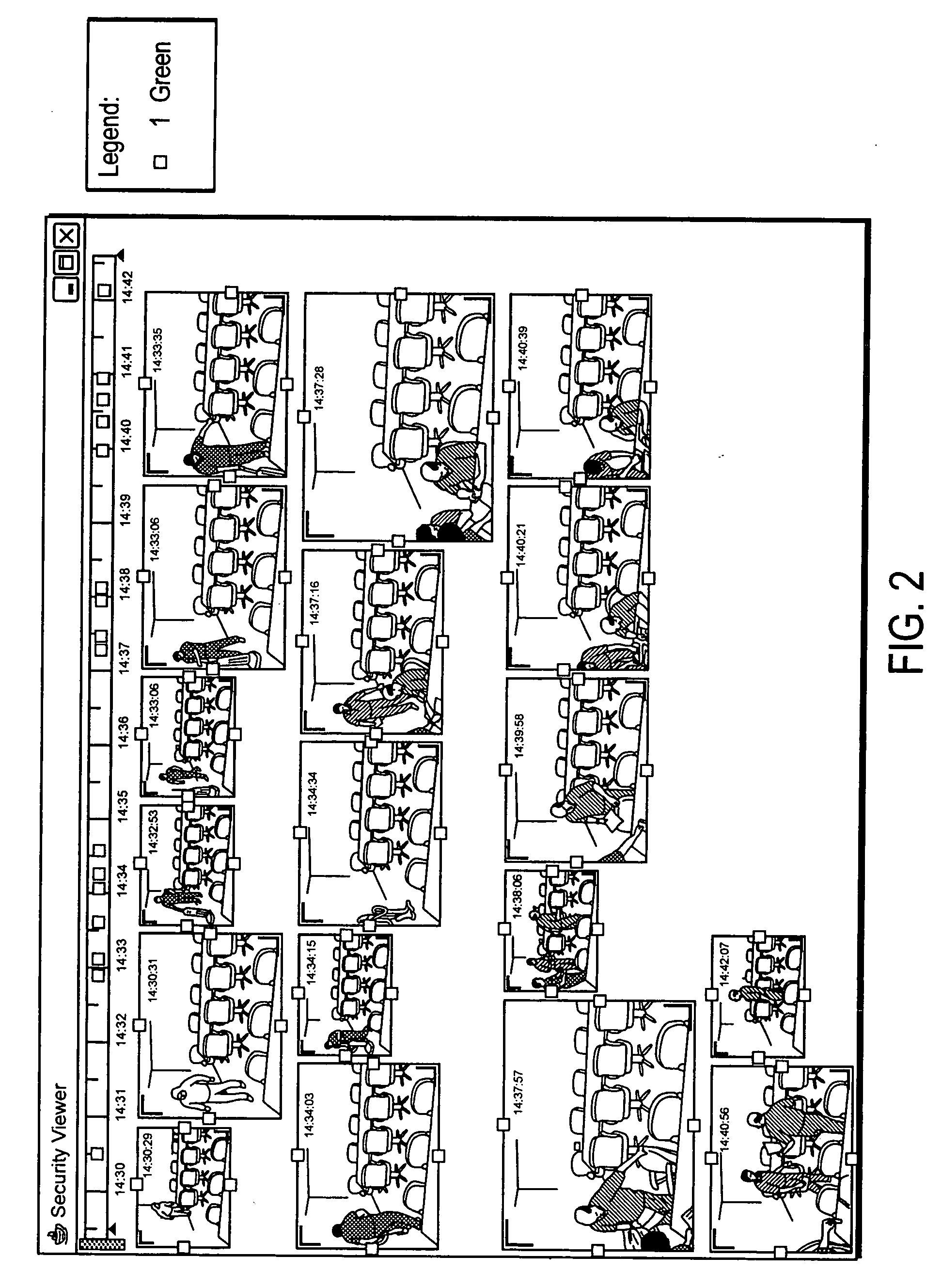

Methods and interfaces for event timeline and logs of video streams

ActiveUS20060288288A1Recording carrier detailsDigital data information retrievalTime lineFixed position

Techniques for generating timelines and event logs from one or more fixed-position cameras based on the identification of activity in the video are presented. Various embodiments of the invention include an assessment of the importance of the activity, the creation of a timeline identifying events of interest, and interaction techniques for seeing more details of an event or alternate views of the video. In one embodiment, motion detection is used to determine activity in one or more synchronized video streams. In another embodiment, events are determined based on periods of activity and assigned importance assessments based on the activity, important locations in the video streams, and events from other sensors. In different embodiments, the interface consists of a timeline, event log, and map.

Owner:FUJIFILM BUSINESS INNOVATION CORP

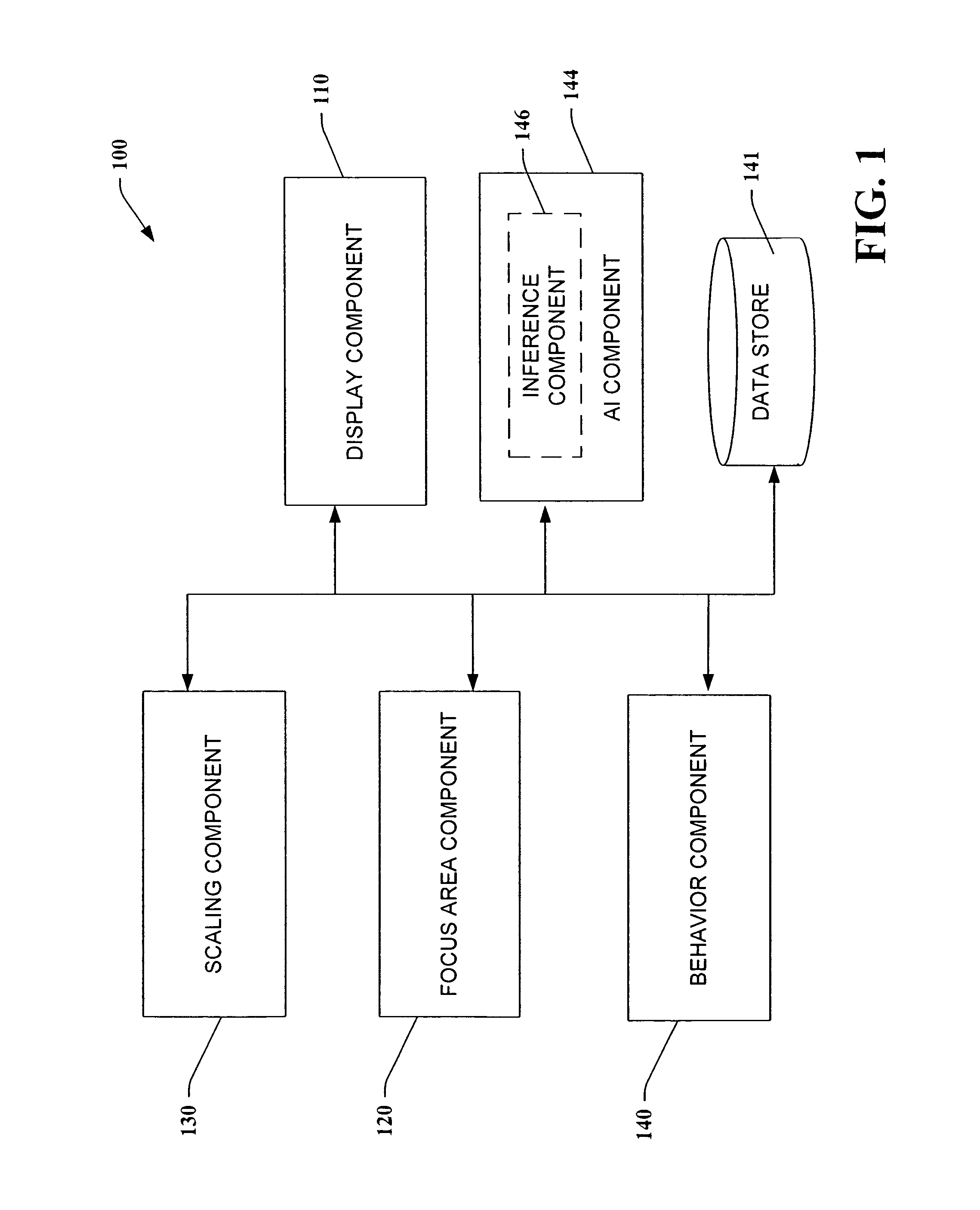

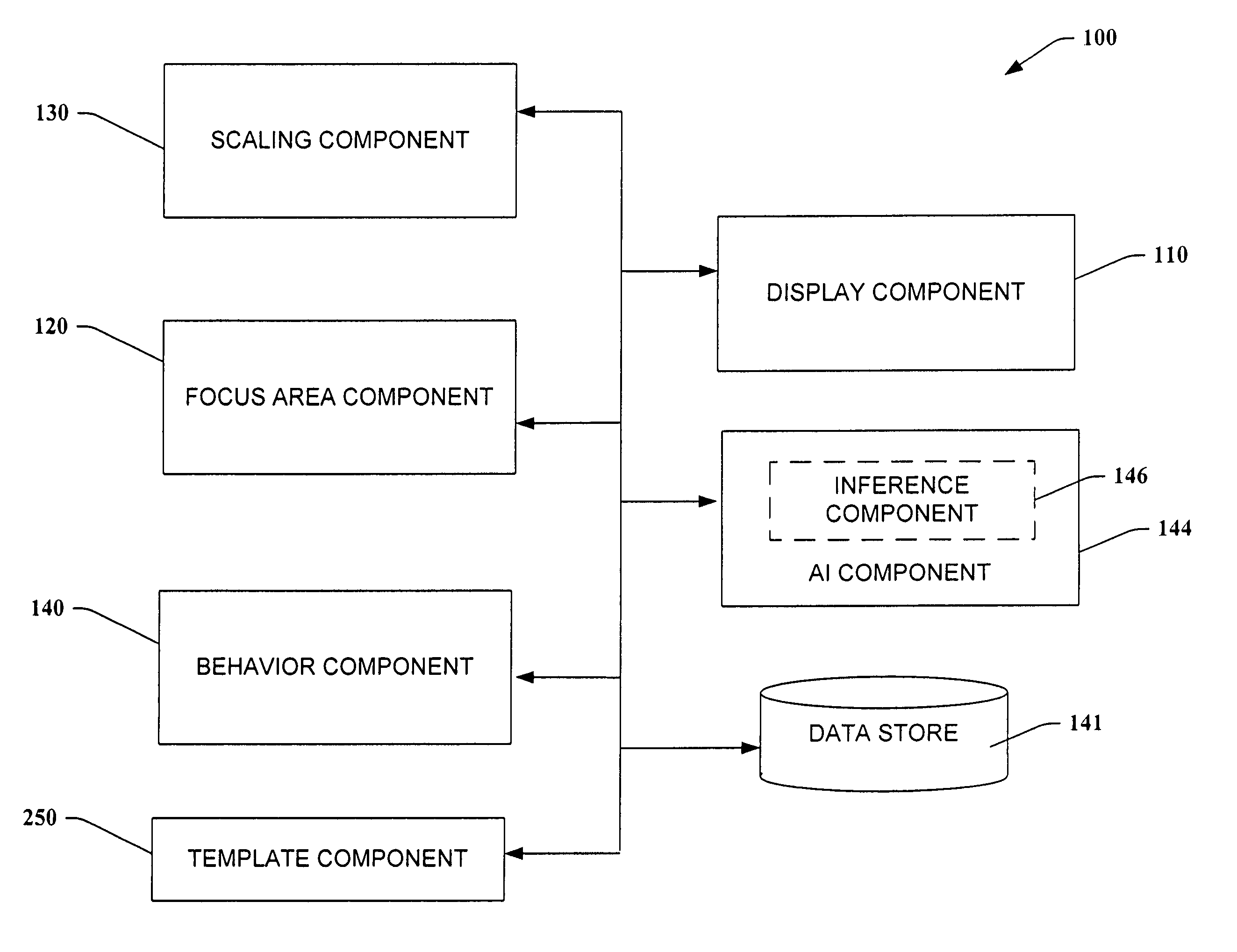

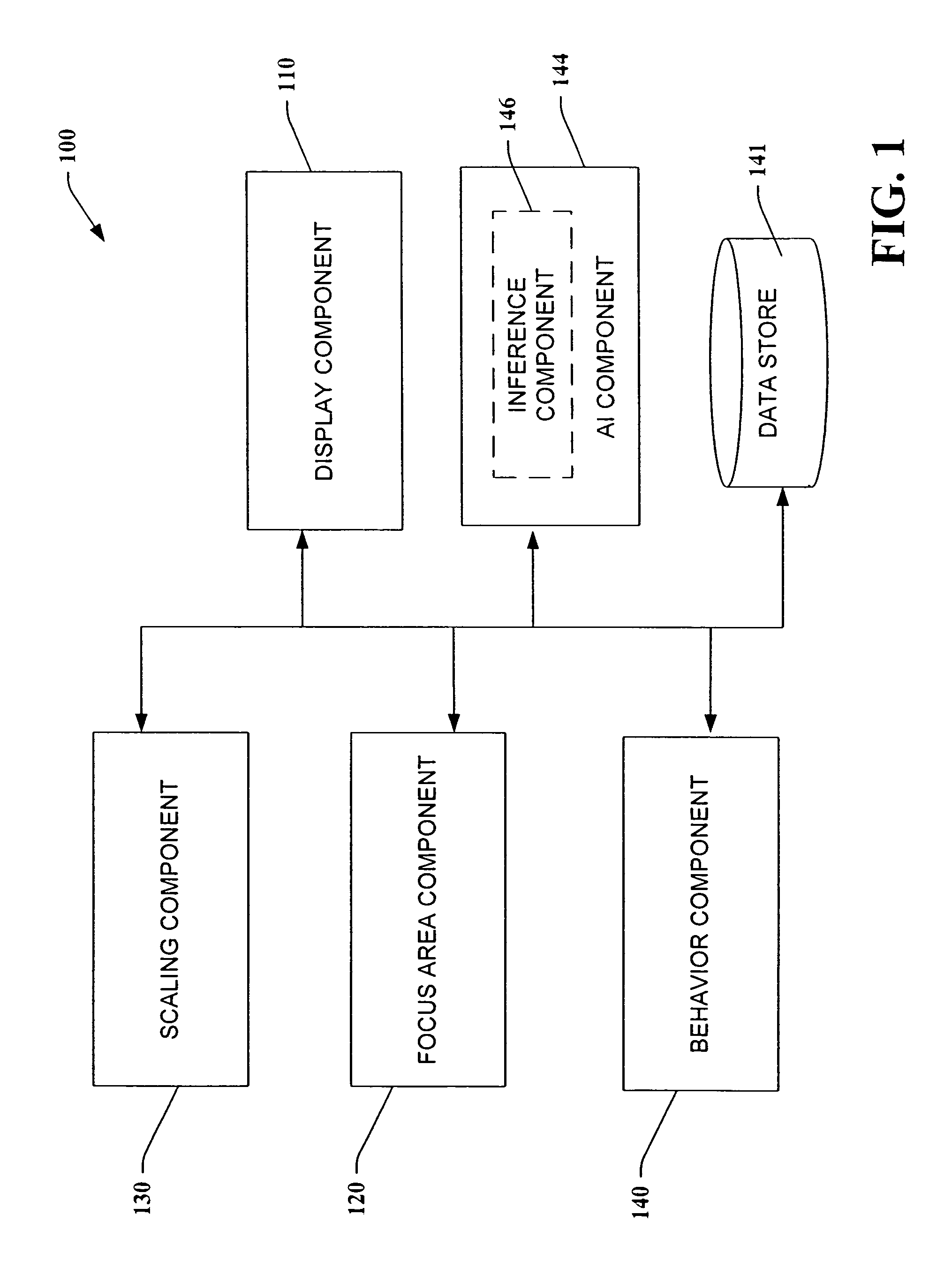

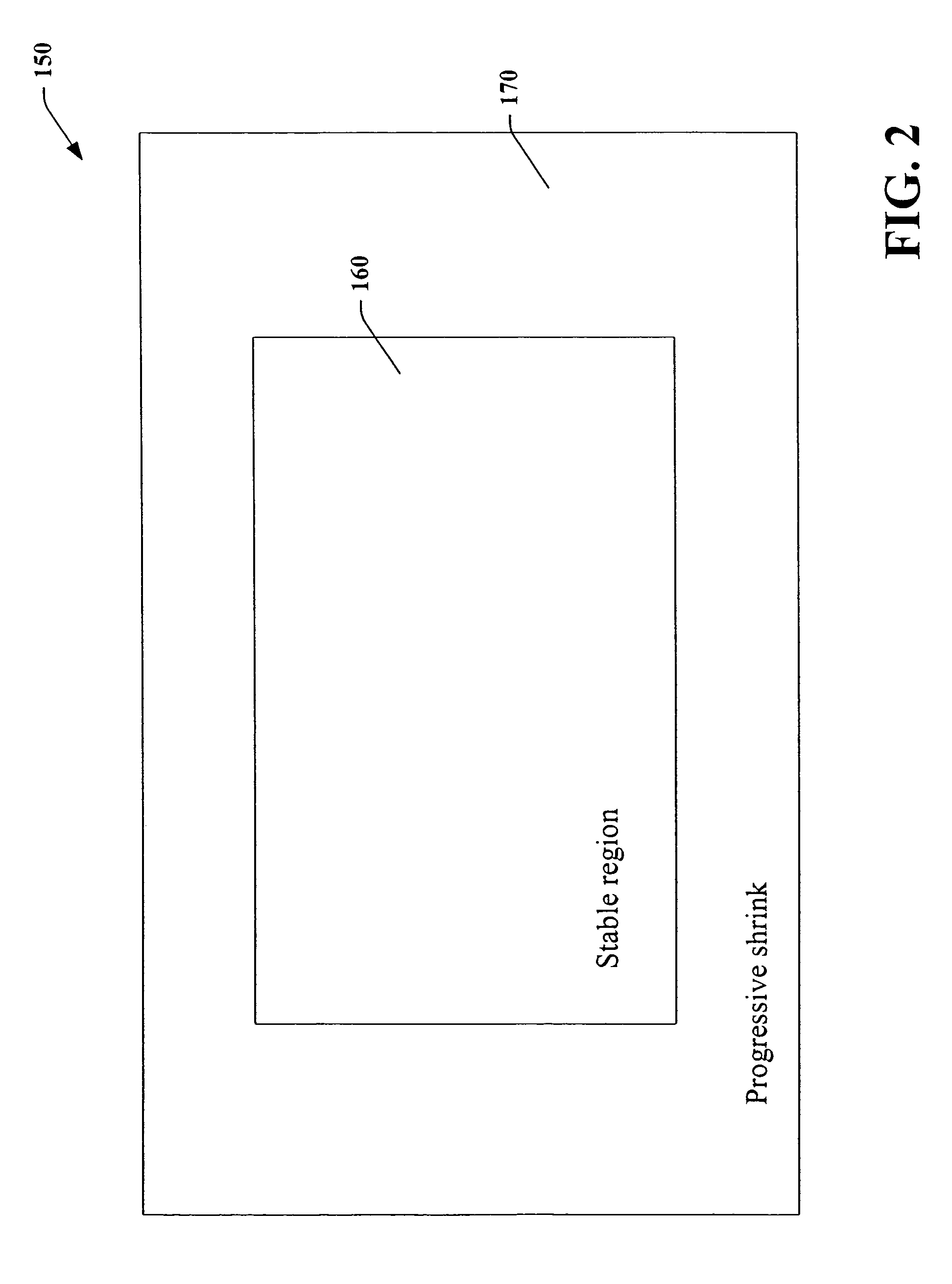

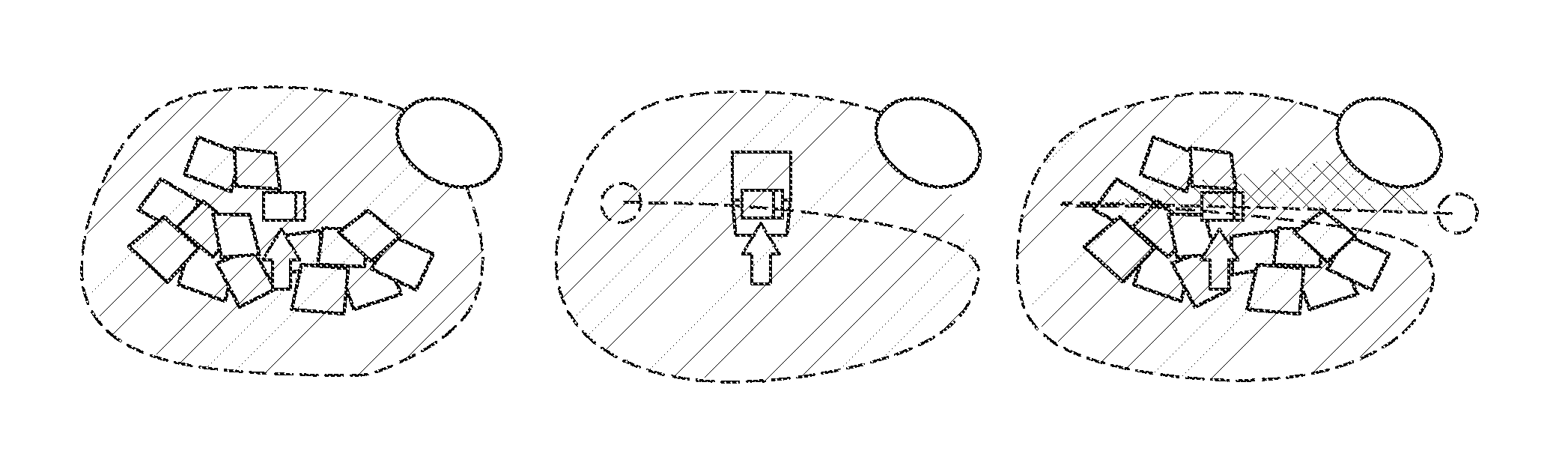

System and method that facilitates computer desktop use via scaling of displayed objects with shifts to the periphery

ActiveUS7386801B1Precise positioningReduce decreaseDigital computer detailsDigital output to display deviceVisibilityObject based

The present invention relates to a system that facilitates multi-tasking in a computing environment. A focus area component defines a focus area within a display space—the focus area occupying a subset area of the display space area. A scaling component scales display objects as a function of proximity to the focus area, and a behavior modification component modifies respective behavior of the display objects as a function their location of the display space. Thus, and more particularly the subject invention provides for interaction technique(s) and user interface(s) in connection with managing display objects on a display surface. One aspect of the invention defines a central focus area where the display objects are displayed and behave as usual, and a periphery outside the focus area where the display objects are reduced in size based on their location, getting smaller as they near an edge of the display surface so that many more objects can remain visible. In addition or alternatively, the objects can fade as they move toward an edge, fading increasing as a function of distance from the focus area and / or use of the object and / or priority of the object. Objects in the periphery can also be modified to have different interaction behavior (e.g., lower refresh rate, fading, reconfigured to display sub-objects based on relevance and / or visibility, static, etc.) as they may be too small for standard rendering. The methods can provide a flexible, scalable surface when coupled with automated policies for moving objects into the periphery, in response to the introduction of new objects or the resizing of pre-existing objects by a user or autonomous process.

Owner:MICROSOFT TECH LICENSING LLC

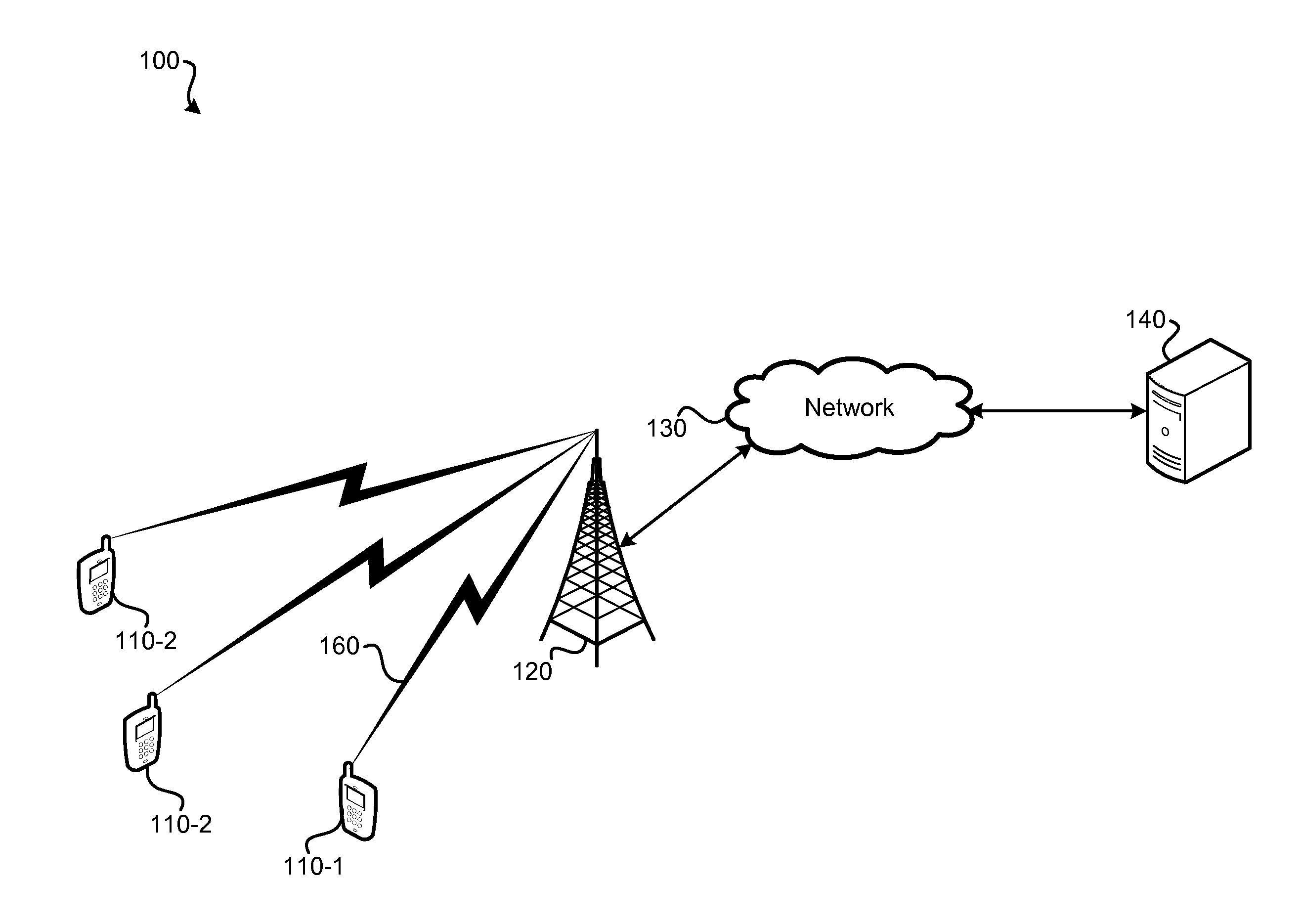

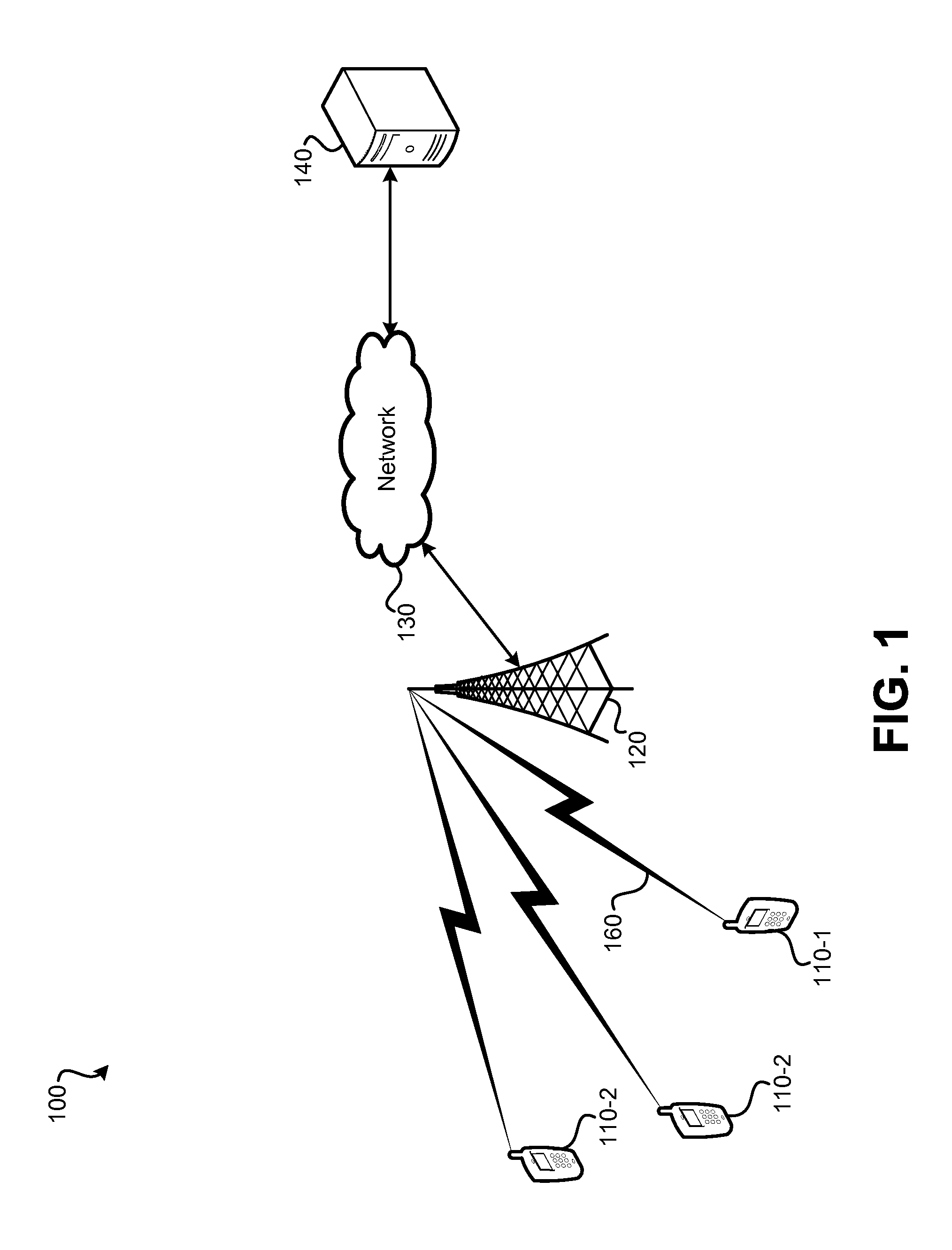

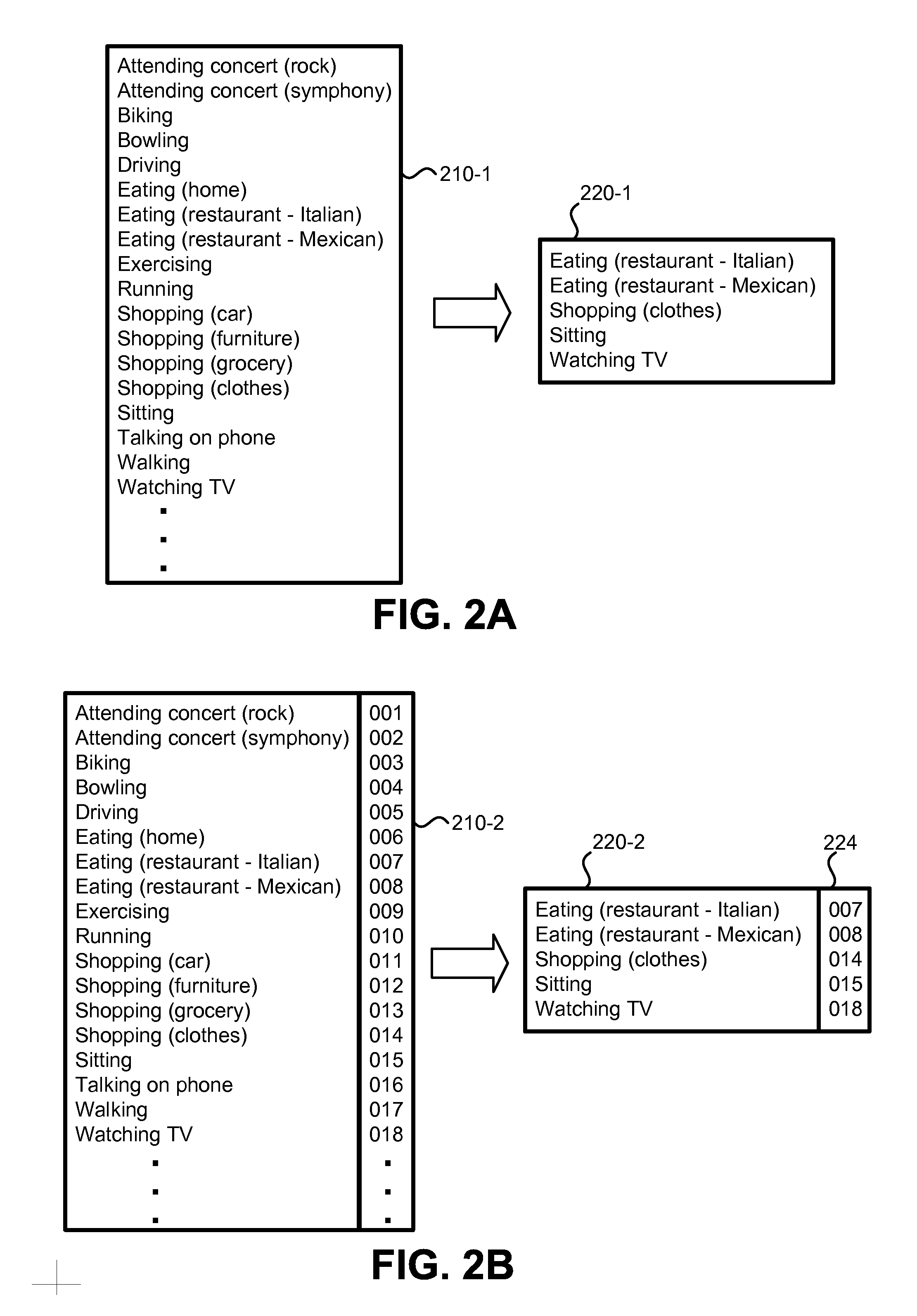

Becoming more "aware" through use of crowdsourcing and device interaction

InactiveUS20130084882A1Reduce power consumptionHigh speedLocation information based serviceTransmissionActivity listMobile device

Techniques disclosed herein provide for assisted context determination through the use of one or more servers remote to a mobile device. The one or more servers can receive location and / or other information from the mobile device and select, from a list of possible activities, a smaller list of activities a mobile device user is likely engaged in. The one or more servers can return the smaller list to the mobile device, which can use the smaller list to make a faster context determination. In creating the smaller list, the one or more servers can utilize information regarding a region in which the mobile device is located, which can be updated and modified using information received from mobile devices. Furthermore, the one or more servers can gather and share information from nearby mobile devices, enabling a mobile device to use information from nearby mobile devices to facilitate a context determination.

Owner:QUALCOMM INC

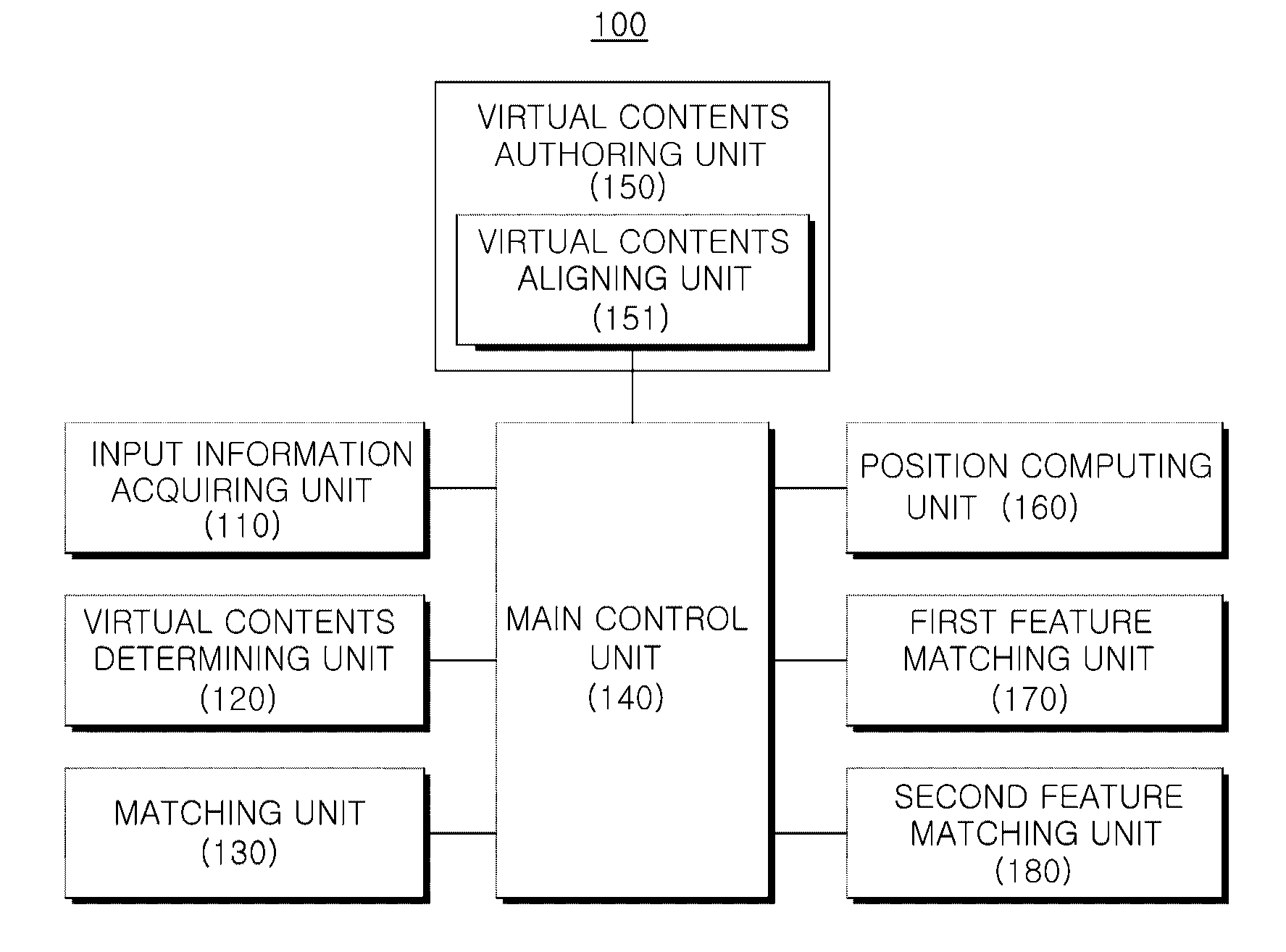

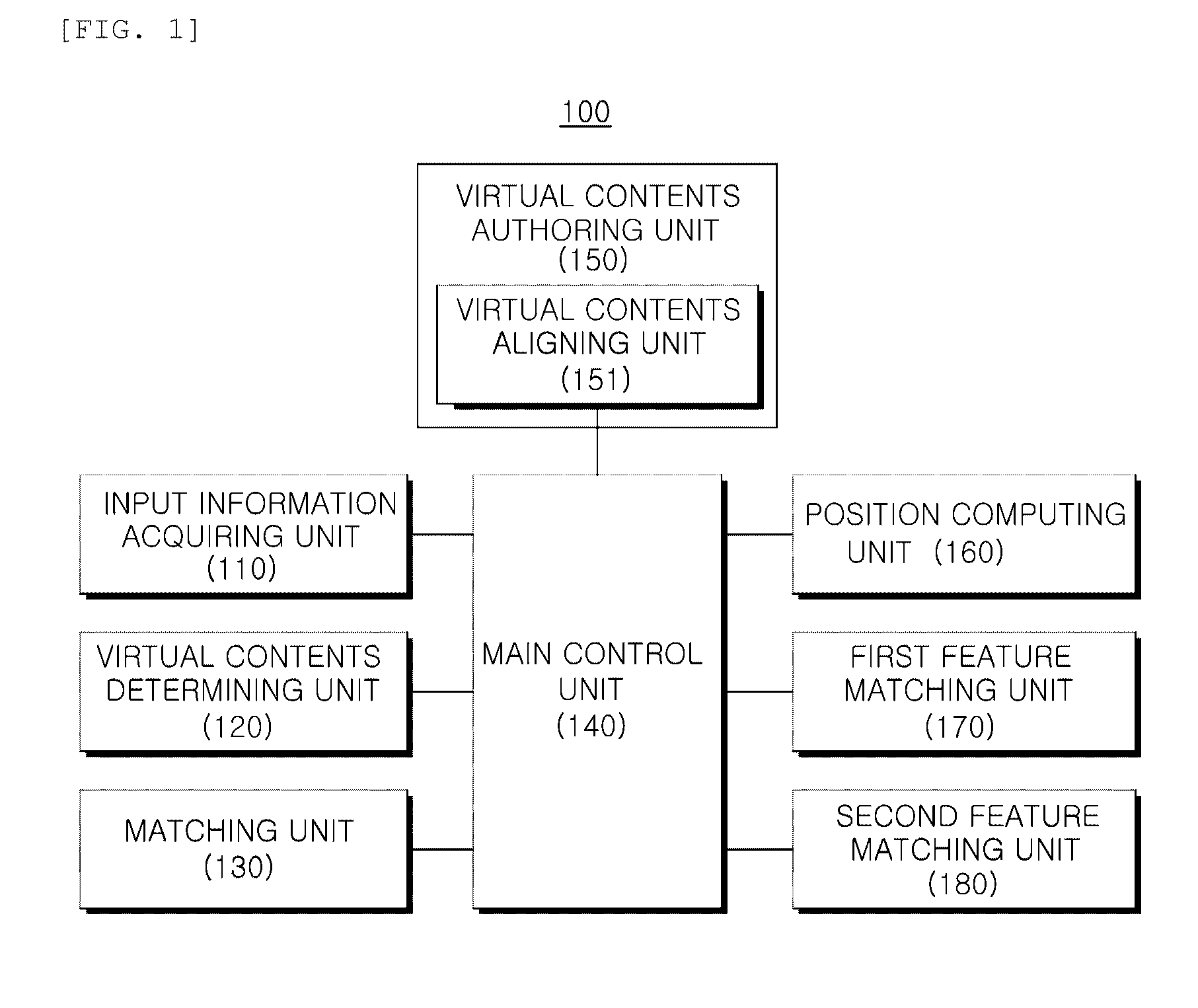

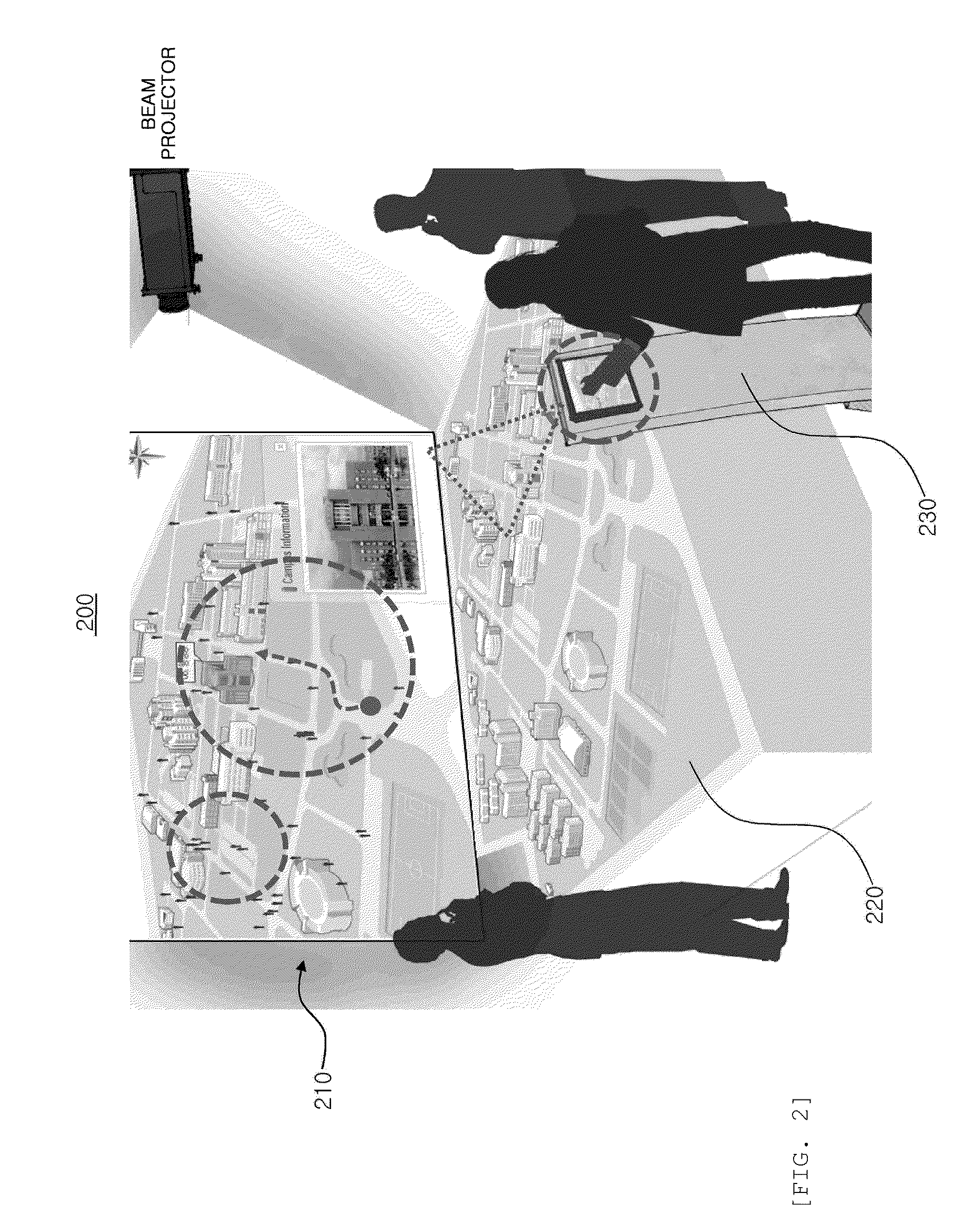

Real-time interactive augmented reality system and method and recording medium storing program for implementing the method

InactiveUS20110216090A1Improve immersionImmersive realizationCharacter and pattern recognitionCathode-ray tube indicatorsProgram planningDigital content

The present invention relates to a real-time interactive system and method regarding an interactive technology between miniatures in real environment and digital contents in virtual environment, and a recording medium storing a program for performing the method. An exemplary embodiment of the present invention provides a real-time interactive augmented reality system including: an input information acquiring unit acquiring input information for an interaction between real environment and virtual contents in consideration of a planned story; a virtual contents determining unit determining the virtual contents according to the acquired input information; and a matching unit matching the real environment and the virtual contents by using an augmented position of the virtual contents acquired in advance. According to the present invention, an interaction between the real environment and the virtual contents can be implemented without tools, and improved immersive realization can be obtained by augmentation using natural features.

Owner:GWANGJU INST OF SCI & TECH

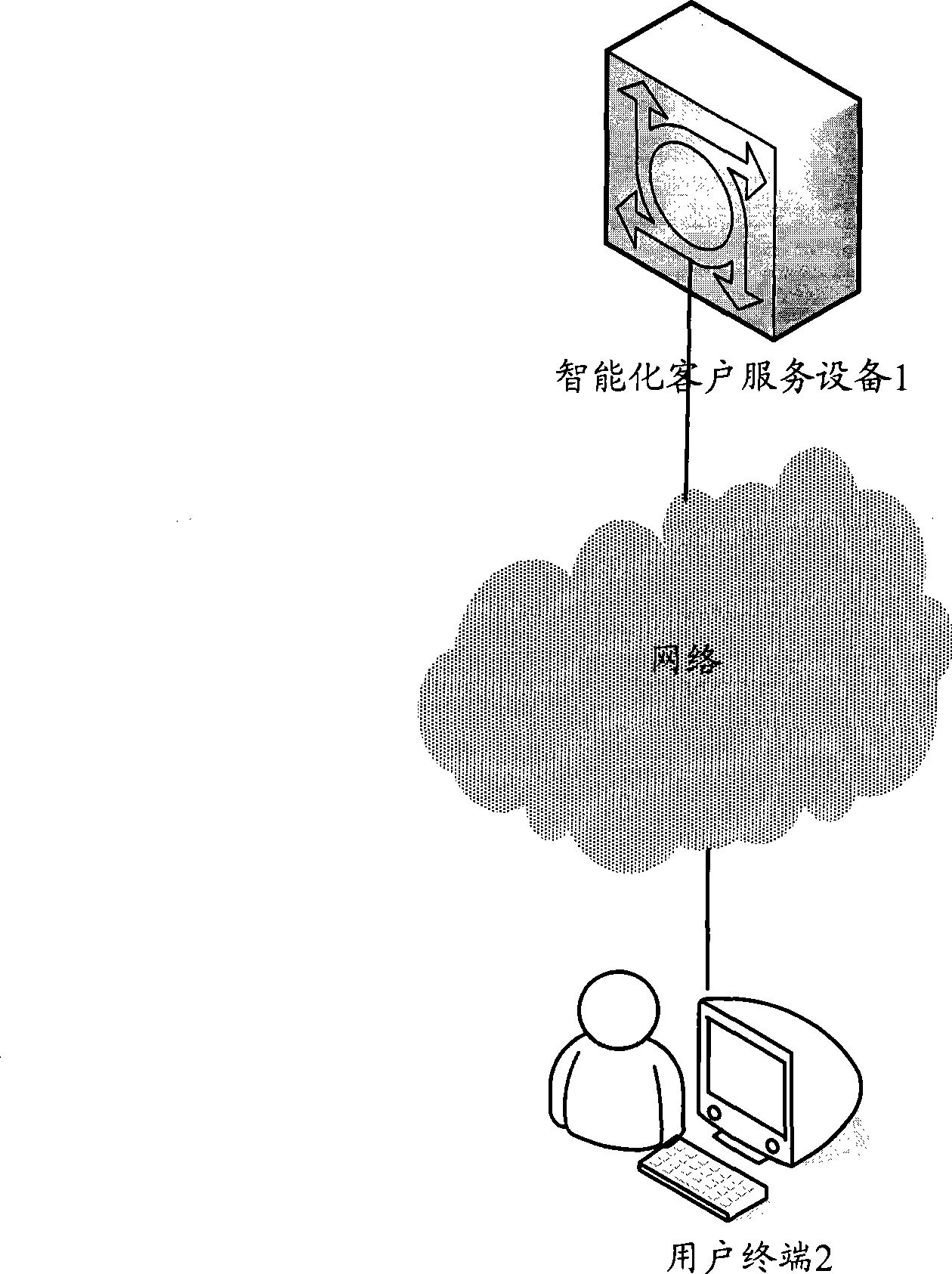

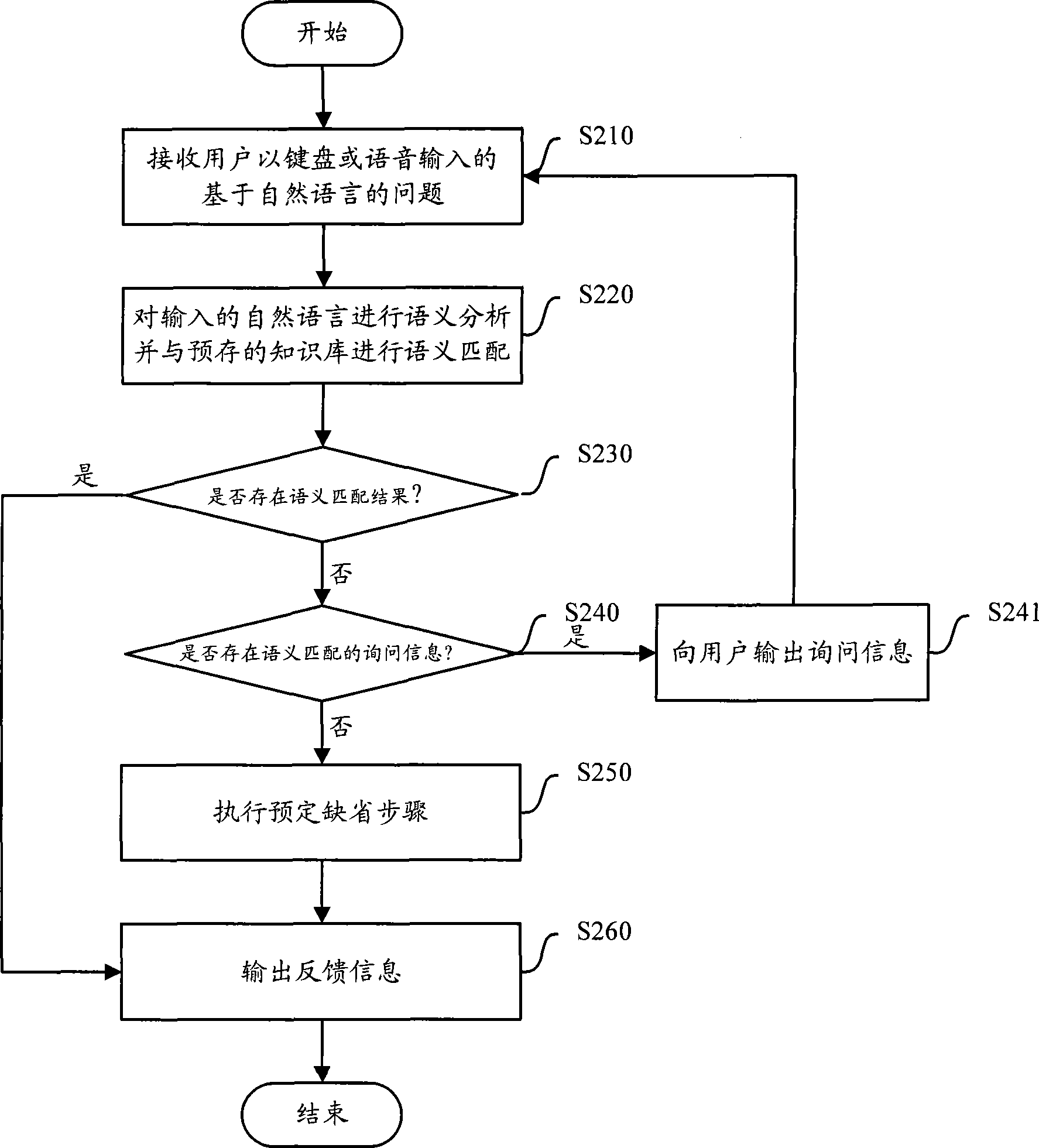

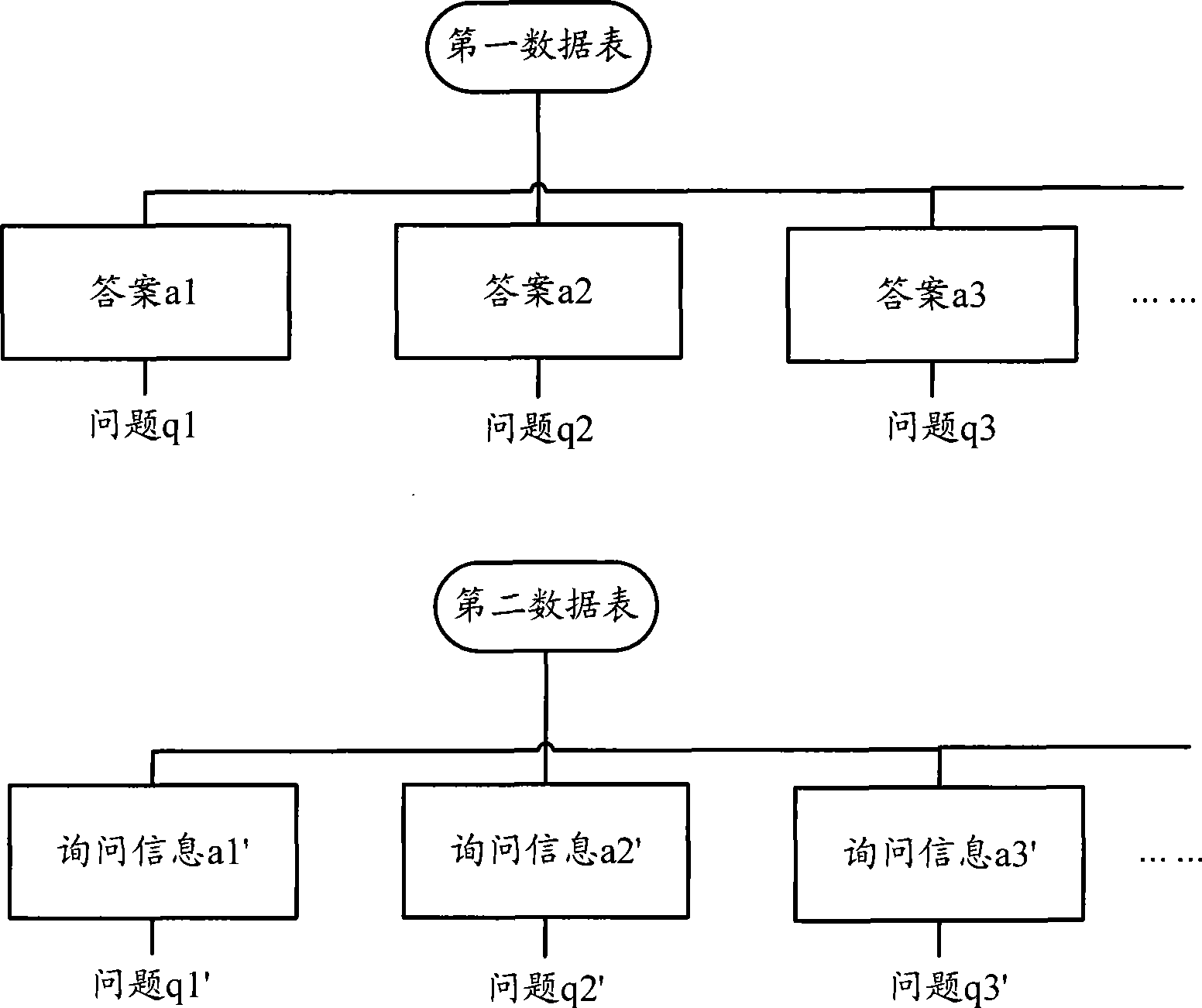

Method and equipment for implementing automatic customer service through human-machine interaction technology

ActiveCN101431573AReduce reinvestmentLow running costSpecial service for subscribersSupervisory/monitoring/testing arrangementsHuman–robot interactionHuman system interaction

The invention provides a method realizing automatic client service using human-computer interaction technique, and an equipment realizing the method. The method includes: receiving problem imported with nature language from the user, converting the nature language into information identified by the computer using human-computer interaction technique; outputting feedback information according withthe information identified for the computer. In addition, the invention also provides the equipment realizing the method.

Owner:SHANGHAI XIAOI ROBOT TECH CO LTD

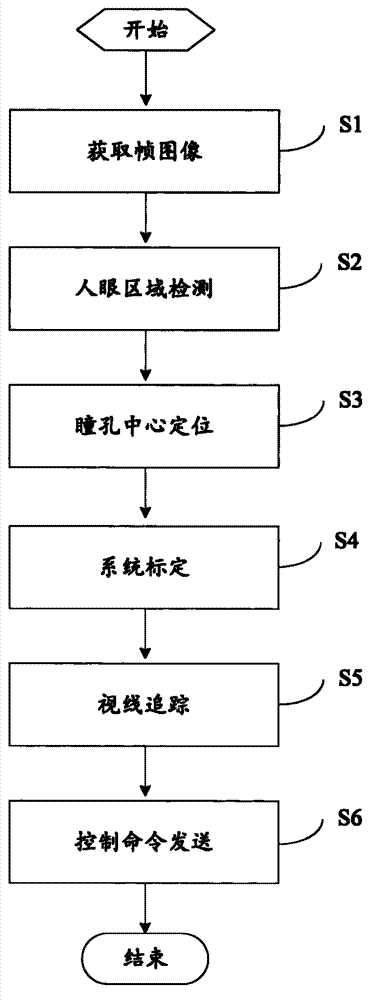

Man-machine interaction method and system based on sight judgment

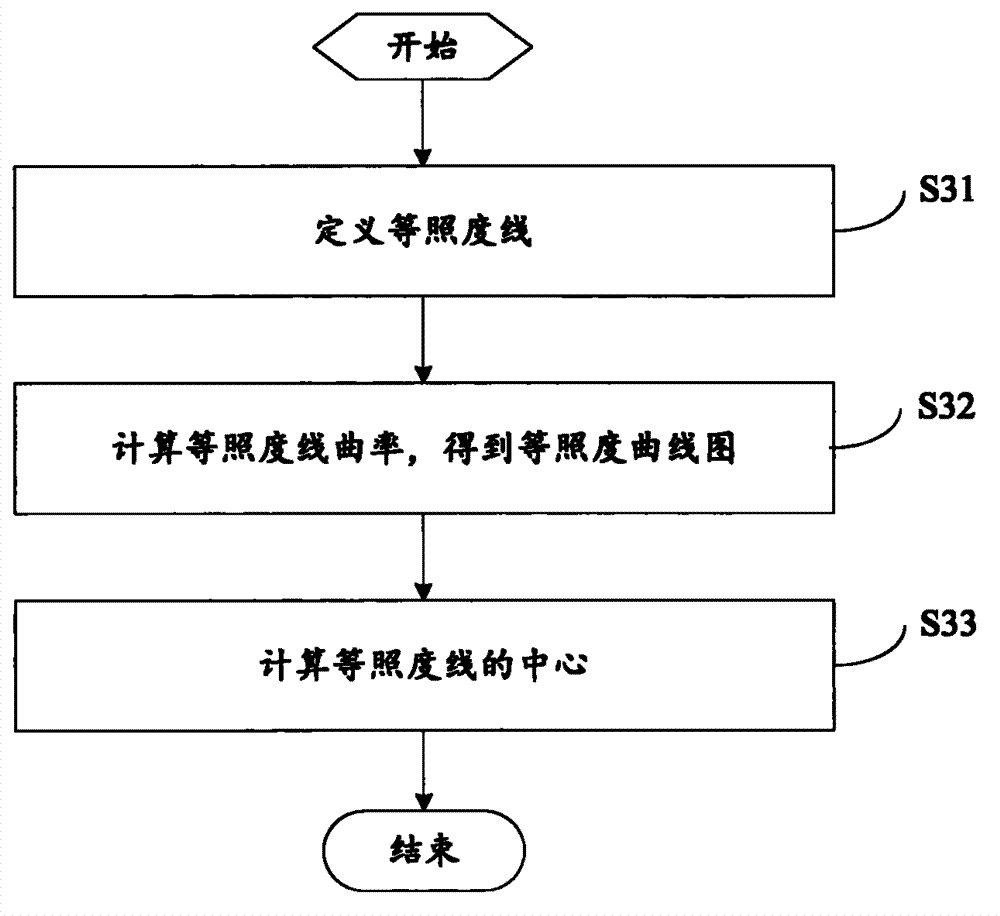

ActiveCN102830797ARealize the operationEasy to operateInput/output for user-computer interactionGraph readingEye closurePupil

The invention relates to the technical field of man-machine interaction and provides a man-machine interaction method based on sight judgment, to realize the operation on an electronic device by a user. The method comprises the following steps of: obtaining a facial image through a camera, carrying out human eye area detection on the image, and positioning a pupil center according to the detected human eye area; calculating a corresponding relationship between an image coordinate and an electronic device screen coordinate system; tracking the position of the pupil center, and calculating a view point coordinate of the human eye on an electronic device screen according to the corresponding relationship; and detecting an eye blinking action or an eye closure action, and issuing corresponding control orders to the electronic device according to the detected eye blinking action or the eye closure action. The invention further provides a man-machine interaction system based on sight judgment. With the adoption of the man-machine interaction method, the stable sight focus judgment on the electronic device is realized through the camera, and control orders are issued through eye blinking or eye closure, so that the operation on the electronic device by the user becomes simple and convenient.

Owner:SHENZHEN INST OF ADVANCED TECH

Interaction techniques for flexible displays

Owner:VERTEGAAL ROEL +3

Methods and interfaces for event timeline and logs of video streams

InactiveUS7996771B2Digital data information retrievalColor television detailsTime lineFixed position

Owner:FUJIFILM BUSINESS INNOVATION CORP

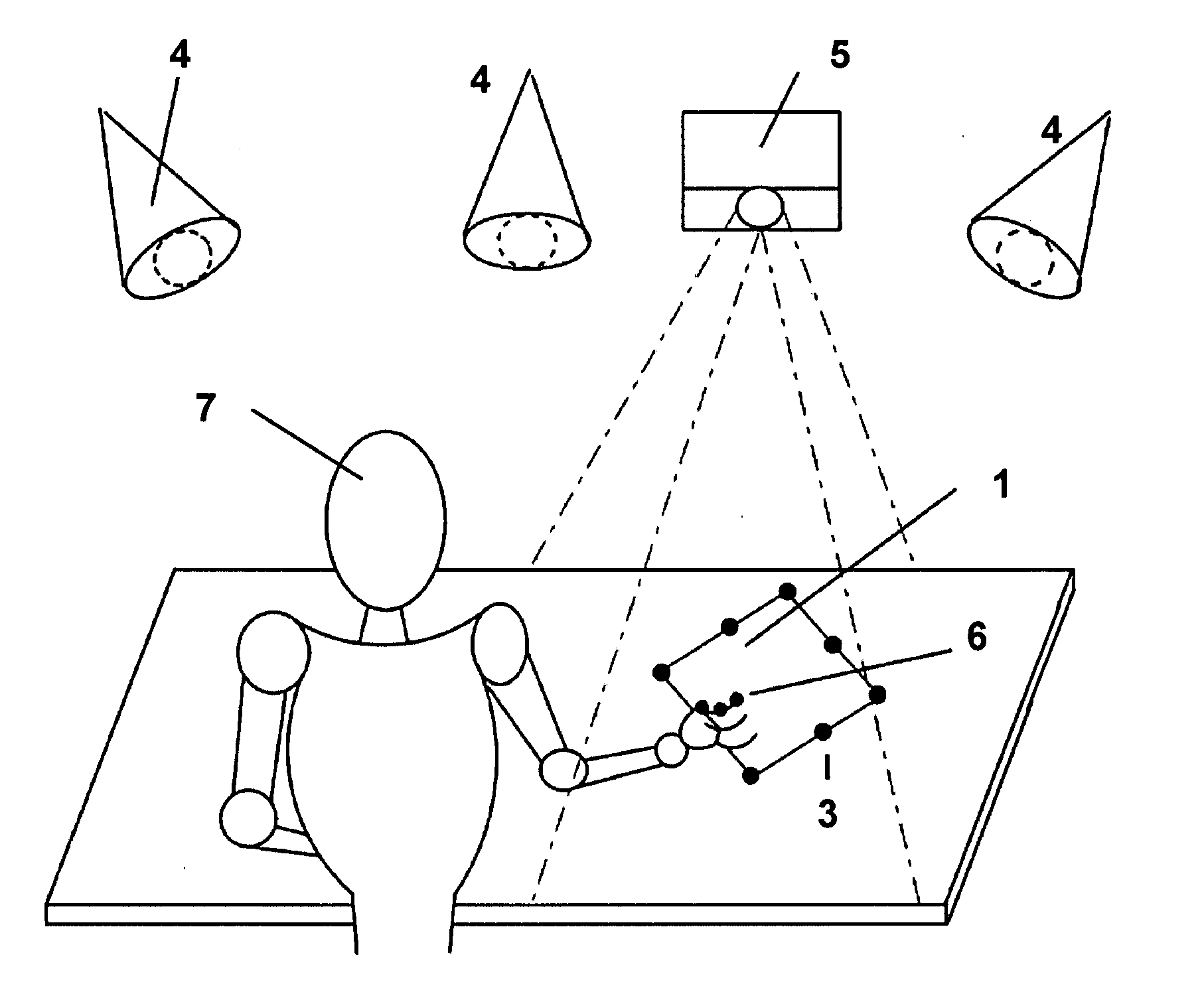

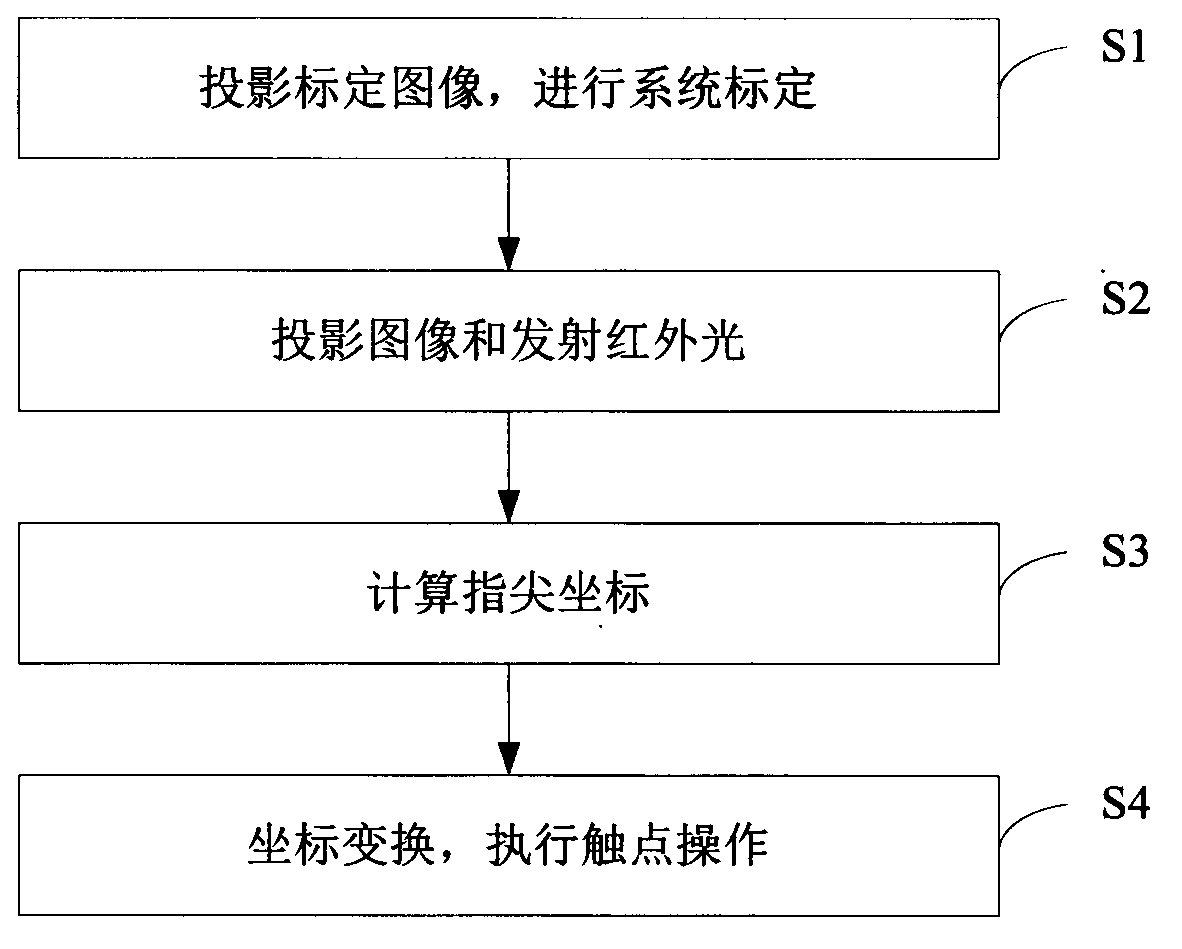

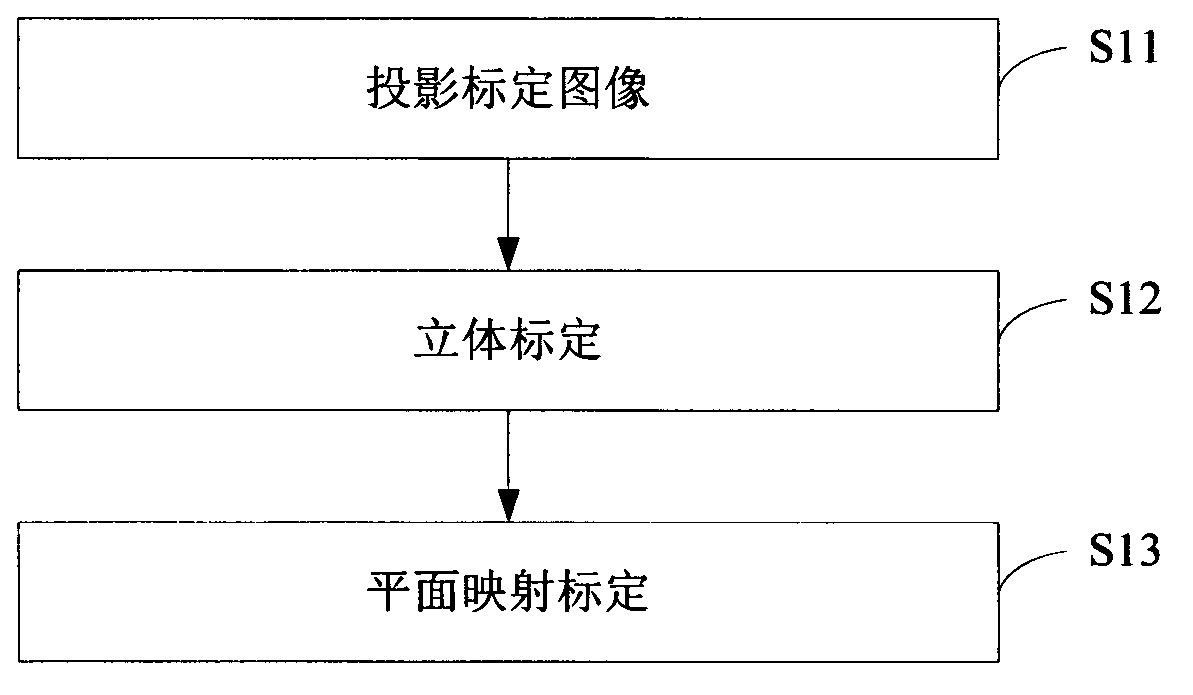

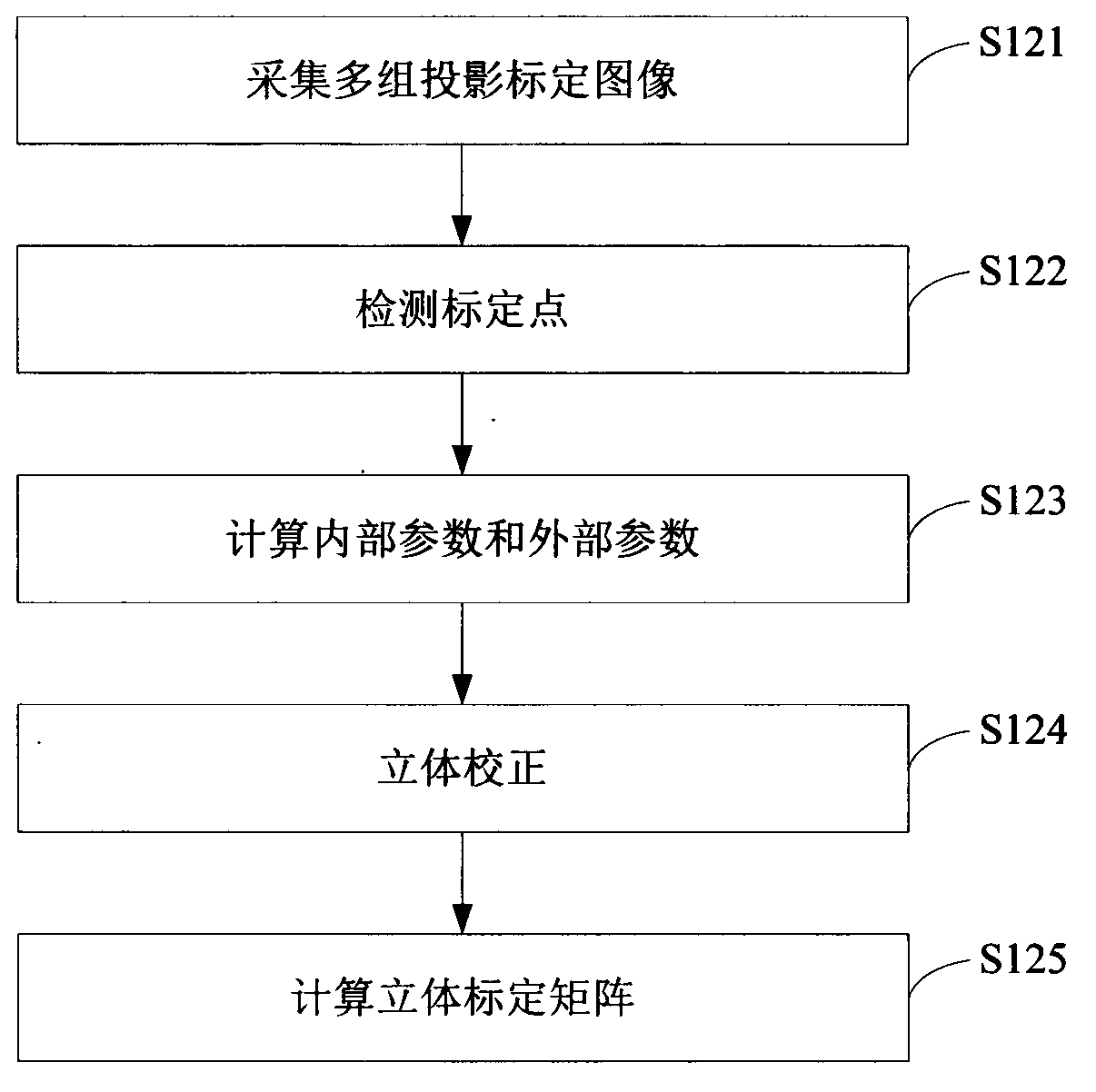

Human-machine interaction method and system based on binocular stereoscopic vision

ActiveCN102799318AImprove human-computer interactionEnhanced interactionInput/output processes for data processingHuman–robot interactionProjection plane

The invention relates to the technical field of human-machine interaction and provides a human-machine interaction method and a human-machine interaction system based on binocular stereoscopic vision. The human-machine interaction method comprises the following steps: projecting a screen calibration image to a projection plane and acquiring the calibration image on the projection surface for system calibration; projecting an image and transmitting infrared light to the projection plane, wherein the infrared light forms a human hand outline infrared spot after meeting a human hand; acquiring an image with the human hand outline infrared spot on the projection plane and calculating a fingertip coordinate of the human hand according to the system calibration; and converting the fingertip coordinate into a screen coordinate according to the system calibration and executing the operation of a contact corresponding to the screen coordinate. According to the invention, the position and the coordinate of the fingertip are obtained by the system calibration and infrared detection; a user can carry out human-machine interaction more conveniently and quickly on the basis of touch operation of the finger on a general projection plane; no special panels and auxiliary positioning devices are needed on the projection plane; and the human-machine interaction device is simple in and convenient for mounting and using and lower in cost.

Owner:SHENZHEN INST OF ADVANCED TECH

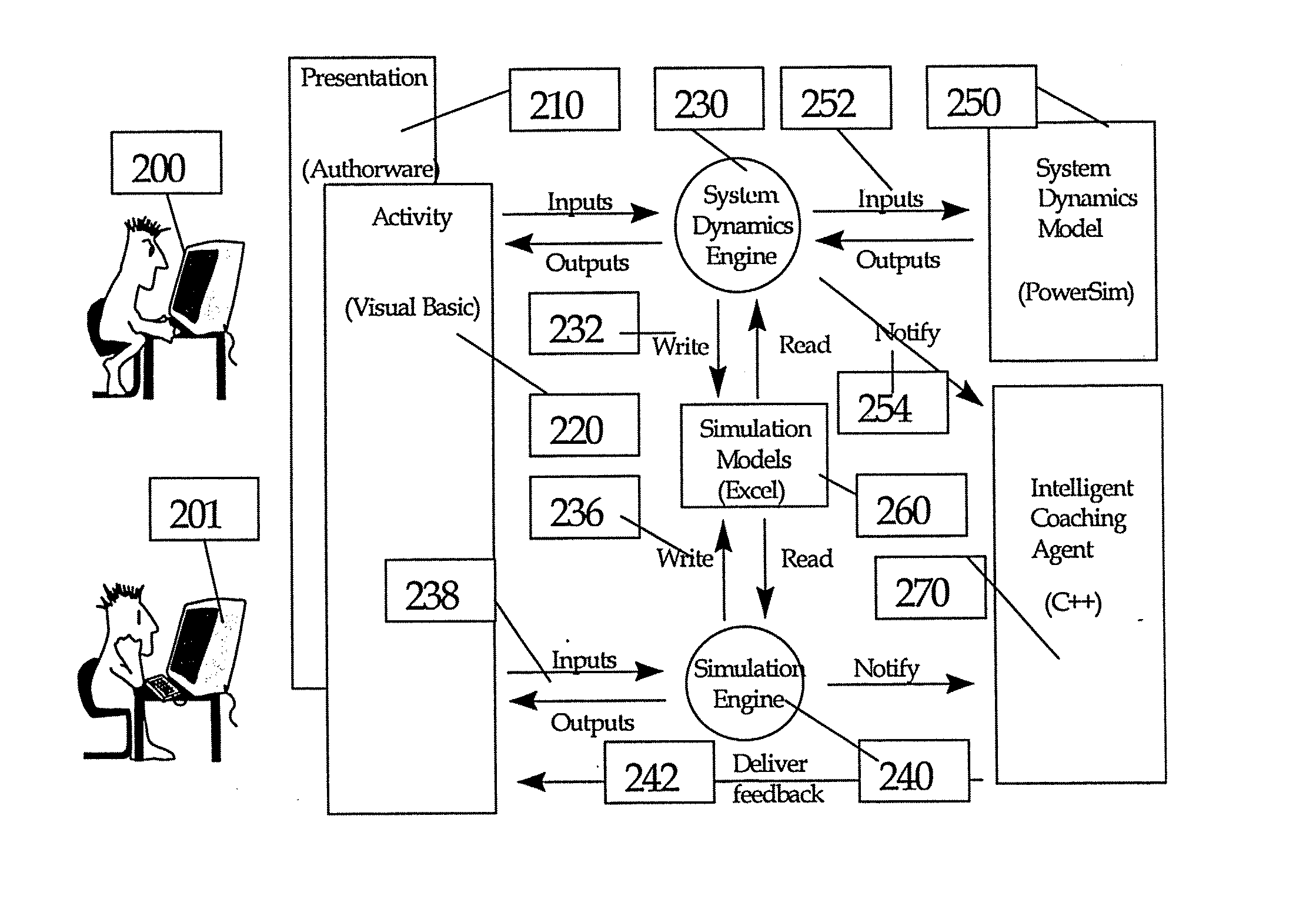

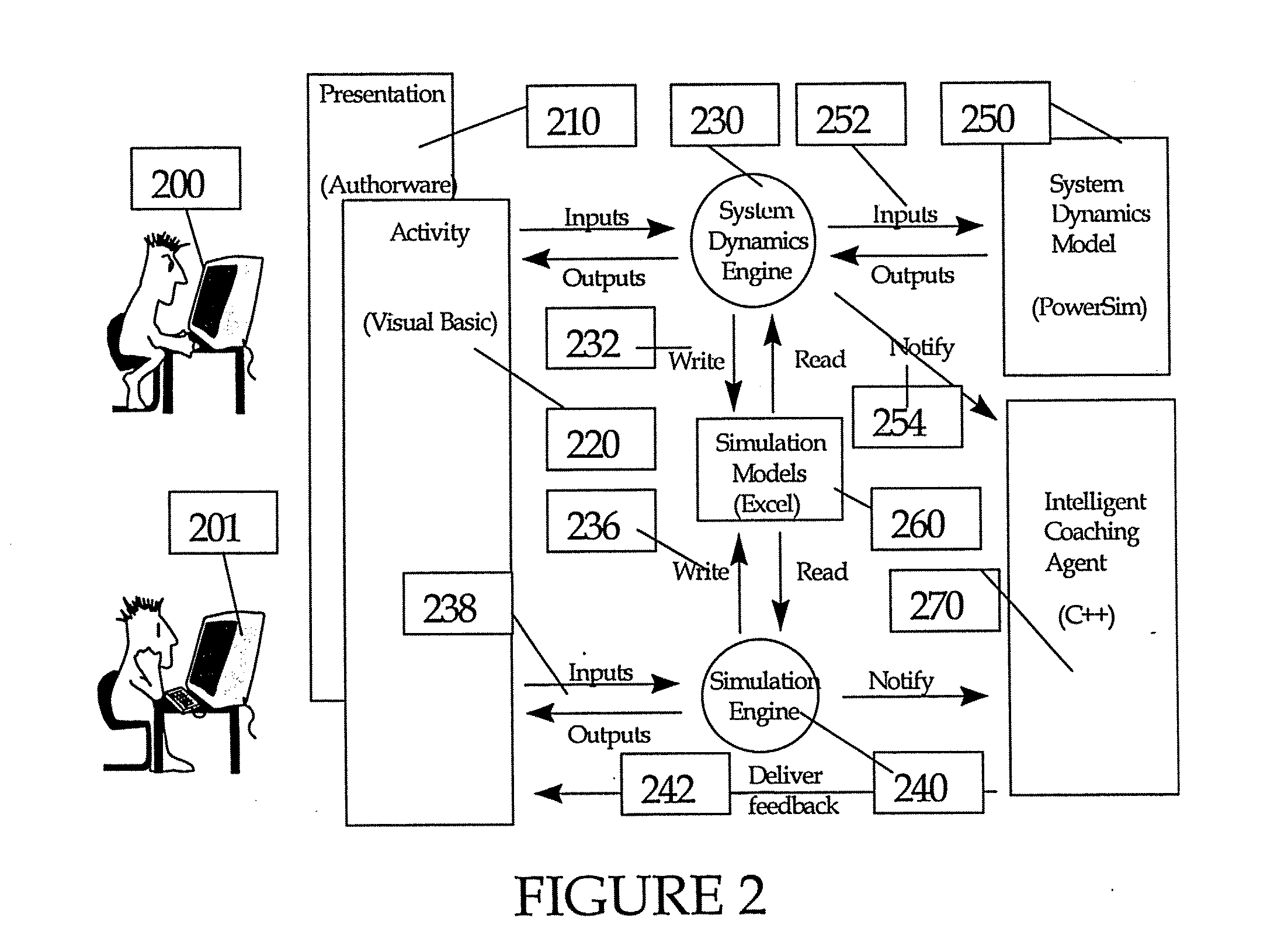

Creating a Virtual University Experience

InactiveUS20070255805A1Multiple digital computer combinationsElectrical appliancesHuman–computer interactionVirtual classroom

A system is disclosed that provides a goal based learning system utilizing a rule based expert training system to provide a cognitive educational experience. The system provides the user with a simulated environment that presents a training opportunity to understand and solve optimally. The technique establishes a virtual university by connecting a virtual university server and one or more users, selects a destination within the virtual university server to interact with the one or more users, couples the one or more users through the virtual university server based on the selected destination. The interaction techniques include rules for one to one correspondence and one to many. The destinations include a virtual classroom, administrative offices, virtual library and virtual student union. Additional support is provided for distributing grades, tests, homework materials, directory information and other classroom materials electronically.

Owner:ACCENTURE GLOBAL SERVICES LTD

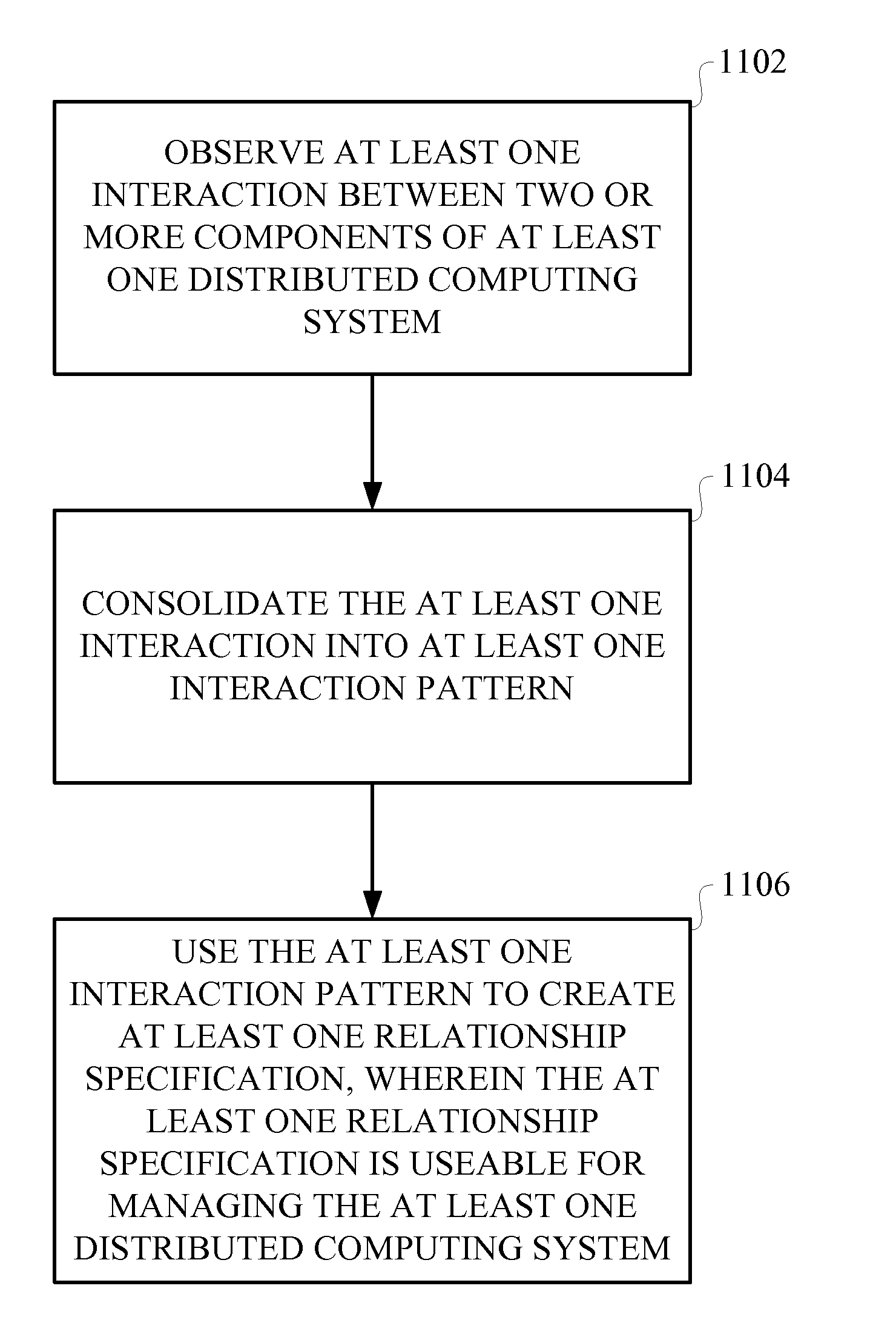

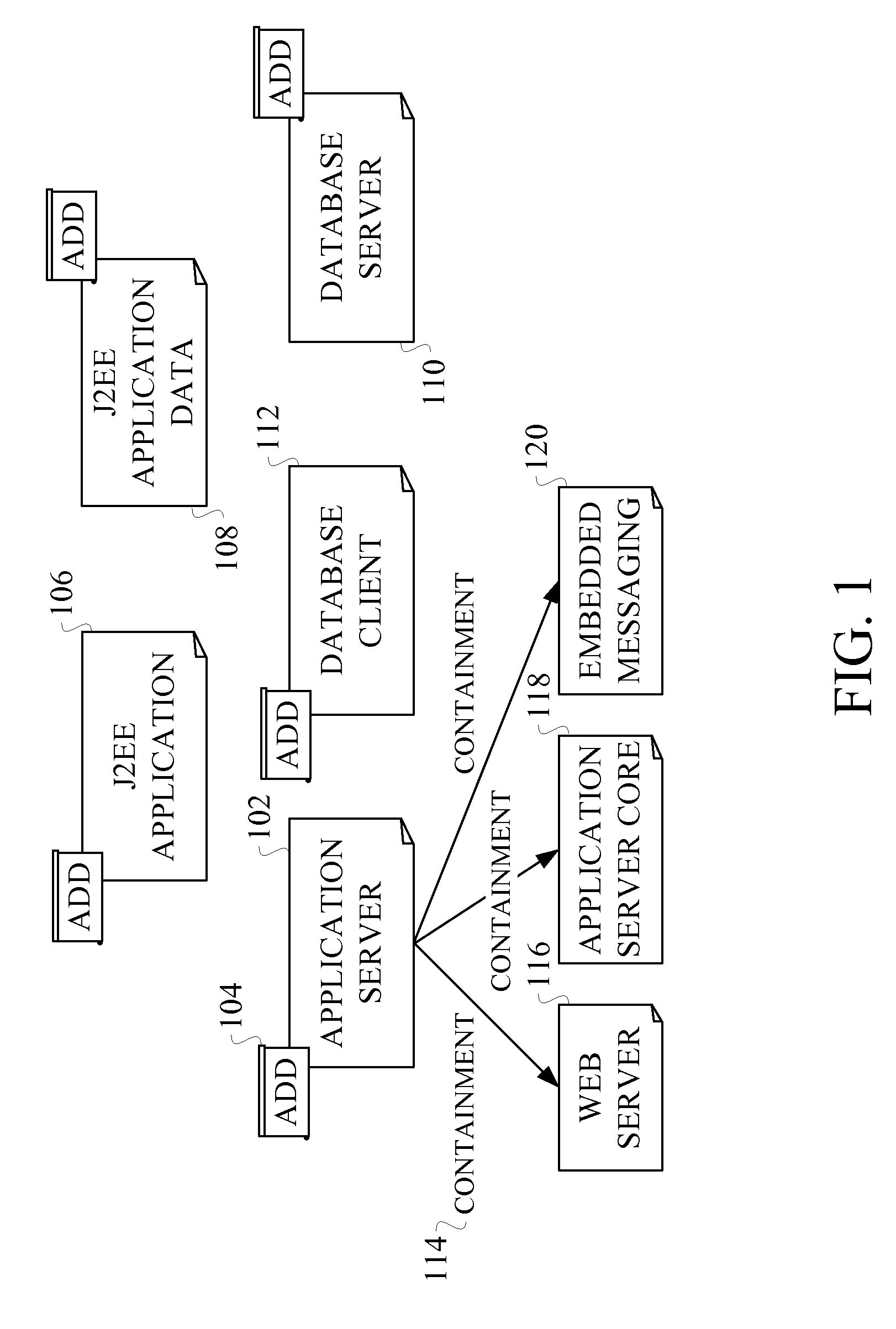

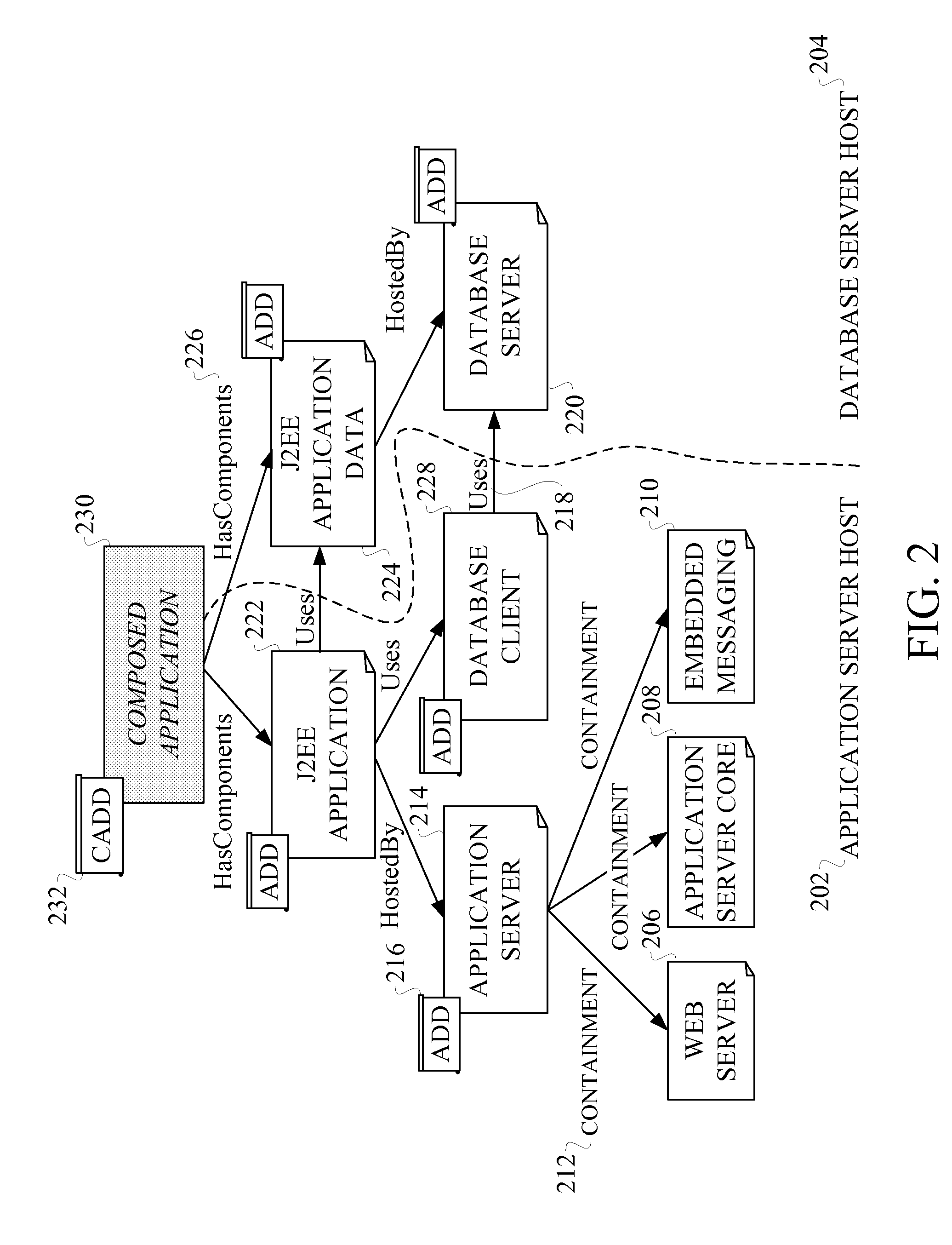

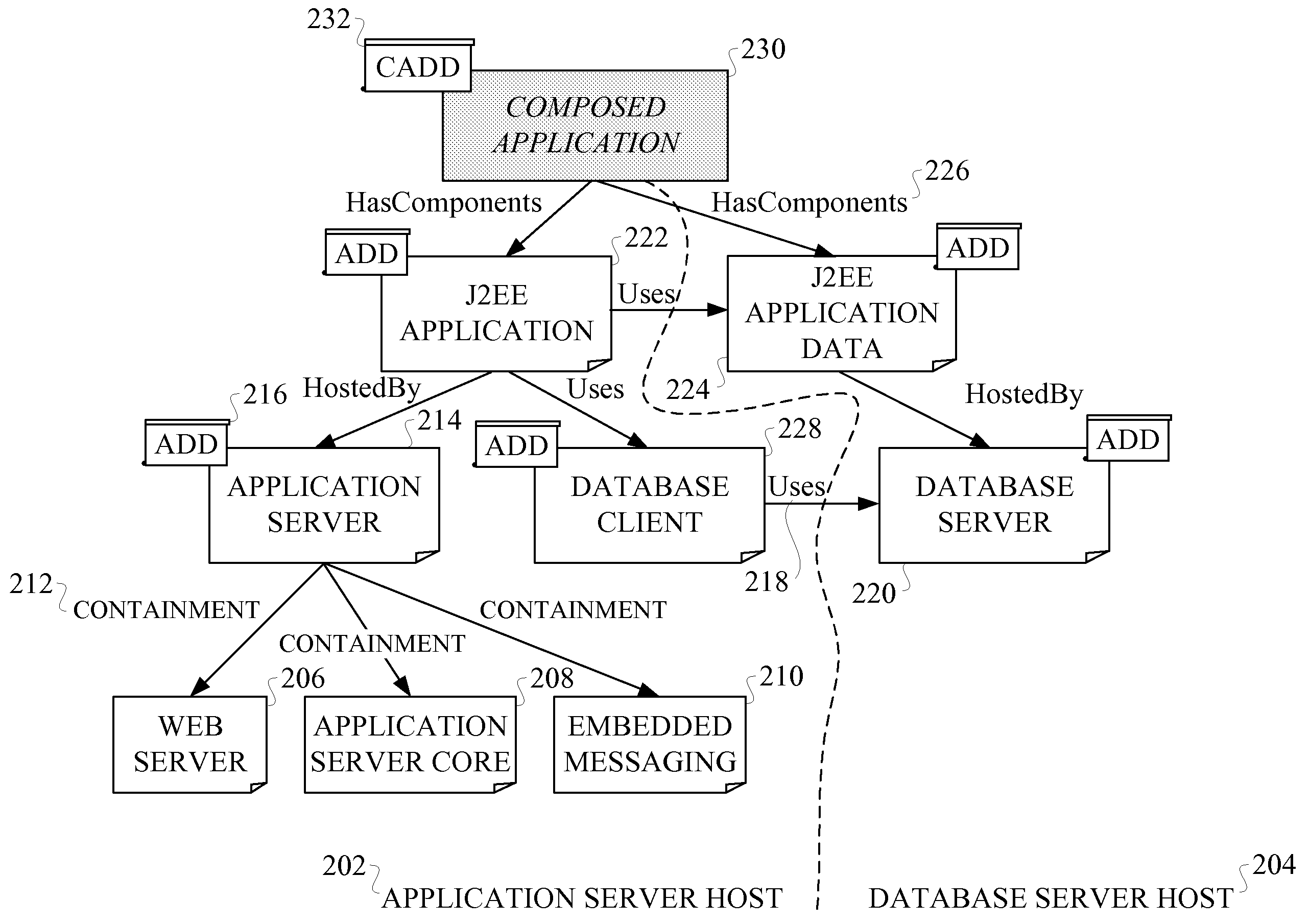

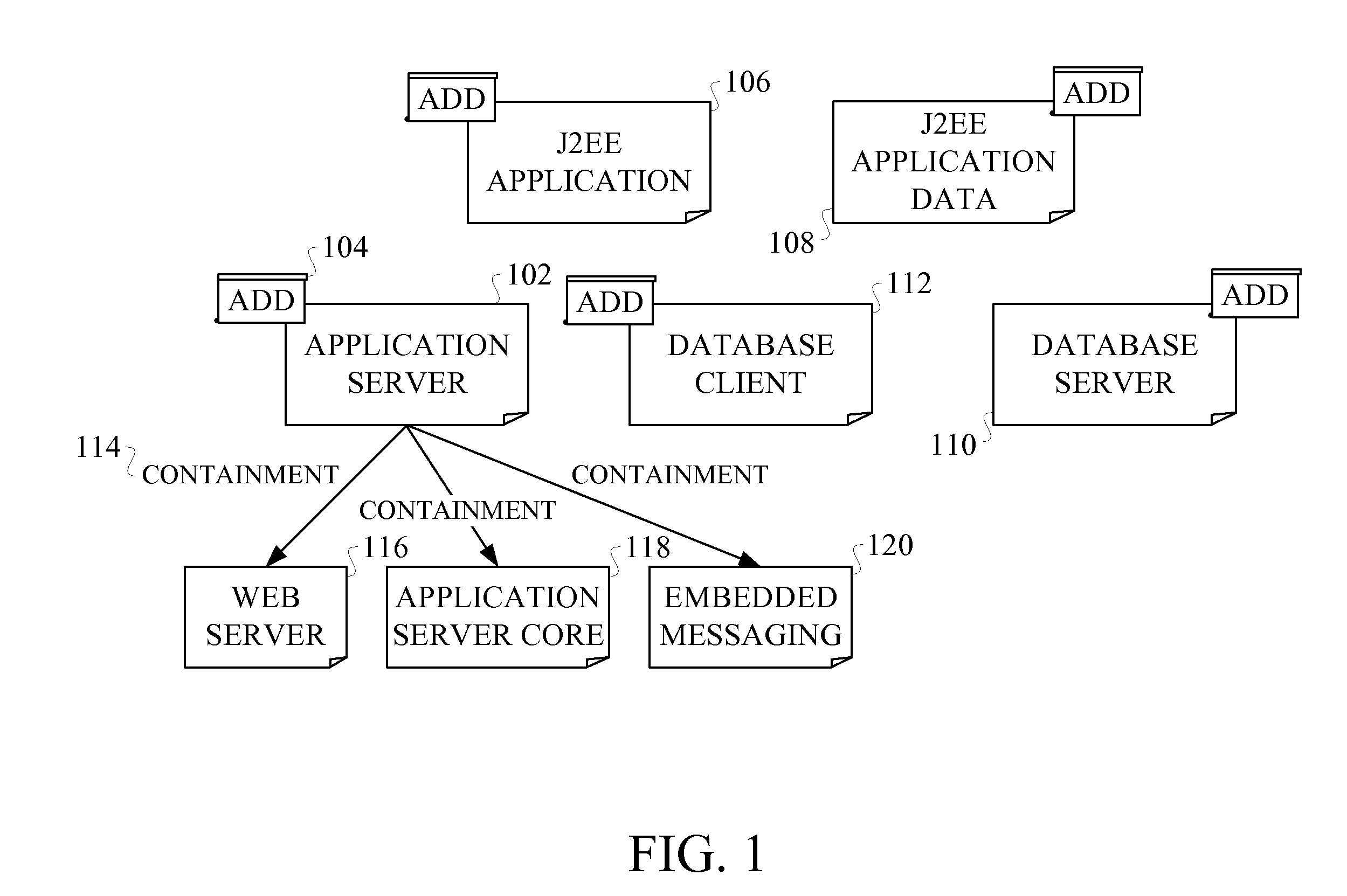

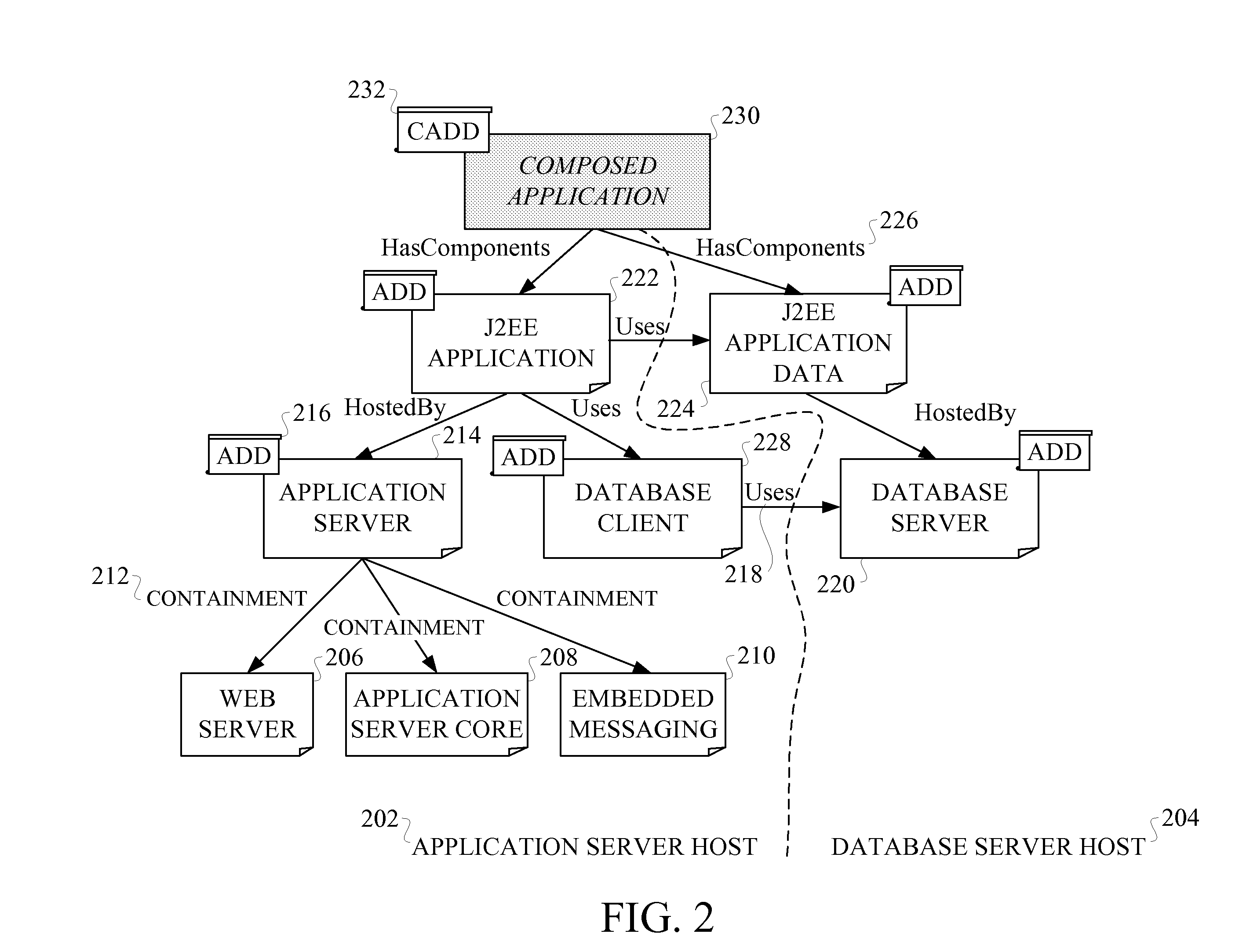

Systems and methods for constructing relationship specifications from component interactions

InactiveUS8037471B2Eliminate needEliminate requirementsDigital computer detailsProgram loading/initiatingDatabaseInteraction technique

Techniques for automatically creating at least one relationship specification are provided. For example, one computer-implemented technique includes observing at least one interaction between two or more components of at least one distributed computing system, consolidating the at least one interaction into at least one interaction pattern, and using the at least one interaction pattern to create at least one relationship specification, wherein the at least one relationship specification is useable for managing the at least one distributed computing system.

Owner:INT BUSINESS MASCH CORP

System and method that facilitates computer desktop use via scaling of displayed objects with shifts to the periphery

InactiveUS7536650B1Precise positioningReduce decreaseInput/output processes for data processingVisibilityObject based

The present invention relates to a system that facilitates multi-tasking in a computing environment. A focus area component defines a focus area within a display space—the focus area occupying a subset area of the display space area. A scaling component scales display objects as a function of proximity to the focus area, and a behavior modification component modifies respective behavior of the display objects as a function their location of the display space. Thus, and more particularly the subject invention provides for interaction technique(s) and user interface(s) in connection with managing display objects on a display surface. One aspect of the invention defines a central focus area where the display objects are displayed and behave as usual, and a periphery outside the focus area where the display objects are reduced in size based on their location, getting smaller as they near an edge of the display surface so that many more objects can remain visible. In addition or alternatively, the objects can fade as they move toward an edge, fading increasing as a function of distance from the focus area and / or use of the object and / or priority of the object. Objects in the periphery can also be modified to have different interaction behavior (e.g., lower refresh rate, fading, reconfigured to display sub-objects based on relevance and / or visibility, static, etc.) as they may be too small for standard rendering. The methods can provide a flexible, scalable surface when coupled with automated policies for moving objects into the periphery, in response to the introduction of new objects or the resizing of pre-existing objects by a user or autonomous process.

Owner:MICROSOFT TECH LICENSING LLC

Method and apparatus for cross-lingual communication

ActiveUS20090204386A1Natural language translationSpeech recognitionSpeech to speech translationAmbiguity

A system and method for a highly interactive style of speech-to-speech translation is provided. The interactive procedures enable a user to recognize, and if necessary correct, errors in both speech recognition and translation, thus providing robust translation output than would otherwise be possible. The interactive techniques for monitoring and correcting word ambiguity errors during automatic translation, search, or other natural language processing tasks depend upon the correlation of Meaning Cues and their alignment with, or mapping into, the word senses of third party lexical resources, such as those of a machine translation or search lexicon. This correlation and mapping can be carried out through the creation and use of a database of Meaning Cues, i.e., SELECT. Embodiments described above permit the intelligent building and application of this database, which can be viewed as an interlingua, or language-neutral set of meaning symbols, applicable for many purposes. Innovative techniques for interactive correction of server-based speech recognition are also described.

Owner:ZAMA INNOVATIONS LLC

Interaction Techniques for Flexible Displays

ActiveUS20120112994A1Input/output for user-computer interactionDigital data processing detailsGraphicsGraphical content

The invention relates to a set of interaction techniques for obtaining input to a computer system based on methods and apparatus for detecting properties of the shape, location and orientation of flexible display surfaces, as determined through manual or gestural interactions of a user with said display surfaces. Such input may be used to alter graphical content and functionality displayed on said surfaces or some other display or computing system.

Owner:VERTEGAAL ROEL +1

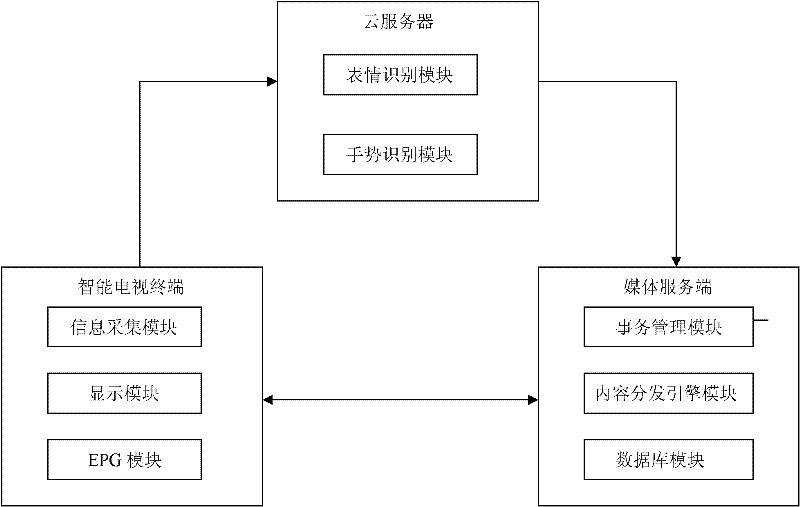

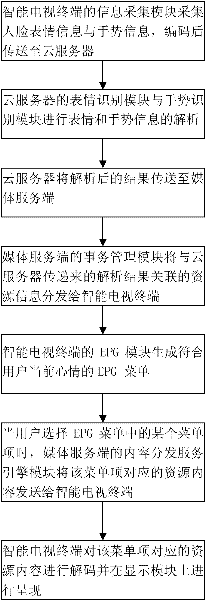

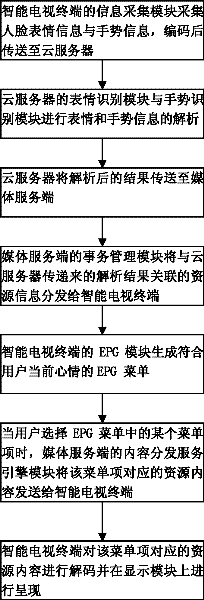

Intelligent television interaction system and interaction method

InactiveCN102523502AHumanizedCharacter and pattern recognitionSelective content distributionInteraction systemsResource information

The invention relates to a human-computer interaction technology and discloses an intelligent television interaction method, which is capable of ensuring the television to understand the moods of an user and recommending corresponding resource contents according to the user moods, so that the problem that the interaction way in the conventional technology is still imperfect in the aspect of humanity is solved. The key point of the technical scheme can be summarized as follows: identifying the expression and gestures of the user to judge the current mood of the user; pushing adequate resource information to an intelligent television terminal according to the current mood of the user and generating a corresponding electronic program guide (EPG) menu for the user to choose. Furthermore, the invention also discloses an intelligent television interaction system, which is suitable for the humanized human-computer interaction.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

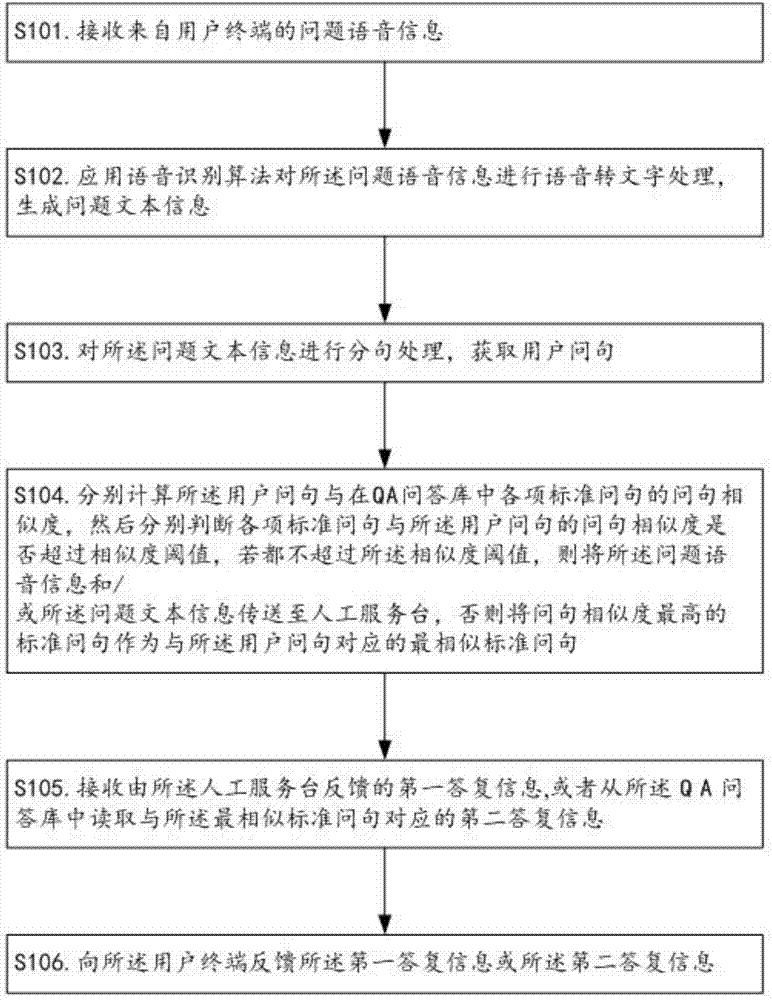

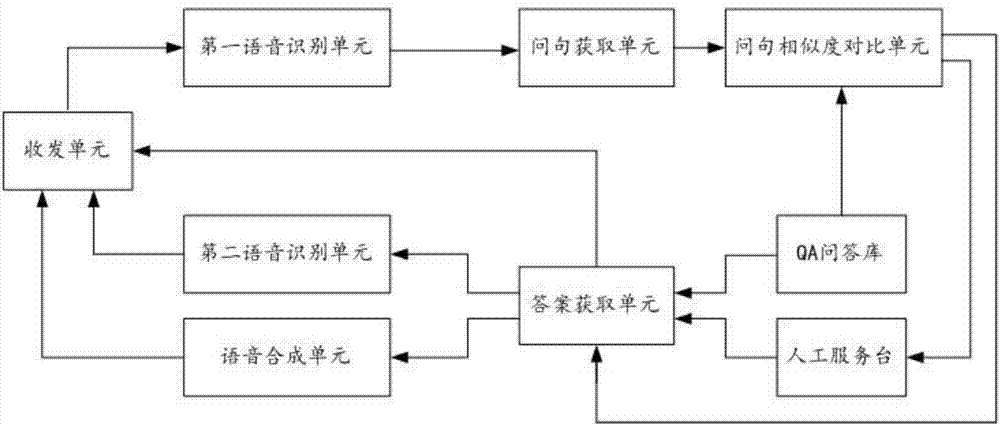

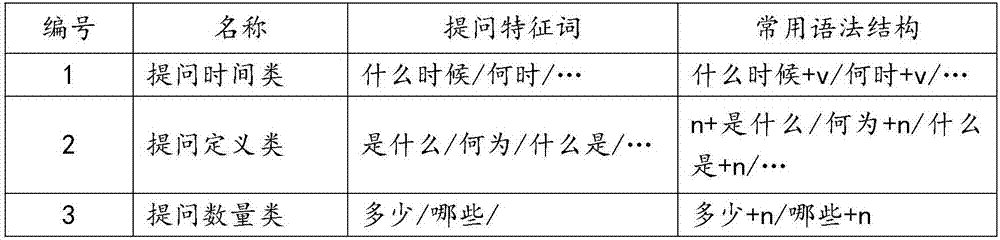

Voice answering method for combining intelligent answer with artificial answer

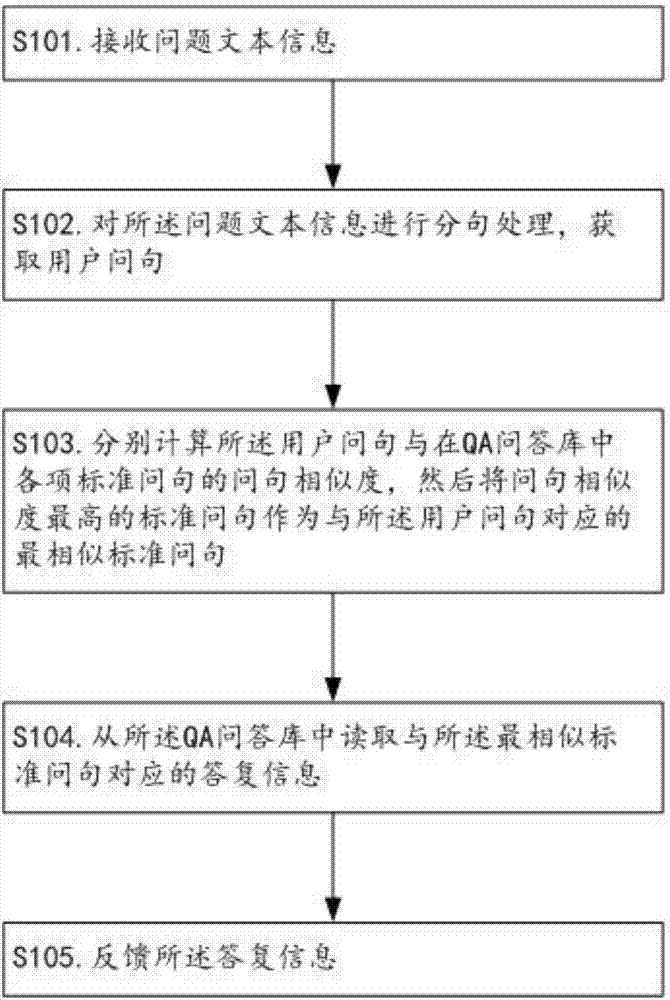

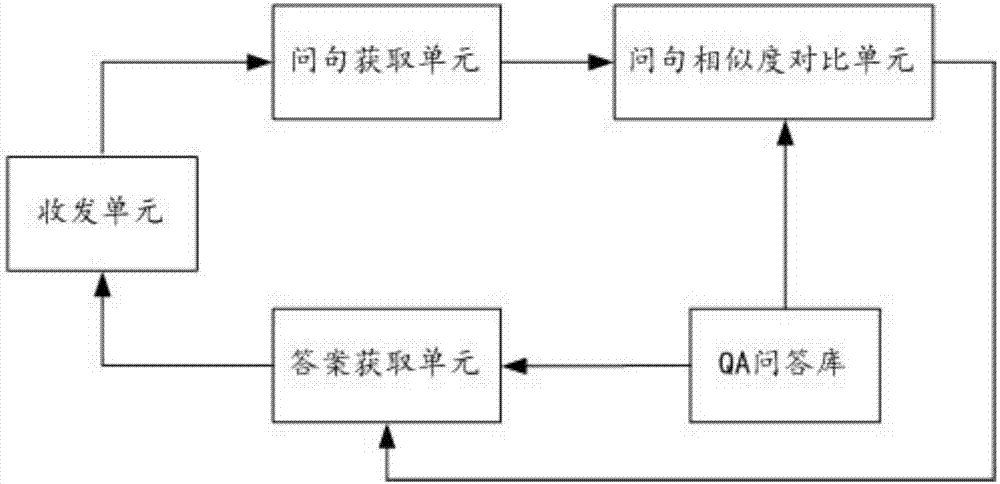

InactiveCN107315766AImprove experienceSolve questions in timeNatural language data processingSpeech recognitionNatural language processingSentence segmentation

The invention relates to the technical field of human-computer interaction, and discloses a voice answering method for combining intelligent answer with artificial answer. The voice answering method provided by the invention has a core thought that a voice identification algorithm is firstly used for converting problem voice information into problem text information; then, the problem text information is subjected to sentence segmentation processing to obtain a user interrogative sentence; and finally, on the basis of an interrogative sentence similarity, whether a standard interrogative sentence which is most similar to the user interrogative sentence and corresponding answering information are found in a QA library or a manual service desk is accessed to obtain answering information is determined. Therefore, voice answering can be realized so as to bring convenience for users to input problem information, and questioning and answering efficiency and user experience are improved. Meanwhile, when a proper answer can not be found in the QA library, manual answer can be switched to, and the doubts of users can be solved in time.

Owner:JIANGMEN POWER SUPPLY BUREAU OF GUANGDONG POWER GRID

Information processing method and device for realizing intelligent question answering

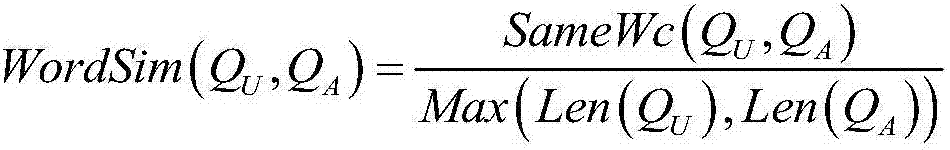

InactiveCN107273350AImprove experienceImprove targetingSemantic analysisSpecial data processing applicationsInformation processingNatural language understanding

The invention relates to the technical field of man-machine interaction, and discloses an information processing method and device for realizing intelligent question answering. The information processing method comprises the following steps of: carrying out sentence segmentation on question text information to obtain a user question; and searching a standard question most similar to the user question and corresponding answer information from a QA library on the basis of a question similarity. Compared with the existing keyword retrieval-based question answering method, the method disclosed by the invention does not need to require the users to have keyword decomposition ability, is automatic in the whole process and is capable of greatly enhancing the user experience and improving the search effect and the pertinence and effectiveness of answers. Meanwhile, through fusing natural language understanding technologies such as sentence model analysis, lexical analysis and lexical meaning extension, and carrying out comprehensive calculation on multi-dimensional similarity, the method is capable of improving the correctness of a final sentence similarity in a Chinese automatic question answering process, and enabling a Chinese intelligent question answering system to be possible.

Owner:JIANGMEN POWER SUPPLY BUREAU OF GUANGDONG POWER GRID

Systems and Methods for Constructing Relationship Specifications from Component Interactions

InactiveUS20080120400A1Eliminate needEliminate requirementsMultiple digital computer combinationsProgram loading/initiatingComputing systemsDistributed computing

Techniques for automatically creating at least one relationship specification are provided. For example, one computer-implemented technique includes observing at least one interaction between two or more components of at least one distributed computing system, consolidating the at least one interaction into at least one interaction pattern, and using the at least one interaction pattern to create at least one relationship specification, wherein the at least one relationship specification is useable for managing the at least one distributed computing system. In another computer-implemented technique, at least one task relationship and at least one corresponding relationship constraint of at least two components of at least one computing system are determined, the at least one task relationship is consolidated with the at least one corresponding relationship constraint, the at least one consolidated task relationship and relationship constraint are used to generate at least one deployment descriptor, and the at least one deployment descriptor is stored, wherein the at least one deployment descriptor is useable for subsequent reuse and processing in managing the at least one distributed computing system and / or one or more different computing systems.

Owner:IBM CORP

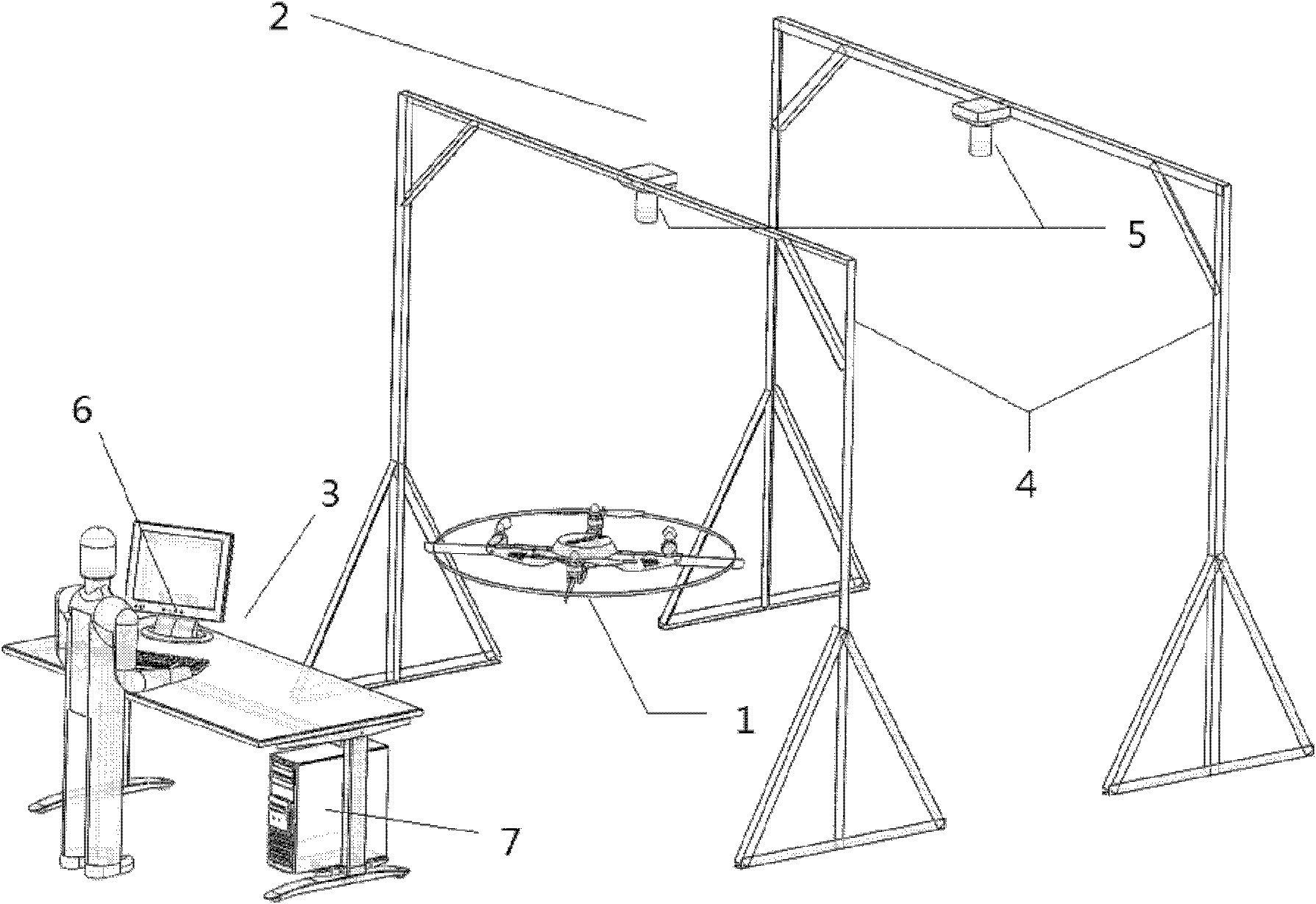

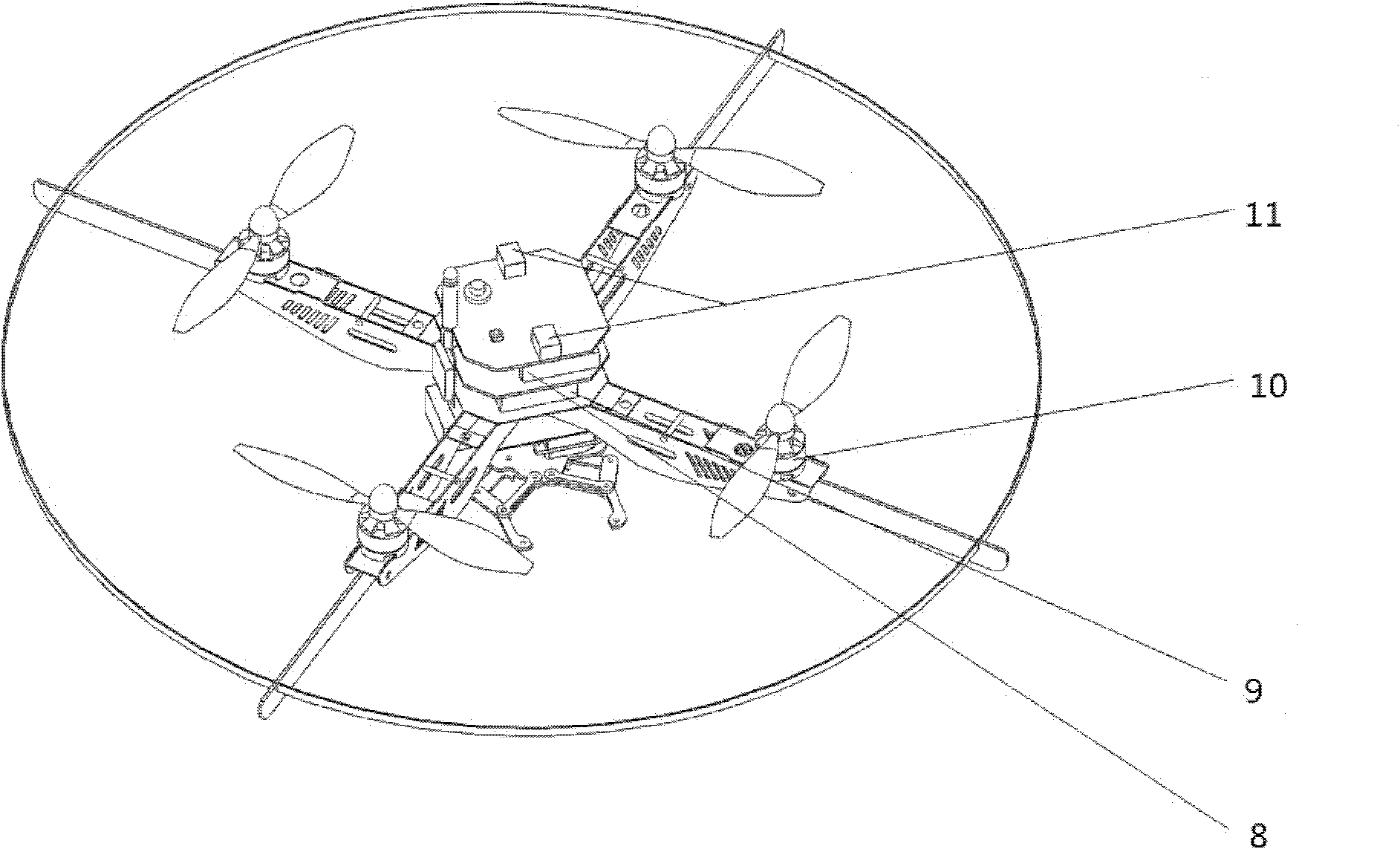

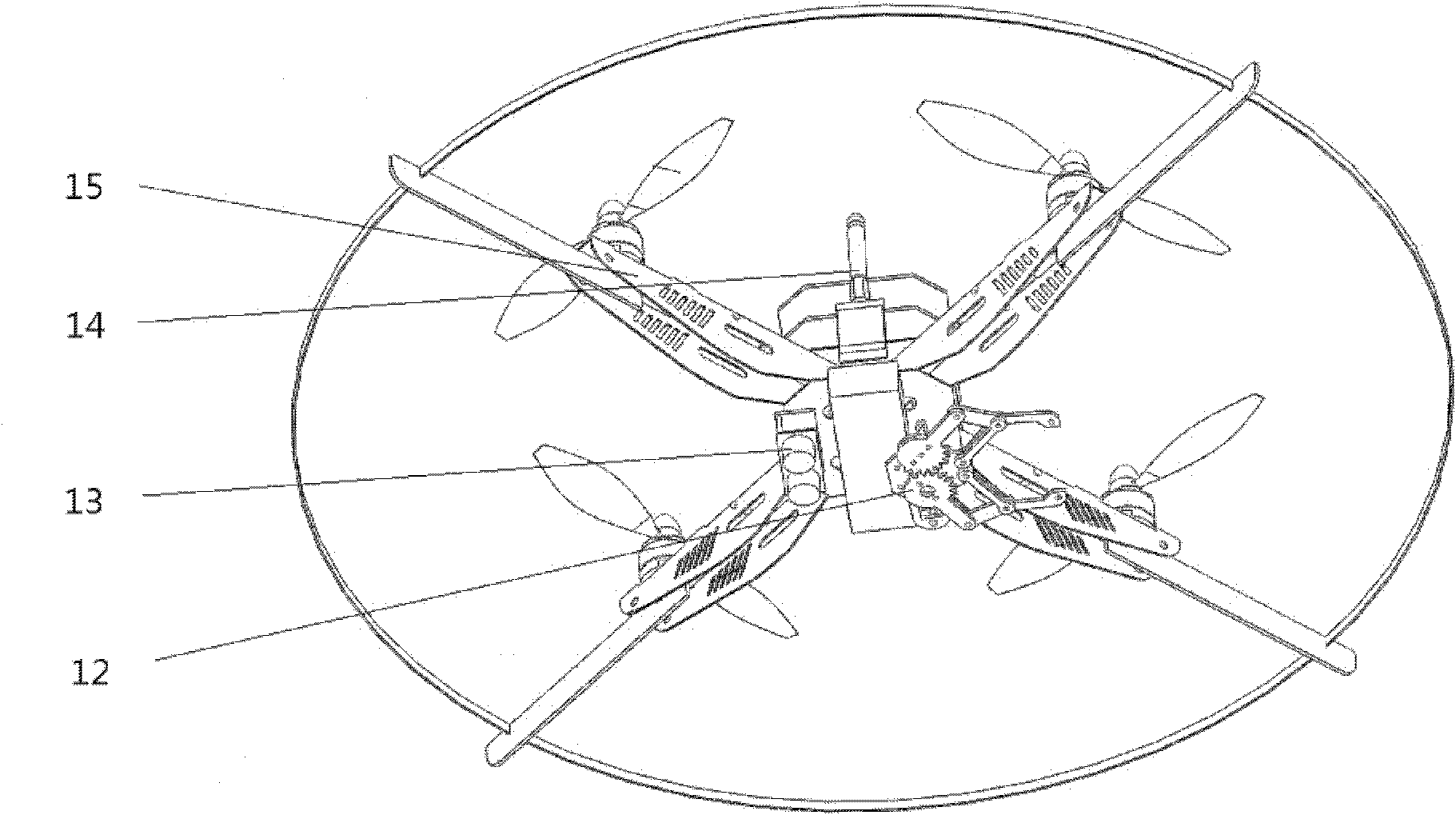

Method for controlling four-rotor aircraft system based on human-computer interaction technology

InactiveCN102219051AFlexible operationPrecise flight positioning operationImage analysisActuated automaticallyAttitude controlControl signal

A method for controlling a four-rotor aircraft system based on the human-computer interaction technology, which belongs to the intelligent flying robot field, is characterized in that a manipulator can control the four-rotor aircraft by gestures. The flight attitude control is accomplished by the cooperative running of the four rotors which are arranged at geometrical vertexes of the four-rotor aircraft with three degrees of freedom being yaw angle, pitch angle and roll angle. The human-computer interaction technology mainly utilizes OpenCV and OpenGL. The system captures depth images of the manipulator hands through a depth camera; the depth images are analyzed and processed by the computer to obtain gesture information and generate control signals corresponding to the gesture information; and then the control signals are sent through a radio communication device to the aircraft for execution, so as to accomplish the mapping from the motion state of the manipulator hands to the motion state of the aircraft and complete the gesture control. With the help of far controlling distance and more visual gesture corresponding relation, the gesture control can be applied to danger experiments and industrial production processes with high execution difficulty.

Owner:BEIJING UNIV OF TECH

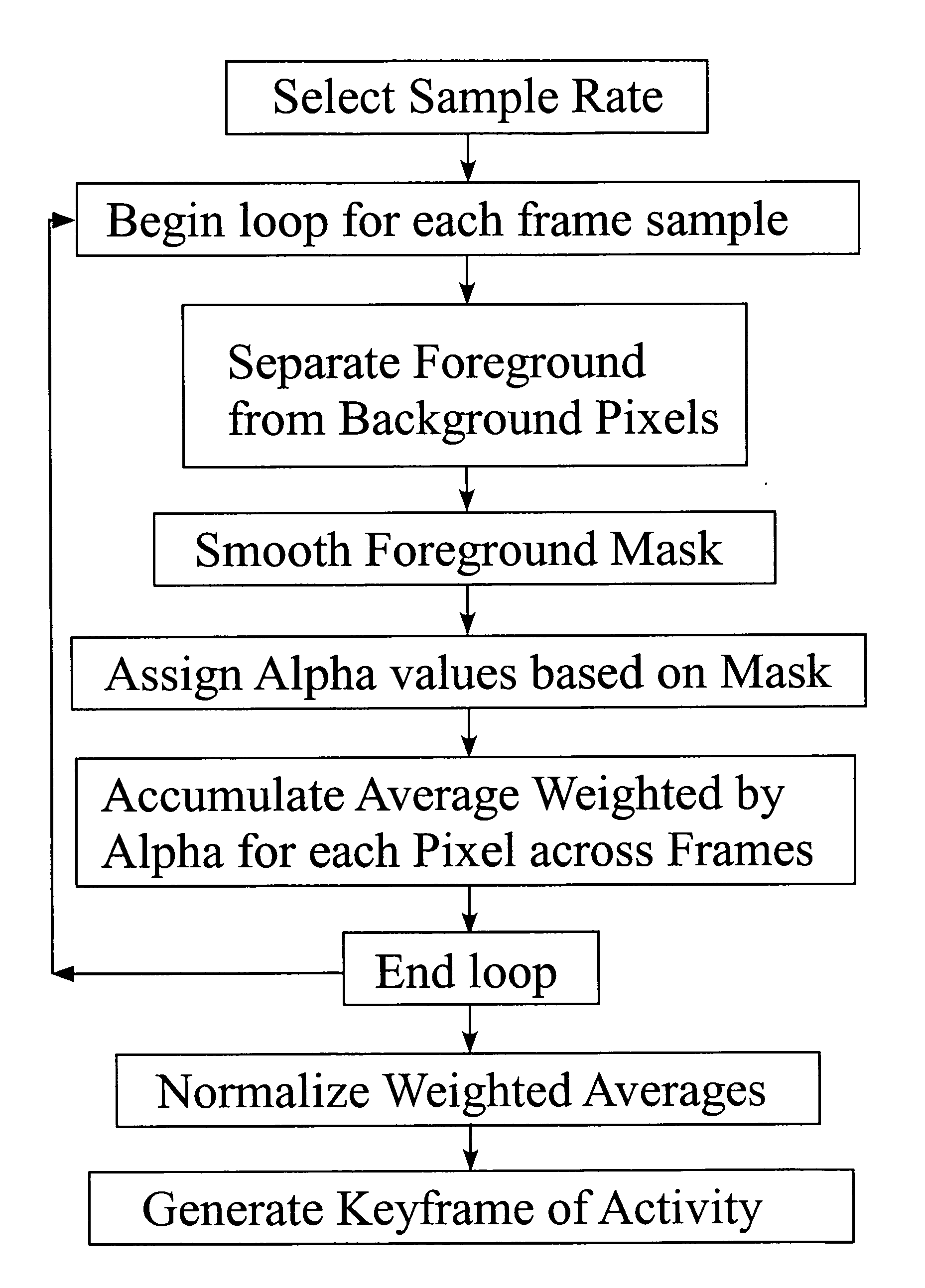

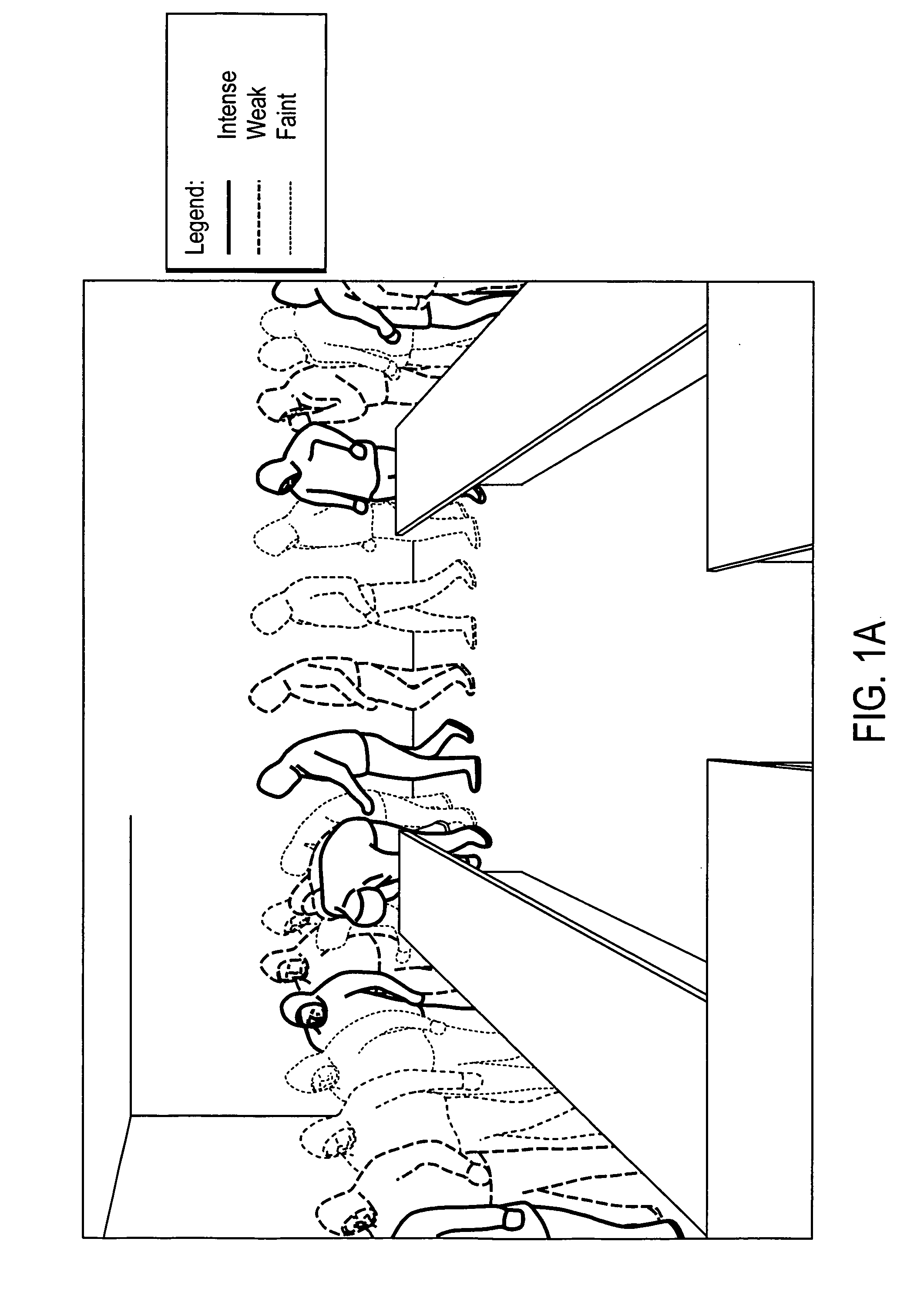

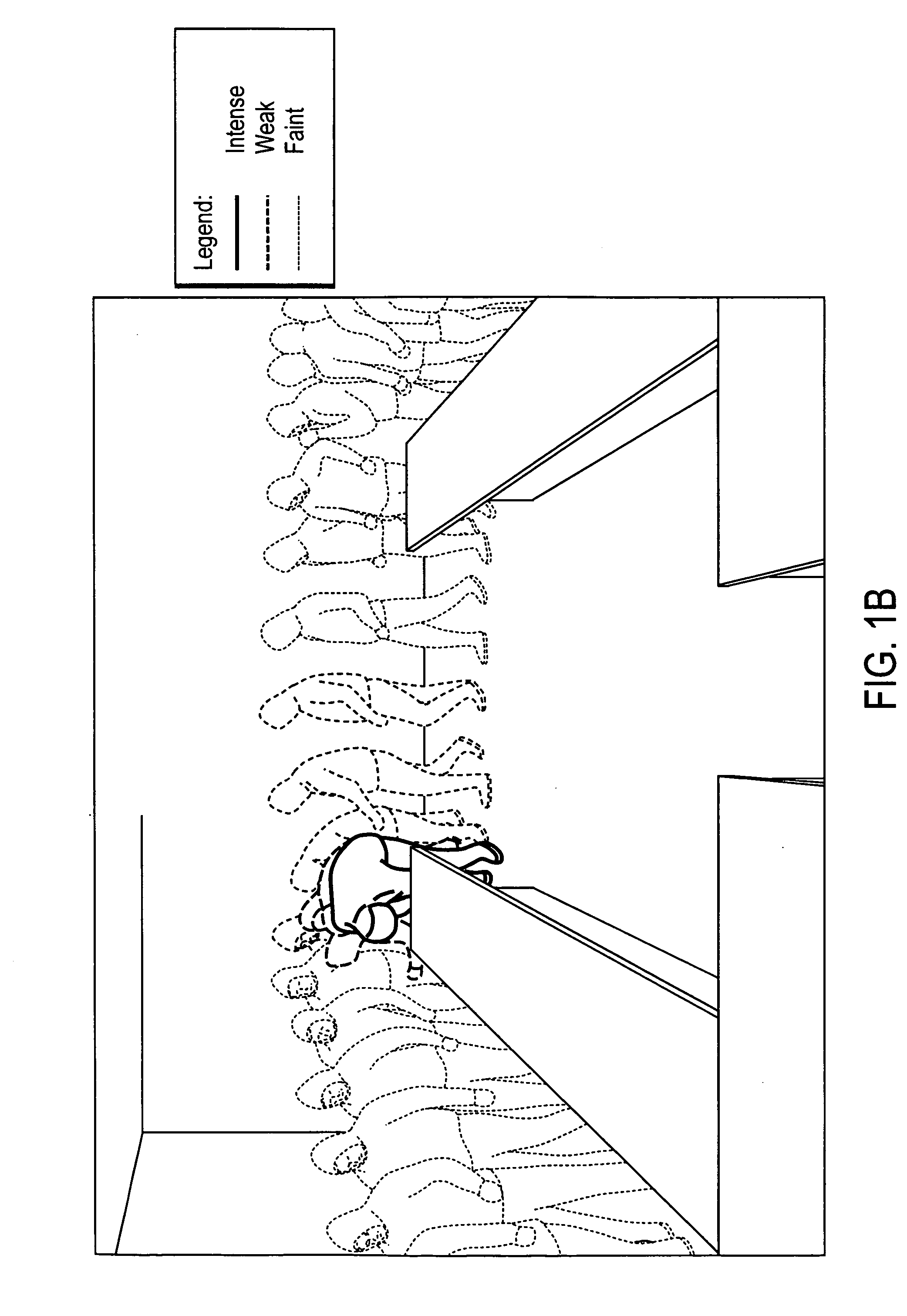

Methods and interfaces for visualizing activity across video frames in an action keyframe

ActiveUS20060284976A1Less valuableTelevision system detailsDigital data information retrievalPattern recognitionLocation detection

Owner:FUJIFILM BUSINESS INNOVATION CORP

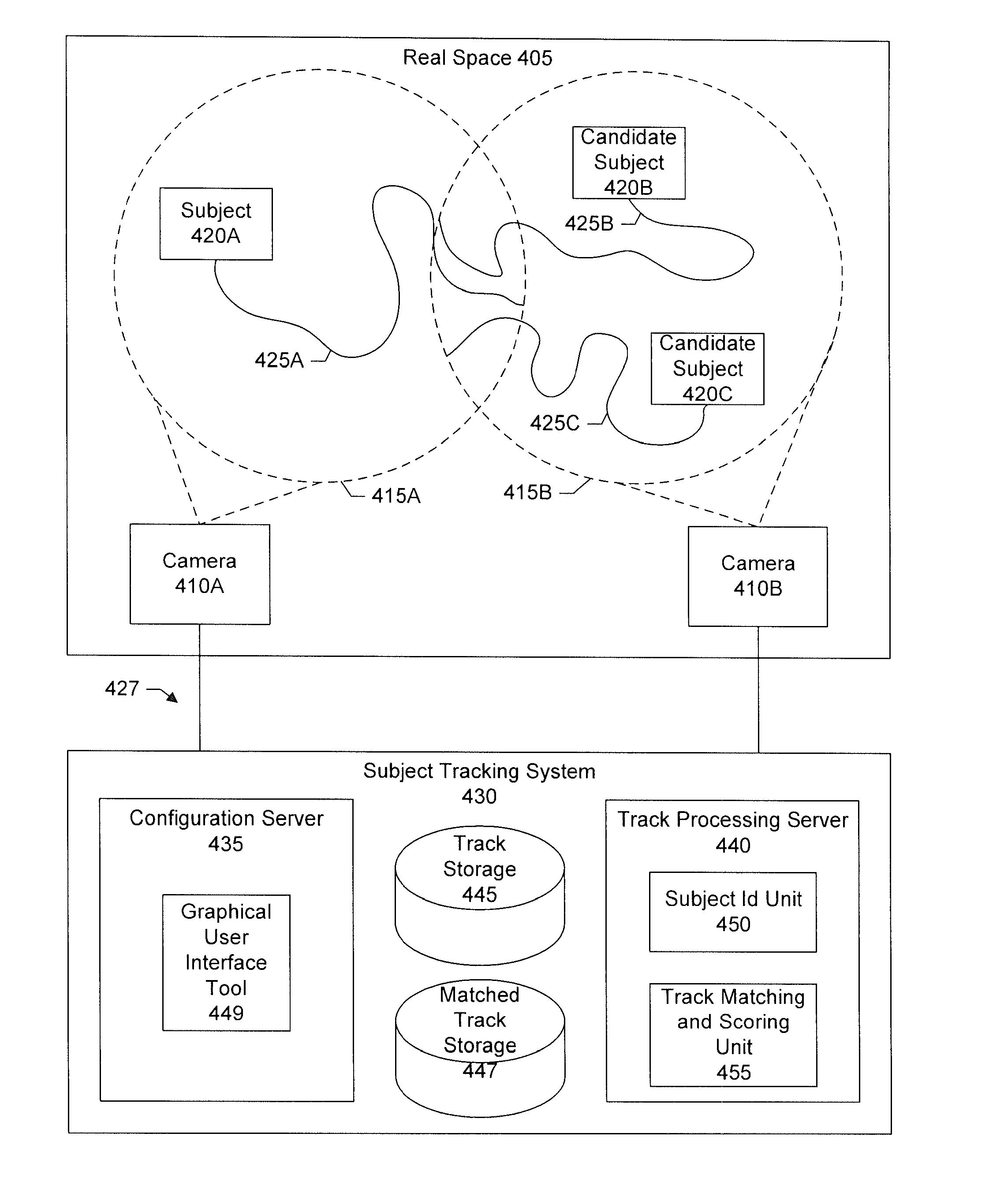

Method and system for analyzing interactions

ActiveUS20130294646A1Accurate and scalable wayGood level of precisionImage analysisPosition fixationWi-FiPattern recognition

Owner:RETAILNEXT

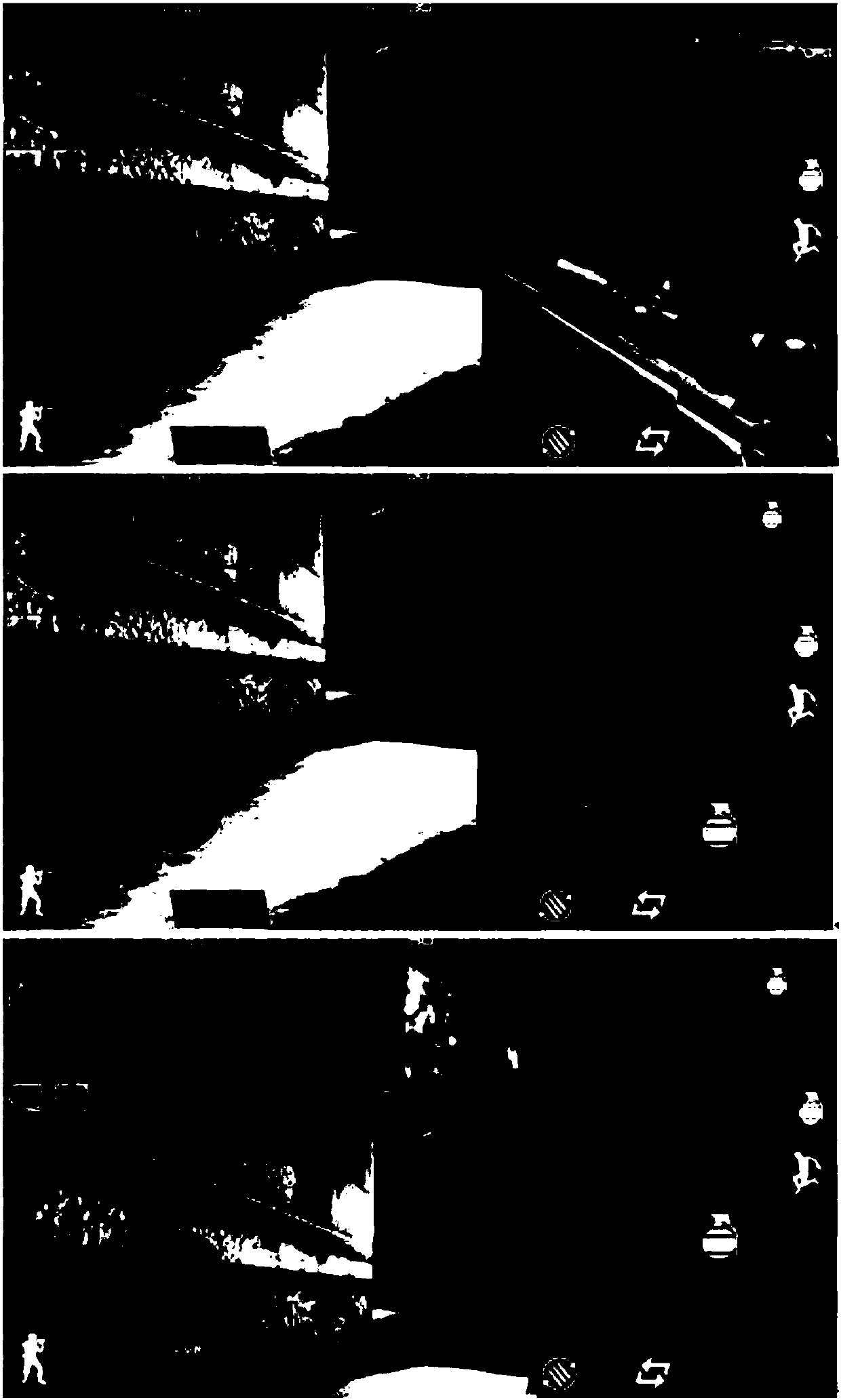

Method and system for analyzing fixed-camera video via the selection, visualization, and interaction with storyboard keyframes

Techniques for generating a storyboard are disclosed. In one embodiment of the invention the storyboard is comprised of videos from one or more cameras based on the identification of activity in the video. Various embodiments of the invention include an assessment of the importance of the activity, the creation of a storyboard presentation based on importance and interaction techniques for seeing more details or alternate views of the video. In one embodiment, motion detection is used to determine activity in one or more synchronized video streams. Periods of activity are recognized and assigned importance assessments based on the activity, important locations in the video streams, and events from other sensors. In different embodiments, the interface consists of a storyboard and a map.

Owner:FUJIFILM BUSINESS INNOVATION CORP

System for organizing and visualizing display objects

InactiveUS8402382B2Avoid difficult choicesInput/output processes for data processingHuman–computer interactionComputer program

A method, system and computer program for organizing and visualizing display objects within a virtual environment is provided. In one aspect, attributes of display objects define the interaction between display objects according to pre-determined rules, including rules simulating real world mechanics, thereby enabling enriched user interaction. The present invention further provides for the use of piles as an organizational entity for desktop objects. The present invention further provides for fluid interaction techniques for committing actions on display objects in a virtual interface. A number of other interaction and visualization techniques are disclosed.

Owner:GOOGLE LLC

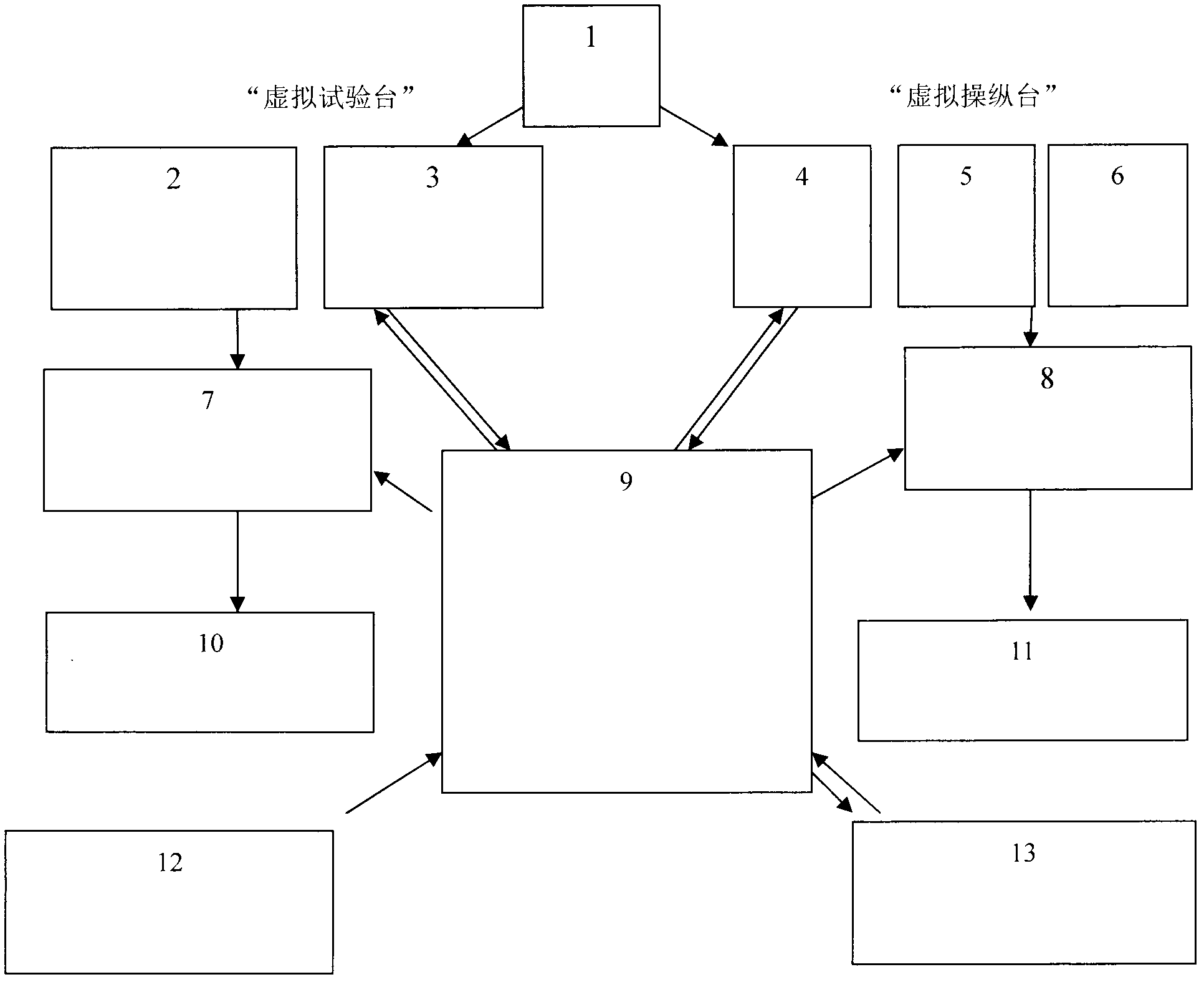

Multi-channel and multi-screen three dimensional immersion simulation system of ship steering and operation

InactiveCN102663921AOvercome the cycleOvercome difficultyCosmonautic condition simulationsSimulatorsComputer moduleInteraction technology

Belonging to the field of naval architecture and ocean engineering, the invention relates to a three dimensional visual simulator system device of ship driving and cabin operation. Based on a distributed mathematical physics platform model and a distributed ship operation principle, the device of the invention comprises six operational modules: (1) a power system mathematical physics equation modeling module, (2) a three dimensional display module, (3) a climate environment change display module, (4) a geographical information environment change display module, (5) a digital glove interactive operation module, and (6) a physical platform simulation operation module. The above modules are integrated into one physical platform, to solve the problem of integration between various modules. Specifically, three dimensional display, the database exchange technology and the digital glove interaction technology are integrated into one platform. Physical operation is used to replace keyboard event response, and scene change is used to replace ship navigation and cabin operation, to achieve the purpose of simulating ship steering and cabin operation.

Owner:JIANGSU KEDA HUIFENG SCI & TECH

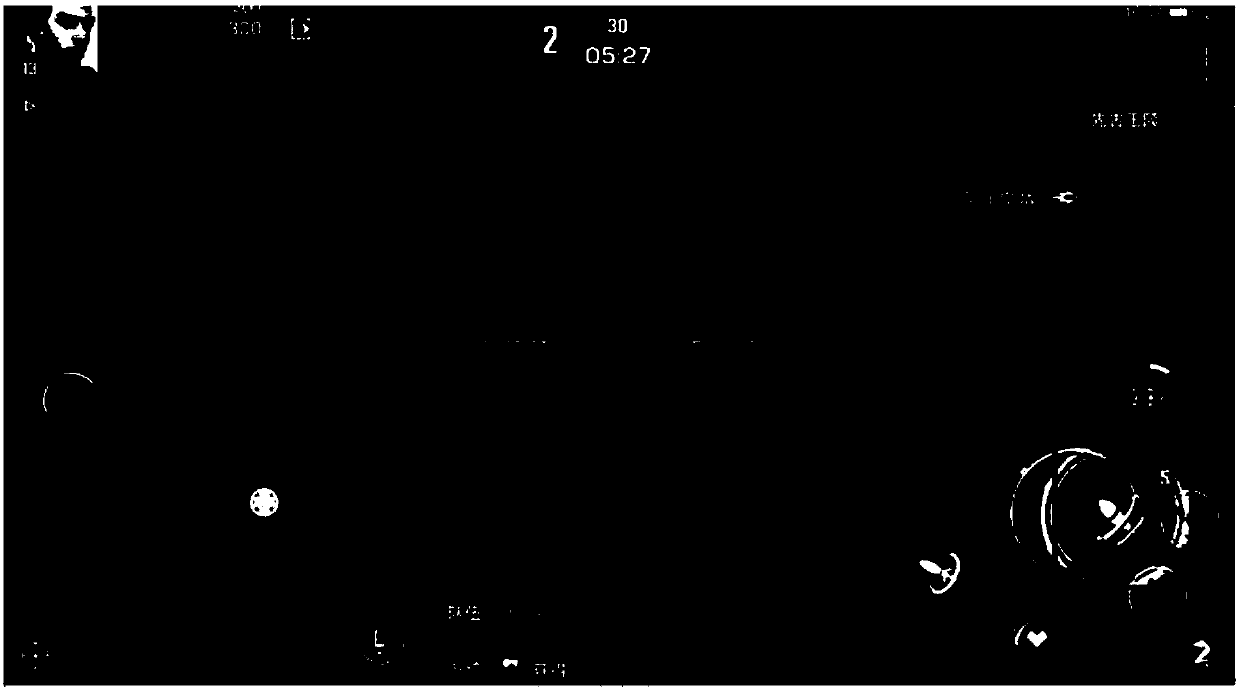

Information processing method and device, electronic equipment and storage medium

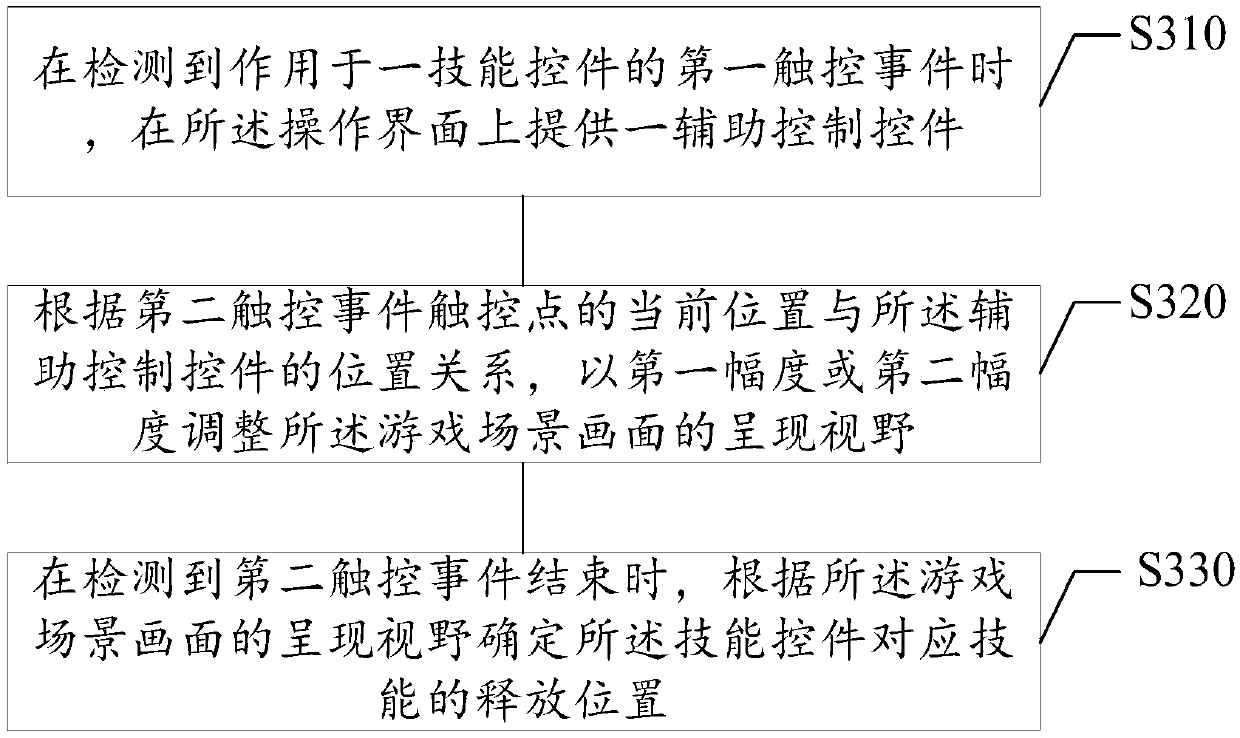

The invention provides an information processing method and device, electronic equipment and a storage medium and relates to the technical field of human-computer interaction. The method comprises thesteps when it is detected that a first touch incident acts on a skill control part, an auxiliary control control part is provided on an operation interface; a presentation view field of a game scenepicture is adjusted at a first amplitude and a second amplitude according to the relation of the current position of a second touch incident touch point and the position of the auxiliary control control part; when it is detected that a second touch incident ends, the release position of the skill corresponding to the skill control part is determined according to the presentation view filed of thegame scene picture. According to the information processing method and device, the electronic equipment and the storage medium, the skill releasing accuracy is improved by adjusting the presentation view filed of the game scene picture.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com