Man-machine interaction method and system based on sight judgment

A technology of human-computer interaction and line of sight, applied in the field of human-computer interaction, can solve the problems of human eye injury, increased cost, human body burden, etc., and achieve the effect of simple and convenient operation, easy implementation and low cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

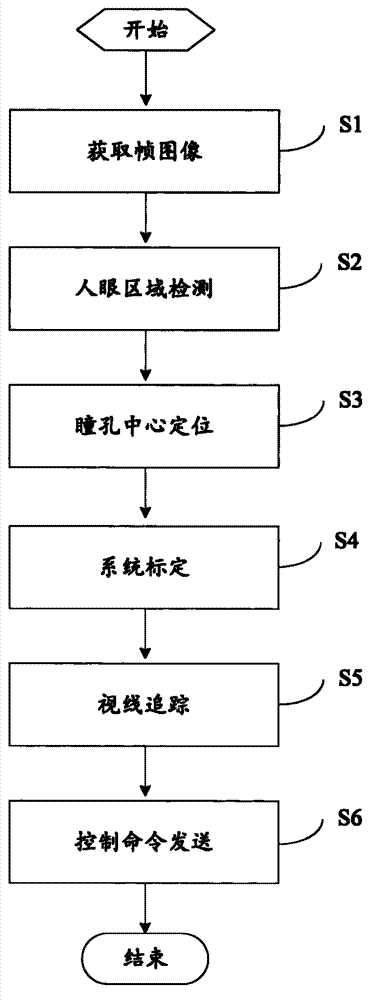

[0074] see figure 1 As shown, it is a flow chart of a human-computer interaction method based on line-of-sight judgment in the present invention. The method comprises the steps of:

[0075] Step S1: Acquire frame images.

[0076] In this step, the face image can be obtained in real time through the front camera of the mobile phone.

[0077] Step S2: Human eye region detection.

[0078] Considering that when using a mobile phone, the distance between the human eye and the camera is generally kept between 10 and 30 centimeters, and within this range the face will occupy the entire image area, so this method does not need the steps of face detection, and directly performs human detection. Eye area detection is enough. The initial positioning of the human eye area is not required to be very accurate, so there are many methods that can be used, such as histogram projection method, Haar (Haar) detection method, frame difference method, template matching method and other methods ...

Embodiment 2

[0170] This embodiment provides a human-computer interaction method based on line of sight judgment. Steps S1 to S6 of this method are the same as those in Embodiment 1, and will not be described in detail here. This method is different from Embodiment 1 in the specific implementation of the step S6 control command sending. What Embodiment 1 adopted is the blink control method. What this embodiment adopts is the closed-eye control method. There is a single eye closing and the closing time; when there is a single eye closing, according to the comparison relationship between the preset eye closing time and the control command, the corresponding control command is sent to the electronic device. The detailed implementation steps of sending the control command in the method will be described below.

[0171] see Figure 11 Shown is another detailed flow chart of the control command transmission of the present invention. The detailed steps of the control command sending method incl...

Embodiment 3

[0182] This embodiment provides a human-computer interaction system based on line of sight judgment. see Figure 12 As shown, it is a schematic diagram of a user-operated human-computer interaction system based on line-of-sight judgment according to Embodiment 3 of the present invention. The system provided by Embodiment 3 is used to realize the non-contact operation of the mobile phone by the user. The system includes a mobile phone 2 and a camera 21. The mobile phone 2 has a screen 22. The camera 21 is a front camera of the mobile phone 2. The method of Example 1 or Example 2 is used for human-computer interaction.

[0183] The direction shown by the arrow in the figure is the line of sight of the human eye. The front camera 21 of the mobile phone 2 is used to capture the head picture of the user 1 in real time. The coordinates of the pupil centers of the eyes, determine the corresponding relationship between the coordinates of the pupil centers of the two eyes and the x-y...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com