Patents

Literature

176 results about "Anisotropic diffusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In image processing and computer vision, anisotropic diffusion, also called Perona–Malik diffusion, is a technique aiming at reducing image noise without removing significant parts of the image content, typically edges, lines or other details that are important for the interpretation of the image. Anisotropic diffusion resembles the process that creates a scale space, where an image generates a parameterized family of successively more and more blurred images based on a diffusion process. Each of the resulting images in this family are given as a convolution between the image and a 2D isotropic Gaussian filter, where the width of the filter increases with the parameter. This diffusion process is a linear and space-invariant transformation of the original image. Anisotropic diffusion is a generalization of this diffusion process: it produces a family of parameterized images, but each resulting image is a combination between the original image and a filter that depends on the local content of the original image. As a consequence, anisotropic diffusion is a non-linear and space-variant transformation of the original image.

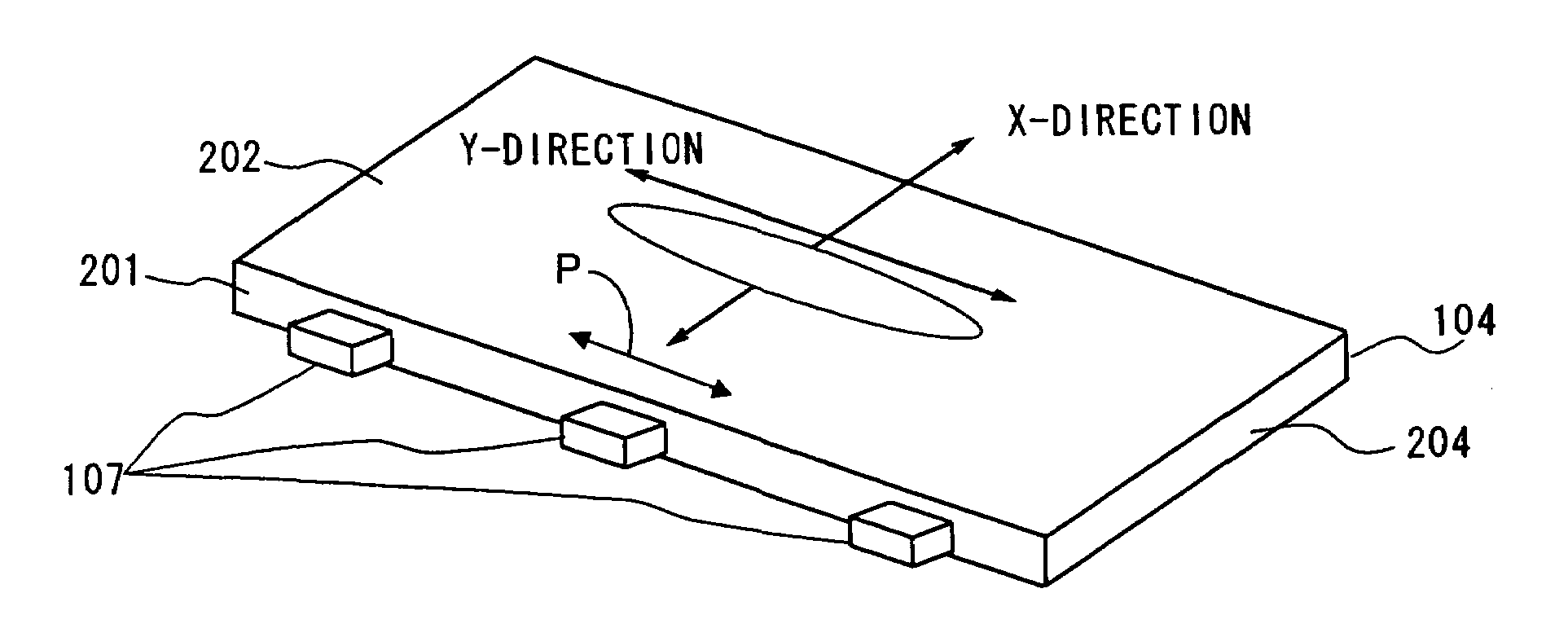

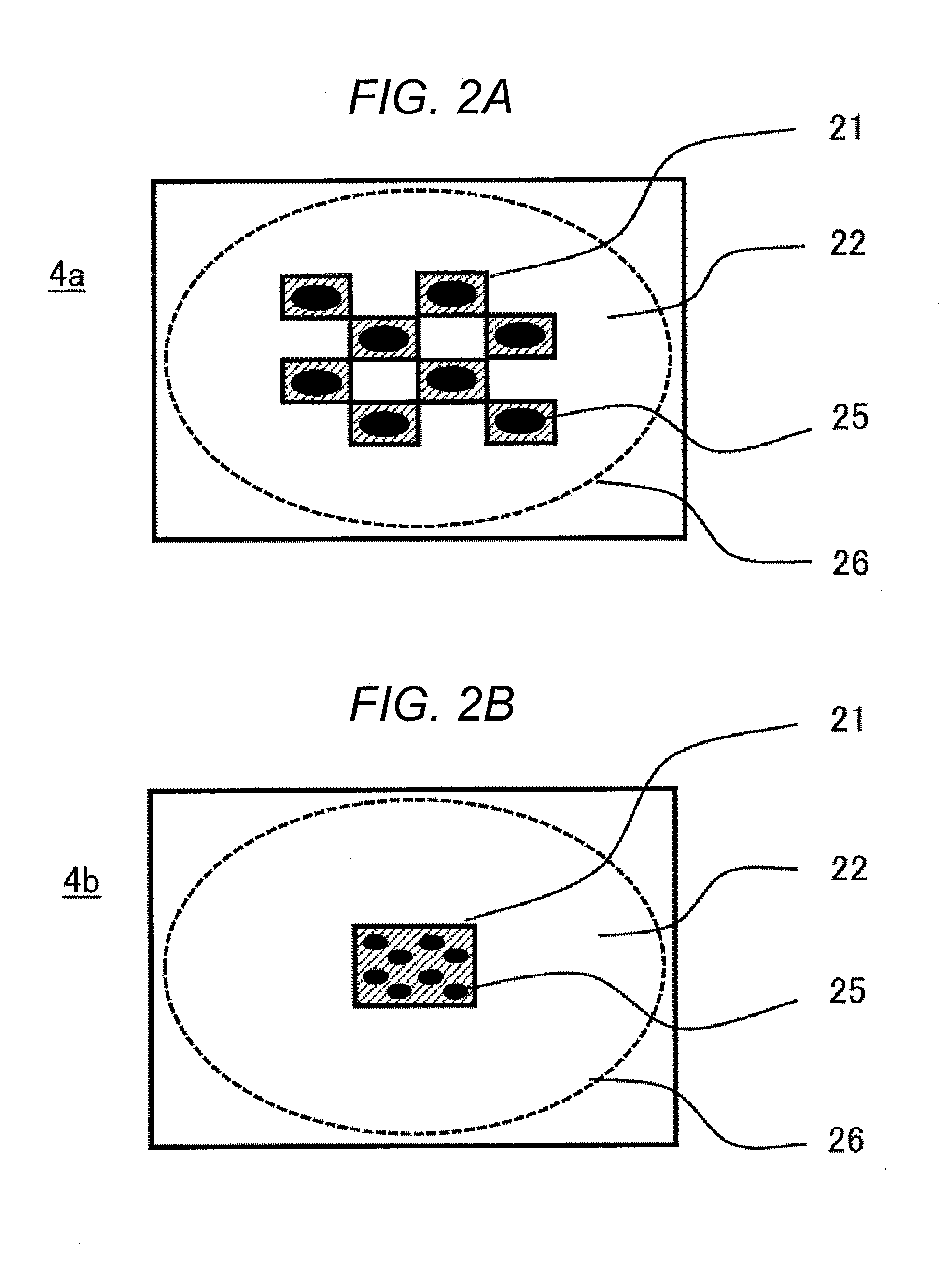

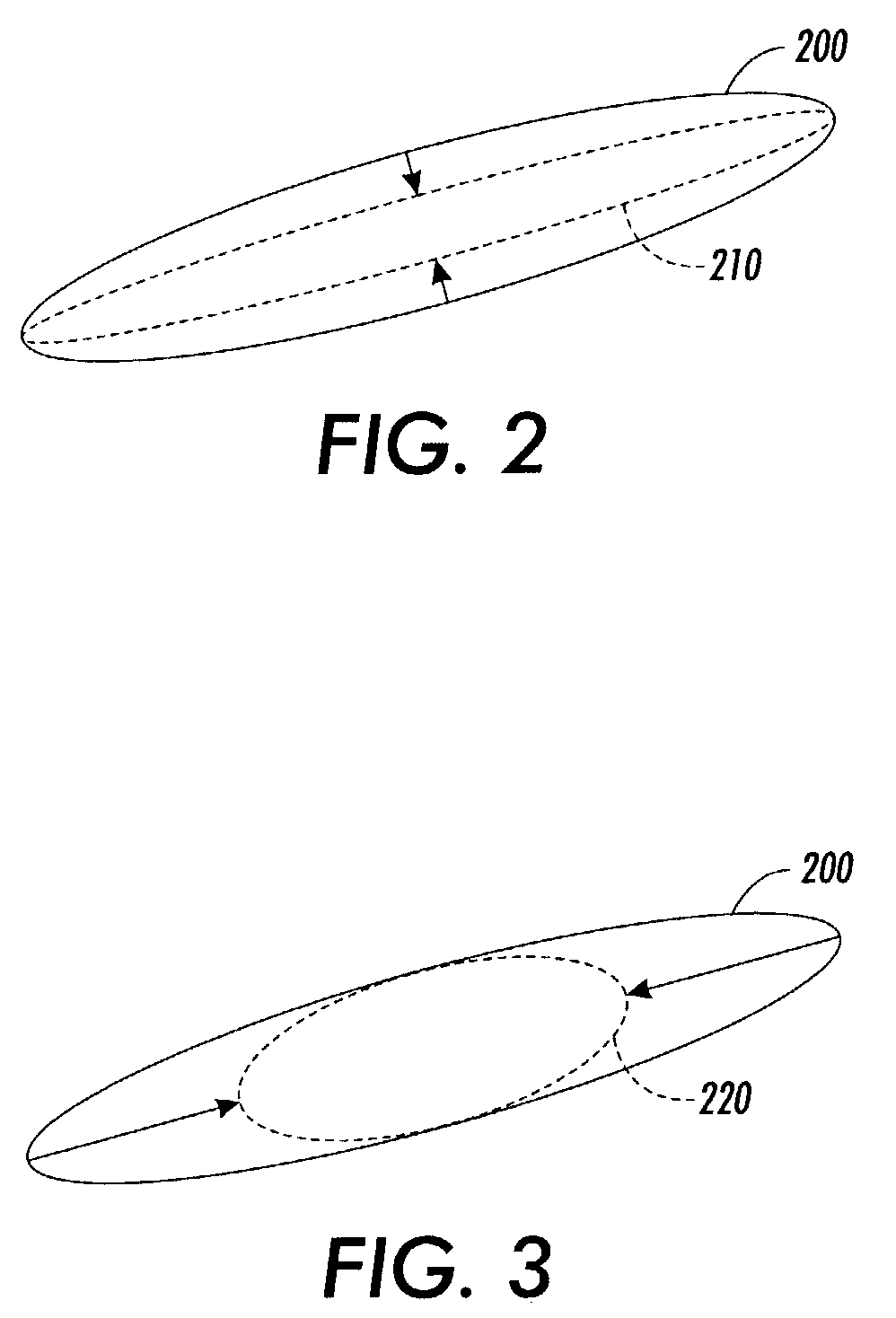

Structured materials with tailored isotropic and anisotropic poisson's ratios including negative and zero poisson's ratios

The invention described herein relates to structured porous materials, where the porous structure provides a tailored Poisson's ratio behavior. In particular, the structures of this invention are tailored to provide a range in Poisson's ratio ranging from a negative Poisson's ratio to a zero Poisson's. Two exemplar structures, each consisting of a pattern of elliptical or elliptical-like voids in an elastomeric sheet, are presented. The Poisson's ratios are imparted to the substrate via the mechanics of the deformation of the voids (stretching, opening, and closing) and the mechanics of the material (rotation, translation, bending, and stretching). The geometry of the voids and the remaining substrate are not limited to those presented in the models and experiments of the exemplars, but can vary over a wide range of sizes and shapes. The invention applies to both two-dimensional structured materials as well as three dimensionally structured materials.

Owner:BOYCE CHRISTOPHER M +3

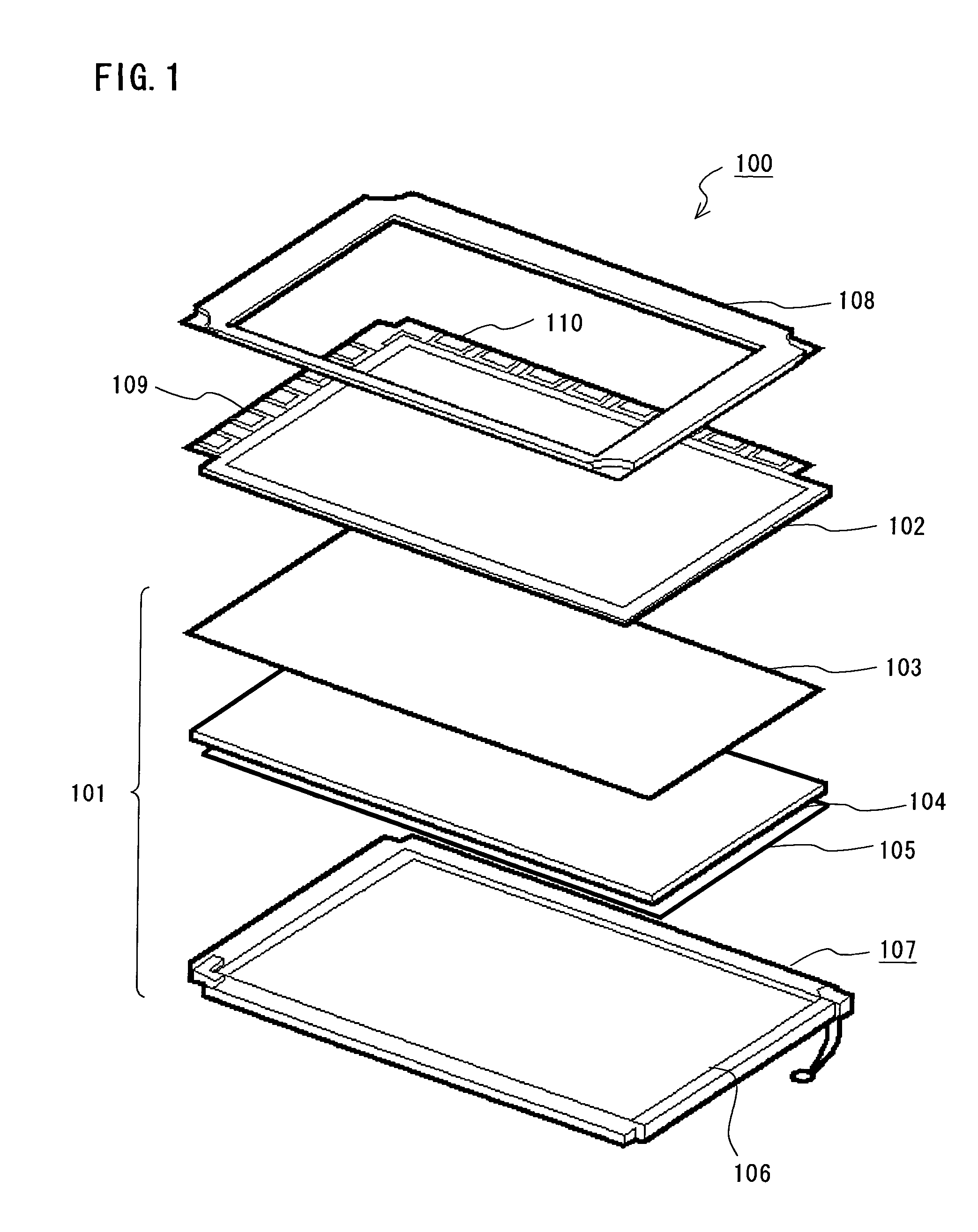

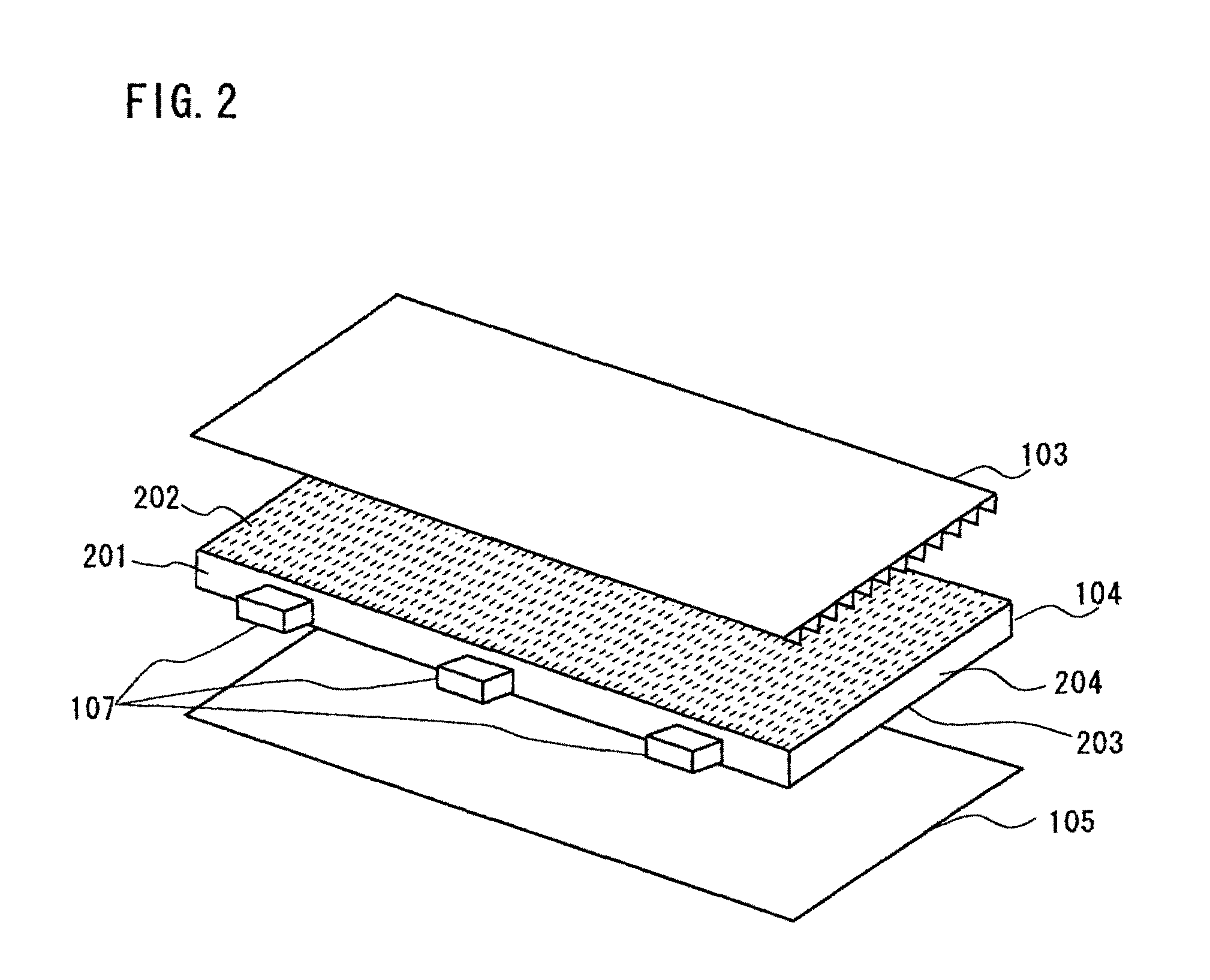

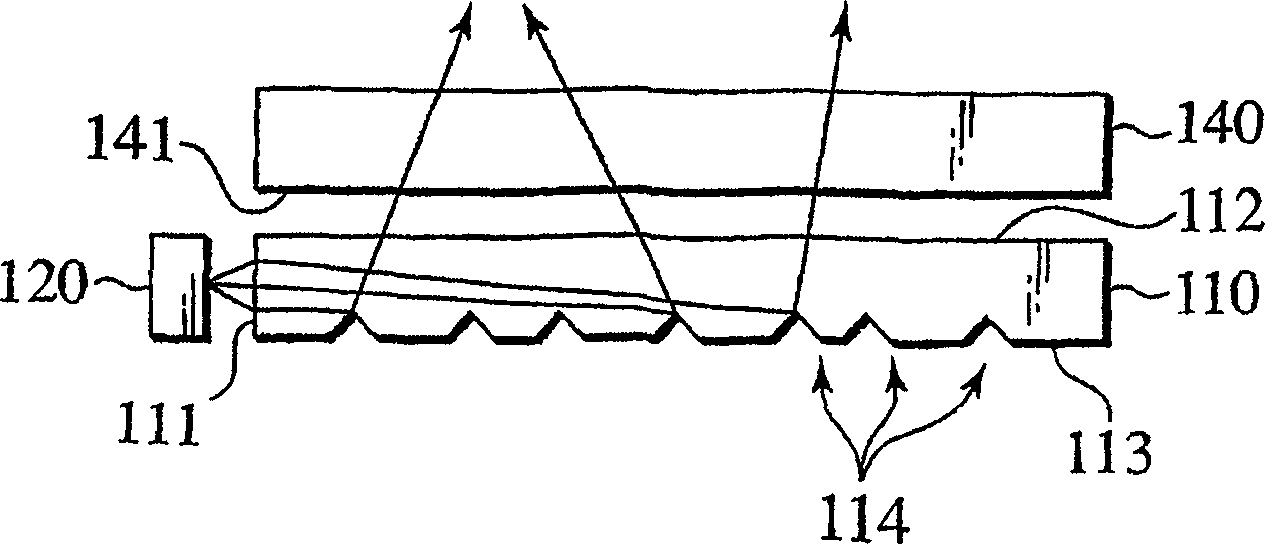

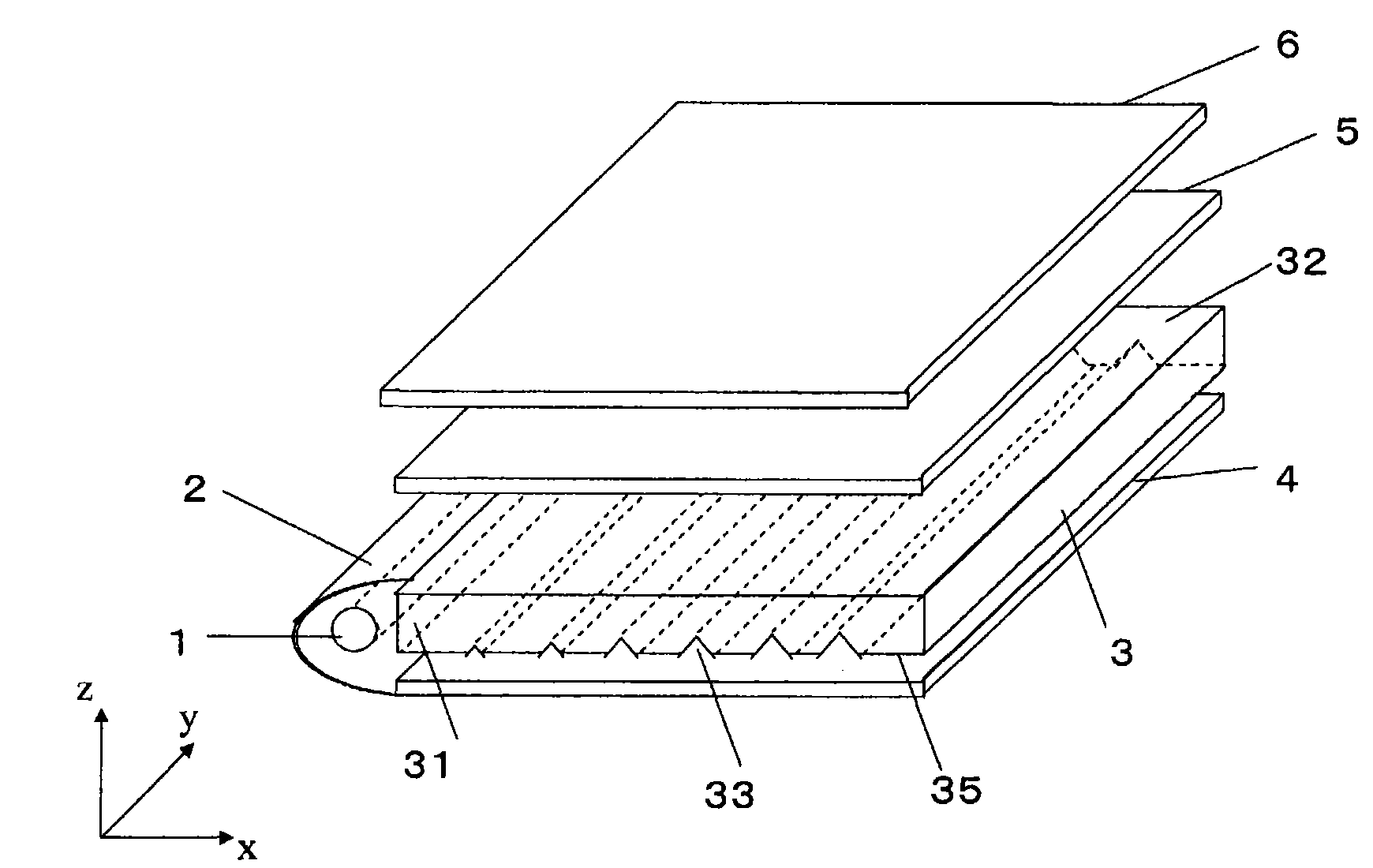

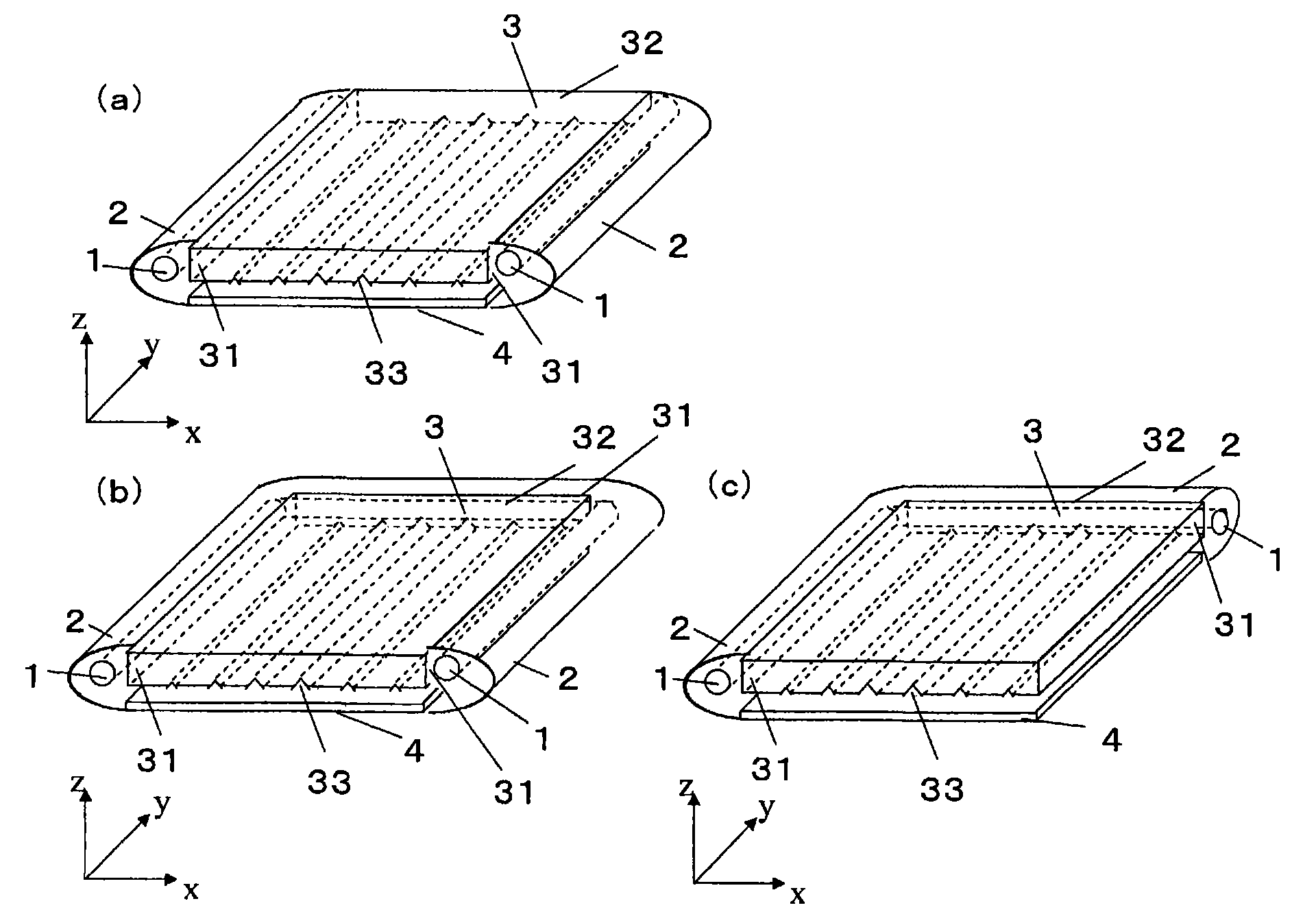

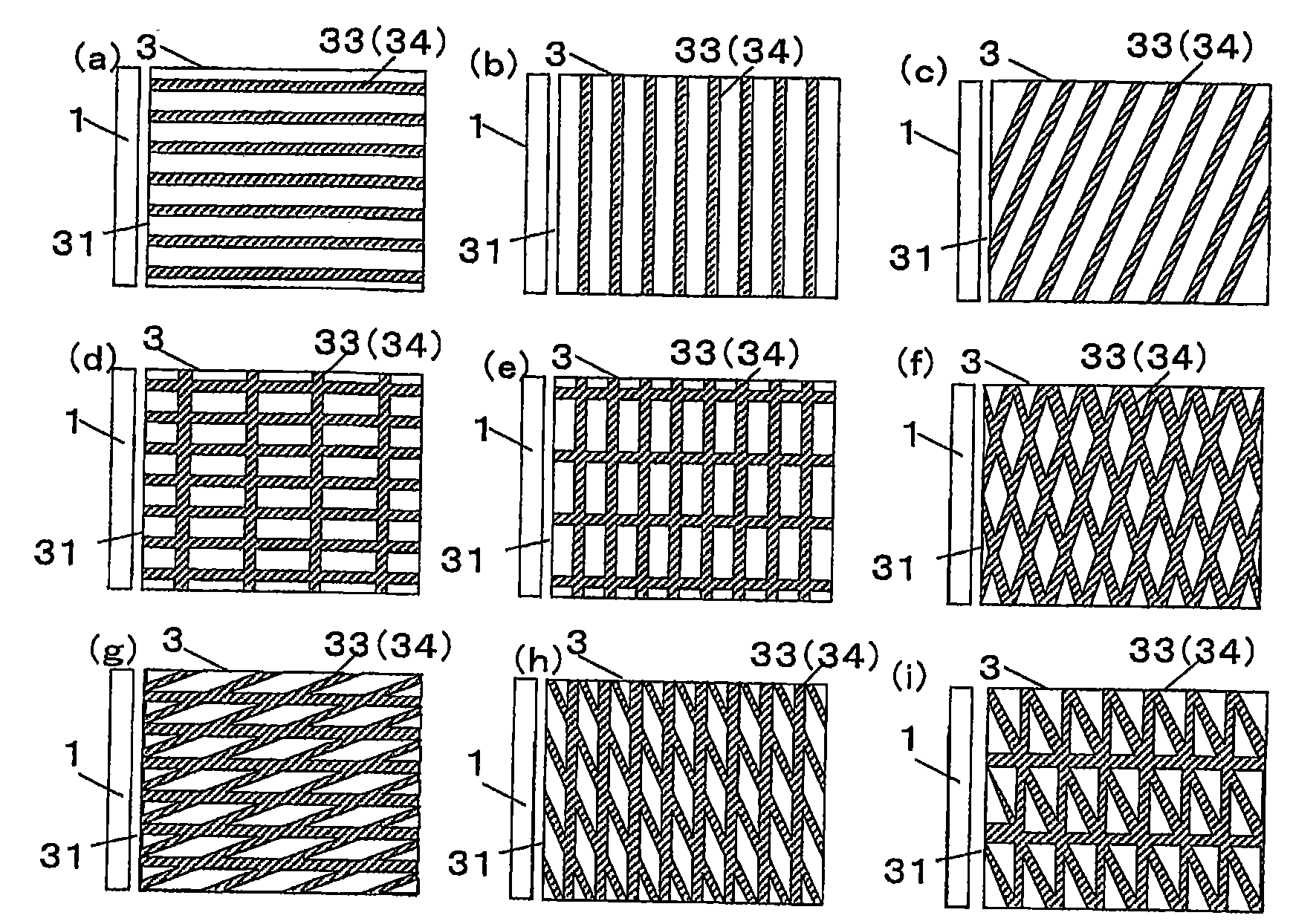

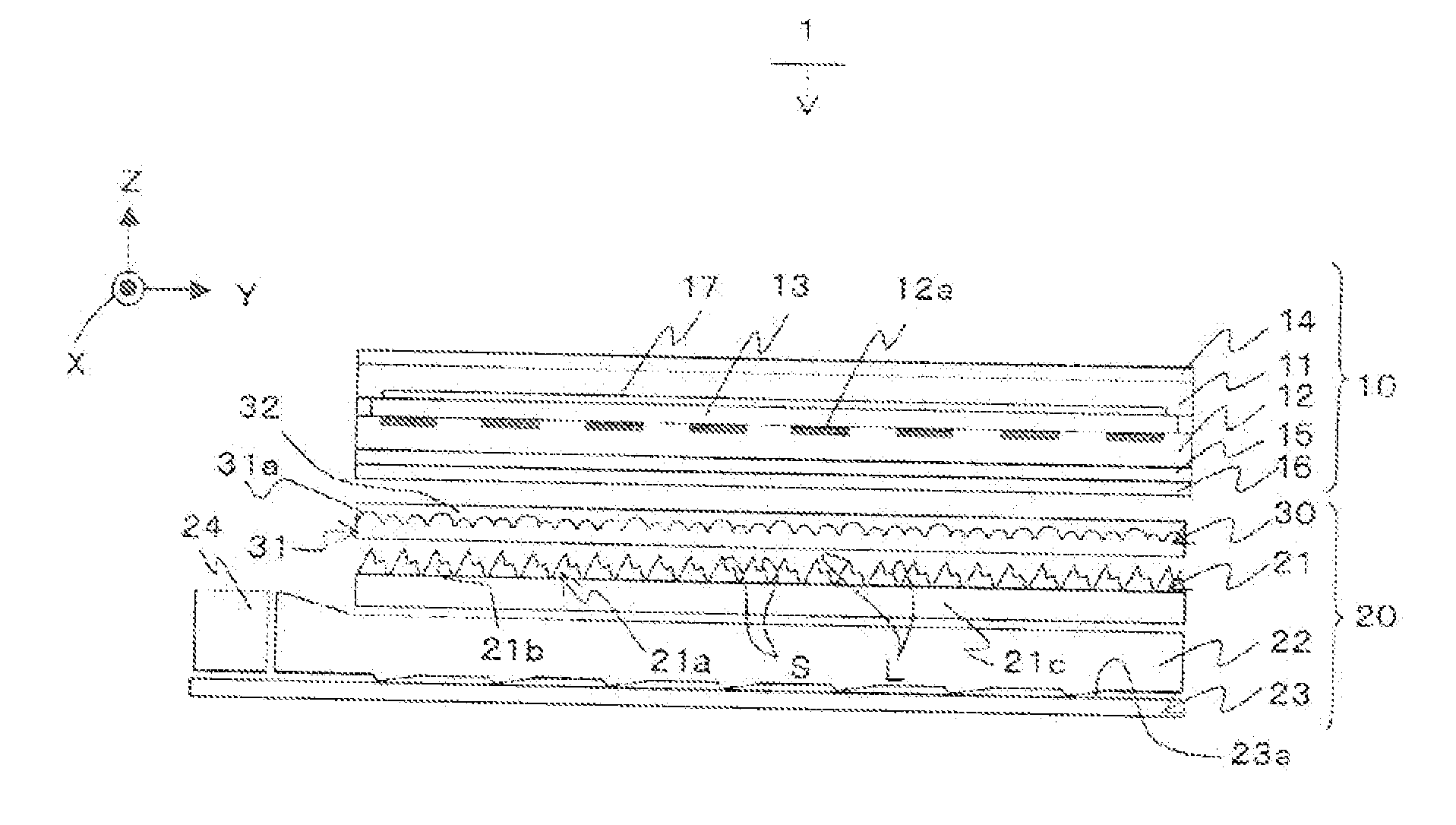

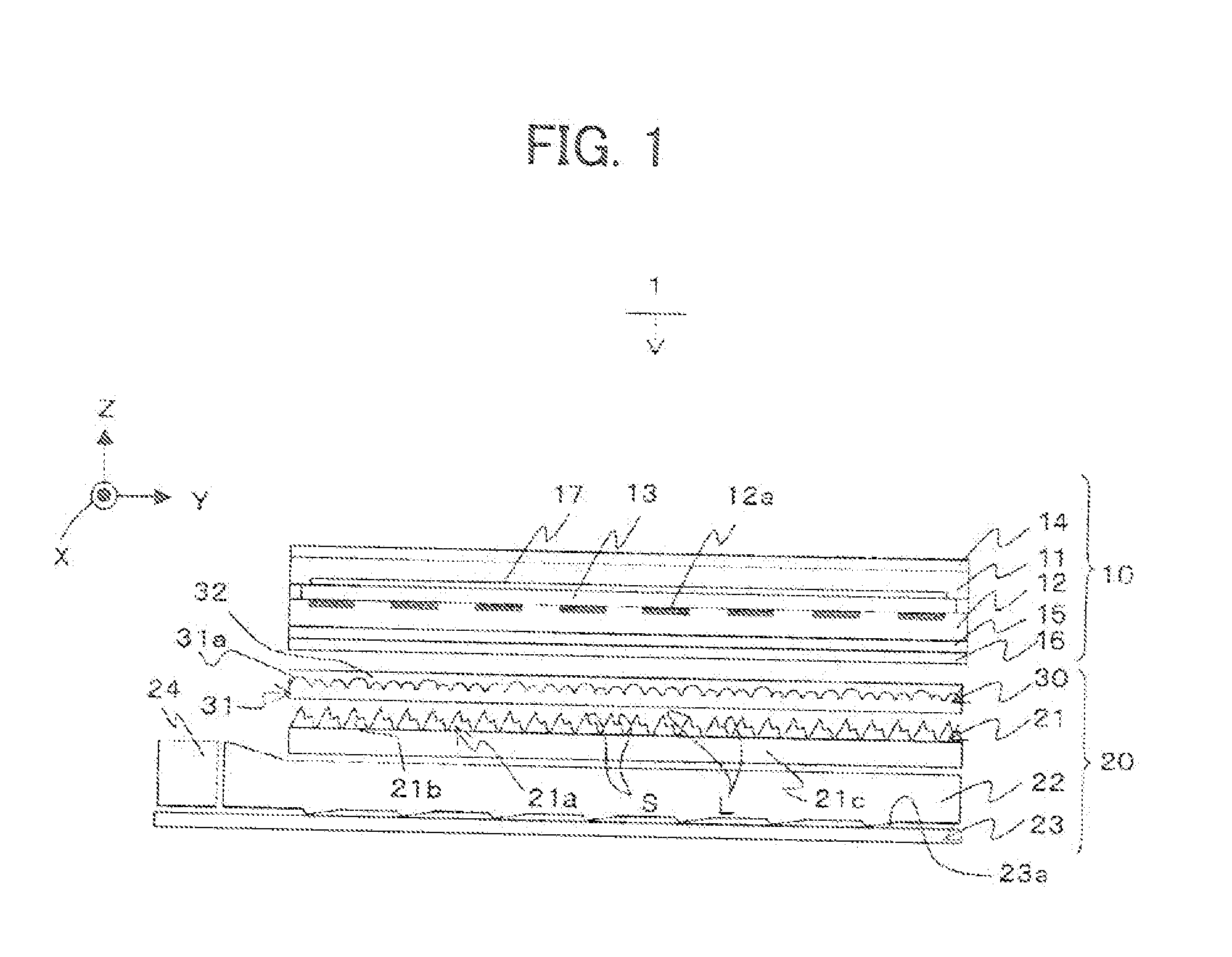

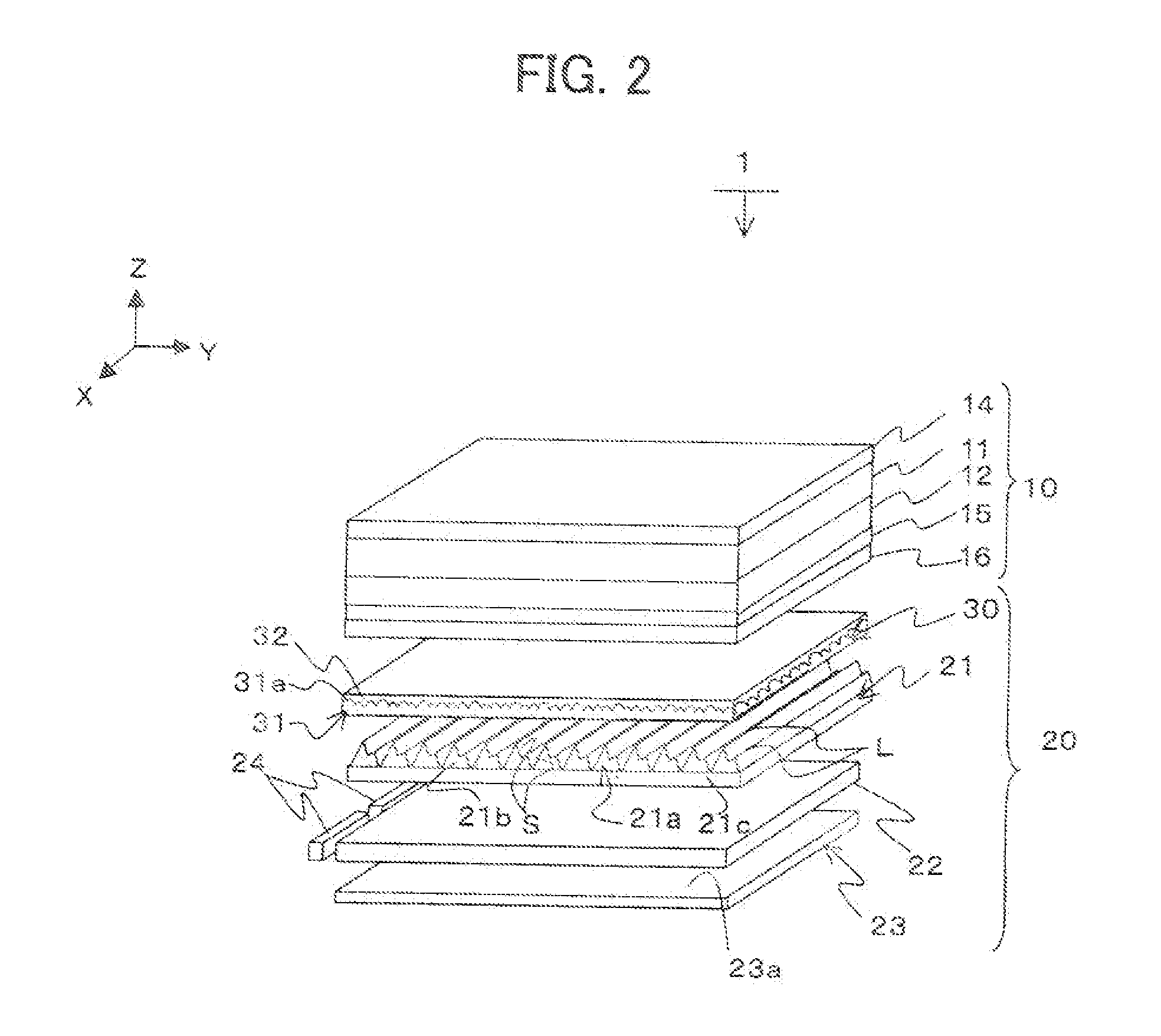

Planar light source unit and display device

InactiveUS6991358B2Improve light utilization efficiencyExcellent luminous propertiesPrismsMechanical apparatusLiquid-crystal displayLight guide

A light guide plate has a hologram pattern on a light exit surface. The hologram pattern anisotropically diffuses light in the direction parallel with a light incident surface of the light guide plate. On a back surface of the light guide plate are formed mirror-polished prism structures extending parallel with the light incident surface. A downward-pointing prism sheet with a given prism apex angle is placed on the light exit surface of the light guide plate. The use of the downward-pointing prism sheet together with the light guide plate having the anisotropic diffusion hologram pattern integrally formed thereon produces a liquid crystal display device with enhanced luminance properties.

Owner:MITSUBISHI ELECTRIC CORP

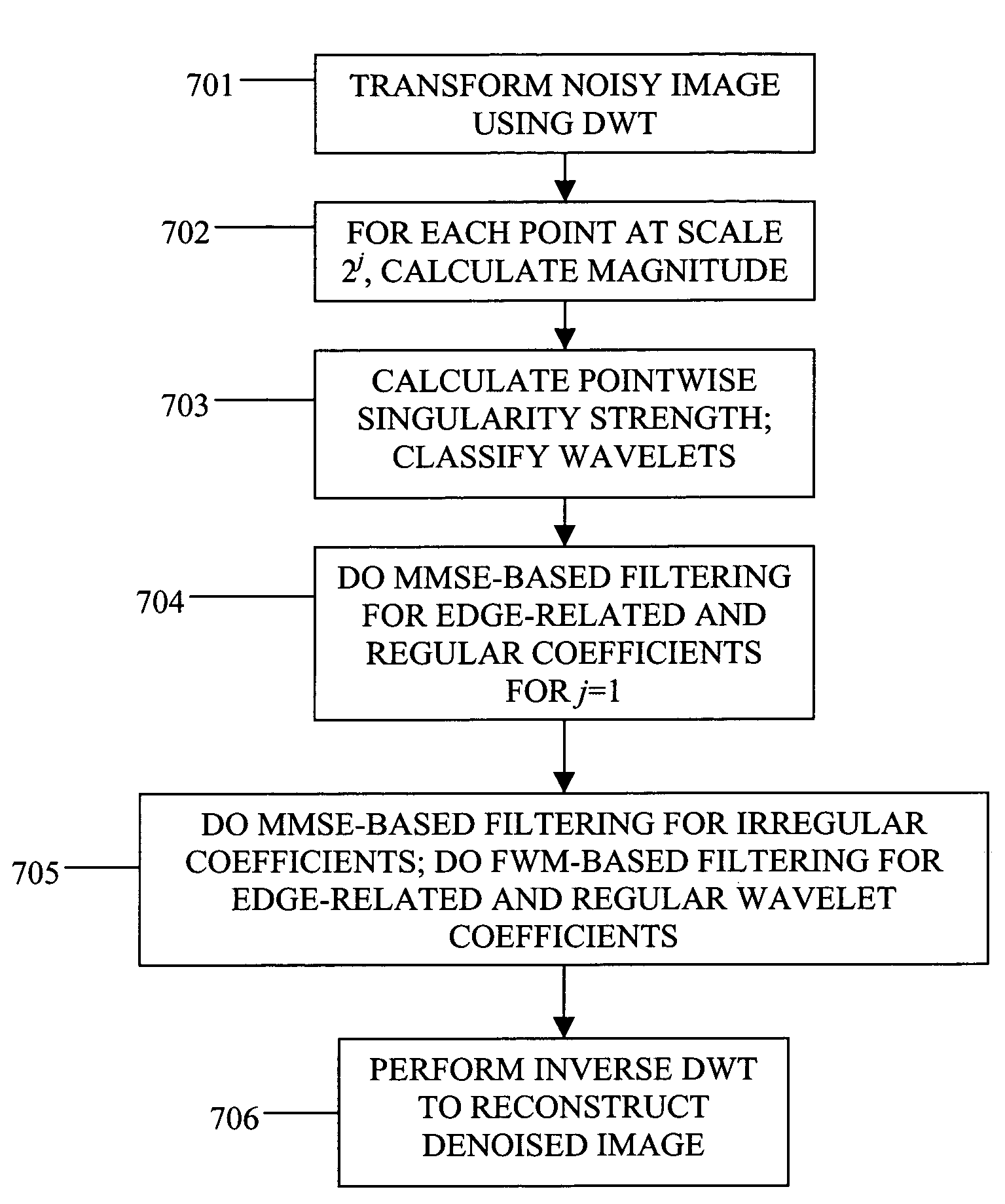

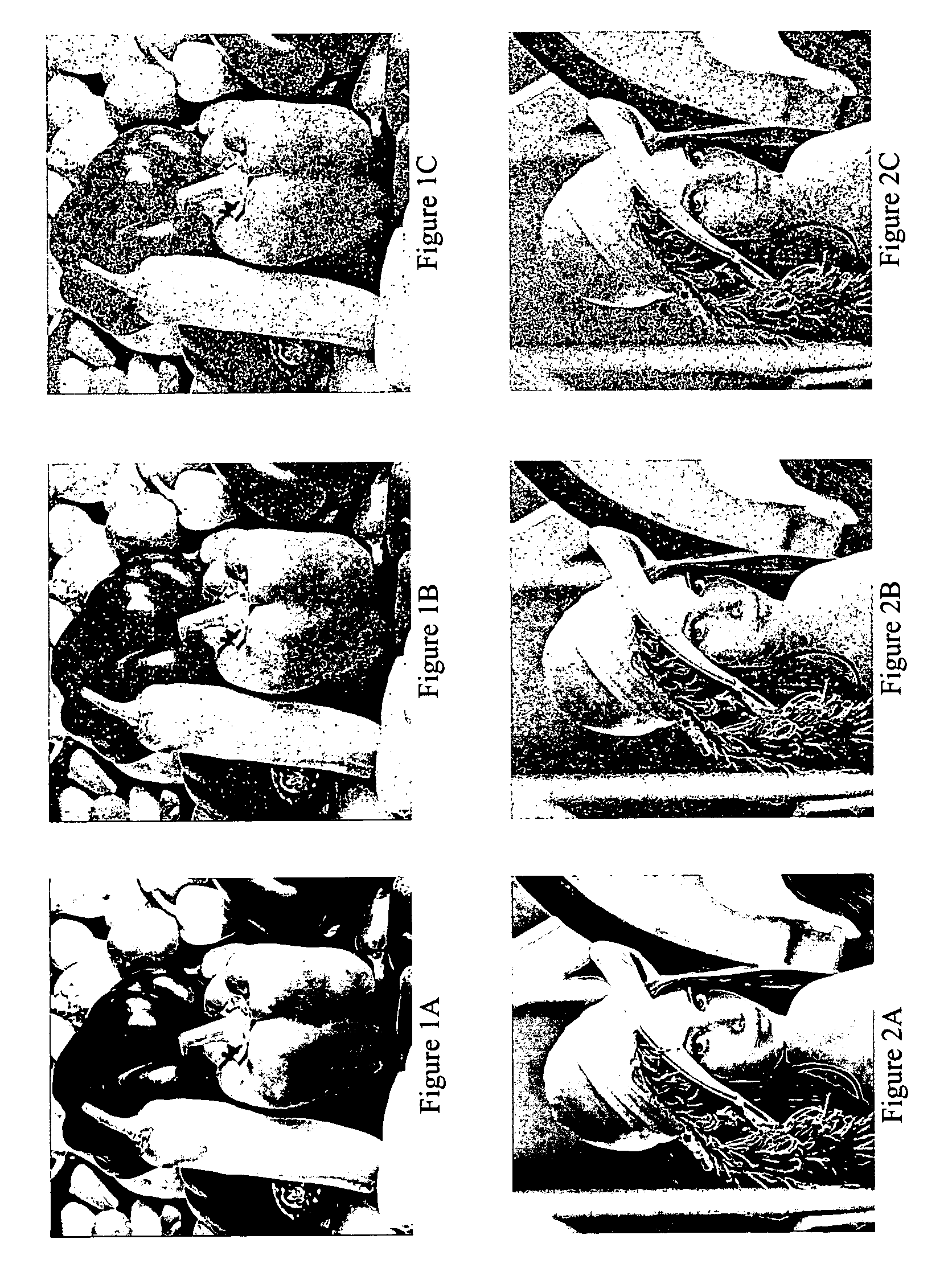

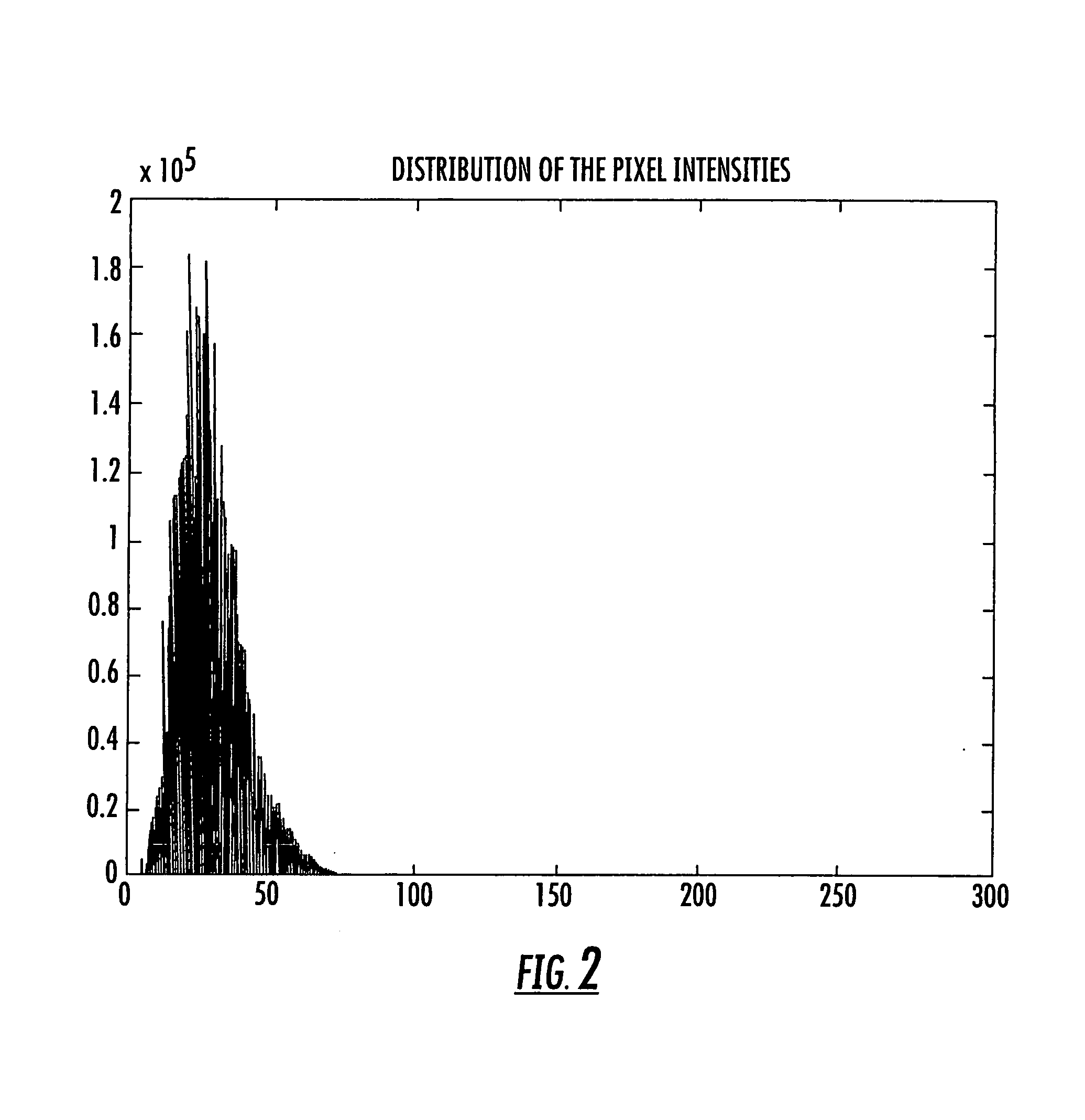

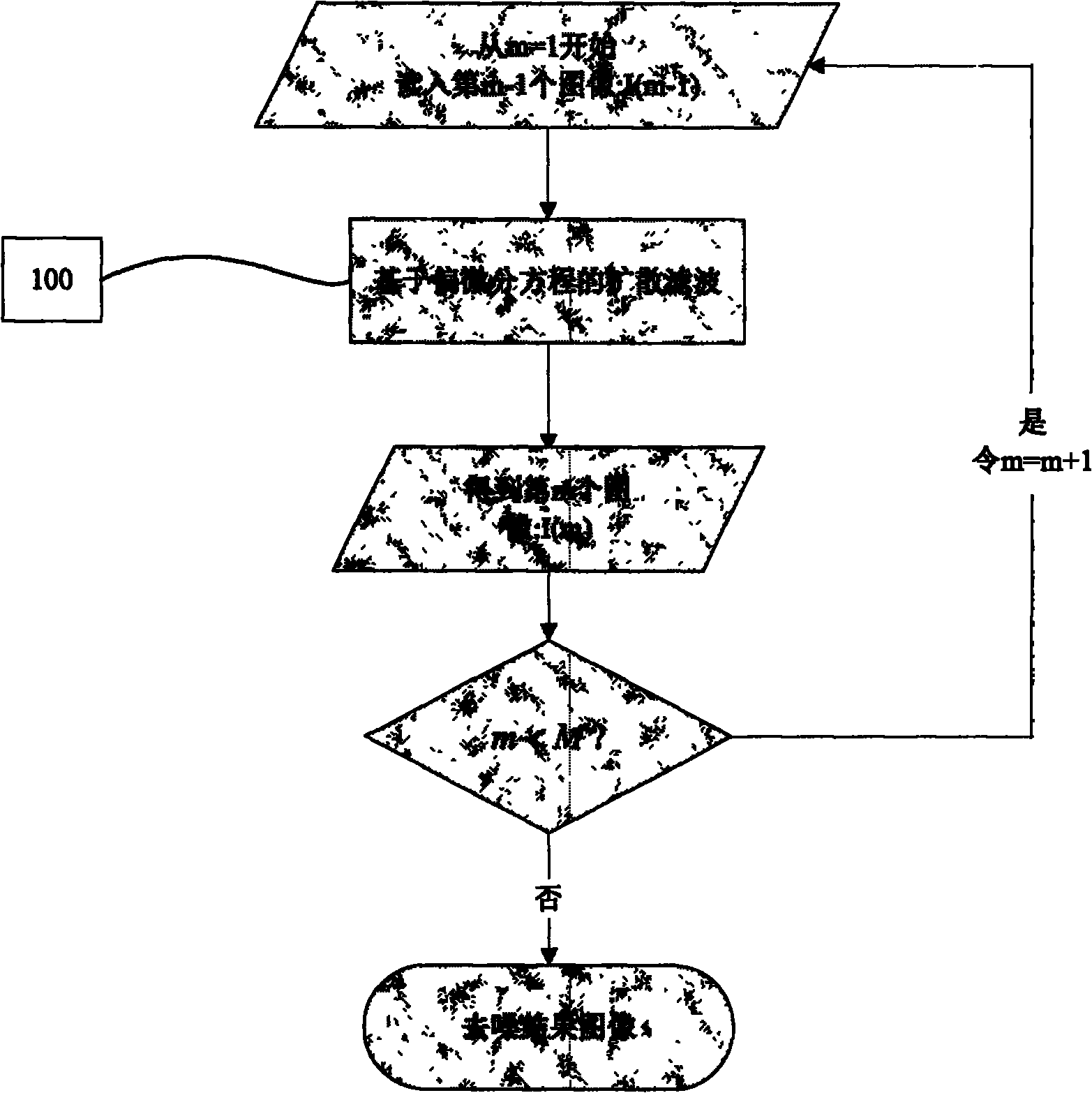

Image denoising based on wavelets and multifractals for singularity detection and multiscale anisotropic diffusion

InactiveUS7515763B1Reduce noiseRestore signal componentImage enhancementImage analysisDenoising algorithmWavelet transform

A noisy image is transformed through a wavelet transform into multiple scales. The wavelet coefficients are classified at each scale into two categories corresponding to irregular coefficients, edge-related and regular coefficients. Different denoising algorithms are applied to different classes. Alternatively, a denoising technique is applied to the finest scale, and the wavelet coefficients at all scales are denoised through anisotropic diffusion.

Owner:UNIVERSITY OF ROCHESTER

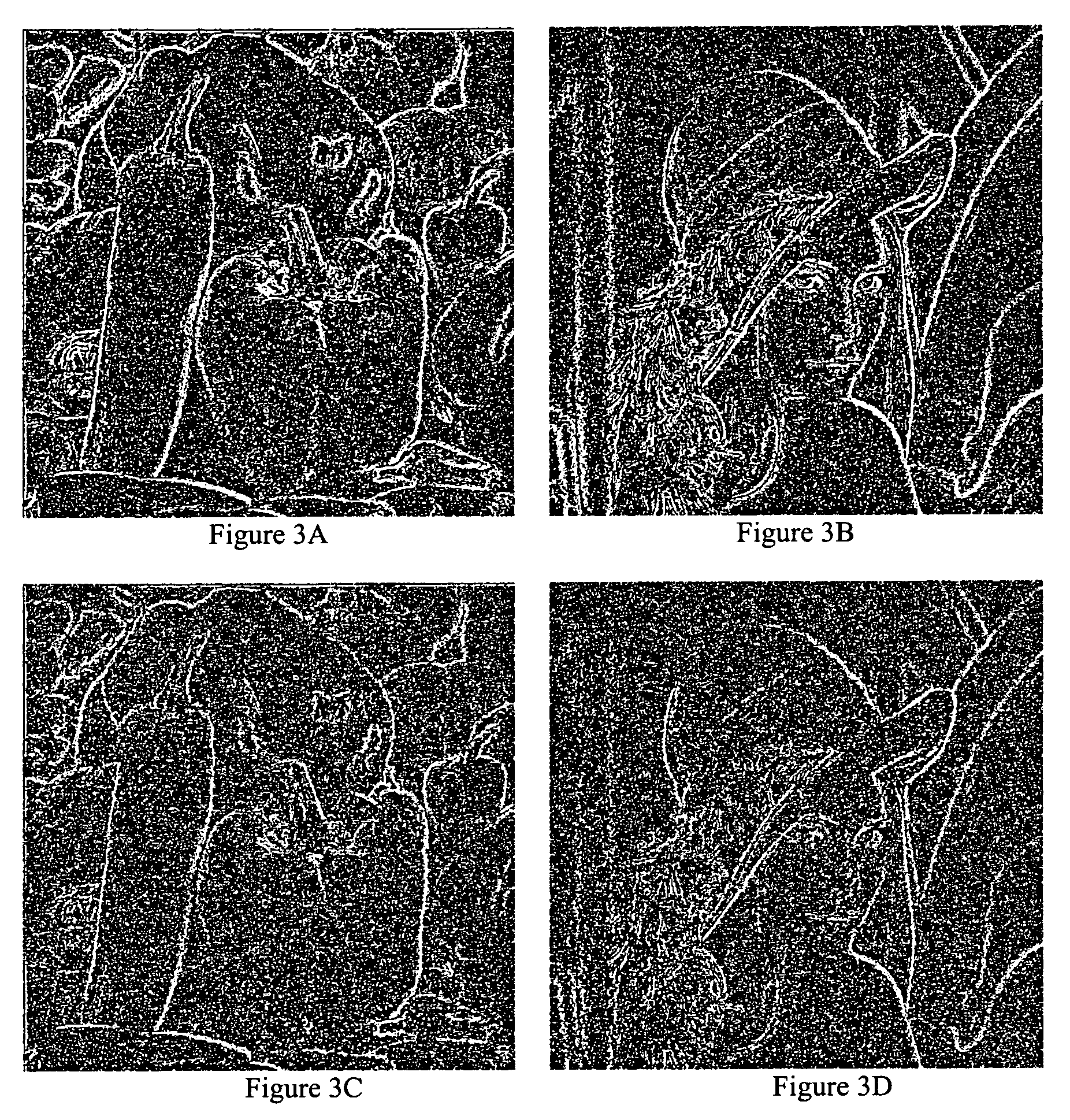

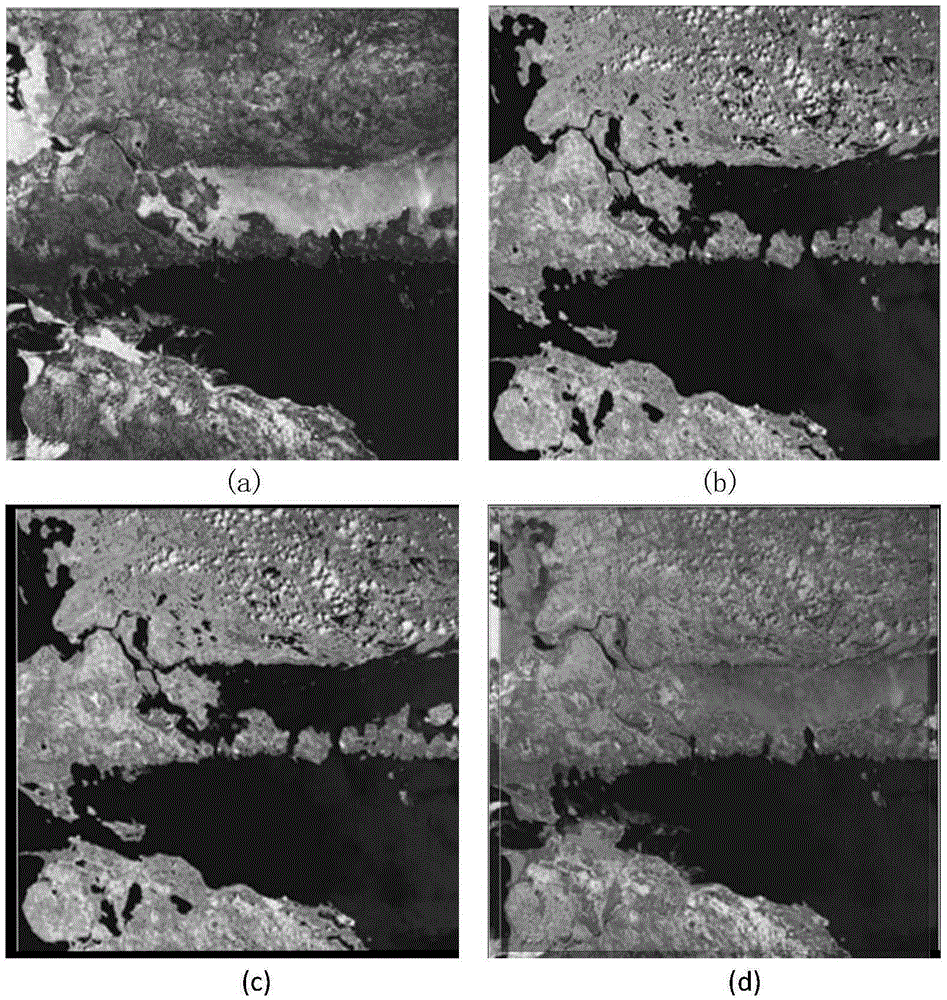

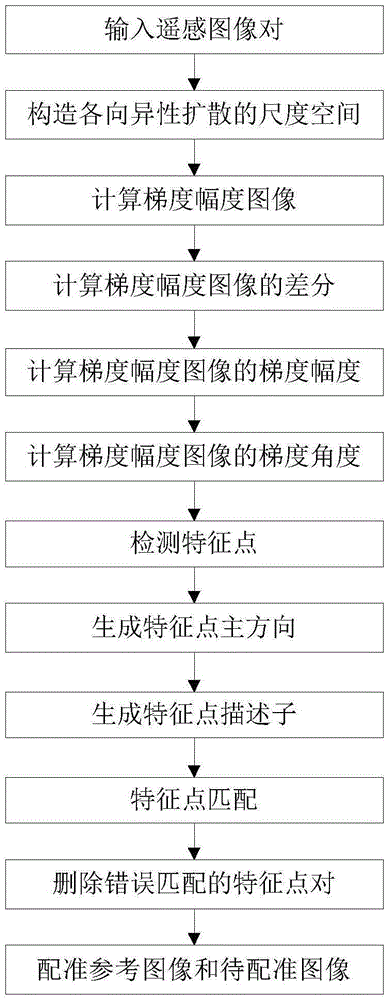

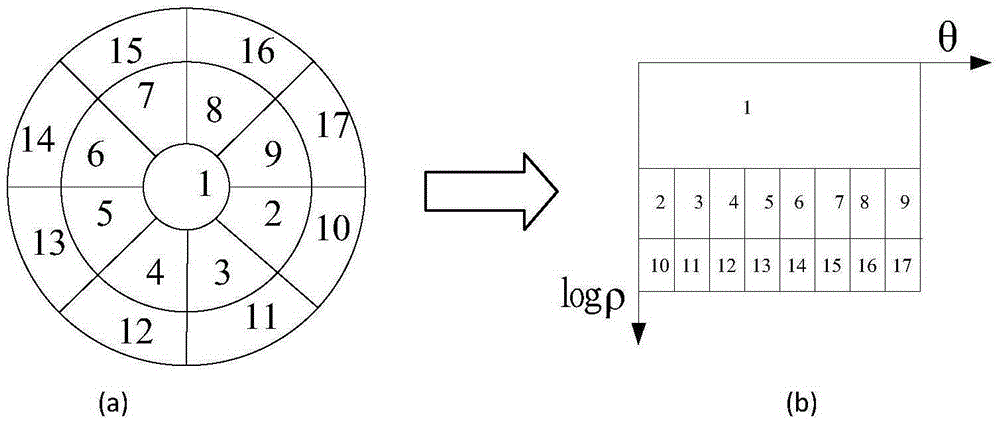

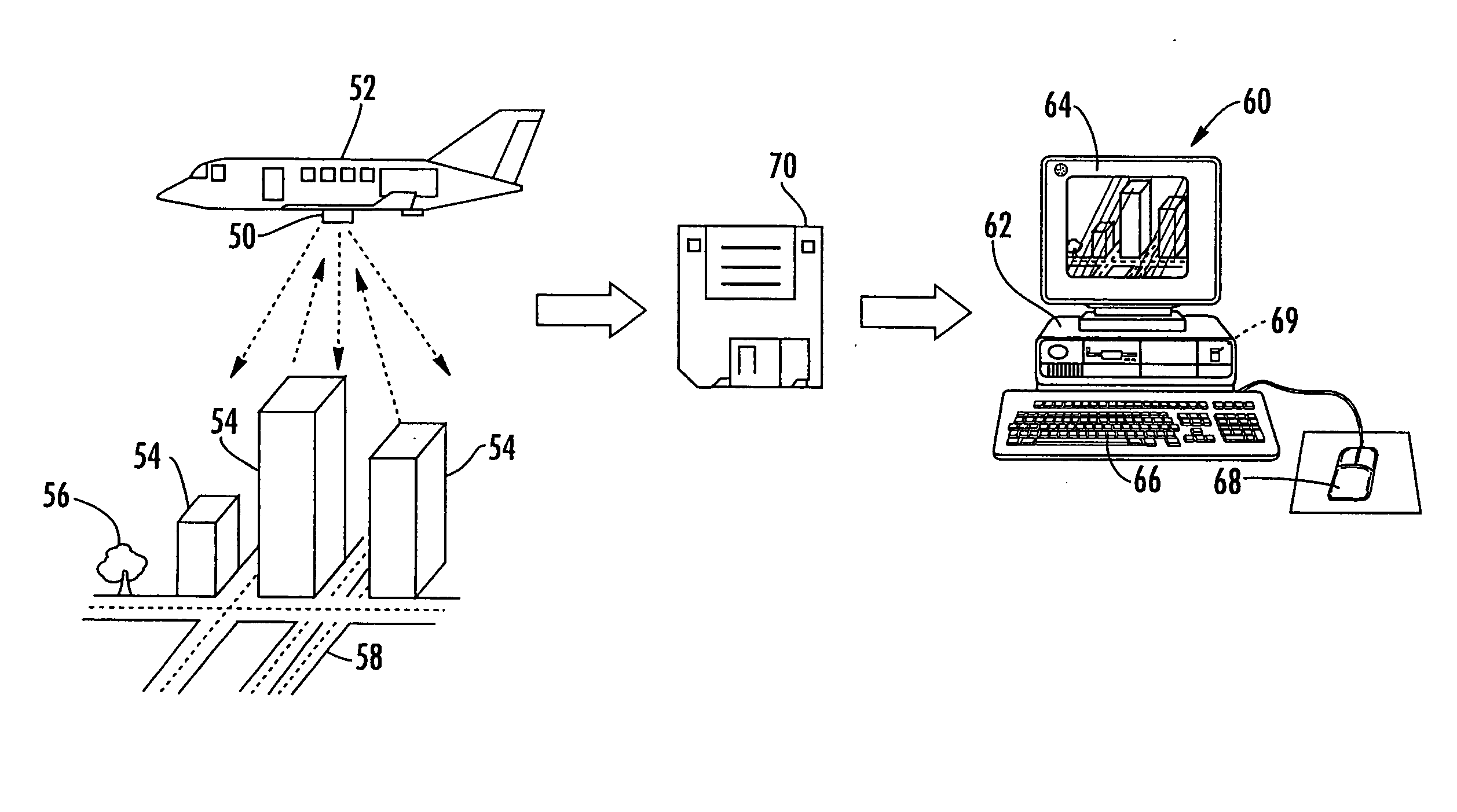

Remote sensing image registration method based on anisotropic gradient dimension space

ActiveCN105427298AImprove correct match rateOvercome the problem of large nonlinear changes in brightnessImage enhancementImage analysisReference imageImage pair

The invention discloses a remote sensing image registration method based on anisotropic gradient dimension space, which mainly solves the problem of relatively low correct matching rate under the condition of relatively great brightness nonlinear change of the remote sensing images. The implementing steps of the remote sensing image registration method based on anisotropic gradient dimension space are as follows: (1) inputting remote sensing image pairs; (2) constructing dimension space of anisotropic diffusion; (3) calculating a gradient amplitude image; (4) detecting feature points; (5) generating a main direction of the feature points; (6) generating a descriptor of each feature point; (7) matching the feature points; (8) deleting wrongly matched feature point pairs; and (9) registering a reference image and a to-be-registered image. As feature point detection, feature point main direction generation and feature point descriptor generation are carried out on the gradient amplitude image in the anisotropic dimension space, the situation of relatively great brightness nonlinear change of the images can be dealt efficiently, and the remote sensing image registration method based on anisotropic gradient dimension space can be applied to complex multisource and multispectral remote sensing image registration.

Owner:XIDIAN UNIV

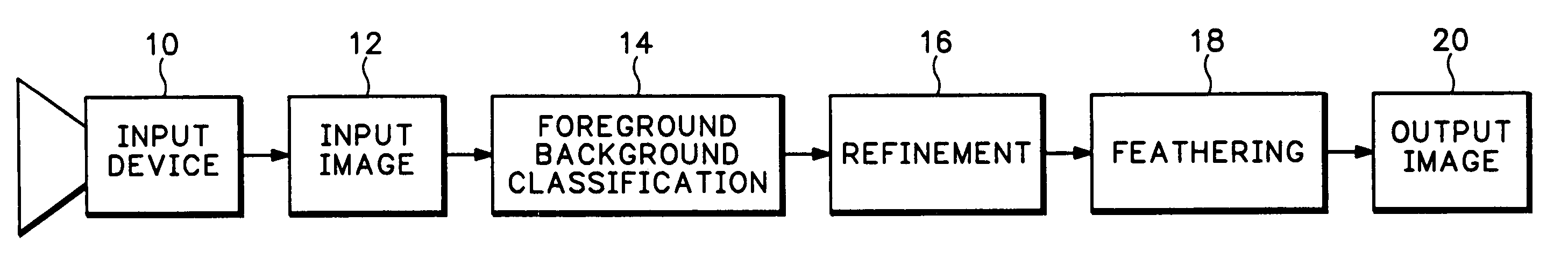

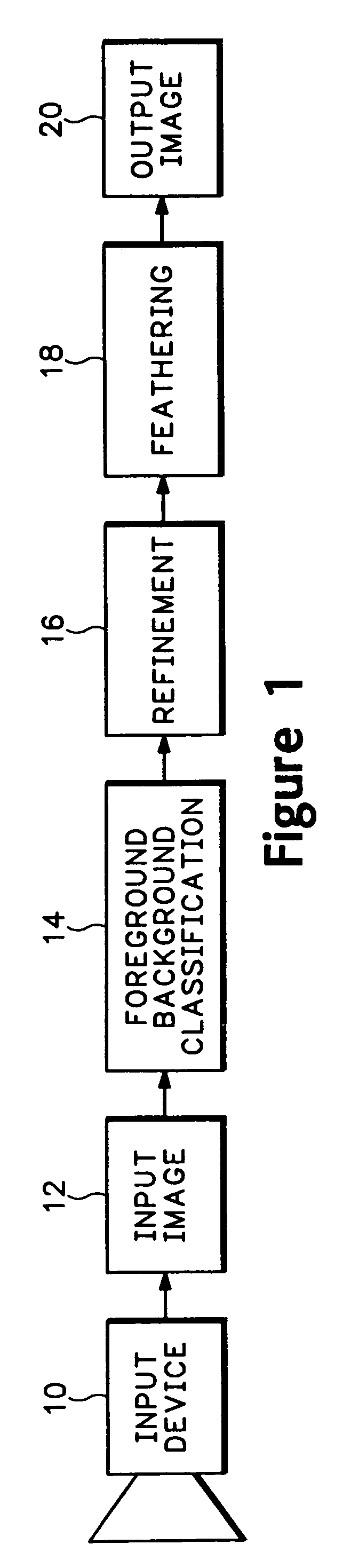

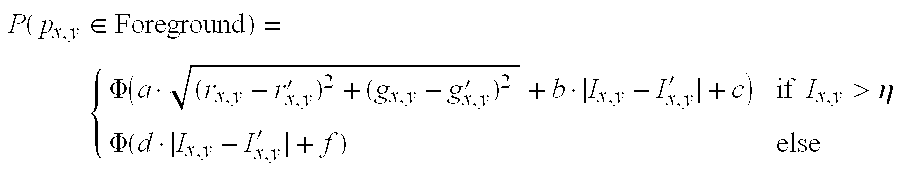

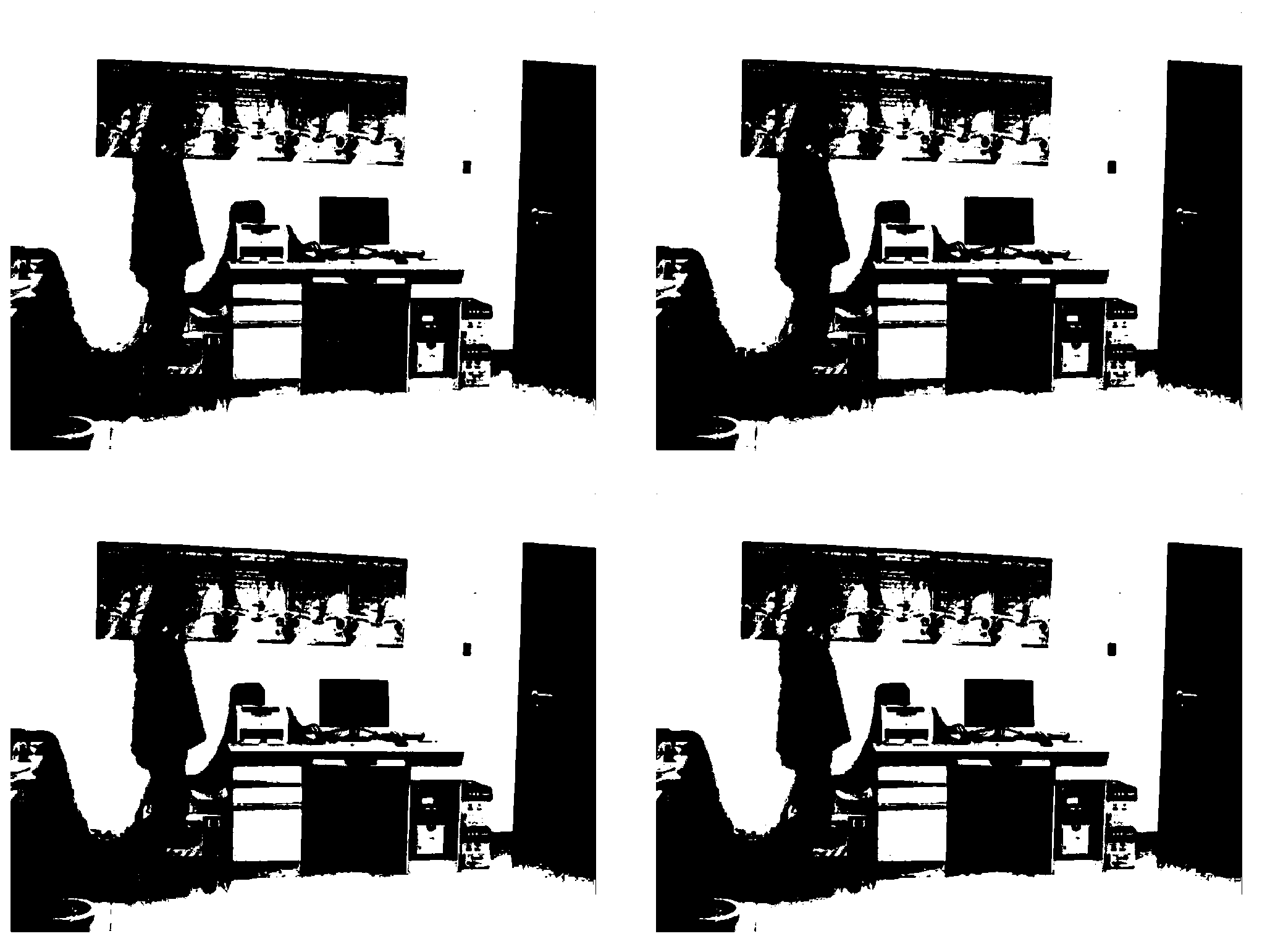

Method of image background replacement

InactiveUS6950130B1Quick changeReal-time processingTelevision system detailsColor signal processing circuitsMorphological filteringMethod of images

A method for background replacement. The method takes an input image of one or more frames of video, or a still image, and performs an initial classification of the pixels (14) as foreground or background pixels. The classification is refined (16) using one of several techniques, including anisotropic diffusion or morphological filtering. After the refined classification is completed, a feathering process (18) is used to overlay the foreground pixels from the original image on the pixels of the new background, resulting in a new output image (20).

Owner:RAKUTEN INC

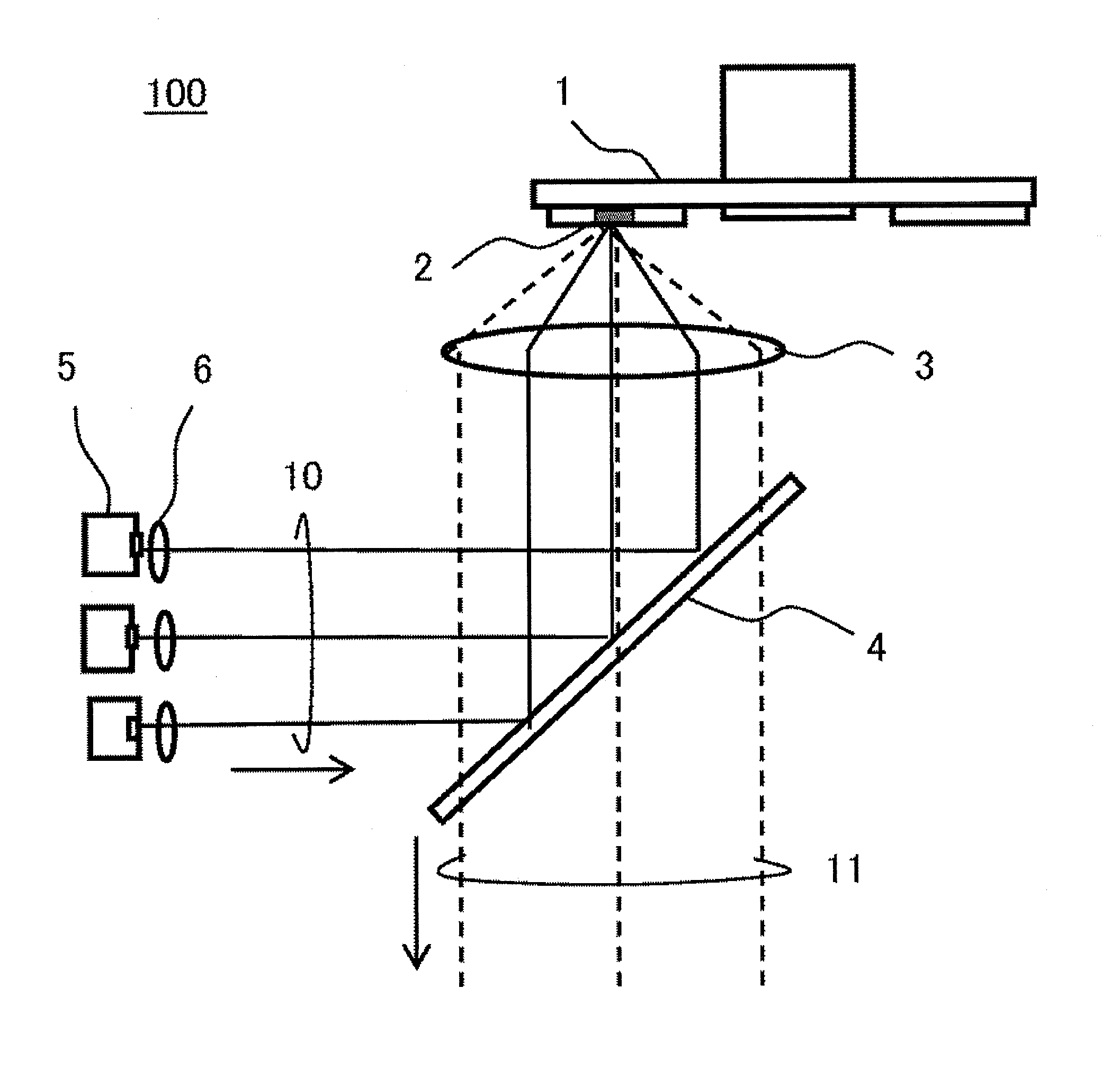

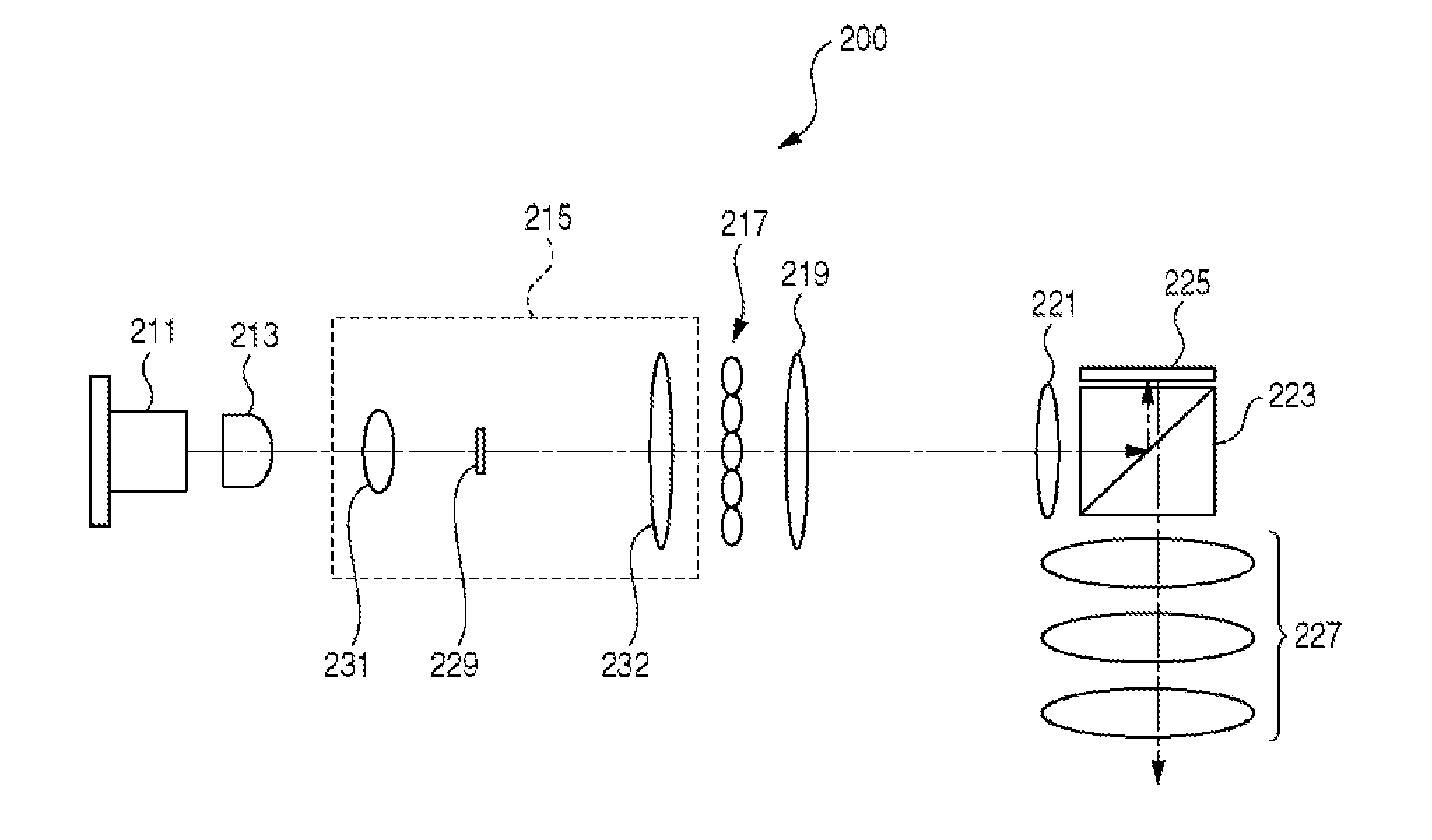

Light source device

A light source device includes an excitation light source that generates excitation light, a phosphor wheel including a phosphor segment that generates fluorescent light by excitation of the excitation light, and a mirror that guides the excitation light from the excitation light source to the phosphor wheel and emits the fluorescent light from the phosphor wheel as illumination light. The phosphor wheel further includes an anisotropic diffusion and reflection unit that diffuses and reflects incident excitation light such that an optical path for the incident excitation light and an optical path for the diffused excitation light after incidence of the excitation light are not overlapped. The mirror includes a first region that reflects the excitation light and transmits the fluorescent light and a second region that transmits the fluorescent light and the diffused excitation light which the anisotropic diffusion and reflection unit has diffused and reflected.

Owner:MAXELL HLDG LTD

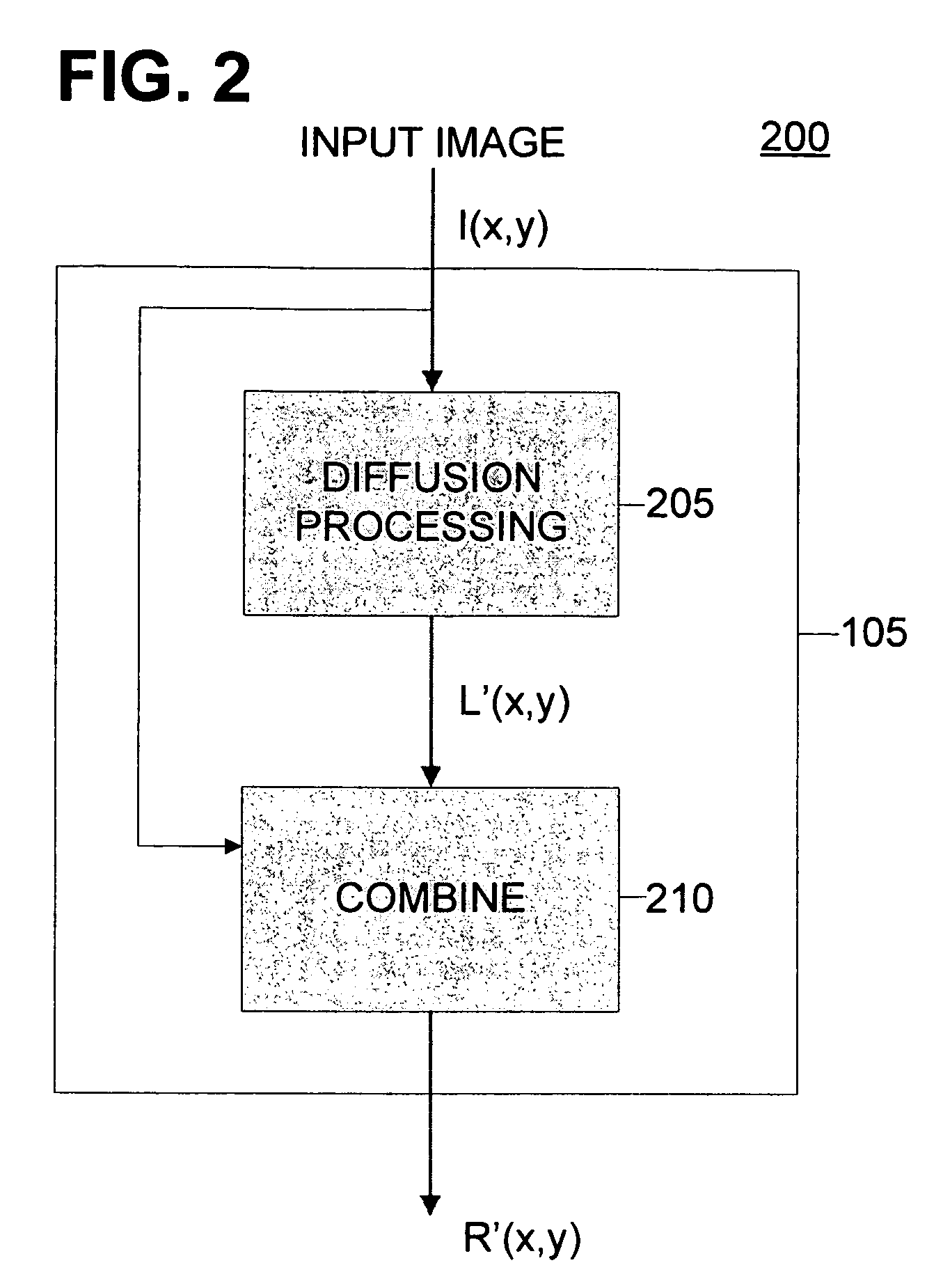

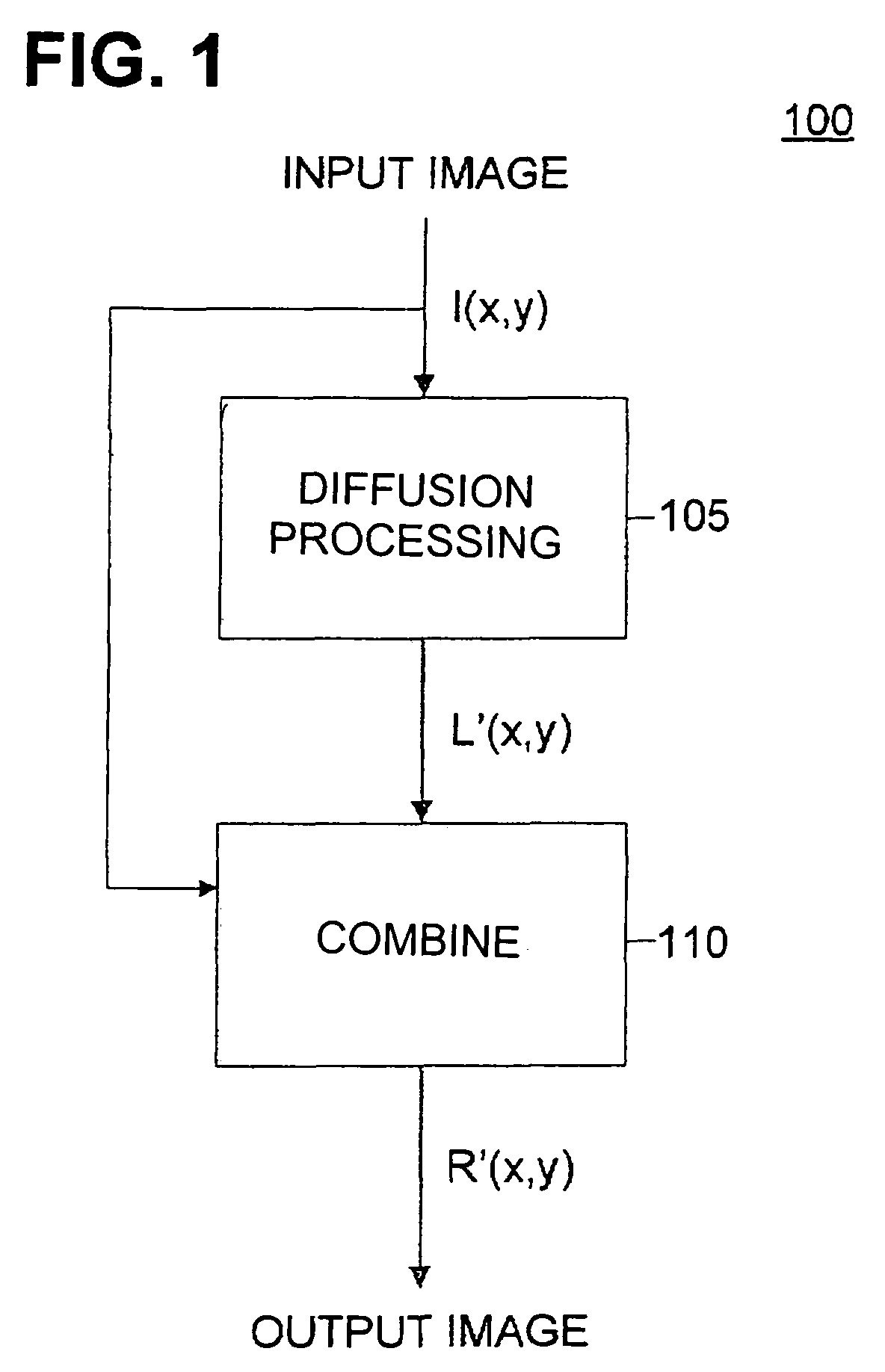

Method and apparatus for diffusion based image relighting

A method for image relighting is presented which receives an input image having at least one spurious edge directly resulting from a first illumination present when the input image was acquired, performs anisotropic diffusion on the input image to form a diffusion image, removes the first illumination using the diffusion image to generate a reflectance image and applies a second illumination to the reflectance image. An apparatus for relighting is presented which includes a processor operably coupled to memory storing input image having a first illumination present when the input image was acquired, and functional processing including an anisotropic diffusion module to perform anisotropic diffusion on the input image to form a diffusion image, a combination module which removes the first illumination using the diffusion image to generate a reflectance image, a second illumination module which generates a second illumination, and a lighting application model which applies the second illumination.

Owner:FUJIFILM CORP

Method and apparatus for processing complex interferometric SAR data

ActiveUS20080231504A1Improves subsidence measurementImprove boundary qualityImage enhancementComputer controlData setInterferometric synthetic aperture radar

A computer system for processing interferometric synthetic aperture radar (SAP) images includes a database for storing SAR images to be processed, and a processor for processing interferometric SAR images from the database. The processing includes receiving first and second complex SAR data sets of a same scene, with the second complex SAR data set being offset in phase with respect to the first complex SAR data set. Each complex SAR data set includes a plurality of pixels. An interferogram is formed based on the first and second complex SAR data sets for providing a phase difference therebetween. A complex anisotropic diffusion algorithm is applied to the interferogram. The interferogram includes a real and an imaginary part for each pixel. A shock filter is applied to the interferogram. The processing further includes performing a two-dimensional variational phase unwrapping on the interferogram after application of the shock filter.

Owner:HARRIS CORP

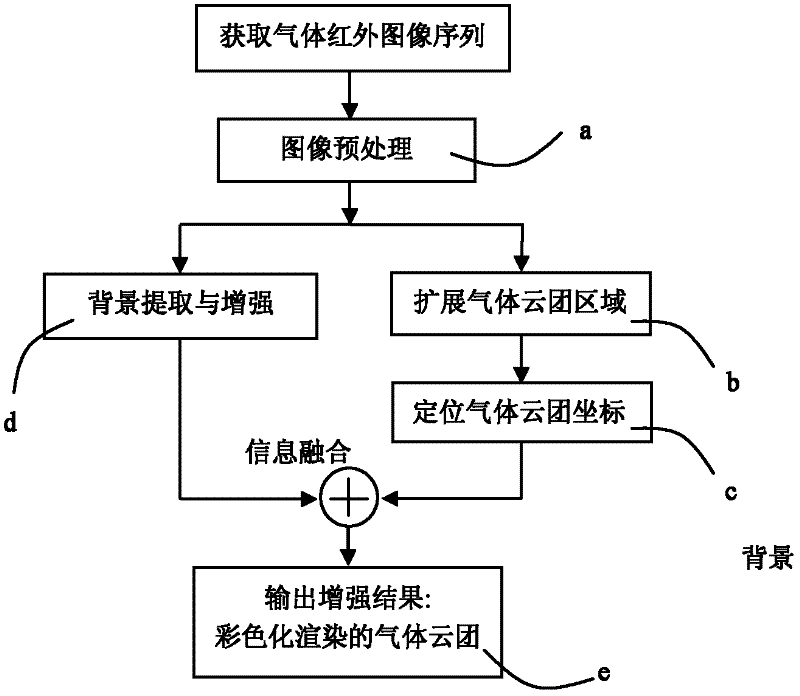

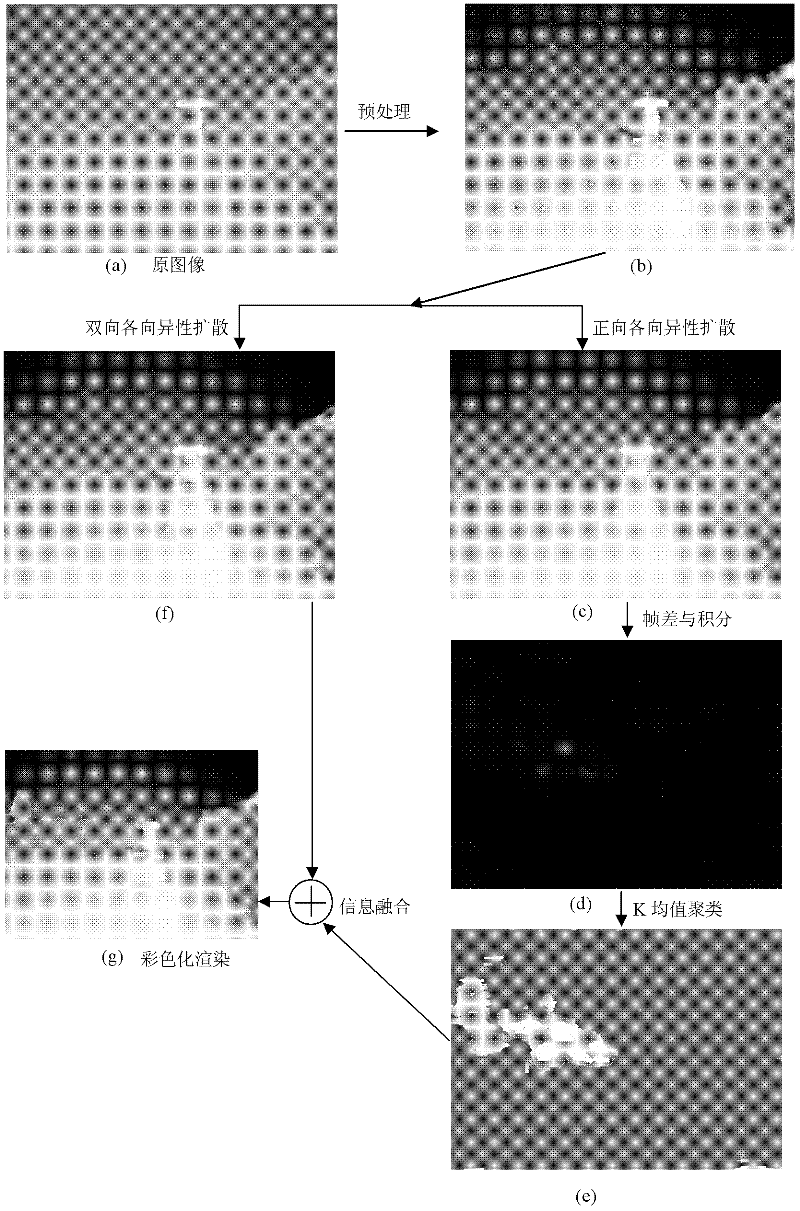

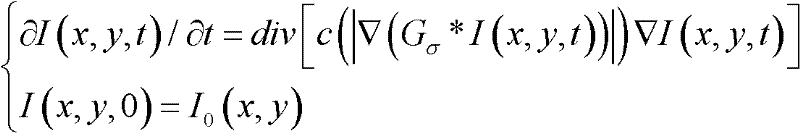

Gas infrared image enhancing method based on anisotropic diffusion

InactiveCN102609906AQuality improvementIncrease signal strengthImage enhancementFrame differenceForming gas

The invention relates to a gas infrared image enhancing method based on anisotropic diffusion and belongs to the field of gas detection. The method comprises the following steps of: firstly, preprocessing a gas infrared video sequence image, and respectively processing by two ways, wherein one way uses a forward anisotropic diffusion algorithm so as to spread a gas cloud cluster region, and the other way uses a bidirectional anisotropic diffusion algorithm so as to reduce the noise, and protect and enhance the detail and edge of an image background; then, carrying out discontinuous frame difference on a first processing result, and accumulating difference results; and marking the gas cloud cluster region by the means that a K mean value is clustered in the accumulated result, confirming the position coordinate of the gas cloud cluster, and finally rendering the gas cloud cluster in a colorizing way according to the corresponding position of the coordinate in a second processing result, so that the interpretation property of the gas cloud cluster can be observably improved, the quality of the gas infrared image can be improved, and human eyes can quickly detect the formed gas cloud cluster when the gas leaks. The method can be used for detecting the leakage of the invisible hazardous gas.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

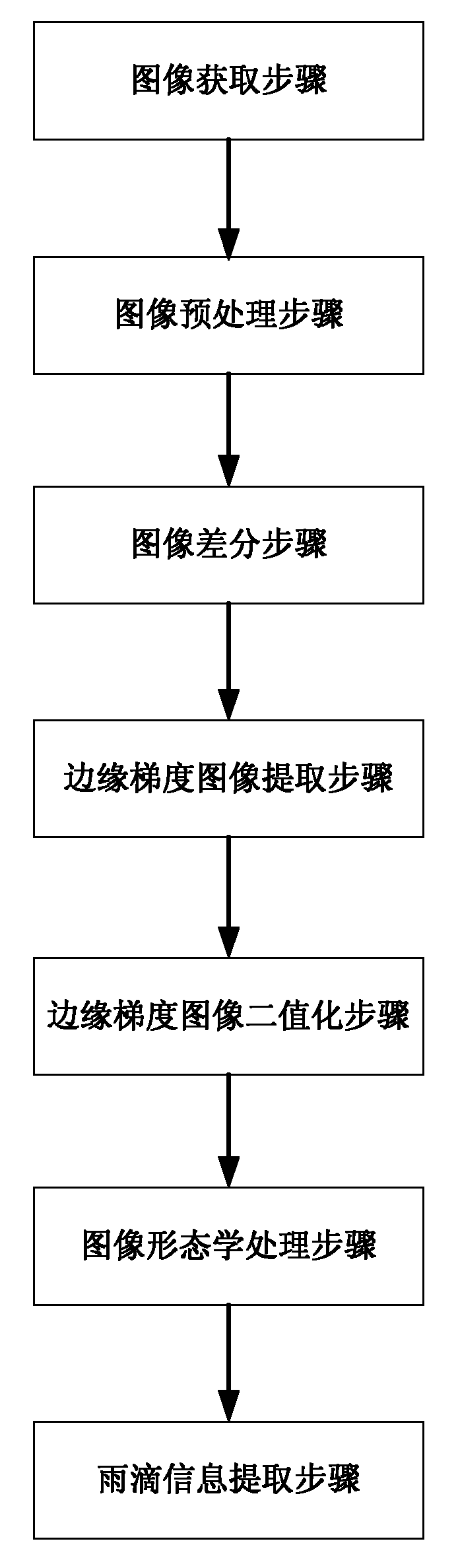

Raindrop identifying method

The invention provides a raindrop identifying method, belongs to the field of digital image identification, and aims to automatically identify a raindrop target in the raindrop image and extract related raindrop information so as to improve the automation and precision of raining weather phenomenon observation. The method is applied to automatic observation and acquisition of a raindrop spectrum in the raining weather phenomenon observation. The method comprises the following steps in sequence: 1, image acquisition; 2, image pretreatment; 3, image differentiation; 4, extraction of the edge gradient image; 5, binarization of the edge gradient image; 6, image morphologic treatment; and 7, extraction of the raindrop information. In the method of the invention, the anisotropic diffusion smoothing filter is used as a method for the image pretreatment, the edge gradient information is used as effective characteristics of the raindrop target, an image morphologic method is also utilized to effectively identify the raindrop target and extract the related raindrop information, and thus the automaticity and the precision on the raining weather phenomenon observation can be improved.

Owner:HUAZHONG UNIV OF SCI & TECH

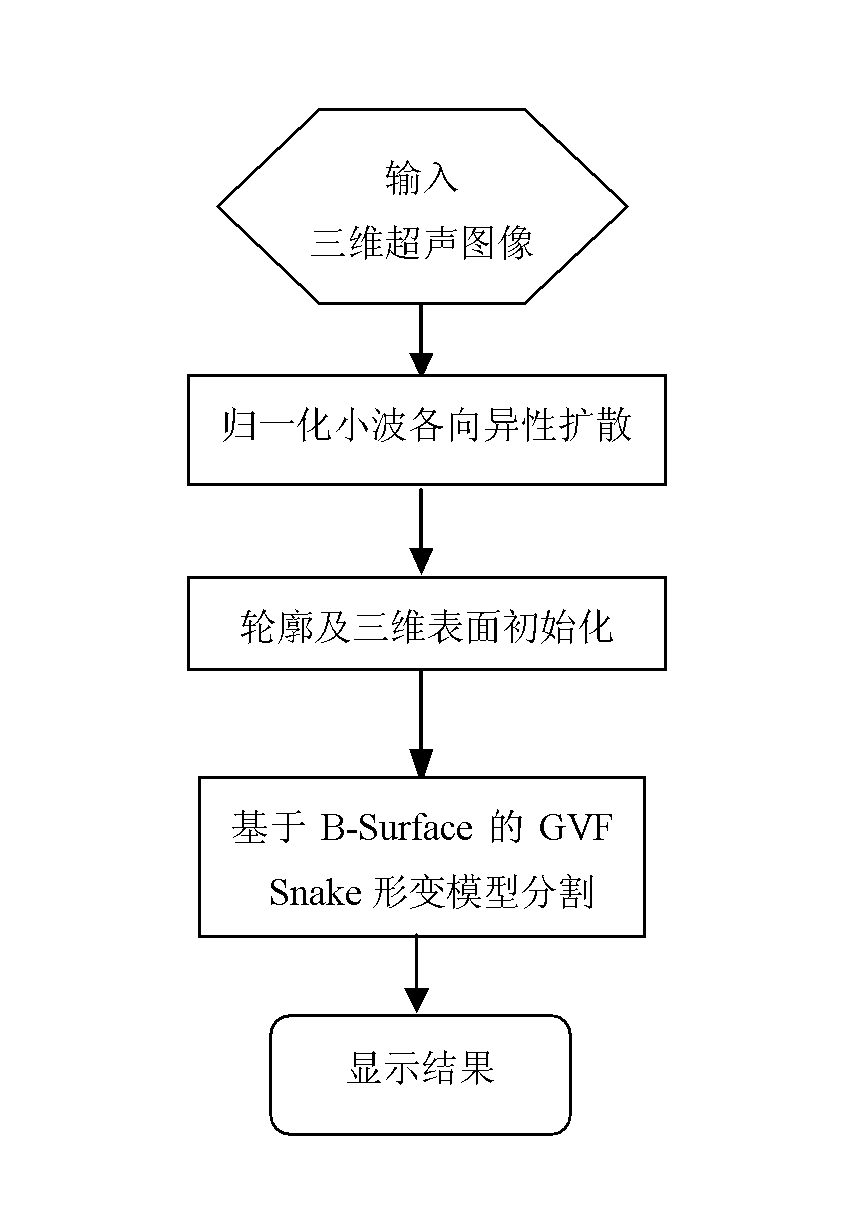

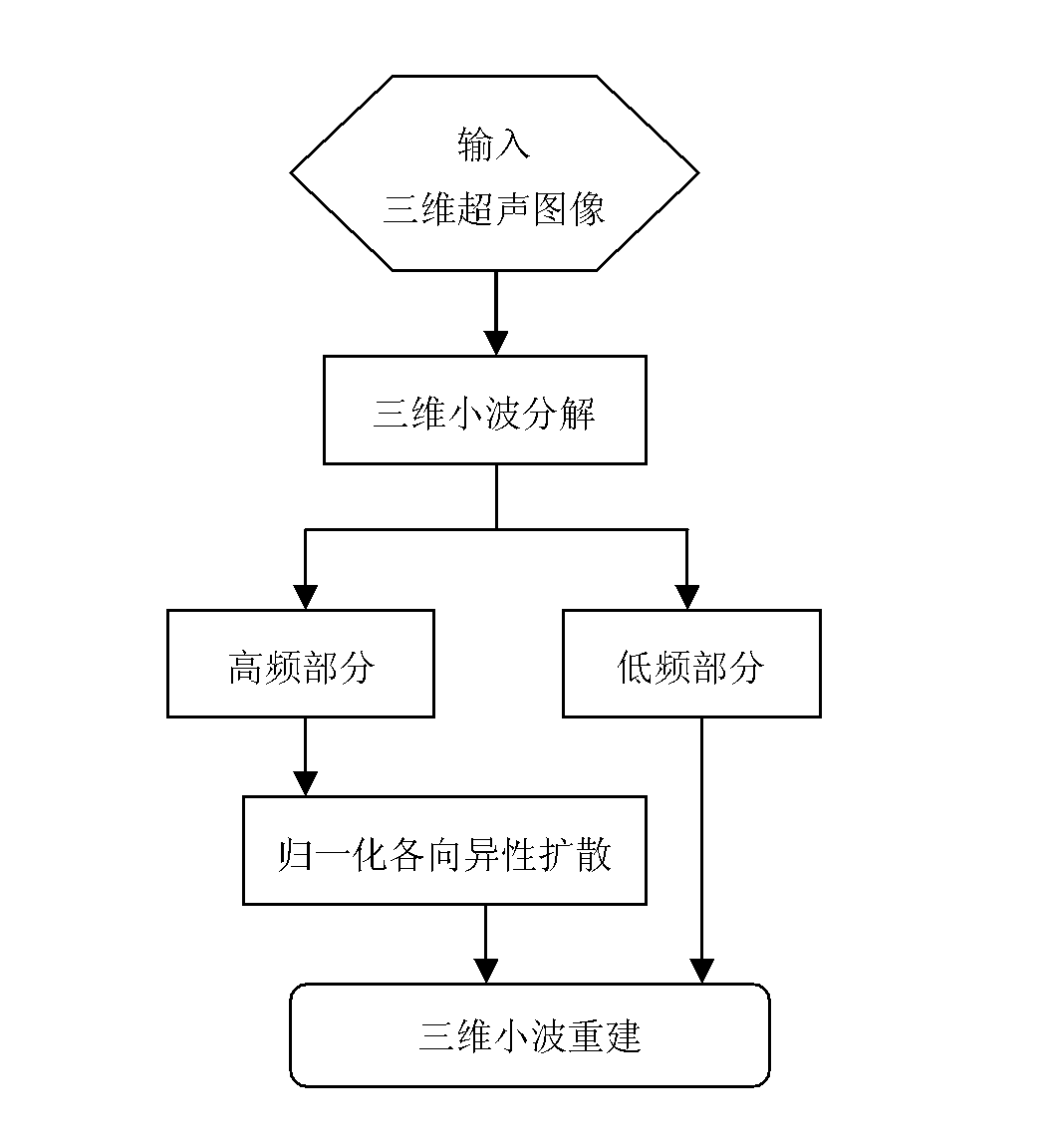

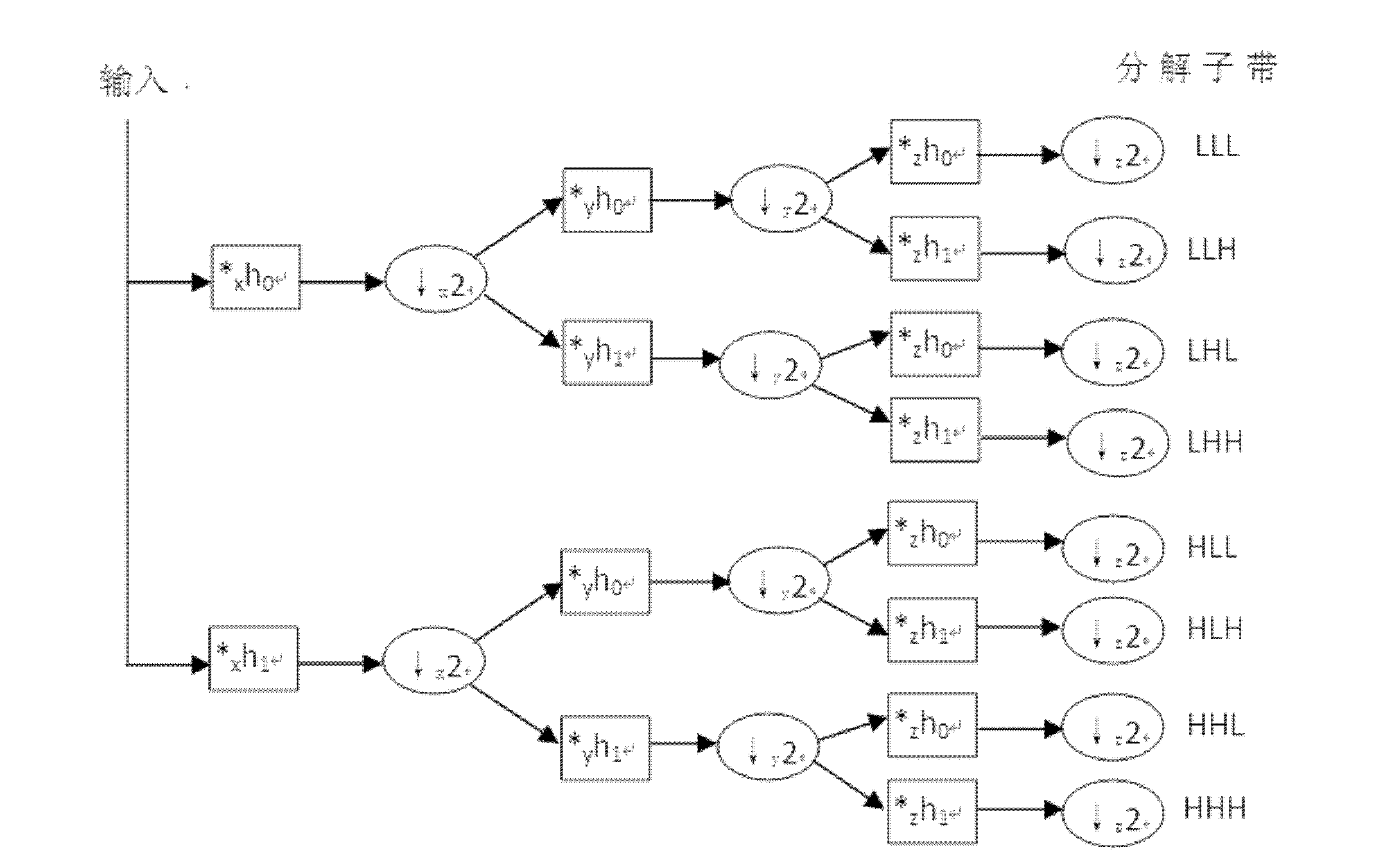

Method for segmenting three-dimensional ultrasonic image

InactiveCN102402788AEffective smoothingAccurate segmentationImage enhancementImage analysisComputed tomographyResonance

The invention discloses a method for segmenting a three-dimensional ultrasonic image, belonging to the technical field of the digital image processing. The method comprises the following steps: (1) preprocessing spots of the three-dimensional ultrasonic image by adopting a normalized anisotropic diffusion method of a three-dimensional wavelet according to the characteristics of the three-dimensional ultrasonic image to remove spot noise; (2) initializing the preprocessed three-dimensional ultrasonic image by adopting a Canny edge detection operator; and (3) segmenting the three-dimensional ultrasonic image three-dimensionally by using a B-Surface and GVF Snake based three-dimensional deformation model. The method disclosed by the invention can be used to rapidly and accurately segment the three-dimensional ultrasonic image and particularly has strong noise robustness. The method for automatically segmenting the three-dimensional ultrasonic image can be also used for segmenting other three-dimensional images such as CT (computed tomography) images, MRI (magnetic resonance images) and PET (position-emission tomography) images, thereby having high application value.

Owner:SOUTH CHINA UNIV OF TECH

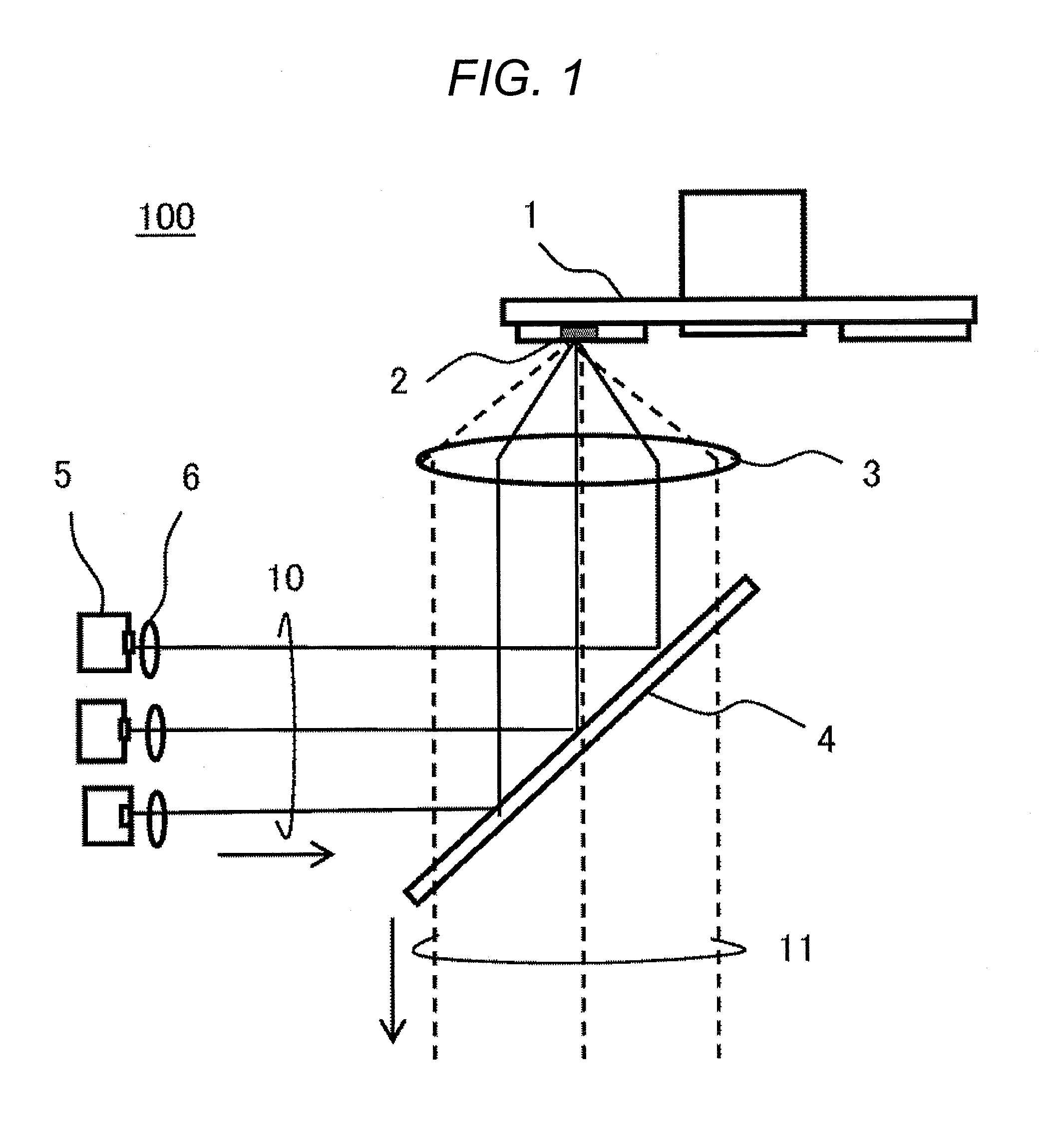

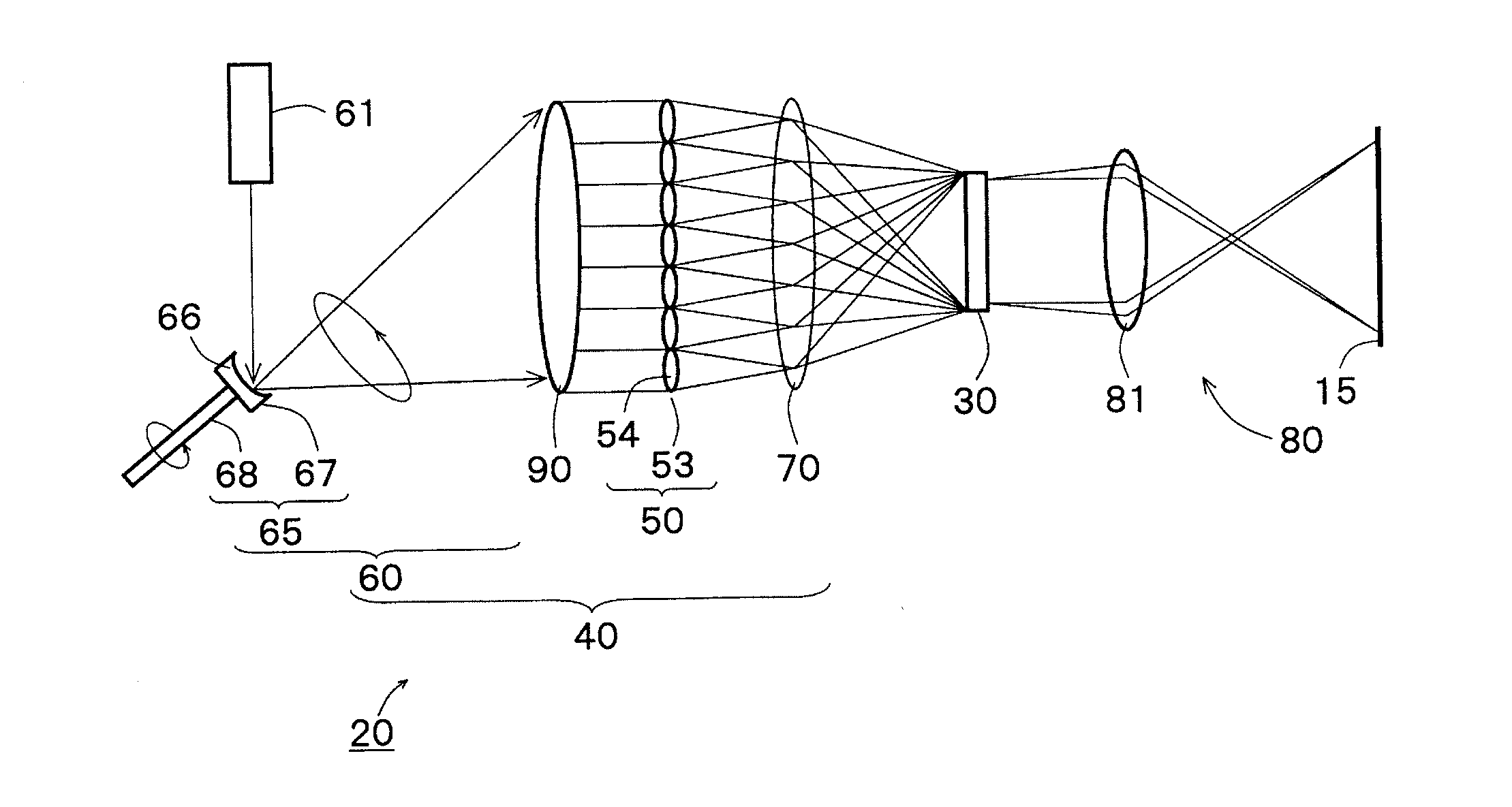

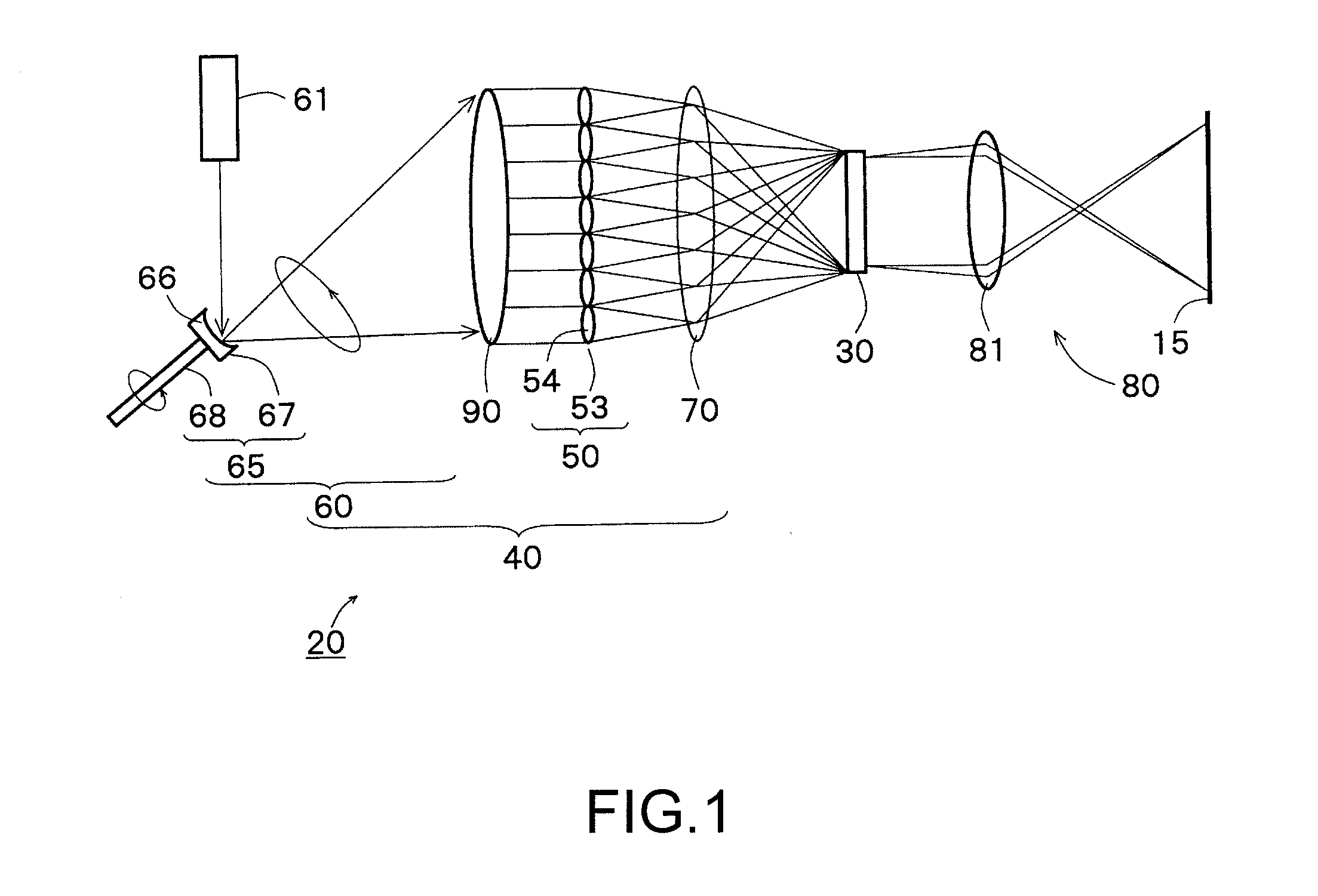

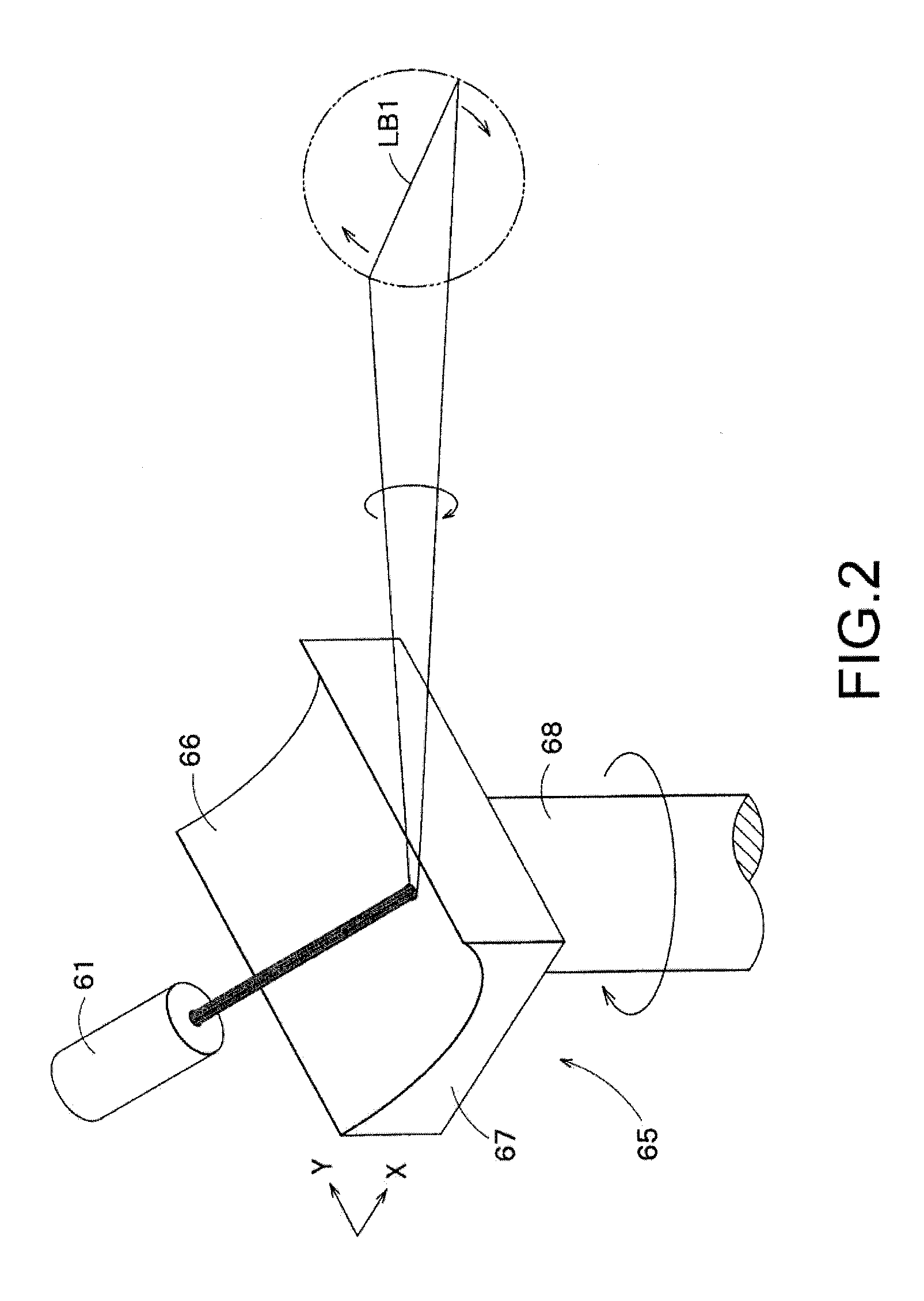

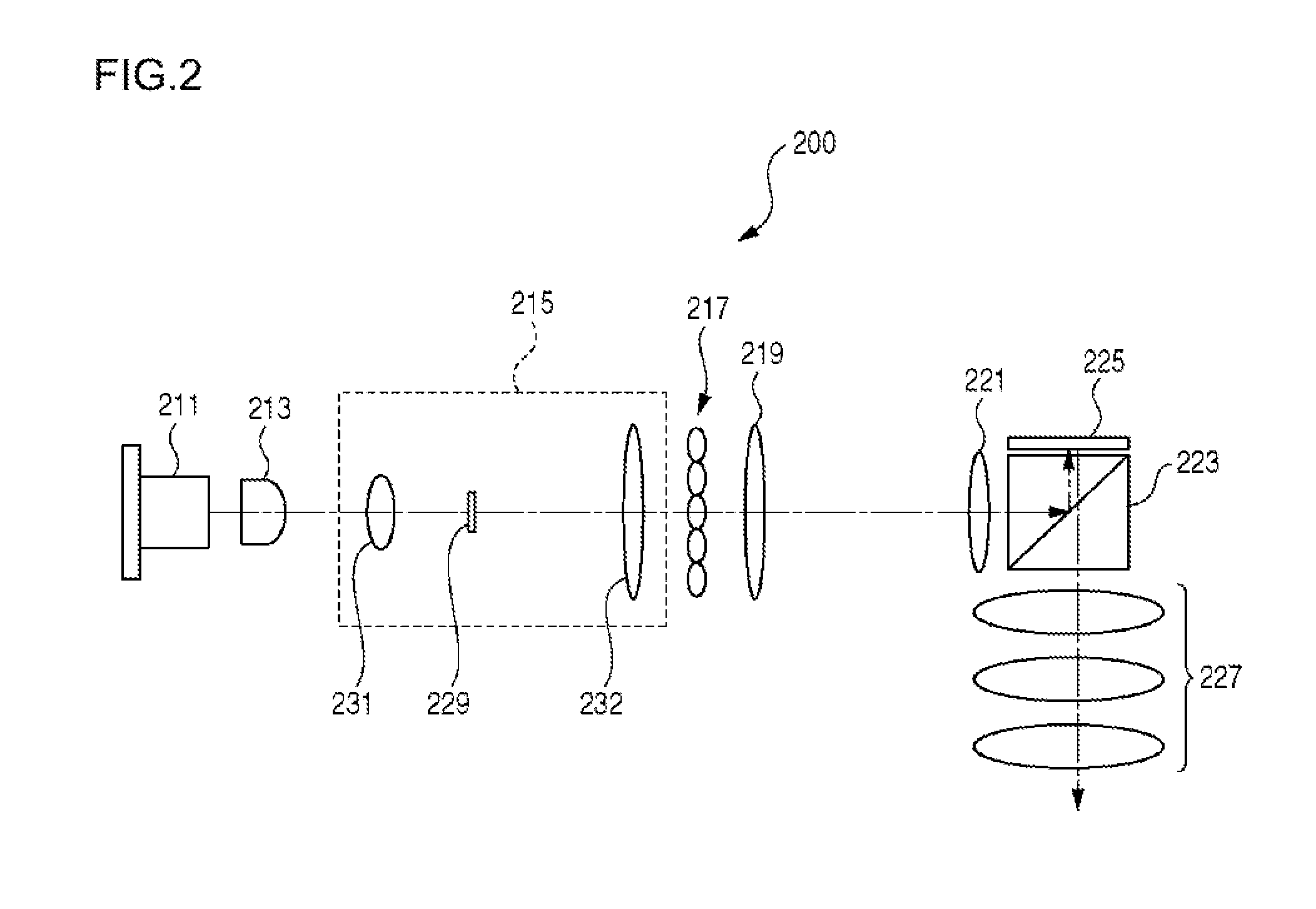

Optical scanning device, illumination device, projection apparatus and optical device

ActiveUS20160313567A1Speckle reductionMaximum speckle reduction effectDiffusing elementsProjectorsDiffusionLight beam

An illumination device includes a diffusion member having an anisotropic diffusion surface, a rotary shaft member configured to rotate the anisotropic diffusion surface while a coherent light beam from a light source is illuminated on the anisotropic diffusion surface, and an optical device that further diffuses a coherent light beam diffused on the anisotropic diffusion surface, wherein the coherent light beam diffused on the anisotropic diffusion surface is diffused in a form of line and the diffused coherent light beam in the form of line is configured to move to draw a locus of rotation in one direction in accordance with the rotation of the anisotropic diffusion surface.

Owner:DAI NIPPON PRINTING CO LTD

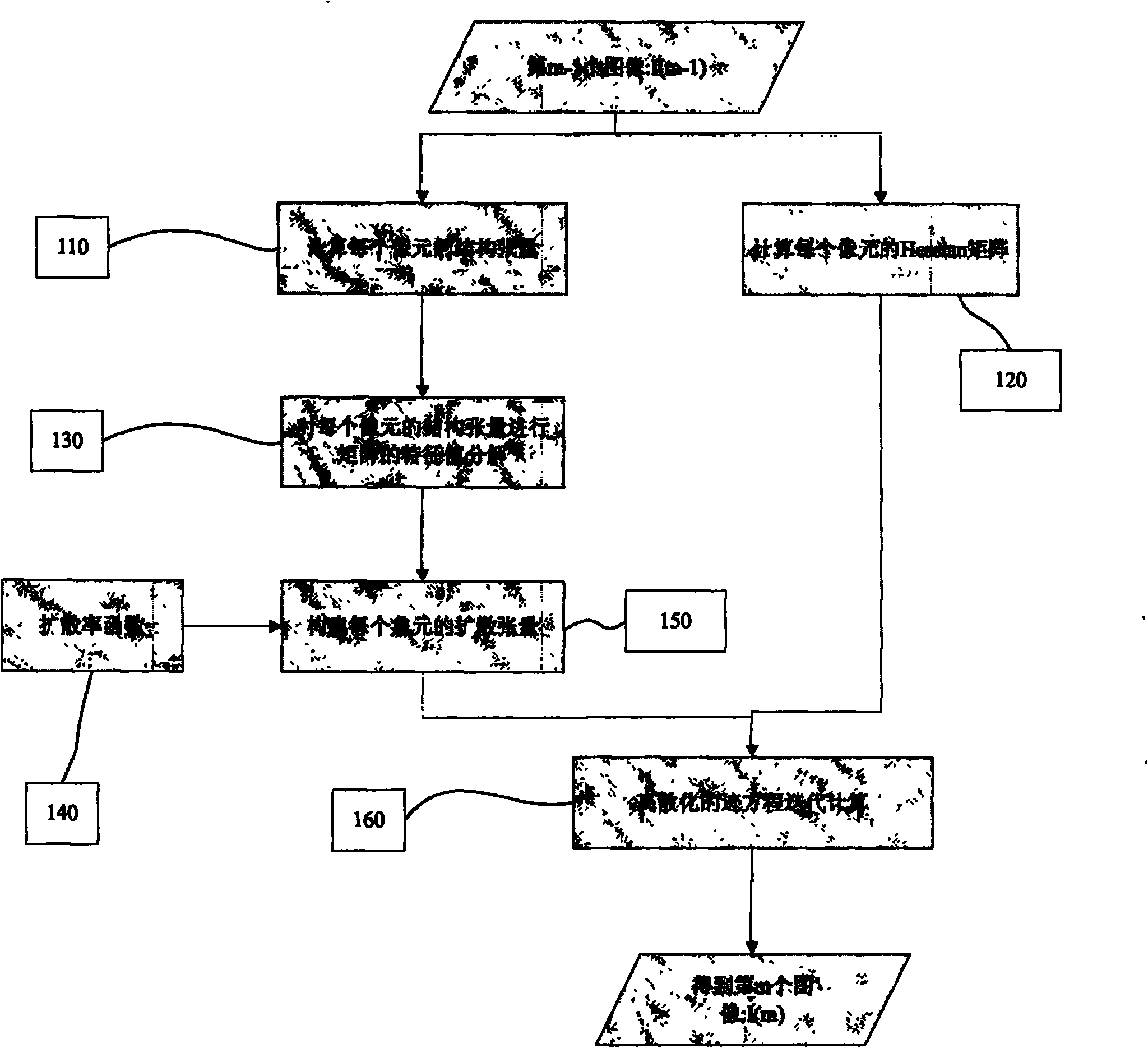

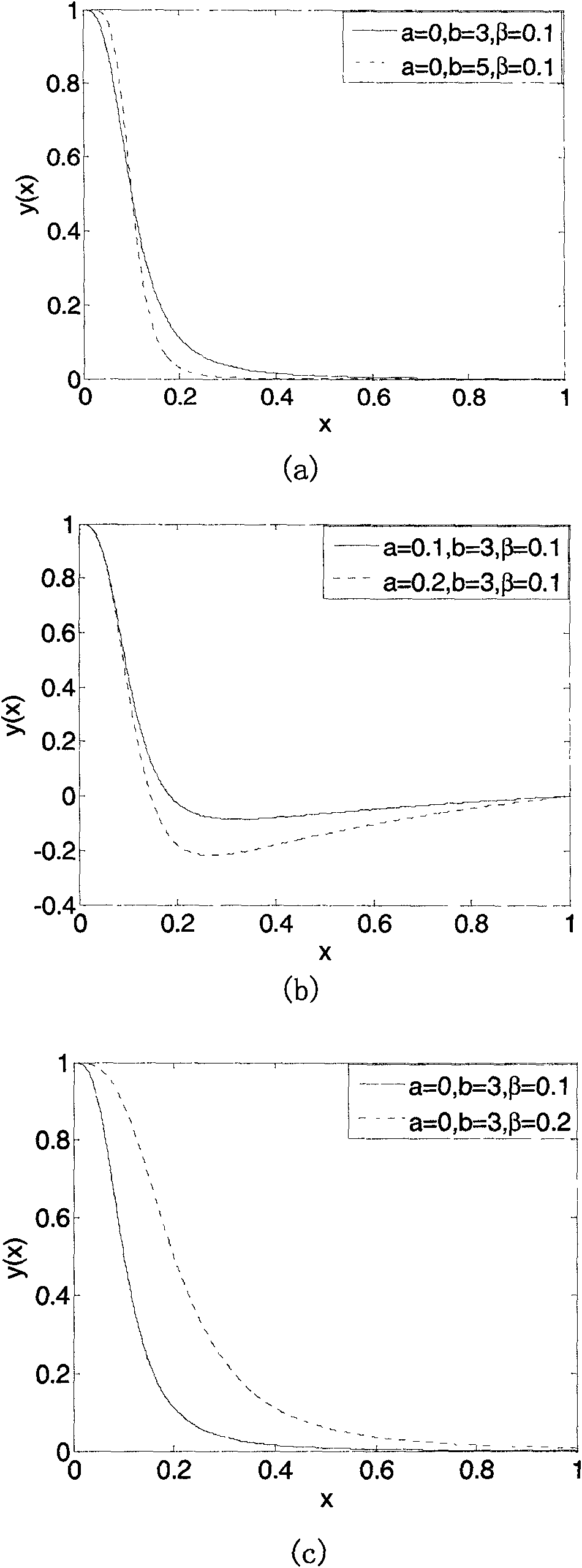

Method for denoising and enhancing anisotropic diffusion image with controllable diffusion degree

The invention discloses a method for denoising and enhancing an anisotropic diffusion image with controllable diffusion degree based on a track model. The method is characterized by comprising the following steps of: firstly, calculating a structure tensor and a Hessian matrix of each pixel point; secondly, decomposing the characteristic values of the structure tensor of each image point; thirdly, constructing a diffusion tensor of each image point, enabling the characteristic vector of the diffusion tensor to be the characteristic vector of the structure tensor, making the characteristic value as a function of the diffusion rate, i.e., a function of the characteristic value of the structure vector, and making the diffusion degree controllable by adjusting the parameter of the function; and finally, iteratively solving the track model. By adopting the method, the image can be effectively denoised and enhanced.

Owner:REMOTE SENSING APPLIED INST CHINESE ACAD OF SCI +1

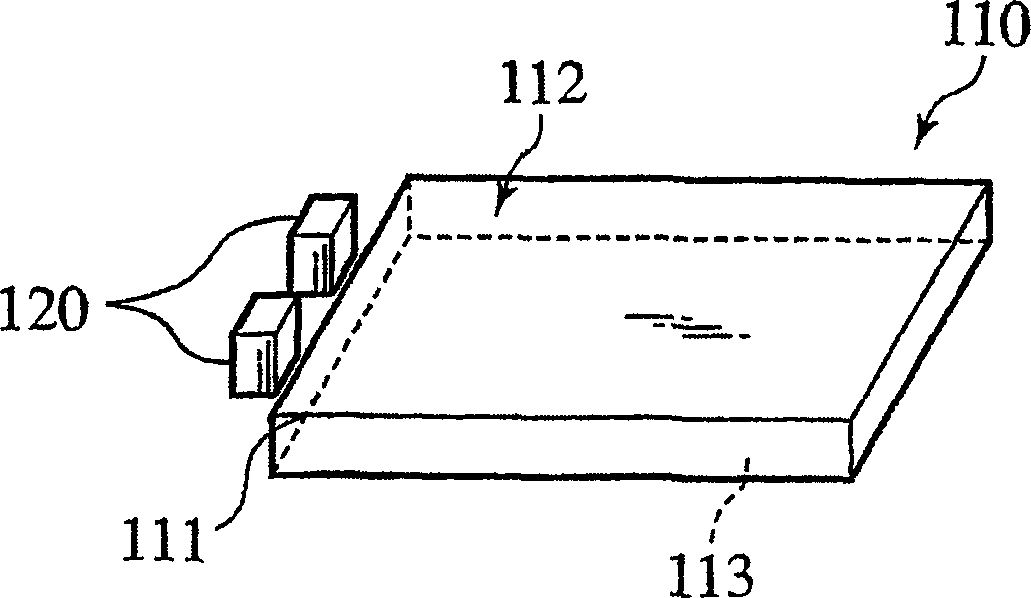

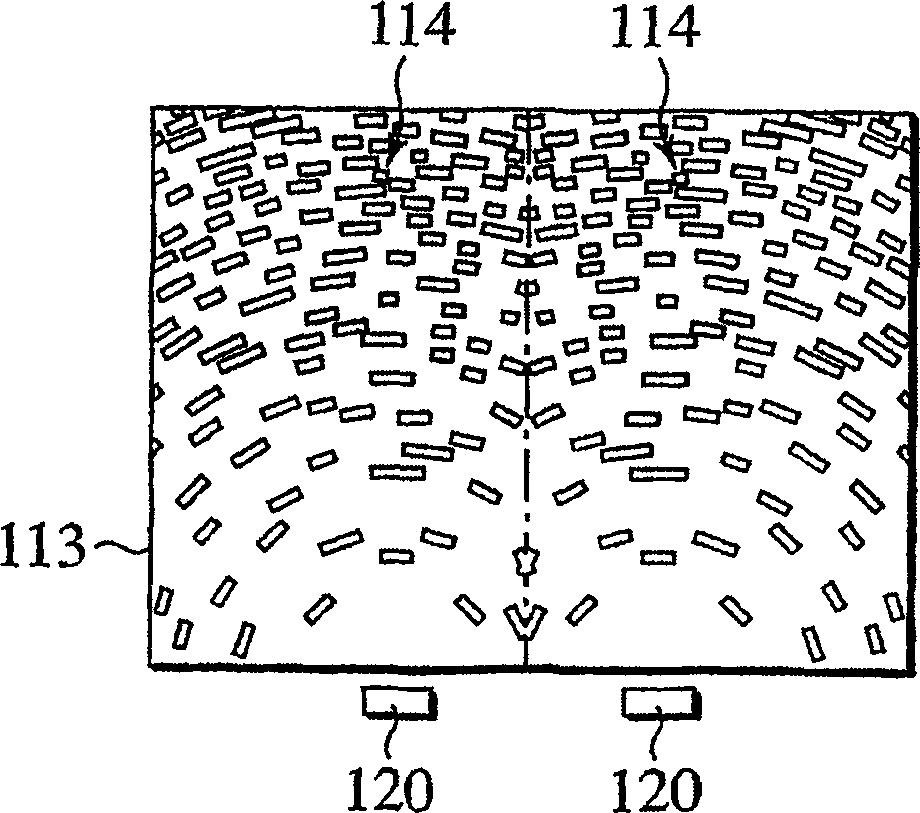

Light guide plate and backlight device

InactiveCN1678866AImprove utilization efficiencyMechanical apparatusLight guides for lighting systemsDiffusionLight guide

A light guide plate 10 comprising an entry surface 11 on at least one side surface, a rectangular exit surface 12 orthogonal to the entry surface 11 and a reflecting surface 13, wherein the reflecting surface 13 has a deflection pattern that receives a ray of light incoming from the entry surface 11 or the exit surface 12 at a certain angle to the normal to the exit surface 12 and outputs the ray of light at a reduced angle to the normal, the deflection pattern has a plurality of arcuate deflection pattern elements formed on a plurality of concentric circles, the exit surface 12 has an anisotropic diffusion pattern that transmits so as to spread, a ray of light incoming from the deflection pattern at an angle up to a specified angle with respect to the normal the exit surface 12, the anisotropic diffusion pattern spreads the ray of light incoming from the deflection pattern so that the width of diffusion in a direction orthogonal to the radial direction of arcs of the concentric circles is larger than that in the radial direction, and the center of the concentric circles is positioned on any one of the side surfaces of the light guide plate 10 or in the vicinity thereof.

Owner:HITACHI CHEM CO LTD

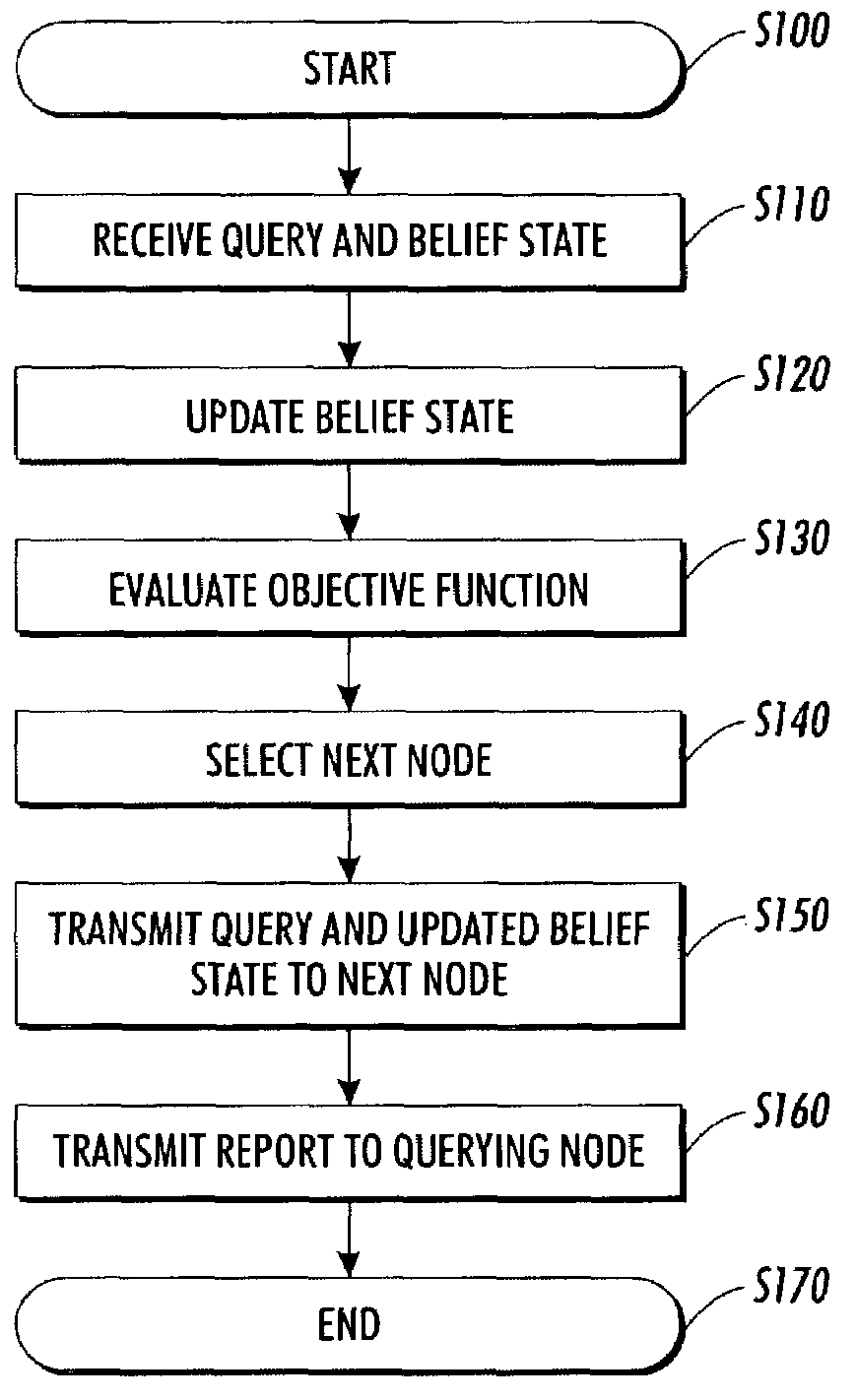

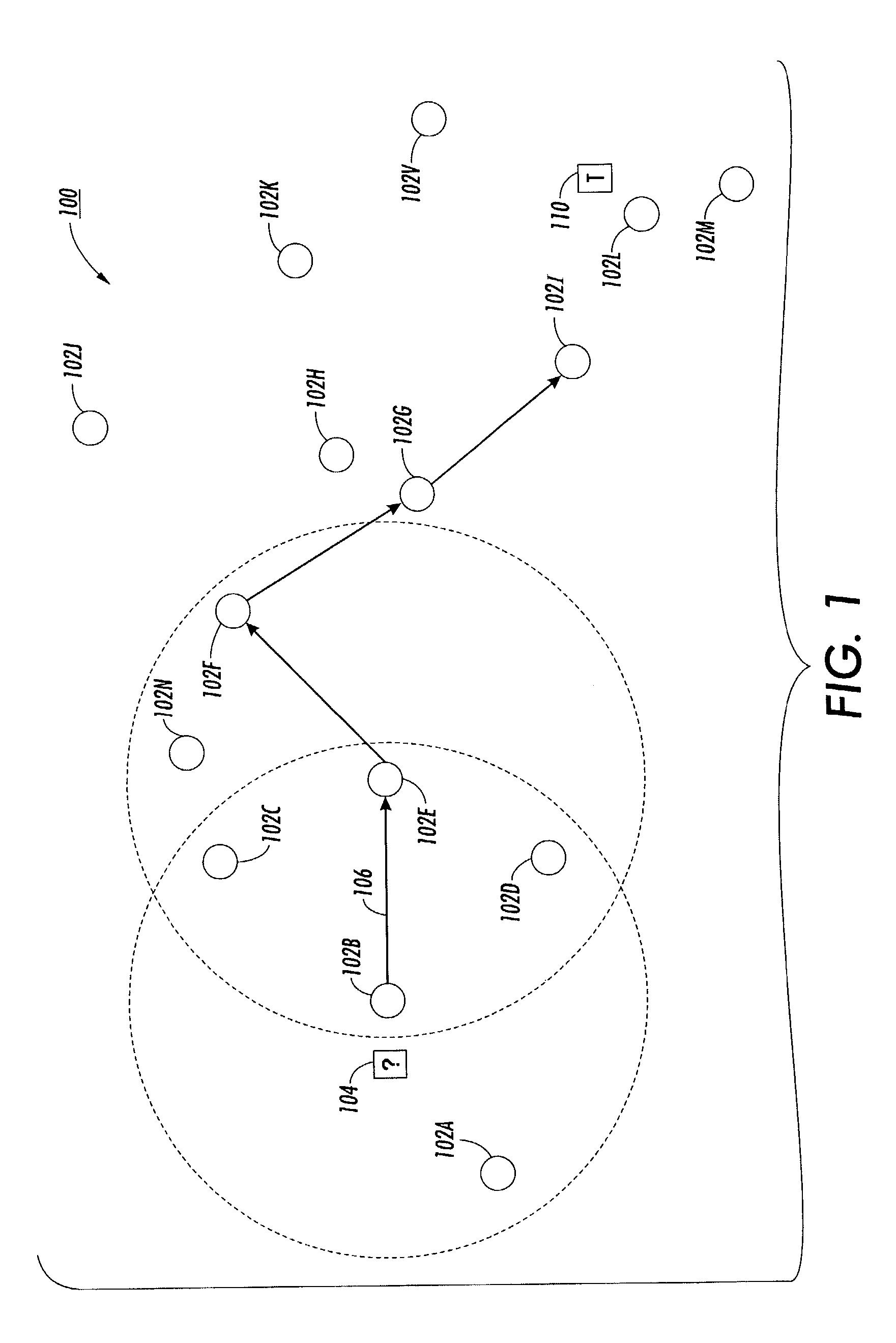

Systems and methods for constrained anisotropic diffusion routing within an ad hoc network

ActiveUS7194463B2Improve detection accuracyImprove scalabilityEnergy efficient ICTError preventionExtensibilityCommunication bandwidth

This invention describes methods and systems for tasking nodes in a distributed ad hoc network. A node is selected to participate in a data gathering or a routing task based on its potential contribution to information gain and the cost associated with performing the task such as communication bandwidth usage. This invention describes methods and systems for implementing various selection strategies at each node, using local knowledge about the network. For resource-limited sensor networks, the information-driven data gathering and routing strategies significantly improve the scalability and quality of sensing systems, while minimizing resource cost.

Owner:XEROX CORP

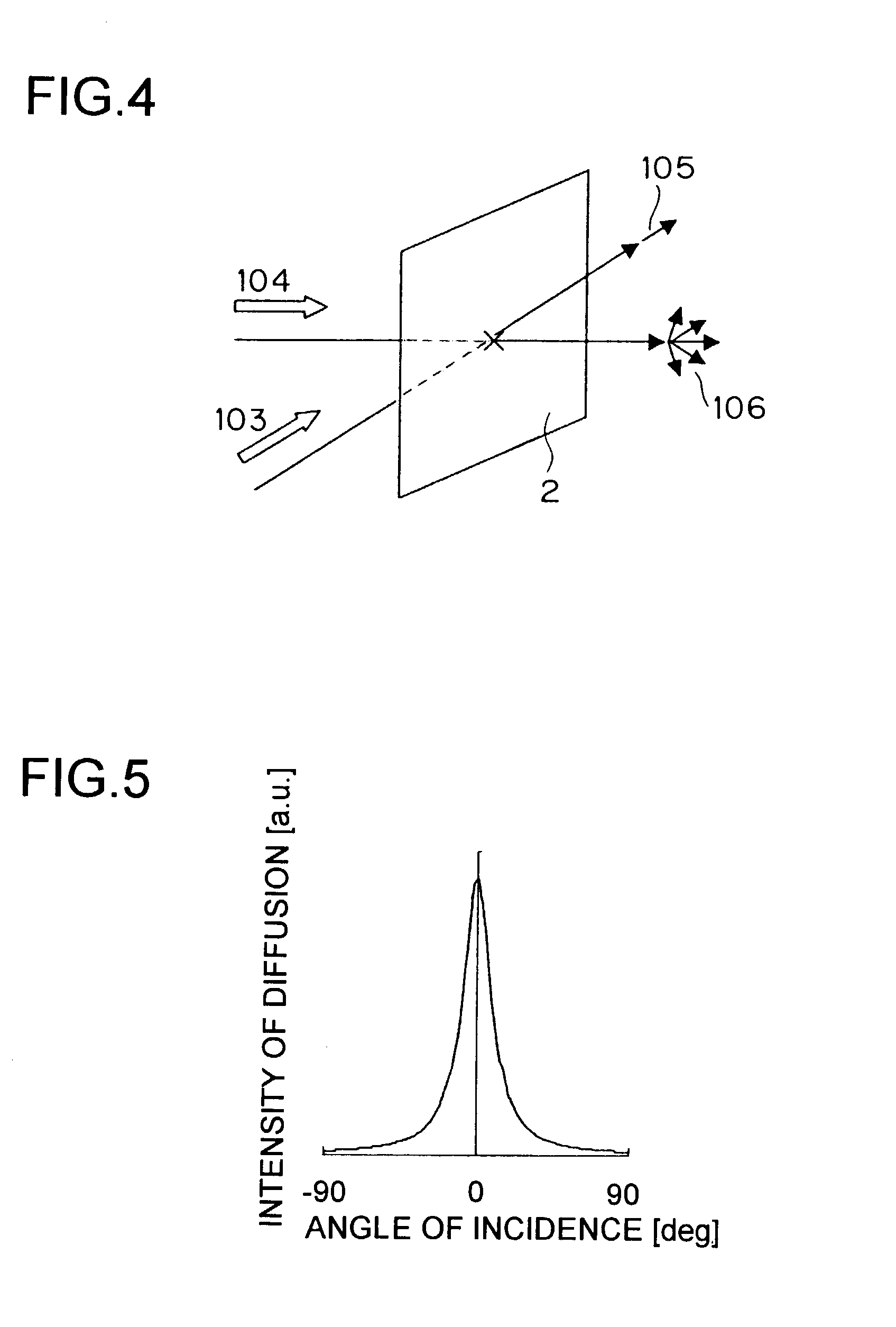

Antiglare film, method for fabricating the same, polarizer element and display device employing the same, and internal diffusion film

InactiveUS6992827B2Reduce flickerLowering transmitted image sharpnessLayered productsDiffusing elementsDisplay deviceRefractive index

An antiglare film has an internal diffusion layer, composed of a transparent matrix and a transparent diffusive material, and surface irregularities. The diffusive material has a different refractive index from the transparent matrix, exhibits anisotropic diffusion resulting from the anisotropic shape of the particles thereof, and is dispersed in the transparent matrix so that the particles are oriented parallel to one another and to a normal to the film.

Owner:SHARP KK

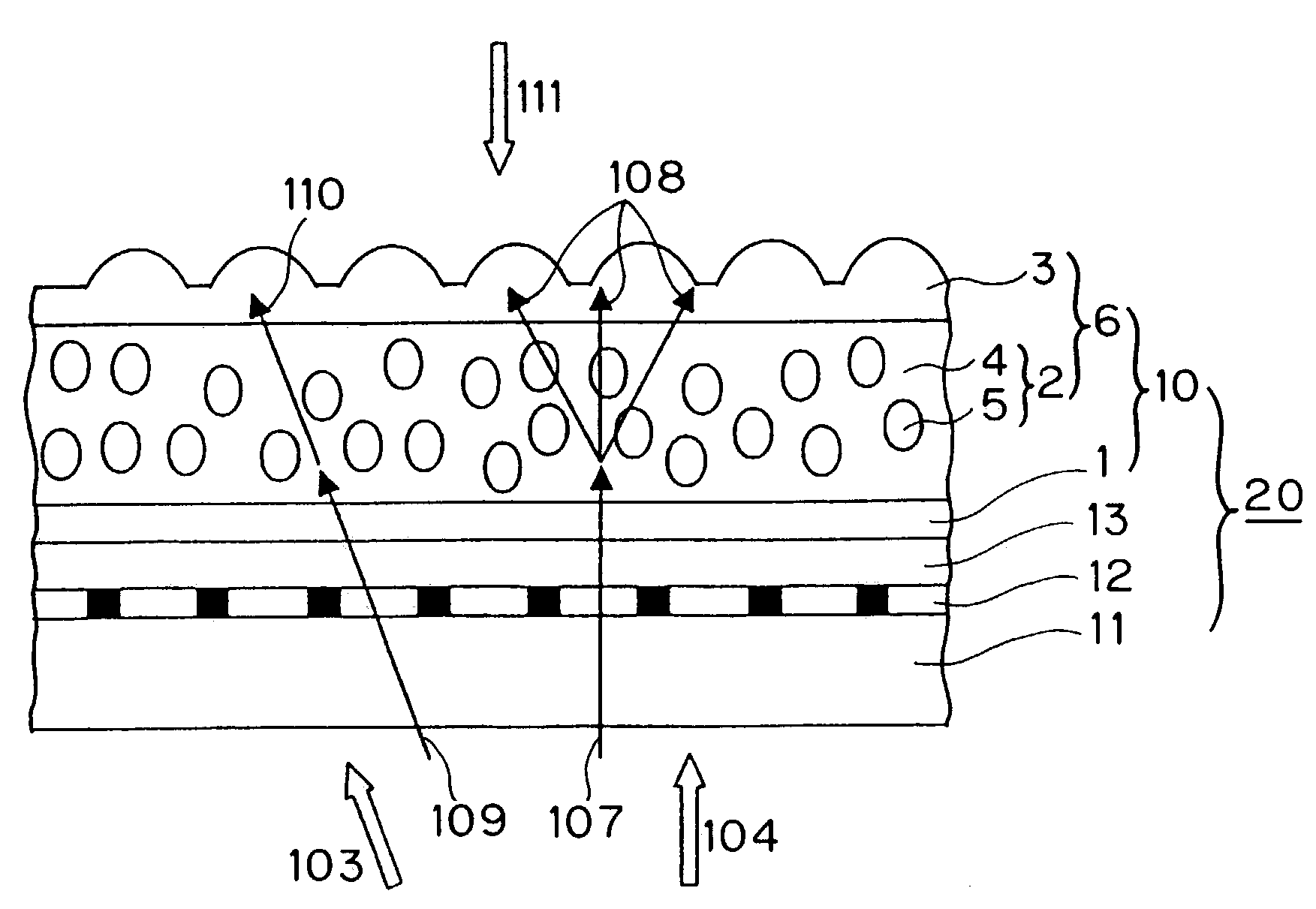

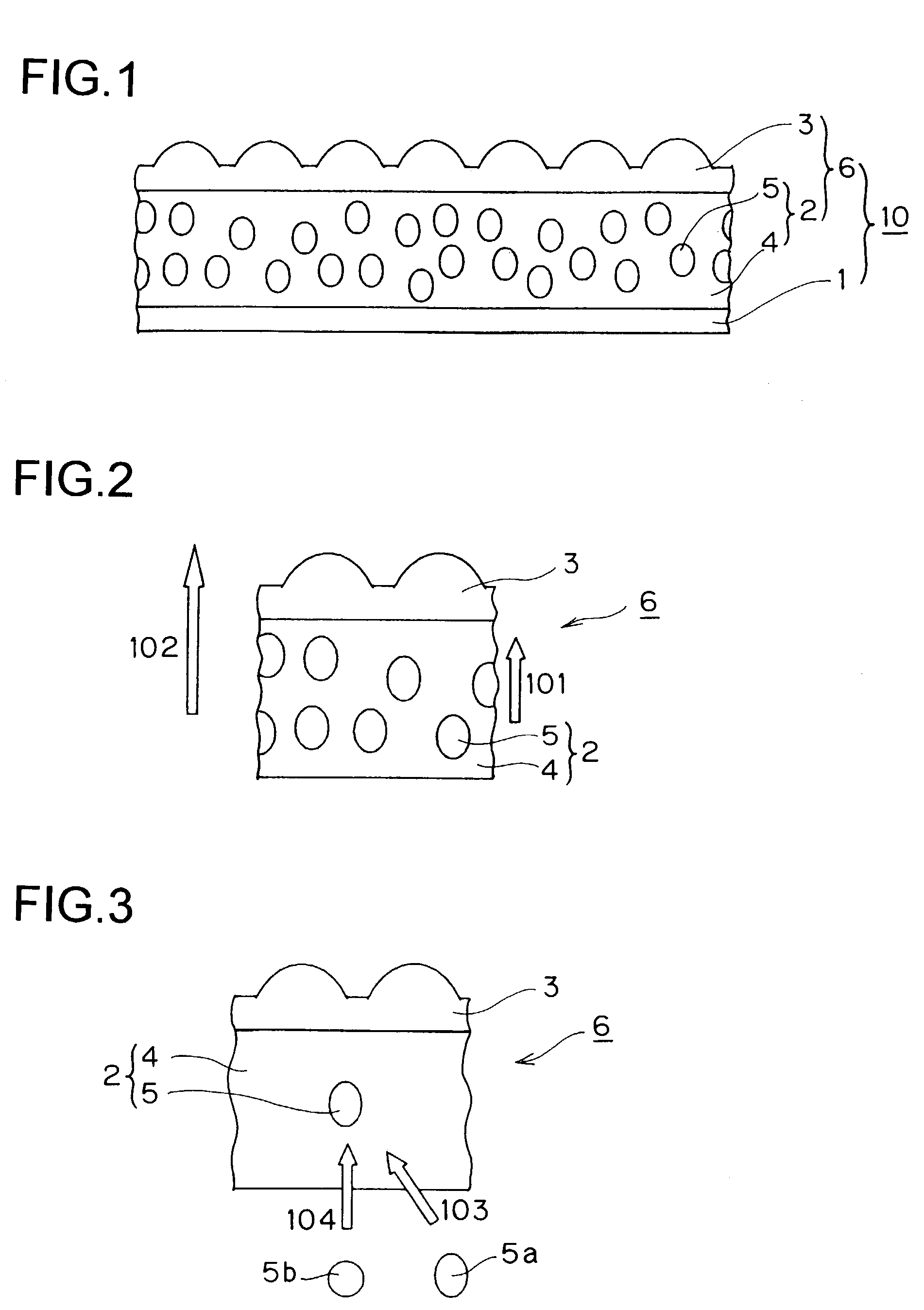

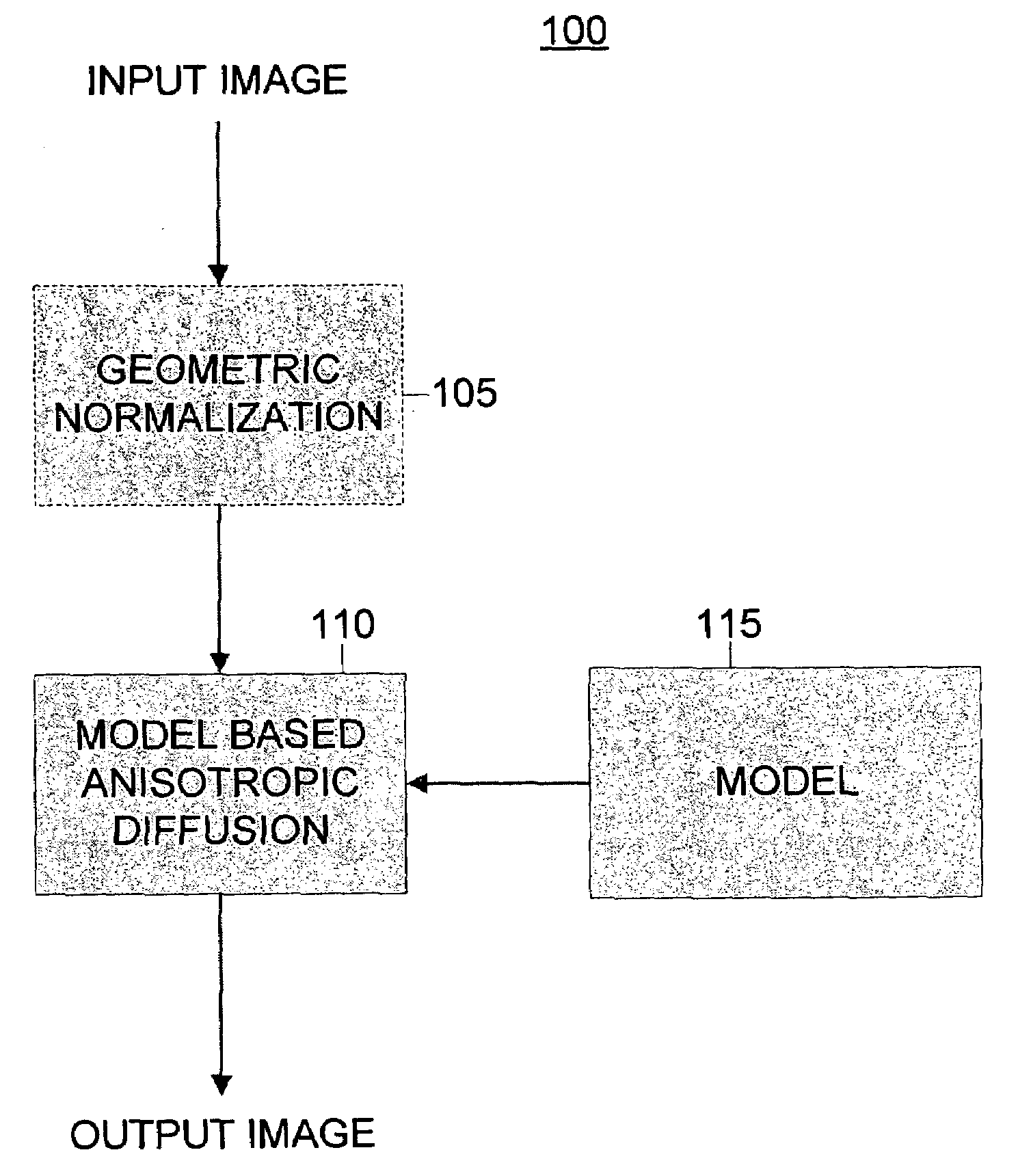

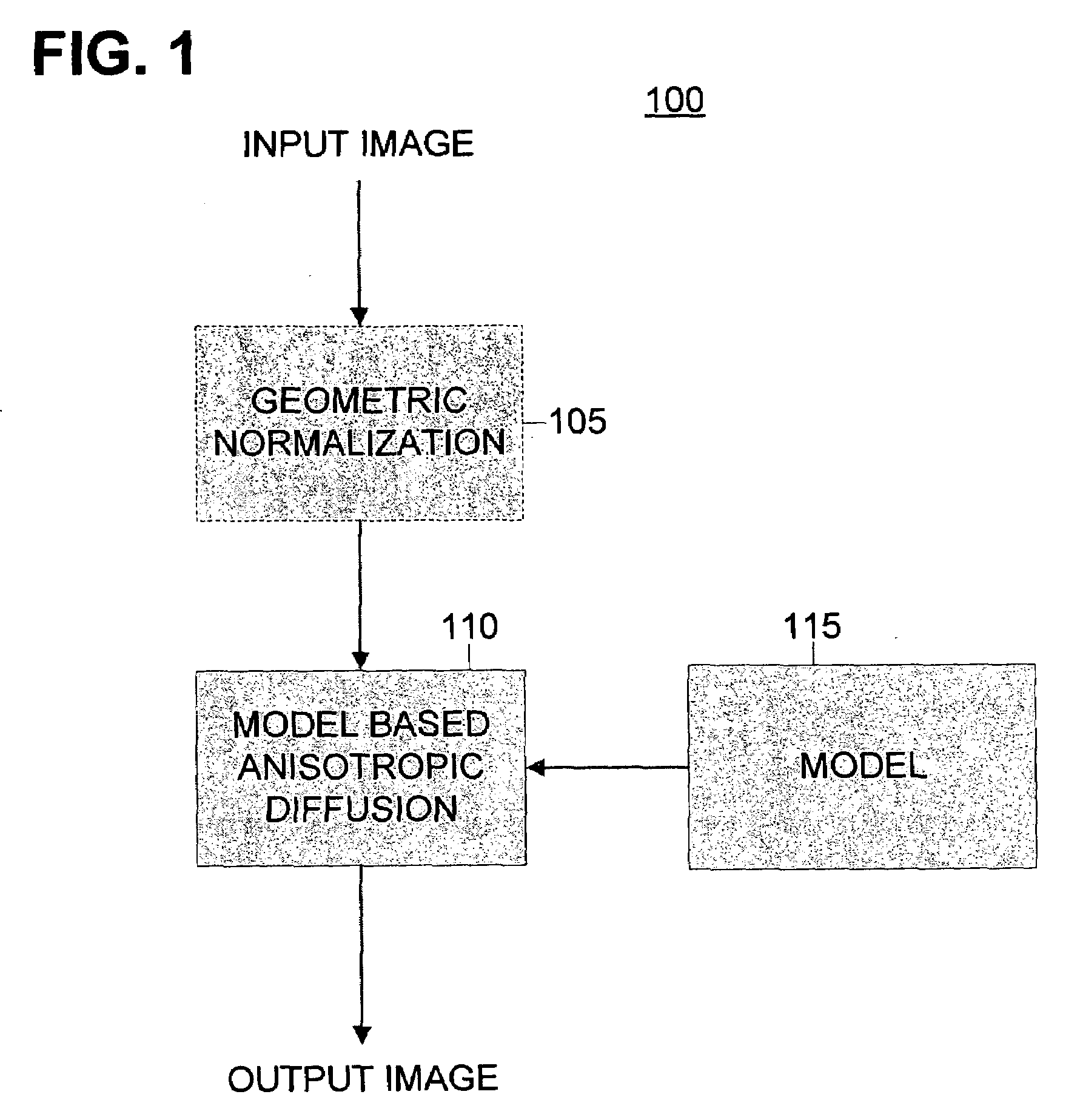

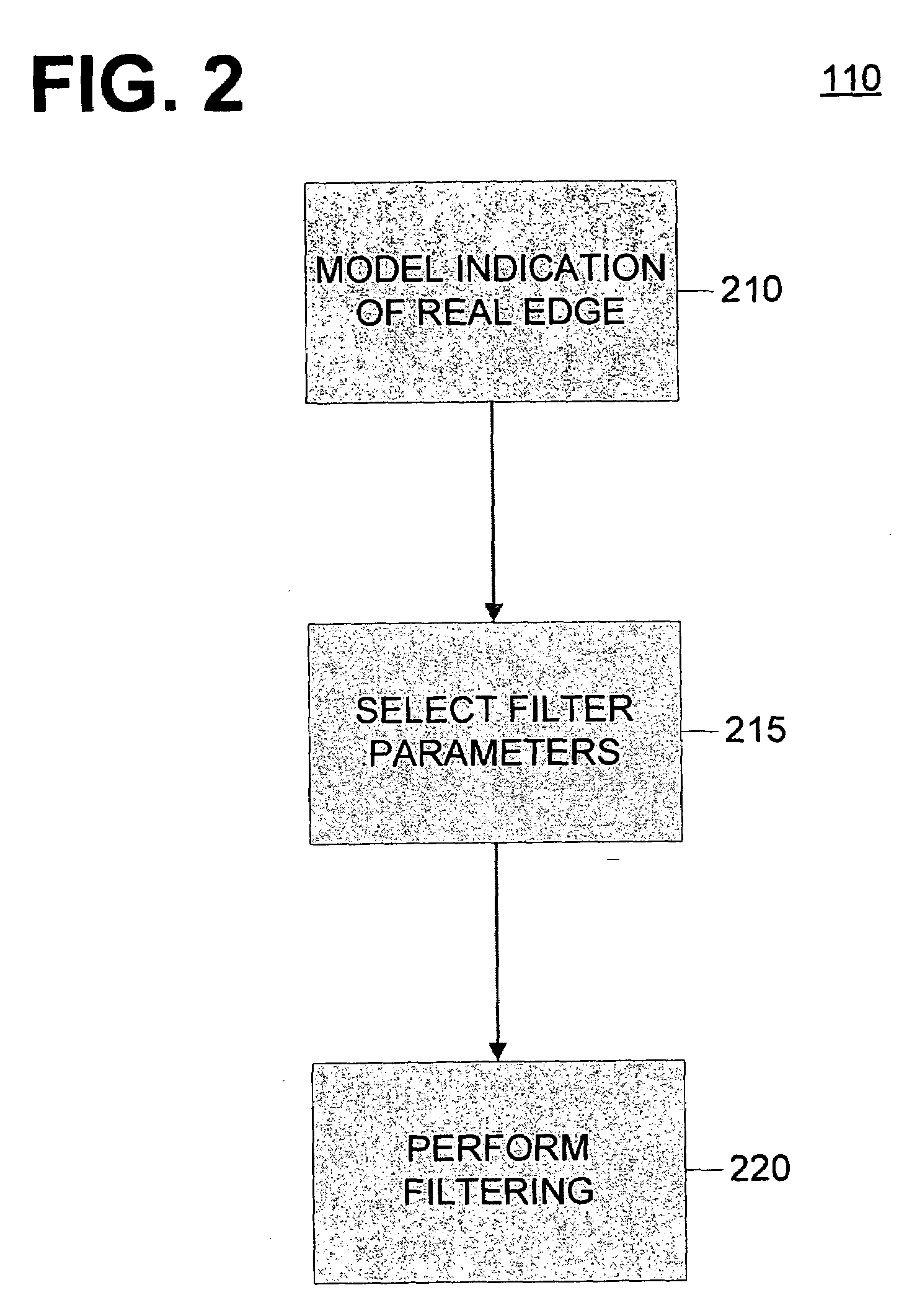

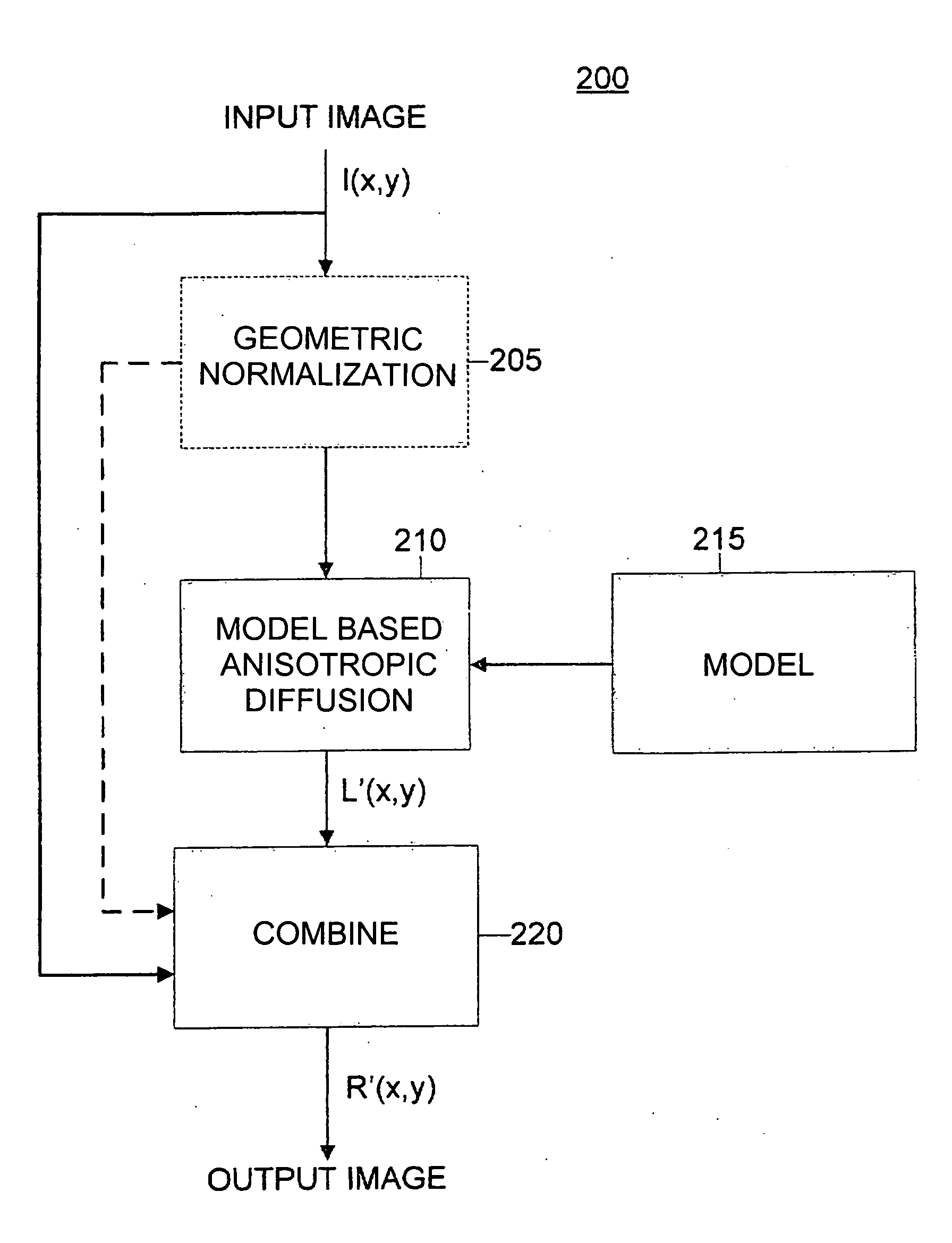

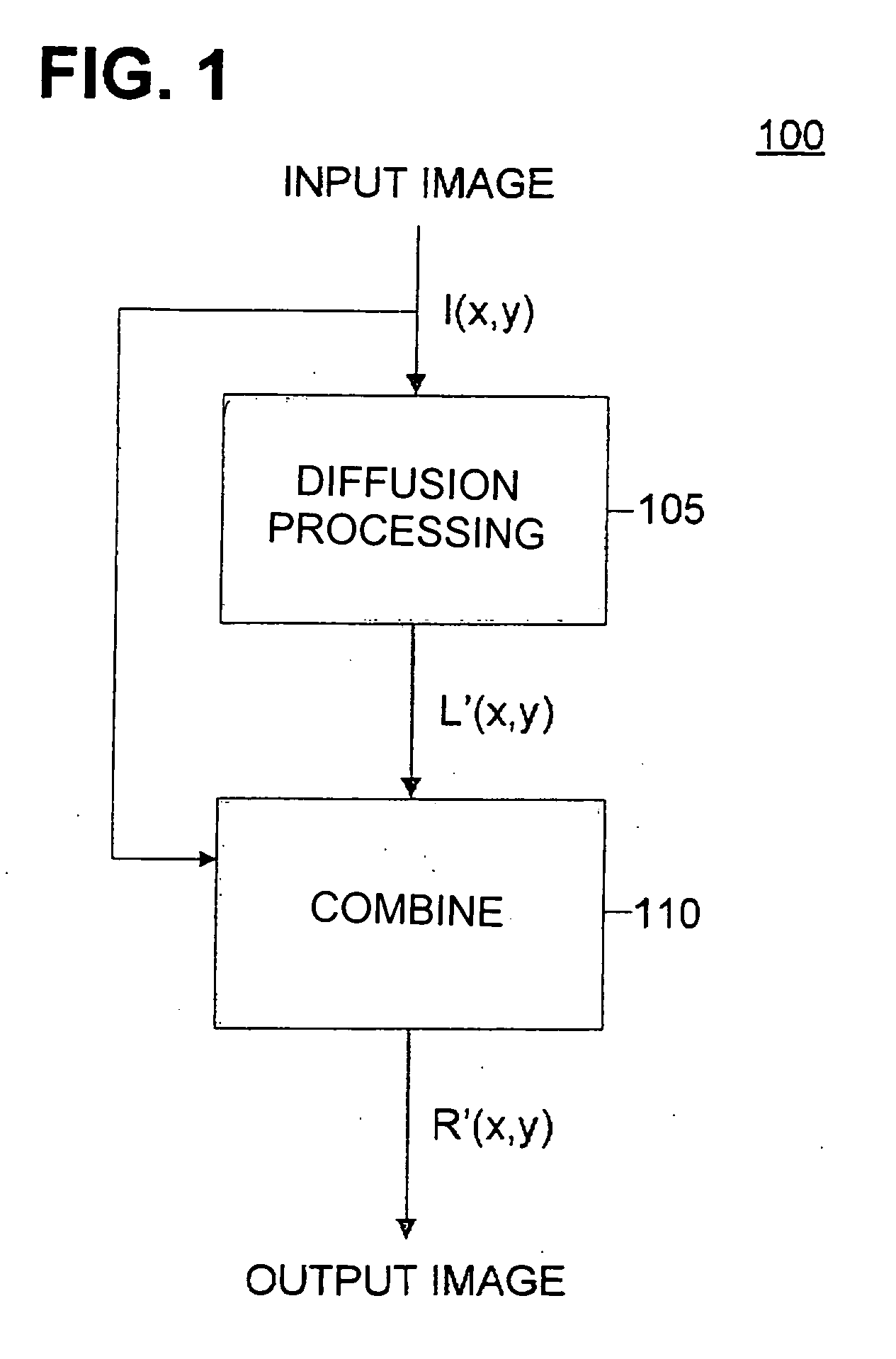

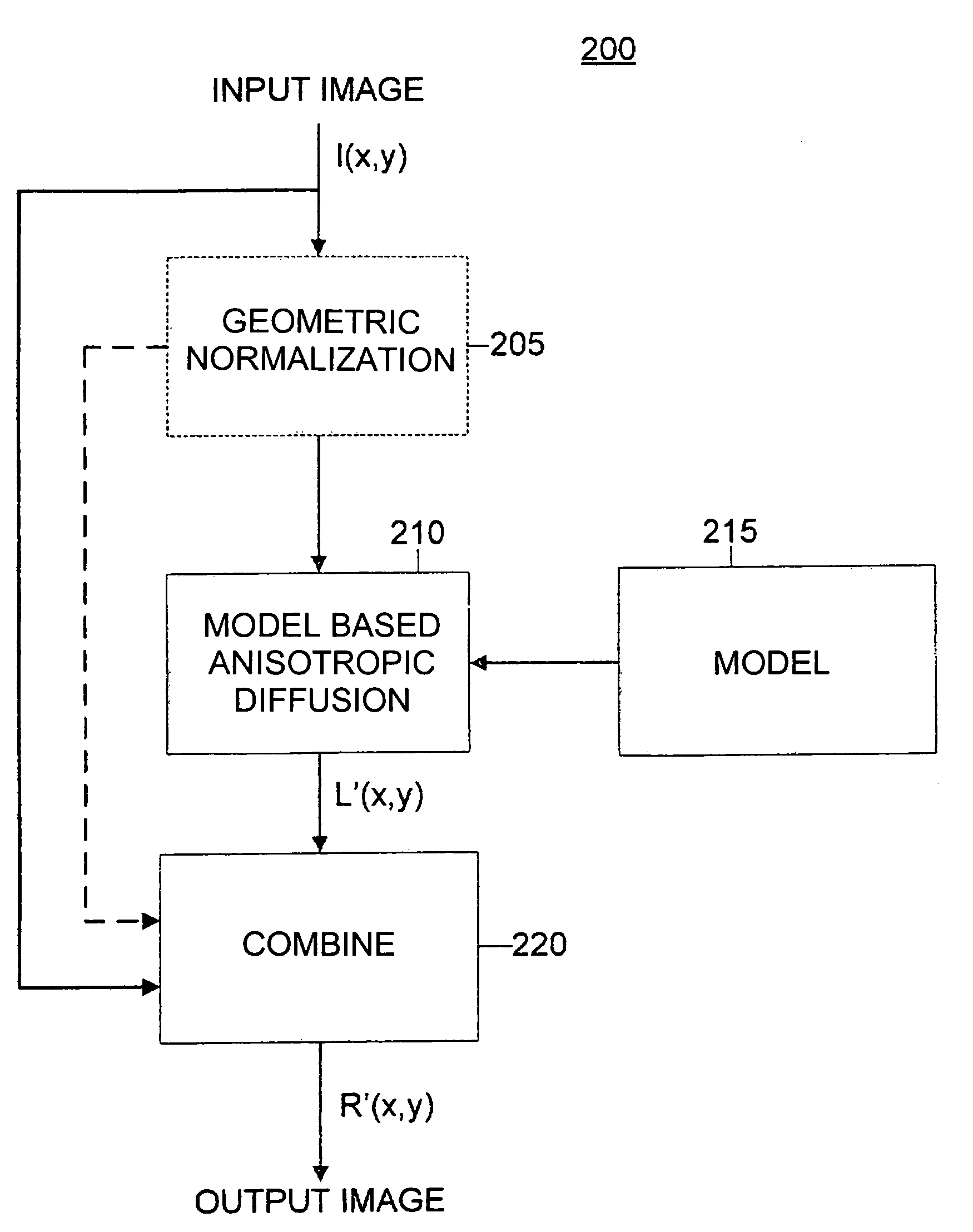

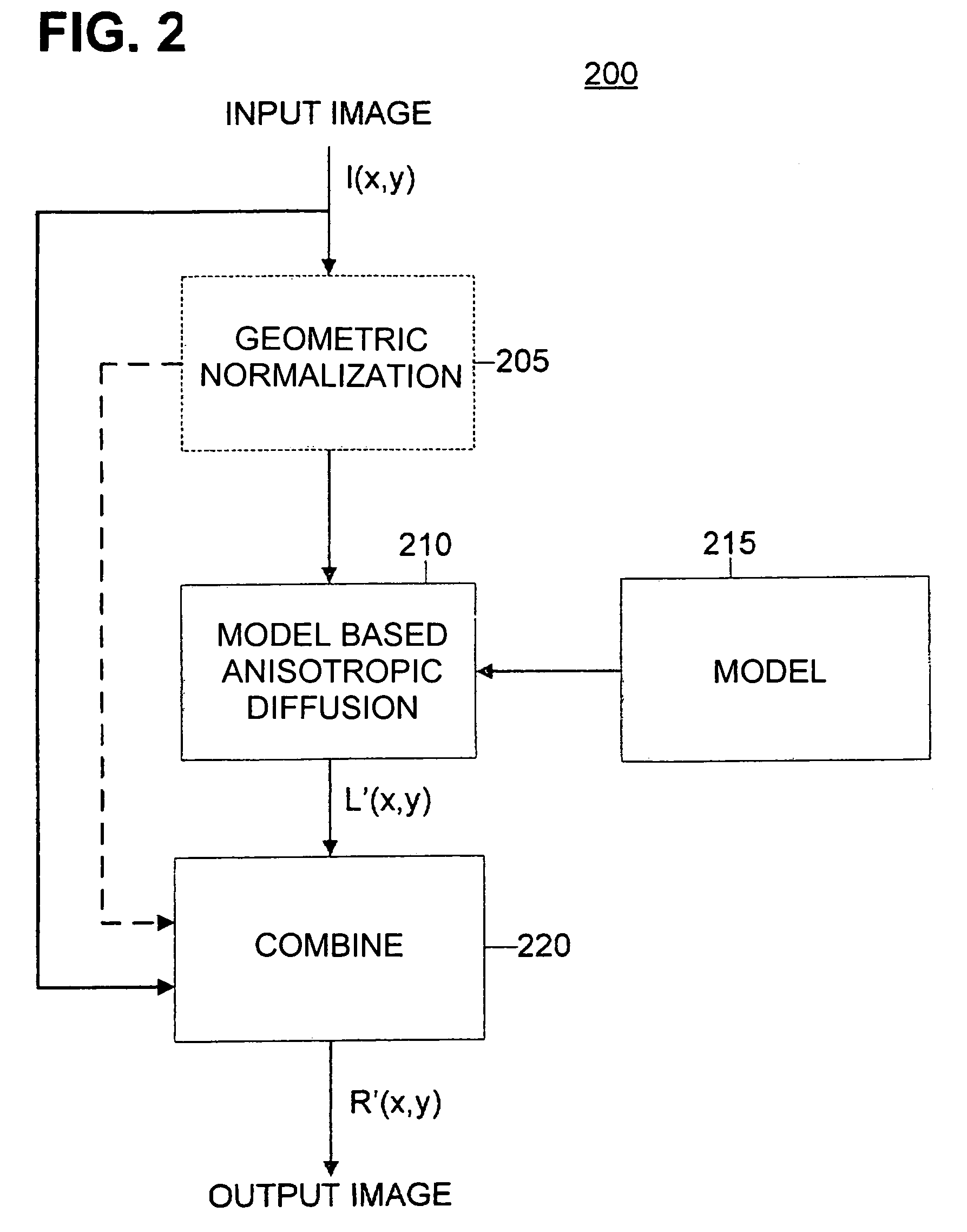

Method and apparatus for model based anisotropic diffusion

InactiveUS20080007747A1Image enhancementDigitally marking record carriersImaging processingDigital image data

Methods and apparatuses for image processing are presented. An exemplary method is provided which provides a model which includes information not found in the digital image, accessing digital image data and the model, and performing anisotropic diffusion on the digital image data utilizing the model. An apparatus for processing a digital image is presented which includes a processor operably coupled to memory storing digital image data, a model which includes information not found in the digital image data, and functional processing units for controlling image processing, where the functional processing units include a model generation module, and a model-based anisotropic diffusion module which performs anisotropic diffusion on the digital image data utilizing the information provided by the model.

Owner:FUJIFILM CORP

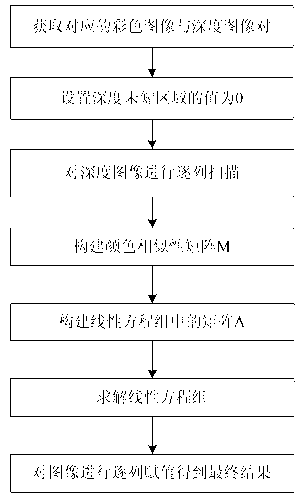

Depth image enhancement method based on anisotropic diffusion

ActiveCN103198486AOvercoming blurry edgesEasy to fillImage enhancementImage analysisDiffusionColor image

The invention discloses a depth image enhancement method based on anisotropic diffusion. The similarity among pixel points of a color image corresponding to a depth image is used as a basis of depth diffusion, and based on the known depth of the depth image, filling of a depth missing region of the depth image is finished. Through the depth image enhancement method based on the anisotropic diffusion, the defect that in a traditional depth enhancement method based on interpolation, edges of an object are blurry is overcome, meanwhile, the limit of the size of a wave filtering window in a depth enhancement method based on wave filtering is broken through, and the depth image enhancement method based on the anisotropic diffusion has the advantages of being high in universality, high in robustness and the like. The depth image enhancement method based on the anisotropic diffusion can be widely used for a variety of depth images which have missing depth regions, and in a practical application process, based on the color image and the depth image which are in correspondence to each other, a good depth image missing region filling effect can be achieved.

Owner:ZHEJIANG UNIV

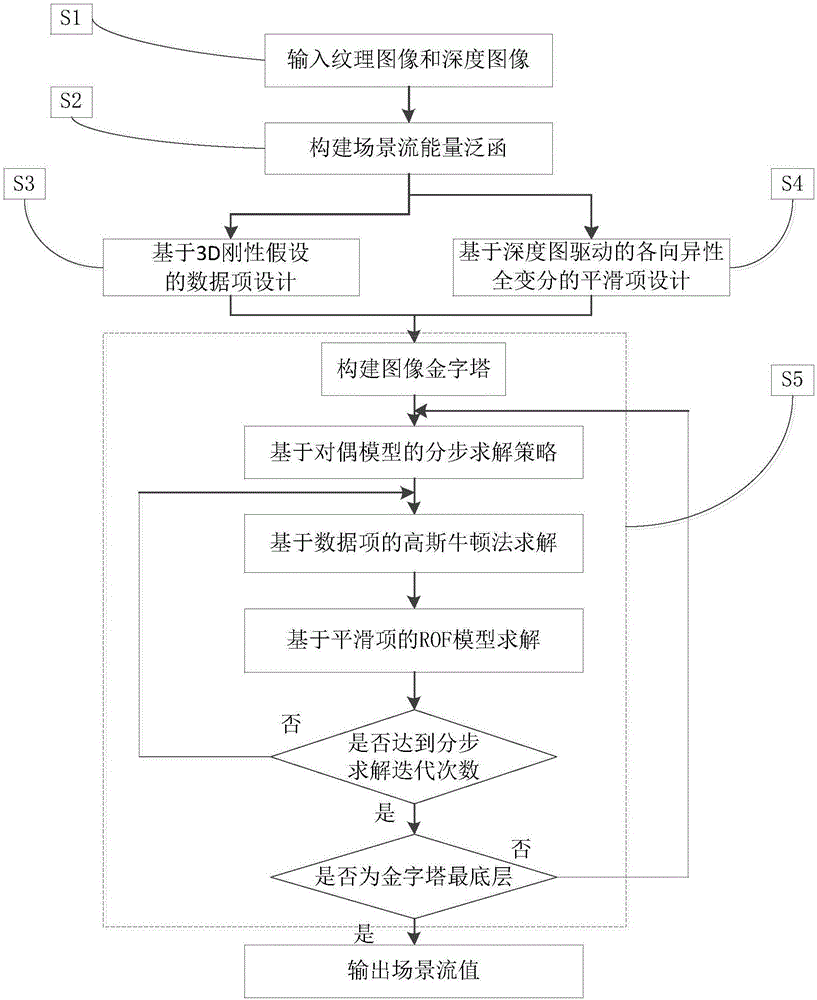

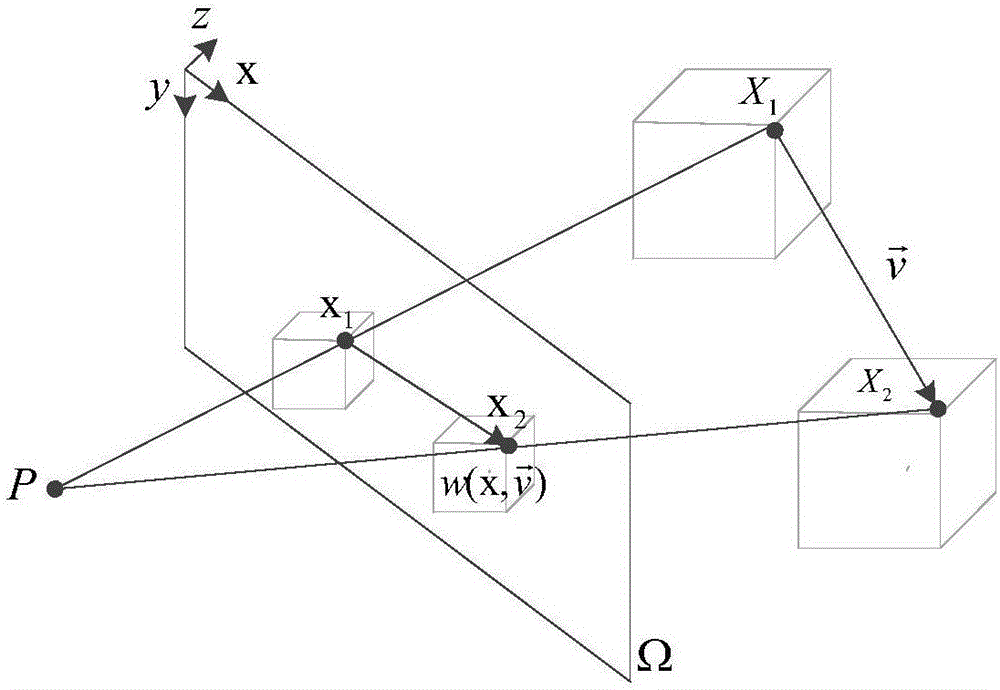

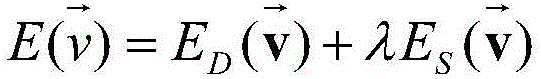

Scene flow estimation method based on 3D local rigidity and depth map guided anisotropic smoothing

ActiveCN106485675ASolve the problem of edge distortionReduce repair errorsImage enhancementImage analysisColor imageEnergy functional

The invention relates to a scene flow estimation method based on 3D local rigidity and depth map guided anisotropic smoothing. The method comprises the following steps: S1, acquiring a texture image and a depth image which are aligned at the same time through an RGB-D sensor; S2, building a scene flow estimation energy functional, and calcualting a dense scene flow based on a 3D local rigidity surface hypothesis and a global constrained method, wherein the form of a scene flow energy function is shown in the description; S3, designing date items based on the texture image and the depth image as well as the 3D local rigidity surface hypothesis; S4, designing smoothing items based on a depth map driven anisotropic diffusion tensor and total variation regularization; S5, creating an image pyramid, and adopting a coarse-to-fine solution strategy; and S6, calculating a scene flow by use of a duality method, and introducing scene flow auxiliary variables. According to the invention, the weight of a space-domain filter is determined by both the chromatic aberration and location relationship between the pixels of a color image, and therefore, the edge distortion problem in the process of repair is solved. In order to reduce repair error, the weight of a value-domain filter is determined by the color information and the structural similarity coefficient.

Owner:HARBIN ENG UNIV

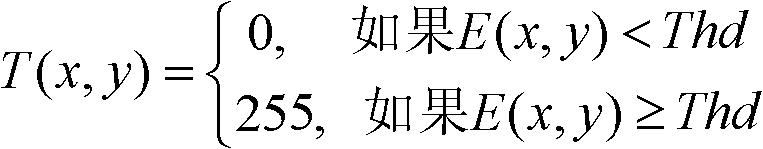

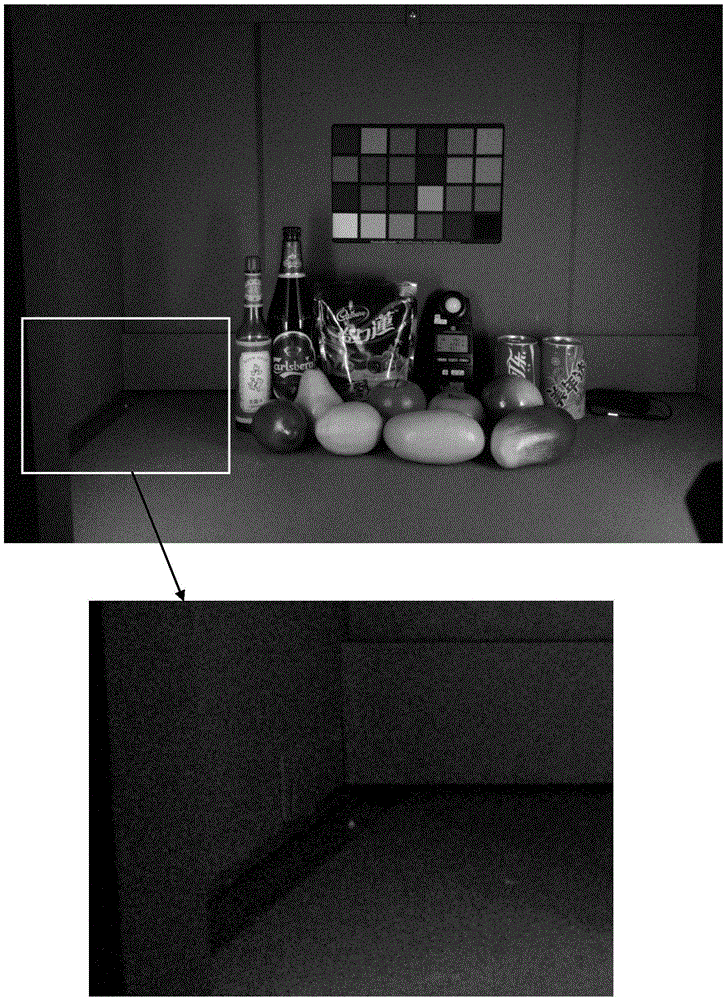

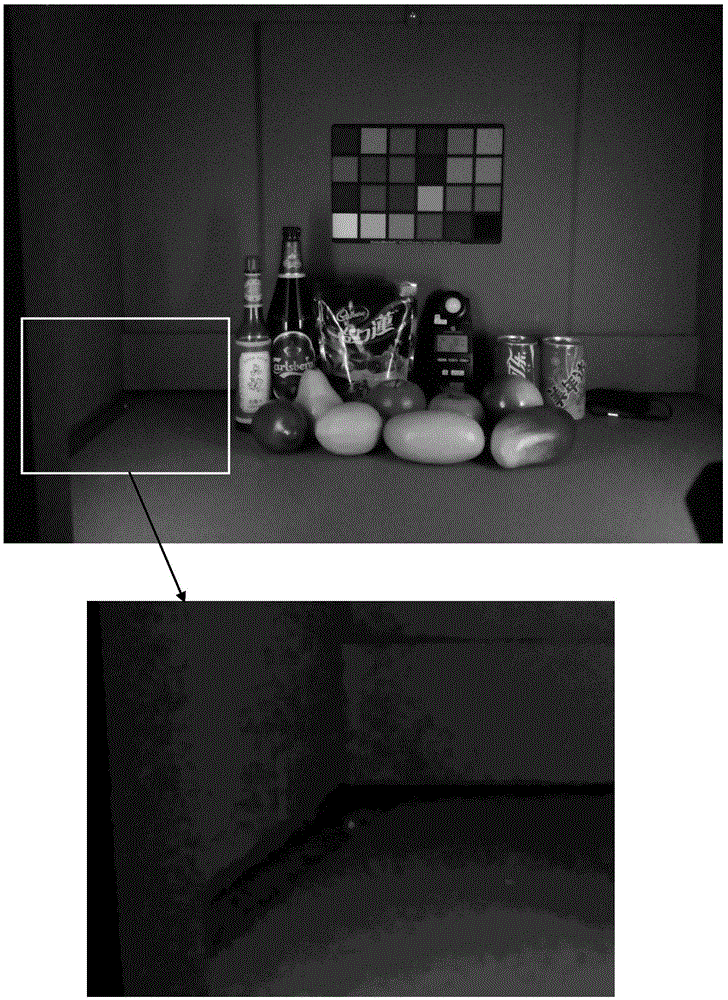

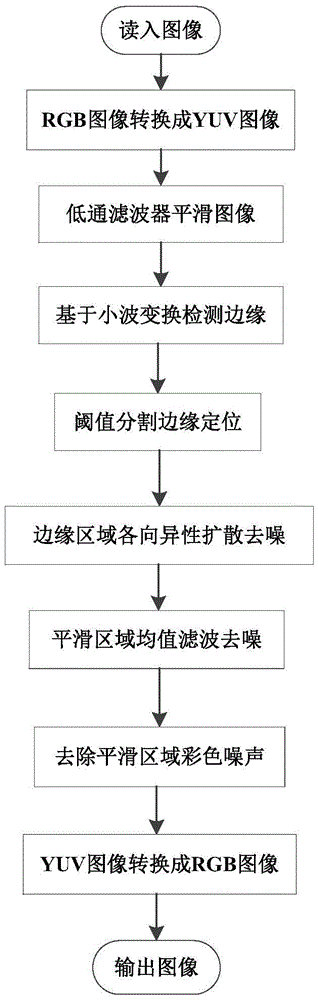

Mobile phone image denoising method based on wavelet transform edge detection

ActiveCN105654445AHigh precisionReduce misjudgmentImage enhancementImage analysisPattern recognitionImage denoising

The invention discloses a mobile phone image denoising method based on wavelet transform edge detection. The mobile phone image denoising method disclosed by the invention can be used for effectively smoothing noise, reserving image edge details and removing colour noise at the same time, so that an ideal denoising effect is obtained. The mobile phone image denoising method disclosed by the invention comprises the following steps: firstly, reading a colour noise image, and converting an input image into a YUV colour space from an RGB colour space; secondly, smoothing the image by adopting a Gaussian filter, and carrying out lowpass filtering on a Y channel image three times; thirdly, detecting the edge of the image by utilizing a wavelet transform algorithm; fourthly, obtaining a binary image of the edge by carrying out threshold segmentation; fifthly, smoothing noise by carrying out anisotropic diffusion at the edge region of the image; sixthly, carrying out mean filtering in a smooth region of the image for denoising; seventhly, further removing the colour noise from the image in the smooth region; and eighthly, converting the image into the RGB colour space from the YUV colour space to obtain a final denoised image.

Owner:SOUTHEAST UNIV

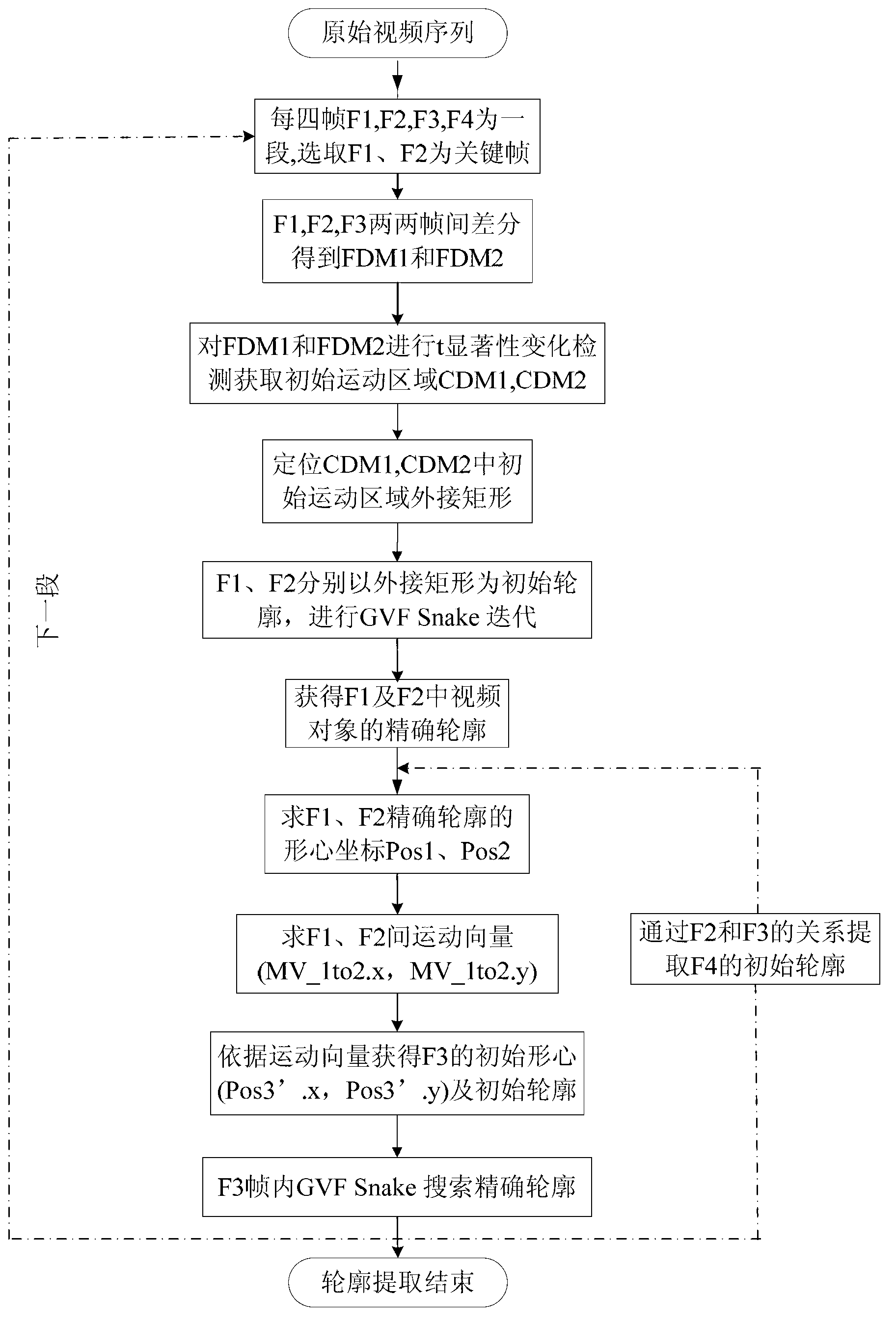

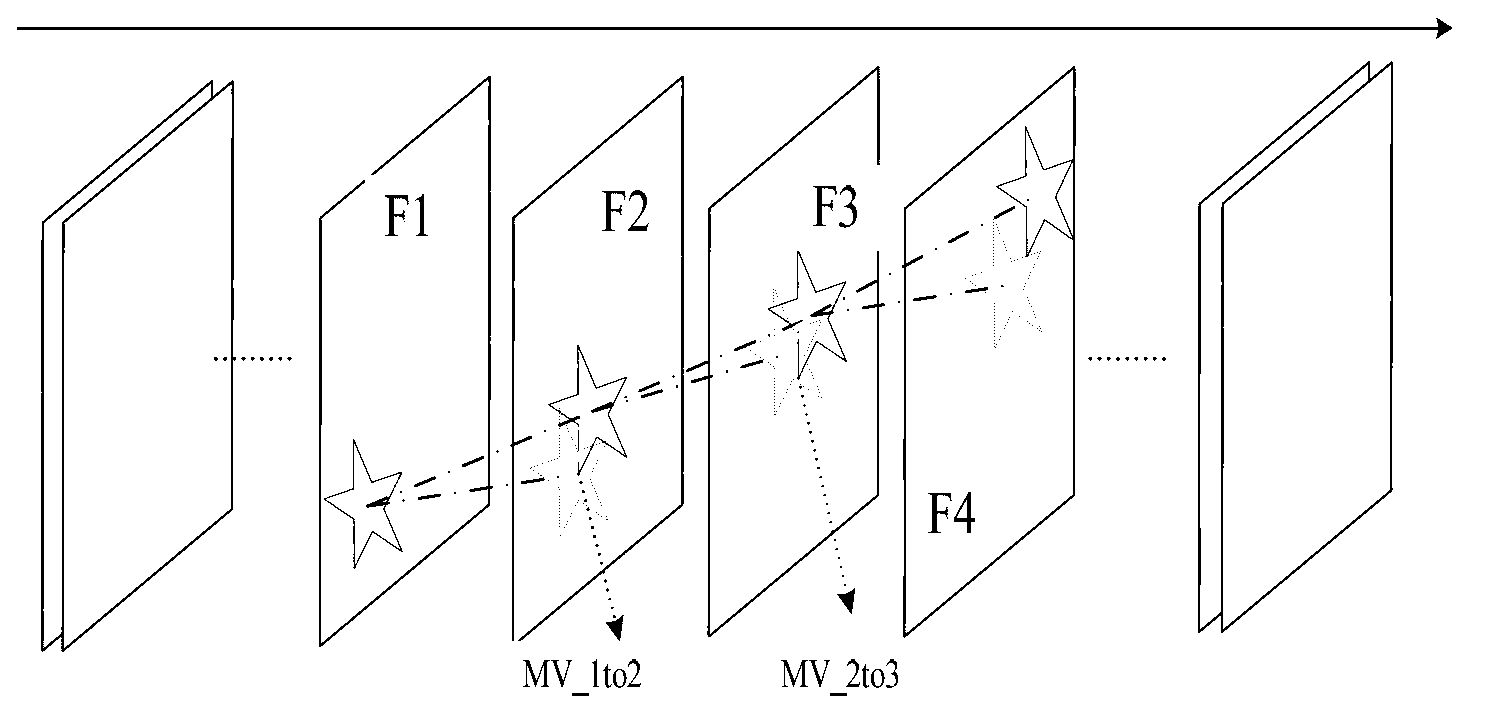

Method for extracting video object contour based on centroid tracking and improved GVF Snake

The invention discloses a method for extracting video object contour based on centroid tracking and improved GVF Snake. The method is characterized by comprising the following steps of according to the condition that the movement trend of adjacent frames is similar in short time, dividing a video sequence into a plurality of sections, wherein each section has k frames of video, taking the first two frames in the section as key frames, detecting the effect of eliminating the background border based on the change of t obvious test, and acquiring an initial movement area; extracting a critical quadrangle as an initial contour of the key frames, performing intra-frame GVF Snake evolution, searching the exact contour, then forecasting initial contours of the subsequent frames through the movement vector between centroids of the intra-frame movement object contours of the key frames, and then performing the exact intra-frame GVF Snake contour positioning of the subsequent frames; and by the analogy, extracting the object contour of all frames. The improved model adopts four-direction anisotropic diffusion, and adopts fidelity term coefficients with fast descending speed to enhance the performance to enter into concave; and the convergence to a weak edge is kept. By the method, the shortcoming of manually acquiring the initial contours is conquered.

Owner:丰县新中牧饲料有限公司

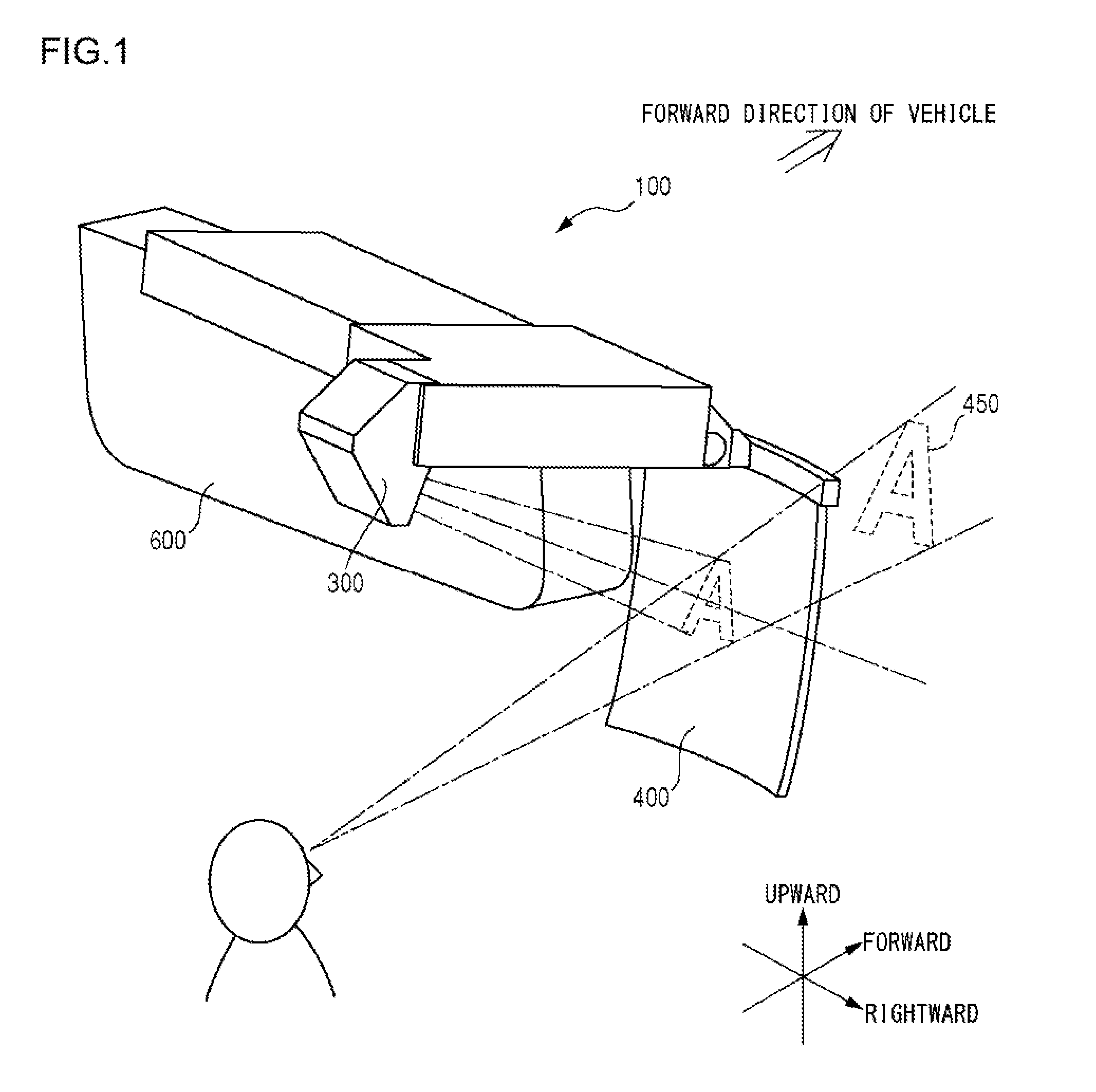

Image display device

ActiveUS20150124227A1Improve utilization efficiencyReduce the impactDiffusing elementsProjectorsDisplay deviceLight beam

Owner:JVC KENWOOD CORP A CORP OF JAPAN

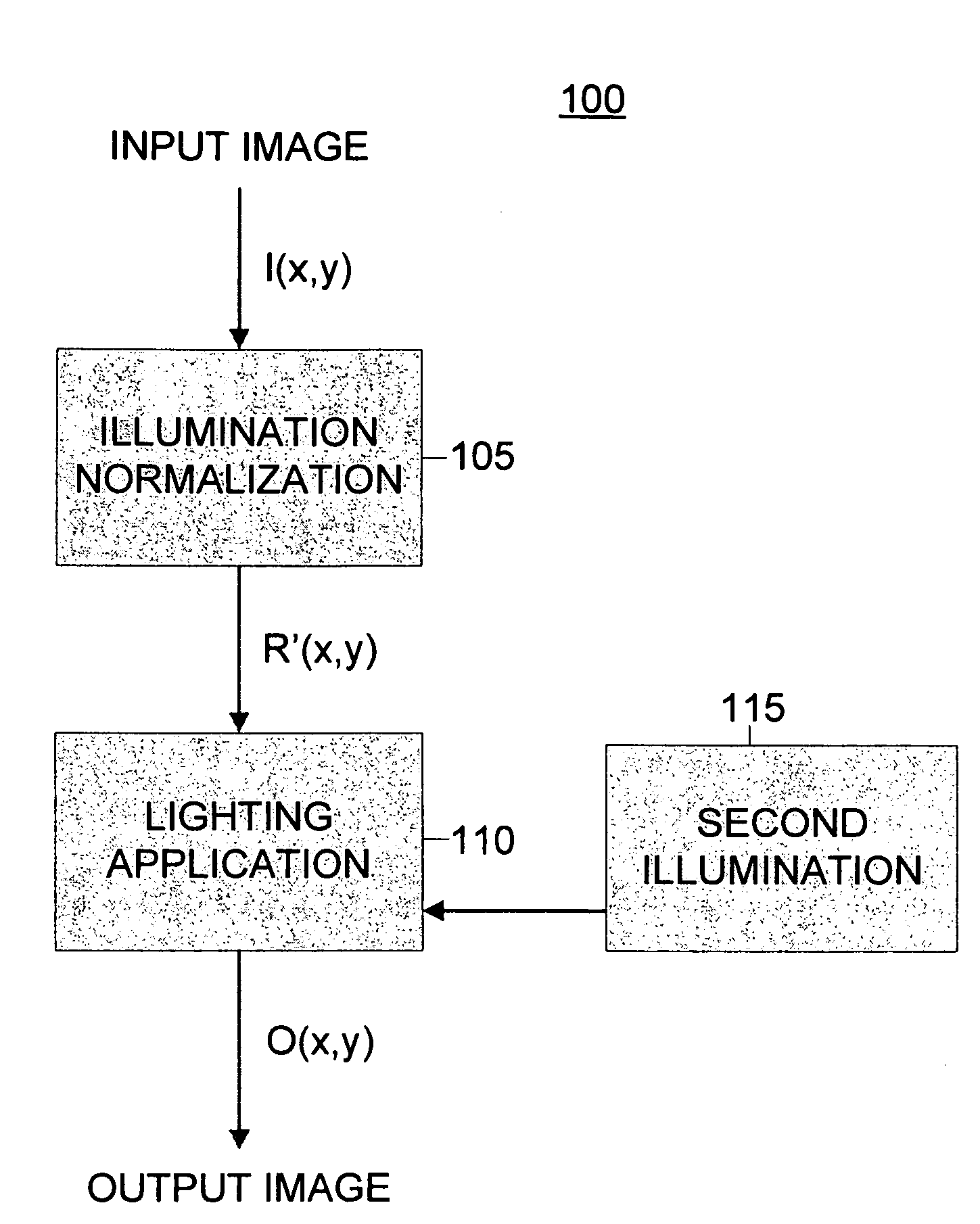

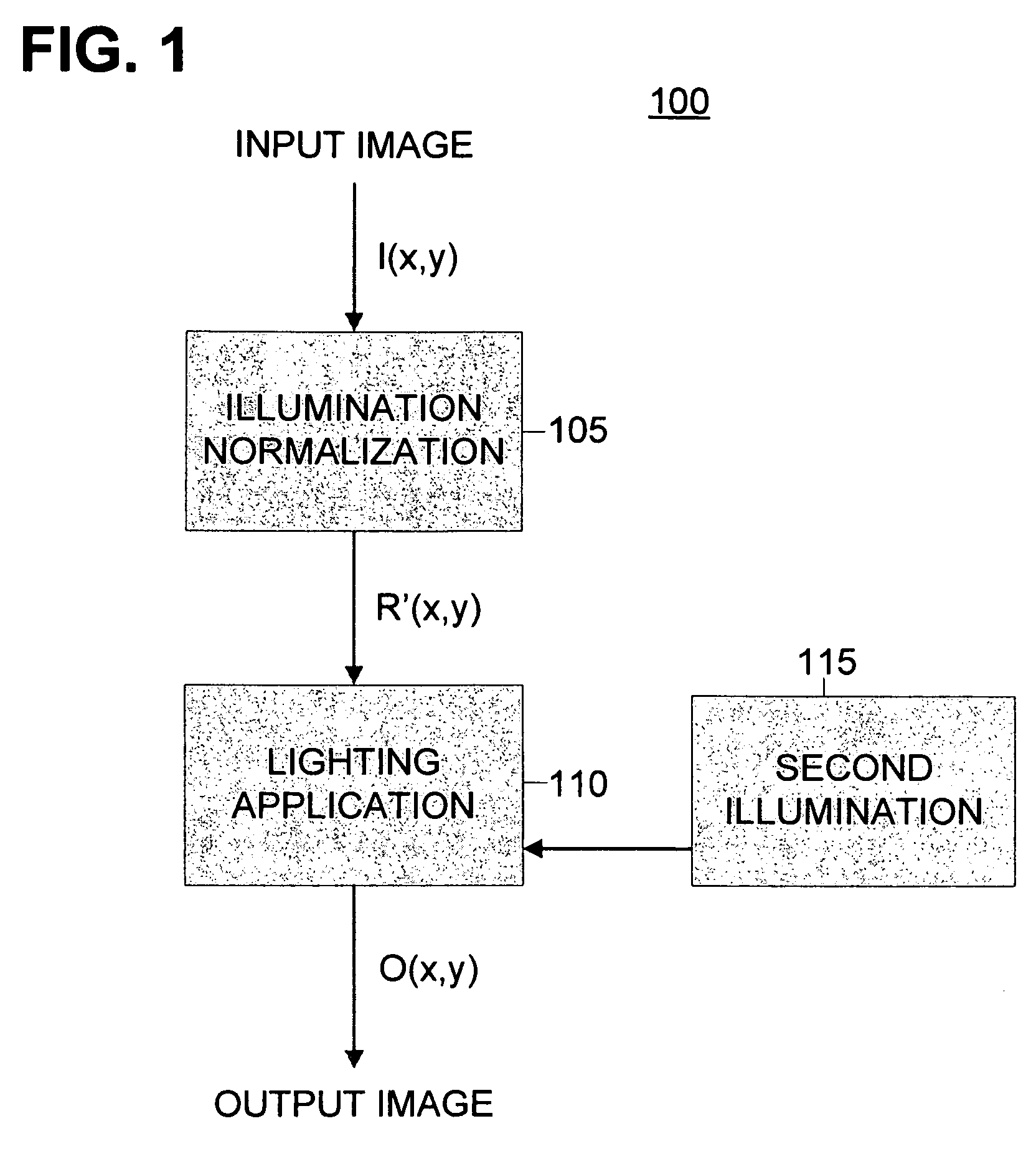

Method and apparatus for diffusion based illumination normalization

An exemplary illumination normalization method is provided which includes receiving an input image having at least one spurious edge directly resulting from illumination, performing anisotropic diffusion on the input image to form a diffusion image, and removing at the least one spurious edge using the diffusion image. Another embodiment consistent with the invention is an apparatus for performing illumination normalization in an image which includes a processor operably coupled to a memory storing input image data which contains an object of interest having at least one spurious edge directly resulting from illumination, a model of a representative object of interest, and functional processing units for controlling image processing, wherein the functional processing units further include a model based anisotropic diffusion module which predicts edge information regarding the object of interest based upon the model, and produces a reflectance estimation utilizing the predicted edge information.

Owner:FUJIFILM CORP

Surface light source and liquid crystal display device using the same

InactiveCN101606020AIncrease brightnessImprove brightness uniformityMechanical apparatusElongate light sourcesLiquid-crystal displayLight guide

Provided is a side light type surface light source, which has a high light use efficiency, a high luminance and a wide view angle. A liquid crystal display device using such light source is also provided. The surface light source is provided with a light source; a light guide body having at least one light incoming surface facing the light source and a light outgoing surface substantially orthogonally intersecting with the light incoming surface; and a first optical film arranged to face the light outputting surface. On the light guide body, a plurality of linear grooves or linear protruding sections are arranged substantially parallel on the light outputting surface or on the rear surface of the light outputting surface not outputting light. The first optical film has anisotropic diffusion characteristics, and is arranged to have a direction, in which the anisotropic diffusion is maximum, substantially parallel to the longitudinal direction of the linear grooves or the linear protruding sections.

Owner:TORAY IND INC +1

Automatic precise partition method for prostate ultrasonic image

The invention discloses an automatic precise partition method for a prostate ultrasonic image and belongs to the field of computer-aided diagnosis. The method comprises the following steps of: extracting the textural features of the prostate ultrasonic image under different scales through Gabor by using a multi-scale space constructed by anisotropic diffusion; meanwhile, constructing a prostate shape space by a non-parameter kernel density estimation method, and searching in the shape space through mean shift; under the dual constraints of the textural features and the shape space, roughly partitioning a prostate contour; and finally, refining a partition result in a self-adaptive detection range by using active contour models in combination with orientation gradients, and accurately partitioning the prostate ultrasonic image stably at last. By the method, the problems of low contrast ratio of ultrasonic images and large interference of speckle noise and shadow areas in partition are solved; the prostate ultrasonic image can be accurately partitioned; and the method can adapt to ultrasonic machines which are produced by different manufacturers and have different models, and is not sensitive to the parameter setting of an ultrasonic imaging system.

Owner:刘怡光

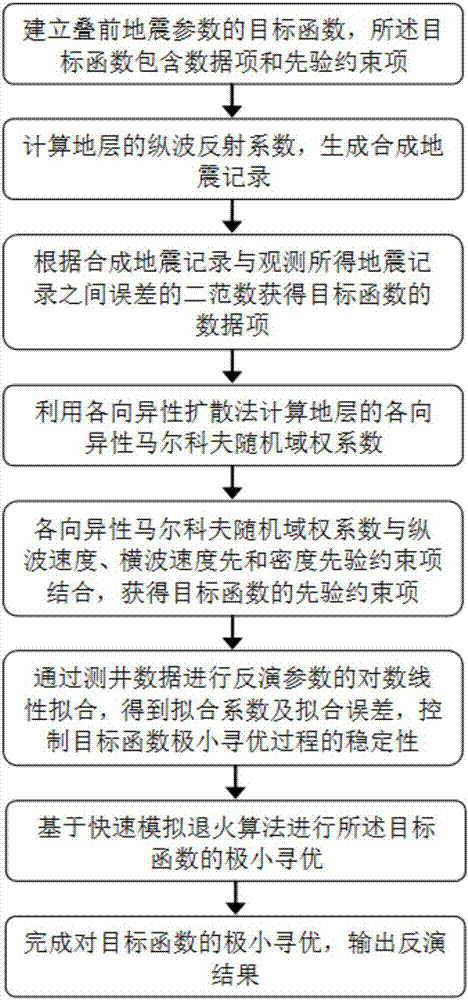

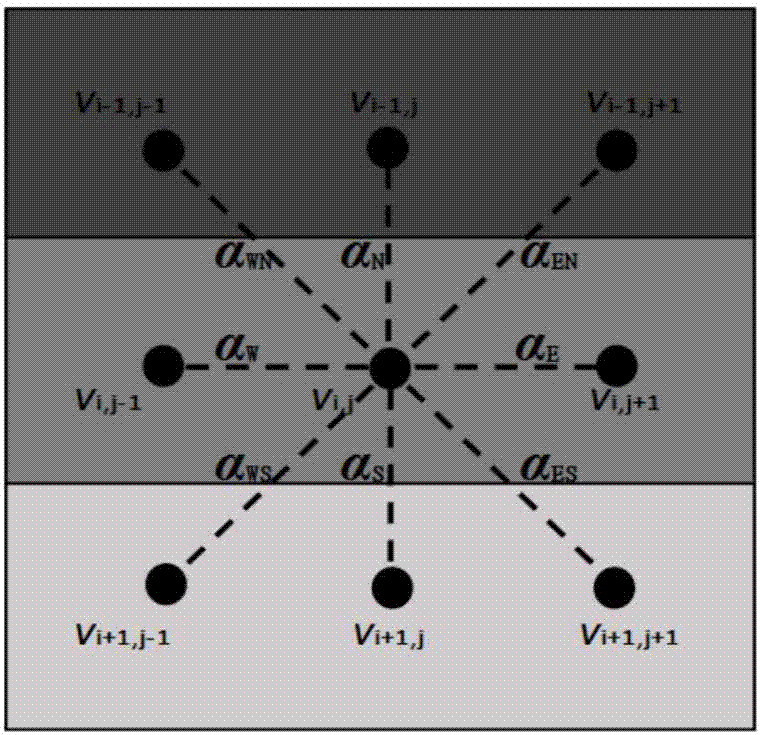

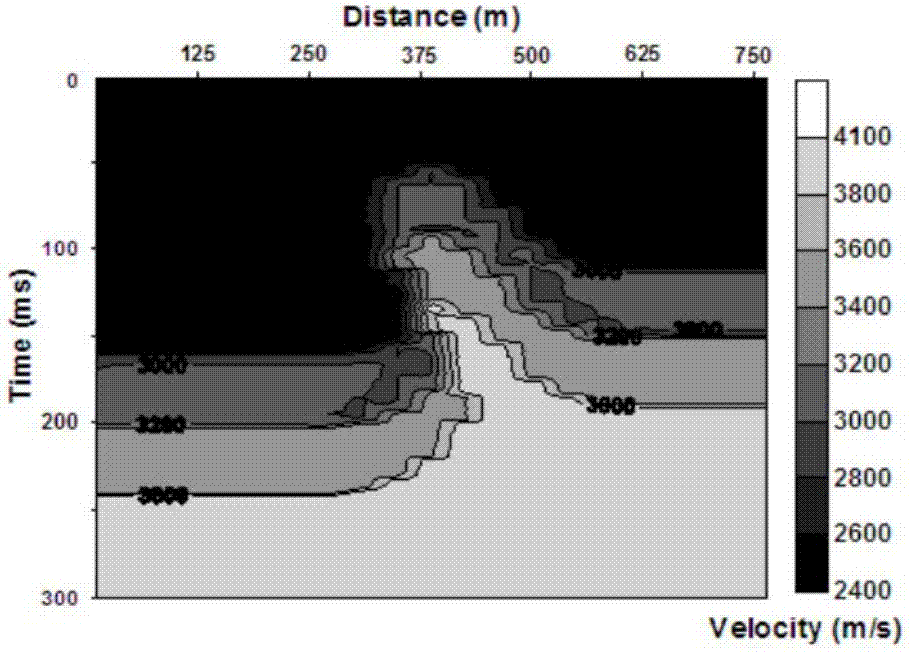

Pre-stack seismic parameter inversion method based on anisotropic Markov random field

InactiveCN106932819AAccurately reflect layered featuresImprove protectionSeismic signal processingWeight coefficientLongitudinal wave

The invention discloses a pre-stack seismic parameter inversion method based on an anisotropic Markov random field. The method comprises: establishing an objective function of a pre-stack seismic parameter; calculating a longitudinal wave reflection coefficient based on a Zoprez equation, and obtaining a data item of the objective function according to 2-norm of an error of between a seismic record obtained by measurement and a synthesized seismic record; acquiring anisotropic Markov random domain weight coefficients of data points in different directions by using an anisotropic diffusion method; acquiring a priori constraint term of the objective function; extracting logging data of a to-be-inverted region and carrying out inversion parameter logarithmic linear fitting on the logging data; determining an objective function and carrying out minimum optimizing based on a rapid simulated annealing algorithm; and completing iterative optimization of the objective function and outputting an inversion result. Therefore, the influence of anisotropy of the stratum on the inversion result can be corrected by using the anisotropic Markov weight coefficients, thereby reflecting the stratiform feature of the strata accurately and protecting the fault and the boundary well.

Owner:HOHAI UNIV

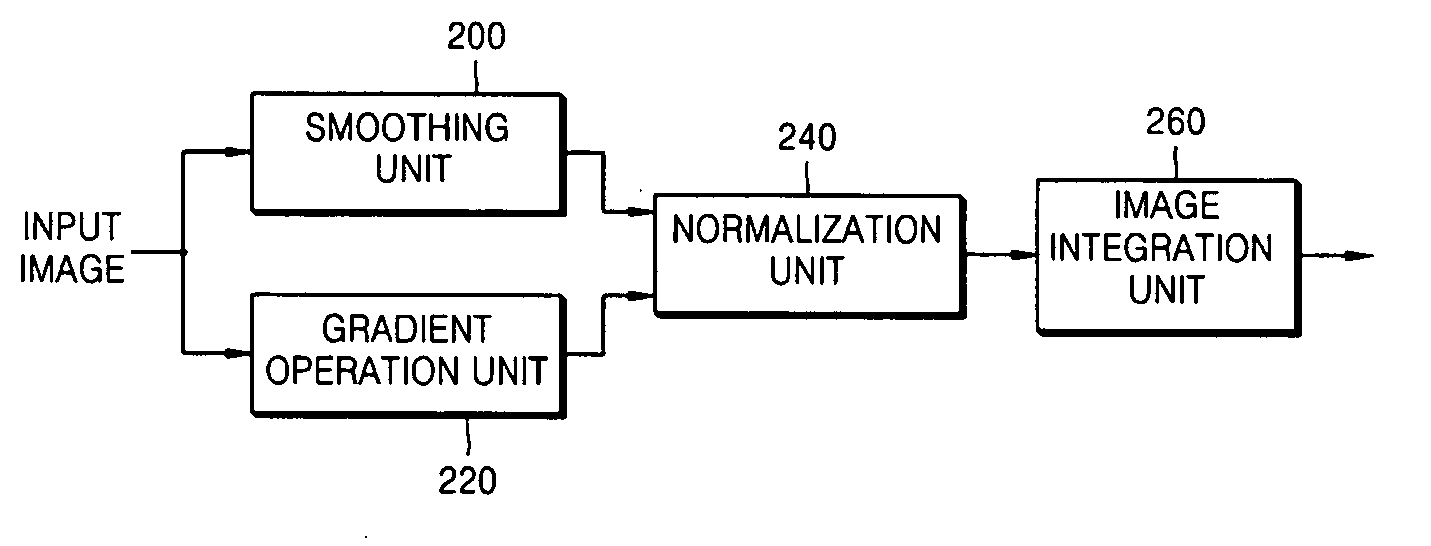

Method, apparatus, and medium for removing shading of image

InactiveUS20060285769A1Improve performanceCompensation is simpleImage enhancementImage analysisGradient operatorsSmoothing kernel

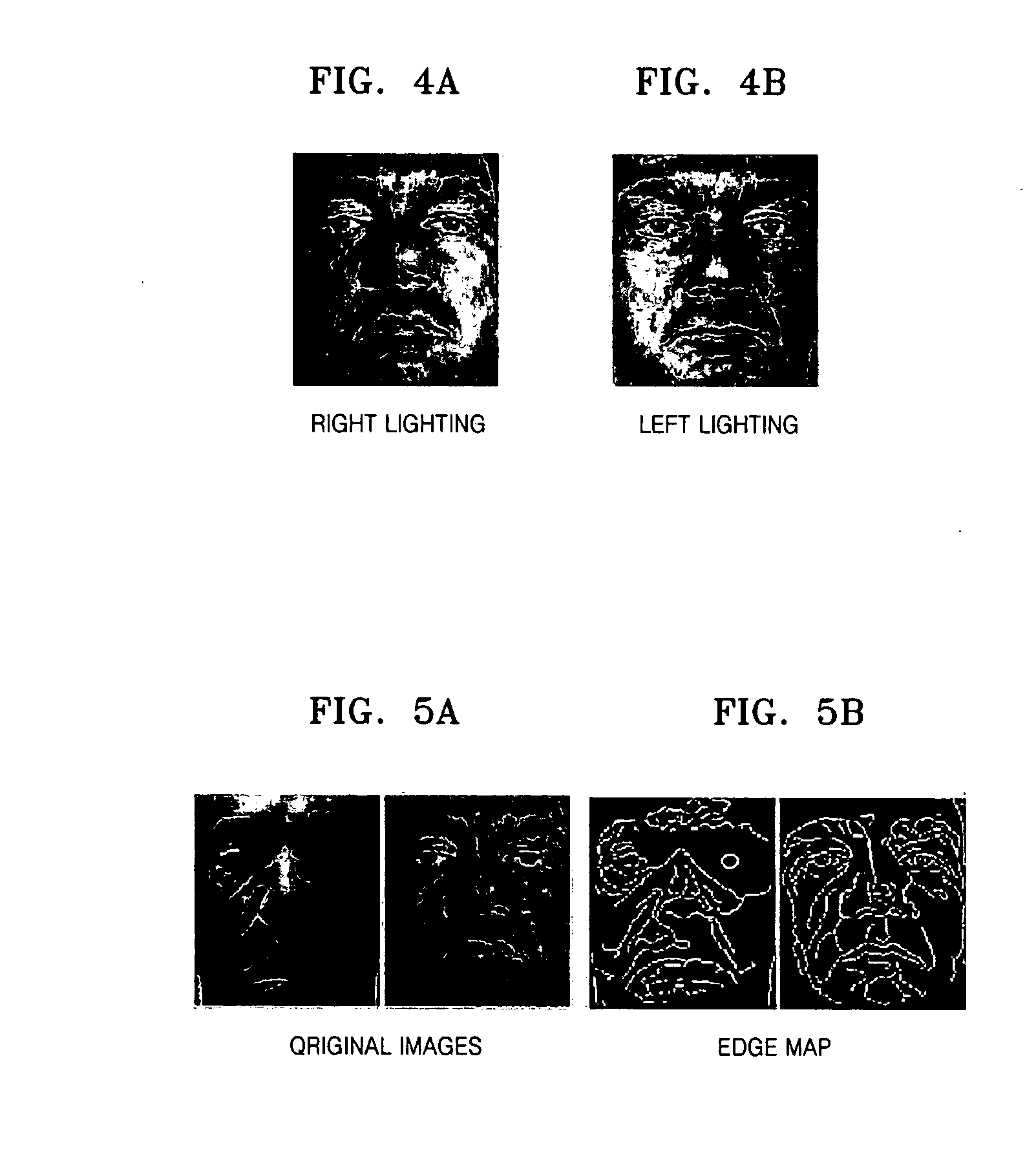

A method, apparatus, and medium for removing shading of an image are provided. The method of removing shading of an image includes: smoothing an input image; performing a gradient operation for the input image; performing normalization using the smoothed image and the images for which the gradient operation is performed; and integrating the normalized images. The apparatus for removing shading of an image includes: a smoothing unit smoothing an input image using a predetermined smoothing kernel; a gradient operation unit performing a gradient operation for the input image using a predetermined gradient operator; a normalization unit performing normalization using the smoothed image and the images for which the gradient operation is performed; and an image integration unit integrating the normalized images. According to the method, apparatus, and medium, by defining a face image model analysis and intrinsic and extrinsic factors and setting up a rational assumption, an integral normalized gradient image not sensitive to illumination is provided. Also, by employing an anisotropic diffusion method, a moire phenomenon in an edge region of an image can be avoided.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and apparatus for diffusion based illumination normalization

An exemplary illumination normalization method is provided which includes receiving an input image having at least one spurious edge directly resulting from illumination, performing anisotropic diffusion on the input image to form a diffusion image, and removing at the least one spurious edge using the diffusion image. Another embodiment consistent with the invention is an apparatus for performing illumination normalization in an image which includes a processor operably coupled to a memory storing input image data which contains an object of interest having at least one spurious edge directly resulting from illumination, a model of a representative object of interest, and functional processing units for controlling image processing, wherein the functional processing units further include a model based anisotropic diffusion module which predicts edge information regarding the object of interest based upon the model, and produces a reflectance estimation utilizing the predicted edge information.

Owner:FUJIFILM CORP

Liquid crystal display device

ActiveUS20120069272A1Improve reuse efficiencyReduce power consumptionNon-linear opticsOptical elementsLiquid-crystal displayBi-isotropic material

The backlight has an anisotropic diffusion sheet disposed between the reflective polarizing plate and the optical path converter. The anisotropic diffusion sheet includes a refractive index anisotropic diffusion sheet stretched in an absorption axis direction in which a concave-convex portion is formed on a surface of the reflective polarized plate, and an isotropic material part laminated on a surface of the concave-convex part. The isotropic material part has an isotropic reflective index. The refractive index of the isotropic material part is the same as the refractive index in the transmission axis direction perpendicular to a stretching direction of the refractive index anisotropic sheet.

Owner:JAPAN DISPLAY INC

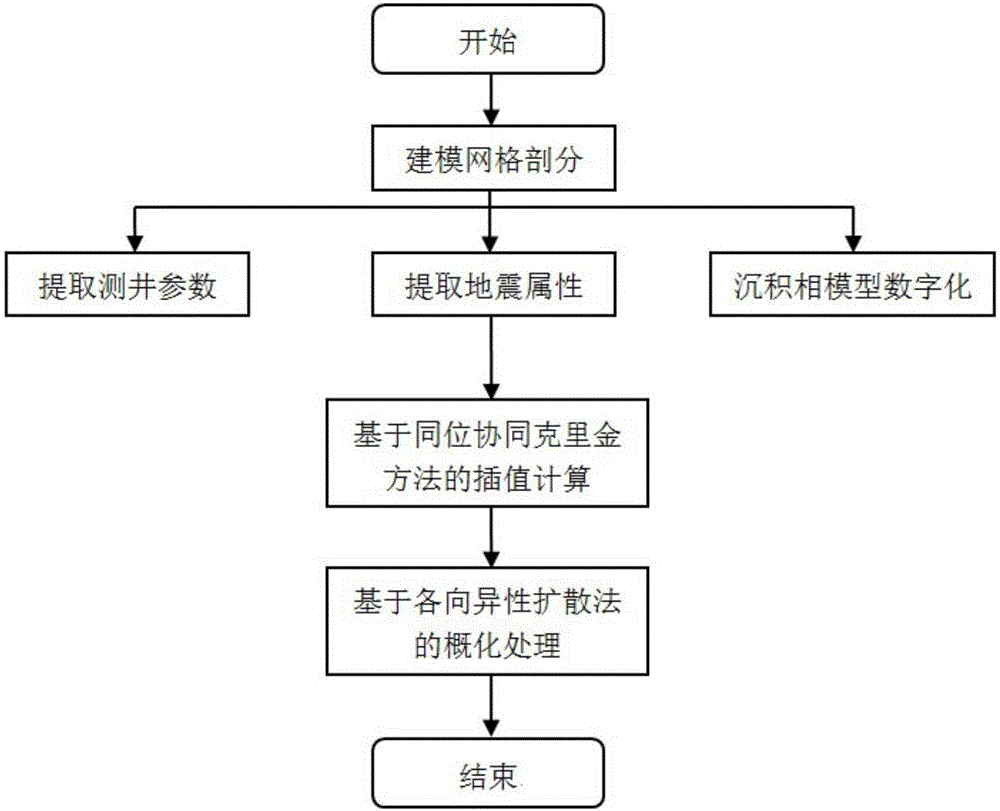

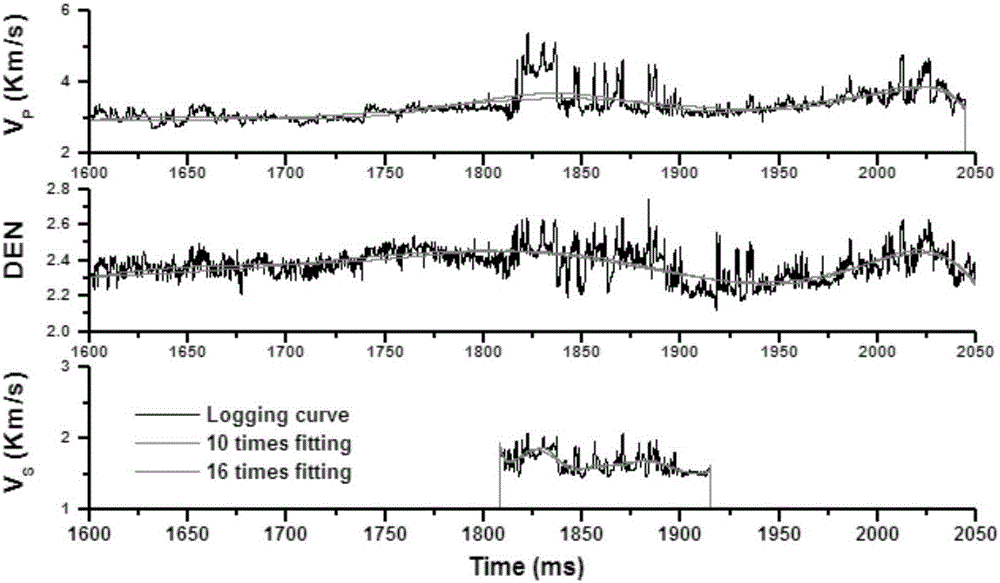

Phase-control modeling method for seismic elastic parameters on basis of coordinate multi-phase cooperation Kriging

InactiveCN106772587AHigh precisionImprove accuracySeismic signal processingWell loggingSeismic attribute

The invention discloses a phase-control modeling method for seismic elastic parameters on the basis of coordinate multi-phase cooperation Kriging. The phase-control modeling method includes steps of dividing modeling grids inside target layers by the aid of equal-proportion grid division processes and forming a plurality of planes which are used as target locations for modeling elastic parameters; respectively extracting well logging elastic parameters, seismic attribute parameters and sedimentary phase information which are used as modeling data, selecting master variables and selecting first cooperation variables and second cooperation variables; utilizing the obtain master variables, the obtained first cooperation variables and the obtained second cooperation variables as calculation parameters and carrying out interpolation calculation by the aid of coordinate multi-phase cooperation Kriging processes to obtain parameter values of all to-be-estimated points on the planes; carrying out generalization processing by the aid of anisotropic diffusion processes to obtain generalized and processed seismic elastic parameter modeling results. The parameter values of all the to-be-estimated points on the planes are used as multi-source data fusion modeling results. The phase-control modeling method has the advantages that calculation abnormal points and boundary noisy points can be eliminated, accordingly, the multi-information parameter synthetic modeling precision can be improved, a large quantity of real geological information is fused, and accordingly the phase-control modeling is good in applicability.

Owner:HOHAI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com