Patents

Literature

194results about How to "Improve performance and efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

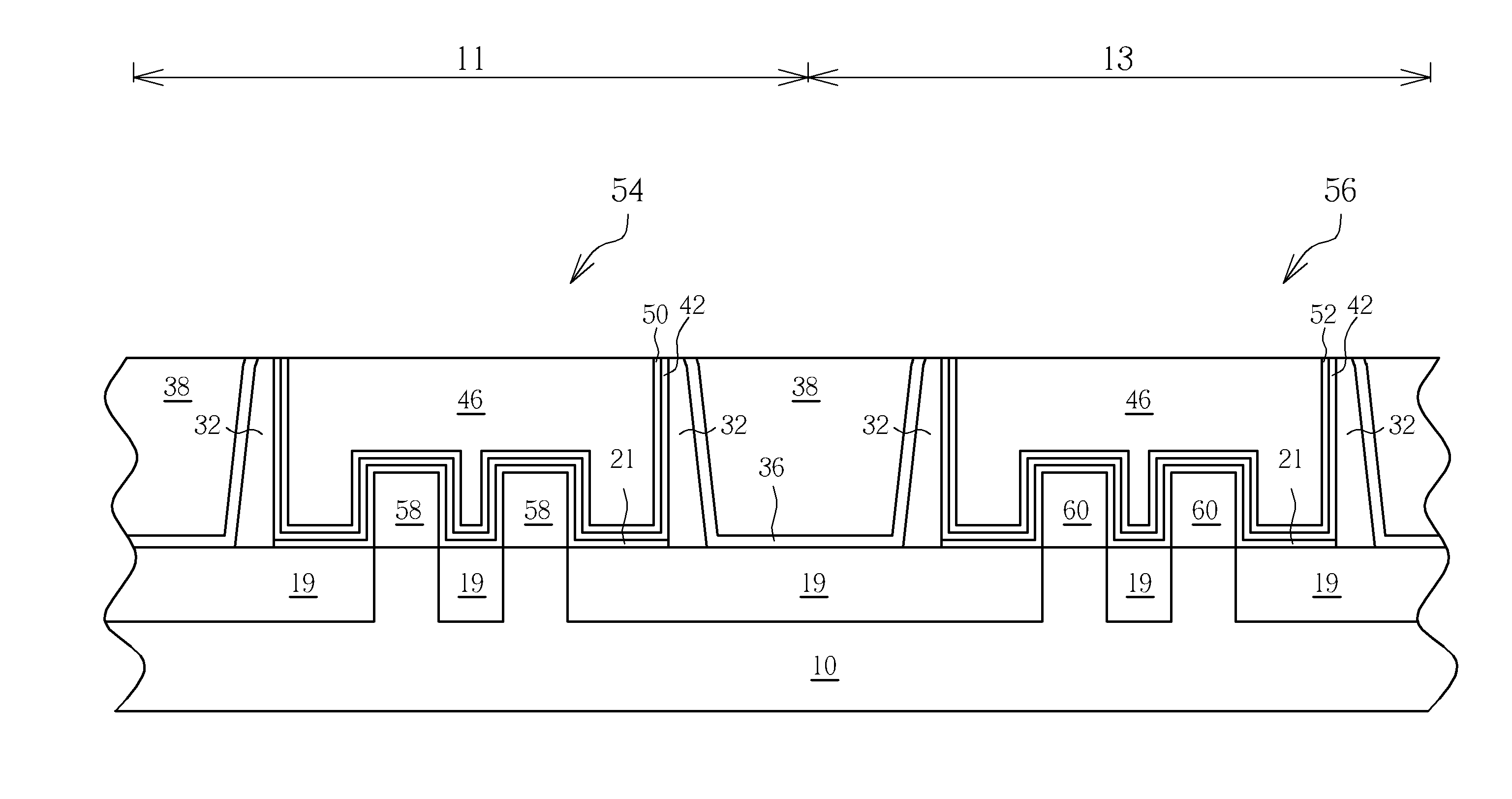

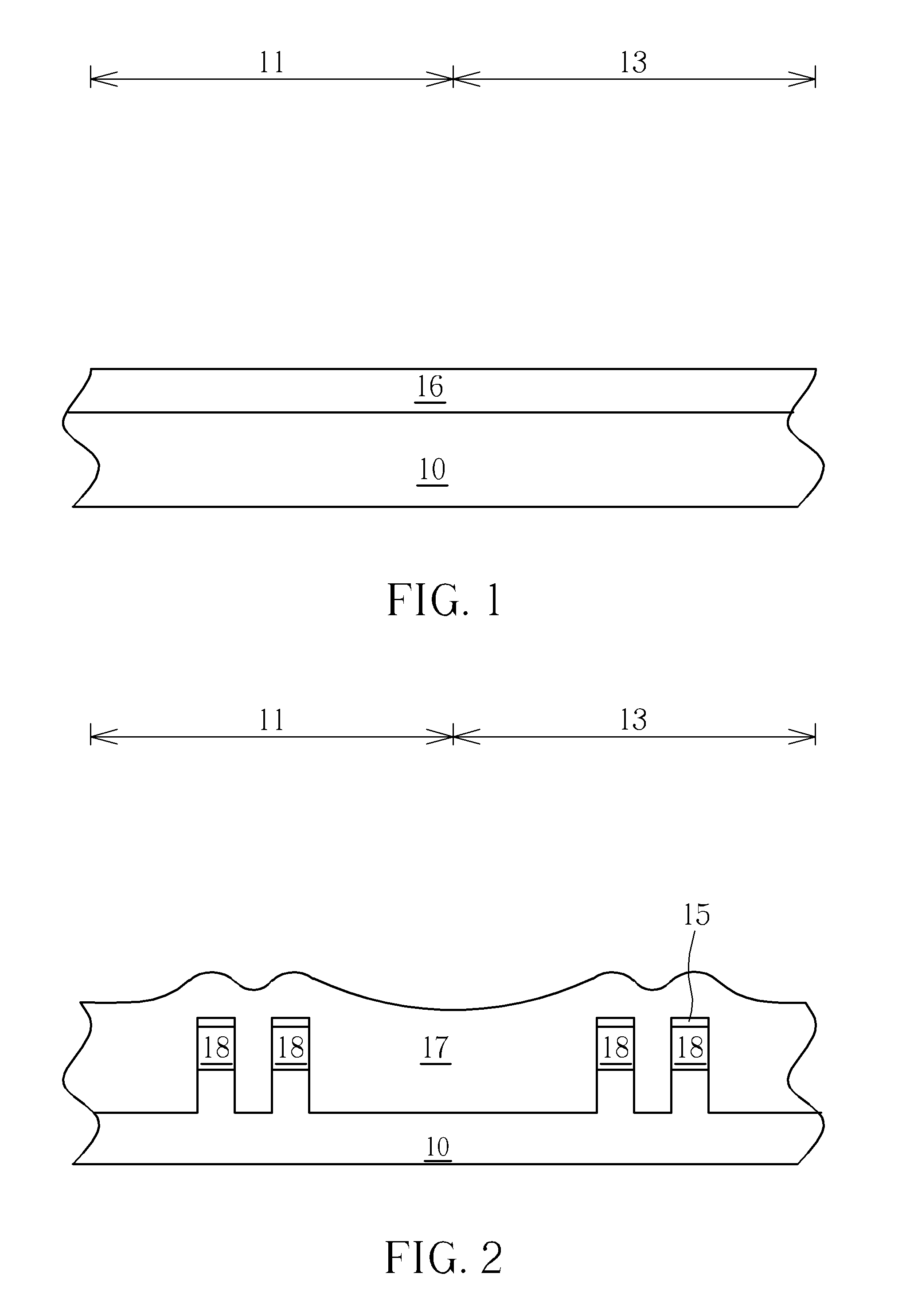

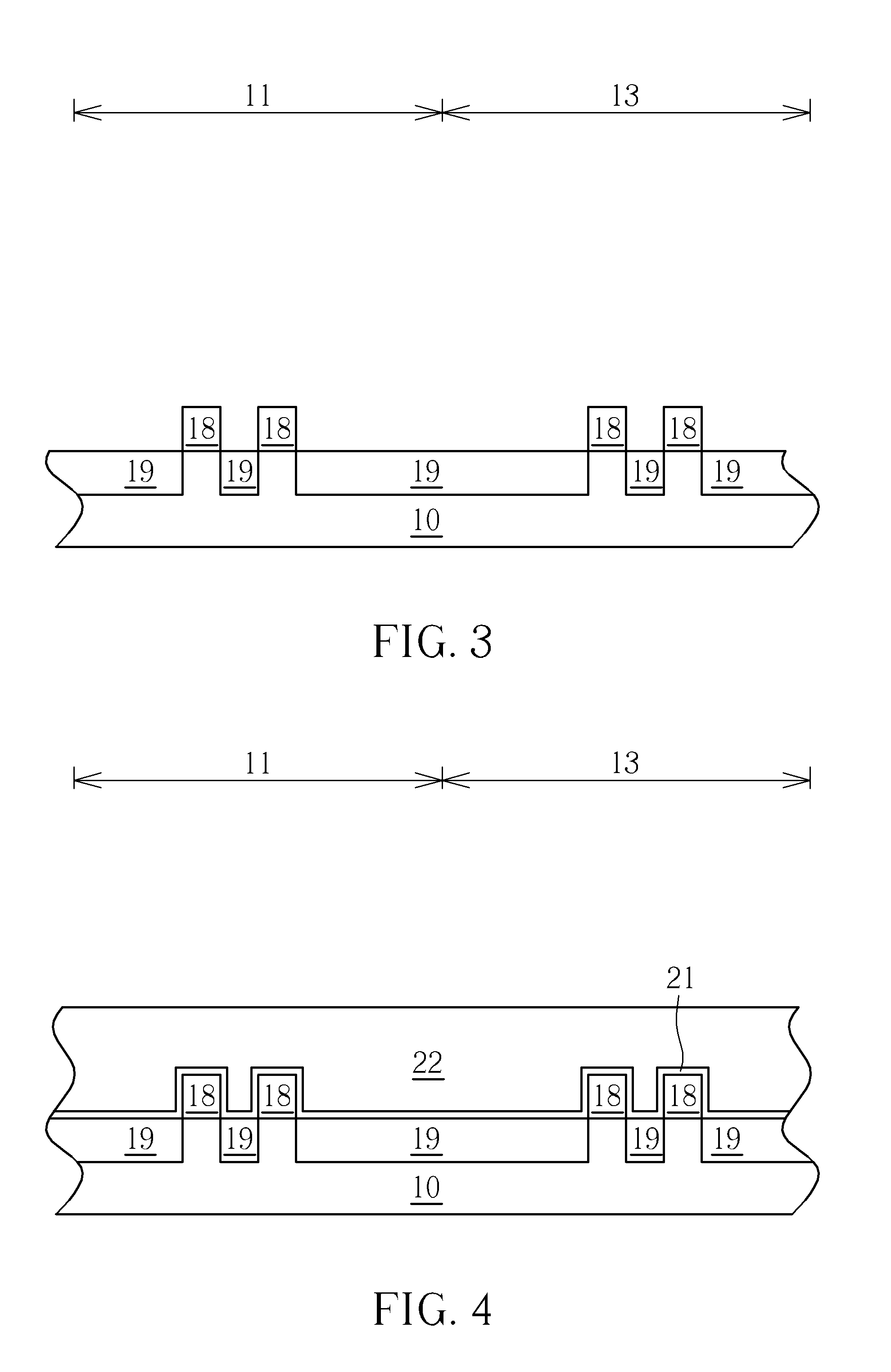

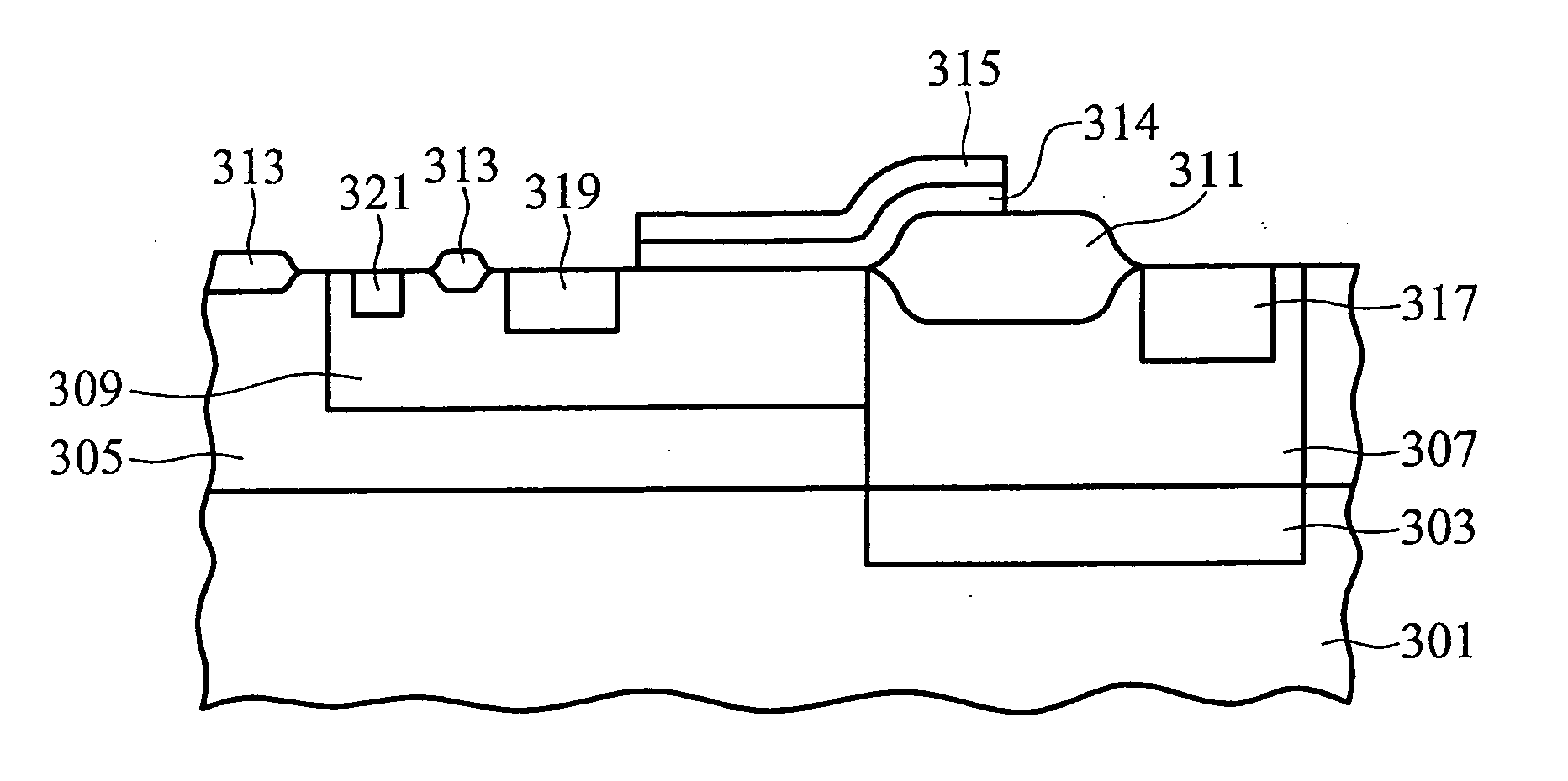

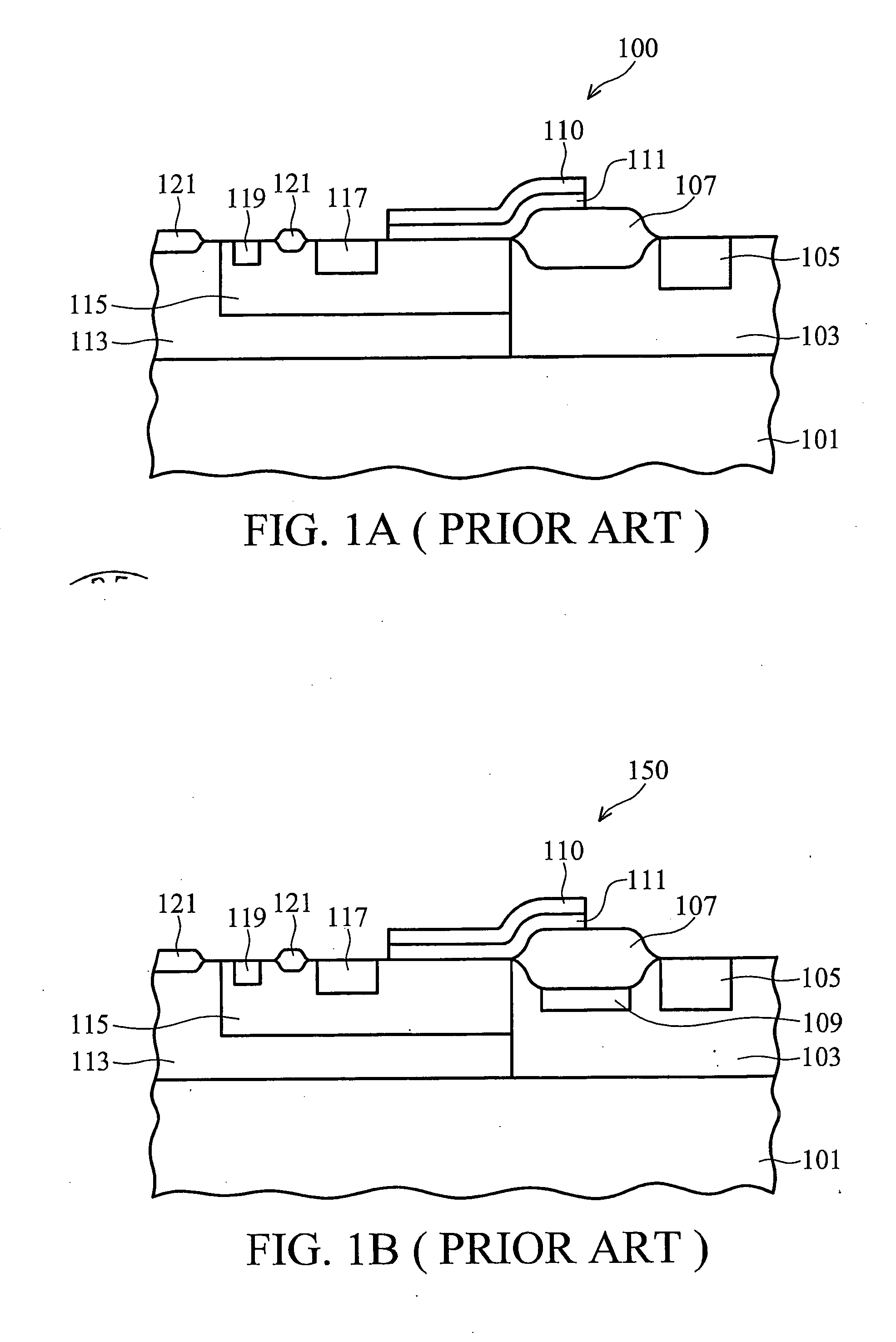

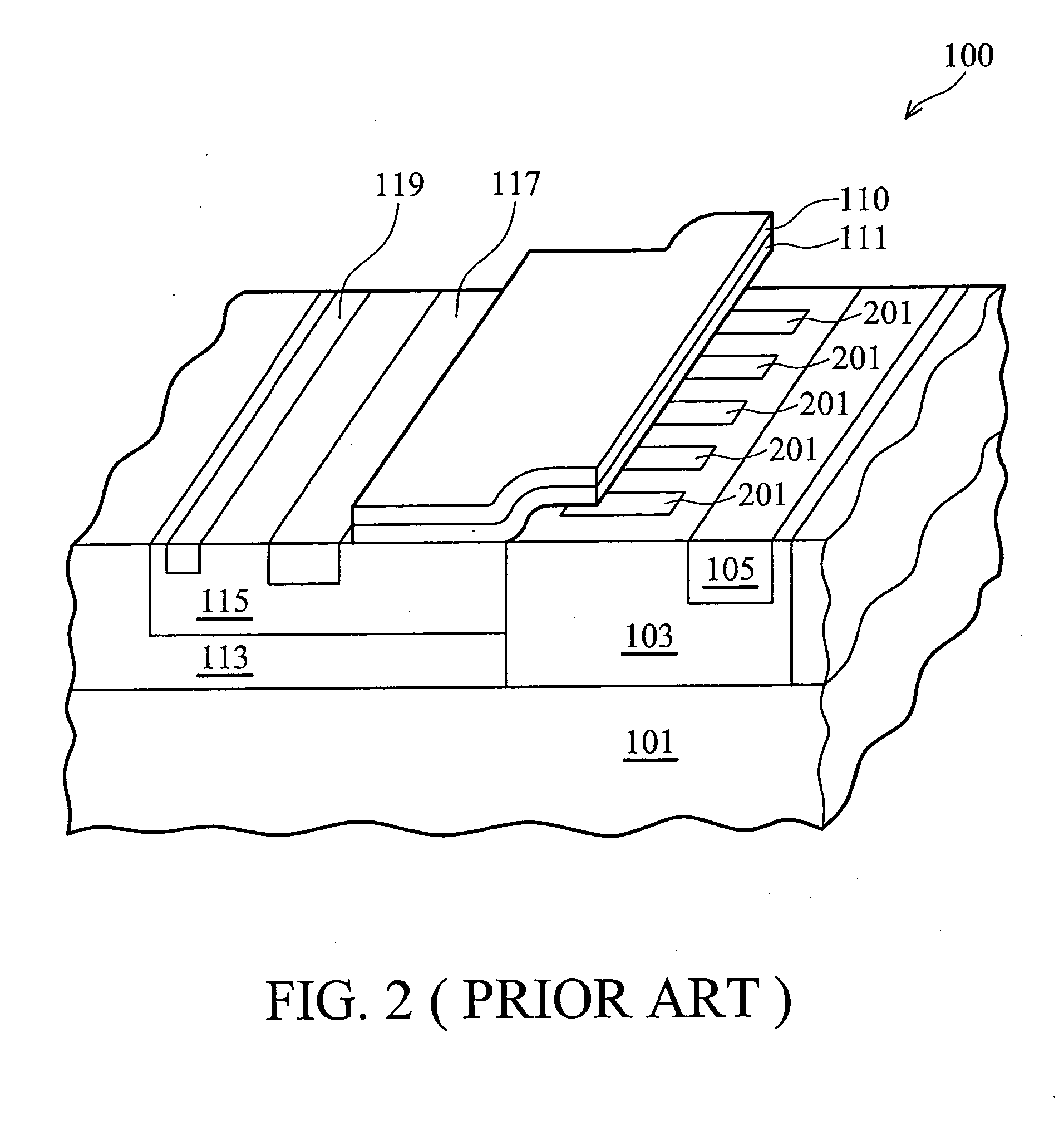

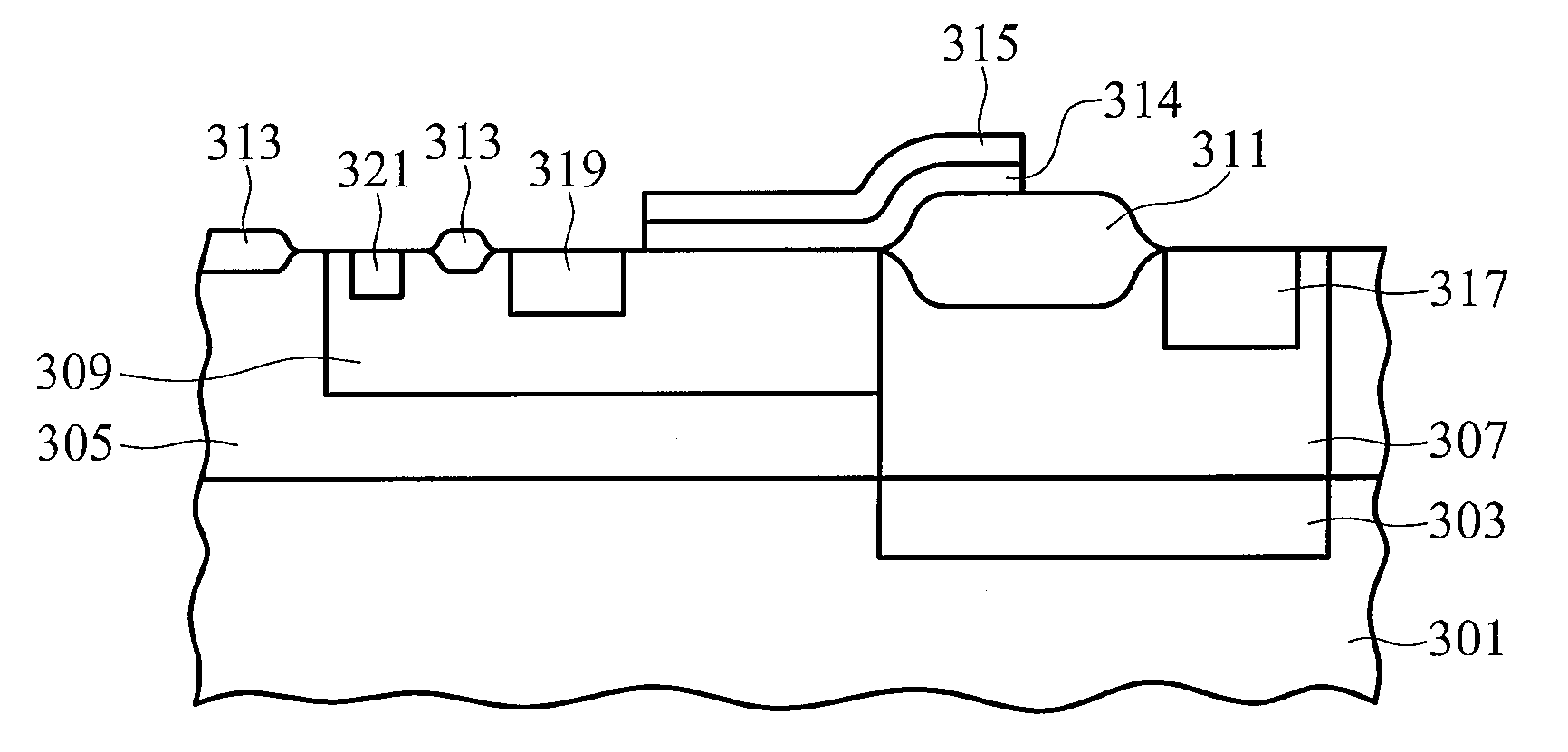

Semiconductor device and method of making the same

ActiveUS20130037886A1Improve efficiencyImprove performanceTransistorSolid-state devicesPower semiconductor deviceEngineering

A semiconductor device includes a semiconductor substrate, at least a first fin structure, at least a second fin structure, a first gate, a second gate, a first source / drain region and a second source / drain region. The semiconductor substrate has at least a first active region to dispose the first fin structure and at least a second active region to dispose the second fin structure. The first / second fin structure partially overlapped by the first / second gate has a first / second stress, and the first stress and the second stress are different from each other. The first / second source / drain region is disposed in the first / second fin structure at two sides of the first / second gate.

Owner:UNITED MICROELECTRONICS CORP

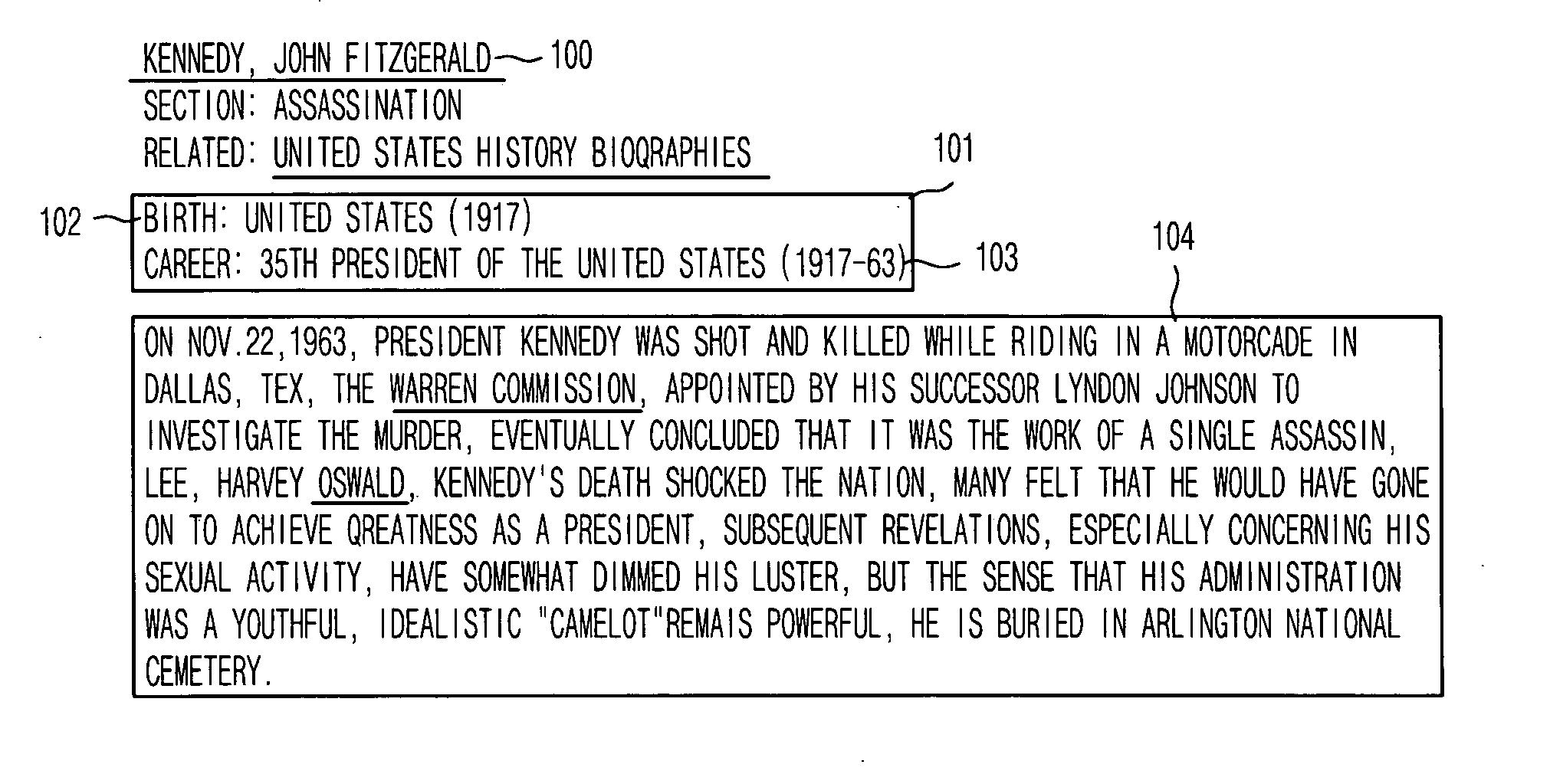

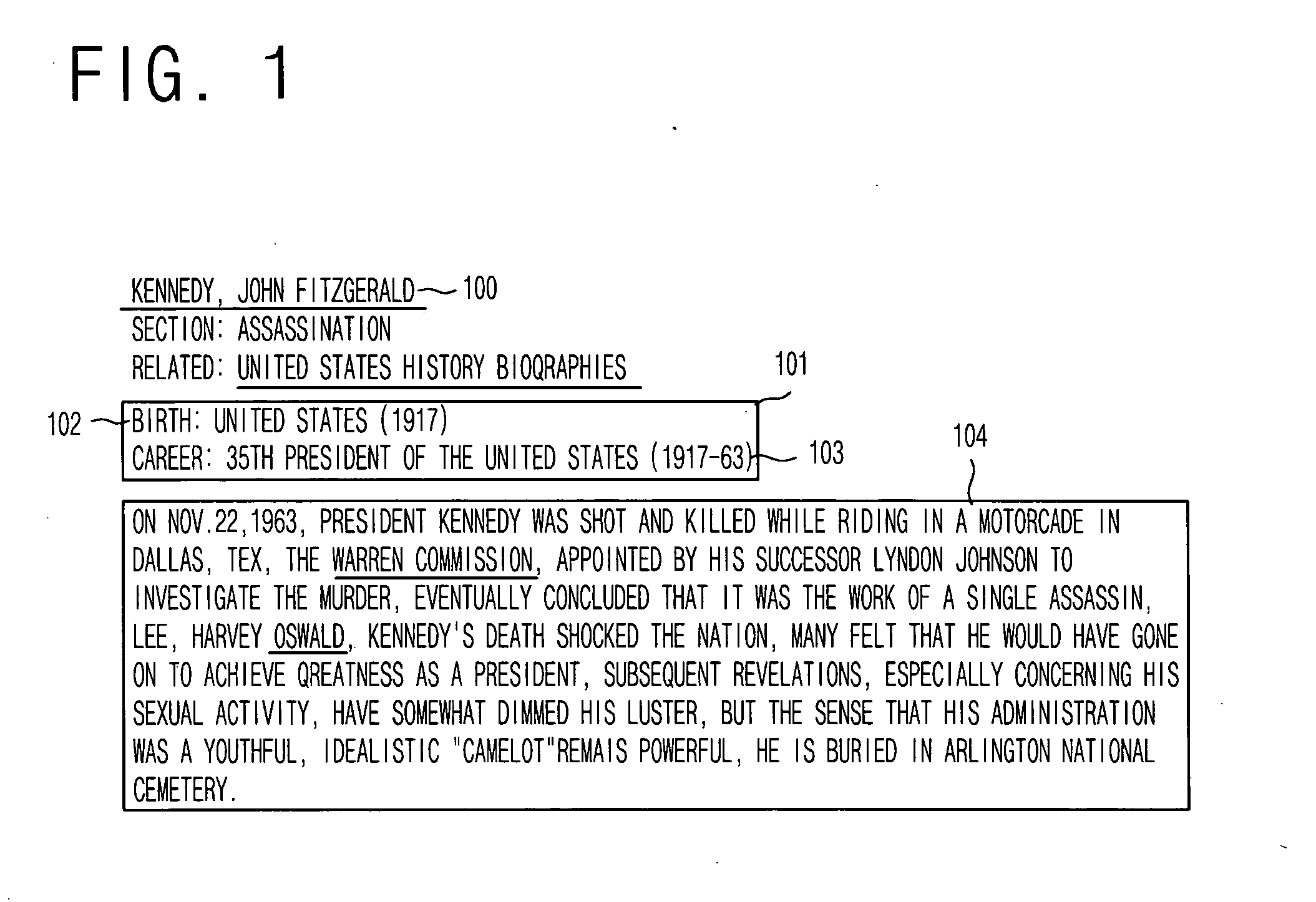

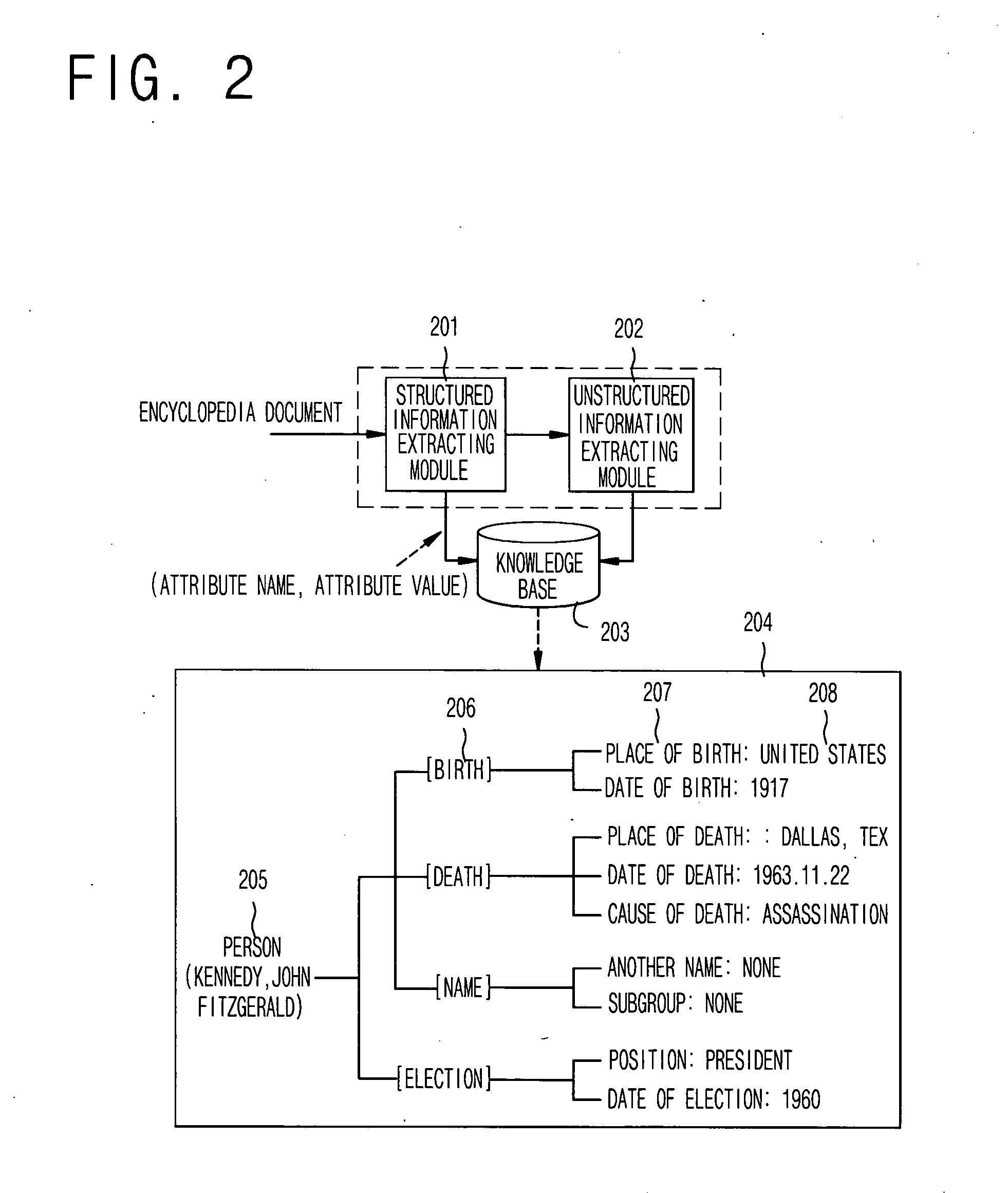

Semi-automatic construction method for knowledge base of encyclopedia question answering system

InactiveUS20050086222A1Saving cost to constructShorten the timeDigital data processing detailsSpecial data processing applicationsSemi automaticQuestion answer

The present invention relates to a semi-automatic construction method for knowledge base of an encyclopedia question answering system, in which concept-oriented systematic templates are designed and important fact information related to entries is automatically extracted from summary information and body of the encyclopedia to semi-automatically construct the knowledge base of the encyclopedia question answering system. A semi-automatic construction method for knowledge base of an encyclopedia question answering system of the present invention comprises the steps of: (a) designing structure of the knowledge base with a plurality of templates for each entry and a plurality attributes related to each of the templates; (b) extracting structured information including the entry, an attribute name and attribute values from summary information of the encyclopedia; (c) extracting unstructured information including an attribute name and attribute values of the entry from a body of the encyclopedia; and (d) storing the structured information and the unstructured information in corresponding template and attribute of the knowledge base according to the entry.

Owner:ELECTRONICS & TELECOMM RES INST

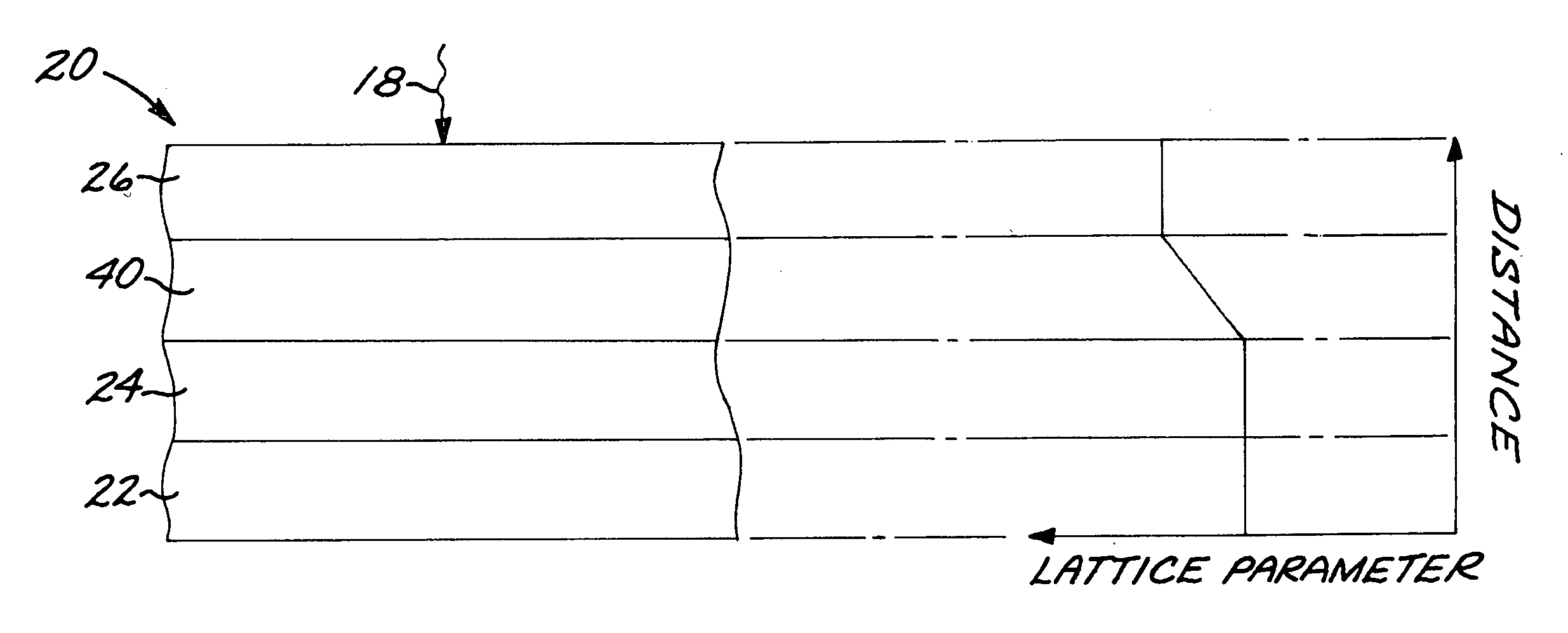

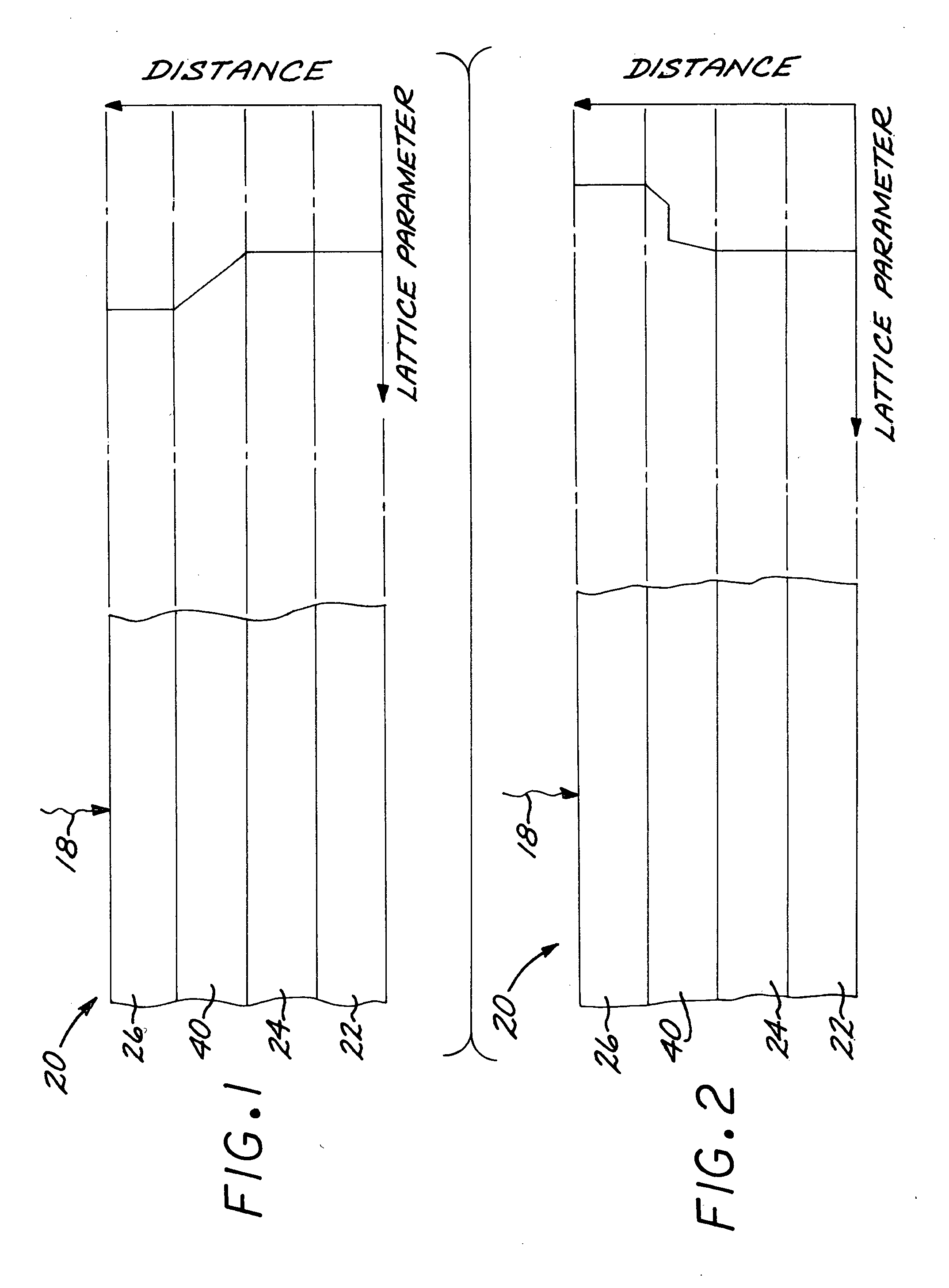

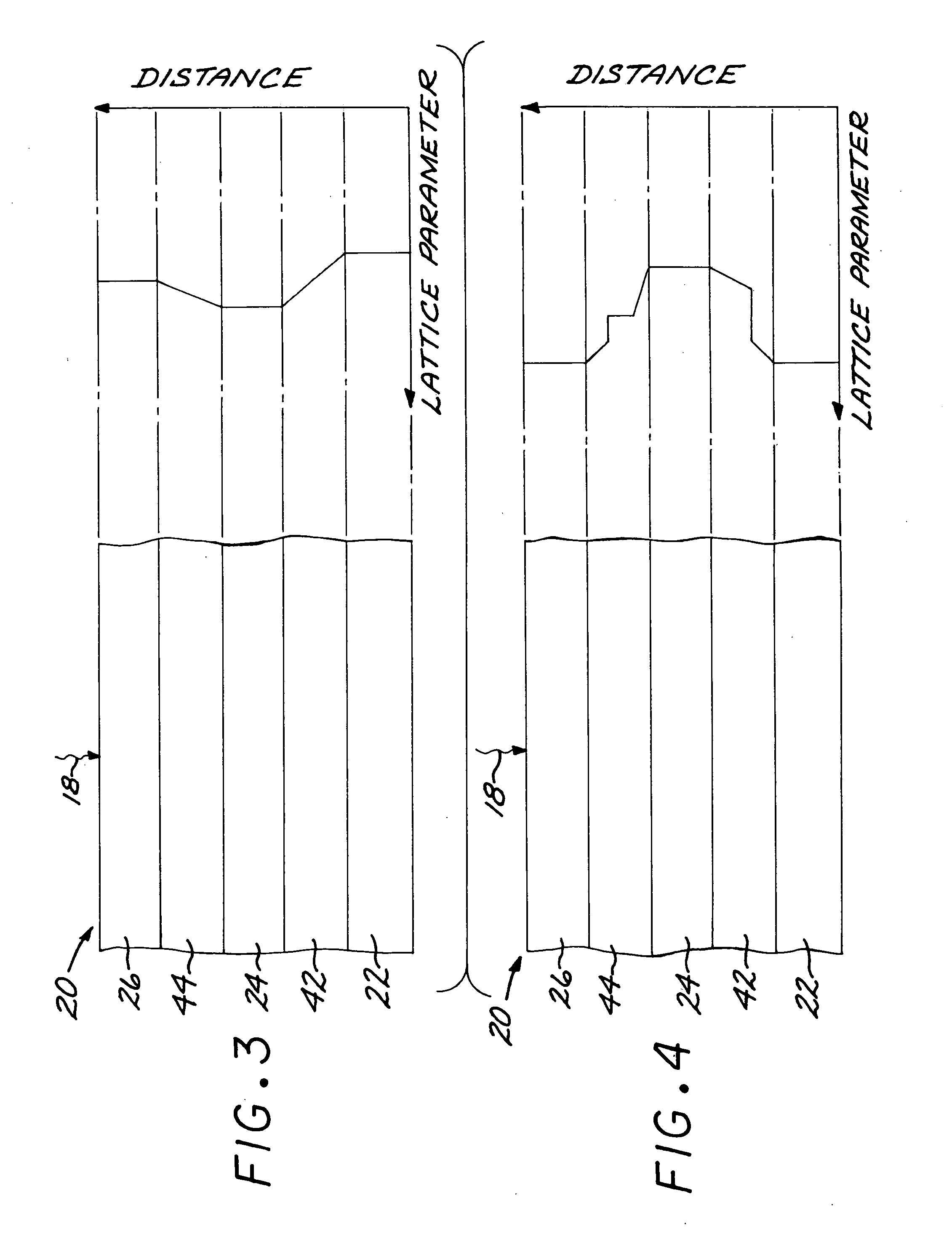

Solar cells having a transparent composition-graded buffer layer

ActiveUS20050274411A1Improve performance and efficiencyLow cost of changePV power plantsPhotovoltaic energy generationSolar cellSecondary layer

A solar cell includes a first layer having a first-layer lattice parameter, a second layer having a second-layer lattice parameter different from the first-layer lattice parameter, wherein the second layer includes a photoactive second-layer material; and a third layer having a third-layer lattice parameter different from the second-layer lattice parameter, wherein the third layer includes a photoactive third-layer material. A transparent buffer layer extends between and contacts the second layer and the third layer and has a buffer-layer lattice parameter that varies with increasing distance from the second layer toward the third layer, so as to lattice match to the second layer and to the third layer. There may be additional subcell layers and buffer layers in the solar cell.

Owner:THE BOEING CO

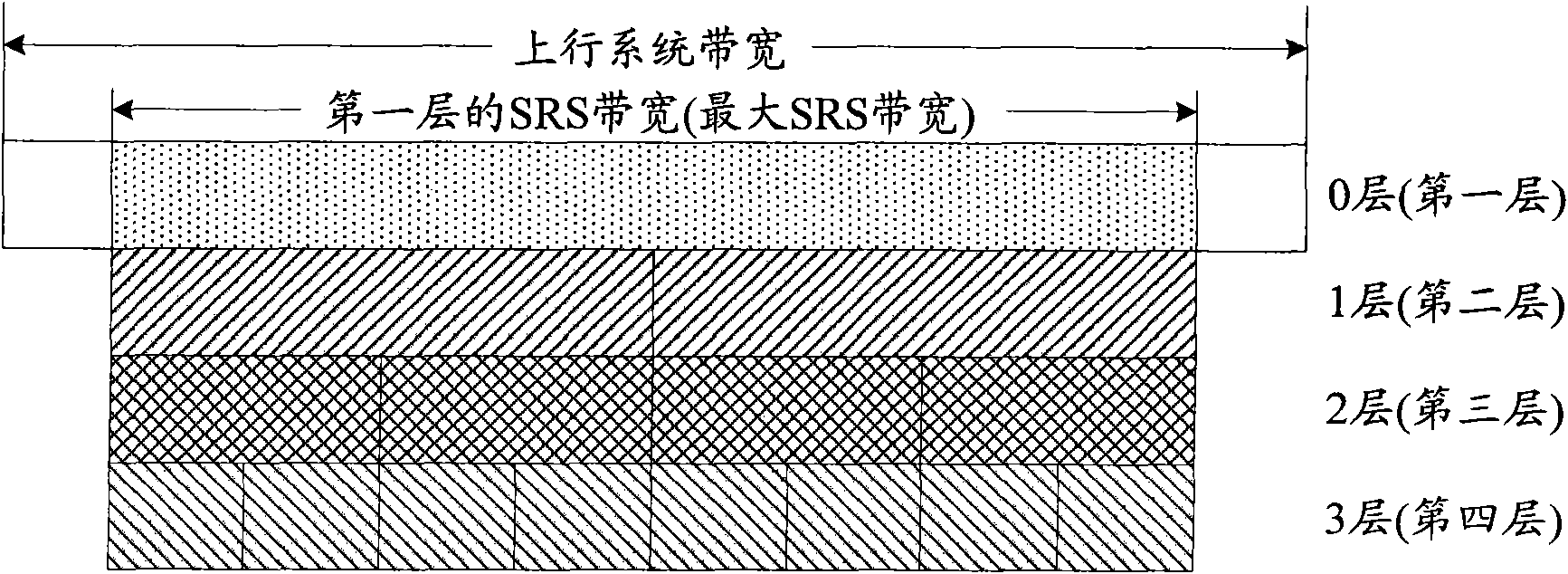

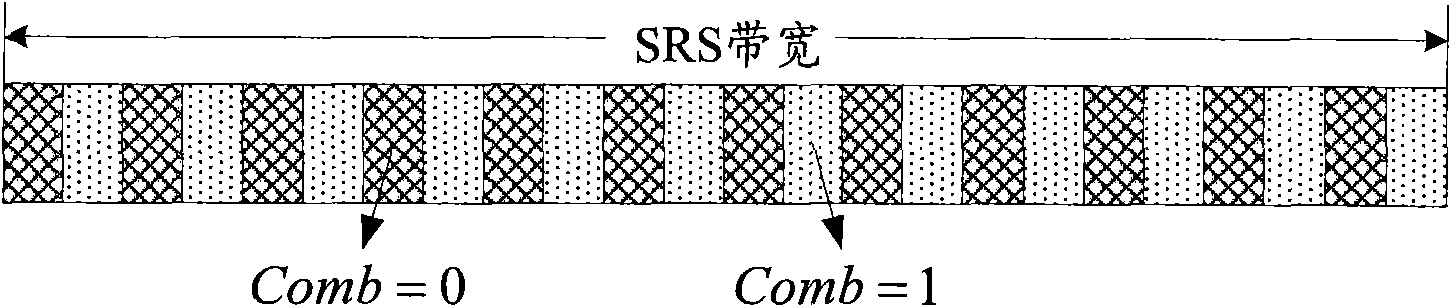

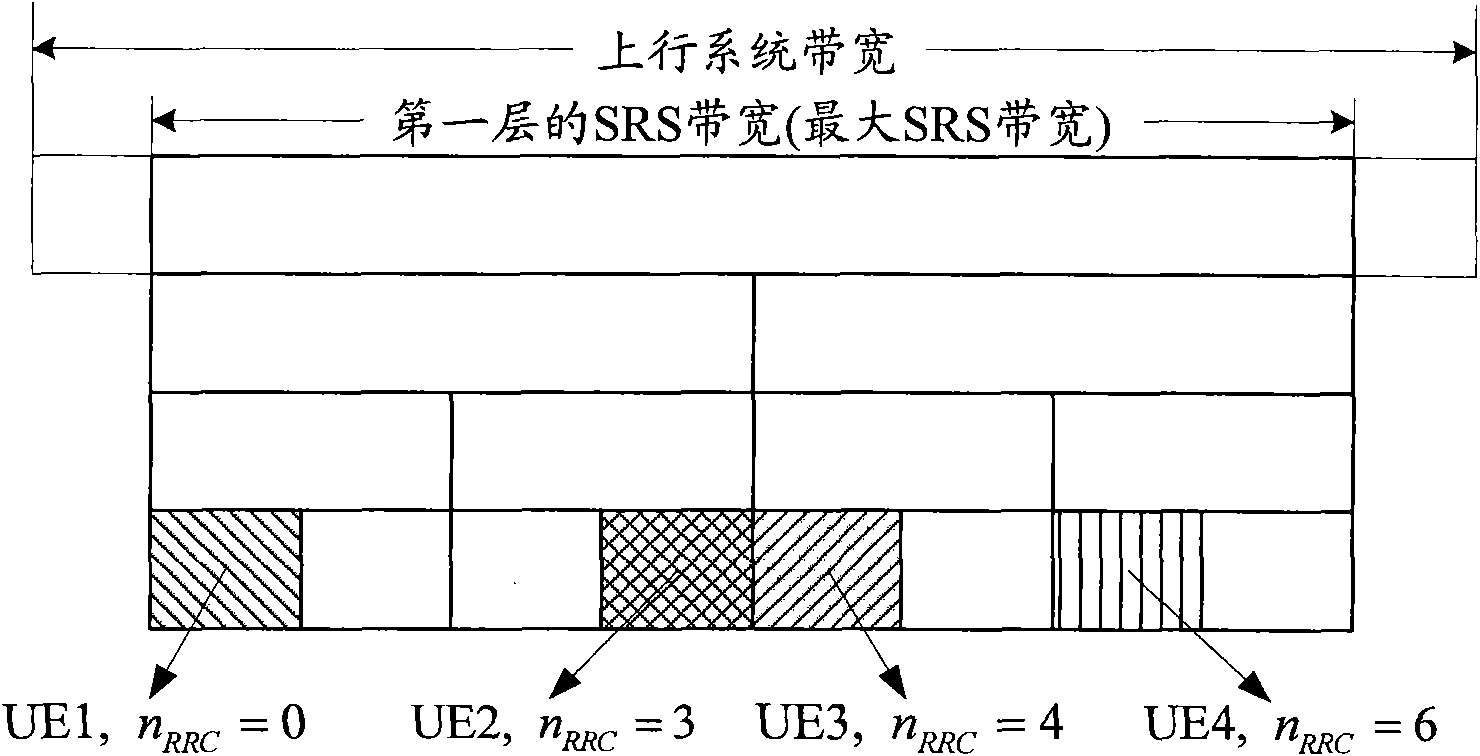

Multi-antenna sending method and device for measuring reference signal

ActiveCN101540631AImprove performance and efficiencyImprove efficiencySpatial transmit diversityModulated-carrier systemsEngineeringMulti antenna

The invention discloses a multi-antenna sending method and a device for measuring reference signal. In the proposal of the invention, in the condition of using precoding to send uplink SRS, UE can provide orthogonal resources for different precoding matrices by CDM, or TDM, FDM, or in mutual combination manner; in the condition of not using precoding to send uplink SRD, UE can send SRS on orthogonal resources by CDM, or TDM, or FDM, or in mutual combination manner. The invention provides operable realization mode for sending uplink SRS in multi-antenna condition.

Owner:ZTE CORP

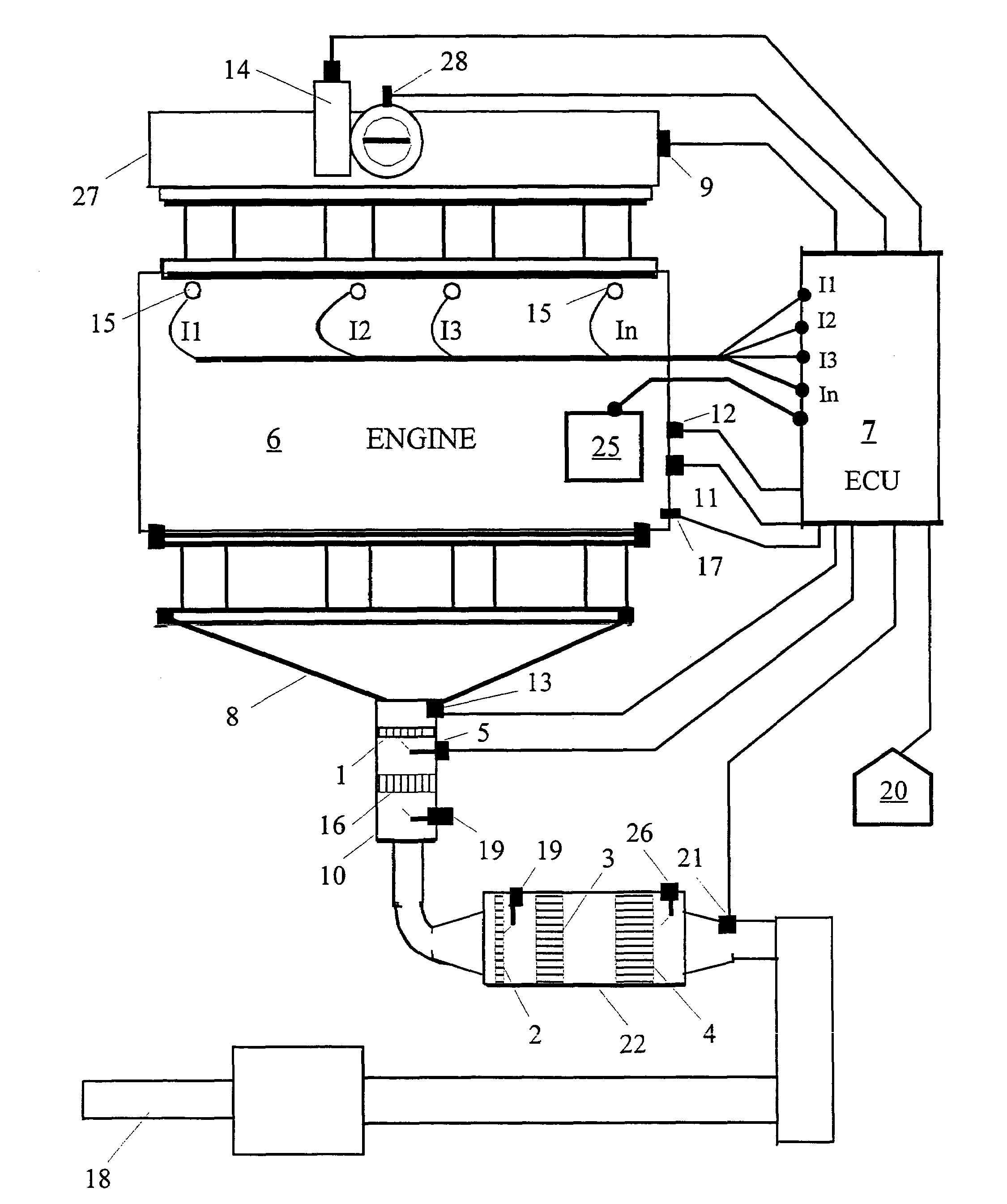

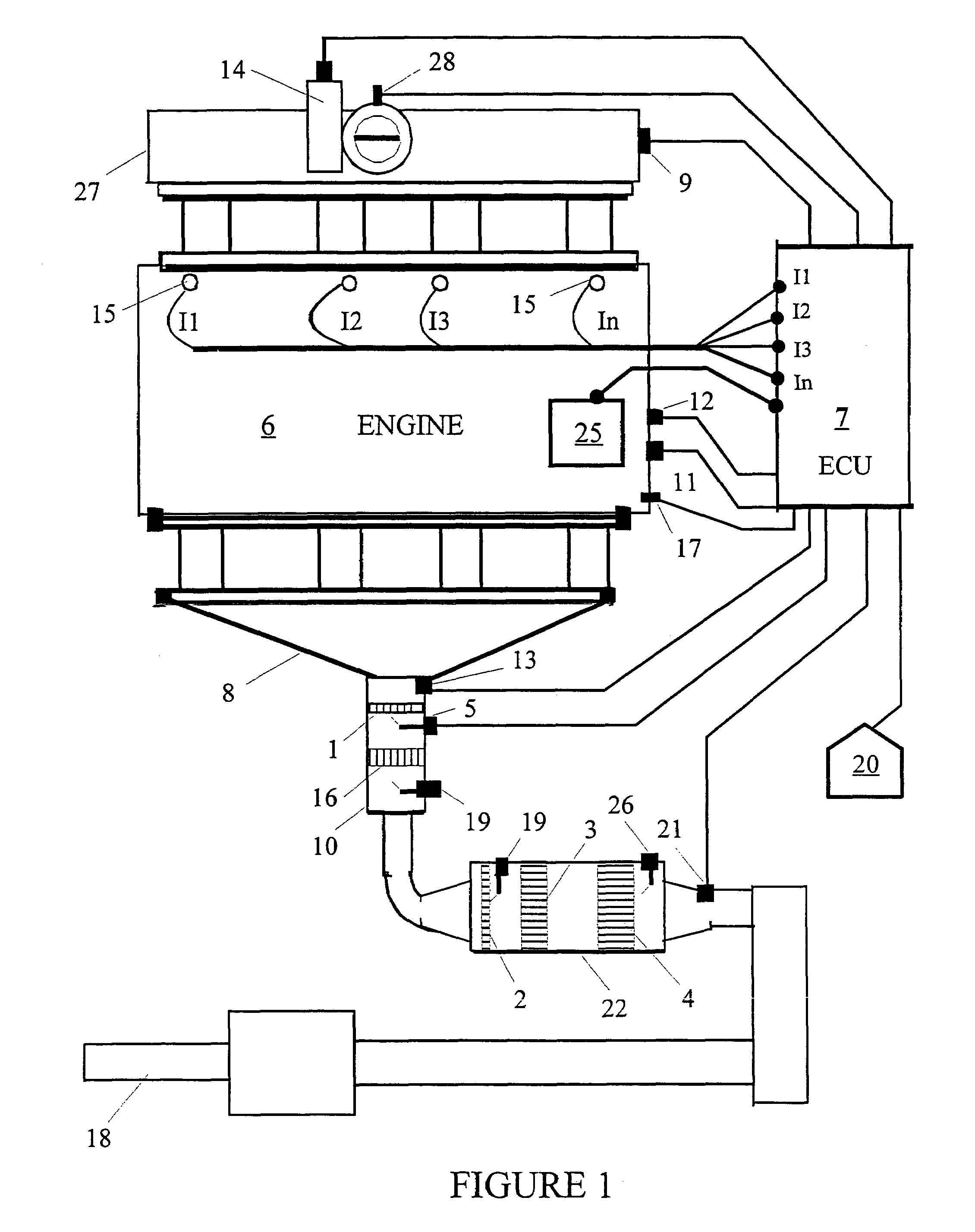

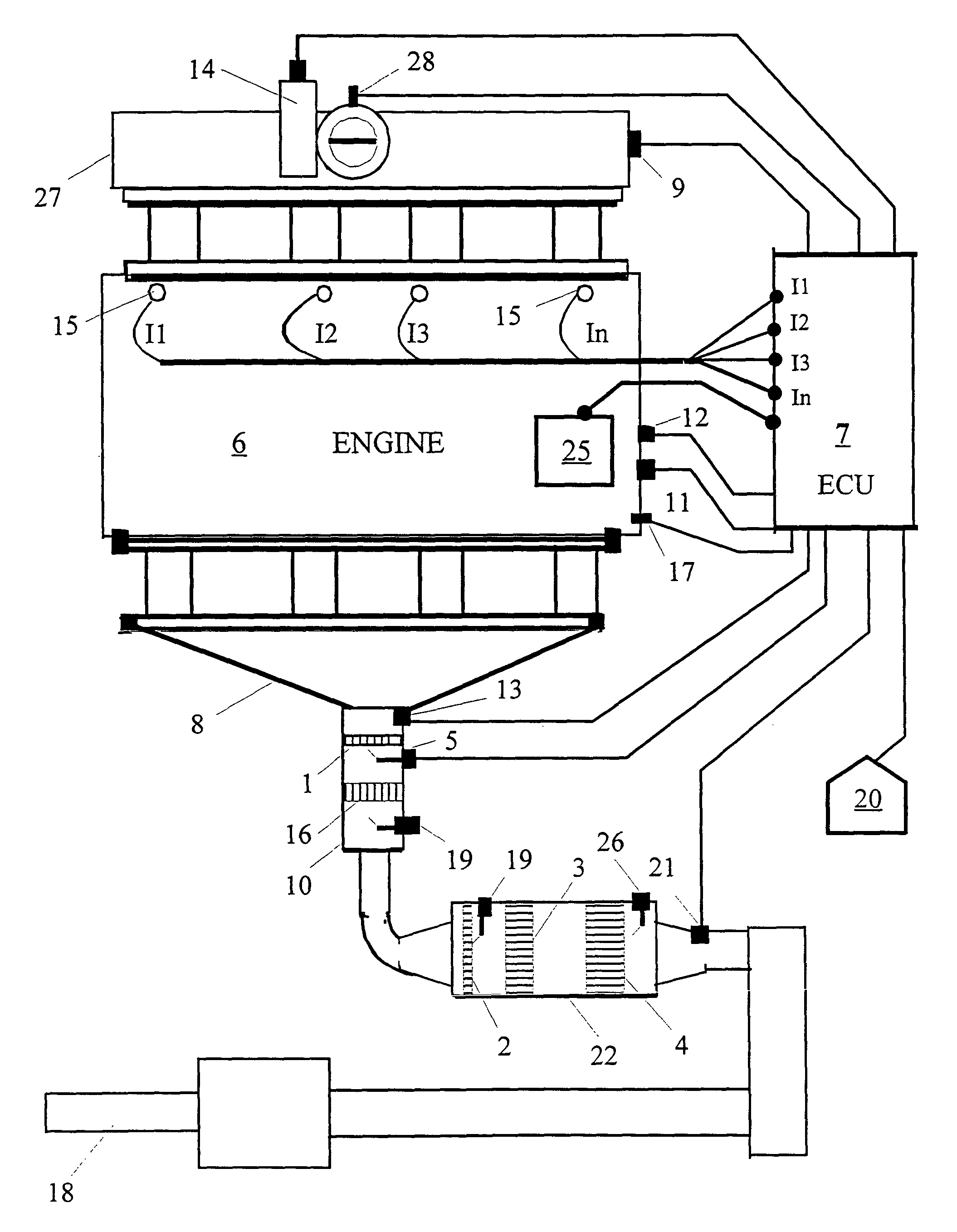

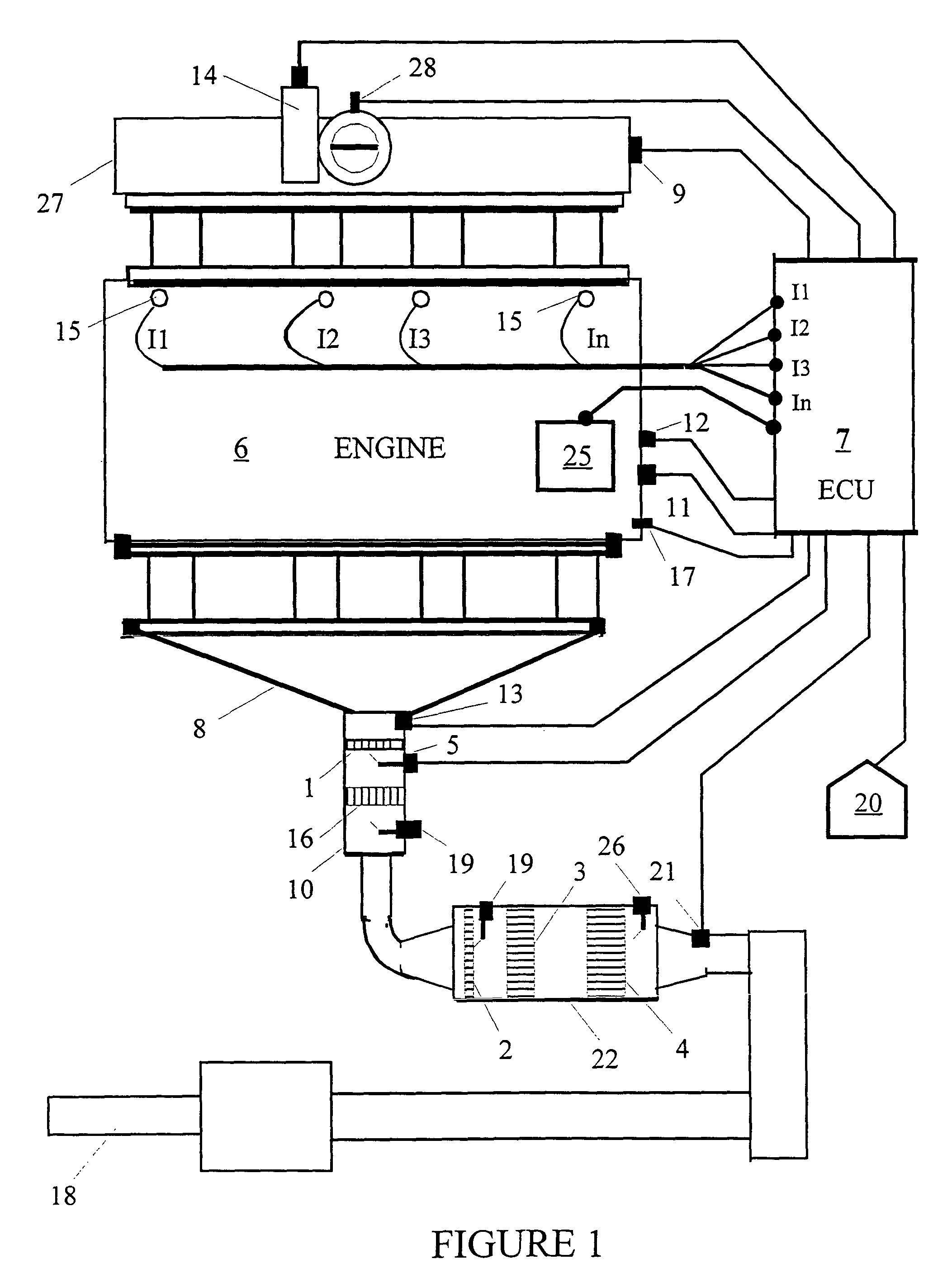

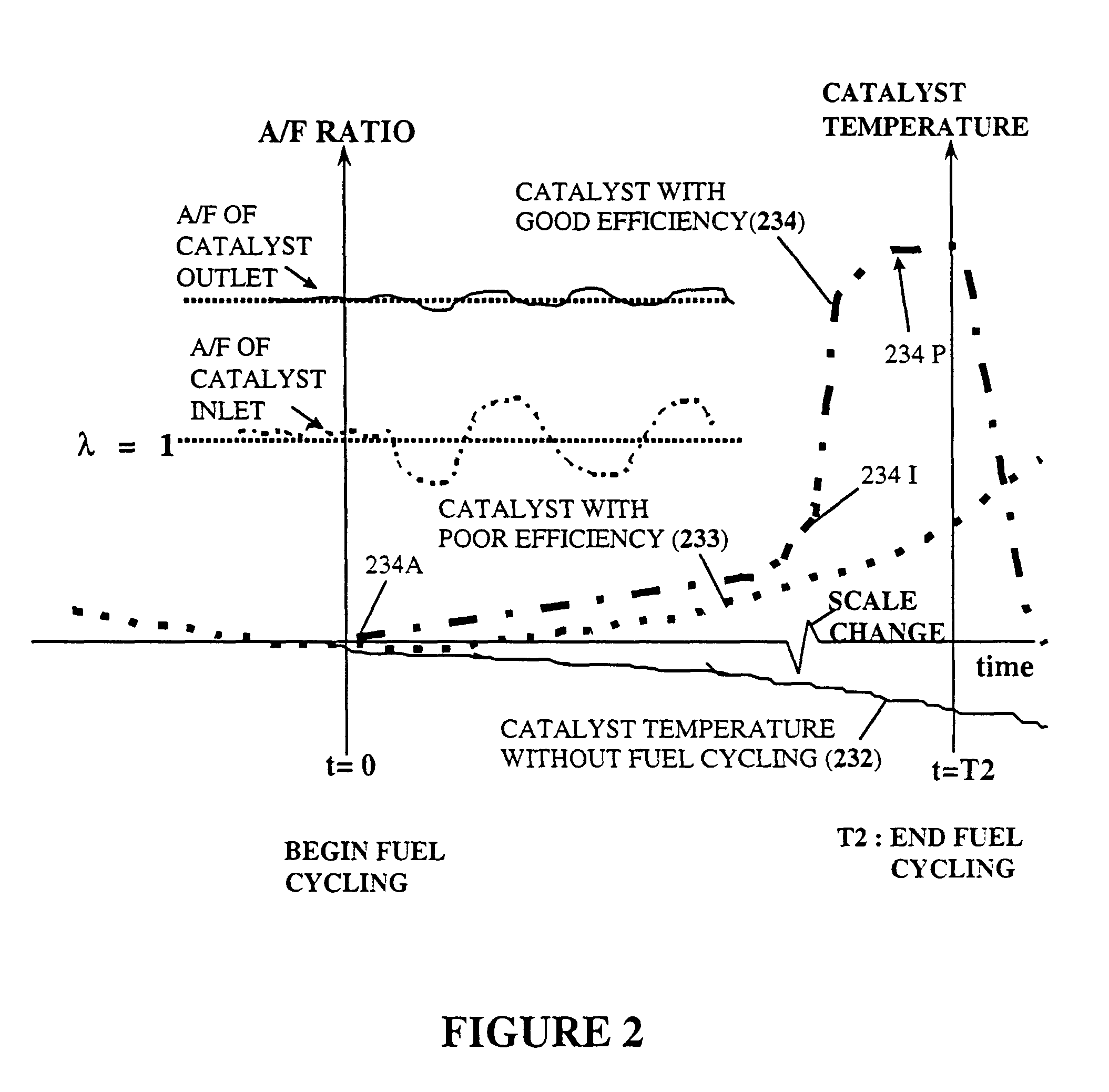

Control methods for improved catalytic converter efficiency and diagnosis

InactiveUS7707821B1Improve efficiencyImprove performanceElectrical controlInternal combustion piston enginesClosed loopProcess engineering

A controlling method for adjusting concentrations of, for example, individual cylinder's exhaust gas constituents to provide engine functions such as catalytic converter diagnosis, increased overall catalytic converter efficiency and rapid catalyst heating, before and / or after initiating closed loop fuel injection control, using a selected temperature sensor location within a low thermal mass catalytic converter design.

Owner:LEGARE JOSEPH E

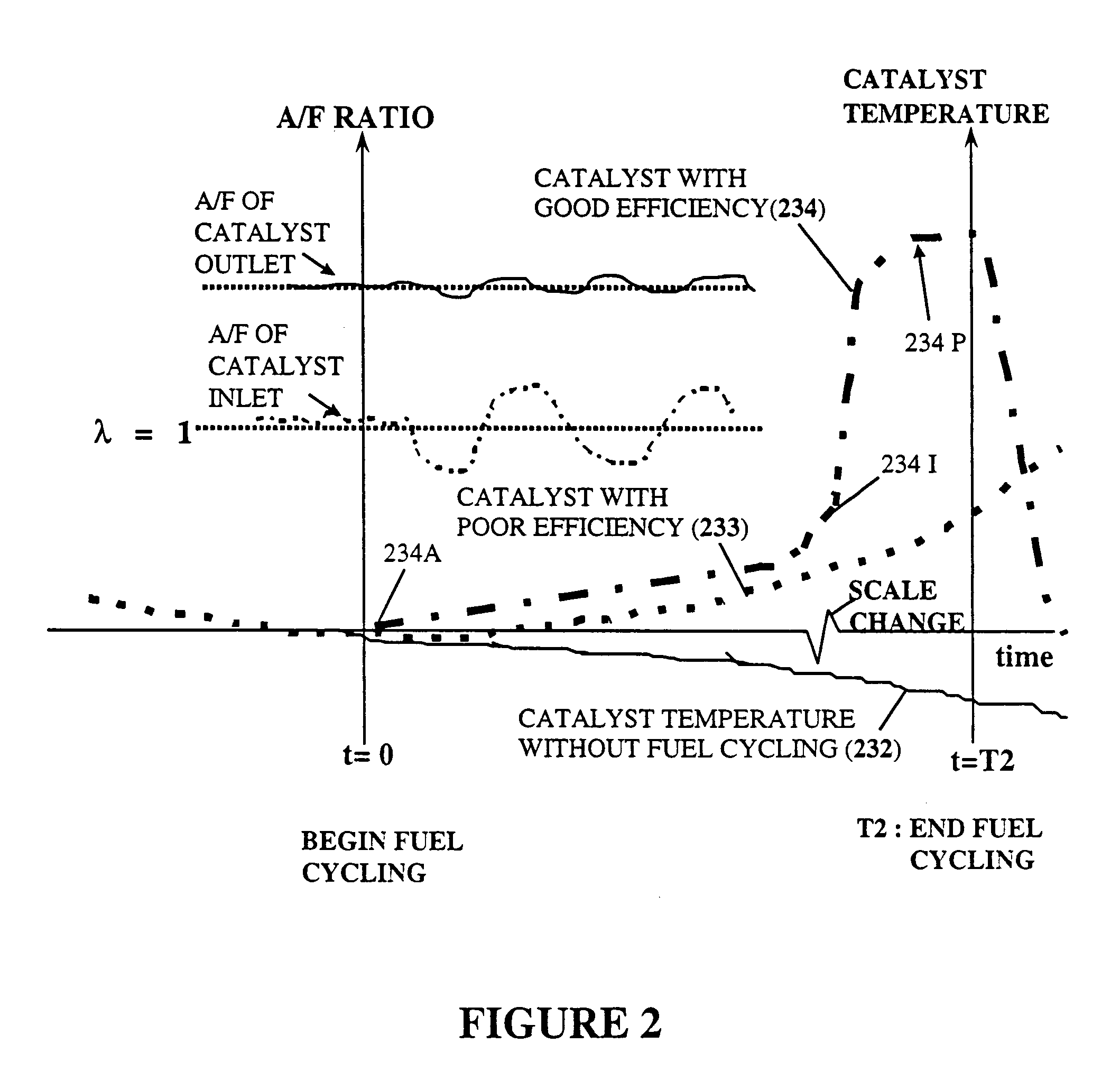

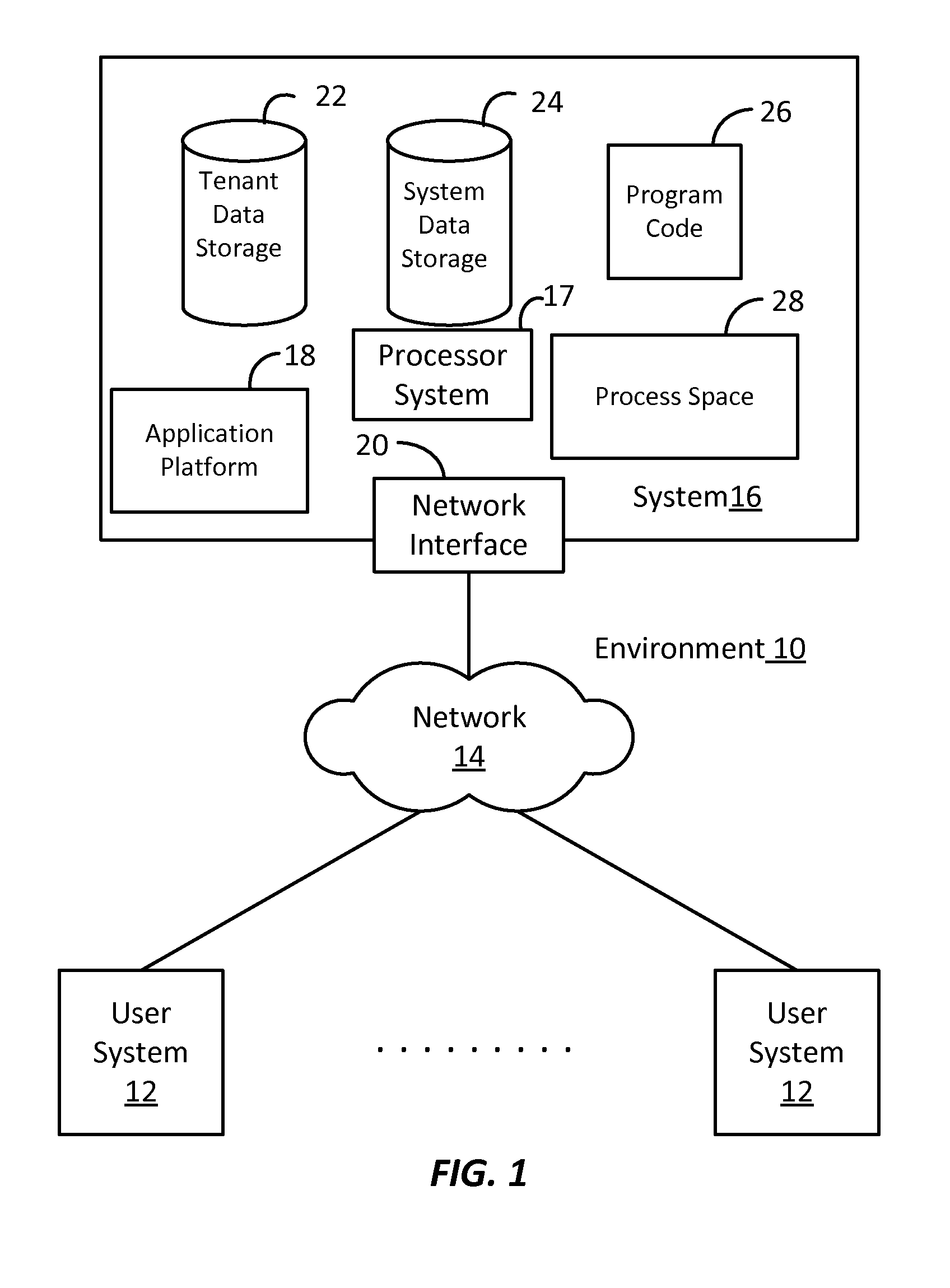

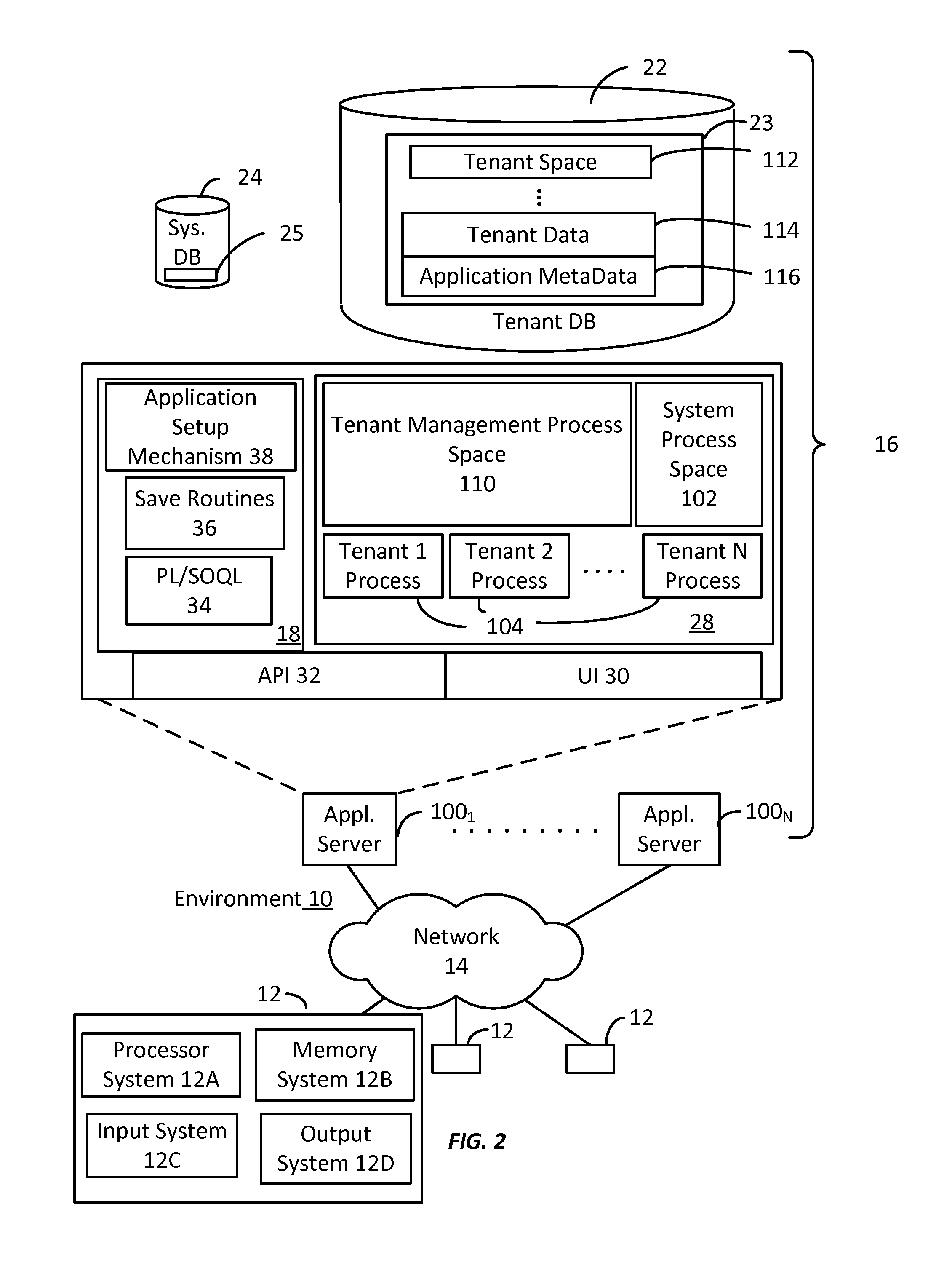

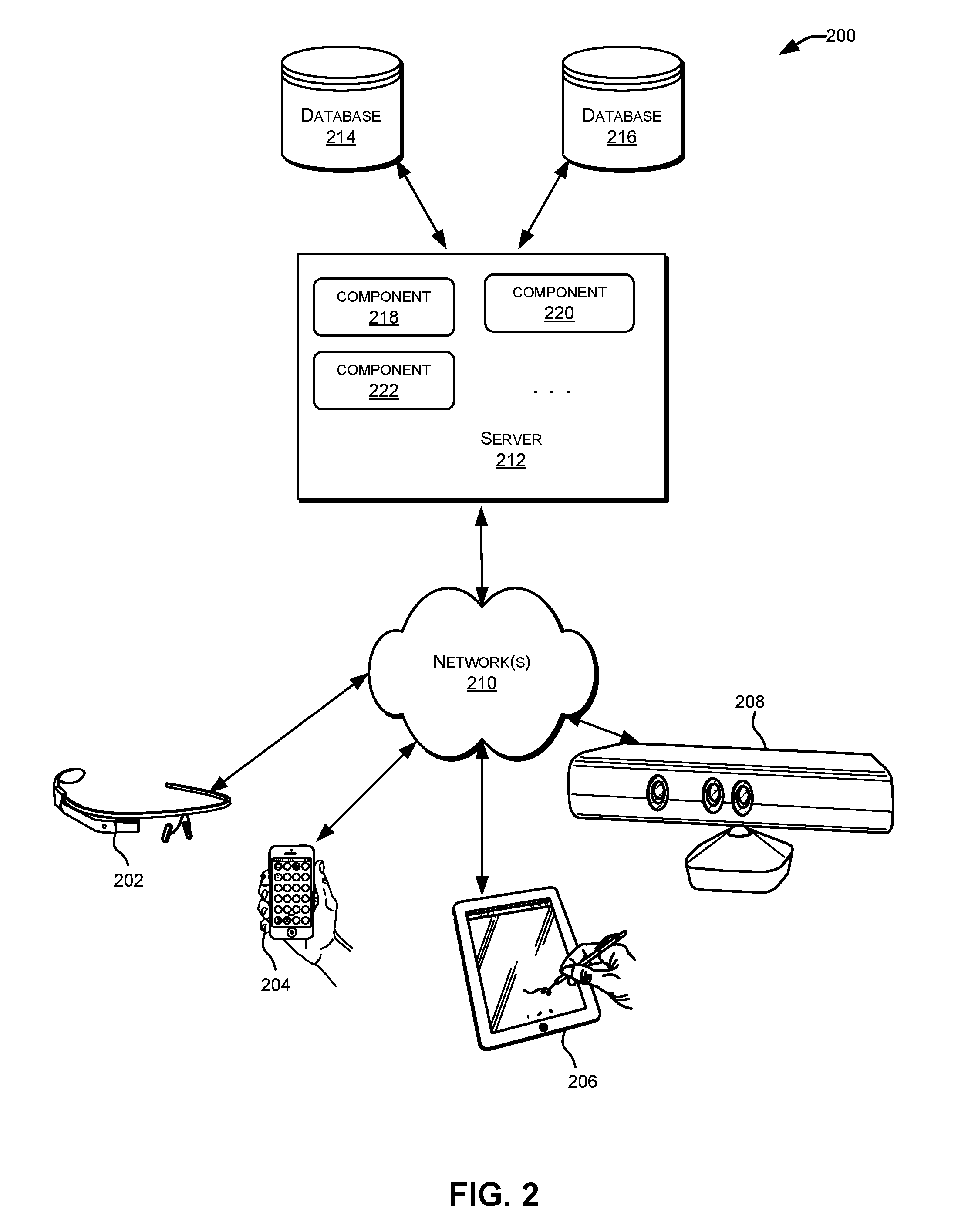

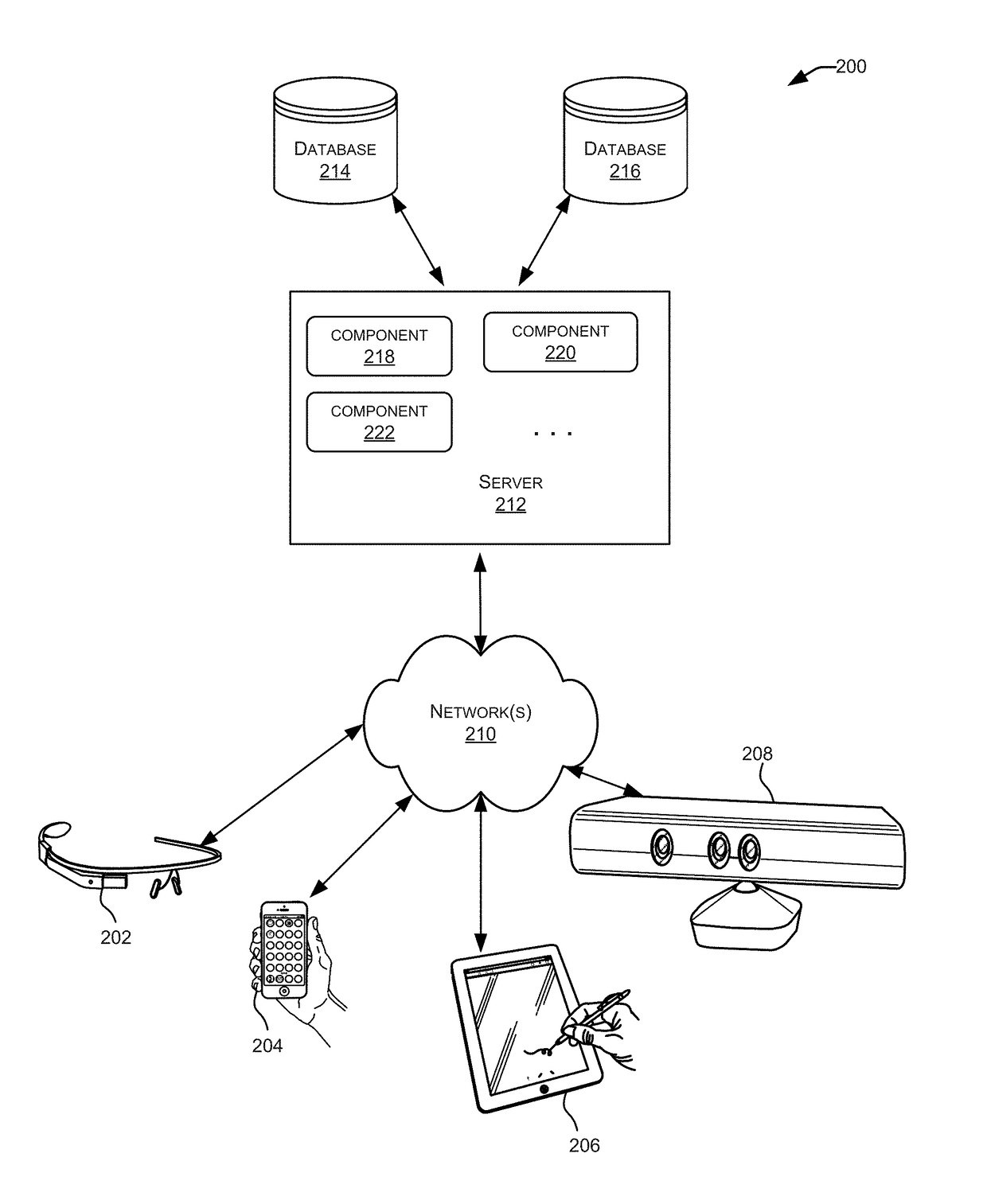

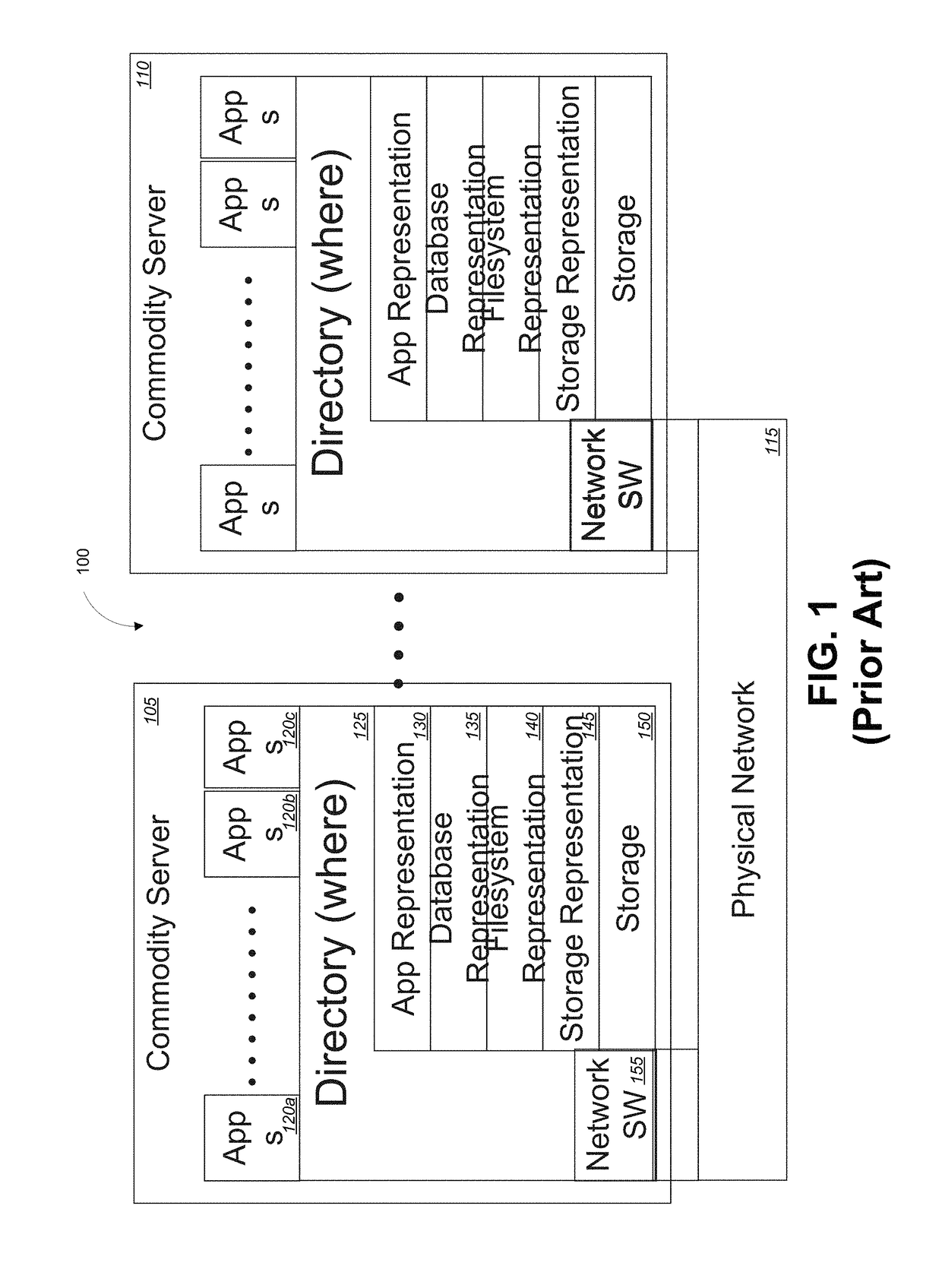

Method and System for Optimizing Queries in a Multi-Tenant Database Environment

ActiveUS20110282864A1Improve performance and efficiencyHigh expressionDigital data information retrievalDigital data processing detailsQuery optimization

In accordance with embodiments, there are provided mechanisms and methods for query optimization in a database system. These mechanisms and methods for query optimization in a database system can enable embodiments to optimize OR expression filters referencing different logical tables. The ability of embodiments to optimize OR expression filters referencing different logical tables can enable optimization that is dynamic and specific to the particular tenant for whom the query is run and improve the performance and efficiency of the database system in response to query requests.

Owner:SALESFORCE COM INC

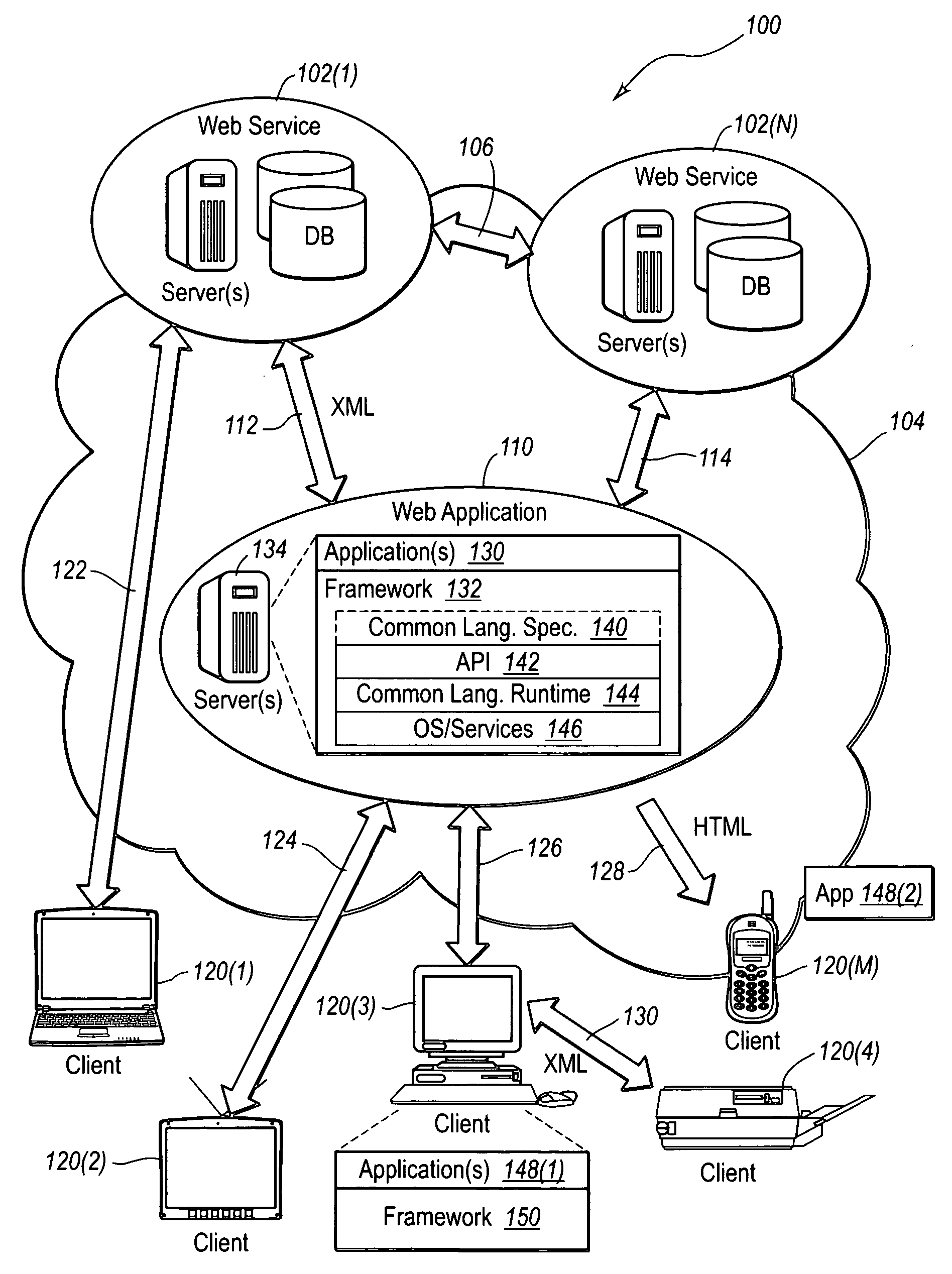

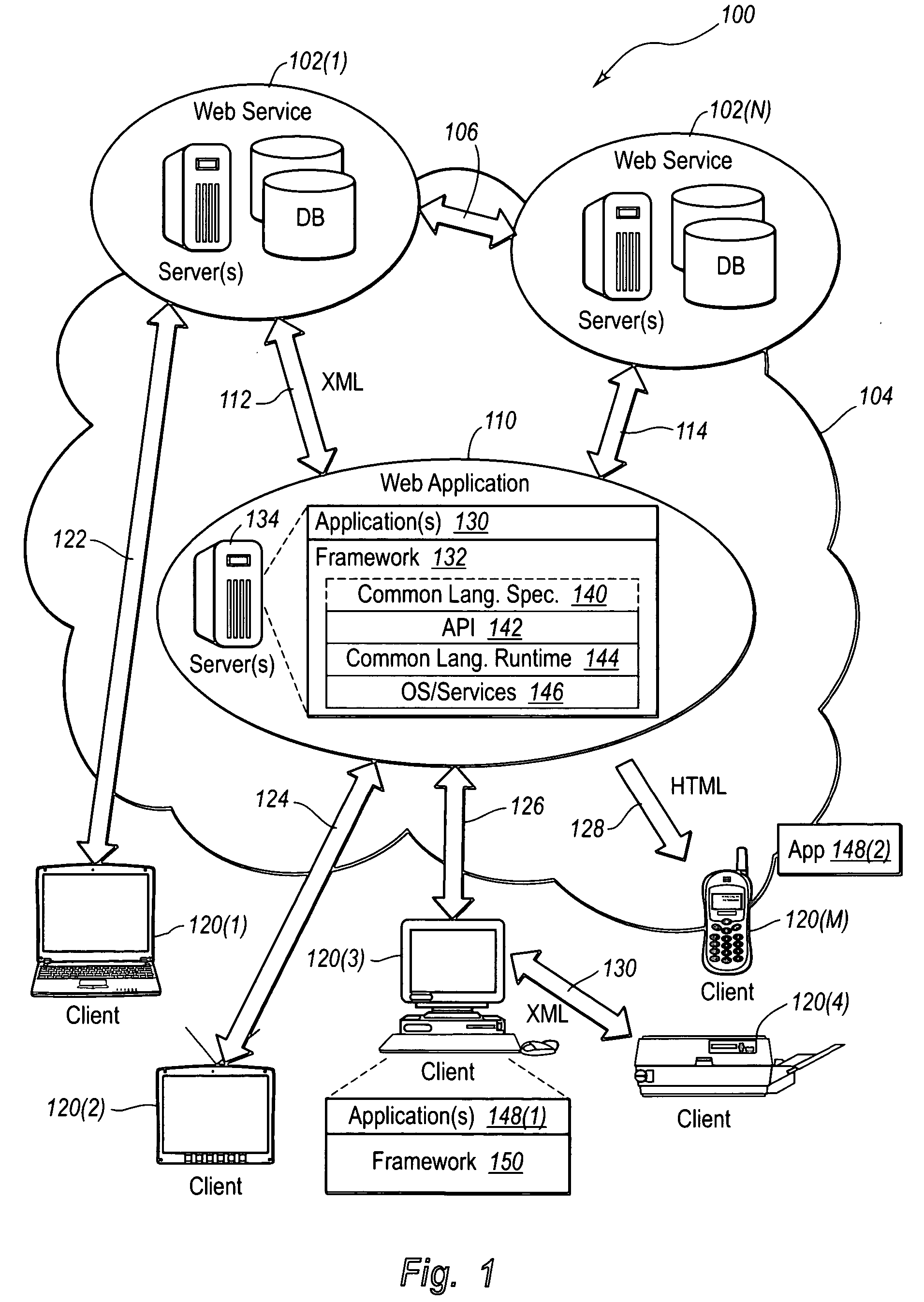

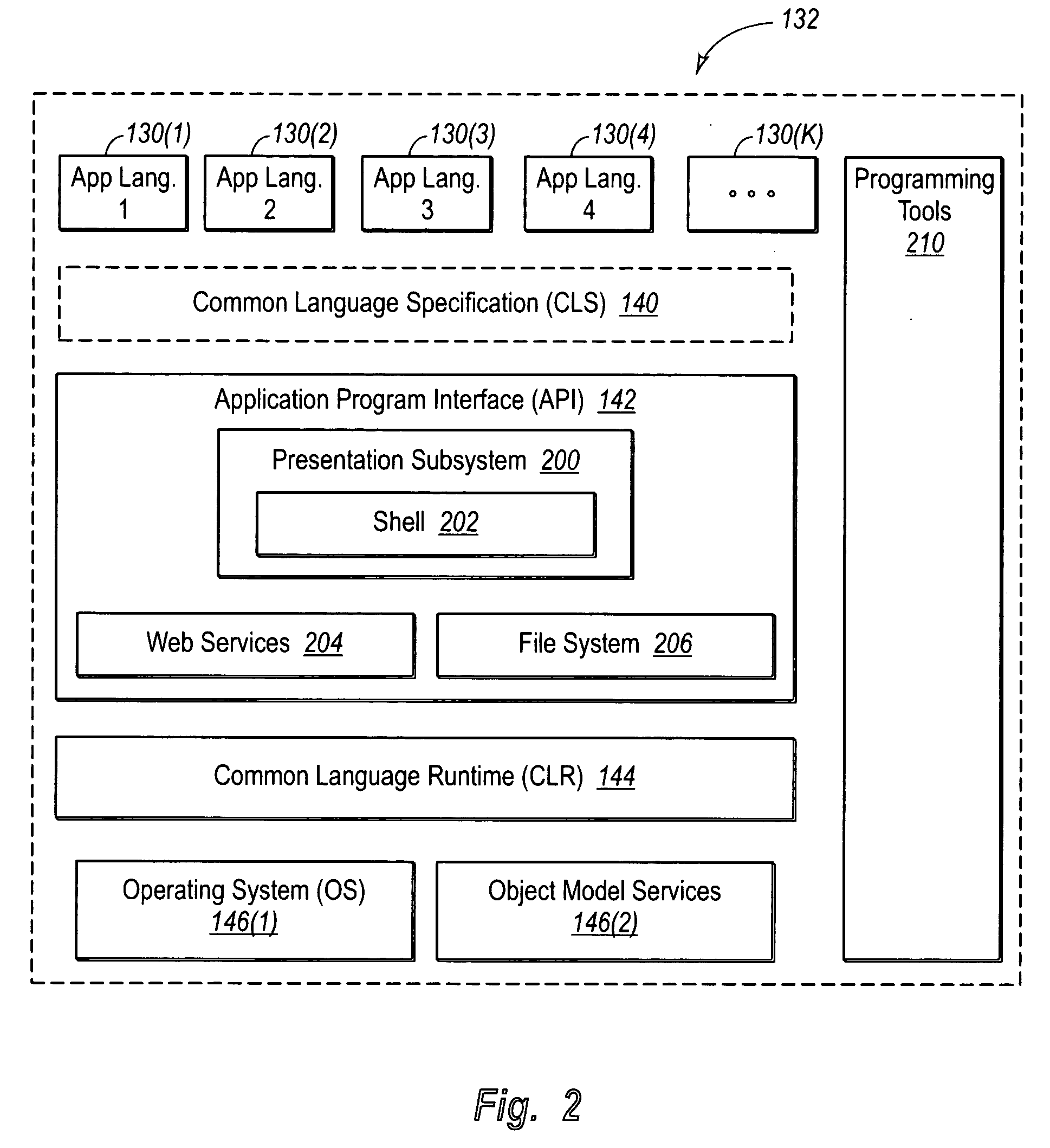

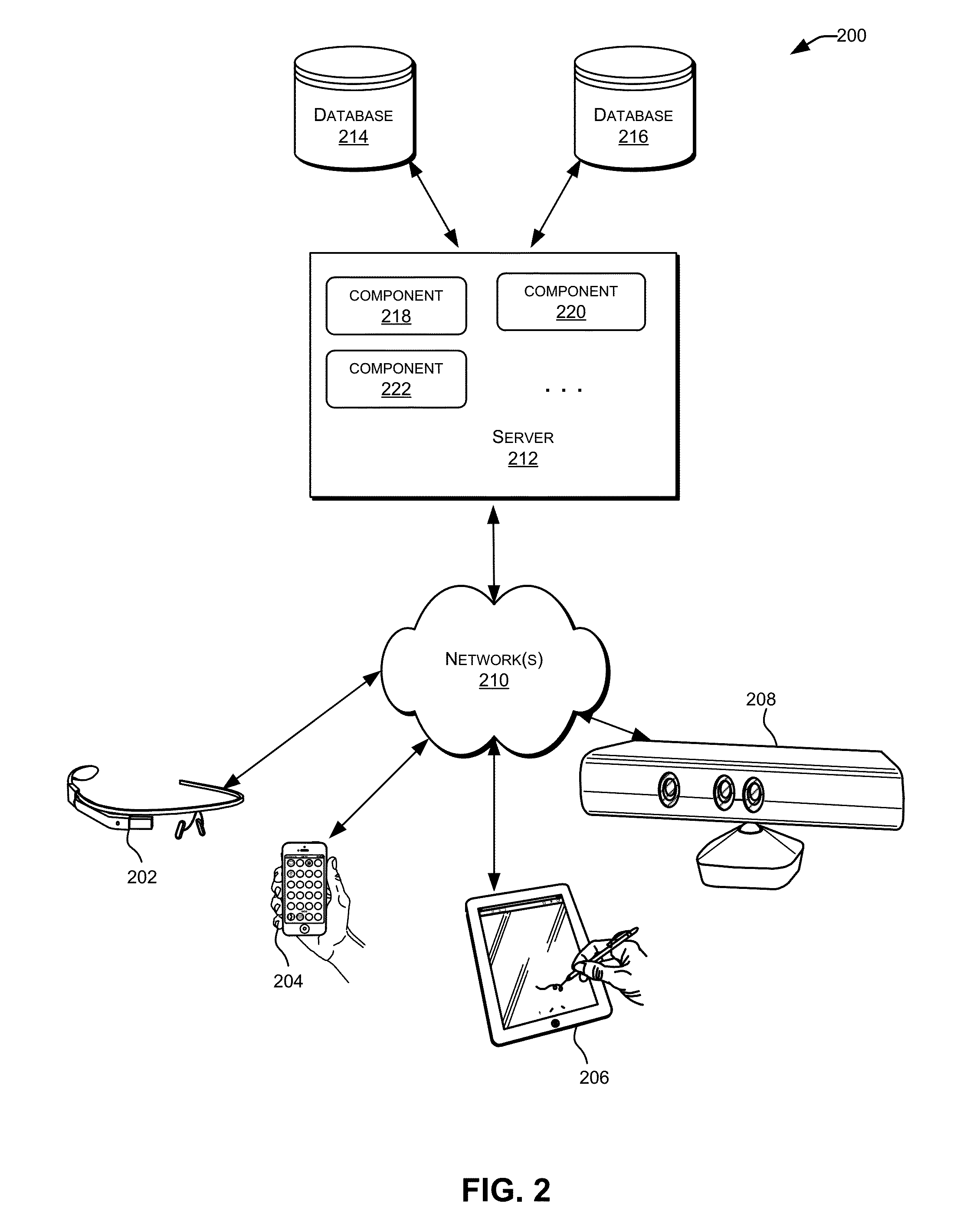

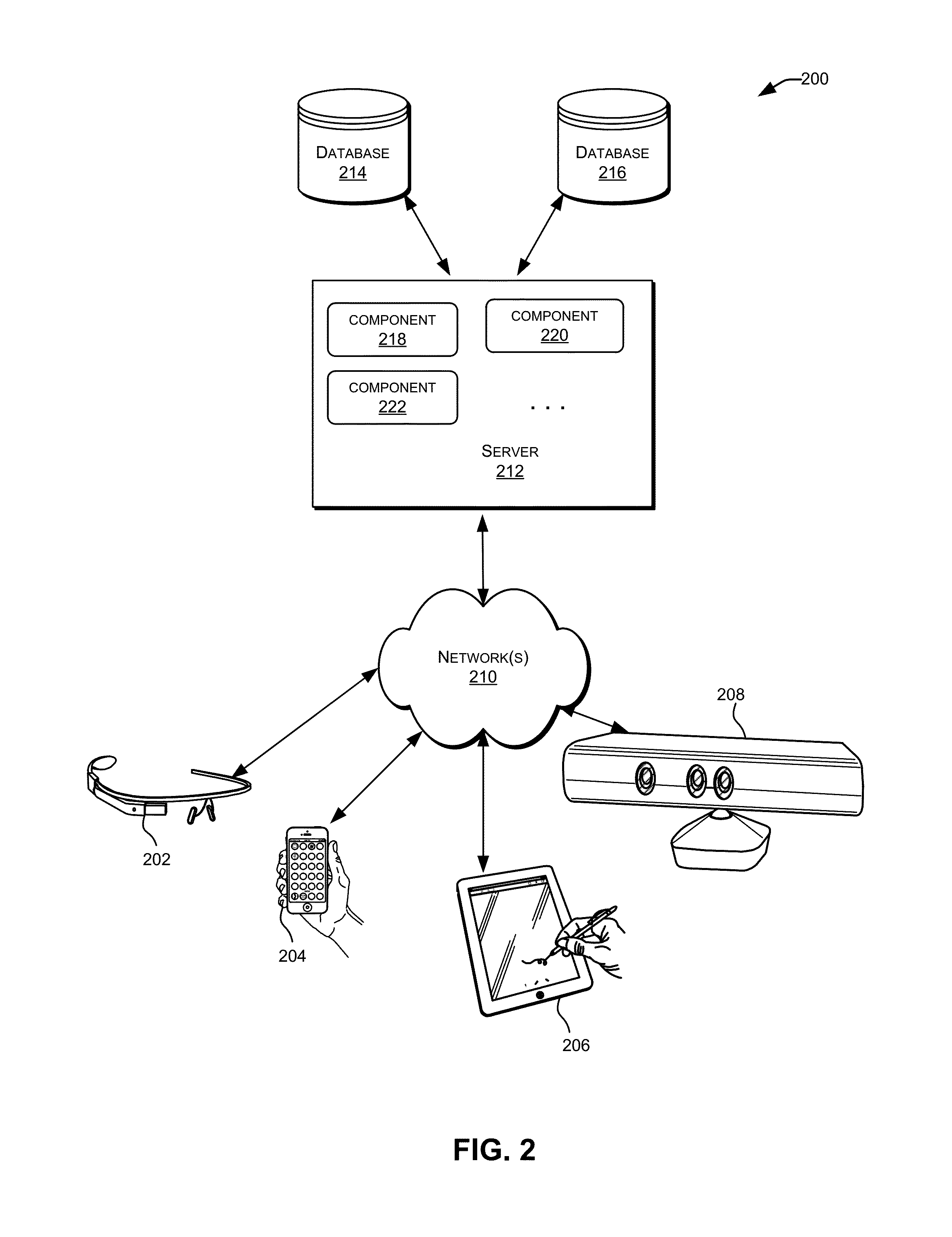

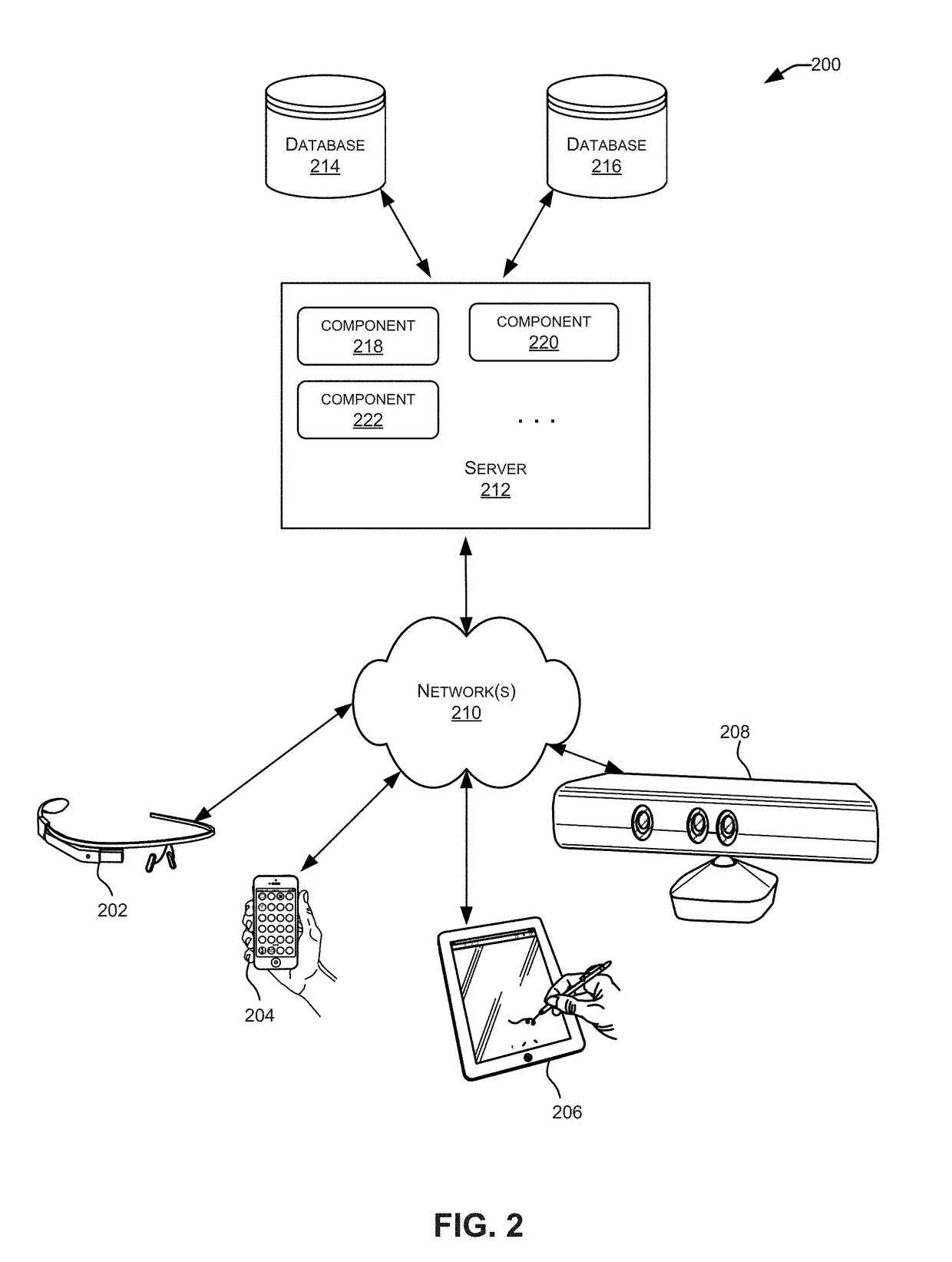

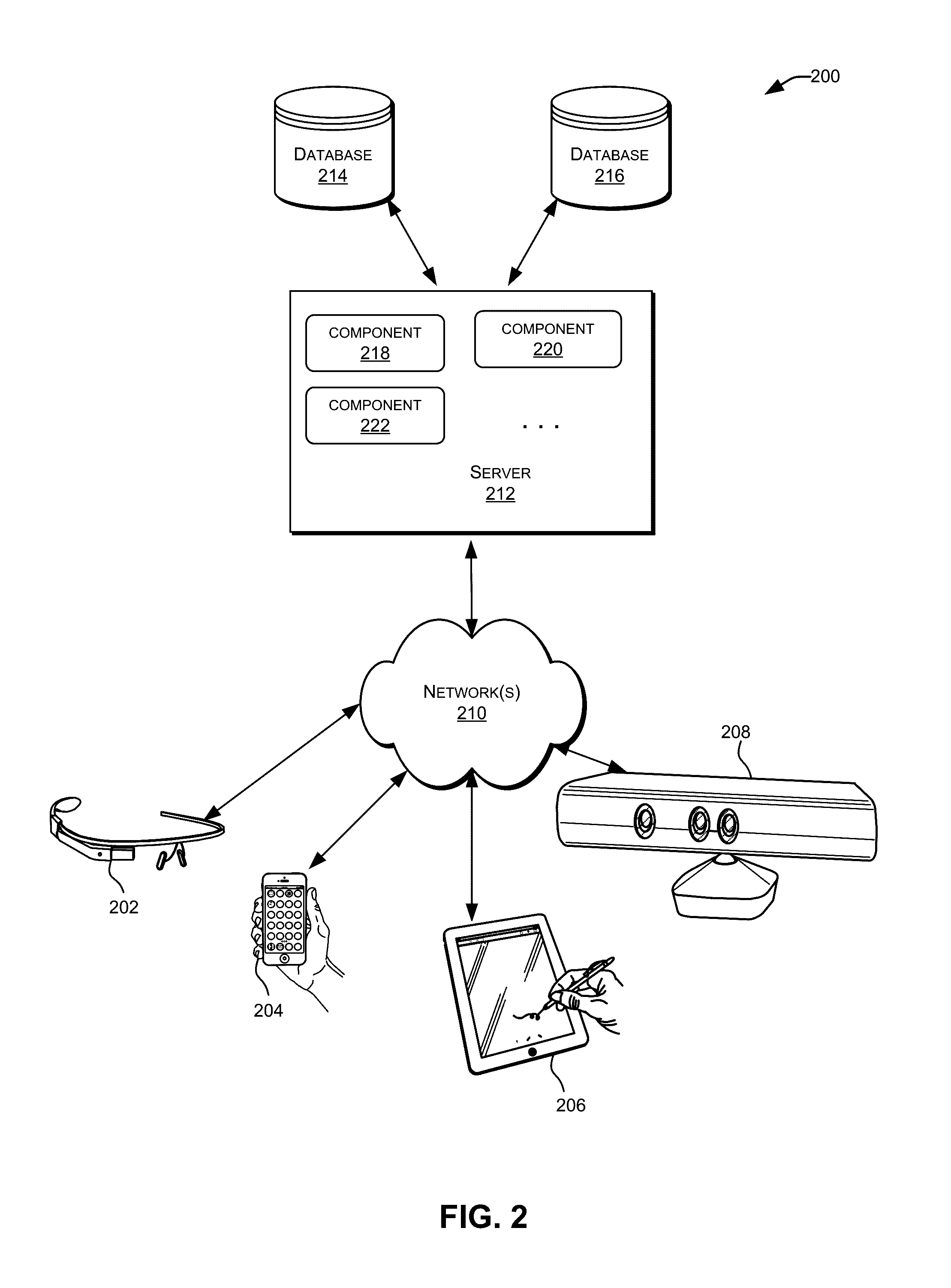

Interface infrastructure for creating and interacting with web services

InactiveUS20060150200A1Improve performance and efficiencyImprove efficiencyMultiprogramming arrangementsMultiple digital computer combinationsMessage passingApplication software

A web services namespace pertains to an infrastructure for enabling creation of a wide variety of applications. The infrastructure provides a foundation for building message-based applications of various scale and complexity. The infrastructure or framework provides APIs for basic messaging, secure messaging, reliable messaging and transacted messaging. In some embodiments, the associated APIs are factored into a hierarchy of namespaces in a manner that balances utility, usability, extensibility and versionability.

Owner:MICROSOFT TECH LICENSING LLC

Control methods for improved catalytic converter efficiency and diagnosis

ActiveUS7886523B1Improve performance and efficiencyIncrease speedElectrical controlInternal combustion piston enginesClosed loopProcess engineering

A controlling method for adjusting concentrations of, for example, individual cylinder's exhaust gas constituents to provide engine functions such as catalytic converter diagnosis, increased overall catalytic converter efficiency and rapid catalyst heating, before and / or after initiating closed loop fuel injection control, using a selected temperature sensor location within a low thermal mass catalytic converter design.

Owner:LEGARE JOSEPH E

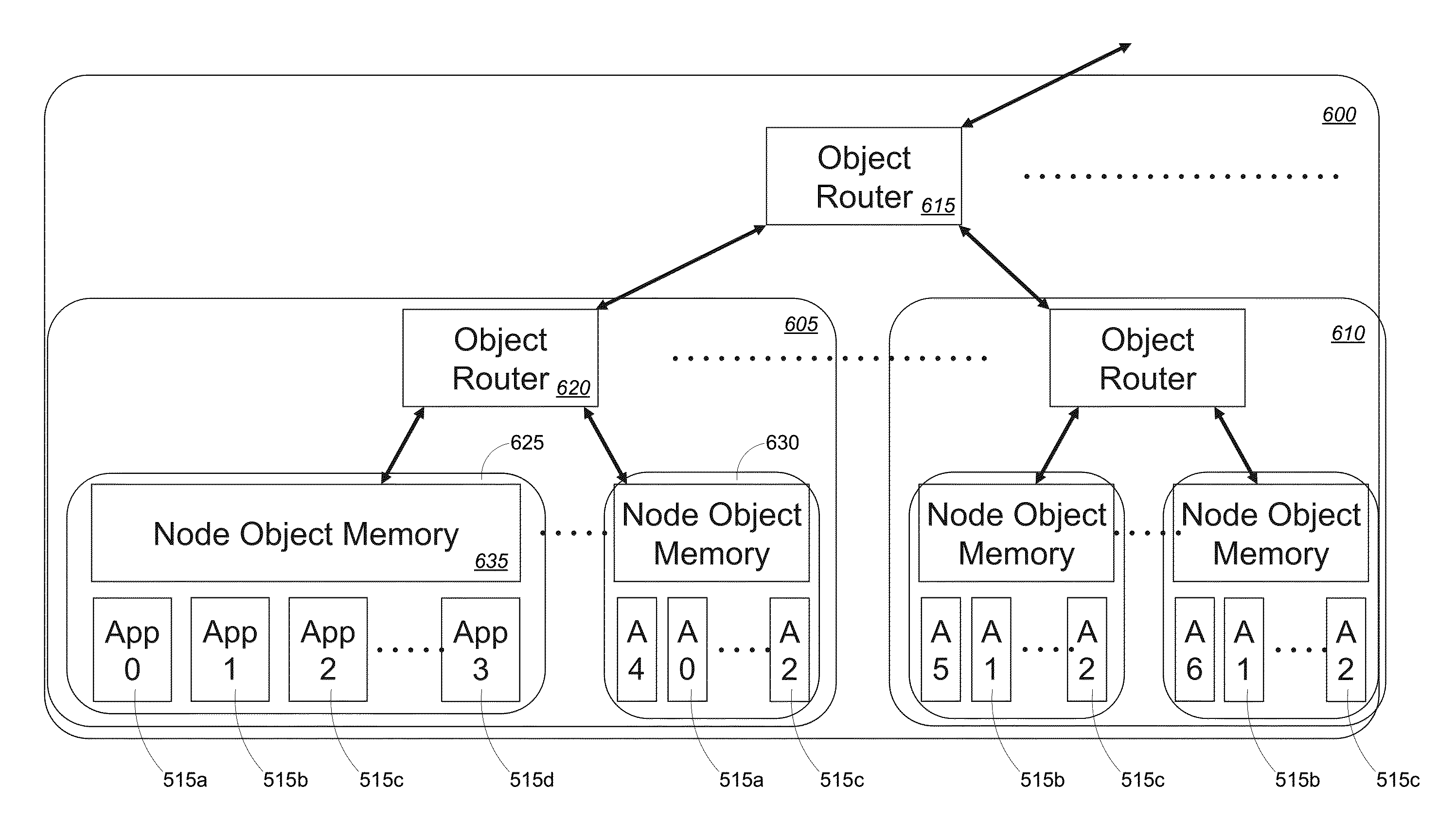

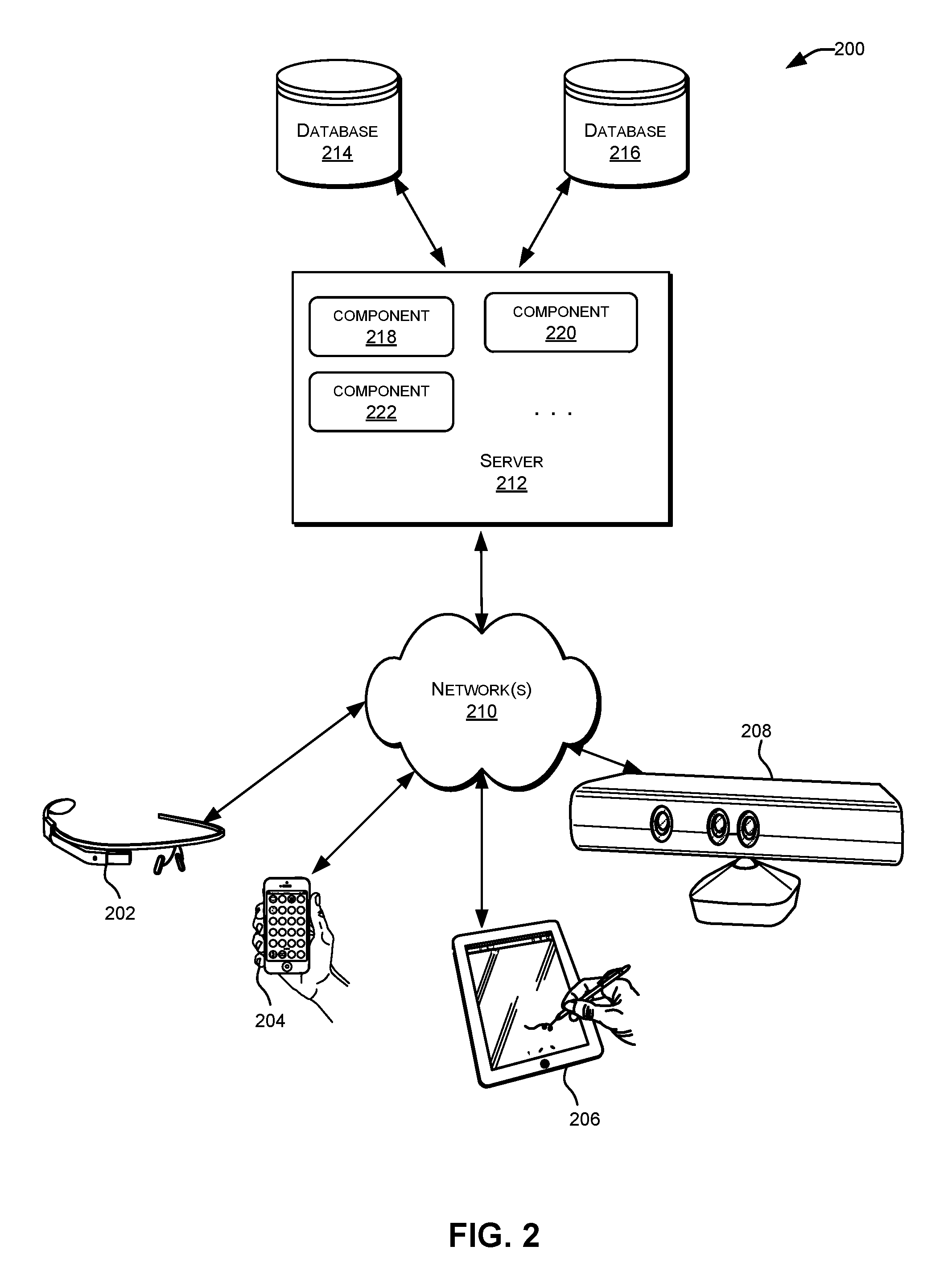

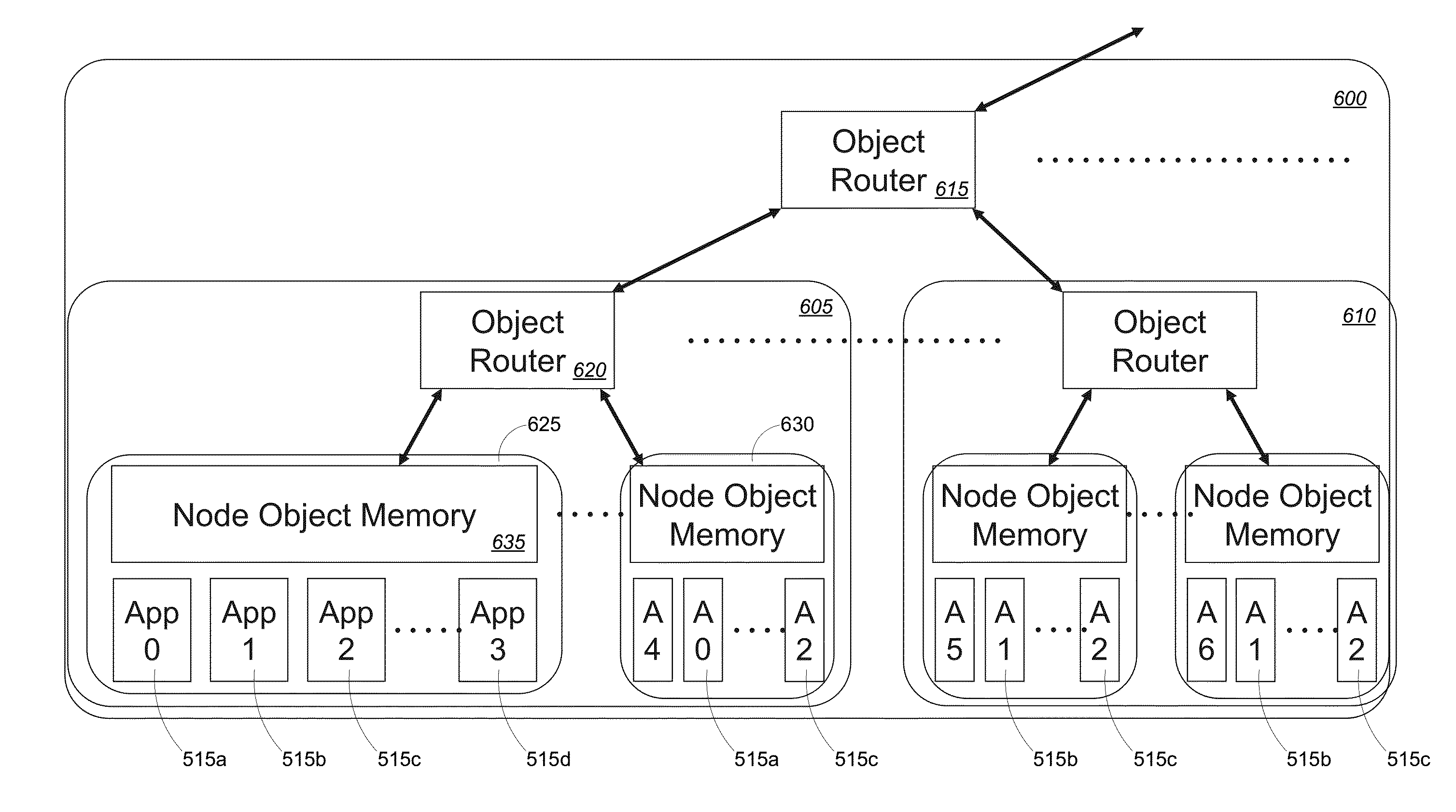

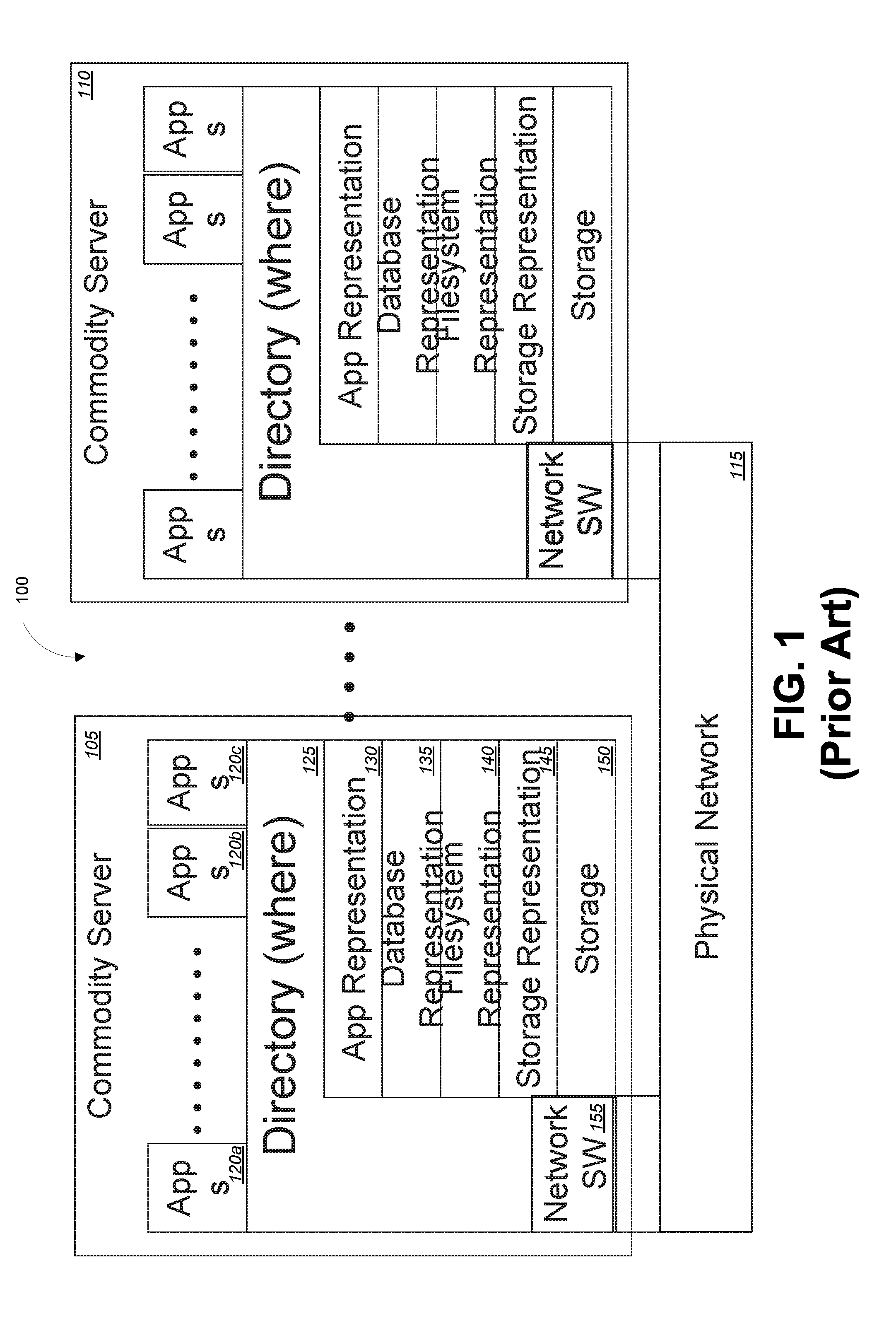

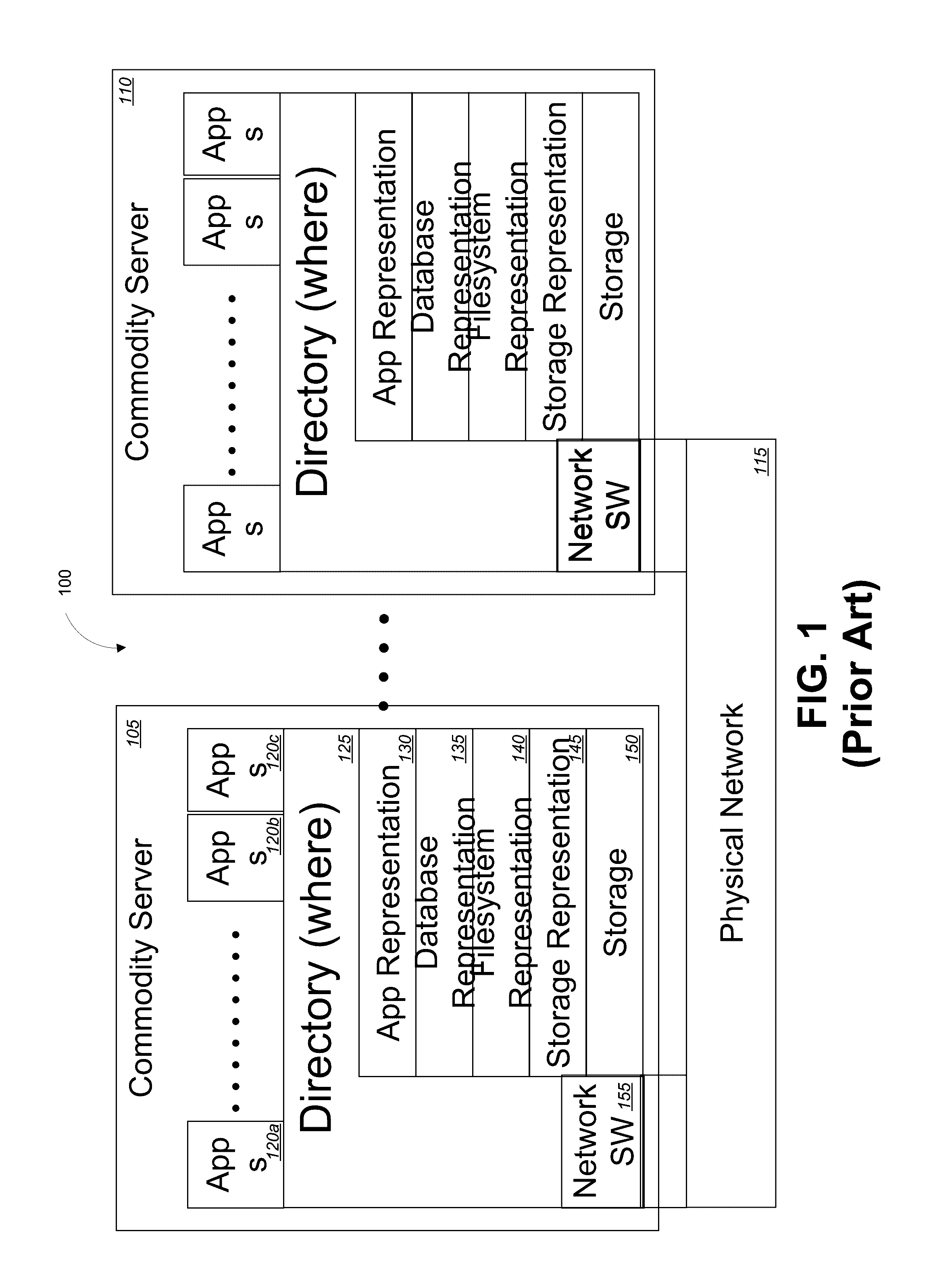

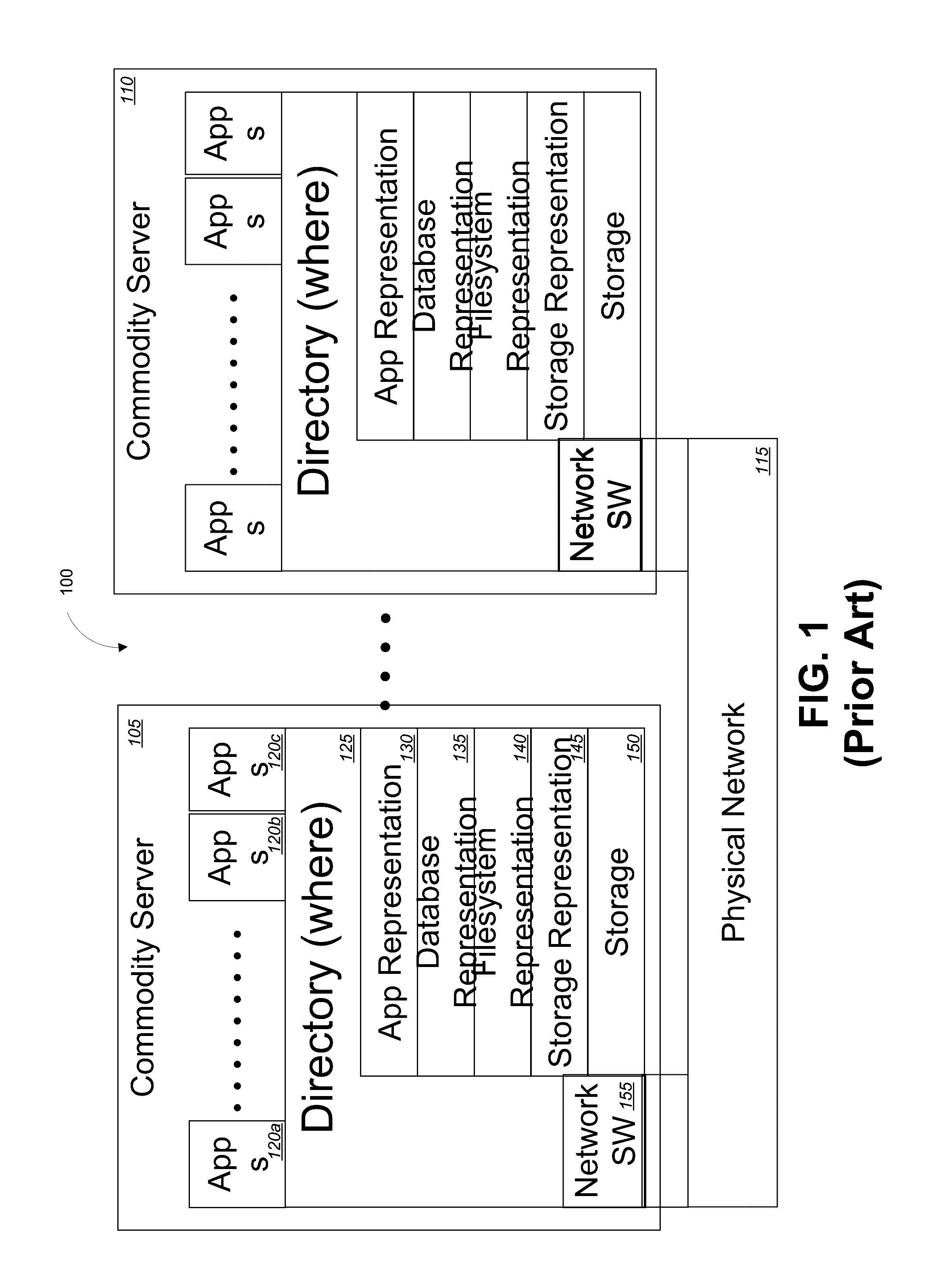

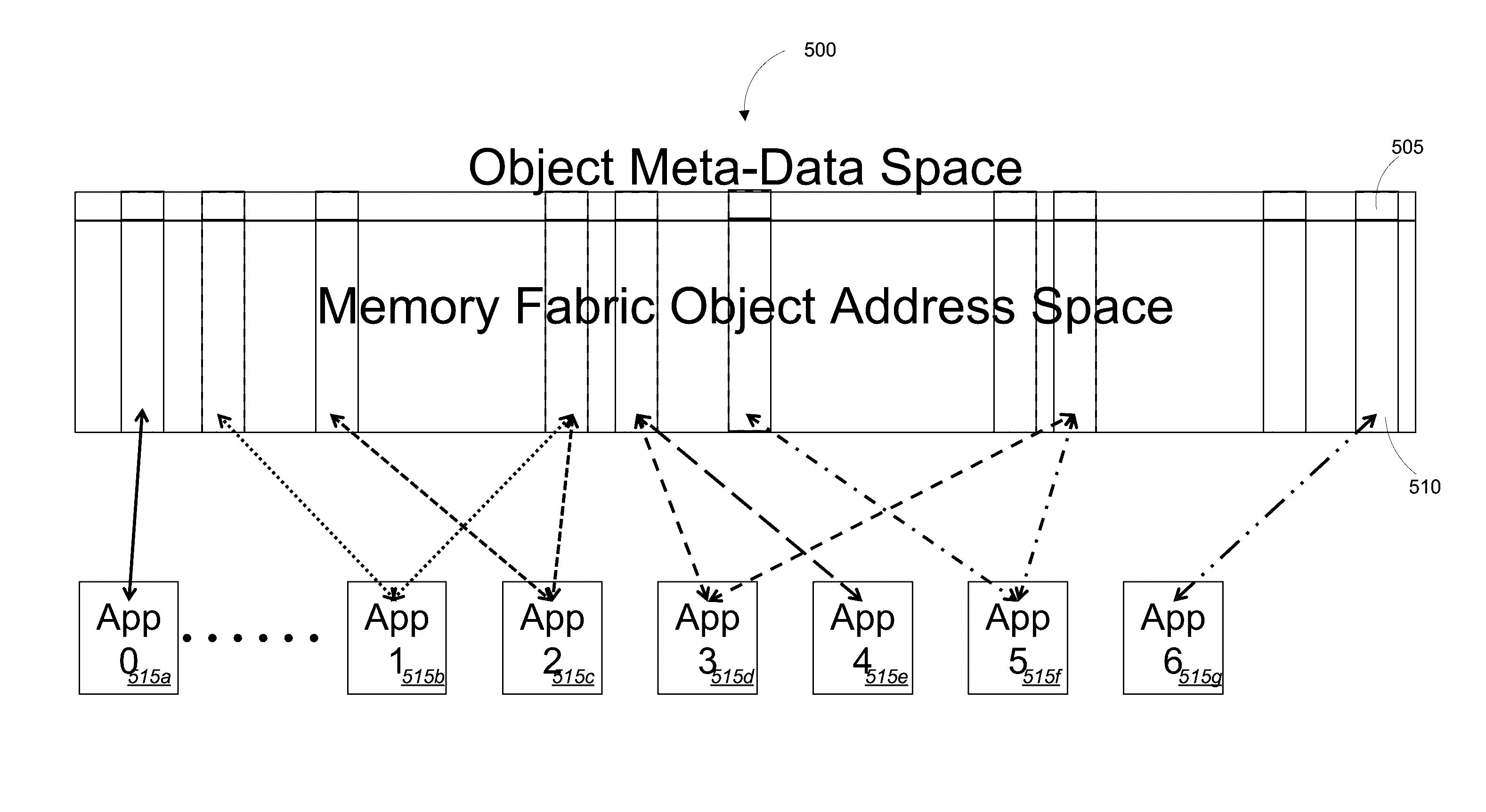

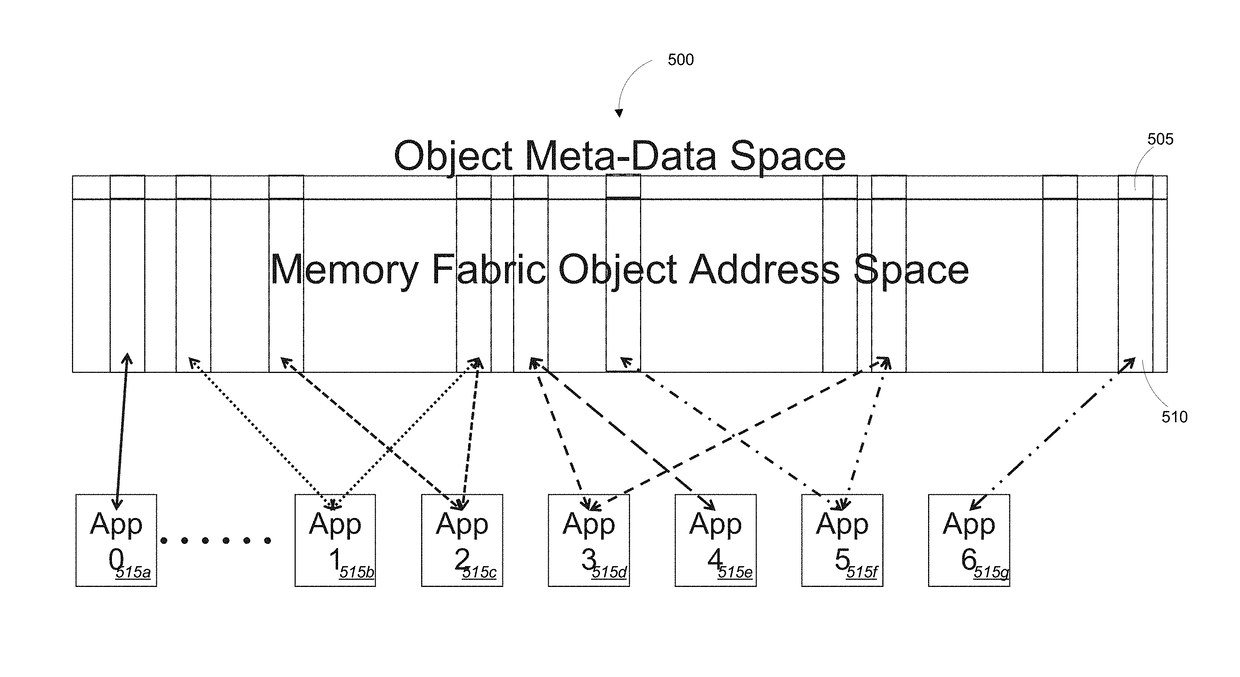

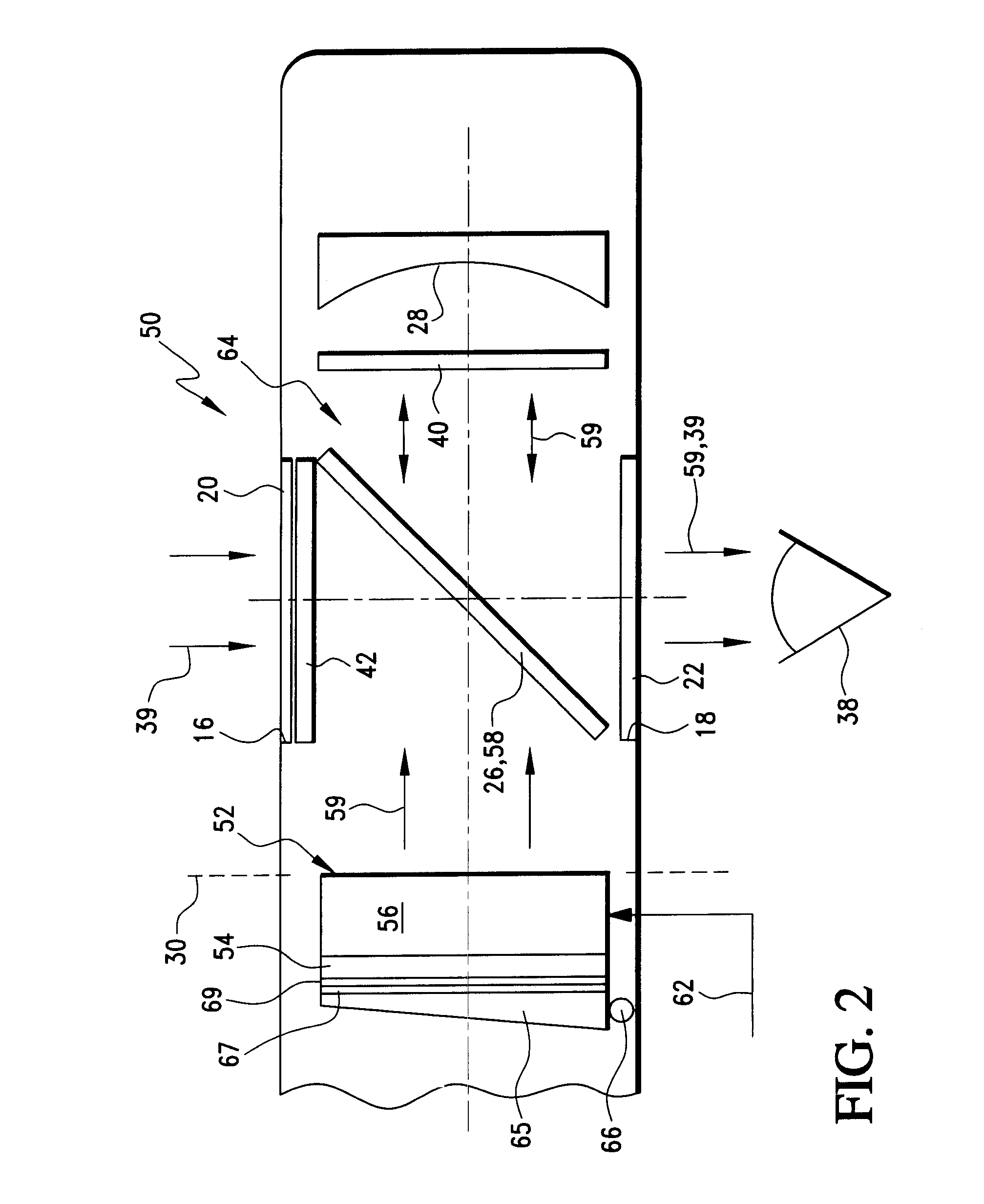

Object memory data flow instruction execution

ActiveUS20160210080A1Improve efficiencyImprove performanceMemory architecture accessing/allocationInput/output to record carriersMemory objectData access

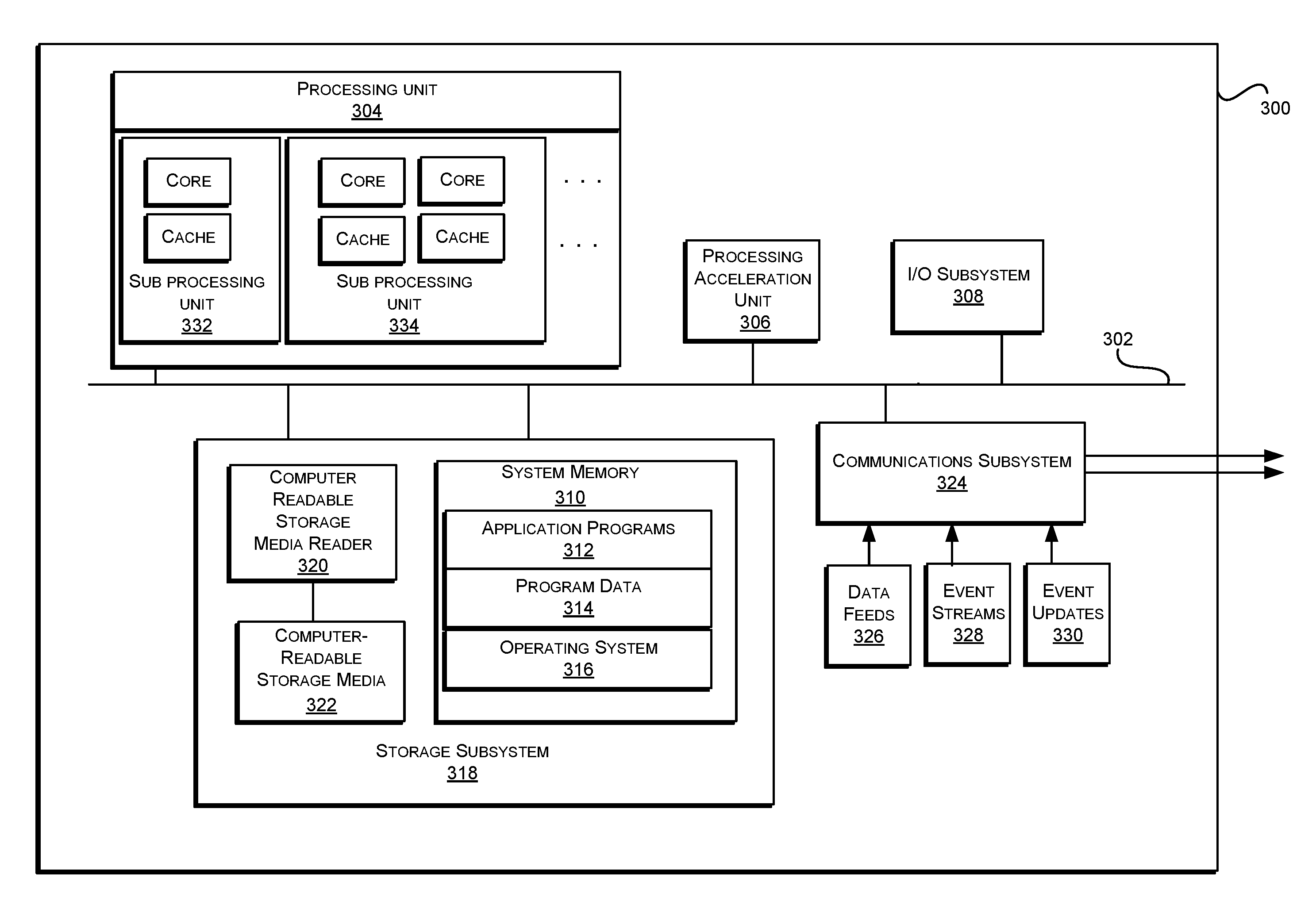

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to an instruction set of an object memory fabric. This object memory fabric instruction set can be used to provide a unique instruction model based on triggers defined in metadata of the memory objects. This model represents a dynamic dataflow method of execution in which processes are performed based on actual dependencies of the memory objects. This provides a high degree of memory and execution parallelism which in turn provides tolerance of variations in access delays between memory objects. In this model, sequences of instructions are executed and managed based on data access. These sequences can be of arbitrary length but short sequences are more efficient and provide greater parallelism.

Owner:ULTRATA LLC

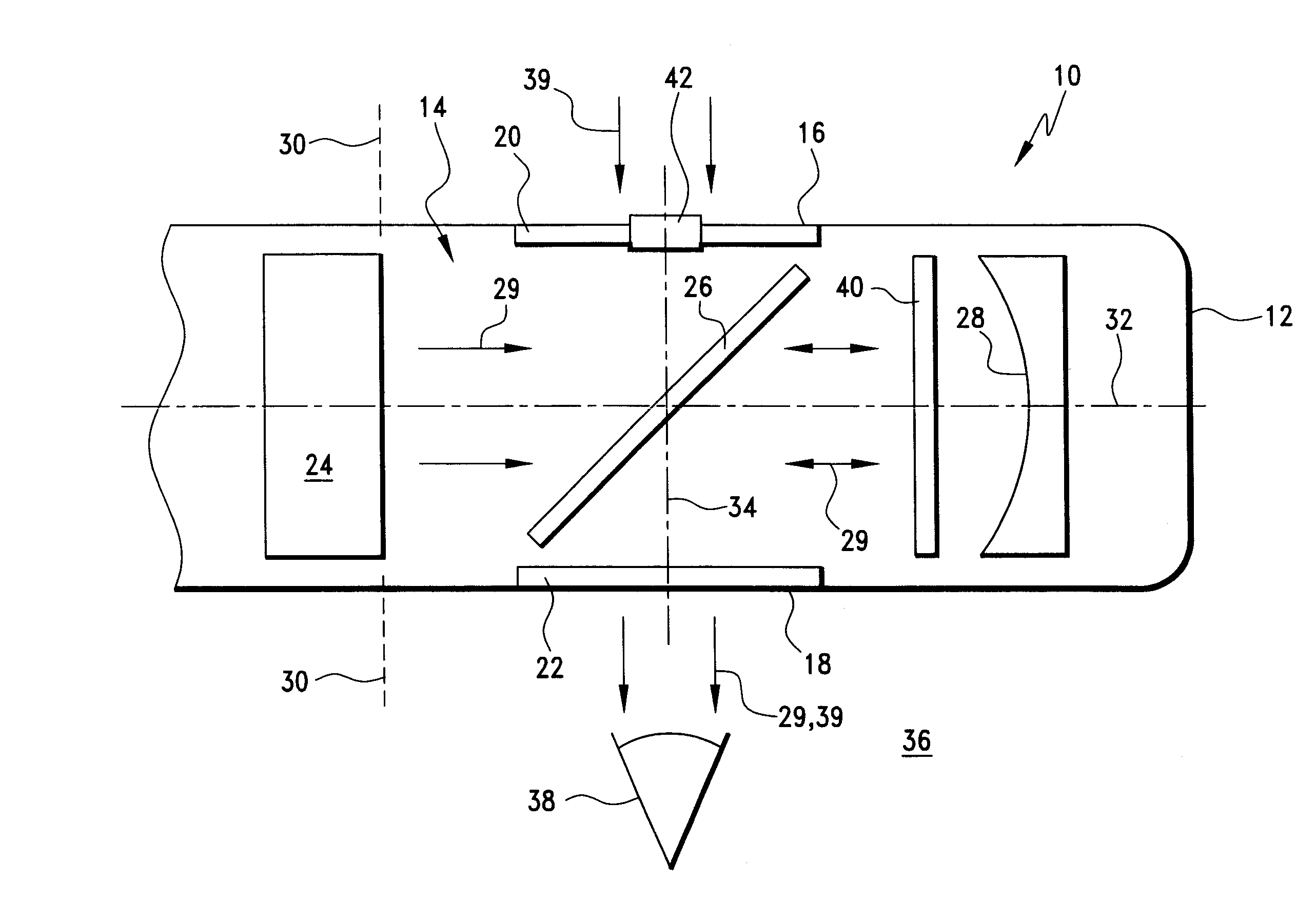

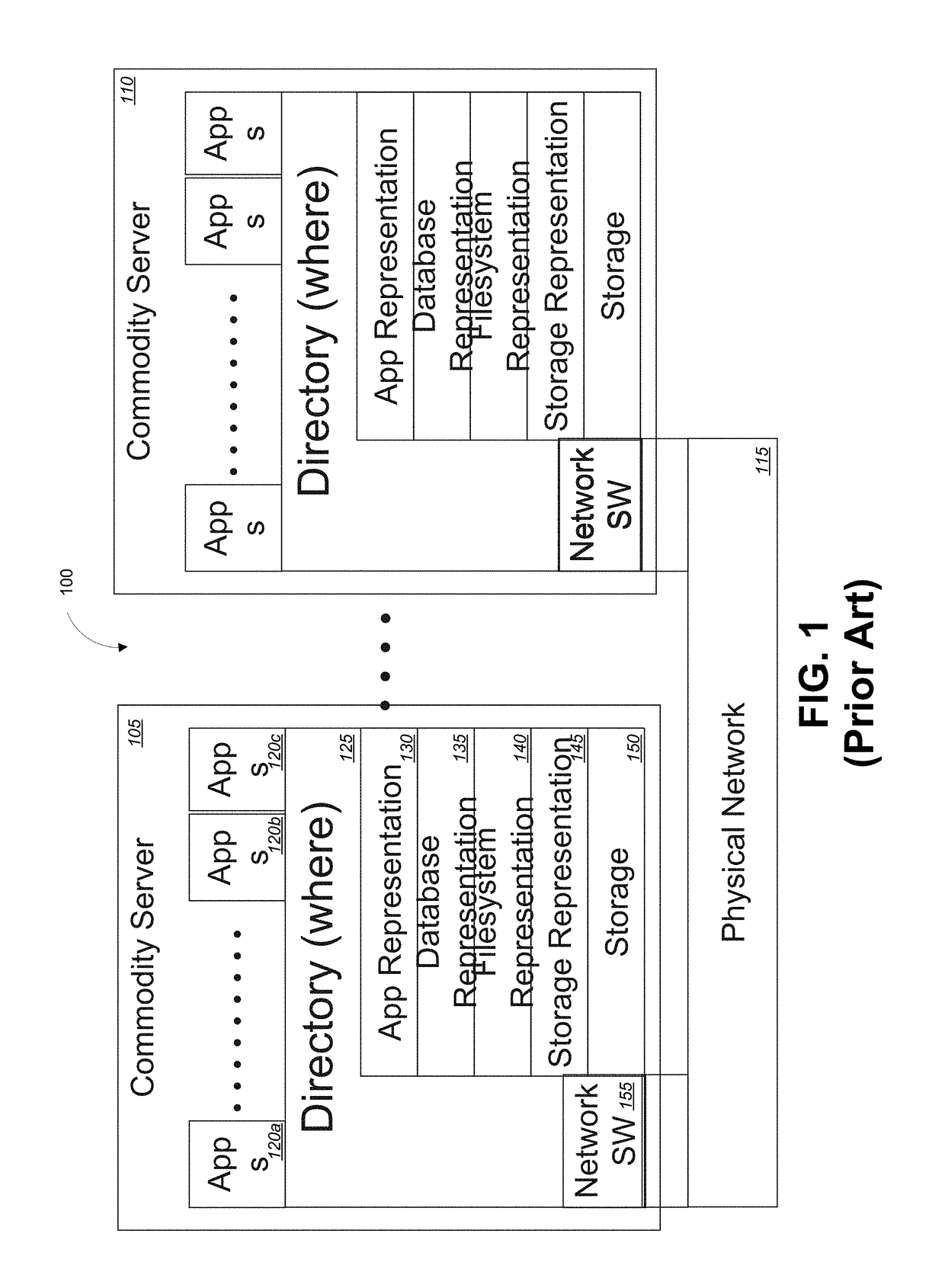

Micro-display engine

ActiveUS20050180021A1Improve efficiencyImprove performancePolarising elementsCathode-ray tube indicatorsCatoptricsImage formation

Virtual displays with micro-display engines are arranged in compact, lightweight configurations that generate clear virtual images for an observer. The displays are particularly suitable for portable devices, such as head-mounted displays adapted to non-immersive or immersive applications. The non-immersive applications feature reflective optics moved out of the direct line of sight of the observer and provide for differentially modifying the amount or form of ambient light admitted from the forward environment with respect to image light magnified within the display. Micro-display engines suitable for both non-immersive and immersive display applications and having LCD image sources displace polarization components of the LCD image sources along the optical paths of the engines for simplifying engine design. A compound imaging system for micro-display engines features the use of reflectors in sequence to expand upon the imaging possibilities of the new micro-display engines. Polarization management also provides for differentially regulating the transmission of ambient light with respect to image light and for participating in the image formation function of the image source.

Owner:TDG ACQUISITION COMPANY

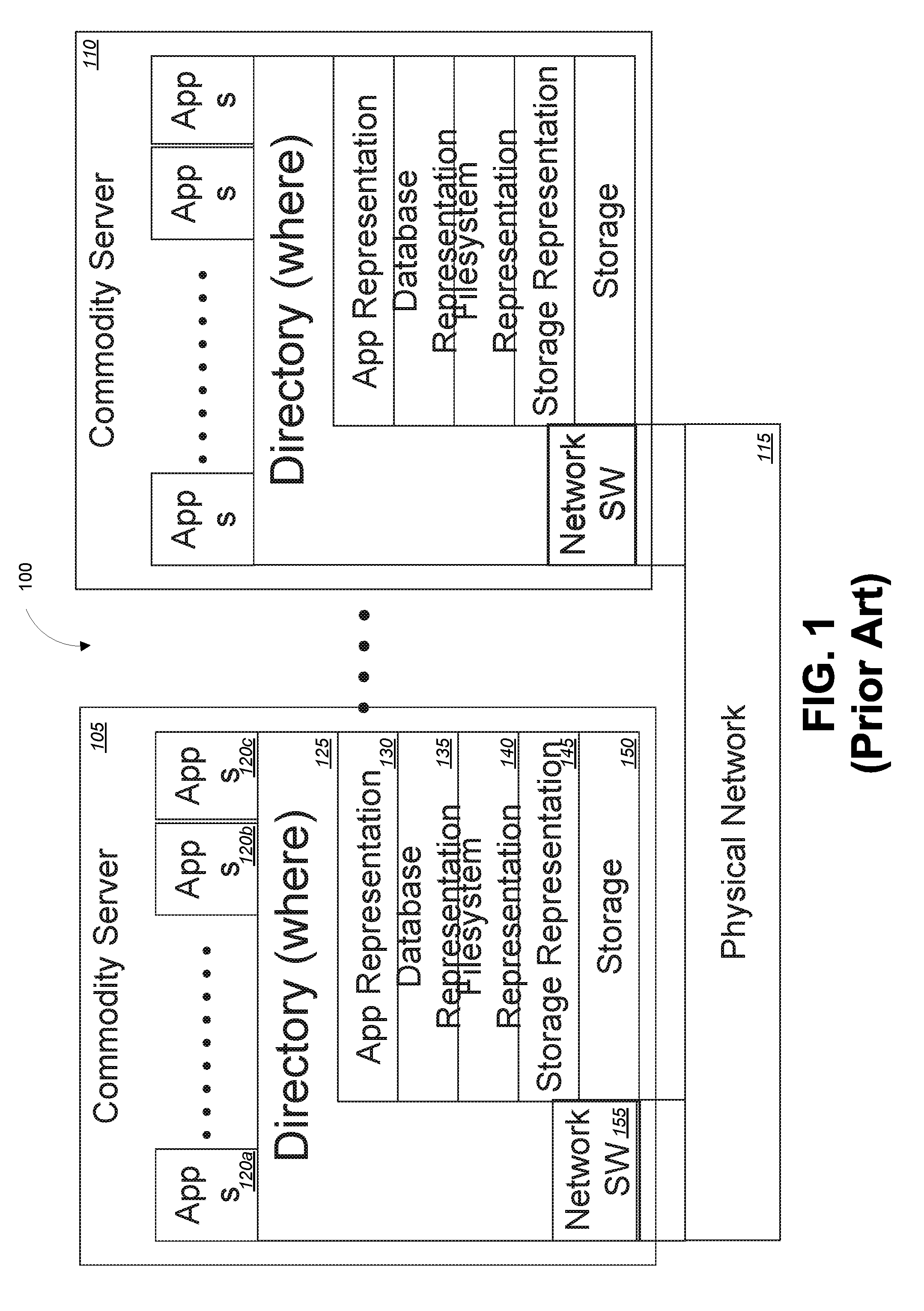

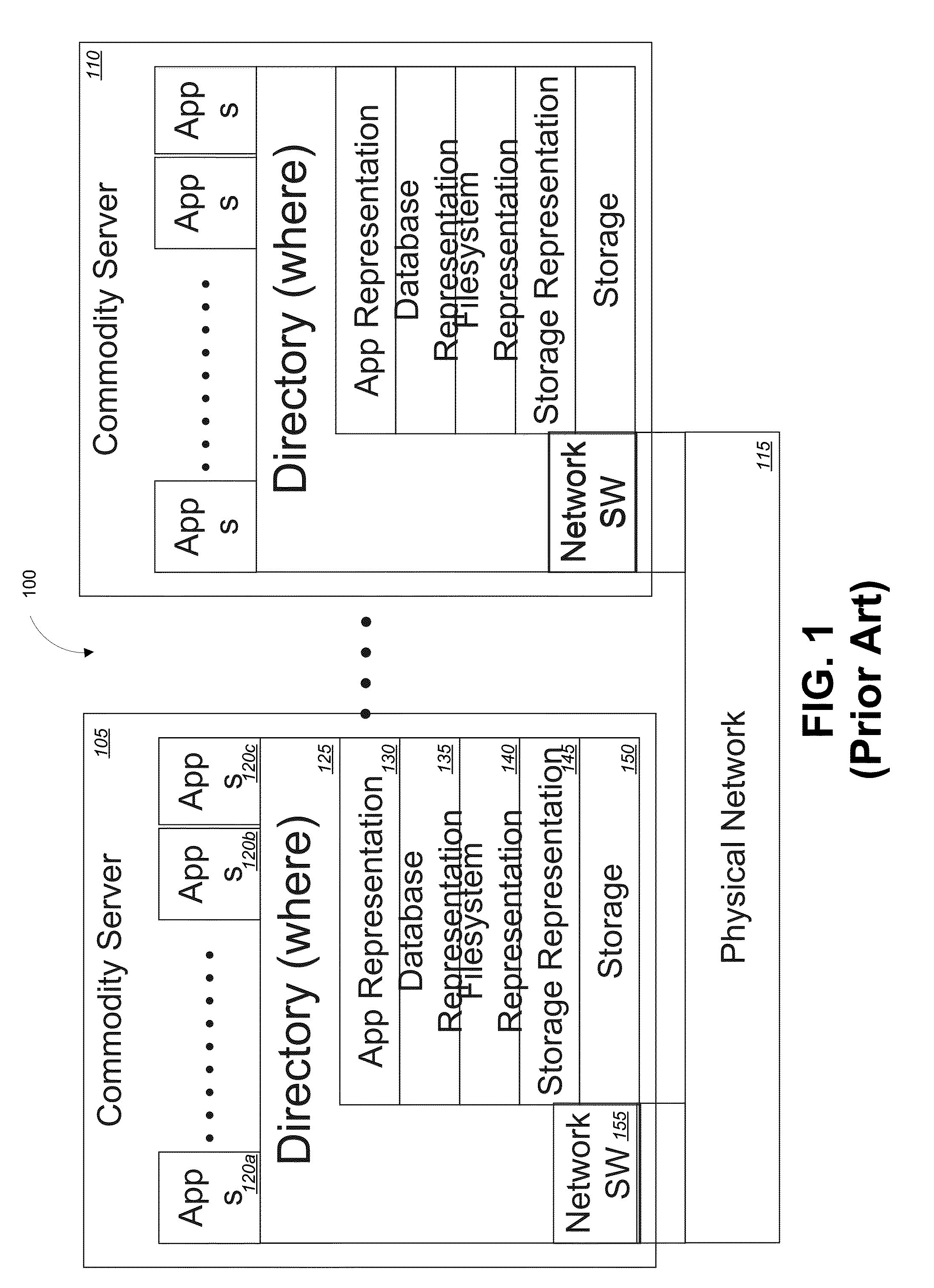

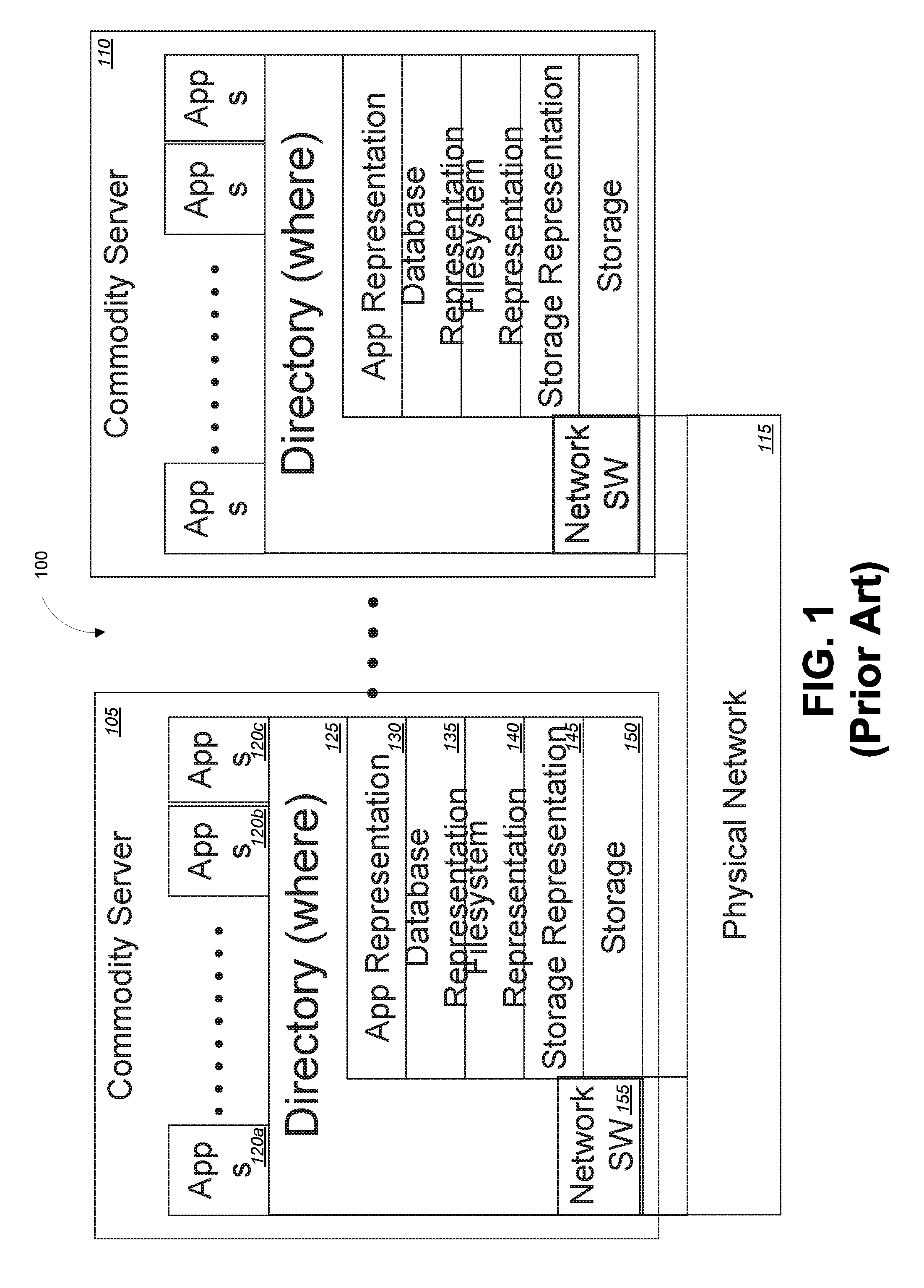

Object memory fabric performance acceleration

InactiveUS20160210079A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationParallel computingMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can provide transparent and dynamic performance acceleration, especially with big data or other memory intensive applications, by reducing or eliminating overhead typically associated with memory management, storage management, networking, and data directories. Rather, embodiments manage memory objects at the memory level which can significantly shorten the pathways between storage and memory and between memory and processing, thereby eliminating the associated overhead between each.

Owner:ULTRATA LLC

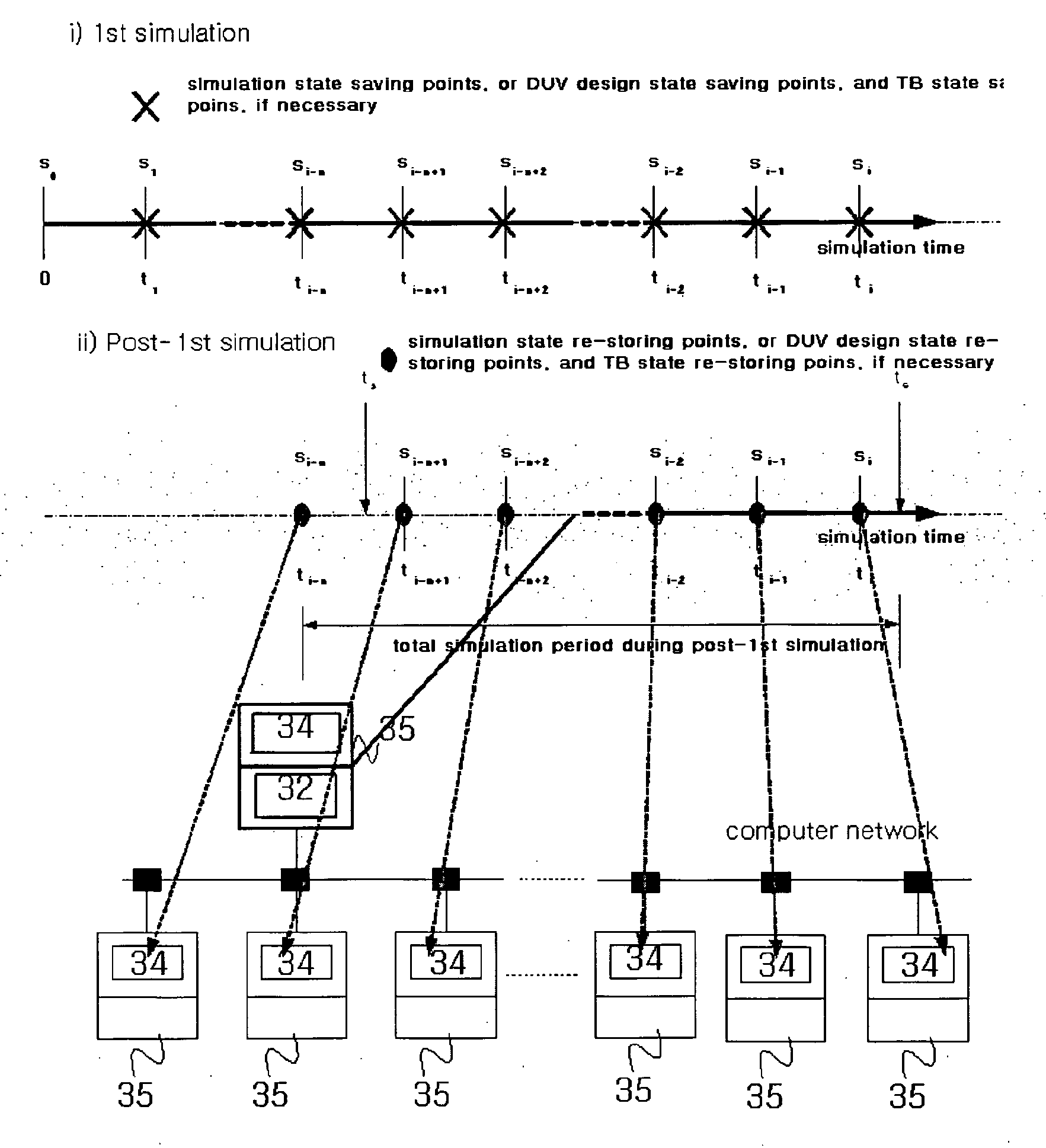

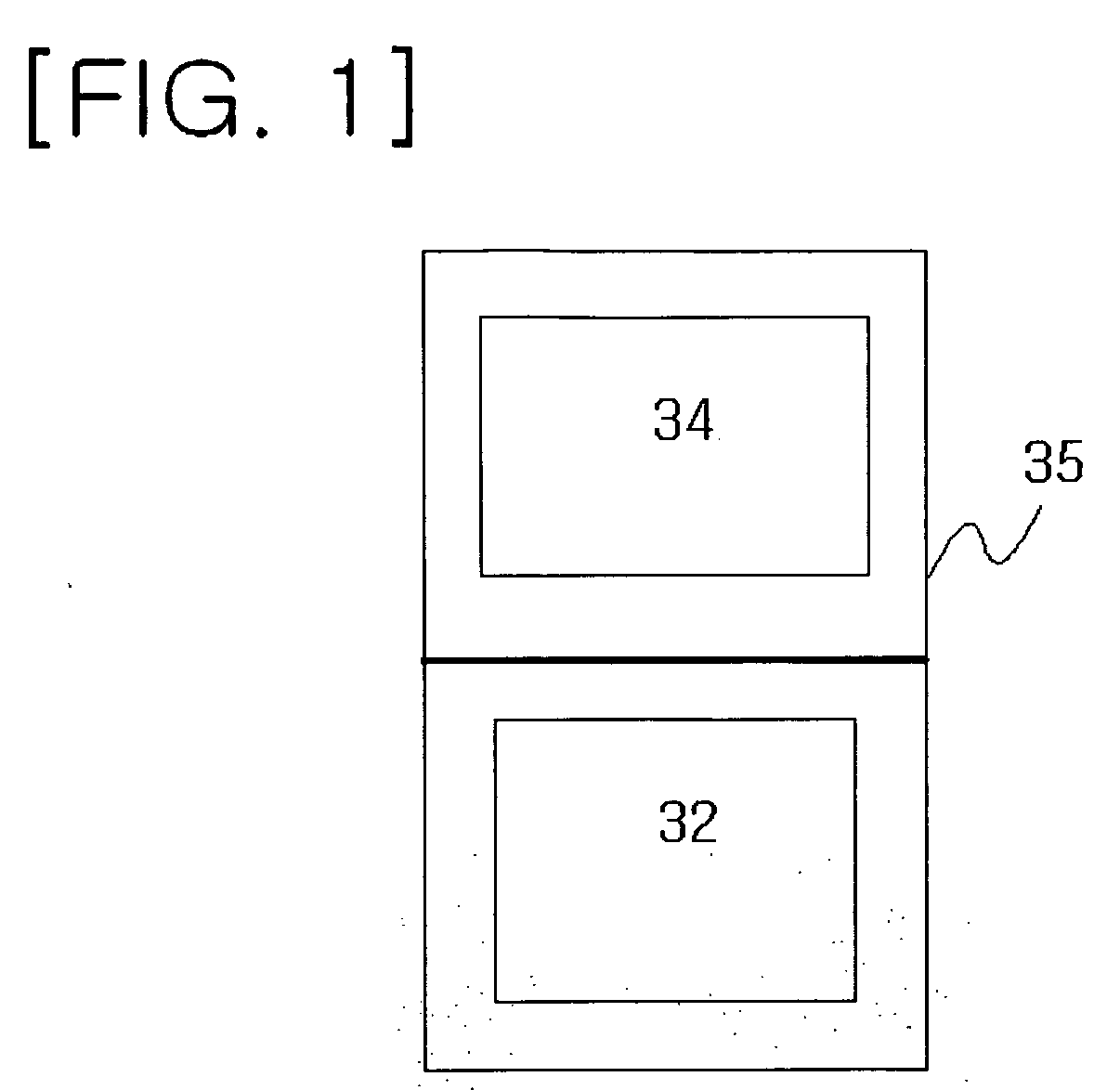

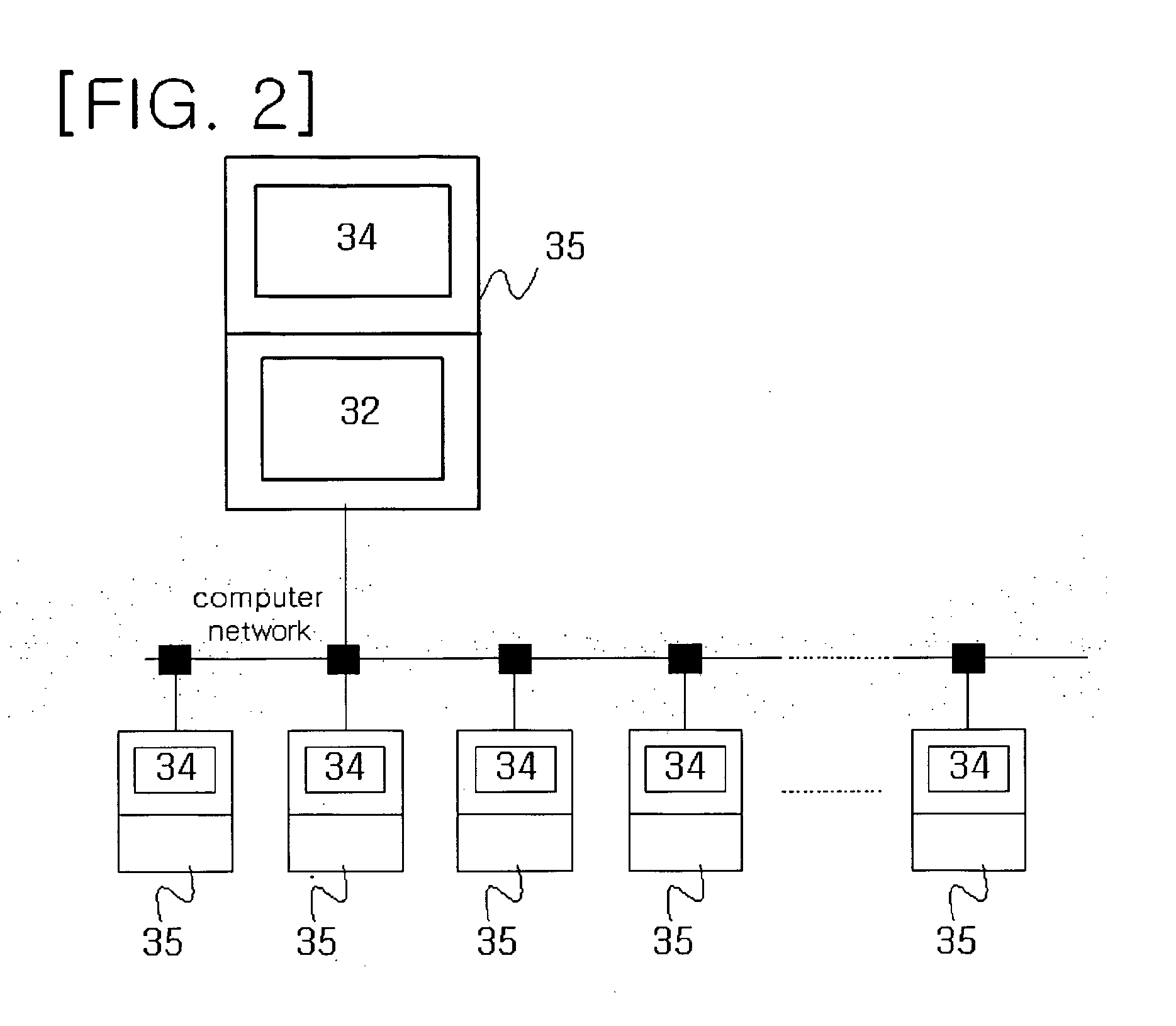

Dynamic-Verification-Based Verification Apparatus Achieving High Verification Performance and Verification Efficiency and the Verification Methodology Using the Same

InactiveUS20080306721A1Improve efficiencyImprove performanceFunctional testingSpecial data processing applicationsSimulation basedHardware emulation

The present invention relates to a simulation-based verification apparatus and a verification method, which enhance the simulation performance and efficiency greatly, for verifying a digital system containing at least million gates. Also, the present invention relates to a simulation-based verification apparatus and a verification method used together with formal verification, simulation acceleration, hardware emulation, and prototyping to achieve the high verification performance and efficiency for verifying a digital system containing at least million gates.

Owner:YANG SEI YANG

Object memory instruction set

PendingUS20160210075A1Improve efficiencyImprove performanceMemory architecture accessing/allocationInput/output to record carriersControl flowTerm memory

Embodiments of the present invention are directed to an instruction set of an object memory fabric. This object memory fabric instruction set can be used to define arbitrary, parallel functionality such as: direct object address manipulation and generation without the overhead of complex address translation and software layers to manage differing address space; direct object authentication with no runtime overhead that can be set based on secure 3rd party authentication software; object related memory computing in which, as objects move between nodes, the computing can move with them; and parallelism that is dynamically and transparent based on scale and activity. These instructions are divided into three conceptual classes: memory reference including load, store, and special memory fabric instructions; control flow including fork, join, and branches; and execute including arithmetic and comparison instructions.

Owner:ULTRATA LLC

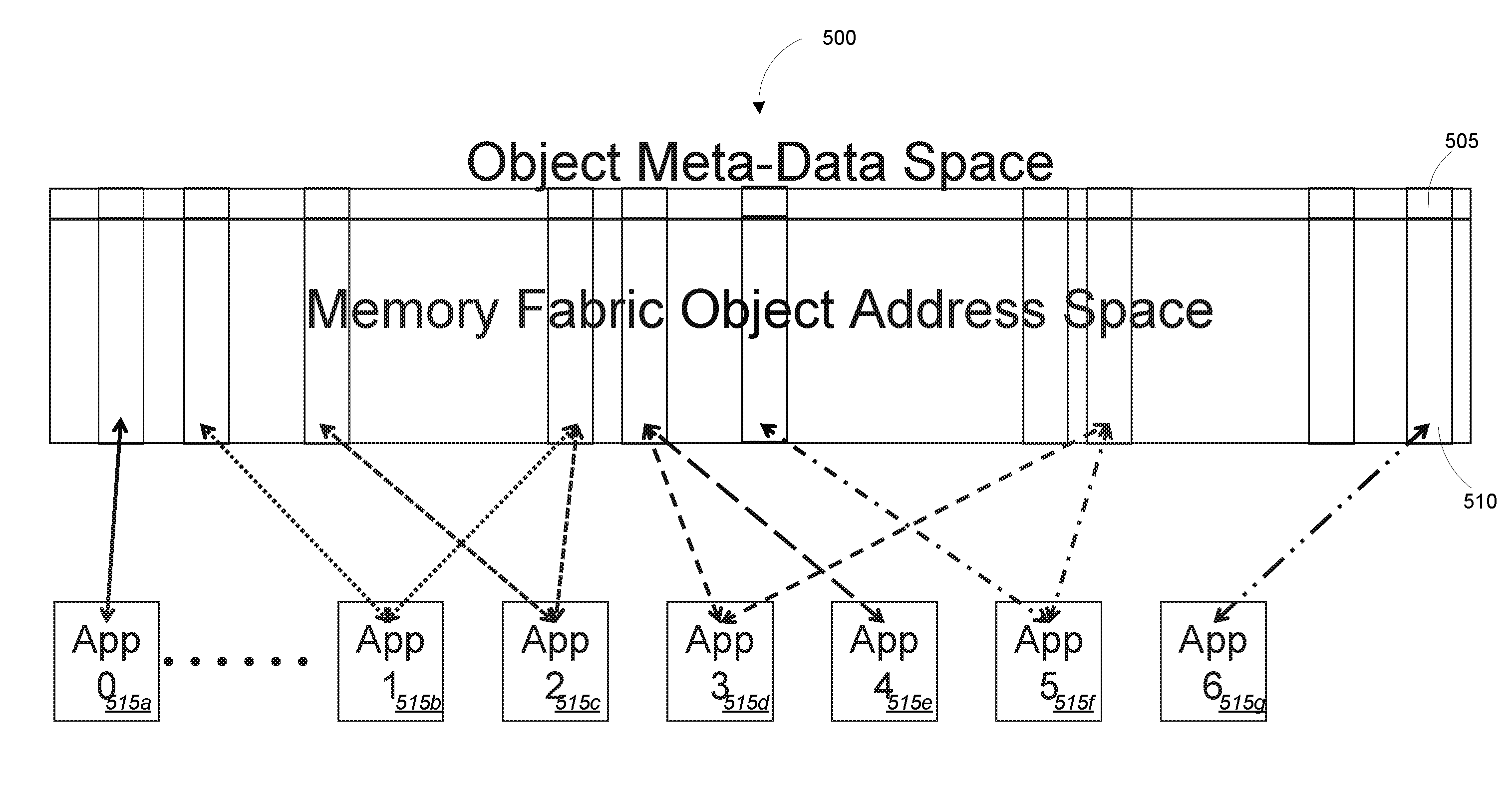

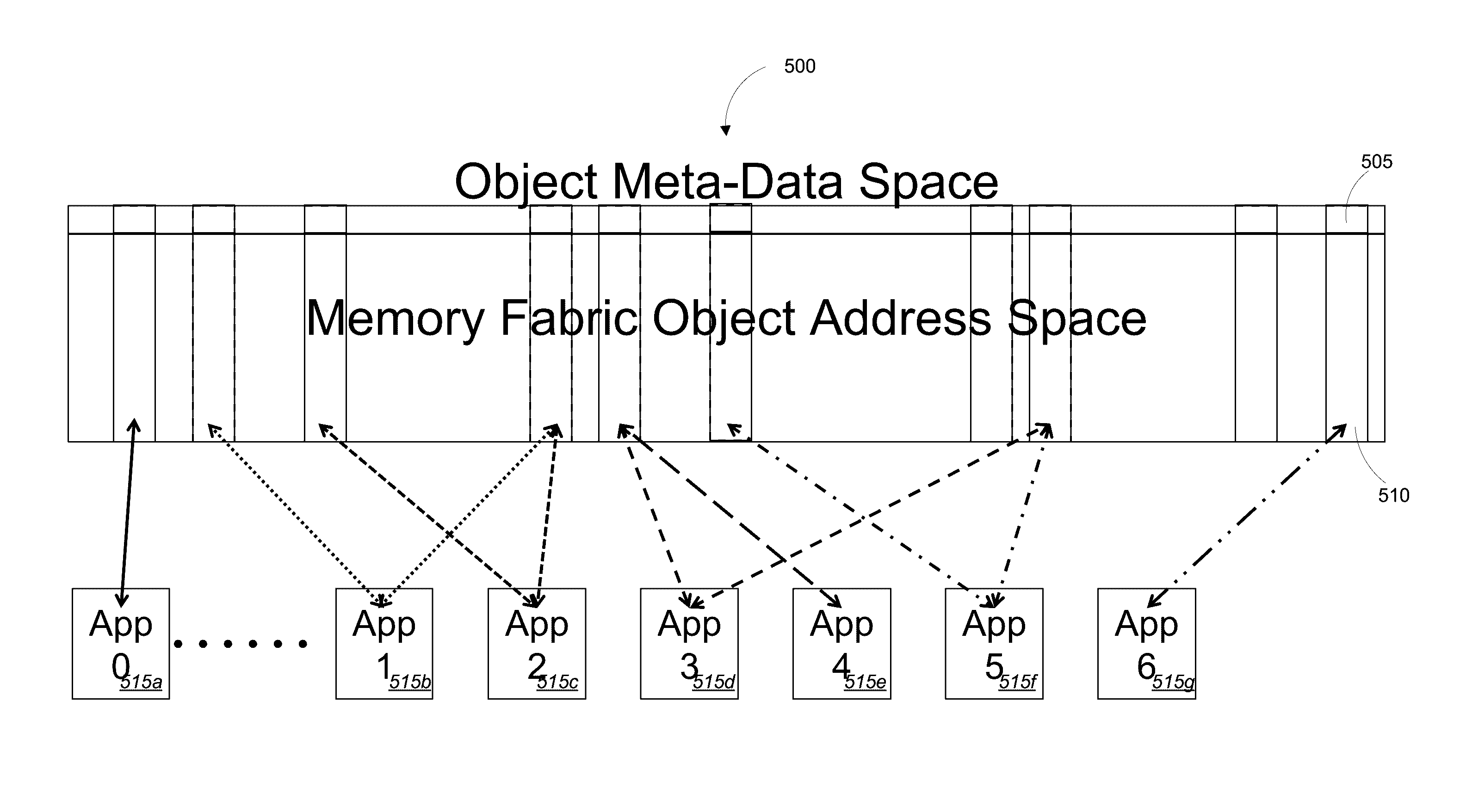

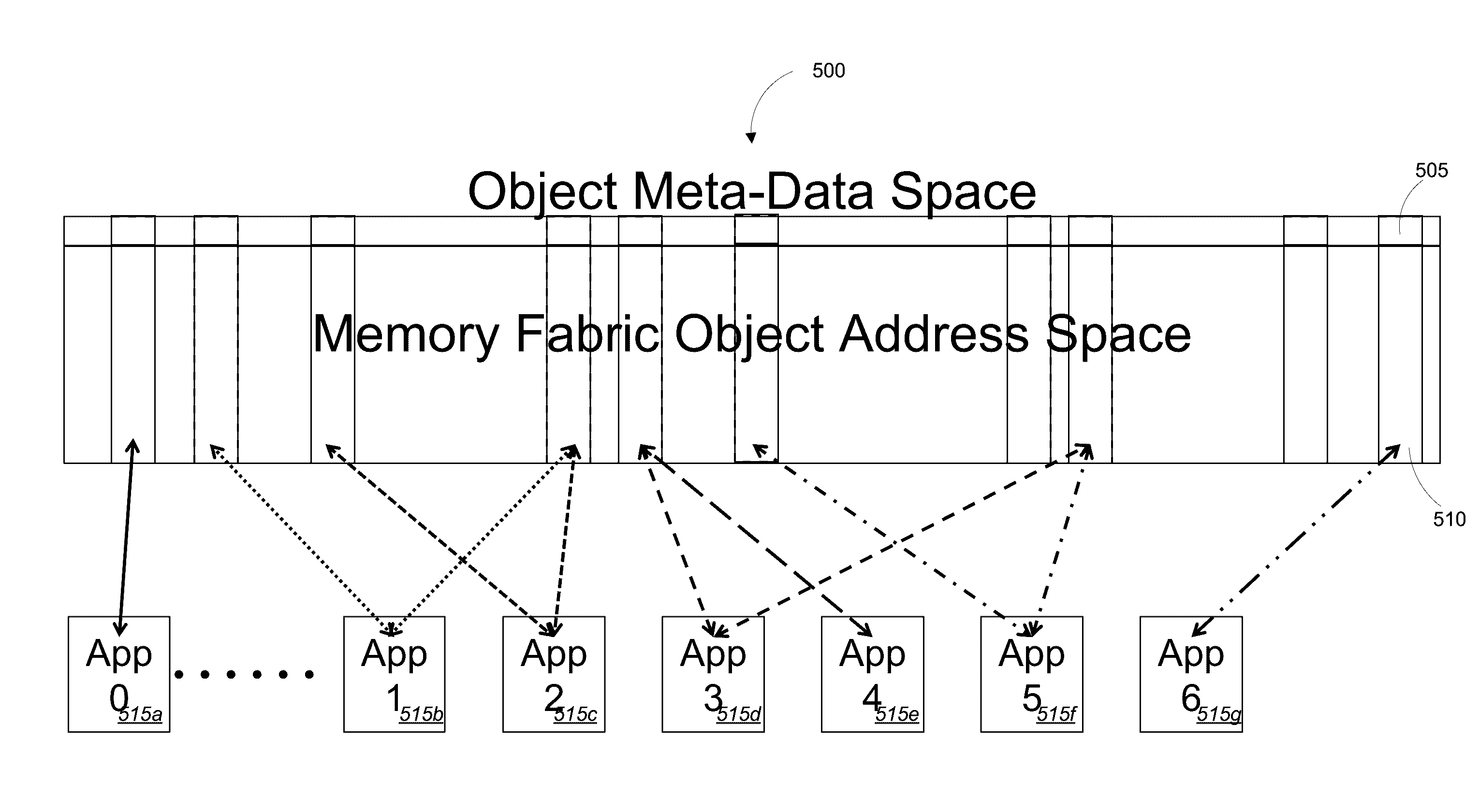

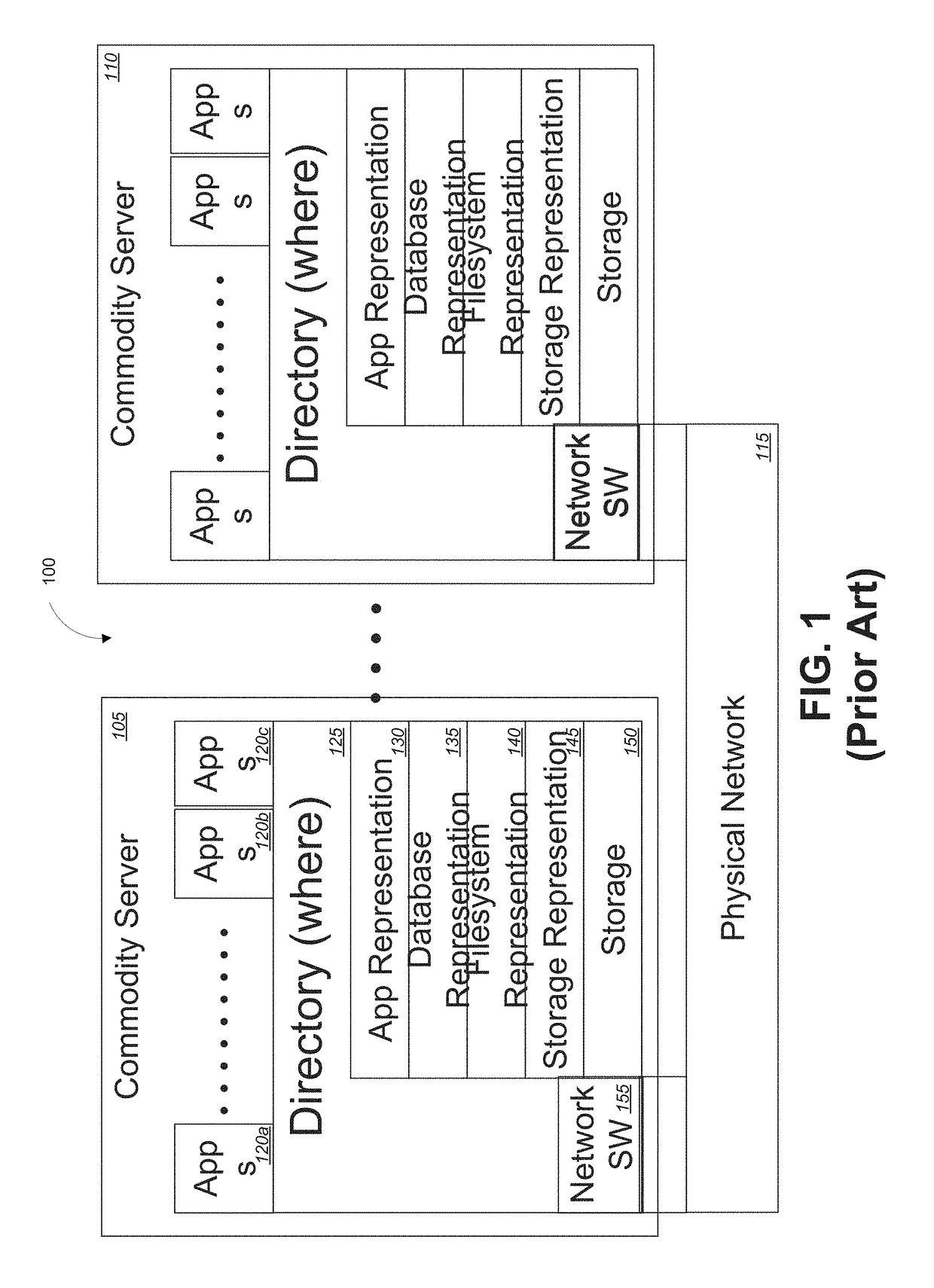

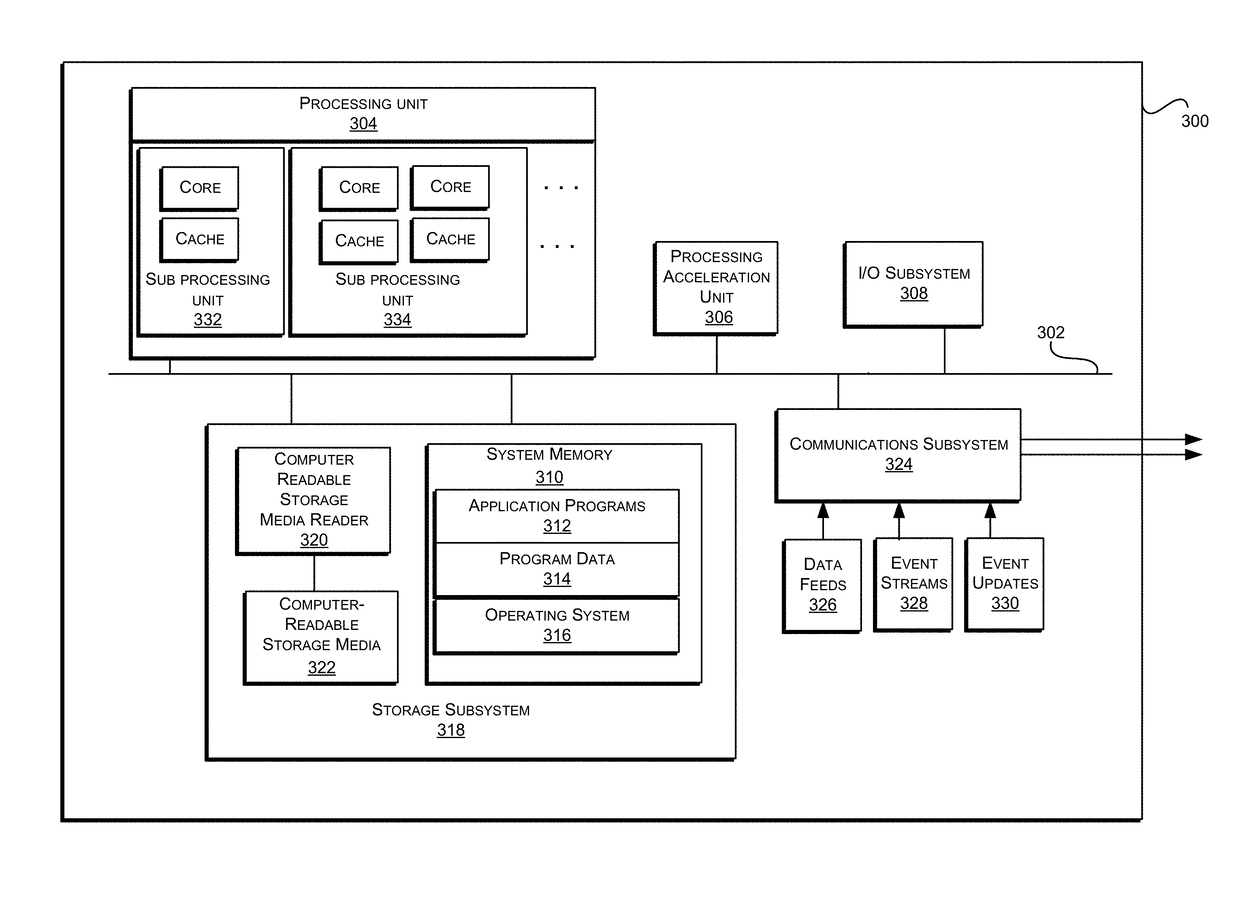

Universal single level object memory address space

InactiveUS20160210078A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationMemory addressMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can eliminate typical size constraints on memory space of commodity servers and other commodity hardware imposed by address sizes. Rather, physical addressing can be managed within the memory objects themselves and the objects can be in turn accessed and managed through the object name space.

Owner:ULTRATA LLC

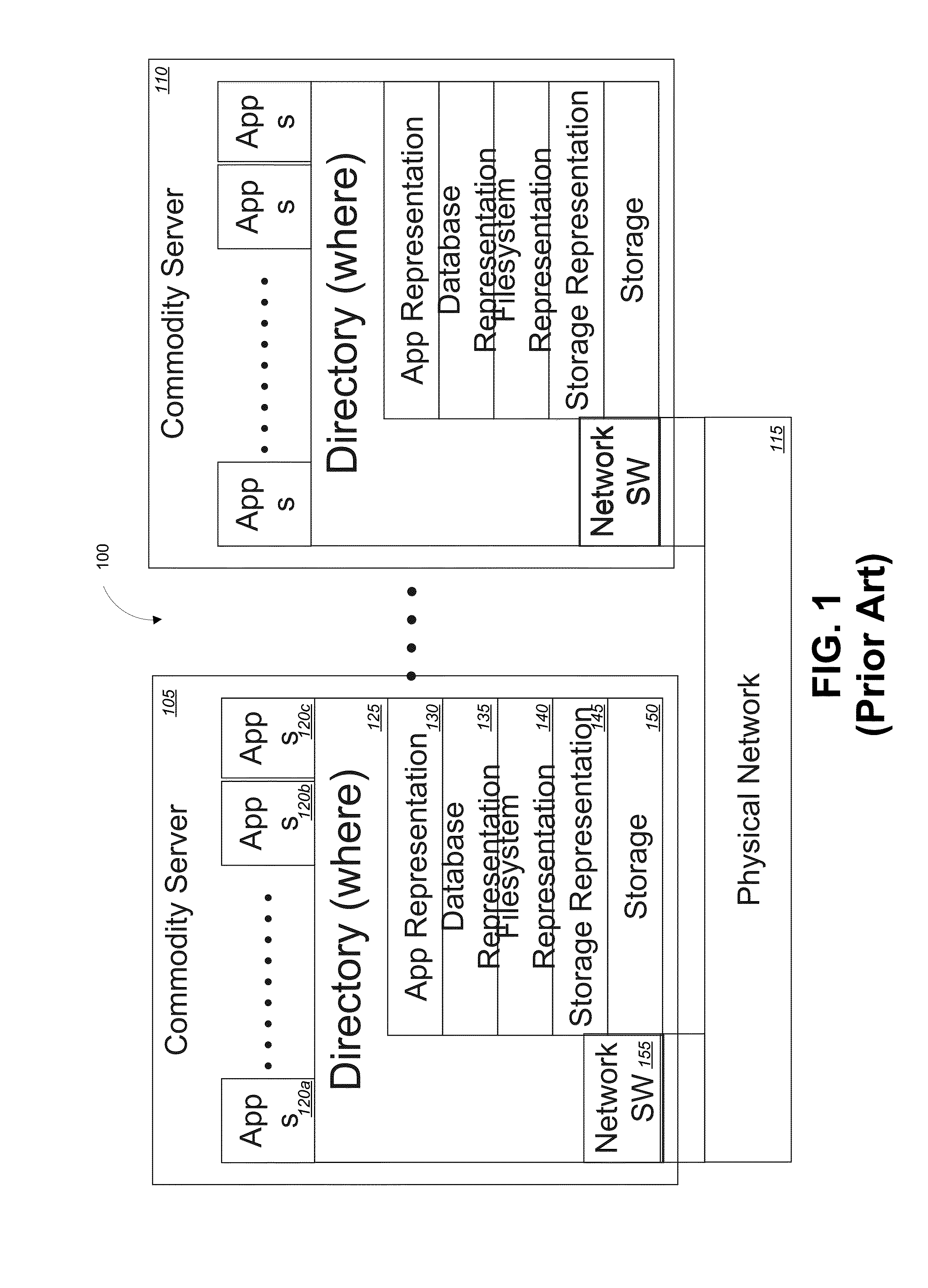

Object based memory fabric

InactiveUS20160210076A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationObject basedParallel computing

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can implement an object-based memory which manages the objects within the memory at the memory layer rather than in the application layer. That is, the objects and associated properties can be implemented and managed natively in memory enabling the object memory system to provide increased functionality without any software and increasing performance by dynamically managing object characteristics including, but not limited to persistence, location and processing. Object properties can also propagate up to higher application levels.

Owner:ULTRATA LLC

Object memory data flow triggers

ActiveUS20160210048A1Improve efficiencyImprove performanceMemory architecture accessing/allocationInput/output to record carriersMemory objectObject storage

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to an instruction set of an object memory fabric. This object memory fabric instruction set can include trigger instructions defined in metadata for a particular memory object. Each trigger instruction can comprise a single instruction and action based on reference to a specific object to initiate or perform defined actions such as pre-fetching other objects or executing a trigger program.

Owner:ULTRATA LLC

Trans-cloud object based memory

InactiveUS20160210077A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationObject basedParallel computing

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can eliminate the distinction between memory (temporary) and storage (persistent) by implementing and managing both within the objects. These embodiments can eliminate the distinction between local and remote memory by transparently managing the location of objects (or portions of objects) so all objects appear simultaneously local to all nodes. These embodiments can also eliminate the distinction between processing and memory through methods of the objects to place the processing within the memory itself.

Owner:ULTRATA LLC

Infinite memory fabric hardware implementation with memory

ActiveUS20160364172A1Improve efficiencyImprove performanceInput/output to record carriersInterprogram communicationData setMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to a hardware-based processing node of an object memory fabric. The processing node may include a memory module storing and managing one or more memory objects, the one or more memory objects each include at least a first memory and a second memory, wherein the first memory has a lower latency than the second memory, and wherein each memory object is created natively within the memory module, and each memory object is accessed using a single memory reference instruction without Input / Output (I / O) instructions, wherein a set of data is stored within the first memory of the memory module; wherein the memory module is configured to receive an indication of a subset of the set of data that is eligible to be transferred between the first memory and the second memory; and wherein the memory module dynamically determines which of the subset of data will be transferred to the second memory based on access patterns associated with the object memory fabric.

Owner:ULTRATA LLC

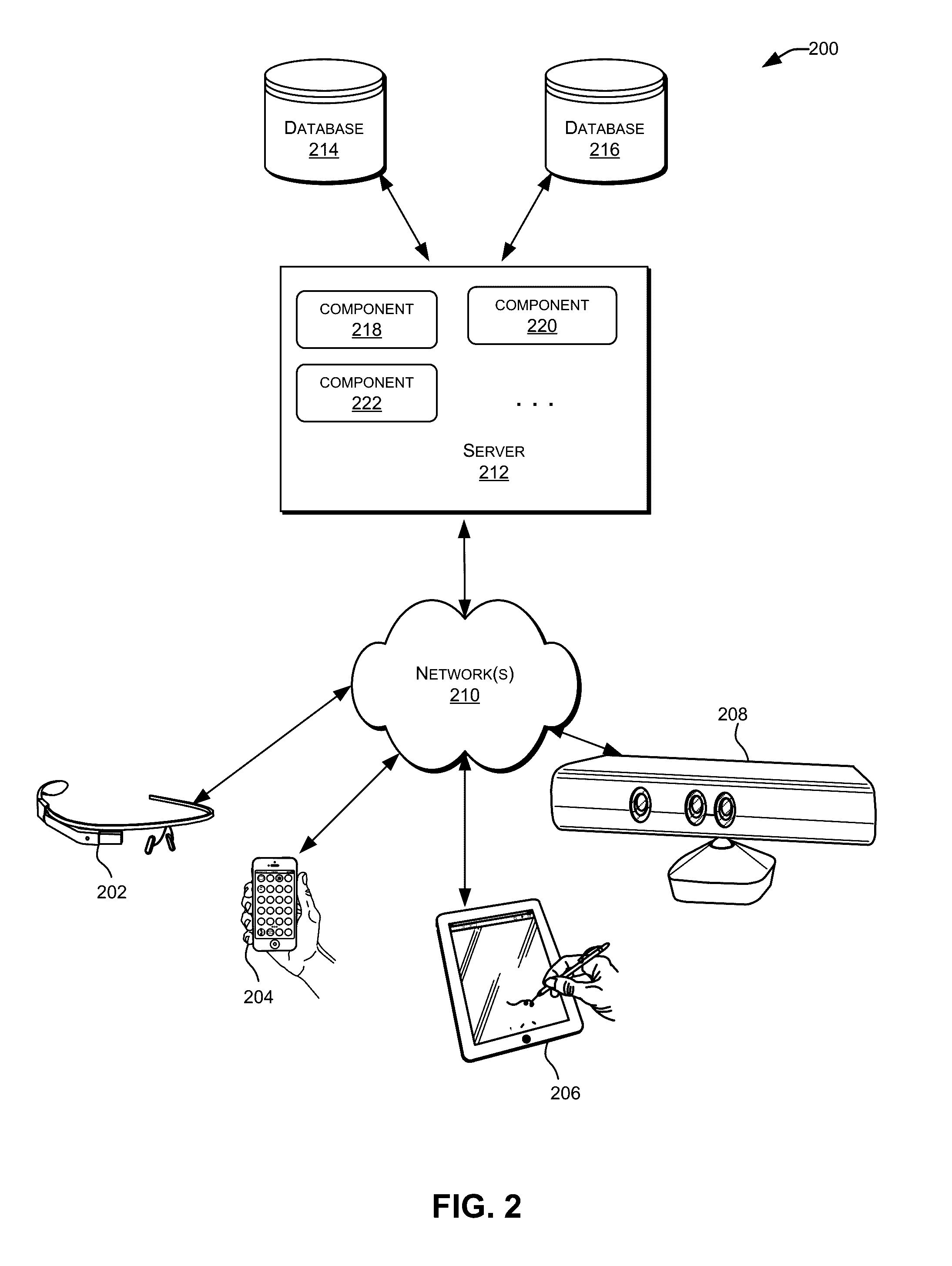

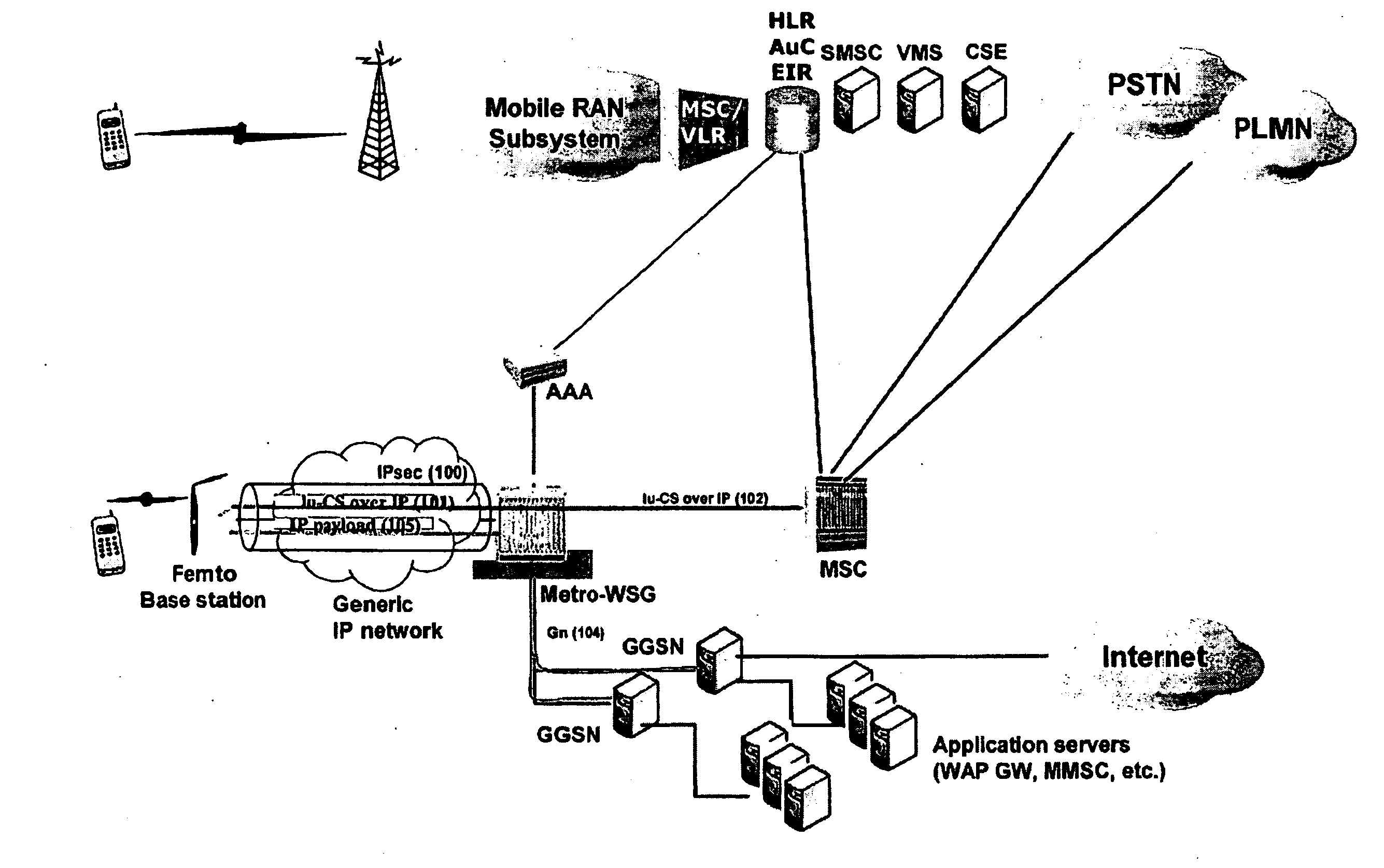

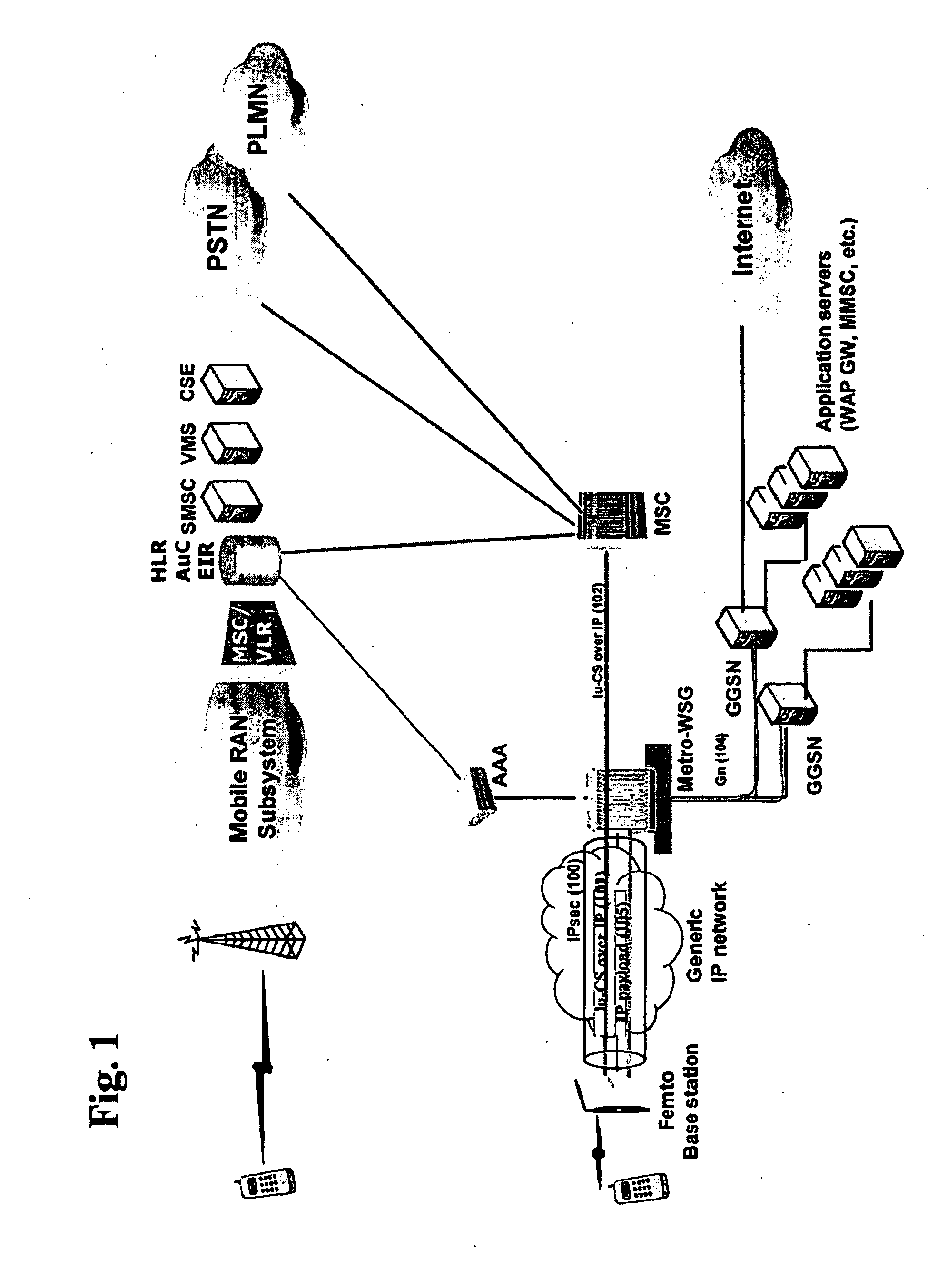

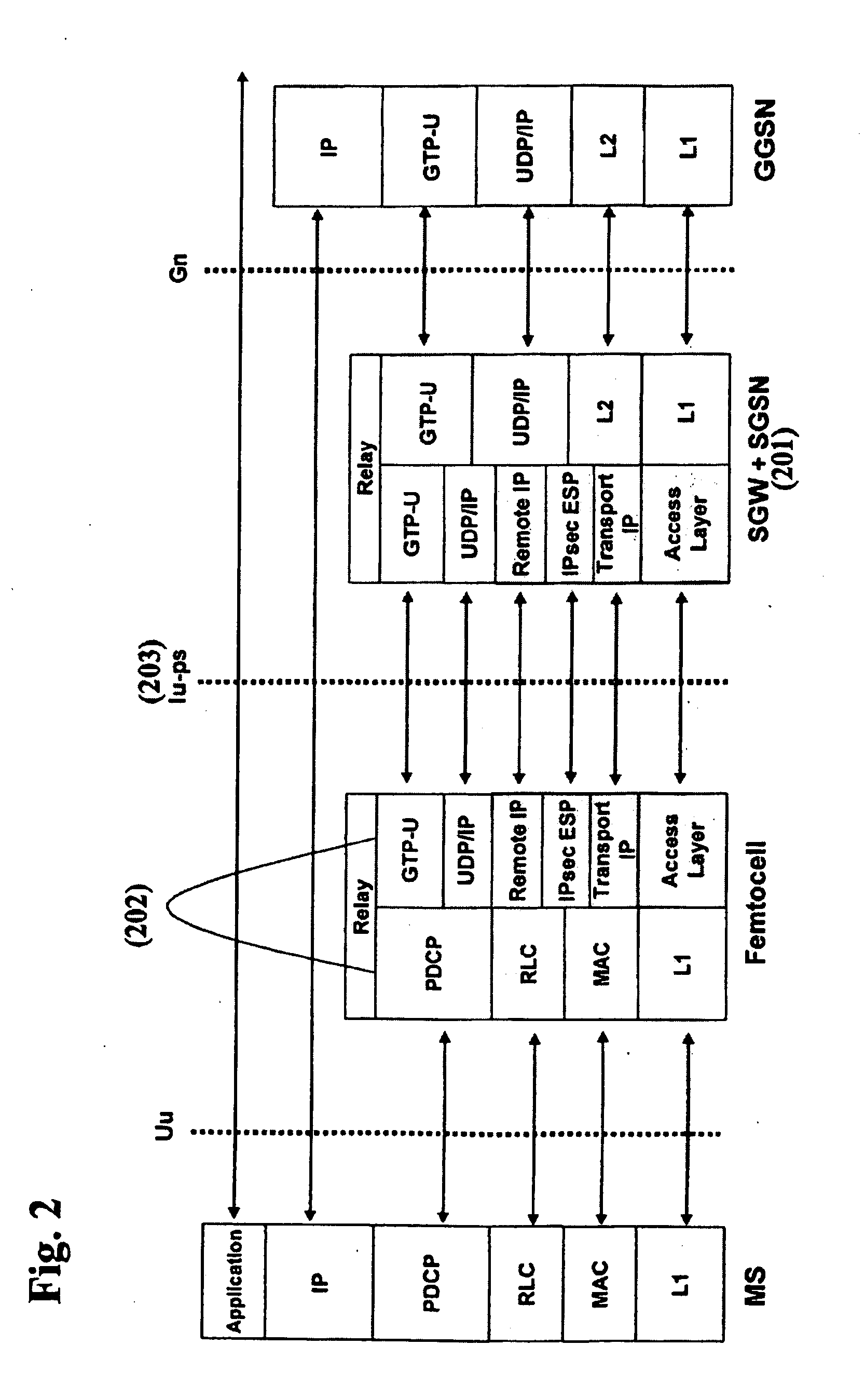

Femtocell architecture in support of voice and data communications

ActiveUS20090135795A1Significant overheadEasy to handleNetwork topologiesWireless commuication servicesThird generationVoice traffic

Methods and systems for providing voice and date services in a femtocell wireless network. The proposed approach integrates IWLAN architecture into femtocell architecture by introducing a gateway to serve both IWLAN and femtocell users. The proposed approach handles the voice and data in a different way so that it enhances the data handling efficiency while re-using existing MSC investment. The proposed approach carries the data traffic from a femtocell base station to the gateway in native IP packet, instead of encapsulating them in 3G data, thus enhancing the efficiency and performance for the data traffic. The data traffic can then be sent to GGSN or directly to packet data network. The approach tunnels voice traffic to MSC through the gateway as in conventional Iu-CS approach.

Owner:BISON PATENT LICENSING LLC

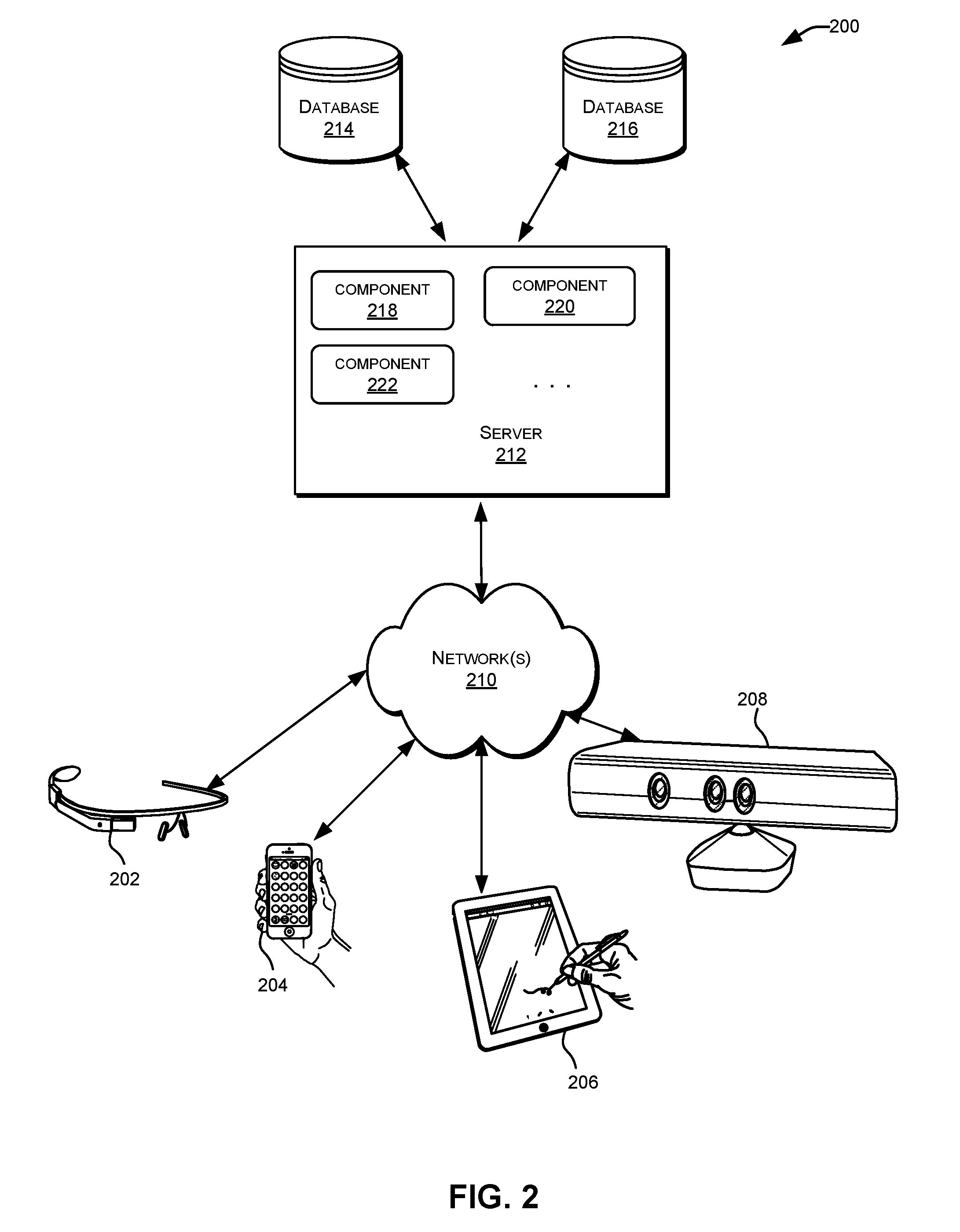

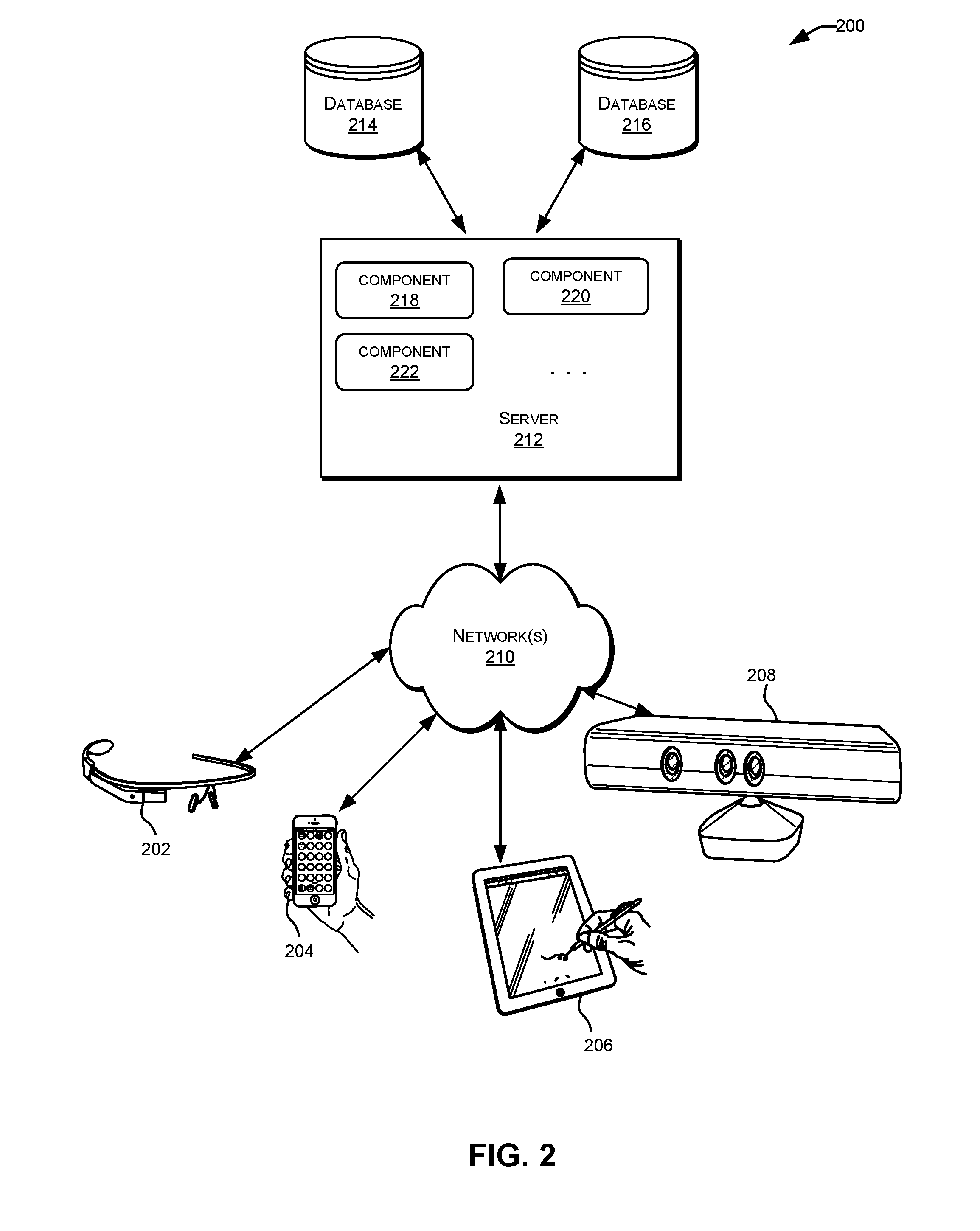

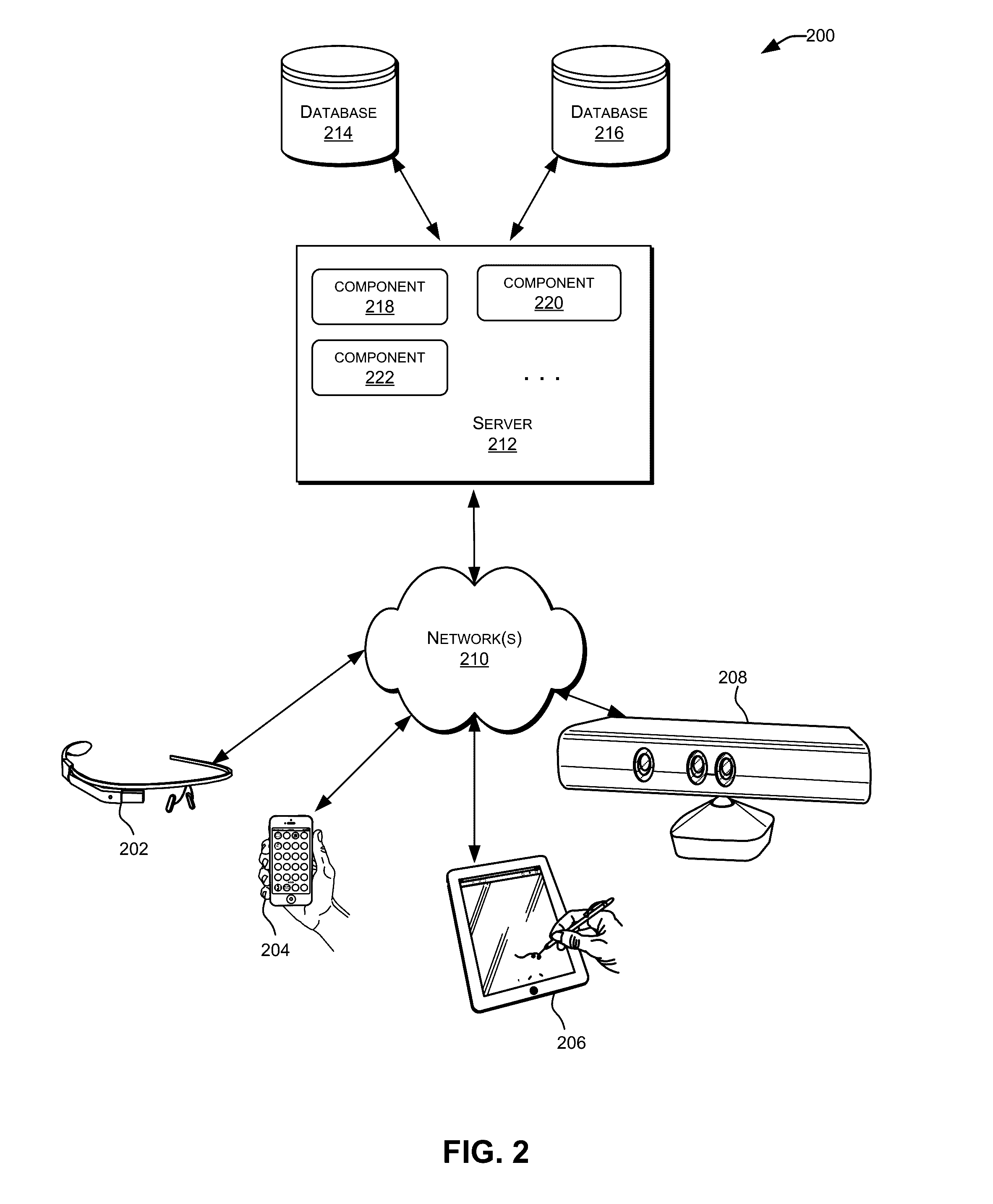

Infinite memory fabric streams and apis

ActiveUS20160364171A1Improve efficiencyImprove performanceInput/output to record carriersInterprogram communicationApplication programming interfaceDistributed object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to object memory fabric streams and application programming interfaces (APIs) that correspond to a method to implement a distributed object memory and to support hardware, software, and mixed implementations. The stream API may be defined from any point as two one-way streams in opposite directions. Advantageously, the stream API can be implemented with a variety topologies. The stream API may handle object coherency so that any device can then move or remotely execute arbitrary functions, since functions are within object meta-data, which is part of a coherent object address space.

Owner:ULTRATA LLC

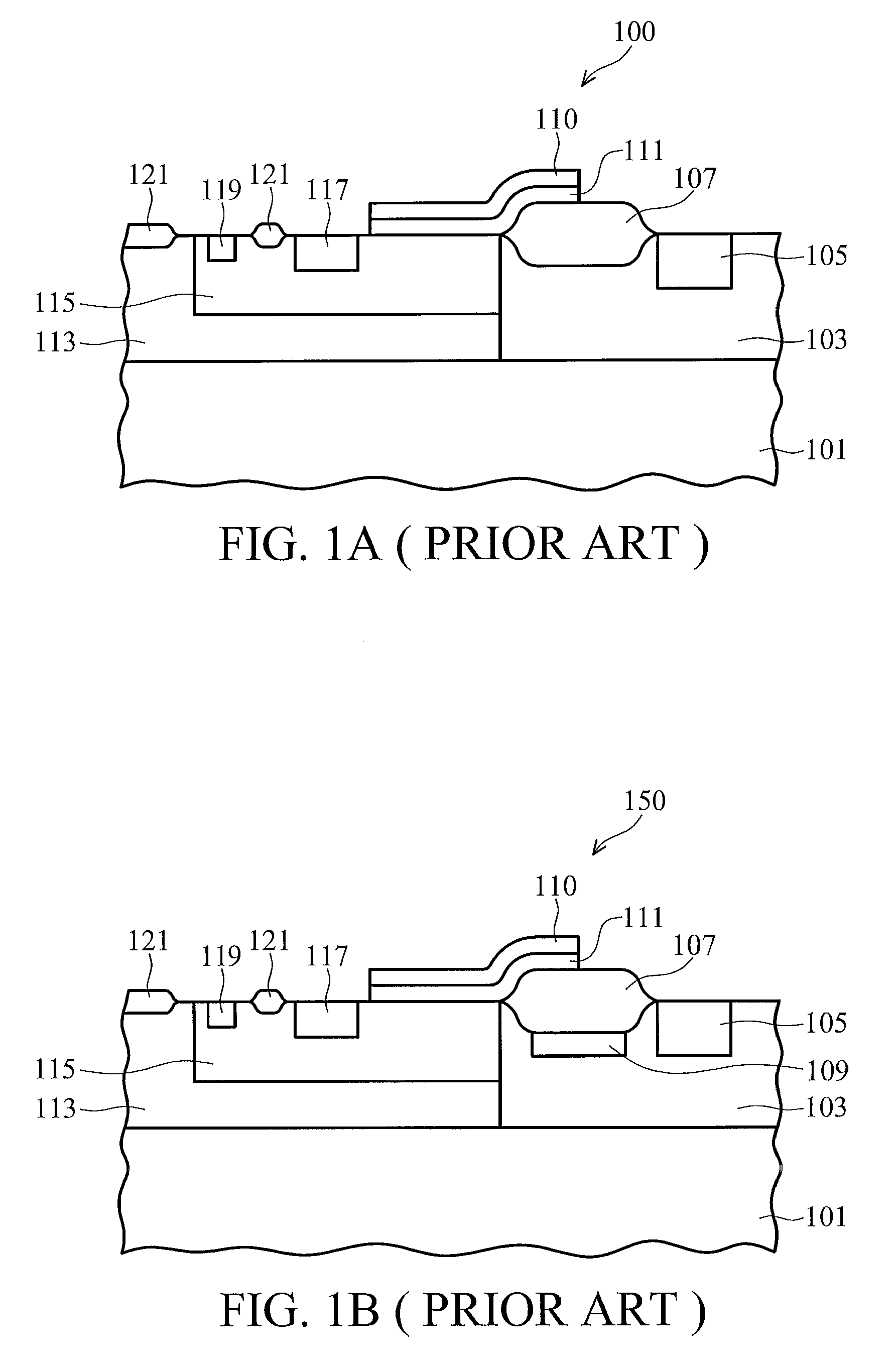

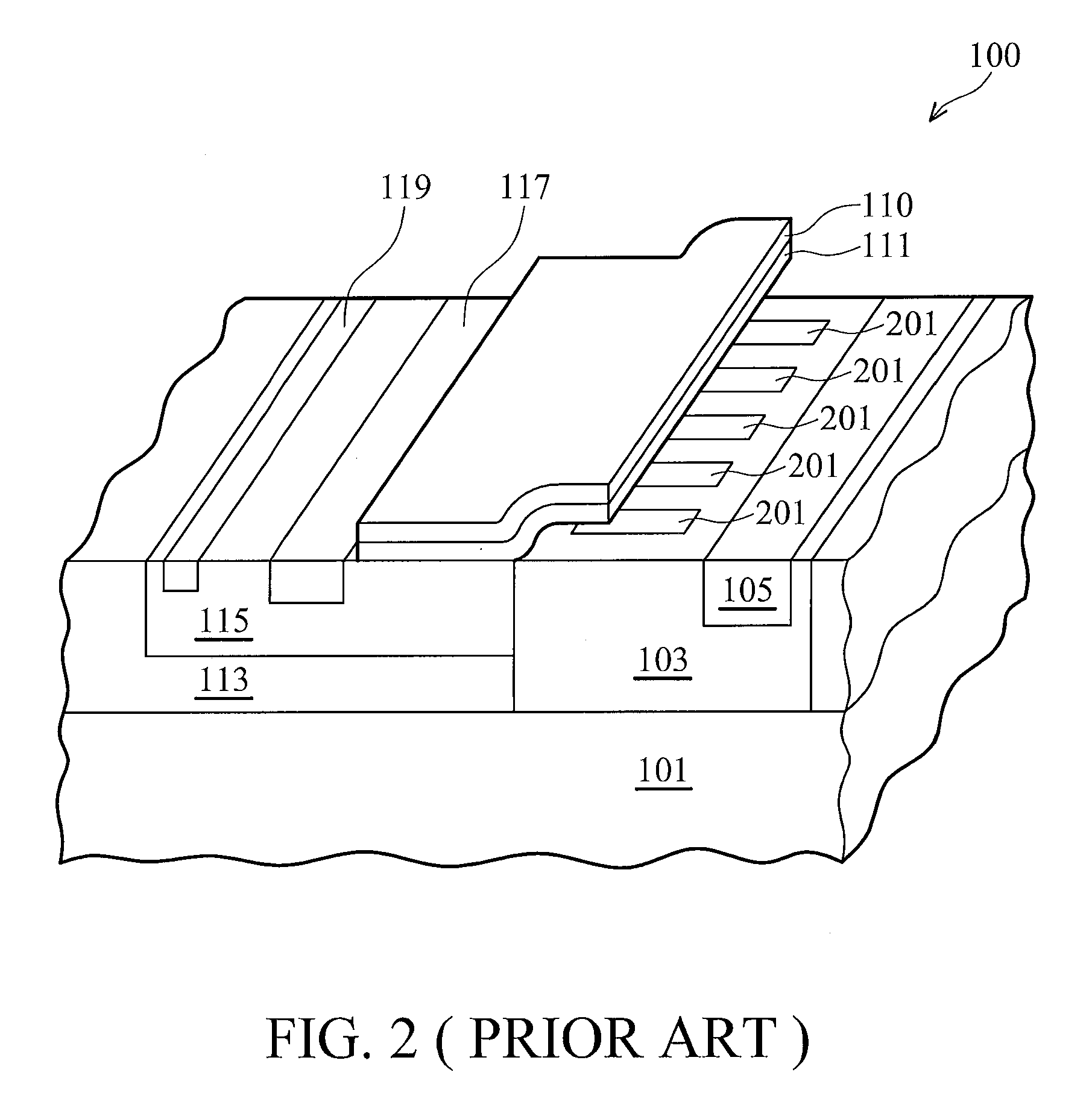

Lateral power MOSFET with high breakdown voltage and low on-resistance

ActiveUS20080090347A1Improve performance and efficiencyOn-resistance of the device is reducedSemiconductor/solid-state device manufacturingSemiconductor devicesDielectricGate dielectric

A semiconductor device with high breakdown voltage and low on-resistance is provided. An embodiment comprises a substrate having a buried layer in a portion of the top region of the substrate in order to extend the drift region. A layer is formed over the buried layer and the substrate, and high-voltage N-well and P-well regions are formed adjacent to each other. Field dielectrics are located over portions of the high-voltage N-wells and P-wells, and a gate dielectric and a gate conductor are formed over the channel region between the high-voltage P-well and the high-voltage N-well. Source and drain regions for the transistor are located in the high-voltage P-well and high-voltage N-well. Optionally, a P field ring is formed in the N-well region under the field dielectric. In another embodiment, a lateral power superjunction MOSFET with partition regions located in the high-voltage N-well is manufactured with an extended drift region.

Owner:TAIWAN SEMICON MFG CO LTD

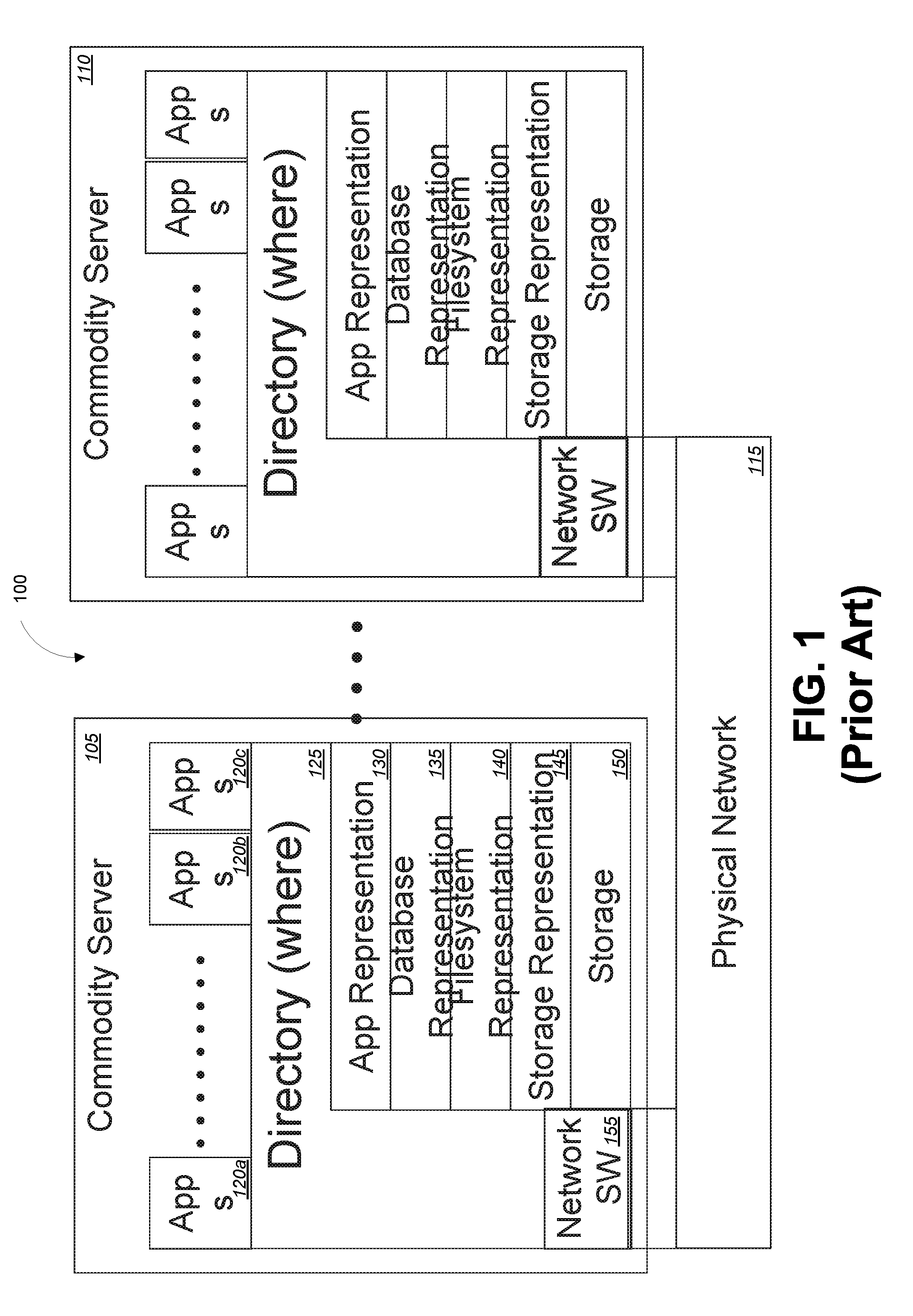

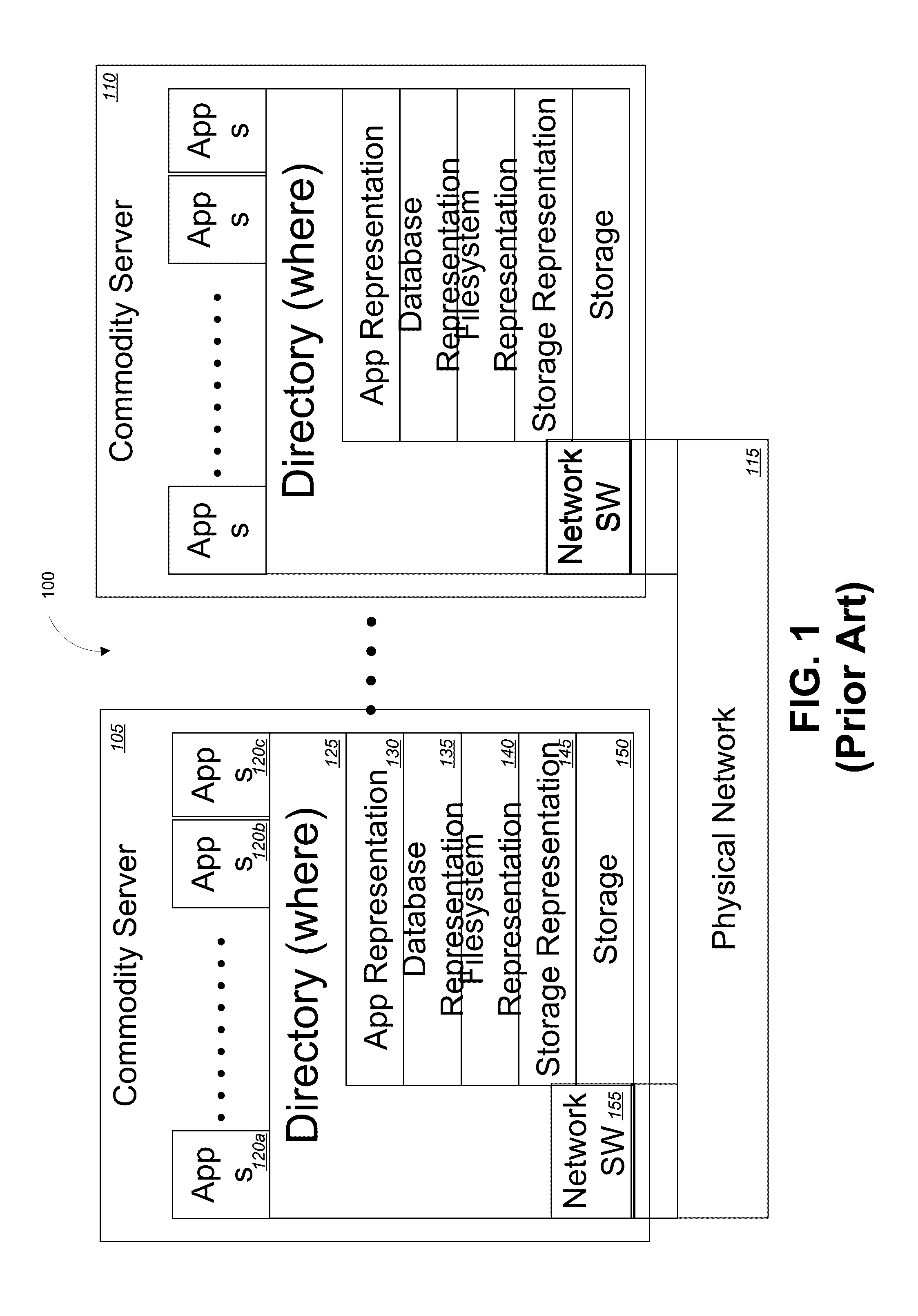

Memory fabric software implementation

ActiveUS20170199815A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationObject basedComputer module

A hardware-based processing node of an object memory fabric can comprise a memory module storing and managing one or more memory objects within an object-based memory space. Each memory object can be created natively within the memory module, accessed using a single memory reference instruction without Input / Output (I / O) instructions, and managed by the memory module at a single memory layer. The memory module can provide an interface layer below an application layer of a software stack. The interface layer can comprise one or more storage managers managing hardware of a processor and controlling portions of the object-based memory space visible to a virtual address space and physical address space of the processor. The storage managers can further provide an interface between the object-based memory space and an operating system executed by the processor and an alternate object memory based storage transparent to software using the interface layer.

Owner:ULTRATA LLC

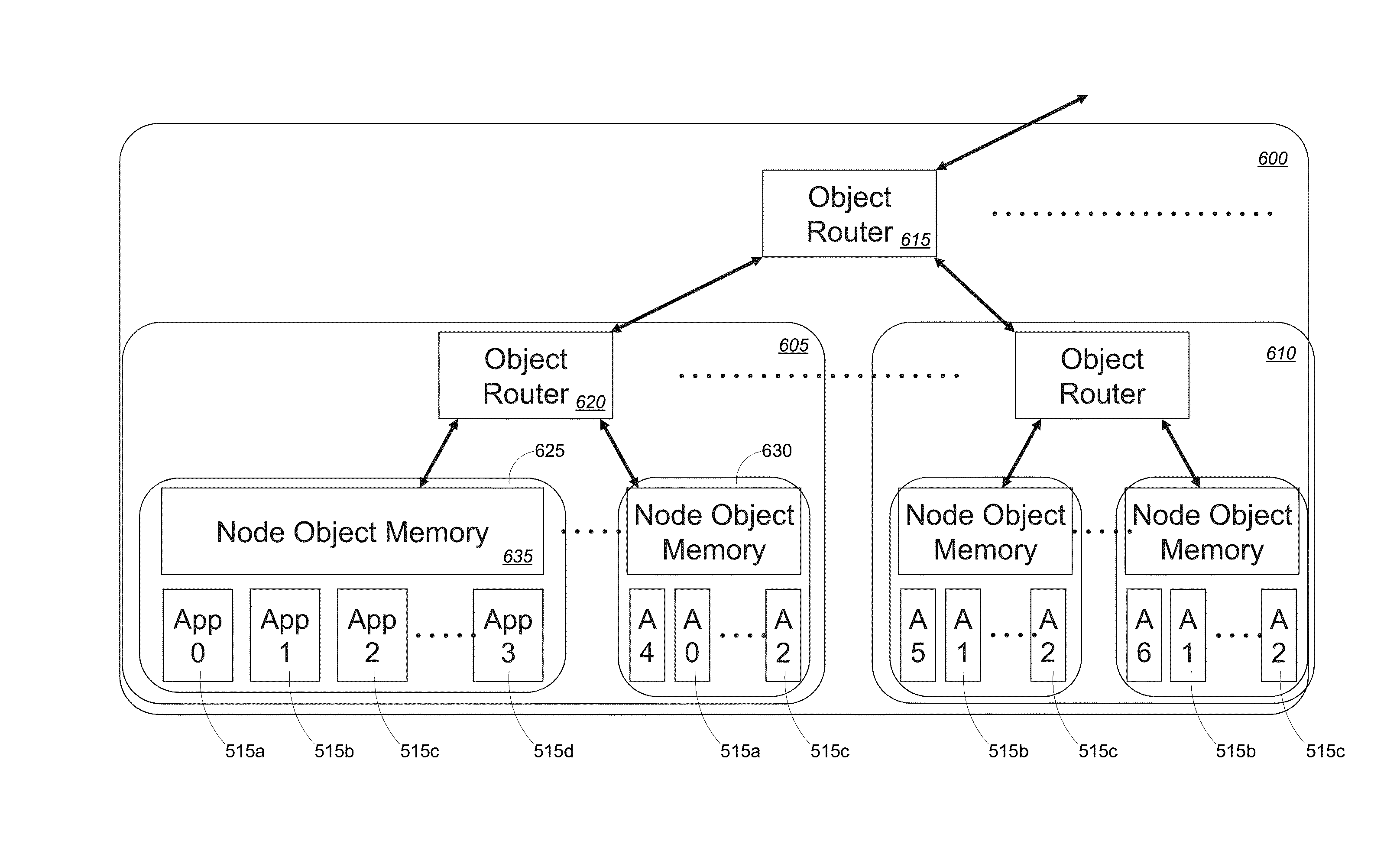

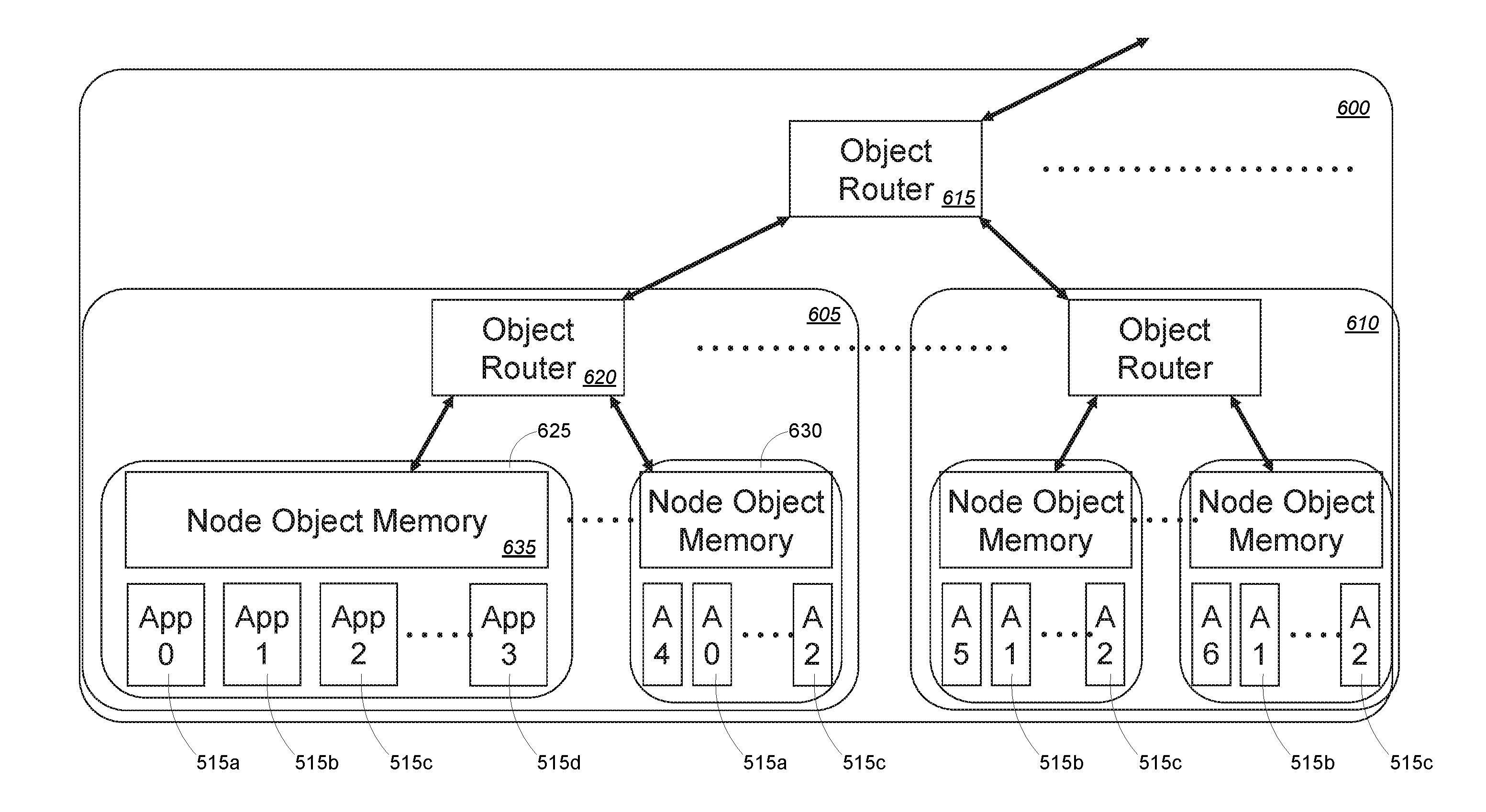

Infinite memory fabric hardware implementation with router

ActiveUS20160364173A1Improve efficiencyImprove performanceMemory architecture accessing/allocationInput/output to record carriersMemory objectParallel computing

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to a hardware-based processing node of an object memory fabric. The processing node may include a memory module storing and managing one or more memory objects, the one or more memory objects each include at least a first memory and a second memory, wherein: each memory object is created natively within the memory module, and each memory object is accessed using a single memory reference instruction without Input / Output (I / O) instructions; and a router configured to interface between a processor on the memory module and the one or more memory objects; wherein a set of data is stored within the first memory of the memory module; wherein the memory module dynamically determines that at least a portion of the set of data will be transferred from the first memory to the second memory; and wherein, in response to the determination that at least a portion of the set of data will be transferred from the first memory to the second memory, the router is configured to identify the portion to be transferred and to facilitate execution of the transfer.

Owner:ULTRATA LLC

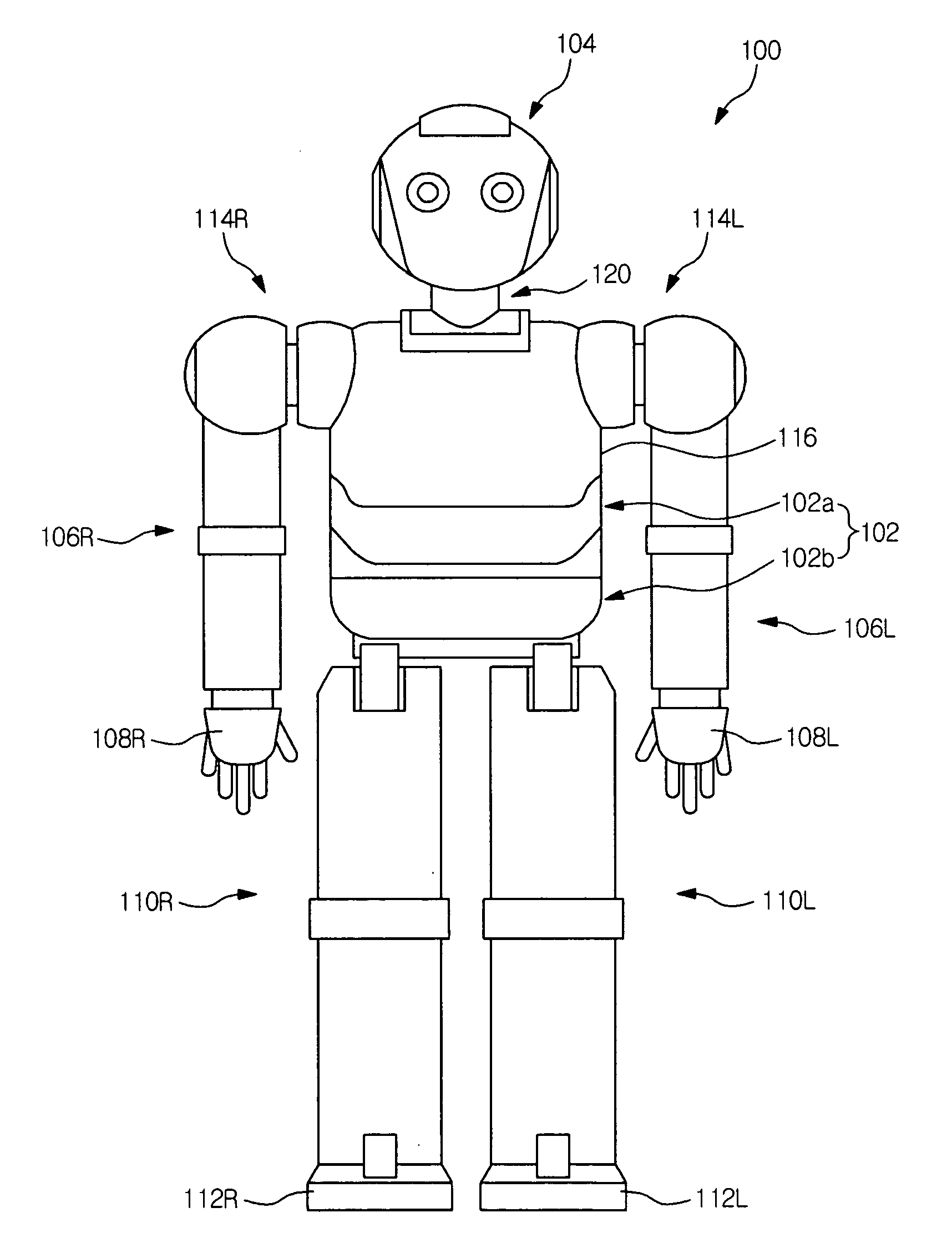

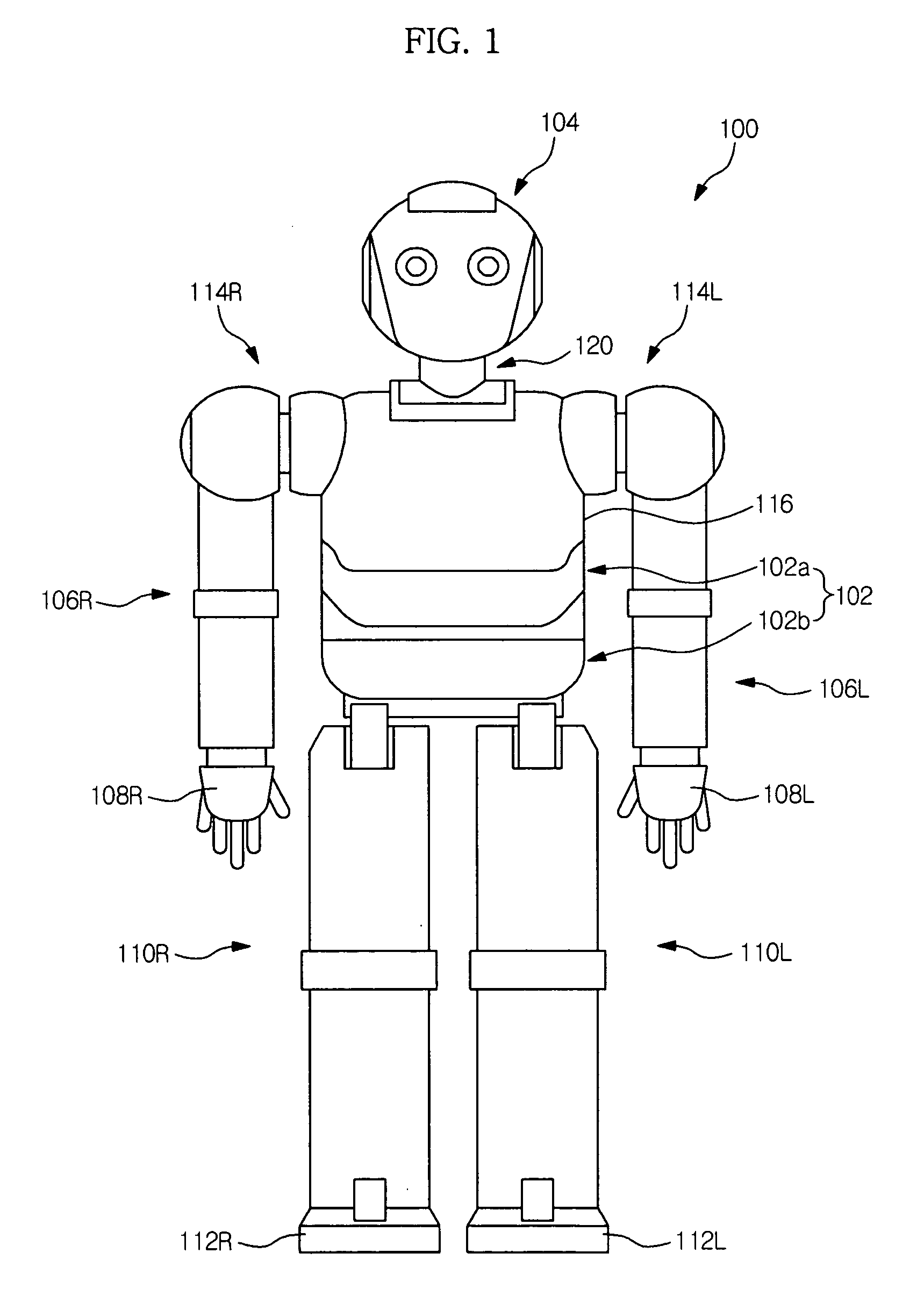

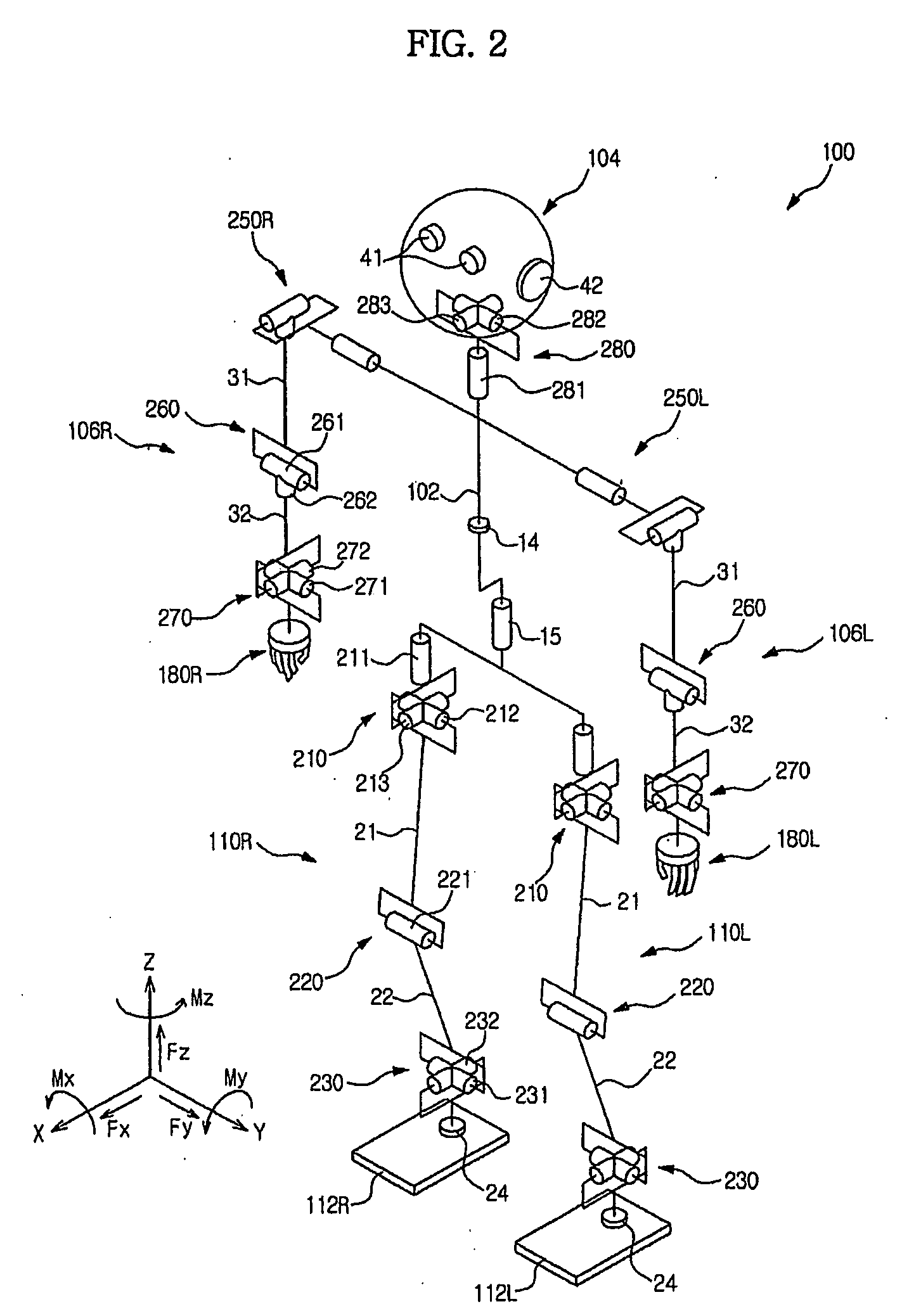

Walking robot and method of controlling the same

InactiveUS20090321150A1Improve efficiencyImprove performanceProgramme-controlled manipulatorComputer controlControl modeRobot

Disclosed are a walking robot and a method of controlling the same, in which one method is selected from a ZMP control method and a FSM control method. Based on characteristics of a motion to be performed, the current control mode of the walking robot is converted into a different control mode, and the motion is performed based on the converted control mode, to enhance the efficiency and performance of the walking robot. The method includes receiving an instruction to perform a motion; selecting any one mode, which is determined to be more proper to perform the instructed motion, out of a position-based first control mode and a torque-based second control mode; and performing the instructed motion according to the selected control mode.

Owner:SAMSUNG ELECTRONICS CO LTD

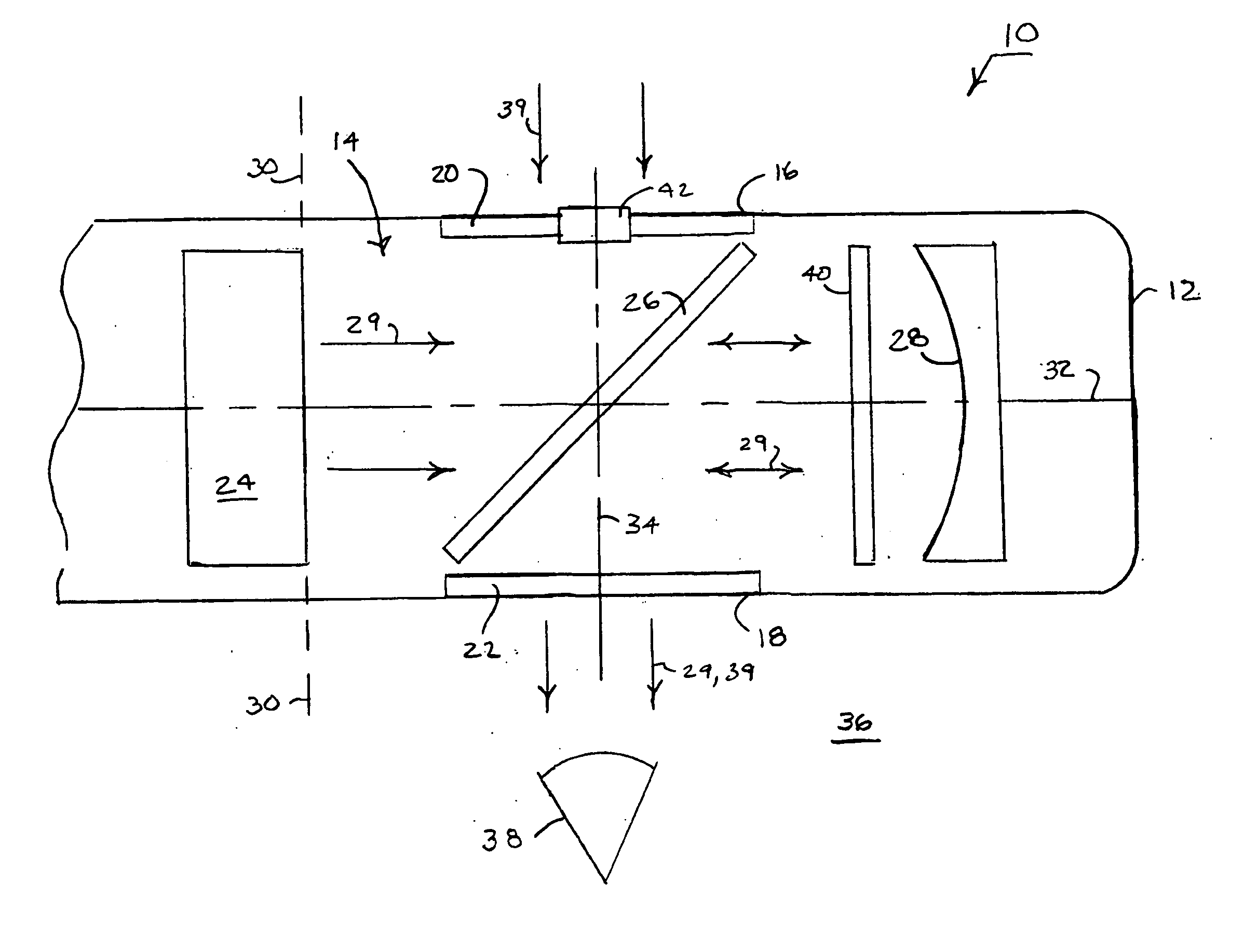

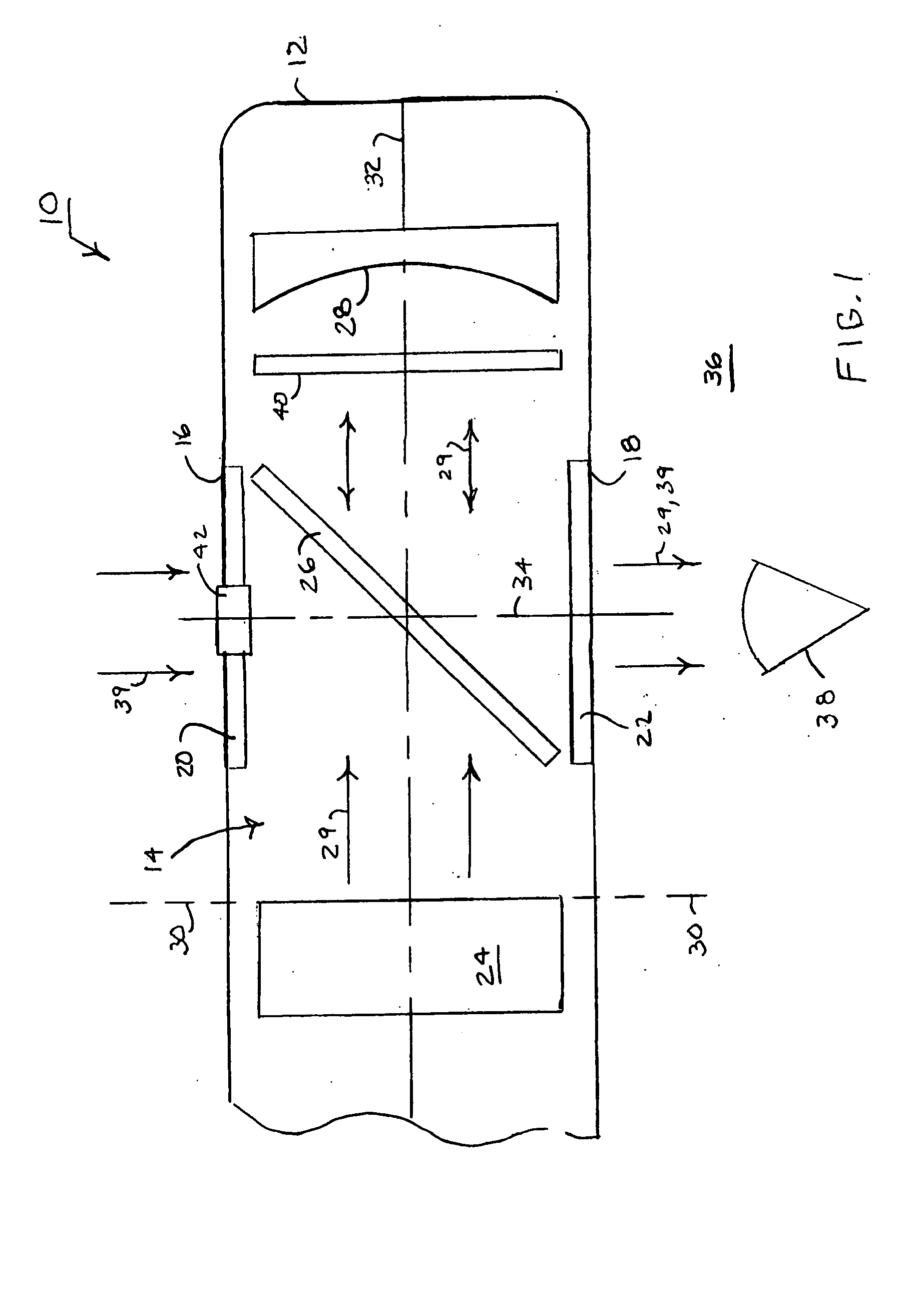

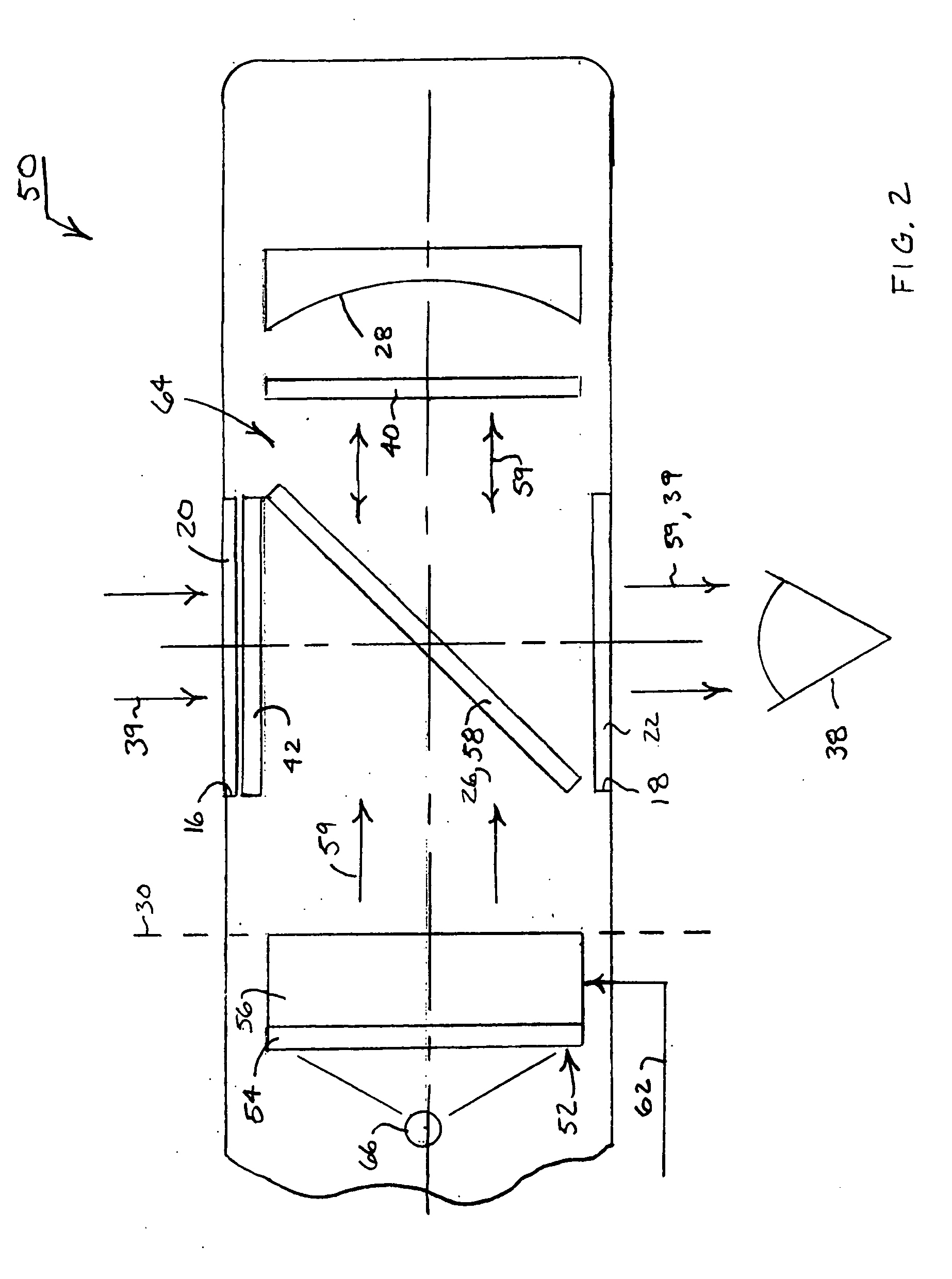

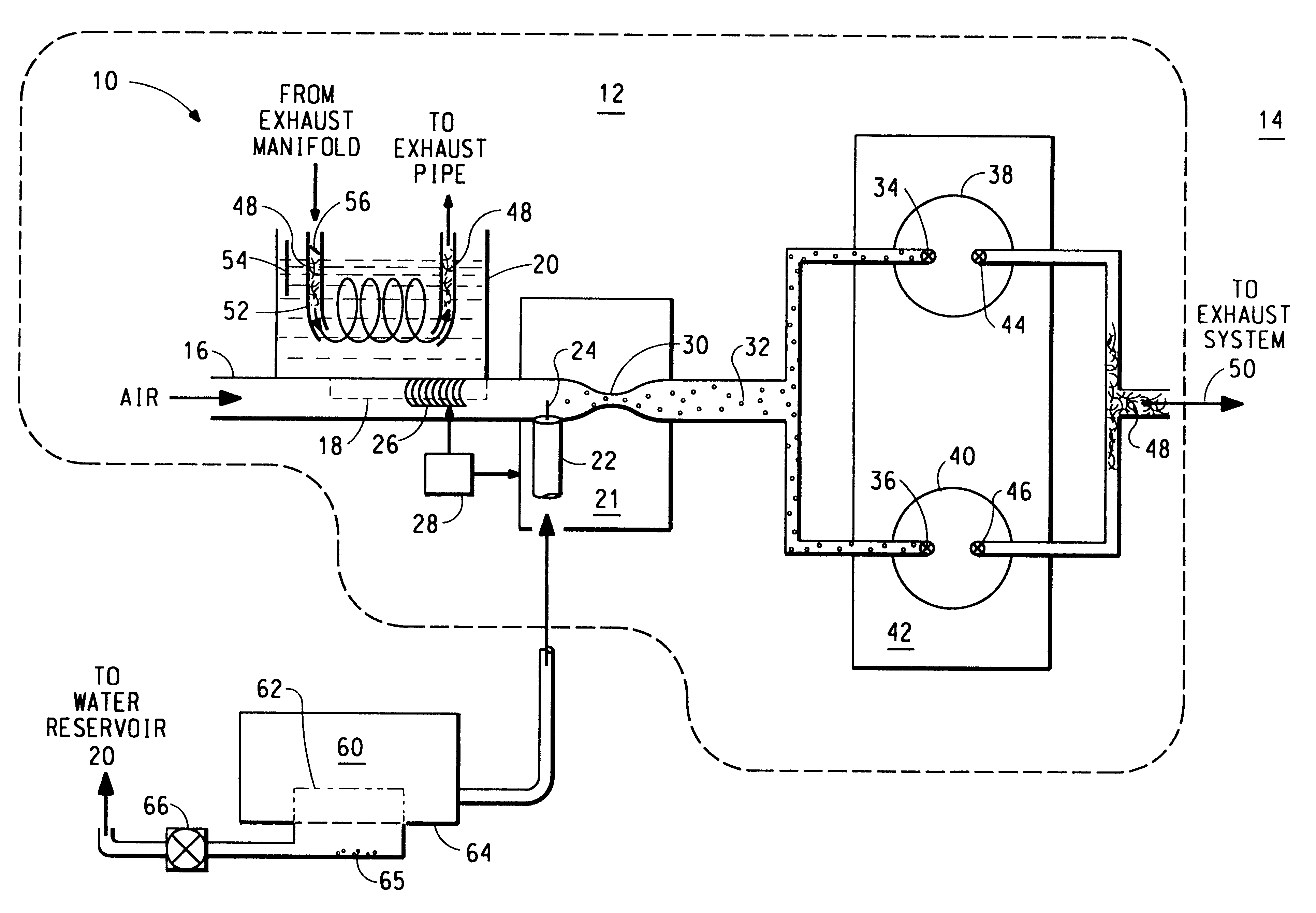

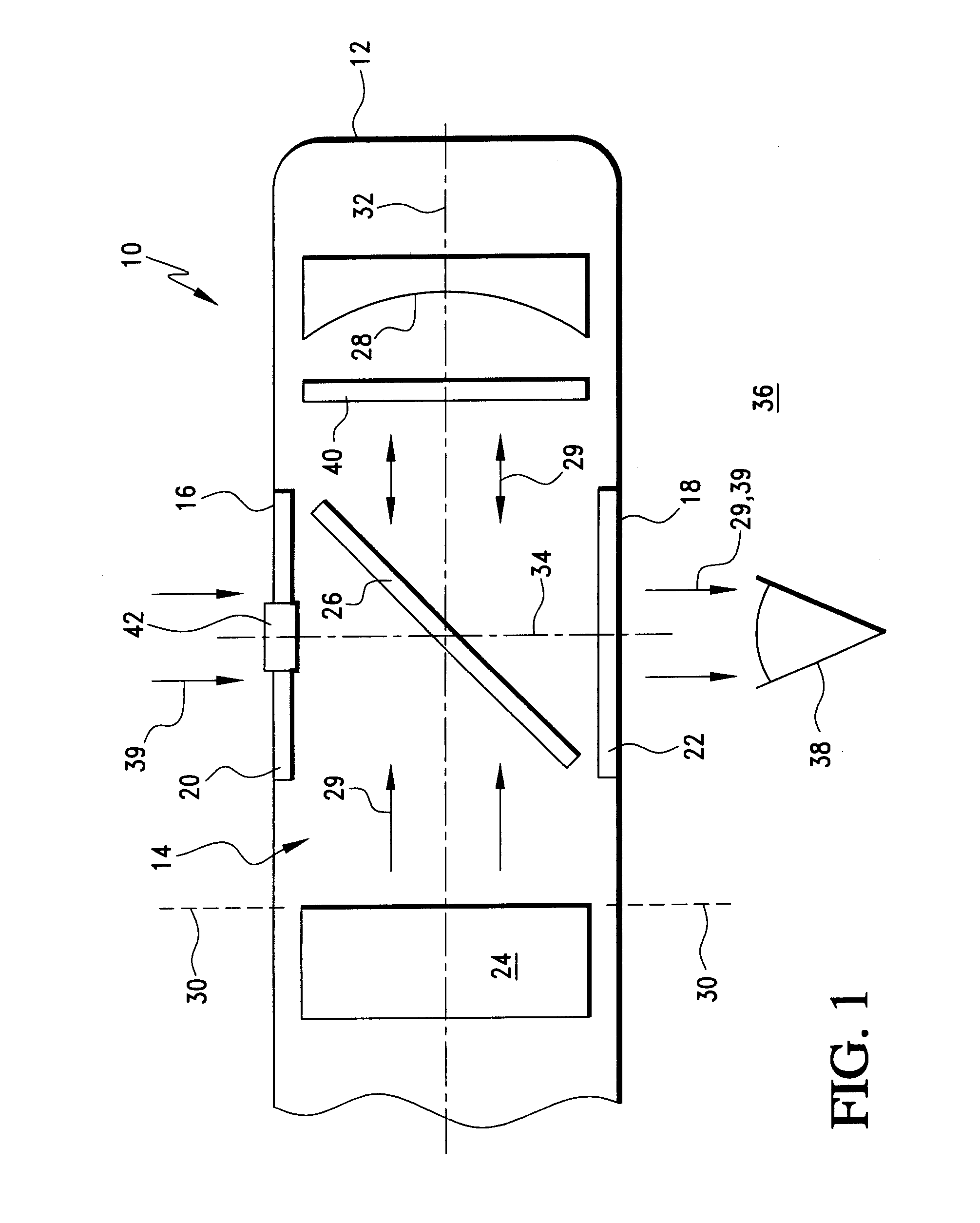

Humidifying gas induction or supply system

InactiveUS6511052B1Maximize efficiencyReduce the amount requiredInternal combustion piston enginesUsing liquid separation agentWater vaporGas induction

Water vapor is introduced into an inlet air stream (16) of an engine (12), for example, by a pervaporation process through a non-porous hydrophilic membrane (18). A water reservoir (20), which can contain contaminated water, provides a vapor pressure gradient across the hydrophilic membrane (18) into the inlet air stream (16), while the rate of delivery of the water vapor to a cylinder (38-40) is self-regulated by the rate of flow of air across the membrane. The hydrophilic membrane (18) therefore also filters the water from the water reservoir (20) to an extent that pure water vapor is provided to the air inlet stream (16). Delivery of water vapor can nevertheless be controlled using a hood (26) that slides over the hydrophilic membrane to limit its exposed surface area. Alternatively, water vapor is introduced into one or more of the gas streams of a fuel cell by separating the gas stream from the wet exhaust gas stream by a hydrophilic membrane such that moisture passes across the membrane to moisten the gas stream and thereby prevent drying out of the proton exchange membrane.

Owner:DESIGN TECH & INNOVATION

Infinite memory fabric hardware implementation with router

ActiveUS9886210B2Improve performance and efficiencyEasy to implementMemory architecture accessing/allocationInput/output to record carriersMemory objectParallel computing

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to a hardware-based processing node of an object memory fabric. The processing node may include a memory module storing and managing one or more memory objects, the one or more memory objects each include at least a first memory and a second memory, wherein: each memory object is created natively within the memory module, and each memory object is accessed using a single memory reference instruction without Input / Output (I / O) instructions; and a router configured to interface between a processor on the memory module and the one or more memory objects; wherein a set of data is stored within the first memory of the memory module; wherein the memory module dynamically determines that at least a portion of the set of data will be transferred from the first memory to the second memory; and wherein, in response to the determination that at least a portion of the set of data will be transferred from the first memory to the second memory, the router is configured to identify the portion to be transferred and to facilitate execution of the transfer.

Owner:ULTRATA LLC

Lateral Power MOSFET with High Breakdown Voltage and Low On-Resistance

ActiveUS20090085101A1Improve performance and efficiencyOn-resistance of the device is reducedSemiconductor/solid-state device manufacturingSemiconductor devicesDielectricElectrical conductor

A semiconductor device with high breakdown voltage and low on-resistance is provided. An embodiment comprises a substrate having a buried layer in a portion of the top region of the substrate in order to extend the drift region. A layer is formed over the buried layer and the substrate, and high-voltage N-well and P-well regions are formed adjacent to each other. Field dielectrics are located over portions of the high-voltage N-wells and P-wells, and a gate dielectric and a gate conductor are formed over the channel region between the high-voltage P-well and the high-voltage N-well. Source and drain regions for the transistor are located in the high-voltage P-well and high-voltage N-well. Optionally, a P field ring is formed in the N-well region under the field dielectric. In another embodiment, a lateral power superjunction MOSFET with partition regions located in the high-voltage N-well is manufactured with an extended drift region.

Owner:TAIWAN SEMICON MFG CO LTD

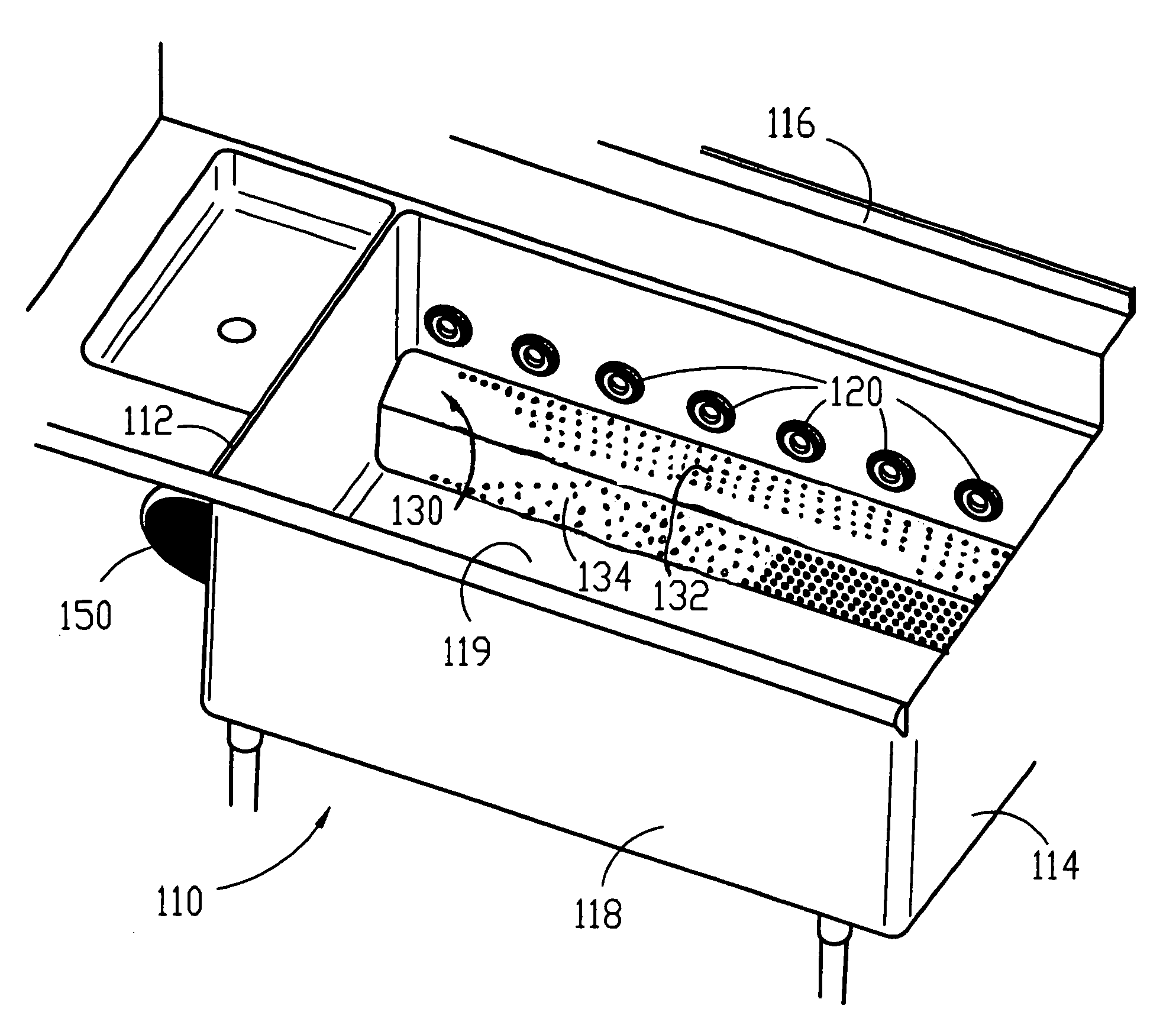

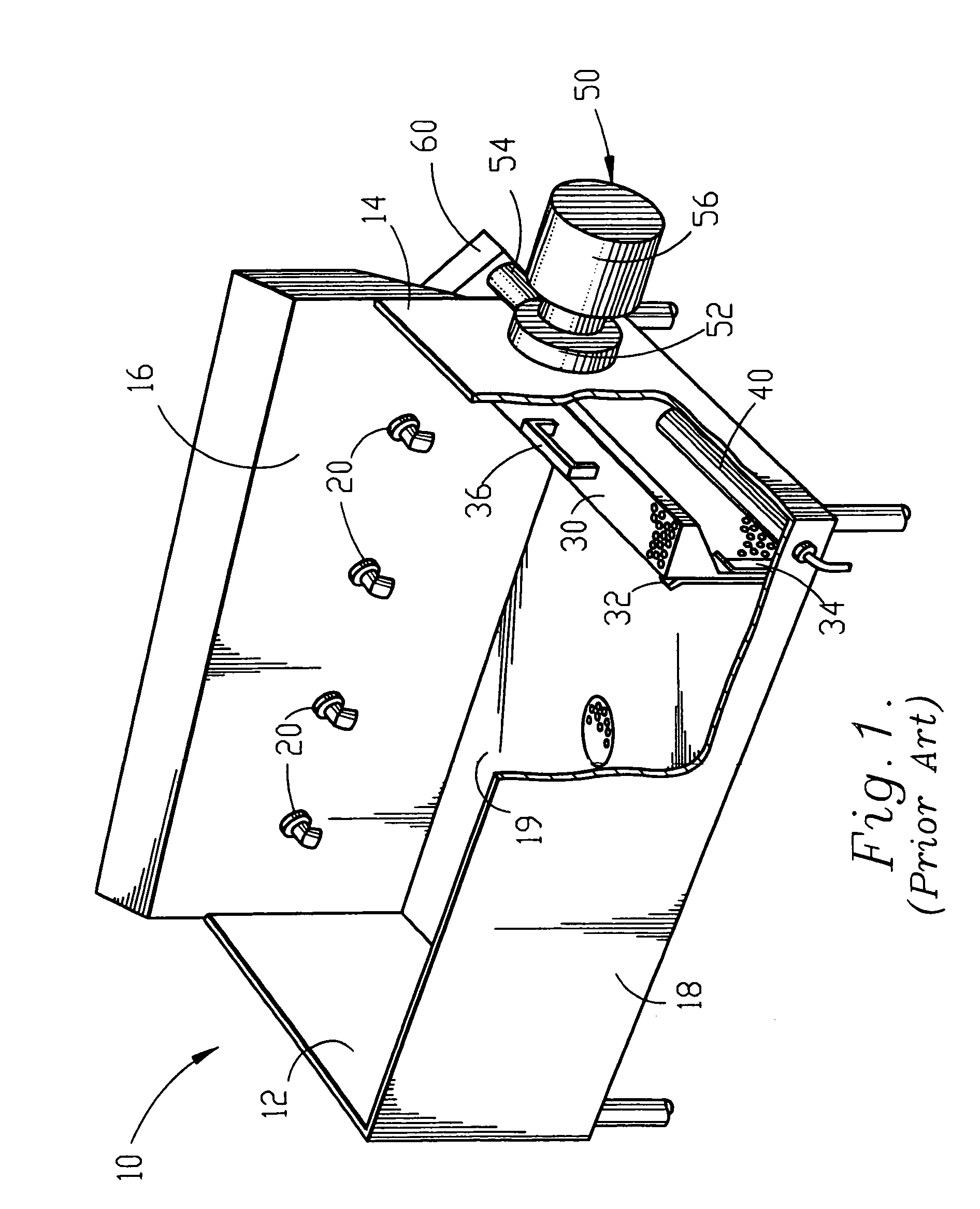

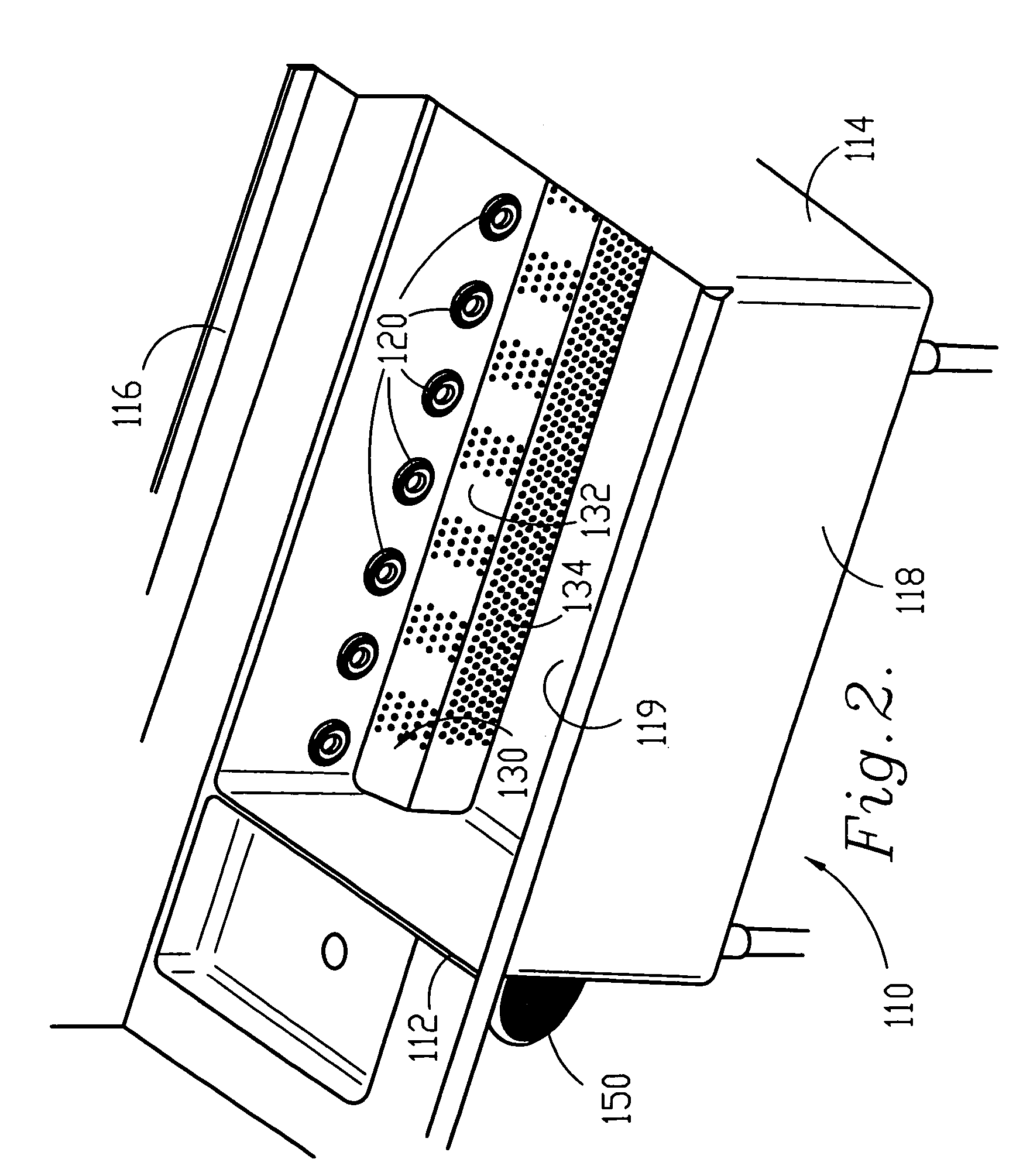

Pot and pan washing machine, components, and methods of washing items

InactiveUS7246624B2Eliminates potentialLess turbulenceTableware washing/rinsing machine detailsPump componentsBiomedical engineeringInlet manifold

An improved pot and pan washing machine is provided including a low suction intake manifold and a partition for capturing a substantial portion of the wash action of the washing machine within a segregated area. The intake manifold of the instant invention includes a plurality of voids having a void concentration that increases as the distance from the source of suction (such as a pump or intake inlet) increases. The partition (or divider) of the instant invention can be removed and repositioned within the wash tank through the use of channels along the walls of the wash tank that receive the partition.

Owner:UNIFIED BRANDS

Micro-display engine

ActiveUS7133207B2Improve performance and efficiencyReduce in quantityPolarising elementsCathode-ray tube indicatorsImage formationDisplay device

Virtual displays with micro-display engines are arranged in compact, lightweight configurations that generate clear virtual images for an observer. The displays are particularly suitable for portable devices, such as head-mounted displays adapted to non-immersive or immersive applications. The non-immersive applications feature reflective optics moved out of the direct line of sight of the observer and provide for differentially modifying the amount or form of ambient light admitted from the forward environment with respect to image light magnified within the display. Micro-display engines suitable for both non-immersive and immersive display applications and having LCD image sources displace polarization components of the LCD image sources along the optical paths of the engines for simplifying engine design. A compound imaging system for micro-display engines features the use of reflectors in sequence to expand upon the imaging possibilities of the new micro-display engines. Polarization management also provides for differentially regulating the transmission of ambient light with respect to image light and for participating in the image formation function of the image source.

Owner:TDG ACQUISITION COMPANY

Infinite memory fabric streams and APIs

ActiveUS9971542B2Improve performance and efficiencyInput/output to record carriersInterprogram communicationApplication programming interfaceDistributed object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to object memory fabric streams and application programming interfaces (APIs) that correspond to a method to implement a distributed object memory and to support hardware, software, and mixed implementations. The stream API may be defined from any point as two one-way streams in opposite directions. Advantageously, the stream API can be implemented with a variety topologies. The stream API may handle object coherency so that any device can then move or remotely execute arbitrary functions, since functions are within object meta-data, which is part of a coherent object address space.

Owner:ULTRATA LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com