Patents

Literature

42results about How to "Improve Parallel Processing Efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

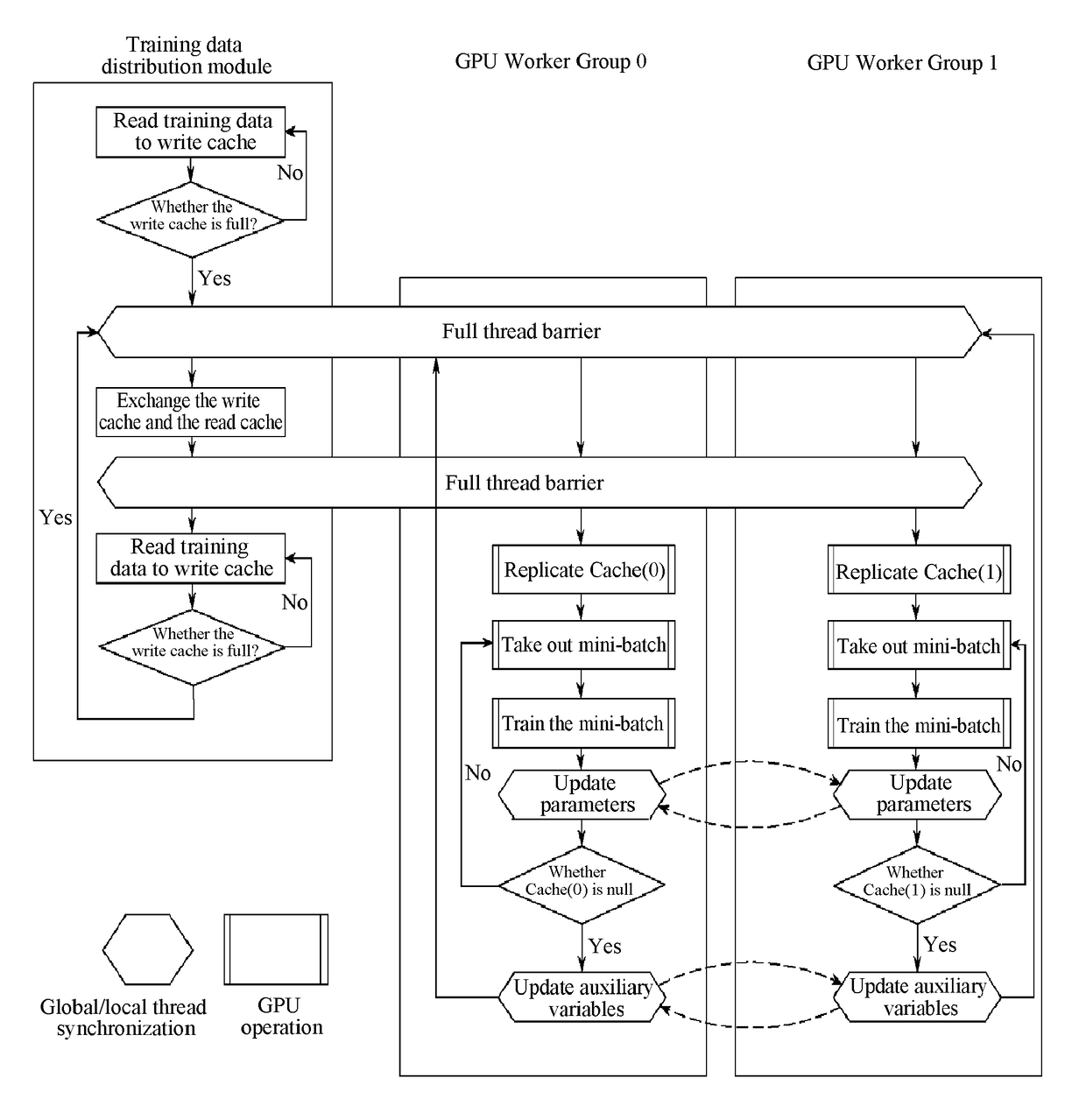

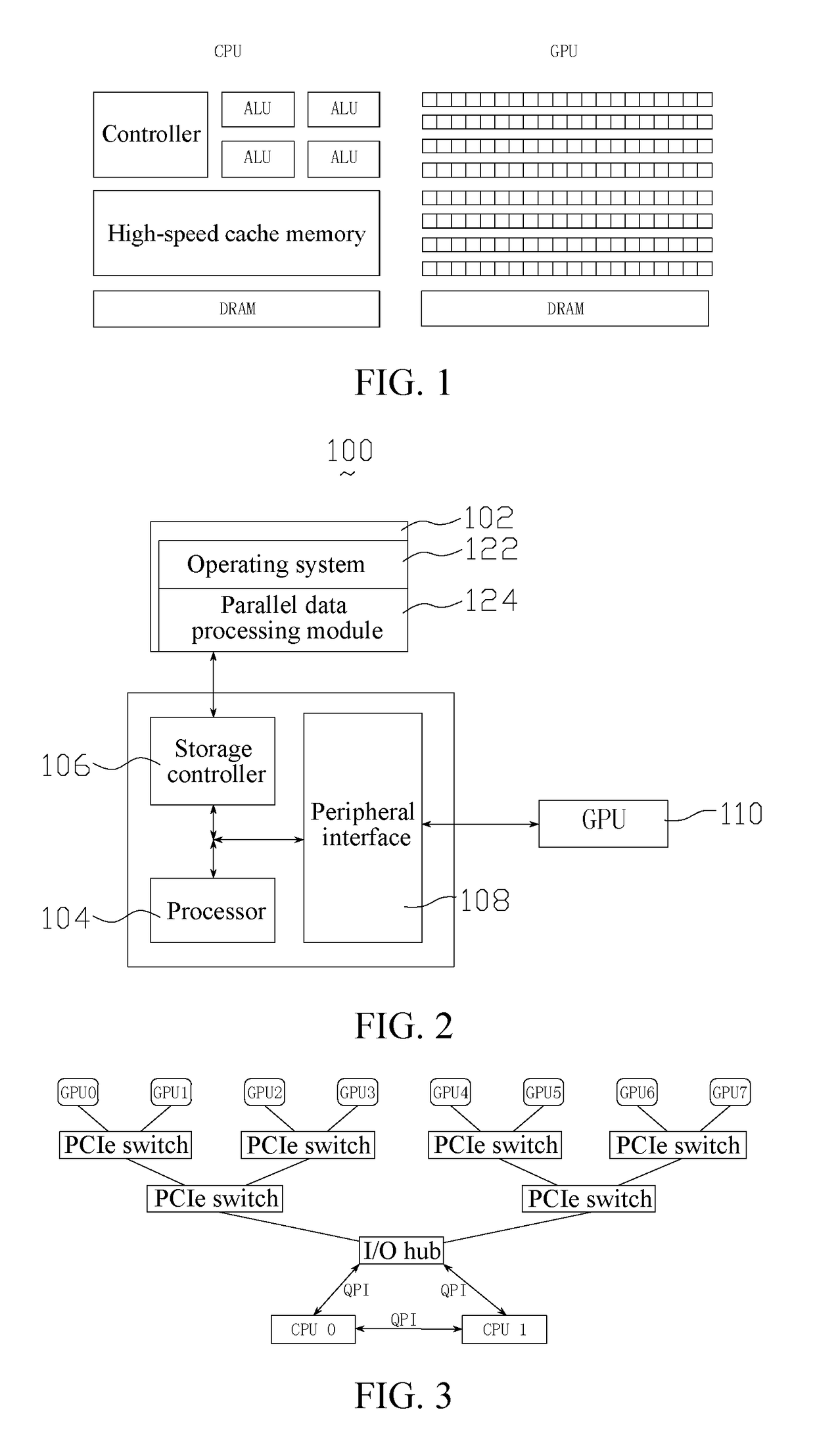

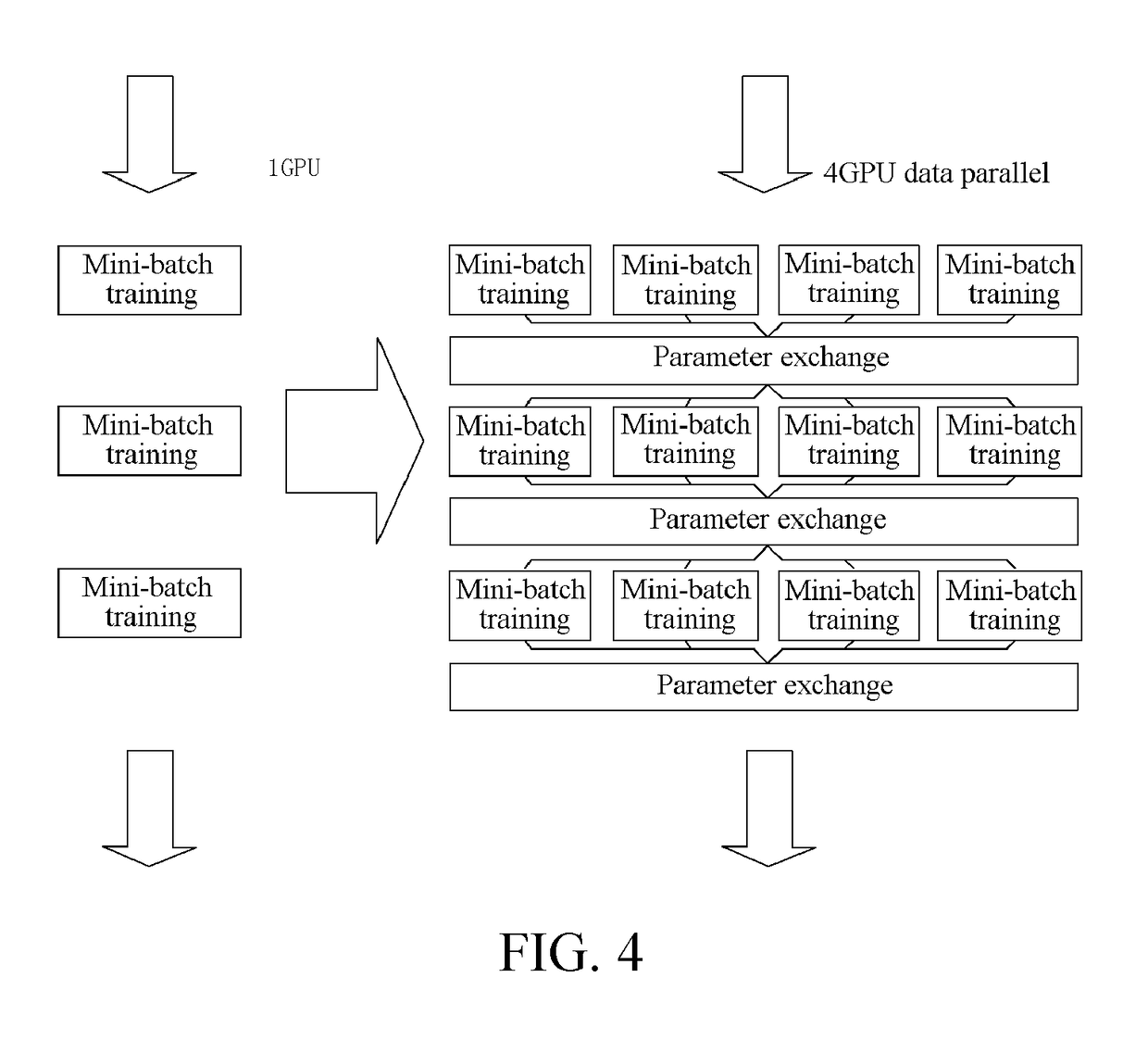

Data parallel processing method and apparatus based on multiple graphic processing units

ActiveUS20160321777A1Enhance data parallel processing efficiencyImprove Parallel Processing EfficiencyResource allocationProgram synchronisationGraphicsVideo memory

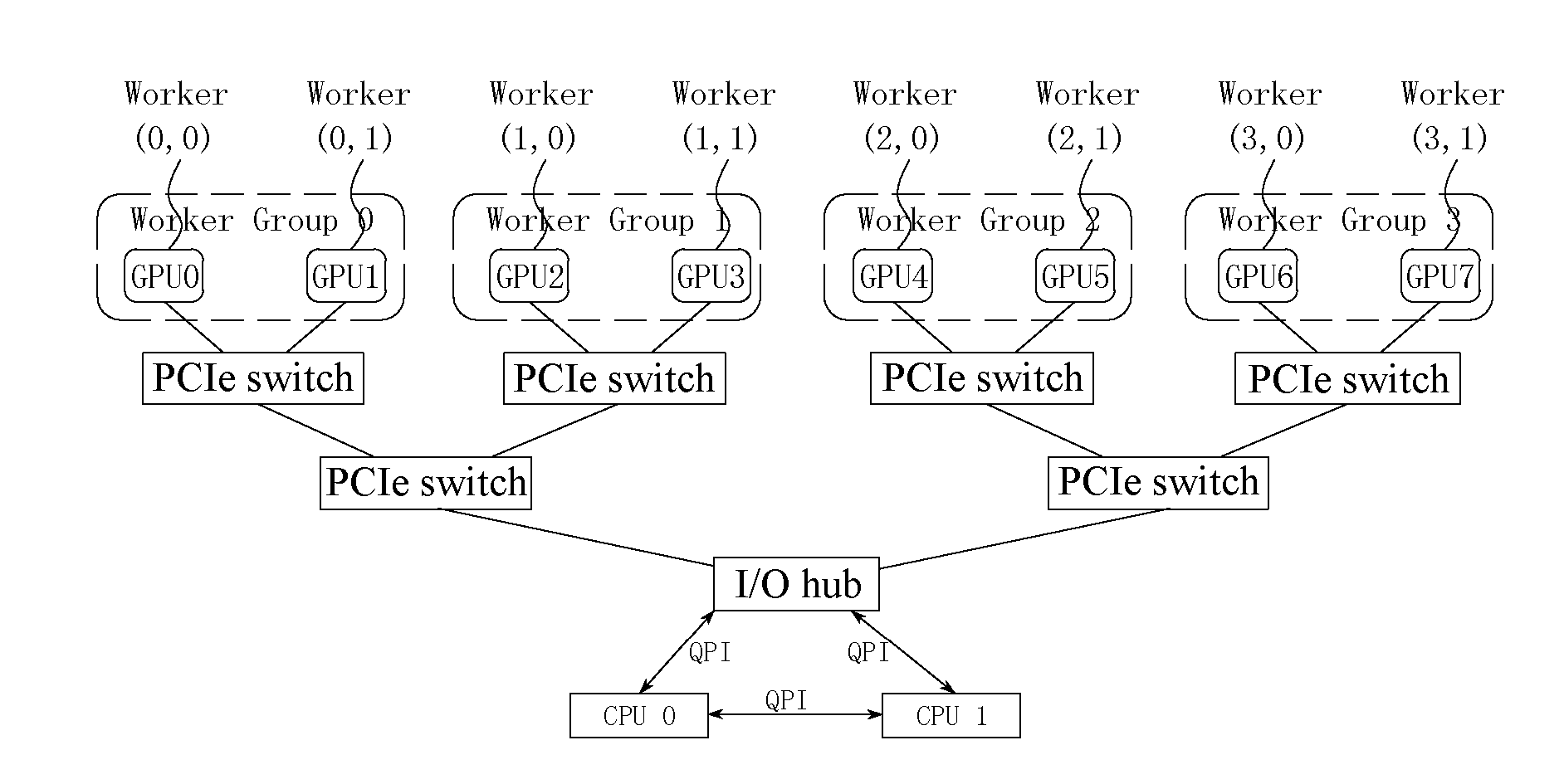

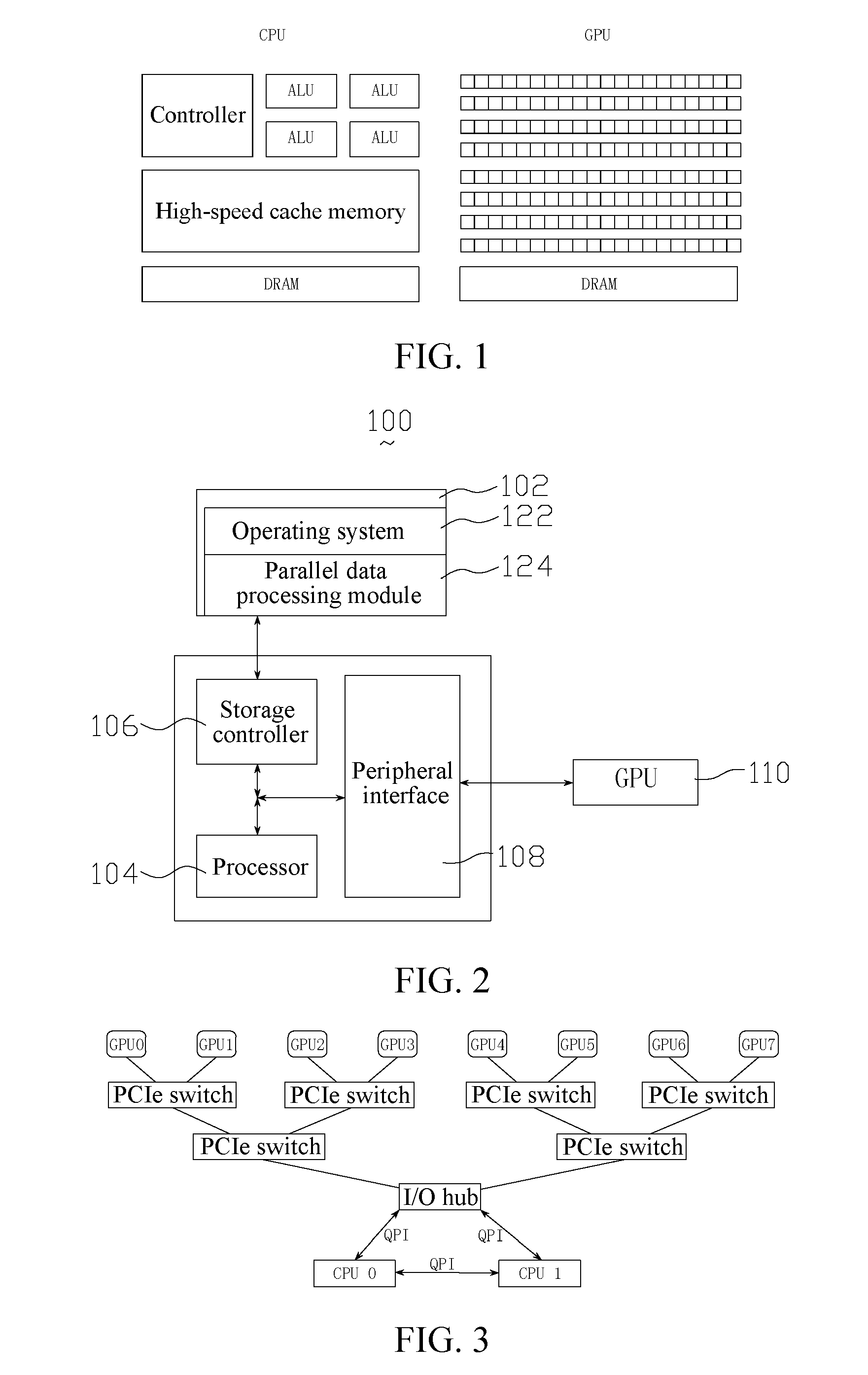

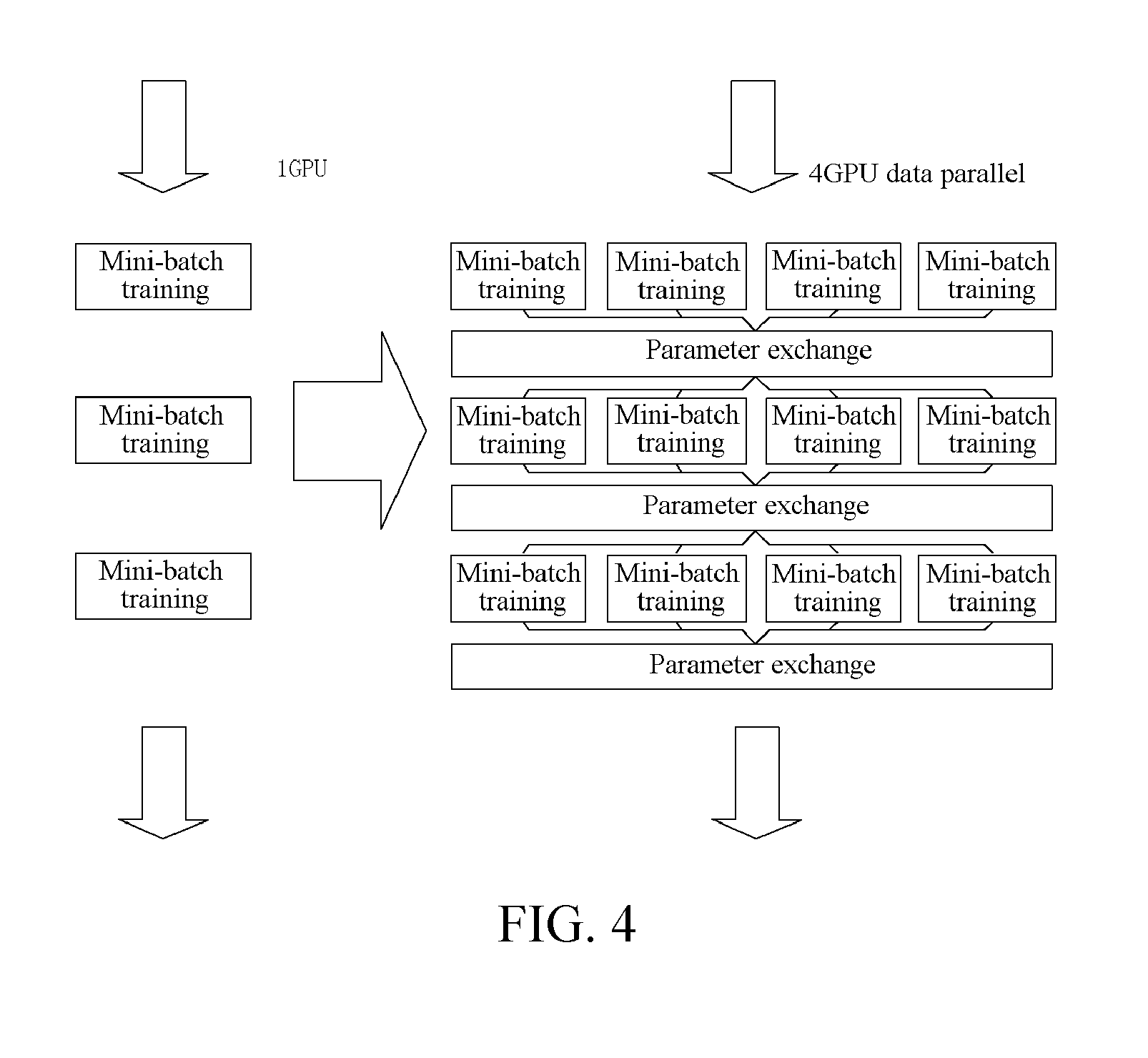

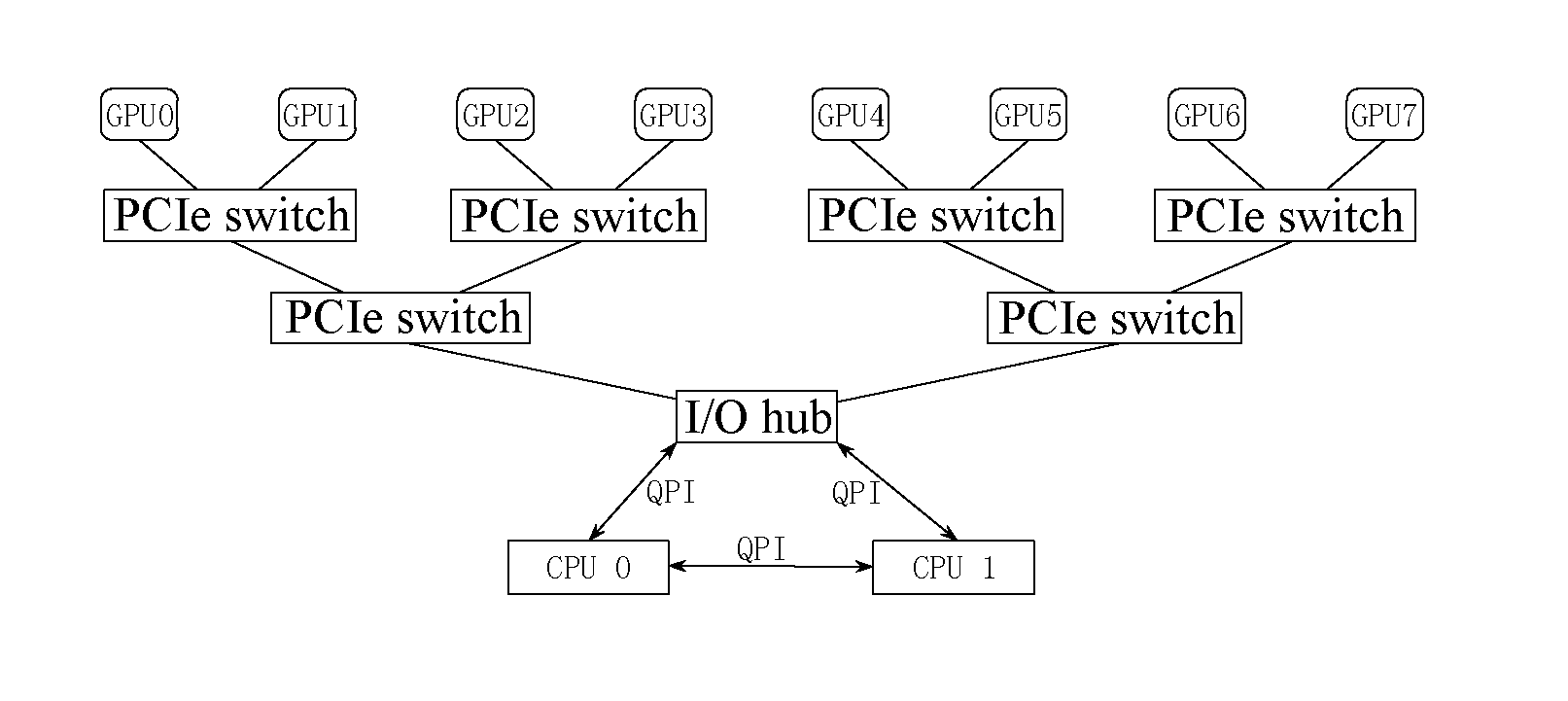

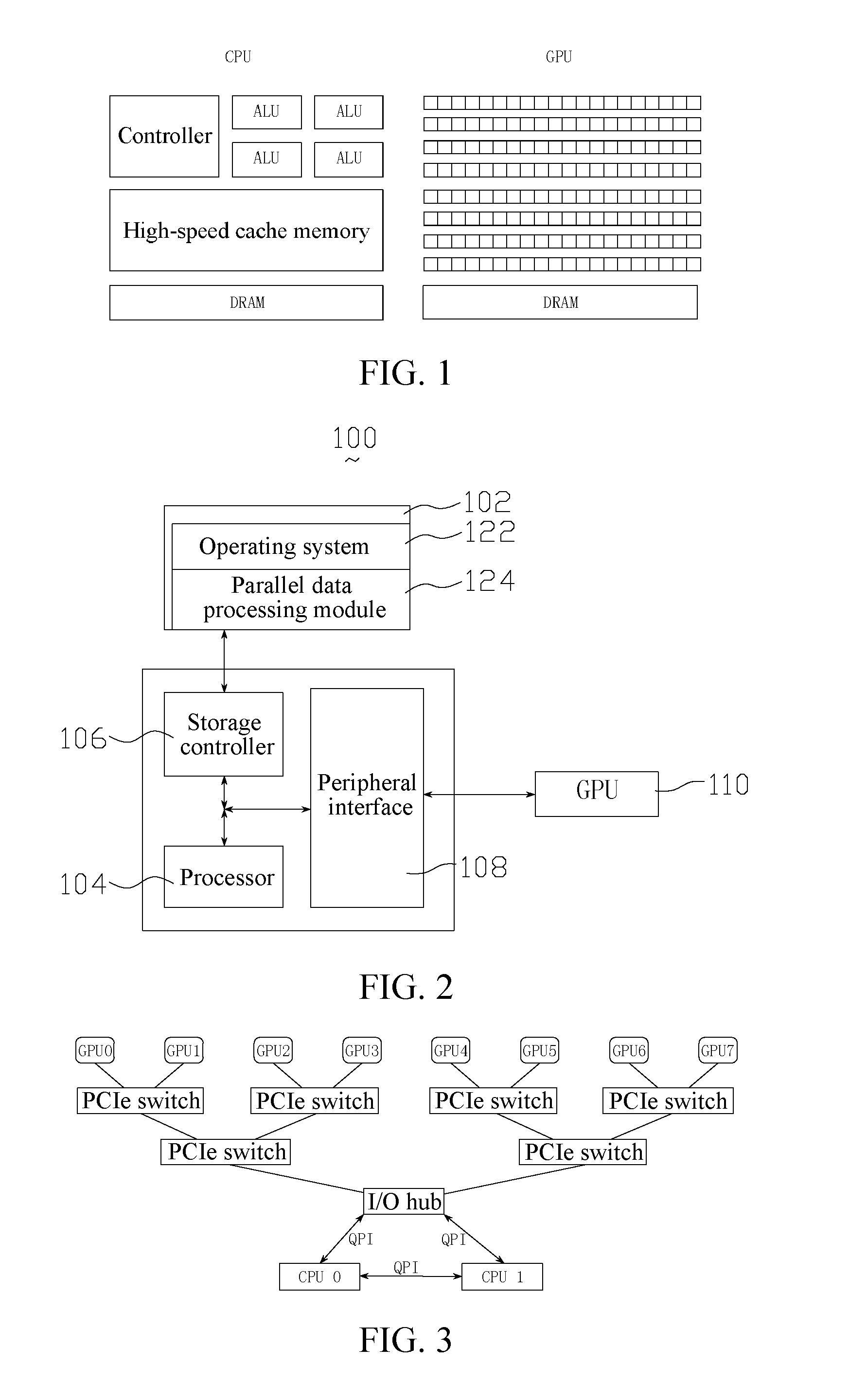

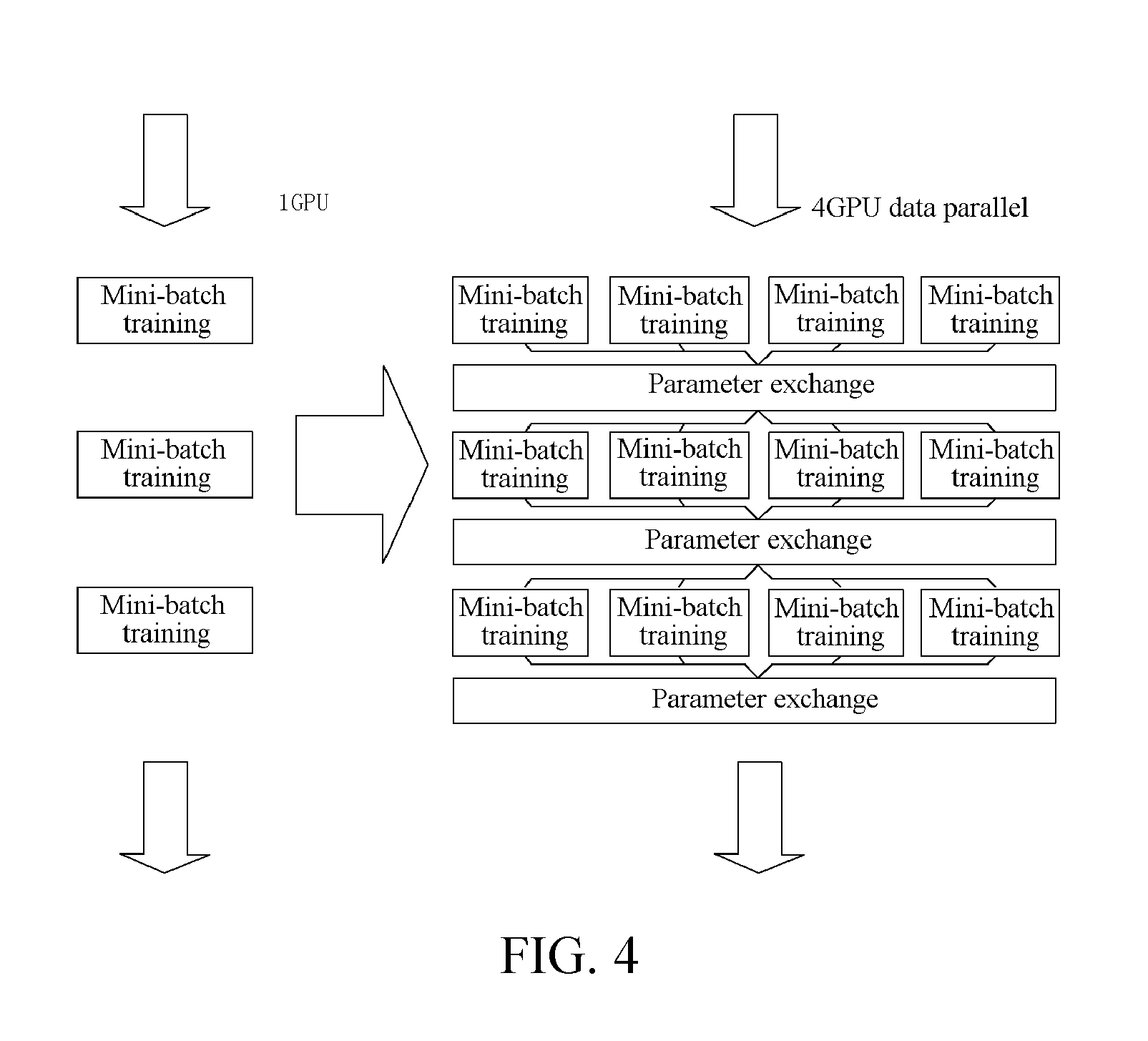

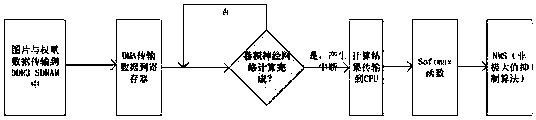

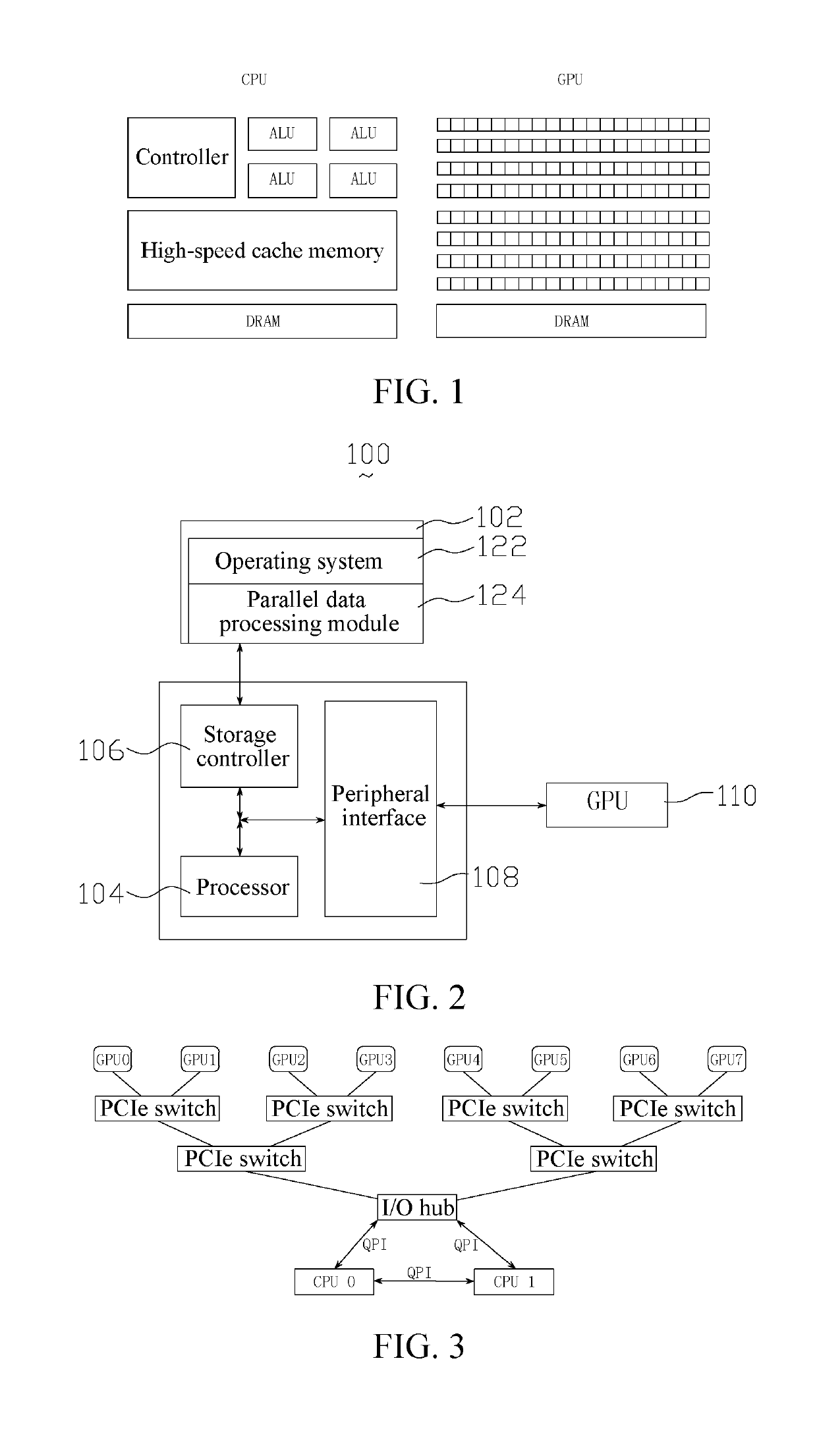

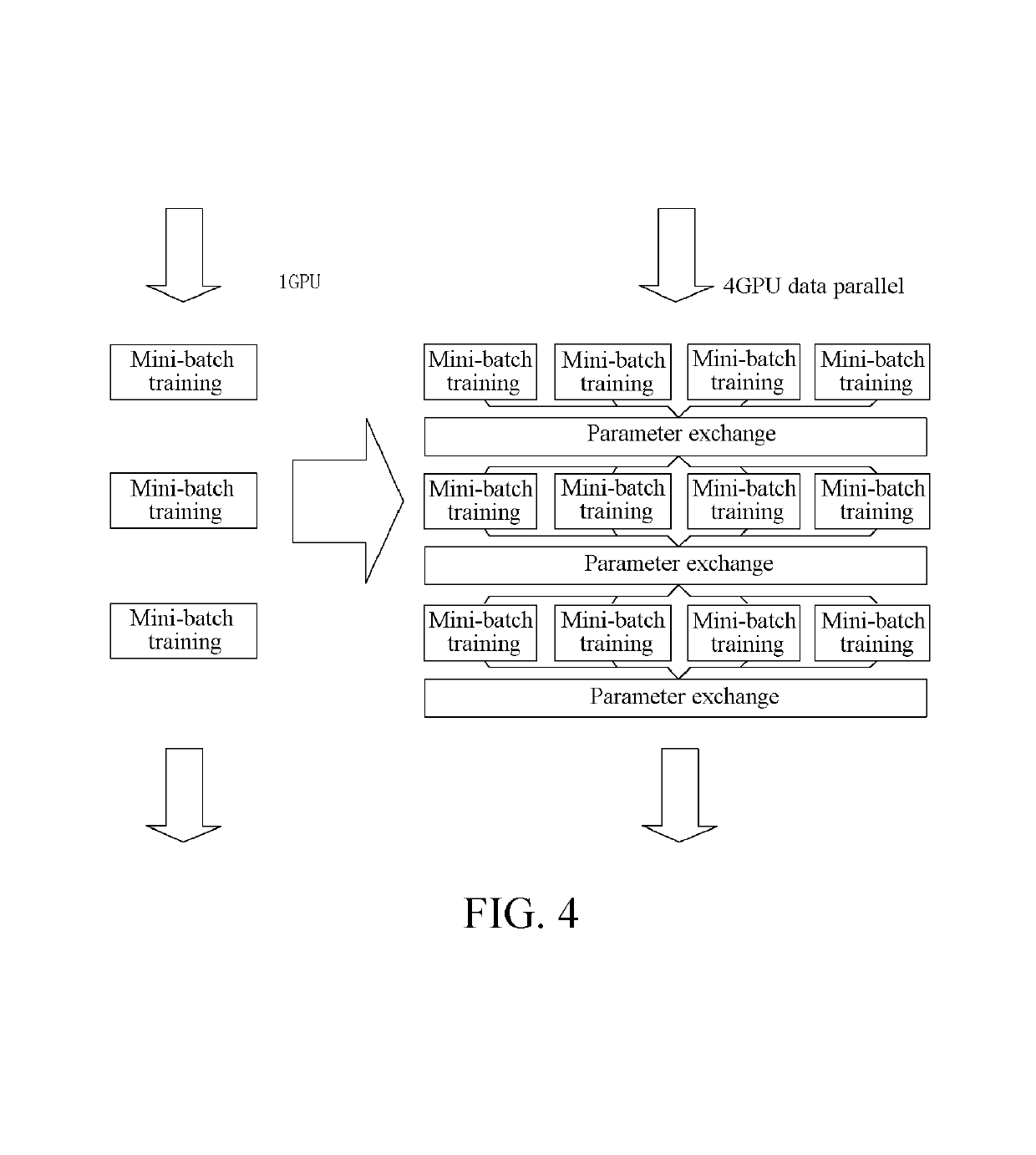

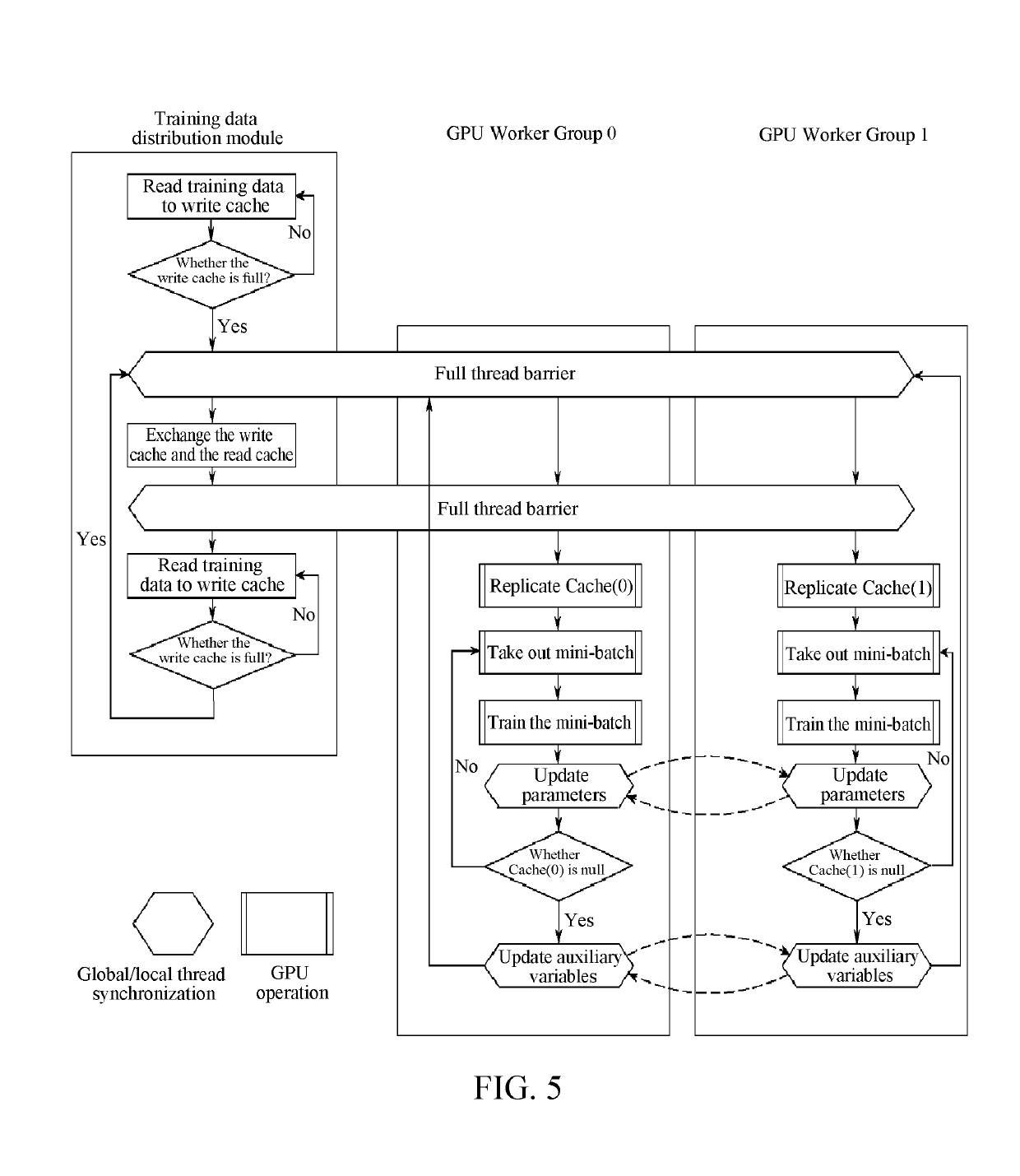

A parallel data processing method based on multiple graphic processing units (GPUs) is provided, including: creating, in a central processing unit (CPU), a plurality of worker threads for controlling a plurality of worker groups respectively, the worker groups including one or more GPUs; binding each worker thread to a corresponding GPU; loading a plurality of batches of training data from a nonvolatile memory to GPU video memories in the plurality of worker groups; and controlling the plurality of GPUs to perform data processing in parallel through the worker threads. The method can enhance efficiency of multi-GPU parallel data processing. In addition, a parallel data processing apparatus is further provided.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Model Parallel Processing Method and Apparatus Based on Multiple Graphic Processing Units

ActiveUS20160321776A1Improve Parallel Processing EfficiencyImprove data processing efficiencyProcessor architectures/configurationProgram controlVideo memoryGraphics

A parallel data processing method based on multiple graphic processing units (GPUs) is provided, including: creating, in a central processing unit (CPU), a plurality of worker threads for controlling a plurality of worker groups respectively, the worker groups including a plurality of GPUs; binding each worker thread to a corresponding GPU; loading one batch of training data from a nonvolatile memory to a GPU video memory corresponding to one worker group; transmitting, between a plurality of GPUs corresponding to one worker group, data required by data processing performed by the GPUs through peer to peer; and controlling the plurality of GPUs to perform data processing in parallel through the worker threads.

Owner:TENCENT TECH (SHENZHEN) CO LTD

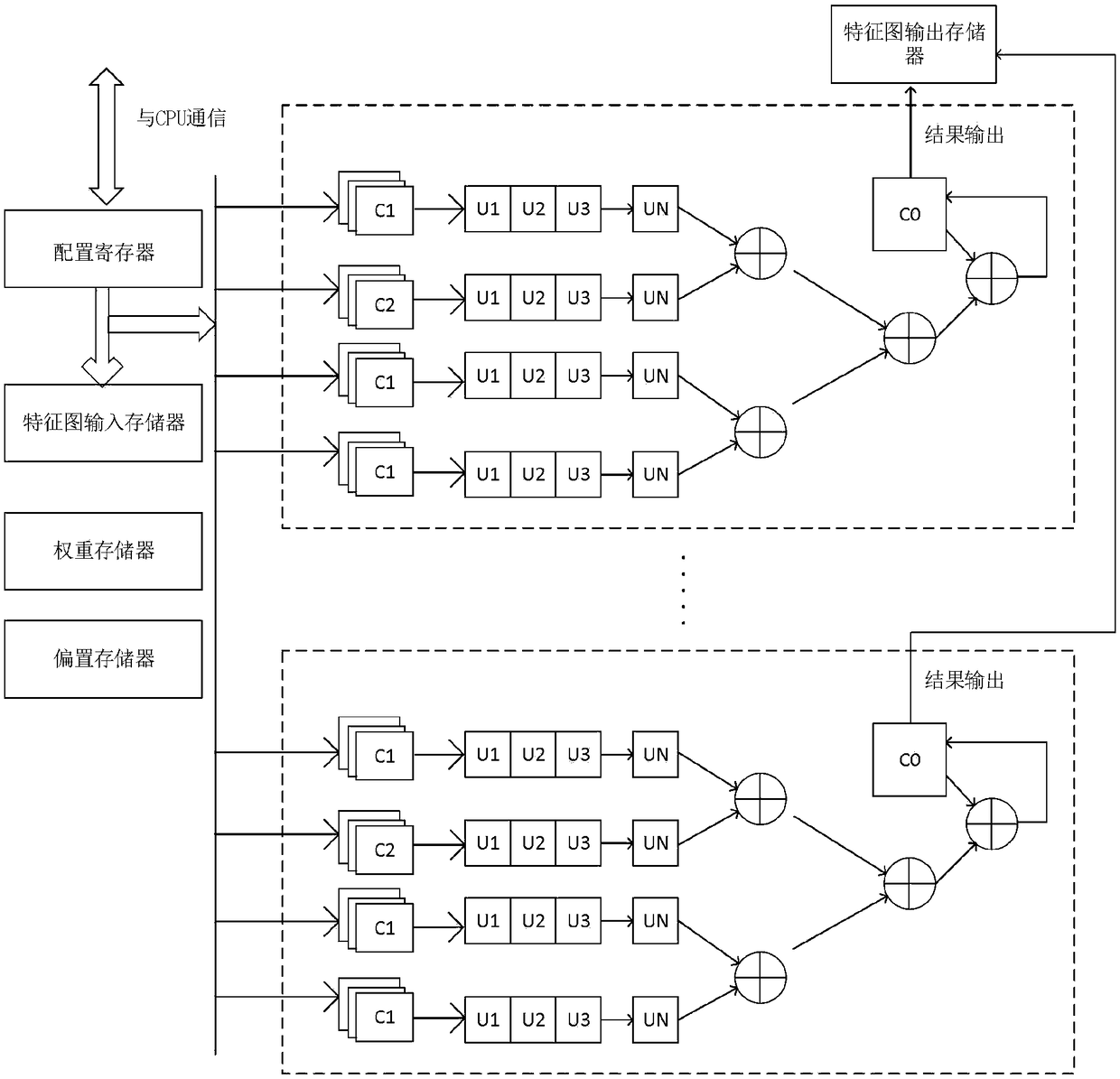

A convolution neural network accelerator based on PSoC

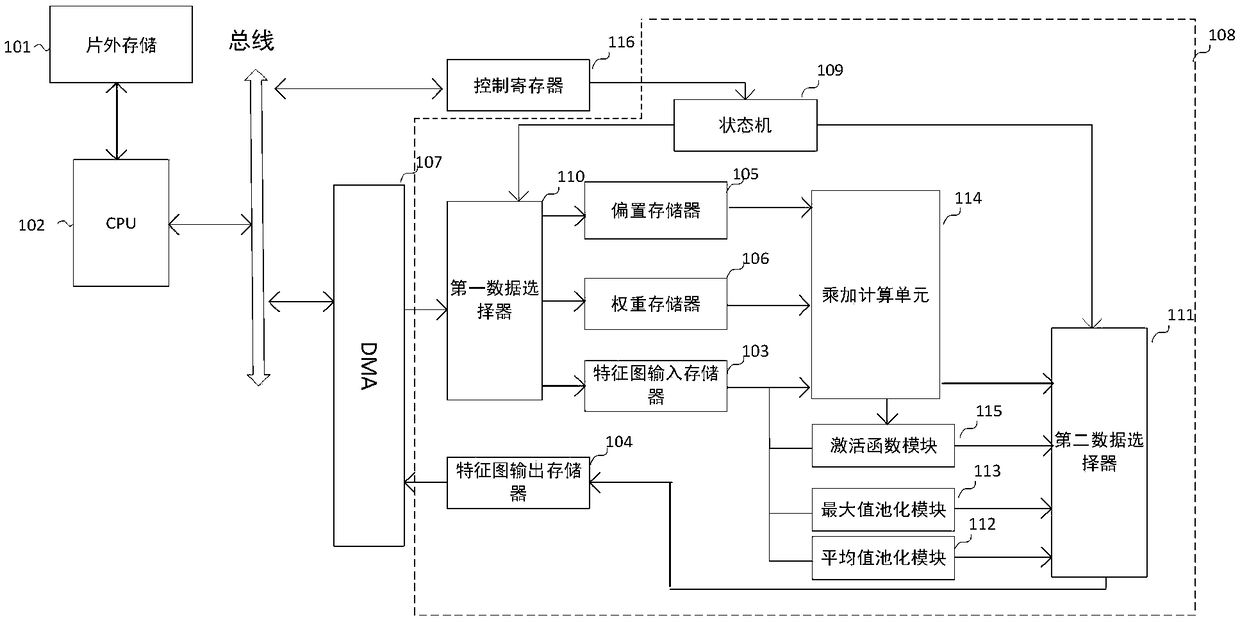

ActiveCN109102065ASolve the large amount of calculationImprove Parallel Processing EfficiencyNeural architecturesPhysical realisationNeural network systemActivation function

This patent discloses a convolution neural network accelerator based on PSoC device, including an off-chip memory, a CPU, a feature map input memory, a feature Map Output Memory, a bias memory, a weight memory, a direct memory access to the same number of cells as neurons. The calculation unit comprises a first-in first-out queue, a state machine, a data selector, an average pooling module, a maximum pooling module, a multiplication and addition calculation module and an activation function module, wherein the calculations in the multiplication and addition calculation module are executed in parallel, and can be used for a convolution neural network system of various architectures. The invention fully utilizes the programmable part in the PSoC (Programmable System on Chip) device to realize the convolution neural network calculation part with large calculation amount and high parallelism, and utilizes the CPU to realize the serial algorithm and the state control.

Owner:GUANGDONG UNIV OF TECH +1

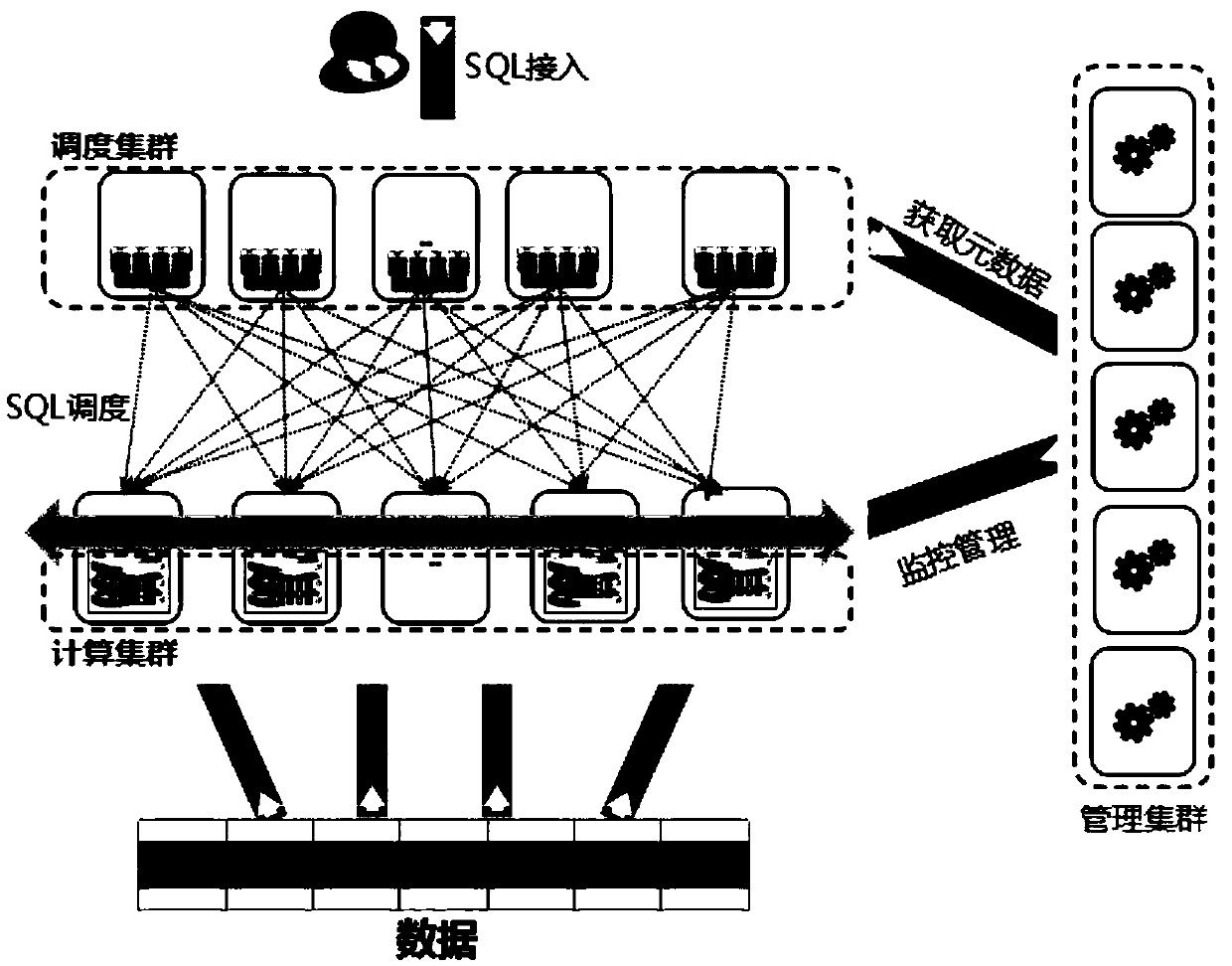

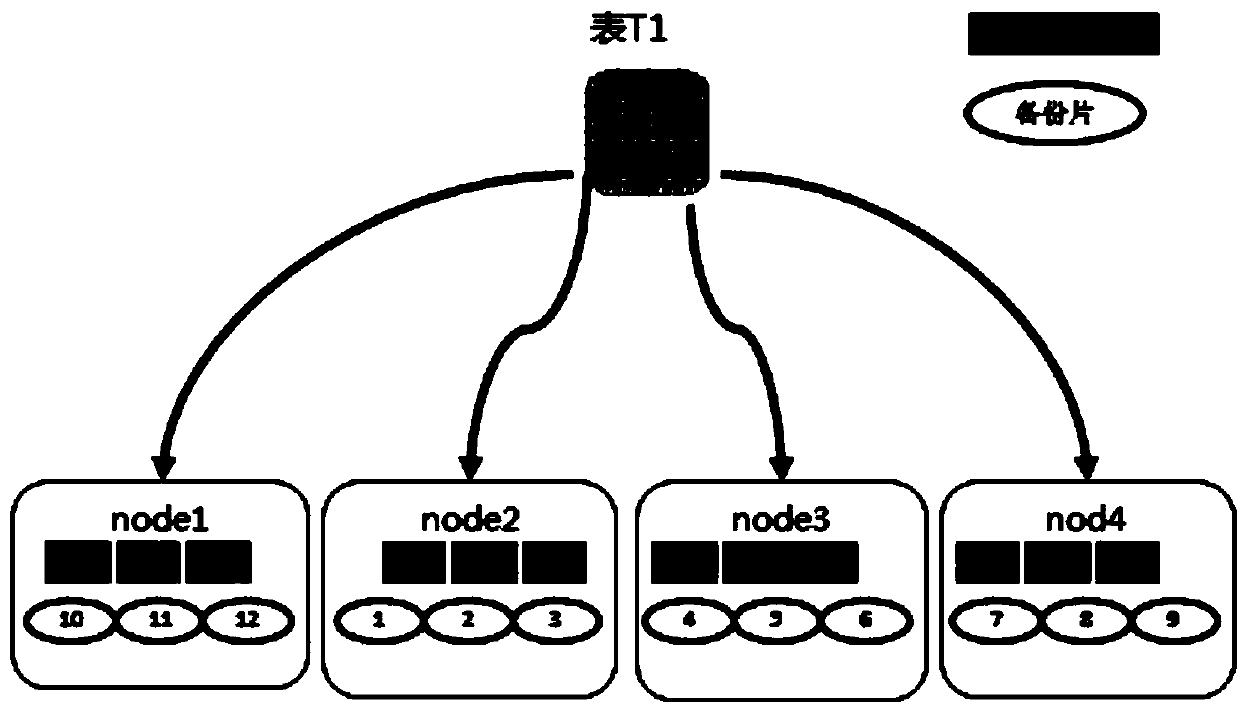

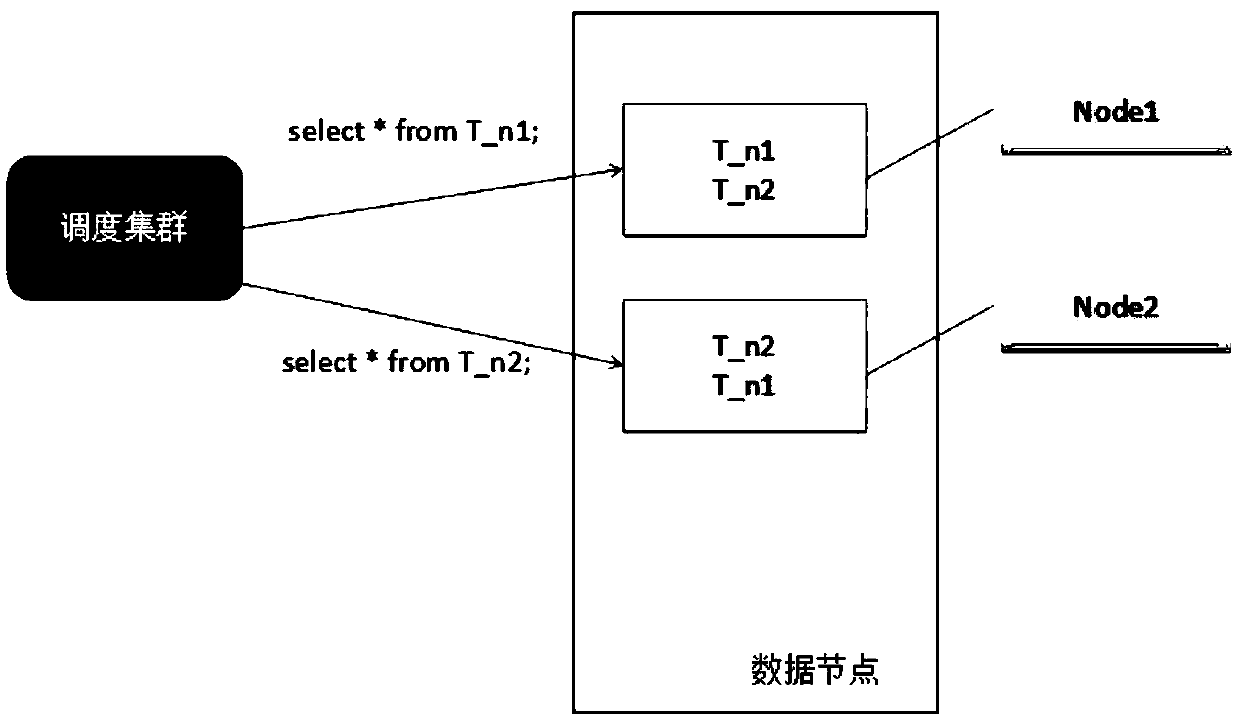

Distributed parallel processing database system and a data processing method thereof

ActiveCN109656911AGuaranteed automatic recoverySolve the problem of low storage capacityDatabase distribution/replicationEnergy efficient computingData operationsTheoretical computer science

The invention discloses a distributed parallel processing database system and a data processing method thereof. Reliable data support is provided for data recovery by formulating a data fragment distribution rule, a redundant backup rule and a redo log mechanism. And according to the data operation type, log recovery or inter-node consistency recovery is redone to perform data automatic recovery.Distributed deployment is supported, automatic data recovery is achieved, and a stable storage computing service is provided.

Owner:CHINA REALTIME DATABASE +2

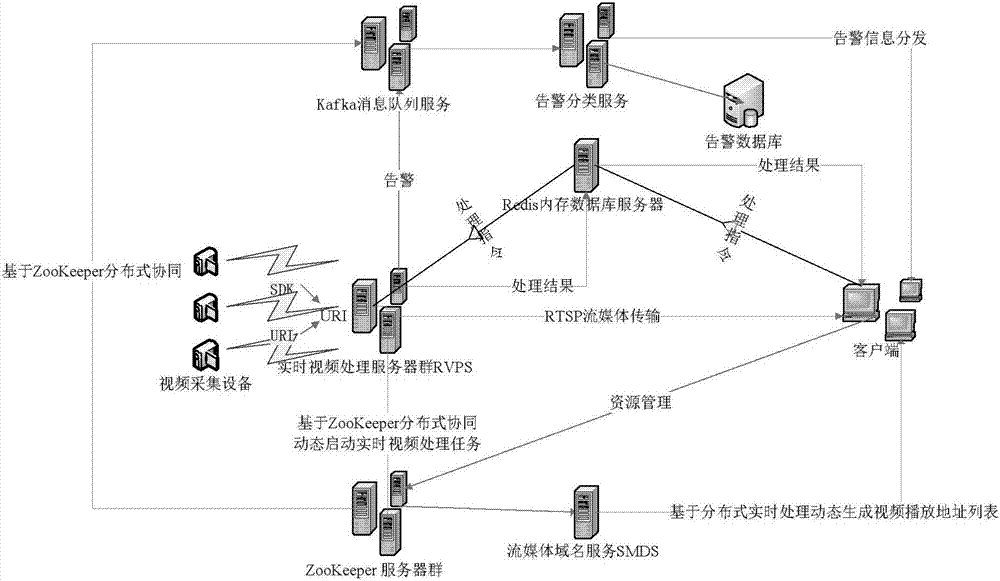

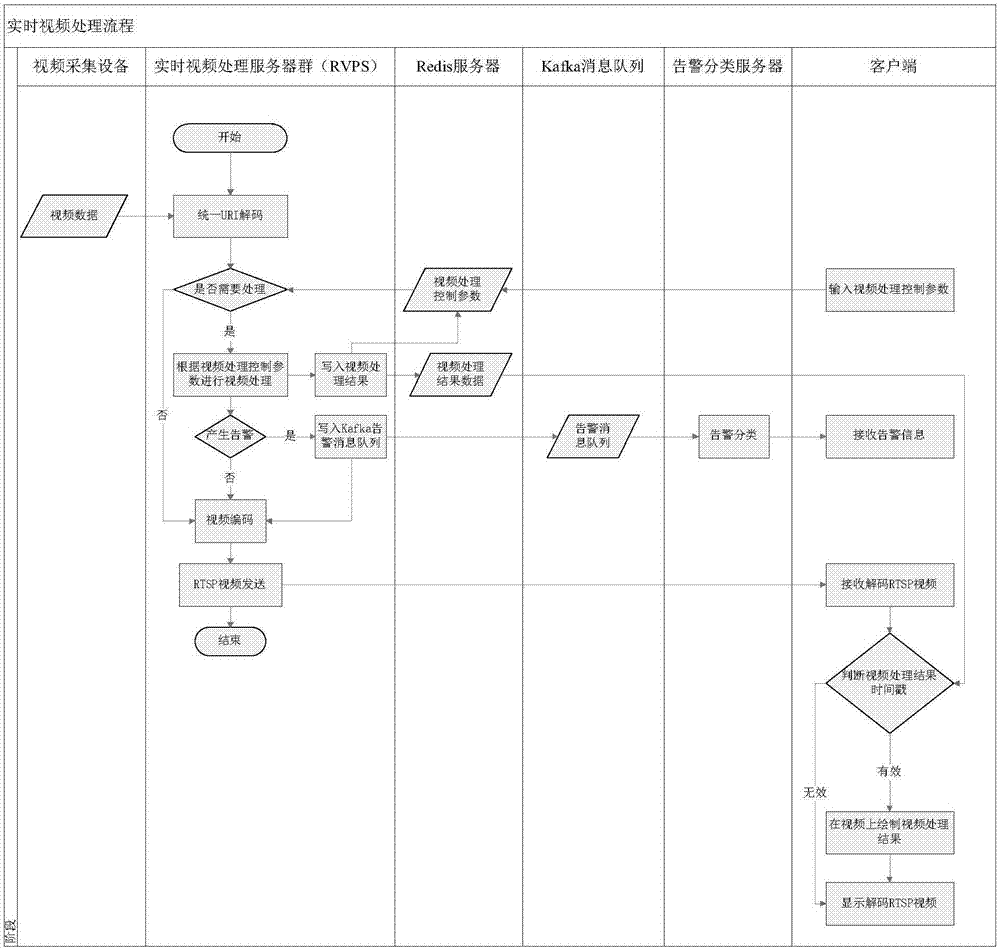

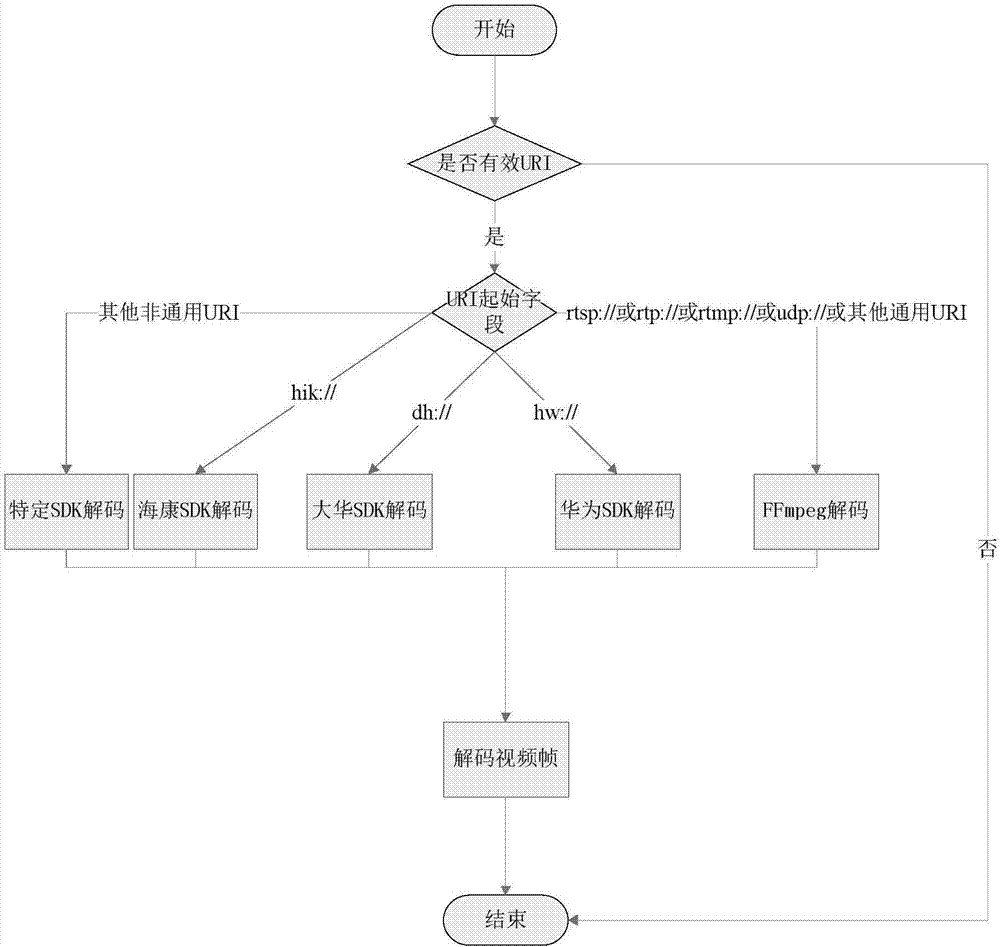

Distributed real-time video monitoring processing system based on ZooKeeper

ActiveCN107104961AImprove Parallel Processing EfficiencyEasy accessClosed circuit television systemsTransmissionDomain nameProcessing Instruction

The invention discloses a distributed real-time video monitoring processing system based on a ZooKeeper. The system comprises video acquisition equipment, a ZooKeeper server farm, a memory database server, a real-time video processing server farm RVPS, a streaming media domain name service and a client. In the invention, the ZooKeeper server farm is used to realize high availability of a cluster, resource distribution and calculation and load balancing. The client sends an operation instruction to the real-time video processing server farm through the memory database server and can modify the operation instruction at any time. The RVPS accesses a real-time video stream from video acquisition equipment. Through polling a processing instruction stored in the memory database server, a real-time video is processed. The RVPS sends an original coded video to the client through an RTSP streaming media protocol and feeds back a processing result to the client through the memory database server so as to realize separation of the video and the processing result. The client accesses an RTSP video stream with an unchanged domain name and a changed IP address through the streaming media domain name service.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

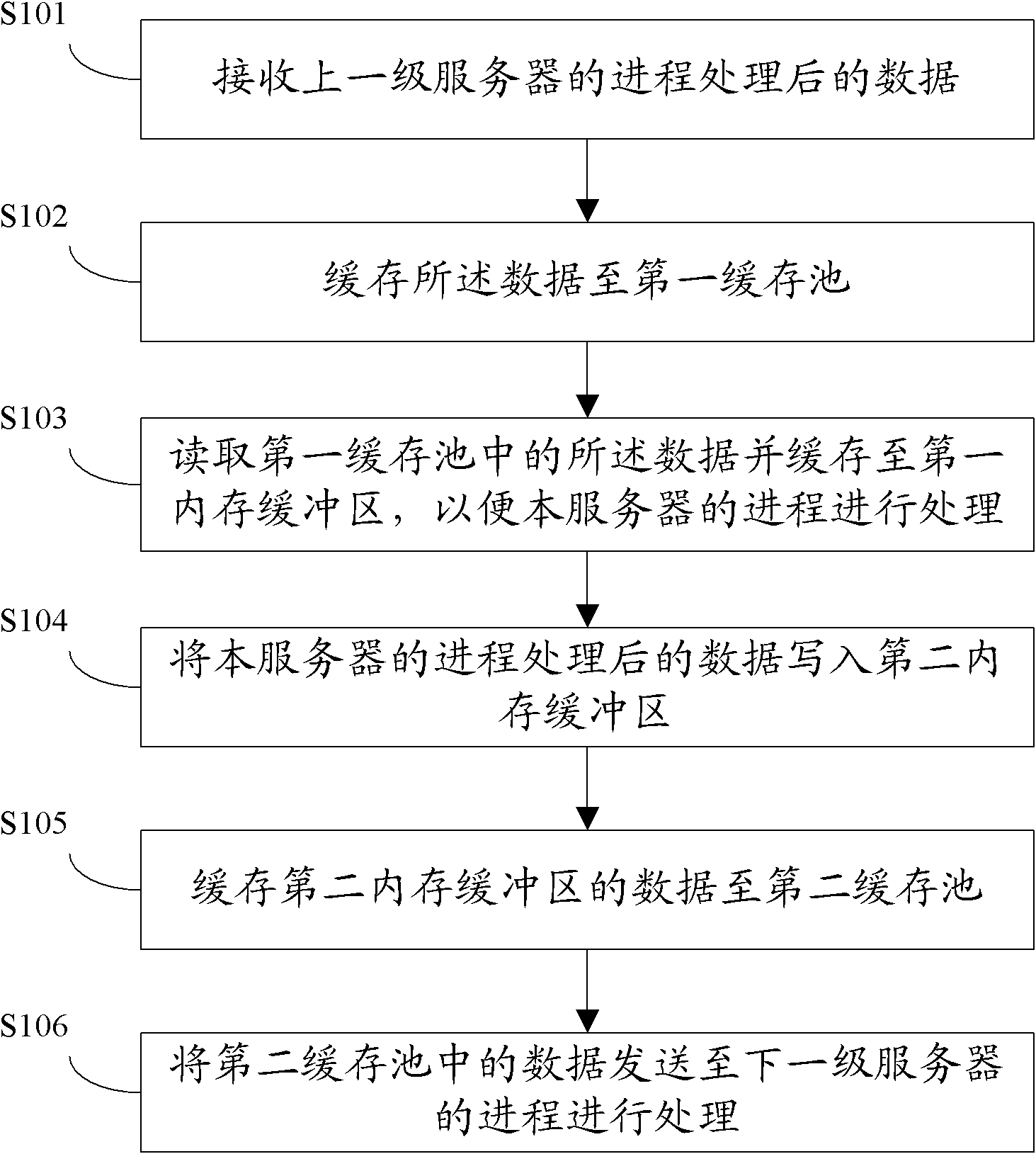

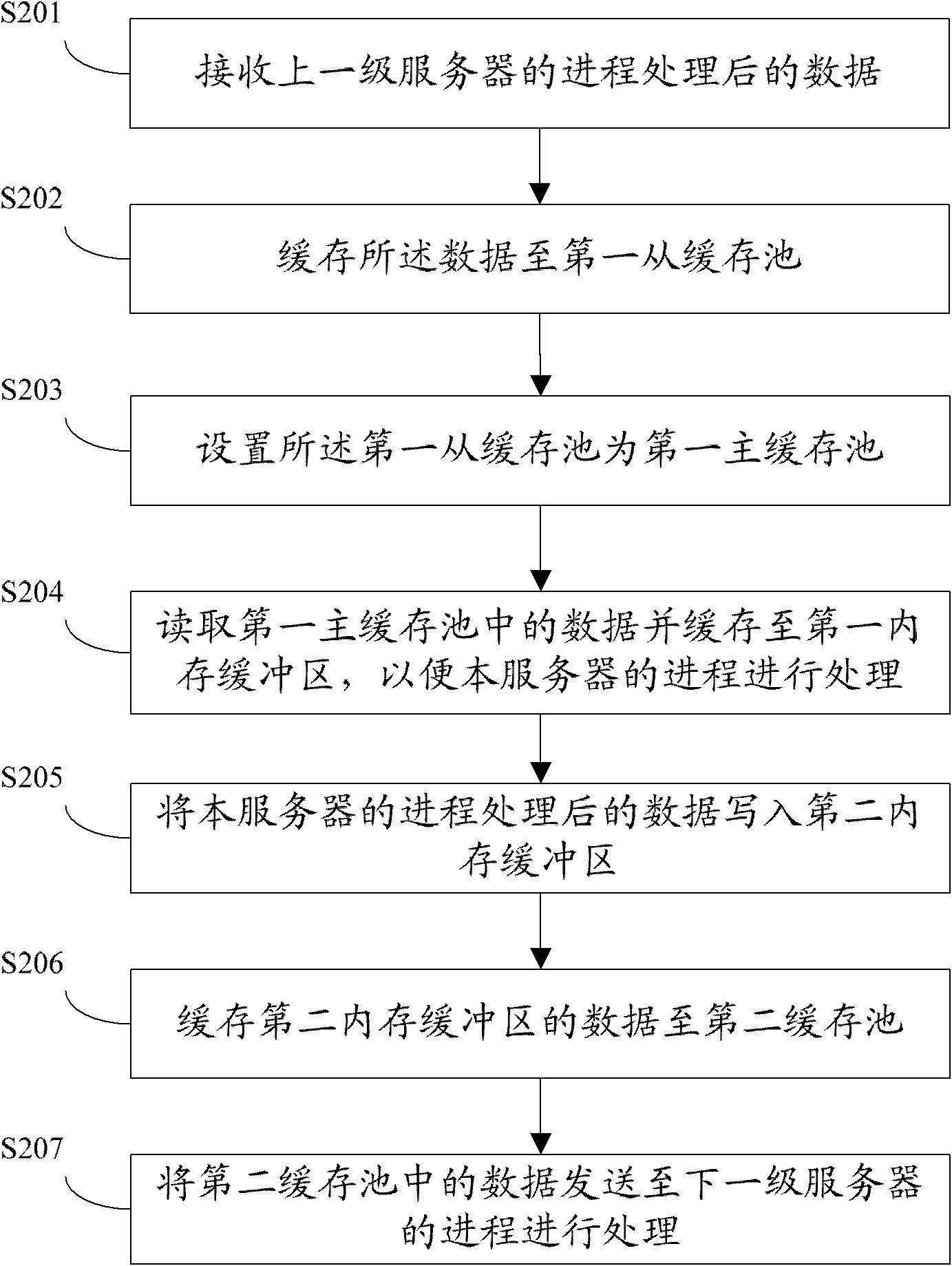

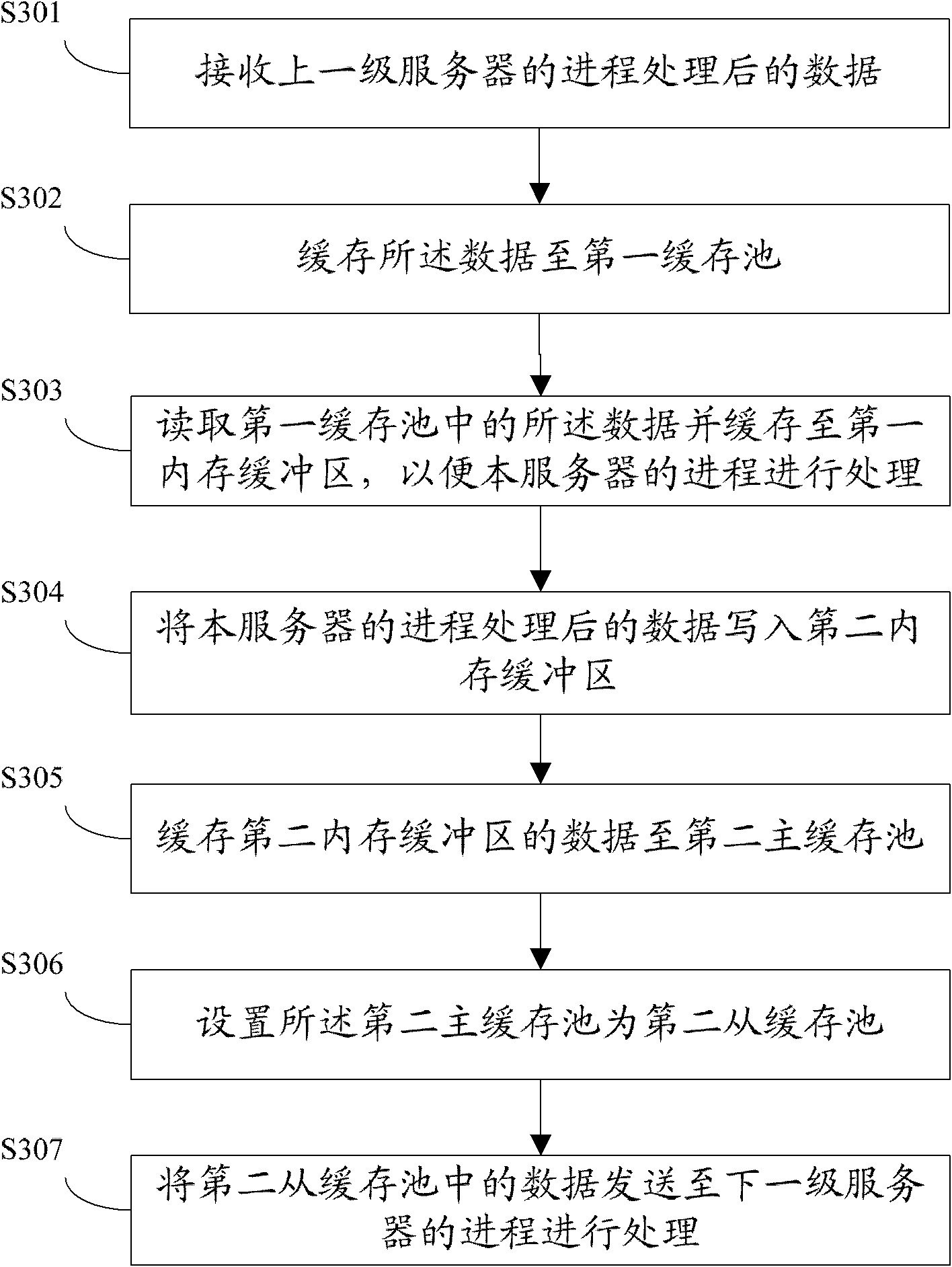

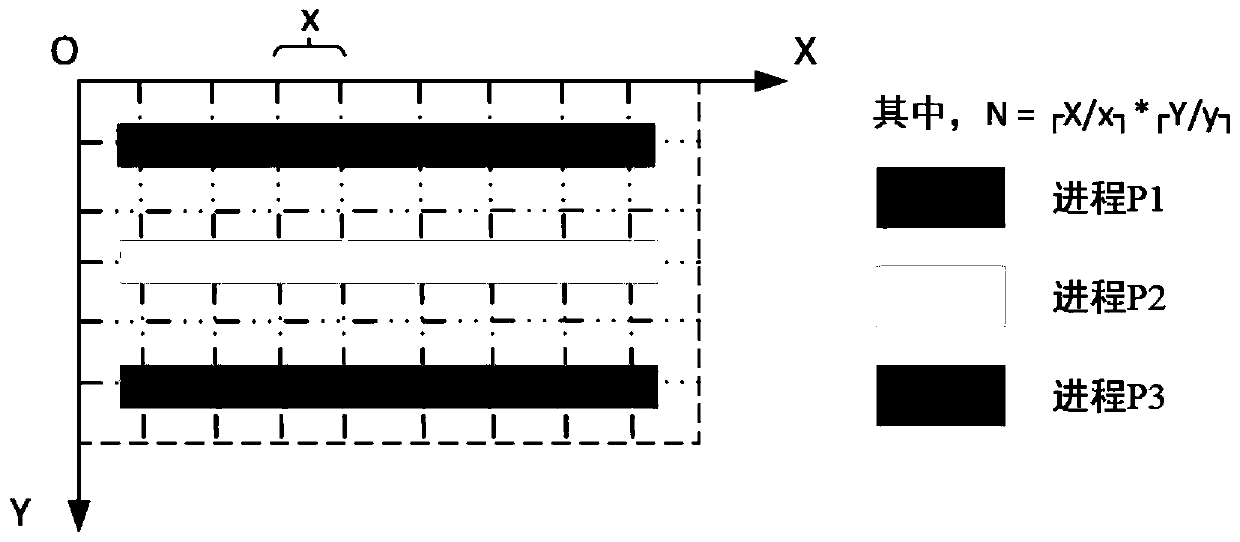

Pipe-type communication method and system for interprocess communication

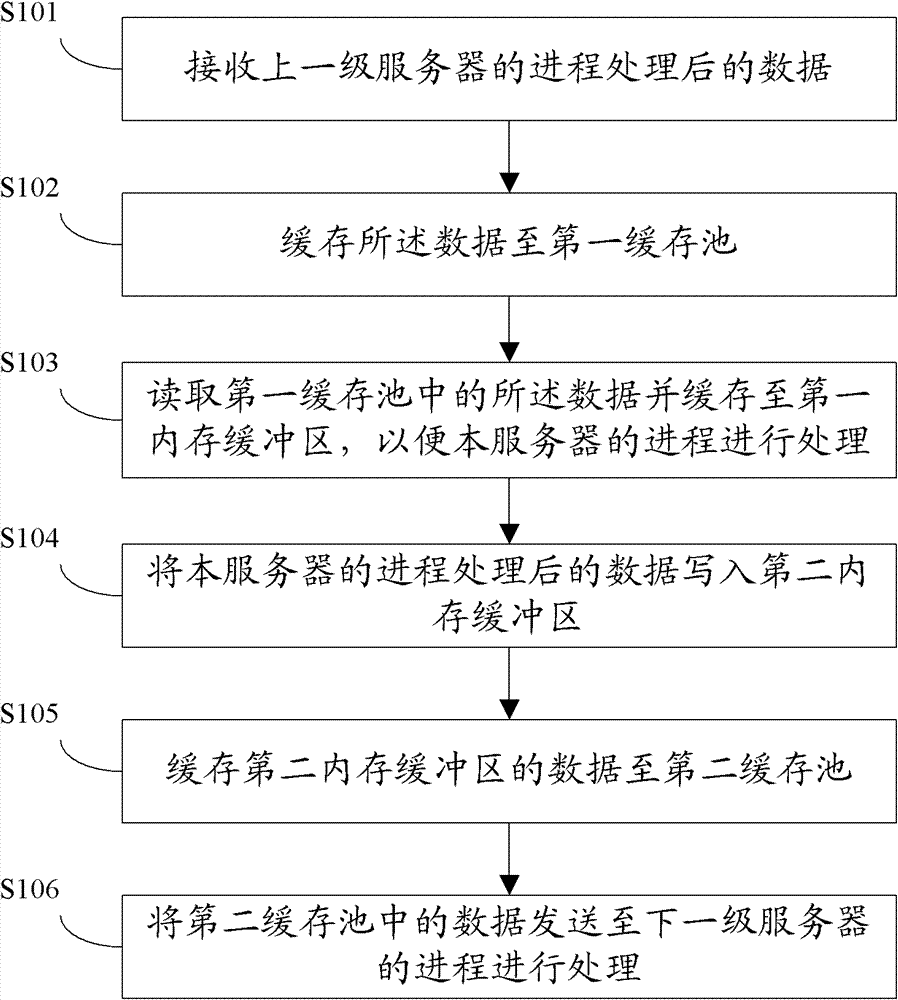

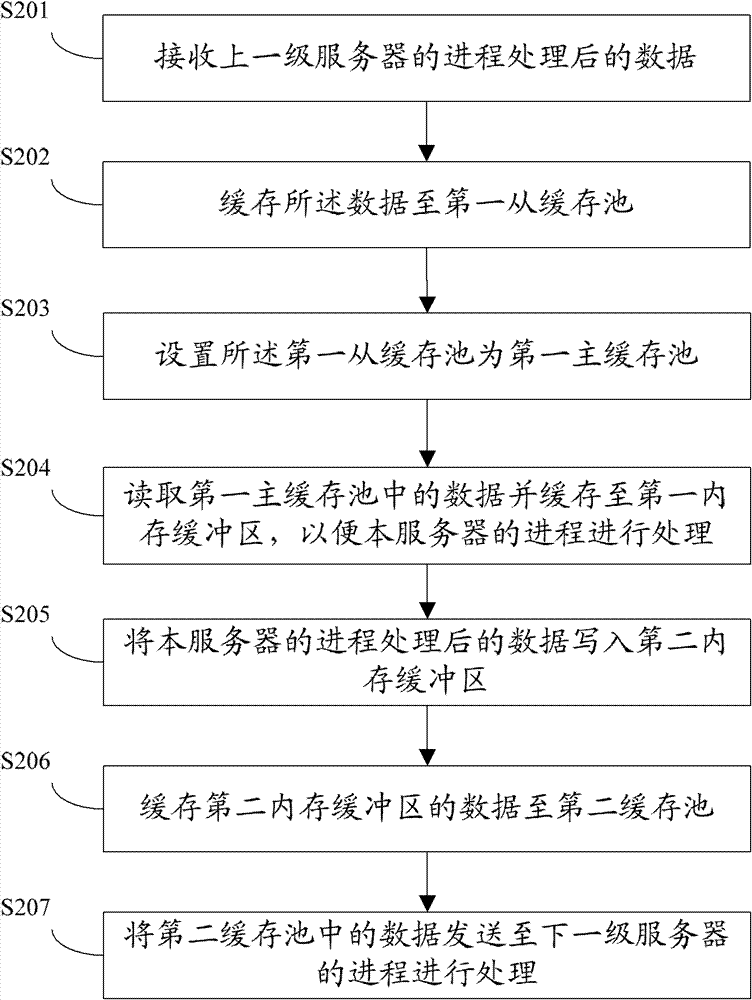

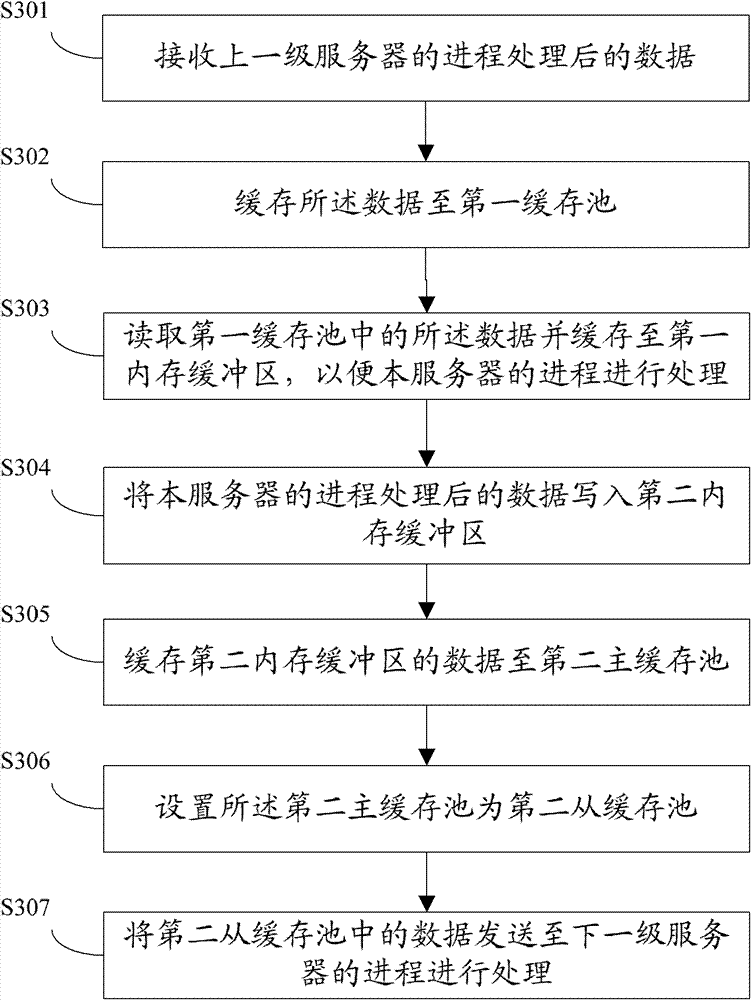

InactiveCN102122256AImprove reliabilityImprove efficiencyInterprogram communicationDigital computer detailsMass storageCommunications system

The invention discloses a pipe-type communication method for interprocess communication, comprising the following steps: receiving the data processed by the process of a server at the previous level; caching the data to a first caching pool; reading the data in the first caching pool, and caching the data to a first memory buffer zone so as to bring convenience for the process of the server to process; writing the data processed by the process of the server into a second memory buffer zone; caching the data in the second memory buffer zone to the second caching pool; and sending the data in the second caching pool to the process of the server at the next level to process, wherein the first caching pool and the second caching pool are storage spaces arranged on an external memory. The invention also discloses a pipe-type communication system for interprocess communication. According to the method and the system, data in the pipe-type communication mode in the streamline concurrent processing process are cached by the large-capacity storage space of the external memory, thereby improving the reliability and the efficiency for data transmission during pipe-type communication.

Owner:NAT UNIV OF DEFENSE TECH

Model parallel processing method and apparatus based on multiple graphic processing units

ActiveUS9607355B2Improve Parallel Processing EfficiencyImprove data processing efficiencyProcessor architectures/configurationProgram controlVideo memoryComputer architecture

A parallel data processing method based on multiple graphic processing units (GPUs) is provided, including: creating, in a central processing unit (CPU), a plurality of worker threads for controlling a plurality of worker groups respectively, the worker groups including a plurality of GPUs; binding each worker thread to a corresponding GPU; loading one batch of training data from a nonvolatile memory to a GPU video memory corresponding to one worker group; transmitting, between a plurality of GPUs corresponding to one worker group, data required by data processing performed by the GPUs through peer to peer; and controlling the plurality of GPUs to perform data processing in parallel through the worker threads.

Owner:TENCENT TECH (SHENZHEN) CO LTD

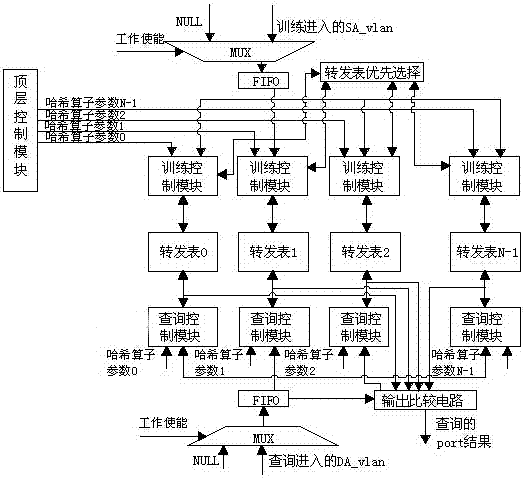

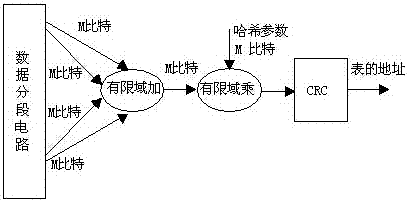

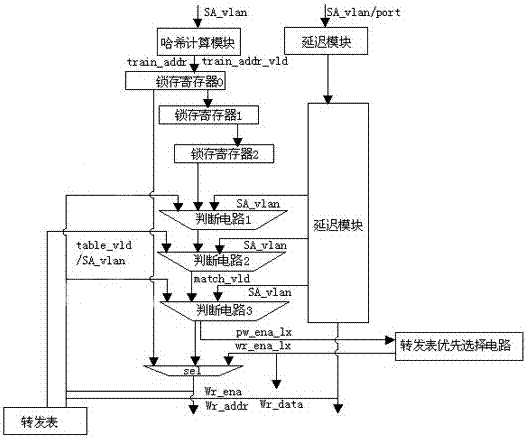

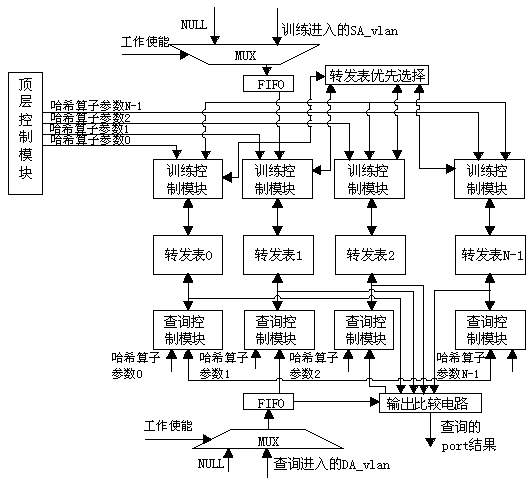

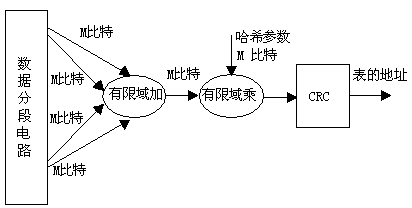

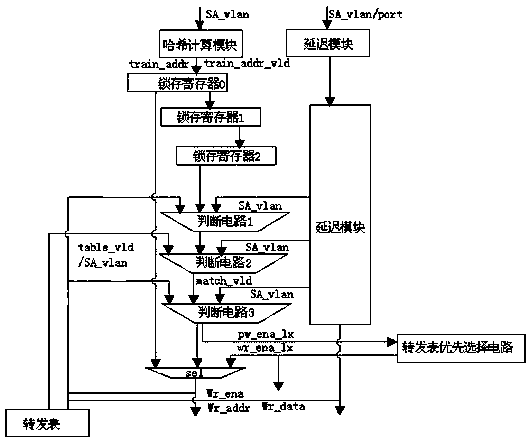

Maintenance method for distributed dynamic double-layer forwarding table

ActiveCN107171960ARealize the function of forwardingIncrease irrelevanceNetworks interconnectionForwarding planeReal-time computing

The invention discloses a maintenance method for a distributed dynamic double-layer forwarding table, and the method comprises the training, querying and aging process of a forwarding table, wherein the training process of he forwarding table comprises the steps: S1), inputting a real random number from the outside; S2), receiving a source address and a virtual local area network SA_vlan; S3), carrying out the Hash calculation of the real random number and the virtual local area network SA_vlan, and calculating a to-be-written address; S4), transmitting a signal indication of an item corresponding to the address downwards; S5), carrying out no writing of the item if a to-be-written item is set to be invalid, or else writing the virtual local area network SA_vlan into the item in an sel circuit; S6), carrying out the Hash calculation of a target address DA_vlan and the real random value, obtaining the address of the item, and reading a to-be-queried forwarding port. According to the invention, a plurality of small forwarding tables employ the same Hash calculation rule, and form different Hash mapping with different real random numbers. The item load rate which is far greater than the item load rate of one unified forwarding table is obtained, and the precious BRAM resources in an FPGA are greatly saved.

Owner:HUAXIN SAIMU CHENGDU TECH CO LTD

Data parallel processing method and apparatus based on multiple graphic processing units

ActiveUS10282809B2Improve Parallel Processing EfficiencyImprove data processing efficiencyResource allocationProgram synchronisationVideo memoryVideo storage

A parallel data processing method based on multiple graphic processing units (GPUs) is provided, including: creating, in a central processing unit (CPU), a plurality of worker threads for controlling a plurality of worker groups respectively, the worker groups including one or more GPUs; binding each worker thread to a corresponding GPU; loading a plurality of batches of training data from a nonvolatile memory to GPU video memories in the plurality of worker groups; and controlling the plurality of GPUs to perform data processing in parallel through the worker threads. The method can enhance efficiency of multi-GPU parallel data processing. In addition, a parallel data processing apparatus is further provided.

Owner:TENCENT TECH (SHENZHEN) CO LTD

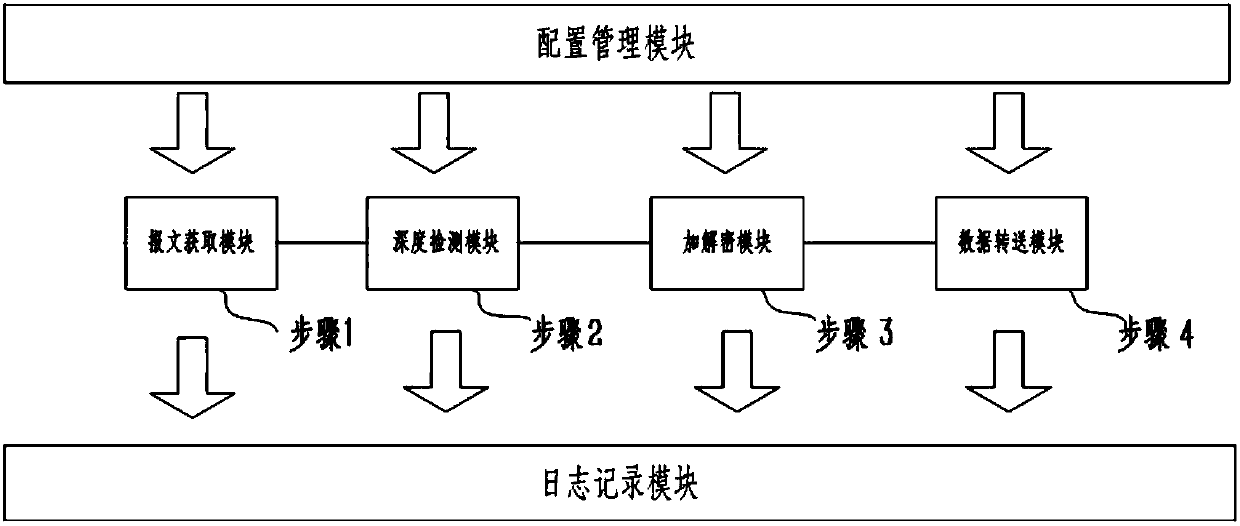

Transparent encryption method and apparatus of network application layer

The invention provides a transparent encryption method and apparatus of a network application layer. A data encryption method of the network application layer in a data transmission process by cooperatively using a message obtaining module, a depth detection module, an encryption / decryption module and a data transfer module, and transparent encryption is achieved according to a unique processing mode in the method. An efficient and reliable data encryption and decryption method of the network application layer is provided, the encryption and decryption granularity is small, the data size is small, and the parallel processing efficiency is higher; and the encryption and decryption do not change the network message structure of the original data message, and the data transmission is not affected. The possibility of message errors is reduced, and the impact of encryption and decryption on the current network environment is reduced; and rules can be freely configured according to differentservice requirements, the application is flexible, and the transparent encryption method and apparatus are applicable to different network environments.

Owner:宝牧科技(天津)有限公司

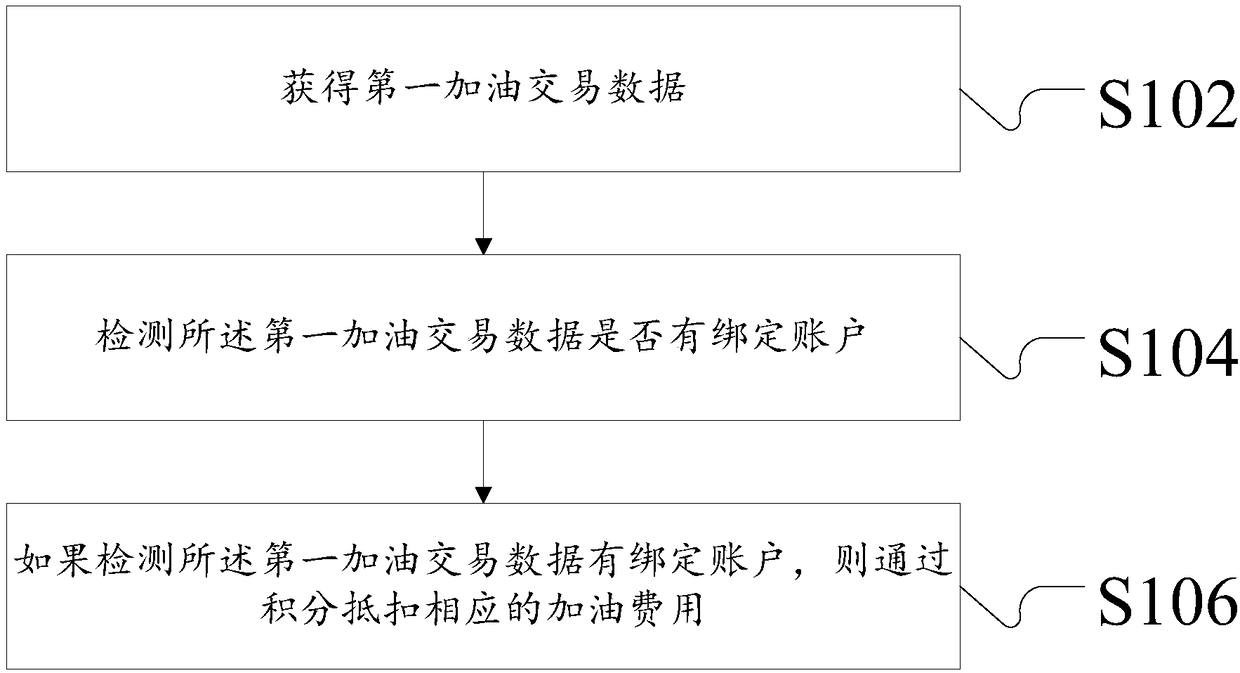

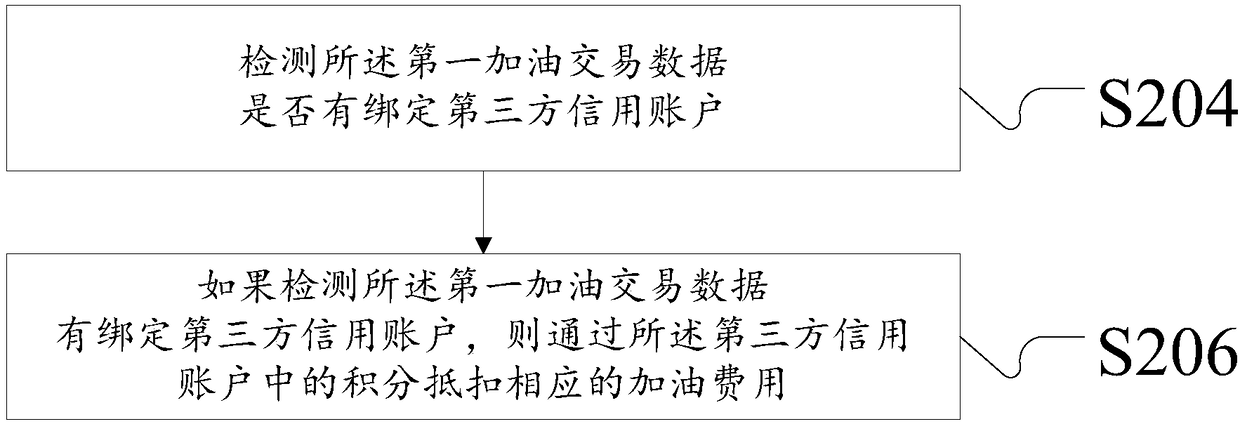

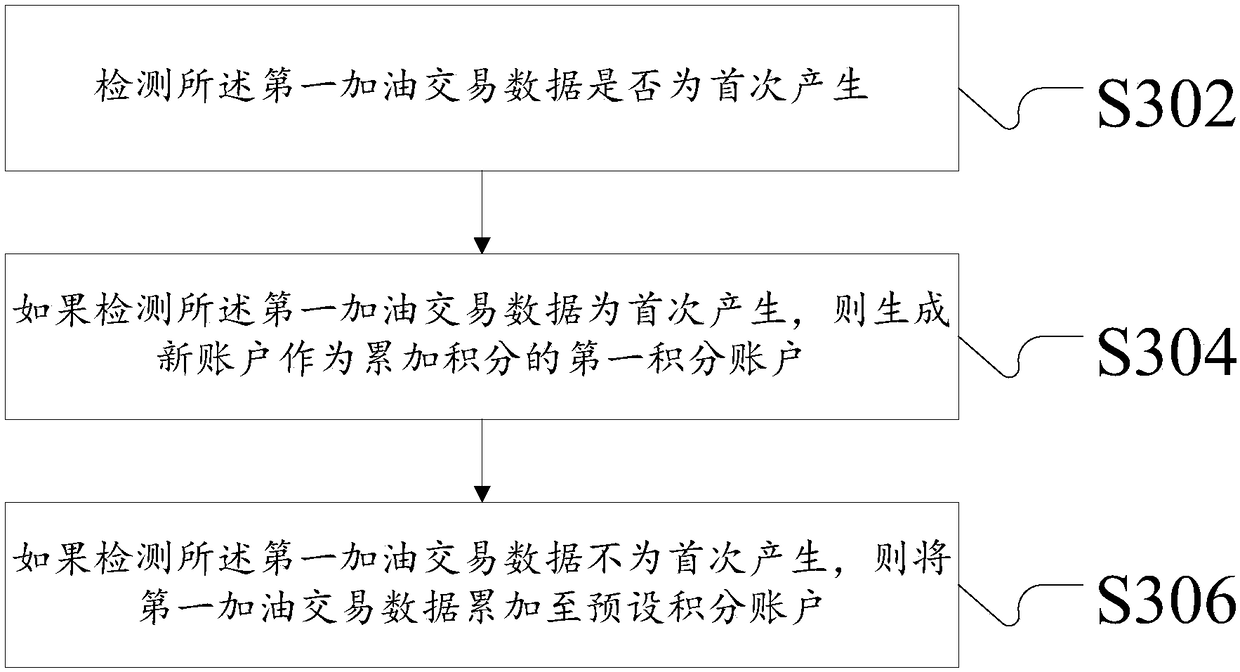

Payment method and device used for refueling

InactiveCN108320151AConvenient parallel paymentSolve technical problems that are more inconvenient to payPayment architectureMarketingPaymentTransaction data

The invention discloses a payment method and device used for refueling. The method comprises the following steps of acquiring first refueling transaction data; detecting whether the first refueling transaction data is bound with an account; and if the first refueling transaction data is detected to be bound with the account, using integrals to deduct corresponding refueling cost. In the invention,a technical problem that payment is not convenient during a refueling process is solved; and the parallel payment and the integral accumulation of the refueling process can be conveniently completed.

Owner:北京微油科技服务有限公司 +1

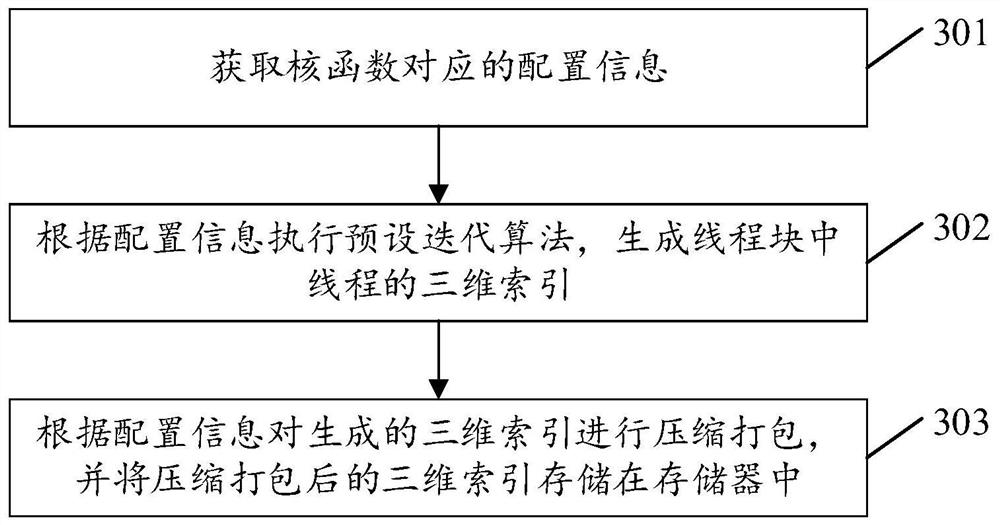

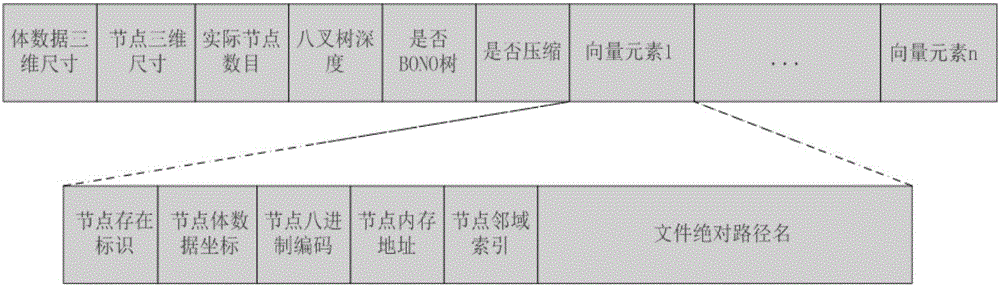

CUDA multi-thread processing method and system and related equipment

ActiveCN114020333AImprove execution efficiencyImprove Parallel Processing EfficiencyConcurrent instruction executionProcessor architectures/configurationSoftware engineeringMultithreading

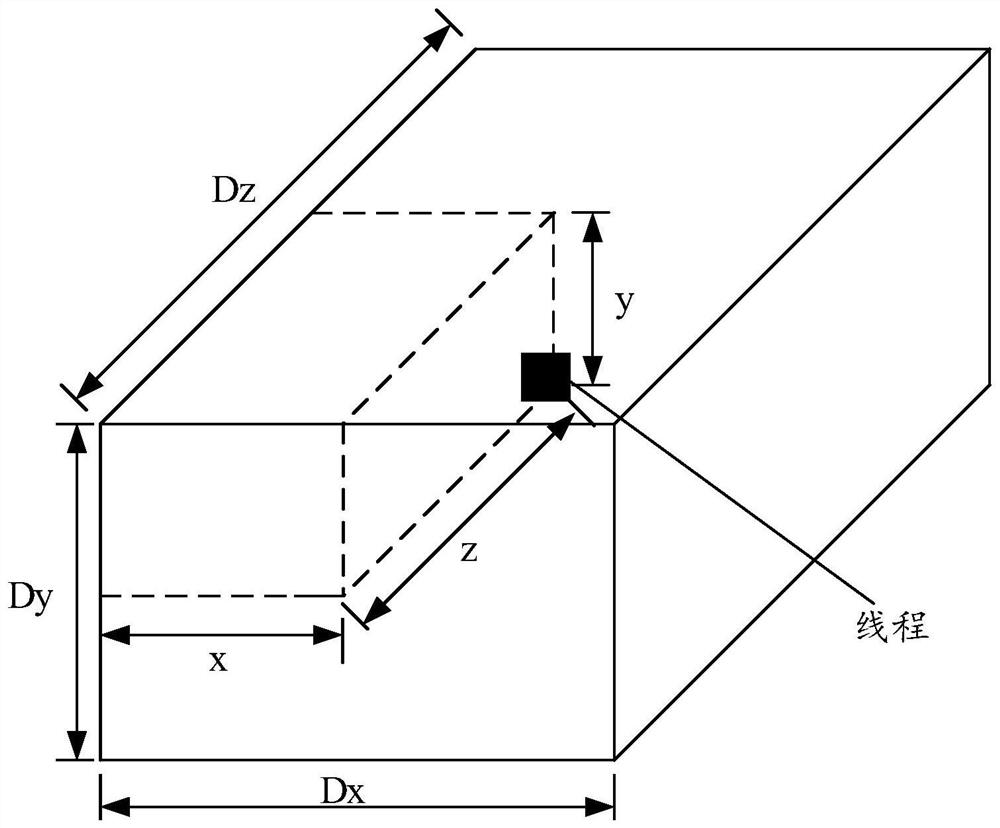

The invention provides a CUDA (Compute Unified Device Architecture) multi-thread processing method and system and related equipment, and the method comprises the following steps: obtaining configuration information corresponding to a kernel function; under the condition that the target historical configuration information matched with the configuration information does not exist in the historical configuration information, generating a three-dimensional index of the thread according to the configuration information; compressing and packaging the generated three-dimensional index according to the configuration information, and storing the compressed and packaged three-dimensional index in a memory; under the condition that the target historical configuration information exists in the historical configuration information, obtaining a historical three-dimensional index corresponding to the target historical configuration information; and compressing and packaging the historical three-dimensional index according to the target historical configuration information, and storing the compressed and packaged historical three-dimensional index in a memory. According to the embodiment of the invention, the multi-thread parallel processing efficiency in the CUDA can be improved.

Owner:AZURENGINE TECH ZHUHAI INC

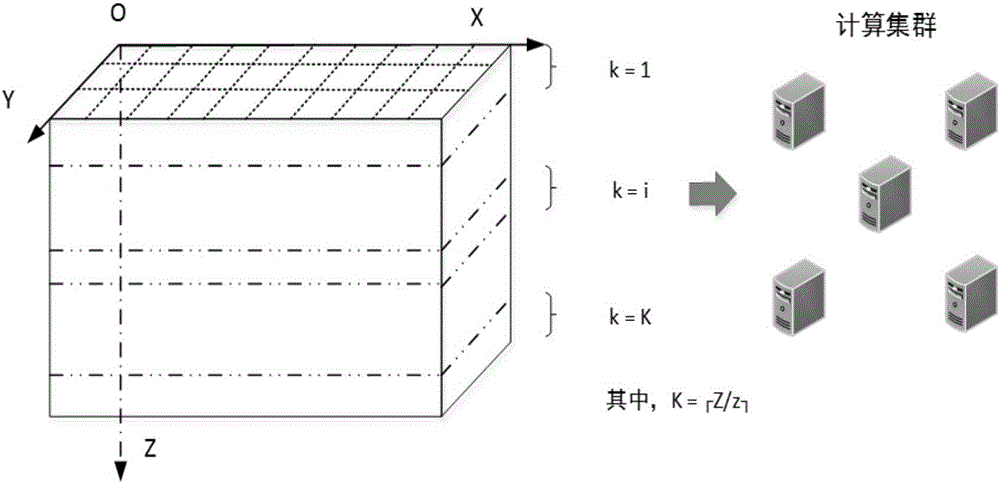

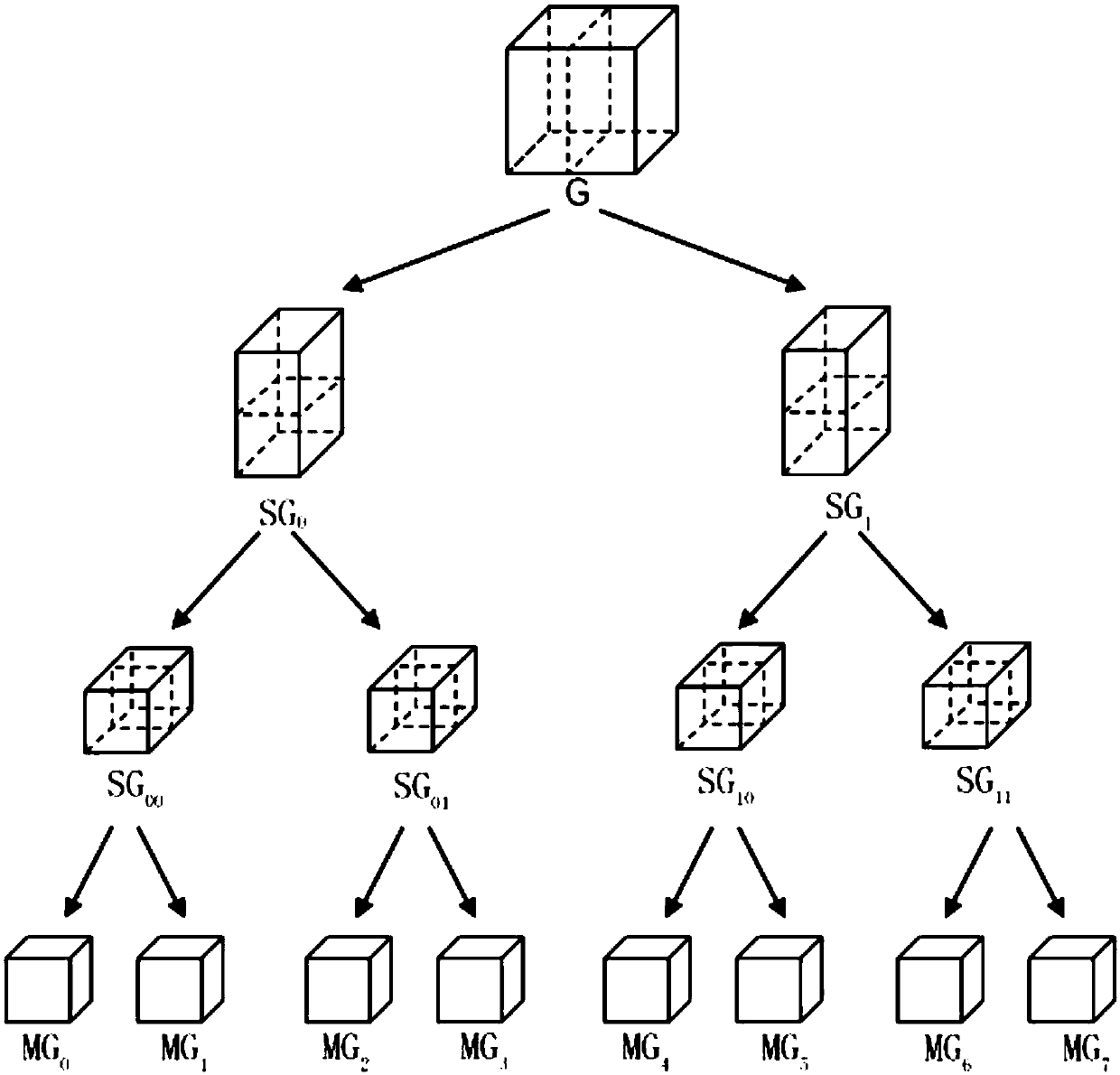

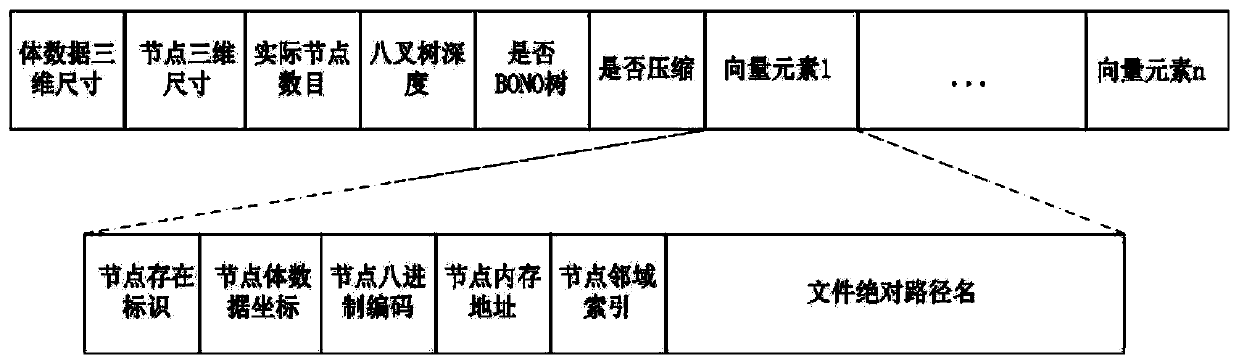

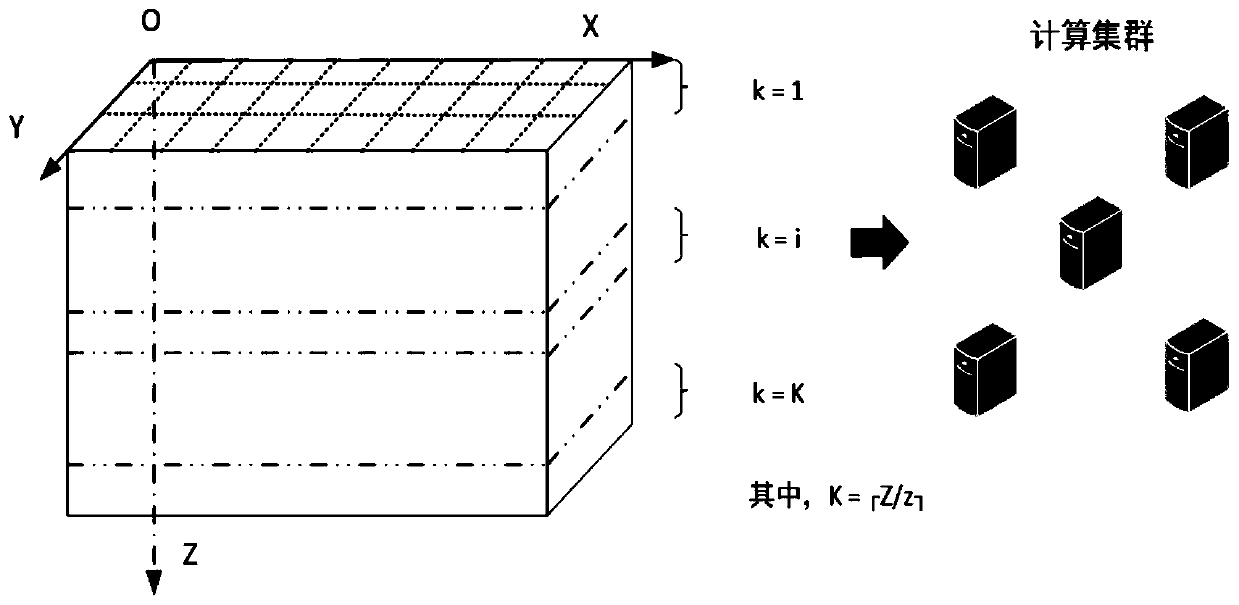

Octree parallel construction method for visual reconstruction of CT slice data

ActiveCN106846457AReduce duplicate data I/O overheadImprove data reuse effectImage memory managementProcessor architectures/configurationNODALParallel programming model

The invention relates to the field of parallel computation application technology and the field of high-performance scientific computation, in particular to an octree parallel construction method for visual reconstruction of CT slice data with a TB-level data processing ability. According to the method, octree parallel construction is performed based on an MPI+OpenMP parallel programming model by use of the characteristics of mesh generation of original volume data, non-dependency of octree node data, etc. based on the scheme of "construction on demand-Branch on need Octrees, BONOs" according to actual three-dimensional size of the volume data; on the one hand, waste of computation resources and storage resources and I / O expenditure in the construction process are reduced; and on the other hand, rapid octree data structure construction of the TB-level CT slice data is realized by means of parallel computation, and the MPI+OpenMP parallel programming technology meets the requirement for rapid construction of an octree data structural body of the TB-level CT slice data under different resolution requirements. The method has a good parallel speedup ratio and good parallel efficiency.

Owner:国家超级计算天津中心

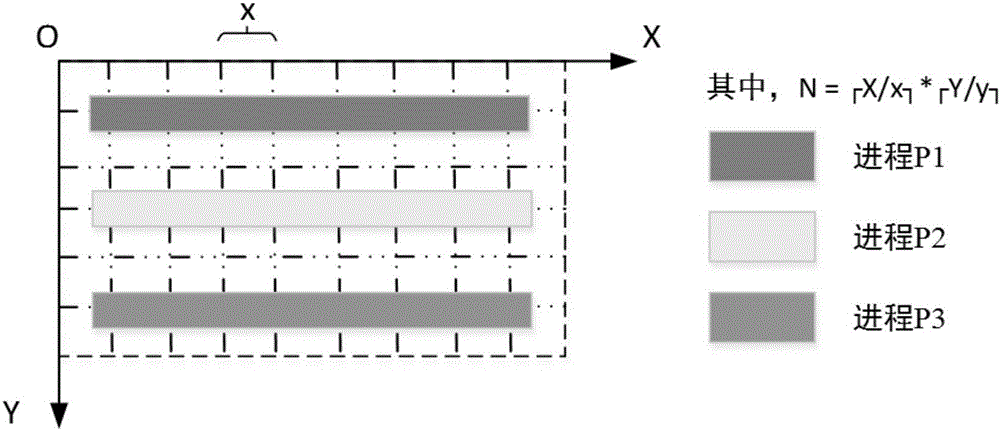

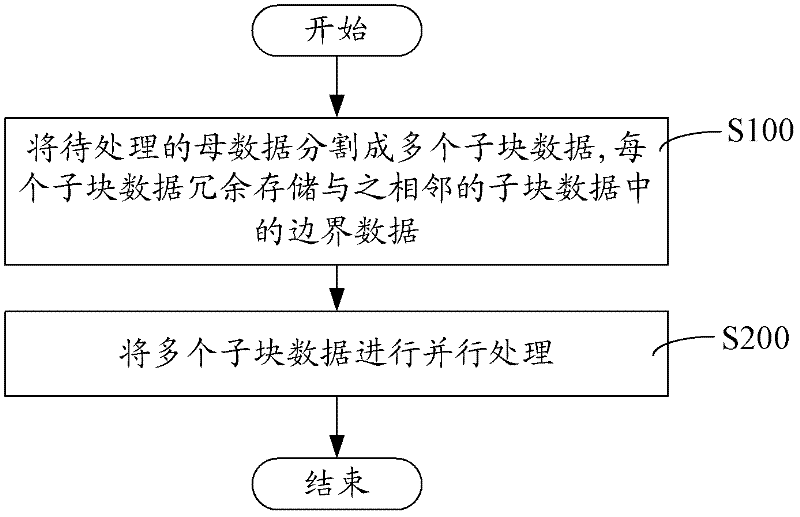

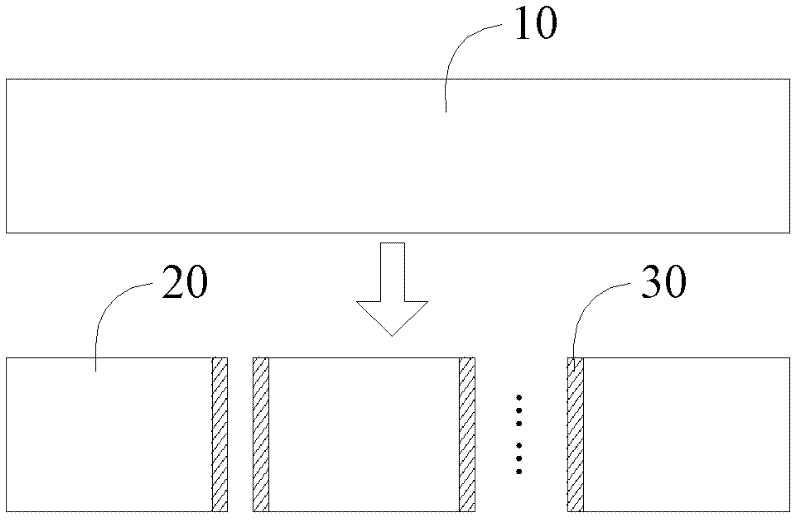

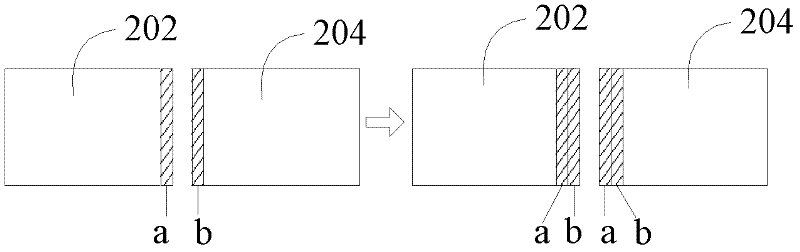

Data area overlapped boundary data zero communication parallel computing method and system

InactiveCN102393851AReduce waiting timeImprove Parallel Processing EfficiencyData switching networksSpecial data processing applicationsData exchangeParallel processing

The invention discloses a data area overlapped boundary data zero communication parallel computing method, which comprises the following steps of: partitioning mother data to be processed into a plurality of sub blocks of data, wherein each sub block of data stores boundary data of the adjacent sub block of data in a redundant way; and carrying out parallel processing to the sub blocks of data. The invention also discloses a data area overlapped boundary data zero communication parallel computing system, which comprises a data partitioning module which is used for carrying out redundant partitioning to the mother data, and a parallel processing unit which is used for carrying out parallel processing to the sub blocks of data, and also comprises a data partitioning module which is used for carrying out non-redundant partitioning to the mother data, a data exchange module which is used for exchanging the boundary data of the adjacent sub blocks of data and carrying out redundant storage to the boundary data, and a parallel processing unit which is used for carrying out parallel processing to the sub blocks of data. According to the method and the system, disclosed by the invention, the waiting time during the data transmission can be saved, and the efficiency of the parallel processing can be improved.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI +1

Multi-parallel strategy convolutional network accelerator based on FPGA

PendingCN112070210AReduce usageSolve the problem of computational redundancyNeural architecturesPhysical realisationComputational scienceRandom access memory

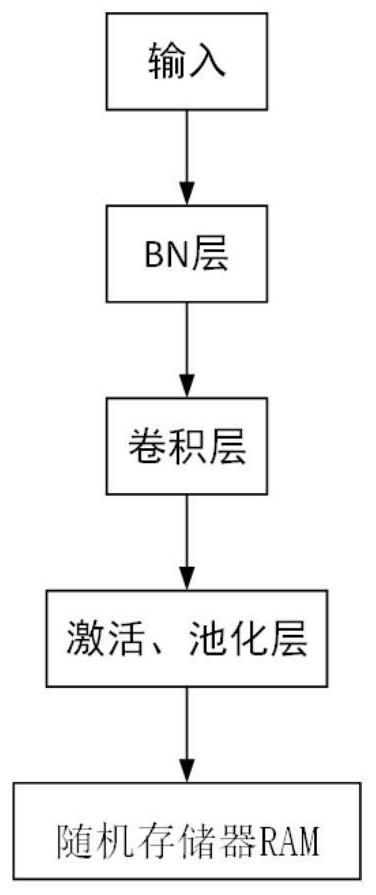

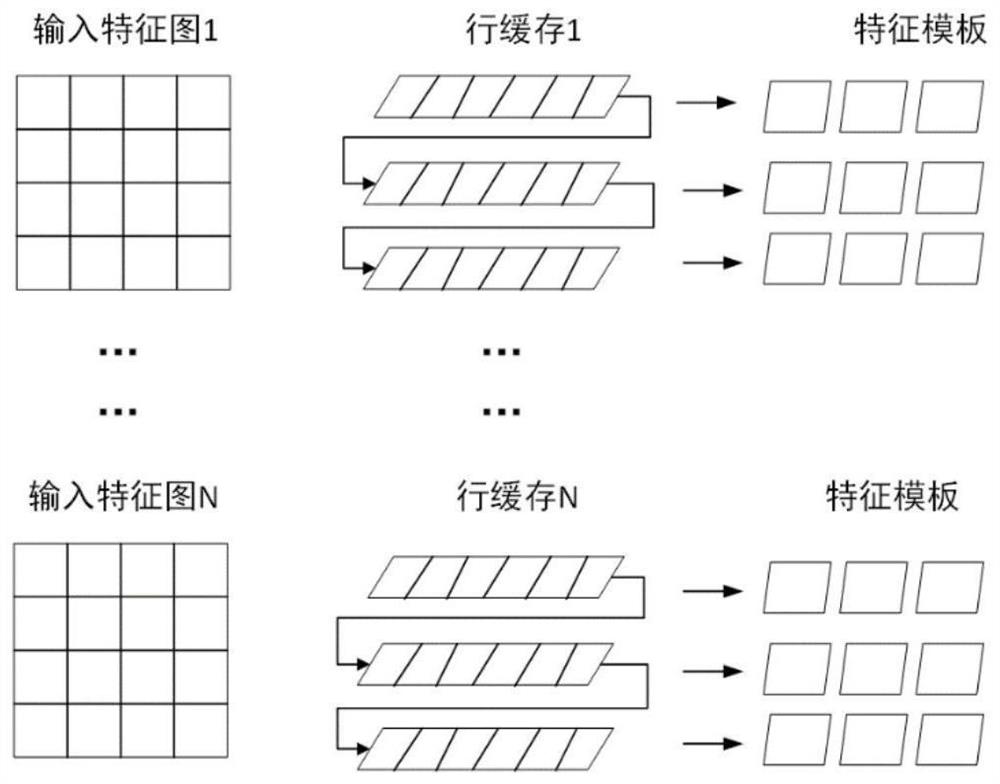

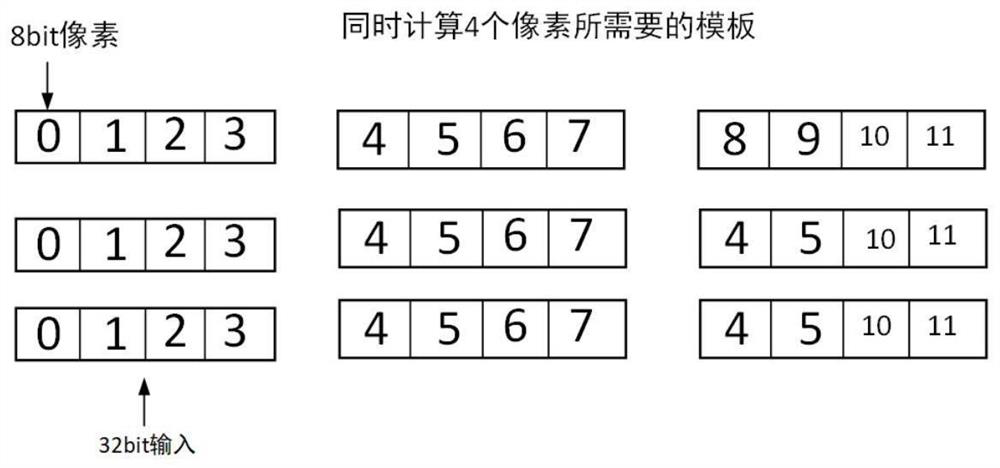

The invention discloses a multi-parallel strategy convolutional network accelerator based on an FPGA, and relates to the field of network computing. The system comprises a single-layer network computing structure, the single-layer network computing structure comprises a BN layer, a convolution layer, an activation layer and a pooling layer, the four layers of networks form an assembly line structure, and the BN layer merges input data; the convolution layer is used for carrying out a large amount of multiplication and additive operation, wherein the convolution layer comprises a first convolution layer, a middle convolution layer and a last convolution layer, and convolution operation is carried out by using one or more of input parallel, pixel parallel and output parallel; the activationlayer and the pooling layer are used for carrying out pipeline calculation on an output result of the convolution layer; and storing a pooled and activated final result into an RAM (Random Access Memory). Three parallel structures are combined, different degrees of parallelism can be configured at will, high flexibility is achieved, free combination is achieved, and high parallel processing efficiency is achieved.

Owner:REDNOVA INNOVATIONS INC

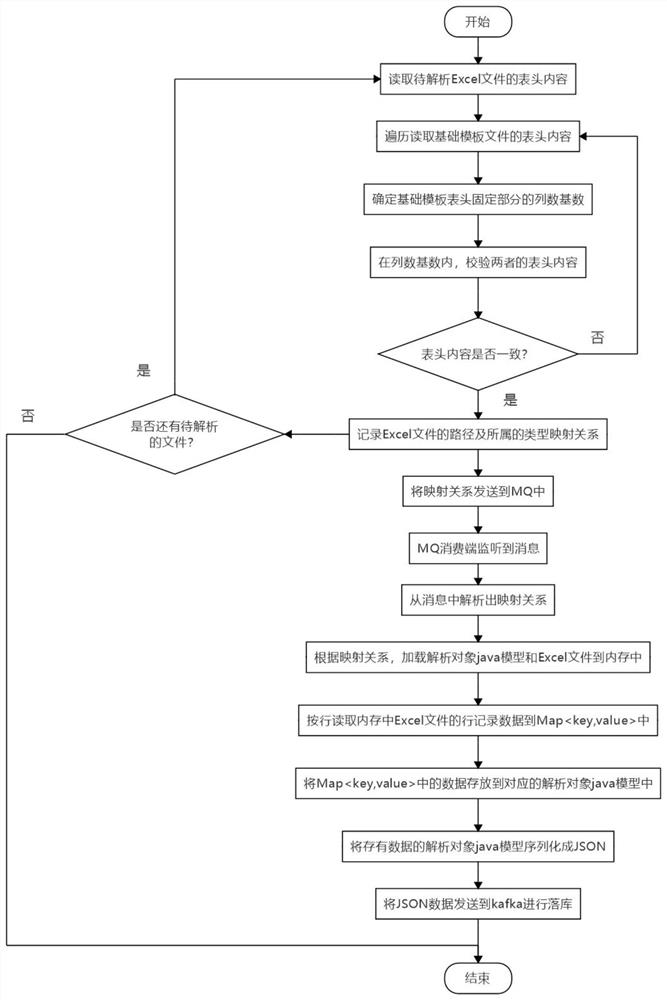

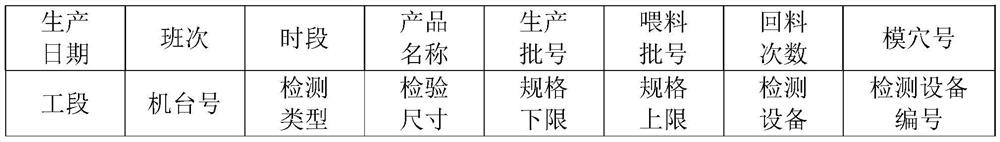

Variable column Excel file analysis method and system

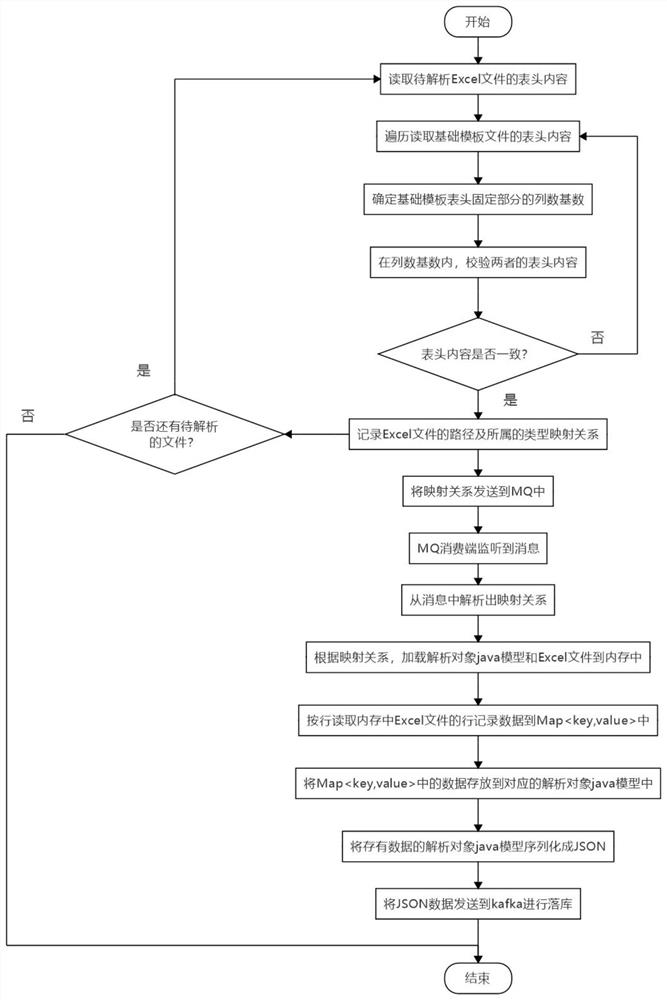

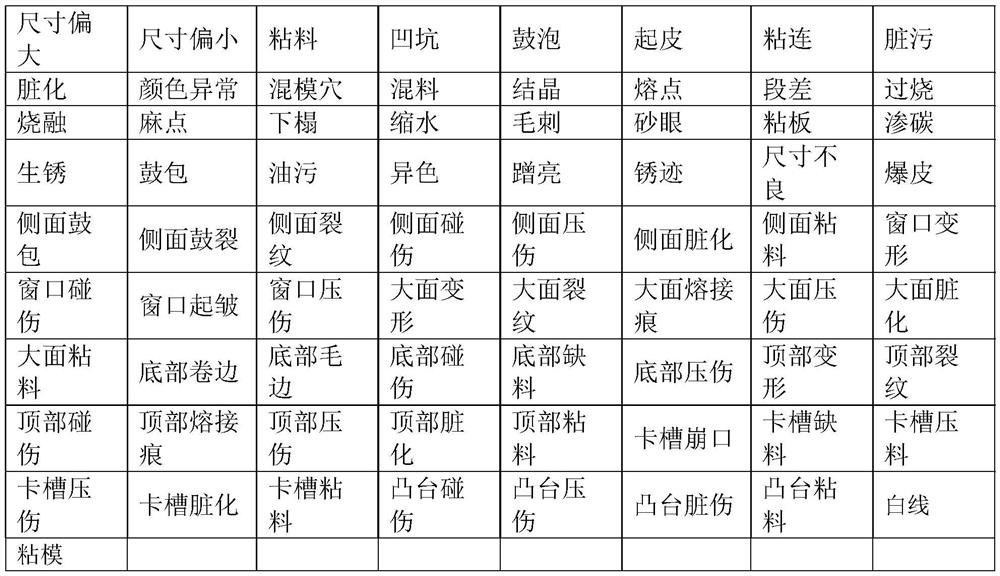

ActiveCN111931460ASolve storage problemsSolve the problem of detecting data into the databaseText processingJavaEngineering

The invention provides a variable column Excel file analysis method and system, and the method comprises the steps: carrying out the header verification of a to-be-analyzed Excel file and a basic template file, determining the type of product detection data, recording the mapping relation between a file path and the type, and transmitting the mapping relation to message-oriented middleware MQ; after monitoring the message, enabling the message-oriented middleware MQ consumption terminal to acquire a mapping relationship, use the type of the message-oriented middleware MQ consumption terminal for loading a pre-established analysis object java model, and load an Excel file corresponding to the file path into a memory; reading each row of data of the Excel file in the memory, and storing thedata in the corresponding parsing object java model; and serializing the analysis object java model with the stored data into a JSON string, and sending the JSON string to Kafka for library falling. The variable column Excel file analysis method is adopted, the problem that factory product detection data enter a database is solved, and digitization of the factory product detection data is facilitated.

Owner:上海微亿智造科技有限公司

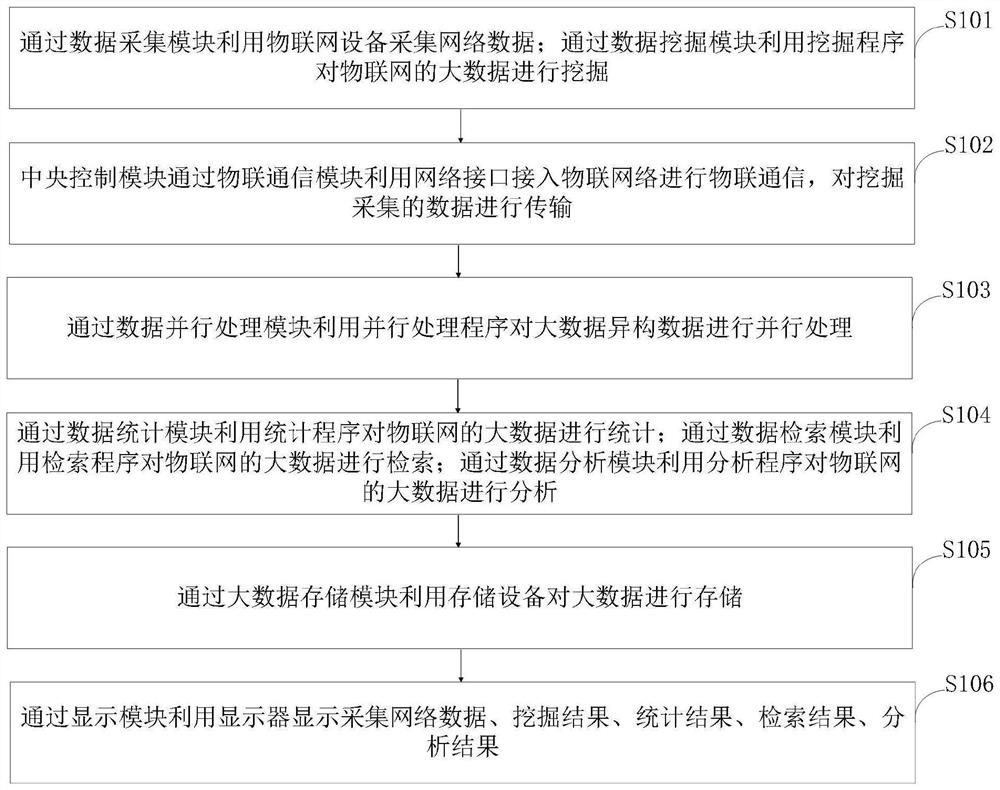

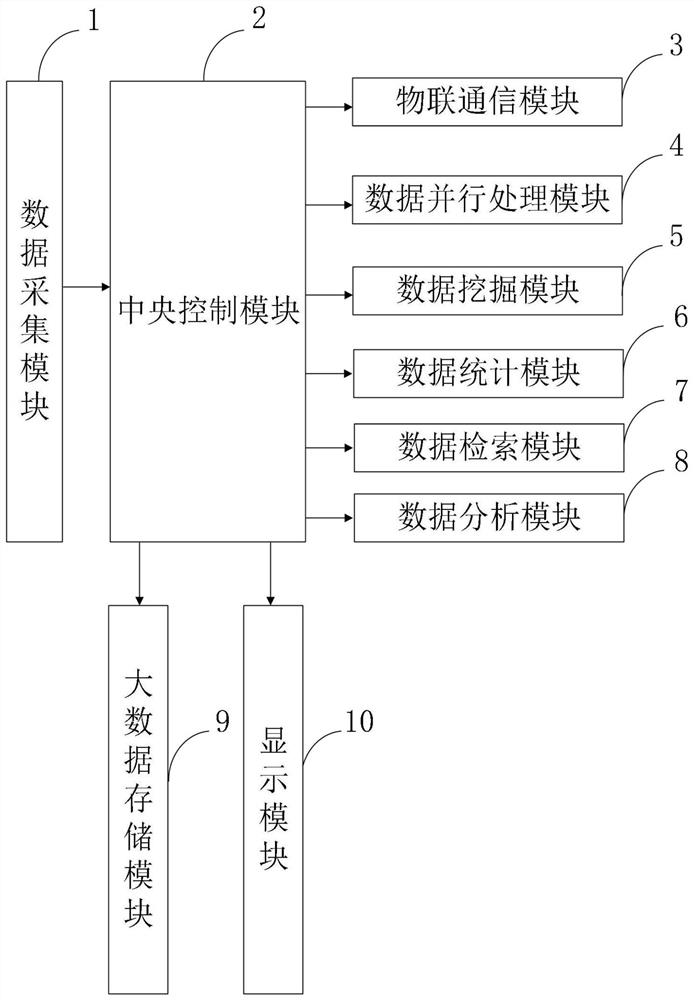

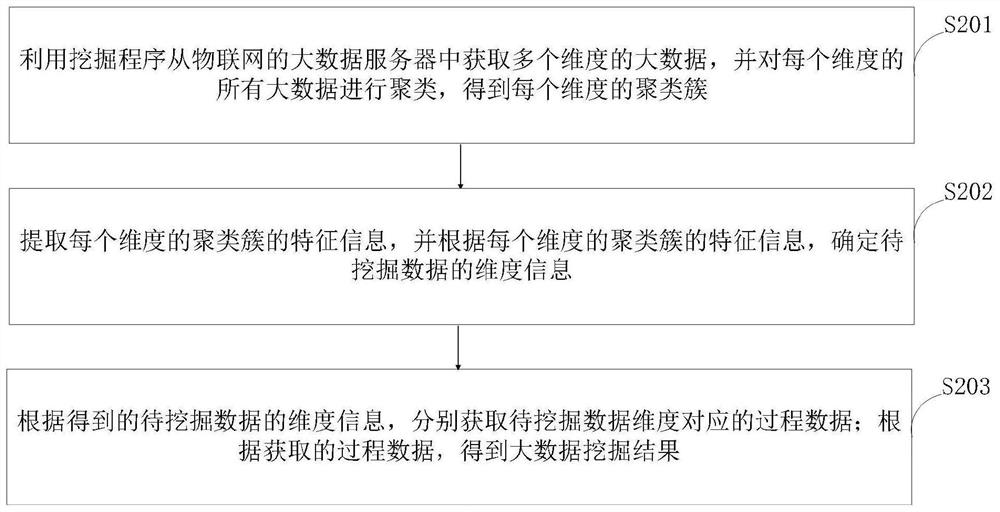

Big data application system and method based on Internet of Things

InactiveCN111858722AHigh data efficiencyImprove Parallel Processing EfficiencyDatabase updatingDatabase management systemsData retrievalThe Internet

The invention belongs to the technical field of big data application, and discloses a big data application system and method based on the Internet of Things. The system comprises a data acquisition module, a central control module, an Internet of Things communication module, a data parallel processing module, a data mining module, a data statistics module, a data retrieval module, a data analysismodule, a big data storage module and a display module. According to the invention, the parallel processing efficiency of heterogeneous data is greatly improved through the data parallel processing module; meanwhile, the big data storage module is designed and used for the big data industry; the system can be used independently, can be used as a main means for data storage and collection, and canalso be used in cooperation with a data service, so that big data is applied in practical application, the better effect of the data is achieved, quick storage of mass data is achieved, and the data efficiency is improved.

Owner:HUAIAN COLLEGE OF INFORMATION TECH

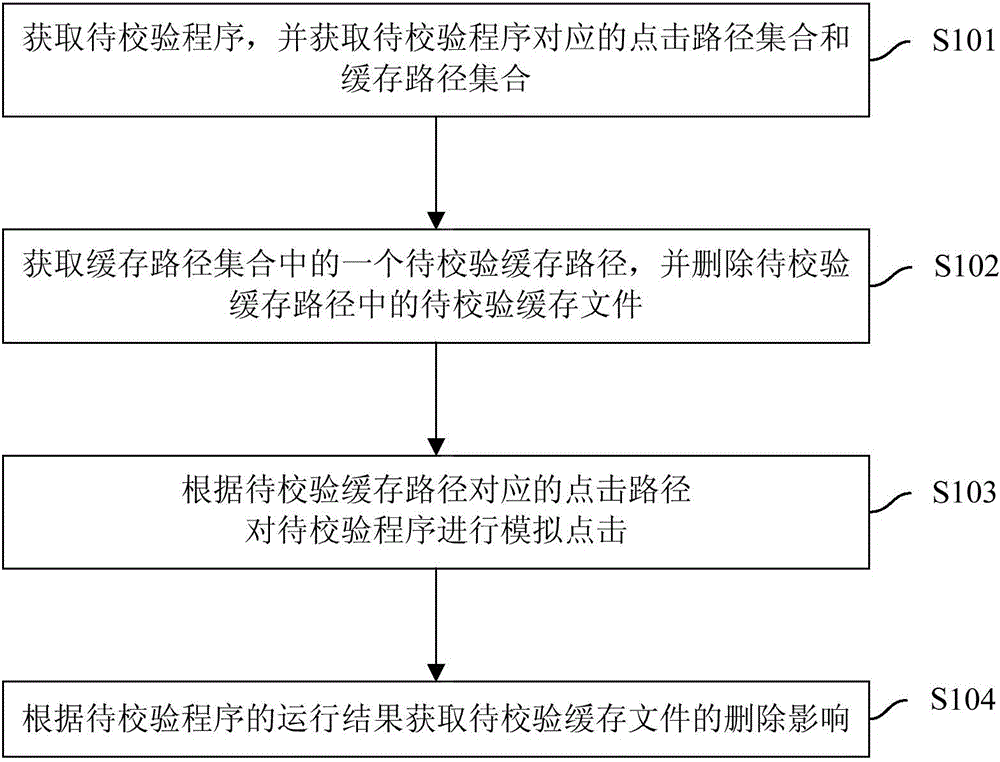

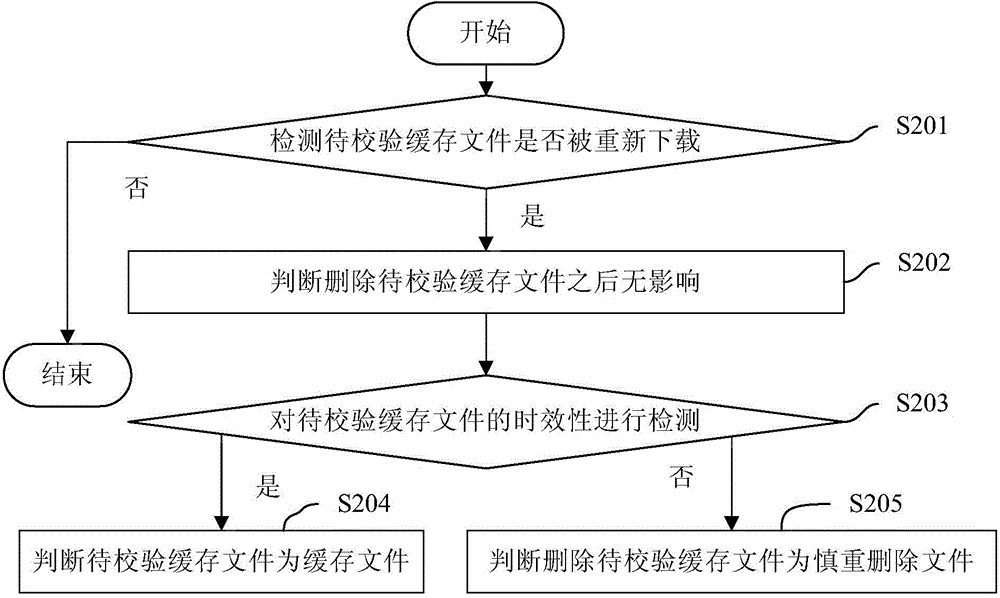

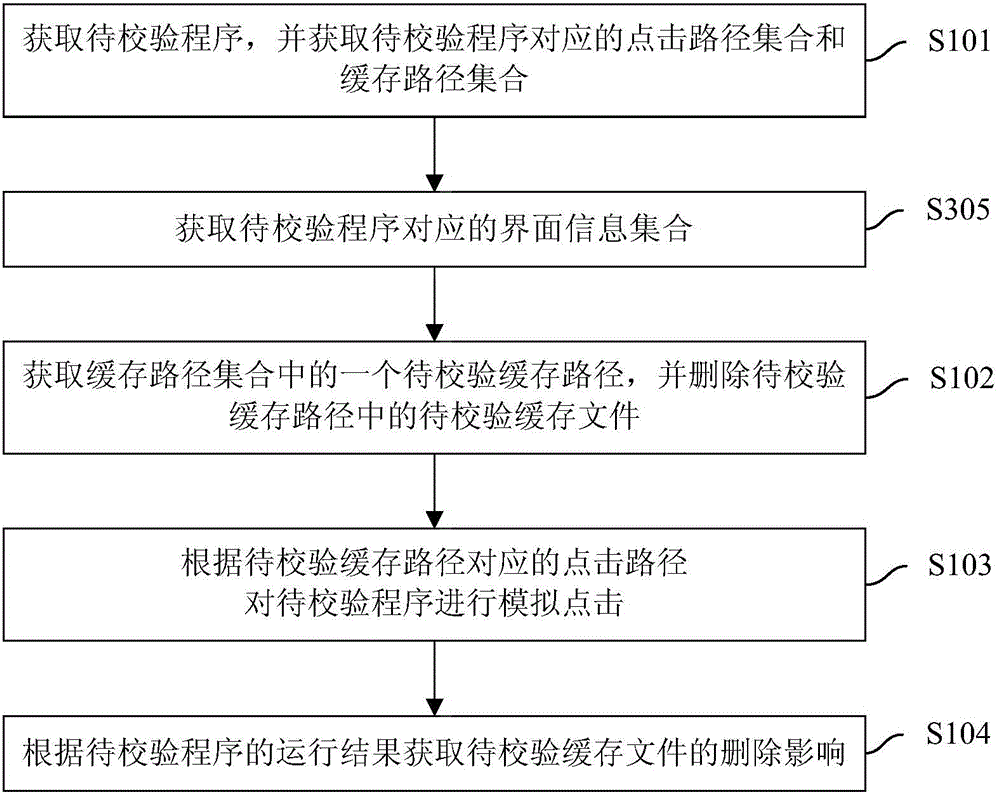

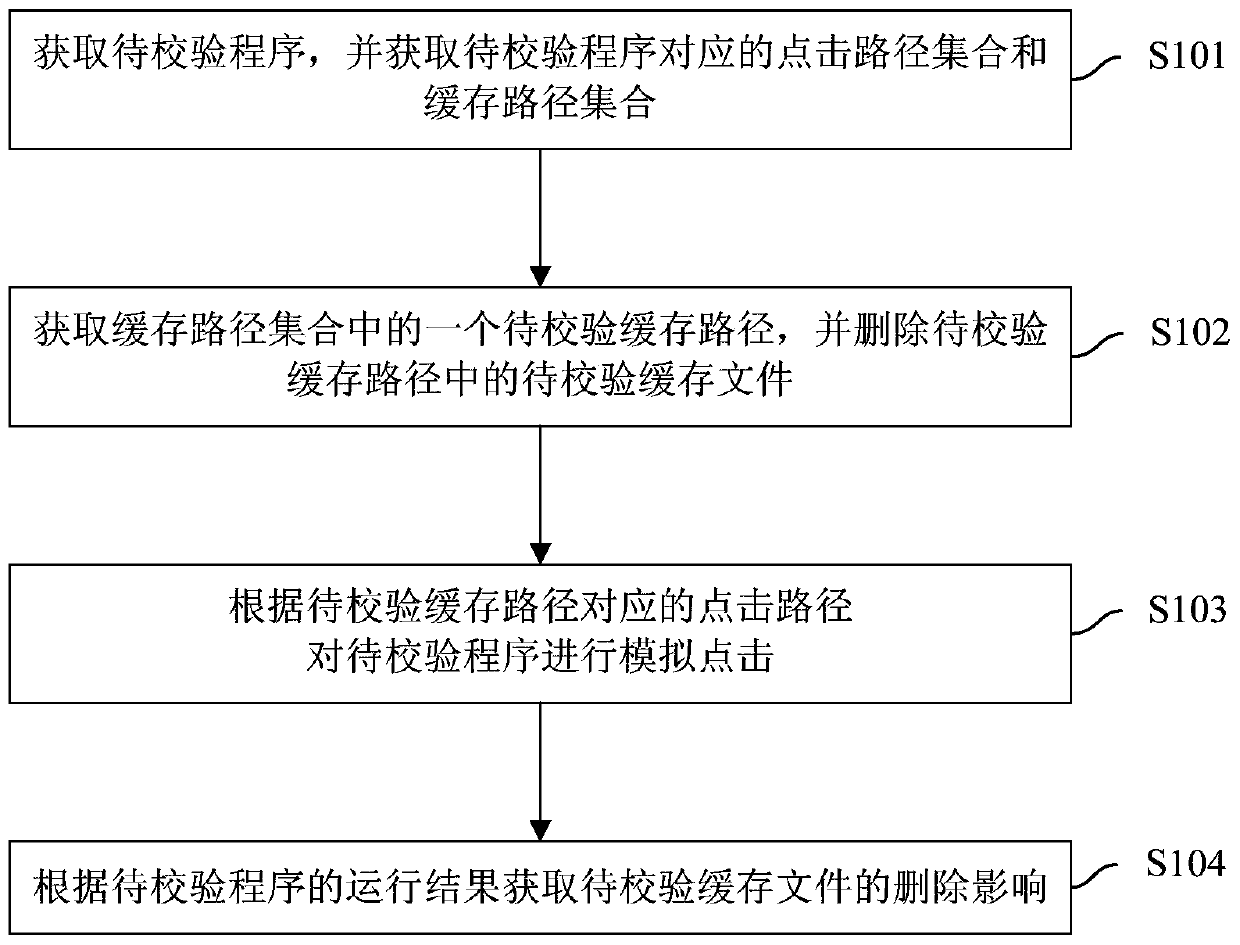

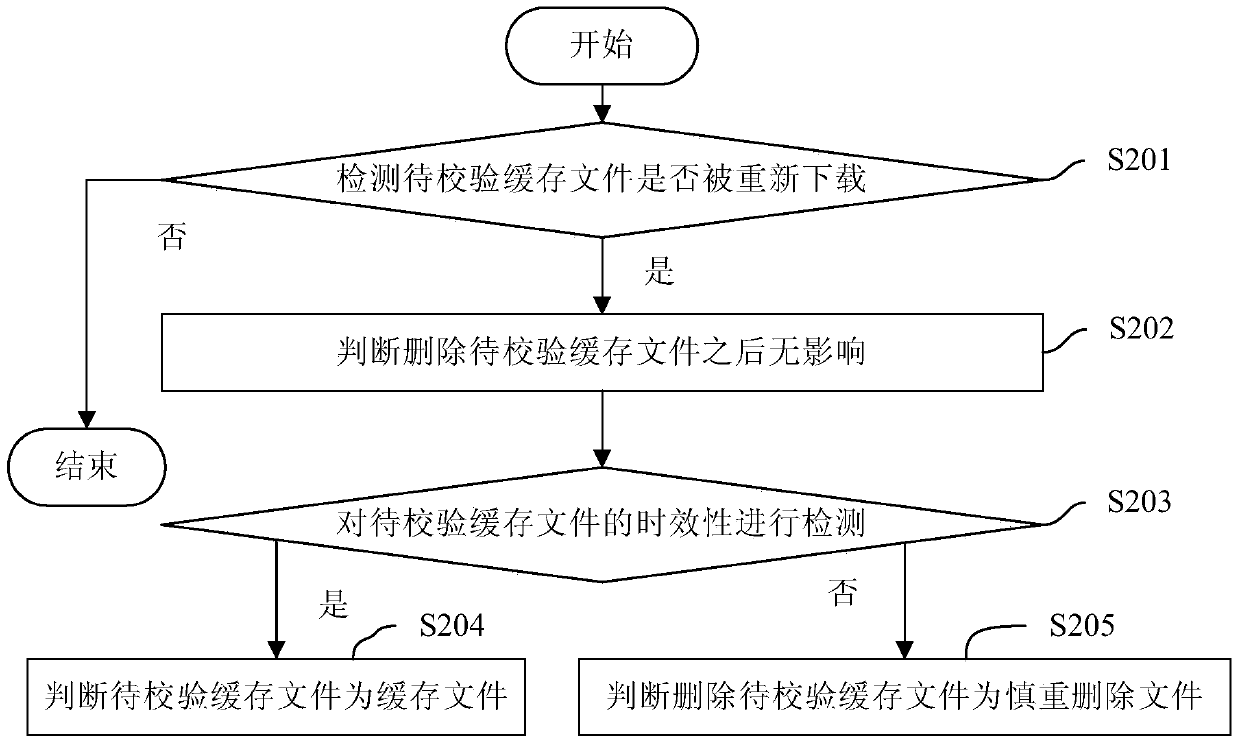

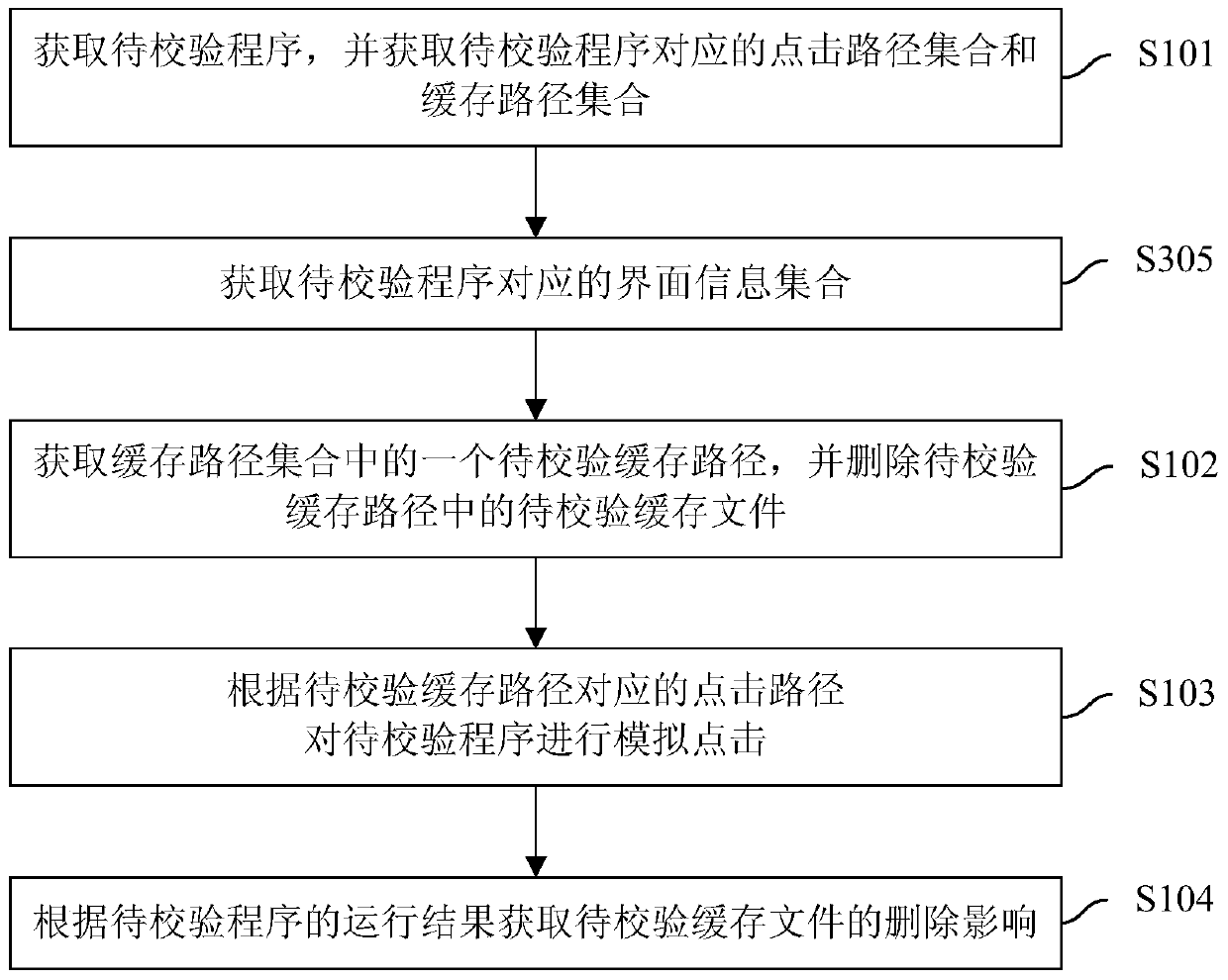

Cache file delete influence verification method, device and mobile terminal

ActiveCN105446864AImprove Parallel Processing EfficiencyReduce labor costsSoftware testing/debuggingCache memory detailsComputer terminalParallel processing

The invention discloses a cache file delete influence verification method, a device and a mobile terminal; the method comprises the following steps: obtaining a to be verified program, and obtaining a click path set and a cache path set corresponding to the to be verified program, wherein the click path set comprises a plurality of click paths, the cache path set comprises a plurality of cache paths, and each click path is matched with one cache path; obtaining one to be verified cache path from the cache path set, and deleting a to be verified cache file of the to be verified cache path; carrying out simulation clicking for the to be verified program according to the click path corresponding to the to be verified cache path; obtaining delete influence of the to be verified cache file according to the running result of the to be verified program. The method only needs simple device to automatically detect whether the deleted cache file can affect the program or not, thus improving parallel processing rate, and reducing labor cost.

Owner:BEIJING KINGSOFT INTERNET SECURITY SOFTWARE CO LTD

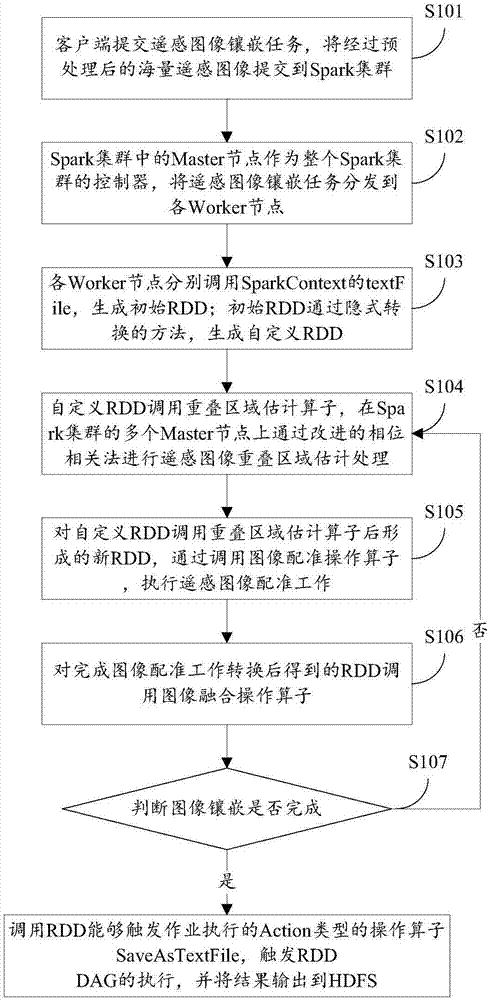

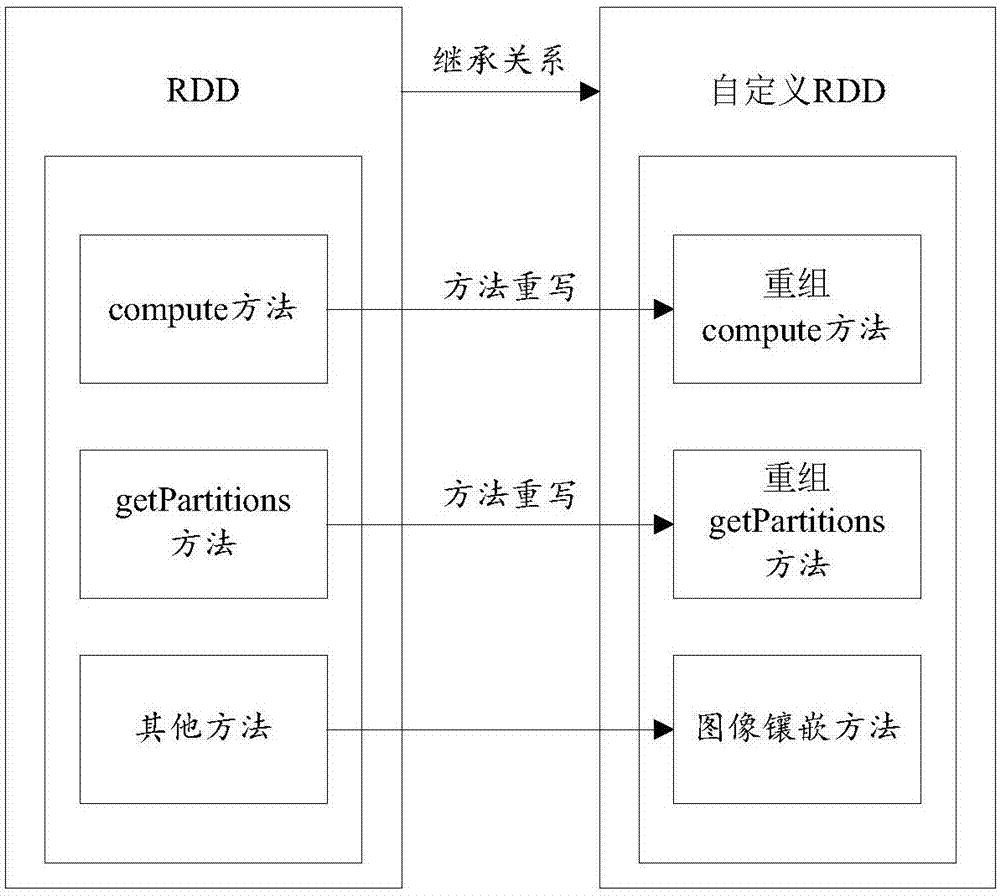

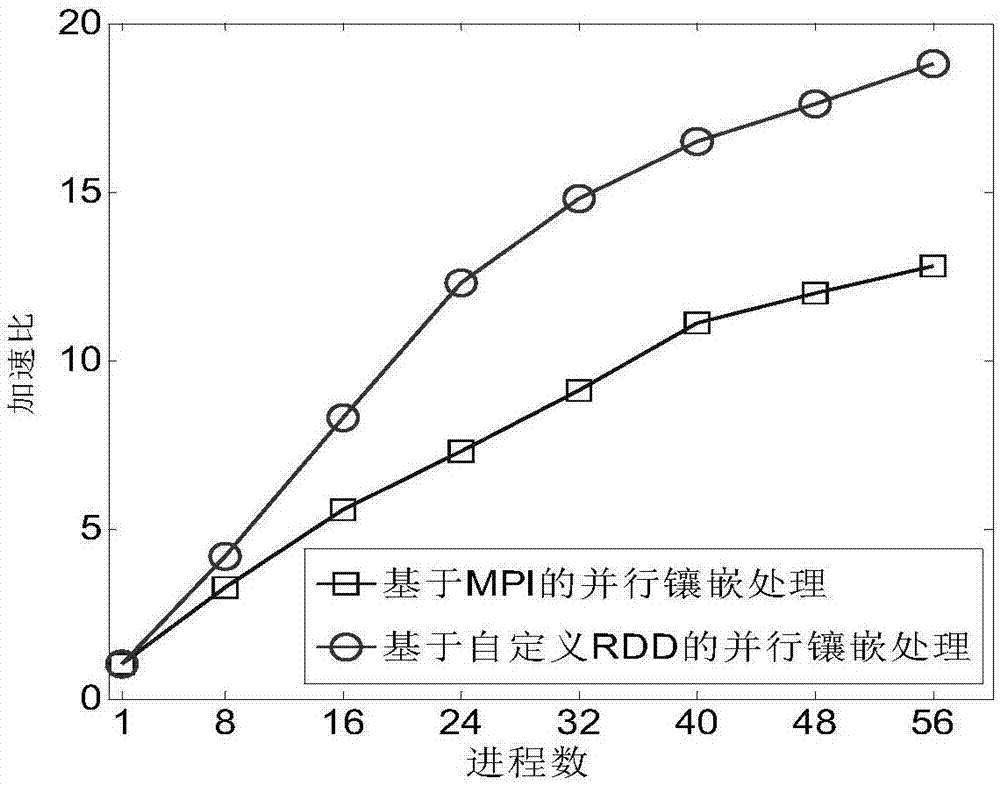

Parallel mosaic method and system for massive remote sensing images based on Spark

InactiveCN107481191AImprove Parallel Processing EfficiencySolve the problem of frequent data read and write operationsImage enhancementImage analysisParallel processingComputer science

The invention provides a parallel mosaic method and system for massive remote sensing images based on Spark, which distributes the remote sensing image mosaic task to each worker node through the Master node in the Spark cluster as a controller of the entire Spark cluster. Through calling a custom RDD operation operator, the image mosaic is completed. The method rewrites RDD's compute and getPartitions methods in Spark to customize the RDD for remote sensing image processing, and takes three key steps of image mosaic, overlap area estimation, image registration and image fusion as the operation of custom RDD Sub-image parallel implementation of the mosaic. The mosaic method and system for massive remote sensing images based on Spark is able to effectively increase the image mosaic efficiency of a large number of images while the image mosaic effect is ensured.

Owner:NORTHEAST FORESTRY UNIVERSITY

Request processing method and device

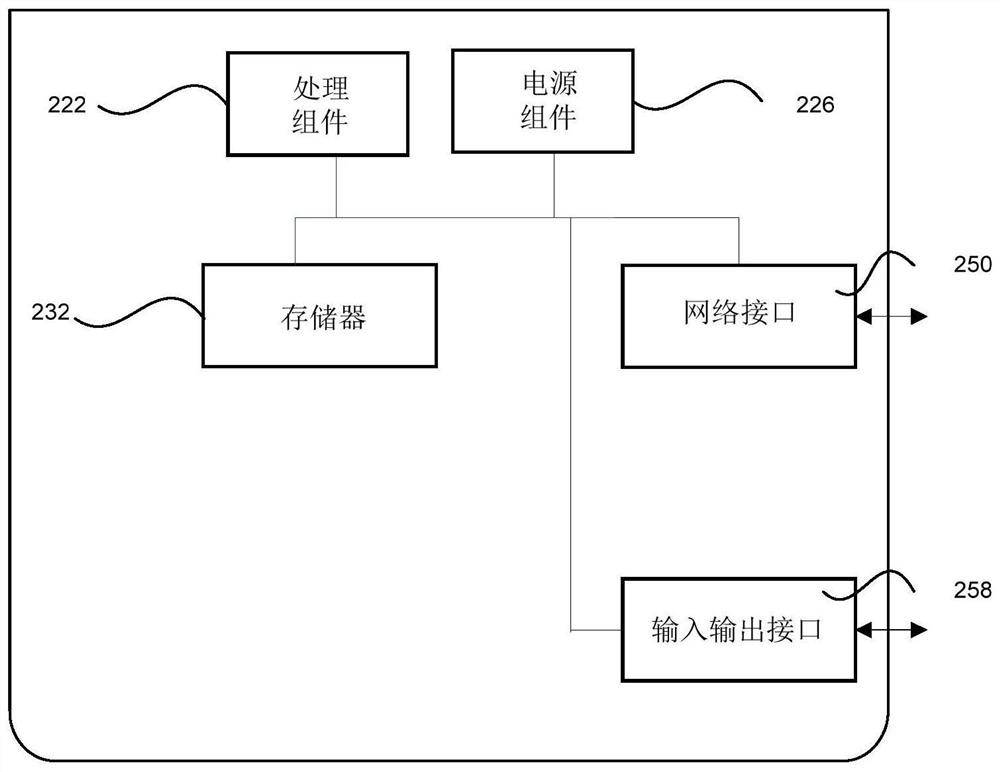

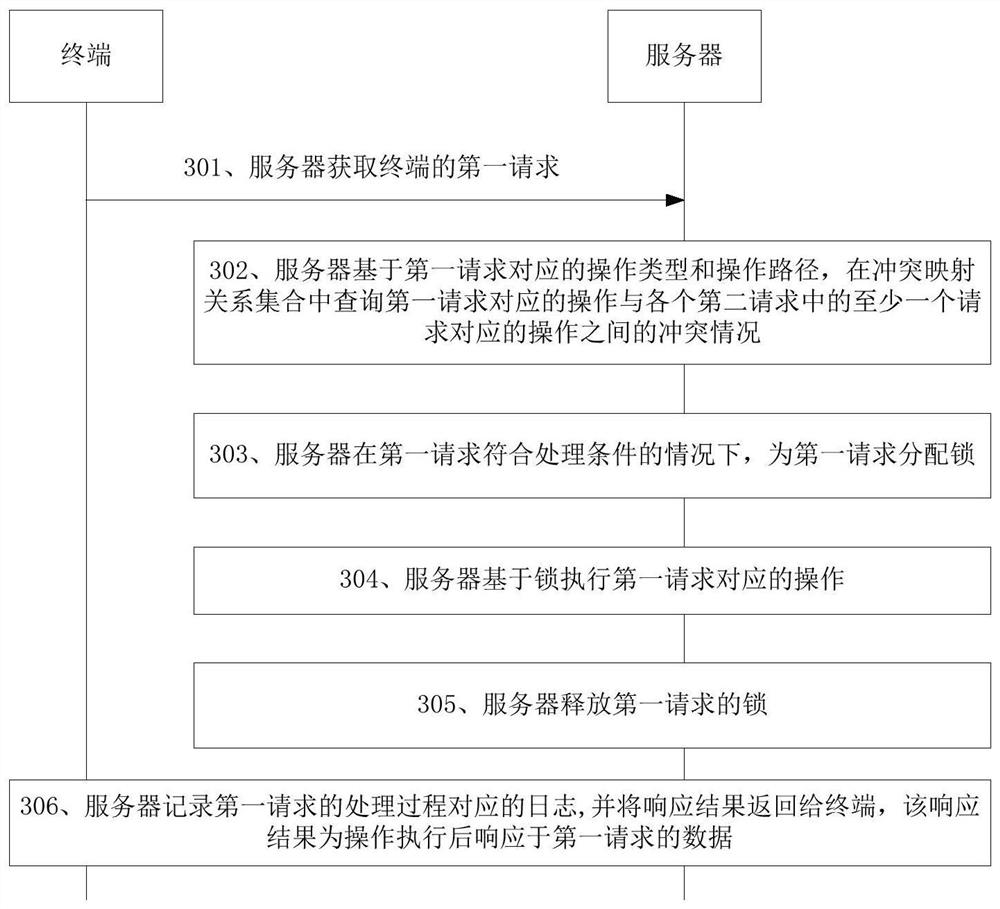

ActiveCN110019057BOperation refinementImprove Parallel Processing EfficiencyDigital data information retrievalSpecial data processing applicationsPathPingServer allocation

The disclosure provides a request processing method and device, which belong to the technical field of computers. The method includes: acquiring a first request of a terminal; and assigning a lock to the first request based on the operation type and operation path corresponding to the first request when the first request meets the processing conditions, and the operation type refers to the operation type indicated by the first request. The type of operation to be performed, the operation path refers to the storage path of the operation object indicated by the first request; the operation corresponding to the first request is executed based on the lock; where the processing conditions include the operation corresponding to the first request and the operation corresponding to each second request No conflict; when the second request allocates a lock for the first request, the request has already been allocated a lock by the server. The present disclosure improves parallel processing efficiency.

Owner:HUAWEI TECH CO LTD

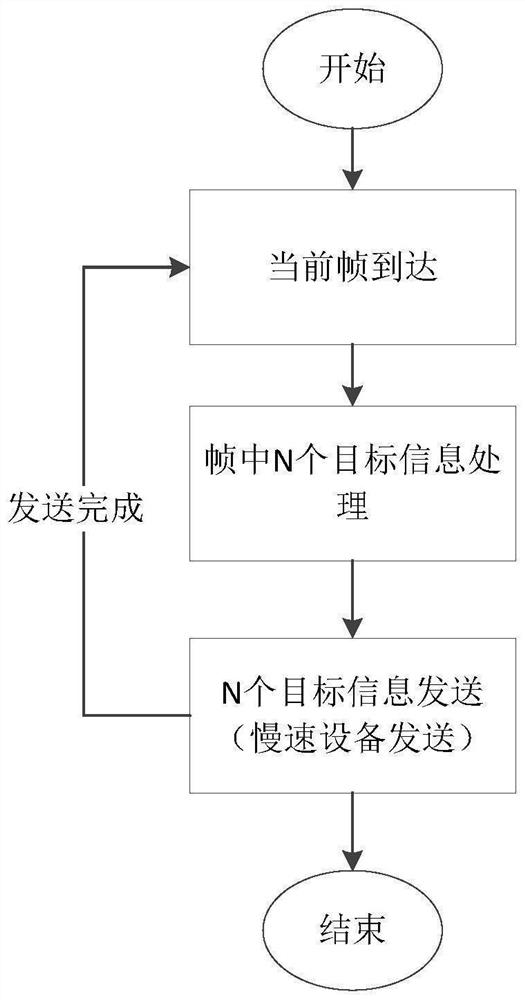

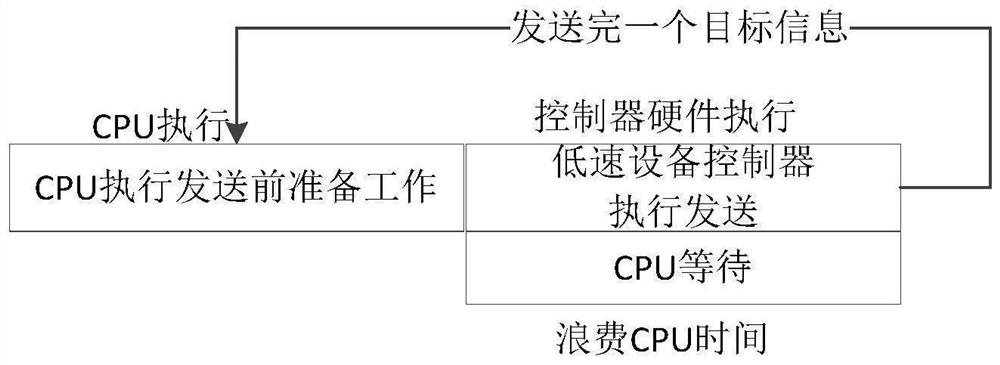

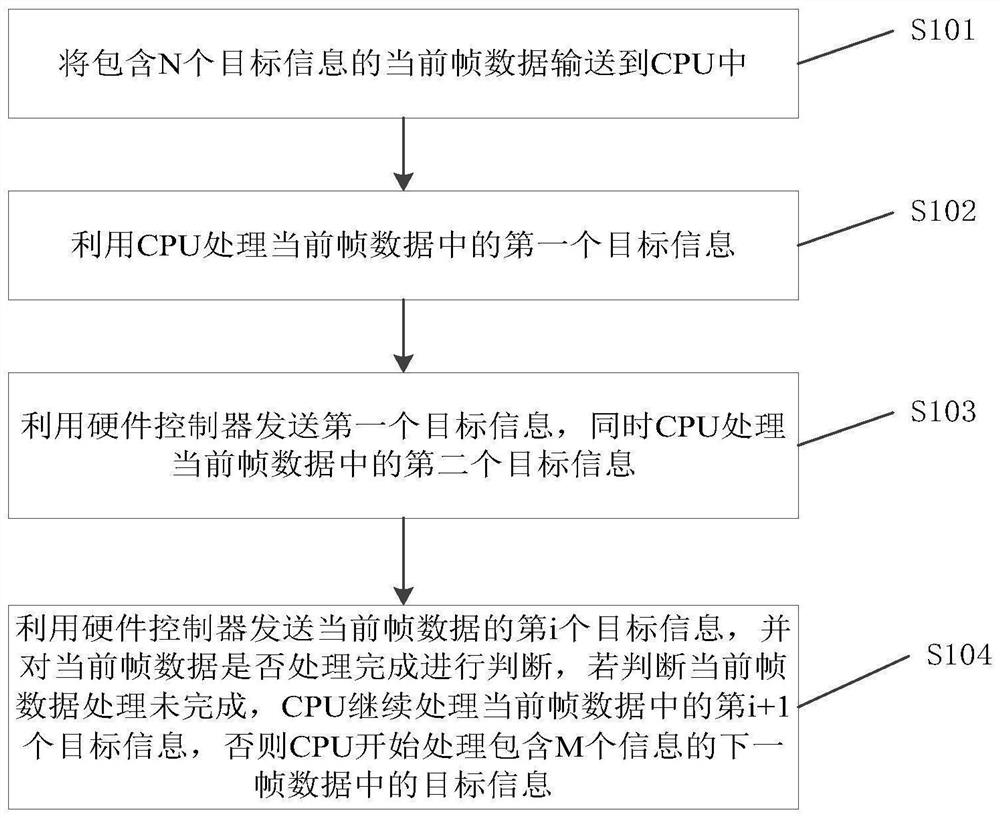

Method and device for improving parallel processing efficiency of bare computer system, medium and equipment

PendingCN114546496AImprove Parallel Processing EfficiencyShorten the timeConcurrent instruction executionEnergy efficient computingComputer architectureEngineering

The invention discloses a method and device for improving the parallel processing efficiency of a bare computer system, a medium and equipment, and belongs to the field of computer processing. The method comprises the following steps: transmitting current frame data containing N pieces of target information to a CPU (Central Processing Unit); processing first target information in the current frame data by utilizing the CPU; the hardware controller is used for sending the first target information, and meanwhile the CPU processes the second target information in the current frame data; and sending the ith target information of the current frame data by using the hardware controller, and judging whether the current frame data is processed or not, if the current frame data is judged not to be processed, continuing to process the (i + 1) th target information in the current frame data by the CPU, otherwise, starting to process the target information in the next frame data containing the M pieces of information by the CPU. According to the method and the device, the problems that CPU time is wasted when low-speed equipment sends data, and the overall task processing period is prolonged due to the fact that other work cannot be processed in parallel are solved.

Owner:北京木牛领航科技有限公司

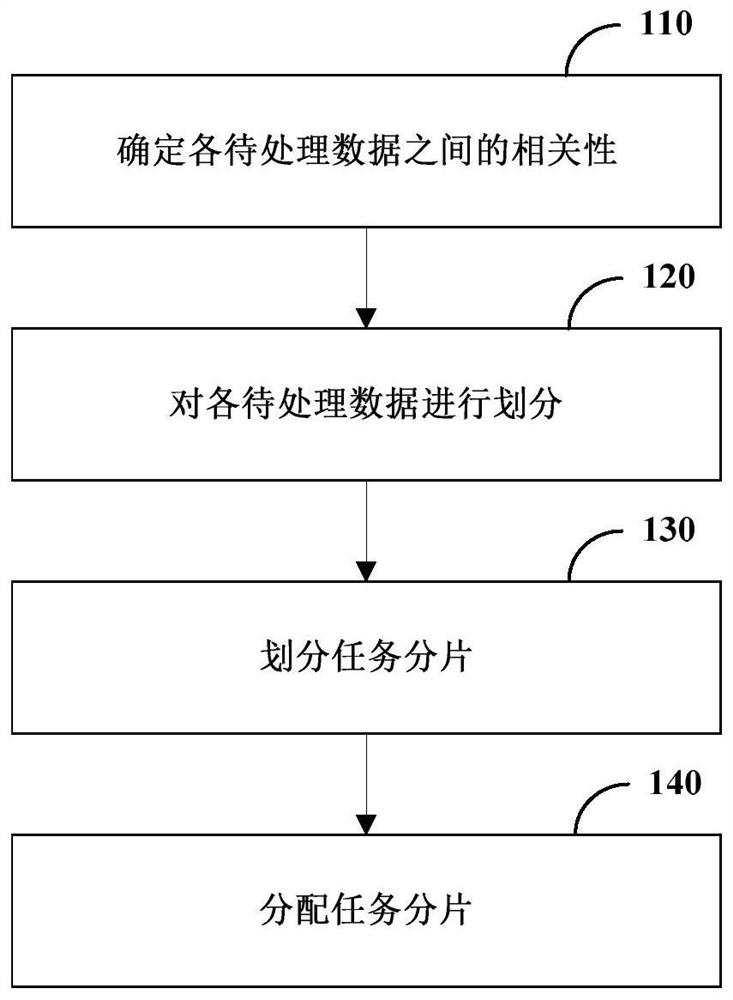

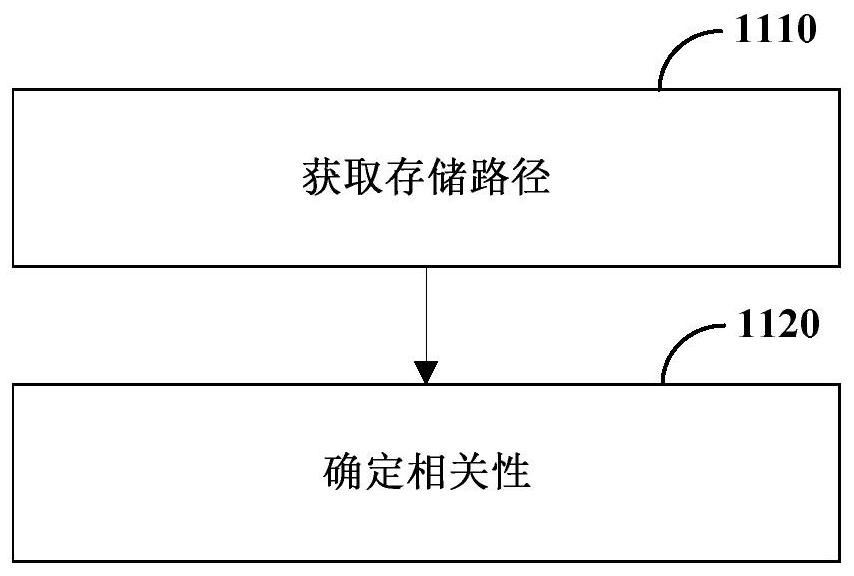

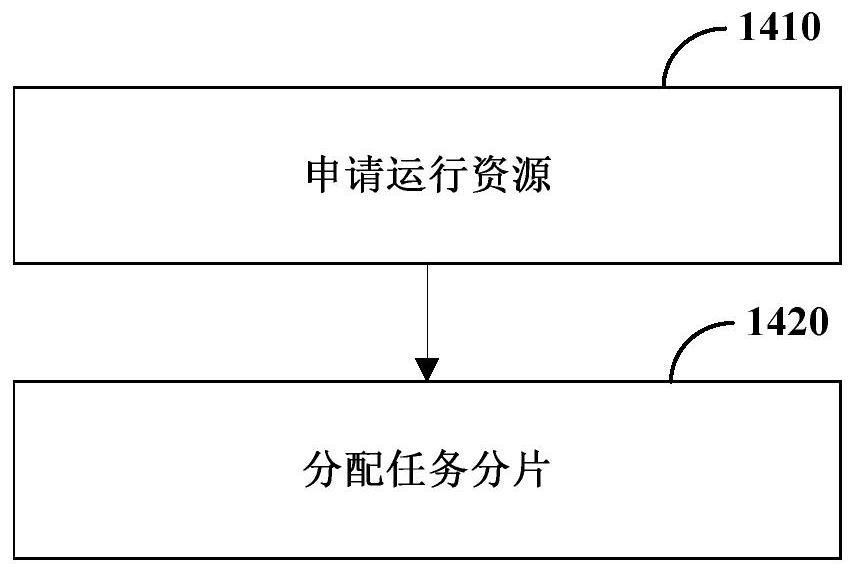

Application program processing method, device and system and computer readable storage medium

PendingCN111782348AImprove Parallel Processing EfficiencyProgram initiation/switchingResource allocationComputer hardwareComputer engineering

The invention relates to an application program processing method, device and system and a computer readable storage medium, and relates to the technical field of computers. The method comprises the steps of determining correlation between to-be-processed data of an application program in response to operation starting of the application program submitted by a user; dividing the to-be-processed data according to the correlation; dividing the application program into a plurality of task fragments according to a division result; and allocating each task fragment to each corresponding actuator node in the actuator node cluster for parallel processing so as to obtain a processing result of each task fragment. According to the technical scheme, the parallel processing efficiency can be improved.

Owner:BEIJING WODONG TIANJUN INFORMATION TECH CO LTD

Method, device and mobile terminal for checking impact of cache file deletion

ActiveCN105446864BImprove Parallel Processing EfficiencyReduce labor costsSoftware testing/debuggingCache memory detailsPathPingParallel processing

The invention discloses a method, a device and a mobile terminal for checking the impact of cache file deletion. The method includes: obtaining a program to be checked, and obtaining a set of click paths and a set of cache paths corresponding to the program to be checked, wherein the set of click paths includes: Multiple click paths, the cache path set includes multiple cache paths, each click path set corresponds to a cache path; obtains a cache path to be verified in the cache path set, and deletes the cache path to be verified in the cache path to be verified file; simulate clicking on the program to be verified according to the click path corresponding to the cache path to be verified; and obtain the deletion effect of the cache file to be verified according to the running result of the program to be verified. The method of the embodiment of the present invention can realize automatic detection of whether the deleted cache file has an influence on the program only by simply adding equipment, thereby improving the parallel processing efficiency and reducing the labor cost.

Owner:BEIJING KINGSOFT INTERNET SECURITY SOFTWARE CO LTD

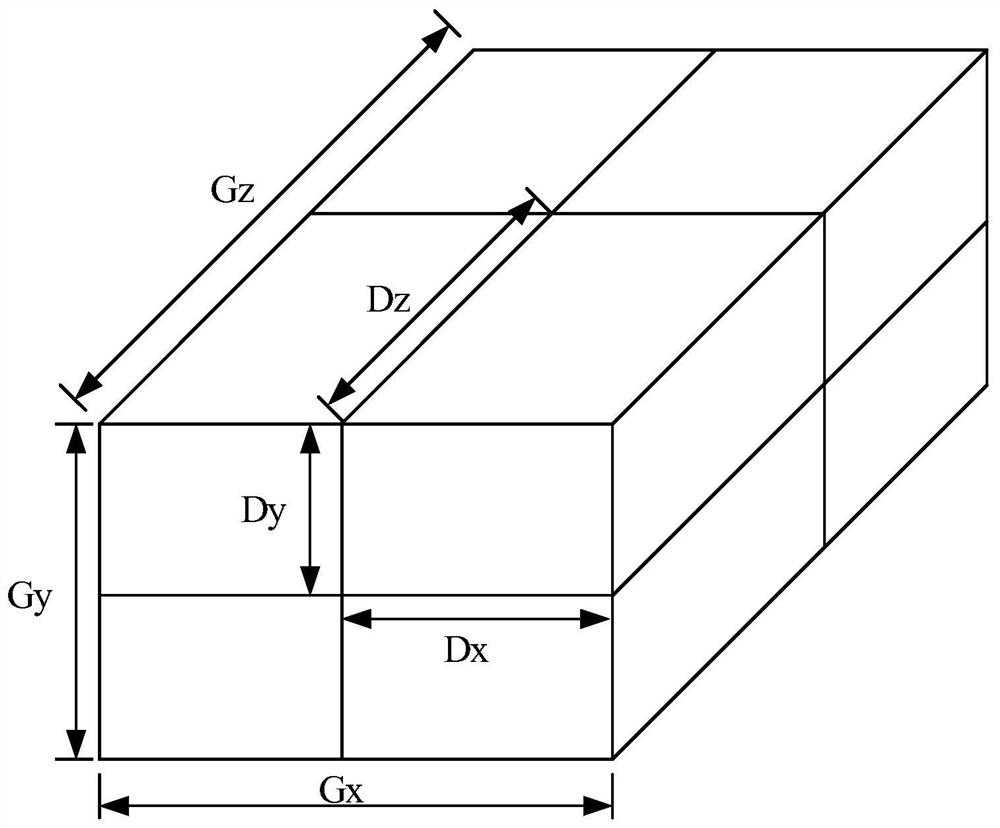

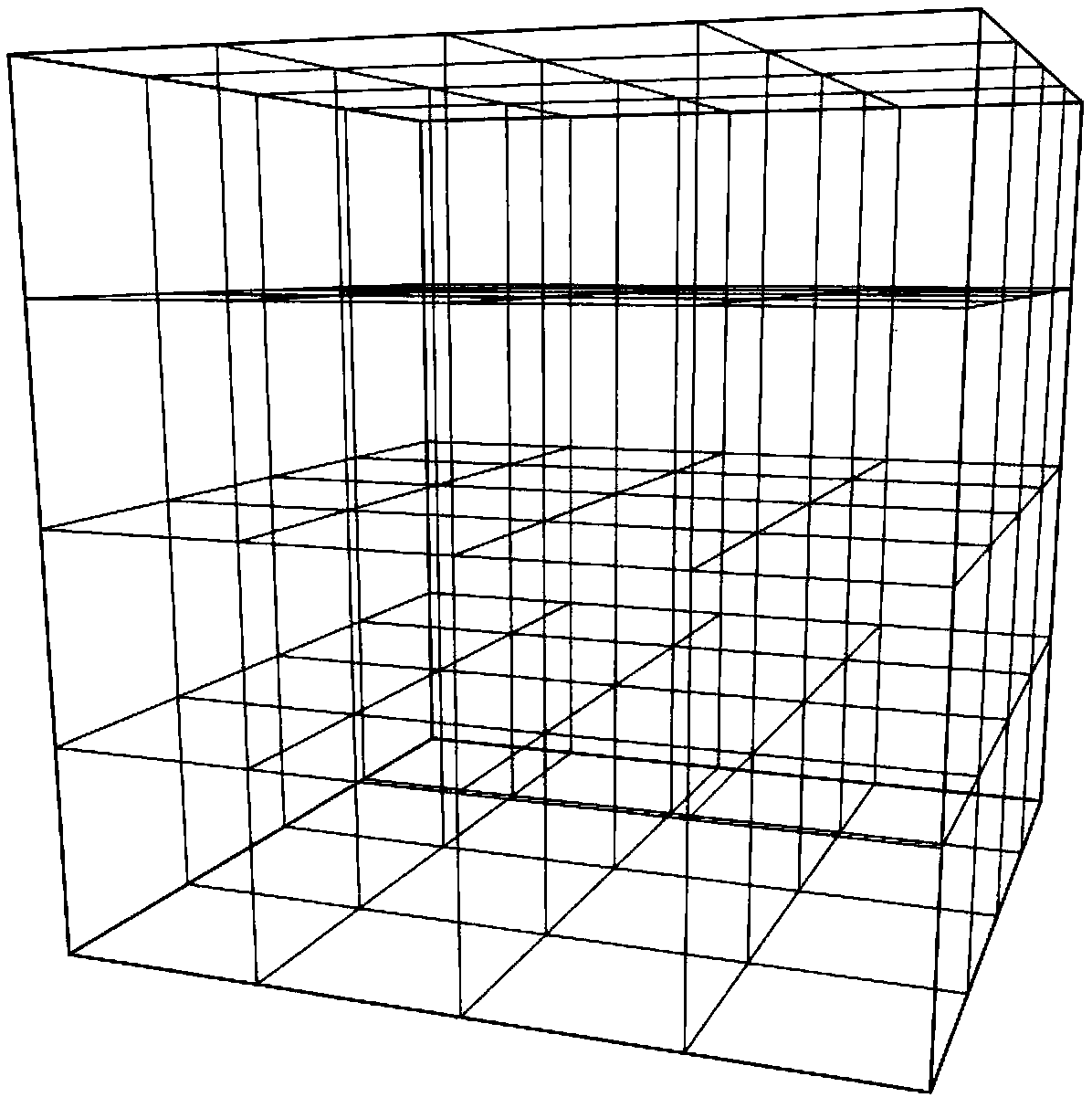

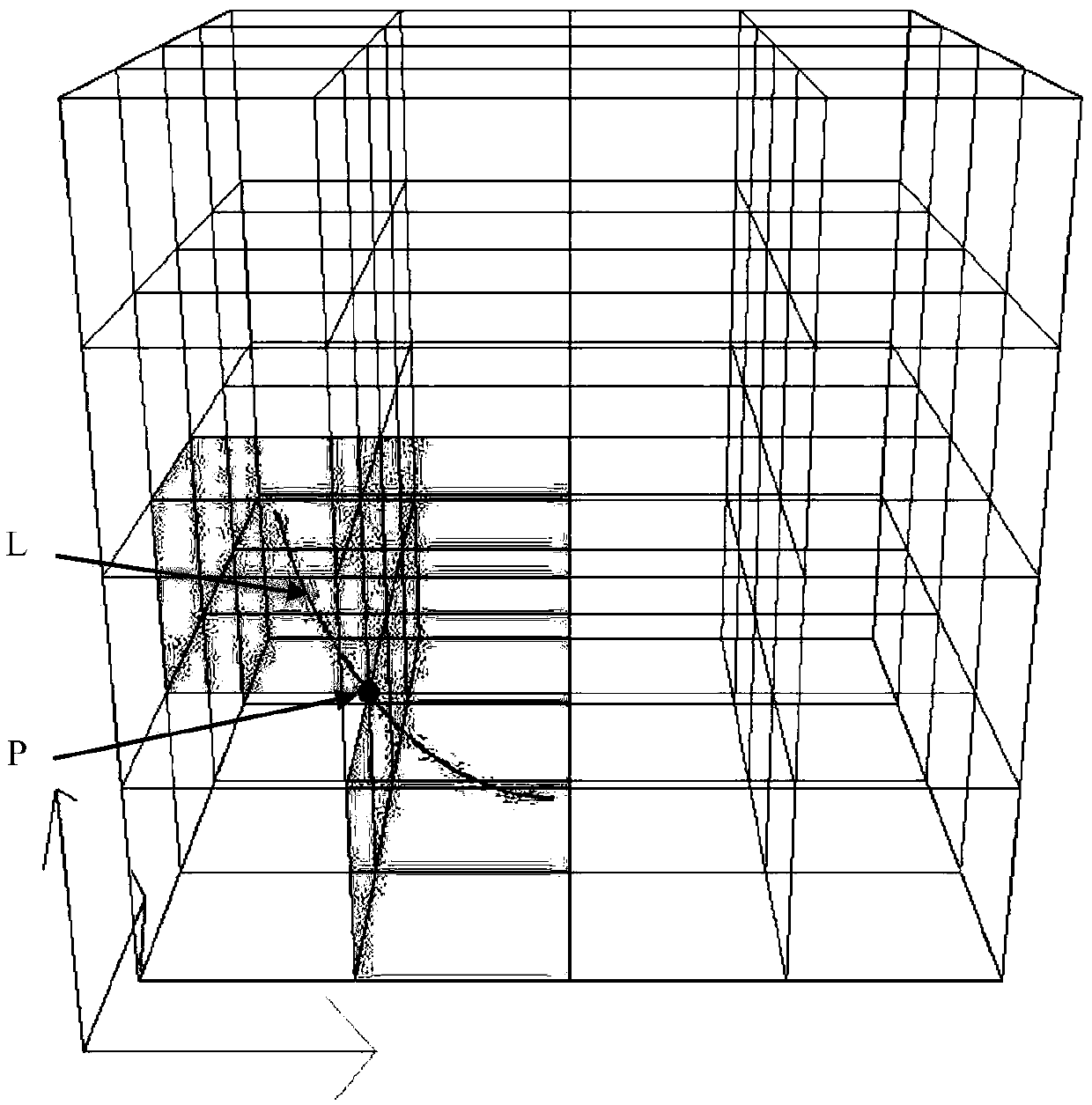

A parallel placement method of three-dimensional streamlines with uniform distribution

ActiveCN109544678AEasy to placeImprove placement efficiency3D modellingParallel processingPlacement method

The invention relates to a parallel placement method of three-dimensional streamlines with uniform distribution, and belongs to the technical field of three-dimensional streamline field visualizationin scientific calculation visualization. The parallel placement method of the three-dimensional streamlines firstly establishes a corresponding three-dimensional orthogonal control mesh for a given three-dimensional flow field, Then the control grid is divided into a series of sub-grids by dividing it into two layers nested strategy, and then several parallel processing units are started to placestreamlines in different sub-grids and merge streamlines layer by layer. The three-dimensional streamline parallel placement method accelerates the three-dimensional streamline placement process and improves the three-dimensional streamline placement efficiency through parallel processing under the condition that the three-dimensional streamline distribution is basically uniform and there is no pseudo boundary.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Support variable column excel file parsing method and system

ActiveCN111931460BSolve storage problemsSolve the problem of detecting data into the databaseText processingJavaEngineering

The present invention provides a method and system for parsing Excel files that support variable columns. The header of the Excel file to be parsed and the basic template file is verified, the type of product detection data is determined, and the mapping relationship between the file path and the type is recorded. , and send the mapping relationship to the message middleware MQ; the message middleware MQ consumer end listens to the message and obtains the mapping relationship, uses the type to load the pre-established parsing object java model, and loads the Excel file corresponding to the file path to In the memory; read each line of data in the Excel file in the memory and store it in the corresponding parsing object java model; serialize the parsing object java model containing the data into a JSON string and send it to Kafka for storage. The Excel analysis method that supports variable columns is used to solve the problem of entering the factory product inspection data into the database, and to help the digitization of the factory product inspection data.

Owner:上海微亿智造科技有限公司

Pipe-type communication method and system for interprocess communication

InactiveCN102122256BImprove reliabilityImprove efficiencyInterprogram communicationDigital computer detailsMass storageCommunications system

The invention discloses a pipe-type communication method for interprocess communication, comprising the following steps: receiving the data processed by the process of a server at the previous level; caching the data to a first caching pool; reading the data in the first caching pool, and caching the data to a first memory buffer zone so as to bring convenience for the process of the server to process; writing the data processed by the process of the server into a second memory buffer zone; caching the data in the second memory buffer zone to the second caching pool; and sending the data in the second caching pool to the process of the server at the next level to process, wherein the first caching pool and the second caching pool are storage spaces arranged on an external memory. The invention also discloses a pipe-type communication system for interprocess communication. According to the method and the system, data in the pipe-type communication mode in the streamline concurrent processing process are cached by the large-capacity storage space of the external memory, thereby improving the reliability and the efficiency for data transmission during pipe-type communication.

Owner:NAT UNIV OF DEFENSE TECH

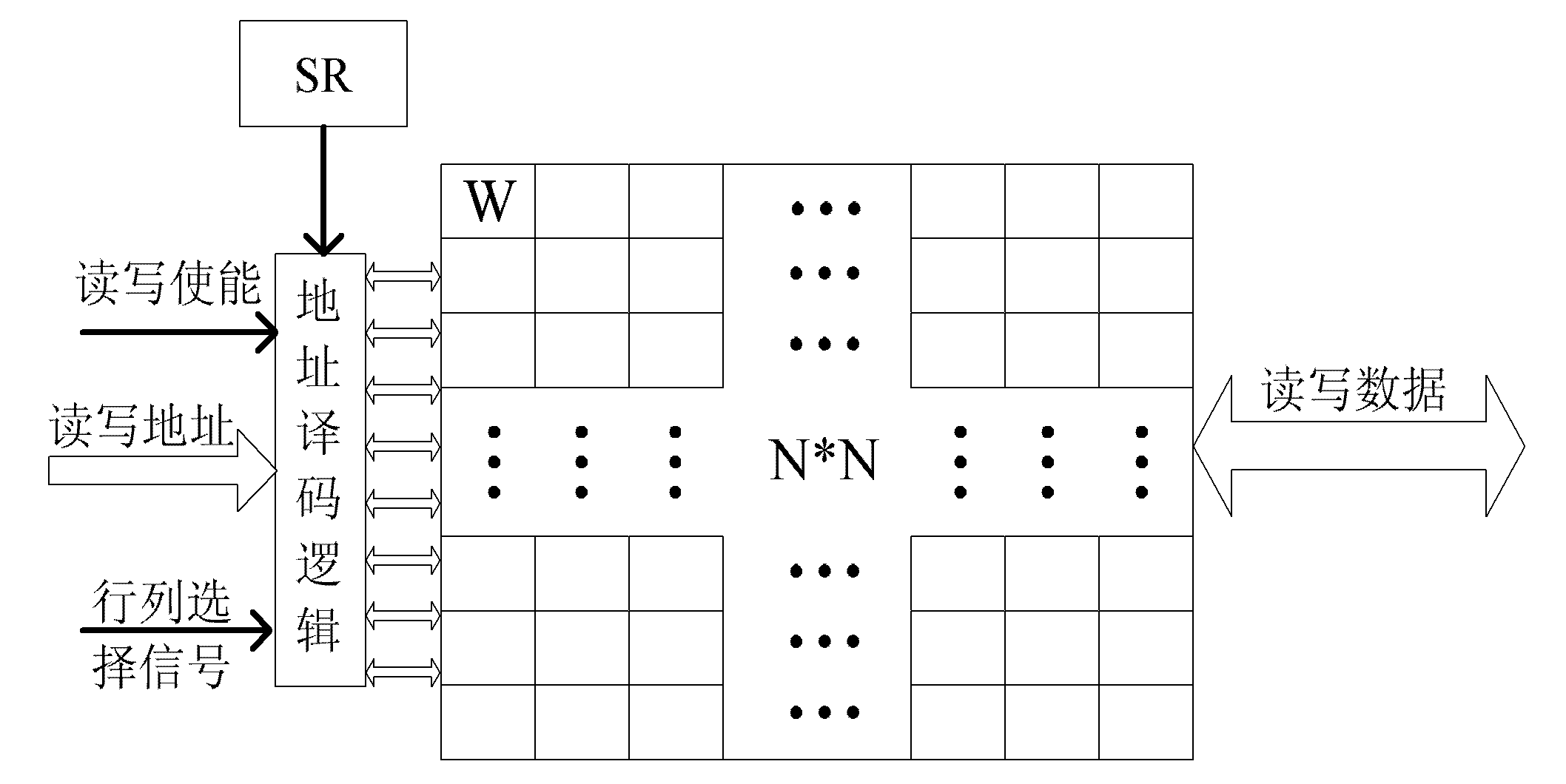

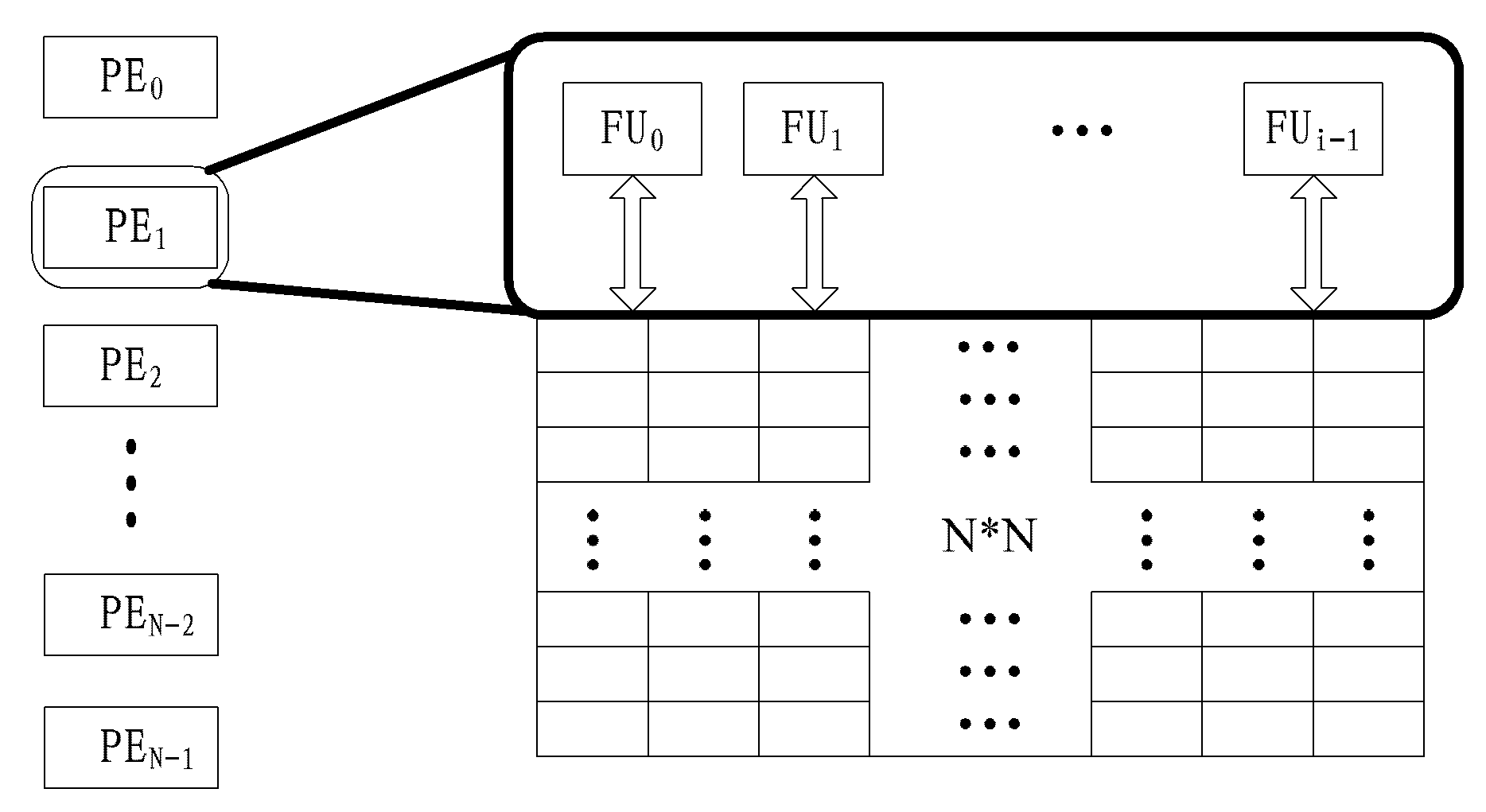

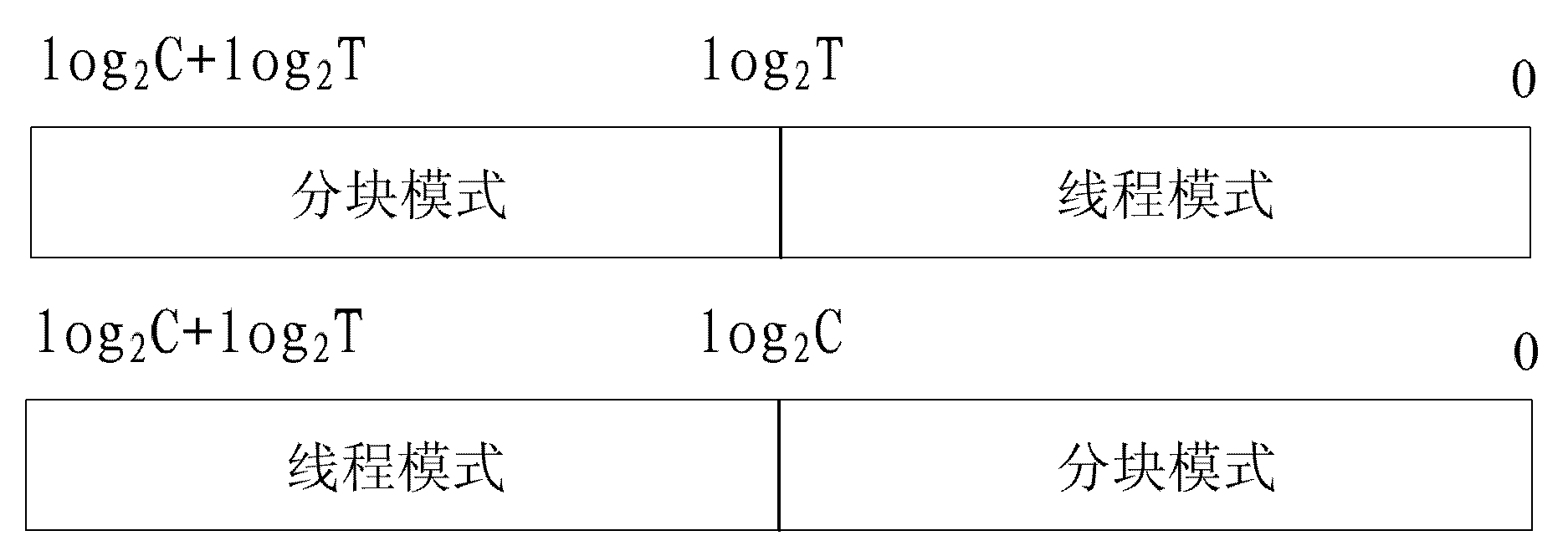

Configurable matrix register unit for supporting multi-width SIMD and multi-granularity SIMT

ActiveCN102012803BFlexible configurationImprove Parallel Processing EfficiencyConcurrent instruction executionSingle instruction, multiple threadsProcessor register

Owner:NAT UNIV OF DEFENSE TECH

A Parallel Octree Construction Method for Visual Reconstruction of CT Slice Data

ActiveCN106846457BMeet the purpose of 3D visualization reconstructionImprove the efficiency of serial number positioningImage memory managementProcessor architectures/configurationComputational scienceConcurrent computation

Owner:国家超级计算天津中心

A Maintenance Method of Distributed Dynamic Layer 2 Forwarding Table

ActiveCN107171960BRealize the function of forwardingIncrease irrelevanceNetworks interconnectionVirtual LANTheoretical computer science

The invention discloses a method for maintaining a distributed dynamic two-layer forwarding table, including the training, query and aging process of the forwarding table. The training of the forwarding table includes: S1) external input of true random numbers; S2) receiving source address and Virtual local area network SA_vlan; S3) Hash the true random number and SA_vlan to calculate the address to be written; S4) The signal instruction of the entry corresponding to the address is transmitted downward; S5) If the entry to be written is set If it is invalid, the entry will not be written, otherwise, write SA_vlan into the entry in the sel circuit; S6) Hash the destination address DA_vlan and the true random number to obtain the address of the entry, and then read out the address to be queried forwarded port. Multiple small forwarding tables in the present invention adopt the same hash calculation rule, and form different hash maps with different true random numbers, so as to obtain a load rate much higher than that of a unified forwarding table, and greatly save FPGA Precious BRAM resources inside.

Owner:HUAXIN SAIMU CHENGDU TECH CO LTD

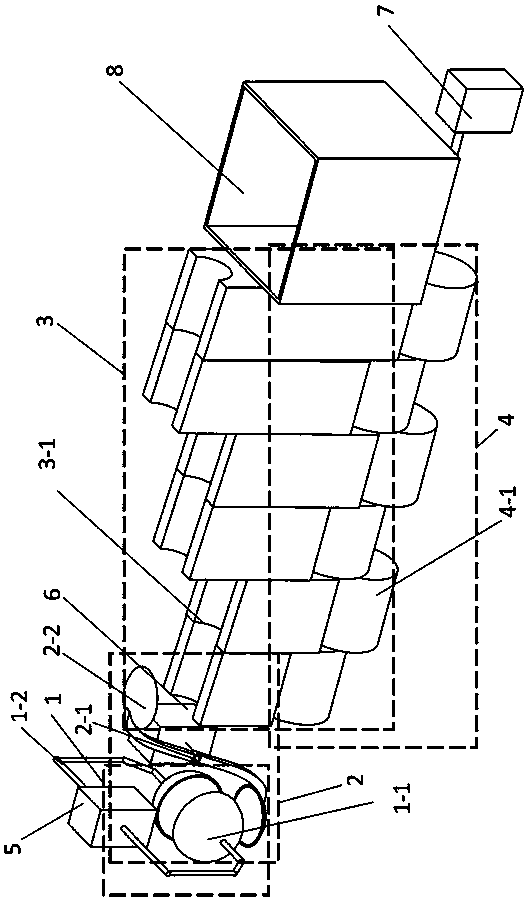

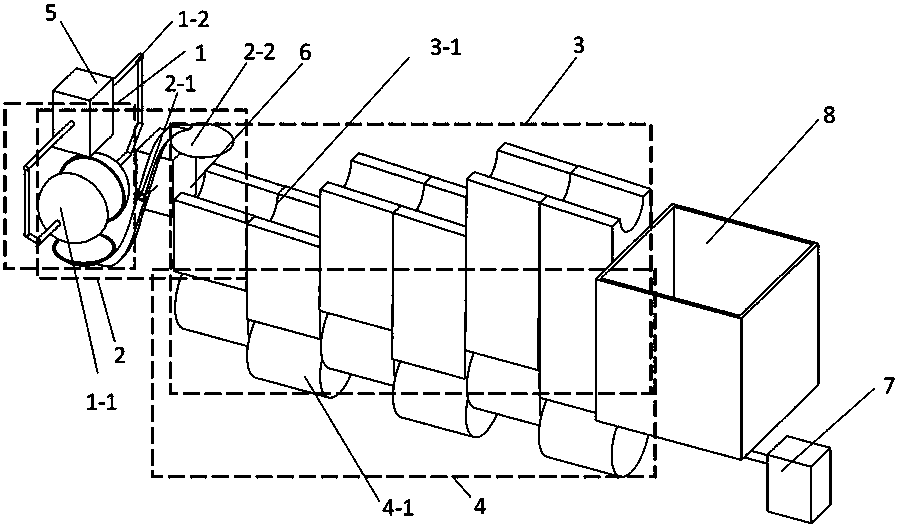

Automobile chassis with accumulated snow ball compressing transmission device

InactiveCN110004866AImplement the collection functionReduce collection processSnow cleaningSnowMechanical transmission

The invention provides an automobile chassis with an accumulated snow ball compressing transmission device, and belongs to the technical fields of environmental protection, mechanical transmission, road accumulated snow removal equipment and the like. An opening is formed in an automobile chassis body, the accumulated snow ball compressing transmission device is installed in the opening, and is composed of a snow ball integrating unit, an accumulated snow ball ejecting unit, an inclined plane transmission unit, a rolling wheel set, a snow ball integrating driving unit, a snow ball ejecting driving unit, a rolling wheel set driving unit and an accumulated snow ball collection unit. According to the automobile chassis with the accumulated snow ball compressing transmission device, the functions of transmission and collection of accumulated snow can be realized.

Owner:邢雪梅

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com