Pipe-type communication method and system for interprocess communication

A technology of inter-process communication and communication method, which is applied in the field of pipeline communication method and system, and can solve problems such as limited memory storage space, reduced reliability and efficiency of pipeline communication, memory buffer occupation, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

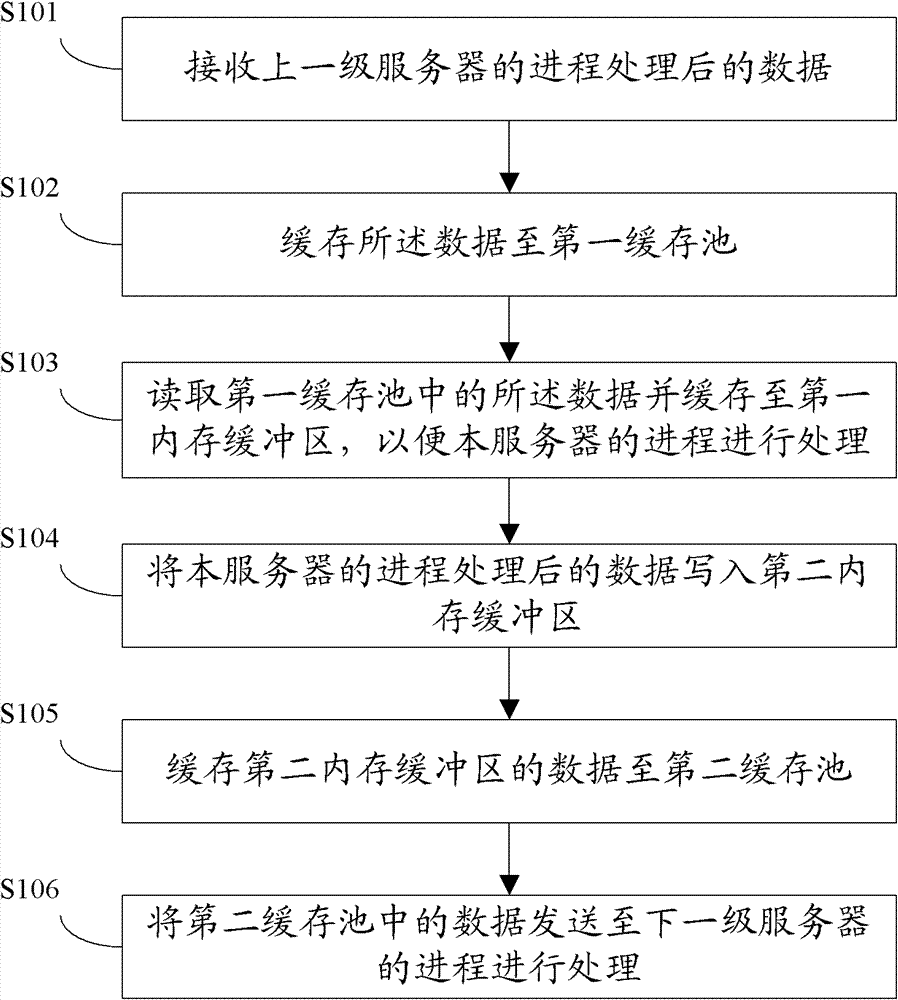

[0148] The subject of execution of this method is any one of multiple servers performing data pipeline parallel processing. see figure 1 , is a flowchart of the pipeline communication method according to Embodiment 1 of the present invention. Such as figure 1 As shown, the method includes the steps of:

[0149] S101: Receive the data processed by the process of the upper-level server;

[0150] The upper-level server refers to a server that processes data in a previous step among multiple servers that perform pipeline parallel processing on data. For example, the server is responsible for processing the data in Step 3, then the upper-level server refers to the server that processes the data in Step 2.

[0151] S102: Cache the data to the first cache pool;

[0152] The buffer pool is a storage space set on the external memory. External storage, that is, external storage, refers to storage other than computer memory and CPU cache. Usually, the storage space on the external...

Embodiment 2

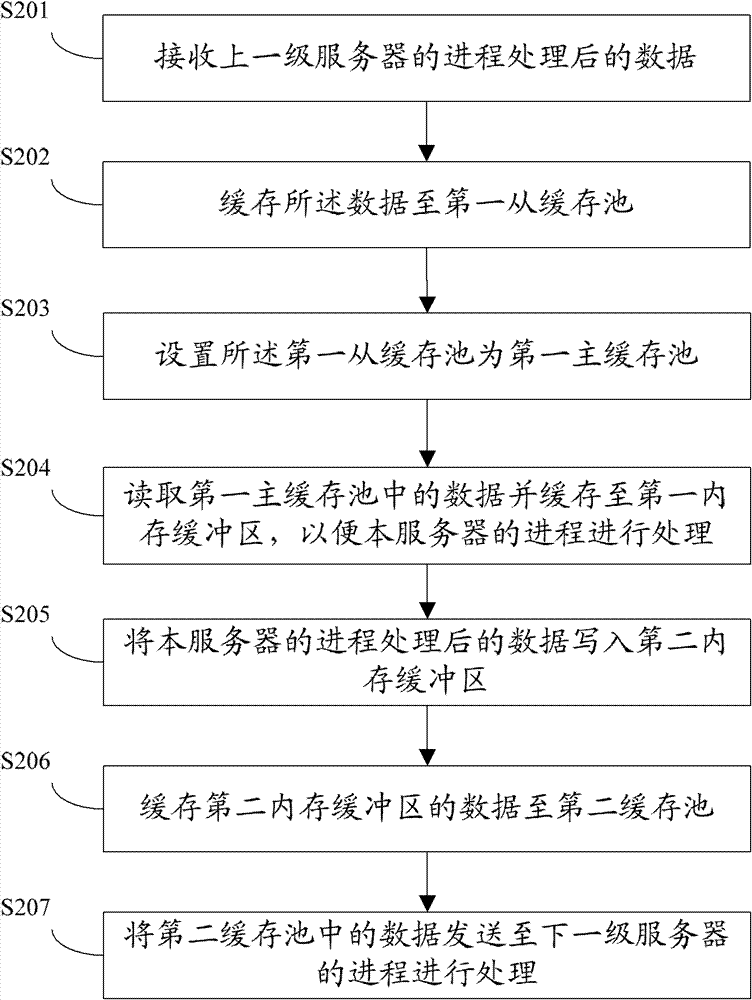

[0163] see figure 2 , is a flowchart of the pipeline communication method according to Embodiment 2 of the present invention. Such as figure 2 As shown, the method includes the steps of:

[0164] S201: Receive the data processed by the process of the upper-level server;

[0165] S202: Cache the data to the first slave cache pool;

[0166] In this embodiment, the first cache pool includes a first slave cache pool and a first primary cache pool. The first slave cache pool is a storage space set on the external storage, and is used for caching the data processed by the process of the upper-level server that needs to be received.

[0167] S203: Set the first secondary cache pool as the first primary cache pool;

[0168] The first main buffer pool is a storage space set on the external memory, and is used for caching data to be read by the first memory buffer. Since the storage space on the external storage can only be read or written at the same time, the setting of the fi...

Embodiment 3

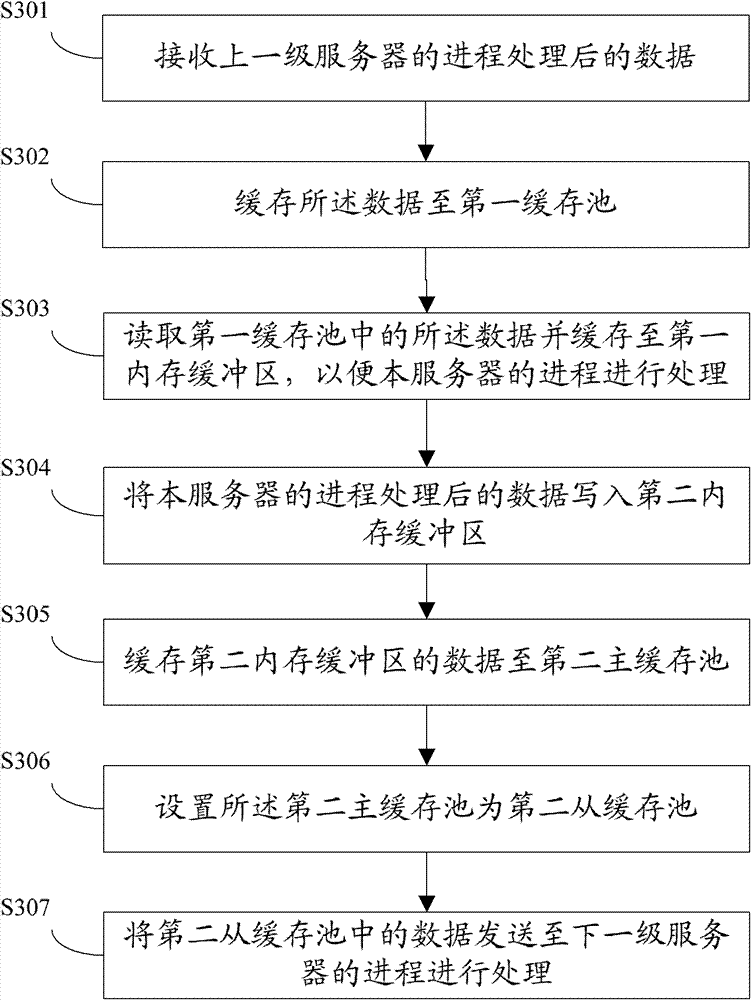

[0177] see image 3 , is a flowchart of the pipeline communication method according to Embodiment 3 of the present invention. Such as image 3 As shown, the method includes the steps of:

[0178] S301: Receive the data processed by the process of the upper-level server;

[0179] S302: Cache the data to the first cache pool;

[0180] S303: Read the data in the first buffer pool and cache it in the first memory buffer, so that the process of the server can process it;

[0181] S304: Write the data processed by the process of the server into the second memory buffer;

[0182] S305: Cache the data of the second memory buffer to the second main cache pool;

[0183] S306: Set the second master cache pool as a second slave cache pool;

[0184] S307: Send the data in the second secondary buffer pool to the process of the next-level server for processing.

[0185] In this embodiment, the second cache pool includes a second master cache pool and a second slave cache pool. Both t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com