A convolution neural network accelerator based on PSoC

A convolutional neural network and accelerator technology, which is applied in the field of convolutional neural network accelerators, can solve the problems of large calculation volume, large bandwidth requirements, and impossibility of being involved in neural networks, so as to reduce bandwidth requirements, solve large calculation volumes, and improve Effects of Parallel Processing Efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

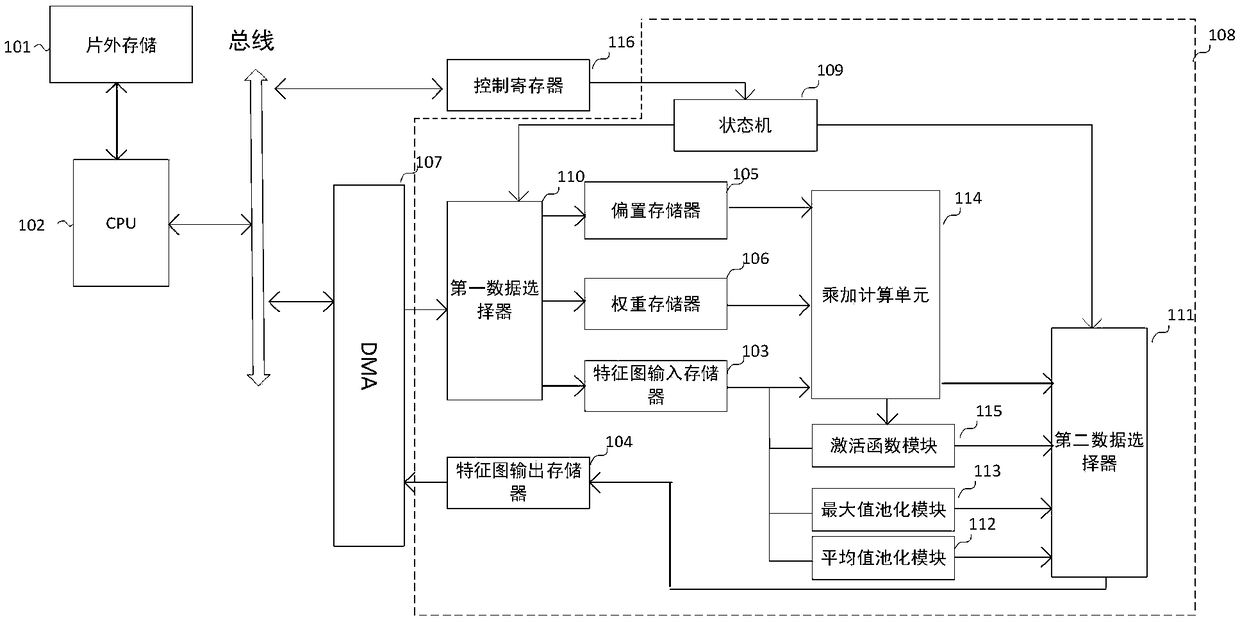

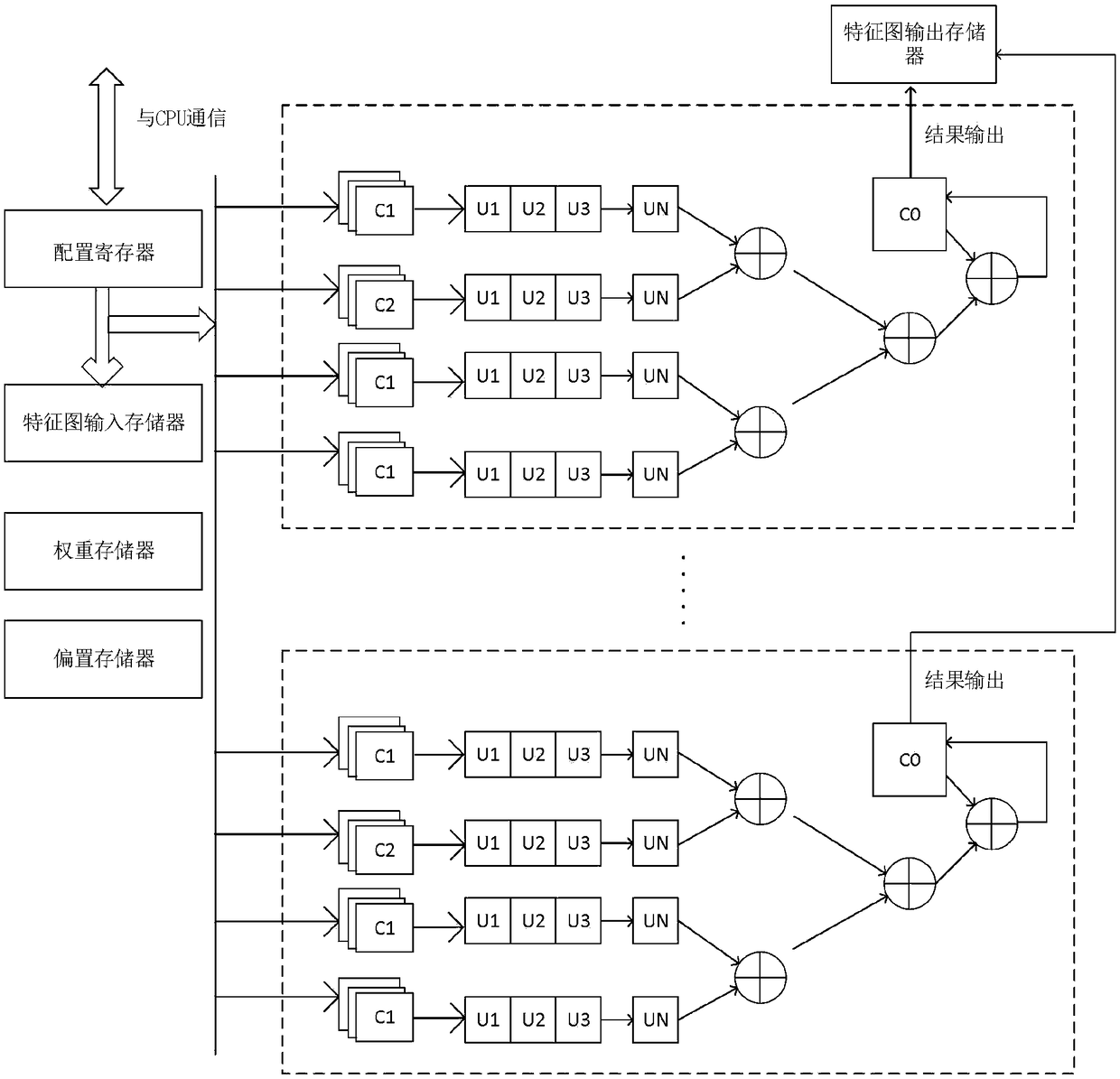

[0023] In order to increase the amount of calculation of the convolutional neural network, improve the efficiency of parallel processing, and reduce the bandwidth requirement, the present invention provides such as figure 1 A PSoC-based convolutional neural network accelerator 100 is shown, including: off-chip memory 101, CPU 102, feature map input memory 103, feature map output memory 104, bias memory 105, weight memory 106, direct memory access DMA 107 and the same number of computing units 108 as neurons.

[0024] Direct memory storage DMA107 reads and transfers from off-chip memory 101 to feature map input memory 103, bias memory 105 and weight memory 106 under the control of CPU102, or writes data from feature map output memory 104 back to off-chip memory 101. The CPU 102 needs to control the storage location of the input feature map, bias, weight, and output feature map in the off-chip memory, as well as the parameter transmission of the multi-layer convolutional neural ...

Embodiment 2

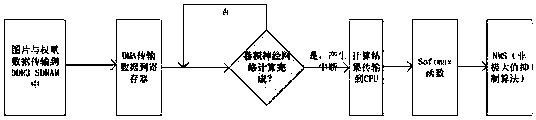

[0031] Correspondingly, the present invention also combines Image 6 The method flow of the convolutional neural network calculation based on the convolutional neural network accelerator based on PSoC is further described.

[0032] The CPU can be programmed in the embedded software, realize the construction of the deep convolutional neural network in the software programming, and input the relevant processor through the bus configuration to transmit the command value control register.

[0033] Examples of configuration commands are shown in the following table:

[0034] The input of the first layer is x1 input feature map data and x3 weight data data, and the calculation results are input to the maximum pooling module and activation function module to obtain x2 output feature map data.

[0035]

[0036] The storage form of the output feature map of the convolutional layer in the off-chip memory has M layers, and the values of M are 1, 3, 5, 7.... The output feature map ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com