Patents

Literature

486results about "Cache memory details" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Device with embedded high-bandwidth, high-capacity memory using wafer bonding

ActiveUS20200243486A1Allowed to operateFast bootMemory architecture accessing/allocationInput/output to record carriersComputer architectureElectrical conductor

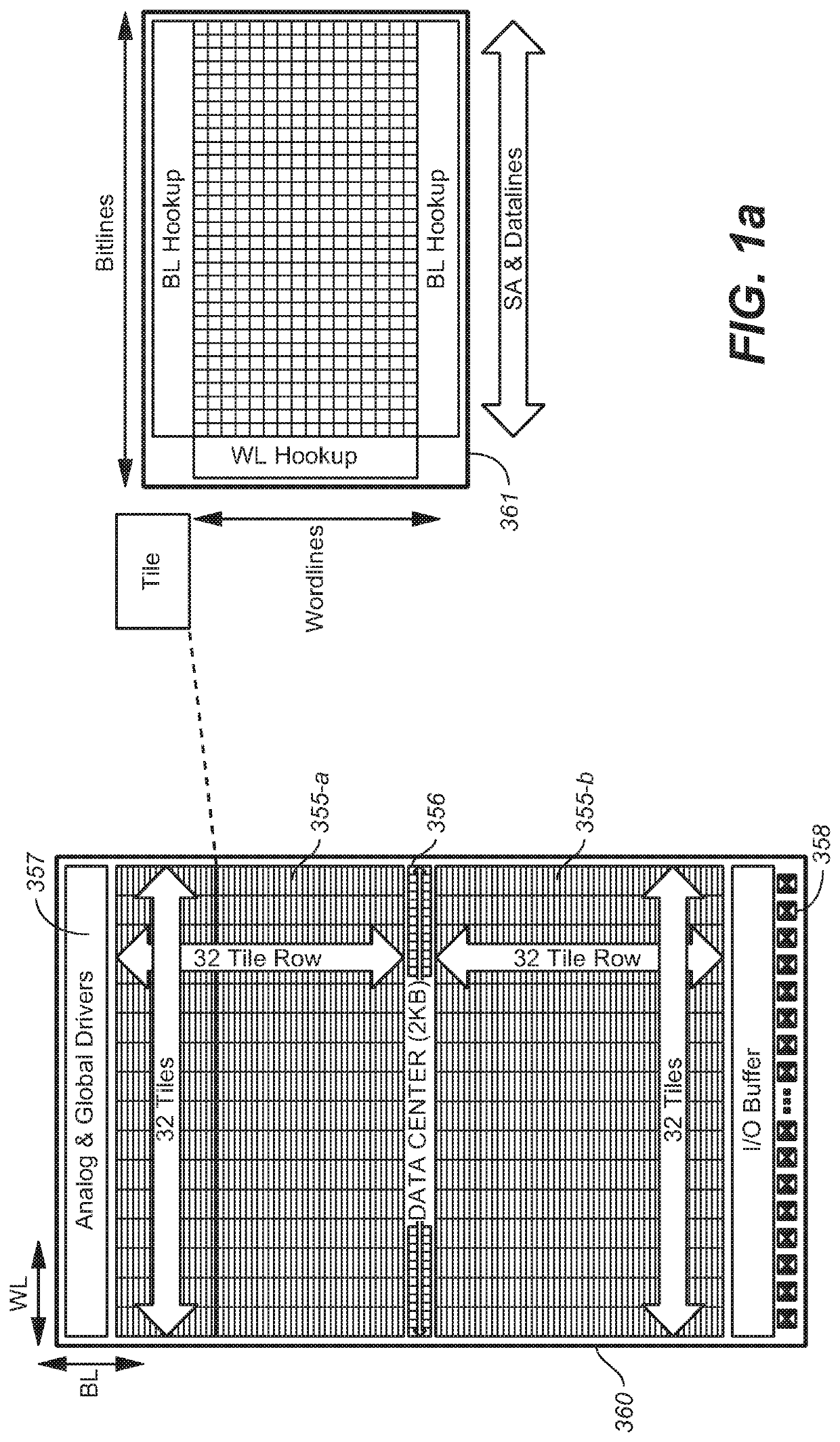

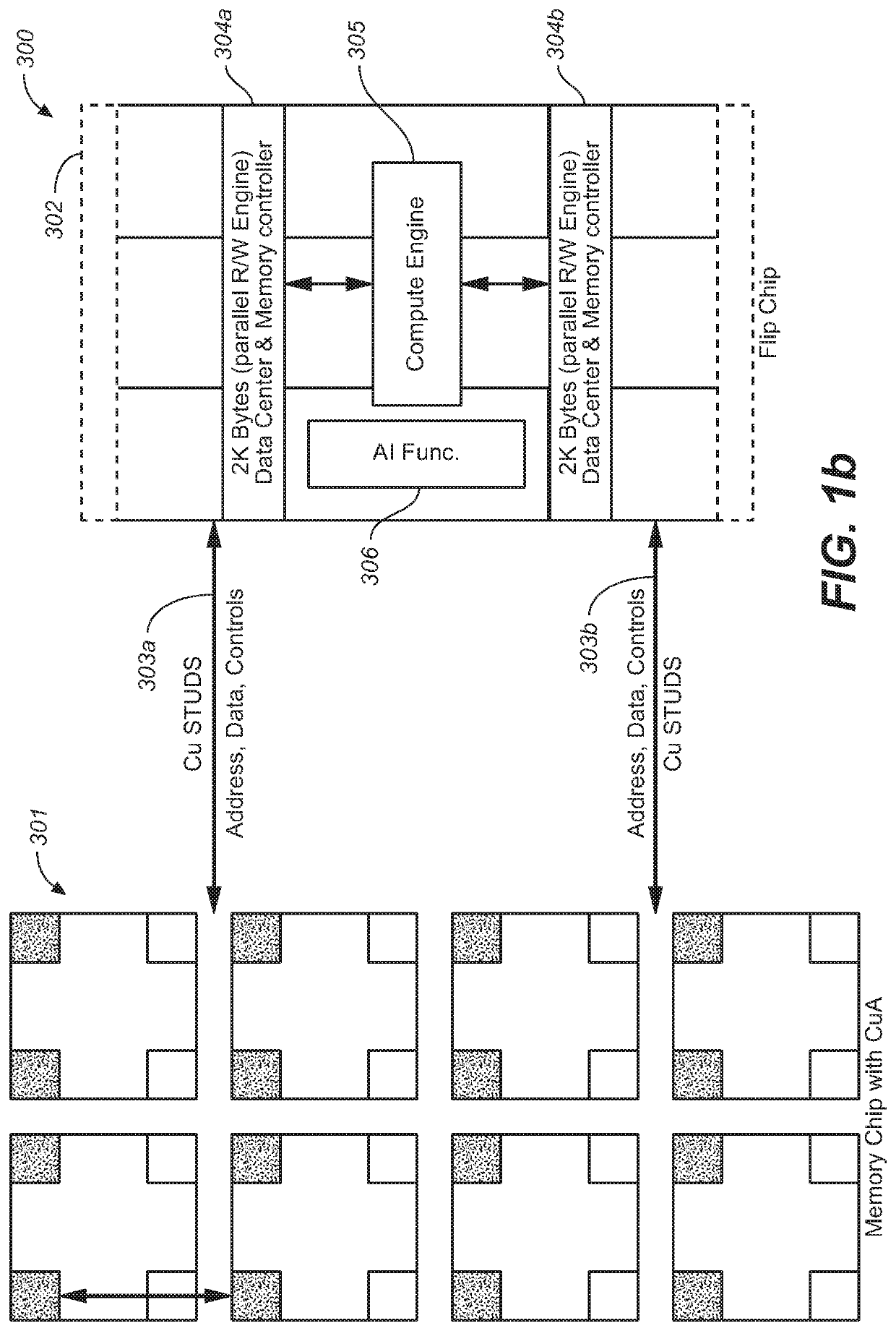

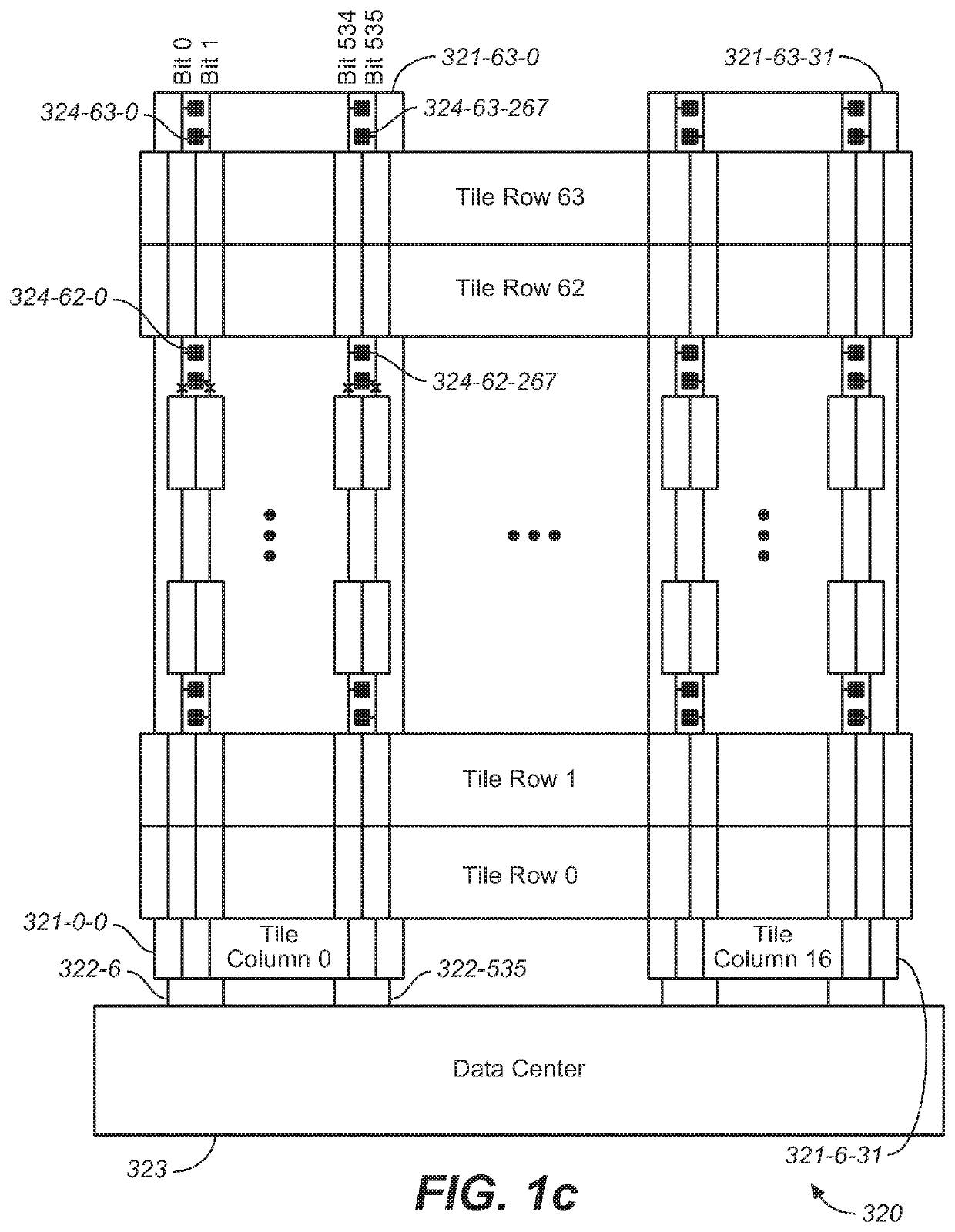

An electronic device with embedded access to a high-bandwidth, high-capacity fast-access memory includes (a) a memory circuit fabricated on a first semiconductor die, wherein the memory circuit includes numerous modular memory units, each modular memory unit having (i) a three-dimensional array of storage transistors, and (ii) a group of conductors exposed to a surface of the first semiconductor die, the group of conductors being configured for communicating control, address and data signals associated the memory unit; and (b) a logic circuit fabricated on a second semiconductor die, wherein the logic circuit also includes conductors each exposed at a surface of the second semiconductor die, wherein the first and second semiconductor dies are wafer-bonded, such that the conductors exposed at the surface of the first semiconductor die are each electrically connected to a corresponding one of the conductors exposed to the surface of the second semiconductor die. The three-dimensional array of storage transistors may be formed by NOR memory strings.

Owner:SUNRISE MEMORY CORP

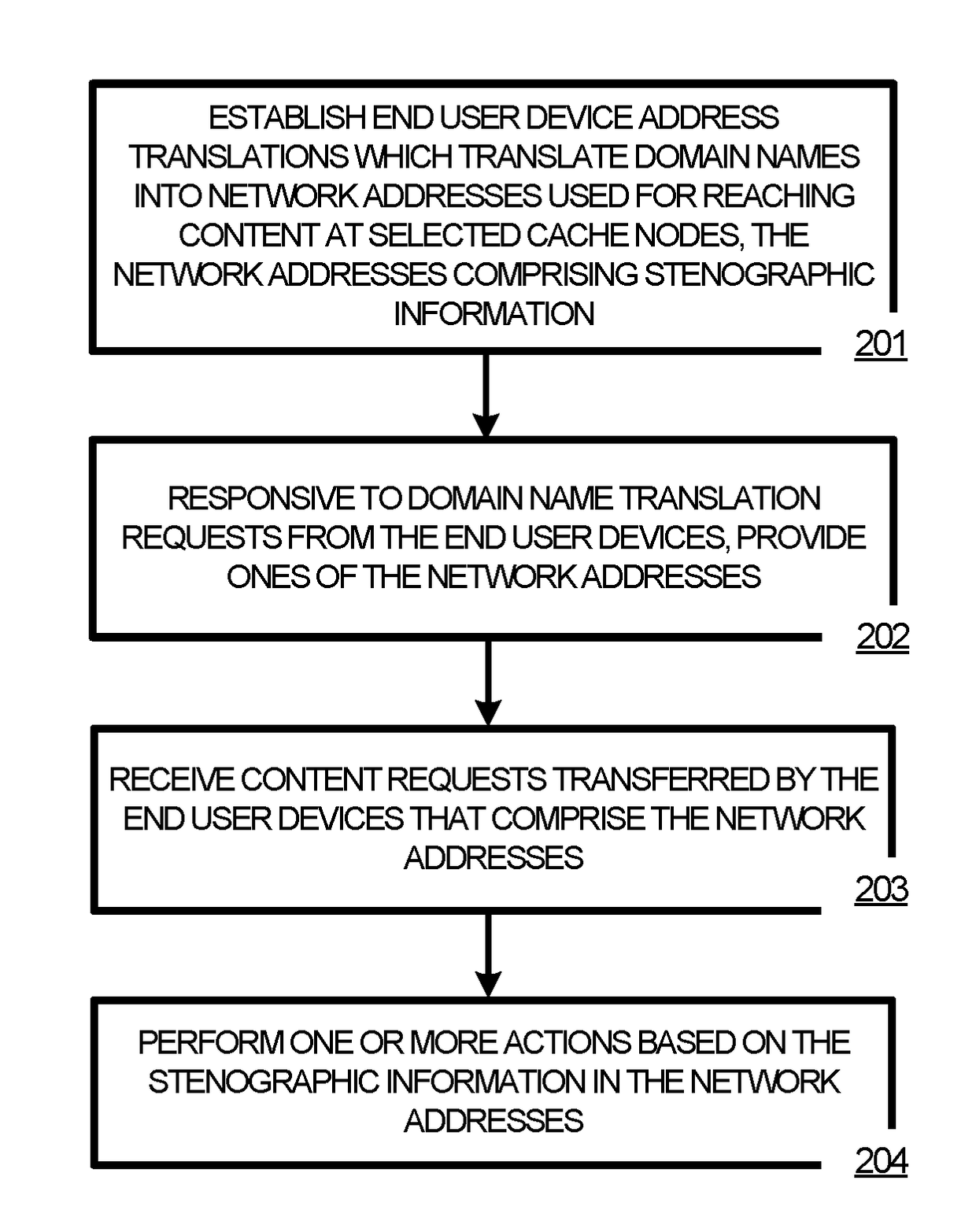

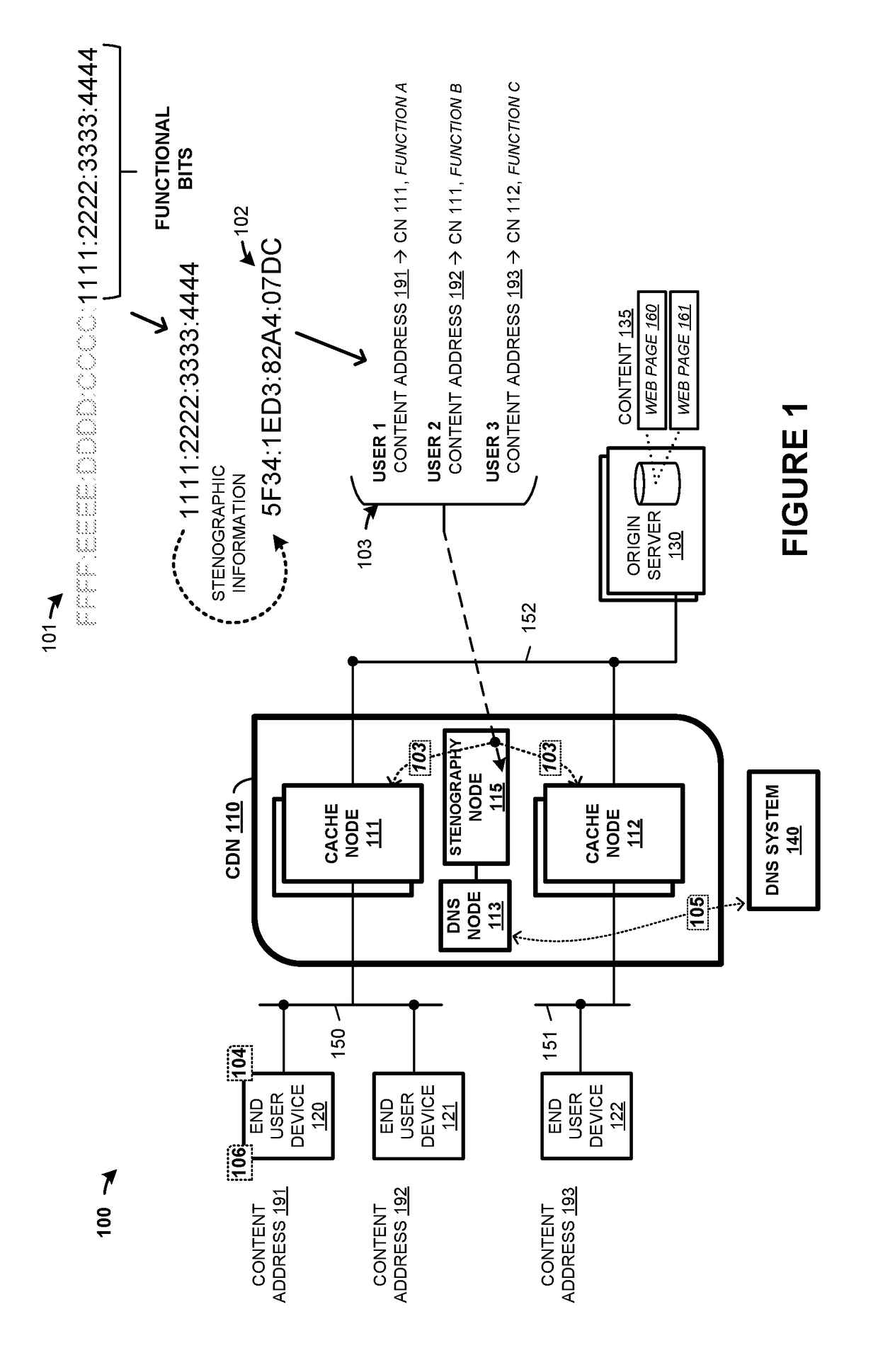

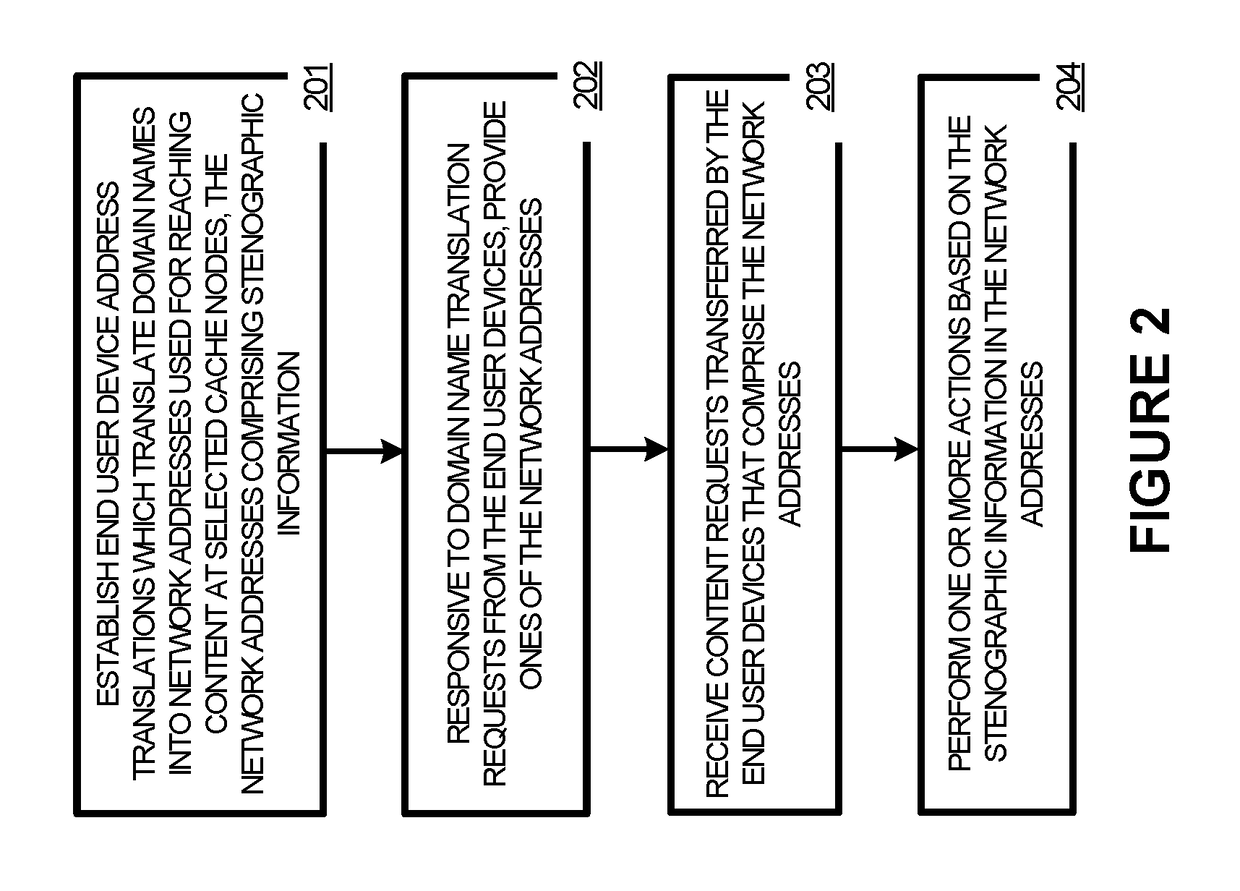

Anonymized network addressing in content delivery networks

ActiveUS20170153980A1Memory architecture accessing/allocationMemory adressing/allocation/relocationUser deviceNetwork addressing

Systems, methods, apparatuses, and software for a content delivery network that caches content for delivery to end user devices is presented. In one example, a content delivery network (CDN) is presented having a plurality of cache nodes that cache content for delivery to end user devices. The CDN includes an anonymization node configured to establish anonymized network addresses for transfer of content to cache nodes from one or more origin servers that store the content before caching by the CDN. The anonymization node is configured to provide indications of relationships between the anonymized network addresses and the cache nodes to a routing node of the CDN. The routing node is configured to route the content transferred by the one or more origin servers responsive to content requests of the cache nodes based on the indications of the relationships between the anonymous network addresses to the cache nodes.

Owner:FASTLY

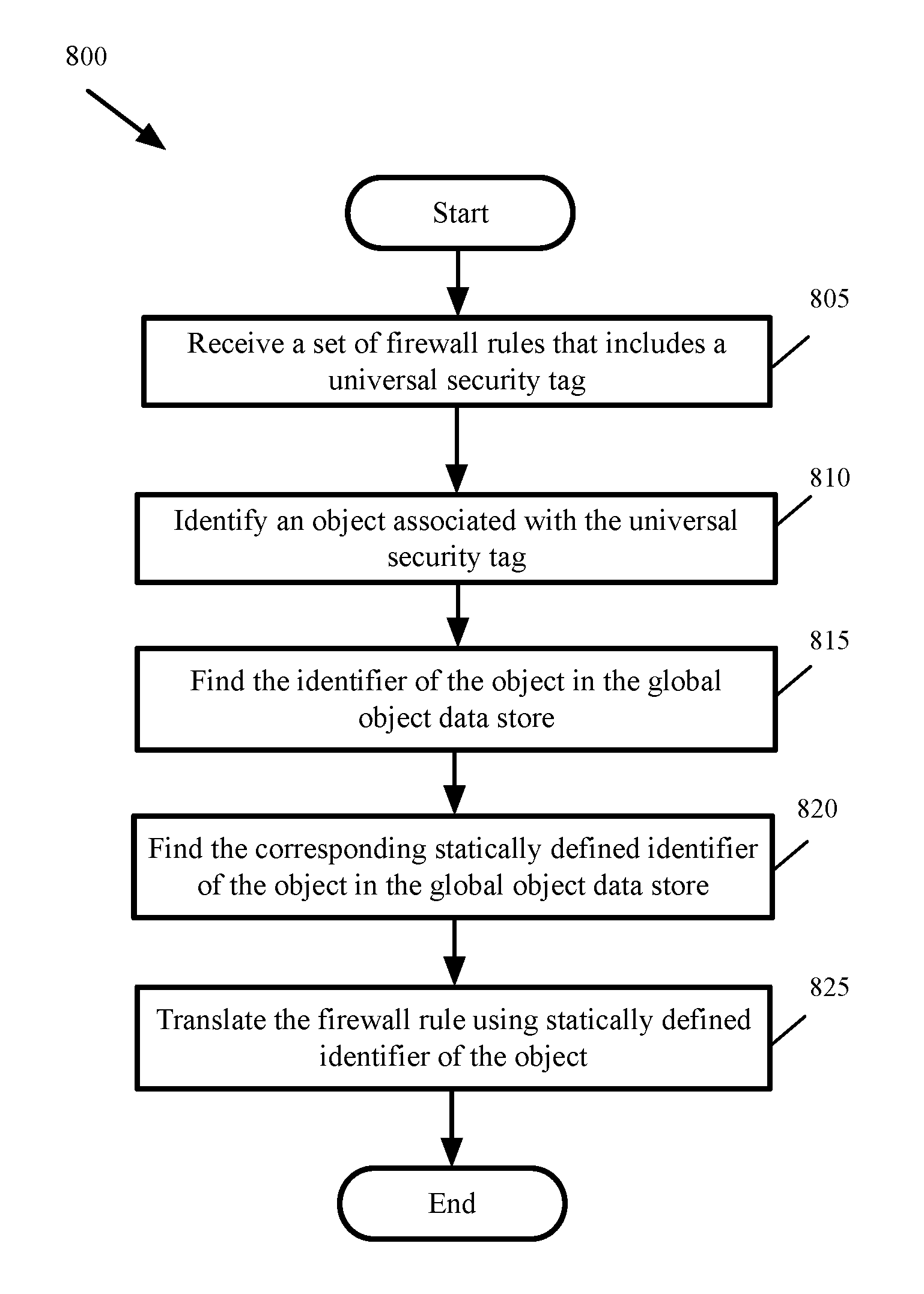

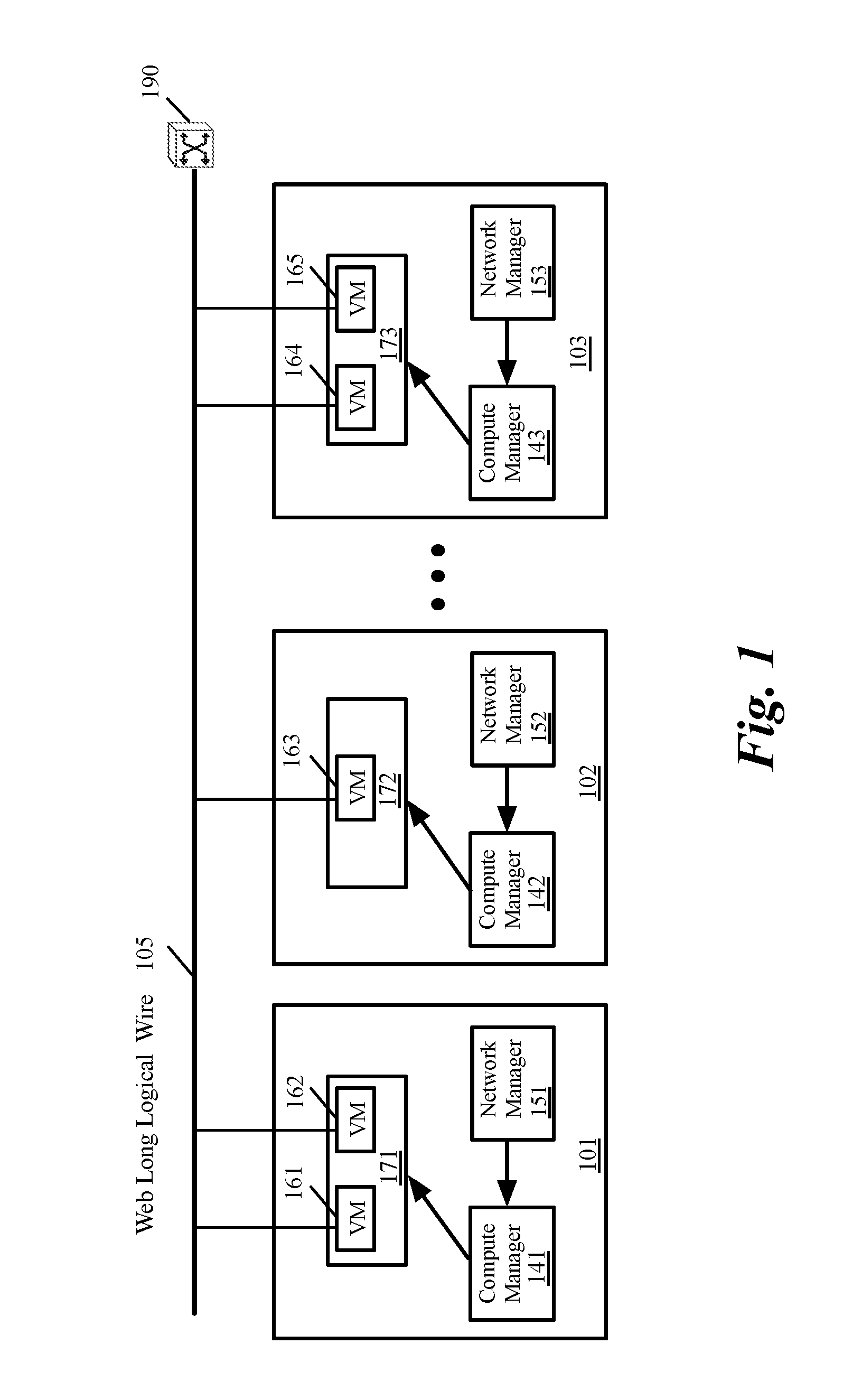

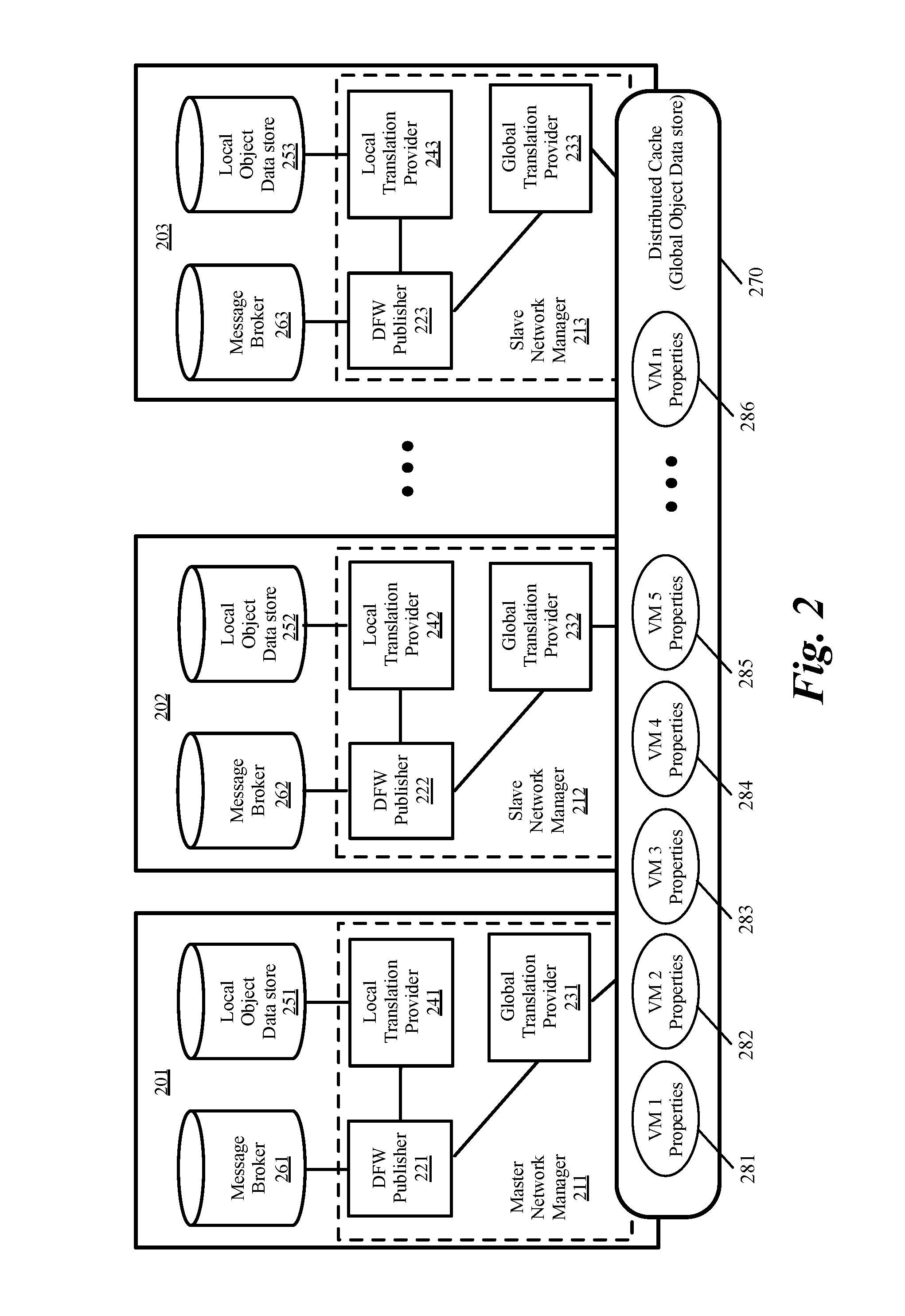

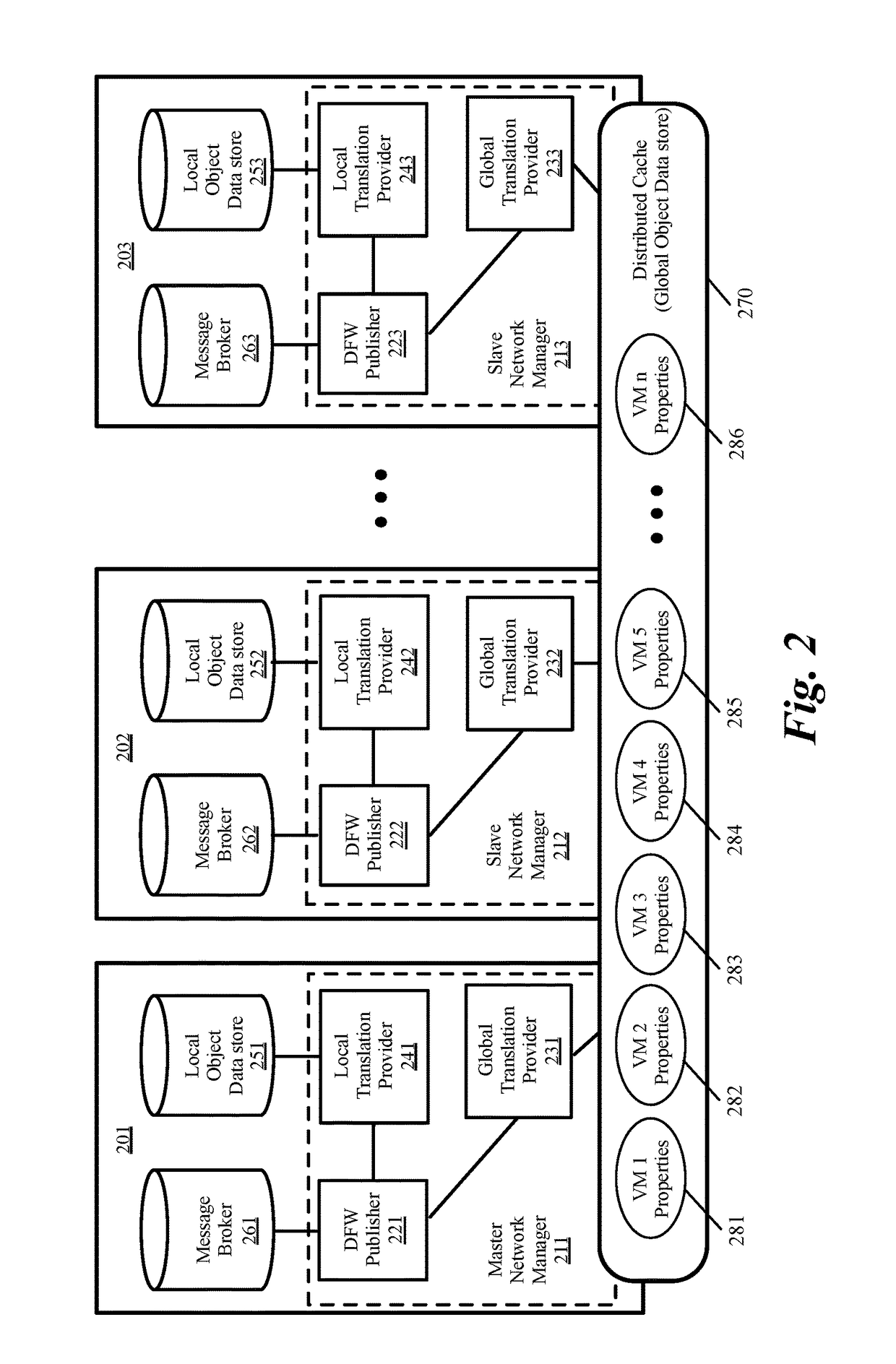

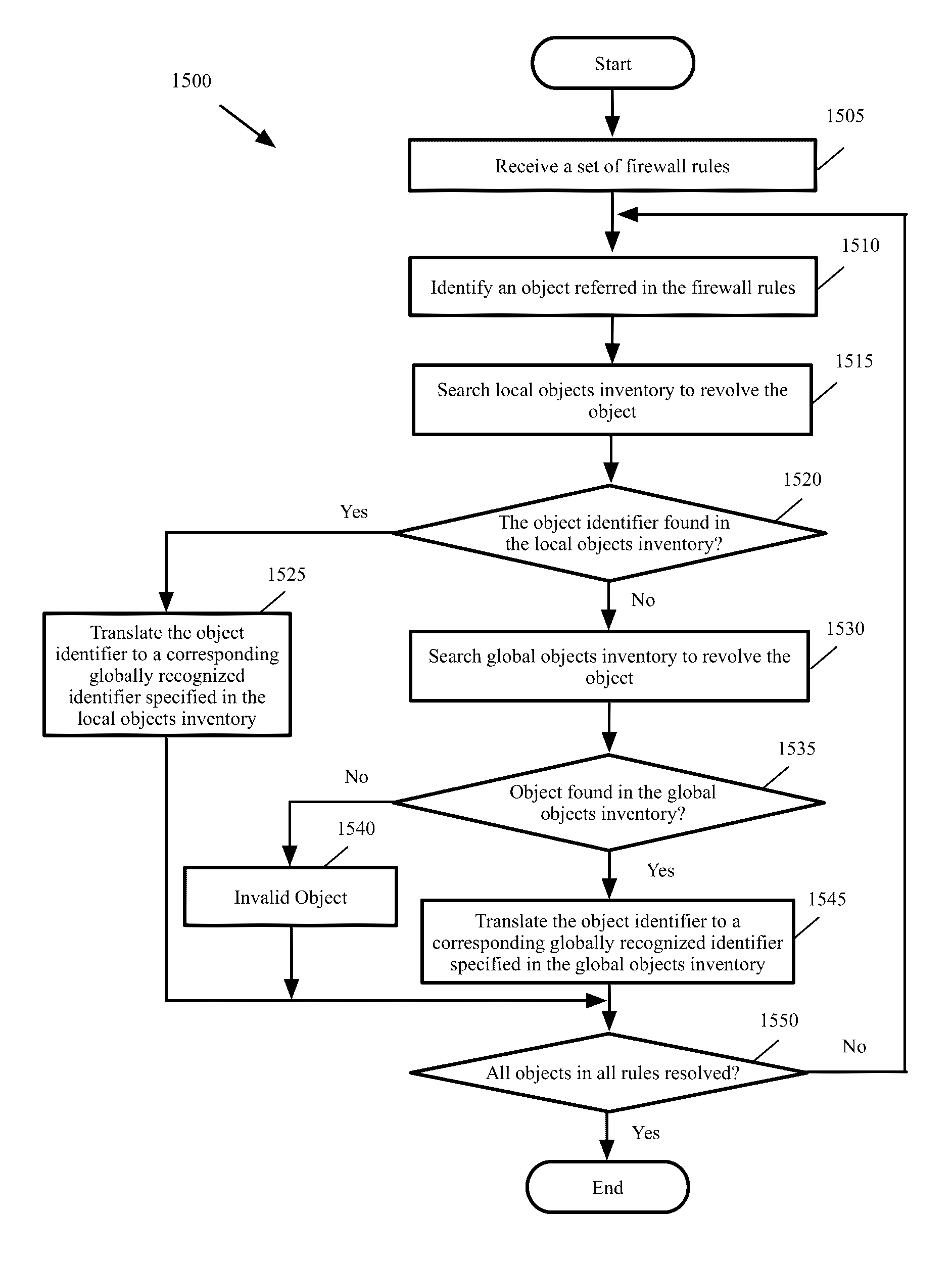

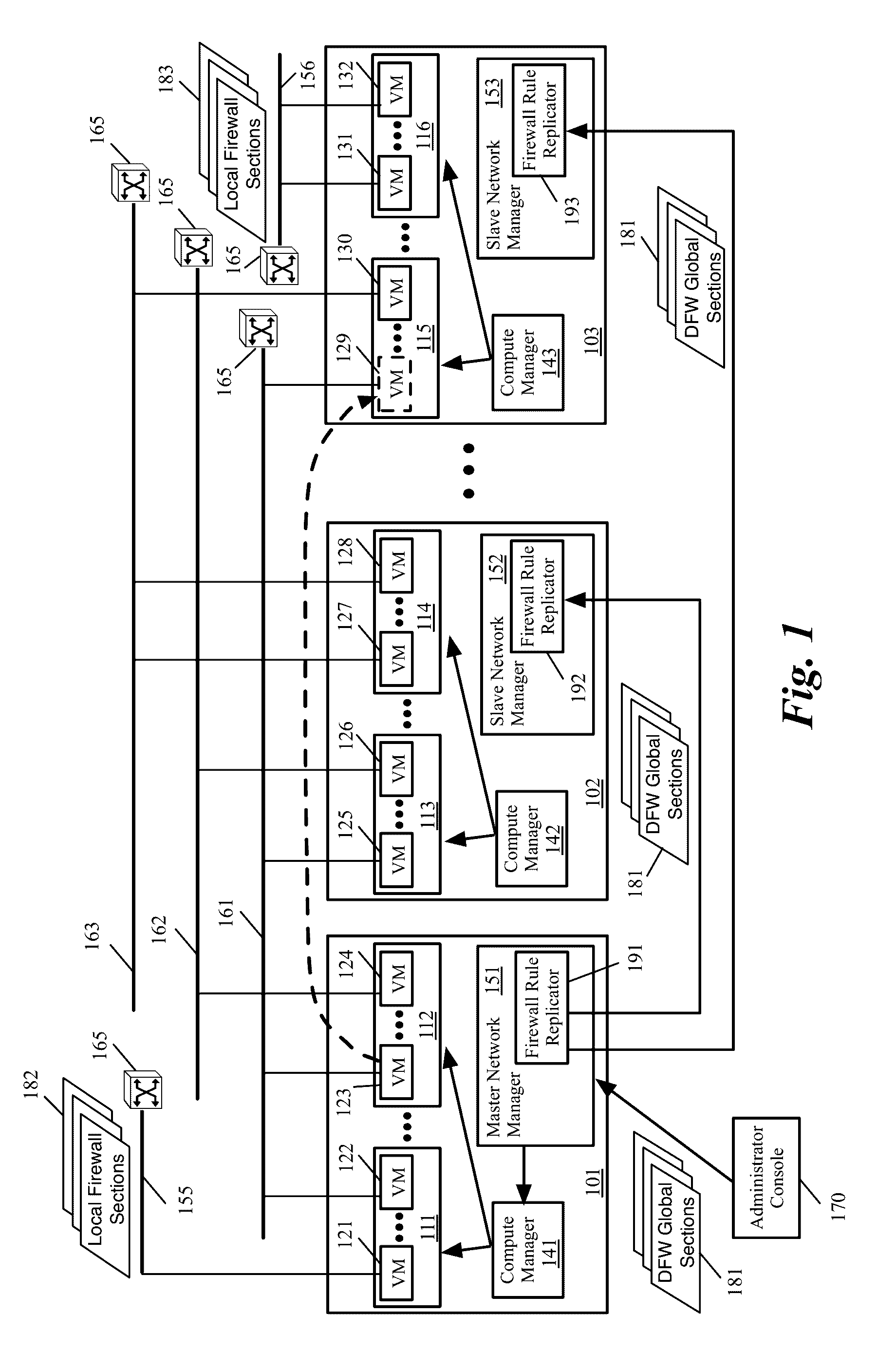

Global objects for federated firewall rule management

ActiveUS20170005988A1Memory architecture accessing/allocationDatabase management systemsComputer networkUnique identifier

A method of defining distributed firewall rules in a group of datacenters is provided. Each datacenter includes a group of data compute nodes (DCNs). The method sends a set of security tags from a particular datacenter to other datacenters. The method, at each datacenter, associates a unique identifier of one or more DCNs of the datacenter to each security tag. The method associates one or more security tags to each of a set of security group at the particular datacenter and defines a set of distributed firewall rules at the particular datacenter based on the security tags. The method sends the set of distributed firewall rules from the particular datacenter to other datacenters. The method, at each datacenter, translates the firewall rules by mapping the unique identifier of each DCN in a distributed firewall rule to a corresponding static address associated with the DCN.

Owner:NICIRA

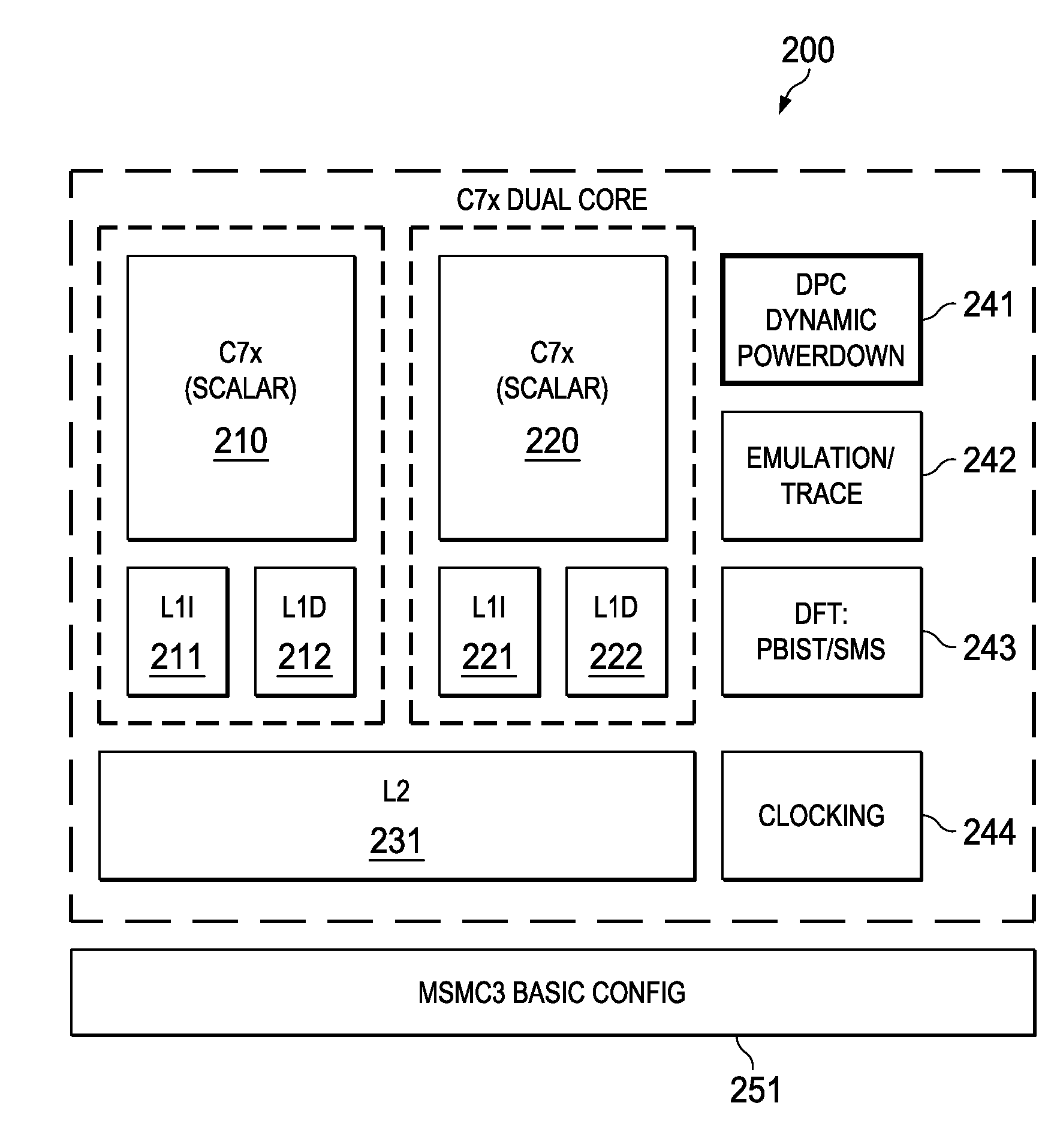

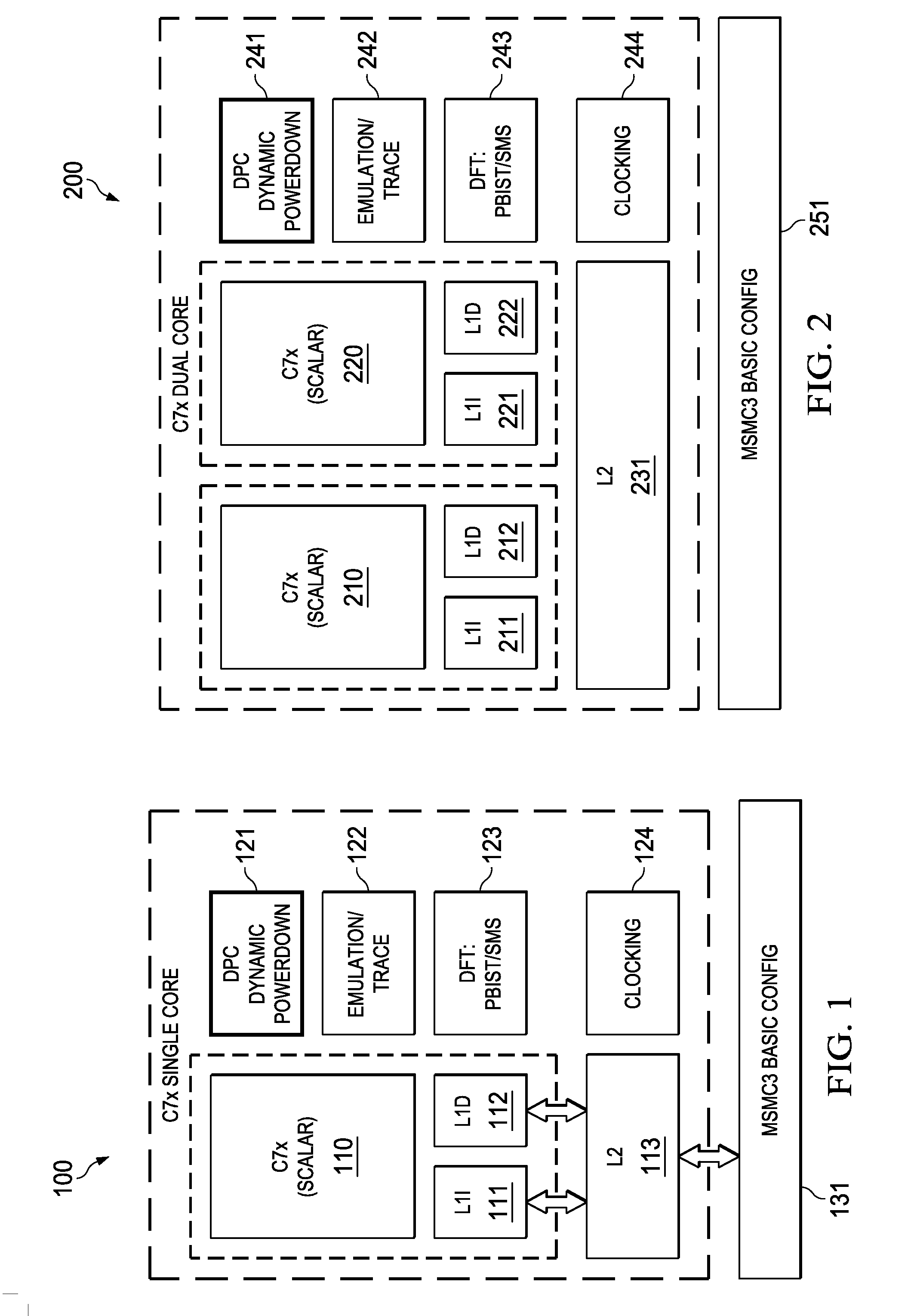

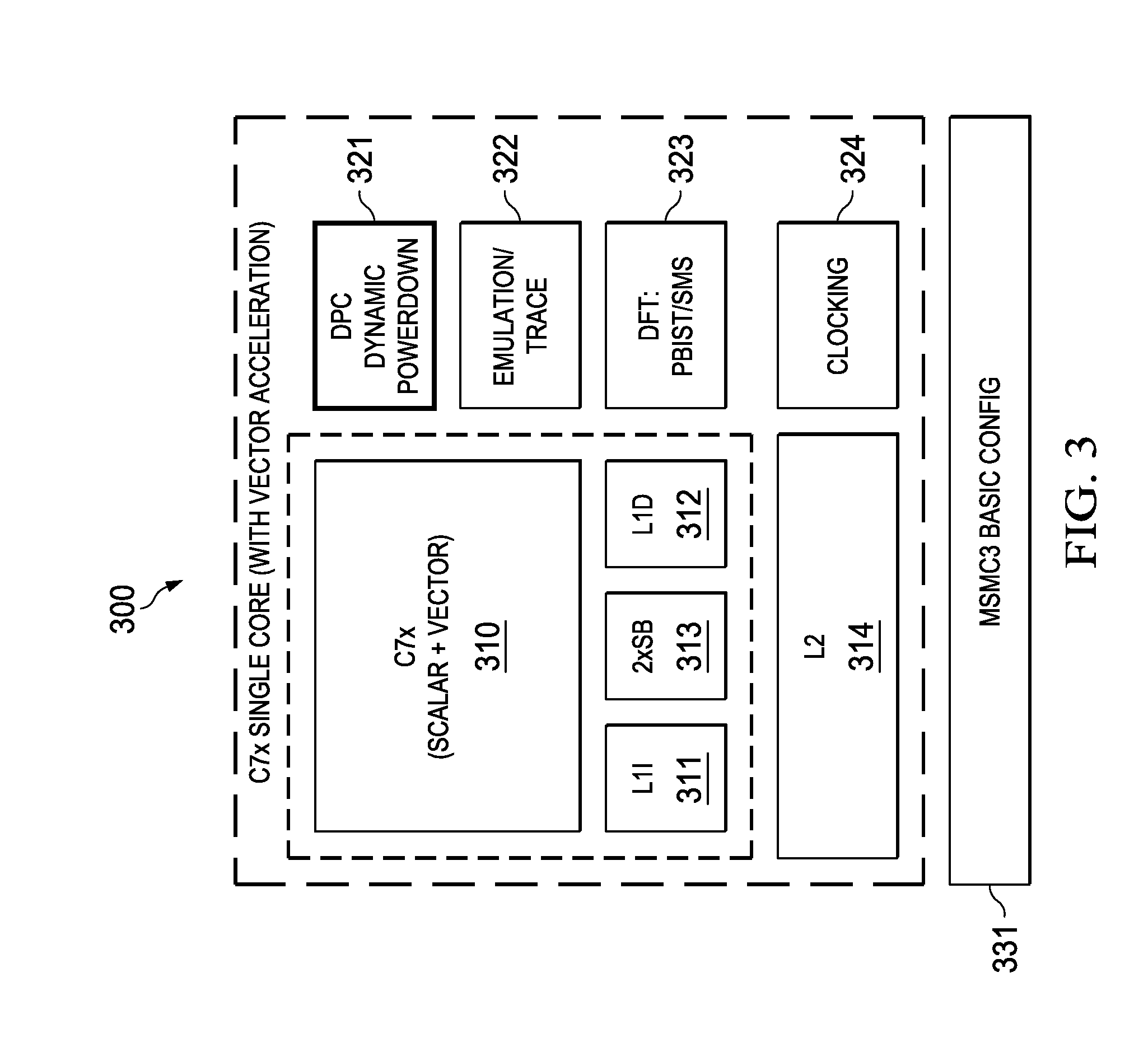

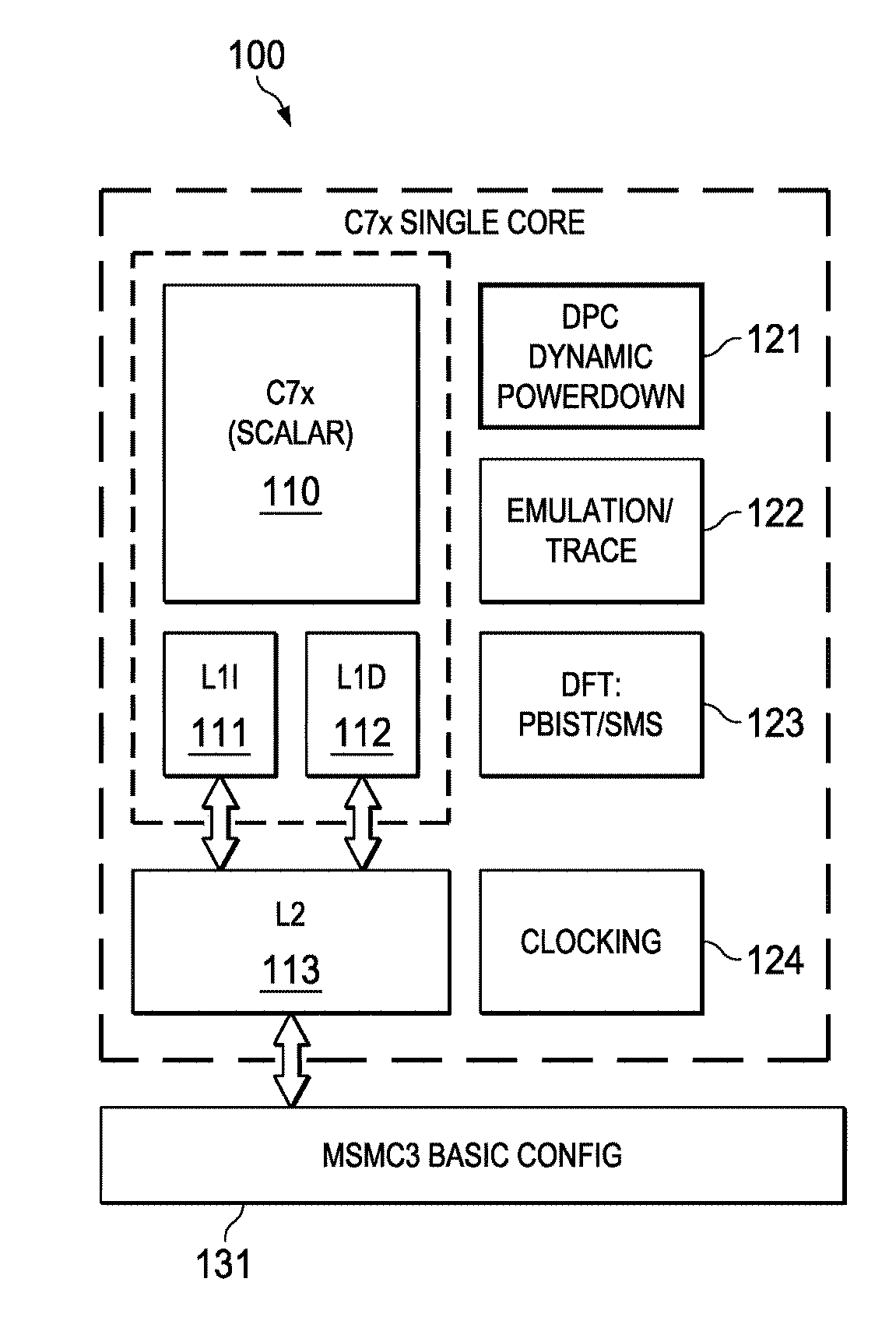

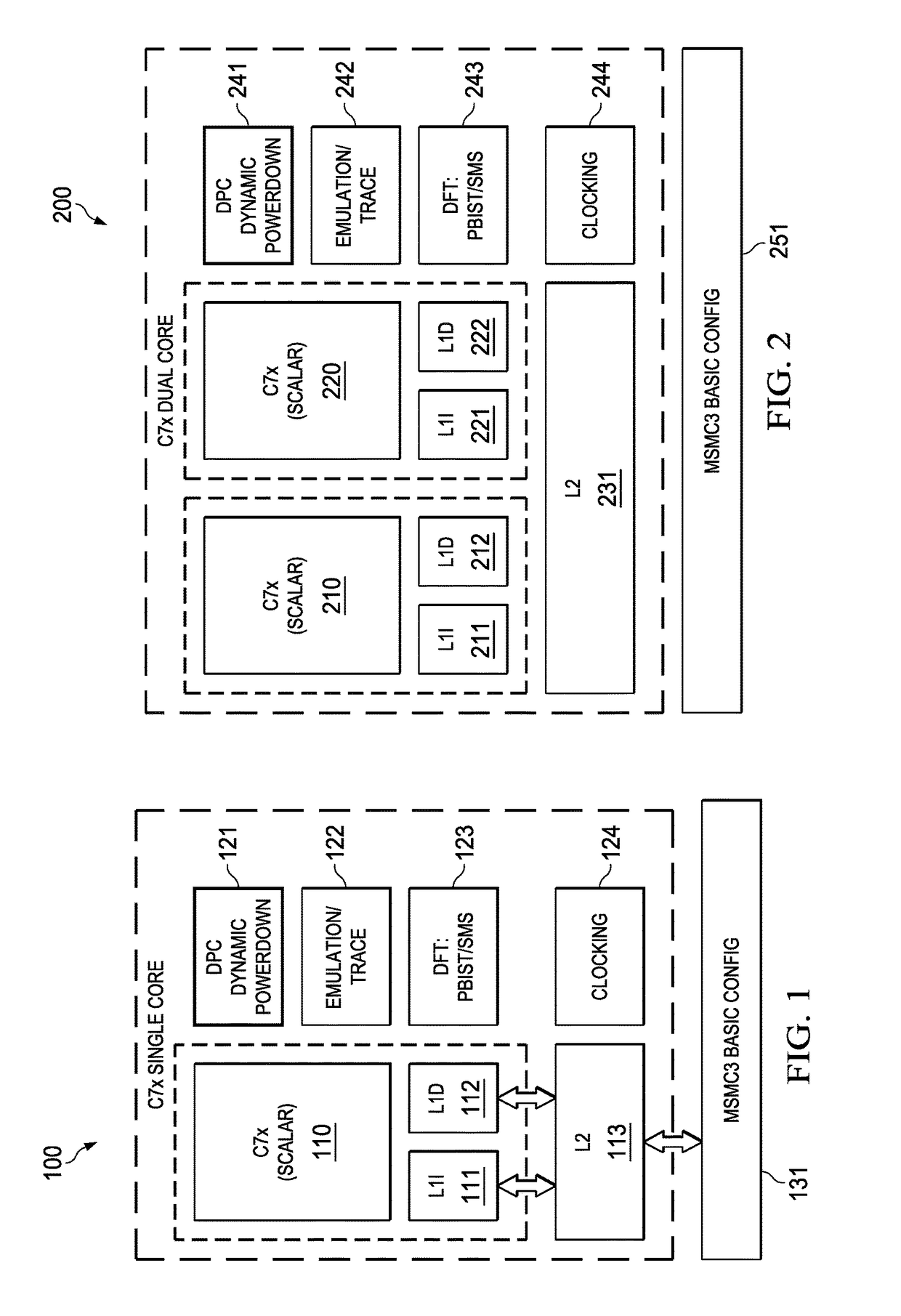

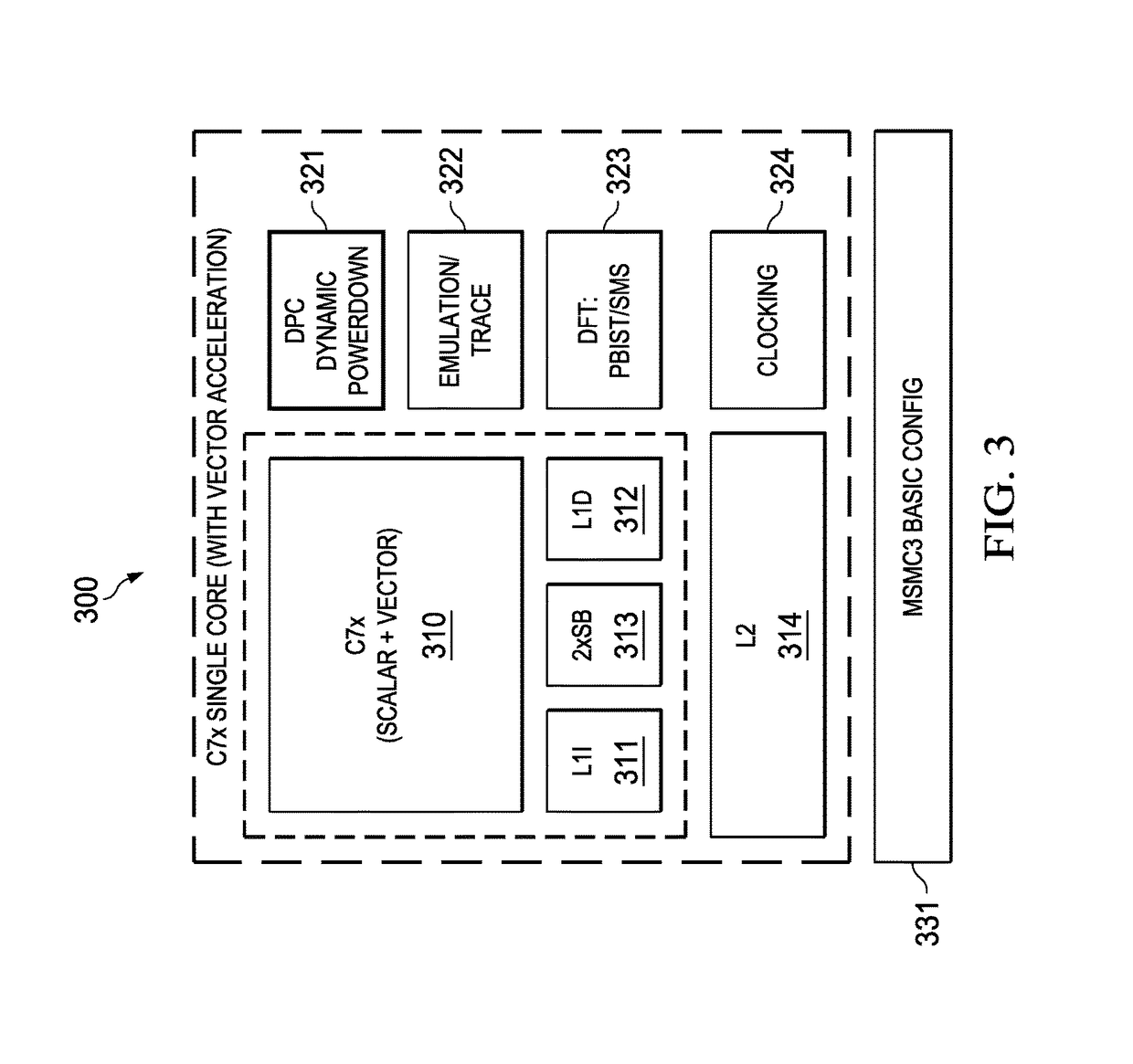

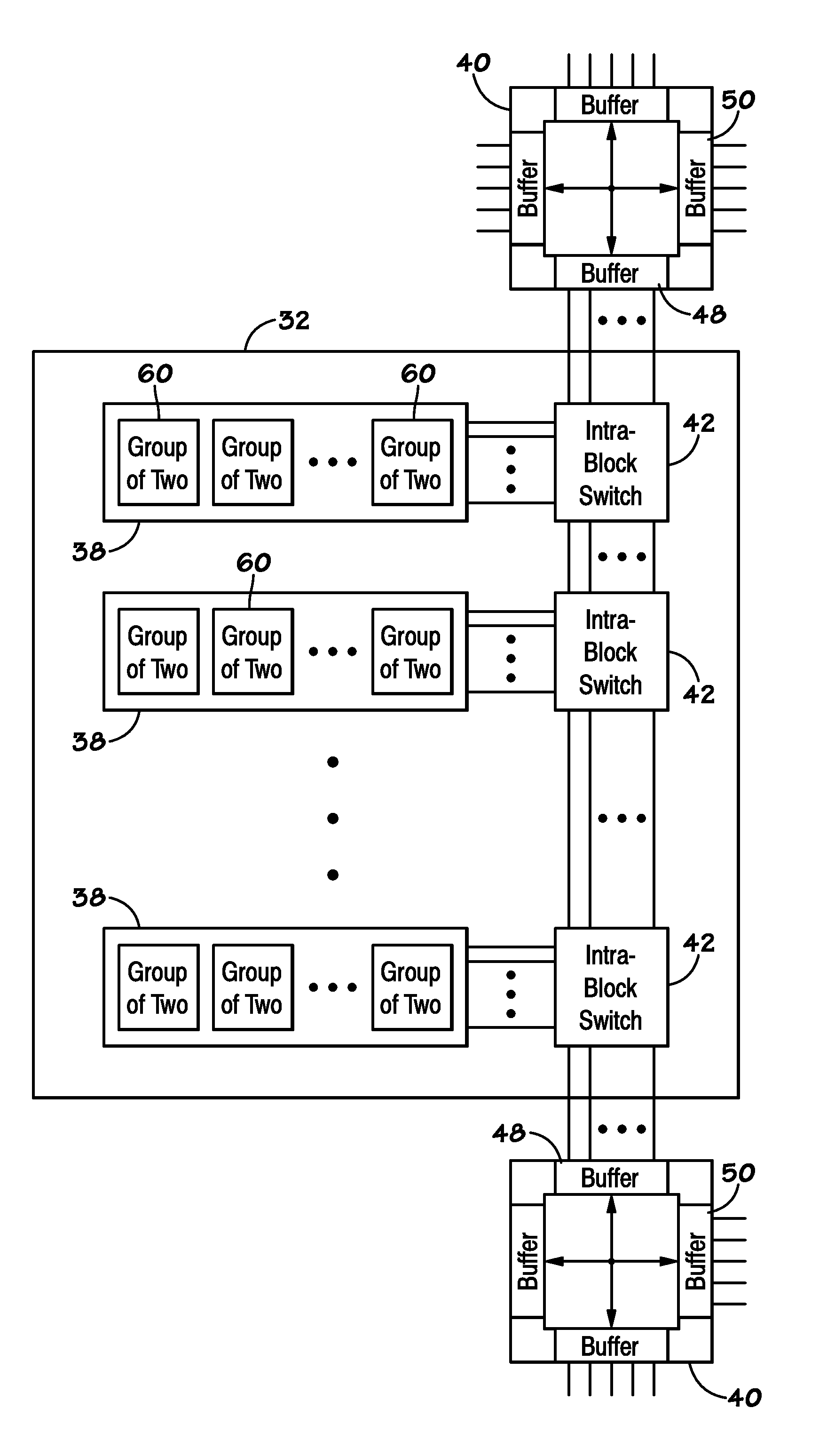

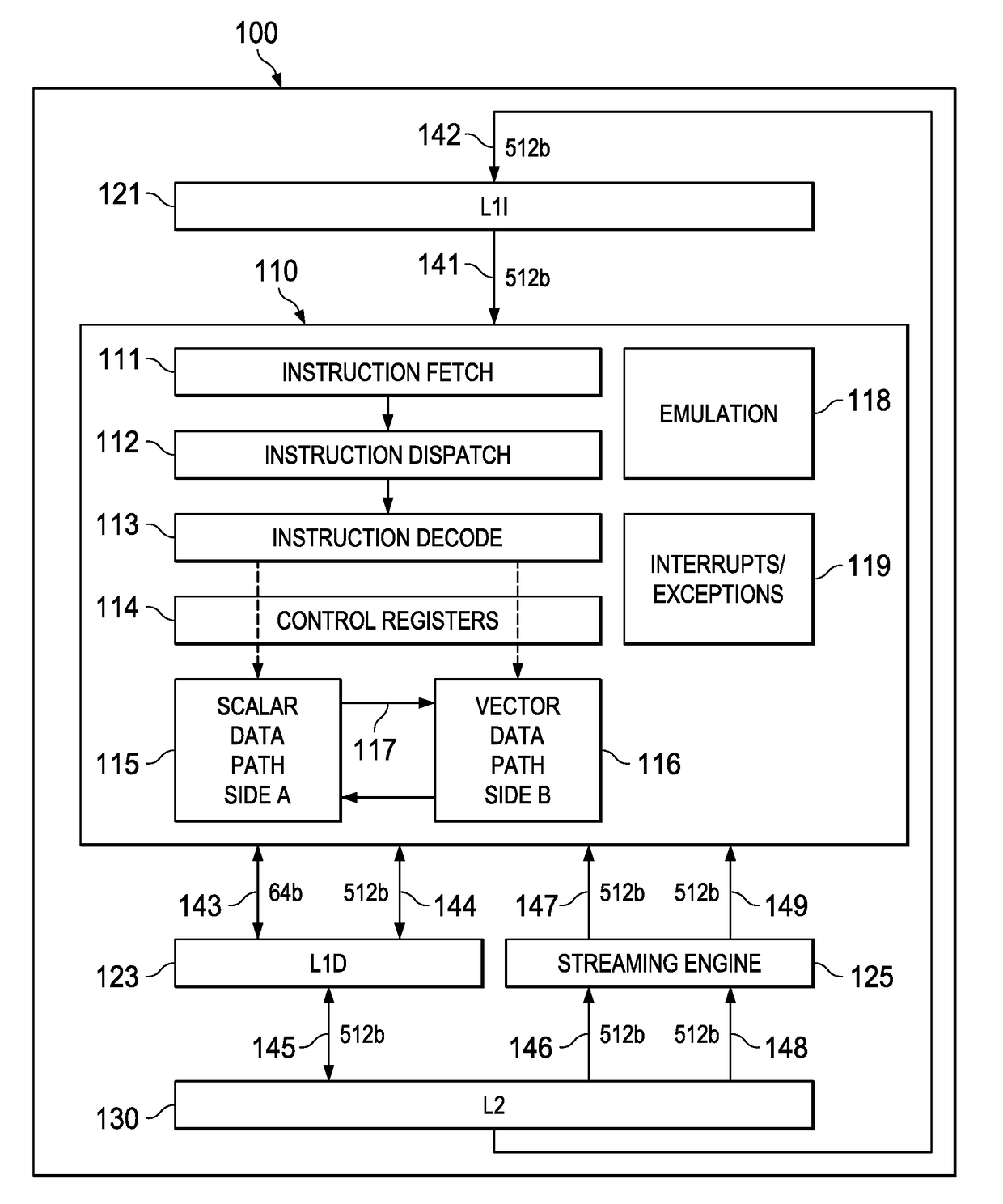

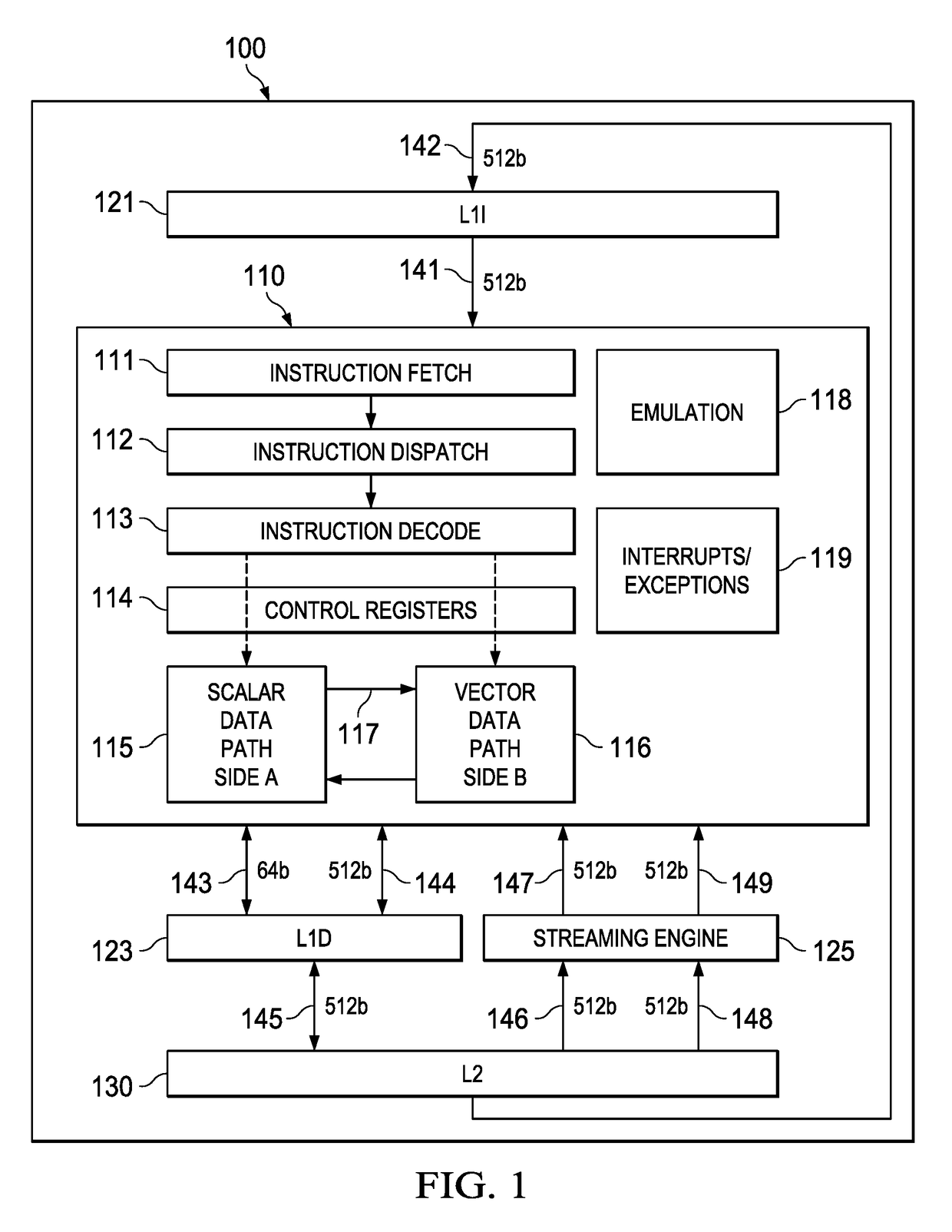

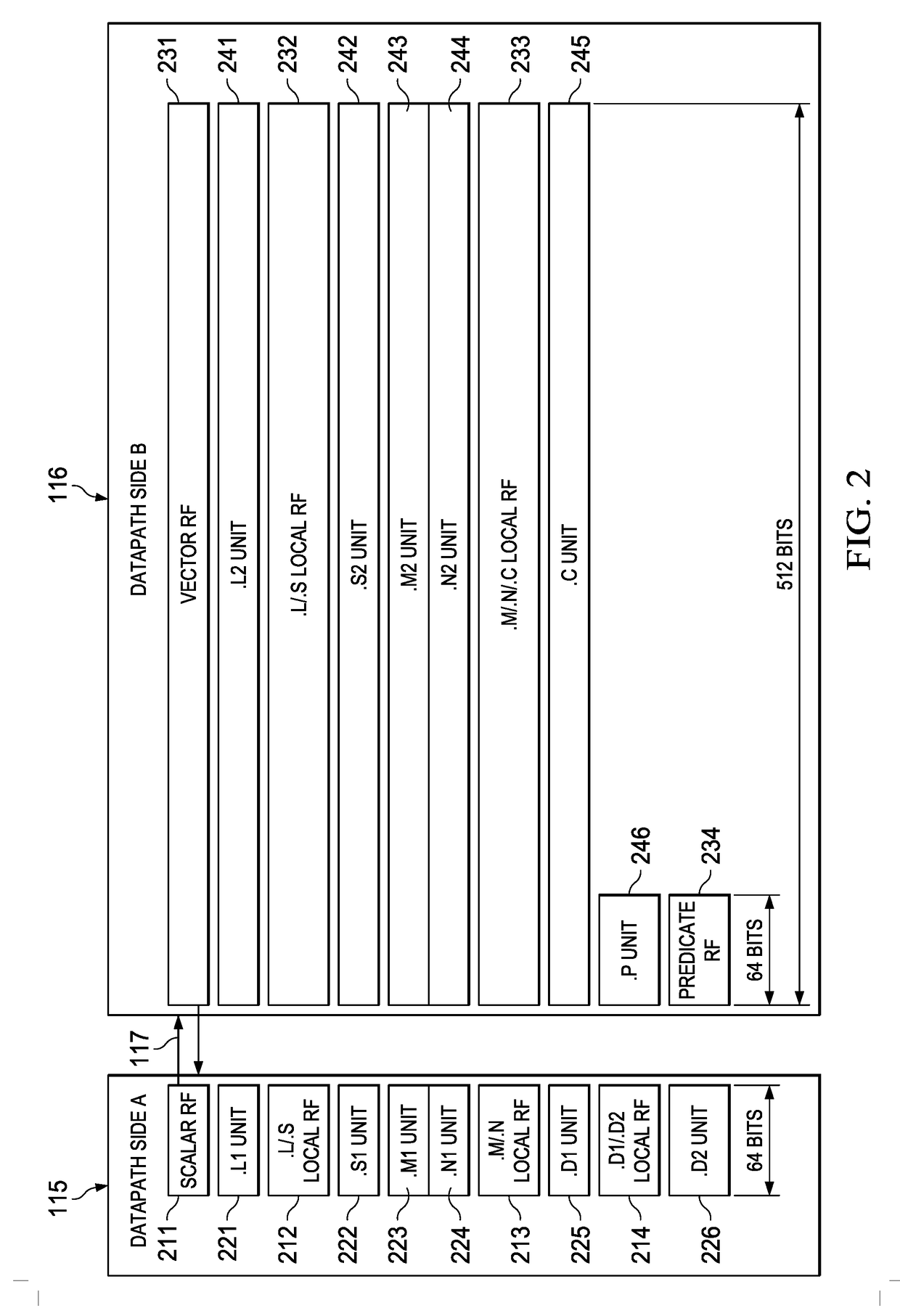

Highly Integrated Scalable, Flexible DSP Megamodule Architecture

ActiveUS20150019840A1High compute to bandwidth ratioExcessive latencyMemory architecture accessing/allocationProgram initiation/switchingSemi automaticData error

This invention addresses implements a range of interesting technologies into a single block. Each DSP CPU has a streaming engine. The streaming engines include: a SE to L2 interface that can request 512 bits / cycle from L2; a loose binding between SE and L2 interface, to allow a single stream to peak at 1024 bits / cycle; one-way coherence where the SE sees all earlier writes cached in system, but not writes that occur after stream opens; full protection against single-bit data errors within its internal storage via single-bit parity with semi-automatic restart on parity error.

Owner:TEXAS INSTR INC

Highly integrated scalable, flexible DSP megamodule architecture

ActiveUS9606803B2Excessive latencyImprove bus utilizationProgram initiation/switchingComputation using non-contact making devicesInternal memorySemi automatic

This invention addresses implements a range of interesting technologies into a single block. Each DSP CPU has a streaming engine. The streaming engines include: a SE to L2 interface that can request 512 bits / cycle from L2; a loose binding between SE and L2 interface, to allow a single stream to peak at 1024 bits / cycle; one-way coherence where the SE sees all earlier writes cached in system, but not writes that occur after stream opens; full protection against single-bit data errors within its internal storage via single-bit parity with semi-automatic restart on parity error.

Owner:TEXAS INSTR INC

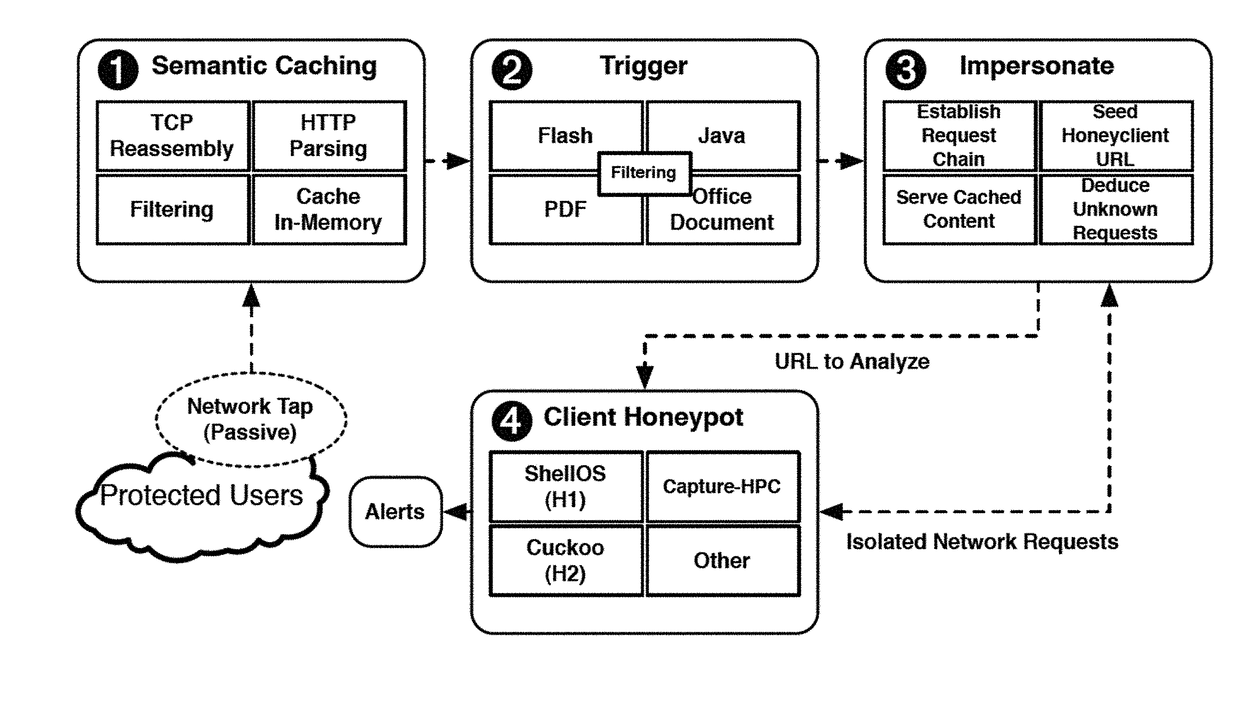

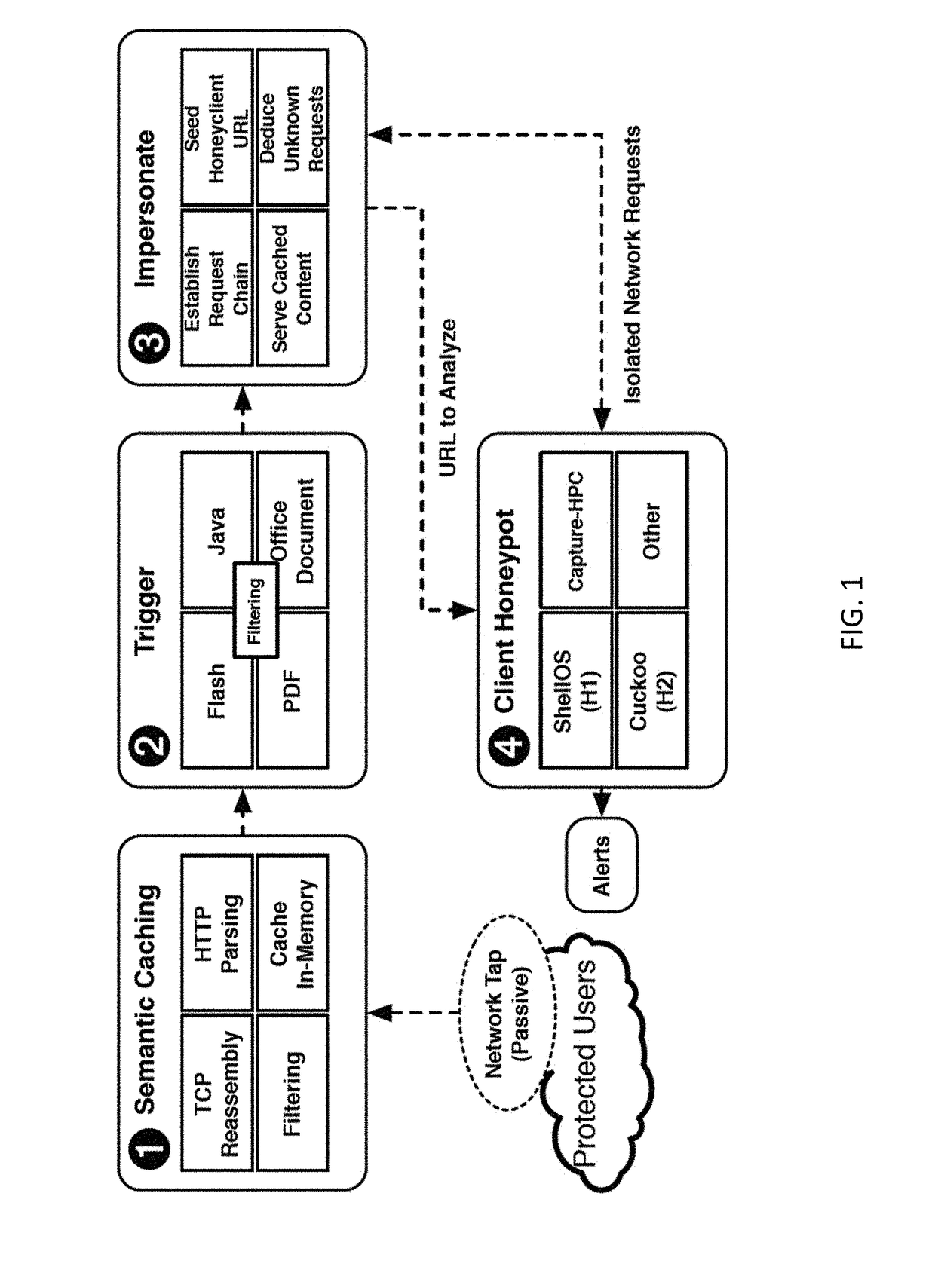

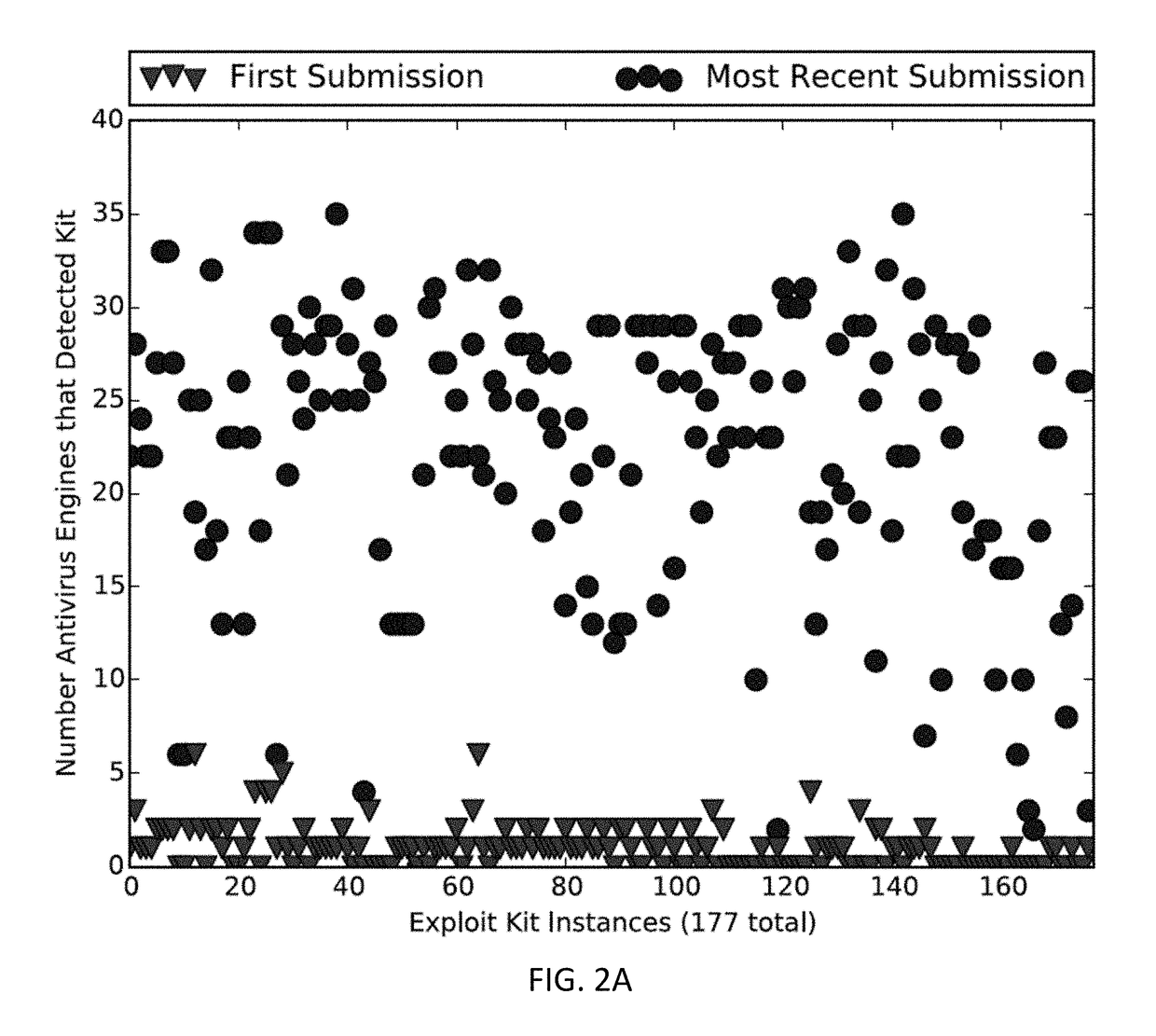

Methods, systems, and computer readable media for detecting malicious network traffic

ActiveUS20170195353A1Memory architecture accessing/allocationCache memory detailsTraffic capacityUniform resource locator

Methods, systems, and computer readable media for detecting malicious network traffic are disclosed. According to one method, the method includes caching network traffic transmitted between a client and a server, wherein the network traffic includes a uniform resource locator (URL) for accessing at least one file from the server. The method also includes determining whether the at least one file is suspicious. The method further includes in response to determining that the at least one file is suspicious, determining whether the at least one file is malicious by replaying the network traffic using an emulated client and an emulated server.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

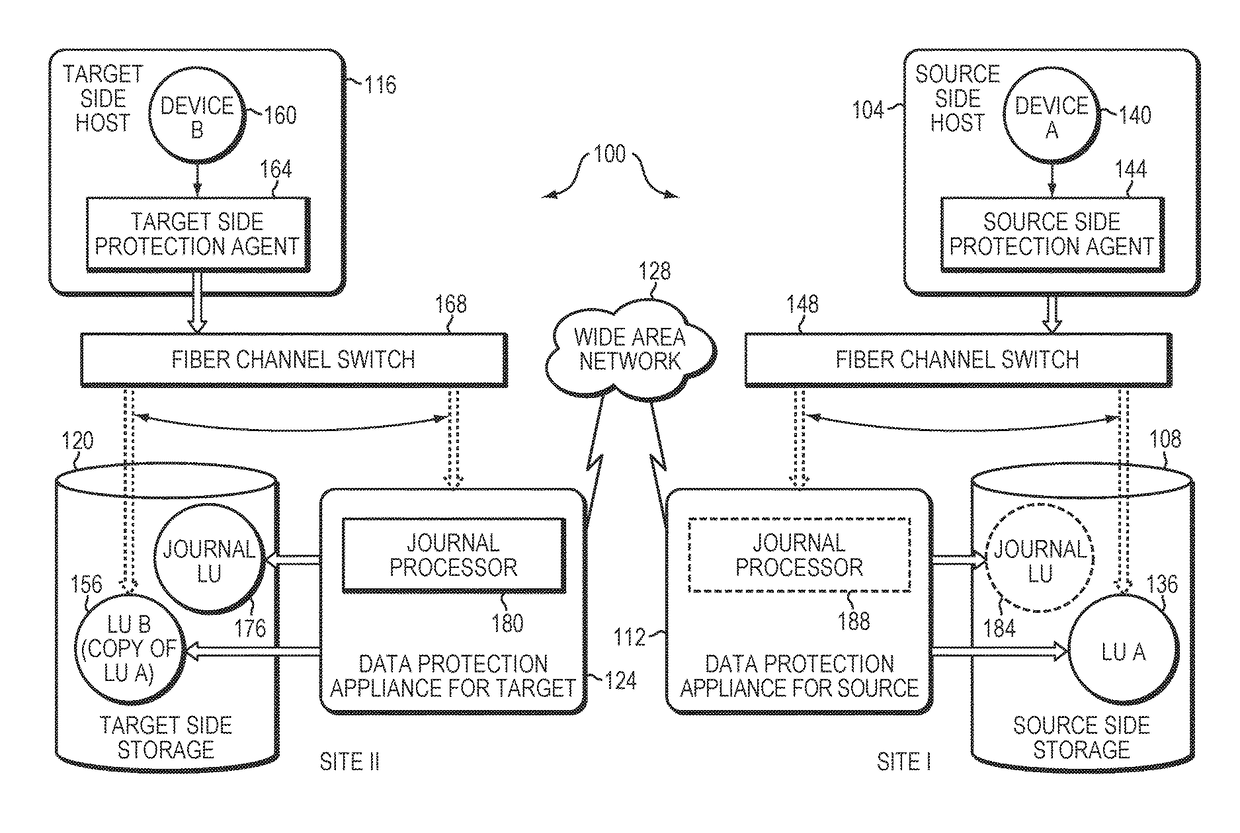

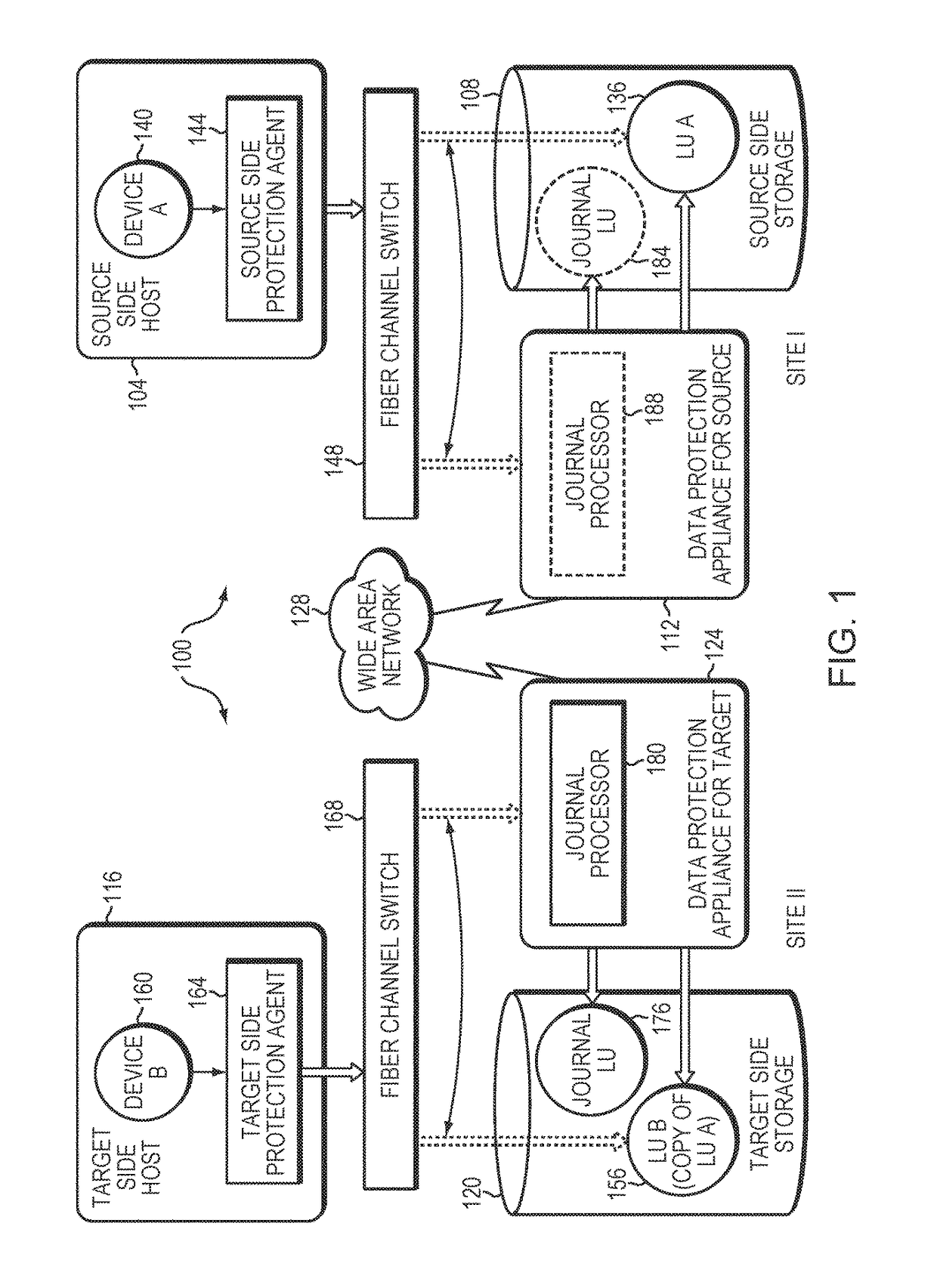

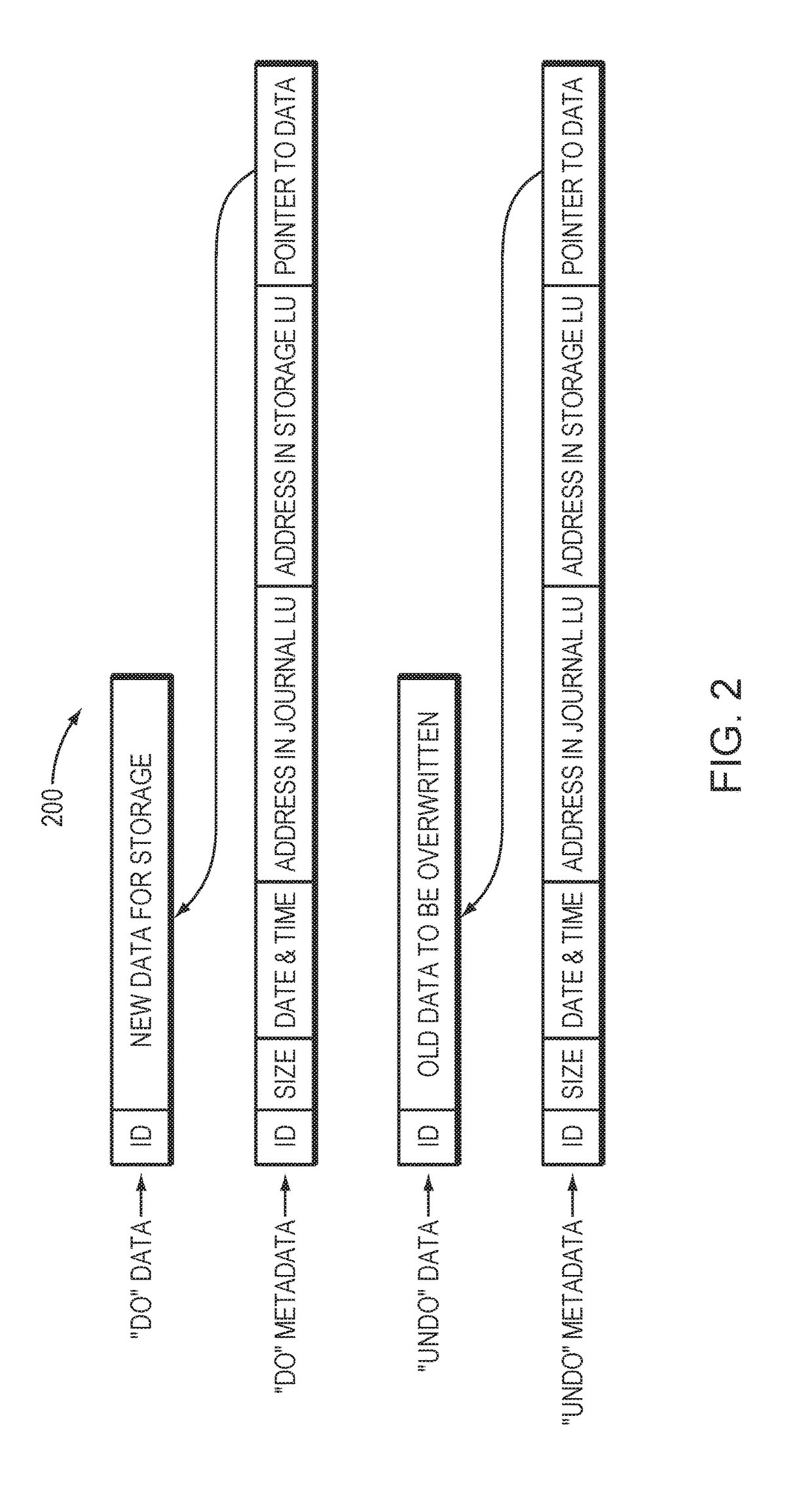

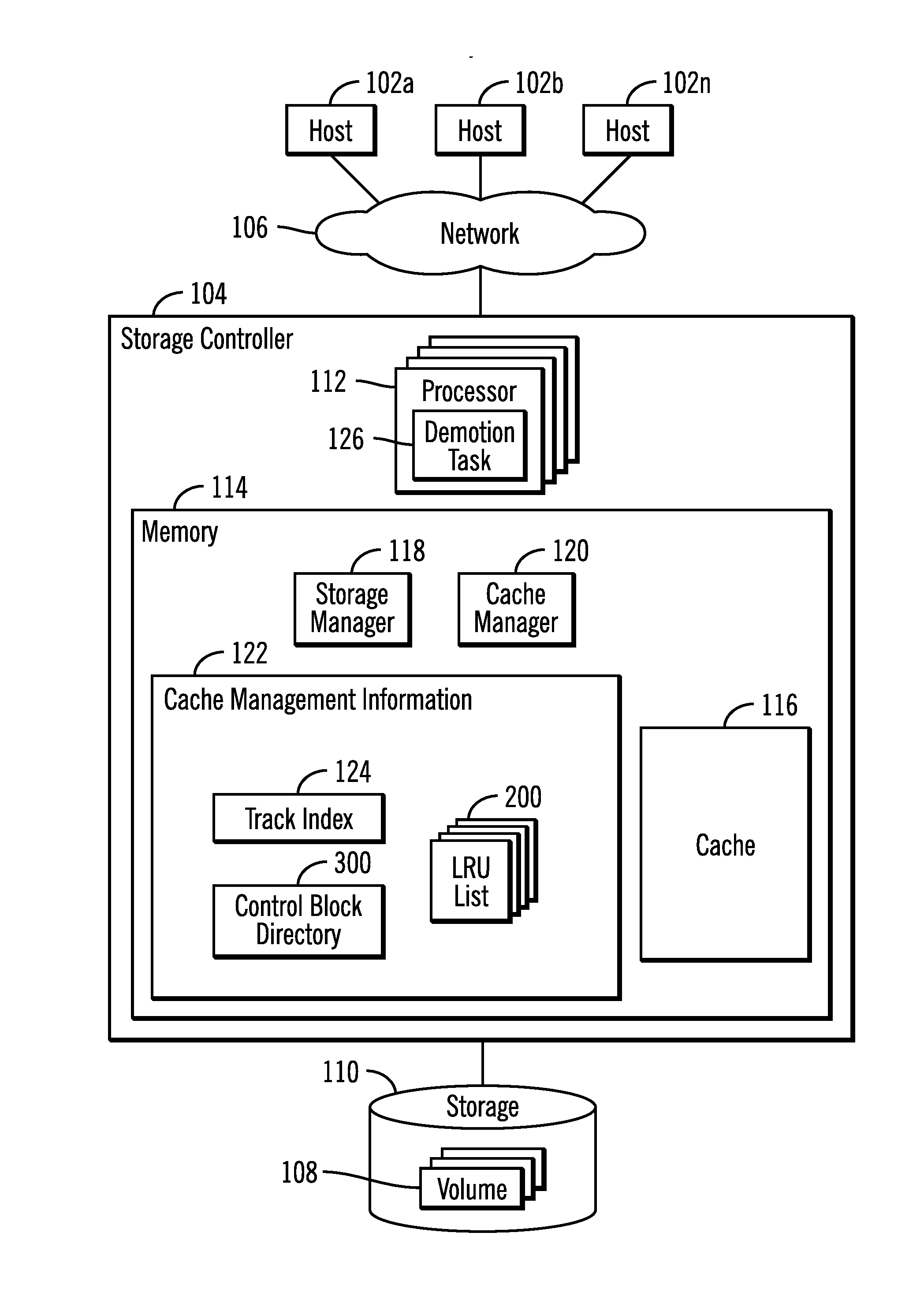

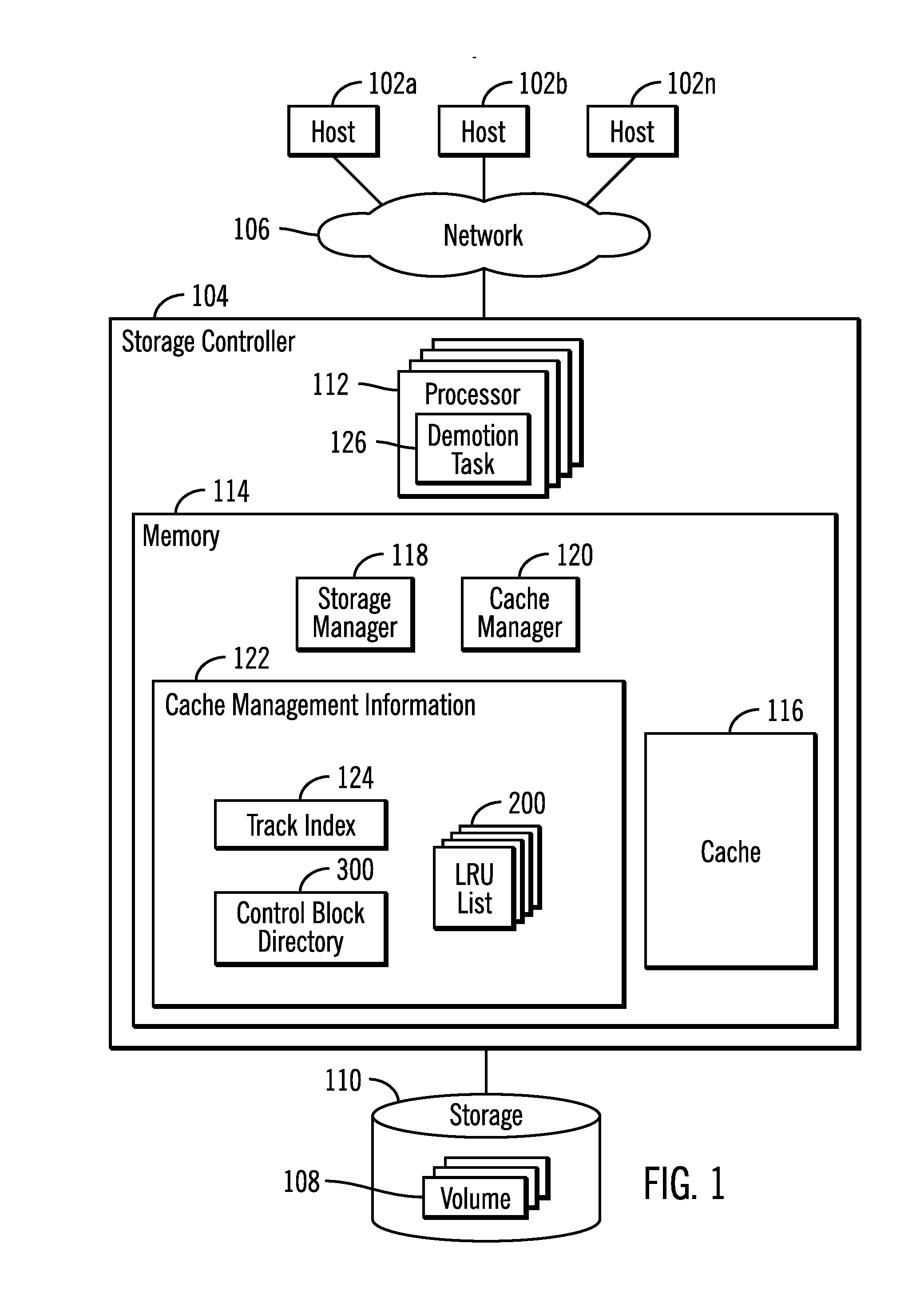

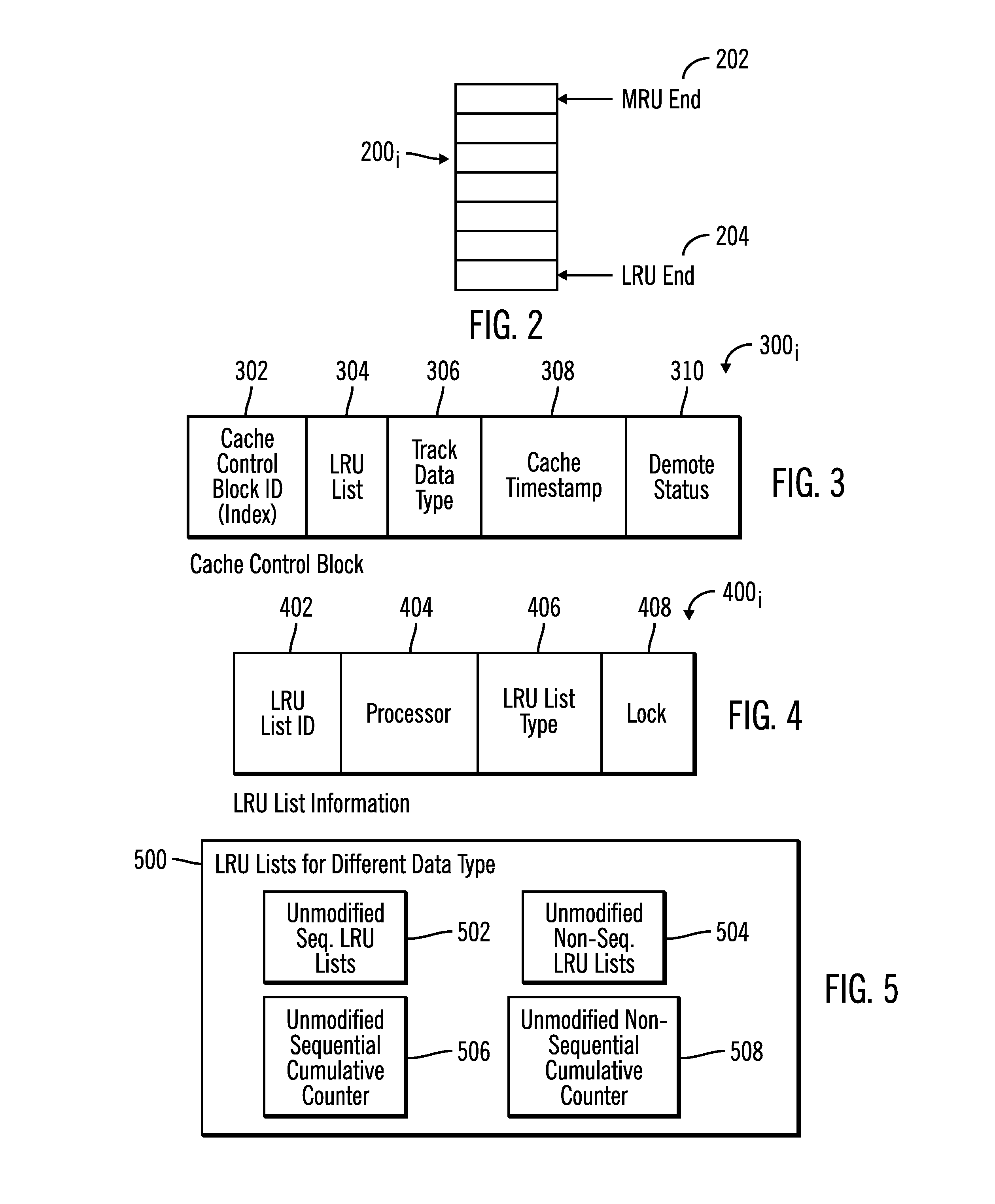

Using cache lists for processors to determine tracks to demote from a cache

InactiveUS20170052898A1Memory architecture accessing/allocationMemory adressing/allocation/relocationTimestampParallel computing

Provided are a computer program product, system, and method for using cache lists for processors to determine tracks in a storage to demote from a cache. Tracks in the storage stored in the cache are indicated in lists. There is one list for each of a plurality of processors. Each of the processors processes the list for that processor to process the tracks in the cache indicated on the list. There is a timestamp for each of the tracks indicated in the lists indicating a time at which the track was added to the cache. Tracks indicated in each of the lists having timestamps that fall within a range of timestamps are demoted.

Owner:IBM CORP

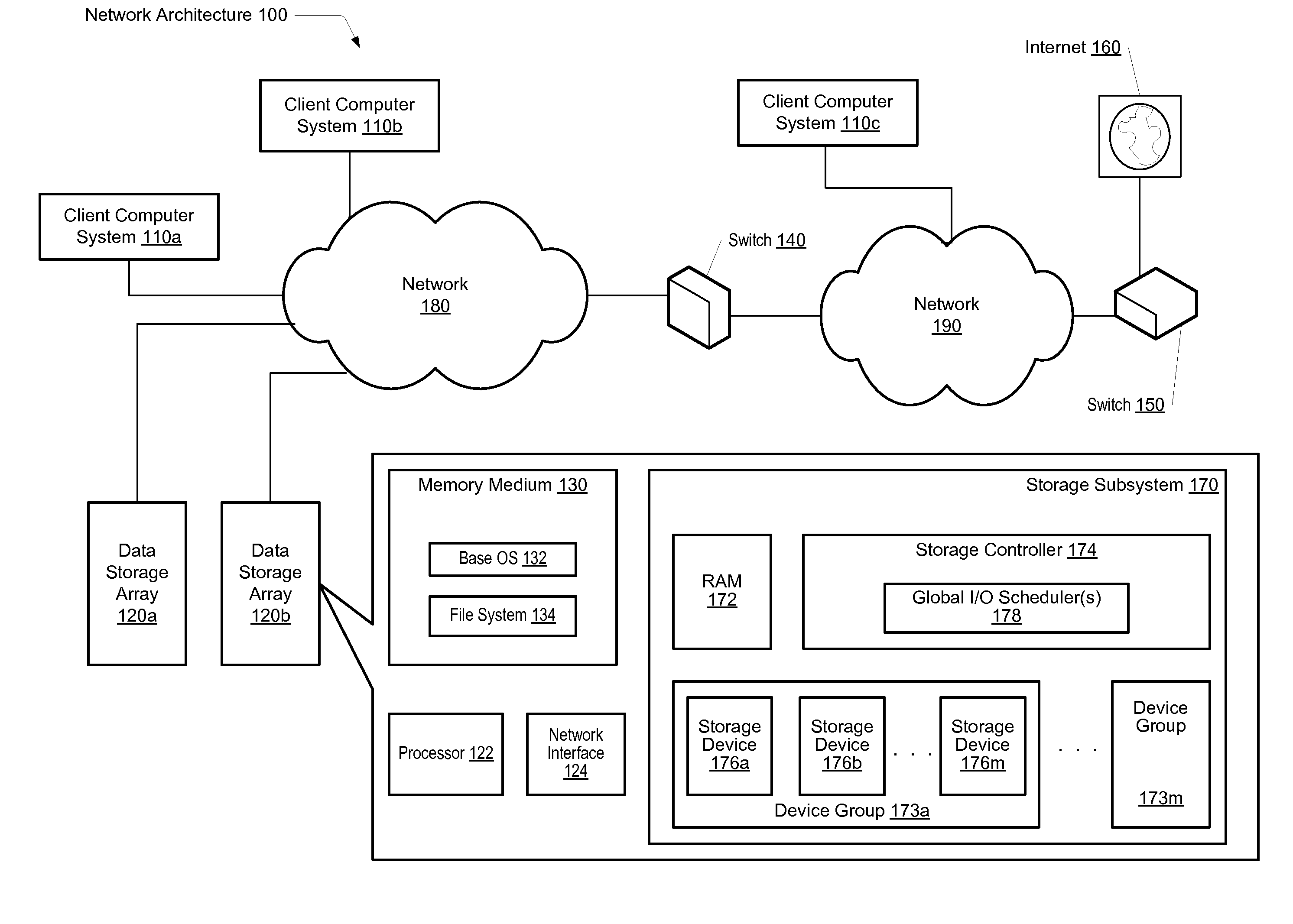

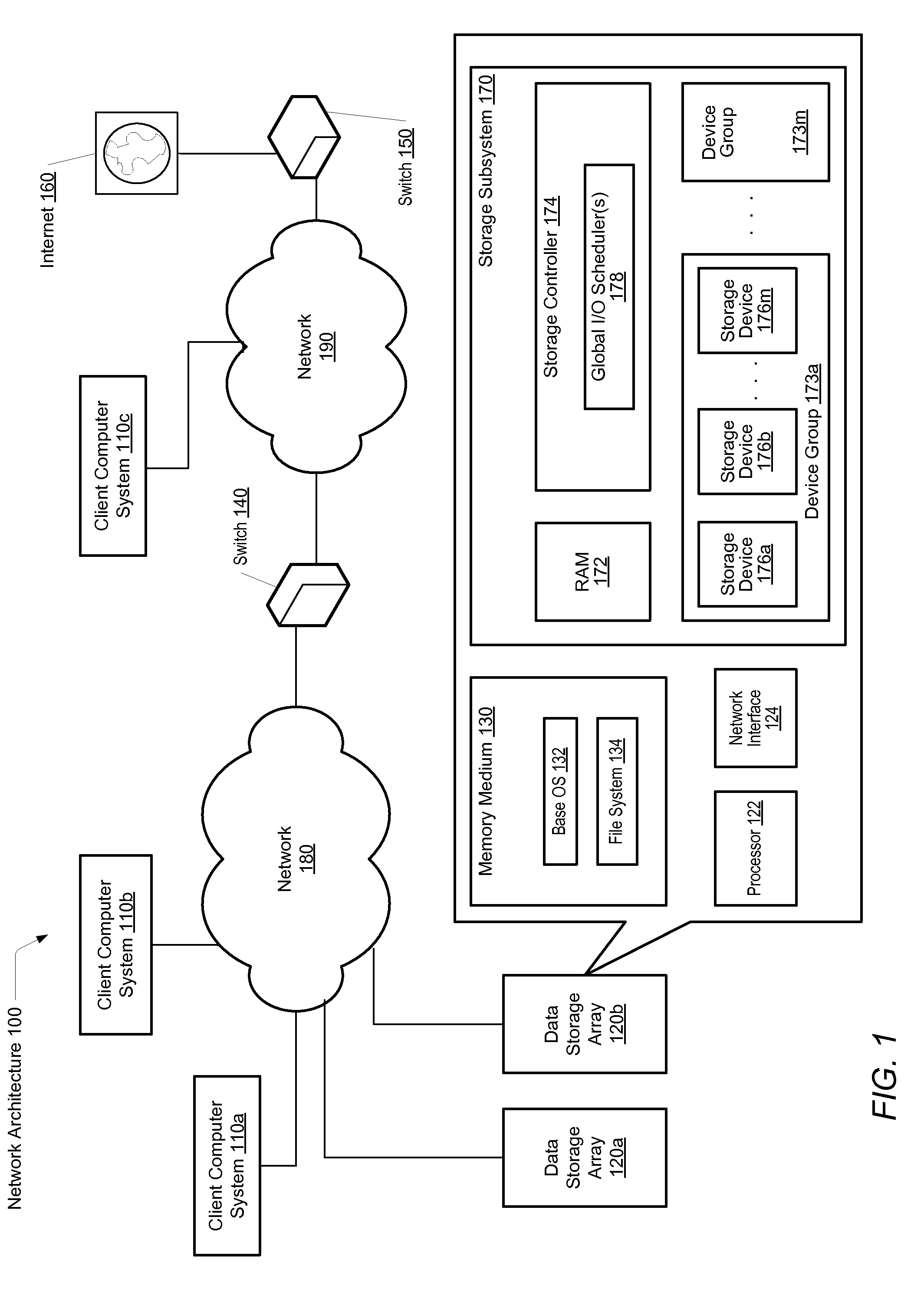

Scheduling of I/O writes in a storage environment

ActiveUS20120066435A1Read operationInput/output to record carriersMemory adressing/allocation/relocationSolid-state storageControl store

A system and method for effectively scheduling read and write operations among a plurality of solid-state storage devices. A computer system comprises client computers and data storage arrays coupled to one another via a network. A data storage array utilizes solid-state drives and Flash memory cells for data storage. A storage controller within a data storage array comprises an I / O scheduler. The data storage controller is configured to receive requests targeted to the data storage medium, said requests including a first type of operation and a second type of operation. The controller is further configured to schedule requests of the first type for immediate processing by said plurality of storage devices, and queue requests of the second type for later processing by the plurality of storage devices. Operations of the first type may correspond to operations with an expected relatively low latency, and operations of the second type may correspond to operations with an expected relatively high latency.

Owner:PURE STORAGE

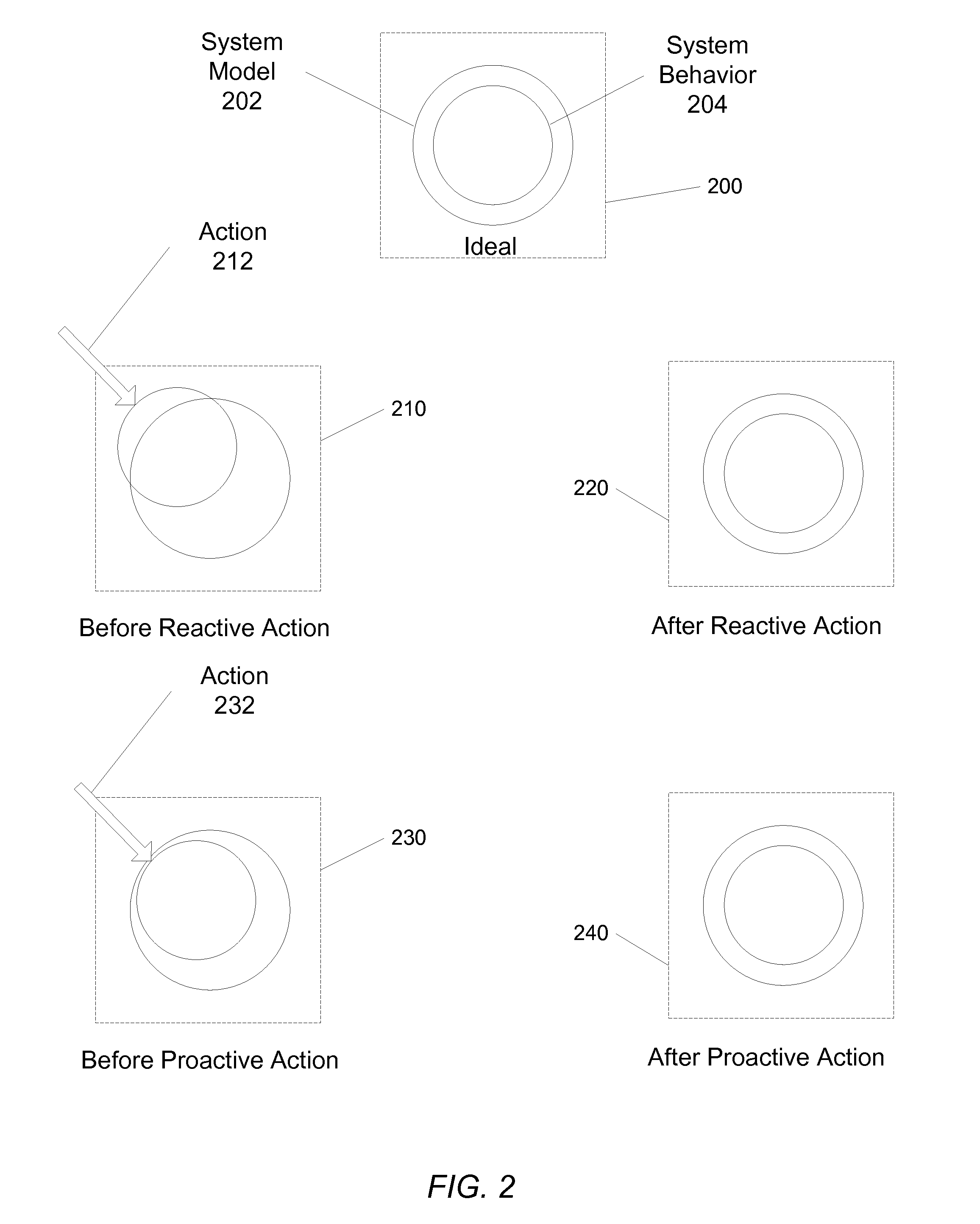

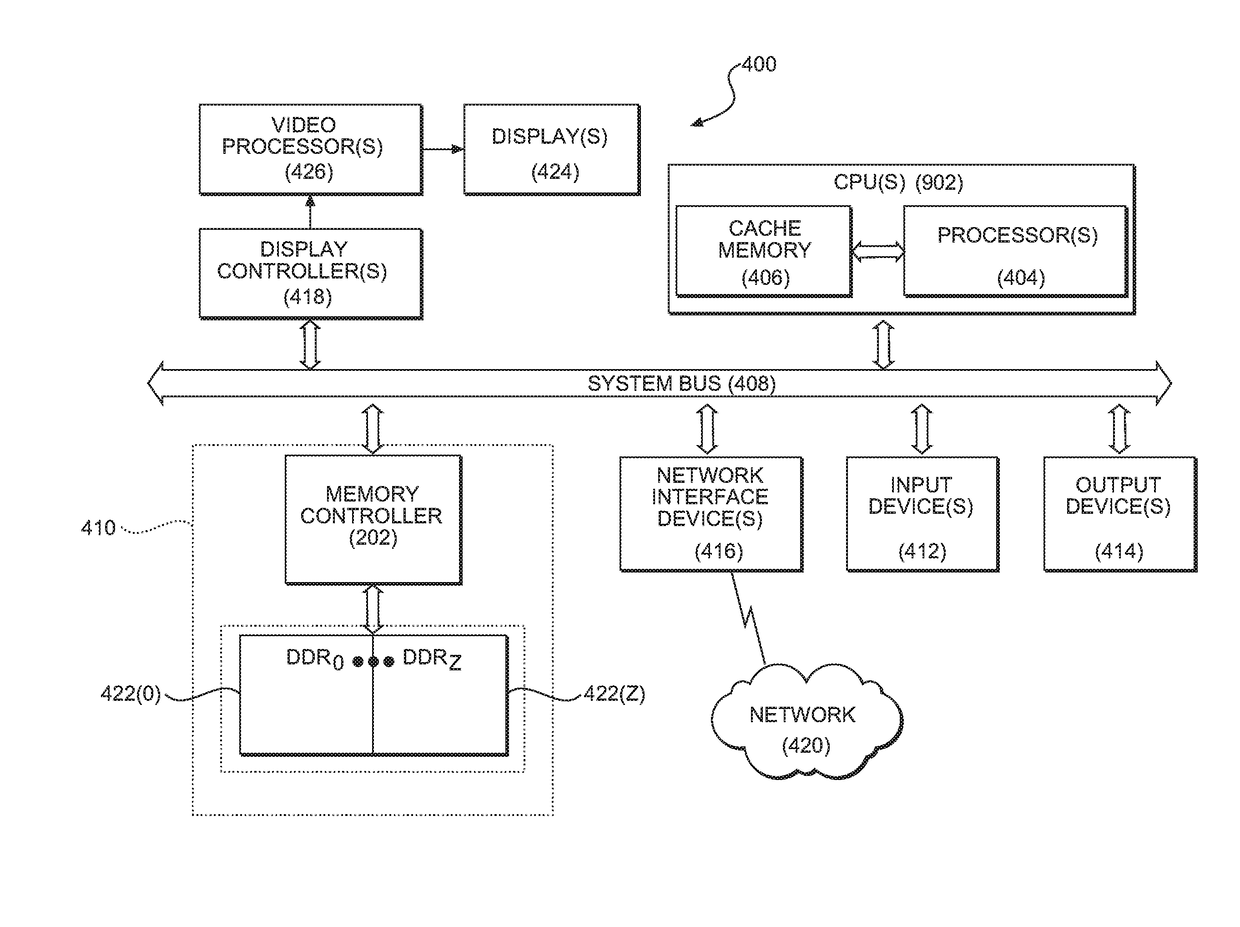

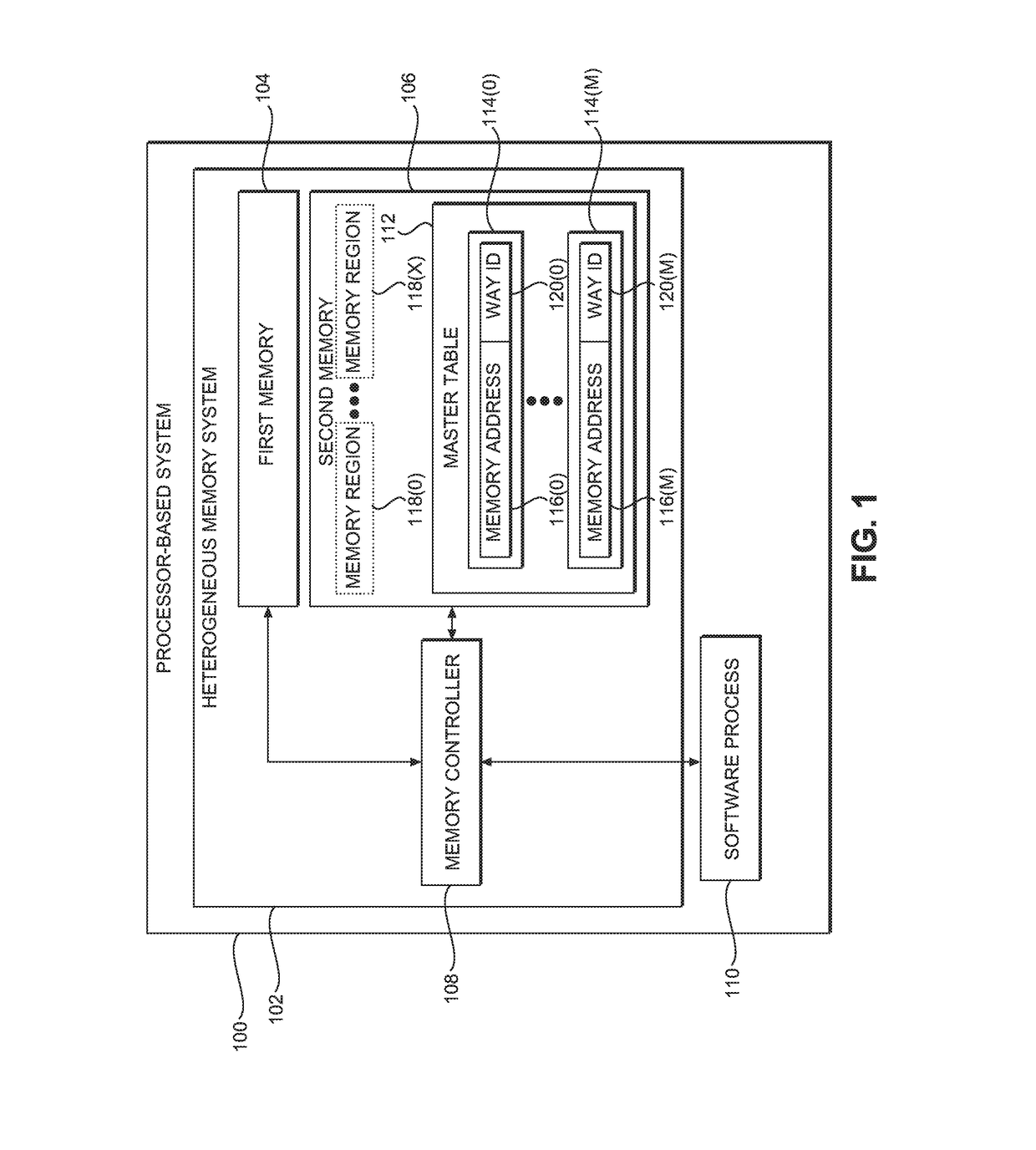

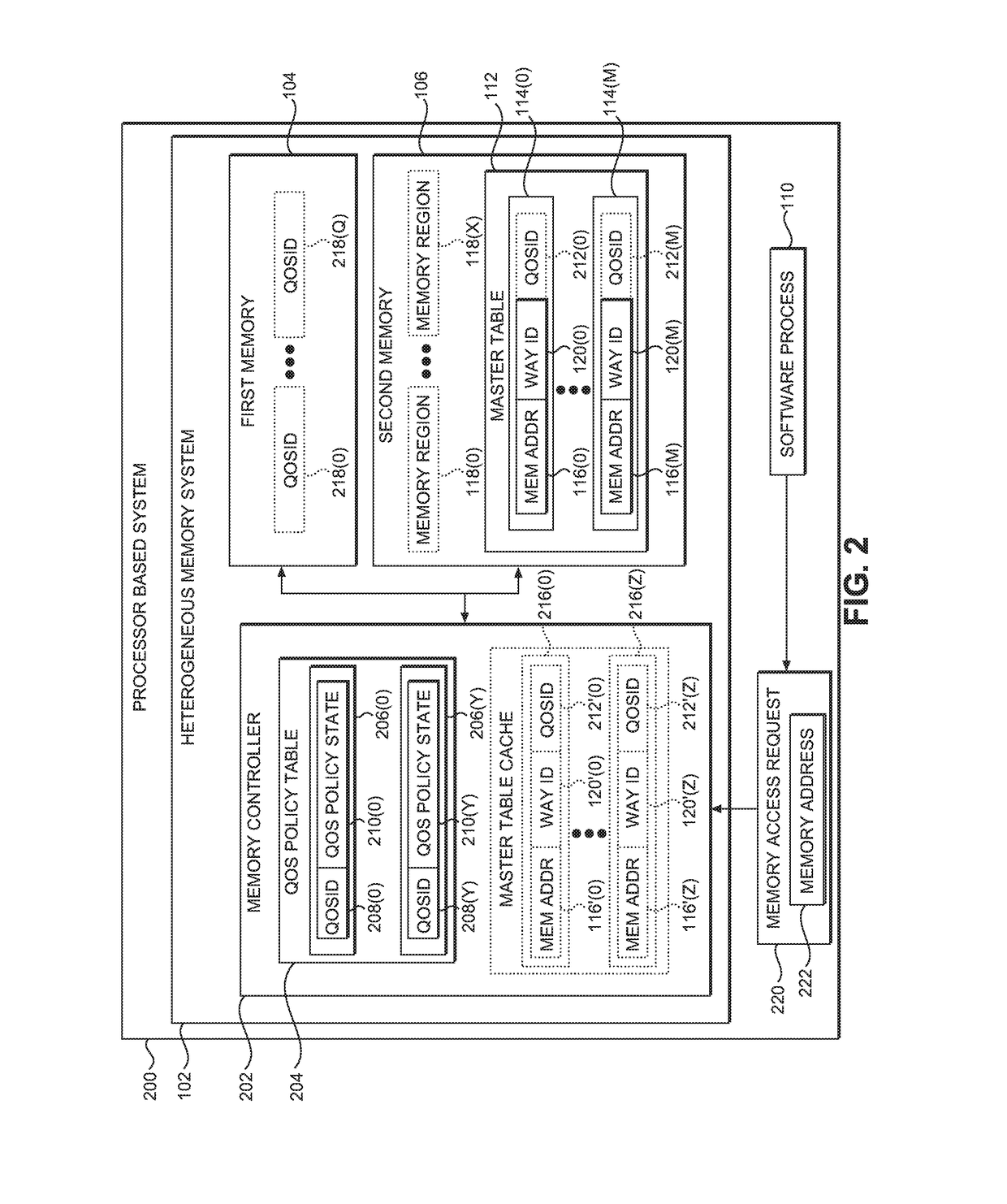

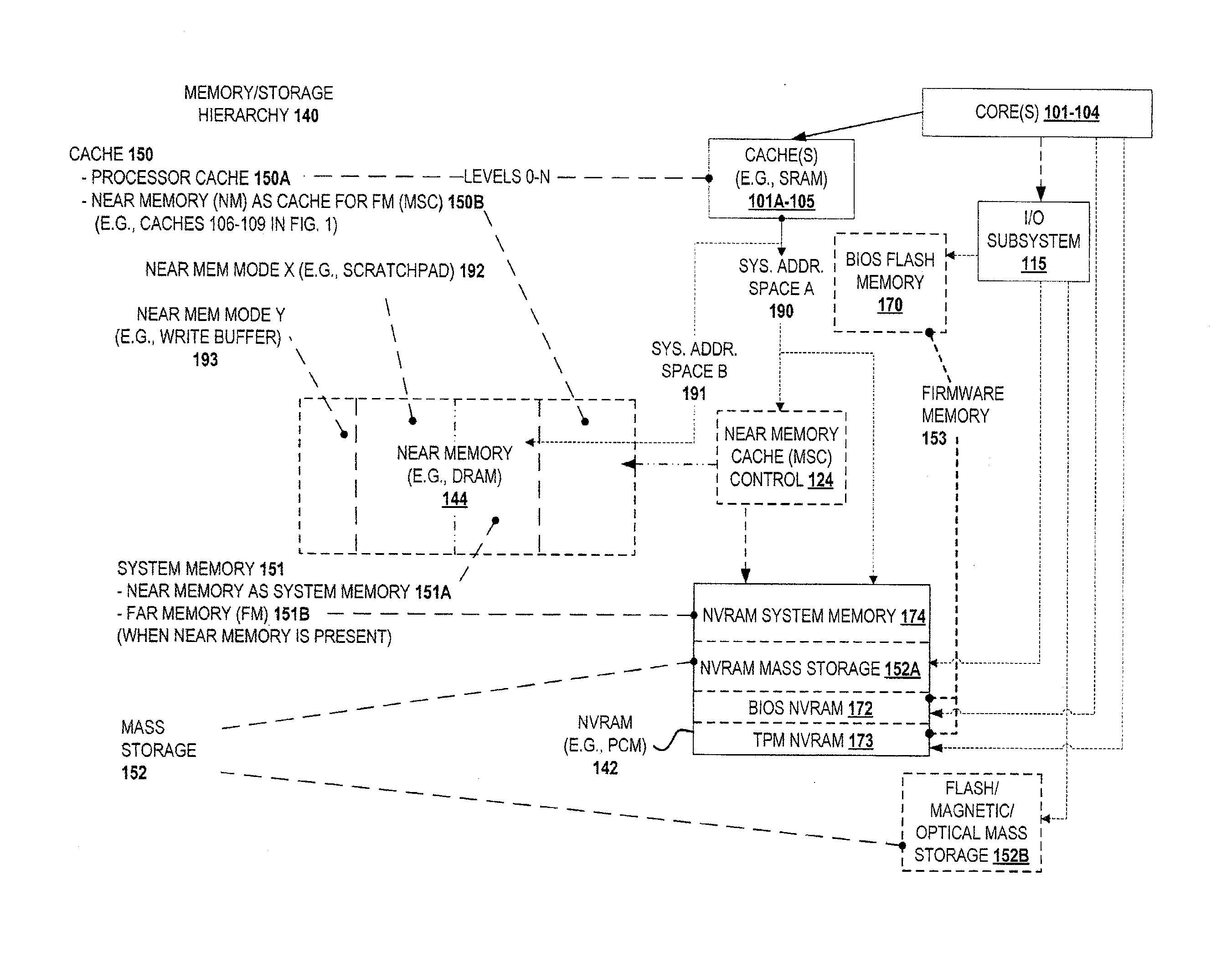

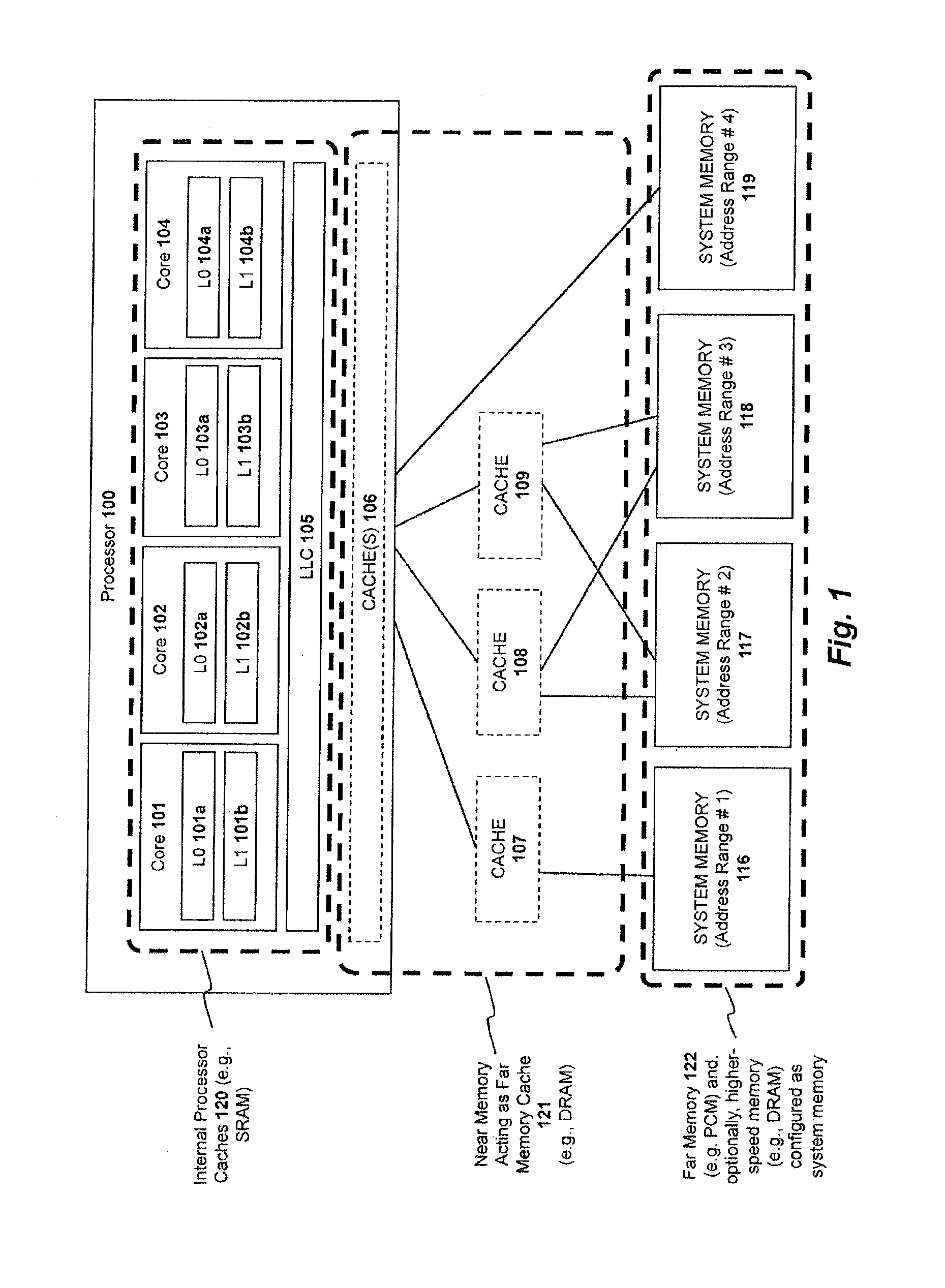

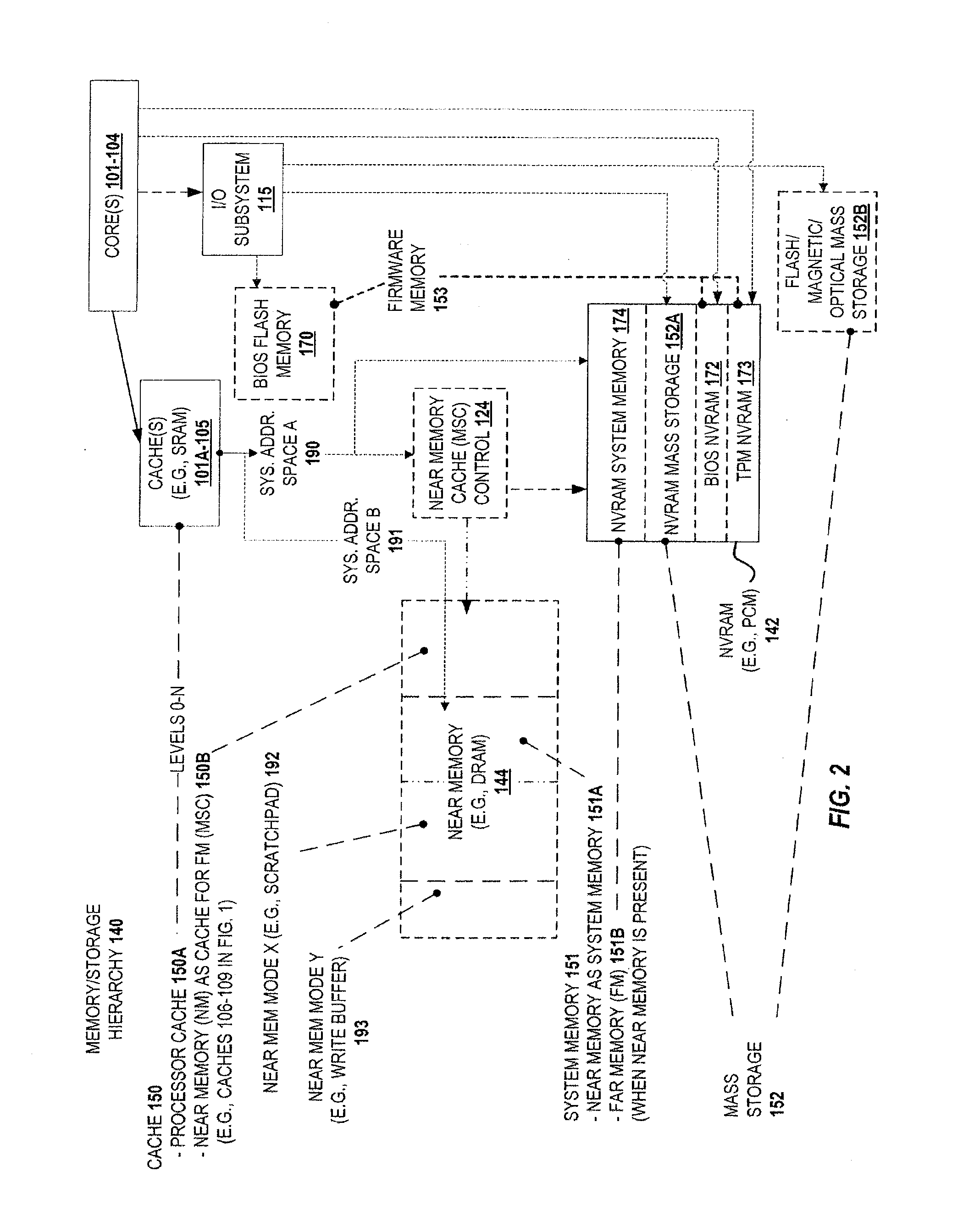

PROVIDING FLEXIBLE MANAGEMENT OF HETEROGENEOUS MEMORY SYSTEMS USING SPATIAL QUALITY OF SERVICE (QoS) TAGGING IN PROCESSOR-BASED SYSTEMS

ActiveUS20180081579A1Influence dataInfluence memory allocationMemory architecture accessing/allocationInput/output to record carriersMemory addressState dependent

Providing flexible management of heterogeneous memory systems using spatial Quality of Service (QoS) tagging in processor-based systems is disclosed. In one aspect, a heterogeneous memory system of a processor-based system includes a first memory and a second memory. The heterogeneous memory system is divided into a plurality of memory regions, each associated with a QoS identifier (QoSID), which may be set and updated by software. A memory controller of the heterogeneous memory system provides a QoS policy table, which operates to associate each QoSID with a QoS policy state, and which also may be software-configurable. Upon receiving a memory access request including a memory address of a memory region, the memory controller identifies a software-configurable QoSID associated with the memory address, and associates the QoSID with a QoS policy state using the QoS policy table. The memory controller then applies the QoS policy state to perform the memory access operation.

Owner:QUALCOMM INC

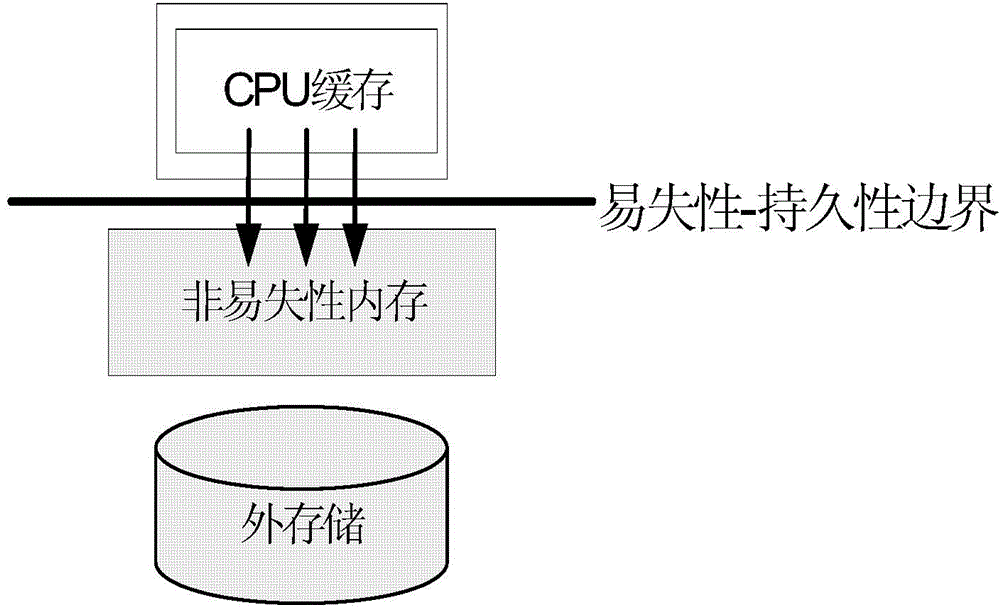

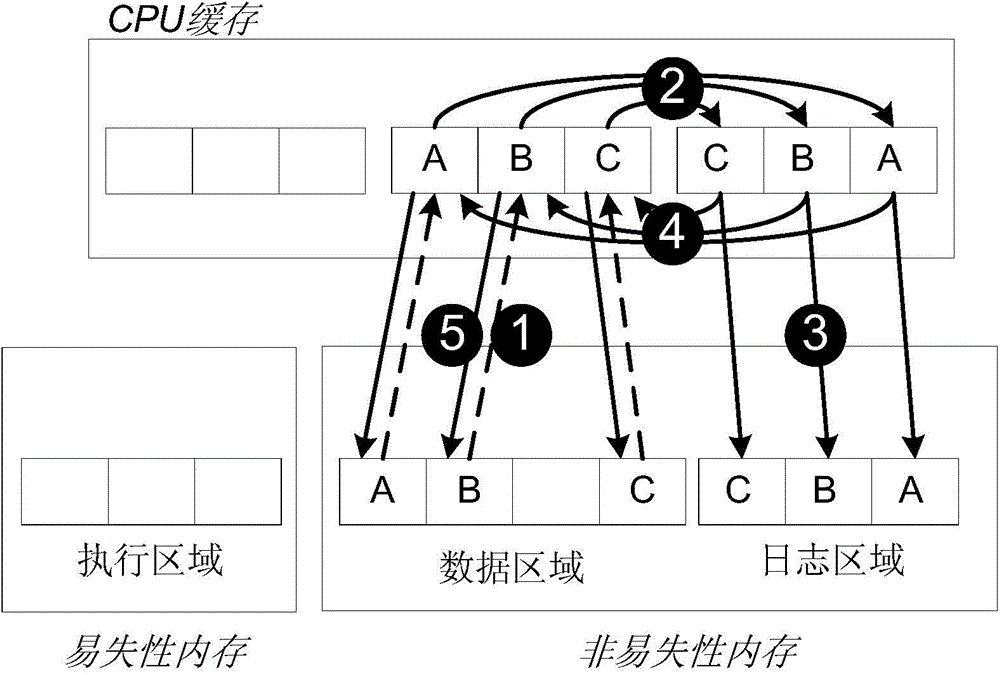

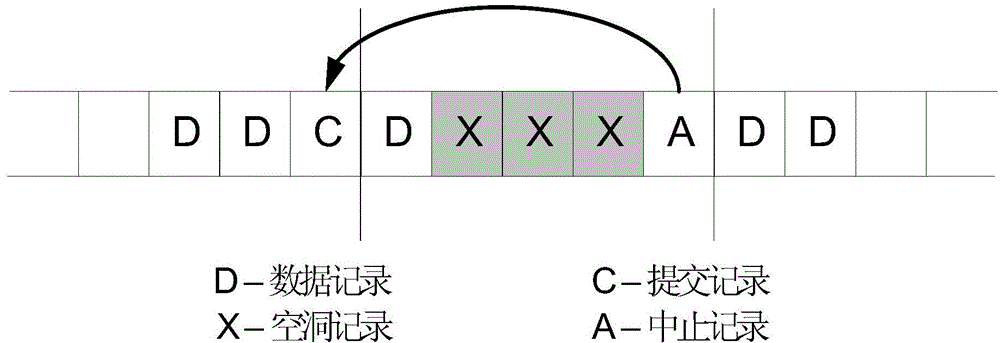

Persistent internal memory transaction processing cache management method and device

ActiveCN104881371AReduce the cost of state trackingReduce persistence overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationInternal memoryOriginal data

The invention provides a persistent internal memory transaction processing cache management method and device. The method comprises the steps of reading, by a transaction, original data from a nonvolatile internal memory to a processor for caching before the start of the transaction, and executing the transaction by the processor; allocating, by the transaction, a space for produced new data during the execution of the transaction, and adopting a cache stealing write-back technique to allow persisting of un-submitted data; forcibly persisting transaction data or state to the nonvolatile internal memory during the submission or interruption of the transaction; writing back the transaction data to an original data address after the transaction data or state has been persisted to the nonvolatile internal memory, and adopting a cache non-forced write-back technique to not forcibly persist submitted data to the nonvolatile internal memory; periodically persisting, by the transaction, cache data to the nonvolatile internal memory by means of forced integral brush-back; performing fault recovery processing on the transaction data when a fault occurs in a system. By adopting the method, the data replication and data persisting frequency in the persistent internal memory can be reduced.

Owner:TSINGHUA UNIV

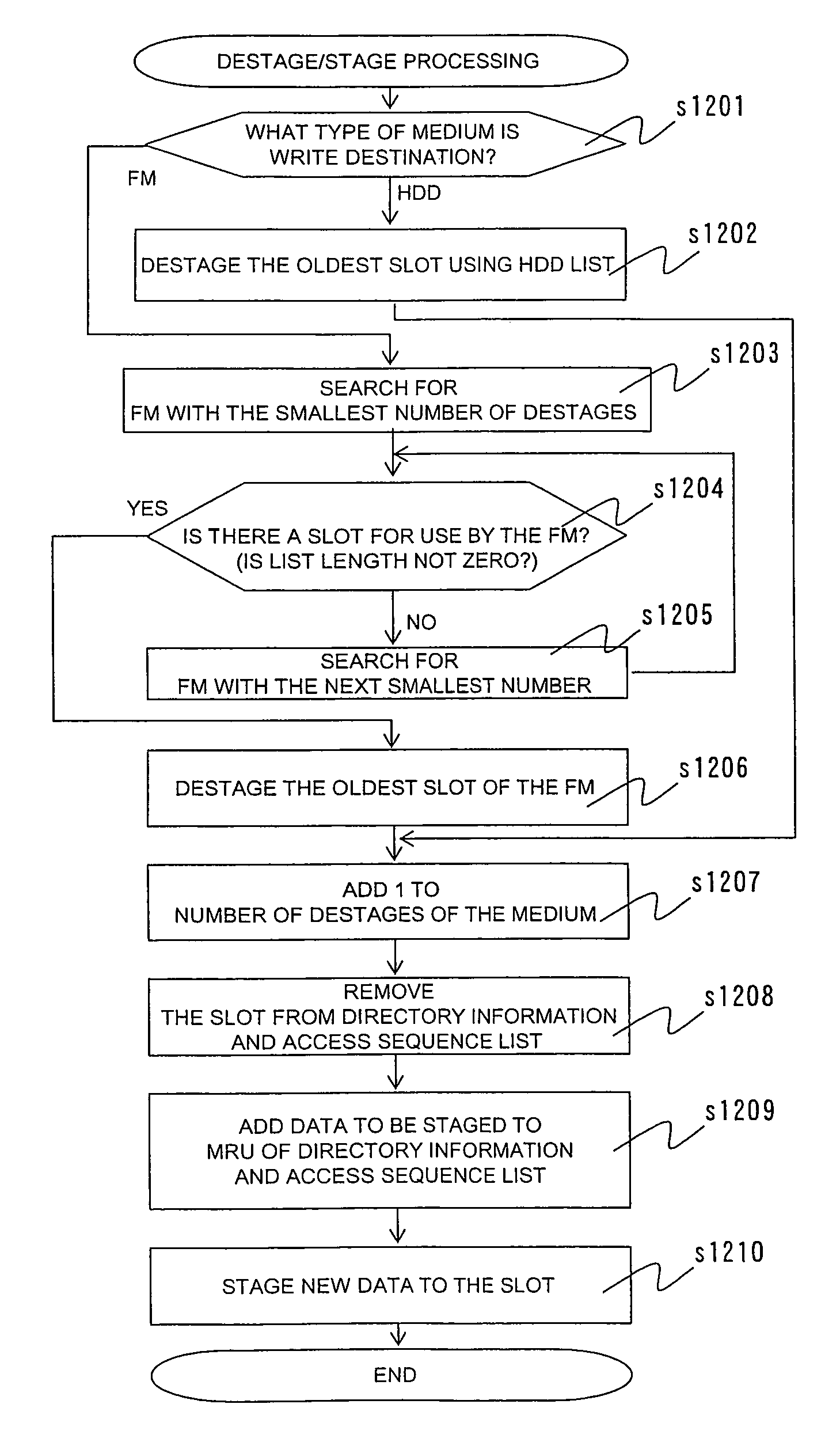

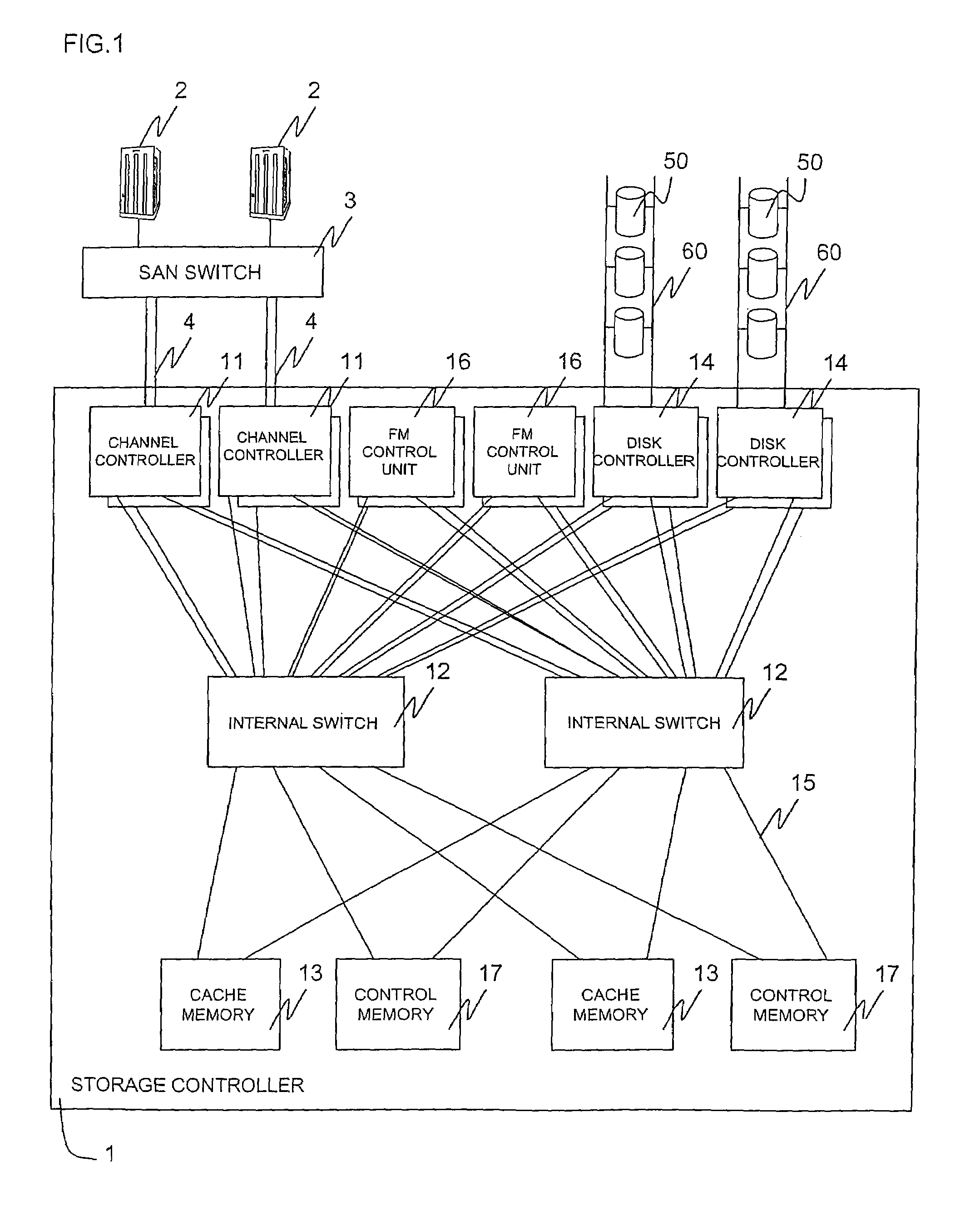

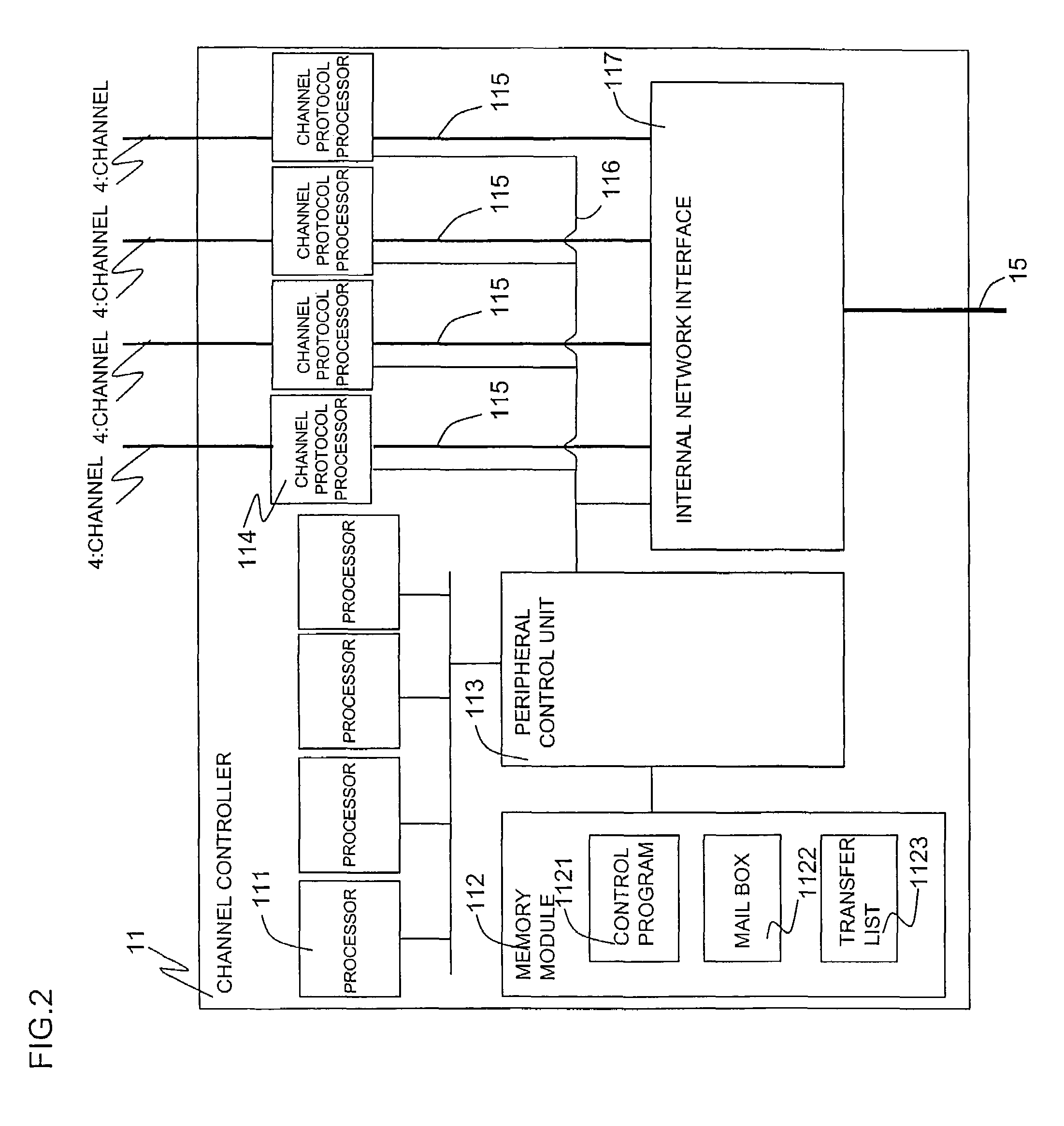

Storage system, storage device, and control method thereof

InactiveUS7464221B2Reduce power consumptionReduce areaMemory architecture accessing/allocationEnergy efficient ICTEmbedded systemTemporary storage

Owner:HITACHI LTD

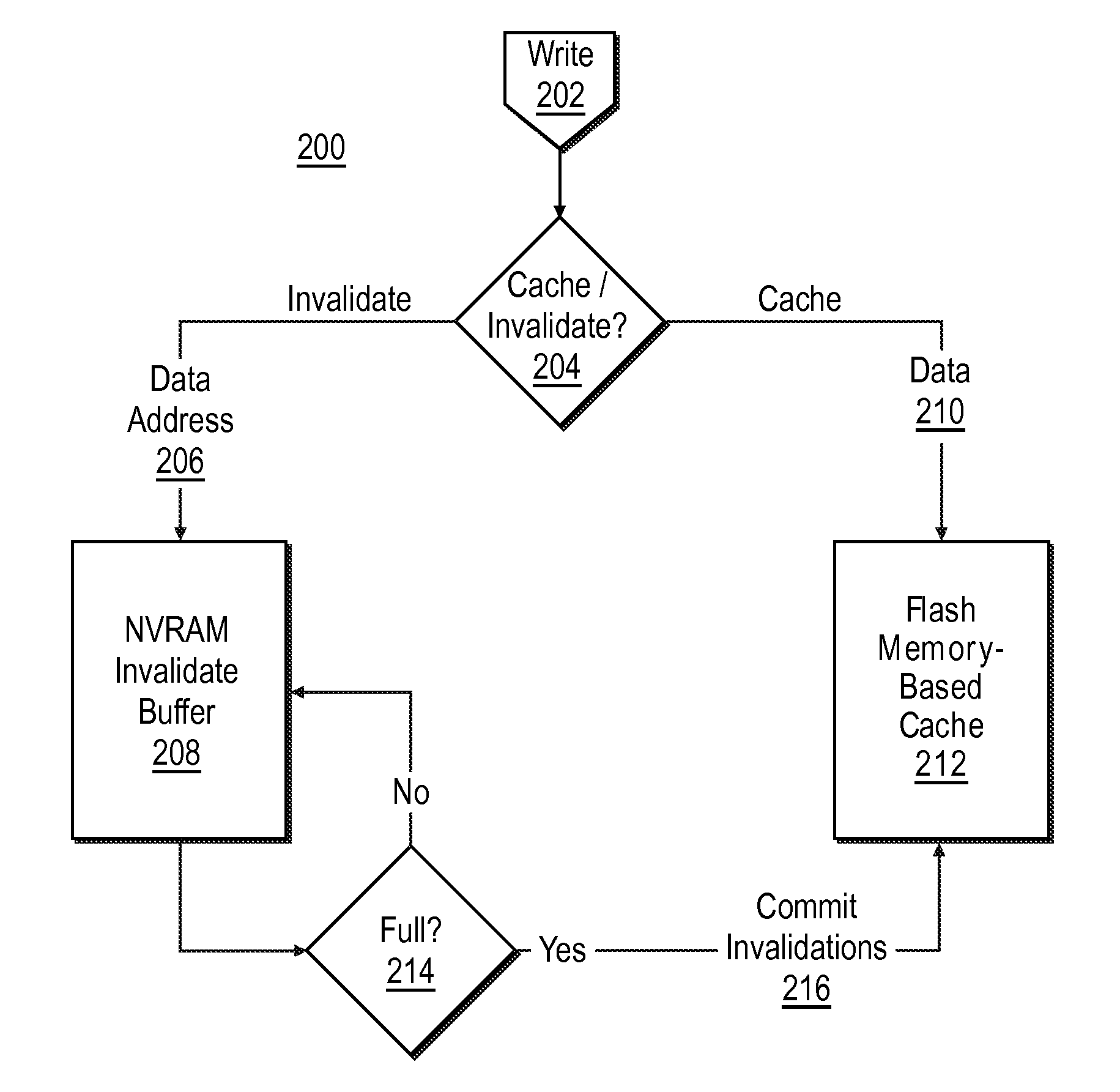

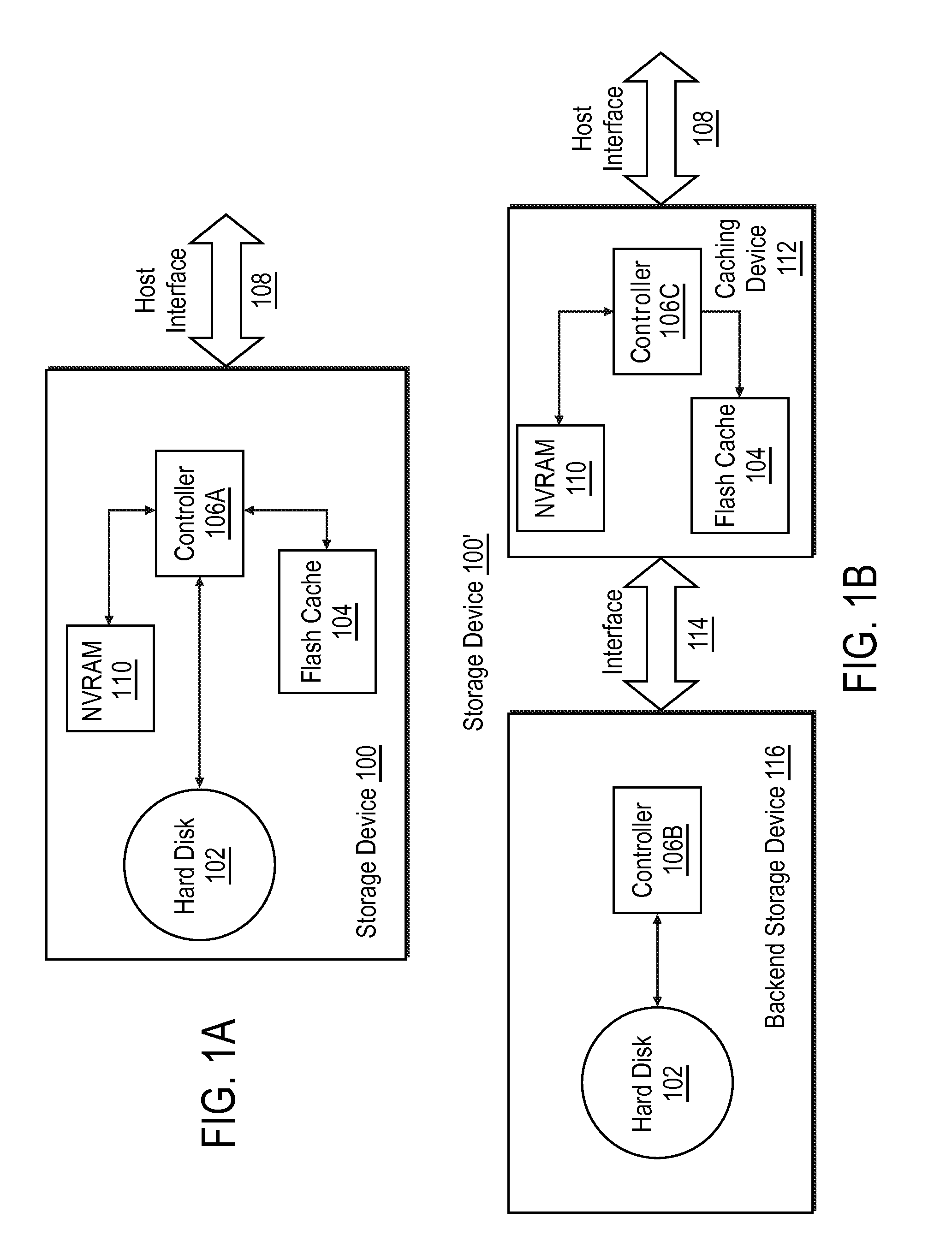

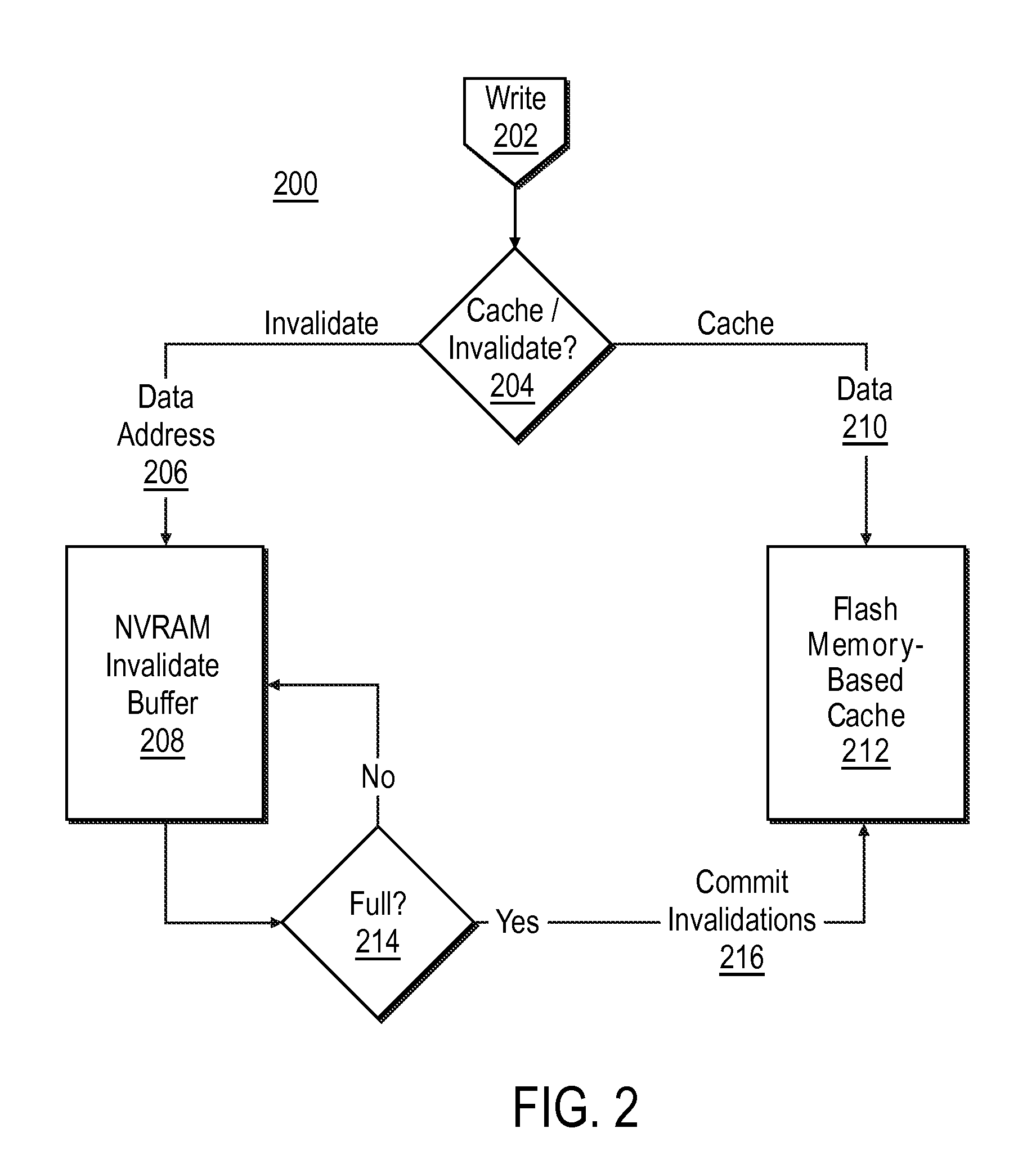

Methods and systems for reducing churn in flash-based cache

ActiveUS20120017034A1Control rateSlow write timeMemory architecture accessing/allocationMemory adressing/allocation/relocationData storeFlash memory

A storage device includes a flash memory-based cache for a hard disk-based storage device and a controller that is configured to limit the rate of cache updates through a variety of mechanisms, including determinations that the data is not likely to be read back from the storage device within a time period that justifies its storage in the cache, compressing data prior to its storage in the cache, precluding storage of sequentially-accessed data in the cache, and / or throttling storage of data to the cache within predetermined write periods and / or according to user instruction.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

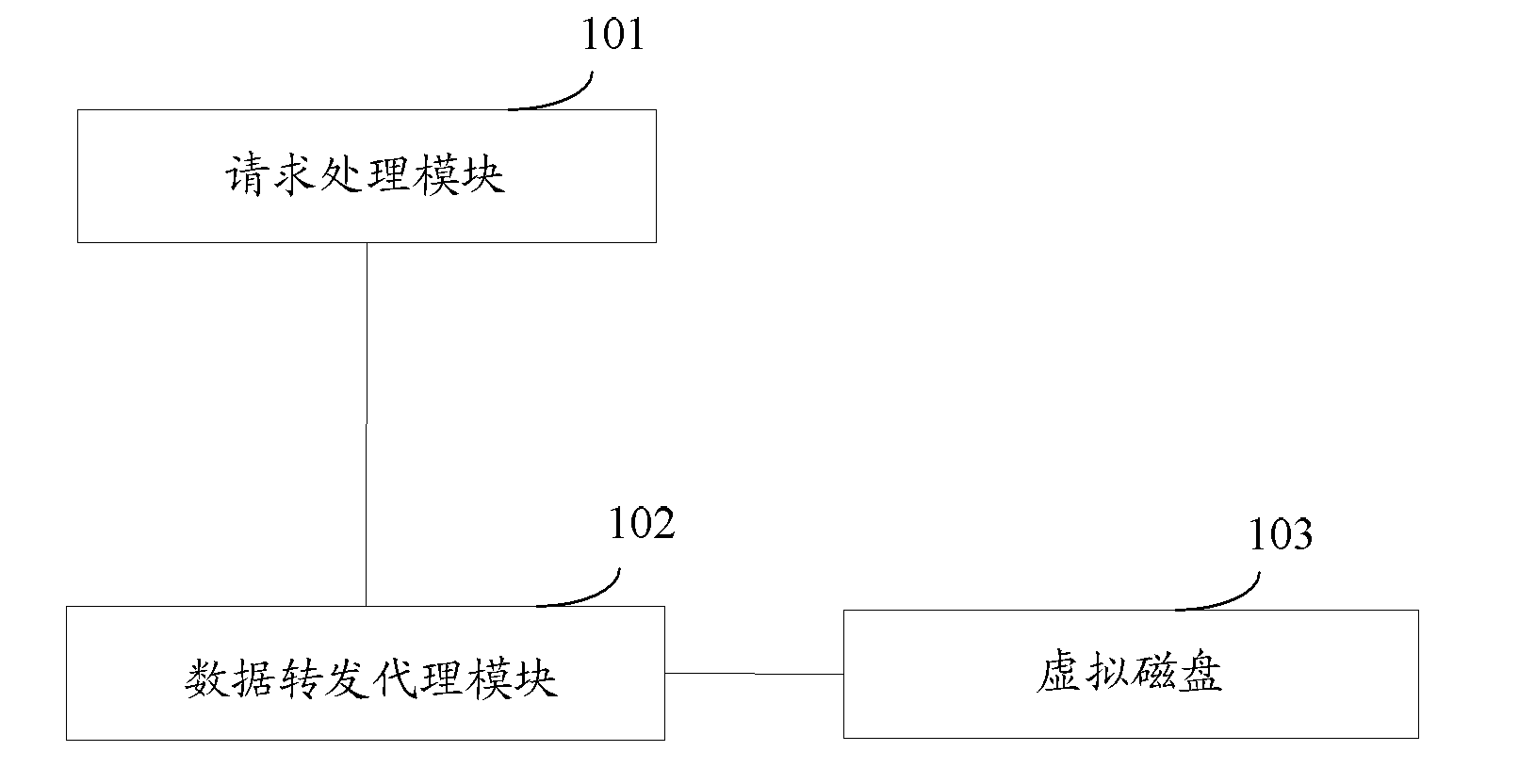

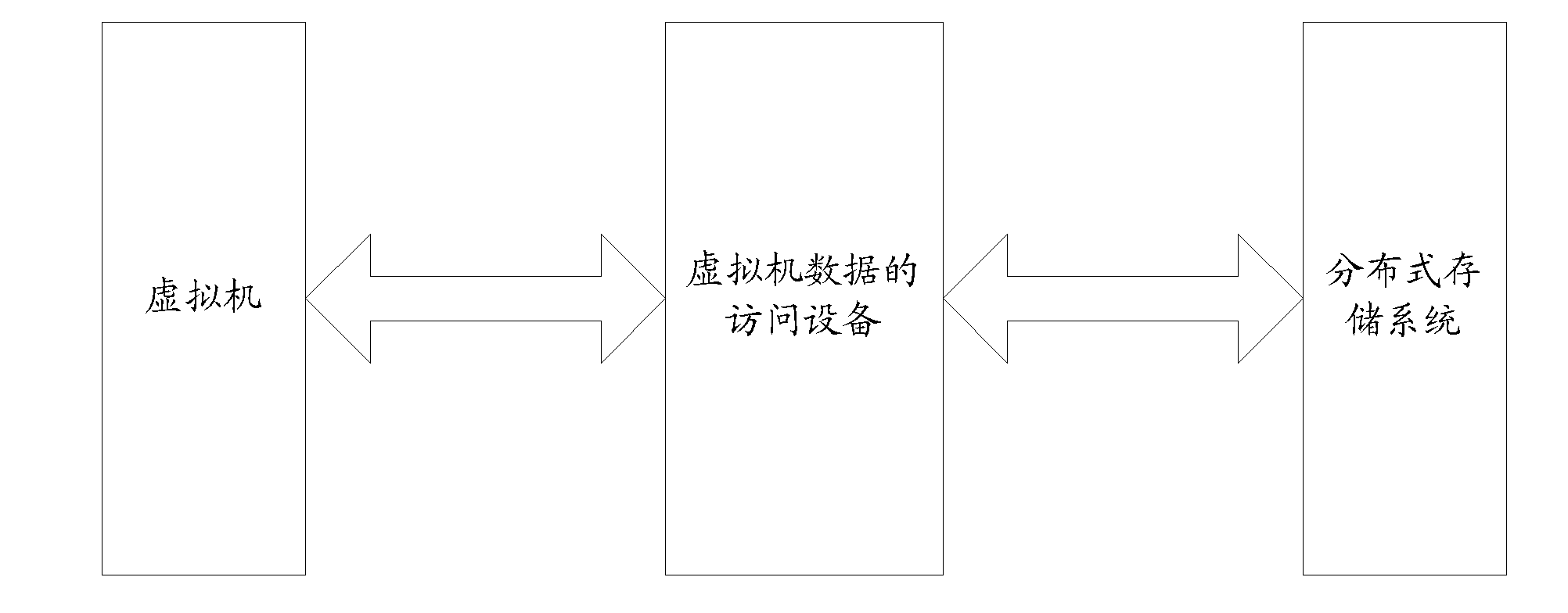

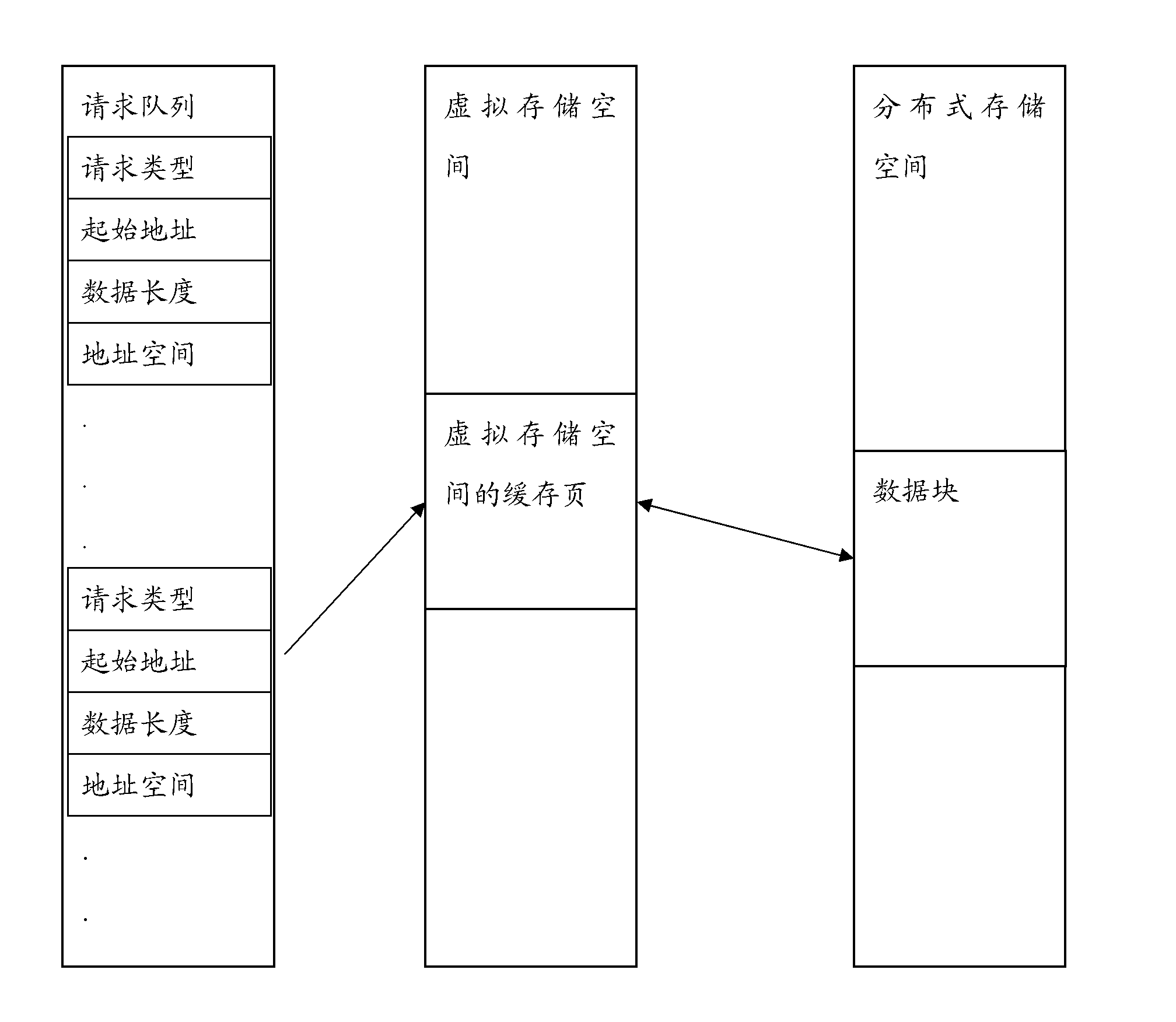

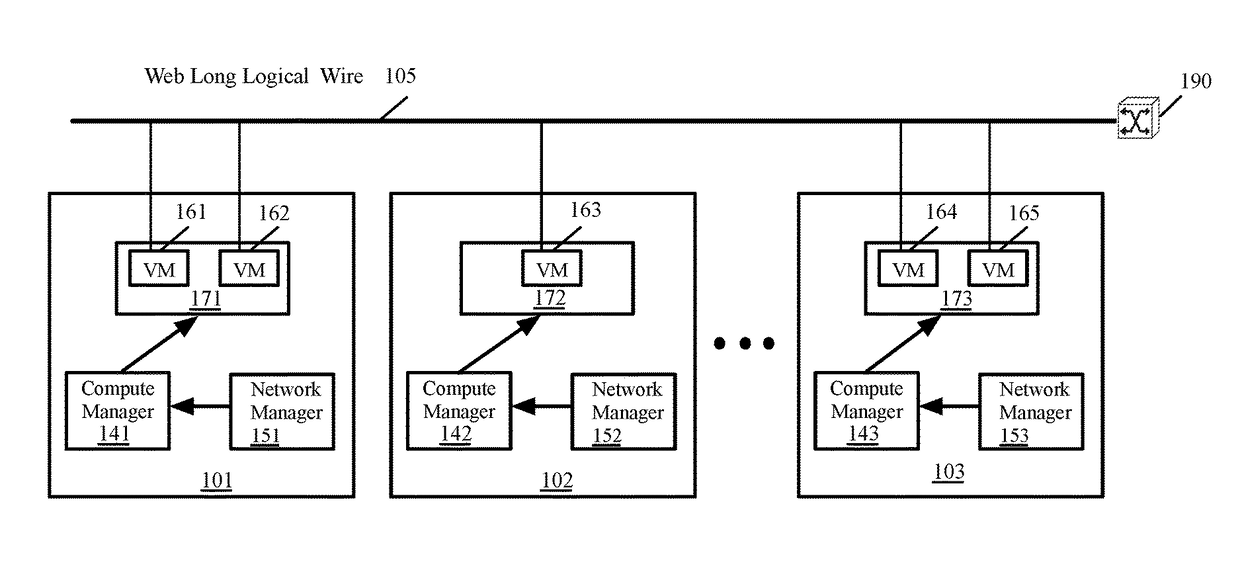

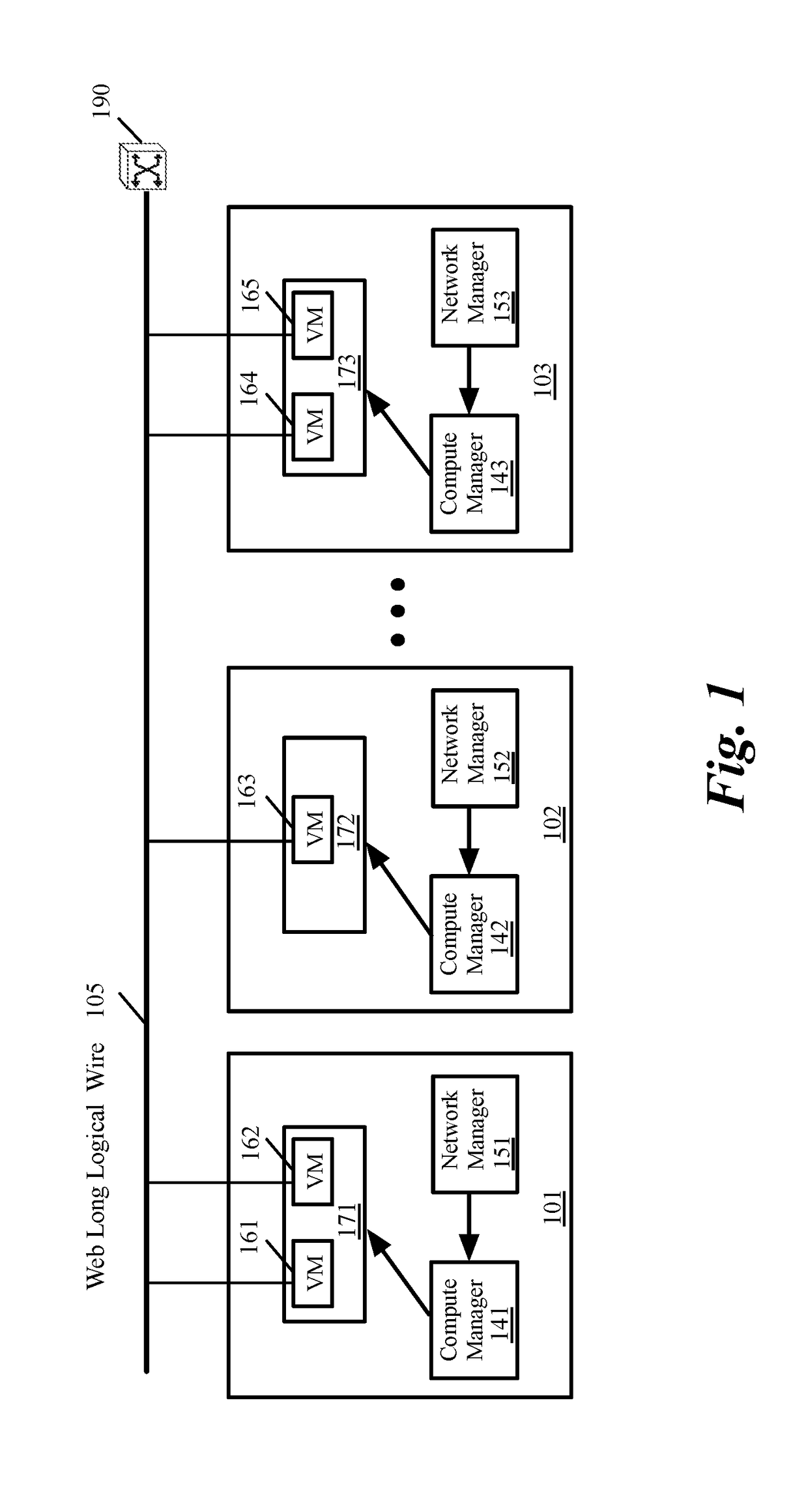

Method and device for accessing data of virtual machine

ActiveCN102467408AImprove securityImprove reliabilityMemory architecture accessing/allocationMemory adressing/allocation/relocationAccess methodData access

The embodiment of the invention discloses a method and a device for accessing the data of a virtual machine. The device comprises an access request processing module, a data forwarding proxy module and a virtual disk, wherein the access request processing module is used for receiving a data access request sent by the virtual machine and adding the data access request to a request queue, the data access request in the request queue and a virtual storage space of the virtual disk are mapped, and the virtual storage space and a physical storage space of a distributed storage system are mapped; and the data forwarding proxy module is used for obtaining the data access request from the request queue and mapping the obtained data access request into the corresponding virtual storage space, mapping the virtual storage space obtained through the mapping into a corresponding physical storage space, and executing corresponding data access operation in the physical storage space obtained through the mapping according to the type of the data access request. According to the embodiment of the invention, the safety and the reliability of storing the data of the virtual machine can be improved.

Owner:ALIBABA GRP HLDG LTD

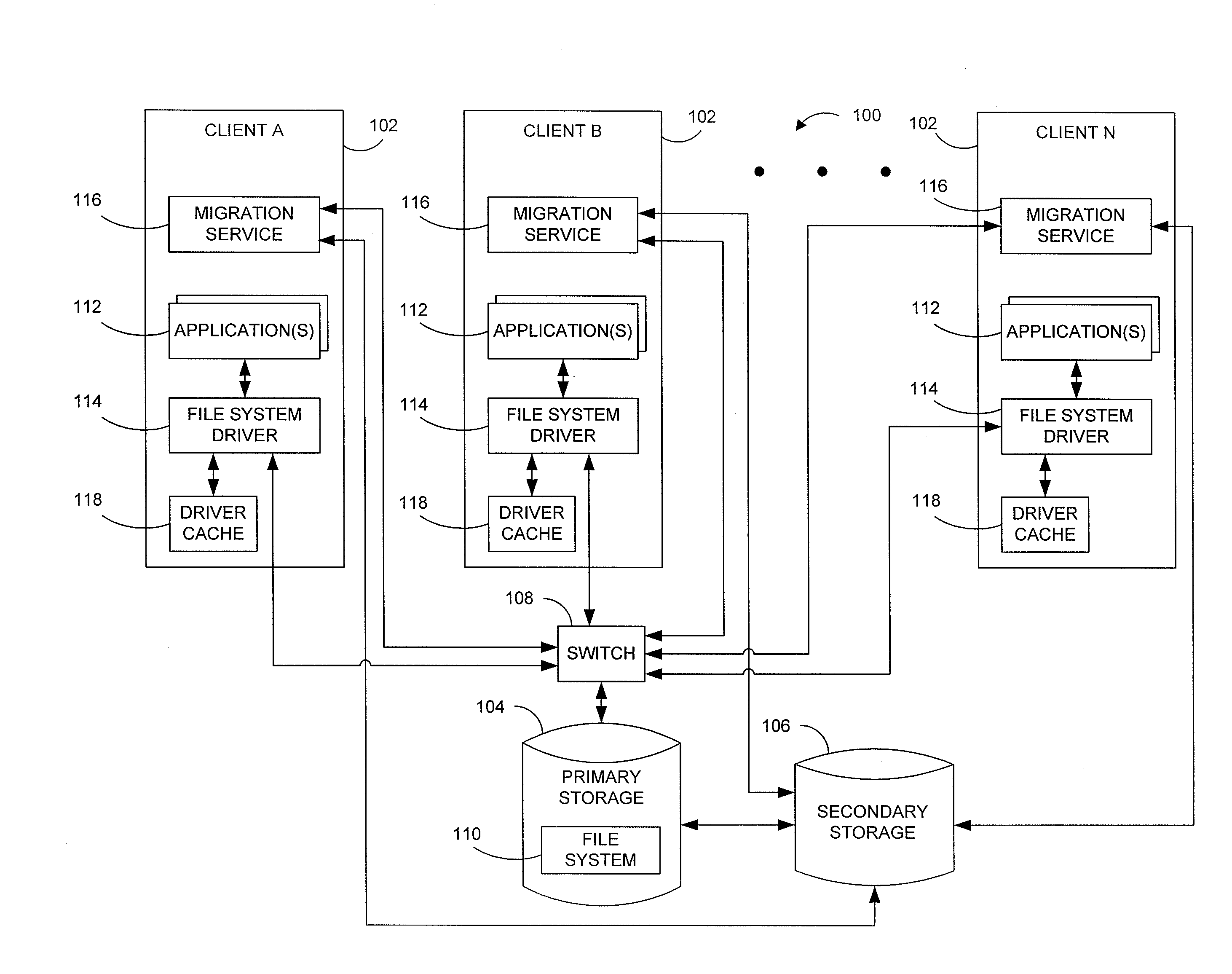

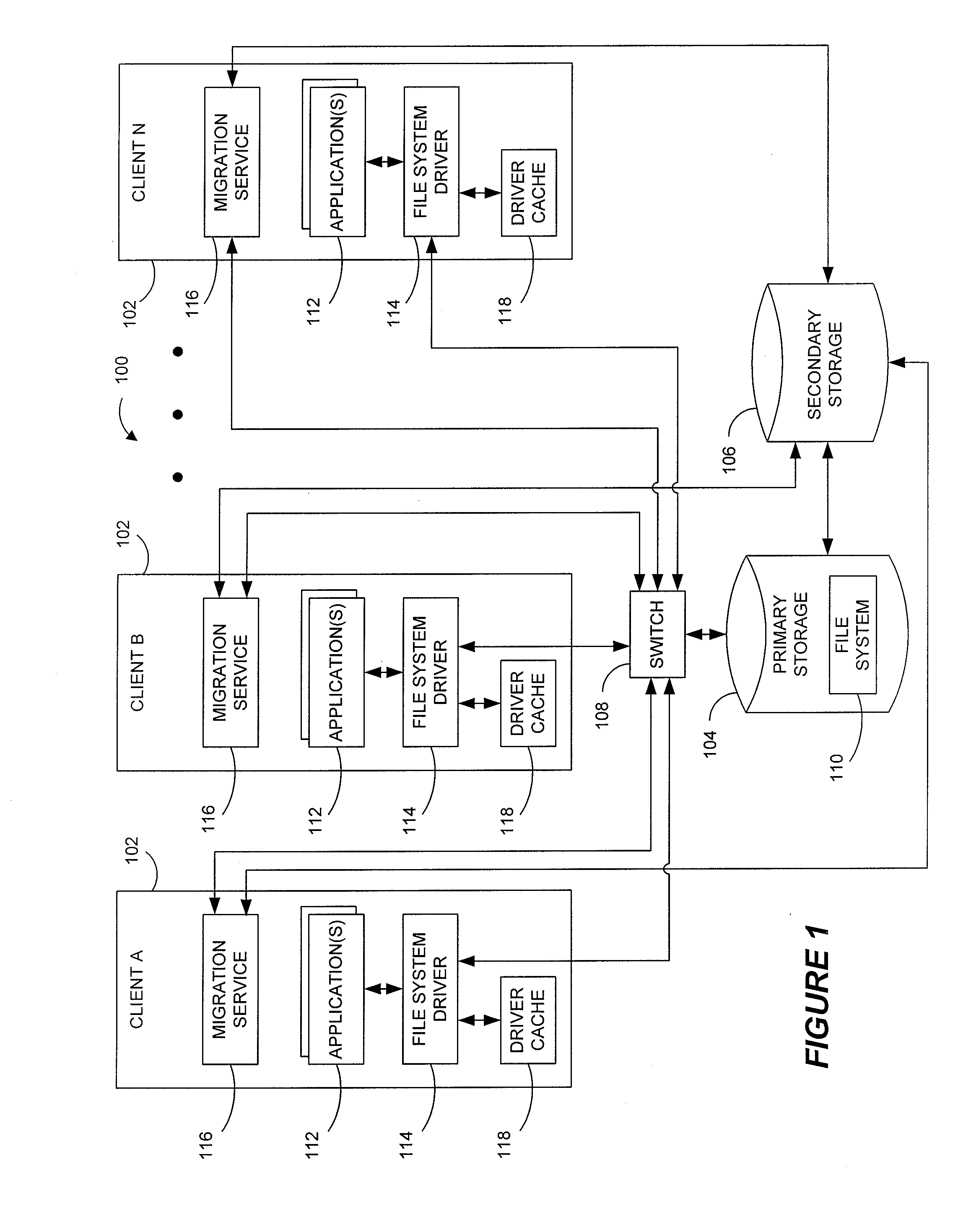

Systems and methods for data migration in a clustered file system

ActiveUS8209307B2Digital data information retrievalDigital data processing detailsFile systemClustered file system

Owner:COMMVAULT SYST INC

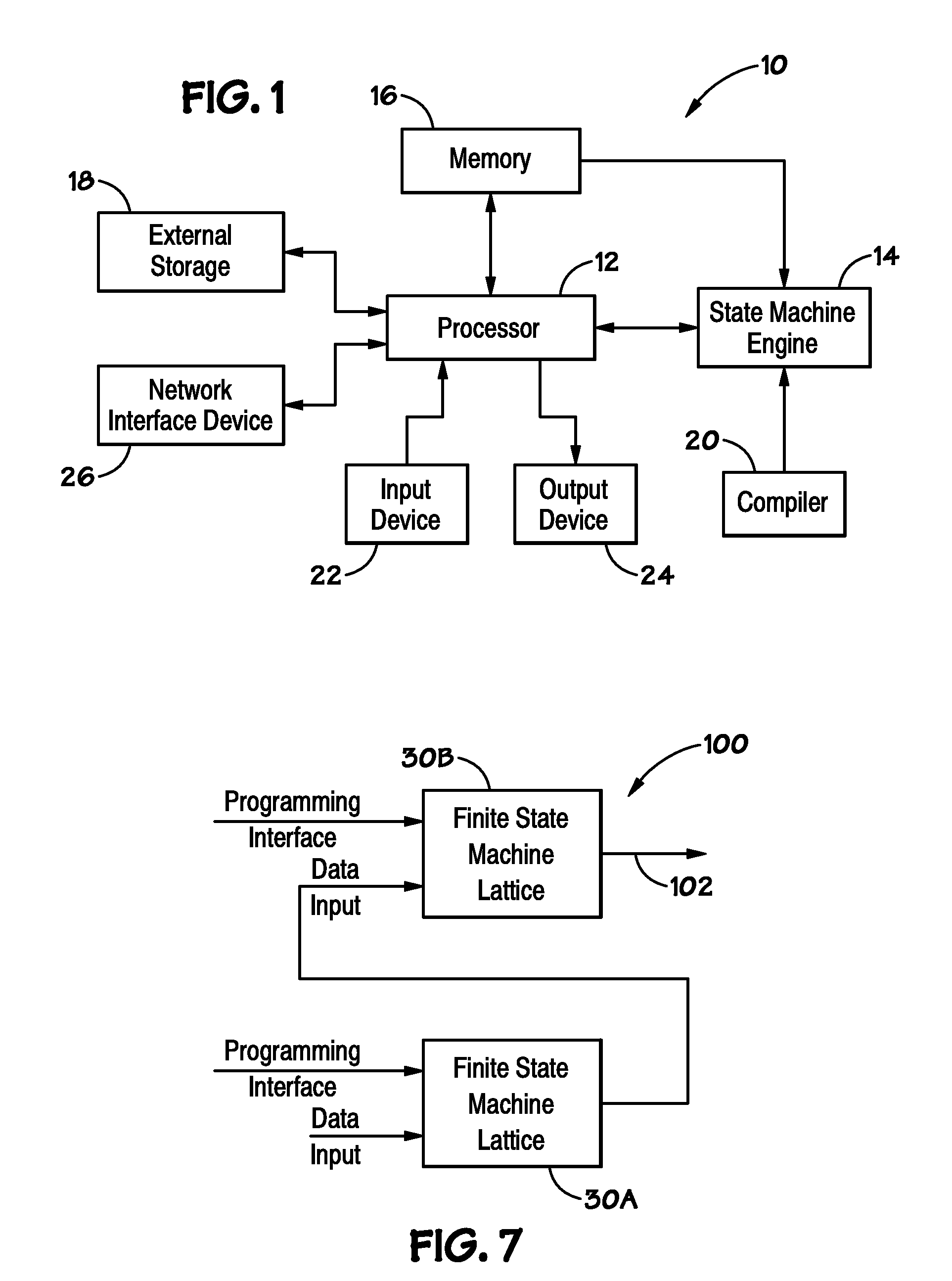

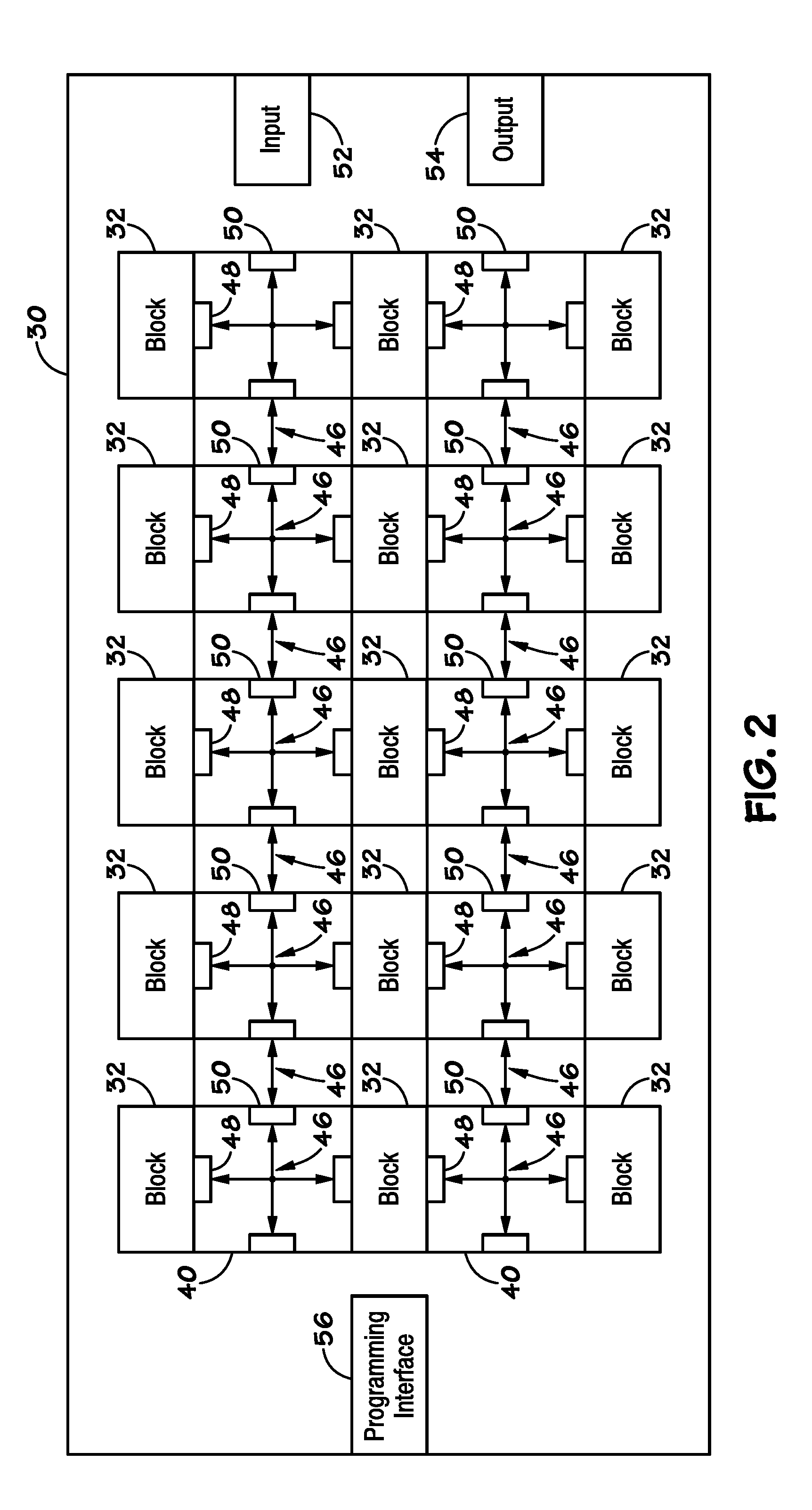

Methods and systems for handling data received by a state machine engine

ActiveUS9235798B2Biological neural network modelsCache memory detailsData analysis systemData buffer

A data analysis system to analyze data. The data analysis system includes a data buffer configured to receive data to be analyzed. The data analysis system also includes a state machine lattice. The state machine lattice includes multiple data analysis elements and each data analysis element includes multiple memory cells configured to analyze at least a portion of the data and to output a result of the analysis. The data analysis system includes a buffer interface configured to receive the data from the data buffer and to provide the data to the state machine lattice.

Owner:MICRON TECH INC

Global object definition and management for distributed firewalls

ActiveUS20170104720A1Memory architecture accessing/allocationDatabase management systemsComputer networkObject definition

A method of defining distributed firewall rules in a group of datacenters is provided. Each datacenter includes a group of data compute nodes (DCNs). The method sends a set of security tags from a particular datacenter to other datacenters. The method, at each datacenter, associates a unique identifier of one or more DCNs of the datacenter to each security tag. The method associates one or more security tags to each of a set of security group at the particular datacenter and defines a set of distributed firewall rules at the particular datacenter based on the security tags. The method sends the set of distributed firewall rules from the particular datacenter to other datacenters. The method, at each datacenter, translates the firewall rules by mapping the unique identifier of each DCN in a distributed firewall rule to a corresponding static address associated with the DCN.

Owner:NICIRA

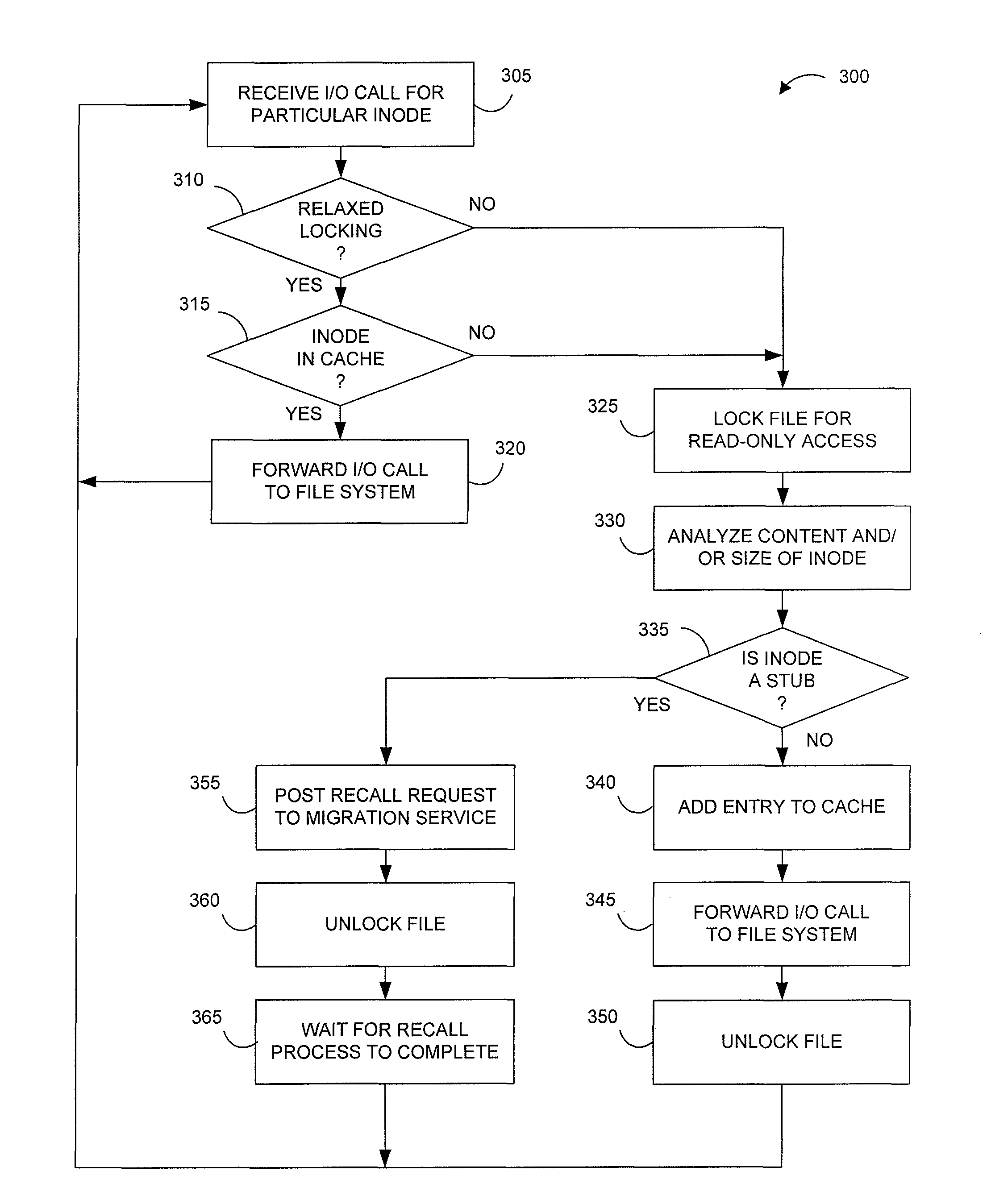

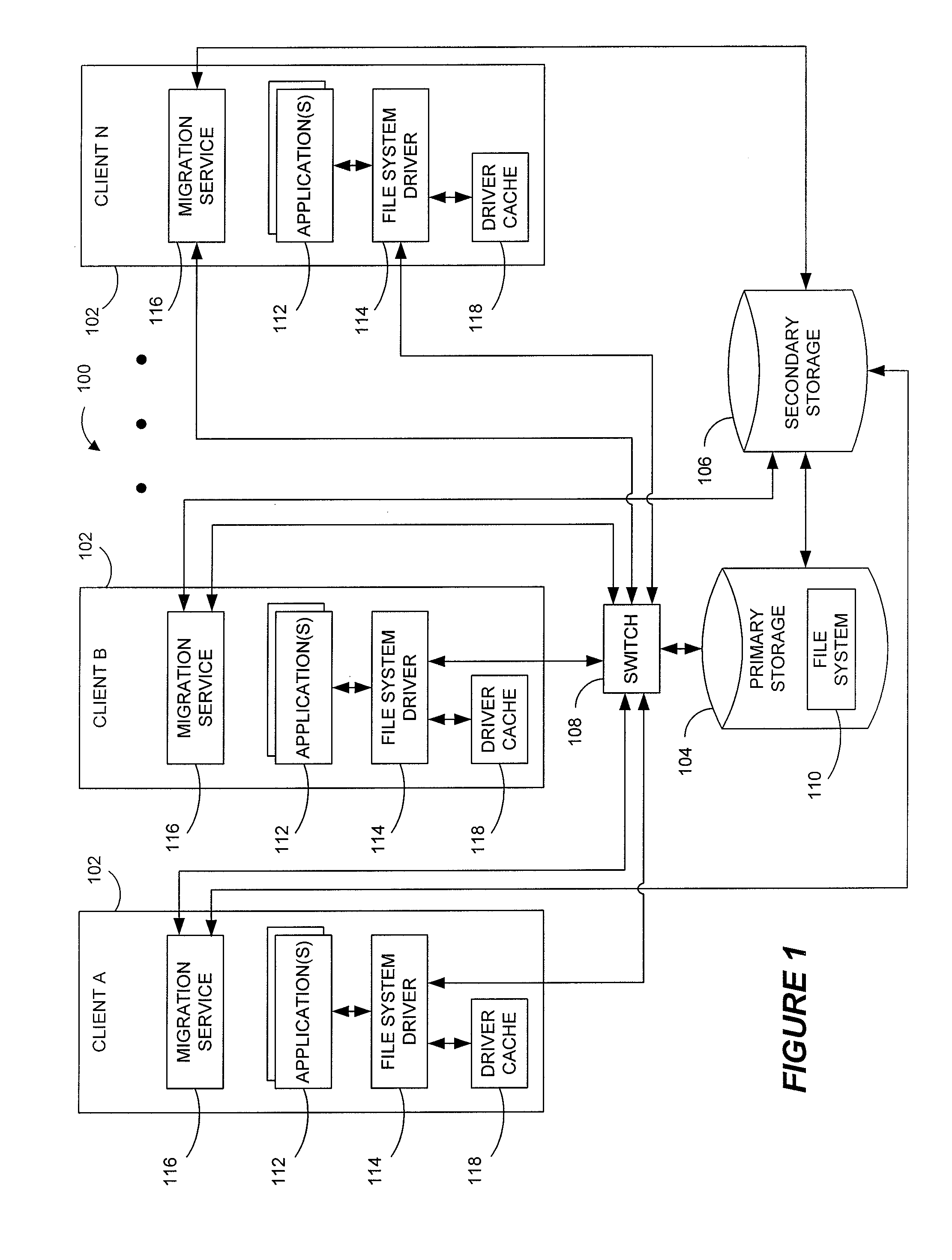

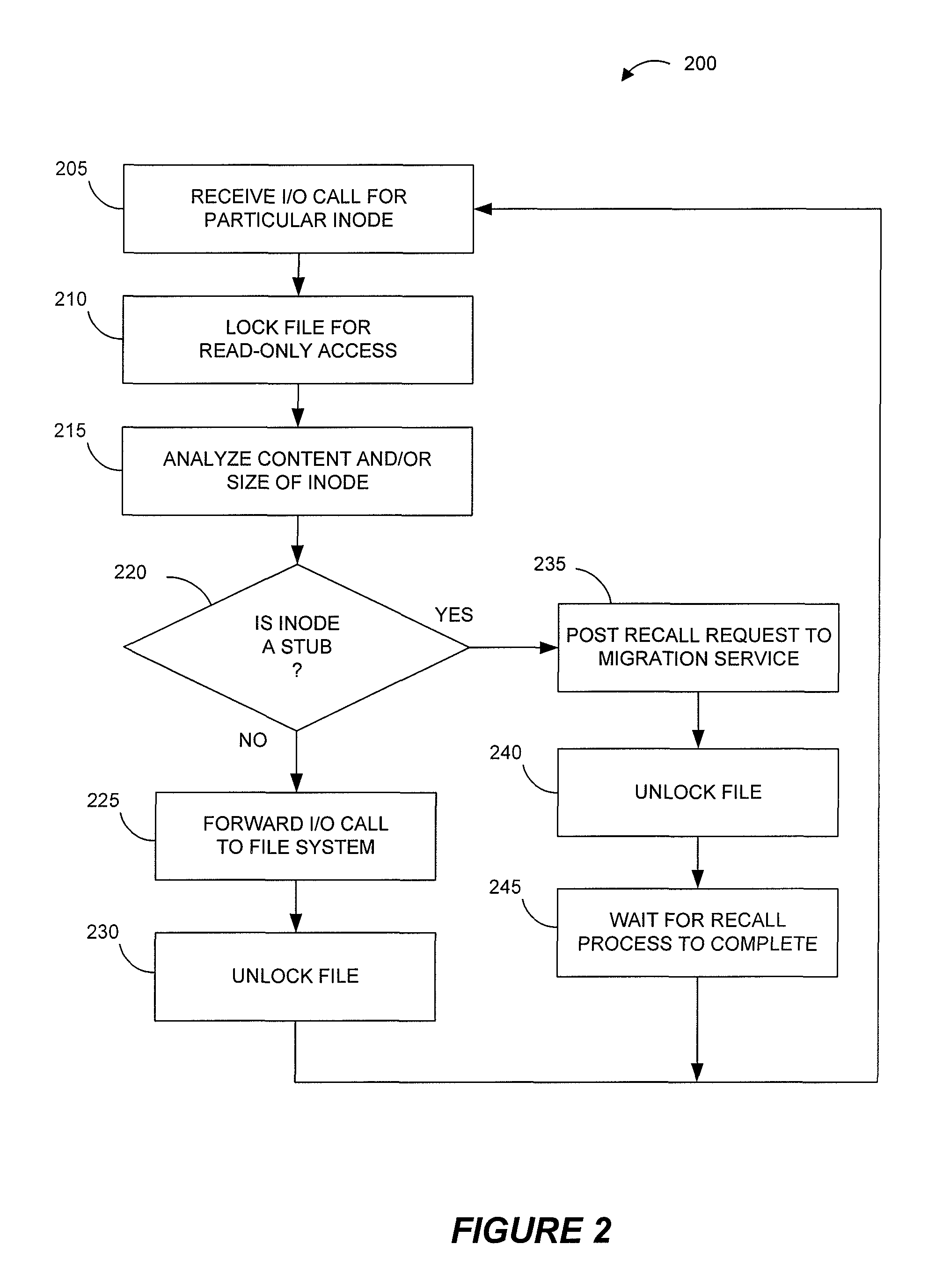

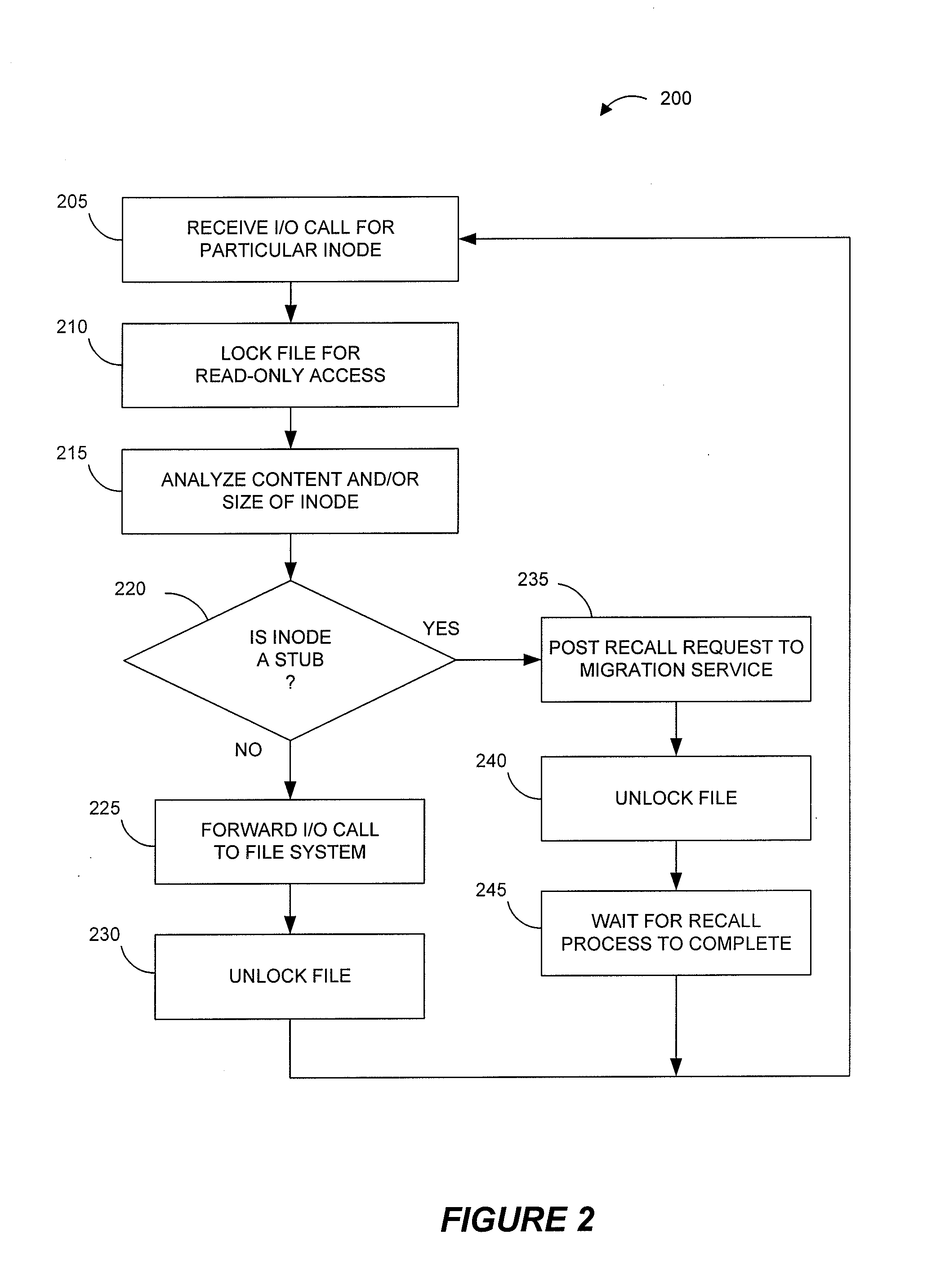

Systems and methods for data migration in a clustered file system

ActiveUS20100250508A1Digital data information retrievalDigital data processing detailsFile systemClustered file system

Systems and methods for providing more efficient handling of I / O requests for clustered file system data subject to data migration or the like. For instance, exemplary systems can more quickly determine if certain files on primary storage represent actual file data or stub data for recalling file data from secondary storage. Certain embodiments utilize a driver cache on each cluster node to maintain a record of recently accessed files that represent regular files (as opposed to stubs). A dual-locking process, using both strict locking and relaxed locking, maintains consistency between driver caches on different nodes and the data of the underlying clustered file system, while providing improved access to the data by the different nodes. Moreover, a signaling process can be used, such as with zero-length files, for alerting drivers on different nodes that data migration is to be performed and / or that the driver caches should be flushed.

Owner:COMMVAULT SYST INC

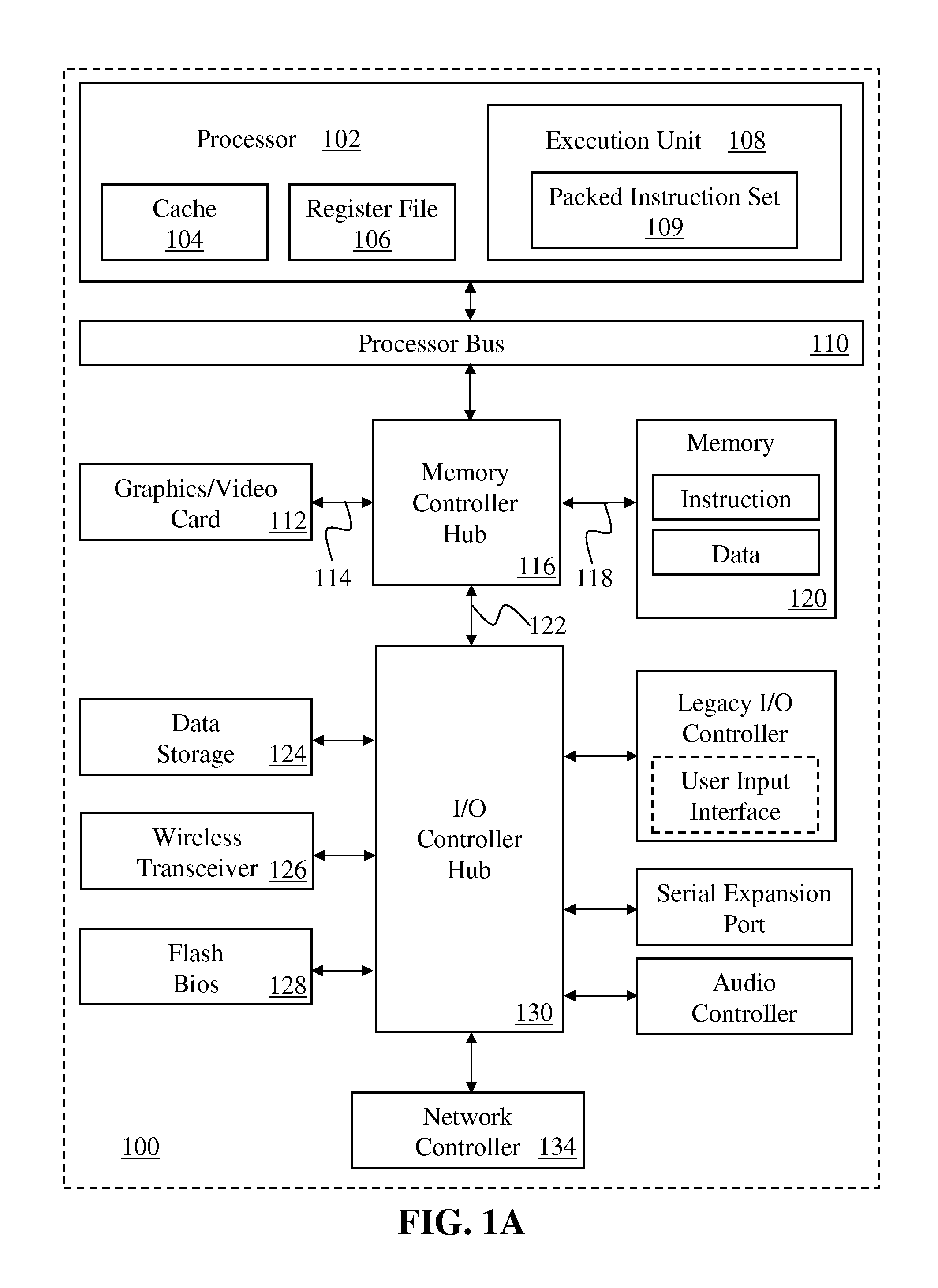

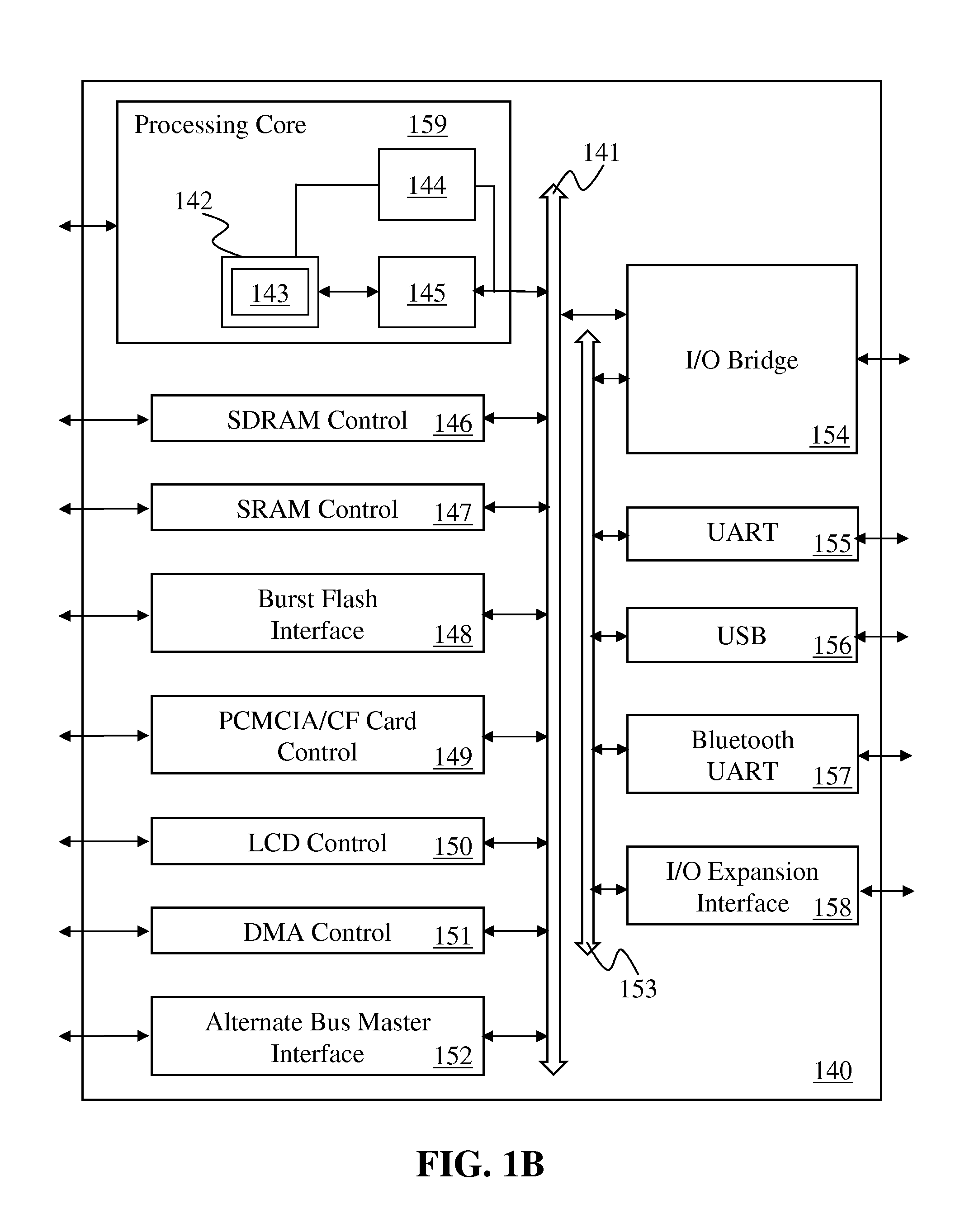

Instruction and logic to provide pushing buffer copy and store functionality

InactiveUS20140149718A1Memory architecture accessing/allocationDigital computer detailsMemory addressHardware thread

Instructions and logic provide pushing buffer copy and store functionality. Some embodiments include a first hardware thread or processing core, and a second hardware thread or processing core, a cache to store cache coherent data in a cache line for a shared memory address accessible by the second hardware thread or processing core. Responsive to decoding an instruction specifying a source data operand, said shared memory address as a destination operand, and one or more owner of said shared memory address, one or more execution units copy data from the source data operand to the cache coherent data in the cache line for said shared memory address accessible by said second hardware thread or processing core in the cache when said one or more owner includes said second hardware thread or processing core.

Owner:INTEL CORP

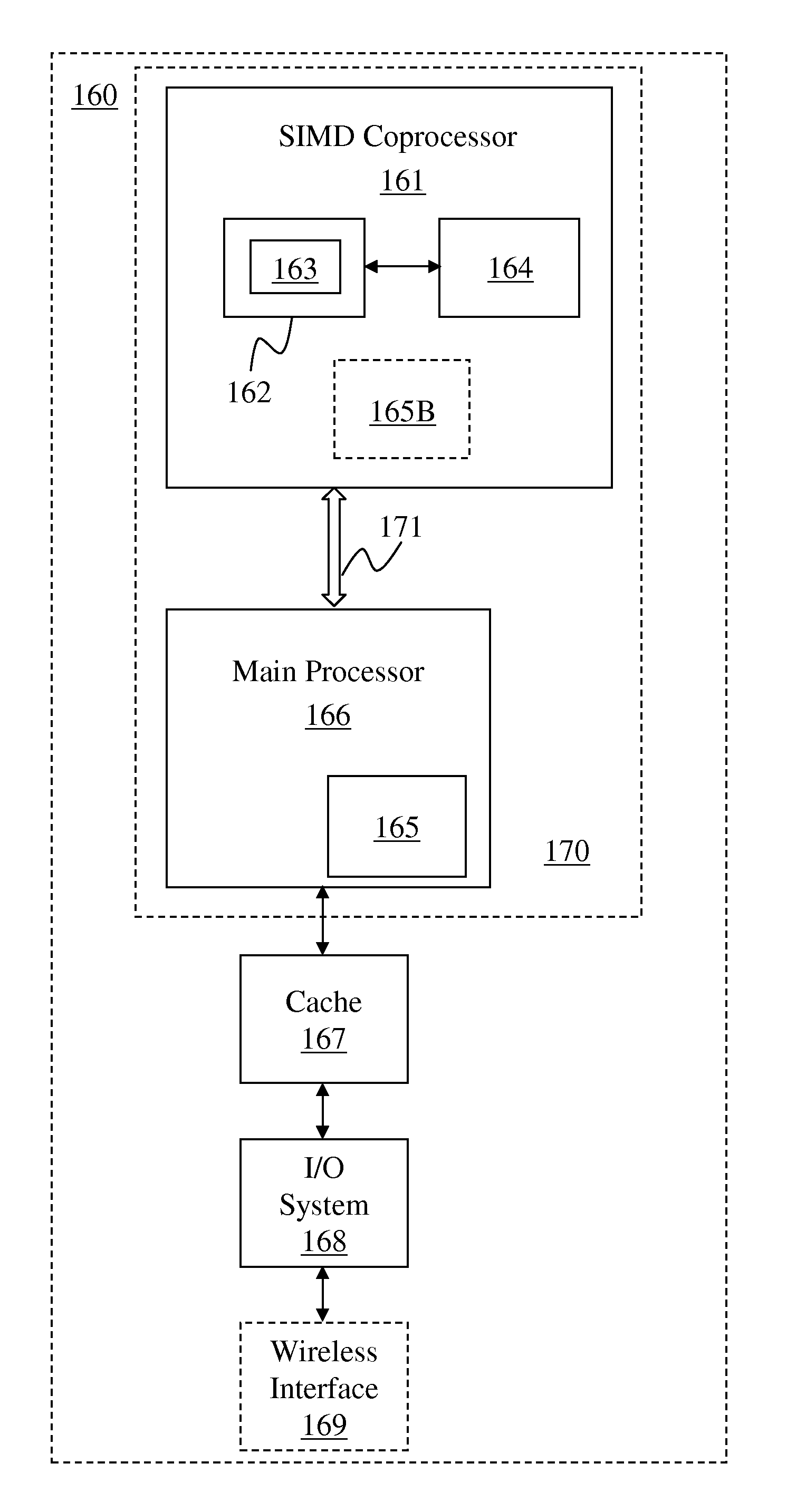

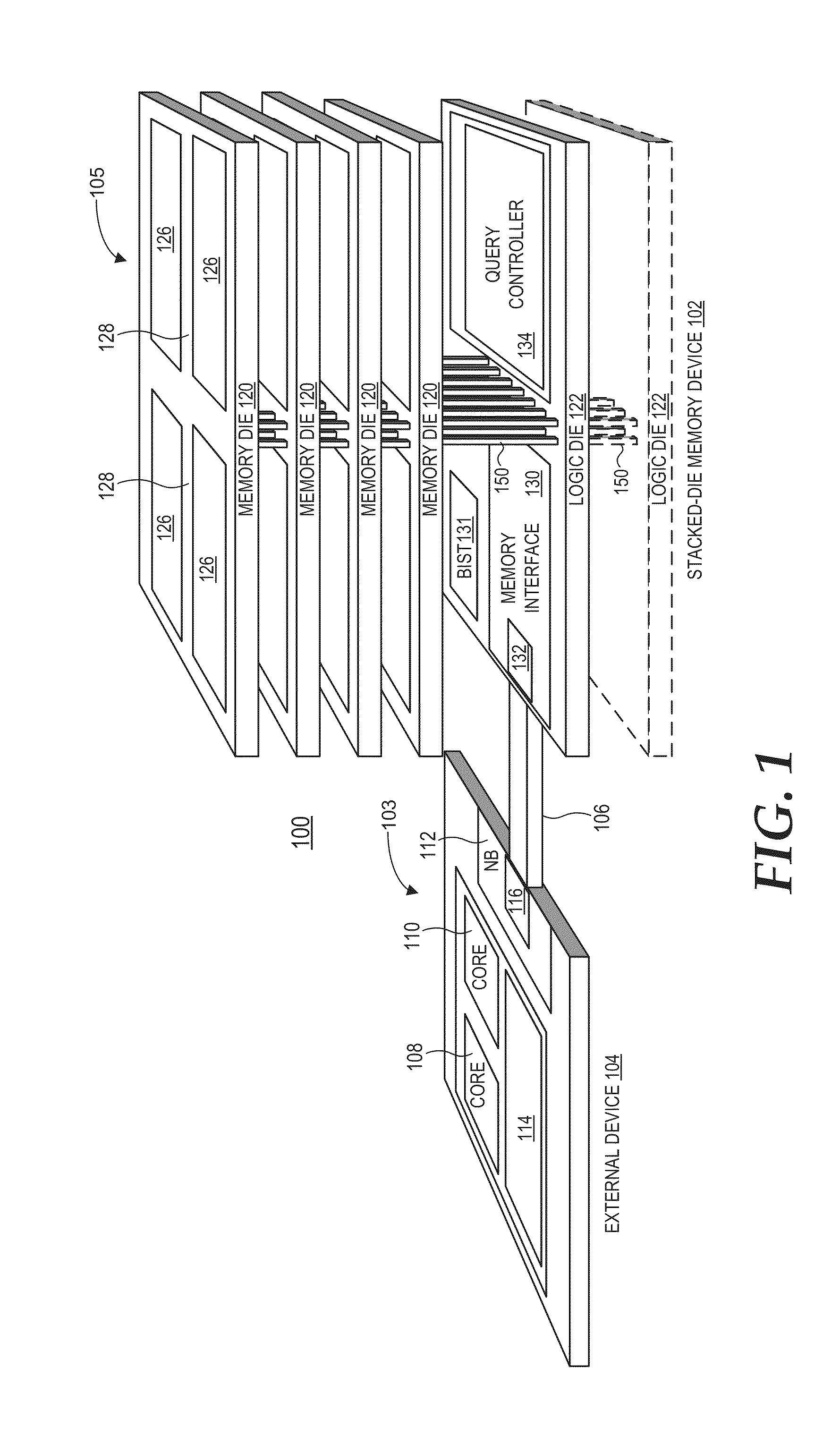

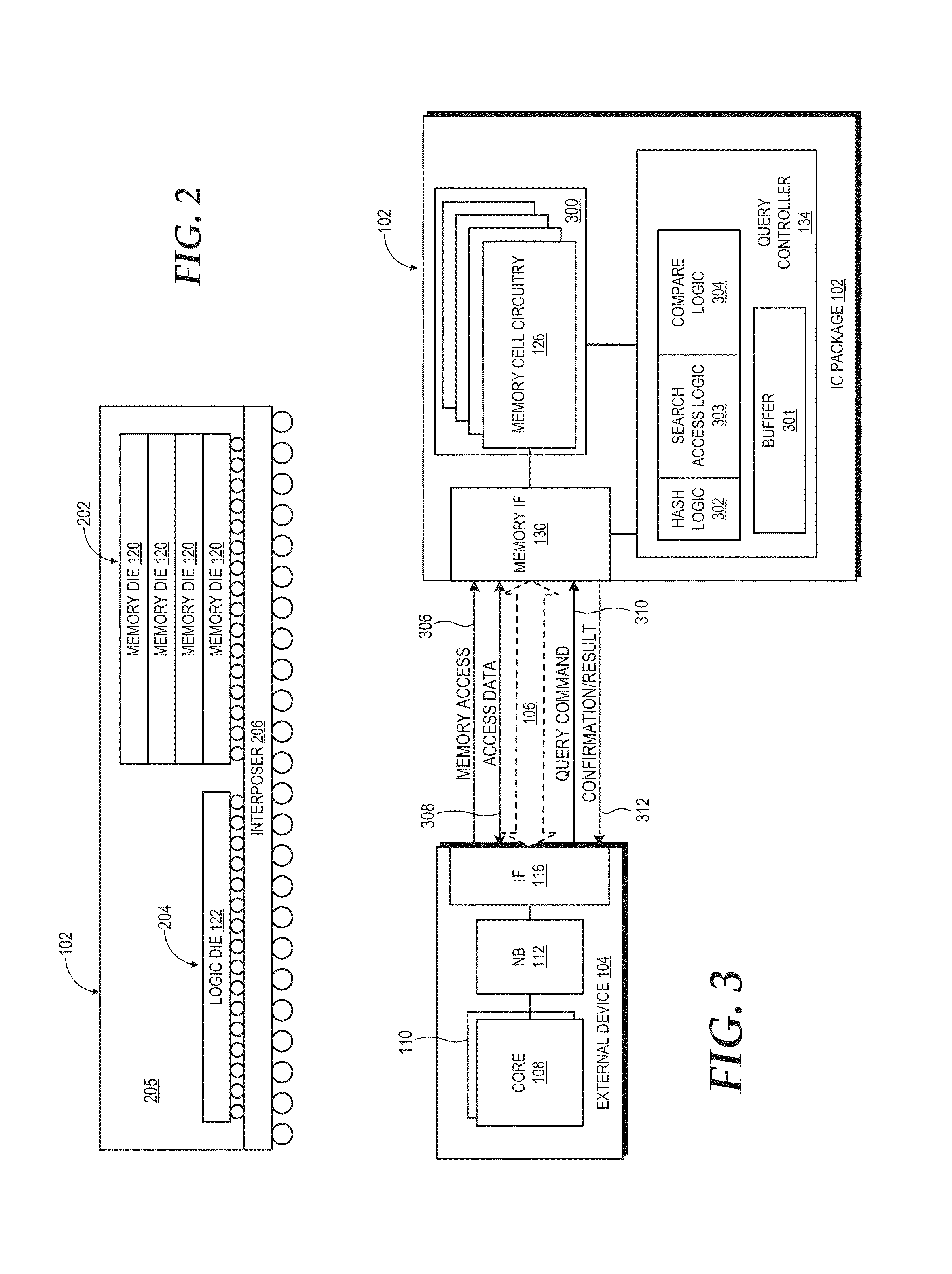

Query operations for stacked-die memory device

ActiveUS20150016172A1Solid-state devicesMemory adressing/allocation/relocationMemory controllerData storing

An integrated circuit (IC) package includes a stacked-die memory device. The stacked-die memory device includes a set of one or more stacked memory dies implementing memory cell circuitry. The stacked-die memory device further includes a set of one or more logic dies electrically coupled to the memory cell circuitry. The set of one or more logic dies includes a query controller and a memory controller. The memory controller is coupleable to at least one device external to the stacked-die memory device. The query controller is to perform a query operation on data stored in the memory cell circuitry responsive to a query command received from the external device.

Owner:ADVANCED MICRO DEVICES INC

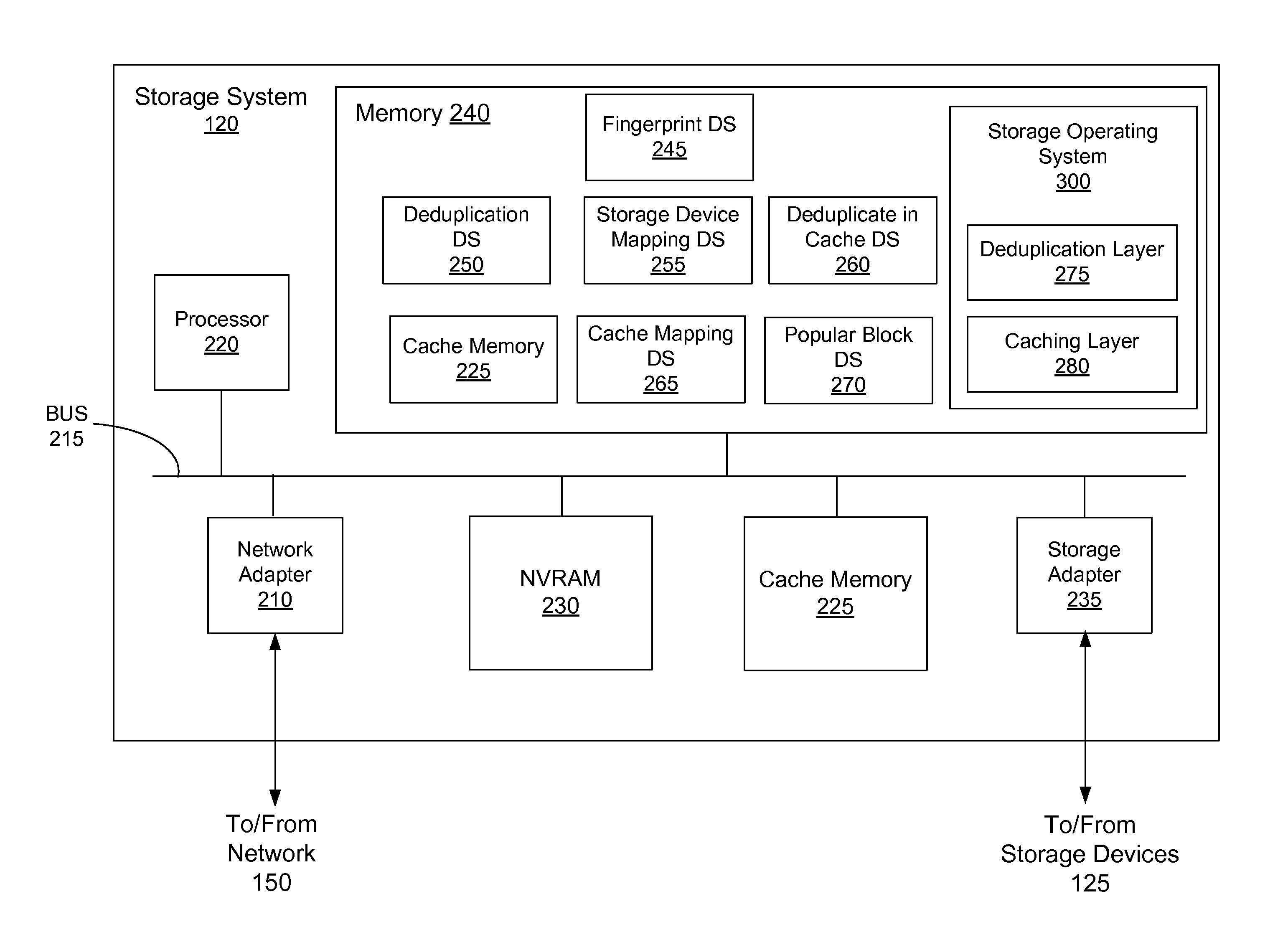

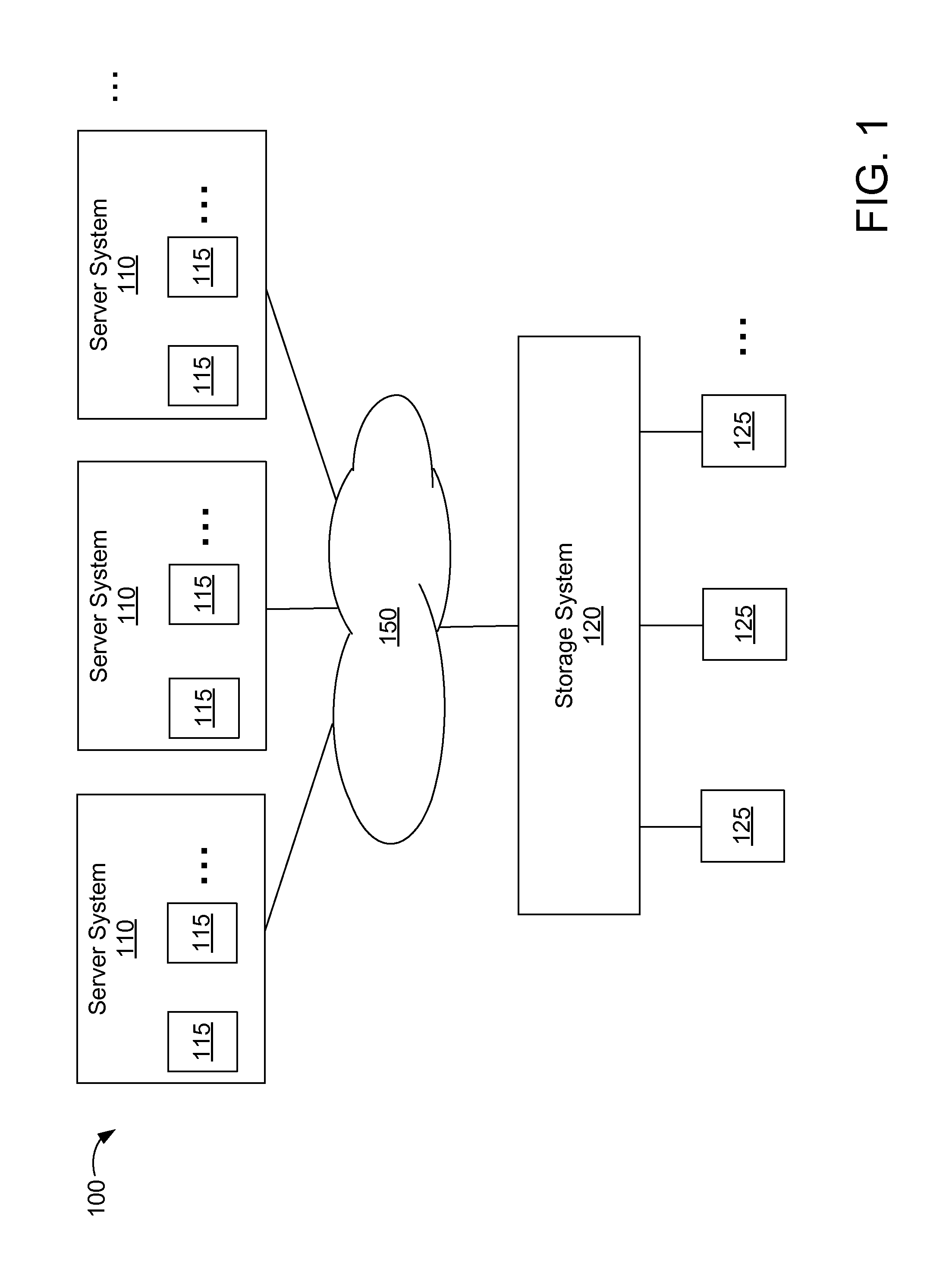

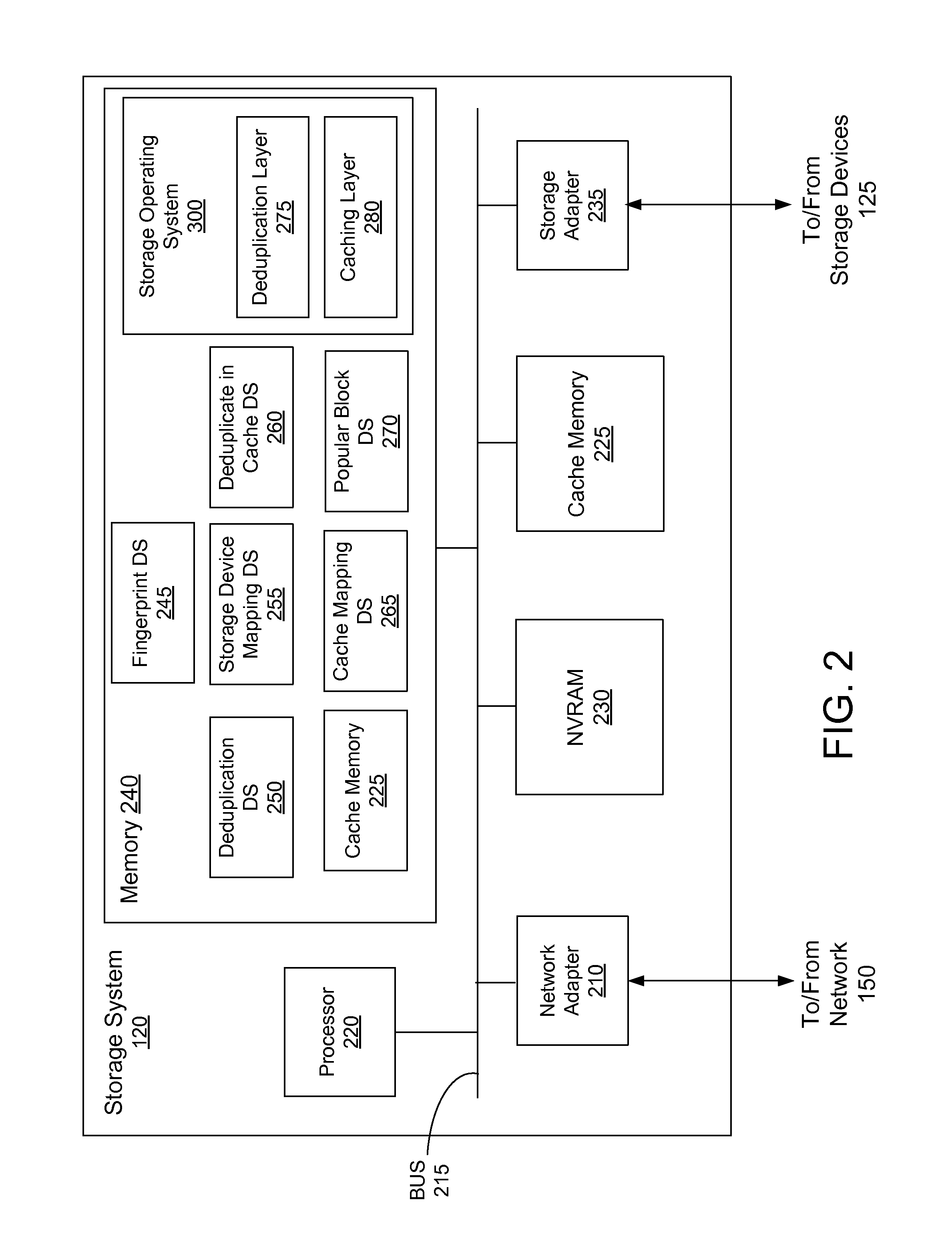

Caching and deduplication of data blocks in cache memory

ActiveUS8706971B1Save on storageLower read latencyMemory architecture accessing/allocationError detection/correctionOperational systemComputer science

A storage system comprises a cache for caching data blocks and storage devices for storing blocks. A storage operating system may deduplicate sets of redundant blocks on the storage devices based on a deduplication requirement. Blocks in cache are typically deduplicated based on the deduplication on the storage devices. Sets of redundant blocks that have not met the deduplication requirement for storage devices and have not been deduplicated on the storage devices and cache are targeted for further deduplication processing. Sets of redundant blocks may be further deduplicated based on their popularity (number of accesses) in cache. If a set of redundant blocks in cache is determined to have a combined number of accesses being greater than a predetermined threshold number of accesses, the set of redundant blocks is determined to be “popular.” Popular sets of redundant blocks are selected for deduplication in cache and the storage devices.

Owner:NETWORK APPLIANCE INC

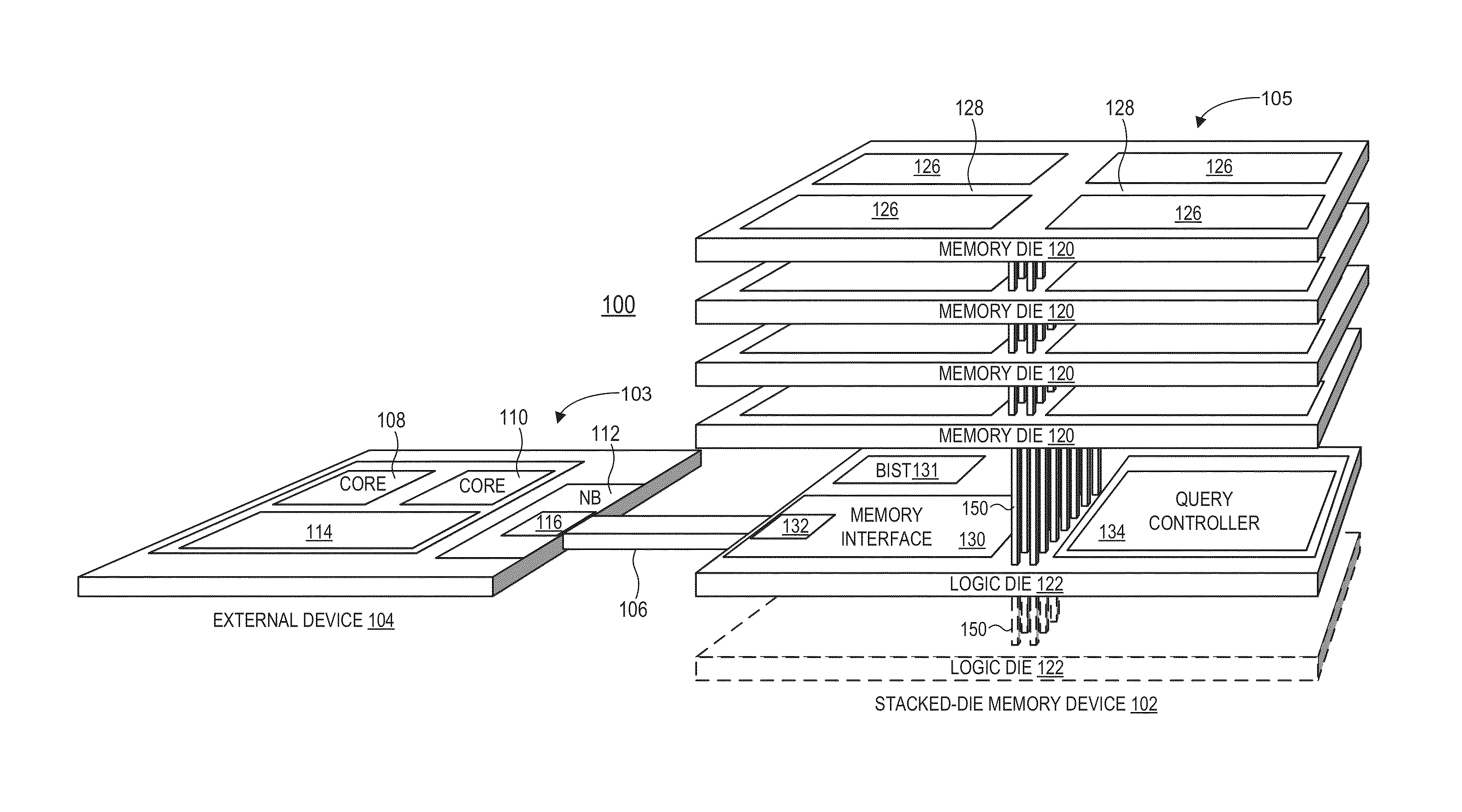

Streaming engine with stream metadata saving for context switching

ActiveUS20170308381A1Memory architecture accessing/allocationRegister arrangementsStreaming dataDigital data

A streaming engine employed in a digital data processor specifies a fixed read only data stream defined by plural nested loops. An address generator produces addresses of data elements. A steam head register stores data elements next to be supplied to functional units for use as operands. Stream metadata is stored in response to a stream store instruction. Stored stream metadata is restored to the stream engine in response to a stream restore instruction. An interrupt changes an open stream to a frozen state discarding stored stream data. A return from interrupt changes a frozen stream to an active state.

Owner:TEXAS INSTR INC

Efficient incremental backup and restoration of file system hierarchies with cloud object storage

ActiveUS20180196816A1Improve natureEnsure consistencyKey distribution for secure communicationInput/output to record carriersFile systemSemantics

Techniques described herein relate to systems and methods of data storage, and more particularly to providing layering of file system functionality on an object interface. In certain embodiments, file system functionality may be layered on cloud object interfaces to provide cloud-based storage while allowing for functionality expected from a legacy applications. For instance, POSIX interfaces and semantics may be layered on cloud-based storage, while providing access to data in a manner consistent with file-based access with data organization in name hierarchies. Various embodiments also may provide for memory mapping of data so that memory map changes are reflected in persistent storage while ensuring consistency between memory map changes and writes. For example, by transforming a ZFS file system disk-based storage into ZFS cloud-based storage, the ZFS file system gains the elastic nature of cloud storage.

Owner:ORACLE INT CORP

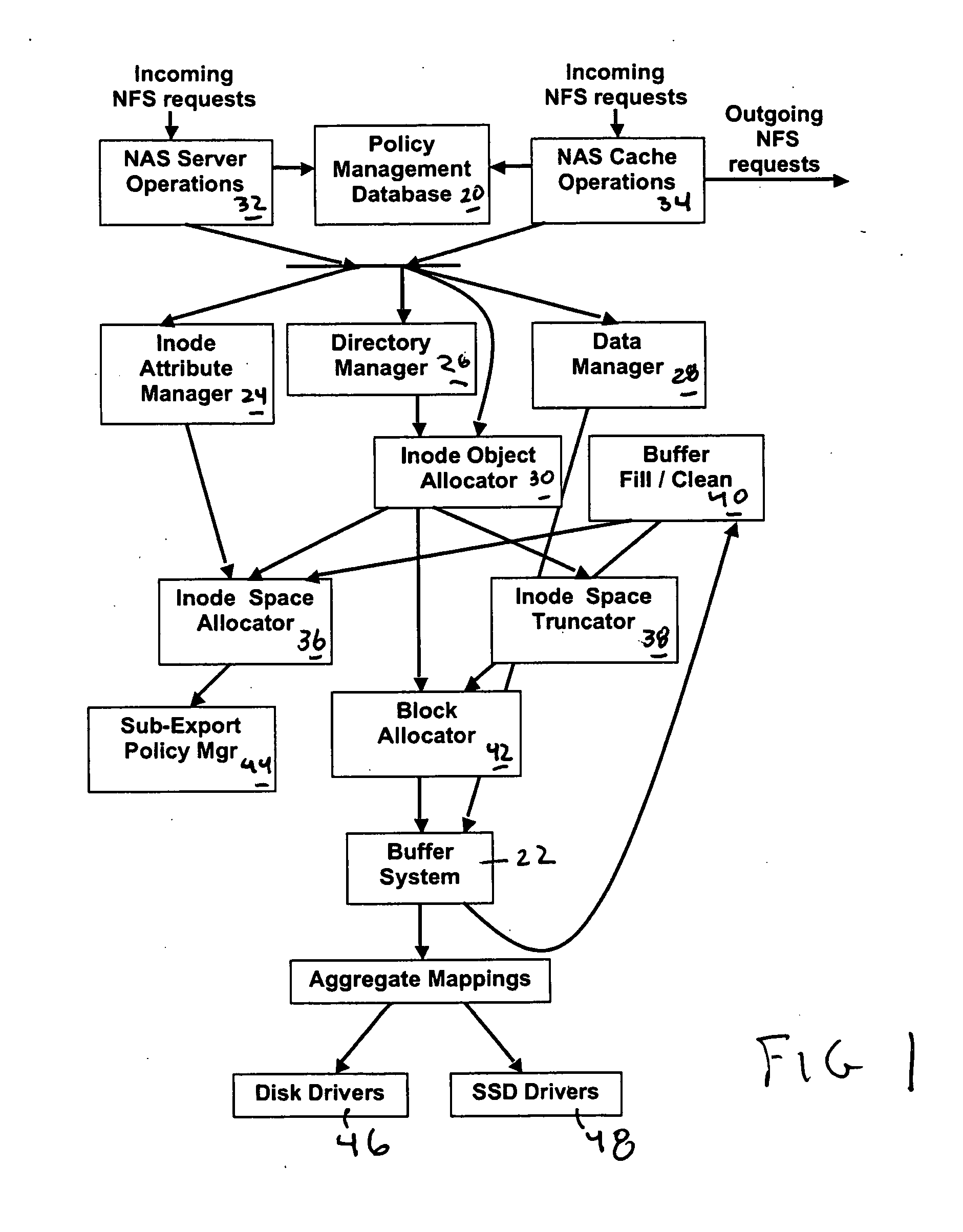

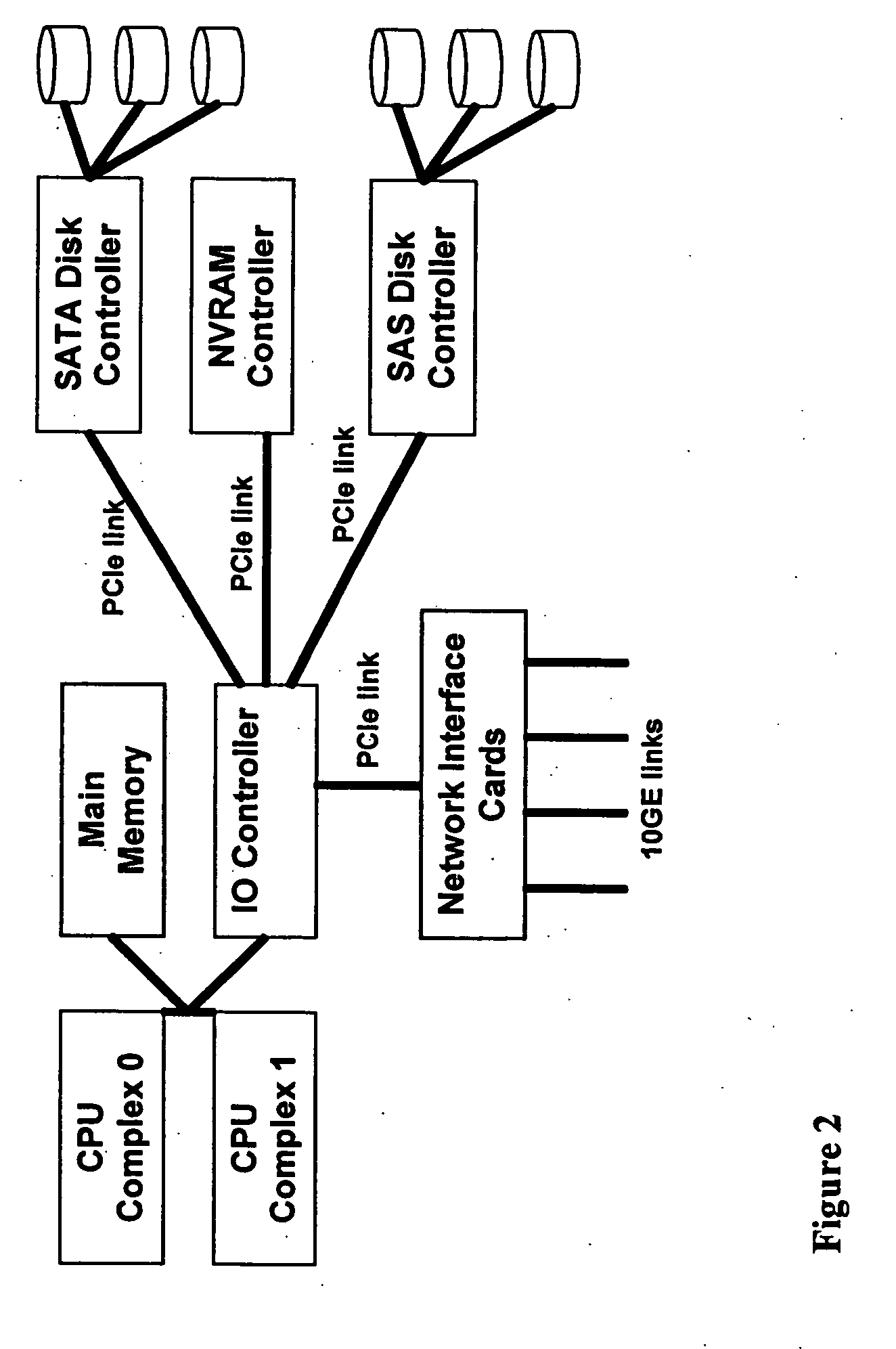

Method and apparatus for tiered storage

ActiveUS20110246491A1Memory architecture accessing/allocationDigital data processing detailsNetwork interface

A system for storing file data and directory data received over a network includes a network interface in communication with the network which receives NAS requests containing data to be written to files from the network. The system includes a first type of storage. The system includes a second type of storage different from the first type of storage. The system includes a policy specification n which specifies a first portion of one or more files' data which is less than all of the files' data is stored in the first type of storage and a second portion of the data which is less than all of the data of the files is stored in the second type of store. The system comprises a processing unit which executes the policy and causes the first portion to be stored in the first type of storage and a second portion to be stored in the second type of storage. A method for storing file data and directory data received over a network.

Owner:MICROSOFT TECH LICENSING LLC

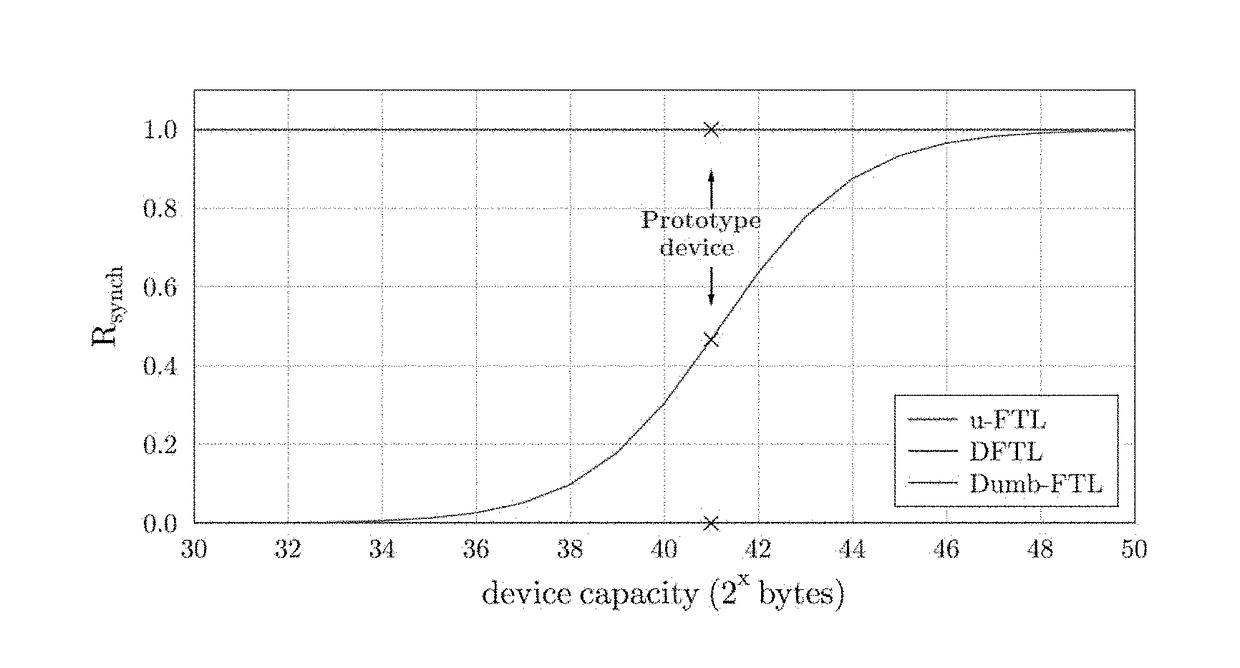

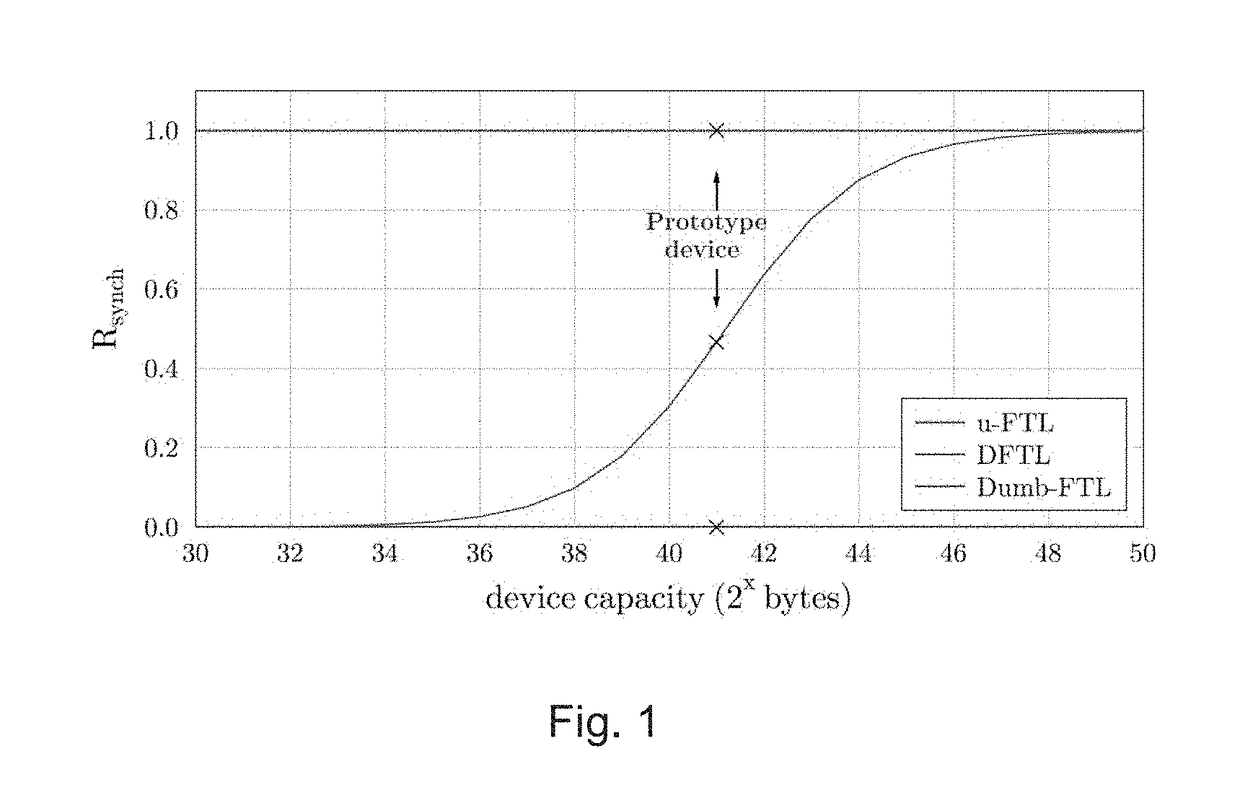

Solid-state storage device flash translation layer

InactiveUS20170249257A1Double the device's lifetime and throughputWrite amplification is very lowMemory architecture accessing/allocationMemory adressing/allocation/relocationSolid-state storageRandom access memory

Embodiments of the present invention include a method for storing a data page d on a solid-state storage device, wherein the solid-state storage device is configured to maintain a mapping table in a Log-Structure Merge (LSM) tree having a C0 component which is a random access memory (RAM) device and a C1 component which is a flash-based memory device. Methods comprise: writing the data page d at a physical storage page having physical storage page address P in the storage device in response to receiving a write request to store the data page d at a logical storage page having a logical storage page address L; caching a new mapping entry e(L,P) associating the logical storage page address L with the physical storage page address P; providing an update indication for the cached new mapping entry to indicate that the cached new mapping entry shall be inserted in the C1 component; and evicting the new mapping entry from the cache. Corresponding solid-state storage device is also provided.

Owner:ITU BUSINESS DEV AS

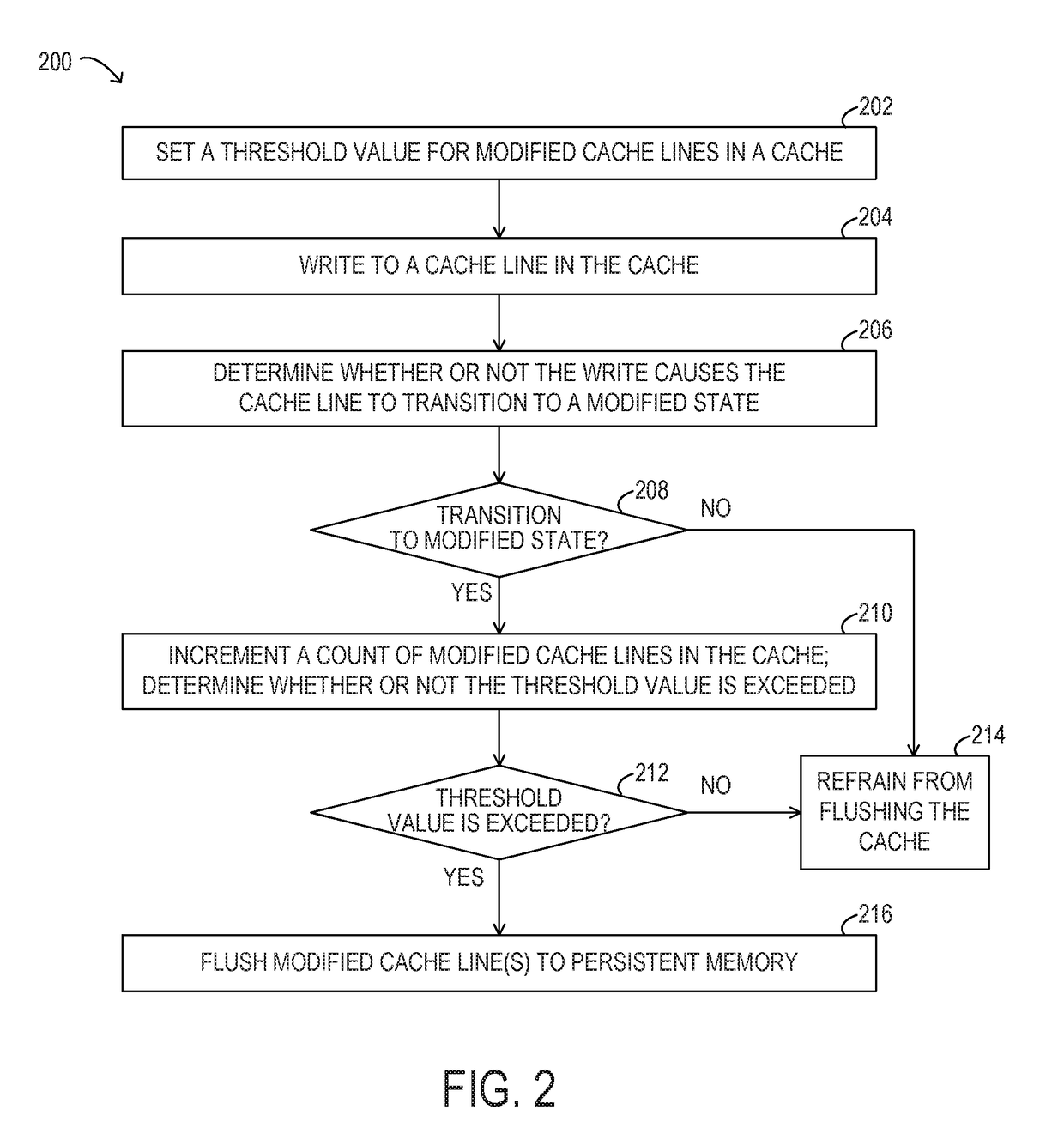

System and method for controlling cache flush size

ActiveUS20180032439A1Increase the number ofMemory architecture accessing/allocationCache memory detailsParallel computingHandling system

An information handling system may implement a method for controlling cache flush size by limiting the amount of modified cached data in a data cache at any given time. The method may include keeping a count of the number of modified cache lines (or modified cache lines targeted to persistent memory) in the cache, determining that a threshold value for modified cache lines is exceeded and, in response, flushing some or all modified cache lines to persistent memory. The threshold value may represent a maximum number or percentage of modified cache lines. The cache controller may include a field for each cache line indicating whether it targets persistent memory. Limiting the amount of modified cached data at any given time may reduce the number of cache lines to be flushed in response to a power loss event to a number that can be flushed using the available hold-up energy.

Owner:DELL PROD LP

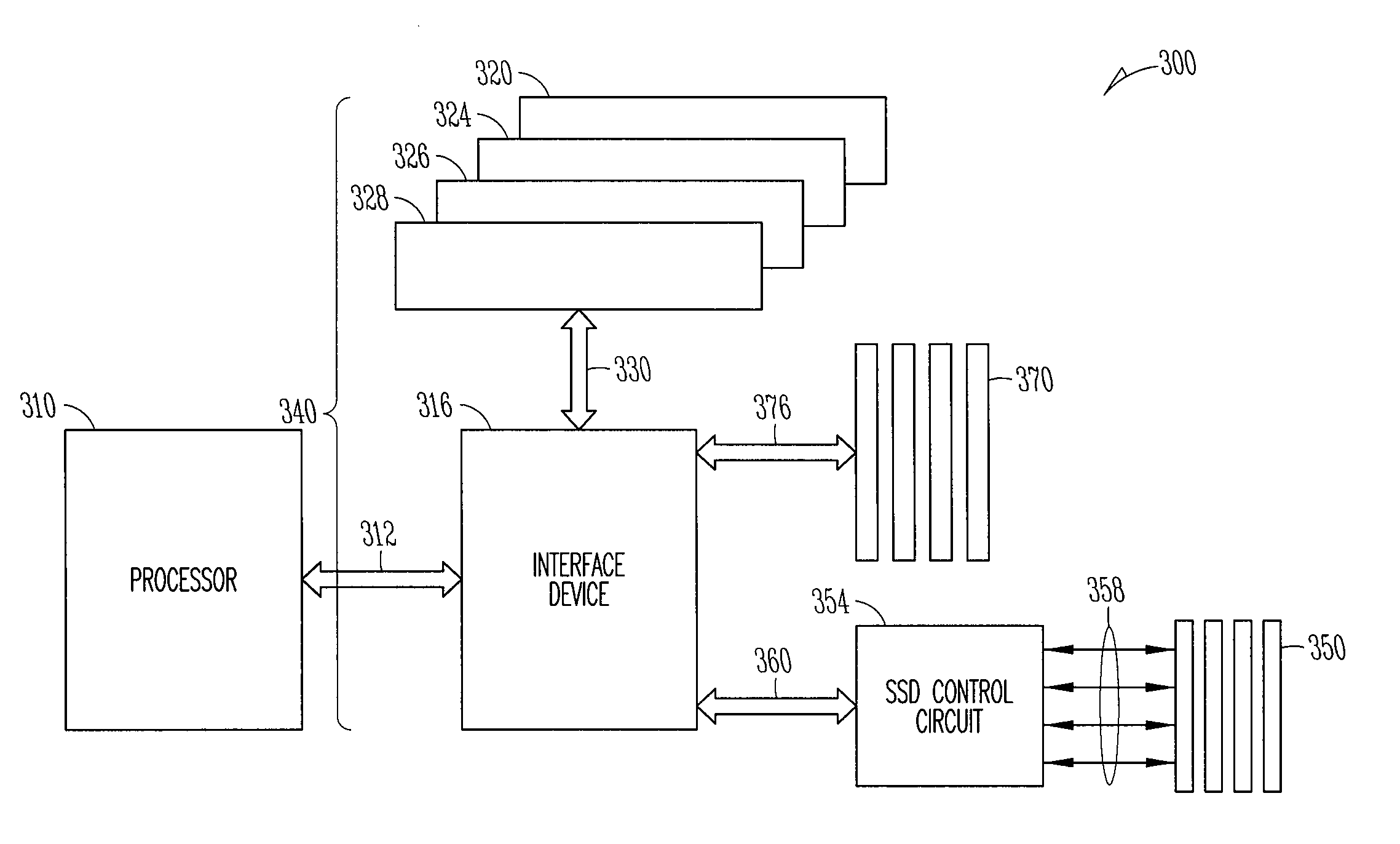

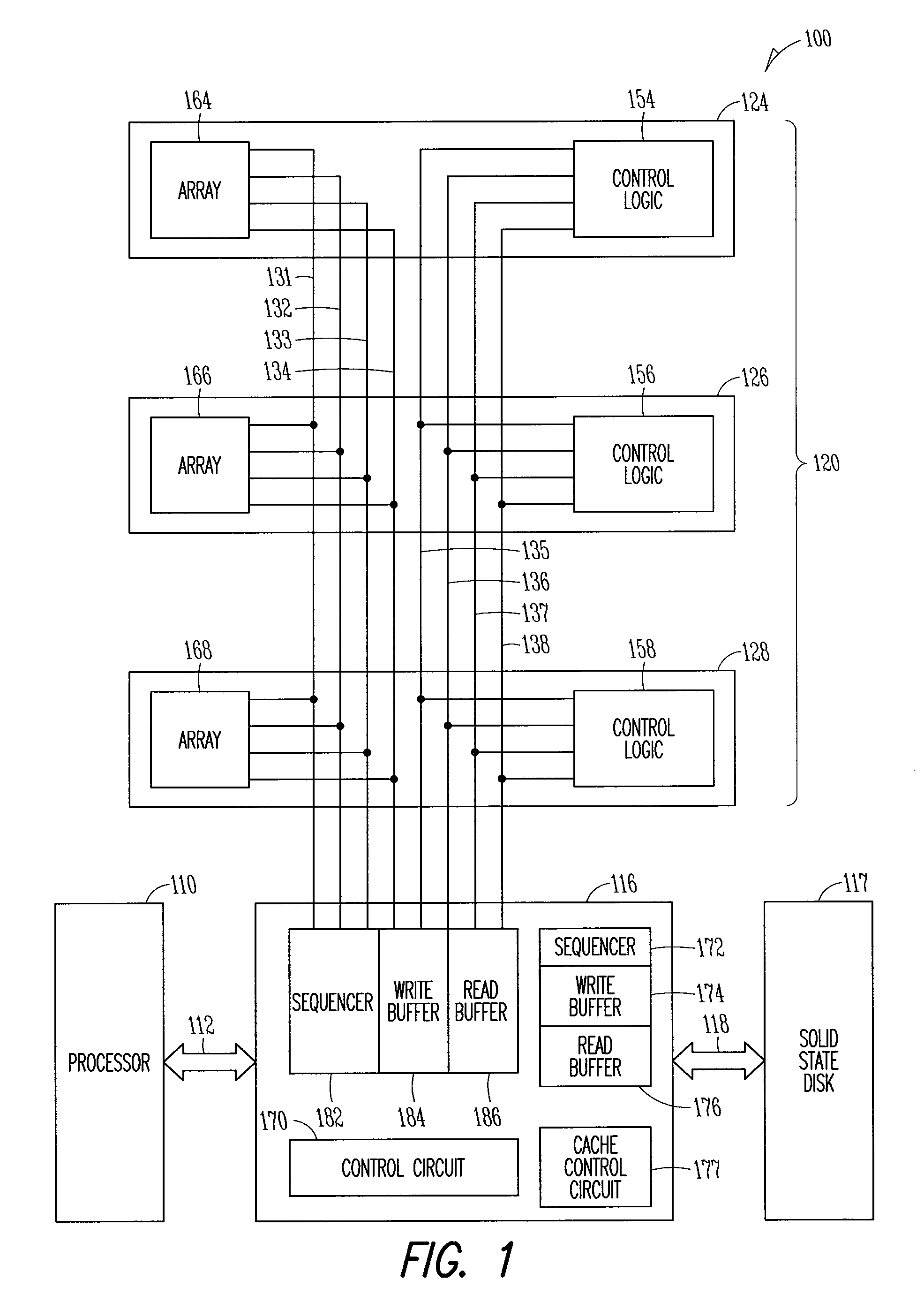

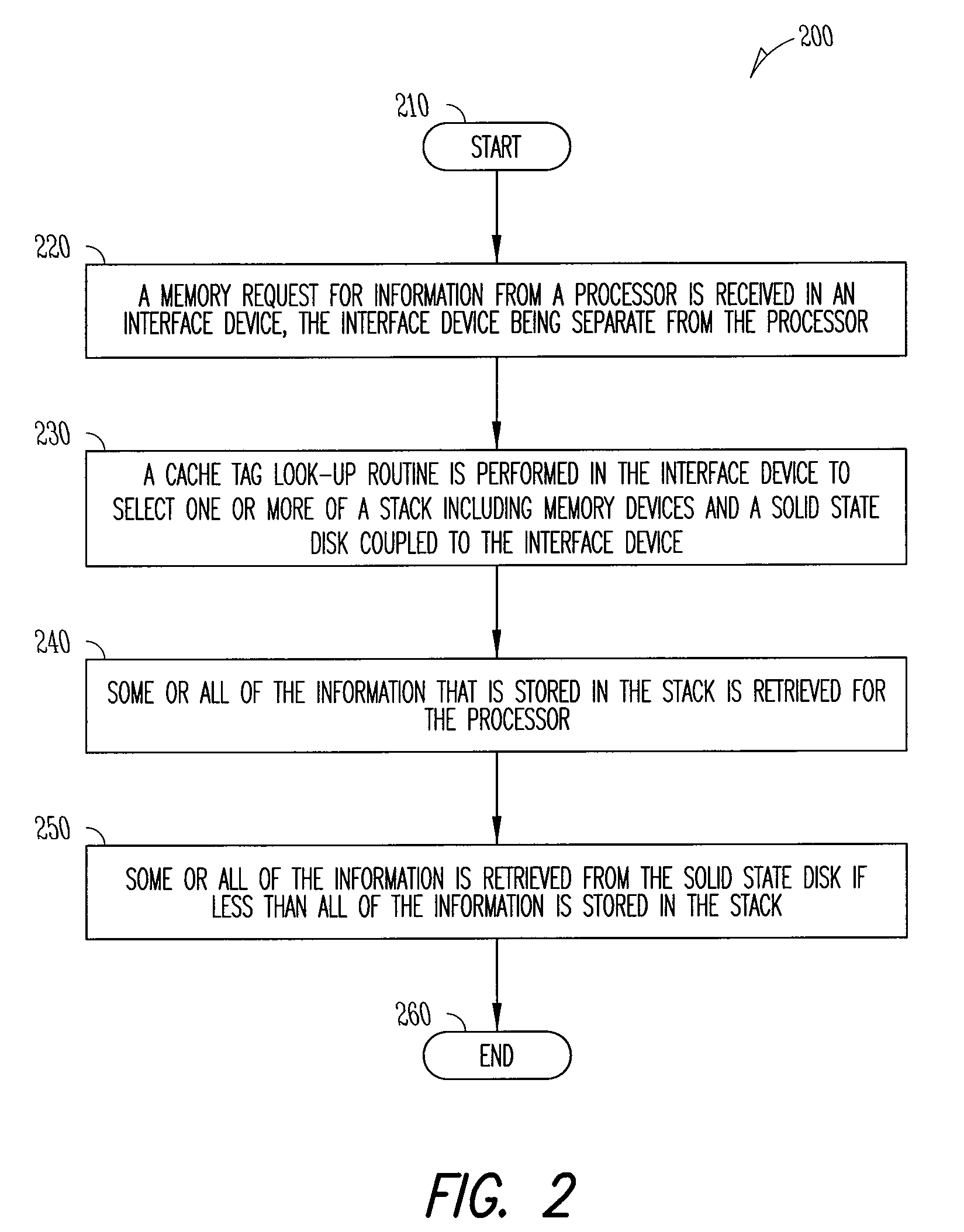

Interface device for memory in a stack, storage devices and a processor

ActiveUS8281074B2Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer hardwareRequest for information

Owner:LODESTAR LICENSING GRP LLC

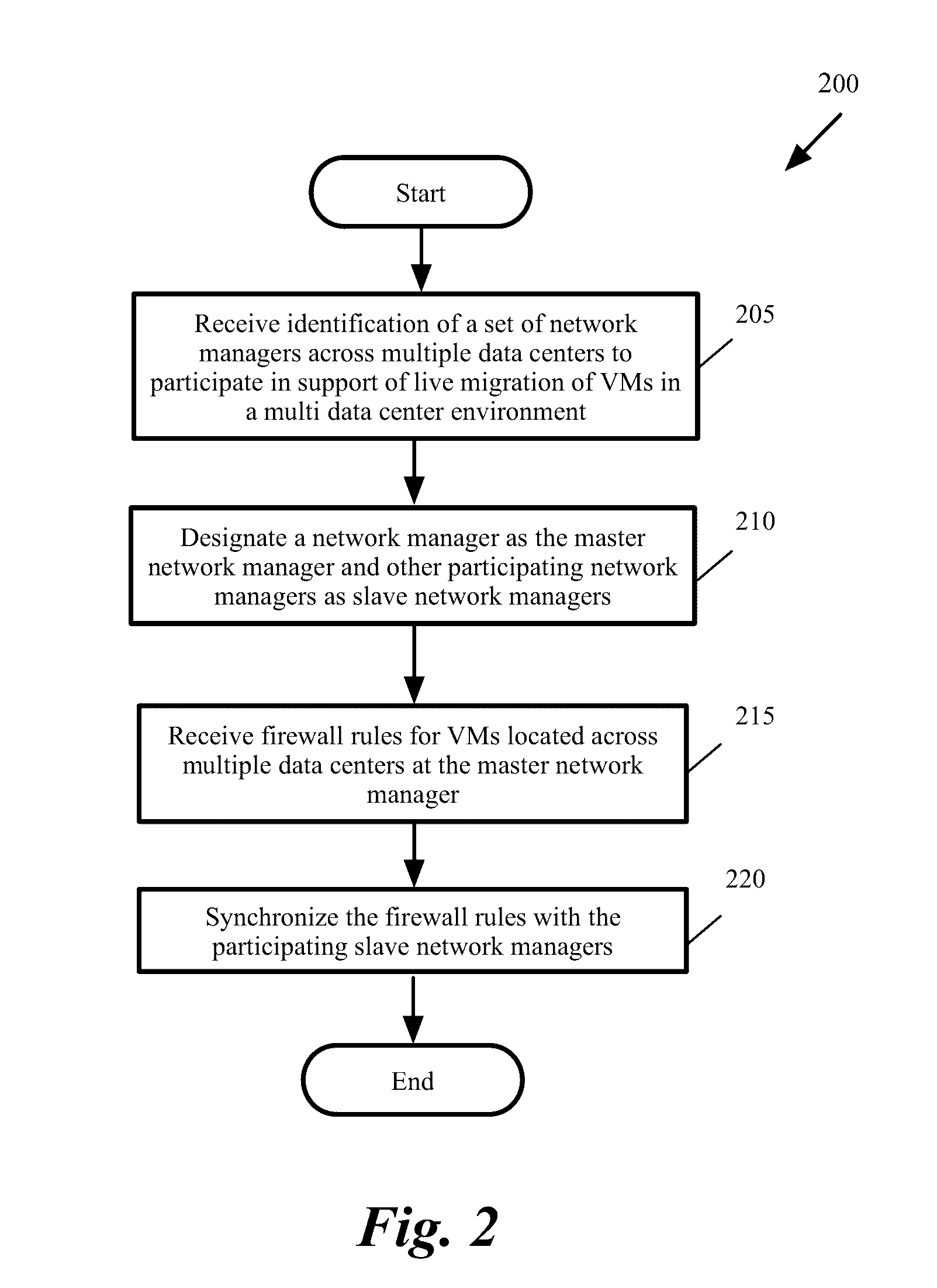

Federated firewall management for moving workload across data centers

ActiveUS20170005987A1Memory architecture accessing/allocationDatabase management systemsUnique identifierEngineering

A method of providing firewall support for moving a data compute node (DCN) across data centers. The method receives a set of global firewall rules to enforce across multiple data centers. The set of global firewall rules utilize unique identifiers that are recognized by the network manager of each data center. The method distributes the specified set of global firewall rules to the network managers. The method receives an indication that a DCN operating on a first host in a first data center is migrating to a second host operating in a second data center. The method sends a set of firewall session states to the network manager of the second data center. The method receives an indication that the DCN on the second host has started and enforces firewall rules for the DCN on the second host by using the firewall session states and the global firewall rules.

Owner:NICIRA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com