Patents

Literature

35results about How to "Significant latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

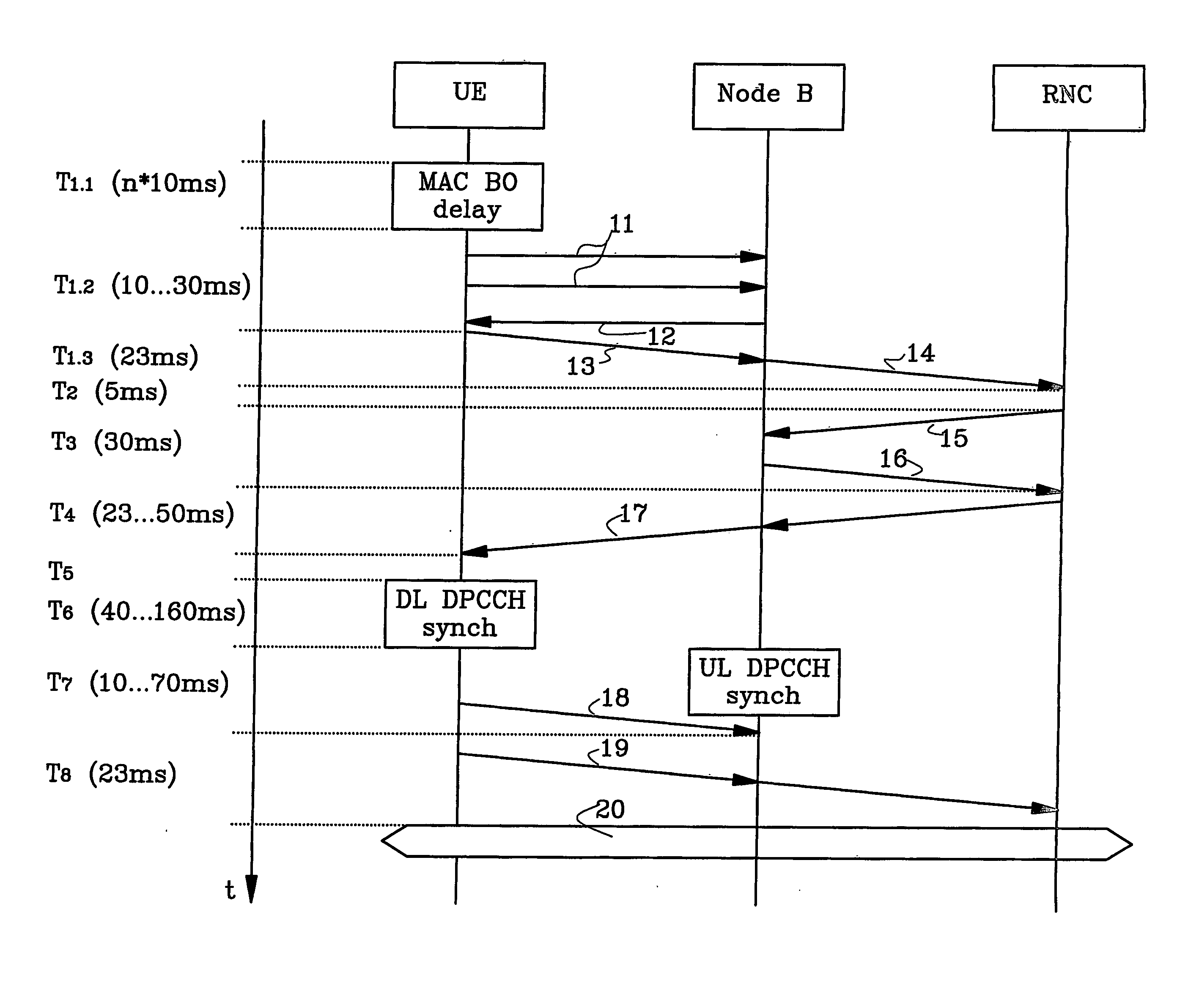

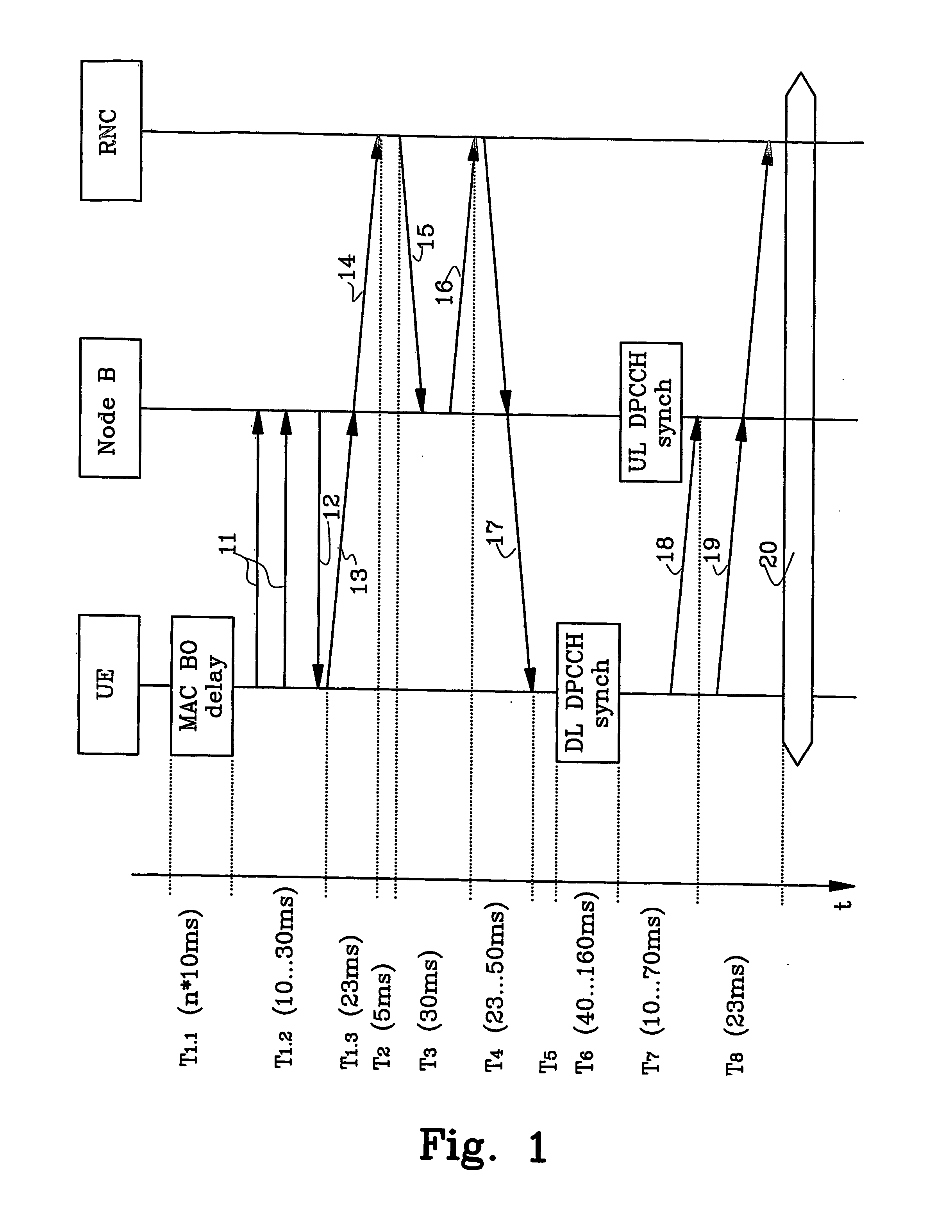

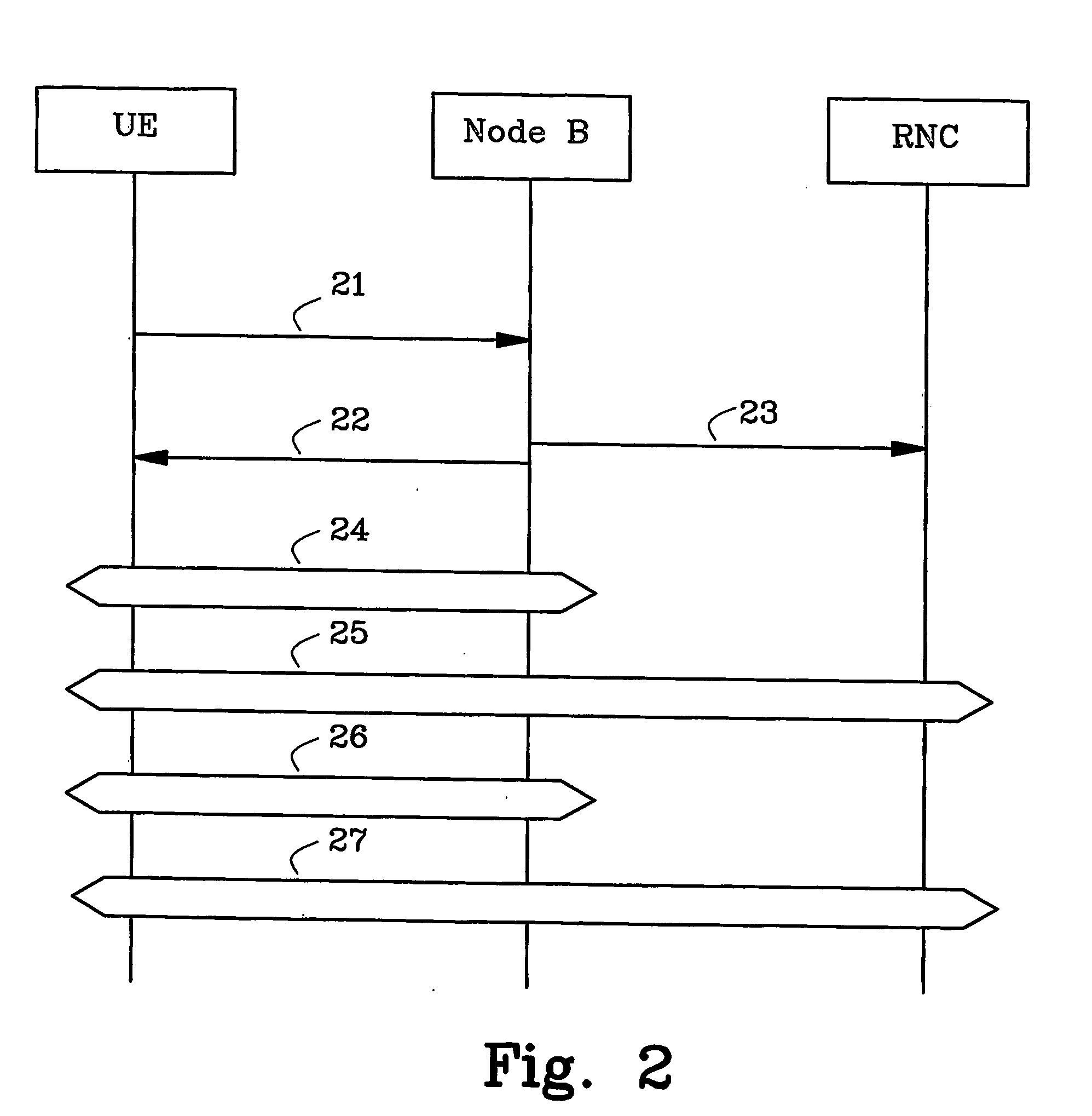

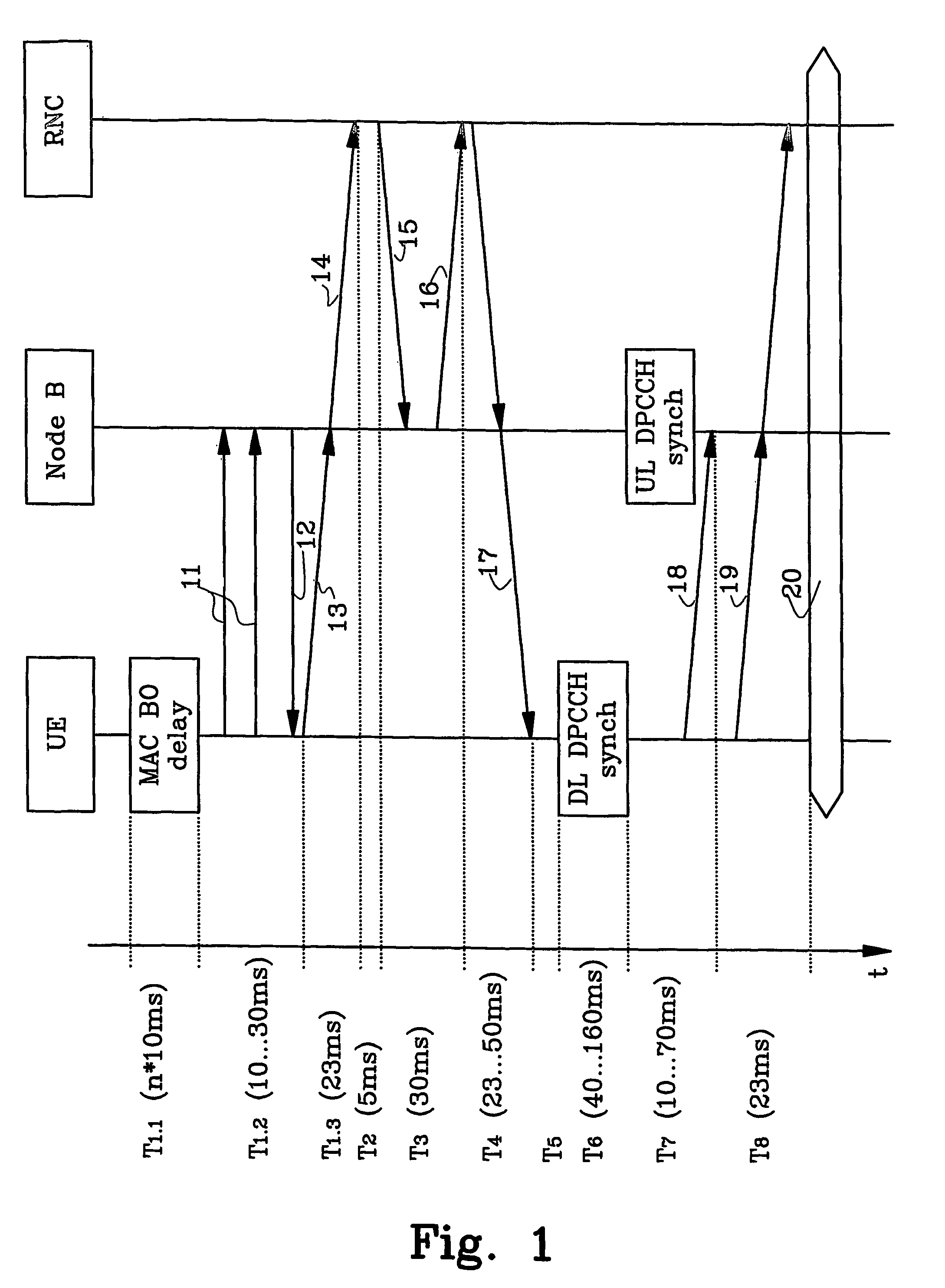

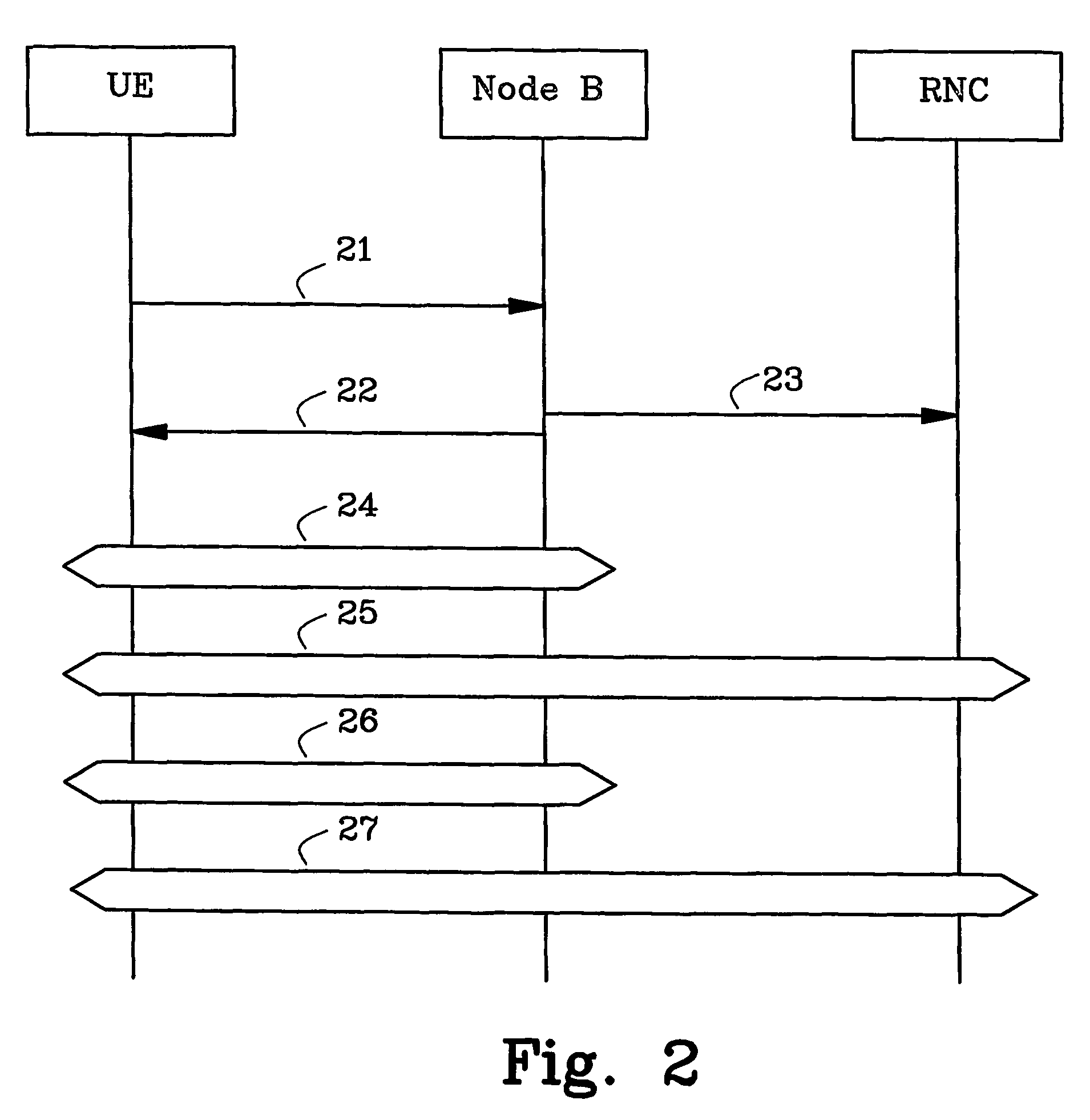

Fast Setup Of Physical Communication Channels

InactiveUS20080123585A1High speed of settingAvoid inefficiencyError preventionNetwork traffic/resource managementTelecommunications networkRadio networks

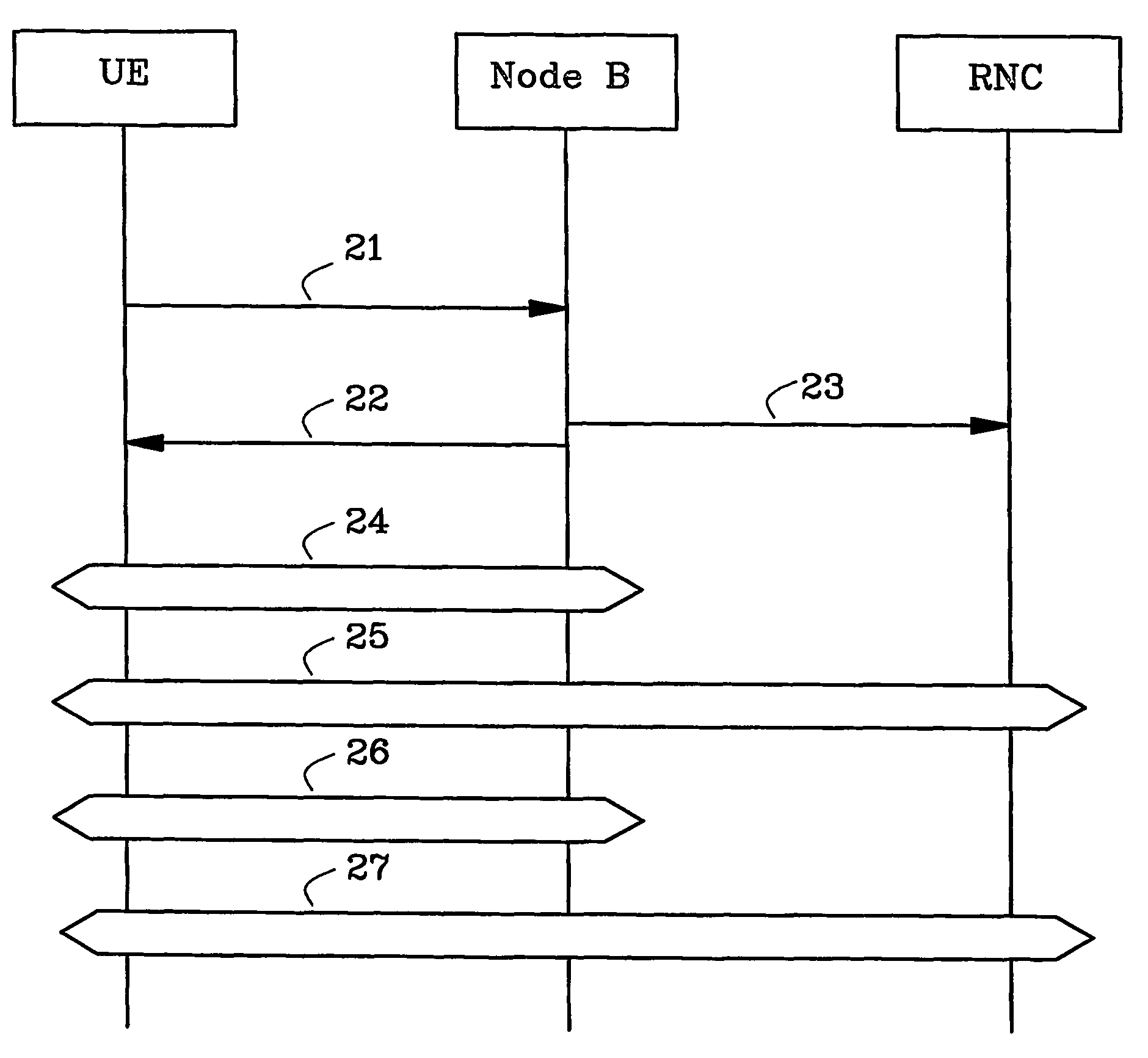

The present invention relates to improvements for a fast setup of physical communication channels in a CDMA-based communication system. A Node B of a telecommunication network is permitted to manage and assign a certain share of the downlink transmission resources of a radio network controller without inquiry of said radio network controller. On reception of a resource request message from a user equipment, the node B derives and specifies a certain amount of said resources that can be allocated to the user equipment. In a preferred embodiment of the present invention said resources are only assigned temporarily until the ordinary RL setup procedure, which involves the RNC, has been successfully finished.

Owner:TELEFON AB LM ERICSSON (PUBL)

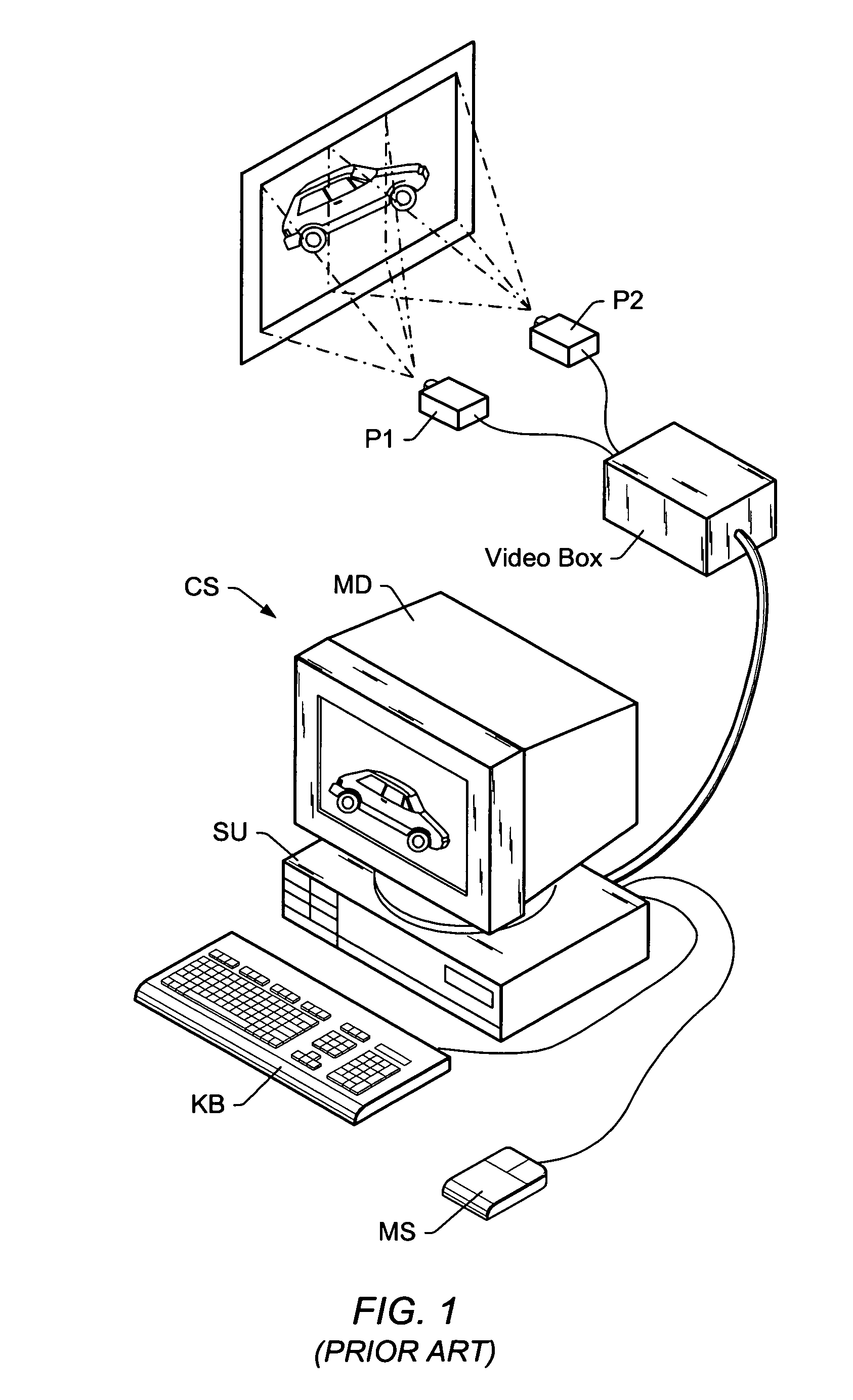

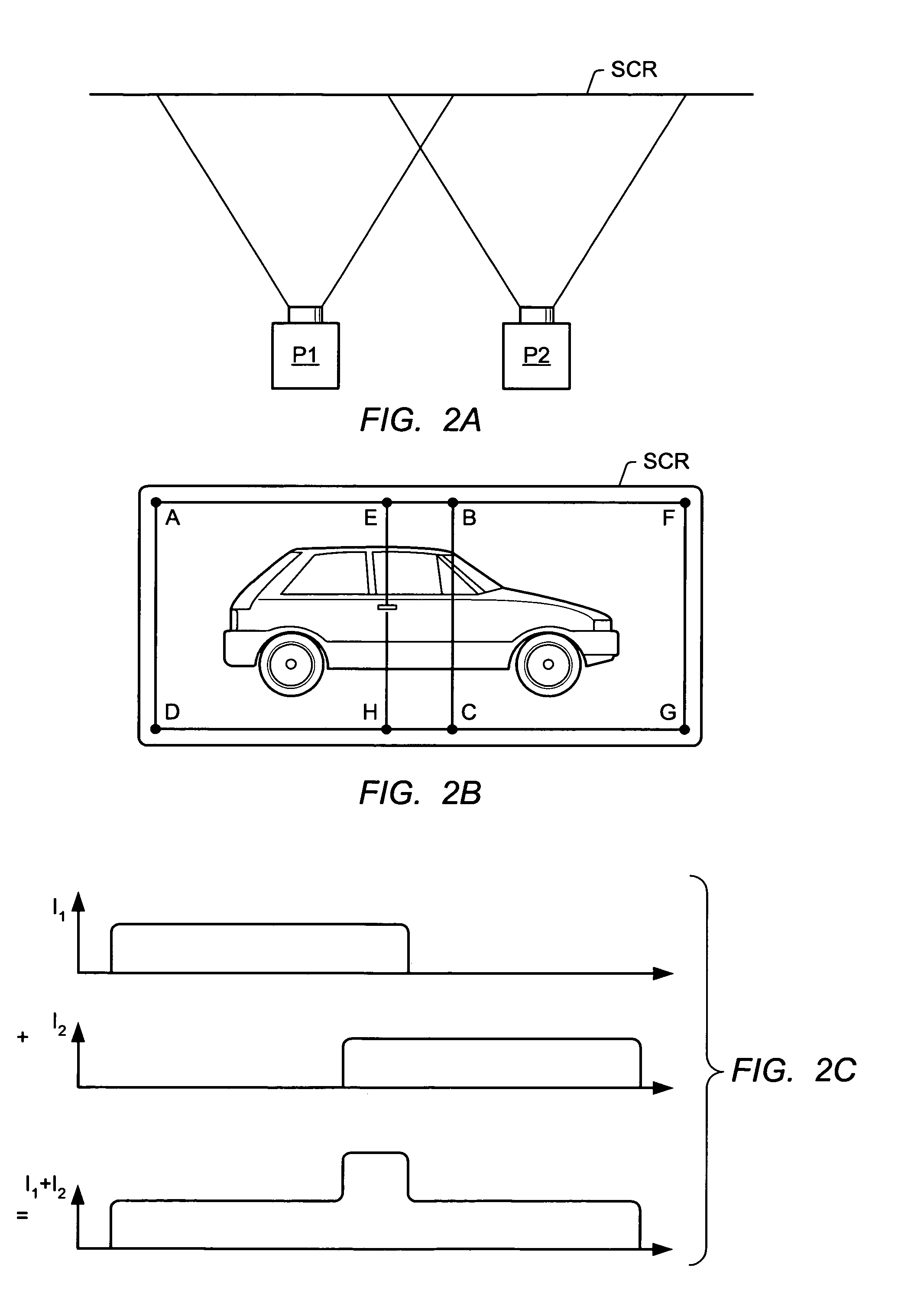

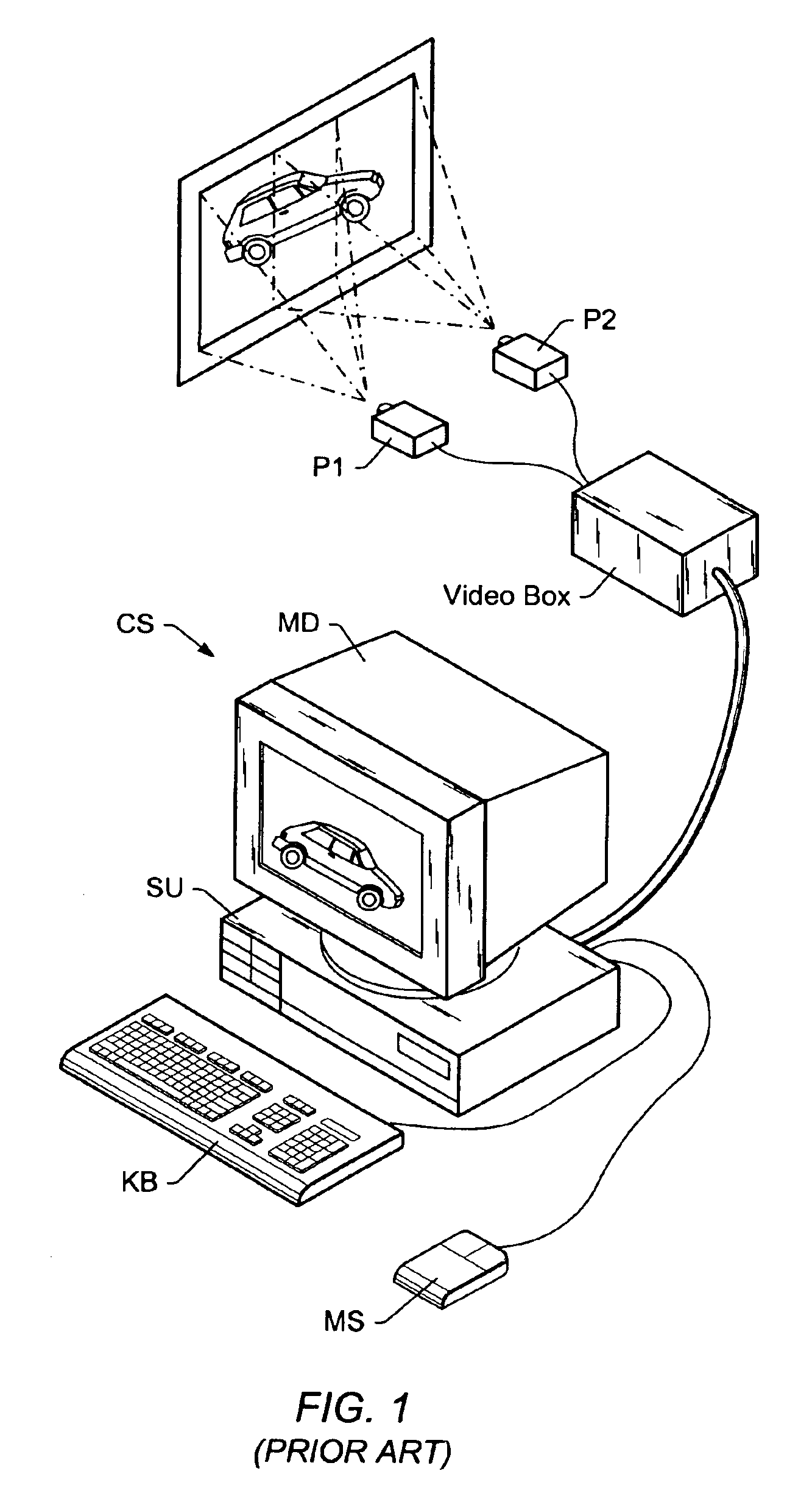

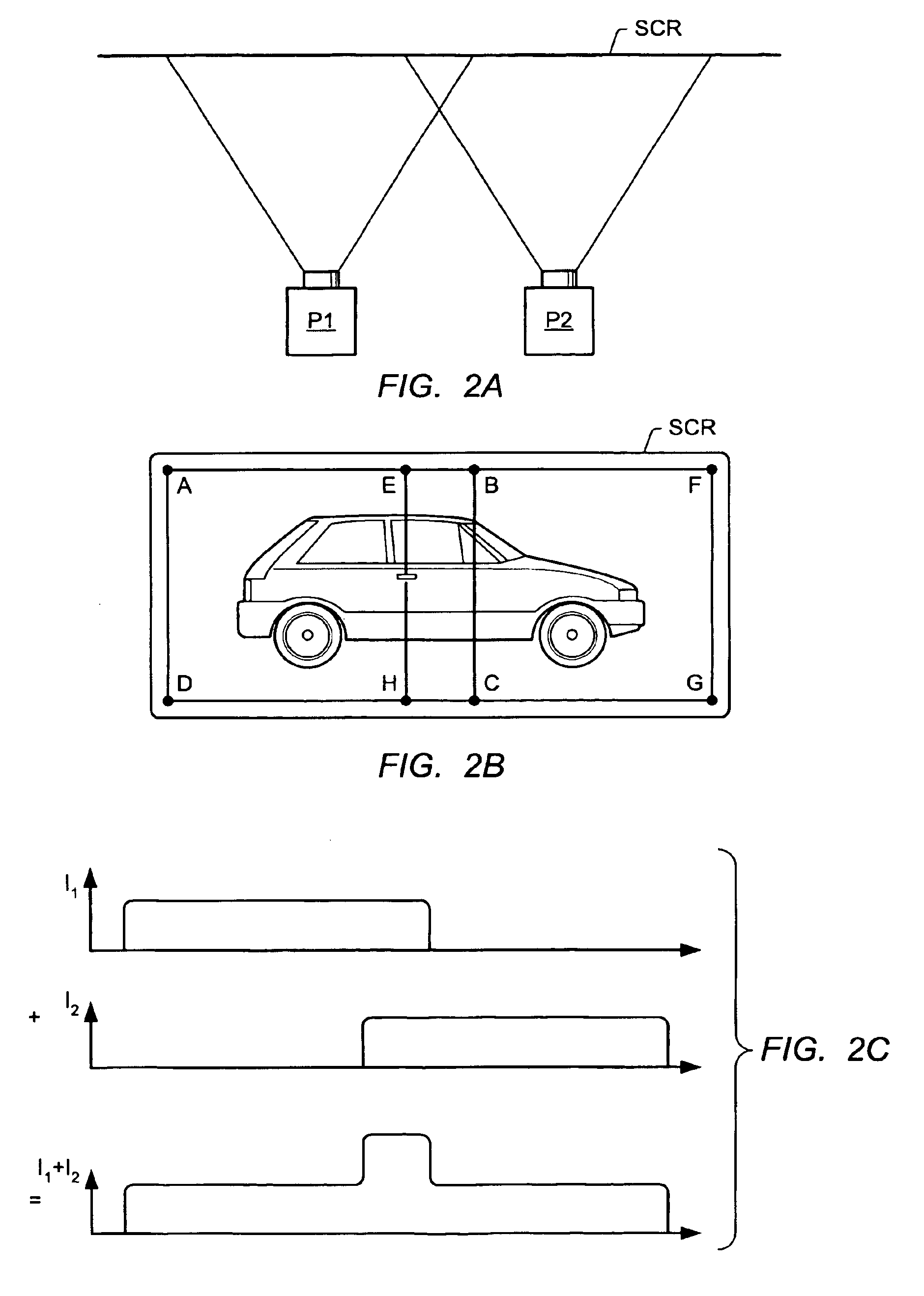

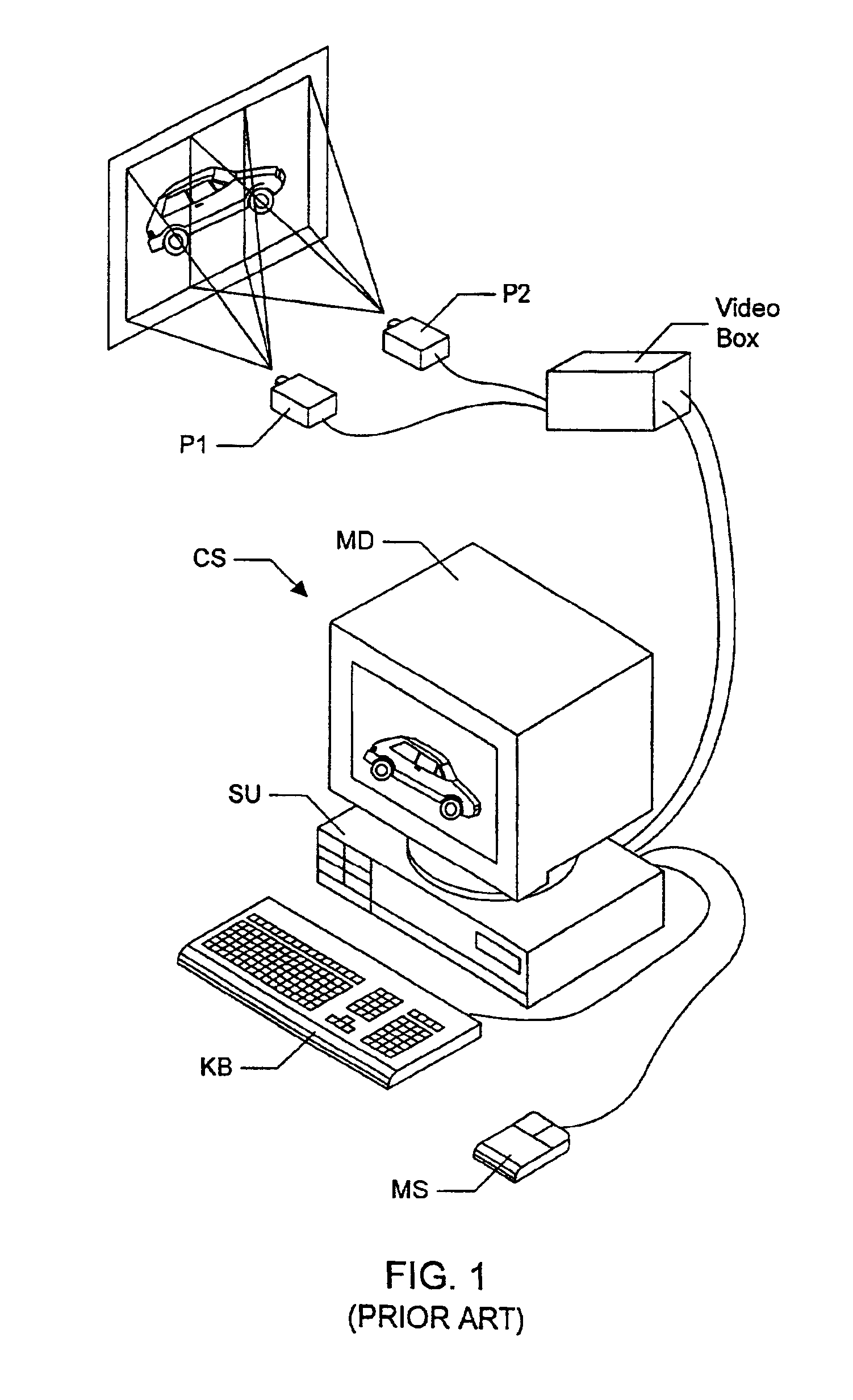

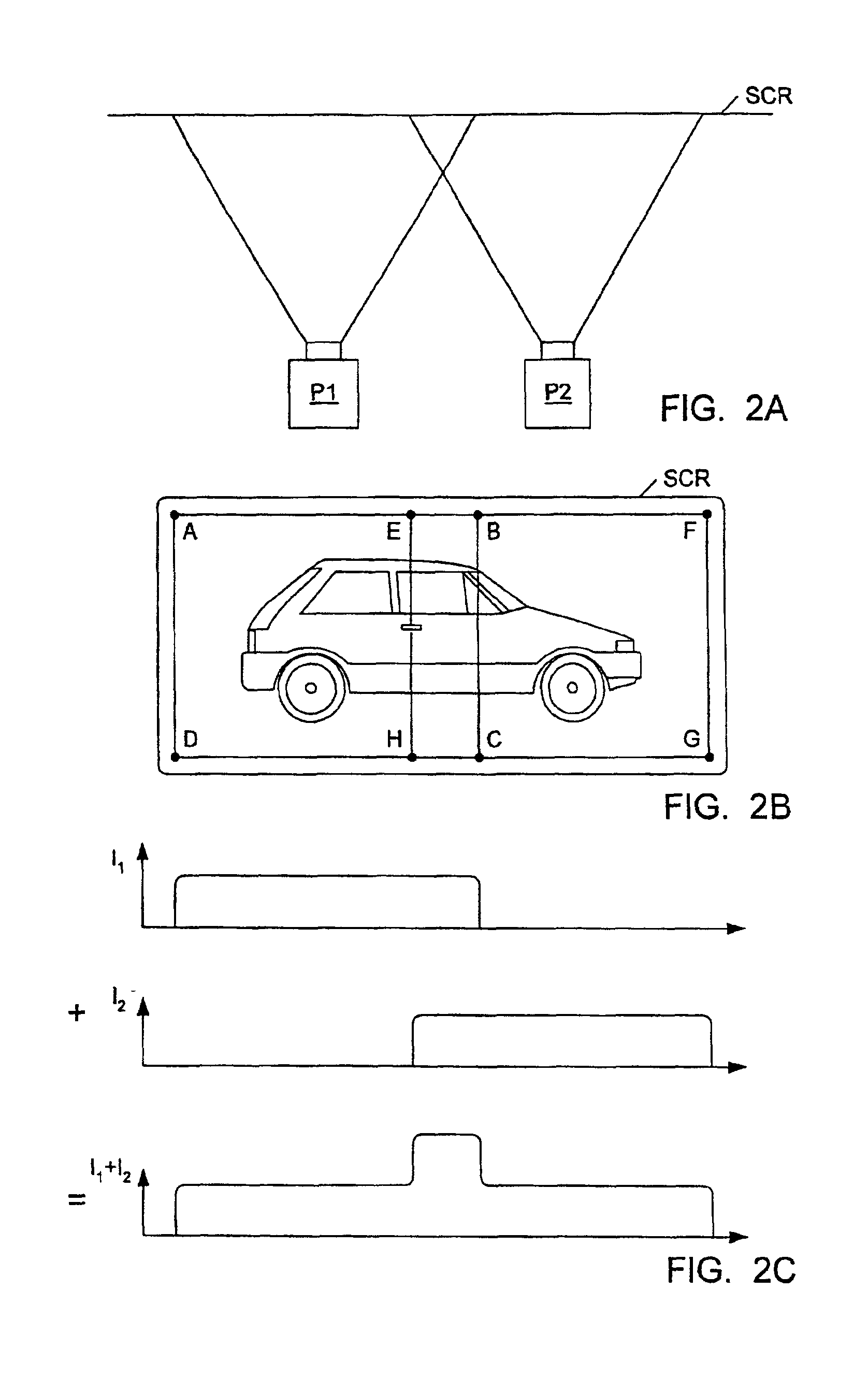

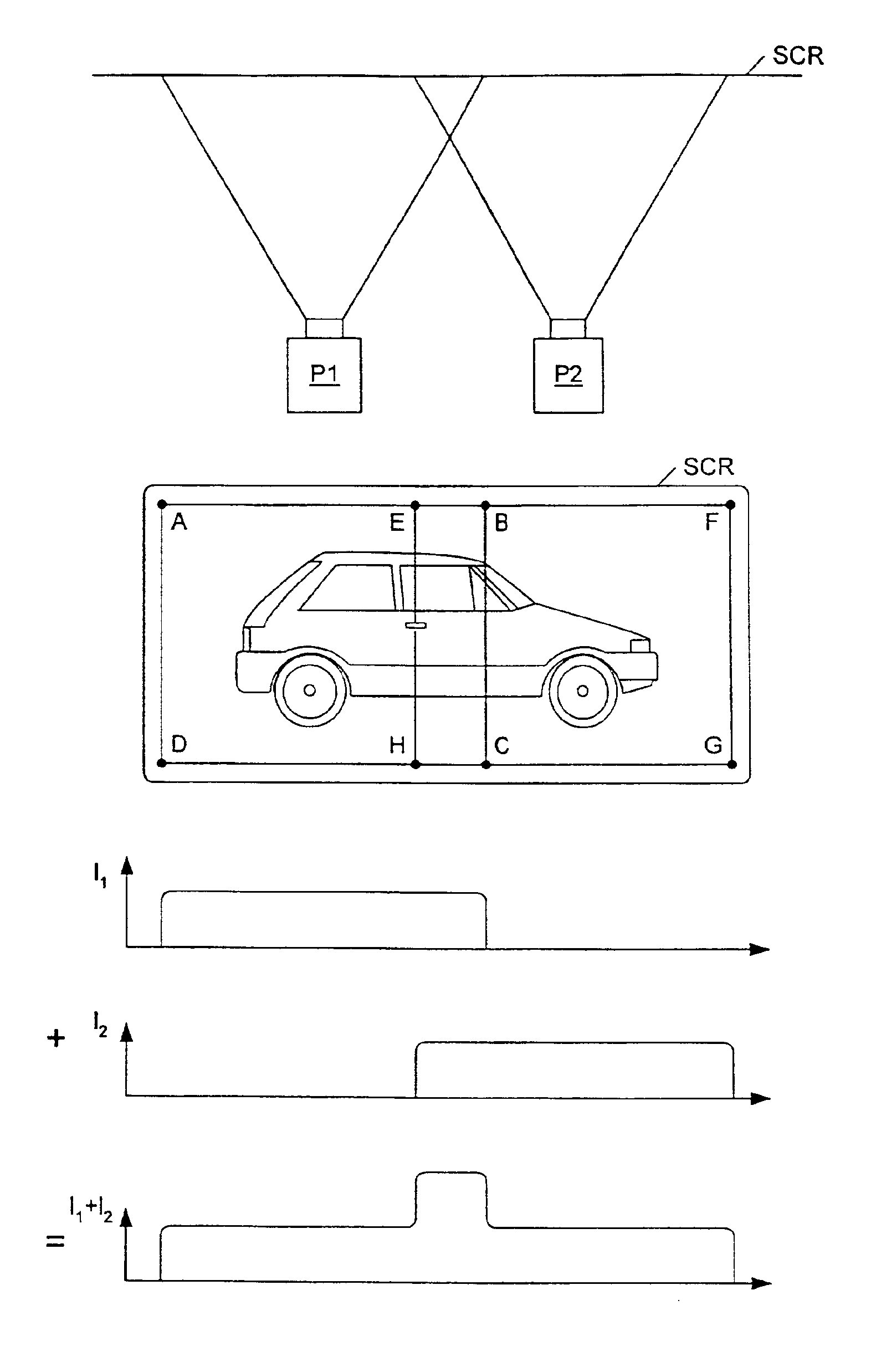

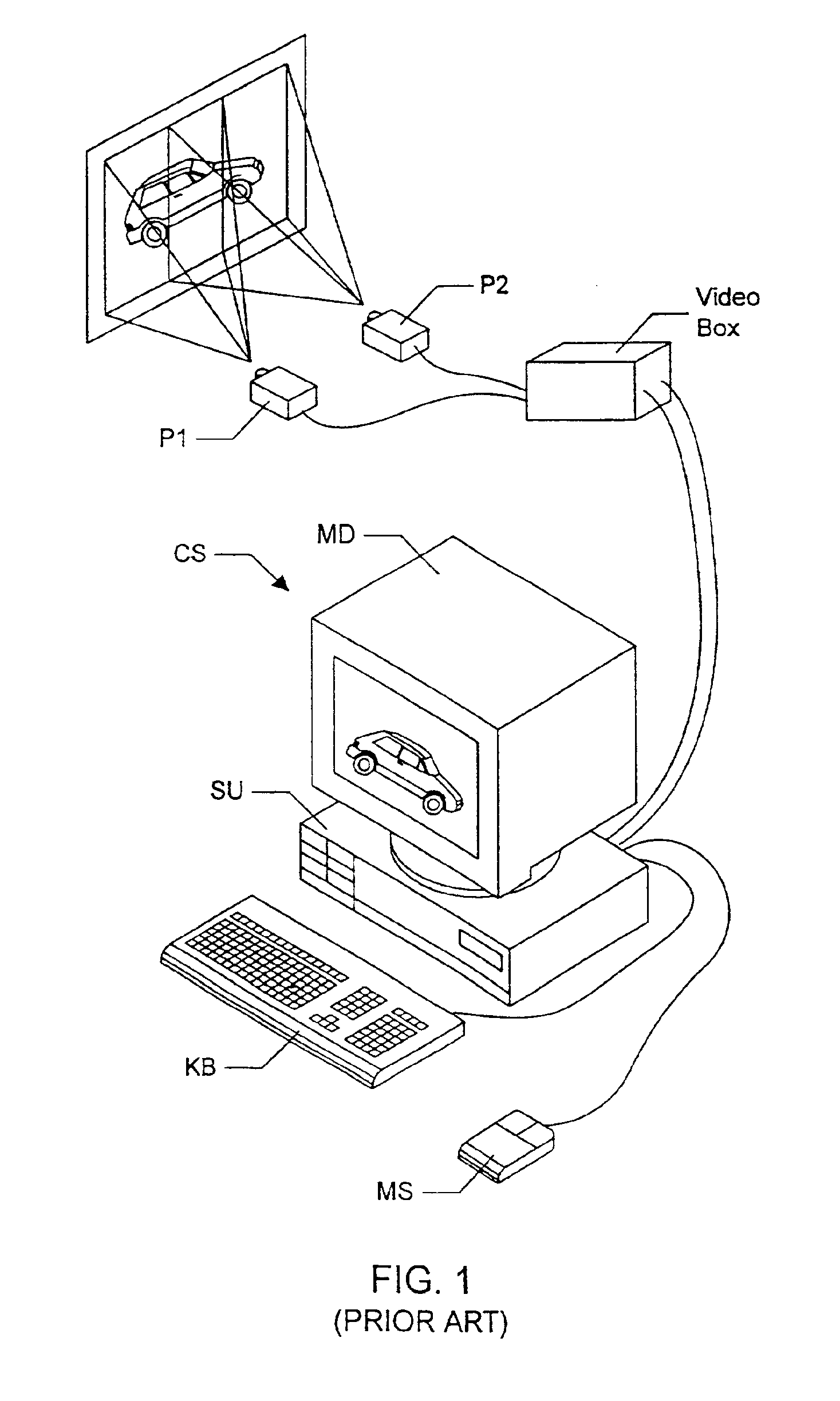

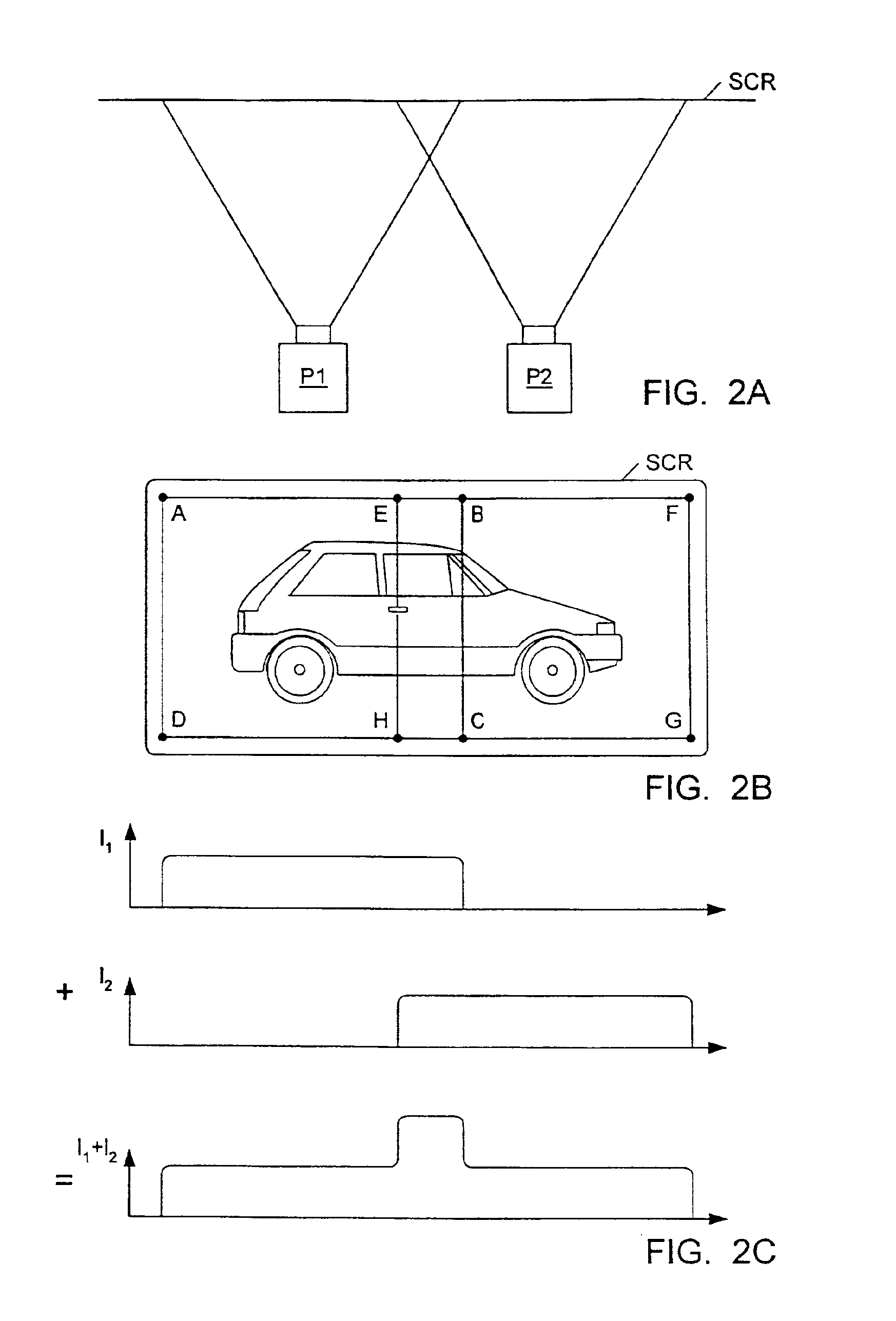

Matching the edges of multiple overlapping screen images

InactiveUS7079157B2Large latency of a frame buffer may be avoidedSignificant latencyImage enhancementImage analysisGraphicsGraphic system

Owner:ORACLE INT CORP

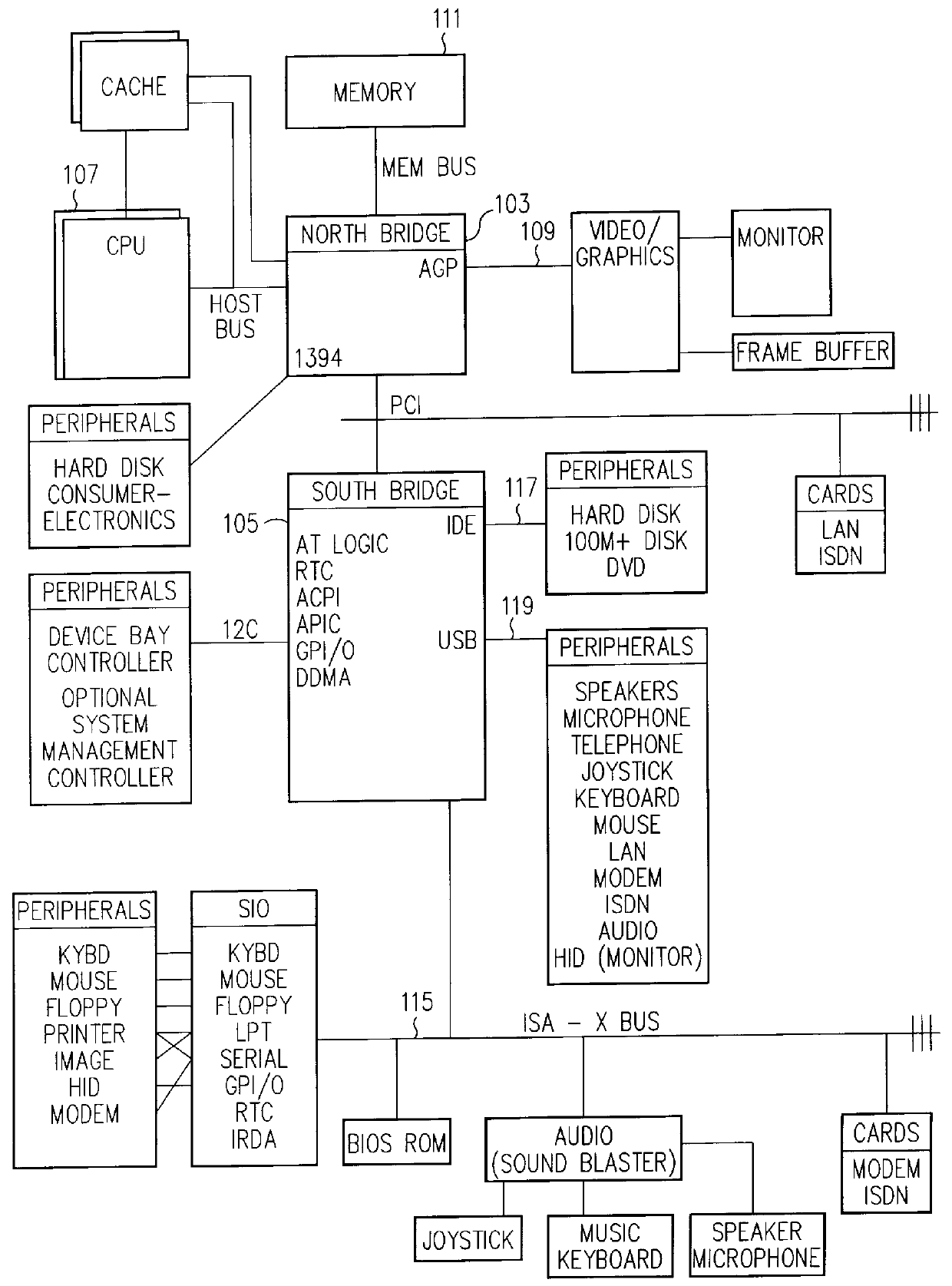

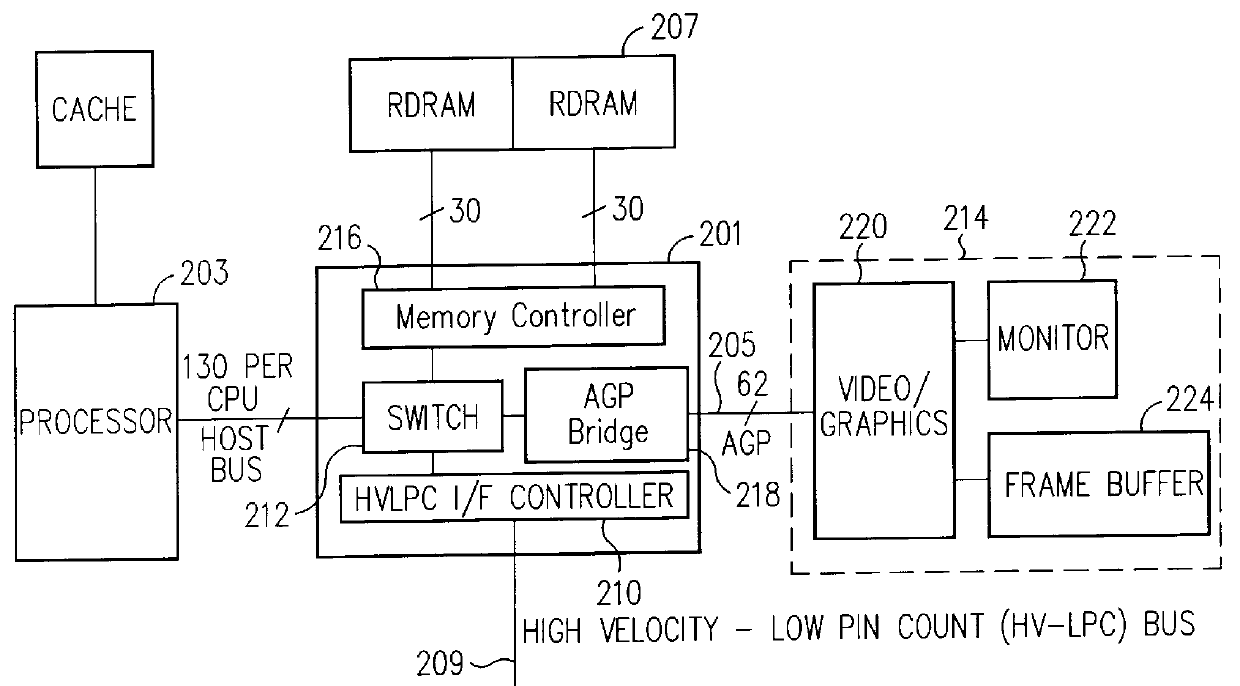

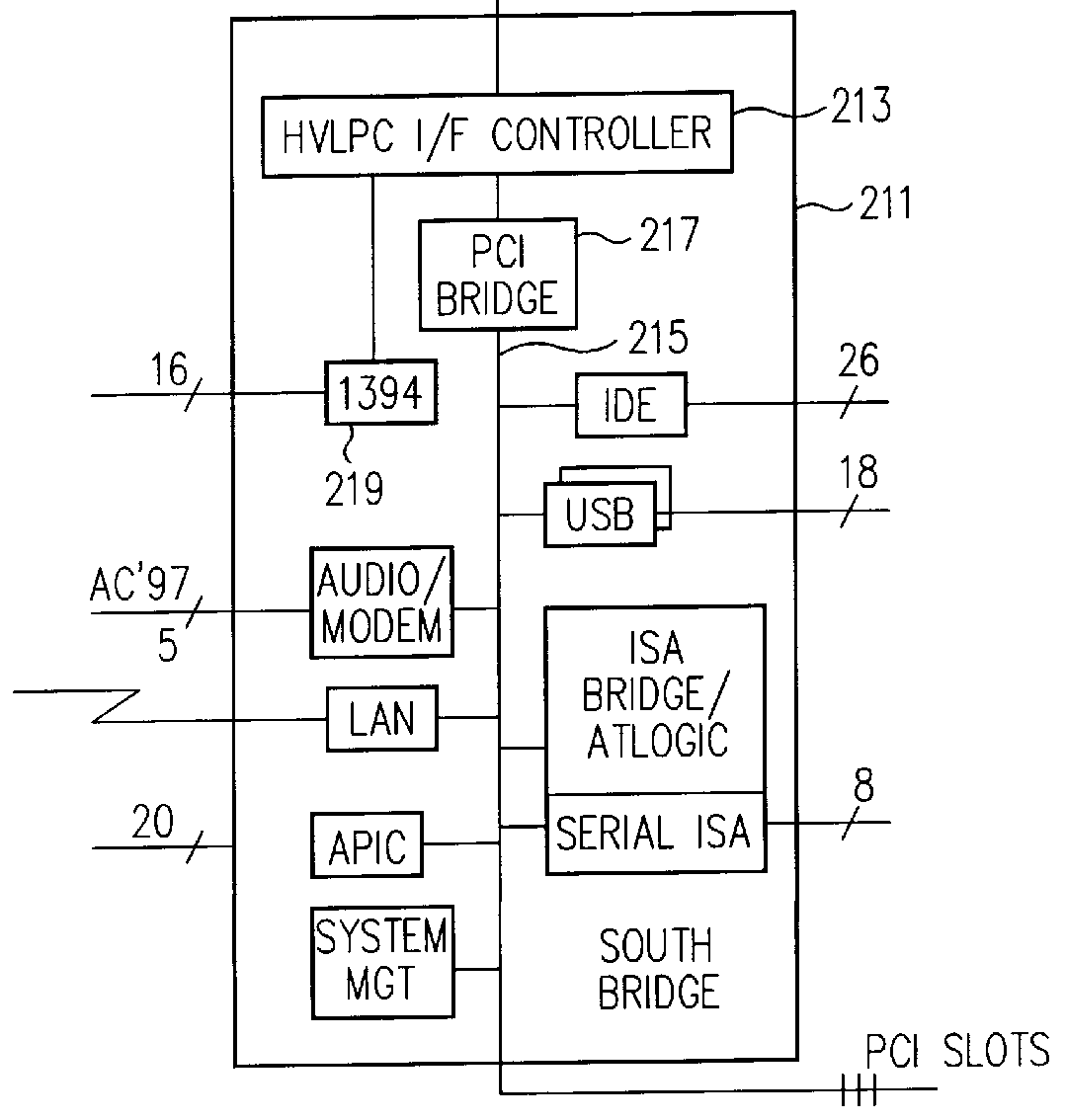

Communication link with isochronous and asynchronous priority modes coupling bridge circuits in a computer system

InactiveUS6151651ASignificant latencyMinimum throughputHybrid switching systemsElectric digital data processingComputer hardwareTelecommunications link

A computer system includes a first processor integrated circuit. A first bridge integrated circuit is coupled to the processor via a host bus. The computer system includes an interconnection bus that couples the first bridge circuit to a second bridge circuit. The interconnection bus provides a first transfer mode for asynchronous data and a second transfer mode for isochronous data. The interconnection bus provides for a maximum latency and a guaranteed throughput for asynchronous and isochronous data.

Owner:GLOBALFOUNDRIES INC

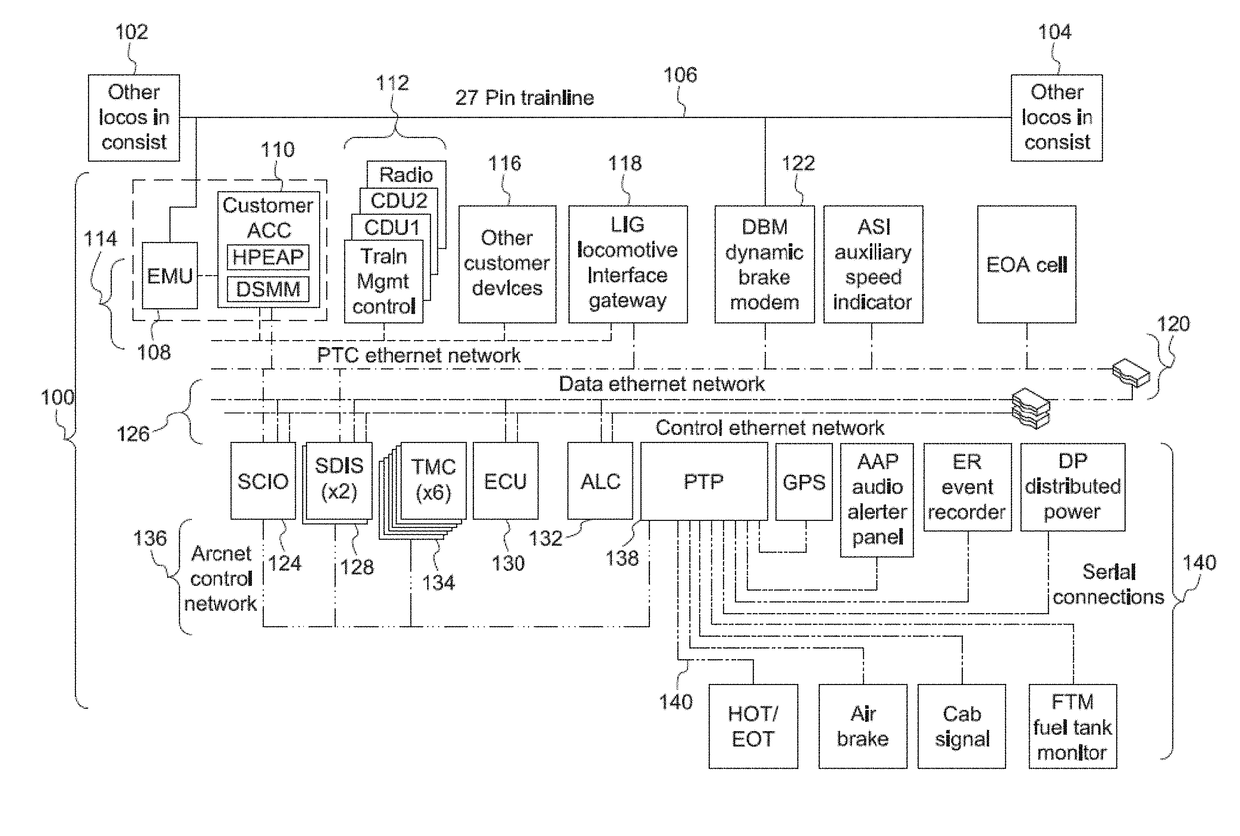

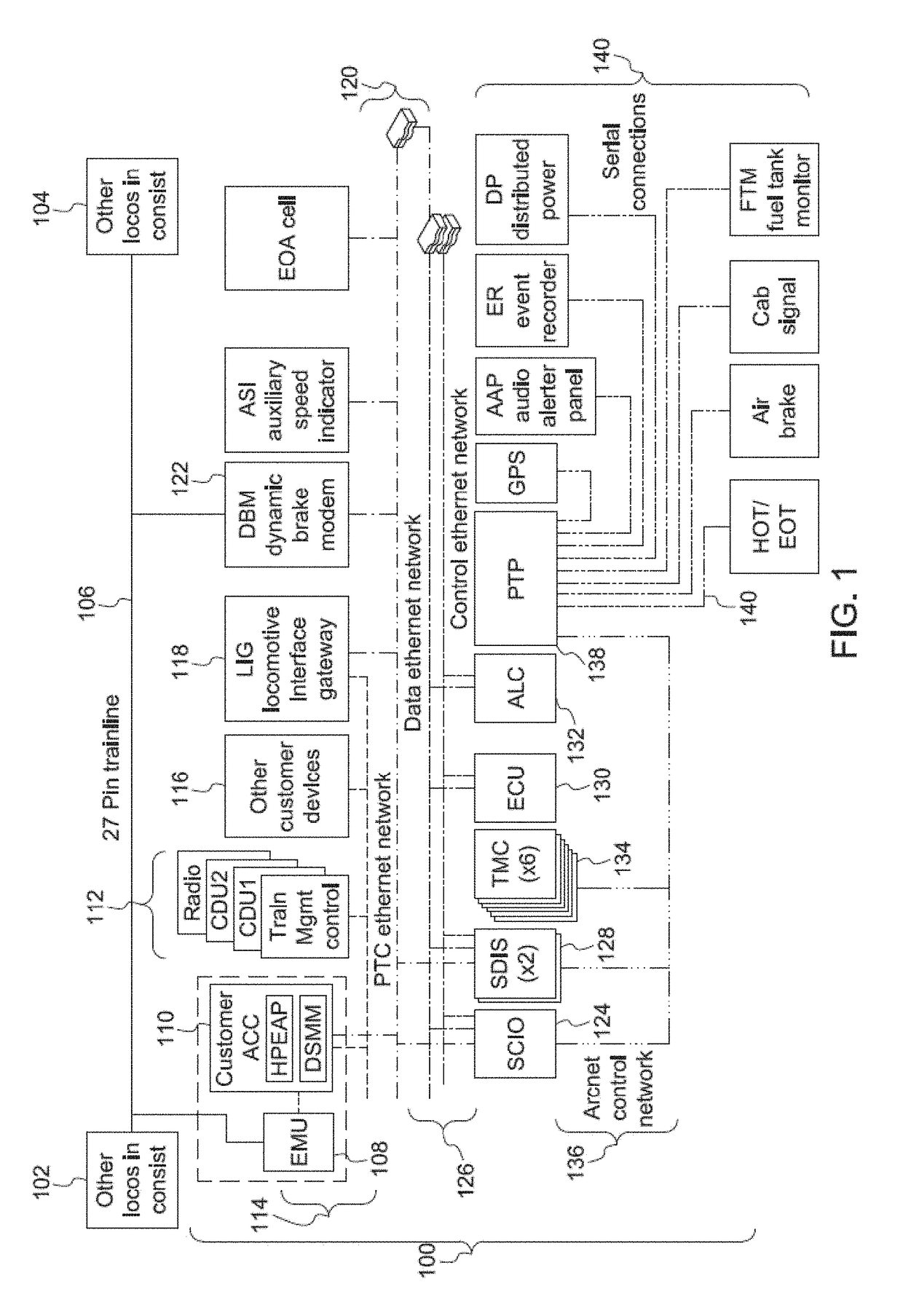

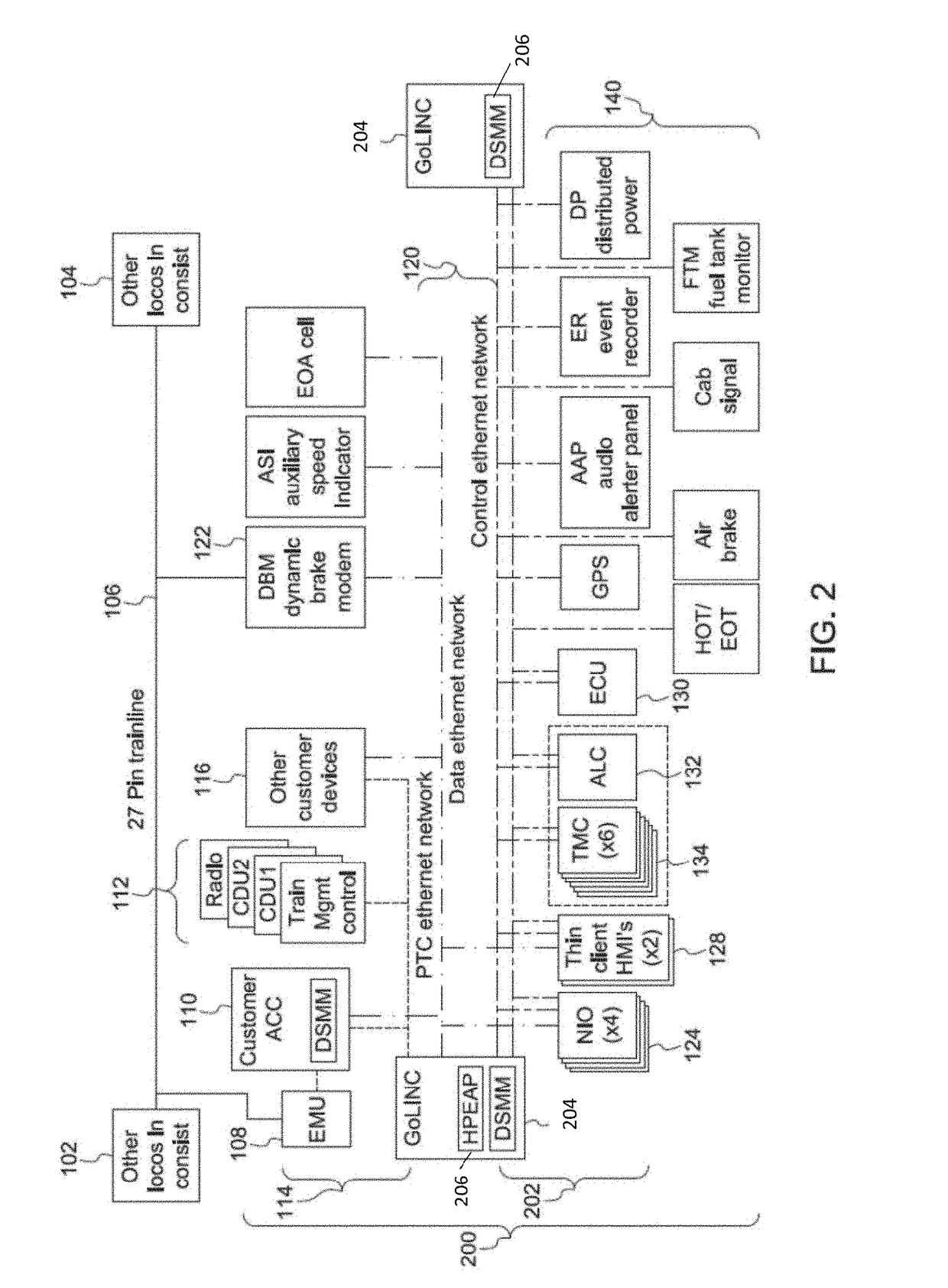

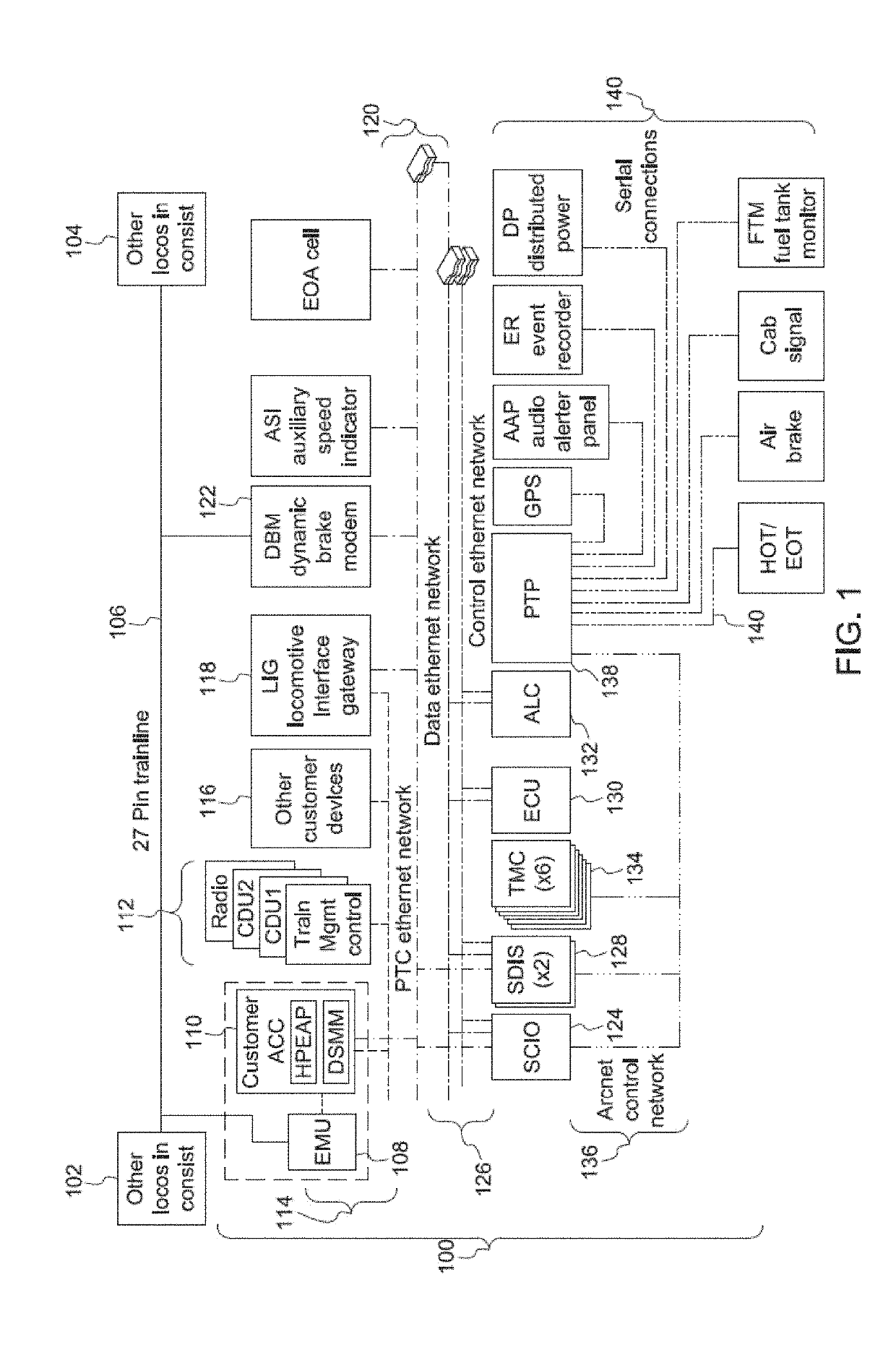

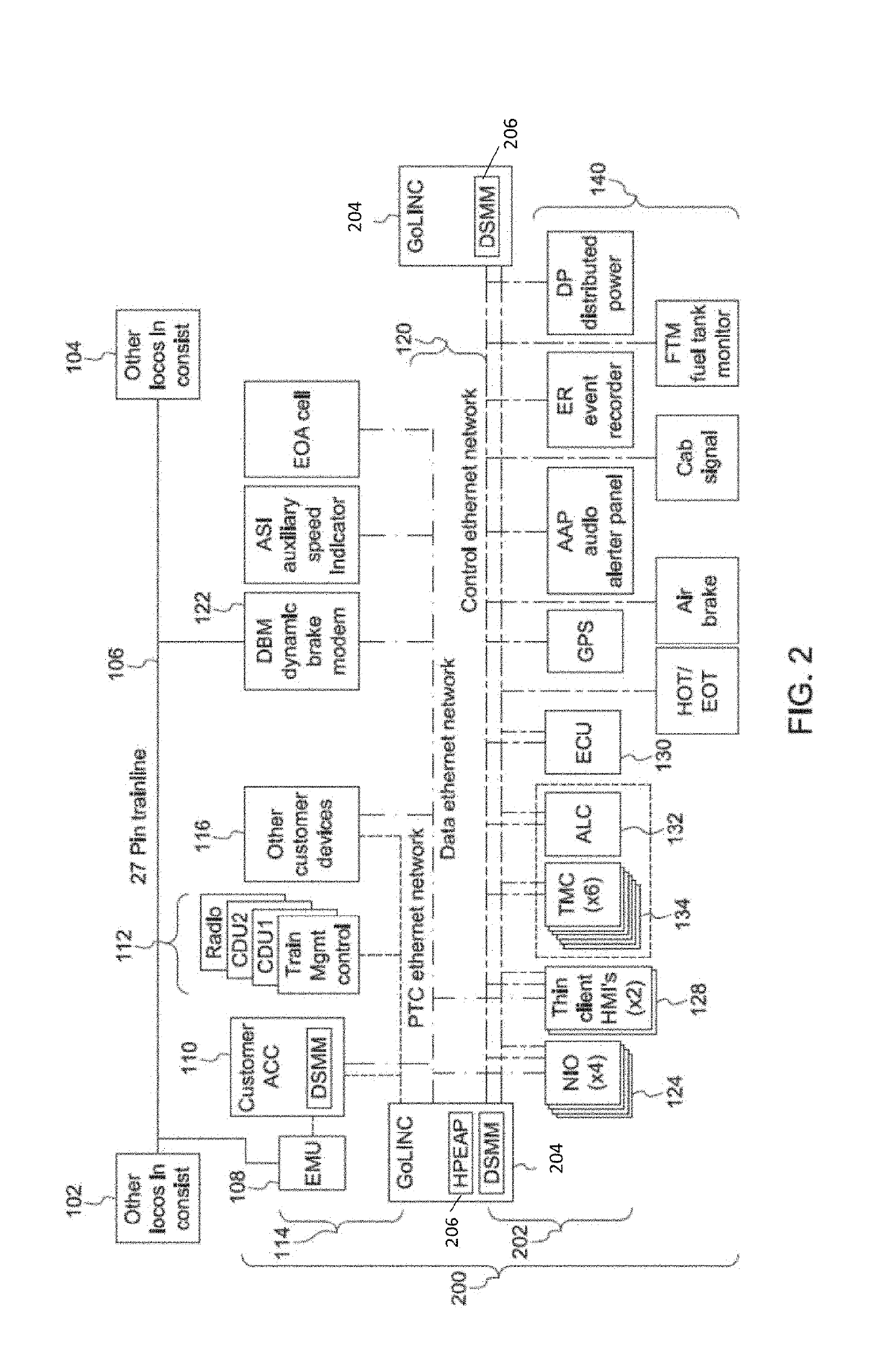

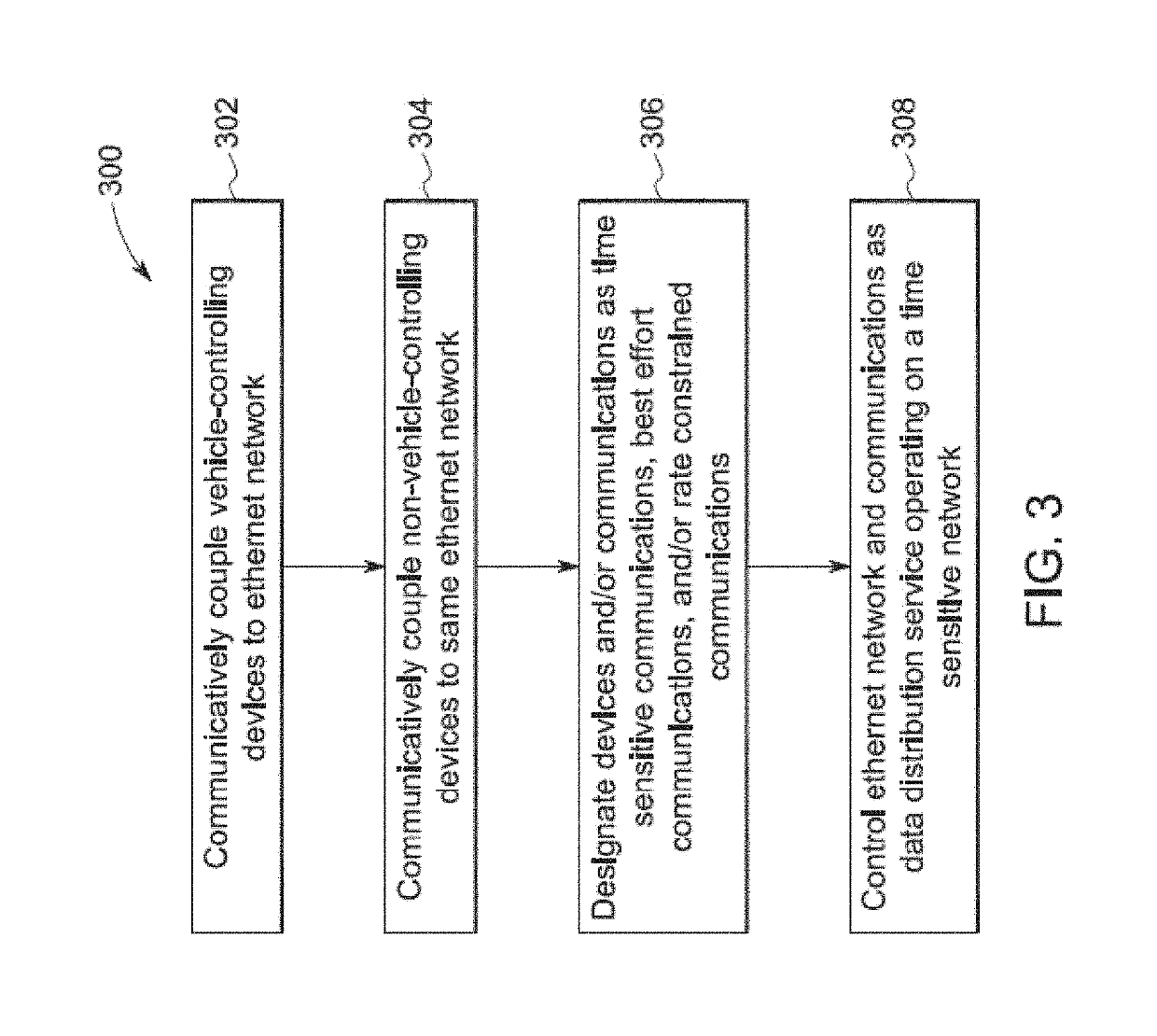

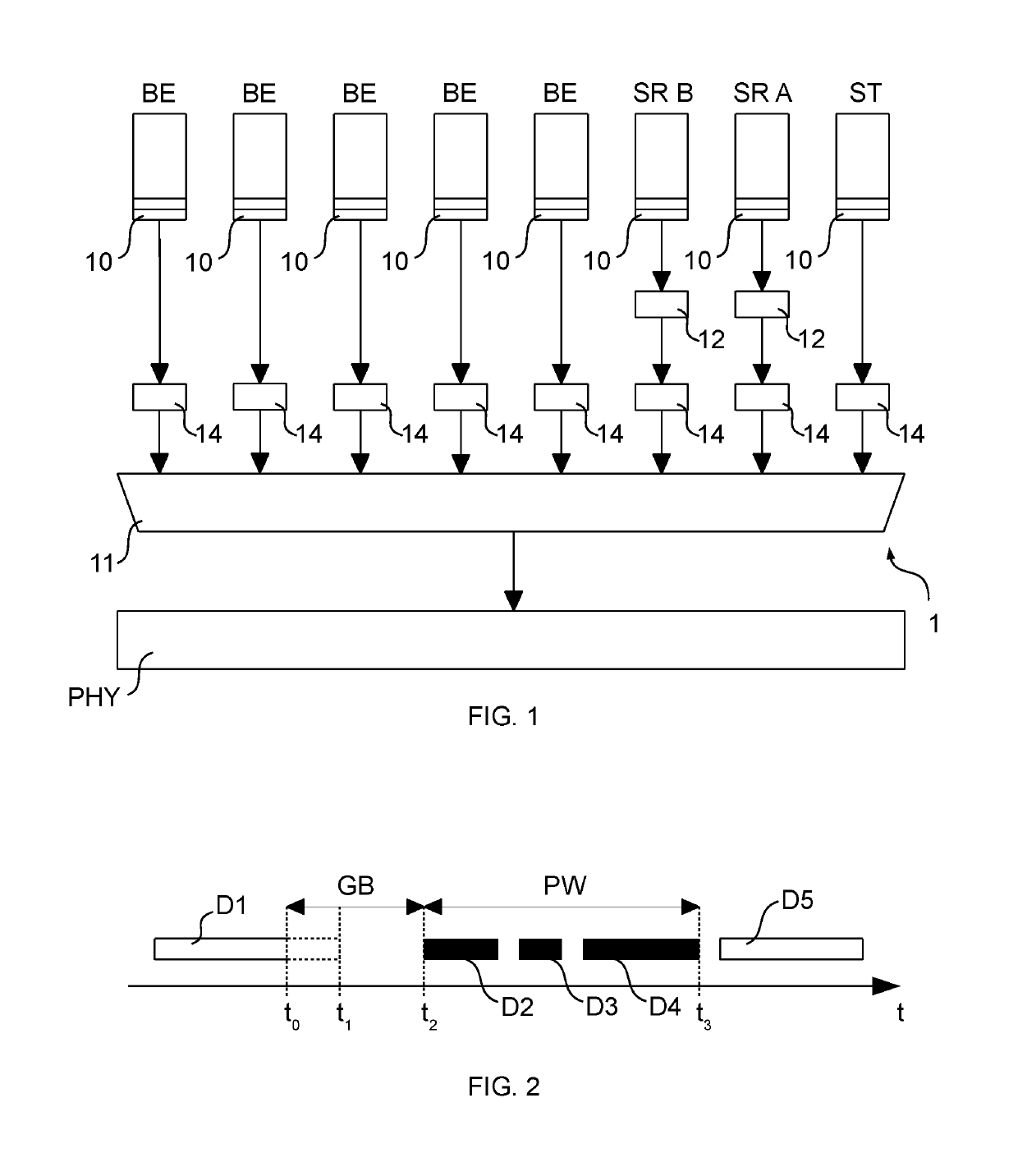

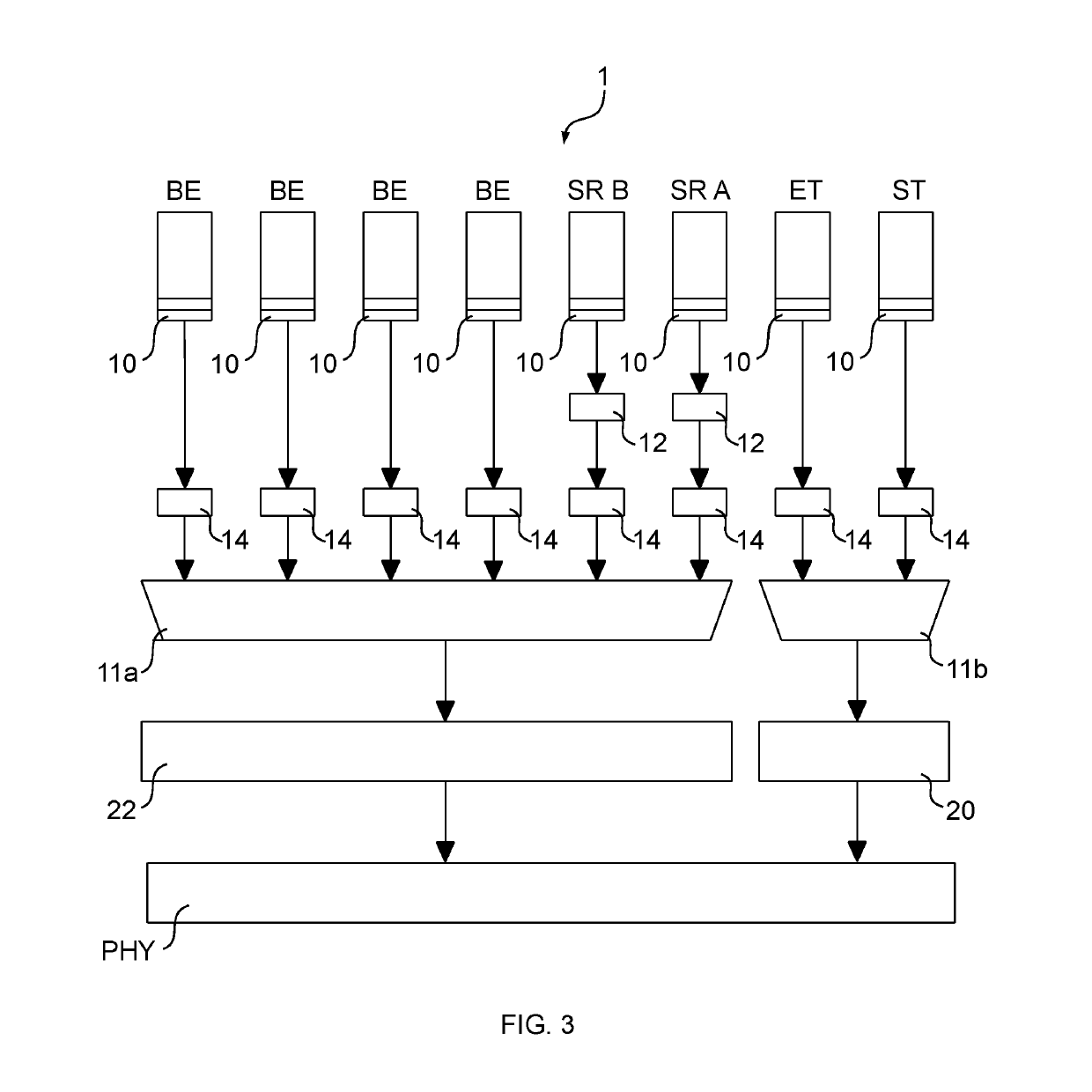

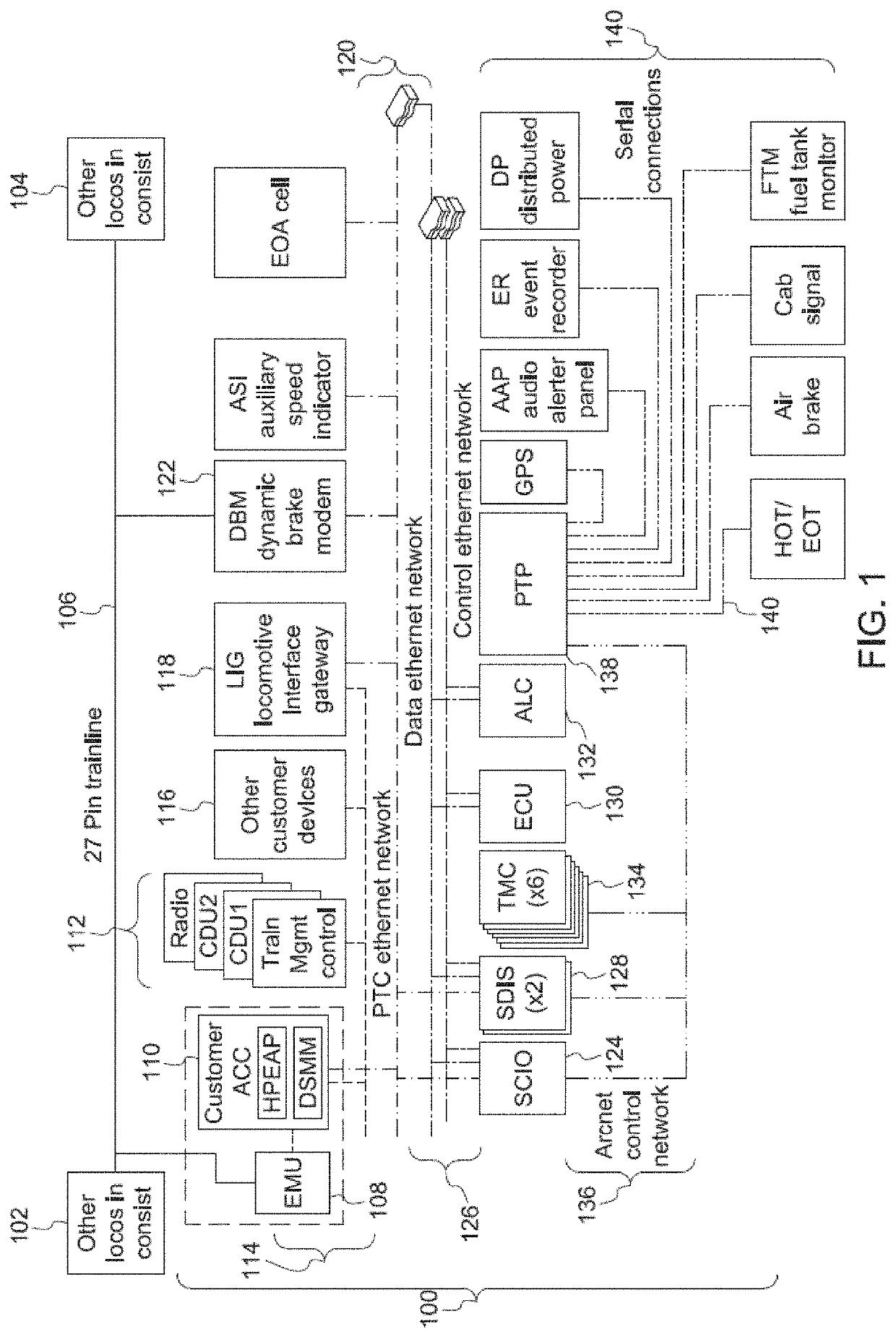

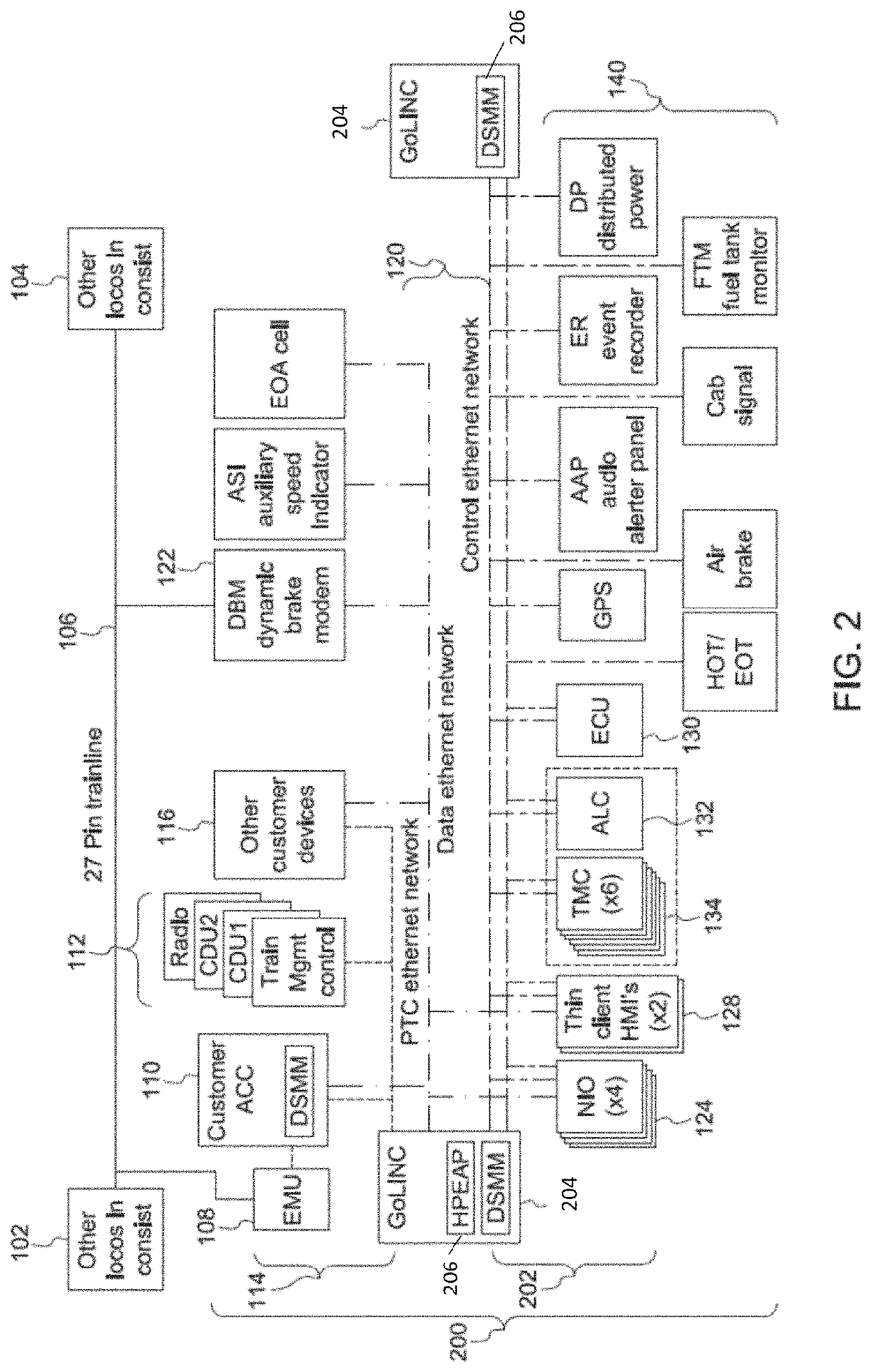

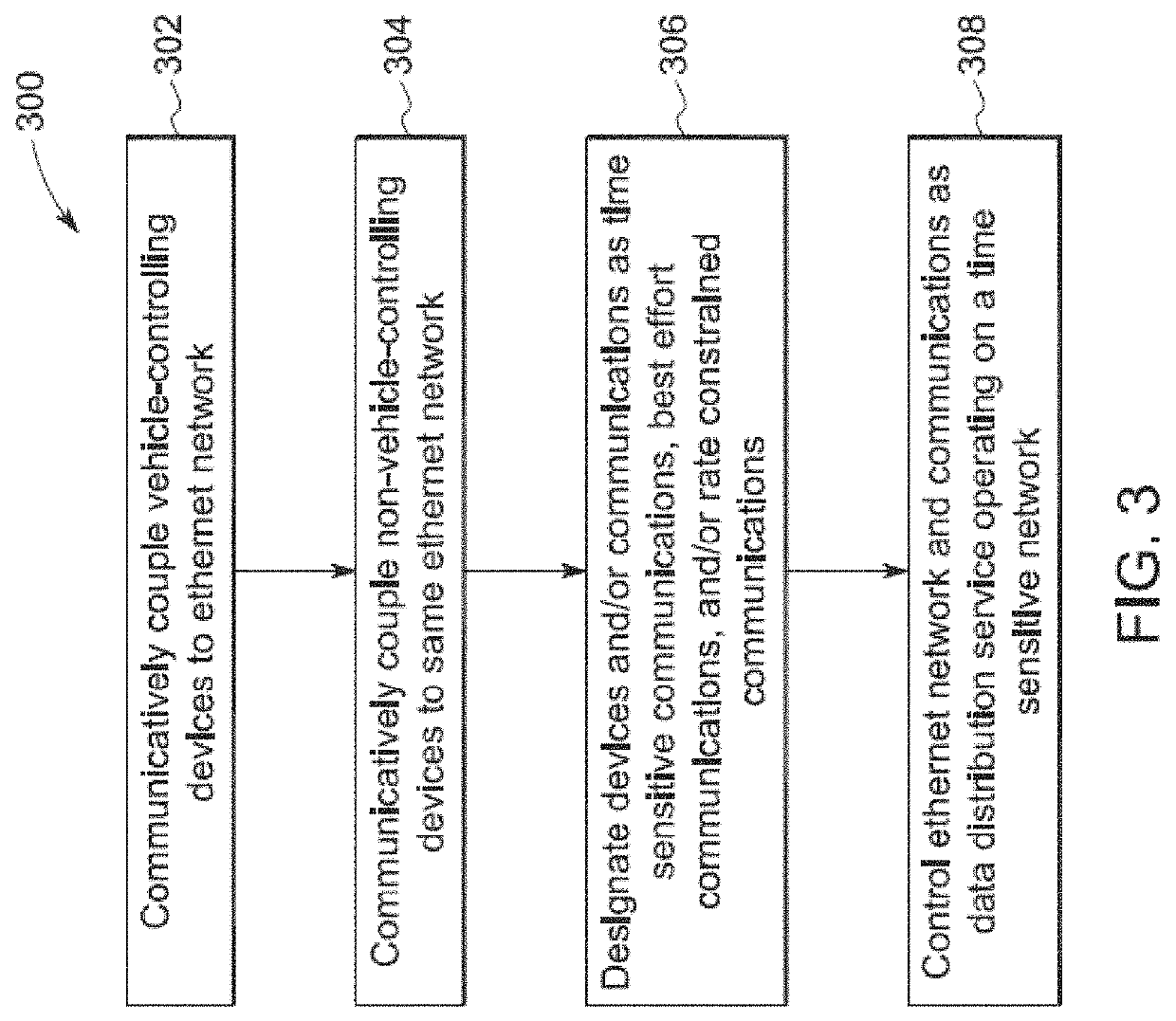

Locomotive control system

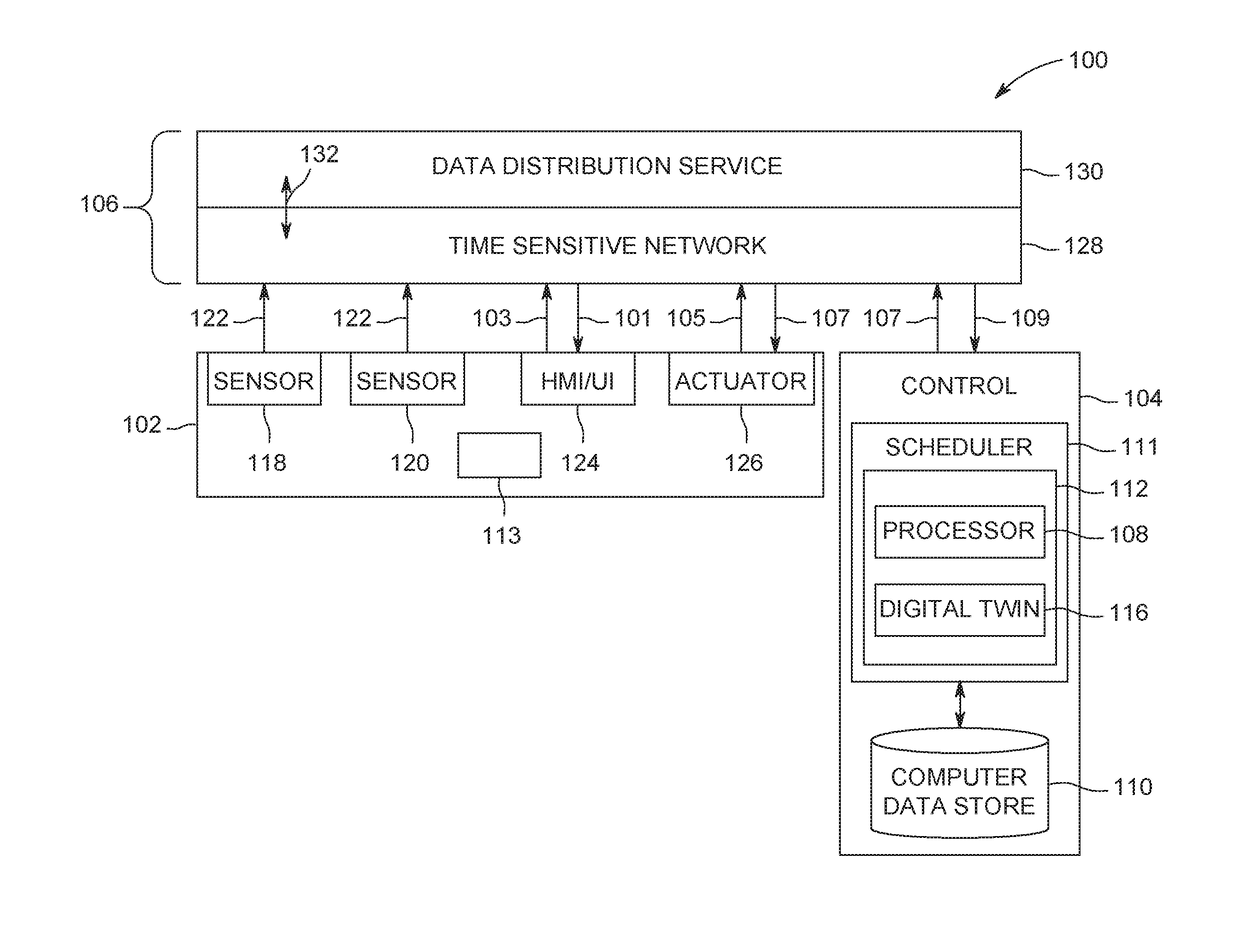

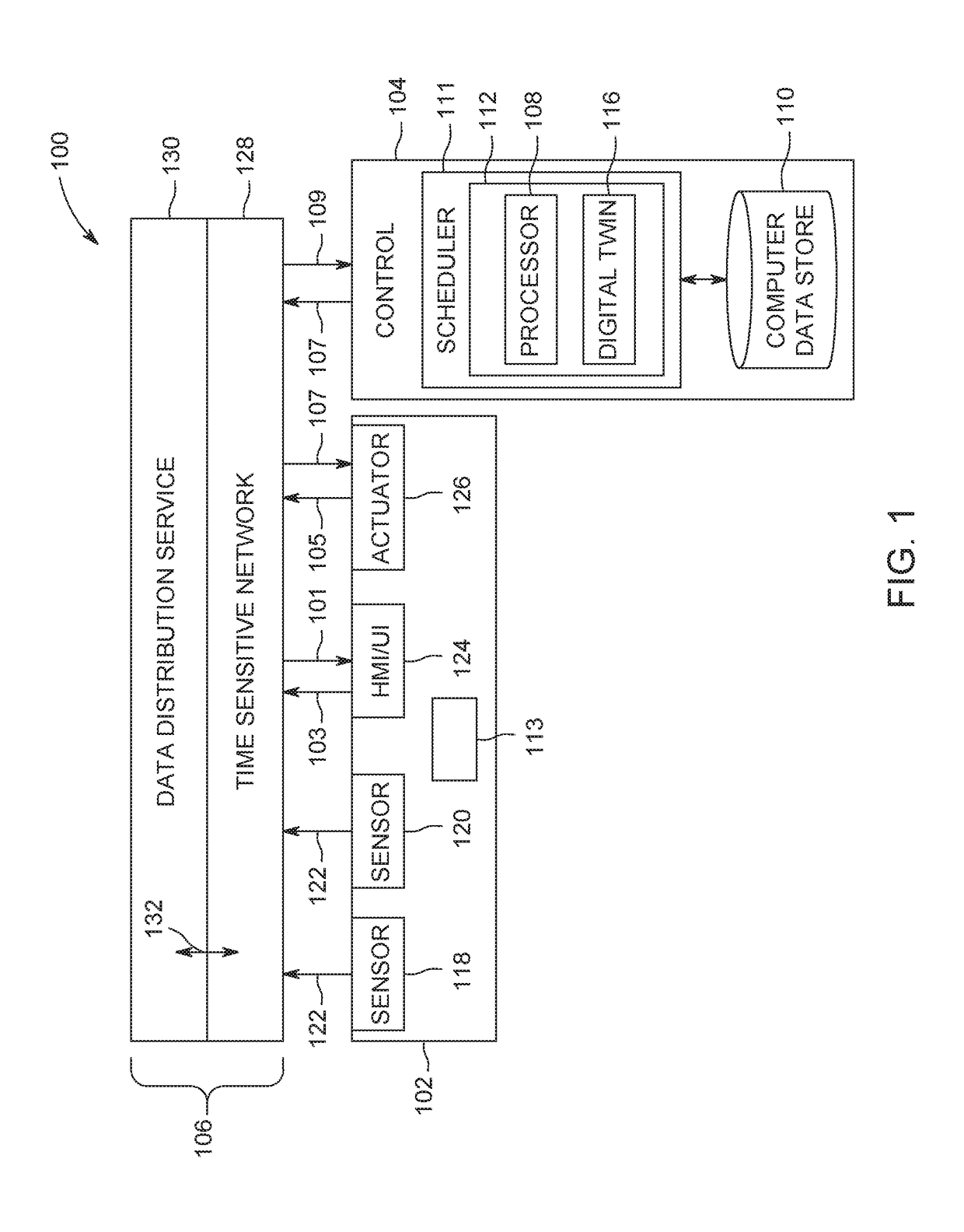

ActiveUS20180237039A1Significant latencySignalling indicators on vehicleStore-and-forward switching systemsControl communicationsControl system

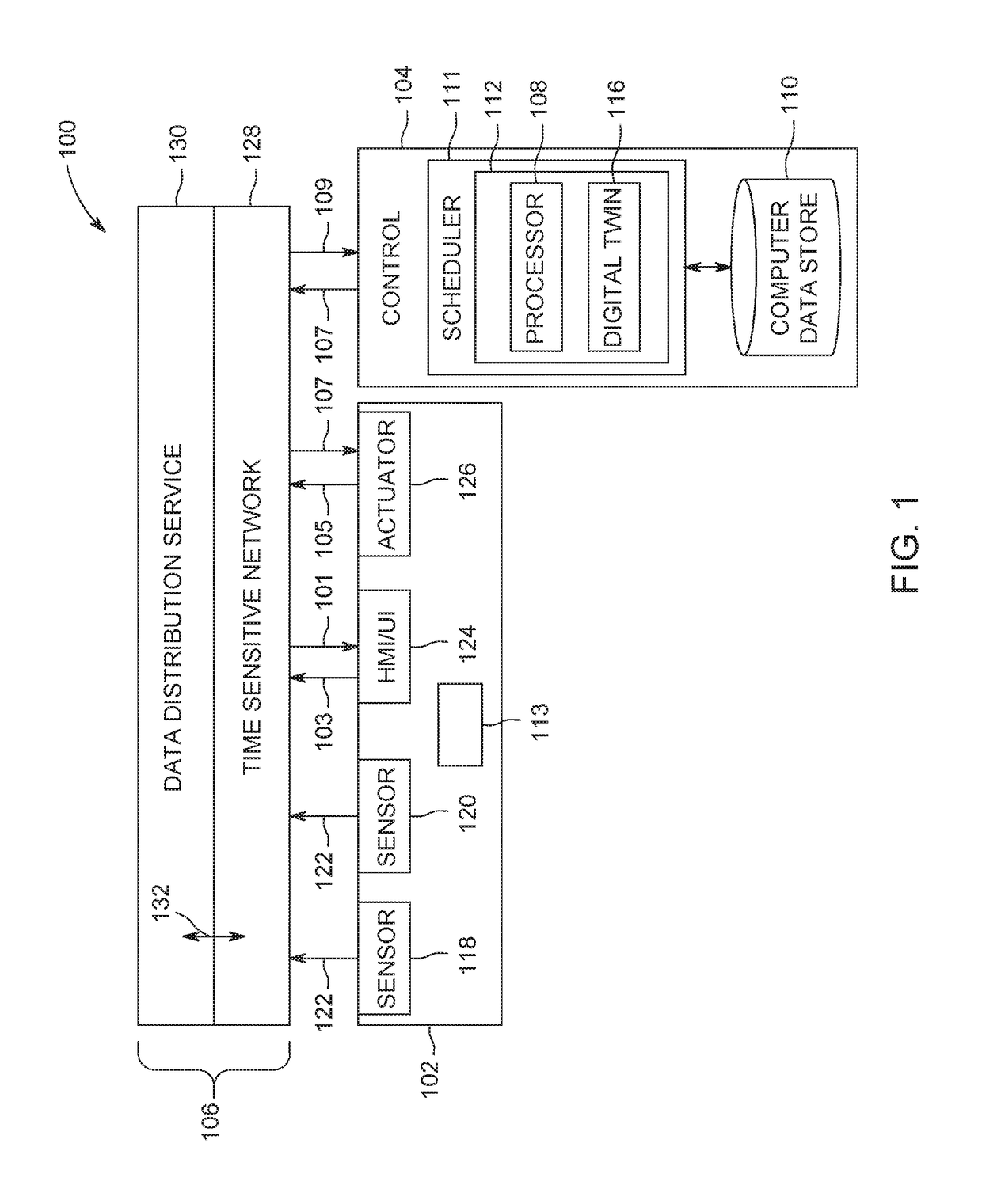

A locomotive control system includes a controller configured to control communication between or among plural locomotive devices that control movement of a locomotive via a network that communicatively couples the vehicle devices. The controller also is configured to control the communication using a data distribution service (DDS) and with the network operating as a time sensitive network (TSN). The controller is configured to direct a first set of the locomotive devices to communicate using time sensitive communications, a different, second set of the locomotive devices to communicate using best effort communications, and a different, third set of the locomotive devices to communicate using rate constrained communications.

Owner:GE GLOBAL SOURCING LLC

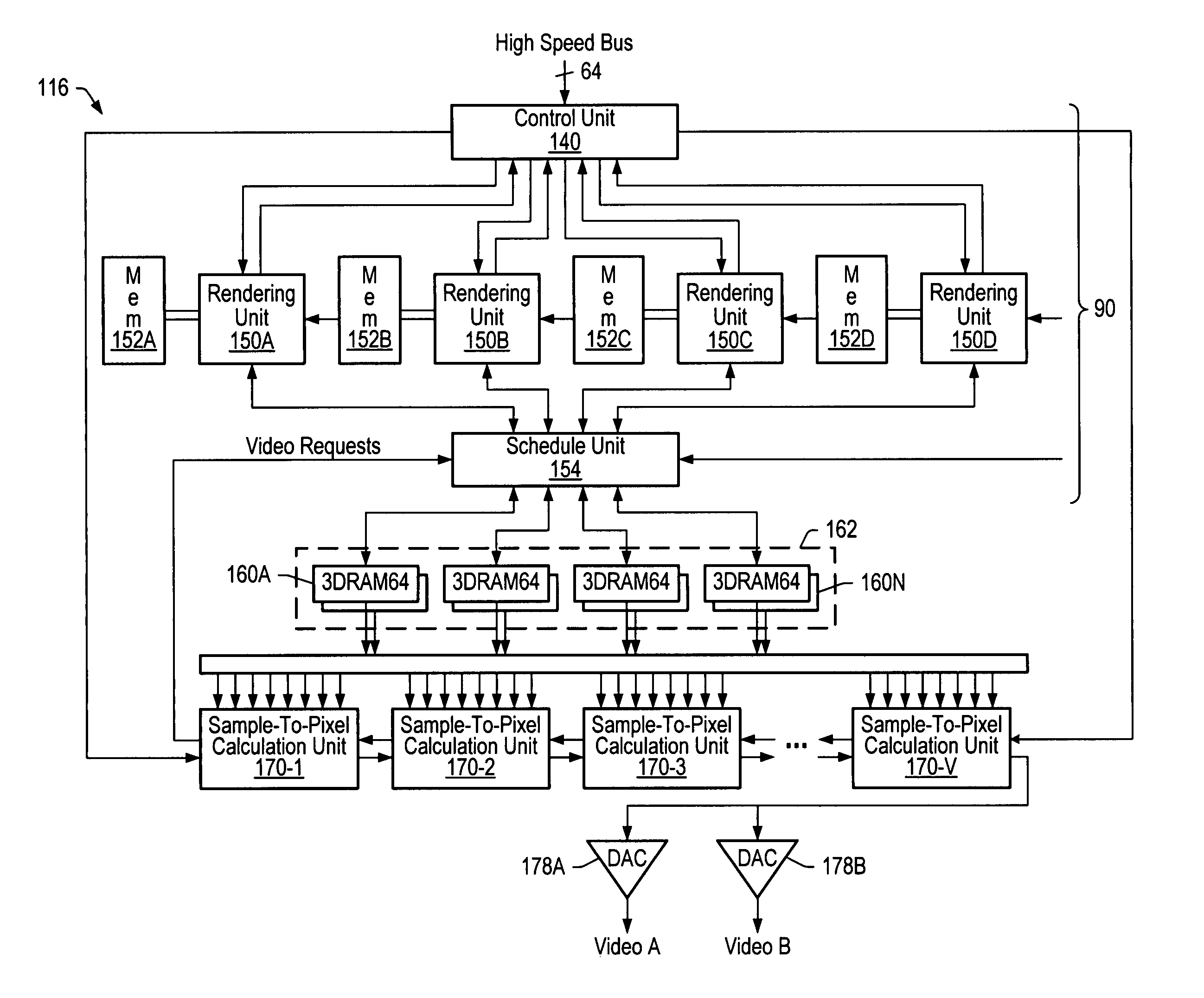

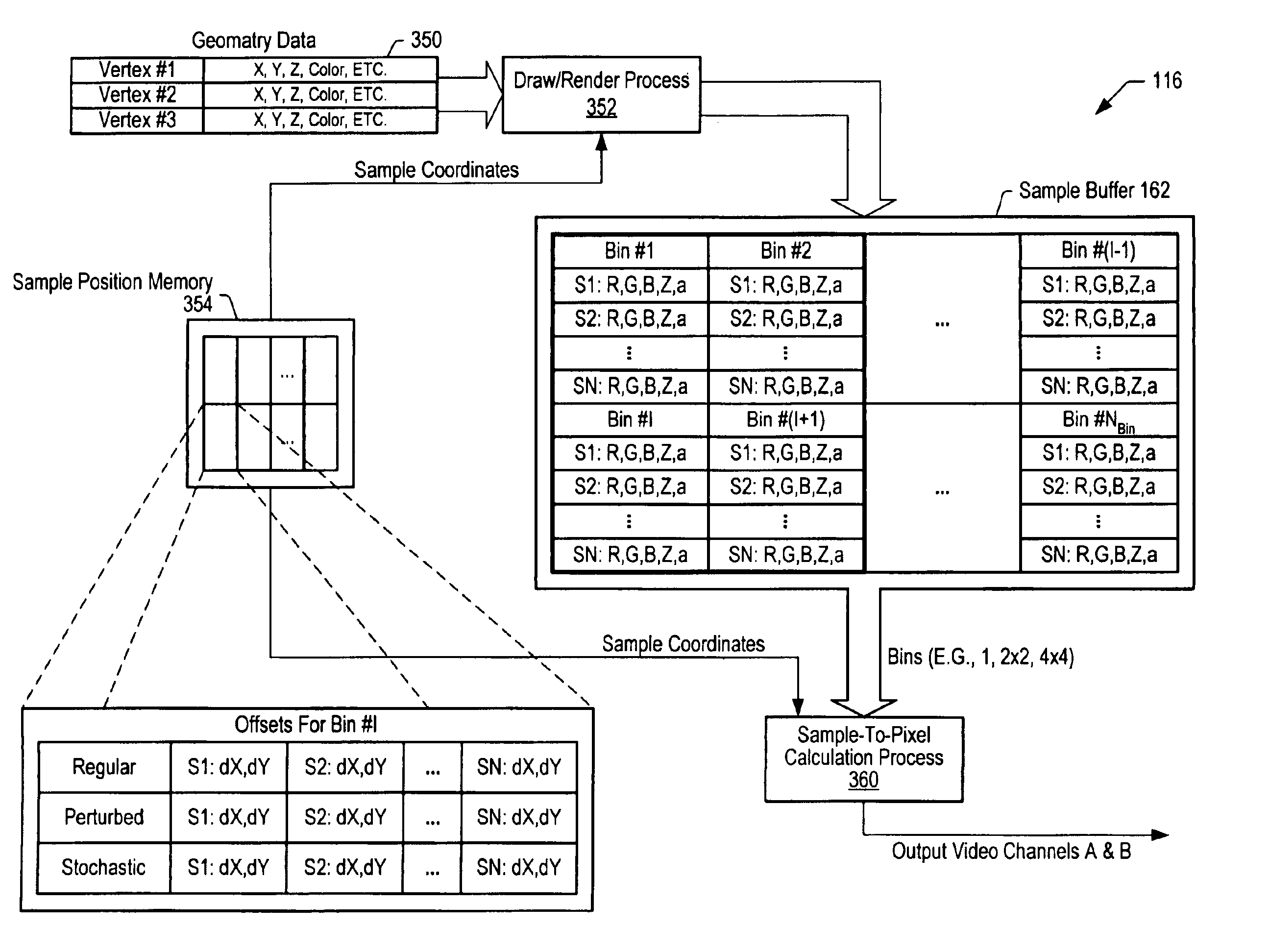

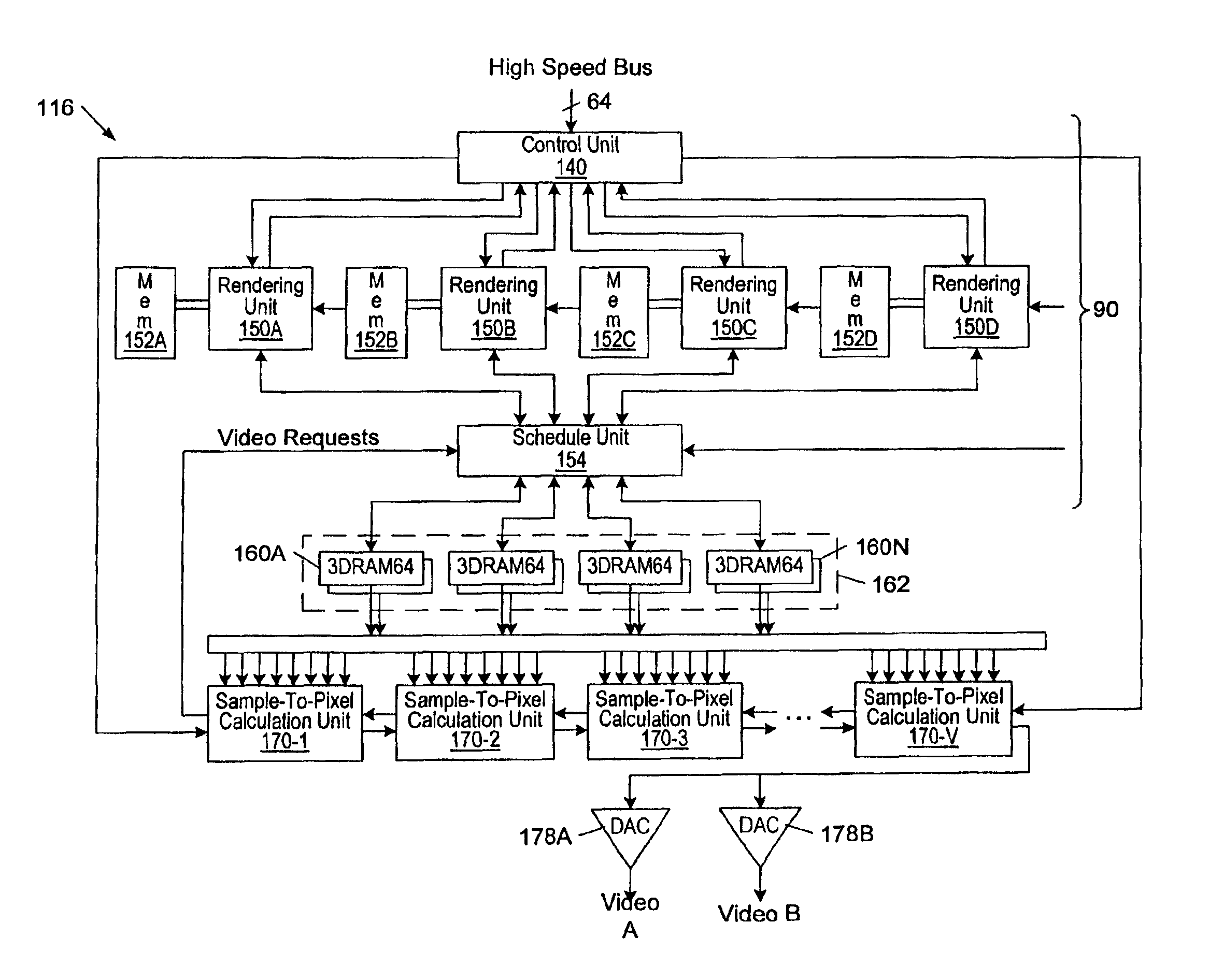

Graphics system configured to perform distortion correction

InactiveUS6940529B2Large latency of a frame buffer may be avoidedSignificant latencyImage enhancementDetails involving antialiasingGraphicsGraphic system

A graphics system comprises pixel calculation units and a sample buffer which stores a two-dimensional field of samples. Each pixel calculation unit selects positions in the two-dimensional field at which pixel values (e.g. red, green, blue) are computed. The pixel computation positions are selected to compensate for image distortions introduced by a display device and / or display surface. Non-uniformities in a viewer's perceived intensity distribution from a display surface (e.g. hot spots, overlap brightness) are corrected by appropriately scaling pixel values prior to transmission to display devices. Two or more sets of pixel calculation units driving two or more display devices adjust their respective pixel computation centers to align the edges of two or more displayed images. Physical barriers prevent light spillage at the interface between any two of the display images. Separate pixel computation positions may be used for distinct colors to compensate for color distortions.

Owner:ORACLE INT CORP

Locomotive control system

InactiveUS20190322299A1Significant latencySignalling indicators on vehicleStore-and-forward switching systemsControl systemControl communications

A locomotive control system includes a controller configured to control communication between or among plural locomotive devices that control movement of a locomotive via a network that communicatively couples the vehicle devices. The controller also is configured to control the communication using a data distribution service (DDS) and with the network operating as a time sensitive network (TSN). The controller is configured to direct a first set of the locomotive devices to communicate using time sensitive communications, a different, second set of the locomotive devices to communicate using best effort communications, and a different, third set of the locomotive devices to communicate using rate constrained communications.

Owner:GE GLOBAL SOURCING LLC

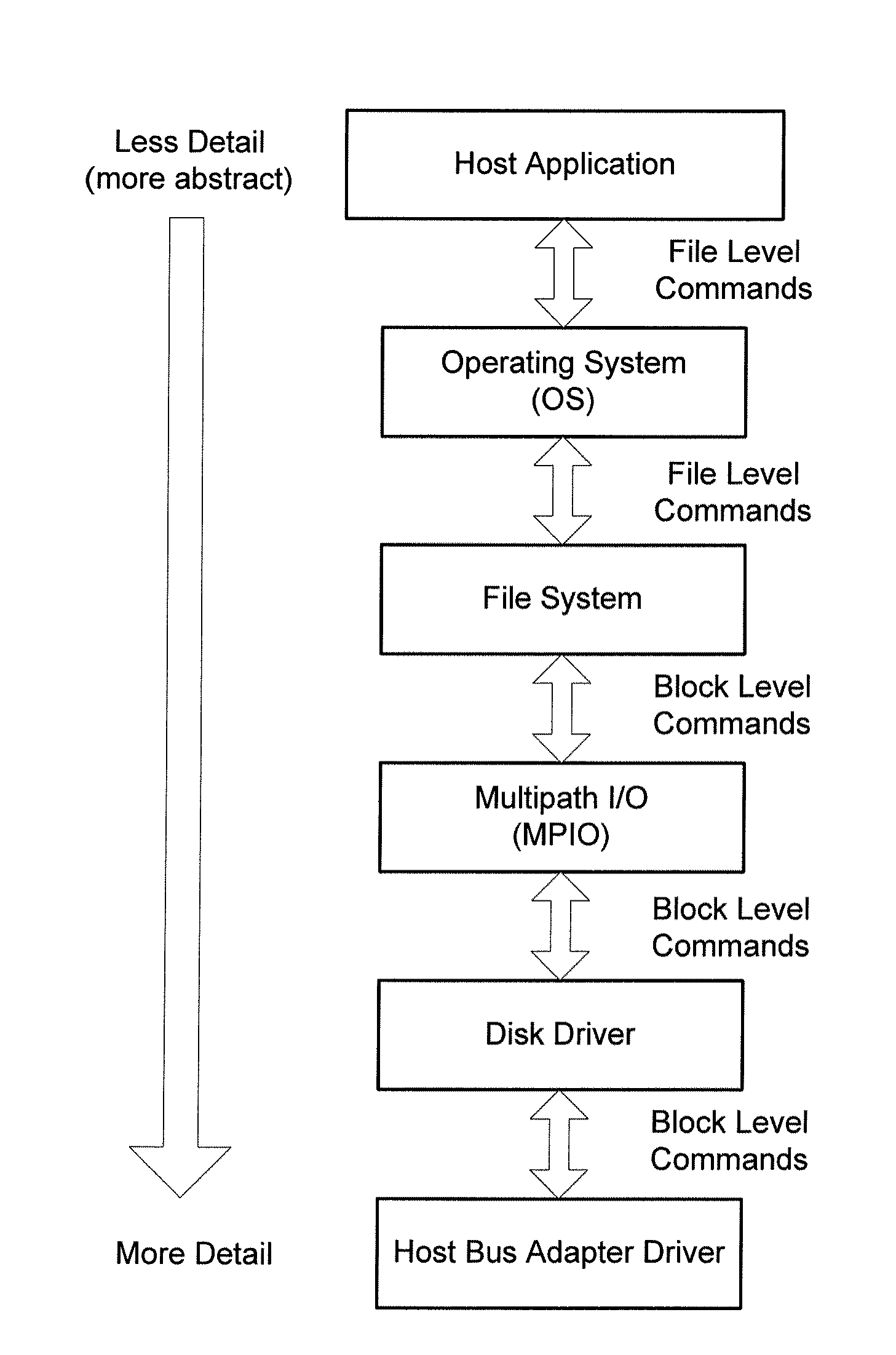

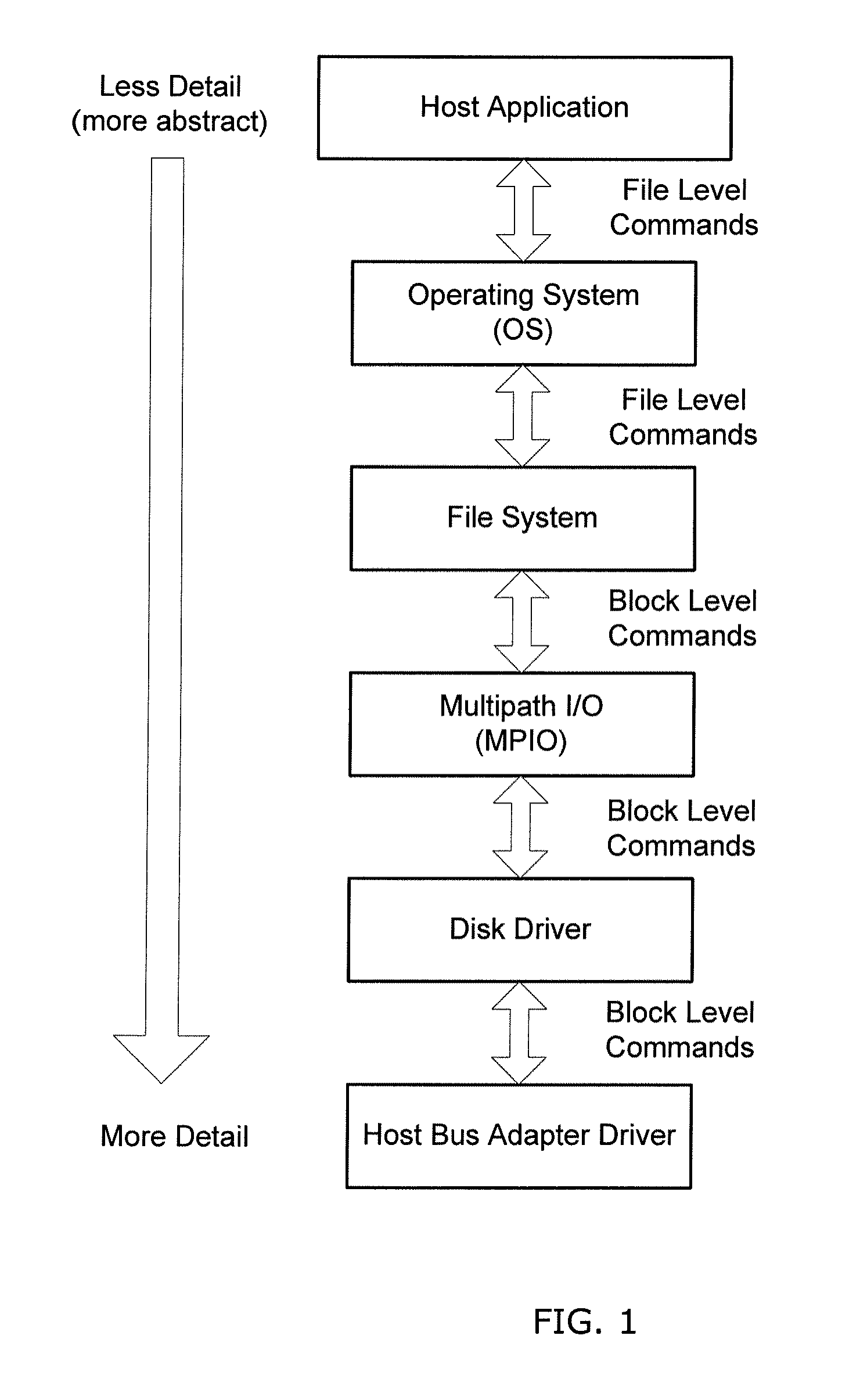

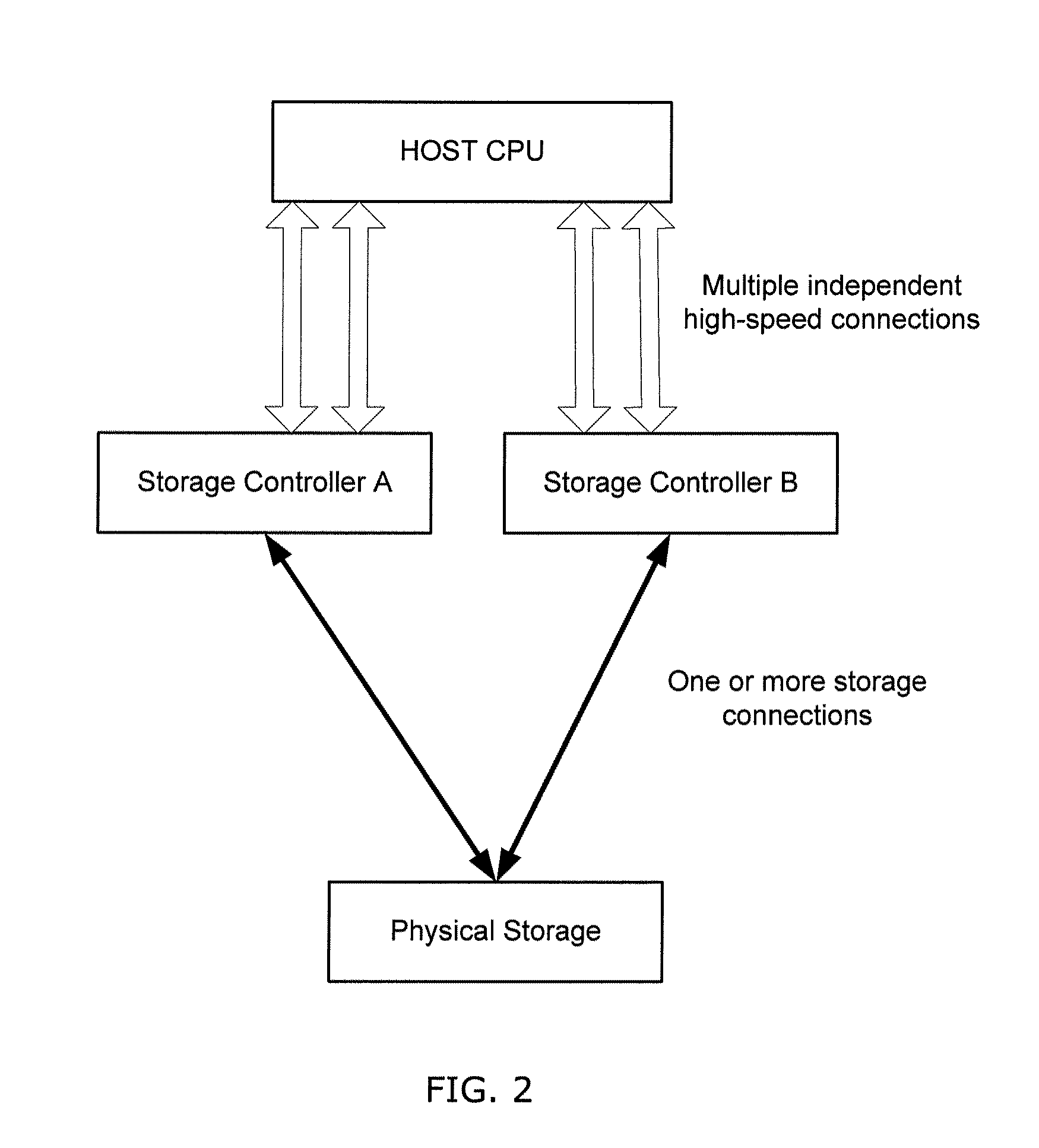

System and method for enhanced load balancing in a storage system

InactiveUS20100070656A1Reduced transit timeImprove throughputInput/output processes for data processingFile systemHybrid storage system

In association with a storage system, dividing or splitting file system I / O commands, or generating I / O subcommands, in a multi-connection environment. In one aspect, a host device is coupled to disk storage by a plurality of high speed connections, and a host application issues an I / O command which is divided or split into multiple subcommands, based on attributes of data on the target storage, a weighted path algorithm and / or target, connection or other characteristics. Another aspect comprises a method for generating a queuing policy and / or manipulating queuing policy attributes of I / O subcommands based on characteristics of the initial I / O command or target storage. I / O subcommands may be sent on specific connections to optimize available target bandwidth. In other aspects, responses to I / O subcommands are aggregated and passed to the host application as a single I / O command response.

Owner:ATTO TECHNOLOGY

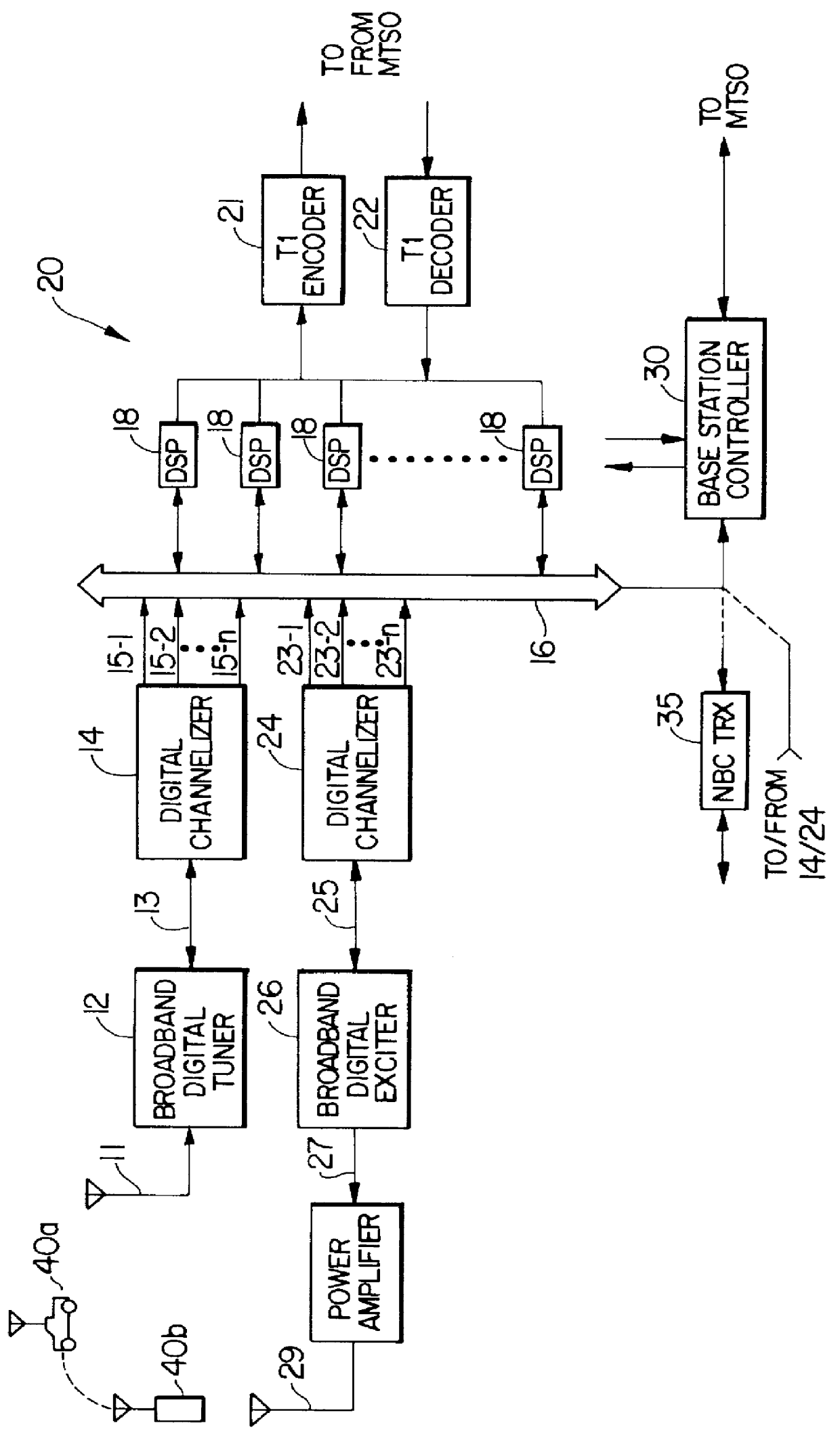

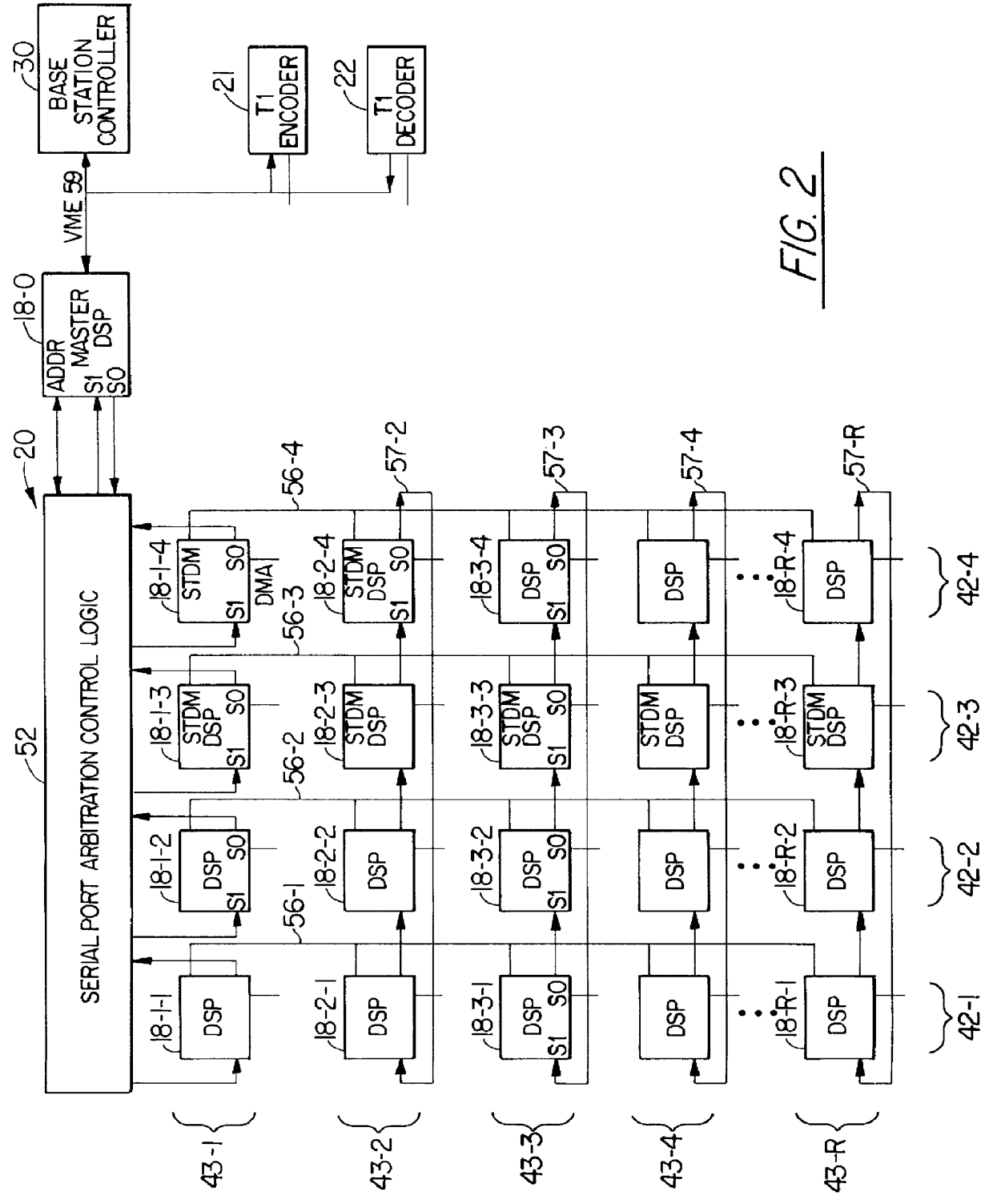

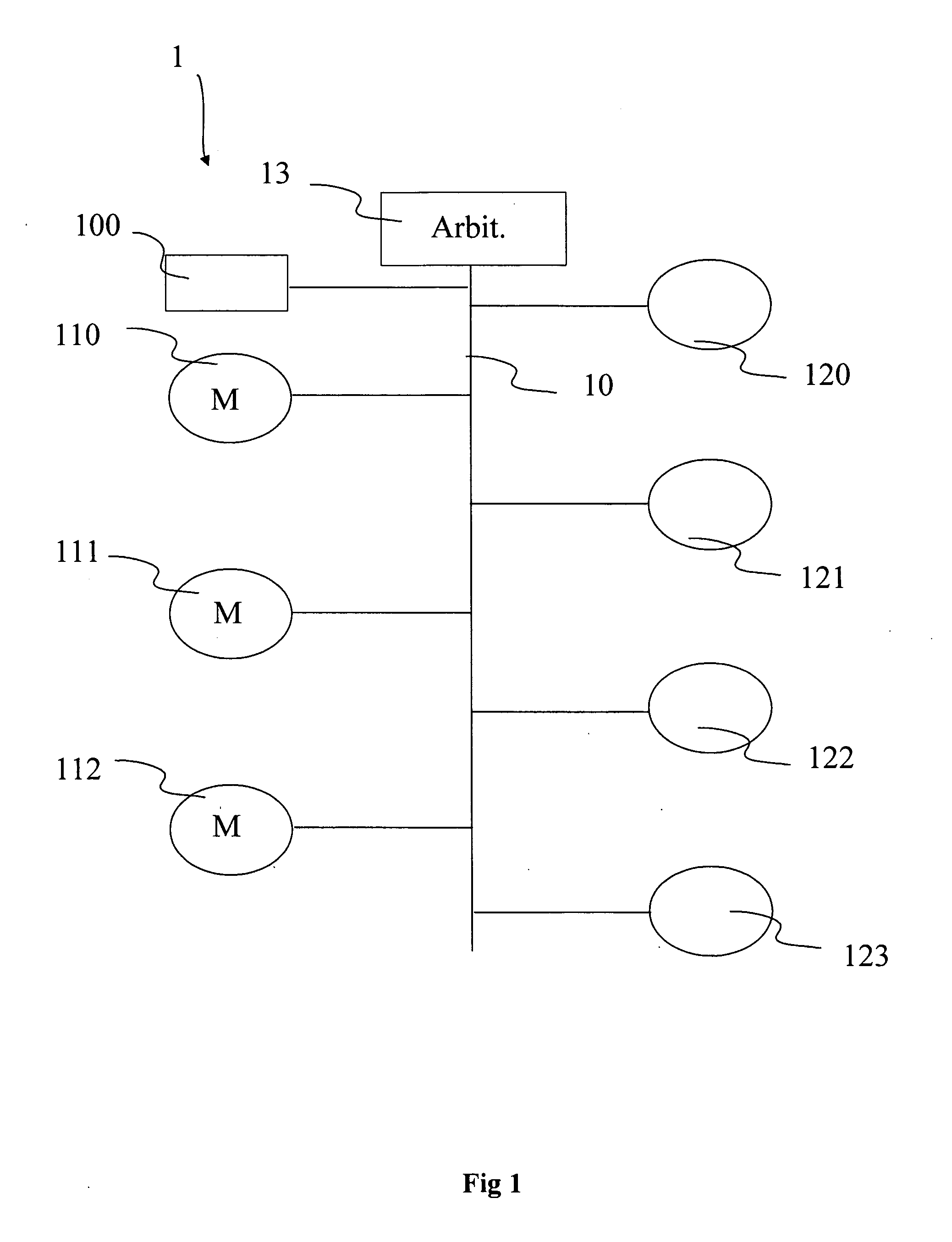

Multichannel broadband transceiver system making use of a distributed control architecture for digital signal processor array

InactiveUS6134229AMessage is thus very limitedReliable receptionTime-division multiplexRadio/inductive link selection arrangementsDigital signal processingTransceiver

A control message communication mechanism for use in a broadband transceiver system that includes multiple digital signal processors for performing real time signal processing tasks. One of the digital signal processor (DSPs) is designated as a master DSP. The remainder of the DSPs are arranged in rows and columns to provide a two-dimensional array. A pair of bit-serial interfaces on each DSP are connected in a vertical bus and horizontal loop arrangement. The vertical bus arrangement provides a primary mechanism for the master DSP to communicate control messages to the array DSPs. The horizontal loop mechanism provides a secondary way for DSPs to communicate control information with one another, without involving the master DSP, such as may be required to handle a particular call, without interrupting the more time critical primary connectivity mechanism.

Owner:RATEZE REMOTE MGMT LLC

Compensating for the chromatic distortion of displayed images

InactiveUS6924816B2Large latency of a frame buffer may be avoidedSignificant latencyImage enhancementDetails involving antialiasingGraphicsGraphic system

Owner:ORACLE INT CORP

Blending the edges of multiple overlapping screen images

InactiveUS7002589B2Large latency of a frame buffer may be avoidedSignificant latencyImage enhancementDetails involving antialiasingGraphicsGraphic system

A graphics system comprises pixel calculation units and a sample buffer which stores a two-dimensional field of samples. Each pixel calculation unit selects positions in the two-dimensional field at which pixel values (e.g. red, green, blue) are computed. The pixel computation positions are selected to compensate for image distortions introduced by a display device and / or display surface. Non-uniformities in a viewer's perceived intensity distribution from a display surface (e.g. hot spots, overlap brightness) are corrected by appropriately scaling pixel values prior to transmission to display devices. Two or more sets of pixel calculation units driving two or more display devices adjust their respective pixel computation centers to align the edges of two or more displayed images. Physical barriers prevent light spillage at the interface between any two of the display images. Separate pixel computation positions may be used for distinct colors to compensate for color distortions.

Owner:ORACLE INT CORP

Method for managing traffic in a network based upon ethernet switches, vehicle, communication interface, and corresponding computer program product

ActiveUS20190199641A1Overcomes drawbackSignificant latencyData switching detailsTraffic capacityCommunication interface

A method for managing traffic in a network based upon Ethernet switches, which are compliant with the IEEE 802.1Q and TSN communication standards, including the steps of dividing frames received into queues; scheduling transmission of the frames contained in the queues on the basis of the respective priorities of the traffic classes; and, if frames are present belonging to a traffic class in the plurality of traffic classes that has a higher priority than frames belonging to the event-triggered traffic class and when at least one protected time window occurs, transmitting the frames that belong to the traffic class with higher priority and blocking transmission of the frames that belong to the remaining traffic classes; and if the queue dedicated to the event-triggered traffic class is empty, transmitting a frame belonging to one of the remaining traffic classes that have frames with a priority lower than the frames belonging to the event-triggered traffic class.

Owner:UNIVERSITY OF CATANIA +1

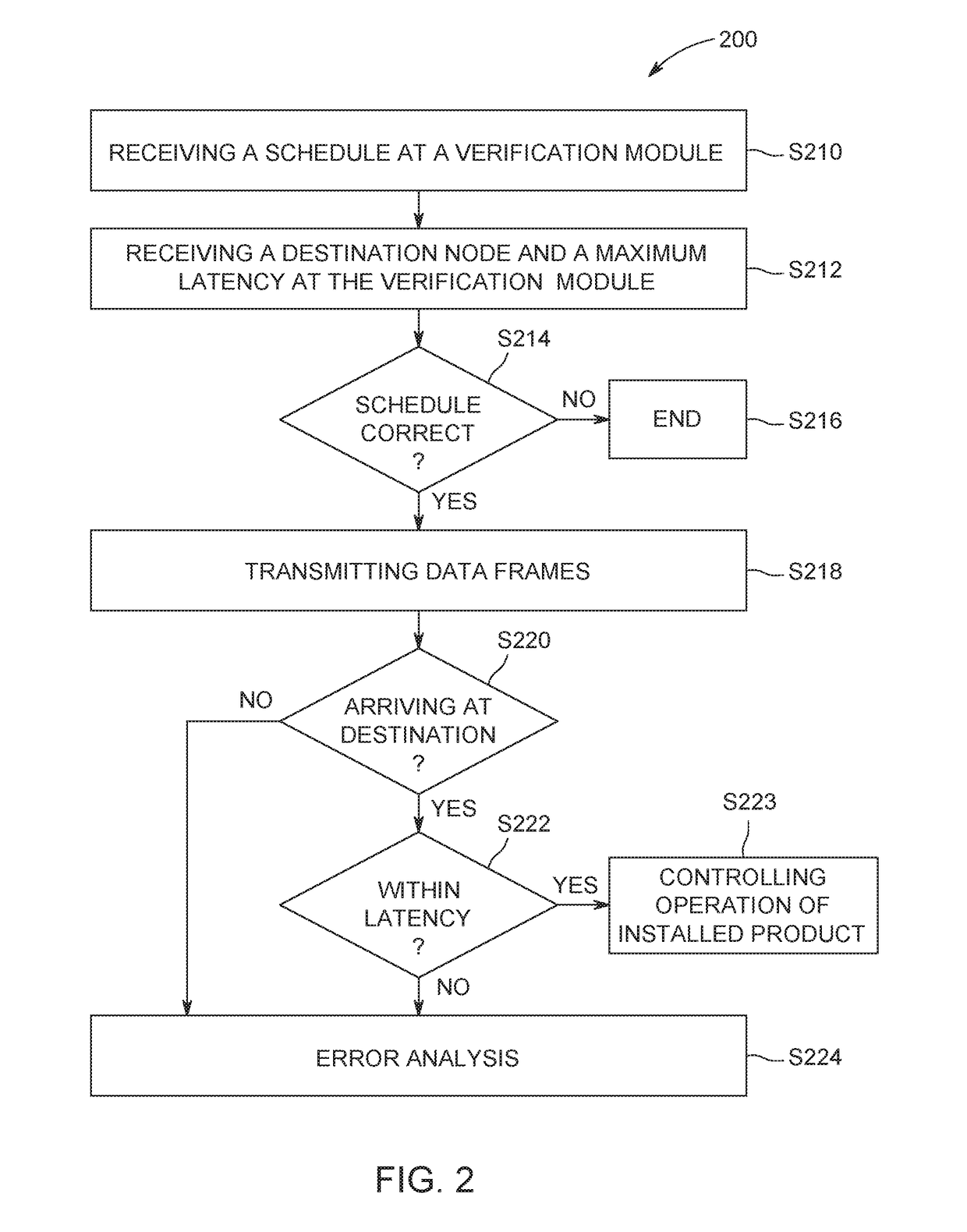

Time sensitive network (TSN) scheduler with verification

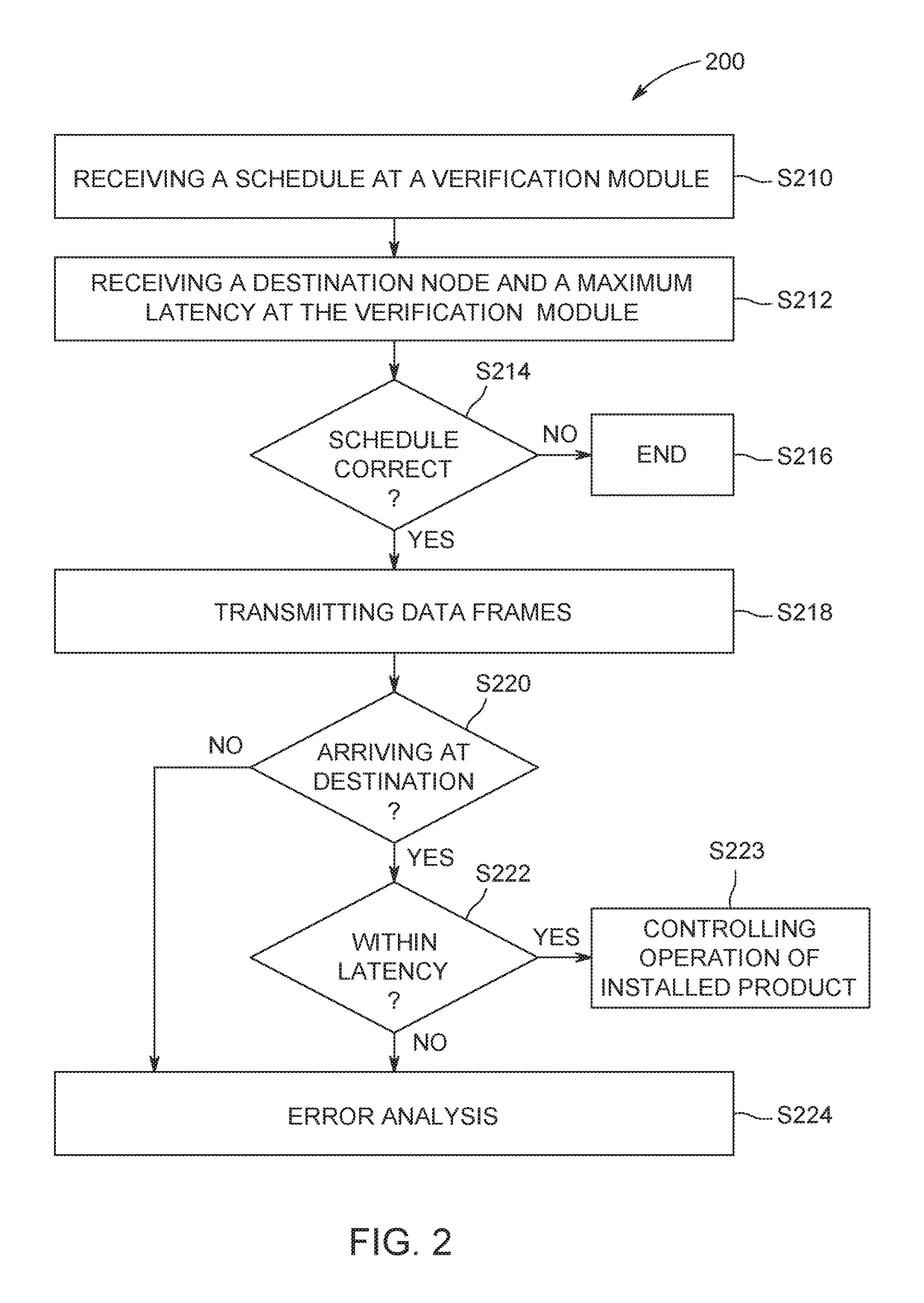

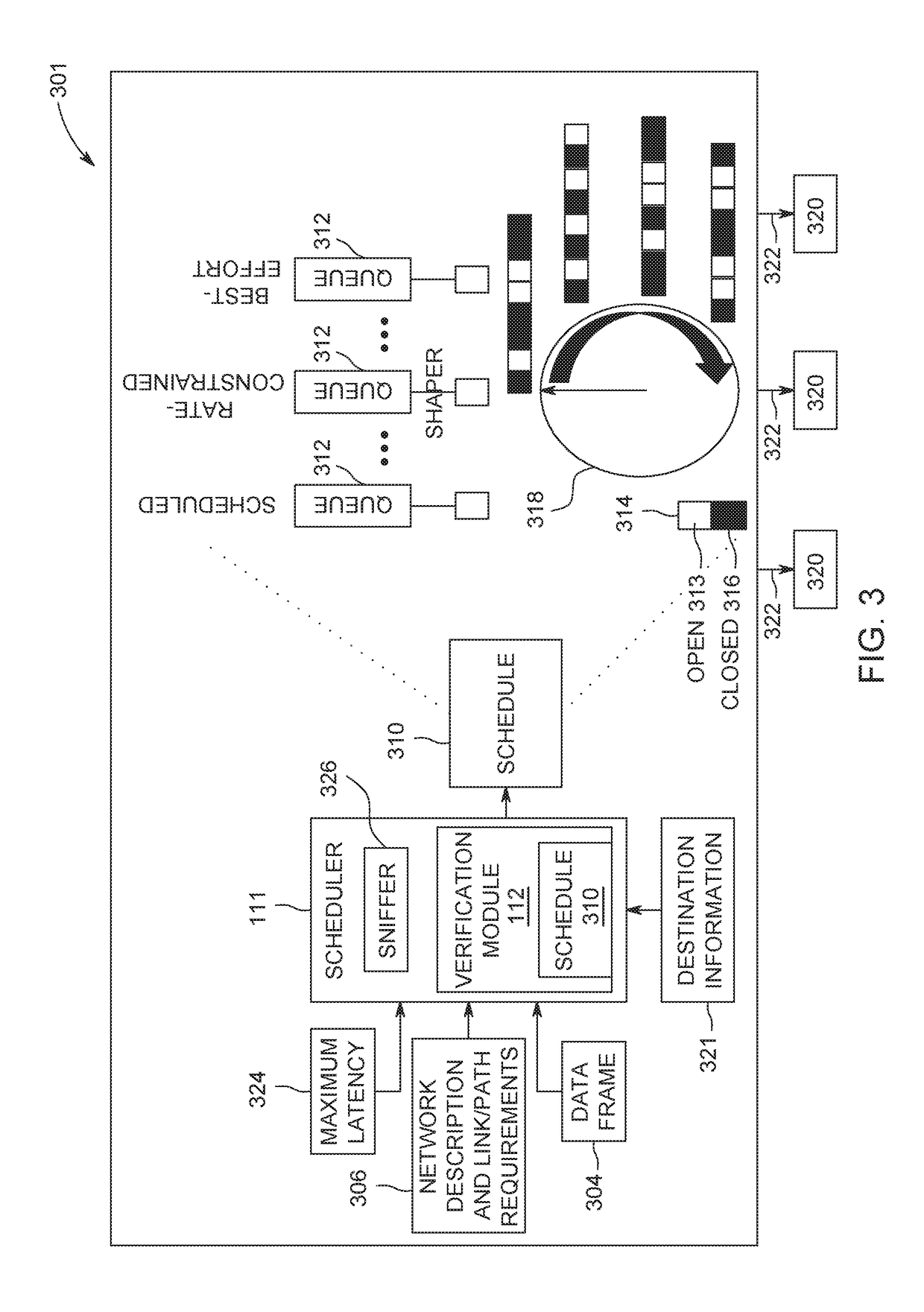

According to some embodiments, system and methods are provided, comprising receiving, at a verification module, a schedule for transmission of one or more data frames to one or more destination nodes via a Time Sensitive Network (TSN); receiving, at the verification module, a destination for each data frame; receiving, at the verification module, a maximum tolerable latency for each data frame; determining, via the verification module, the received schedule is correct; transmitting one or more data frames according to the schedule; accessing, via the verification module, the one or more destination nodes; verifying, via the verification module, the one or more data frames were transmitted to the one or more destination nodes within a maximum tolerable latency, based on accessing the one or more destination nodes; and controlling one or more operations of an installed product based on the transmitted one or more data frames. Numerous other aspects are provided.

Owner:GENERAL ELECTRIC CO

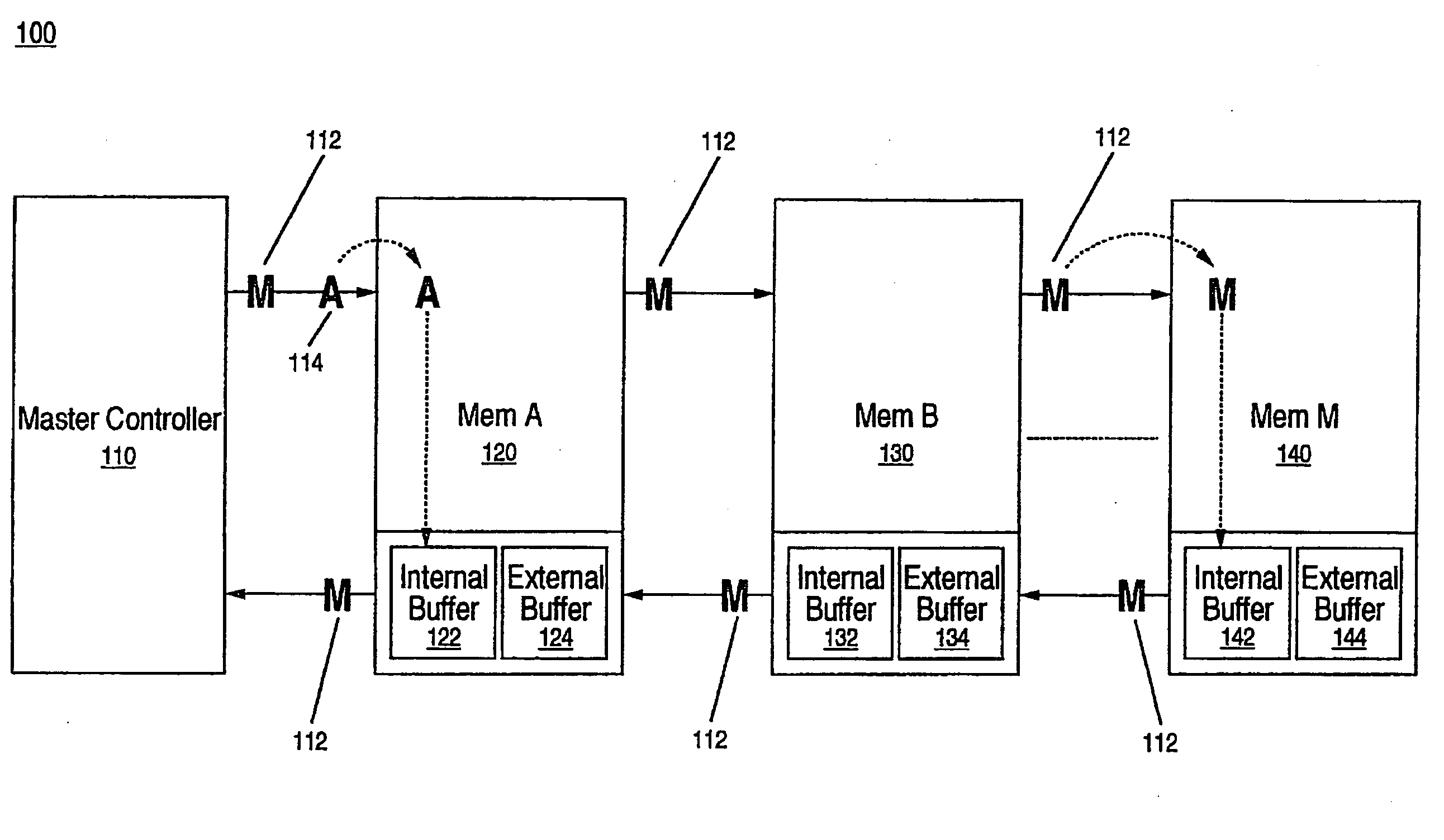

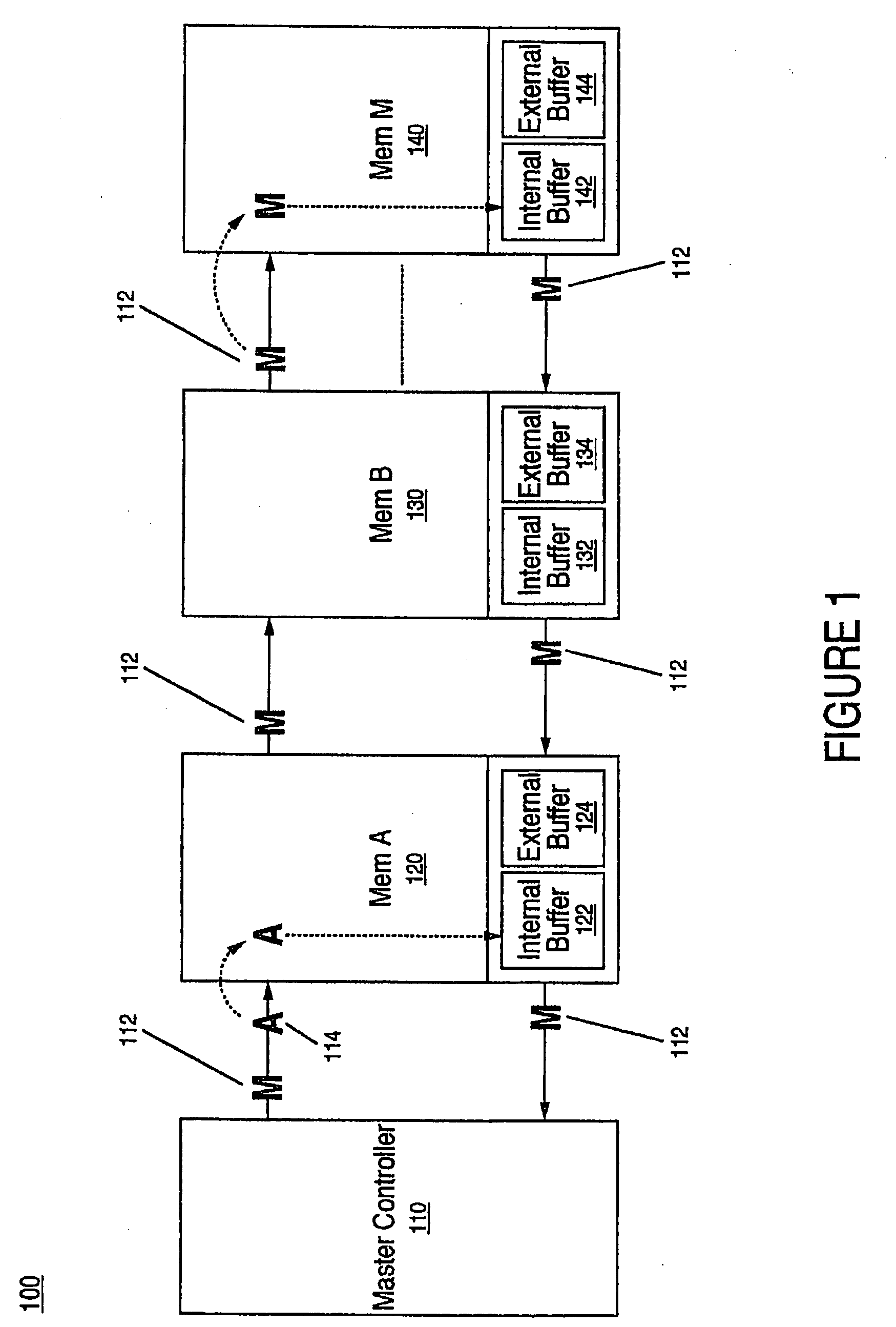

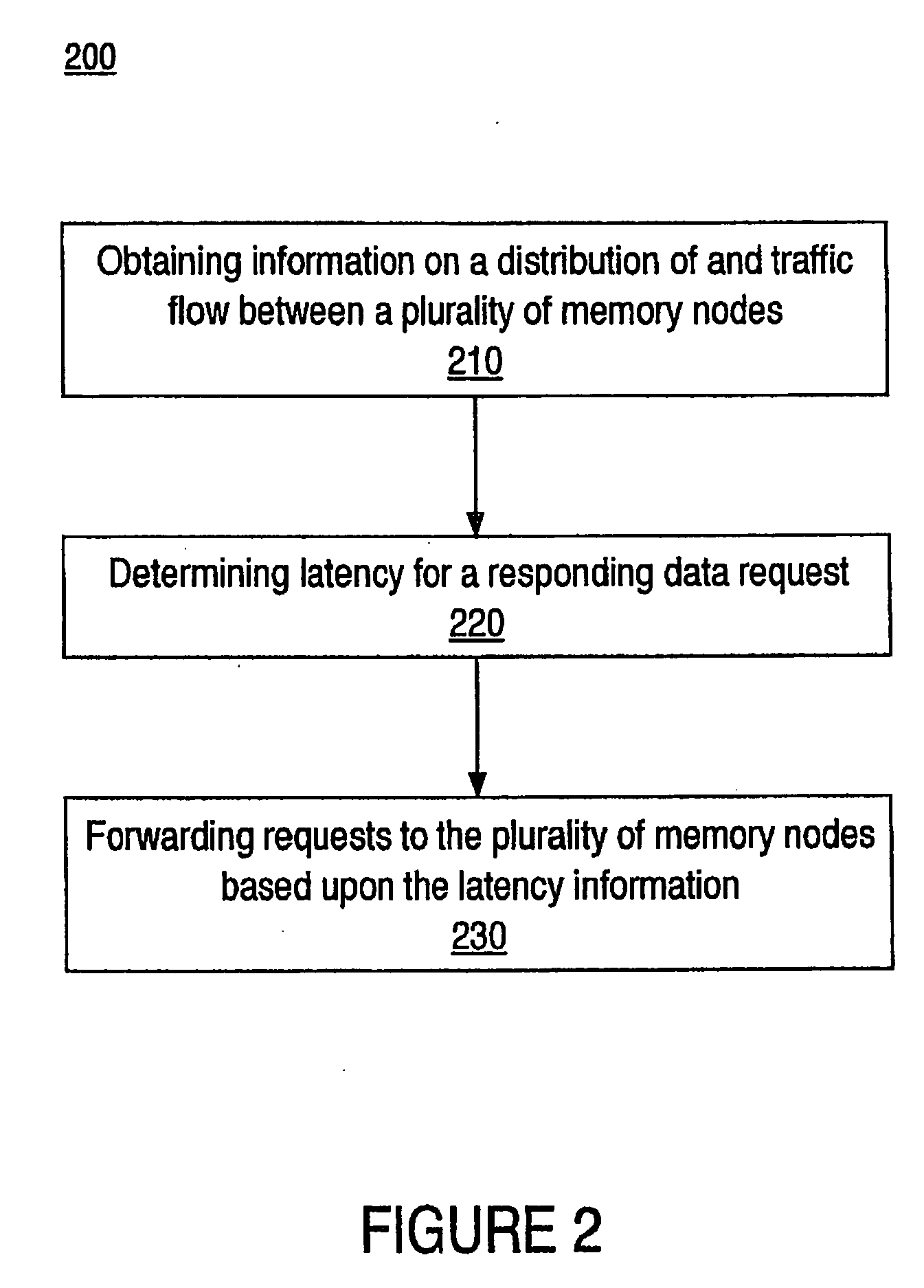

Method for setting parameters and determining latency in a chained device system

ActiveUS20090138570A1Short response timeSignificant latencyMultiple digital computer combinationsElectric digital data processingMaximum latencyMaster controller

A storage system and method for setting parameters and determining latency in a chained device system. Storage nodes store information and the storage nodes are organized in a daisy chained network. At least one of one of the storage nodes includes an upstream communication buffer. Flow of information to the storage nodes is based upon constraints of the communication buffer within the storage nodes. In one embodiment, communication between the master controller and the plurality storage nodes has a determined maximum latency.

Owner:SPANSION LLC +1

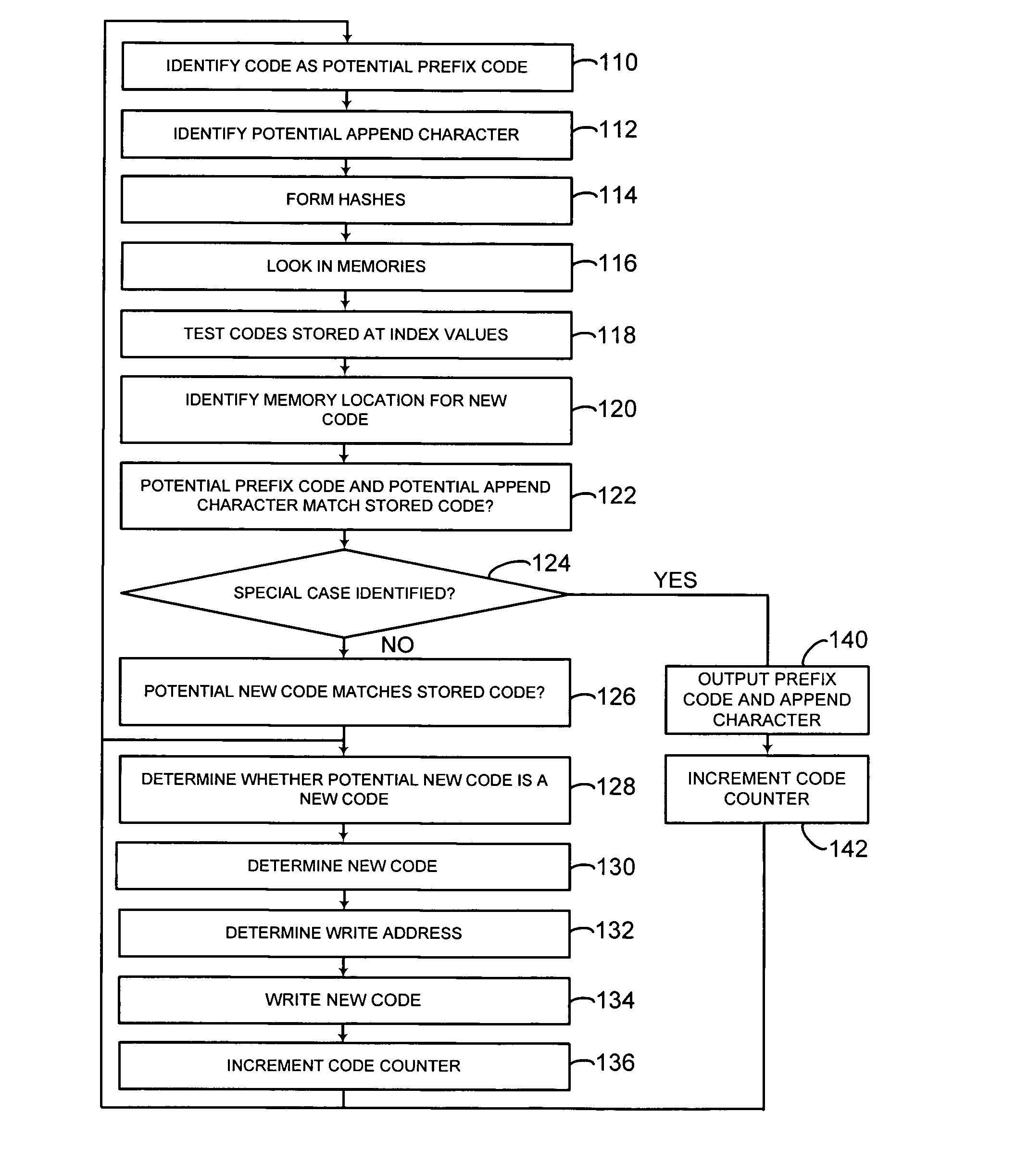

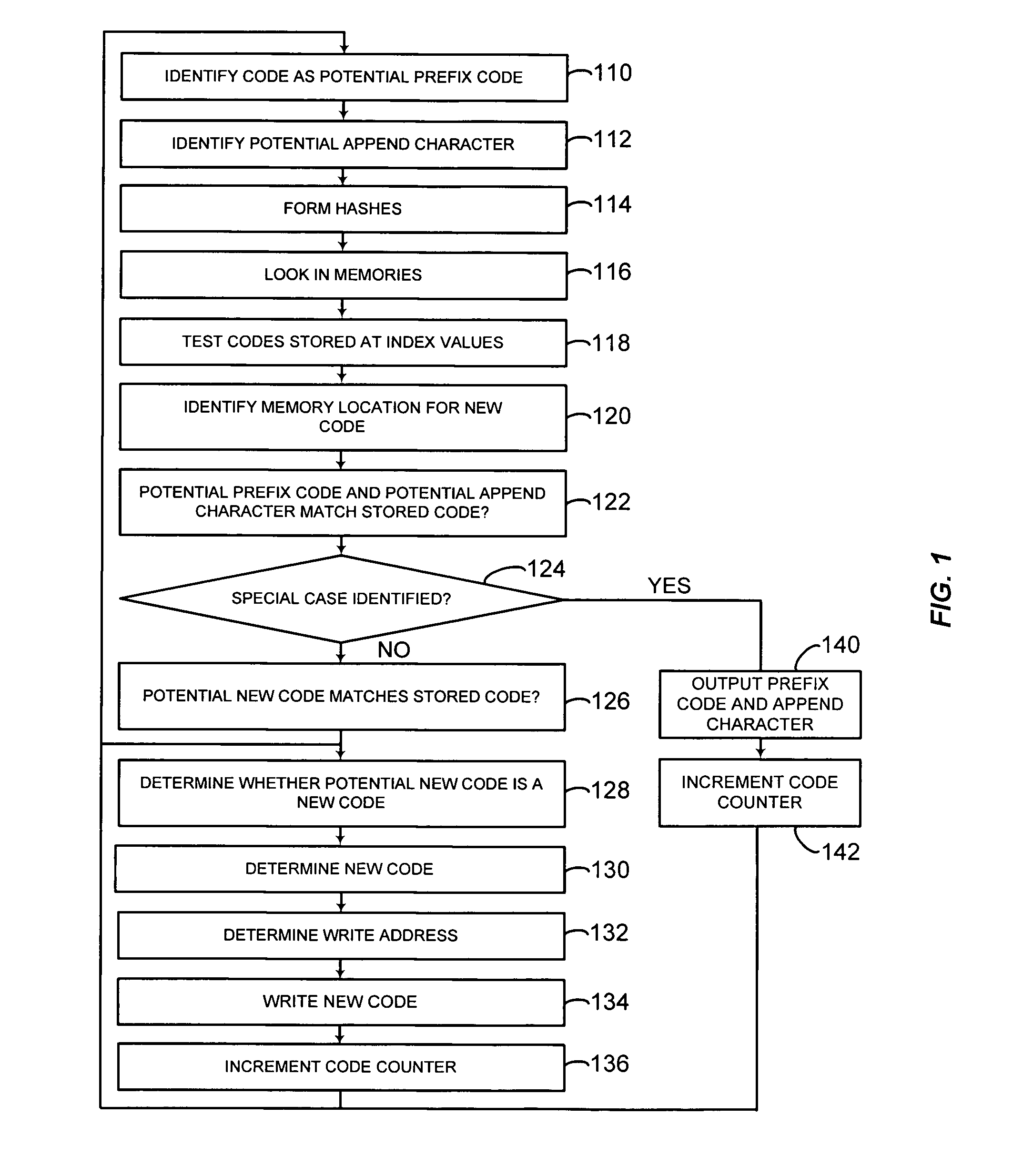

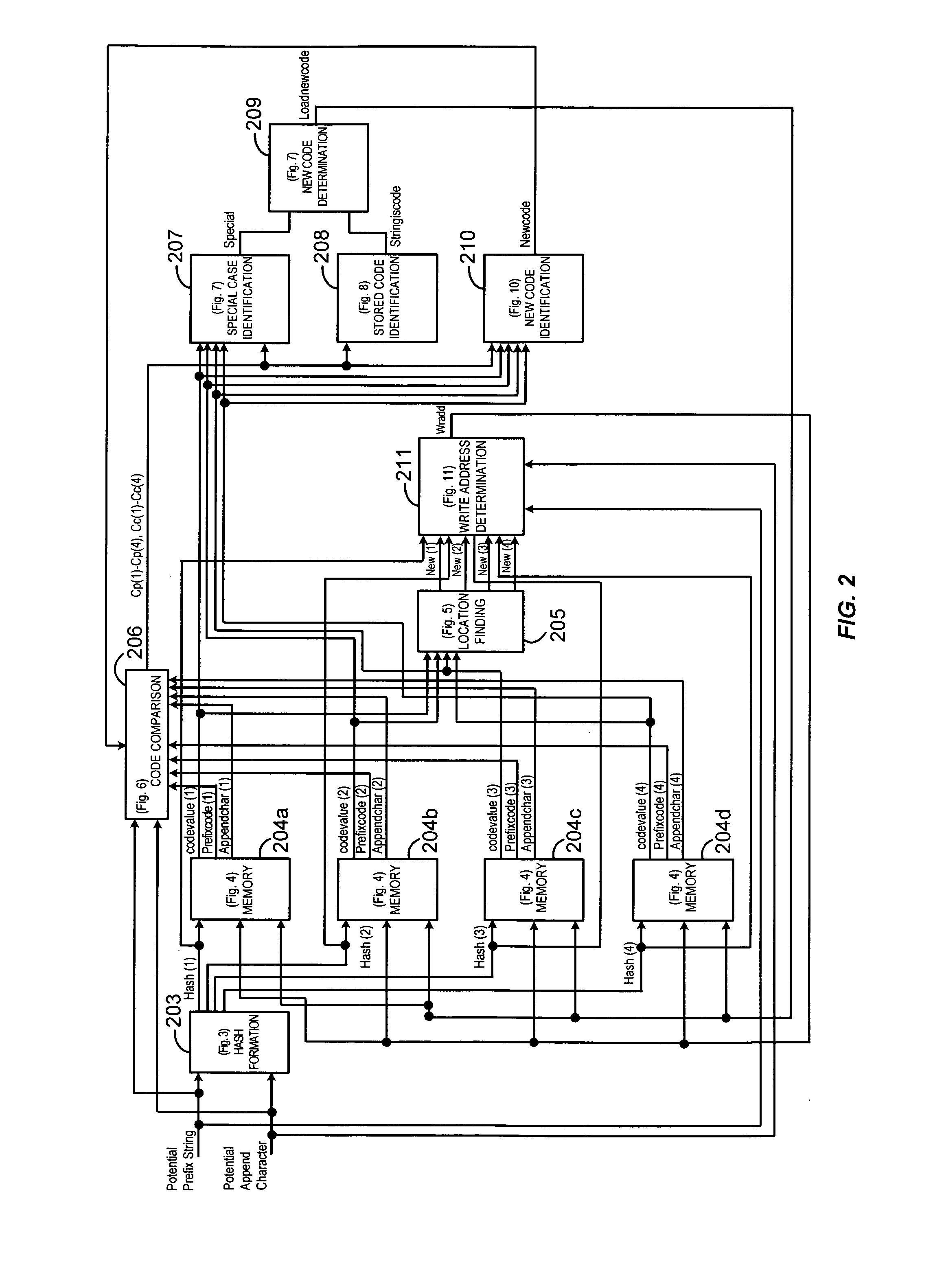

Data compression using dummy codes

InactiveUS7256715B1Significant latencyGuaranteed LatencyCode conversionComputer hardwareData compression

Received data is compressed according to an algorithm, which is a modification of the conventional LZW algorithm. A limited number of hash values are formed, and a code memory is searched at locations corresponding to the hash values. If all of the locations contains a valid code, but none of them contains a valid code corresponding to a potential new code, a dummy code is created, and a counter, which indicates the number of codes created, is incremented. The algorithm can be implemented in parallel in hardware.

Owner:ALTERA CORP

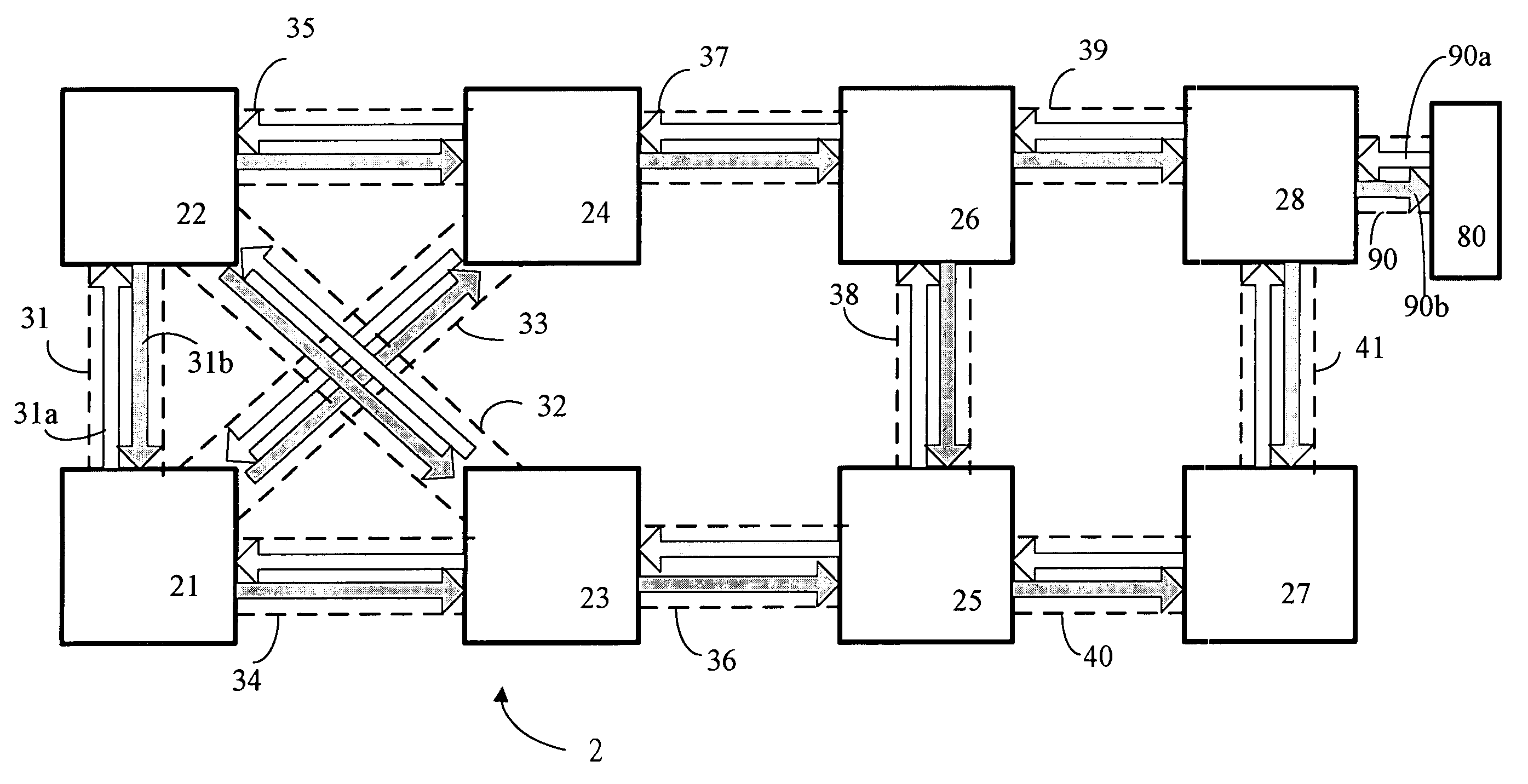

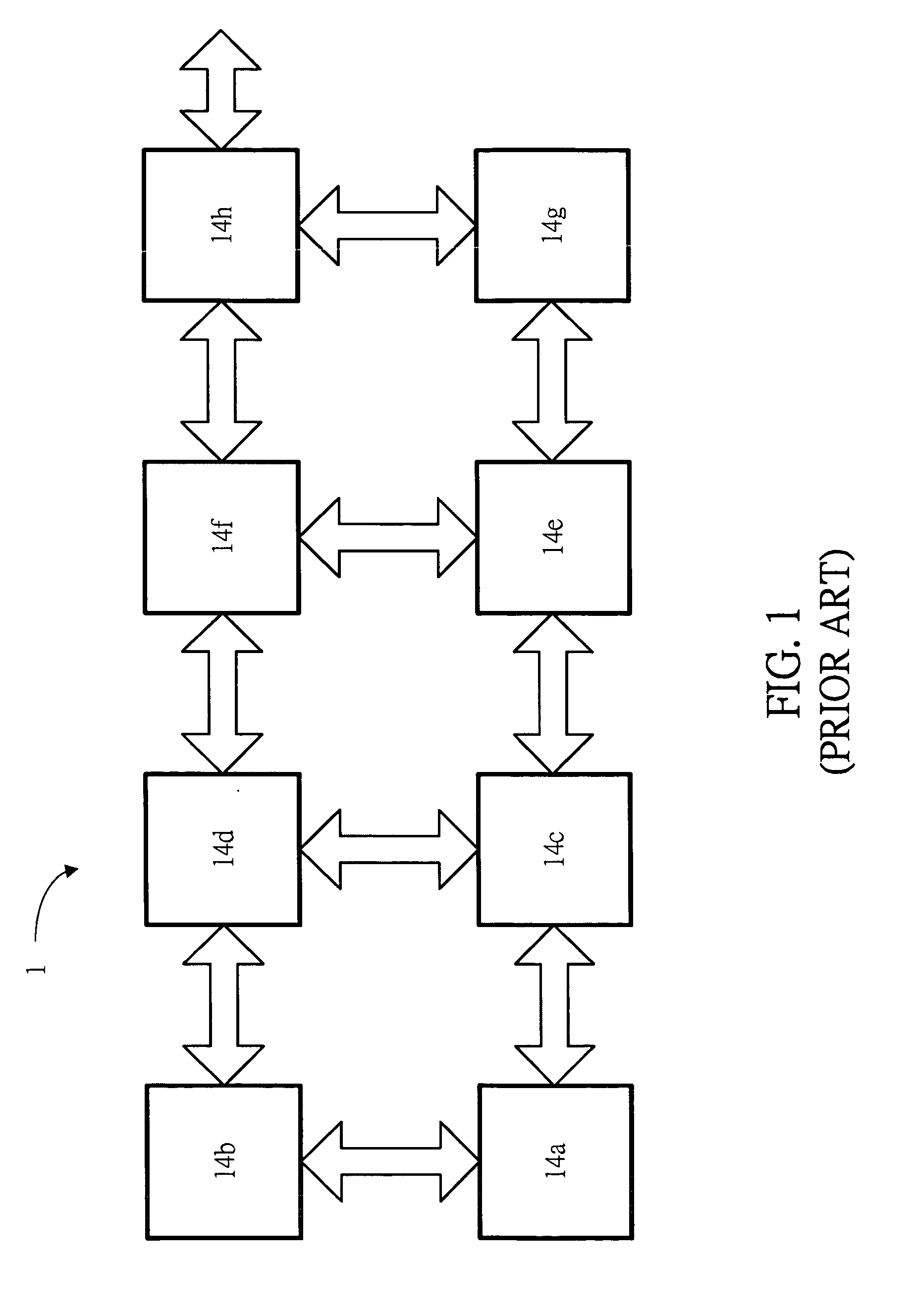

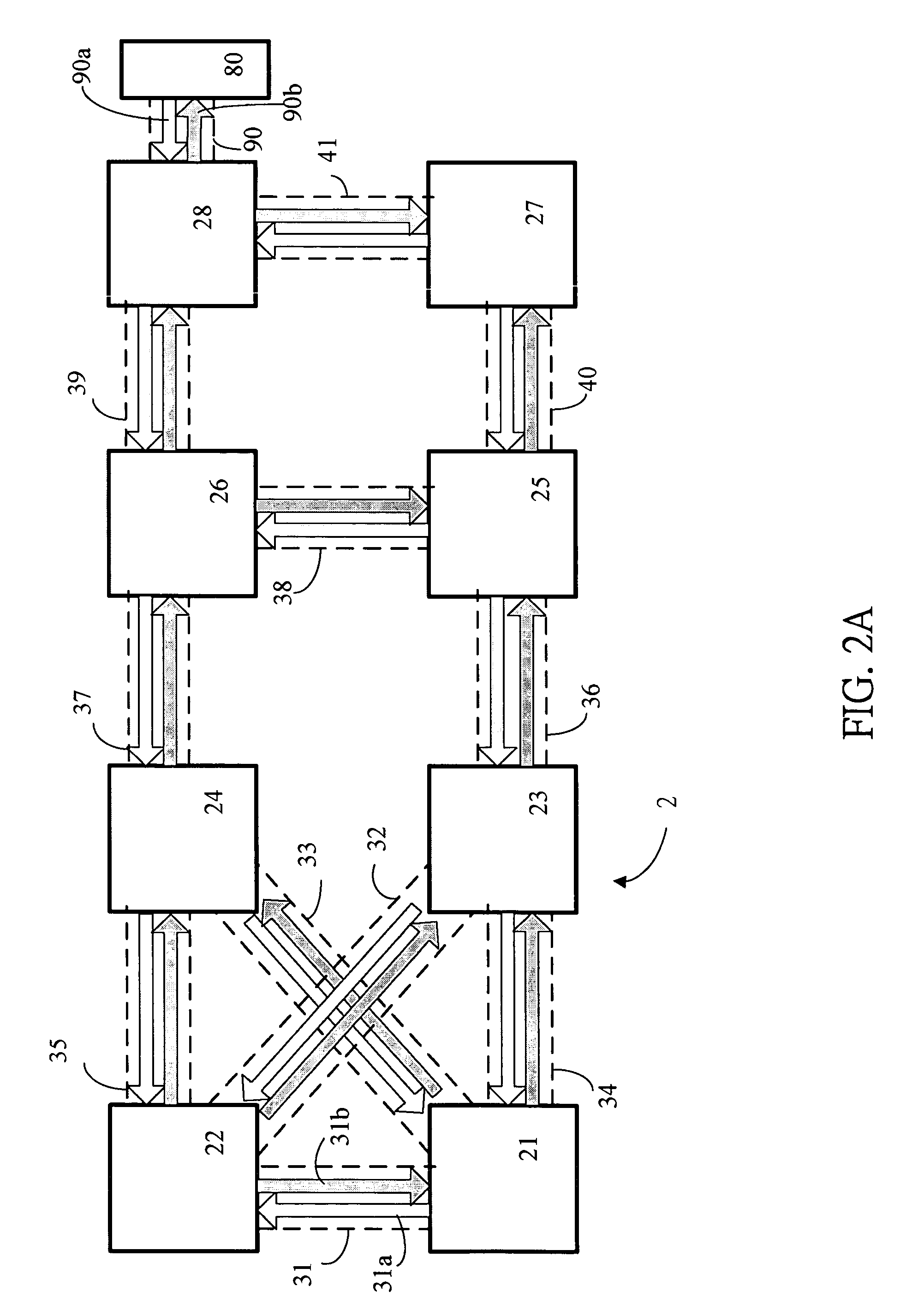

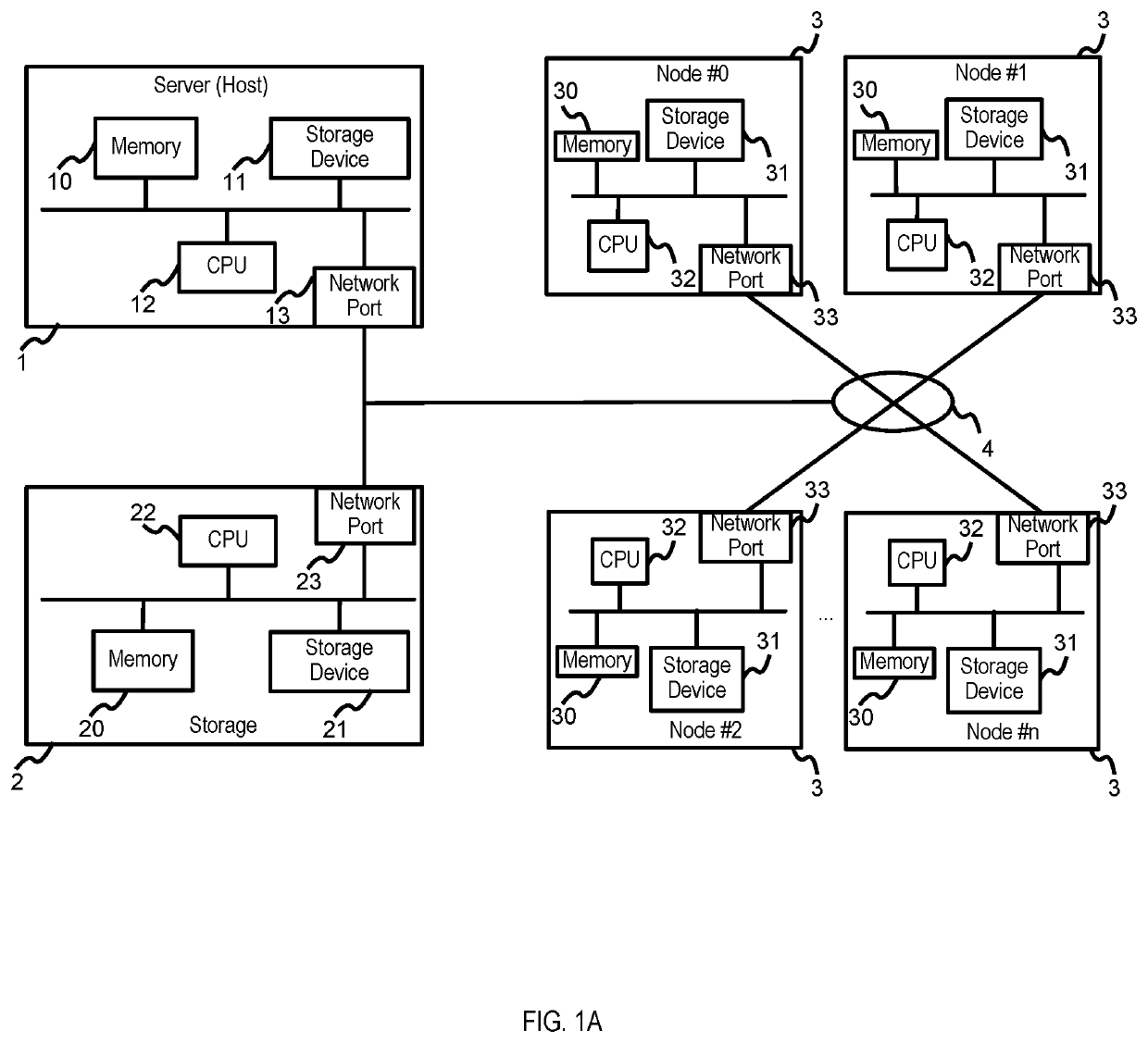

Multiprocessor system

InactiveUS20070079046A1Lower latencySignificant latencyDigital computer detailsElectric digital data processingMulti processorInterconnection

A multiprocessor system is disclosed, which comprises a plurality of processor unit, such as eight processor units, and a plurality of interconnection bus that may be a dual unidirectional point-to-point bus. Every interconnection bus connects predetermined two of the processor units. Particularly, at least two of the interconnection buses are crossed to each other.

Owner:TYAN COMP

Time sensitive network (TSN) scheduler with verification

According to some embodiments, system and methods are provided, comprising receiving, at a verification module, a schedule for transmission of one or more data frames to one or more destination nodes via a Time Sensitive Network (TSN); receiving, at the verification module, a destination for each data frame; receiving, at the verification module, a maximum tolerable latency for each data frame; determining, via the verification module, the received schedule is correct; transmitting one or more data frames according to the schedule; accessing, via the verification module, the one or more destination nodes; verifying, via the verification module, the one or more data frames were transmitted to the one or more destination nodes within a maximum tolerable latency, based on accessing the one or more destination nodes; and controlling one or more operations of an installed product based on the transmitted one or more data frames. Numerous other aspects are provided.

Owner:GENERAL ELECTRIC CO

Fast setup of physical communication channels

InactiveUS8014782B2Significant latencyReduce setup timeError preventionNetwork traffic/resource managementCommunications systemTelecommunications network

The present invention relates to improvements for a fast setup of physical communication channels in a CDMA-based communication system. A Node B of a telecommunication network is permitted to manage and assign a certain share of the downlink transmission resources of a radio network controller without inquiry of said radio network controller. On reception of a resource request message from a user equipment, the node B derives and specifies a certain amount of said resources that can be allocated to the user equipment. In a preferred embodiment of the present invention said resources are only assigned temporarily until the ordinary RL setup procedure, which involves the RNC, has been successfully finished.

Owner:TELEFON AB LM ERICSSON (PUBL)

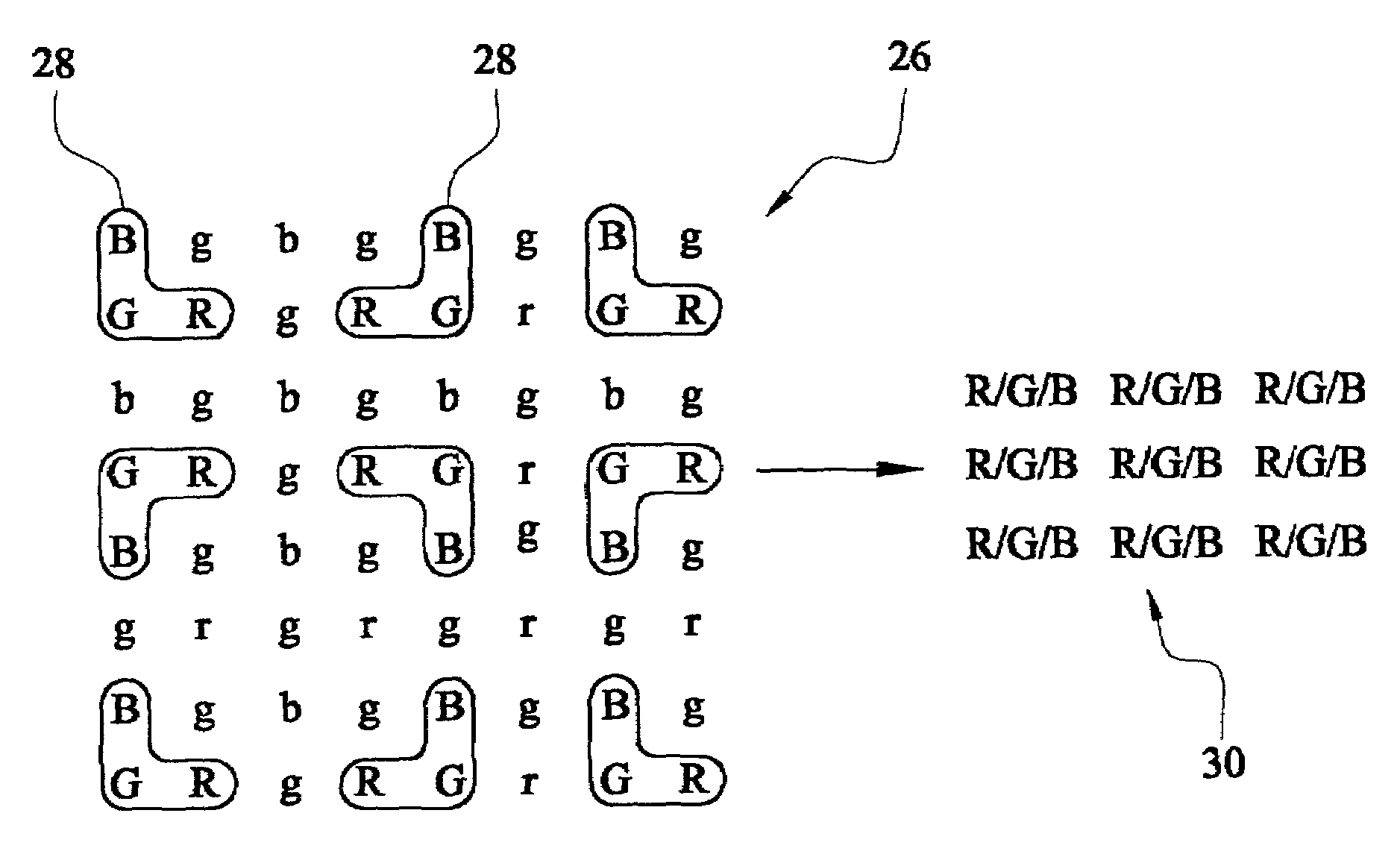

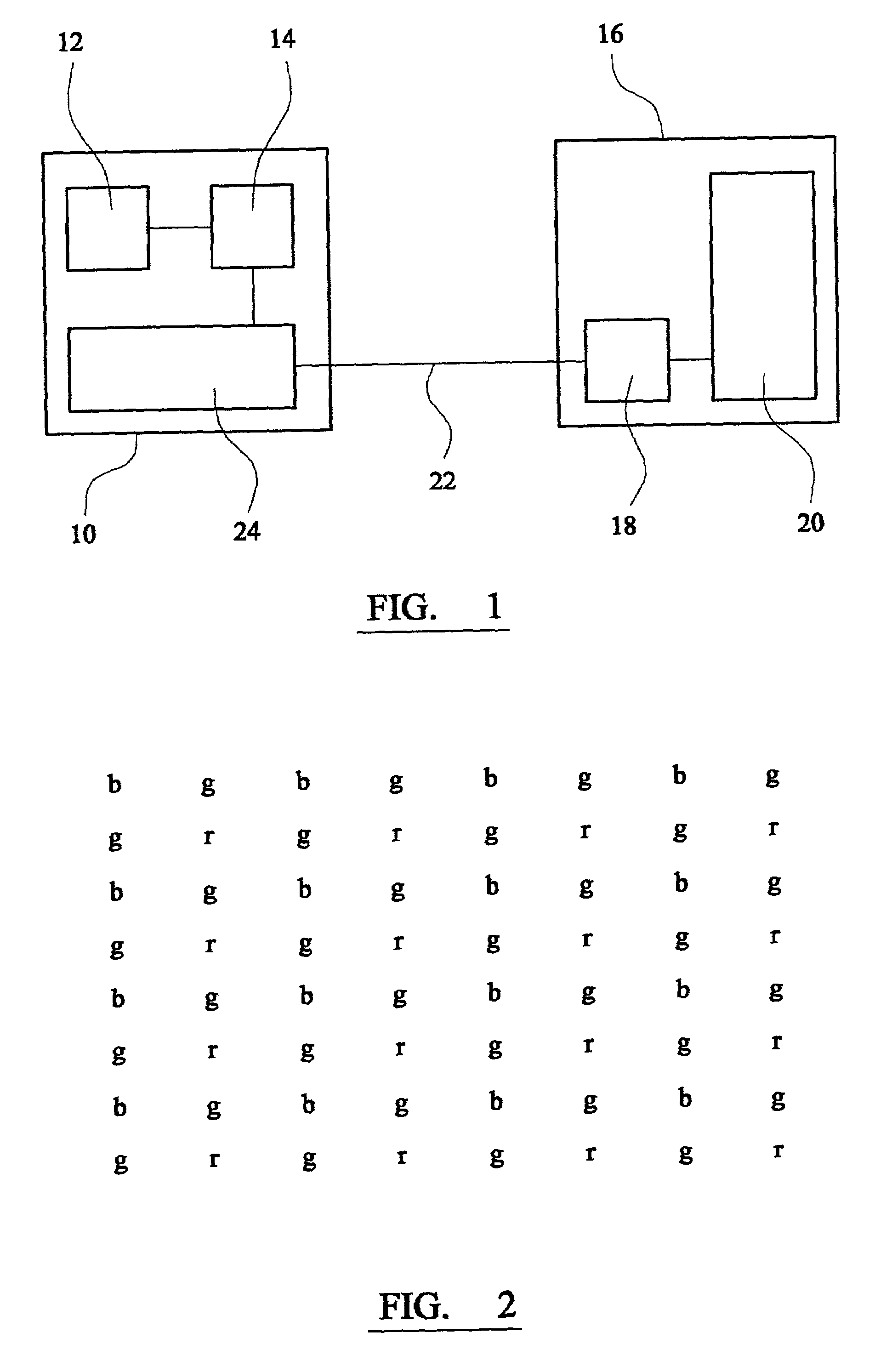

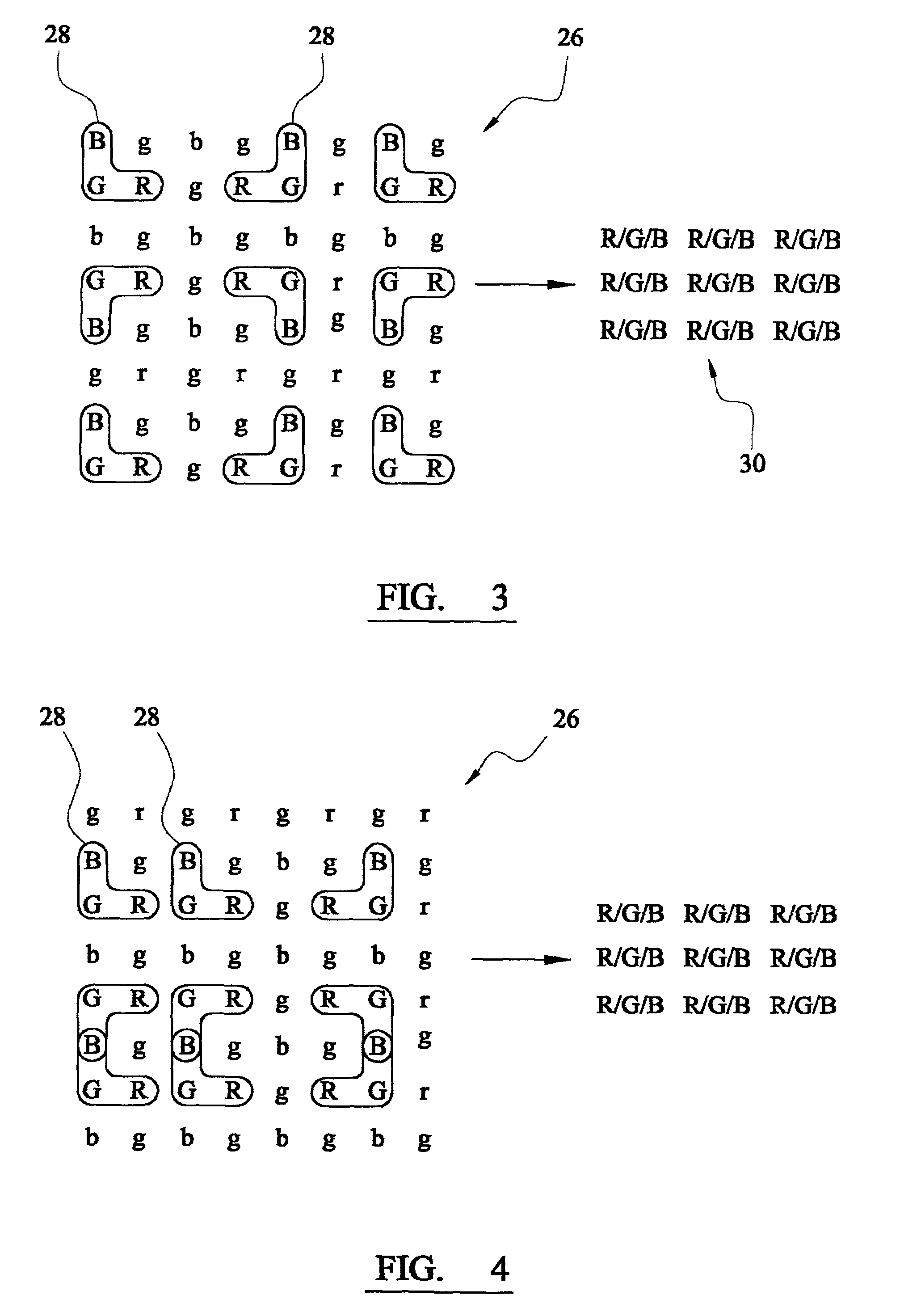

Method of and apparatus for digital image processing

InactiveUS7551189B2Minimize impactMinimal processingGeometric image transformationCathode-ray tube indicatorsDigital signal processingDiagonal

An image incident on a pixel array having a Bayer pattern is compressed by grouping only some of the pixels in the Bayer pattern into sets including a triad of pixels of the Bayer pattern. The triad of pixels of each set includes the three primary colors and are next to each other in the rows, columns and diagonally within the Bayer pattern. The pixels in each set are combined to form a single pixel of the compressed array.

Owner:HEWLETT PACKARD DEV CO LP

Vehicle control system

ActiveUS11072356B2Significant latencySignalling indicators on vehicleStore-and-forward switching systemsControl communicationsControl system

A locomotive control system includes a controller configured to control communication between or among plural locomotive devices that control movement of a locomotive via a network that communicatively couples the vehicle devices. The controller also is configured to control the communication using a data distribution service (DDS) and with the network operating as a time sensitive network (TSN). The controller is configured to direct a first set of the locomotive devices to communicate using time sensitive communications, a different, second set of the locomotive devices to communicate using best effort communications, and a different, third set of the locomotive devices to communicate using rate constrained communications.

Owner:GE GLOBAL SOURCING LLC

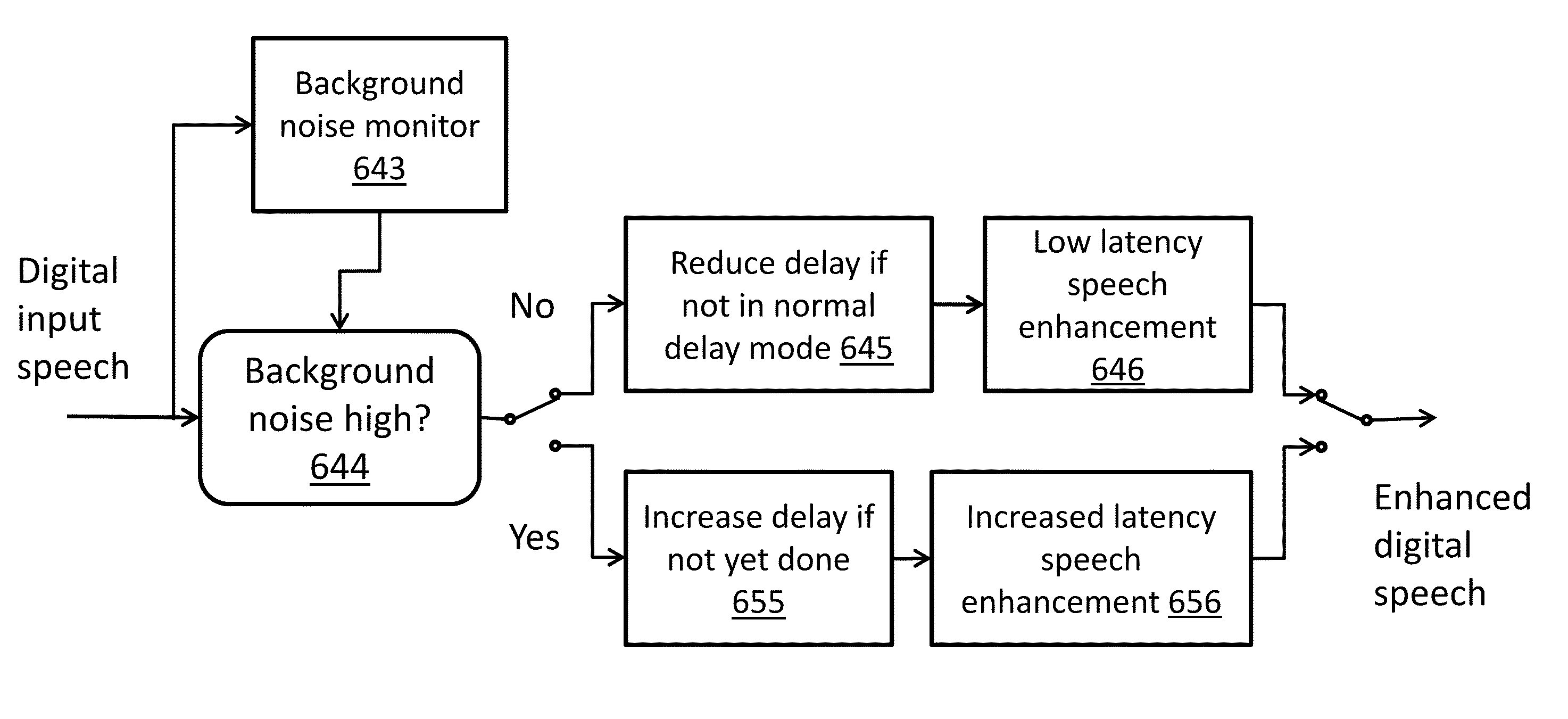

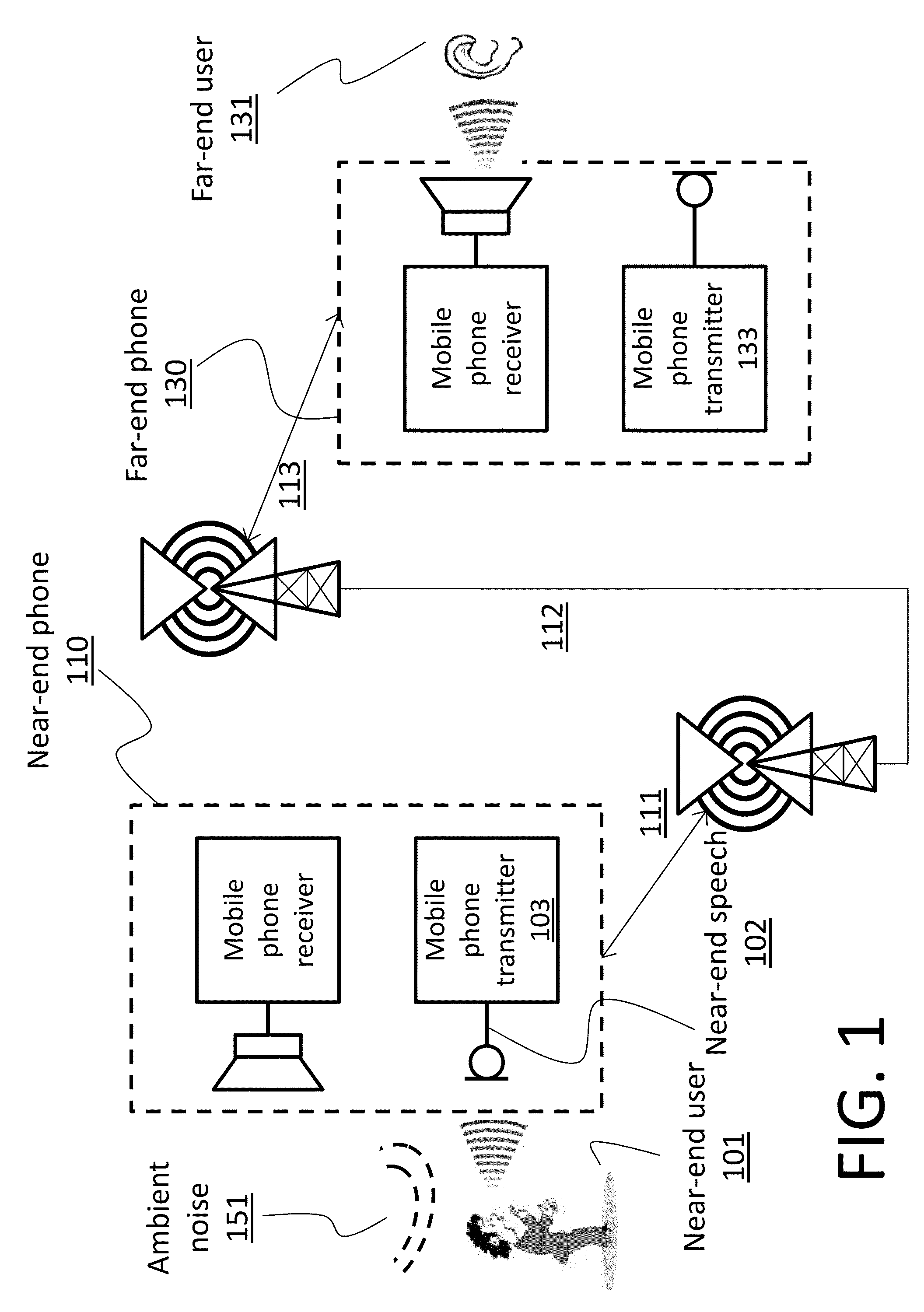

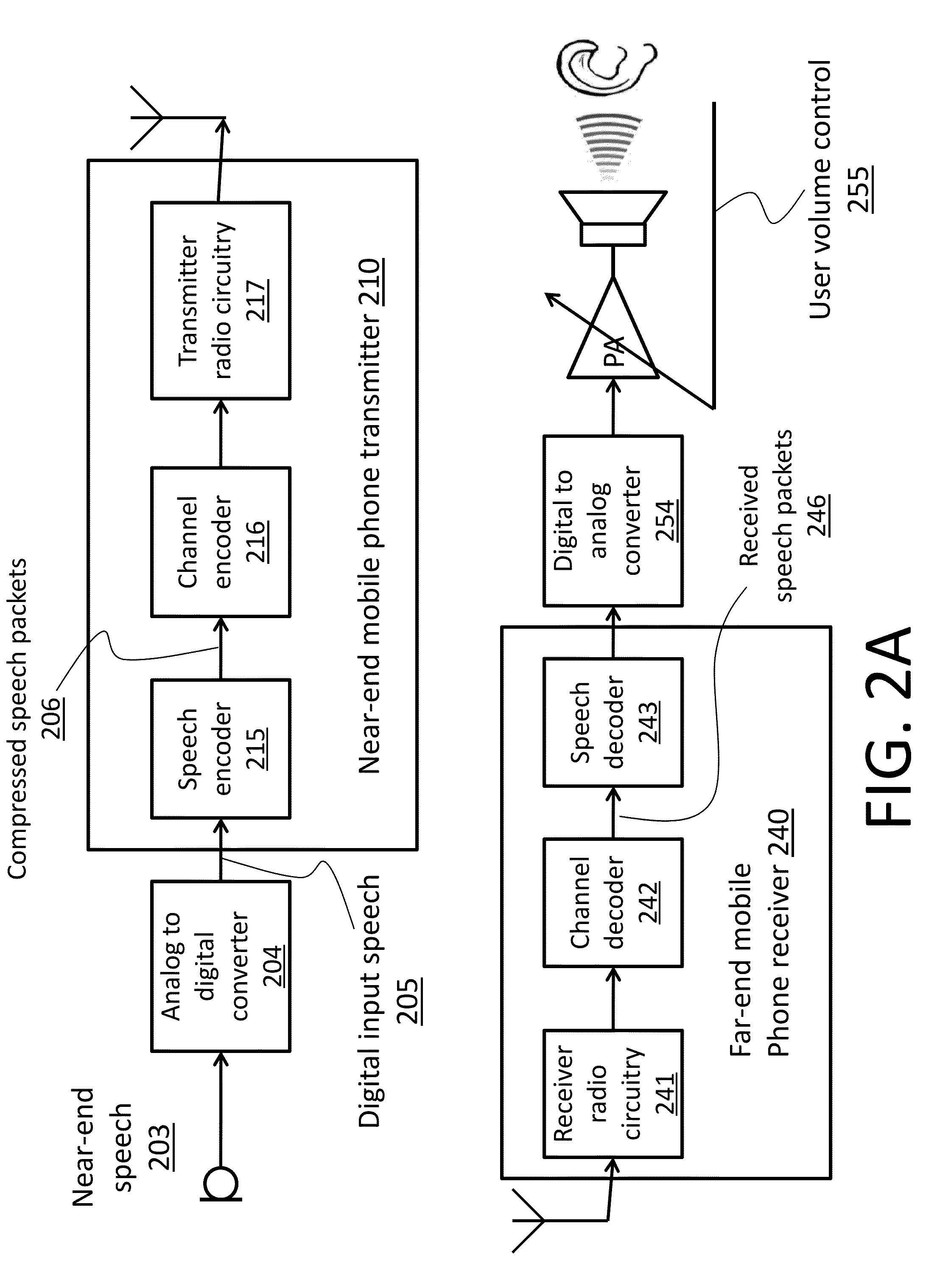

Adaptive delay for enhanced speech processing

ActiveUS9437211B1Improve performanceLower performance requirementsSpeech analysisVoice communicationLatency (engineering)

Provided is a system, method, and computer program product for improving the quality of voice communications on a mobile handset device by dynamically and adaptively selecting adjusting the latency of a voice call to accommodate an optimal speech enhancement technique in accordance with the current ambient noise level. The system, method and computer program product improves the quality of a voice call transmitted over a wireless link to a communication device dynamically increasing the latency of the voice call when the ambient noise level is above a predetermined threshold in order to use a more robust high-latency voice enhancement technique and by dynamically decreasing the latency of the voice call when the ambient noise level is below a predetermined threshold to use the low-latency voice enhancement techniques. The latency periods are adjusted by adding or deleting voice samples during periods of unvoiced activity.

Owner:QOSOUND

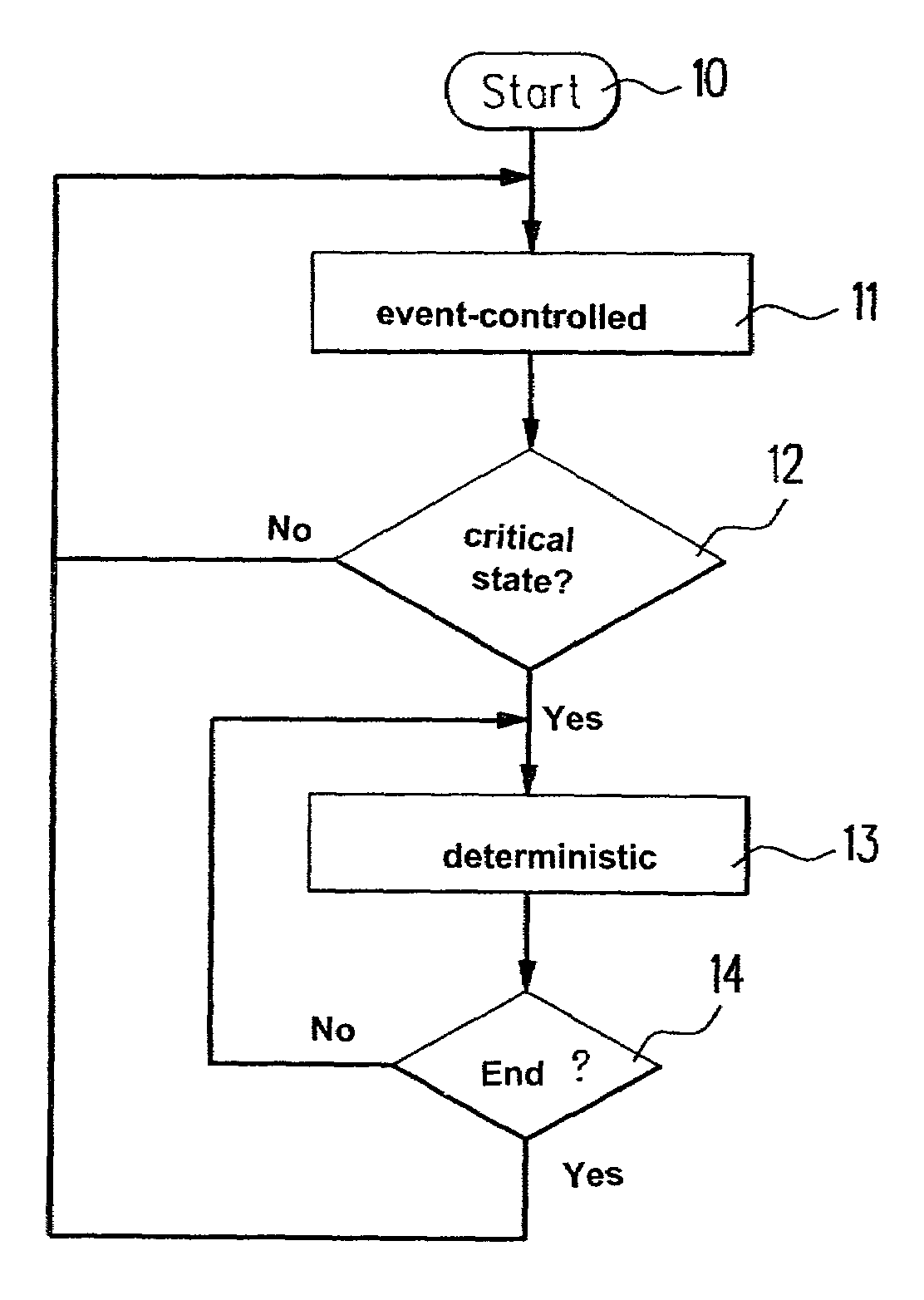

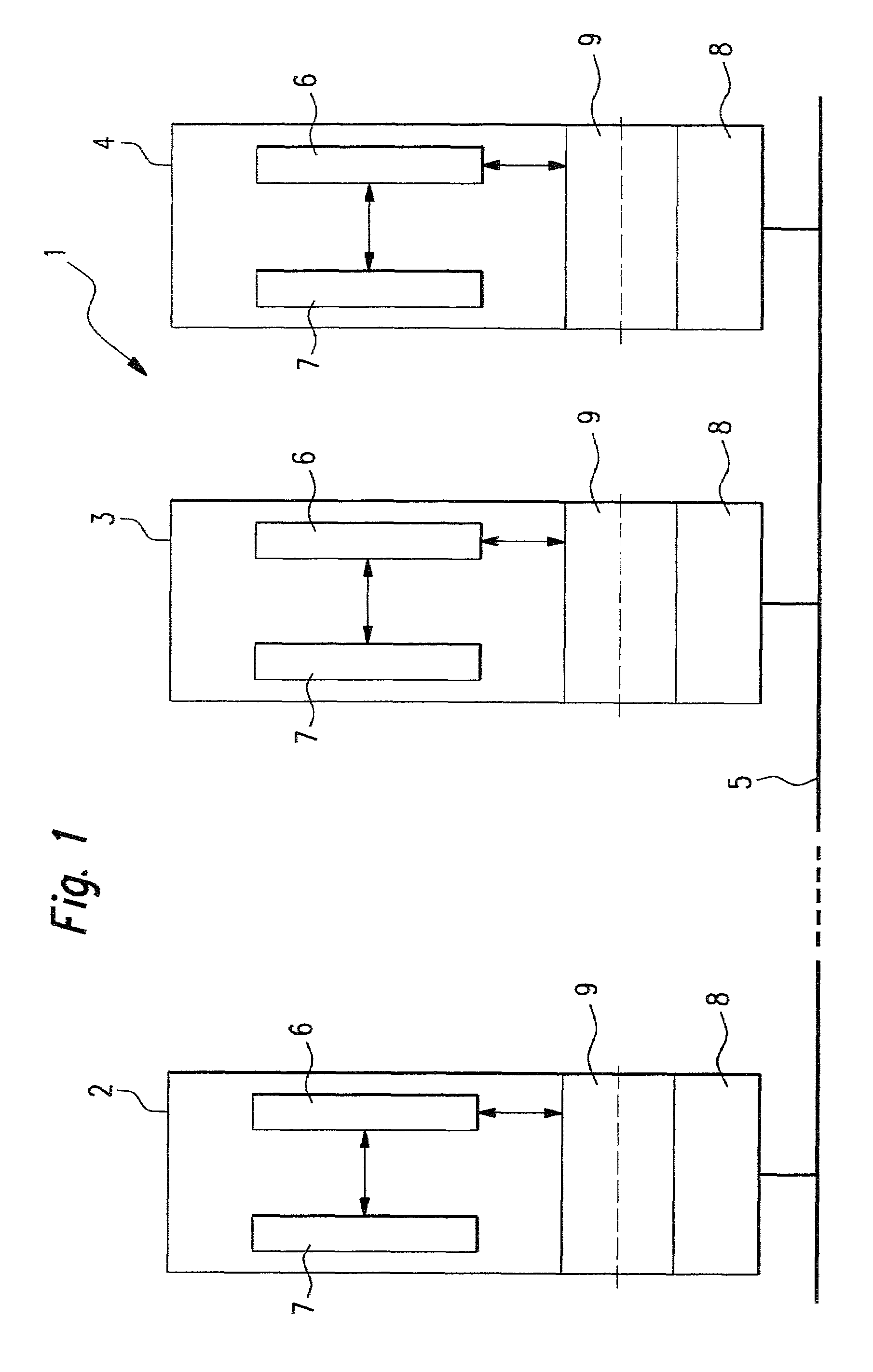

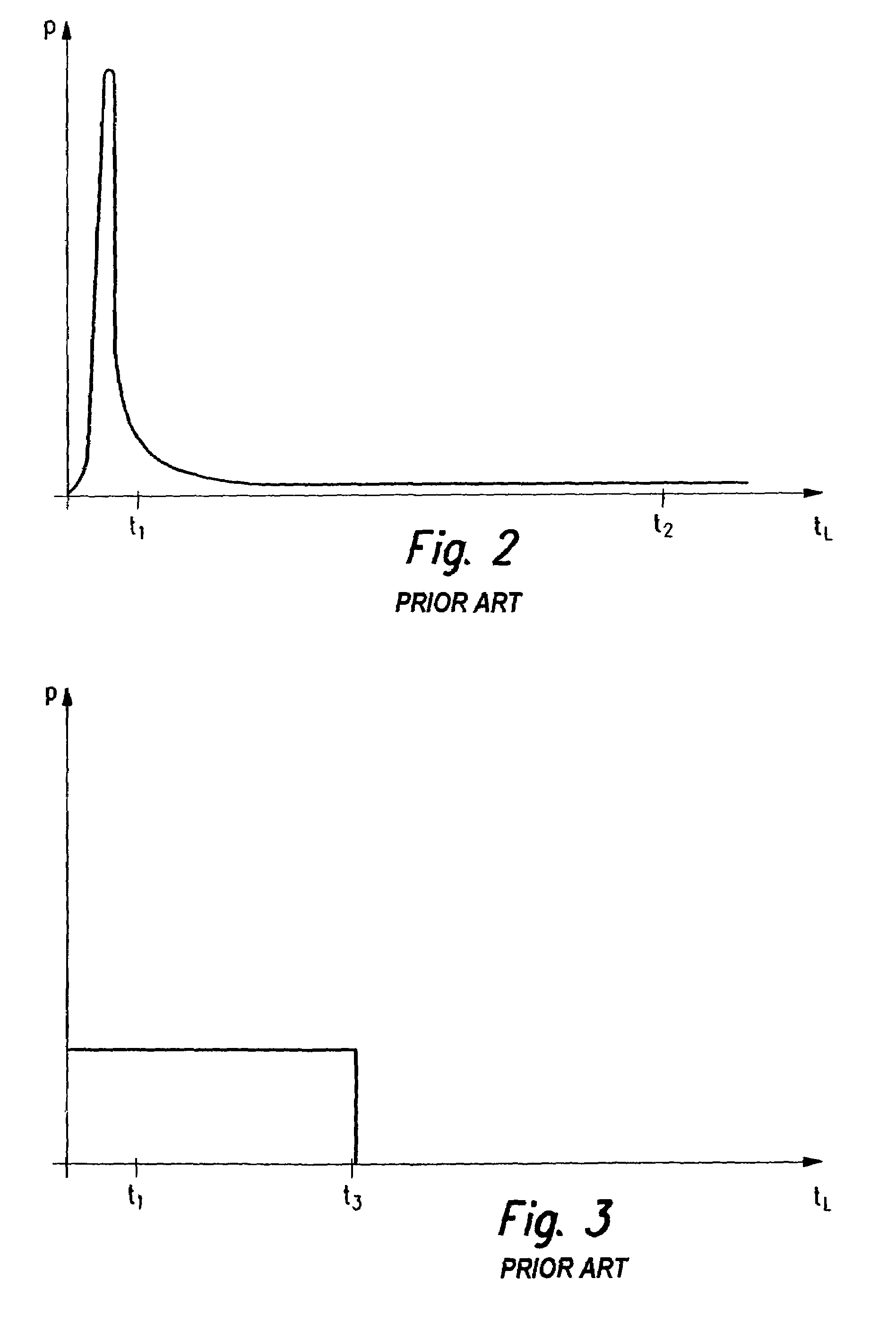

Method and communication system for data exchange among multiple users interconnected over a bus system

ActiveUS7406531B2Additional exchangeIncrease probabilityMultiprogramming arrangementsFrequency-division multiplexDeterministic methodCommunications system

A method and a communication system for exchanging data between at least two users interconnected over a bus system are described. The data is contained in messages which are transmitted by the users over the bus system. To improve data exchange among users so that in the normal case, there is a high probability that it will be possible to transmit messages with a low latency, while on the other hand, in the worst case, a finite maximum latency can be guaranteed, the data be transmitted in an event-oriented method over the bus system as long as a preselectable latency period elapsing between a transmission request by a user and the actual transmission operation of the user can be guaranteed for each message to be transmitted as a function of the utilization of capacity of the bus system, and otherwise the data is transmitted over the bus system by a deterministic method.

Owner:ROBERT BOSCH GMBH

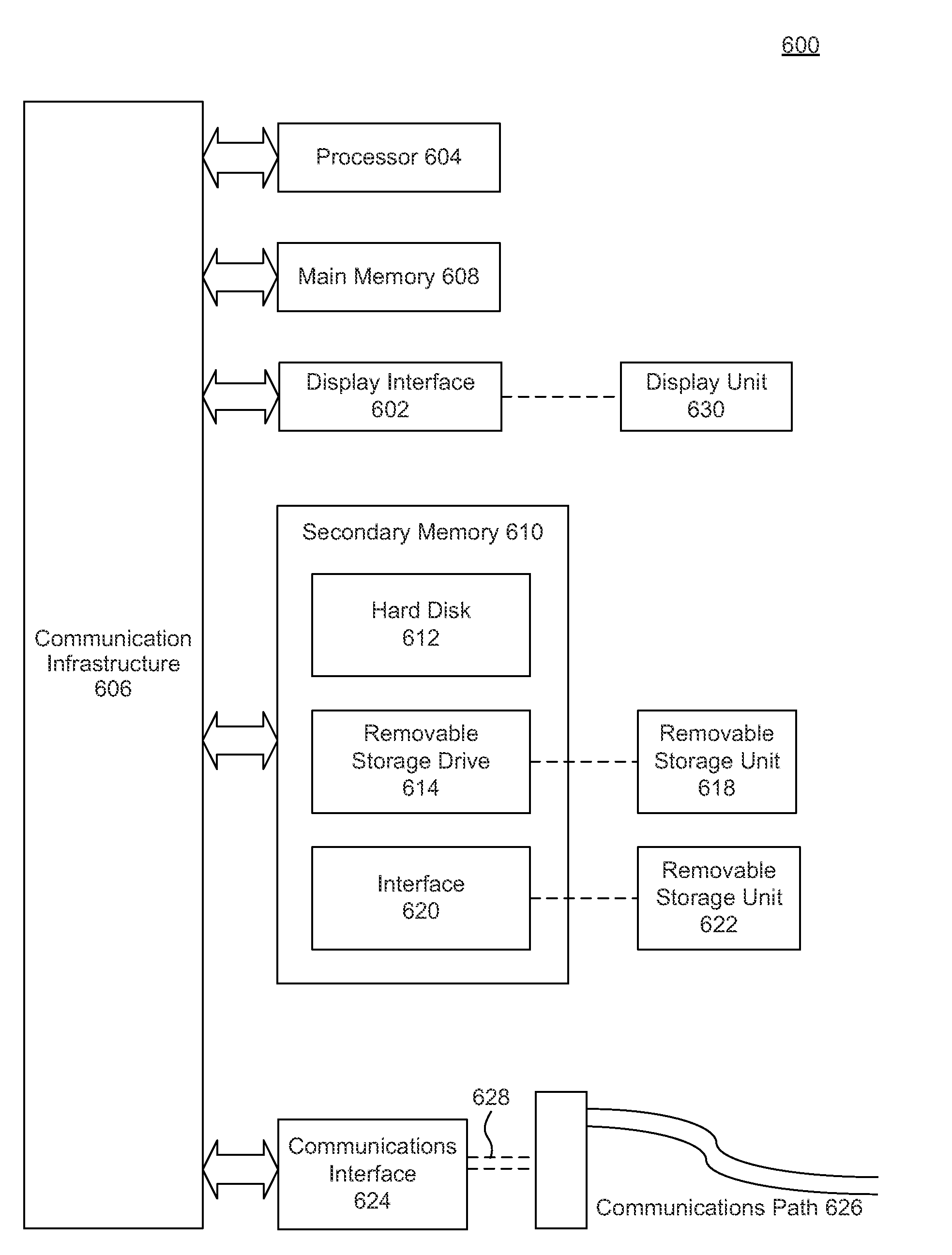

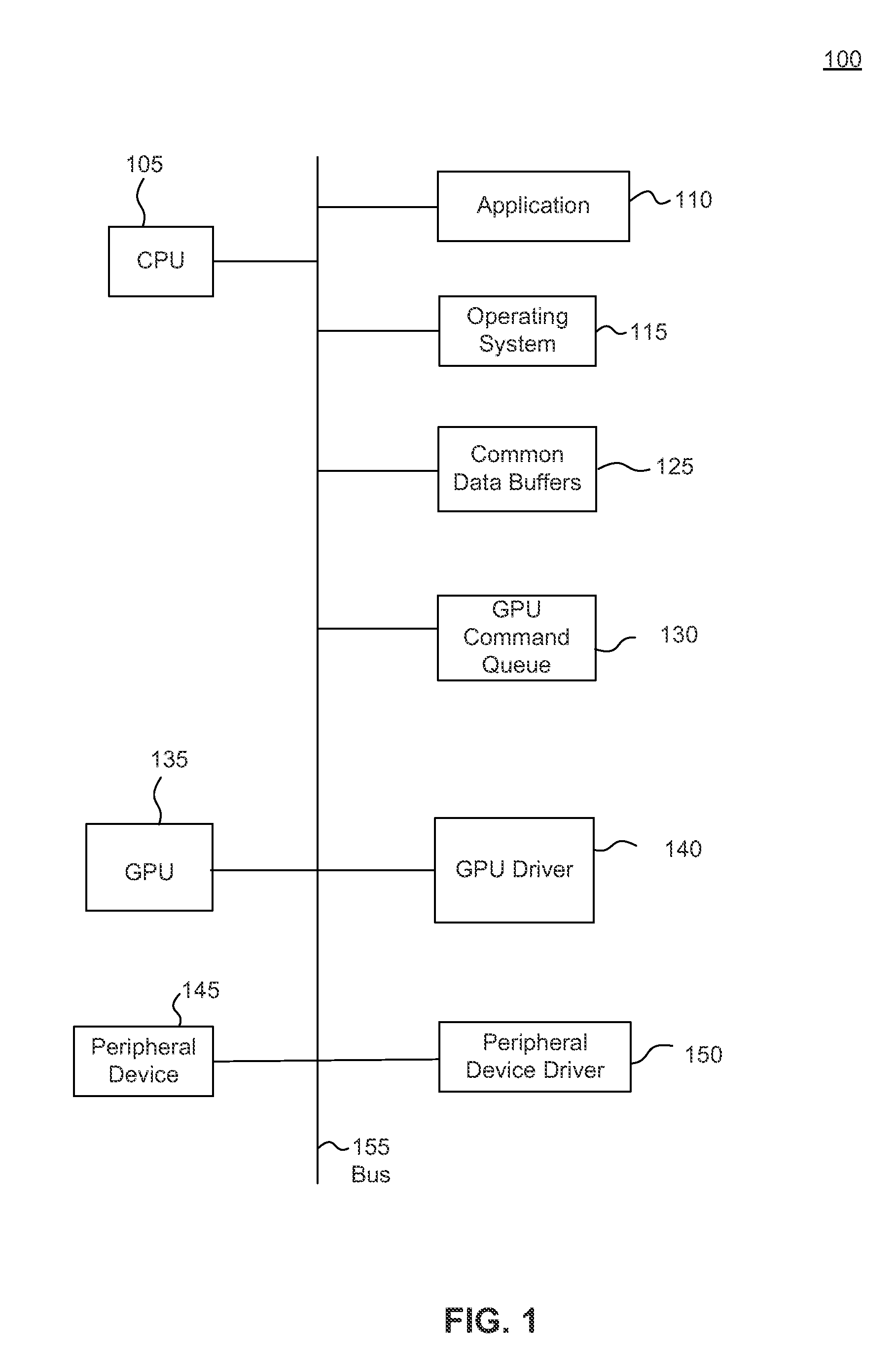

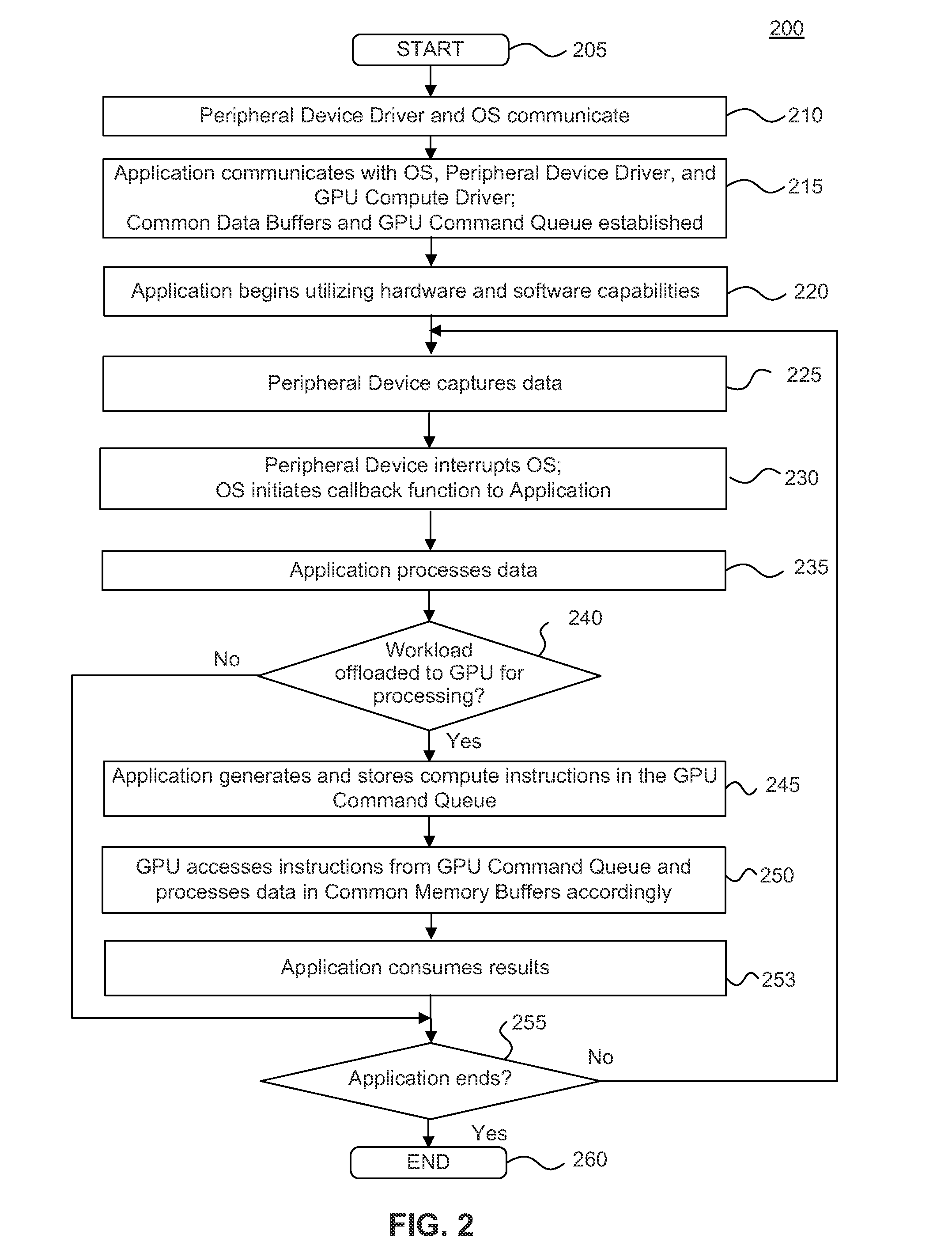

Minimizing latency from peripheral devices to compute engines

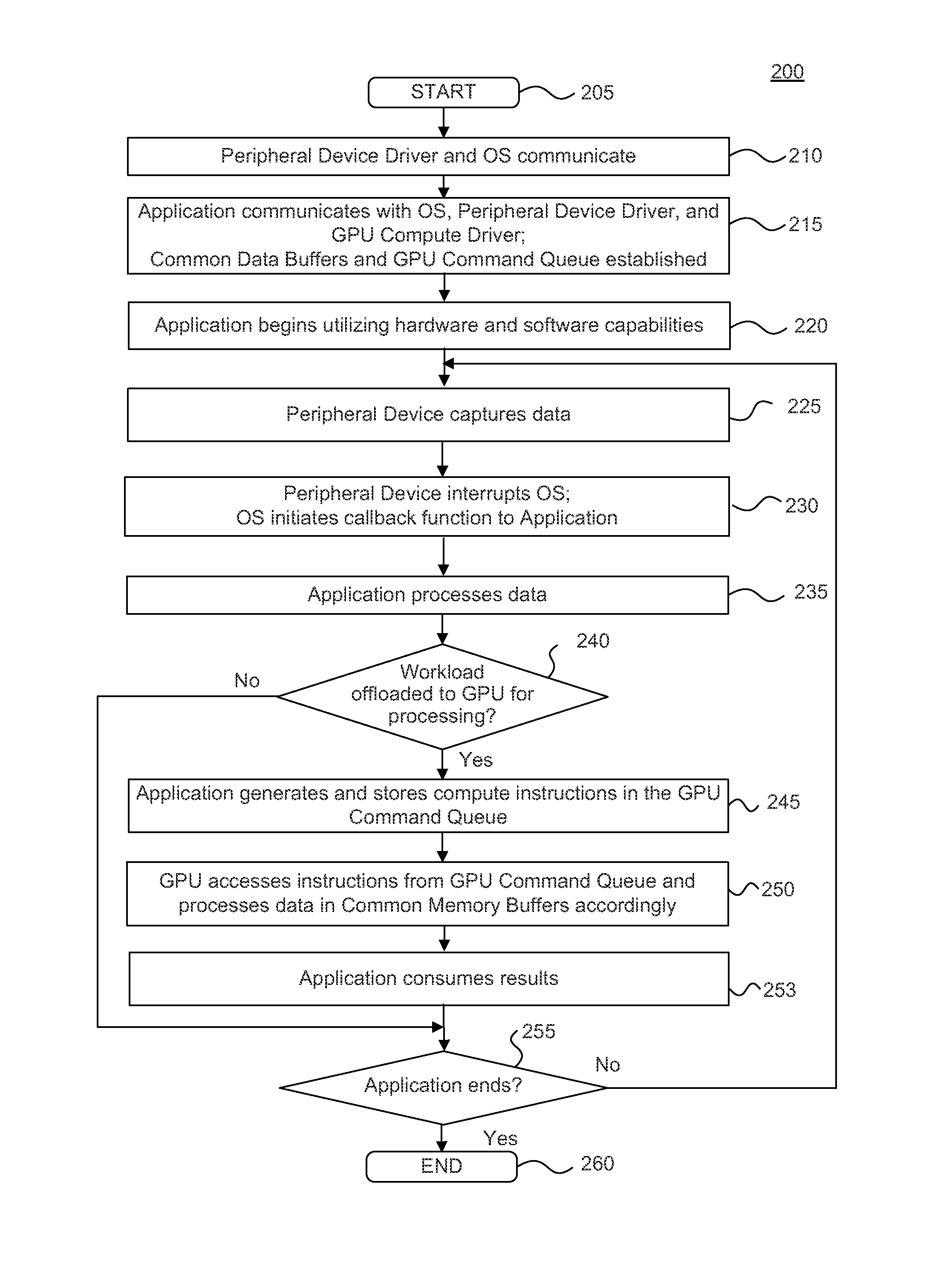

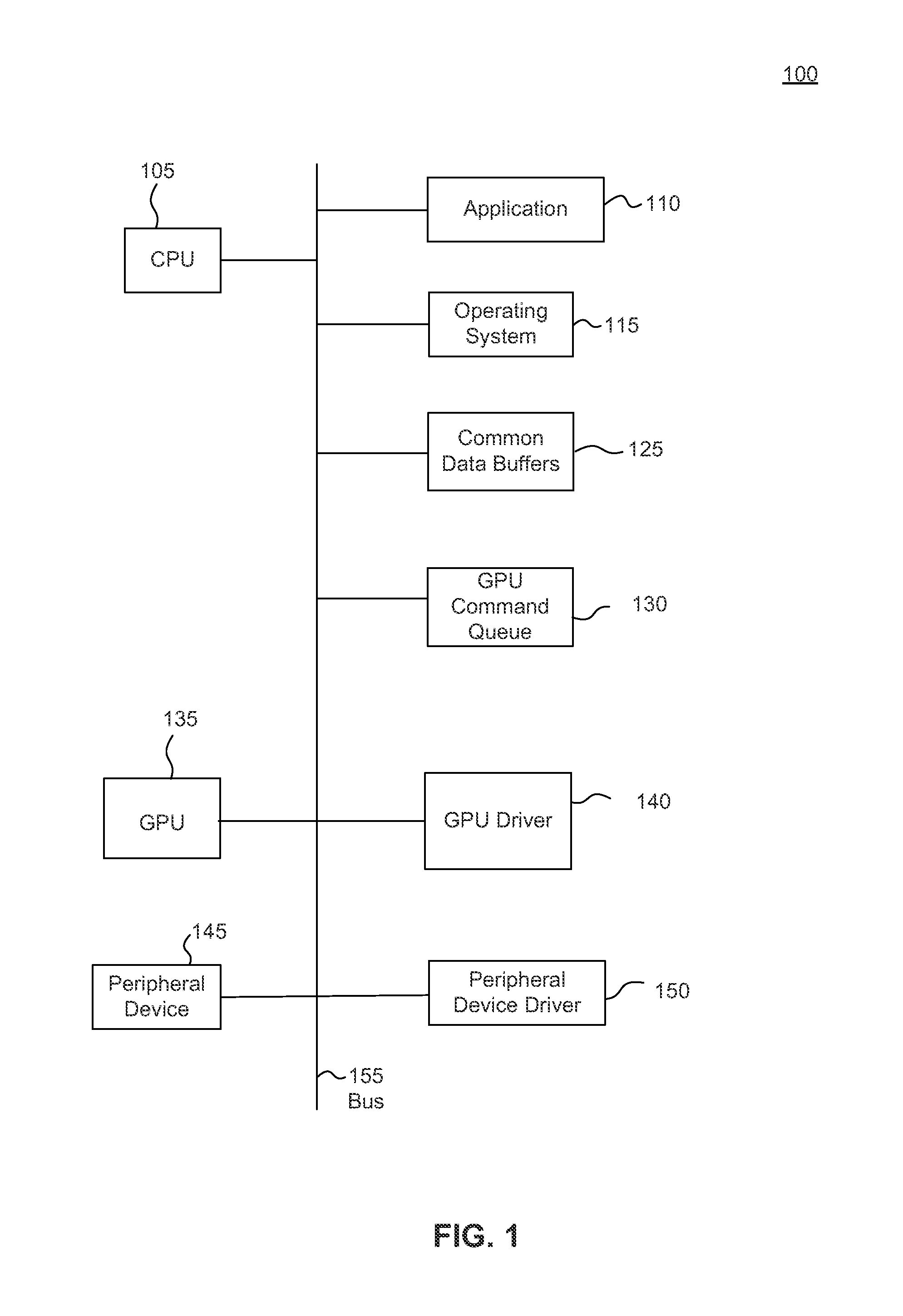

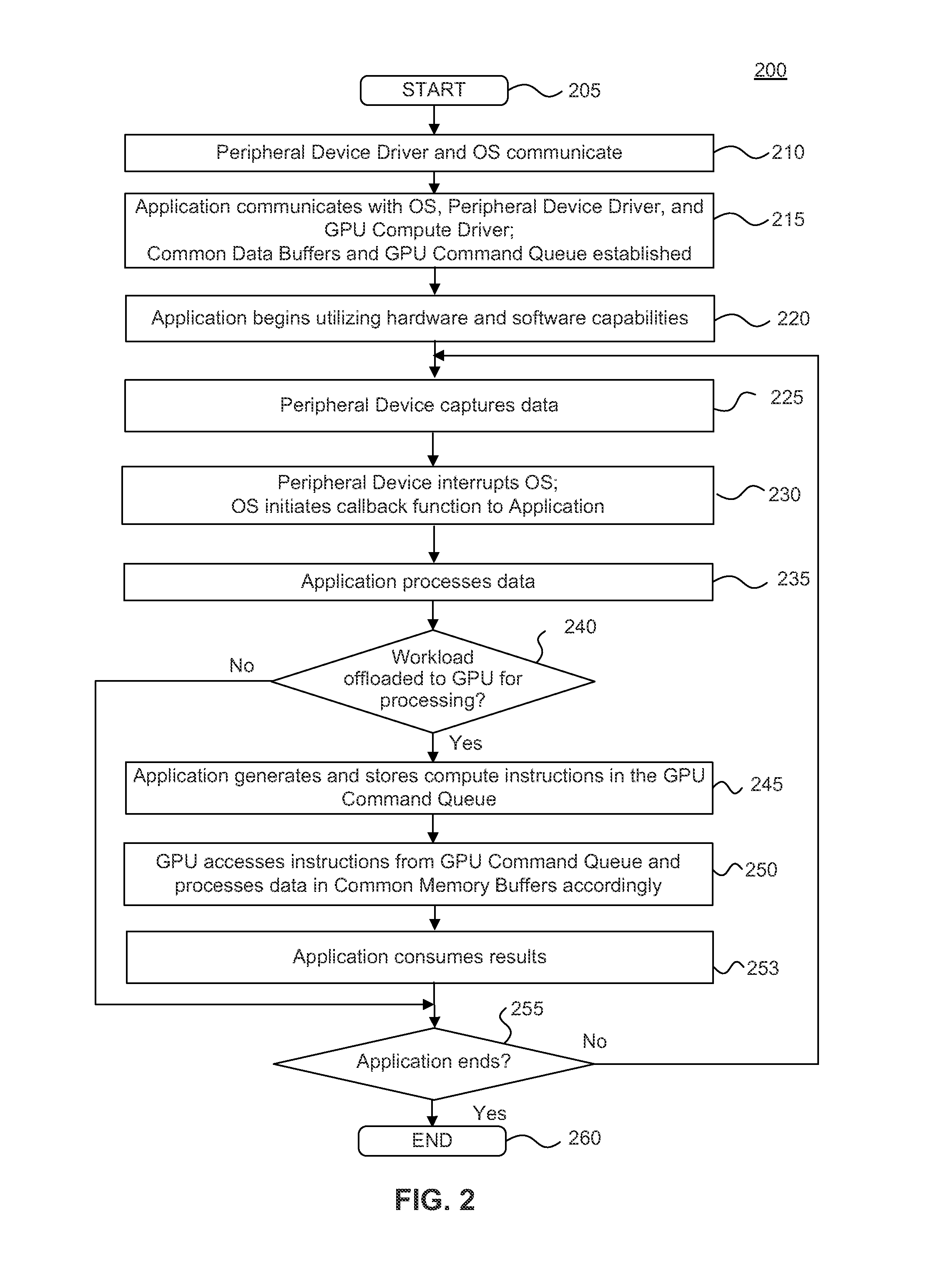

ActiveUS20140317316A1Lower latencyMinimum delayStatic indicating devicesDigital output to display deviceComputer architectureVisual feedback

Methods, systems, and computer program products are provided for minimizing latency in a implementation where a peripheral device is used as a capture device and a compute device such as a GPU processes the captured data in a computing environment. In embodiments, a peripheral device and GPU are tightly integrated and communicate at a hardware / firmware level. Peripheral device firmware can determine and store compute instructions specifically for the GPU, in a command queue. The compute instructions in the command queue are understood and consumed by firmware of the GPU. The compute instructions include but are not limited to generating low latency visual feedback for presentation to a display screen, and detecting the presence of gestures to be converted to OS messages that can be utilized by any application.

Owner:ADVANCED MICRO DEVICES INC

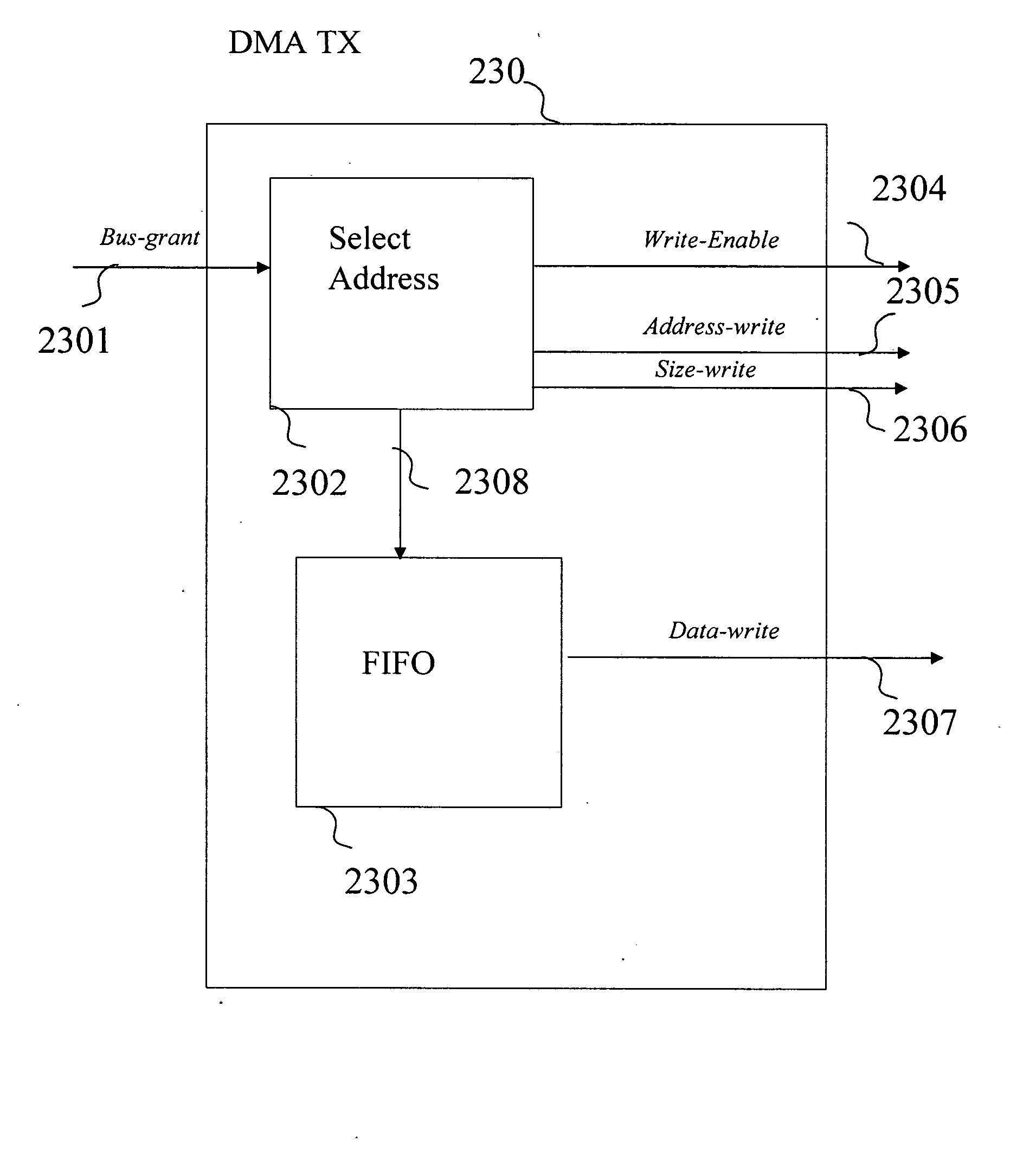

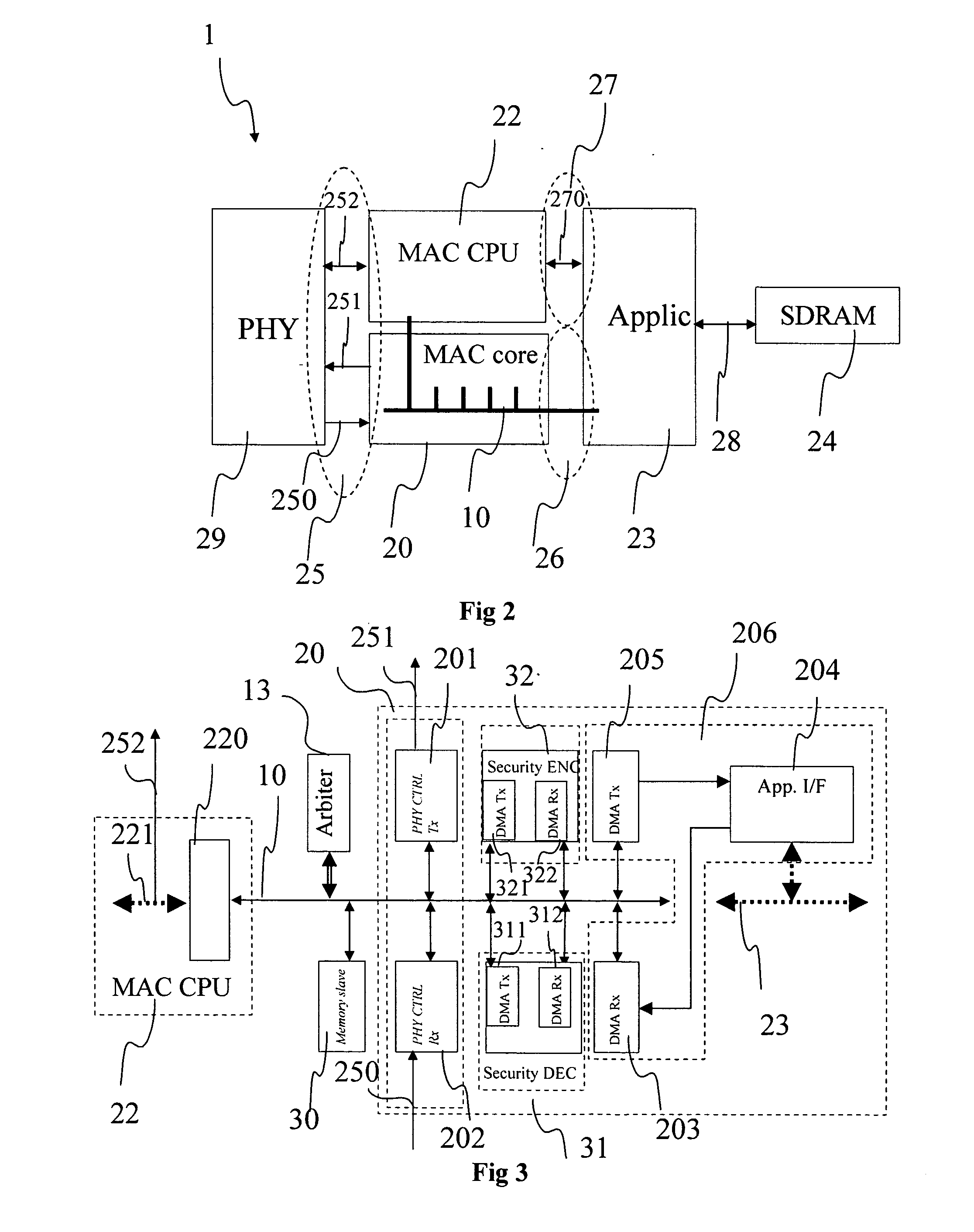

Method for Accessing a Data Transmission Bus, Corresponding Device and System

InactiveUS20100122000A1Bit rate maximumMaximum latencyElectric digital data processingMaximum latencyData transmission

The invention relates to a bus, which is connectable to a primary master and to secondary masters, the bus being suitable for the transmission of data between the peripherals. In order to ensure a minimum rate and / or maximum latency between the secondary masters, when the primary master uses a small time fraction available on the bus, said primary master is provided with the highest priority and comprises means for wirelessly accessing to a medium. The inventive method for accessing to the bus consists in authorising the primary master to access to the bus upon the request thereof and in selecting the access to the bus for the secondary masters when the primary master peripheral does not request said access to the bus.

Owner:THOMSON LICENSING SA

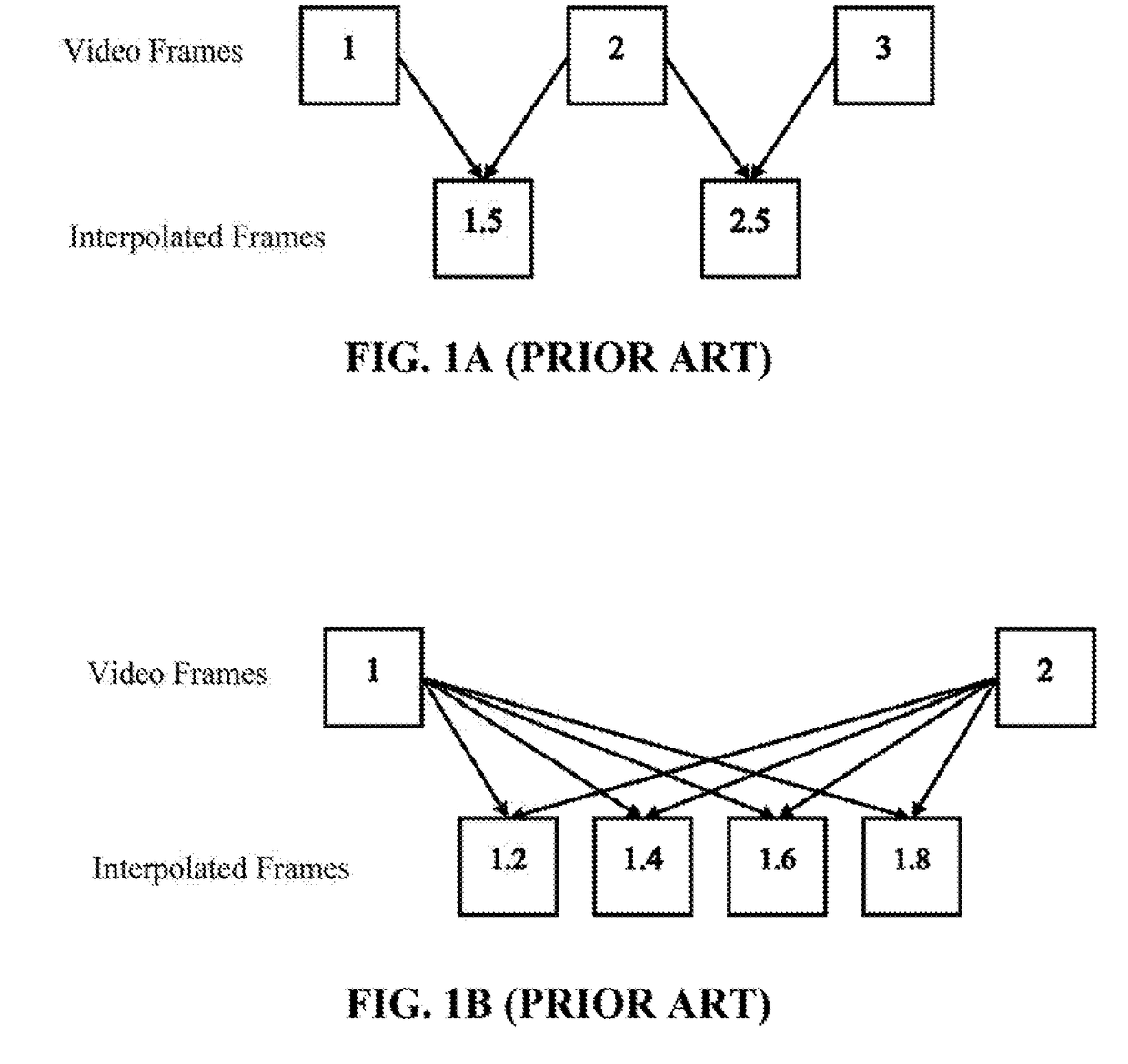

Apparatus and methods for frame interpolation

InactiveUS20180063551A1Consume resourcesAvoiding significantDigital video signal modificationData switching networksComputation complexityComputer vision

Apparatus and methods for generating interpolated frames in digital image or video data. In one embodiment, the interpolation is based on a hierarchical tree sequence. At each level of the tree, an interpolated frame may be generated using original or interpolated frames of the video, such as those closest in time to the desired time of the frame to be generated. The sequence proceeds through lower tree levels until a desired number of interpolated frames, a desired video length, a desired level, or a desired visual quality for the video is reached. In some implementations, the sequence may use different interpolation algorithms (e.g., of varying computational complexity or types) at different levels of the tree. The interpolation algorithms can include for example those based on frame repetition, frame averaging, motion compensated frame interpolation, and motion blending.

Owner:GOPRO

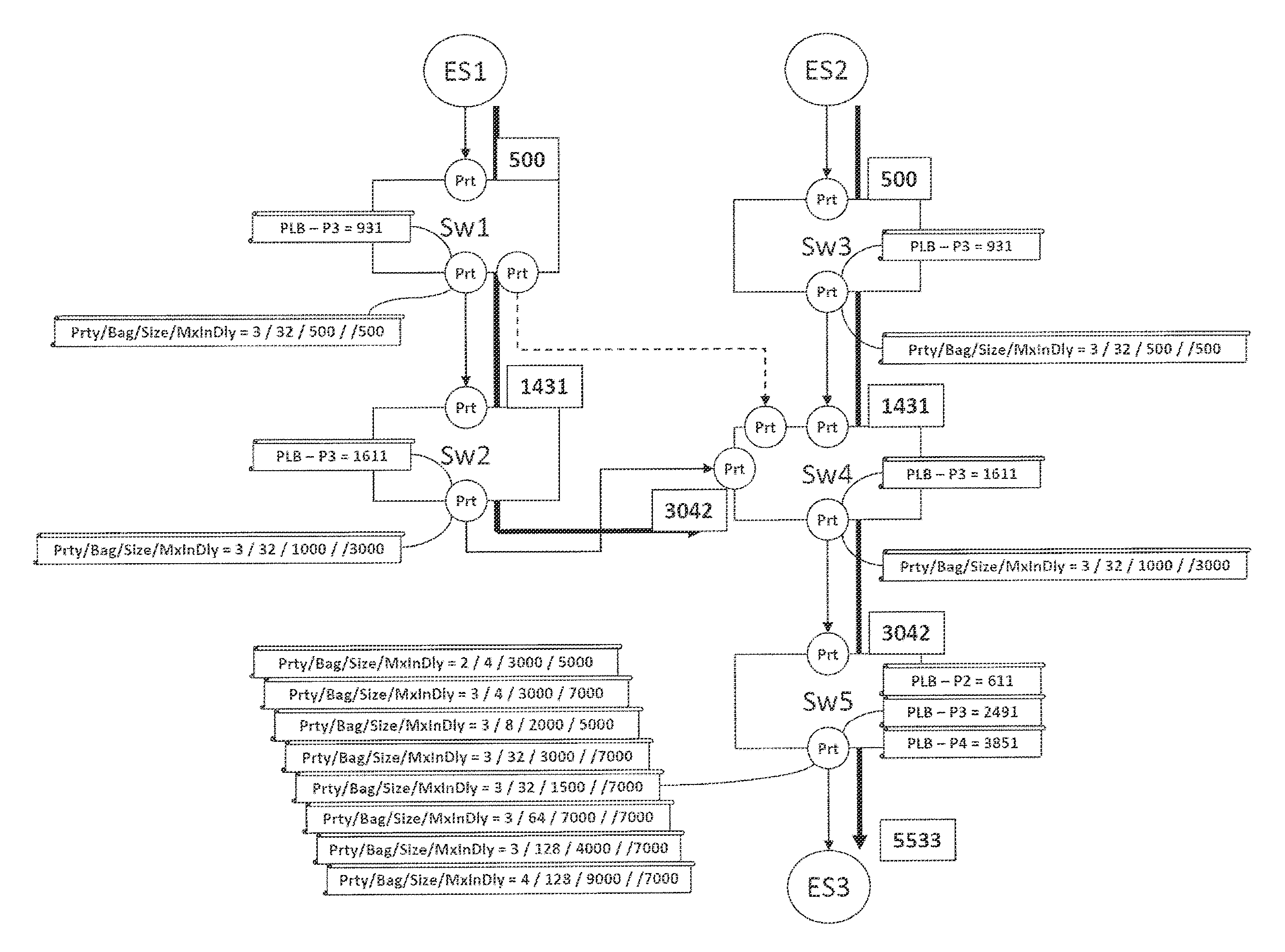

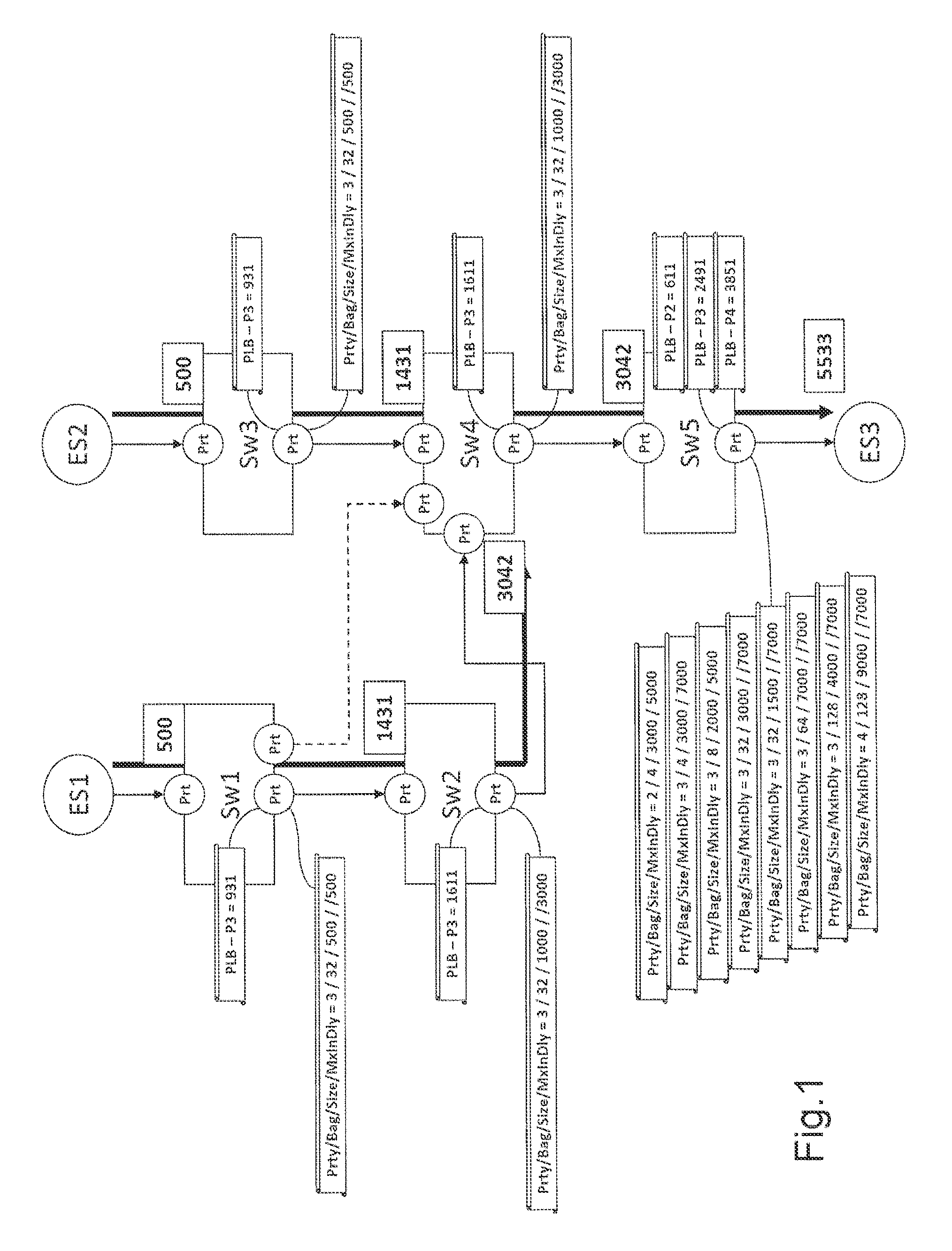

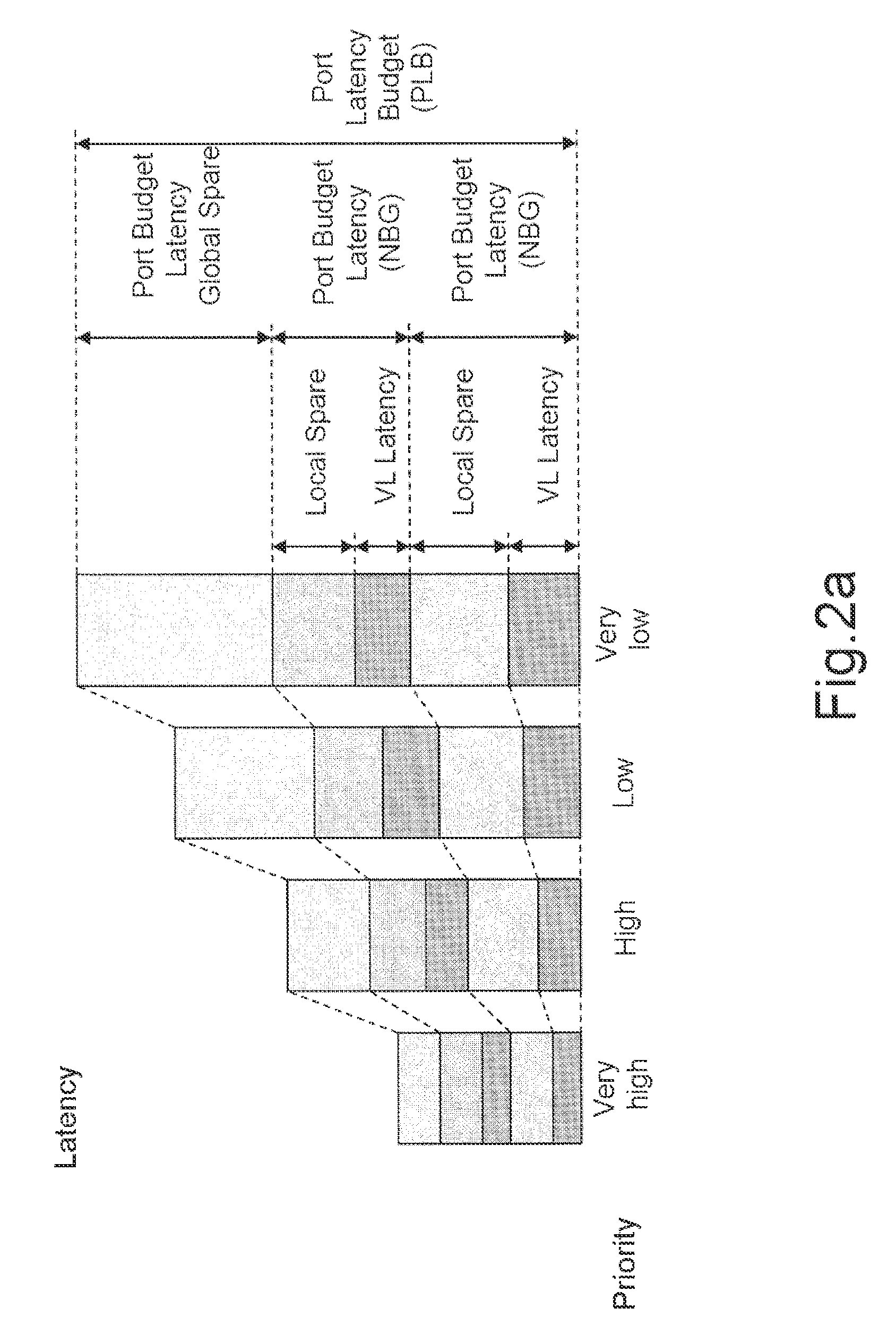

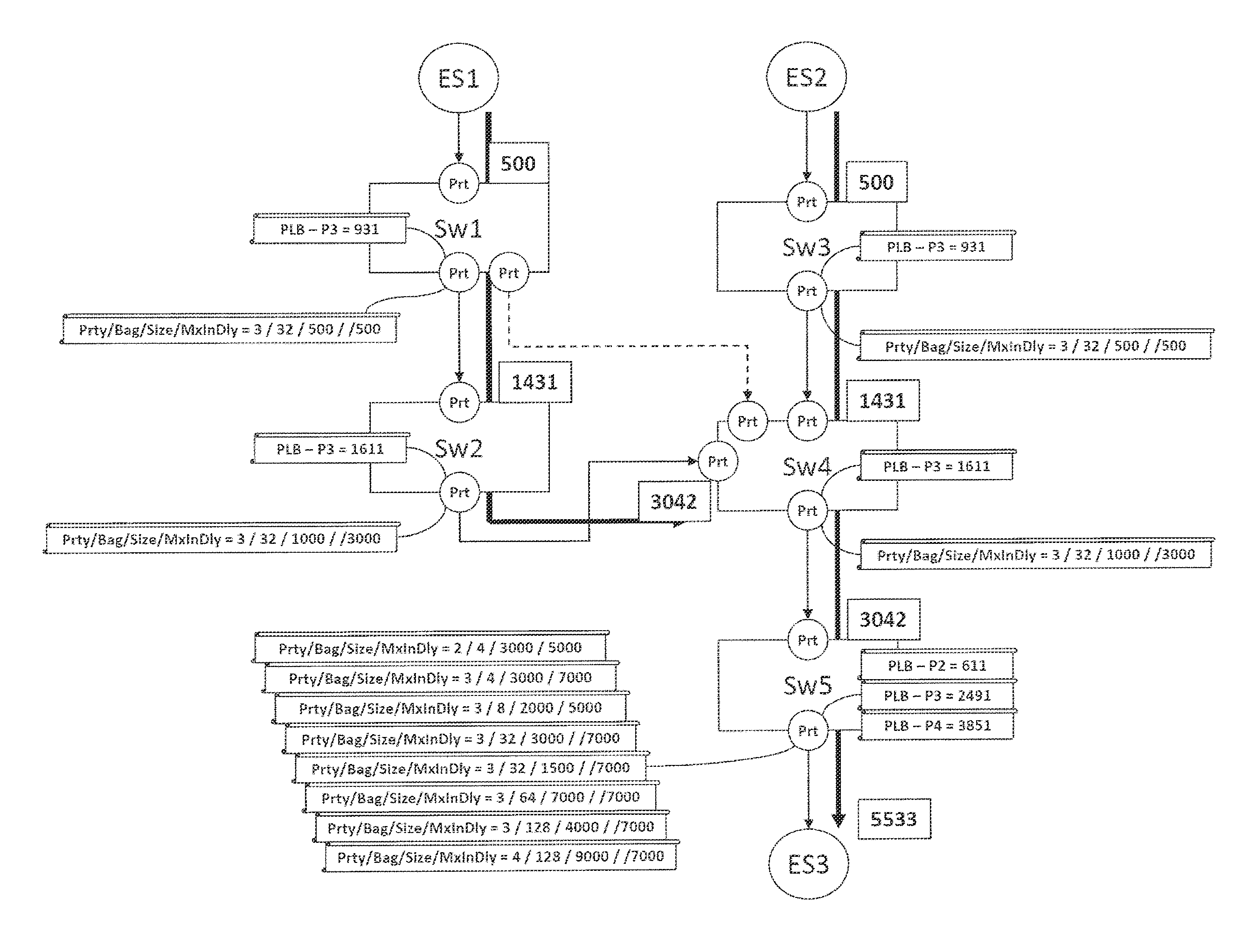

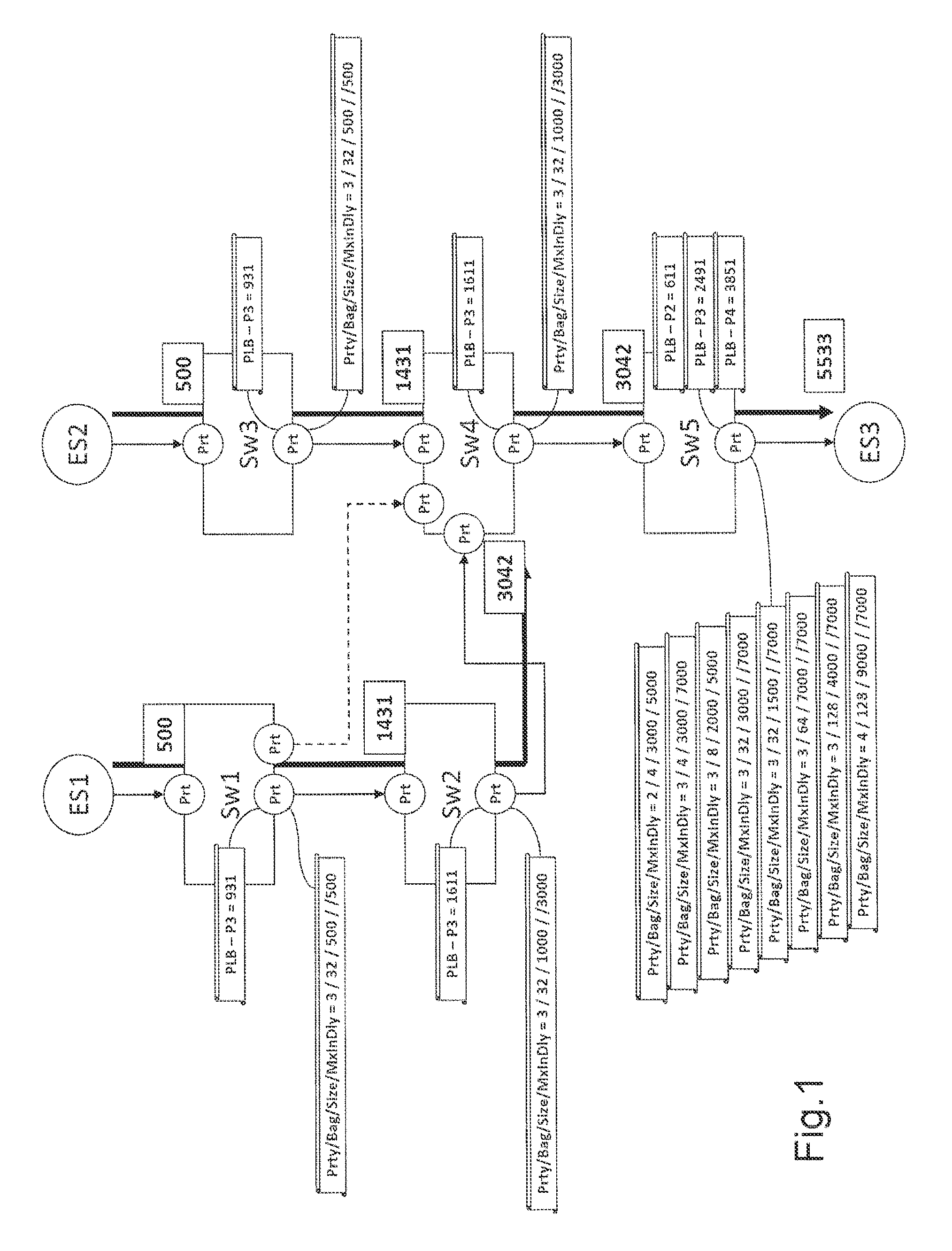

Method and device for the validation of networks

ActiveUS9071515B2Significant latencyError preventionTransmission systemsComputer scienceWaiting time

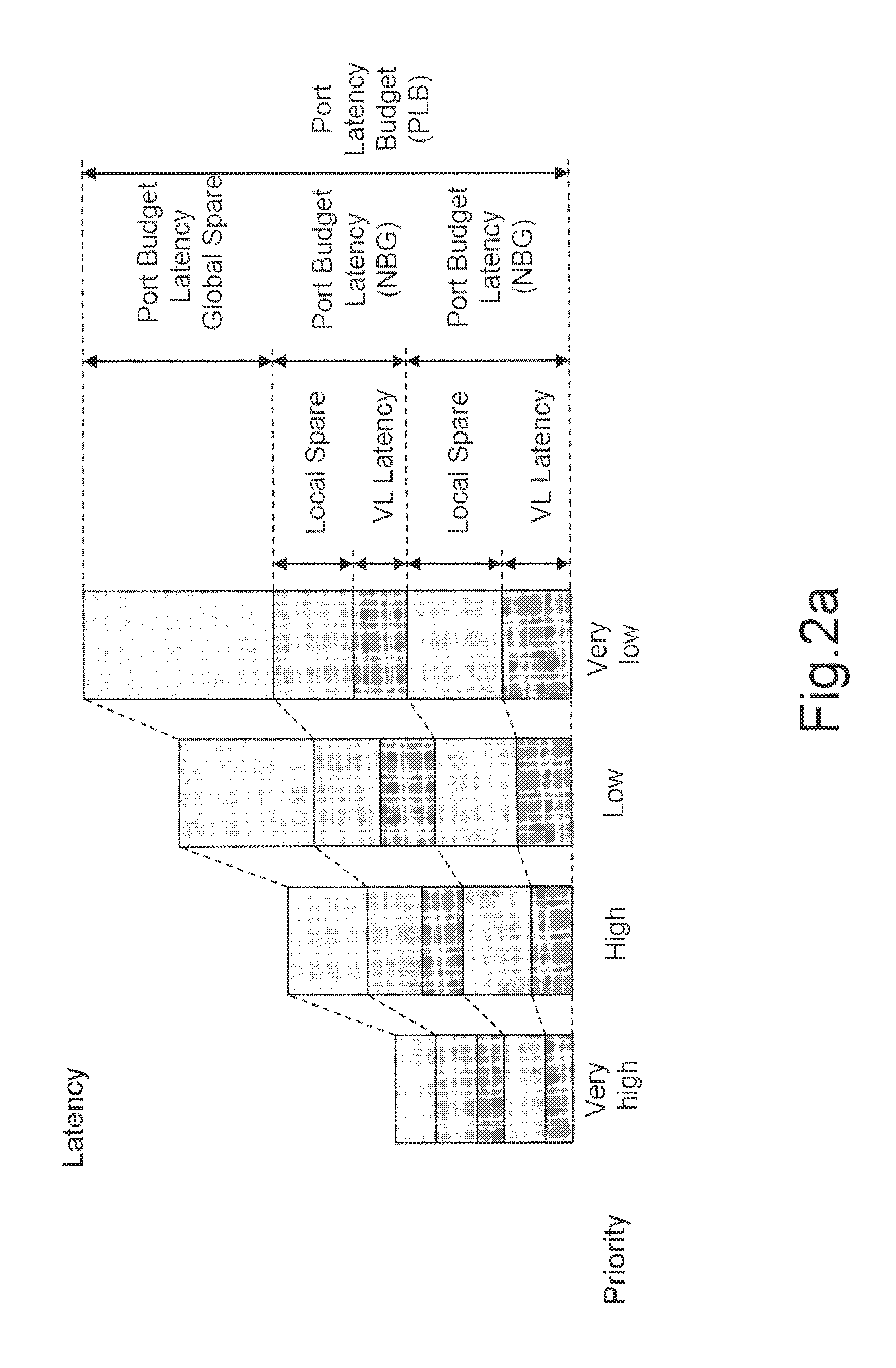

A method is provided for the validation of a network by a checking module, the network comprising a plurality of routers, each of the routers comprising a plurality of output ports, each of the output ports of the routers being associated with a bandwidth budget, a priority latency budget and a plurality of network budget grains. The method comprises, for each of the ports of each of the routers, steps of: calculation of a latency consumed on the output port of the router on the basis of the network budget grains and the bandwidth budget; checking of the compatibility of the latency consumed on the output port with the priority latency budget grains of the output port of the router; and, transmission by the checking module of a signal indicating the result of the check.

Owner:THALES SA

Method and Device for the Validation of Networks

ActiveUS20130163456A1Significant latencyError preventionTransmission systemsWaiting timeReal-time computing

A method is provided for the validation of a network by a checking module, the network comprising a plurality of routers, each of the routers comprising a plurality of output ports, each of the output ports of the routers being associated with a bandwidth budget, a priority latency budget and a plurality of network budget grains. The method comprises, for each of the ports of each of the routers, steps of: calculation of a latency consumed on the output port of the router on the basis of the network budget grains and the bandwidth budget; checking of the compatibility of the latency consumed on the output port with the priority latency budget grains of the output port of the router; and, transmission by the checking module of a signal indicating the result of the check.

Owner:THALES SA

Minimizing latency from peripheral devices to compute engines

ActiveUS9558133B2Lower latencyMinimum delayInput/output for user-computer interactionStatic indicating devicesWaiting timeComputer program

Methods, systems, and computer program products are provided for minimizing latency in a implementation where a peripheral device is used as a capture device and a compute device such as a GPU processes the captured data in a computing environment. In embodiments, a peripheral device and GPU are tightly integrated and communicate at a hardware / firmware level. Peripheral device firmware can determine and store compute instructions specifically for the GPU, in a command queue. The compute instructions in the command queue are understood and consumed by firmware of the GPU. The compute instructions include but are not limited to generating low latency visual feedback for presentation to a display screen, and detecting the presence of gestures to be converted to OS messages that can be utilized by any application.

Owner:ADVANCED MICRO DEVICES INC

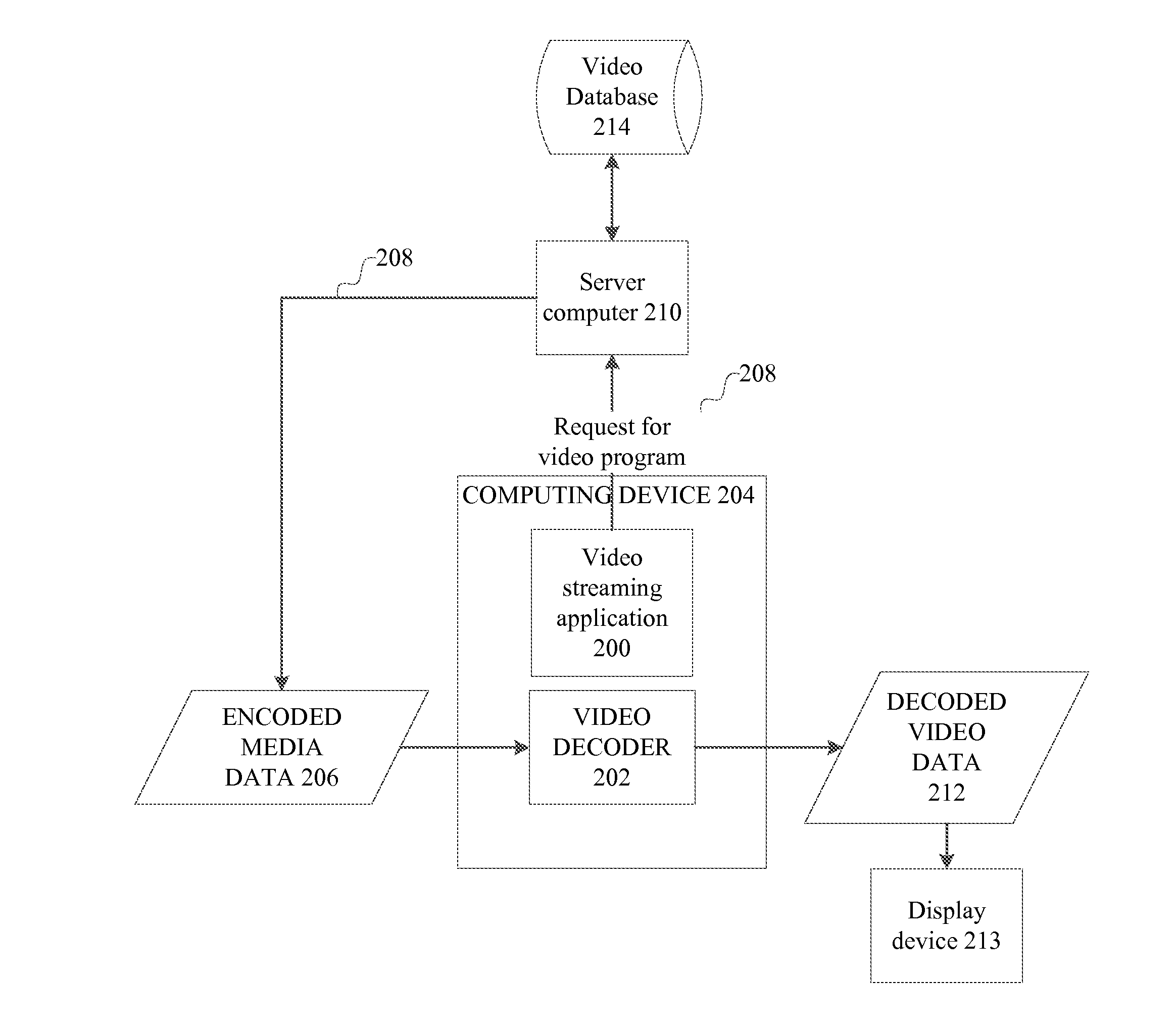

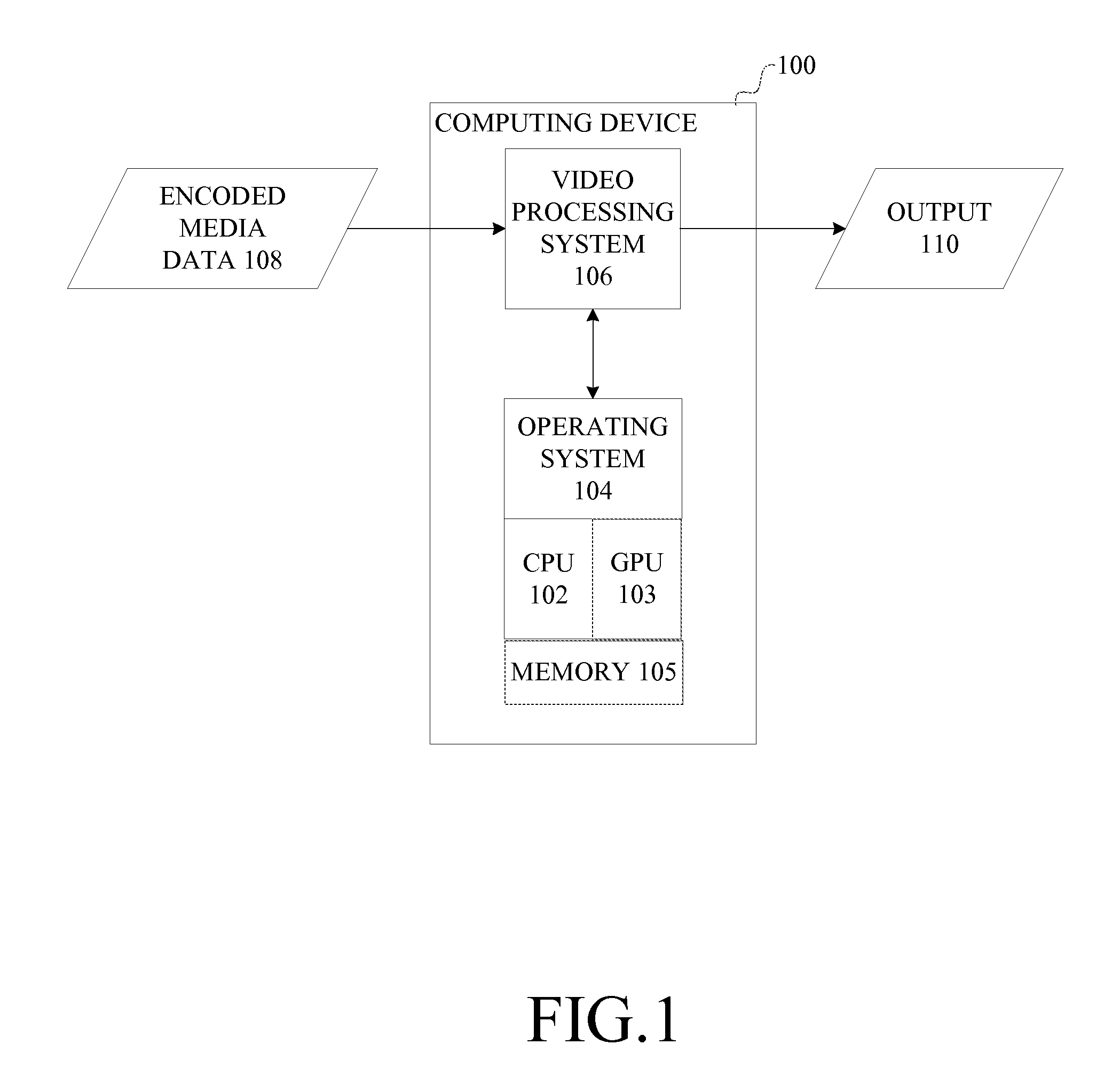

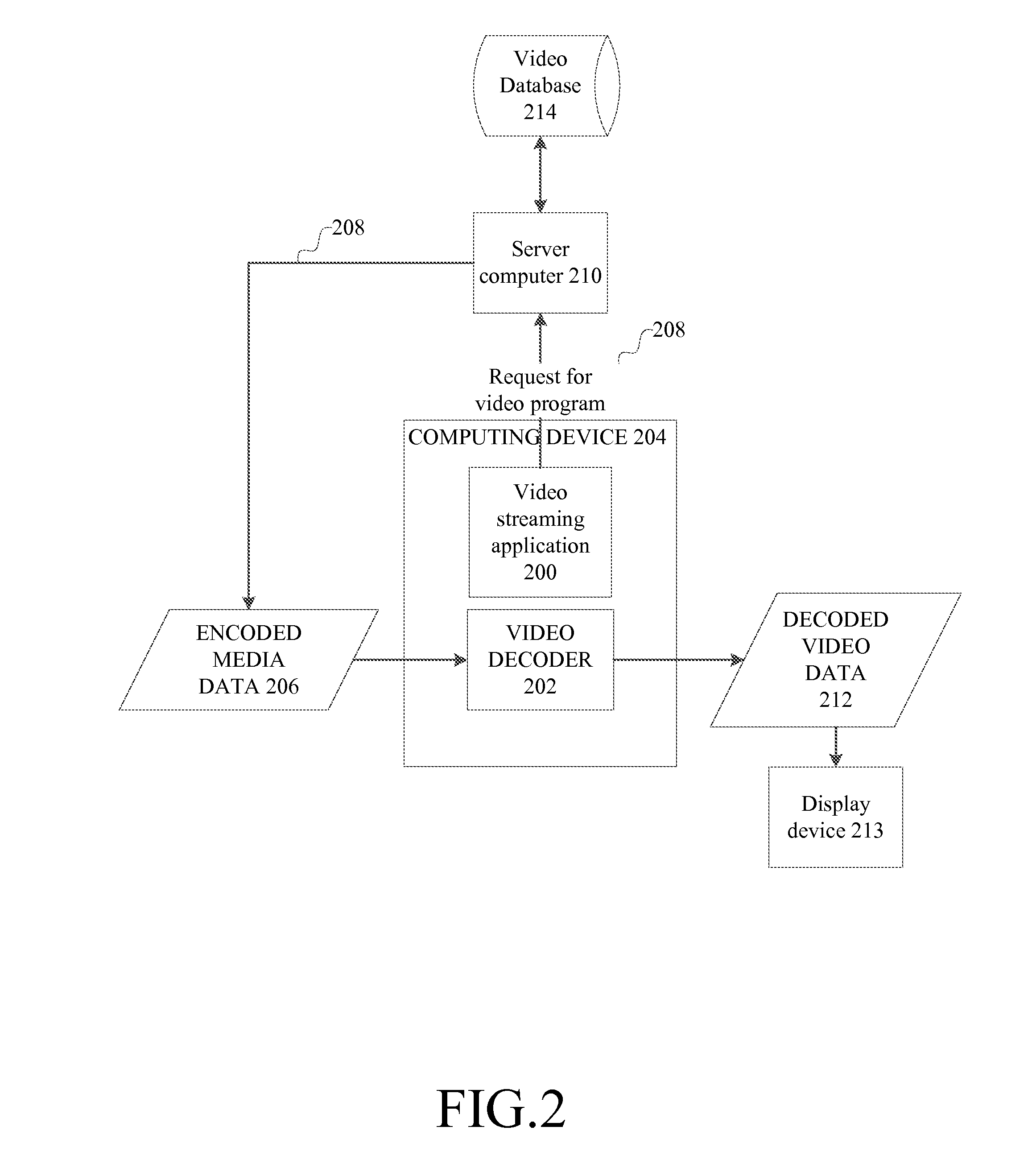

Multiple Bit Rate Video Decoding

ActiveUS20160366430A1Reduces interframe redundancySignificant latencyDigital video signal modificationSelective content distributionVideo processingVideo decoder

In a video processing system including a video decoder, to handle frequent changes in the bit rate of an encoded bitstream, a video decoder can be configured to process a change in bit rates without reinitializing. The video decoder can be configured to reduce memory utilization. The video decoder can be configured both to process a change in bit rate without reinitializing while reducing memory utilization. In one implementation, the video processing system can include an interface between an application running on a host processor and the video decoder which allows the video decoder to communicate with the host application about the configuration of the video decoder.

Owner:MICROSOFT TECH LICENSING LLC

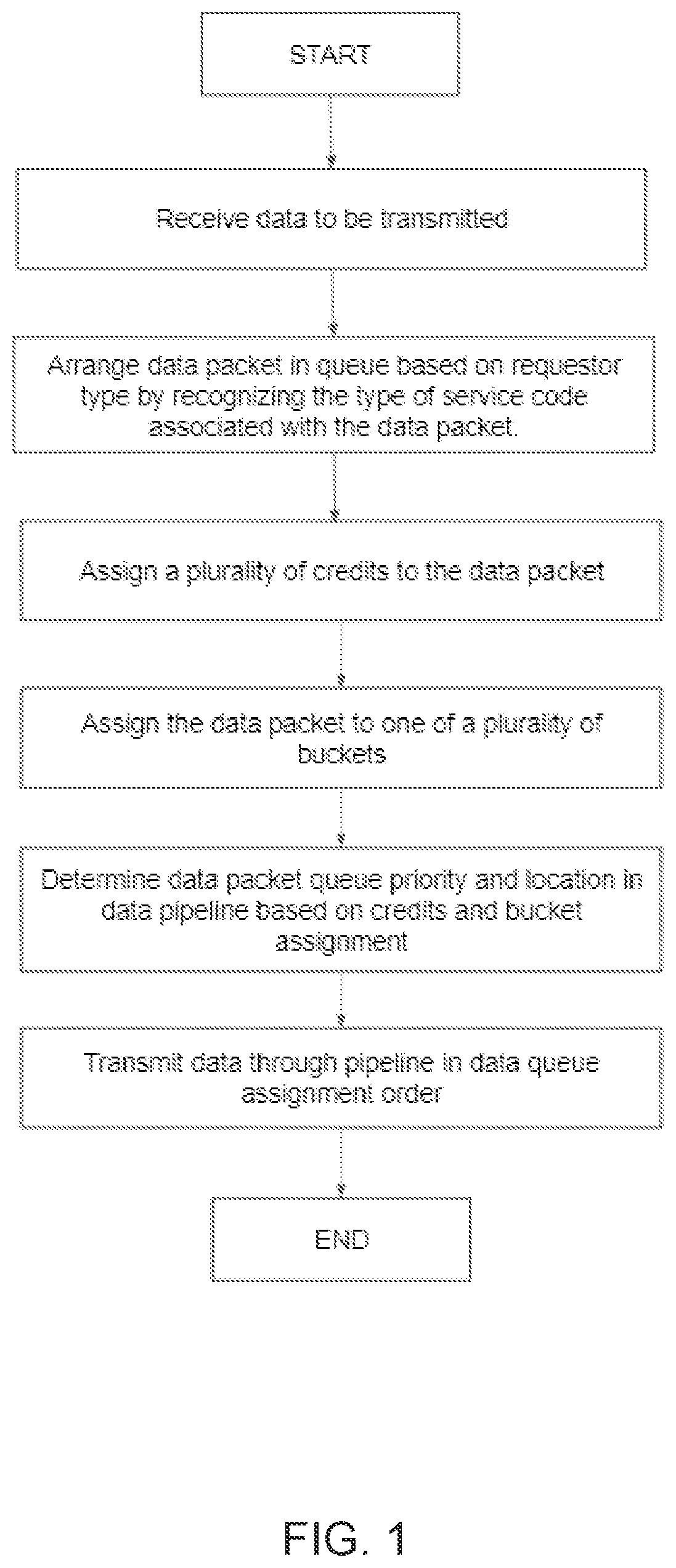

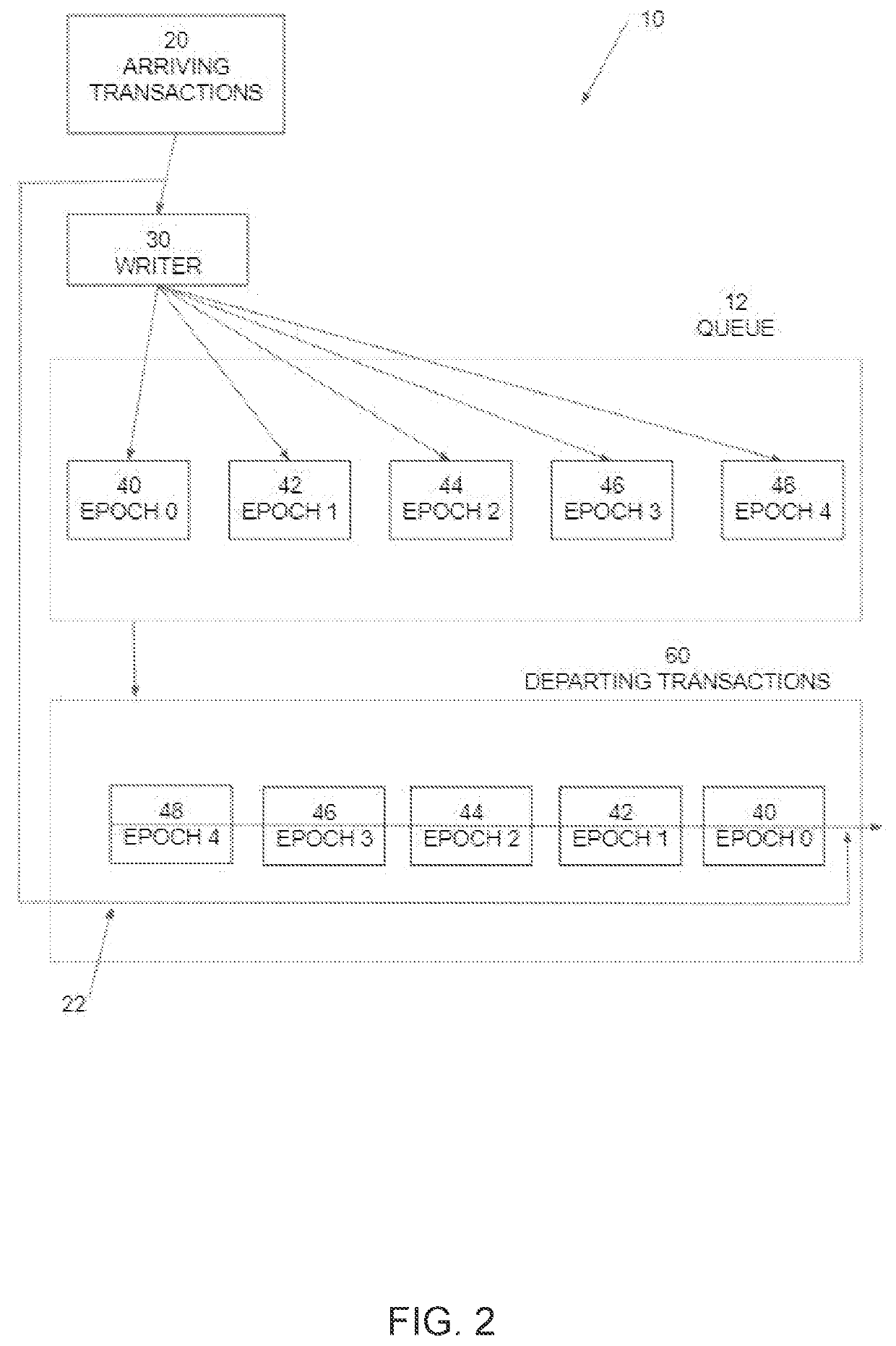

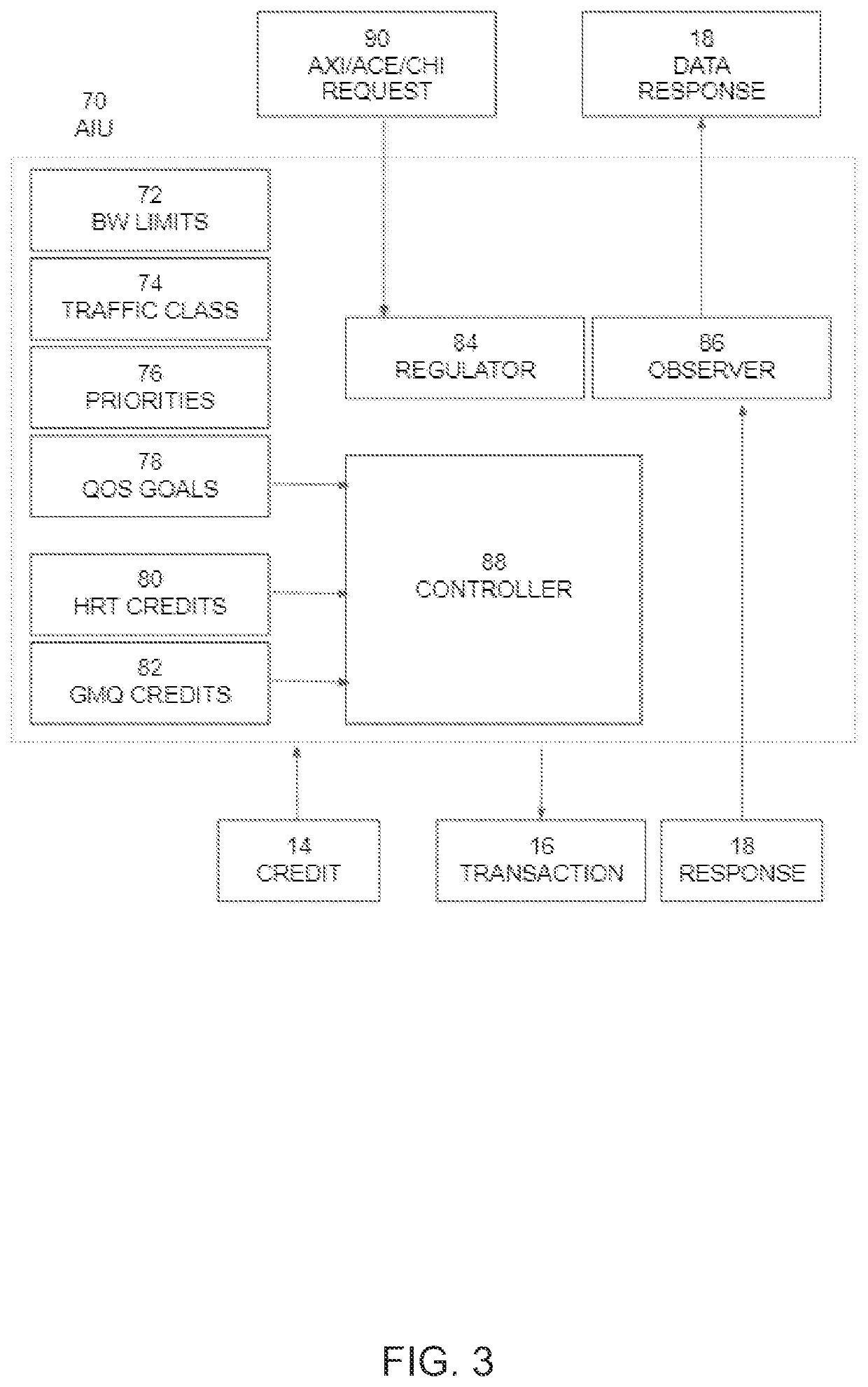

Queue management system, starvation and latency management system, and methods of use

ActiveUS20220210089A1Significant latencyLong durationData switching networksQos managementQos quality of service

A quality of service (QoS) management system and guarantee is presented. The QoS management system can be used for end to end data. More specifically, and without limitation, the invention relates to the management of traffic and priorities in a queue and relates to grouping transactions in a queue providing solutions to queue starvation and transmission latency.

Owner:ARTERIS

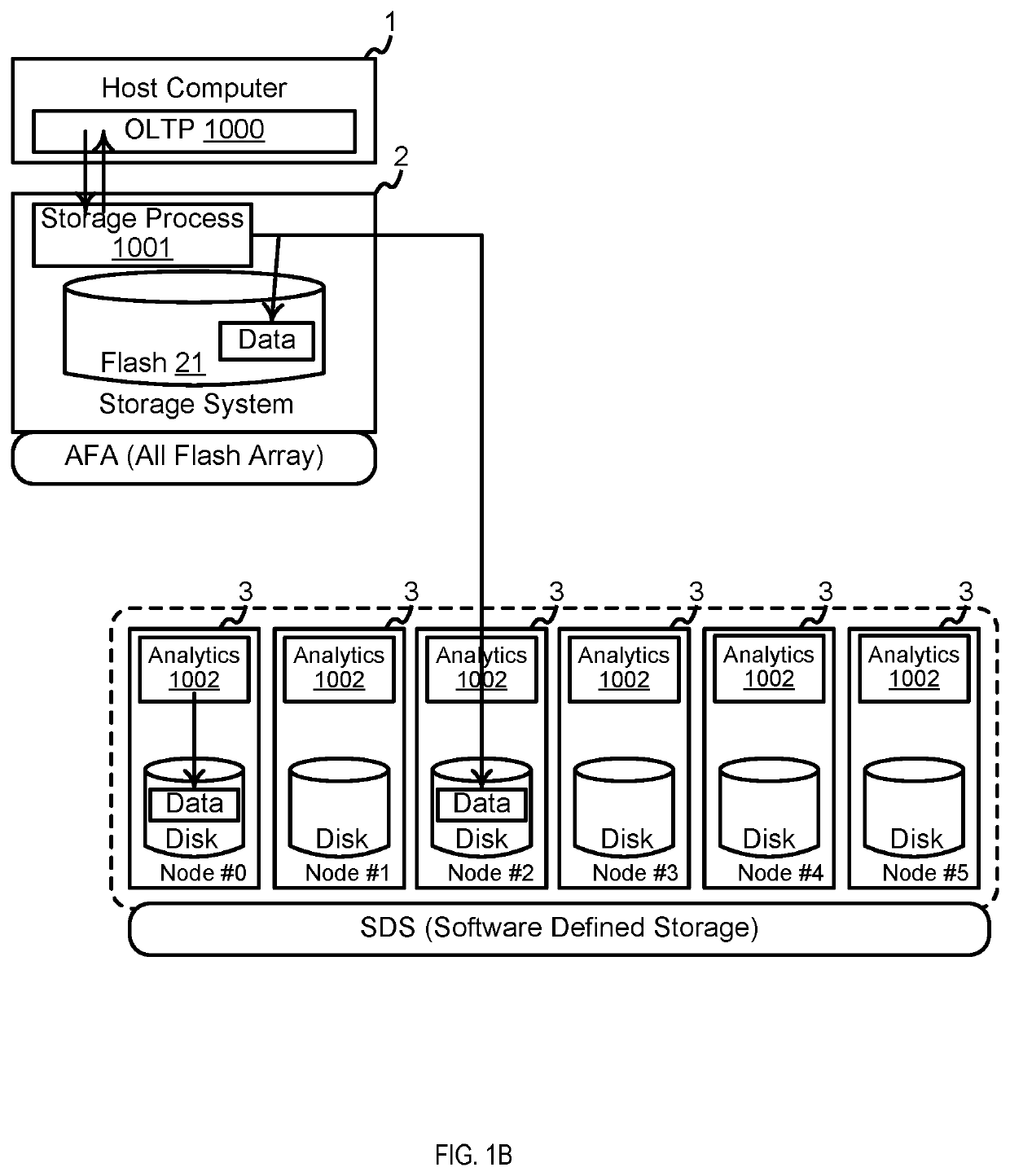

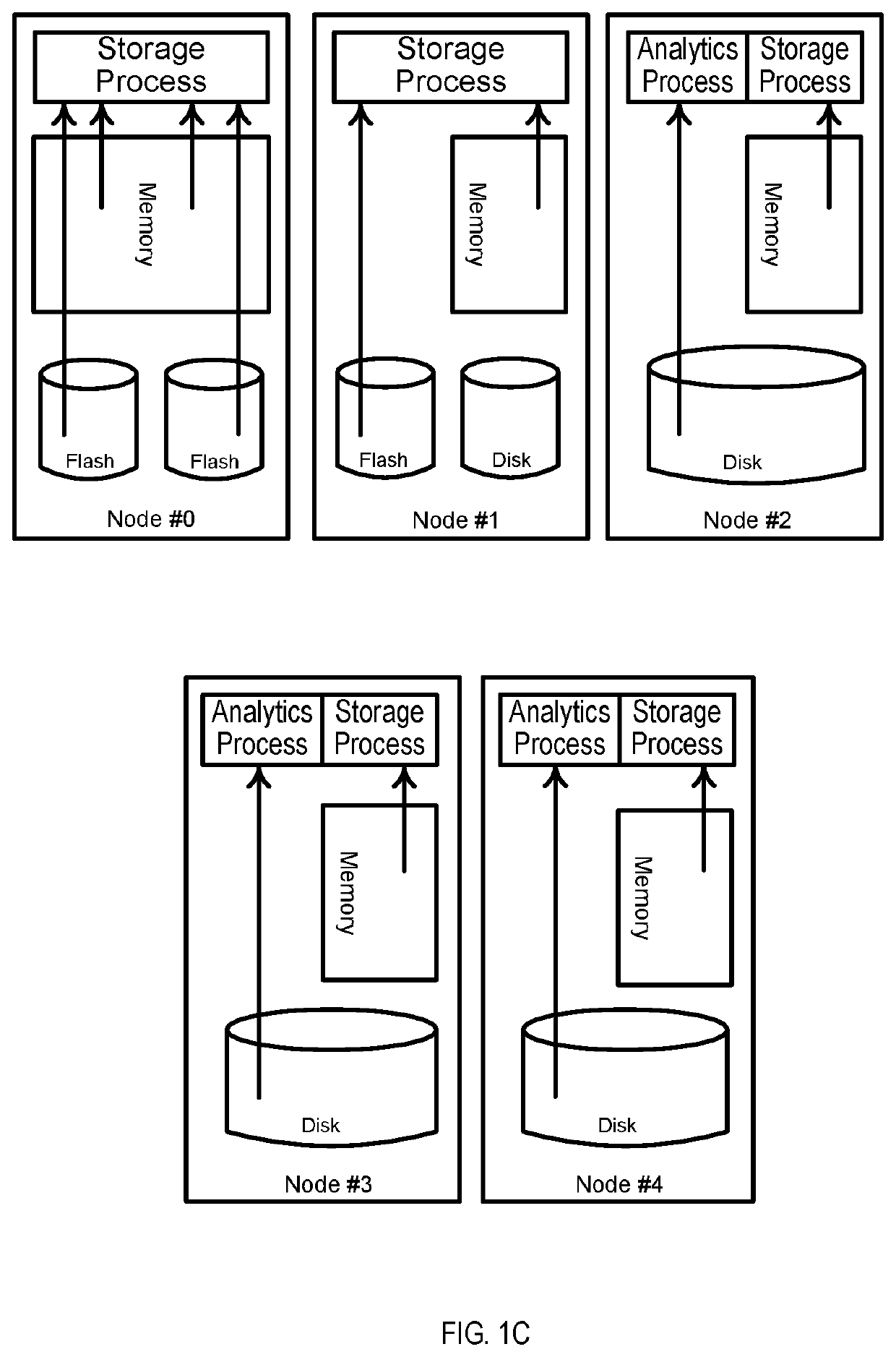

Method for latency improvement of storages using low cost hardware

ActiveUS10983882B2Significant latencyShorten speedInput/output to record carriersRedundant hardware error correctionFailoverResource assignment

Example implementations described herein are directed to systems and methods involving an application running on a host computer and configured to manage storage infrastructure. The application not only manages resources already allocated to itself, but also manages the allocation and de-allocation of resources to itself. The resources can be allocated and de-allocated based on the type of process being executed, wherein upon occurrence of a failure on a primary storage system, higher priority processes that are executed on connected failover storage system nodes are given priority while the lower priority processes of such failover storage system nodes are disabled.

Owner:HITACHI LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com