Patents

Literature

1255results about "Image rendering" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

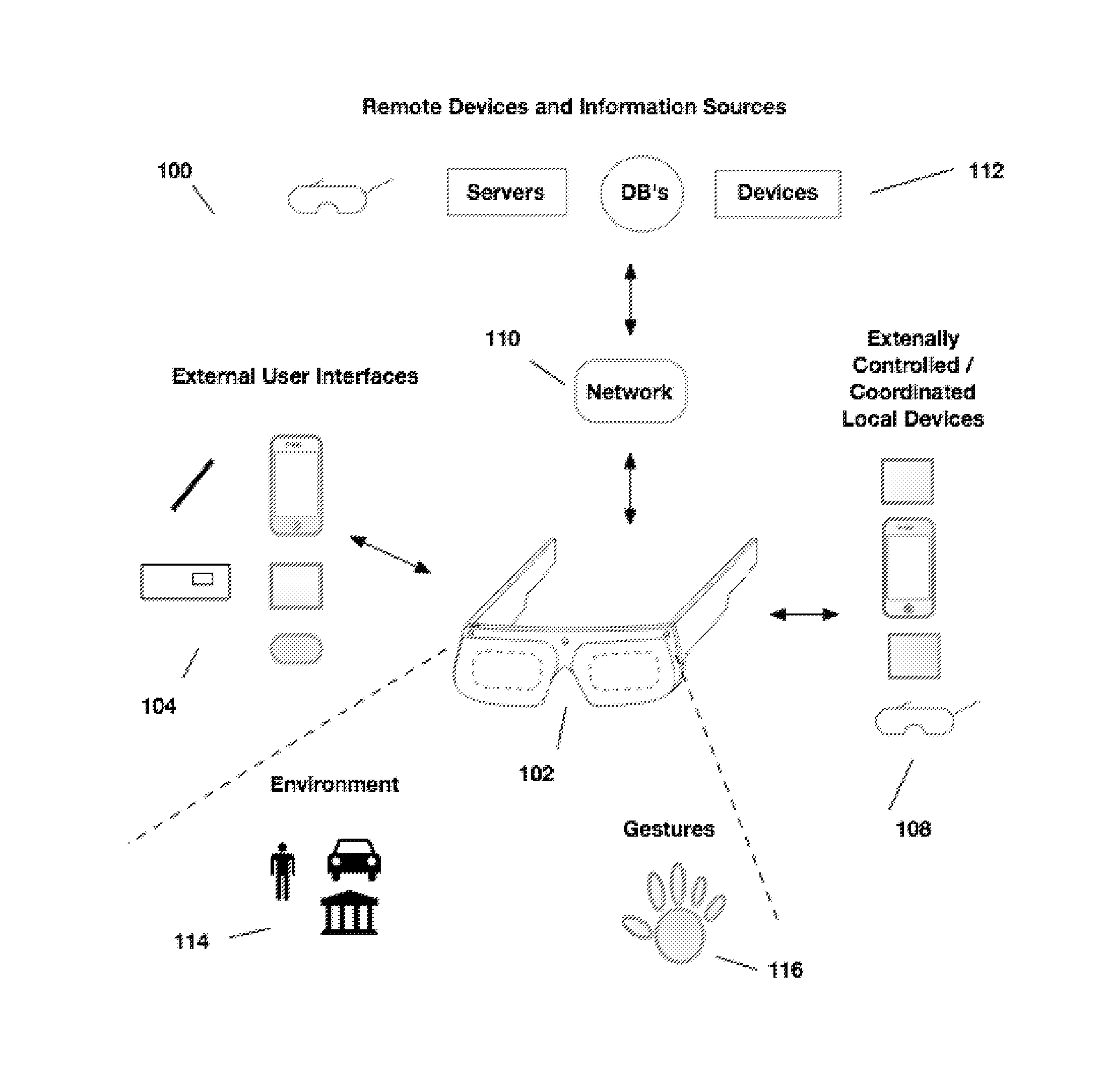

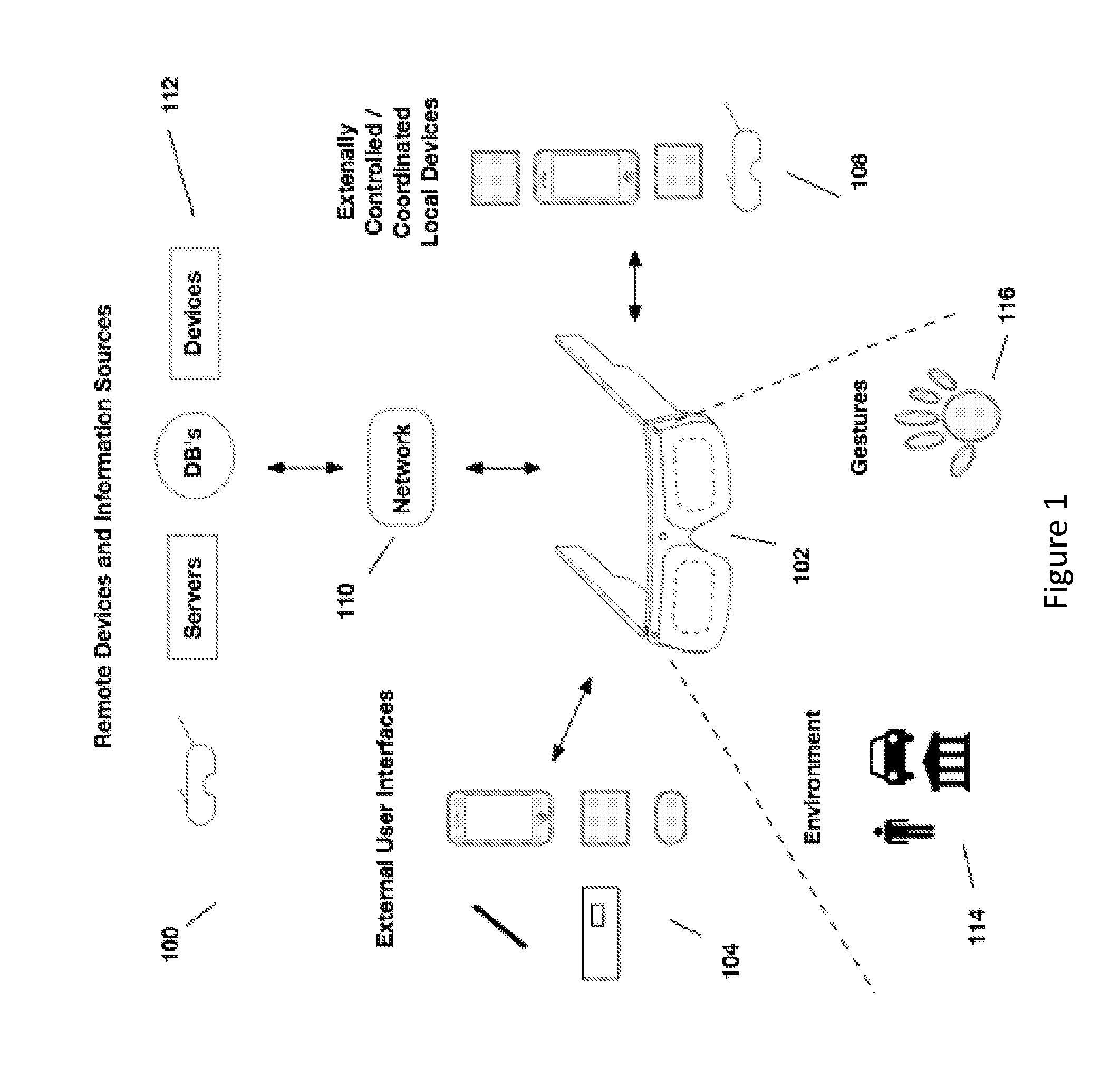

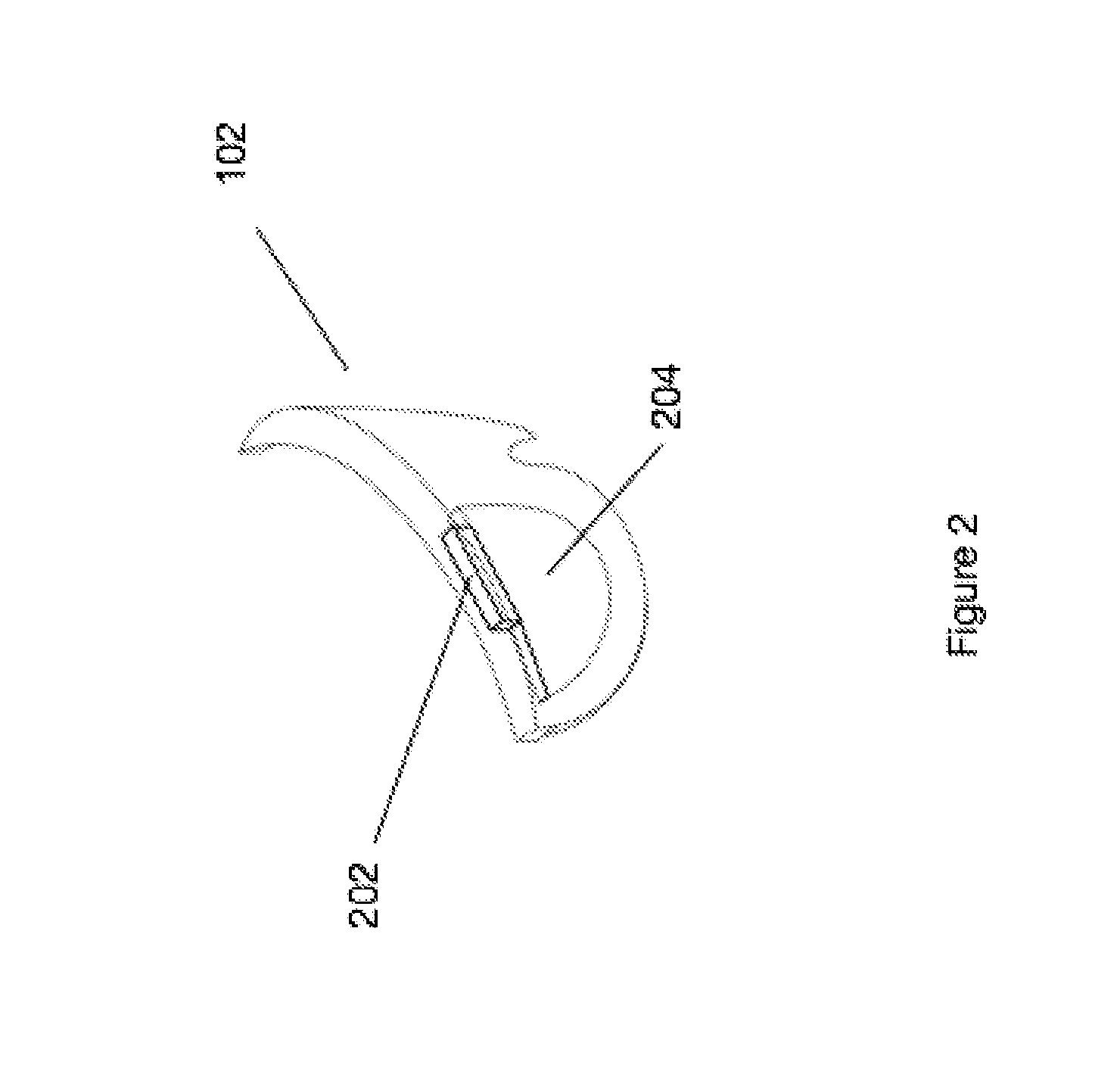

Anchoring virtual images to real world surfaces in augmented reality systems

ActiveUS20120249741A1Television system detailsColor television detailsSensor arrayAugmented reality systems

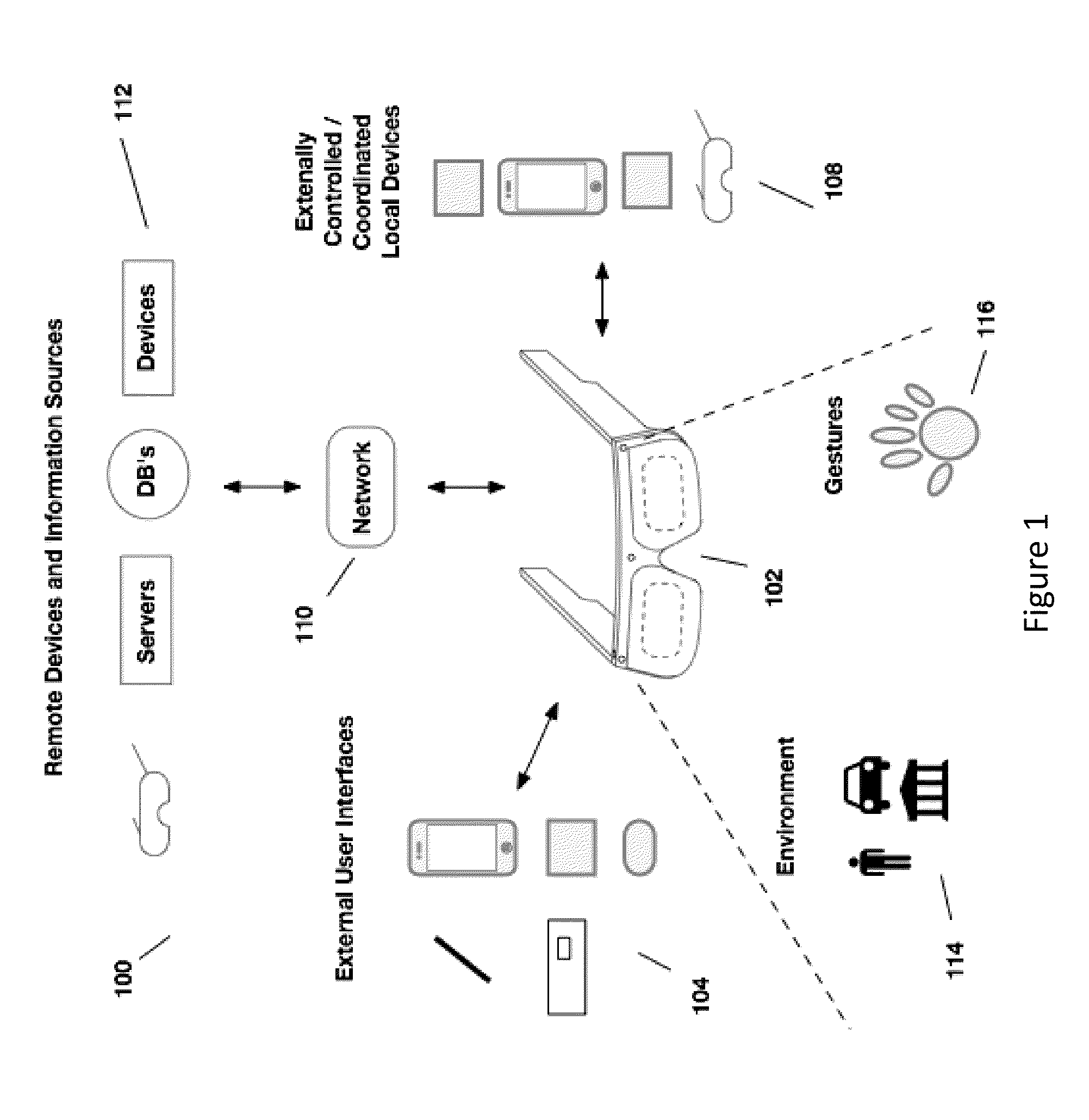

A head mounted device provides an immersive virtual or augmented reality experience for viewing data and enabling collaboration among multiple users. Rendering images in a virtual or augmented reality system may include capturing an image and spatial data with a body mounted camera and sensor array, receiving an input indicating a first anchor surface, calculating parameters with respect to the body mounted camera and displaying a virtual object such that the virtual object appears anchored to the selected first anchor surface. Further operations may include receiving a second input indicating a second anchor surface within the captured image that is different from the first anchor surface, calculating parameters with respect to the second anchor surface and displaying the virtual object such that the virtual object appears anchored to the selected second anchor surface and moved from the first anchor surface.

Owner:QUALCOMM INC

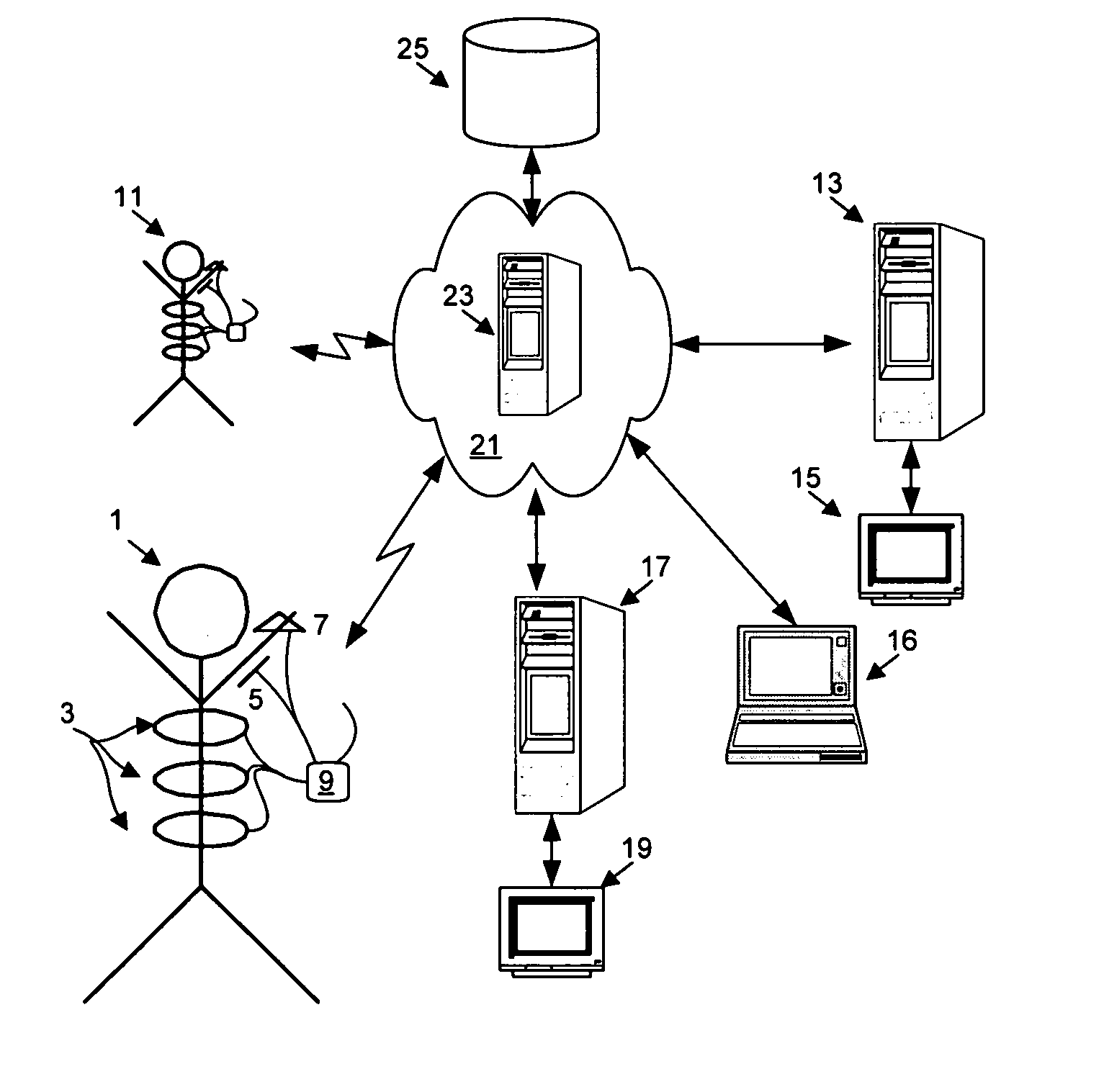

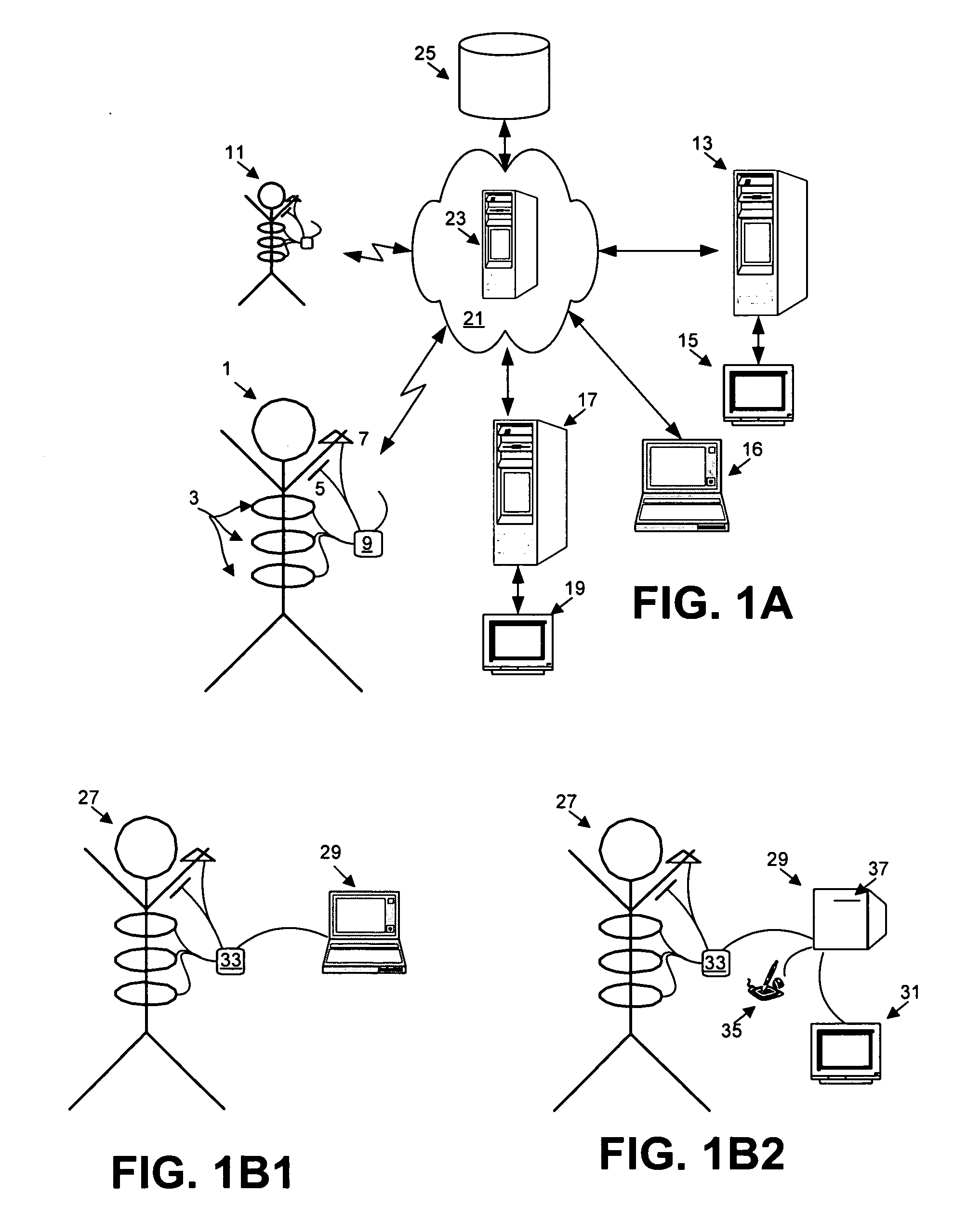

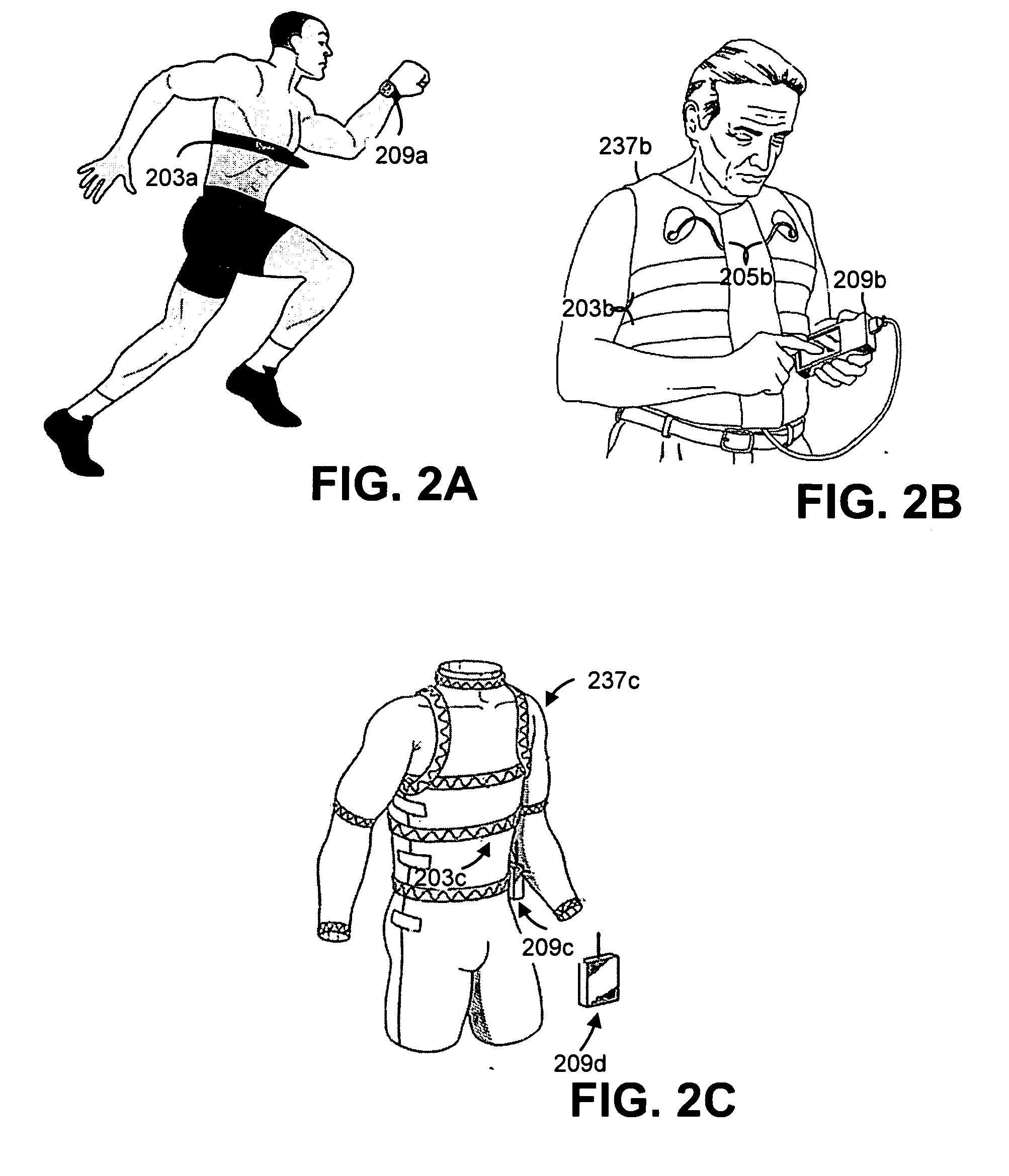

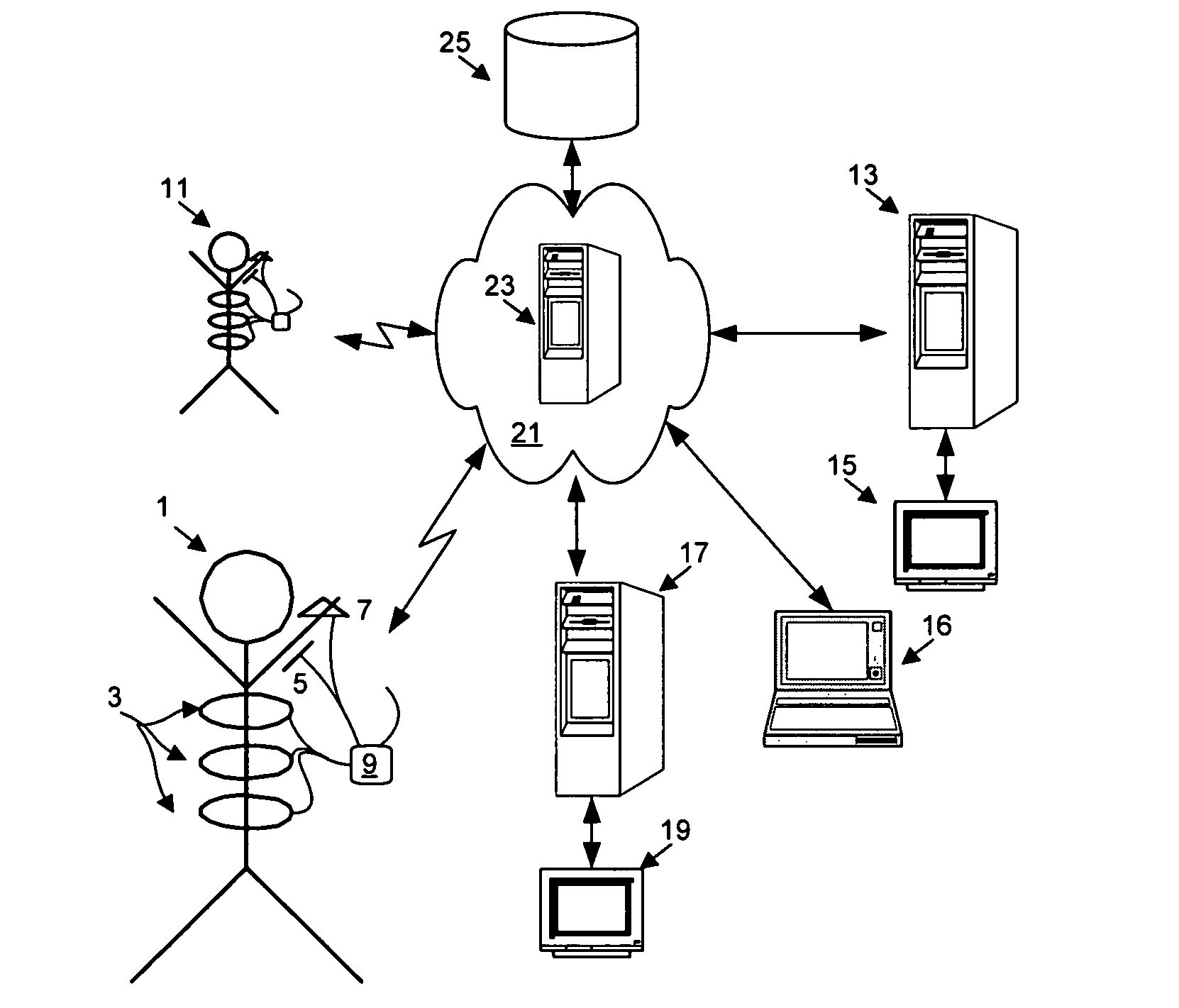

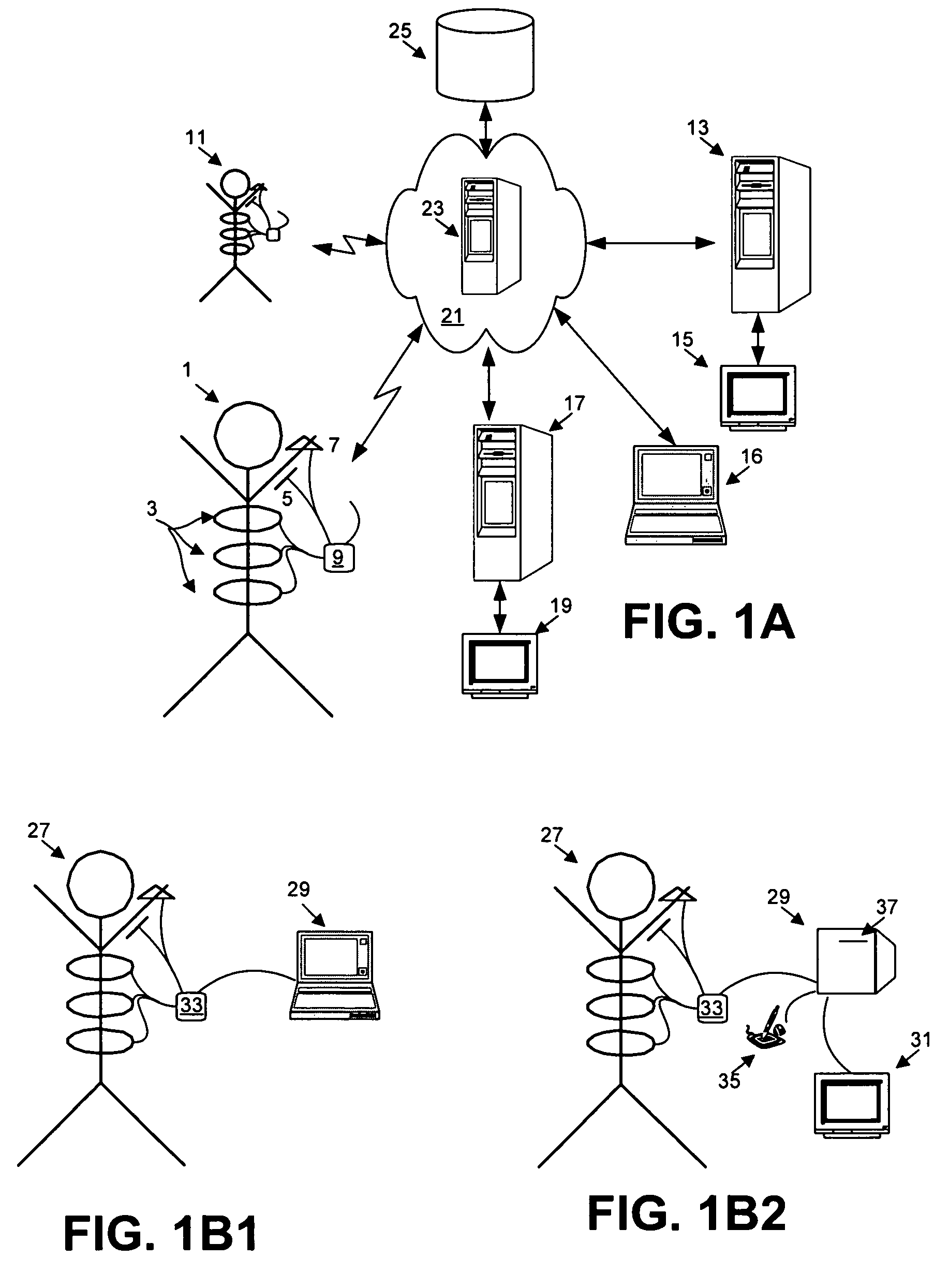

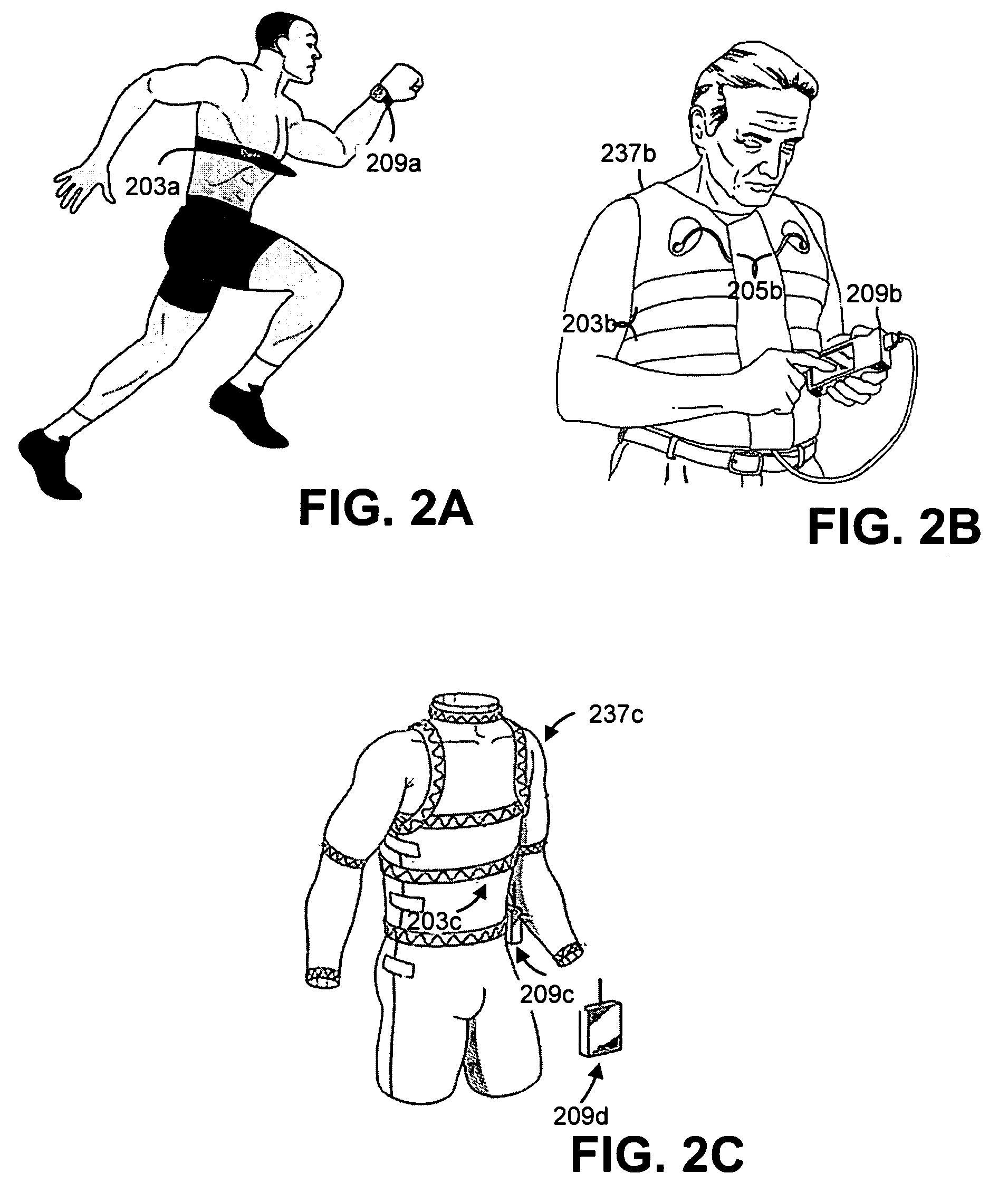

Computer interfaces including physiologically guided avatars

This invention provides user interfaces that more intuitively display physiological data obtained from physiological monitoring of one or more subjects. Specifically, the user interfaces of this invention create and display one or more avatars having behaviors guided by physiological monitoring data. The monitoring data is preferably obtained when the subject is performing normal tasks without substantial restraint. This invention provides a range of implementations that accommodate user having varying processing and graphics capabilities, e.g., from handheld electronic devices to ordinary PC-type computers and to systems with enhanced graphics capabilities.

Owner:ADIDAS

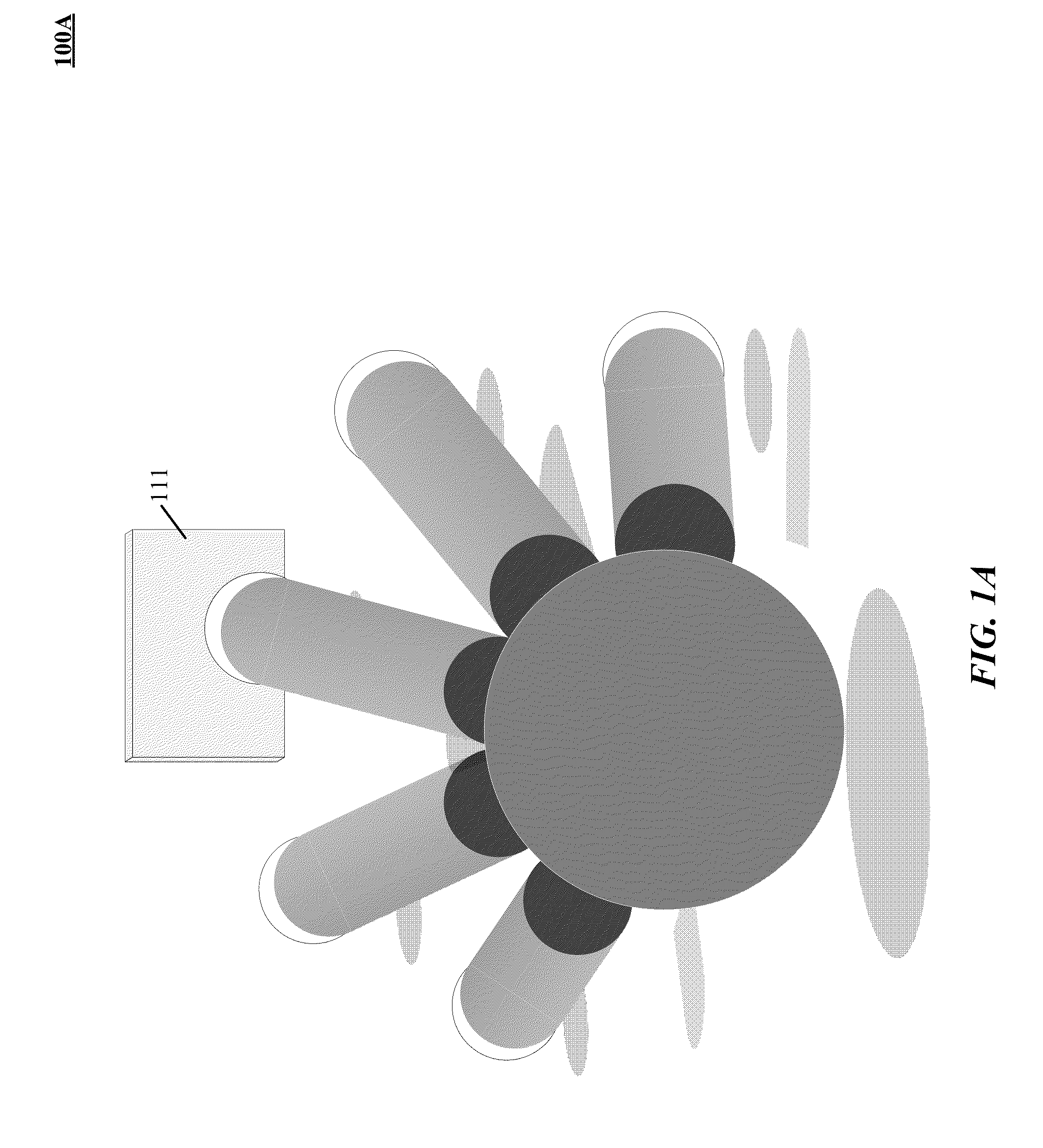

Modular mobile connected pico projectors for a local multi-user collaboration

The various embodiments include systems and methods for rendering images in a virtual or augmented reality system that may include capturing scene images of a scene in a vicinity of a first and a second projector, capturing spatial data with a sensor array in the vicinity of the first and second projectors, analyzing captured scene images to recognize body parts, and projecting images from each of the first and the second projectors with a shape and orientation determined based on the recognized body parts. Additional rendering operations may include tracking movements of the recognized body parts, applying a detection algorithm to the tracked movements to detect a predetermined gesture, applying a command corresponding to the detected predetermined gesture, and updating the projected images in response to the applied command.

Owner:QUALCOMM INC

Method for inter-scene transitions

InactiveUS20060132482A1Television system detailsGeometric image transformationComputer graphics (images)Display device

A method and system for creating a transition between a first scene and a second scene on a computer system display, simulating motion. The method includes determining a transformation that maps the first scene into the second scene. Motion between the scenes is simulated by displaying transitional images that include a transitional scene based on a transitional object in the first scene and in the second scene. The rendering of the transitional object evolves according to specified transitional parameters as the transitional images are displayed. A viewer receives a sense of the connectedness of the scenes from the transitional images. Virtual tours of broad areas, such as cityscapes, can be created using inter-scene transitions among a complex network of pairs of scenes.

Owner:EVERYSCAPE

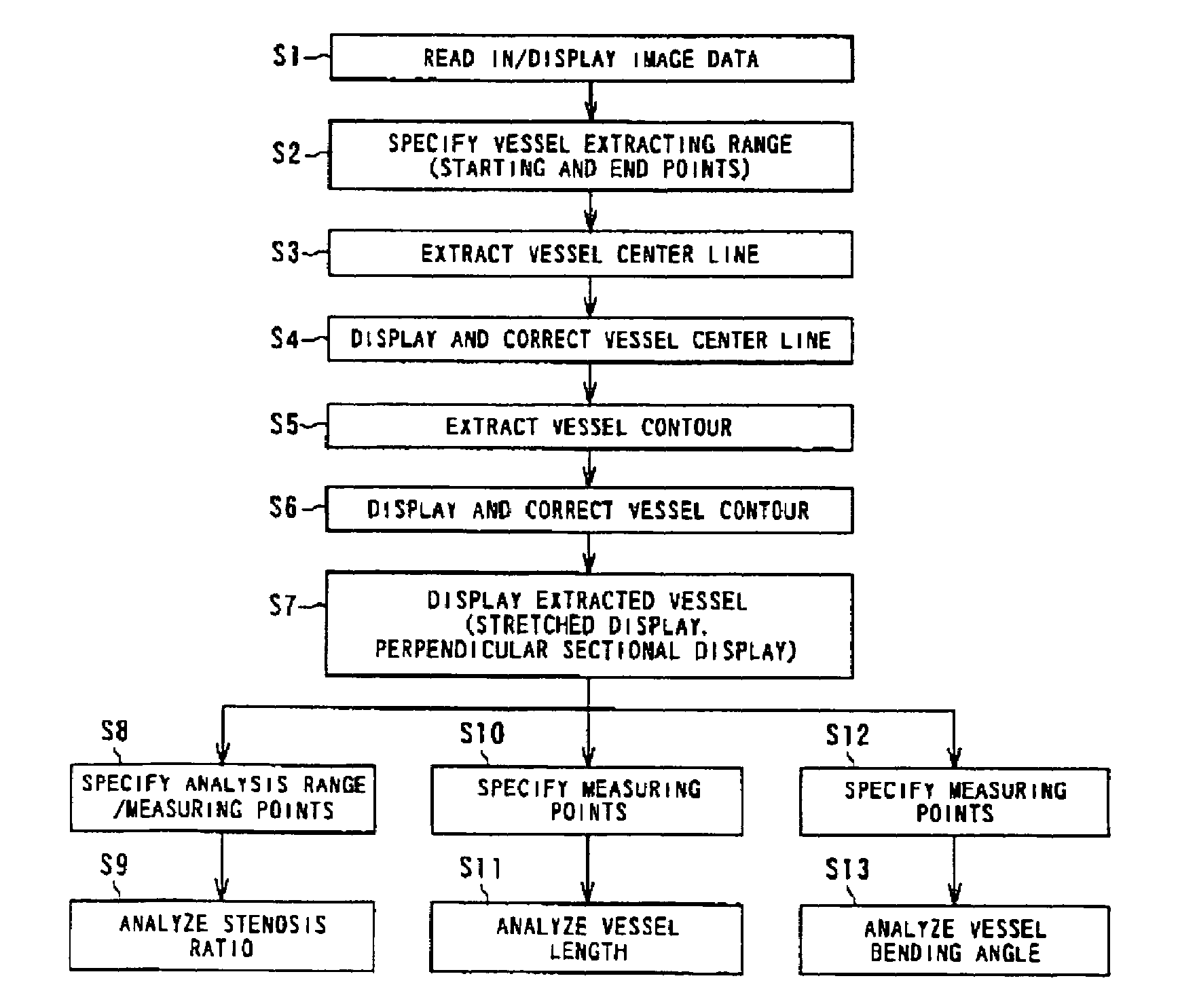

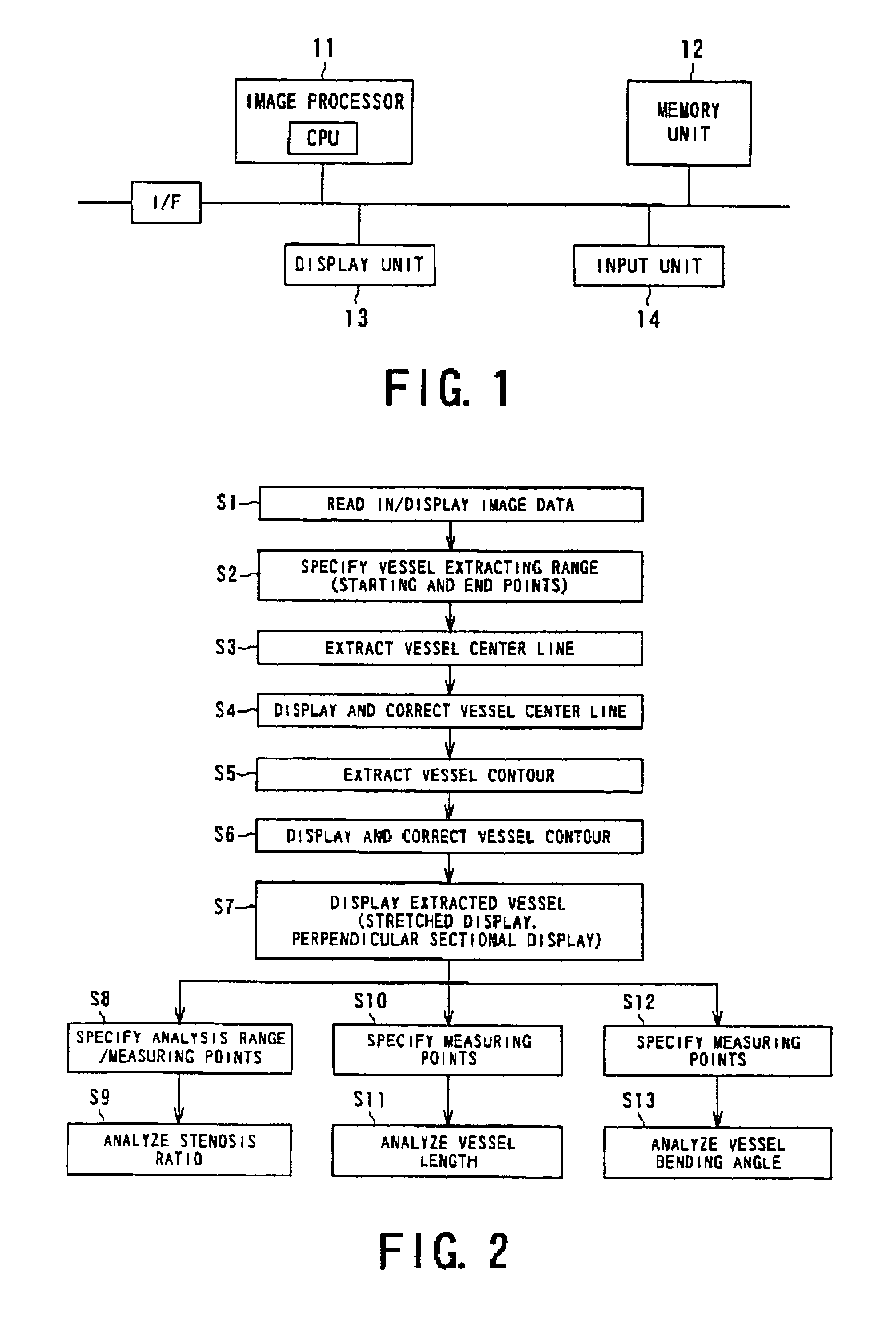

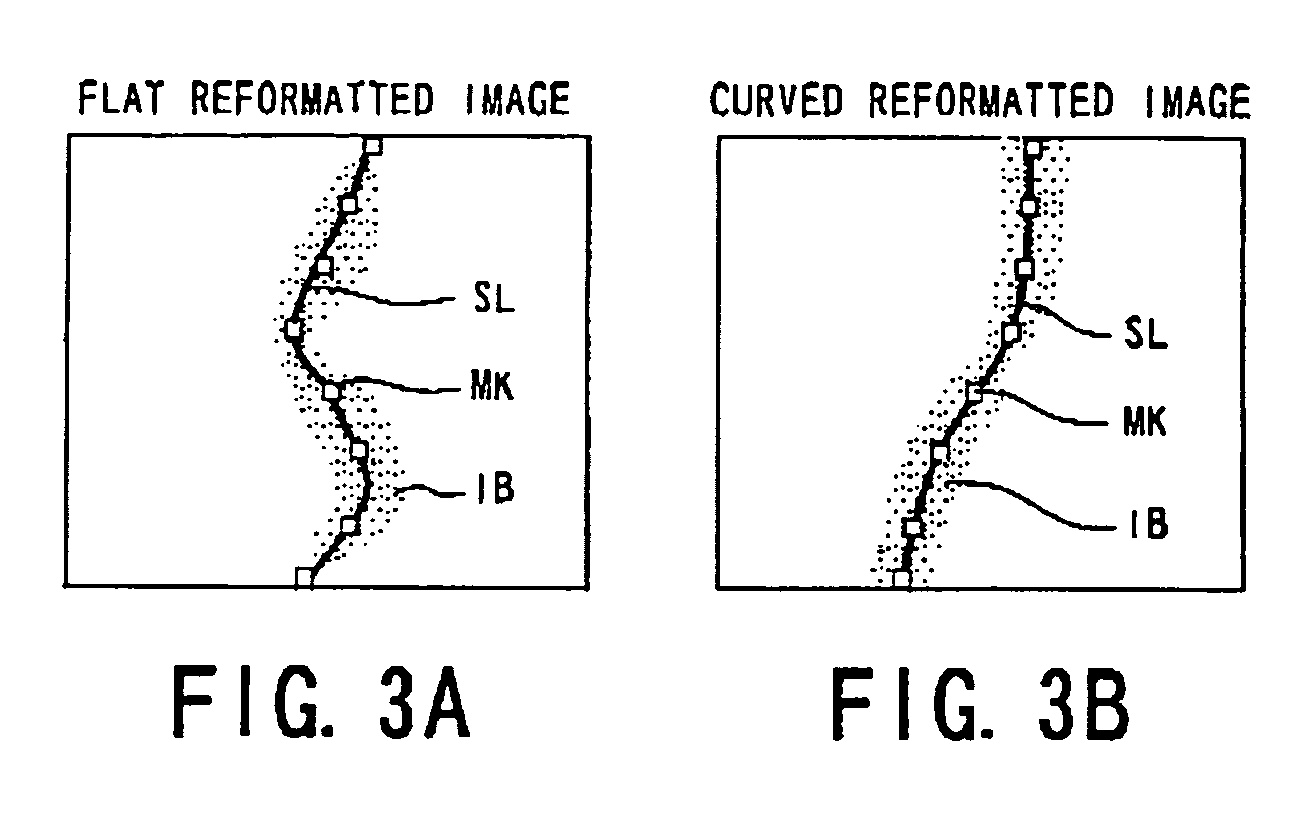

Processor for analyzing tubular structure such as blood vessels

InactiveUS7369691B2Reduce operational burdenEasy to masterUltrasonic/sonic/infrasonic diagnosticsImage enhancementReference imageImaging data

Using medical three-dimensional image data, at least one of a volume-rendering image, a flat reformatted image on an arbitrary section, a curved reformatted image, and an MIP (maximum value projection) image is prepared as a reference image. Vessel center lines are extracted from this reference image, and at least one of a vessel stretched image based on the center line and a perpendicular sectional image substantially perpendicular to the center line is prepared. The shape of vessels is analyzed on the basis of the prepared image, and the prepared stretched image and / or the perpendicular sectional image is compared with the reference image, and the result is displayed together with the images. Display of the reference image with the stretched image or the perpendicular sectional image is made conjunctive. Correction of the center line by the operator and automatic correction of the image resulting from the correction are also possible.

Owner:TOSHIBA MEDICAL SYST CORP

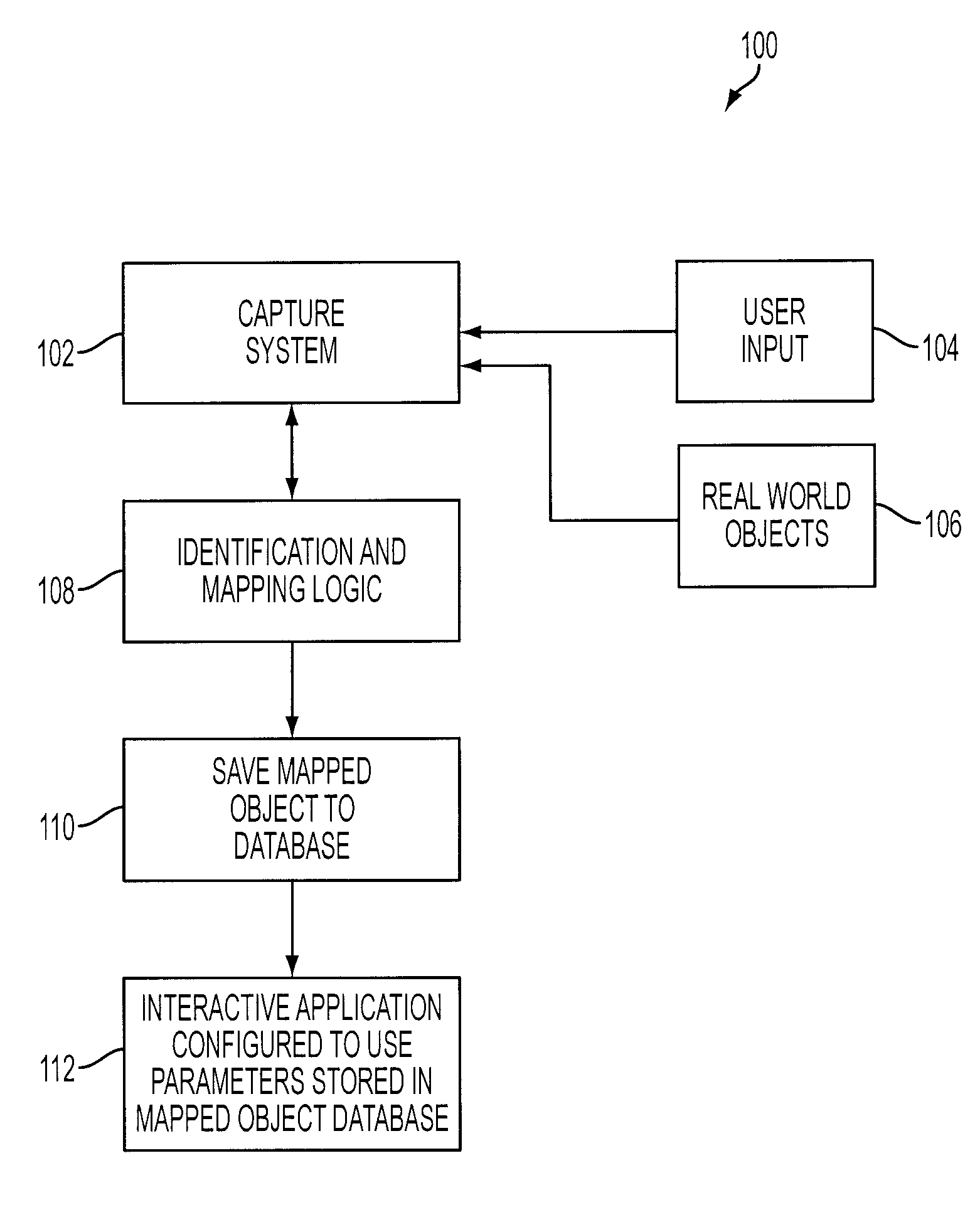

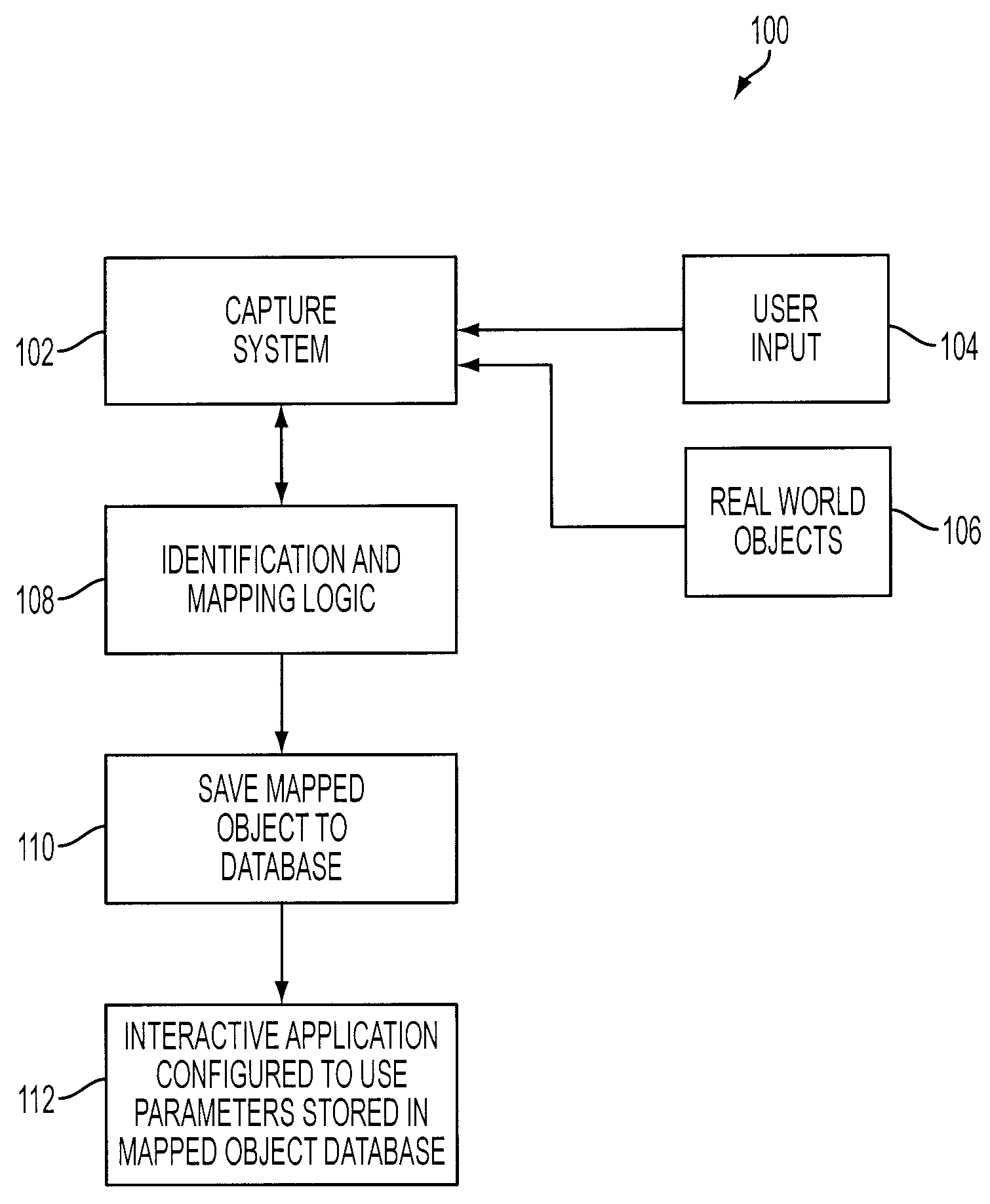

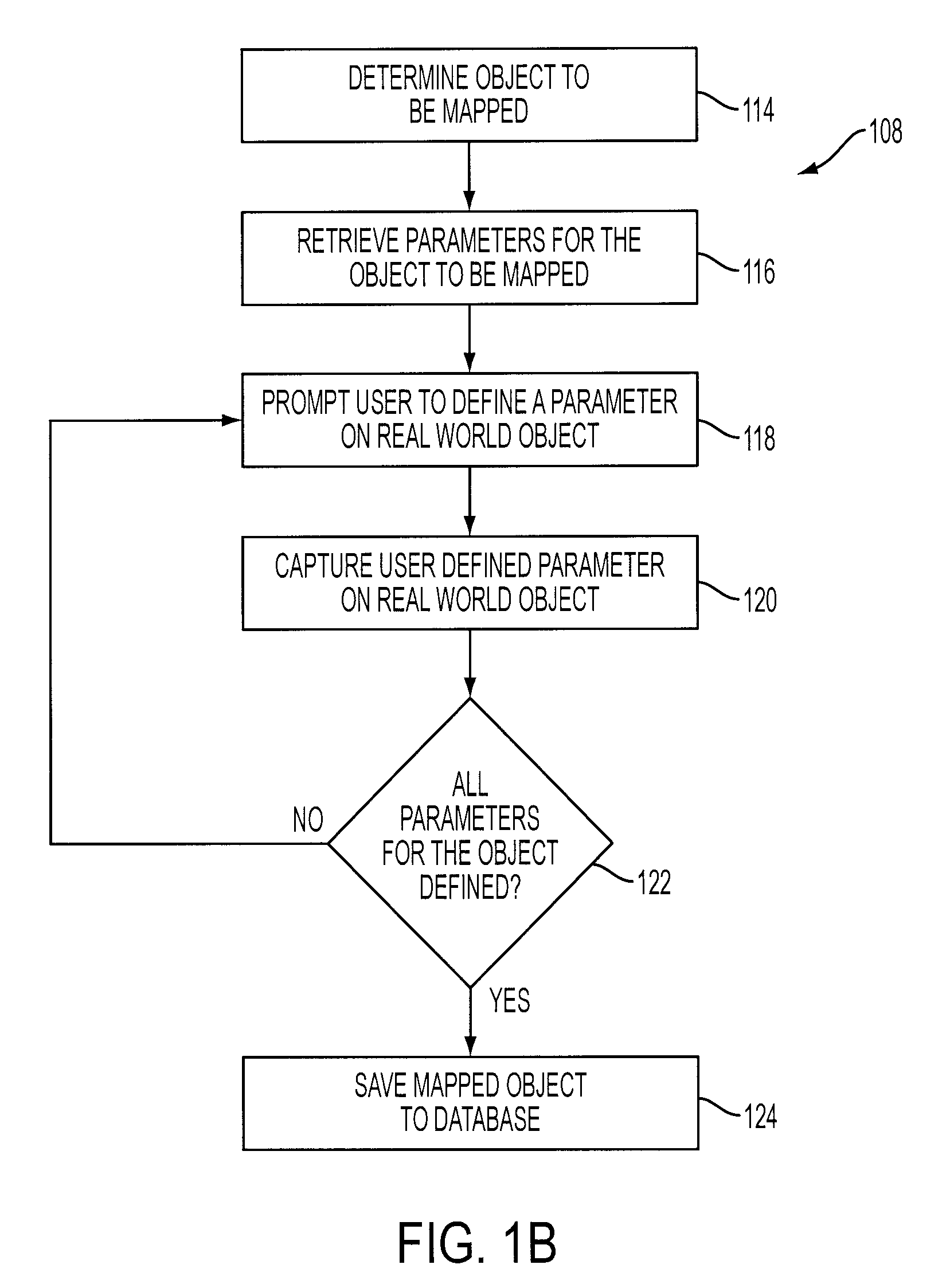

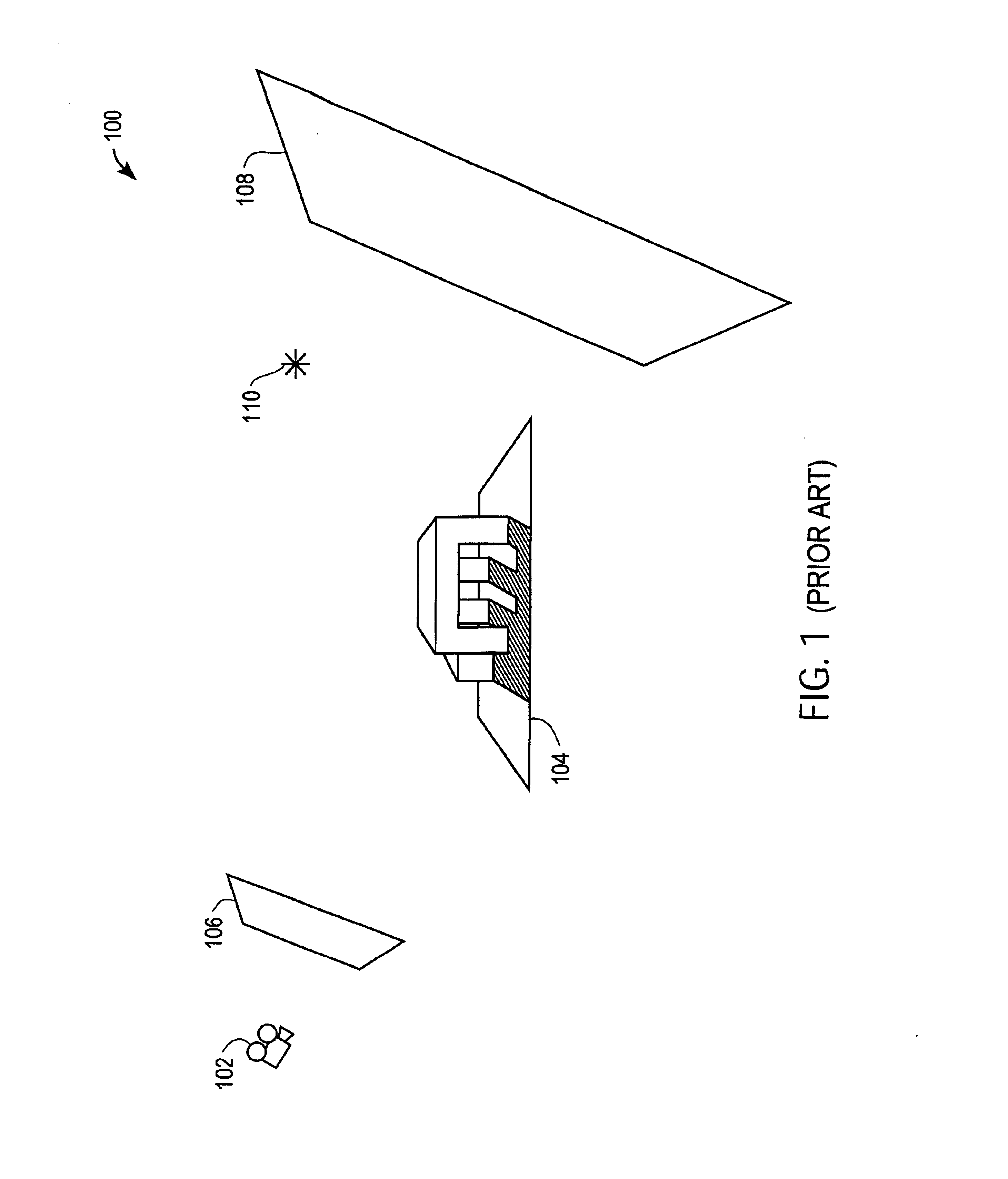

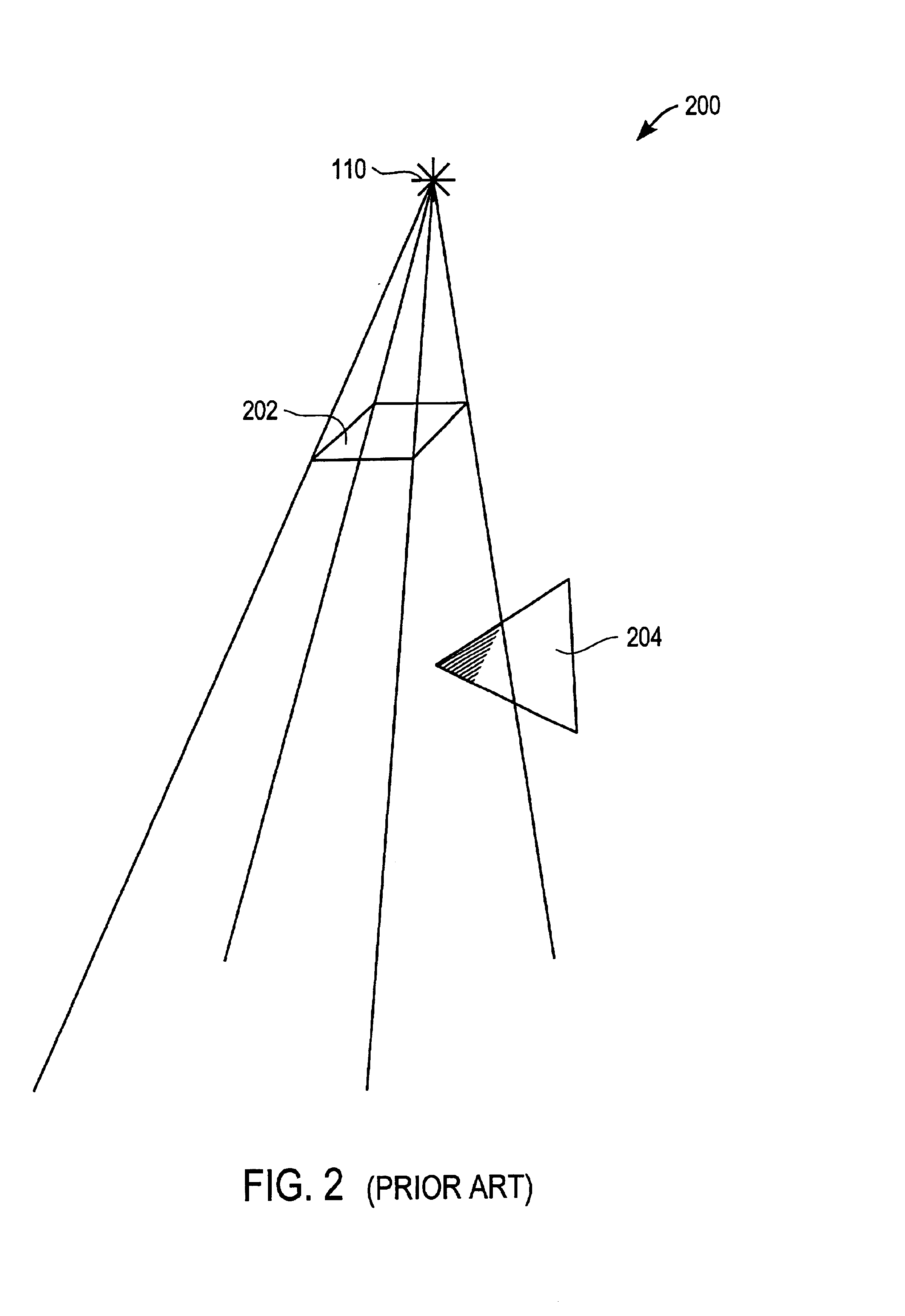

Selective interactive mapping of real-world objects to create interactive virtual-world objects

A method for interactively defining a virtual-world space based on real-world objects in a real-world space is disclosed. In one operation, one or more real-world objects in the real-world space is captured to define the virtual-world space. In another operation, one of the real-world objects is identified, the identified object is to be characterized into a virtual-world object. In yet another operation, a user is prompted for user identification of one or more object locations to enable extraction of parameters for real-world object, and the object locations are identified relative to an identifiable reference plane in the real-world space. In another operation, the extracted parameters of the real-world object may be stored in memory. The virtual-world object can then be generated in the virtual world space from the stored extracted parameters of the real-world object.

Owner:SONY COMP ENTERTAINMENT EURO +1

Computer interfaces including physiologically guided avatars

This invention provides user interfaces that more intuitively display physiological data obtained from physiological monitoring of one or more subjects. Specifically, the user interfaces of this invention create and display one or more avatars having behaviors guided by physiological monitoring data. The monitoring data is preferably obtained when the subject is performing normal tasks without substantial restraint. This invention provides a range of implementations that accommodate user having varying processing and graphics capabilities, e.g., from handheld electronic devices to ordinary PC-type computers and to systems with enhanced graphics capabilities.

Owner:ADIDAS

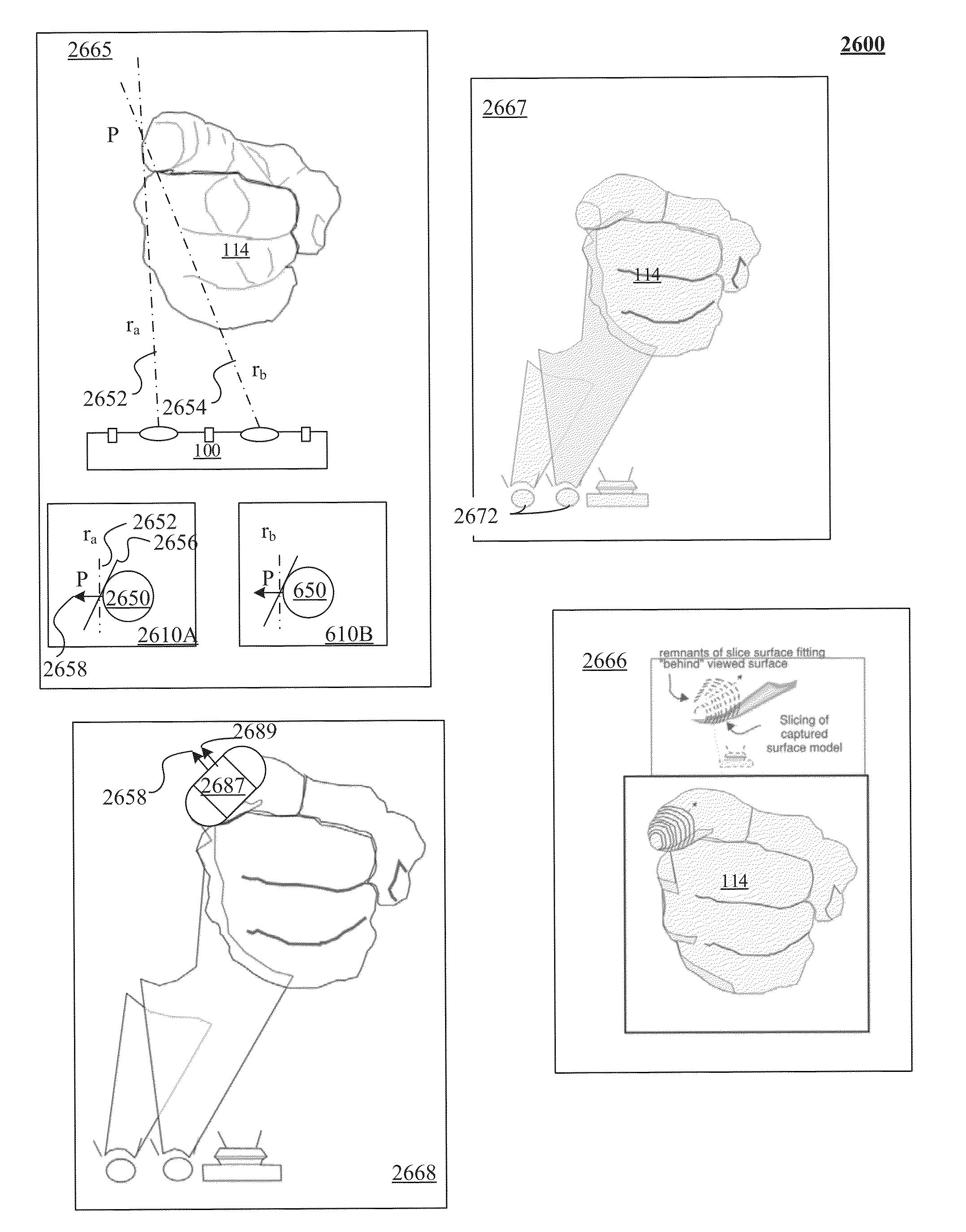

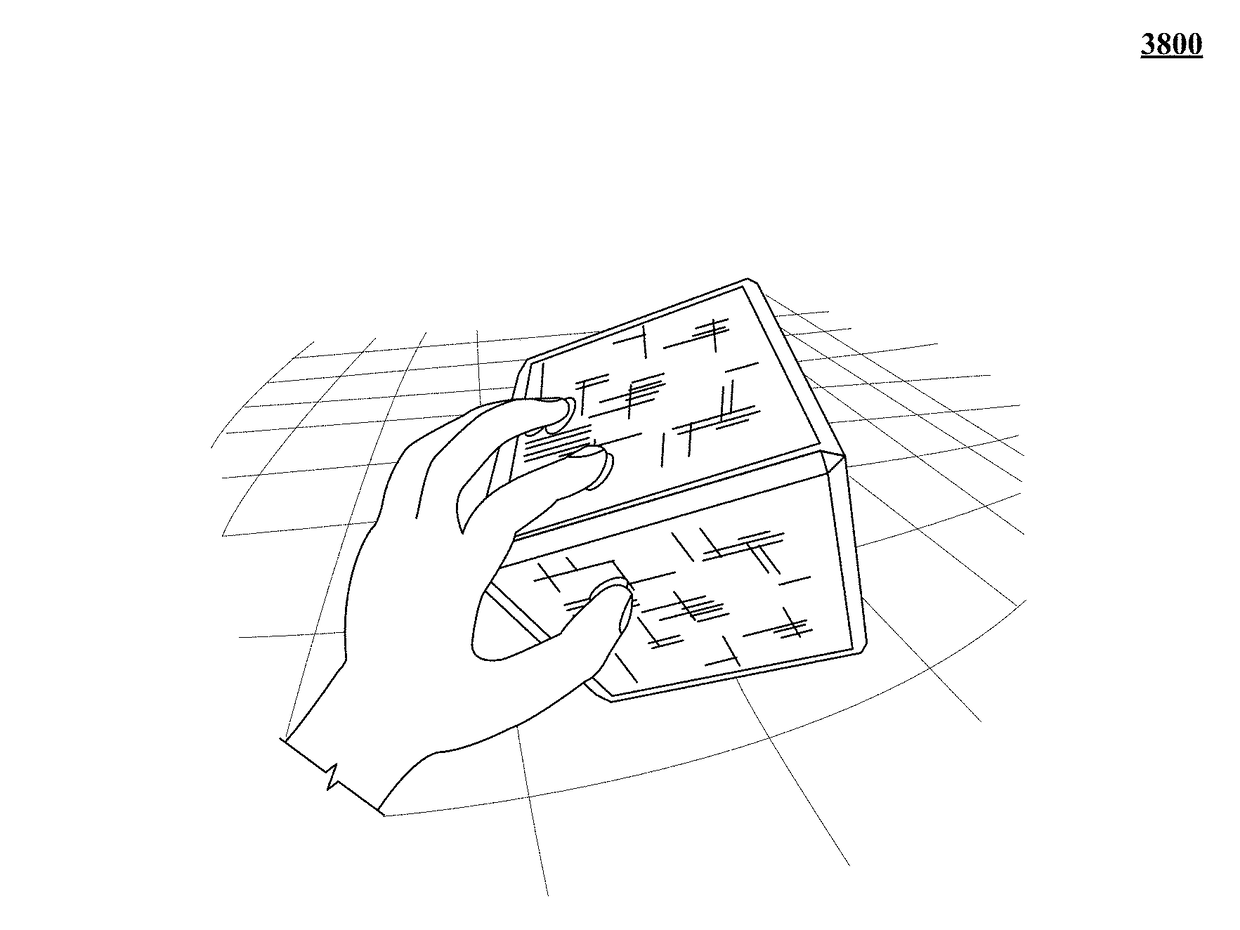

Systems and methods of creating a realistic grab experience in virtual reality/augmented reality environments

ActiveUS20160239080A1Minimize extentInput/output for user-computer interactionDetails for portable computersVirtual spaceFree form

The technology disclosed relates to a method of realistic rotation of a virtual object for an interaction between a control object in a three-dimensional (3D) sensory space and the virtual object in a virtual space that the control object interacts with. In particular, it relates to detecting free-form gestures of a control object in a three-dimensional (3D) sensory space and generating for display a 3D solid control object model for the control object during the free-form gestures, including sub-components of the control object and in response to detecting a two e sub-component free-form gesture of the control object in the 3D sensory space in virtual contact with the virtual object, depicting, in the generated display, the virtual contact and resulting rotation of the virtual object by the 3D solid control object model.

Owner:ULTRAHAPTICS IP TWO LTD

Method and device for real-time mapping and localization

ActiveUS20180075643A1Efficient storageImprove system robustnessImage enhancementImage analysisReference mapLaser ranging

A method for constructing a 3D reference map useable in real-time mapping, localization and / or change analysis, wherein the 3D reference map is built using a 3D SLAM (Simultaneous Localization And Mapping) framework based on a mobile laser range scanner A method for real-time mapping, localization and change analysis, in particular in GPS-denied environments, as well as a mobile laser scanning device for implementing said methods.

Owner:EURATOM

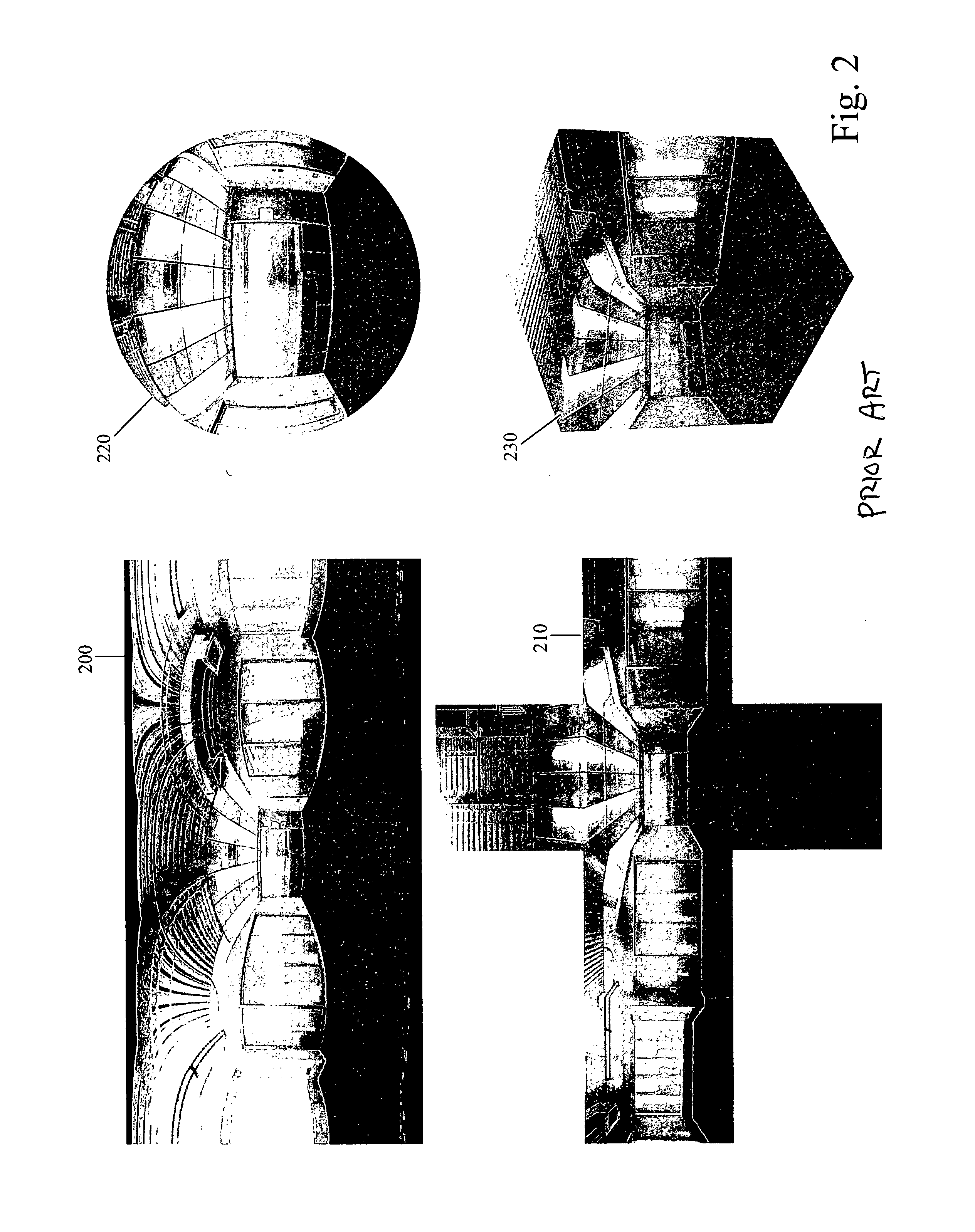

Omnidirectional shadow texture mapping

An invention is provided for rendering using an omnidirectional light. A shadow cube texture map having six cube faces centered by a light source is generated. Each cube face comprises a shadow texture having depth data from a perspective of the light source. In addition, each cube face is associated with an axis of a three-dimensional coordinate system. For each object fragment rendered from the camera's perspective a light-to-surface vector is defined from the light source to the object fragment, and particular texels within particular cube faces are selected based on the light-to-surface vector. The texel values are tested against a depth value computed from the light to surface vector. The object fragment is textured as in light or shadow according to the outcome of the test.

Owner:NVIDIA CORP

Systems and methods of creating a realistic grab experience in virtual reality/augmented reality environments

ActiveUS9696795B2Minimize extentInput/output for user-computer interactionDetails for portable computersVirtual spaceFree form

The technology disclosed relates to a method of realistic rotation of a virtual object for an interaction between a control object in a three-dimensional (3D) sensory space and the virtual object in a virtual space that the control object interacts with. In particular, it relates to detecting free-form gestures of a control object in a three-dimensional (3D) sensory space and generating for display a 3D solid control object model for the control object during the free-form gestures, including sub-components of the control object and in response to detecting a two e sub-component free-form gesture of the control object in the 3D sensory space in virtual contact with the virtual object, depicting, in the generated display, the virtual contact and resulting rotation of the virtual object by the 3D solid control object model.

Owner:ULTRAHAPTICS IP TWO LIMITED

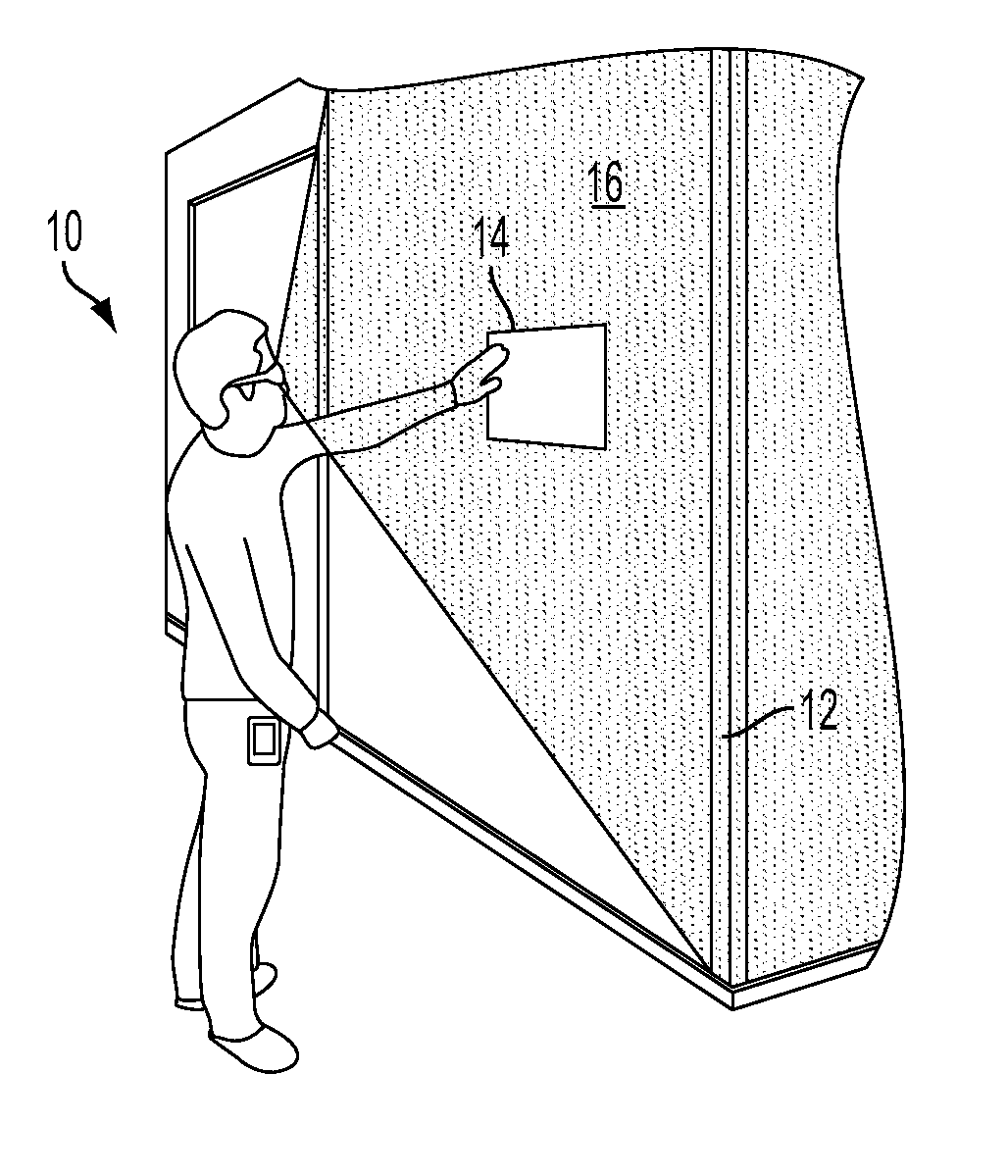

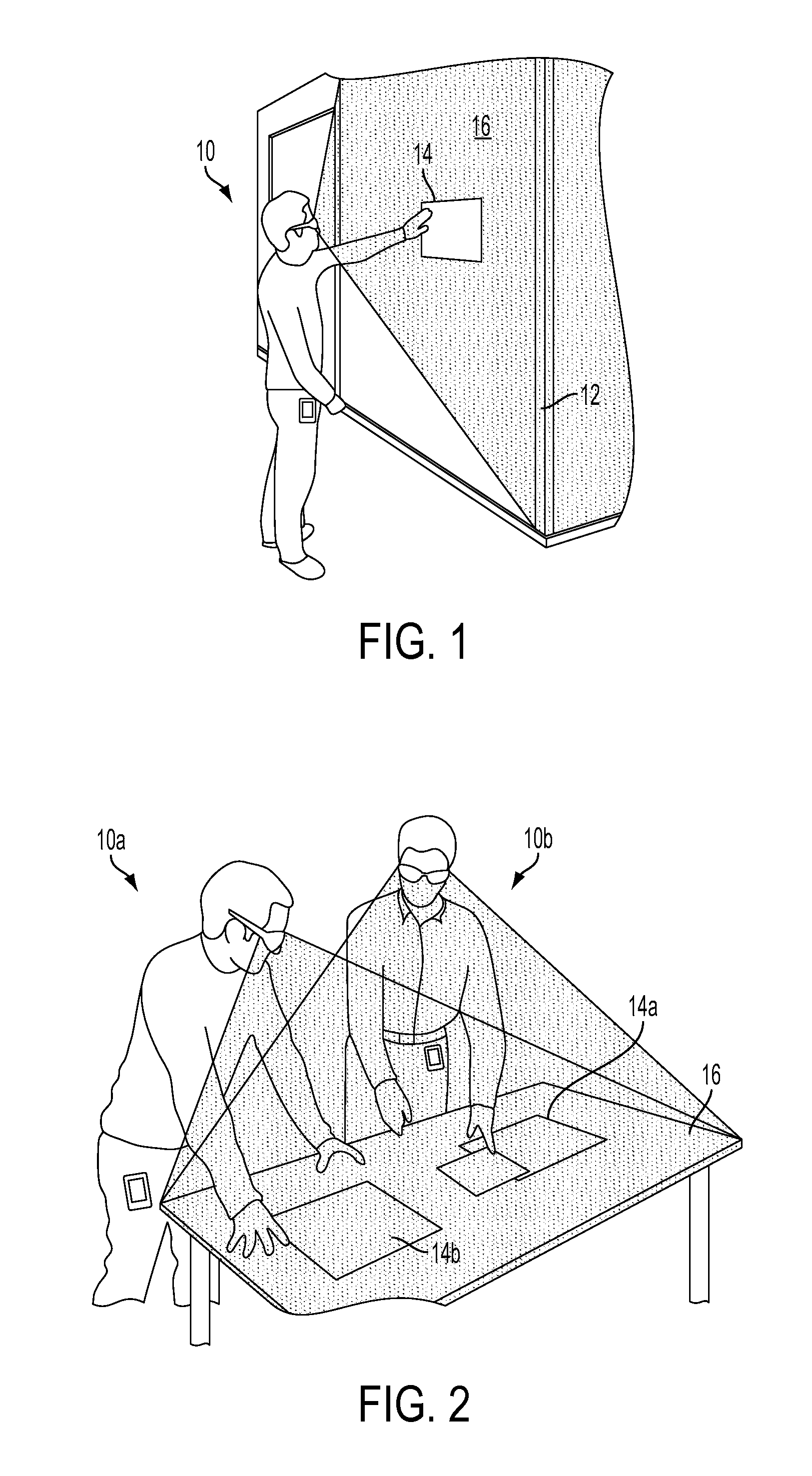

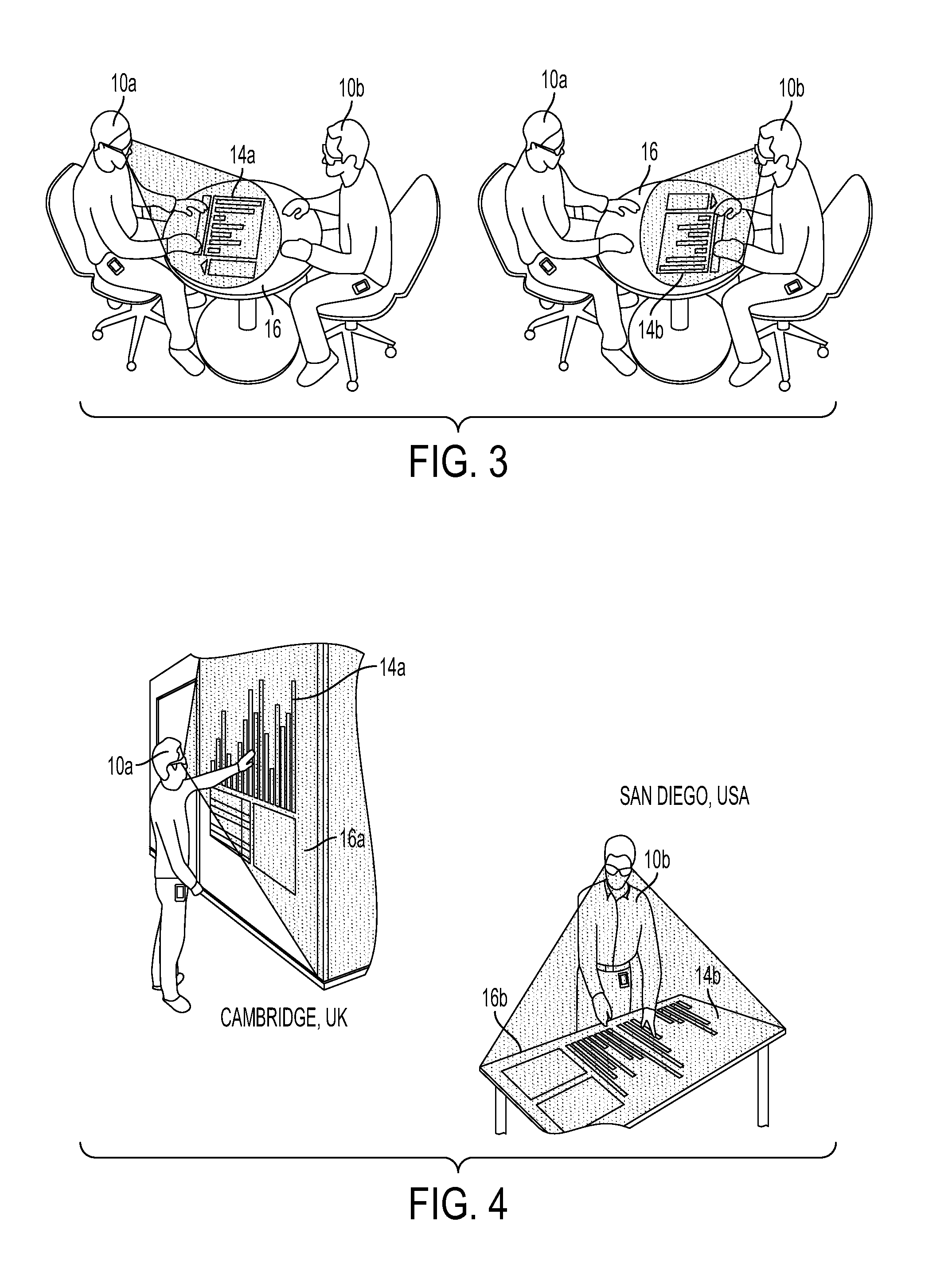

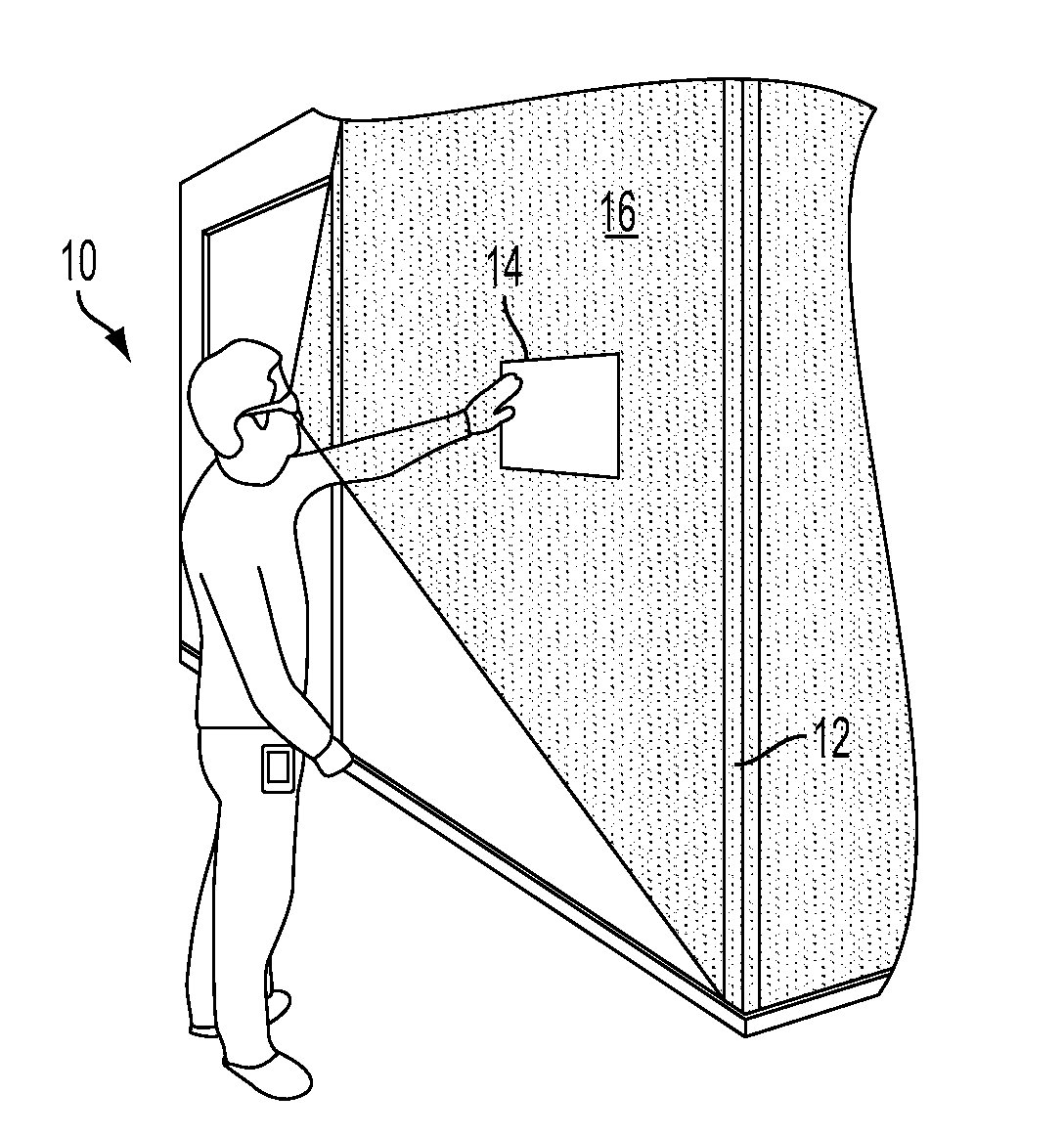

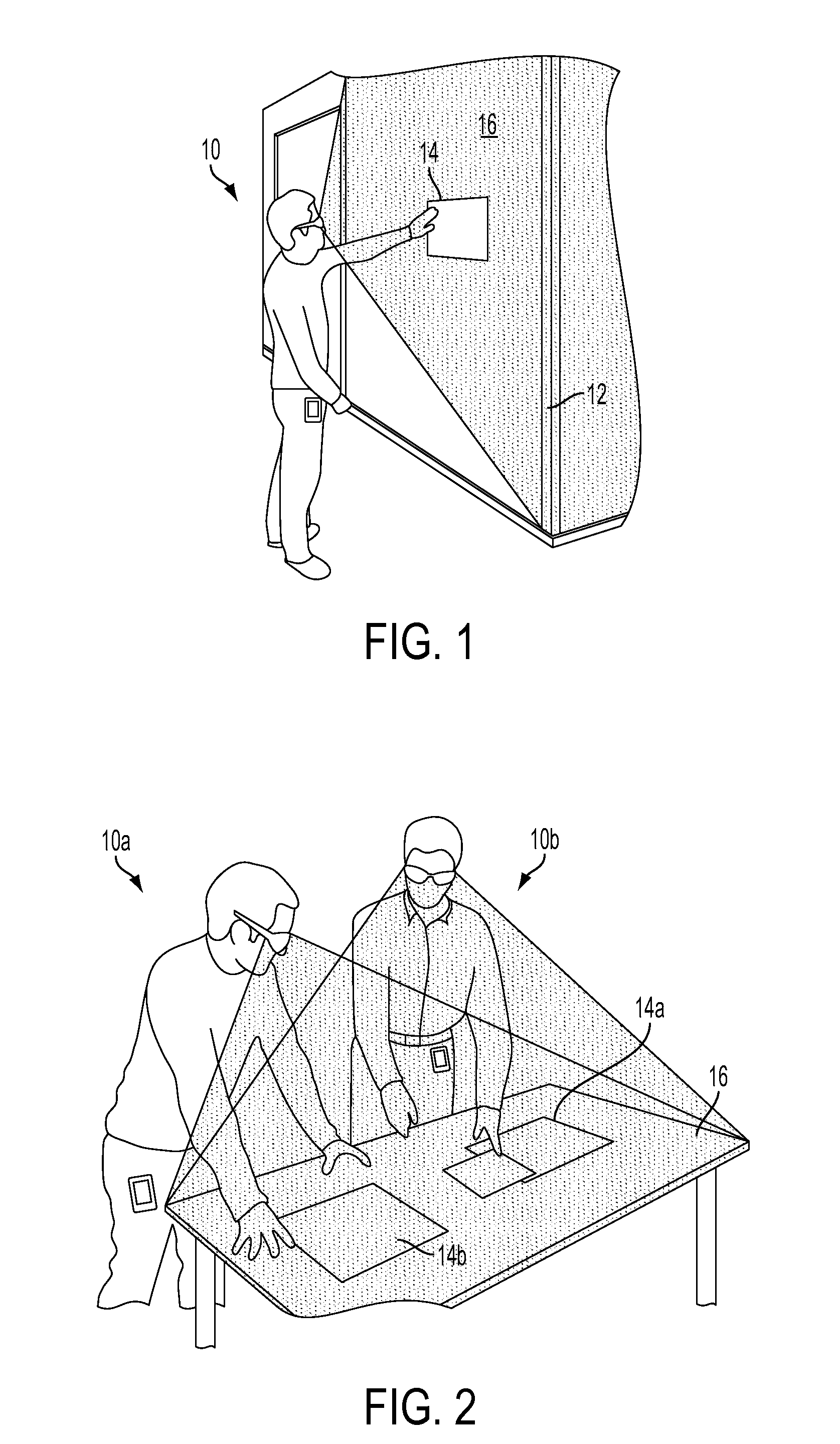

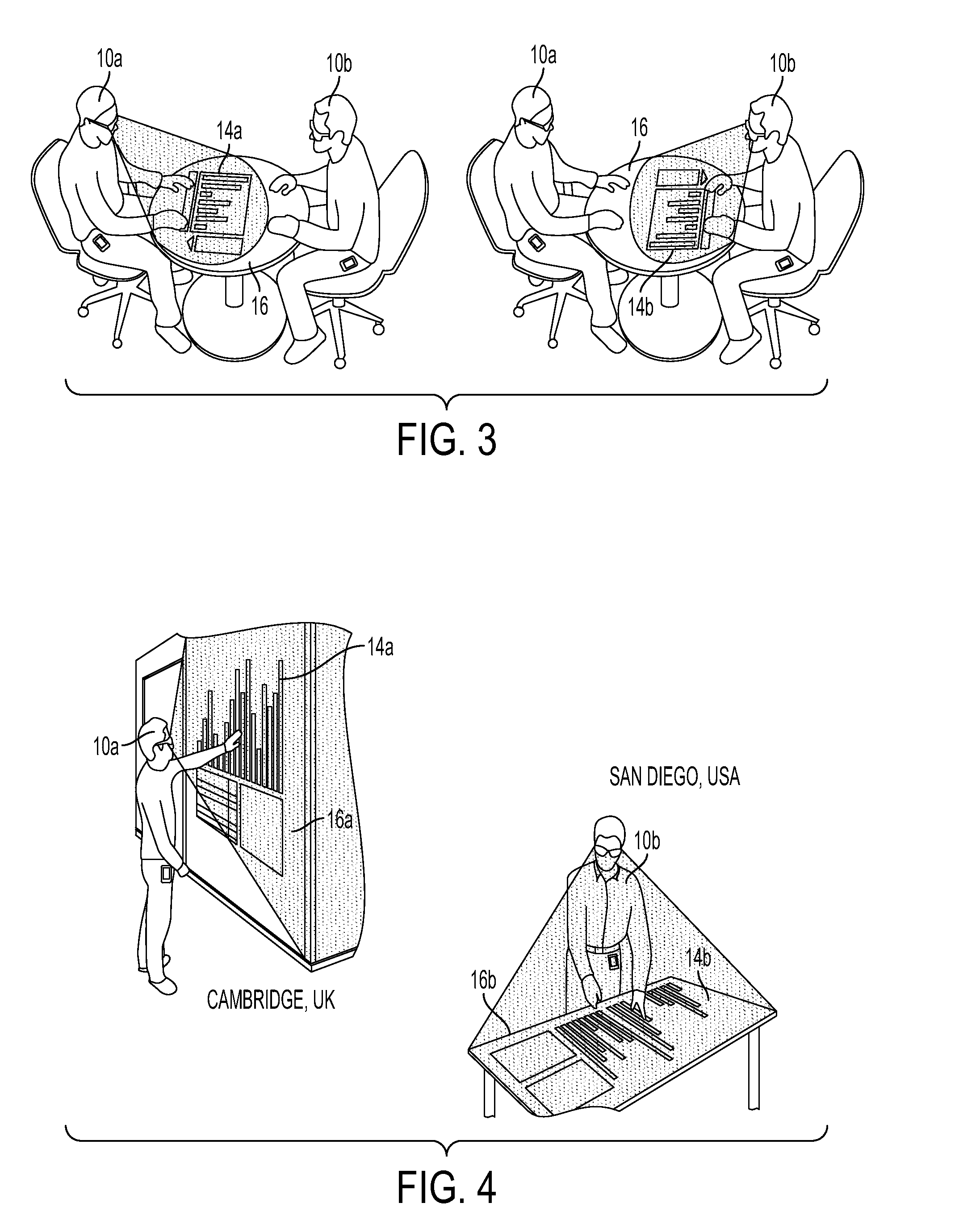

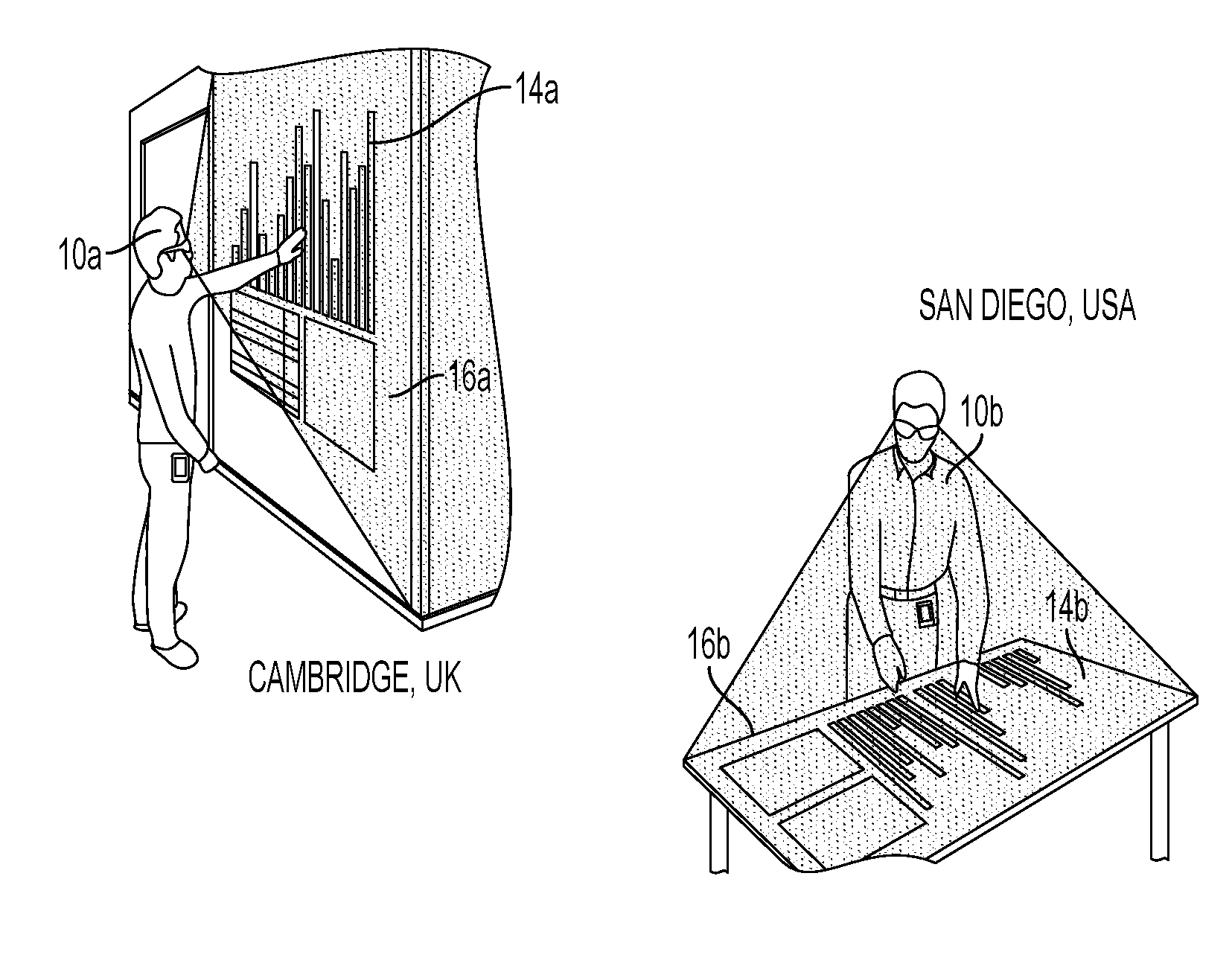

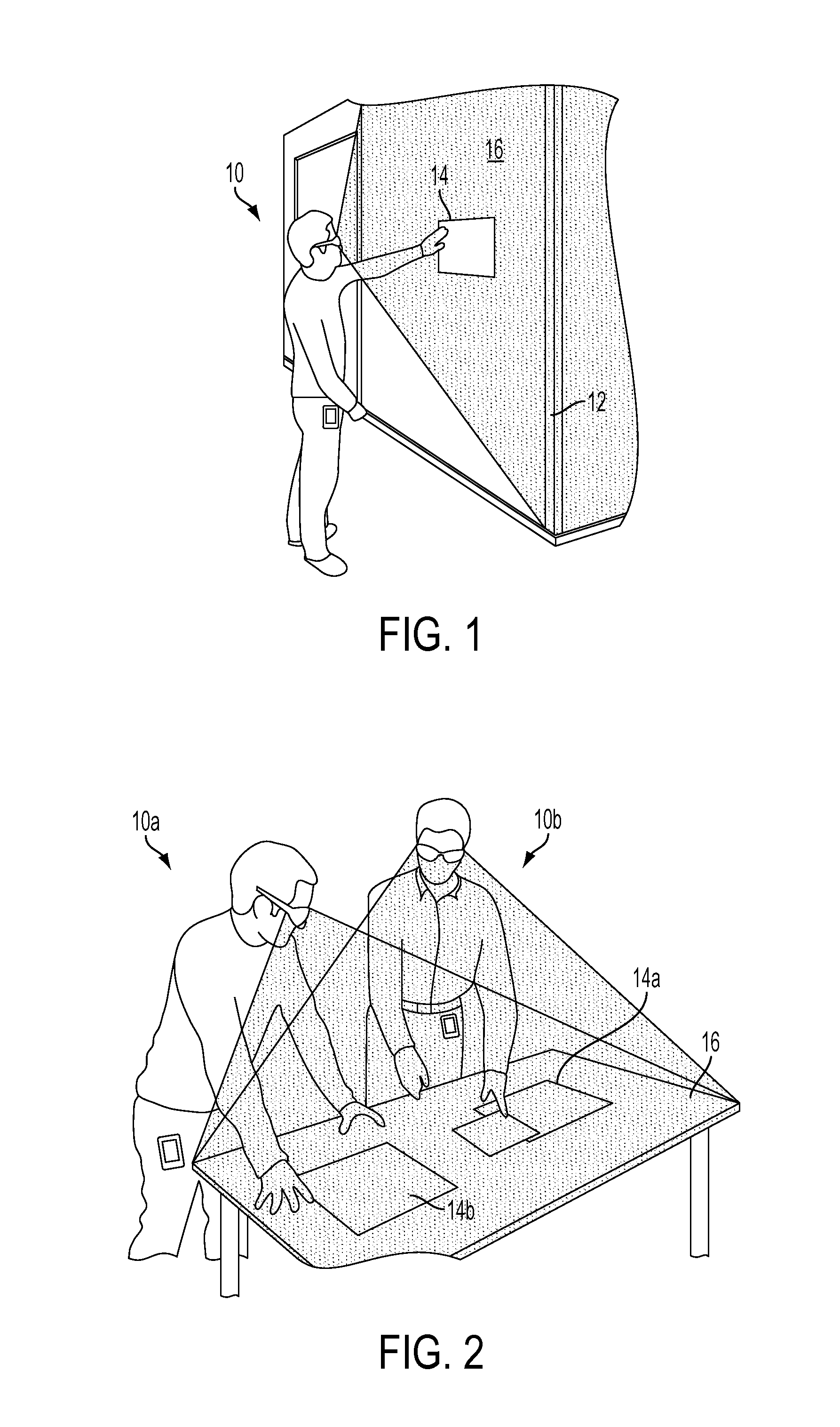

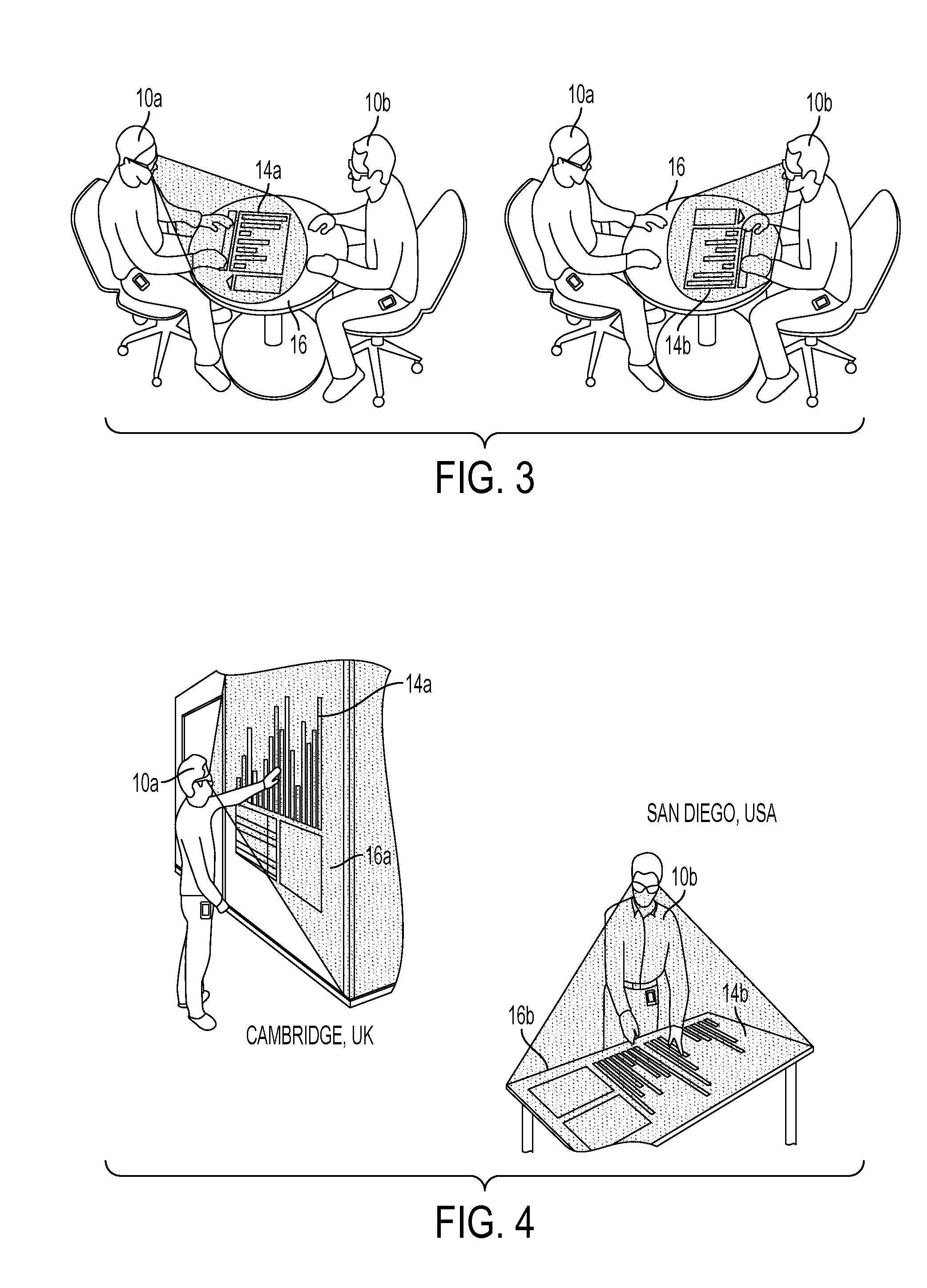

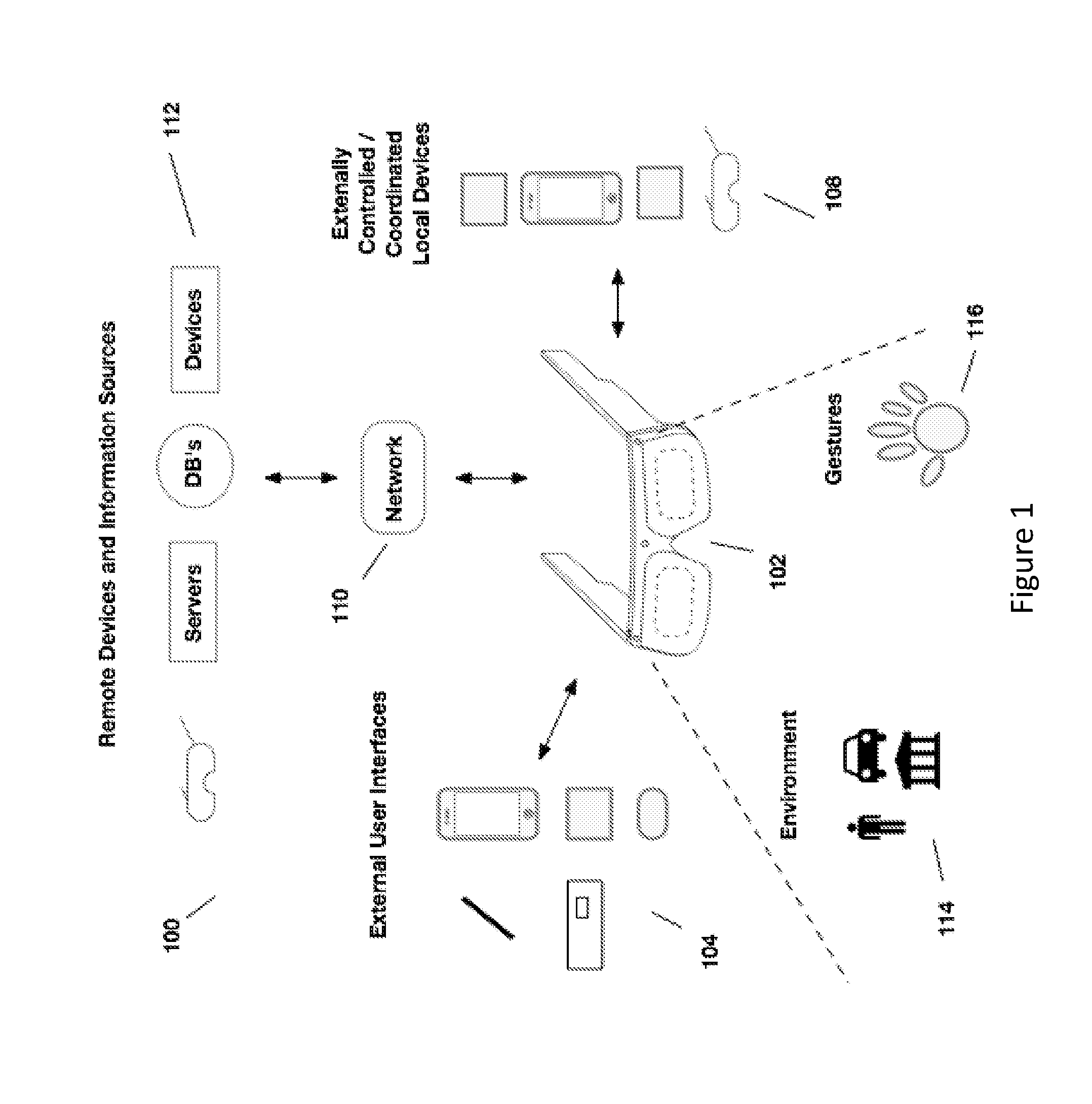

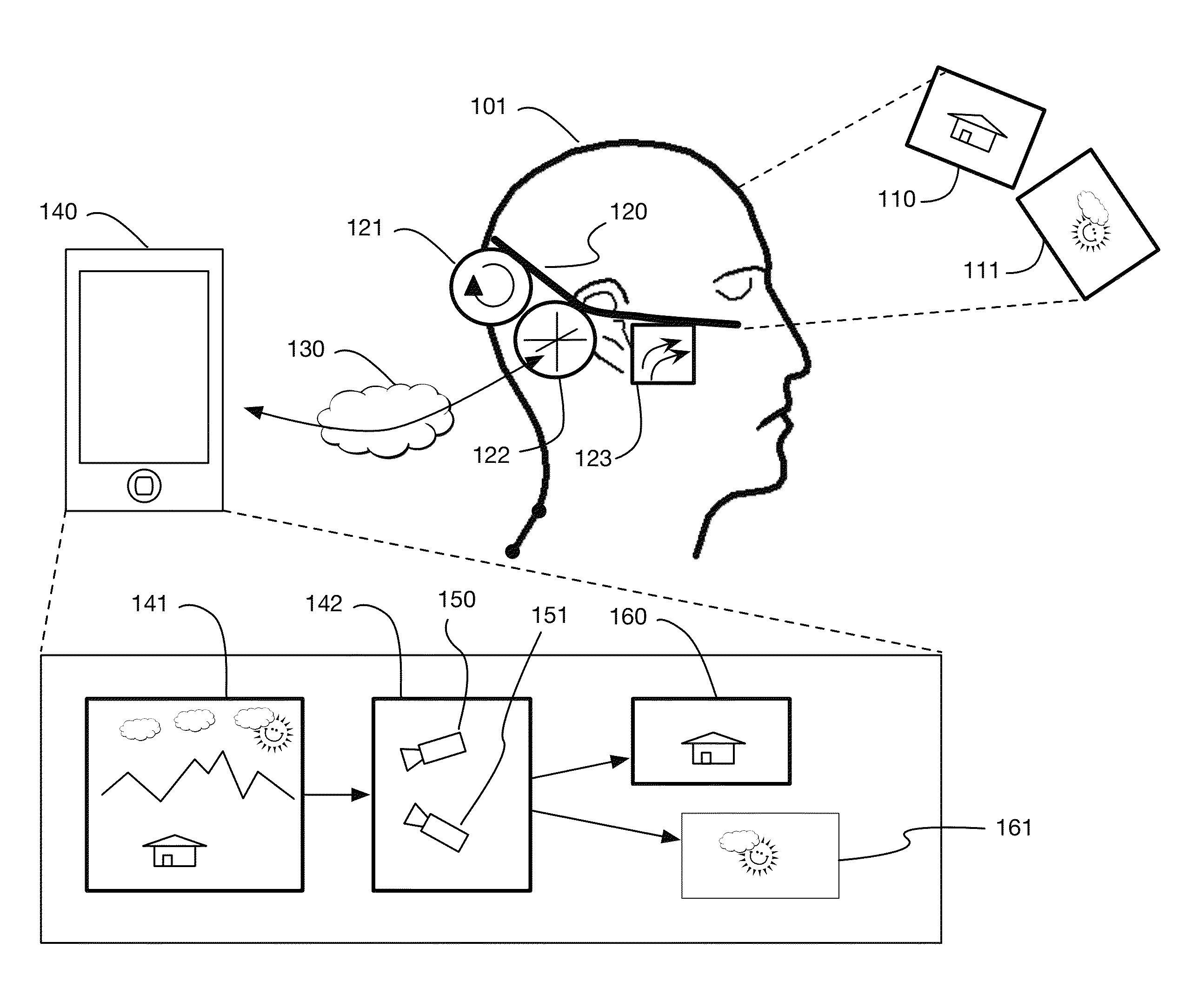

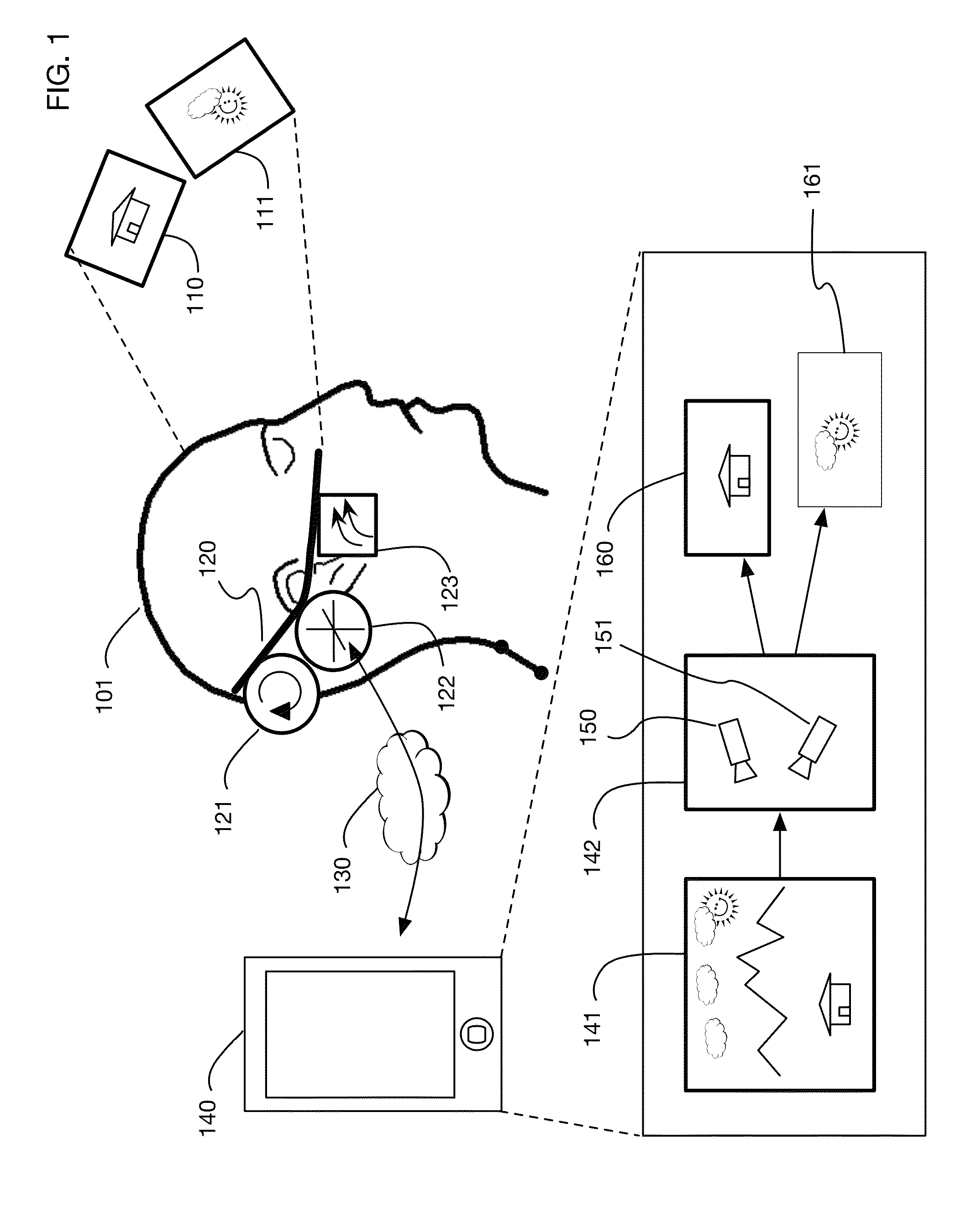

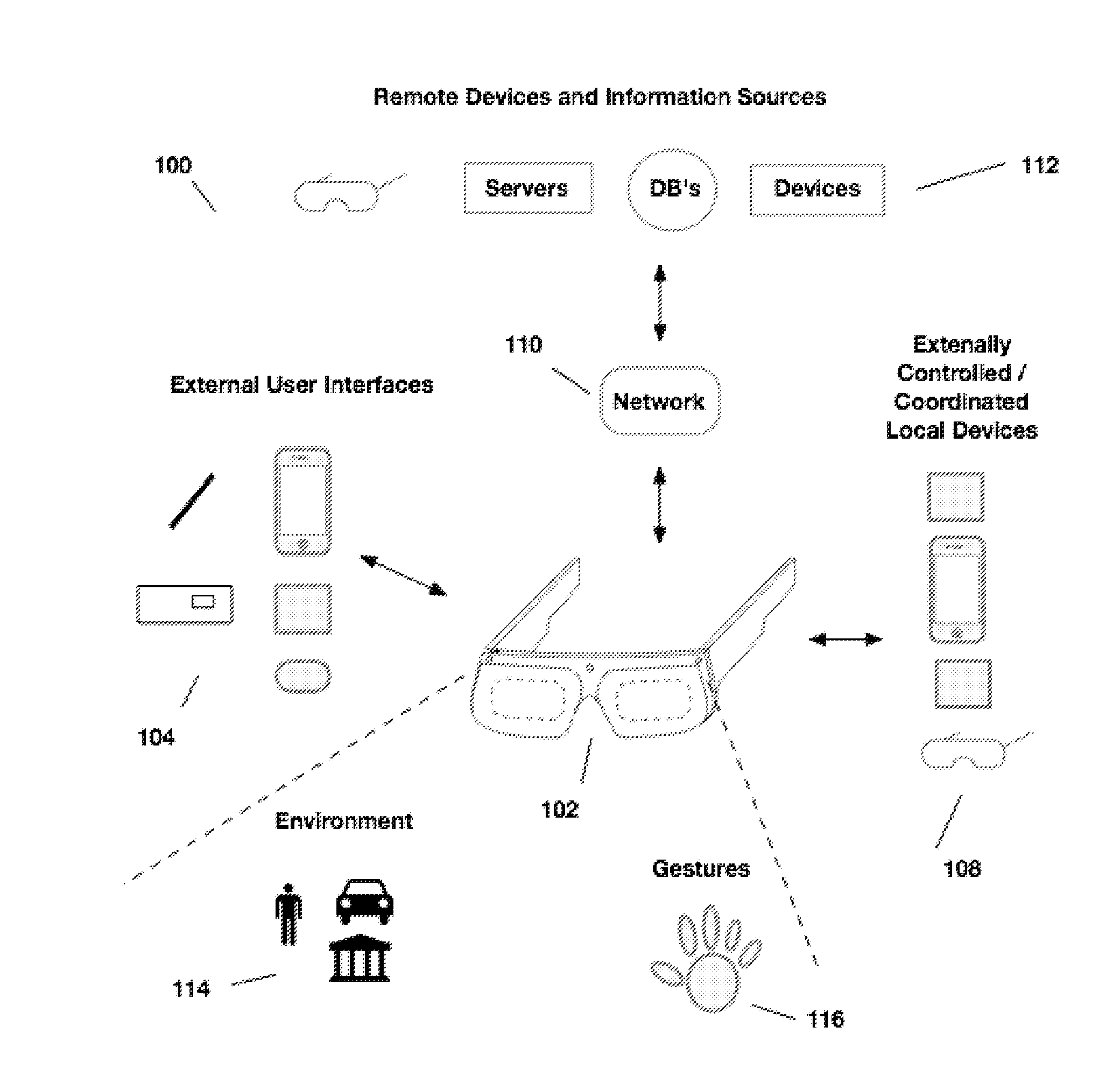

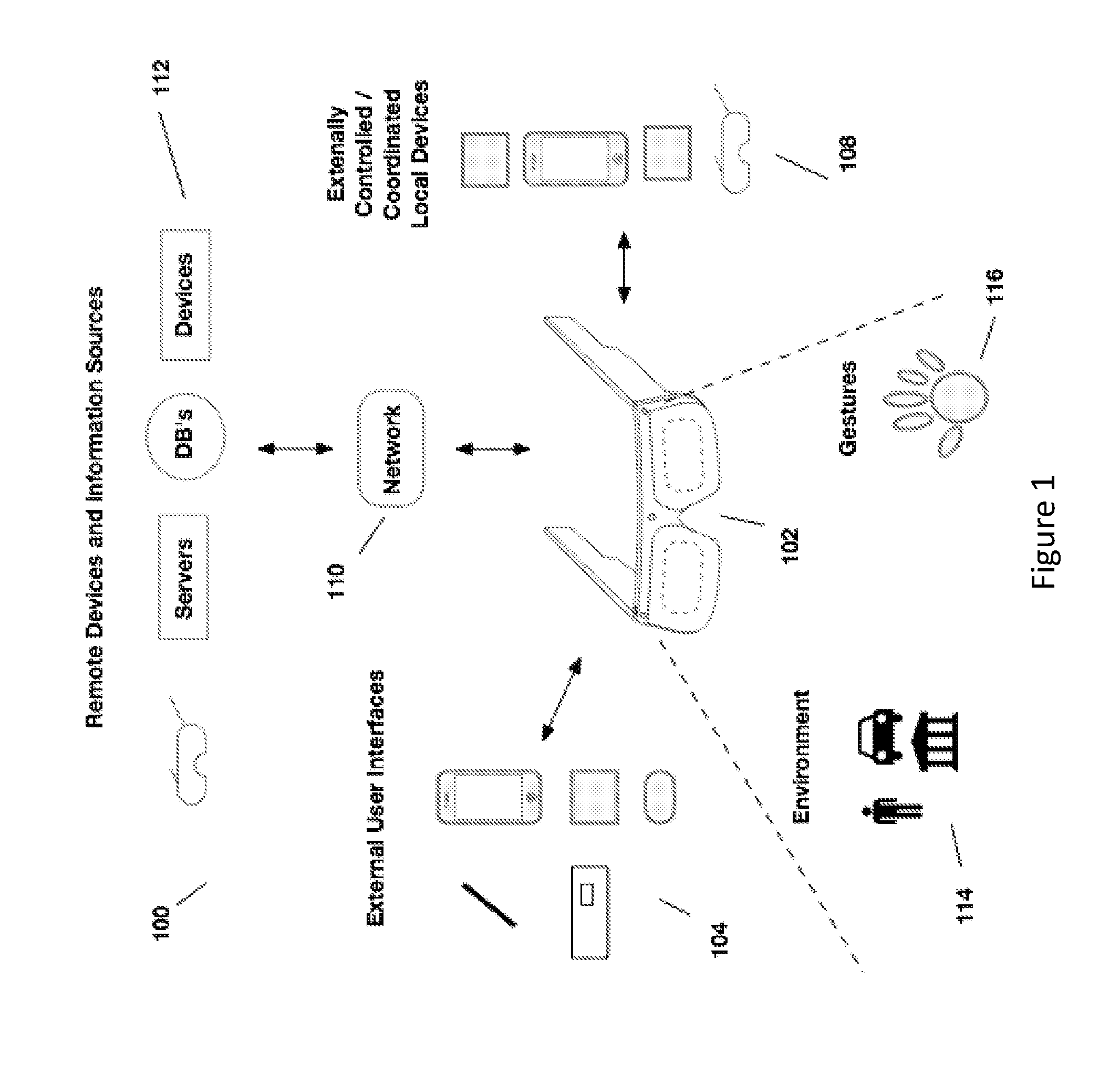

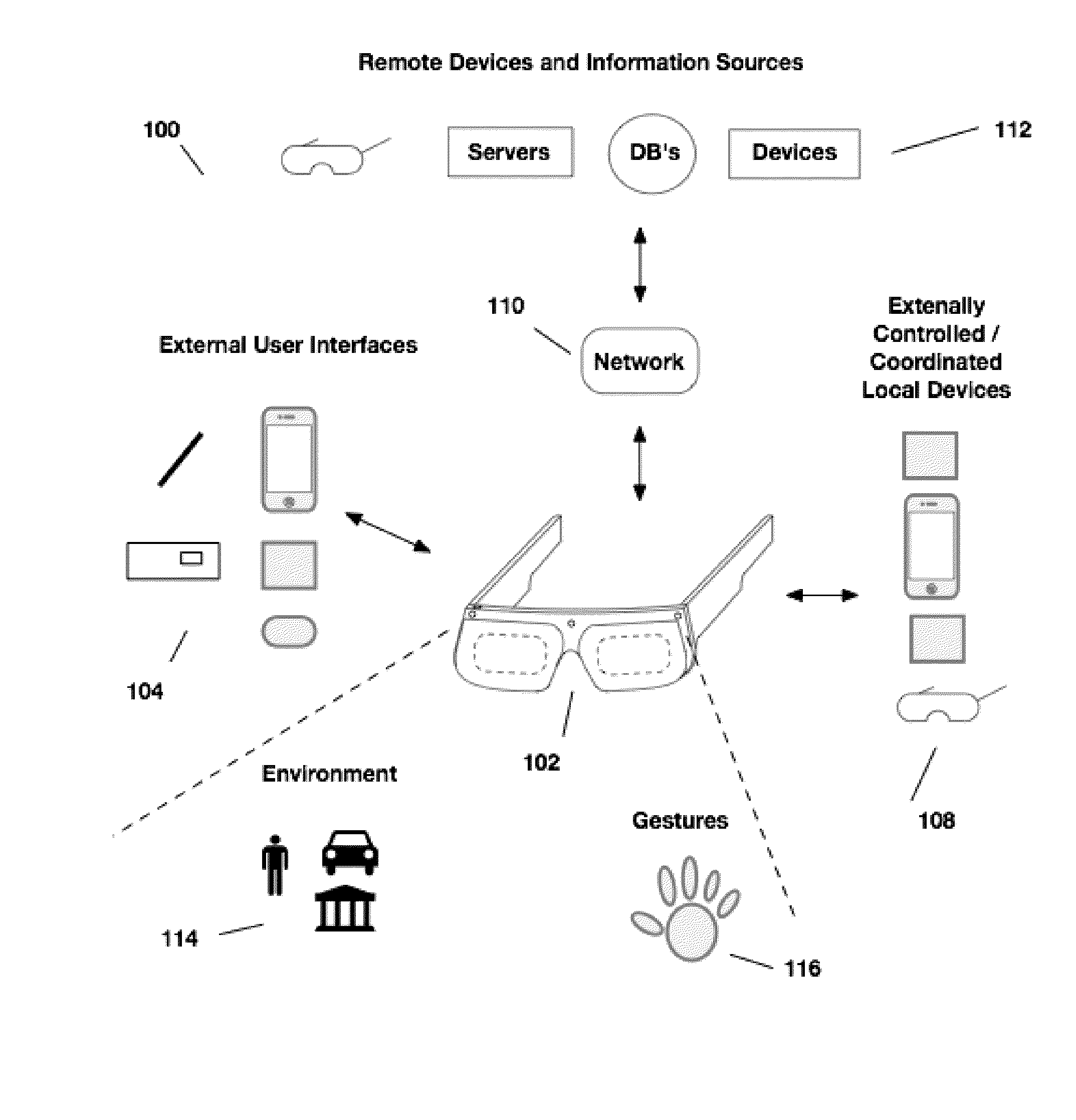

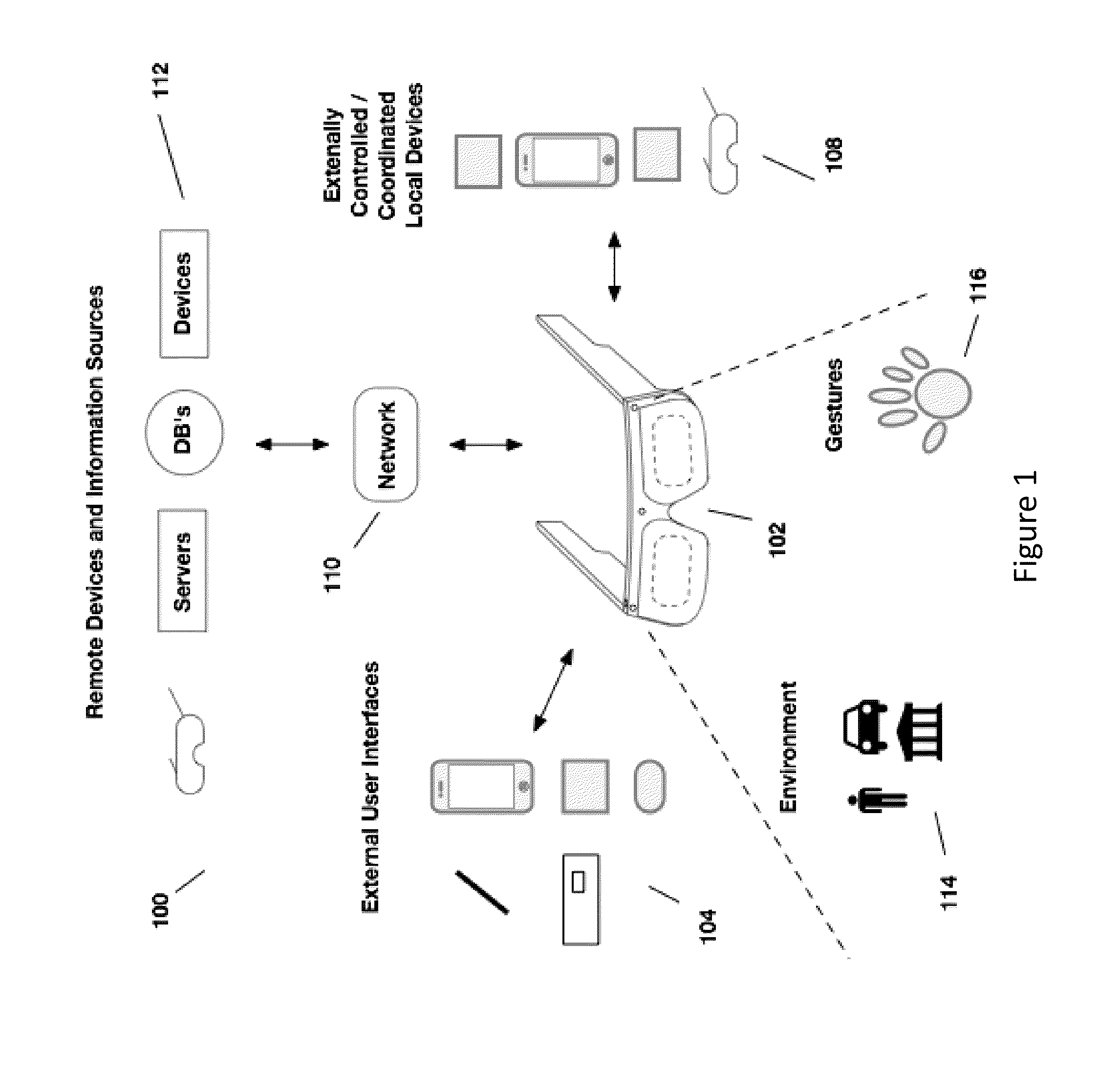

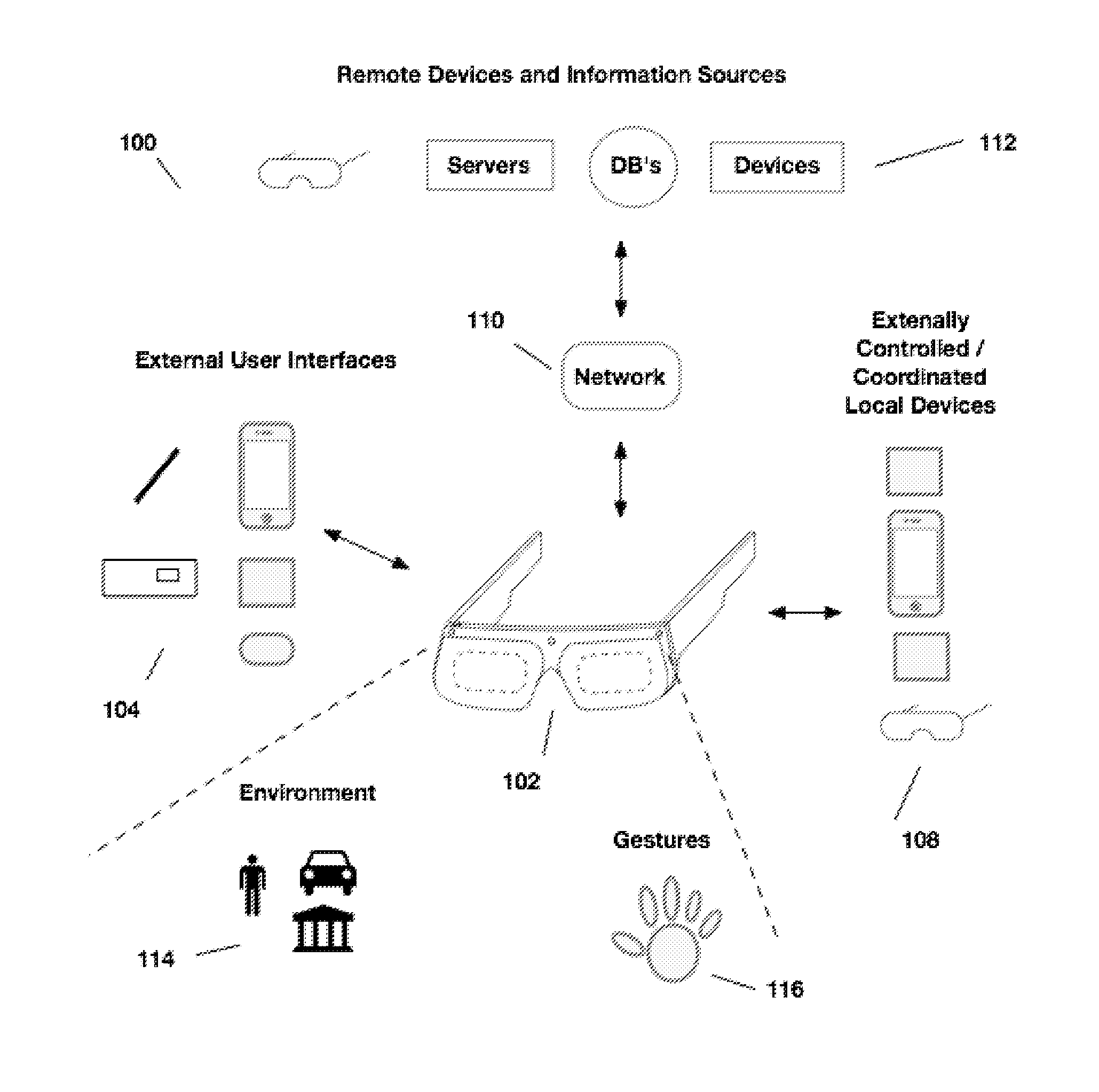

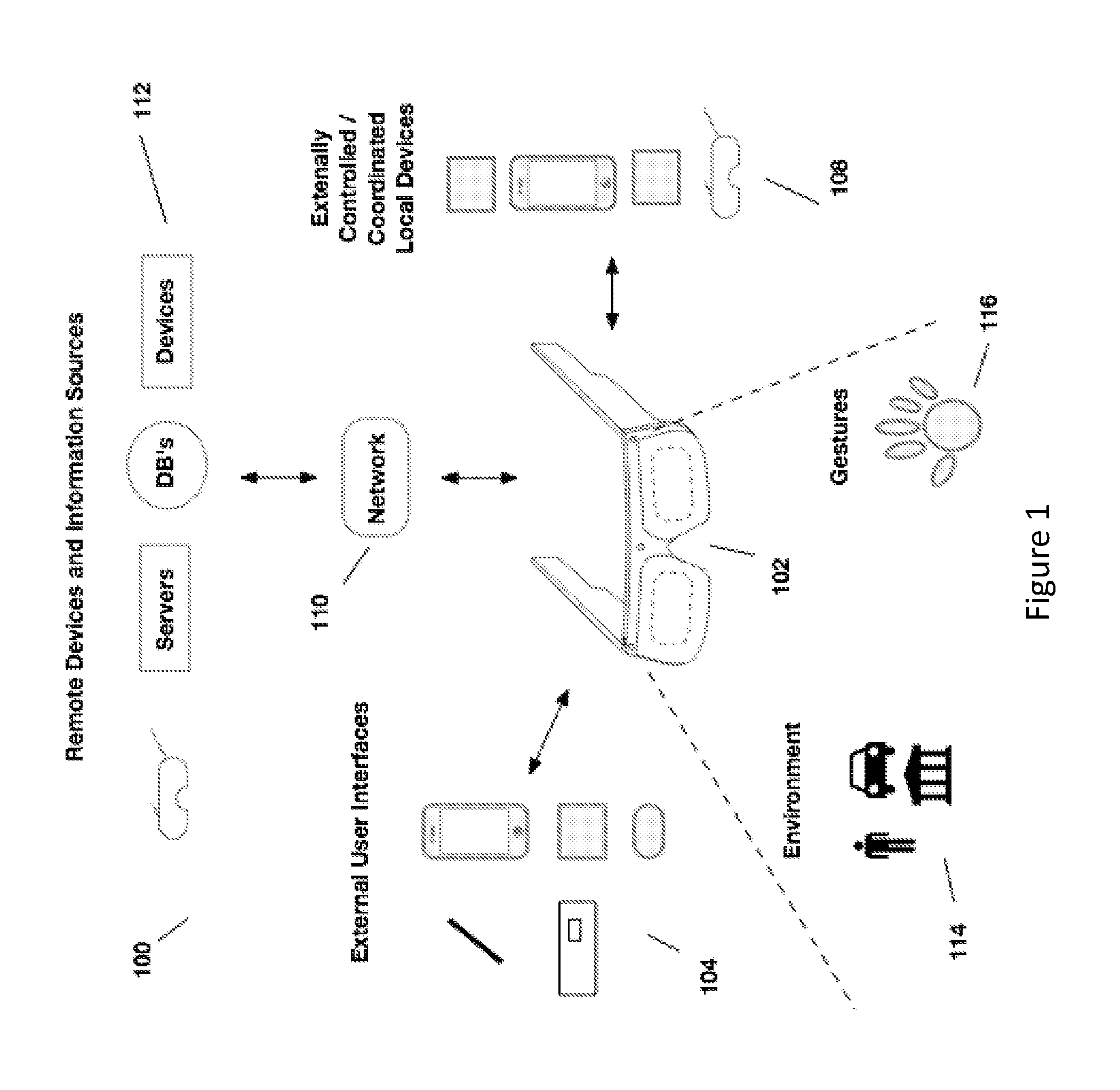

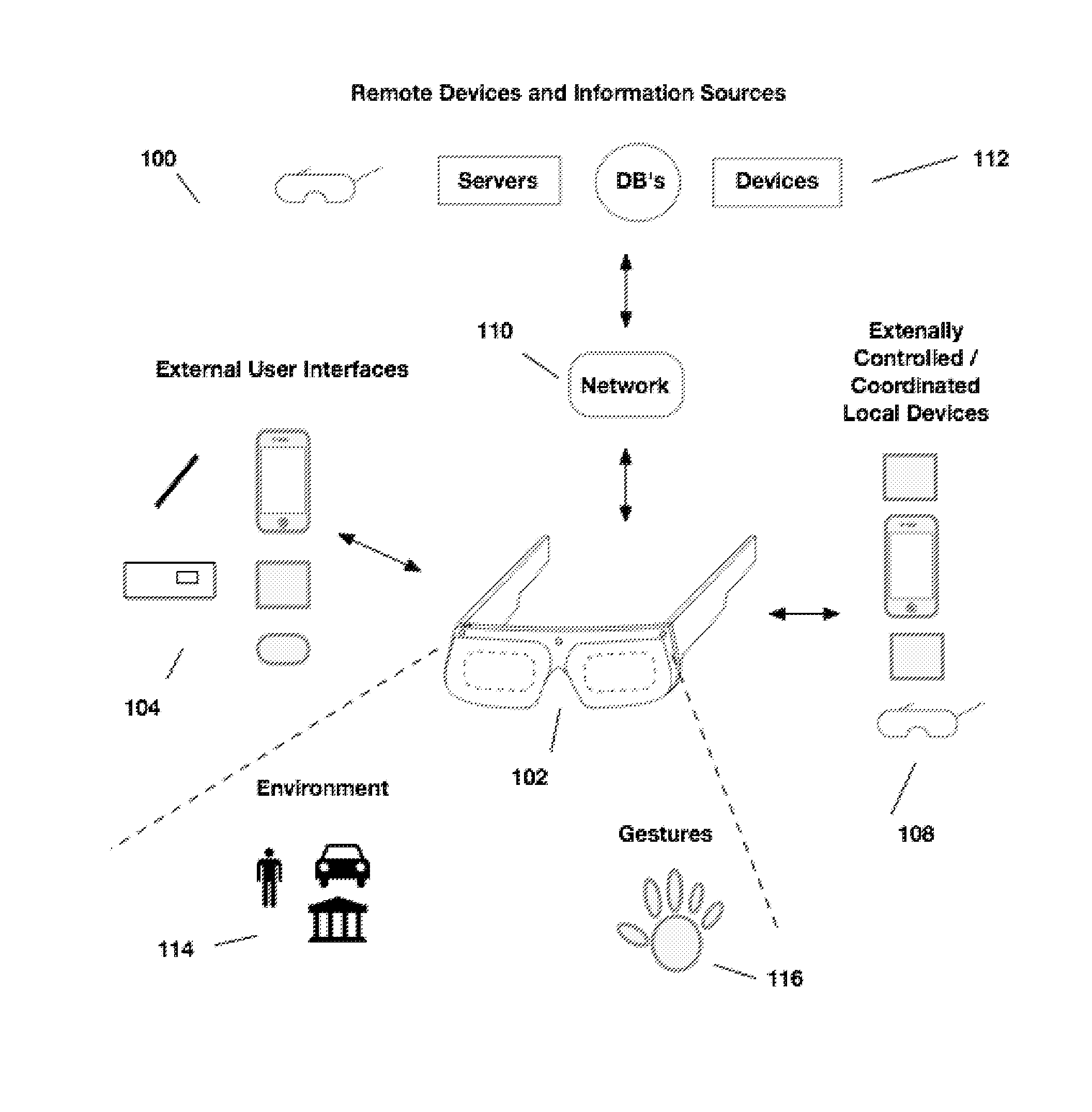

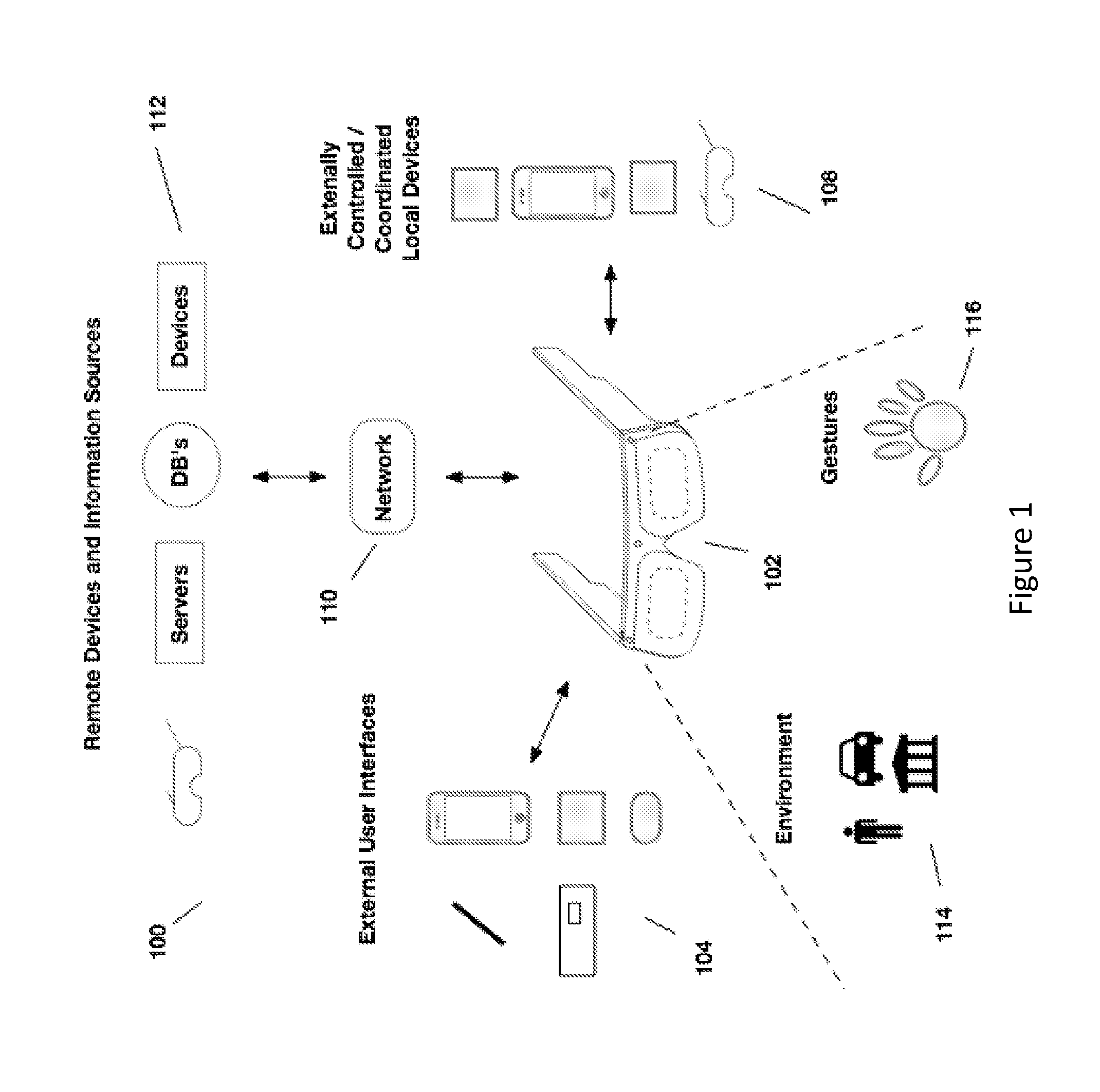

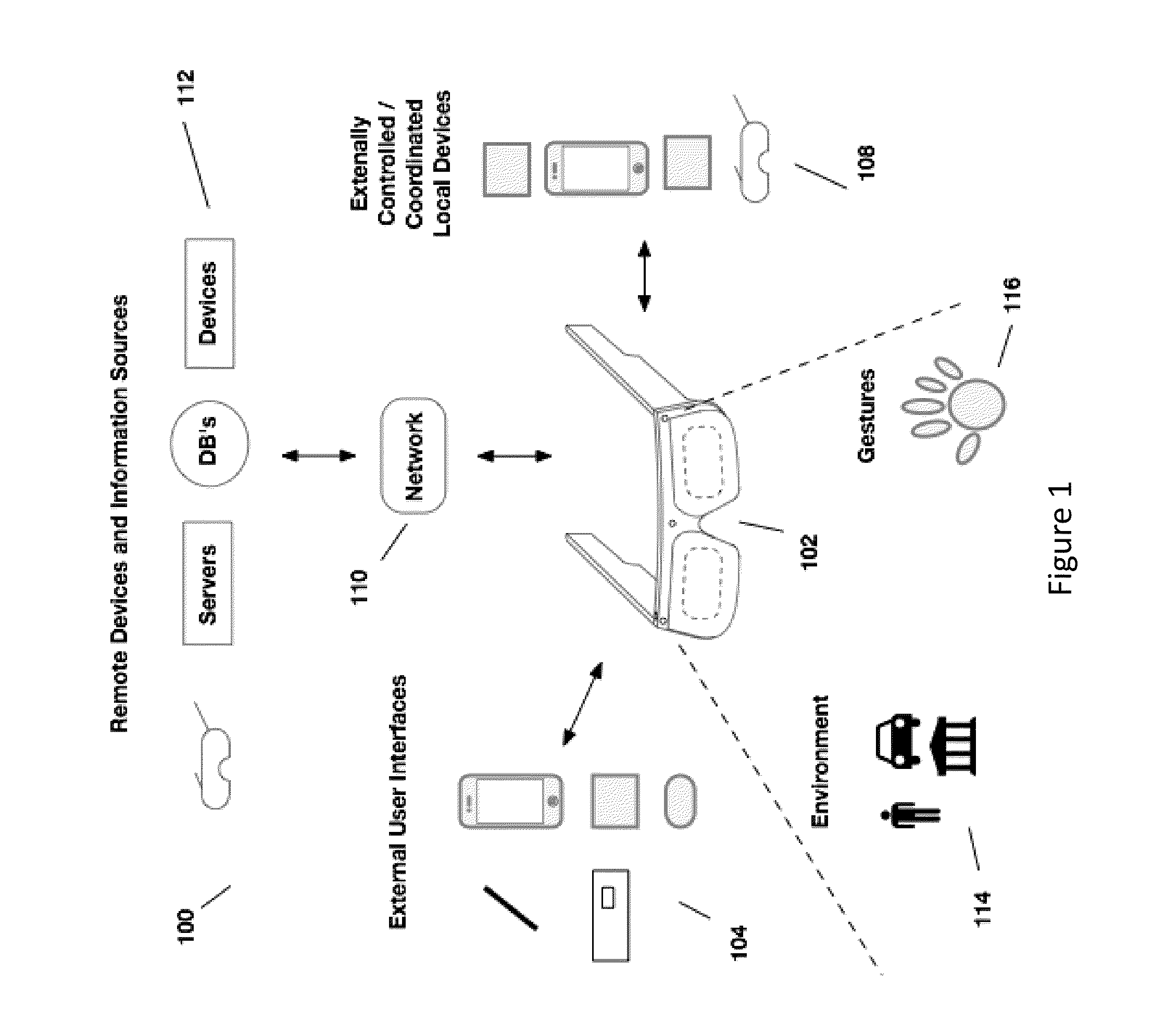

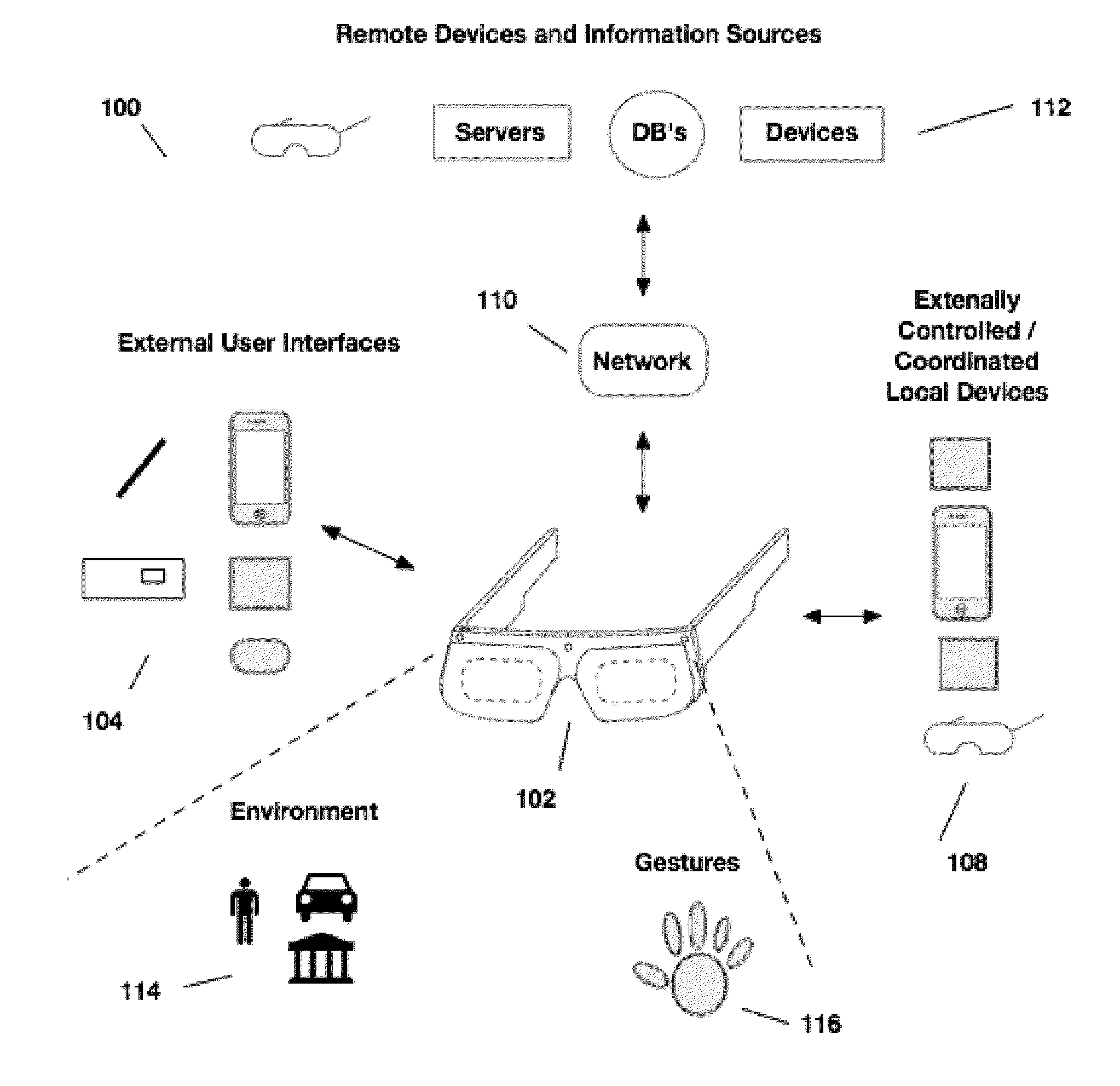

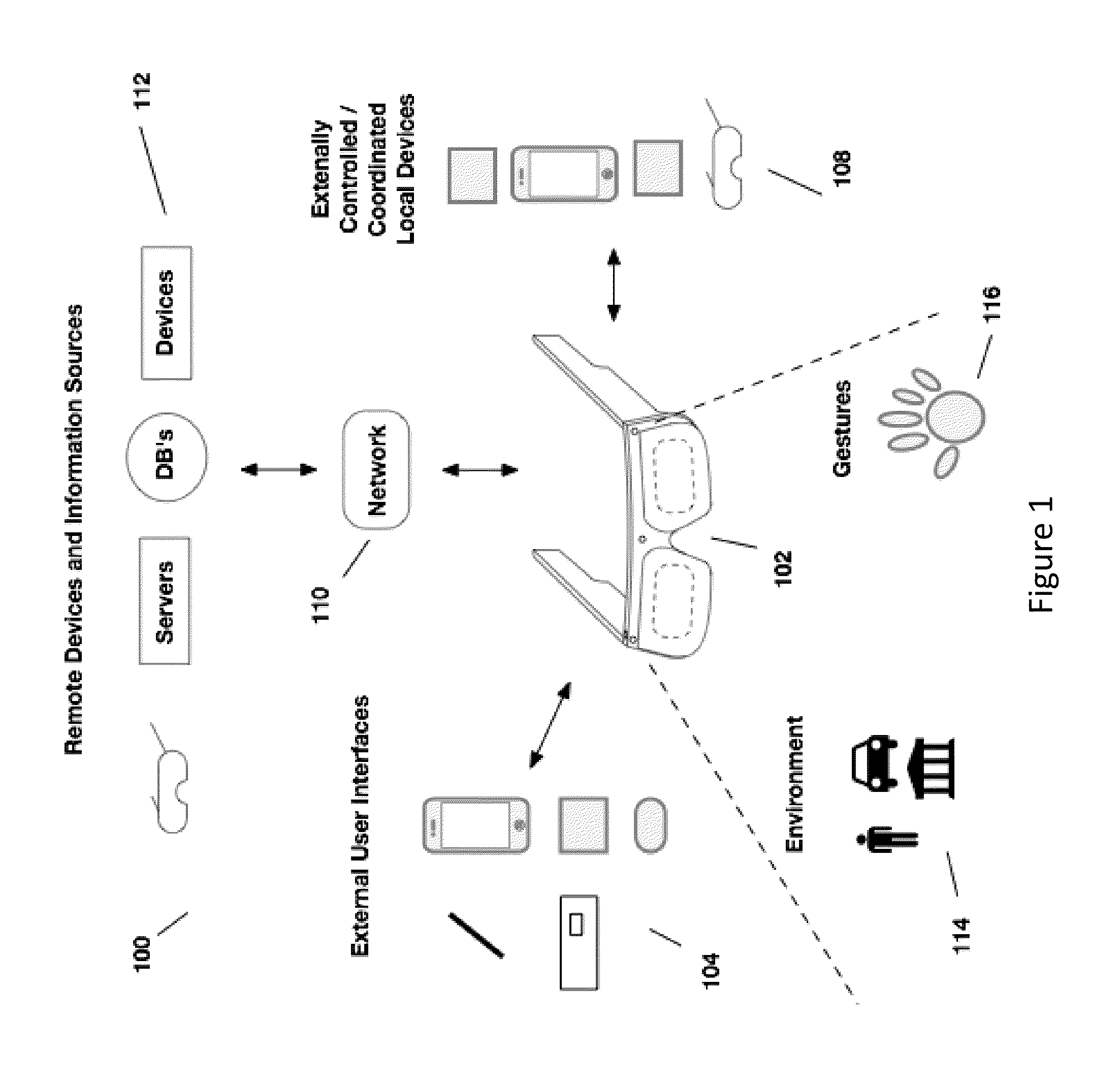

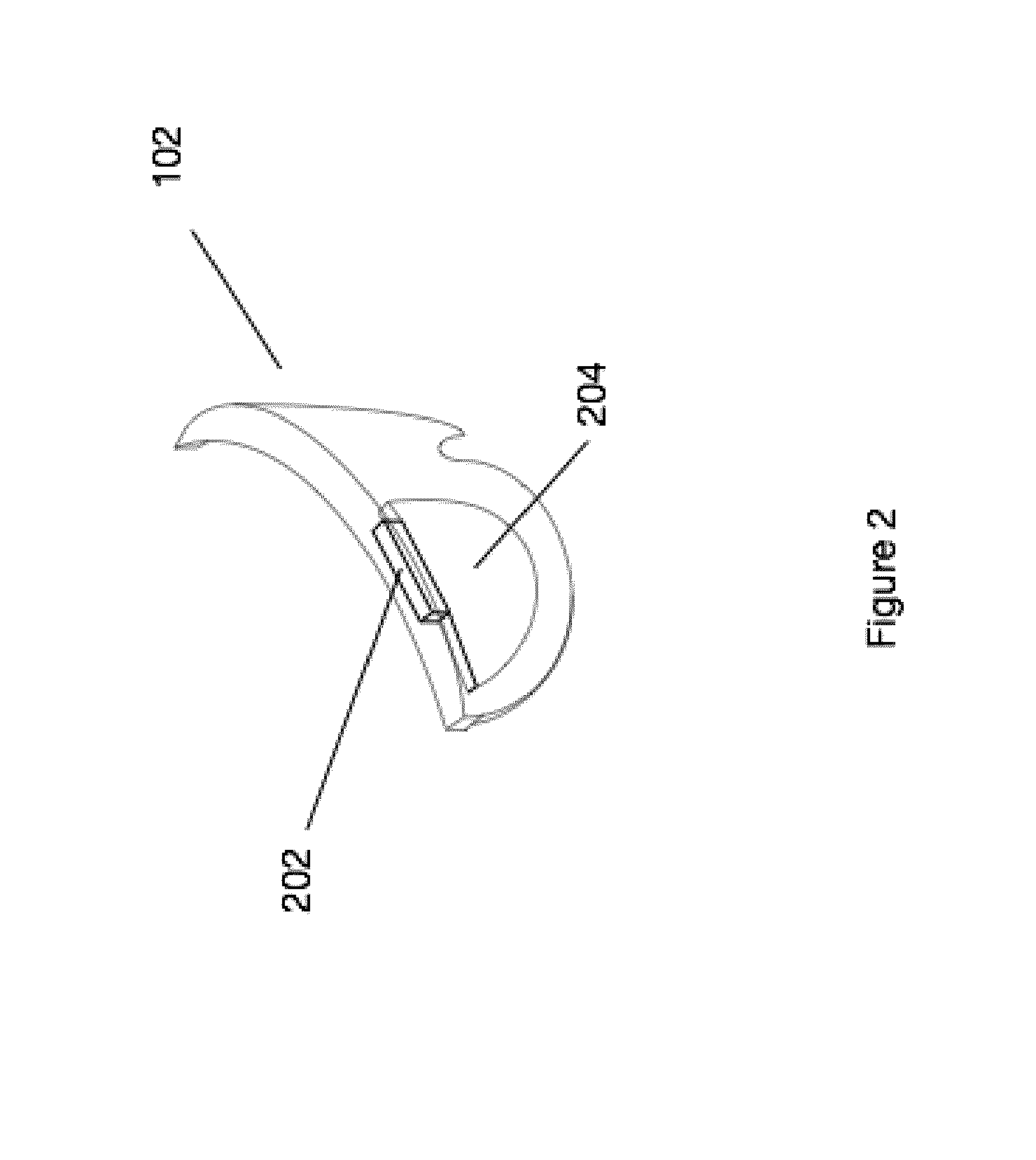

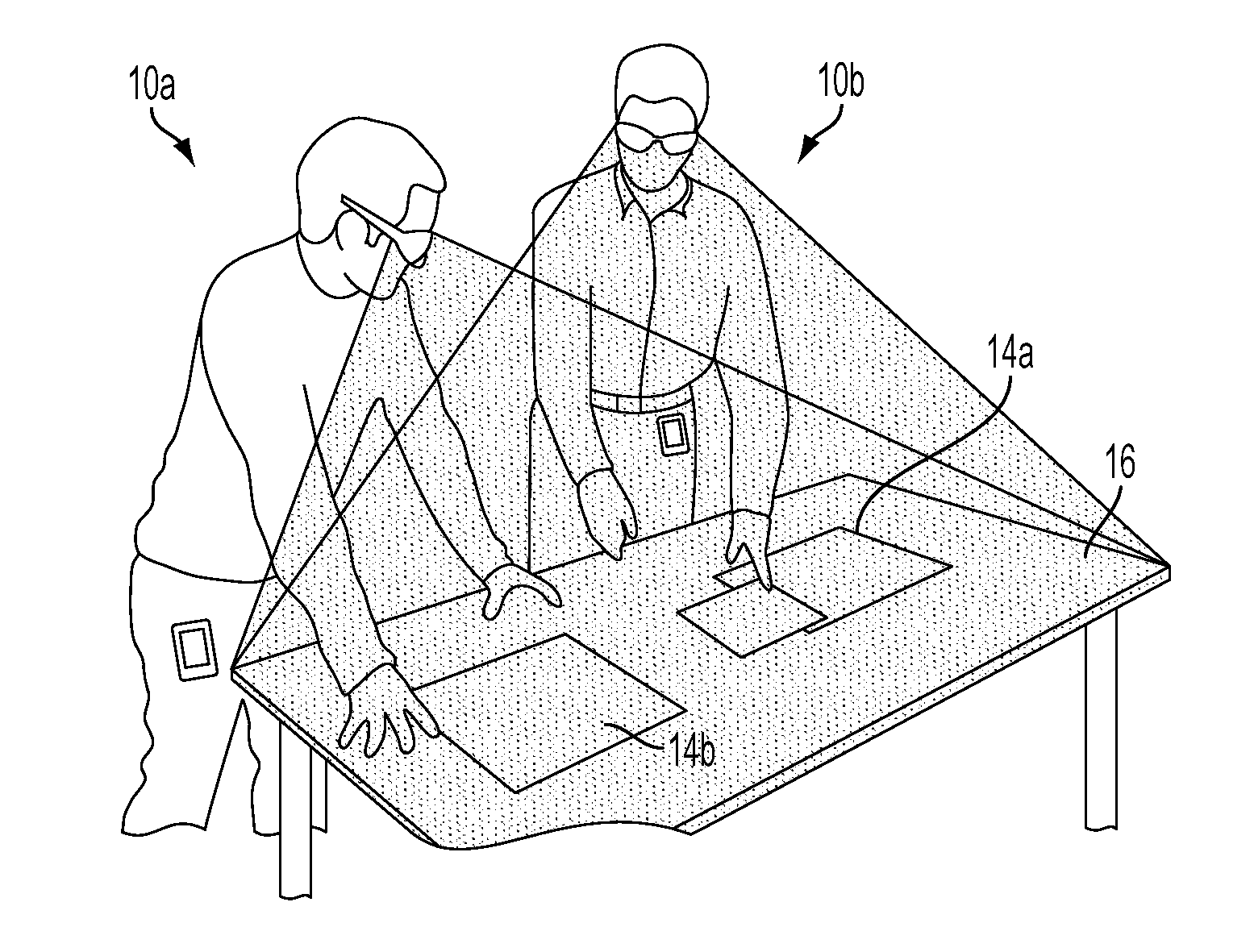

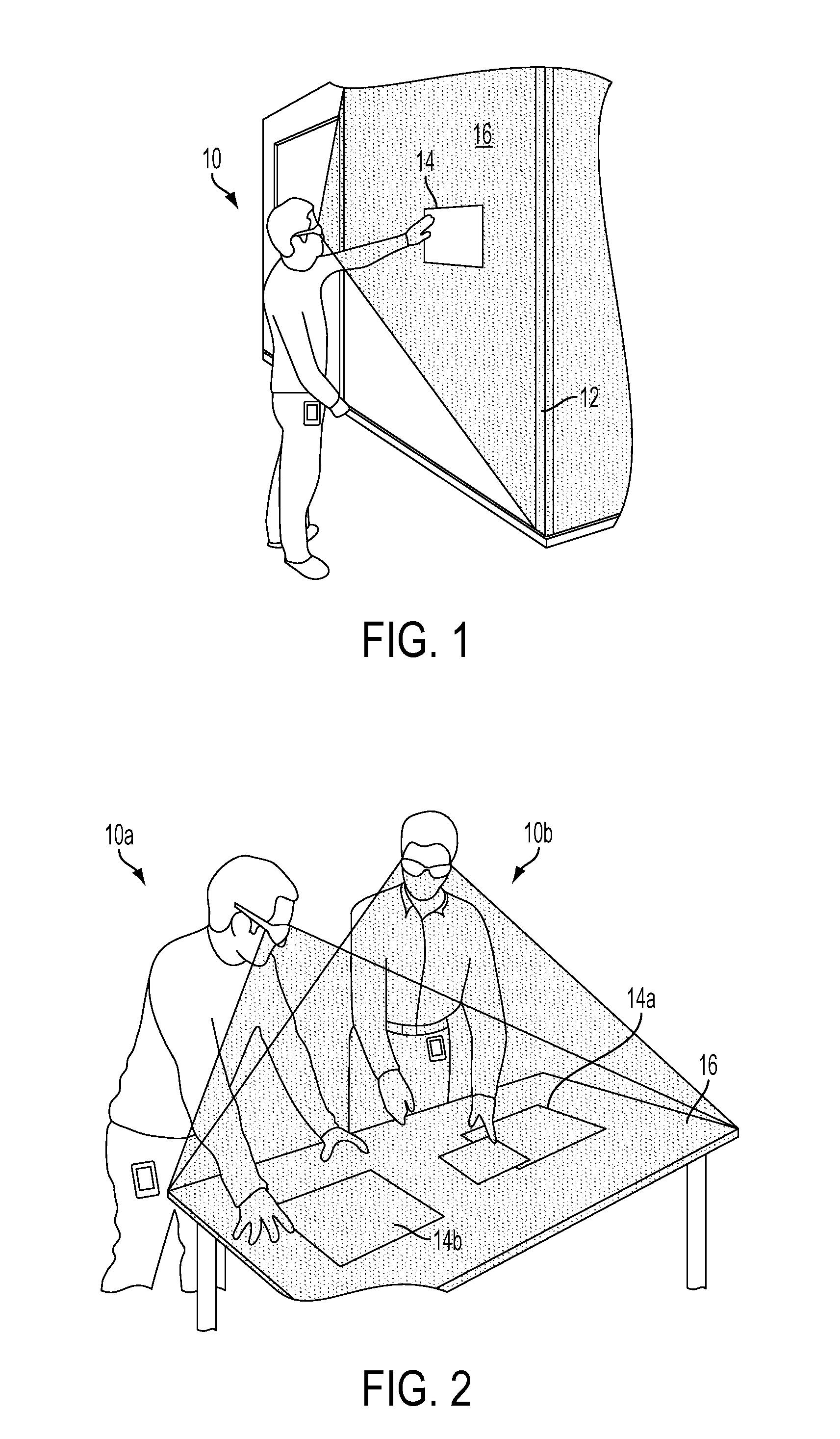

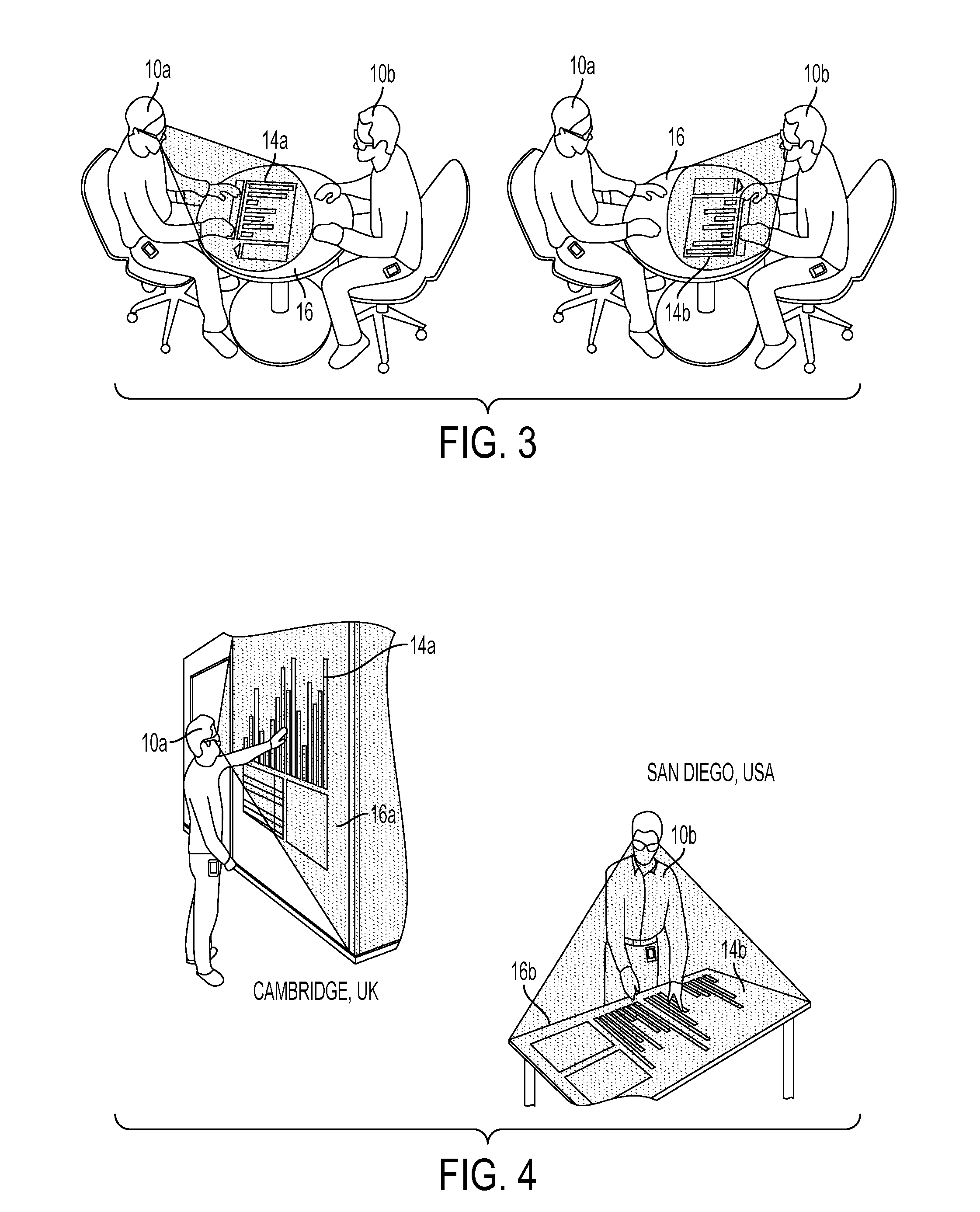

System for the rendering of shared digital interfaces relative to each user's point of view

A head mounted device provides an immersive virtual or augmented reality experience for viewing data and enabling collaboration among multiple users. Rendering images in a virtual or augmented reality system may include capturing an image and spatial data with a body mounted camera and sensor array, receiving input indicating a first anchor surface, calculating parameters with respect to the body mounted camera and displaying a virtual object such that the virtual object appears anchored to the selected first anchor surface. Further rendering operations may include receiving a second input indicating a second anchor surface within the captured image that is different from the first anchor surface, calculating parameters with respect to the second anchor surface and displaying the virtual object such that the virtual object appears anchored to the selected second anchor surface and moved from the first anchor surface.

Owner:QUALCOMM INC

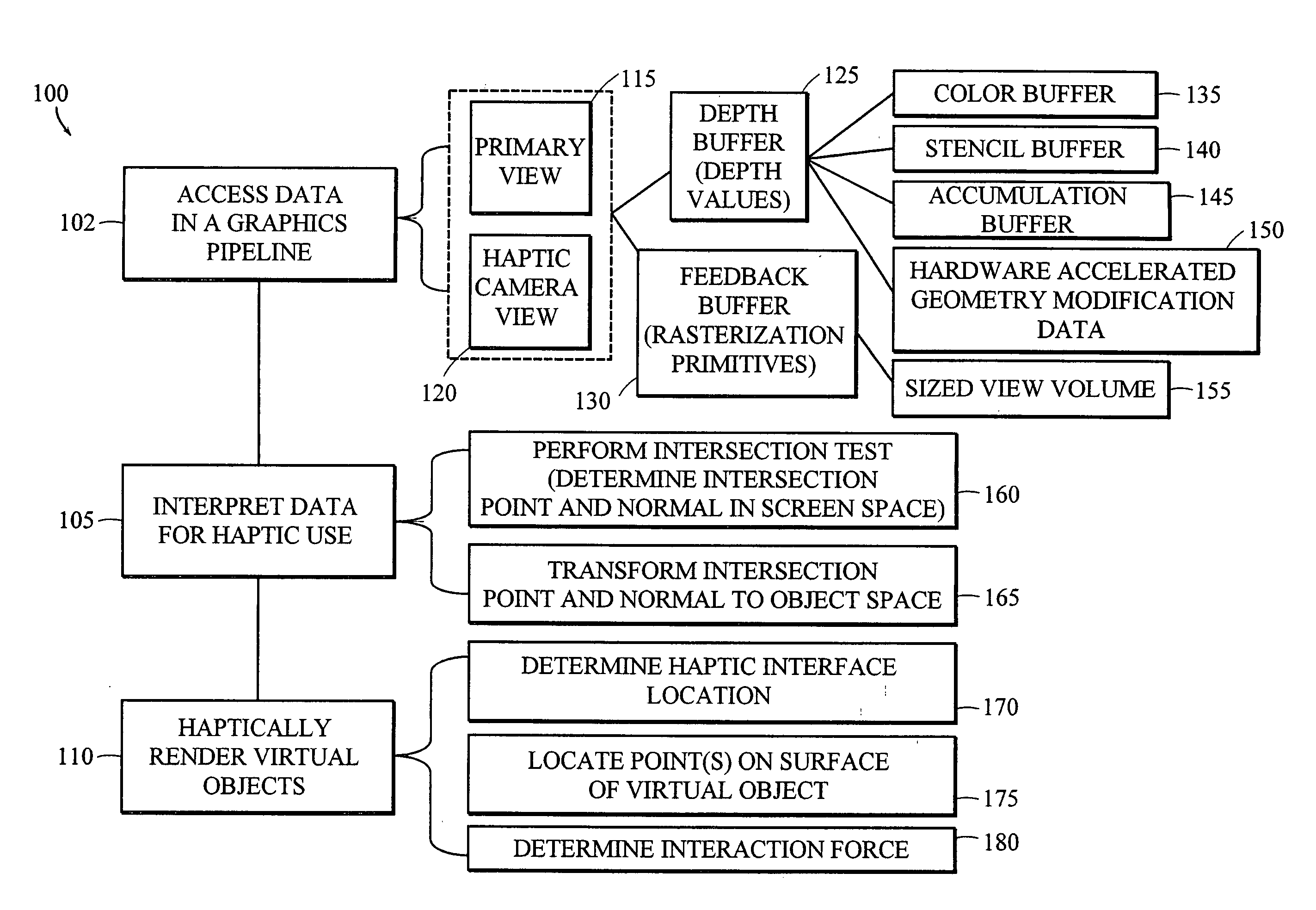

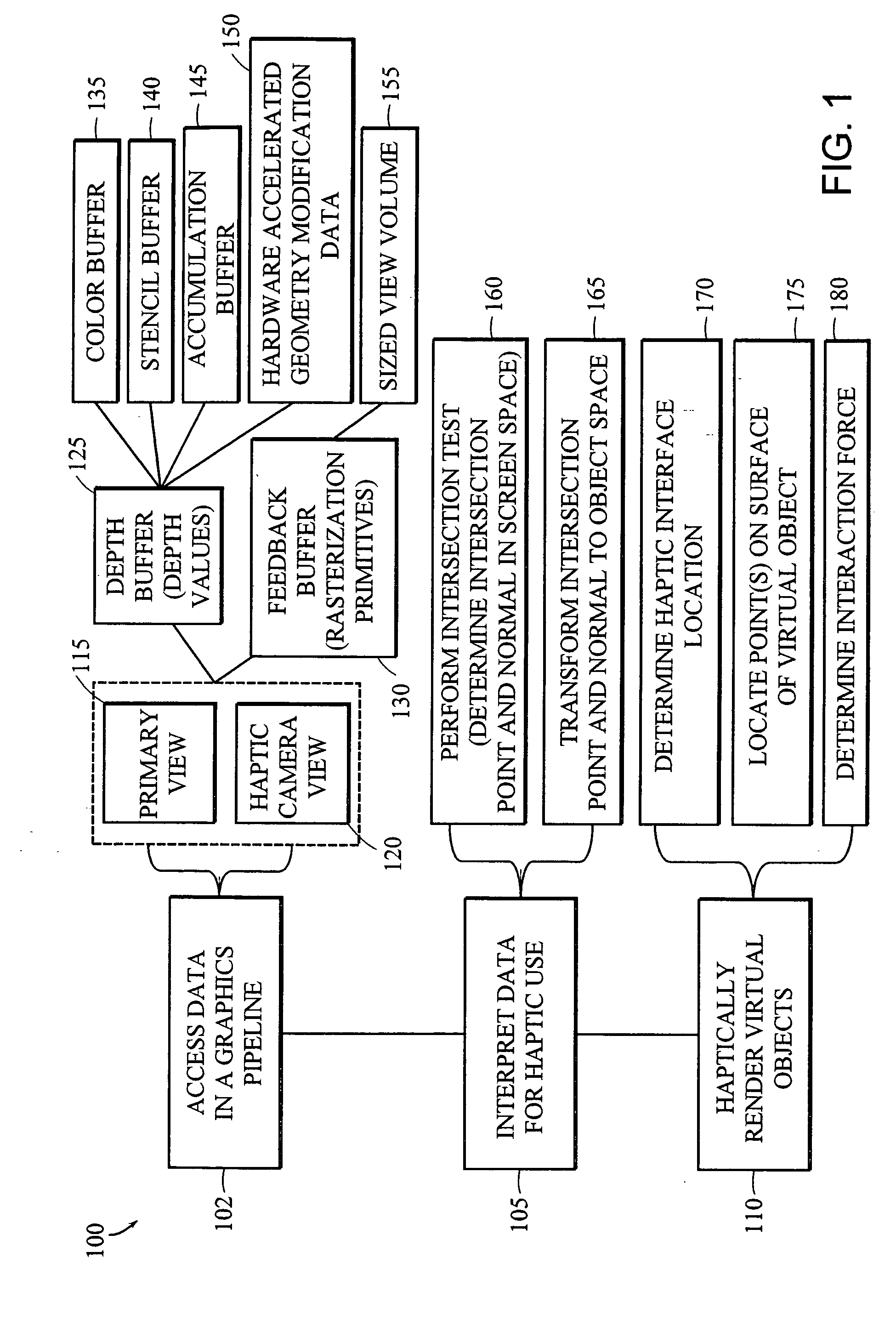

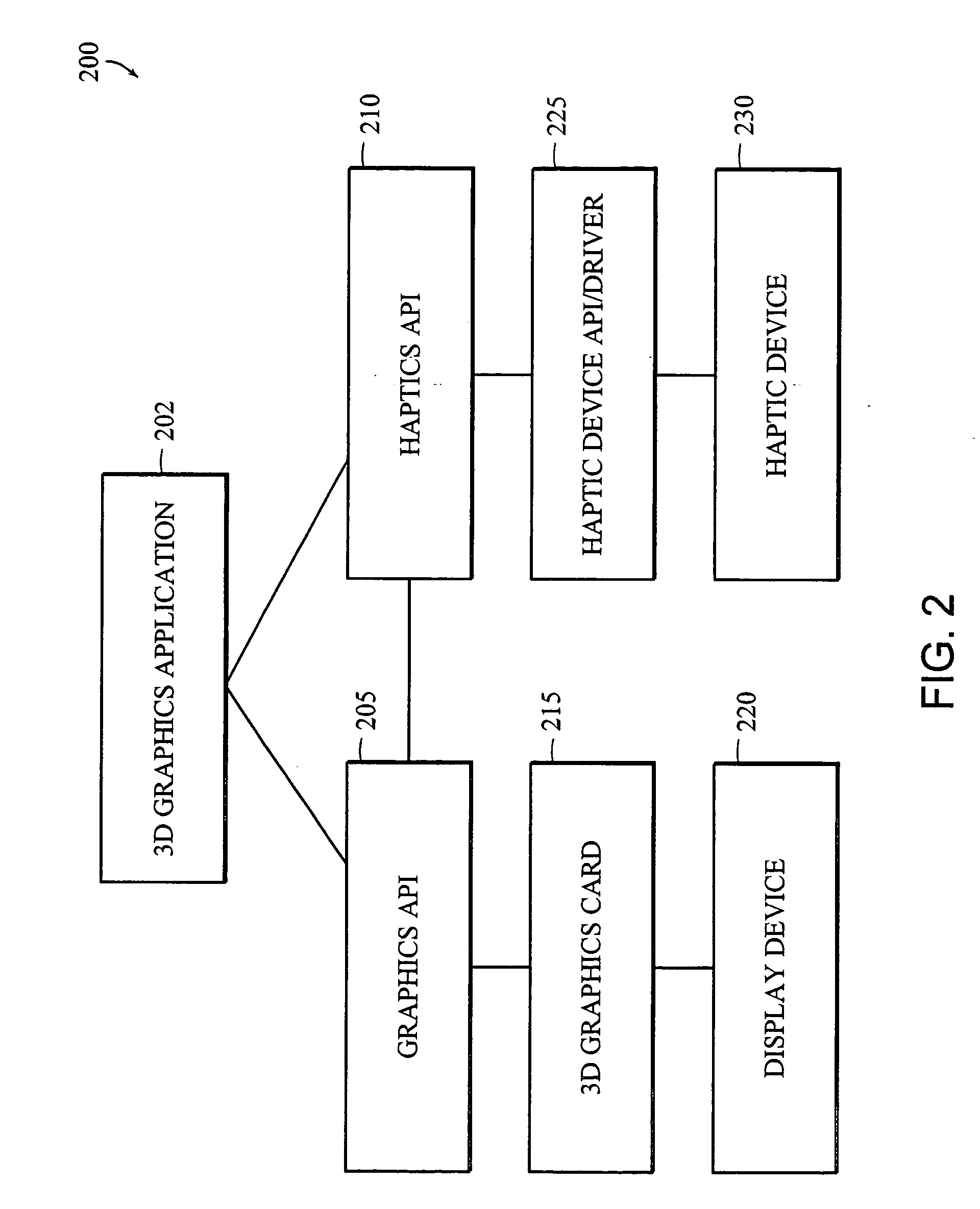

Apparatus and methods for haptic rendering using data in a graphics pipeline

ActiveUS20060109266A1Unleash processing performanceQuick fixGearworksMusical toysGraphicsLine tubing

The invention provides methods for leveraging data in the graphics pipeline of a 3D graphics application for use in a haptic rendering of a virtual environment. The invention provides methods for repurposing graphical information for haptic rendering. Thus, at least part of the work that would have been performed by a haptic rendering process to provide touch feedback to a user is obviated by work performed by the graphical rendering process.

Owner:3D SYST INC

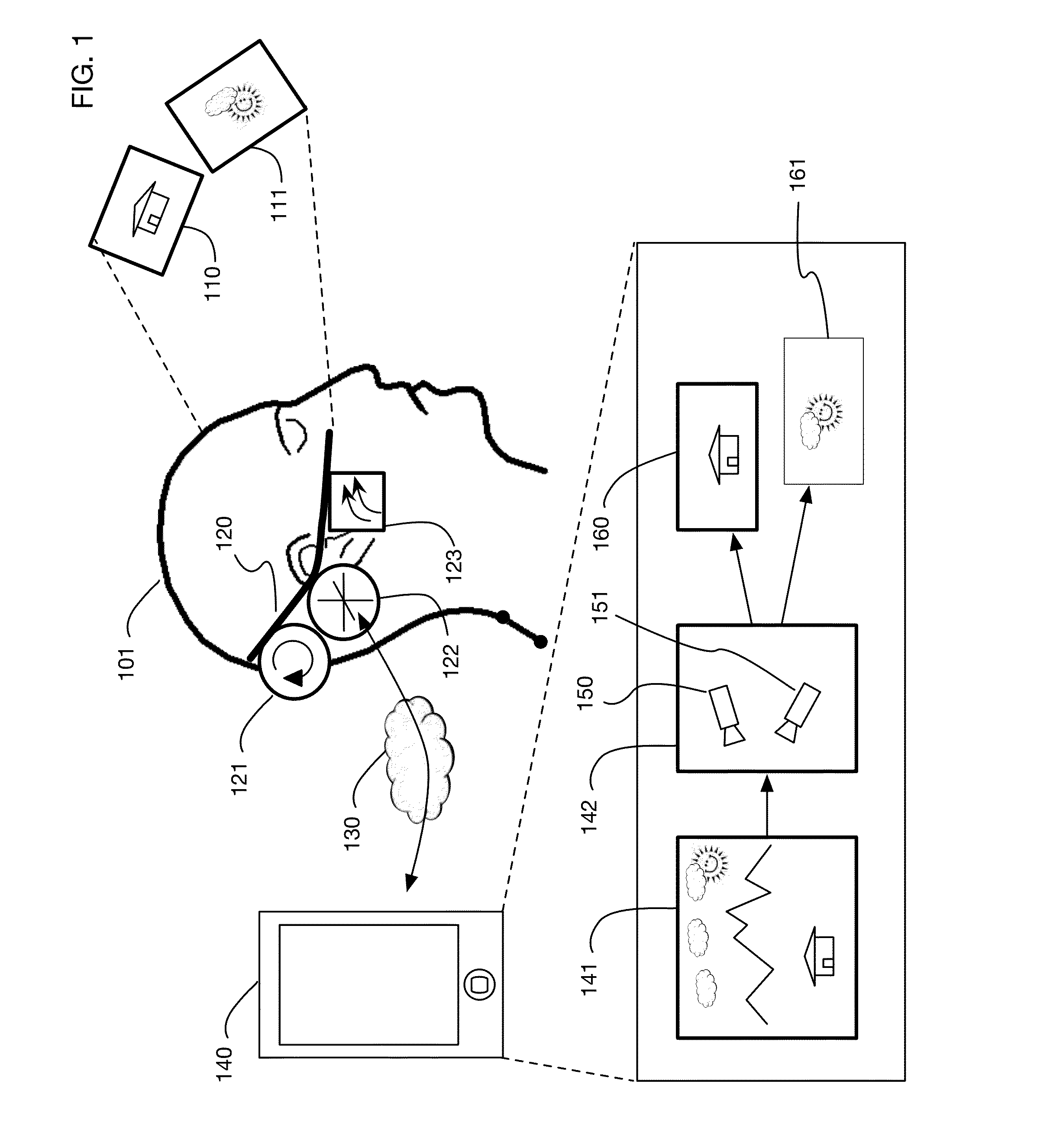

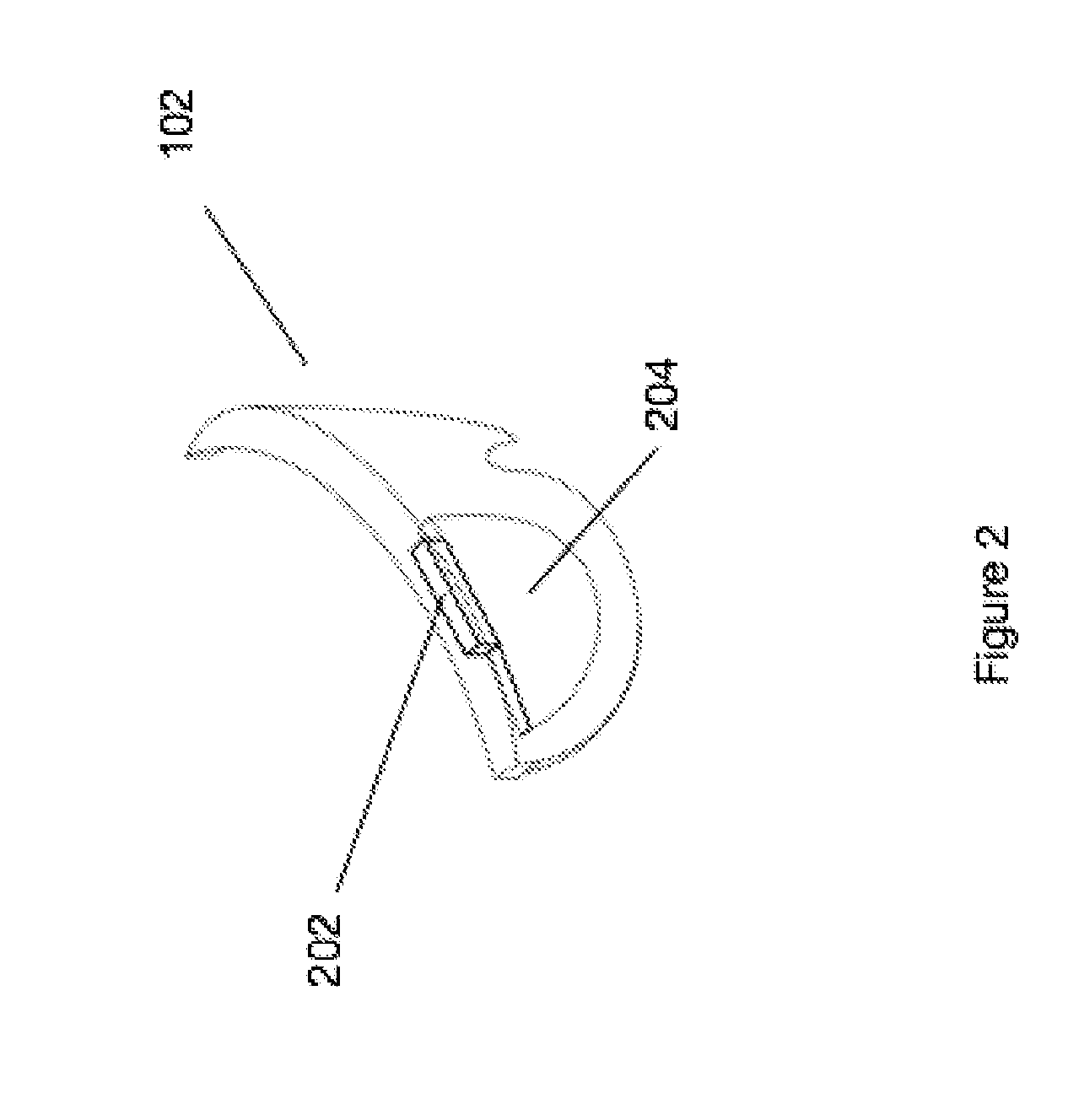

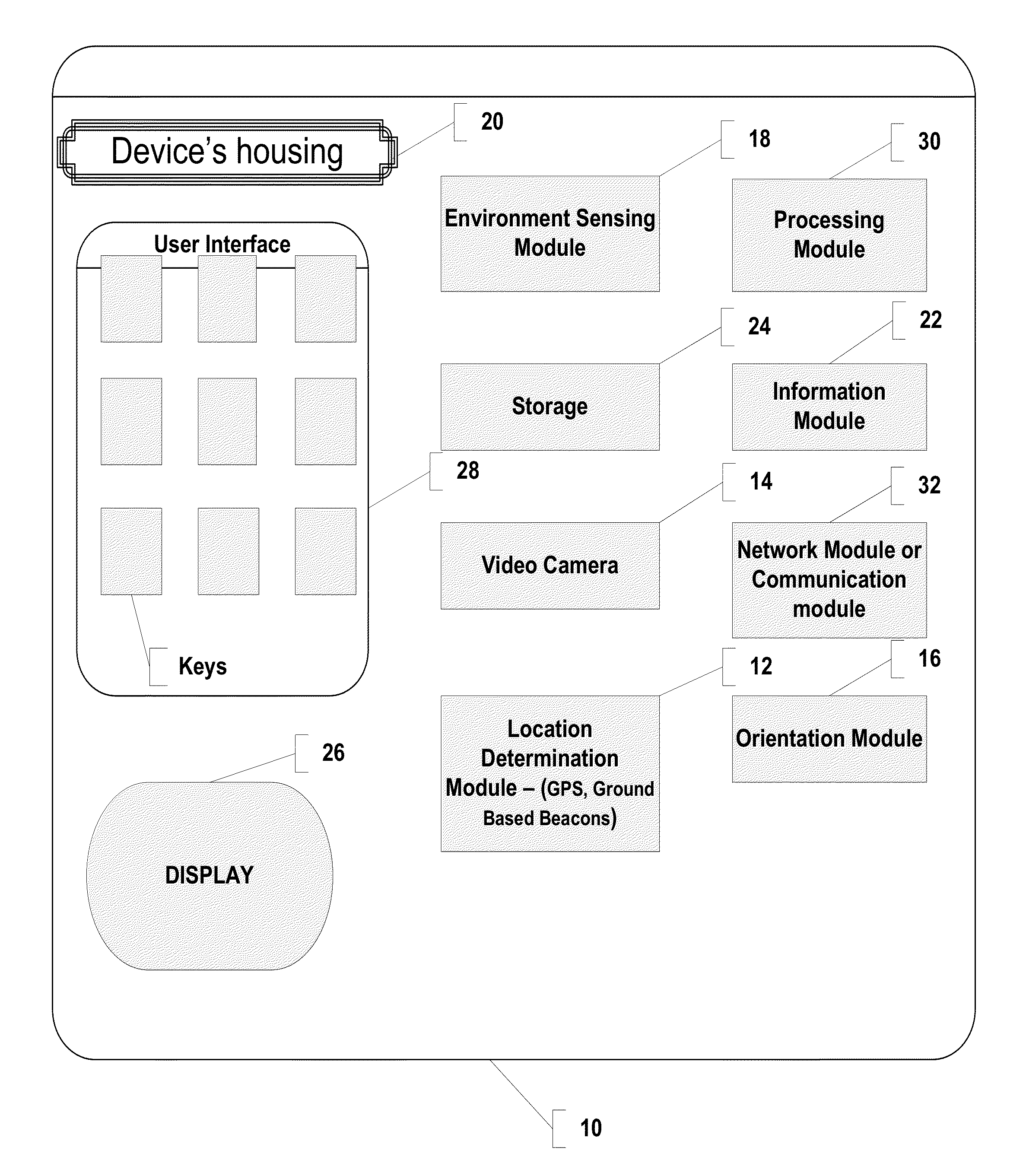

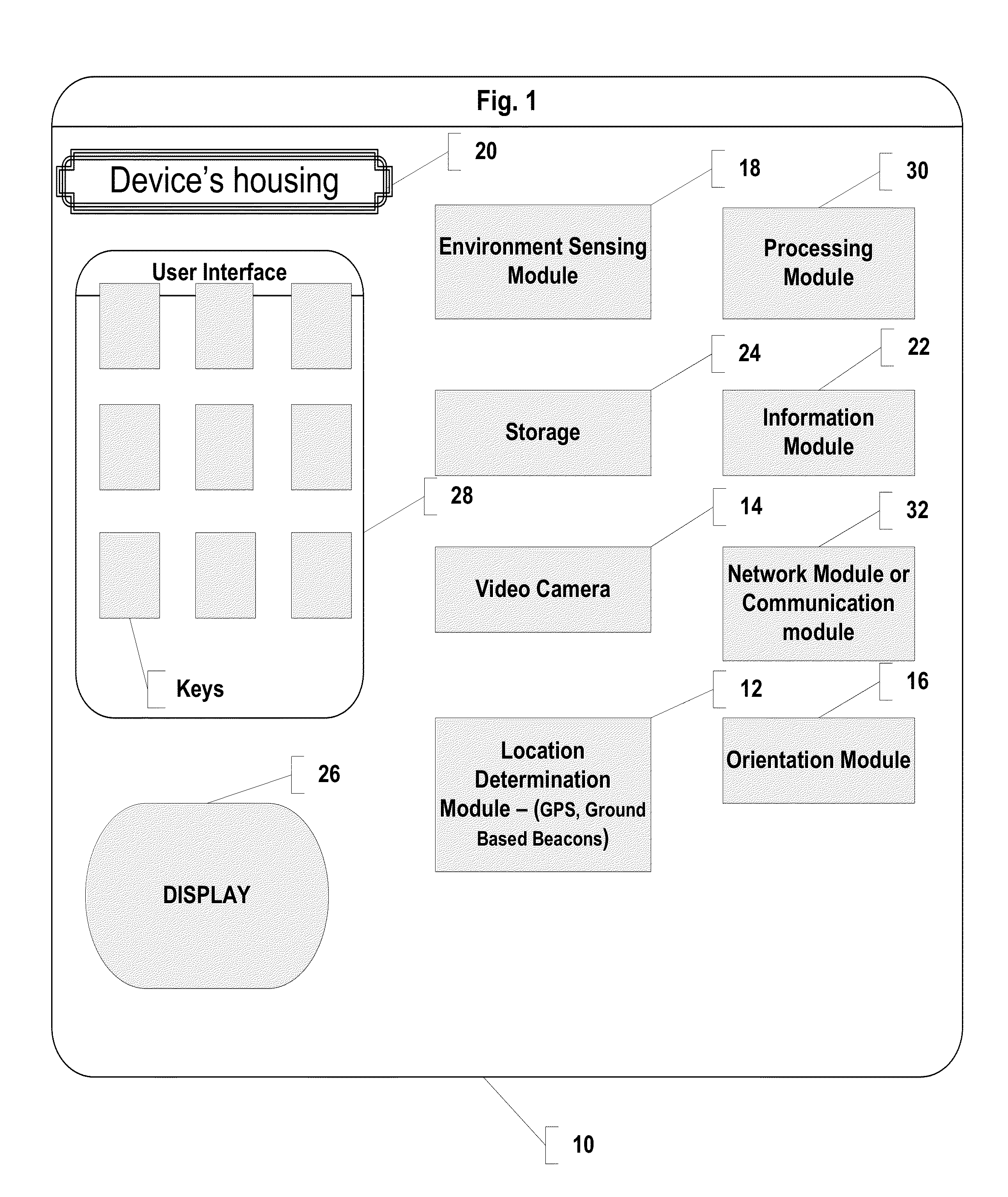

Sensor dependent content position in head worn computing

InactiveUS20150277113A1Input/output for user-computer interactionImage memory managementComputer vision

Owner:OSTERHOUT GROUP INC

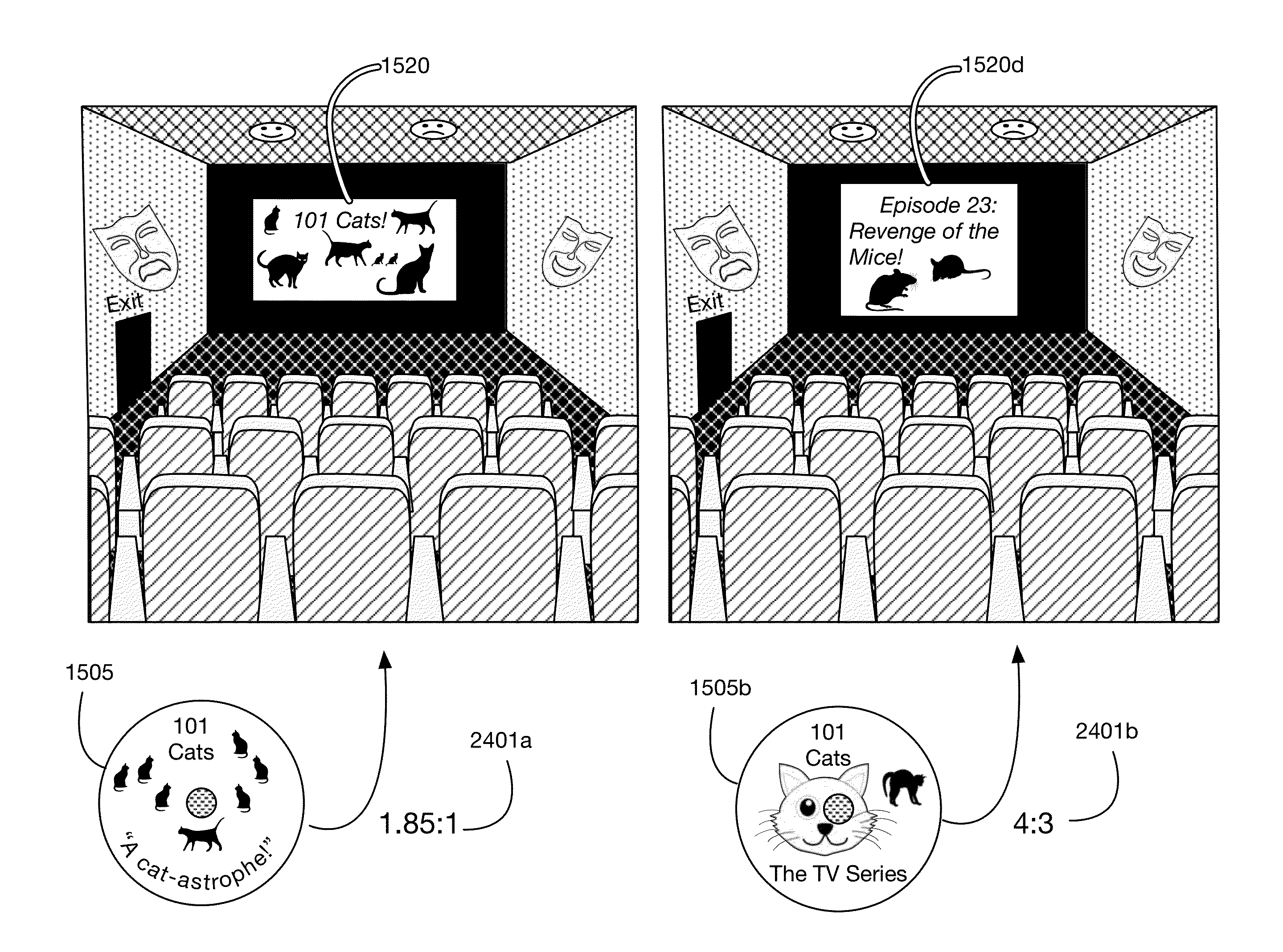

Virtual reality virtual theater system

InactiveUS9396588B1Latency display updateEfficient rerenderingGeometric image transformationStereophonic systemsLoudspeakerVirtual theater

A virtual reality virtual theater system that generates or otherwise displays a virtual theater, for example in which to view videos, such as movies or television. Videos may be for example 2D or 3D movies or television programs. Embodiments create a virtual theater environment with elements that provide a theater-like viewing experience to the user. Virtual theaters may be for example indoor movie theaters, drive-in movie theaters, or home movie theaters. Virtual theater elements may include for example theater fixtures, décor, and audience members; these elements may be selectable or customizable by the user. One or more embodiments also render sound by placing virtual speakers in a virtual theater and projecting sounds from these virtual speakers onto virtual microphones corresponding to the position and orientation of the user's ears. Embodiments may also employ minimal latency processing to improve the theater experience.

Owner:LI ADAM

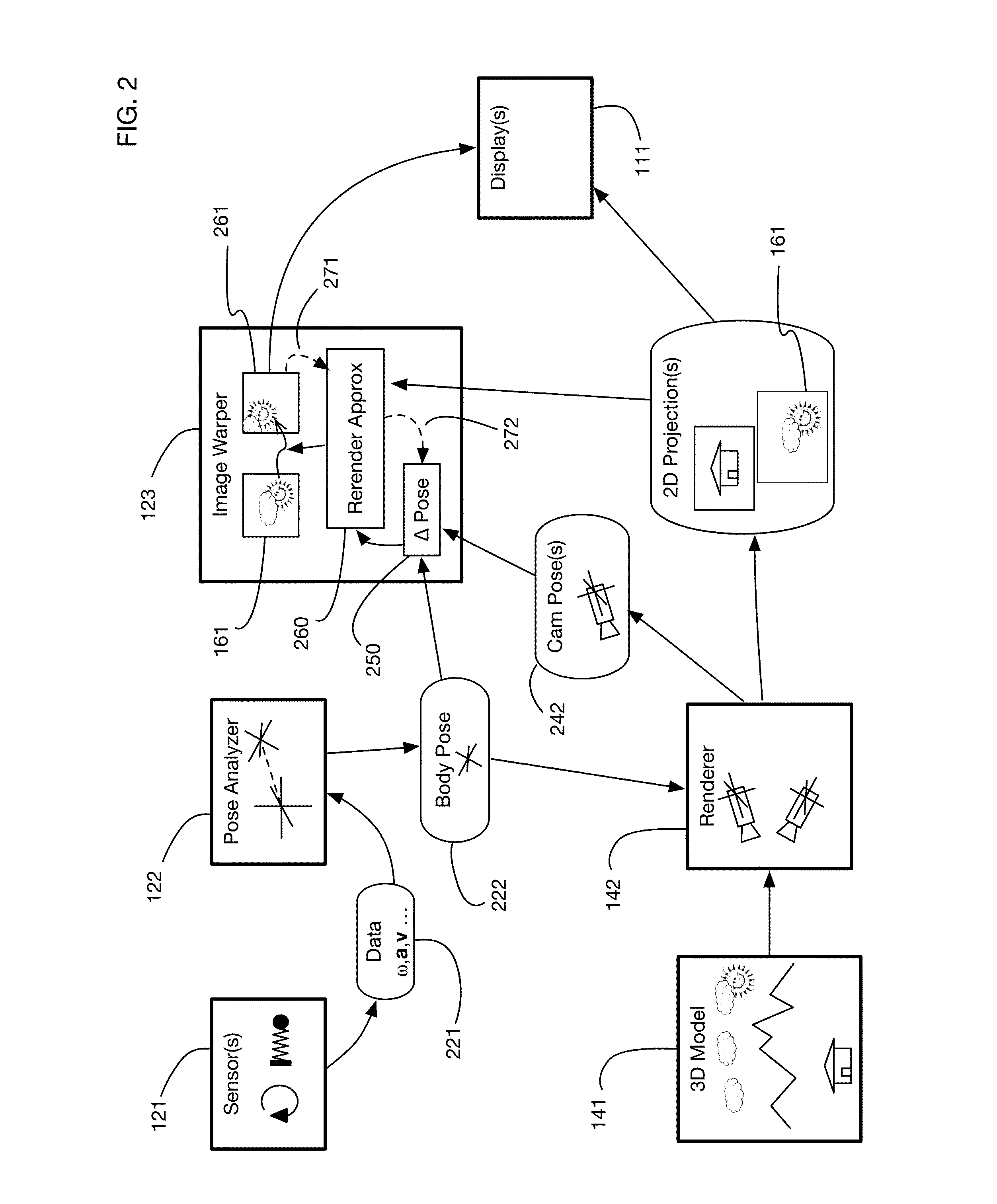

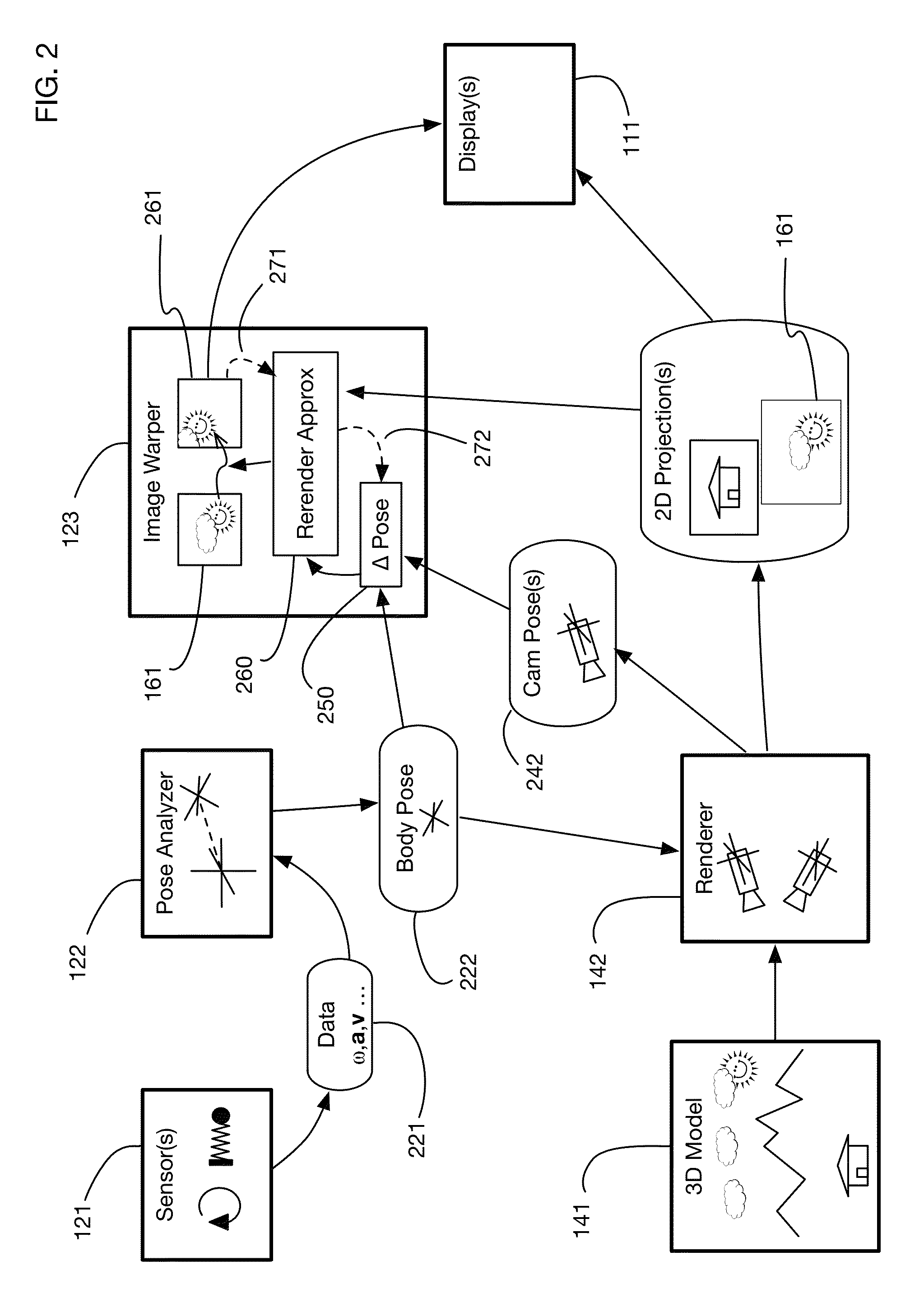

Low-latency virtual reality display system

InactiveUS9240069B1Enhance the virtual reality experienceEfficient and rapid rerendering reduces latencyImage renderingInput/output processes for data processingComputer graphics (images)Display device

A low-latency virtual reality display system that provides rapid updates to virtual reality displays in response to a user's movements. Display images generated by rendering a 3D virtual world are modified using an image warping approximation, which allows updates to be calculated and displayed quickly. Image warping is performed using various rerendering approximations that use simplified 3D models and simplified 3D to 2D projections. An example of such a rerendering approximation is a shifting of all pixels in the display by a pixel translation vector that is calculated based on the user's movements; pixel translation may be done very quickly, possibly using hardware acceleration, and may be sufficiently realistic particularly for small changes in a user's position and orientation. Additional features include techniques to fill holes in displays generated by image warping, synchronizing full rendering with approximate rerendering, and using prediction of a user's future movements to reduce apparent latency.

Owner:LI ADAM

Sensor dependent content position in head worn computing

Owner:OSTERHOUT GROUP INC

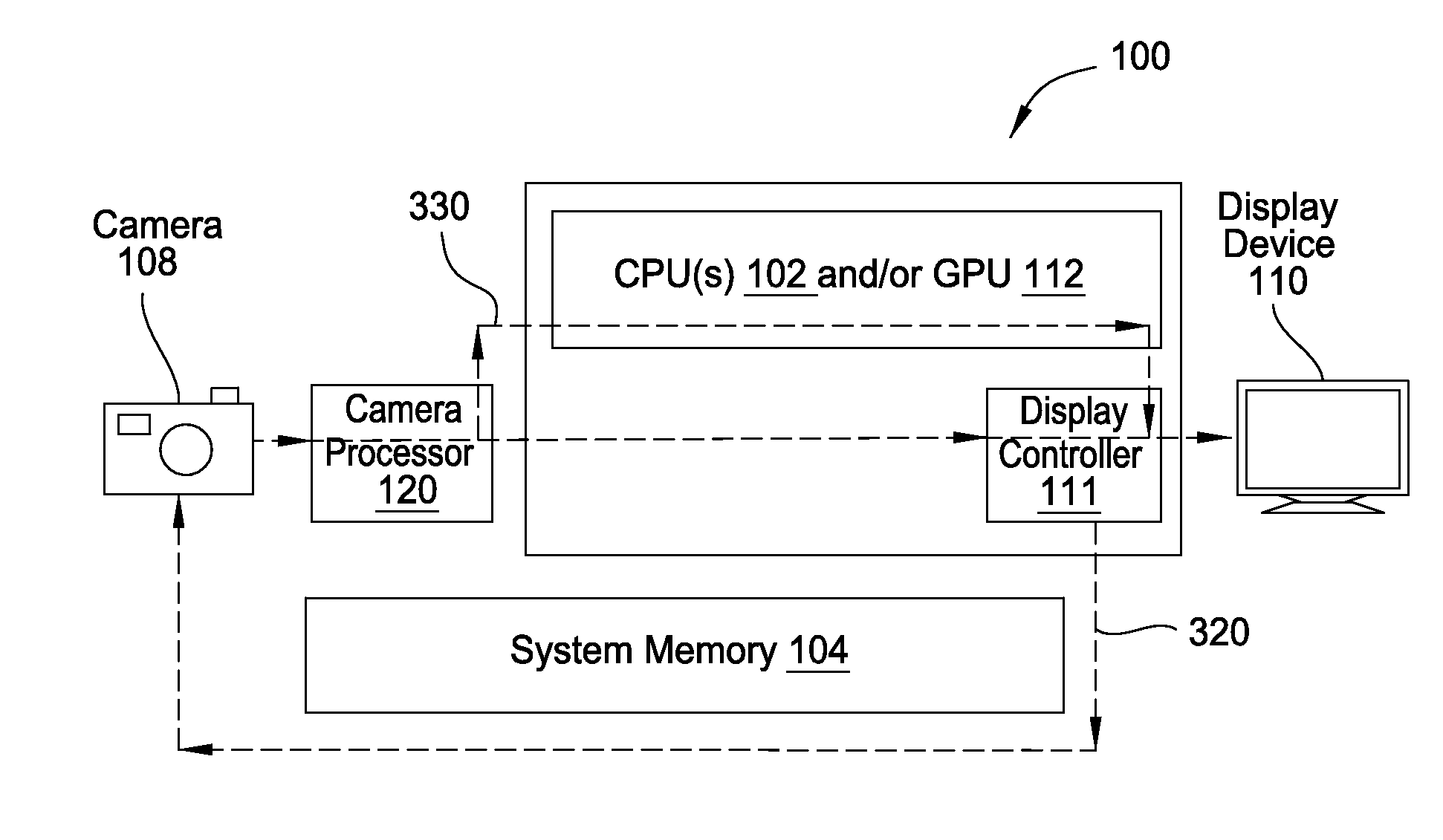

Generating a low-latency transparency effect

InactiveUS20150194128A1Efficiently display deviceTransparency effectImage enhancementTelevision system detailsDisplay deviceLatency (engineering)

One embodiment of the present invention sets forth a technique for generating a transparency effect for a computing device. The technique includes transmitting, to a camera, a synchronization signal associated with a refresh rate of a display. The technique further includes determining a line of sight of a user relative to the display, acquiring a first image based on the synchronization signal, and processing the first image based on the line of sight of the user to generate a first processed image. Finally, the technique includes compositing first visual information and the first processed image to generate a first composited image, and displaying the first composited image on the display.

Owner:NVIDIA CORP

Sensor dependent content position in head worn computing

InactiveUS20150277549A1Input/output for user-computer interactionImage memory managementComputer vision

Owner:OSTERHOUT GROUP INC

Sensor dependent content position in head worn computing

Owner:OSTERHOUT GROUP INC

Sensor dependent content position in head worn computing

InactiveUS20150277122A1Input/output for user-computer interactionImage memory managementComputer vision

Owner:OSTERHOUT GROUP INC

Sensor dependent content position in head worn computing

Owner:OSTERHOUT GROUP INC

Sensor dependent content position in head worn computing

InactiveUS20150277118A1Input/output for user-computer interactionImage memory managementComputer vision

Owner:OSTERHOUT GROUP INC

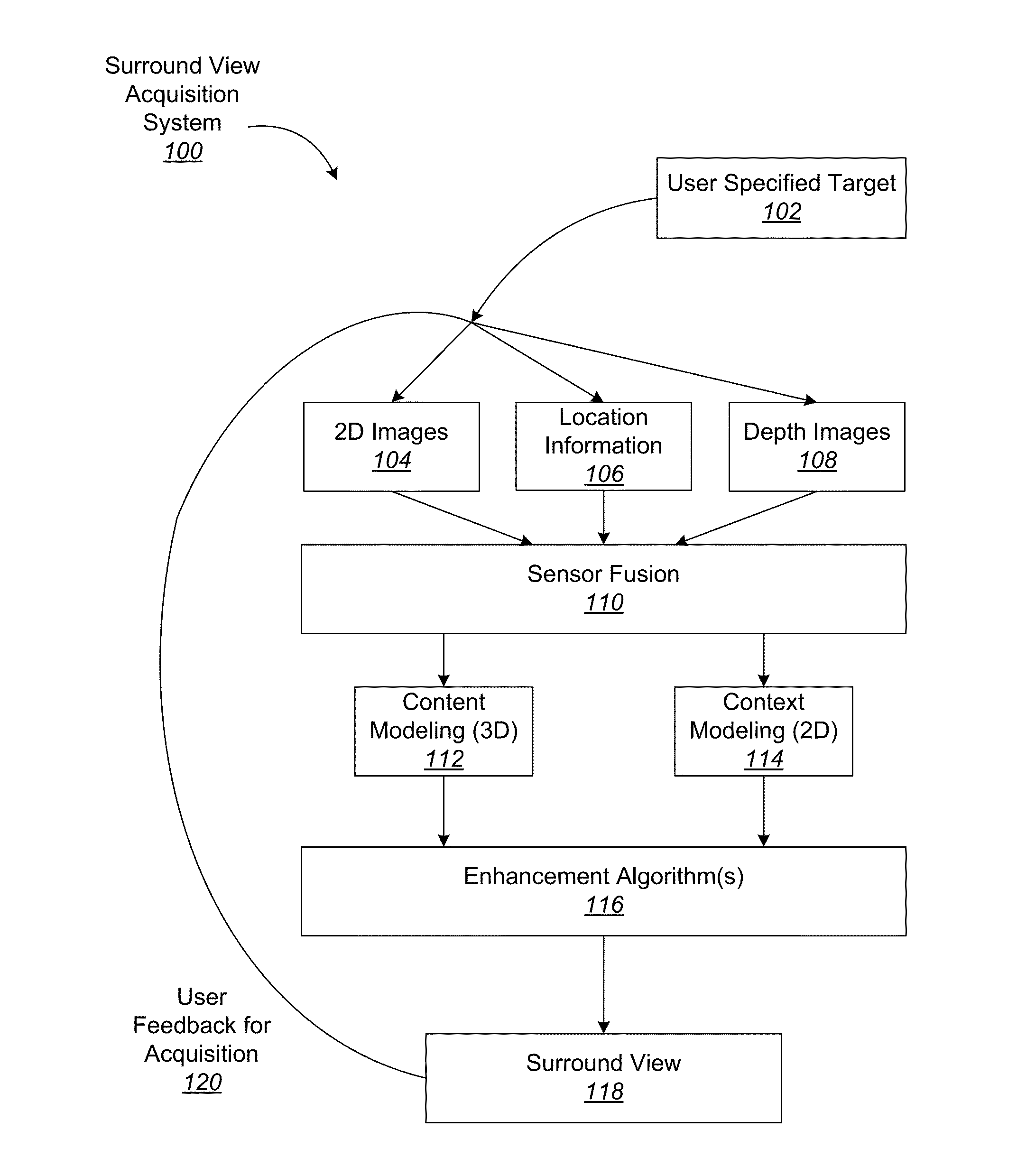

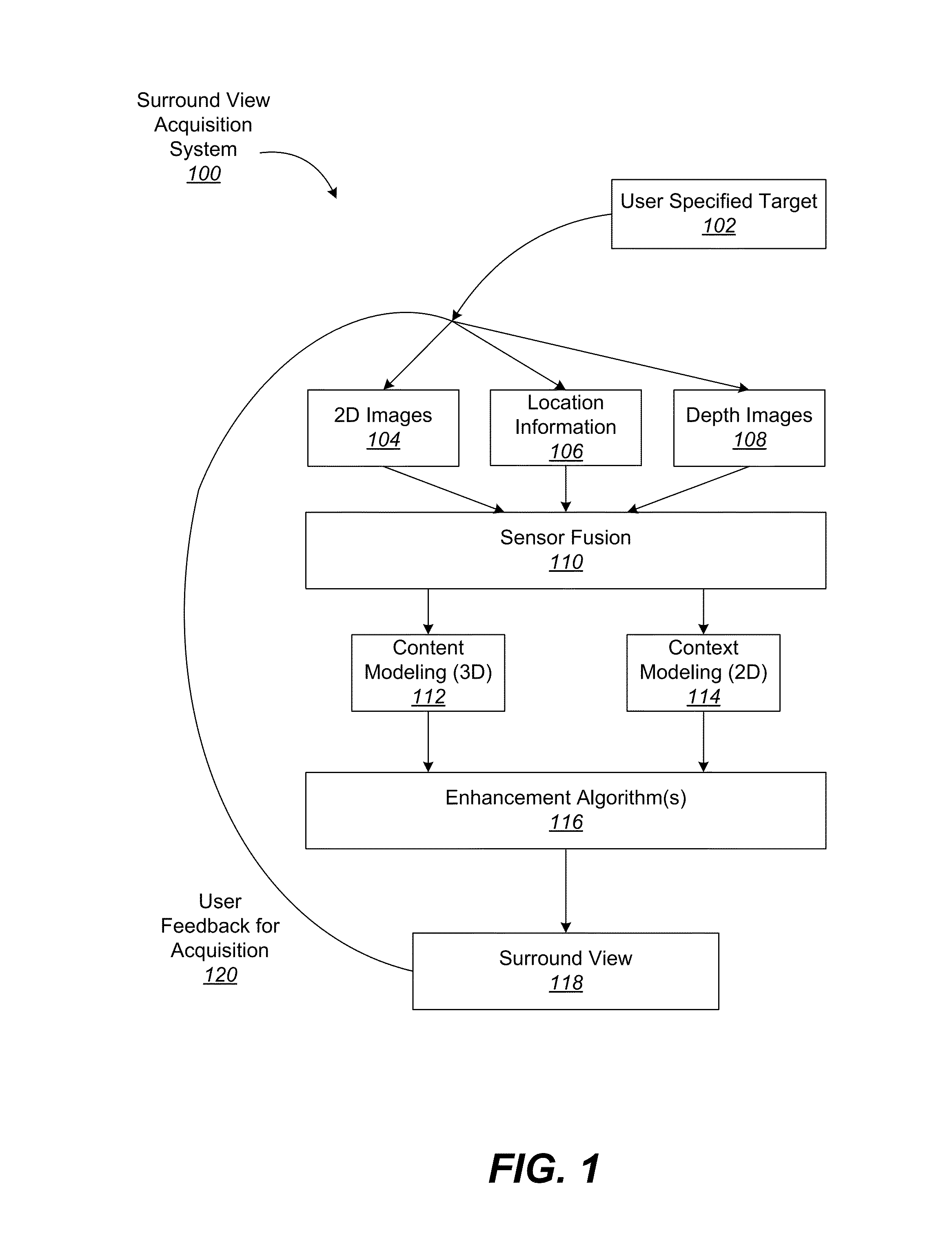

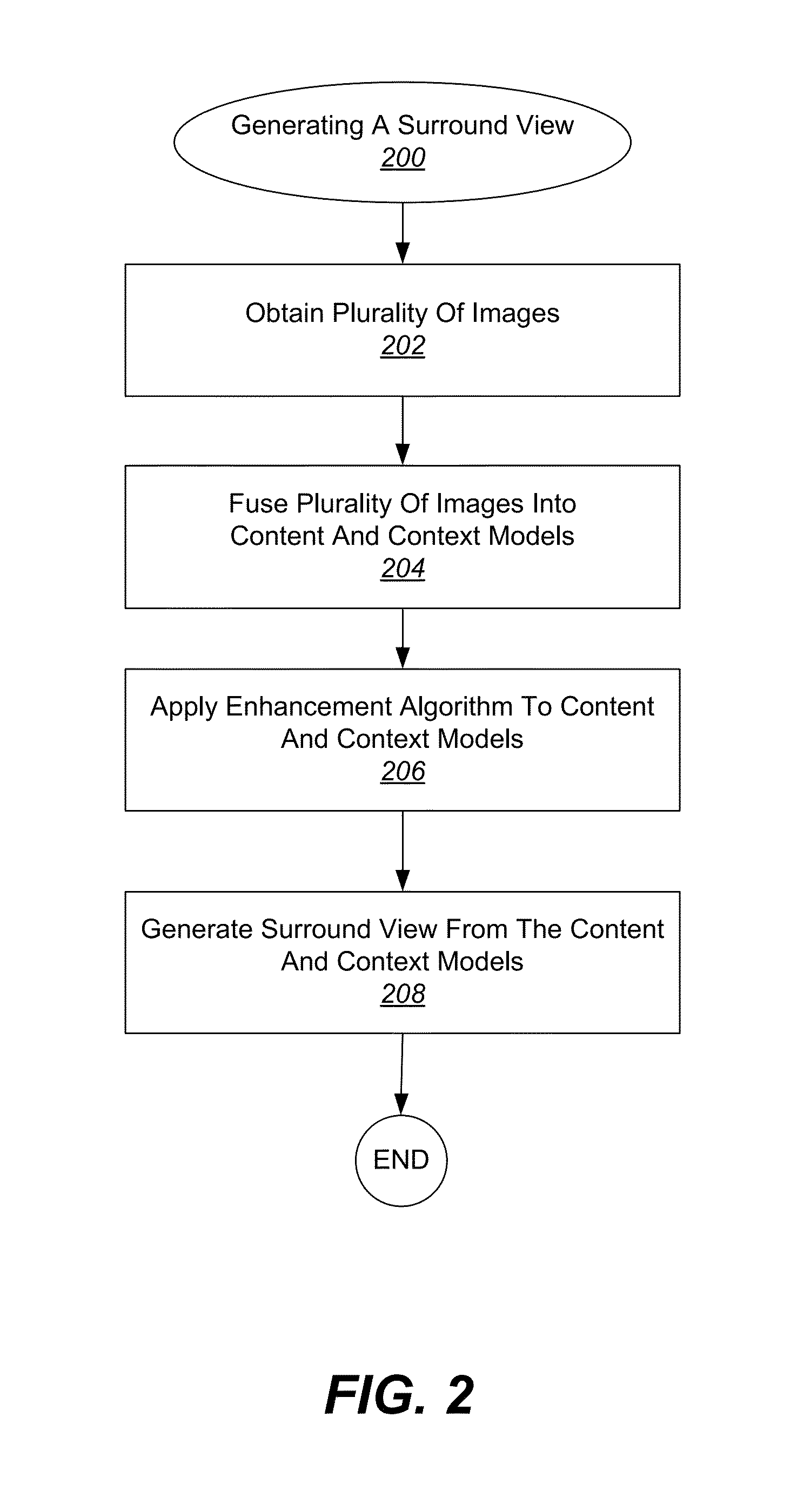

Analysis and manipulation of images and video for generation of surround views

Various embodiments of the present invention relate generally to systems and methods for analyzing and manipulating images and video. According to particular embodiments, the spatial relationship between multiple images and video is analyzed together with location information data, for purposes of creating a representation referred to herein as a surround view. In particular embodiments, the surround view reduces redundancy in the image and location data, and presents a user with an interactive and immersive viewing experience.

Owner:FUSION

Sensor dependent content position in head worn computing

Owner:OSTERHOUT GROUP INC

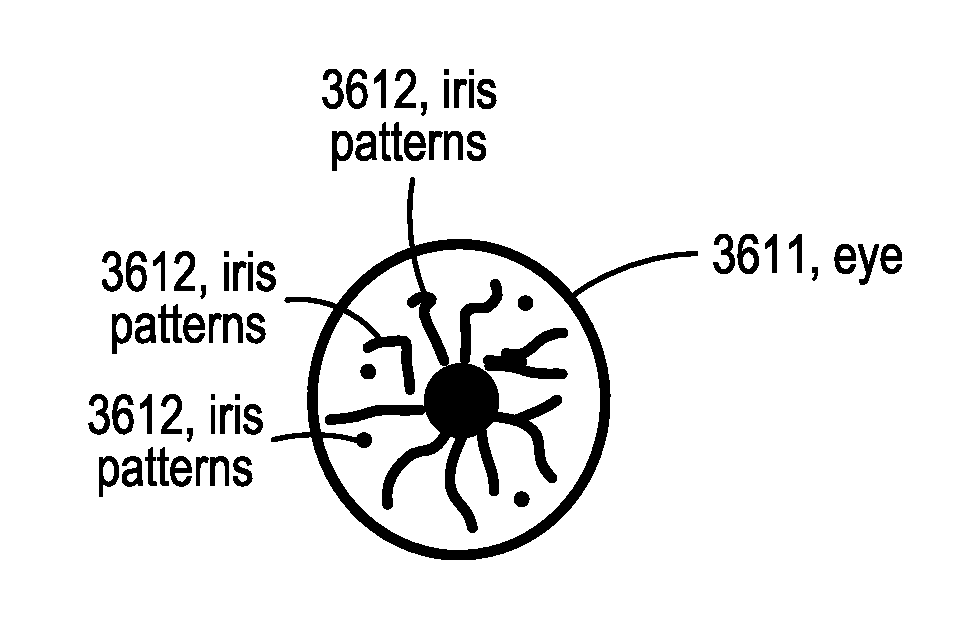

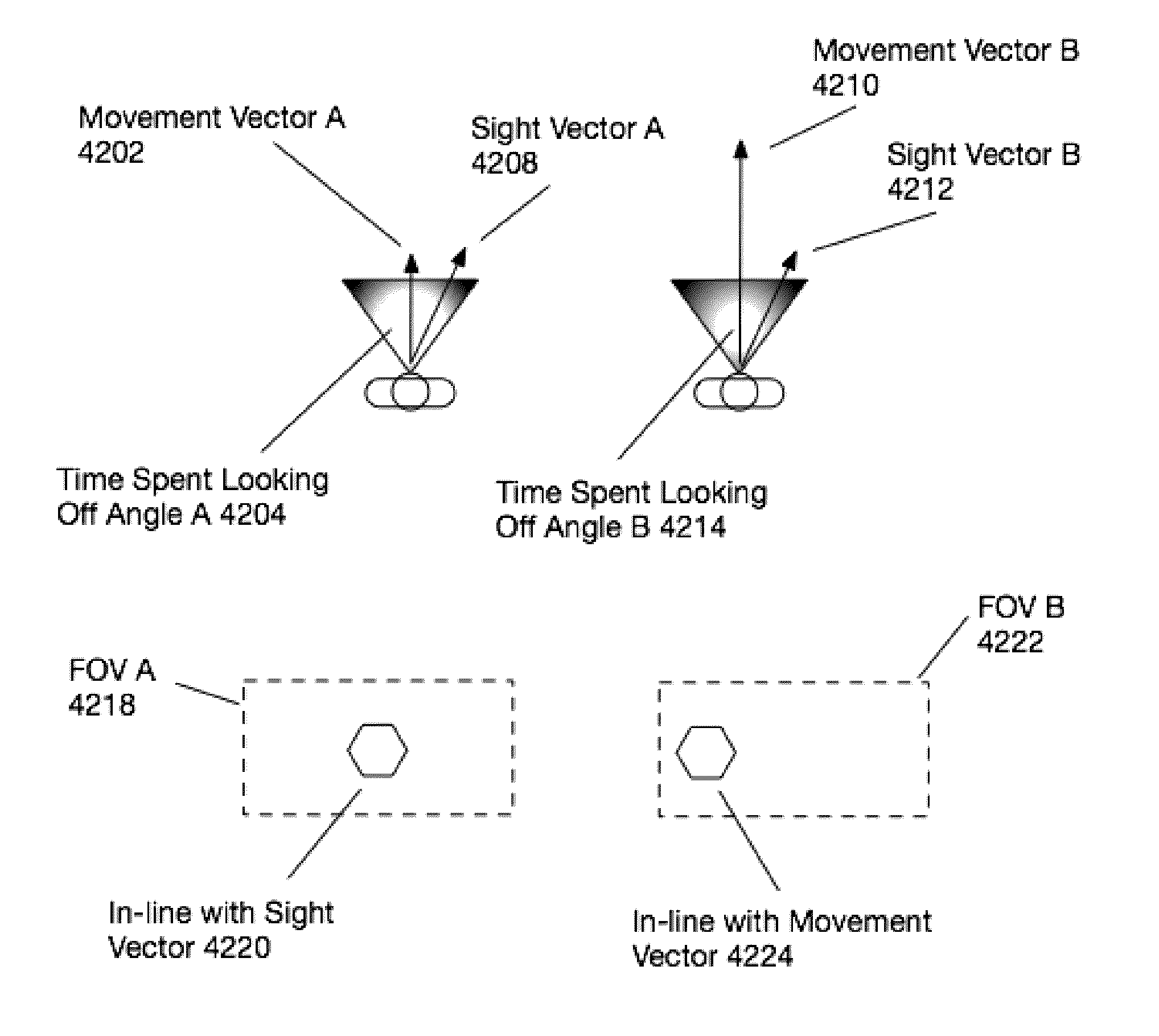

Sight information collection in head worn computing

ActiveUS20150287048A1Input/output for user-computer interactionWeapon componentsComputer scienceVisual perception

Aspects of the present invention relate to methods and systems for collecting an using eye heading and sight heading information in head worn computing.

Owner:OSTERHOUT GROUP INC

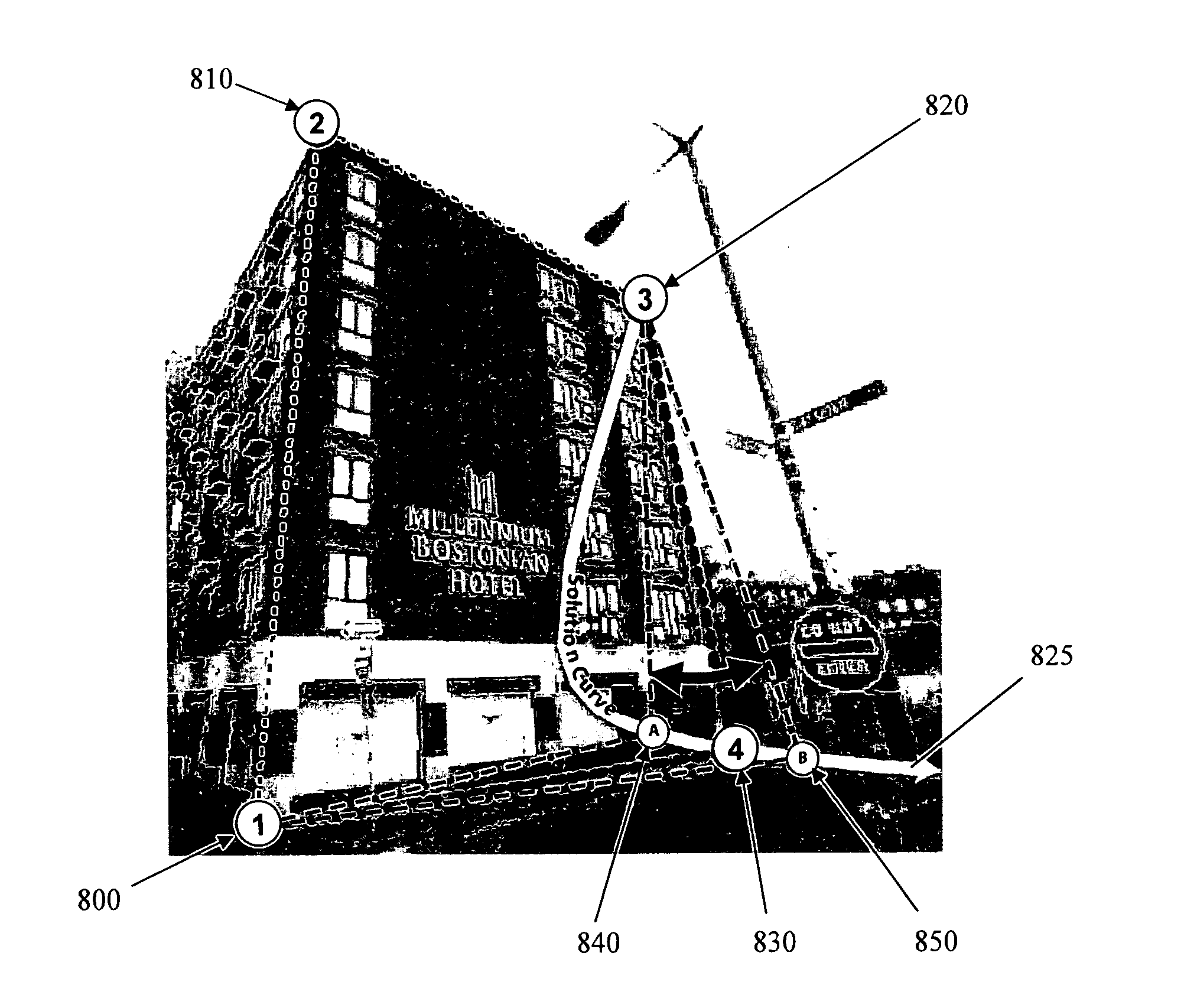

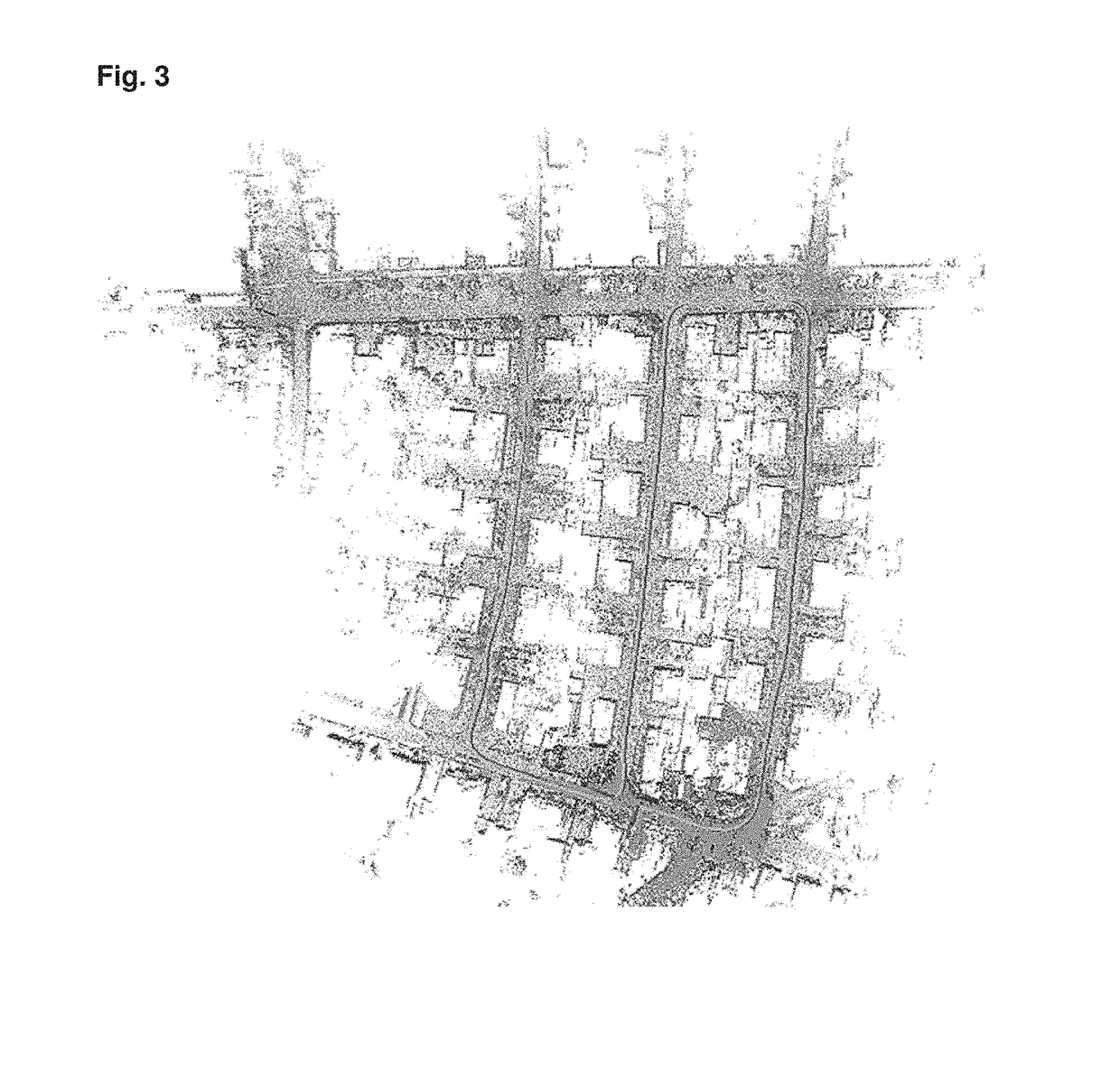

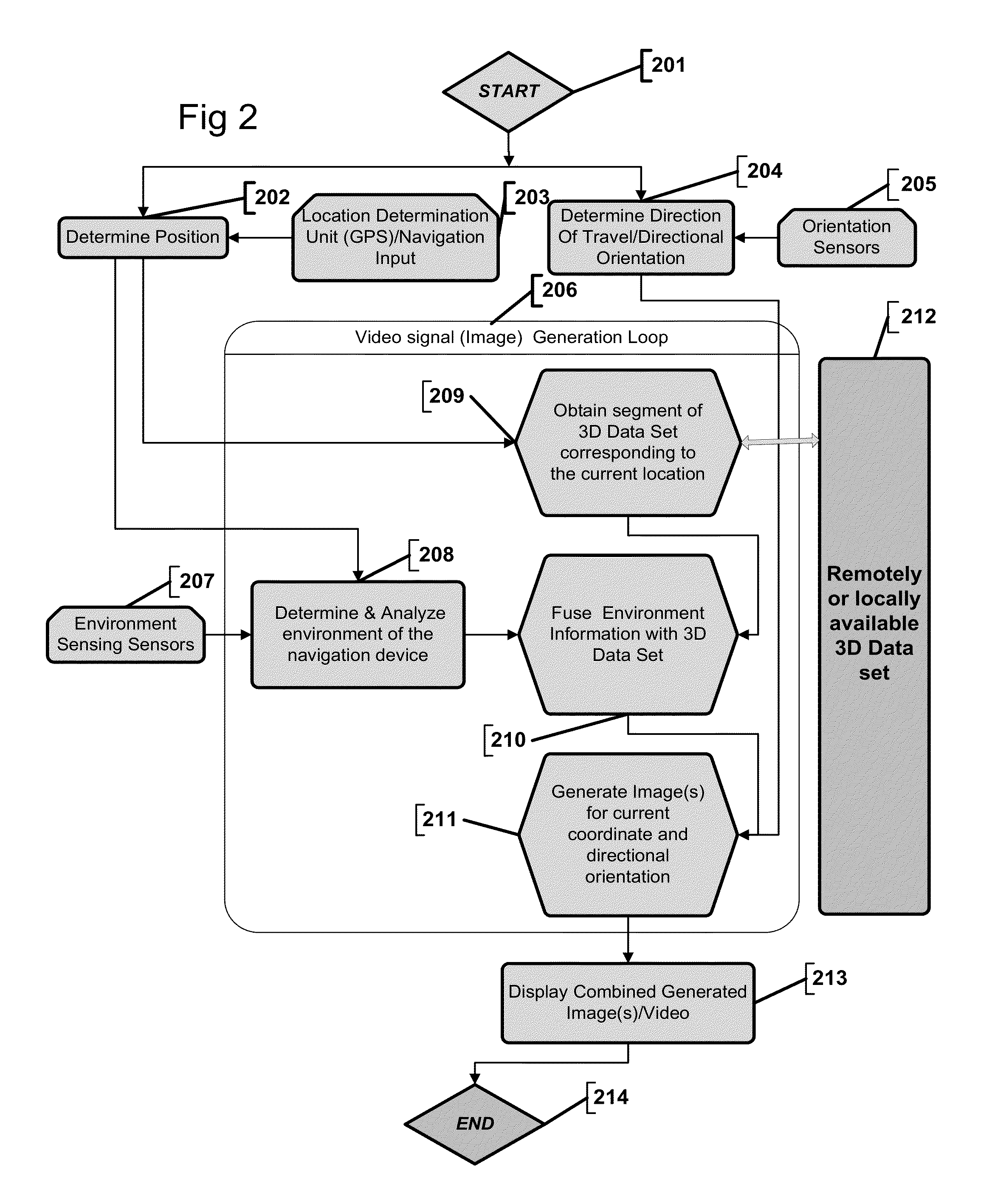

Systems and methods for navigation

ActiveUS20140172296A1Provide situational awarenessNavigation instrumentsSatellite radio beaconingPattern recognitionPoint cloud

The present invention relates to a navigation device. The navigation device is arranged to dynamically generate multidimensional video signals based on location and directional information of the navigation device by processing at pre-generated 3D data set (3D Model or 3D point cloud). The navigation device is further arranged to superimpose navigation directions and / or environment information about surrounding objects onto the generated multidimensional view.

Owner:RAAYONNOVA

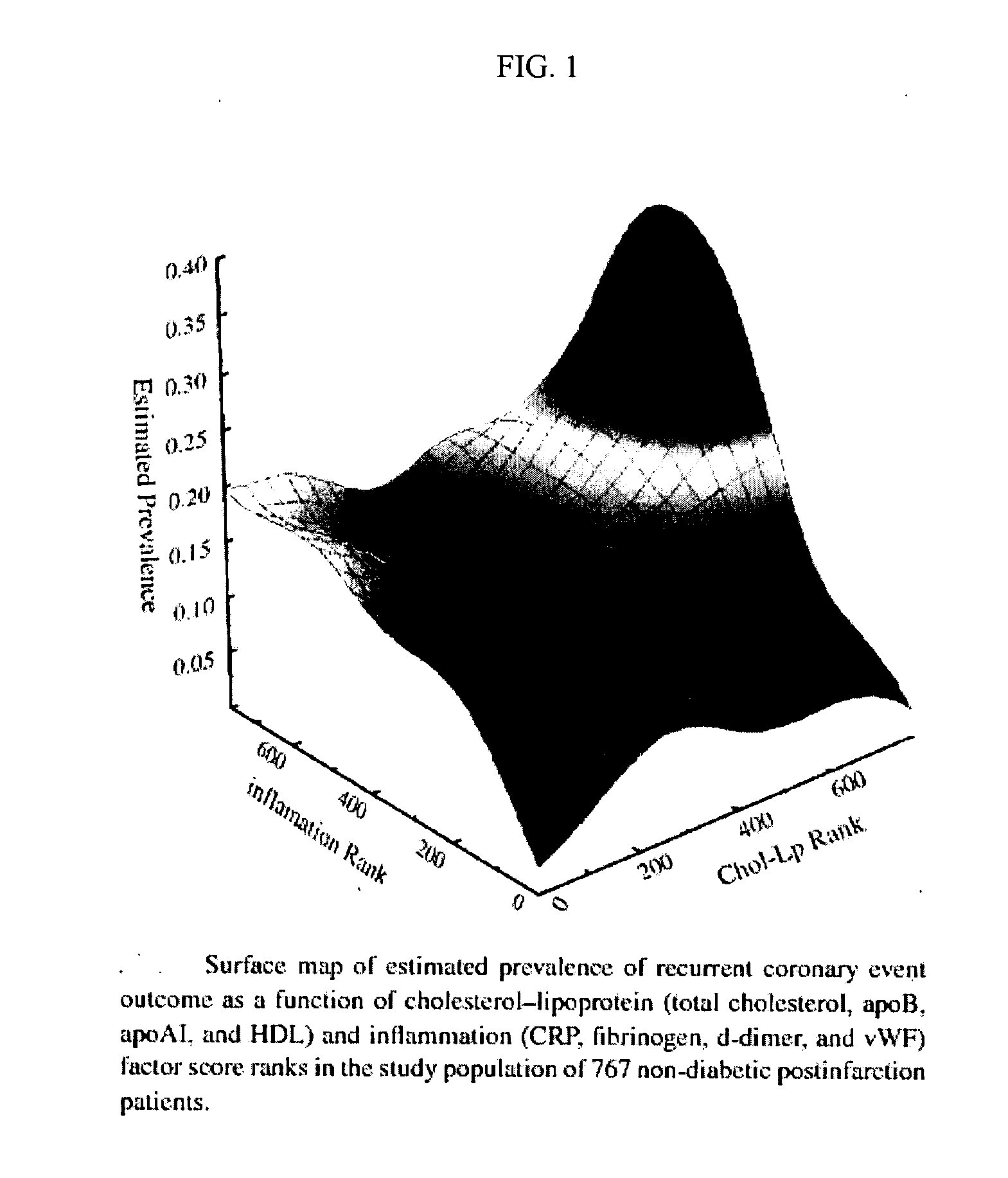

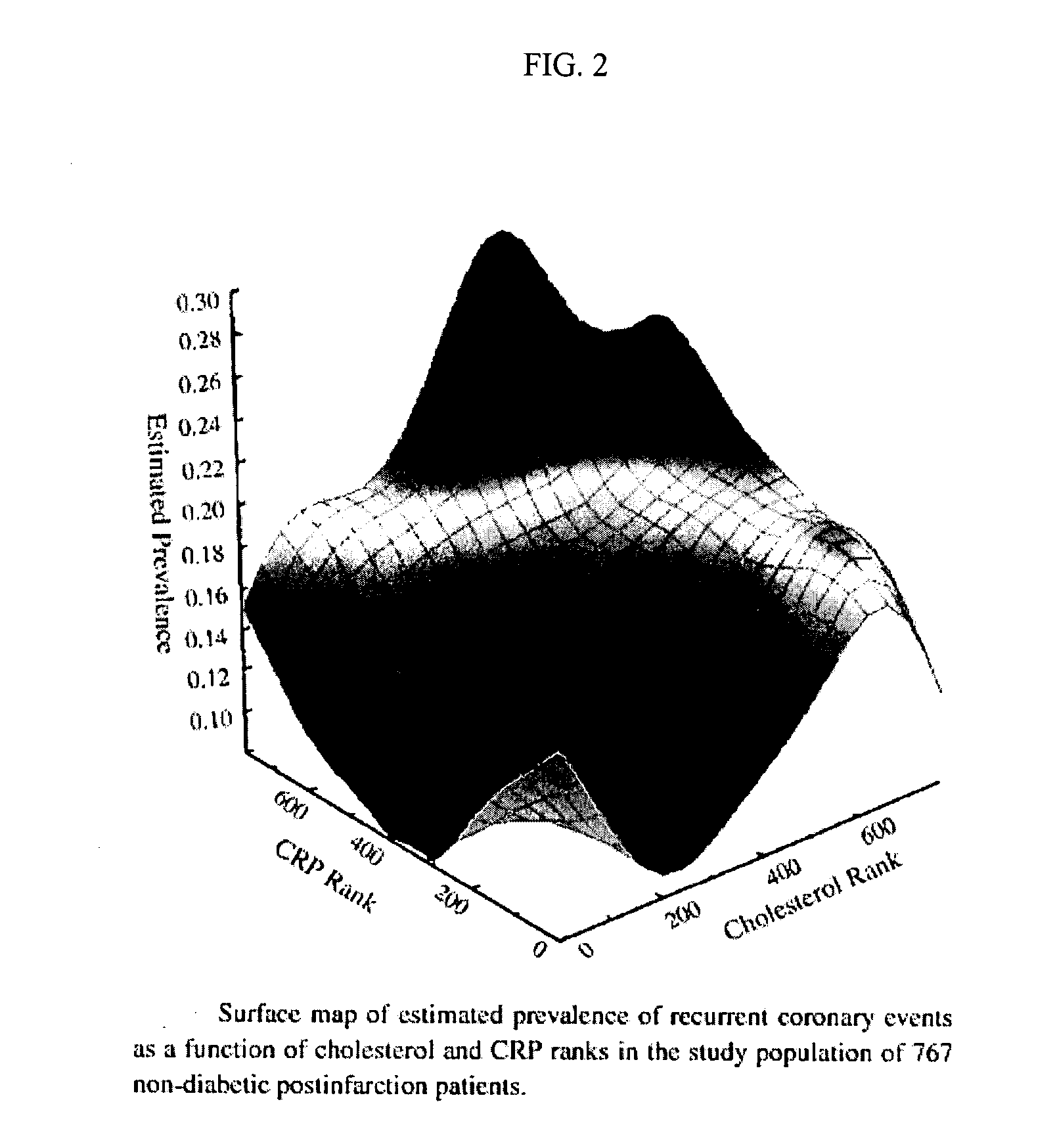

Identifying risk of a medical event

InactiveUS20080009684A1Data processing applicationsHealth-index calculationMultiple risk factorsAnalysis method

Described is a method of determining the relative risk of an outcome based on an analysis of multiple risk factors. A graphical method is used to take values corresponding to risk parameters and an event outcome to produce a smoothed surface map representing relative risk over an entire space defined by n risk factors. Applying a query data point to the surface map permits the determination of the estimated outcome probability for the query data point, based on its location on the surface map. The method then reports a relative risk or other probability measure associated with the query data point. Also described is a method of analysis in which subpopulations previously identified as high-risk can be further analyzed with respect to risk posed by additional factors.

Owner:UNIVERSITY OF ROCHESTER

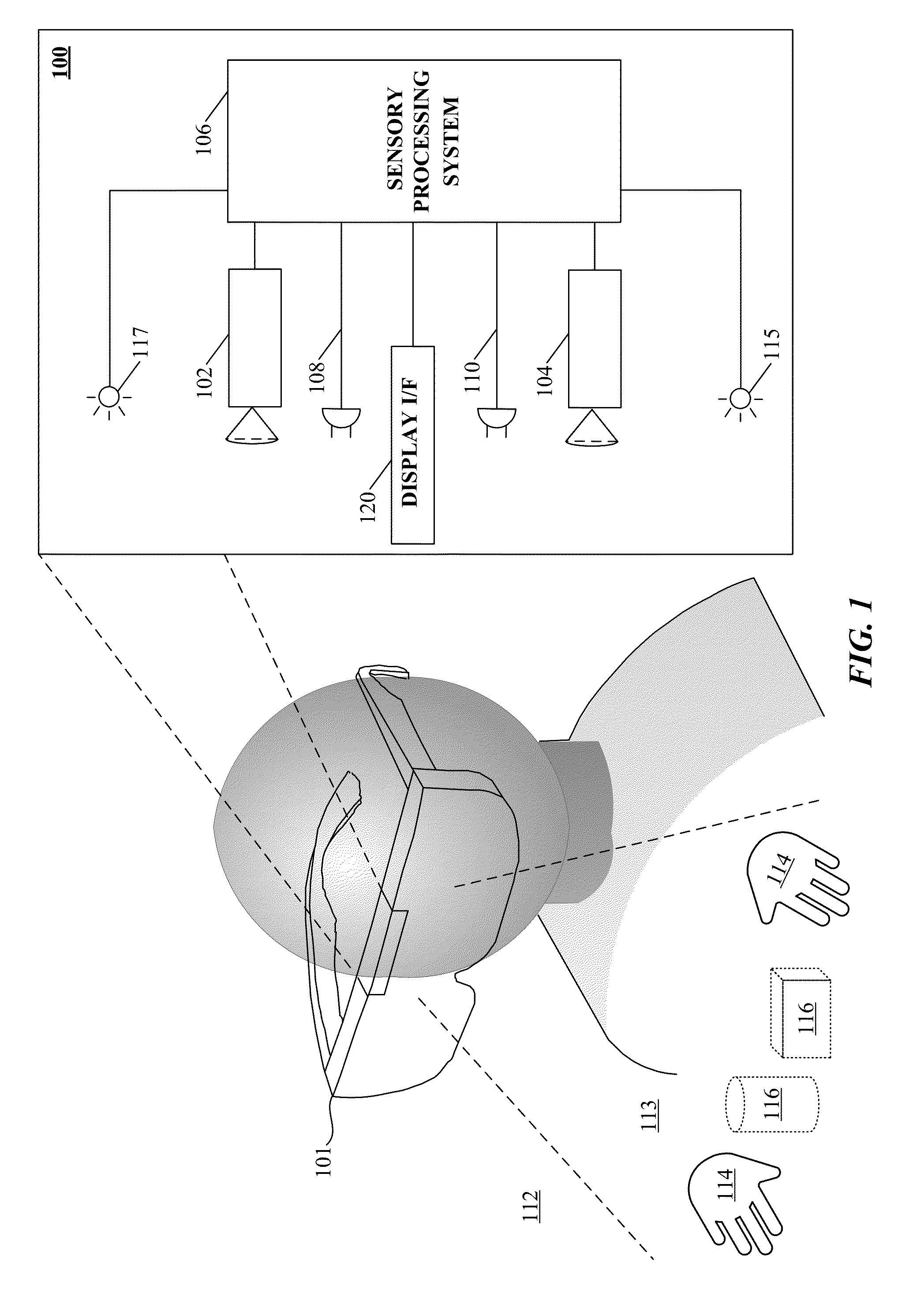

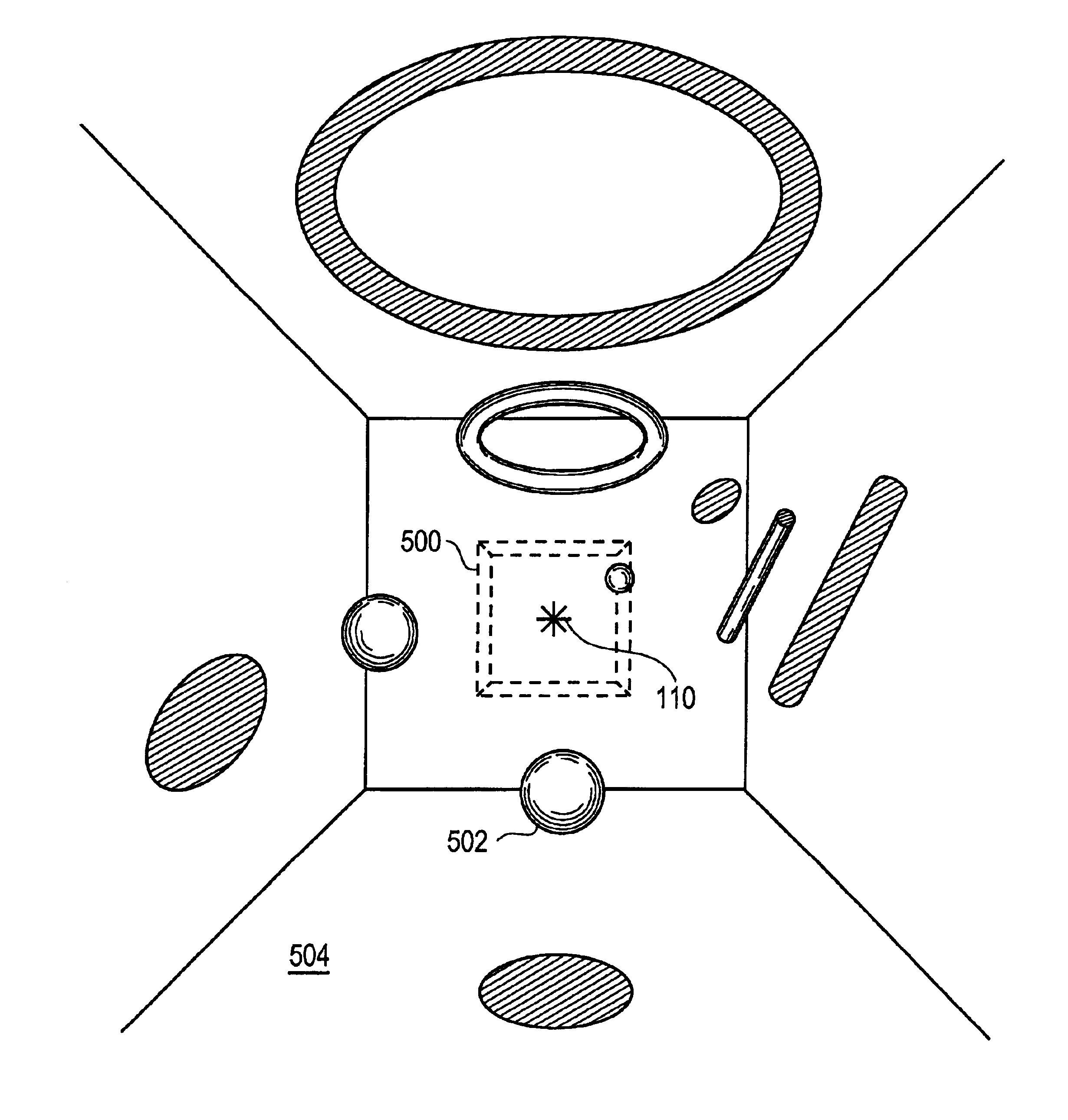

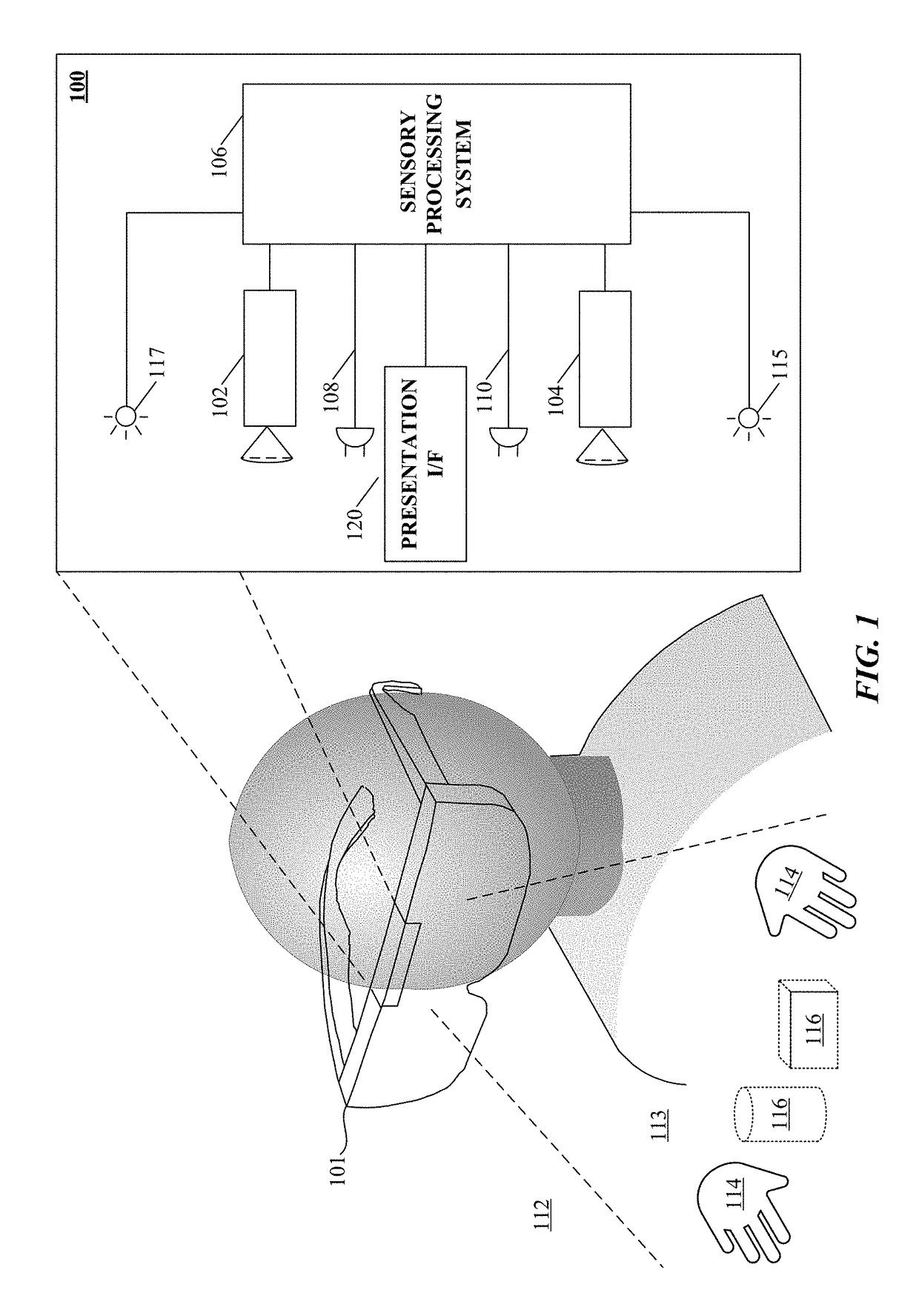

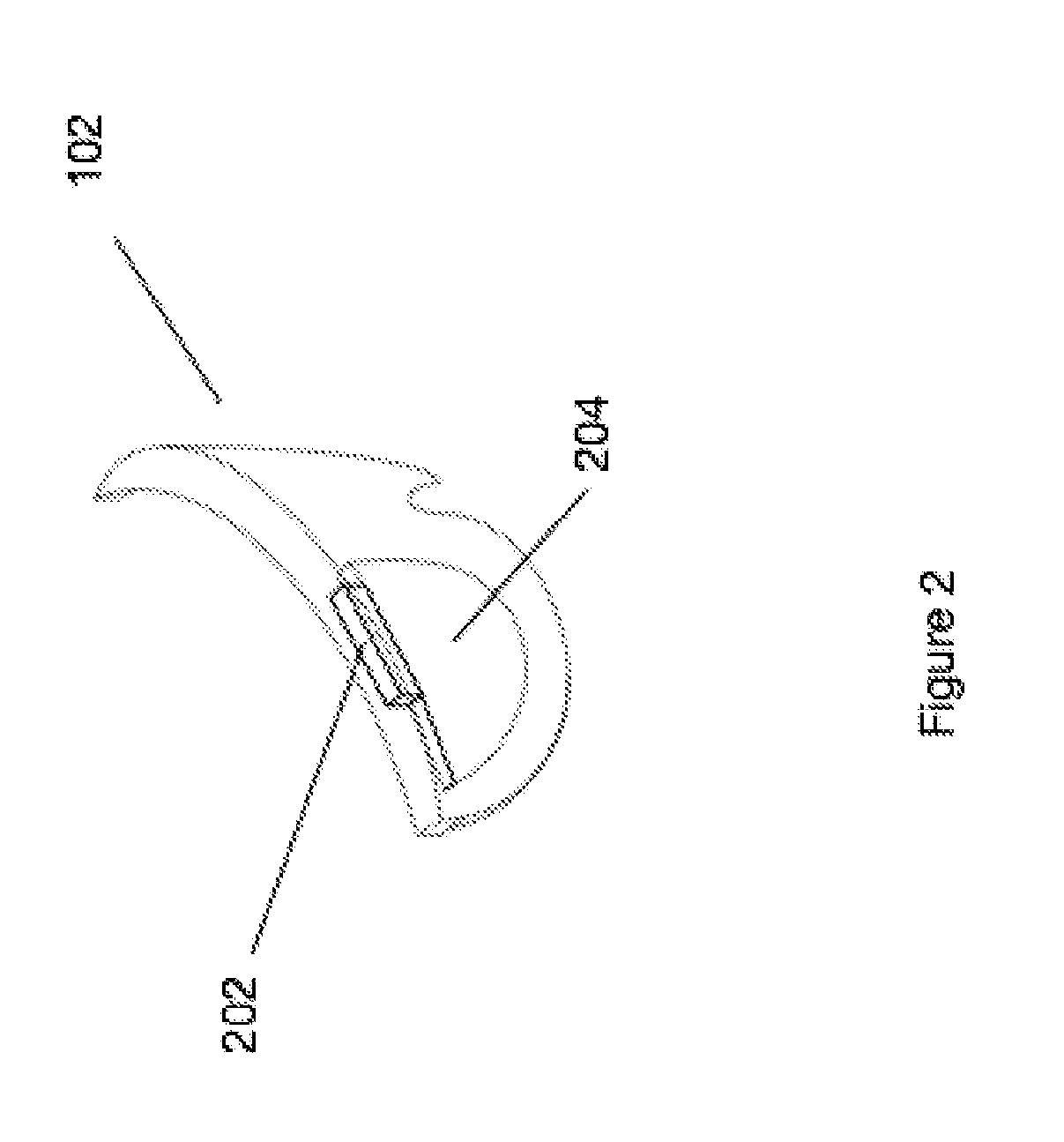

Selective hand occlusion over virtual projections onto physical surfaces using skeletal tracking

ActiveUS20120249590A1Television system detailsCharacter and pattern recognitionSensor arrayObject based

A head mounted device provides an immersive virtual or augmented reality experience for viewing data and enabling collaboration among multiple users. Rendering images in a virtual or augmented reality system may include performing operations for capturing an image of a scene in which a virtual object is to be displayed, recognizing a body part present in the captured image, and adjusting a display of the virtual object based upon the recognized body part. The rendering operations may also include capturing an image with a body mounted camera, capturing spatial data with a body mounted sensor array, recognizing objects within the captured image, determining distances to the recognized objects within the captured image, and displaying the virtual object on a head mounted display.

Owner:QUALCOMM INC

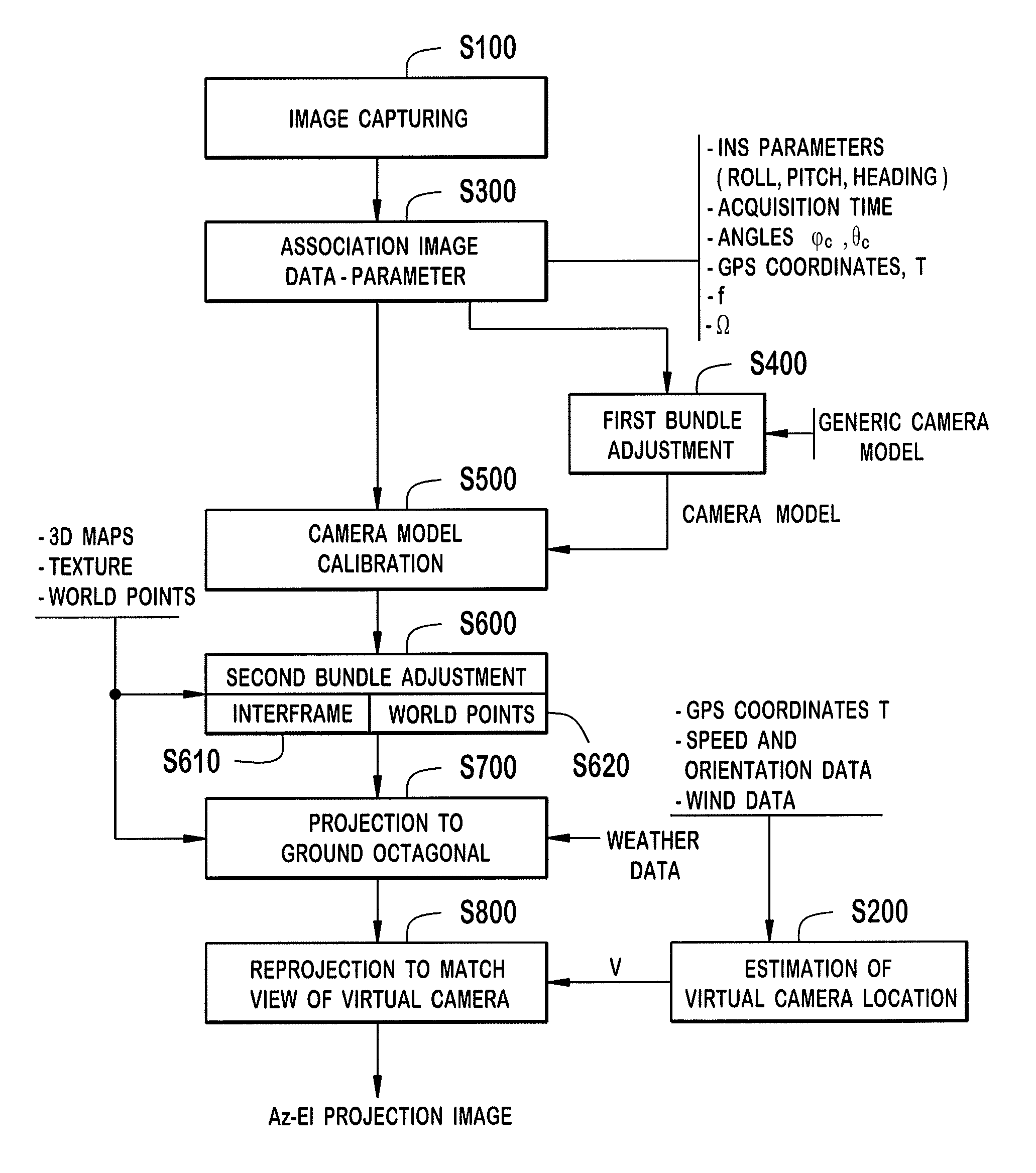

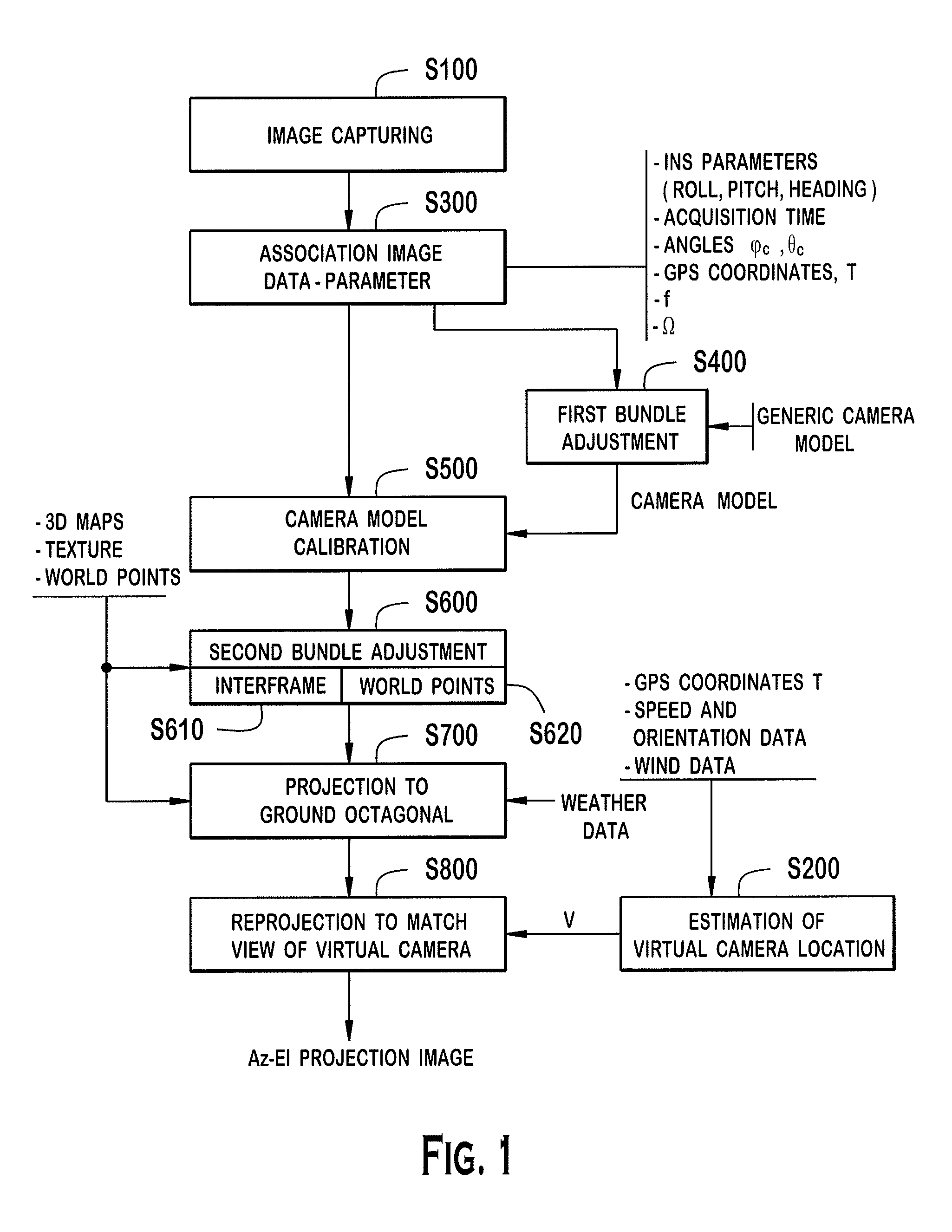

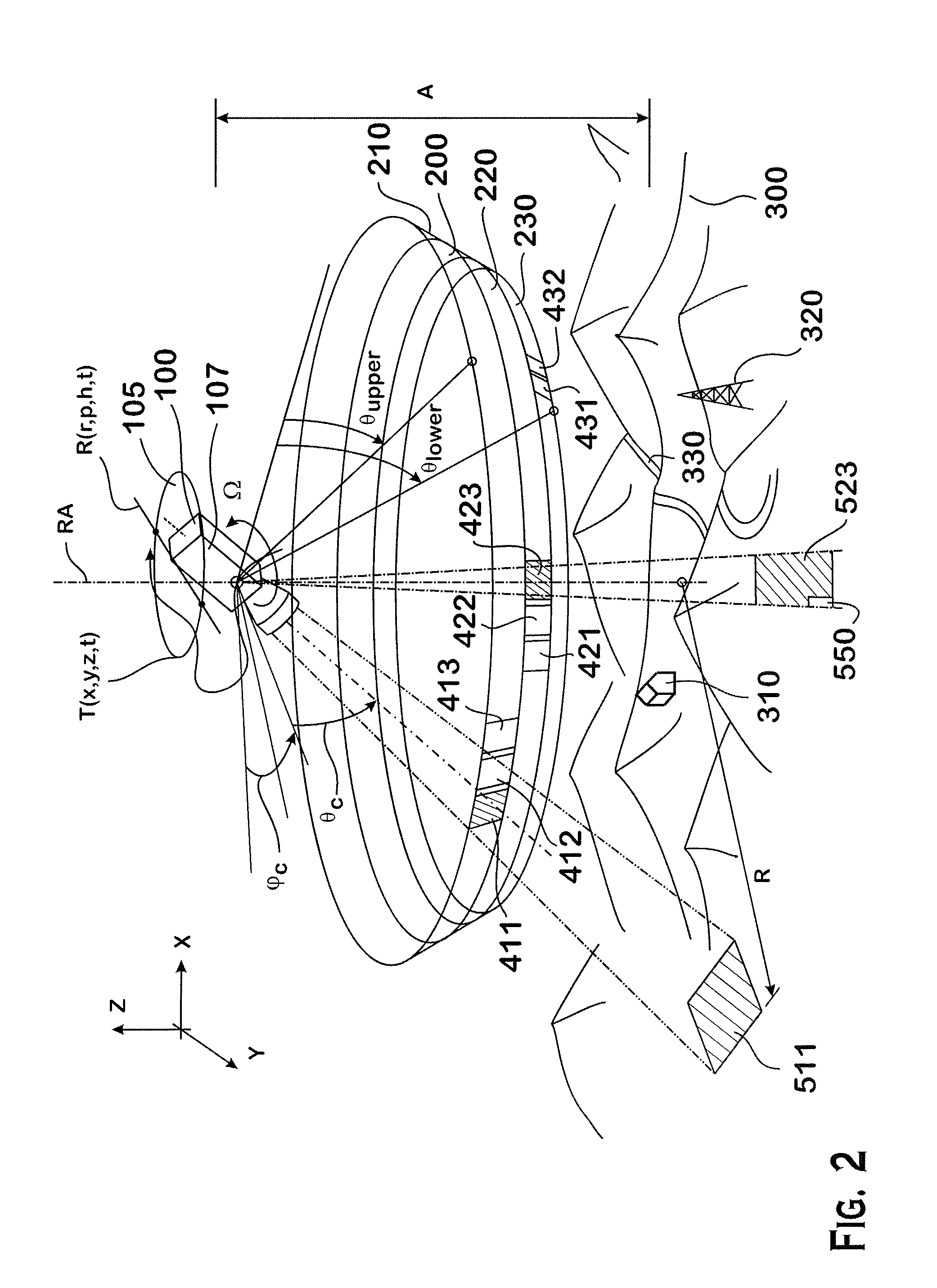

Method, device, and system for computing a spherical projection image based on two-dimensional images

InactiveUS20140340427A1Television system detailsImage enhancementComputer graphics (images)Three-dimensional space

An image projection method for generating a panoramic image, the method including the steps of accessing images that were captured by a camera located at a source location, and each of the images being captured from a different angle of view, the source location being variable as a function of time, calibrating the images collectively to create a camera model that encodes orientation, optical distortion, and variable defects of the camera; matching overlapping areas of the images to generate calibrated image data, accessing a three-dimensional map, first projecting pixel coordinates of the calibrated image data into a three-dimensional space using the three-dimensional map to generate three-dimensional pixel data, and second projecting the three-dimensional pixel data to an azimuth-elevation coordinate system that is referenced from a fixed virtual to generate the panoramic image.

Owner:LOGOS TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com