Bit allocation and rate control method for deep video coding

A technology of depth video and bit allocation, which is applied in the field of 3D video coding, can solve the problems of combining the characteristics of no depth video region with bit rate control, etc., and achieve the effect of improving quality, meeting application requirements, and improving coding quality.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

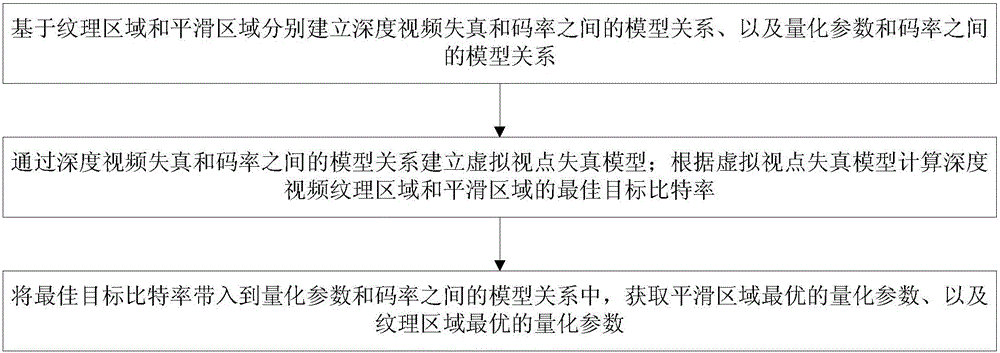

[0031] 101: Establishing a model relationship between depth video distortion and bit rate, and a model relationship between quantization parameters and bit rate based on the texture area and the smooth area;

[0032] 102: Establish a virtual view point distortion model through the model relationship between depth video distortion and bit rate; calculate the optimal target bit rate of the depth video texture area and smooth area according to the virtual view point distortion model;

[0033] 103: Bring the optimal target bit rate into the model relationship between the quantization parameter and the code rate, and obtain the optimal quantization parameter for the smooth area and the optimal quantization parameter for the texture area.

[0034]Wherein, the texture area in step 101 is: the depth video is divided into regions, and the region is composed of depth boundaries and minimum coding units;

[0035] The texture smoothing area in step 101 is: the area other than the texture ...

Embodiment 2

[0041] The scheme in embodiment 1 is described in detail below in combination with specific calculation formulas and scheme principles, see the description below for details:

[0042] 201: Perform region division on the depth video;

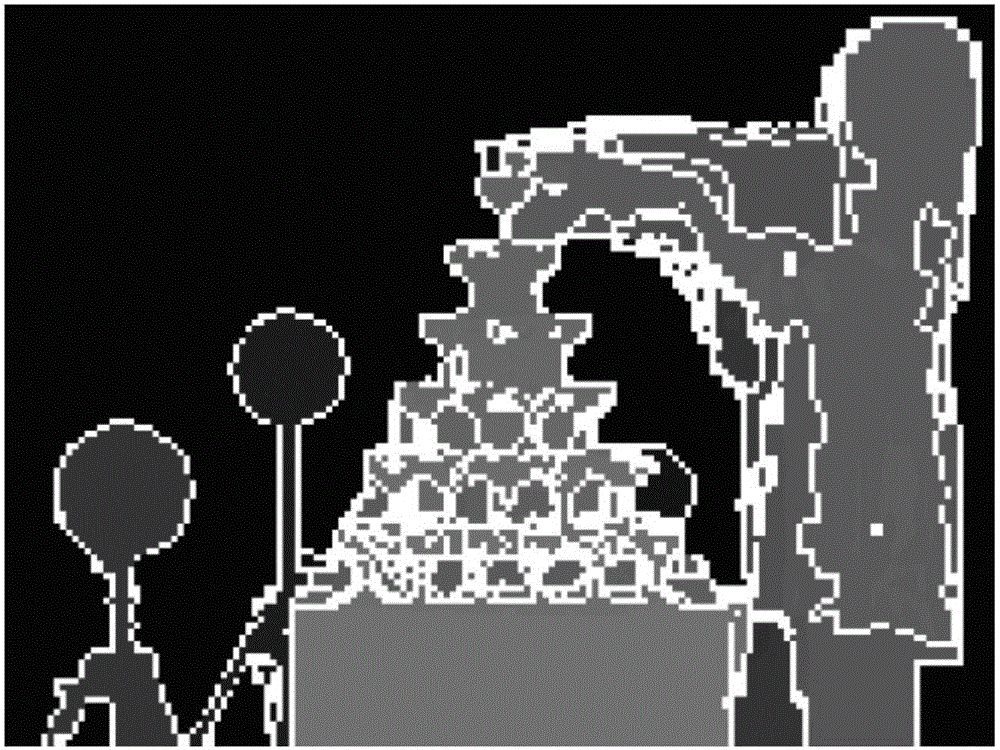

[0043] Among them, the depth video is divided into regions, and the depth boundary and the smallest coding unit (Coding Unit, CU) are marked as texture areas (Texture Area, TA), and the remaining areas are marked as smooth areas (Smooth Area, SA). The depth video boundary is extracted by the Canny operator; among them, the smallest CU is divided according to the coded coding tree unit (Coding Tree Unit, CTU) at the same position in the same temporal layer picture to mark the smallest CU of the current CTU. Usually, the CTU size is 64*64, and the minimum CU size is 8*8. The selection of the minimum coding unit is well known to those skilled in the art, and this embodiment of the present invention will not describe it in detail.

[0044] 202: Esta...

Embodiment 3

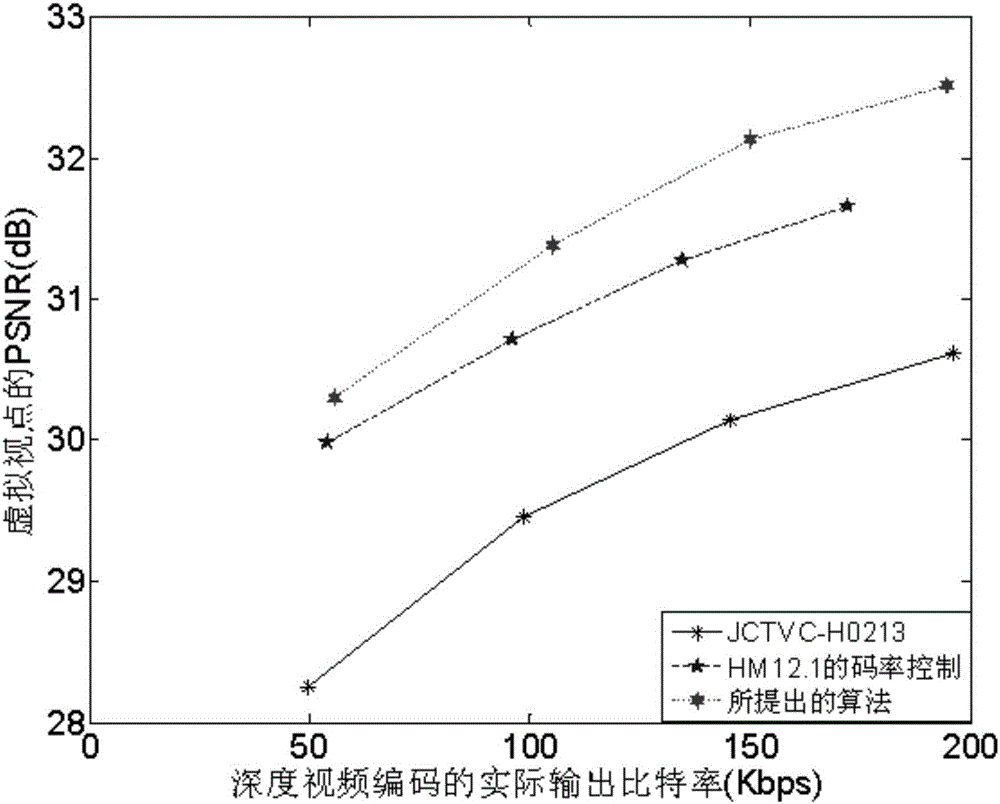

[0086] The following is combined with specific figure 2 and 3 , and experimental data carry out feasibility verification to the scheme in embodiment 1 and 2, see the following description for details:

PUM

| Property | Measurement | Unit |

|---|---|---|

| Frame rate | aaaaa | aaaaa |

Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com