Three-dimensional video fusion method and system based on WebGL

A technology of 3D video and fusion method, applied in the field of video fusion, which can solve problems such as poor display effect, narrow adaptability to occasions, camera distortion correction, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

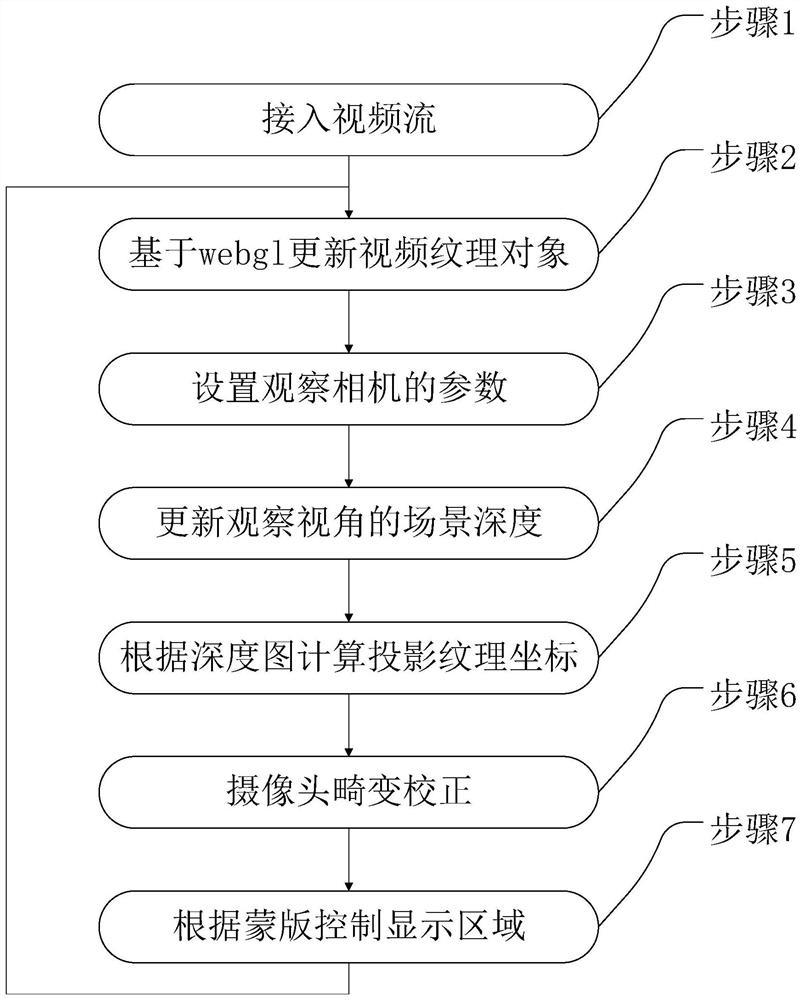

[0045] Embodiment 1 of the present invention provides a method for 3D video fusion based on WebGL, such as figure 1 shown, including the following steps:

[0046] Step 1: Access the video stream.

[0047]During specific implementation, access to video streams supports access to local video files and HTTP protocol network video data, and uses the HTML5 Video tag HTMLVideoElement to store video objects.

[0048] Step 2: Update the video texture object based on WebGL.

[0049] During specific implementation, based on the 3D drawing protocol WebGL standard, the efficiency of graphics rendering is high, and direct access on the browser is convenient and fast. For the video source connected in step 1, use the canvas to copy the single frame image of the HTMLVideoElement video every frame, and use the canvas value to update the video texture object rendered to the scene. A WebGL texture object for each frame of the video.

[0050] Step 3: Set the parameters of the observation cam...

Embodiment 2

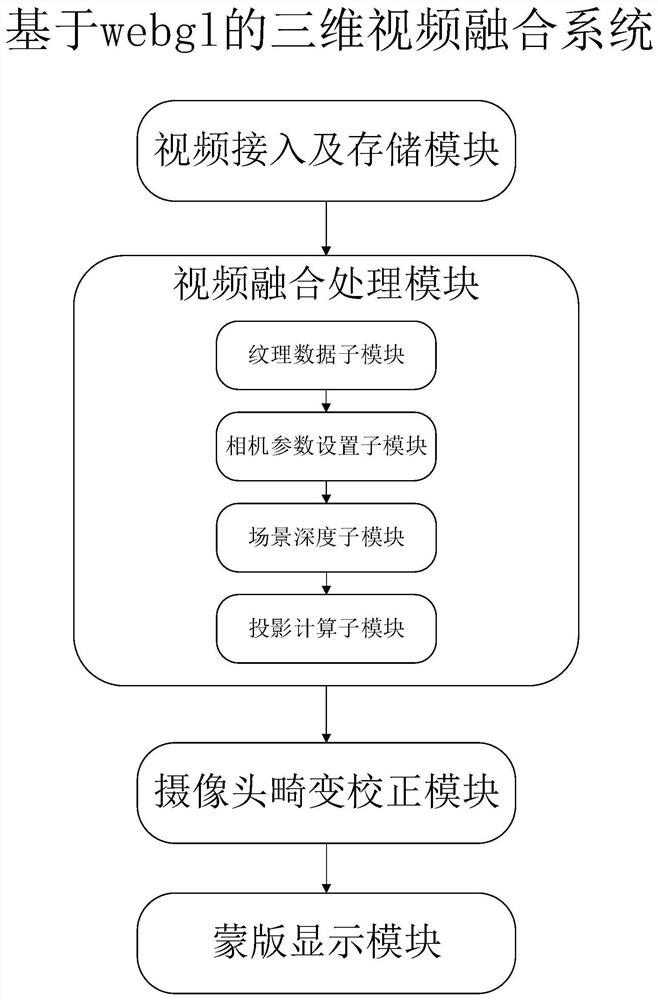

[0072] Embodiment 2 of the present invention, such as figure 2 As shown, a WebGL-based three-dimensional video fusion system is provided, including: a video access and storage module, a video fusion processing module, a camera distortion correction module, and a mask display module. Video access and storage module, used to access video and store video objects; video fusion processing module, based on WebGL to process video and scene fusion; camera distortion correction module, used to process video fusion according to camera internal parameters and distortion parameters Distortion correction is performed on the post-distortion video; the mask display module is used to selectively display the fusion video after distortion correction based on the mask image according to the range of the area to be displayed, so as to realize the cropping display of the video area.

[0073] During specific implementation, the described video fusion processing module includes a texture data submo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com