Multi-scale image semantic segmentation method

A semantic segmentation and multi-scale technology, applied in the field of computer vision, can solve the problems of loss of details of segmentation results, low utilization efficiency of receptive field features, and insufficient robustness of segmentation, etc., to reduce the amount of calculation and the number of parameters, and reduce the calculation volume and number of parameters, the effect of increasing utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

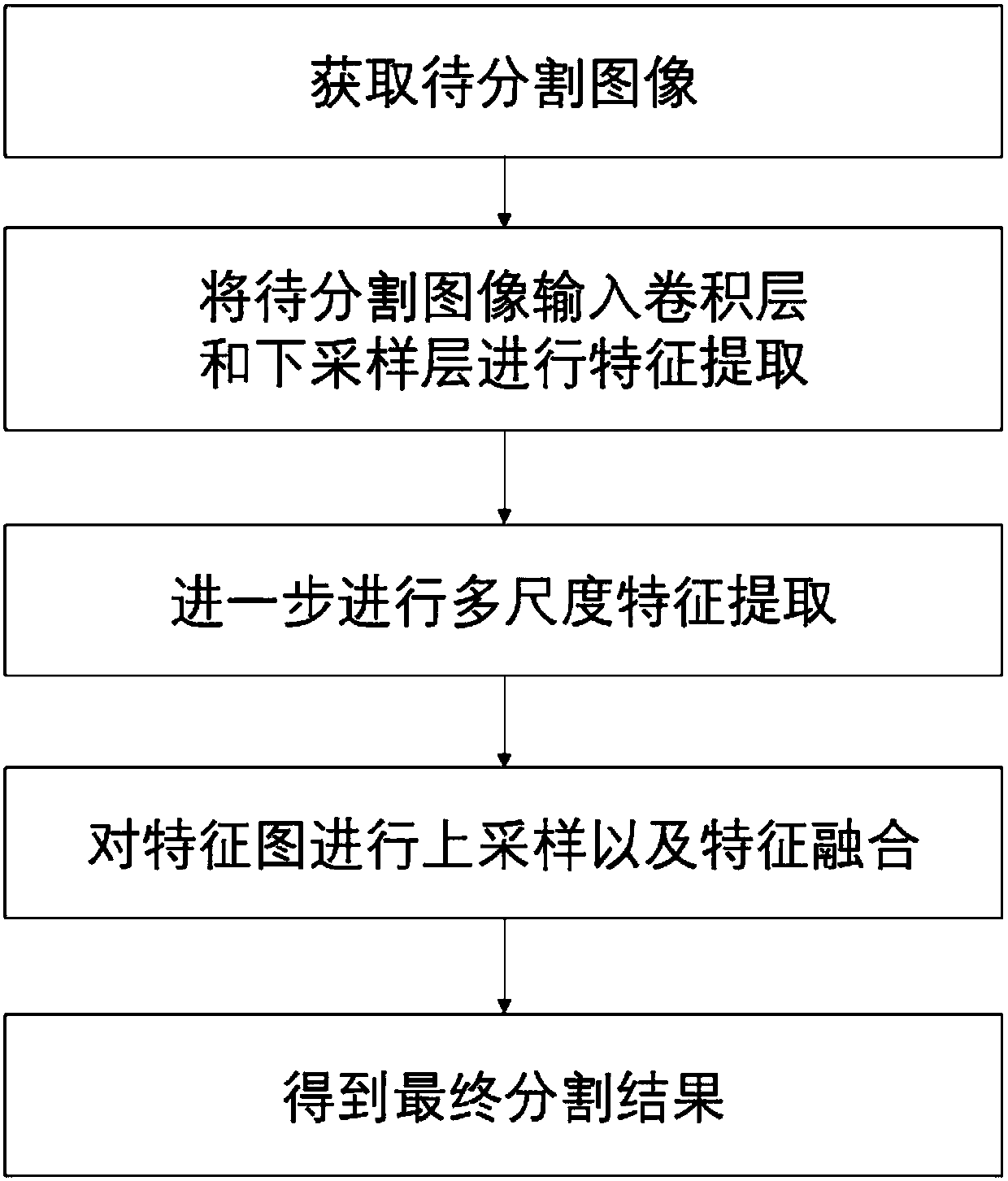

[0047] Such as figure 1 As shown, a multi-scale image semantic segmentation method includes the following steps:

[0048] S1. Obtain an image to be segmented and a corresponding label, the image to be segmented is a three-channel color image, and the label is a category label corresponding to each pixel position;

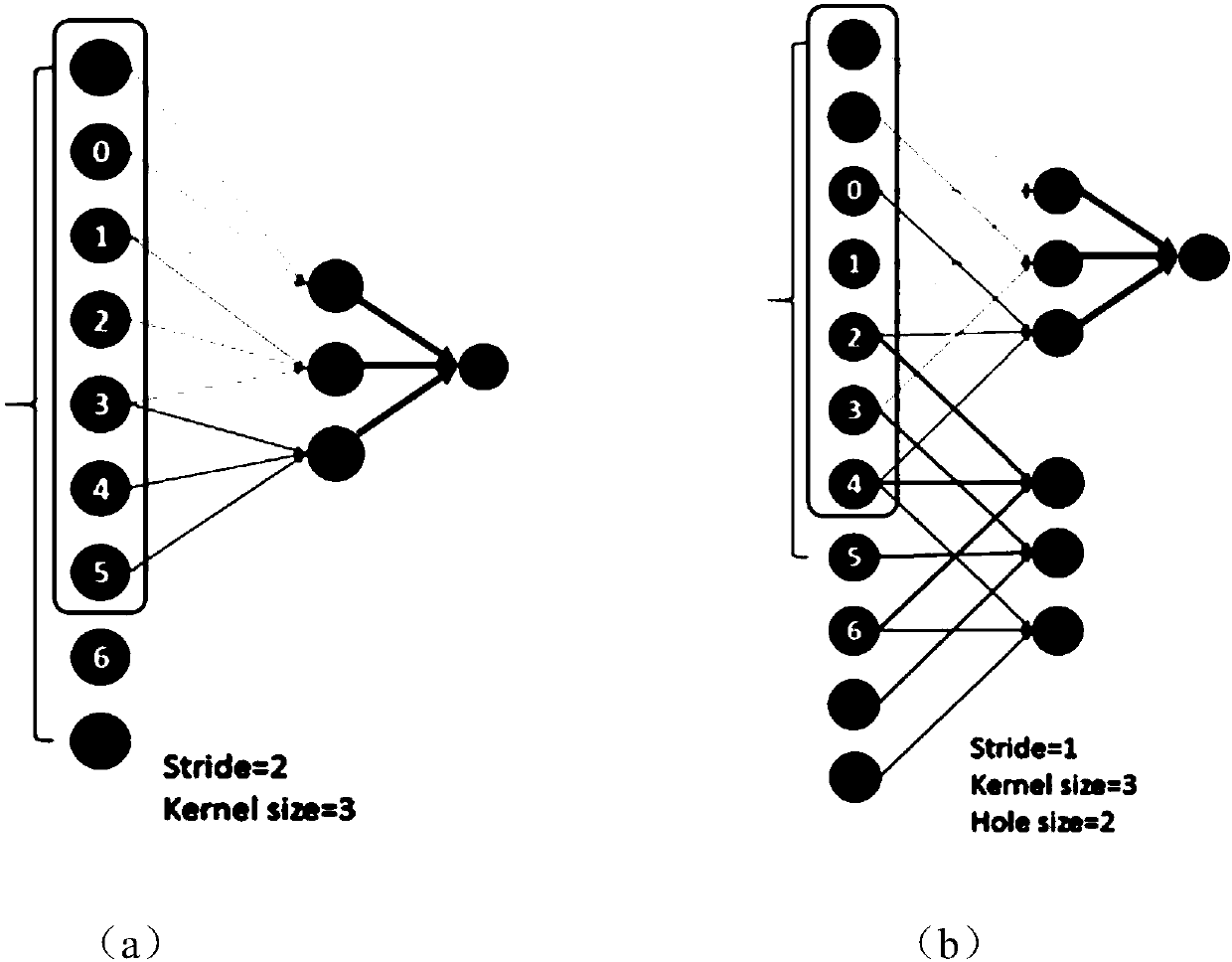

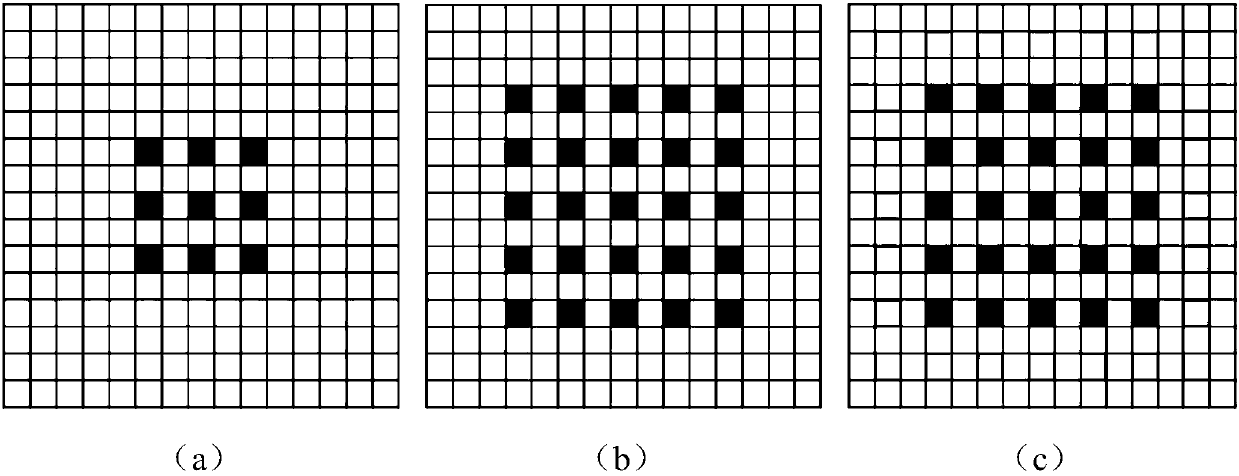

[0049] S2. Construct a fully convolutional deep neural network, such as Figure 4 As shown, the full convolution deep neural network includes a convolution module, a hole convolution module, a pyramid pooling module, a 1×1×depth convolution layer, and a deconvolution structure; the hole convolution module includes several groups A multi-scale atrous convolution structure, the multi-scale atrous convolution structure is provided with atrous convolution kernels of different expansion rates, and extracts information of low, medium, and high-resolution targets from the feature image; step S2 specifically includes the following steps:

[0050] S21. The fully convoluti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com