Data deep fusion image segmentation method for multispectral rescue robot

A rescue robot and multi-spectral image technology, which is applied in the field of data deep fusion image segmentation for multi-spectral rescue robots, can solve the problems of poor scene understanding and low work efficiency, and achieve shortened disaster relief time, reduced labor costs, and high accuracy Enhanced effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

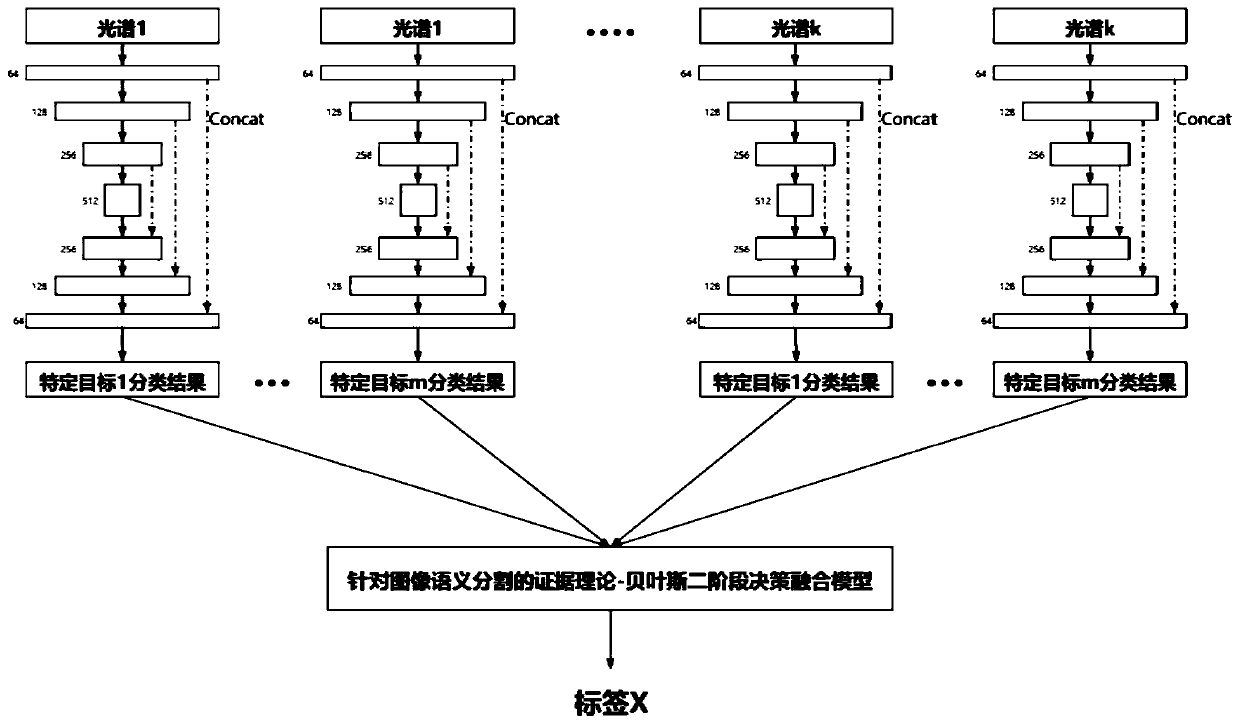

[0031] A data deep fusion image segmentation method for multi-spectral rescue robots proposed by the present invention comprises the following steps:

[0032] Step 1. Generate target segmentation training dataset:

[0033] (1) Information collection: the rescue robot mainly uses its own camera to collect external environmental data information, and converts the collected geographical location and environmental status information into multi-spectral image data for storage;

[0034] (2) Manually labeled data set: In order to train the neural network, after data collection, the multispectral image is manually marked with labels pixel by pixel, and each label indicates the object category to which the current pixel belongs; the object categories include Standing human body, lying human body, common flammables, common explosives, radioactive devices, structures, falling rocks or common objects at disaster sites.

[0035] Step 2. Construct a U-shaped network that recognizes single-...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com