Human body behavior recognition method based on RGB video and skeleton sequence

A recognition method and video technology, applied in the field of computer vision and pattern recognition, can solve problems such as overfitting, high dimensionality of global descriptors, and affecting the performance of human behavior recognition, achieving good performance and reducing the number of parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

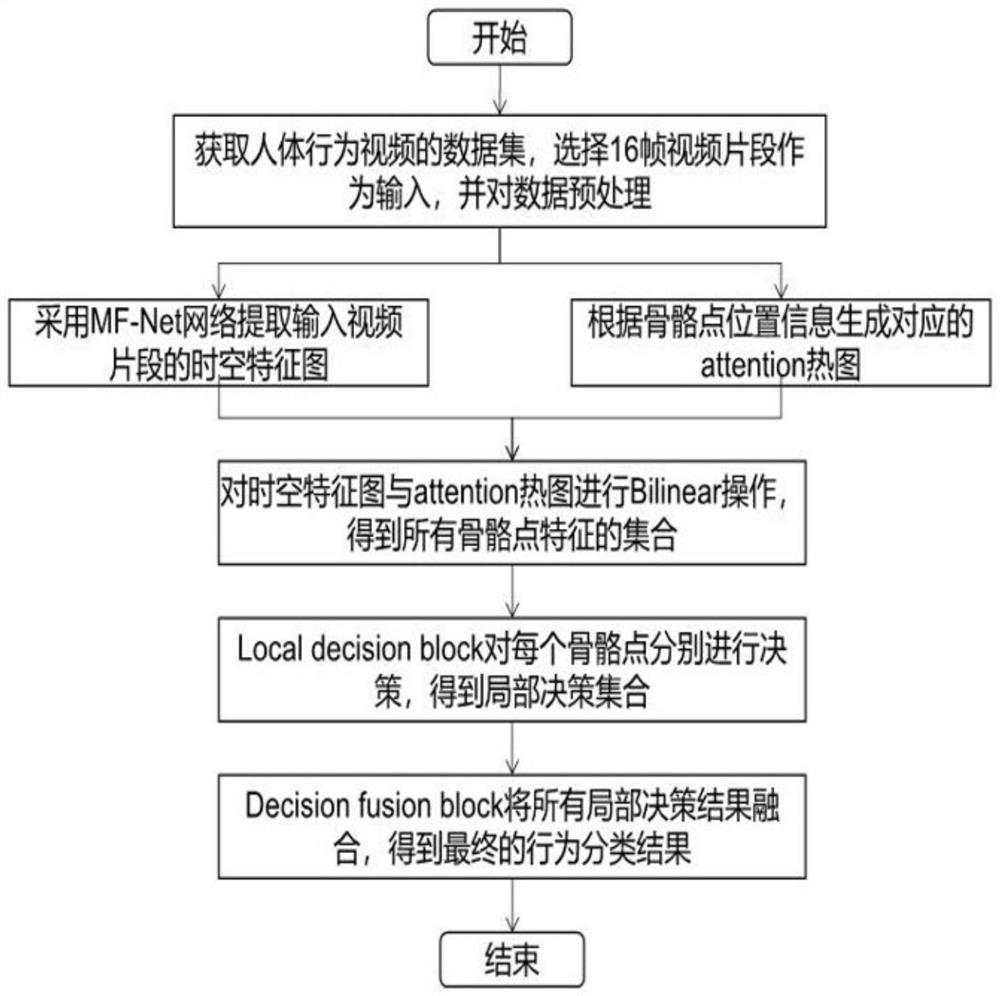

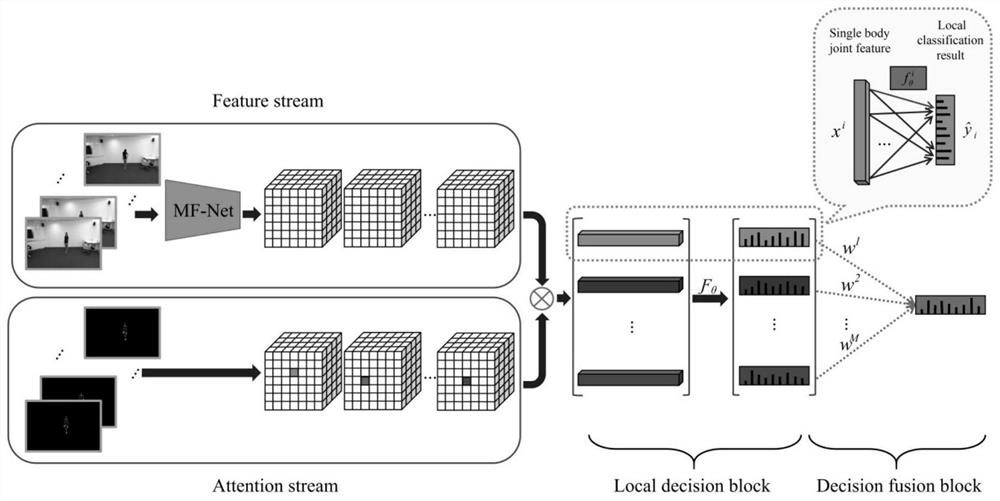

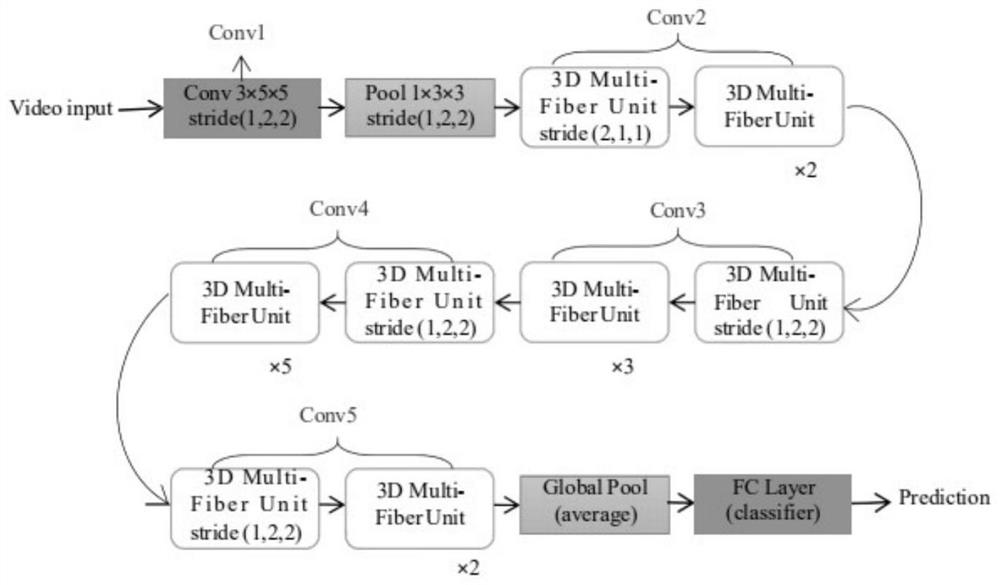

[0057] The present invention proposes a two-flow network structure, including two modules, a local decision block and a decision fusion block, called LD-Net. One stream of LD-Net is the feature stream, and the multi-fiber network (Multi-Fiber Networks, MF-Net) is selected to extract the spatio-temporal features of the video clip. Because MF-Net is a multi-fiber structure network, it can effectively reduce the parameter amount of the three-dimensional network and avoid overfitting. MF-Net network framework such as image 3 shown. The other stream is the attention stream, which uses the corresponding positions of human skeleton points as the attention area. Because the bone point information reflects the posture characteristics of the human body, and at the same time greatly eliminates useless information about the target. For the extracted key region features,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com