Patents

Literature

345 results about "Cosine Distance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

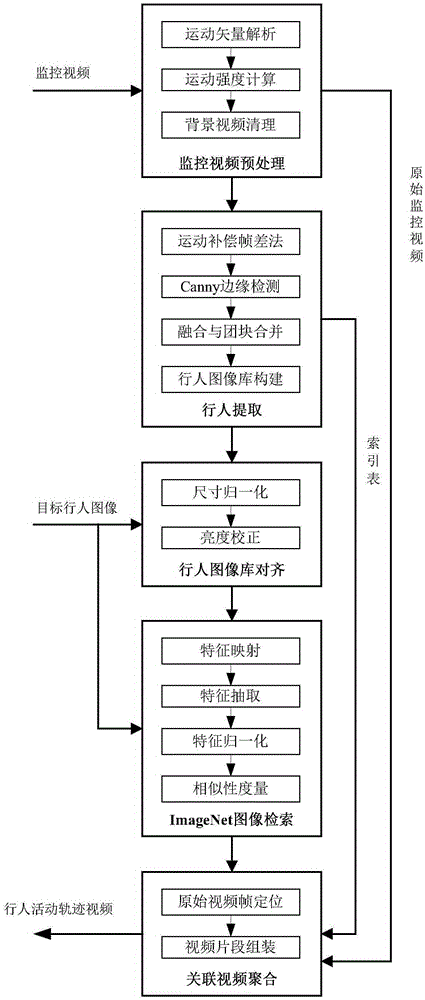

Surveillance video pedestrian re-recognition method based on ImageNet retrieval

ActiveCN105354548AOvercoming adaptabilityOvercoming perspectiveImage analysisCharacter and pattern recognitionHidden layerFrame difference

The present invention discloses a surveillance video pedestrian re-recognition method based on ImageNet retrieval. The pedestrian re-recognition problem is transformed into the retrieval problem of an moving target image database so as to utilize the powerful classification ability of an ImageNet hidden layer feature. The method comprises the steps: preprocessing a surveillance video and removing a large amount of irrelevant static background videos from the video; separating out a moving target from a dynamic video frame by adopting a motion compensation frame difference method and forming a pedestrian image database and an organization index table; carrying out alignment of the size and the brightness on an image in the pedestrian image database and a target pedestrian image; training hidden features of the target pedestrian image and the image in the image database by using an ImageNet deep learning network, and performing image retrieving based on cosine distance similarity; and in a time sequence, converging the relevant videos containing recognition results into a video clip reproducing the pedestrian activity trace. The method disclosed by the present invention can better adapt to changes in lighting, perspective, gesture and scale so as to effective improve accuracy and robustness of a pedestrian recognition result in a camera-cross environment.

Owner:WUHAN UNIV

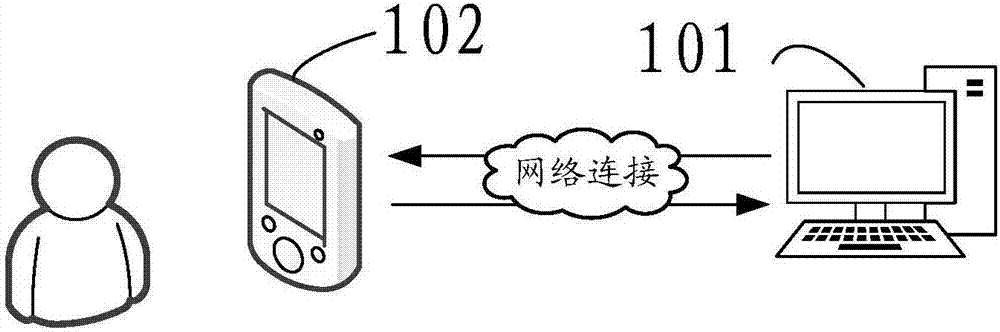

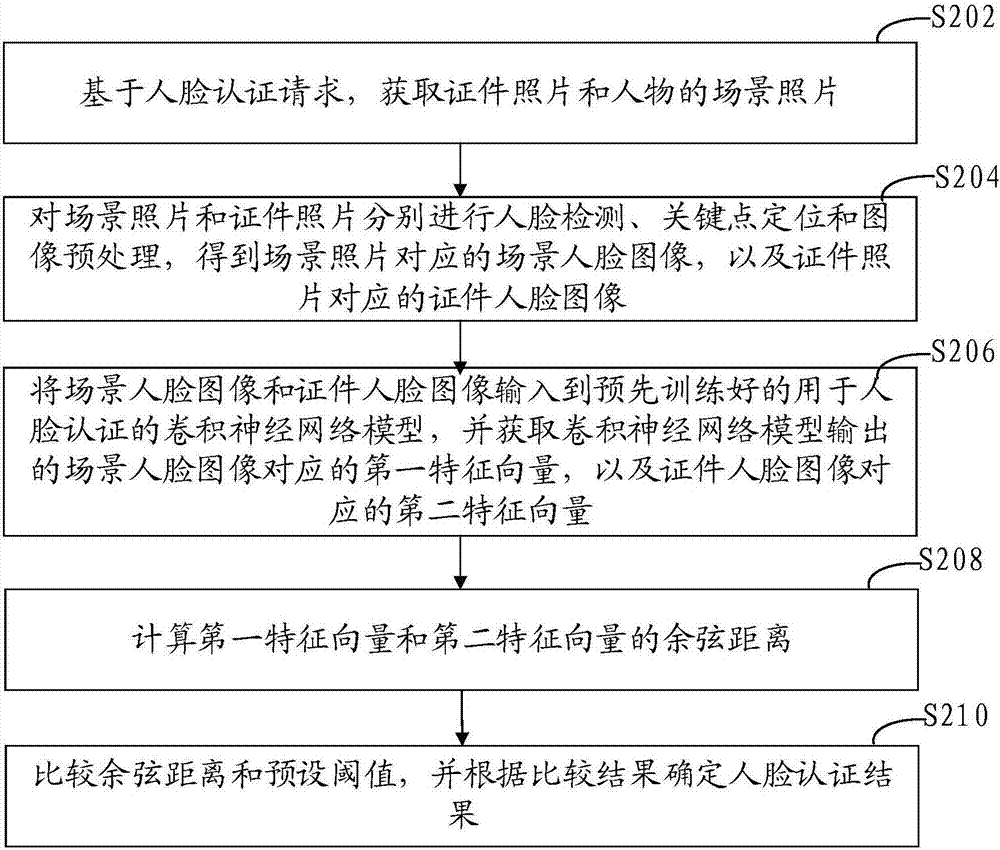

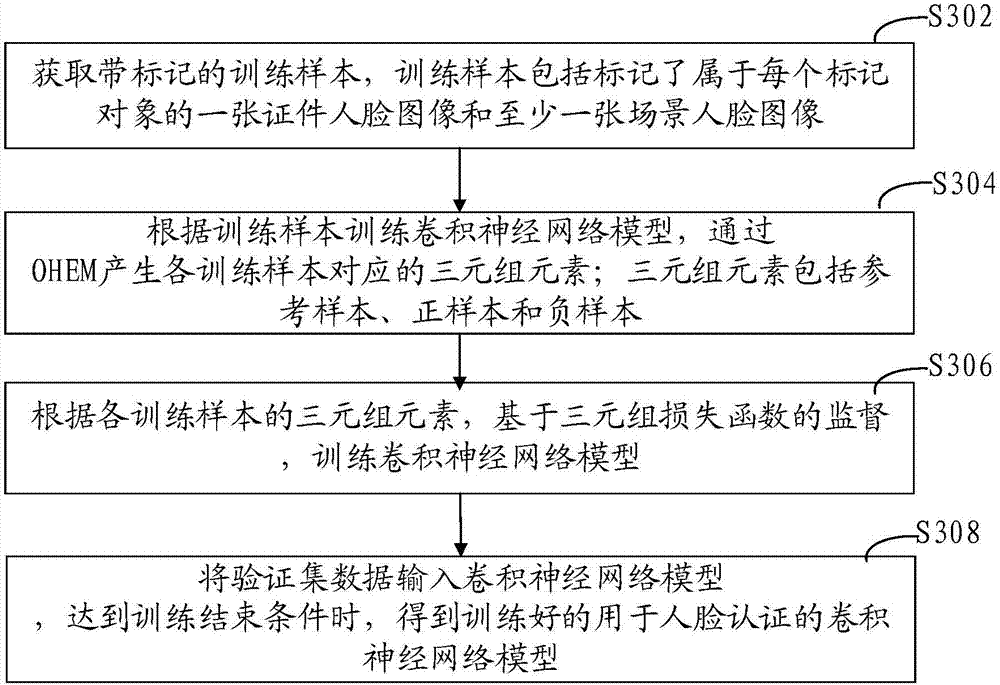

Face verification method and device based on Triplet Loss, computer device and storage medium

ActiveCN108009528AImprove reliabilityConform to the distribution propertiesCharacter and pattern recognitionFace detectionFeature vector

The present invention relates to a face verification method and device based on the Triplet Loss, a computer device and a storage medium. The method comprises the steps of: based on a face verification request, obtaining a certificate photo and a figure scene photo; performing face detection, key point positioning and image preprocessing of the scene photo and the certificate photo, and obtaininga scene face image corresponding to the scene photo and a certificate face image corresponding to the certificate photo; inputting the scene face image and the certificate face image into a pre-trained convolutional neural network model used for face verification, obtaining a first feature vector, corresponding to the scene face image, output by the convolutional neural network model and a secondfeature vector, corresponding to the certificate face image, output by the convolutional neural network model; calculating a cosine distance of the first feature vector and the second feature vector;and comparing the cosine distance with a preset threshold, and determining a face verification result according to a comparison result. The reliability of face verification is improved by the method.

Owner:GRG BAKING EQUIP CO LTD

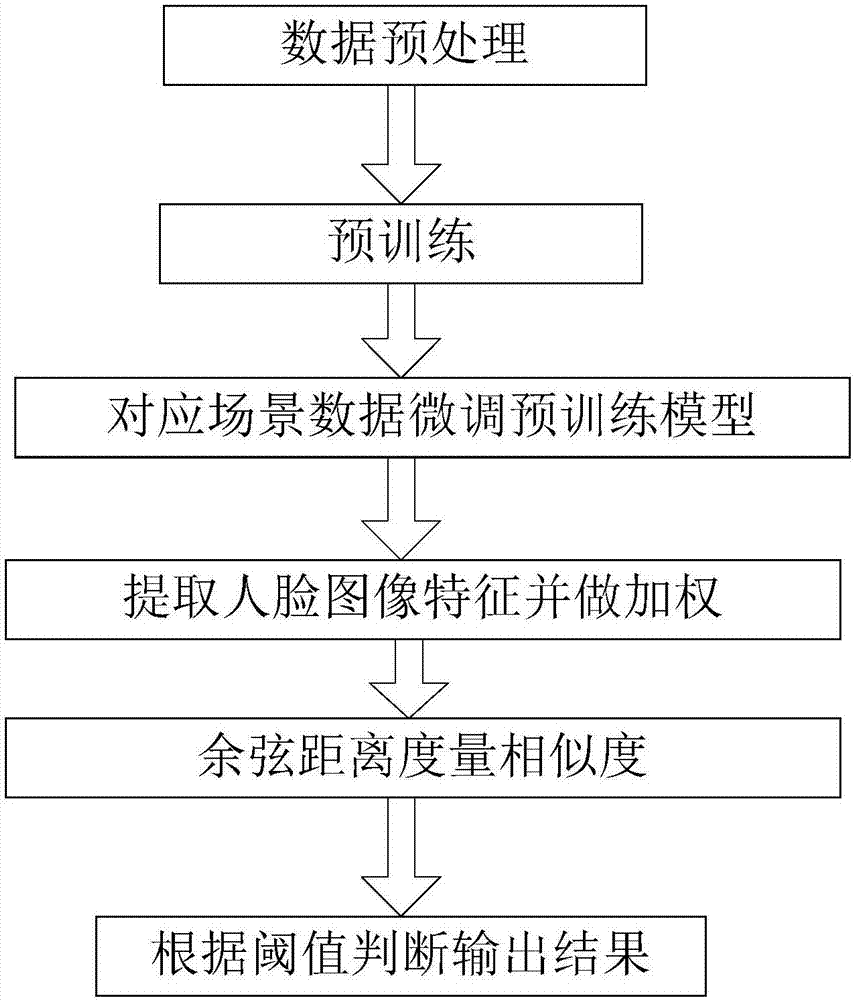

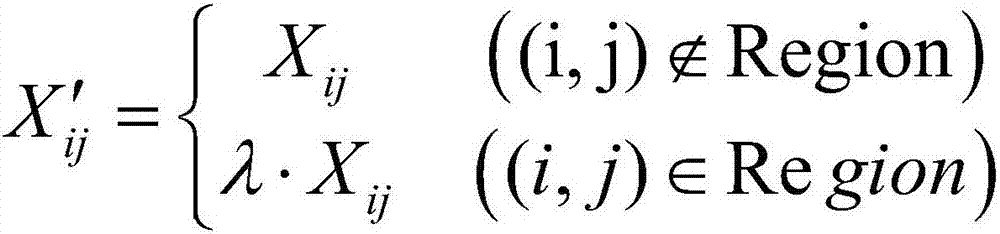

Method for face recognition scene adaptation based on convolutional neural network

ActiveCN107886064AScenario Adaptability GuaranteeImprove accuracyCharacter and pattern recognitionNeural architecturesImage extractionFeature vector

A method for face recognition scene adaptation based on a convolutional neural network comprises the steps of: 1) collecting face data and making classification tags, performing preprocessing and enhancement of the data, and dividing the data into a training set and a verification set; 2) sending the data in the training set into a designed convolutional neural network for training, and obtaininga pre-training model; 3) testing the pre-training model by employing the data in the verification set, and regulating training parameters to perform retraining according to a test result; 4) repeatedly performing the step 3) to obtain an optimum pre-training model; 5) collecting face image data according to different application scenes, performing fine tuning of the pre-training model on the newlycollected data, and obtaining a new adaption scene model; 6) extracting features of a face image to be tested by employing the adaption scene model, performing weighting operation of the five sense organs of the face in the features, and obtaining final feature vectors; and 7) measuring the final feature vectors by employing a cosine distance, determining whether the face is a target face or not,and outputting a result. The method for face recognition scene adaptation based on the convolutional neural network ensures accuracy of face recognition and scene adaptability of the model.

Owner:ANHUI UNIVERSITY

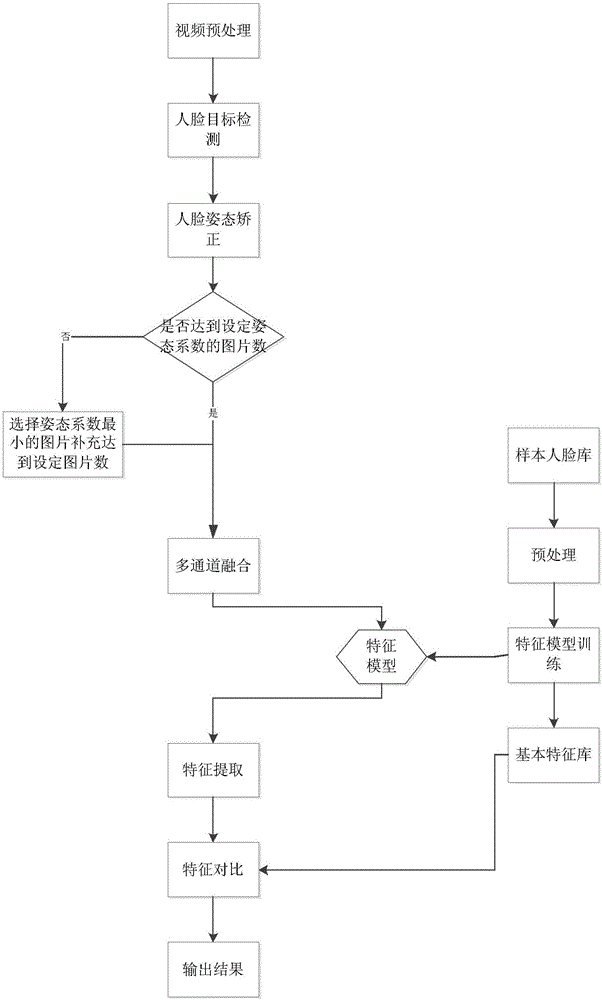

Multi-channel network-based video human face detection and identification method

ActiveCN106845357AImprove accuracyTake advantage of the maximumCharacter and pattern recognitionFeature vectorTemporal information

The invention discloses a multi-channel network-based video human face detection and identification method. The method comprises the following steps of S1, performing video preprocessing: adding time information to each frame image; S2, detecting a target human face and calculating a pose coefficient; S3, correcting a human face pose: for m human faces obtained in the step S2, performing pose adjustment; S4, extracting human face features based on a deep neural network; and S5, comparing the human face features: for an input human face, obtaining eigenvectors by utilizing the step S4, matching a matching degree of an eigenvector of the input human face and a vector in a feature library by utilizing a cosine distance, and adding a class to alternative classes, and if the cosine distances between a feature of the to-be-identified human face and central features of all classes are all smaller than a set threshold phi, regarding that a database does not store information of a person, and ending the identification, wherein the cosine distance between the class and the to-be-identified human face is greater than the set threshold phi. The multi-channel network-based video human face detection and identification method with relatively high accuracy is provided.

Owner:ENJOYOR COMPANY LIMITED

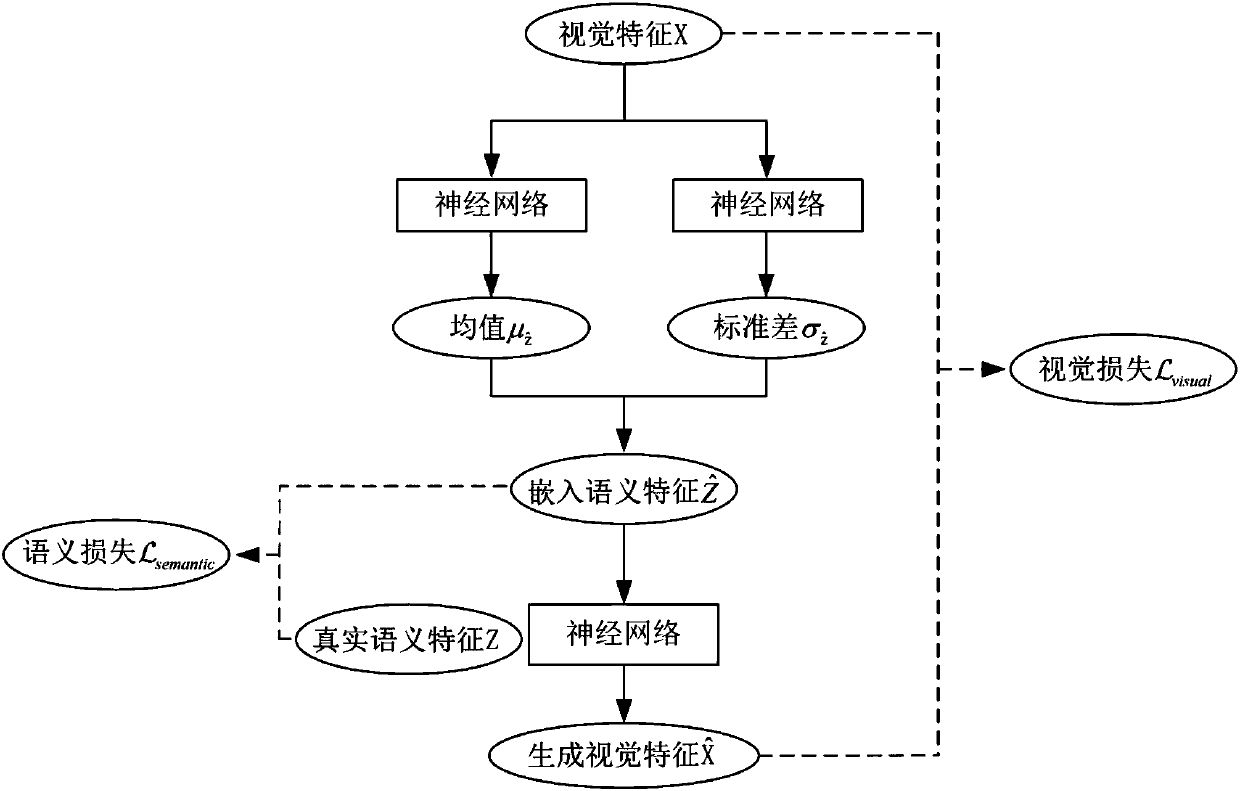

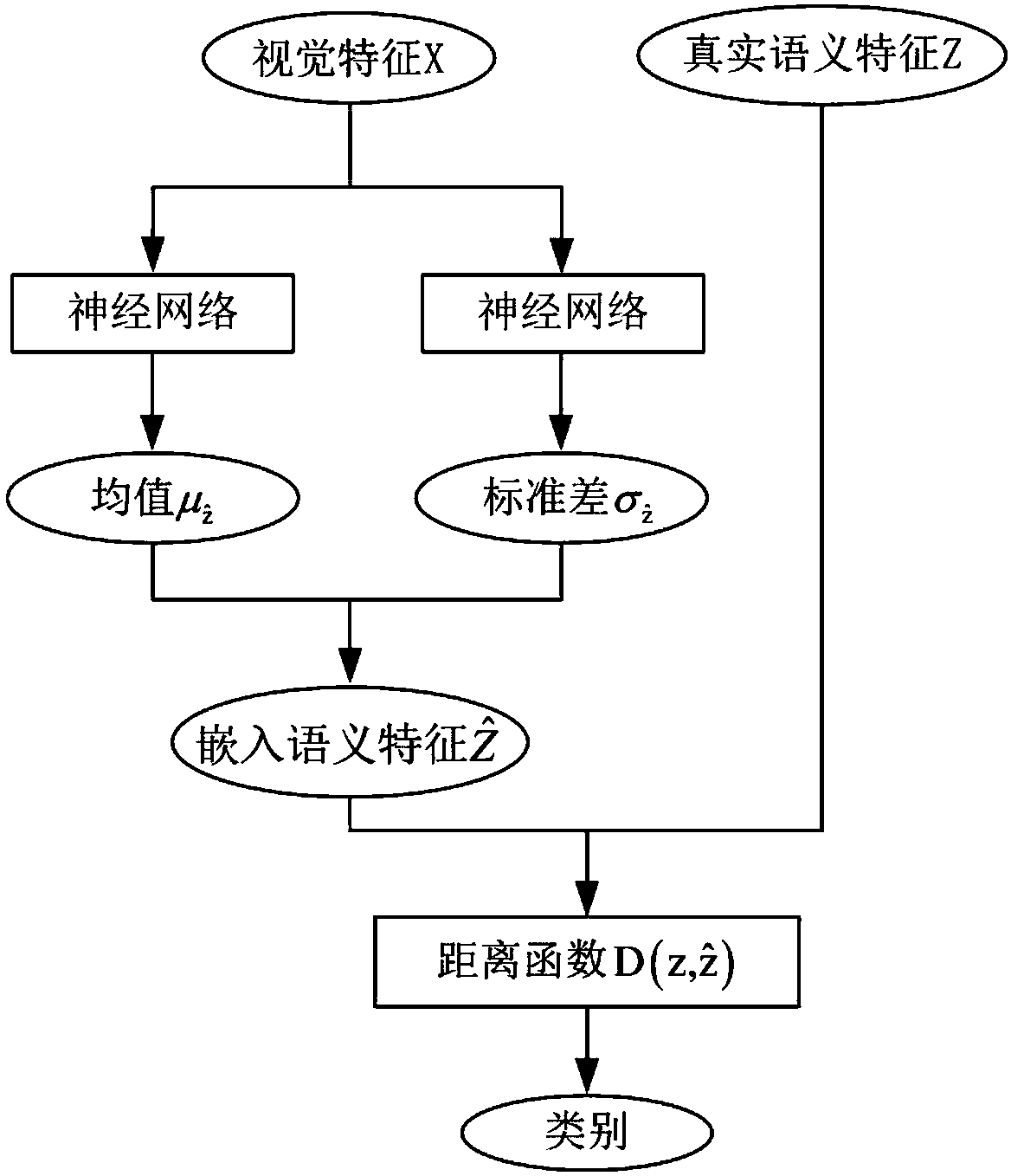

Variational automatic encoder-based zero-sample image classification method

InactiveCN107679556AEffective semantic associationFully consider the probability distribution characteristicsCharacter and pattern recognitionNeural architecturesClassification methodsSample image

The present invention relates to a zero-sample classification technology in the computer vision field, in particular, a variational automatic encoder-based zero-sample image classification method. Asto the zero-sample image classification method, the distribution of the mappings of semantic features and visual features of categories in a semantic space is fitted, and more efficient semantic associations between the visual features and category semantics are built. According to the variational automatic encoder-based zero-sample image classification method, a variational automatic encoder is adopted to generate embedded semantic features on the basis of the visual features; it is regarded that the variational automatic encoder has a latent variable Z<^>; the latent variable Z<^> is adoptedas an embedded semantic feature; as for a zero-sample image classification task and the visual feature xj of a category-unknown sample, the encoding network of the variational automatic encoder whichis trained on visual categories is utilized to calculate a latent variable Z<^>j which is generated through encoding; the latent variable Z<^>j is adopted as an embedded semantic feature, cosine distances between the latent variable Z<^>j and the semantic feature of each invisible category are calculated, wherein the semantic feature of each invisible category is represented by a symbol describedin the descriptions of the invention; and a category of which the semantic feature is separated from the latent variable Z<^>j by the smallest distance is regarded as the category of the vision sample. The method of the present invention is mainly applied to video classification conditions.

Owner:TIANJIN UNIV

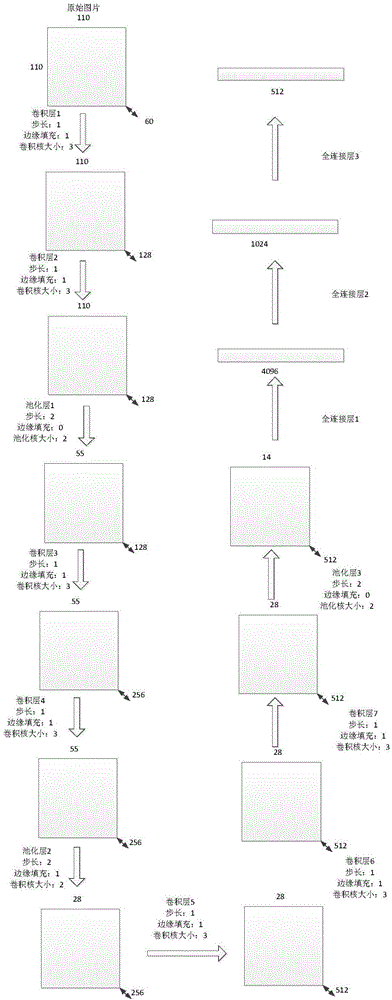

CNN model, CNN training method and vein identification method based on CNN

ActiveCN106971174AReduce demandEasy to identifyStill image data retrievalNeural learning methodsBiometric dataFeature data

The invention discloses a CNN model, a CNN training method and a vein identification method based on CNN. The CNN model comprises multiple convolution layers, a full connection stair layer and a SoftMax layer. In a CNN training process, a database is firstly expanded and multiple biological feature databases including similar features are combined to carry out a training of a model; the full connection layer and the SoftMax layer serve as a multi-classification classifier together; a multi-classification neural network is trained so that the neural network is allowed to learn features capable of identifying vein features; and after the training is finished, the previous layer of the full connection layer is output to serve as feature, and similarity of a pair of images is measured by calculating cosine distance of the features. According to the invention, multi-mode biological feature data is fused and used for training a network, so a problem of insufficient training samples is solved and retrieval speed can be greatly improved in a super large identity identification database.

Owner:SOUTH CHINA UNIV OF TECH

Voice data processing method and device

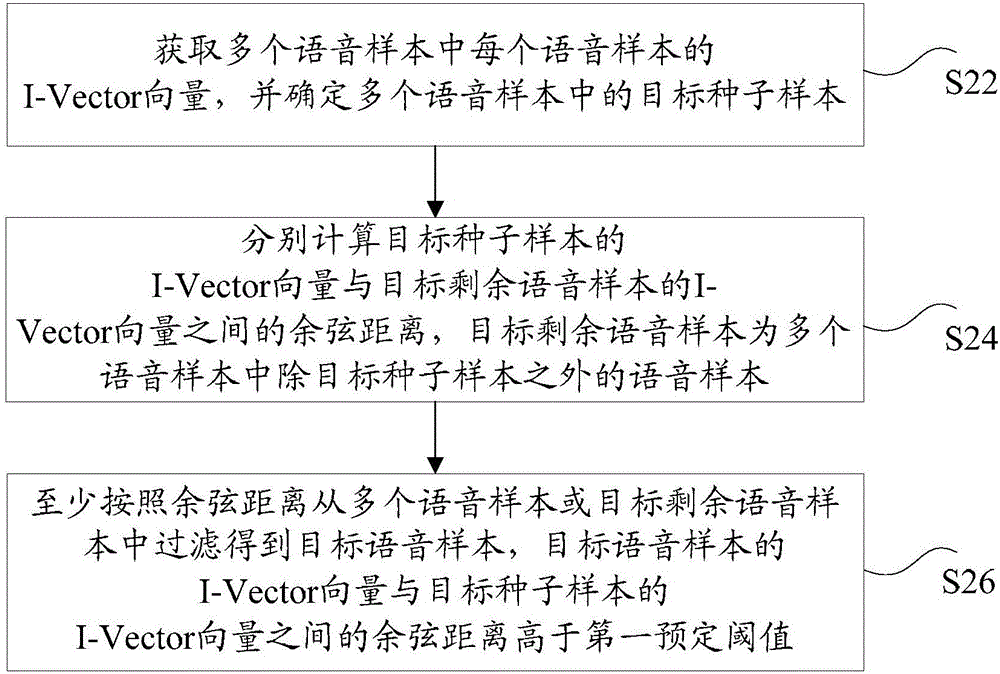

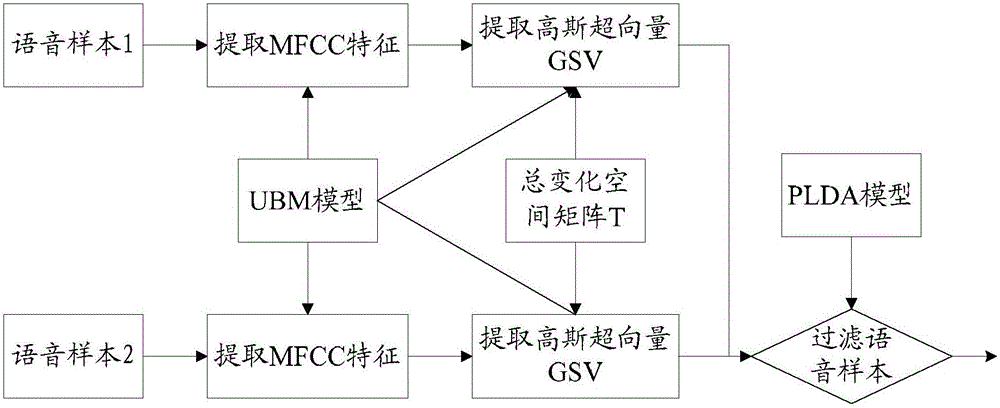

ActiveCN105869645AImprove cleaning efficiencySolve the technical problem of low cleaning efficiencySpeech analysisManual annotationSeed sample

The invention discloses a voice data processing method and a voice data processing device. The voice data processing method comprises the following steps: acquiring the I-Vector of each of a plurality of voice samples, and determining a target seed sample in the plurality of voice samples; respectively calculating the cosine distances between the I-Vector of the target seed sample and the I-Vectors of the target residual voice samples, wherein the target residual voice samples are the voice samples besides the target seed sample in the plurality of voice samples; and at least filtering from the plurality of voice samples or the target residual voice samples according to the cosine distances to obtain a target voice sample, wherein the cosine distance between the I-Vector of the target voice sample and the I-Vector of the target seed sample is higher than a first preset threshold value. With the adoption of the method and the device, the technical problem that in the relevant technologies, cleansing can not be carried out on voice data by adopting a manual annotation method, so that the voice data cleansing efficiency is low is solved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

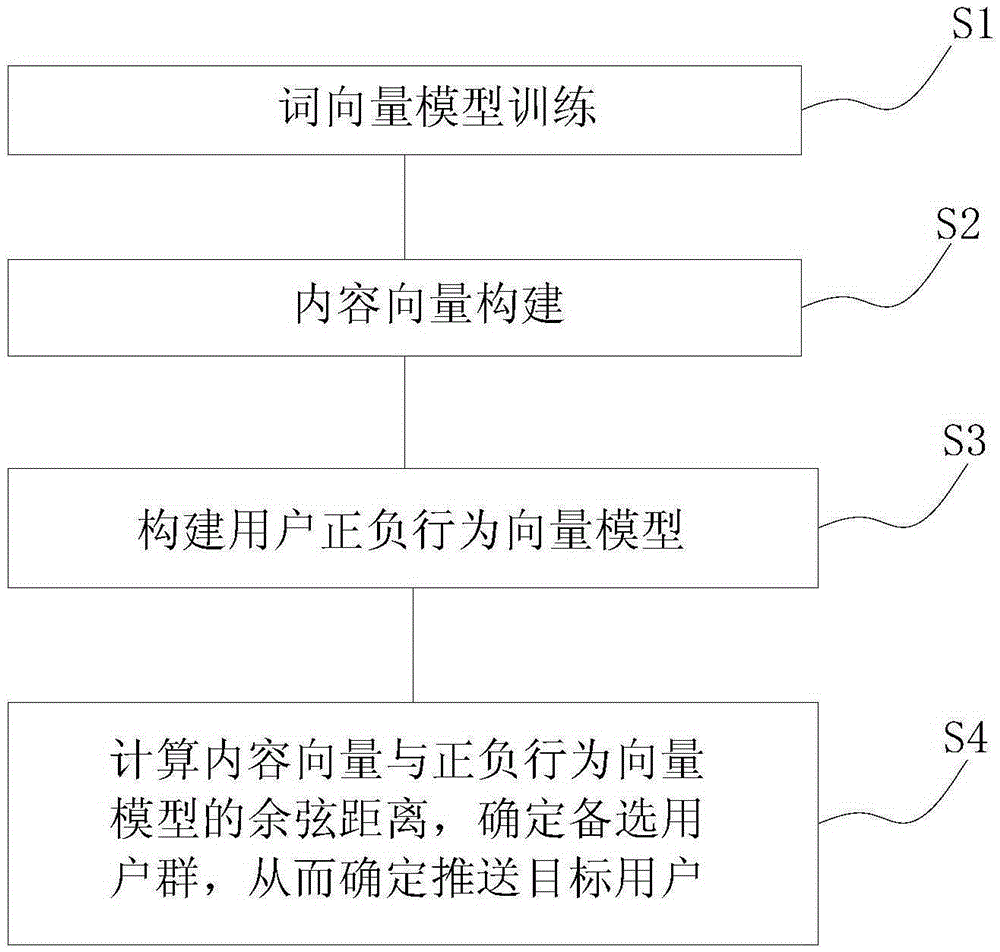

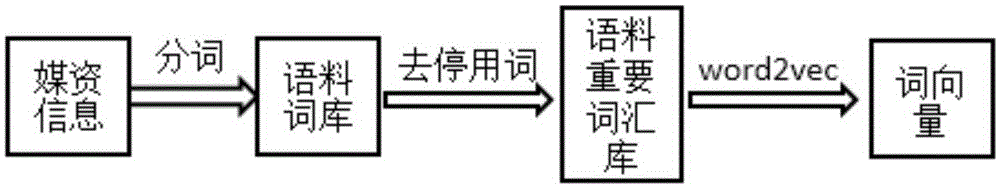

Online content recommending method based on deep neural network

InactiveCN105279288AInhibition biasImprove recommendationsBiological neural network modelsSpecial data processing applicationsHigh dimensionalCosine Distance

The invention discloses an online content recommending method based on a deep neural network. On the traditional basis of content recommendation, a deep neural network (DNN) word vector tool is introduced, content and users are mapped to high-dimensional vector space according to content text information to be recommended and the historical behaviors of the users, the cosine distance between vectors is calculated, and user groups interested in the recommended content are screened and filtered. Experiments in a large-scale moving content service system prove that compared with random recommendation, a Content KNN, Item CF and other algorithms, the recommending effect of the recommending strategy is remarkably improved.

Owner:SHENZHEN UNIV

Problem similarity calculation method based on a plurality of features

ActiveCN109344236AIncrease diversityImprove generalization abilityDigital data information retrievalSemantic analysisSemantic gapSemantic feature

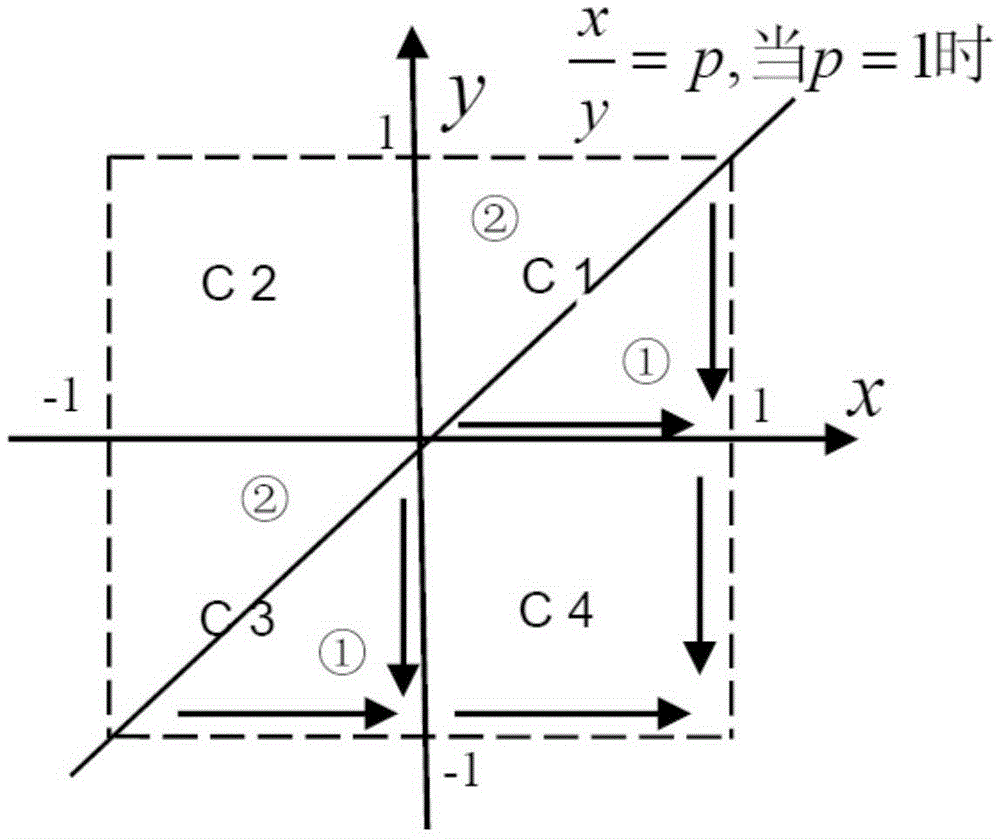

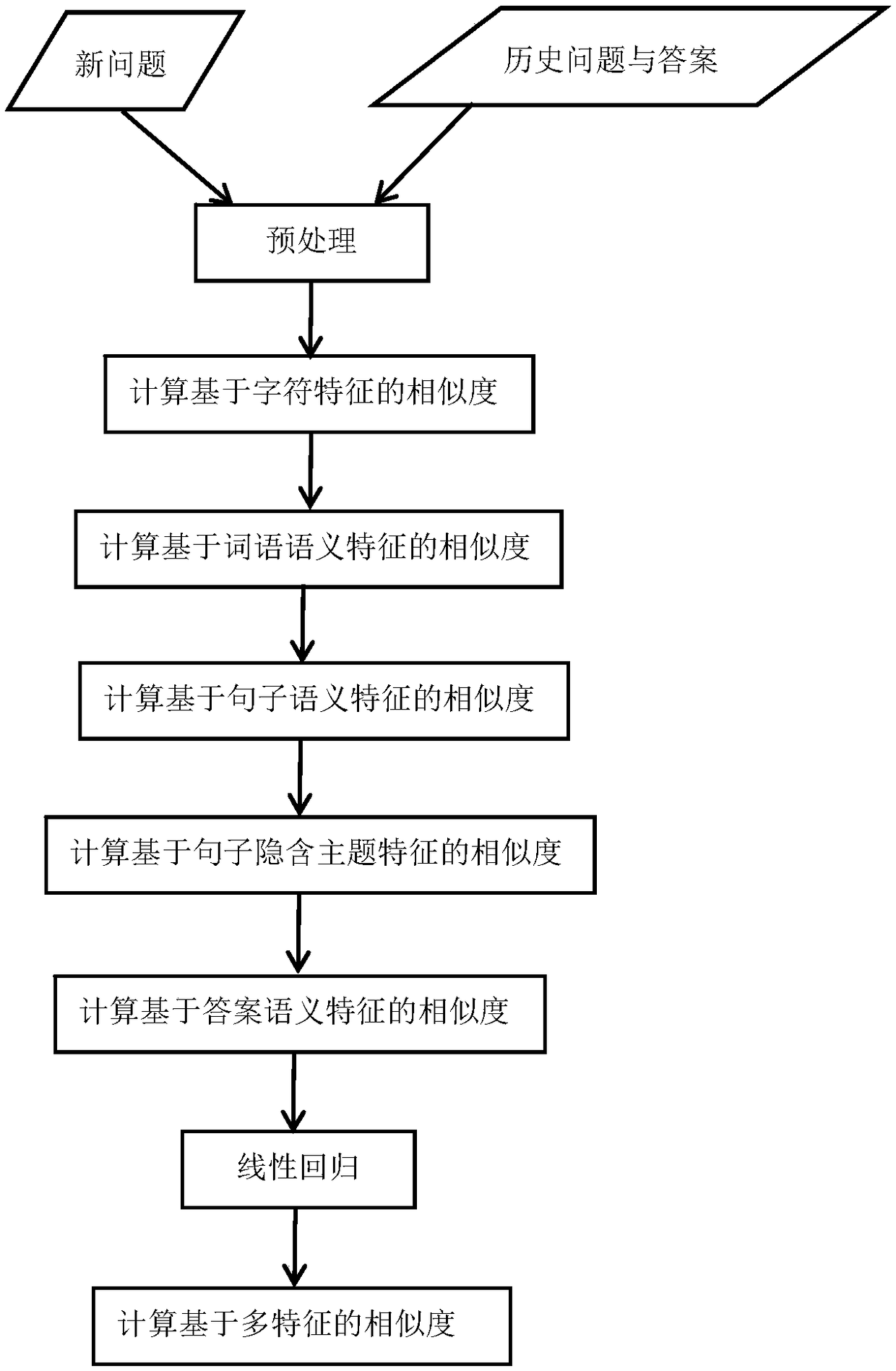

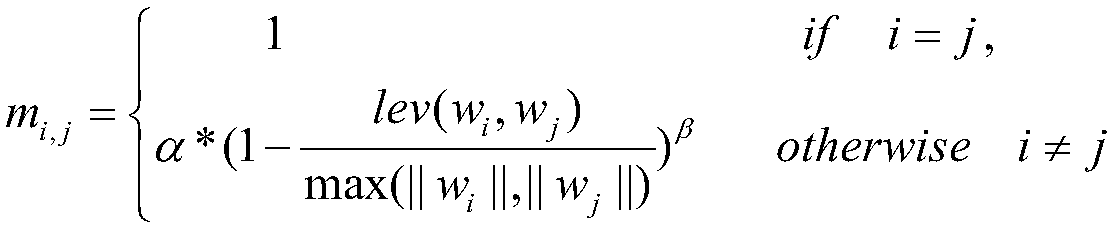

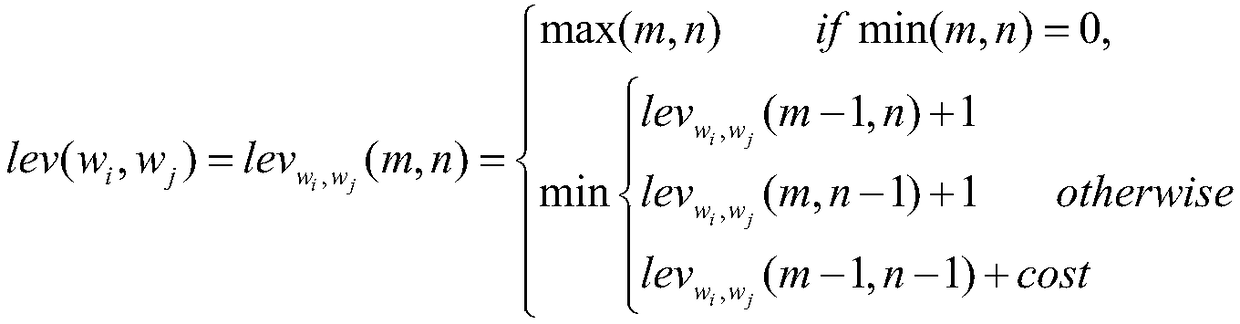

The invention discloses a problem similarity calculation method based on a plurality of features, includes steps: For the input new question sentence, Compared with the stored historical questions andcorresponding answers, the similarities between the new questions and the historical questions are calculated based on character features, semantic features of words, semantic features of sentences,implied topic features of sentences and semantic features of answers. The final similarity is the product of the above five similarities and their corresponding weights, which are trained by linear regression method. The invention adopts a plurality of features to increase the diversity of sample attributes, and improves the generalization ability of the model. At that same time, the soft cosine distance is utilized to convert the TF-IDF is fused with editing distance, word semantics and other information, which overcomes the semantic gap between words and improves the accuracy of similarity calculation.

Owner:JINAN UNIVERSITY

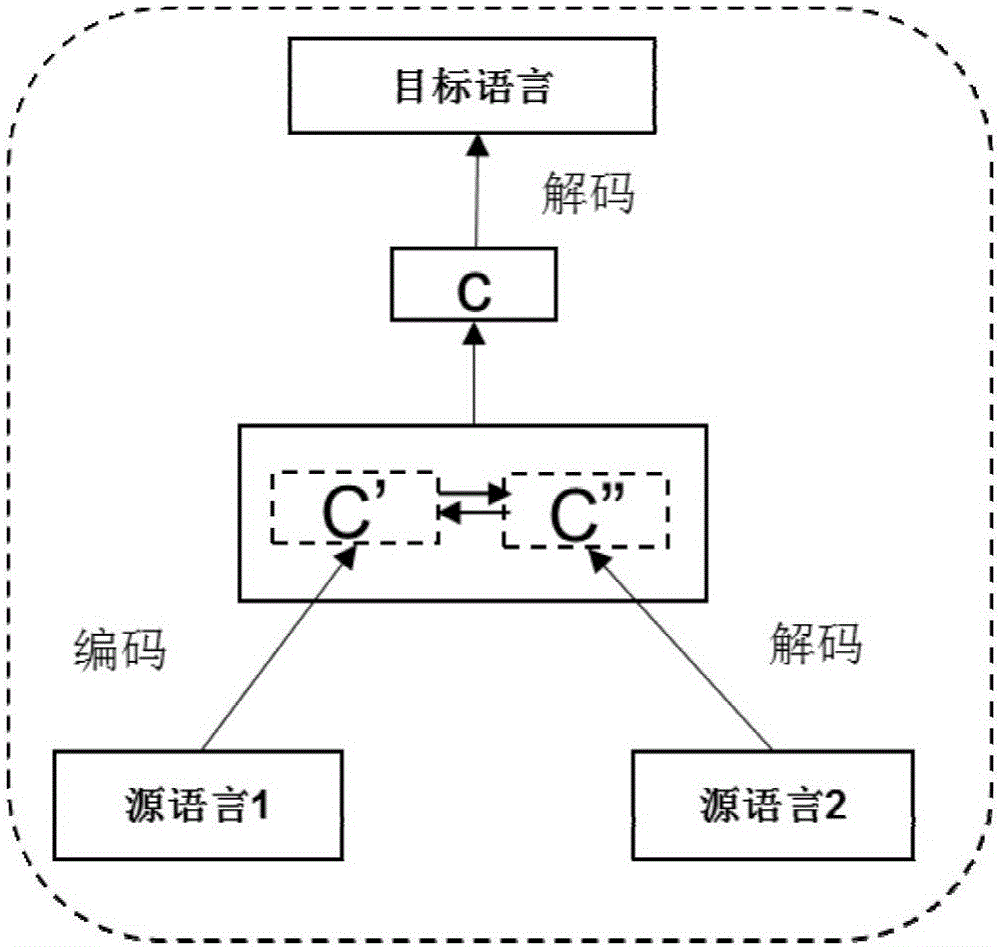

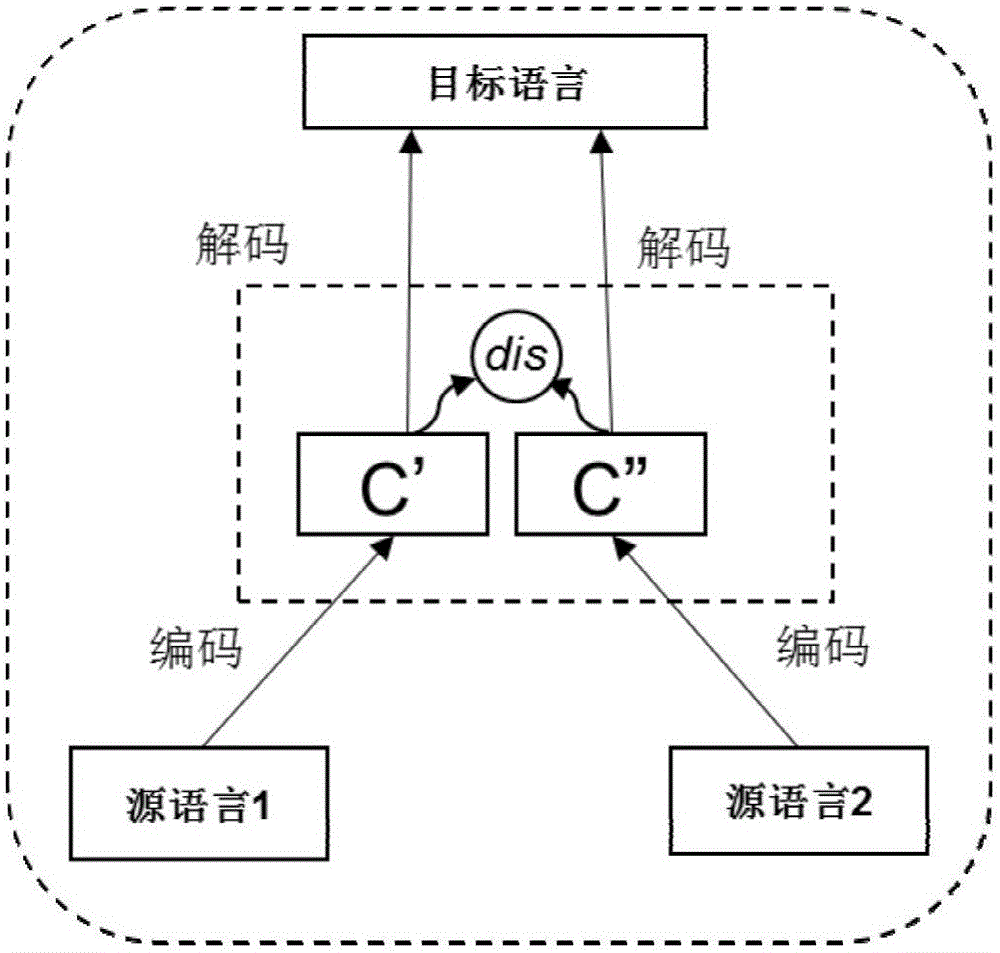

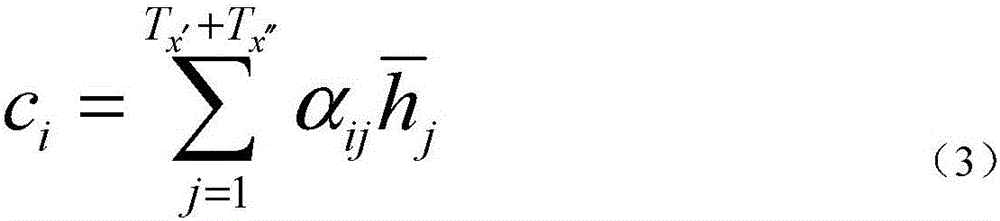

Machine translation method for semantic vector based on multilingual parallel corpus

ActiveCN106202068AImprove performanceNatural language translationSemantic analysisSemantic vectorMachine translation

The invention provides a machine translation method for a semantic vector based on multilingual parallel corpus, and relates to machine translation methods. The problem to be solved in the invention is that semantic information obtained by the bilingual parallel corpus is usually less. The machine translation method comprises the following steps: 1, inputting parallel source languages 1, 2 and a target language; 2, carrying out calculation according to a formula (1) to a formula (6) to obtain implicit states h' and h''; 3, calculating the obtained vector c; 4, generating the target language; or, 1, inputting the source languages 1, 2 and the target language; 2, calculating a normalized cosine distance of the vector c1 and a vector c2; 3, comparing the similarity of the vector c1 and the vector c2; 4, setting dis(c1, c2) be greater than threshold delta, setting a sentence set S1 of the source language 1 and a sentence set S2 of the source language 2, namely expressing as the following constrain optimization problem; and 5, establishing a final target function. The machine translation method provided by the invention is applied to the machine translation field.

Owner:黑龙江省工研院资产经营管理有限公司

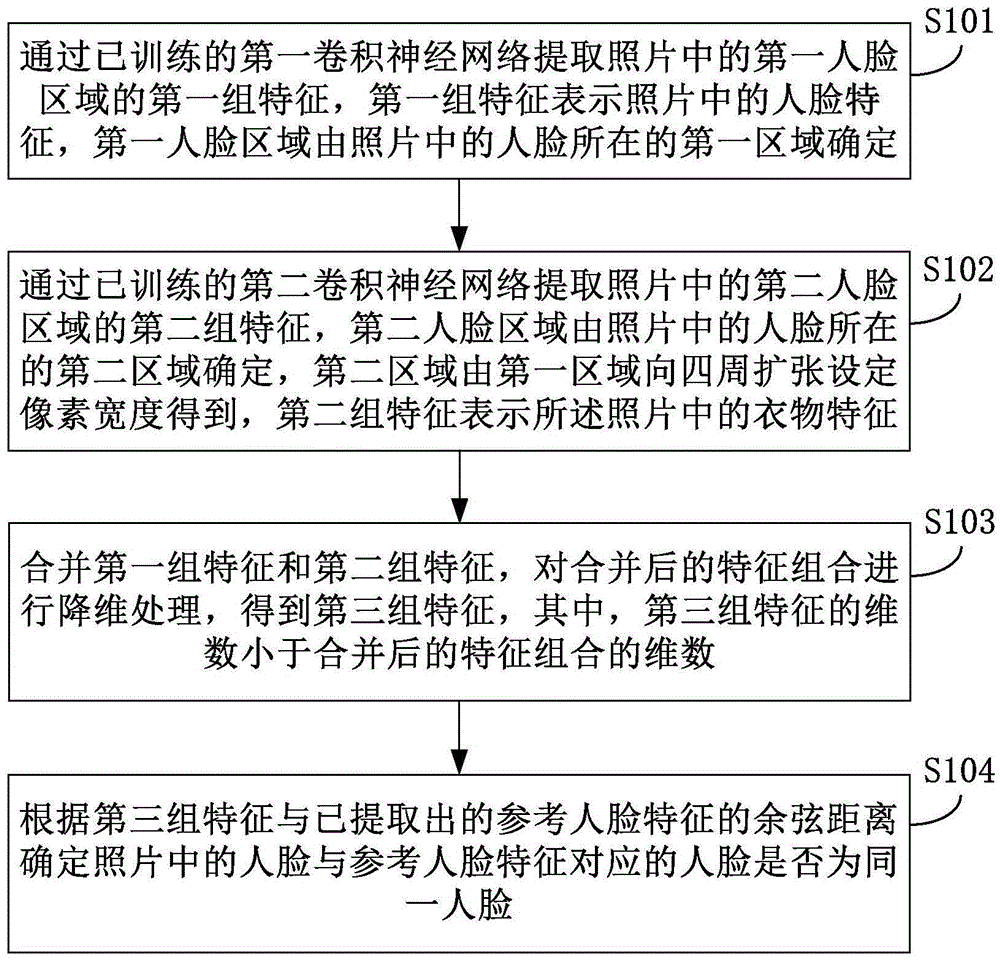

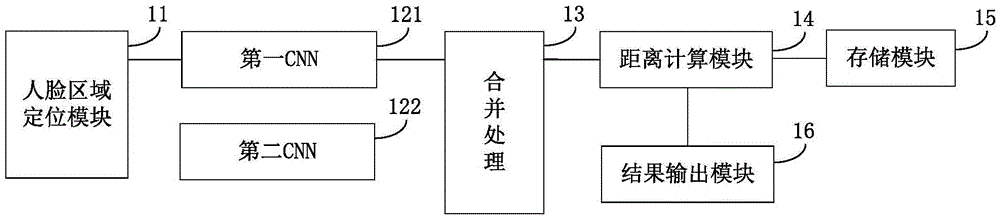

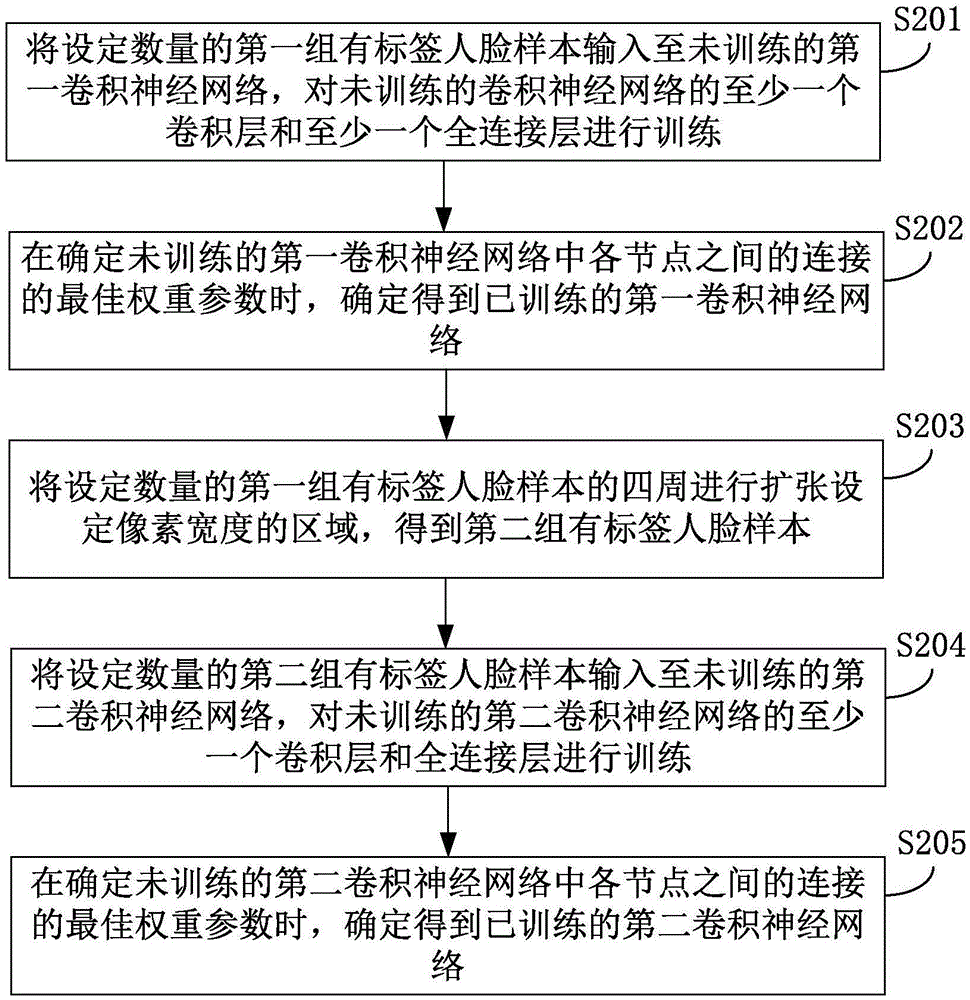

Method and device for human face recognition

ActiveCN105631403AImprove accuracyReduce computational complexityCharacter and pattern recognitionCosine DistanceConvolution

The invention discloses a method and a device for human face recognition. The method comprises steps: through an already-trained first convolutional neural network, a first feature group for a first human face area in a picture is extracted, wherein the first feature group presents human face features in the picture; through an already-trained second convolutional neural network, a second feature group for a second human face area in the picture is extracted, wherein the second human face area is determined by a second area where the human face in the picture is, and the second feature group presents clothes features in the picture; the first feature group and the second feature group are combined, dimension reduction processing is carried out on the feature combination after the combination, and a third feature group is obtained; and according to the cosine distance between the third feature group and already-extracted reference human face features, whether the human face in the picture and the human face corresponding to the reference human face features are the same human face is determined. According to the technical scheme of the invention, the peripheral clothes and ornaments of the user face area can be combined with the user face features for human face recognition, and the human face recognition accuracy is greatly improved.

Owner:XIAOMI INC

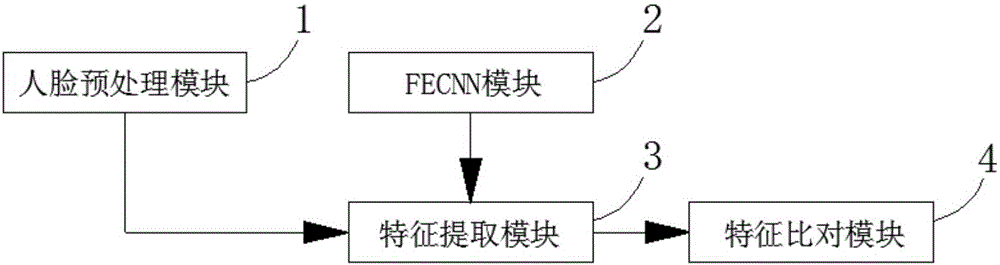

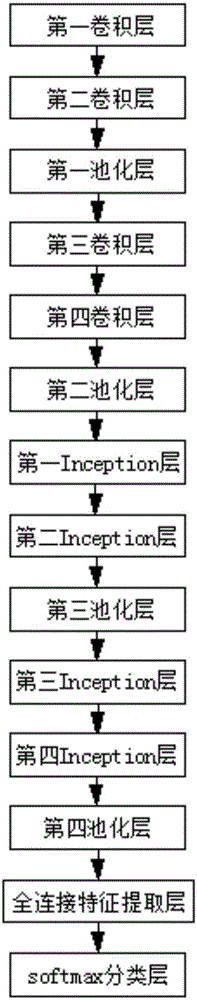

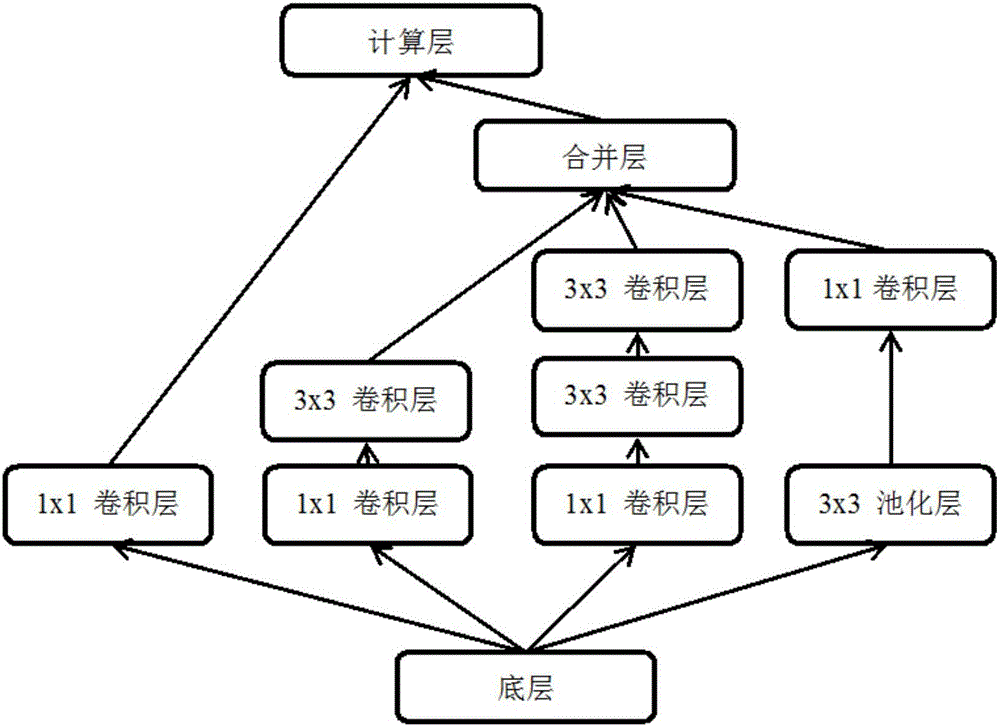

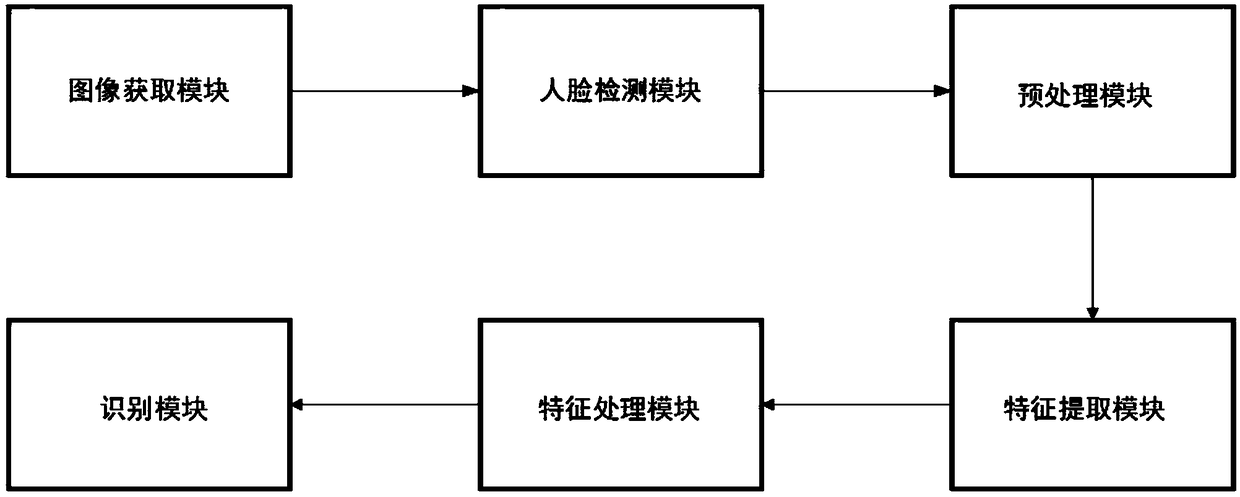

Facial feature extraction system and method based on FECNN

ActiveCN106557743AFast convergenceReduce usageCharacter and pattern recognitionNeural architecturesFace detectionFeature extraction

The invention relates to a facial feature extraction system and a method based on FECNN. The system comprises a face pretreatment module, an FECNN module, a feature extraction module and a feature comparison module, wherein the face pretreatment module is used for carrying out face detection on a face image and carrying out cutting, positioning and aligning on the detected face image; the FECNN module builds an FECNN frame for feature extraction and carries out training until the FECNN is converged, and an FECNN parameter model is obtained; the feature extraction module sends face key points and the face image to the FECNN parameter model to extract face features; and the feature comparison module uses a cosine distance to calculate the face features, when the distance is larger than a set threshold t, the same person is judged, and when the distance is smaller than the set threshold t, different persons are judged. Compared with the prior art, the system and the method of the invention use few parameters, the network model can be quickly converged, and robust face features can be extracted.

Owner:GUILIN UNIV OF ELECTRONIC TECH

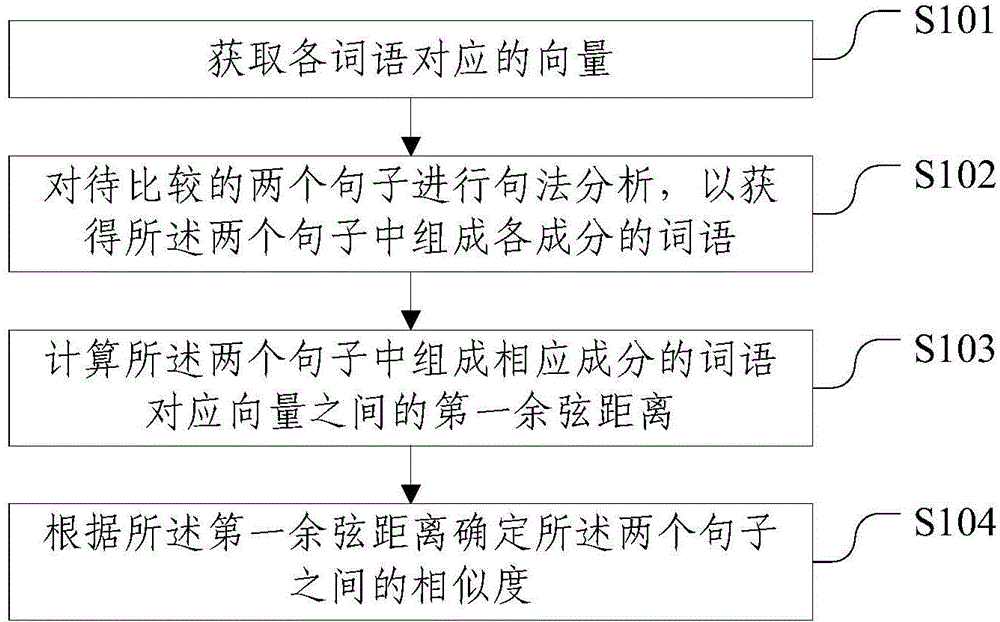

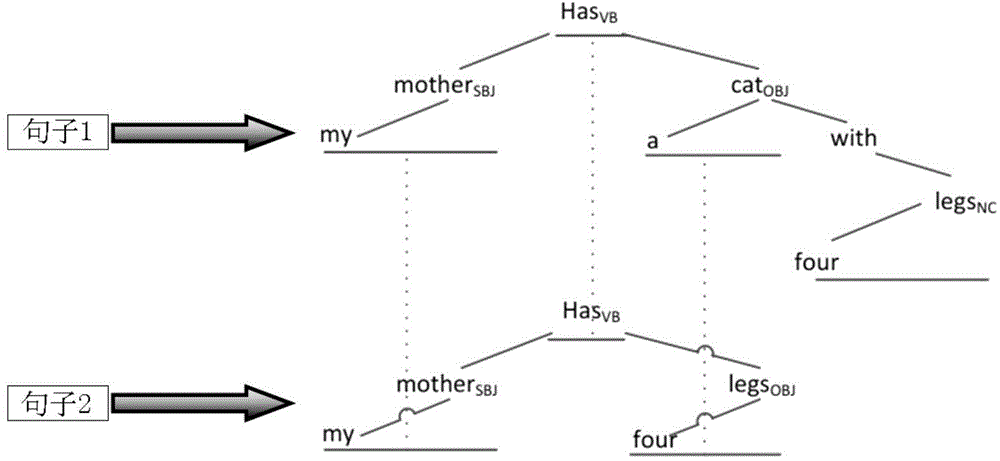

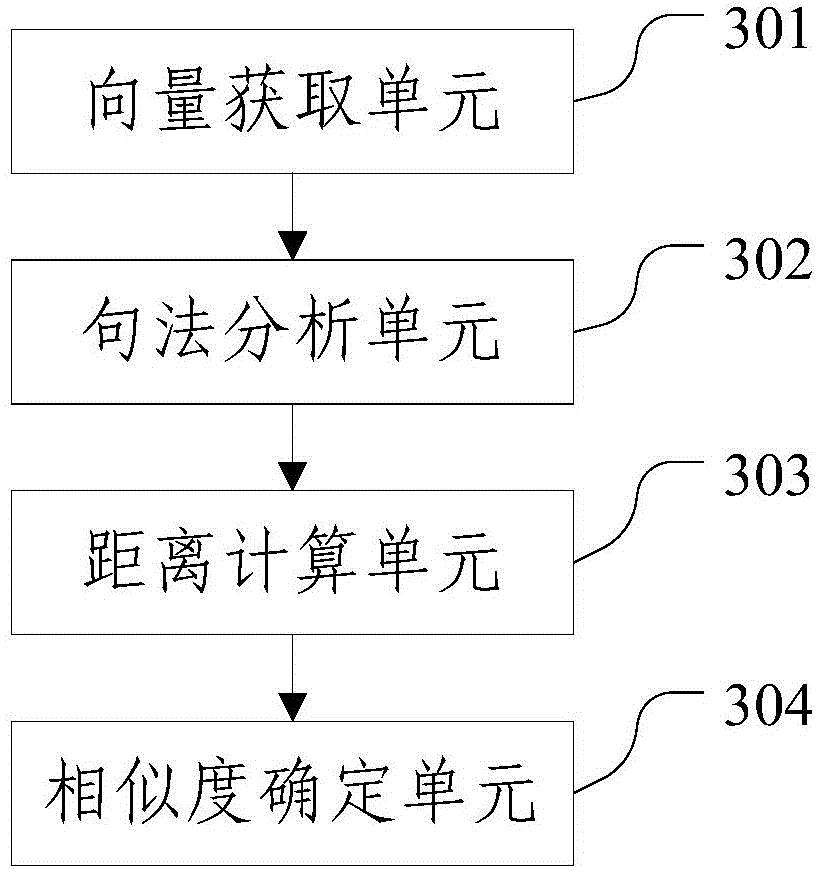

Sentence similarity calculation method and apparatus

InactiveCN105183714ACalculate approximation accuratelyAccurate calculationSpecial data processing applicationsNatural language processingSemantics

The present invention discloses a sentence similarity calculation method and apparatus and relates to the technical field of automatic correcting. The method comprises: acquiring a vector corresponding to each word; performing syntax analysis on two sentences to be compared so as to acquire words forming compositions of the two sentences; calculating a first cosine distance between the vectors corresponding to the words forming the corresponding compositions of the two sentences; and according to the first cosine distance, determining similarity between the two sentences. According to the method provided by the present invention, by performing syntax analysis on the sentences and structurally holding semantics of the sentences, similarity between the sentences are more accurately calculated; and in addition, the word vectors based on a neural network model are adopted to represent the words, thereby more accurately calculating similarity between the words and getting rid of restrictions of a near-synonym dictionary.

Owner:BEIJING FOCUSEDU INT EDUCATION CONSULTATION

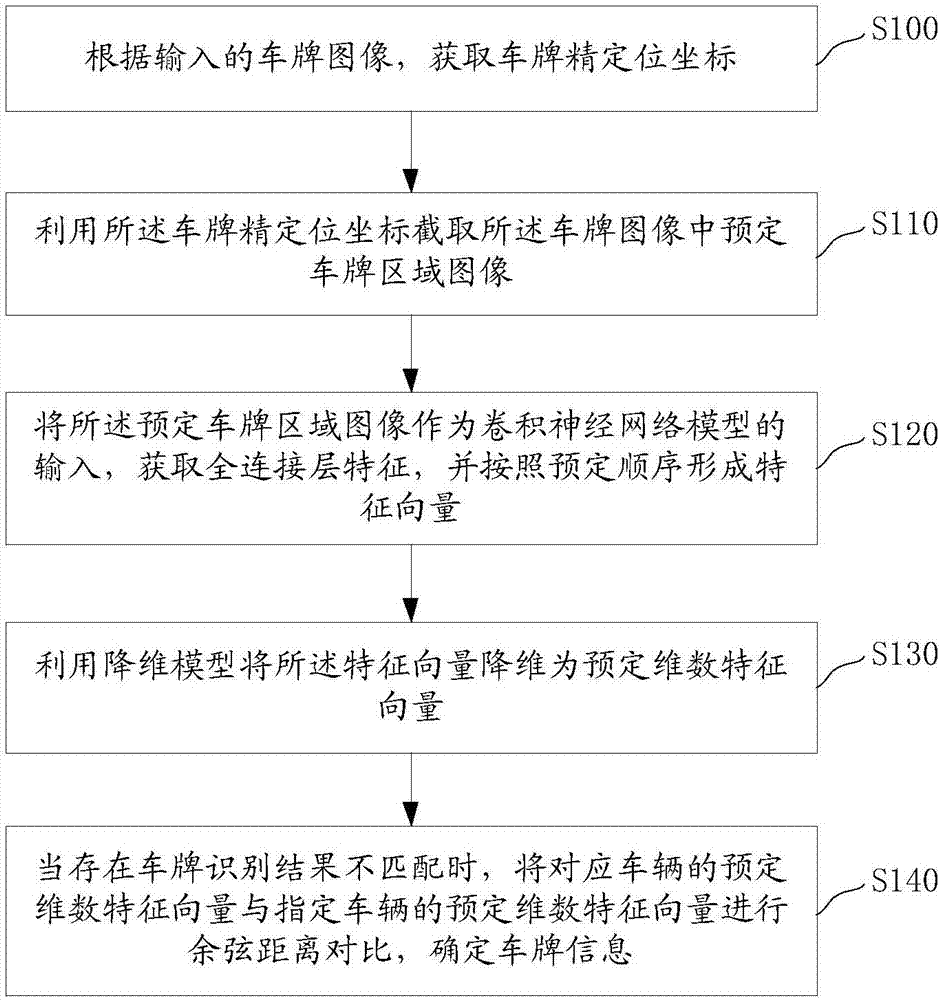

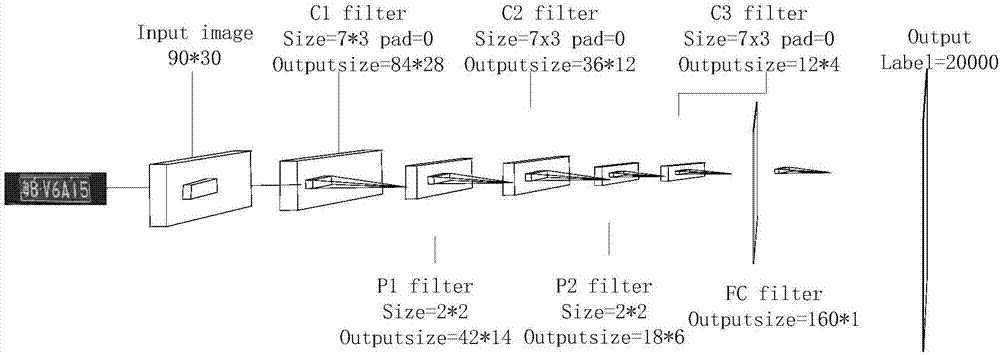

Method and system for retrieving license plate

InactiveCN106934396AReduce in quantityAchieve retrievalCharacter and pattern recognitionNeural architecturesFeature vectorDimensionality reduction

The invention discloses a method for retrieving a license plate. The method comprises a step of obtaining license plate precise positioning coordinates according to an inputted license plate image, a step of intercepting a predetermined license plate area image in the license plate image by using the license plate precise positioning coordinates, a step of taking the predetermined license plate area image as the input of a convolutional neural network model, obtaining a full connection layer characteristic, and forming a characteristic vector according to a predetermined order, a step of using a dimension reduction model to carry out dimension reduction of the characteristic vector into a predetermined dimension characteristic vector, and a step of carrying out cosine distance comparison of the predetermined dimension characteristic vector of a corresponding vehicle and a predetermined dimension characteristic vector of a specified vehicle, and determining license plate information. According to the method, in the condition of license plate matching failure, the license plate information corresponding to the vehicle can be accurately retrieved in a specified range, the license plate retrieval is realized, and the number of security personnel arranged at each gate of a parking lot is reduced. The invention also discloses a system for retrieving a license plate with the above advantages.

Owner:SHENZHEN JIESHUN SCI & TECH IND

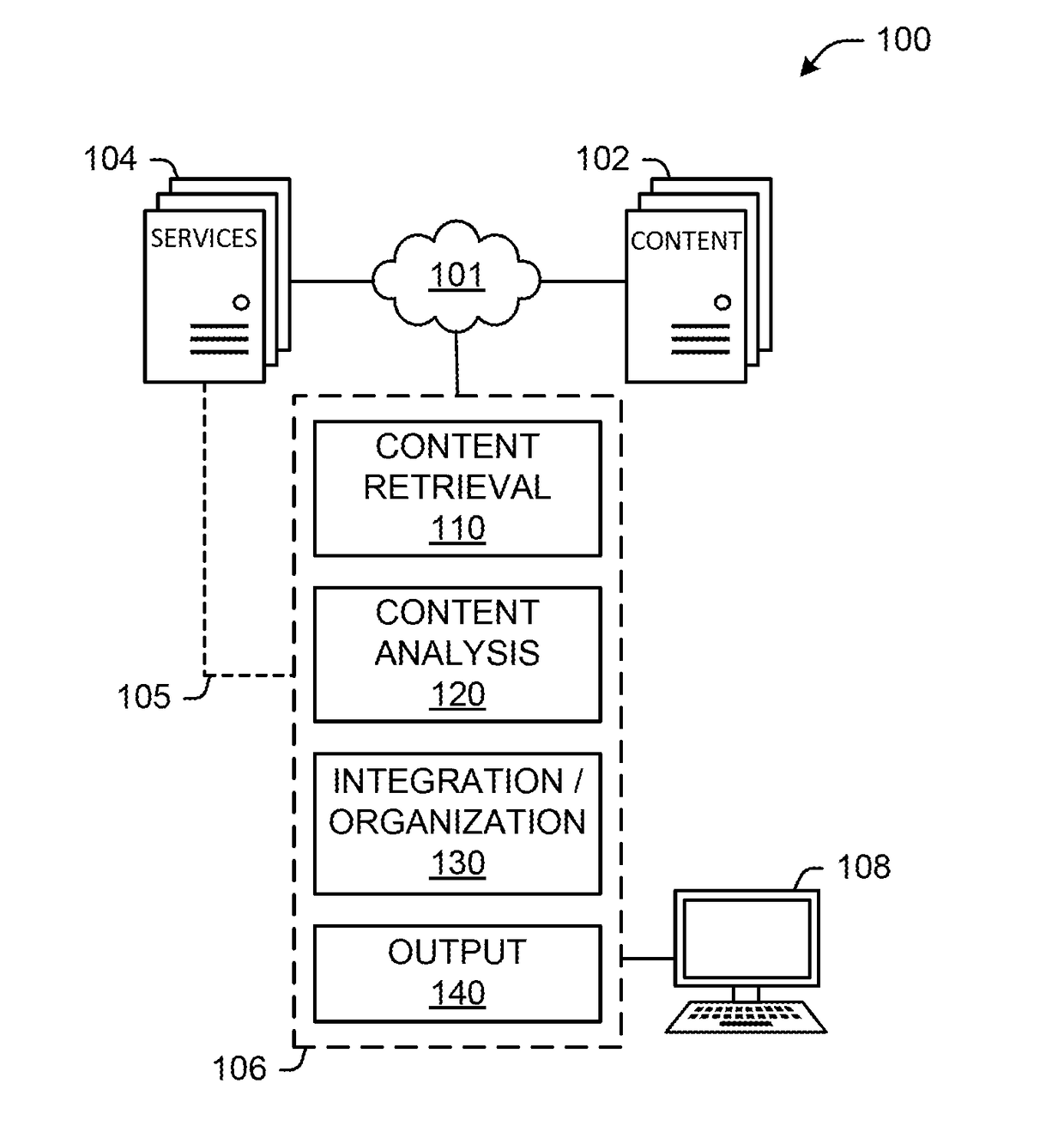

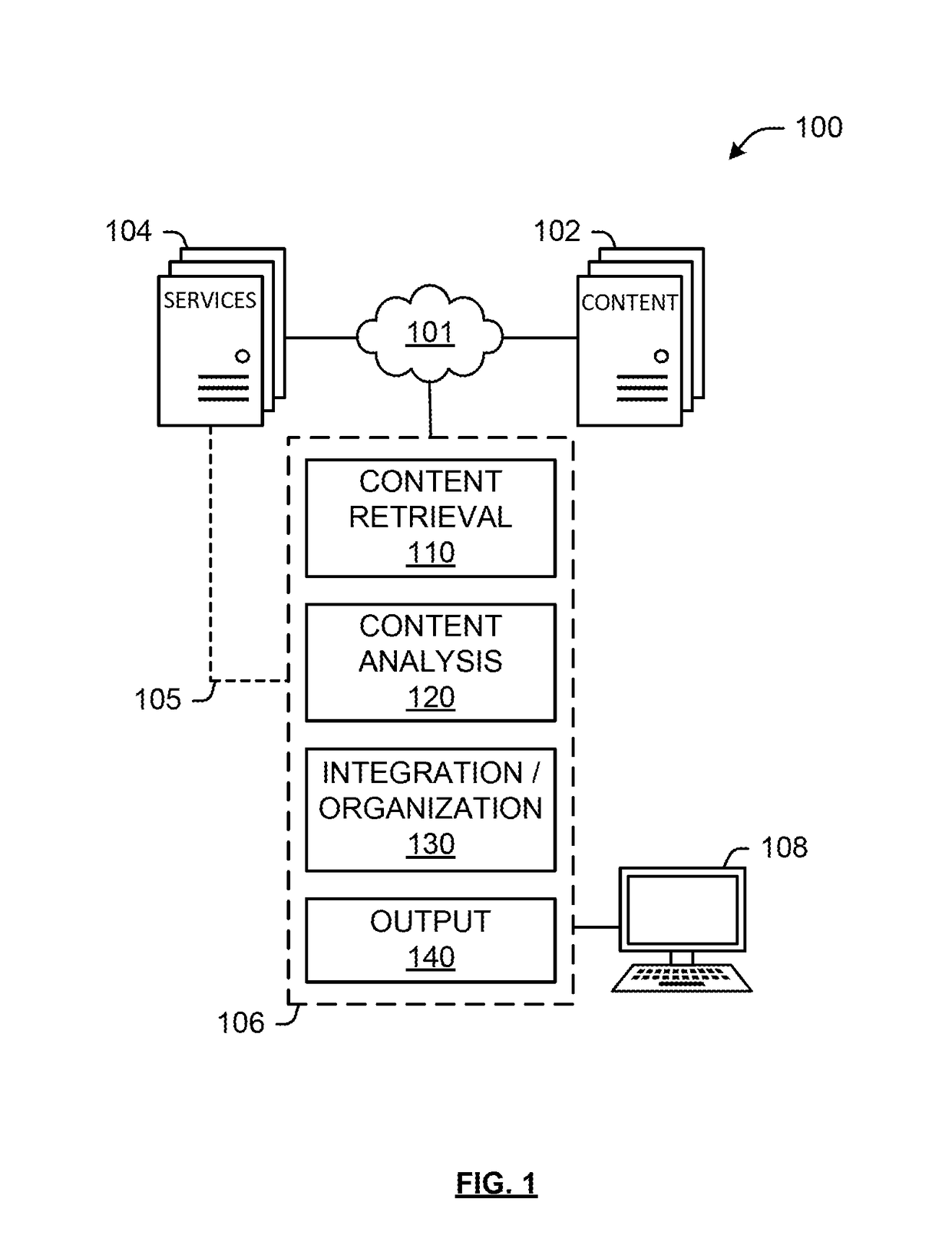

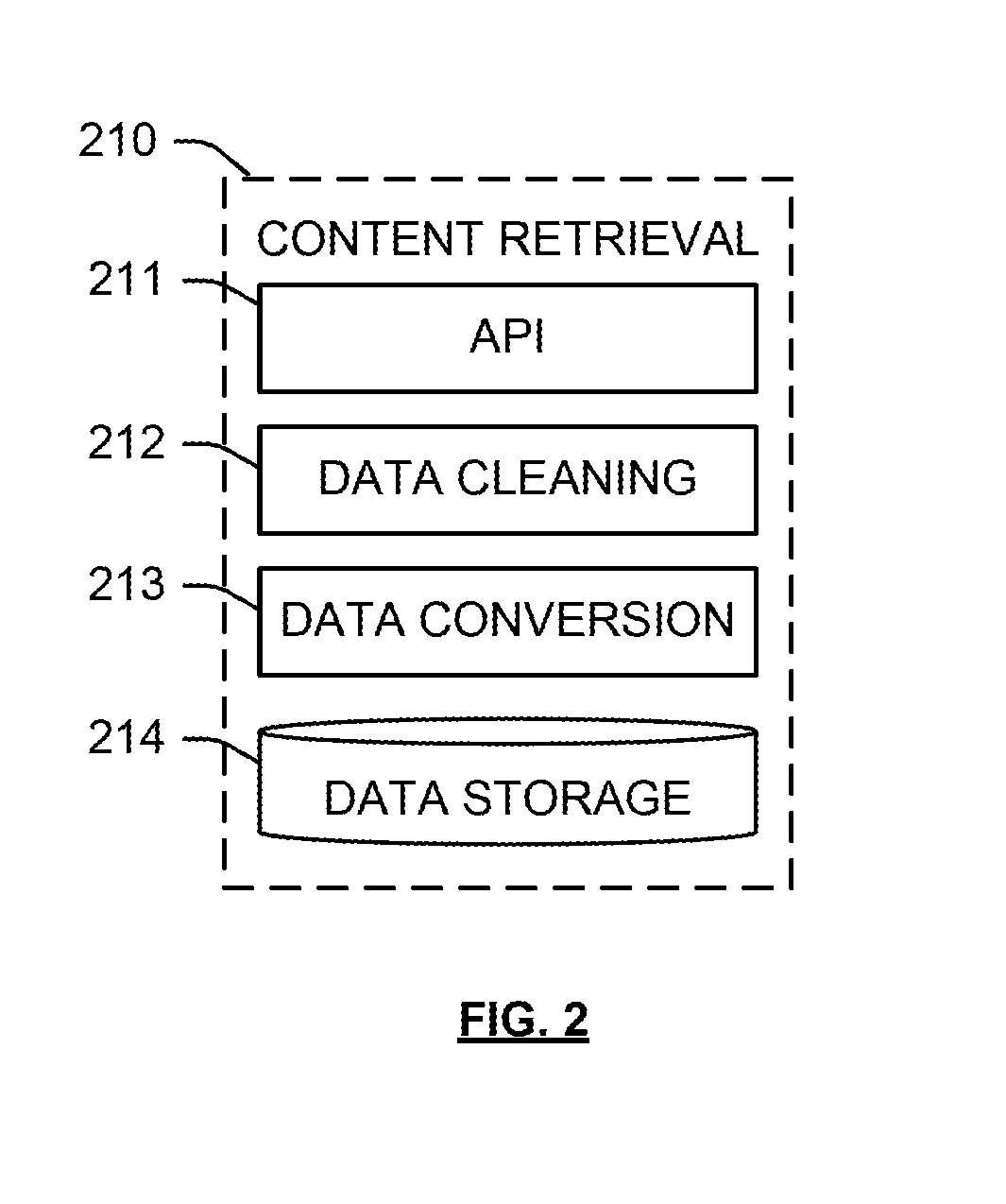

Performing semantic analyses of user-generated textual and voice content

Performing semantic analysis on a user-generated text string includes training a neural network model with a plurality of known text strings to obtain a first distributed vector representation of the known text strings and a second distributed vector representation of a plurality of words in the known text strings, computing a relevance matrix of the first and second distributed representations based on a cosine distance between each of the plurality of words and the plurality of known text strings, and performing a latent dirichlet allocation (LDA) operation using the relevance matrix as an input to obtain a distribution of topics associated with the plurality of known text strings.

Owner:CONDUENT BUSINESS SERVICES LLC

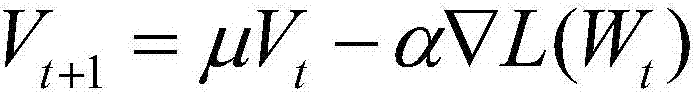

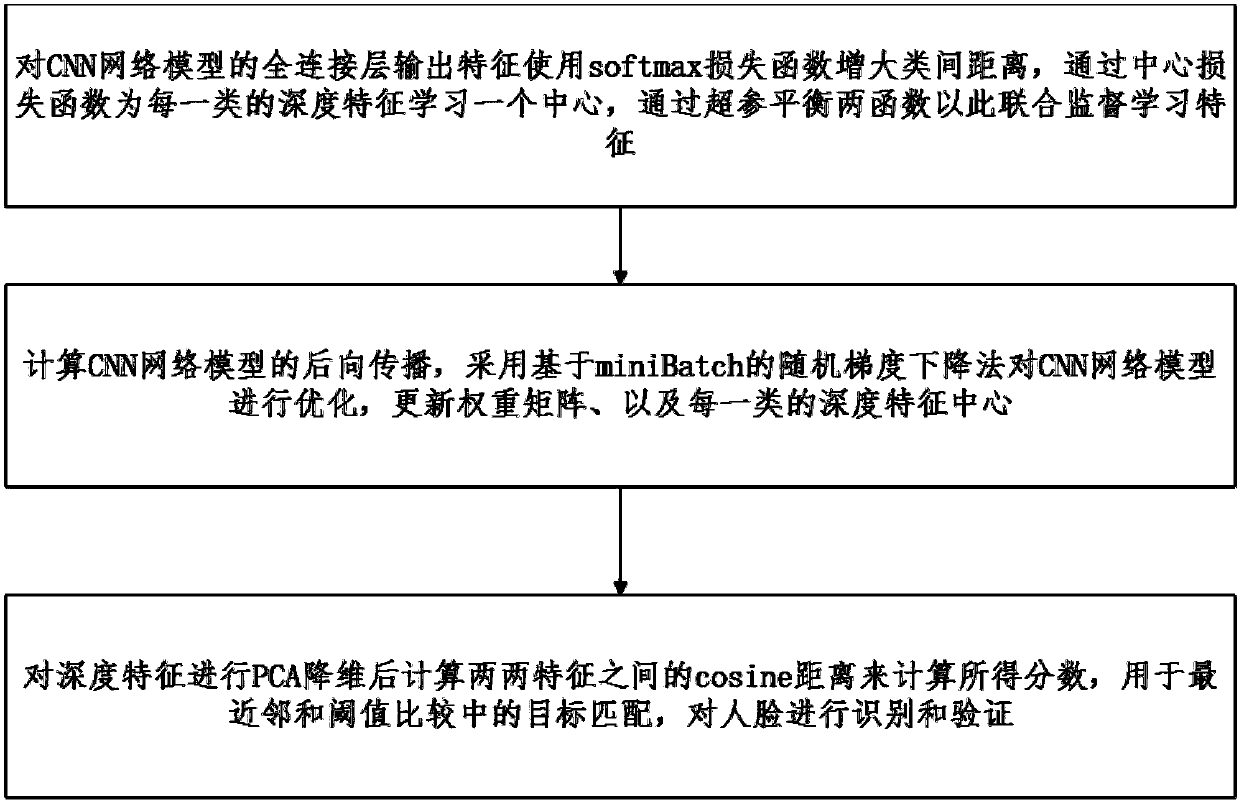

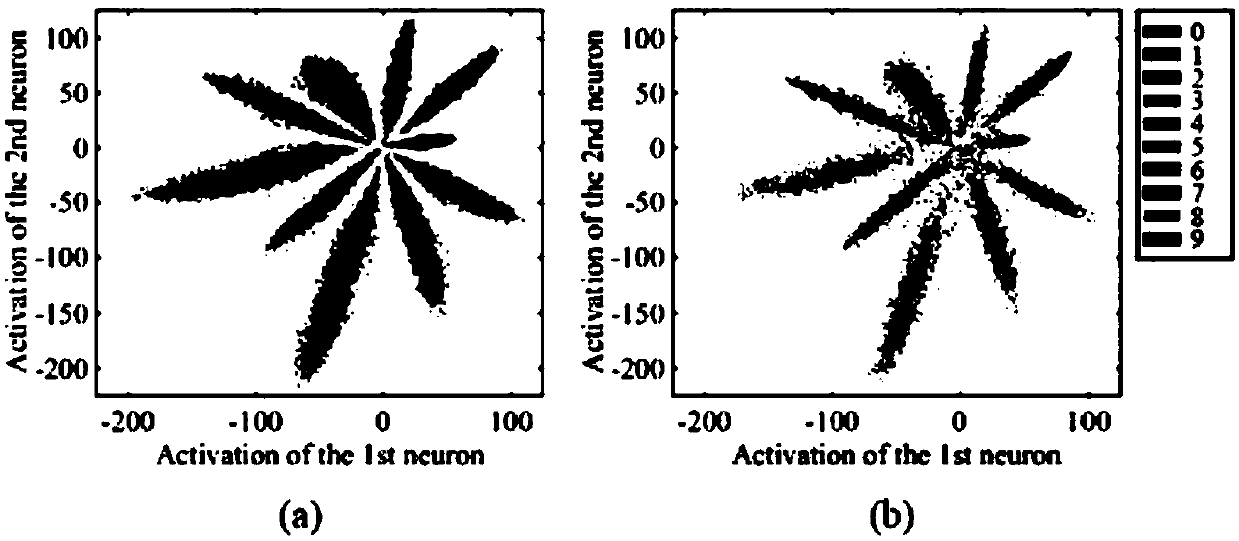

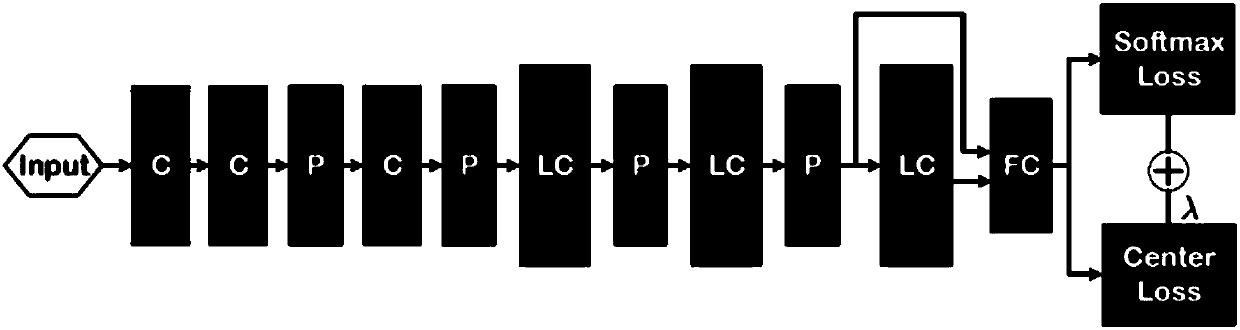

Deep learning-based face recognition and face verification supervised learning method

InactiveCN108256450AHighly identifiable featuresEasy to identifyCharacter and pattern recognitionNeural architecturesNerve networkBatch processing

The invention discloses a deep learning-based face recognition and face verification supervised learning method. The method comprises the following steps: a soft maximum loss function is used to increase a between-class distance for full connection layer output characteristics of a convolutional neural network model, a center is learnt for the depth characteristics of each class through a centralloss function, a super parameter is used to balance the two functions to thus jointly supervise the learning characteristics; backward propagation of the convolution neural network model is calculated, a stochastic gradient descent algorithm based on minimum batch processing is adopted to optimize the convolutional neural network model, and a weight matrix and the depth characteristic center of each class are updated; and after the depth characteristics are subjected to principal component analysis and dimension reduction, the cosine distance between each two characteristics is calculated to calculate a score, wherein the score is used for target matching in nearest neighbor and threshold comparison, and a face is recognized and verified. The identification ability of the neural network learning characteristics can be effectively improved, and a face characteristic recognition and face verification mode with robustness is acquired.

Owner:TIANJIN UNIV

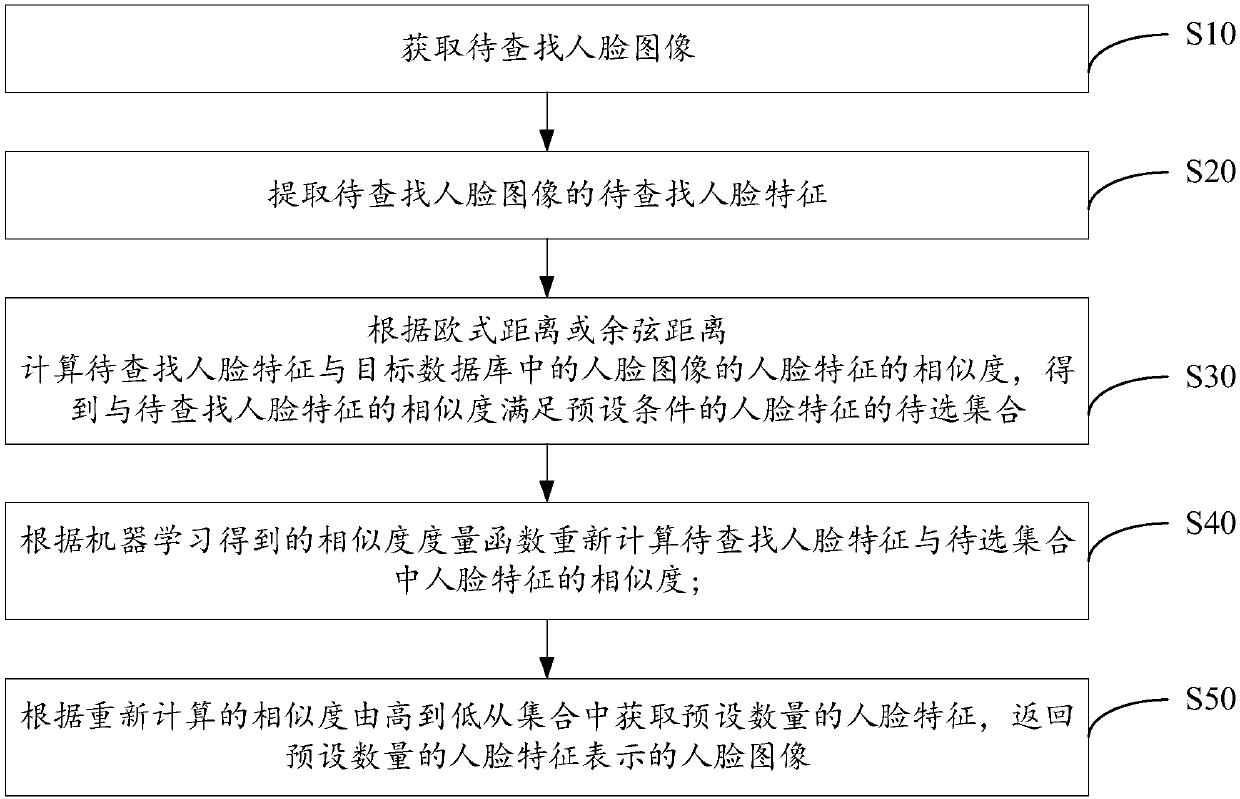

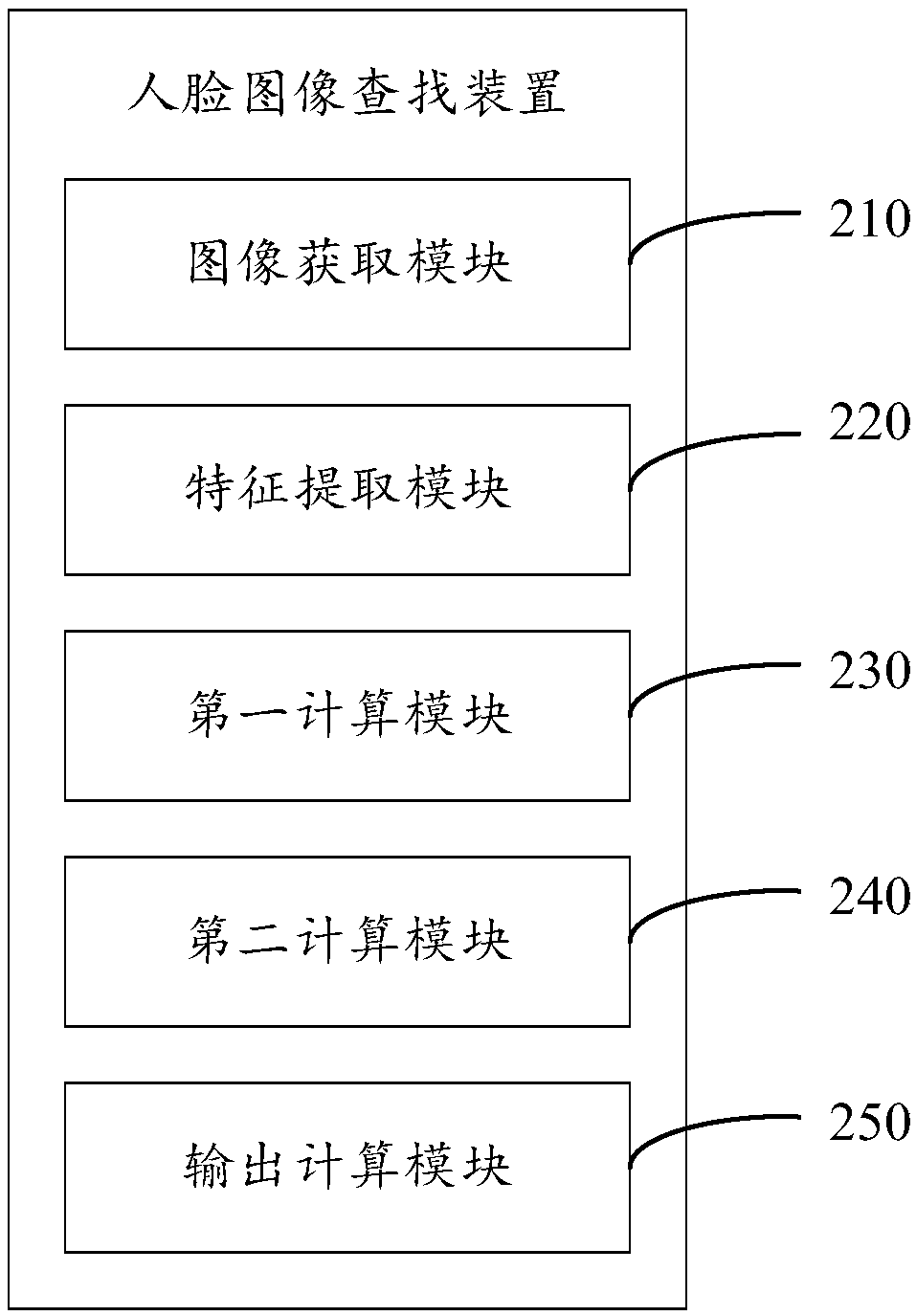

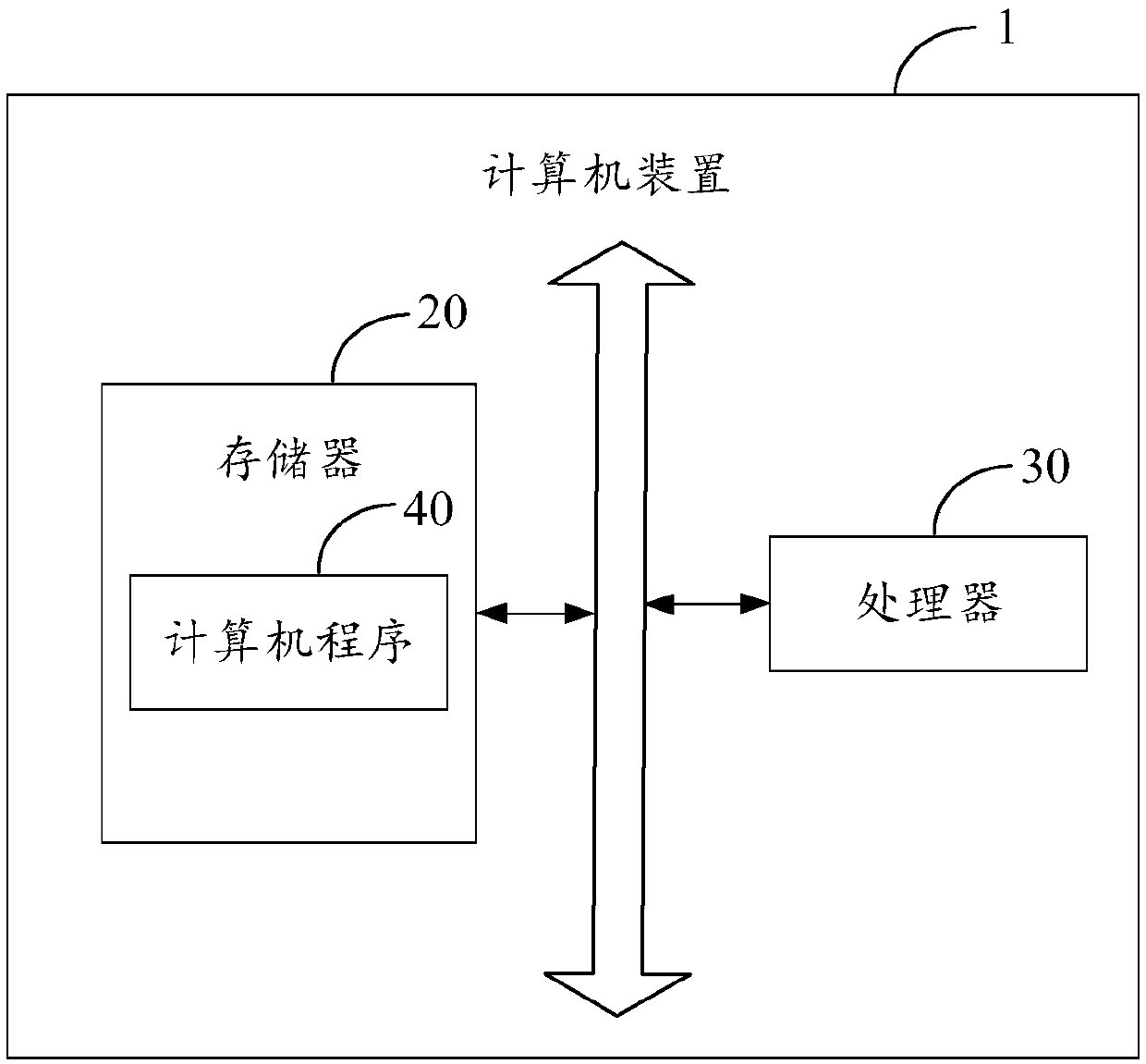

Human face image finding method and device, computer device and storage medium

ActiveCN107944020AShorten the timeImprove accuracyMatching and classificationSpecial data processing applicationsEuclidean distanceSimilarity measure

The invention discloses a human face image finding method. The method comprises the following steps: to obtain a to-be-found human face image; extracting to-be-found human face features of the to-be-found human face image; according to a Euclidean distance or a cosine distance, calculating the similarity of the to-be-found human face features and the human face features of a human face image in atarget database, to obtain a to-be-selected set of the human face features which satisfy the preset conditions of the similarity of the to-be-found human face features; according to a similarity measure function obtained by the machine learning, re-calculating the similarity of the to-be-found human face features and the human face features in the to-be-selected set; according to the re-calculatedsimilarity, to obtain the preset number of the human face features from the set from high to low, and returning the human face image represented by the preset number of the human face features. The invention further discloses a human face image finding device, a computer device and a readable storage medium. The method is capable of improving the accuracy rate and the efficiency of the human faceimage finding.

Owner:SHENZHEN INTELLIFUSION TECHNOLOGIES CO LTD

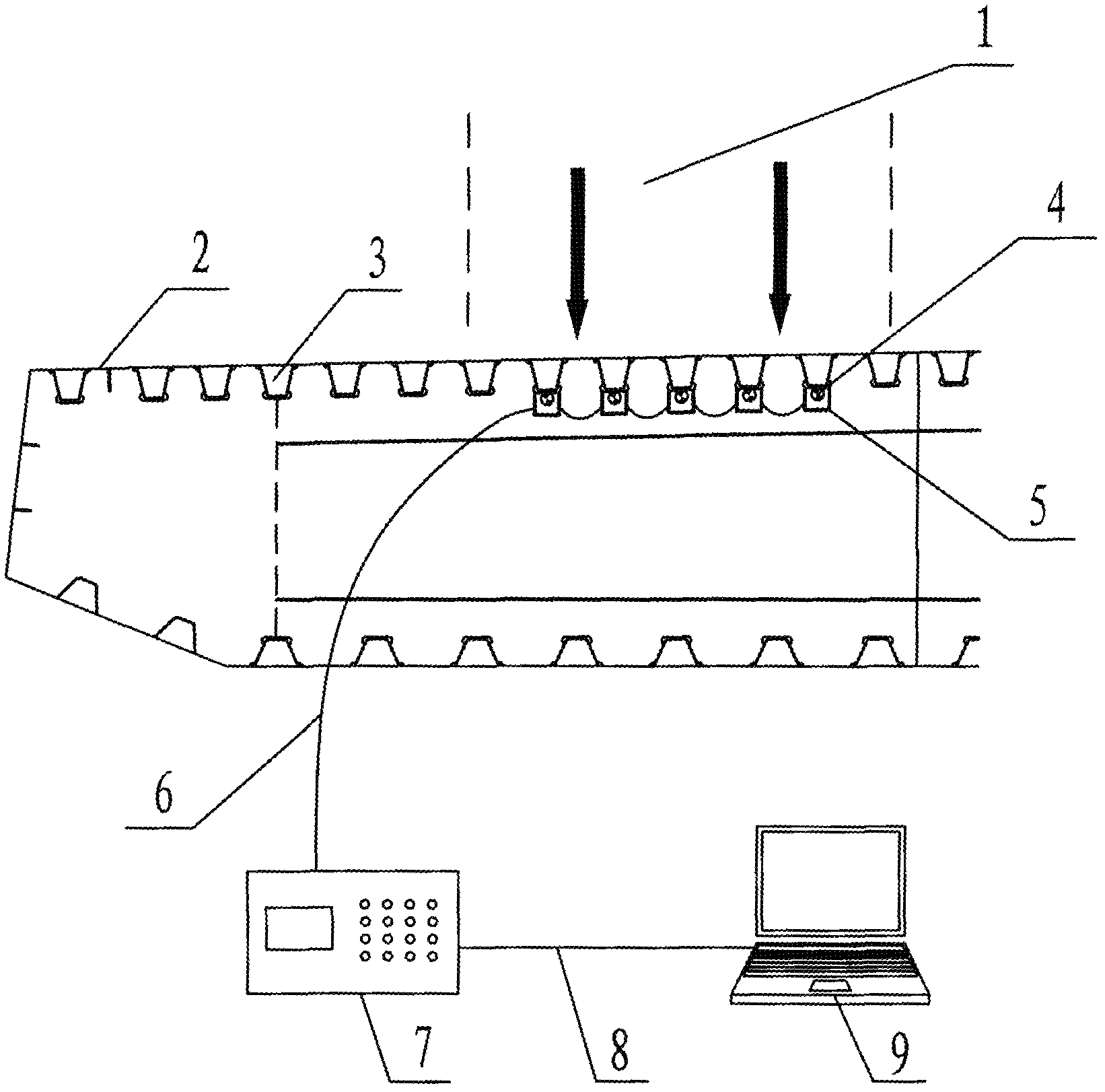

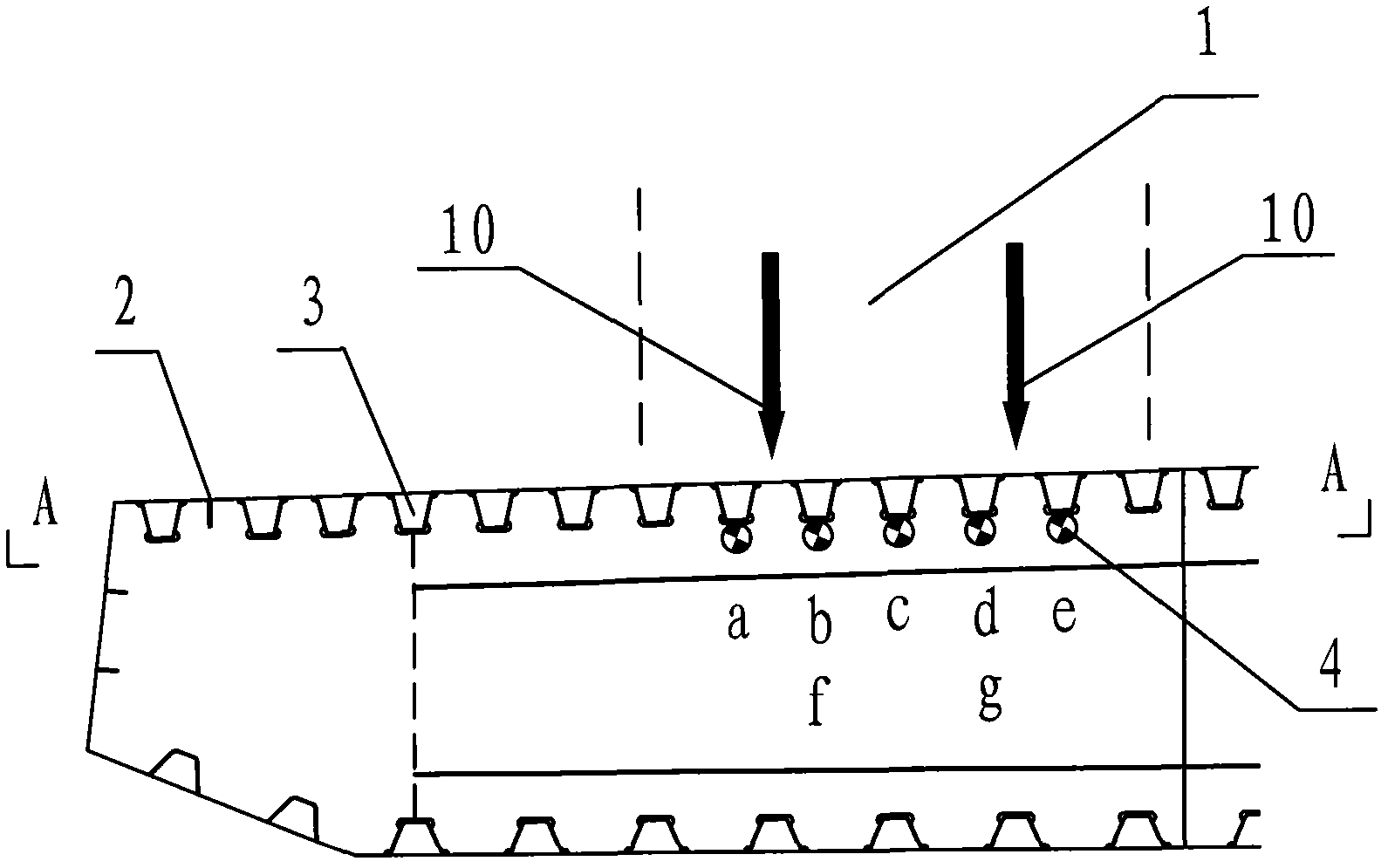

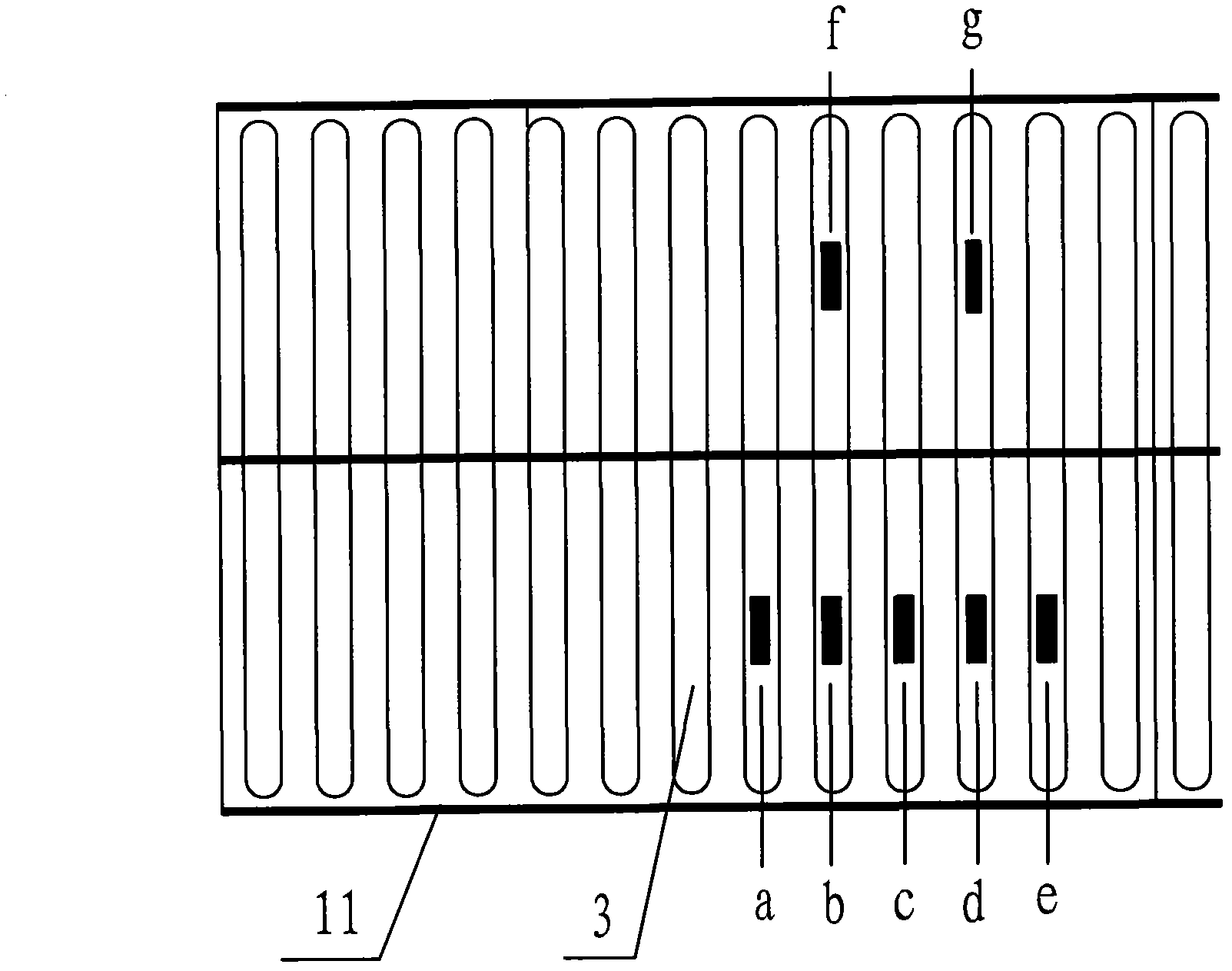

Vehicle load dynamic weighing method for orthotropic bridge deck steel box girder bridge

ActiveCN102628708ADoes not affect drivingHigh sampling frequencyUsing optical meansSpecial purpose weighing apparatusCross correlation analysisBridge deck

The invention discloses a vehicle load dynamic weighing method for an orthotropic bridge deck steel box girder bridge, which relates to the field of bridge health monitoring. The method comprises the following steps of: mounting a fiber grating strain sensor at the bottom of a U-shaped rib of an internal top plate of an orthotropic bridge deck steel box girder; measuring longitudinal bridge strain of the U-shaped rib when a vehicle passes through the position of the sensor; converting the strain into an optical signal by the sensor; demodulating the optical signal by using a fiber grating demodulator; carrying out cross-correlation analysis on actually measured strains of measuring points on the same U-shaped rib in the steel box girder at different sections so as to determine vehicle speed of the vehicle; analyzing actually measured strain area vectors of measuring points on different U-shaped ribs in the steel box girder at the same section; and carrying out angle Cosine distance analysis by using a strain effect linear area vector of the U-shaped rib of the steel box girder so as to figure out transverse acting position and weight of each vehicle on a running lane. The vehicle load dynamic weighing method for the orthotropic bridge deck steel box girder bridge, disclosed by the invention, has the advantages of convenience for mounting, low manufacturing price, no need of interrupting transportation, no excavation or damage of the road surface and capability of achieving nondestructive and automatic dynamic weighing of the bridge vehicle load.

Owner:CHINA RAILWAY BRIDGE SCI RES INST LTD +1

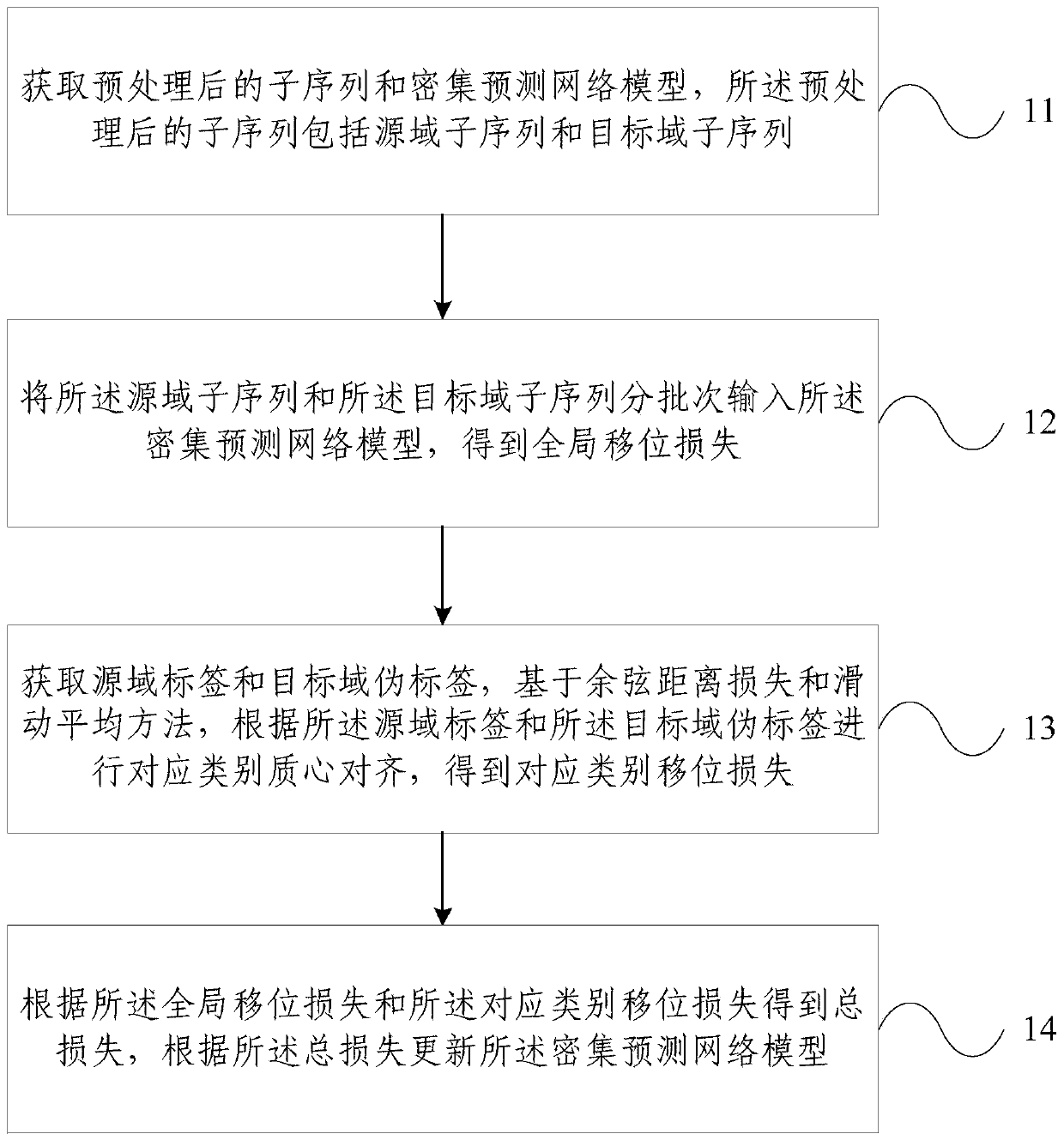

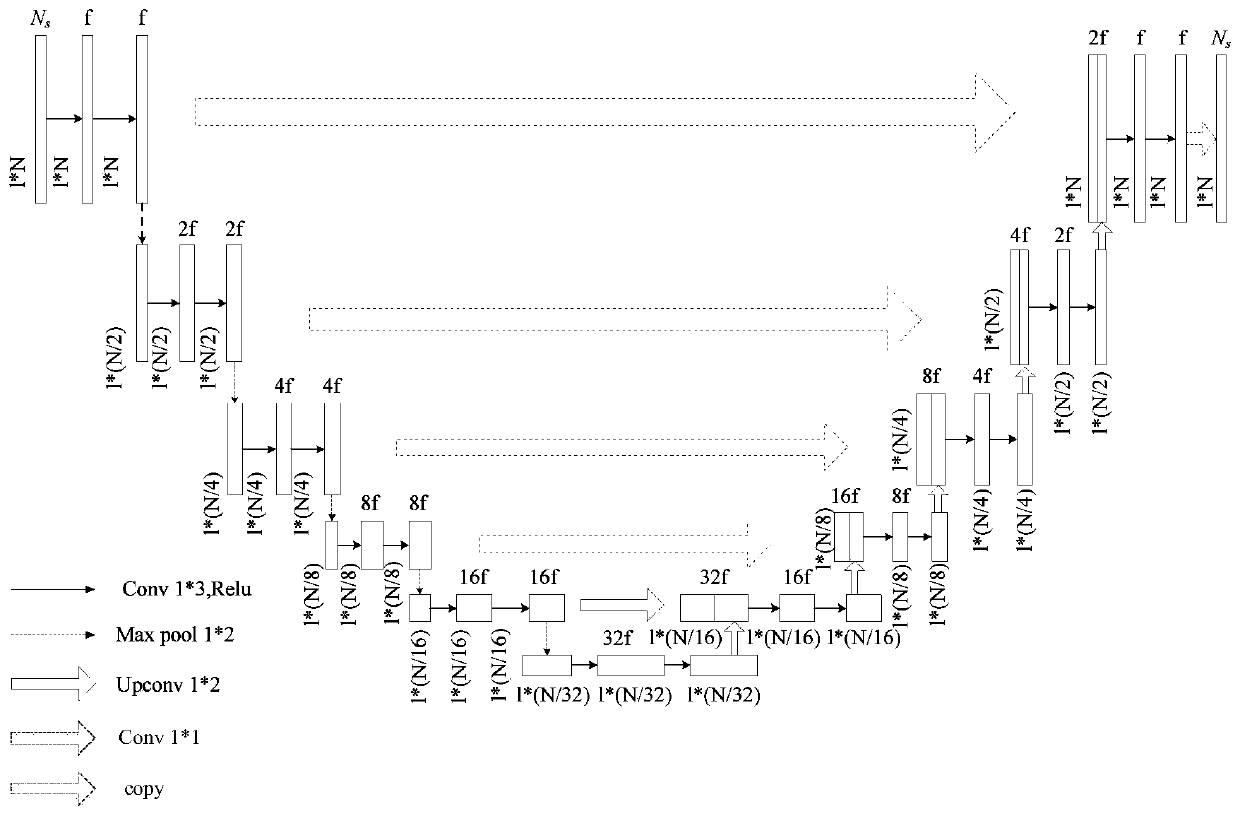

A transfer learning method and device

InactiveCN109948741AImprove performanceImprove accuracyCharacter and pattern recognitionMoving averageAlgorithm

The embodiment of the invention provides a transfer learning method and device, and the method comprises the steps: obtaining a preprocessed sub-sequence and a dense prediction network model, whereinthe preprocessed sub-sequence comprises a source domain sub-sequence and a target domain sub-sequence; Inputting the source domain sub-sequence and the target domain sub-sequence into a dense prediction network model in batches to obtain global shift loss; Obtaining a source domain label and a target domain pseudo label, and performing corresponding category centroid alignment according to the source domain label and the target domain pseudo label on the basis of a cosine distance loss and moving average method to obtain corresponding category shift loss; And obtaining total loss according tothe global shift loss and the corresponding category shift loss, and updating the dense prediction network model according to the total loss. According to the migration learning method and device provided by the embodiment of the invention, a multi-level unsupervised domain adaptation method is provided, alignment of edge distribution and condition distribution is completed, migration of a time series data intensive prediction model is realized, and the performance is more superior.

Owner:BEIJING UNIV OF POSTS & TELECOMM

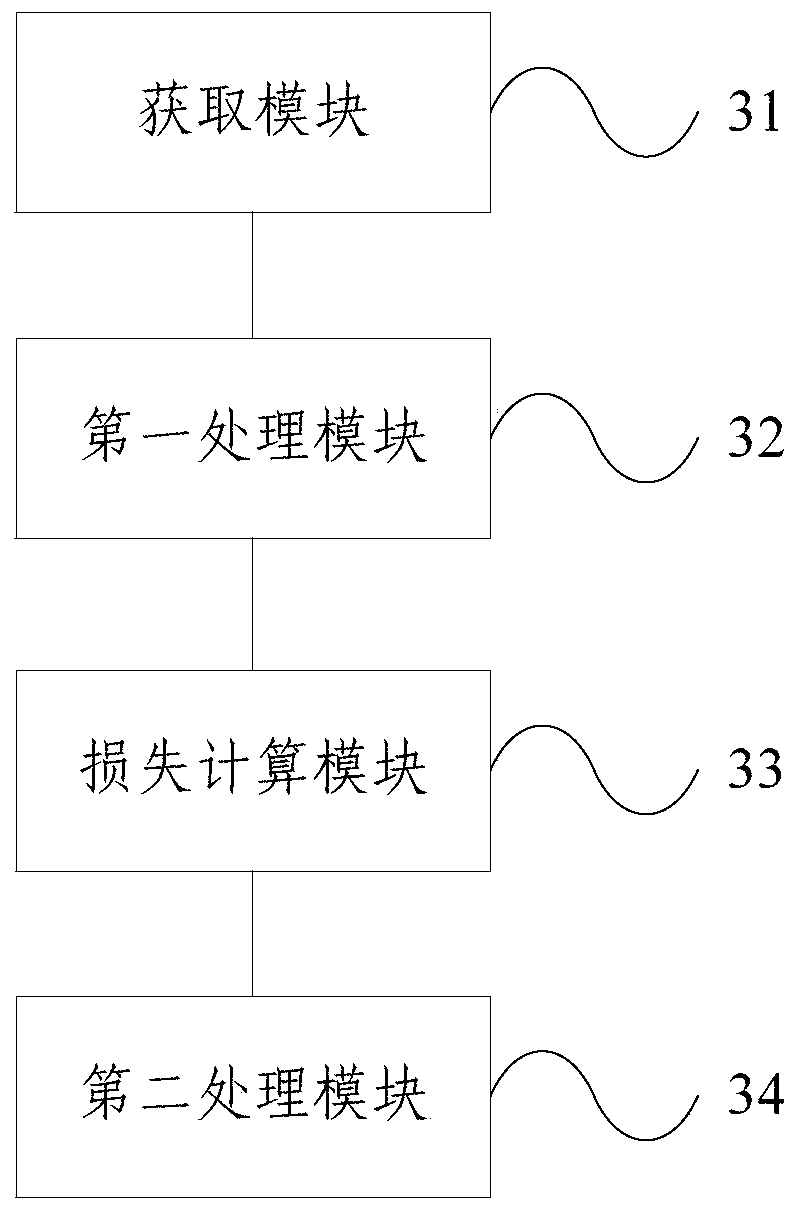

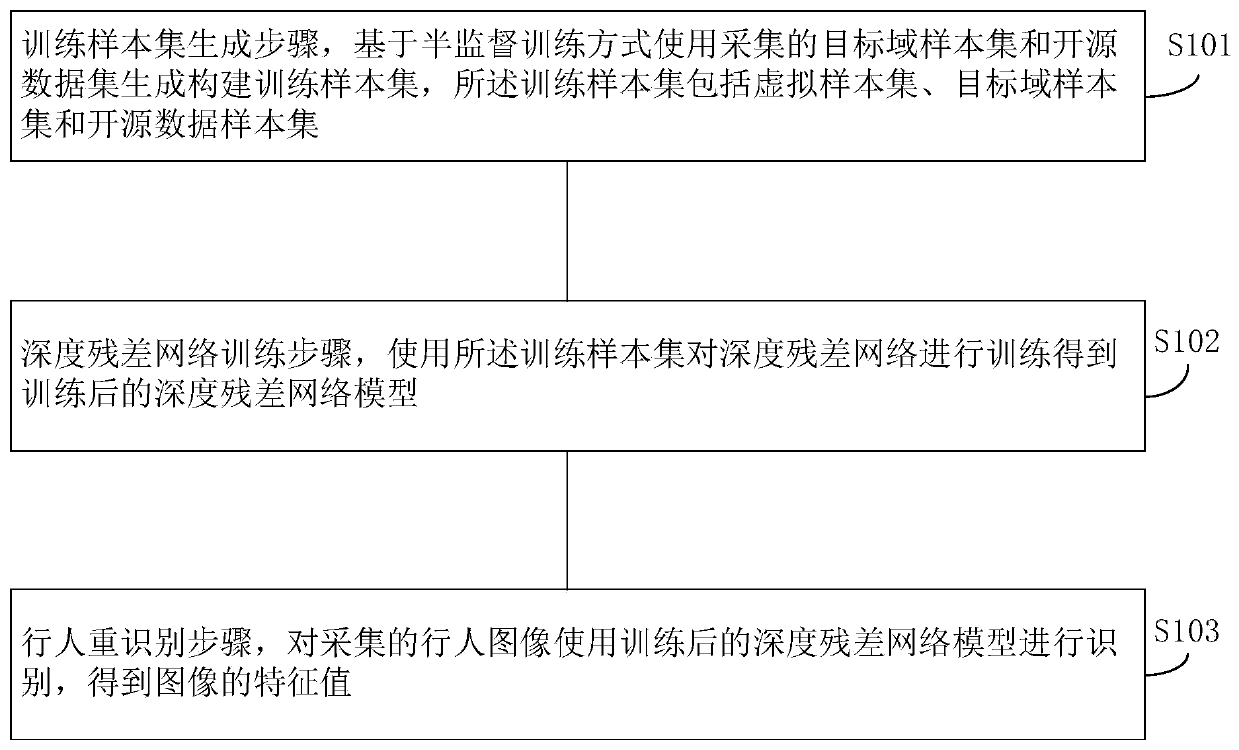

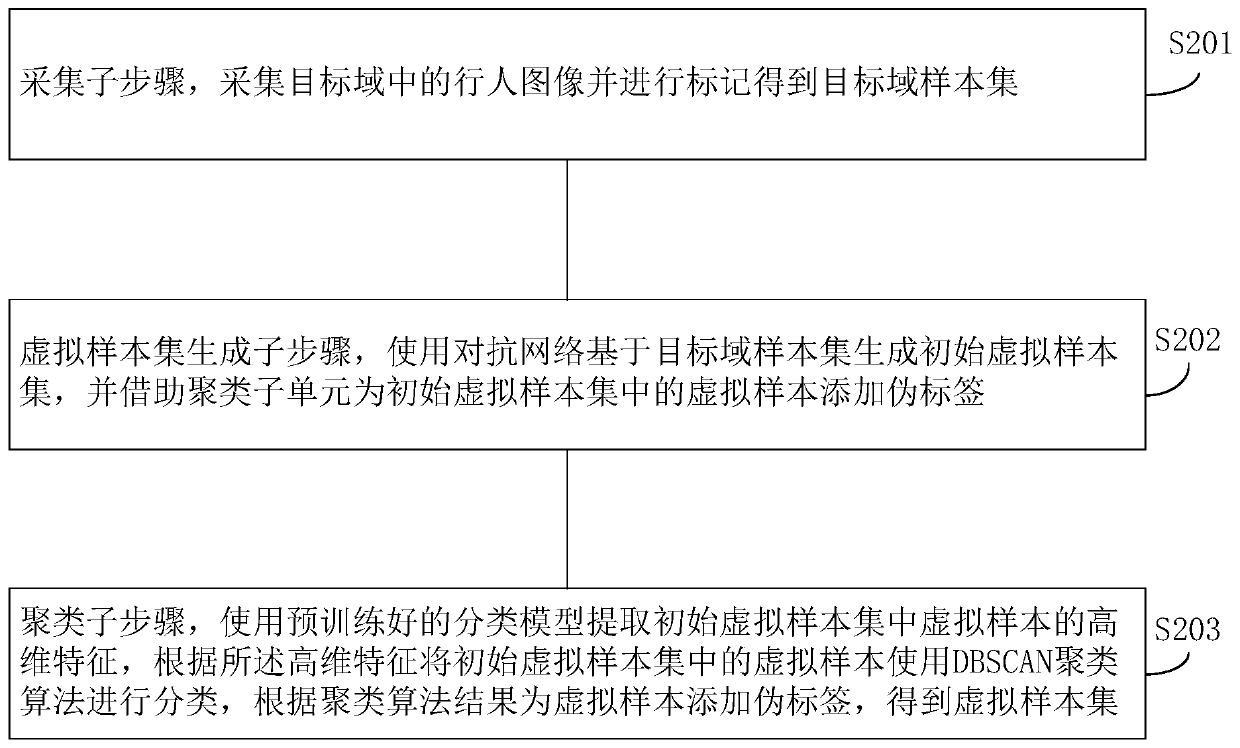

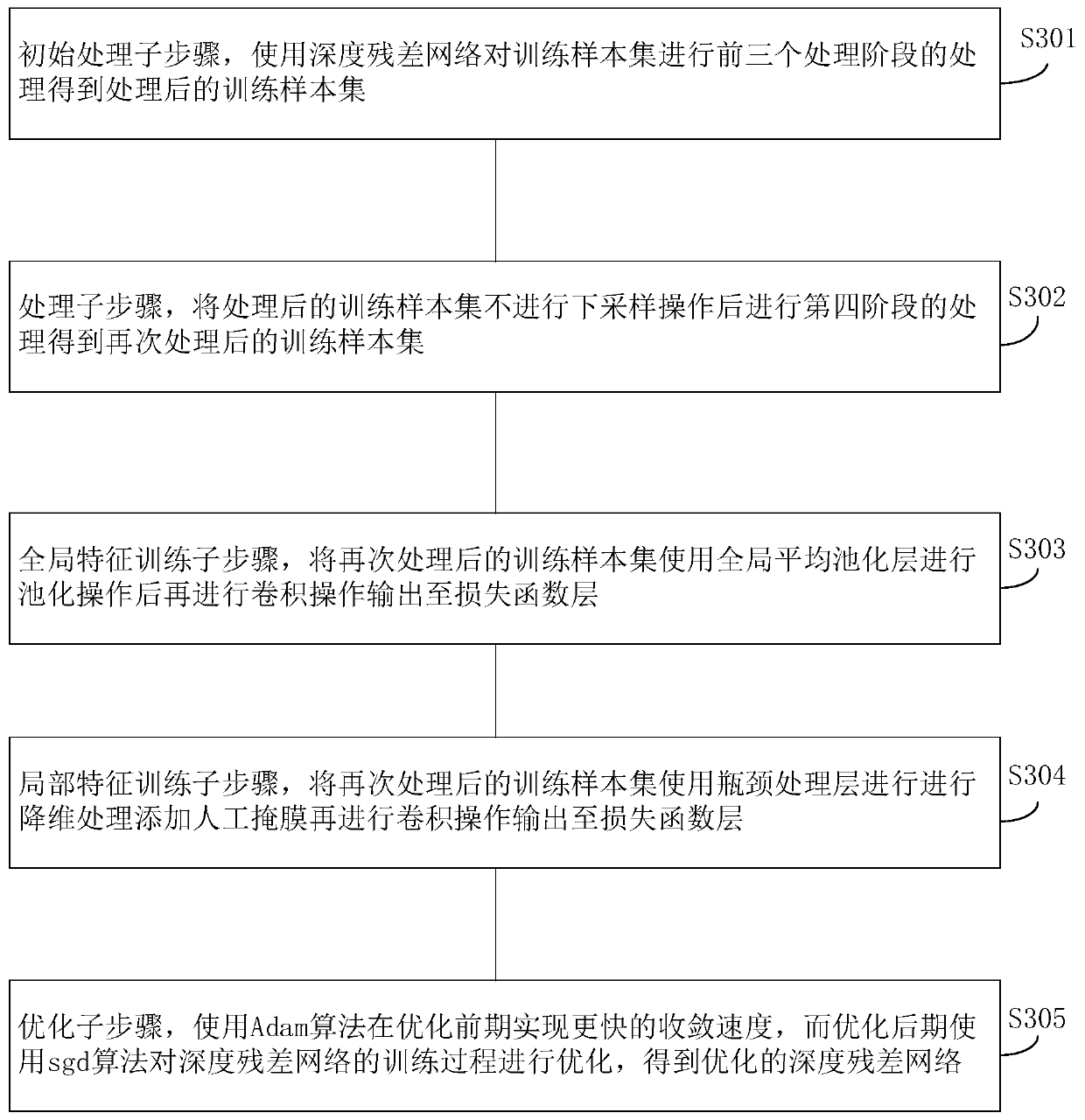

Pedestrian re-identification method and device based on semi-supervised training mode and medium

ActiveCN110555390AIncrease the number ofAccurate identificationCharacter and pattern recognitionNeural architecturesData setVirtual sample

The invention provides a pedestrian re-identification method and device based on a semi-supervised training mode and a storage medium. The method comprises the following steps: constructing a trainingsample set by using the collected target domain sample set and open source data set based on a semi-supervised training mode; and training a deep residual network by using the training sample set toobtain a trained deep residual network model, identifying the acquired pedestrian images by using the trained deep residual network model to obtain feature values of the pedestrian images, and determining whether the pedestrian images belong to the same person according to cosine distances among the feature values. According to the invention, the virtual sample is generated; a smoothing function is constructed when a virtual sample is generated; and meanwhile, a DBSCAN clustering algorithm is used for adding pseudo tags to the virtual samples, local features and global features are used in thedeep neural network, and a joint loss function of different weight combinations is adopted, so that the trained deep neural network is accurate and more reliable in recognition.

Owner:XIAMEN MEIYA PICO INFORMATION

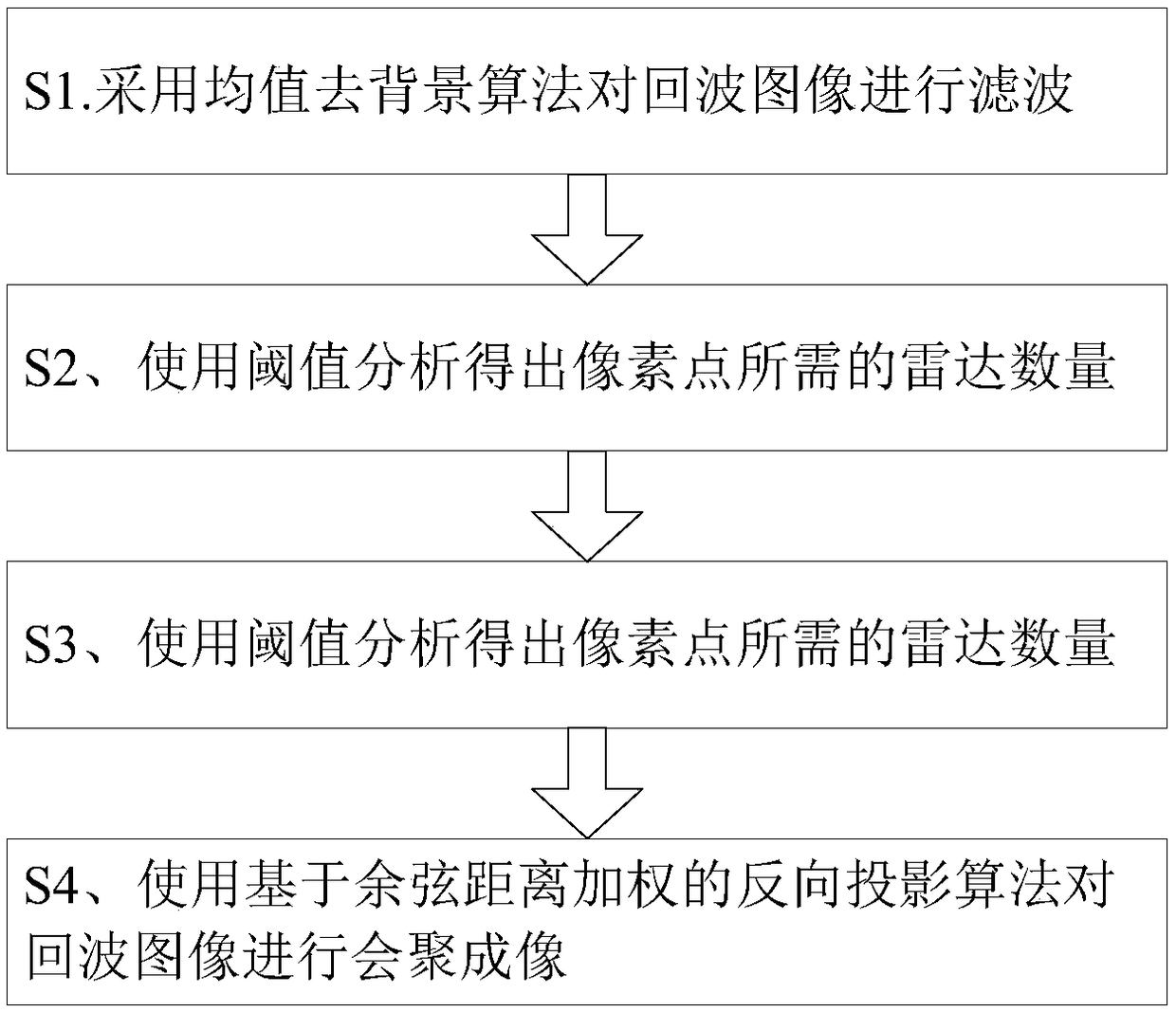

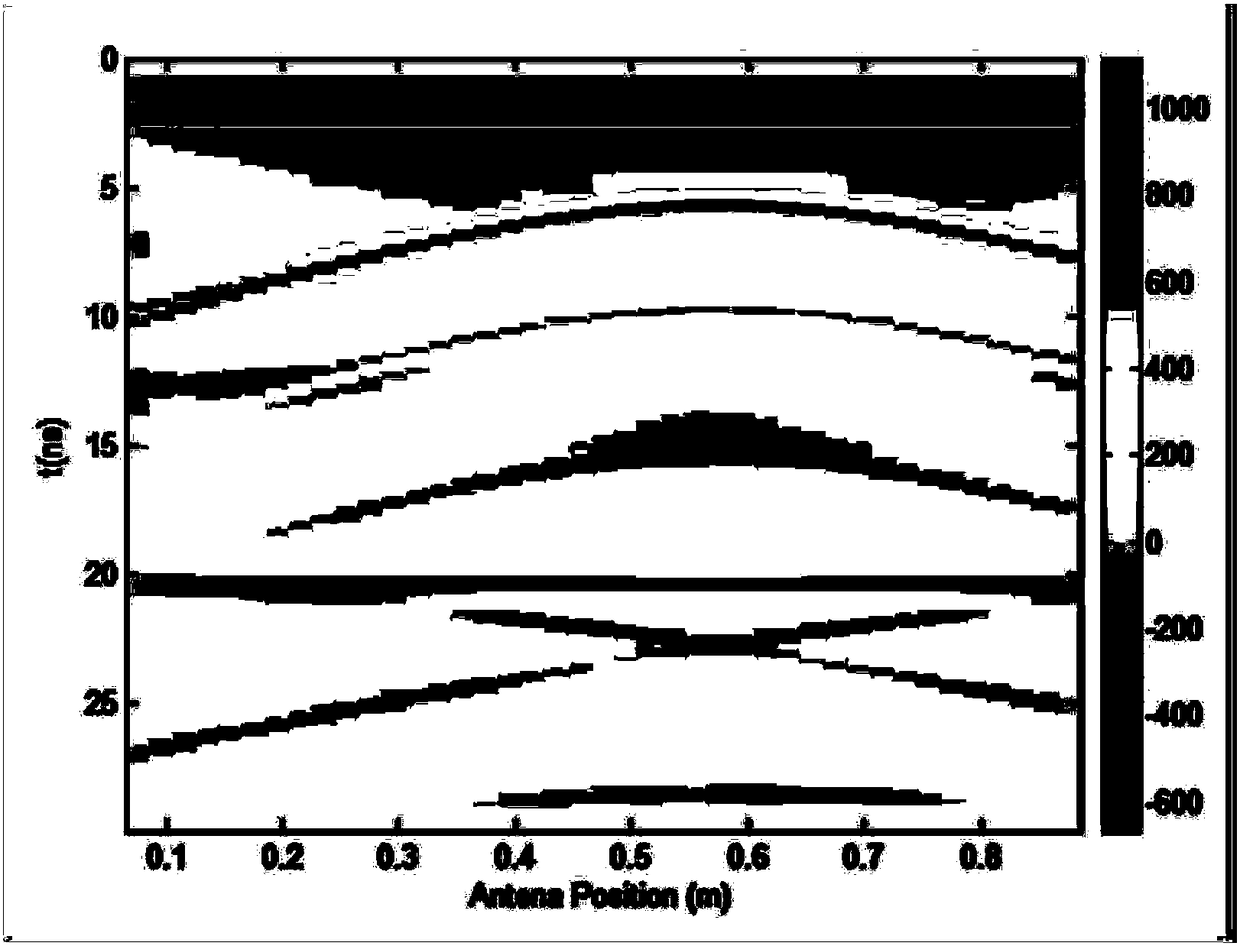

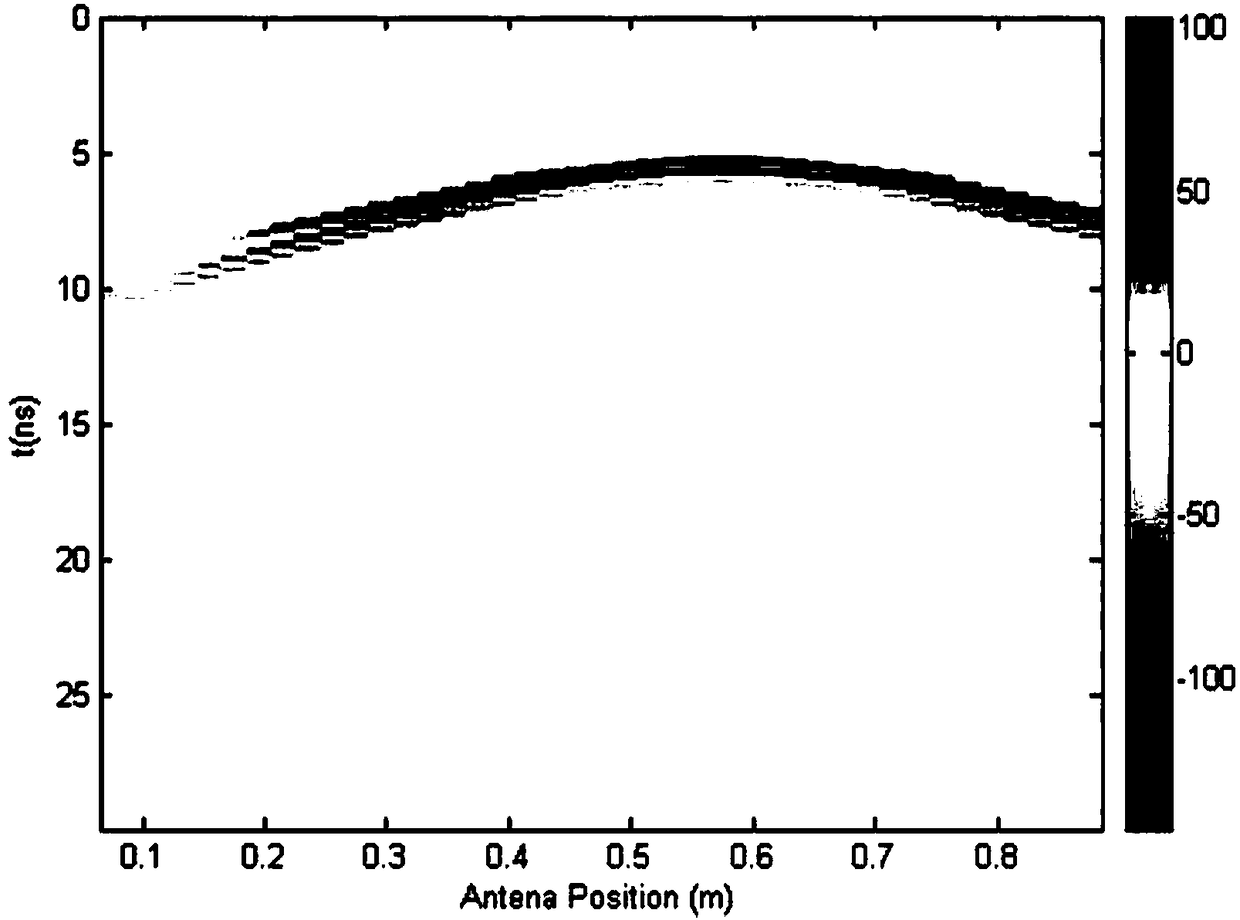

Automatic convergence imaging method based on ground penetrating radar echo data

ActiveCN108387896AGood stickinessFast and efficient automationDetection using electromagnetic wavesRadio wave reradiation/reflectionProjection algorithmsBack projection

The invention discloses an automatic convergence imaging method based on ground penetrating radar echo data. The method comprises the following steps: S1) carrying out filtering on an echo image through a mean de-background algorithm to remove ground clutters; S2) removing echo image noise through a median filtering algorithm; S3) obtaining the number of radars required by pixel points through threshold analysis; and S4) carrying out convergence imaging on the echo image through a back-projection algorithm based on cosine distance weighting. The method can quickly and automatically carry out convergence imaging on the ground penetrating radar echo image, and can still converge echo signals quickly and effectively under the complex urban environment by utilizing the back-projection algorithm based on cosine distance and threshold analysis image segmentation technology, thereby meeting practical and application requirements.

Owner:XIAMEN UNIV

Human face recognition method and human face recognition system

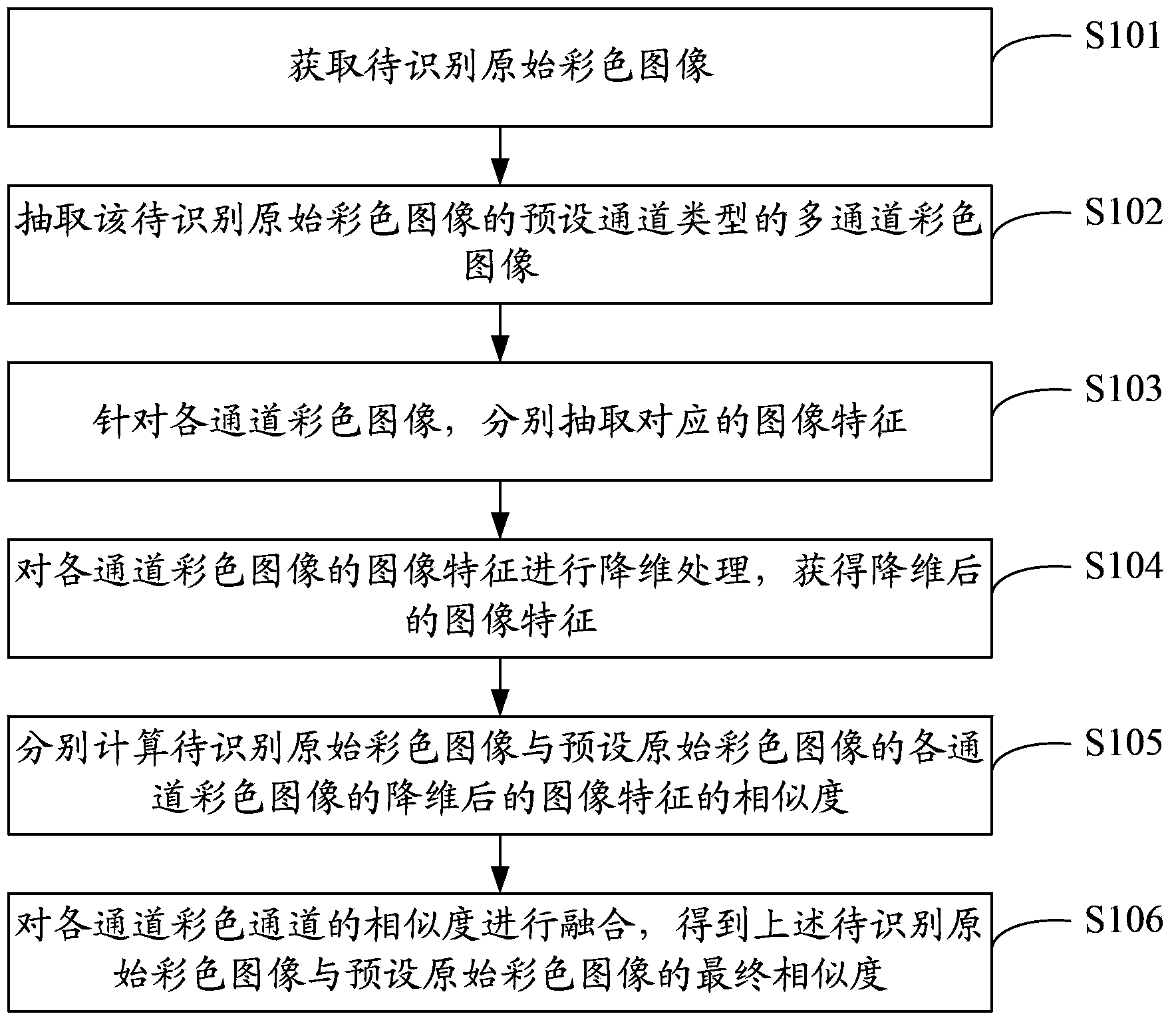

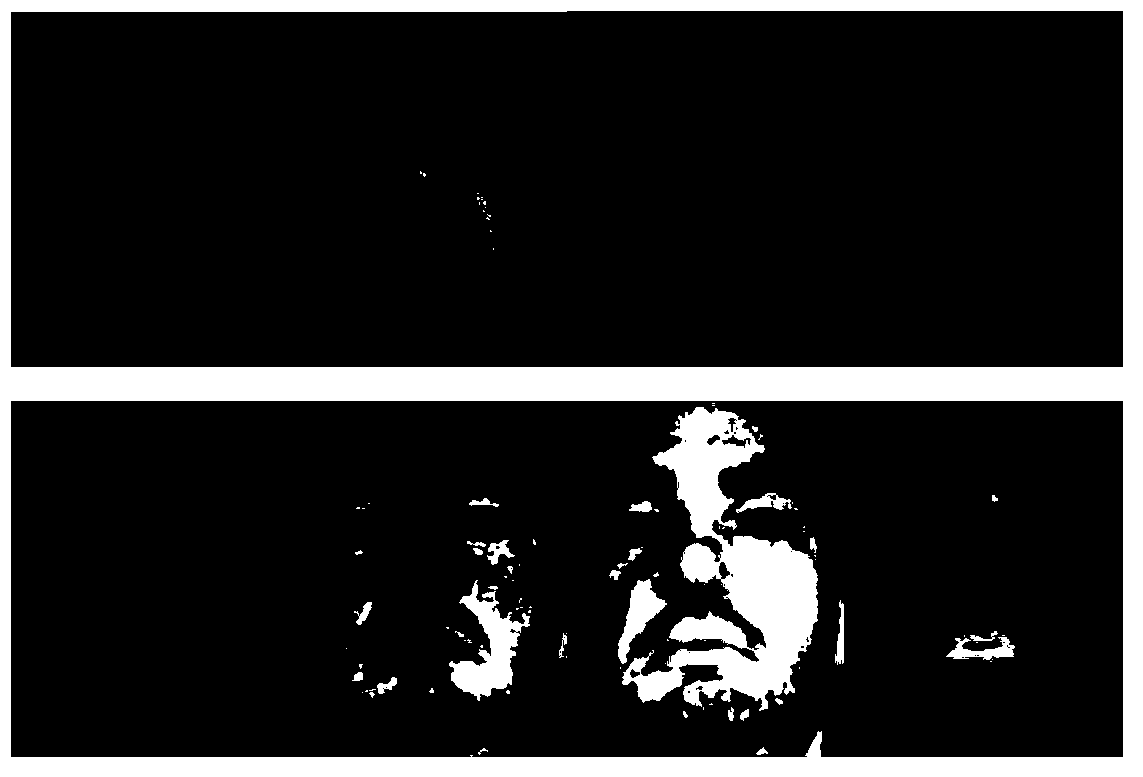

ActiveCN103839042AImprove accuracyCharacter and pattern recognitionColor imageDimensionality reduction

Provided are a human face recognition method and human face recognition system. The method comprises the steps of acquiring original color images to be recognized, extracting channel color images, of preset channel types, of the original color images to be recognized, extracting corresponding image characteristics of all the channel color images respectively, carrying out dimension reduction processing on the image characteristics of all the channel color images to obtain image characteristics after the dimension reduction, carrying out cosine distance calculation on the characteristics, after the dimension reduction, of all the channel color images, and obtaining the final similarities of the original images through SVM fusion. According to the scheme, the human face information can be described from different angles, the accuracy of the description of the human face image information is improved, and application can be carried out in various human face verification and recognition occasions conveniently.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

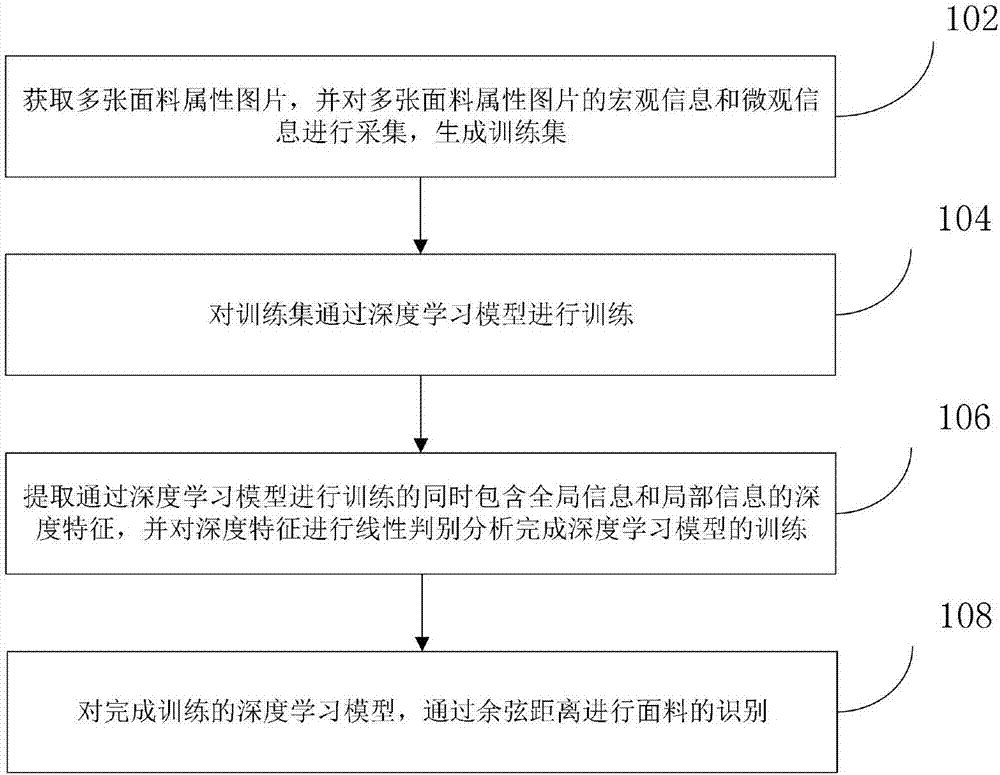

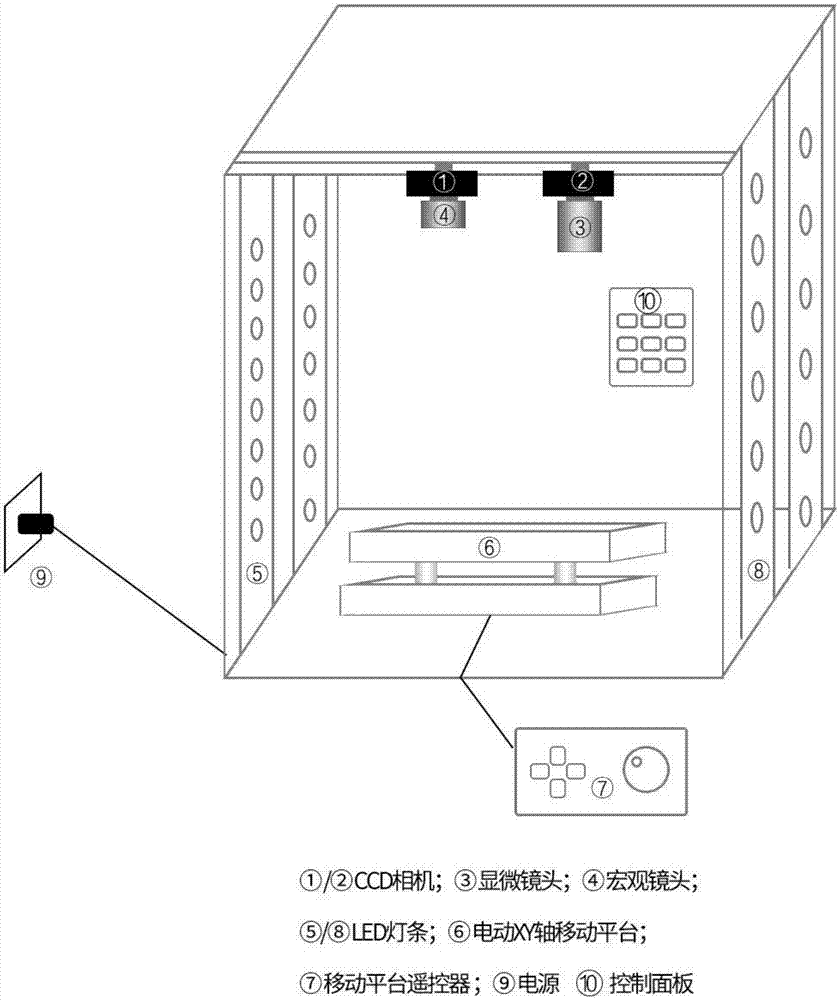

Fabric property picture collection and recognition method and system based on deep learning

PendingCN107463965ASolve the problem of attribute identificationEasy to identifyCharacter and pattern recognitionNeural learning methodsLocal patternGlobal information

The invention discloses a fabric property picture collection and recognition method based on deep learning. The method comprises the steps that multiple fabric property pictures are acquired, macro information and micro information of the fabric property pictures are collected, and a training set is generated; the training set is trained through a deep learning model; deep features which are trained through the deep learning model and contain global information and local information at the same time are extracted, and linear discriminant analysis is performed on the deep features to complete training of the deep learning model; and fabric recognition is performed through a cosine distance according to the trained deep learning model. Through the method, multiple fabric property recognition problems including weaving process problems, background color process problems, surface process problems, printing process problems, spinning process problems and the like are solved, meanwhile, the trained model contains the local information and the global information at the same time, and the accurate recognition rate and the matching rate of a local pattern and a global pattern of a fabric are increased. The invention furthermore discloses a fabric property picture collection and recognition system based on deep learning.

Owner:湖州易有科技有限公司

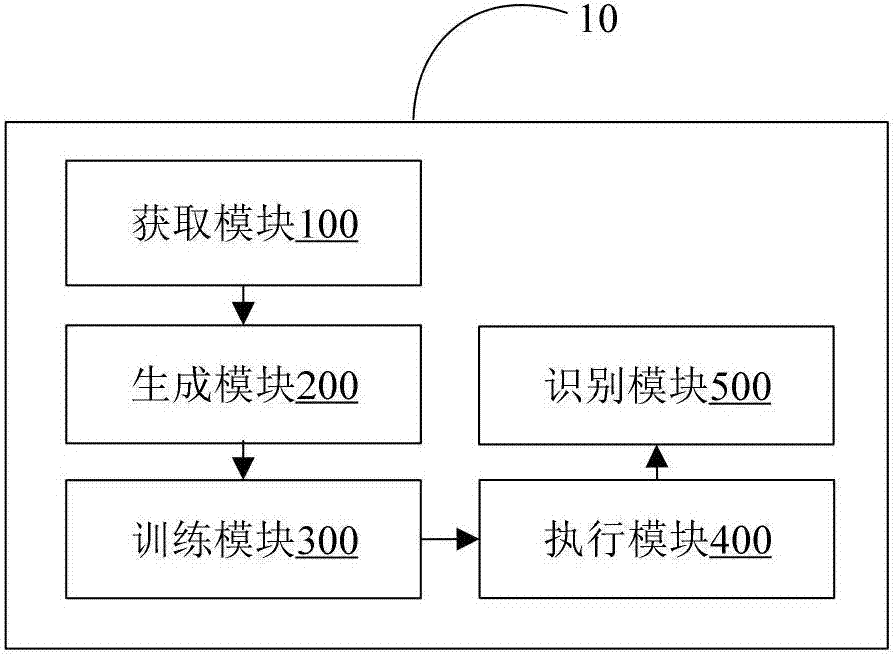

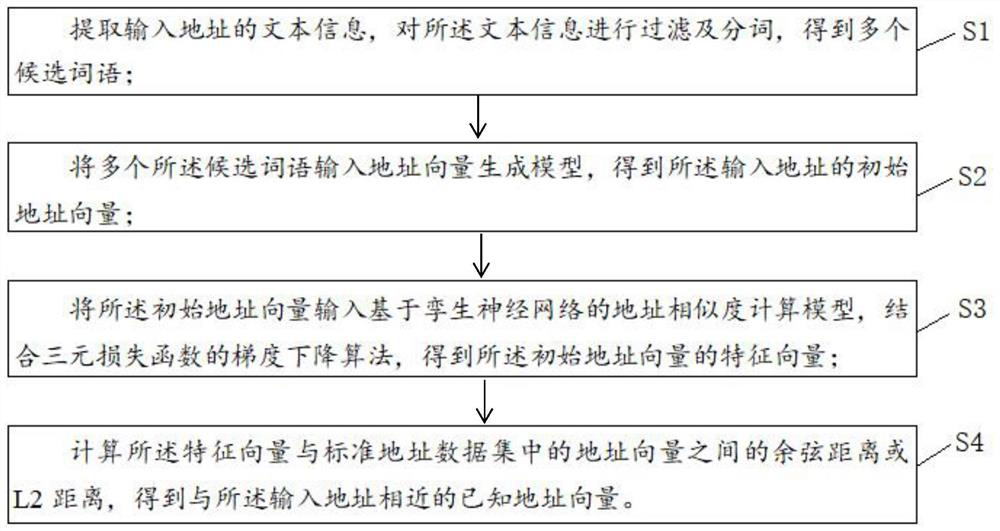

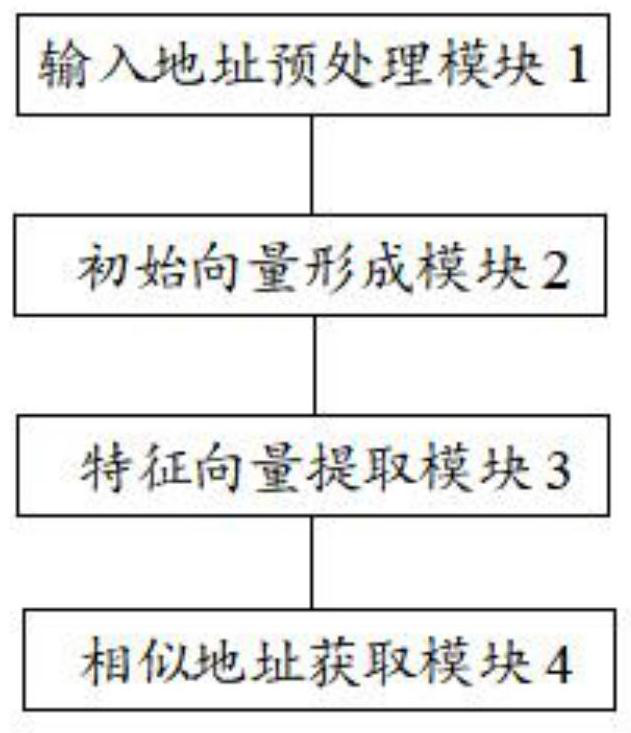

Address similarity calculation method and device, equipment and storage medium

PendingCN111783419AImprove the matching success rateImprove word segmentation accuracyNatural language data processingNeural architecturesFeature vectorData set

The invention discloses an address similarity calculation method and device, equipment and a storage medium. Aiming at the problems that address matching has complex rules, an existing matching algorithm is not high in retrieval speed and accuracy, and the address matching efficiency is low, a solution is proposed. Input address information is expressed by a proper initial vector; an address similarity calculation model based on a twin neural network is used, a gradient descent algorithm of a ternary loss function is combined, thus obtaining a feature vector of the initial address vector; finally, the cosine distance or L2 distance between the feature vector and an address vector in a standard address data set is calculated, the known address vector closest to the input address vector is obtained, so that the address matching rule is simplified, the preferred accuracy of the same address is improved, and the retrieval speed and accuracy of the matching algorithm are further improved.

Owner:SHANGHAI DONGPU INFORMATION TECH CO LTD

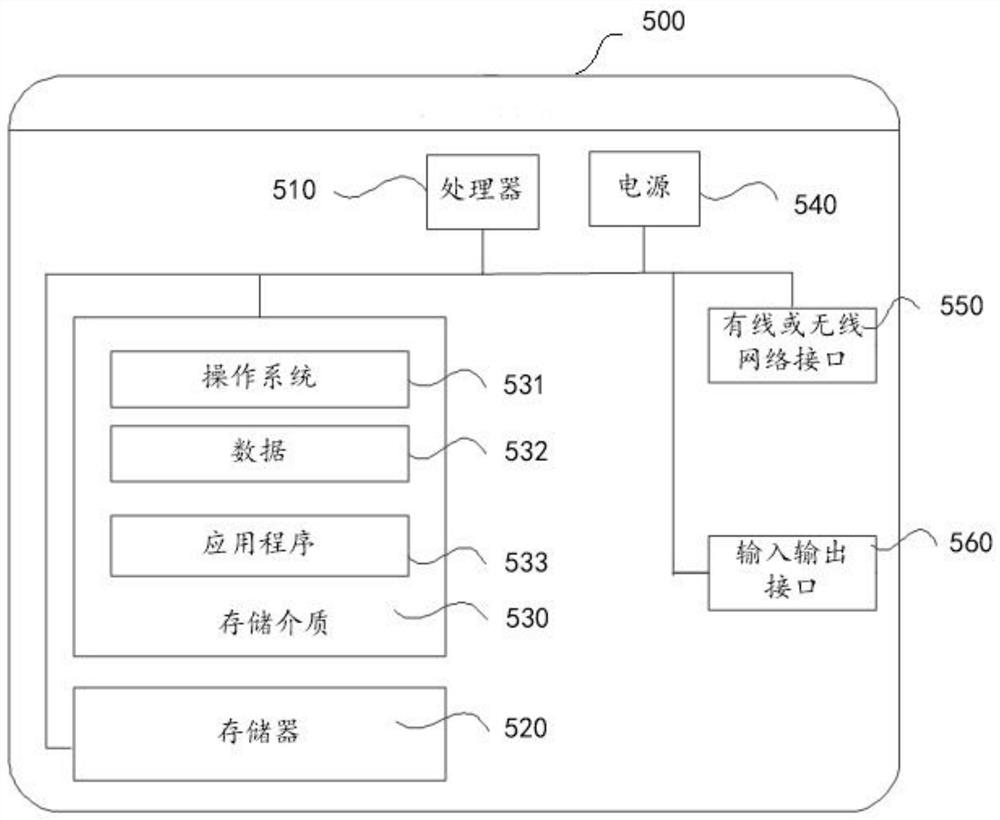

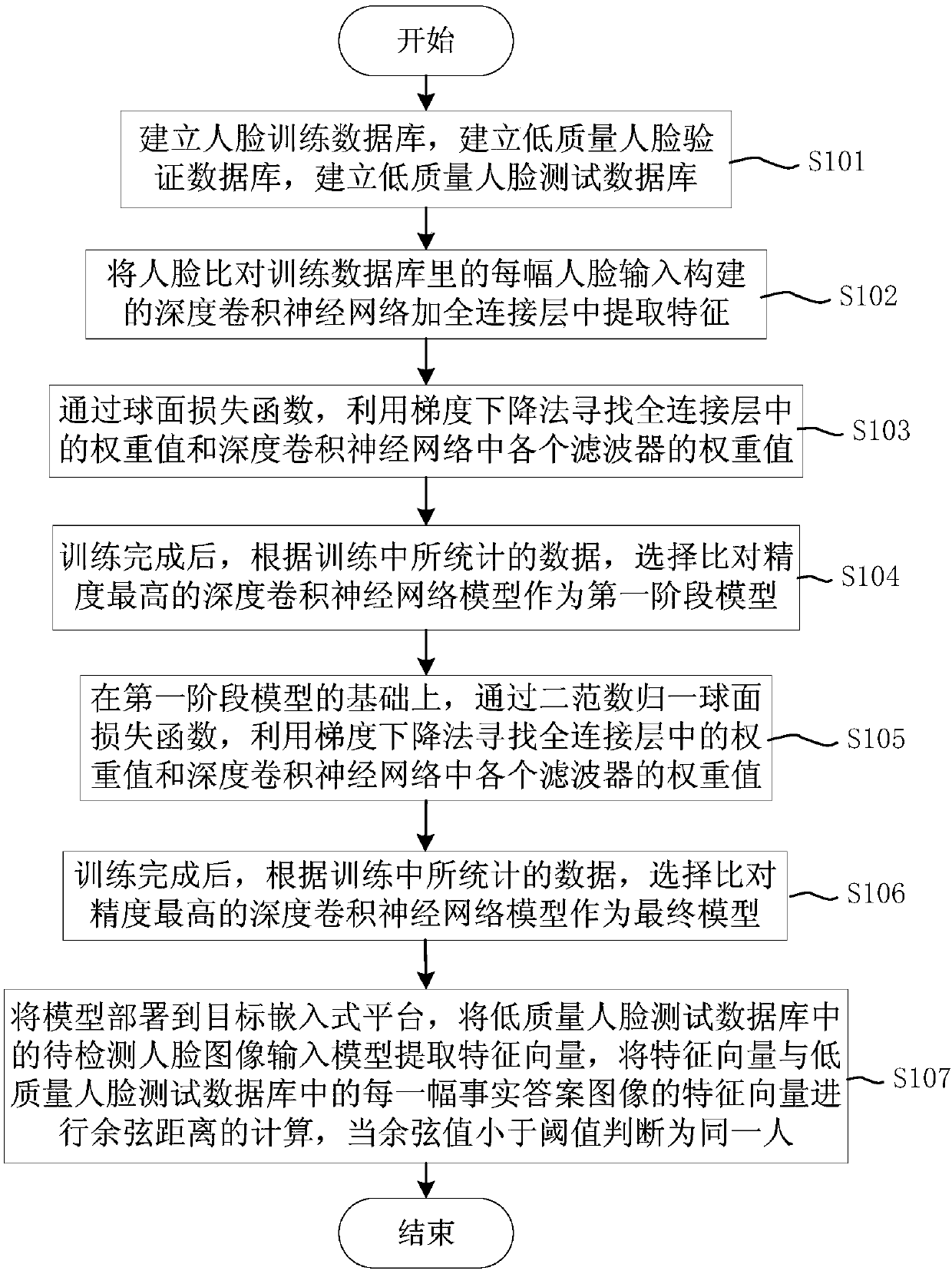

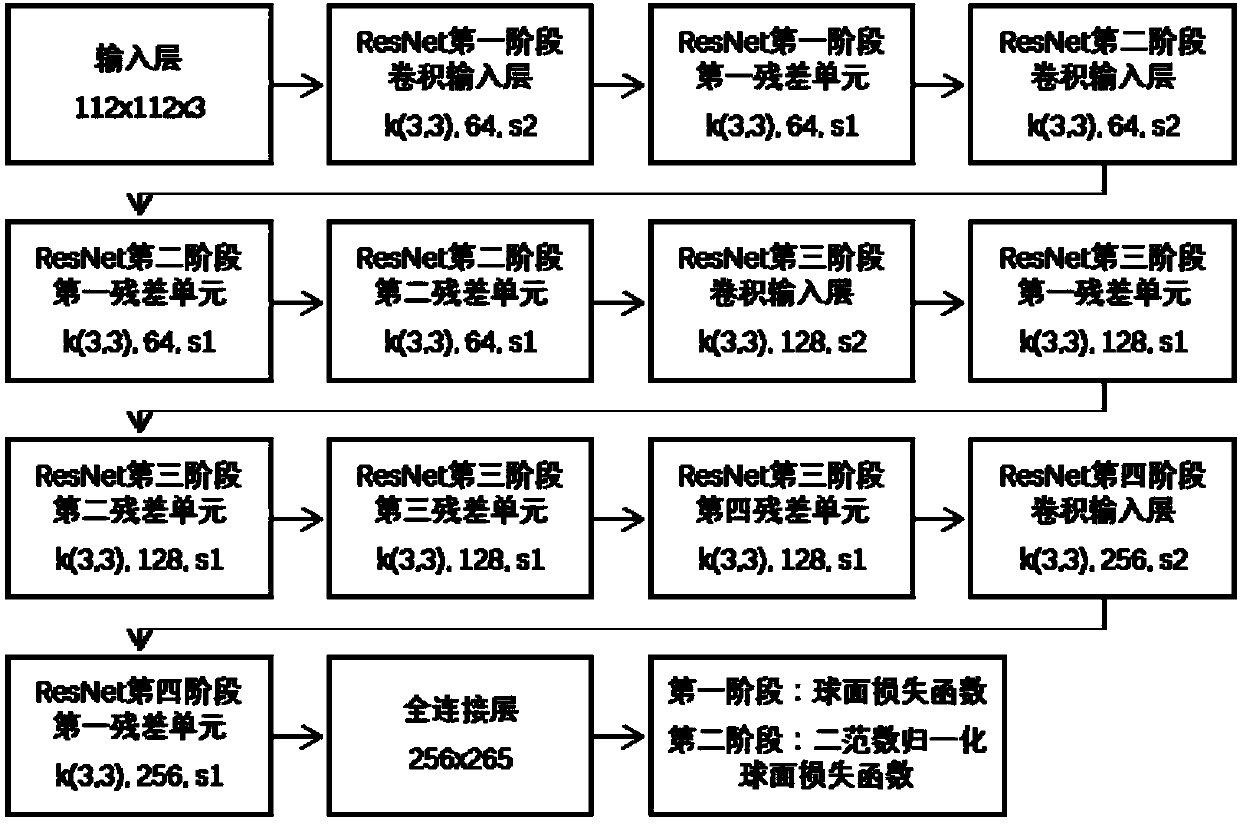

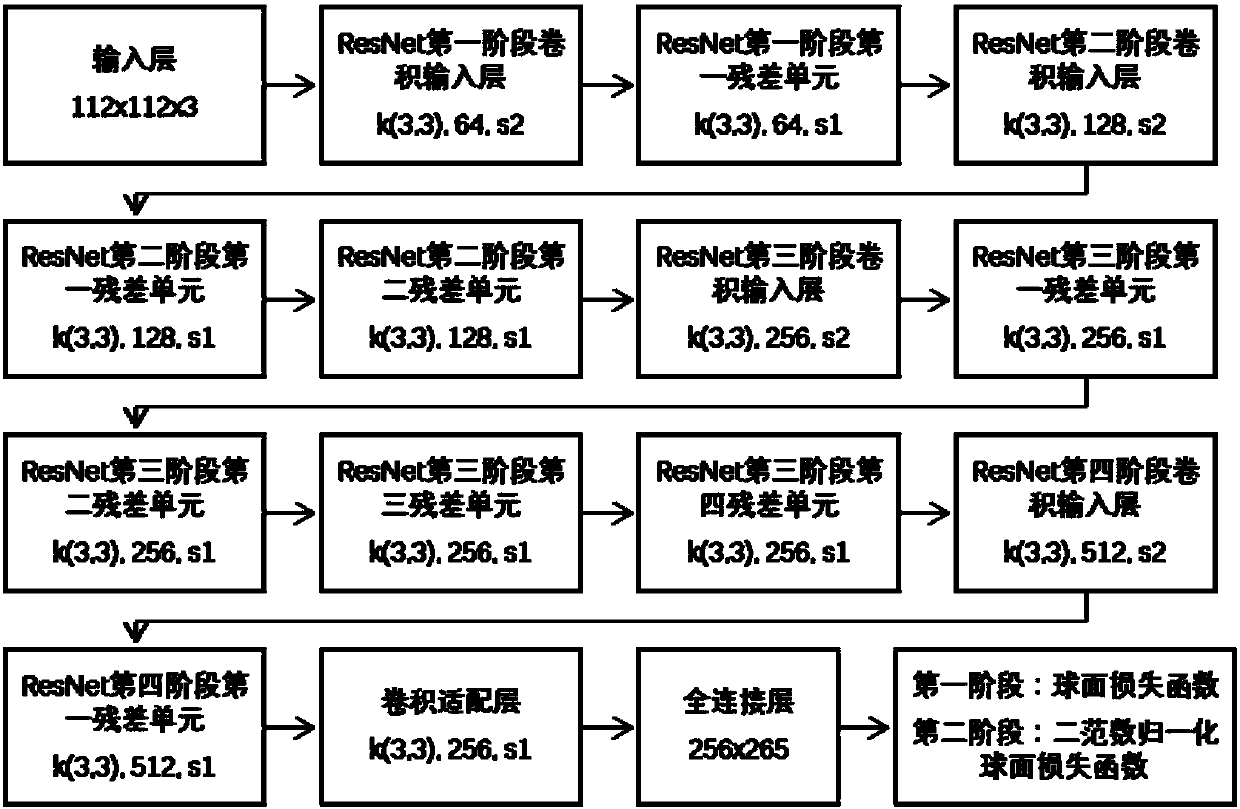

Low-quality face comparison method based on deep convolution neural network

InactiveCN108573243AEfficient comparisonTaking into account the accuracyCharacter and pattern recognitionNeural architecturesHat matrixPass rate

The invention discloses a low-quality face comparison method based on a deep convolution neural network. The method comprises the following steps: each image in a face training database is sent to a constructed deep convolution neural network for feature extraction; the extracted features are inputted to a fully-connected layer and are projected to a projection matrix in low-latitude space throughaffine; feature vectors obtained and calculated by the projection matrix are trained through a two-norm normalized spherical loss function; through a gradient descent method, the weight value of eachfilter in the fully-connected layer and the deep convolution neural network is found out, and the deep convolution neural network with a comparison passing rate to be the highest is selected; and thefeature vector of a to-be-detected face image and the feature vector of each fact answer image in a low-quality face test database are subjected to cosine distance calculation, and the same person isjudged if the cosine value is smaller than a threshold. The method is used for high-efficiency comparison on a low-quality face, fewer calculation resources are used, and both the face comparison precision and the comparison speed can be considered at the same time.

Owner:上海敏识网络科技有限公司

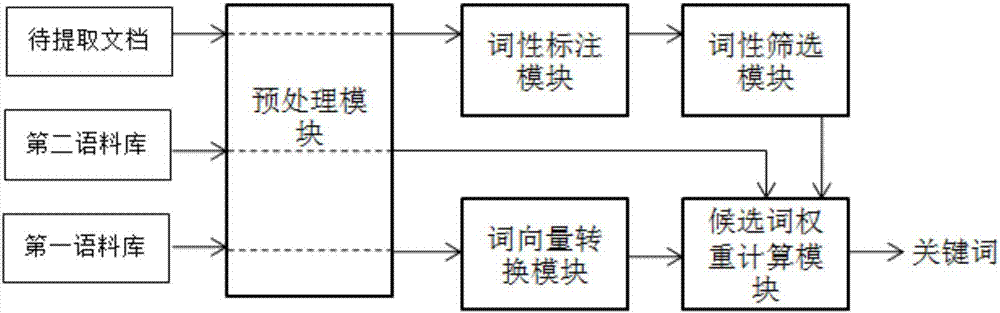

Keyword extraction system

InactiveCN106997344AGuaranteed Extraction DirectionExpand the scope of investigationNatural language data processingSpecial data processing applicationsPart of speechDocument preparation

The invention relates to the field of natural language processing, in particular to a keyword extraction system. The system comprises a preprocessing module, a word vector conversion module, a part-of-speech tagging module, a part-of-speech screening module and a candidate word weight calculation module. According to the system, the part-of-speech of keyword extraction is limited through part-of-speech screening, then a keyword extraction direction is adjusted, and a word vector is trained by introducing a large-scale corpus library; weights of candidate words in a to-be-extracted document are calculated in a cosine distance and IF-IDF weight combination mode by depending on the word vector trained in the large-scale corpus library; and an investigation range of keyword extraction is expanded by introducing an external corpus library, so that a keyword extraction result is more reasonable and a new tool is provided for effectively extracting a keyword.

Owner:成都数联铭品科技有限公司

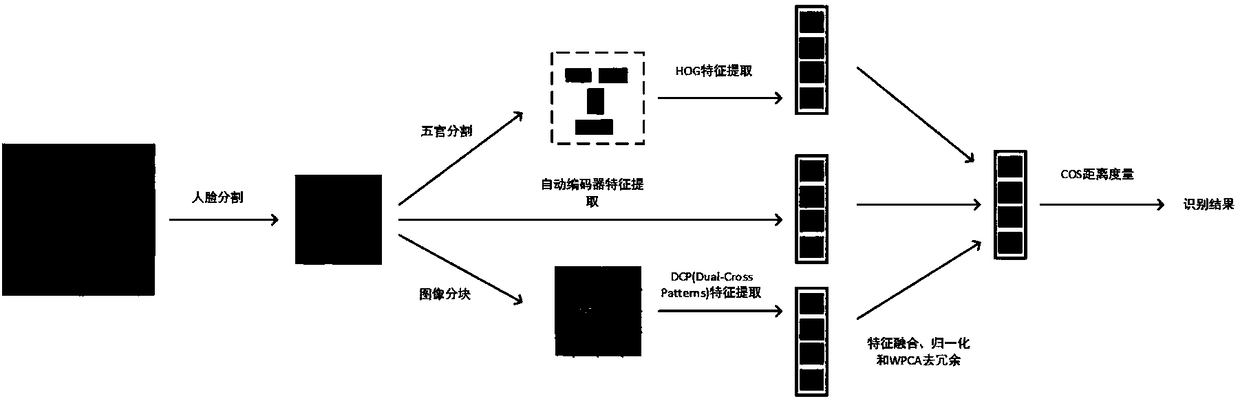

Multi-model multi-channel face identification method and system

InactiveCN108171223AAccurate face recognitionGood effectCharacter and pattern recognitionImage extractionFace detection

The invention relates to a multi-model multi-channel face identification method and system, belongs to the technical field of face identification. Different face identifications are used to generate different face characteristics, the different characteristics include different information, and the characteristics are fused to carry out face identification, so that the identification rate can be improved to large degree. A common camera obtains a face image, whether a face appears is detected via a face detection algorithm, the face area is segmented on the basis of existence of the face, andthe images obtained by segmentation are preprocessed. The characteristics corresponding to different models are extracted from the preprocessed images, and the processed, and a cosine distance is usedto measure the similarity between characteristics of a person not identified and those of people registered in a database. The disadvantages that a present method is not high in accuracy and low in robustness to face environment change as illumination, expression, attitude and shielding are overcome, and the face identification accuracy can be improved effectively.

Owner:北京中晟信达科技有限公司 +1

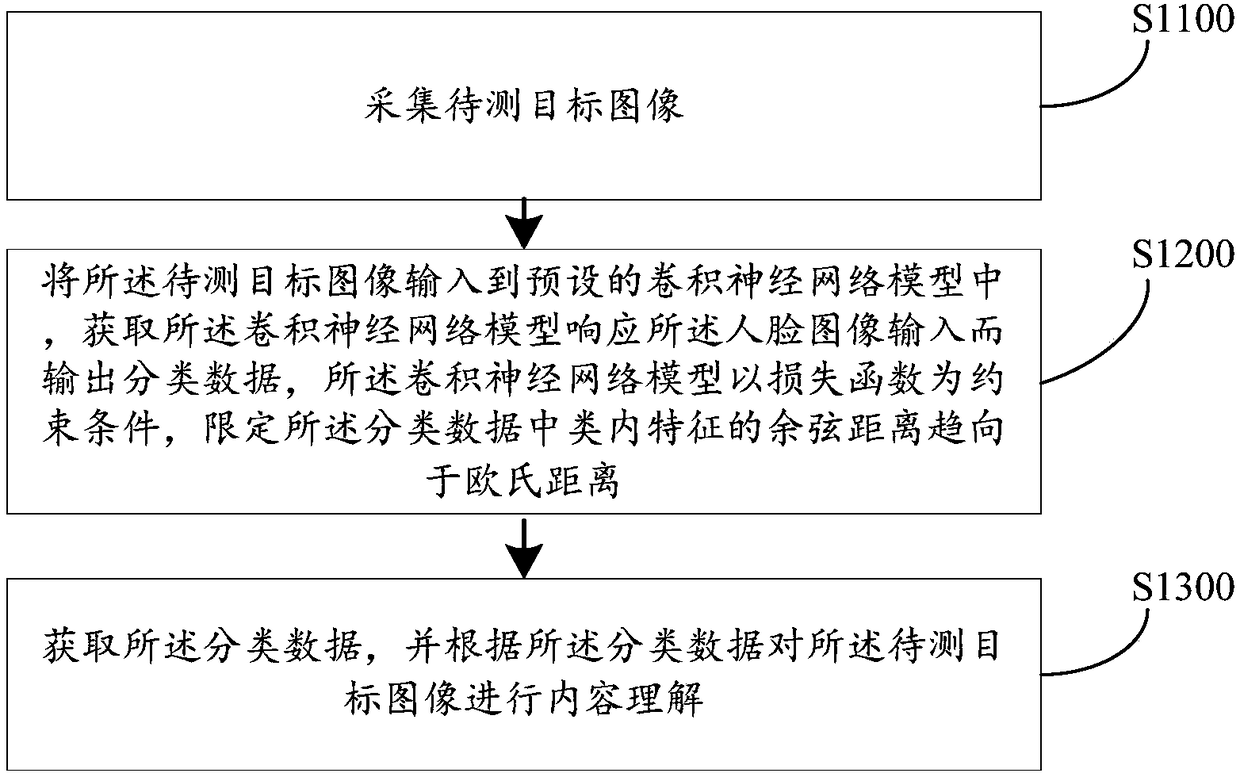

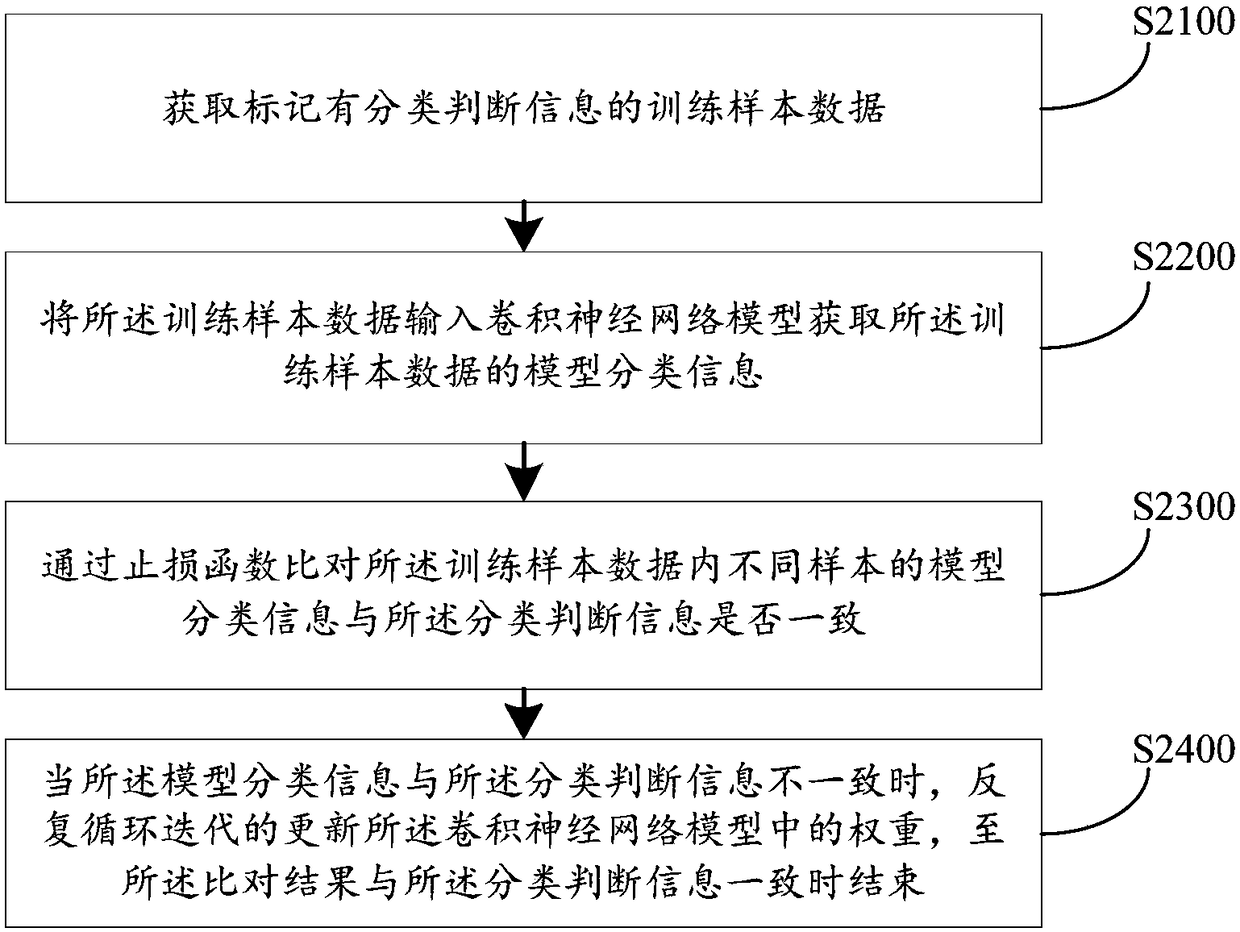

Learning type image processing method, system and server

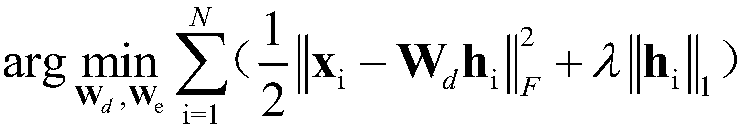

ActiveCN108108807AThe classification result is accurateImprove convergenceNeural architecturesNeural learning methodsImaging processingNetwork model

The embodiment of the invention discloses a learning type image processing method, a system and a server. The method comprises the following steps of acquiring a to-be-detected target image; inputtingthe to-be-detected target image into a preset convolution neural network model; obtaining classification data outputted by the convolution neural network model in response to the input of a human face image, wherein the convolution neural network model takes a loss function as a constraint condition and the cosine distance of the in-class features in the classification data is defined to tend tobe the Euclidean distance; acquiring the classification data, and performing content understanding on the to-be-detected target image according to the classification data. According to the invention,the classification data are screened through a loss function based on the cosine distance in the joint loss function. The cosine distance in the classification data is maximized. However, the color ina simple image is single, and the maximization of the cosine distance with relatively strong internal convergence is achieved. The cosine distance can tend to be a calculation result of the Euclideandistance, so that the implementation complexity is simplified.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

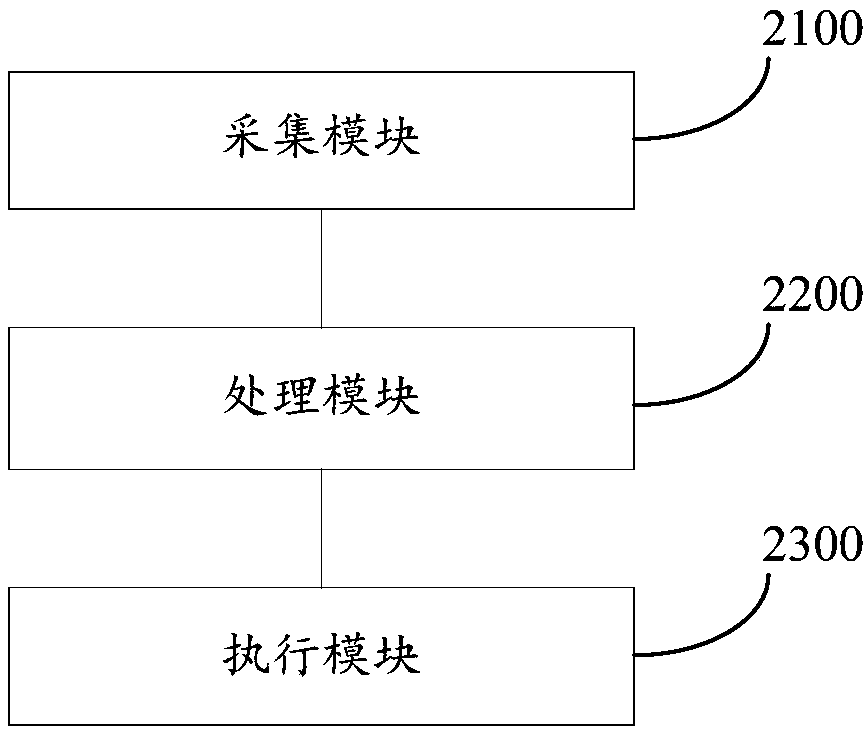

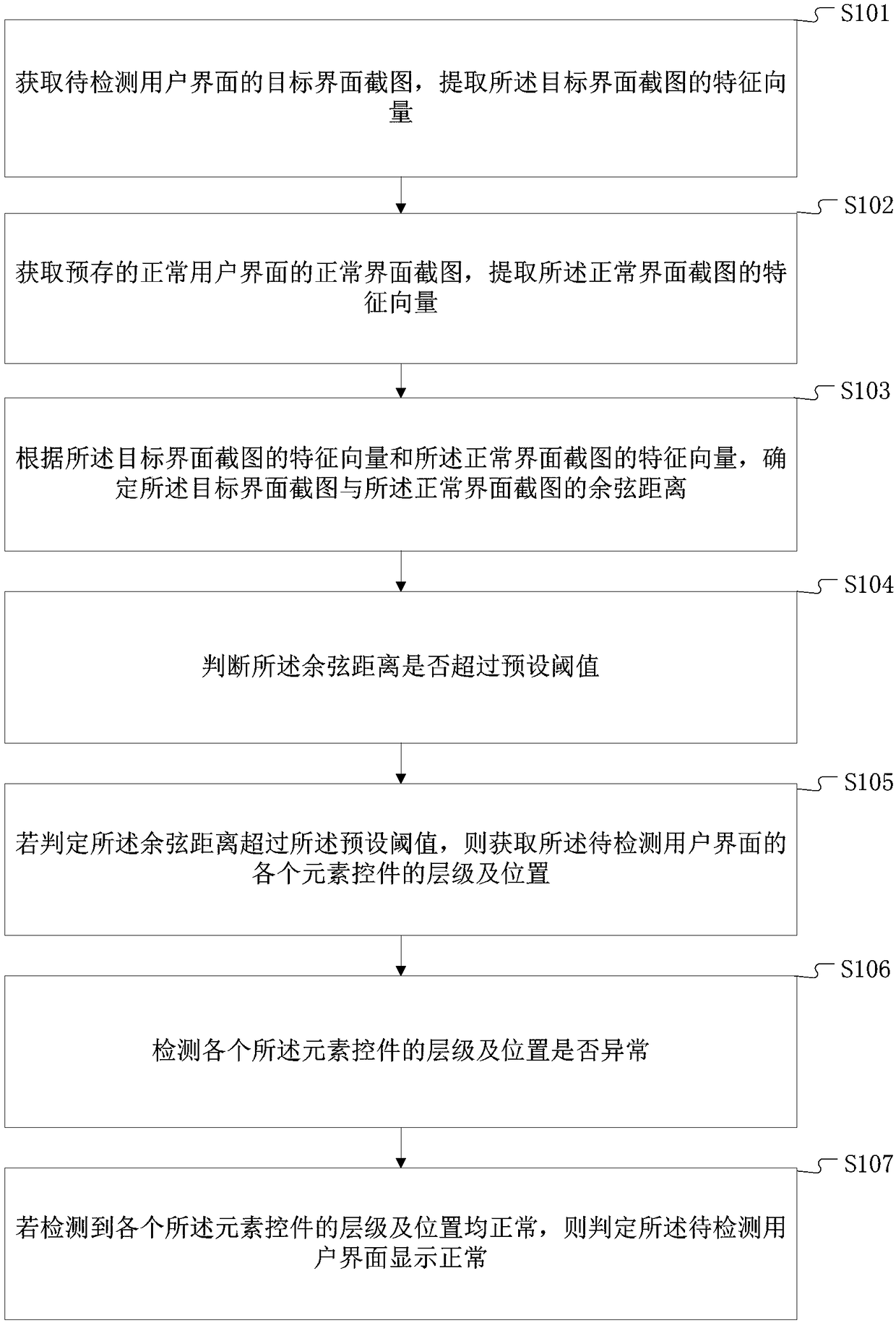

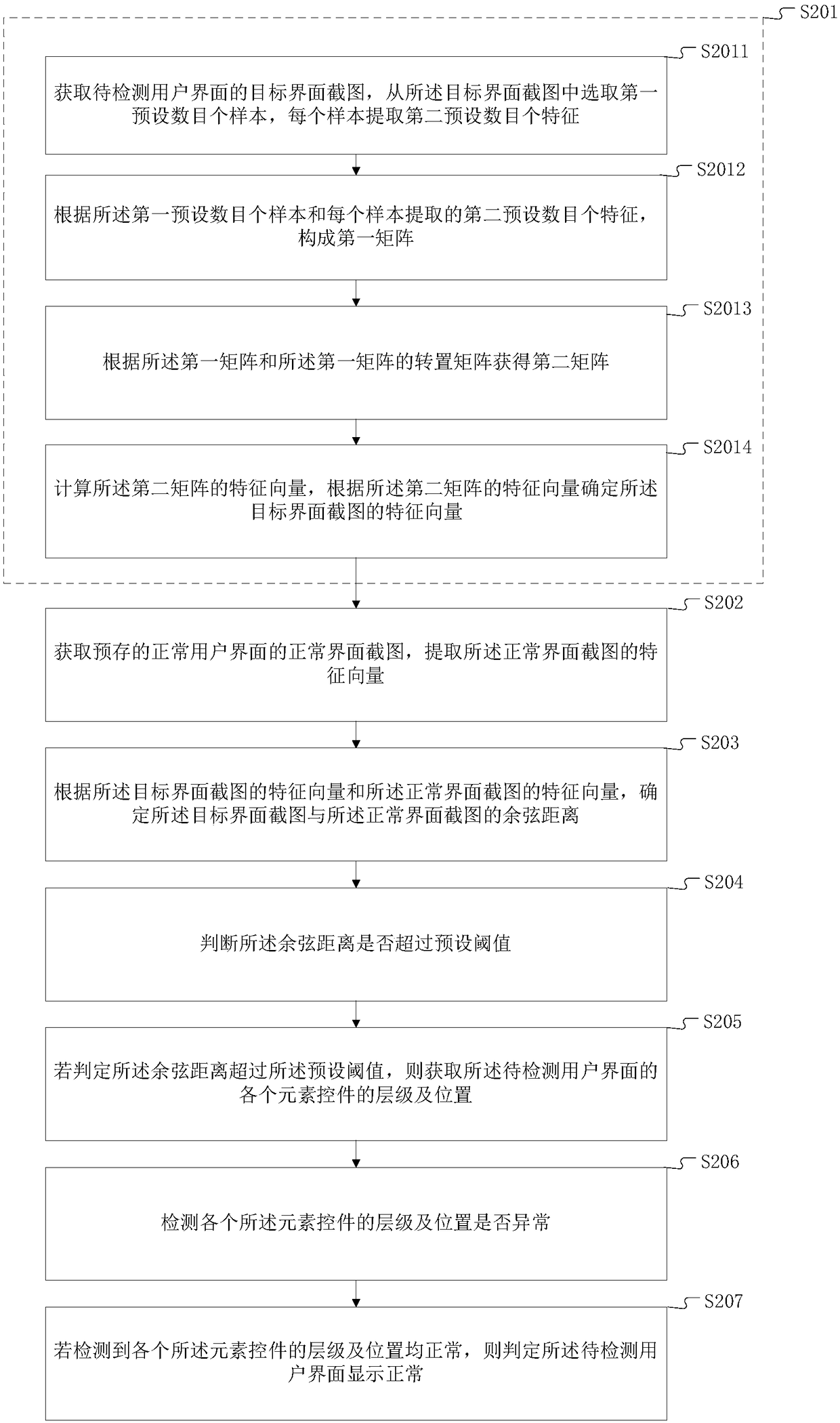

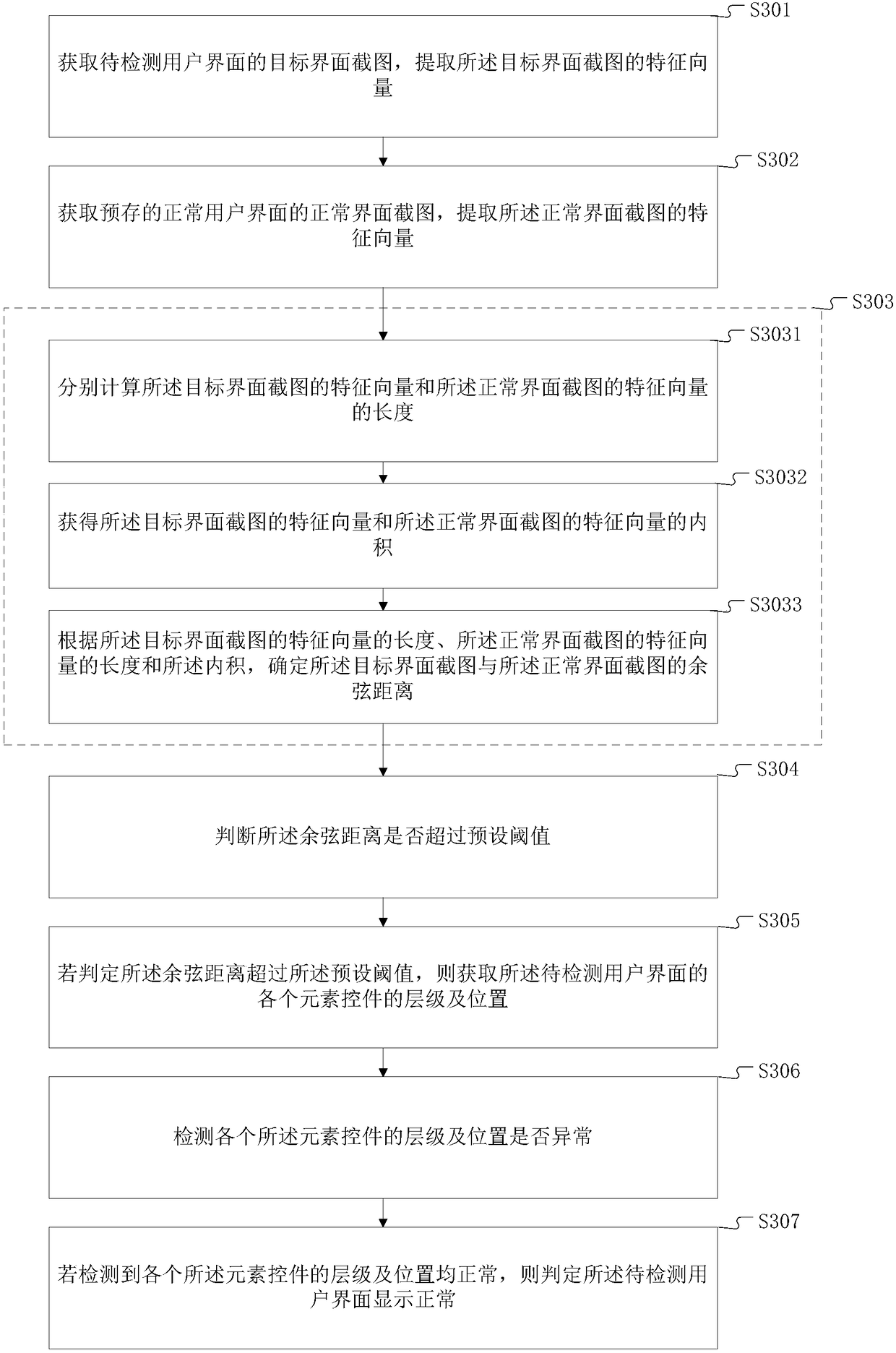

User interface display identification method and terminal device

ActiveCN108363599AImprove display recognition accuracySolve the problem of low script maintenance efficiencyExecution for user interfacesFeature vectorGraphical user interface

The invention is suitable for the technical field of mobile terminals, and provides a user interface display identification method and a terminal device. The method comprises the steps of obtaining atarget interface screenshot of a to-be-detected user interface, and extracting an eigenvector of the target interface screenshot; obtaining a pre-stored normal interface screenshot of a normal user interface, and extracting an eigenvector of the normal interface screenshot; according to the eigenvectors of the target interface screenshot and the normal interface screenshot, determining a cosine distance between the target interface screenshot and the normal interface screenshot; judging whether the cosine distance exceeds a preset threshold or not; if the cosine distance is judged to exceed the preset threshold, obtaining levels and positions of element controls of the to-be-detected user interface; detecting whether the levels and the positions of the element controls are abnormal or not;and if the levels and the positions of the element controls are detected to be normal, judging that the display of the to-be-detected user interface is normal. The problem of low script maintenance efficiency of an existing user interface display detection method is solved.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

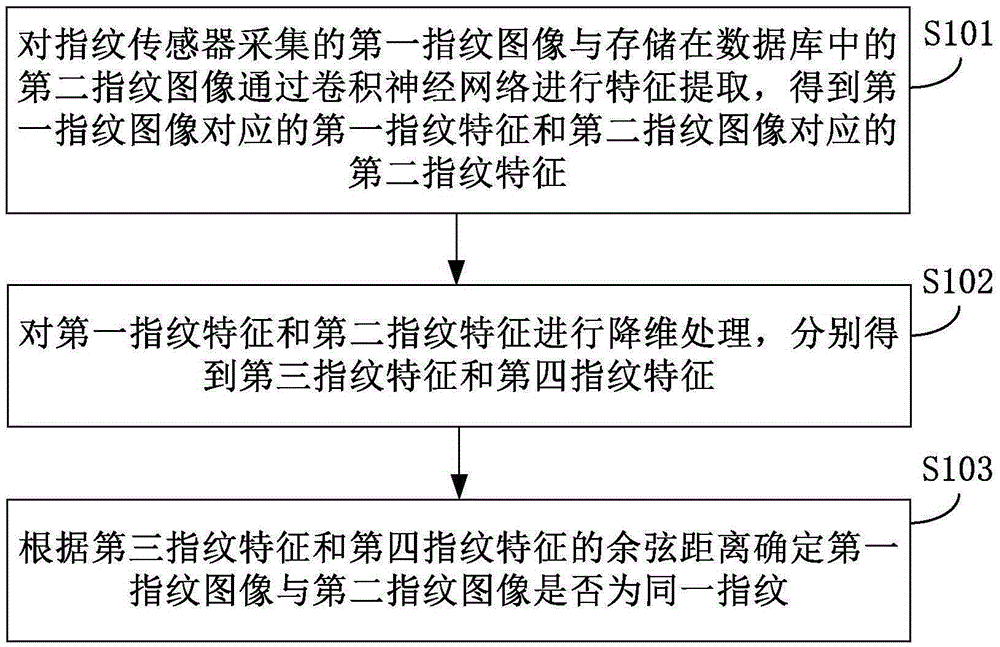

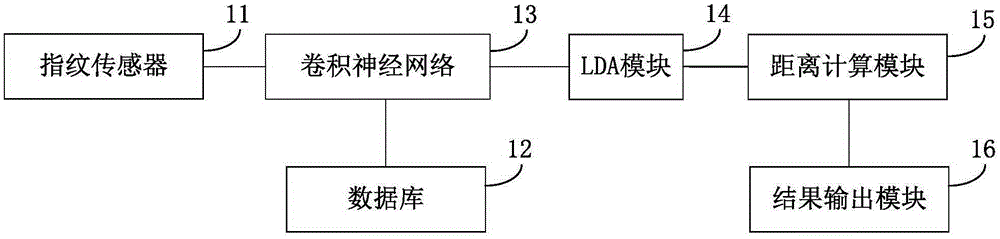

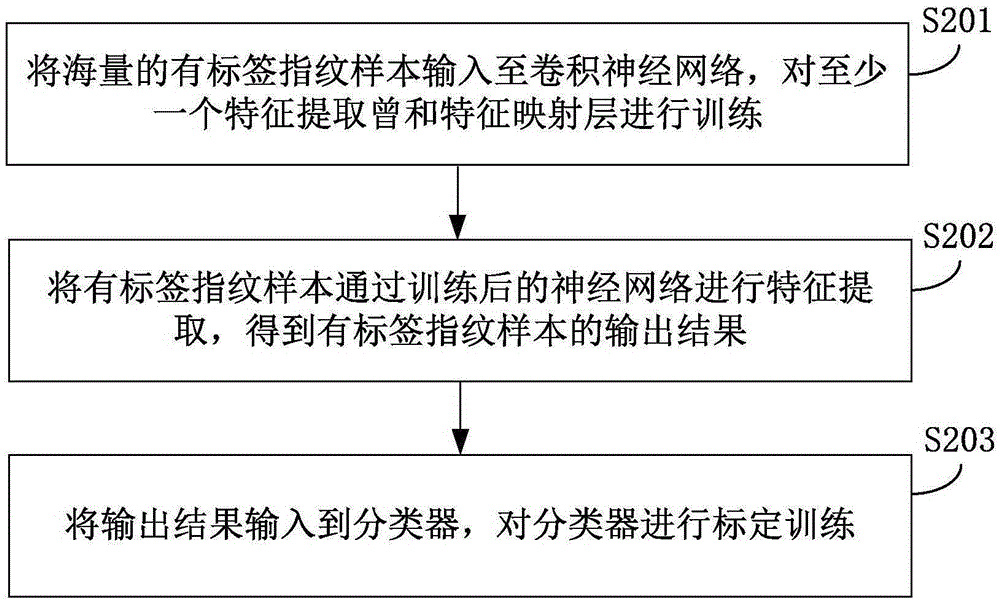

Fingerprint identification method and device

InactiveCN105354560AImprove accuracyReduce computational complexityMatching and classificationFeature extractionDimensionality reduction

The invention discloses a fingerprint identification method and device. The method comprises the following steps: extracting features from a first fingerprint image collected by a fingerprint sensor and a second fingerprint image stored in a database by a convolutional neural network to obtain a first fingerprint feature corresponding to the first fingerprint image and a second fingerprint feature corresponding to the second fingerprint image, wherein the dimensions of the first fingerprint feature and the second fingerprint feature are the same; carrying out dimensionality reduction processing on the first fingerprint feature and the second fingerprint feature to obtain a third fingerprint feature and a fourth fingerprint feature respectively, wherein the dimensions of the third fingerprint feature and the fourth fingerprint feature are the same; and determining whether the first fingerprint image and the second fingerprint image are the same fingerprint according to a cosine distance of the third fingerprint feature and the fourth fingerprint feature. The fingerprint identification method and device disclosed by the technical scheme in the invention can be used for avoiding the problem that fingerprint can be only identified by the global feature points and local feature points of the fingerprint in the prior art, and improving the fingerprint identification accuracy of low-quality fingerprint images.

Owner:XIAOMI INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com