Multi-channel network-based video human face detection and identification method

A face detection and recognition method technology, applied in the field of video face recognition based on deep learning, can solve the problems of time-space connection without consideration, low accuracy and so on

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0071] The present invention will be further described below in conjunction with the accompanying drawings.

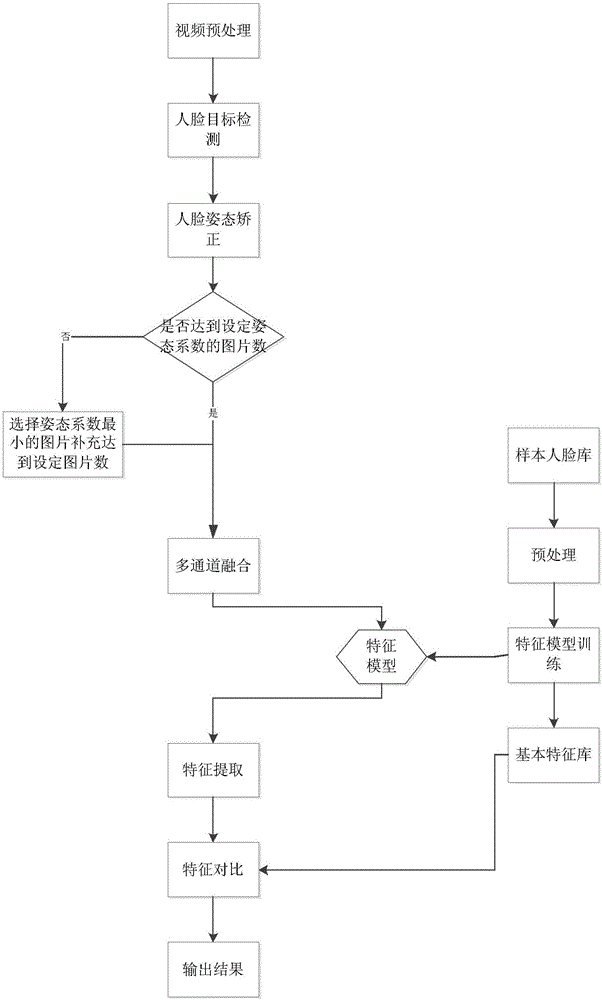

[0072] refer to figure 1 and figure 2 , a kind of video face detection and recognition method based on multi-channel network, described method comprises the steps:

[0073] S1: Video preprocessing

[0074] Receive the video data collected by the monitoring equipment and decompose it into a frame-by-frame image, and add time information to each frame image, specifically: the first frame image in the received video is image 1, and then press Time sequence sets the t-th frame image in the video as image t. In the following narration, with I t Represents the t-th frame image, and I represents the frame image collection of the same video. After completing the preprocessing of the video, the decomposed images are passed to the face target detection module in the order of time from front to back.

[0075] S2: Target face detection and attitude coefficient calculation ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com