Patents

Literature

485 results about "Local binary patterns" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

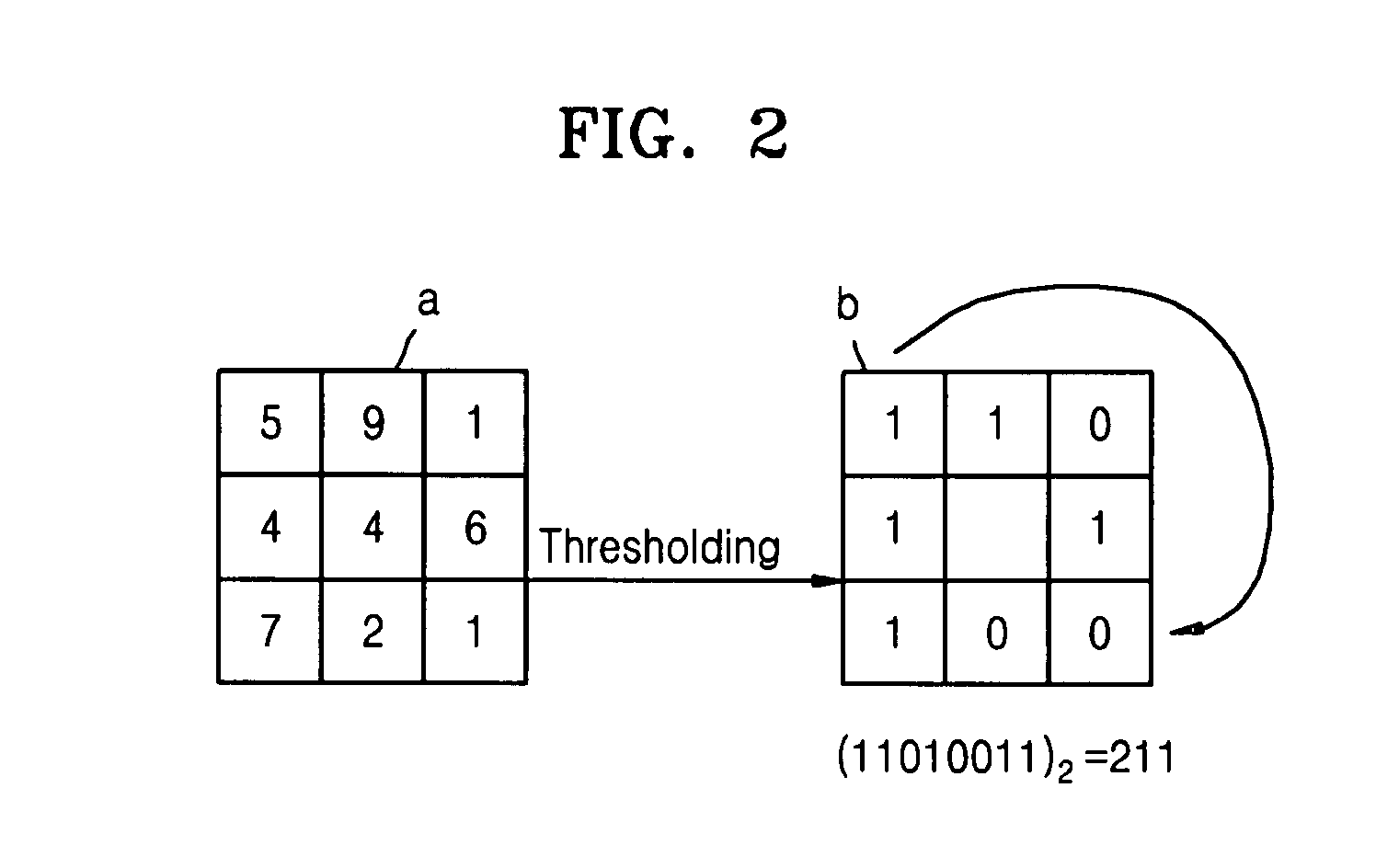

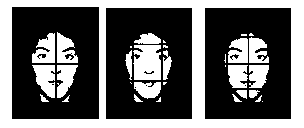

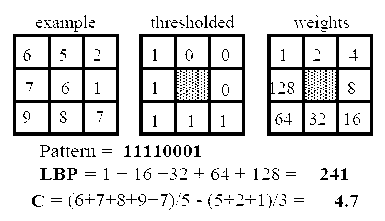

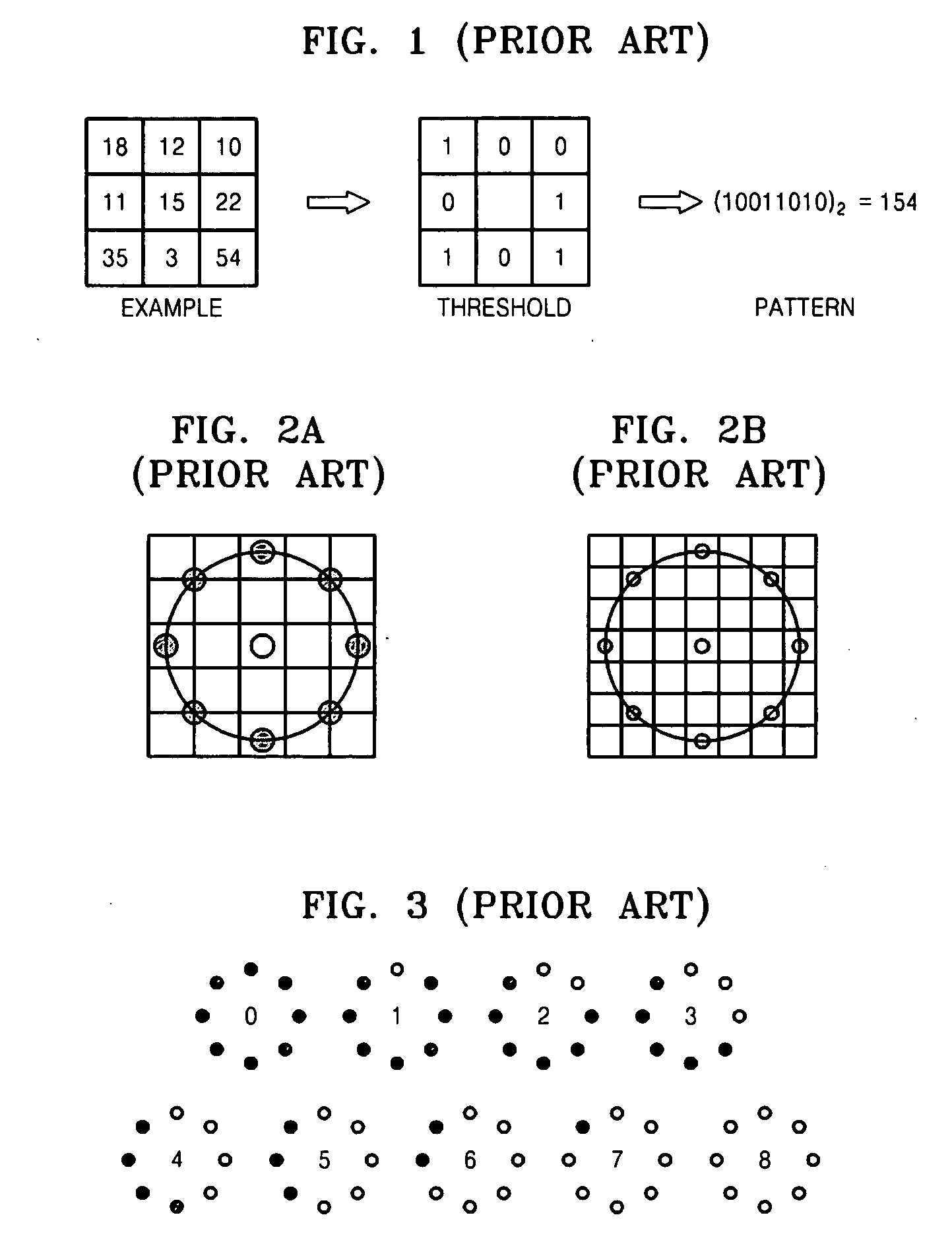

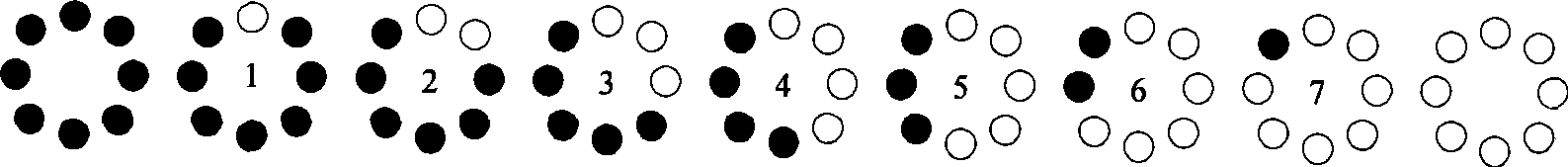

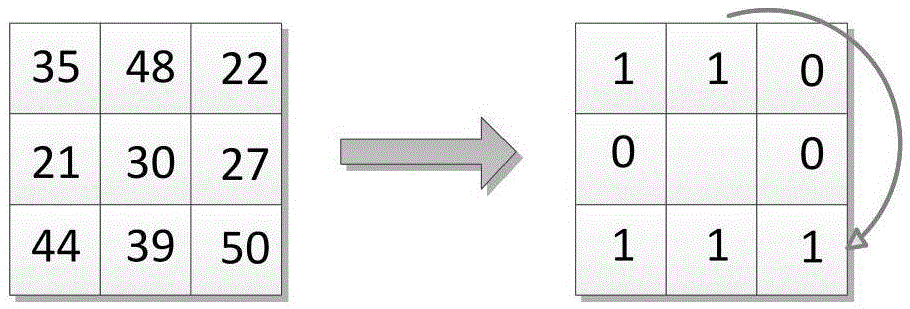

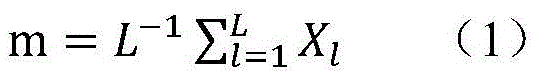

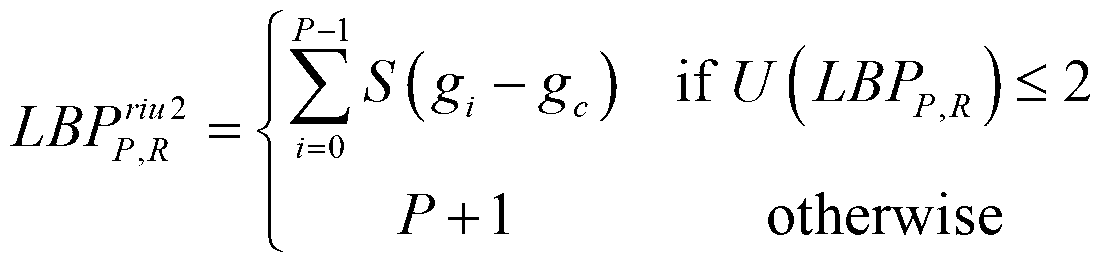

Local binary patterns (LBP) is a type of visual descriptor used for classification in computer vision. LBP is the particular case of the Texture Spectrum model proposed in 1990. LBP was first described in 1994. It has since been found to be a powerful feature for texture classification; it has further been determined that when LBP is combined with the Histogram of oriented gradients (HOG) descriptor, it improves the detection performance considerably on some datasets. A comparison of several improvements of the original LBP in the field of background subtraction was made in 2015 by Silva et al. A full survey of the different versions of LBP can be found in Bouwmans et al.

Method and apparatus for generating face descriptor using extended local binary patterns, and method and apparatus for face recognition using extended local binary patterns

InactiveUS20080166026A1Reduce processing timeHigh error rateCharacter and pattern recognitionSupervised learningAuthentication

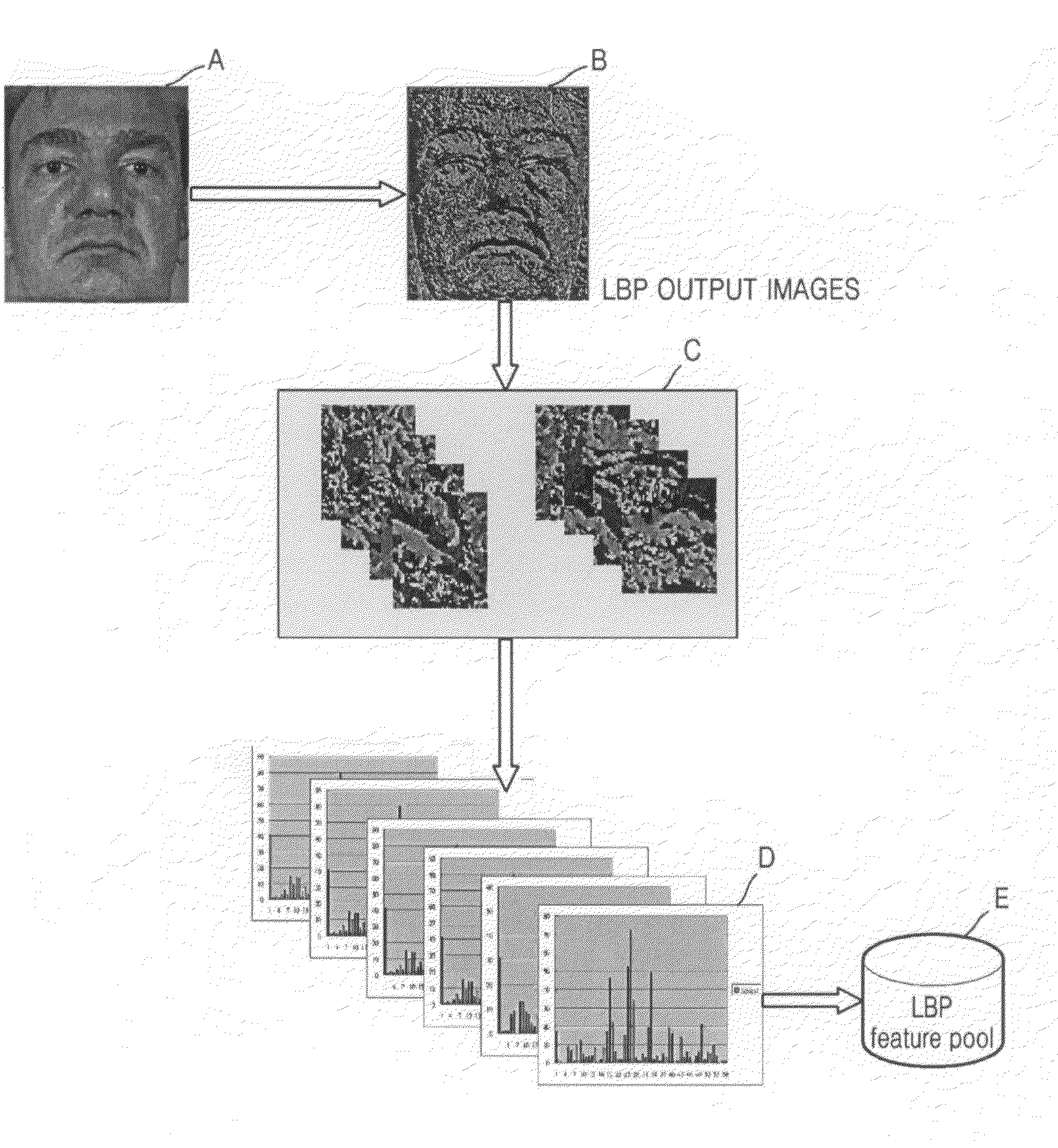

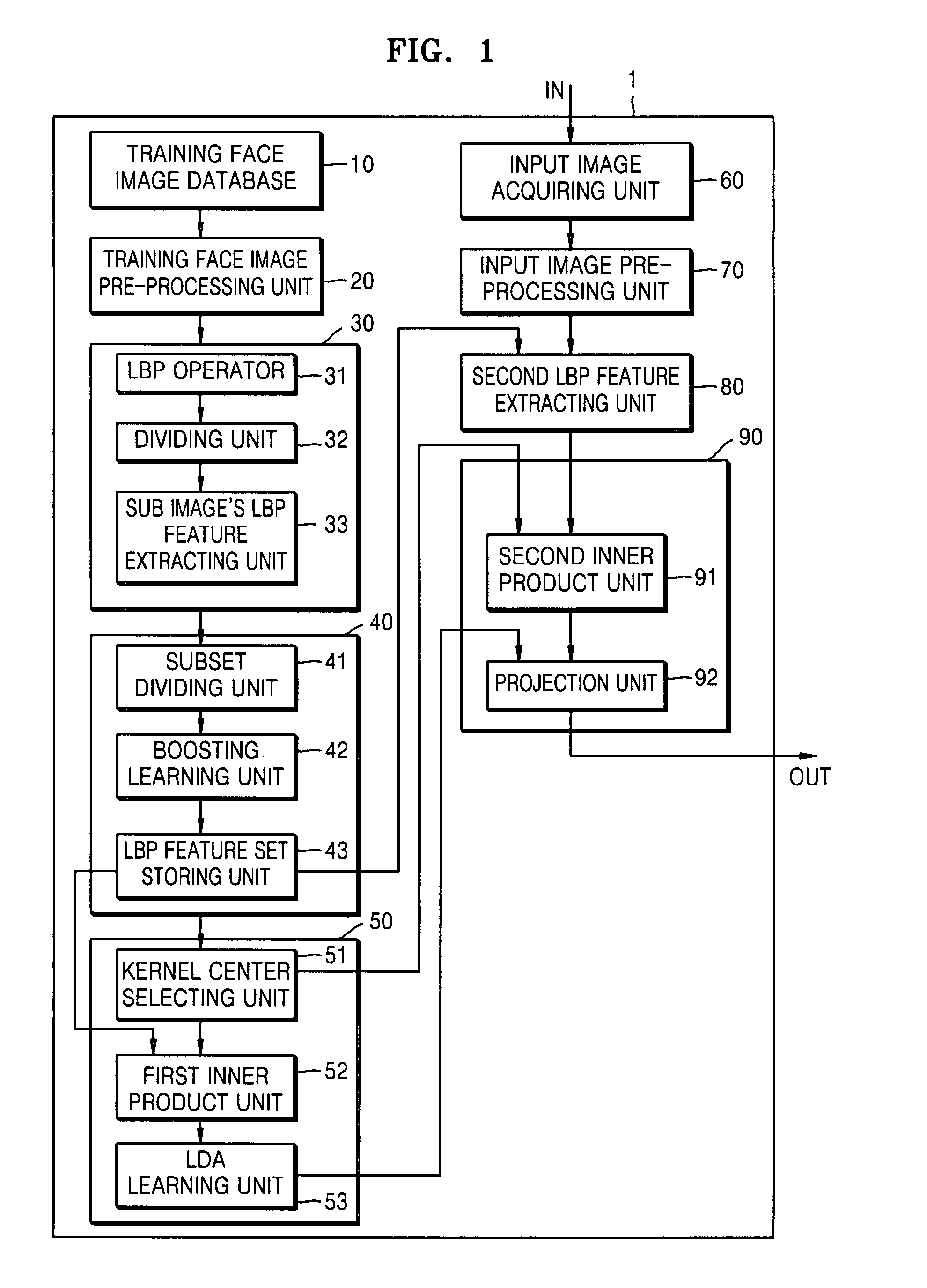

A face descriptor generating method and apparatus and face recognition method and apparatus using extended local binary pattern (LBP) are provided.Since LBP features are selected by performing a supervised learning process on the extended LBP features and the selected extended LBP features are used in face recognition, it is possible to reduce errors in face recognition or identity verification and to increase face recognition efficiency. In addition, the extended LBP features are used so that it is possible to overcome the problem of time-consumption of the process.

Owner:SAMSUNG ELECTRONICS CO LTD

Face recognition method based on Gabor wavelet transform and local binary pattern (LBP) optimization

InactiveCN102024141AReduce distractionsShort storage timeCharacter and pattern recognitionGabor wavelet transformHigh dimensional

The invention relates to a face recognition method based on Gabor wavelet transform and local binary pattern (LBP) optimization. Two-dimensional Gabor wavelet transform can associate pixels of adjacent areas so as to reflect the change conditions of image pixel gray values in a local range from different frequency scales and directions. The feature extraction and the classification recognition are carried out on the basis of a face image two-dimensional Gabor wavelet transform coefficient. For a high-dimensional Gabor wavelet transform coefficient, overall histogram features are extracted by adopting the LBP, and then the image is blocked by utilizing priori knowledge to extract the features of each piece of LBP local histogram. The method has better recognition rate, better robustness to illumination and wide using prospect in the fields of biometric recognition and public security monitoring.

Owner:SHANGHAI UNIV

Face detecting and tracking method and device

InactiveCN103116756ASolve the problem of susceptibility to light intensityConform to the visual characteristicsCharacter and pattern recognitionFace detectionTrack algorithm

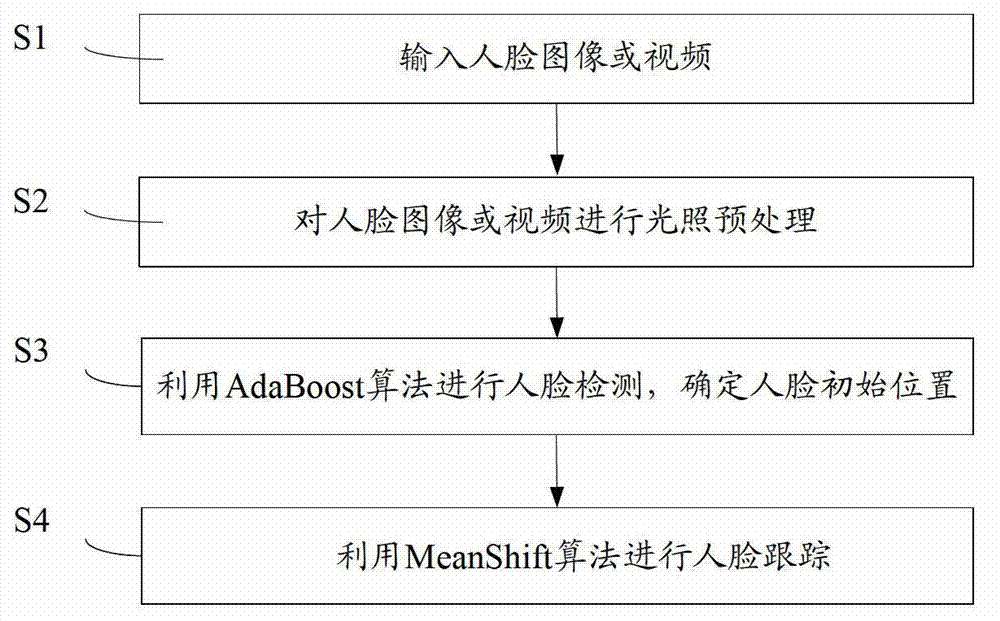

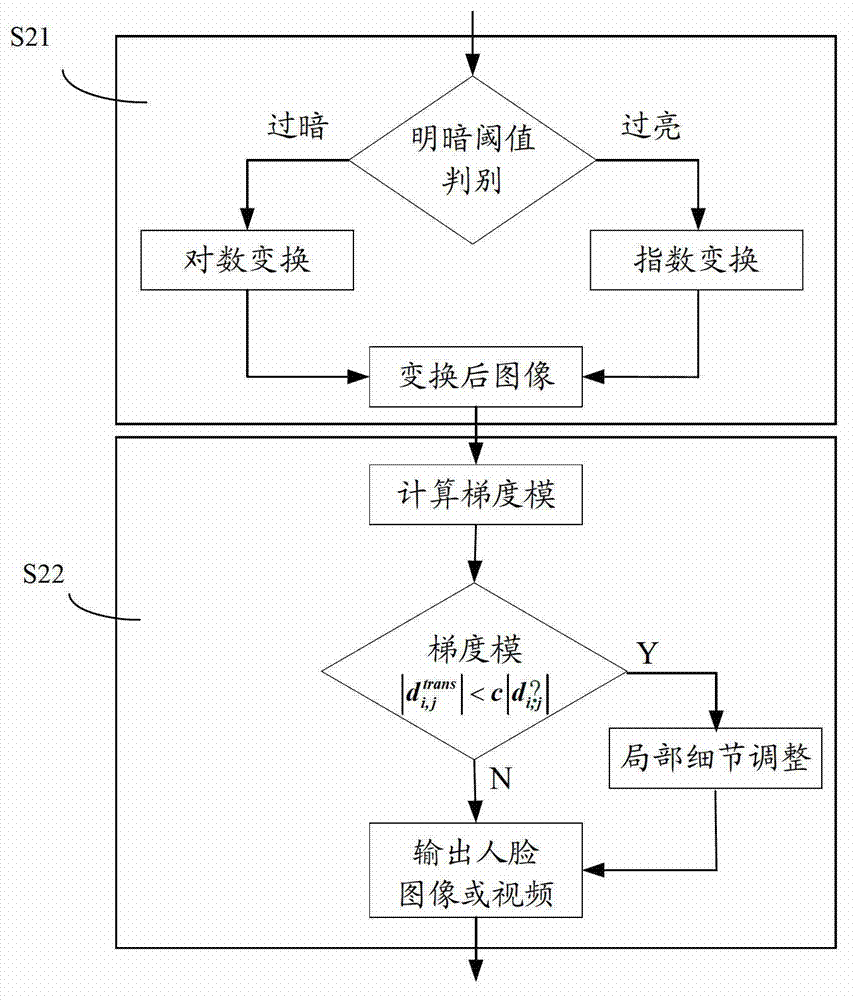

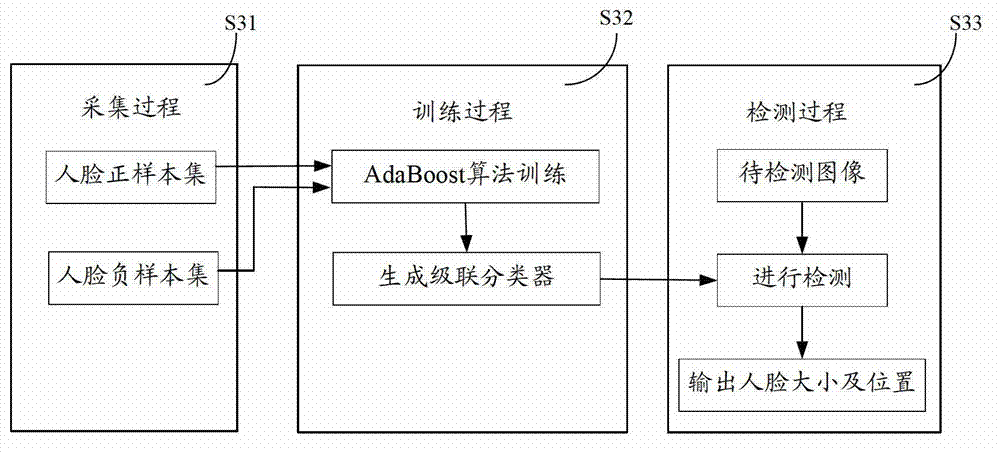

The invention provides a face detecting and tracking method and a device. The method comprises the steps of inputting a face image or a face video, preprocessing the face image or the face video in an illumination mode, detecting a face by usage of an Ada Boost algorithm, confirming an initial position of the face, and tracking the face by the usage of a Mean Shift algorithm. According to the face detecting and tracking method and the device, a self-adaptation local contrast enhancement method is provided to enhance image detail information in the period of image preprocessing, in order to increase robustness under different illumination conditions, face front samples under different illumination are added to training samples and accuracy of the face detection is increased by adoption of the Ada Boost algorithm in the period of face detection, in order to overcome the defect that using color of the Mean Shift algorithm is single, grads features and local binary pattern length between perpendiculars (LBP) vein features are integrated by adoption of the Mean Shift tracking algorithm in the period of face tracking, wherein the LBP vein features further considers using LBP local variance for expressing change of image contrast information, and accuracy of the face detection and the face tracking is improved.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY

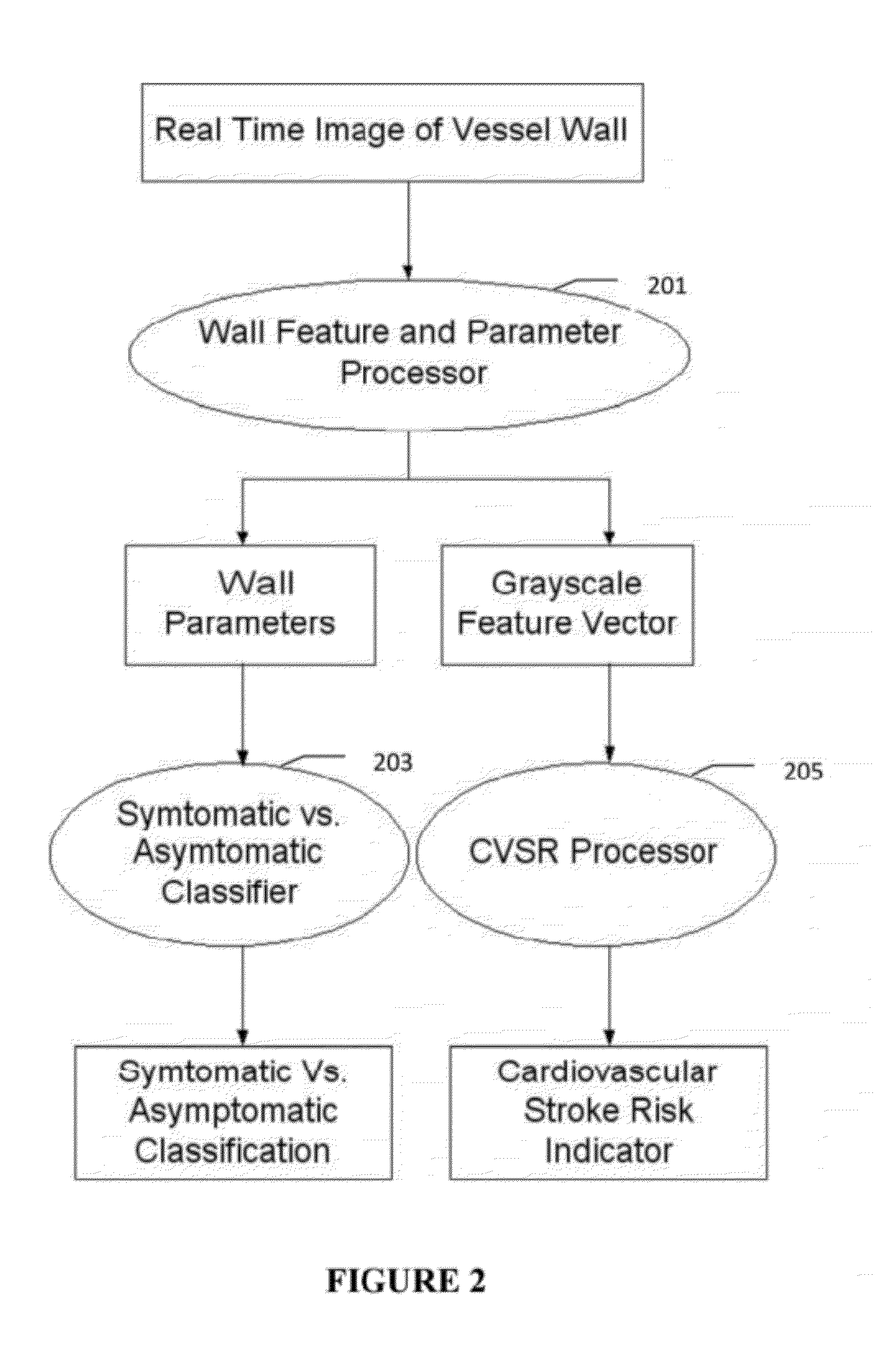

Imaging Based Symptomatic Classification Using a Combination of Trace Transform, Fuzzy Technique and Multitude of Features

InactiveUS20120078099A1Improve accuracyMaximum accuracyUltrasonic/sonic/infrasonic diagnosticsMagnetic measurementsGray levelRadiology

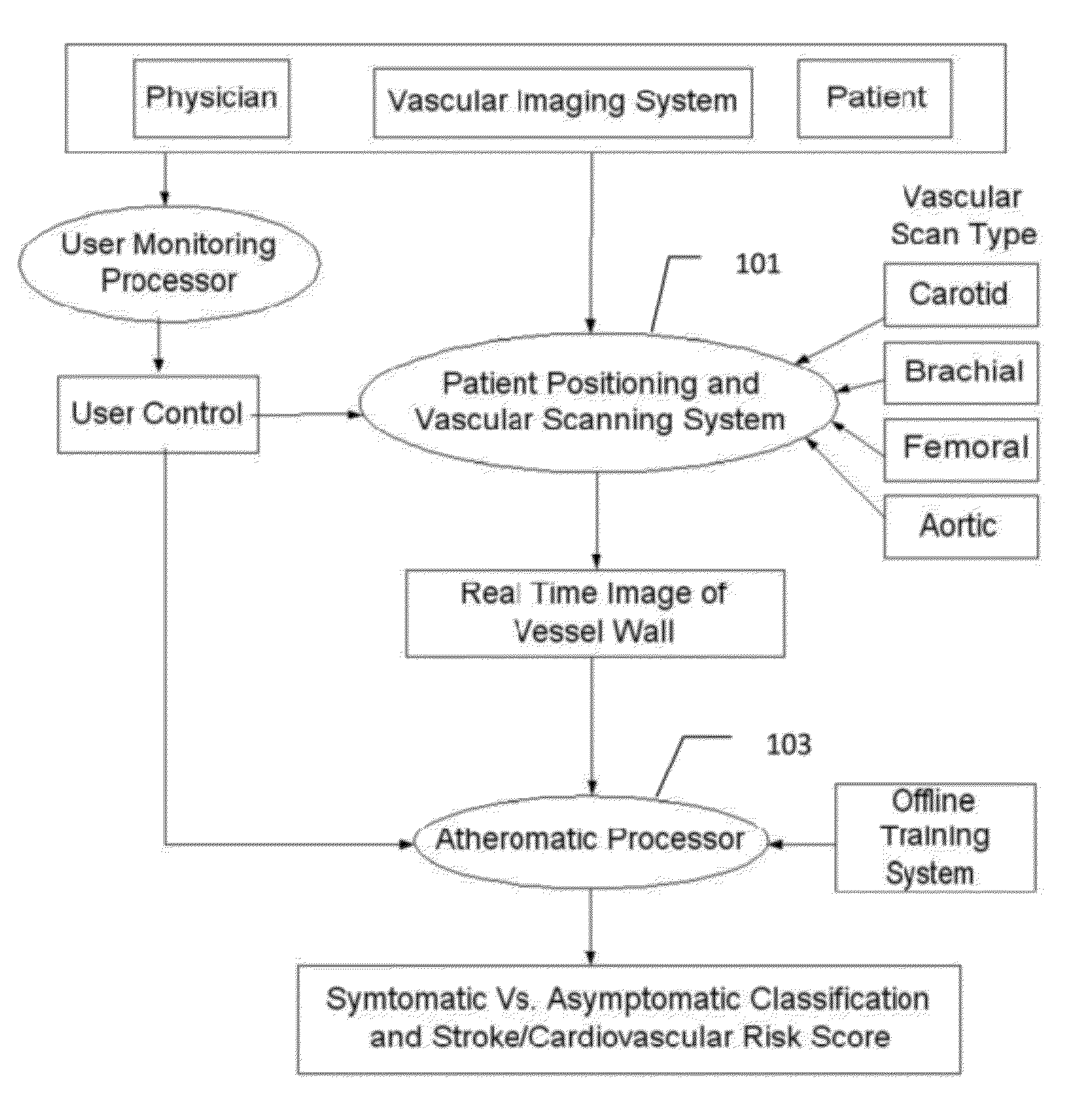

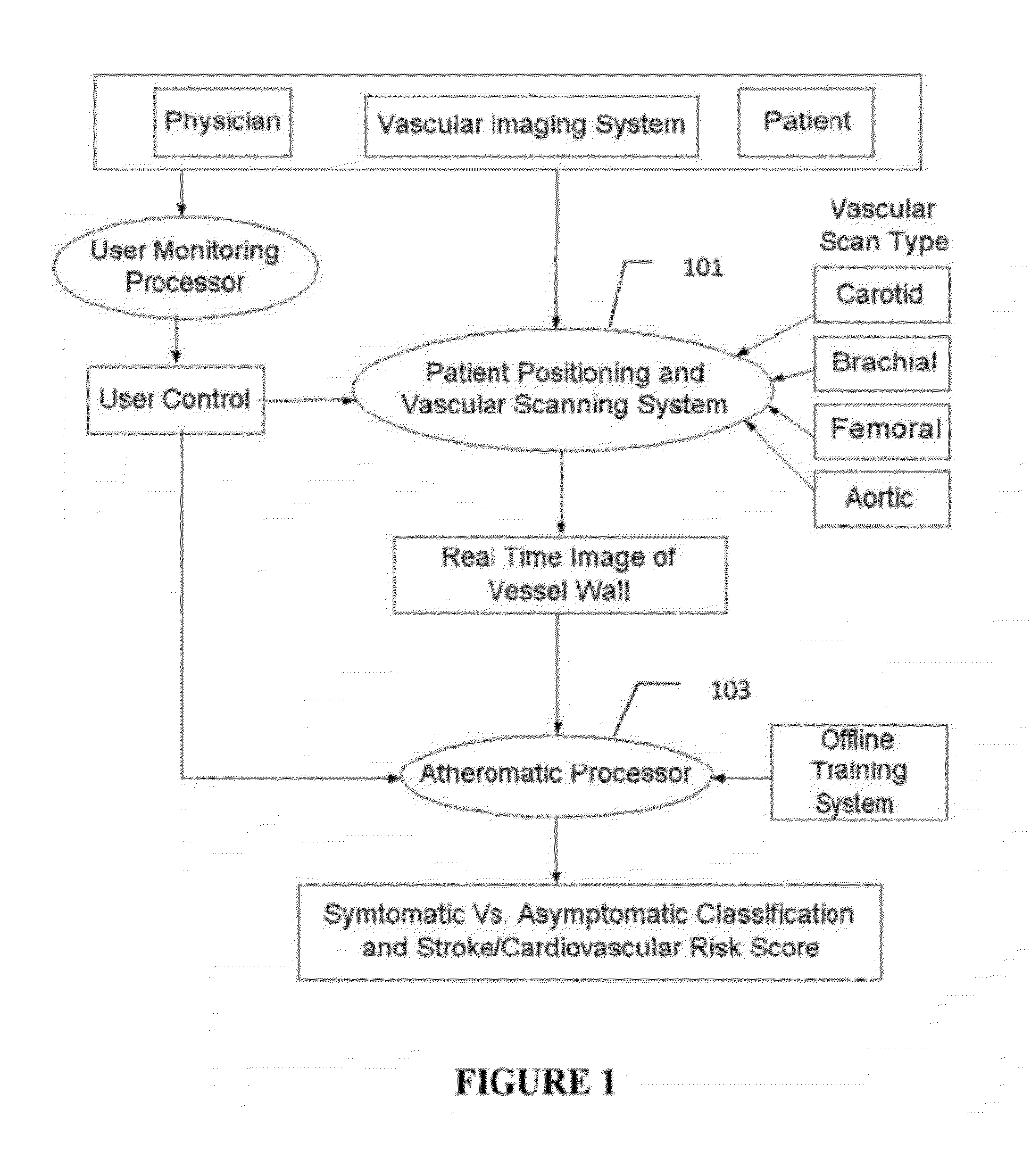

A statistical (a) Computer Aided Diagnostic (CAD) technique is described for symptomatic versus asymptomatic plaque automated classification of carotid ultrasound images and (b) presents a cardiovascular risk score computation. We demonstrate this for longitudinal Ultrasound, CT, MR modalities and extendable to 3D carotid Ultrasound. The on-line system consists of Atherosclerotic Wall Region estimation using AtheroEdge™ for longitudinal Ultrasound or Athero-CTView™ for CT or Athero-MRView from MR. This greyscale Wall Region is then fed to a feature extraction processor which uses the combination: (a) Higher Order Spectra; (b) Discrete Wavelet Transform (DWT); (c) Texture and (d) Wall Variability. Another combination uses: (a) Local Binary Pattern; (b) Law's Mask Energy and (c) Wall Variability. Another combination uses: (a) Trace Transform; (b) Fuzzy Grayscale Level Co-occurrence Matrix and (c) Wall Variability. The output of the Feature Processor is fed to the Classifier which is trained off-line.

Owner:ATHEROPOINT

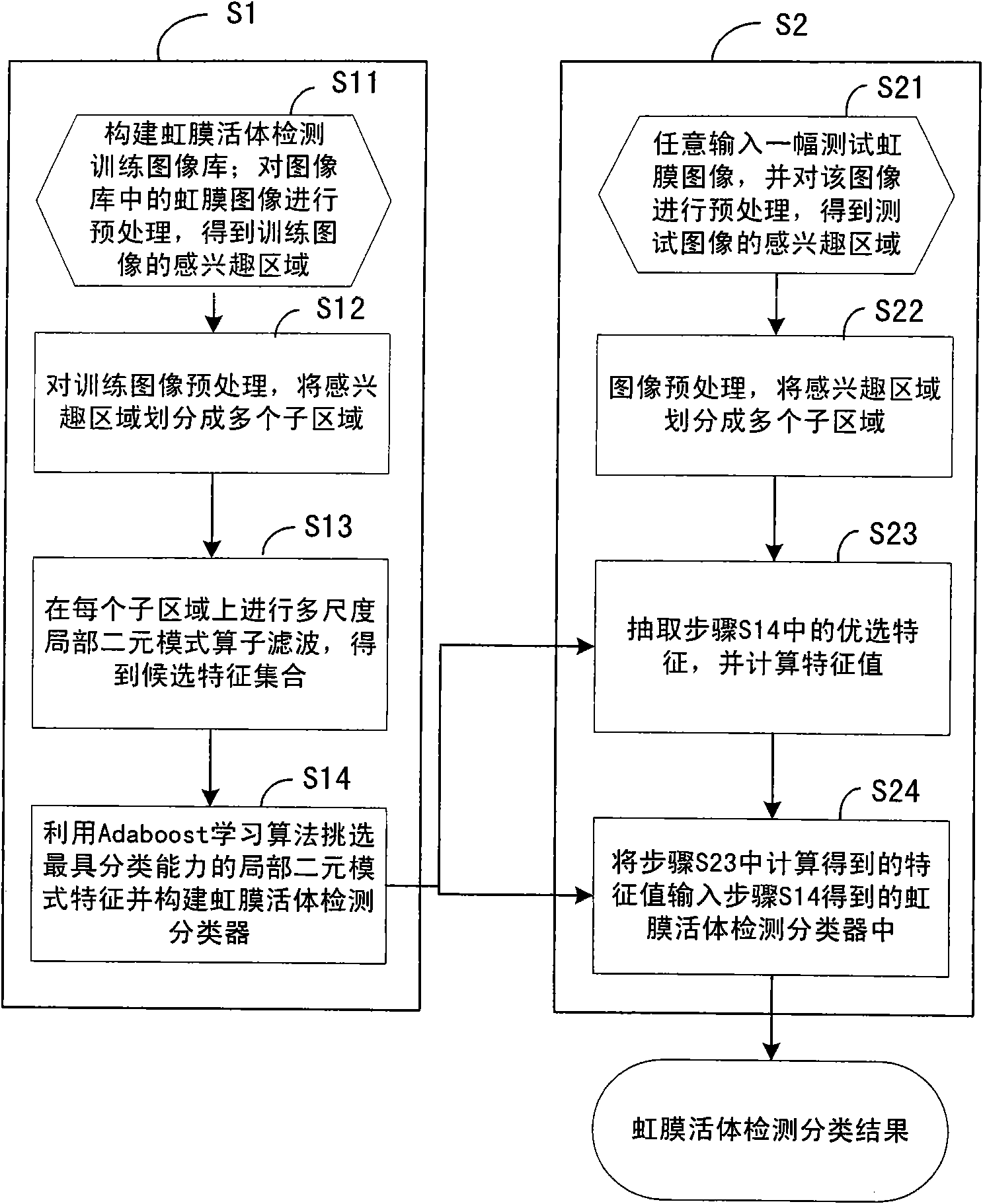

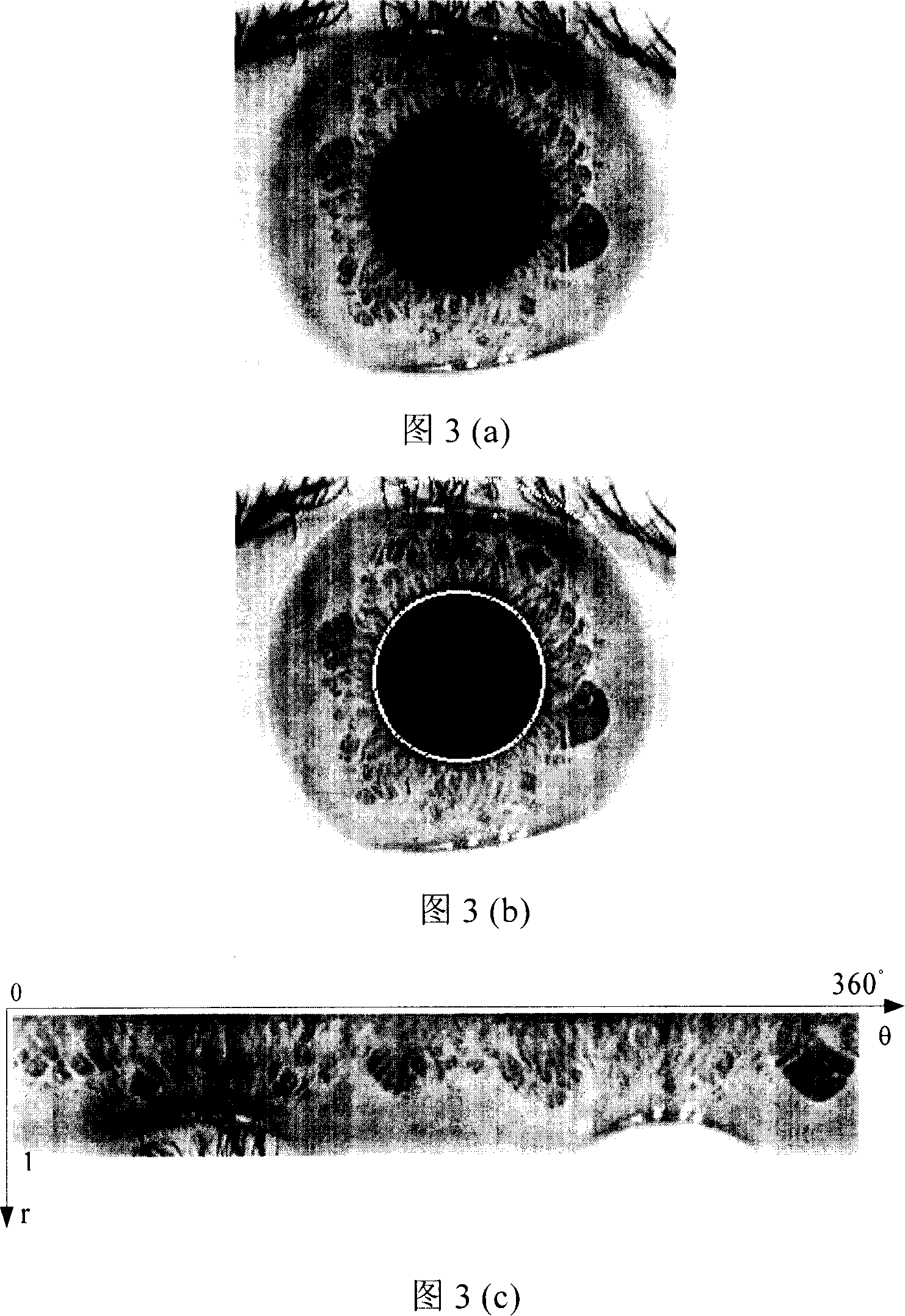

Living iris detection method

ActiveCN101833646AHigh precisionImprove securityCharacter and pattern recognitionPattern recognitionMedicine

The invention relates to a living iris detection method, which comprises the following steps of: S1, pre-treating a living iris image and an artificial iris image in a training image library; performing multi-scale characteristic extraction of local binary mode in an obtained interested area, and selecting the preferable one from the obtained candidate characteristics by using an adaptive reinforcement learning algorithm, and establishing a classifier for living iris detection; and S2, pre-treating the randomly input test iris image, and calculating the preferable local binary-mode characteristic in the obtained interested area; inputting the calculated characteristic value into the classifier for living iris detection obtained in step S1, and judging whether the test image is from the living iris according to the output result of the classifier. The invention can perform effective anti-forgery detection and alarm for the iris image and reduce error rate in iris recognition. The invention is widely applicable to various application systems for identification and safety precaution by using iris recognition.

Owner:BEIJING IRISKING

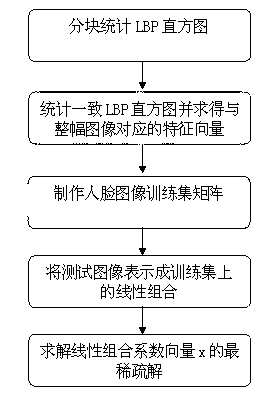

Single-training sample face recognition method based on blocking consistency LBP (Local Binary Pattern) and sparse coding

InactiveCN102799870AAccurate extractionKeep moreCharacter and pattern recognitionCluster algorithmFeature vector

The invention belongs to the technical field of digital image processing and mode recognition, in particular to a face recognition method based on blocking consistency LBP (Local Binary Pattern) and sparse coding. The face recognition method comprises the steps of: firstly, segmenting a face image into 16 subdomains which are same in size according to a mode of 4*4, calculating a consistency LBP histogram with one pixel radius and 8 neighbors, connecting LBP histograms of the 16 subdomains into a column vector to be used as a characteristic vector of a face image; and representing images to be tested into a most sparse linear combination on a training set, and recognizing the face image. Compared with the traditional characteristic extraction and clustering algorithm. According to the invention, structure information of a face can be well extracted, and under the condition of a single training sample and shielding, higher recognition rate and robustness are shown.

Owner:SHANGHAI JILIAN NETWORK TECH CO LTD

Crowd exceptional event detection method based on LBP (Local Binary Pattern) weighted social force model

ActiveCN102682303AImprove featuresReduce complexityImage analysisCharacter and pattern recognitionSupport vector machineFrequency spectrum

The invention discloses a crowd exceptional event detecting method based on an LBP (Local Binary Pattern) weighted social force model. The method comprises the following steps of: computing a light stream vector of a sampled point based on a block-matching method; extracting dynamic textures of the sampled point by a time-space domain local binary pattern, and performing spectral analysis of Fourier transform; computing social force of the sampled point based on LBP weighted social force model; performing histogram quantization on the social force and performing classification on the video sequence based on a support vector machine to detect the exceptional behavior. Through combination of light stream and LBP frequency spectrum, the method provided by the invention innovatively calculates the social force to detect the exceptional behavior of the crowd, thus avoiding background modeling, prospect detection and detection and track of target, improving robustness and reduce calculated amount, and being particularly fit for occassions with large density of the crowd and complicated environment.

Owner:联通(上海)产业互联网有限公司

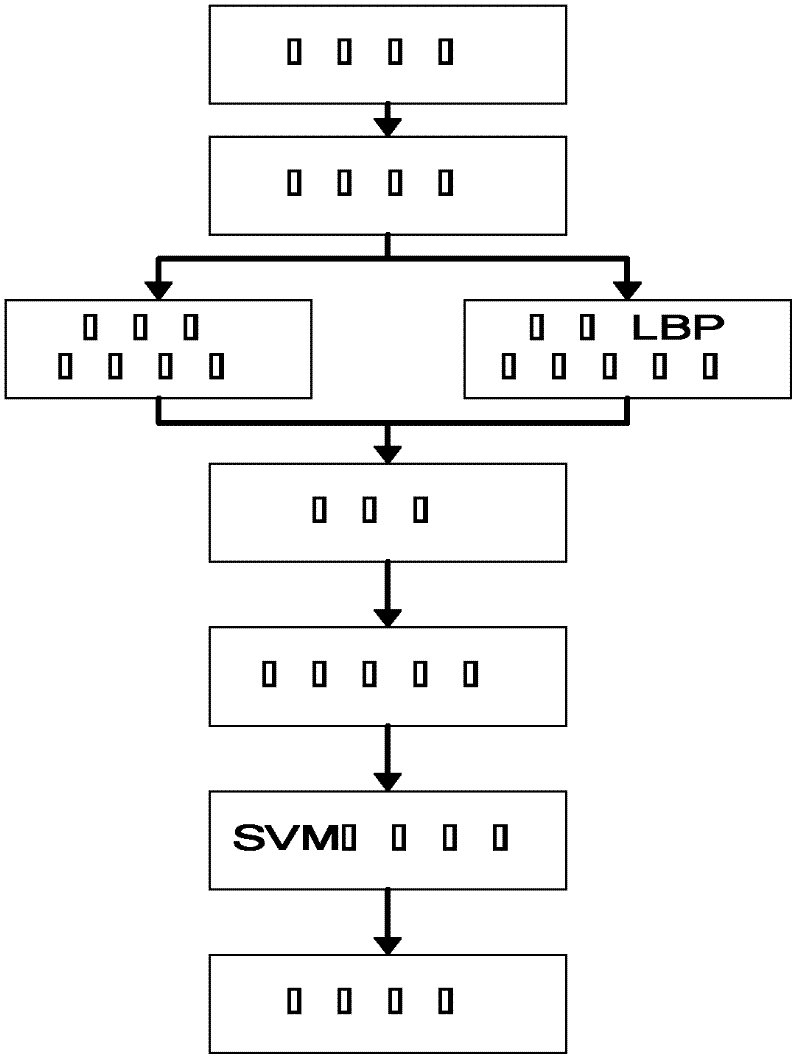

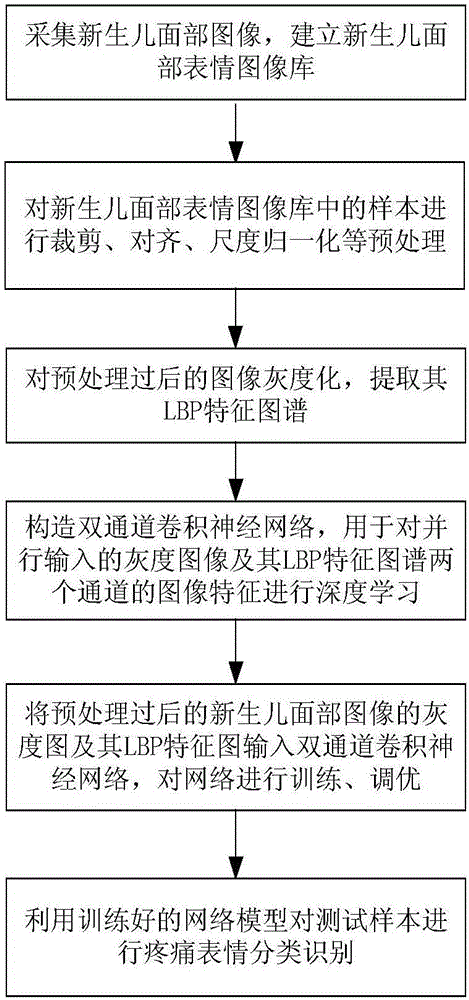

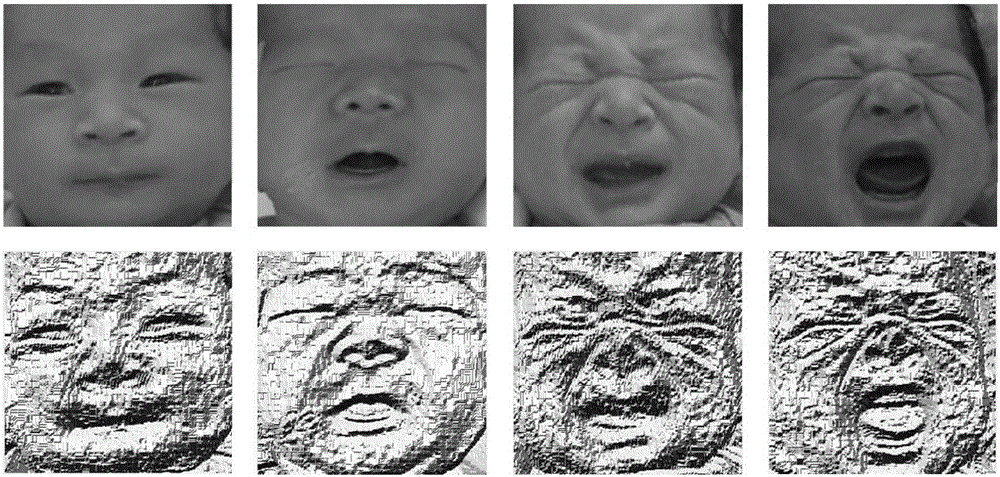

Newborn-painful-expression recognition method based on dual-channel-characteristic deep learning

ActiveCN106682616AEfficient identificationMaintain stabilityNeural architecturesAcquiring/recognising facial featuresIllumination problemNeonatal pain

The invention discloses a newborn-painful-expression recognition method based on dual-channel-characteristic deep learning. The newborn-painful-expression recognition method includes the steps that firstly, newborn facial images are grayed, and a Local Binary Pattern (LBP) specific chromatogram is extracted; secondly, grayscale images of the parallelly-input newborn facial images and the characteristics of two channels of LBP characteristic images of the grayscale images are deeply learned with a dual-channel convolutional neural network; finally, the fusion characteristics of the two channels are subjected to expression classification through a classifier based on a softmax, and expressions are divided into the calmness expression, the crying expression, the mild pain expression and the acute pain expression. According to the newborn-painful-expression recognition method, the grayscale images and the characteristic information of the two channels of the LBP characteristic images of the grayscale images are combined, the expressions such as the calmness expression, the crying expression, the mild pain expression and the acute pain expression can be effectively recognized, the quite-good robustness of the illumination problem, the noise problem and the shielding problem of the newborn facial images is achieved, and a new method and way are provided for developing a newborn-painful-expression recognition system.

Owner:NANJING UNIV OF POSTS & TELECOMM

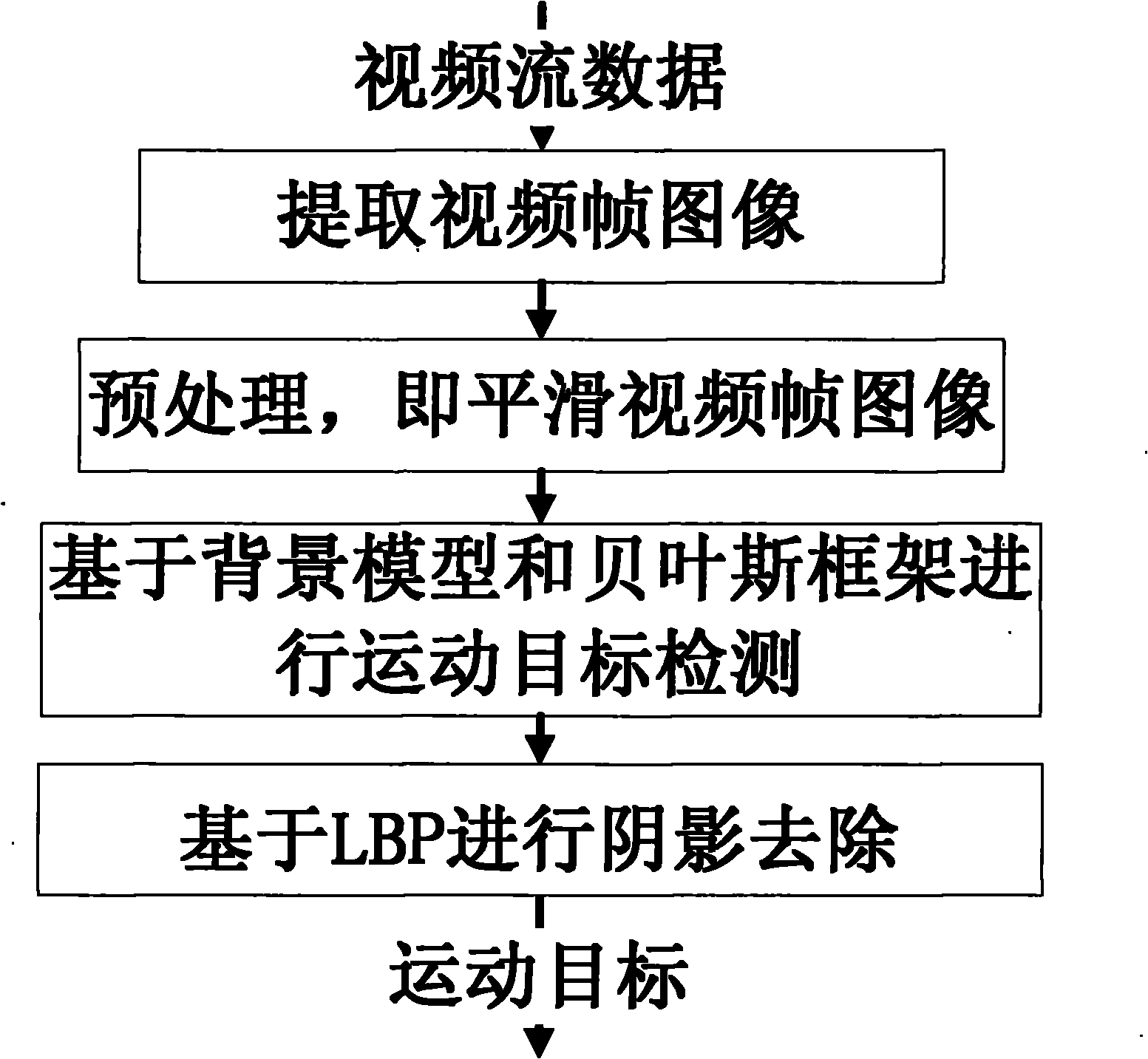

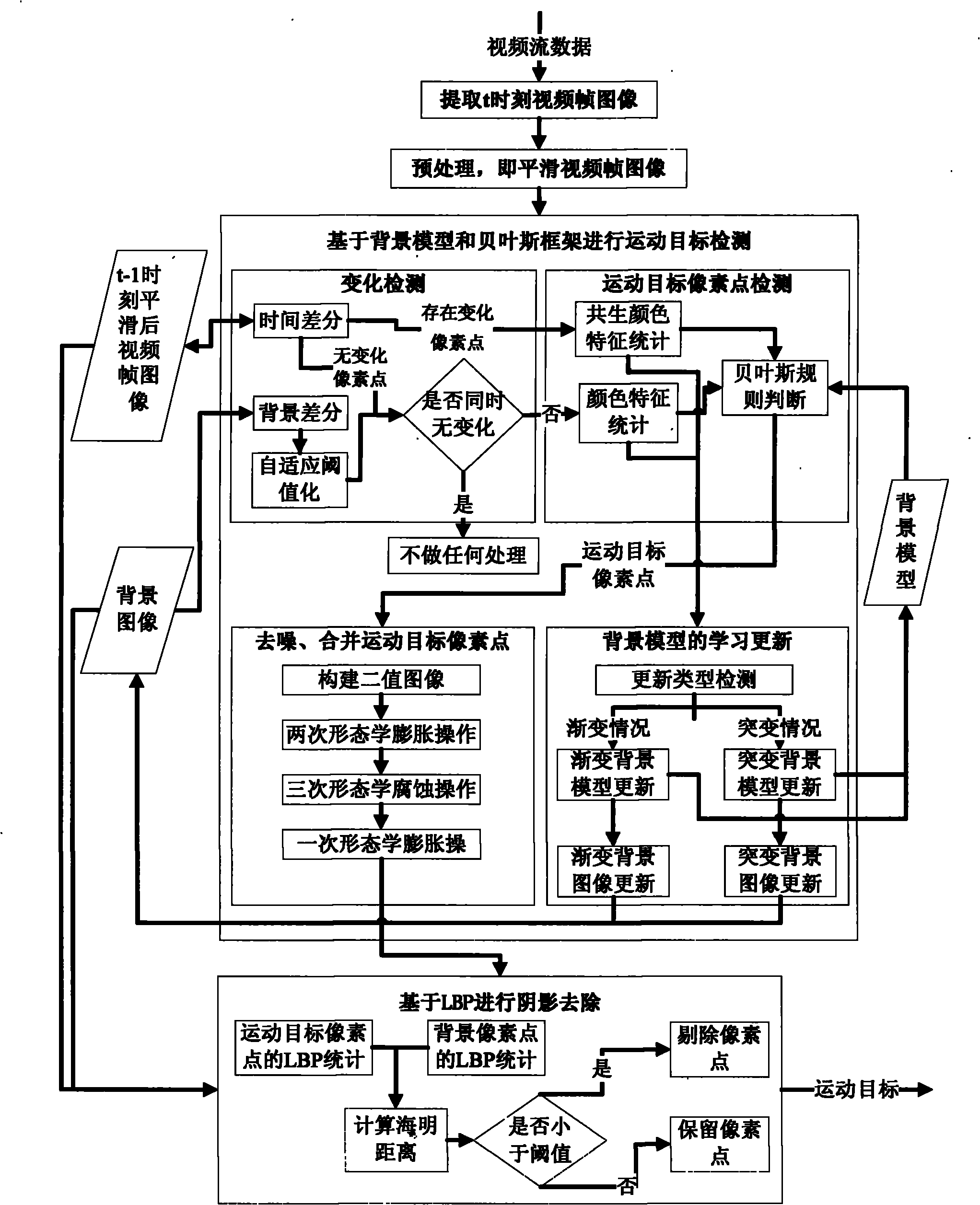

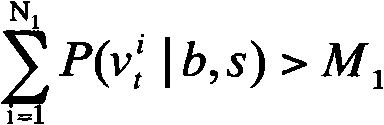

Moving object detecting method based on Bayesian frame and LBP (Local Binary Pattern)

InactiveCN101916448AEliminate distractionsHigh speedImage analysisClosed circuit television systemsVideo monitoringBackground image

The invention discloses a moving object detecting method based on a Bayesian frame and an LBP (Local Binary Pattern), which relates to the technical field of intellectualized video monitoring. The method comprises the following steps of: 1. extracting a video frame in a video stream; 2. preprocessing the video frame and eliminating the interference of fine light ray change and other disturbance; 3. carrying out moving object detection based on a background model and the Bayesian frame; before detecting, filtering a pixel point with unchanged time difference and background difference and degrading color dimensionality in modeling, wherein the speed of the whole method is improved, and the background model comprises a color characteristic and a symbiotic color characteristic and can favorably detect a moving and static object in a video; and 4. removing a shadow, detecting the texture information of the obtained moving object area and the texture information of a background image corresponding area by utilizing an LBP descriptor and comparing the differences of the texture information for removing the shadow in moving object detection.

Owner:云南清眸科技有限公司

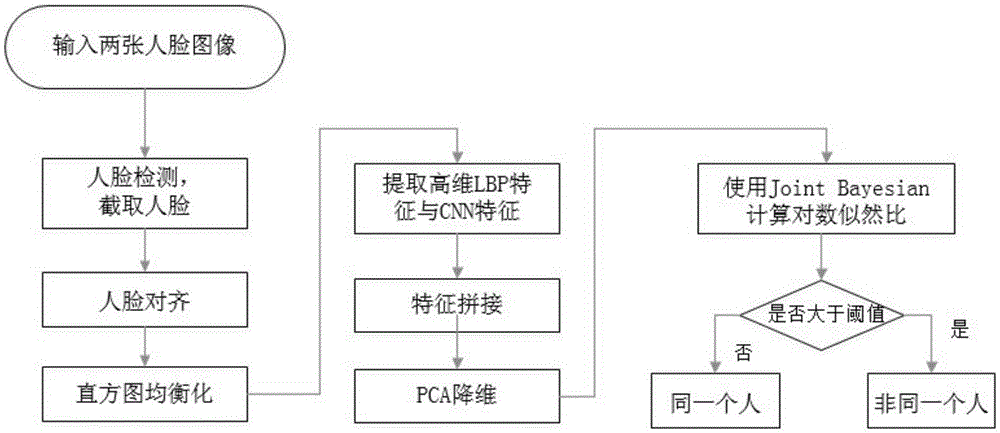

Face comparison method based on high-dimensional LBP (Local Binary Patterns) and convolutional neural network feature fusion

InactiveCN105550658AImprove accuracySolve the problem of weak generalization abilityCharacter and pattern recognitionDimensionality reductionPrincipal component analysis

The invention provides a face comparison method based on high-dimensional LBP (Local Binary Patterns) and convolutional neural network feature fusion. The method comprises the following steps: firstly, two types of face images are input, preprocessing is independently carried out, then, each image independently extracts the high-dimensional LBP features and the CNN (Convolutional Neural Network) features of the image, the two features are combined and are subjected to dimensionality reduction via PCA (Principal Component Analysis), and finally, a Joint Bayesian method is used for obtaining a similarity of the two images. In a feature extraction process, since the high-dimensional LBP extracts local information and the CNN extracts global information, two types of information are fused, and the information extracted by the features is complete. Compared with a method which separately uses the high-dimensional LBP or the CNN, the method is higher in accuracy and better in robustness and achieves a real-time face comparison rate.

Owner:王华锋 +6

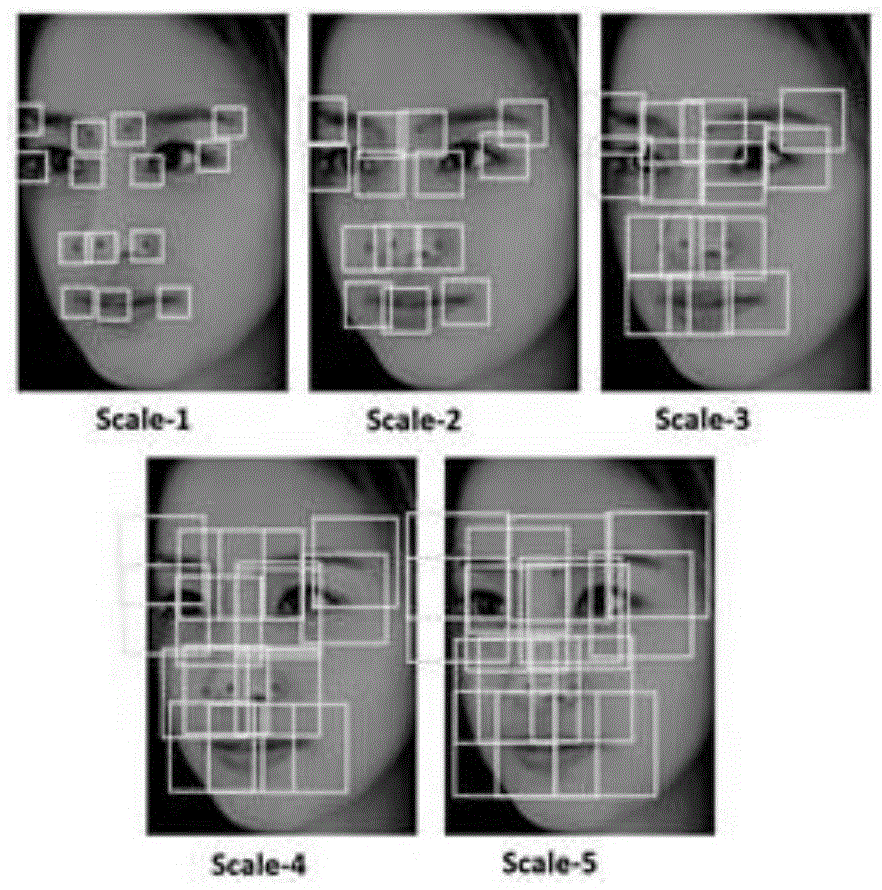

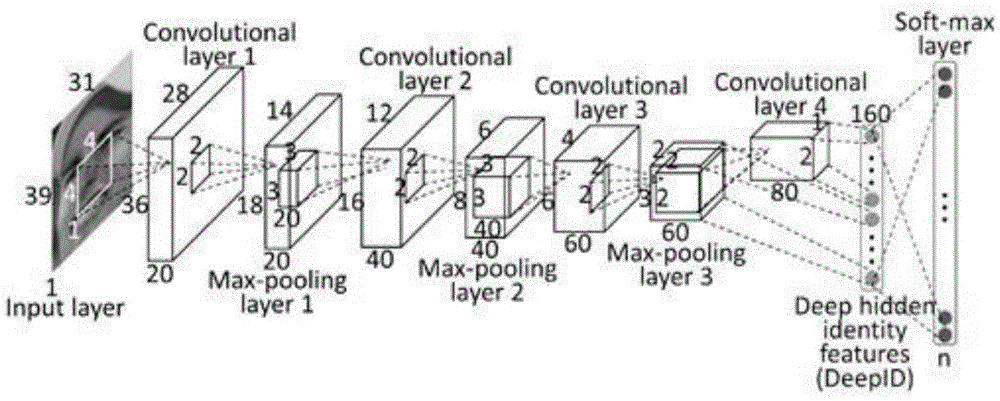

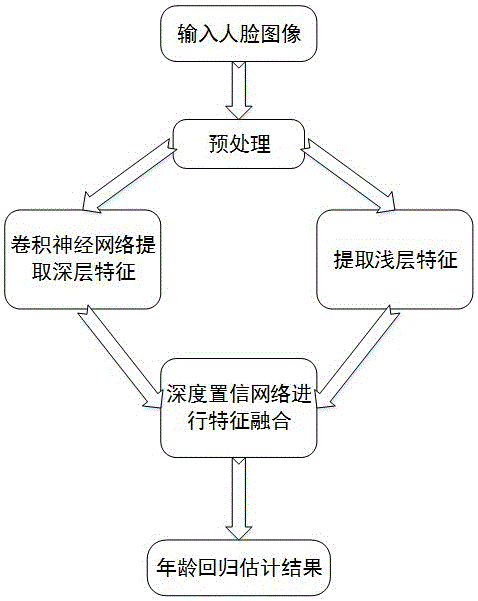

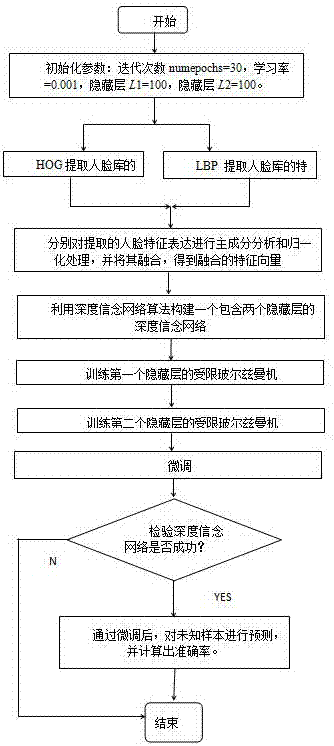

Human face age estimation method based on fusion of deep characteristics and shallow characteristics

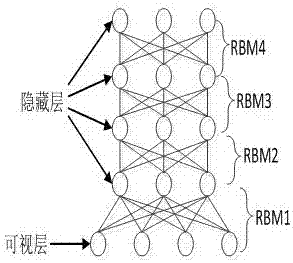

ActiveCN106778584AImprove reliabilitySimple structureCharacter and pattern recognitionDeep belief networkData set

The invention discloses a human face age estimation method based on the fusion of deep characteristics and shallow characteristics. The method comprises the following steps that: preprocessing each human face sample image in a human face sample dataset; training a constructed initial convolutional neural network, and selecting a convolutional neural network used for human face recognition; utilizing a human face dataset with an age tag value to carry out fine tuning processing on the selected convolutional neural network, and obtaining a plurality of convolutional neural networks used for age estimation; carrying out extraction to obtain multi-level age characteristics corresponding to the human face, and outputting the multi-level age characteristics as the deep characteristics; extracting the HOG (Histogram of Oriented Gradient) characteristic and the LBP (Local Binary Pattern) characteristic of the shallow characteristics of each human face image; constructing a deep belief network to carry out fusion on the deep characteristics and the shallow characteristics; and according to the fused characteristics in the deep belief network, carrying out the age regression estimation of the human face image to obtain an output an age estimation result. By sue of the method, age estimation accuracy is improved, and the method owns a human face image age estimation capability with high accuracy.

Owner:NANJING UNIV OF POSTS & TELECOMM

Multi-feature fusion-based deep learning face recognition method

InactiveCN107578007AImprove accuracyReduce operationCharacter and pattern recognitionNeural architecturesDeep belief networkFeature vector

The invention discloses a multi-feature fusion-based deep learning face recognition method. The multi-feature fusion-based deep learning face recognition method comprises performing feature extractionon images in ORL (Olivetti Research Laboratory) through a local binary pattern and an oriented gradient histogram algorithm; fusing acquired textual features and gradient features, connecting the twofeature vectors into one feature vector; recognizing the feature vector through a deep belief network of deep learning, and taking fused features as input of the deep belief network to layer by layertrain the deep belief network and to complete face recognition. By fusing multiple features, the multi-feature fusion-based deep learning face recognition method can improve accuracy, algorithm stability and applicability to complex scenes.

Owner:HANGZHOU DIANZI UNIV

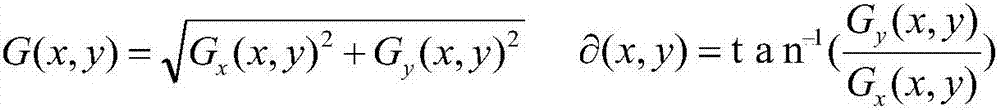

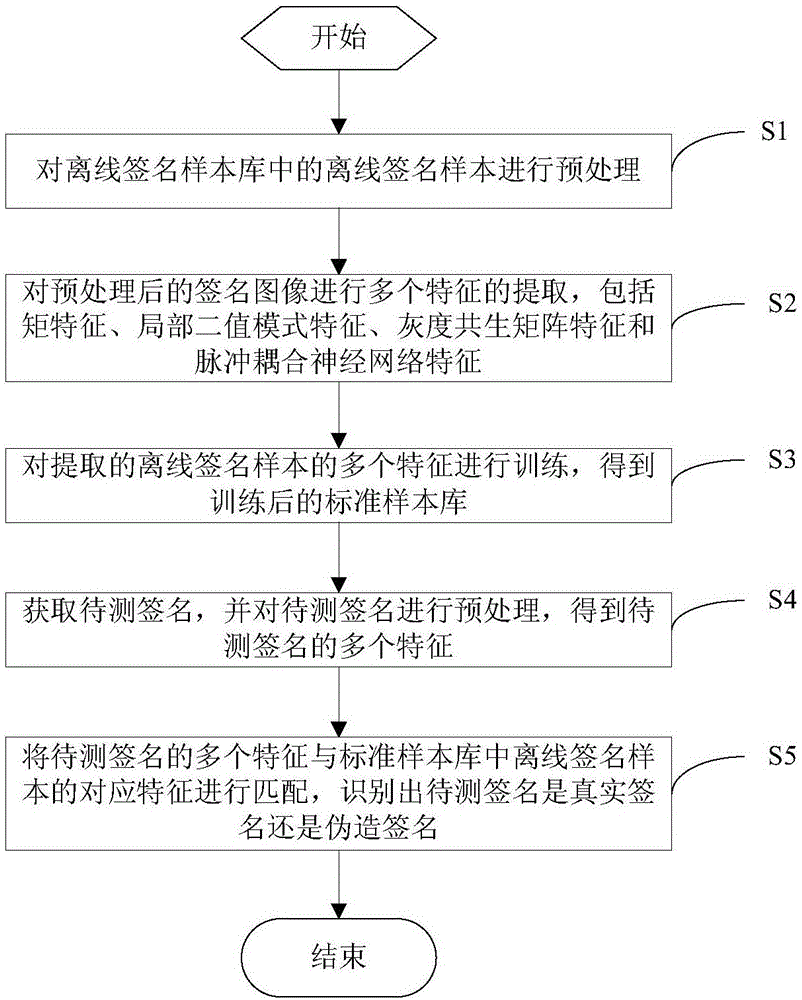

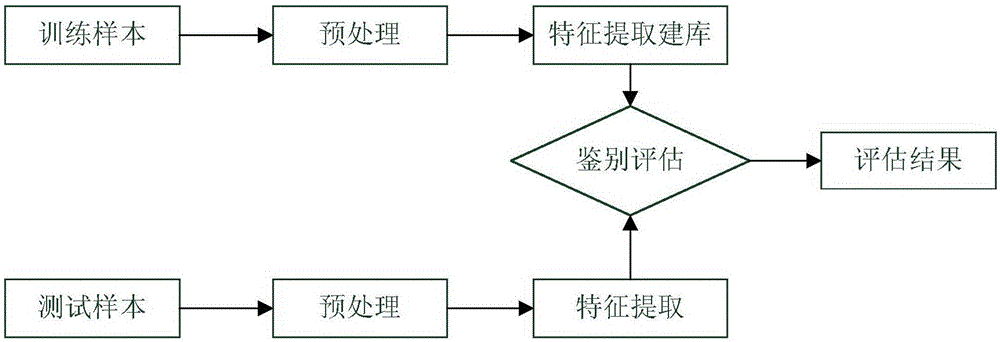

Method and system for identifying offline handwritten signature

InactiveCN106778586AAccurate identificationEliminate the influence of external factorsCharacter and pattern recognitionPattern recognitionCo-occurrence matrix

The invention discloses a method and a system for identifying an offline handwritten signature. The method comprises the following steps of S1, preprocessing an offline signature sample in an offline signature sample library; S2, extracting a plurality of characteristics for a preprocessed signature image, wherein the characteristics include moment characteristics, local binary pattern characteristics, gray-level co-occurrence matrix characteristics and pulse coupled neural network characteristics; S3, training the plurality of extracted characteristics of the offline signature sample to obtain a trained standard sample library; S4, acquiring a signature to be detected, and preprocessing the signature to be detected to obtain the plurality of characteristics of the signature to be detected; and S5, matching the plurality of characteristics of the signature to be detected with corresponding characteristics of the offline signature sample in the standard sample library, and identifying whether the signature to be detected is a genuine signature or a forged signature. The method and the system can effectively and accurately identify an offline signature to be detected.

Owner:WUHAN UNIV OF TECH

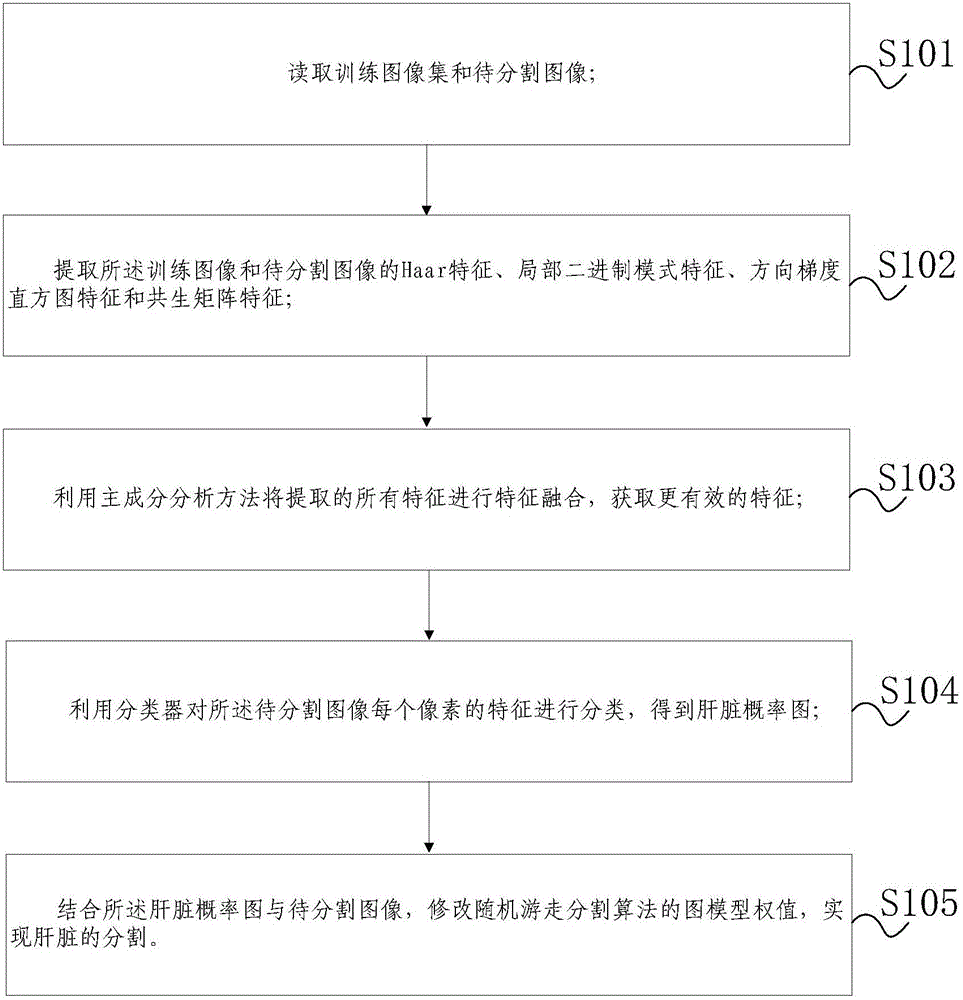

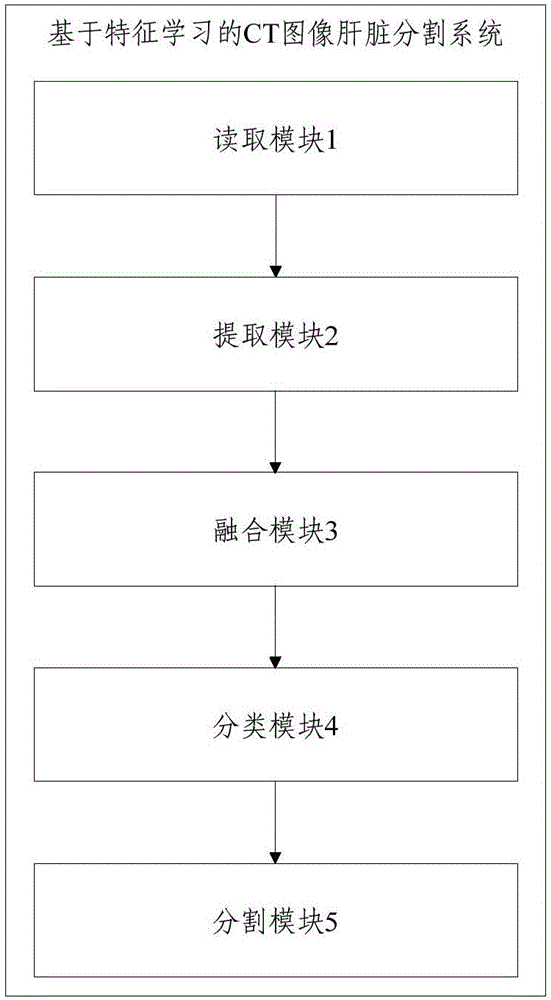

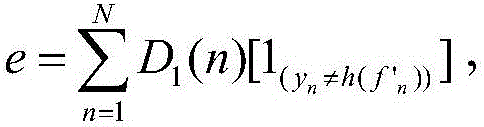

CT image liver segmentation method and system based on characteristic learning

ActiveCN105894517AImprove Segmentation AccuracyGood segmentation resultImage enhancementImage analysisPrincipal component analysisHistogram of oriented gradients

The invention discloses a CT image liver segmentation method and system based on characteristic learning, being able to effectively improve the segmentation precision of the liver in a CT image. The method comprises the steps: S101: reading a training image set and an image to be segmented, wherein the training images in the training image set and the image to be segmented are the CT images of a belly; S102: extracting the Haar characteristic of the training images and the image to be segmented, the local binary pattern characteristic, the directional gradient histogram and the co-occurrence matrix characteristic; S103: utilizing a principal component analysis method to perform characteristic fusion on all the extracted characteristics so as to acquire more effective characteristics; S104: utilizing a classifier to classify the characteristics of each pixel of the image to be segmented to obtain a liver probability graph; and S105: combining the liver probability graph with the image to be segmented, modifying the graph model weight of a random walk segmentation algorithm to realize segmentation of the liver.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Image verification method, medium, and apparatus using a kernel based discriminant analysis with a local binary pattern (LBP)

InactiveUS20070112699A1Improve verification capabilitiesImage analysisDigital computer detailsValidation methodsKernel Fisher discriminant analysis

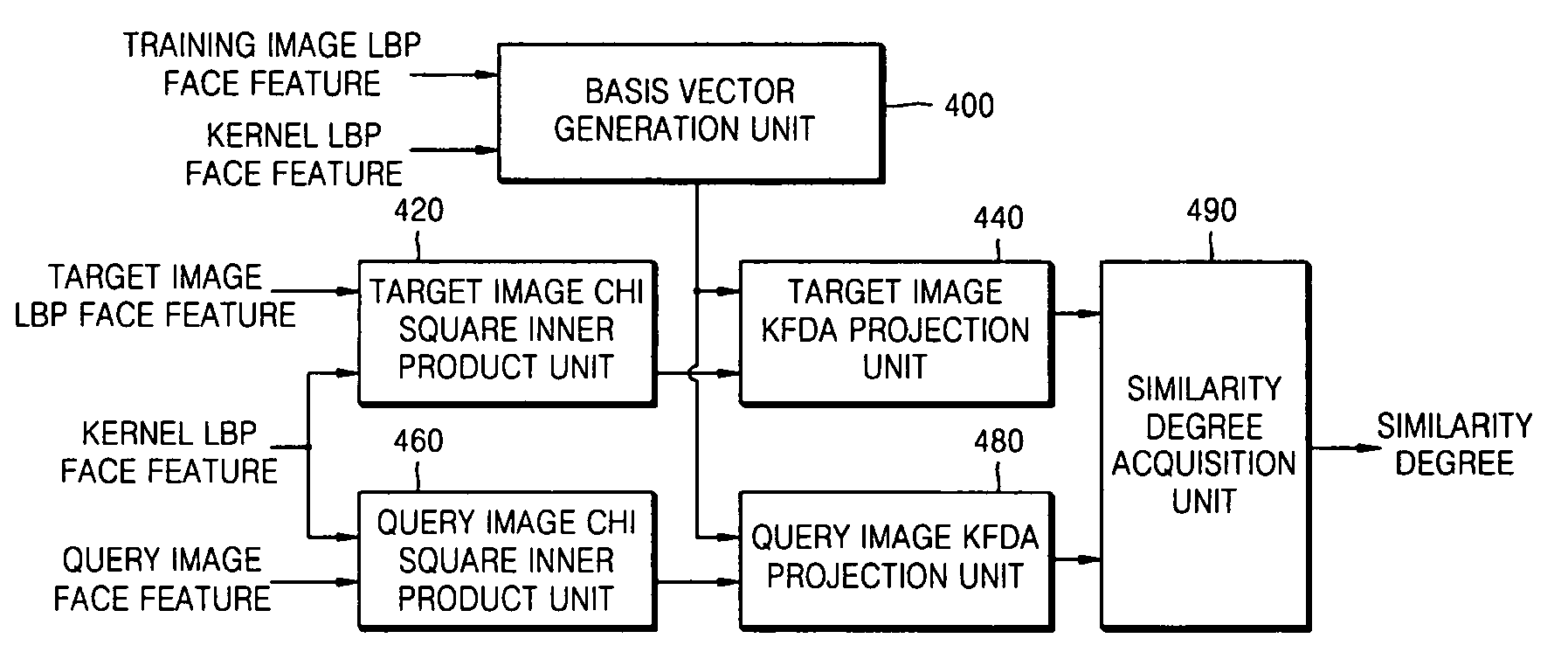

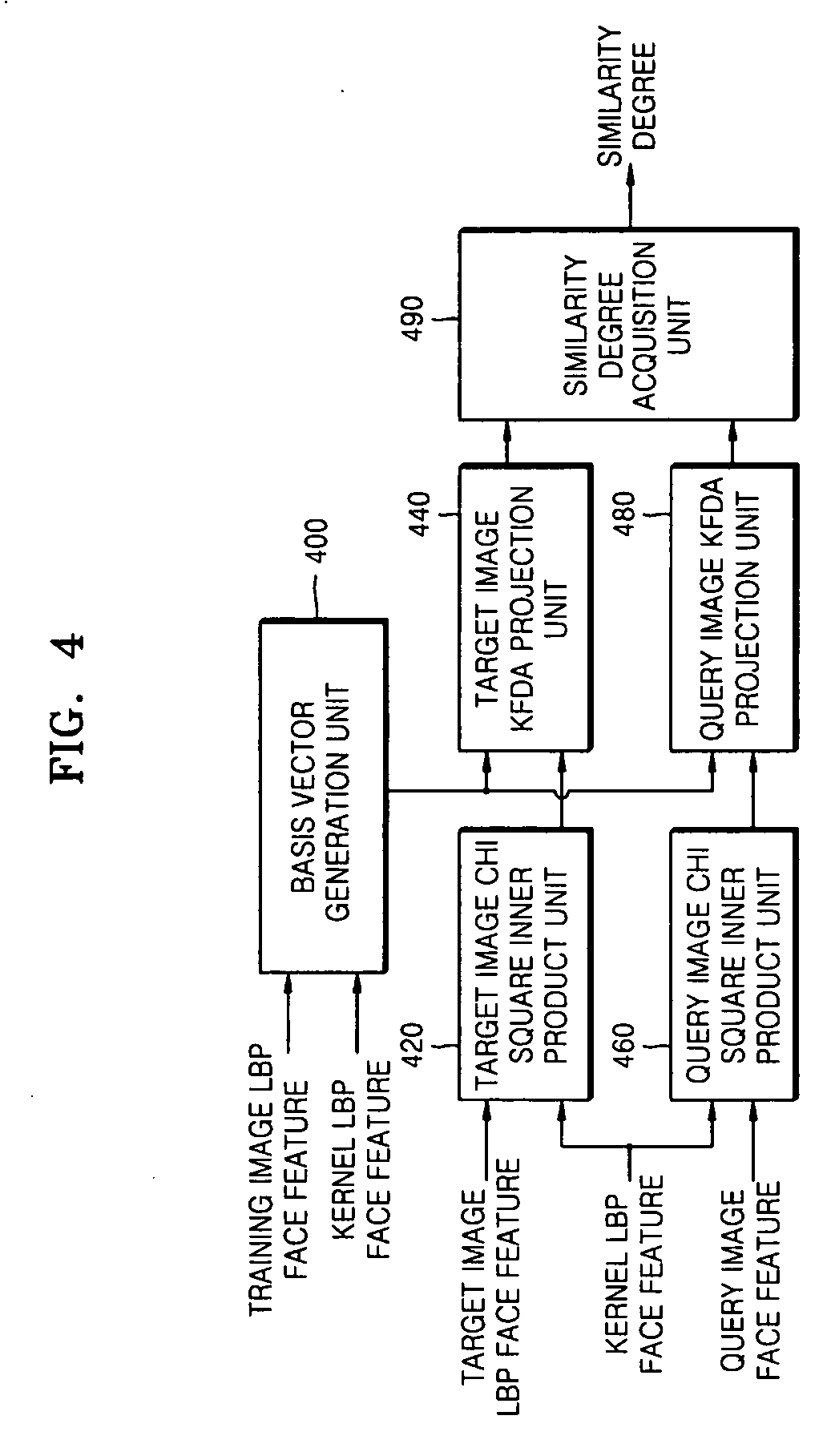

A image verification method, medium, and apparatus using a local binary pattern (LBP) discriminant technique. The verification method includes generating a kernel fisher discriminant analysis (KFDA) basis vector by using the LBP feature of an input image, obtaining a Chi square inner product by using the LBP feature of an image registered in advance and a kernel LBP feature and projecting to a KFDA basis vector, obtaining a Chi square inner product by using the LBP feature of a query image and a kernel LBP feature and projecting to a KFDA basis vector, and obtaining the similarity degree of the target image and the query image that are obtained as Chi square inner product results, and projected to the KFDA basis vector. According to the method, medium, and apparatus, the KFDA based LBP shows superior performance over conventional LBP, KFDA, and biometric experimentation environment (BEE) baseline algorithms.

Owner:SAMSUNG ELECTRONICS CO LTD

Method for recognizing iris with matched characteristic and graph based on partial bianry mode

InactiveCN101154265AImprove computing efficiencyMeet real-time requirementsCharacter and pattern recognitionLight irradiationIris image

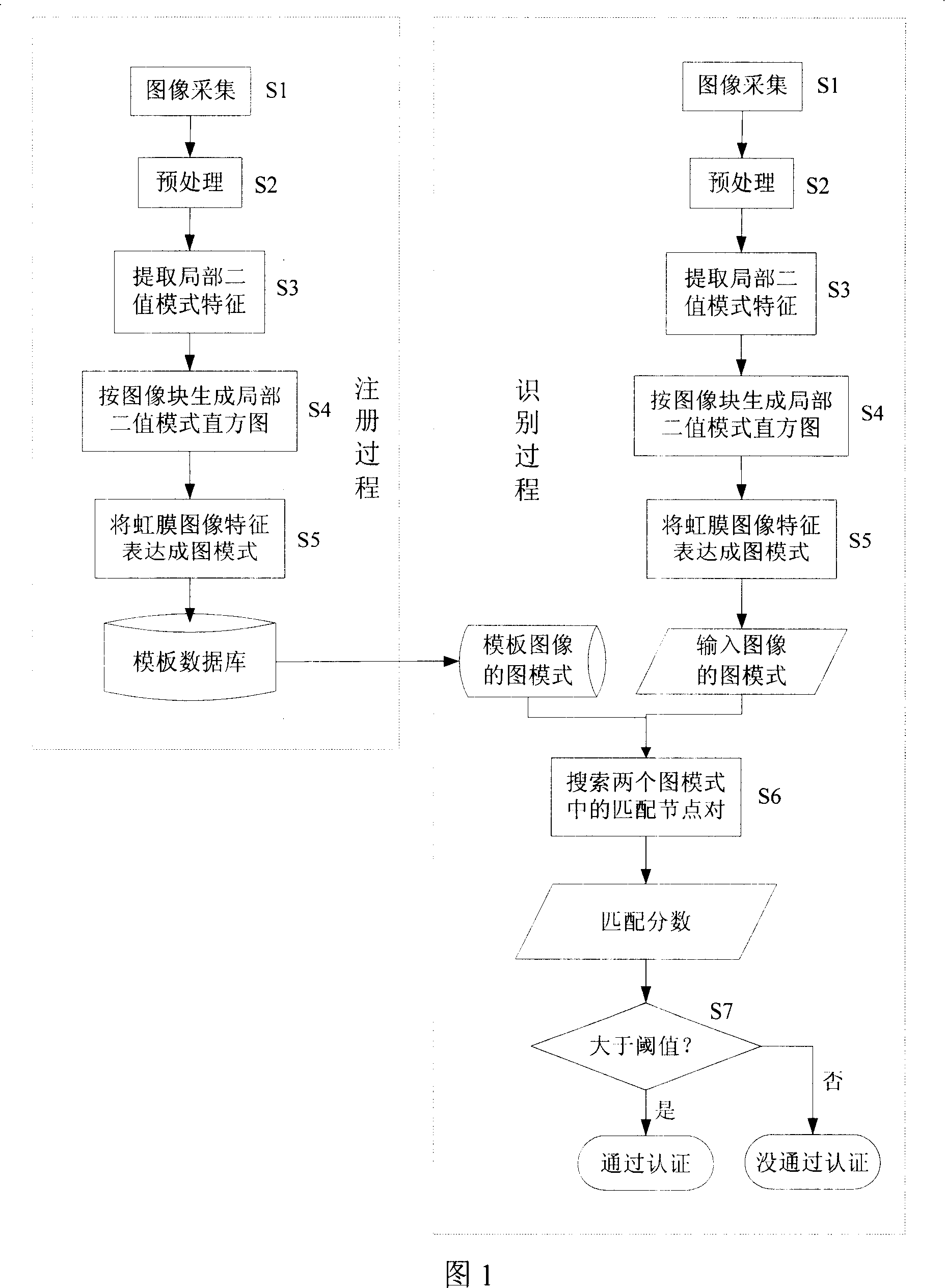

The invention discloses an iris recognition method based on local two-value mode feature and graph matching. Firstly local two-value mode code is picked up according to the ordinal relation of each two pixel grey-scale values in an iris image neighborhood for describing the iris oriented texture feature having a constant characteristic under light irradiation; secondly the iris image is divided into a plurality of image pieces, and a local two-value mode histogram in each piece is calculated to describe the iris oriented texture statistical characterization having robustness on translation and deformation; each image piece is viewed as a node, the local two-value mode histogram in each piece is viewed as the attribute of the node, and the feature of each iris image is presented as a graph mode; during iris recognition, the graph matching method is used for searching matching node pairs in two graph modes; image recognition and registration of the number of the matching node pairs in the graph modes are carried out to show the similarity between the two graph mode, thereby judging the ID of a user. The invention is used for automatic identification in the application fields such as entrance guard, attendance check and clearance.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

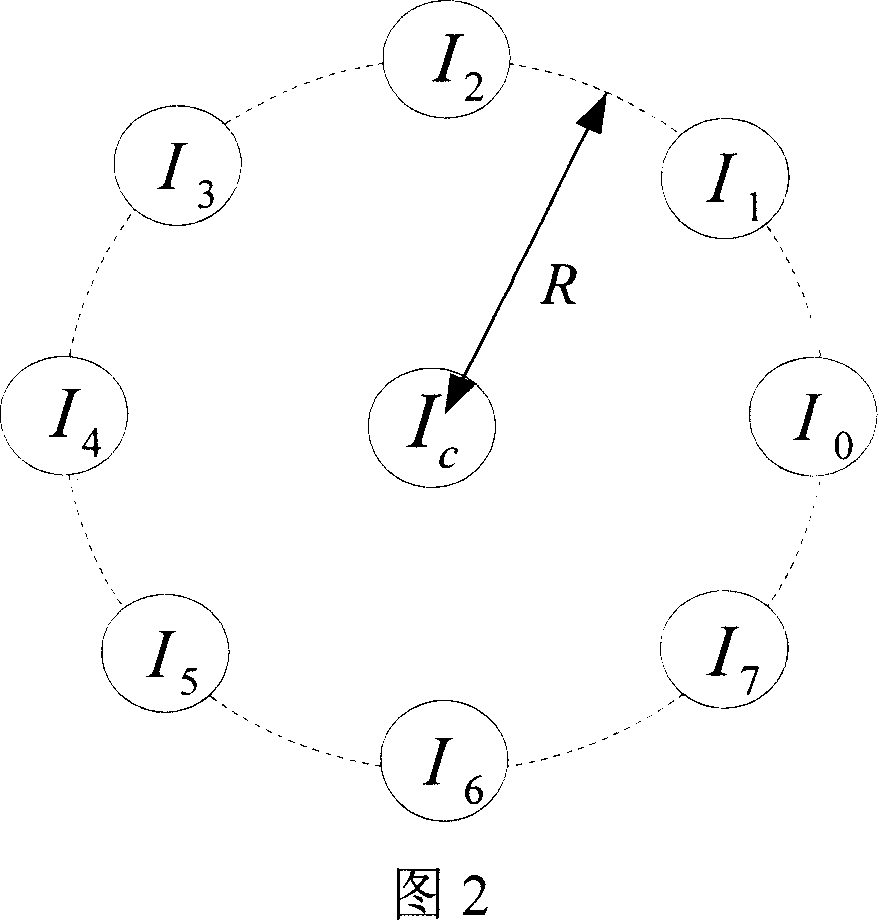

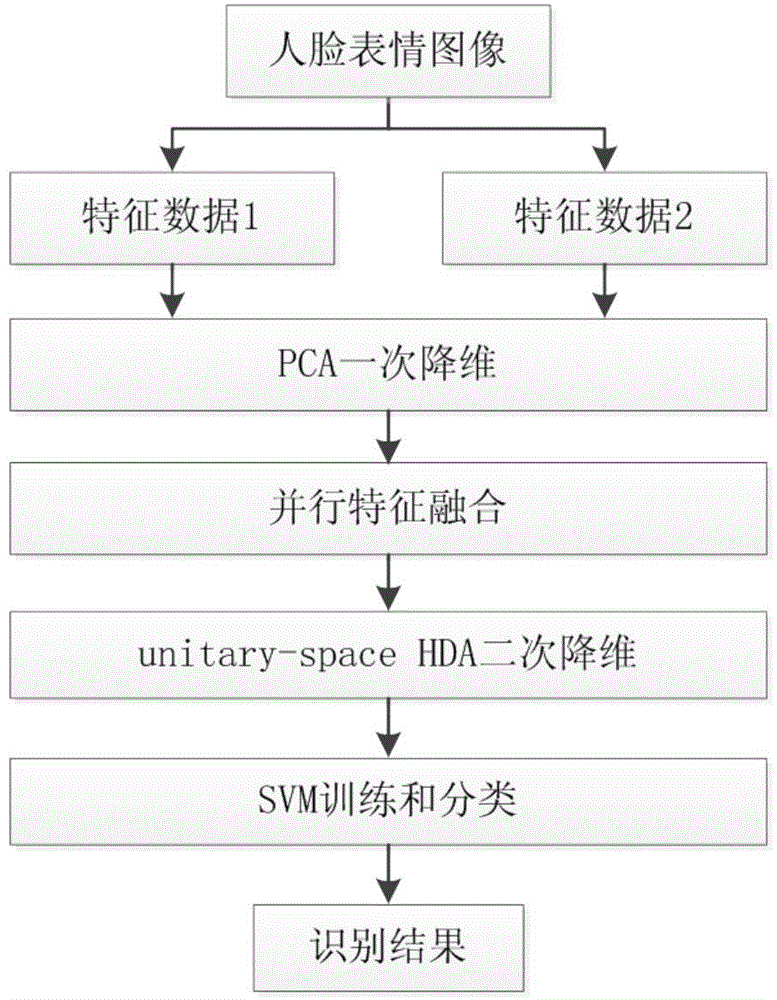

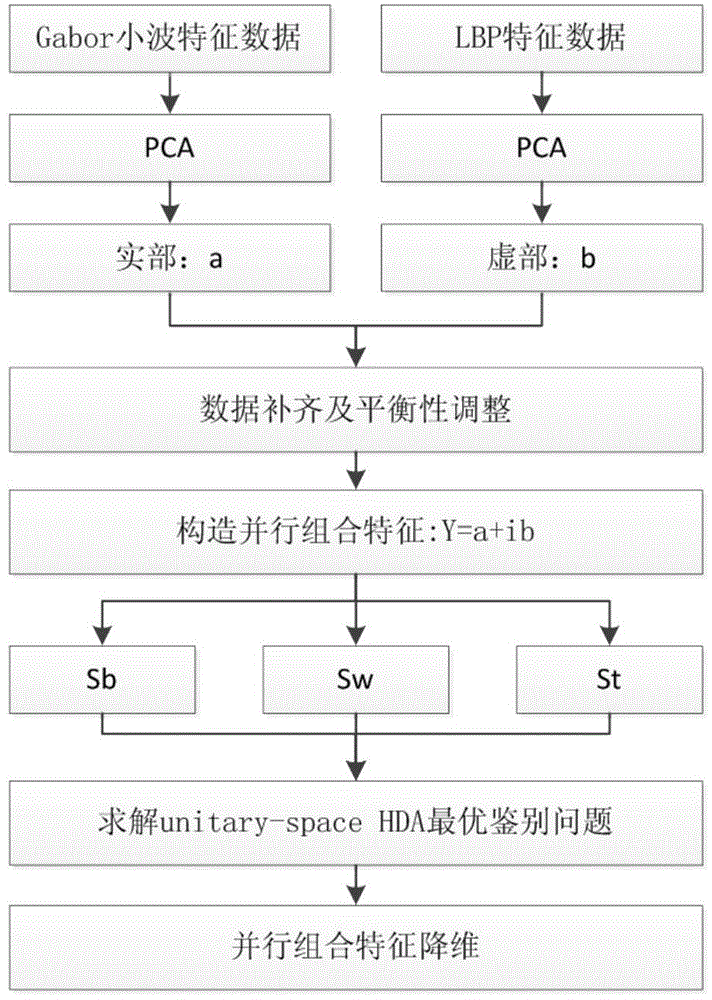

Identification method for human facial expression based on two-step dimensionality reduction and parallel feature fusion

ActiveCN104408440ARealize identificationReduce dimensionalityCharacter and pattern recognitionVideo monitoringPrincipal component analysis

The invention requests to protect an identification method for a human facial expression based on two-step dimensionality reduction and parallel feature fusion. The adopted two-step dimensionality method comprises the following steps: firstly, respectively performing the first-time dimensionality reduction on two kinds of human facial expression features to be fused in the real number field by using a principal component analysis (PCA) method, then performing the parallel feature fusion on the features subjected to dimensionality reduction in a unitary space, secondly, providing a hybrid discriminant analysis (HDA) method based on the unitary space as a feature dimensionality reduction method of the unitary space, respectively extracting two kinds of features of a local binary pattern (LBP) and a Gabor wavelet, combining dimensionality reduction frameworks in two steps, and finally, classifying and training by adopting a support vector machine (SVM). According to the method, the dimensions of the parallel fusion features can be effectively reduced; besides, the identification for six kinds of human facial expressions is realized and the identification rate is effectively improved; the defects existing in the identification method for serial feature fusion and single feature expression can be avoided; the method can be widely applied to the fields of mode identification such as safe video monitoring of public places, safe driving monitoring of vehicles, psychological study and medical monitoring.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

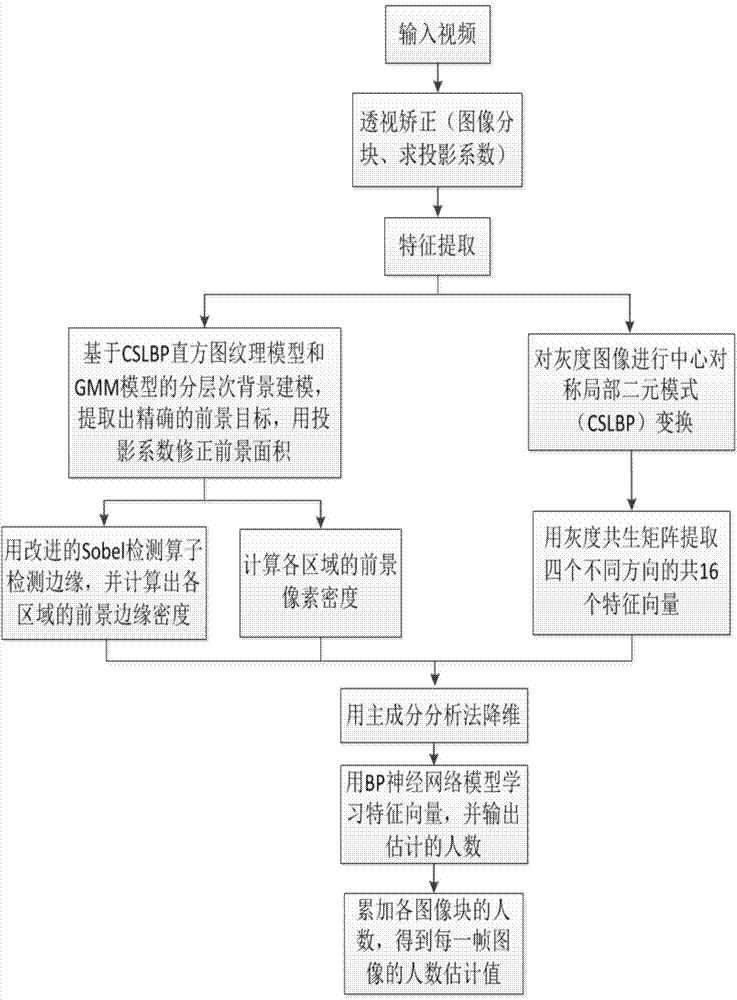

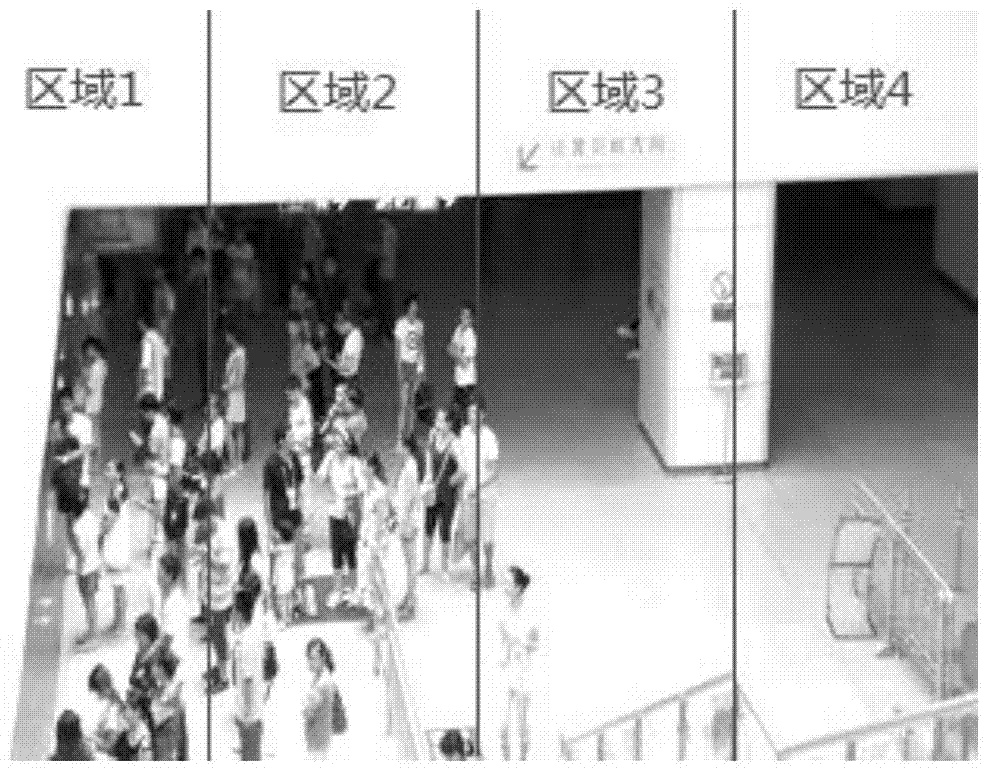

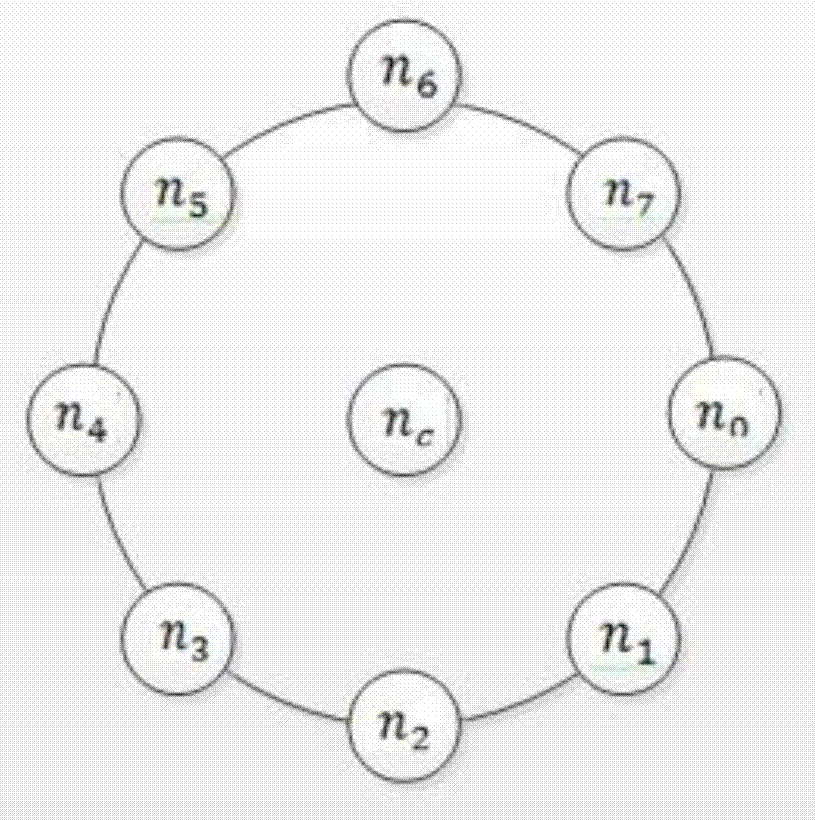

Dese population estimation method and system based on multi-feature fusion

ActiveCN104504394AImprove accuracyGood effectBiological neural network modelsBiometric pattern recognitionNerve networkPrincipal component analysis

The invention provides a dense population estimation method and a system based on multi-feature fusion. The method comprises the following steps: partitioning an image into N equal sub-blocks; performing hierarchical background modeling on the image by using a method based on a CSLBP (Center-Symmetric Local Binary Pattern) histogram texture model and mixture Gaussian background modeling, extracting the foreground area of each sub-block subjected to perspective correction, detecting the edge density of each sub-block in combination with an improved Sobel edge detection operator, and extracting four important texture feature vectors in different directions for describing image texture features in combination with CSLBP transform and a gray-level co-occurrence matrix; performing dimension reduction processing on the extracted population foreground partition feature vectors and texture feature vectors through main component analysis; inputting the dimension-reduced feature vectors into an input layer of a nerve network model, and acquiring the population estimation of each sub-block through an output layer; adding to obtain the total population. The dense population estimation method and system have high accuracy and high robustness, and a good effect is achieved in the population counting experiment of subway station monitoring videos.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

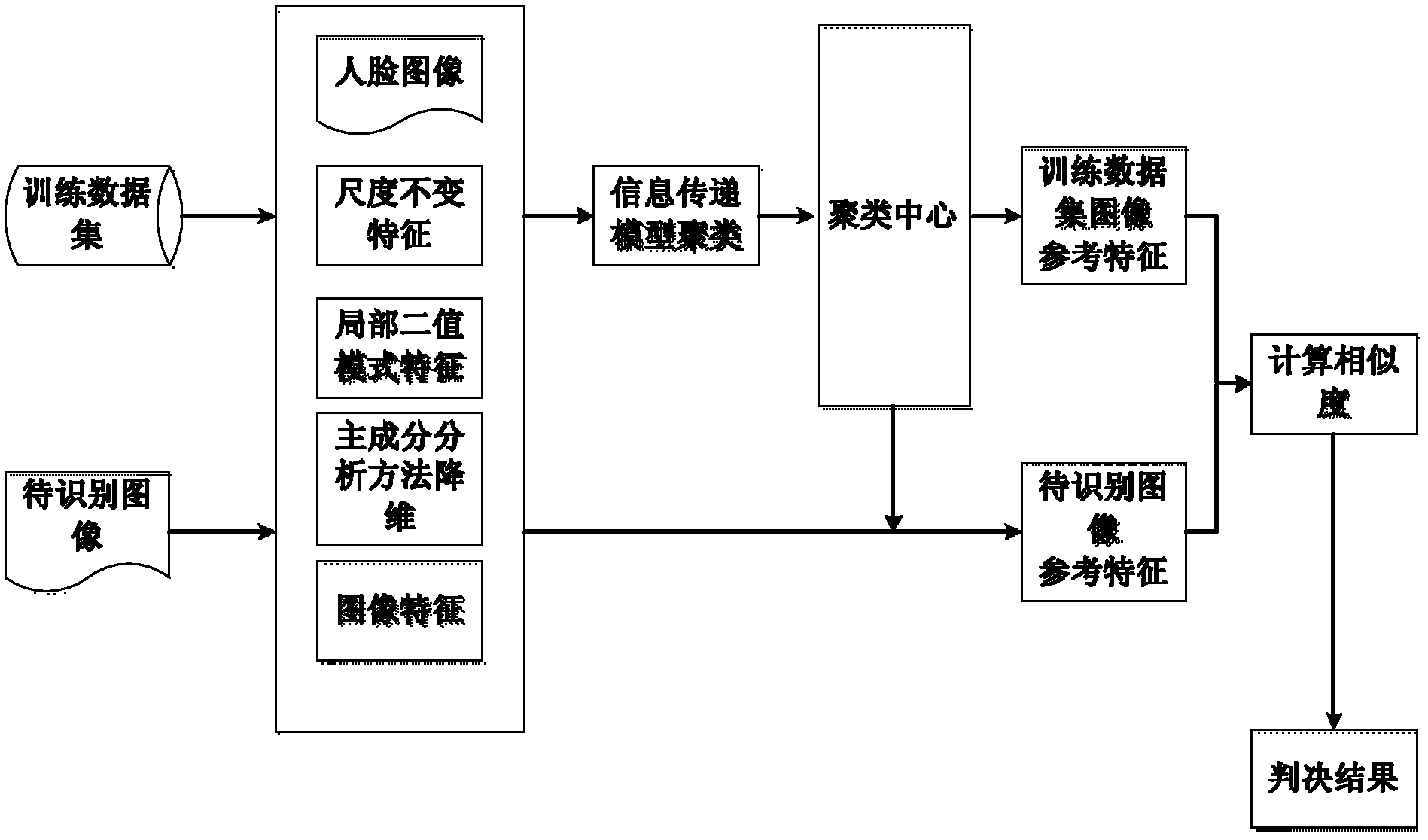

Face recognition method based on reference features

ActiveCN102637251AComprehensive representationSimplify the feature extraction processCharacter and pattern recognitionKernel principal component analysisPrincipal component analysis

The invention discloses a face recognition method based on reference features. The method comprises the following steps that: scale invariant features and local binary pattern features of a face image to be recognized are extracted; a principal component analysis method is utilized for dimensionality reduction to obtain the image features of the face image to be recognized; the similarity of the image features to a cluster center is calculated by utilizing the obtained image features to obtain the reference features of the face image to be recognized; and the similarity of the reference features of the face image to be recognized and the reference features of training data concentration is calculated to obtain an analysis result. The reference features of the face image provided by the invention comprise texture information and structure information of the face image, so that the method provided by the invention can more comprehensively represent the face compared with the method in the prior art, which only represents the texture information or the structure information of the face. The process of feature extraction is simple and easy to realize; the recognition result is highly precise; high recognition rate of different facial gestures of the same person is realized.

Owner:HUAZHONG UNIV OF SCI & TECH

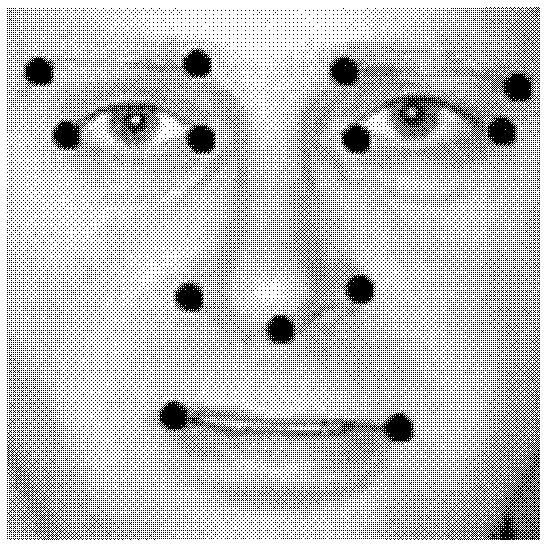

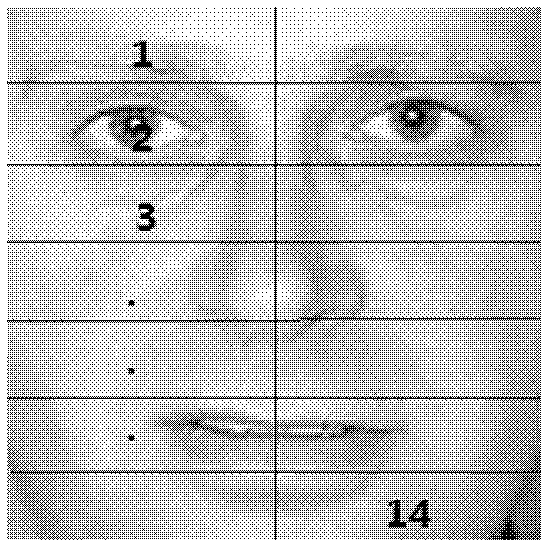

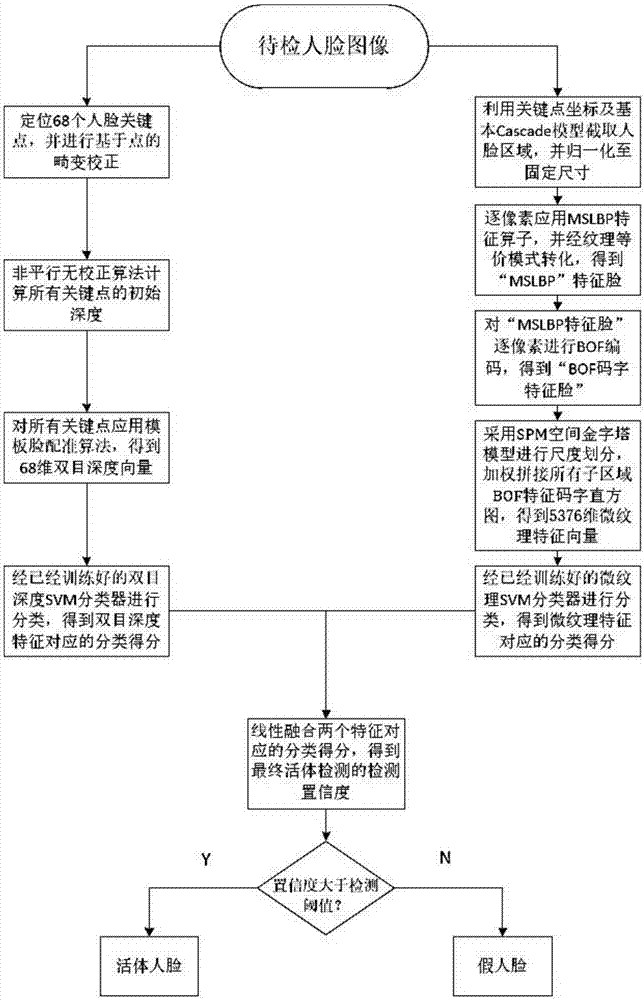

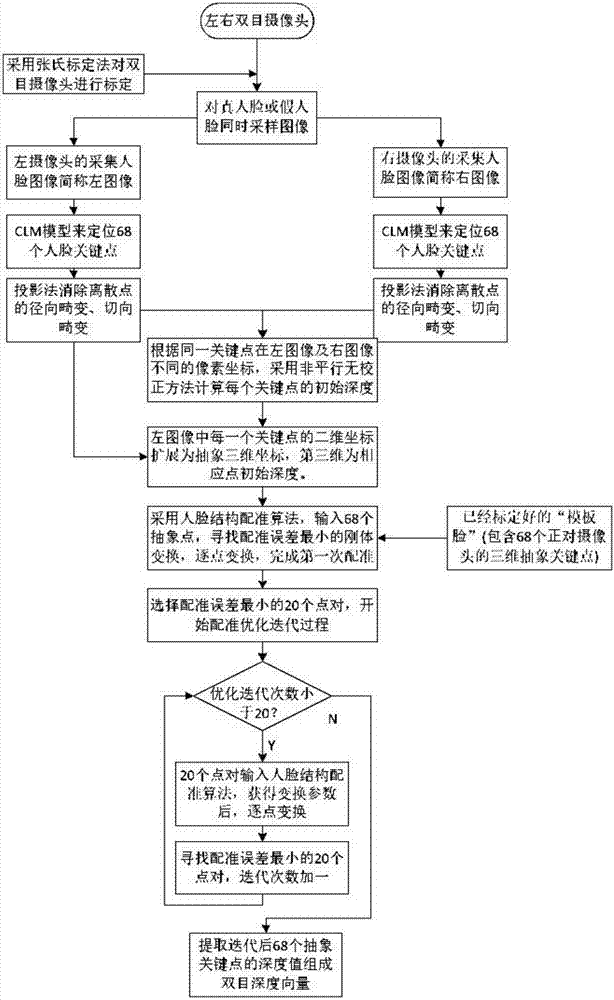

Binocular vision depth feature and apparent feature-combined face living body detection method

The present invention provides a binocular vision depth feature and apparent feature-combined face living body detection method. The method includes the following steps that: step 1, a binocular vision system is established; step 2, a face is detected through the binocular vision system, so that a plurality of key points can be obtained; step 3, a binocular vision depth feature and a classification score corresponding to the binocular vision depth feature are obtained; step 4, a complete face area is intercepted from a left image, the complete face area is normalized to a fixed size, and a local binary pattern (LBP) feature is extracted so as to be adopted as an apparent feature descriptor; step 5, a face living body detection score corresponding to a micro-texture feature is obtained; and step 6, the classification score corresponding to the binocular vision depth feature obtained in the step 3 and the face living body detection score corresponding to the micro-texture feature obtained in the step 5 are fused in a decision-making layer, so that whether an image to be detected is a live body can be judged. The binocular vision depth feature and apparent feature-combined face living body detection method of the invention has the advantages of simple algorithm, high operation speed, high precision and the like. With the method adopted, a new and reliable method can be provided for living body face detection.

Owner:SHANGHAI JIAO TONG UNIV

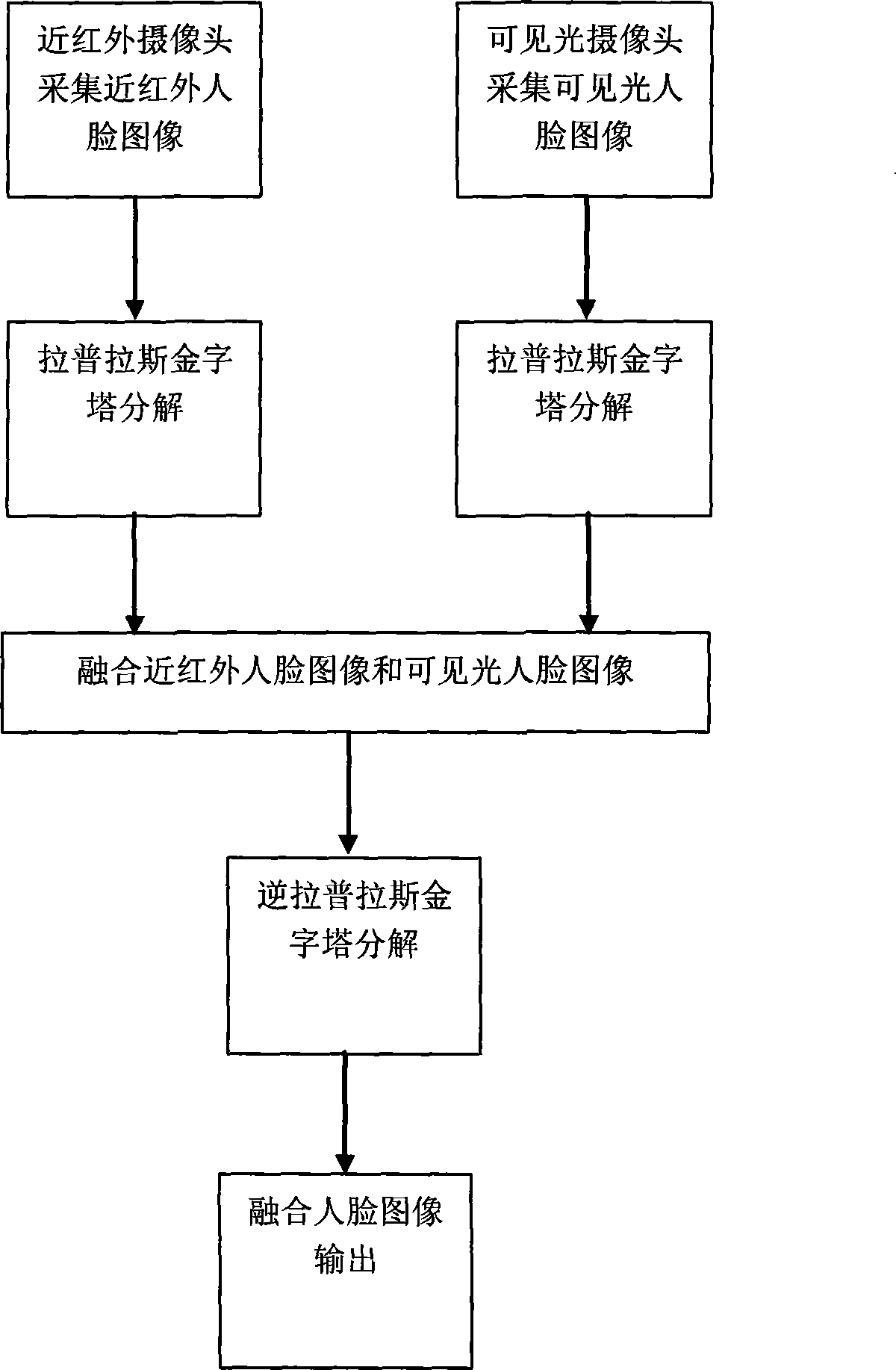

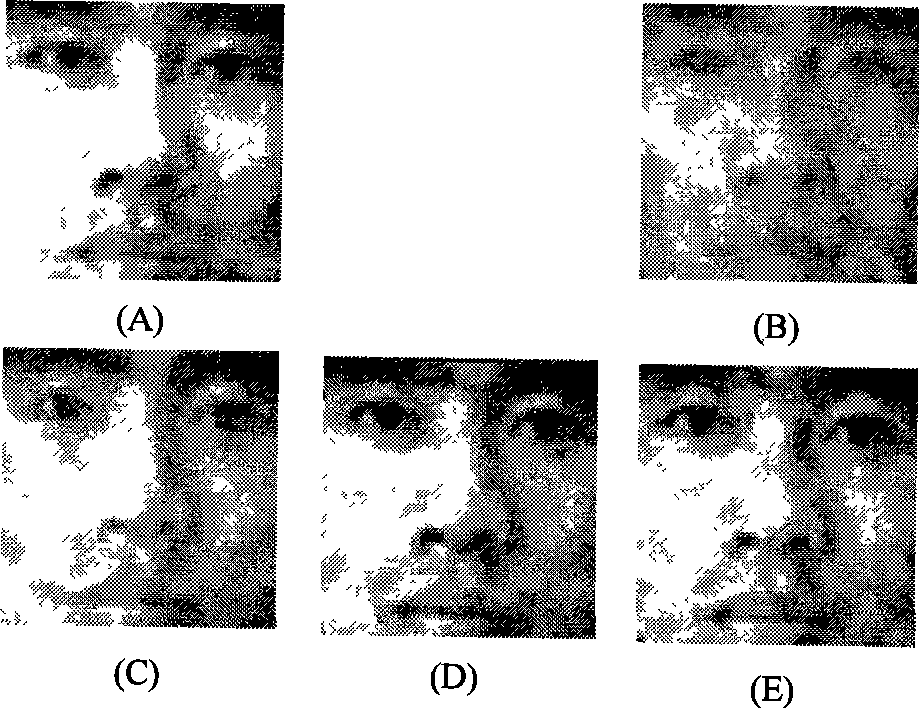

Face image fusing method based on laplacian-pyramid

InactiveCN101425137ARich in detailsEasy to observeImage enhancementCharacter and pattern recognitionMean squareWeight coefficient

The invention relates to a human face image fusing method based on laplacian-pyramid. On a basis of the laplacian-pyramid decomposition, the invention applies a partial binary mode arithmetic operator into a fusion rule. As for infrared and visible-light human face images in each layer of the laplacian-pyramid, average gradient of the image, standard deviation of an image histogram and Chi square statistic similarity measurement are used for determining the selection of respective weighting coefficients of the infrared and visible-light human face images, and effective fusion is performed by utilizing the determined weighting coefficients so as to obtain a fused image which is rich in detail information, clear and stable and facilitates the observation of human eyes. Compared with visible-light images and near-infrared images, the fused information provided by the invention has greater comentropy but smaller average cross-entropy and mean square root cross-entropy and has more remarkable effect of image fusion.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY +1

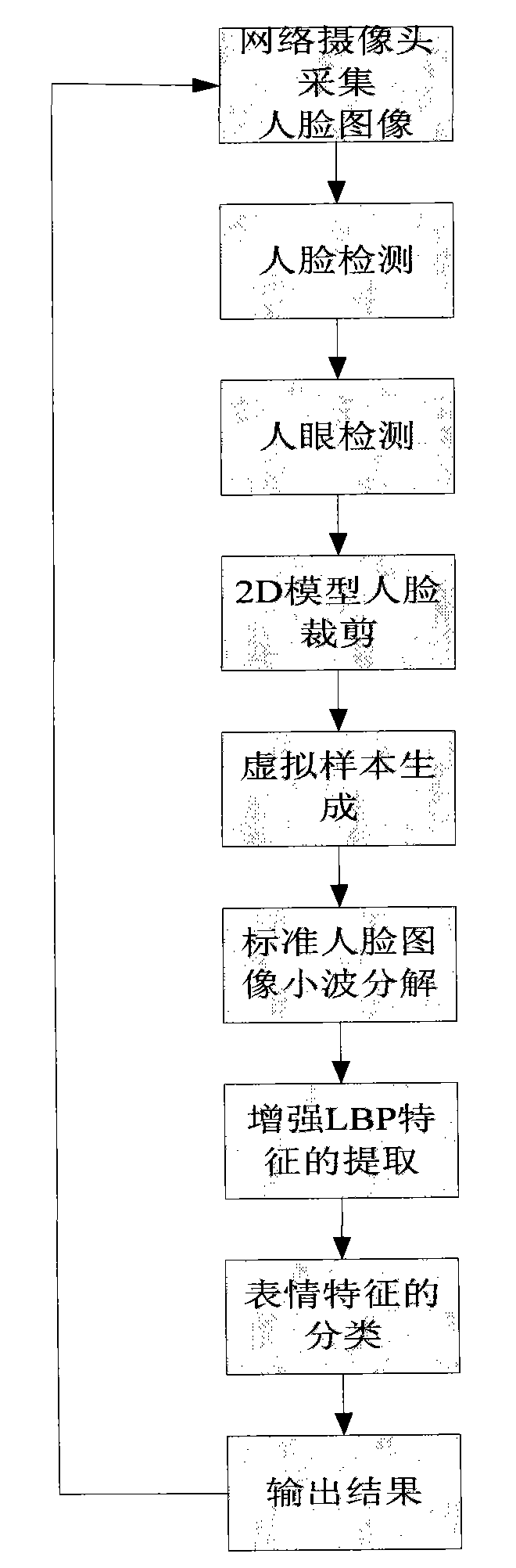

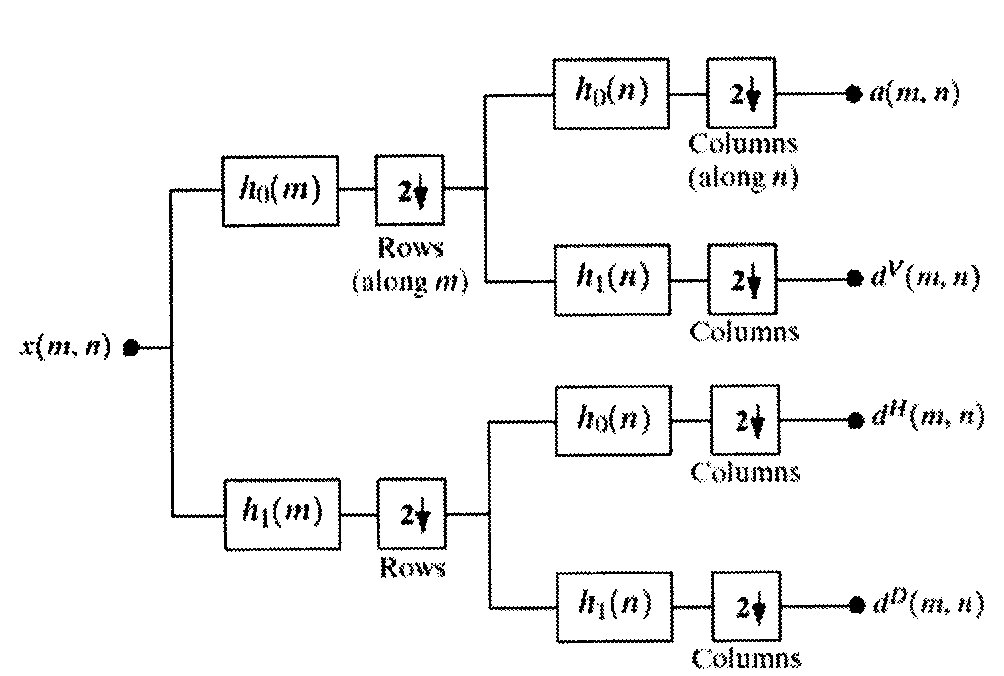

Expression recognition method based on AVR and enhanced LBP

InactiveCN101615245AImprove accuracyCharacter and pattern recognitionFace detectionPattern recognition

An expression recognition method based on AVR and enhanced LBP in the technical field of model recognition comprises the following steps: collecting an original image; expanding virtual samples; carrying out wavelet decomposition on human image; extracting local binary pattern characteristic LBP; calculating an enhanced variance ratio AVR characteristic value and adding with a penalty factor, then extracting a plurality of groups of characteristic values of different dimensionality discriminated by AVR value, carrying out support vector machine classification accuracy test, and taking the characteristic dimensionality with the highest accuracy and corresponding characteristic value as the LBP characteristic. The method of the invention integrates image acquisition, human face test and human eye test, enhances the LBP characteristic by wavelet decomposition, and effectively improves the accuracy by adopting AVR method to extract effective characteristic.

Owner:SHANGHAI JIAO TONG UNIV +1

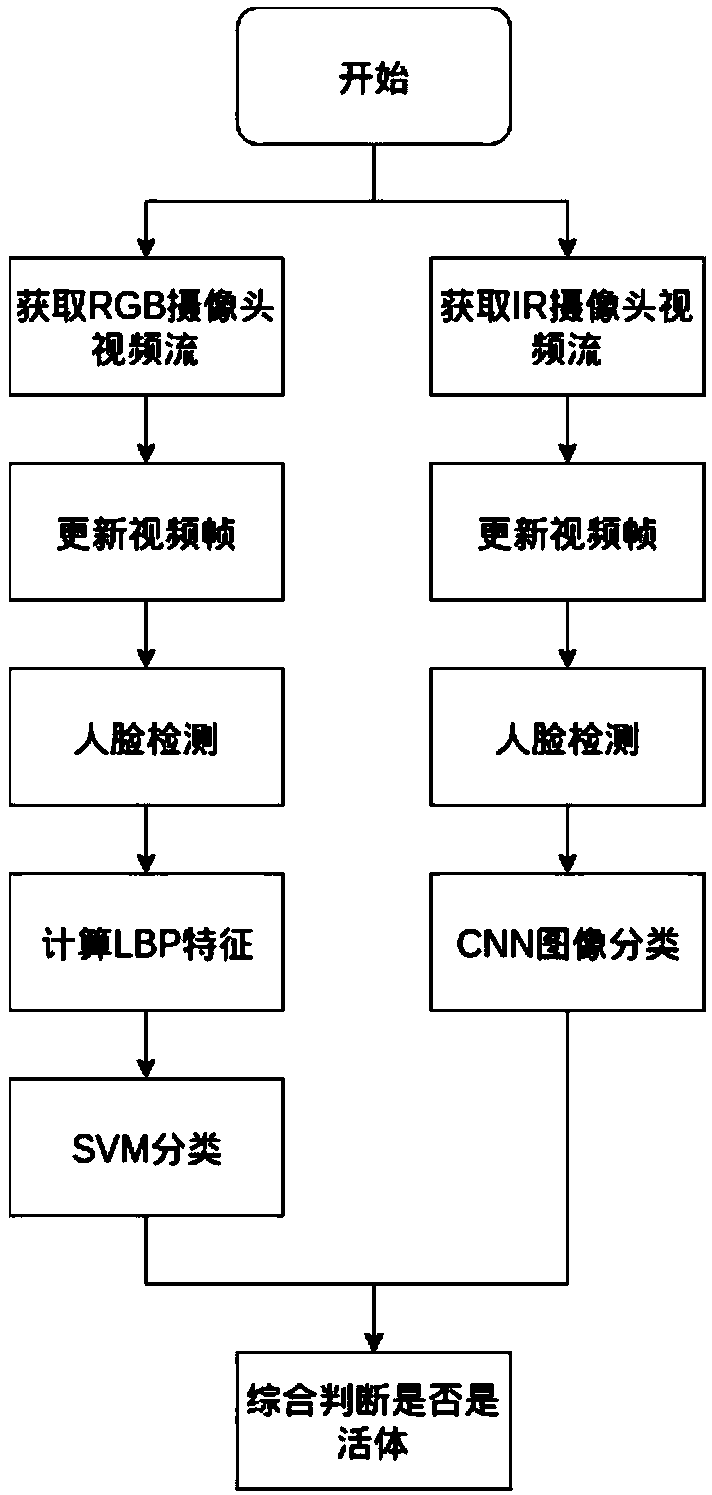

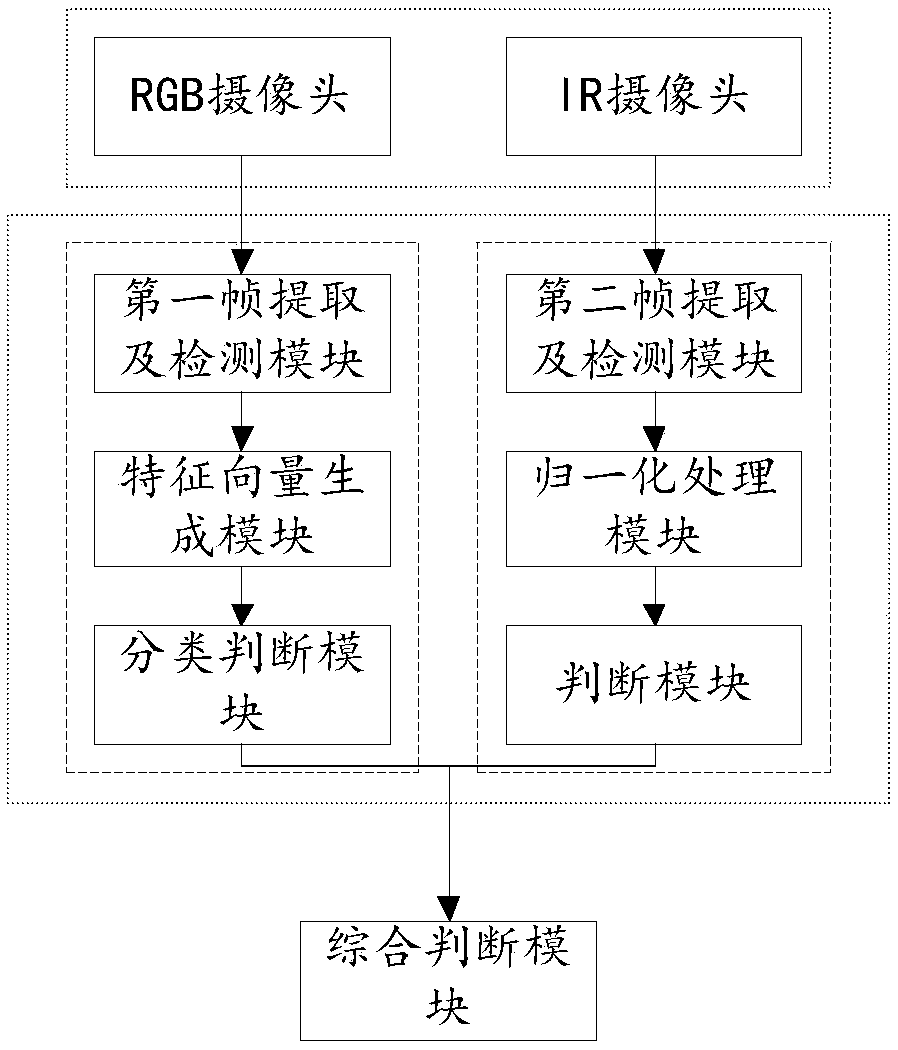

RGB (Red Green Blue) and IR (Infrared) binocular camera-based living body detecting method and device

The invention relates to an RGB (Red Green Blue) and IR (Infrared) binocular camera-based living body detecting method and device. The method comprises the steps of obtaining two sets of video streamsthrough an RGB camera and an IR camera respectively; carrying out face detection and living body judgement on video frames in the two sets of video streams; and when both the two sets of video framesare judged as living bodies, regarding the face in the current video frame to be a living human face. The method disclosed by the invention specifically comprises the steps of collecting face videosby adopting the two cameras and carrying out face detection to respectively obtain human faces under RGB and IR; aiming at an RGB colorful face image, extracting LBP (Local Binary Pattern) features byutilizing a traditional image processing algorithm and judging the face is a living human face or not through SVM (Support Vector Machine) classification; meanwhile, aiming at an IR face image, directly entering a trained CNN (convolutional neural network) to carry out classification and judge whether the face is a living human face or not; and if both the faces are judged as living human faces,eventually judging the face is a living face. The method disclosed by the invention has the beneficial effects of high robustness, low cost and convenience for large-scale use.

Owner:深圳神目信息技术有限公司

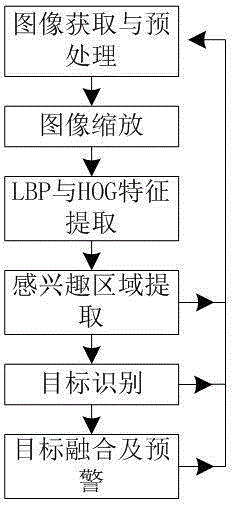

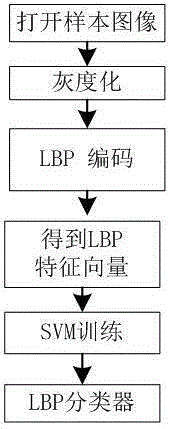

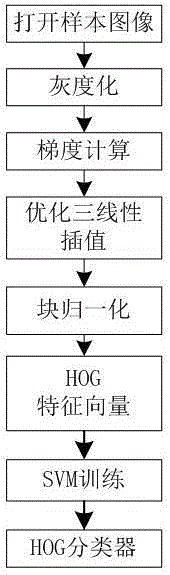

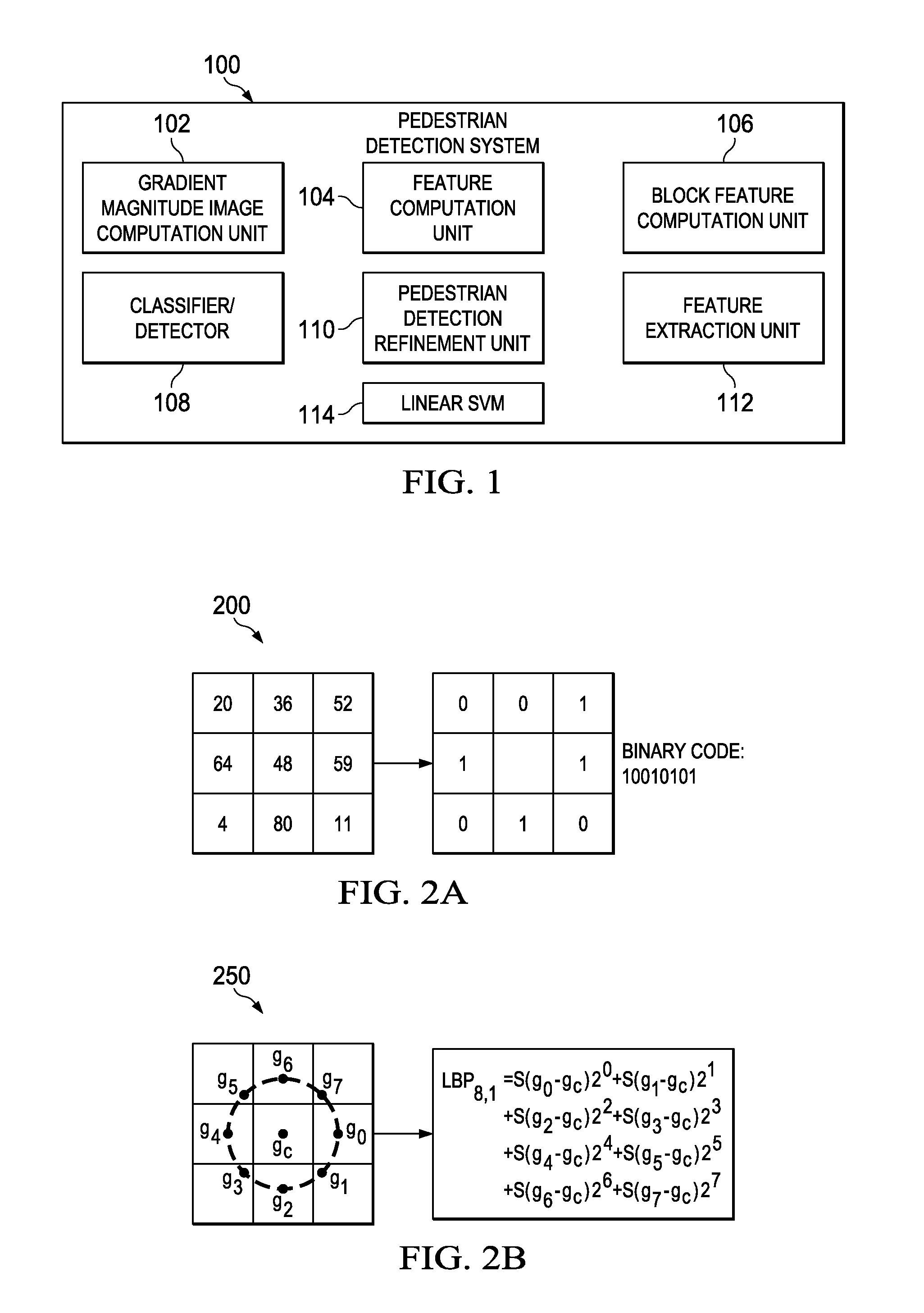

Method and system for detecting pedestrian in front of vehicle

InactiveCN105260712AImprove accuracyImprove securityBiometric pattern recognitionDriver/operatorHistogram of oriented gradients

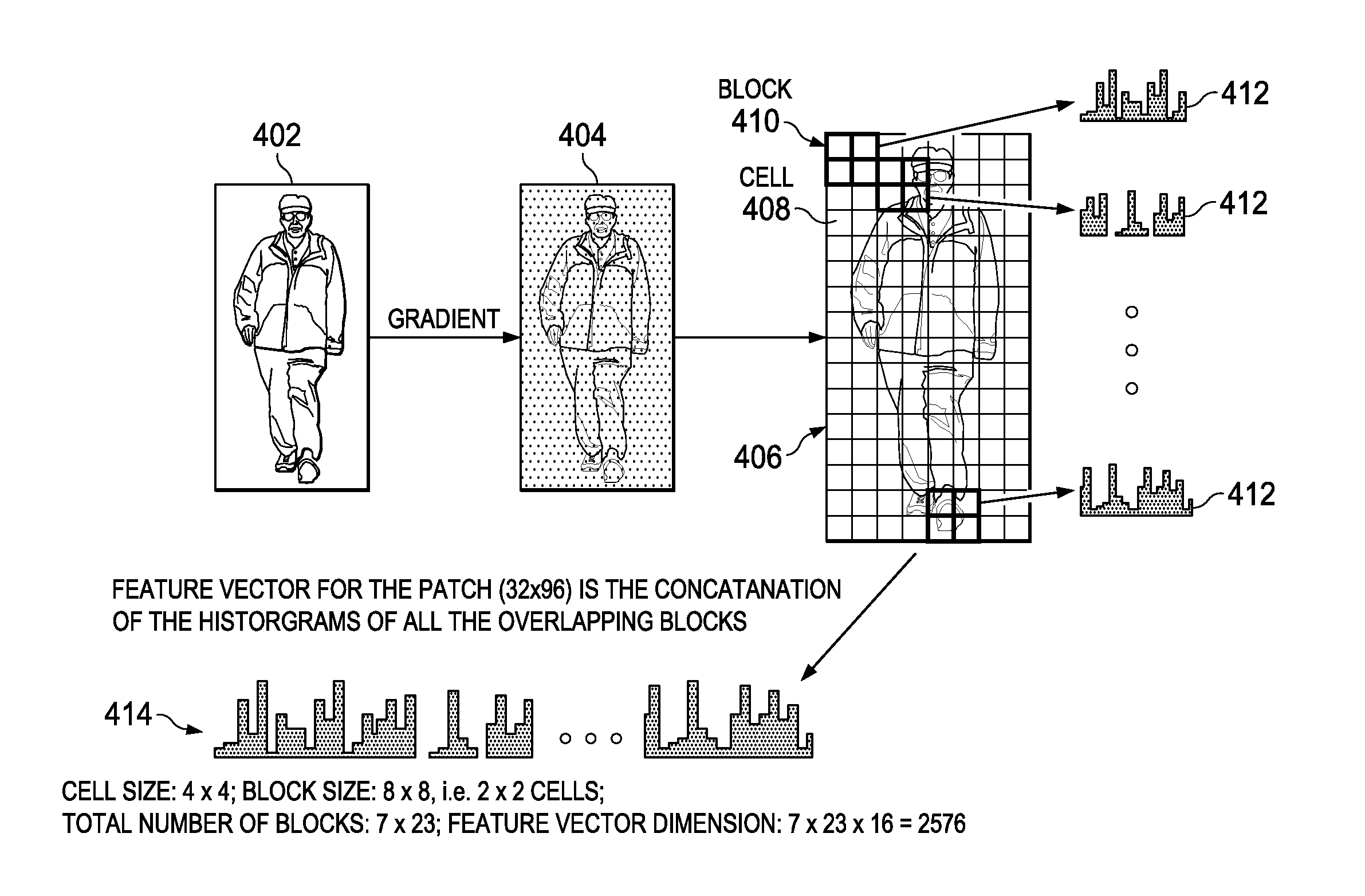

The invention discloses a method and a system for detecting the pedestrian in front of a vehicle. The method comprises the steps of image acquisition and preprocessing, image scaling, LBP (Local Binary Pattern) and HOG (Histogram of Oriented Gradient) feature extraction, region of interest extraction, target identification, and target fusion and early warning. A driver is reminded timely at the presence of the pedestrian in front of the vehicle. The system for detecting the pedestrian in front of the vehicle comprises three portions which are an image acquisition unit, an SOPC (System on Programmable Chip) unit and an ASIC (Application Specific Integrated Circuit) unit, wherein the image acquisition unit is a camera unit, the SOPC unit comprises an image preprocessing unit, a region of interest extraction unit, a target identification unit, and a target fusion and early warning unit, and the ASIC unit comprises an image scaling unit, an LBP feature extraction unit and an HOG feature extraction unit. According to the invention, LBP features and HOG features are used in a joint manner, and two-level detection improves the accuracy of pedestrian detection on the whole; and HOG feature extraction is dynamically adjusted according to classification conditions of an LBP based SVM (Support Vector Machine), the calculation amount is reduced, the calculation speed is improved, and the driving safety of the vehicle is improved.

Owner:SHANGHAI UNIV

Face authentication method and device

ActiveCN105138972ASolve few problemsReduce time complexityCharacter and pattern recognitionFeature DimensionImaging processing

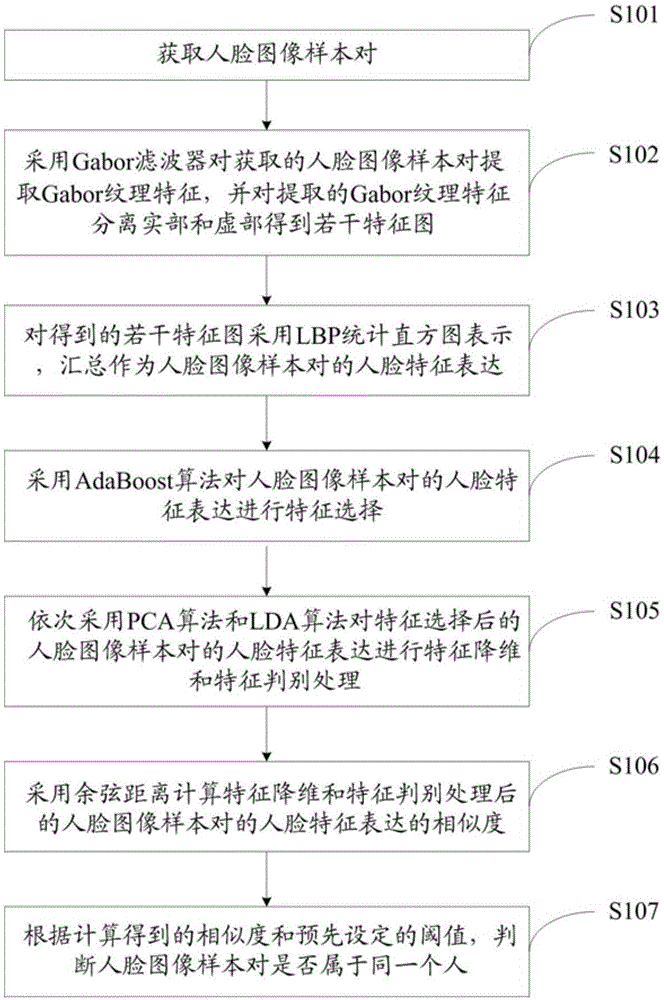

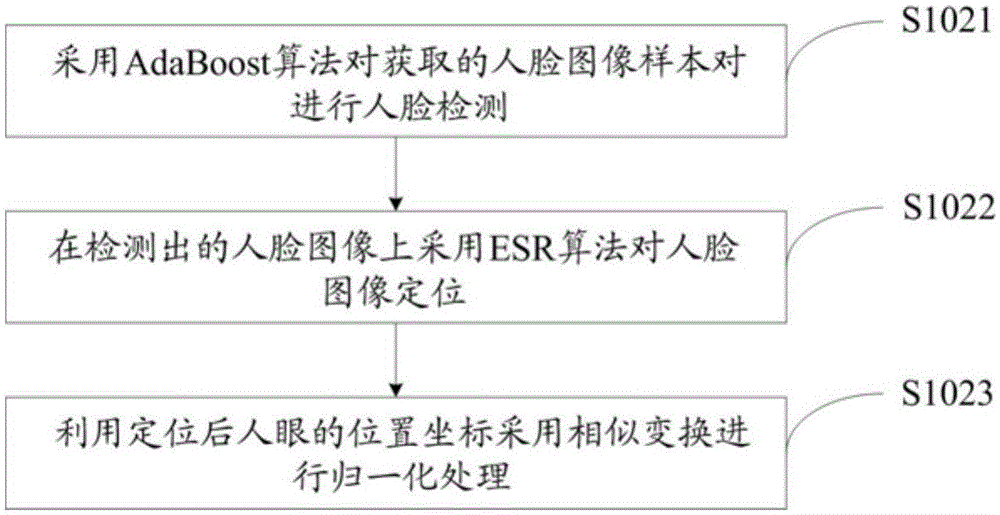

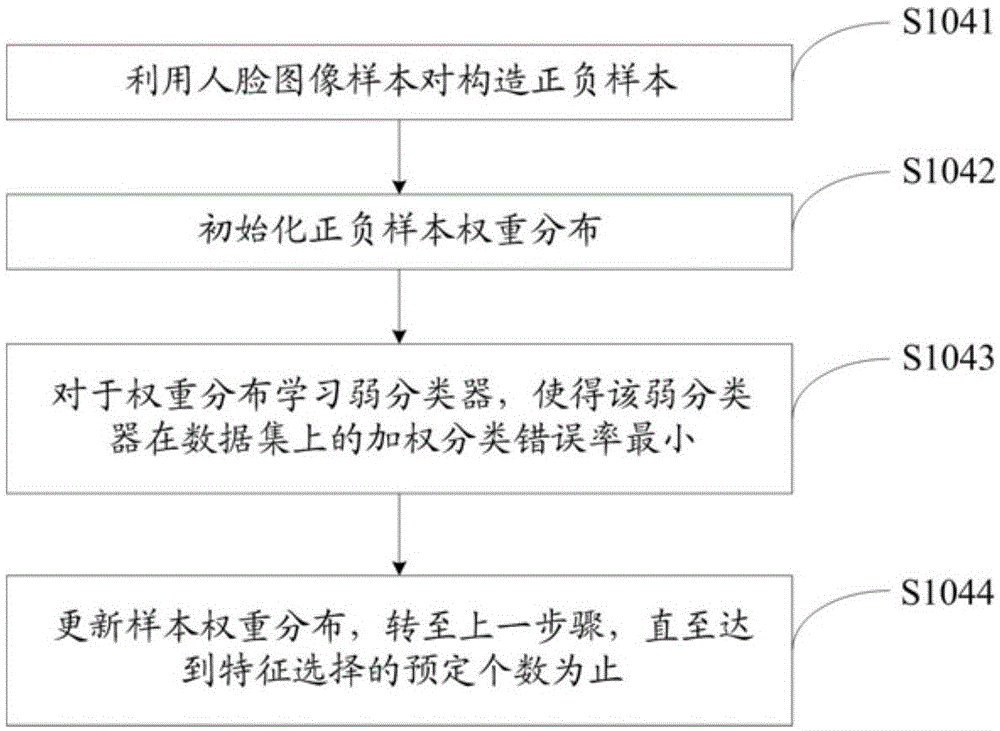

The invention provides a face authentication method and device, and belongs to the field of image processing and pattern recognition. The method comprises the following steps: extracting Gabor texture features of an acquired face image sample pair through a Gabor filter, and separating real parts from virtual parts of the extracted Gabor texture features to obtain a plurality of feature graphs; summarizing the plurality of obtained feature graphs through an LBP (Local Binary Pattern) statistical histogram to serve as face feature expression of the face image pair; performing feature selection on the face feature expression of the face image sample pair through an AdaBoost (Adaptive Boosting) algorithm; and performing feature dimension reduction, feature judgment processing and the like on the face feature expression of the face image sample pair subjected to the feature selection through a PCA (Principal Components Analysis) algorithm and an LDA (Linear Discriminant Analysis) algorithm in sequence. Compared with the prior art, the face authentication method provided by the invention has the advantages of full extraction of sample texture information, low sample quantity demand, short algorithm time and low space complexity.

Owner:BEIJING EYECOOL TECH CO LTD +1

Nuclear coordinated expression-based hyperspectral image classification method

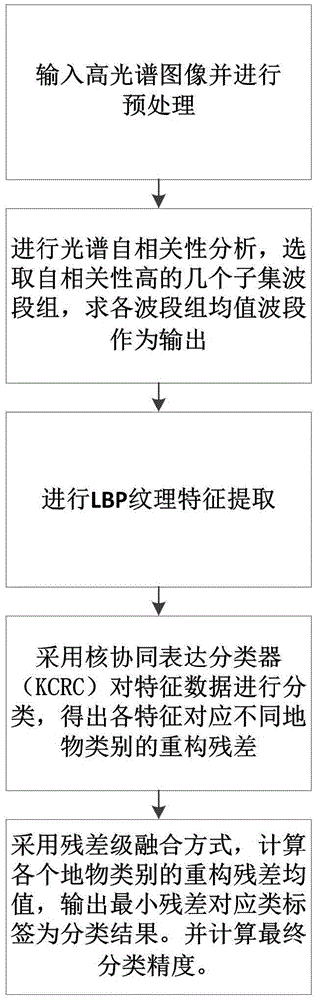

ActiveCN105608433AGood divisibilityRotation invariantScene recognitionOperabilityHyperspectral image classification

The invention discloses a nuclear coordinated expression-based hyperspectral image classification method. The method comprises the following steps: carrying out feature selection by adopting a waveband selection strategy with strong operability; carrying out local binary-pattern space feature extraction on the basis of selected different feature groups; carrying out nuclear coordinated expression classification; and finally fusing classification results corresponding to the groups of features and a residual-level fusion strategy and obtaining the final high-precision classification result. According to the method, the textural features of data extracted by a LBP operator are combined, the LBP has the remarkable advantages of rotation invariance and grey level invariance, and the calculation is simple, so that the robustness of the features to be classified is further increased. Finally, a nuclear coordinated expression classifier is used for classification, the calculation efficiency is better than that of the traditional sparse manner and the nonlinear space data can be classified, so that the application range is wider and the application performance is more excellent.

Owner:BEIJING UNIV OF CHEM TECH

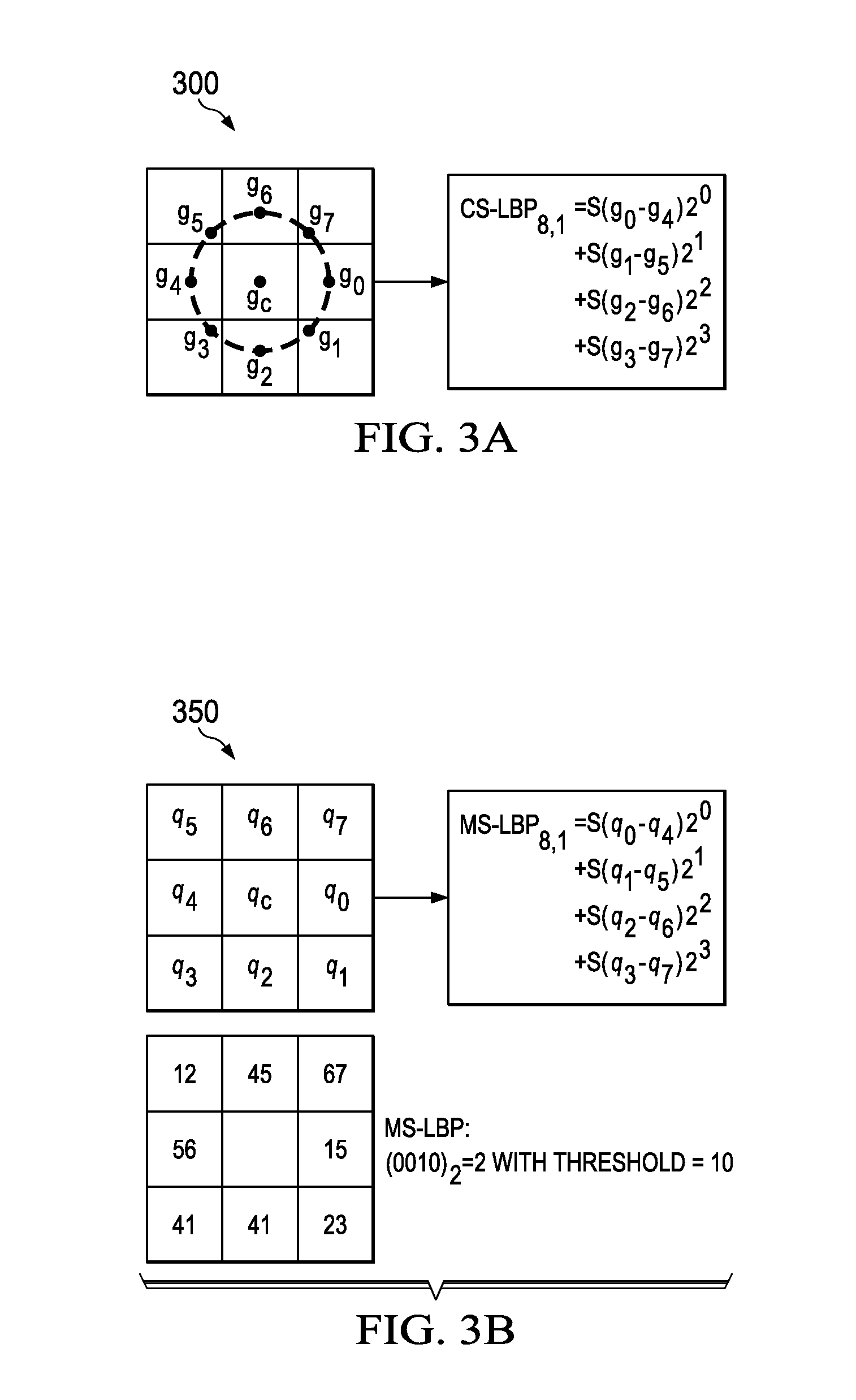

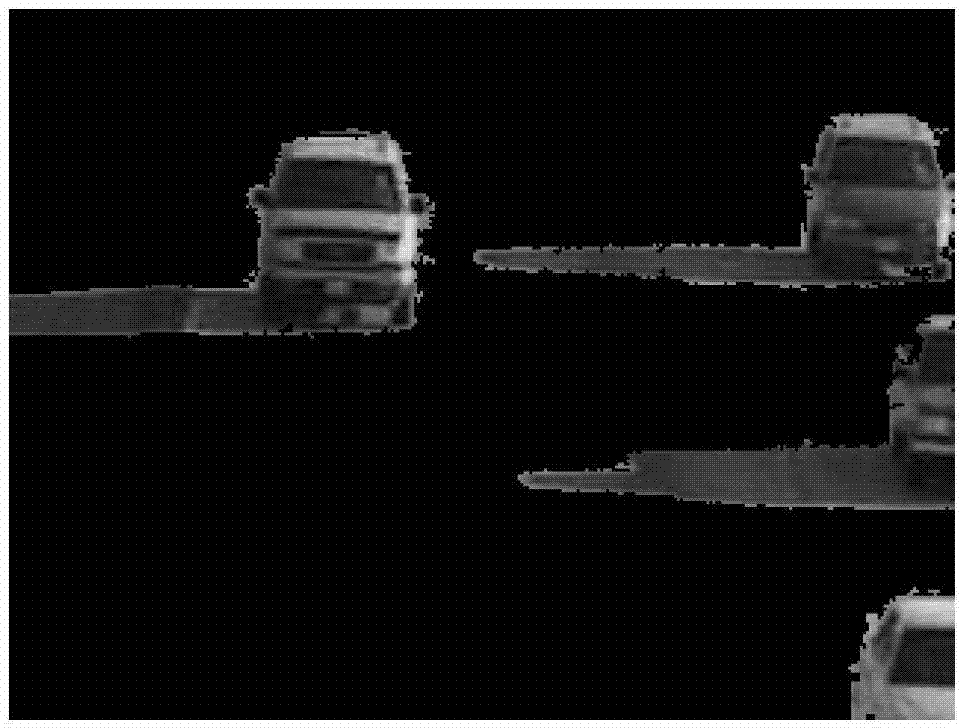

Systems and Methods for Pedestrian Detection in Images

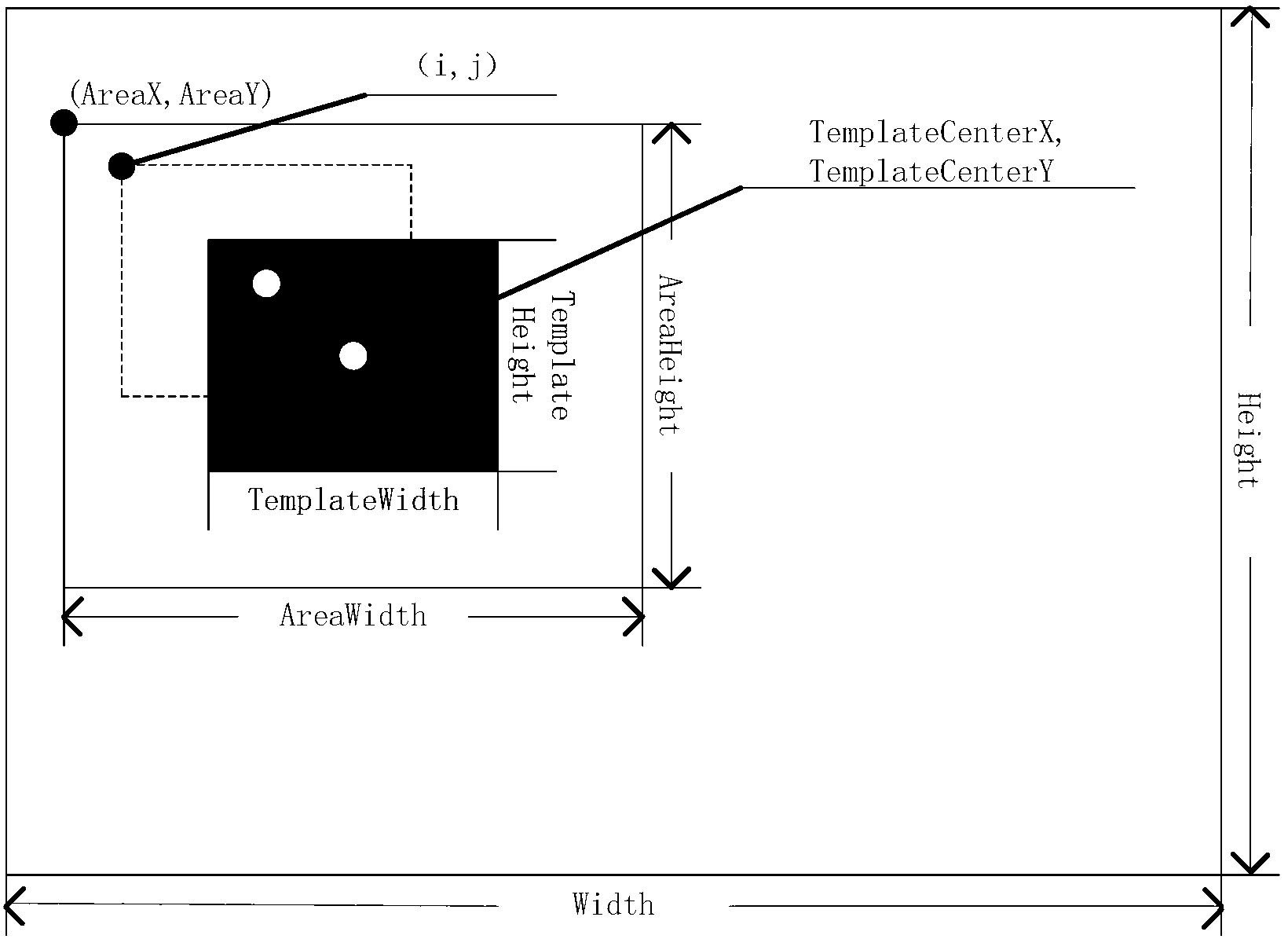

System, apparatus, and method embodiments are provided for detecting the presence of a pedestrian in an image. In an embodiment, a method for determining whether a person is present in an image includes receiving a plurality of images, wherein each image comprises a plurality of pixels and determining a modified center symmetric local binary pattern (MS-LBP) for the plurality of pixels for each image, wherein the MS-LBP is calculated on a gradient magnitude map without using an interpolation process, and wherein a value for each pixel is a gradient magnitude.

Owner:HUAWEI TECH CO LTD

Accurate moving shadow detection method based on multi-feature fusion

ActiveCN103035013AAchieve mutual complementarityHigh precisionImage analysisVideo imageBrightness perception

The invention relates to an accurate moving shadow detection method based on multi-feature fusion, belonging to the field of video image processing. Firstly, the foreground image in a video is extracted, and six features of brightness, color and texture of the foreground image are extracted. In order to describe the features as roundly as possible, under the brightness constraint, the color information of multiple color spaces and multi-scale images are extracted. Meanwhile, the texture information is respectively described through entropy and a local binary pattern. Secondly, the features are fused to generate a feature pattern. Then, a moving shadow can be roughly determined on the feature pattern. Finally, in order to obtain an accurate shadow detection result, misclassified pixels are corrected through space adjustment. A lot of experiment and comparison results express that the method has good performance and is superior to the current shadow detection method.

Owner:NORTHEAST NORMAL UNIVERSITY

Face identification method based on wavelet multi-scale analysis and local binary pattern

InactiveCN102663426AReduce dimensionalitySmall amount of calculationCharacter and pattern recognitionFeature vectorImaging processing

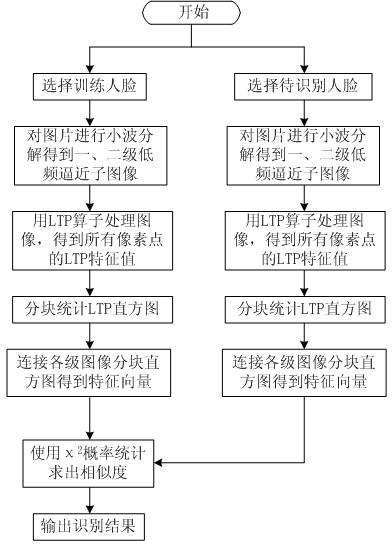

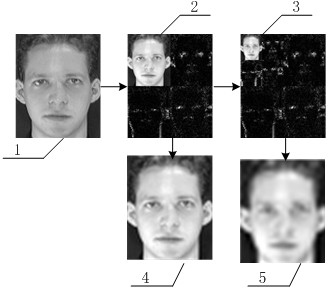

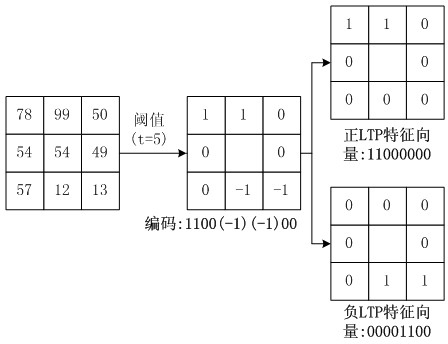

The invention, which relates to the technical fields including pattern identification, image processing and computer vision and the like, provides a face identification method based on a wavelet multi-scale analysis and a local binary pattern (LTP). The method comprises the following steps: selecting an appropriate face image; carrying out a multi-scale wavelet analysis on a training image to obtain a first-level low frequency approximation image and a second-level low frequency approximation image; utilizing an LTP algorithm to carry out conversion on the low frequency approximation images to obtain LTP characteristic values of all pixel points; carrying out statistics on LTP histograms of the images by utilizing a blocking method and connecting the blocked histograms of the images of the two levels to obtain characteristic vector representation of the face image; and for a to-be-identified face, obtaining a characteristic vector of the to-be-identified face image and then using X <2> probability statistics to complete face identification. According to the method provided by the invention, an image noise effect can be effectively reduced; extraction capability for image texture characteristics can be enhanced; besides, the method has advantages of good robustness, high identification rate, fast calculating speed and important practical value.

Owner:SOUTHEAST UNIV

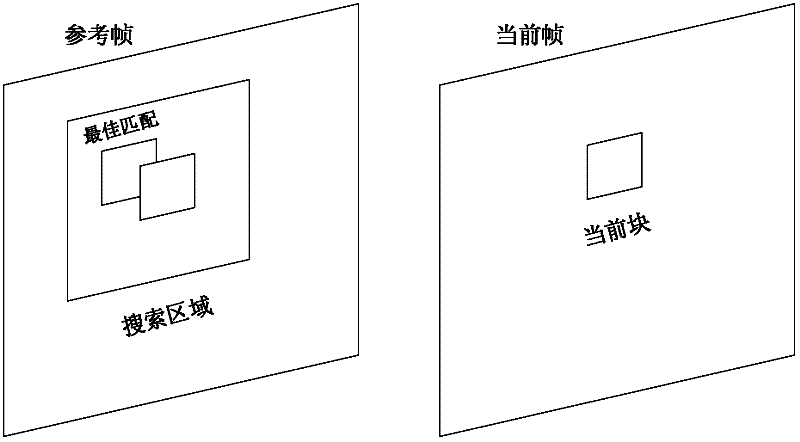

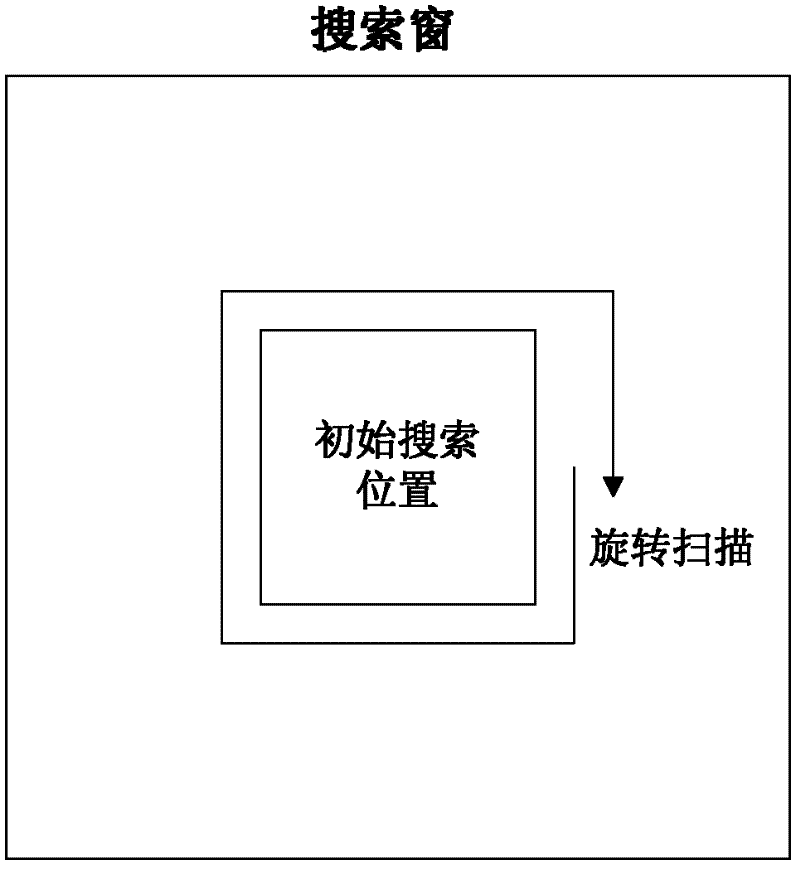

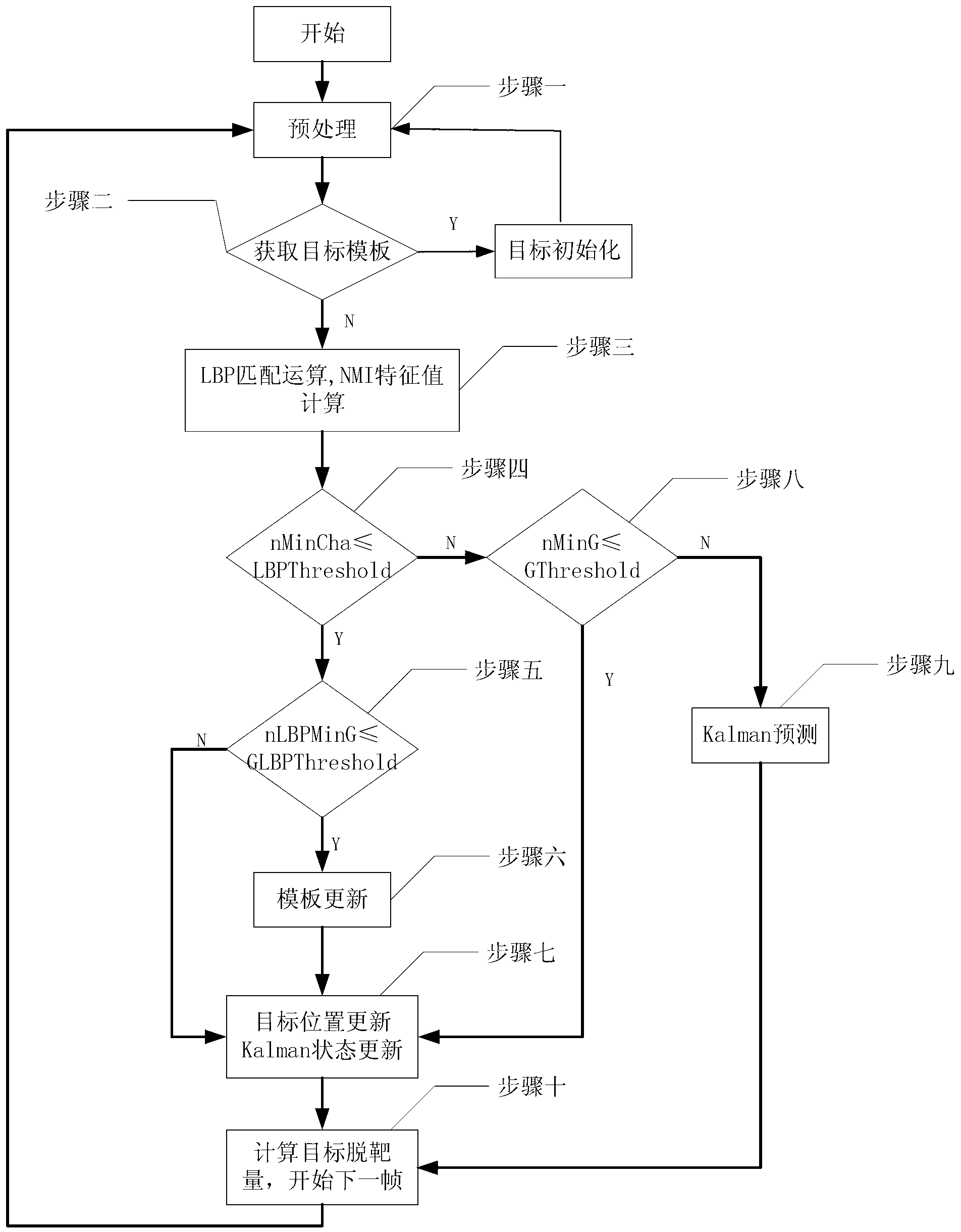

Video target tracking method under circumstance of scale change and shielding

InactiveCN103325126AImprove real-time performanceOvercoming the vulnerability to lossImage analysisImaging processingMoment of inertia

The invention relates to a video target tracking method under the circumstance of scale change and shielding and belongs to the field of image processing. The invention aims at solving the problem of non-ideal tracking effect under the circumstance of the scale change and shielding of an LBP (local binary pattern) tracking algorithm. The invention provides an optimization objective tracking method based on combining the LBP algorithm, NMI (normalized moment of inertia) features and kalman filtering. The NMI features are used for determining an update strategy of a module and solving the problem of target loss caused by target rotation, scale change and the like. The kalman filtering is used for overcoming the defect that a target is easy to lose under the circumstance of shielding.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com