Patents

Literature

69 results about "Model inference" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Inference, or model scoring, is the phase where the deployed model is used to make predictions. Using GPUs instead of CPUs offers performance advantages on highly parallelizable computation. Tip. Although the code snippets in this article usee a TensorFlow model, you can apply the information to any machine learning framework that supports GPUs.

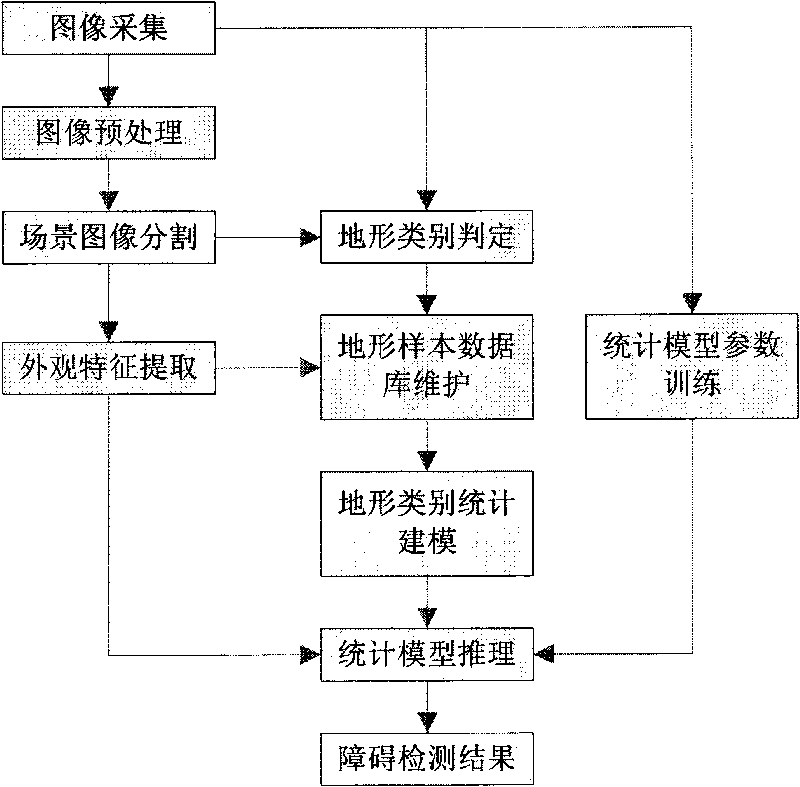

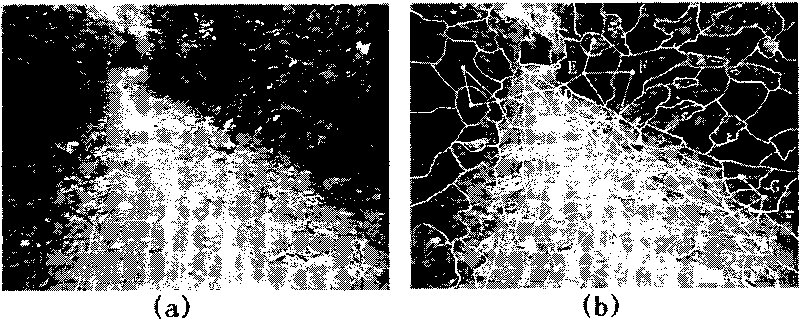

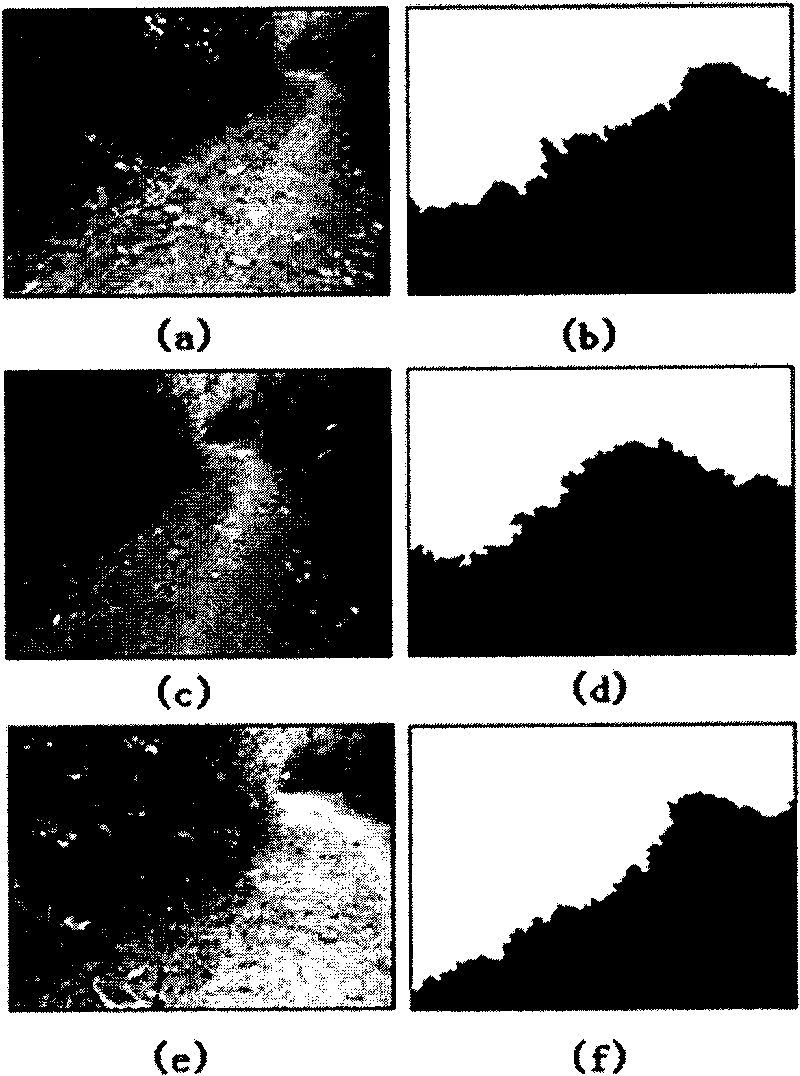

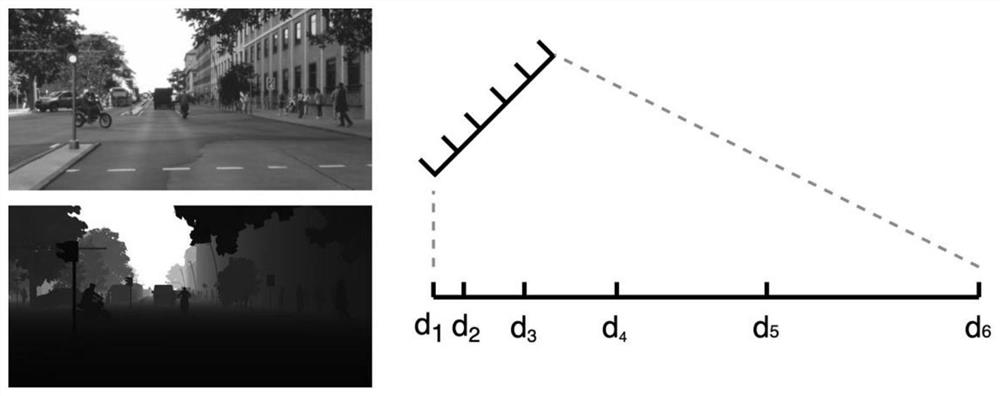

Method for detecting long-distance barrier

InactiveCN101701818ATo achieve effective detectionImprove accuracyImage analysisPicture interpretationPattern recognitionAmbiguity

The invention provides a method for detecting a long-distance barrier and belongs to the technical field of robots. The method particularly comprises the following steps: image acquisition, image pre-processing, scene image segmentation, appearance characteristic extraction, topographic pattern judgment, topographic sample database maintenance, topographic pattern statistics modeling, statistics model parameter training and statistics model inference. The invention achieves the effective detection of a multi-mode barrier, improves the accuracy of barrier detection under the condition of unbalanced samples and improves the adaptability of the barrier detection to the changes in online real-time scenes; the topographic pattern modeling integrates the independent smooth characteristic functions and eliminates the pattern ambiguity caused by characteristic overlapping; the topographic pattern modeling integrates the correlation smooth characteristic functions and improves the online self-adaptability of the barrier detection results to the changes in real-time illumination; and the topographic pattern statistics modeling not only integrates the characteristics of the scene areas, but also theoretically integrates the spatial relationship between the scene areas and improves the stability of barrier detection under the condition of mapping deviation.

Owner:SHANGHAI JIAO TONG UNIV

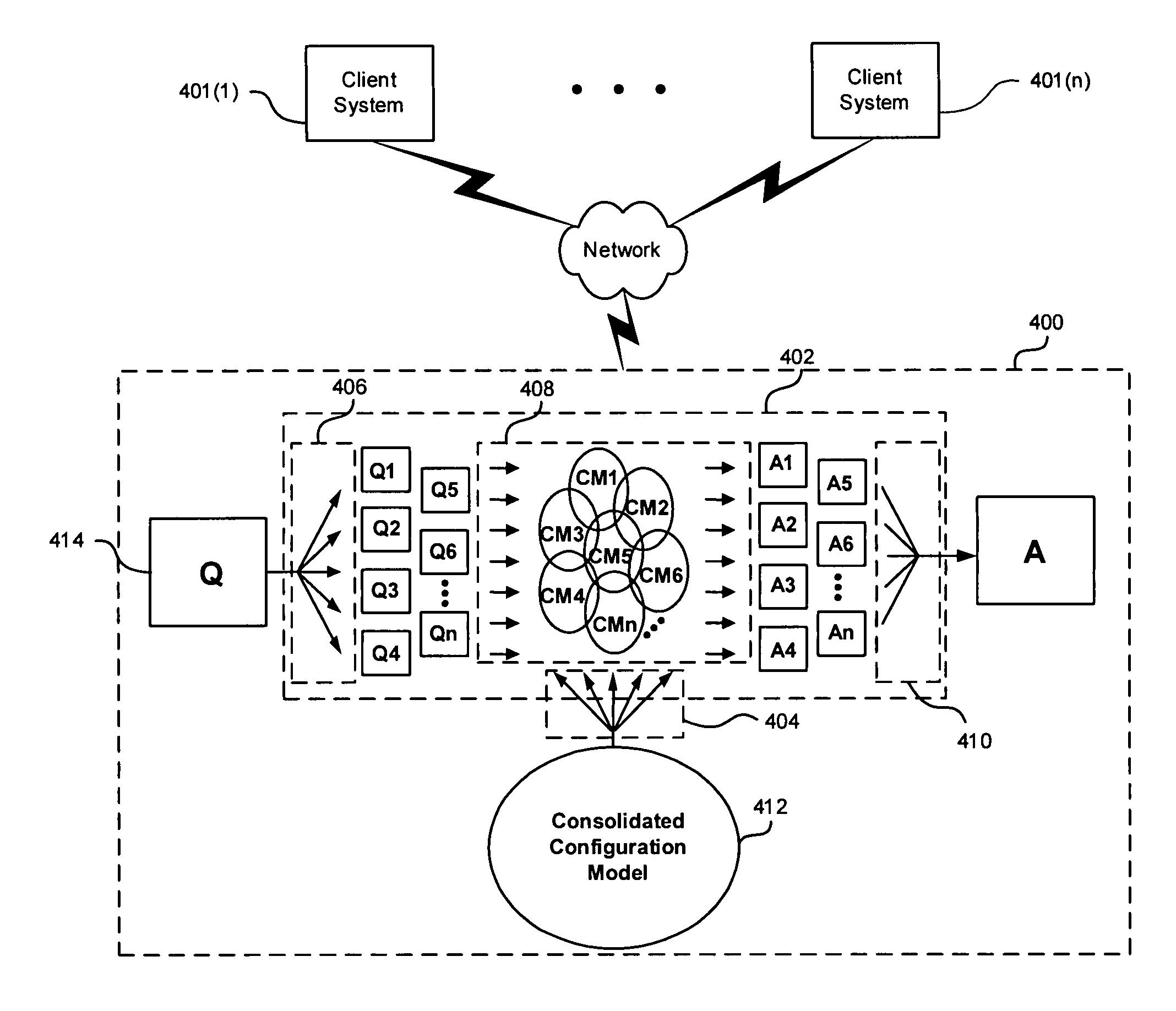

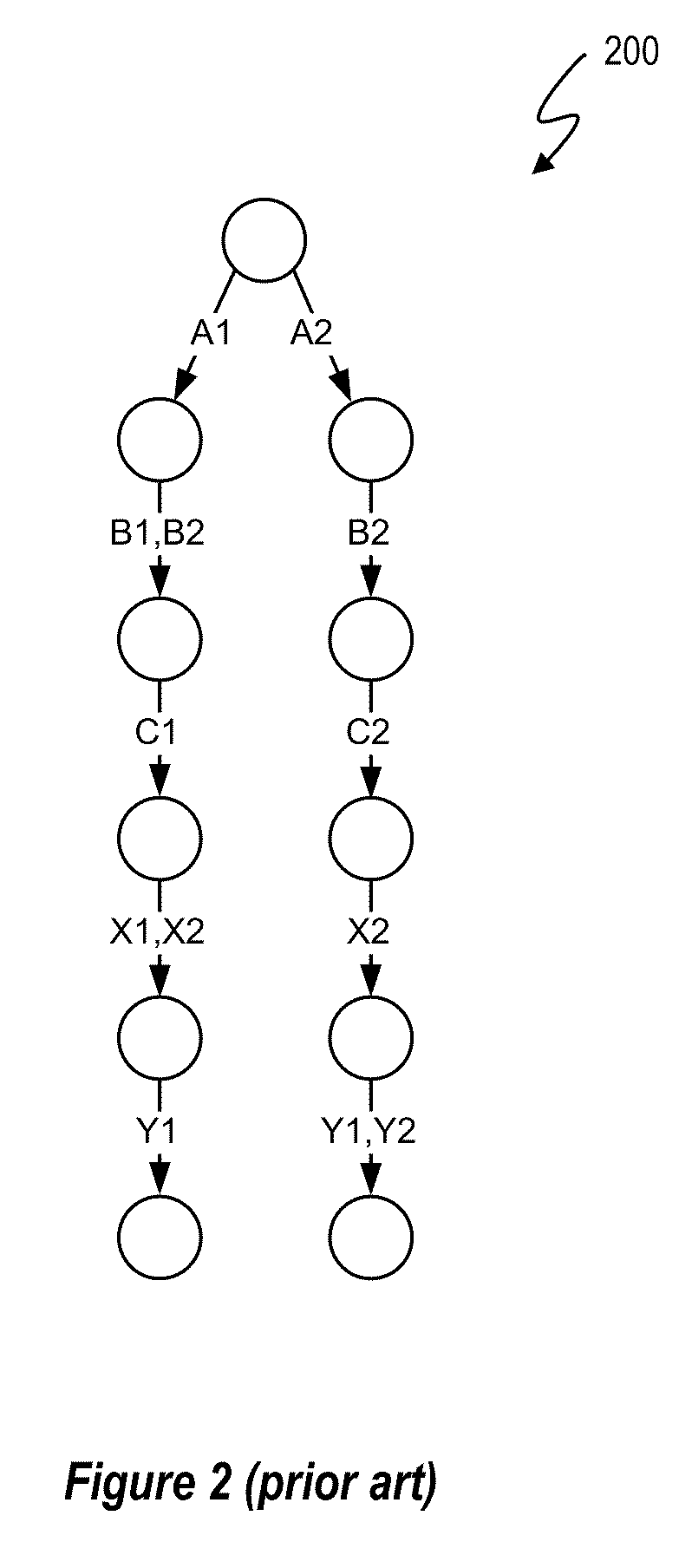

Complex configuration processing using configuration sub-models

ActiveUS7882057B1Solve the real problemResourcesComputer aided designData processing systemAlgorithm

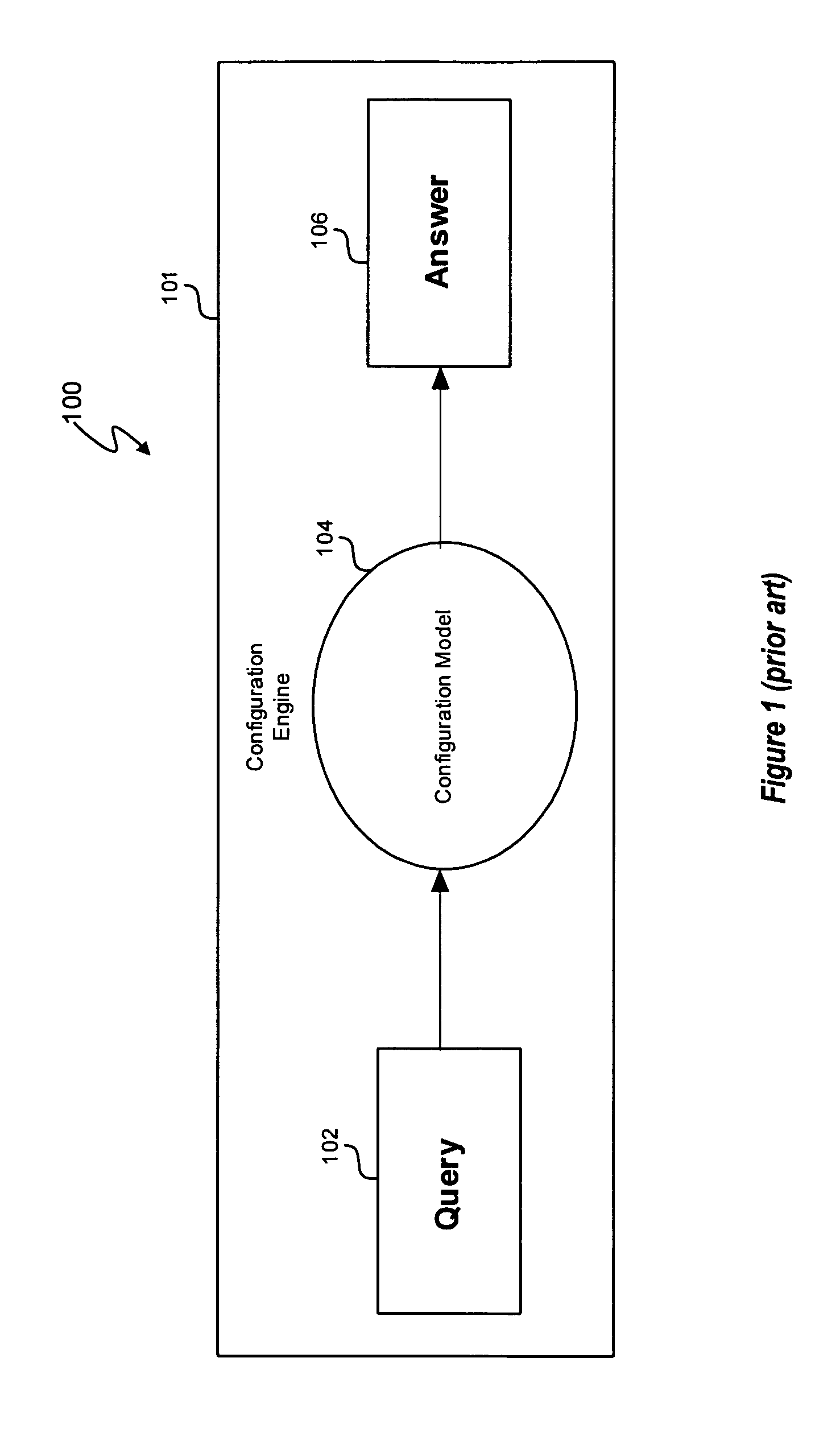

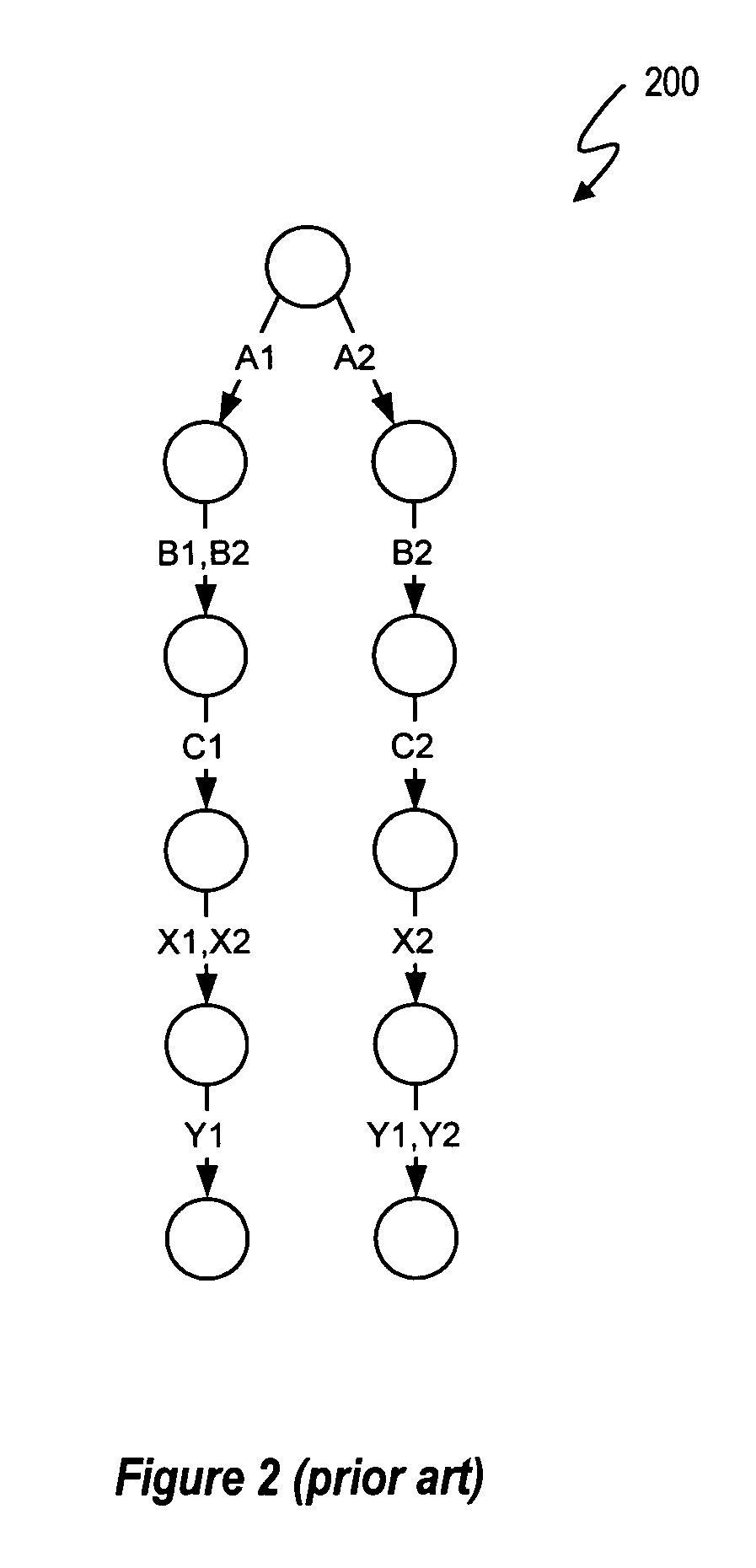

A configuration model dividing and configuration sub-model inference processing system and procedure addresses the issue of configuration model and query complexity by breaking a configuration problem down into a set of smaller problems, solving them individually and recombining the results into a single result that is equivalent to a conventional inference procedure. In one embodiment, a configuration model is divided into configuration sub-models that can respectively be processed using existing data processing resources. A sub-model inference procedure provides a way to scale queries to larger and more complicated configuration models. Thus, the configuration model dividing and configuration sub-model processing system and inference procedure allows processing by a data processing system of configuration models and queries whose collective complexity exceeds the complexity of otherwise unprocessable conventional, consolidated configuration models and queries.

Owner:VERSATA DEV GROUP

Diesel engine lubricating system fault diagnosis method based on Bayes network

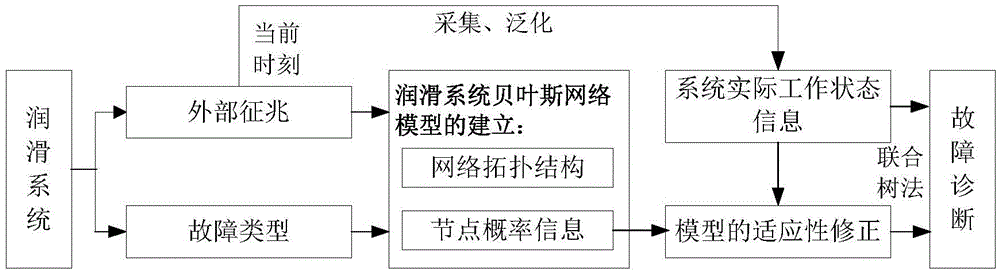

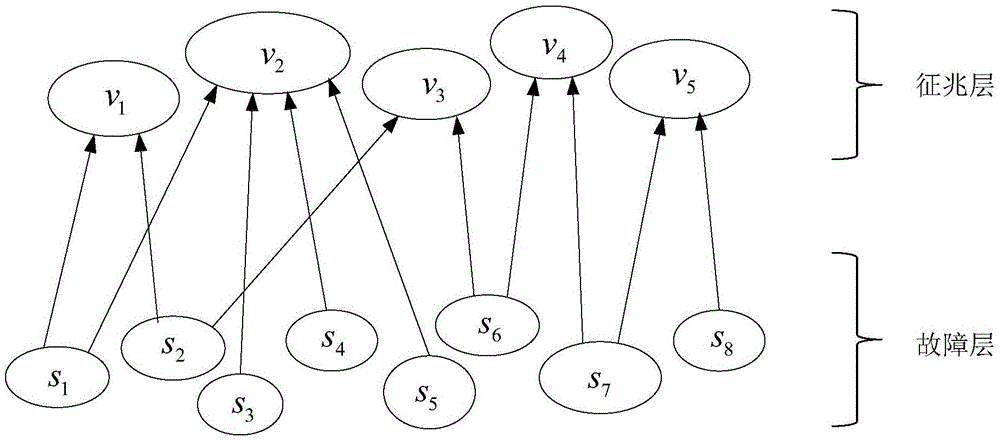

ActiveCN105547717AReflect actual performanceReduce uncertaintyStructural/machines measurementData acquisitionNetwork model

The invention relates to a diesel engine lubricating system fault diagnosis method based on the Bayes network. According to the method, fault types and external symptoms of a lubricating system are abstracted into fault layer nodes and symptom layer nodes, and a diesel engine lubricating system Bayes network model is established; performance parameters of the diesel engine lubricating system are detected by utilizing a data acquisition system, a linear proportion transformation method is employed to carry out classification processing on the performance parameters, and the actual work state information of the lubricating system is acquired; a Hugin combined tree algorithm is employed to convert a corrected lubricating system Bayes network model into a combined tree. Before inference diagnosis, on the basis of the actual work state of the lubricating system, through resetting the prior probability of the fault layer nodes, adaptability correction on the Bayes network model is carried out, so the actual work state of the lubricating system can be accurately described through the model, nondeterminacy of model inference is reduced, and thereby fault diagnosis accuracy is improved.

Owner:HARBIN ENG UNIV

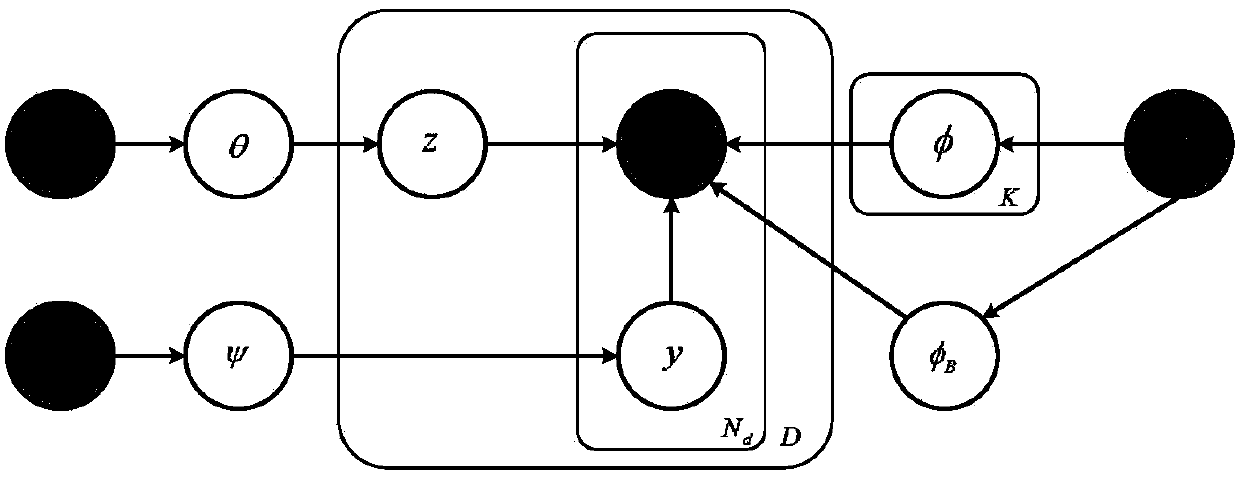

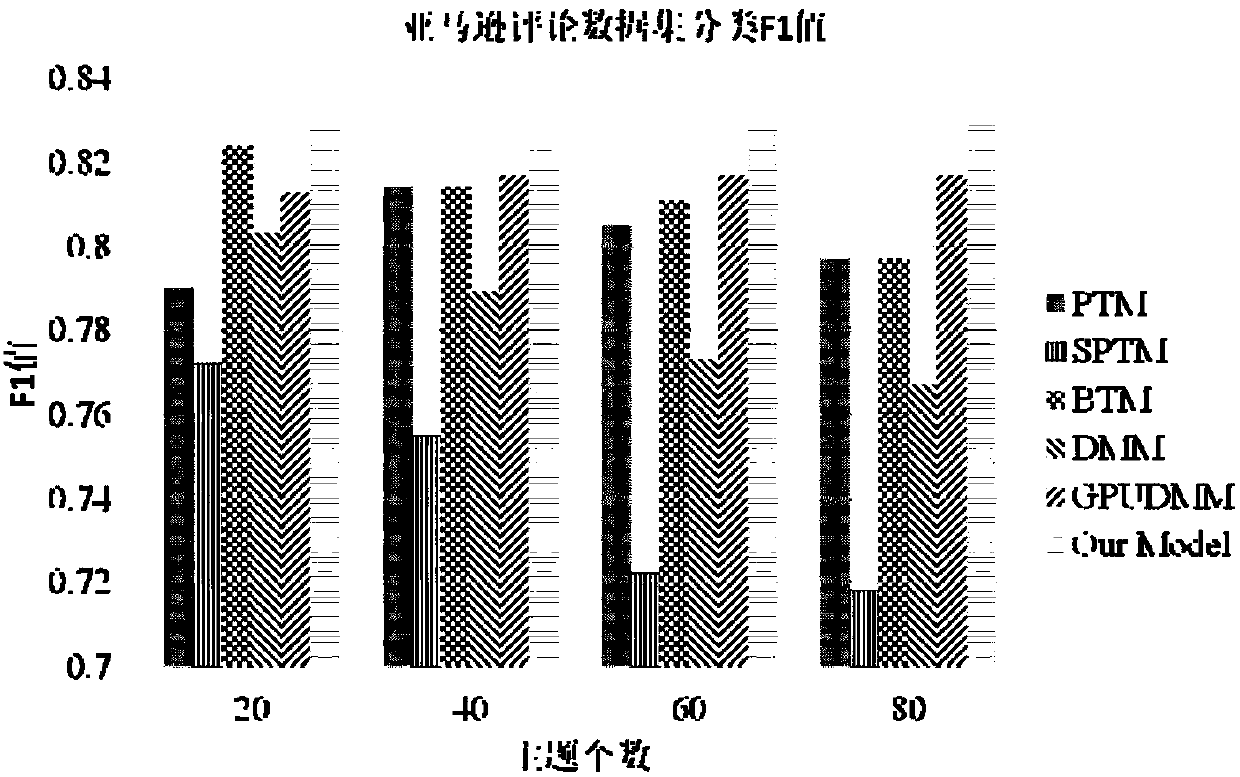

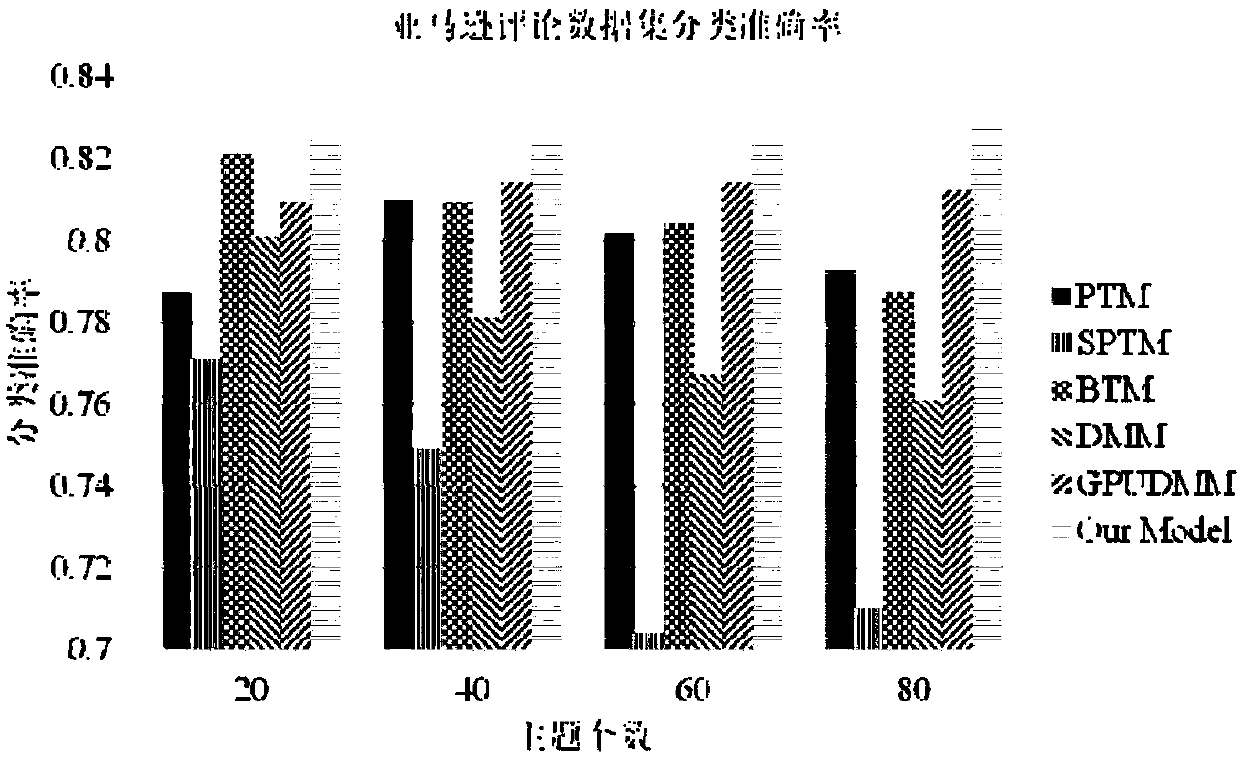

Word vector and context information-based short text topic model

InactiveCN108415901AEnhancing Semantic ConsistencyImprove efficiencySemantic analysisSpecial data processing applicationsData setCo-occurrence

The invention discloses a word vector and context information-based short text topic model. A semantic relationship between words is extracted from word vectors; by explicitly obtaining the semantic relationship, the shortcoming of word co-occurrence deficiency of short text data is made up for; and through data of a training set, the semantic relationship between the words is further filtered, sothat the data set can be better trained. A background topic is added in a generation process; and through the background topic, noise words in a document are modeled. The model is solved by using a Gibbs sampling method in model inference, and the probability of the words with relatively high semantic correlation in related topics is increased by using a sampling strategy of a generalized Polya urn model in the sampling process; and in the way, the semantic consistency of the words in the topics is greatly improved. A series of experiments show that the method provided by the invention can improve the semantic consistency of the topics to a greater extent, and a new method is provided for short text topic modeling.

Owner:DALIAN UNIV OF TECH

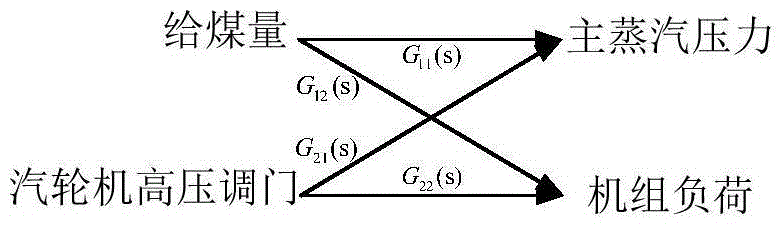

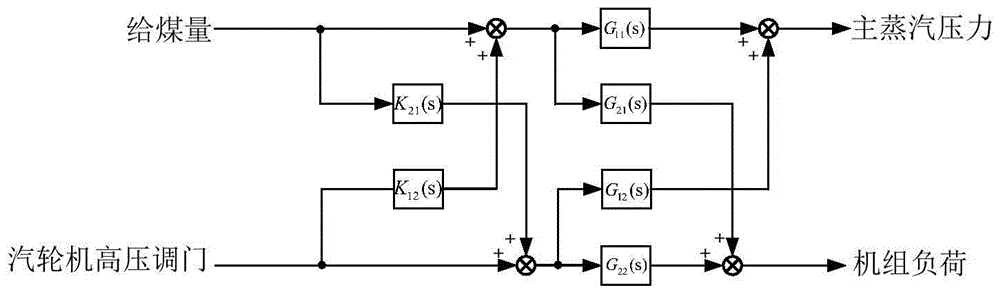

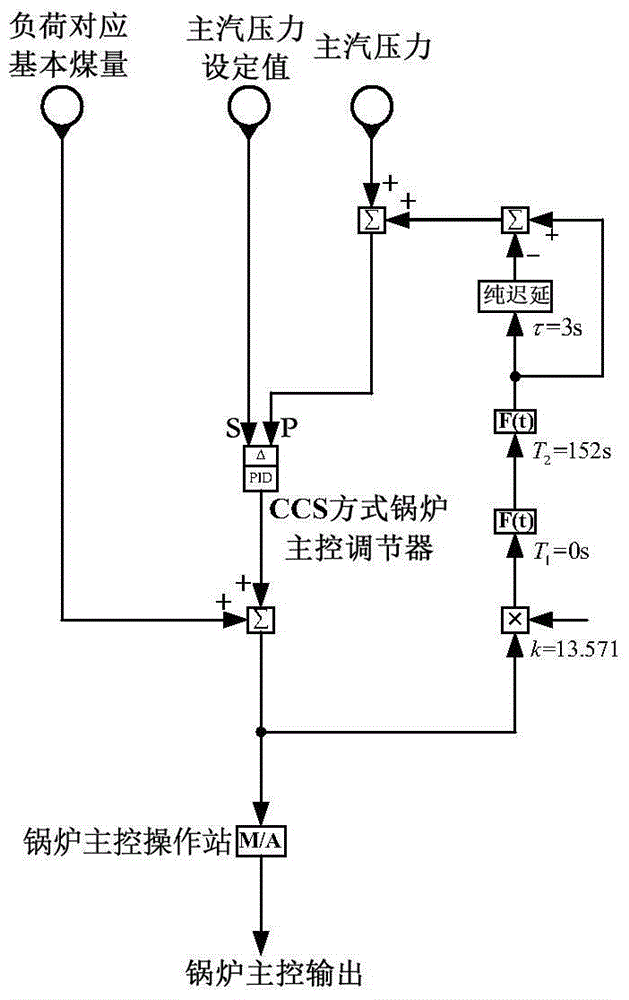

Unit coordinated control master control system decoupling compensation control method

ActiveCN105739309ADoes not cause fluctuations in the high-pressure valveRun fastAdaptive controlTransfer function modelControl manner

The invention discloses a unit coordinated control master control system decoupling compensation control method. According to the method, data are acquired by utilizing a unit onsite coal feed rate step test and a steam turbine high pressure regulating valve step test. A boiler master control decoupling compensation transfer function model is established through a genetic identification algorithm. Meanwhile, a boiler master control compensation simplification model is established and coupled in boiler master control by utilizing decoupling compensation model inference so as to overcome the high delay and high inertia properties of a unit boiler master control system. Compared with the conventional cascade control method, main team pressure compensation regulation can be more rapidly performed by the control method without generating fluctuation of a high pressure regulating valve so that safer and more economical and energy-saving operation of the unit can be realized.

Owner:XIAN TPRI THERMAL CONTROL TECH +1

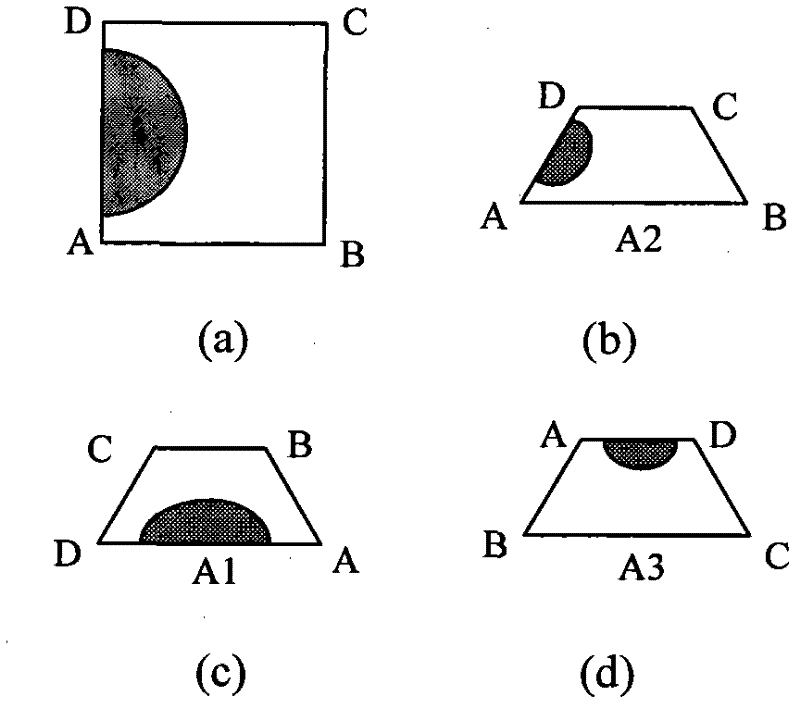

A multi-agent cooperative target recognition method based on msbn

The invention discloses a multi-agent cooperative target identification method based on an MSBN (Multiple Sectioned Bayesian Network), which regards various agents of a multi-agent system as a BN (Bayesian Network) subnet of a multiple-section Bayesian network and used for solving the problem of accurate target identification. By taking target identification type nodes as an overlapping subdomain, the BN subnet is constructed to be the MSBN, and the multi-agent system can be cooperatively solved in terms of BN model inference. In a multi-agent cooperative target identification algorithm mainly, a reliability communication algorithm is mainly adopted to complete the reliability update of the whole MSBN, therefore, the target type hidden node probability query support of a target to be identified in the corresponding MSBN is completed, and the target identification is achieved. According to the invention, while the system identification ability is improved, the timeliness of the system is strengthened, and the target identification speed and accuracy are greatly improved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

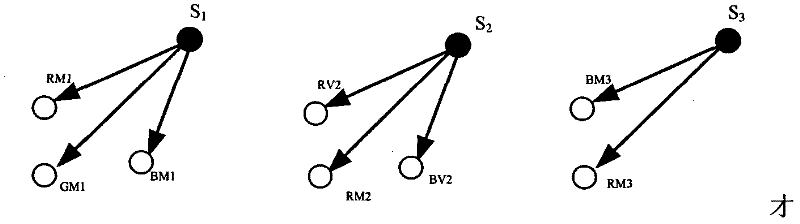

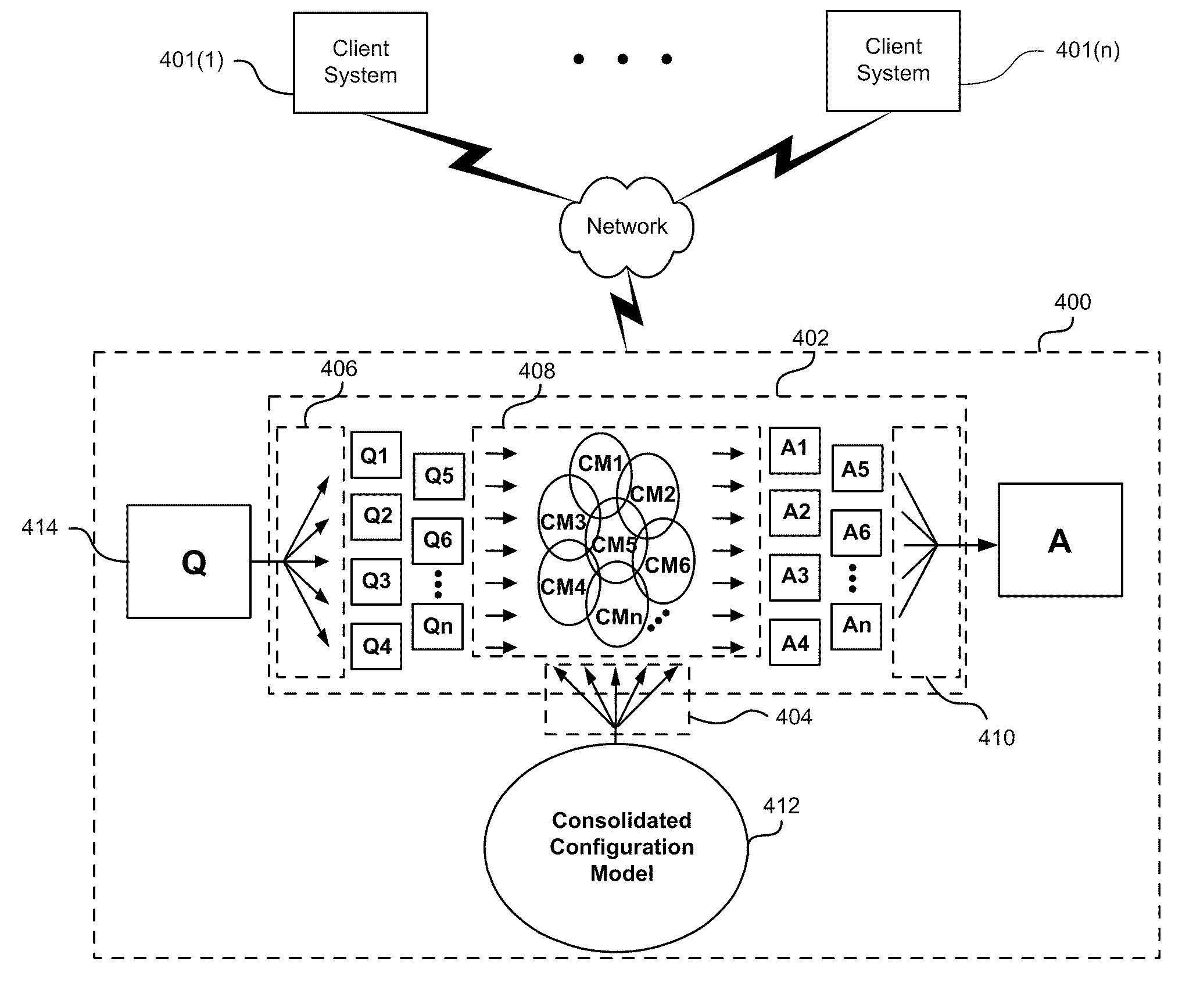

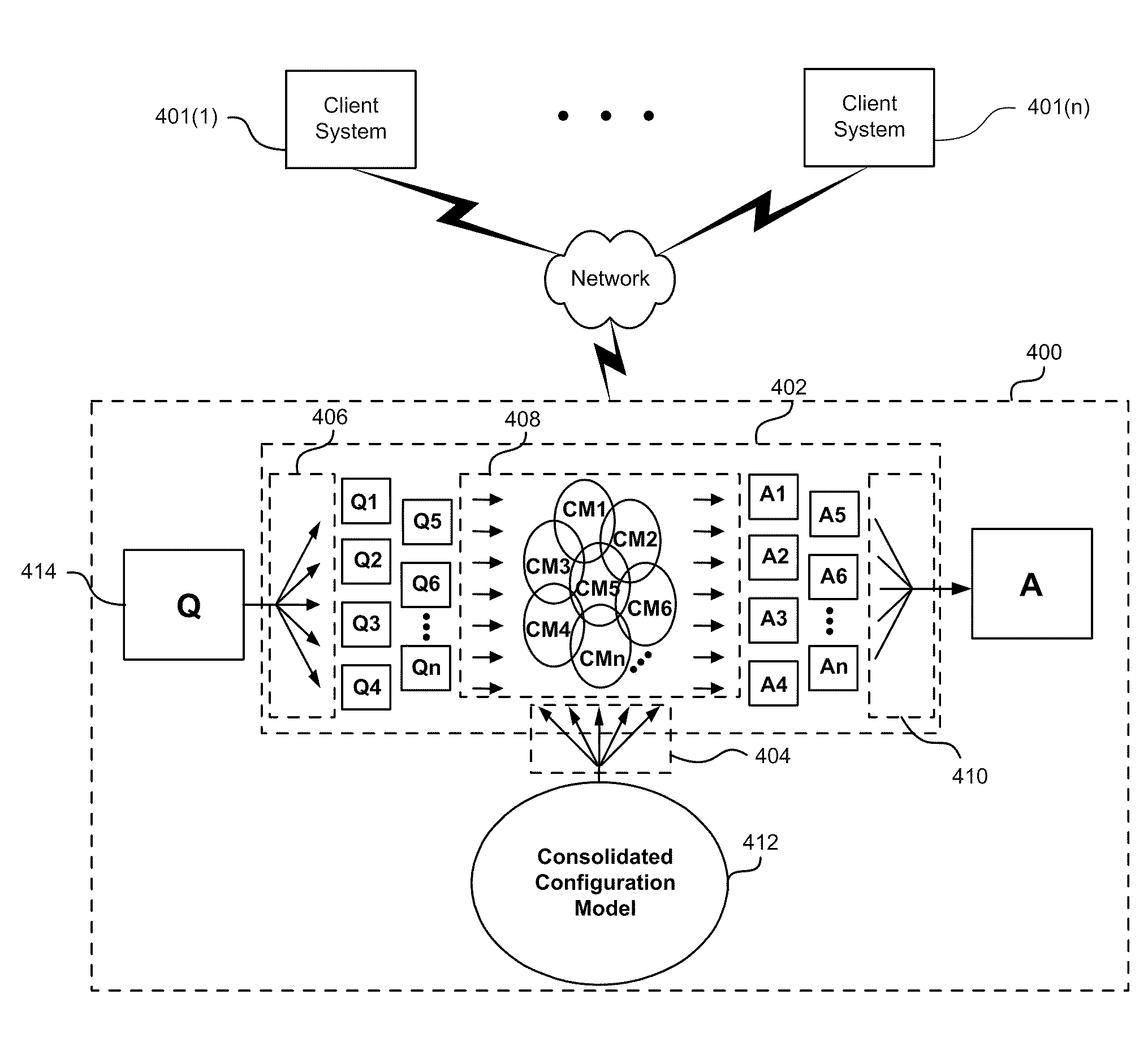

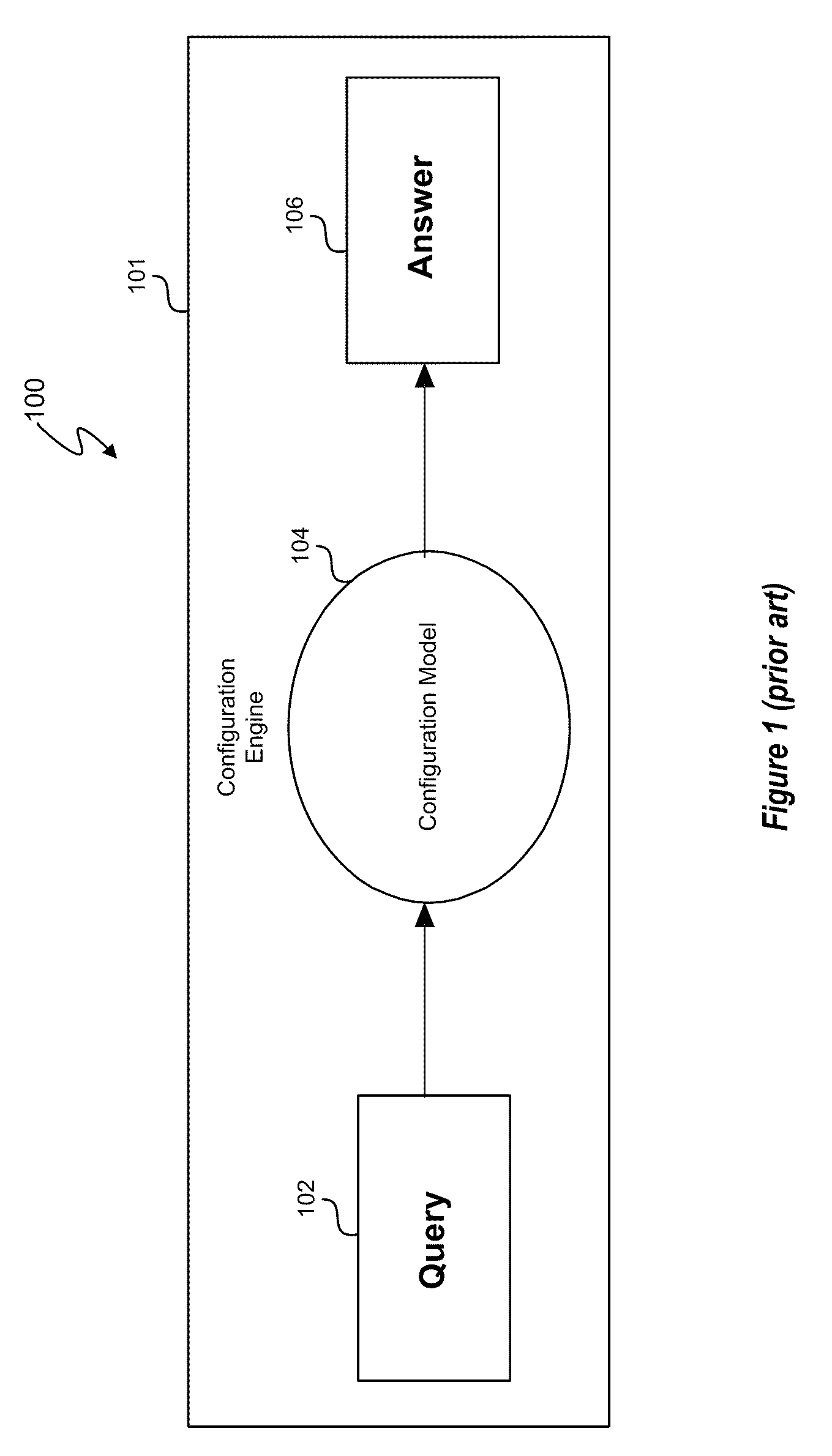

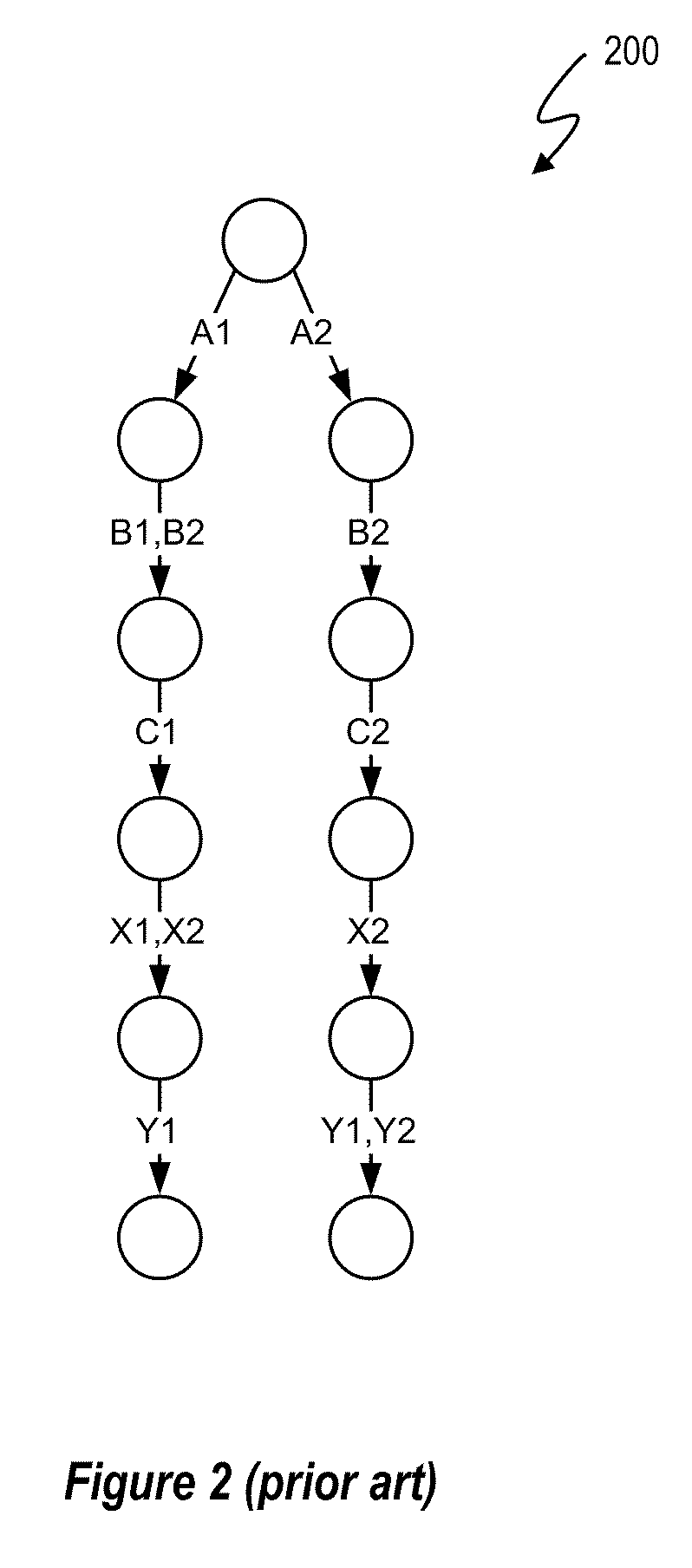

Complex Configuration Processing Using Configuration Sub-Models

ActiveUS20110082675A1ResourcesComputer aided designData processing systemTheoretical computer science

A configuration model dividing and configuration sub-model inference processing system and procedure addresses the issue of configuration model and query complexity by breaking a configuration problem down into a set of smaller problems, solving them individually and recombining the results into a single result that is equivalent to a conventional inference procedure. In one embodiment, a configuration model is divided into configuration sub-models that can respectively be processed using existing data processing resources. A sub-model inference procedure provides a way to scale queries to larger and more complicated configuration models. Thus, the configuration model dividing and configuration sub-model processing system and inference procedure allows processing by a data processing system of configuration models and queries whose collective complexity exceeds the complexity of otherwise unprocessable conventional, consolidated configuration models and queries.

Owner:VERSATA DEV GROUP

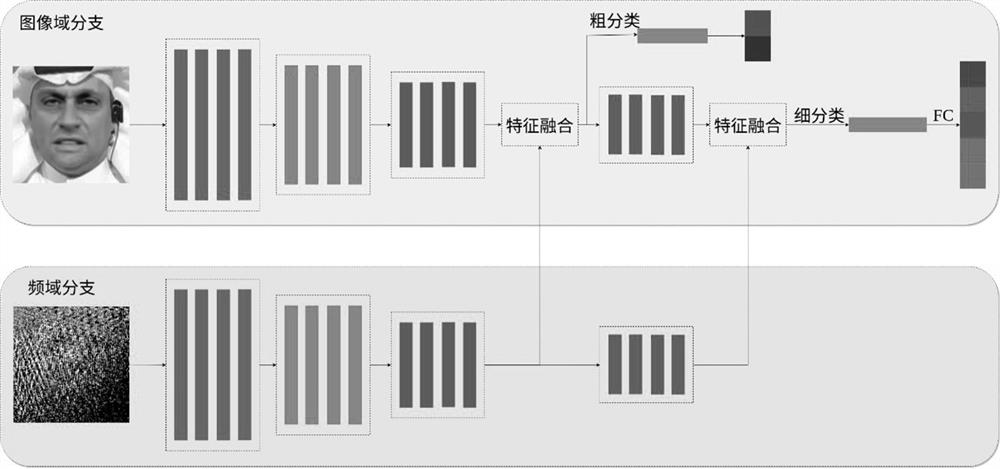

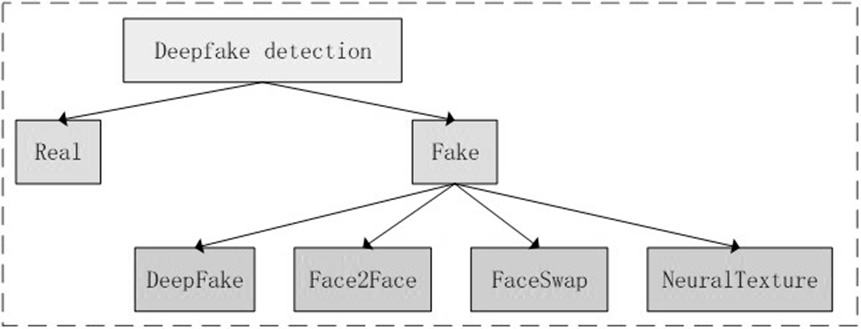

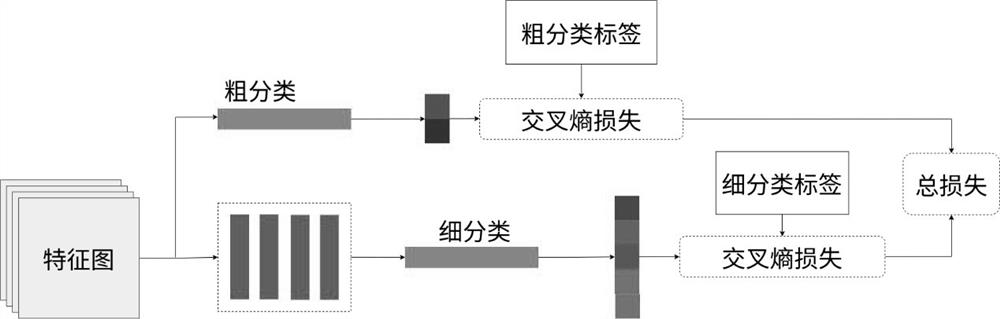

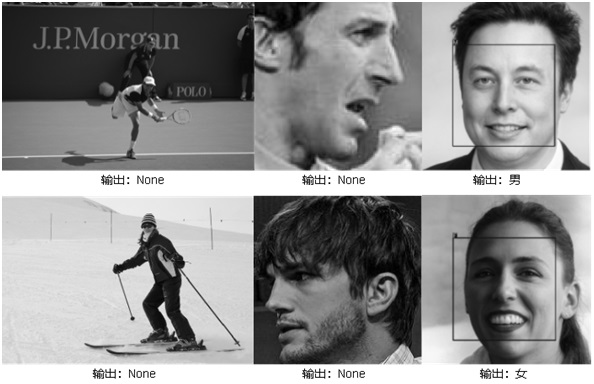

Face forgery detection method based on image domain and frequency domain double-flow network

PendingCN113723295AImplement hierarchical classificationImprove detection accuracyCharacter and pattern recognitionNeural architecturesVisual technologyEngineering

The invention relates to the technical field of computer vision, in particular to a face forgery detection method based on an image domain and frequency domain double-flow network. The detection method comprises a model training stage and a model inference stage. In the model training stage, a server with high computing performance is used for training a network model, network parameters are optimized by reducing a network loss function until the network converges, and a double-flow network model based on an image domain and a frequency domain is obtained; in the model inference stage, the network model obtained in the model training stage is utilized to judge whether the new image is a face counterfeit image or not. Compared with a method for converting face counterfeit detection into a dichotomy problem, the method realizes hierarchical classification, and can achieve better detection precision by using diversified supervision information of counterfeit images.

Owner:ZHEJIANG UNIV

Complex configuration processing using configuration sub-models

ActiveUS9020880B2Chaos modelsNon-linear system modelsData processing systemTheoretical computer science

A configuration model dividing and configuration sub-model inference processing system and procedure addresses the issue of configuration model and query complexity by breaking a configuration problem down into a set of smaller problems, solving them individually and recombining the results into a single result that is equivalent to a conventional inference procedure. In one embodiment, a configuration model is divided into configuration sub-models that can respectively be processed using existing data processing resources. A sub-model inference procedure provides a way to scale queries to larger and more complicated configuration models. Thus, the configuration model dividing and configuration sub-model processing system and inference procedure allows processing by a data processing system of configuration models and queries whose collective complexity exceeds the complexity of otherwise unprocessable conventional, consolidated configuration models and queries.

Owner:VERSATA DEV GROUP

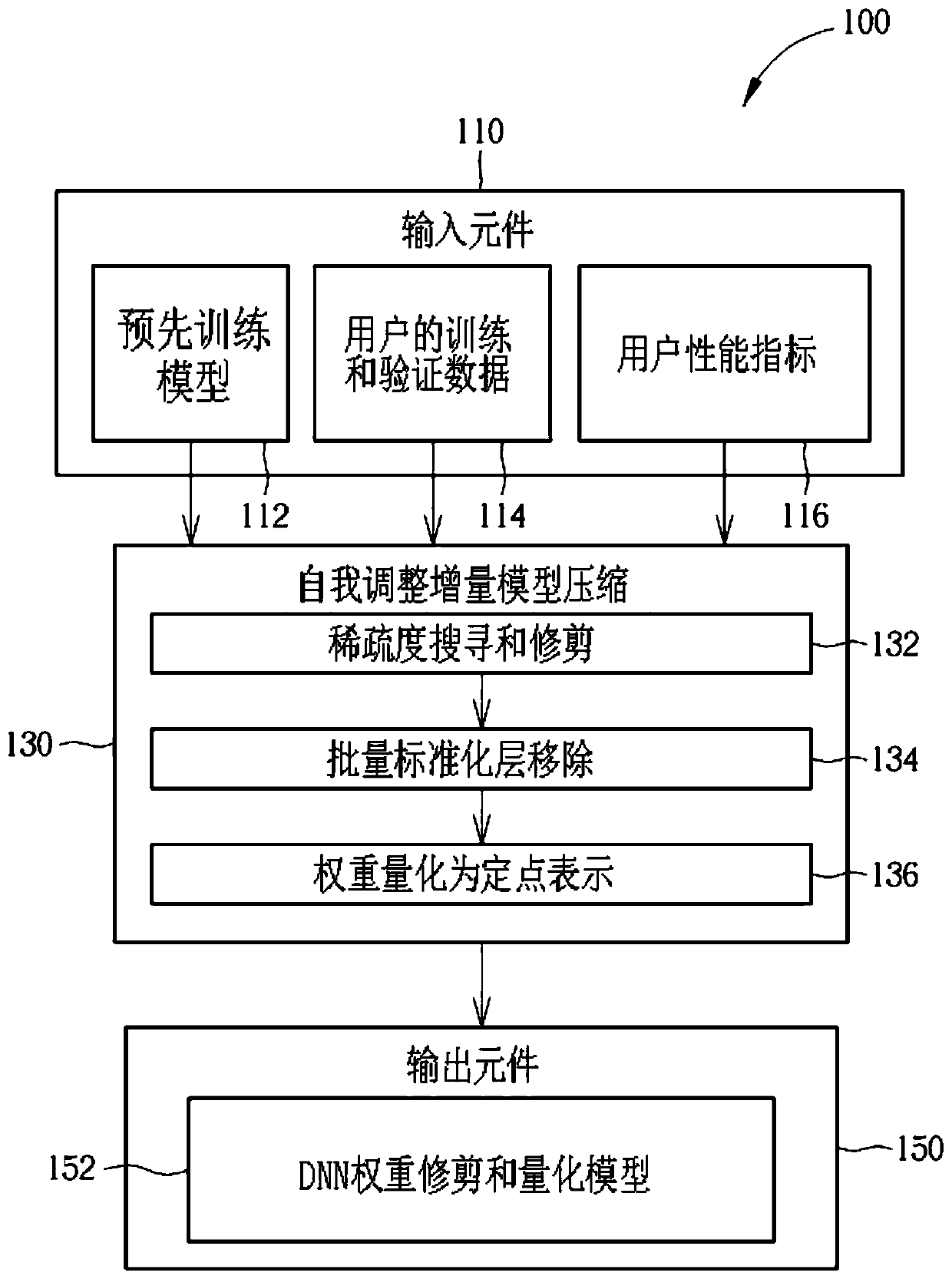

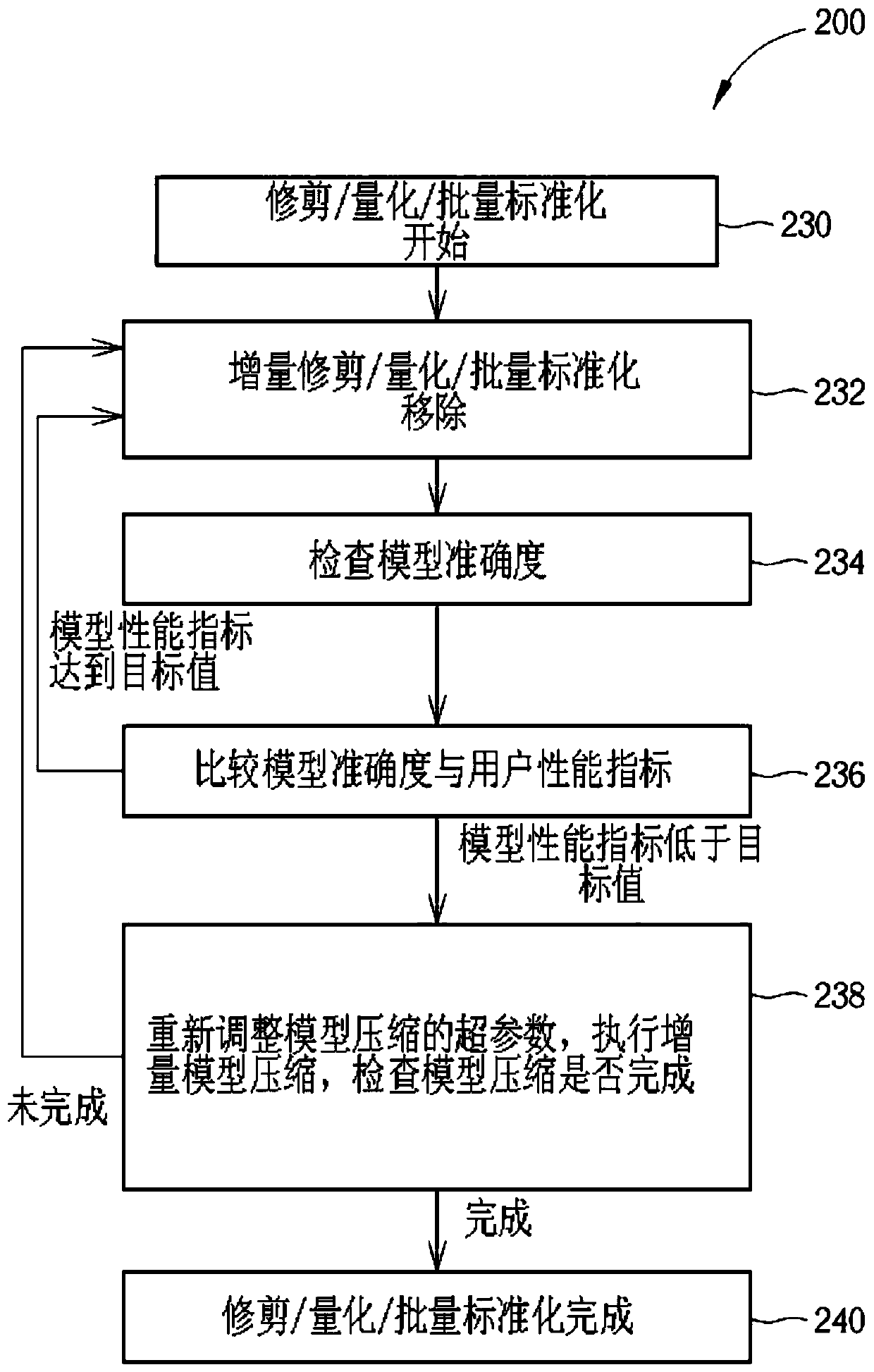

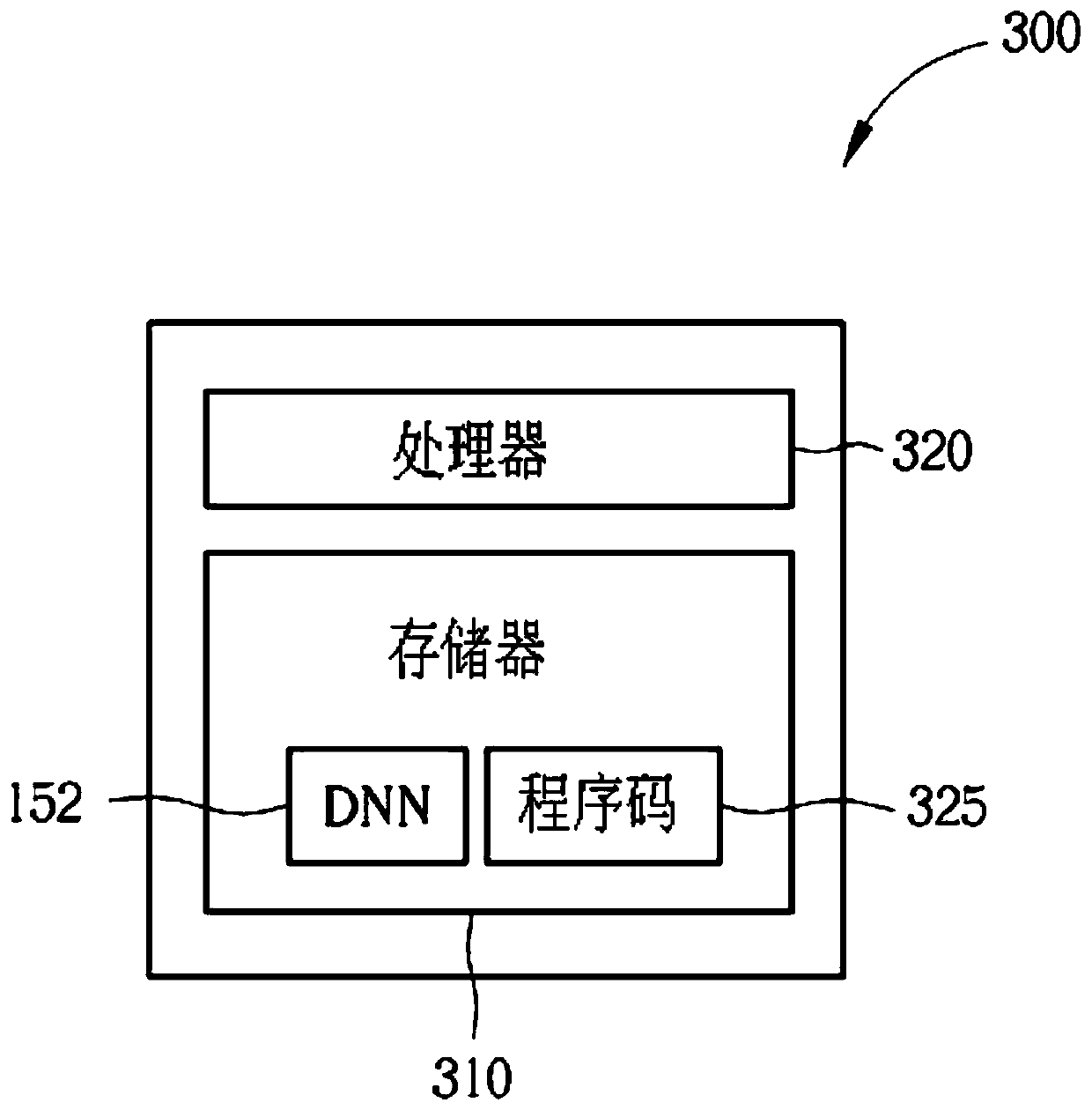

Method of compressing a pre-trained deep neural network model

InactiveCN110555510ASmall scaleReduce computing requirementsKernel methodsNeural architecturesData setNetwork model

A method of compressing a pre-trained deep neural network model includes inputting the pre-trained deep neural network model as a candidate model. The candidate model is compressed by increasing sparsity of the candidate, removing at least one batch normalization layer present in the candidate model, and quantizing all remaining weights into fixed-point representation to form a compressed model. Accuracy of the compressed model is then determined utilizing an end-user training and validation data set. Compression of the candidate model is repeated when the accuracy improves. Via the method, the size of the DNN is reduced, implementation requirements, such as memory, hardware, or processing necessities, are also reduced. Inference can be achieved with much increased speed and much decreasedcomputational requirements. Lastly, due to the disclosed method of compression, these benefits occur while guaranteeing accuracy during inference.

Owner:KNERON TAIWAN CO LTD

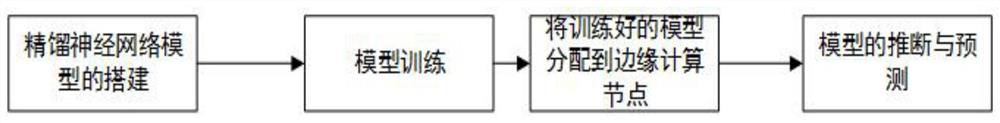

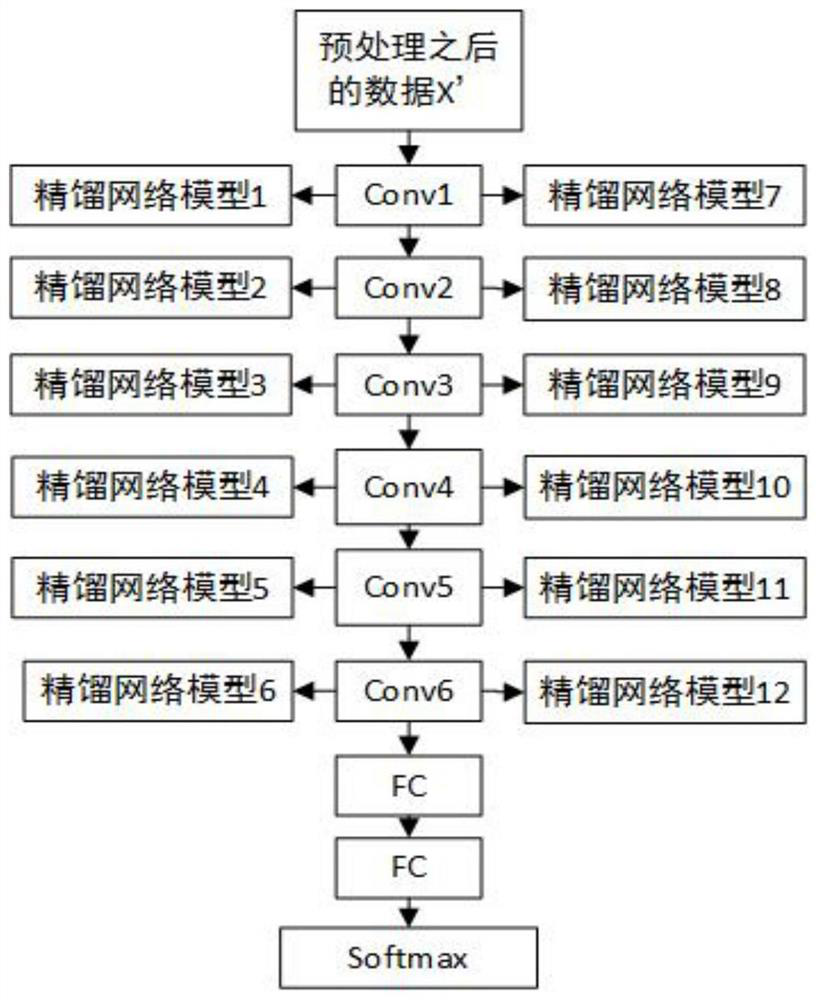

Edge computing node distribution and quit method based on branch neural network

The invention discloses an edge computing node distribution and quit method based on a branch neural network, which is mainly divided into three steps in order to improve the safety of an artificial intelligence model under edge computing and accelerate the computing efficiency of the model: training a neural network model, deploying a branch neural network model at an edge computing node and selecting a model exit point: firstly building the neural network model and combining with a model rectification algorithm to train the model; secondly, in the process of distributing edge computing nodes for the trained classification model, selecting proper edge computing nodes by utilizing a minimum delay algorithm; and finally, selecting an appropriate model exit point in a model inference stage to reduce the calculation amount of an edge calculation node, so that the precision and accuracy of classification network prediction are improved, the effects of improving the safety of a neural network model and accelerating the calculation efficiency of the model are finally achieved, and the purposes of defending against samples and improving the calculation efficiency of the model are achieved.

Owner:浙江捷瑞电力科技有限公司

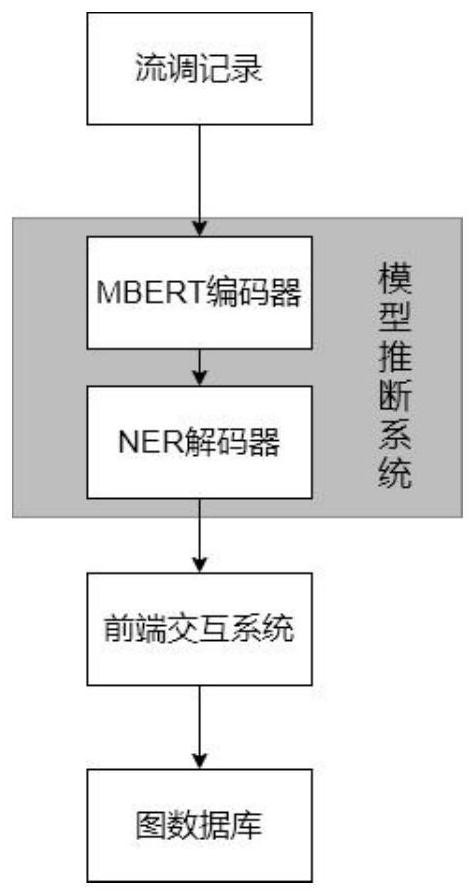

Intelligent epidemiological investigation system

ActiveCN113312915AImprove human-computer interactionFriendly man-machine interfaceEpidemiological alert systemsNatural language data processingEngineeringData mining

According to a method in the technical field of information, an intelligent epidemiological investigation system is realized. The system is composed of an intelligent epidemiological front-end interaction subsystem, an intelligent epidemiological model inference subsystem and an intelligent epidemiological map database storage subsystem. The intelligent epidemiological front-end interaction subsystem is mainly responsible for modifying and inserting a traffic scheduling event by traffic scheduling personnel and synchronously visualizing traffic scheduling time and space; the intelligent epidemiological model inference subsystem adopts a multi-language MBERT pre-training model and is a mature technology, the pre-training model is used for inferring an entity of a text, and finally an inference result is stored in the intelligent epidemiological graph database storage subsystem. According to the system, the technical effects of automatically extracting character information of ta key event site from a streaming survey interview text or the questionnaire with relatively high efficiency and accelerating the streaming survey speed are realized.

Owner:BEIHANG UNIV

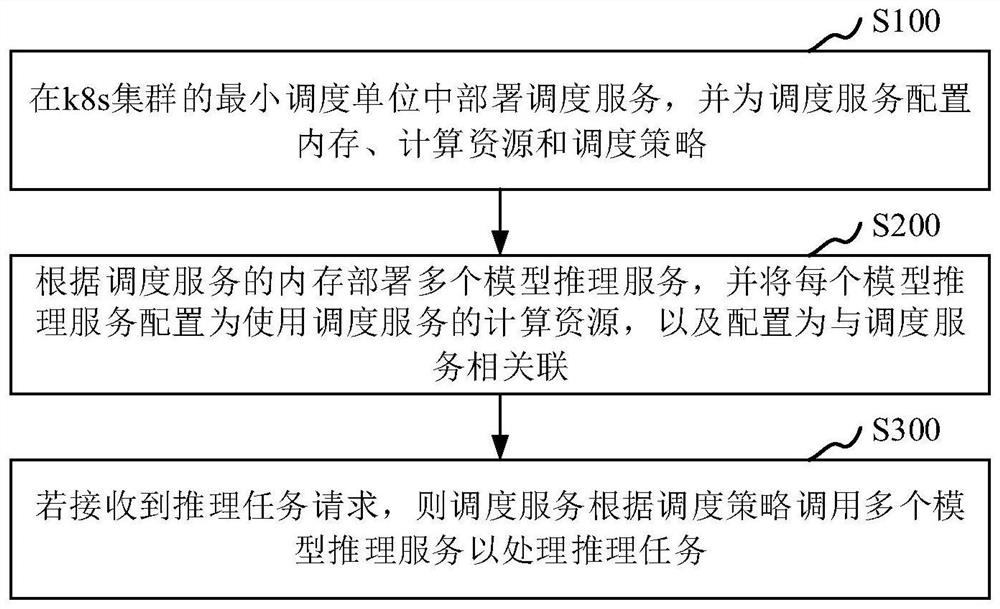

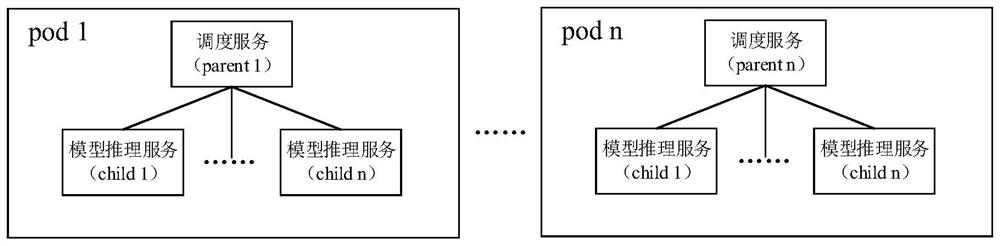

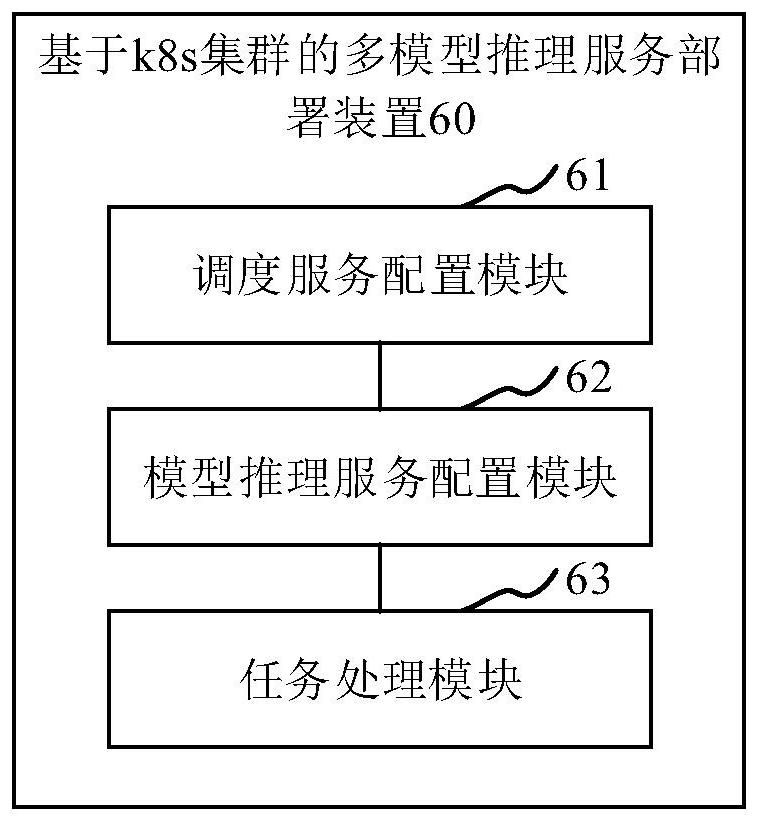

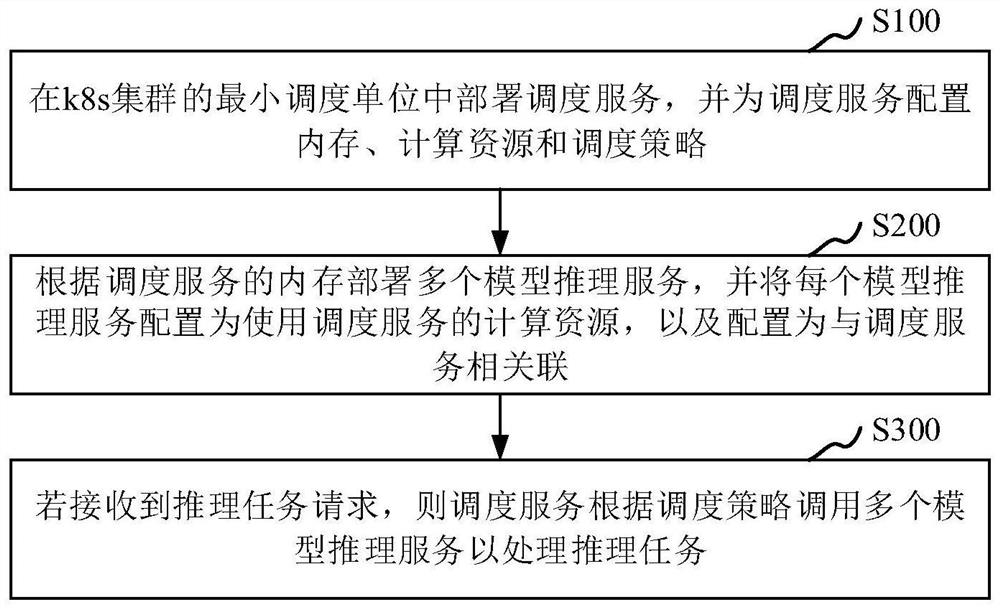

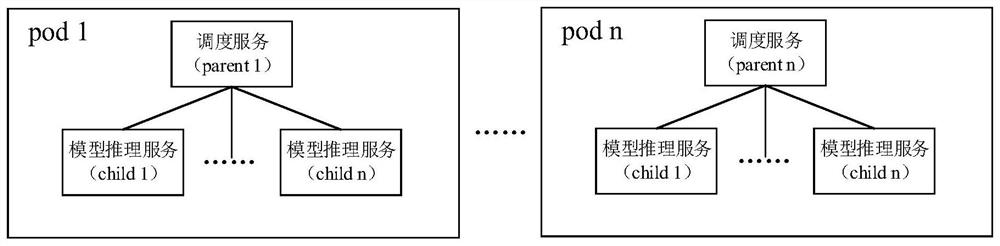

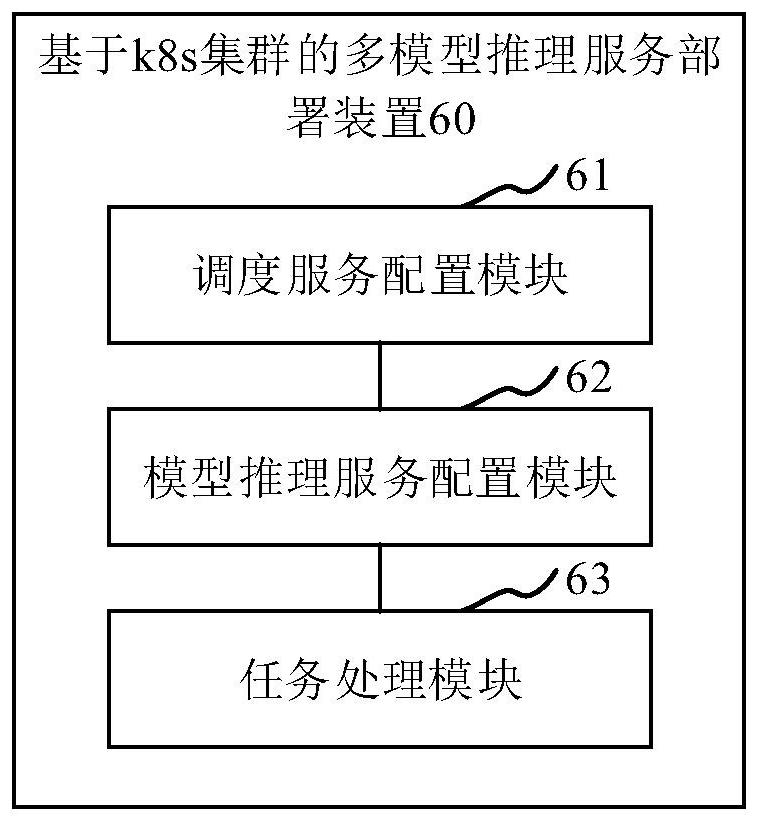

Multi-model reasoning service deployment method and device based on k8s cluster

ActiveCN112231054AAbility to implement shared podsSimplify deployment operationsInference methodsSoftware simulation/interpretation/emulationAutoscalingTerm memory

The invention discloses a multi-model reasoning service deployment method and device based on a k8s cluster. The method comprises the following steps: deploying a scheduling service in a minimum scheduling unit of a k8s cluster, and configuring a memory, computing resources and a scheduling strategy for the scheduling service; deploying a plurality of model inference services according to a memoryof a scheduling service, and configuring each model inference service as a computing resource for using the scheduling service and to be associated with the scheduling service; and the scheduling service calls the plurality of model reasoning services according to the scheduling strategy so as to process the reasoning task. According to the scheme of the invention, the capability of sharing the minimum scheduling unit by multiple model inference services is realized, the multi-model inference service can be elastically expanded and contracted along with the service load, and the deployment operation is relatively simple.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

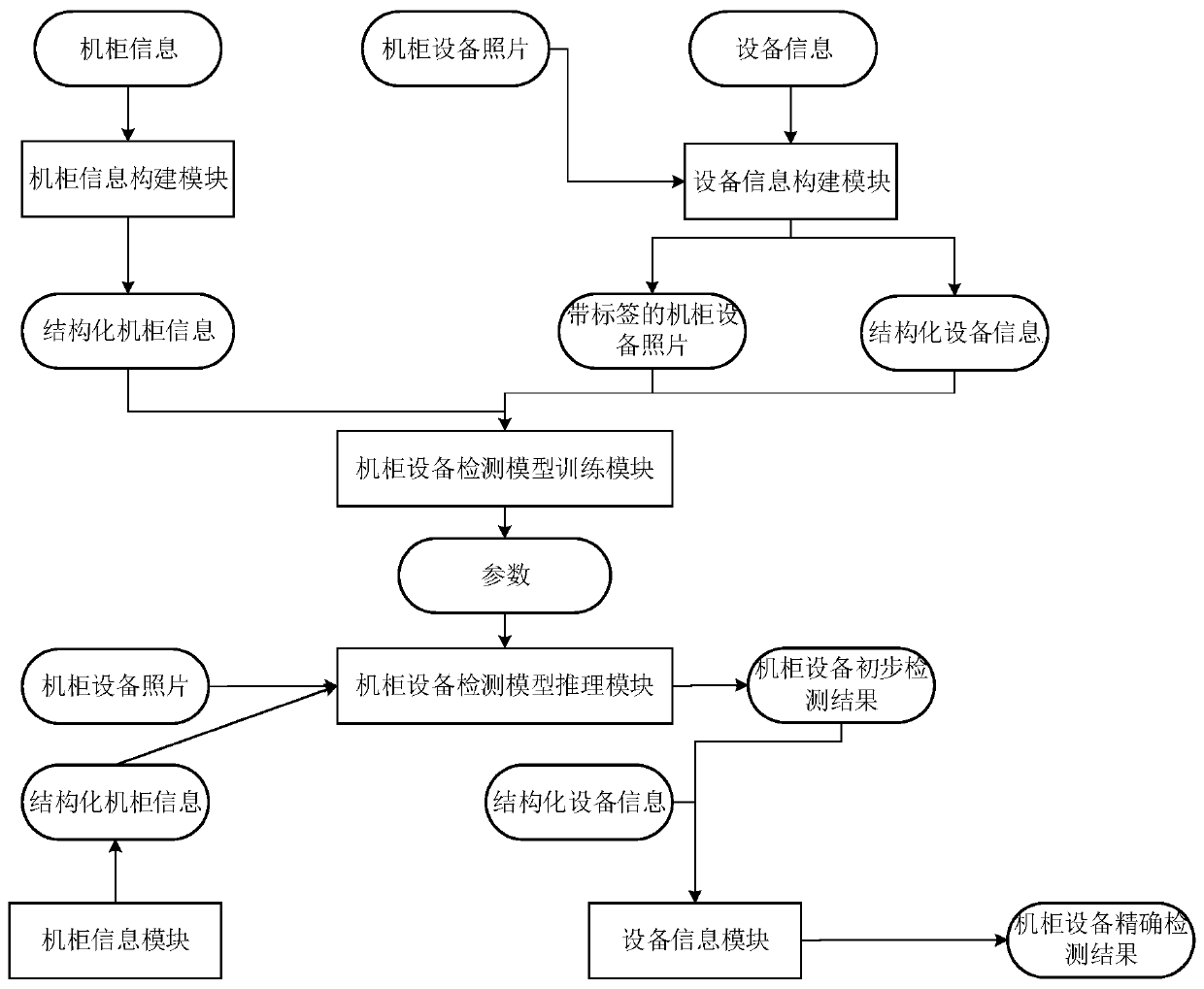

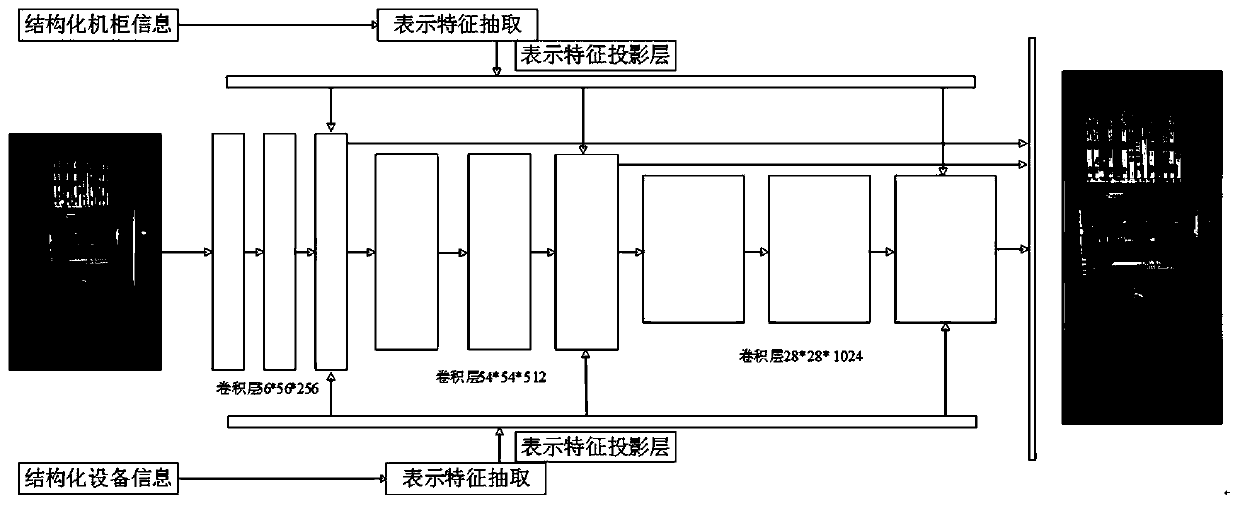

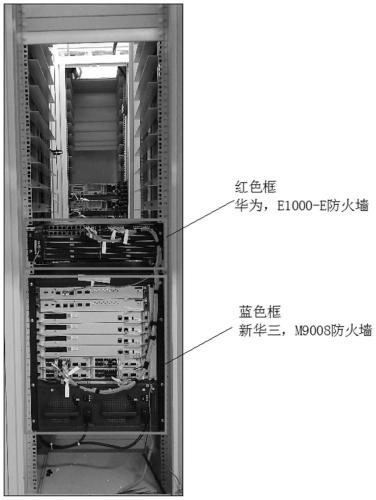

Cabinet equipment detection method

ActiveCN111598121AFast recognitionImprove accuracyCharacter and pattern recognitionComputer hardwareReliability engineering

The invention discloses a cabinet equipment detection method. The method comprises a model training stage and a detection stage. In the model training stage, by realizing a cabinet information construction module, an equipment information construction module and a cabinet equipment detection model training module, structured cabinet information and structured equipment information are output, anda cabinet equipment detection model trainer based on deep learning is established; in the detection stage, a cabinet equipment detection inference device based on deep learning is established by realizing a cabinet information module, an equipment information module and a cabinet equipment detection model inference module; and a cabinet equipment detection result is output through the cabinet equipment detection inference device, the cabinet equipment detection result comprises a cabinet equipment position and equipment identification. Compared with the prior art, the method is high in detection accuracy, high in recognition speed and high in applicability, and the model can be dynamically iterated and updated.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD

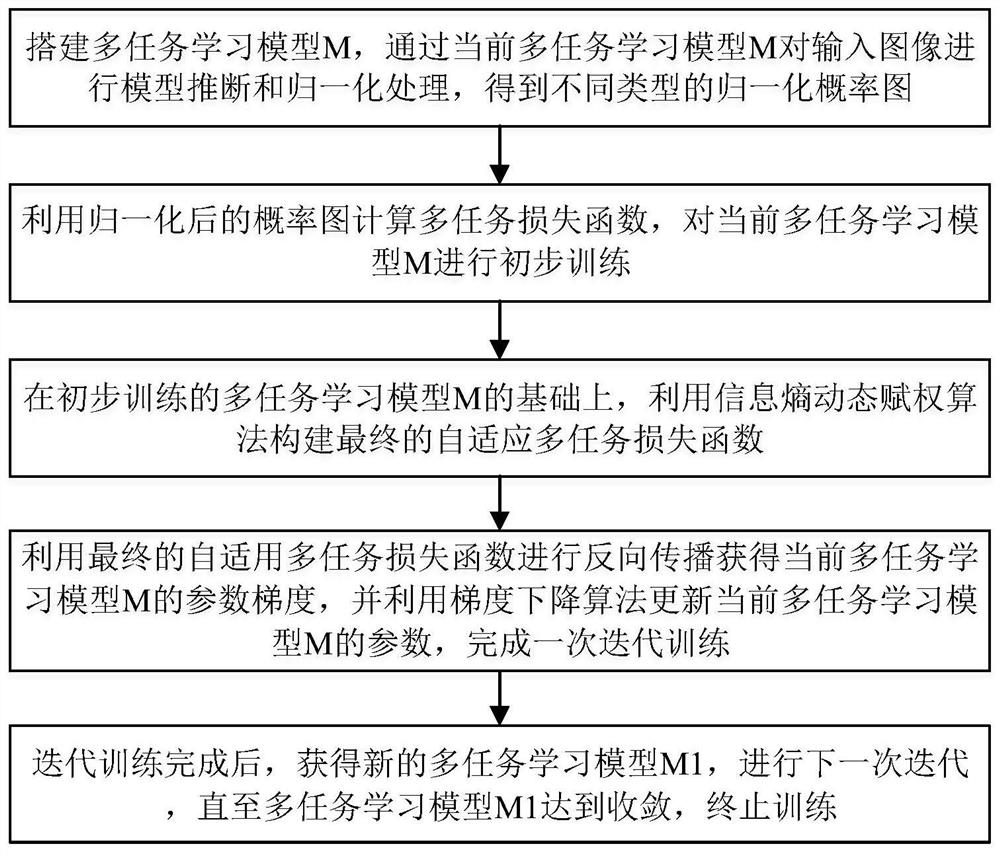

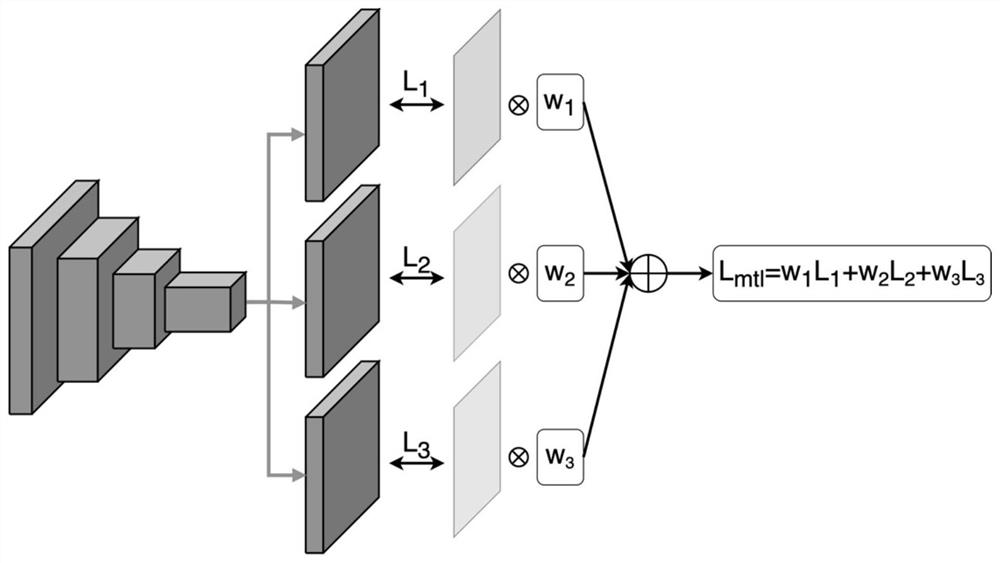

Multi-task learning method based on information entropy dynamic weighting

PendingCN113537365AAdapt effectivelyRespond effectivelyCharacter and pattern recognitionNeural learning methodsEngineeringMulti-task learning

The invention discloses a multi-task learning method based on information entropy dynamic weighting, and belongs to the technical field of machine learning. The method comprises the following steps: firstly, building an initial multi-task learning model M, carrying out model inference on an input image to obtain a plurality of task output graphs, and respectively carrying out normalization processing on the task output graphs to obtain corresponding normalized probability graphs; then, calculating a fixed weight multi-task loss function by utilizing each normalized probability graph, and carrying out preliminary training on the multi-task learning model M; and finally, on the basis of the preliminarily trained multi-task learning model M, constructing a final adaptive multi-task loss function through an information entropy dynamic weighting algorithm, performing iterative optimization training on the preliminarily trained multi-task learning model until the multi-task learning model achieves convergence, training is terminated, and obtaining an optimized multi-task learning model M1. The method can effectively cope with different types of tasks, self-adaptively balance the relative importance of each task, and is high in algorithm applicability, simple and efficient.

Owner:BEIHANG UNIV

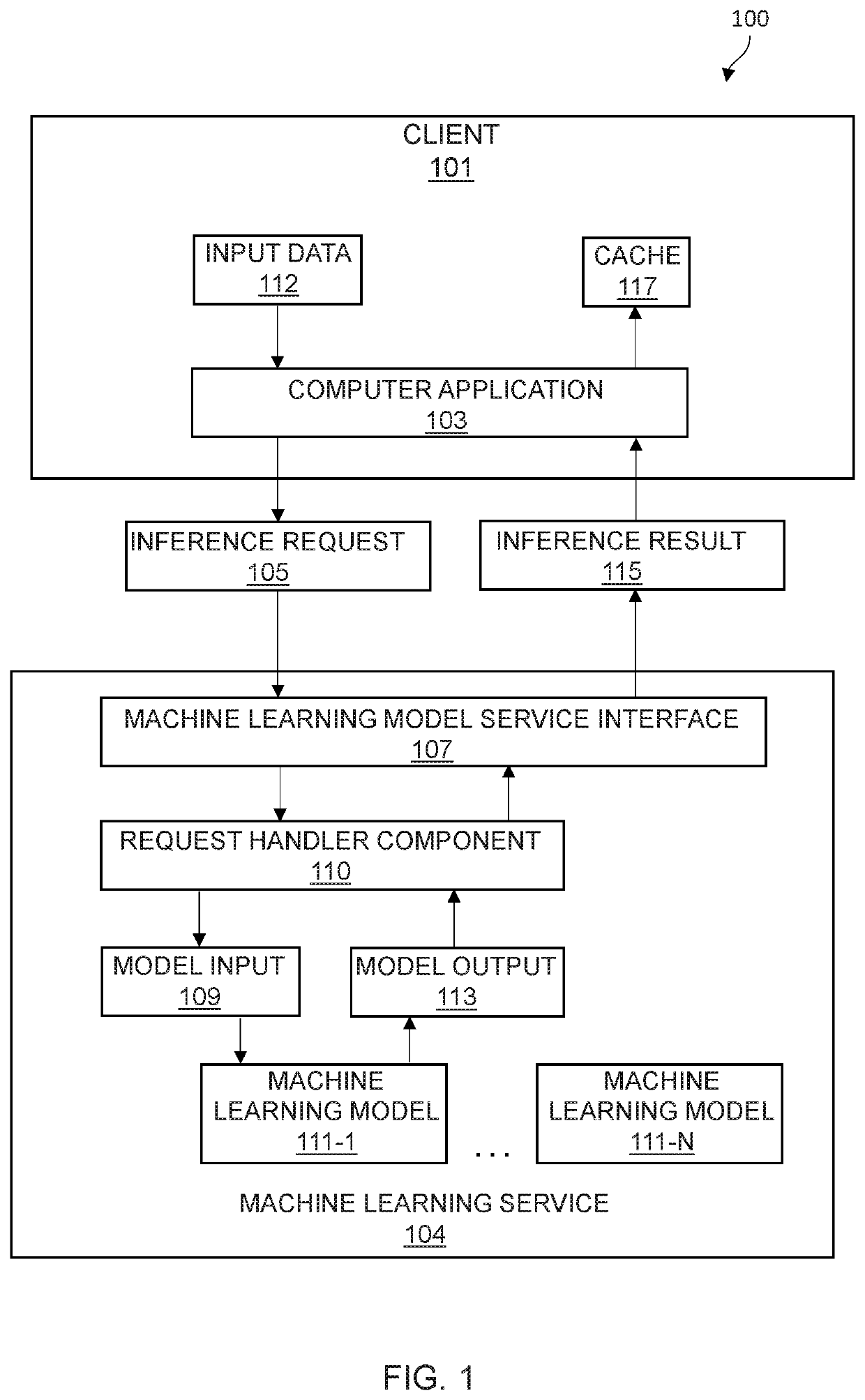

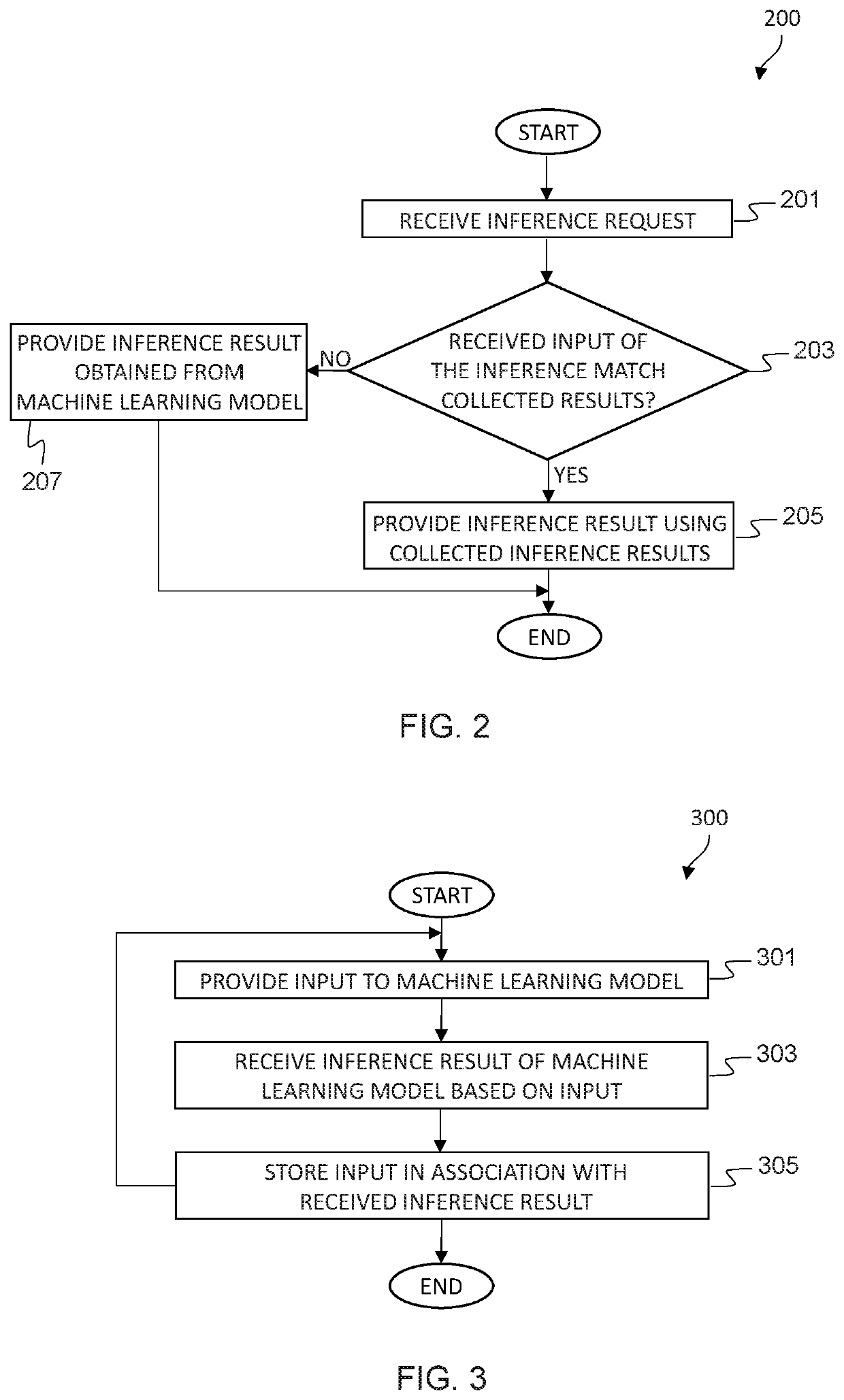

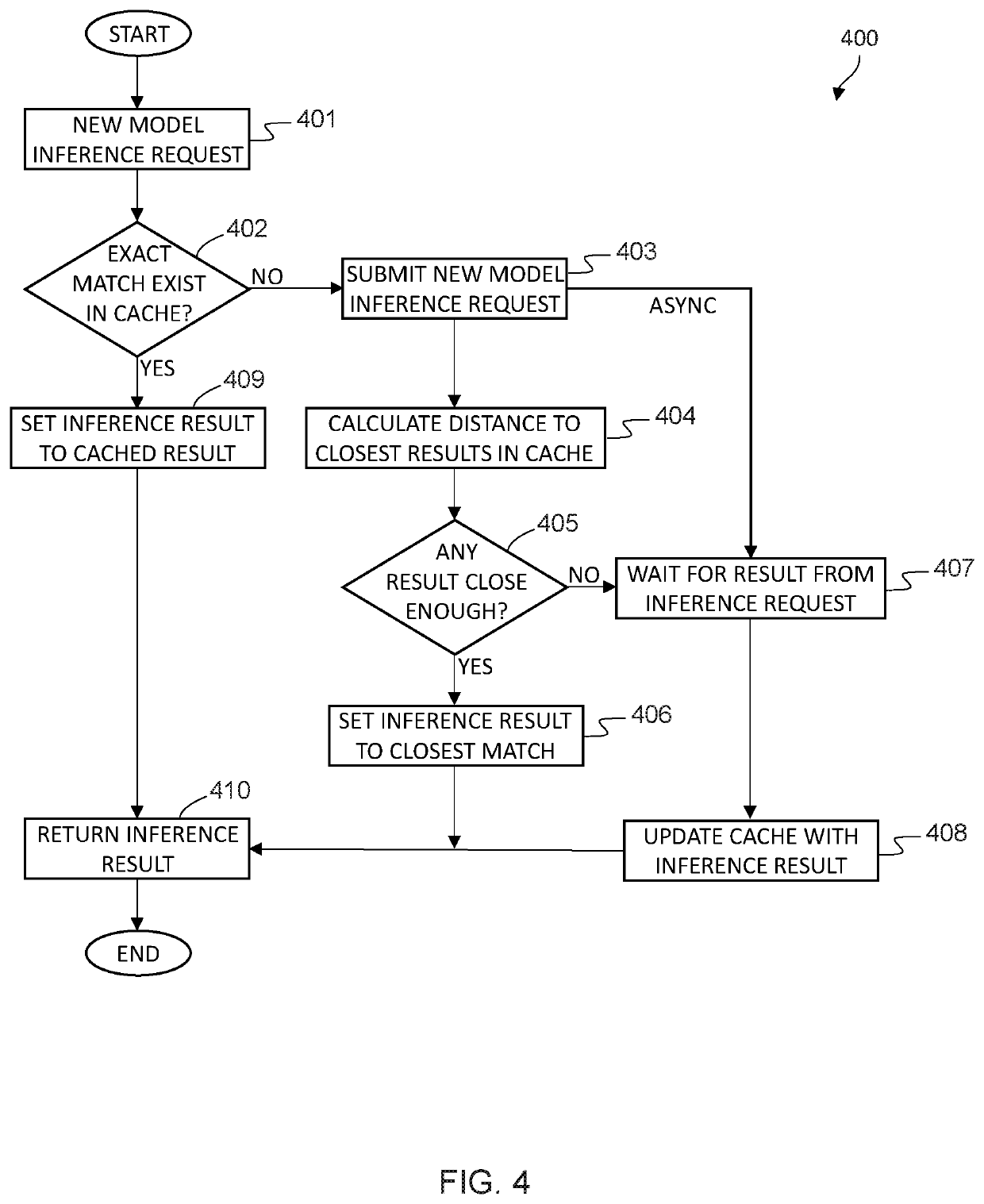

Inference of machine learning models

Inference results of a machine learning model and associated inputs are collected. An inference request is received. A determination is made whether a request input of the inference request matches at least one collected input of a set of collected inputs. In response to determining that the request input matches at least one collected input in the set of collected inputs, an inference result is determined using one or more collected inference results associated with said one or more matching inputs in the set of collected inputs.

Owner:IBM CORP

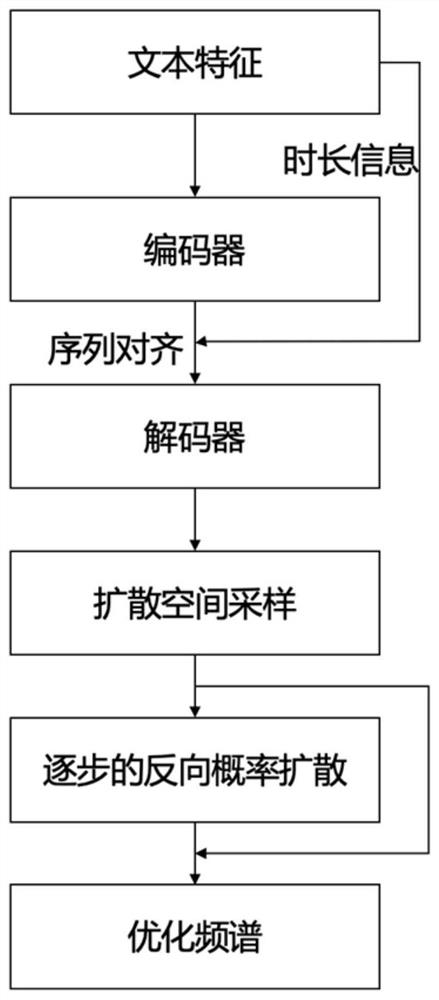

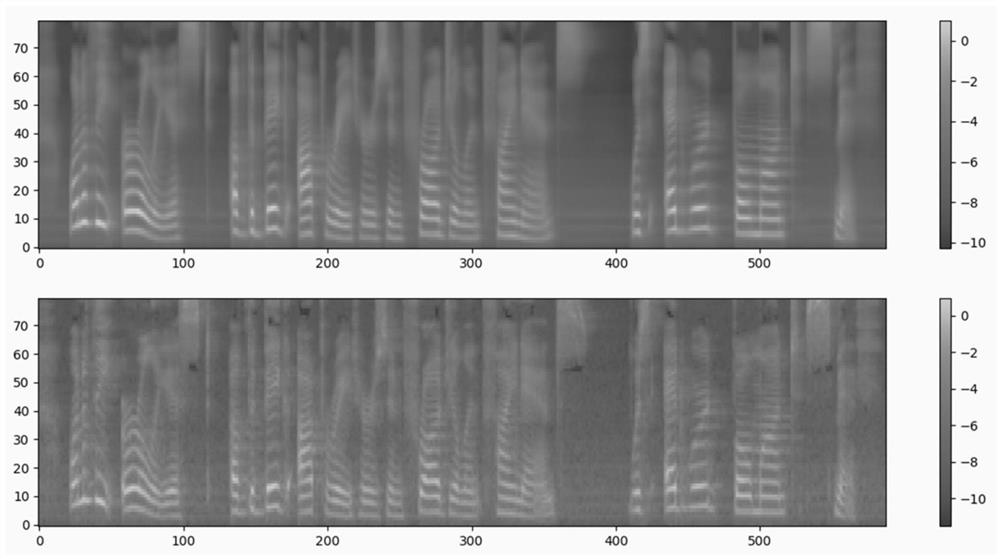

Acoustic model post-processing method based on probability diffusion model, server and readable memory

PendingCN114512114AImprove composite qualityImprove naturalnessSpeech synthesisFrequency spectrumNoise

The invention discloses an acoustic model post-processing method based on a probability diffusion model, a server and a readable memory. The method comprises the following steps: model training: training the probability diffusion model by using the server, optimizing parameters of the probability diffusion model by reducing a loss function until the model converges, and obtaining the weight of the probability diffusion model; and model inference: according to the model weight obtained in the training stage, realizing spectrum optimization on the input predicted spectrum by using a server. According to the method, by learning the feature similarity between an input predicted spectrum and a real spectrum and using the data fitting capability of a noise estimation network in a model, probability distribution transfer based on diffusion is realized, and finally, the input predicted spectrum is more approximate to the real spectrum. And the naturalness of the synthesized speech is improved by improving the quality of the frequency spectrum. According to the method, the spectrum detail optimization effect can be achieved for the spectrums obtained by various acoustic models, and compared with other methods, the better spectrum generation effect is achieved.

Owner:ZHEJIANG UNIV

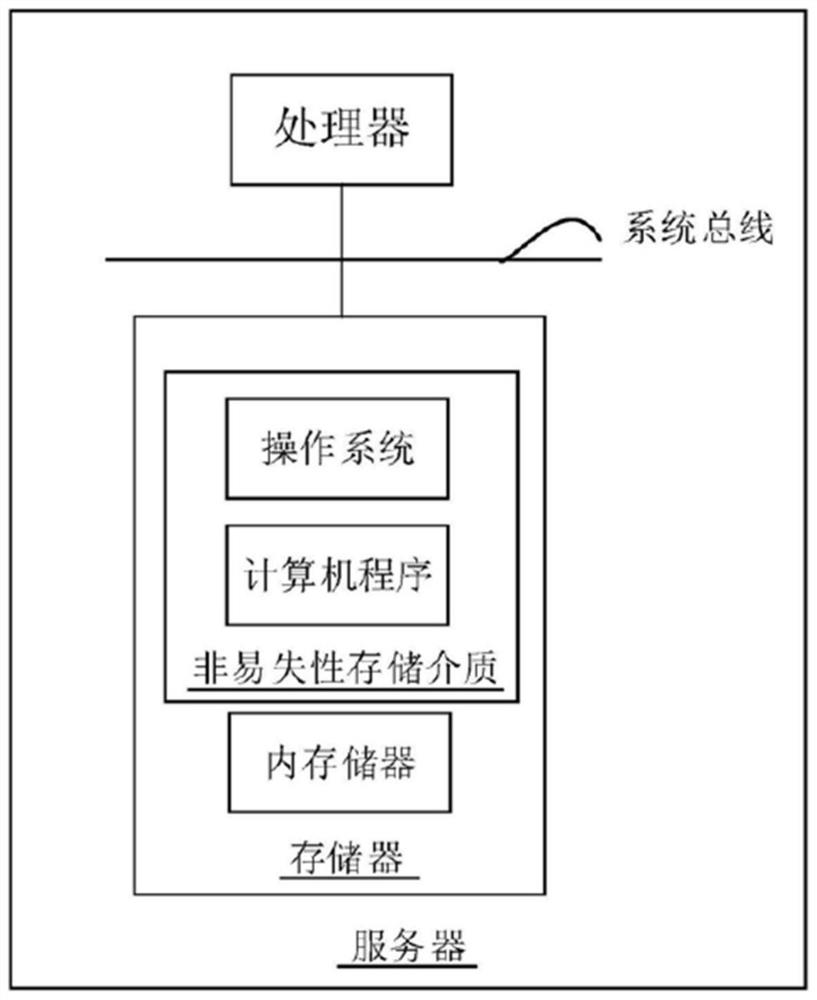

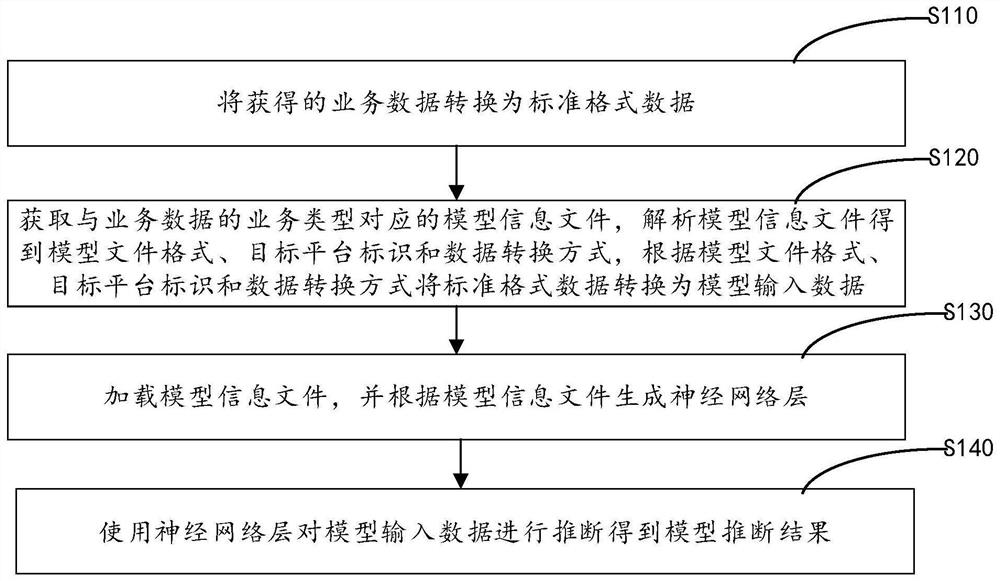

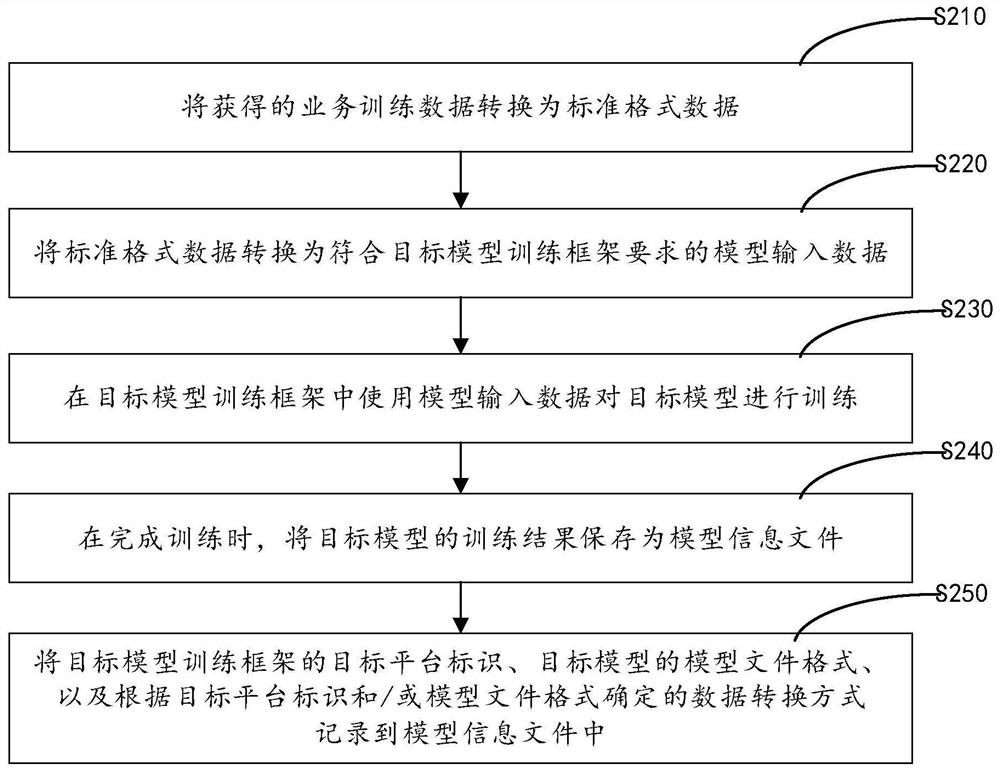

Data processing method and device, computer equipment and storage medium

PendingCN113641337AReduce in quantityReduce development costsDigital data information retrievalSoftware designData transformationEngineering

The invention relates to the field of machine learning, in particular to a data processing method and device, computer equipment and a storage medium. The method comprises the following steps: when service data is obtained, firstly converting the obtained service data into standard format data; then obtaining a model information file corresponding to the business type of the business data; analyzing the model information file to obtain a model file format, a target platform identifier and a data conversion mode; converting the standard format data into model input data according to the model file format, the target platform identifier and the data conversion mode; then loading the model information file into a memory; generating a neural network layer according to the model information file; and inferring model input data by using the neural network layer to obtain a model inference result. According to the embodiment of the invention, the model information files in different formats can be analyzed and deduced uniformly through one tool, so that the development cost and the maintenance cost are reduced.

Owner:广州三七互娱科技有限公司

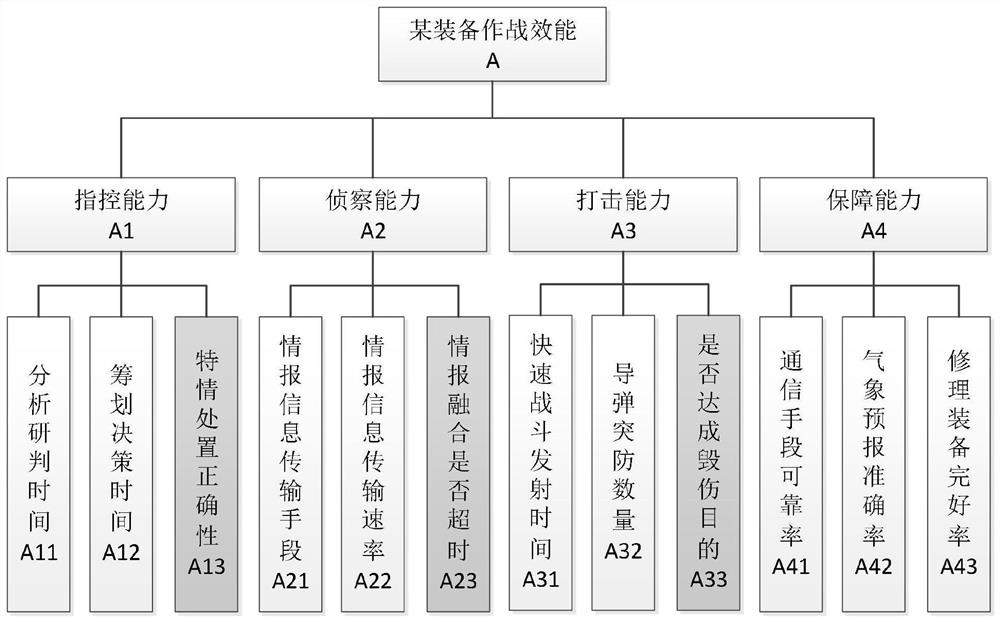

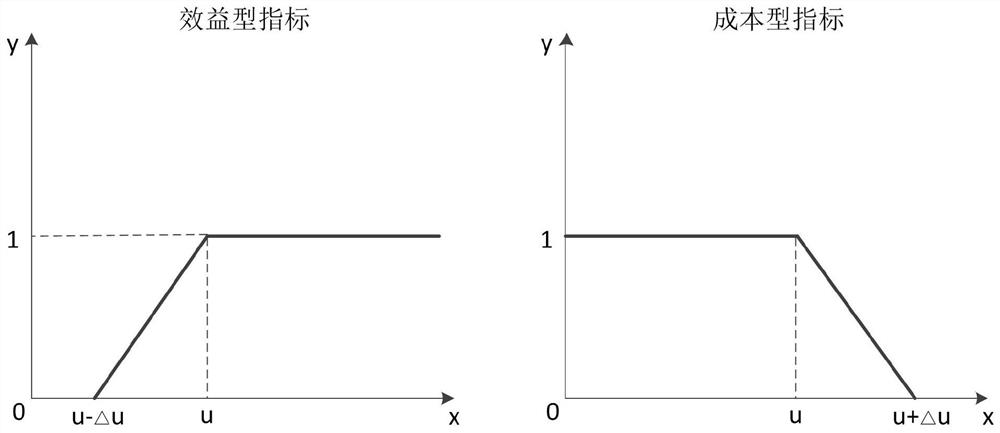

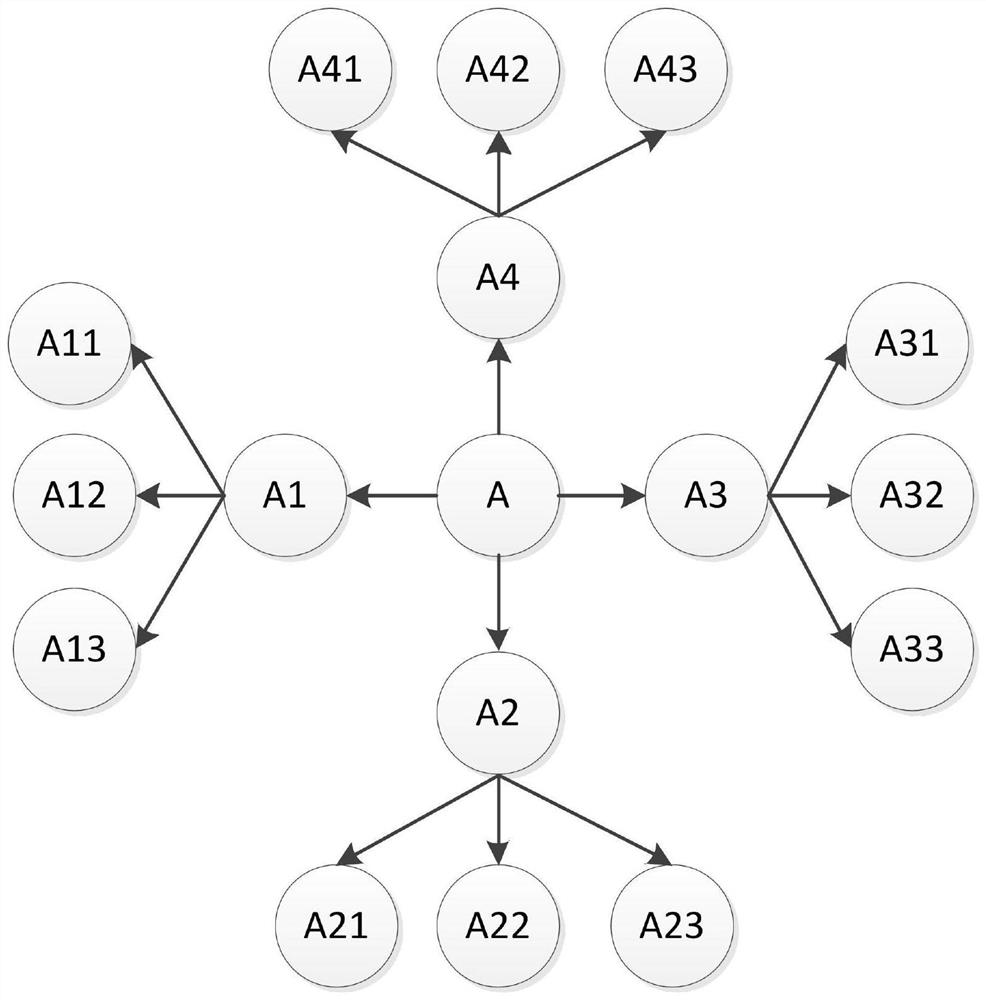

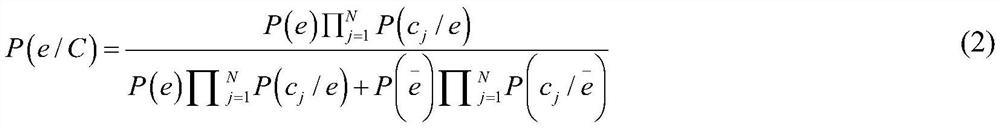

Weighted random mixed semantic method for combat effectiveness evaluation

The invention discloses a weighted random mixed semantic method for combat effectiveness evaluation. The method comprises the following steps of evaluation index system construction and index empowerment, evaluation index quantification and remapping, weighted random mixed semantic model establishment and data substitution inference. According to the method, under the framework of the Bayesian network, a weighted random mixed semantics is used for describing a combat effectiveness evaluation process, a quantitative index and a qualitative index can be mixed and incorporated, the quantitative index and the qualitative index only have differences in a random semantic level, and potential information provided by the qualitative index can be fully utilized; meanwhile, the method is substantially a Bayesian posterior inference method, so that the method has the advantage that a Bayesian method allows missing data, quantifiable indexes are reflected as observation value nodes in a model, missing indexes are reflected as unknown parameter nodes, and the parameter nodes are not used as evidence of model inference, so that the model inference efficiency is greatly improved. Therefore, the missing index has no influence on the evaluation process and the evaluation result.

Owner:信云领创(北京)科技有限公司

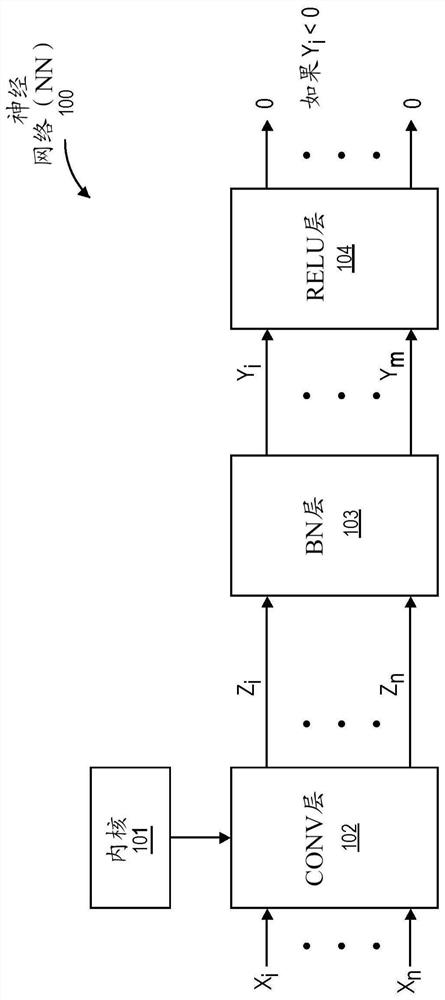

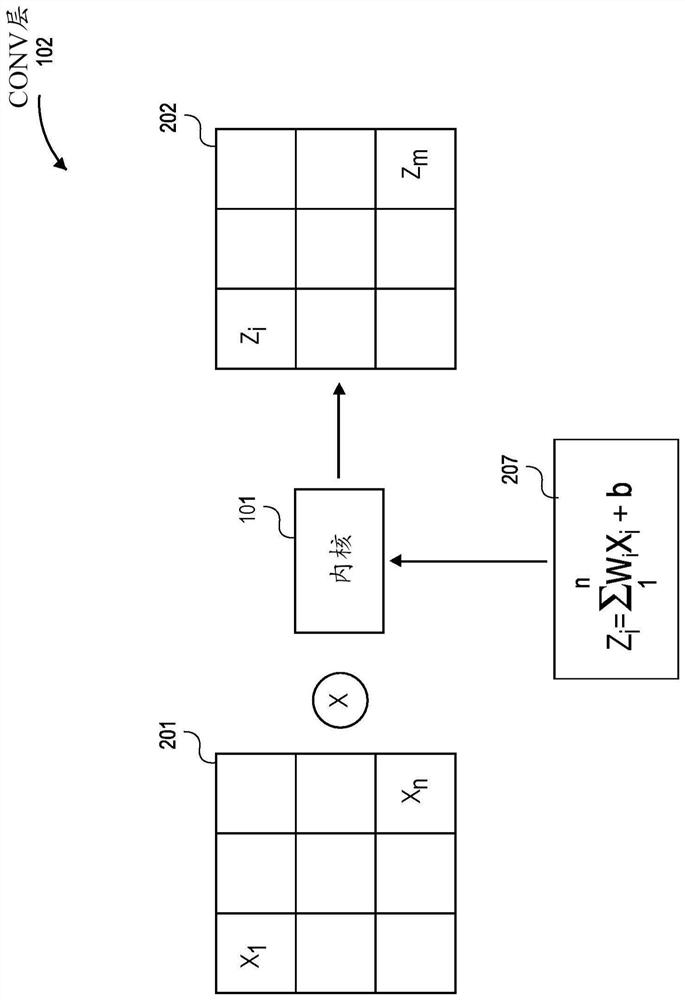

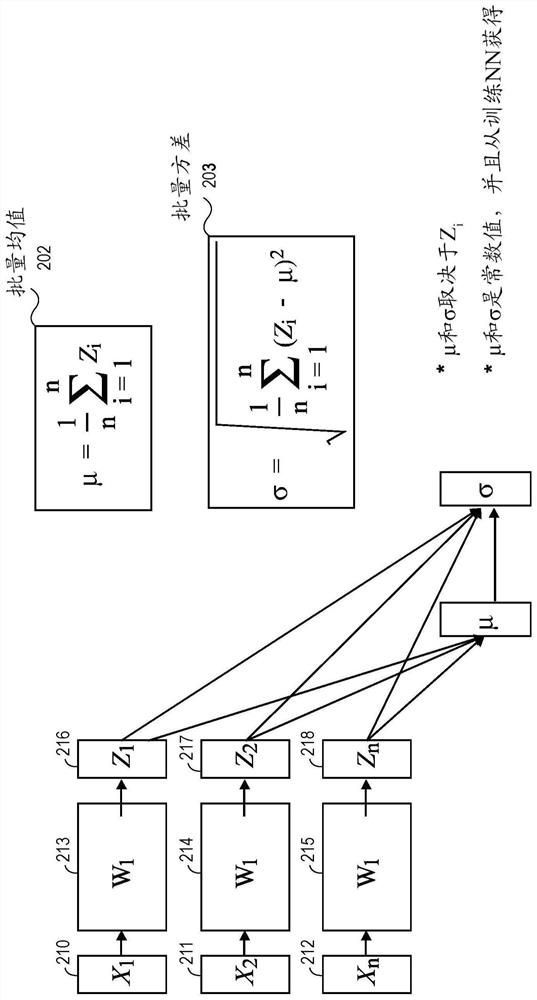

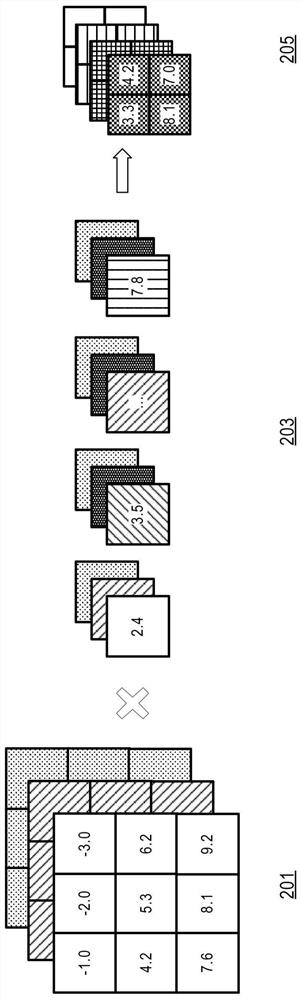

Batch normalization layer fusion and quantization method for model inference in ai neural network engine

Batch normalization (BN) layer fusion and quantization method for model inference in artificial intelligence (AI) network engine are disclosed. A method for a neural network (NN) includes merging batch normalization (BN) layer parameters with NN layer parameters and computing merged BN layer and NN layer functions using the merged BN and NN layer parameters. A rectified linear unit (RELU) functioncan be merged with the BN and NN layer functions.

Owner:BAIDU USA LLC

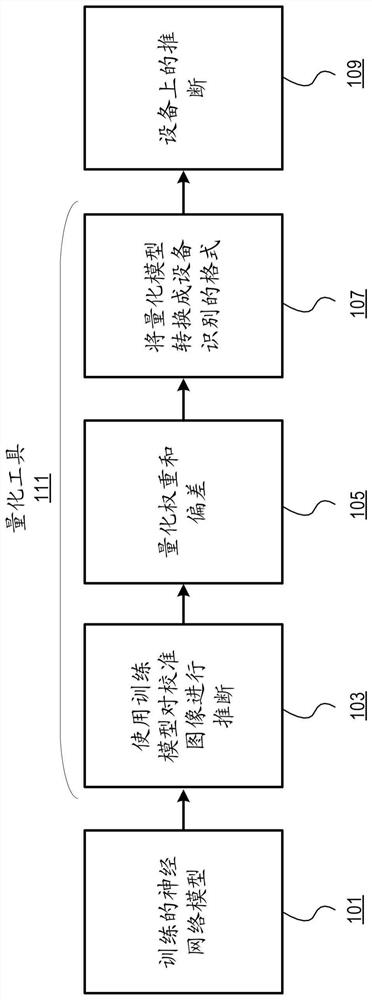

Quantization method of improving the model inference accuracy

The disclosure describes various embodiments for quantizing a trained neural network model. In one embodiment, a two-stage quantization method is described. In the offline stage, statically generatedmetadata (e.g., weights and bias) of the neural network model is quantized from floating-point numbers to integers of a lower bit width on a per-channel basis for each layer. Dynamically generated metadata (e.g., an input feature map) is not quantized in the offline stage. Instead, a quantization model is generated for the dynamically generated metadata on a per-channel basis for each layer. The quantization models and the quantized metadata can be stored in a quantization meta file, which can be deployed as part of the neural network model to an AI engine for execution. One or more speciallyprogrammed hardware components can quantize each layer of the neural network model based on information in the quantization meta file.

Owner:BAIDU USA LLC

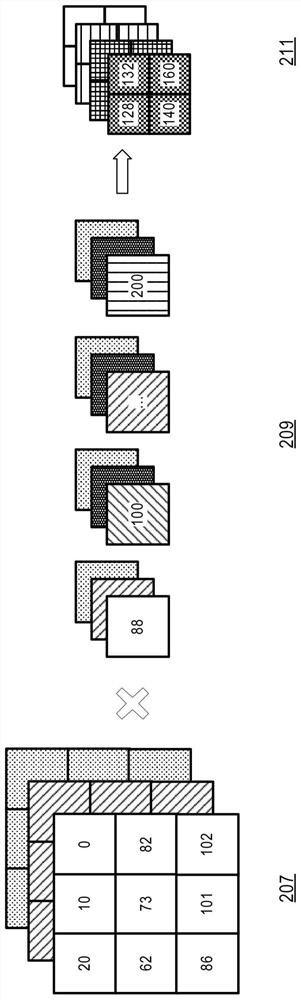

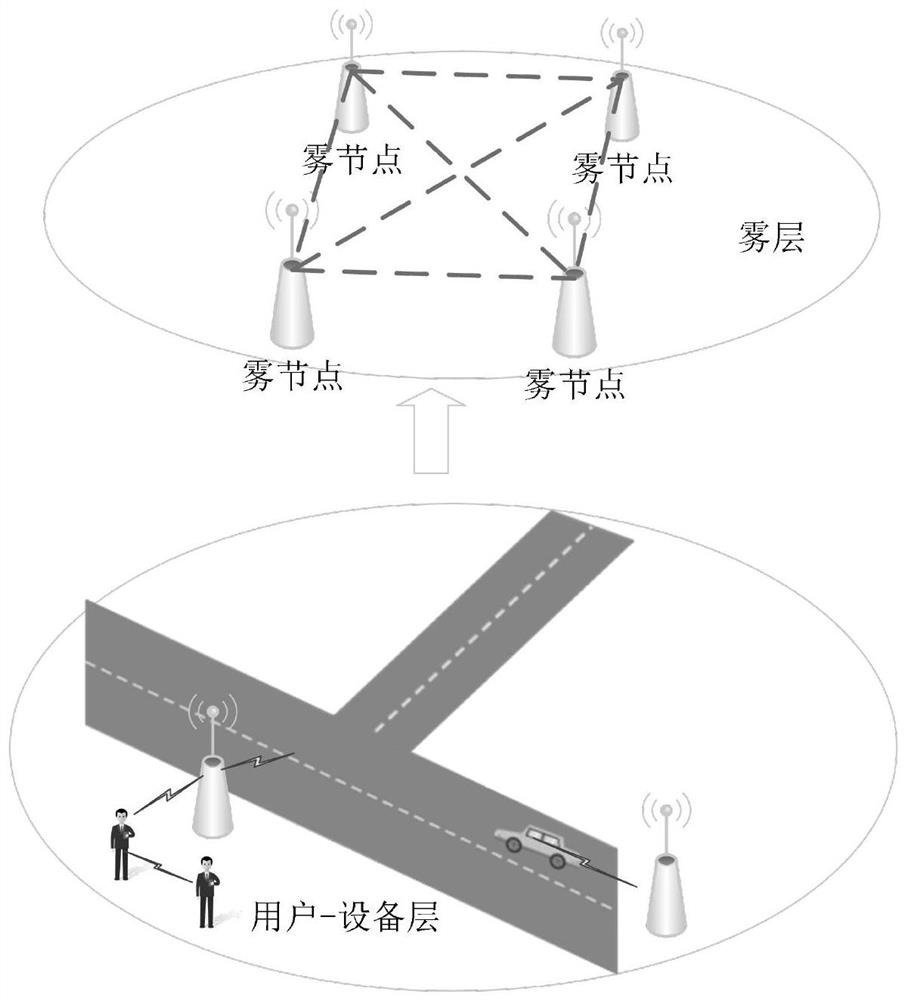

Multi-factor data reliability evaluation method in fog computing

The invention relates to a multi-factor data reliability evaluation method in fog computing, and belongs to the technical field of mobile communication. And data sharing enables users to obtain important information around in time. However, in a data sharing process, due to the mobility and variability of the users, the users do not completely trust each other. Due to sensor faults, virus infection and even selfish reasons, the equipment may spread error information. In order to reduce the influence of the malicious messages on other users, the propagation of false messages must be inhibited. The invention designs a method to quantify the authenticity of the data, so that a data requester can obtain more reliable data in the data sharing process. And the fog node prejudges the authenticity of the data through Bayesian model inference to obtain the credibility value of the data. The requester obtains the satisfaction degree of the data by integrating the experience-based trust value of the provider and historical interaction on the basis of the fog node pre-judgment result, so that the reliability evaluation of the data is more comprehensive.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

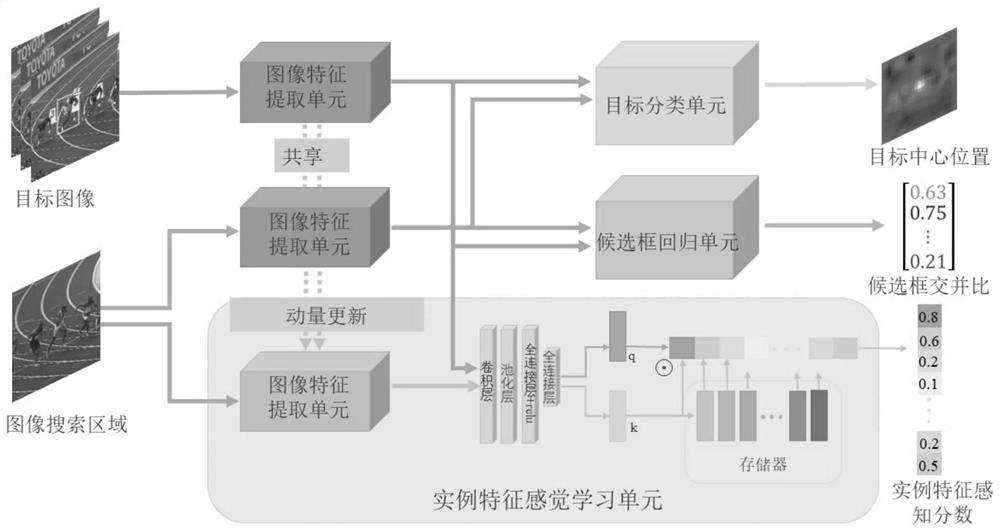

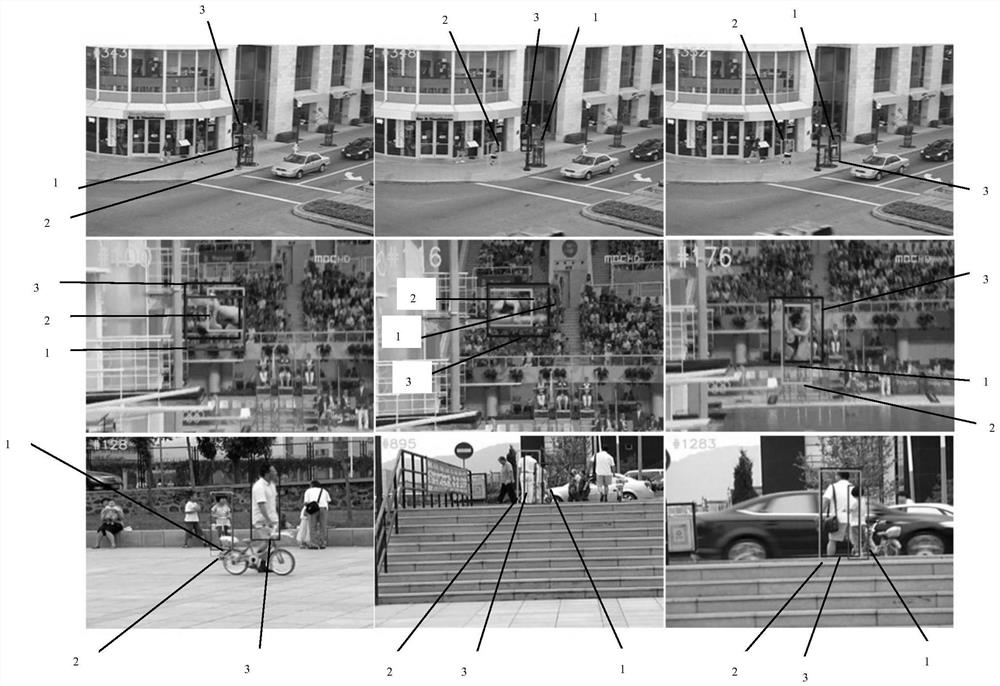

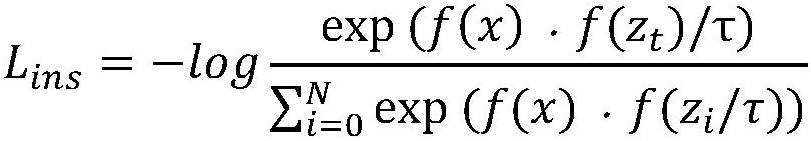

Robust single target tracking method based on instance feature perception

ActiveCN113361329AImprove discrimination abilityImprove anti-interference abilityImage enhancementImage analysisPattern recognitionAnti jamming

The invention discloses a robust single-target tracking method based on instance feature perception, and the method comprises the following steps: 1, model training: training a network model through employing a server, optimizing network parameters through reducing a network loss function until the network converges, and obtaining the network weight of robust single-target tracking based on instance feature perception; and 2, model inference: tracking a target in a new video sequence by using the network weight obtained in the training stage. According to the robust single-target tracking method based on instance feature perception, features between similar targets are distinguished through learning, the discrimination capability of a tracker is enhanced, and the anti-interference capability and robustness of the tracking process are improved. According to the method, the target can be accurately and stably tracked in numerous difficult actual scenes, and compared with other methods, a better target tracking and capturing effect is achieved.

Owner:ZHEJIANG UNIV

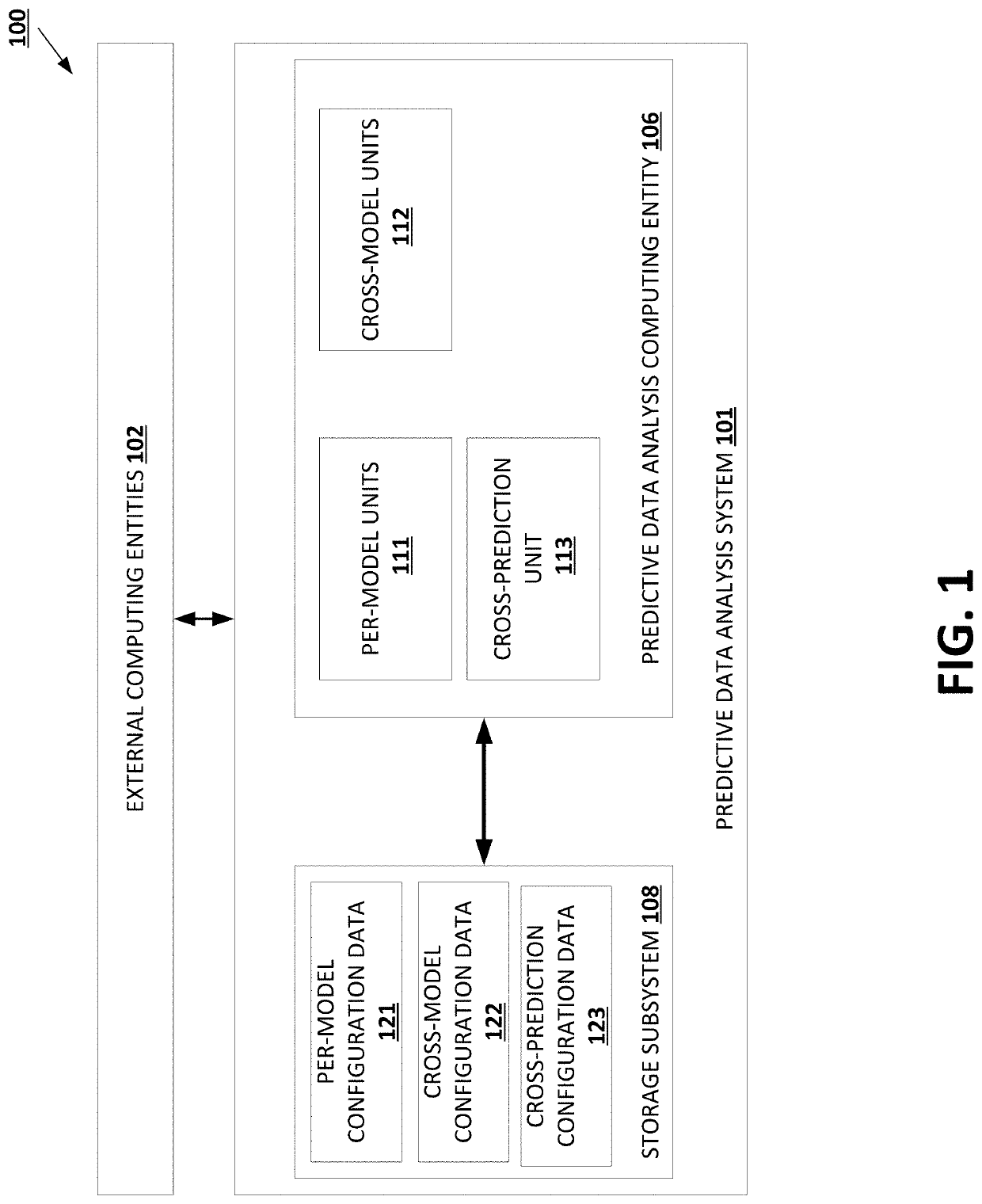

Predictive data analysis with probabilistic updates

There is a need for solutions for more efficient predictive data analysis systems. This need can be addressed, for example, by a system configured to obtain, for each predictive task of a plurality of predictive tasks, a plurality of per-model inferences; generate, for each predictive task, a cross-model prediction based on the plurality of per-model inferences for the predictive task; and generate, based on each cross-model prediction associated with a predictive task, a cross-prediction for the particular predictive task, wherein determining the cross-prediction comprises applying one or more probabilistic updates to the cross-model prediction for the particular predictive task and each probabilistic update is determined based on the cross-model prediction for a related predictive task of the one or more related predictive tasks.

Owner:OPTUM SERVICES IRELAND LTD

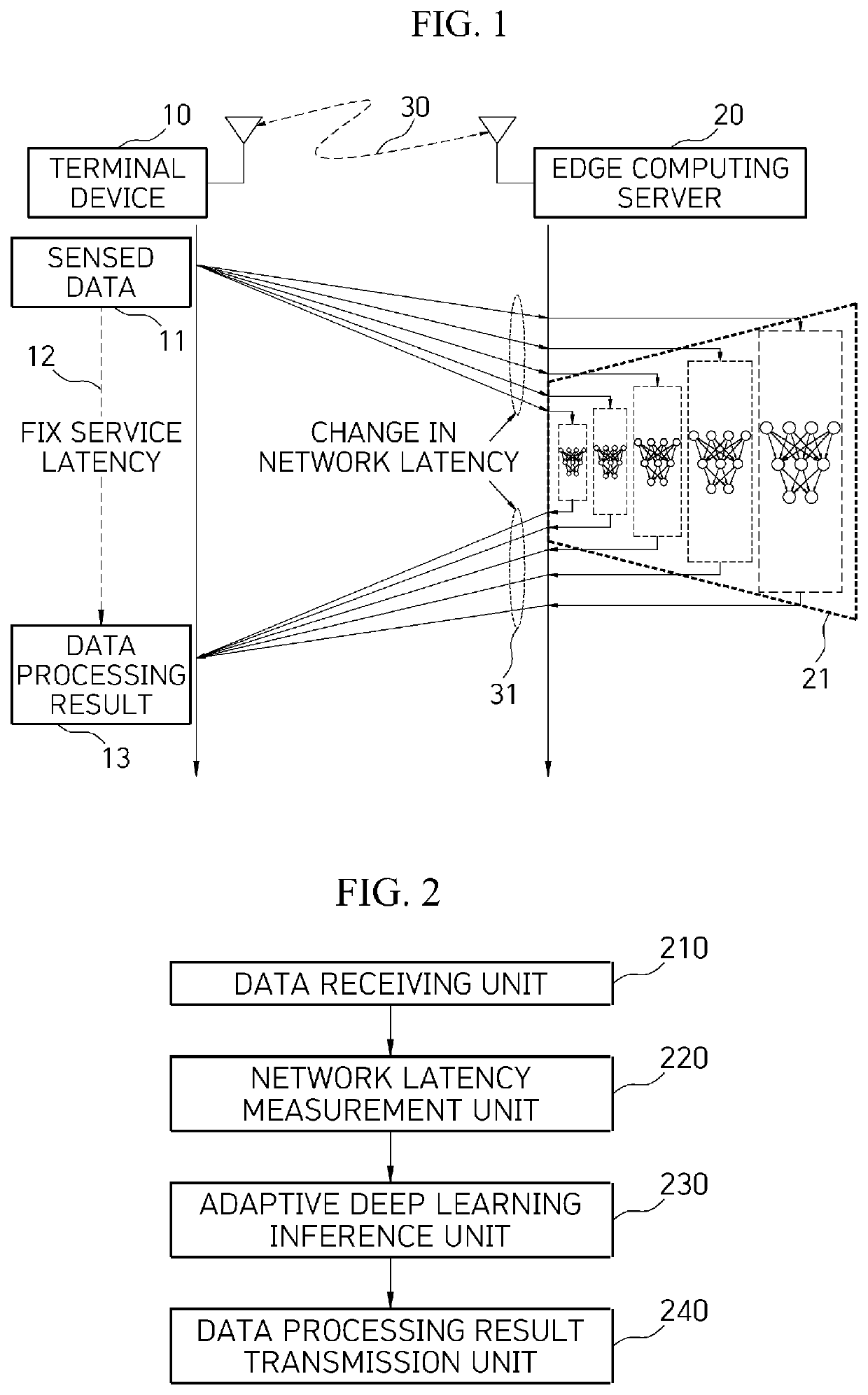

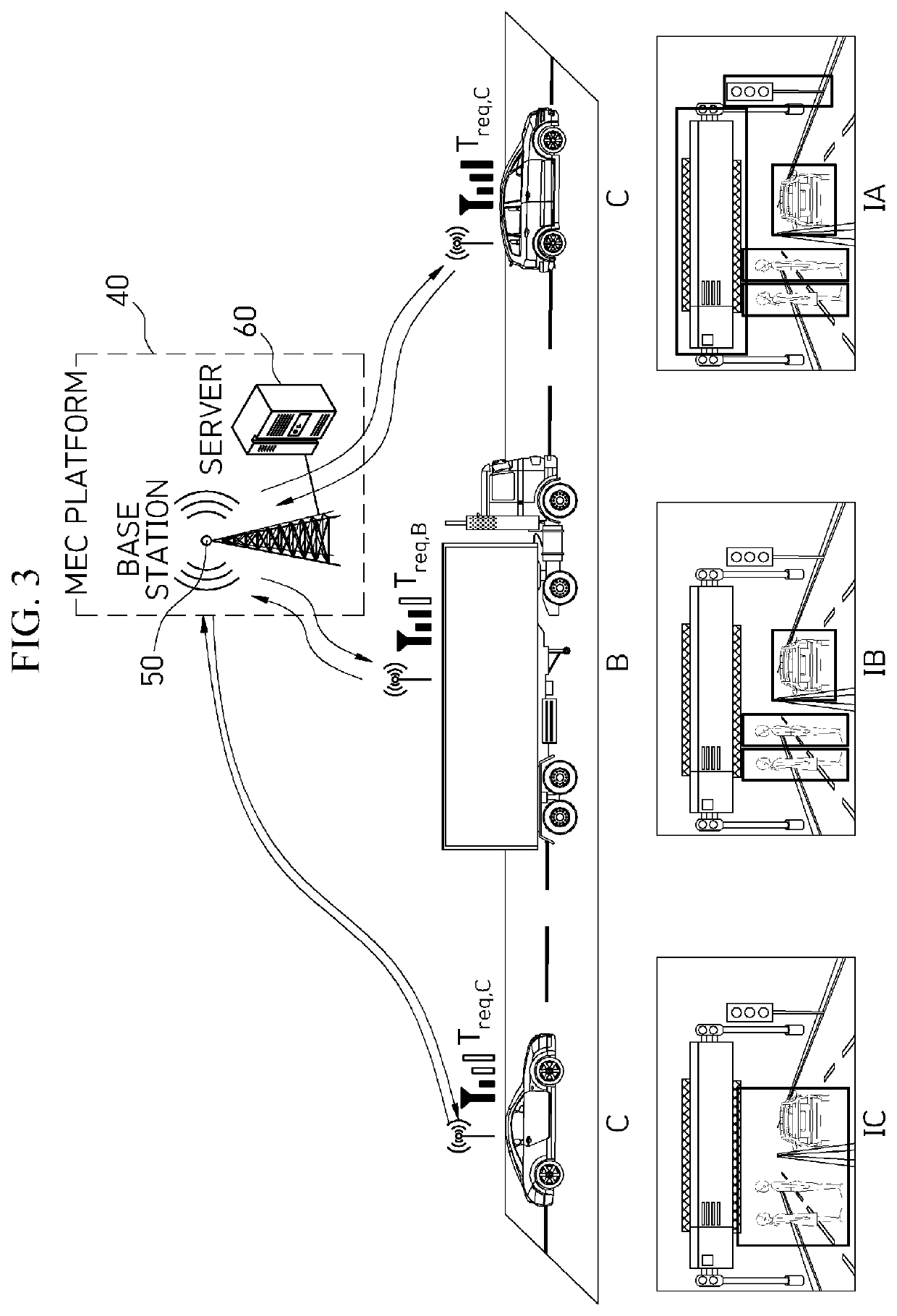

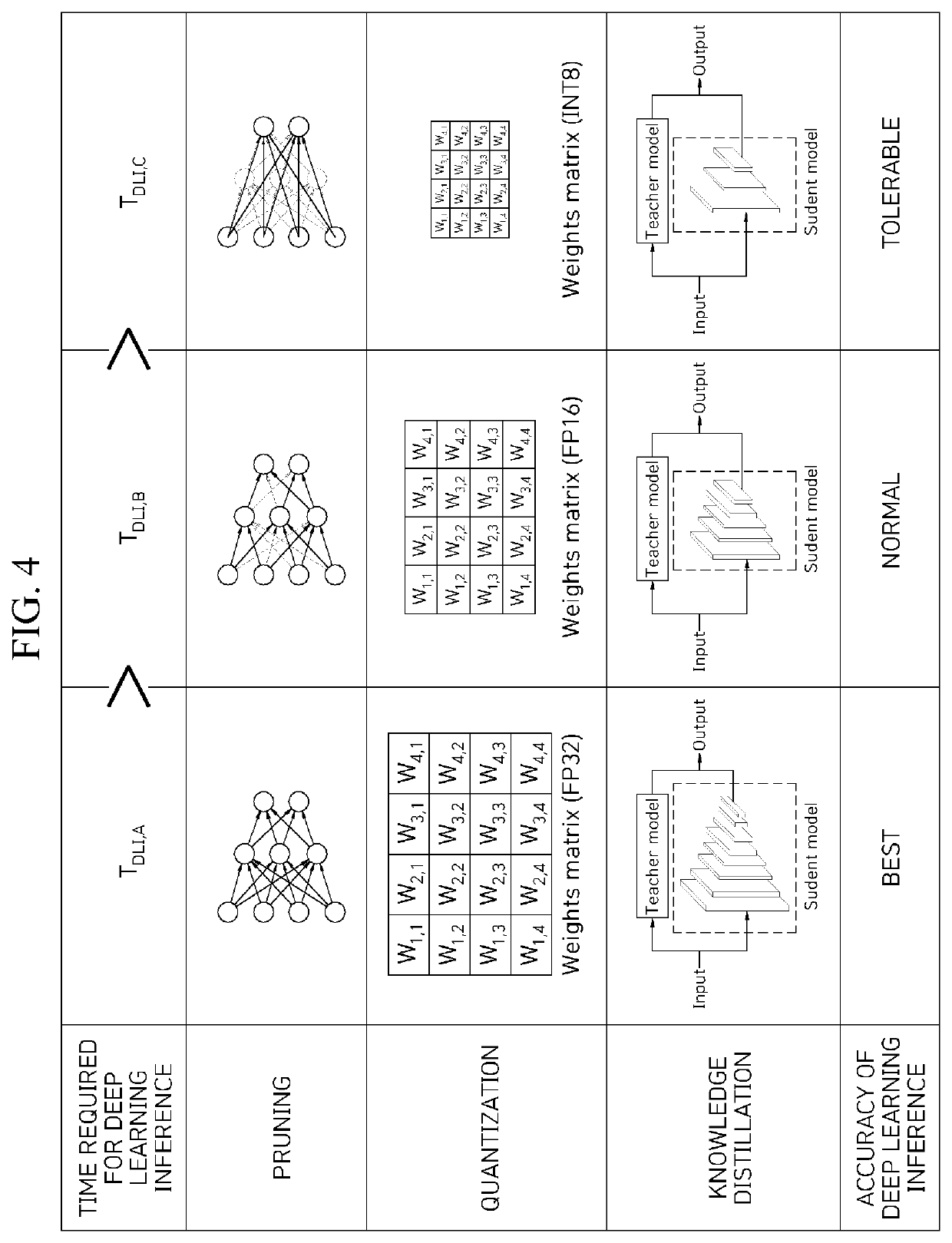

Adaptive deep learning inference apparatus and method in mobile edge computing

Disclosed is an adaptive deep learning inference system that adapts to changing network latency and executes deep learning model inference to ensure end-to-end data processing service latency when providing a deep learning inference service in a mobile edge computing (MEC) environment. An apparatus and method for providing a deep learning inference service performed in an MEC environment including a terminal device, a wireless access network, and an edge computing server are provided. The apparatus and method provide deep learning inference data having deterministic latency, which is fixed service latency, by adjusting service latency required to provide a deep learning inference result according to a change in latency of the wireless access network when at least one terminal device senses data and requests a deep learning inference service.

Owner:ELECTRONICS & TELECOMM RES INST

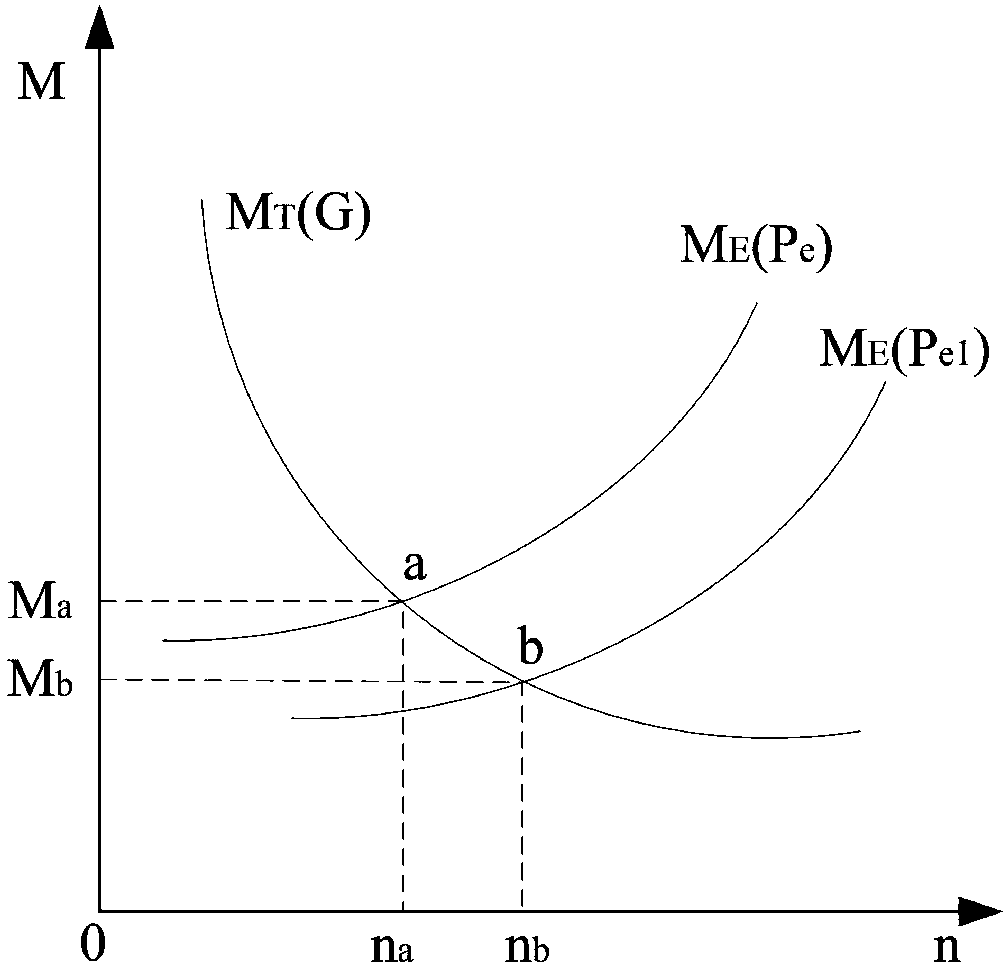

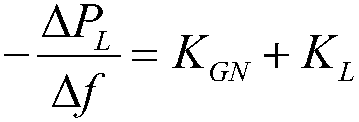

A New Energy Consumption Method Based on Power Generation Frequency Limit Adjustment

ActiveCN107154644BImprove absorption capacitySingle network parallel feeding arrangementsNew energyEngineering

The invention discloses a new energy accommodation method based on the power generation frequency limit value adjustment, and the method gives consideration to the improvement of the operation frequency limit value of a power grid in a reasonable range when a system is in normal operation and in a time period of low load, stabilizes the characteristics of randomness, fluctuation and reverse peak regulation of new energy power generation, and improves the accommodation capacity of the system for new energy power generation. The method comprises the steps: constructing a new energy accommodation model inference model based on the power generation frequency limit value adjustment, wherein the system can accommodate more new energy output through primary frequency modulation during normal operation; accommodating the new energy in a low load time period through reducing the technical output of the conventional energy resource, wherein the new energy, which is not completely accommodated, can be used for solving the change transient process of the frequency and a new steady-state value of the system after new energy grid connection through an inference formula after the conventional energy resource has already reached the minimum technical output. According to the embodiment of the invention, the method improves the new energy accommodation capacity of the power grid during normal operation and the low-load time period through the real-time dynamic adjustment of the frequency of the power grid.

Owner:STATE GRID LIAONING ELECTRIC POWER RES INST +1

Method and device for deploying multi-model inference service based on k8s cluster

ActiveCN112231054BAbility to implement shared podsSimplify deployment operationsInference methodsSoftware simulation/interpretation/emulationAutoscalingParallel computing

The invention discloses a multi-model inference service deployment method and device based on k8s cluster. The method includes: deploying a scheduling service in the smallest scheduling unit of the k8s cluster, and configuring memory, computing resources and scheduling policies for the scheduling service; deploying multiple model inference services according to the memory of the scheduling service, and inferring each model A service is configured to use computing resources of the scheduling service and is configured to be associated with the scheduling service; the scheduling service invokes the plurality of model inference services to process inference tasks according to the scheduling policy. The solution of the present invention realizes the ability of multiple model reasoning services to share the minimum scheduling unit, and the multi-model reasoning service can be elastically scaled with the service load, and the deployment operation is relatively simple.

Owner:SUZHOU METABRAIN INTELLIGENT TECH CO LTD

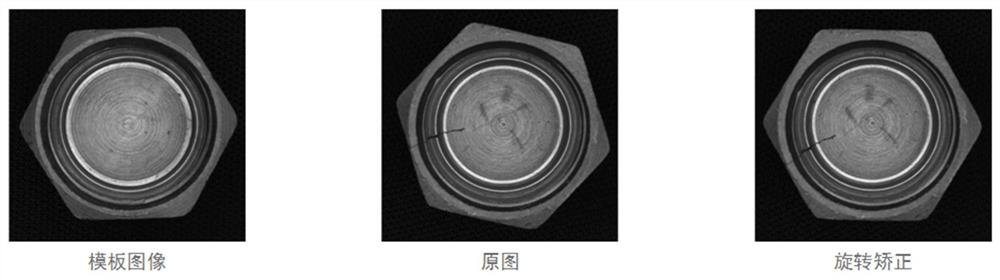

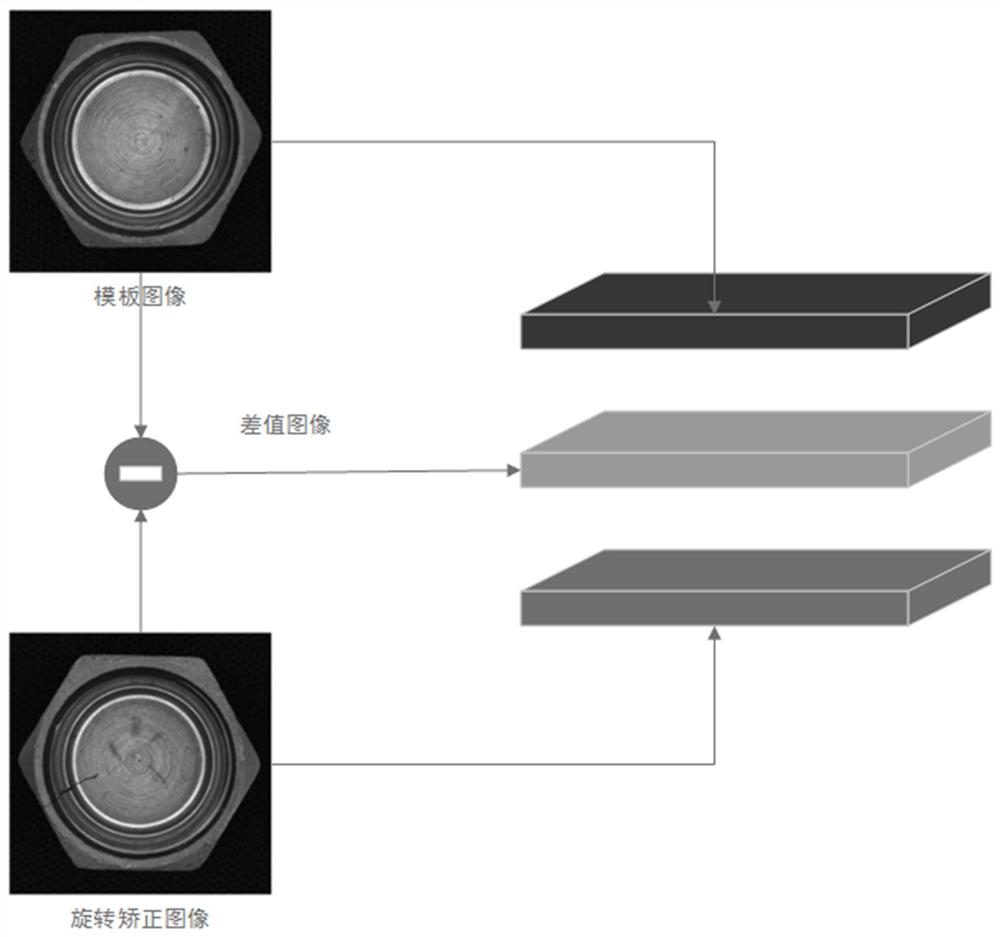

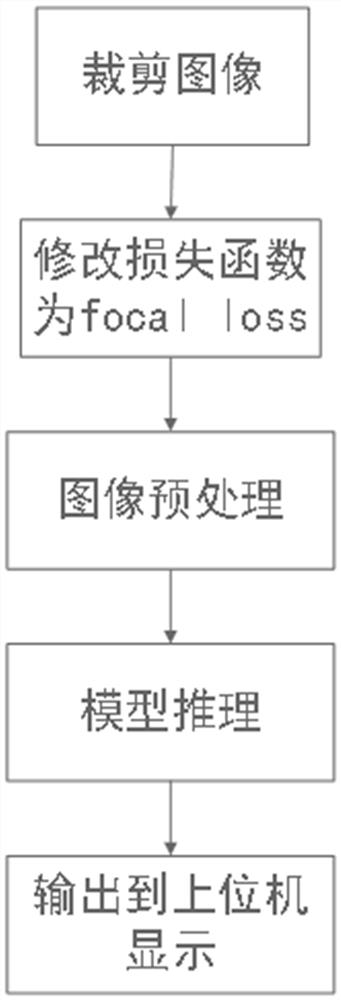

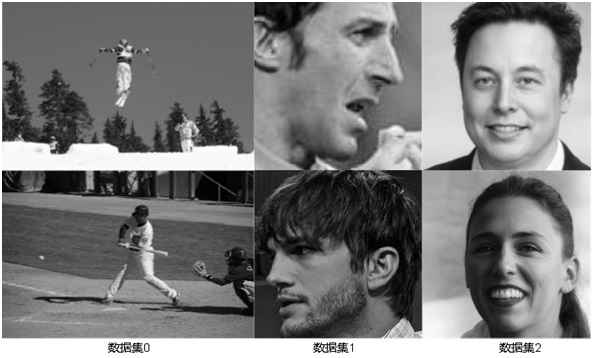

A Classification Method of Nut Surface Defects Based on EfficientNet

ActiveCN113052809BAvoid time-consuming manual adjustmentsImprove generalization abilityImage enhancementImage analysisData setClassification methods

The invention discloses a nut surface defect classification method based on EfficientNet, comprising step 1: collecting training samples and making a data set; training the EfficientNet model through the data set, after the model training converges, and then classifying the nut surface; using EfficientNet to classify the model The scale is self-adjusted to avoid time-consuming manual adjustment and it is difficult to obtain the optimal solution; Step 2: Collect the target image and crop the target image; Step 3: Modify the loss function to focal loss, adjust the weight of positive and negative samples, and improve the accuracy of the model; Avoid the impact of positive and negative sample imbalance on the model; step 4: image preprocessing, make a template image to rotate and correct the input image, and make a difference to obtain a difference image, and fuse the three into a three-channel image as the model input image; Enhance the adaptability of the model to the production environment and improve the generalization ability of the model. Step five: model inference, use the EfficientNet model to infer the input image; step six: output the inference image to the host computer for display.

Owner:中科海拓(无锡)科技有限公司

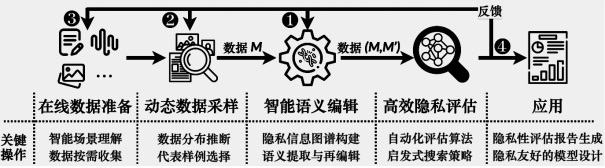

Intelligent system privacy evaluation method and system

ActiveCN114091108AHigh transparencyGuarantee the right to knowSemantic analysisCharacter and pattern recognitionAnalysis dataUser privacy

The invention discloses an intelligent system privacy evaluation method and system, and the method comprises the steps: firstly, carrying out the data on-demand preparation according to an application scene of a to-be-evaluated system, and carrying out the dynamic data sampling according to the work requirements of the to-be-evaluated system; then modifying privacy information in the evaluation data through an intelligent semantic editing algorithm, analyzing the incidence relation between the data privacy information and the system inference precision by evaluating the influence of the modified data on the model inference precision, and then conducting quantitative evaluation on the privacy of the to-be-evaluated system. According to the method, privacy evaluation of the intelligent system is realized by establishing the relationship between the user privacy information and the model inference precision in a data-driven manner, so that a universal, efficient and automatic privacy evaluation system for the intelligent system is established, the transparency of the intelligent system for use of the user privacy information is effectively improved, and the right of the user to know the use risk of the personal privacy information is ensured.

Owner:NANJING UNIV

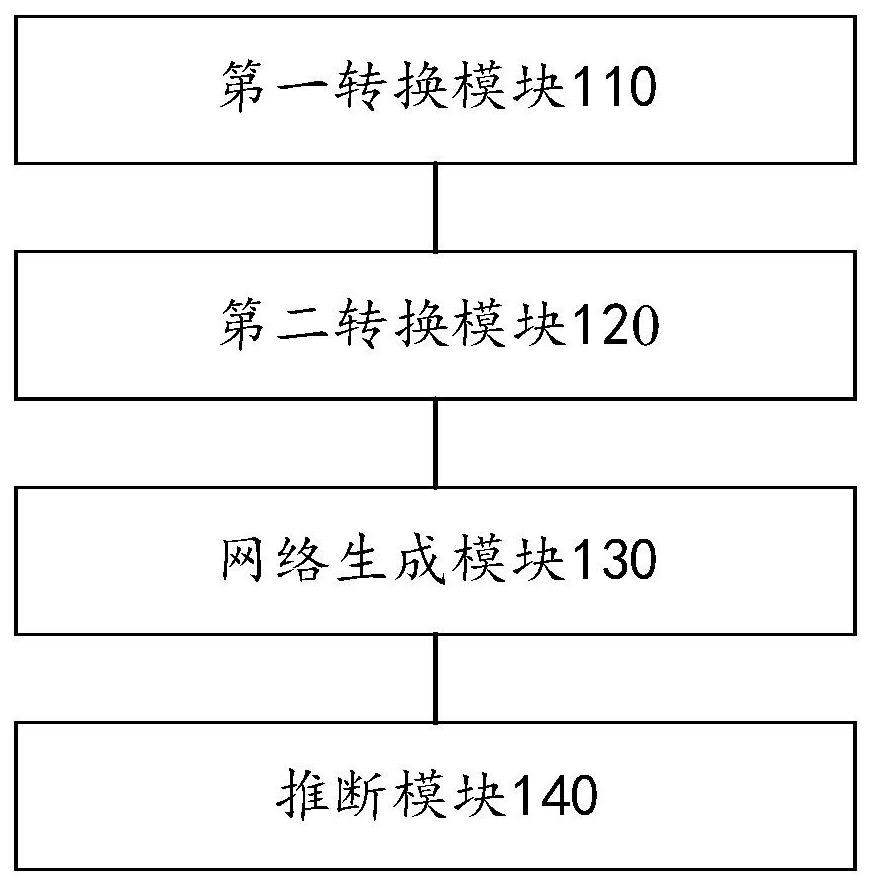

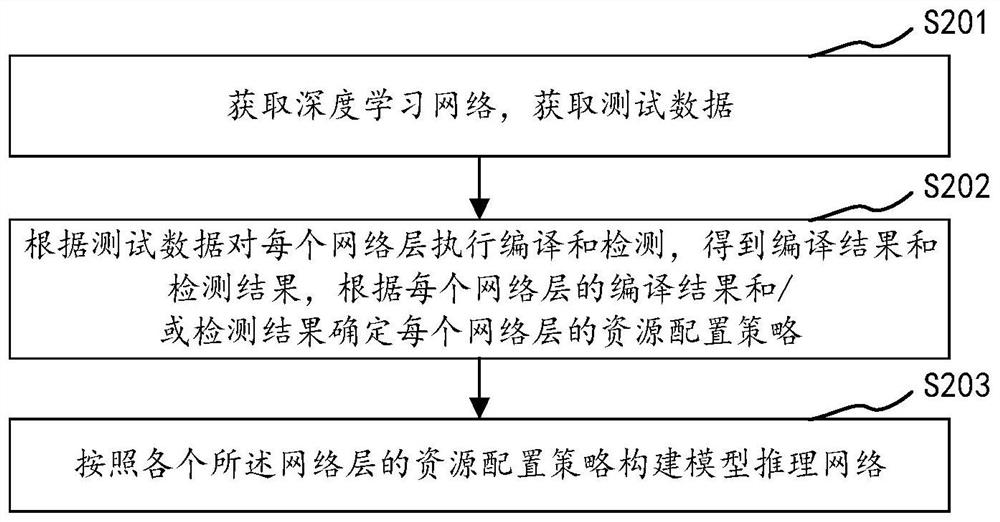

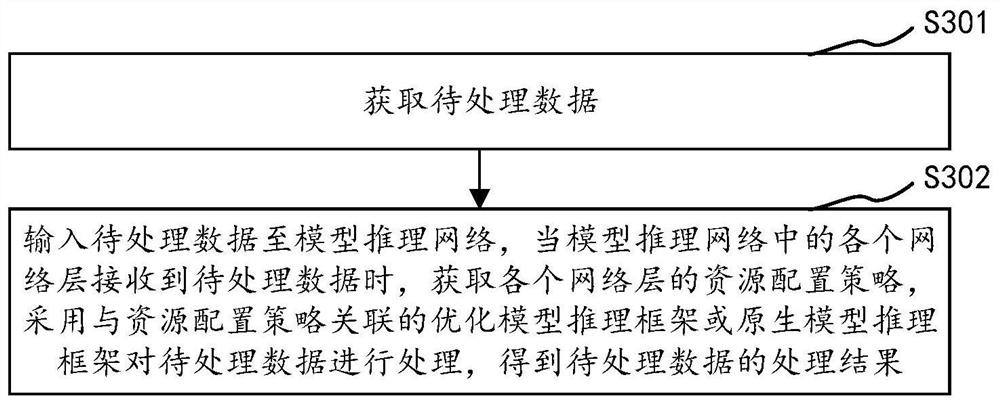

Method, data processing method, device and storage medium for constructing model inference network

ActiveCN111162946BImprove processing efficiencyImprove data processing efficiencyTransmissionTheoretical computer scienceData mining

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com