Method of compressing a pre-trained deep neural network model

一种深度神经网络、模型的技术,应用在压缩预先训练的深度神经网络模型领域,能够解决模型无法安装、深度学习模型大、深度学习模型无法从零开始学习等问题,达到确保准确性、降低深度神经网络规模的效果

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

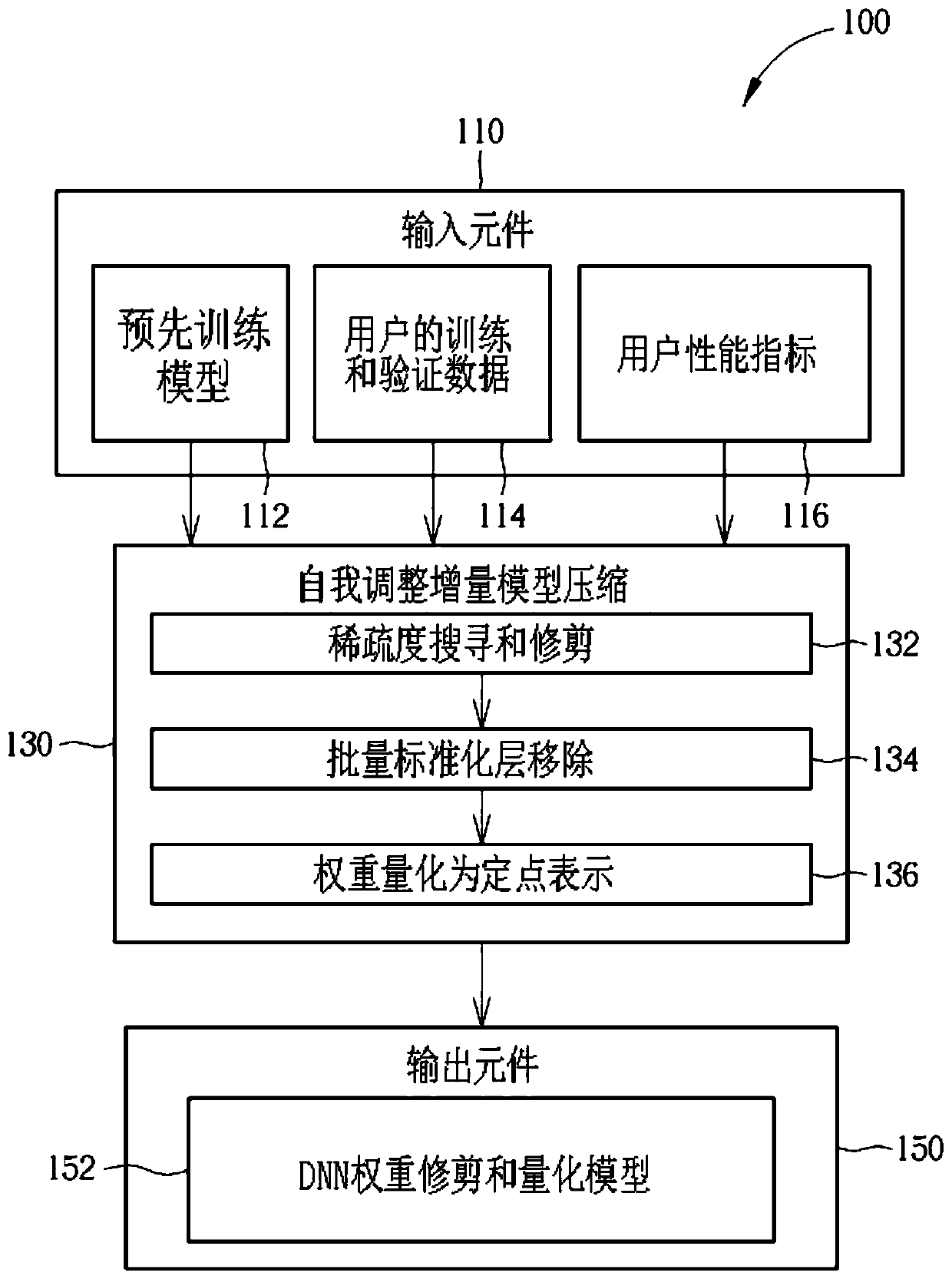

[0025] The model compression method proposed by the present invention removes unnecessary layers in a deep neural network (Deep Neural Network, DNN), and automatically introduces a sparse structure into a calculation-intensive layer.

[0026] figure 1 is a schematic diagram of the model compression architecture 100 of an embodiment. The model compression architecture 100 includes an input element 110 and a self-adjusting incremental model compression 130, the input element 110 includes a DNN pre-trained model 112, such as AlexNet, VGG16, RestNet, MobileNet, GoogLeNet, Sufflenet, ResNext, Xception Network, etc., a User training and validation data 114 and a user performance indicator 116 . The self-tuning incremental model compression 130 analyzes the sparsity of the pre-trained model 112 , automatically prunes and quantizes network redundancy, and removes unnecessary layers in the model compression architecture 100 . Meanwhile, the proposed technique can reuse the parameters...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com