Batch normalization layer fusion and quantization method for model inference in ai neural network engine

A neural network and normalization technology, applied in biological neural network models, neural learning methods, kernel methods, etc., can solve problems such as reducing the computational efficiency of CNN

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

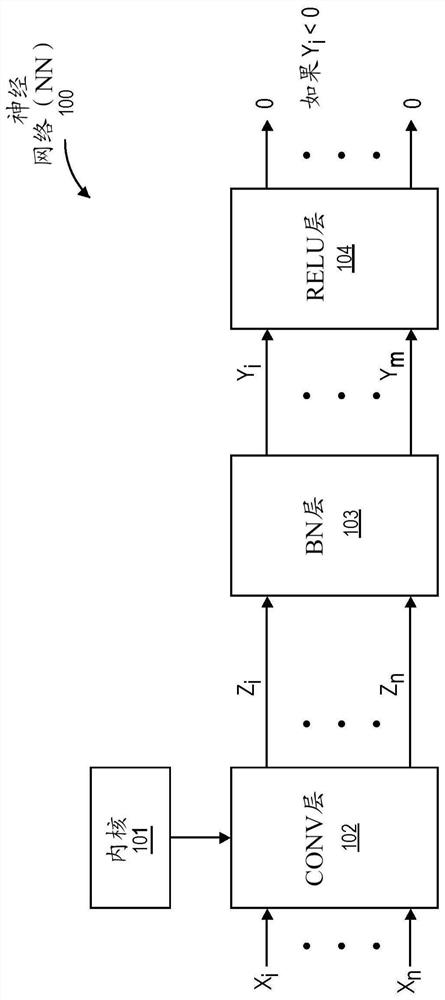

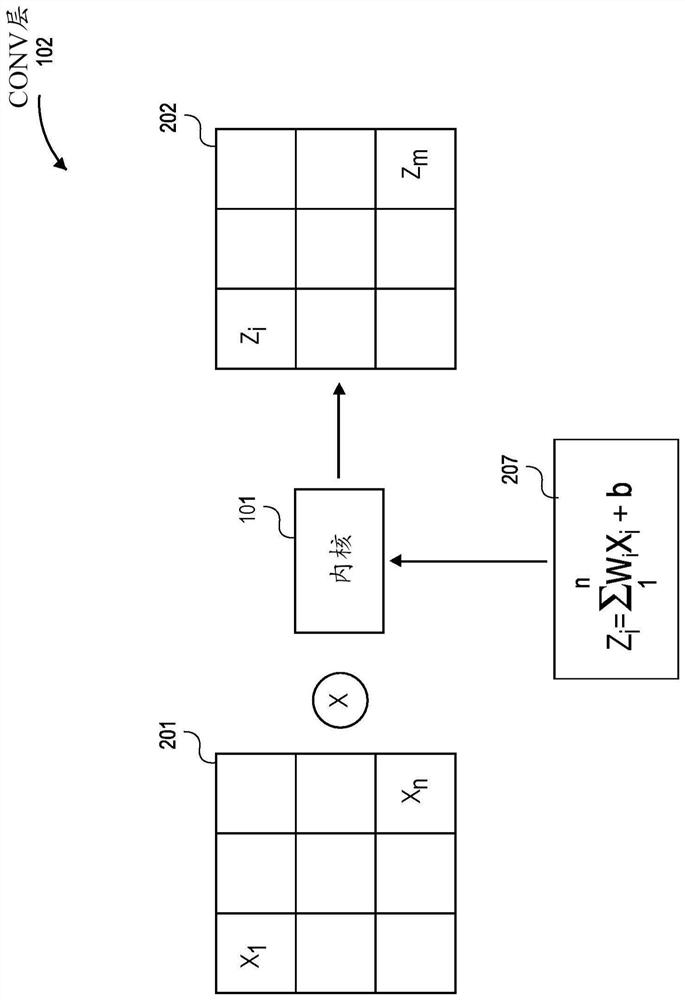

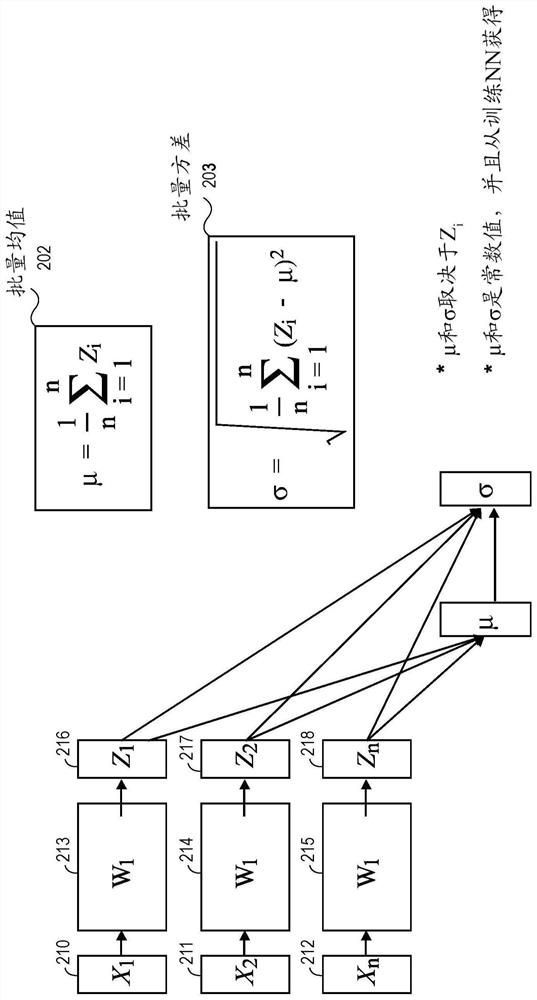

[0019] The following detailed description provides implementations and examples of batch normalization (BN) layer fusion and quantization methods for model inference in an artificial intelligence (AI) network engine. References to neural networks (NN) include any type of neural network that can be used in an AI network engine, including deep neural networks (DNN) and convolutional neural networks (CNN). For example, a NN may be an instance of a machine learning algorithm or process, and may be trained to perform a given task, such as classifying input data (eg, an input image). Training a NN may include determining weights and biases for data passing through the NN, including determining batch normalization (BN) parameters for the NN's inference performance.

[0020] Once trained, a NN can perform a task by computing an output using the parameters, weights, and biases of any number of layers to produce activations that determine the classification or score of the input data. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com