Patents

Literature

78 results about "Texture enhancement" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

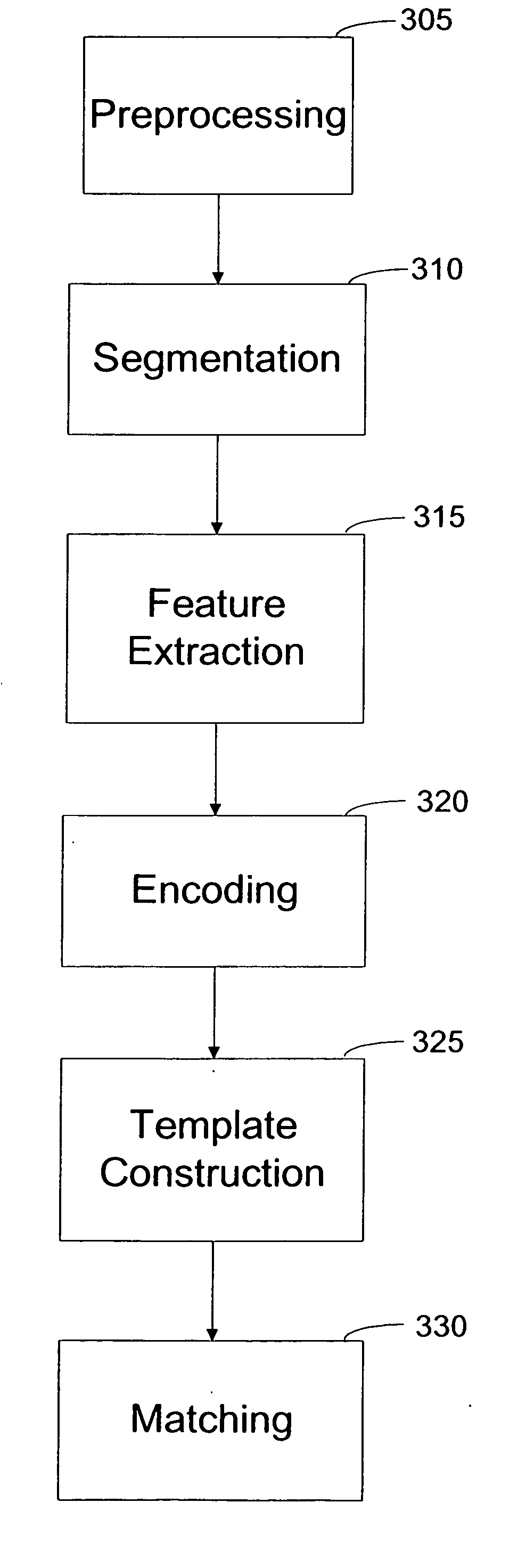

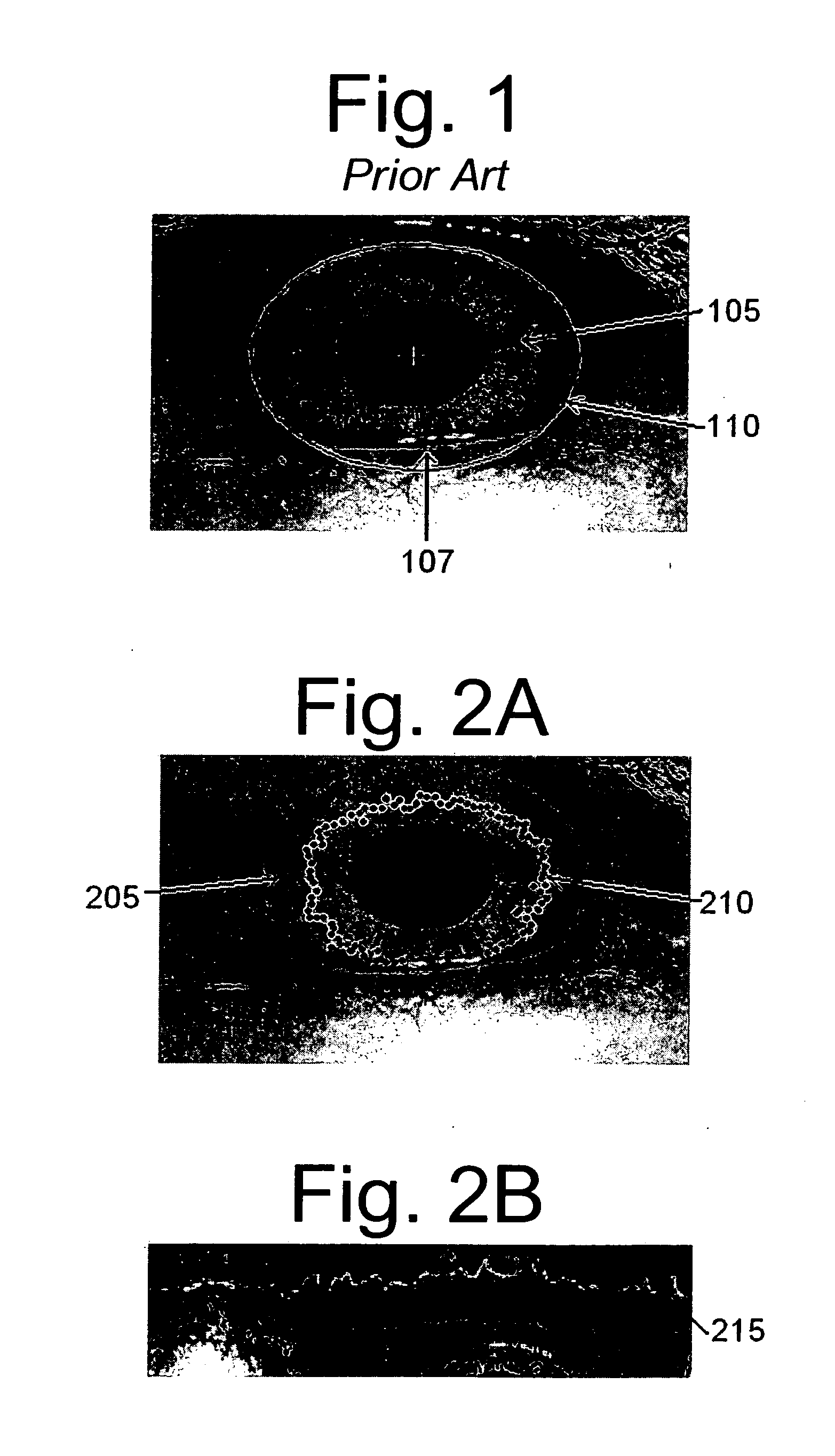

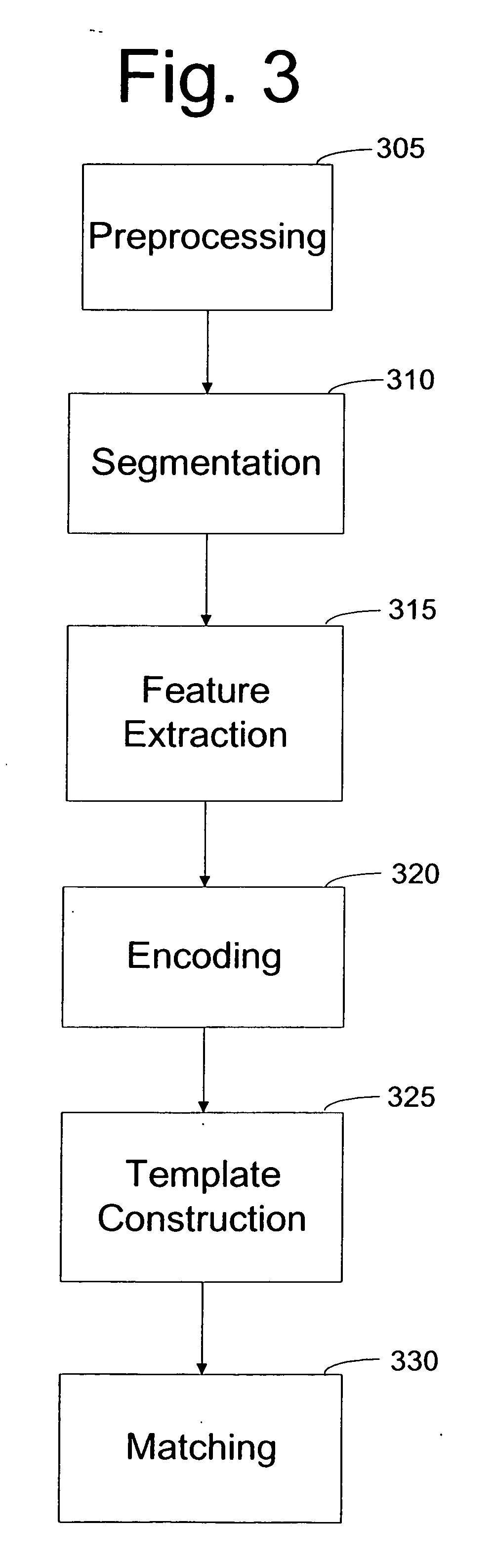

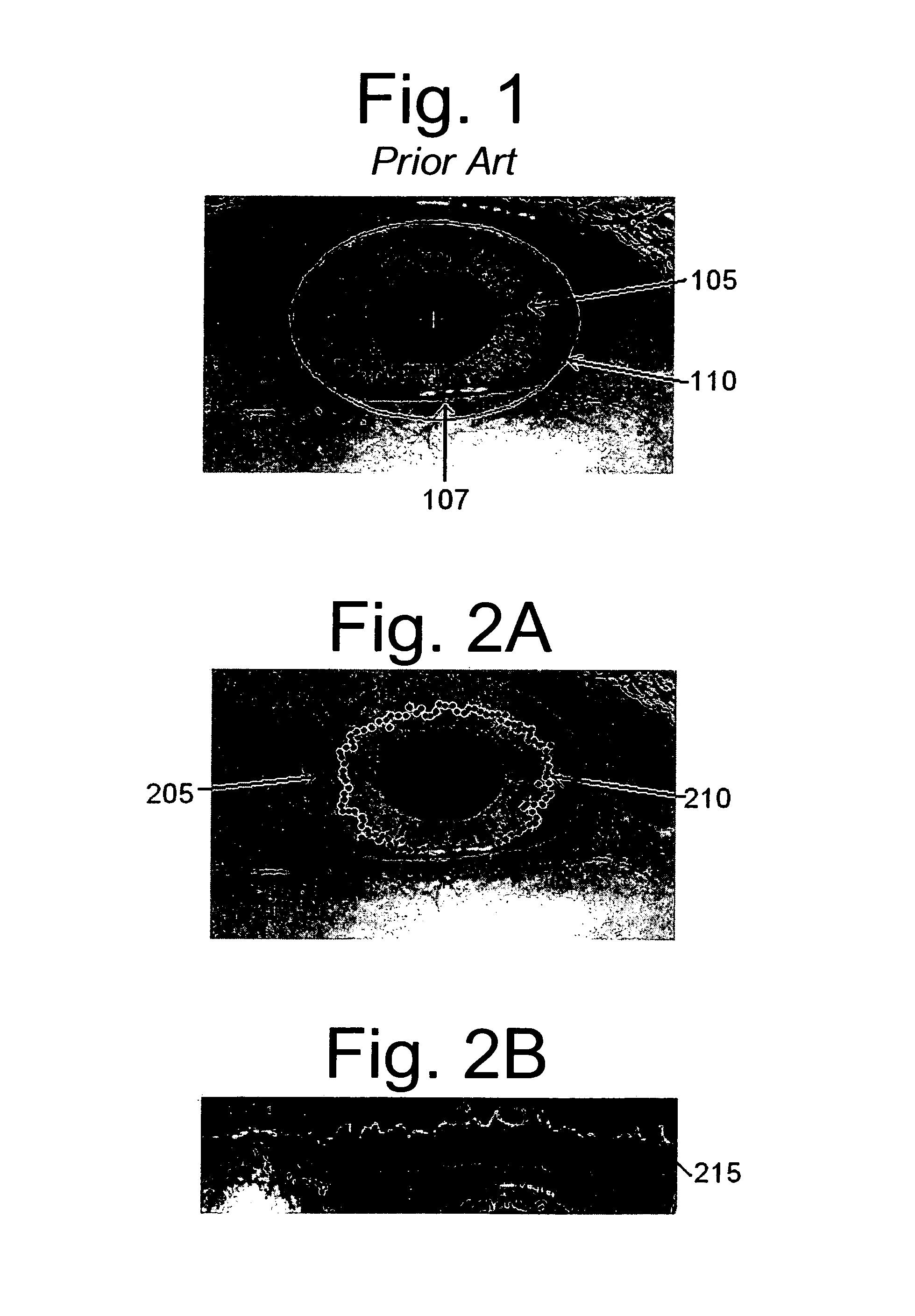

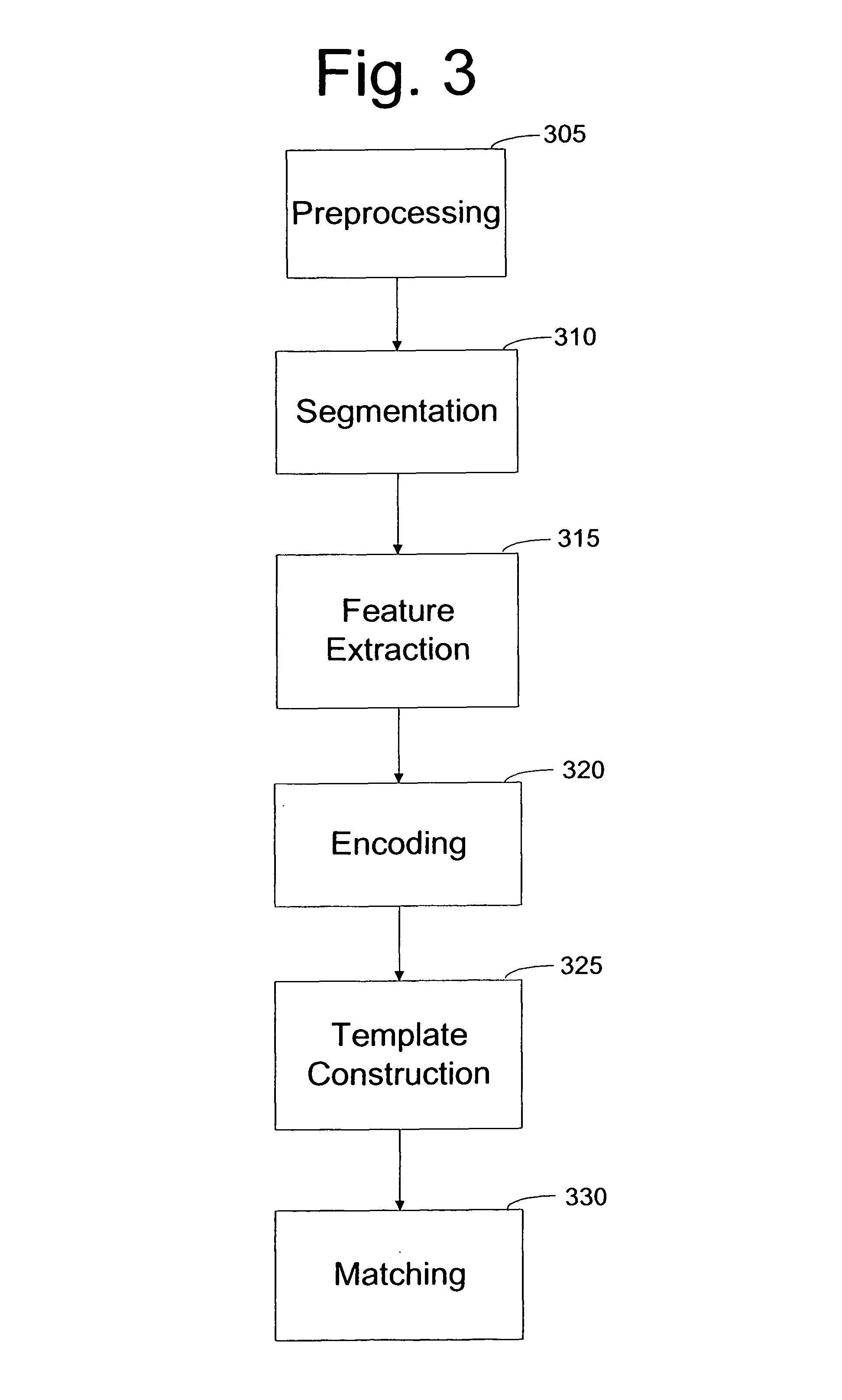

Invariant radial iris segmentation

ActiveUS20070211924A1Improved biometric algorithmEliminate needAcquiring/recognising eyesData setColor intensity

A method and computer product are presented for identifying a subject by biometric analysis of an eye. First, an image of the iris of a subject to be identified is acquired. Texture enhancements may be done to the image as desired, but are not necessary. Next, the iris image is radially segmented into a selected number of radial segments, for example 200 segments, each segment representing 1.8° of the iris scan. After segmenting, each radial segment is analyzed, and the peaks and valleys of color intensity are detected in the iris radial segment. These detected peaks and valleys are mathematically transformed into a data set used to construct a template. The template represents the subject's scanned and analyzed iris, being constructed of each transformed data set from each of the radial segments. After construction, this template may be stored in a database, or used for matching purposes if the subject is already registered in the database.

Owner:GENTEX CORP

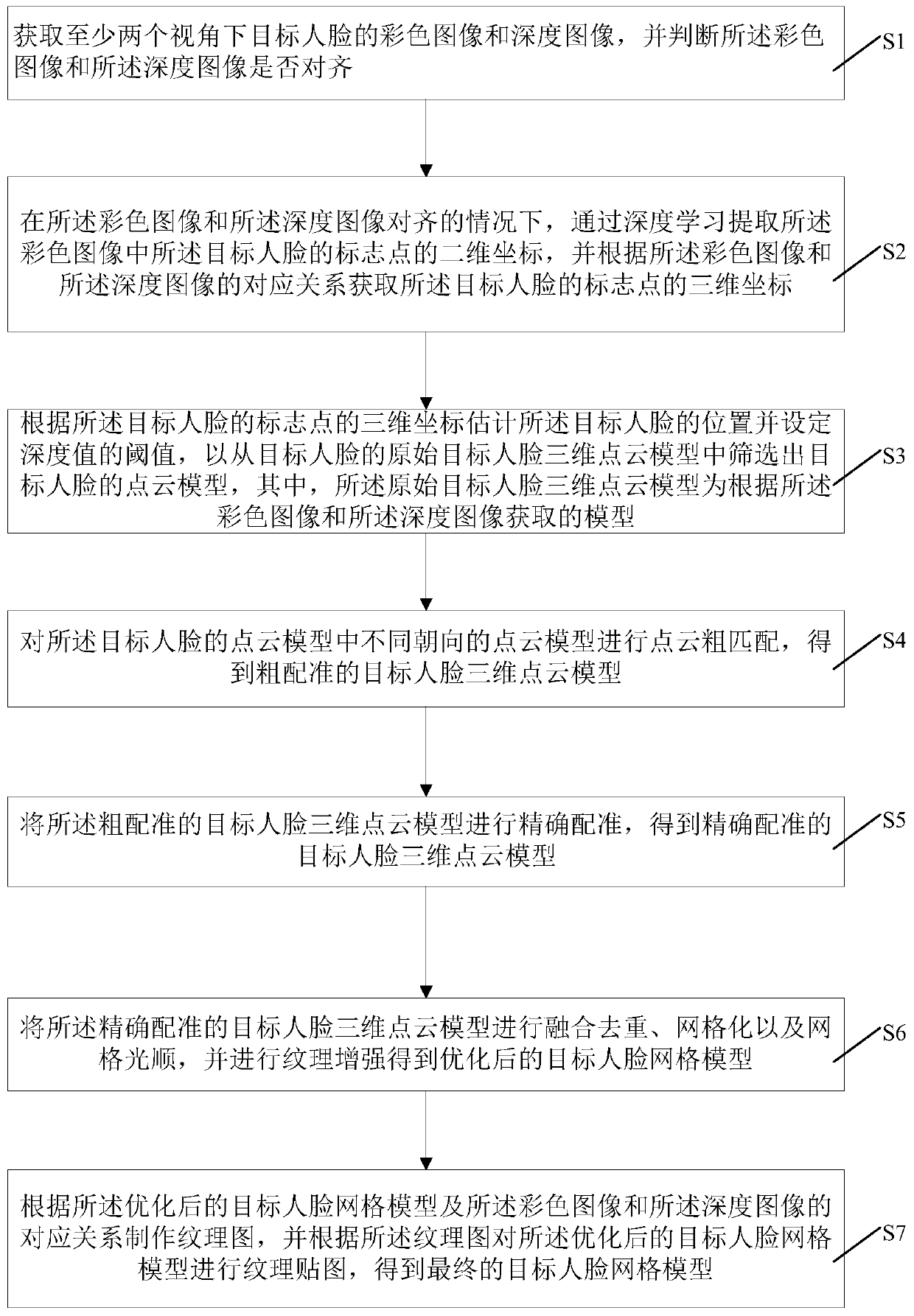

Three-dimensional face reconstruction method and system

The invention provides a three-dimensional face reconstruction method and system, and the method comprises the steps: obtaining a color image and a depth image of a target face under at least two visual angles, and judging whether the color image and the depth image are aligned or not; acquiring three-dimensional coordinates of the mark points of the target face and an original target face three-dimensional point cloud model of the target face; screening out a point cloud model of the target face; carrying out point cloud rough matching to obtain a roughly registered target face three-dimensional point cloud model; performing accurate registration to obtain an accurately registered target face three-dimensional point cloud model; fusion deduplication, gridding and grid fairing are carriedout, and then performing texture enhancement to obtain an optimized target face grid model; and making a texture map, and performing texture mapping on the optimized target face grid model to obtain afinal target face grid model. And the reconstructed face model is higher in quality and is closer to a real face effect.

Owner:新拓三维技术(深圳)有限公司

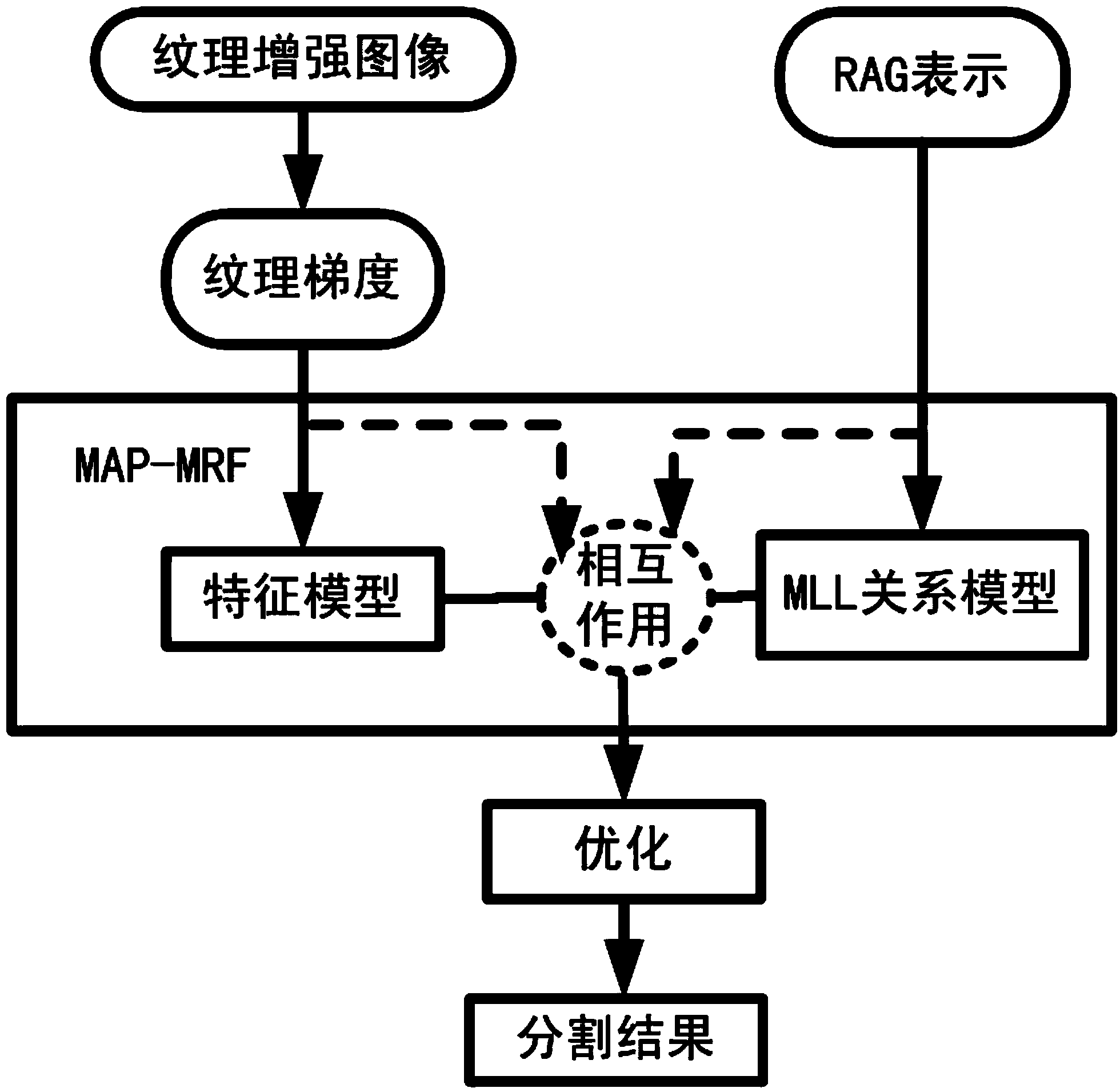

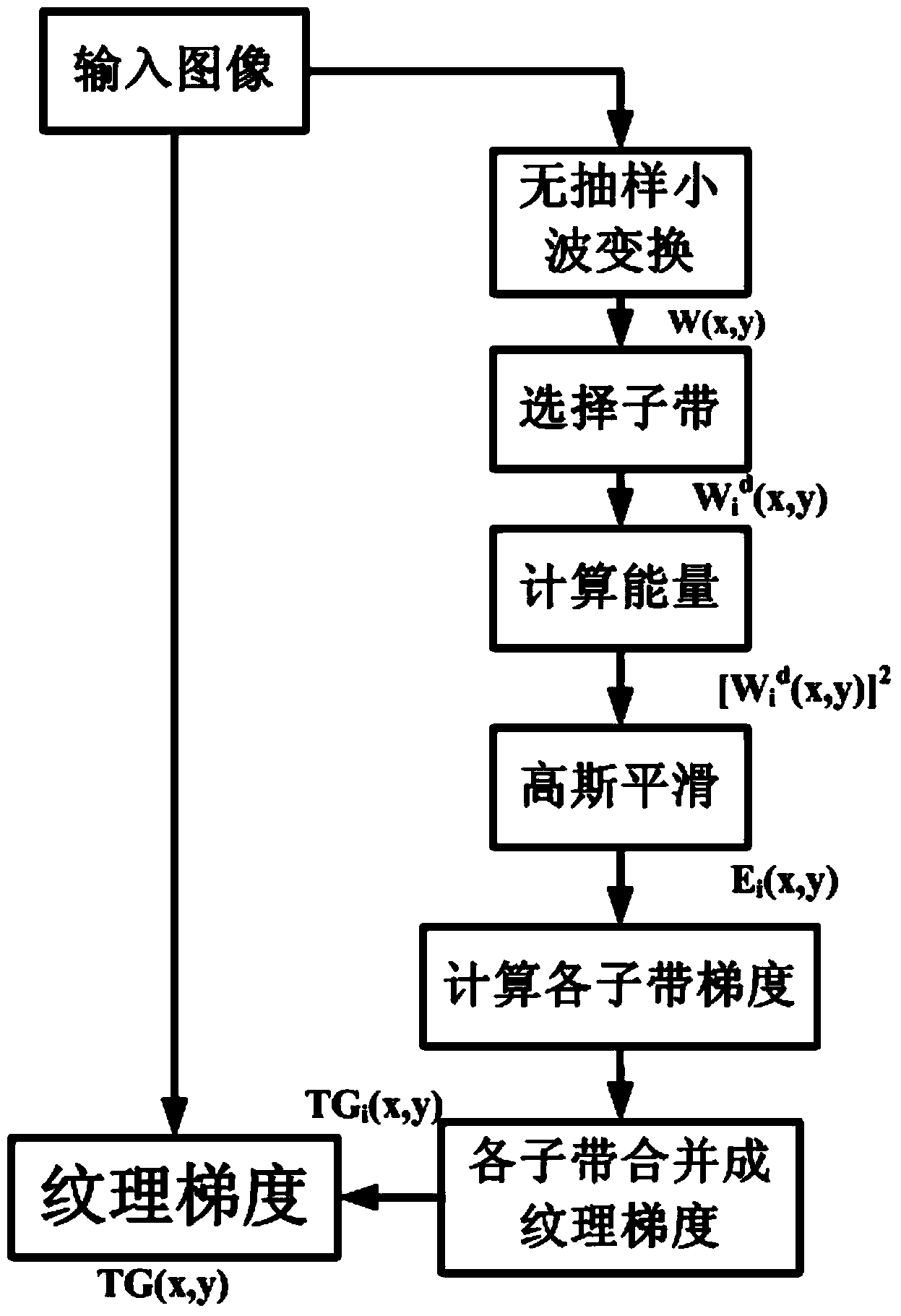

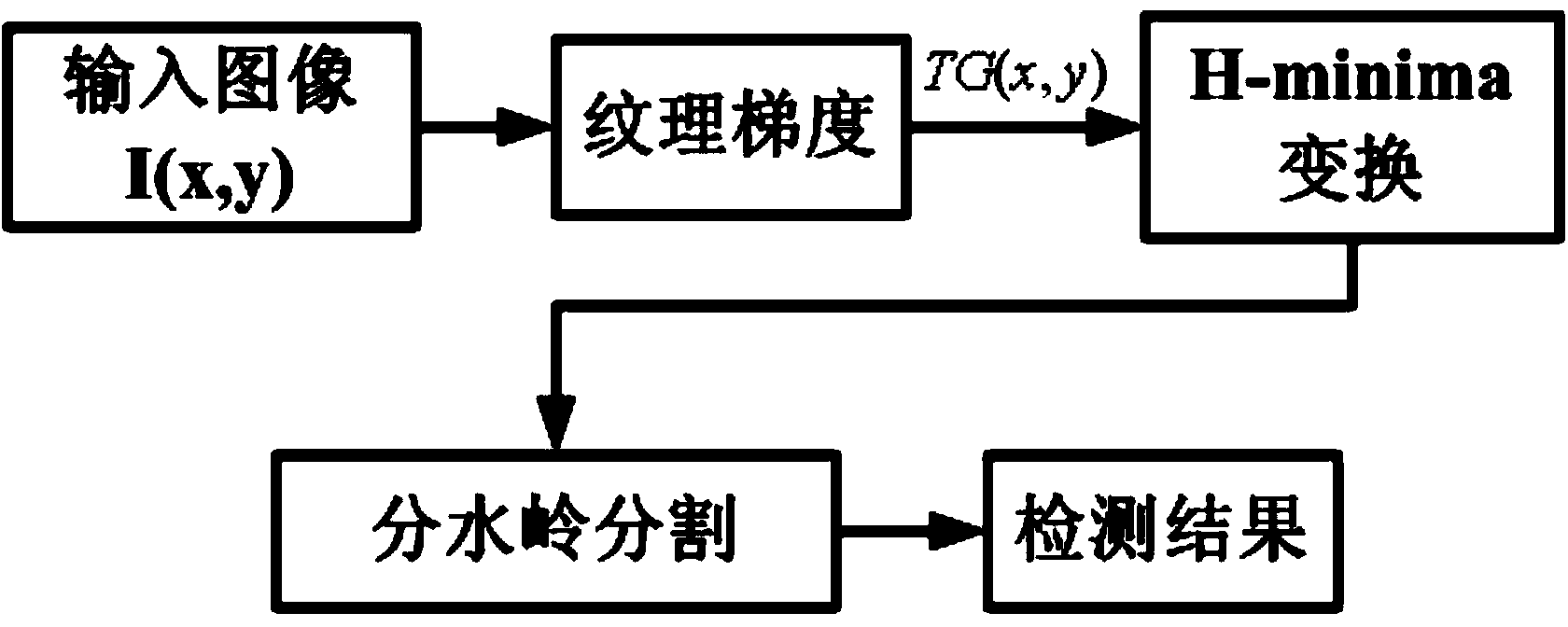

Textile defect detecting algorithm based on texture gradients

InactiveCN103413314AQuality improvementImprove accuracyImage enhancementImage analysisNon localTexture enhancement

The invention discloses a textile defect detecting algorithm based on texture gradients. Firstly, texture gradients of sub-band characteristics after wavelet transformation are calculated, marked watershed segmentation is carried out on a texture gradient image, and a textile defect detecting system based on the texture gradients is primarily finished; secondly, non-local average filtering is utilized to eliminate imaging noise in a textile image and influences of other uncorrelated details, useful texture details are highlighted, preprocessing is completed, and texture enhancement is achieved; at last, on the bases of the texture enhancement and the texture gradients, an MRF model is utilized to extract a defect zone boundary, texture defects can also be well extracted by means of the method, and the defect that in watershed marking, a threshold value needs to be chosen manually is eliminated. The textile defect detecting algorithm based on the texture gradients has the advantages of being capable of rapidly and accurately distinguishing defects of textiles and wide in application, improving the quality of the textiles, and the like.

Owner:HEFEI NORMAL UNIV

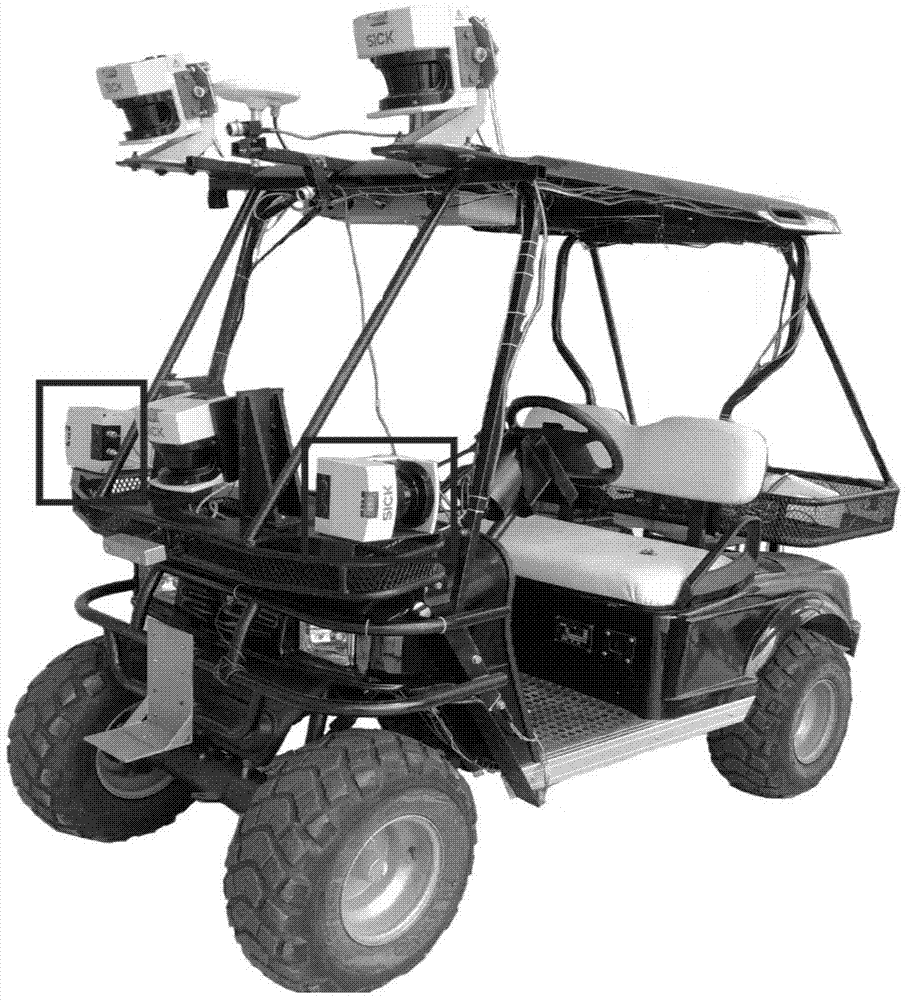

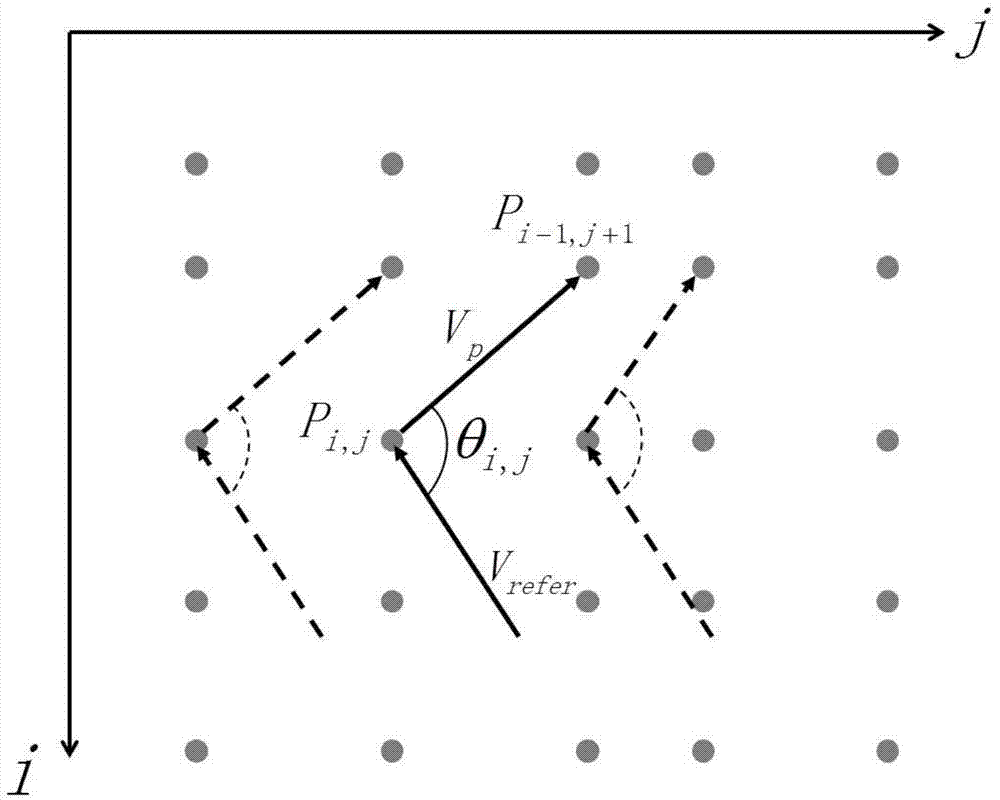

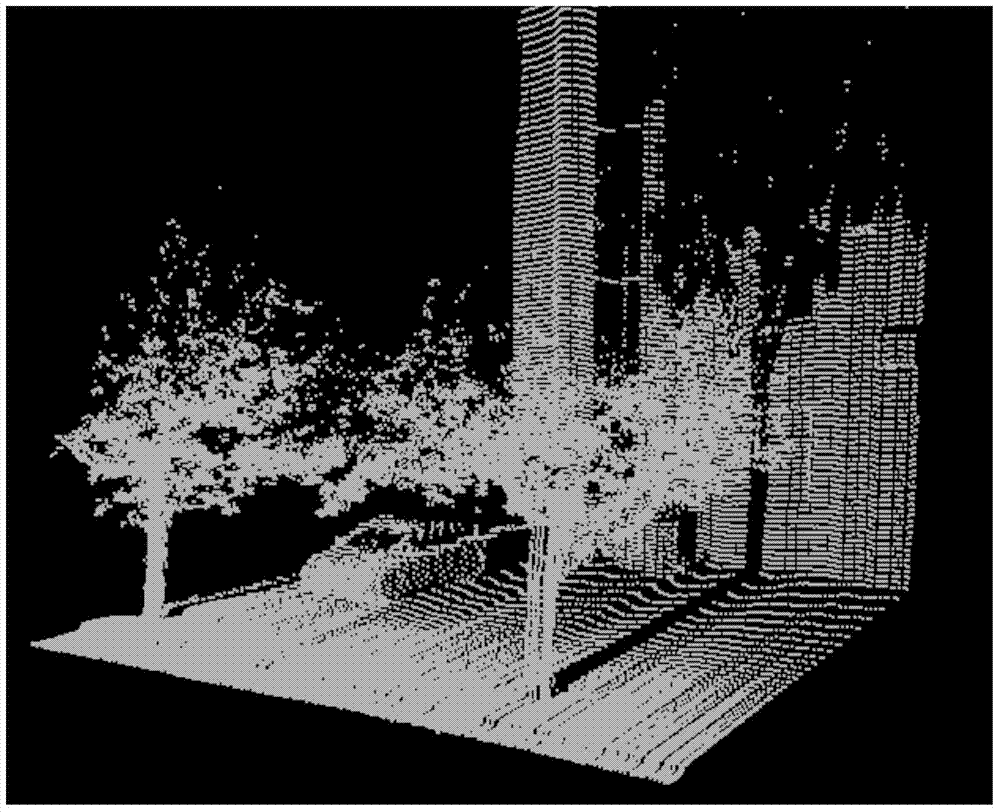

Scanning imaging method for three-dimensional environment in vehicle-mounted two-dimensional laser movement

ActiveCN104268933AFor dynamic applicationsImage analysis3D-image renderingReference vectorLaser scanning

The invention discloses a scanning imaging method for the three-dimensional environment in vehicle-mounted two-dimensional laser movement, belongs to the technical field of ranging laser scanning imaging and autonomous environment awareness of unmanned vehicles, and provides a calculation model of a texture enhancement graph. The calculation model can achieve three-dimensional environment scanning imaging based on vehicle-mounted two-dimensional laser ranging data, effectively overcomes image blurring caused by irregular movement of a vehicle body, can obtain a clear two-dimensional image description of three-dimensional point cloud data, and supplements three-dimensional space ranging information. According to the texture enhancement graph, the distinction degree of the gray level of pixels of a generated image is the maximum by calculating out an optimal reference vector, texture details of objects in a scene are highlighted, and therefore scene division, object recognition and scene understanding based on laser scanning data are effectively supported. The scanning imaging method can be applied to the artificial intelligence field of outdoor scene understanding, environment cognition and the like of unmanned vehicles.

Owner:DALIAN UNIV OF TECH

Polyp segmentation method and device, computer equipment and storage medium

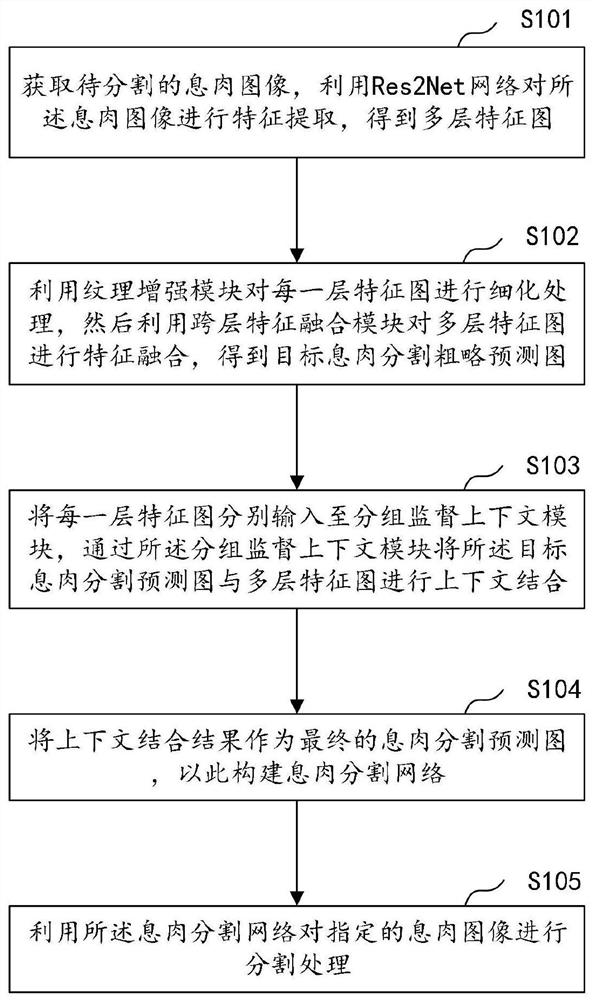

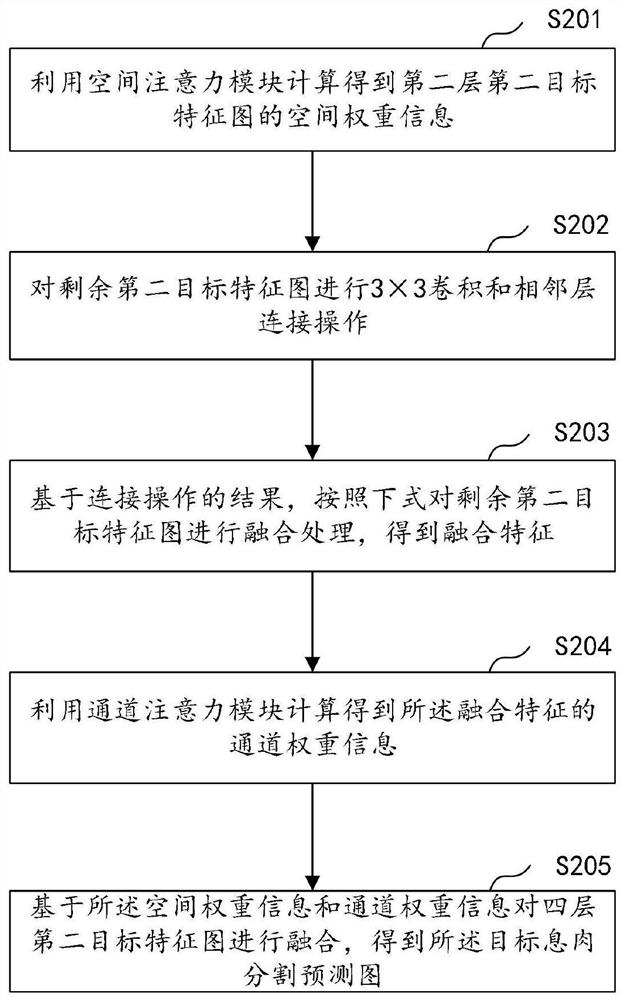

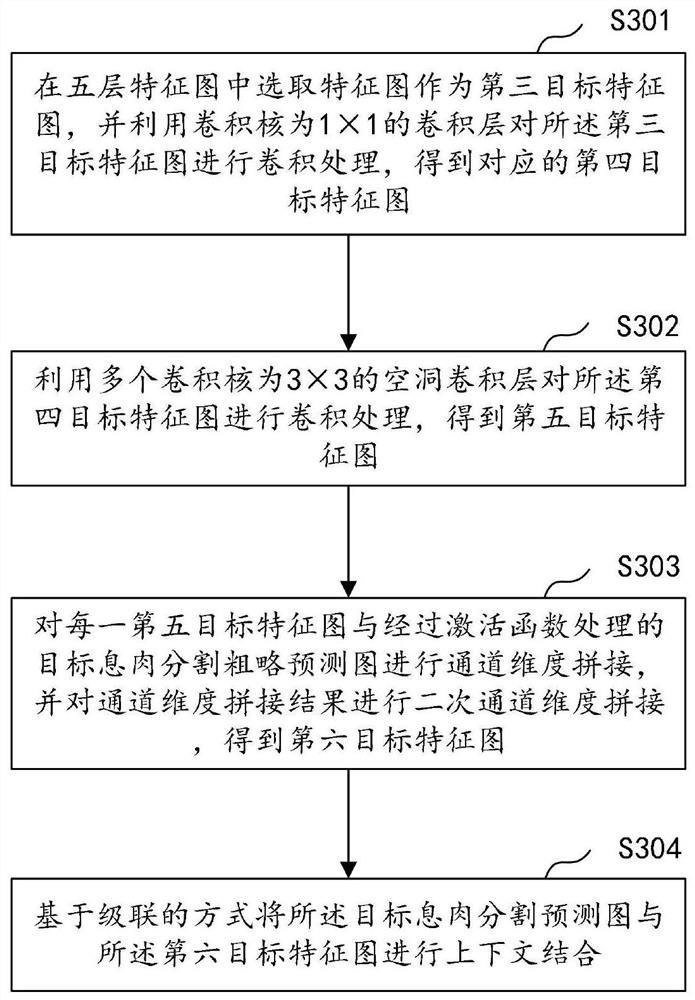

ActiveCN113538313AImprove Segmentation AccuracyRich polyp featuresImage enhancementImage analysisTexture enhancementEngineering

The invention discloses a polyp segmentation method and device, computer equipment and a storage medium, and the method comprises the steps: obtaining a to-be-segmented polyp image, carrying out the feature extraction of the polyp image through a Res2Net network, and obtaining a multi-layer feature map; performing refining processing on each layer of feature map by using a texture enhancement module, and then performing feature fusion on multiple layers of feature maps by using a cross-layer feature fusion module to obtain a target polyp segmentation rough prediction map; inputting each layer of feature map into a grouping supervision context module, and performing context combination on the target polyp segmentation rough prediction map and the multi-layer feature map through the grouping supervision context module; taking a context combination result as a final polyp segmentation prediction map so as to construct a polyp segmentation network; and performing segmentation processing on the polyp image by using the polyp segmentation network. According to the method, richer polyp features are extracted by considering information complementation between hierarchical feature maps and feature fusion under multiple views, so that the segmentation precision of the polyp image is improved.

Owner:SHENZHEN UNIV

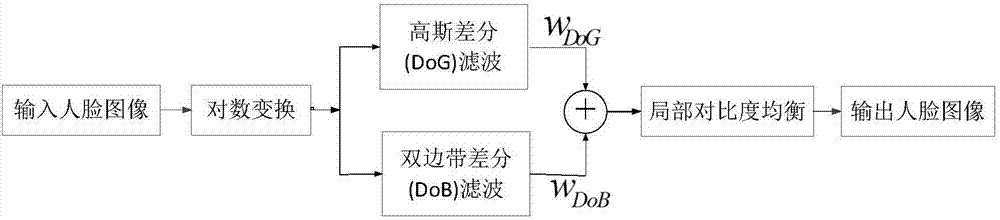

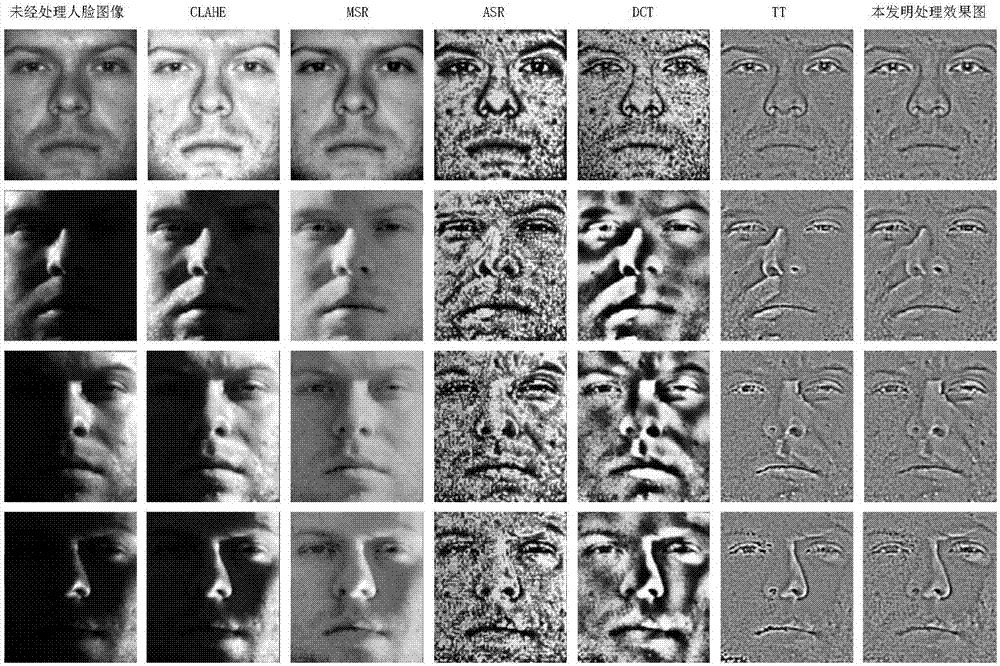

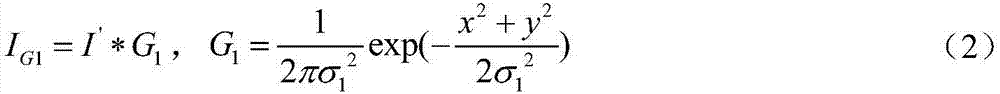

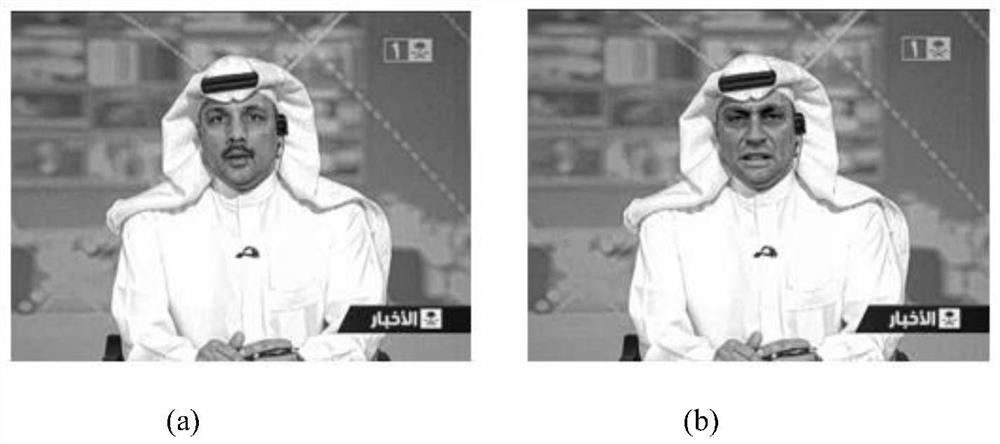

Illumination-robust facial image local texture enhancement method

ActiveCN107392866AReduce lossAlleviate the problem of contrast imbalanceImage enhancementImage analysisPattern recognitionTexture enhancement

The present invention relates to an illumination-robust facial image local texture enhancement method includes the following steps that: logarithmic transformation is performed on the gray value of an inputted original face image I, so that a logarithmic transformation result image I' can be obtained; Gaussian differential filtering and bilateral differential filtering are performed on the logarithmic transformation result image I', so that differential filtering result images IDoG and IDoB are obtained, image information fusion is performed on IDoG and IDoB, so that a fusion result image I" is obtained; and the fusion result image I" is divided into sub-image blocks, grayscale-equalization processing is performed on each sub-image block by means of a mean normalization method, and the sub-image blocks are spliced according to division positions, and then the pixel gray value range of a spliced image is compressed by means of a hyperbolic tangent function, and an image is outputted. With the method of the invention adopted, facial images imaged under different illumination conditions can be processed; illumination influence can be eliminated; face local texture information can be enhanced; and recognition accuracy in face recognition application can be improved. The method has the advantages of low algorithm complexity and high light robustness.

Owner:WUHAN UNIV OF SCI & TECH

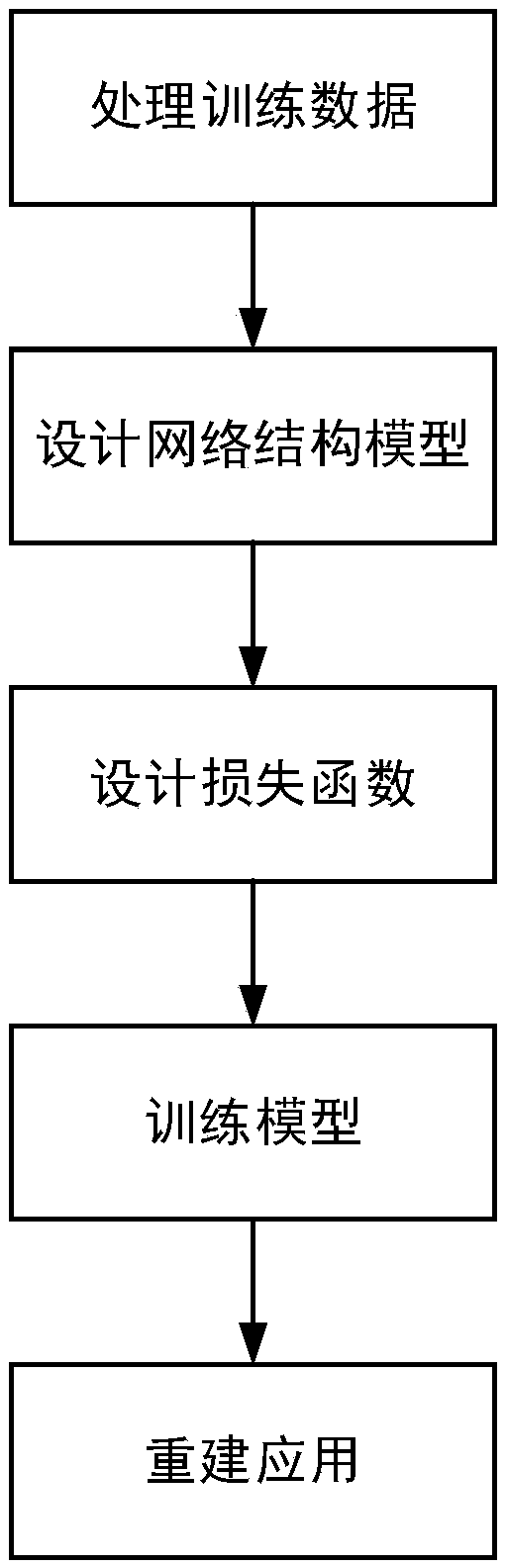

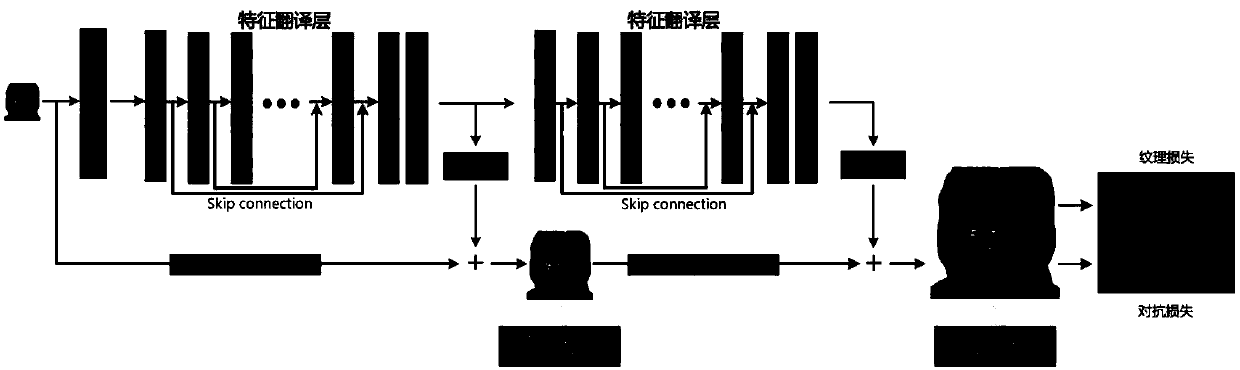

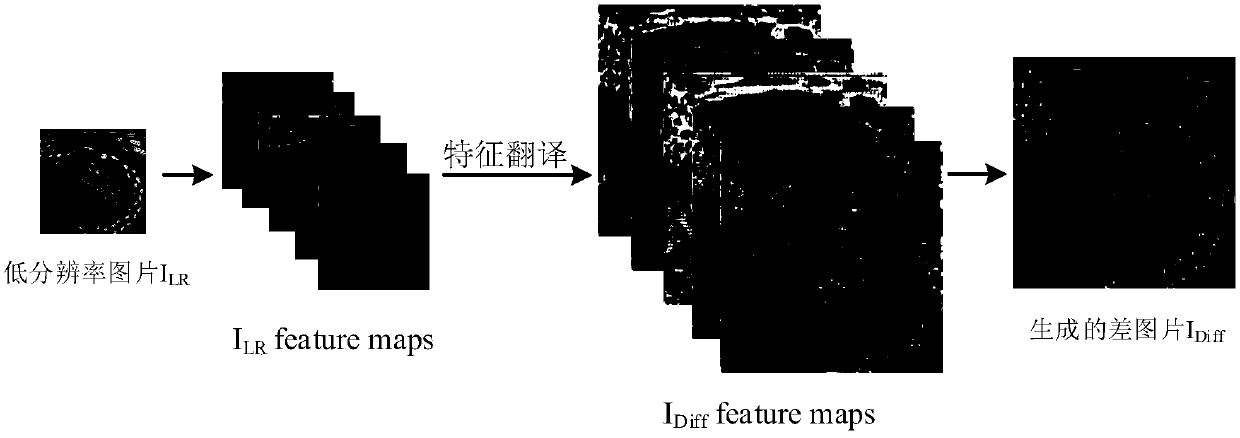

A picture texture enhancement super-resolution method based on a deep feature translation network

ActiveCN109671022AEasy to completePromise to be loyalGeometric image transformationCharacter and pattern recognitionFeature extractionImage resolution

The invention relates to a picture texture enhancement super-resolution method based on a deep feature translation network, and belongs to the technical field of computer vision. The method comprisesthe steps of firstly, processing the training data, and then designing a network structure model which comprises a super-resolution reconstruction network, a fine-grained texture feature extraction network and a discrimination network; and then, designing a loss function for training the network by adopting a method of combining various loss functions, and training the network structure model by using the processed training data to obtain a super-resolution reconstruction network with a texture enhancement function, and finally, inputting the low-resolution image into the super-resolution reconstruction network, and performing reconstruction to obtain a high-resolution image. According to the method, the picture texture information can be extracted under finer granularity, a mode of combining multiple loss functions is adopted, compared with other methods, the method guarantees that the original picture is loyalty, the texture feature information can be recovered, and the picture is clearer. The method is suitable for any picture, has a good effect, and has good universality.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

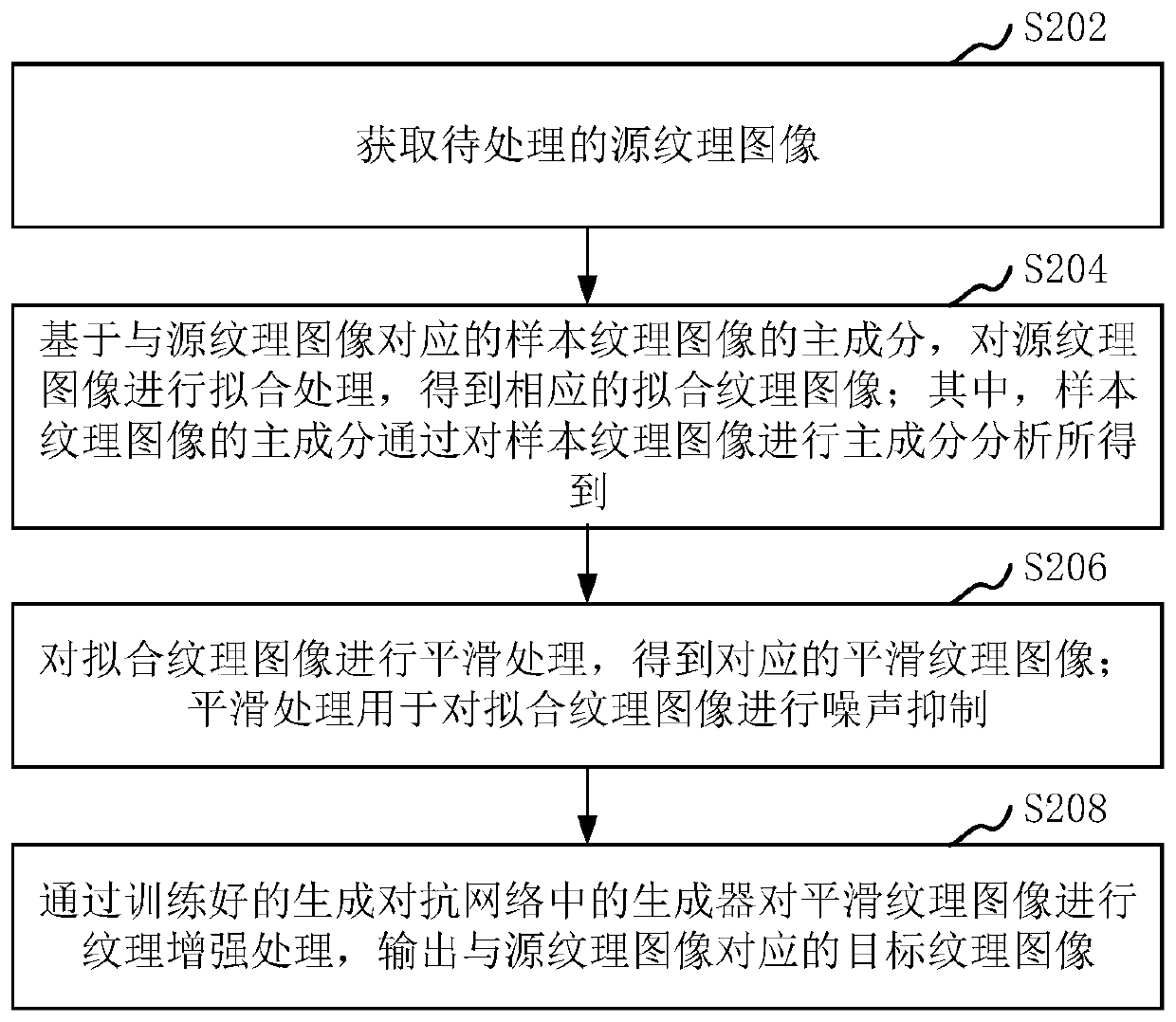

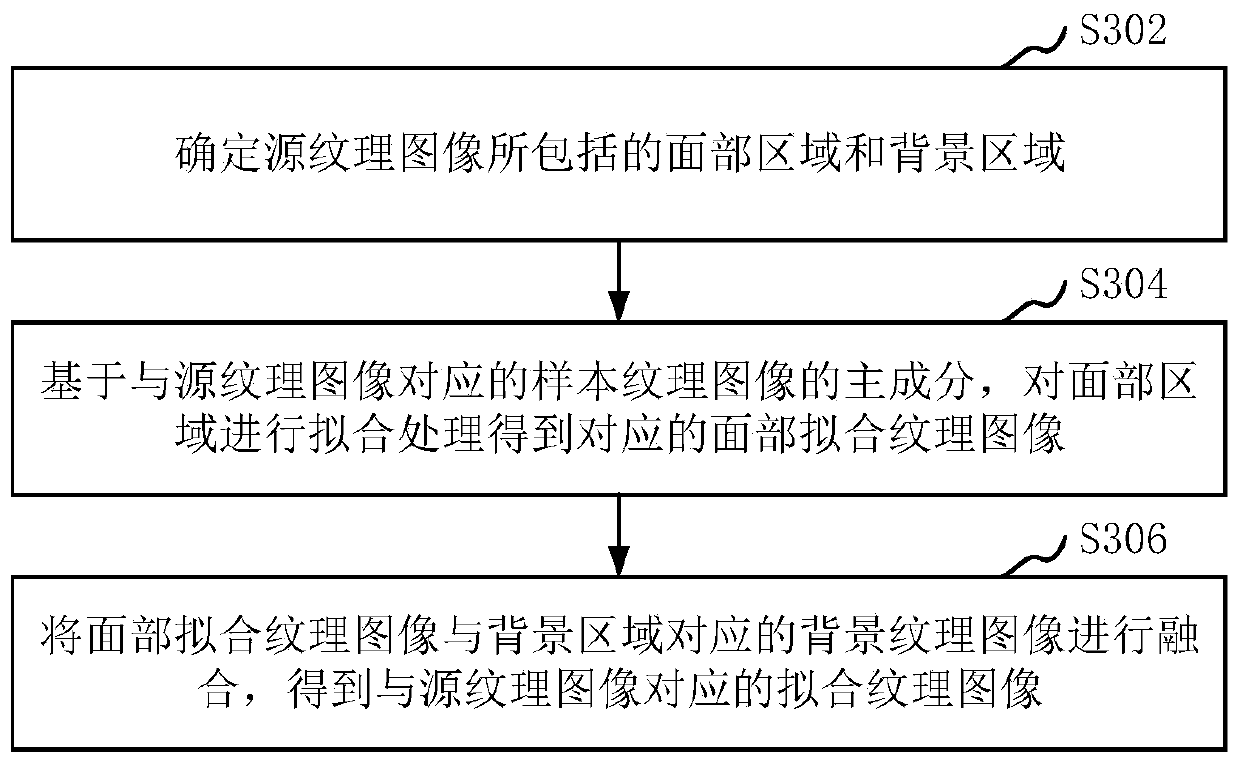

Texture enhancement method and device based on texture image, equipment and storage medium

ActiveCN111445410AEnhancement effect is goodCancel noiseImage enhancementImage analysisPrincipal component analysisRadiology

The invention relates to a texture enhancement method and device based on a texture image, computer equipment and a storage medium. The method comprises the steps of obtaining a to-be-processed sourcetexture image; performing fitting processing on the source texture image based on the principal component of the sample texture image corresponding to the source texture image to obtain a corresponding fitting texture image, wherein the principal component of the sample texture image is obtained by performing principal component analysis on the sample texture image; carrying out smoothing processing on the fitting texture image to obtain a corresponding smooth texture image, wherein the smoothing processing is used for carrying out noise suppression on the fitting texture image; and performing texture enhancement processing on the smooth texture image through a generator in a generative adversarial network, and outputting a target texture image corresponding to the source texture image. By adopting the method, the texture enhancement effect can be greatly improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

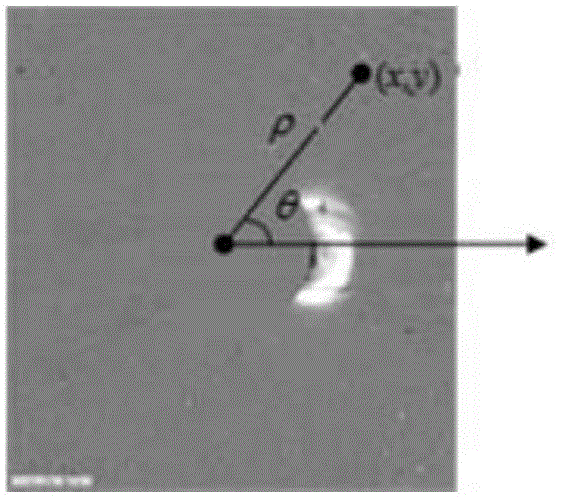

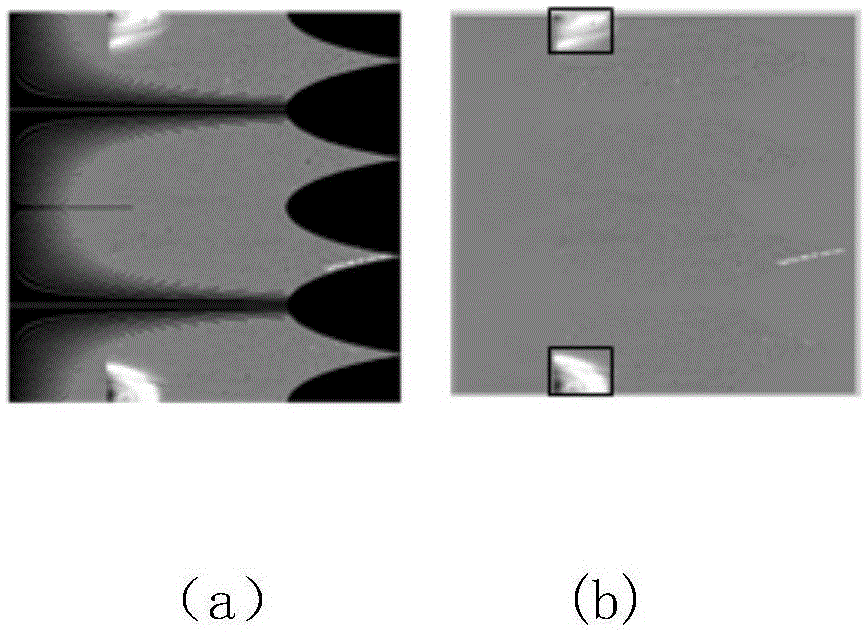

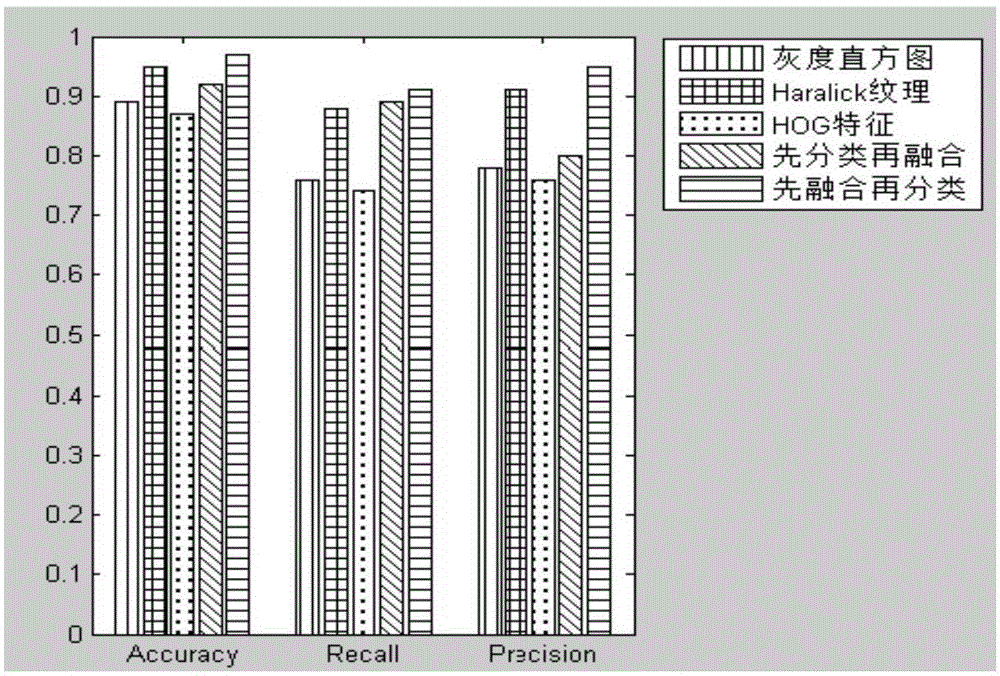

Coronal mass ejection (CME) detection method based on multi-feature fusion

InactiveCN105046259ADescribe wellHigh precisionCharacter and pattern recognitionCoronal mass ejectionTexture enhancement

The invention discloses a coronal mass ejection (CME) detection method based on multi-feature fusion. Through segmenting a CME differential image, CME detection is modeled to be the classification problem of a brightest block in the differential image. The method comprises the following steps: firstly, an original image is converted into polar coordinates for display; secondly, since the typical representation of CME is a bright and complex-texture enhancement structure, the brightest block of the CME is searched for, the brightest block is taken as a representative of the image, the gray scale, texture and HOG features of the brightest block are extracted; and finally, by taking the extracted gray-scale feature, the texture and the HOG feature as a basis, taking a decision tree as a basic classifier, and finishing CME detection by use of an integrated decision tree. An experiment result shows that the CME detection algorithm based on the multi-feature fusion, brought forward by the invention, can obtain a quite good CME detection result.

Owner:UNIV OF JINAN

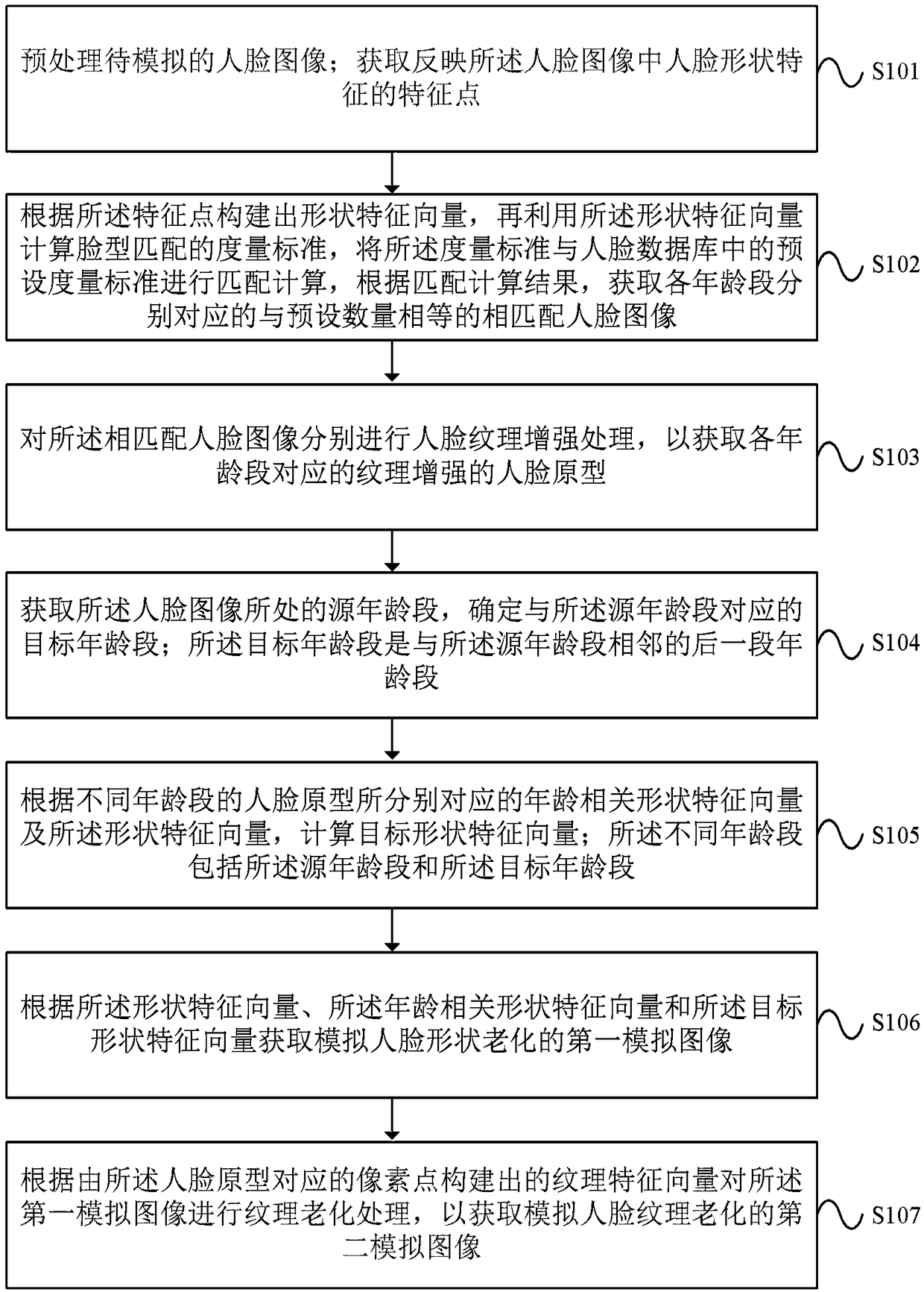

Method and device for simulating human face aging based on homologous continuity

ActiveCN109002763ASimulate the realSimulate natureCharacter and pattern recognition3D-image renderingFeature vectorTransient state

The invention discloses a method and a device for simulating human face aging based on homologous continuity. The shape feature vector is constructed according to the feature points and the metric iscalculated. The metric and the preset metric are matched and calculated to obtain the corresponding matching face images of each age group which are equal to the preset number. A texture enhancement face prototype is acquired corresponding to each age group; the source target age range of the face image is determined; according to the shape feature vectors, age-related and target shape feature vectors corresponding to the face prototype, the first simulated image simulating the aging of the face shape is obtained. According to the texture feature vector constructed by the pixel points corresponding to the face prototype, the second simulated image which simulates the aging of face texture is obtained. The device comprises: an electronic device which is communicated by a processor, a memoryand a bus; and a non-transient computer-readable storage media. The device performs the method described above. The method and the device can truly and naturally simulate the aging of a human face.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

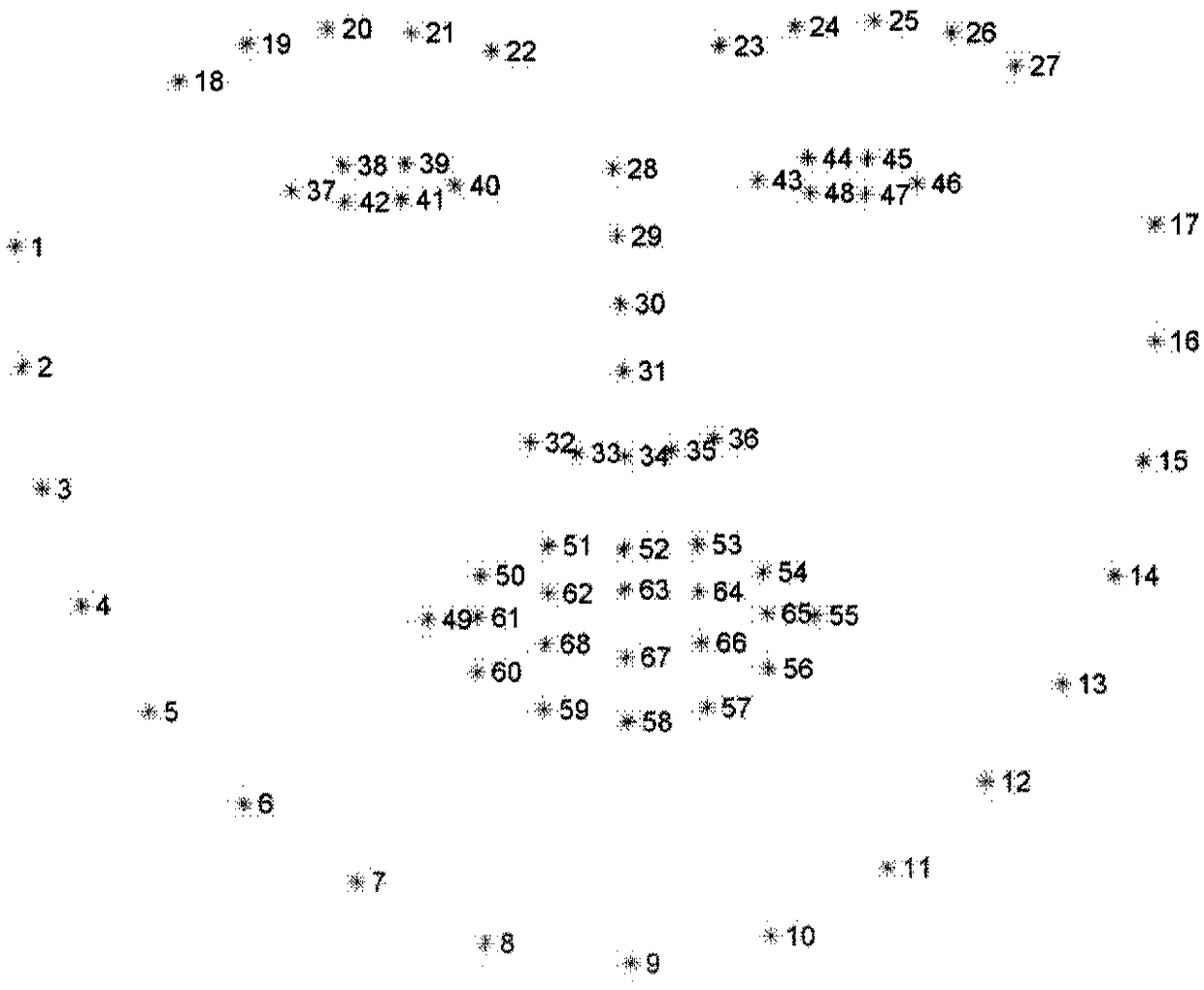

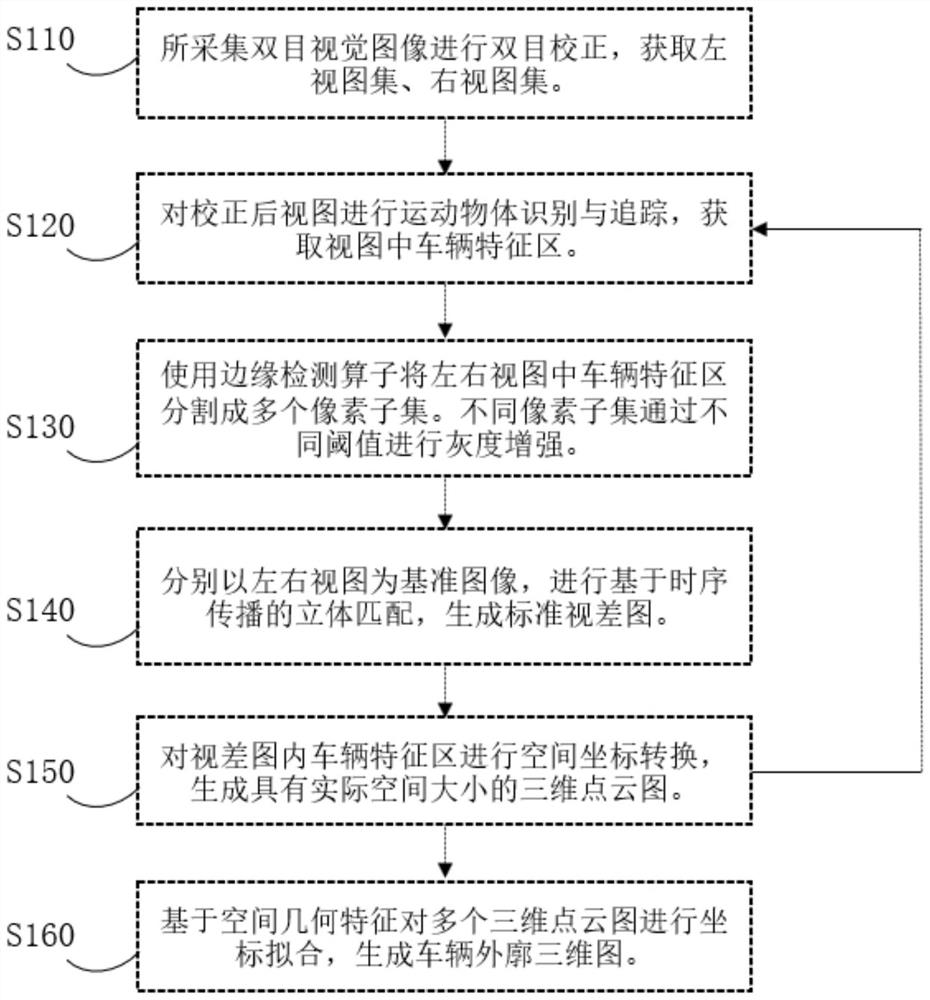

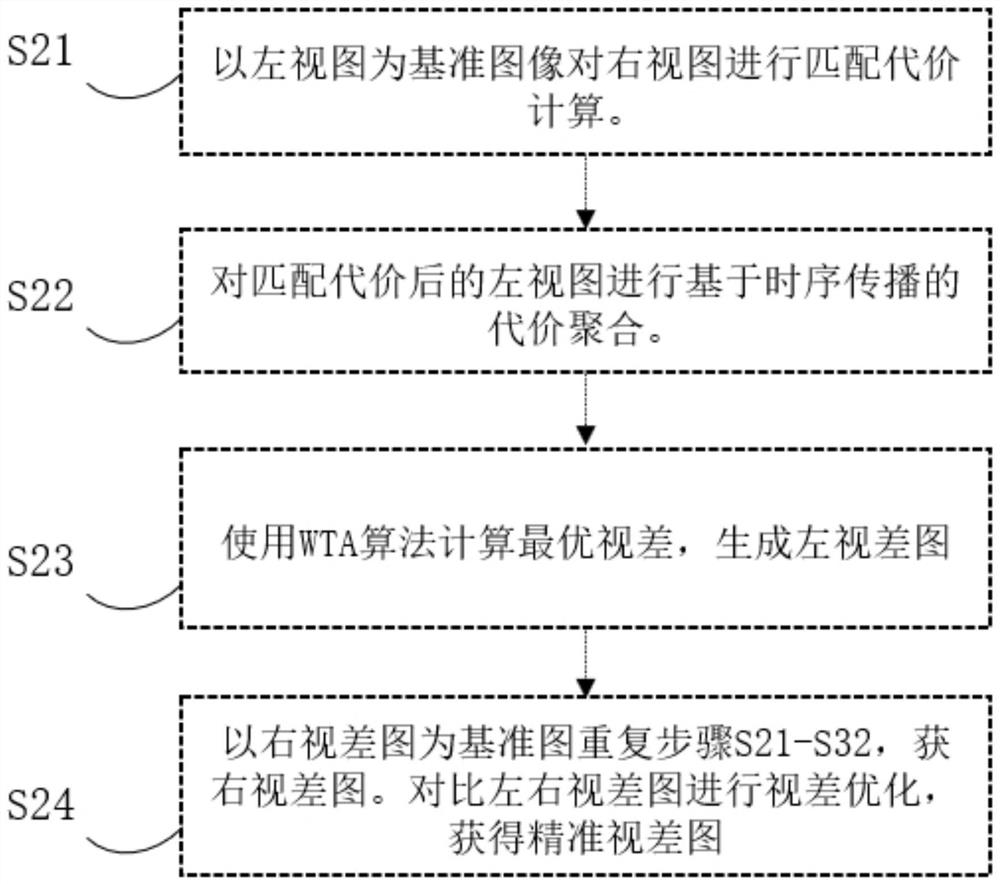

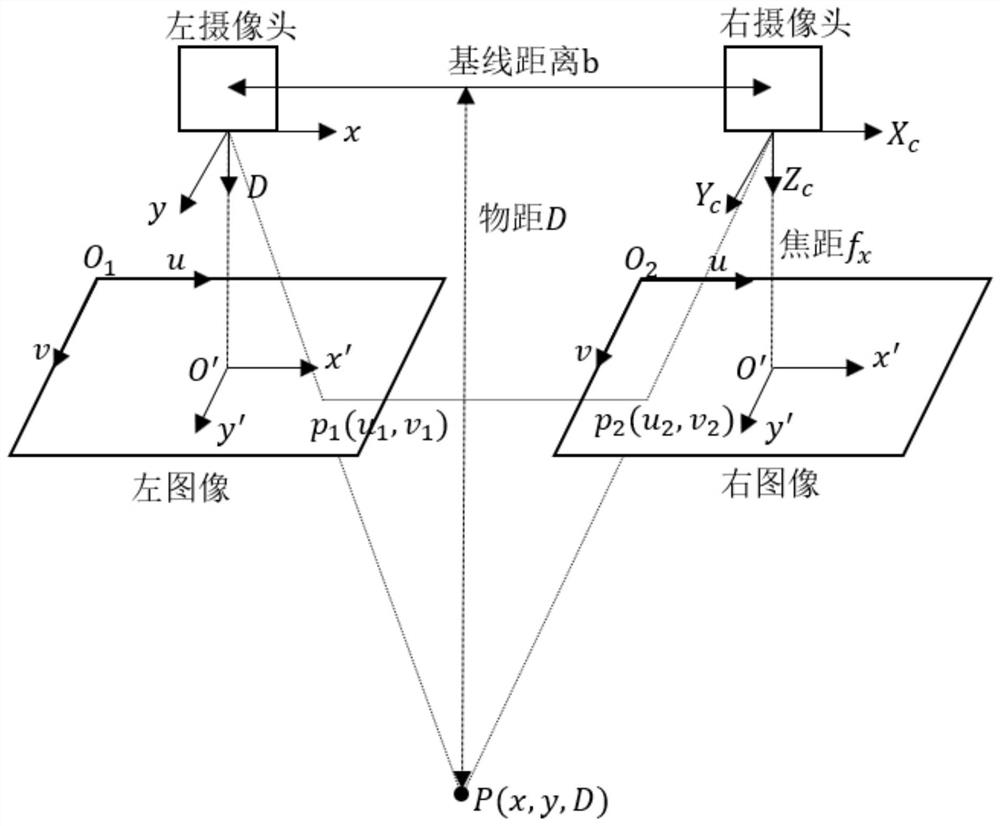

Method for detecting overall dimension of running vehicle based on binocular vision

PendingCN112991369ASolve the problem of incomplete contour measurementImprove relevanceImage enhancementImage analysisPattern recognitionStereo matching

The invention discloses a method for detecting the overall dimension of a running vehicle based on binocular vision. The method comprises the following steps: calibrating and correcting a binocular camera; performing moving object recognition and tracking on the corrected view to obtain a vehicle feature region; enabling the identified vehicle surface to be subjected to texture enhancement processing, so that the problem of low detection precision of a weak texture surface is solved; based on vehicle driving scene characteristics, providing a stereo matching algorithm based on time sequence propagation to generate a standard disparity map, and improving the vehicle overall dimension measurement precision; performing three-dimensional reconstruction on the generated disparity map to generate a point cloud map; providing a space coordinate fitting algorithm, fitting a plurality of frames of point cloud images of the tracked vehicle, generating a standard vehicle overall dimension image. The problem that the overall dimension of the vehicle cannot be completely displayed through a single frame of point cloud image is solved. The method is not limited by the vehicle speed in measurement effect, high in measurement precision, wide in measurement range and low in cost. The binocular camera has the advantages of being flexible in structure, convenient to install and suitable for measurement of all road sections.

Owner:HUBEI UNIV OF TECH

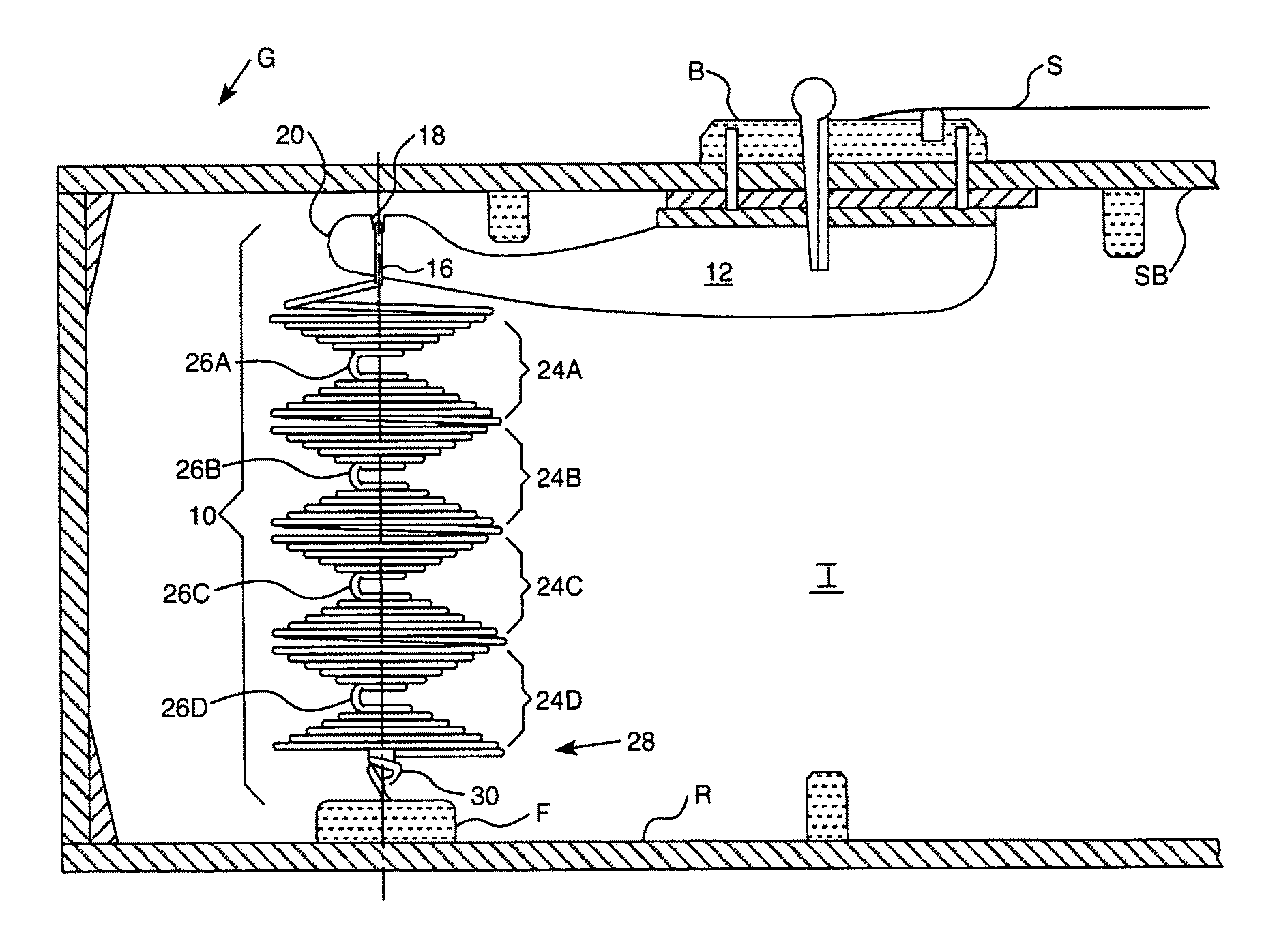

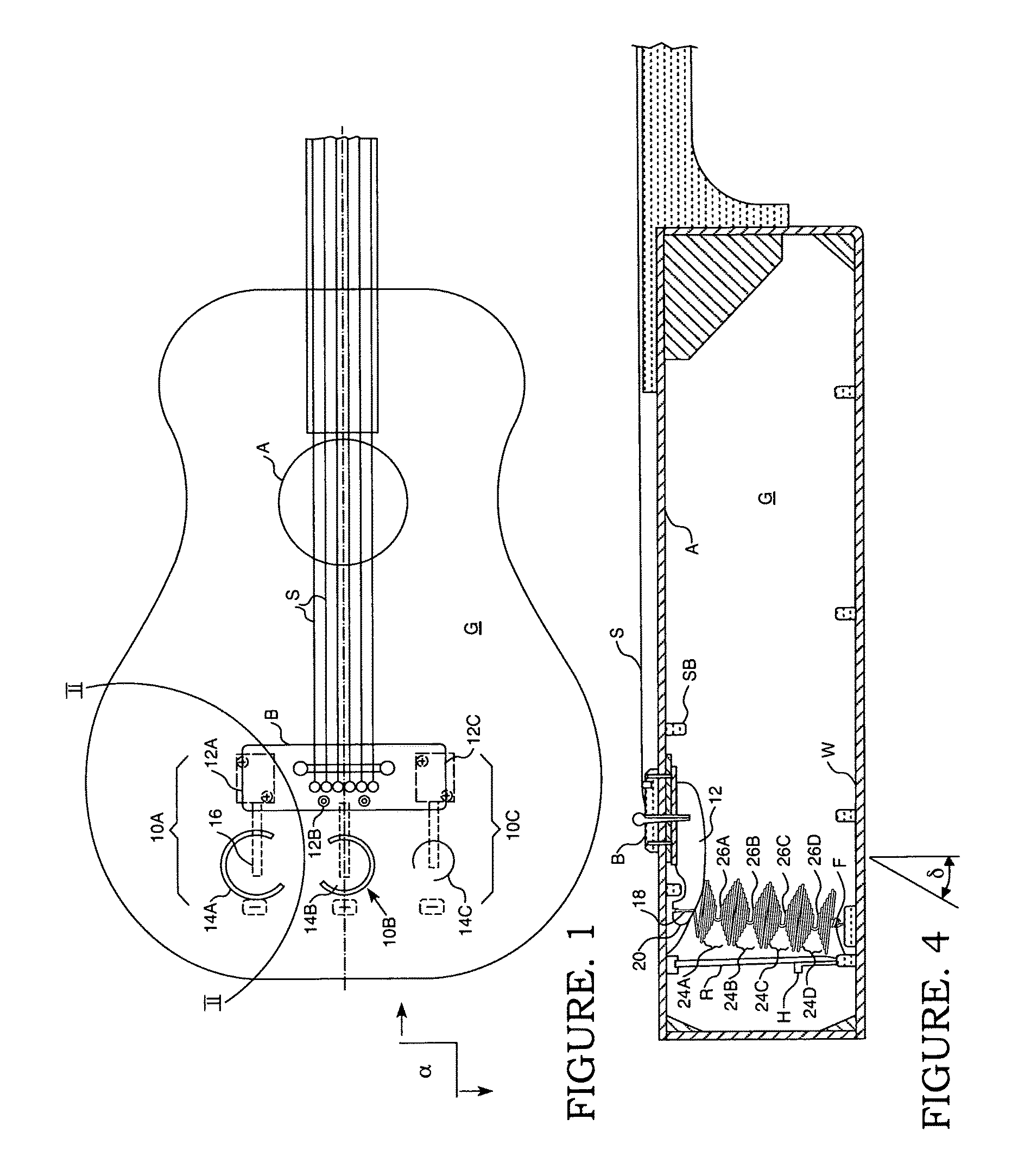

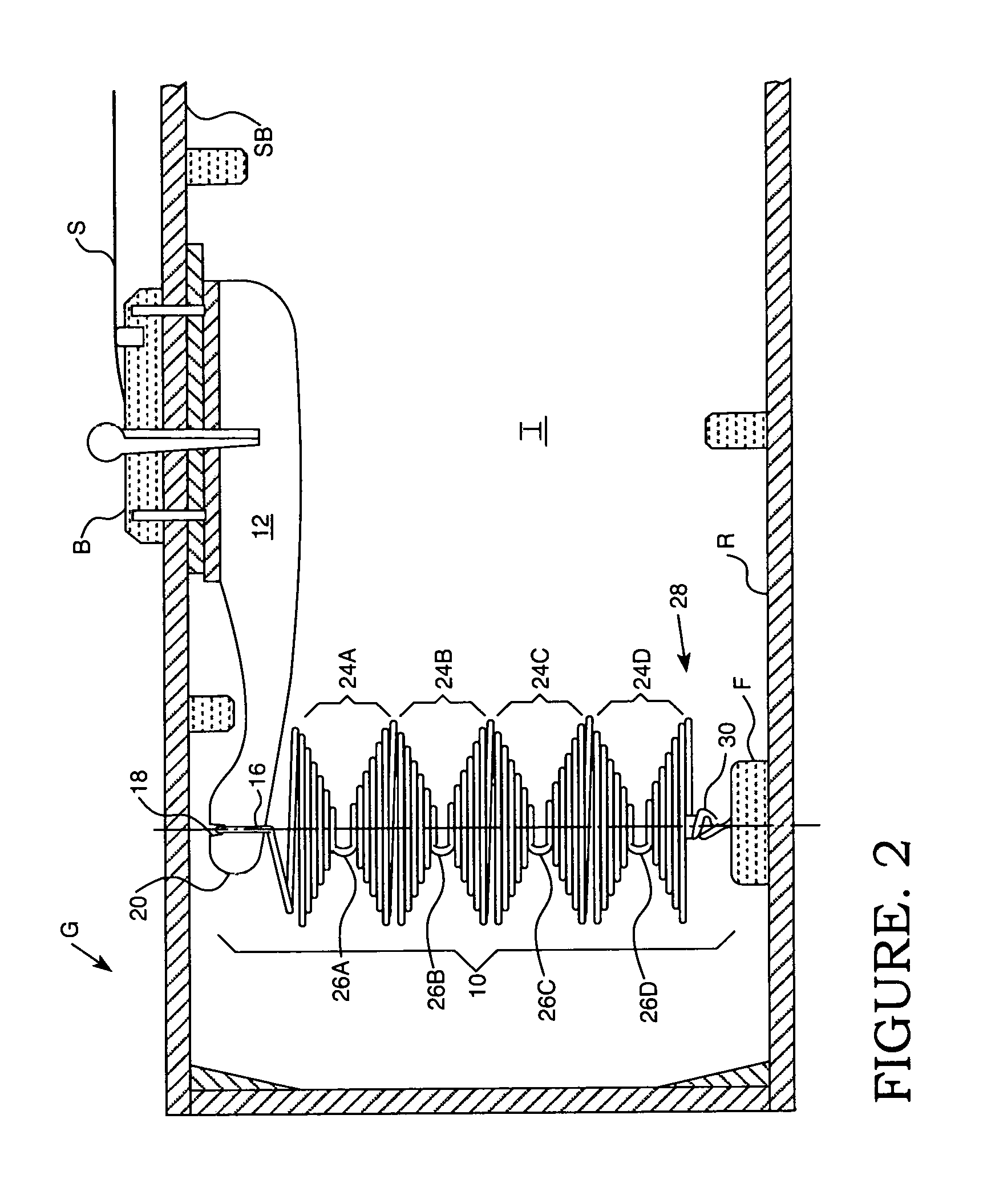

Organic sound texture enhancement and bridge strengthening system for acoustic guitars and other stringed instruments

A sound resonator device for internal connection to a guitar or other musical instrument that has strings tensioned over any type of bridge piece. The resonator device includes a torsion arm affixed to a sound board of the instrument from which is connected a plurality of involute coil sets, each made from a harmonically predetermined thickness (or gauge) of metal wire secured at its opposite end to the instrument interior.

Owner:MOORE DWAINE +2

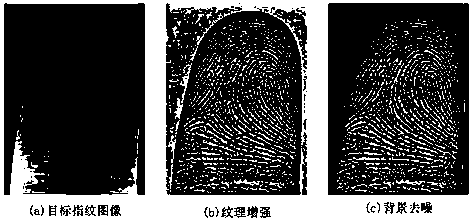

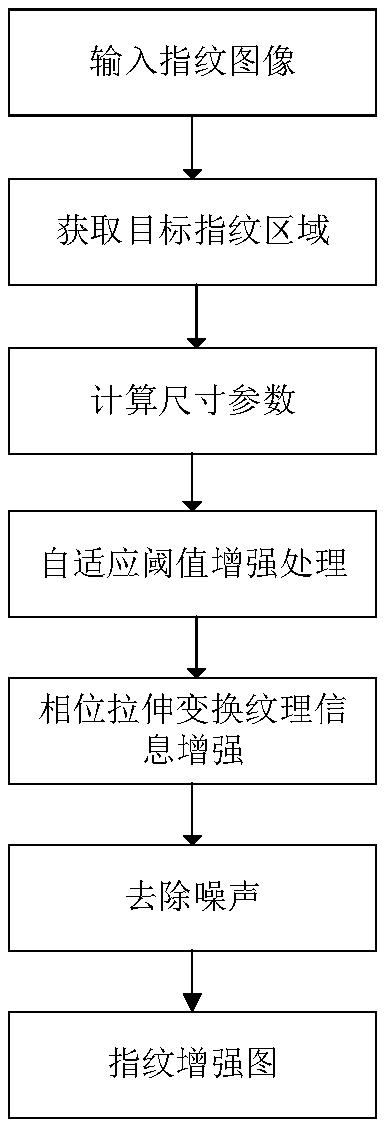

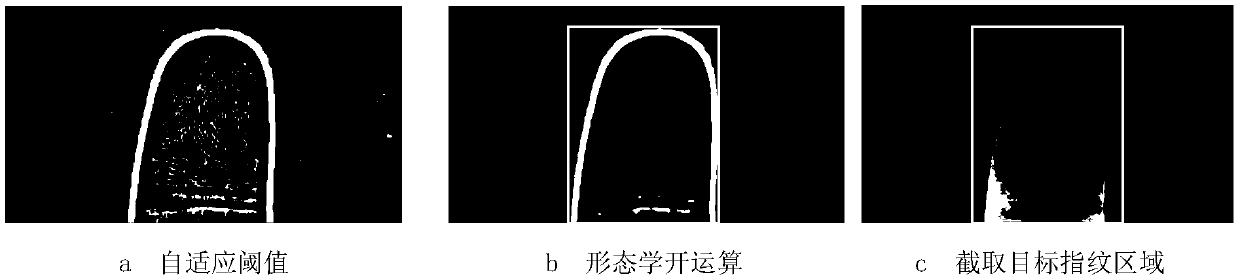

A fingerprint image enhancement method based on phase stretching transformation

InactiveCN109544474ASmall amount of calculationGood effectImage enhancementImage analysisTexture enhancementHistogram equalization

The invention aims at the shortcomings of computing speed and effective texture enhancement in fingerprint identification, provides a method of fingerprint image enhancement in fingerprint identification system. The method uses the obtained fingerprint image to intercept the target fingerprint image, and then uses the limited contrast histogram equalization method to enhance the overall contrast of the target fingerprint image, and uses the phase stretching transformation method to enhance the texture of the enhanced target fingerprint image and denoise the background, so as to obtain the enhanced fingerprint image. The invention can effectively enhance the fingerprint texture information in the fingerprint picture, especially in the low-quality fingerprint picture, thereby improving the fingerprint identification performance in the fingerprint identification system.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

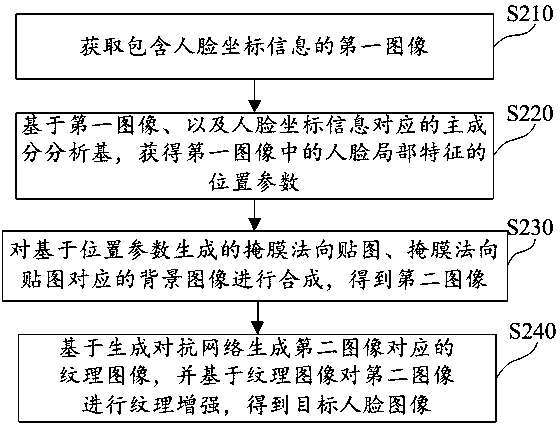

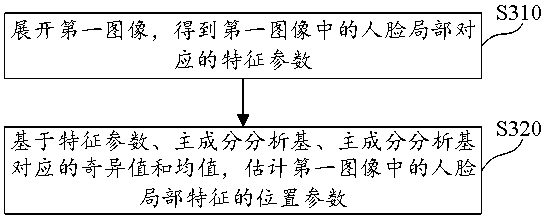

Method and device for generating face image and training model of face image and electronic equipment

ActiveCN111192201AGuaranteed accuracyImprove consistencyImage enhancementImage analysisPrincipal component analysisRadiology

The embodiment of the invention provides a method and a device for generating a face image and training a model of the face image, and electronic equipment. The method for generating the human face image comprises the following steps: acquiring a first image containing human face coordinate information; obtaining a position parameter of a face local feature in the first image based on the first image and a principal component analysis base corresponding to the human face coordinate information; synthesizing a mask normal map generated based on the position parameters and a background image corresponding to the mask normal map to obtain a second image; generating a texture image corresponding to the second image through a generative adversarial network, and performing texture enhancement onthe second image based on the texture image to obtain a target human face image containing the human face feature normal information and the human face texture information. According to the invention, the accuracy of the face features is ensured, the consistency of the human face texture information and the normal information is improved, and the details and authenticity of the human face image are ensured.

Owner:TENCENT TECH (SHENZHEN) CO LTD

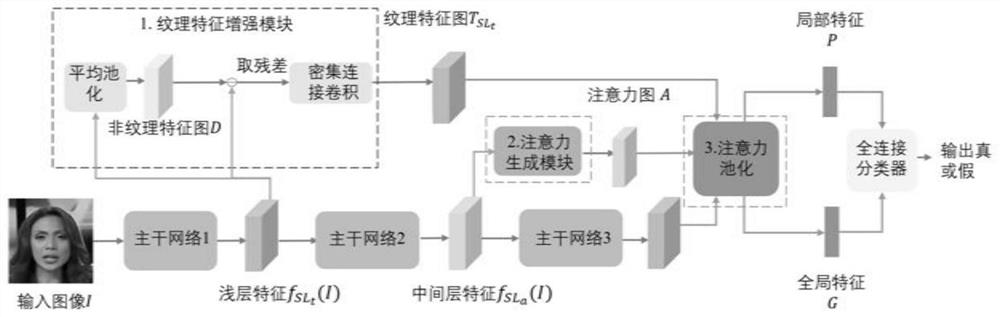

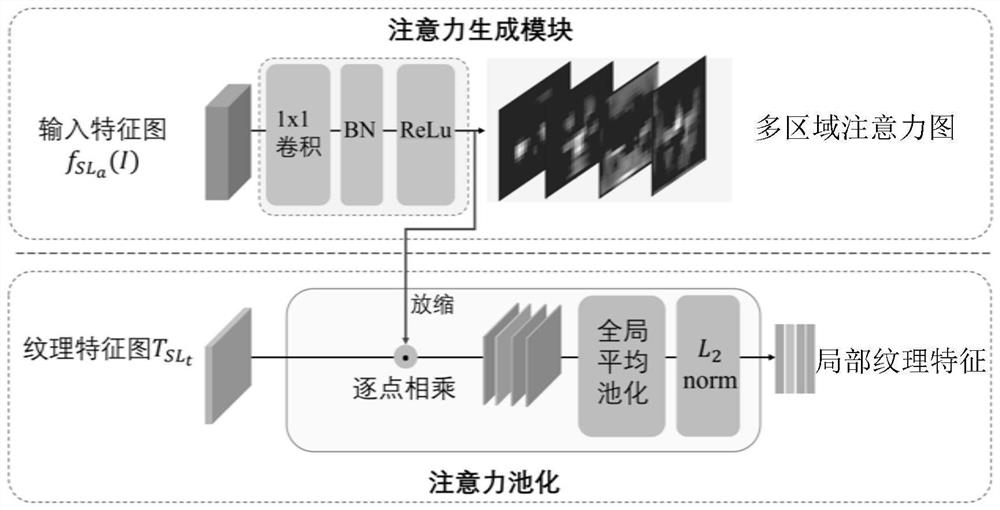

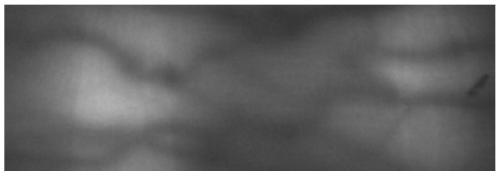

Face forgery detection method based on multi-region attention mechanism

PendingCN113011332AImprove accuracyImage analysisNeural architecturesTexture enhancementForgery detection

The invention discloses a face forgery detection method based on a multi-region attention mechanism. The method comprises the steps: inputting a to-be-detected face image into a convolutional neural network, and obtaining a shallow-layer feature map, a middle-layer feature map and a deep-layer feature map; performing texture enhancement operation on the shallow feature map to obtain a texture feature map; generating a multi-region attention map for the intermediate layer feature map through a multi-attention mechanism; performing attention pooling on the texture feature map by using a multi-region attention map to obtain local texture features, adding the attention maps, and performing attention pooling on the deep feature map to obtain global features; and the global features and the local texture features are fused and then classified to obtain a face forgery detection result. The method has a plurality of attention regions, and each region can extract mutually independent features to enable the network to pay more attention to local texture information, so that the accuracy of a detection result is improved.

Owner:UNIV OF SCI & TECH OF CHINA

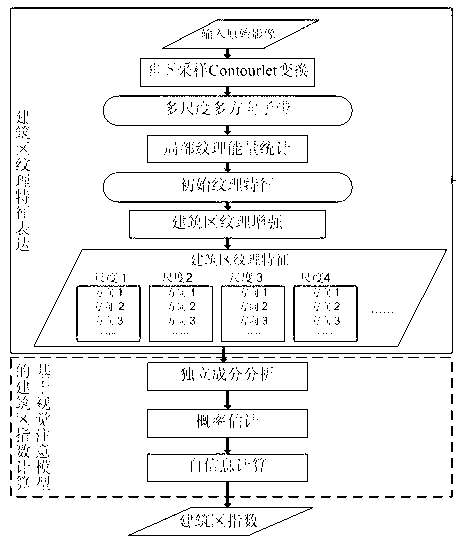

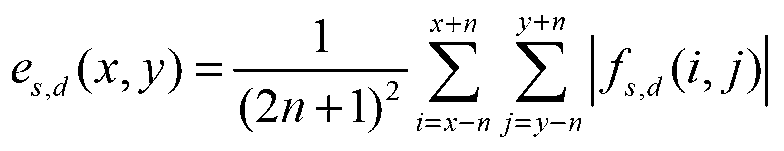

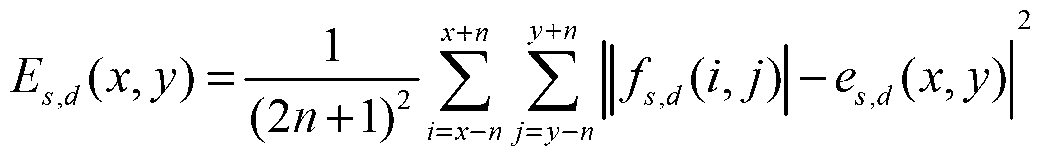

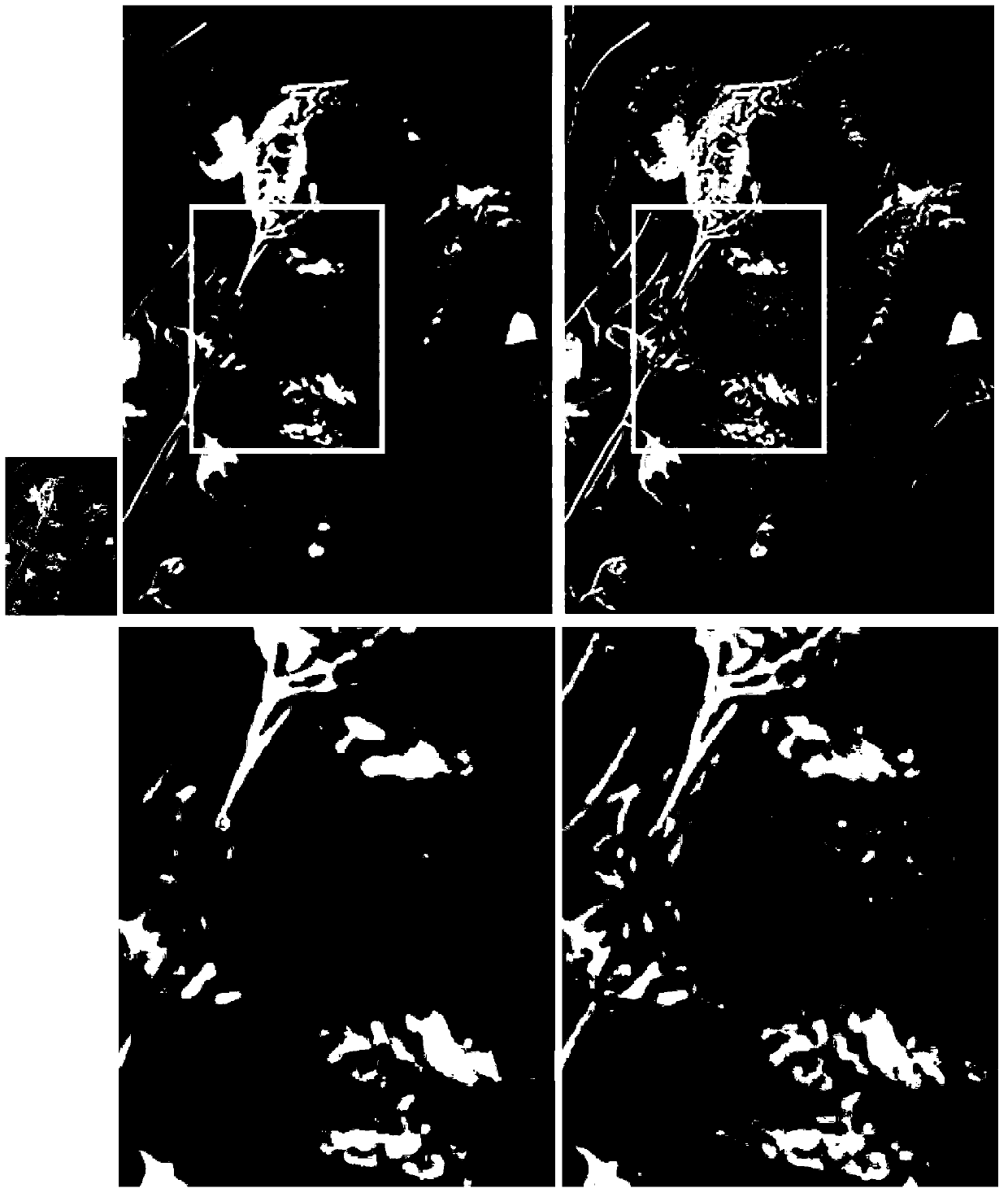

Texture-based method of calculating index of building zone of high-resolution remote sensing image

ActiveCN103345739AImprove extraction accuracyIntuitive and easy extraction processImage analysisCharacter and pattern recognitionComputing MethodologiesVisual saliency

The invention discloses a texture-based method of calculating an index of a building zone of a high-resolution remote sensing image. The method comprises the following steps that NSCT conversion is conducted on the image, multi-scale and multi-directional sub-band coefficients are formed, partial texture energy of each sub-band coefficient is counted, building zone texture enhancement calculation is conducted on the partial texture energy to make characteristics of the building zone stand out, visual saliency is defined from the perspective of information theory, the index of the building zone is generated by adopting a visual attention mechanism based on self-information maximization, and the larger the index is, the higher significance the building zone has in a human vision process. According to the texture-based method of calculating the index of the building zone of the high-resolution remote sensing image, the characteristics of the building zone in the high-resolution remote sensing image are taken into consideration fully, multi-scale and multi-directional textural features of the building zone are built, the index is calculated according to a visual attention process to describe the building zone, the index can describe the building zone intuitively, the good effect of extracting the building zone from the high-resolution remote sensing image is achieved, and extracting accuracy can also be guaranteed in a complex environment.

Owner:WUHAN UNIV

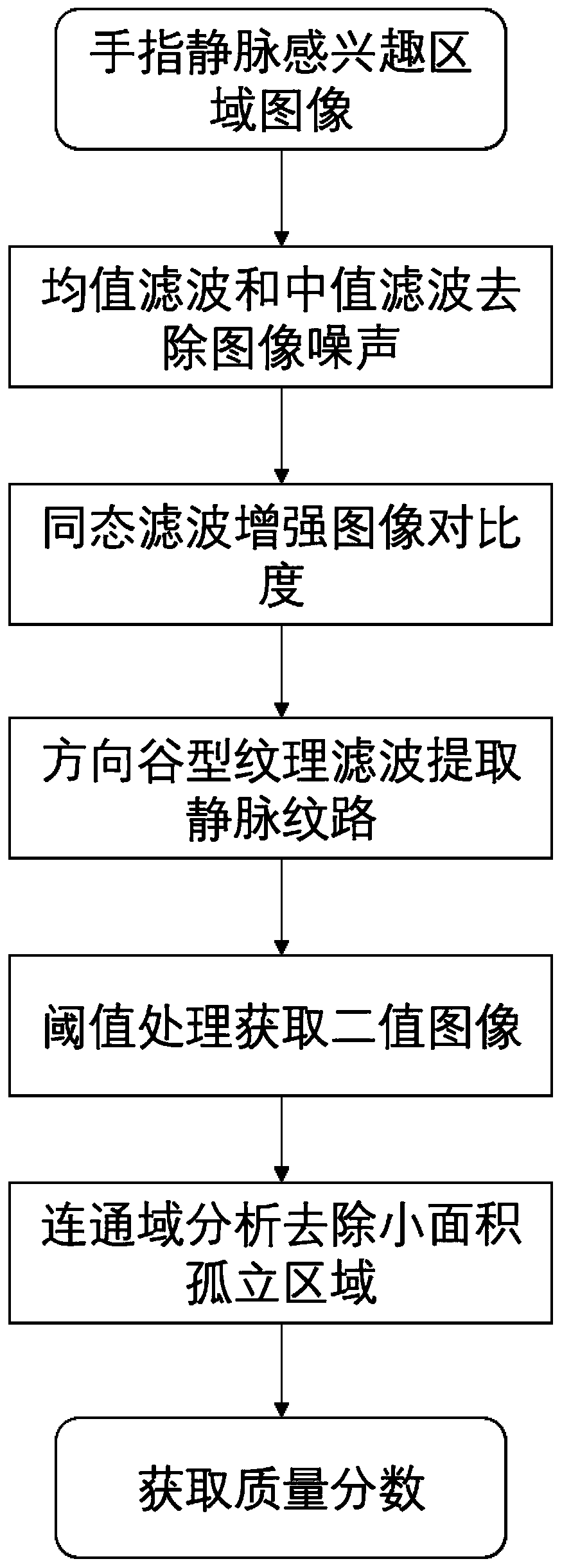

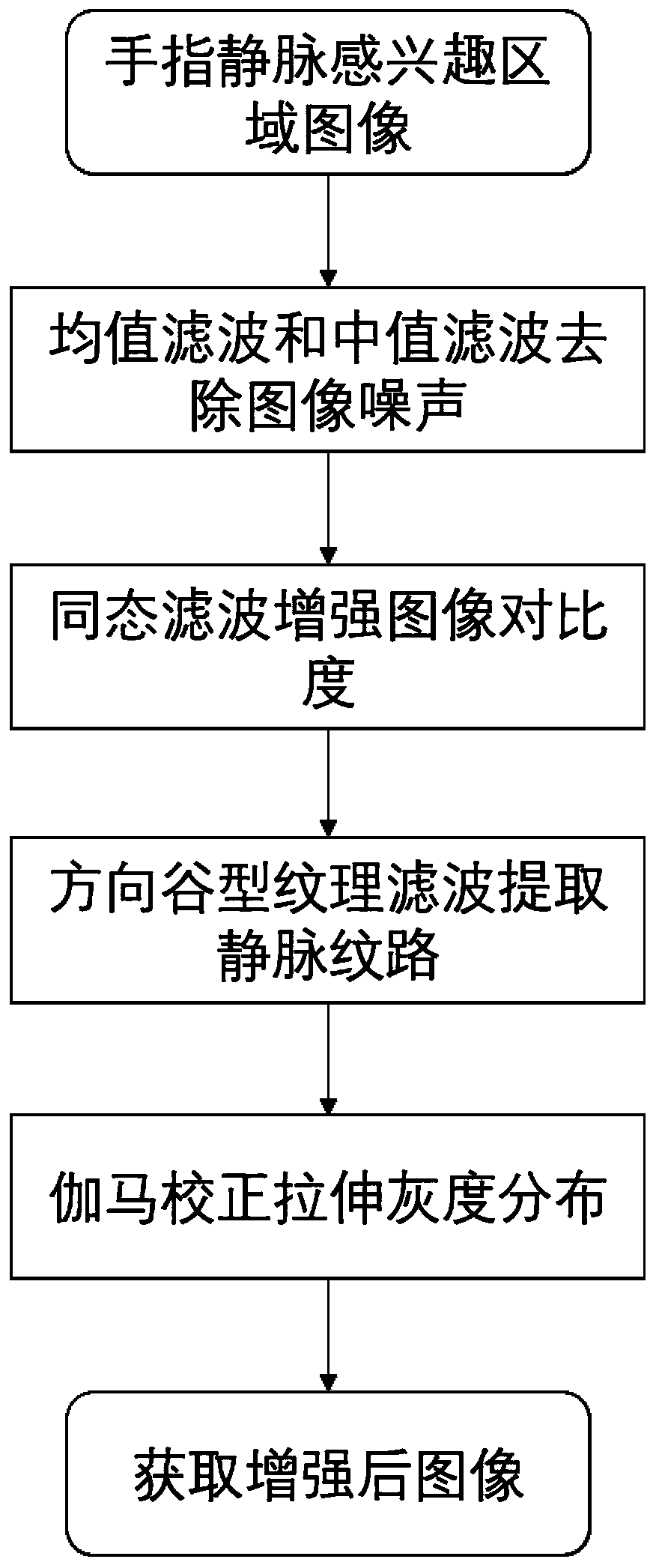

Finger vein image quality evaluation method based on image processing

ActiveCN111291709AEfficient removalReduce distractionsSubcutaneous biometric featuresBlood vessel patternsContrast levelImaging quality

The invention discloses a finger vein image quality evaluation method based on image processing. The method comprises the following steps: 1) acquiring an originally acquired finger vein region-of-interest picture; 2) filtering image noise by using mean filtering and median filtering to obtain a denoised image; 3) enhancing the contrast of the denoised image by using homomorphic filtering to obtain a contrast-enhanced image; 4) enhancing texture details of the contrast-enhanced image by using directional texture filtering to obtain a texture-enhanced image; 5) setting a threshold value, and performing binarization processing on the image after the texture enhancement to obtain a finger vein binary image; and 6) performing connected domain analysis on the finger vein binary image by using the connected domain information, removing a region with the area smaller than a threshold value, and counting the area of the remaining region to obtain an image quality score. The method can effectively reflect the quality of the collected finger vein image, thereby effectively solving the problem of no universal finger vein image quality evaluation method.

Owner:SOUTH CHINA UNIV OF TECH +1

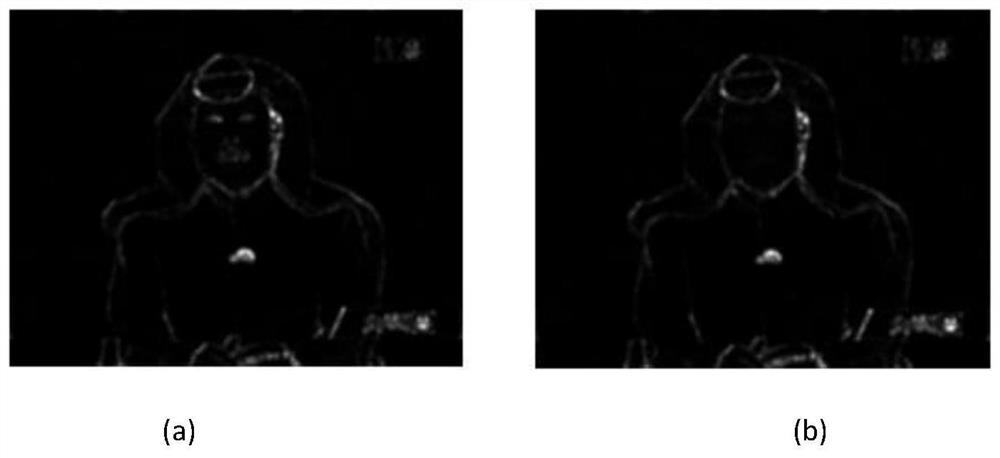

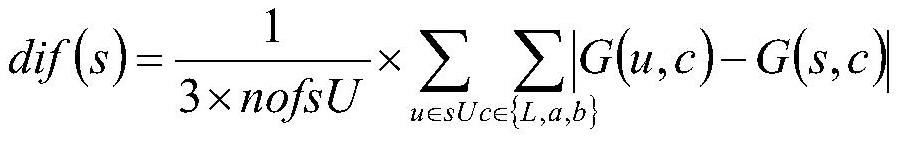

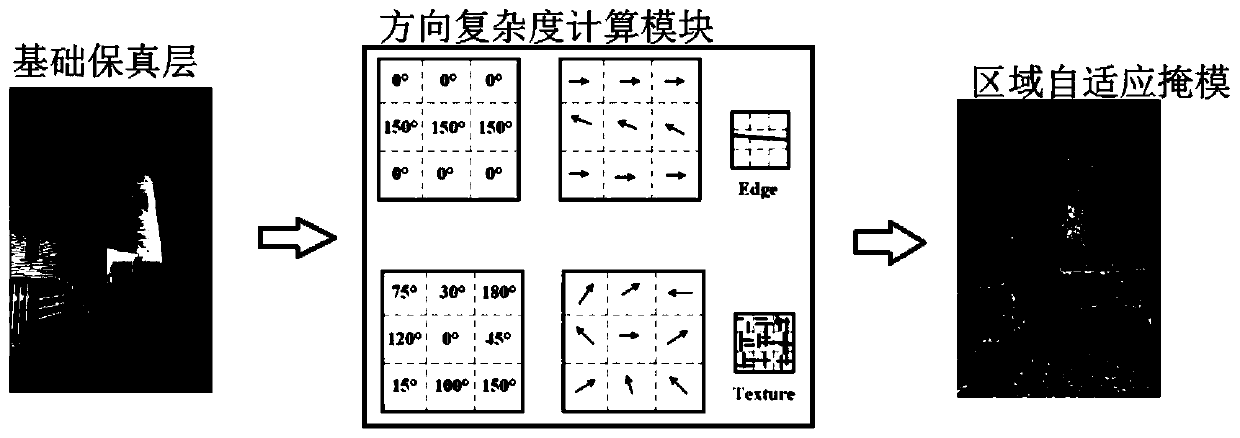

Image authenticity identification method

ActiveCN112163511ATextural differential visualization and enhancementIncrease training speedImage enhancementImage analysisSaliency mapRadiology

The invention relates to an image authenticity identification method, which comprises the following steps of: image saliency processing: segmenting an original image through an SLIC algorithm to obtain superpixels, and calculating the contrast between each superpixel and an edge superpixel to obtain a background-based saliency image; segmenting the original image according to the pixel threshold to select a foreground, and calculating the similarity between the original image and the foreground to obtain a foreground-based salient image; fusing the salient image based on the foreground and thebackground, and performing smoothing processing by using a Gaussian filter to obtain a local feature salient image; obtaining a saliency image of a pixel level; obtaining a texture enhanced image; and training a true and false face image identification network.

Owner:TIANJIN UNIV

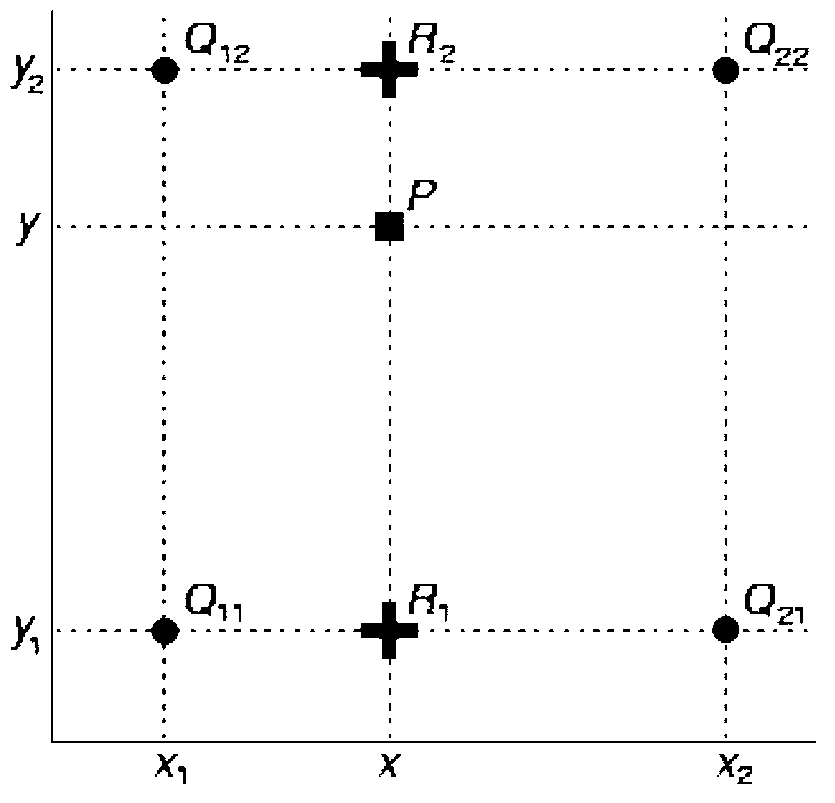

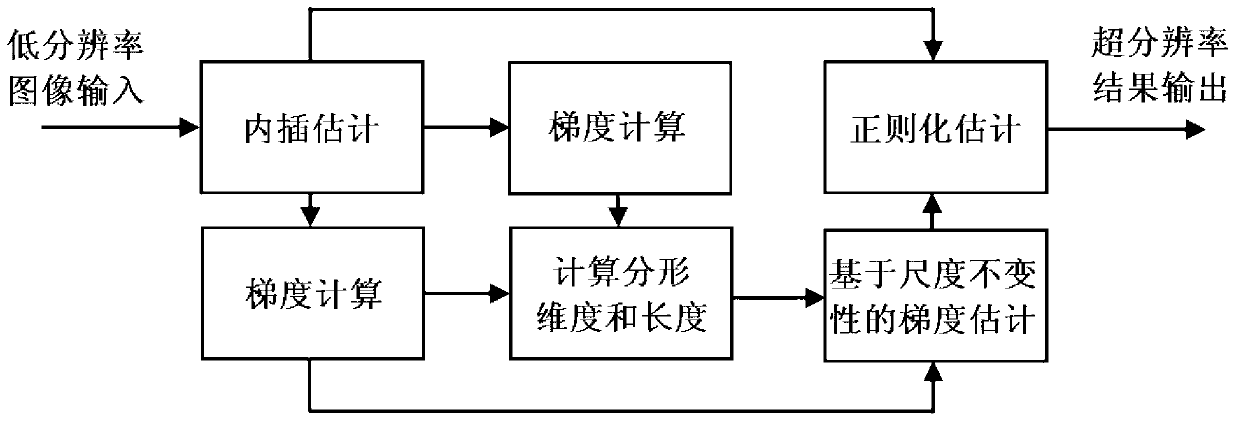

Method for super-resolution of image and video based on fractal analysis, and method for enhancing super-resolution of image and video

The invention discloses a method for super-resolution of images and videos based on a fractal analysis, and a method for enhancing the super-resolution of the images and videos. The methods comprise the following steps: reading an image or a frame in a video, recording as I, calculating a gradient Gradori of the I; performing a super-resolution process on the I based on interpolation to obtain an estimated value H' of the high-definition image; calculating a gradient Gradest of the H'; calculating fractal dimension Dori and Dest, and fractal length Lori and Lest of corresponding pixel of the I and H' through the Gradori and the Gradest, respectively; reestimating the gradient GradH of the high-definition image according to scale invariance of the fractal dimensions and the fractal lengths; and reestimating the high-definition image, with the H' and the GradH as restrictions. The methods uses pixel of the image as a fractal set and the gradient corresponding to the pixel as a measure of the fractal set, and local fractal dimensions and local fractal lengths of the image are calculated. According to the scale invariance of the fractal dimensions, a super-resolution problem is restricted. Through bringing in a restriction of the scale invariance of the fractal lengths, the methods can be used in image quality enhancement of images and videos, and especially in texture enhancement.

Owner:SHANGHAI JIAO TONG UNIV

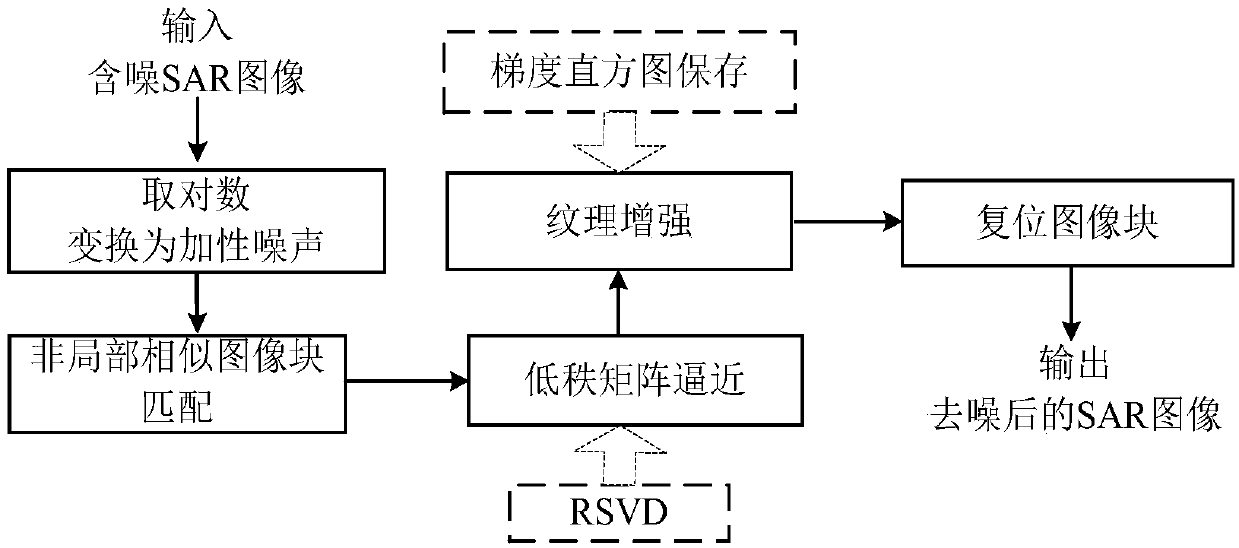

SAR image rapid denoising method based on RSVD and histogram storage

ActiveCN109658340AImprove denoising efficiencyReduce denoising timeImage enhancementImage analysisSingular value decompositionImage denoising

The invention discloses an SAR image rapid denoising method based on RSVD and histogram storage. The method comprises the following steps: firstly, carrying out logarithmic transformation on an SAR image; multiplicative noise is converted into additive noise; The SAR image denoising method comprises the following steps: firstly, carrying out non-local similar image block matching on an SAR image,then carrying out low-rank matrix approximation on a low-rank matrix composed of non-local similar image blocks by adopting random singular value decomposition, carrying out texture enhancement on theimage by adopting a gradient histogram storage method, and finally resetting the image blocks to realize rapid denoising of the SAR image. Experimental results on an MSTAR database show that comparedwith an existing method, the method provided by the invention has the advantages that the edge preserving index is obviously improved, and meanwhile, the denoising speed is increased by three times.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

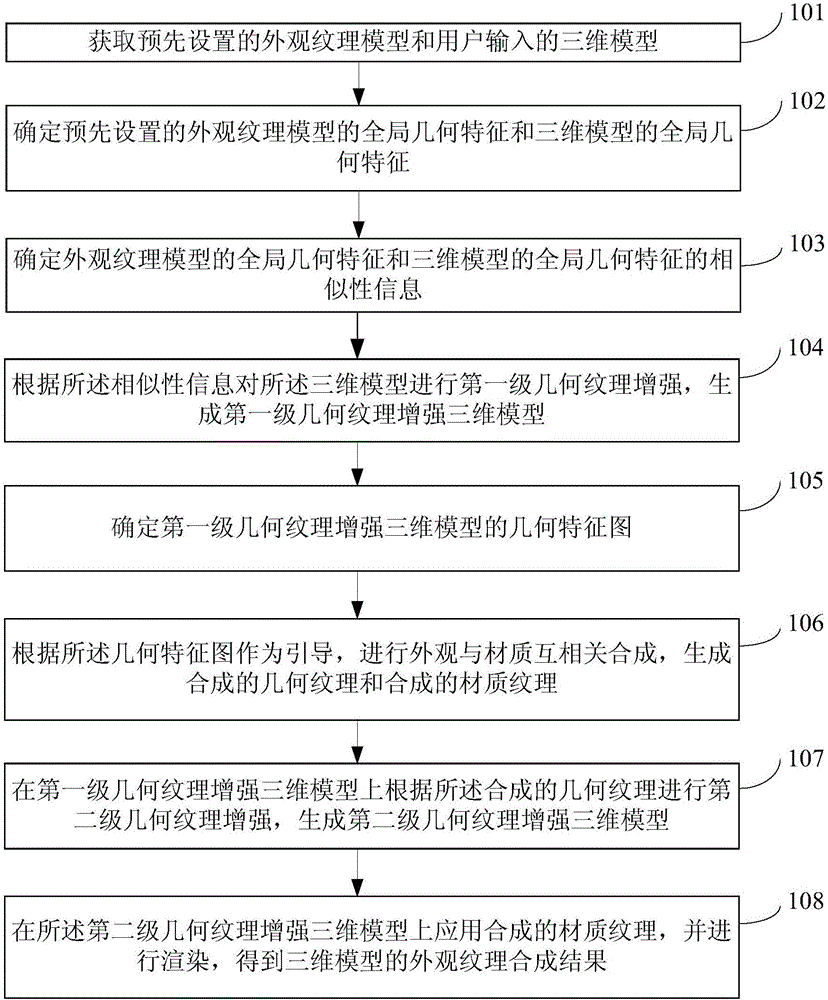

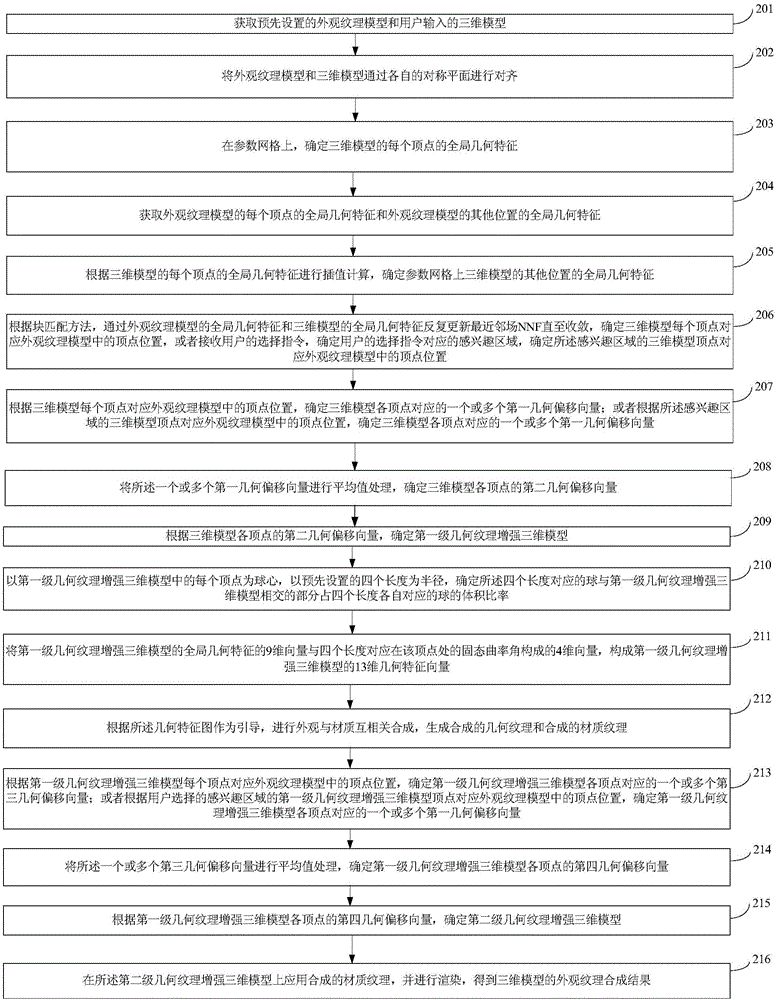

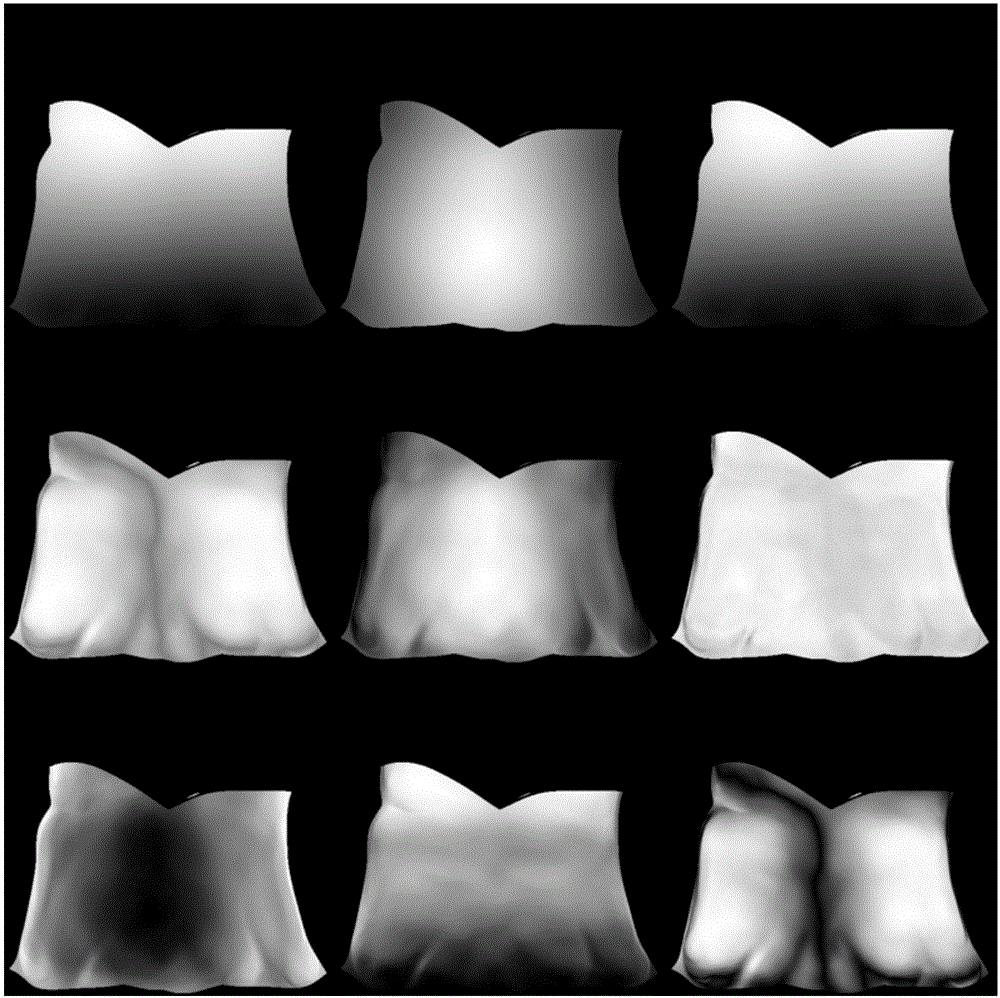

Appearance texture synthesis method and device for three-dimensional model

The invention provides an appearance texture synthesis method and device for a three-dimensional model and relates to the field of three-dimensional model technology. The method comprises the steps that global geometric features of an appearance texture model, global geometric features of the three-dimensional model and similarity information of the appearance texture model and the three-dimensional model are determined; first-stage geometric texture enhancement is performed on the three-dimensional model to generate a first-stage geometric texture enhanced three-dimensional model, and a geometric feature map is determined; appearance and material cross-correlation synthesis is performed under the guidance of the geometric feature map to generate synthesized geometric texture and synthesized material texture; second-stage geometric texture enhancement is performed on the first-stage geometric texture enhanced three-dimensional model according to the synthesized geometric texture to generate a second-stage geometric texture enhanced three-dimensional model; the synthesized material texture is applied to the second-stage geometric texture enhanced three-dimensional model, and rendering is performed to obtain an appearance texture synthesis result of the three-dimensional model. Through the appearance texture synthesis method and device, the problems that in the prior art, appearance texture is not truthful visually, and appearance texture information cannot be captured sufficiently can be solved.

Owner:SHENZHEN UNIV

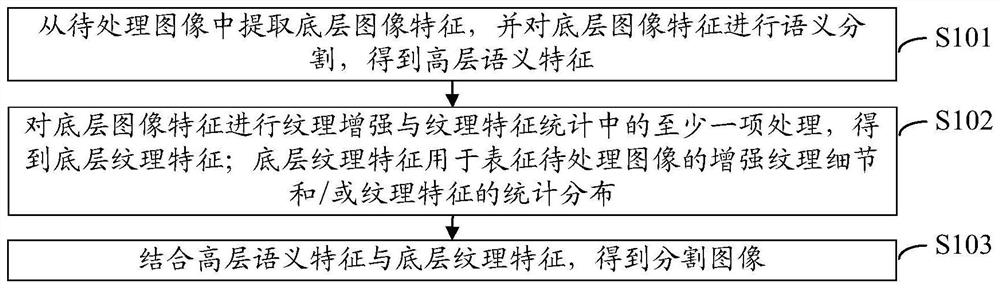

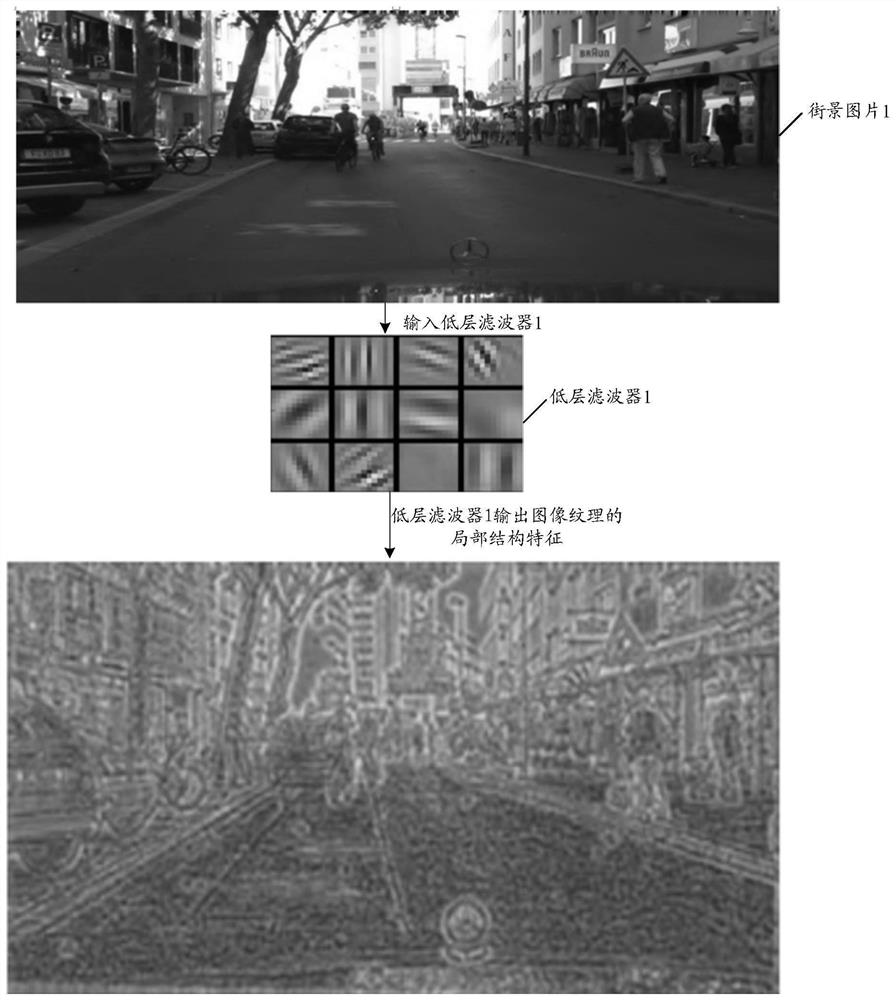

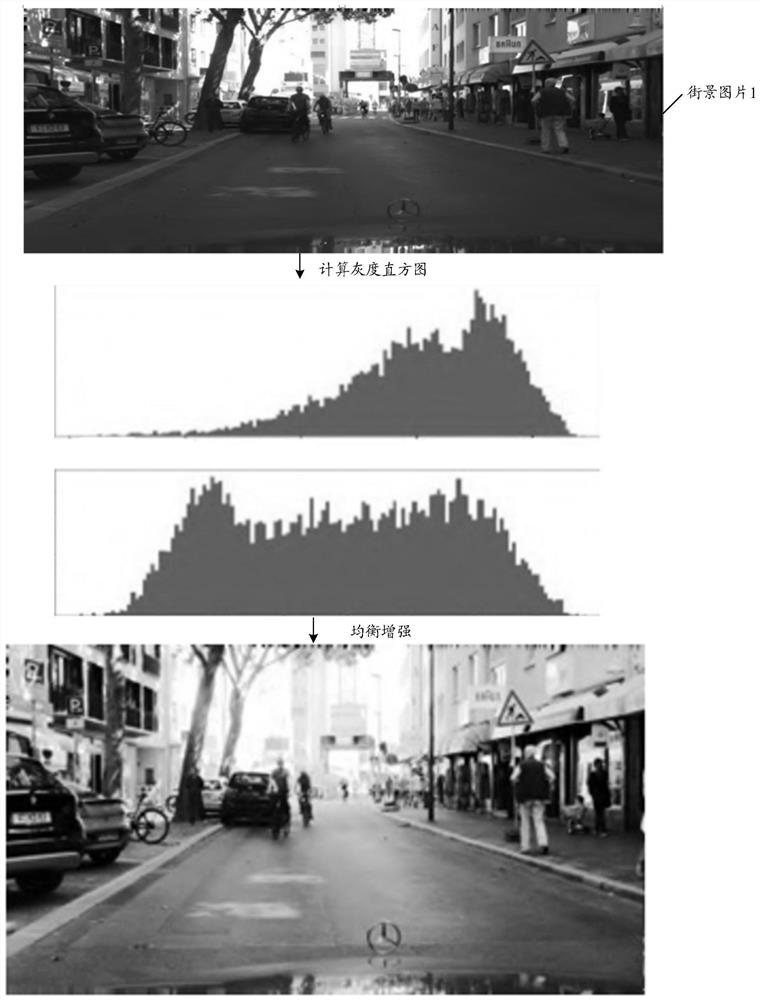

Image segmentation method and device, electronic equipment and computer readable storage medium

PendingCN113011425AEnhanced statistical distributionImprove accuracyCharacter and pattern recognitionNeural architecturesPattern recognitionComputer graphics (images)

The invention provides an image segmentation method and device, electronic equipment and a computer readable storage medium. The method comprises the following steps: extracting a bottom layer image feature from a to-be-processed image, and performing semantic segmentation on the bottom layer image feature to obtain a high layer semantic feature; carrying out at least one processing of texture enhancement and texture feature statistics on the bottom layer image features to obtain bottom layer texture features, wherein the bottom-layer texture features are used for representing statistical distribution of enhanced texture details and / or texture features of the to-be-processed image; and combining the high-level semantic features with the bottom-level texture features to obtain a semantic segmentation image. According to the invention, the accuracy of image segmentation can be improved.

Owner:SHANGHAI SENSETIME INTELLIGENT TECH CO LTD

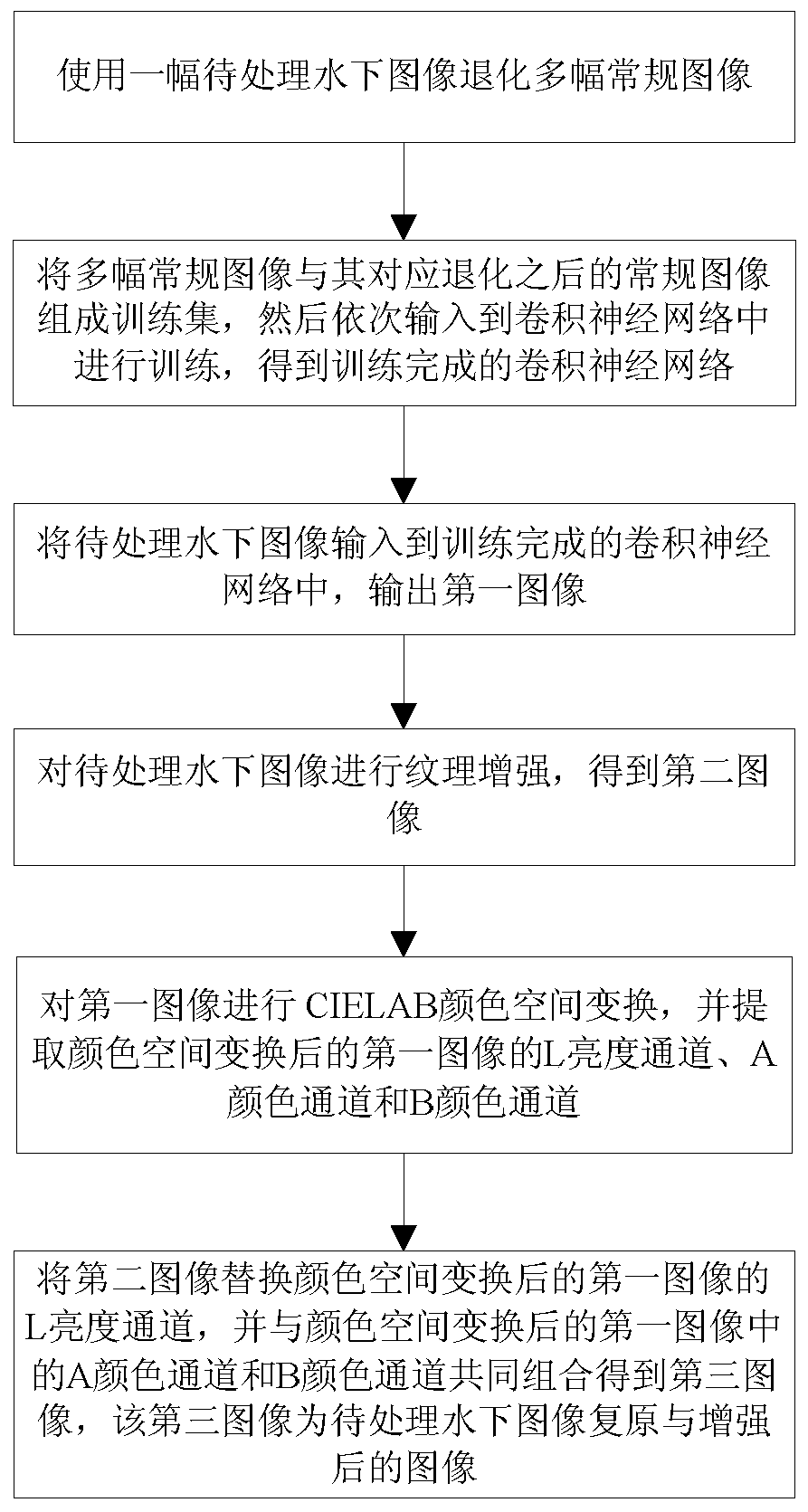

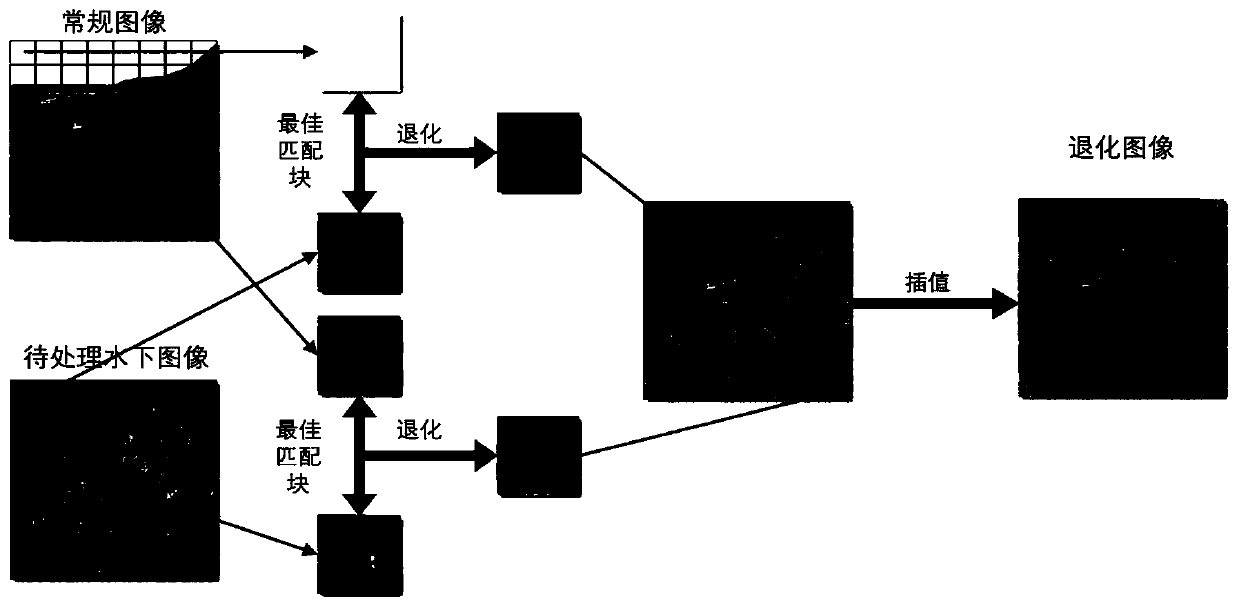

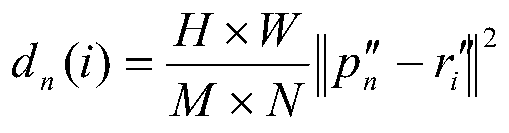

Underwater image enhancement and restoration method based on convolutional neural network

ActiveCN111462002ASolve the problem of missing training dataQuality improvementImage enhancementImage analysisTexture enhancementImage pair

The invention discloses an underwater image enhancement and restoration method based on a convolutional neural network, and the method comprises the steps: degrading a plurality of conventional imagesthrough a to-be-processed underwater image, forming a training set through the plurality of conventional images and the corresponding degraded conventional images, and sequentially inputting the training set into the convolutional neural network for training; then, inputting the underwater image to be processed into the trained convolutional neural network, and outputting a first image; carryingout CIELAB color space transformation on the image, and extracting an L brightness channel, an A color channel and a B color channel of the image; and finally, performing texture enhancement on the to-be-processed underwater image, replacing the L brightness channel of the first image with the image after texture enhancement, and combining the image after texture enhancement with the A color channel and the B color channel in the first image to obtain a final image. According to the method, the problem of missing of underwater image training data is solved, complex calculation of a traditionalunderwater imaging model is avoided, and the quality of underwater images is well improved.

Owner:CHONGQING UNIV OF TECH

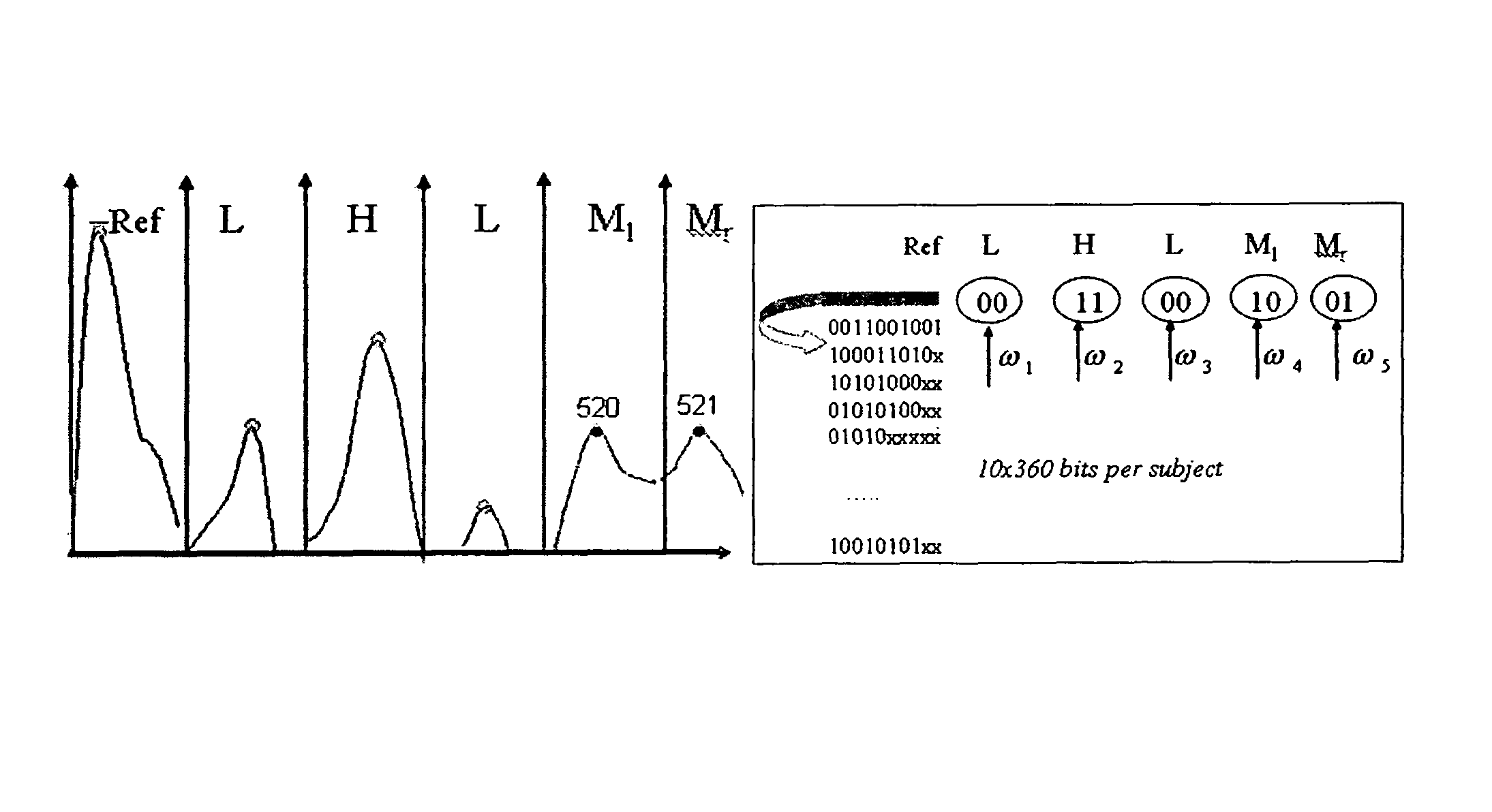

Invariant radial iris segmentation

ActiveUS8442276B2Improved biometric algorithmEliminate needAcquiring/recognising eyesData setTexture enhancement

A method and computer product are presented for identifying a subject by biometric analysis of an eye. First, an image of the iris of a subject to be identified is acquired. Texture enhancements may be done to the image as desired, but are not necessary. Next, the iris image is radially segmented into a selected number of radial segments, for example 200 segments, each segment representing 1.8° of the iris scan. After segmenting, each radial segment is analyzed, and the peaks and valleys of color intensity are detected in the iris radial segment. These detected peaks and valleys are mathematically transformed into a data set used to construct a template. The template represents the subject's scanned and analyzed iris, being constructed of each transformed data set from each of the radial segments. After construction, this template may be stored in a database, or used for matching purposes if the subject is already registered in the database.

Owner:GENTEX CORP

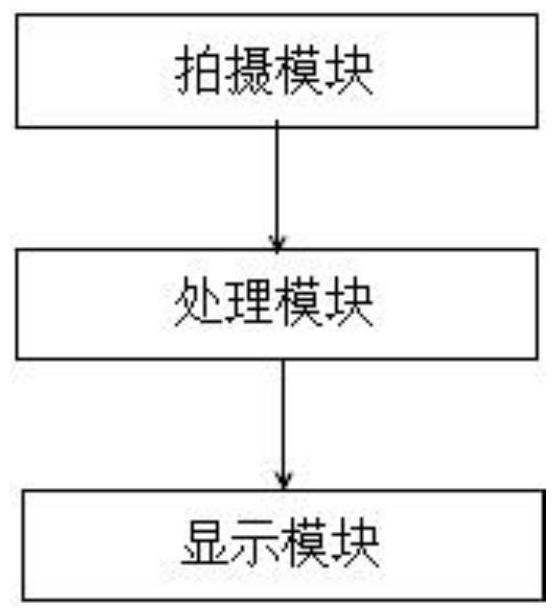

Skin texture detection system

The invention provides a skin texture detection system. The skin texture detection system comprises a shooting module, a processing module and a display module; the shooting module is used for acquiring a skin image of a user; the processing module is used for carrying out filtering processing and texture enhancement processing on the skin image to obtain a preprocessed image, and is used for obtaining texture feature information contained in the preprocessed image; and the display module is used for displaying the texture feature information. In the process of obtaining the texture feature information, the step of texture enhancement is added after the skin image is filtered, so that more edge detail information is reserved in the preprocessed image, and the accuracy of the skin texture feature information obtained subsequently is improved.

Owner:北京美医医学技术研究院有限公司

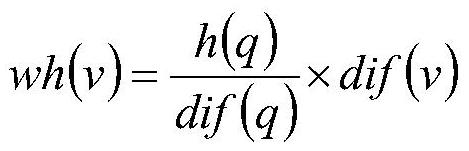

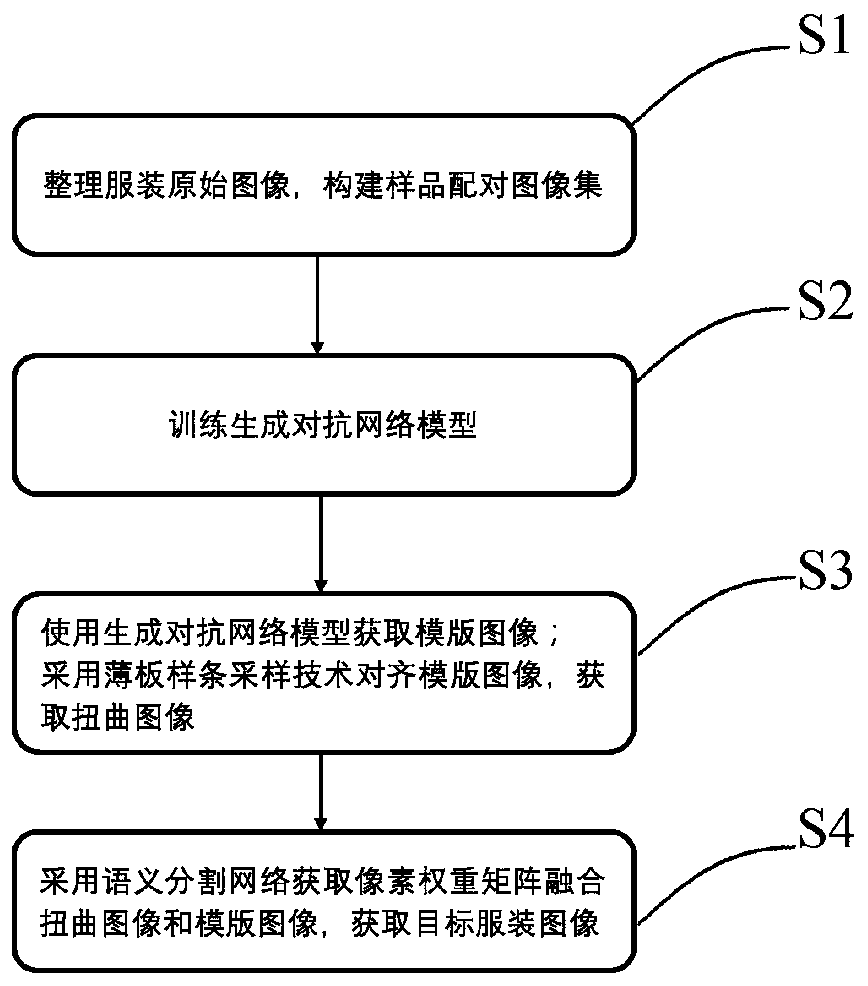

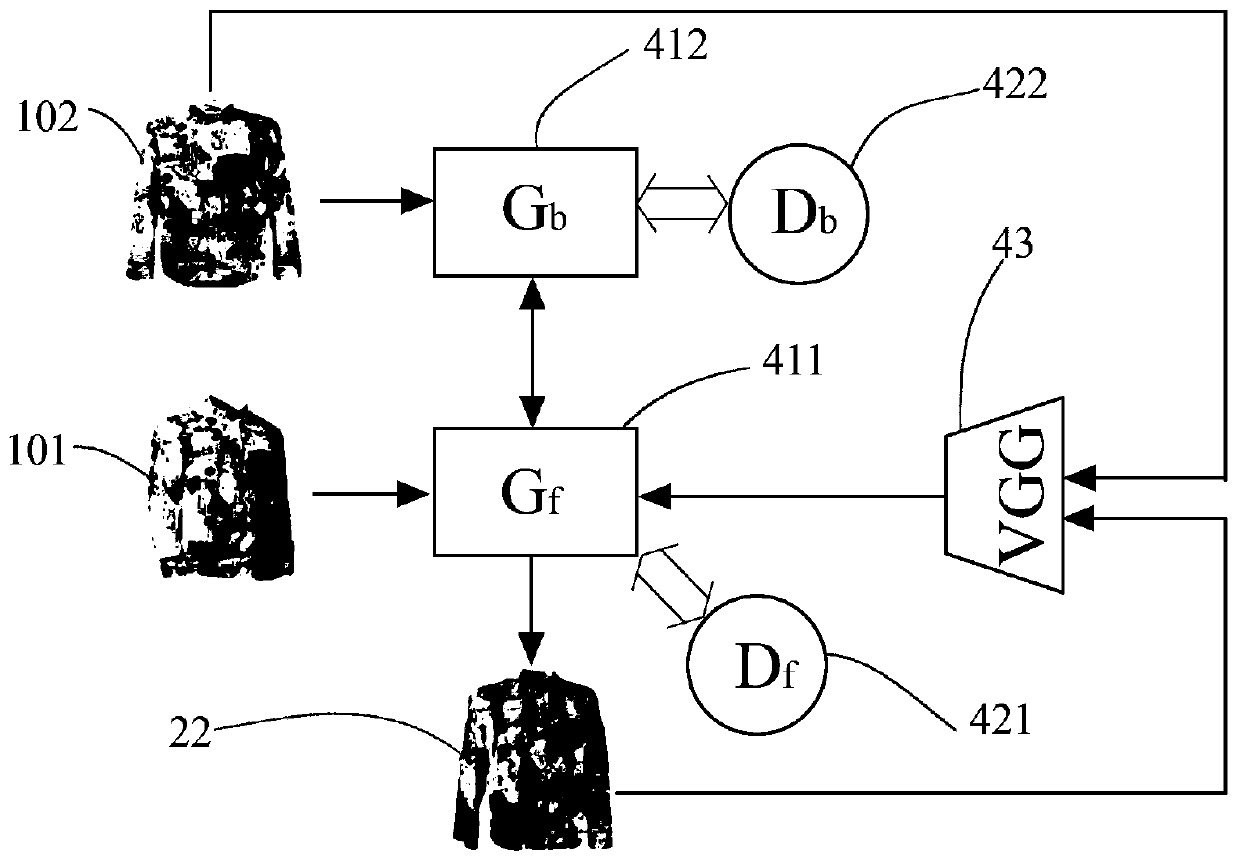

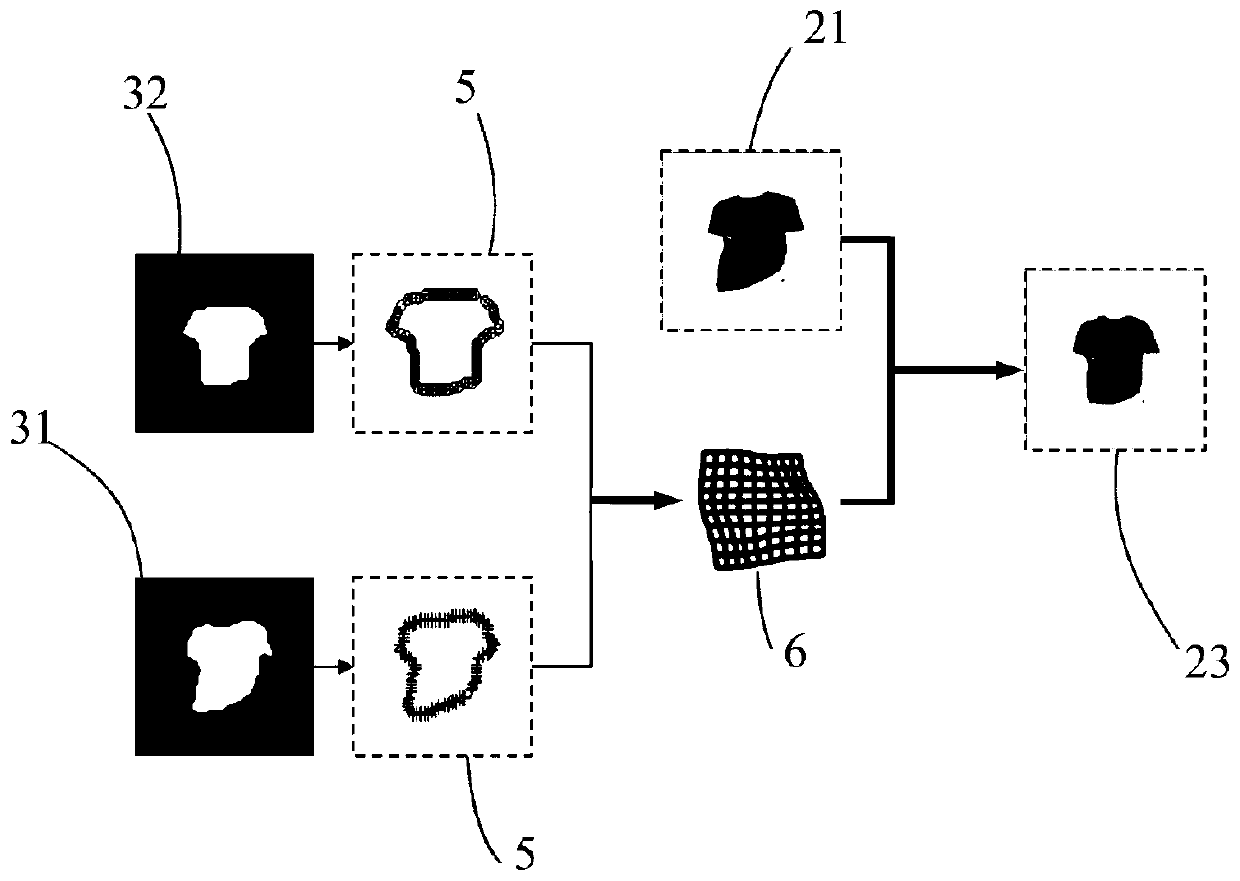

Target clothing image processing method based on generative adversarial network model

PendingCN111445426ARemove deformationRemove noiseImage enhancementImage analysisPattern recognitionImaging processing

The invention provides a target clothing image processing method based on a generative adversarial network model. The target clothing image processing method comprises the following steps: pairing a sample standard image with corresponding sample area images to form a sample pairing image set; optimizing loss function parameters of the generative adversarial network model according to the sample pairing image set; inputting the to-do area image into the generative adversarial network model, and outputting a template image; stretching and deforming the to-do area image to output a distorted image, so that the distorted image is aligned with the frame of the template image; and obtaining a pixel weight matrix, fusing the distorted image and the template image, and outputting a target clothing image. According to the method, the generative adversarial network model based on the perception loss function and the step-by-step image fusion technology are constructed, and the garment images with different angles and postures are converted into the target garment images with regular postures and enhanced textures to be searched and used by an intelligent system, so that the quality of the target garment images is improved, and the retrieval accuracy of the intelligent system is improved.

Owner:HARBIN INSTITUTE OF TECHNOLOGY SHENZHEN (INSTITUTE OF SCIENCE AND TECHNOLOGY INNOVATION HARBIN INSTITUTE OF TECHNOLOGY SHENZHEN)

Nanocrystalline complex phase neodymium iron boron permanent magnet texturing enhancement preparation method

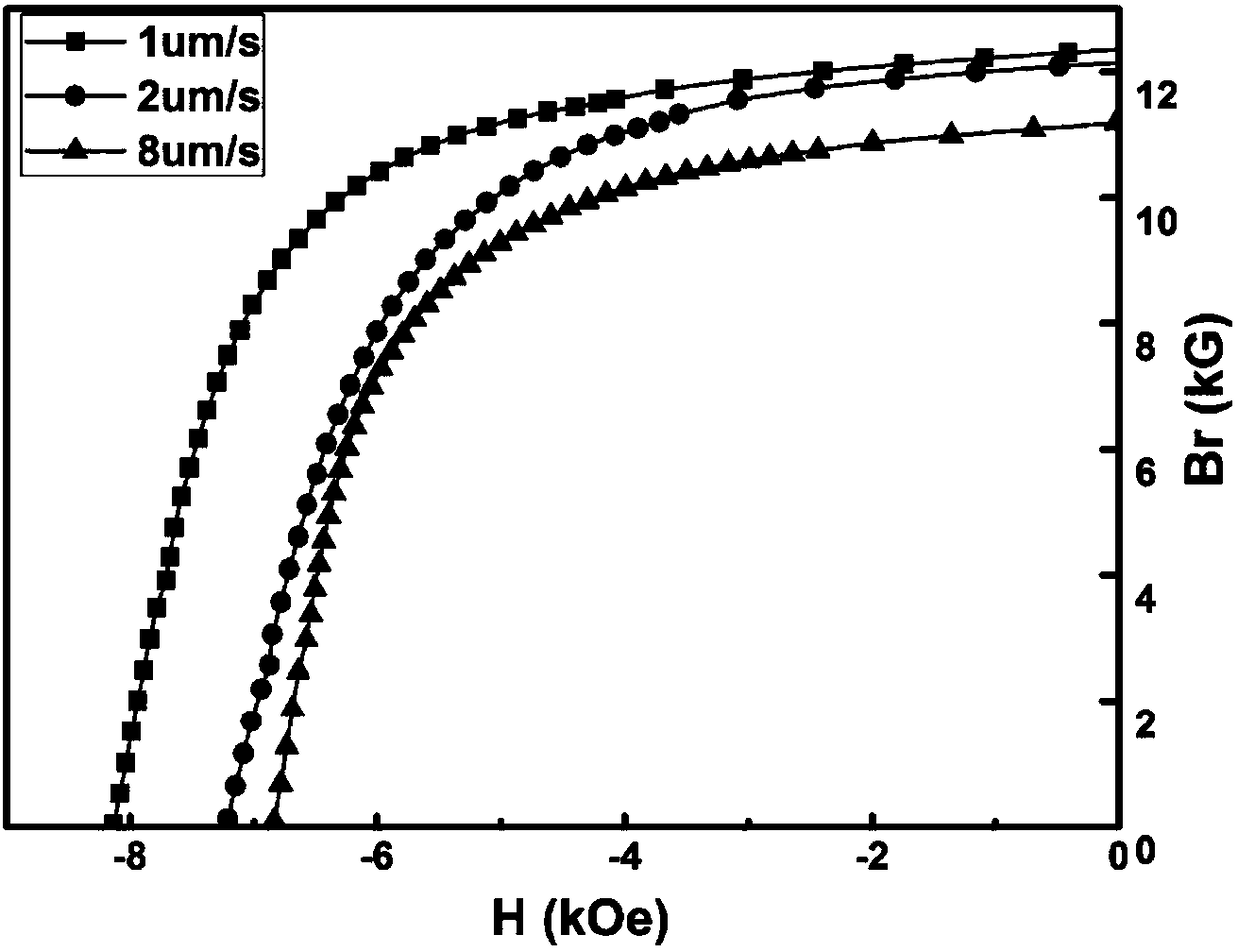

ActiveCN108346508AImprove compactnessImprove magnetic propertiesInorganic material magnetismInductances/transformers/magnets manufactureMagnetic phaseThermal deformation

The invention provides a nanocrystalline complex phase neodymium iron boron permanent magnet texturing enhancement preparation method. According to the method, an NdFeB complex green body obtained bythermal pressing treatment is subjected to thermal deformation treatment at a low temperature and low rate to obtain a total-compact anisotropic NdFeB complex phase permanent magnet. Compared with theconventional process, the permanent magnet is subjected to deformation and orientation at a low temperature, so that grain growth can be suppressed effectively; and meanwhile, grain boundary phase uniform distribution at a low temperature can be facilitated by thermal deformation at low rate, so that hard magnetic phase texturing can be reinforced, crack formation and expansion in the deformationprocess can be suppressed, and the compaction and magnetic performance of the block body can be improved.

Owner:NINGBO INST OF MATERIALS TECH & ENG CHINESE ACADEMY OF SCI

Finger vein image enhancement method based on image processing

ActiveCN111368661AEfficient removalReduce distractionsInternal combustion piston enginesSubcutaneous biometric featuresLow contrastHomomorphic filtering

The invention discloses a finger vein image enhancement method based on image processing. The method comprises the following steps: 1) acquiring an originally acquired finger vein region-of-interest picture; 2) filtering image noise by using mean filtering and median filtering to obtain a denoised image; 3) enhancing the contrast of the denoised image by using homomorphic filtering to obtain a contrast-enhanced image; 4) enhancing texture details of the contrast-enhanced image by using directional texture filtering to obtain a texture-enhanced image; and 5) correcting the gray level distribution of the stretched image by using gamma, and enhancing the texture discrimination of the filtered image so as to further enhance the texture pixel value of the filtered image, thereby completing theenhancement of the finger vein image. The influence of equipment noise and finger dirt during collection is effectively avoided, meanwhile, the condition of poor contrast caused by background environment illumination is avoided, the response of vein lines is enhanced through the direction valley filter, and the problem of finger vein image enhancement under the condition of low contrast is effectively solved.

Owner:SOUTH CHINA UNIV OF TECH +1

Image processing method and device, electronic equipment and computer readable storage medium

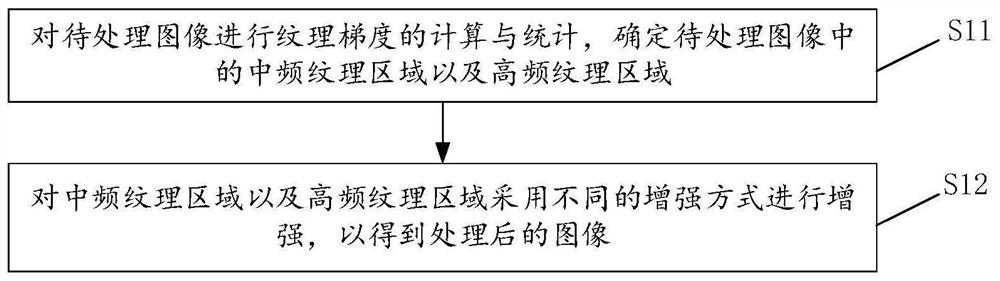

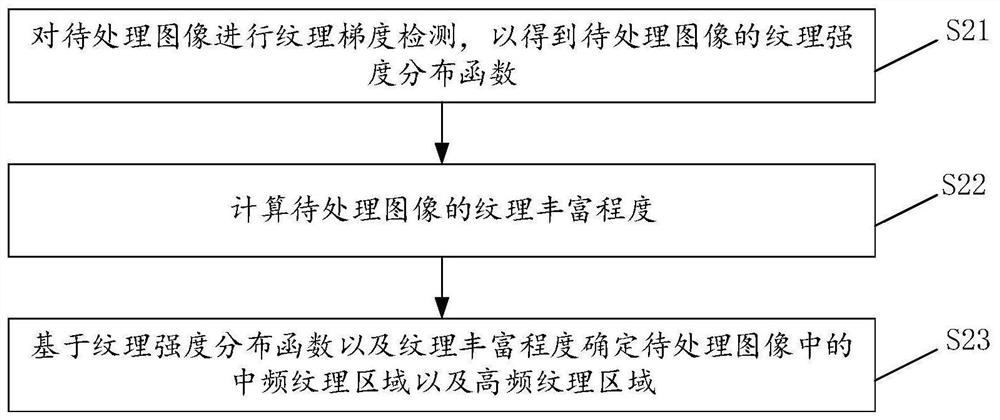

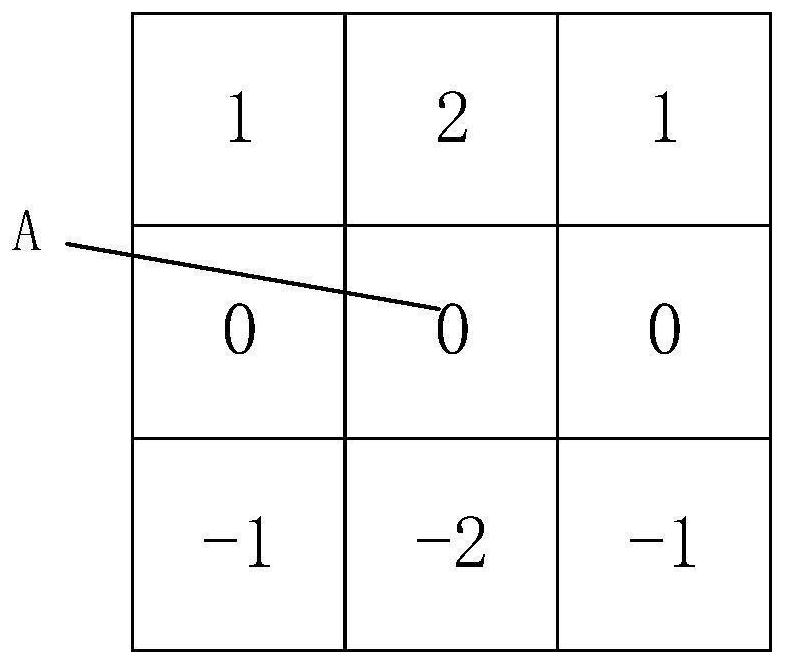

PendingCN113674165AImprove subjective qualityReduce excessImage enhancementImage analysisQuantization (image processing)Imaging processing

The invention provides an image processing method and device, electronic equipment and a computer readable storage medium, and the method comprises the steps: carrying out the calculation and statistics of the texture gradient of a to-be-processed image, and determining an intermediate-frequency texture region and a high-frequency texture region in the to-be-processed image; and enhancing the intermediate-frequency texture region and the high-frequency texture region in different enhancement modes to obtain a processed image. According to the method, intermediate-frequency textures and high-frequency textures are enhanced to different degrees, and the original intention of texture enhancement is better met, so that the intermediate-frequency textures are not lost by a relatively large quantization step size at a low bit rate as much as possible, and a prediction mode is stabilized by enhancing the textures; the high-frequency strong texture enhancement is weak, the excessive phenomenon after enhancement is reduced, and the subjective quality of the image can be improved.

Owner:ZHEJIANG DAHUA TECH CO LTD

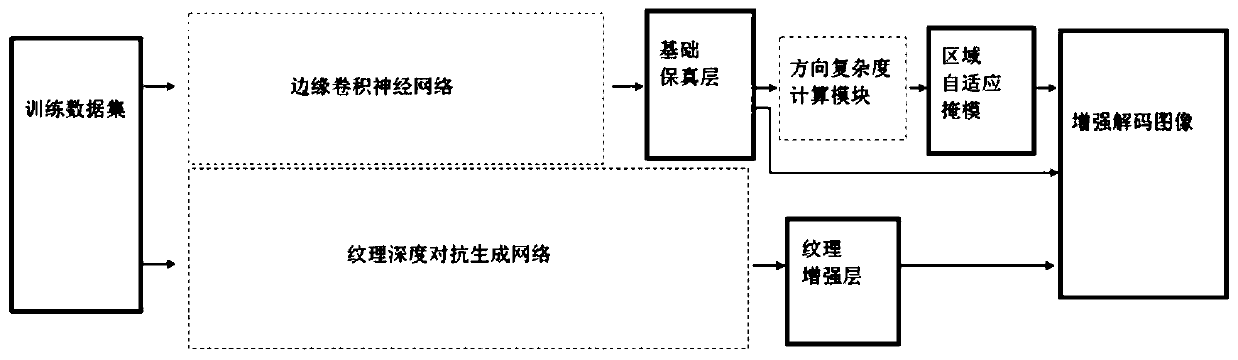

Decoded image enhancement method

ActiveCN110246093AMass balanceBalanced Signal FidelityImage enhancementImage analysisPattern recognitionImaging algorithm

The invention discloses a decoded image enhancement method, and mainly solves the problem that the current decoded enhanced image algorithm does not fully consider the visual and transmission requirements of human eyes on different areas of an image and cannot achieve the balance of texture subjective quality and edge signal fidelity. The method is based on different characteristics of a texture region and an edge region. An edge region-based decoding enhancement method and a texture region-based decoding enhancement method are respectively designed to obtain a basic fidelity layer and a texture enhancement layer; and then the basic fidelity layer and the texture enhancement layer are adaptively fused by using a region adaptive fusion technology, so that the balance of texture subjective quality and edge signal fidelity is obtained, a decoded image is enhanced better, and the subjective experience of a user and the fidelity transmission of a signal are improved.

Owner:PEKING UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com