Method and system for detecting deep forged video based on time sequence inconsistency

A video detection and consistency technology, applied in the field of deep fake video detection, can solve the problems of high error rate and low detection rate of fake video, and achieve the effect of overcoming the probability of misjudgment and improving detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

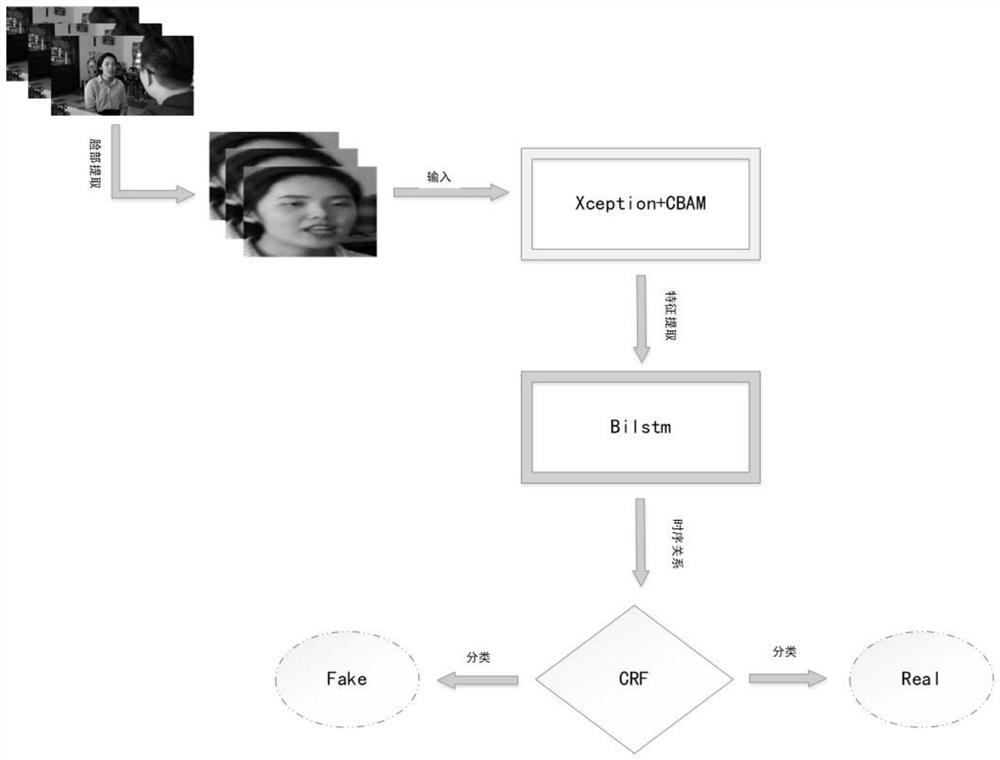

[0061] figure 1 is a schematic flowchart of a timing-based inconsistency detection method according to an exemplary embodiment, according to figure 1 , including the following steps:

[0062] Step S1: Divide the obtained forged video set into a training set, a verification set, and a test set according to a certain ratio, wherein the number of real videos and forged videos should be equal, mark the real video and the forged video, and mark the real video It is 0, and the fake video is marked as 1; use ffmpeg to take a certain number of frames according to the frame rate of each video; use the mtcnn face detector to detect the face area of the captured frame, and then perform face landmarks on the face area Align, save face images, and normalize them according to 240*240 pixels.

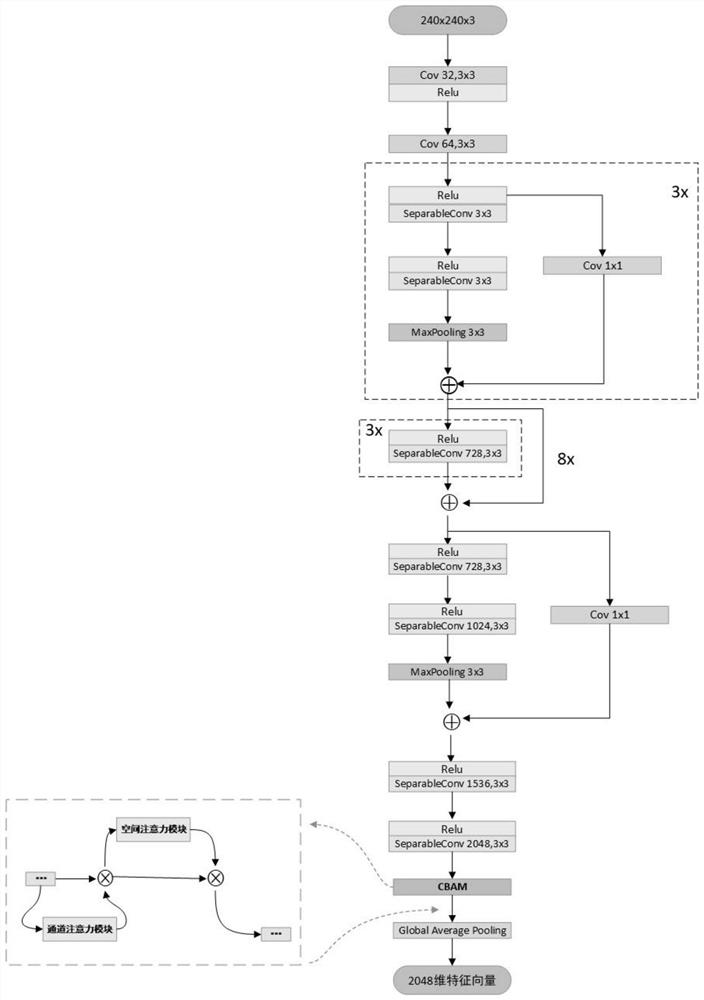

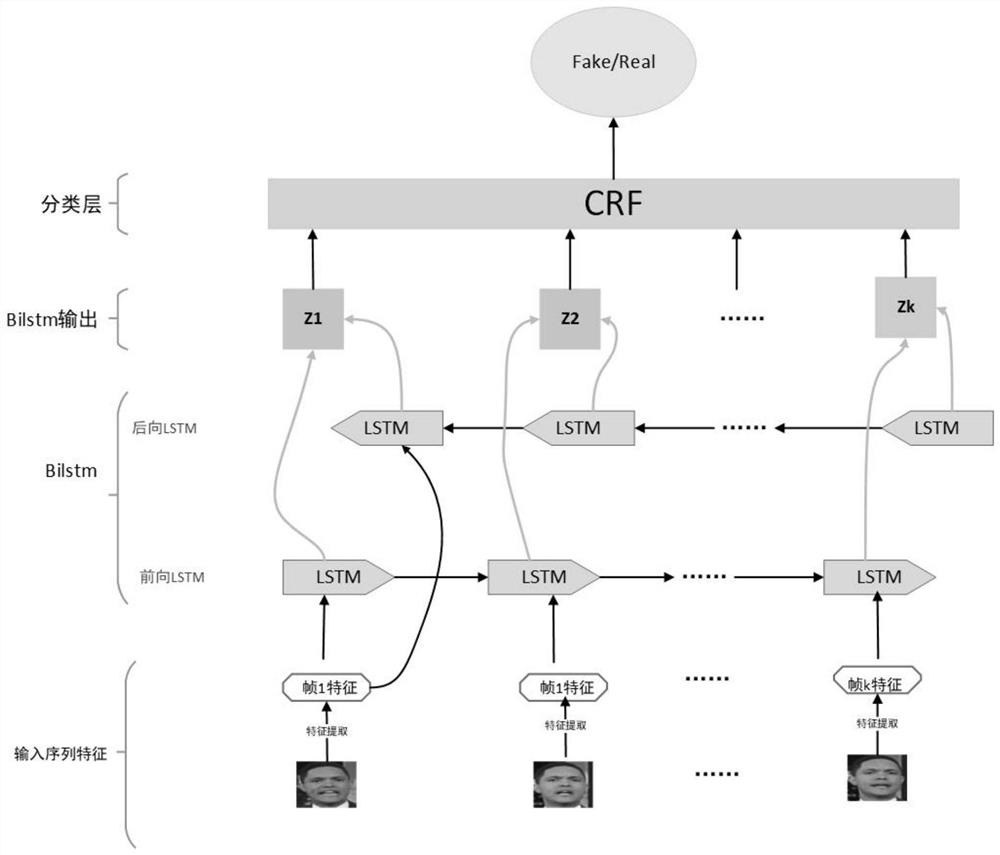

[0063] Step S2: Input the video frame processed by S1 into the Xception network for feature extraction training. Due to the global pooling layer of the Xception network, some channel and spatial ...

no. 2 example

[0090] see Figure 5 A deep fake video detection system based on the consistency between video frames is shown, which is characterized in that the method includes the following units: a data preprocessing module, a video frame feature extraction module, a video frame timing analysis module, and a fake video classification module.

[0091] The data preprocessing module is used to process the experimental data, mainly including three units: data set division, frame extraction, and face extraction. When dividing the data set, it is divided into training set, verification set, and test set according to a certain ratio; then the video is divided into frames, and finally the face is extracted and the obtained face pictures are normalized into a unified Pixels, when extracting a face, first use the face detector to frame the face area, and then extract the face through face landmark alignment, so as to improve the detection rate of the face.

[0092] The video frame feature extracti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com