Patents

Literature

193 results about "Action selection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Action selection is a way of characterizing the most basic problem of intelligent systems: what to do next. In artificial intelligence and computational cognitive science, "the action selection problem" is typically associated with intelligent agents and animats—artificial systems that exhibit complex behaviour in an agent environment. The term is also sometimes used in ethology or animal behavior.

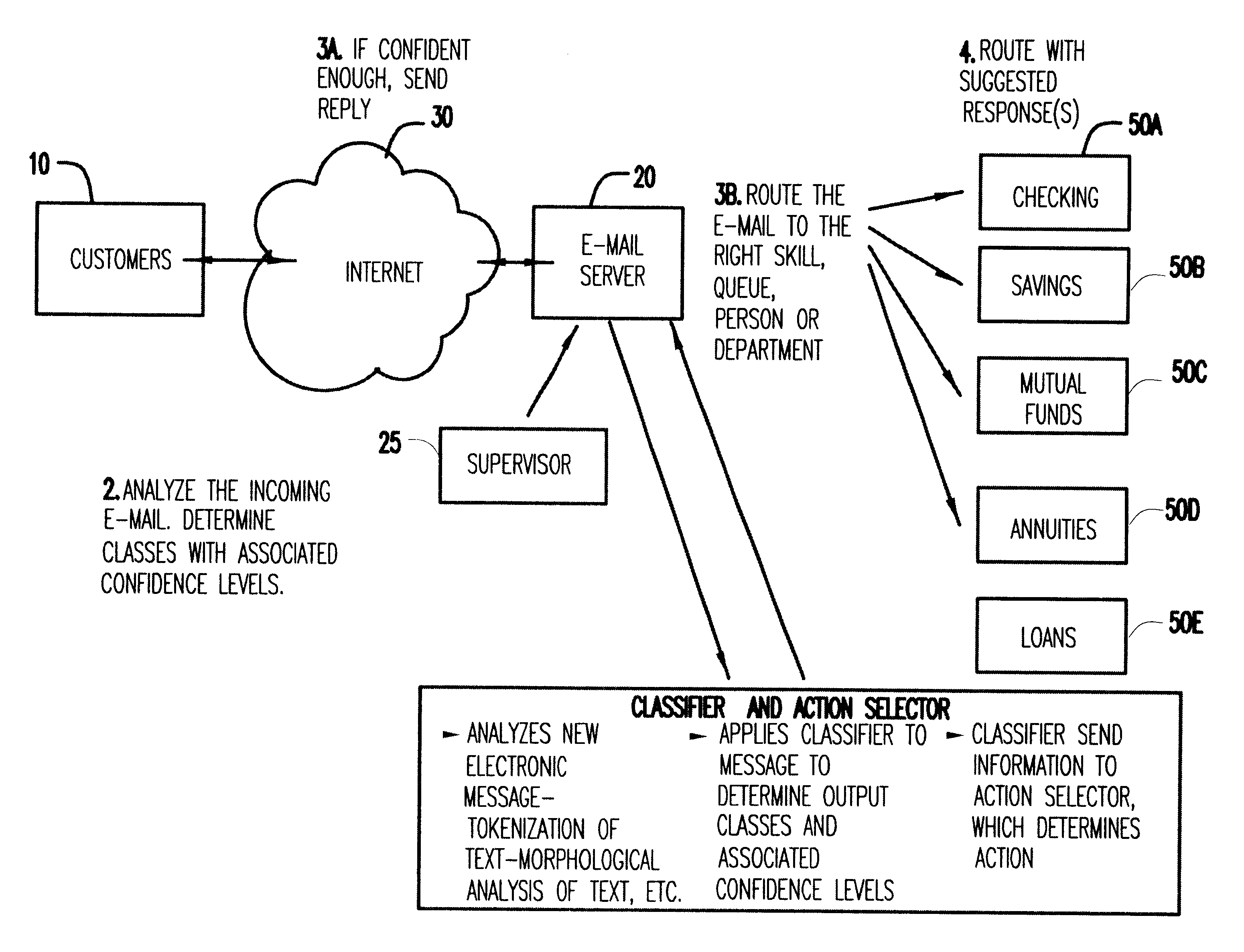

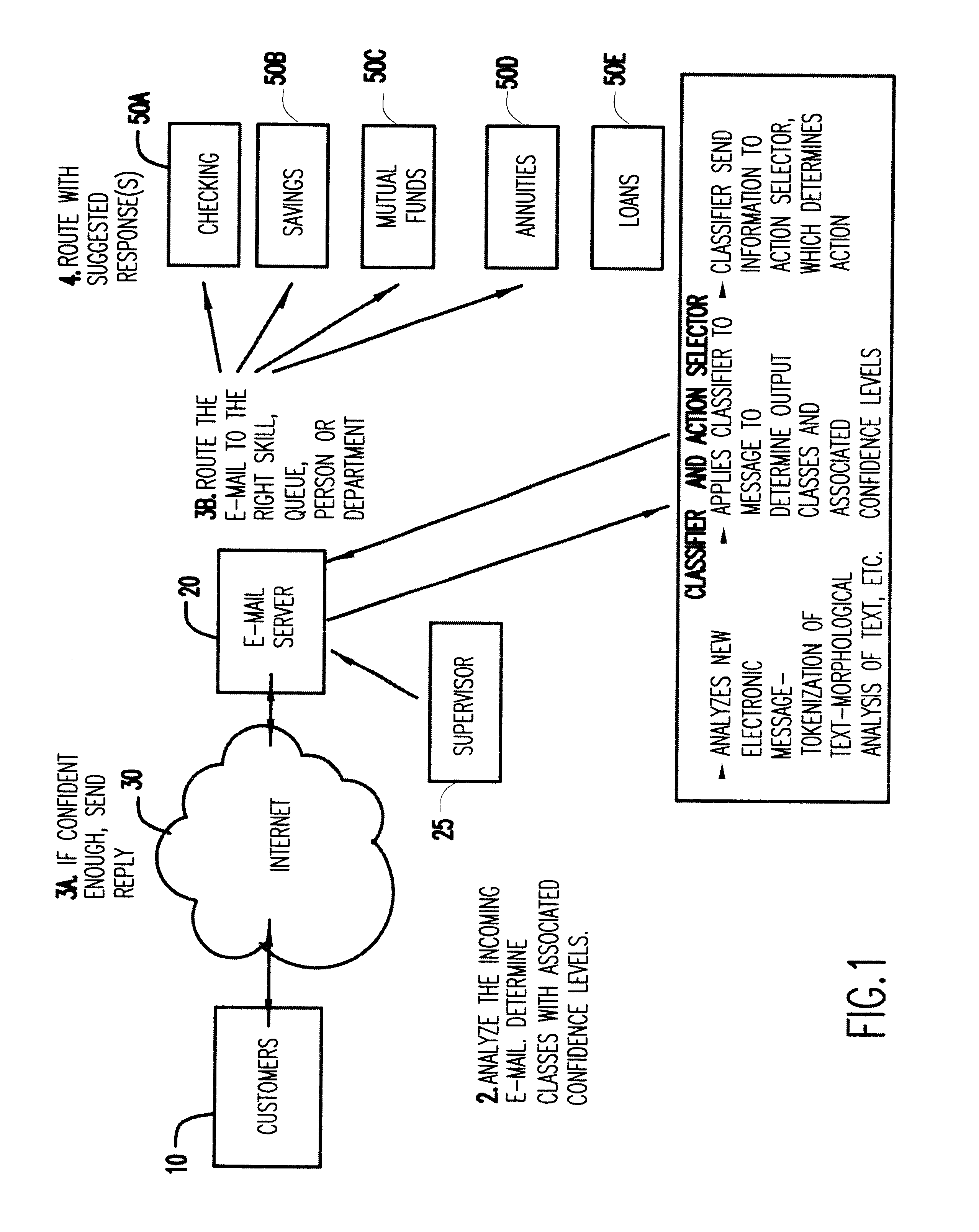

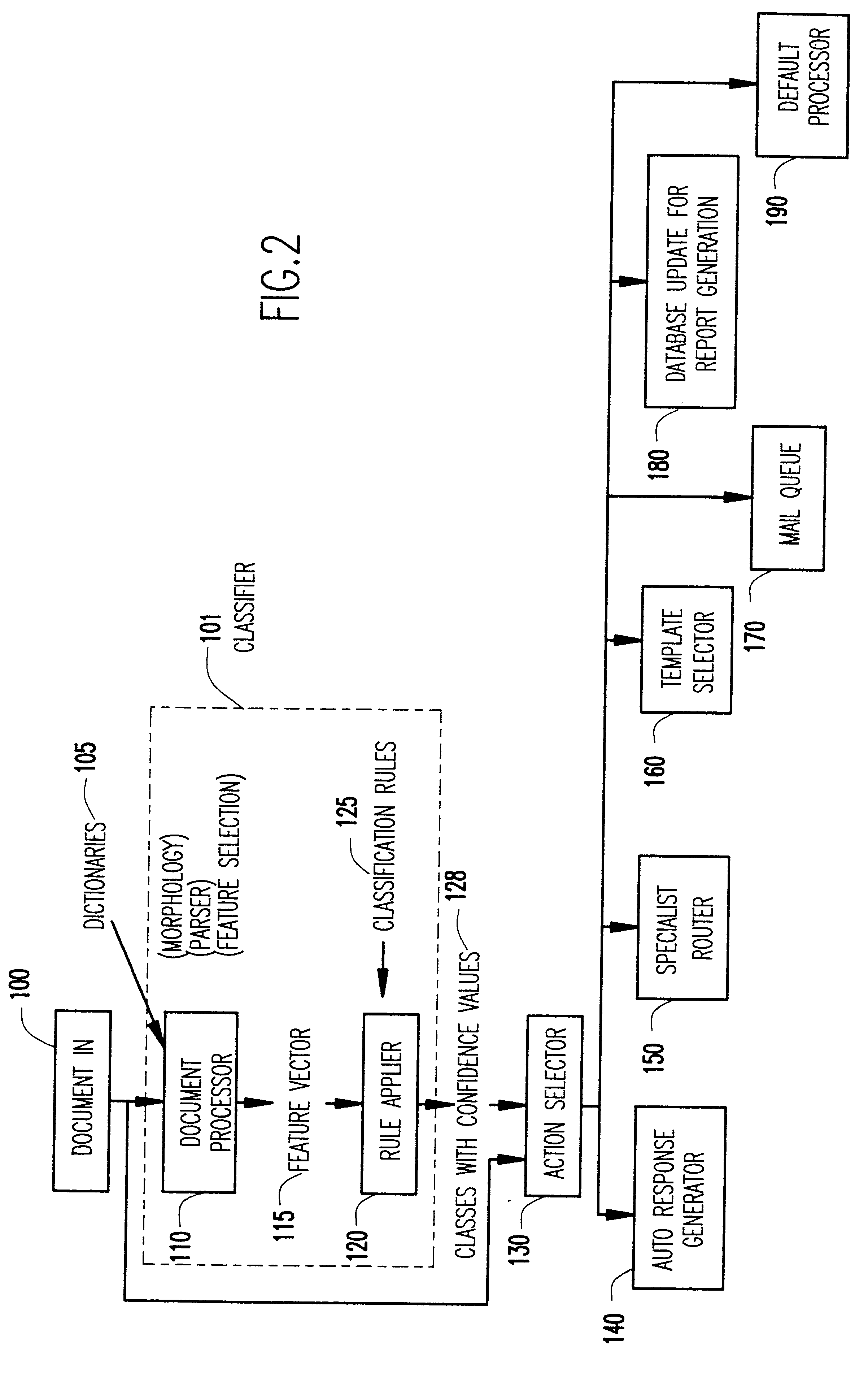

Machine learning based electronic messaging system

InactiveUS6424997B1Natural language data processingMultiple digital computer combinationsLearning basedComputer module

A machine learning based electronic mail system. A classifier and action selection module analyzes the incoming message and classifies the messages with associated confidence levels, which may include analyzing the electronic message by tokenization of the text, morphological analysis of the text, and other well known processes. The classifier and action selection module then determines the appropriate action or actions to effect on the message.

Owner:LINKEDIN

Automatic driving system based on enhanced learning and multi-sensor fusion

ActiveCN108196535APrecise positioningAccurate understandingPosition/course control in two dimensionsLearning networkProcess information

The invention discloses an automatic driving system based on enhanced learning and multi-sensor fusion. The system comprises a perception system, a control system and an execution system. The perception system high-efficiently processes a laser radar, a camera and a GPS navigator through a deep learning network so as to realize real time identification and understanding of vehicles, pedestrians, lane lines, traffic signs and signal lamps surrounding a running vehicle. Through an enhanced learning technology, the laser radar and a panorama image are matched and fused so as to form a real-time three-dimensional streetscape map and determination of a driving area. The GPS navigator is combined to realize real-time navigation. The control system adopts an enhanced learning network to process information collected by the perception system, and the people, vehicles and objects of the surrounding vehicles are predicted. According to vehicle body state data, the records of driver actions are paired, a current optimal action selection is made, and the execution system is used to complete execution motion. In the invention, laser radar data and a video are fused, and driving area identification and destination path optimal programming are performed.

Owner:清华大学苏州汽车研究院(吴江)

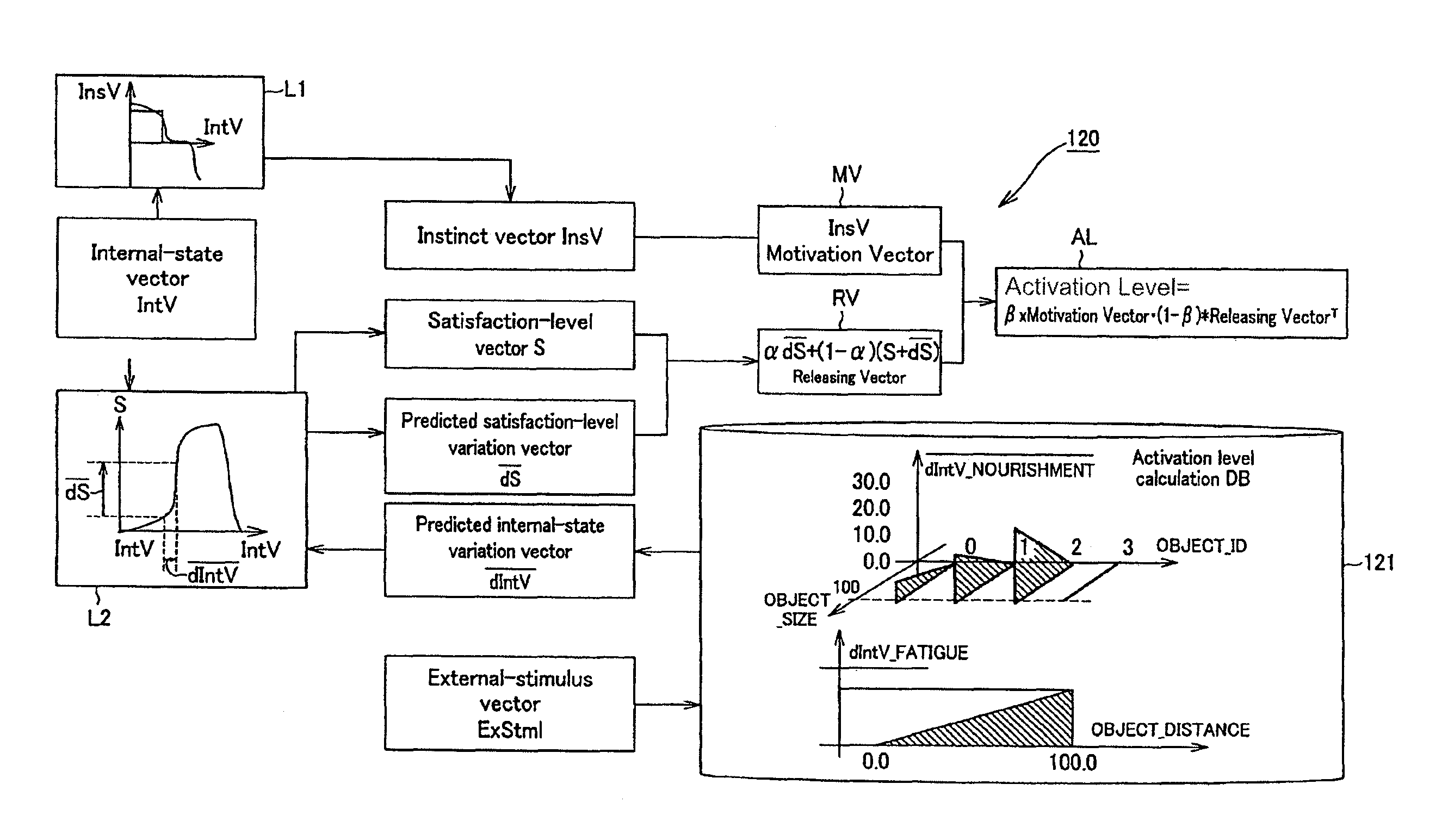

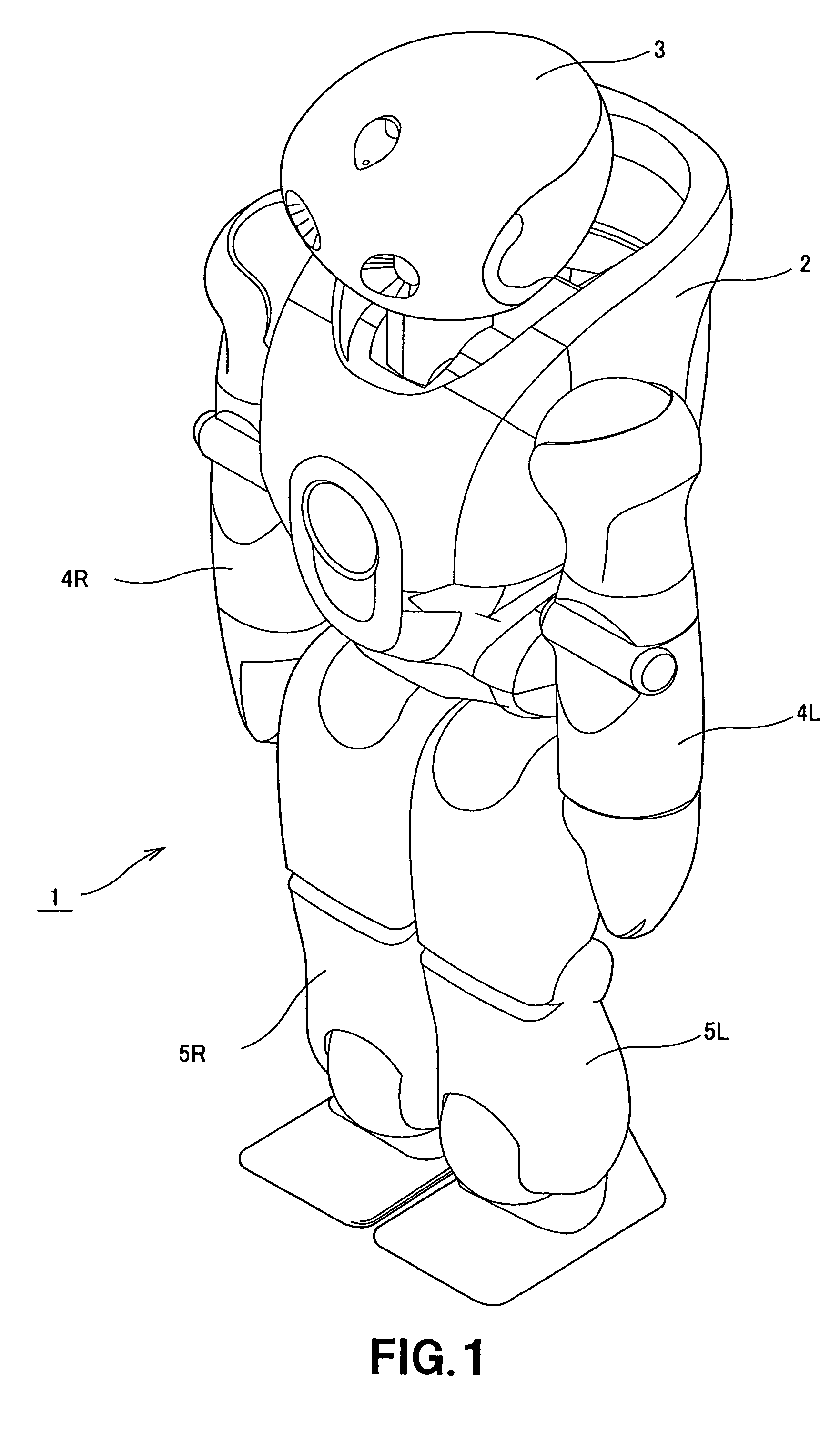

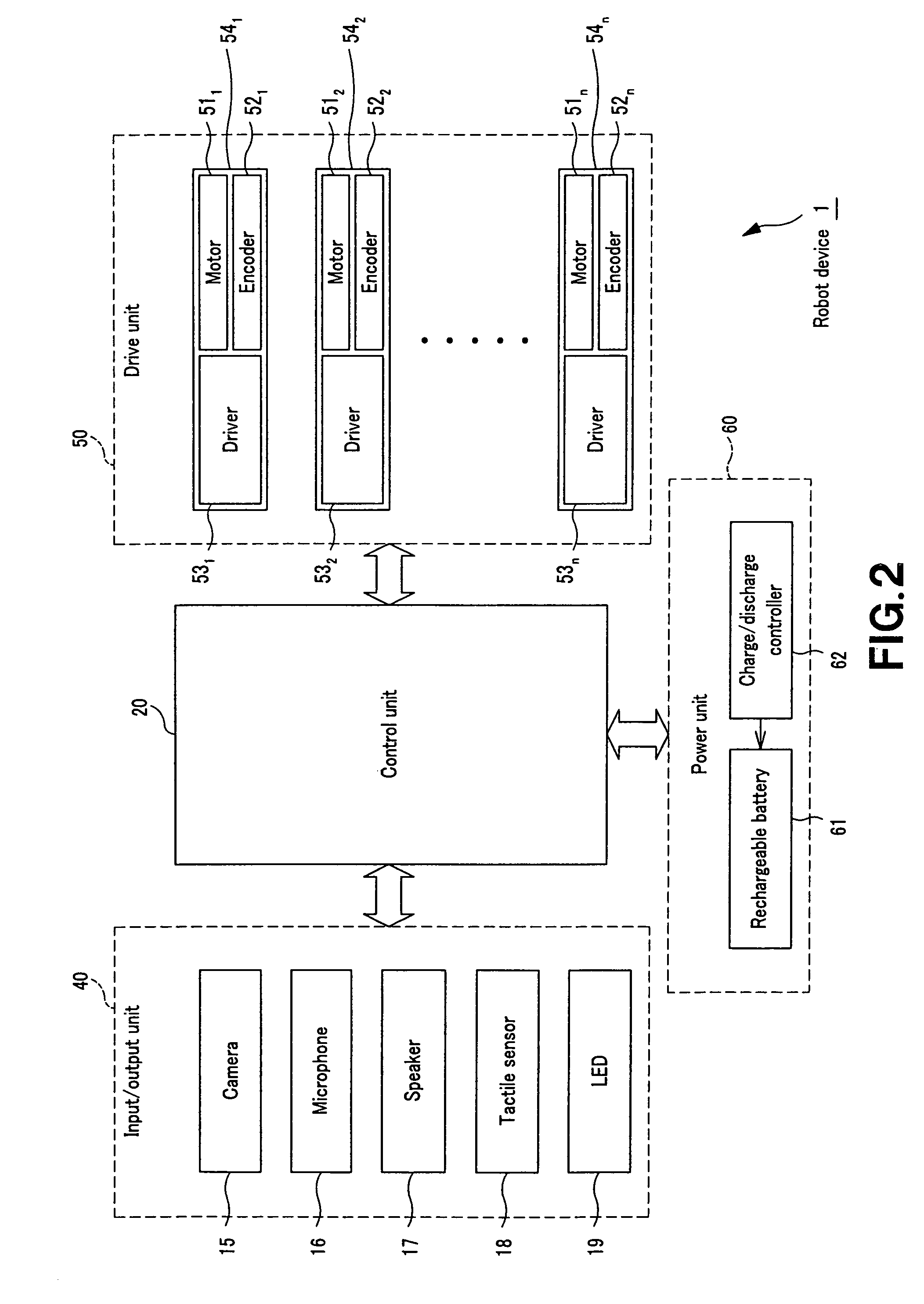

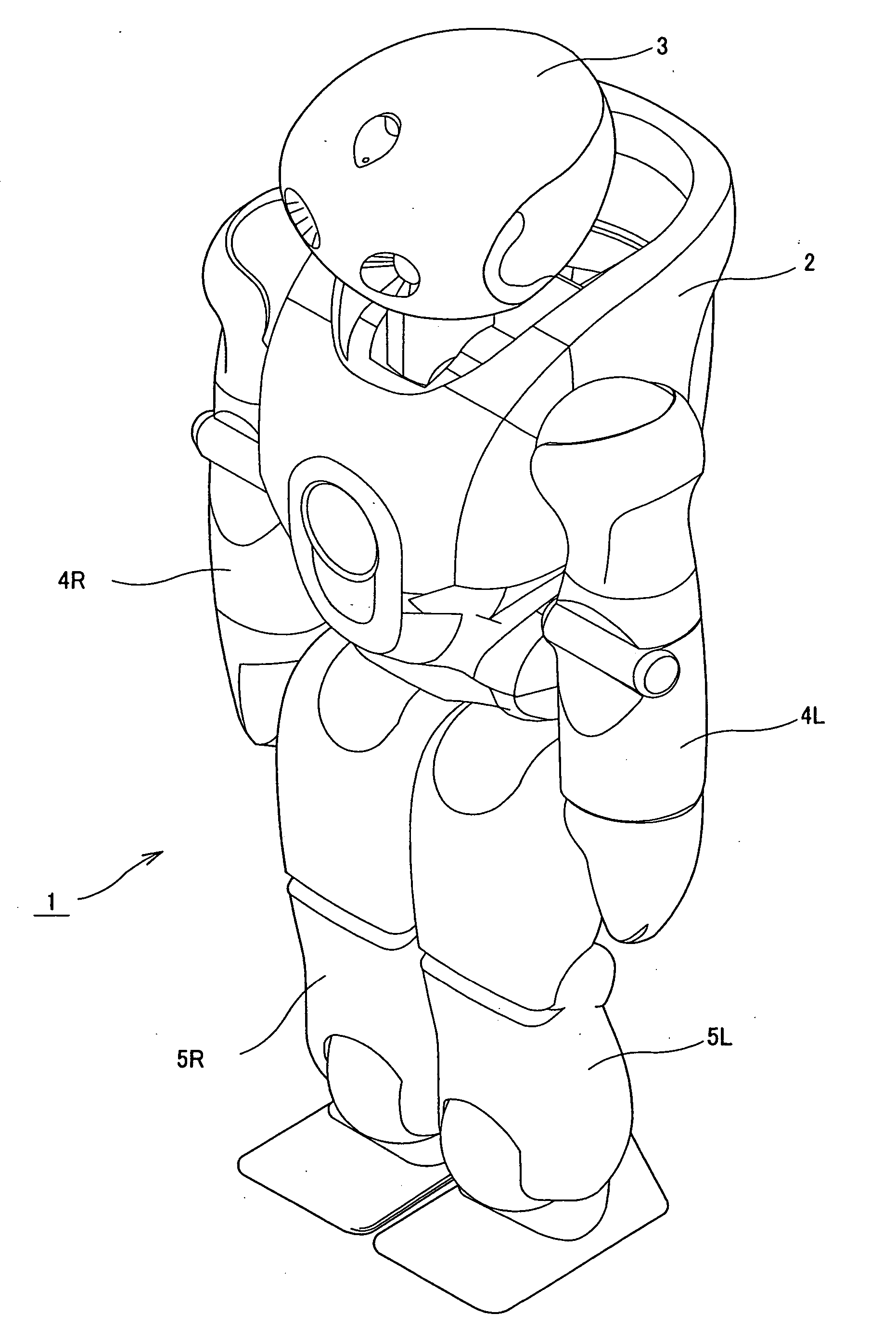

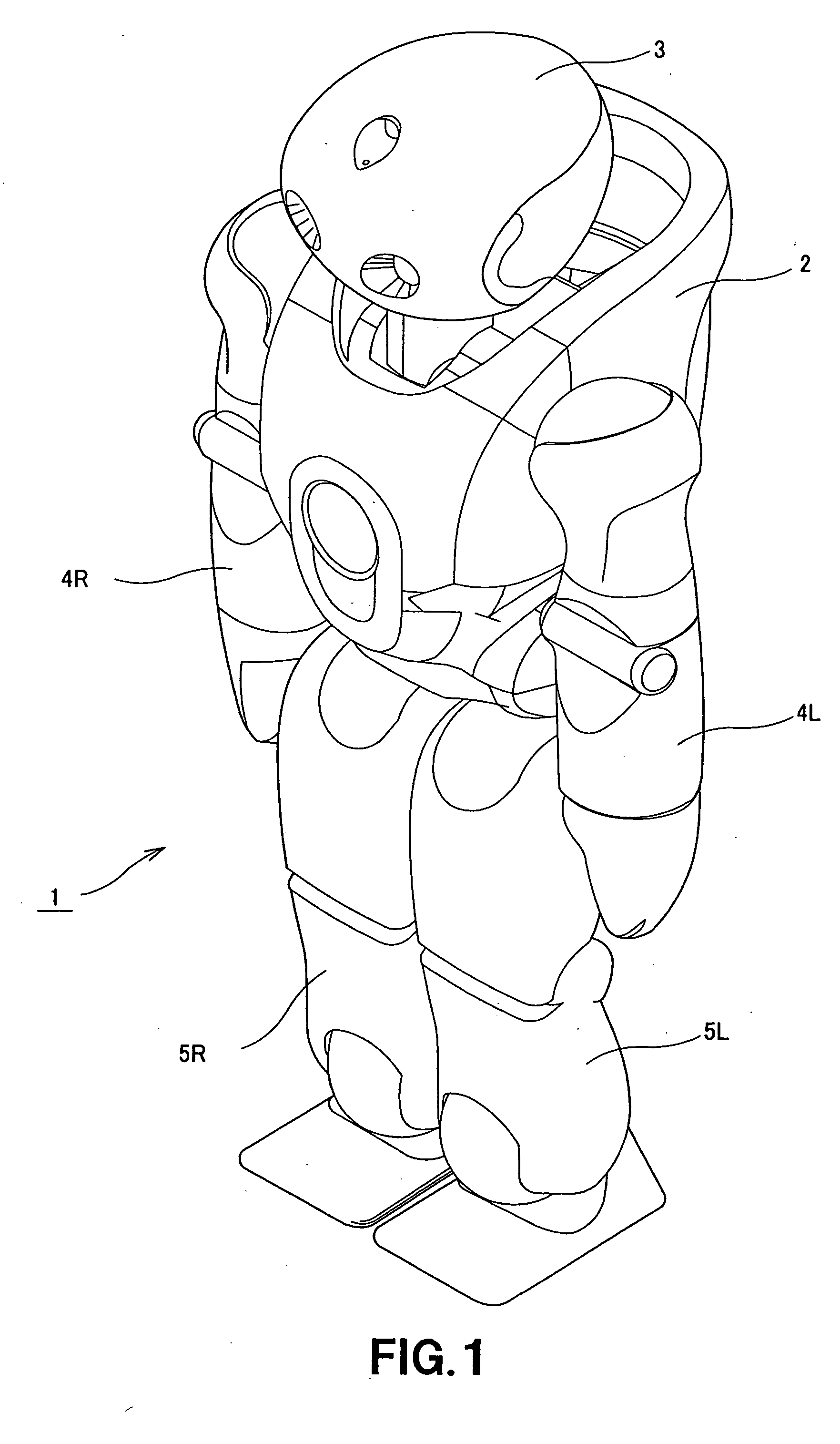

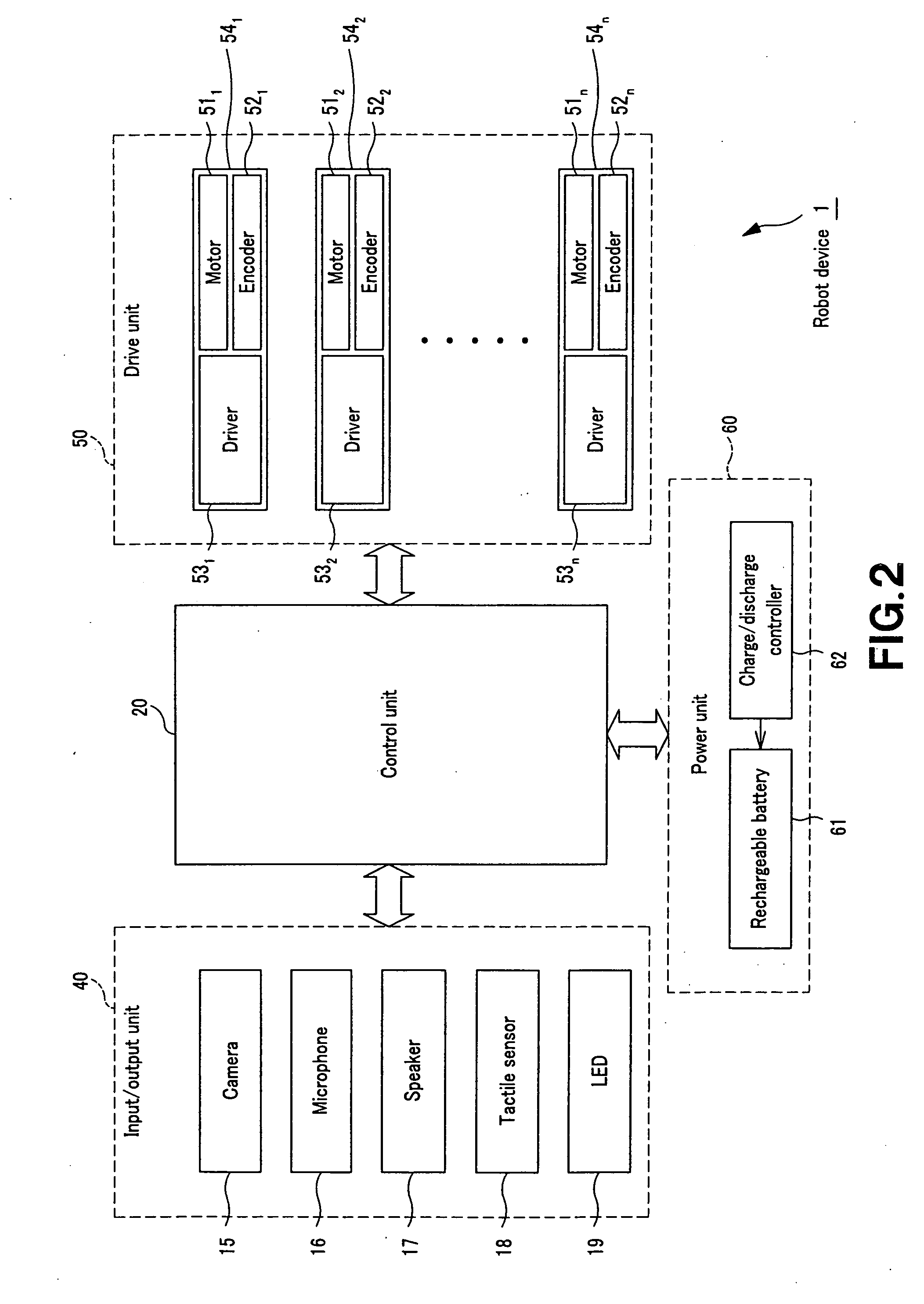

Robot behavior control based on current and predictive internal, external condition and states with levels of activations

InactiveUS7853357B2Reduce the amount requiredProgramme-controlled manipulatorBiological neural network modelsMedicineControl system

In a robot device, an action selecting / control system includes a plurality of elementary action modules each of which outputs an action when selected. An activation level calculation unit calculates an activation level AL of each elementary action on the basis of information from an internal-state manager and external-stimulus recognition unit and with reference to a data base. An action selector selects an elementary action whose activation level AL is highest as an action to be implemented. Each action is associated with a predetermined internal state and external stimulus. The activation level calculation unit calculates an activation level AL of each action on the basis of a predicted satisfaction level variation based on the level of an instinct for an action corresponding to an input internal state and a predicted internal-state variation predictable based on an input external stimulus.

Owner:SONY CORP

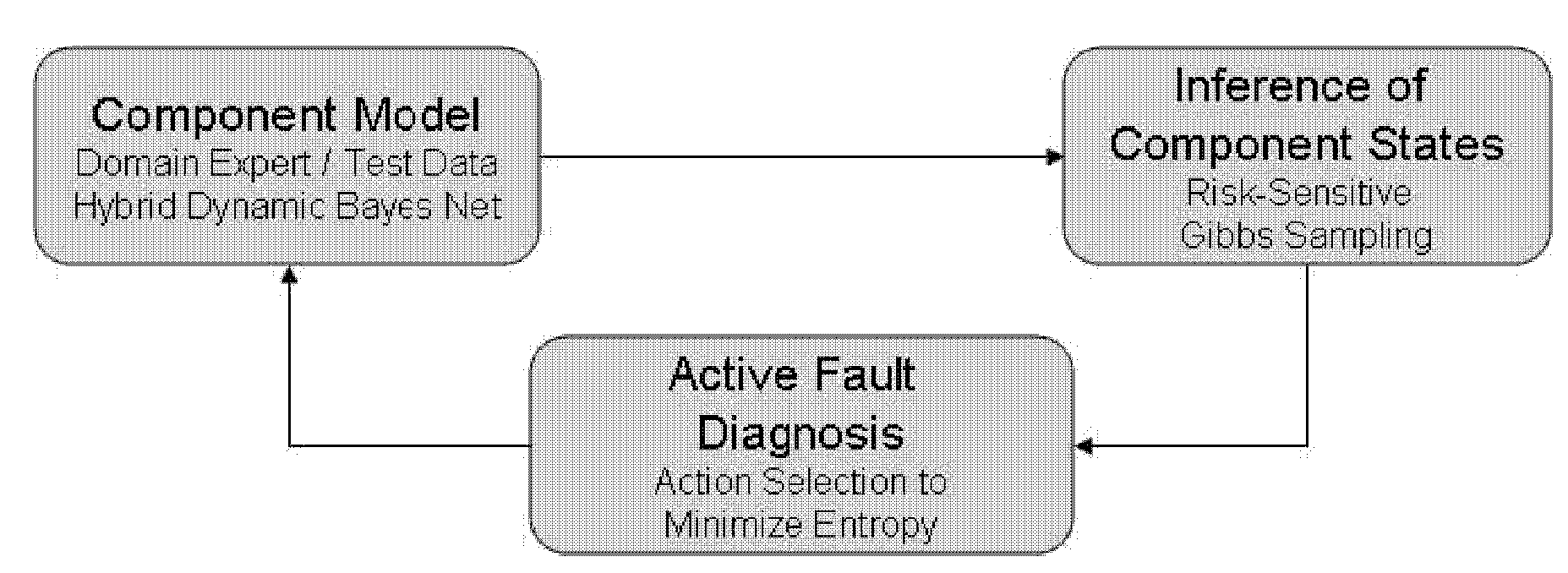

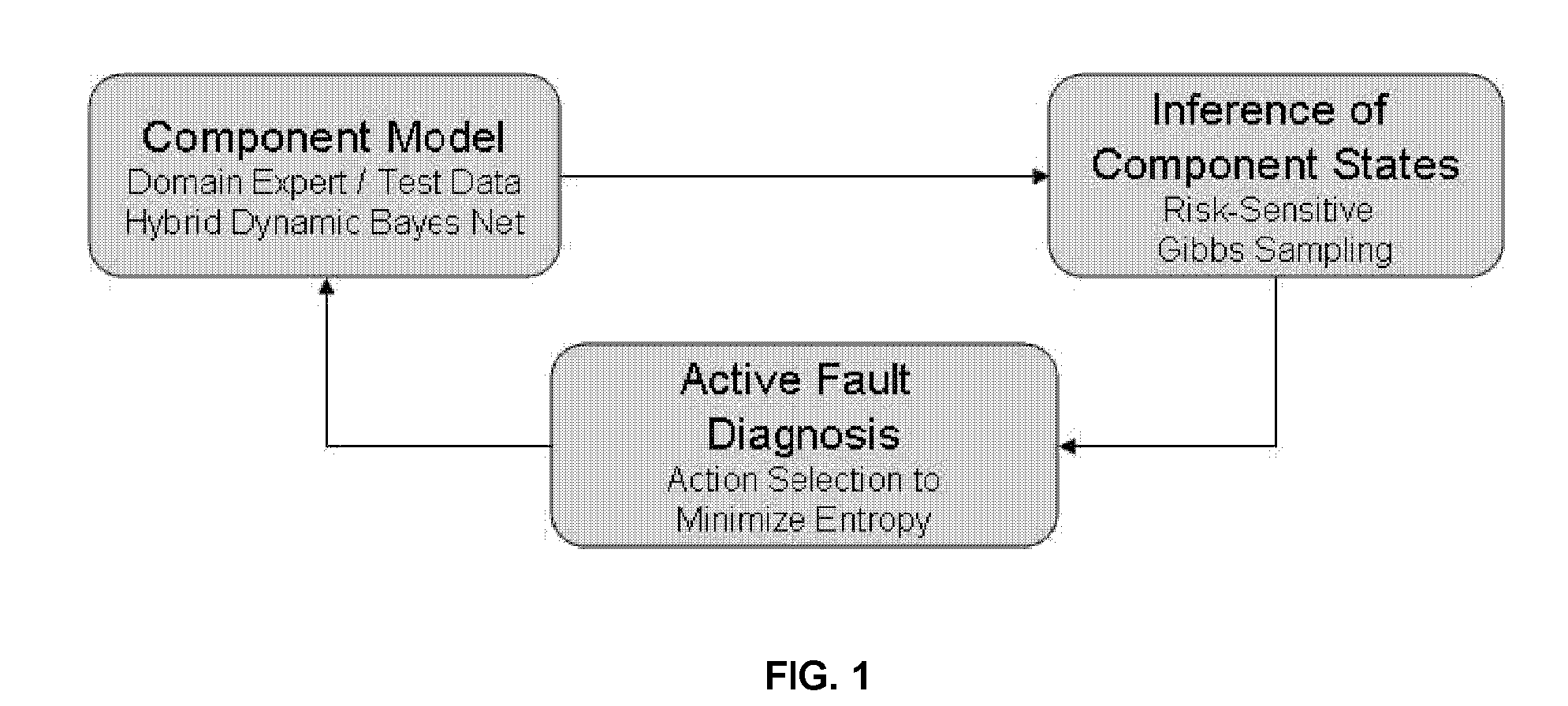

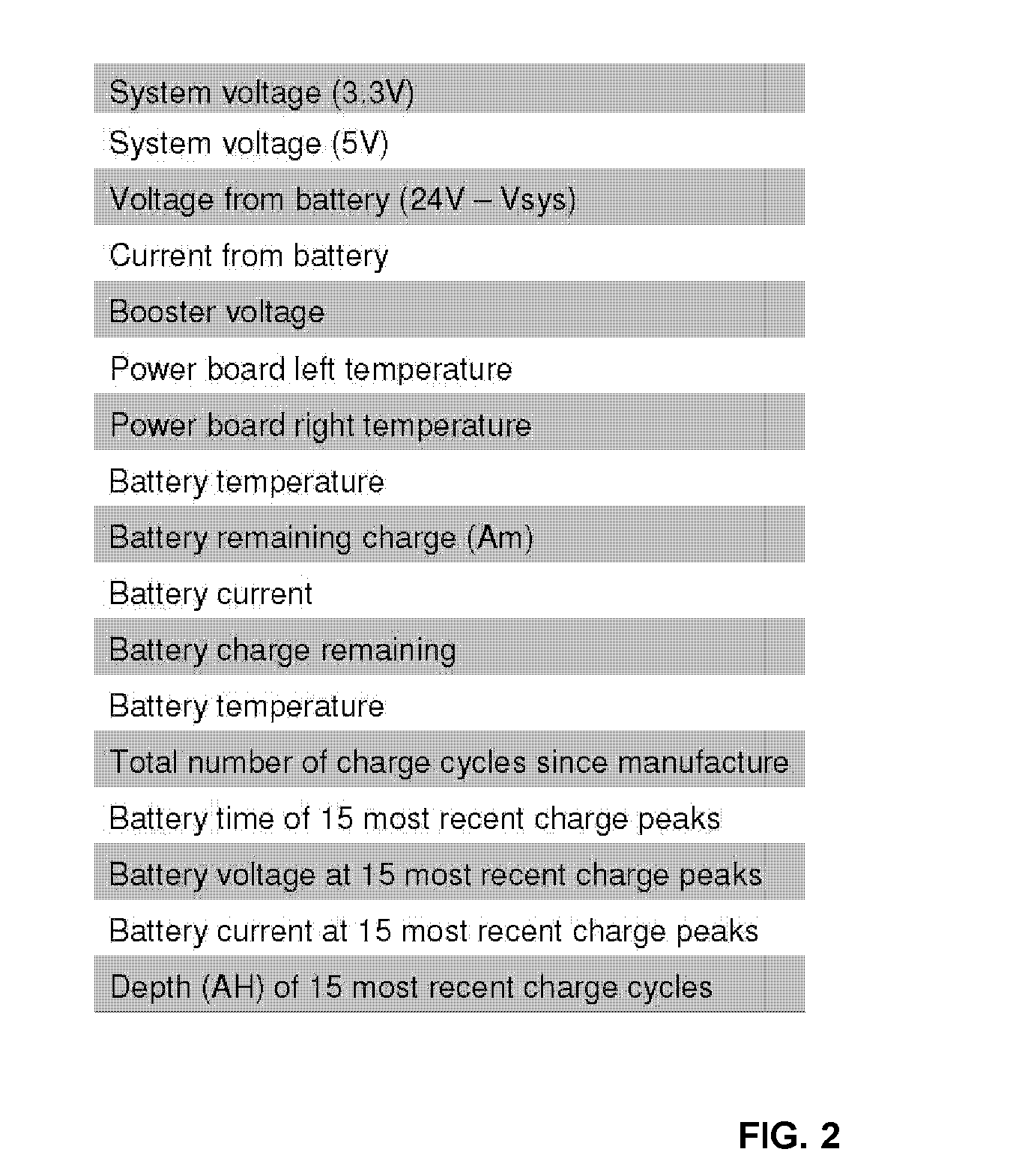

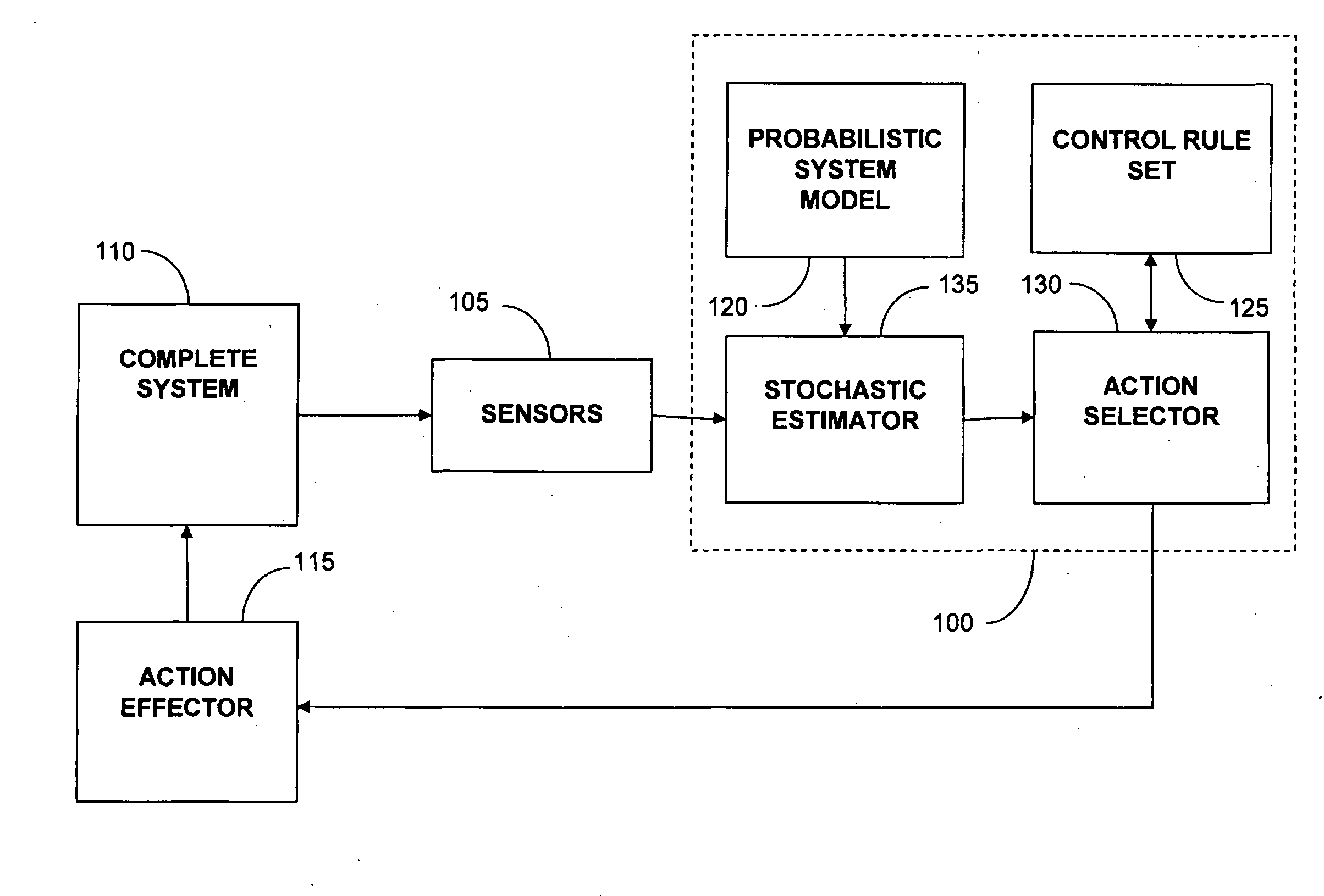

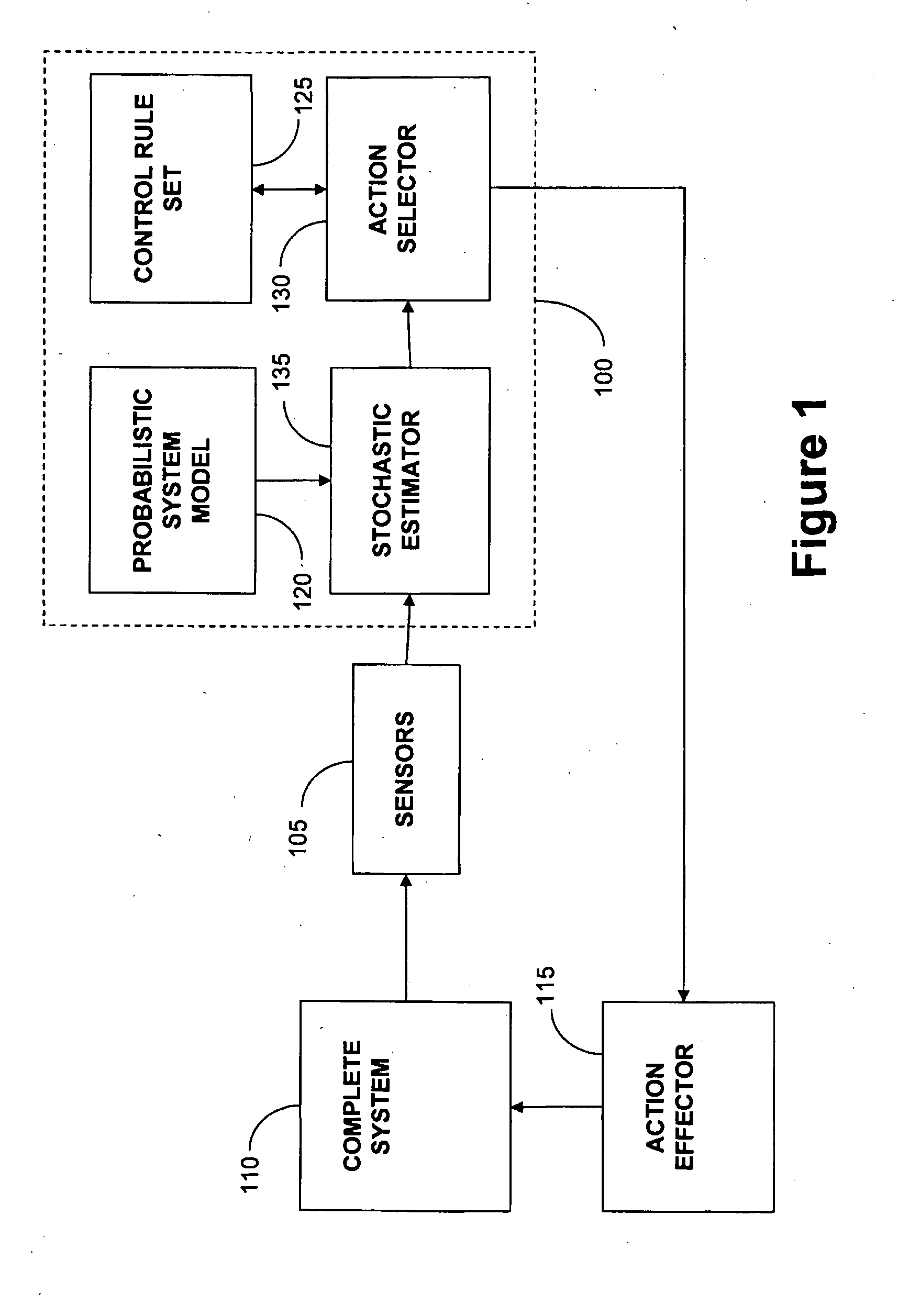

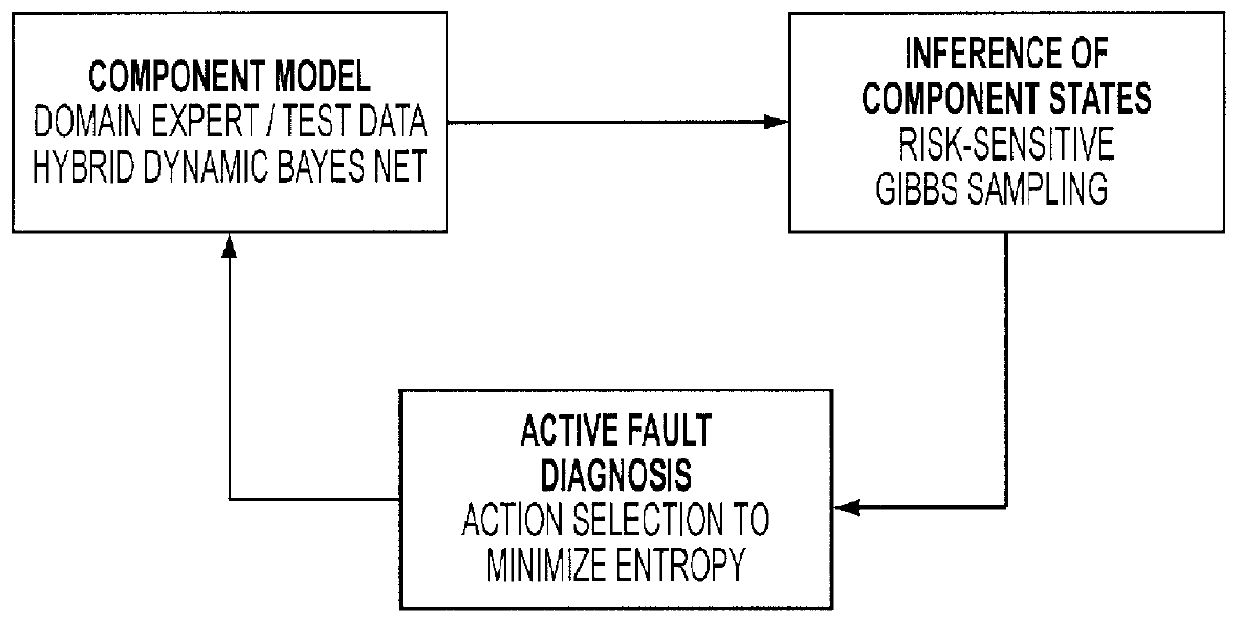

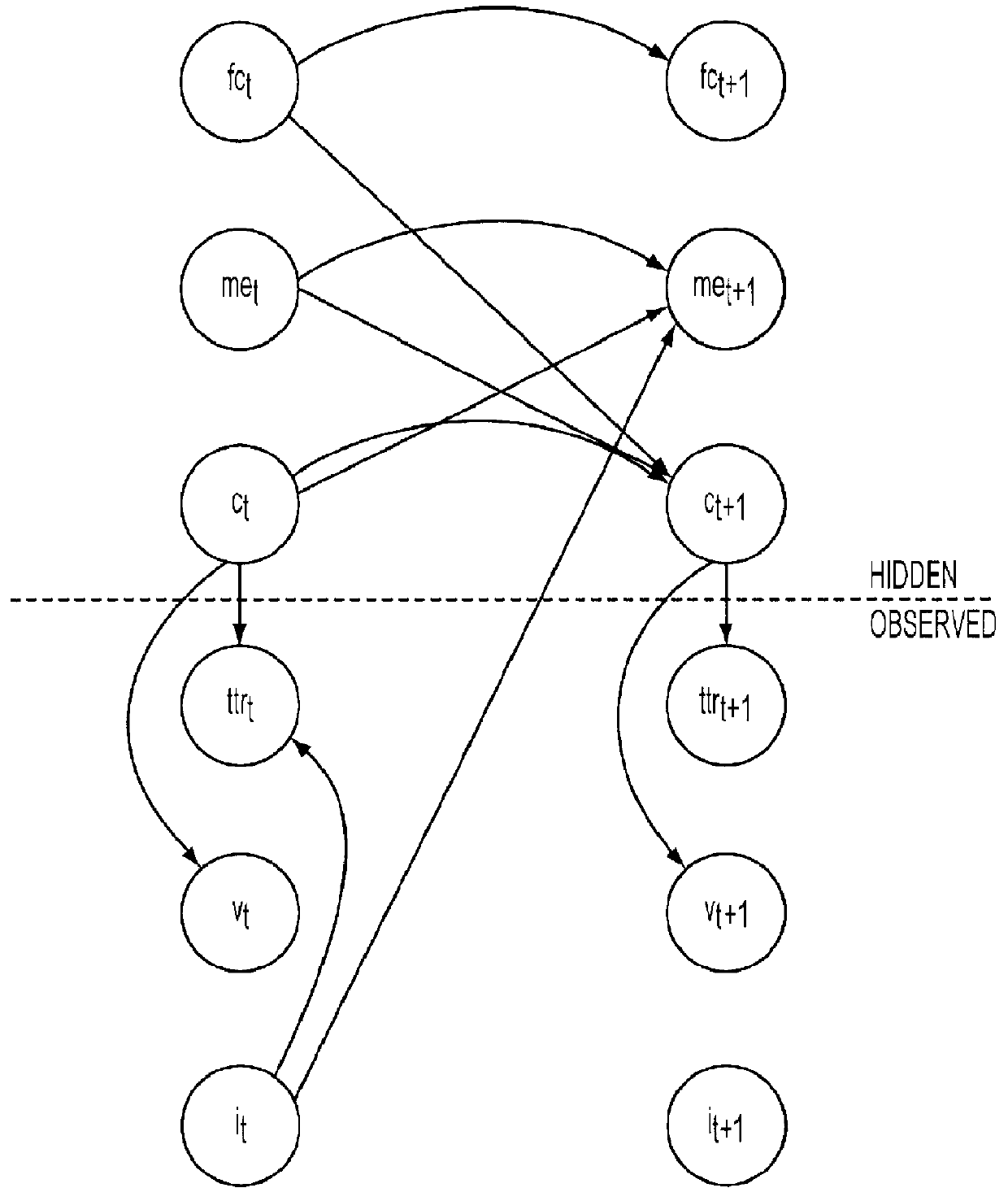

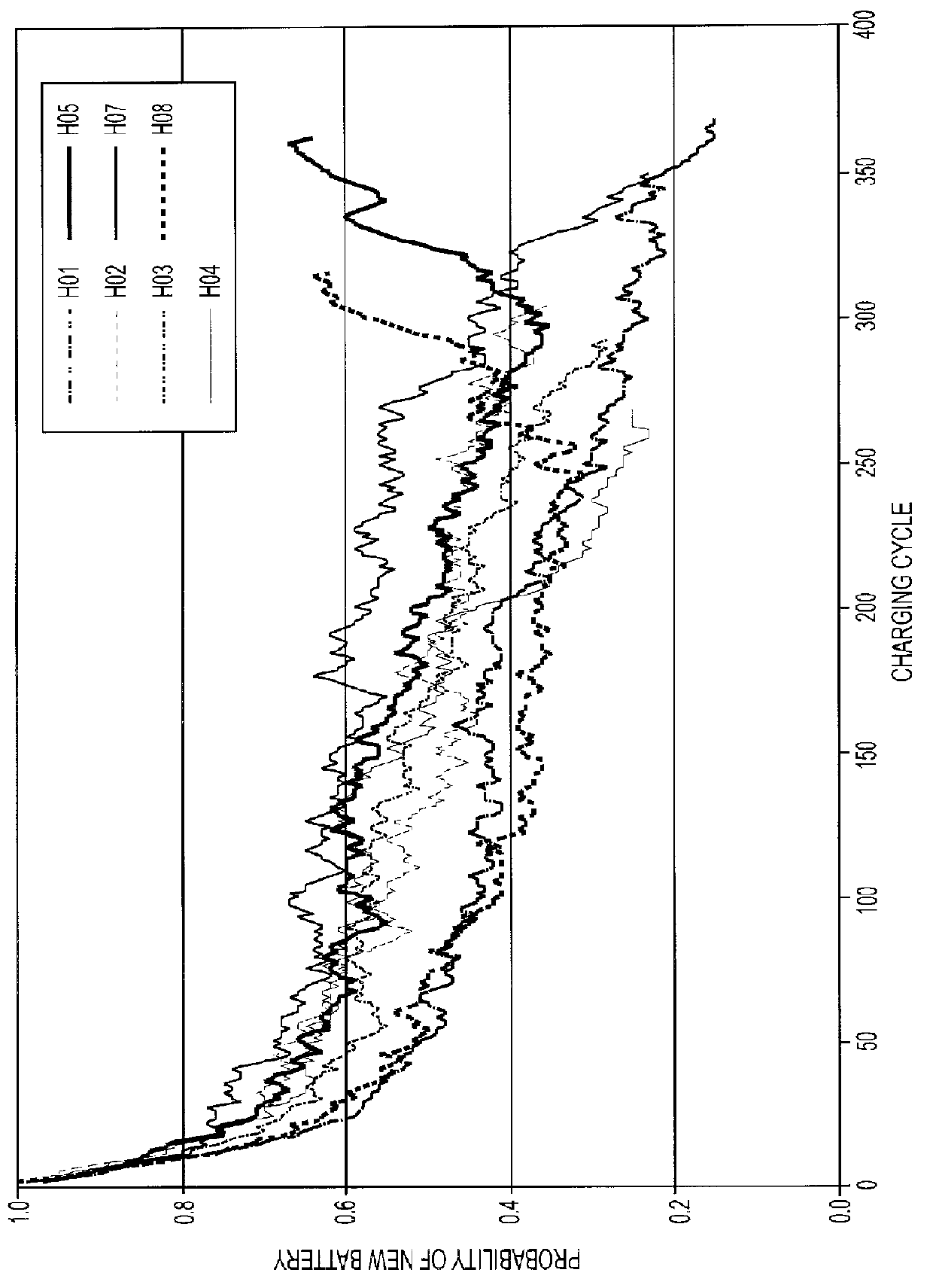

Electronic System Condition Monitoring and Prognostics

InactiveUS20080235172A1Improve forecast accuracyElectrical testingProbabilistic networksElectronic systemsPrognostics

A system for monitoring and predicting the condition of an electronic system comprises a component model, an inference engine based on the component model, and an action selection component that selects an action based on an output of the inference engine.

Owner:MASSACHUSETTS INST OF TECH +1

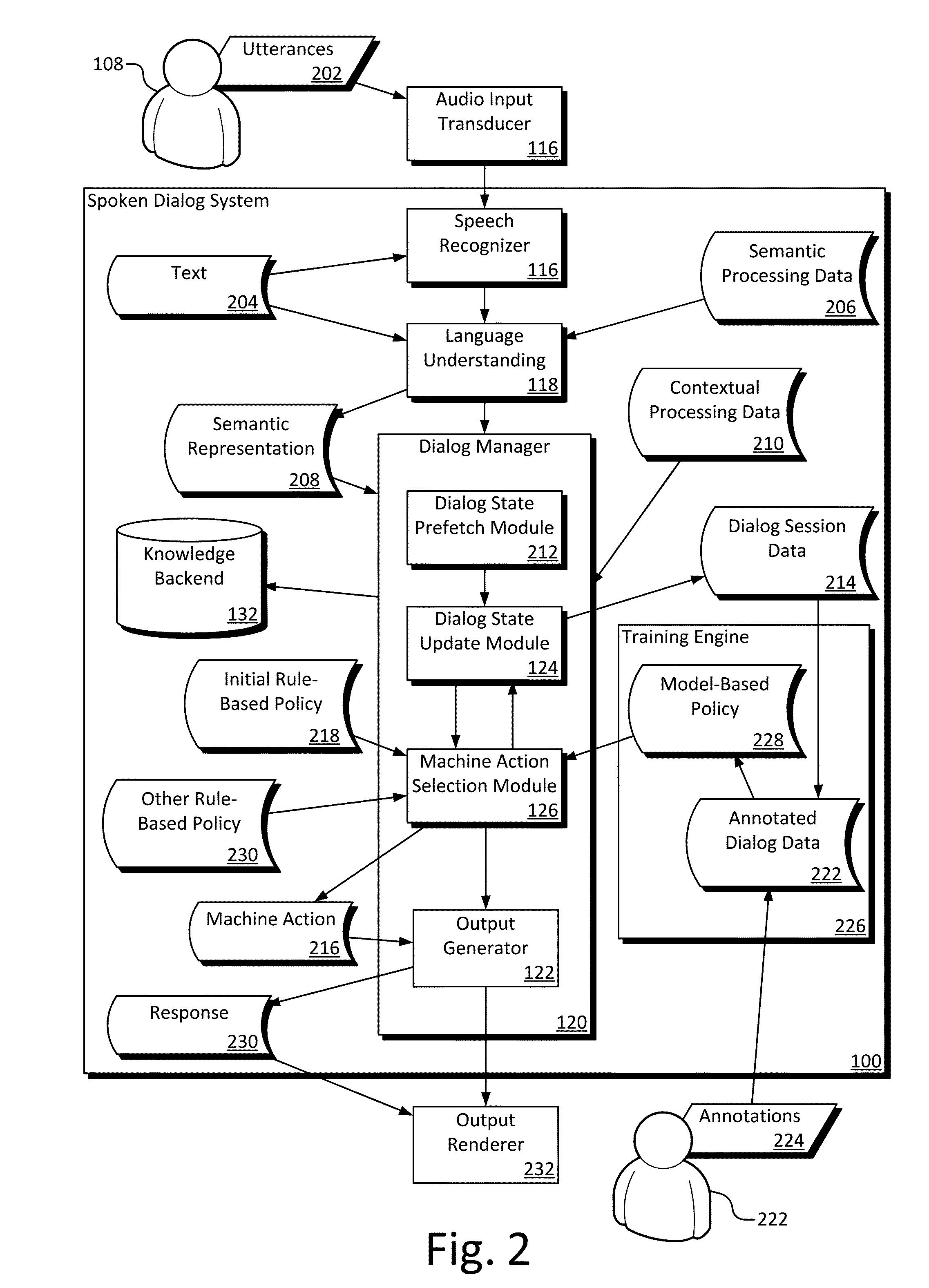

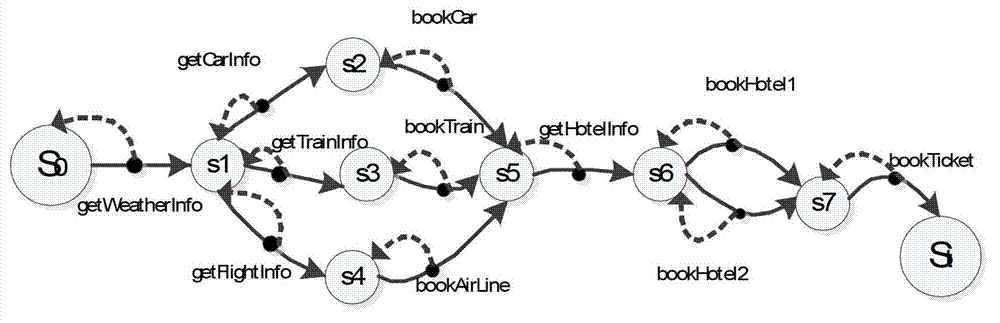

Discriminative Policy Training for Dialog Systems

Embodiments of a dialog system employing a discriminative action selection solution based on a trainable machine action model. The discriminative machine action selection solution includes a training stage that builds the discriminative model-based policy and a decoding stage that uses the discriminative model-based policy to predict the machine action that best matches the dialog state. Data from an existing dialog session is annotated with a dialog state and an action assigned to the dialog state. The labeled data is used to train the discriminative model-based policy. The discriminative model-based policy becomes the policy for the dialog system used to select the machine action for a given dialog state.

Owner:MICROSOFT TECH LICENSING LLC

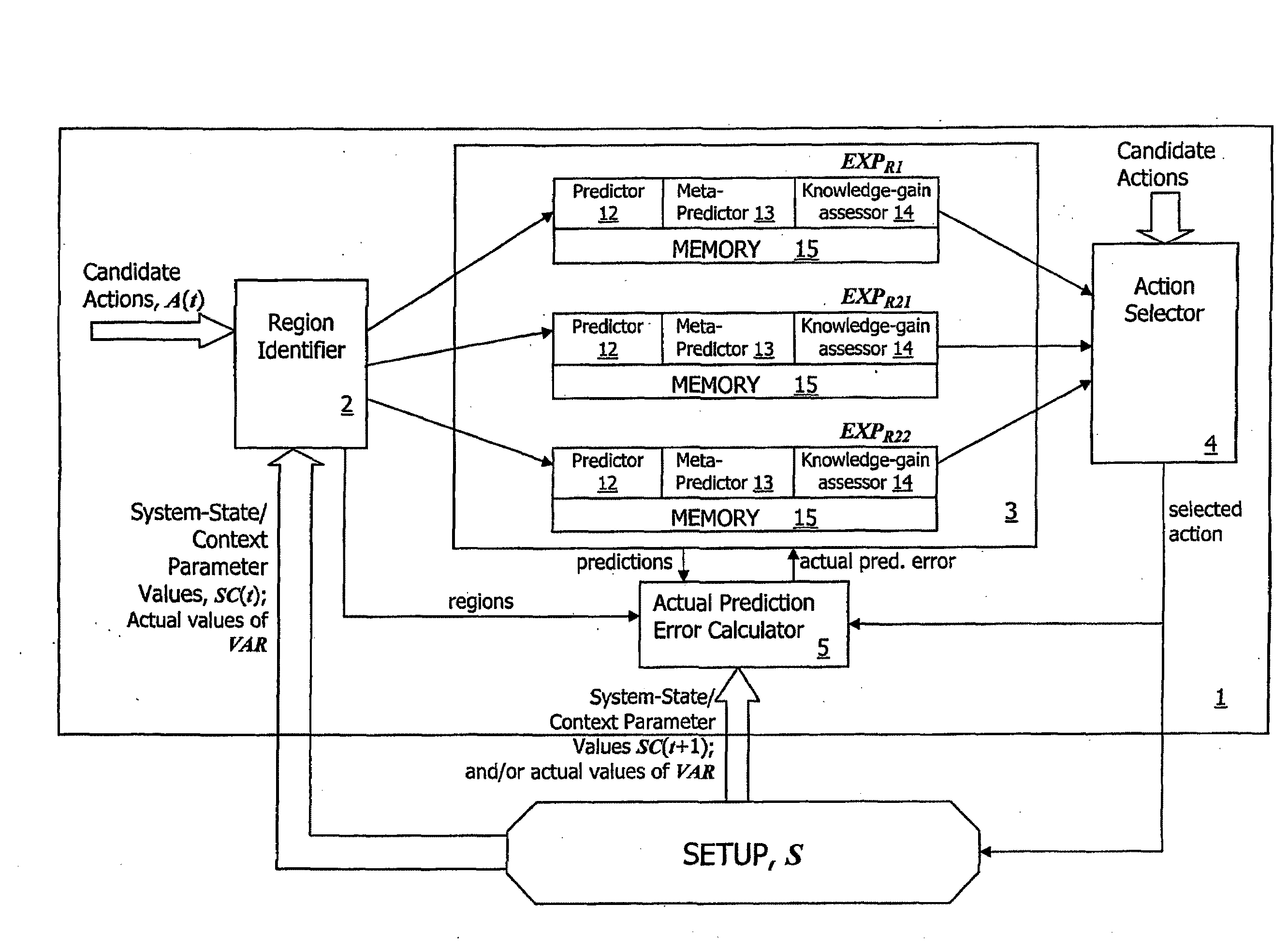

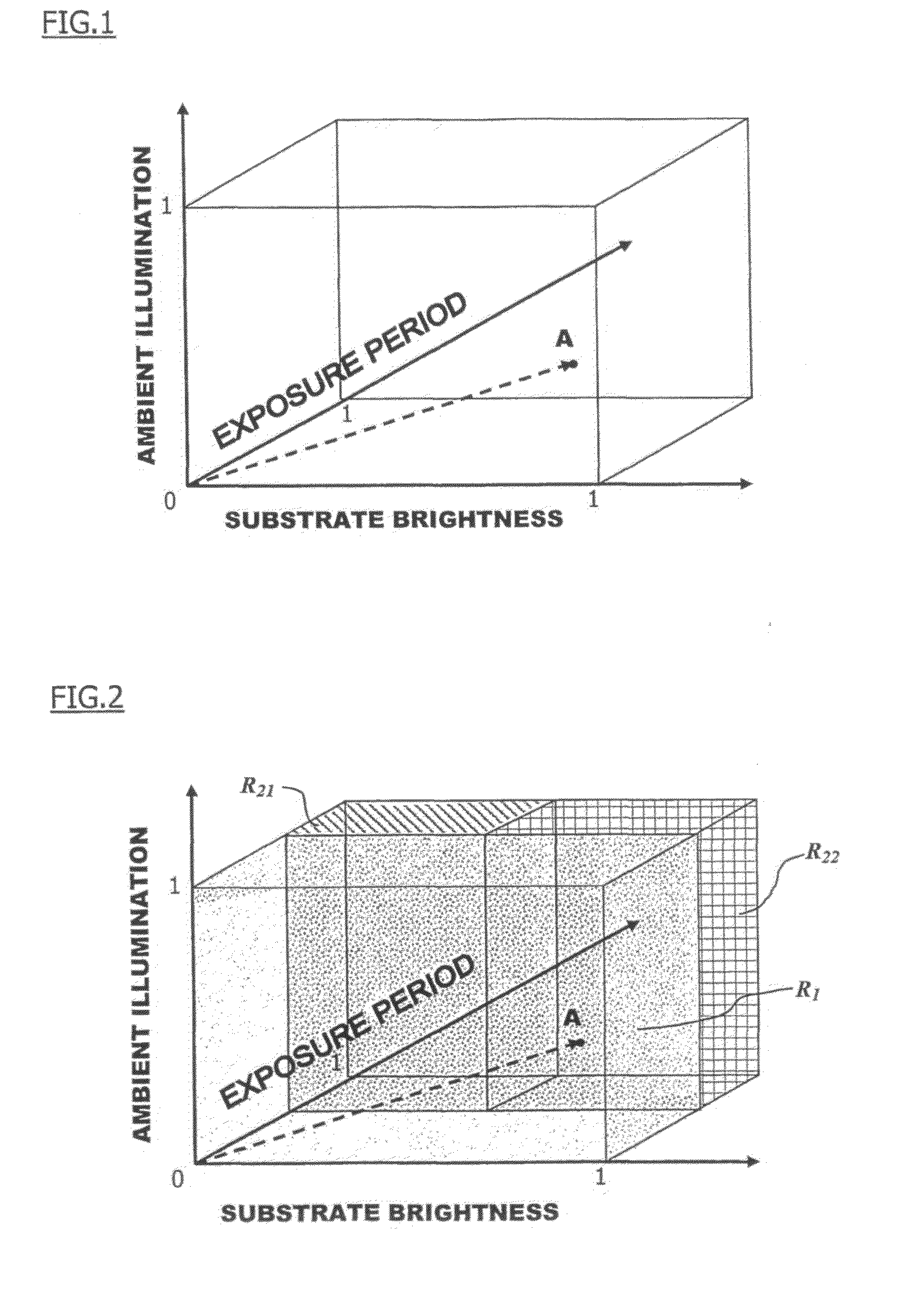

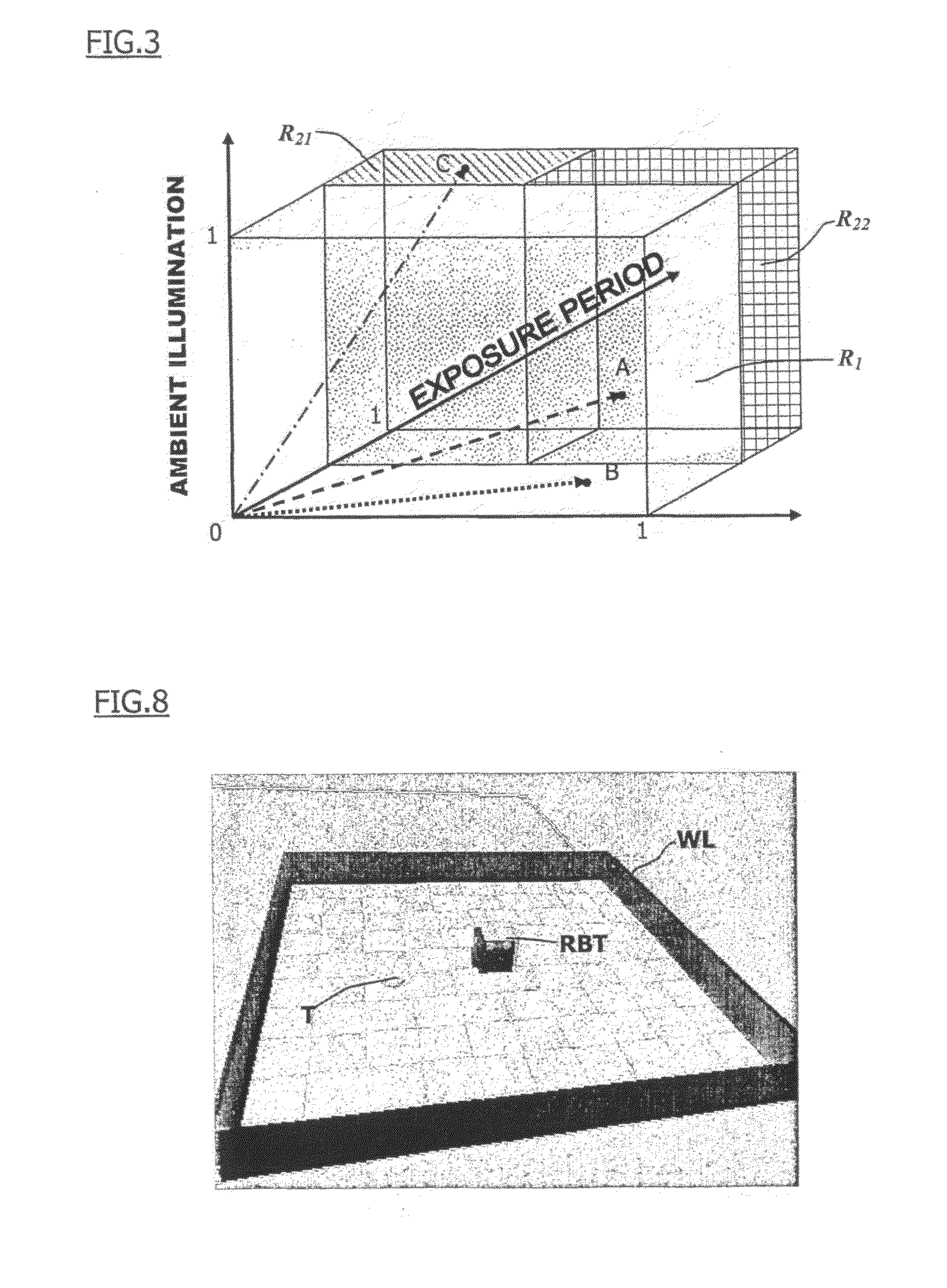

Automated Action-Selection System and Method , and Application Thereof to Training Prediction Machines and Driving the Development of Self-Developing Devices

ActiveUS20080319929A1Simple weighting of the different drivesDigital computer detailsArtificial lifeKnowledge gainSelection system

In order to promote efficient learning of relationships inherent in a system or setup S described by system-state and context parameters, the next action to take, affecting the setup, is determined based on the knowledge gain expected to result from this action. Knowledge-gain is assessed “locally” by comparing the value of a knowledge-indicator parameter after the action with the value of this indicator on one or more previous occasions when the system-state / context parameter(s) and action variable(s)=had similar values to the current ones. Preferably the “level of knowledge” is assessed based on the accuracy of predictions made by a prediction module. This technique can be applied to train a prediction machine by causing it to participate in the selection of a sequence of actions. This technique can also be applied for managing development of a self-developing device or system, the self-developing device or system performing a sequence of actions selected according to the action-selection technique.

Owner:SONY EUROPE BV

Robot device, Behavior control method thereof, and program

InactiveUS20060184273A1Reduce the amount requiredProgramme-controlled manipulatorBiological neural network modelsState variationReference database

In a robot device, an action selecting / control system (100) includes a plurality of elementary action modules each of which outputs an action when selected, an activation level calculation unit (120) to calculate an activation level AL of each elementary action on the basis of information from an internal-state manager (91) and external-stimulus recognition unit (80) and with reference to a data base, and an action selector (130) to select an elementary action whose activation level AL is highest as an action to be implemented. Each action is associated with a predetermined internal state and external stimulus. The activation level calculation unit (120) calculates an activation level AL of each action on the basis of a predicted satisfaction0level variation based on the level of an instinct for an action corresponding to an input internal state and a predicted internal-state variation predictable based on an input external stimulus.

Owner:SONY CORP

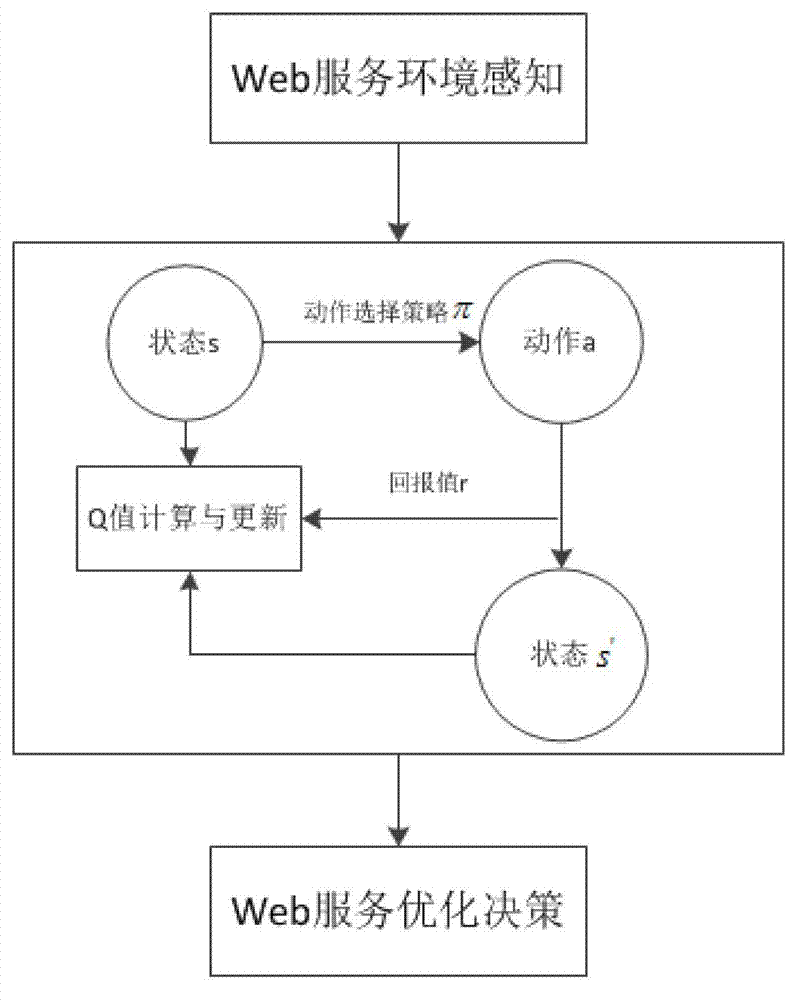

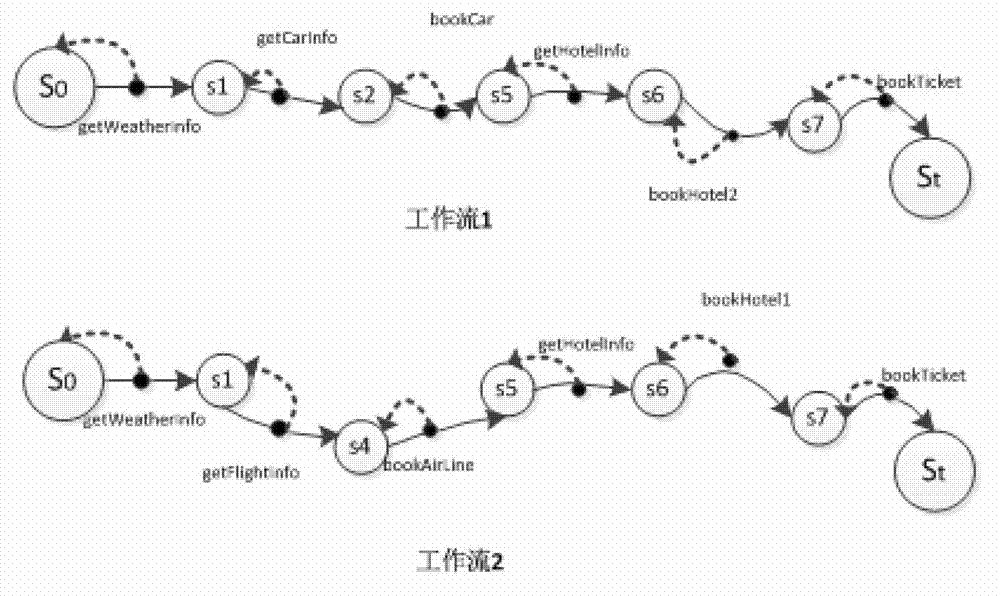

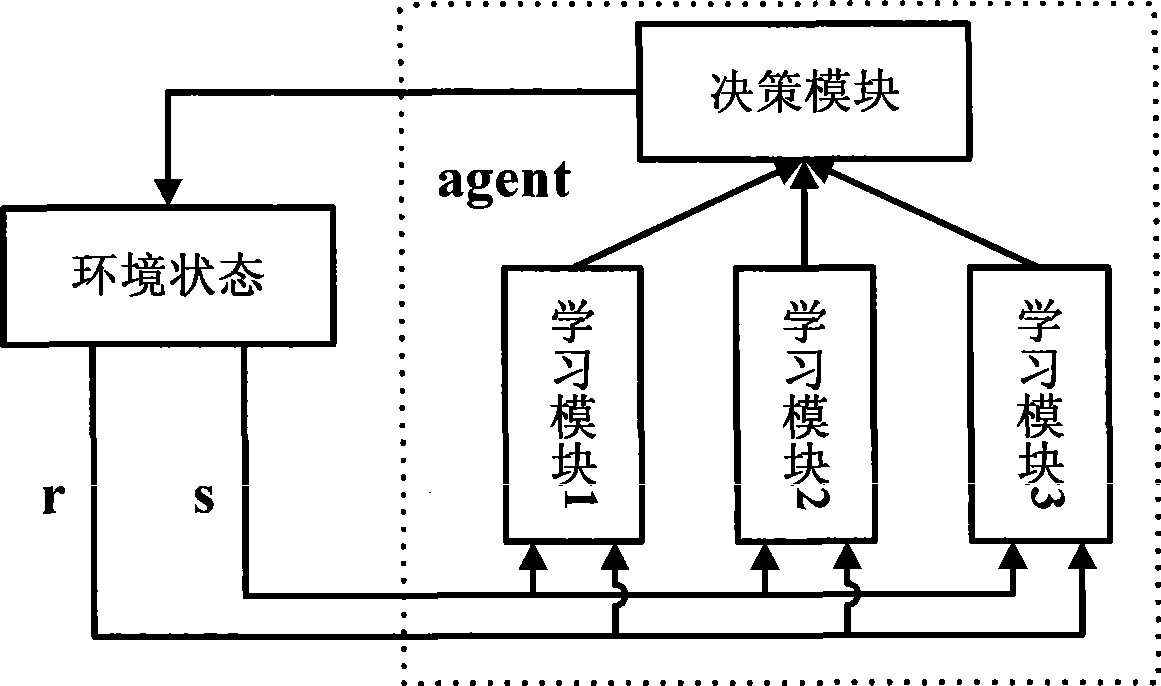

Large-scale self-adaptive composite service optimization method based on multi-agent reinforced learning

InactiveCN103248693AAdd coordinatorMeet individual needsTransmissionComposite servicesCombinatorial optimization

The invention discloses a self-adaptive composite service optimization method based on multi-agent reinforced learning. The method combines conceptions of the reinforced learning and agents, and defines the state set of reinforced learning to be the precondition and postcondition of the service, and the action set to be the Web service; parameters for Q learning including the learning rate, discount factors and Q value in reinforced learning are initialized; each agent is used for performing one composite optimizing task, and can perceive the current state, and select the optimal action under the current state as per the action selection strategy; the Q value is calculated and updated as per the Q learning algorithm; before the Q value is converged, the next round learning is performed after one learning round is finished, and finally the optimal strategy is obtained. According to the method, the corresponding self-adaptive action strategy is worked out on line as per the environment change at the time, so that higher flexibility, self-adaptability and practical value are realized.

Owner:SOUTHEAST UNIV

Controller

InactiveUS20090299496A1Easy to integrateEfficient implementationSimulator controlDigital computer detailsControl systemEngineering

A controller is provided, operable to control a system on the basis of measurement data received from a plurality of sensors indicative of a state of the system, with at least partial autonomy, but in environments in which it is not possible to fully determine the state of the system on the basis of such sensor measurement data. The controller, comprises: a system model, defining at least a set of probabilities for the dynamical evolution of the system and corresponding measurement models for the plurality of sensors of the system; a stochastic estimator operable to receive measurement data from the sensors and, with reference to the system model, to generate a plurality of samples each representative of the state of the system; a rule set corresponding to the system model, defining, for each of a plurality of possible samples representing possible states of the system, information defining an action to be carried out in the system; and an action selector, operable to receive an output of the stochastic estimator and to select, with reference to the rule set, information defining one or more corresponding actions to be performed in the system.

Owner:BEAS SYST INC

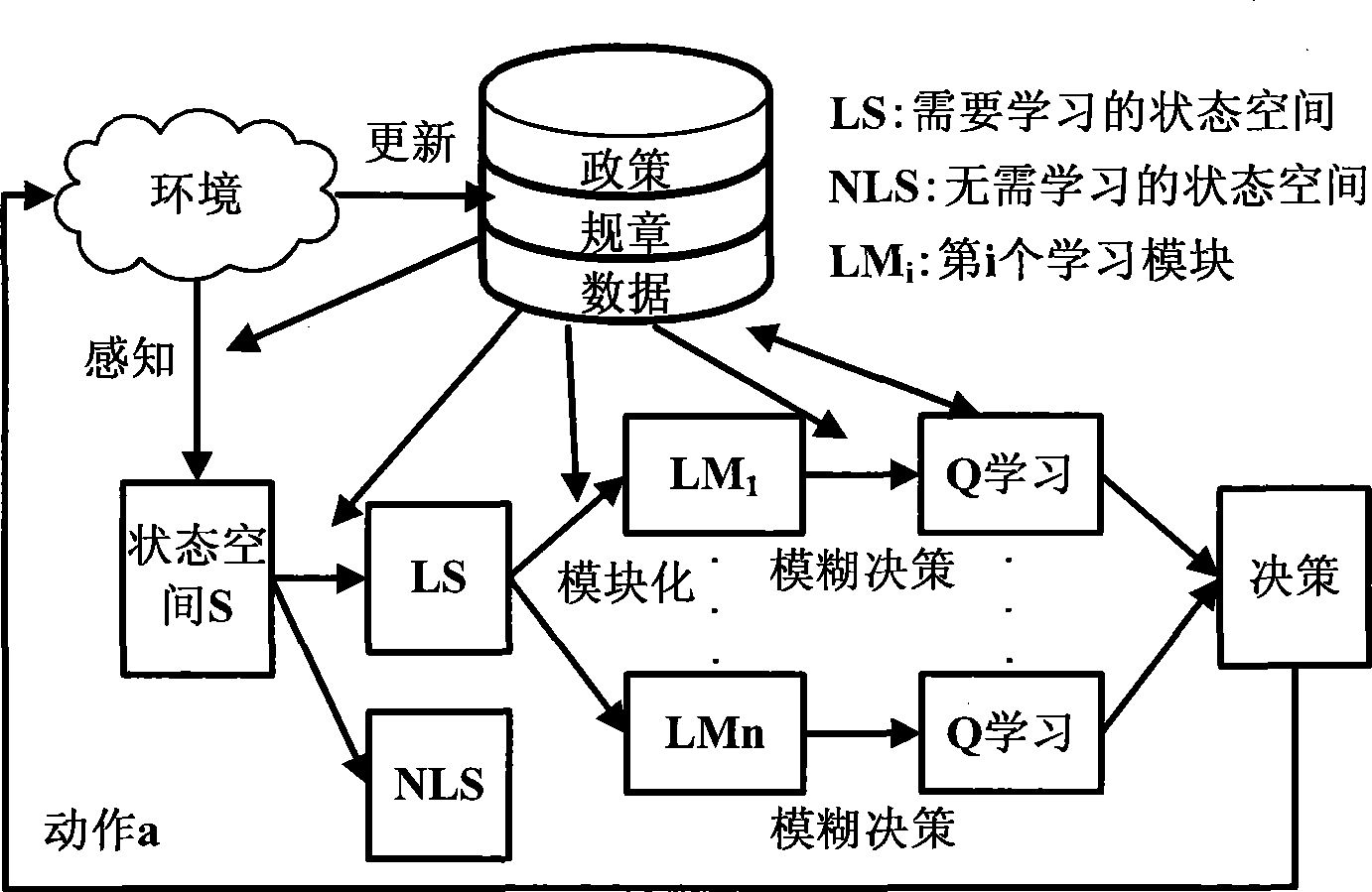

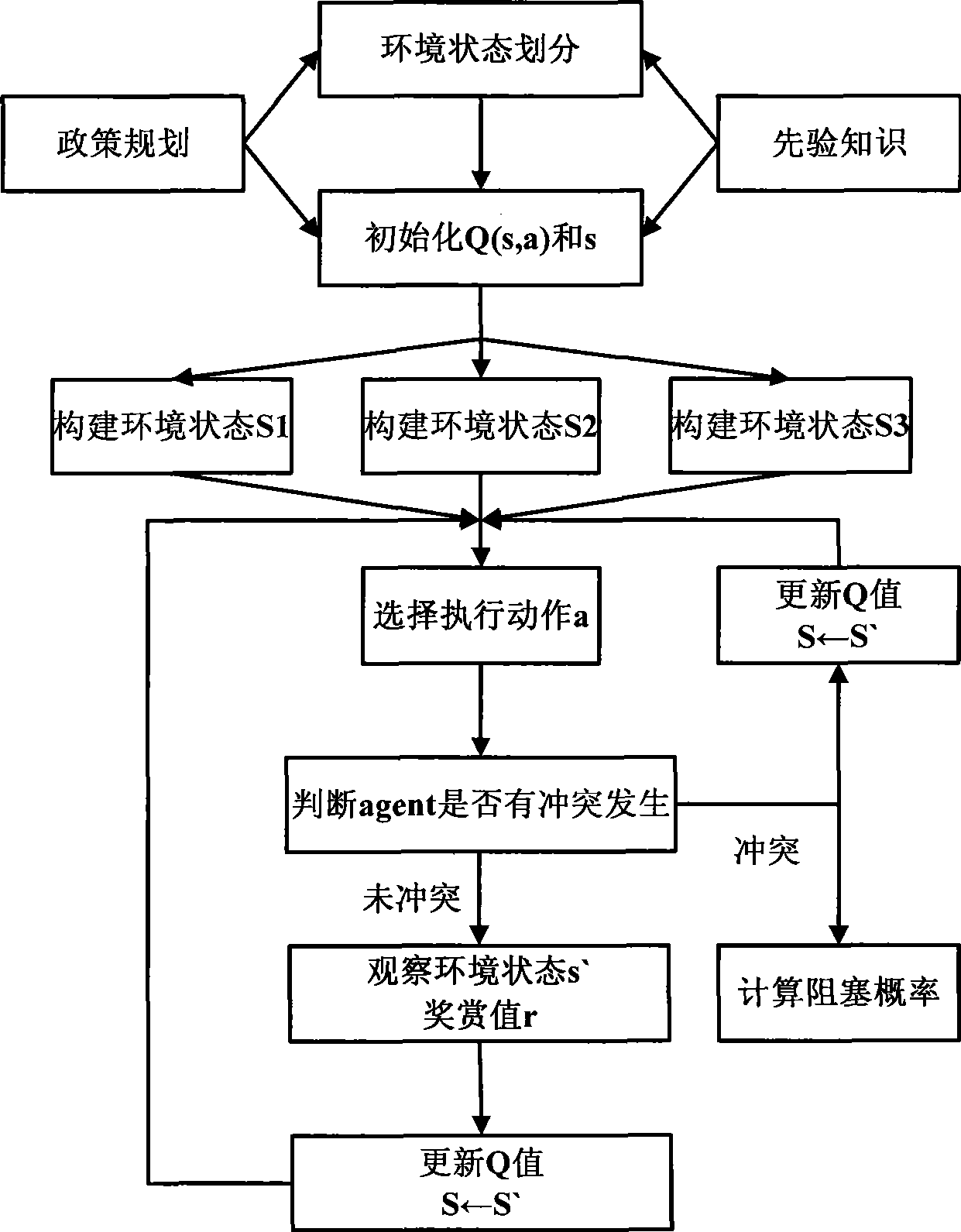

Dynamic spectrum access method based on policy planning constrain Q study

InactiveCN101466111AAvoid blindnessImprove learning efficiencyWireless communicationPropogation channels monitoringCognitive userFrequency spectrum

The invention provides a dynamic spectrum access method on the basis that the policy planning restricts Q learning, which comprises the following steps: cognitive users can divide the frequency spectrum state space, and select out the reasonable and legal state space; the state space can be ranked and modularized; each ranked module can finish the Q form initialization operation before finishing the Q learning; each module can individually execute the Q learning algorithm; the algorithm can be selected according to the learning rule and actions; the actions finally adopted by the cognitive users can be obtained by making the strategic decisions by comprehensively considering all the learning modules; whether the selected access frequency spectrum is in conflict with the authorized users is determined; if so, the collision probability is worked out; otherwise, the next step is executed; whether an environmental policy planning knowledge base is changed is determined; if so, the environmental policy planning knowledge base is updated, and the learning Q value is adjusted; the above part steps are repeatedly executed till the learning convergence. The method can improve the whole system performance, and overcome the learning blindness of the intelligent body, enhance the learning efficiency, and speed up the convergence speed.

Owner:COMM ENG COLLEGE SCI & ENGINEEIRNG UNIV PLA

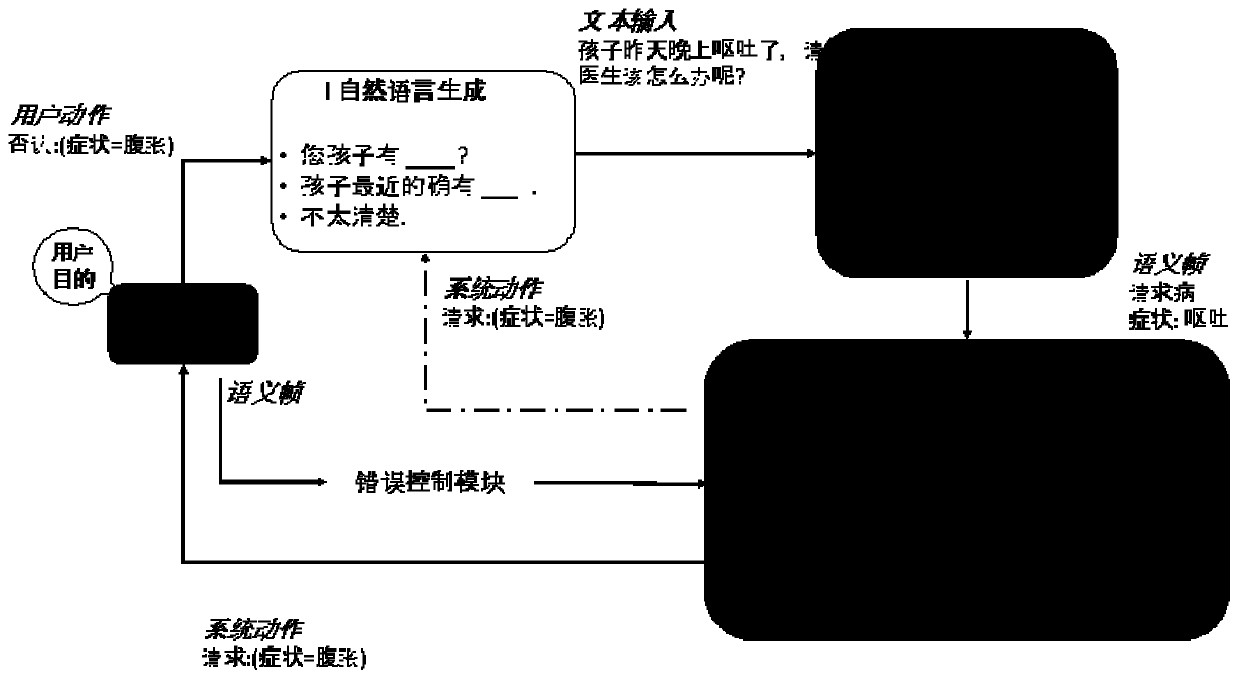

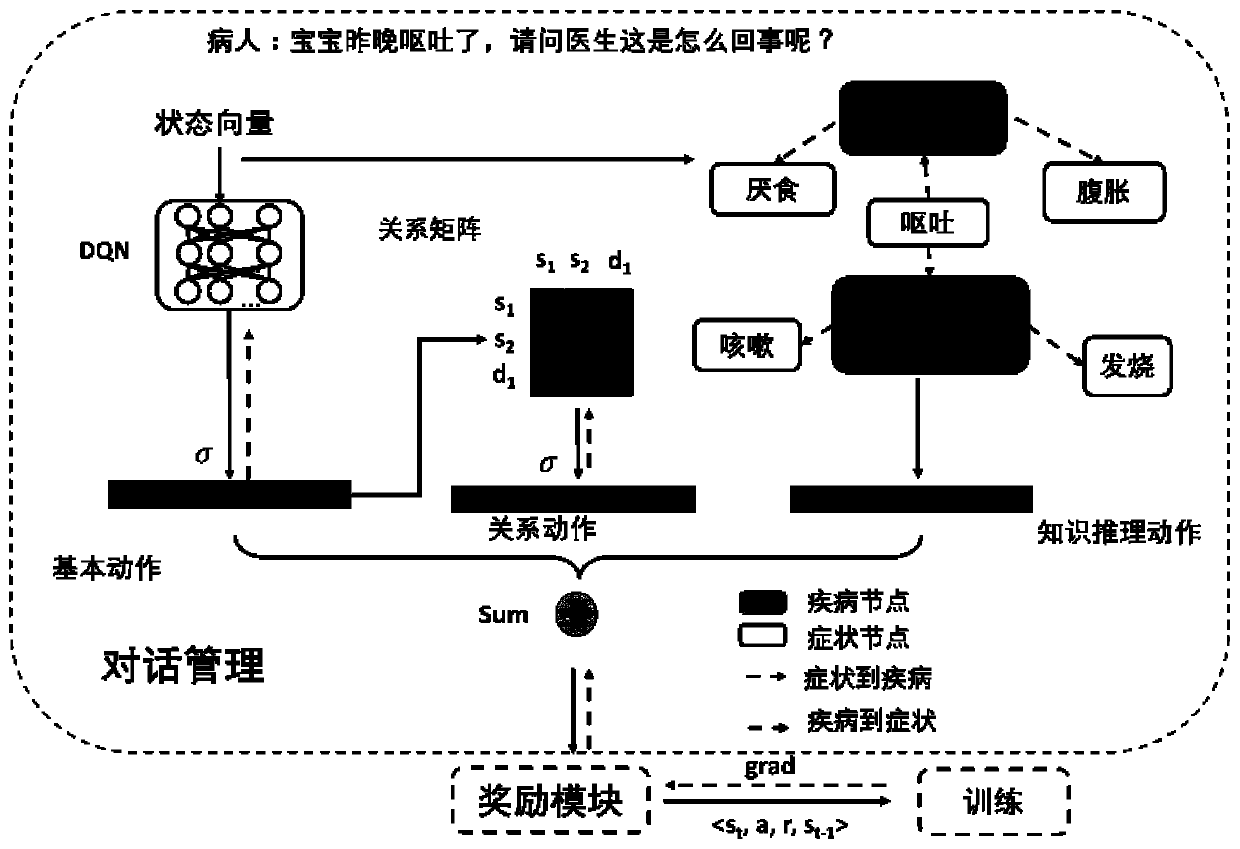

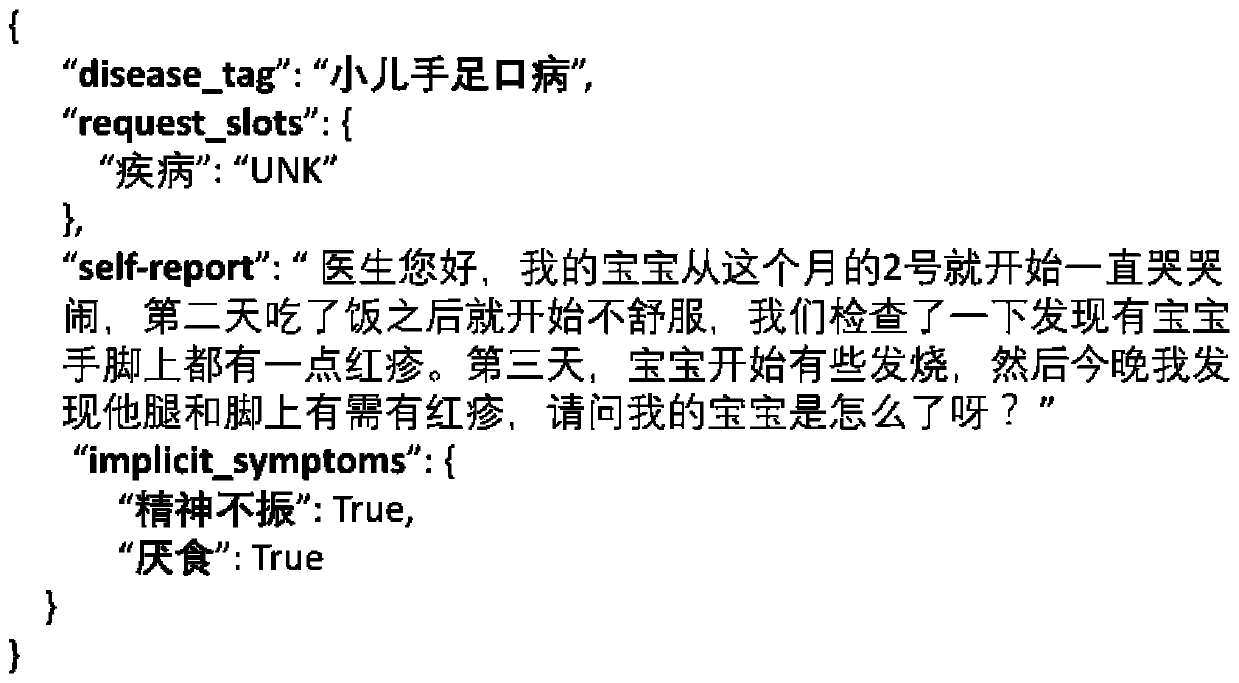

Medical interrogation dialogue system and reinforcement learning method applied to medical interrogation dialogue system

ActiveCN109817329AThe result is reasonableEnhance reasoning abilityMedical automated diagnosisSpecial data processing applicationsDiseaseNatural language understanding

The invention discloses a medical interrogation dialogue system and a reinforcement learning method applied to the medical interrogation dialogue system, and relates to the technical field of medicalinformation. The system comprises a natural language understanding module used for classifying the intentions of users and filling slot values to form structured semantic frames; a dialogue managementmodule used for interacting with a user through a robot agent, inputting a dialogue state, performing action decision on the semantic frame through a decision network, and outputting final system action selection; a user simulator used for carrying out natural language interaction with the dialogue management module and outputting user action selection; a natural language generation module used for receiving system action selection and user action selection, enabling the user to check the selection through generating sentences similar to a human language by using a template-based method. According to the invention, the medical knowledge information between diseases and symptoms is introduced as a guide, and the inquiry historical experience is enriched through continuous interaction witha simulated patient. The reasonability of inquiry symptoms and the accuracy of disease diagnosis are improved, and the diagnosis result is higher in credibility.

Owner:暗物智能科技(广州)有限公司

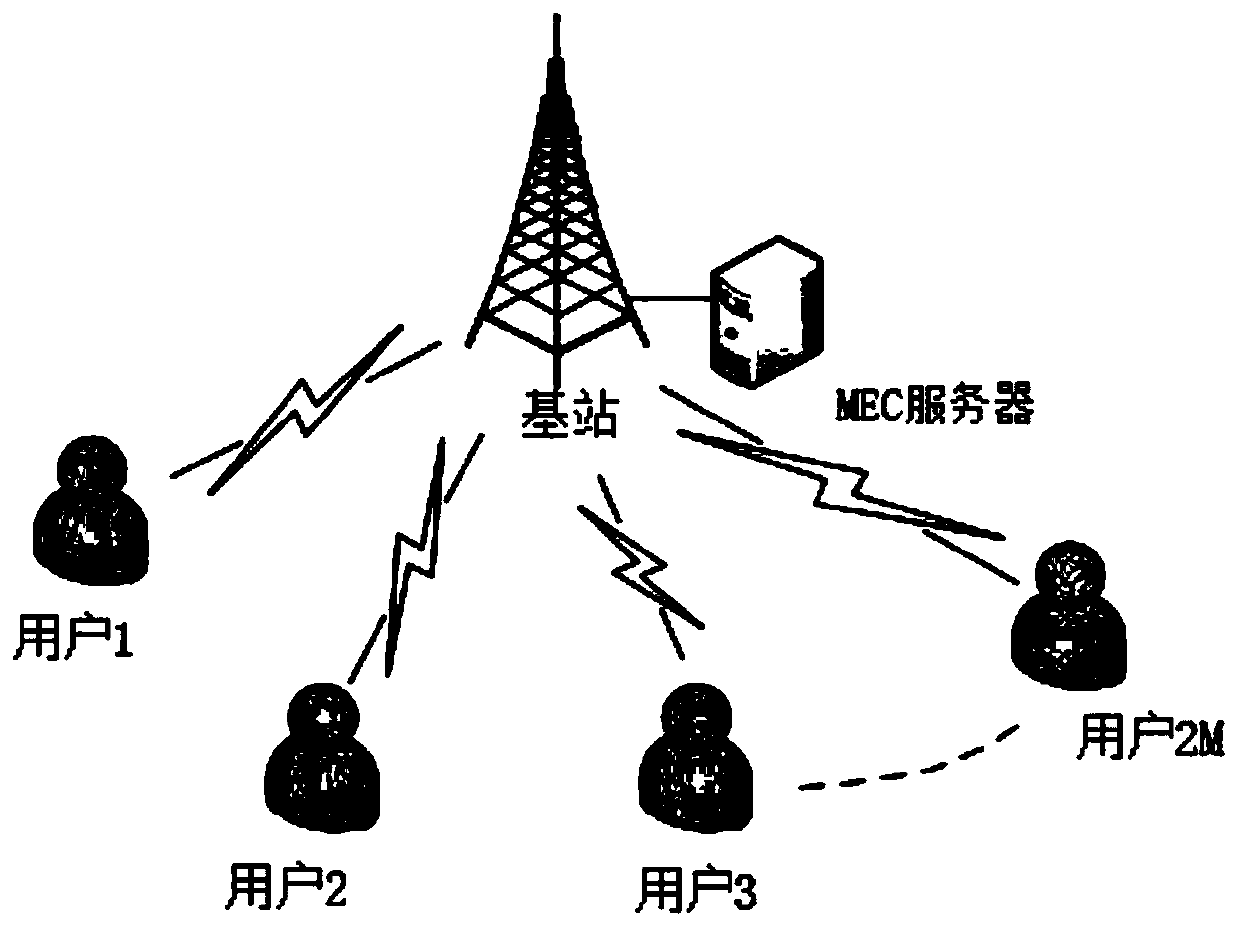

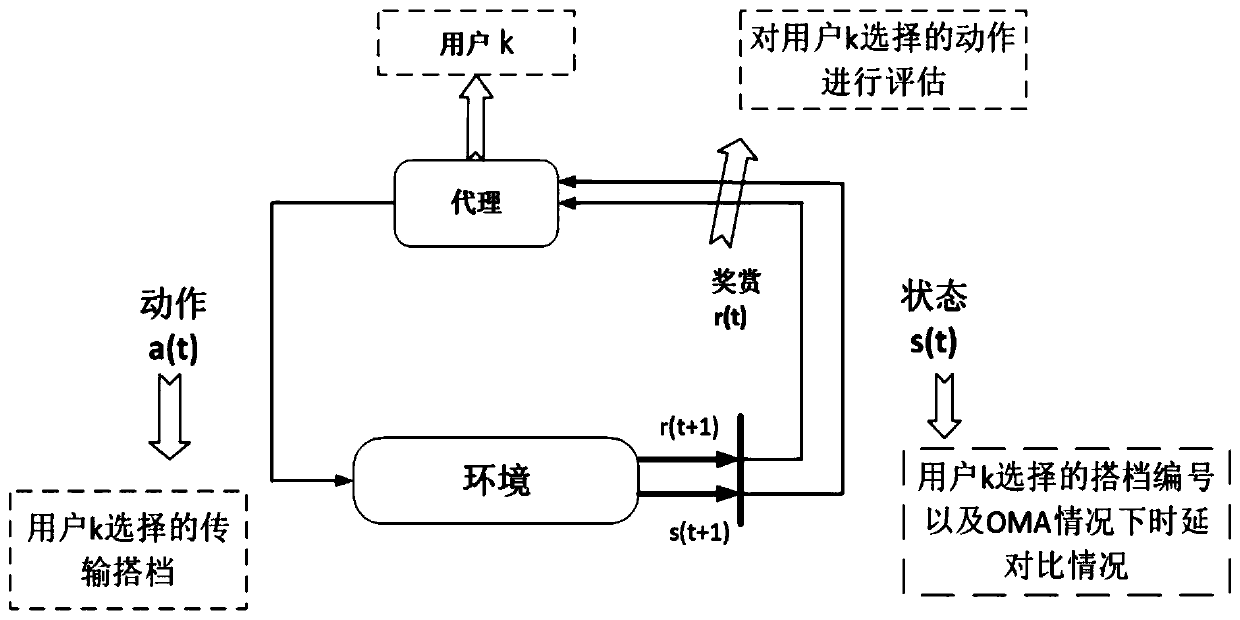

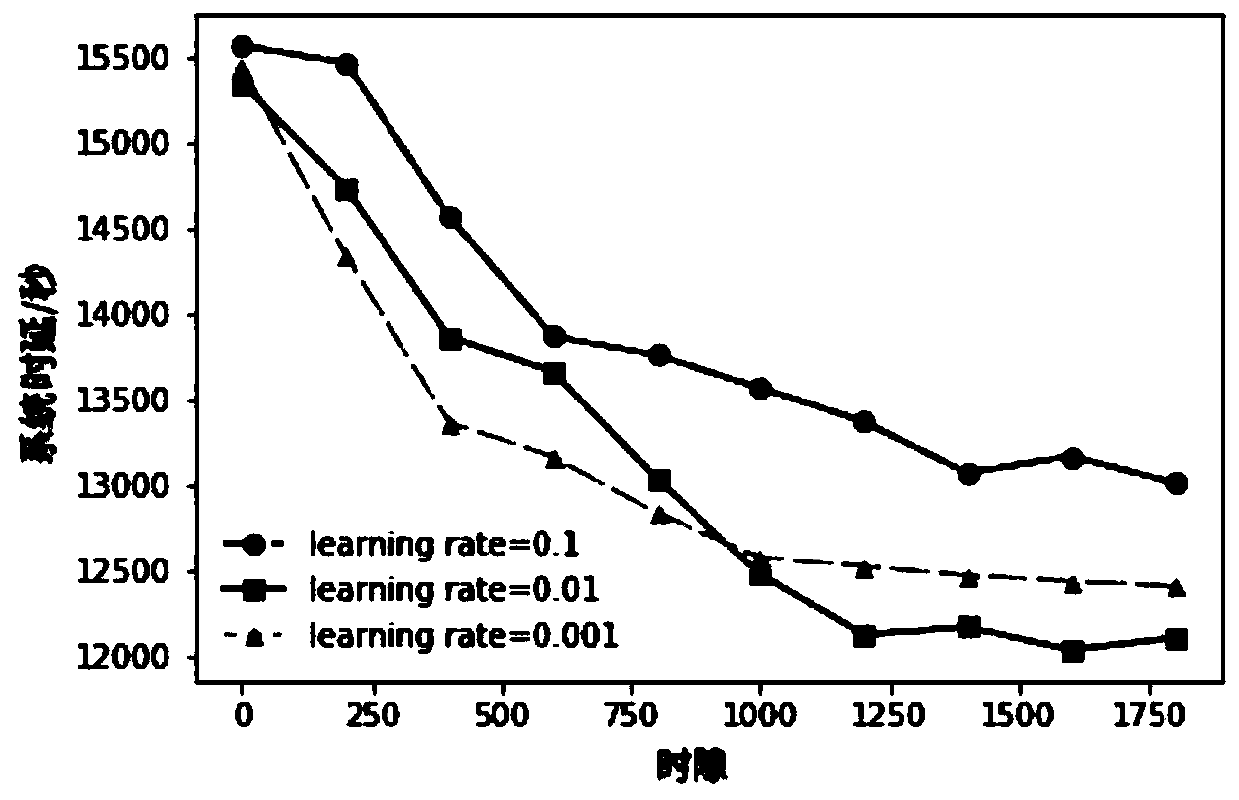

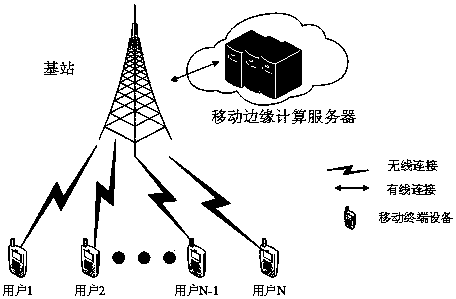

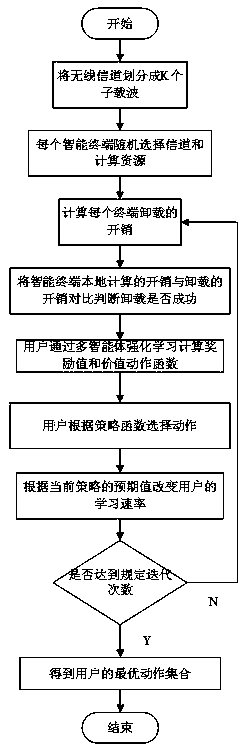

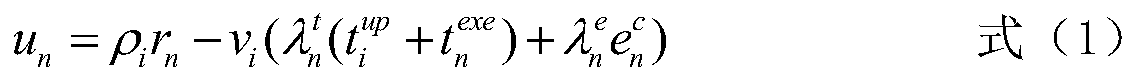

Unloading time delay optimization method in mobile edge computing scene

ActiveCN110113190AThe total offload delay is continuously minimizedNeural architecturesData switching networksAlgorithmTime delays

The invention aims to provide an unloading delay optimization method in a mobile edge computing scene. The method comprises the following steps: step 1, constructing a system model, wherein the systemmodel comprises 2M users and an MEC server, each user has L tasks and needs to be unloaded to the MEC server for calculation, and it is assumed that only two users are allowed to adopt a mixed NOMA strategy to unload at the same time; step 2, setting each user as an executor, and performing action selection by each executor according to a DQN algorithm, i.e., selecting one user from the rest 2M-1users as the own transmission partner and unloading the transmission partner at the same time; step 3, performing system optimization by using a DQN algorithm: calculating the total unloading time delay of the system, updating a reward value, then training a neural network, and updating a Q function by using the neural network as a function approximator after the selection of all users is completed; and continuously carrying out the iterative optimization on the system until the optimal time delay is found. The problem of high time delay consumption in the existing multi-user MEC scene is solved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

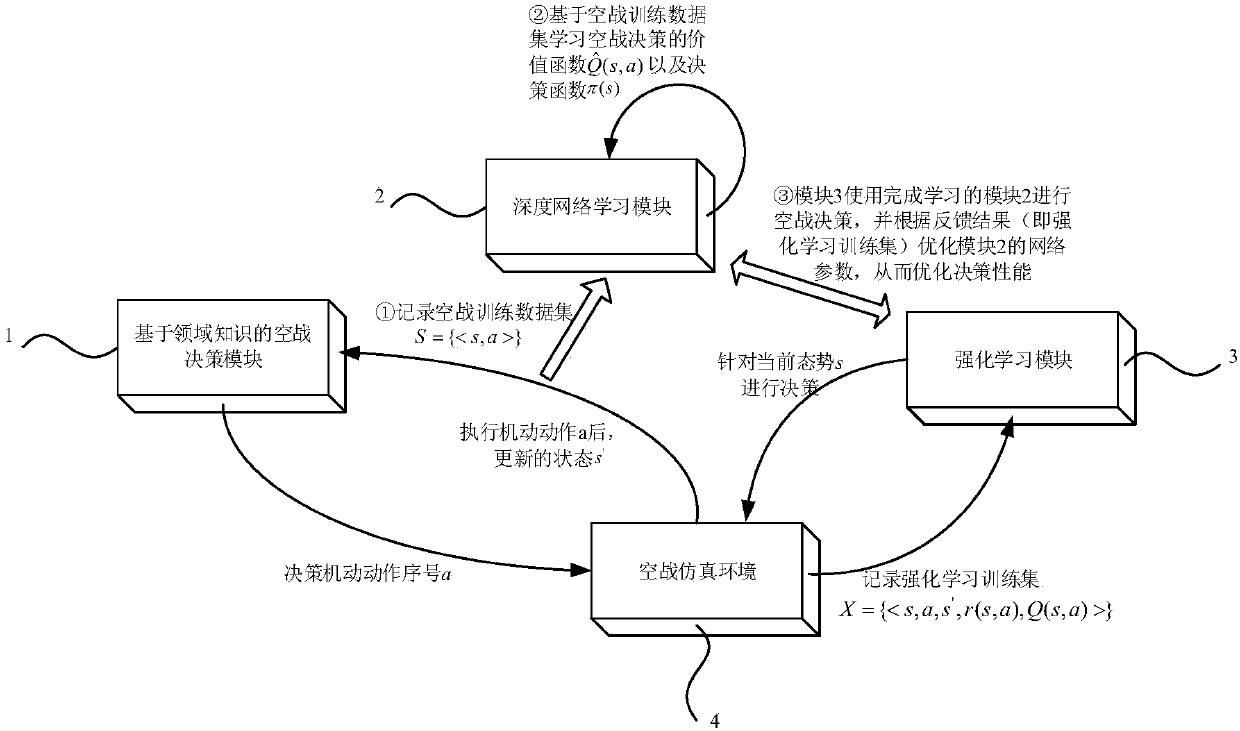

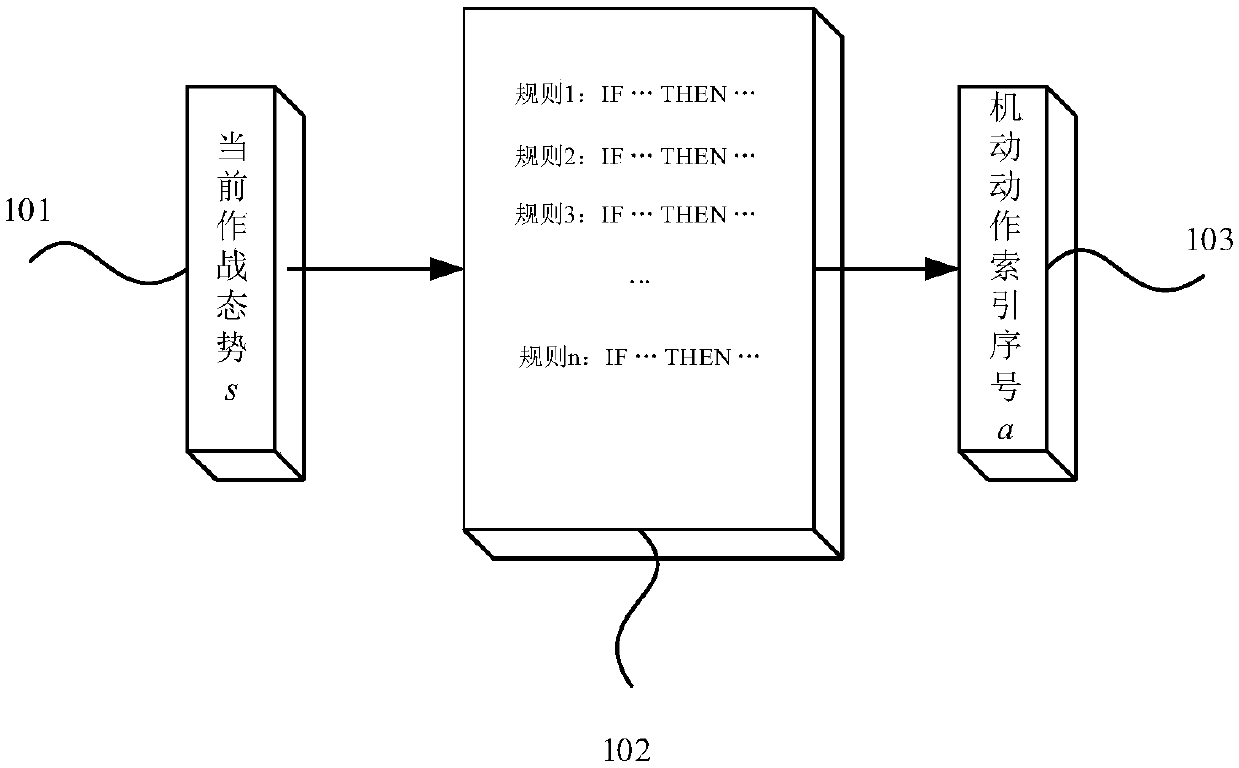

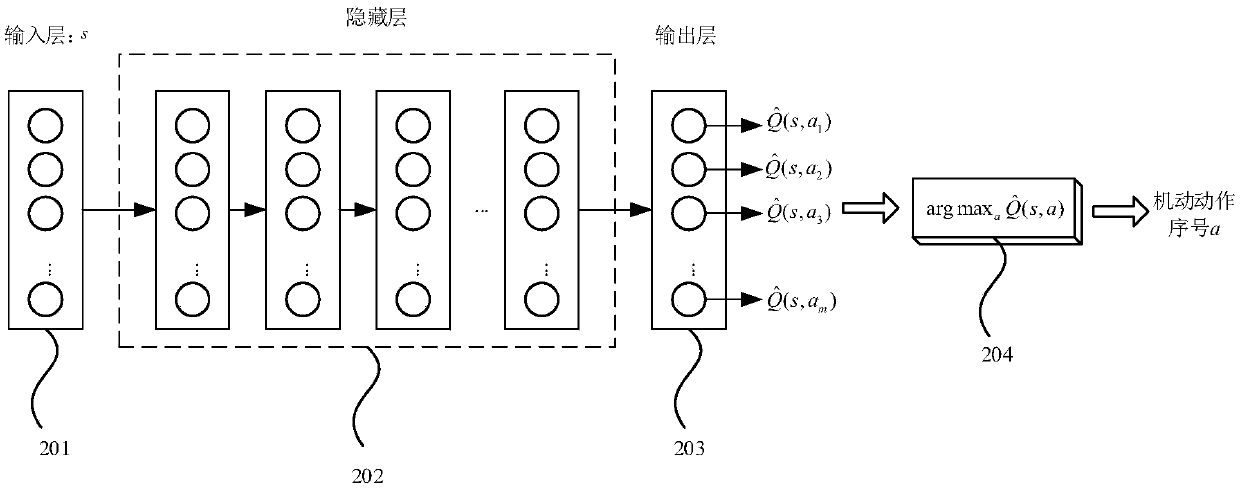

Unmanned aerial vehicle autonomous air combat decision framework and method

InactiveCN108021754ASolve the original data source problemEnhance expressive abilityDesign optimisation/simulationKnowledge representationData setClosed loop

The invention discloses an unmanned aerial vehicle autonomous air combat decision framework and method, and belongs to the field of computer simulation. The framework comprises an air combat decisionmodule, a deep network learning module, an enhanced learning module and an air combat simulation environment which are based on domain knowledge. The air combat decision module generates an air combattraining data set and outputs the air combat training data set to the deep network learning module, and a depth network, a Q value fitting function and a motion selection function are obtained through learning and output to the enhanced learning module; the air combat simulation environment uses the learned air combat decision function to carry out a self-air combat process, and records air combat process data to form an enhanced learning training set; the enhanced learning module is used for optimizing and improving the Q value fitting function by utilizing the enhanced learning training set, and an air combat strategy with better performance is obtained. According to the framework, a Q function which is complex in nature can be more accurately and quickly fitted, the learning effect isimproved, the Q function is prevented from being converged to the local optimum value to the largest extent, an air combat decision optimization closed-loop process is constructed, and external intervention is not needed.

Owner:BEIHANG UNIV

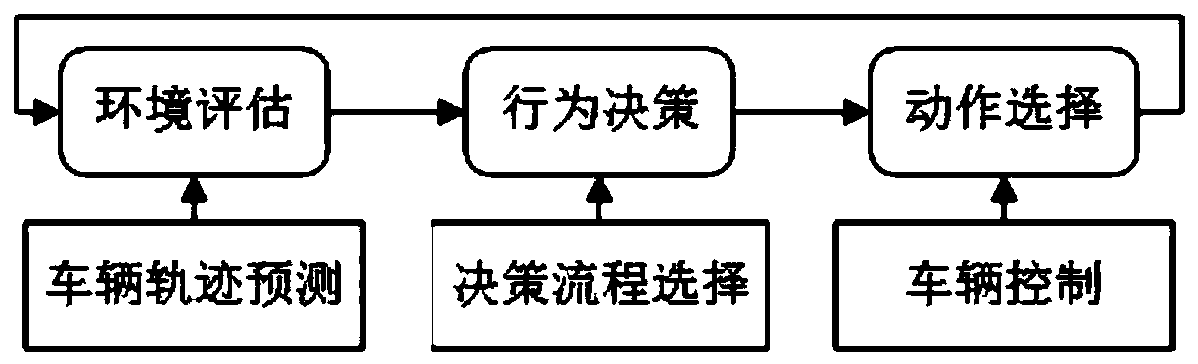

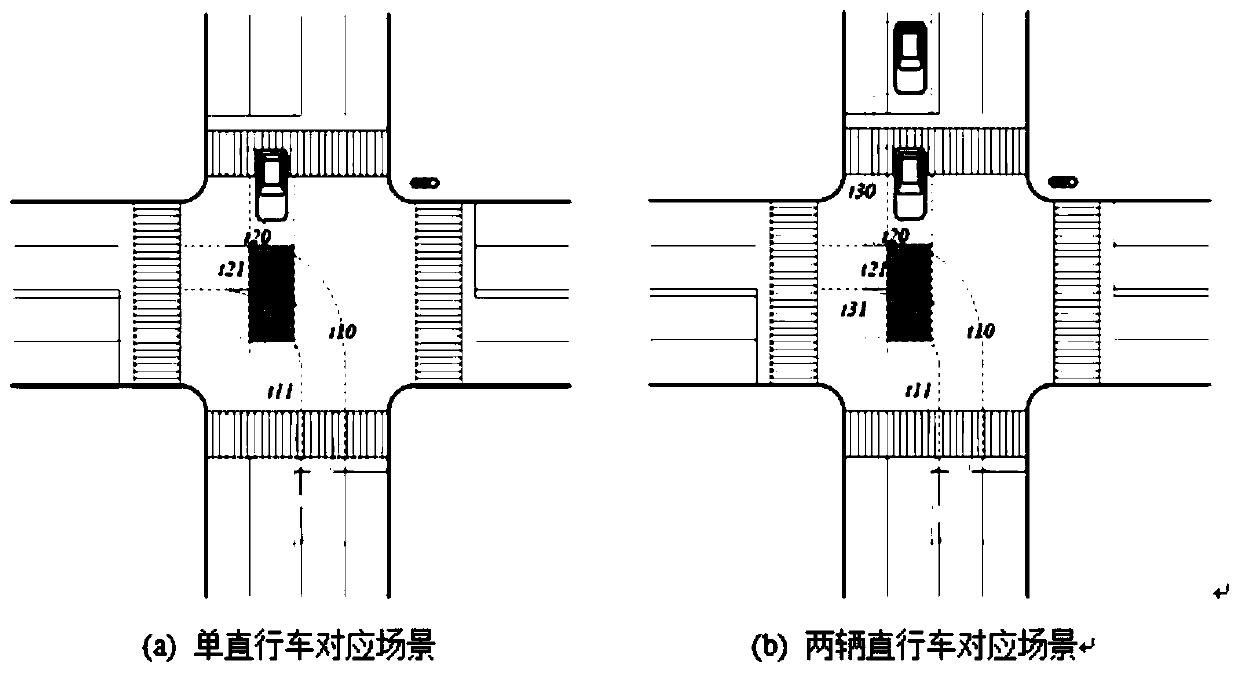

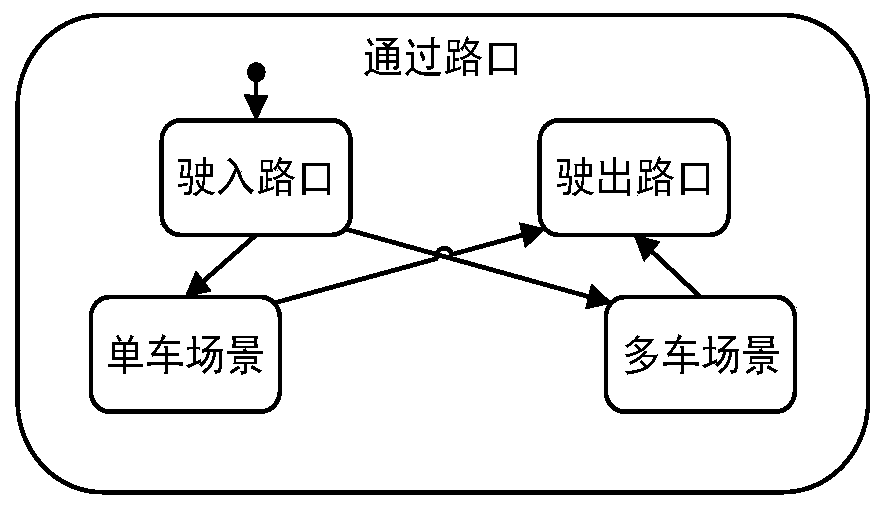

Unmanned vehicle urban intersection left turn decision-making method based on conflict resolution

ActiveCN110298122AImprove rationalityImprove adaptabilityControlling traffic signalsForecastingDecision modelVehicle driving

The invention discloses an unmanned vehicle urban intersection left-turn decision-making method based on conflict resolution. The method comprises the steps of track prediction for straight vehicles at an intersection, decision-making process selection of a behavior decision-making module corresponding to different scenes, and vehicle control parameter selection corresponding to an action selection module. According to the invention, the decision-making framework of the left turn of the unmanned vehicle at the intersection is divided into environment assessment, behavior decision-making and action selection; prediction of intersection straight driving motion tracks is realized by using a Gaussian process regression model, decision-making processes under different left-turn scenes are formulated, and an unmanned vehicle driving action selection method considering multiple factors is provided; and the decision-making process of the left turn of the unmanned vehicle at the intersection isstructured and clarified, so that the reasonability and the adaptability of the decision-making model are improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Electronic system condition monitoring and prognostics

InactiveUS8200600B2Improve forecast accuracyElectrical testingProbabilistic networksElectronic systemsPrognostics

A system for monitoring and predicting the condition of an electronic system comprises a component model, an inference engine based on the component model, and an action selection component that selects an action based on an output of the inference engine.

Owner:MASSACHUSETTS INST OF TECH +1

Resource allocation method based on multi-agent reinforcement learning in mobile edge computing system

ActiveCN110418416ALow costReduce complexityTransmissionHigh level techniquesCarrier signalReward value

The invention discloses a resource allocation method based on multi-agent reinforcement learning in a mobile edge computing system, which comprises the following steps: (1) dividing a wireless channelinto a plurality of subcarriers, wherein each user can only select one subcarrier; (2) enabling each user to randomly select a channel and computing resources, and then calculating time delay and energy consumption generated by user unloading; (3) comparing the time delay energy consumption generated by the local calculation of the user with the time delay energy consumption unloaded to the edgecloud, and judging whether the unloading is successful or not; (4) obtaining a reward value of the current unloading action through multi-agent reinforcement learning, and calculating a value function; (5) enabling the user to perform action selection according to the strategy function; and (6) changing the learning rate of the user to update the strategy to obtain an optimal action set. Based onvariable-rate multi-agent reinforcement learning, computing resources and wireless resources of the mobile edge server are fully utilized, and the maximum value of the utility function of each intelligent terminal is obtained while the necessity of user unloading is considered.

Owner:SOUTHEAST UNIV

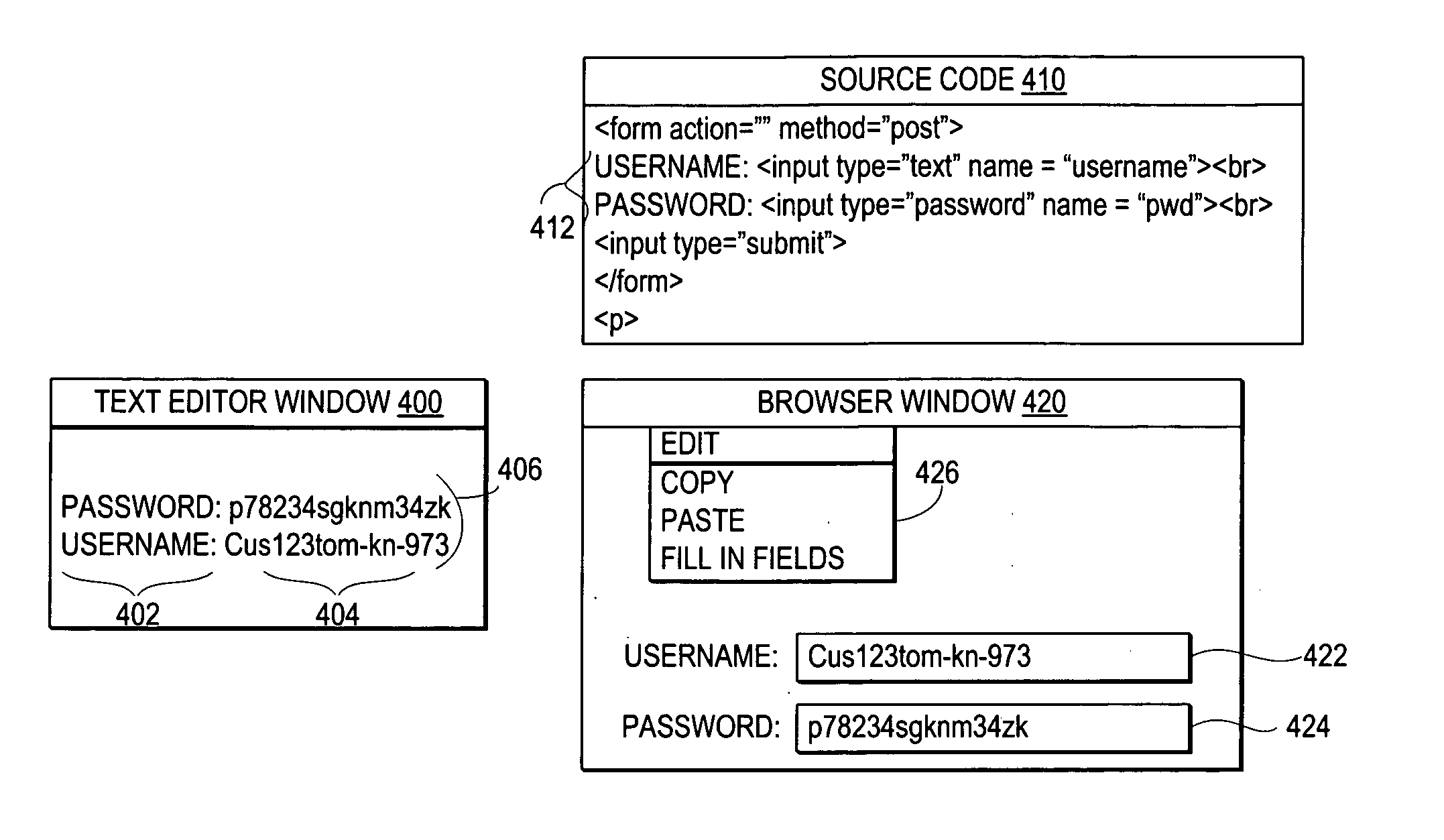

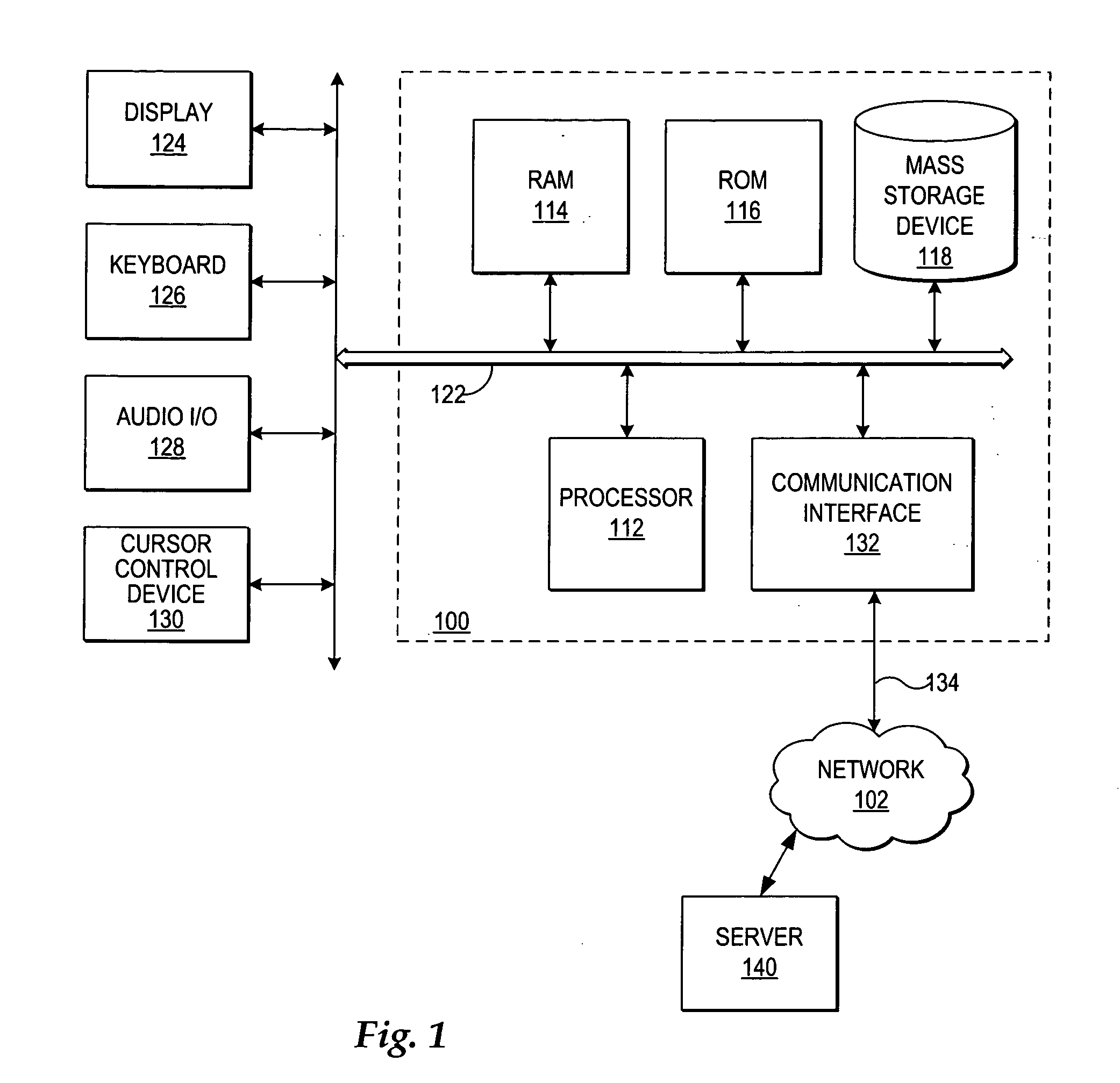

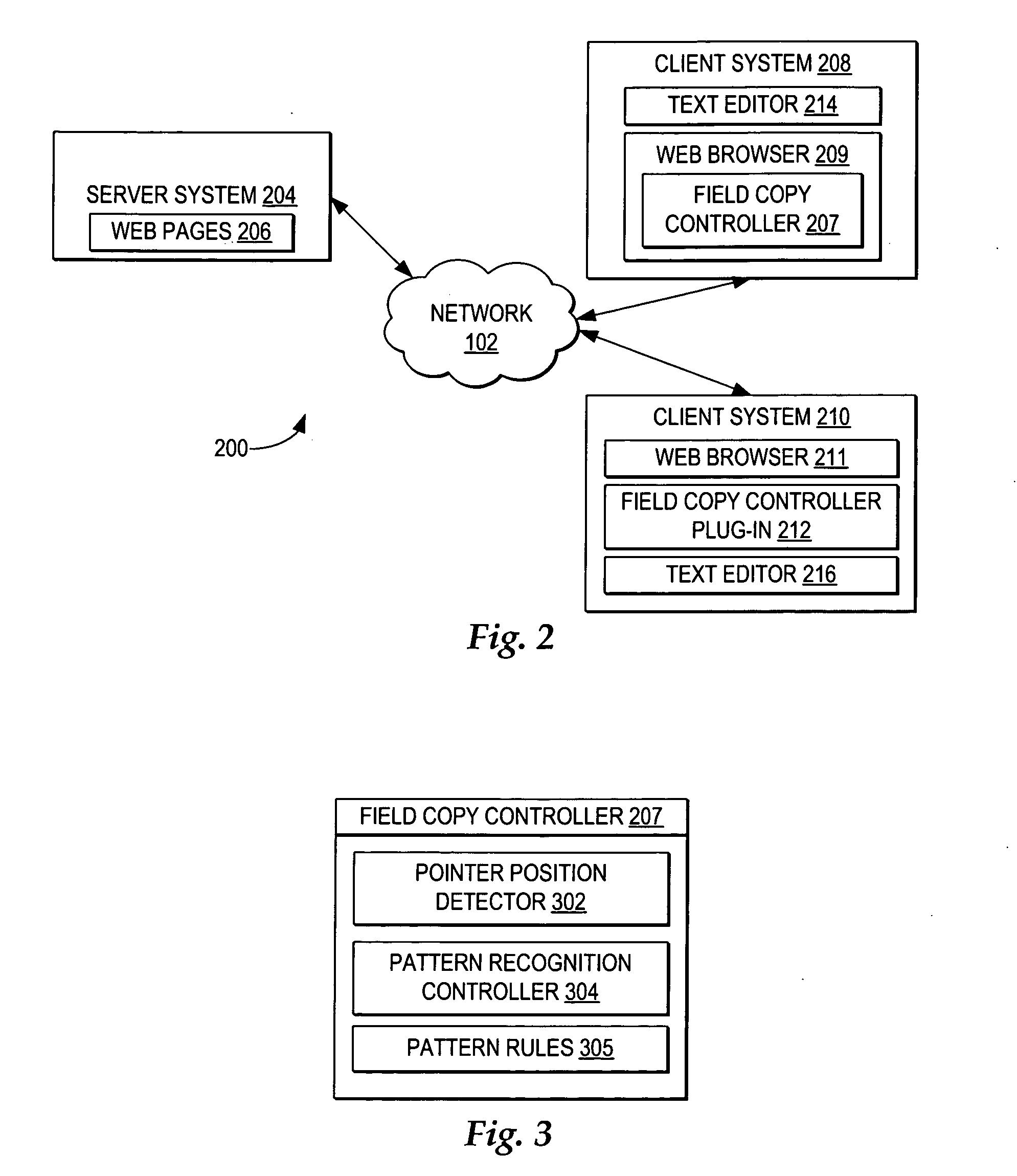

Automatic simultaneous entry of values in multiple web page fields

InactiveUS20060059247A1Improved copyingImproved pastingNatural language data processingMultiple digital computer combinationsWeb browserSegment descriptor

A method, system, and program for automatic simultaneous entry of values in multiple web page fields are provided. Responsive to detecting a single action selection by a user to fill multiple fields of a web page, wherein each of the fields is associated with one from among multiple field descriptors within a source code for the web page, a field copy controller scans multiple lines of text copied into a local memory to identify text tags that match the field descriptors. Then, the field copy controller automatically fills in the fields within the web browser with the values from the lines of text, wherein each of the values is associated with one from among multiple text tags matching the field descriptors associated with the fields, such that multiple fields of a web page are automatically filled in responsive to only a single action by a user.

Owner:IBM CORP

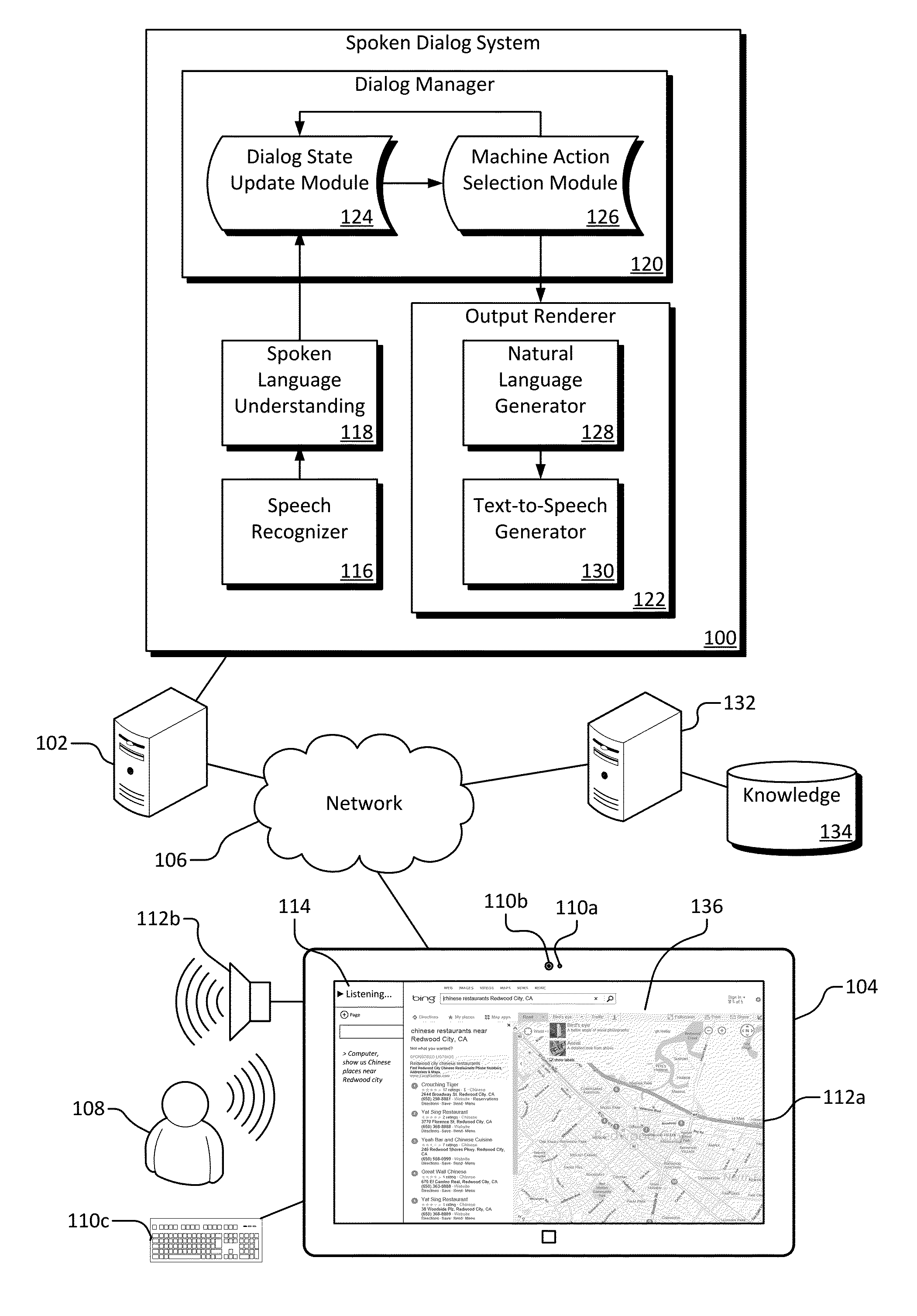

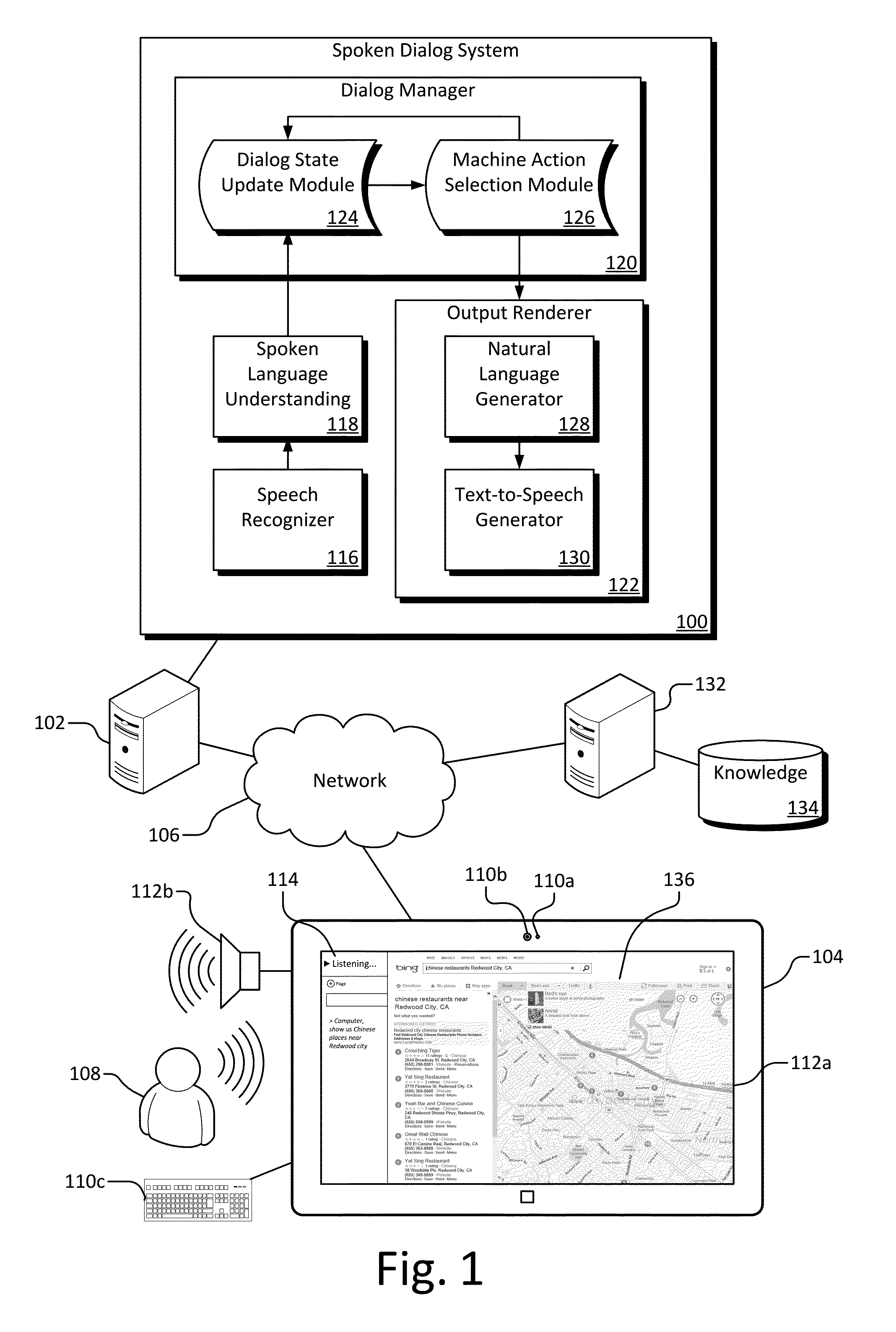

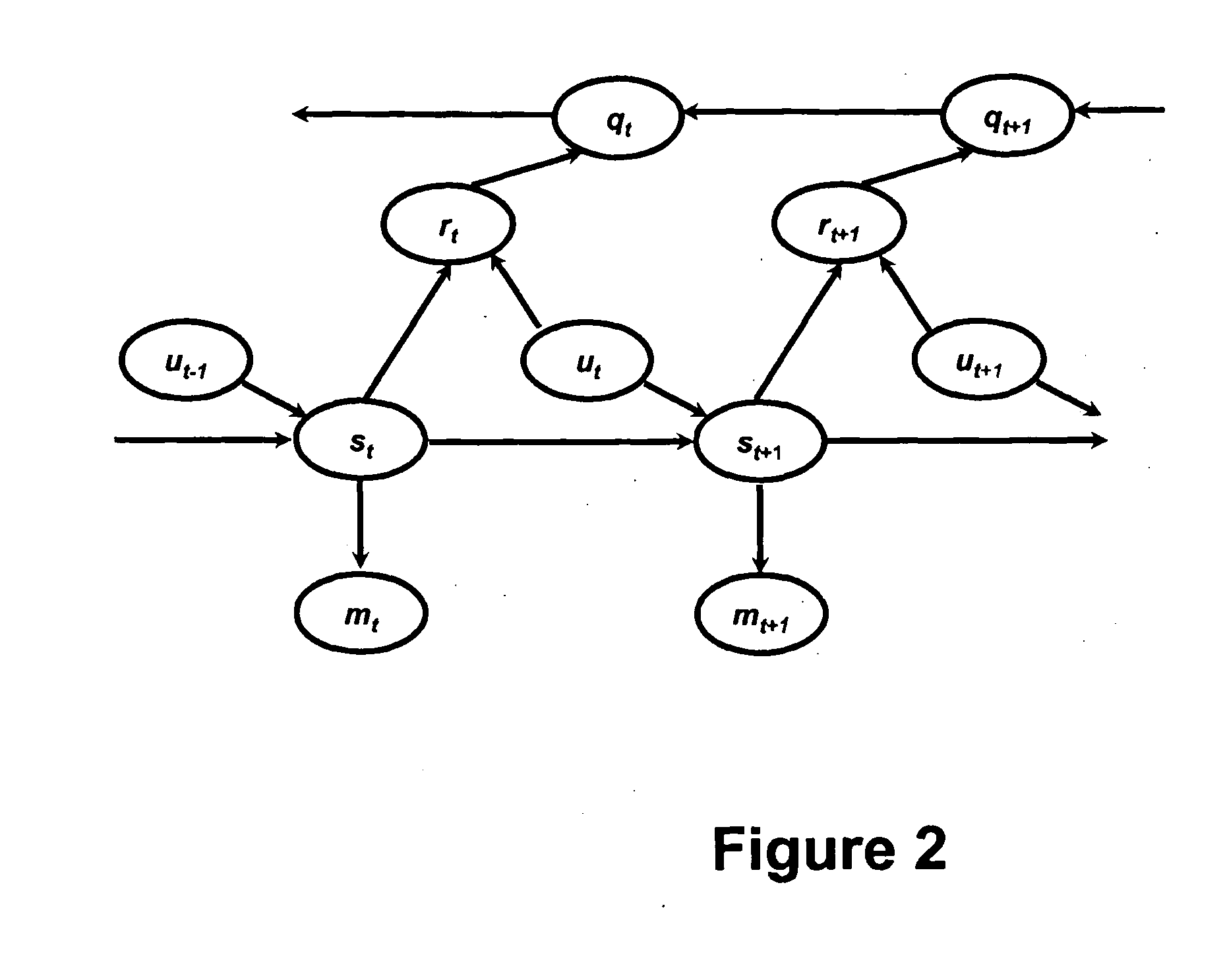

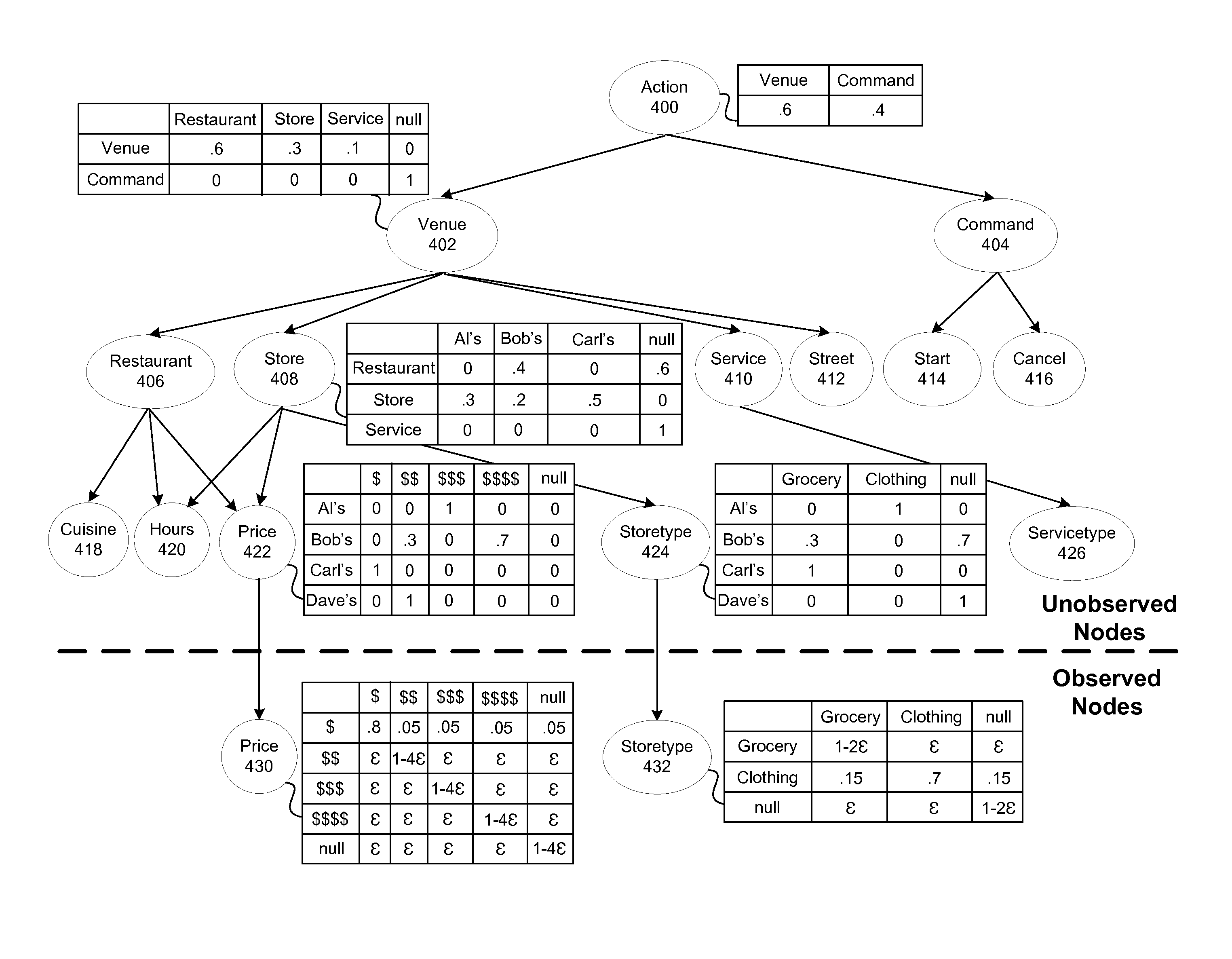

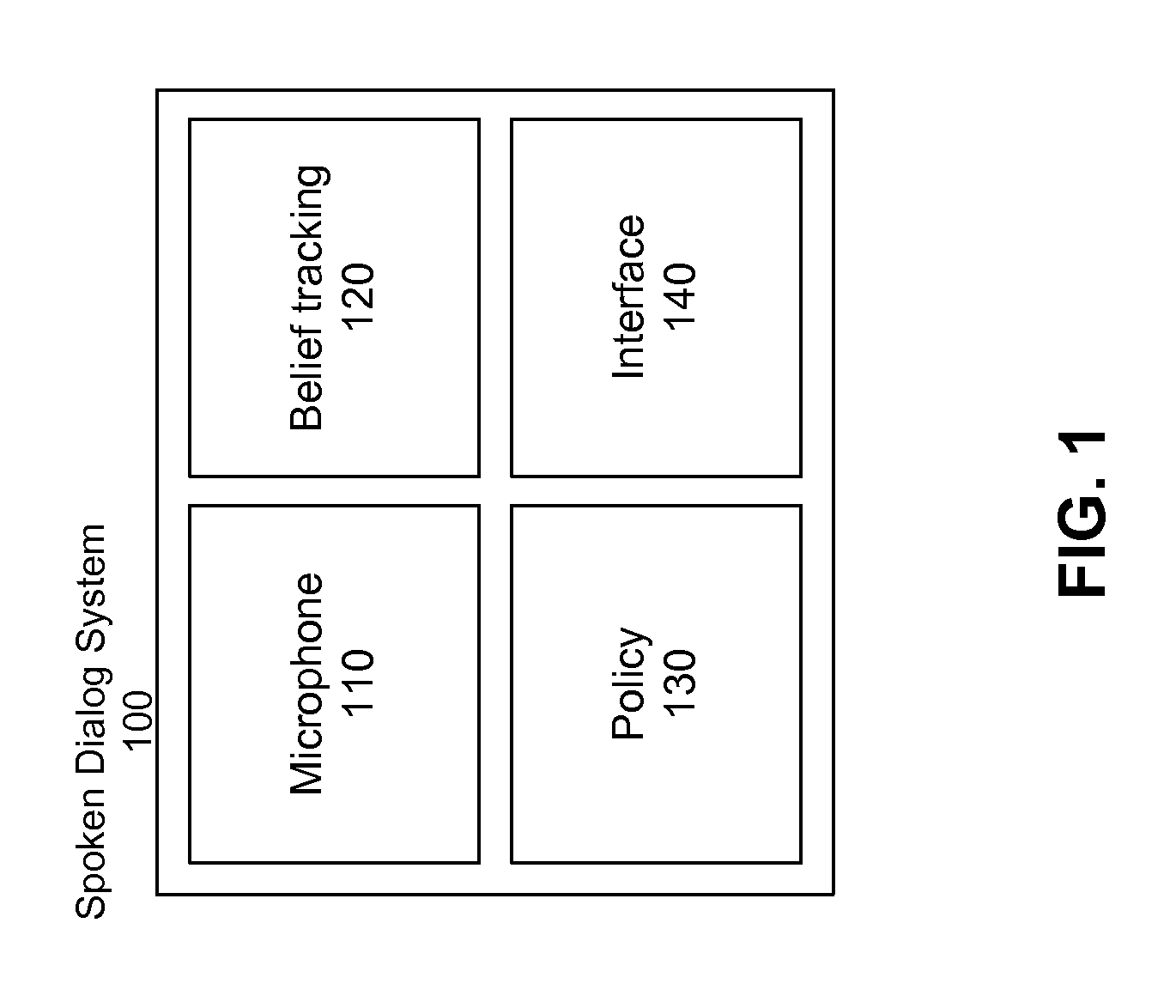

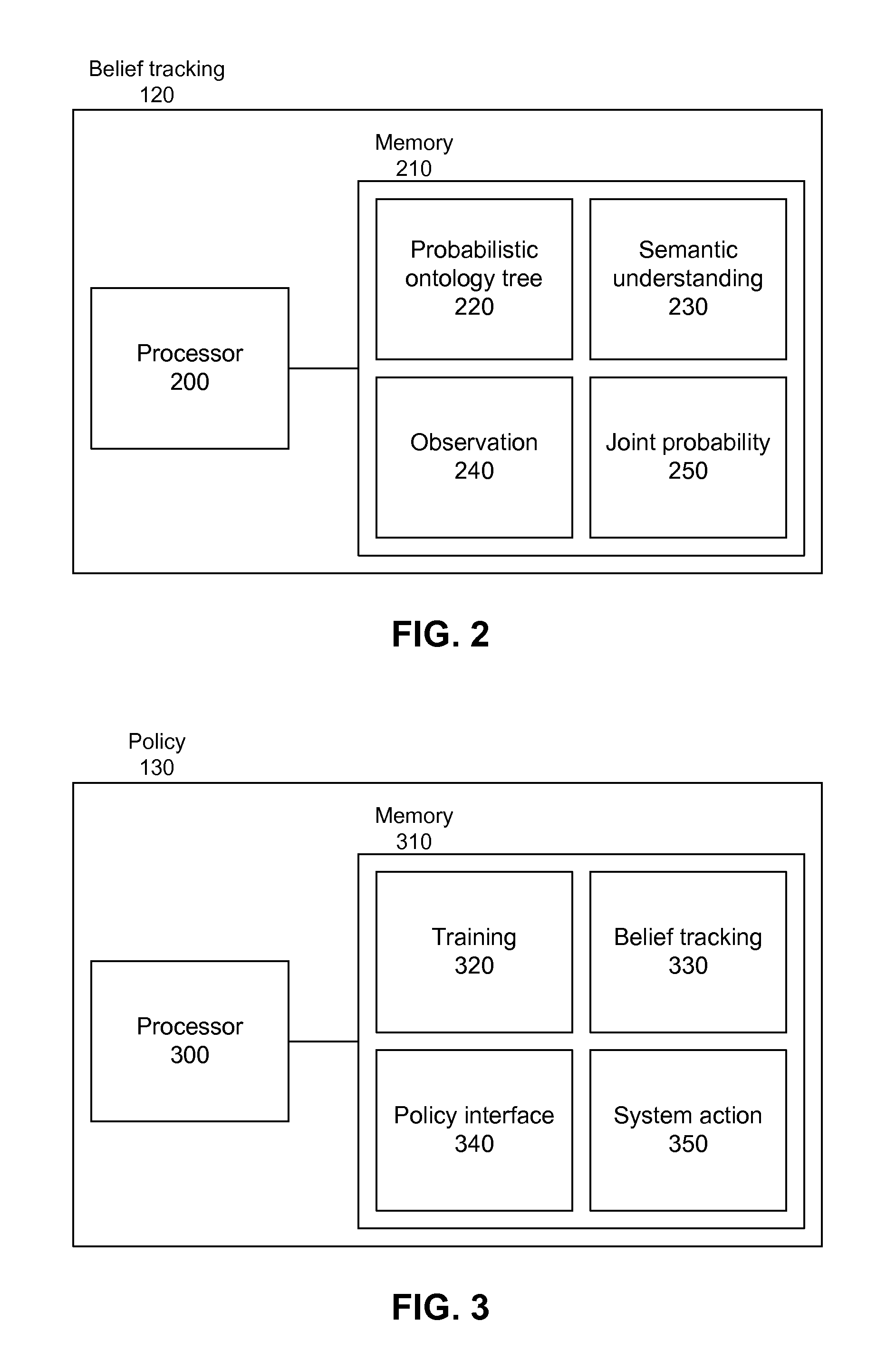

Belief tracking and action selection in spoken dialog systems

An action is performed in a spoken dialog system in response to a user's spoken utterance. A policy which maps belief states of user intent to actions is retrieved or created. A belief state is determined based on the spoken utterance, and an action is selected based on the determined belief state and the policy. The action is performed, and in one embodiment, involves requesting clarification of the spoken utterance from the user. Creating a policy may involve simulating user inputs and spoken dialog system interactions, and modifying policy parameters iteratively until a policy threshold is satisfied. In one embodiment, a belief state is determined by converting the spoken utterance into text, assigning the text to one or more dialog slots associated with nodes in a probabilistic ontology tree (POT), and determining a joint probability based on probability distribution tables in the POT and on the dialog slot assignments.

Owner:HONDA MOTOR CO LTD

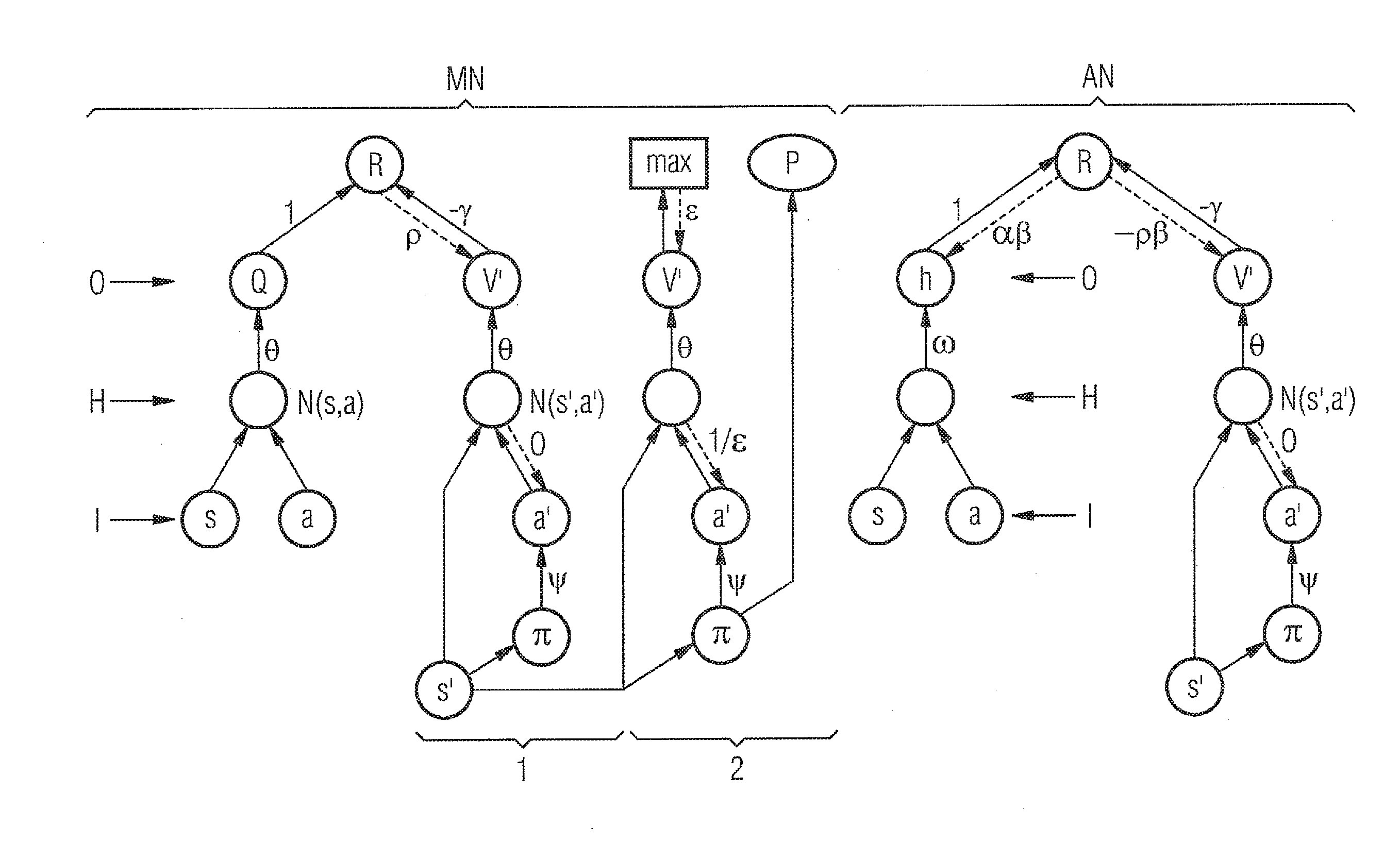

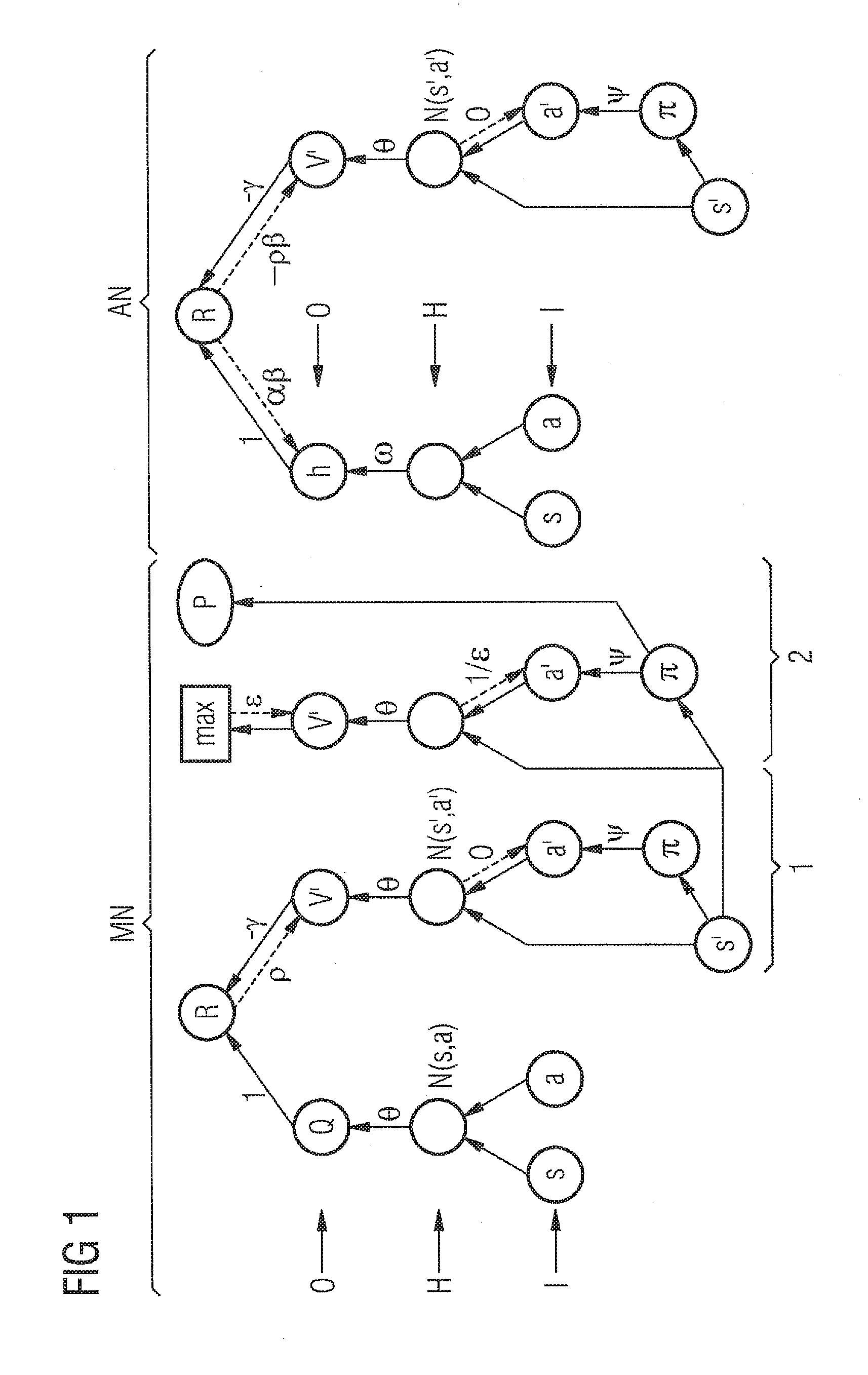

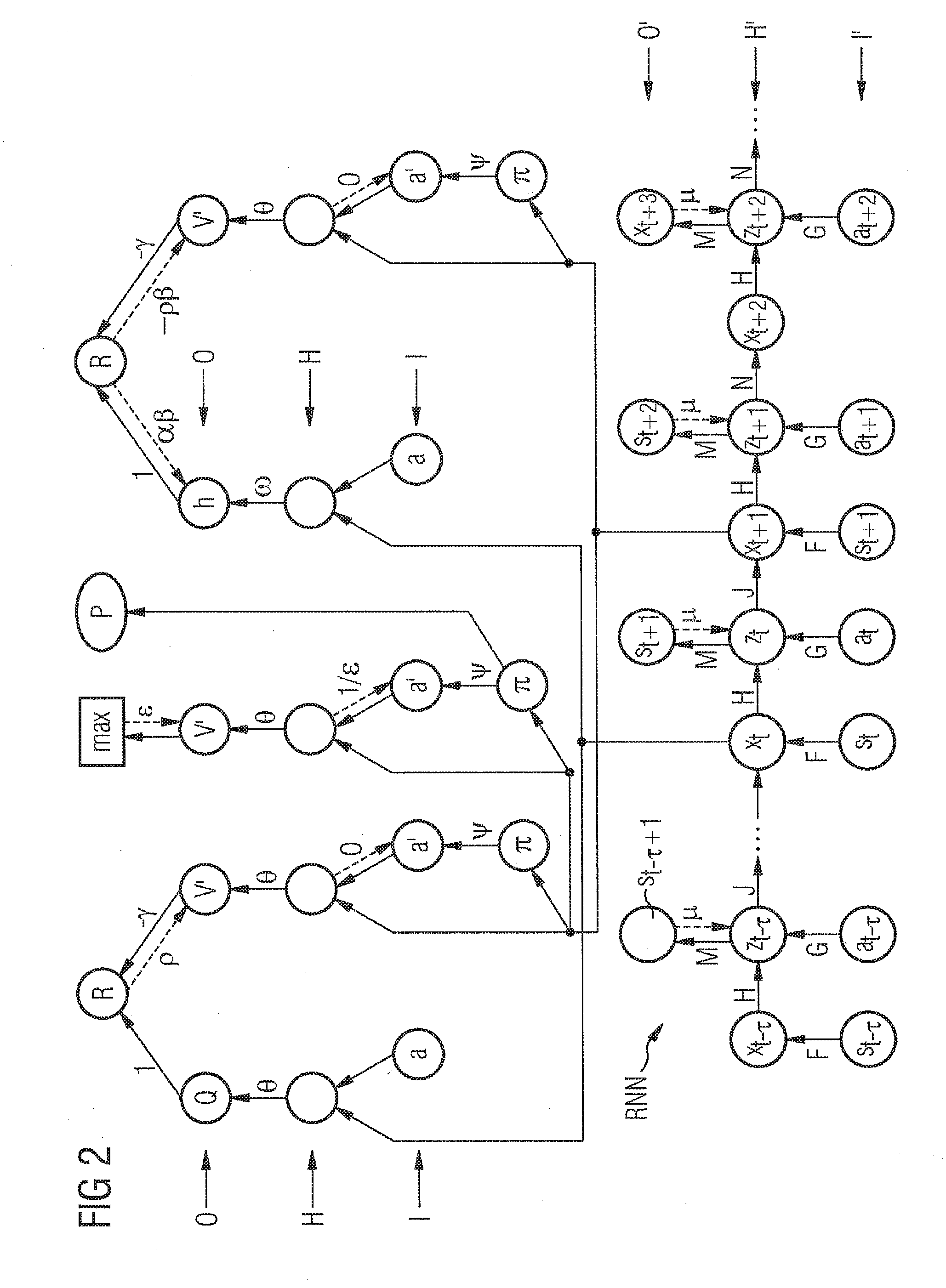

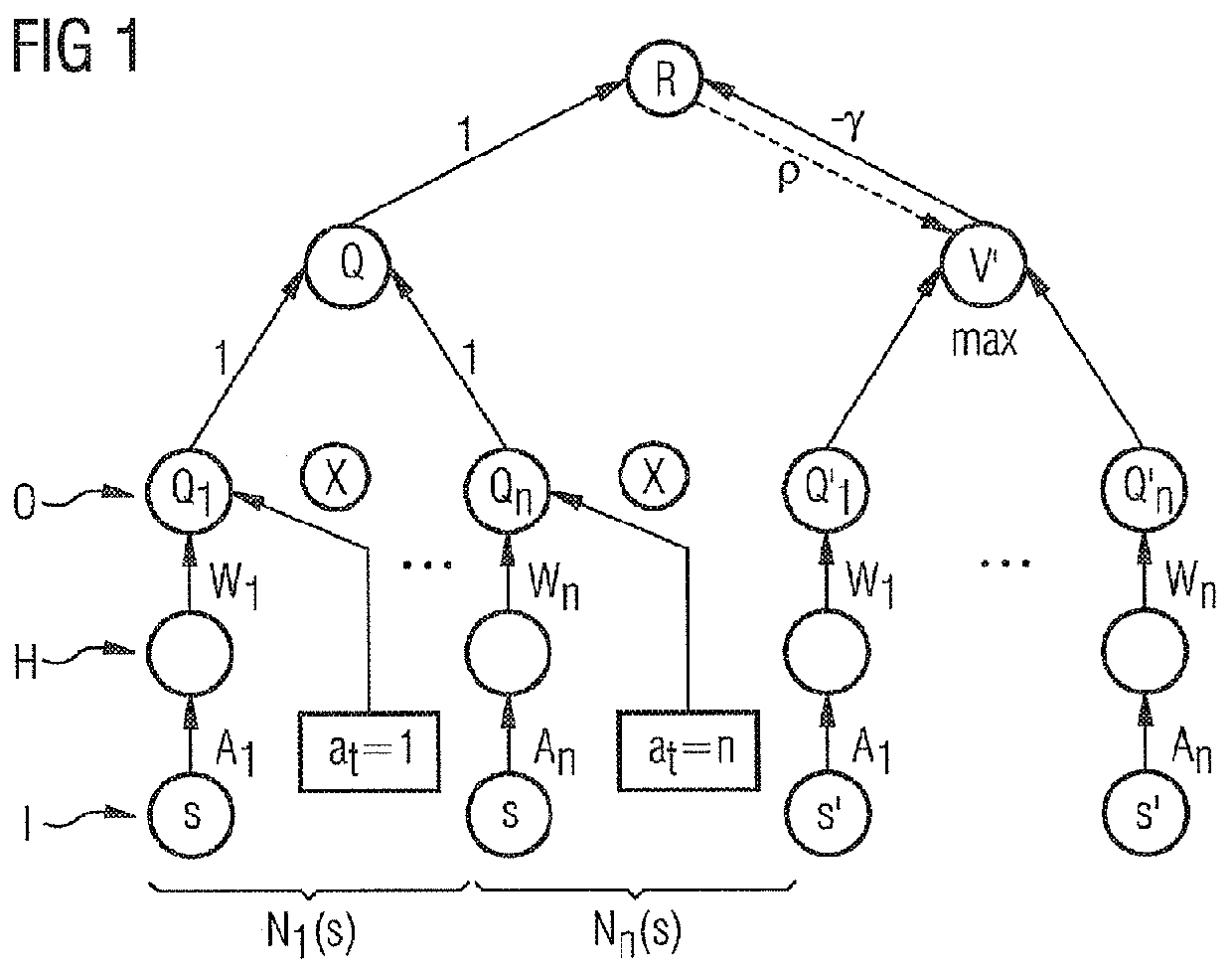

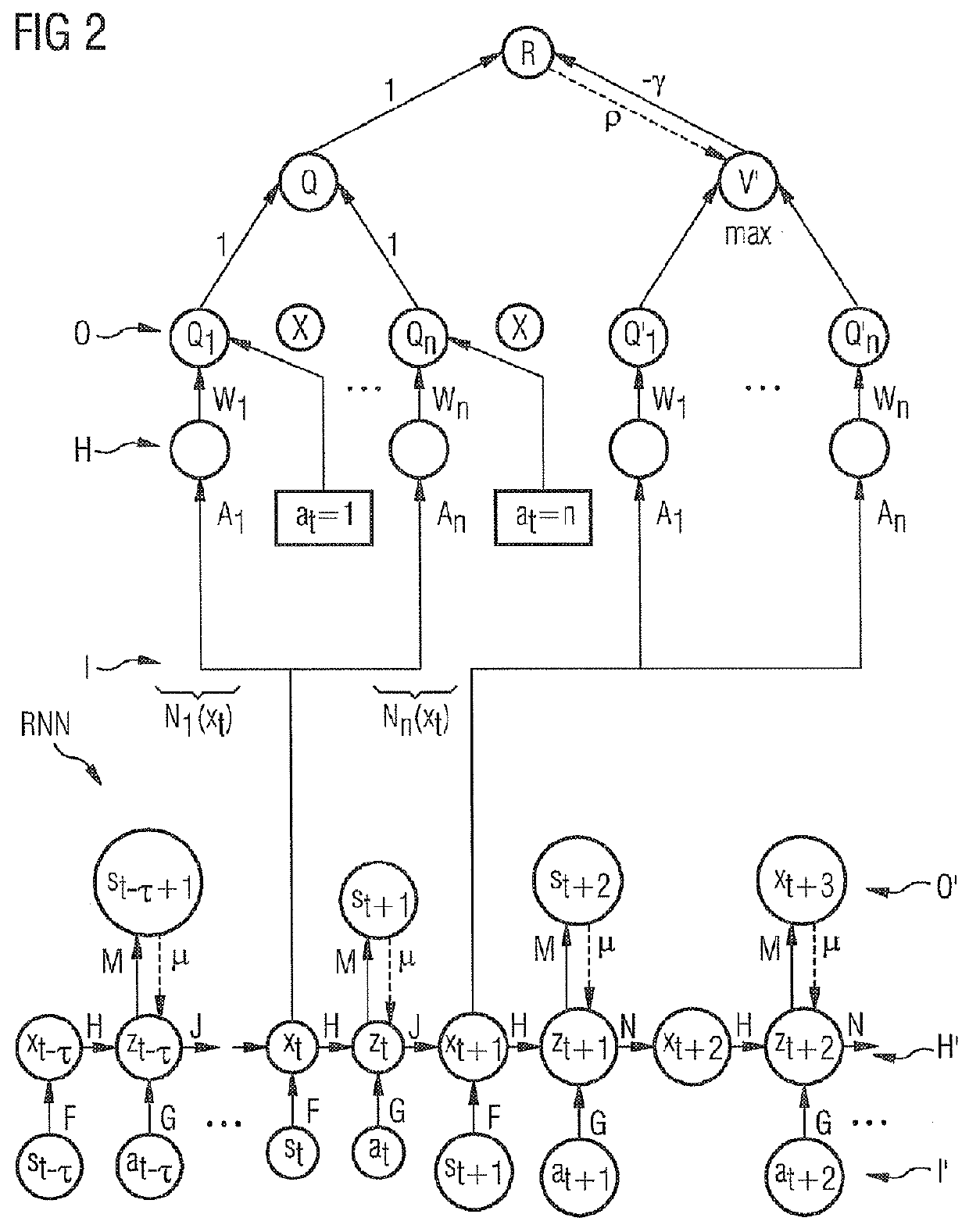

Method for computer-aided control and/or regulation using neural networks

ActiveUS20100205974A1Maximizing quality functionPromote resultsDigital computer detailsGas turbine plantsFeed forward networkComputer-aided

A method for a computer-aided control of a technical system is provided. The method involves use of a cooperative learning method and artificial neural networks. In this context, feed-forward networks are linked to one another such that the architecture as a whole meets an optimality criterion. The network approximates the rewards observed to the expected rewards as an appraiser. In this way, exclusively observations which have actually been made are used in optimum fashion to determine a quality function. In the network, the optimum action in respect of the quality function is modeled by a neural network, the neural network supplying the optimum action selection rule for the given control problem. The method is specifically used to control a gas turbine.

Owner:SIEMENS AG

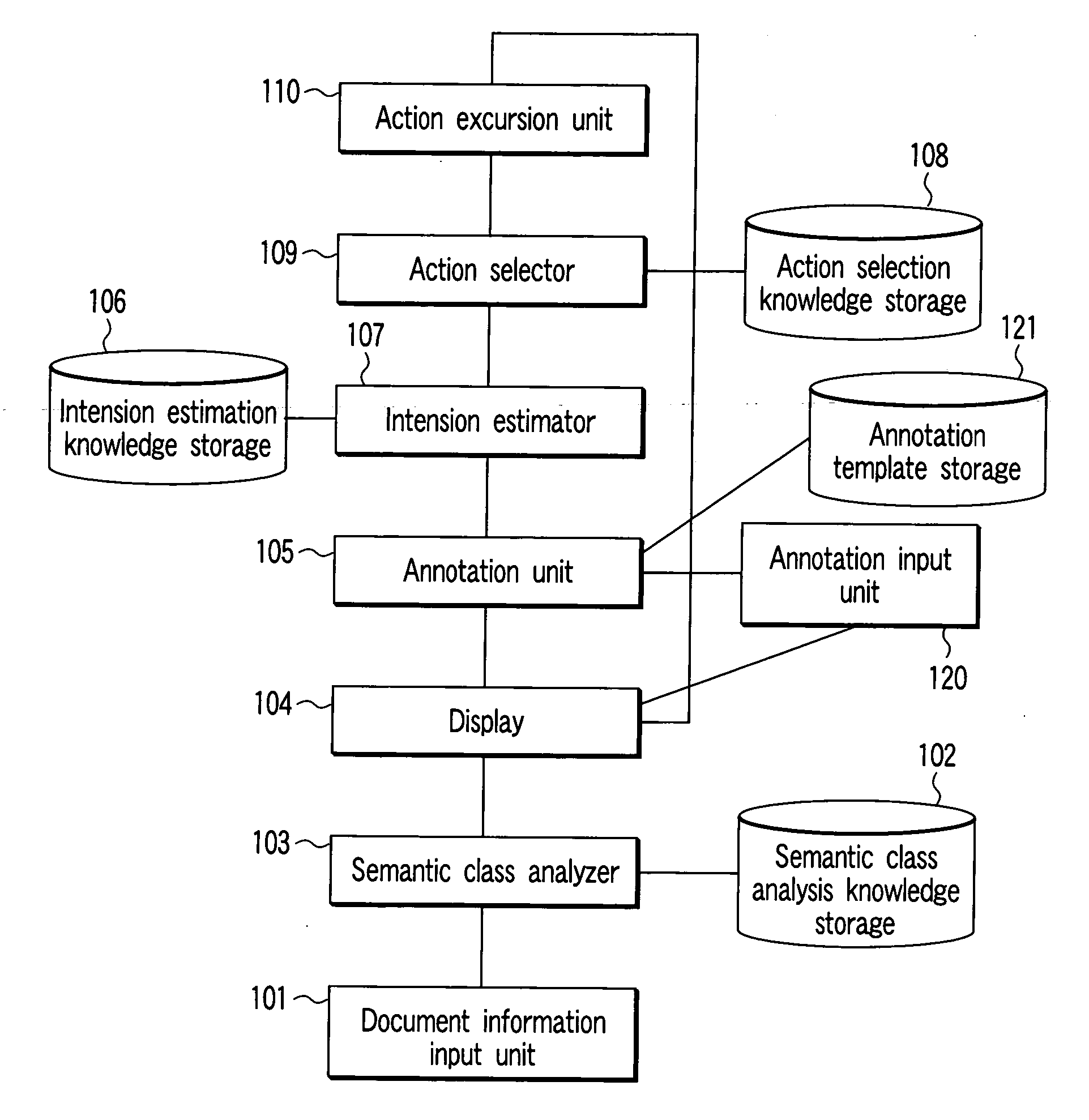

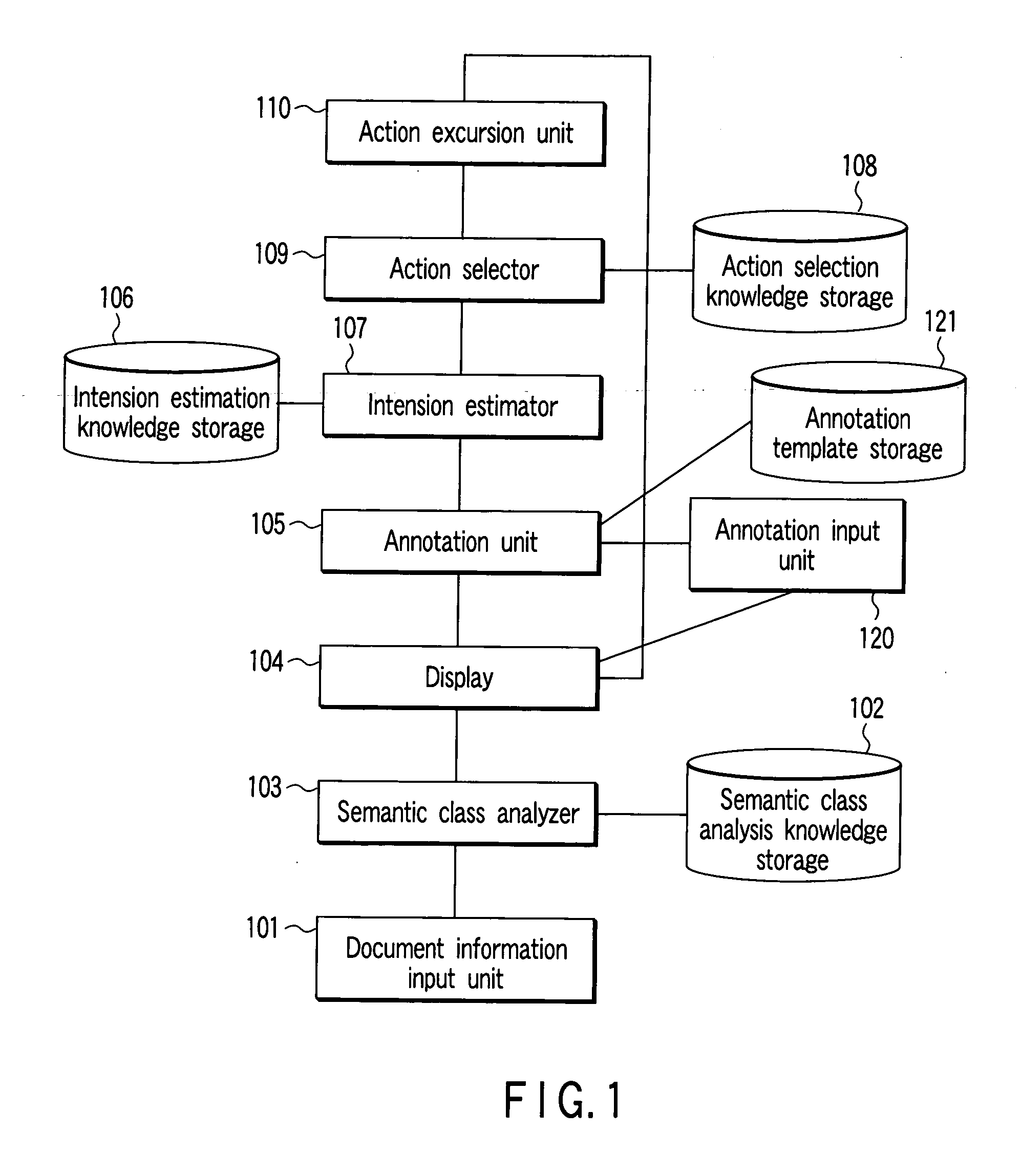

Information processing method and apparatus

InactiveUS20060080276A1Natural language data processingSpecial data processing applicationsInformation processingString sign

An information processing apparatus includes an input unit configured to input an annotation of at least one of an underline, a box, a character, a character string, a symbol and a symbol string to a displayed document, an annotation recognition unit configured to recognize a type of the annotation and a coverage of the annotation in the document, an intention estimation unit configured to estimate intention of a user based on the type of the annotation and information in the coverage, an action storage unit configured to store a plurality of actions, an action selection unit configured to select an action to be performed for the document from the action storage based on the intention estimated by the intention estimation unit, and an execution unit configured to execute the action selected by the action selection unit.

Owner:KK TOSHIBA

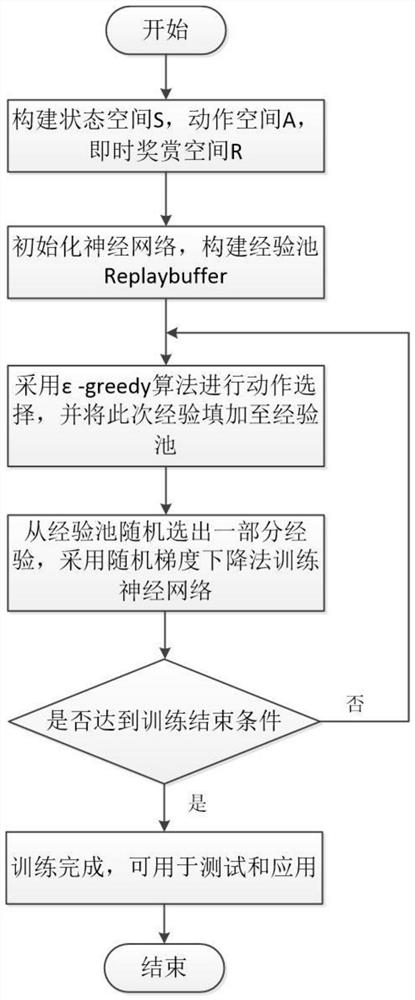

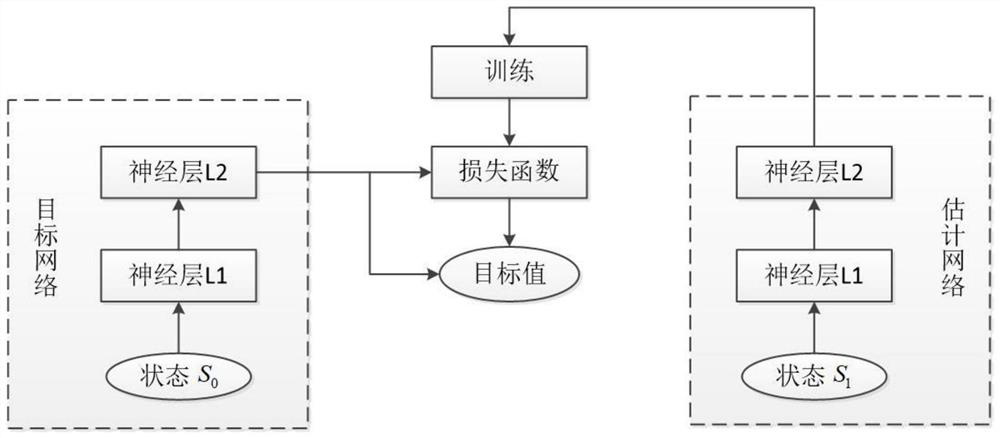

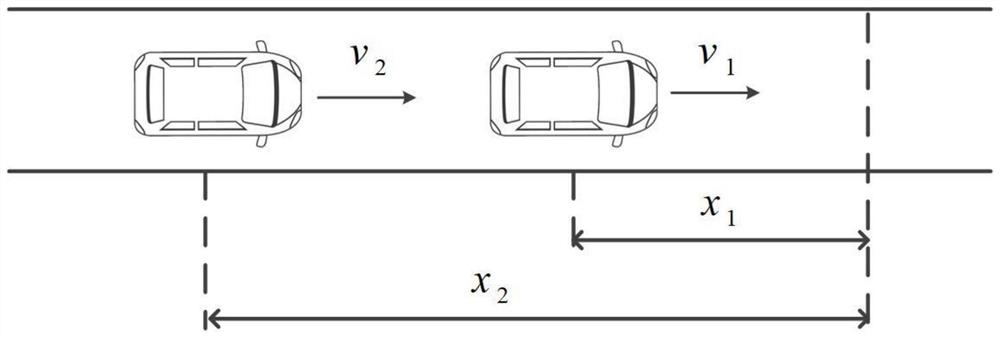

Intelligent vehicle speed decision-making method based on deep reinforcement learning and simulation method thereof

ActiveCN111898211AEffective decision-makingLow costGeometric CADDesign optimisation/simulationStochastic gradient descentDecision model

The invention discloses an intelligent vehicle speed decision-making method based on a deep reinforcement learning method. The method comprises the steps of constructing a state space S, an action space A and an instant rewarding space R of a Markov decision-making model of an intelligent vehicle passing through an intersection; initializing a neural network, and constructing an experience pool; performing action selection by adopting an epsilon-greed algorithm, and filling the experience into the experience pool constructed in the step 2; randomly selecting a part of experience from the experience pool, and training a neural network by adopting a stochastic gradient descent method; completing the speed decision of the intelligent vehicle at the current moment according to the latest neural network, adding the experience to an experience pool, randomly selecting a part of experience, and carrying out the training of a new round of neural network. The invention further discloses a simulation method of the intelligent vehicle speed decision-making method based on deep reinforcement learning. The method is advantaged in that simulation experiments are carried out based on a deep reinforcement learning simulation system established by a matlab automatic driving toolbox.

Owner:JILIN UNIV

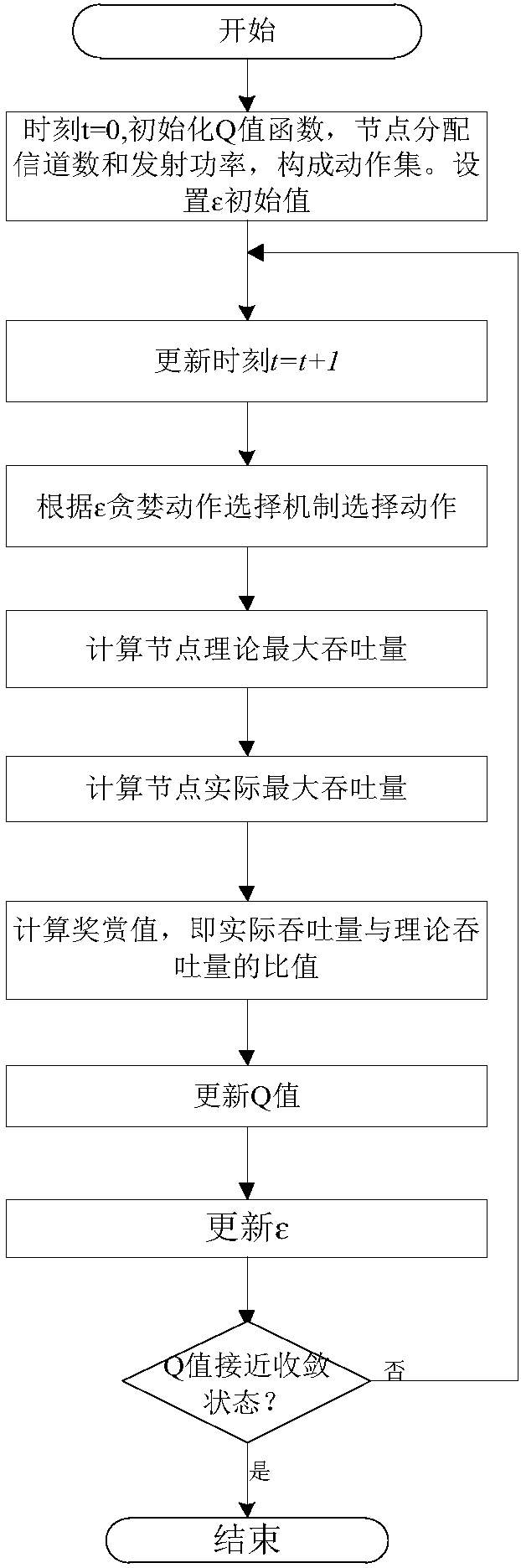

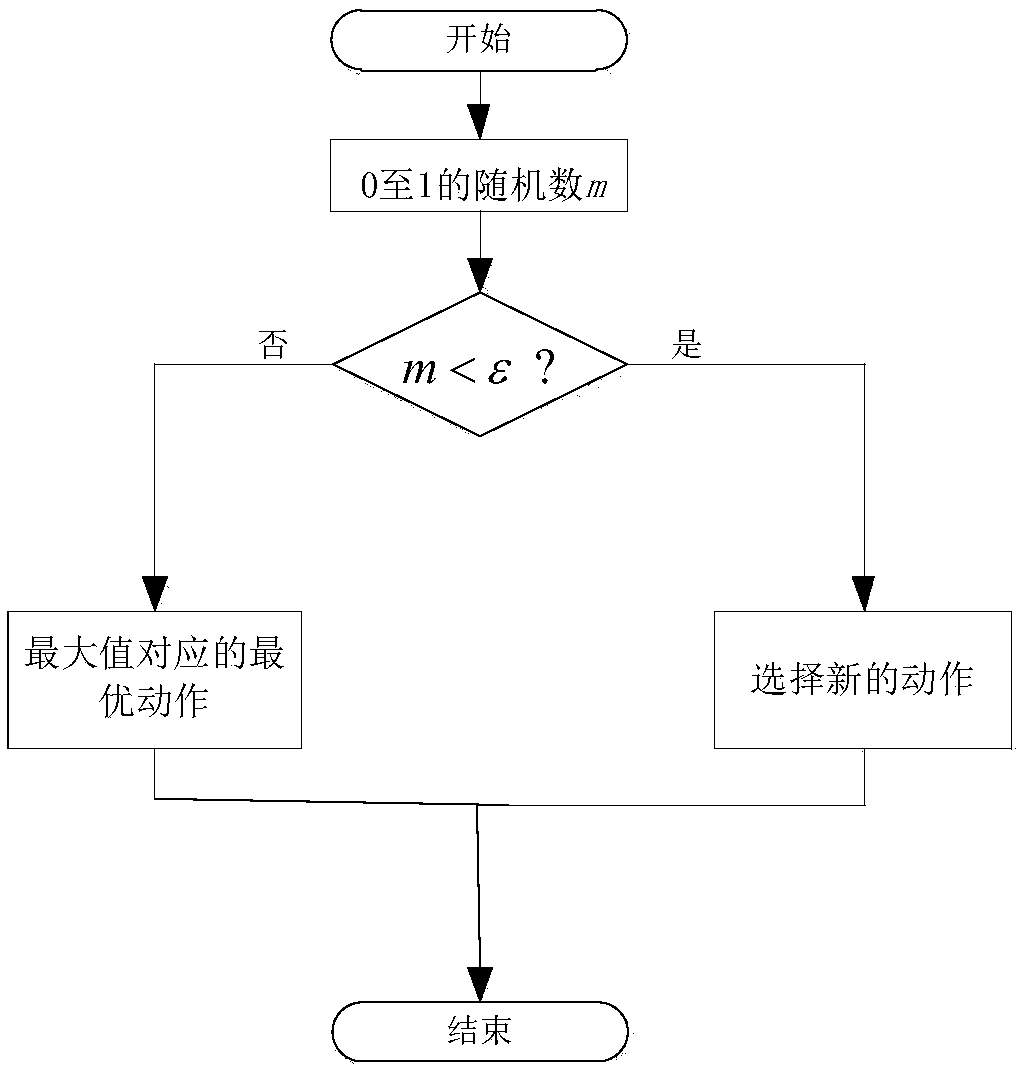

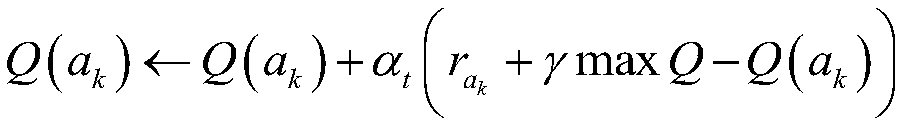

Wireless network distributed autonomous resource allocation method based on stateless Q learning

ActiveCN108112082AExcellent throughputHigh level techniquesWireless communicationWireless mesh networkPrior information

The invention discloses a wireless network distributed autonomous resource allocation method based on stateless Q learning. First, the number of channels and the transmitted power are taken as a set of actions, and a set of actions is randomly selected to calculate the actual network throughput; then the ratio of the actual network throughput and the theoretical throughput is taken as a reward after the action selection, and an action value function is updated according to the reward; and finally, the iterative adjustment of the actions can find the maximum solution of a cumulative reward value function, and the corresponding action thereof can reach the optimum performance of the wireless network. The method provided by the invention allows each node to autonomously perform channel allocation and transmitted power control to maximize the network throughput under the condition that prior information such as resource configuration of other nodes in the network is unknown.

Owner:BEIJING UNIV OF TECH

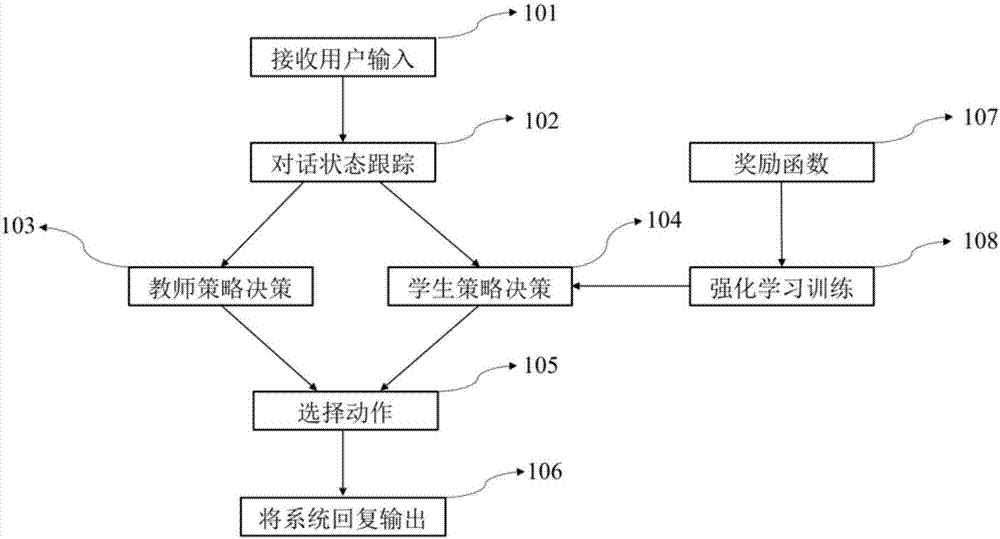

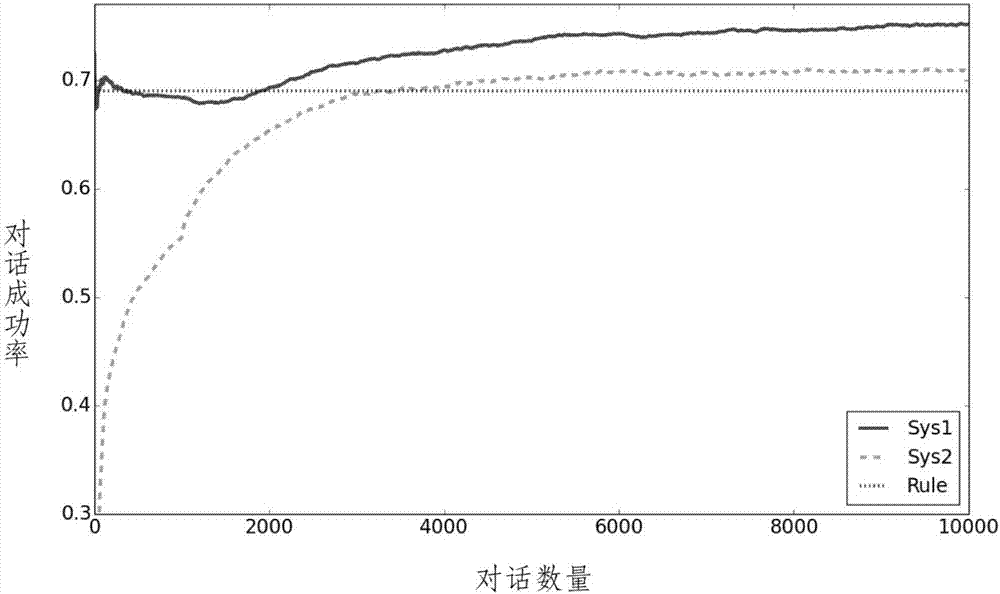

Dialogue strategy-optimized cold start system and method

The invention relates to a dialogue strategy-optimized cold start system and a method. The system comprises a user input module, a dialogue state tracking module, a teacher decision-making module, a student decision-making module, an action selection module, an output module, a strategy training module and a reward function module. The action selection module randomly selects one final reply action from all reply actions generated by the teacher decision-making module and the student decision-making module. The output module converts the final reply action into a more natural expression and displays the more natural expression to a user. The strategy training module stores the dialogue experience (transition) in an empirical pool, samples a fixed number of experiences, and updates network parameters according to a depth Q network (DQN) algorithm. The reward function module calculates the reward of the dialogue at each round of the dialogue, and outputs the reward to the strategy training module. According to the invention, the performance of the dialogue strategy during the strengthened learning on-line training initial stage can be remarkably improved. The learning speed of the dialogue strategy is increased, and the number of dialogues used for achieving certain performances is reduced.

Owner:AISPEECH CO LTD

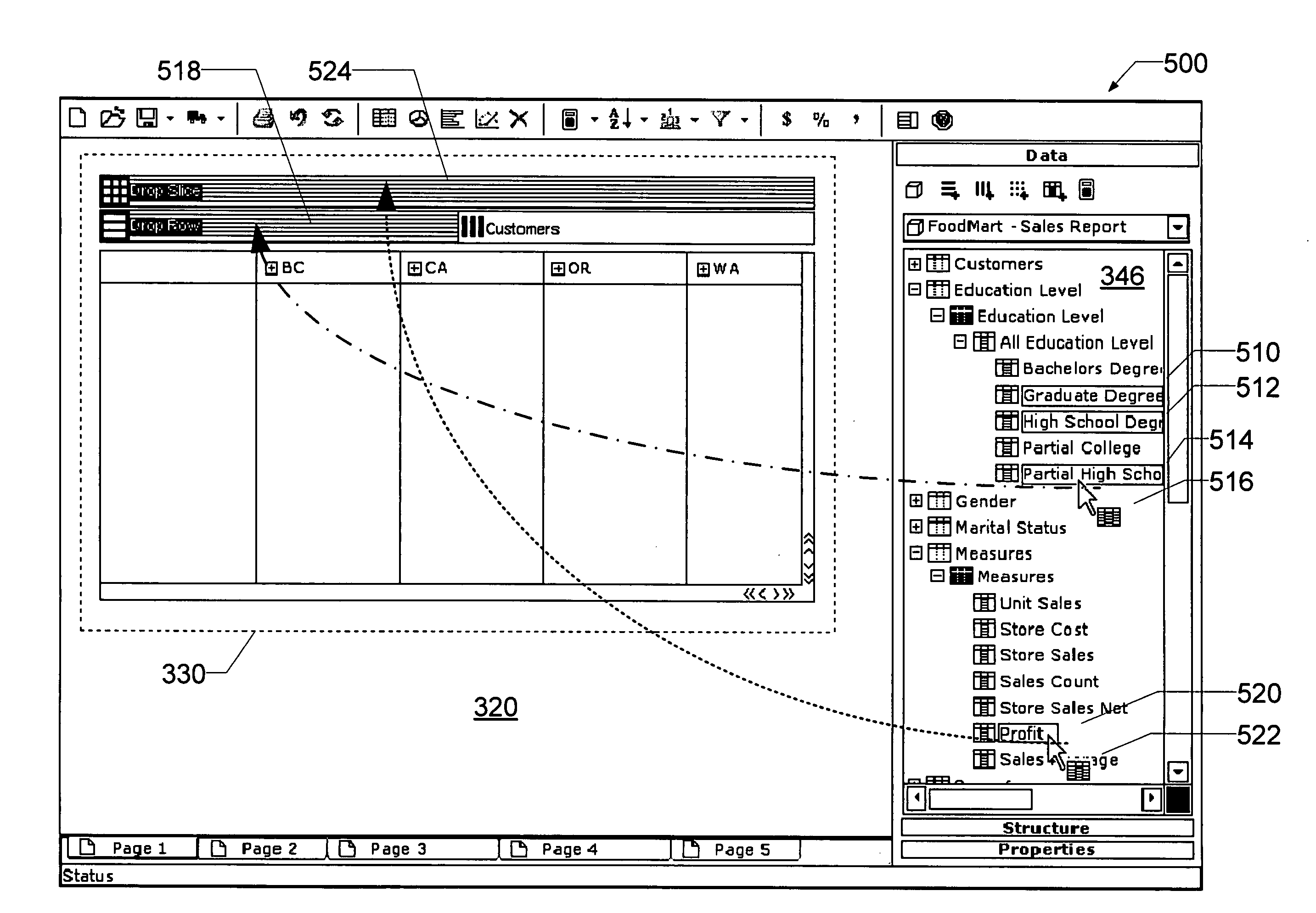

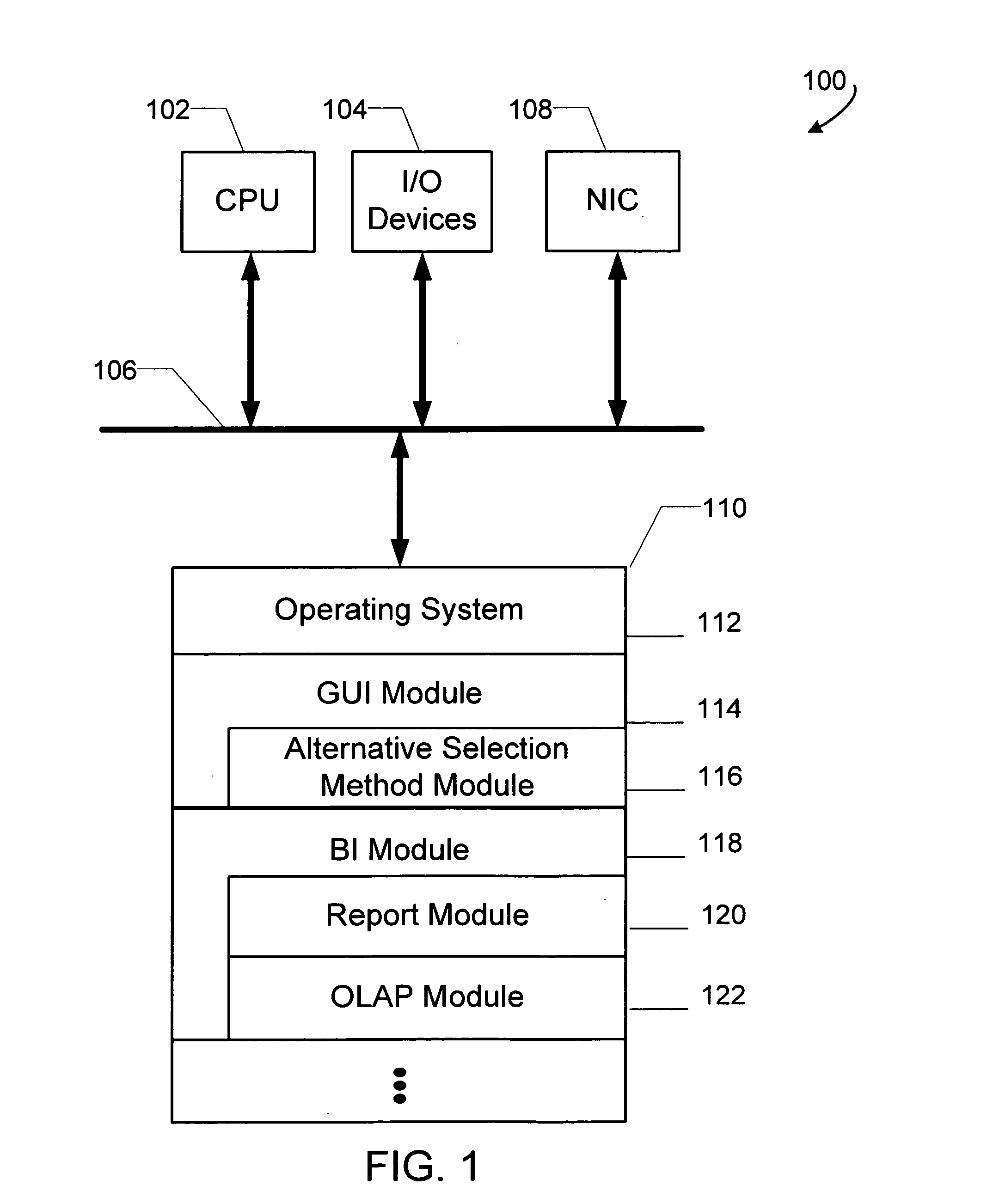

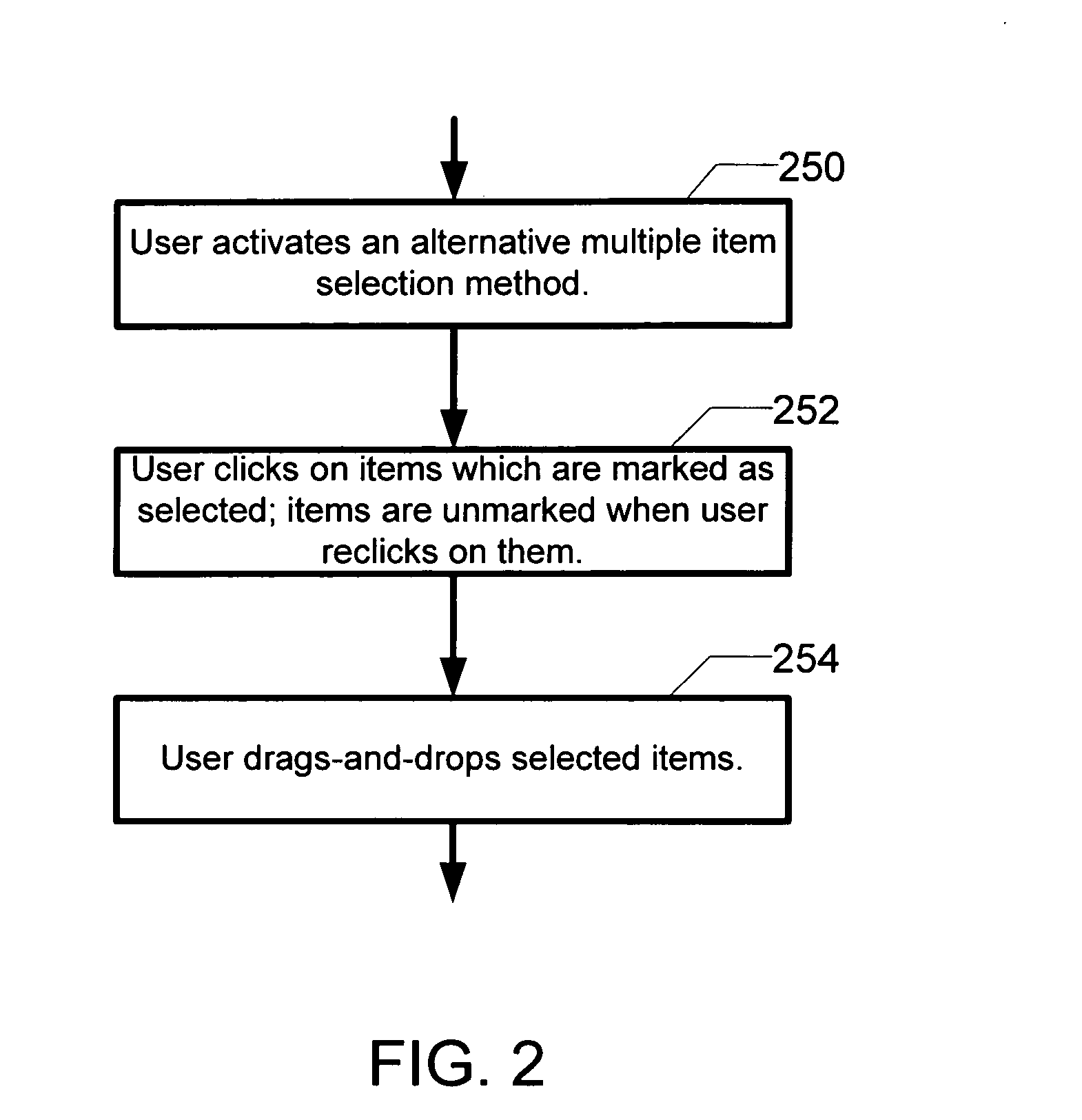

Apparatus and method for selecting multiple items in a graphical user interface

A computer readable medium includes executable instructions to identify an alternative selection mode within a graphical user interface. A set of selected items are linked during the alternative selection mode in response to single input action selection of each item.

Owner:BUSINESS OBJECTS SOFTWARE

Method for computer-supported control and/or regulation of a technical system

ActiveUS8260441B2Promote resultsDigital computer detailsDigital dataFeed forward networkStudy methods

A method for computer-supported control and / or regulation of a technical system is provided. In the method a reinforcing learning method and an artificial neuronal network are used. In a preferred embodiment, parallel feed-forward networks are connected together such that the global architecture meets an optimal criterion. The network thus approximates the observed benefits as predictor for the expected benefits. In this manner, actual observations are used in an optimal manner to determine a quality function. The quality function obtained intrinsically from the network provides the optimal action selection rule for the given control problem. The method may be applied to any technical system for regulation or control. A preferred field of application is the regulation or control of turbines, in particular a gas turbine.

Owner:SIEMENS AG

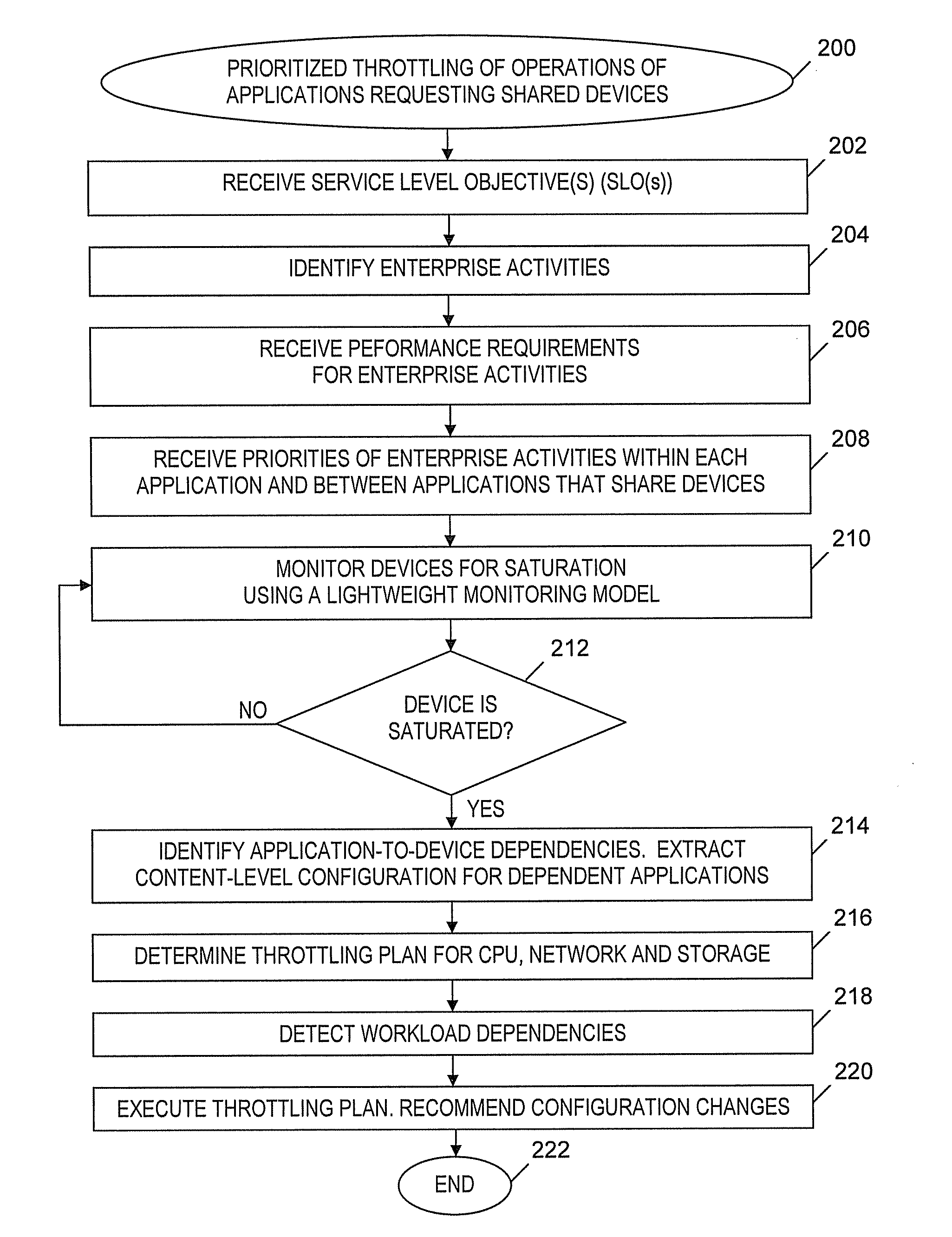

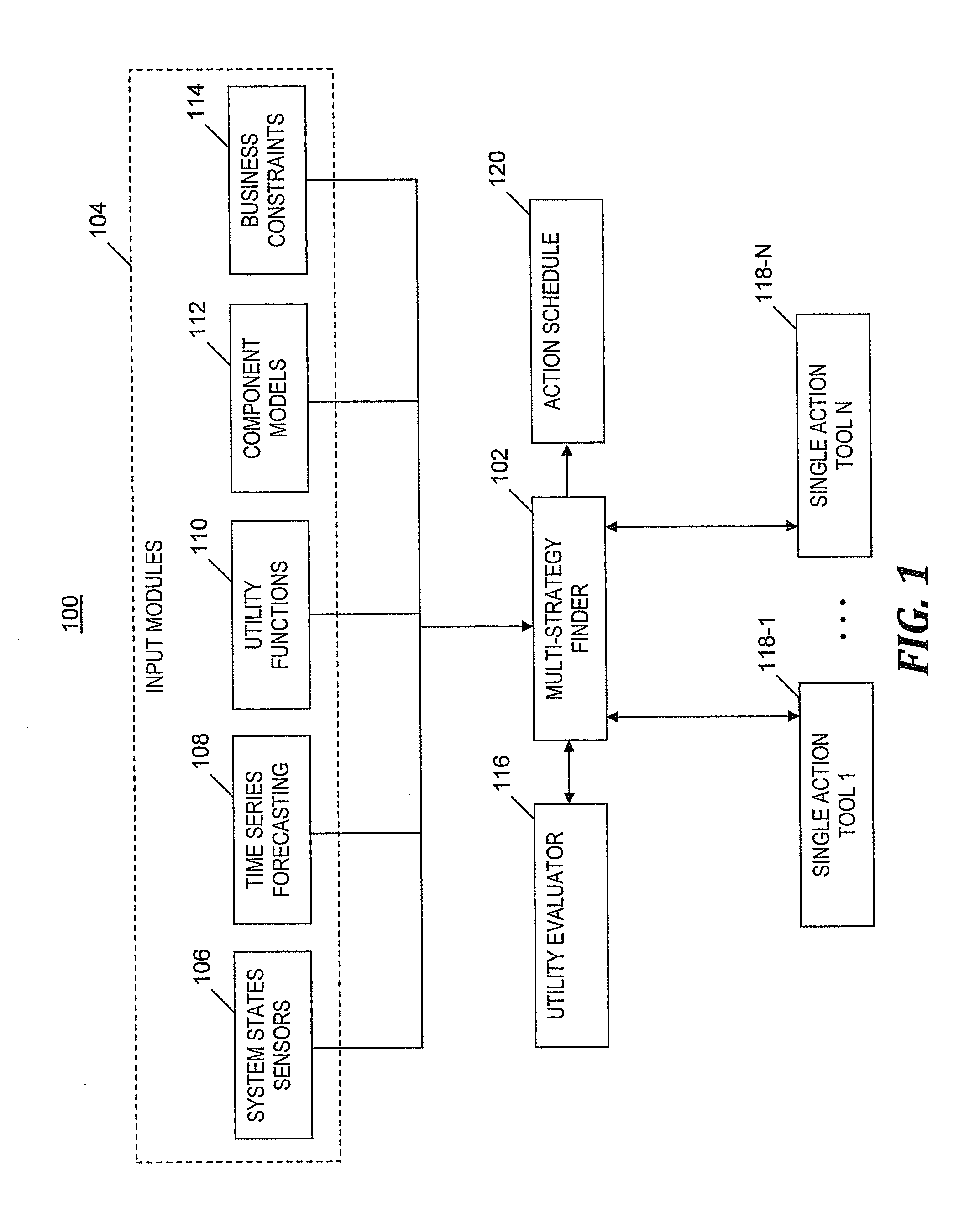

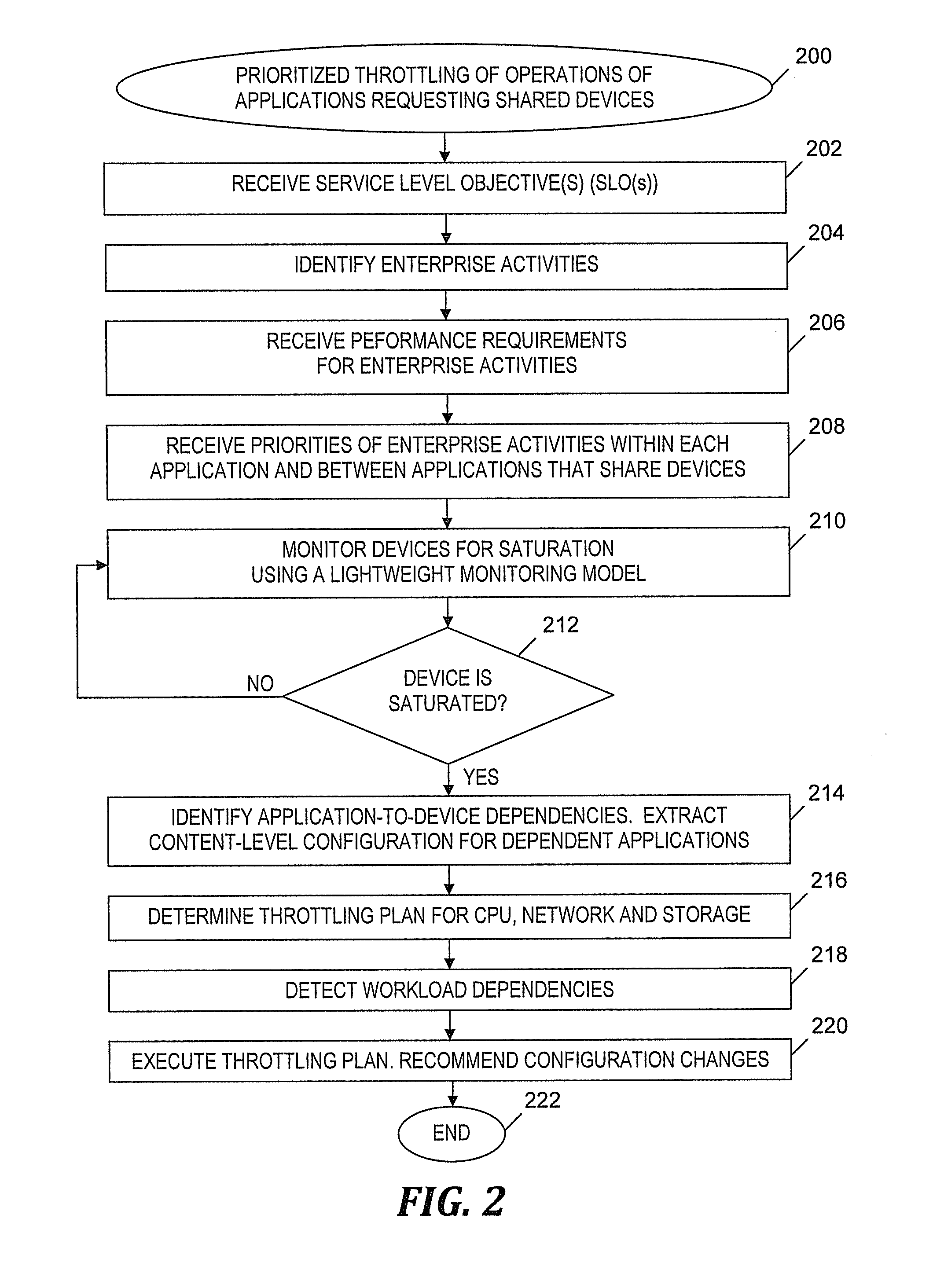

Integrated differentiated operation throttling

InactiveUS20110167424A1Increase profitDigital computer detailsMultiprogramming arrangementsNormal modeWorkload

A method and system for throttling a plurality of operations of a plurality of applications that share a plurality of resources. A difference between observed and predicted workloads is computed. If the difference does not exceed a threshold, a multi-strategy finder operates in normal mode and applies a recursive greedy pruning process with a look-back and look-forward optimization to select actions for a final schedule of actions that improve the utility of a data storage system. If the difference exceeds the threshold, the multi-strategy finder operates in unexpected mode and applies a defensive action selection process to select actions for the final schedule. The selected actions are performed according to the final schedule and include throttling of a CPU, network, and / or storage.

Owner:IBM CORP

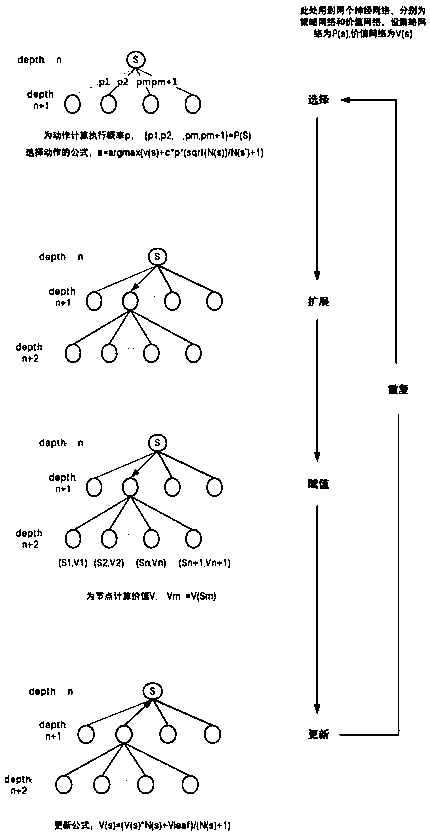

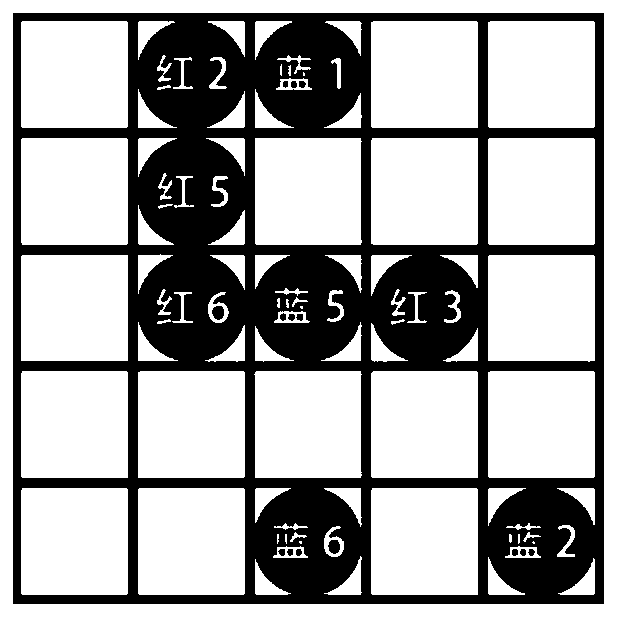

Enshinstein chess game algorithm based on reinforcement learning

InactiveCN110119804AImprove the level ofImprove execution efficiencyIndoor gamesNeural architecturesAlgorithmTheoretical computer science

The invention discloses an Enshinstein chess game algorithm based on reinforcement learning. BP neural network is applied to a value evaluation method of a chessboard and an action selection strategyof a Monte Carlo tree search algorithm. The characteristics of the chessboard are learned and the network parameters are gradually adjusted by means of the self-chess-playing learning rule of the reinforcement learning method, so that the value evaluation of the BP neural network on the chessboard and the strategy calculation of the chess playing action are gradually accurate, and the performanceof the whole game algorithm is gradually improved. According to the invention, the two BP neural networks are respectively used as a value estimation function and a behavior strategy function of the Einstein chess. The reinforcement learning algorithm serves as an evolution mechanism for adjusting BP neural network parameters, the defect that the level of an existing Enshinstein chess training setis limited by the human level is overcome, and the upper limit of the game level of the Enshinstein chess is improved.

Owner:ANHUI UNIVERSITY

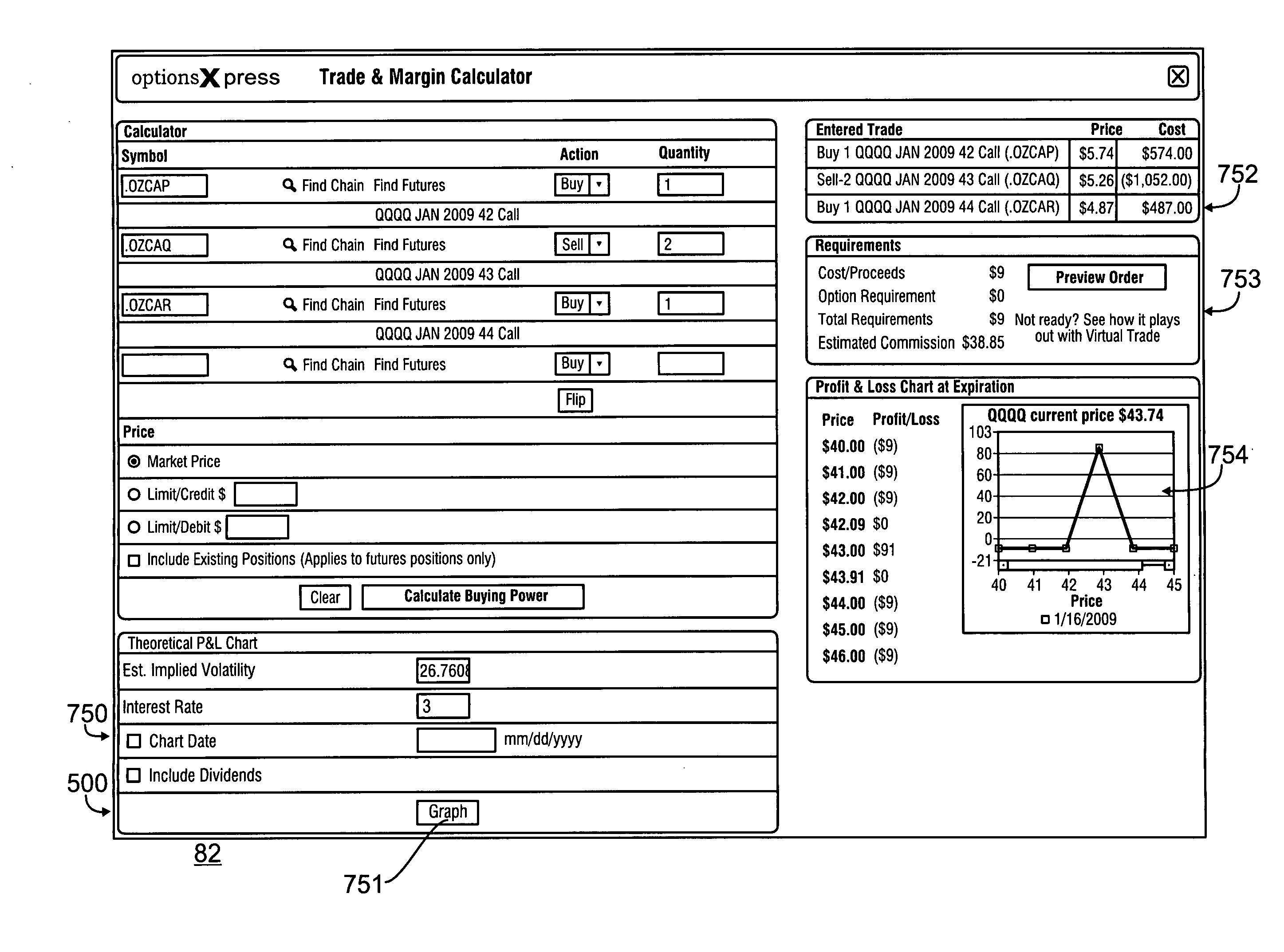

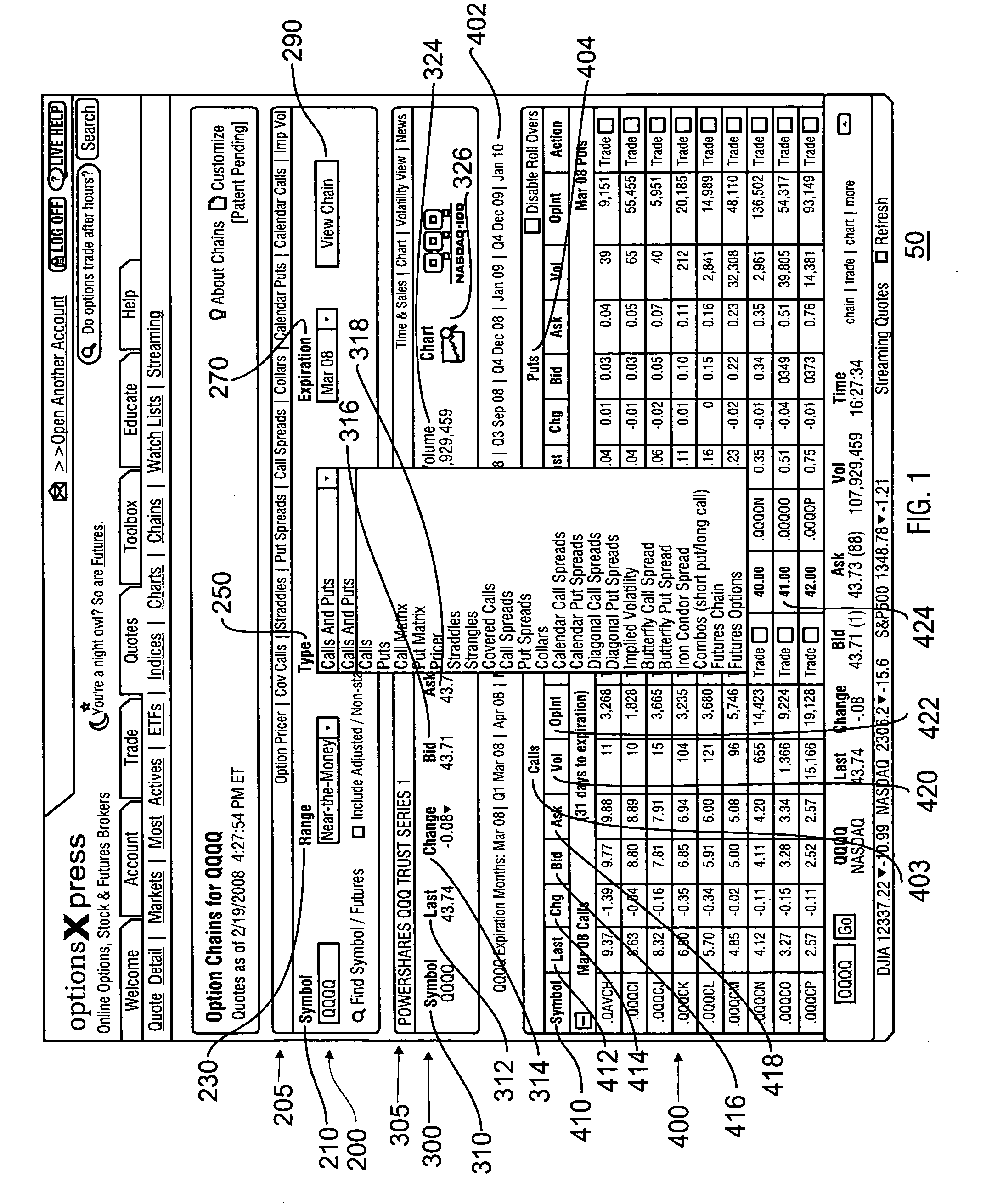

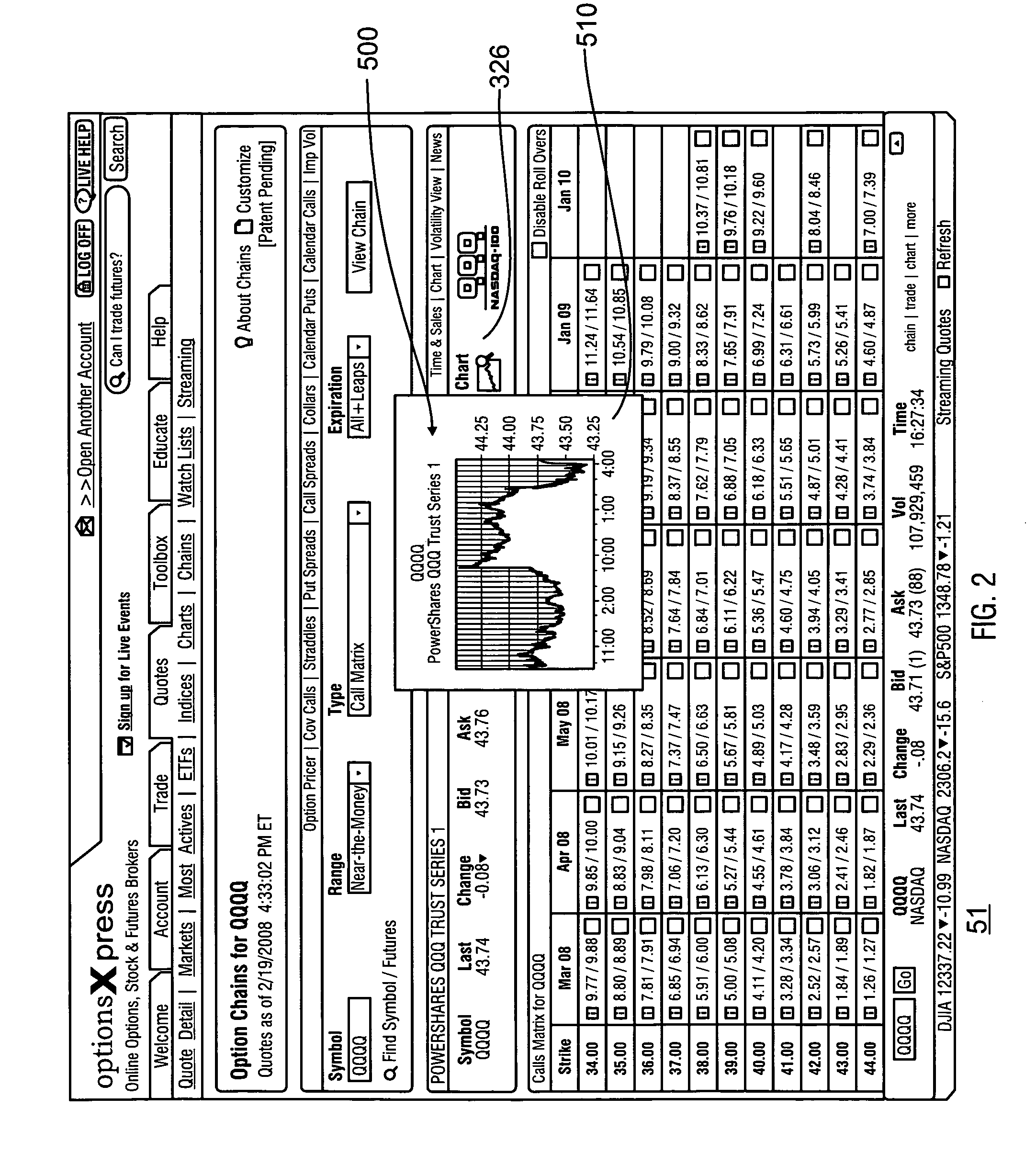

Trading system and methods

The present invention is directed to a system and method that facilitates the more fully informed and efficient trading of items of value, including securities. According to the present invention, certain embodiments permit a customer to determine the merits of and to execute a trade from a single screen. One embodiment of the present invention provides a single option chain trading screen enabling a customer to view a matrix of all available options for a given security, including the various strike prices, expiration dates, and whether they are calls or puts. Another embodiment provides a customer with a single option chain trading screen allowing a customer to “hover” at or near various icons to obtain supplemental information without leaving the trading screen, and use a triple-action selection component to ultimately execute a trade.

Owner:CHARLES SCHWAB & CO INC

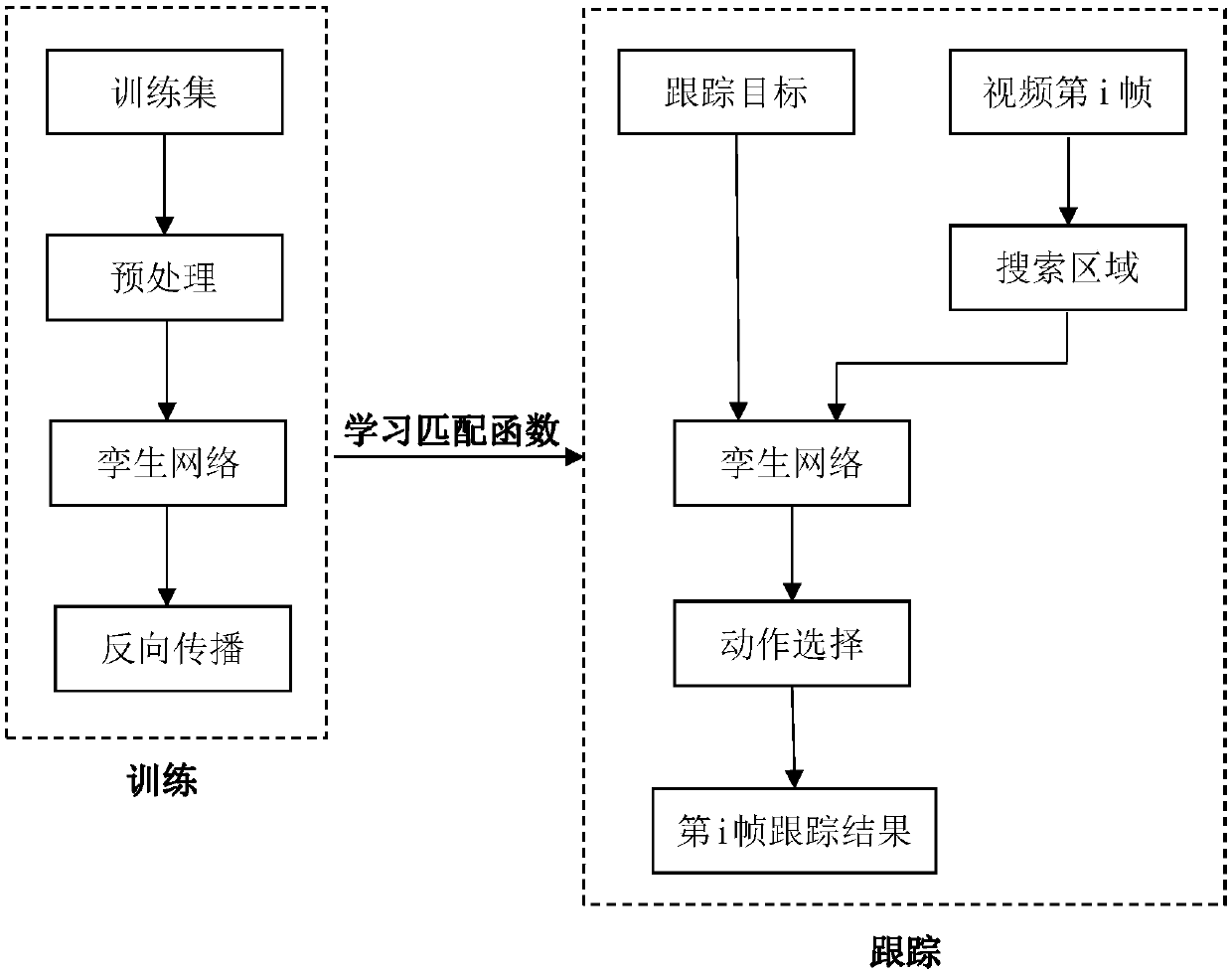

Target tracking method and system based on twin network and motion selection mechanism

ActiveCN109543559AImprove robustnessImprove sampling efficiencyImage analysisCharacter and pattern recognitionPattern recognitionAction selection

The invention discloses a target tracking method based on a twin network and an action selection mechanism. This method is based on twin network. Firstly, a large amount of external video data is usedto train the weights of the network. After training, In any video, in the case of specifying any of the tracking targets, the candidate area is collected and input to the twin network, the feature ofthe candidate region which is most similar to the tracking target is selected according to the action selection mechanism, and the features are mapped back to the position of the original image in the way of rectangular box as the tracking result of the current frame, the final rectangular box can be any aspect ratio and size. The invention also provides a target tracking system based on a twin network and an action selection mechanism, Compared with the traditional method, the invention utilizes the trained twin network and combines the outputs of different layers to match the characteristics of different layers of the target, so that the invention has stronger robustness to the appearance change of the target, meanwhile, the invention has the advantages of real-time and high precision.

Owner:SOUTHEAST UNIV

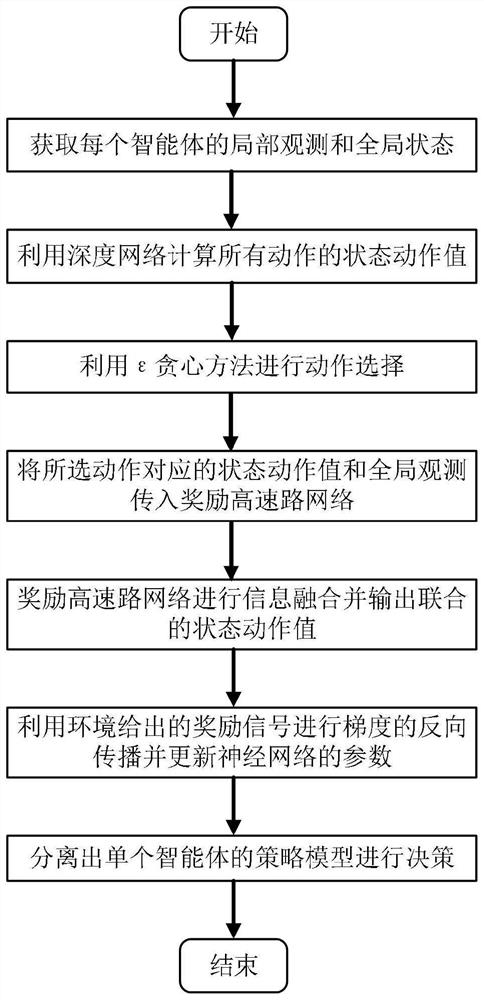

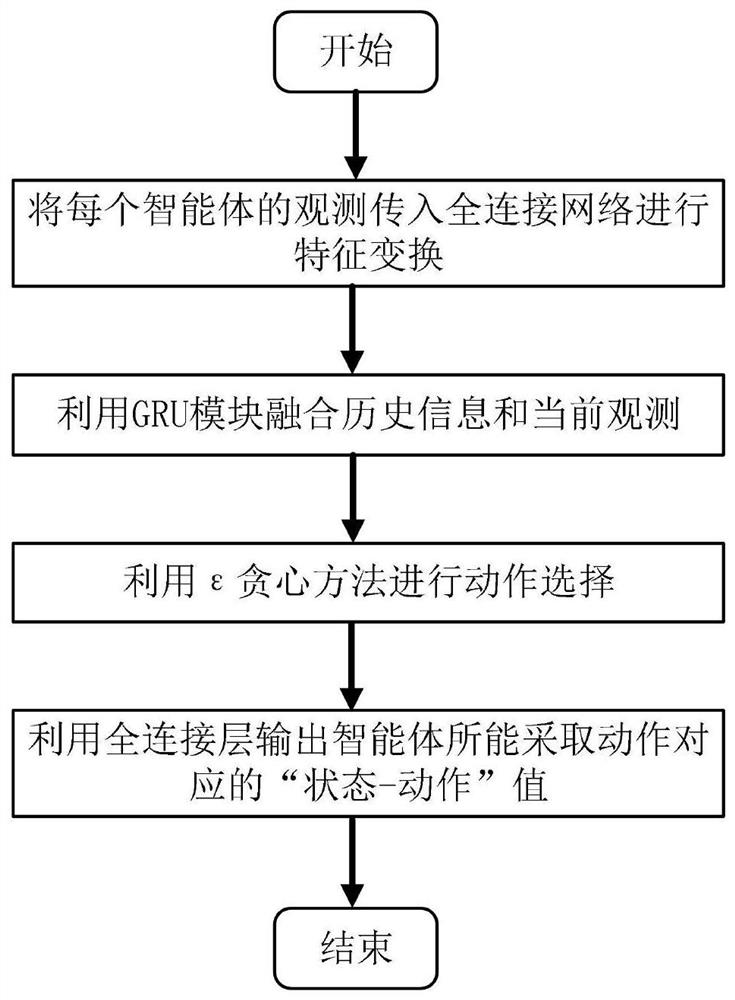

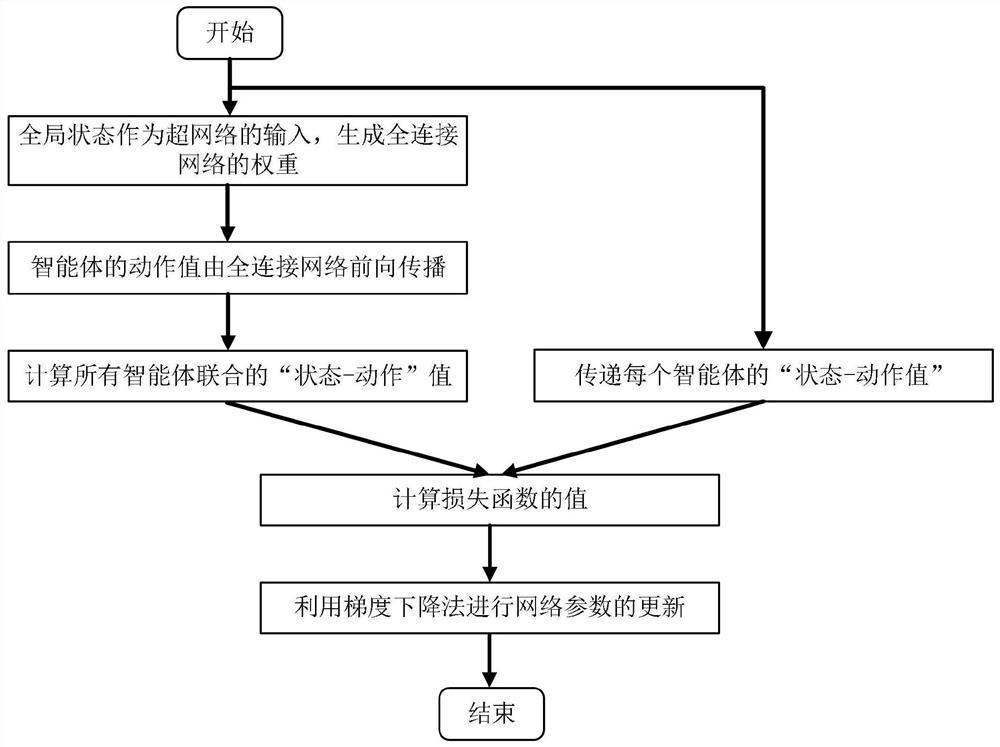

Collaborative multi-agent reinforcement learning method

PendingCN112364984ARelaxation of unreasonable assumptionsNeural architecturesNeural learning methodsMulti-agent systemEngineering

The invention discloses a collaborative multi-agent reinforcement learning method. The method comprises the following steps: obtaining observation information of each agent and a global state of a system; transmitting the obtained observation information of each intelligent agent into a deep neural network to calculate and obtain state action values of all actions of the intelligent agent; performing action selection by utilizing a greedy rule; transmitting the state action value corresponding to the adopted action and the global observation information into a reward highway network; rewardingthe highway network to perform information fusion and inputting a combined state action value; and performing gradient back transmission by utilizing a reward signal given by the environment and updating parameters of the neural network so as to obtain a strategy model of each intelligent agent. The data volume required in the training process of the multi-agent system can be reduced, and the invention is suitable for being popularized to large-scale multi-agent systems.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com