Patents

Literature

134 results about "Air combat" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Dynamic guidance for close-in maneuvering air combat

InactiveUS20060142903A1Improve performanceDirection controllersAircraft stabilisationControl systemFlight vehicle

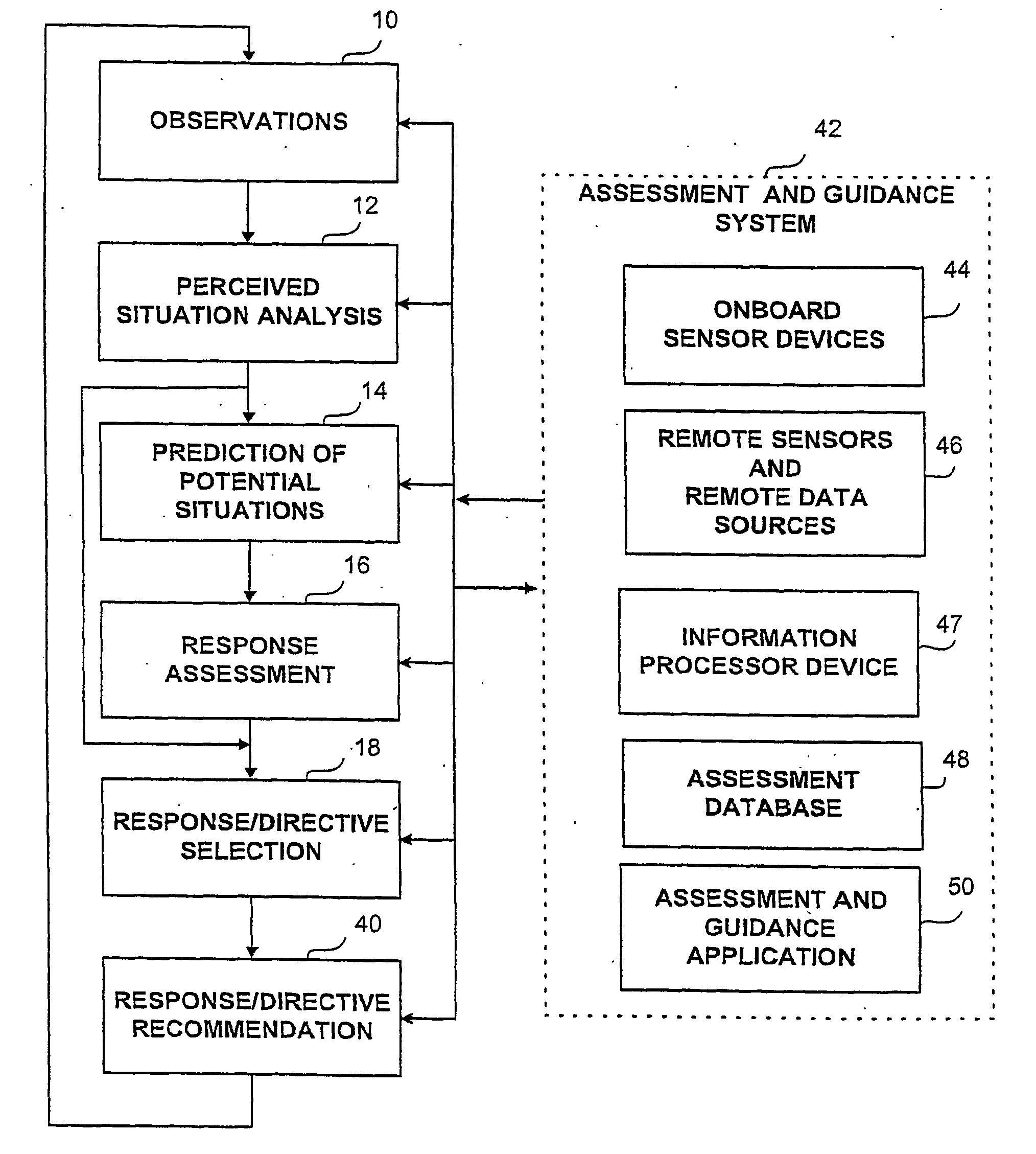

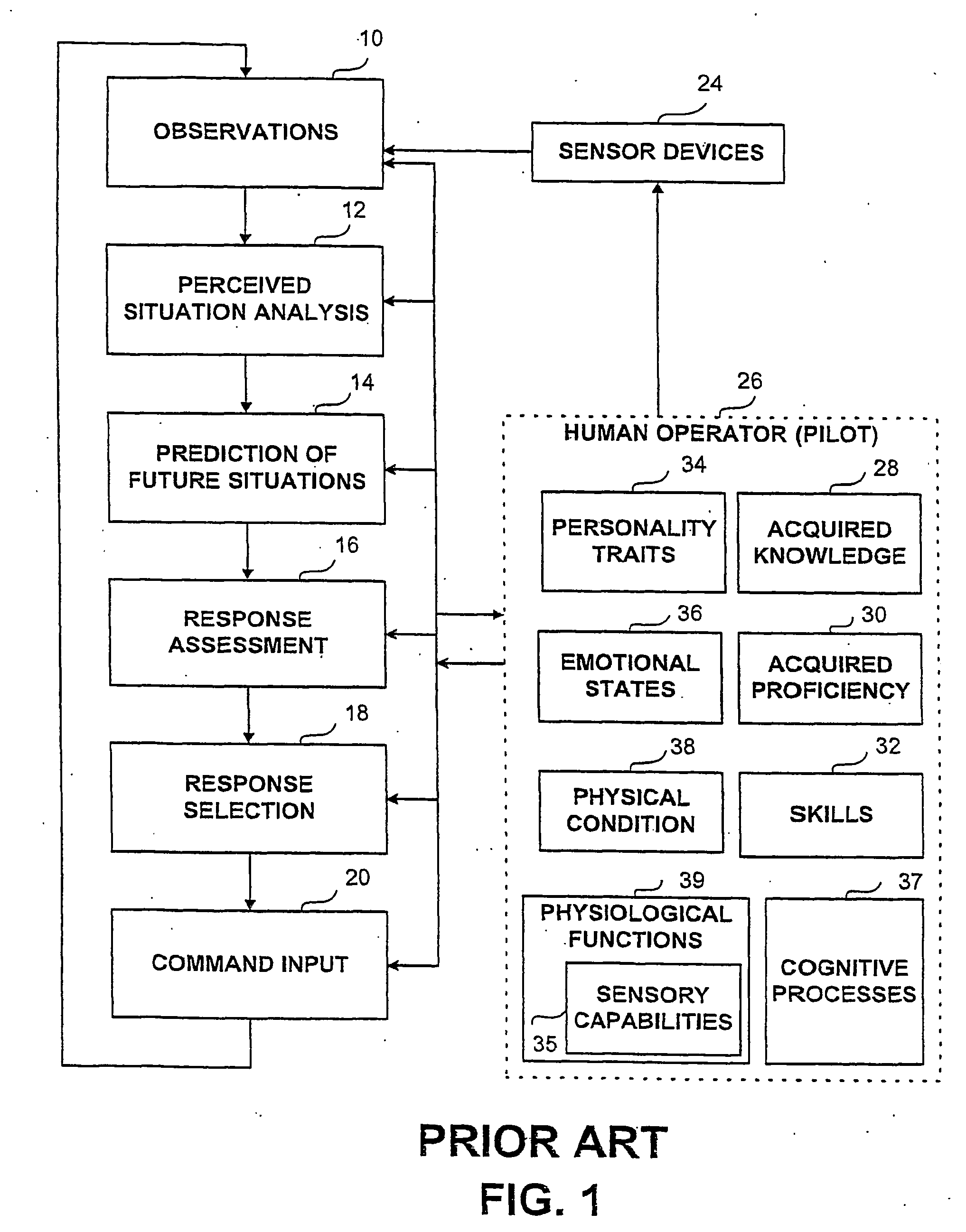

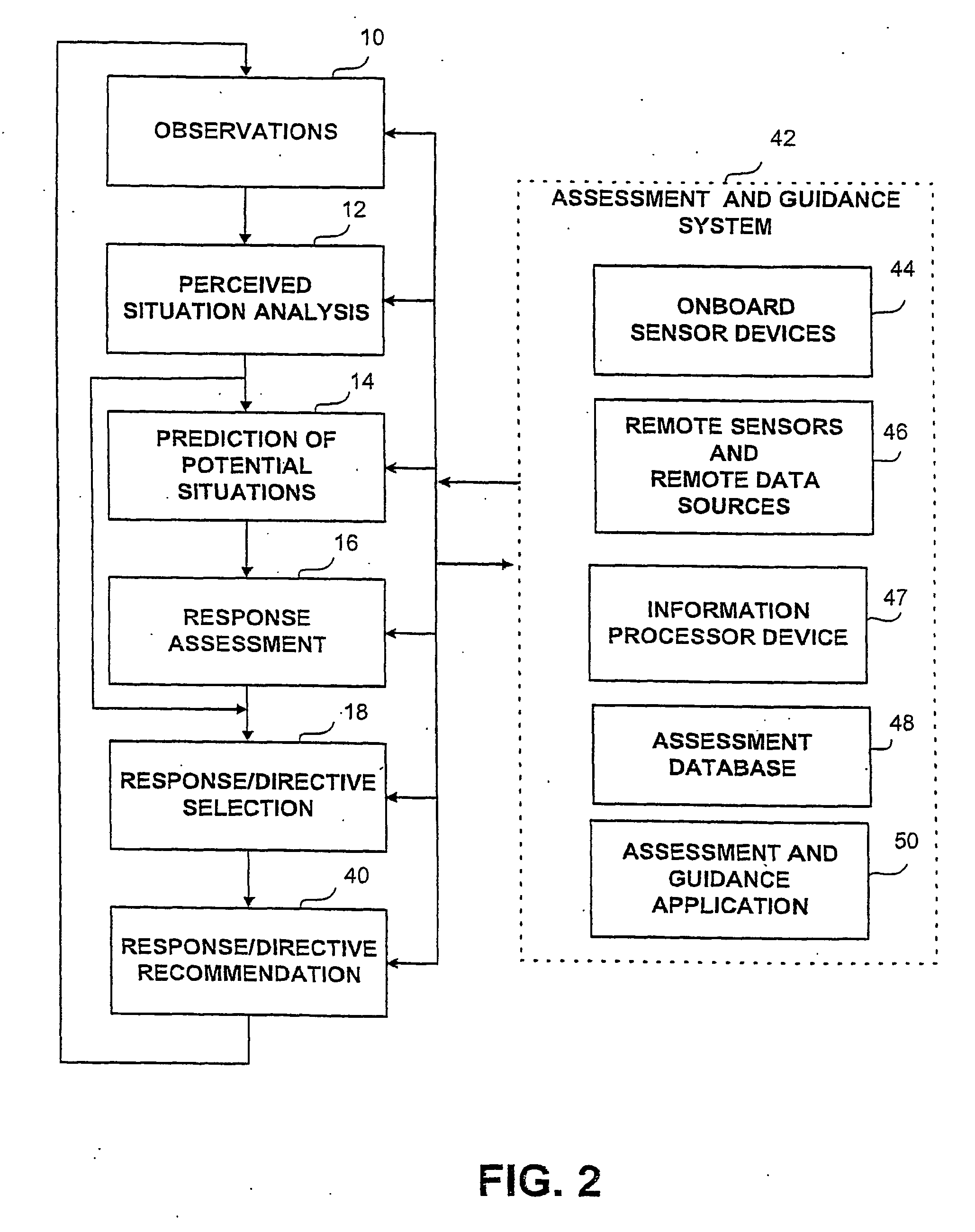

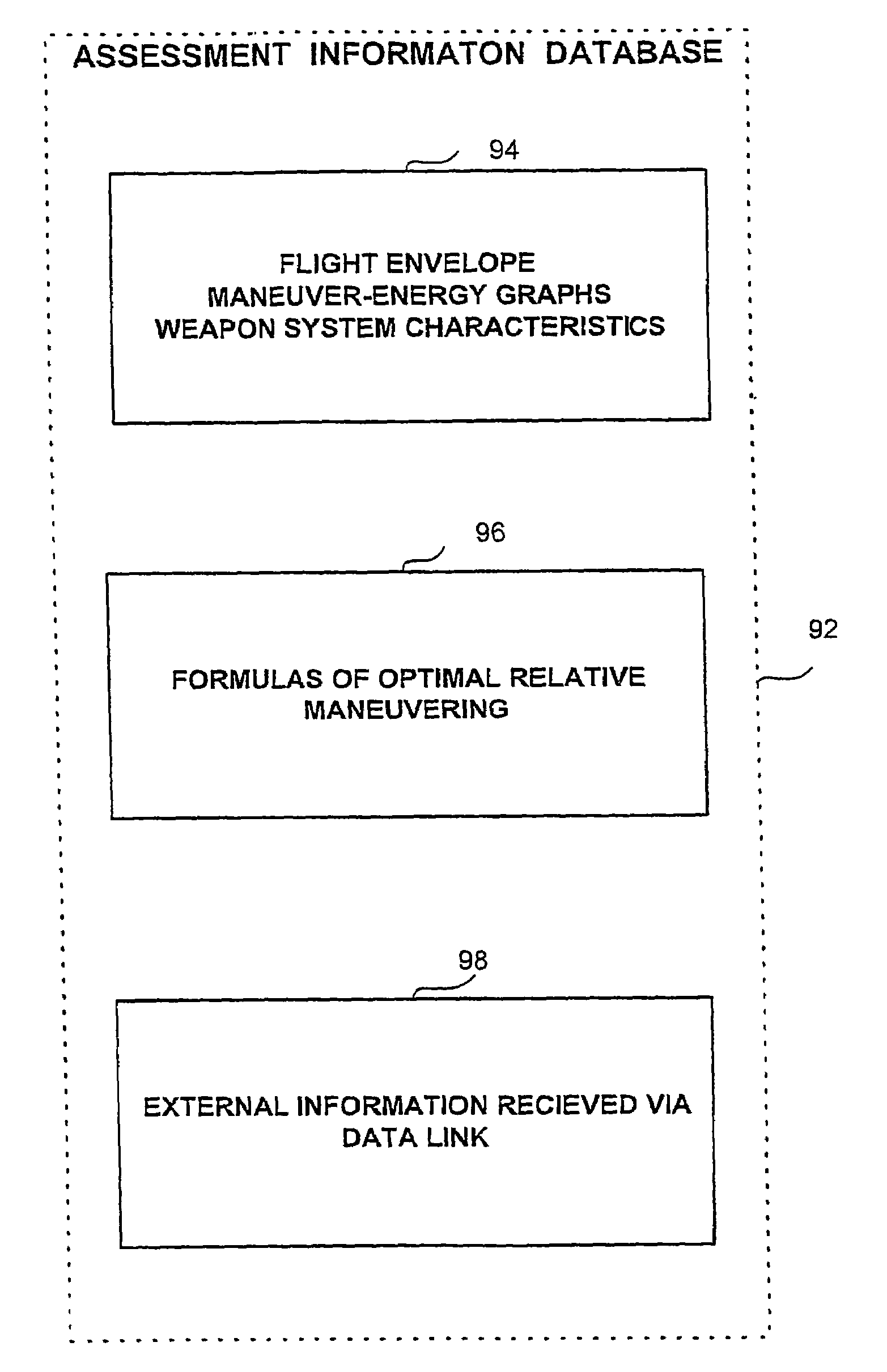

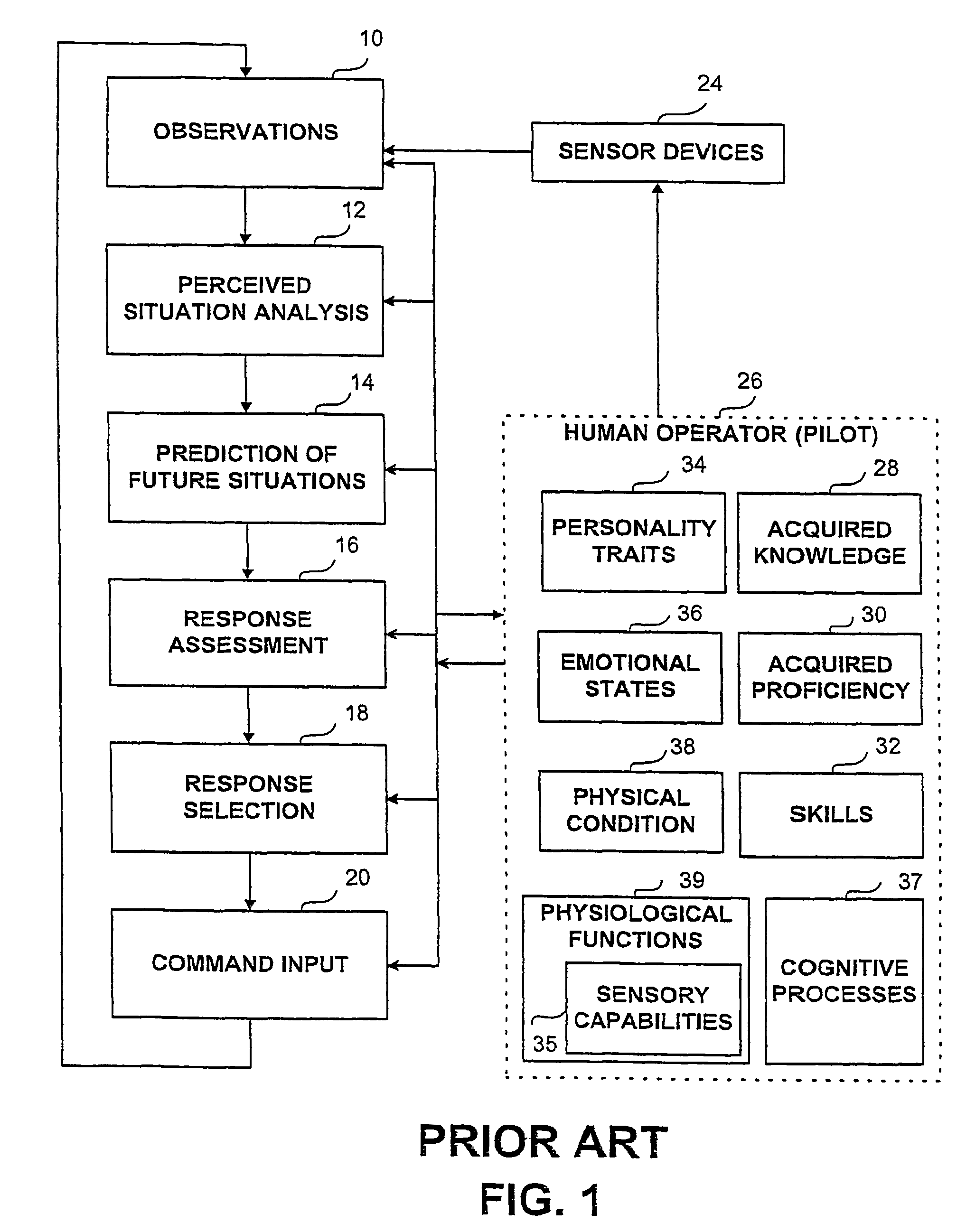

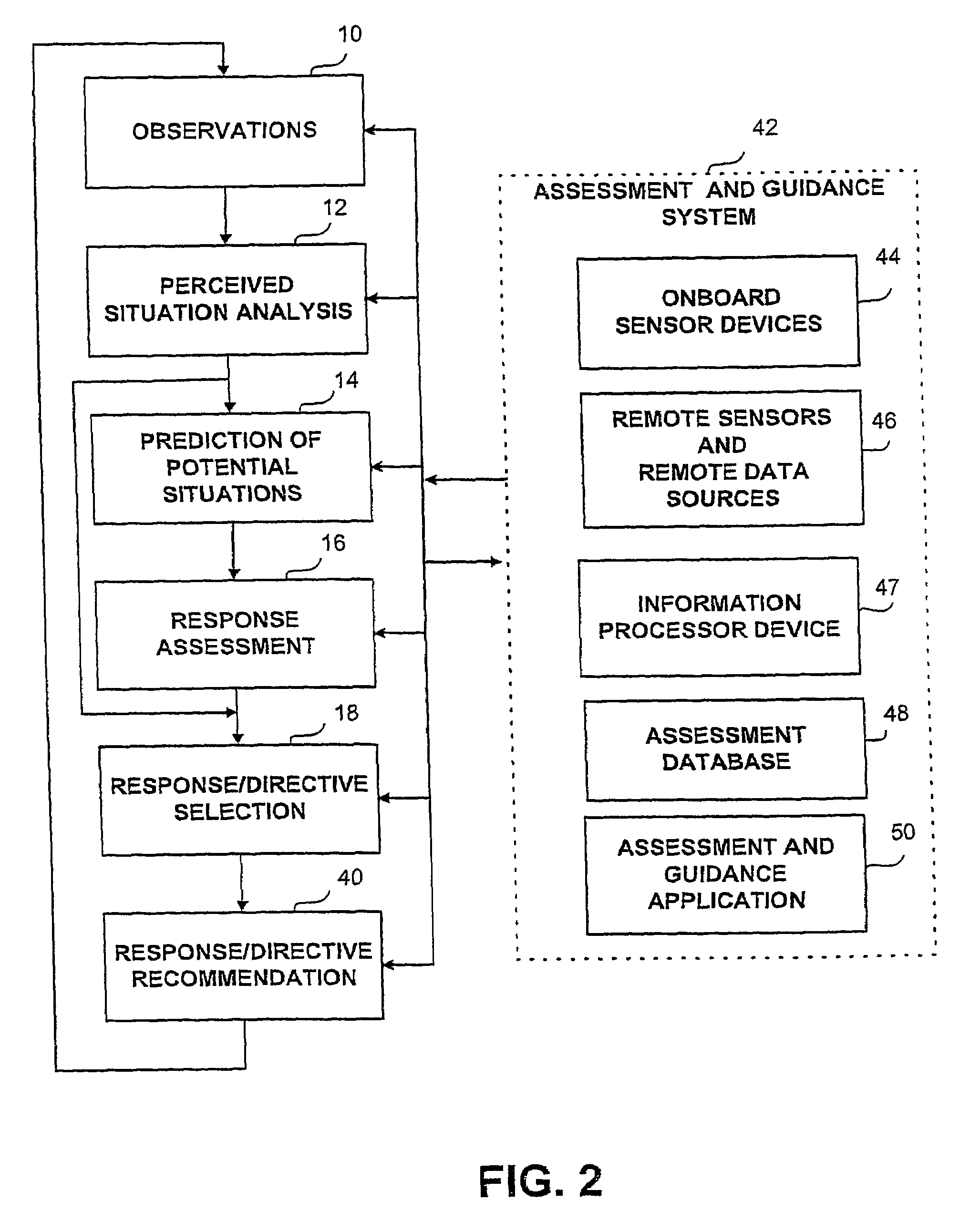

A system, apparatus and method for optimizing the conduct of a close-in air combat are disclosed. A computing device is operative in the analysis of a close-in air combat situation. The computing device stores and utilizes one or more aerial aircraft-specific, weapon systems-specific and close-in air combat-situation-specific information. The computing device is operative in the analysis of the current air combat situation (12) and in the generation of a flight control recommendation (40). Consequently, the recommendation is converted into an indication having visual or audio format to be communicated to the operating crew of the aerial vehicle. The recommendation could further to be converted into specific flight control / energy commands and to be introduced directly to the control systems of the aircraft.

Owner:PADAN NIR

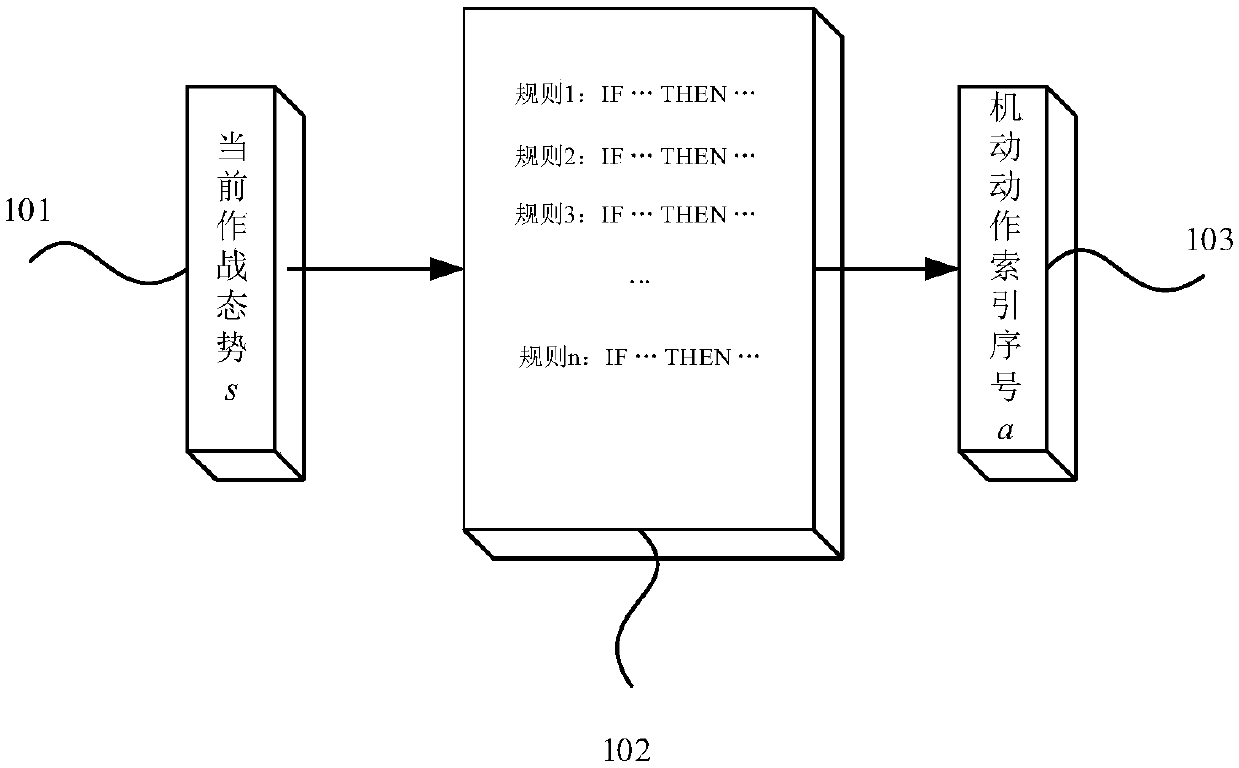

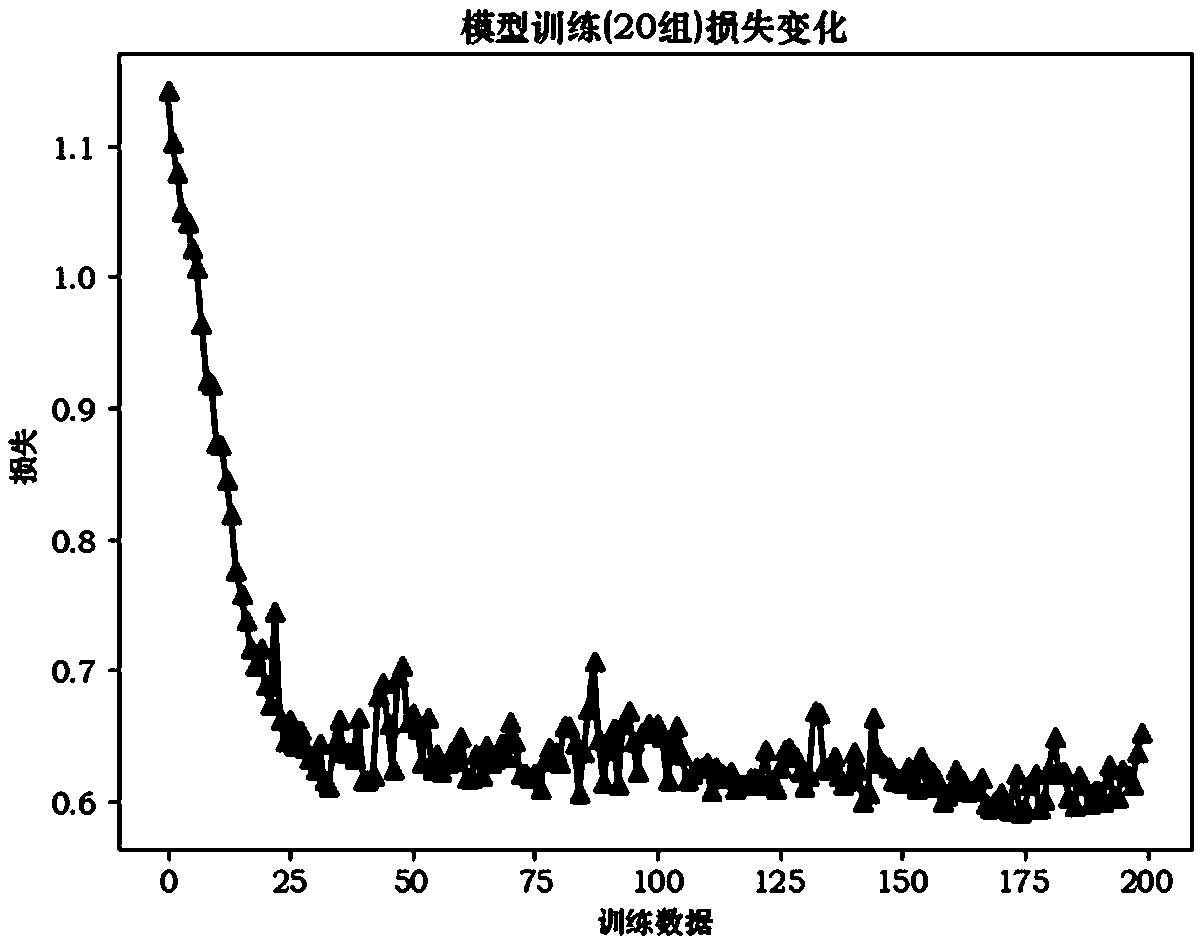

Reinforcement learning based air combat maneuver decision making method of unmanned aerial vehicle (UAV)

InactiveCN108319286AEnhance autonomous air combat capabilityAvoid tedious and error-proneAttitude controlPosition/course control in three dimensionsJet aeroplaneFuzzy rule

Owner:NORTHWESTERN POLYTECHNICAL UNIV

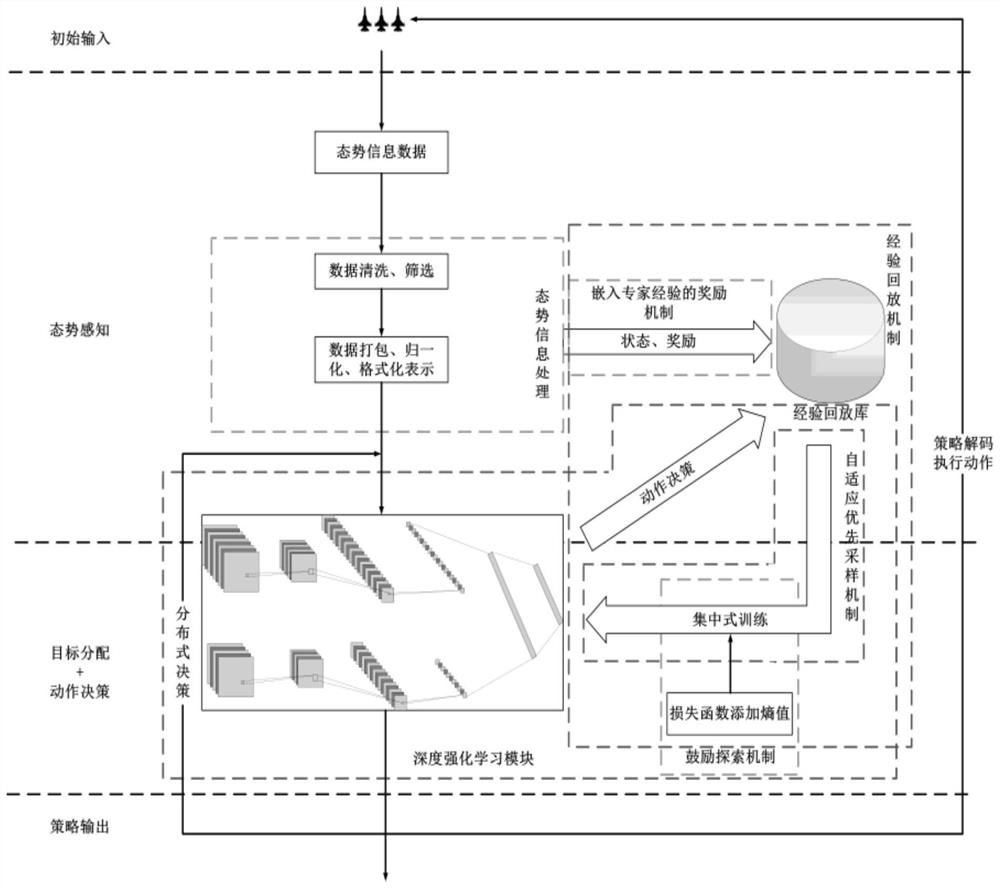

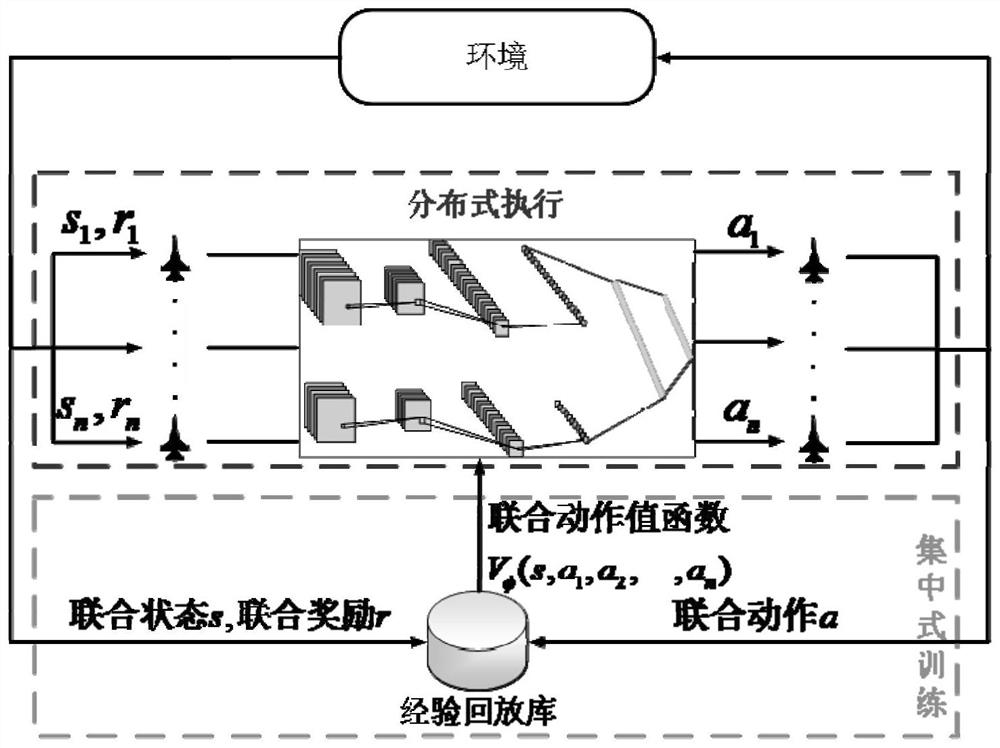

Multi-machine collaborative air combat planning method and system based on deep reinforcement learning

ActiveCN112861442ASolve hard-to-converge problemsMake up for the shortcomings of poor exploratoryDesign optimisation/simulationNeural architecturesEngineeringNetwork model

According to the multi-aircraft cooperative air combat planning method and system based on deep reinforcement learning provided by the invention, a combat aircraft is regarded as an intelligent agent, a reinforcement learning agent model is constructed, and a network model is trained through a centralized training-distributed execution architecture, so that the defect that the exploratory performance of a network model is not strong due to low action distinction degree among different entities during multi-aircraft cooperation is overcome; and by embedding expert experience in the reward value, the problem that a large amount of expert experience support is needed in the prior art is solved. Through an experience sharing mechanism, all agents share one set of network parameters and experience playback library, and the problem that the strategy of a single intelligent agent is not only dependent on the feedback of the own strategy and the environment, but also influenced by the behaviors and cooperation relationships of other agents is solved. By increasing the sampling probability of the samples with large absolute values of the advantage values, the samples with extremely large or extremely small reward values can influence the training of the neural network, and the convergence speed of the algorithm is accelerated. The exploration capability of the intelligent agent is improved by adding the strategy entropy.

Owner:NAT UNIV OF DEFENSE TECH

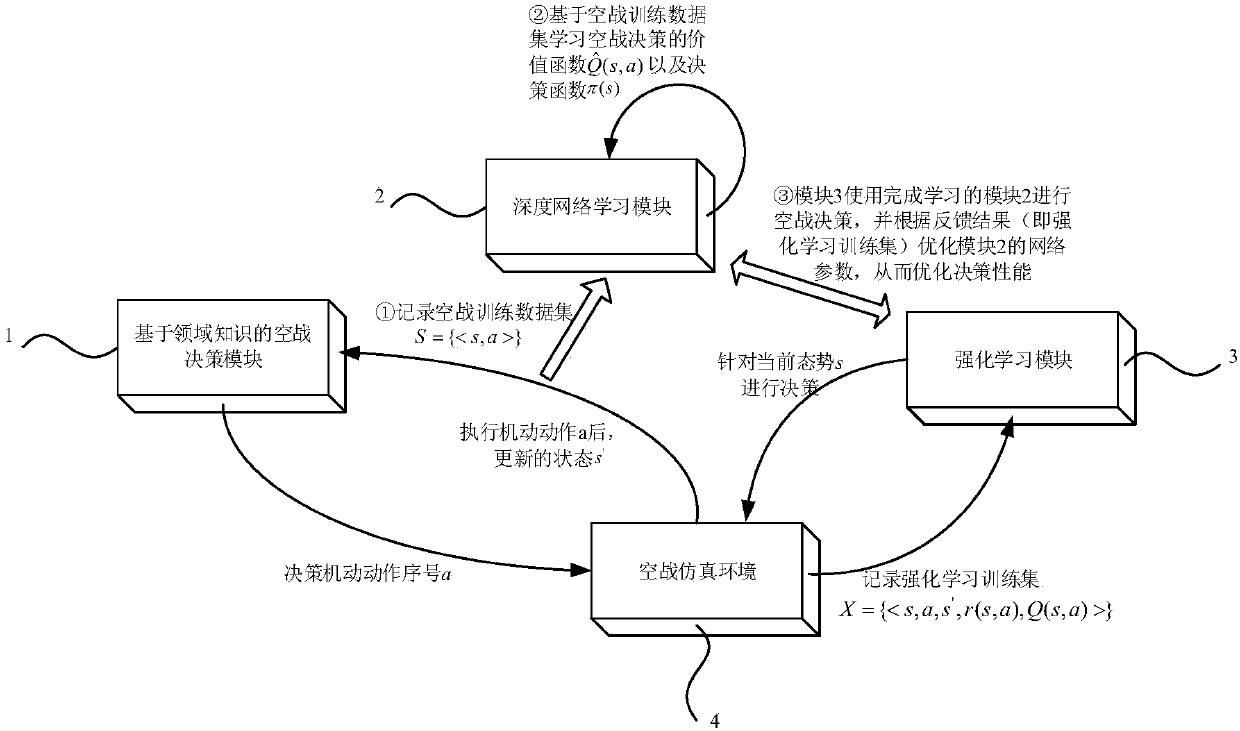

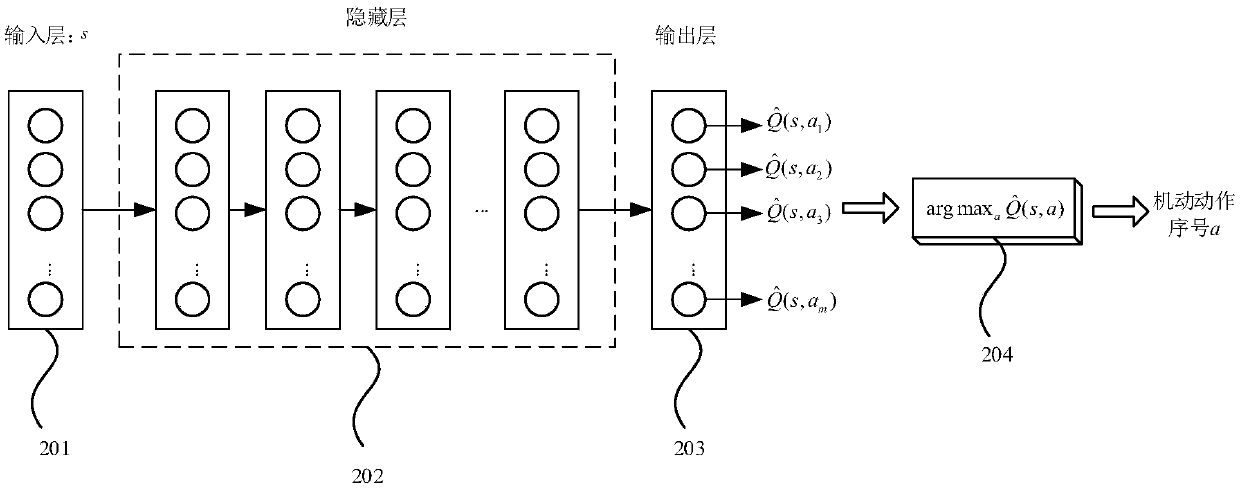

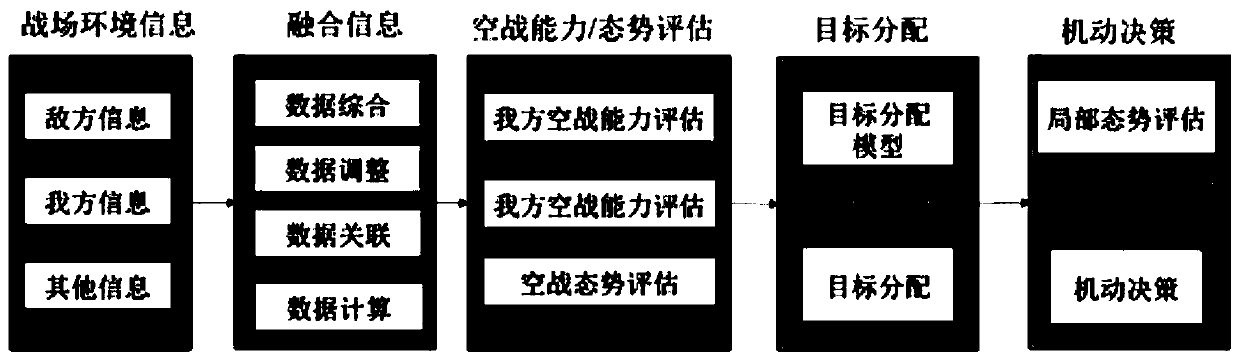

Unmanned aerial vehicle autonomous air combat decision framework and method

InactiveCN108021754ASolve the original data source problemEnhance expressive abilityDesign optimisation/simulationKnowledge representationData setClosed loop

The invention discloses an unmanned aerial vehicle autonomous air combat decision framework and method, and belongs to the field of computer simulation. The framework comprises an air combat decisionmodule, a deep network learning module, an enhanced learning module and an air combat simulation environment which are based on domain knowledge. The air combat decision module generates an air combattraining data set and outputs the air combat training data set to the deep network learning module, and a depth network, a Q value fitting function and a motion selection function are obtained through learning and output to the enhanced learning module; the air combat simulation environment uses the learned air combat decision function to carry out a self-air combat process, and records air combat process data to form an enhanced learning training set; the enhanced learning module is used for optimizing and improving the Q value fitting function by utilizing the enhanced learning training set, and an air combat strategy with better performance is obtained. According to the framework, a Q function which is complex in nature can be more accurately and quickly fitted, the learning effect isimproved, the Q function is prevented from being converged to the local optimum value to the largest extent, an air combat decision optimization closed-loop process is constructed, and external intervention is not needed.

Owner:BEIHANG UNIV

Dynamic guidance for close-in maneuvering air combat

InactiveUS7599765B2Cosmonautic condition simulationsDirection controllersControl systemFlight vehicle

A system, apparatus and method for optimizing the conduct of a close-in air combat are disclosed. A computing device is operative in the analysis of a close-in air combat situation. The computing device stores and utilizes one or more aerial aircraft-specific, weapon systems-specific and close-in air combat-situation-specific information. The computing device is operative in the analysis of the current air combat situation (12) and in the generation of a flight control recommendation (40). Consequently, the recommendation is converted into an indication having visual or audio format to be communicated to the operating crew of the aerial vehicle. The recommendation could further to be converted into specific flight control / energy commands and to be introduced directly to the control systems of the aircraft.

Owner:PADAN NIR

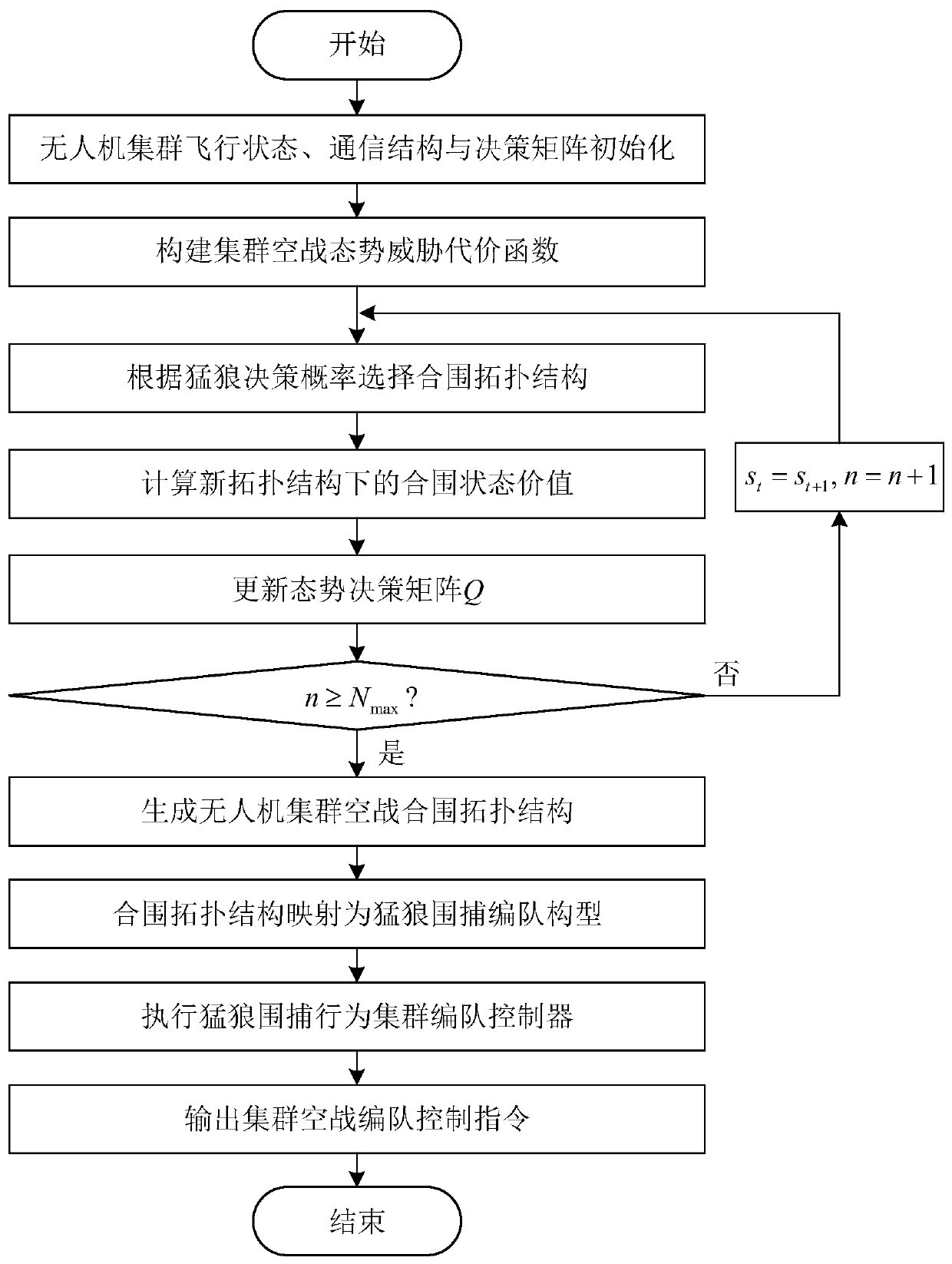

Unmanned aerial vehicle cluster air combat method based on wolf roundup behavior

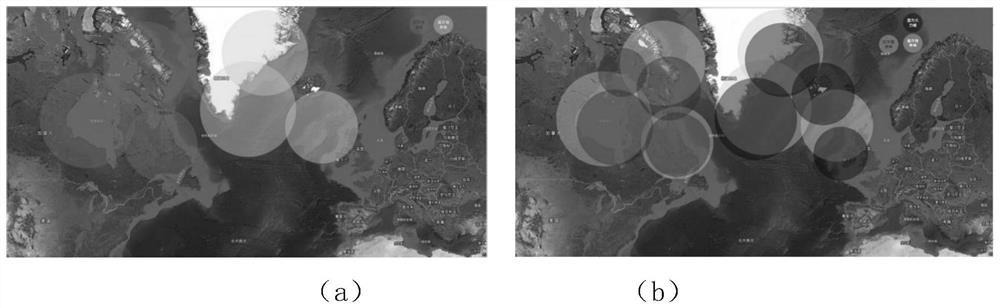

ActiveCN110069076AReduce the risk of attackGuaranteed stabilityPosition/course control in three dimensionsConsistency controlUncrewed vehicle

The invention discloses an unmanned aerial vehicle cluster air combat method based on a wolf roundup behavior. The method comprises the steps that first, an unmanned aerial vehicle cluster air combatis initialized; second, cluster air combat situation threat cost is constructed; third, an unmanned aerial vehicle cluster air combat encirclement topological structure is determined by use of wolf situation learning; fourth, the encirclement topological structure is mapped into a wolf roundup formation configuration; fifth, unmanned aerial vehicle formation encirclement control is performed basedon the wolf roundup configuration; and sixth, a cluster air combat formation control instruction is output. The method can support a dynamically-changing air combat environment, an attack rick of anunmanned aerial vehicle cluster is effectively lowered, and optimal overcome effectiveness is achieved; and meanwhile, the stability of an attack formation, the consistency of attack behaviors and a high target damage probability are guaranteed, and dynamic decision, consistency control and other difficulties in cluster control are overcome.

Owner:BEIHANG UNIV

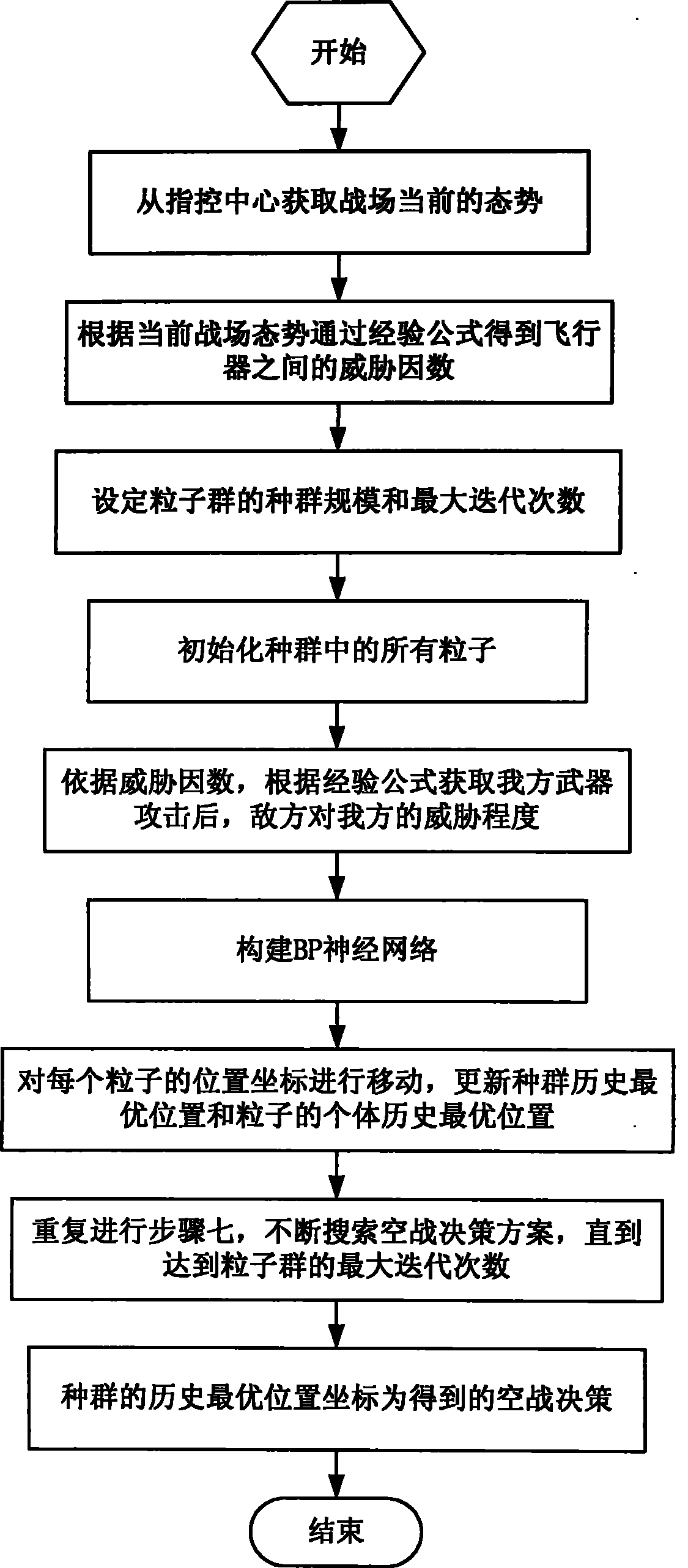

Particle swarm optimization method for air combat decision

InactiveCN101908097AGood air combat decisionsAddress inherent shortcomingsBiological neural network modelsSpecial data processing applicationsDecision schemeEmpirical formula

The invention discloses a particle swarm optimization method for an air combat decision, comprising the following steps of: firstly, acquiring the current situation of a battlefield from a command control center; secondly, acquiring a threat factor among aircrafts according to the current situation of the battlefield; thirdly, setting the particle swarm scale and the maximum iterations of the particle swarm; fourthly, initializing all particles of the particle swarm; fifthly, acquiring the threat degree of an enemy party on a first party after weapon attacks of the first part according to an empirical formula; sixthly, constructing a BP (Back Propagation) neural network; seventhly, updating the historical optimal position of the particle swarm and the individual historical optimal position of the particles; eighthly, continuously searching an air combat decision scheme until the maximum iterations of the particle swarm are achieved; and ninthly, determining the historical optimal position coordinate of the particle swarm as the obtained air combat decision. By processing the input and the output of the BP neural network, the decision method can move in a set solution space and has favorable search capability on the optimal solution.

Owner:BEIHANG UNIV

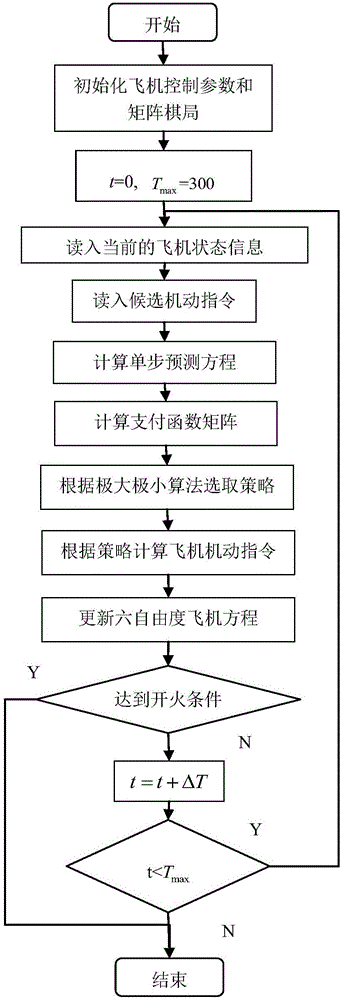

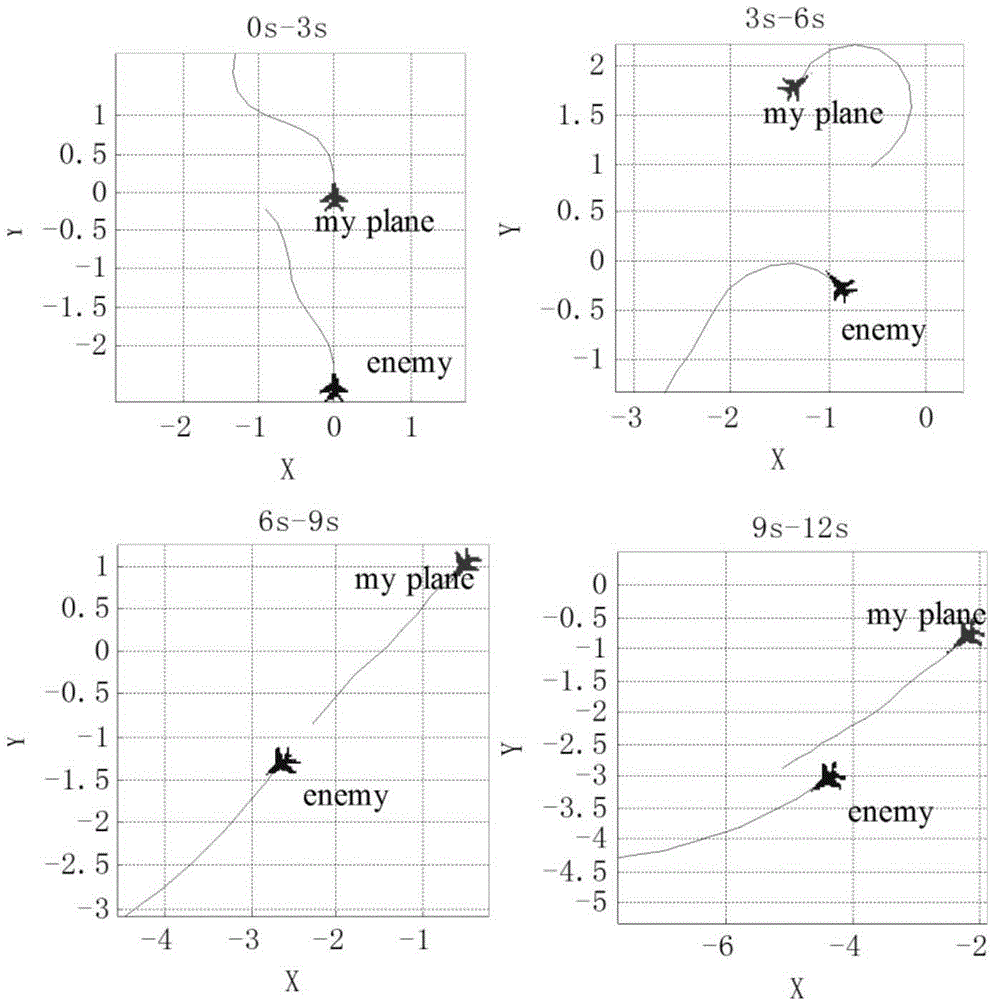

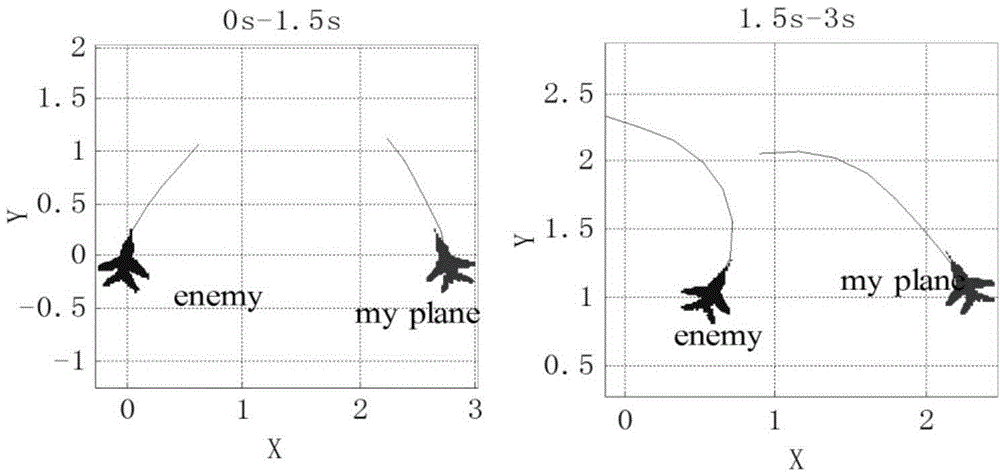

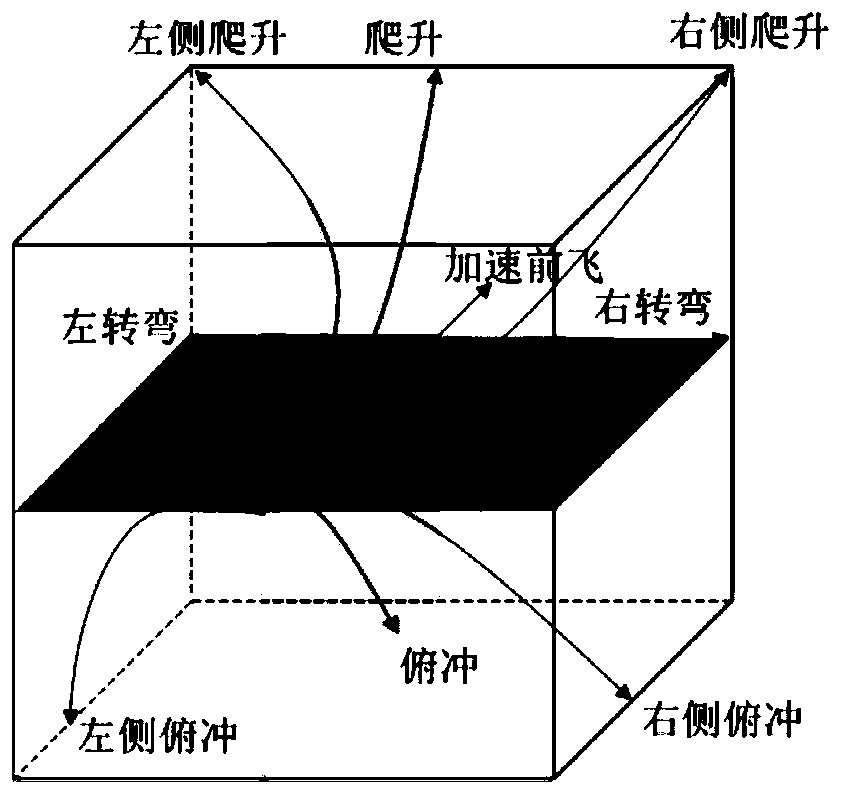

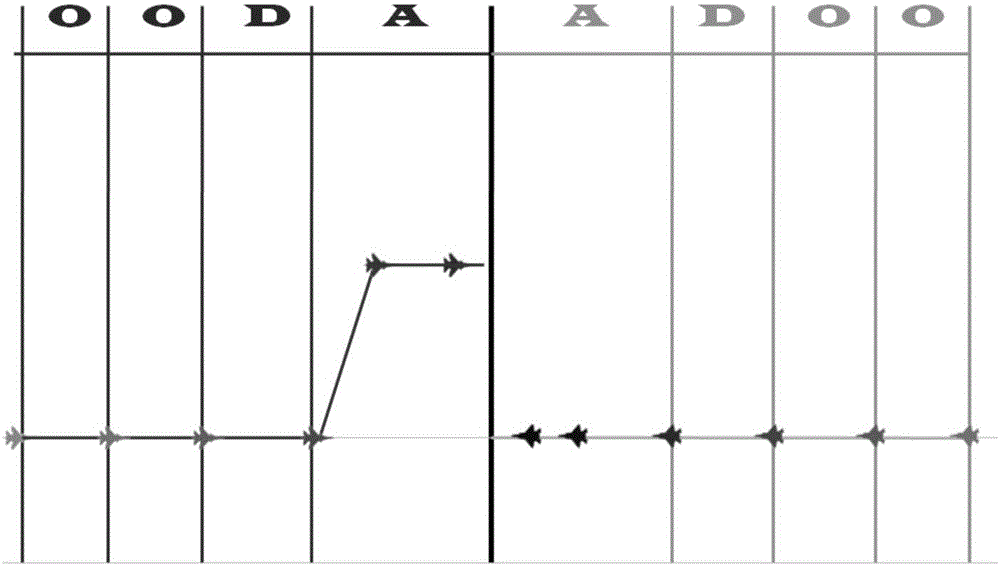

Near-distance air combat automatic decision-making method based on single-step prediction matrix gaming

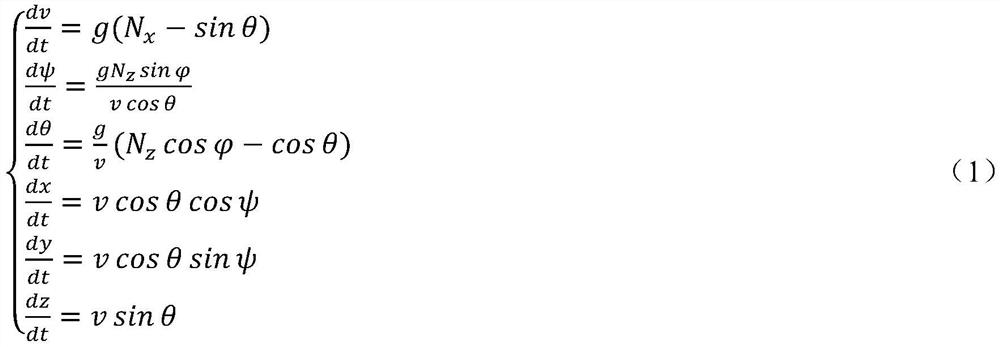

ActiveCN106020215AImprove combat capabilityMeet real-time requirementsAttitude controlPosition/course control in three dimensionsDynamic equationSimulation

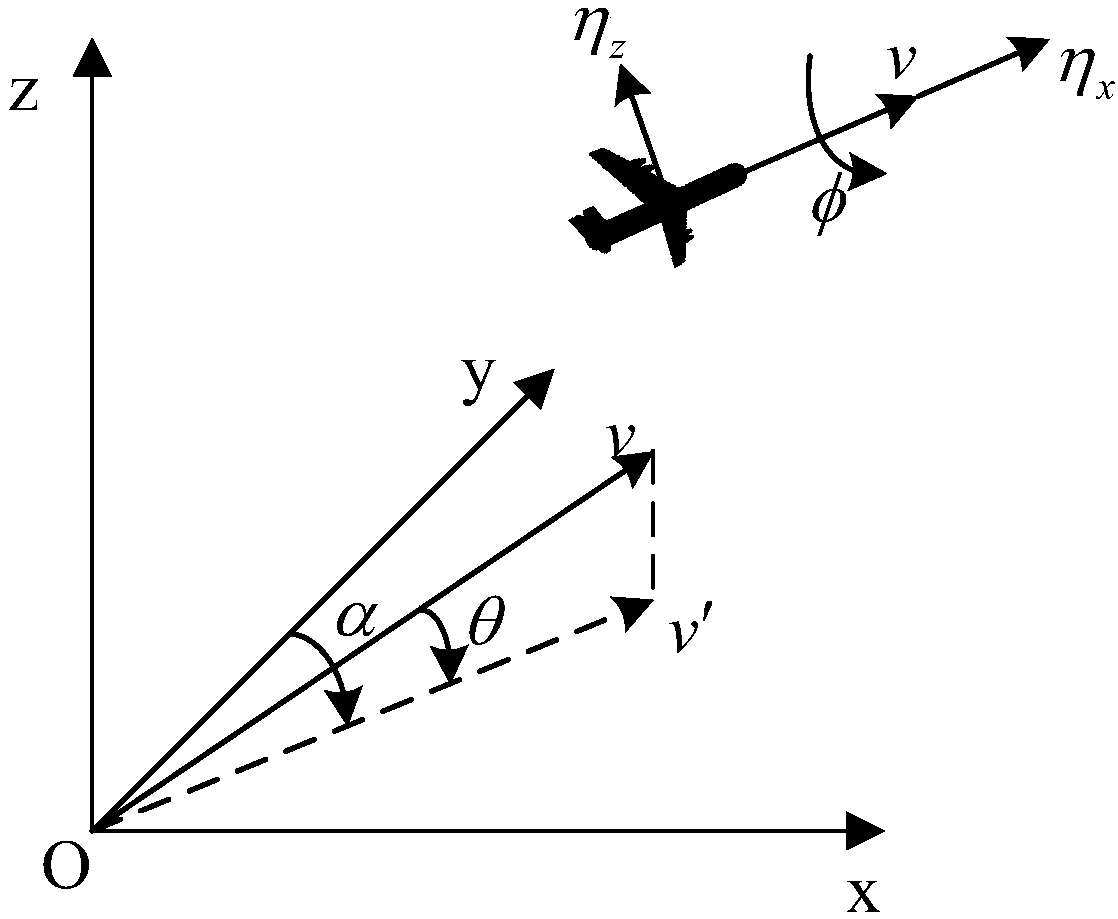

The invention discloses a near-distance air combat automatic decision-making method based on single-step prediction matrix gaming. The method comprises the following steps: step 1, erecting a six-degree-of-freedom nonlinear unmanned combat air vehicle control law structure; step 2, initializing a matrix gaming chessboard; step 3, according to the gaming chessboard, carrying out single-step prediction calculation; step 4, calculating a payment function matrix; step 5, carrying out strategy selection through a minimax algorithm; step 6, updating a six-degree-of-freedom plane kinetic and dynamic equation; and step 7, determining whether an air combat termination condition is reached. The method has the following advantages: compared to a three-degree-of-freedom particle model, the actual application value is higher. At the same time, a conventional matrix gaming method based on a maneuver library is changed to be based on a maneuver library of an instruction model, what is needed is only prediction of a step length of a single step, the decision-making time is effectively reduced, the requirement for real-time performance of air verification is satisfied, the method can be better adapted to complex dynamic baffle field environment change, and the combat capability of an unmanned combat air vehicle in a near-distance combat is improved.

Owner:BEIHANG UNIV

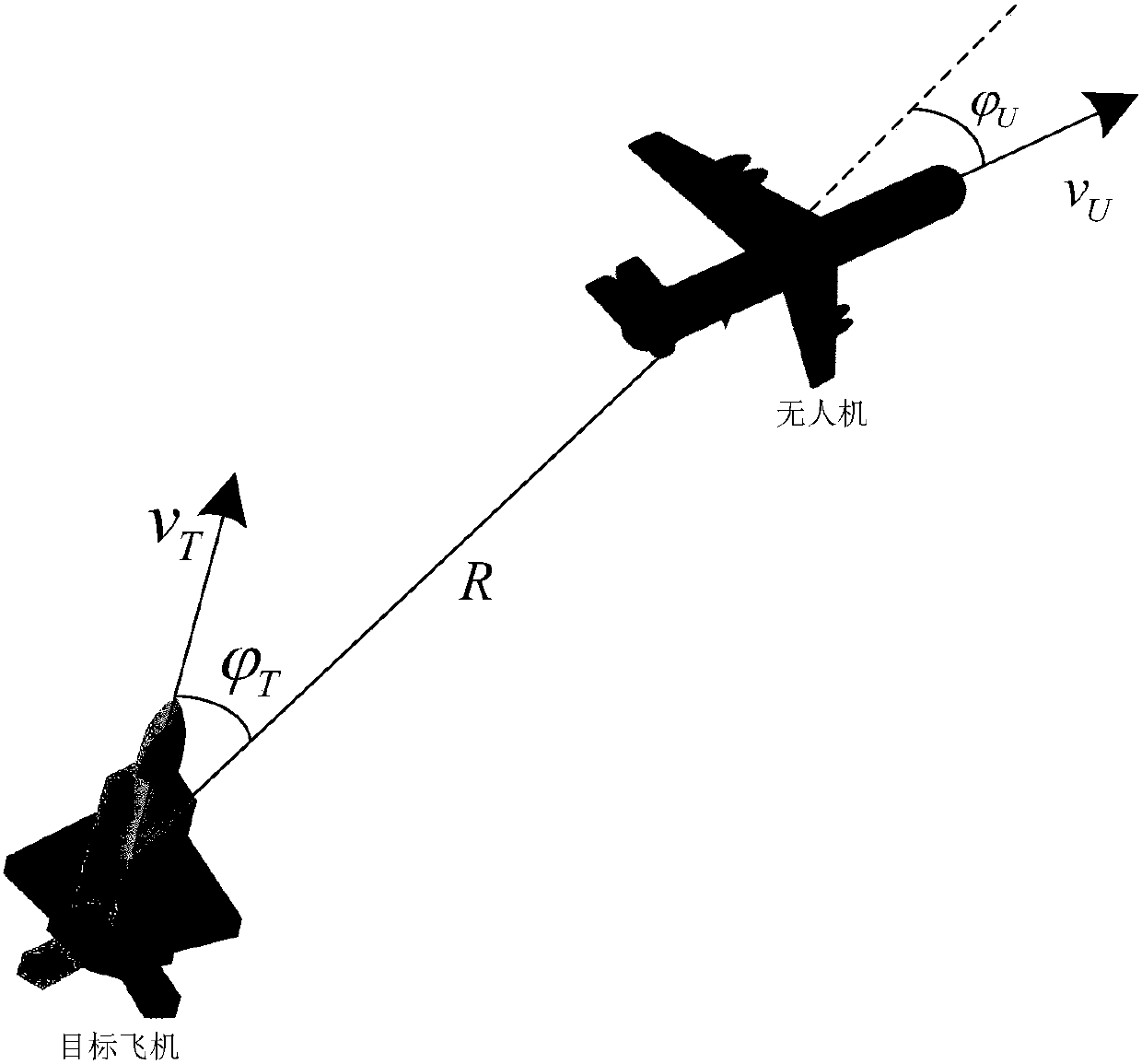

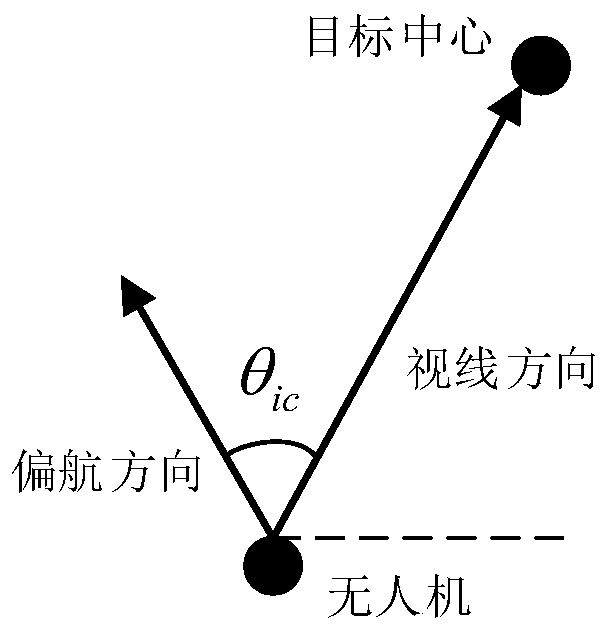

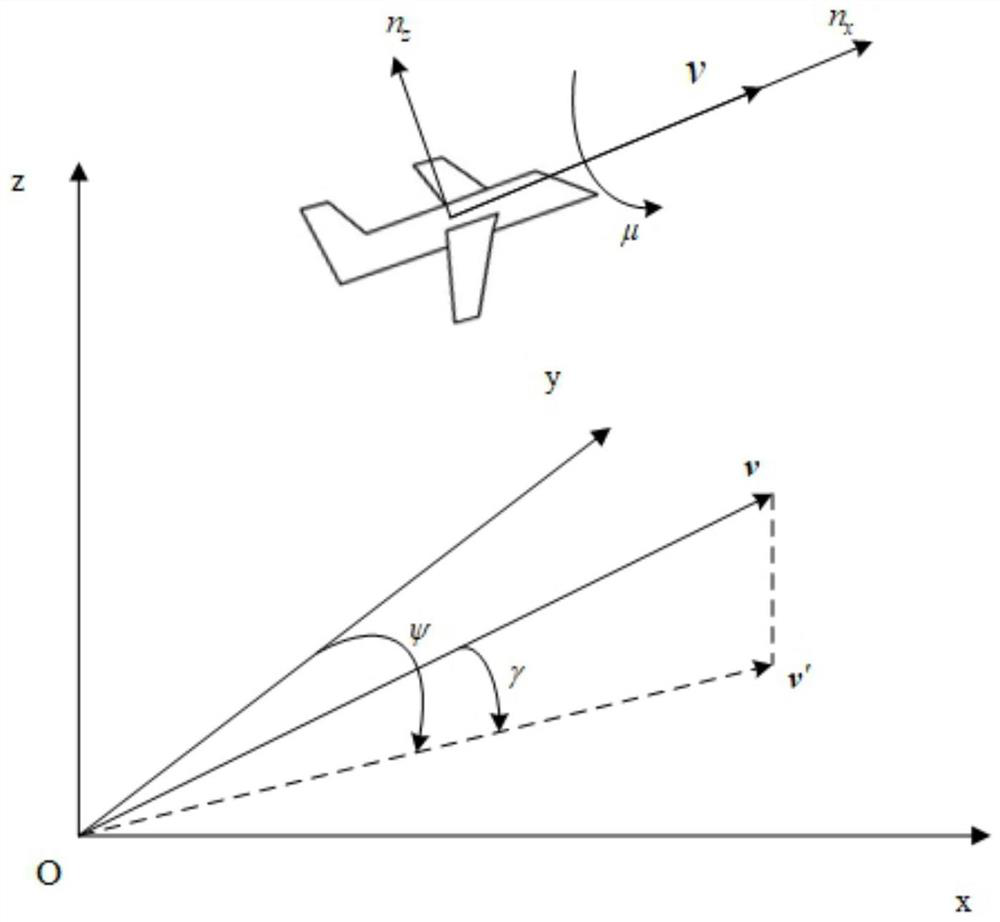

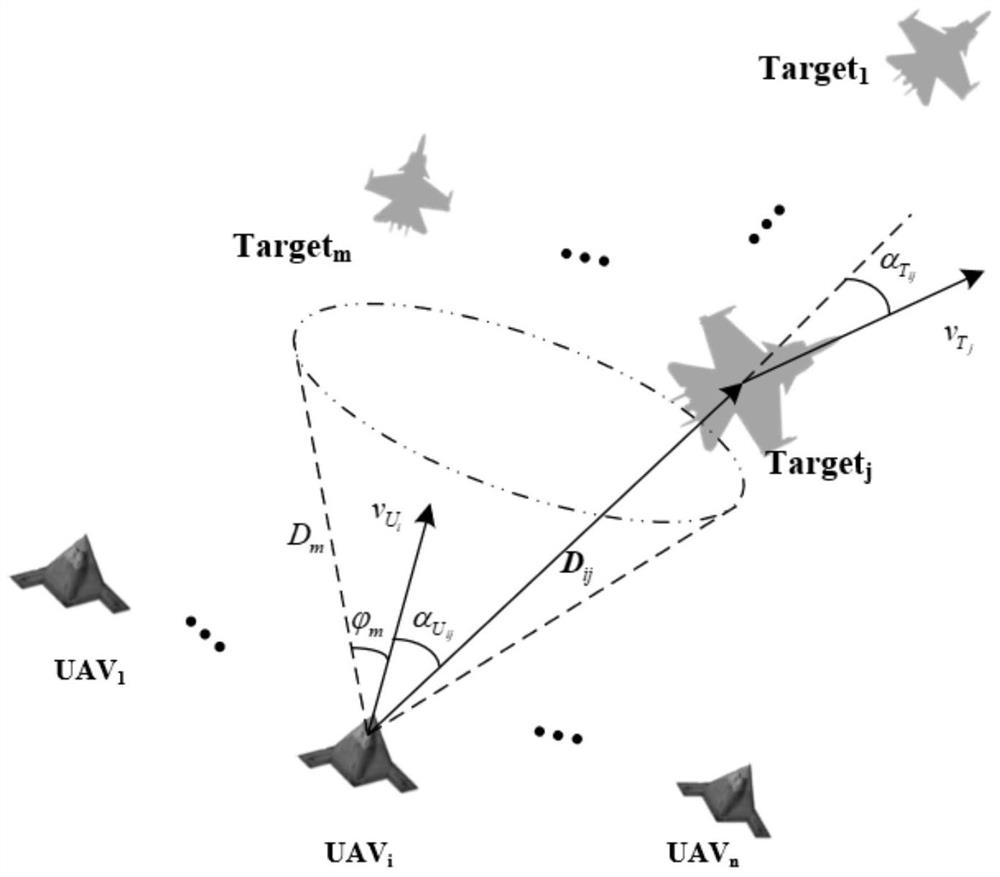

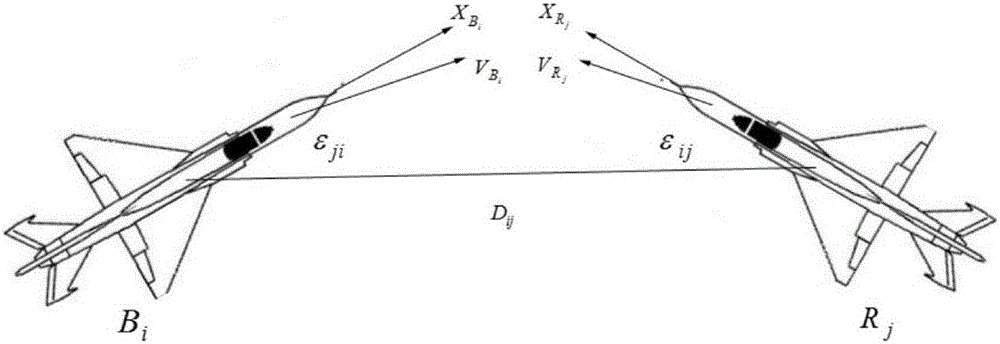

Multi-unmanned aerial vehicle cooperative air combat maneuver decision-making method based on multi-agent reinforcement learning

ActiveCN112947581AImprove unityUnity of purposeInternal combustion piston enginesPosition/course control in three dimensionsUncrewed vehicleReinforcement learning

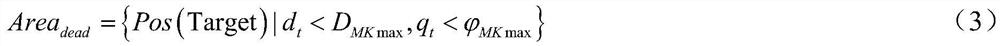

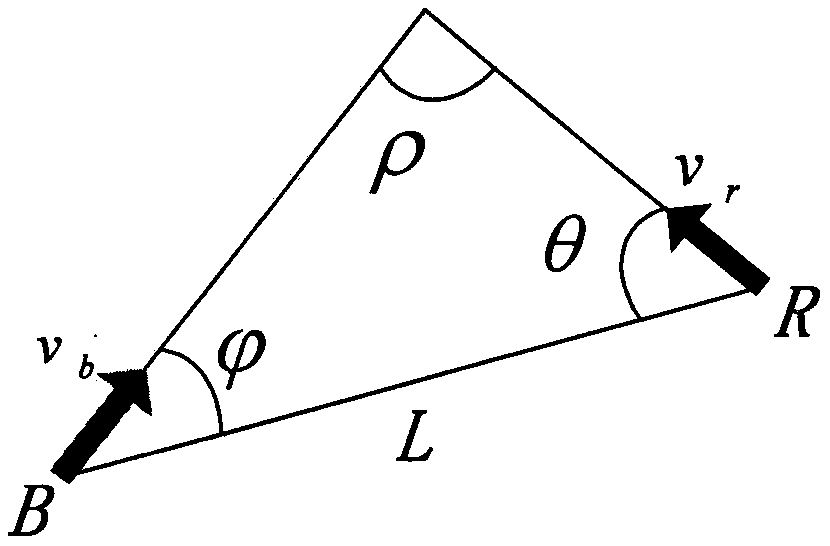

The invention discloses a multi-unmanned aerial vehicle cooperative air combat maneuver decision-making method based on multi-agent reinforcement learning. The problem of autonomous decision-making of maneuver actions in multi-unmanned aerial vehicle cooperative air combat in simulation of many-to-many air combat is solved. The method comprises the following steps: creating a motion model of an unmanned aerial vehicle platform; analyzing a state space, an action space and a reward value of a multi-machine air combat maneuvering decision based on multi-machine air combat situation assessment of an attack area, a distance and an angle factor; designing a target distribution method and a strategy coordination mechanism in a collaborative air combat, defining behavior feedback of each unmanned aerial vehicle in target distribution, situation advantages and safe collision avoidance through distribution of reward values, and achieving strategy coordination after training. According to the method, the ability of multiple unmanned aerial vehicles to carry out collaborative air combat maneuvering autonomous decision making can be effectively improved, higher collaboration and autonomous optimization are achieved, and the decision making level of unmanned aerial vehicle formation in continuous simulation and learning is continuously improved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

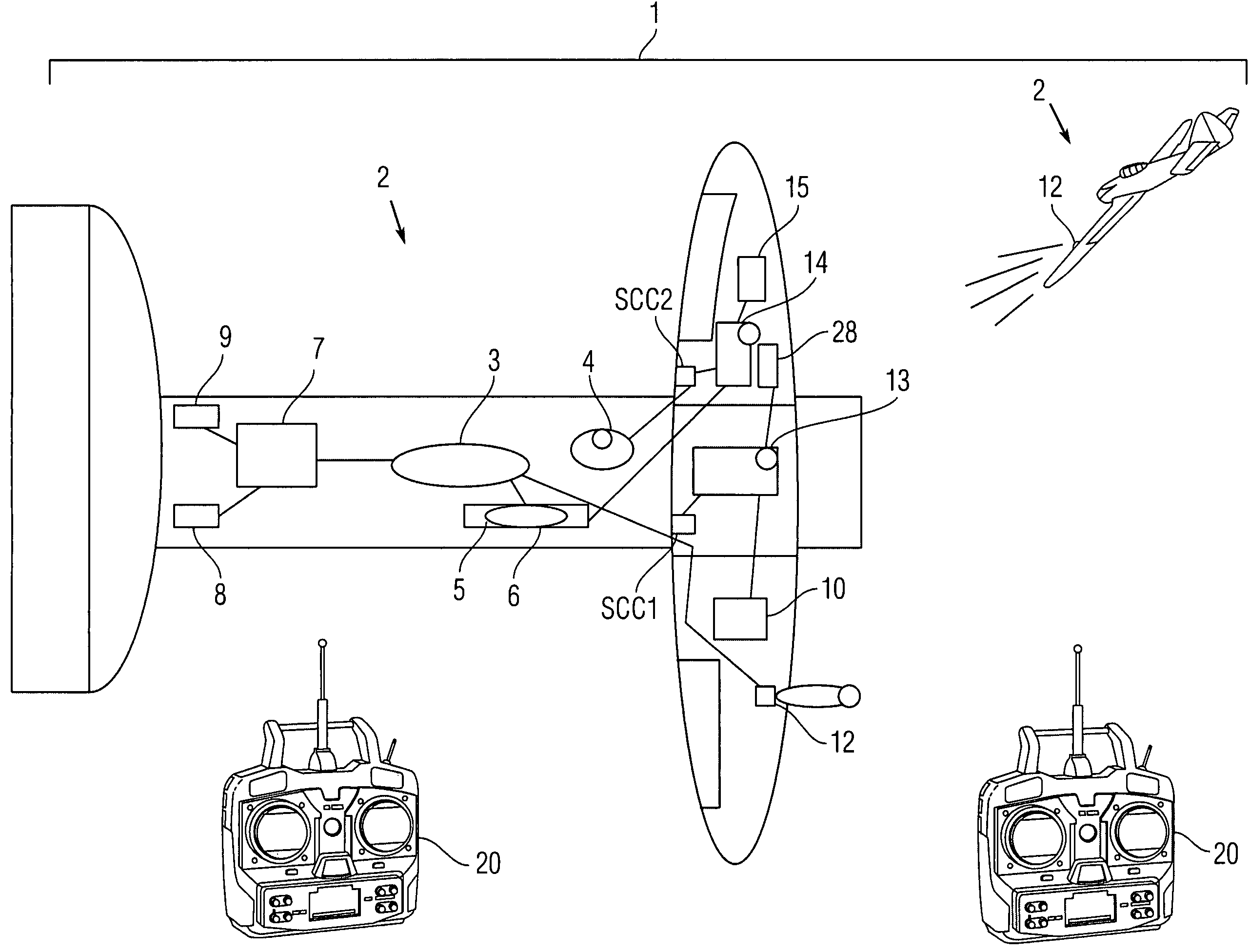

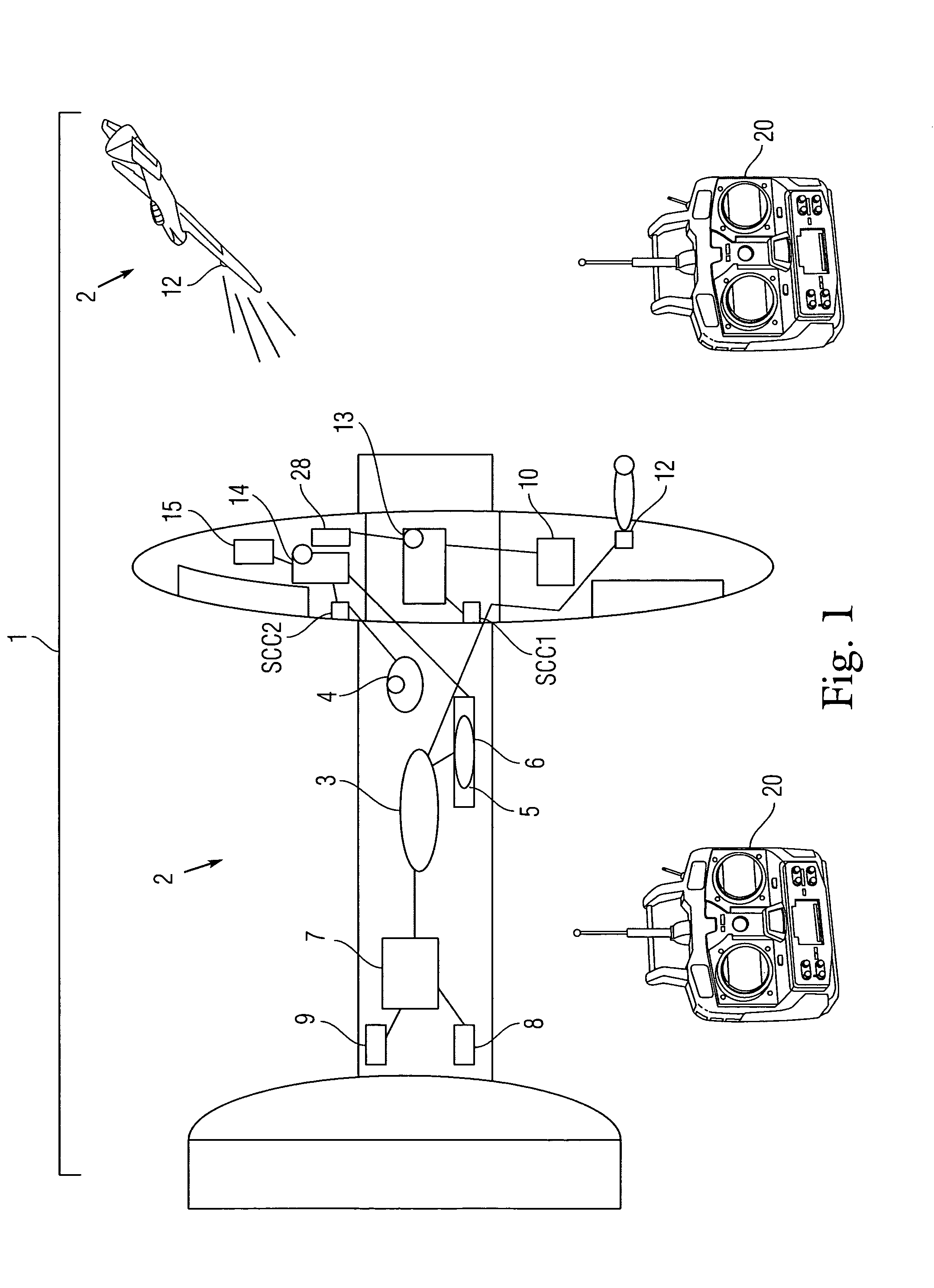

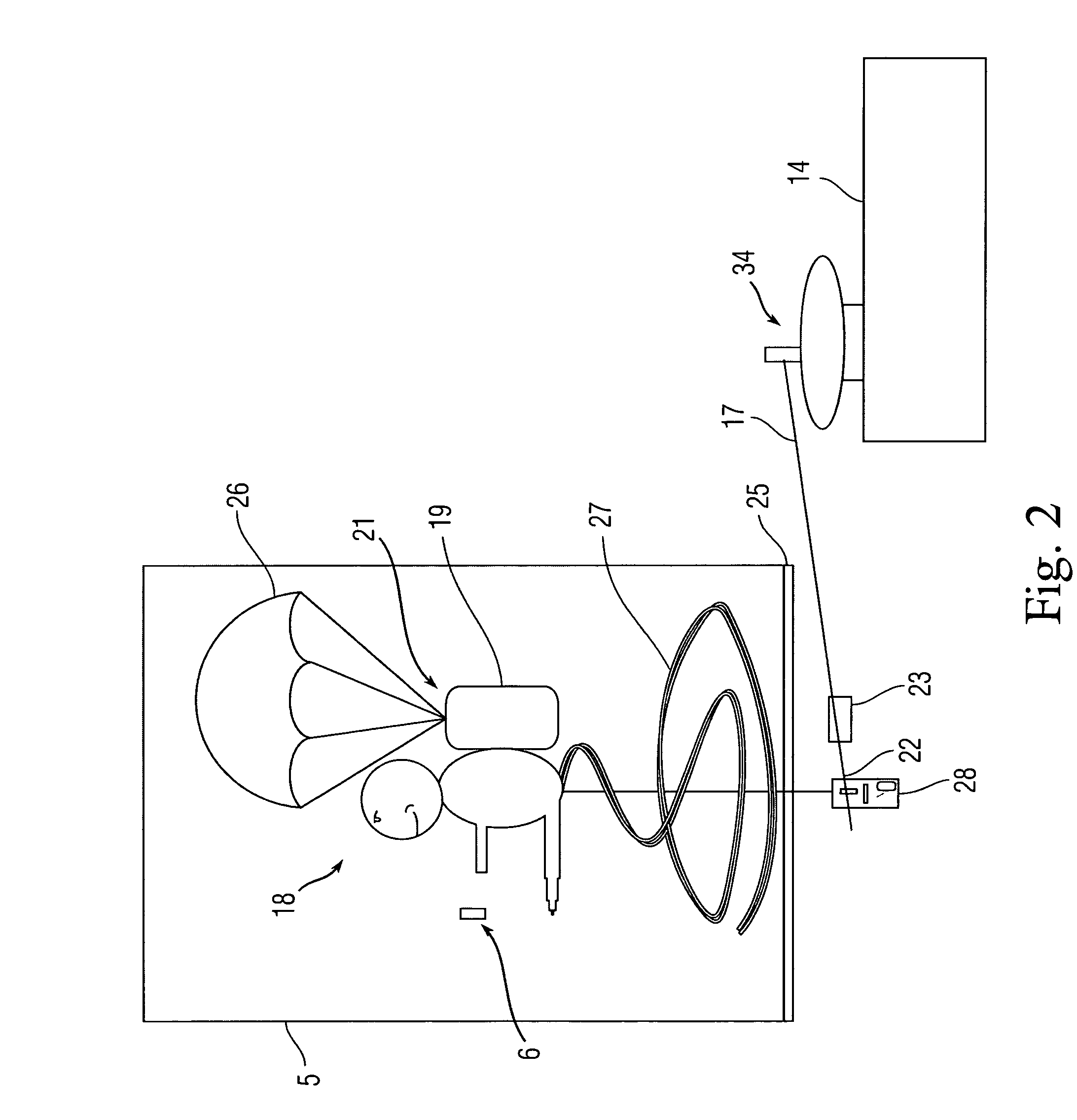

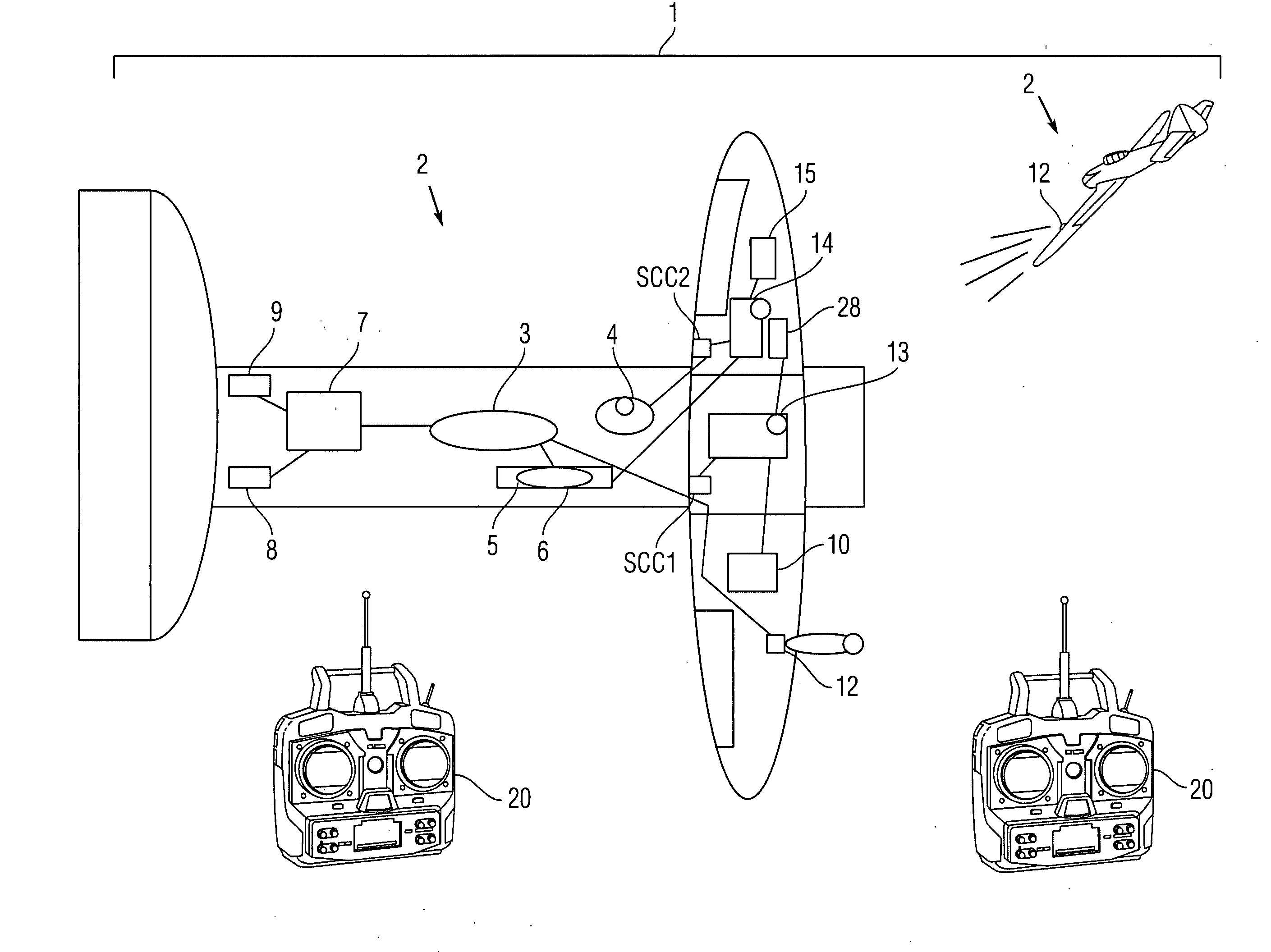

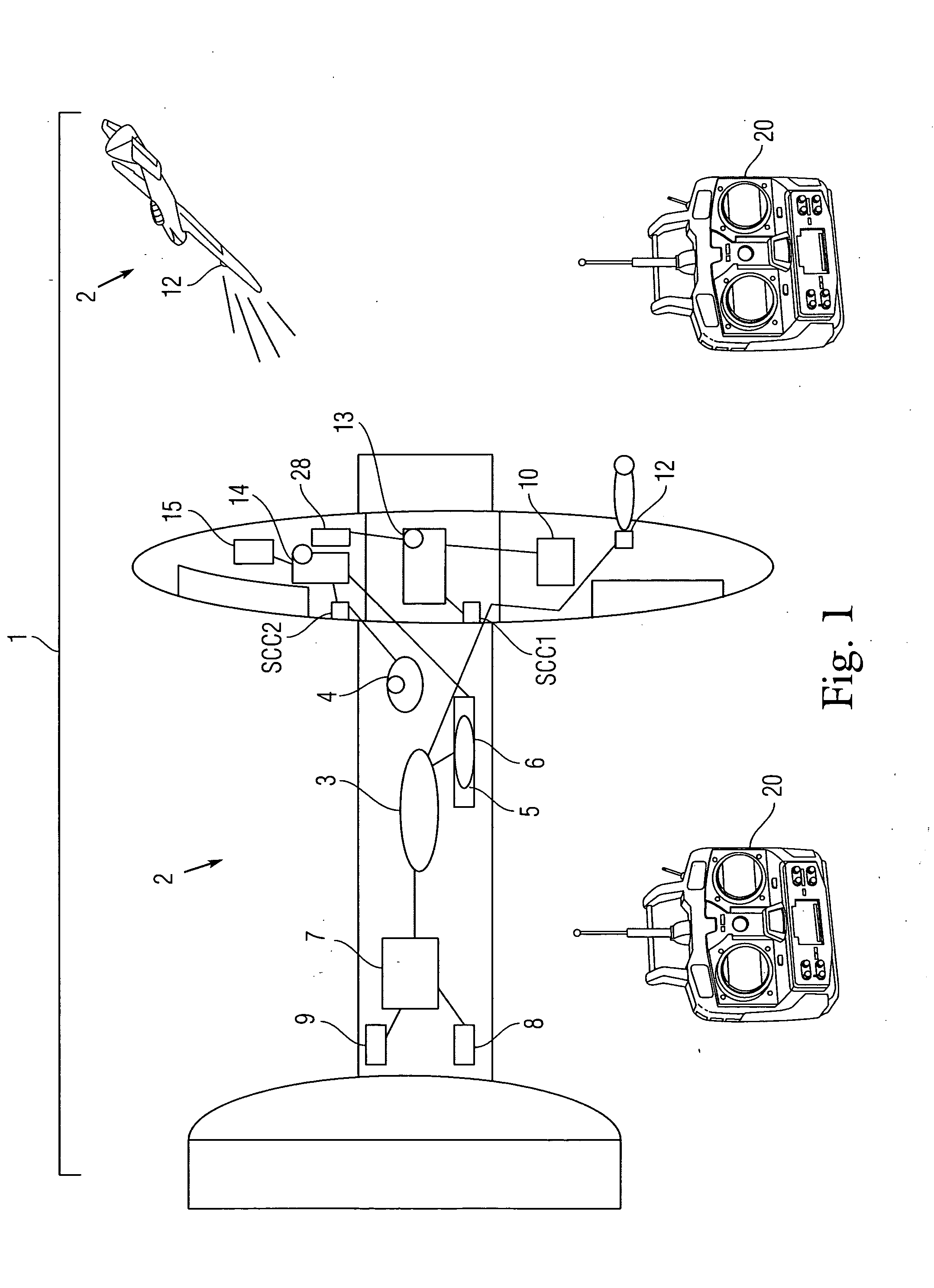

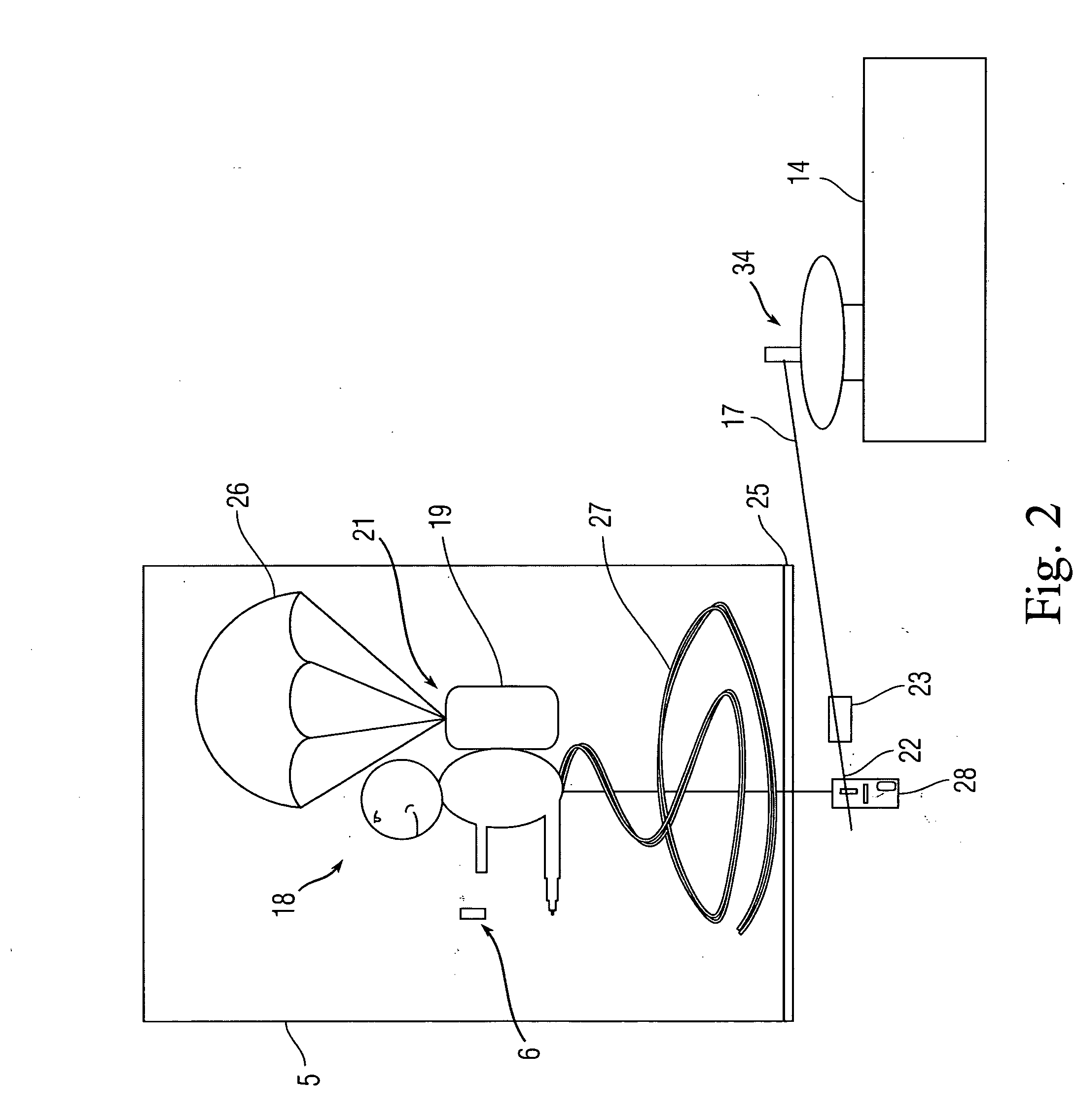

Remote control model aircraft with laser tag shooting action

The present invention is a radio-controlled (RC) model aircraft system with laser tag capabilities. A transmitter and receiver are each installed in at least two separate RC aircrafts. The transmitter on one aircraft emits an infrared light beam to the receiver on the other aircraft(s) that changes the infrared signal to a first servo to move an arm, which releases a model aircraft door behind which there are ribbons. The ribbons escape from the aircraft wings to show a hit. An optional second servo operate a smoke screen and eject a pilot to simulate actual combat. The system may also include audio and lighting effects to simulate firing and hit sequences with accompanying theatrical, physical effects including release of smoke and ribbons, and ejection of the pilot that realistically simulates air combat.

Owner:TROUTMAN S CLAYTON

Air combat behavior modeling method based on fitting reinforcement learning

InactiveCN104484500AFit closelyImprove sampling efficiencySpecial data processing applicationsSimulationState space

The invention provides an air combat behavior modeling method based on fitting reinforcement learning, and realizes intelligent decision of tactical action in virtual air combat simulation. The method includes the steps: sampling the track of the aircraft combat process; fitting utility functions in a state space and approximately calculating the utility functions by Behrman iteration and least square fitting; making operational decisions, making action decisions by the aid of the fitted utility functions in the forecasting process, and determining finally executed action according to forecasted execution results. The fitting efficiency and the acquisition efficiency of the utility functions can be effectively improved, and optimal action strategies can be more rapidly acquired as compared with a traditional method.

Owner:BEIHANG UNIV

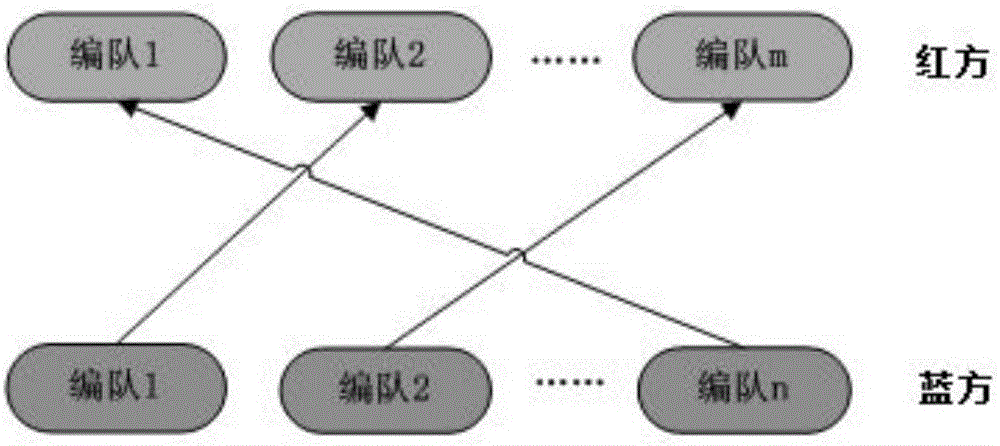

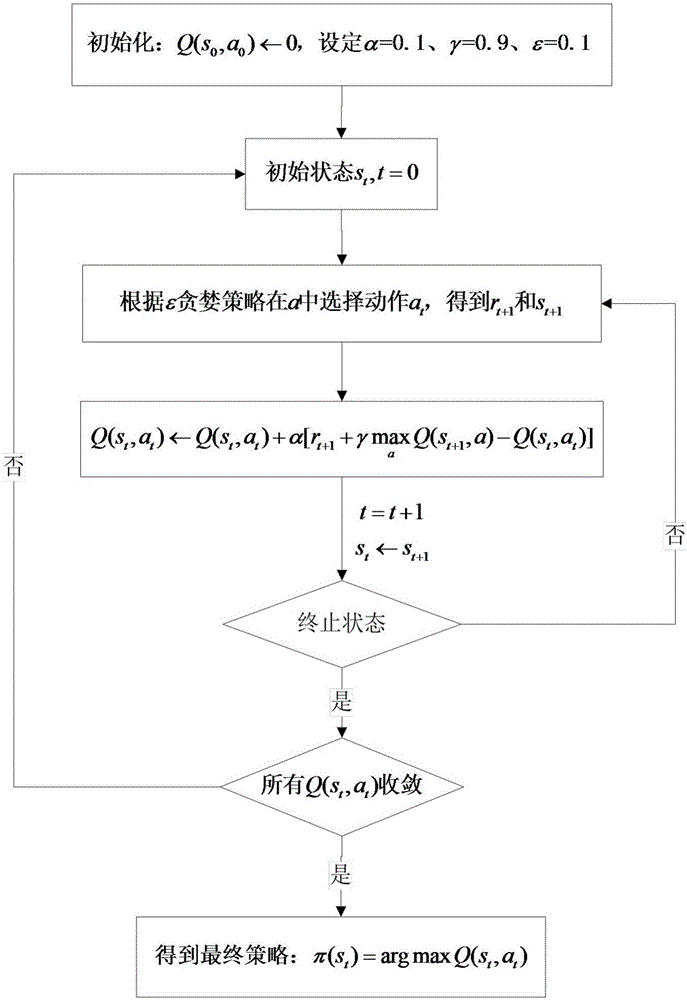

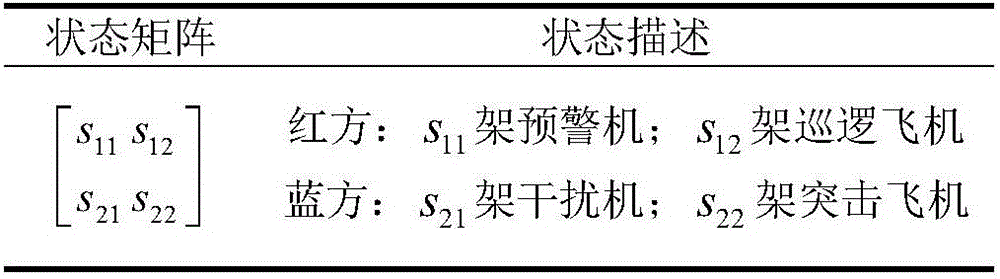

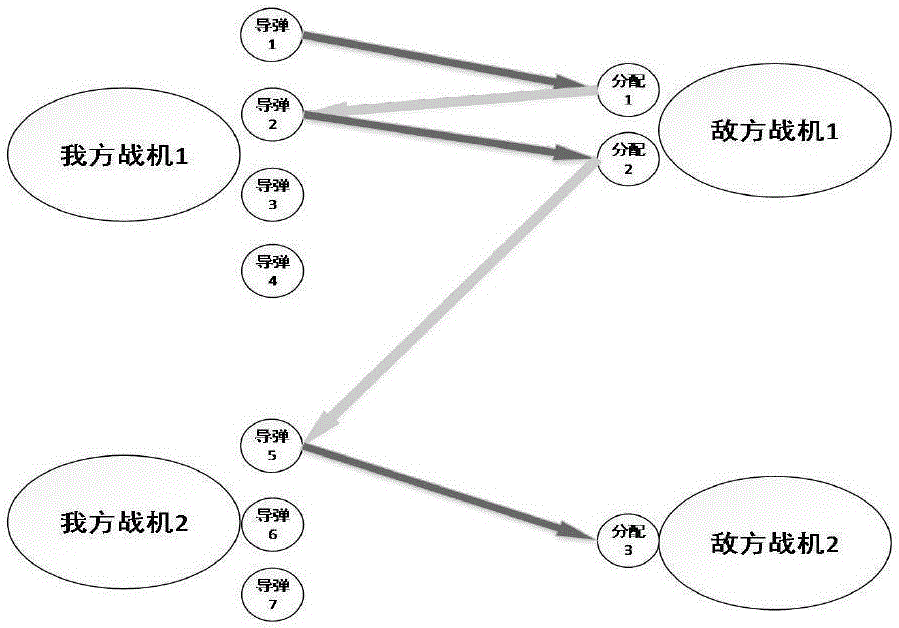

Distribution method oriented to simulation Q learning attack targets

ActiveCN105844068AVersatilityAvoid falling intoSpecial data processing applicationsLocal optimumDistribution method

The invention discloses a distribution method oriented to simulation Q learning attack targets. The method comprises the following steps: (1) determining an initial state, acquiring two-side air combat situation information of a red side and a blue side, wherein the two-side air combat situation information comprises the number of formation aircrafts and relevant parameters of formation aircrafts, and providing input for target distribution and air combat model calculation of the red side; (2) determining an action set performed by the formation of the red side, strictly stipulating complete state-action pairs, determining a proper probability epsilon value and selecting actions of the red side by using an epsilon-greedy strategy; and (3) stipulating a Q learning algorithm reward function, an ending state and a convergence condition and distributing iteration to attack targets of the red side by using a Q learning algorithm until the convergence condition is met. The method gets rid of the dependence on the priori knowledge; due to the introduction of the epsilon-greedy strategy, the local optimum trap is avoided; by setting the parameter epsilon, the balance between the algorithm efficiency and the local optimum problem can be sought.

Owner:NAT UNIV OF DEFENSE TECH

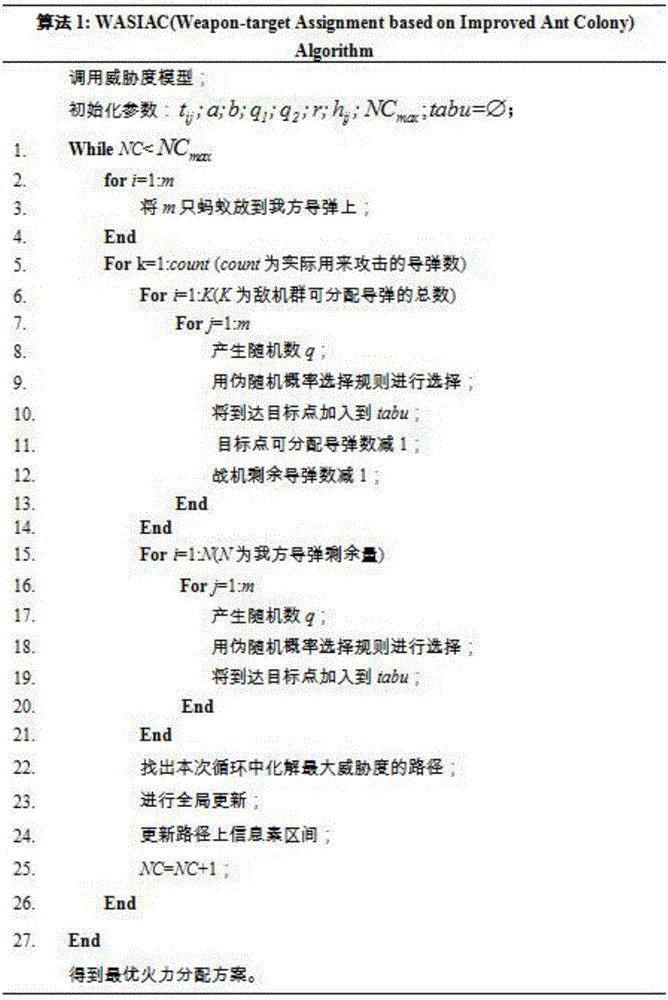

Ant colony algorithm-based firepower distribution method

ActiveCN106779210AFast convergenceThere is no "gene drift" phenomenonForecastingArtificial lifeLocal optimumDecision model

The invention discloses an ant colony algorithm-based firepower distribution method. The method comprises the steps of firstly building an air combat threat degree model and a firepower distribution decision model according to a fight situation of both sides; and secondly, in the aspect of model solving, performing algorithm improvement for deficiencies of a typical ant colony algorithm: improving the ant colony algorithm in combination with thoughts of typical ant colony system and max-min ant colony system algorithms, so that the improved ant colony algorithm is more reasonable in early evolution trend and higher in convergence speed, and can be better prevented from falling into local optimum. The improved ant colony algorithm proposed for firepower distribution not only can be used for firepower distribution of an air combat but also can be expected to be used for other combination optimization problems such as decision problems of firepower distribution and the like in an attack battle of ground tank groups and a maritime warship formation combat.

Owner:NAT UNIV OF DEFENSE TECH

Unmanned aerial vehicle cooperative air combat decision-making method based on genetic fuzzy tree

ActiveCN111240353AIncreased chance of damageImprove survival rateAutonomous decision making processPosition/course control in three dimensionsSimulationGenetics algorithms

The invention discloses an unmanned aerial vehicle cooperative air combat decision-making method based on a genetic fuzzy tree, and the method comprises the following steps: building an unmanned aerial vehicle cooperative air combat comprehensive advantage evaluation index system which comprises an unmanned aerial vehicle air combat capability evaluation model and an unmanned aerial vehicle air combat situation evaluation model; establishing a target allocation evaluation function, searching an optimal target allocation result by a genetic algorithm, and constructing an unmanned aerial vehiclecooperative air combat target allocation model based on the genetic algorithm; constructing an unmanned aerial vehicle air combat motion model and refining and expanding the basic maneuvering actionlibrary of the unmanned aerial vehicle;and constructing an unmanned aerial vehicle cooperative air combat decision-making model based on the genetic fuzzy tree, performing parameter identification onthe fuzzy tree through the sample data, identifying the fuzzy tree structure through a genetic algorithm, and obtaining the unmanned aerial vehicle cooperative air combat decision-making model which meets the precision requirement and is low in complexity. According to the method, the optimal target allocation result can be obtained in the cooperative air combat of the unmanned aerial vehicle group, and the optimal maneuver of the unmanned aerial vehicle in the single-to-single air combat can be realized.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

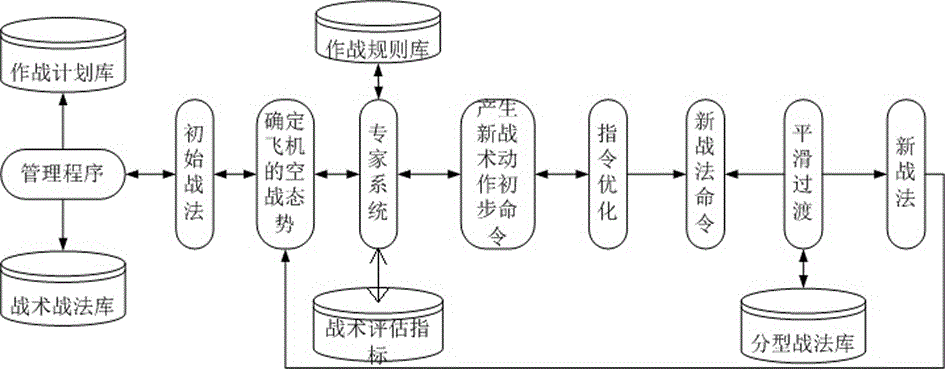

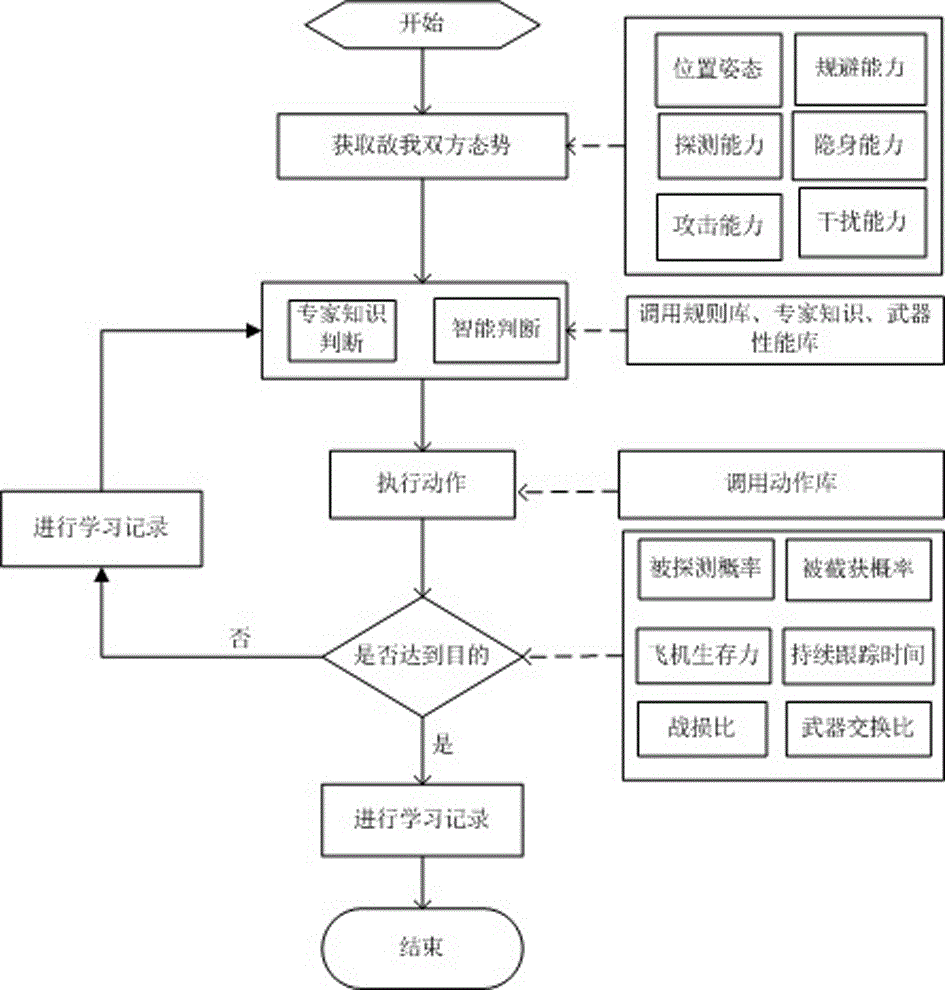

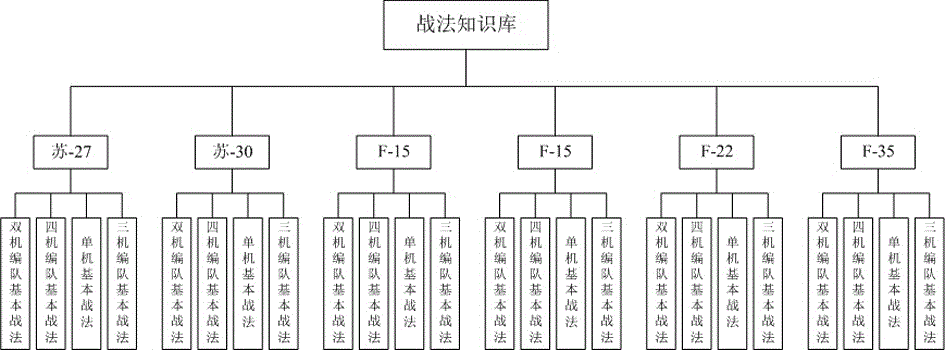

Air-combat tactic team simulating method based on expert system and tactic-military-strategy fractalization

InactiveCN105678030AReduce hardware sizeIncrease training difficultyDesign optimisation/simulationSpecial data processing applicationsData setSimulation

The invention discloses an air-combat tactic team simulating method based on an expert system and tactic-military-strategy fractalization.The method includes the following steps that simulation modeling is conducted with a knowledge establishing, knowledge expressing and processing mathematical method, and tactics and military strategies are correspondingly converted into data sets respectively and are stored and called in a library or sub-library mode; a fractal military-strategy library is constructed; a tactic index system for evaluating the combat effectiveness of a tactic team is built; an air-combat knowledge self-learning mechanism is built; a military-strategy inferring mechanism is built through an artificial intelligence technology; military-strategy reconstruction is conducted, wherein smooth transition between tactics and between military strategies is achieved.The method is tried and verified in scientific research projects, the blue-party intelligent virtual tactic team is built with the method, the double-party whole-process air-combat simulation confronting test can be achieved in the mode that red-party air combat simulating trained persons only need to conduct operation and commanding on a flight simulation platform of a red party, the hardware scale is reduced, and training difficulty can be improved.

Owner:黄安祥 +4

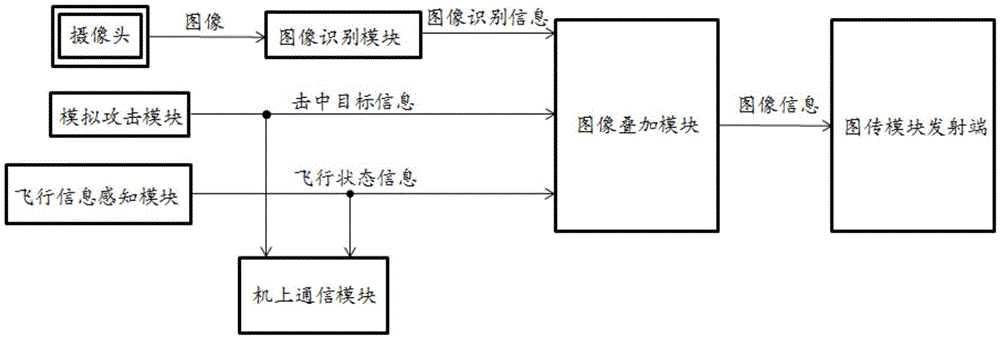

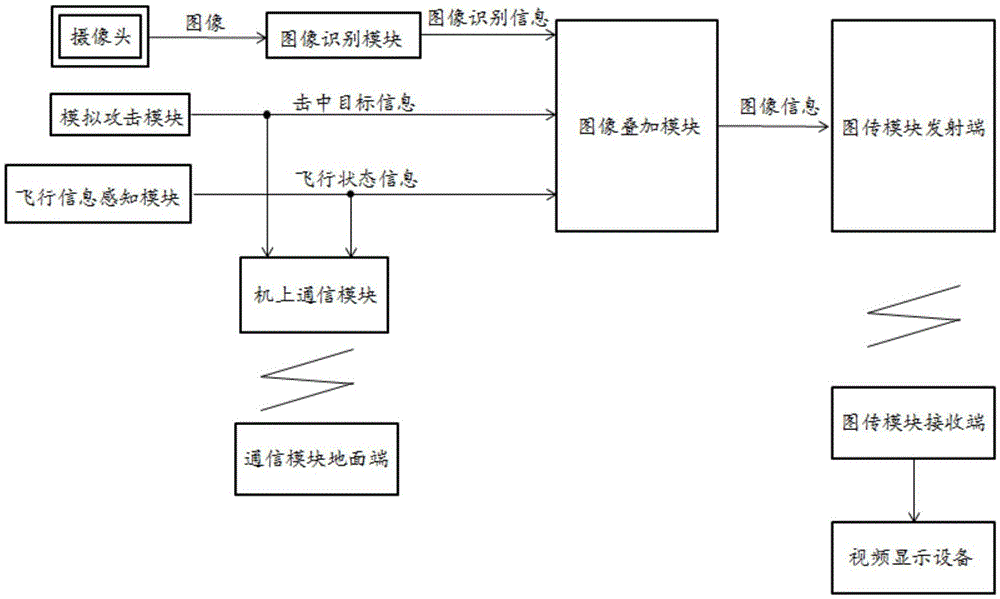

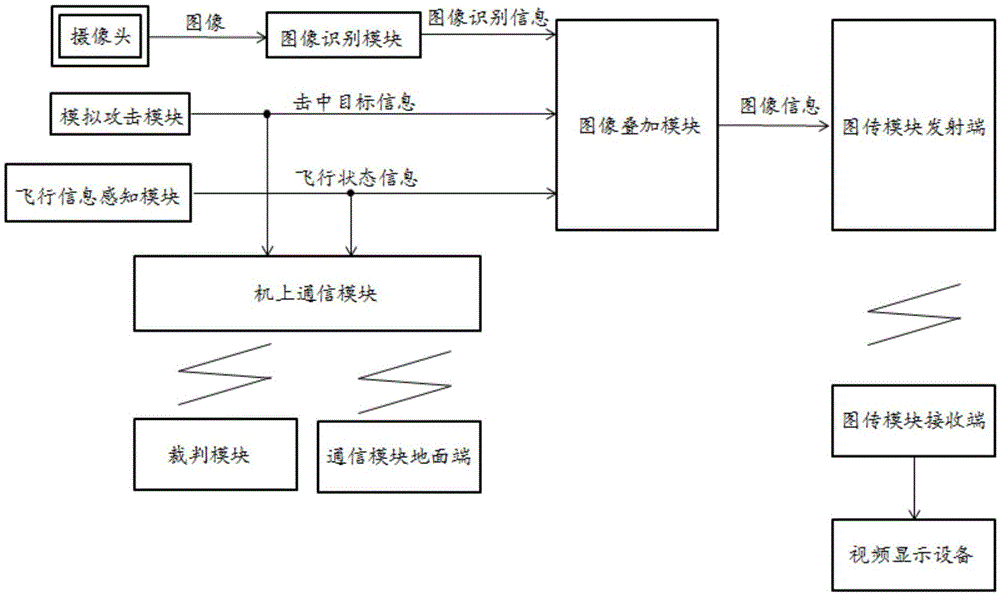

Unmanned plane, simulative air combat gaming device and simulative air combat gaming system

ActiveCN105597308ASolve the disadvantage that it is difficult to judge whether to hit the opponentReduce manufacturing costVideo gamesSimulationGround station

The invention discloses an unmanned plane, a simulative air combat gaming device and a simulative air combat gaming system. The unmanned plane comprises a camera mounted on the unmanned plane, an image identification module used for processing images collected by the camera to obtain image identification information, and an image transmission module transmitting terminal used for transmitting the image identification information. The unmanned plane comprises the camera, the image identification module and the image transmission module transmitting terminal; the flight status of the unmanned plane is transmitted to a ground station without dependency on the visual sight of operators; the problem of high difficulty of determining whether the counterpart is hit when the unmanned plane is applied to the simulative air combat games in the prior art can be solved.

Owner:上海圣尧智能科技有限公司

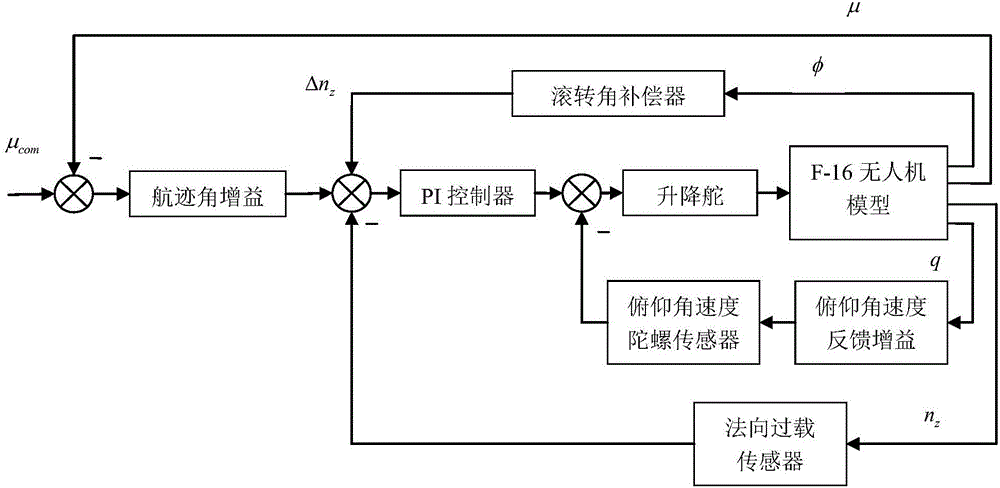

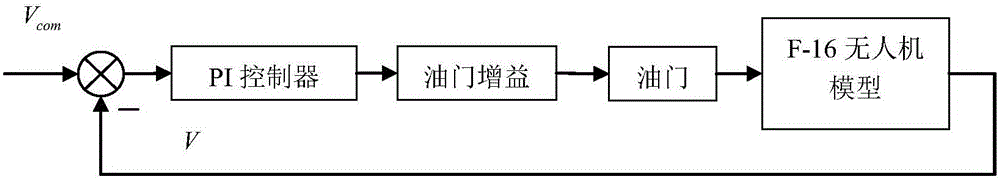

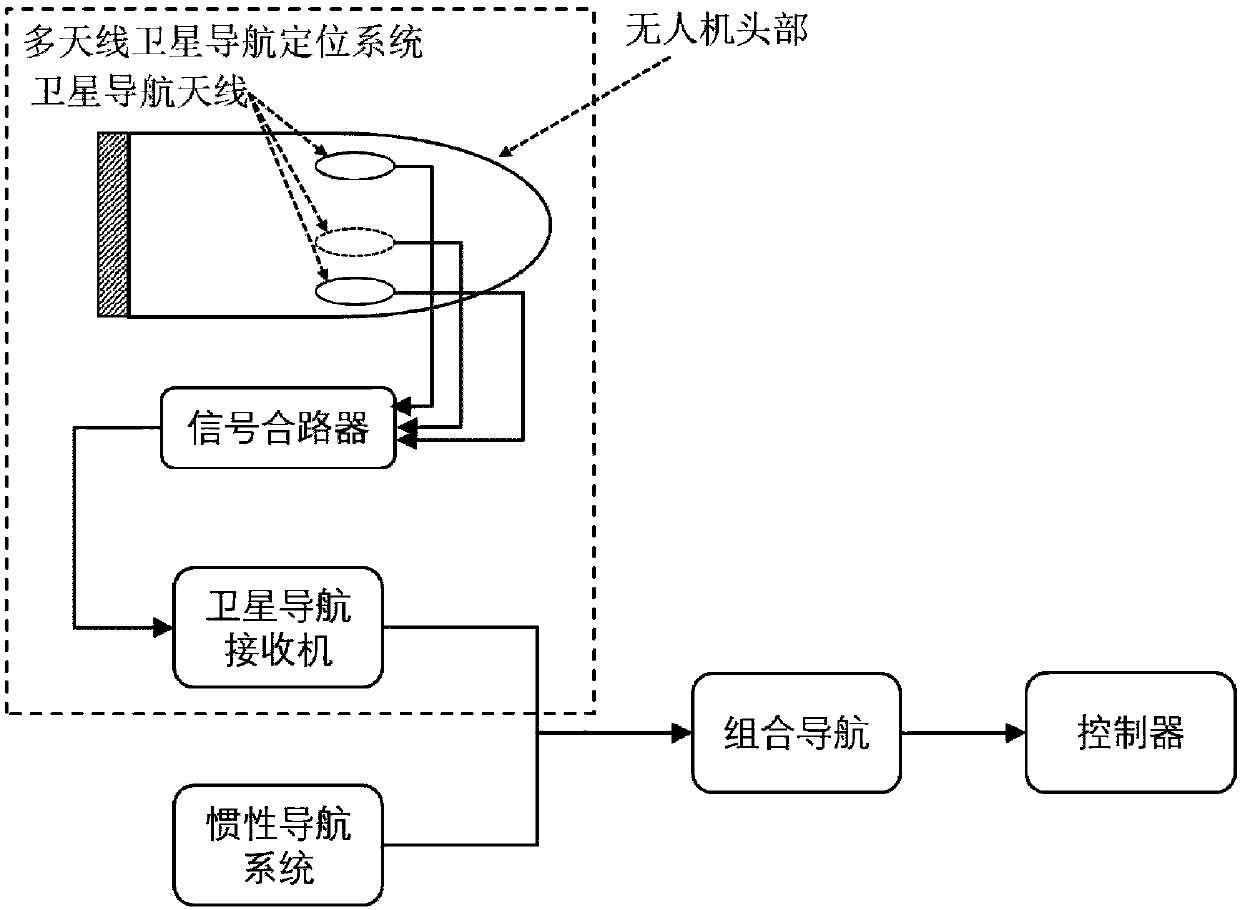

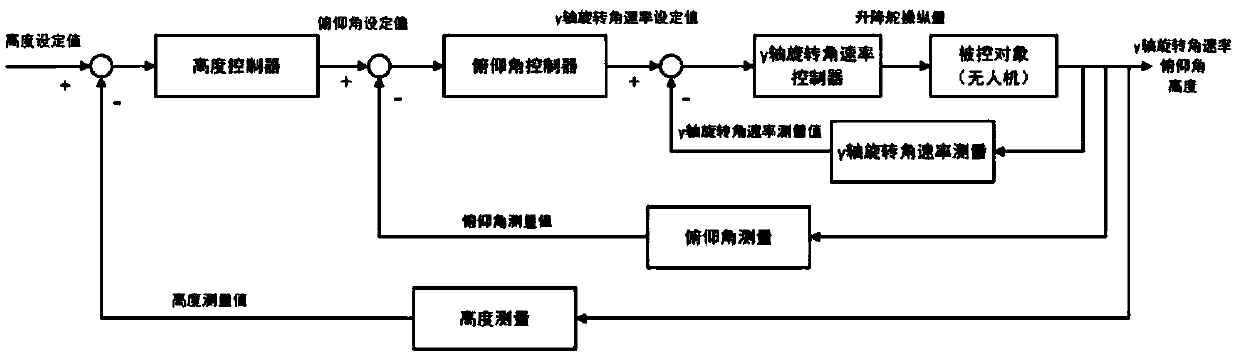

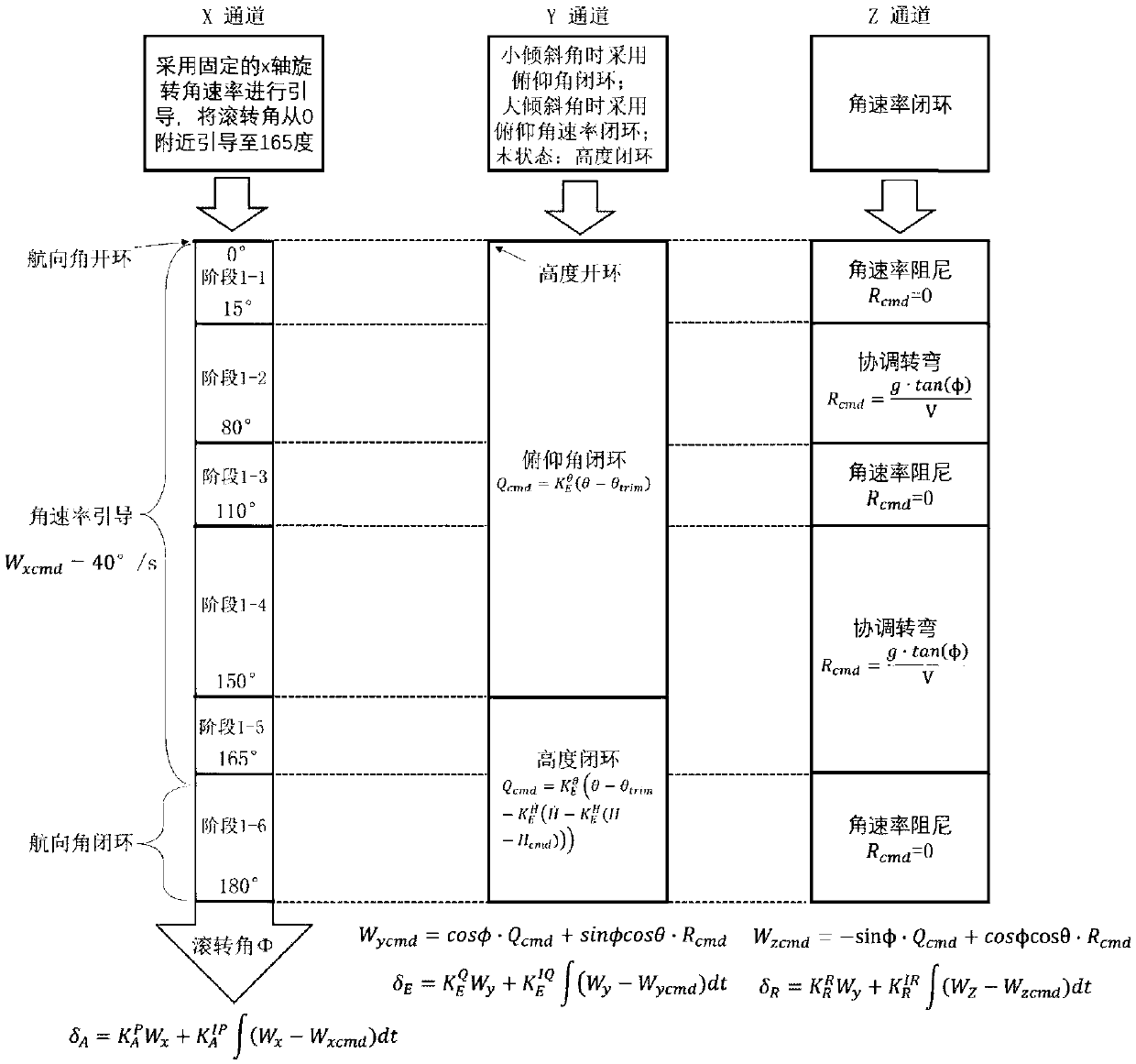

Fixed-wing unmanned aerial vehicle rolling maneuvering control method

The invention provides a fixed-wing unmanned aerial vehicle rolling maneuvering control method. The method is applied to a fixed-wing unmanned aerial vehicle and provides an automatic control method for each control channel in the rolling maneuvering process. According to the method, 360-degree full-gesture seamless navigation is realized through a full-gesture combined navigation method; autonomous switching of the fixed-wing unmanned aerial vehicle between the upright flight state and the inverted flight state is realized through a rolling control method, and balance is rapidly established;and gravity potential energy is reasonably applied through a pitching rolling control method, safe and effective pitching rolling is realized, the flight direction of the unmanned aerial vehicle can be quickly changed under the condition that the safe angle of attack is guaranteed, and the unmanned aerial vehicle can be quickly separated from the dangerous situation in air combat. According to themethod, reasonable automatic control can be conducted on an engine and a control surface, flexible rolling maneuvering of the fixed-wing unmanned aerial vehicle can be realized, so that the unmannedaerial vehicle can freely switch between upright flying and inverted flying, and the flying direction can be quickly changed.

Owner:ZHEJIANG UNIV

Aircraft effectiveness sensitivity analysis method

InactiveCN106548000ASimple designImprove combat effectivenessGeometric CADDesign optimisation/simulationOperational effectivenessSimulation

The invention relates to the technical field of aircraft design and especially relates to an aircraft effectiveness sensitivity analysis method. The aircraft effectiveness sensitivity analysis method comprises following steps: 1. establishing a preset operational effectiveness requirement set of different air combat superiorities; 2. establishing a combat tactics set corresponding to the different requirements in the preset operational effectiveness requirement set; 3. performing large sample optimization on aircraft tactical index values to obtain an optimized interval of the aircraft tactical index values; step 3 comprises following steps: 3.1 setting an initial aircraft tactical index value as simulation input; 3.2 using the combat tactics set as a simulation scheme to carry out simulation experiment; 3.3 using the preset operational effectiveness requirement set as the evaluation criteria of simulation results. By means of the tactical index effectiveness sensitivity analysis method of the invention, aircraft optimized design is guided and combat effectiveness is increased, the related cost of development stages is reduced and technical risk is reduced.

Owner:SHENYANG AIRCRAFT DESIGN INST AVIATION IND CORP OF CHINA

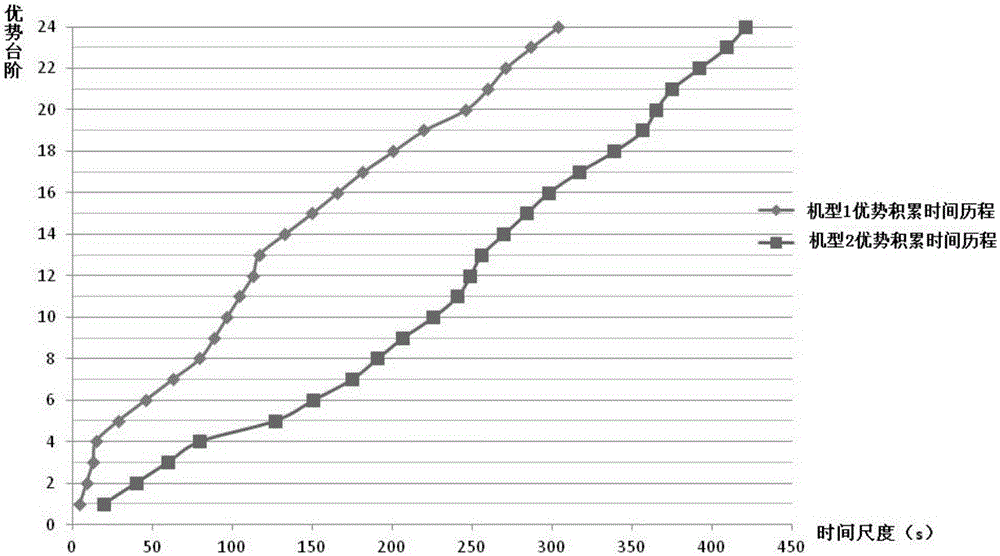

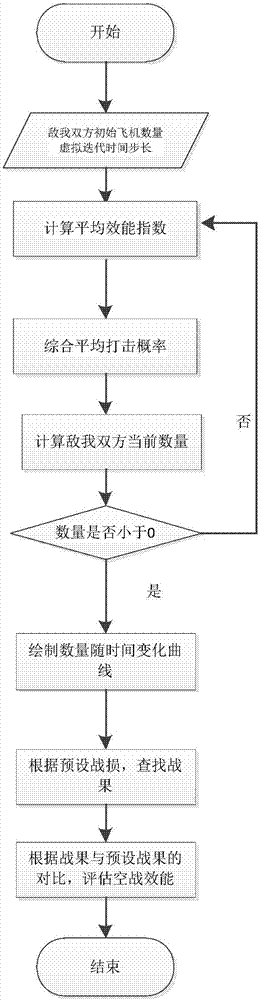

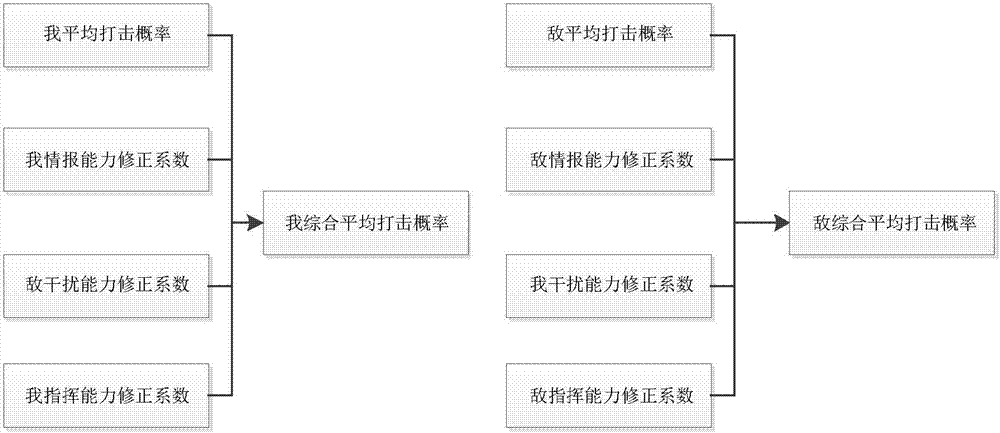

Large-scale air-to-air combat effectiveness evaluation method based on improved lanchester equation

ActiveCN107247879AImprove adaptabilityIncrease credibilityInformaticsSpecial data processing applicationsSimulationVirtual time

The invention discloses a large-scale air-to-air combat effectiveness evaluation method based on an improved lanchester equation. The method comprises the following steps: determining the number of initial combat aircrafts on our party and the number of initial combat aircrafts of the enemy at first, and selecting a suitable virtual time step length; calculating the average air combat capability index of the combat aircrafts on our party and the average air combat capability index of the combat aircrafts of the enemy; then calculating the average hit probability; calculating combat achievement and combat damage on our party; and finally evaluating the air-to-air combat effectiveness level according to combat requirements and the combat achievement and the combat damage on our party. The combat achievement and the combat damage on our party at different virtual times can be calculated, and effectiveness of large-scale air-to-air combat is evaluated. The method can be used for evaluating task effectiveness of air-to-air combat in a planning and preparing stage before the war and providing basis for distribution of armed forces, and can further be used for evaluating a combat implementing stage in real time and providing basis for decisions of commanders.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

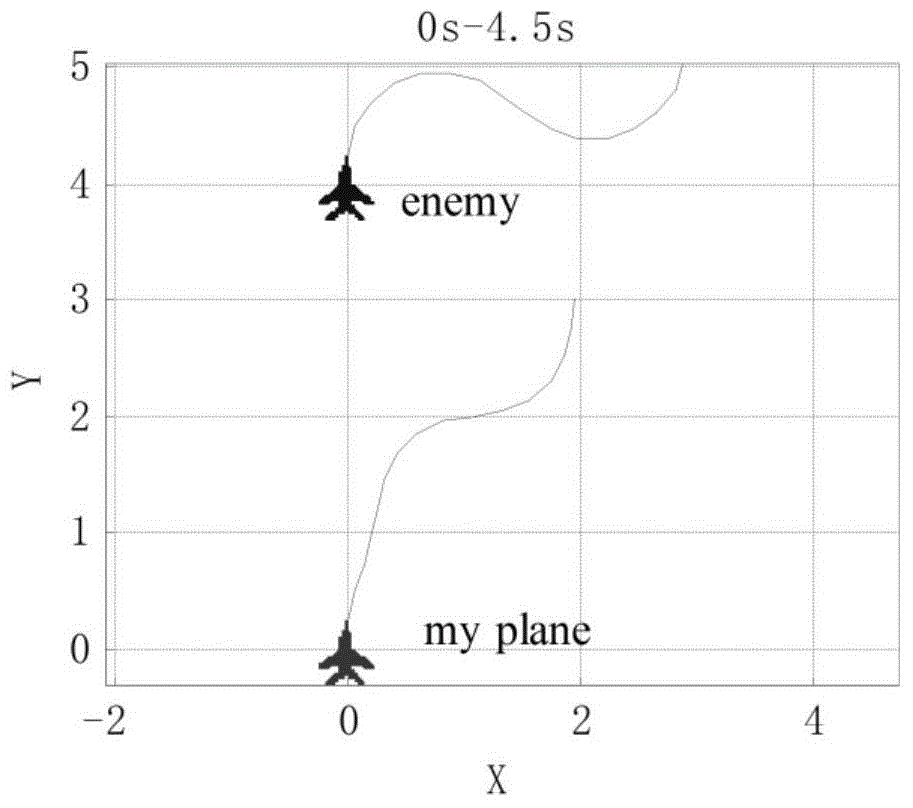

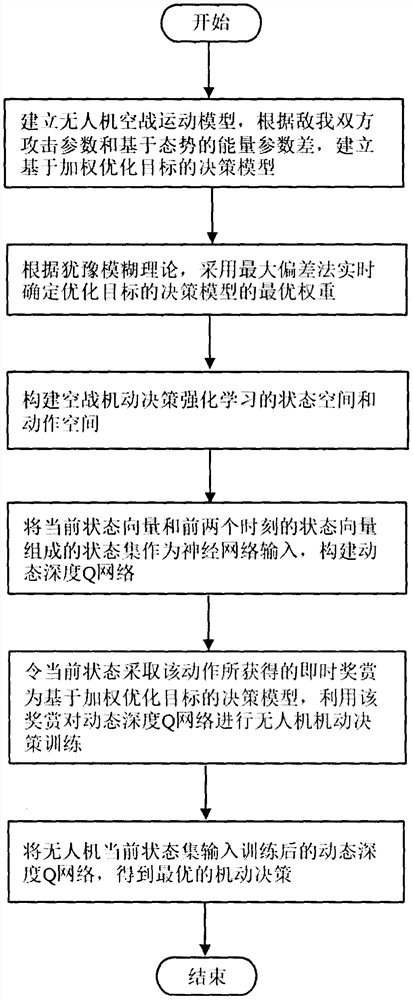

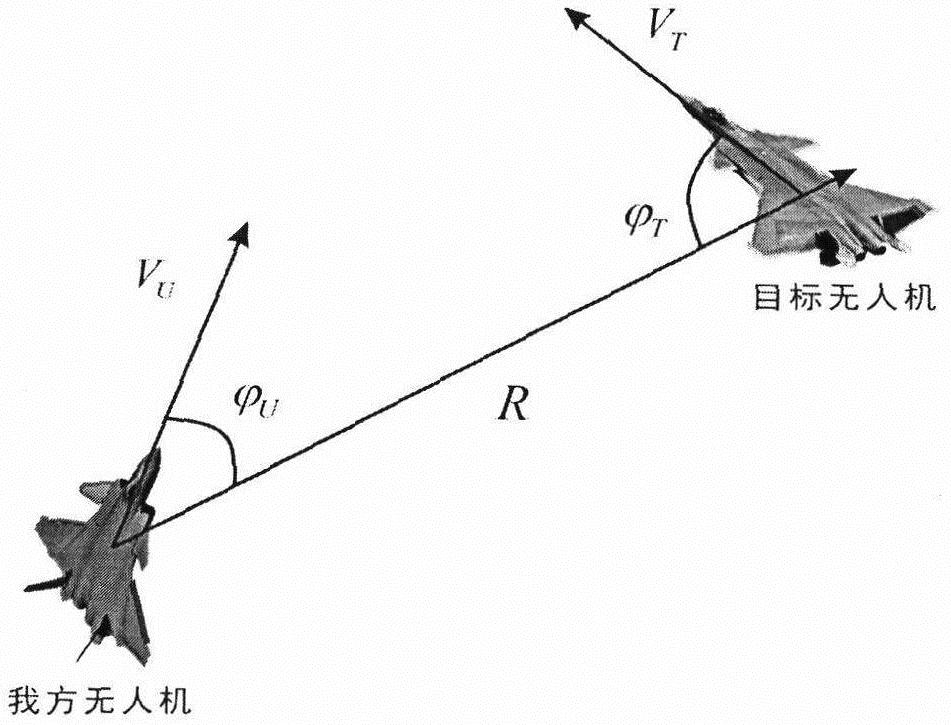

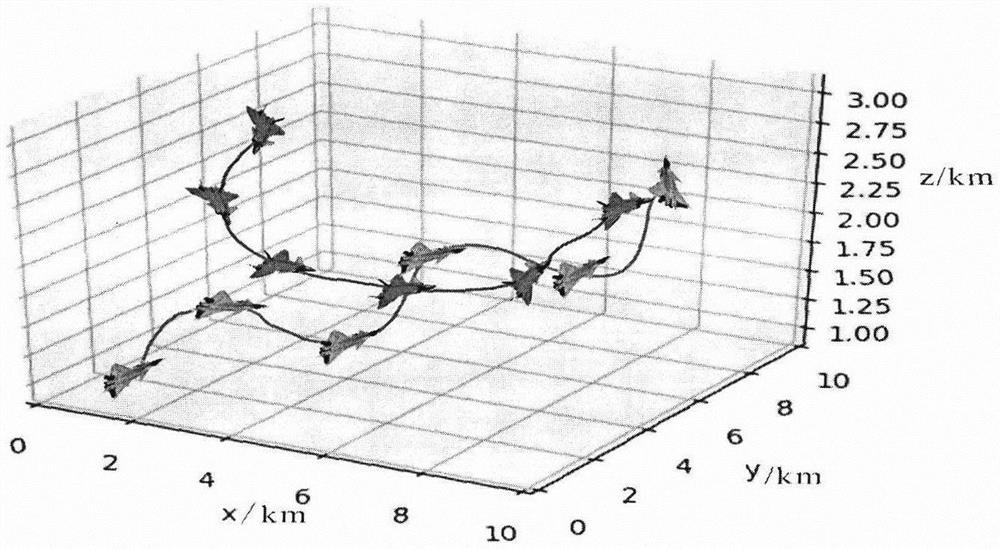

Hesitant fuzzy and dynamic deep reinforcement learning combined unmanned aerial vehicle maneuvering decision-making method

PendingCN111666631AFix fixitySolve rationalityGeometric CADDesign optimisation/simulationDecision modelSimulation

The invention discloses a hesitant fuzzy and dynamic deep reinforcement learning combined unmanned aerial vehicle maneuvering decision-making method, which comprises the steps of firstly, establishingan unmanned aerial vehicle air combat motion model, and establishing a decision-making model based on a weighted optimization target according to attack parameters of friends and my parties and an energy parameter difference based on a situation; secondly, according to a hesitant fuzzy theory, determining an optimal weight of a decision model of an optimization target in real time by adopting a maximum deviation method; then, constructing a state space and an action space of air combat maneuver decision reinforcement learning; then, combining the unmanned aerial vehicle states at multiple moments into a state set as neural network input, and constructing a dynamic deep Q network to perform unmanned aerial vehicle maneuvering decision training; and finally, obtaining an optimal maneuveringdecision through the trained dynamic deep Q network. The hesitant fuzzy and dynamic deep reinforcement learning combined unmanned aerial vehicle maneuvering decision-making method mainly solves the problem of maneuvering decision making of the unmanned aerial vehicle under the condition of incomplete environmental information, considers the influence of the air combat process in the decision making process, and better meets the requirements of actual air combat.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

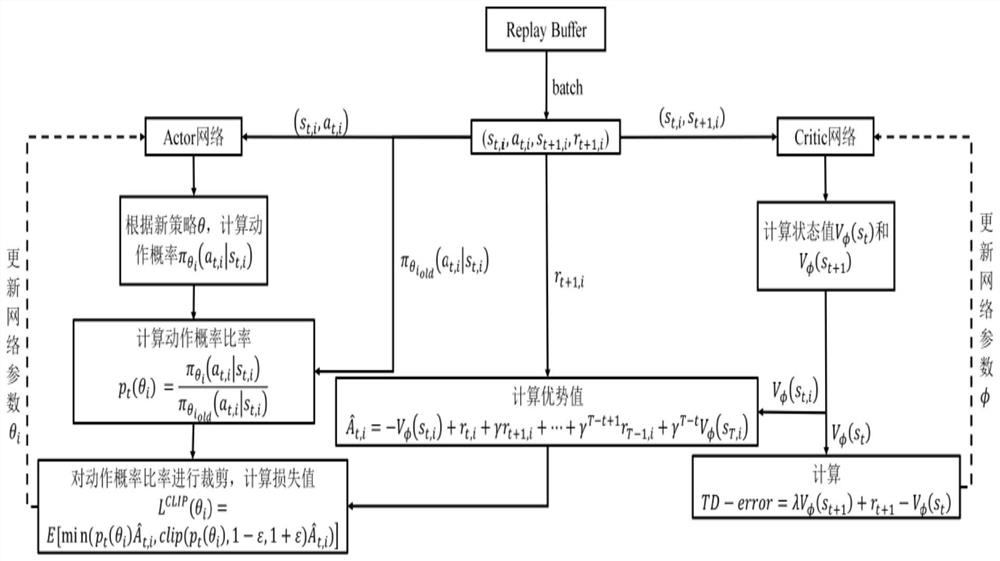

Multi-agent reinforcement learning-based multi-machine air combat decision-making method

PendingCN113791634AEasy inputEasy to modularizePosition/course control in three dimensionsReinforcement learning algorithmUncrewed vehicle

The invention discloses a multi-agent reinforcement learning-based multi-machine air combat decision-making method. The method comprises the following steps of firstly, establishing a six-degree-of-freedom model, a missile model, a neural network normalization model, a battlefield environment model and a situation judgment and target distribution model of an unmanned aerial vehicle; then, adopting an MAPPO algorithm as a multi-agent reinforcement learning algorithm, and designing a corresponding return function on the basis of a specific air combat environment; and finally, combining the constructed unmanned aerial vehicle model with the multi-agent reinforcement learning algorithm to generate the final multi-agent reinforcement learning-based multi-machine cooperative air combat decision-making method. The method effectively solves the problems that a traditional multi-agent cooperative air combat is large in calculated amount and difficult to cope with the battlefield situation which needs real-time settlement and varies instantaneously.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Aircraft soldier system intelligent behavior modeling method based on global situation information

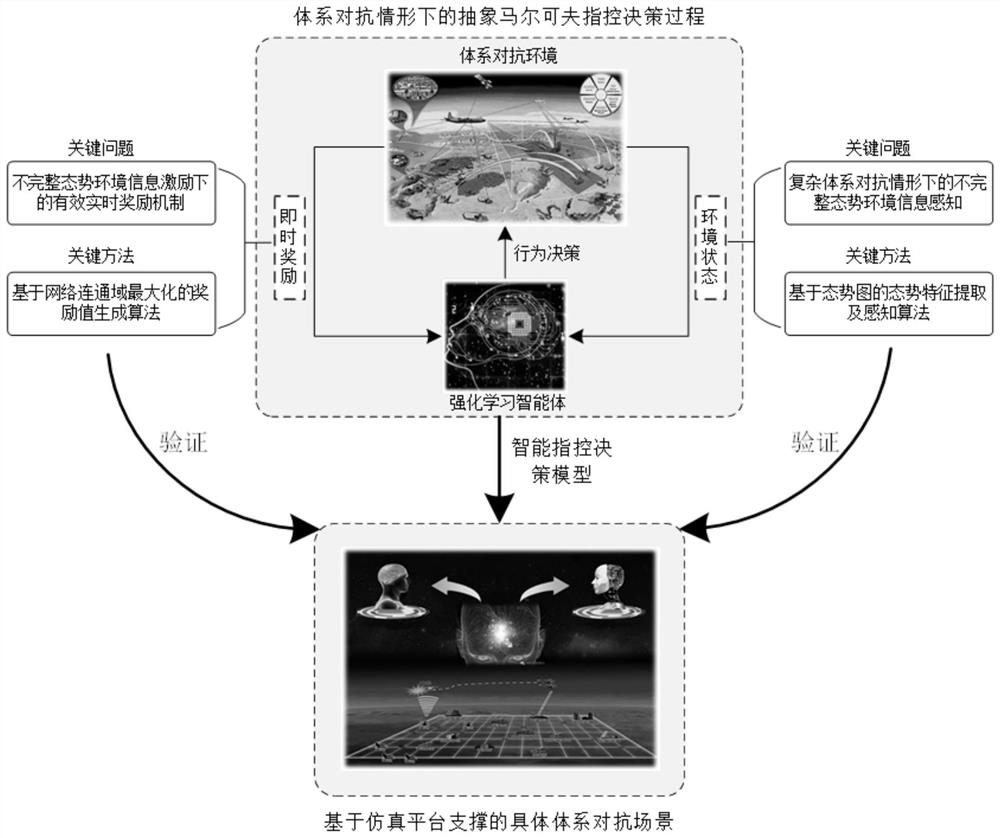

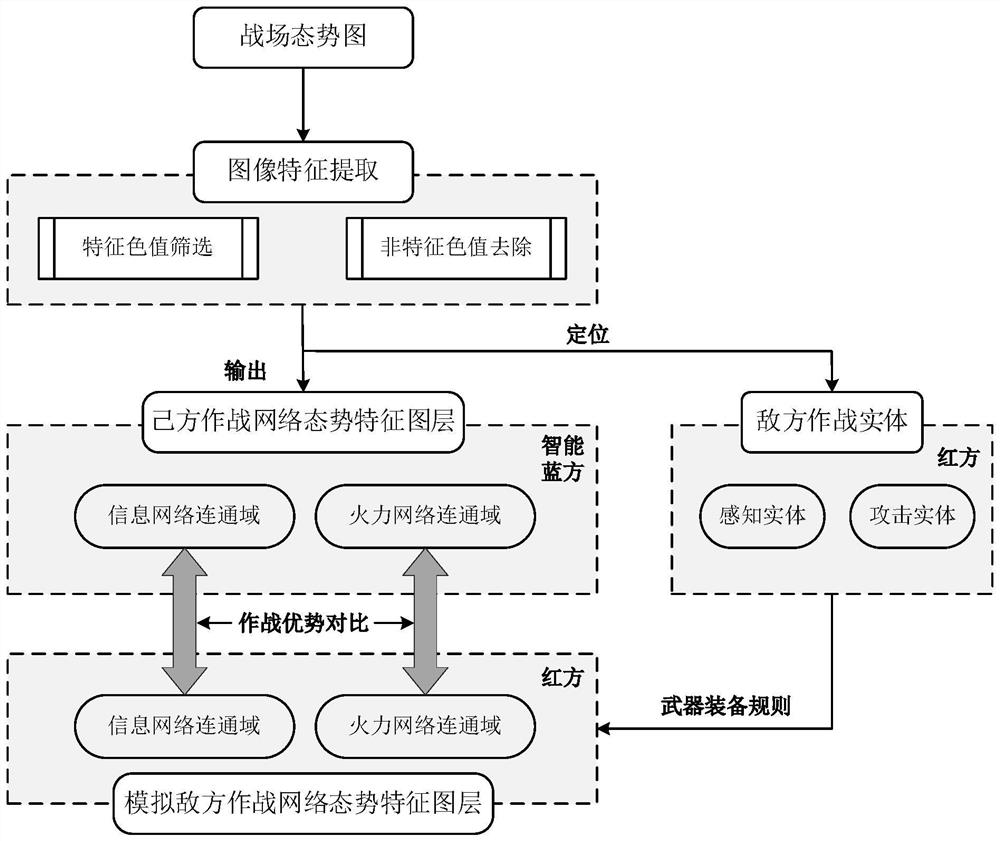

ActiveCN112560332AImprove adaptabilityKnowledge acquisition takes less timeCharacter and pattern recognitionDesign optimisation/simulationFeature extractionCommand and control

The invention discloses an aircraft soldier system intelligent behavior modeling method based on global situation information, and the method comprises the steps of carrying out the mathematical expression of a global situation based on a state vector through the comprehensive research and judgment of the global battlefield situation in complex air; proposing a situation feature extraction and perception algorithm based on a two-dimensional GIS situation map, obtaining element information which cannot be directly obtained in the state vector, and obtaining a global situation state space for anaircraft soldier intelligent behavior model to perceive; and proposing a reward value generation algorithm based on network connected domain maximization, and driving the aircraft soldier intelligentbehavior model to iteratively evolve to a high return under incomplete global situation excitation. According to the technical scheme, effective theoretical basis and technical assistance can be provided for research of obtaining greater combat information advantages under incomplete battlefield situation awareness, generation of efficient air combat command and control decision-making, analysis,deduction and repeated air combat schemes, improvement of the combat level of an aircraft soldier system and the like.

Owner:BEIHANG UNIV

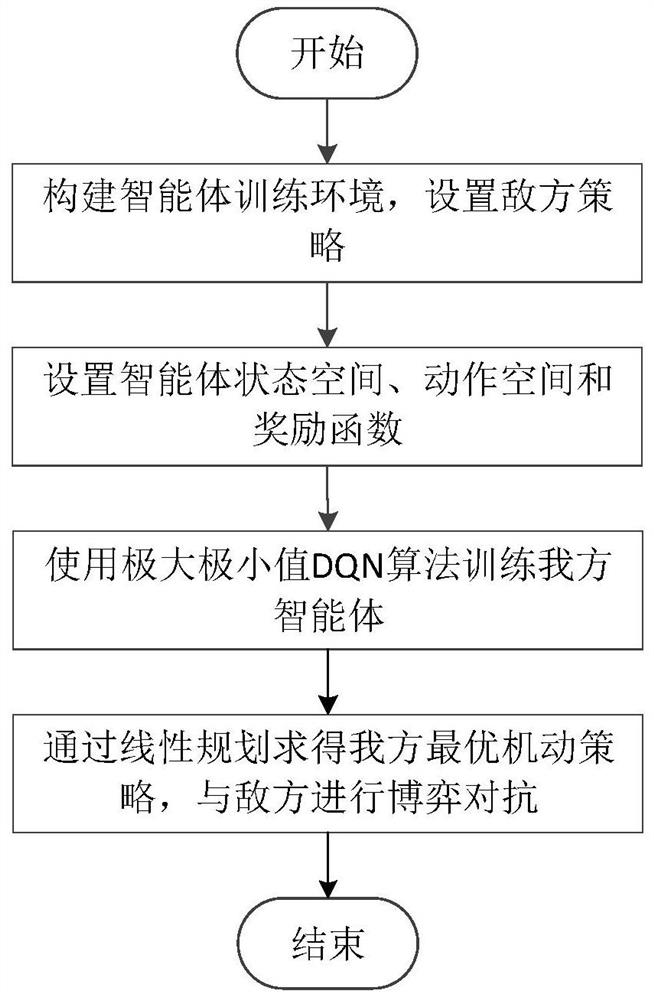

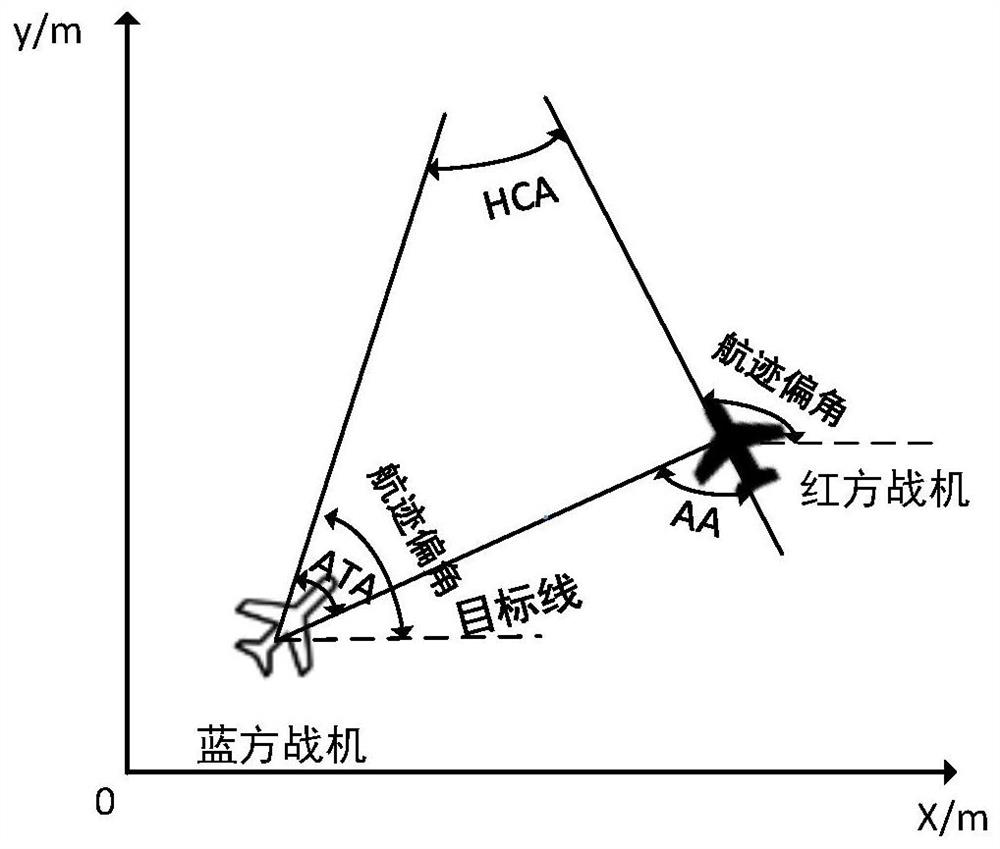

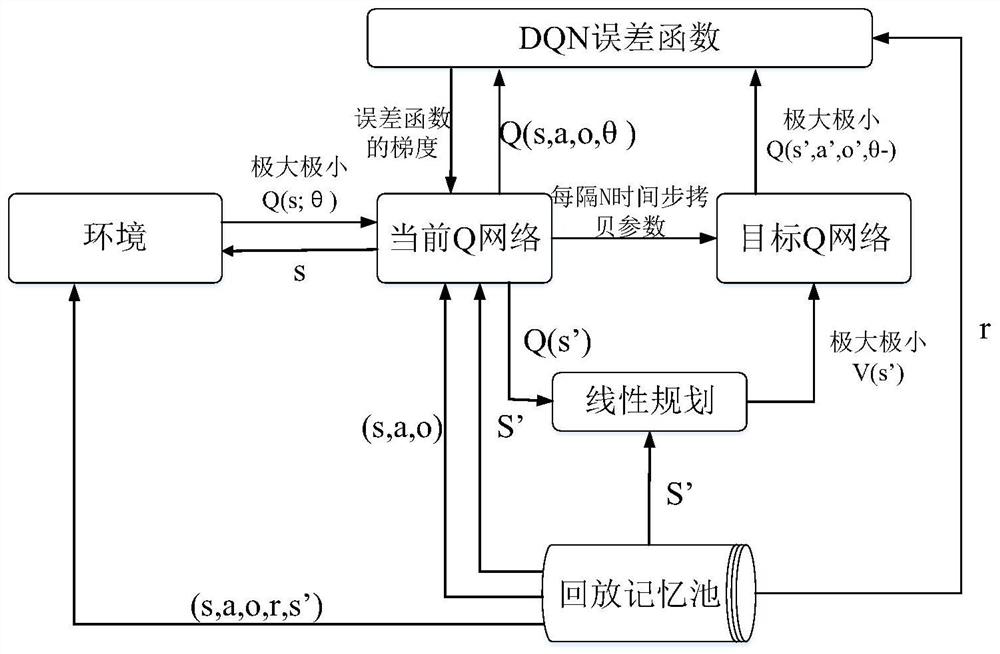

Air combat maneuvering strategy generation technology based on deep random game

InactiveCN112052511AGuaranteed real-timeImprove computing efficiencyGeometric CADDesign optimisation/simulationSimulationIntelligent agent

The invention discloses a short-distance air combat maneuvering strategy generation technology based on a deep random game. The technology comprises the following steps: firstly constructing a training environment for combat aircraft game confrontation according to a 1V1 short-distance air combat flow, and setting an enemy maneuvering strategy; secondly, by taking a random game as a standard, constructing agents of both sides of air combat confrontation, and determining a state space, an action space and a reward function of each agent; thirdly, constructing a neural network by using a maximumand minimum value DQN algorithm combining random game and deep reinforcement learning, and training our intelligent agent; and finally, according to the trained neural network, obtaining an optimal maneuvering strategy under an air combat situation through a linear programming method, and performing game confrontation with enemies. The thought of random game and deep reinforcement learning is combined, the maximum and minimum value DQN algorithm is provided to obtain the optimal air combat maneuver strategy, the optimal air combat maneuver strategy can be applied to an existing air combat maneuver guiding system, and effective decisions can be accurately made in real time to guide a fighter plane to occupy a favorable situation position.

Owner:CHENGDU RONGAO TECH

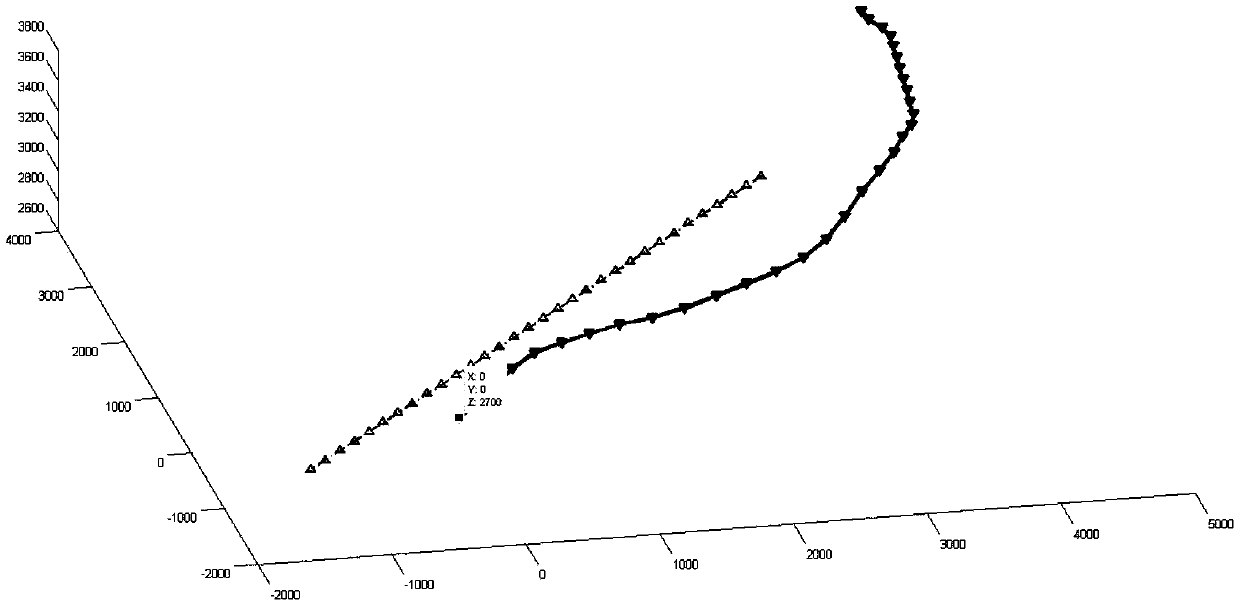

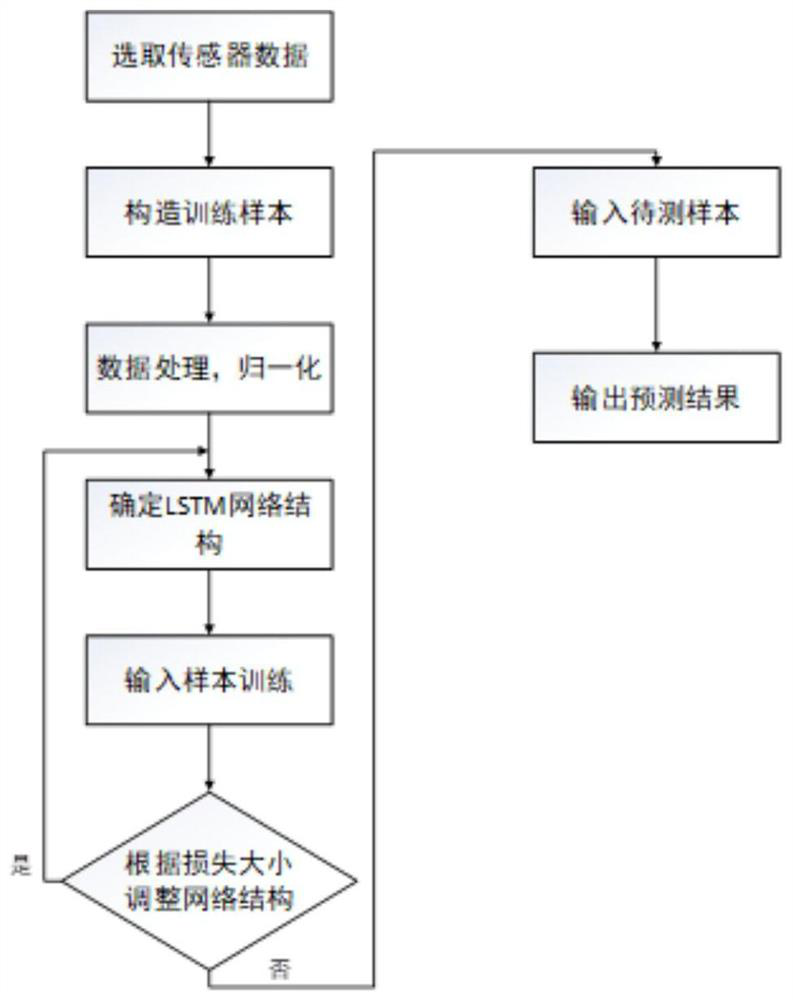

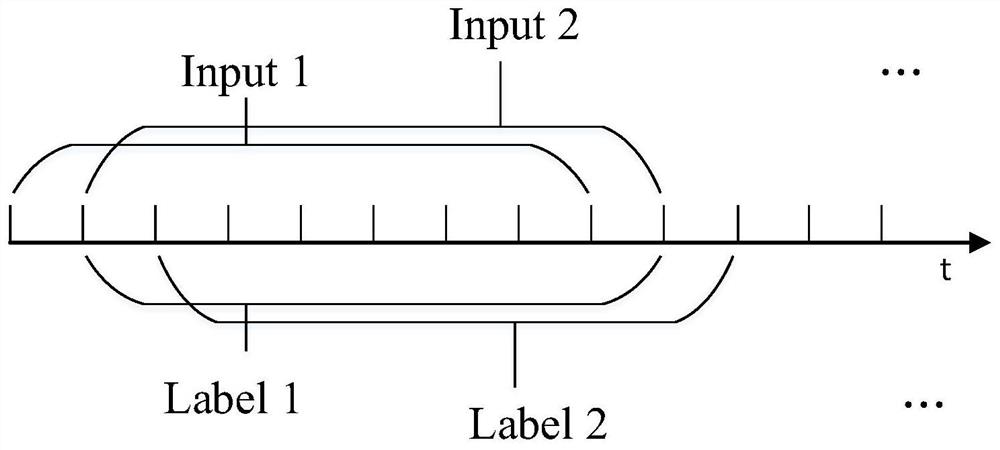

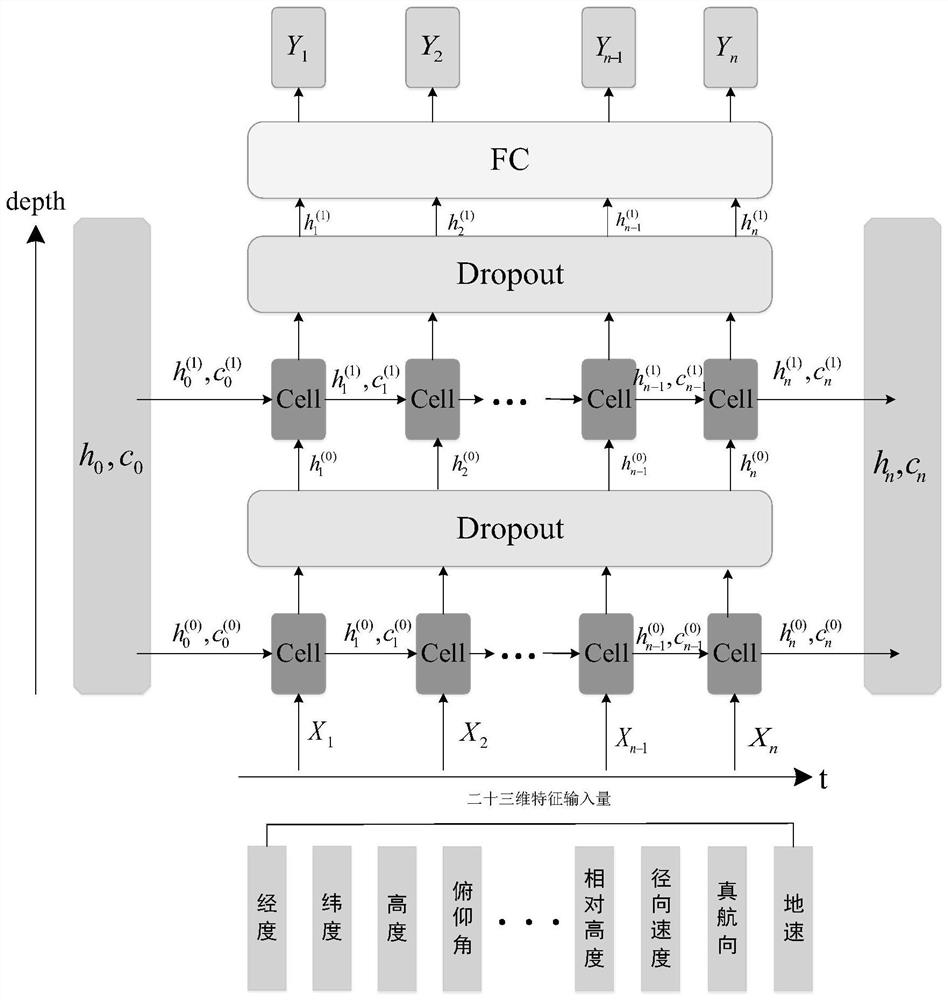

Aircraft trajectory prediction method based on long short-term memory network

ActiveCN114048889ASimplify complexityOptimize network parametersSustainable transportationForecastingSimulationTerm memory

The invention relates to the fields of air combat environments, data processing, deep learning and the like, and provides a method for realizing aircraft trajectory prediction by using a long short-term memory (LSTM) network under an uncertain sensing condition. Therefore, the technical scheme adopted by the invention is as follows: the method for predicting the trajectory of the aircraft based on the long-short-term memory network comprises the following steps of: eliminating noise interference carried by a sensor feature vector by using Kalman filtering; data preprocessing including downsampling, invalid value elimination and missing value complementation is carried out on the directly obtained state parameters, in addition, in order to improve the calculation stability, data is subjected to normalization processing, and the value range of input data is included in the interval of [0, 1]; and an LSTM-based trajectory prediction model is created, input and output of the network is defined, and the network is supervised and trained. The invention is mainly applied to the prediction occasion of the flight path of the unmanned aerial vehicle.

Owner:TIANJIN UNIV

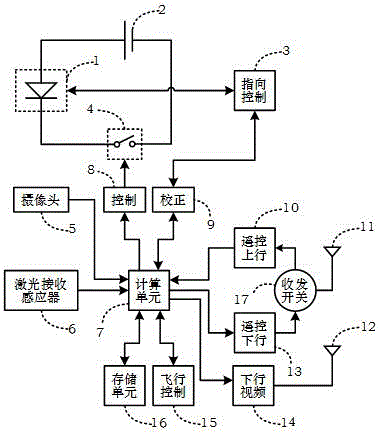

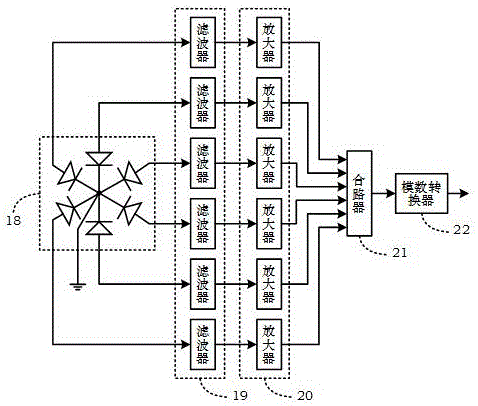

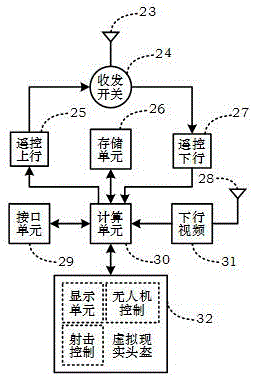

UAV (Unmanned Aerial Vehicle) air combat equipment capable of receiving laser beams emitted from multiple directions

ActiveCN106075915AReal experience the feeling of fightingDodge to avoid shootingToysVideo gamesRemote controlLight beam

The invention discloses UAV (Unmanned Aerial Vehicle) air combat equipment capable of receiving laser beams emitted from multiple directions, comprising a UAV and ground remote control equipment. According to the UAV air combat equipment, a laser receiving sensor of the UAV can realize omni-directional receiving, or the laser receiving sensor of the UAV can realize the receiving in part of the direction; the laser receiving sensor of the UAV can receive the laser beams emitted from the multiple directions; the number of the laser receiving sensors of the UAV can be one or more, so that the UAV can receive the laser beams emitted from another UAV when flying in any postures; the laser receiving sensor has a faltering capability, only can receive the laser beam with specified characteristic; a display device or an operating device of the ground equipment of the UAV is a VR (virtual reality) display helmet, and the flight or shooting action of the UAV can be controlled through the VR helmet.

Owner:成都定为电子技术有限公司

Remote control model aircraft with laser tag shooting action

The present invention is a radio-controlled (RC) model aircraft system with laser tag capabilities. A transmitter and receiver are each installed in at least two separate RC aircrafts. The transmitter on one aircraft emits an infrared light beam to the receiver on the other aircraft(s) that changes the infrared signal to a first servo to move an arm, which releases a model aircraft door behind which there are ribbons. The ribbons escape from the aircraft wings to show a hit. An optional second servo operate a smoke screen and eject a pilot to simulate actual combat. The system may also include audio and lighting effects to simulate firing and hit sequences with accompanying theatrical, physical effects including release of smoke and ribbons, and ejection of the pilot that realistically simulates air combat.

Owner:TROUTMAN S CLAYTON

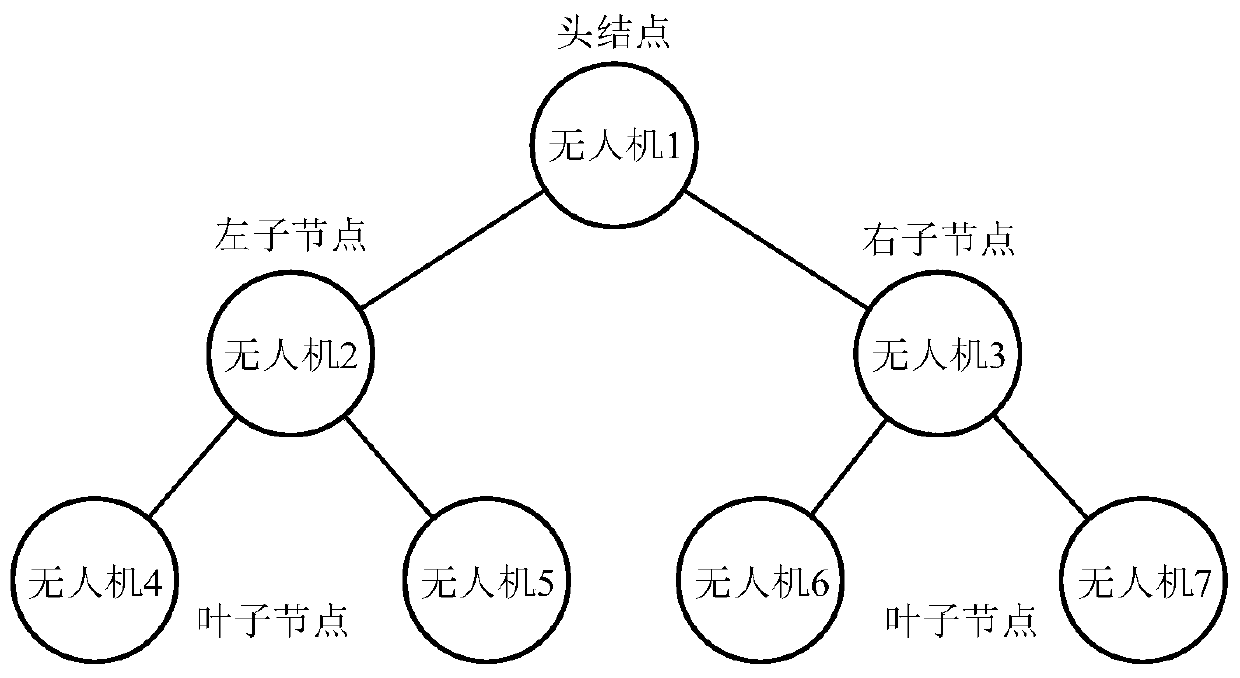

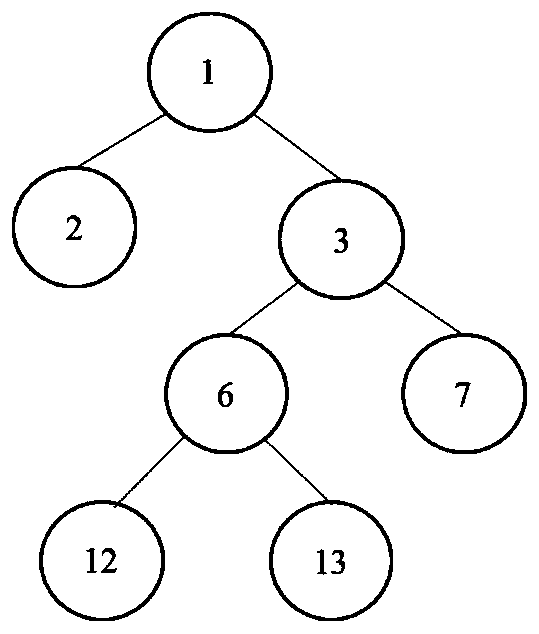

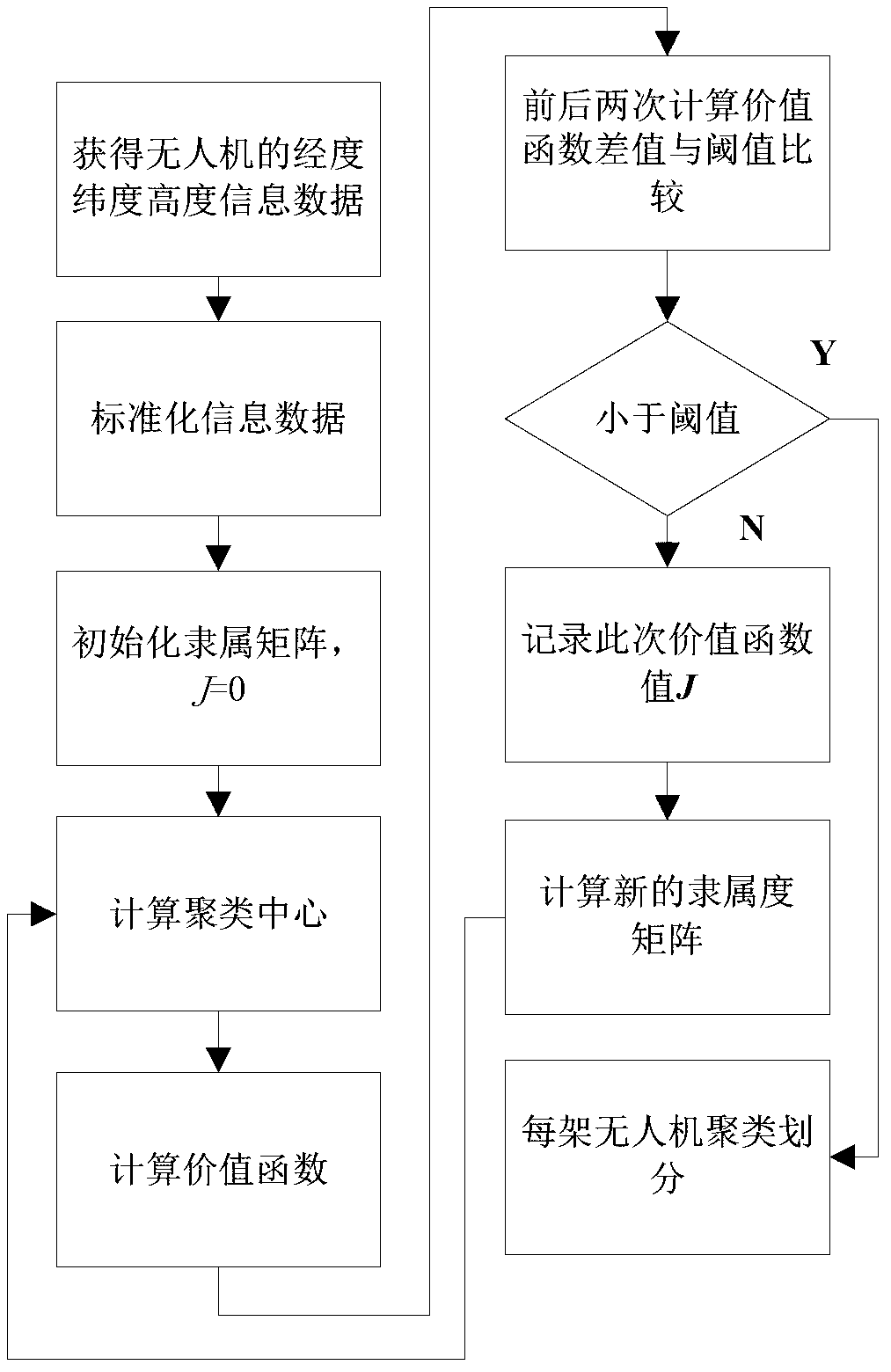

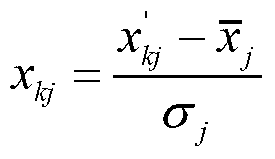

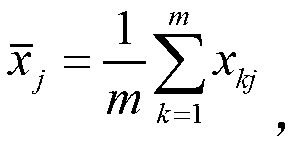

Multiple unmanned aerial vehicle formation partition method

InactiveCN103324830AAdd dimensionReduce computationSpecial data processing applicationsAlgorithmData value

The invention provides a multiple unmanned aerial vehicle formation partition method. The multiple unmanned aerial vehicle formation partition method comprises sequentially obtaining situation information data of every target of an air combat and performing standardization processing; initializing a membership matrix to enable the membership matrix to meet constraint conditions; calculating cluster centers; calculating a value function J, if a step out condition of terminating the calculation is met, stopping an algorithm, otherwise, calculating a new membership matrix, and returning to the former step and recalculating the cluster centers; obtaining a final membership matrix when the step out condition is met; performing cluster partition on multiple targets according to all the data values in the final membership matrix. According to the multiple unmanned aerial vehicle formation partition method, the calculating dimensions during task allocation execution of multiple target formations are reduced, the calculation is reduced, and the calculation speed is improved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

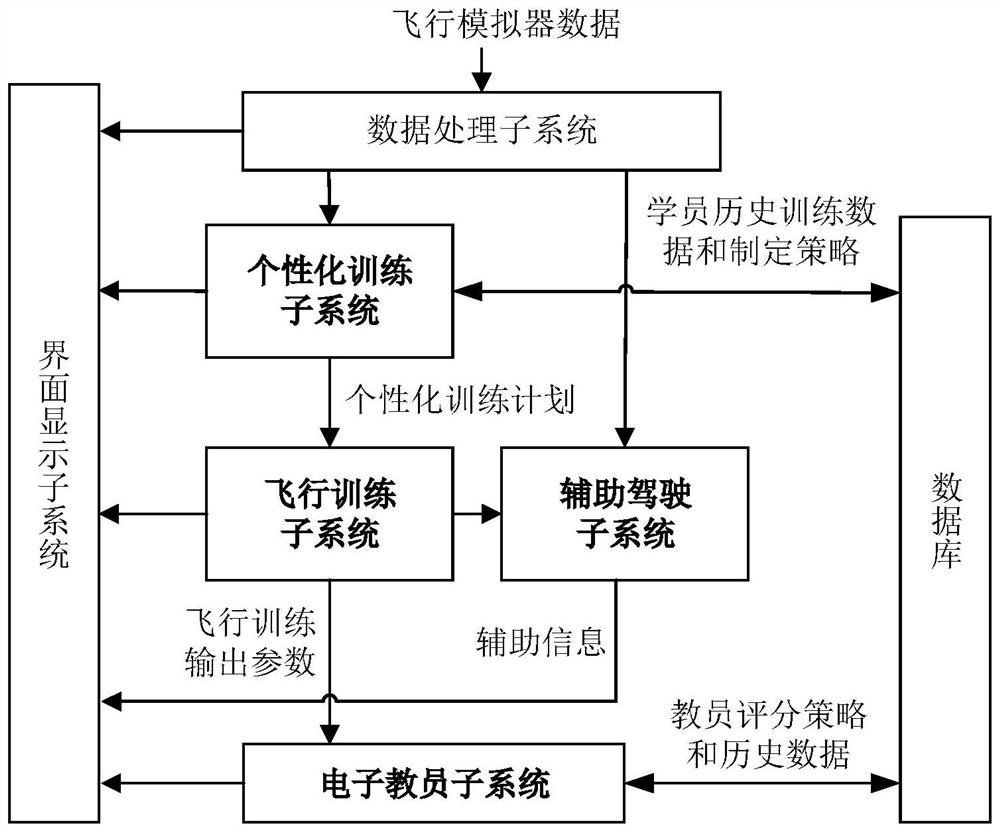

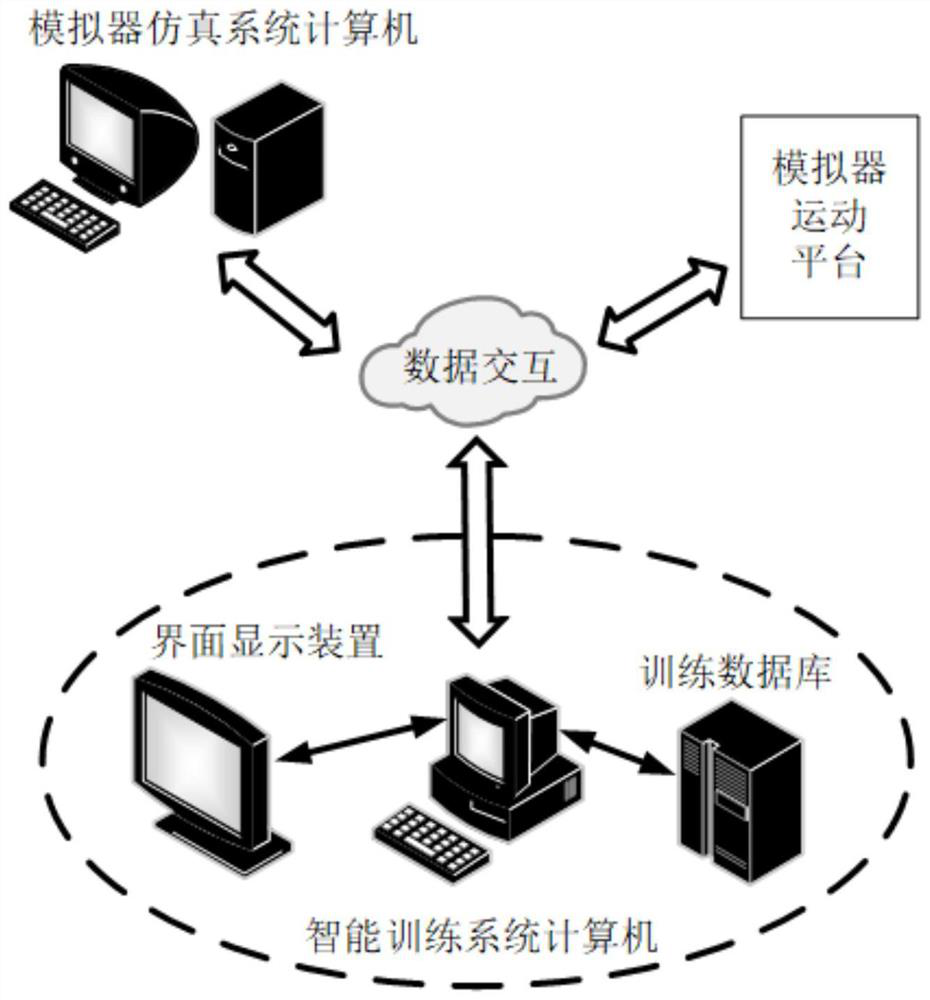

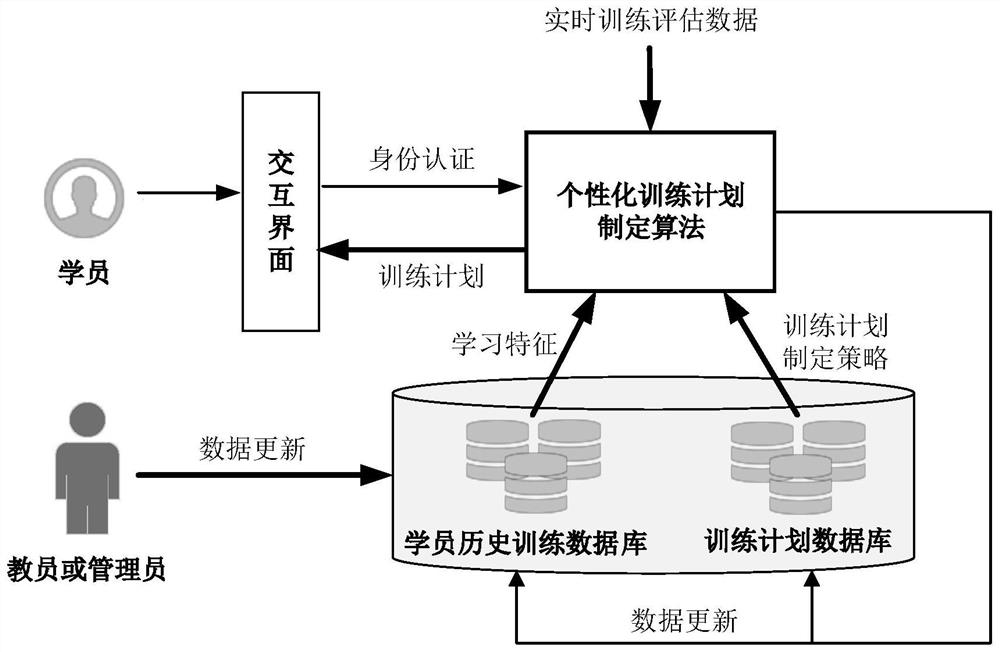

Flight simulator intelligent training system, method and device

ActiveCN114373360AAutomate trainingHigh precisionCosmonautic condition simulationsResourcesTraining planControl engineering

The invention provides a flight simulator intelligent training system, method and device. The system comprises a personalized training subsystem, a flight training subsystem, an auxiliary driving subsystem and an electronic teacher subsystem. The personalized training subsystem is used for customizing a personalized training plan according to the learning characteristics of each flight student; the flight training subsystem is used for carrying out air combat flight training on flight trainees based on the personalized training plan and outputting flight training output parameters; the auxiliary driving subsystem is used for providing auxiliary information for the flight trainees when the flight trainees carry out air combat flight training; and the electronic teacher subsystem performs flight training quality evaluation on flight trainees through flight training output parameters based on the intelligent electronic teacher model. According to the intelligent training system of the flight simulator, the problems that an existing flight training simulator cannot effectively formulate a personalized training plan and needs personnel such as a teacher to participate manually, so that the training working efficiency of trainees is seriously influenced can be solved.

Owner:TSINGHUA UNIV

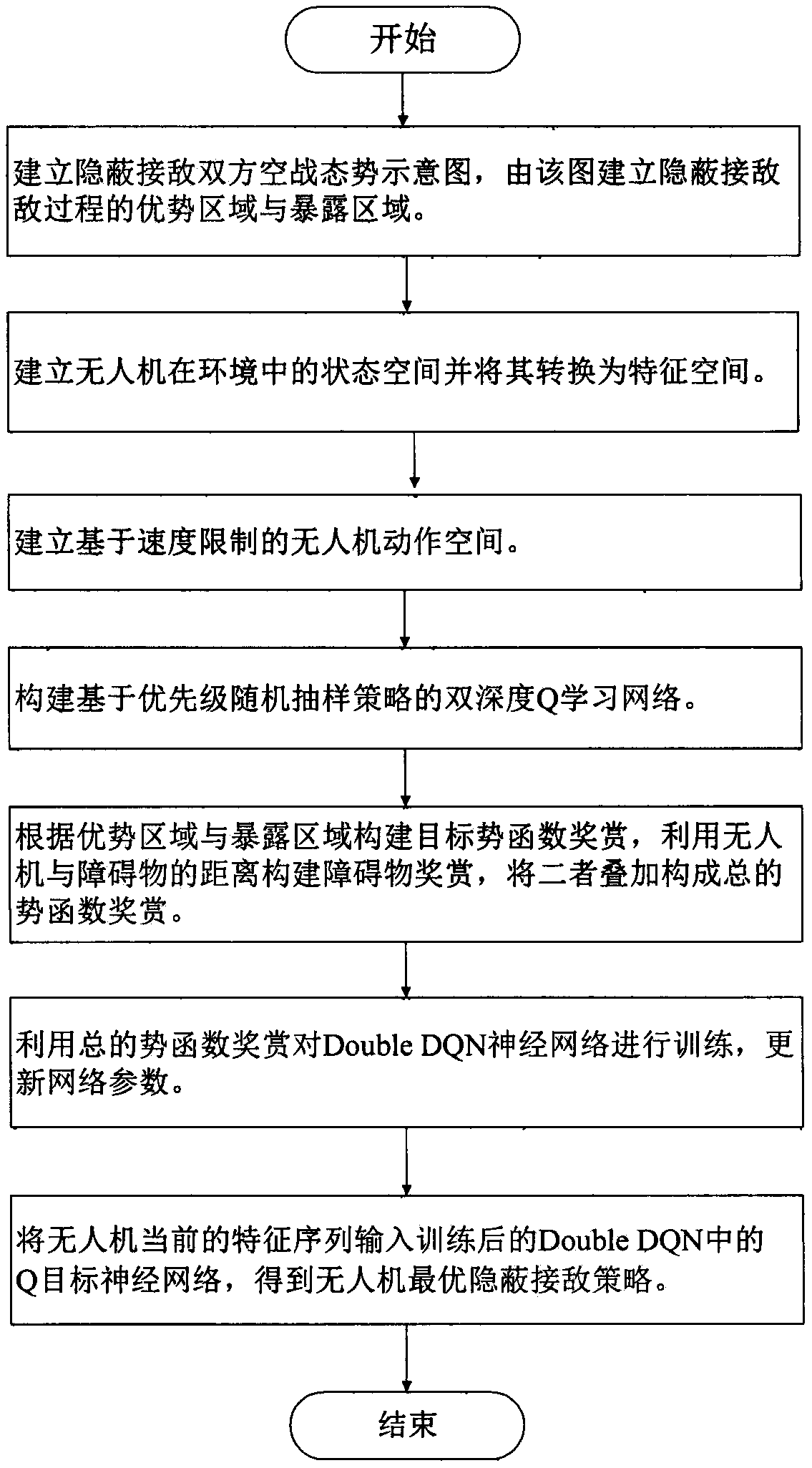

Unmanned aerial vehicle concealing approach method of employing priority random sampling strategy-based Double DQN

InactiveCN110673488AIn line with the actual battlefield environmentOvercome the disadvantages of fittingAdaptive controlSimulationUncrewed vehicle

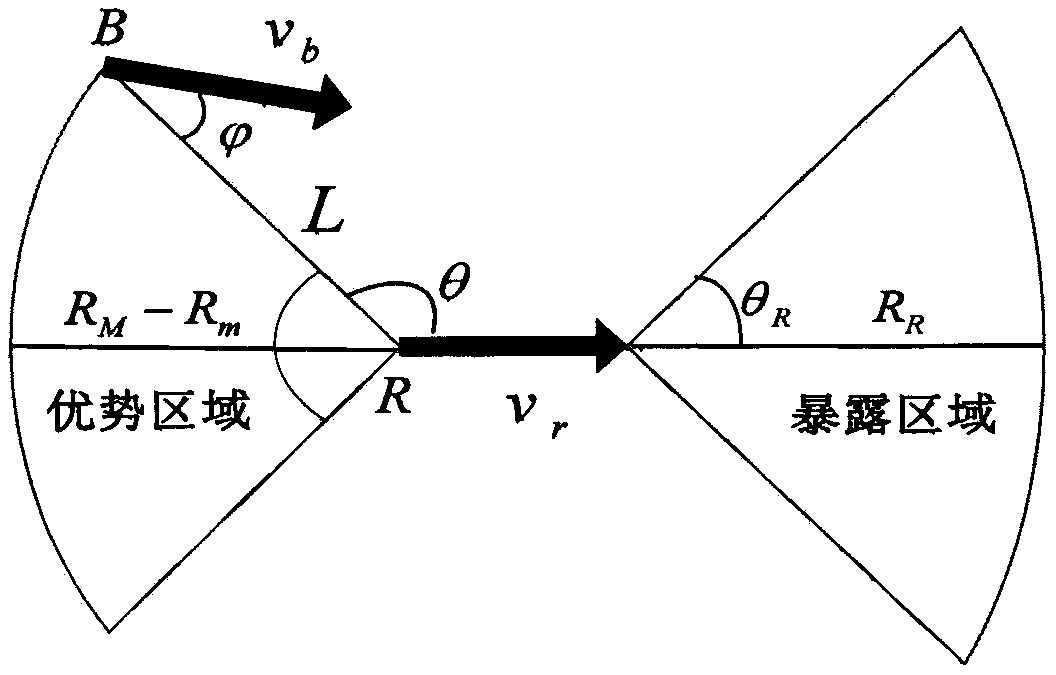

The invention discloses an unmanned aerial vehicle concealing approach method of employing a priority random sampling strategy-based Double DQN. The unmanned aerial vehicle concealing approach methodcomprises the steps of firstly, establishing a both-side air combat situation diagram of concealing approach and establishing a dominant area and an exposed area in a concealing approach process through the diagram; secondly, establishing state space of an unmanned aerial vehicle and converting the state space into feature space and speed limit-based unmanned aerial vehicle action space; thirdly,building a priority random sampling strategy-based double-depth Q learning network; fourthly, establishing a target potential function reward according to the relative positions of friend or foe bothsides in the dominant area and the exposed area, establishing an obstacle reward according to the distance between the unmanned aerial vehicle and an obstacle, and superposing the target potential function reward and the obstacle reward as the total reward for concealing approach training of a Double DQN neural network; and finally inputting a current feature sequence of the unmanned aerial vehicle into the trained Q target neural network in the Double DQN to obtain an optimal concealing approach strategy of the unmanned aerial vehicle. According to the method disclosed by the invention, the problem of model-free concealing approach of the unmanned aerial vehicle is mainly solved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

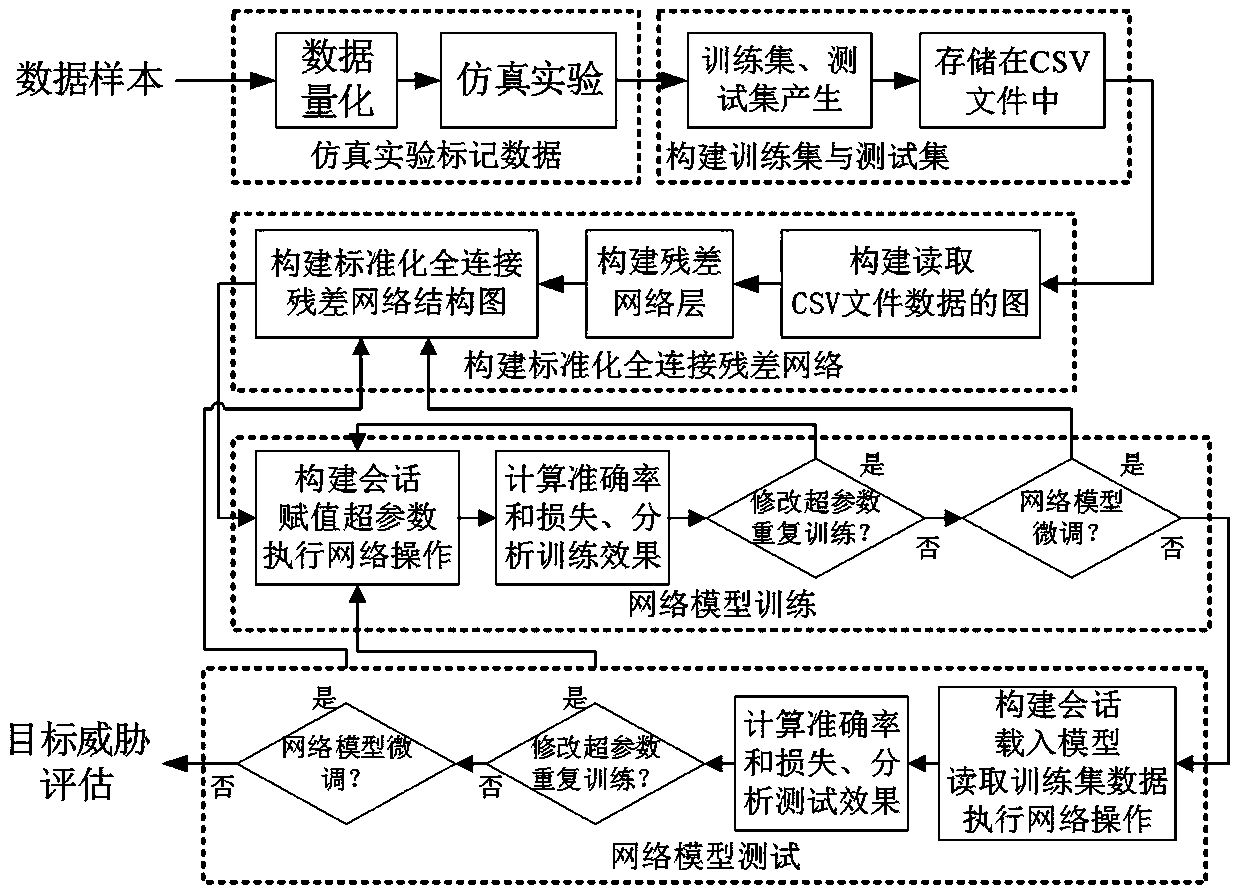

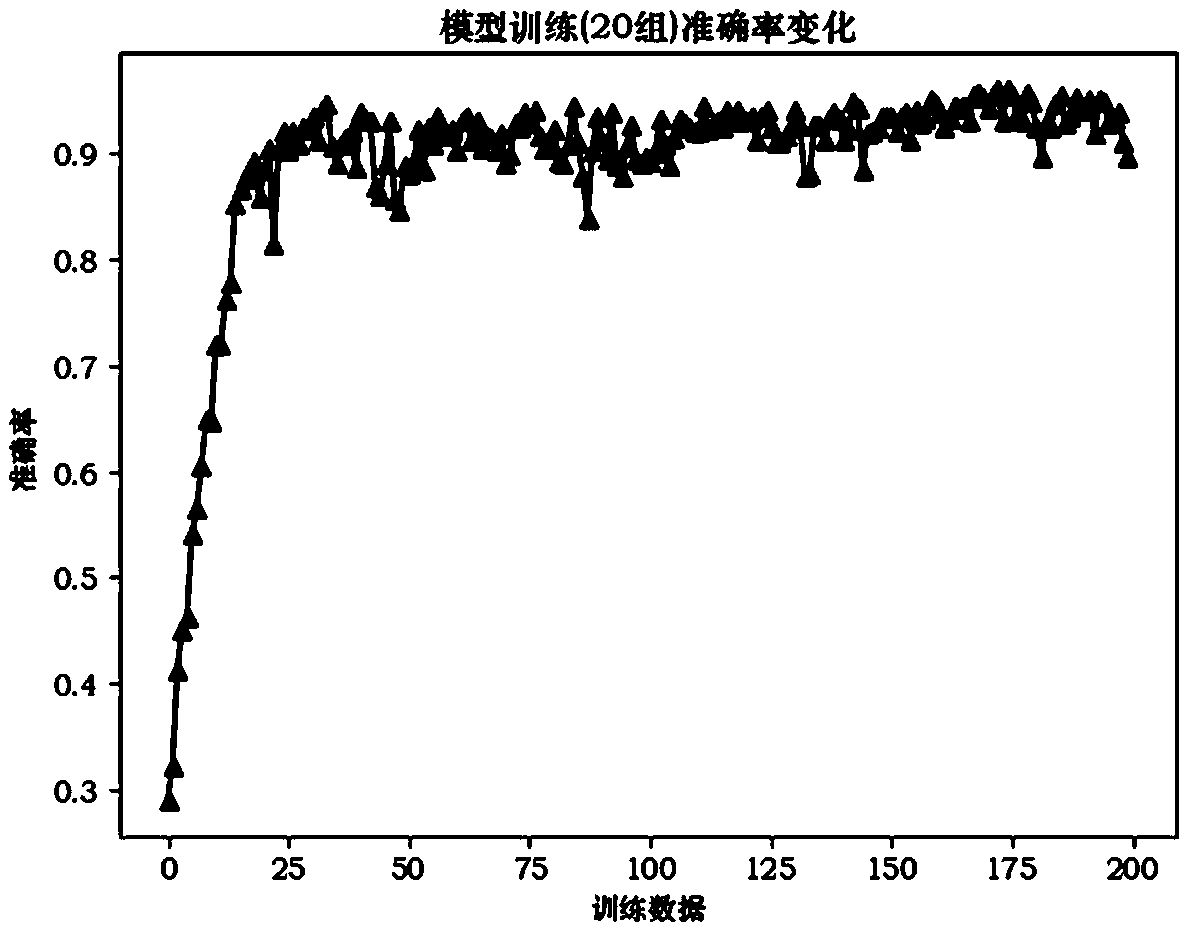

Air combat target threat assessment method based on standardized full-connection residual network

ActiveCN110472296ASolve the problem of inaccurate assessment resultsImprove information processing abilityCharacter and pattern recognitionNeural architecturesNetwork modelTargeted threat

The invention discloses an air combat target threat assessment method based on a standardized full-connection residual network, and belongs to the field of battlefield situation assessment. Firstly, asimulation experiment is carried out to mark data; constructing a training set and a test set and storing the training set and the test set in a CSV file; secondly, constructing a standardized full-connection residual error network under a TensorFlow database, including constructing a graph for reading CSV file data, a residual error network layer and a standardized full-connection residual errornetwork graph, and finally creating a TensorFlow session, training a network model, testing, analyzing network performance and verifying the model. According to the method, the problem of inaccurateevaluation result caused by lack of self-learning reasoning capability for large sample data in other air combat target threat evaluation methods is solved, distribution of input data can be self-learned, rules hidden in the data can be mined, and the trained model can accurately evaluate the air combat target threat. The battlefield situation assessment method is mainly used for (but not limitedto) battlefield situation assessment.

Owner:ZHONGBEI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com