Patents

Literature

497 results about "Return function" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

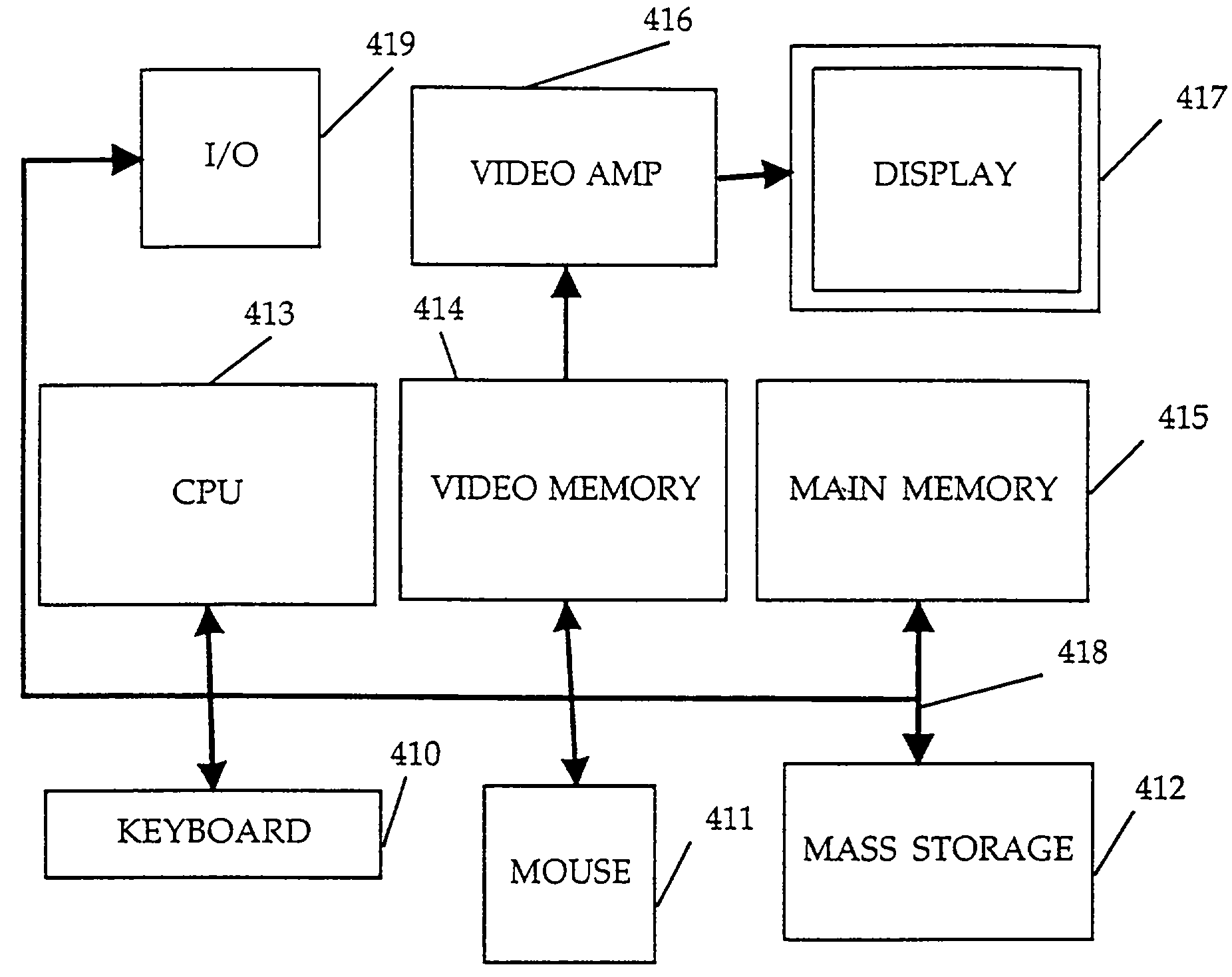

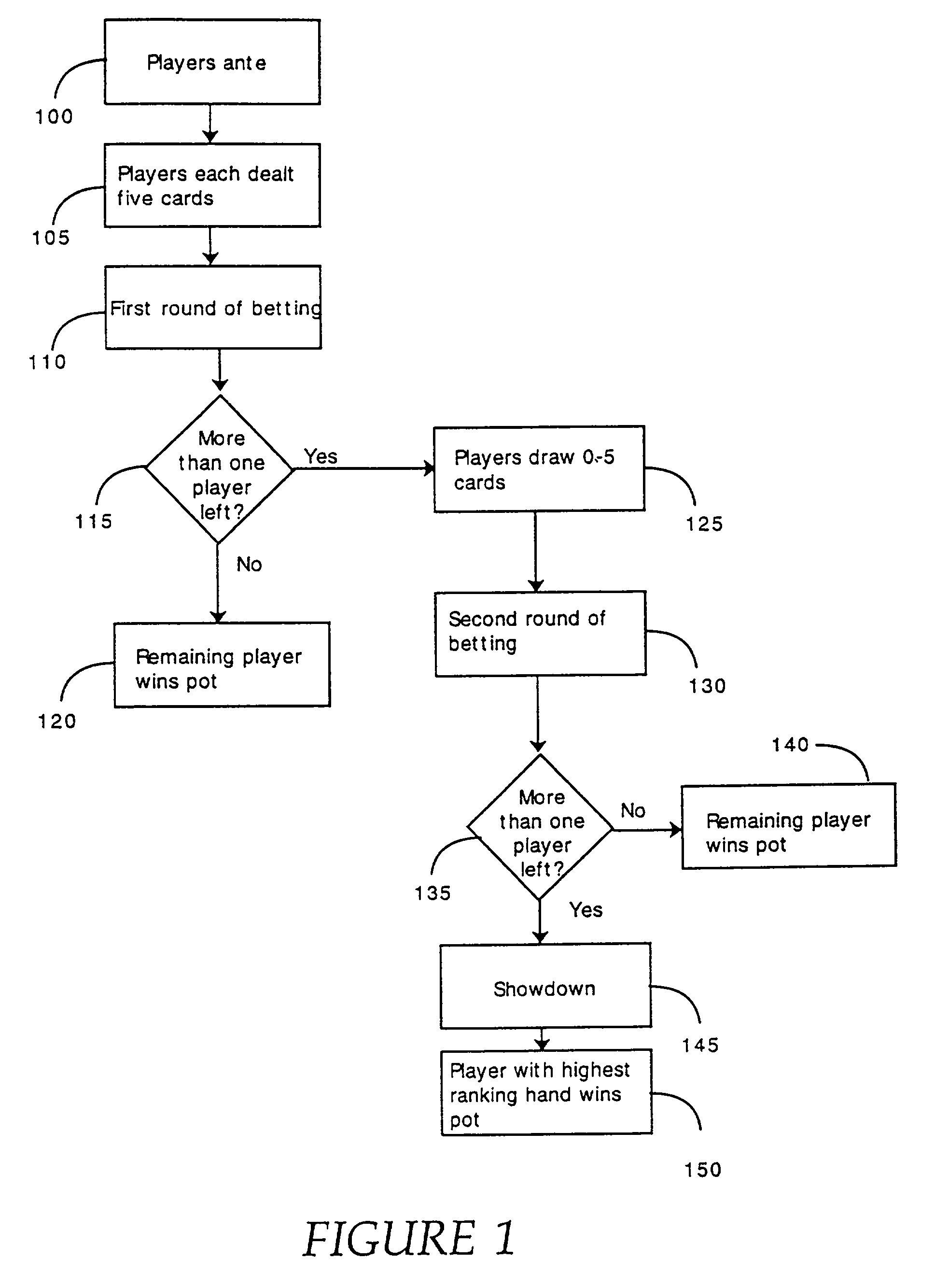

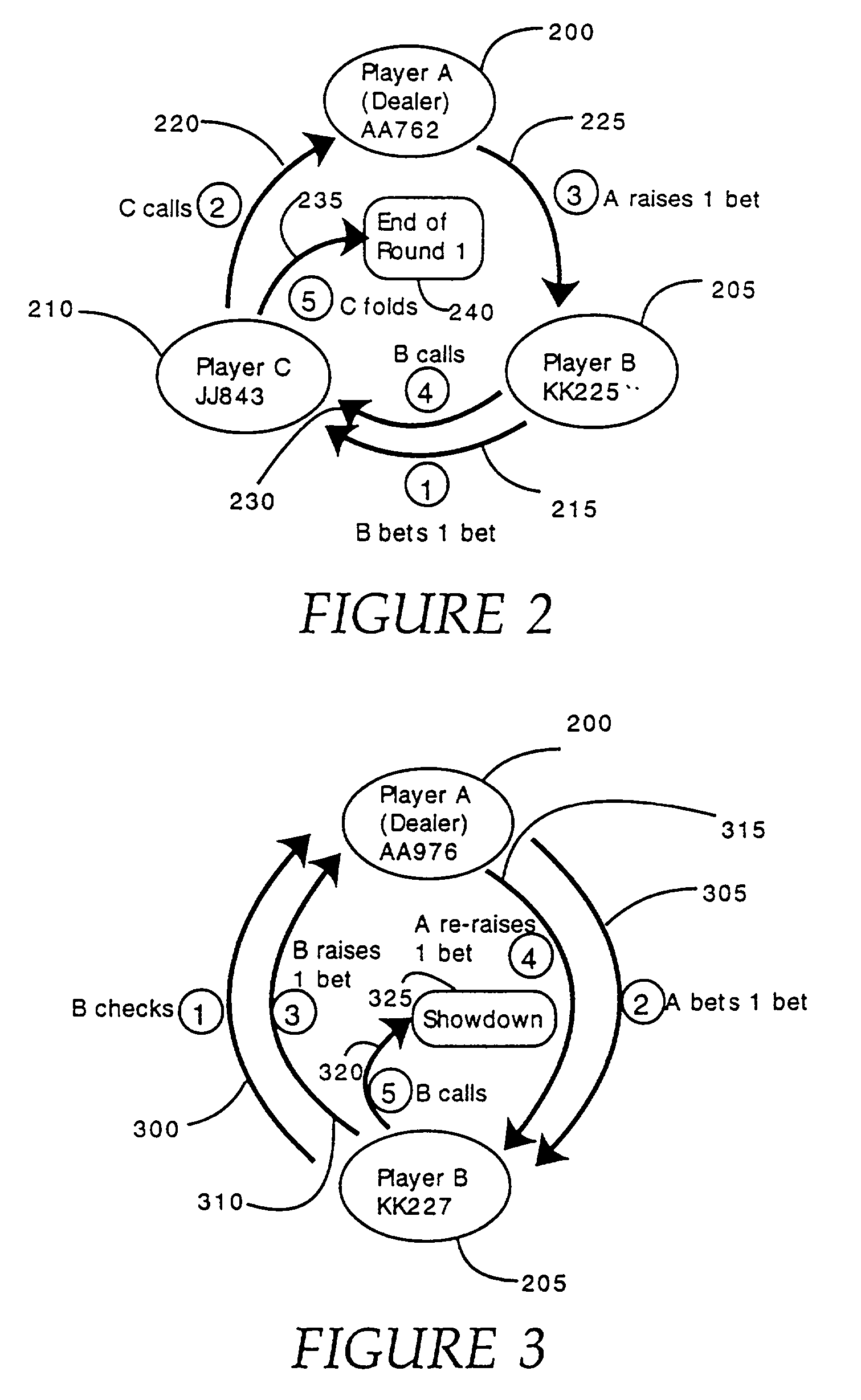

Computer gaming system

InactiveUS20020019253A1Card gamesApparatus for meter-controlled dispensingS distributionReturn function

The present invention comprises an intelligent gaming system that includes a game engine, simulation engine, and, in certain embodiments, a static evaluator. Embodiments of the invention include an intelligent, poker playing slot machine that allows a user to play poker for money against one or more intelligent, simulated opponents. In one embodiment, the invention generates card playing strategies by analyzing the expected return to players of a game. In one embodiment, a multi-dimensional model is used to represent possible strategies that may be used by each player participating in a card game. Each axis (dimension) of the model represents a distribution of a player's possible hands. Points along a player's distribution axis divide each axis into a number of segments. Each segment has associated with it an action sequence to be undertaken by the player with hands that fall within the segment. The dividing points delineate dividing points between different action sequences. The model is divided into separate portions each corresponding to an outcome determined by the action sequences and hand strengths for each player applicable to the portion. An expected return expression is generated by multiplying the outcome for each portion by the size of the portion, and adding together the resulting products. The location of the dividing points that result in the maximum expected return is determined by taking partial derivatives of the expected return function with respect to each variable, and setting them equal to zero. The result is a set of simultaneous equations that are solved to obtain values for each dividing point. The values for the optimized dividing points define optimized card playing strategies.

Owner:GAMECRAFT

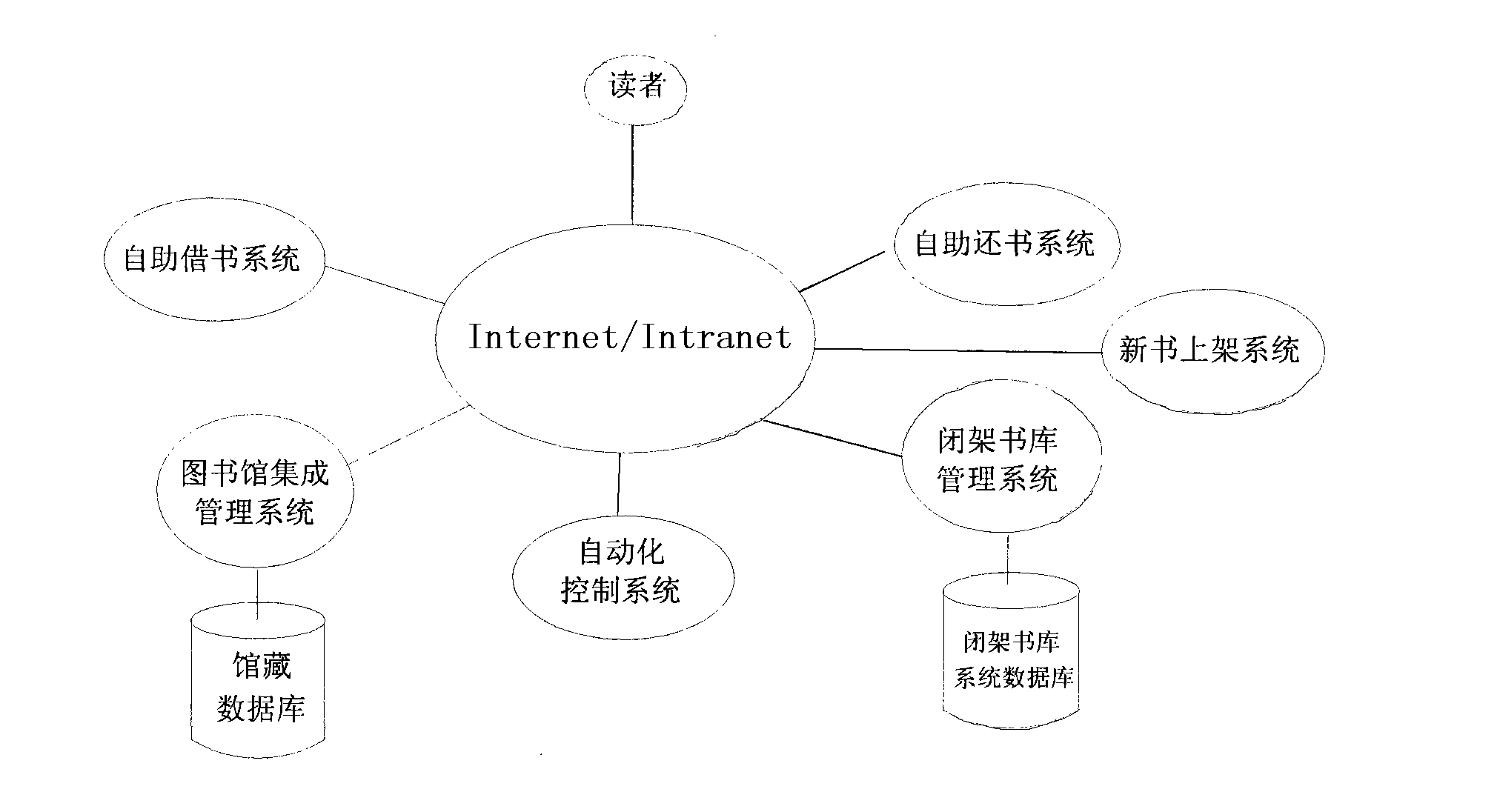

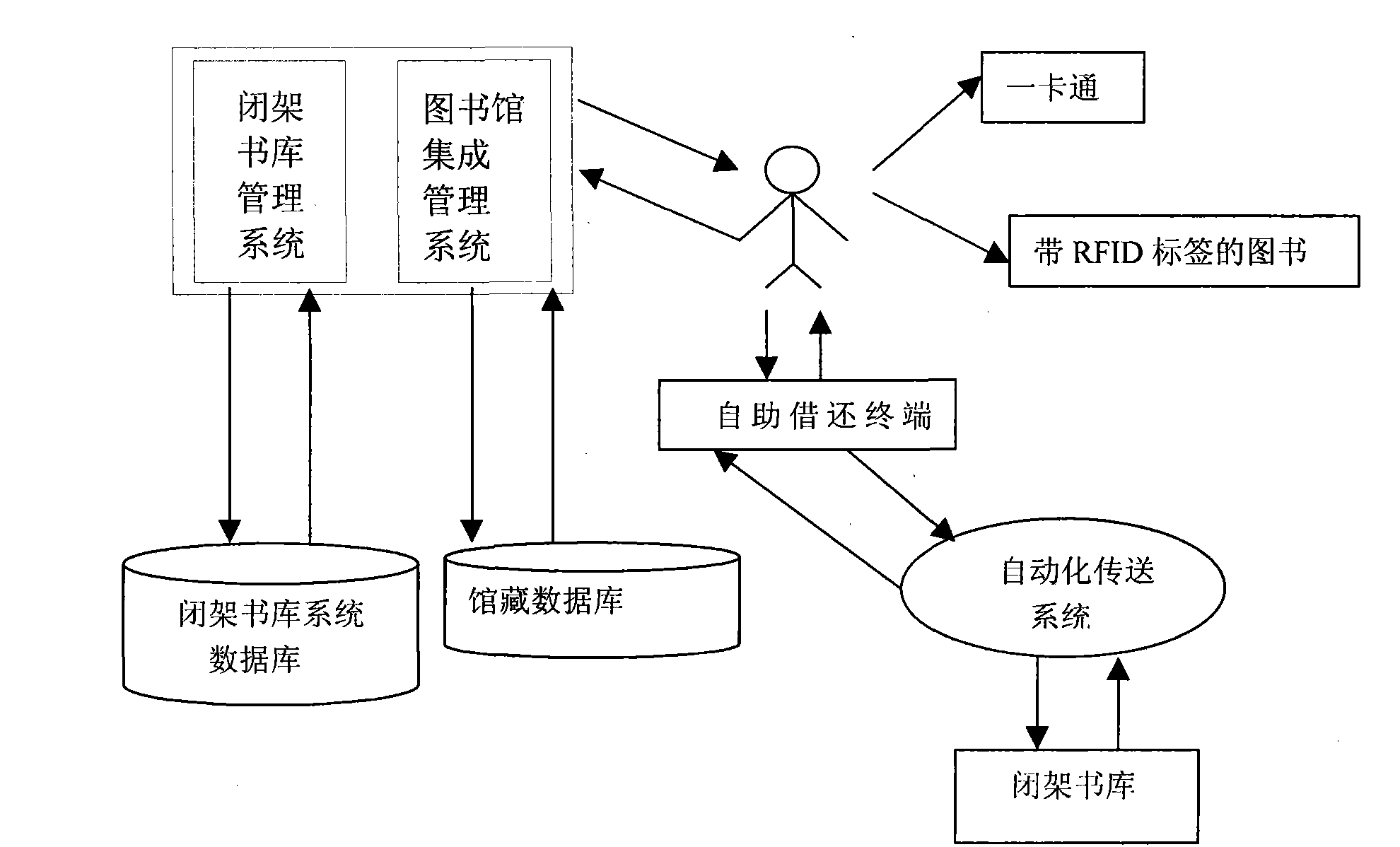

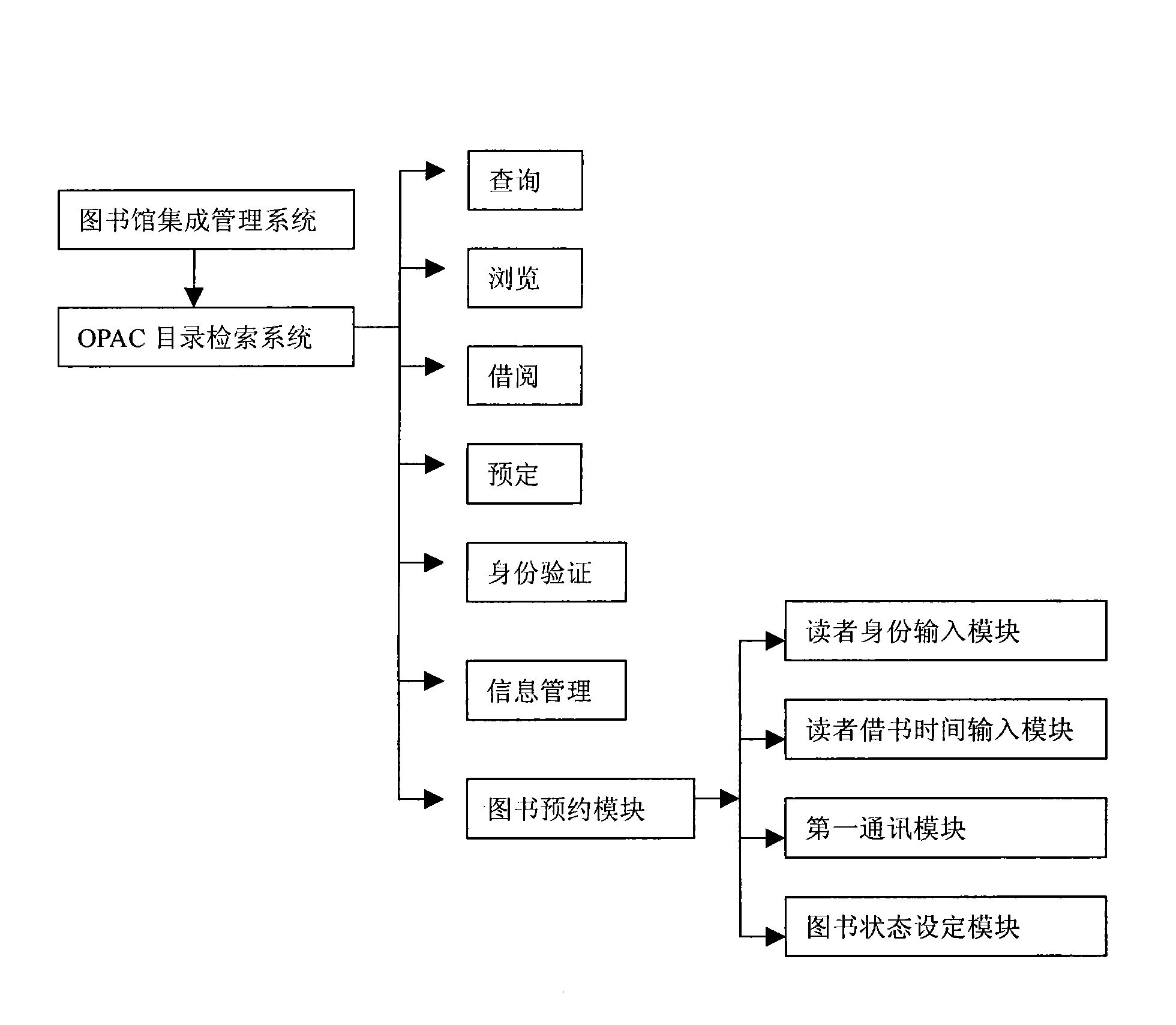

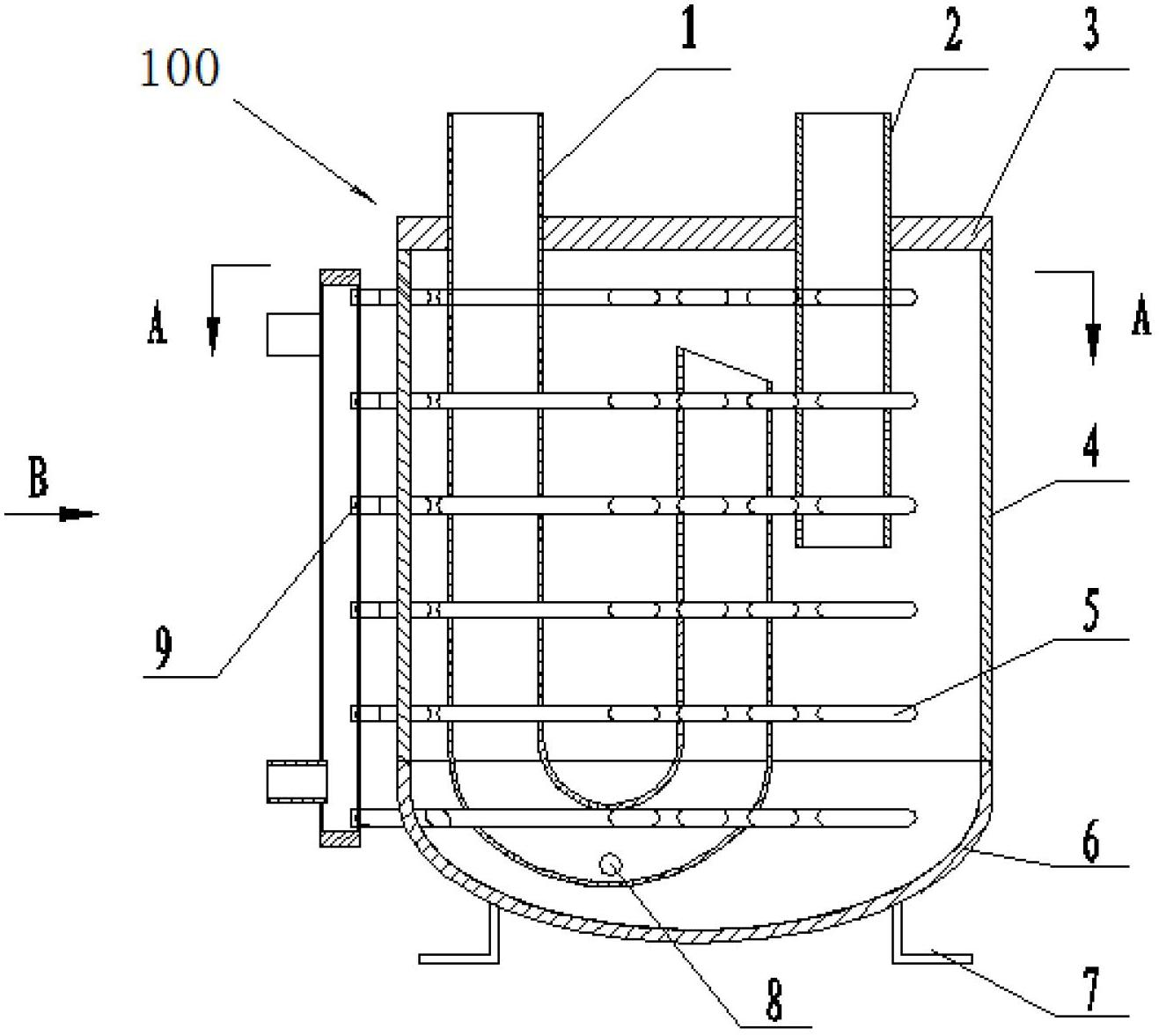

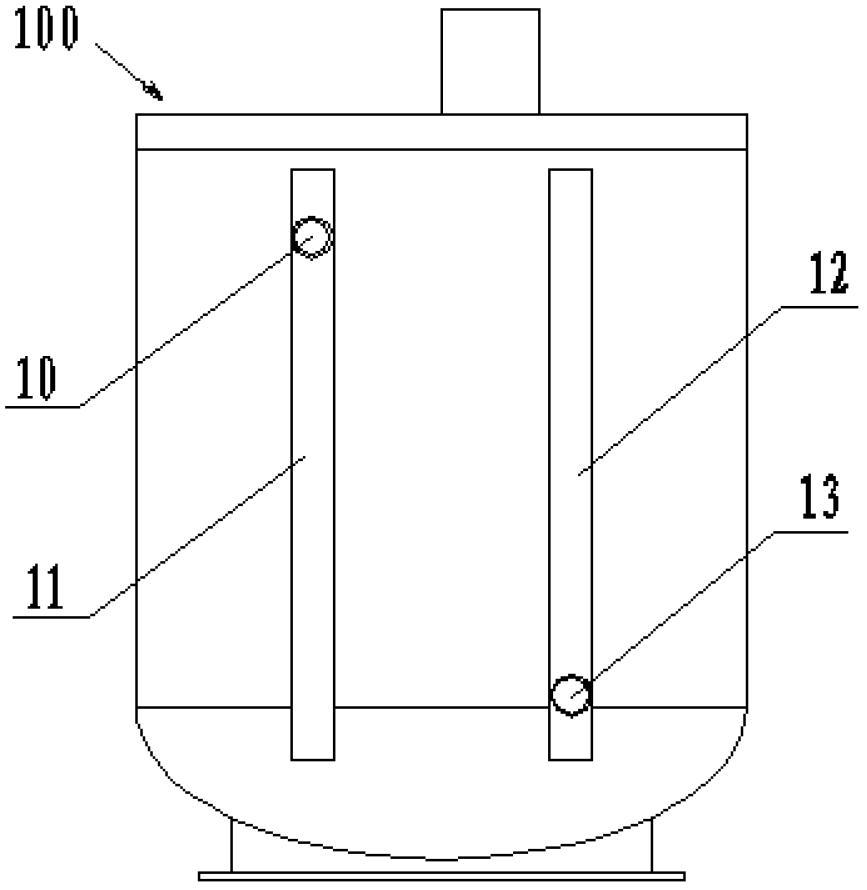

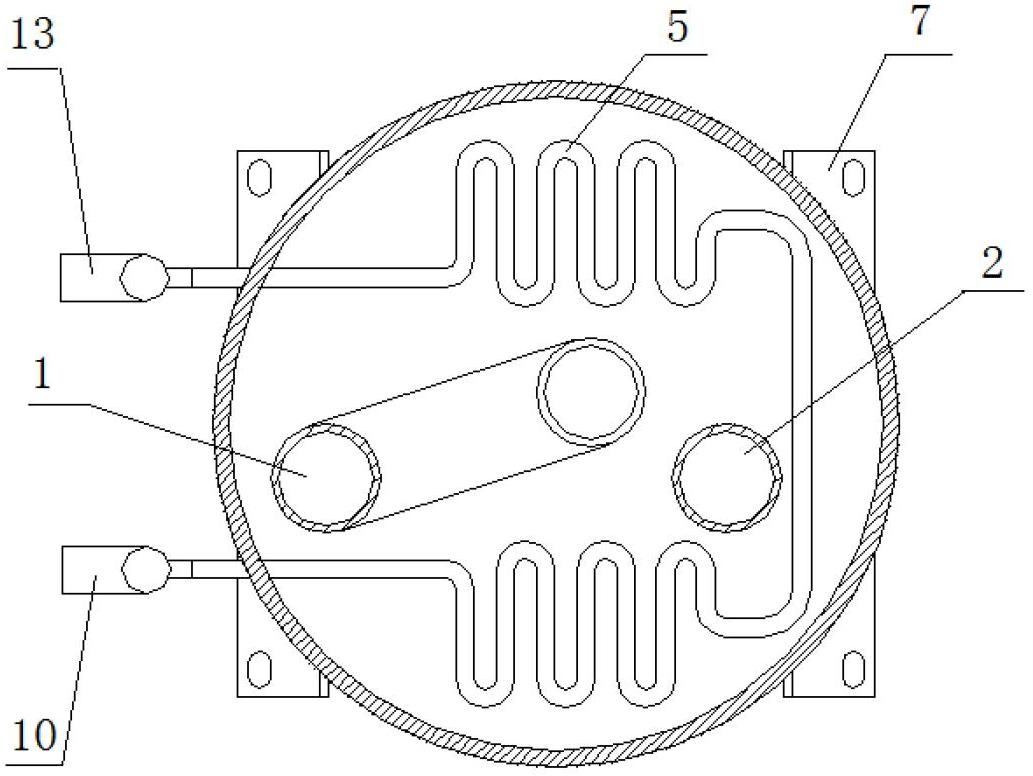

Automatic book management system based on closed stacks

InactiveCN101515391ARealize unmanned management functionImprove securityData processing applicationsCash registersReturn functionTransfer system

The invention discloses an automatic book management system based on closed stacks, which combines the original library management systems, realizes automatic borrowing and returning functions of books, lowers the labor intensity of the workers in the library and effectively improves the quality of the library in serving readers. The automatic library system adopts RFID technology, mechanical automation, network and computer technologies. The book management system of the invention comprises shelves, a library integrated management system, books with RFID chips, a self-service borrowing and returning terminal, a management system of closed stacks, a self-service borrowing system, a self-service returning system and an automatic transfer system which realizes that books automatically go in and out of the closed stacks; wherein, the management system of closed stacks operates the information of the database of the closed shelf system to realize information interaction between the library integrated management system and the automatic transfer system, thus finally realizing the automatic management functions of books.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

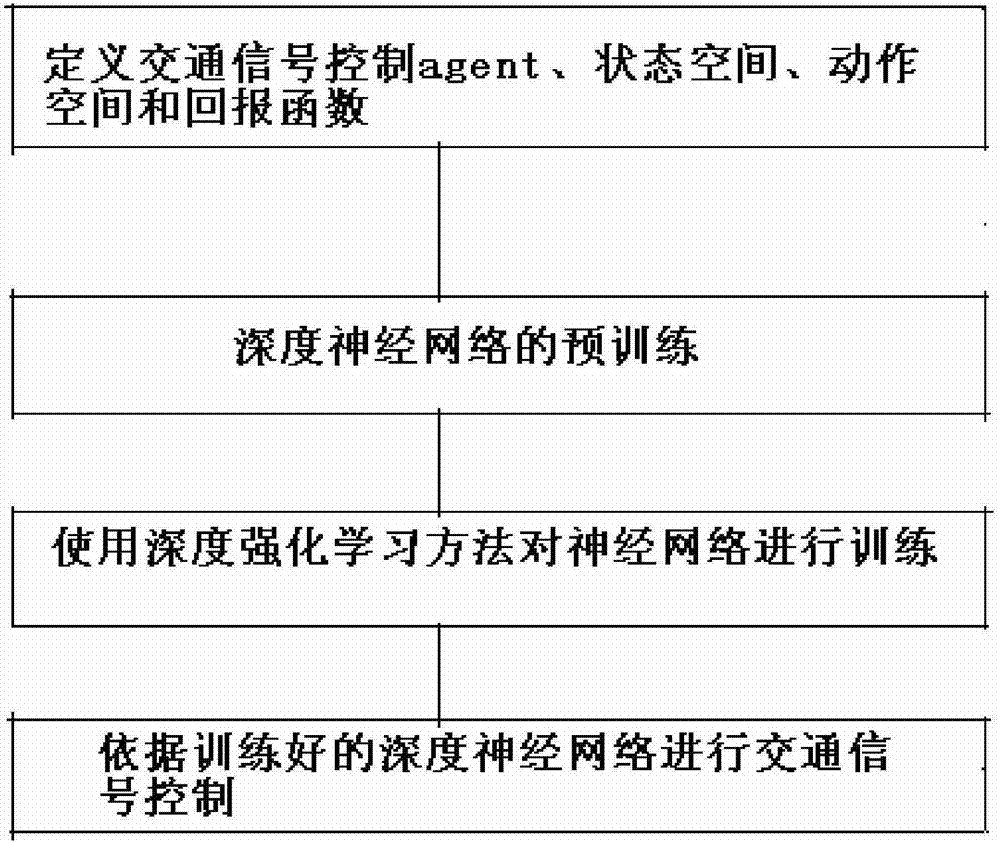

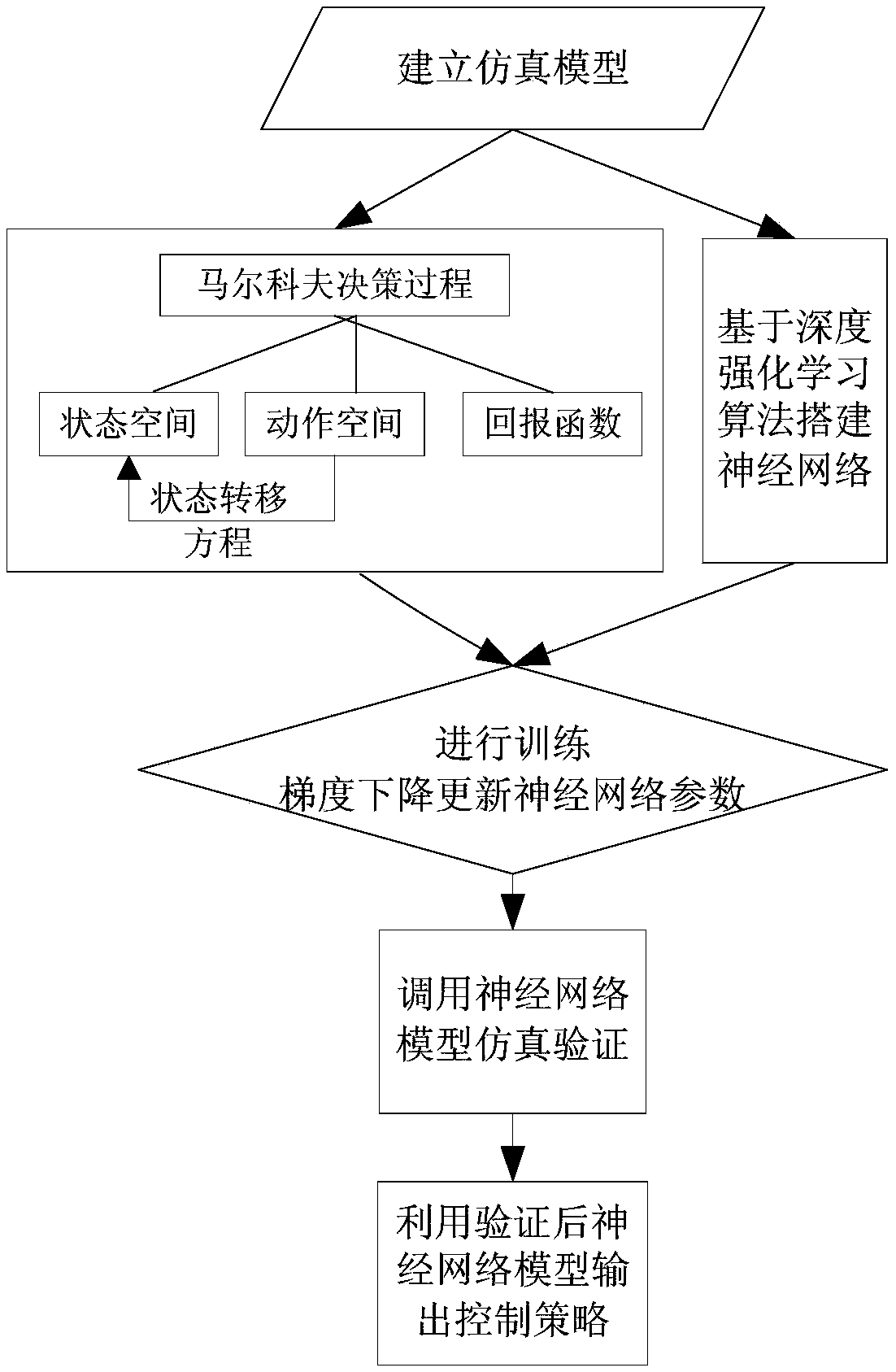

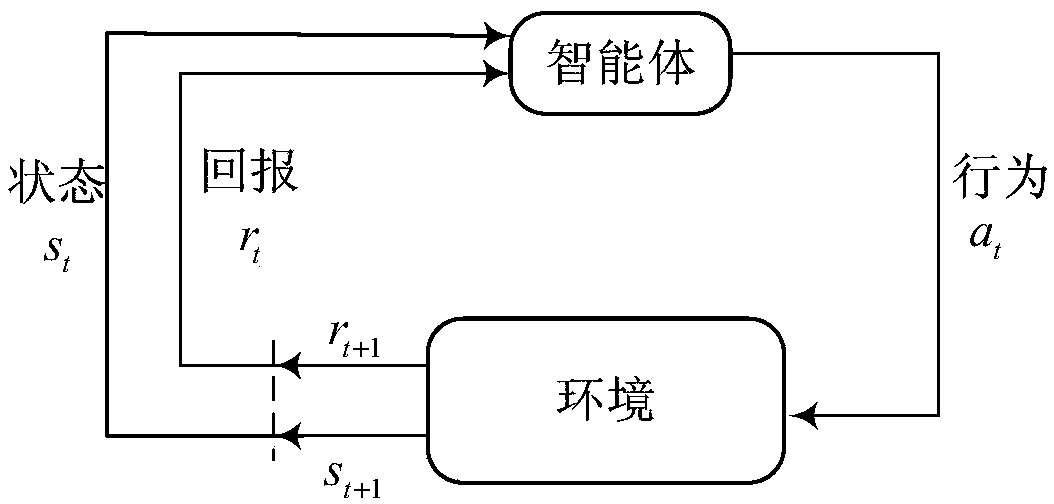

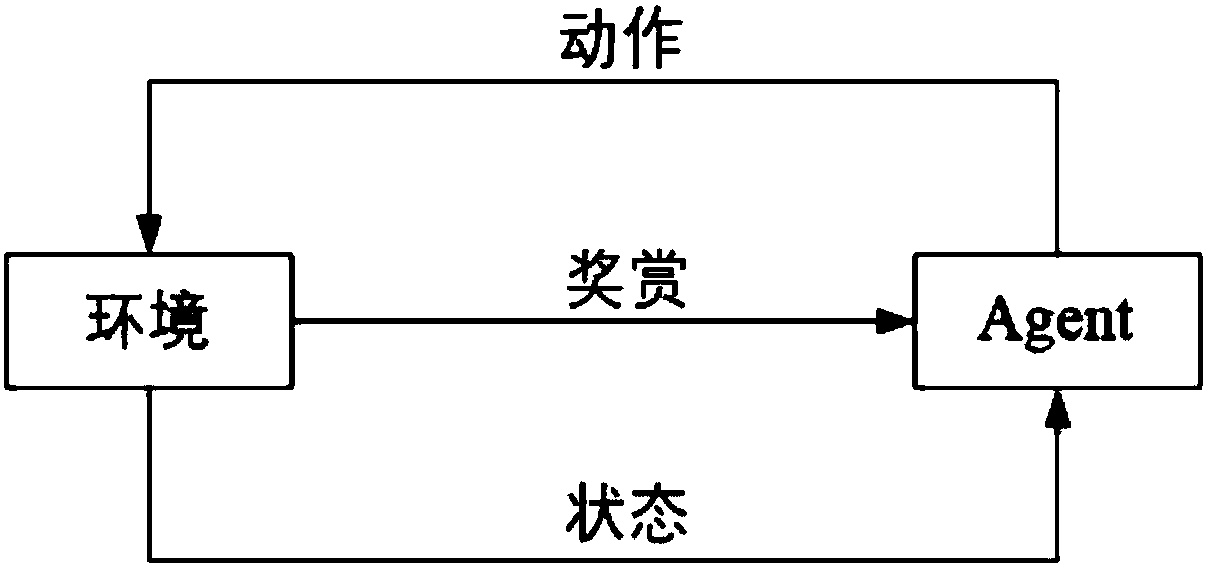

Traffic signal self-adaptive control method based on deep reinforcement learning

InactiveCN106910351ARealize precise perceptionSolve the problem of inaccurate perception of traffic statusControlling traffic signalsNeural architecturesTraffic signalReturn function

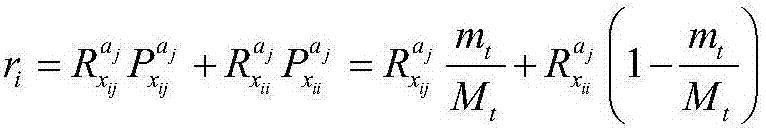

The invention relates to the technical field of traffic control and artificial intelligence and provides a traffic signal self-adaptive control method based on deep reinforcement learning. The method includes the following steps that 1, a traffic signal control agent, a state space S, a motion space A and a return function r are defined; 2, a deep neutral network is pre-trained; 3, the neutral network is trained through a deep reinforcement learning method; 4, traffic signal control is carried out according to the trained deep neutral network. By preprocessing traffic data acquired by magnetic induction, video, an RFID, vehicle internet and the like, low-layer expression of the traffic state containing vehicle position information is obtained; then the traffic state is perceived through a multilayer perceptron of deep learning, and high-layer abstract features of the current traffic state are obtained; on the basis, a proper timing plan is selected according to the high-layer abstract features of the current traffic state through the decision making capacity of reinforcement learning, self-adaptive control of traffic signals is achieved, the vehicle travel time is shortened accordingly, and safe, smooth, orderly and efficient operation of traffic is guaranteed.

Owner:DALIAN UNIV OF TECH

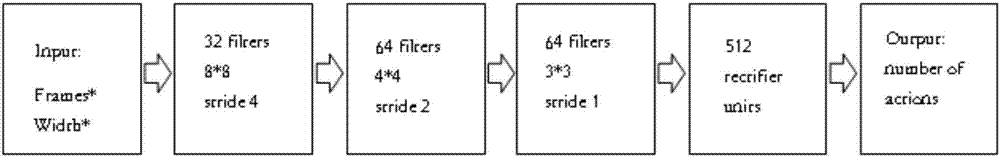

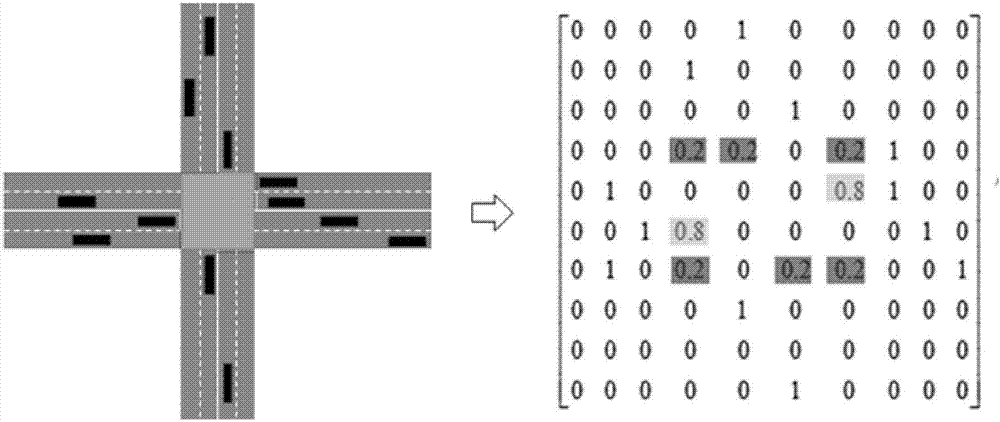

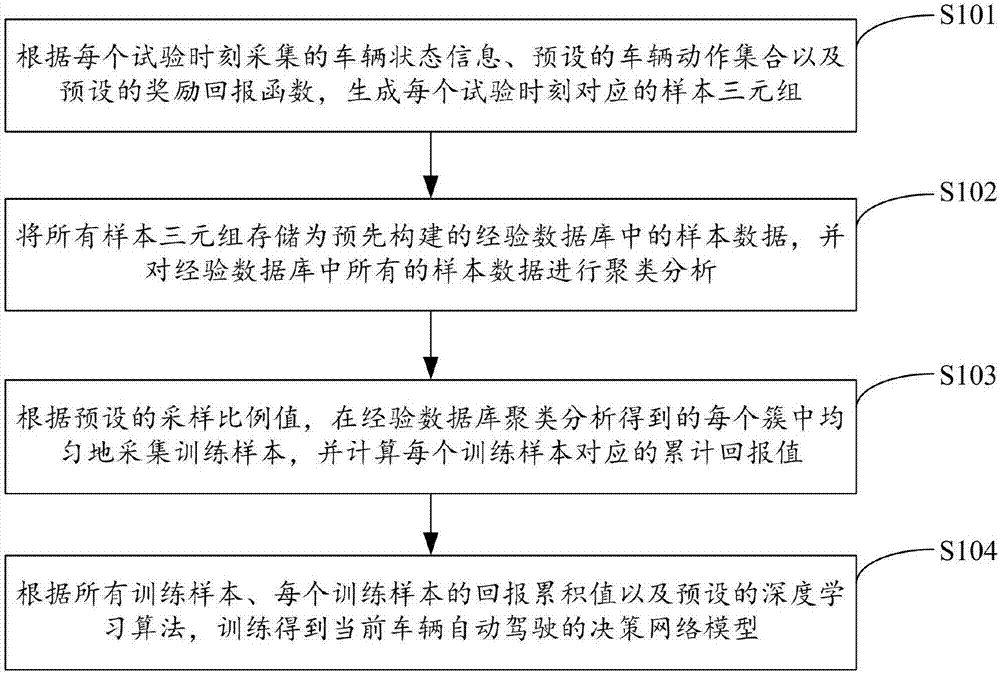

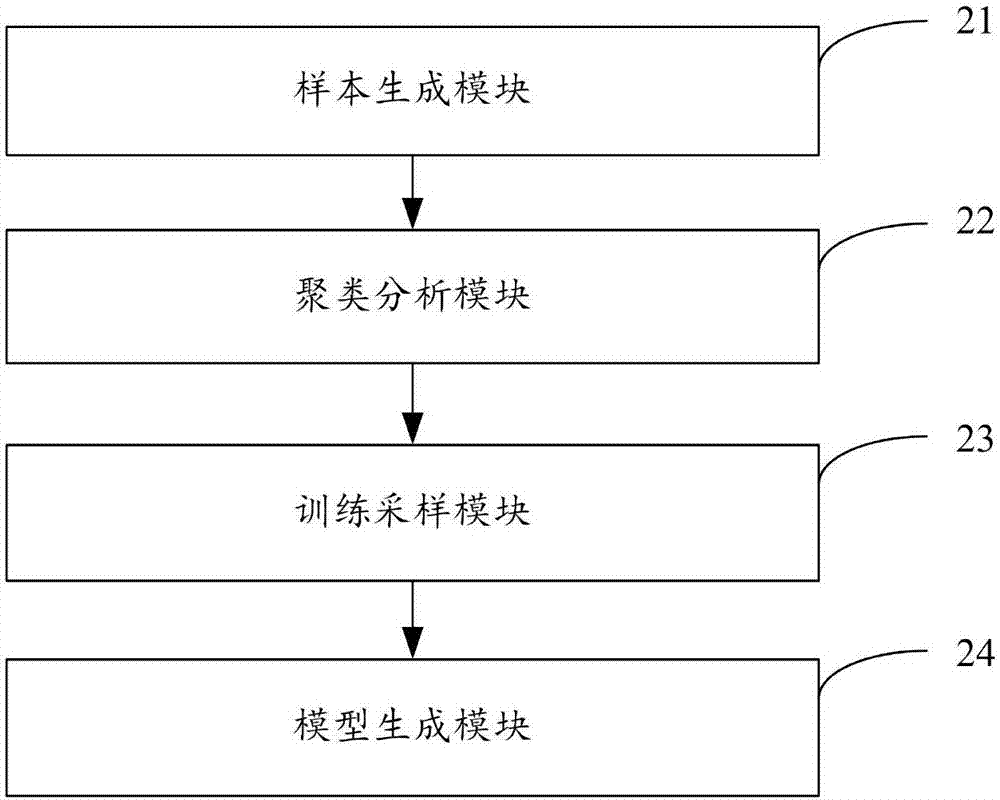

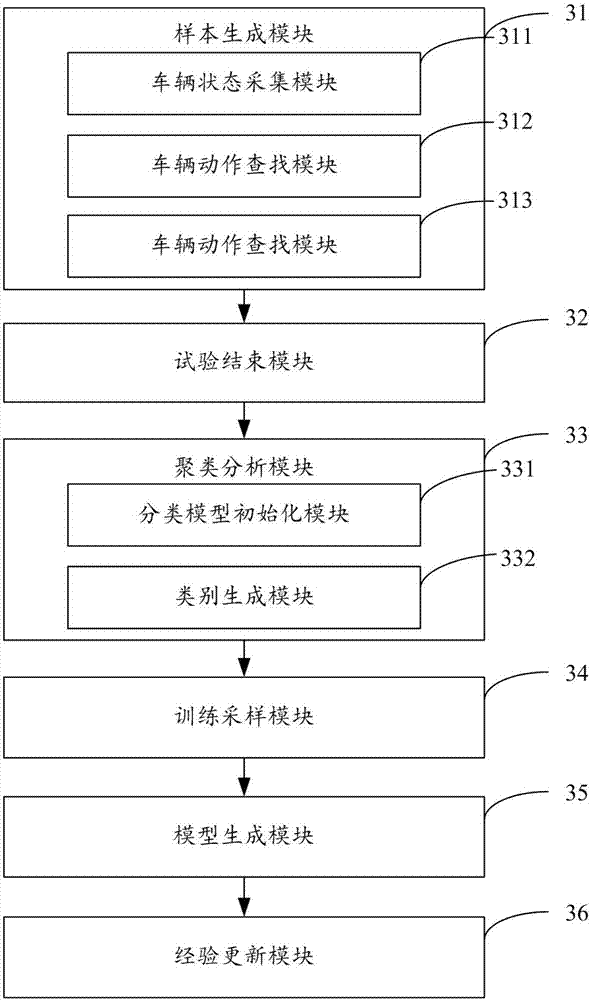

Generation method and device for decision network model of vehicle automatic driving

ActiveCN107169567AFast trainingTraining samples, and finally training fastKnowledge representationMachine learningAlgorithmDecision networks

The invention is applicable to the field of computer technology and provides a generation method and device for a decision network model of vehicle automatic driving. The method comprises the steps that a sample triad corresponding to each test moment is generated according to vehicle state information collected at each test moment, a preset vehicle movement set and a preset return function, all the sample triads are stored as sample data in a pre-established experience database, and all the sample data is subjected to clustering analysis; training samples are uniformly collected from each cluster obtained after clustering analysis of the experience database according to a preset sampling scale value, and a return accumulated value of each training sample is calculated; and according to all the training samples, the return accumulated value of each training sample and a preset deep learning algorithm, training is performed to obtain the decision network model of vehicle automatic driving. Therefore, the training efficiency of the decision network model and the generalization ability of the decision network model are effectively improved.

Owner:SHENZHEN INST OF ADVANCED TECH

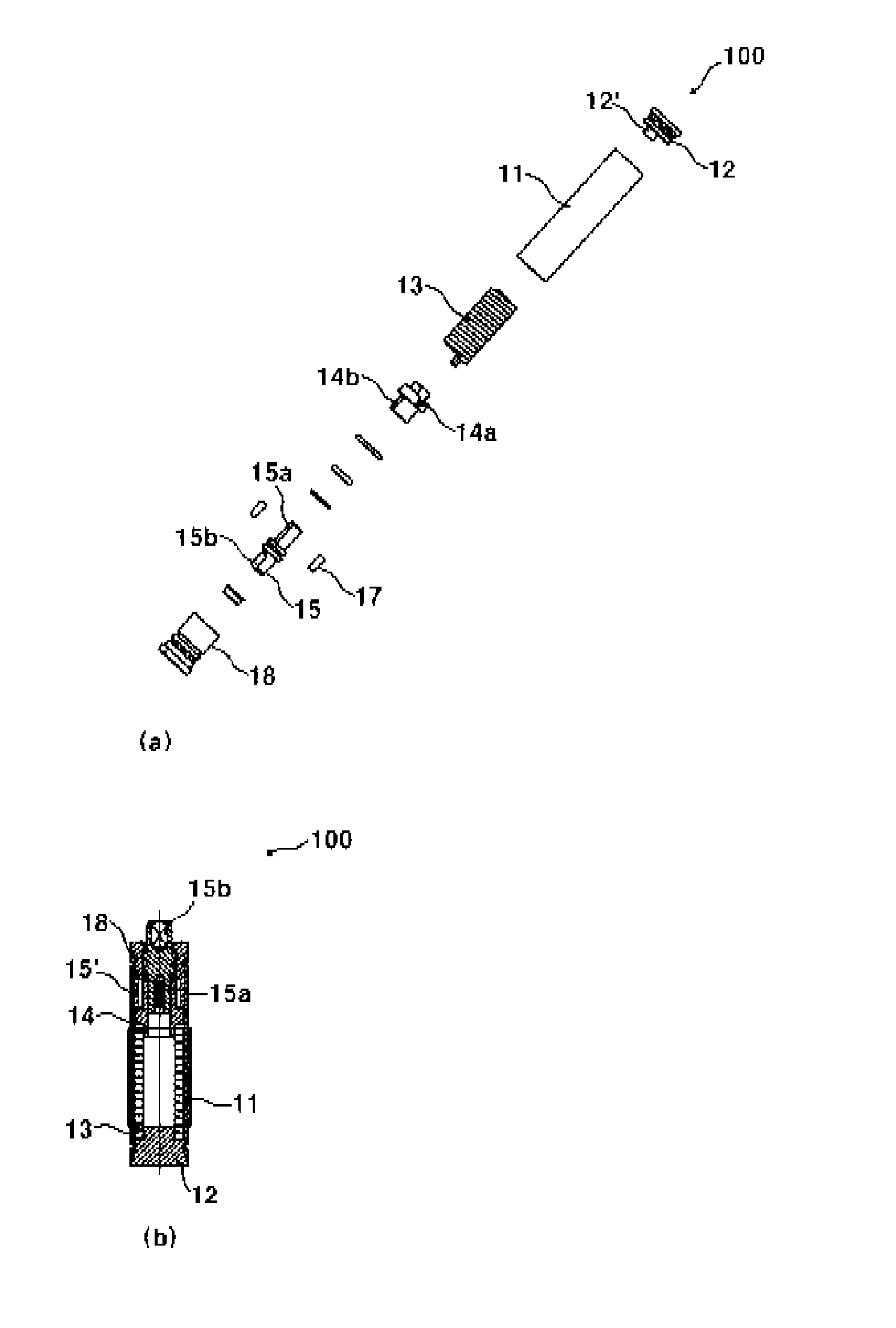

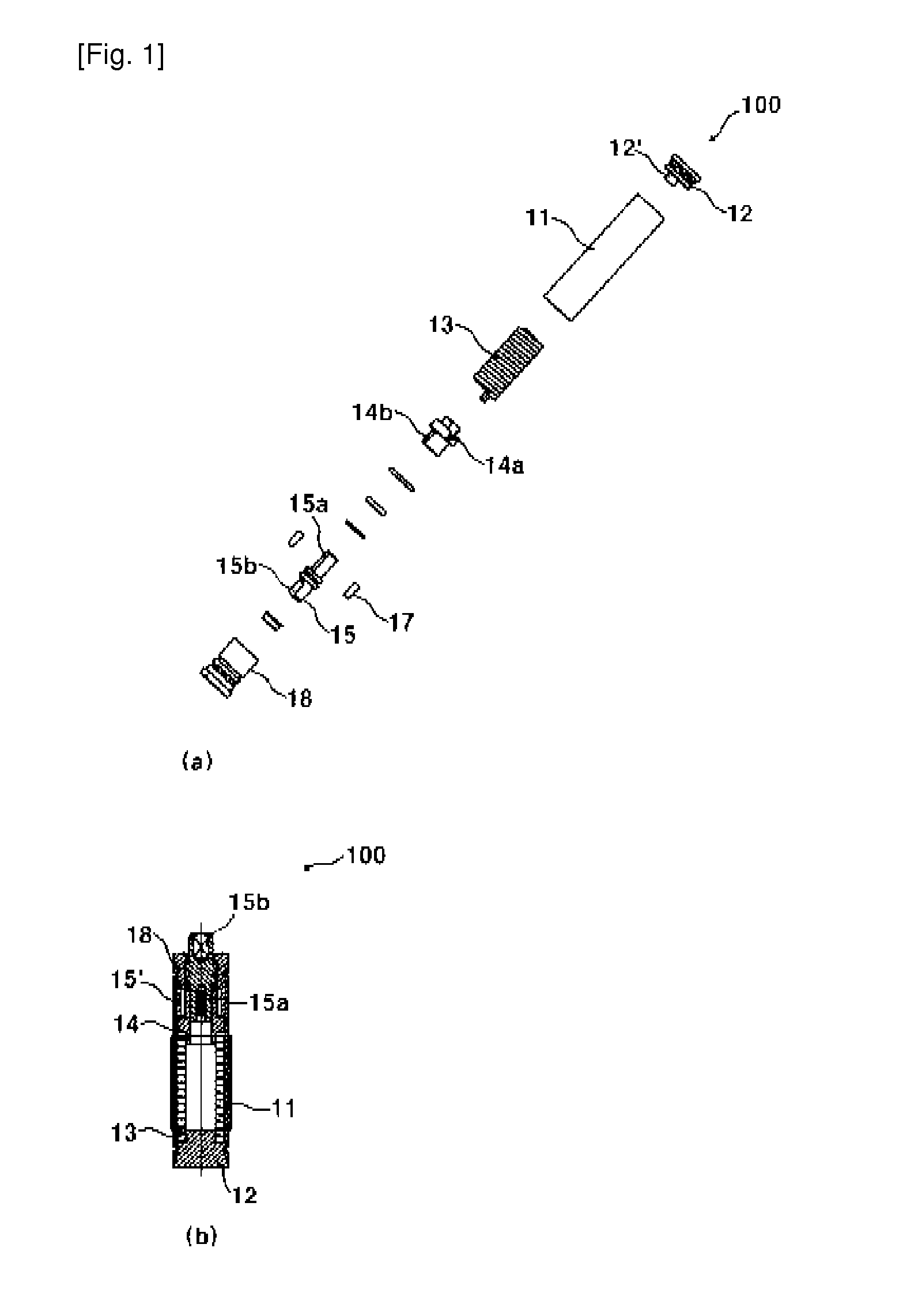

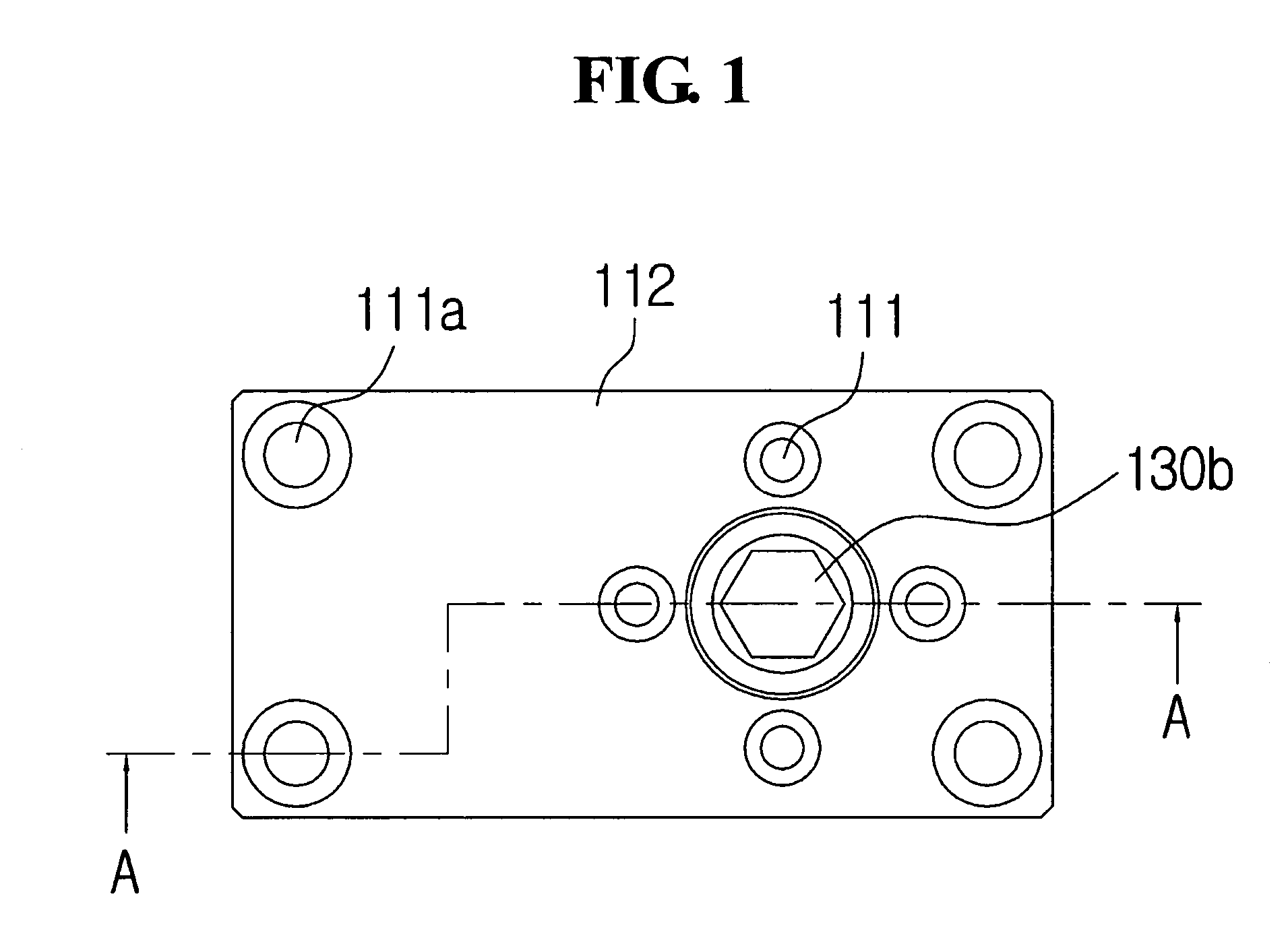

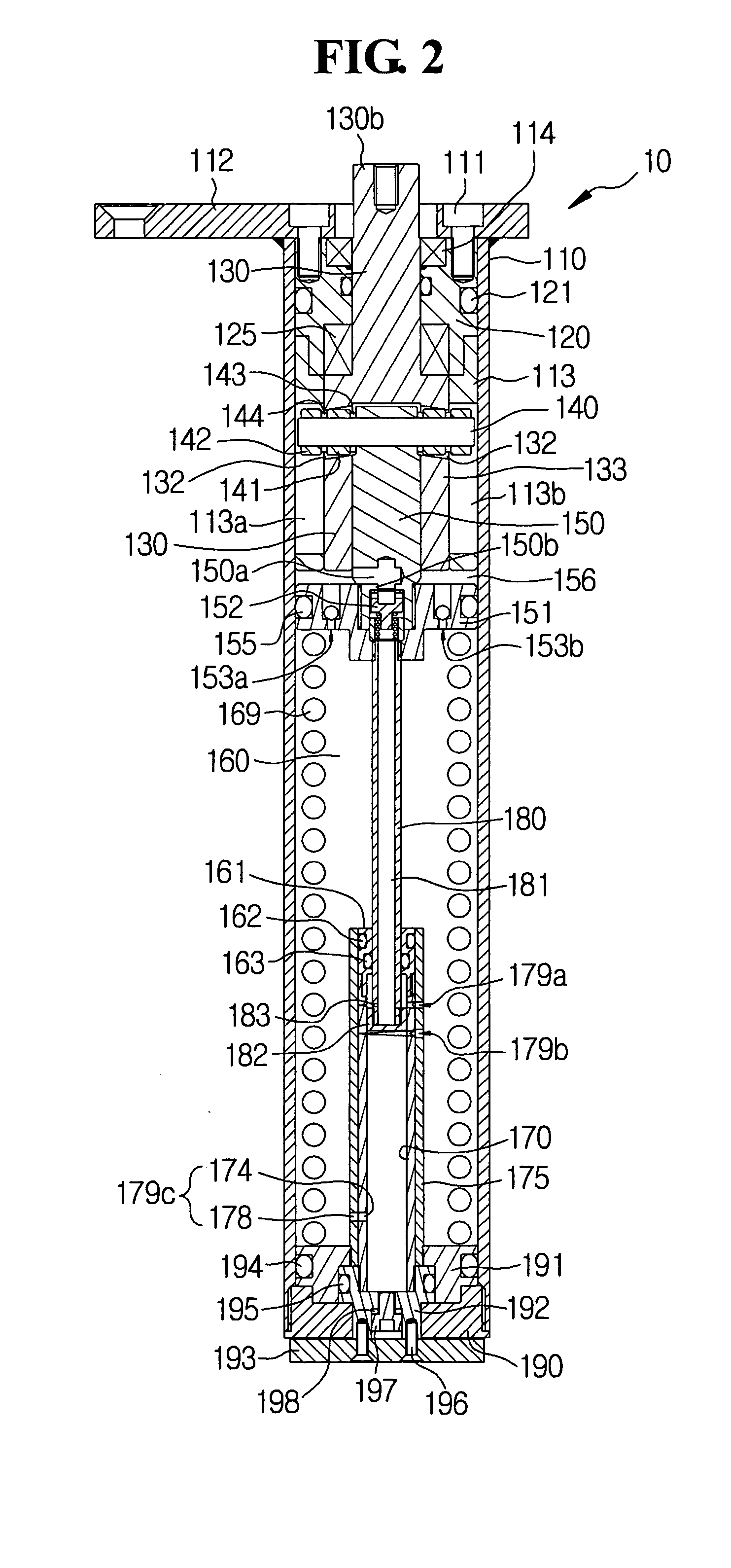

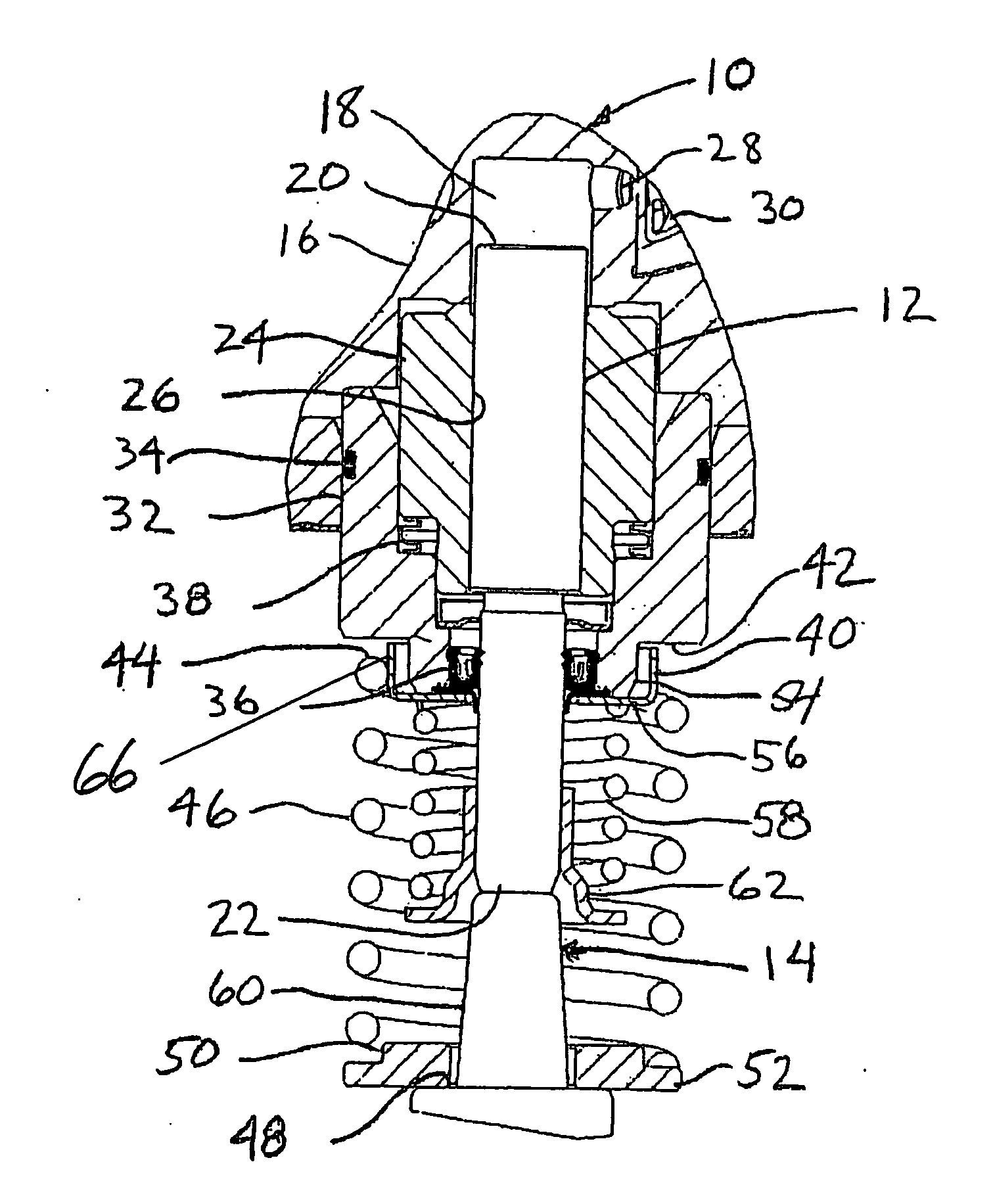

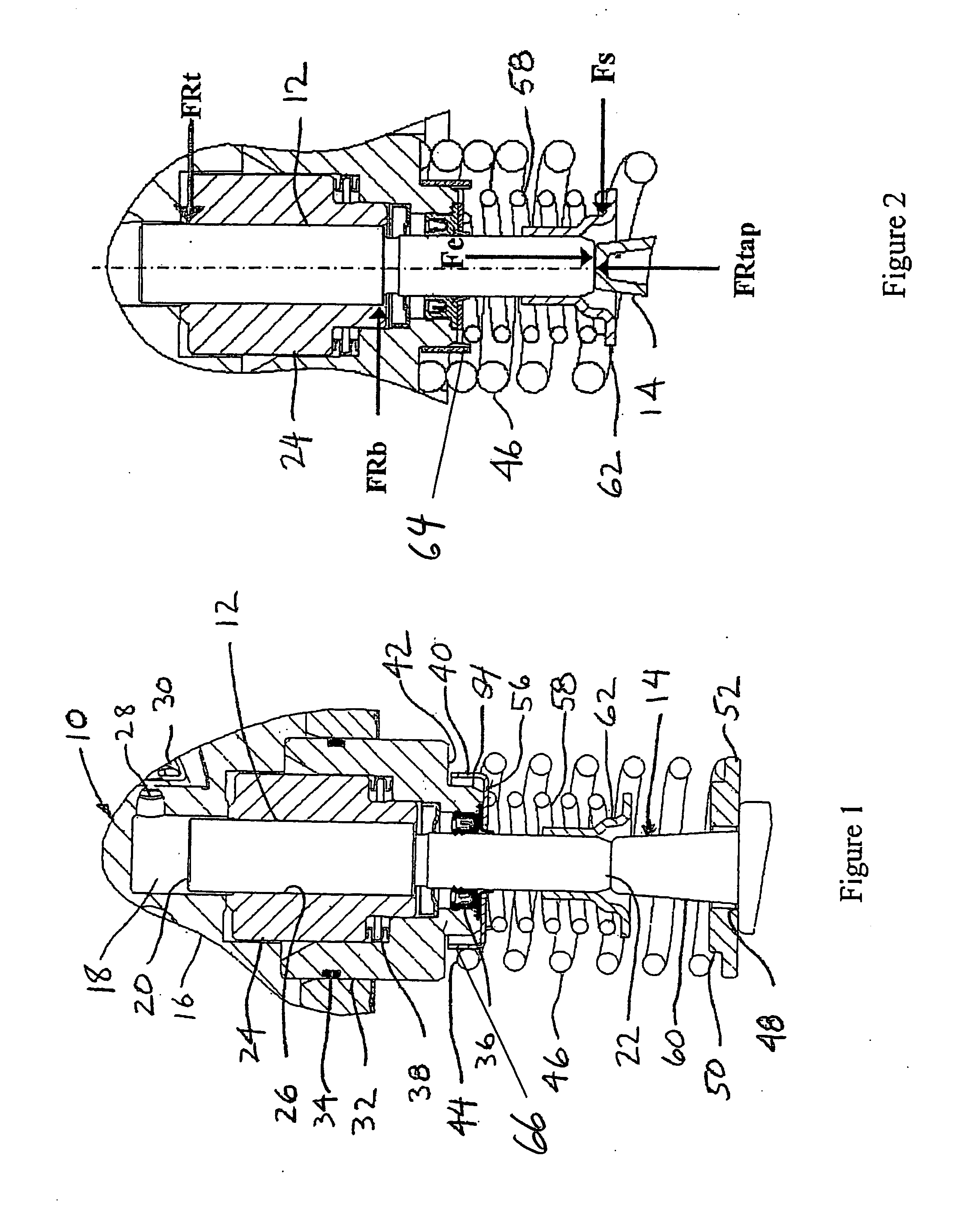

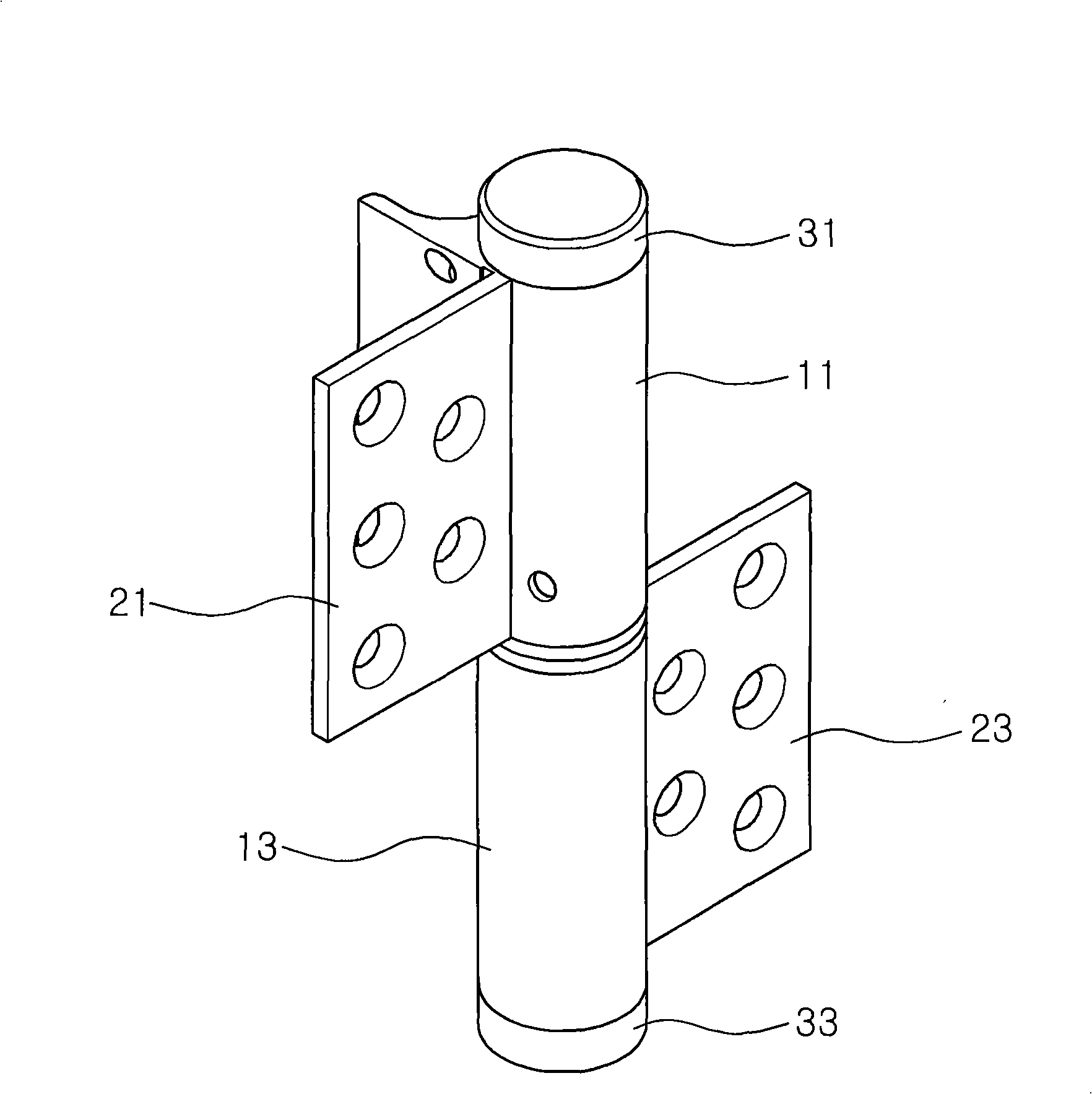

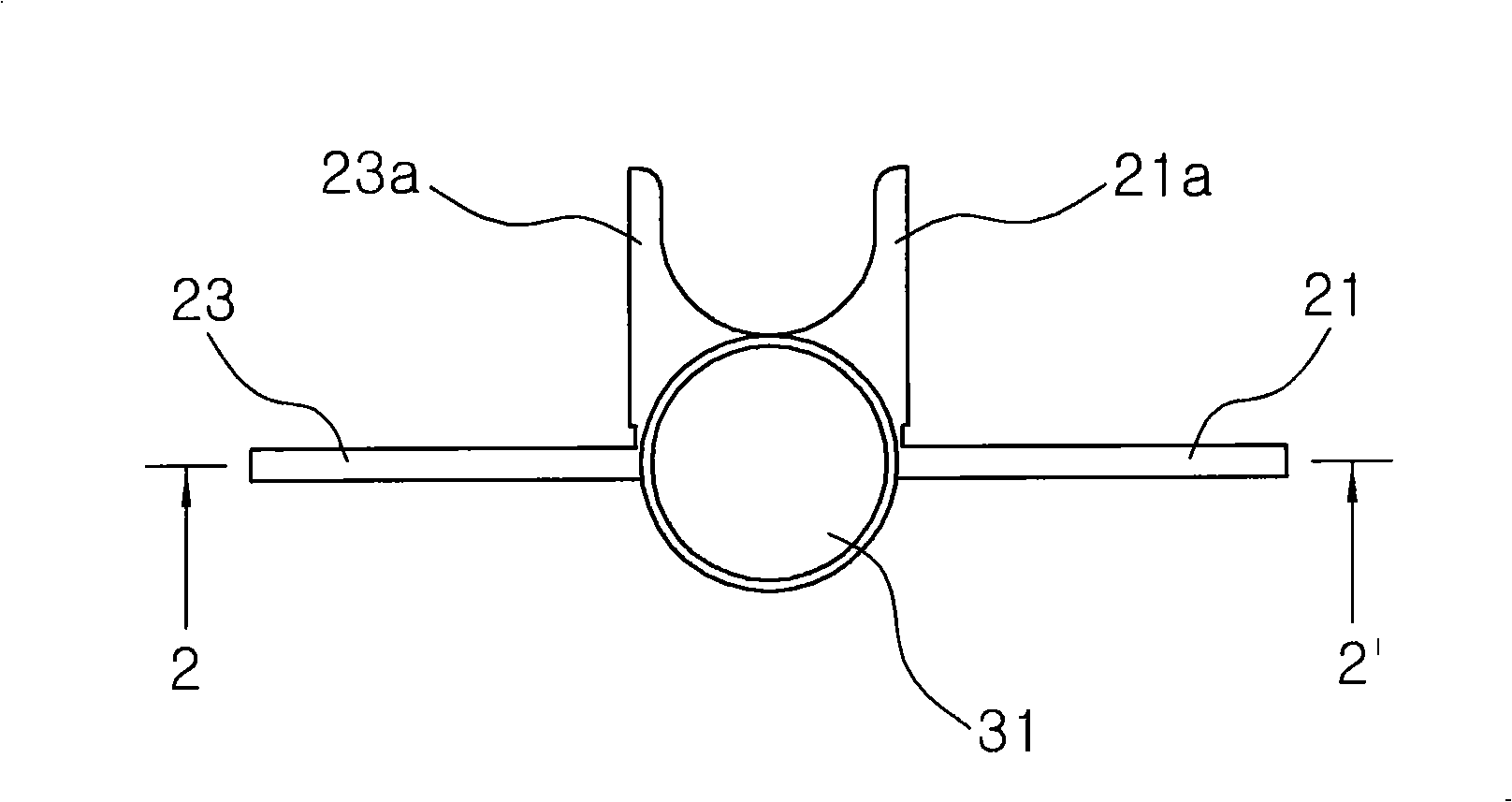

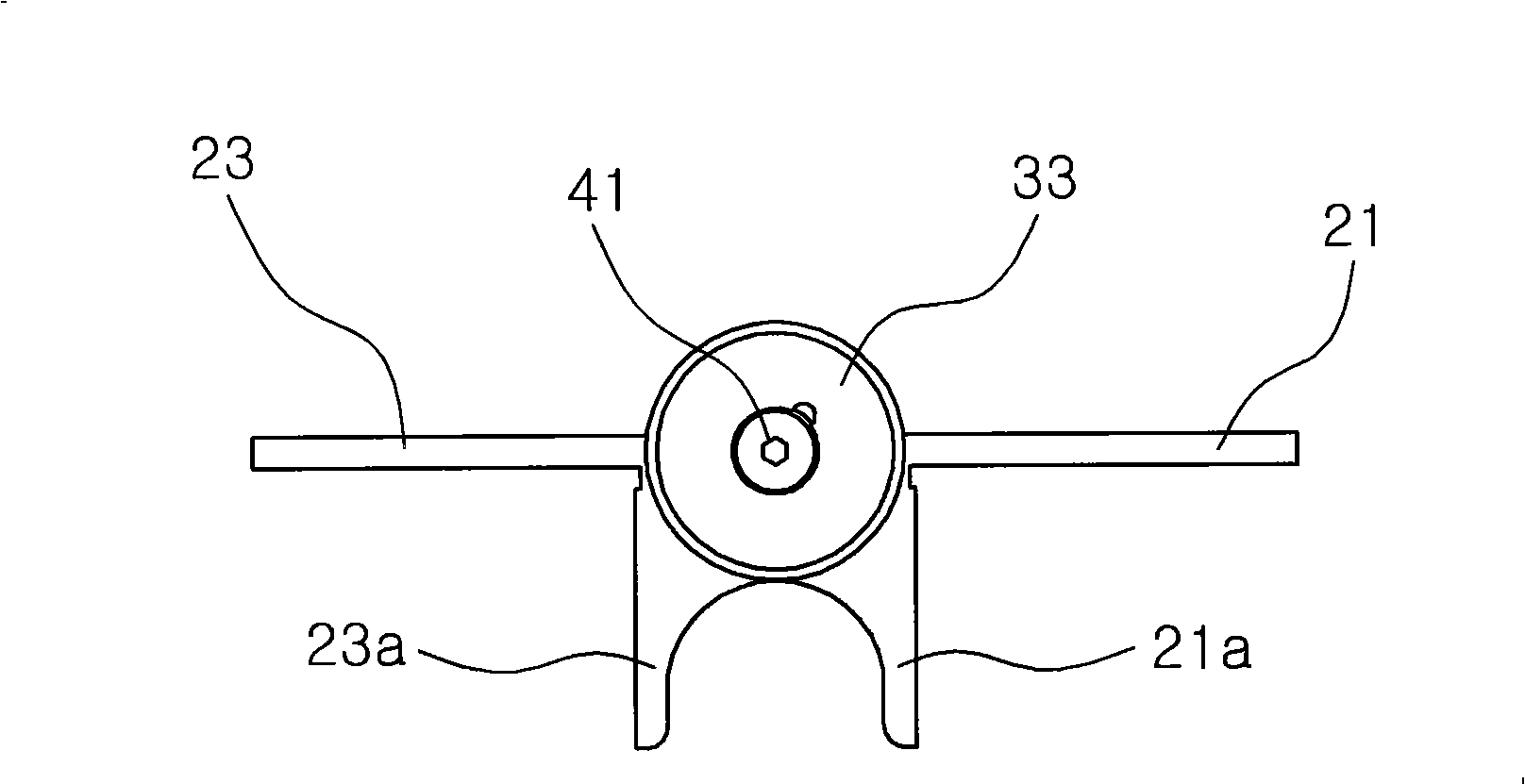

Separated type hinge apparatus with return function

ActiveUS20110041285A1Reduces need for spaceEasy to adjustBuilding braking devicesHingesReturn functionEngineering

The present invention relates to a hinge apparatus for a door, and more particularly, to a separated type hinge apparatus with an automatic return function, in which a restoring device and a damping device are installed separately so that the overall length of the hinge apparatus is reduced, and thus the hinge apparatus may be buried less deeply. A separated type hinge apparatus comprises: an elastic casing; a first elastic member that provides a restoring force; a clutch device which is combined with one end of the first elastic member and controls the elastic force of the first elastic member to remain constant; a shaft penetratively combined through the clutch device in order to transmit a rotational force; and a spring fixing member which is coupled to the other end end of the first elastic member and has a gear groove to control a spring elastic force.

Owner:HONG CHAN HO

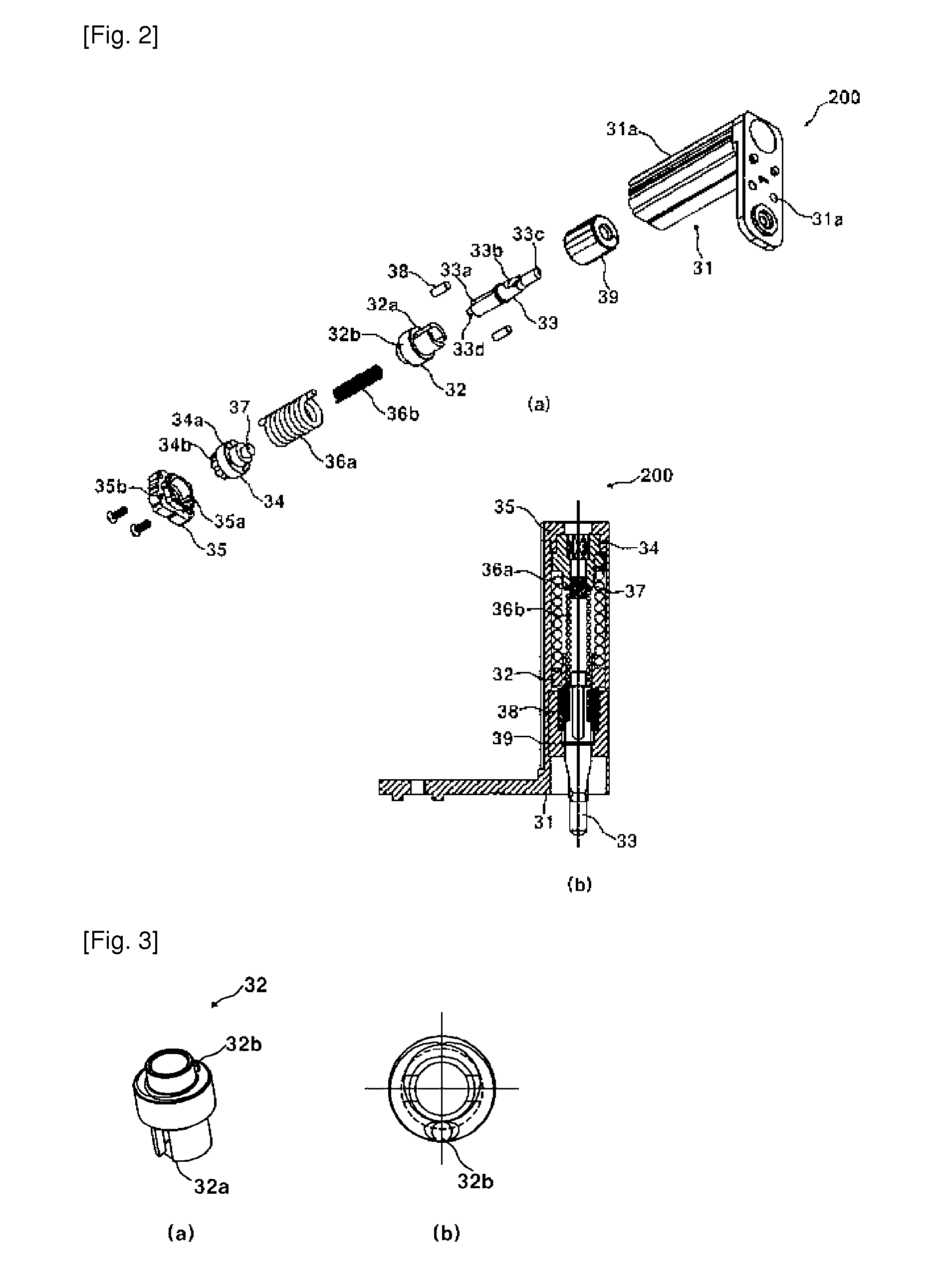

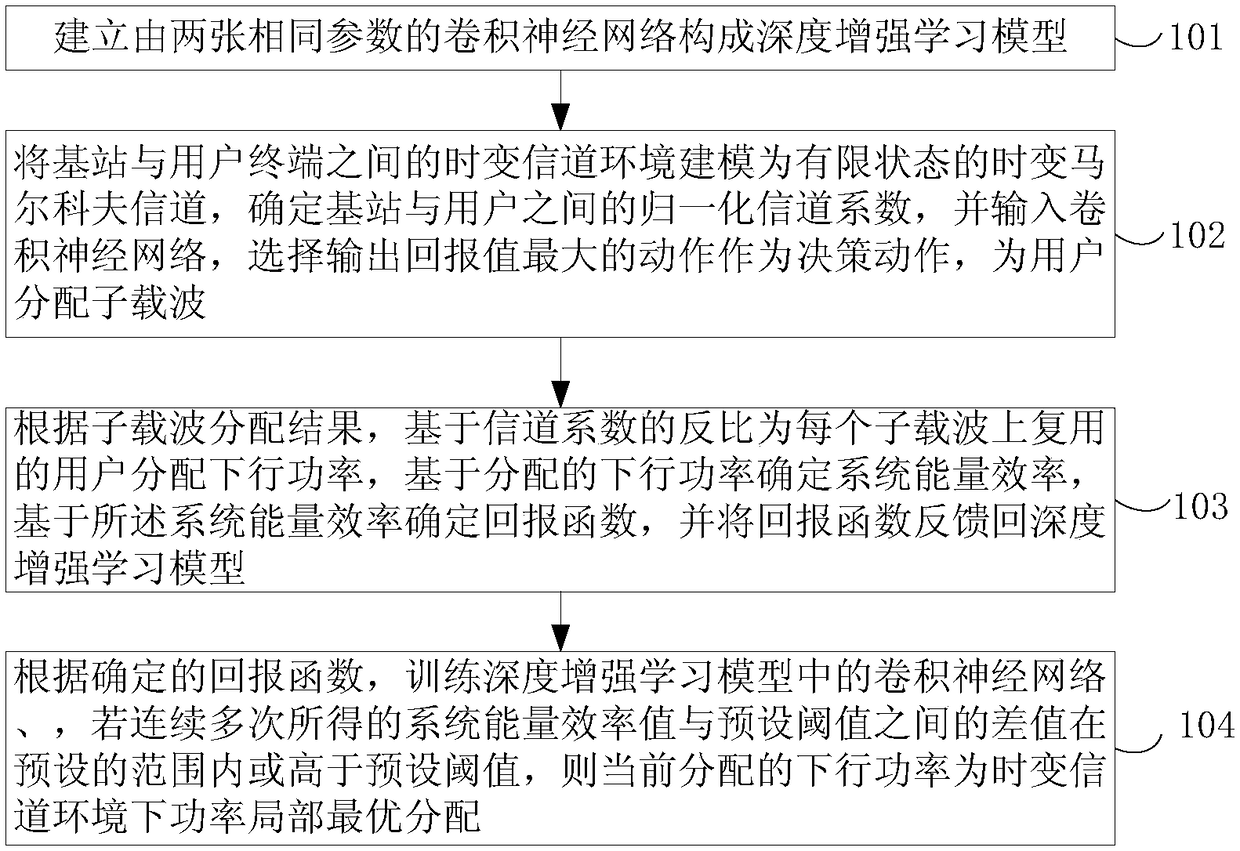

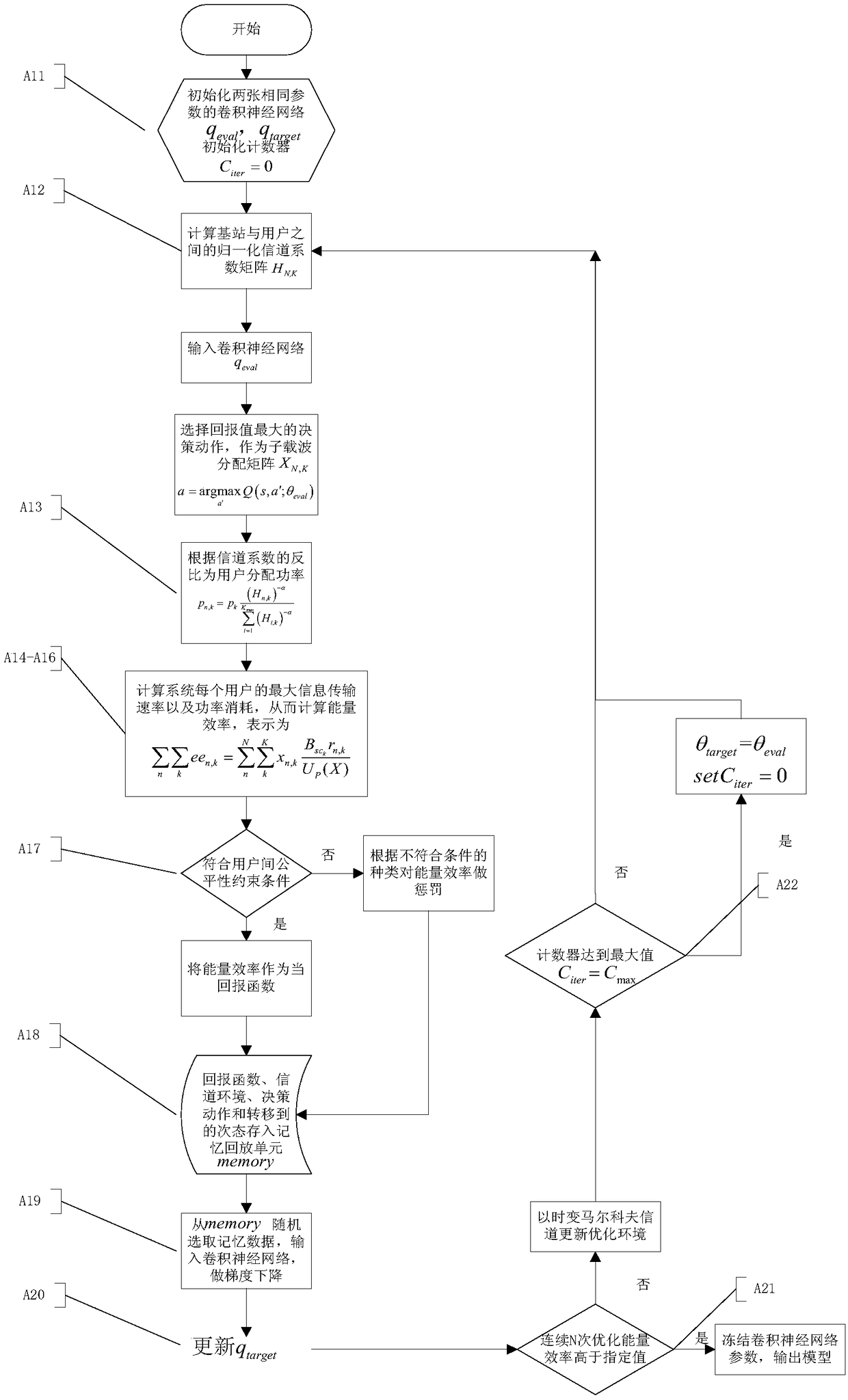

Wireless network resource allocation method based on deep reinforcement learning

ActiveCN109474980AImprove energy efficiencyPower managementNeural architecturesReturn functionCarrier signal

The invention provides a wireless network resource allocation method based on deep reinforcement learning. The energy efficiency in time-varying channel environment can be maximally improved with relatively low complexity. The method comprises the following steps: establishing a deep reinforcement learning model; modeling the time-varying channel environment between a base station and a user terminal as a time-varying Markov channel in a finite state, determining a normalization channel coefficient, and inputting a convolution neural network qeval, selecting an action with the maximum output return value as a decision action, and allocating the sub-carrier for the user; allocating downlink power for the user reusing on each subcarrier based on the inverse ratio of the channel coefficient according to a subcarrier allocation result, and determining a return function based on the allocated downlink power, and feeding back the return function to the deep reinforcement learning model; andtraining the convolution neural network qeval and qtarget in the deep reinforcement learning model according to the determined return function, and determining the power local optimal allocation underthe time-varying channel environment. The wireless network resource allocation method provided by the invention relates to the field of the wireless communication and artificial intelligence decision.

Owner:UNIV OF SCI & TECH BEIJING

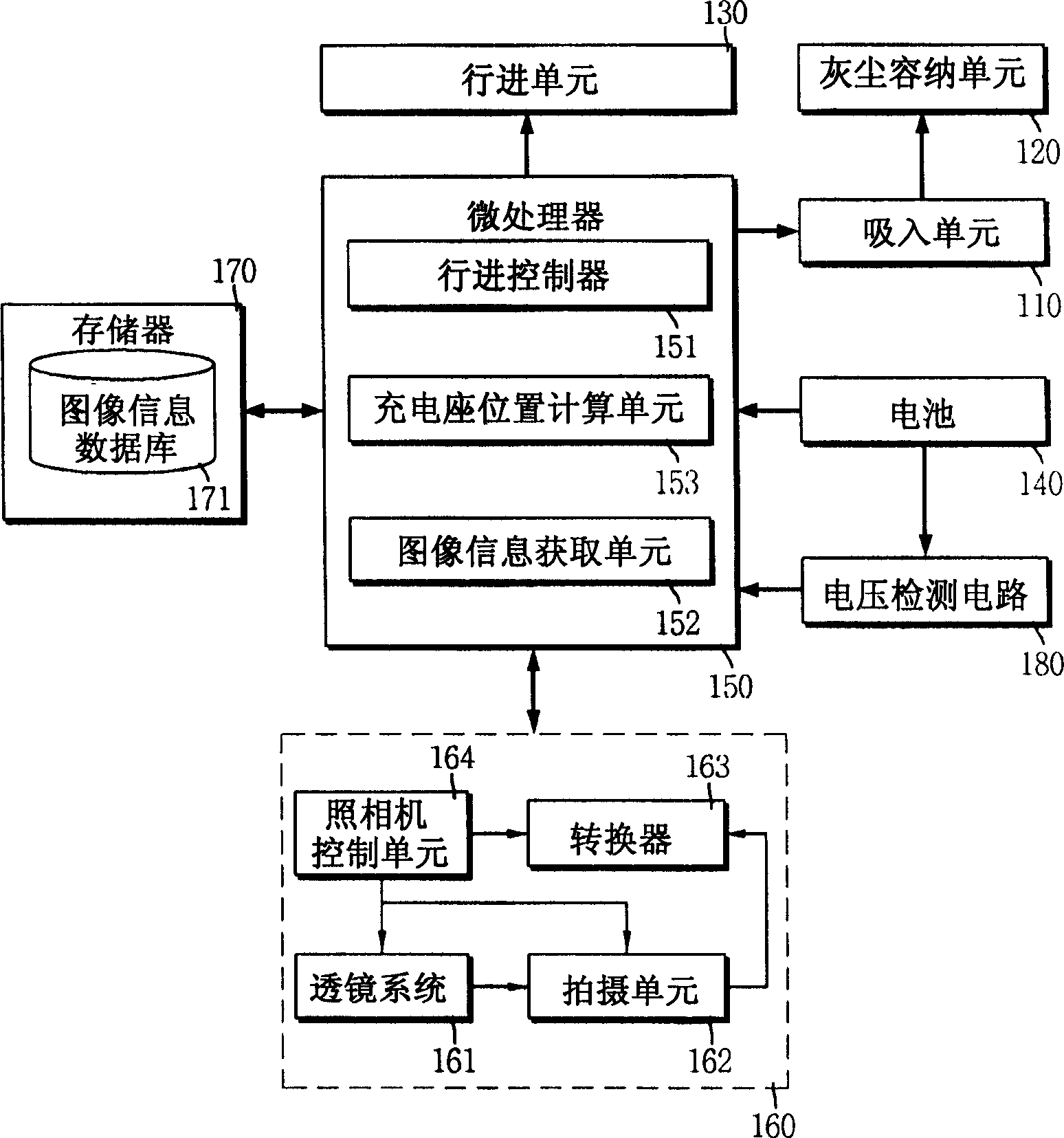

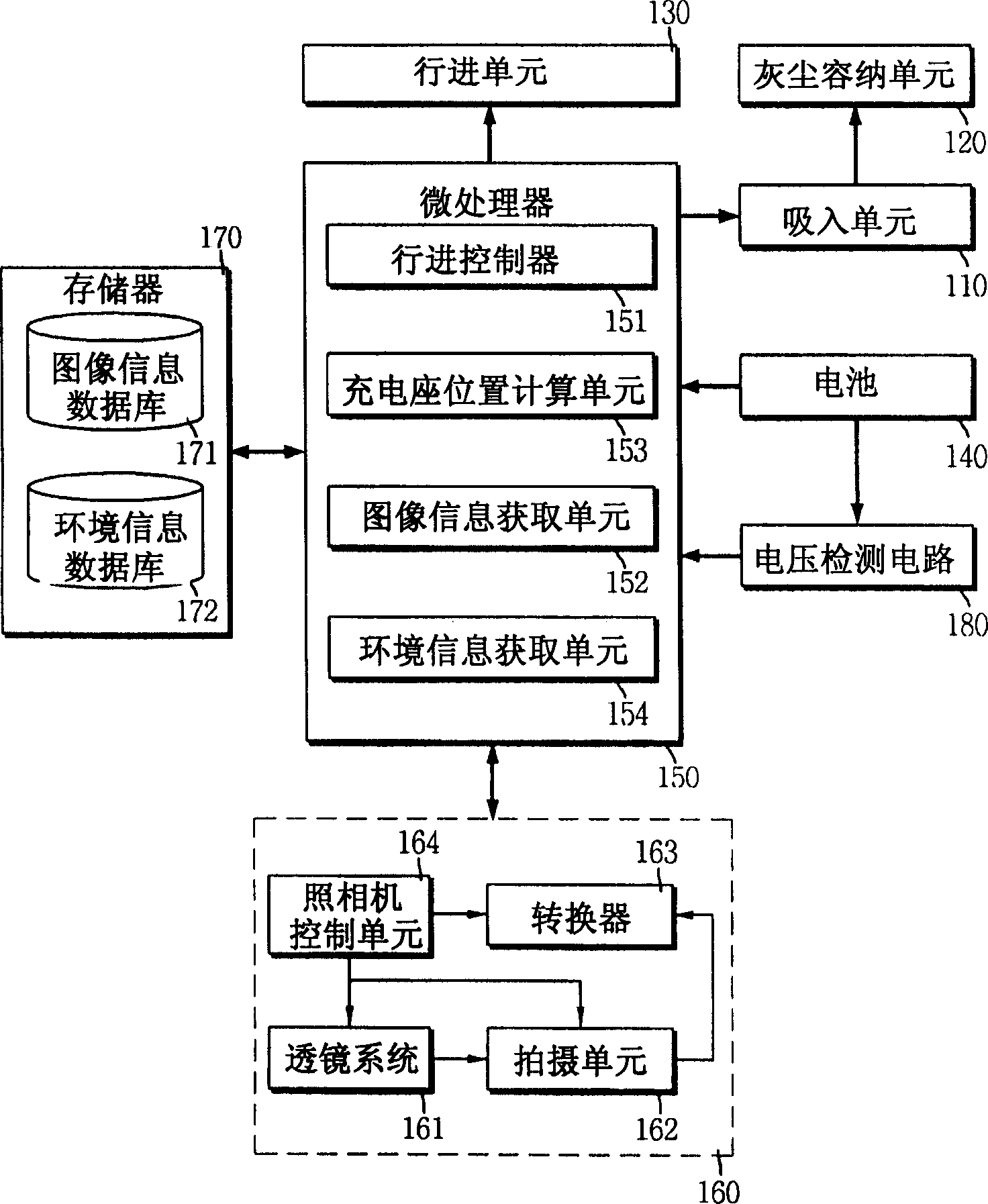

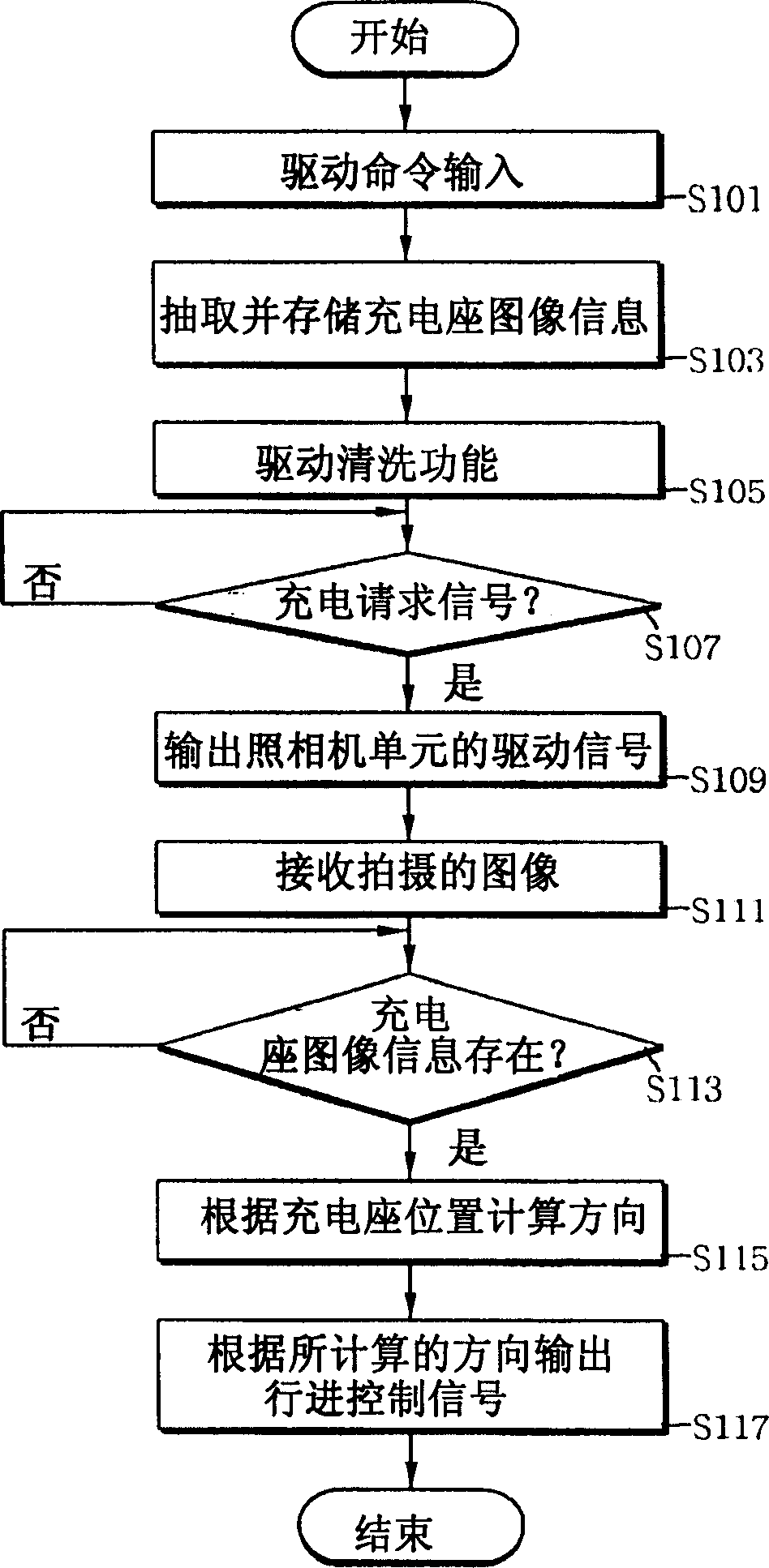

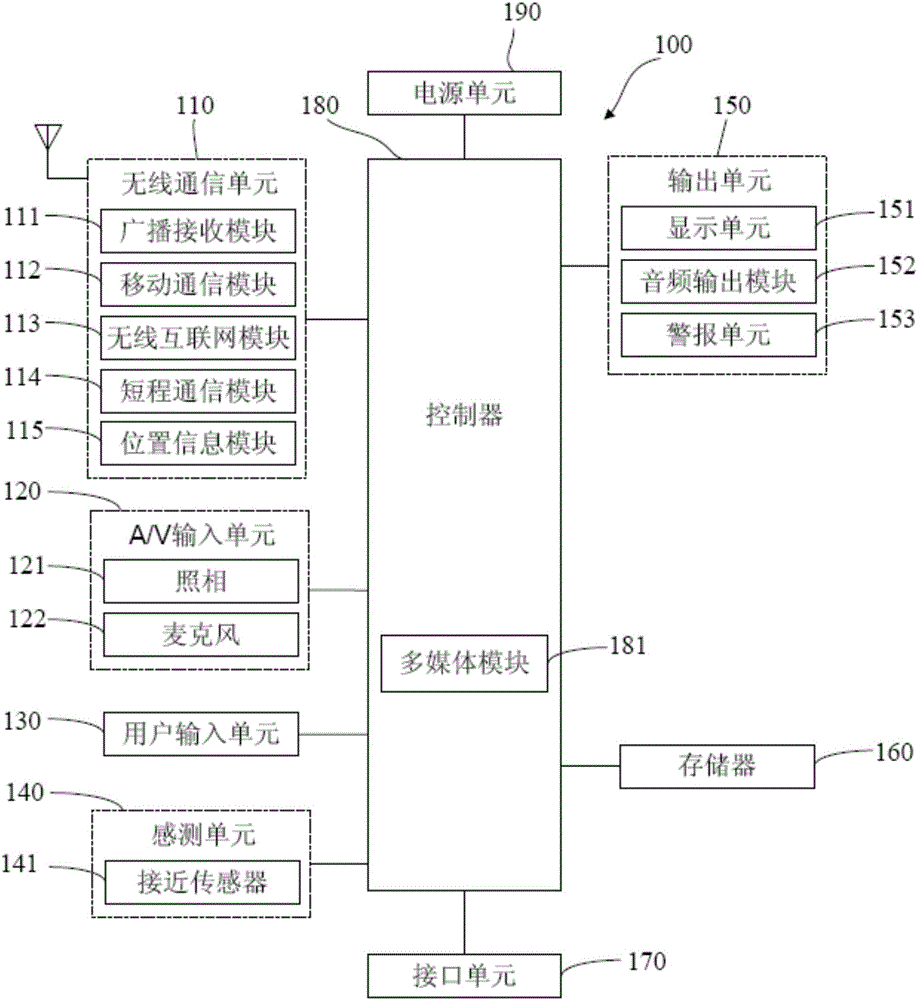

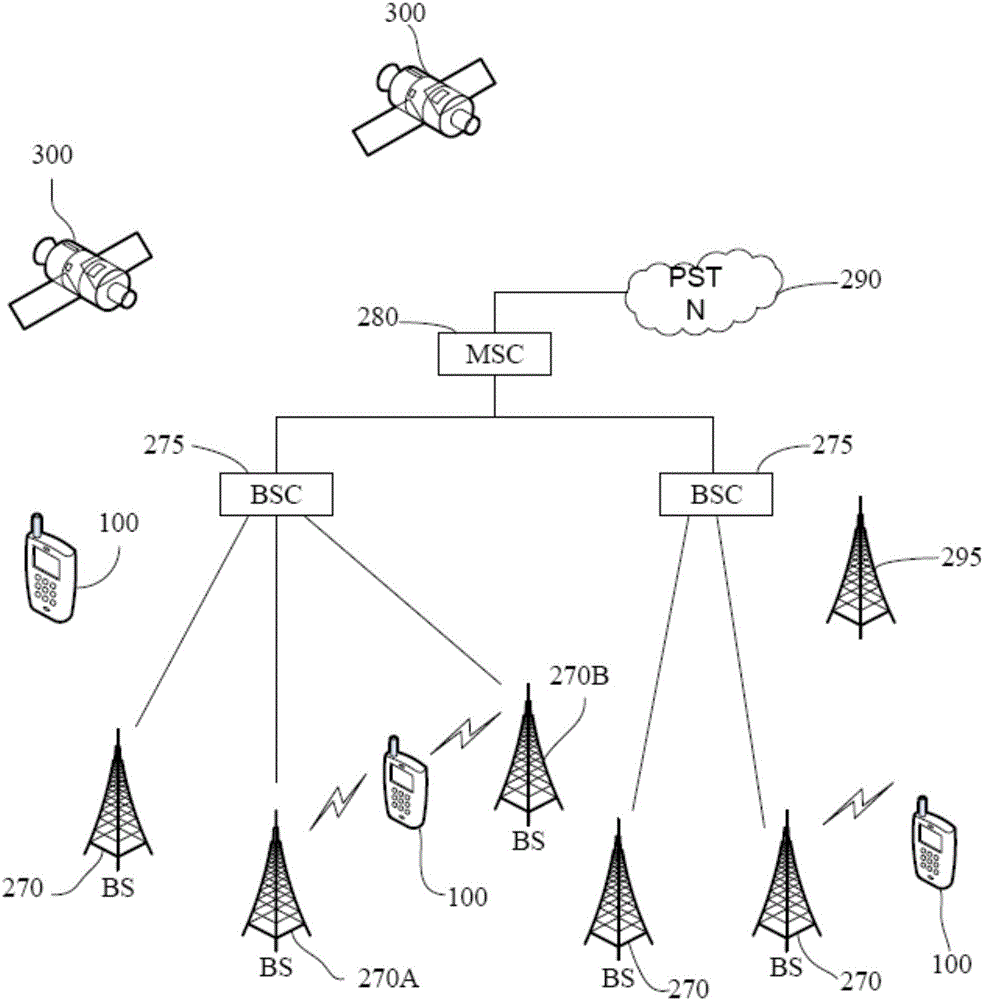

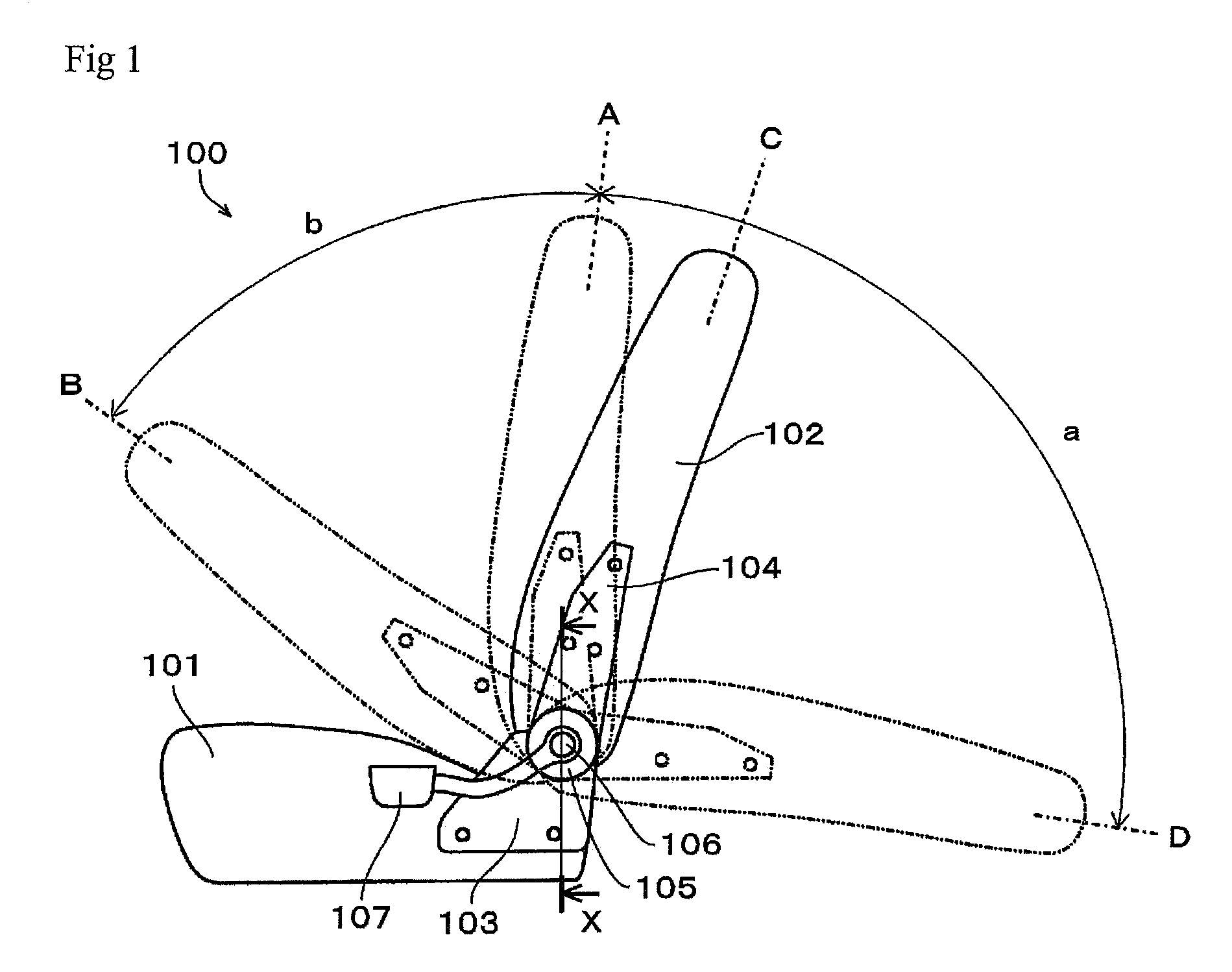

Cleaning robot having auto-return function to charching-stand and method using the same

A cleaning robot, and more particularly a cleaning robot comprising a camera unit for photographing an external image containing the charging-stand image, converting it into electrical image information, and outputting externally; and a microprocessor for storing the image information inputted the camera unit, acquiring position information of the charging-stand from the external image on the basis of the image information of the charging-stand in detecting a return signal, and causing the cleaning robot to be returned to the charging-stand. The cleaning robot having an auto-return function to a charging-stand detects a direction and position of the charging-stand using image information of the charging-stand, and thereby correctly and rapidly returning to the charging-stand for the cleaning robot.

Owner:LG ELECTRONICS INC

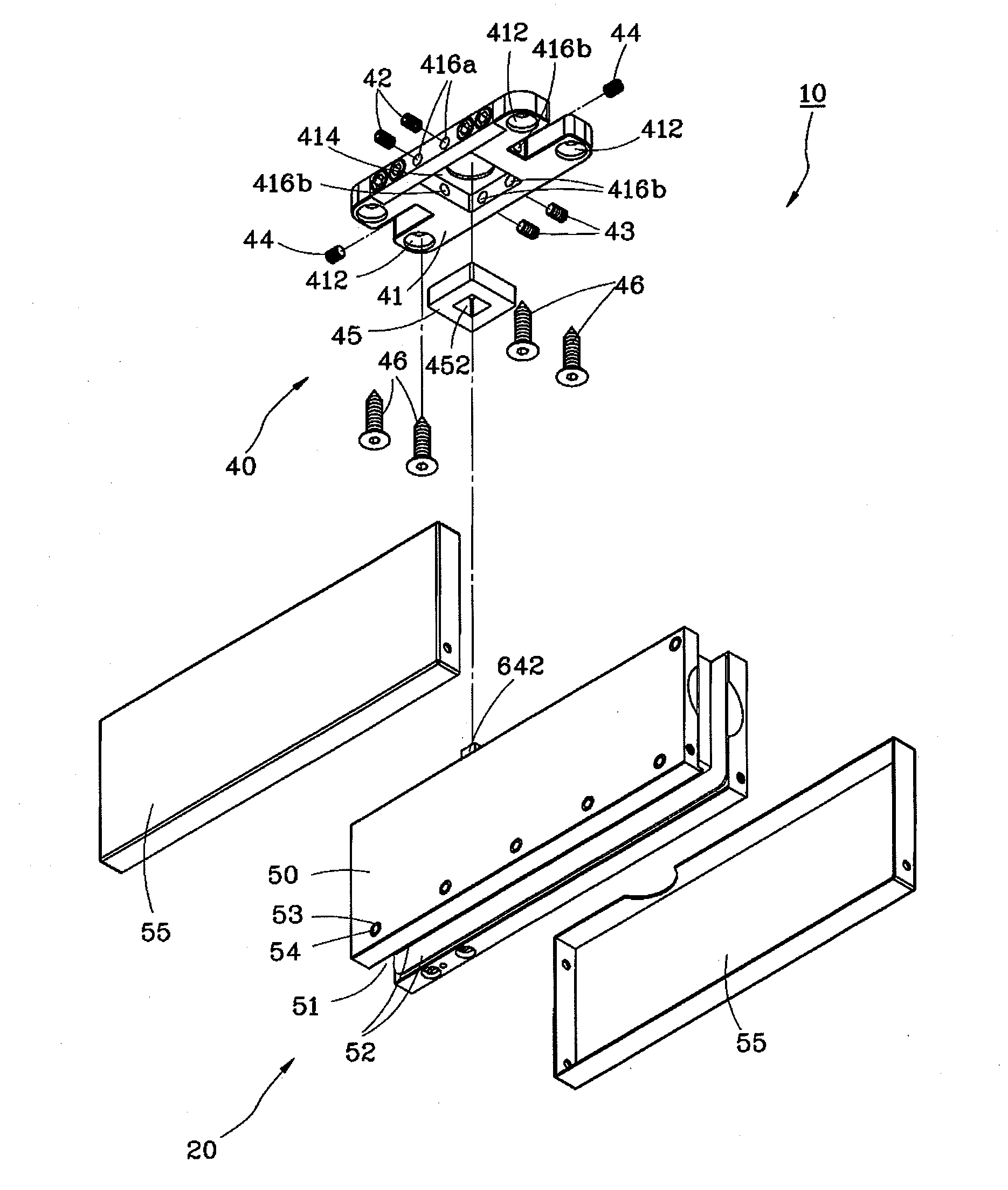

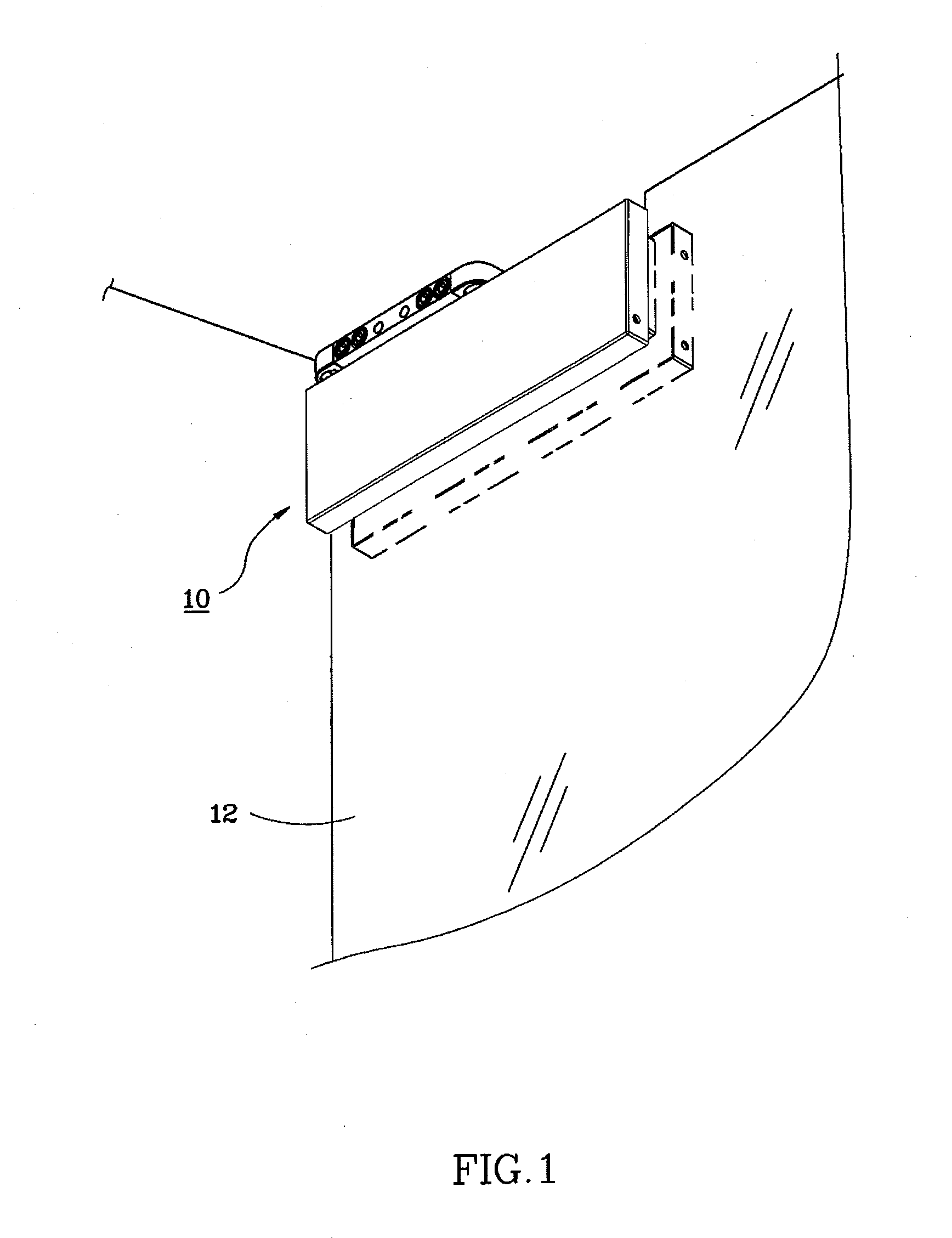

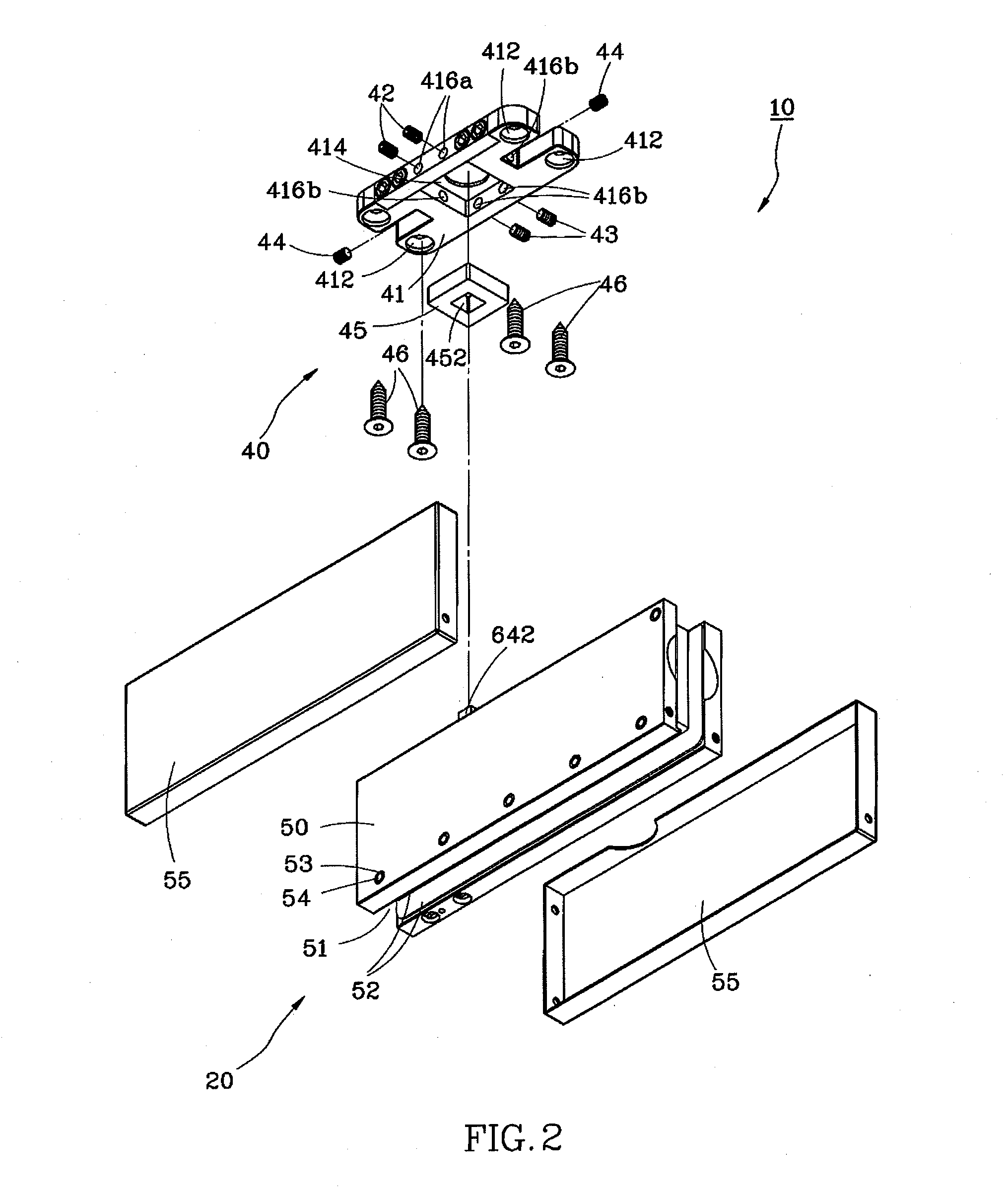

Patch fitting with auto-return function

ActiveUS8528169B1Easy to installSmall dimensionBuilding braking devicesWing accessoriesReturn functionEngineering

A patch fitting with auto-return function for a glass door includes a clamping seat, a damper and a misalignment adjuster. The clamping seat is adapted for clamping and being mounted on the top edge of the glass door and comprises a shaft body and an eccentric cam connected with the shaft body. The damper is installed in the clamping seat and contacted with the eccentric cam. The misalignment adjuster comprises a mounting plate, a plurality of adjustment members and an adjustment plate. The mounting plate has a concave space and a plurality of adjustment holes communicated with the concave space. Each of the adjustment members is disposed in each of the adjustment holes. The adjustment plate is arranged in the concave space of the mounting plate, connected with the shaft body and stopped by at least one of the adjustment members.

Owner:LEADO DOOR CONTROLS

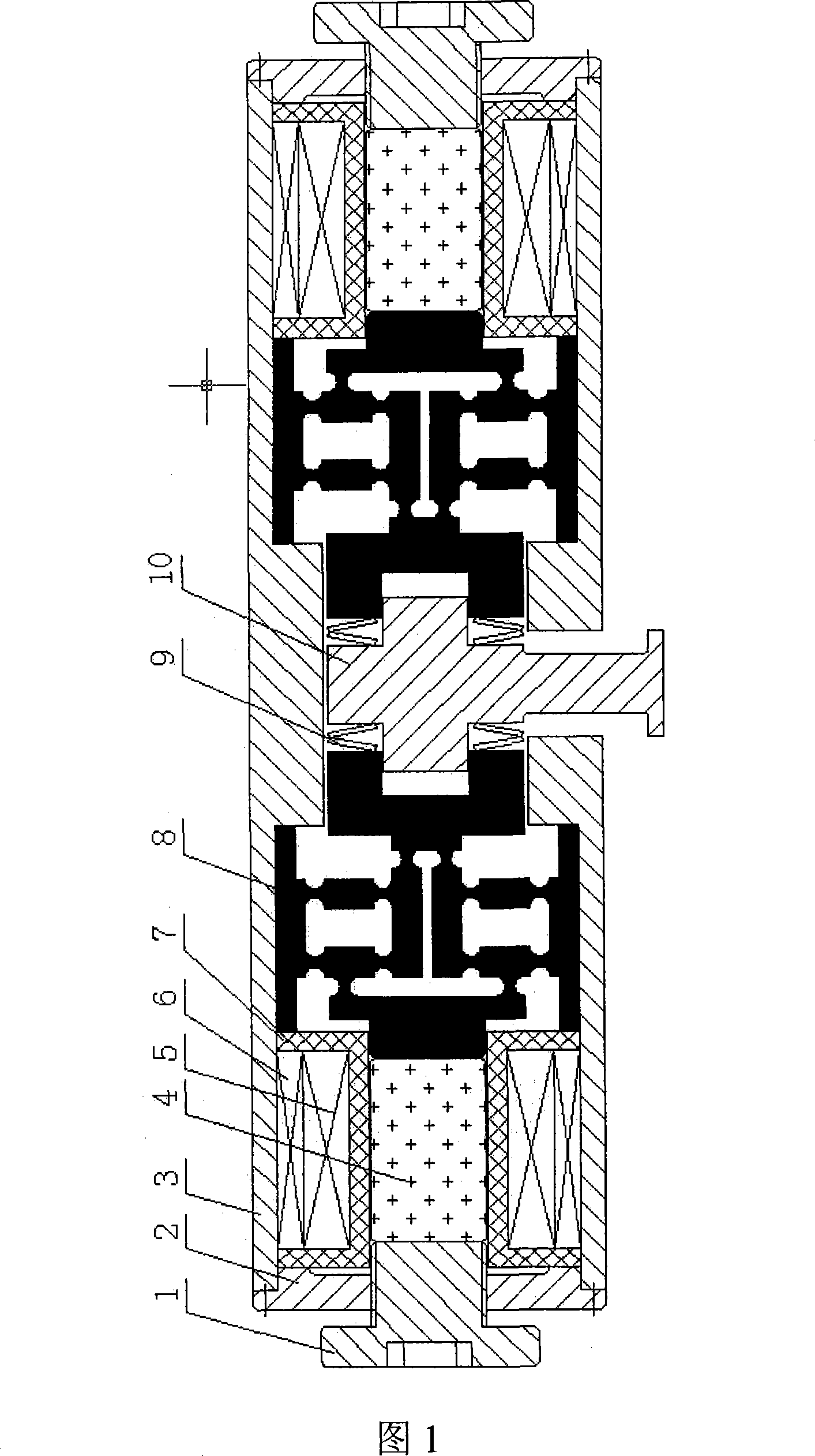

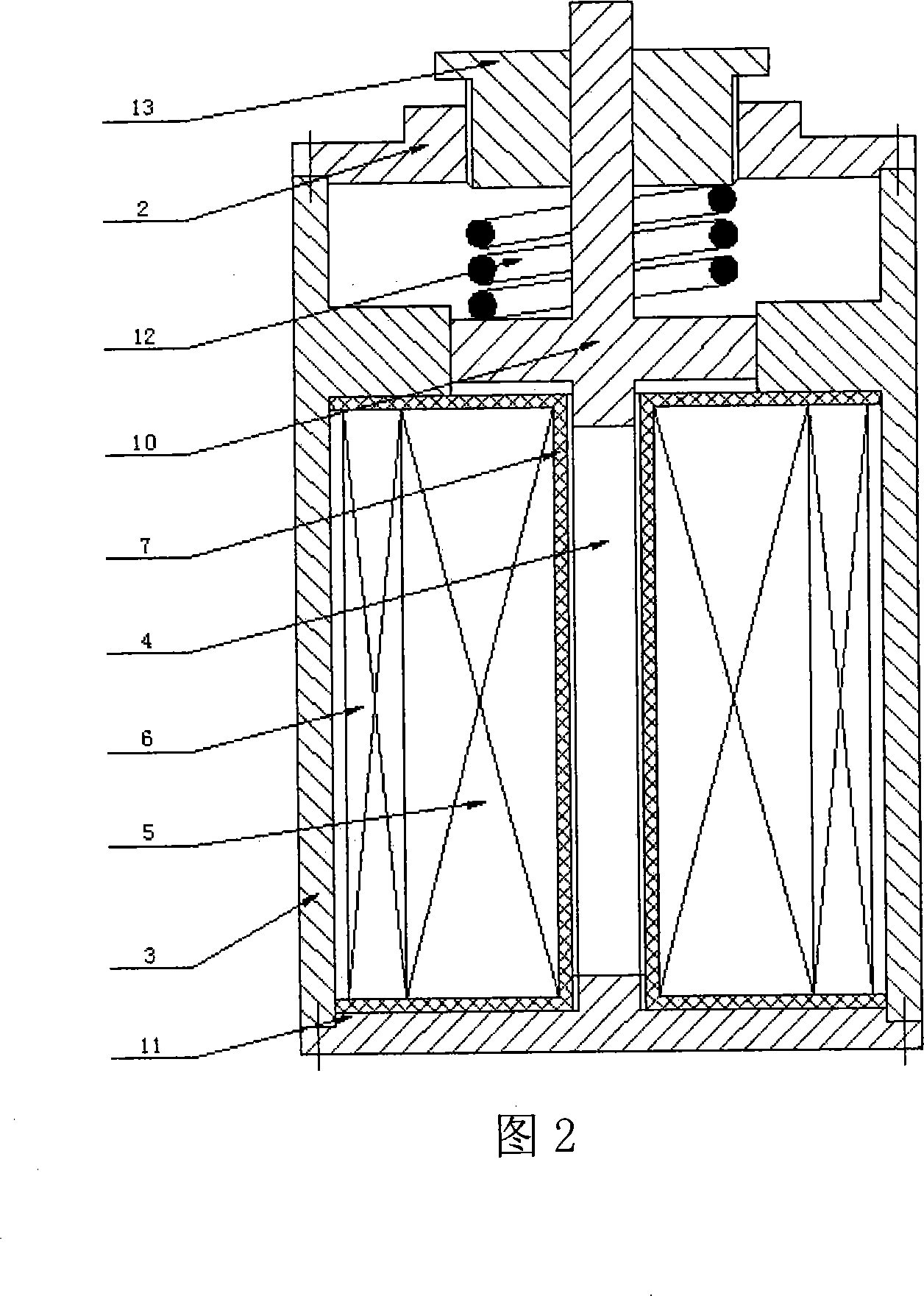

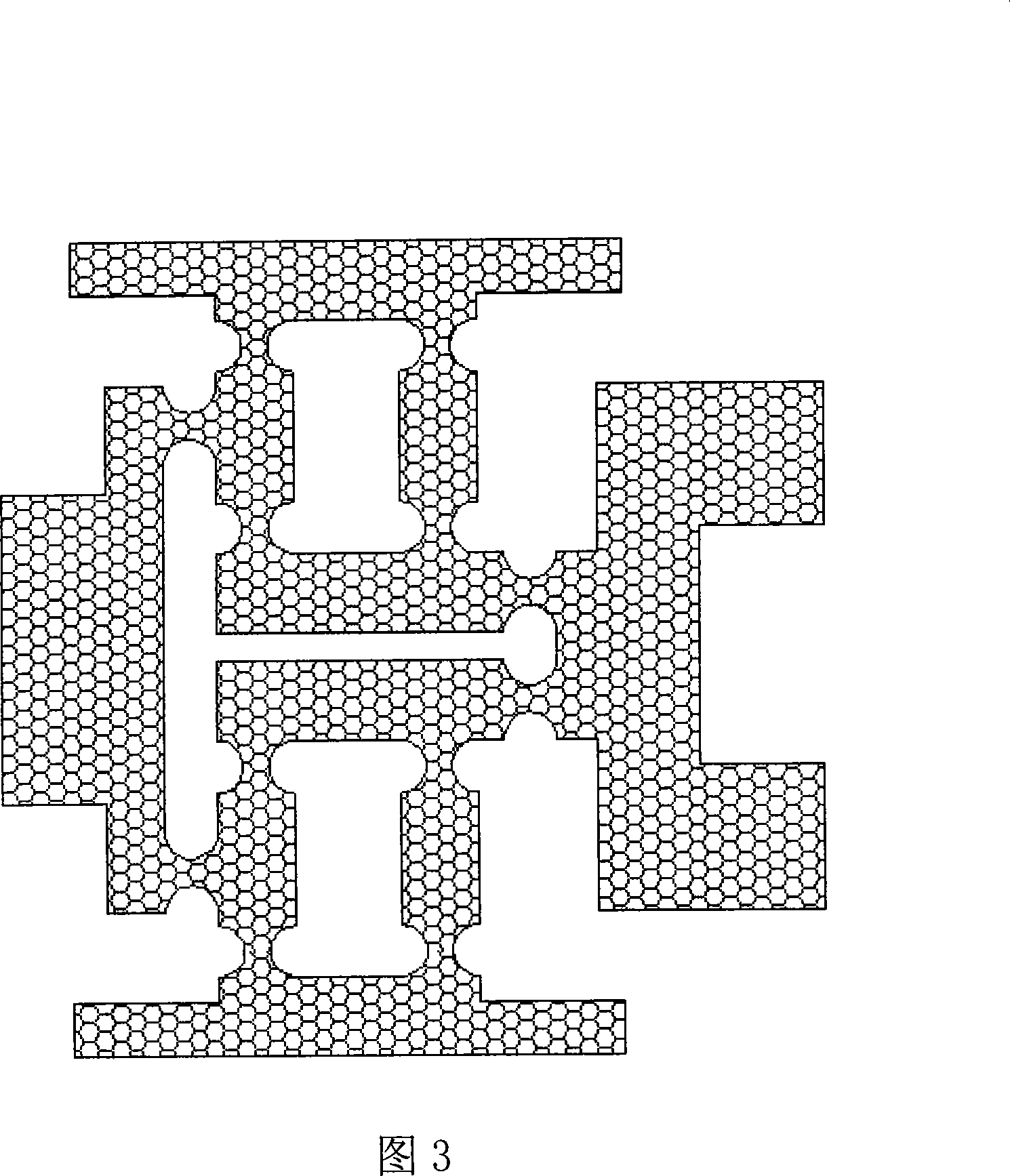

A dual-phase set ultra-magnetism flexible driver and its implementation method

InactiveCN101145742ARealize automatic centering and return functionHigh frequency responsePiezoelectric/electrostriction/magnetostriction machinesDifferential pressureMagnetostrictive actuator

The invention belongs to the field of micro-drive technology. The present invention proposes a new double-phase opposing giant magnetostrictive driver. A pair of disc springs are sleeved on the output assembly and placed in the middle of the housing. Two single giant magnetostrictive drivers with the same structure and size pass through the The disc springs are symmetrically placed on both sides of the output assembly, forming a double-phase structure. Dual sets of thermal compensation mechanisms are used to suppress thermal deformation, and single-degree-of-freedom flexible hinge mechanisms are used to amplify and transmit the output displacement. The driver works through differential pressure (or bias voltage), which can realize the function of automatic centering and return, with high frequency response , high control precision, large output force, large output displacement and other excellent performances, and the structure is simple and easy to miniaturize, it can be widely used in the development of micro drives and the production of sensors, especially suitable for the drive unit of electro-hydraulic servo control valves .

Owner:BEIJING UNIV OF TECH

Intelligent control method for vertical recovery of carrier rockets based on deep reinforcement learning

ActiveCN109343341AFast autonomous planningIncrease autonomyAttitude controlAdaptive controlAttitude controlReturn function

An intelligent control method for vertical recovery of carrier rockets based on deep reinforcement learning is disclosed. A method of autonomous intelligent control for carrier rockets is studied. Theinvention mainly studies how to realize attitude control and path planning for vertical recovery of carrier rockets by using intelligent control. For the aerospace industry, the autonomous intellectualization of spacecrafts is undoubtedly of great significance whether in the saving of labor cost or in the reduction of human errors. A carrier rocket vertical recovery simulation model is established, and a corresponding Markov decision-making process, including a state space, an action space, a state transition equation and a return function, is established. The mapping relationship between environment and agent behavior is fitted by using a neural network, and the neural network is trained so that a carrier rocket can be recovered autonomously and controllably by using the trained neural network. The project not only can provide technical support for the spacecraft orbit intelligent planning technology, but also can provide a simulation and verification platform for attack-defense confrontation between spacecrafts based on deep reinforcement learning.

Owner:BEIJING AEROSPACE AUTOMATIC CONTROL RES INST +1

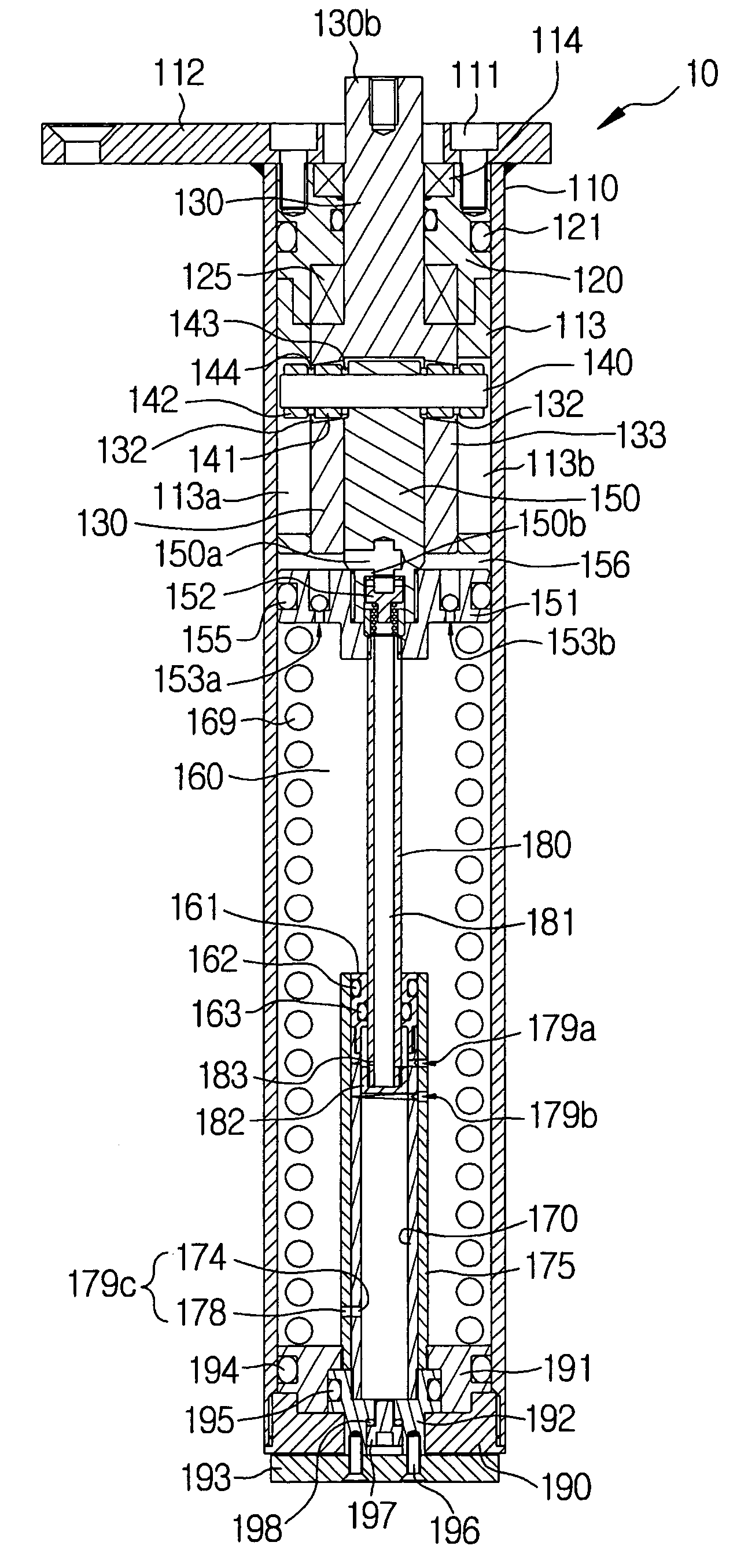

Multipurpose hinge apparatus having automatic return function

A multipurpose hinge apparatus having an automatic return function is provided in which the apparatus is installed between the door and a main body. The apparatus includes a driving mechanism for ascending and descending a piston rod according to opening and closing of the door which is installed in the upper portion of a cylindrical housing. A piston is connected with the piston rod, in which a one-direction check valve is installed in the piston. The piston partitions an upper chamber and a lower chamber and ascends and descends in association with the piston rod. A first oil path communicates with the upper and lower chambers via the lower portion of the piston rod in the central portion of the piston. A compression spring which makes the piston ascend is inserted into the lower chamber. Oil is filled in the chamber. Thus, the hinge apparatus is automatically returned to the initial position with return speed in multiple steps by controlling an amount of oil flowing from upper chamber to lower chamber in multiple steps when a door is closed.

Owner:I ONE INNOTECH

System for Cash, Expense And Withdrawal Allocation Across Assets and Liabilities to Maximize Net Worth Over a Specified Period

This invention constitutes a method for taking a set of input parameters specifying a person or family's assets, liabilities, income and expenses and computing the optimal amount of cash to withdrawal from each credit source or asset (including liquidation of physical assets, credit card balance transfers, and use of loan sources) and the allocation of expenses and cash flow across assets and liabilities so as to maximize net worth by a particular date. The method consists of computing marginal and average expense and return functions for each asset and liability to do a no-withdrawal monthly optimization from the start month to the target month on which to maximize net worth, then recursively computing optimal withdrawal amounts for each source of capital on specific months, ultimately finding the optimal withdrawal amount for each source on each of these months and returning the optimal allocation of expenses and the resulting income and cash-flow across assets and liabilities. This invention is being applied to an online environment, where the user inputs the necessary details and optimization takes place on the server.

Owner:ROBERTSON MICHAEL PAUL

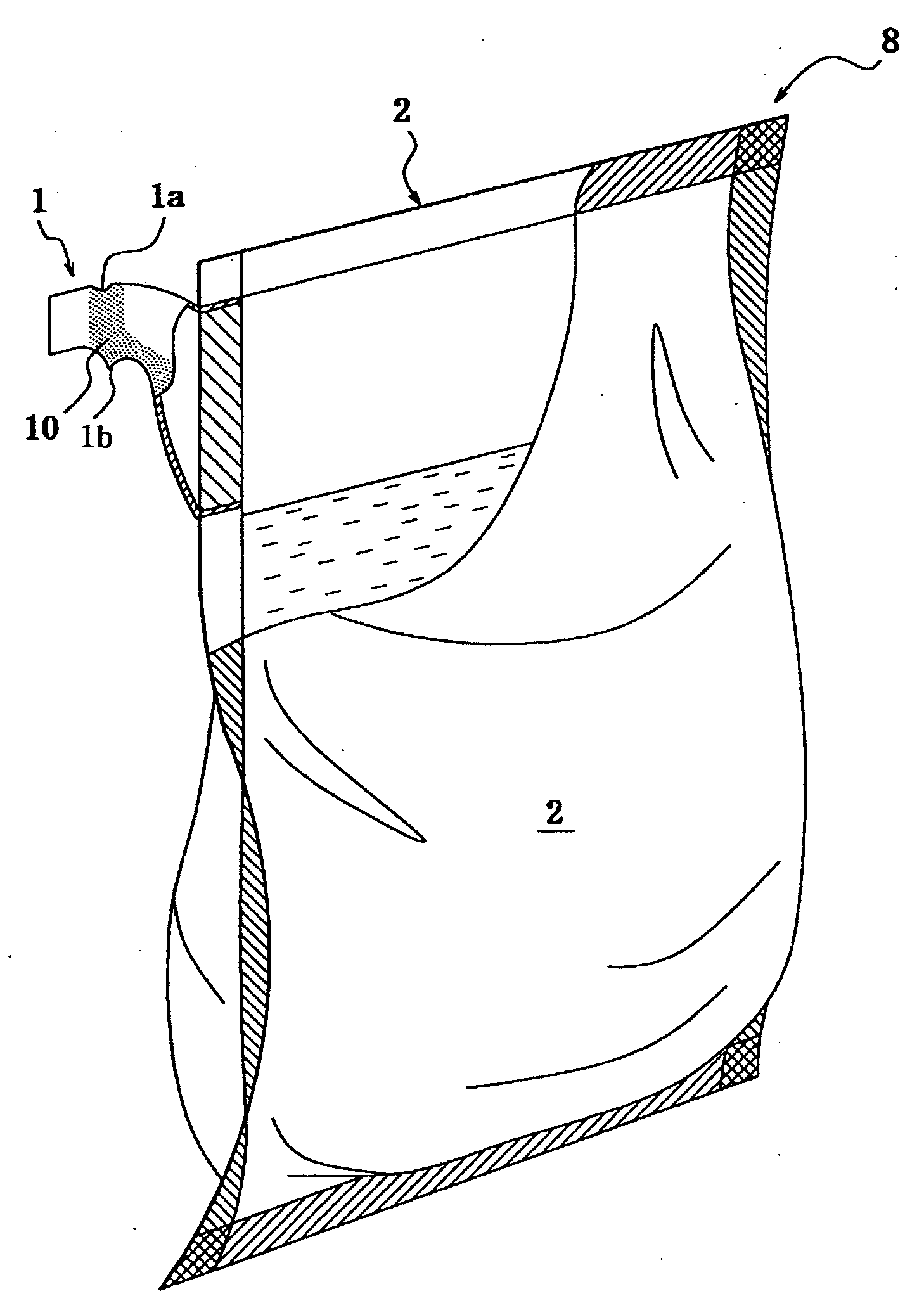

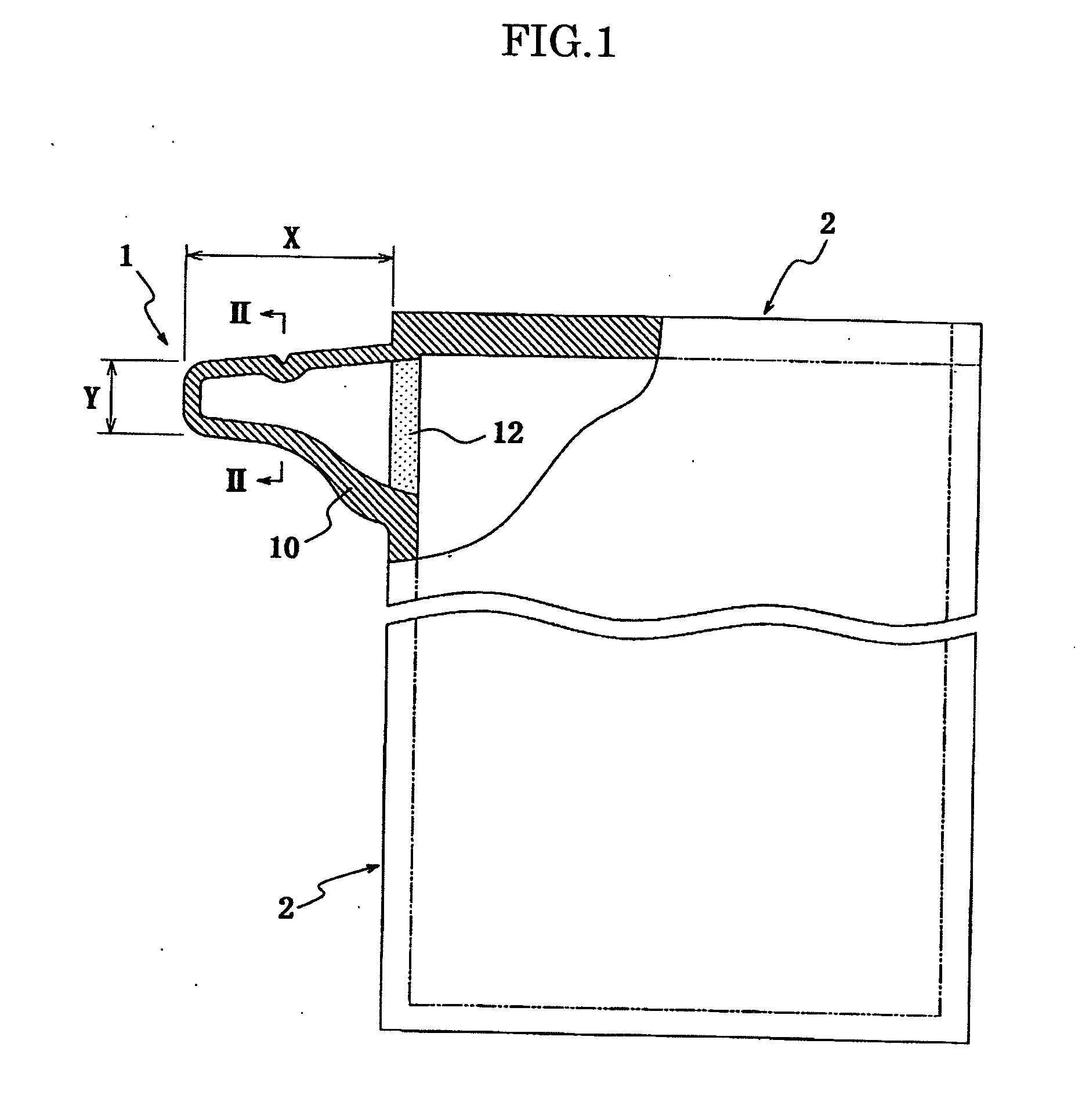

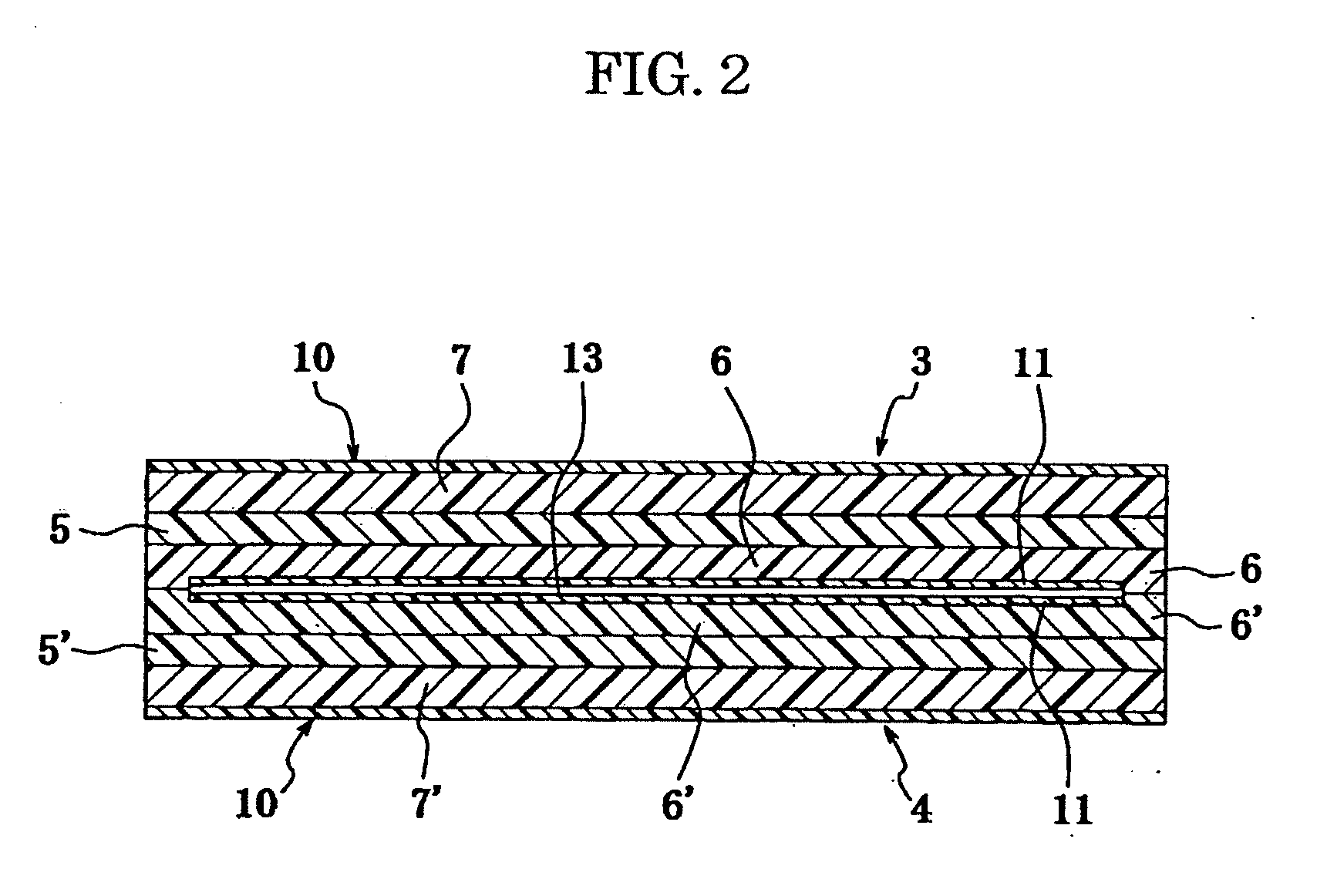

Flexible package bag provided with one-way functioning nozzle and packaging structure for liquid material

InactiveUS20100209025A1Prevent backflowImprove waterproof performanceBagsSacksFilling materialsReturn function

There is proposed a non-self supporting type flexible package bag provided with a film-shaped one-way pouring nozzle, which is excellent in not only the non-return function and liquid cutting properties but also the pouring property of a liquid packed material as a filling material (packed material in the bag can be poured smoothly until the end). In the flexible package bag provided with the film-shaped one-way pouring nozzle protruding from a side portion, corner portion or top portion of the package bag body, a coating layer made from a water-repellent material or an oil-repellent material is provided on an outer surface of the film-shaped one-way pouring nozzle, while a wet-treated layer is provided on an inner face of a pouring path in the film-shaped one-way pouring nozzle.

Owner:YUSHIN

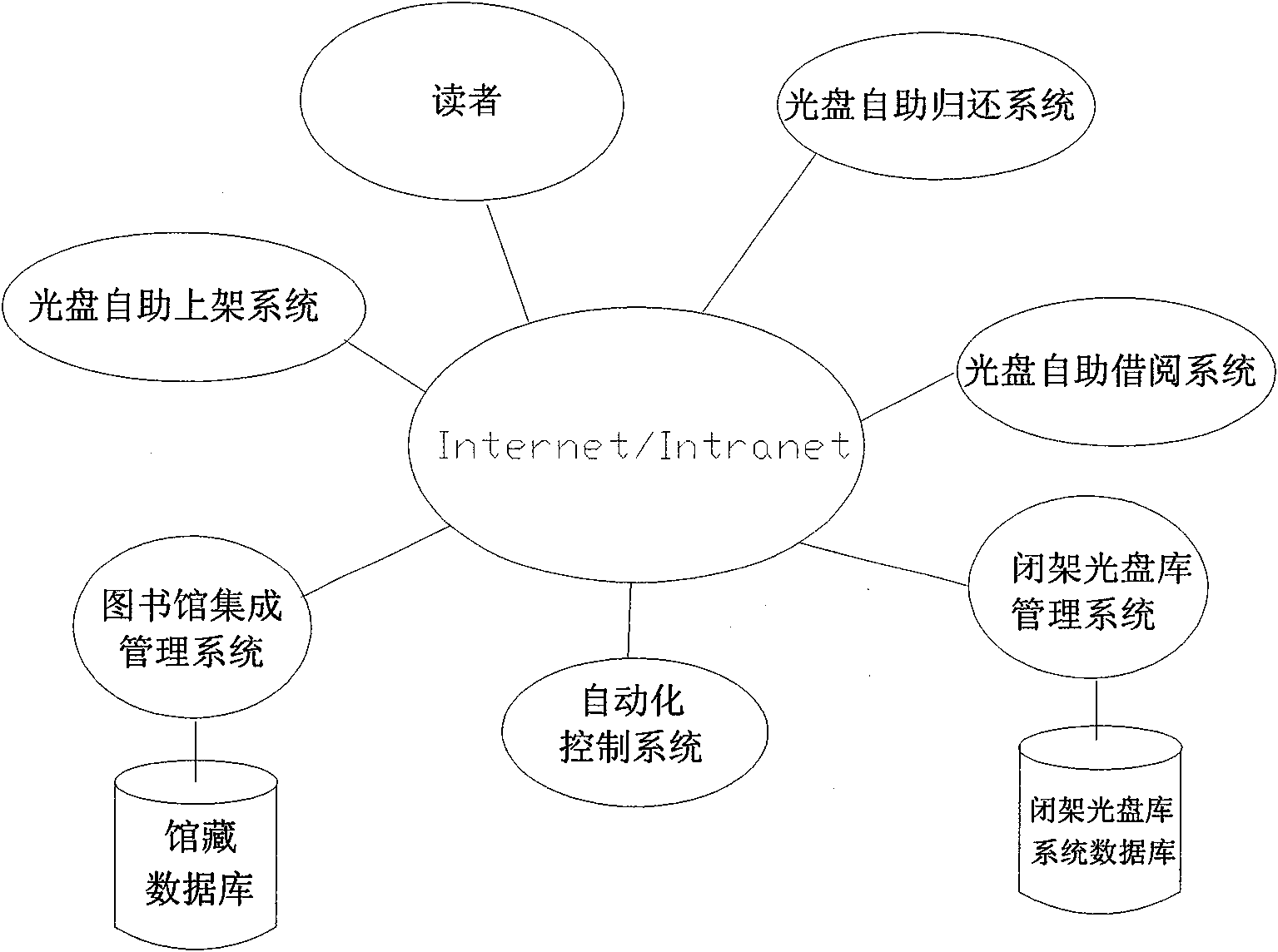

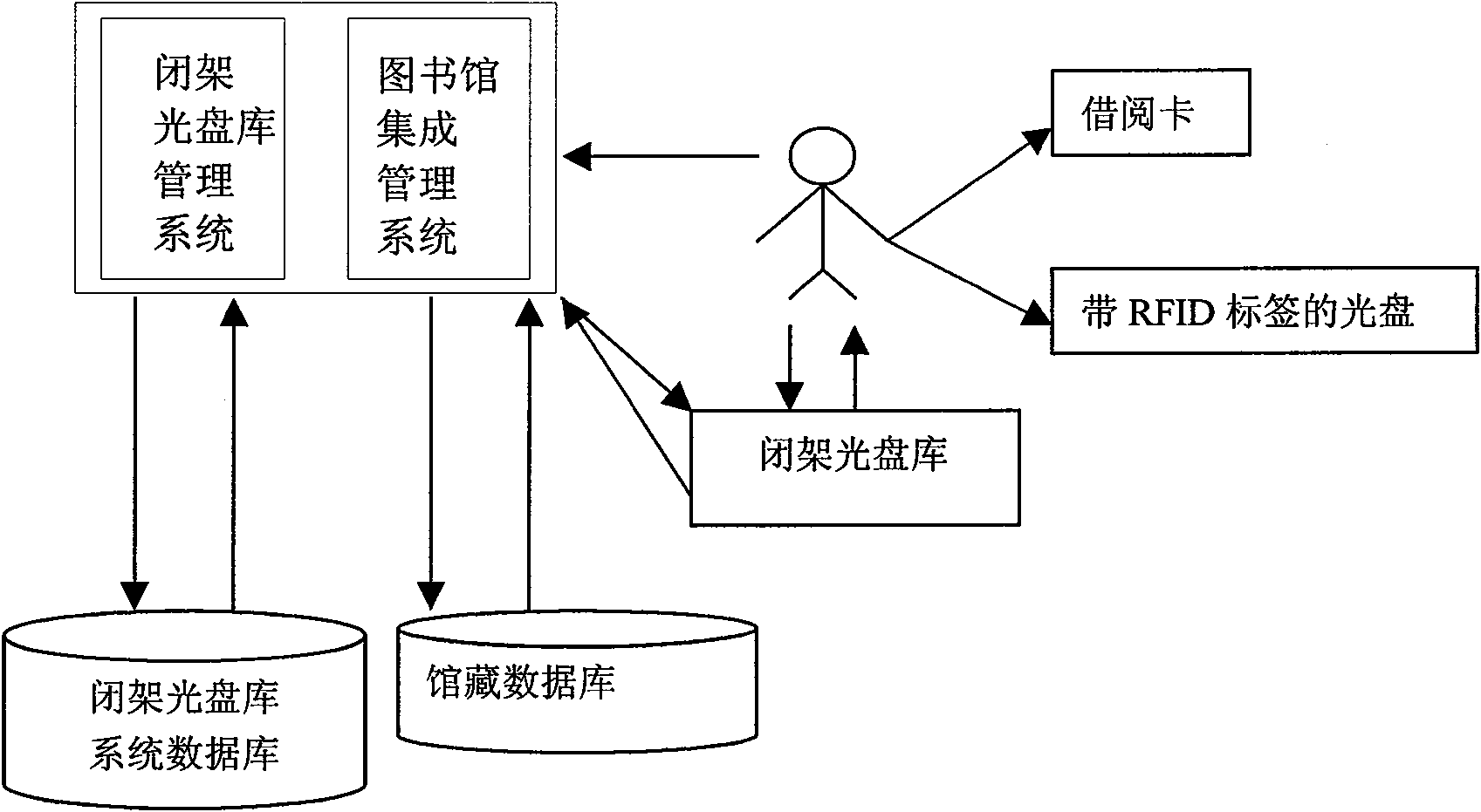

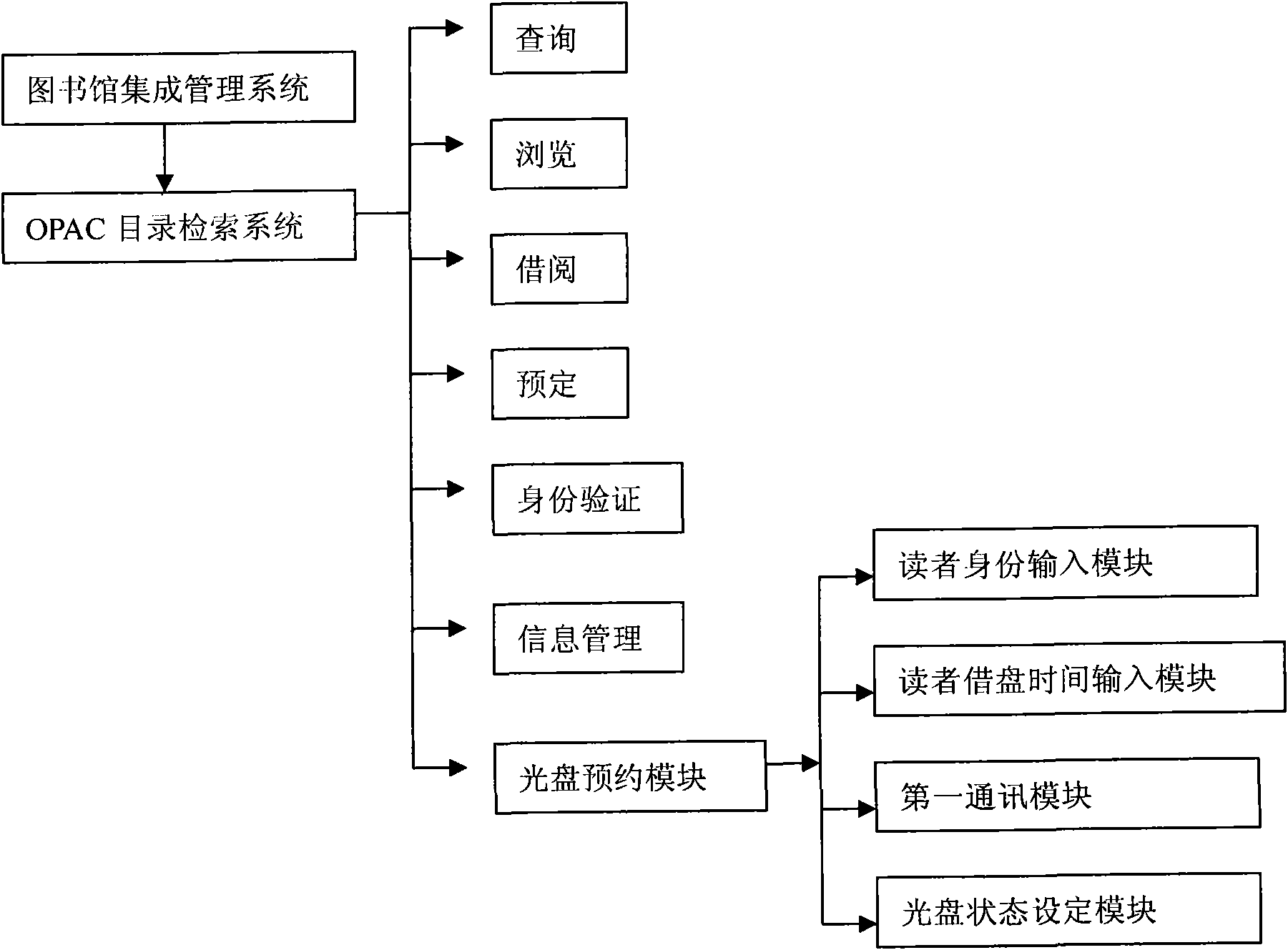

An automatic CD management system based on a closed-shelf jukebox

InactiveCN101551665ARealize centralized storageRealize standardized managementRecord information storageCo-operative working arrangementsReturn functionEngineering

The present invention is an automatic CD management system based on a closed-shelf jukebox. It realizes the centralized storage, standardized management and reader-specific automatic access of CD, extends the functions of libraries, enables libraries to not only perform automatic storage, borrowing and returning of CD but also realize the groundbreaking self-service borrowing and returning functions of CD and effectively improves library's reader service function. The automatic CD management system adopts RFID technique, mechanical automation, network and computer techniques and includes an integrated library management system, consisting of: a jukebox mechanical structure, a closed-shelf jukebox management system, a self-service CD borrowing system, a self-service CD return system and an automation system. It adopts an automatic CD management system based on a closed-shelf jukebox, completely sets free the work of CD administrator, raises work efficiency and extends the functions of automatic libraries.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

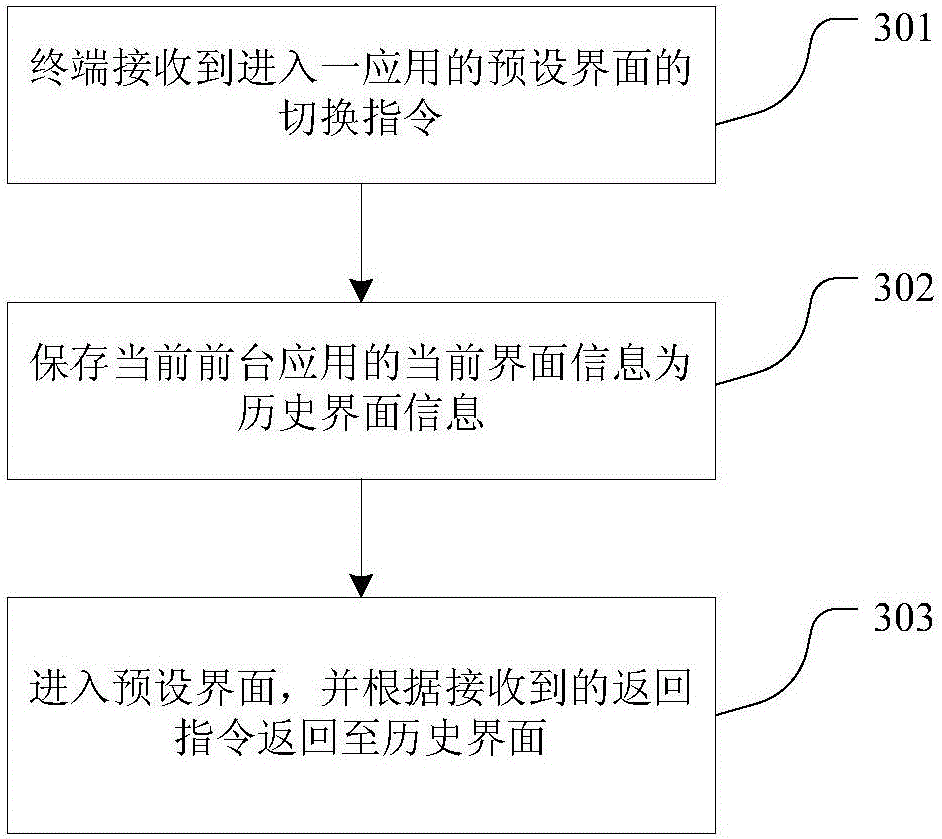

Method and device for implementing return to original interface after interface switching

InactiveCN105100401AImprove experienceTelephone sets with user guidance/featuresReturn functionSoftware engineering

The invention discloses a method and device for implementing return to an original interface after interface switching. The method comprises the following steps: when a switching command of entering a preset interface of an application to be switched is received, saving the current interface information of a current foreground application in a history interface information list; switching from a current interface into the preset interface; and returning to a history interface according to a return command received by the preset interface. According to the scheme disclosed by the invention, the history interface information before entrance of the application via a system notification bar is saved, one or more return function items are set on the preset interface, and a user can return to anyone history interface after clicking of one return function item, so that the user can return to the original interface rapidly and conveniently, and the user experience is improved greatly.

Owner:NUBIA TECHNOLOGY CO LTD

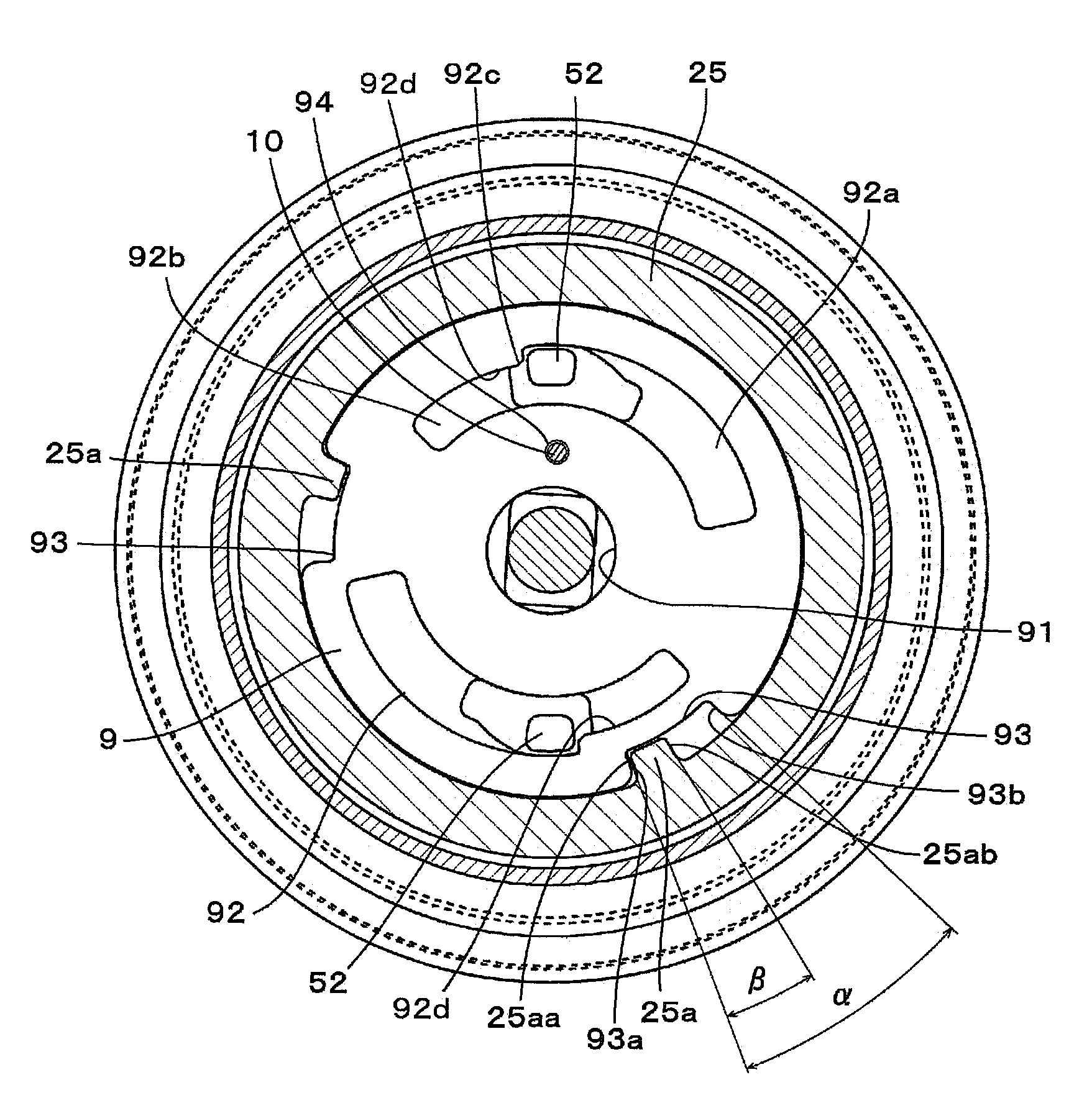

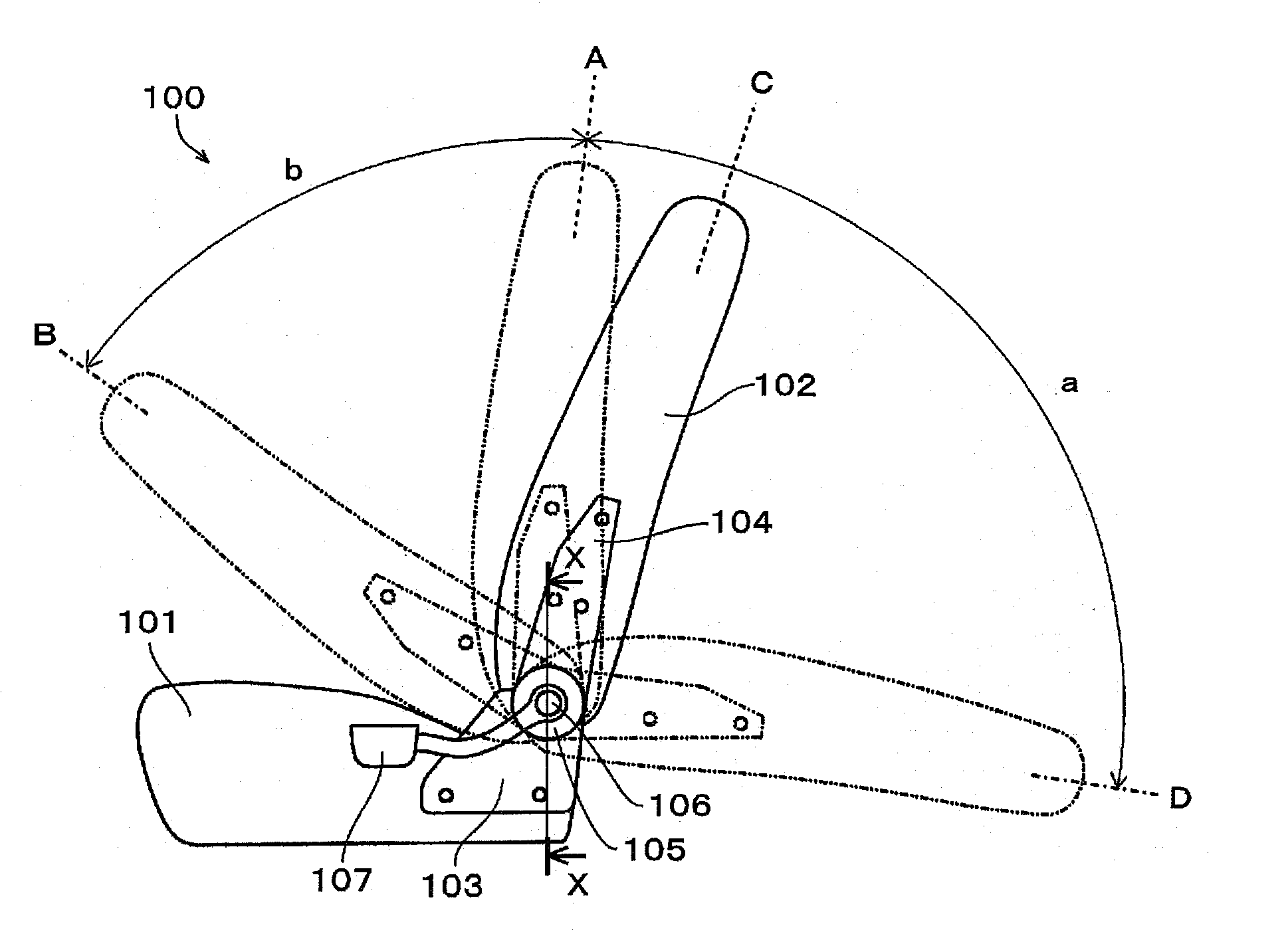

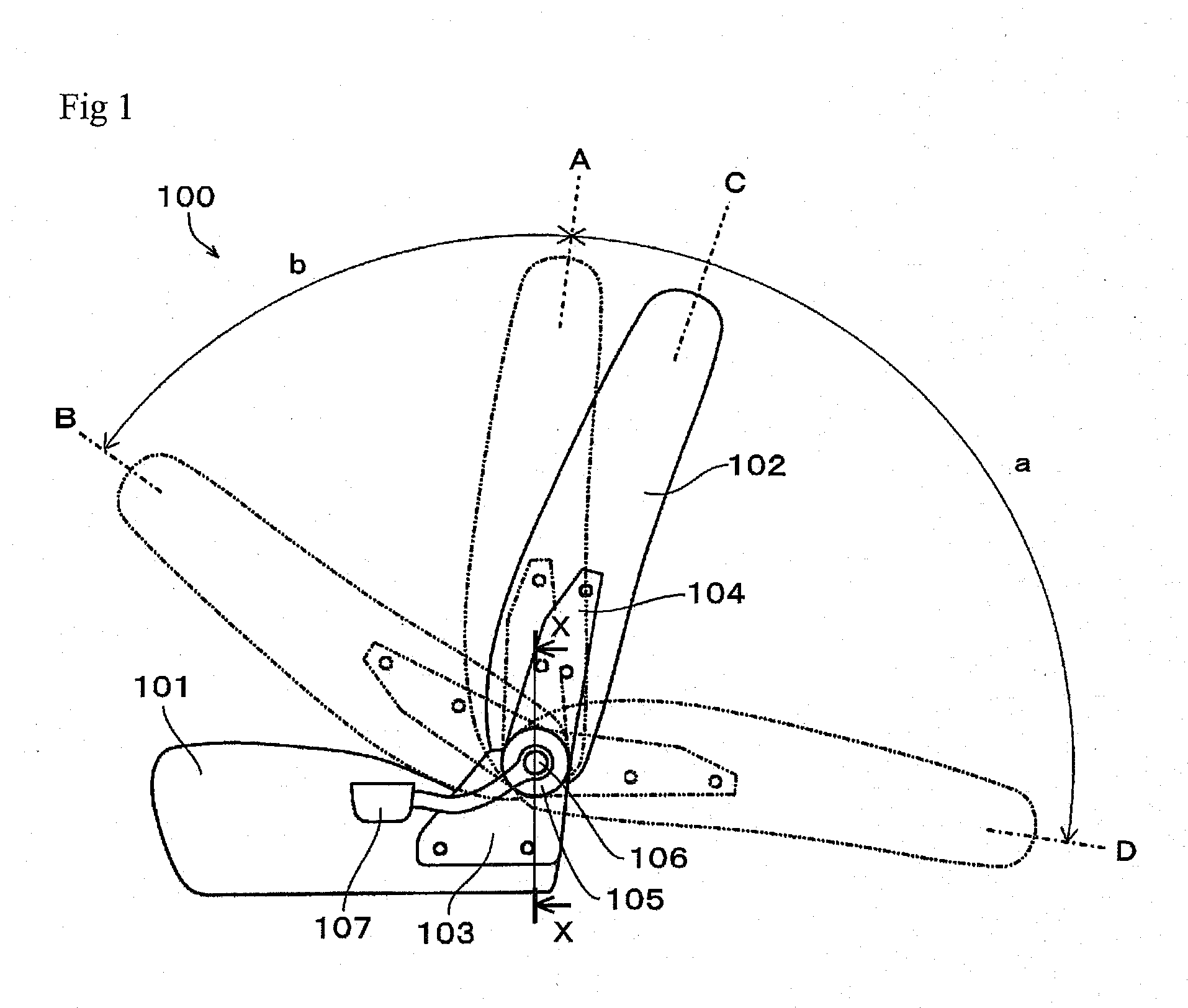

Reclining apparatus

A compact and lightweight round reclining apparatus having a neutral position return function of automatically returning and securing the backrest to the preset neutral position when the backrest that has been reclined forward is raised is provided. A memory plate in approximately disc form having a center shaft at its center and a spring member for pressing the memory plate so that the memory plate always rotates in one direction intervene between a base plate and a gear plate. A guide portion, which is a long hole in approximately arc form, for restricting the movement of the lock gear outward in the direction of the radius through contact with the protrusion of the above described lock gear is provided inside the disc of the memory plate, and at least one notch is provided in the outer periphery of the memory plate so that an engaging portion provided on the above described gear plate enters into the notch and the memory plate is rotatable relative to the gear plate within the range of the notch.

Owner:IMASEN ELECTRIC IND

Multipurpose hinge apparatus having automatic return function

InactiveCN1573003AHysteresis auto reset speedPrevent automatic resetSpringsLighting and heating apparatusReturn functionClosed chamber

A multipurpose hinge apparatus having an automatic return function is provided in which the apparatus is installed between the door and a main body. The apparatus includes a driving mechanism for ascending and descending a piston rod according to opening and closing of the door which is installed in the upper portion of a cylindrical housing. A piston is connected with the piston rod, in which a one-direction check valve is installed in the piston. The piston partitions an upper chamber and a lower chamber and ascends and descends in association with the piston rod. A first oil path communicates with the upper and lower chambers via the lower portion of the piston rod in the central portion of the piston. A compression spring which makes the piston ascend is inserted into the lower chamber. Oil is filled in the chamber. Thus, the hinge apparatus is automatically returned to the initial position with return speed in multiple steps by controlling an amount of oil flowing from upper chamber to lower chamber in multiple steps when a door is closed.

Owner:I ONE INNOTECH

Single piston pump with dual return springs

ActiveUS20110303195A1Eliminate pump piston seizuresSpring force can be minimizedPositive displacement pump componentsFuel injecting pumpsReturn functionSpring force

Pump piston seizures caused by excessive side loads produced by the uneven loading of a large piston return spring are prevented by separating the tappet return function from the piston return function, thereby minimizing the spring force acting on the piston. Separate and distinct biasing means perform the respective functions. Preferably, a stronger, heavier load outer spring is mounted between the pump body and the tappet, such that it imparts no load and therefore no side loads to the pumping piston. A weaker, lighter load inner spring imparts less side load to the pumping piston than a conventional piston return spring, because the inner spring need not carry any tappet load. During both the pumping and charging strokes of the piston, the piston return spring can assist the tappet return spring, but the tappet return spring does not assist the piston return spring.

Owner:STANADYNE OPERATING CO LLC (

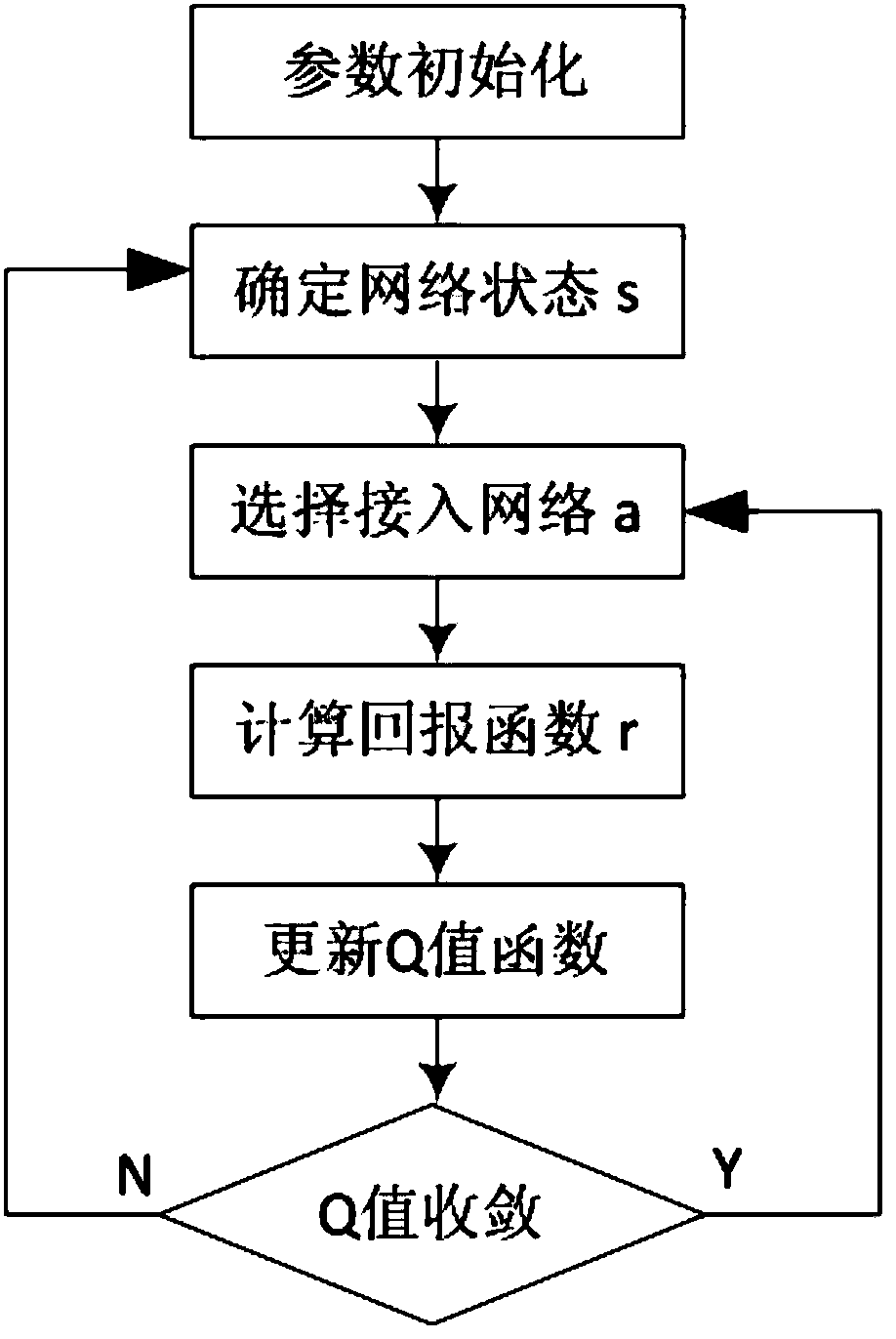

Network selection method based on Q-learning algorithm

ActiveCN107690176AAdapt to the choice problemImprove throughputAssess restrictionReturn functionPacket loss rate

The invention discloses a network selection method based on a Q-learning algorithm. The network selection method includes the steps of (1) initializing a Q value table and setting a discount factor gamma and a learning rate alpha; (2) determining a business type k and load rates of two current networks when a set time is up so as to obtain a current state sn; (3) selecting a useable action from anaction set A and recording such action as well as next network state sn+1; (4) computing an immediate return function r according to the network state after the selected action is implemented; (5), updating a Q value function Qn (s, a), and gradually decreasing the learning rate alpha to 0 according to rules of an inverse proportional function; (6), repeating the steps (2)-(5) until Q values areconverged, in other words, a difference value of the Q values before and after updating is smaller than a threshold value; (7) returning to the step (3) to select the action and accessing to an optimal network. The network selection method based on the Q-learning algorithm is capable of decreasing a voice business block rate and a data service packet loss rate and increasing average network throughout.

Owner:NANJING NARI GROUP CORP +1

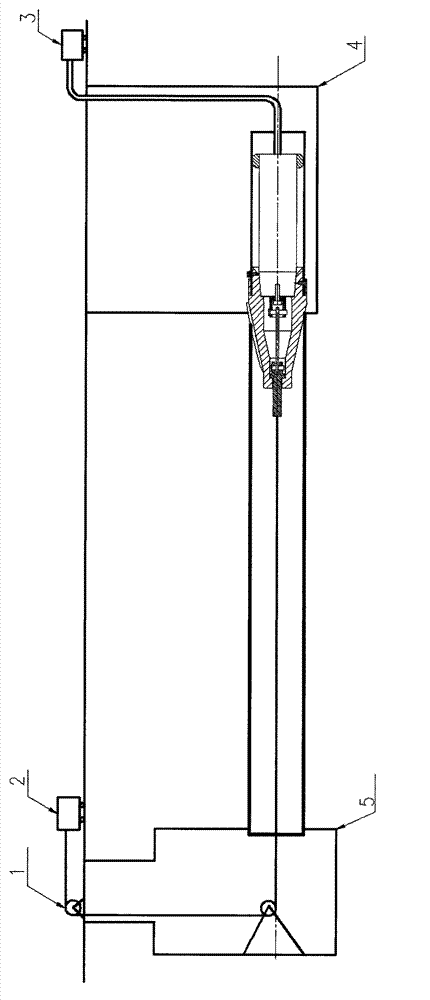

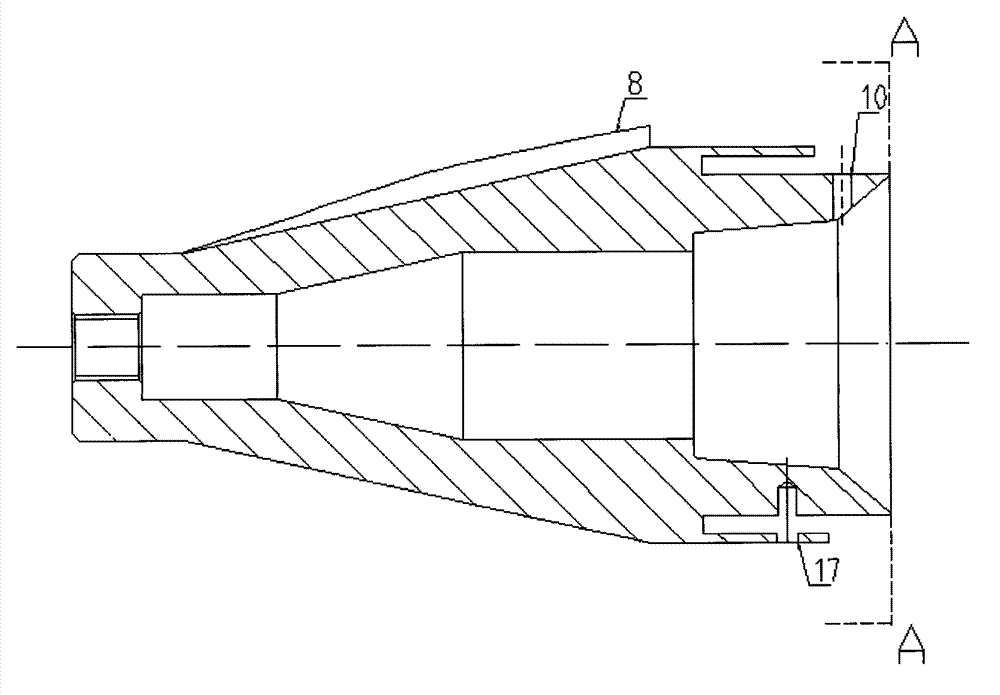

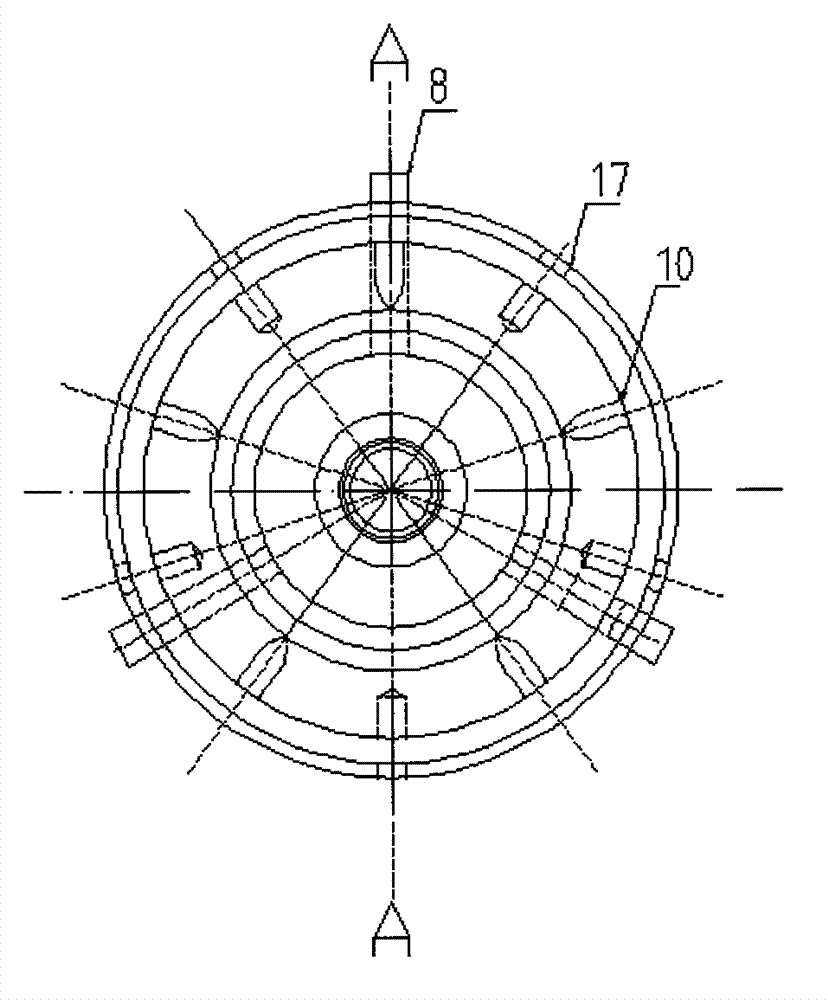

Device, system and method for repairing underground pipeline of trenchless pneumatic cracking pipe

ActiveCN102889427AReduce excavationImprove work efficiencyPipe laying and repairSewer pipelinesReturn functionEngineering

The embodiment of the invention provides a device, a system and a method for repairing an underground pipeline of a trenchless pneumatic cracking pipe. The system comprises an existing well and a working pit, wherein the existing well is provided with a pulley group and a winch; the winch is connected with a cracking pipe head through a traction device; the traction device is provided with front and back steering connectors, a pin, an adjusting device and a bolt; and the working pit is externally provided with an air compressor to drive a pipe tampering machine. The cracking pipe head is provided with a tapered head and a tail part for connecting with a replacing pipe, and is provided with a through hole; the middle line of the through hole is overlapped with the middle line of the tapered head; a front columnar through hole is used for mounting the front steering connector; a tail tapered through hole is used for connecting the pipe tampering machine; the cracking pipe head is provided with the tapered head and an expansion cutter is arranged on the outer surface; and the outer diameter of the cracking pipe head formed by the expansion cutter is greater than the inner pipe of a waste pipe to propel the expanded and cracked waste pipe. The whole process ingeniously utilizes the returning function of the pipe tampering machine under the condition that one pit corresponds to one well, and a receiving pit is replaced by the existing well. Therefore, the digging amount can be reduced so that the working efficiency is improved and the cost is saved.

Owner:北京隆科兴科技集团股份有限公司

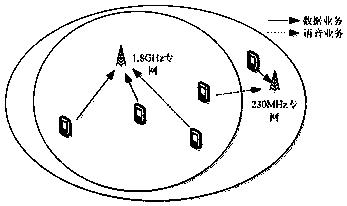

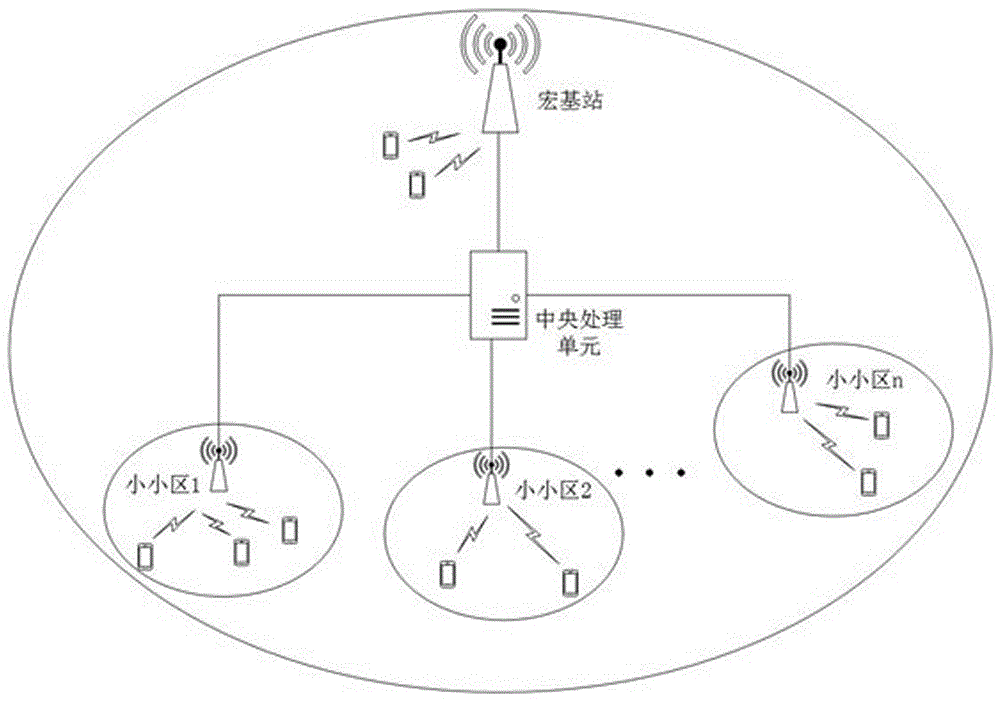

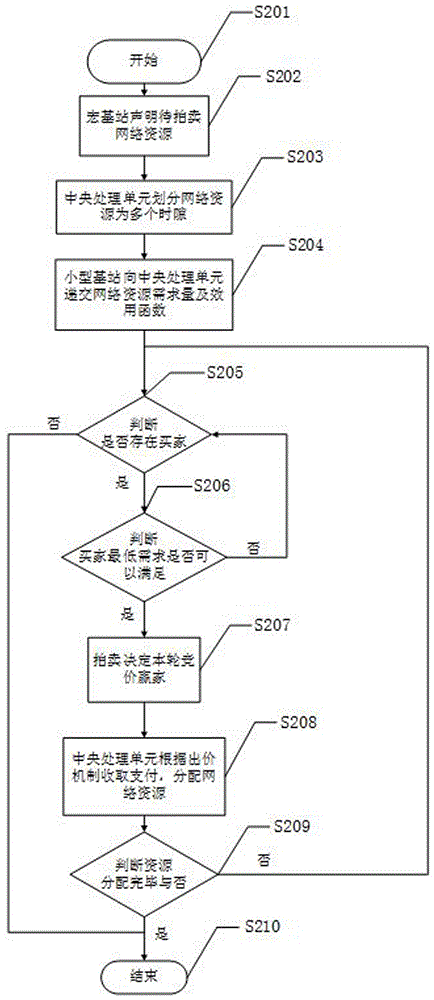

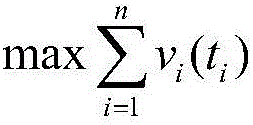

Frequency spectrum auction method of two-layer heterogeneous network containing small cells

InactiveCN106550369AGuaranteed communication qualityImprove spectrum utilizationNetwork planningFrequency spectrumReturn function

The invention provides a frequency spectrum auction method of a two-layer heterogeneous network containing small cells. In combination with a macro cellular network and numerous small cells within a radiation range, a frequency spectrum allocation auction model is established to divide an authorized frequency band provided by a macro base station into a plurality of time slots for auction as commodities, a user return function is set, an auction target function is established according to auction bid, and a maximum return slot allocation method is obtained through the optimization theory according to the bid. The frequency spectrum allocation auction model is auction technology based on a VCG mechanism that performs allocation by dividing the time slots. The frequency spectrum auction method has the beneficial technical effects as follows: (1) the auction mode is used for resource allocation; 2) the frequency spectrum resource is divided into time slots; and 3) the user bits are associated with the gains obtained by the small cells by purchasing the frequency band communication. By adoption of the frequency spectrum auction method provided by the invention, the effective allocation of the mobile communication resources is realized, an excellent frequency spectrum resource allocation mechanism is provided, the utilization efficiency of the spectrum resources is improved, and thus the application prospect is broad.

Owner:GUILIN UNIV OF ELECTRONIC TECH

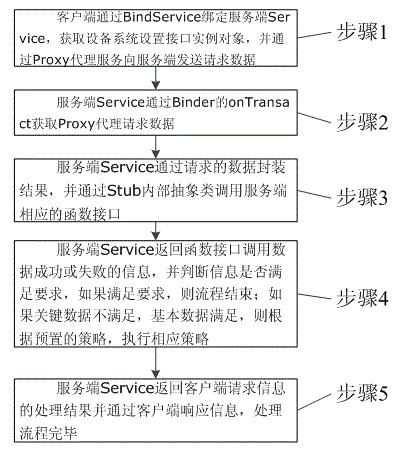

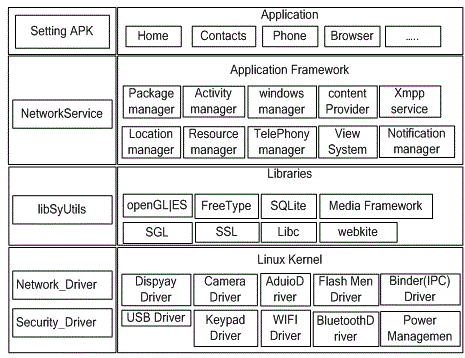

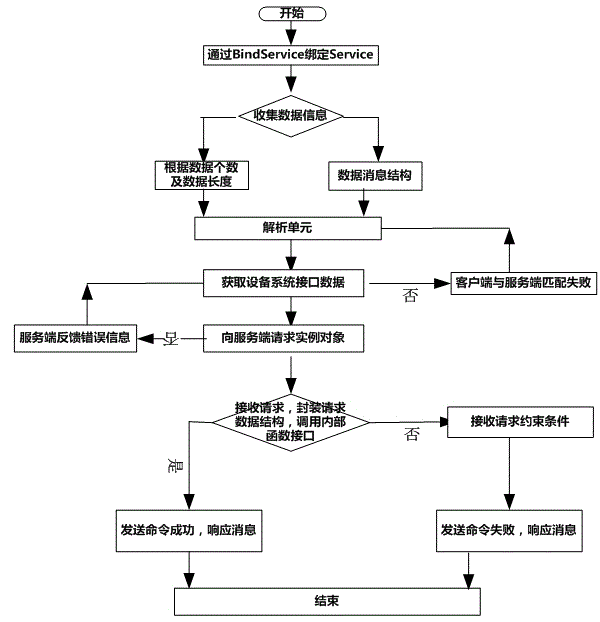

Adaptation method for android terminal device system setting interface

ActiveCN104618437ASolve the unification problemImprove performanceTransmissionExtensibilityInformation processing

The invention discloses an adaptation method for an android terminal device system setting interface. The adaptation method includes the steps: firstly, binding a Service through a Bind Service by a client, acquiring a device system setting interface instance object and transmitting request data to the Service through a Proxy service; secondly, acquiring Proxy request data through an on Transact of a Binder by the Service; thirdly, calling corresponding function interfaces of the Service through a Stud internal abstract class according to the request data package results; fourthly, returning function interface calling results by the Service, judging whether information meets requirements or not, finishing flow if meeting the requirements, and executing corresponding strategies according to preset strategies if partially meeting the requirements; fifthly, returning request information processing results of the client by the Service, responding to information by the client, and finishing the processing flow. Streaming device upper layer control modules in existing android system settings are unified, and the performance and the expandability of IPTV+OTT (internet protocol television over-the-top) business settings are enhanced.

Owner:成都卓影科技股份有限公司

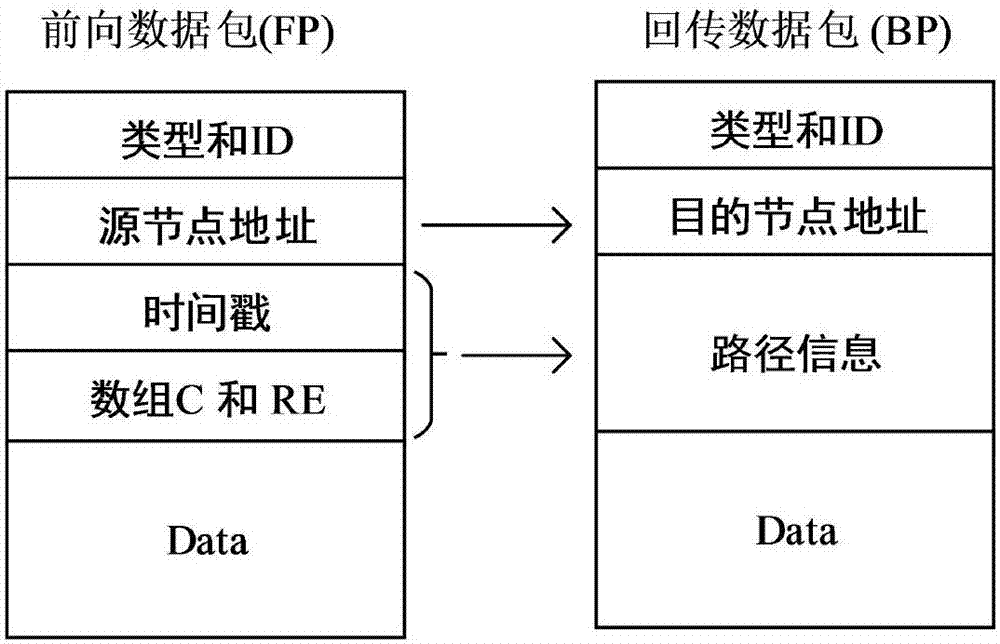

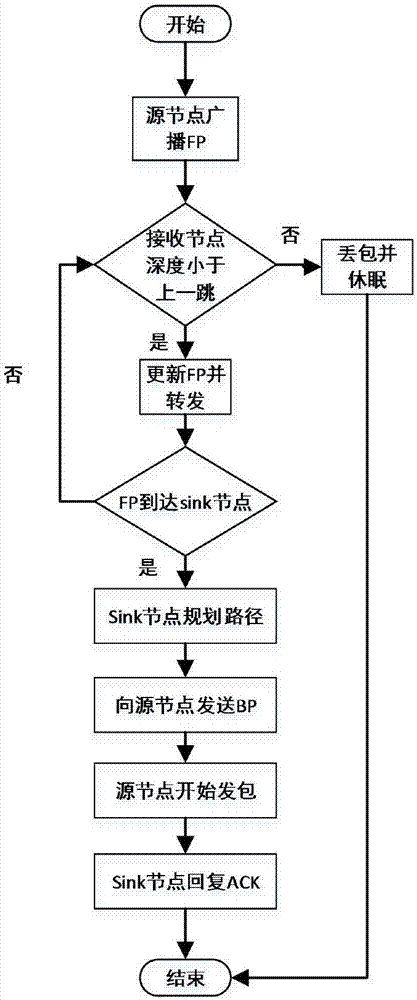

Energy consumption balanced underwater acoustic network route establishing method

InactiveCN106992932AExtend the life cycleData switching networksWireless communicationTelecommunicationsReturn function

The invention relates to an energy consumption balanced underwater acoustic network route establishing method. Each node is equipped with a pressure sensor, and depth information of each node can be acquired; a current return function and a future return function are defined for communication of the nodes; in the return functions, an end-to-end delay and node residual energy are simultaneously considered; then two return functions constitute an action utility function; by solving a maximum utility value, a next hop of node is determined; a communication process is divided into a forward transmission stage and a return stage; the forward transmission stage is used for sending a communication request to a sink node by a source node and collecting information of other nodes between the sink node and the source node; and the Sink node utilizes the information to carry out path planning, then a planned path and communication request confirmation are sent to the source node by the return stage, and then data packet transmission is started.

Owner:TIANJIN UNIV

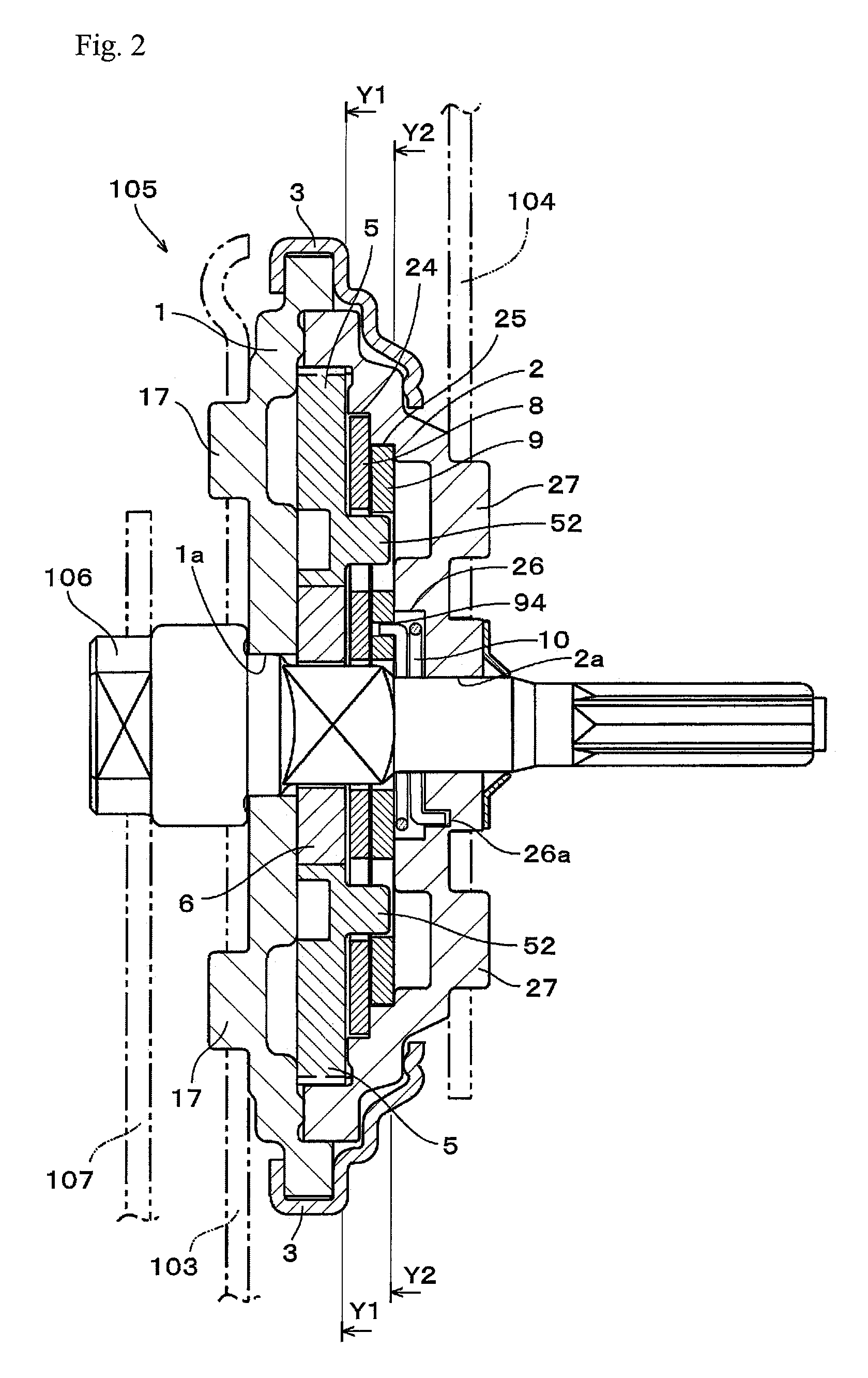

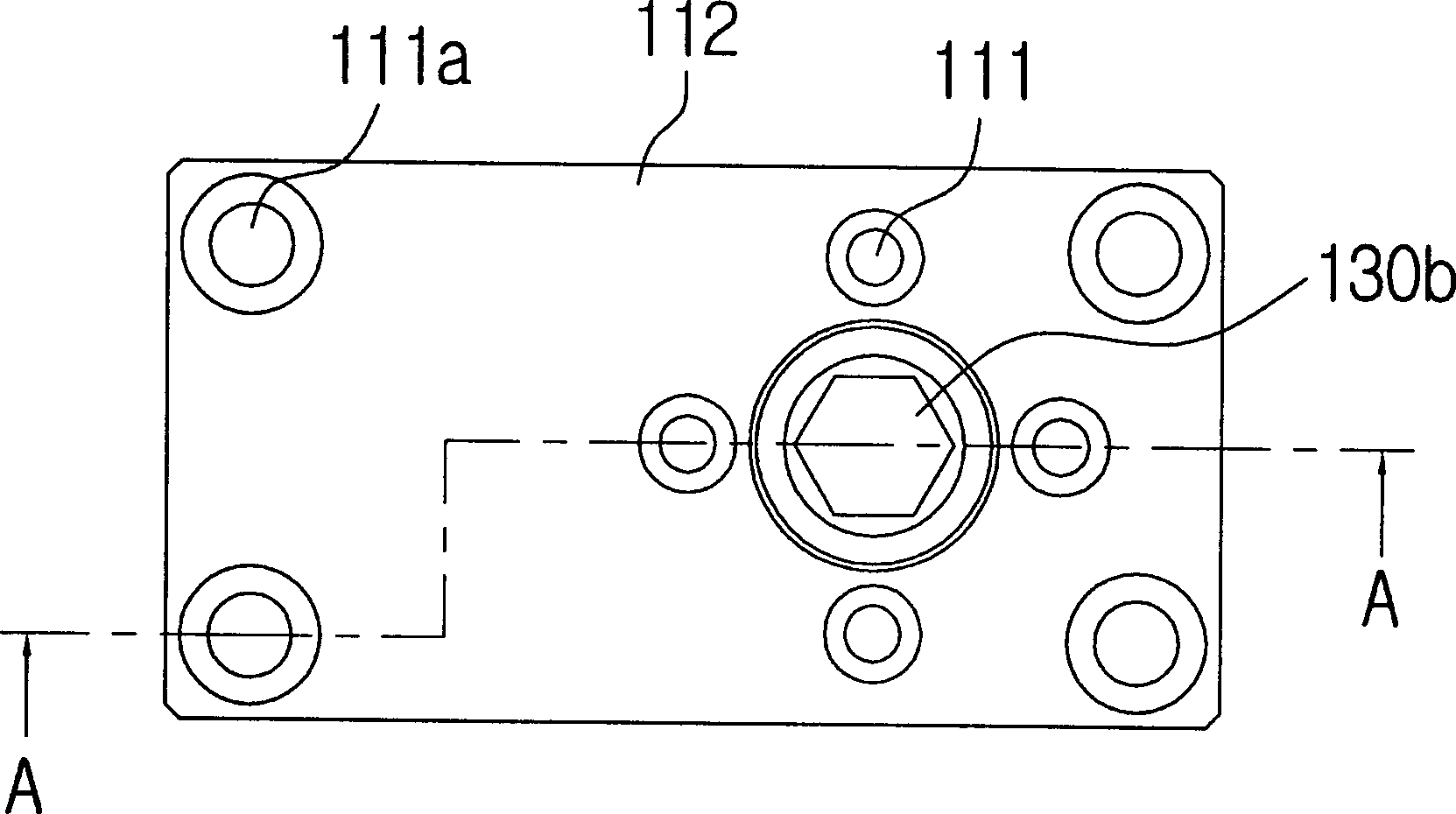

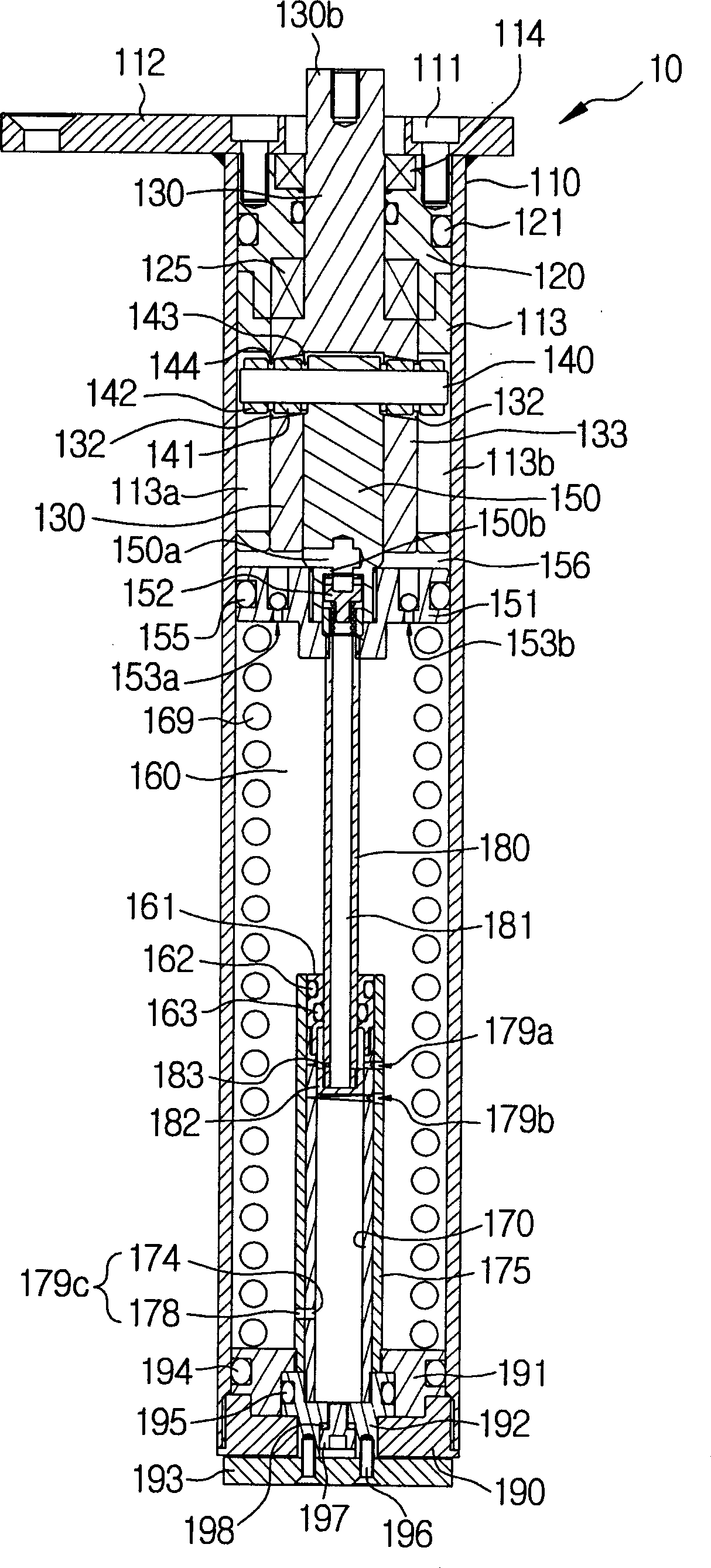

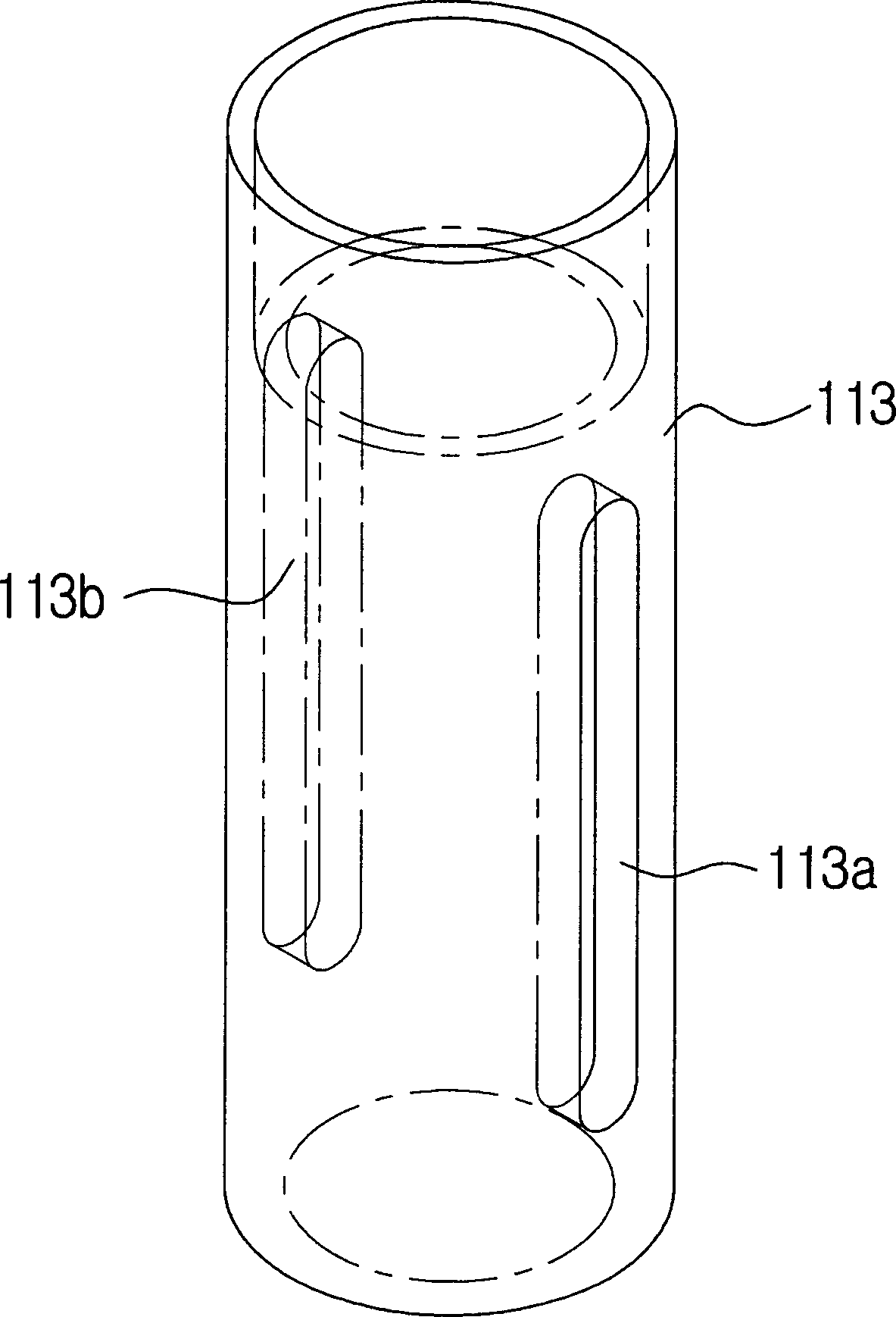

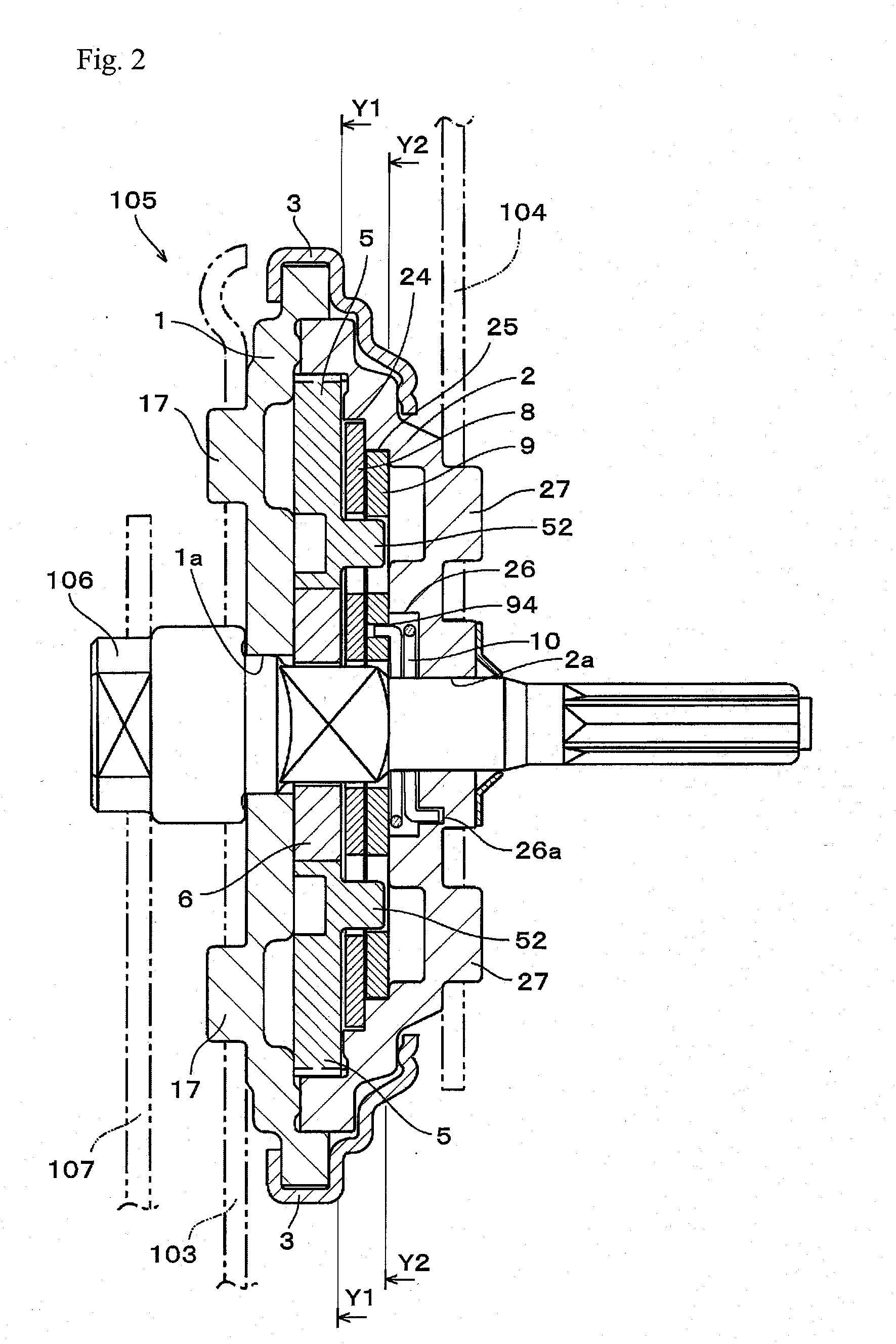

Hinge apparatus having automatic return function for use in building materials

ActiveCN101517184ARealization of automatic return functionClosing Force CompensationBuilding braking devicesPin hingesLinear motionReturn function

A hinge apparatus having an automatic return function for building materials is provided, in which a return spring is removed to thereby offer a closing force of each door with only a torsion spring. The hinge apparatus includes an upper body; a lower body; a shaft whose lower end portion is rotatably installed in the lower body; a piston whose outer circumference is slidably installed along the inner surface of the lower body, a rotational / linear motion converter which converts a rotational motion of the shaft into an axial linear motion of the piston according to rotation of the door; a damping unit which provides a damping function selectively where the piston ascends according to return of the door; a torsion spring which rotates the shaft in the direction returning the door at the closing time; and a clutch unit for stopping an increment of an elastic force due to reverse twisting of the spring where an opening angle reaches a clutch stop start angle, or for restoring the elastic force of the spring.

Owner:I ONE INNOTECH

Reclining apparatus

A compact and lightweight round reclining apparatus having a neutral position return function of automatically returning and securing the backrest to the preset neutral position when the backrest that has been reclined forward is raised is provided. A memory plate in approximately disc form having a center shaft at its center and a spring member for pressing the memory plate so that the memory plate always rotates in one direction intervene between a base plate and a gear plate. A guide portion, which is a long hole in approximately arc form, for restricting the movement of the lock gear outward in the direction of the radius through contact with the protrusion of the above described lock gear is provided inside the disc of the memory plate, and at least one notch is provided in the outer periphery of the memory plate so that an engaging portion provided on the above described gear plate enters into the notch and the memory plate is rotatable relative to the gear plate within the range of the notch.

Owner:IMASEN ELECTRIC IND

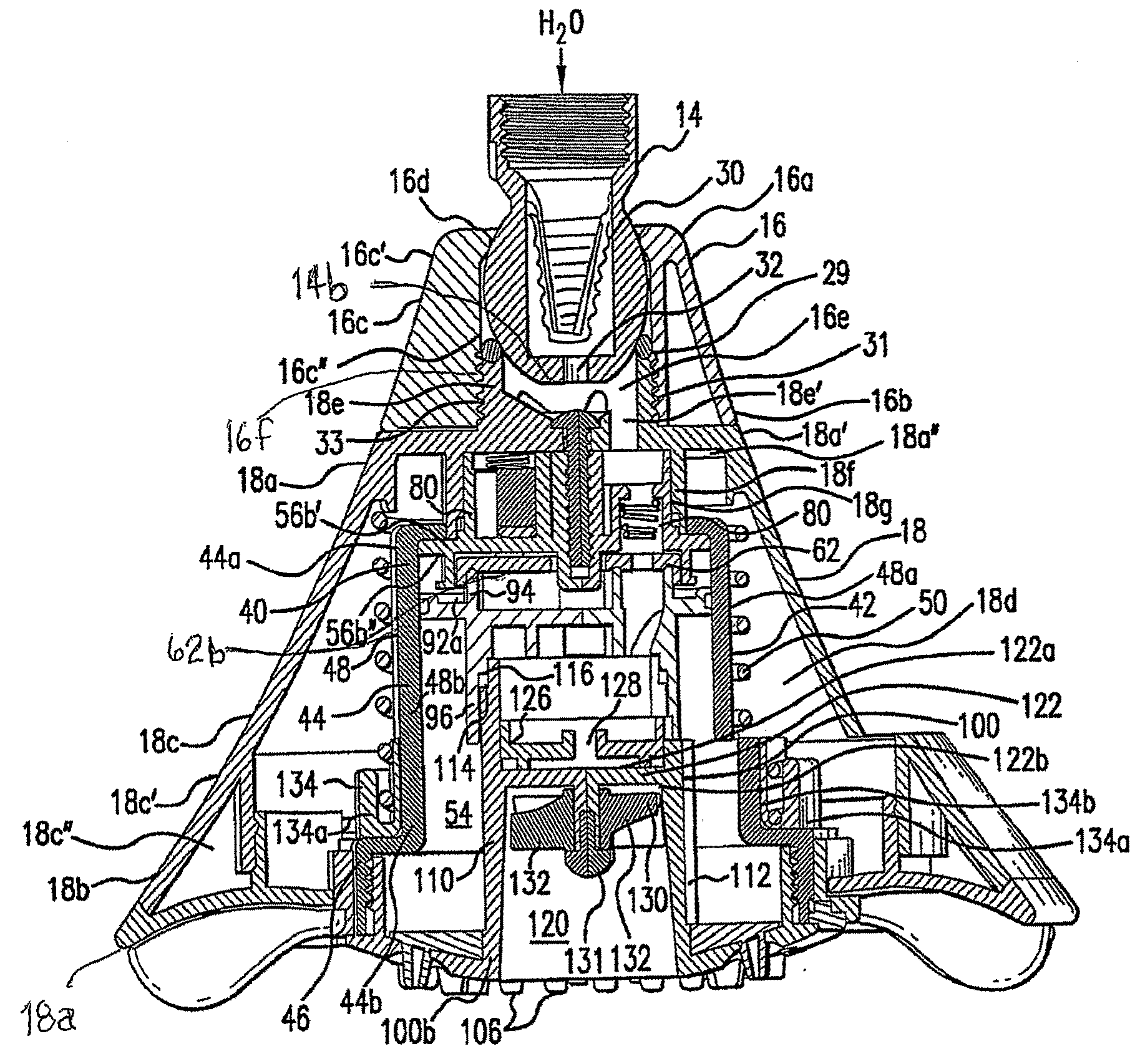

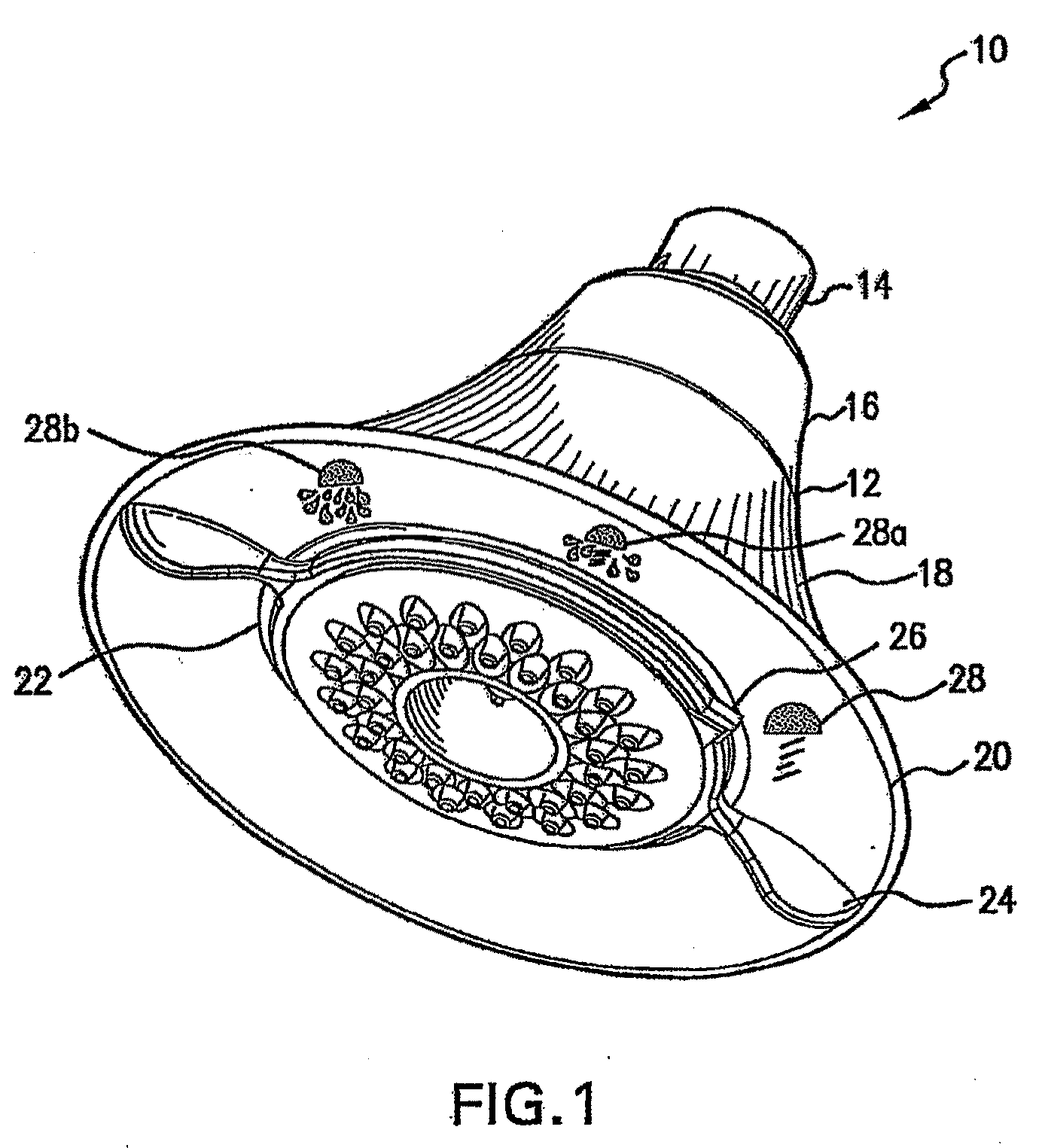

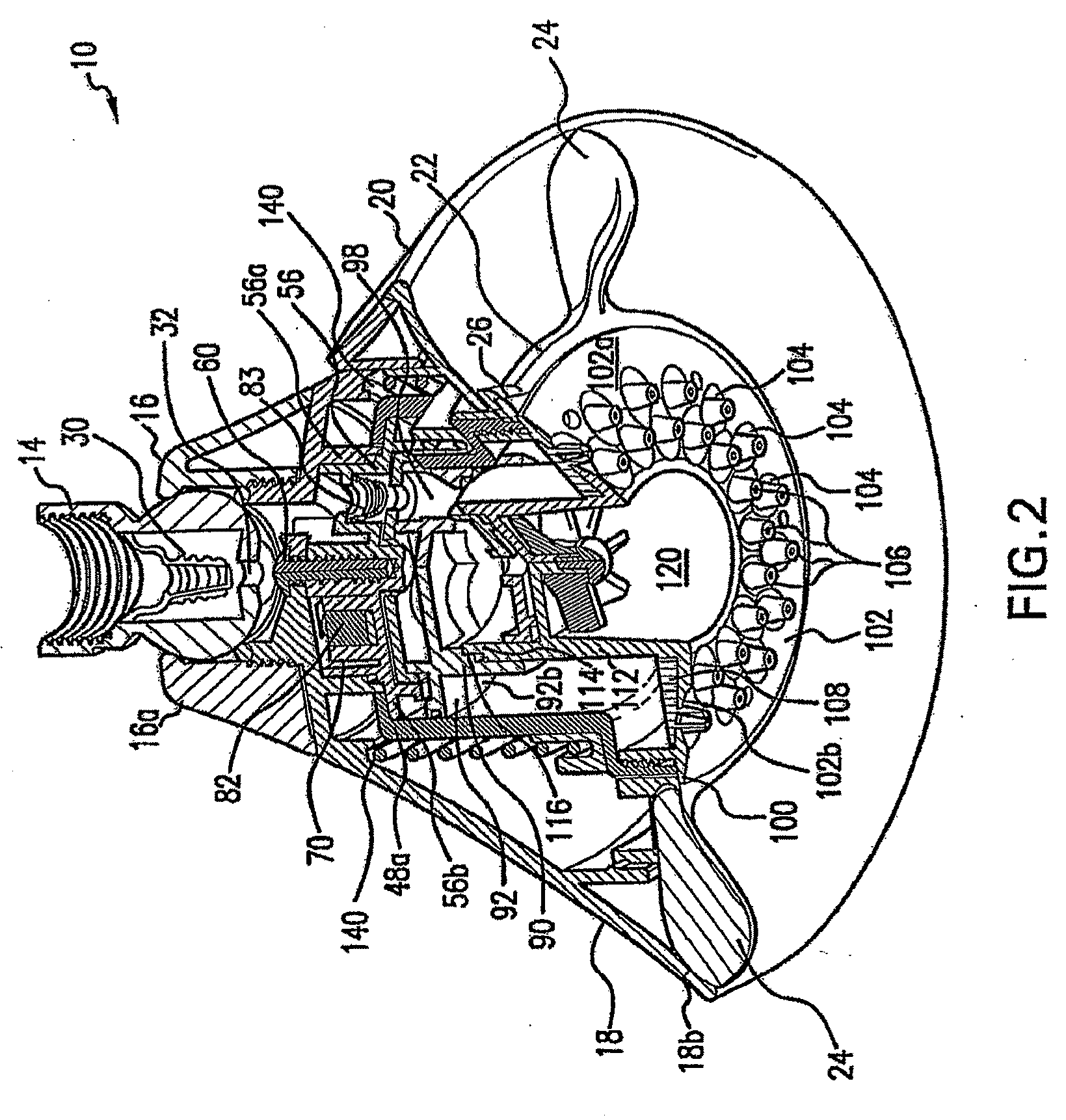

Multifunction Showerhead with Automatic Return Function for Enhanced Water Conservation

ActiveUS20090127353A1Good for conservationSacrificing the assembly's advantageous conservation featuresMovable spraying apparatusSpray nozzlesWater sourceReturn function

The present invention provides a showerhead that allows a bather to switch among at least three different water delivery functions. In the first function, the showerhead delivers a concentrated fluttering spray at a rate not to exceed 1.5 GPM (5.75 GPM). In the second function, the showerhead delivers a combined spray pattern, wherein the fluttering spray and a radially dispersed precision spray are simultaneously delivered to the bather at a rate not to exceed 2.0 GPM (7.57 L / min) for the combined water flow. In the third function, the showerhead delivers the precision spray pattern at a rate not to exceed 2.0 L / min (7.57 GPM). The combination spray pattern is effected without compromising either the desirable massaging and cleaning effect of water delivery or the inherent water conservation benefits. In addition, the showerhead of the present invention provides an automatic return feature for return of the showerhead to the first function when water pressure to the showerhead falls below a predetermined bottom threshold.

Owner:AS AMERICA

Gas-liquid separator with heat-returning function and application method thereof to air conditioning unit

InactiveCN102645061AAvoid aspiration of fluidImprove performanceRefrigeration componentsVapor–liquid separatorReturn function

The invention discloses a gas-liquid separator with a heat-returning function and an application method thereof to an air conditioning unit. The gas-liquid separator with the heat-returning function comprises a superheating passageway and a supercooling passageway, and the superheating passageway is provided with an air inlet pipe used for bringing refrigerant vapour in the gas-liquid separator and an air outlet pipe used for exhausting the refrigerant vapour out of the gas-liquid separator; and the supercooling passageway is provided with one or more than one supercooling pipe arranged in the gas-liquid separator, refrigerant liquid flows through the supercooling pipe(s) so as to carry out heat exchange with the refrigerant vapour which is brought in the gas-liquid separator, thus realizing the superheating of the refrigerant vapour and supercooling of the refrigerant liquid. The gas-liquid separator and the application method provided by the invention can effectively promote the performance and efficiency of refrigerating / heating circulation and can be widely applied to the air conditioning unit.

Owner:四川同达博尔置业有限公司

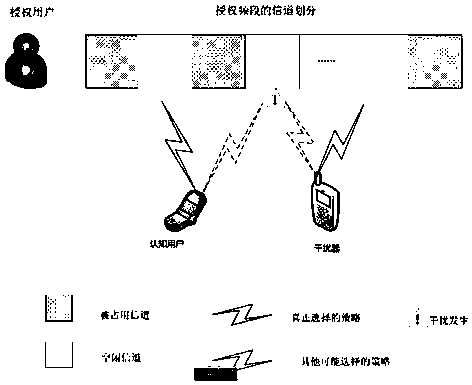

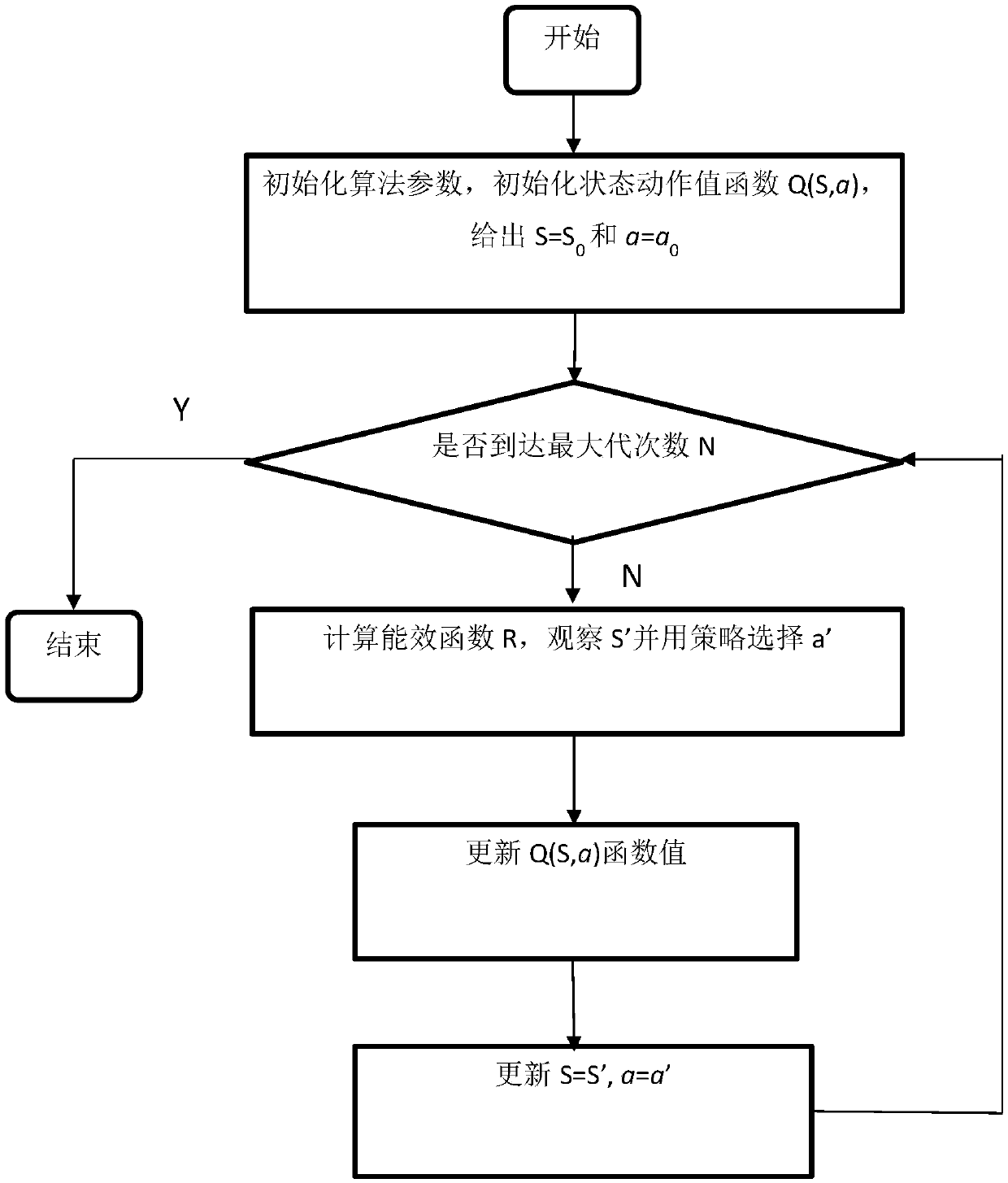

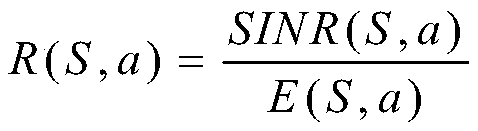

A method for cognitive radio anti-interference intelligent decision based on reinforcement learning

ActiveCN108712748ASolve the problem of selectivityImprove energy efficiencyNetwork planningCognitive userDecision model

The invention relates to a method for cognitive radio anti-interference intelligent decision based on reinforcement learning. The method includes the following steps: under the multichannel cognitivescene, a cognitive user takes perceived channel information and transmission power and channel selection information of an interference unit as state information S and actively selects transmission power and channel selection information to be action information a; the ratio of the signal to interference plus noise ratio SINR to the energy consumption E of the cognitive user is defined as an utility function R which serves as a measuring standard of the action selection performance of the cognitive user; in the cognitive decision model, the state information serves as a known condition, the action selection is decided by the cognition user as a body, the utility function serves as an instant return function in the reinforcement learning, a Q-learning reinforcement learning model is established, and the action decision optimized by the cognitive user is obtained.

Owner:TIANJIN UNIV

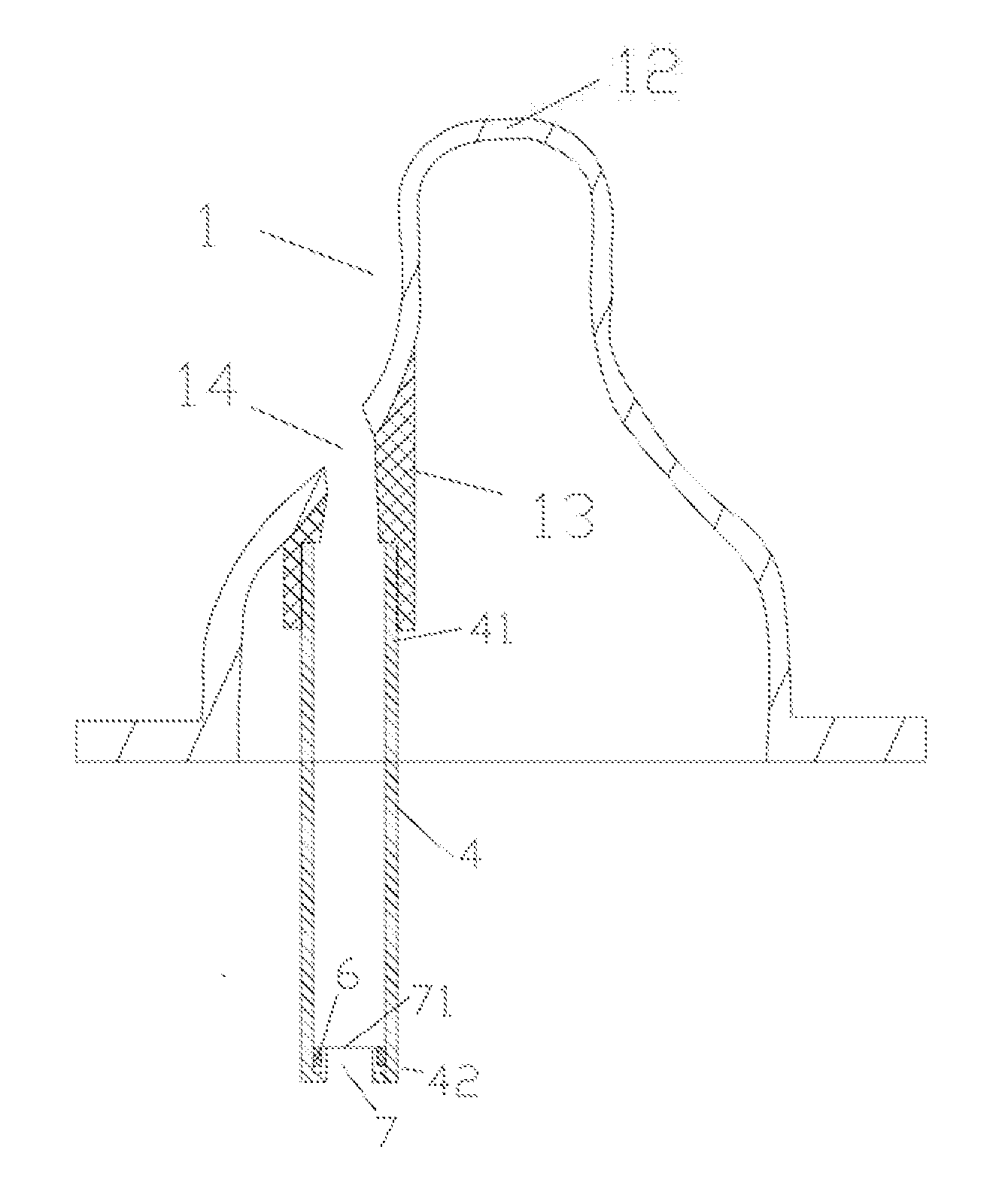

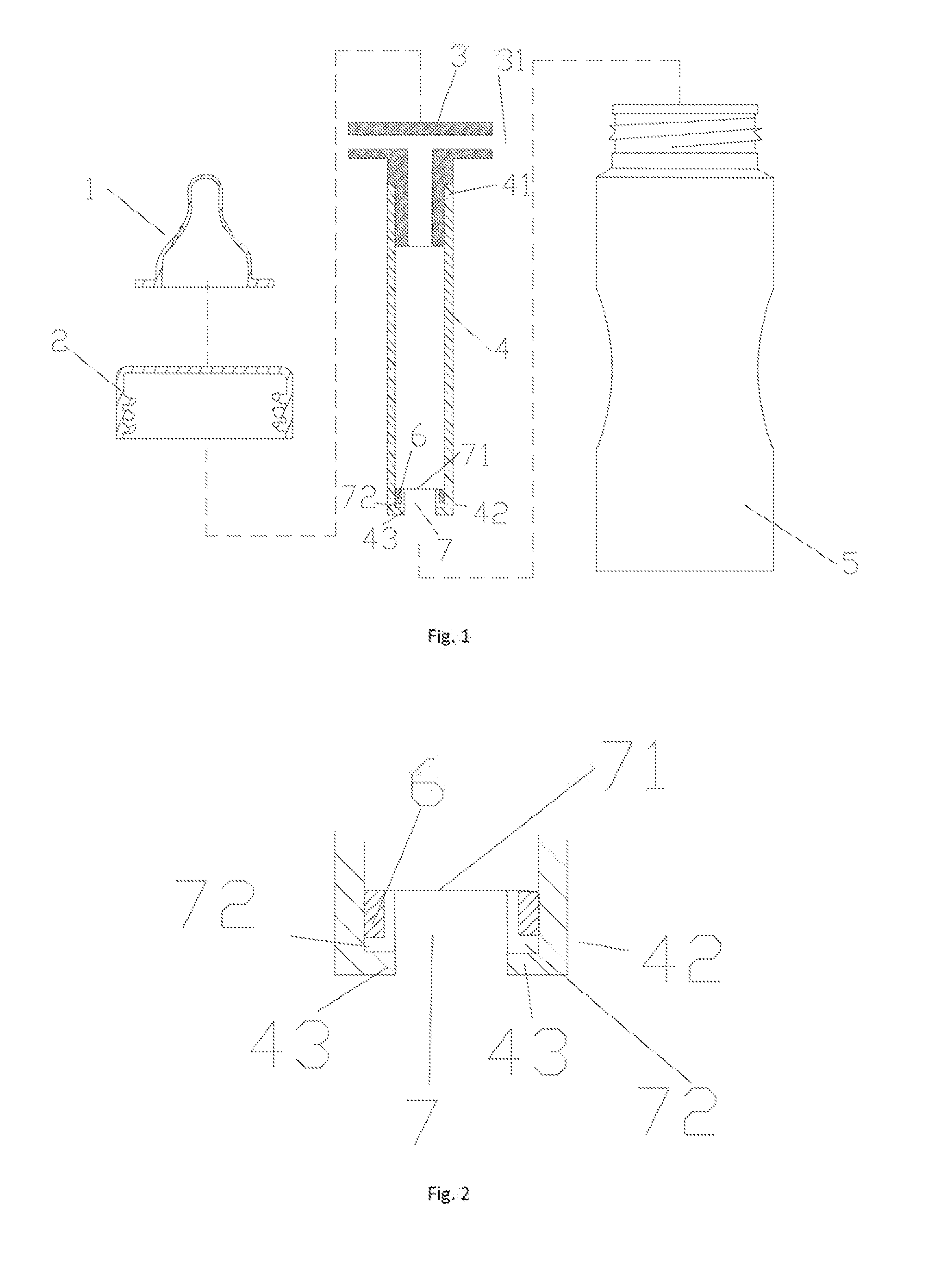

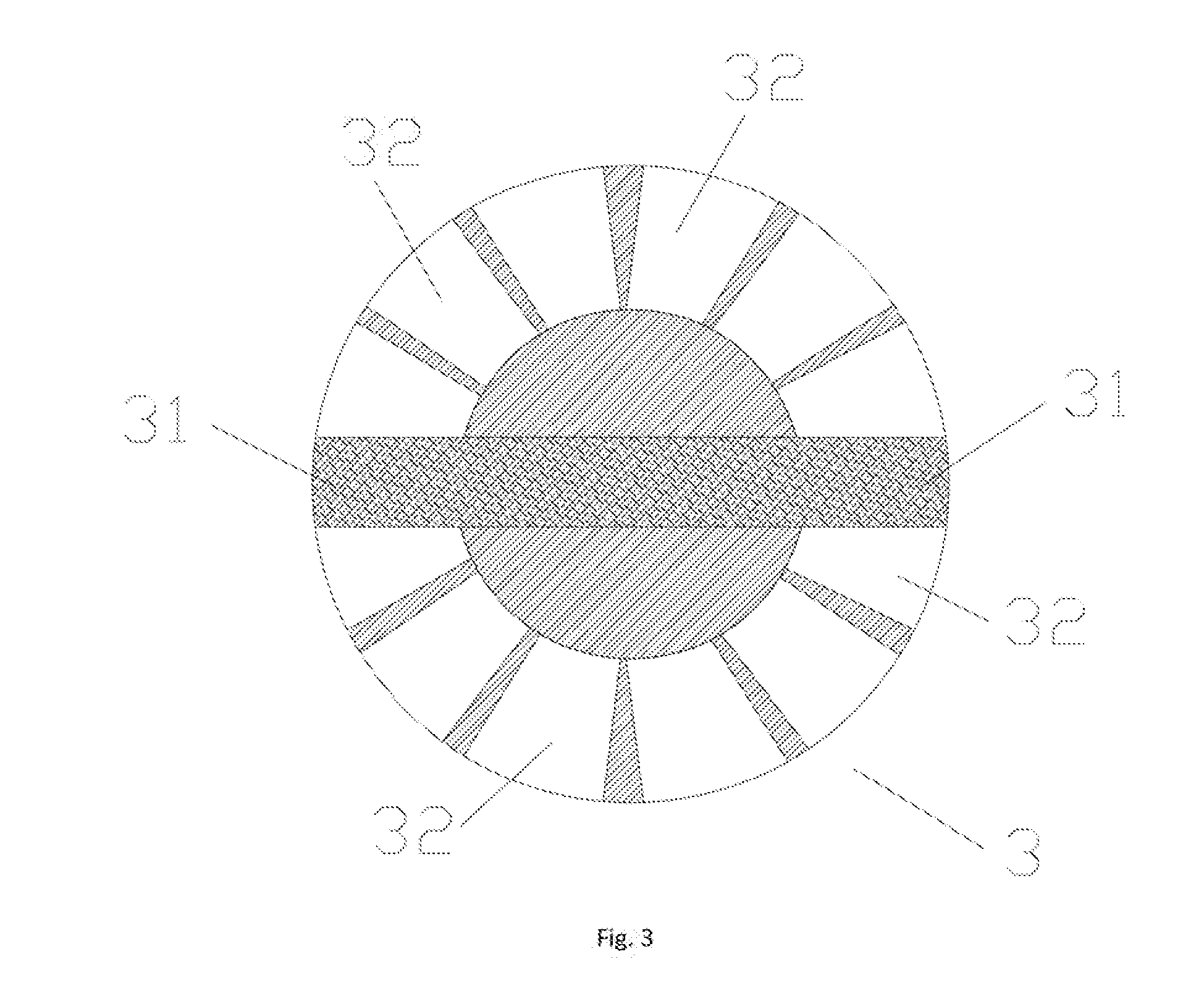

Nursing bottle having air returning function

InactiveUS20160346168A1Decrease phenomenonSimple manufacturing processFeeding-bottlesTeatsReturn functionEngineering

A nursing bottle having an air returning function comprises a bottle body, a fixing cover, a nipple, a ventilation component, an air conduit, an elastic one-way valve set at a second opening of the air conduit and is located at the interior of the air conduit, a mounting pan located on the second opening of the air conduit to secure the elastic one-way valve. The ventilation component, the air conduit, the elastic one-way valve and the mounting part forms a gas returning device and are located on the interior of the bottle. The present invention reduces the contacting area between the air and the milk, lowering the amount of loss of vitamin. The phenomenon of vomiting milk, choking and flatulence will be omitted since no air bubble is generated.

Owner:SUN QINGYANG

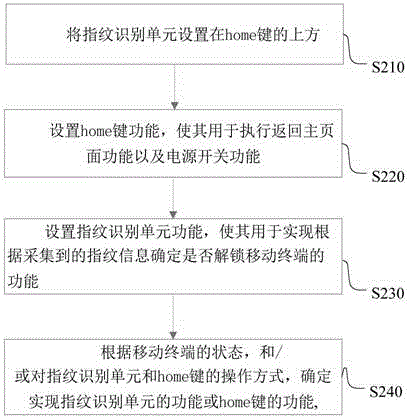

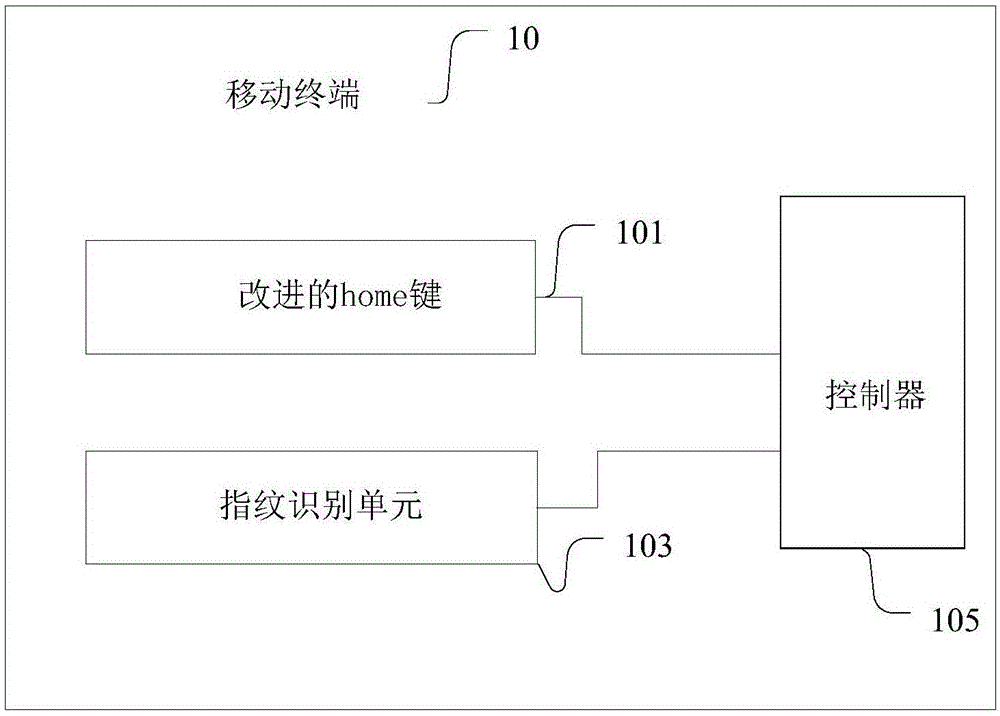

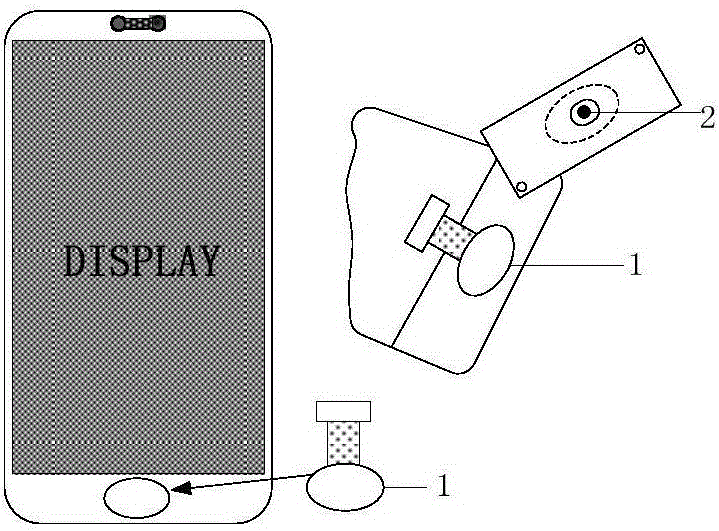

Mobile terminal and key control method of mobile terminal

InactiveCN106293422AReduce the number of keysImprove convenienceDigital data authenticationInput/output processes for data processingComputer hardwareReturn function

The invention provides a mobile terminal and a key control method of the mobile terminal. The mobile terminal comprises an improved home key, a fingerprint identification unit and a controller. The fingerprint identification unit is arranged above the improved home key. The improved home key is used for executing a homepage returning function and a power switch function. The fingerprint identification unit is used for determining whether the function of the mobile terminal is unlocked or not according to acquired fingerprint information. The controller is used for determining achievement of the function of the fingerprint identification unit and the function of the improved home key according to the state of the mobile terminal and / or the mode of operating the fingerprint identification unit and the improved home key. By the adoption of the scheme, the terminal device space occupied by the key is decreased, and a user conveniently operates a terminal device.

Owner:GREE ELECTRIC APPLIANCES INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com