Patents

Literature

172 results about "Decision networks" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

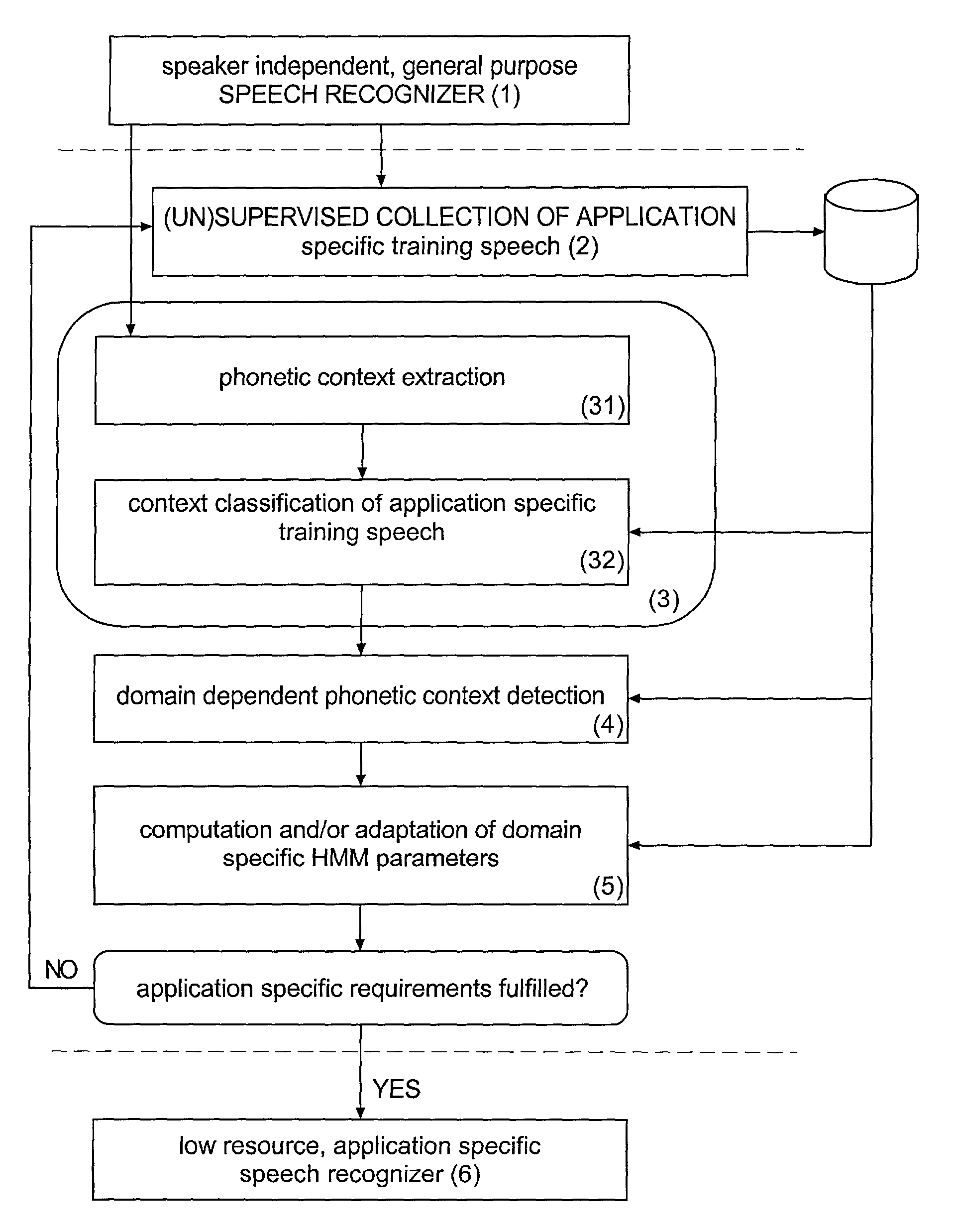

Method and apparatus for phonetic context adaptation for improved speech recognition

The present invention provides a computerized method and apparatus for automatically generating from a first speech recognizer a second speech recognizer which can be adapted to a specific domain. The first speech recognizer can include a first acoustic model with a first decision network and corresponding first phonetic contexts. The first acoustic model can be used as a starting point for the adaptation process. A second acoustic model with a second decision network and corresponding second phonetic contexts for the second speech recognizer can be generated by re-estimating the first decision network and the corresponding first phonetic contexts based on domain-specific training data.

Owner:NUANCE COMM INC

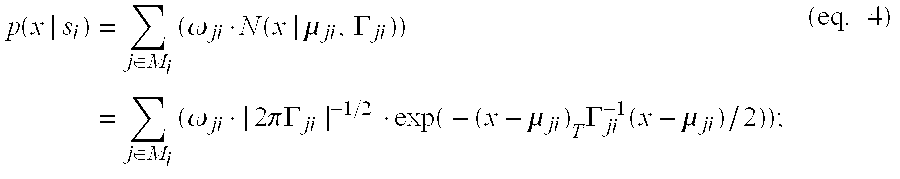

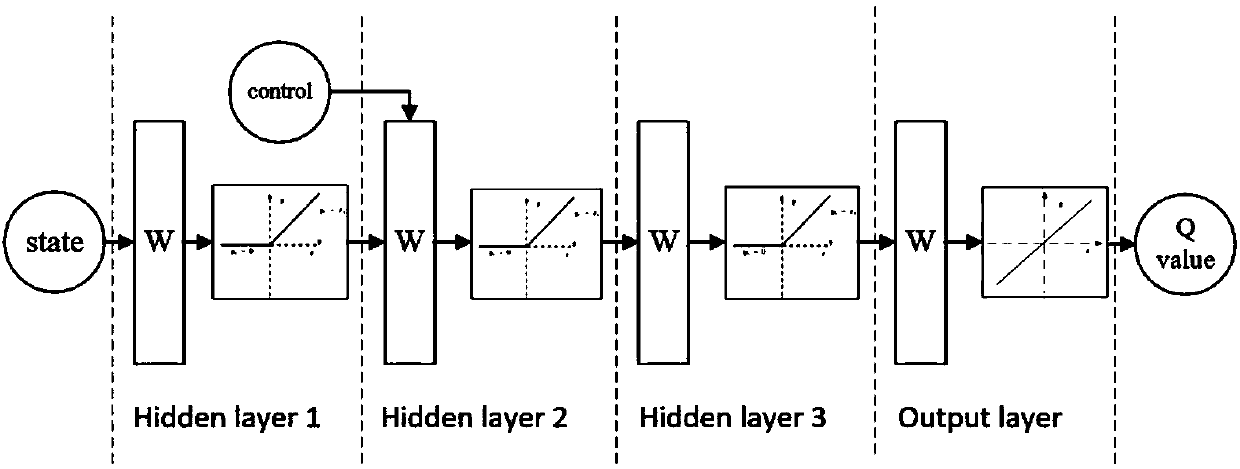

Deep Neural Network-Based Decision Network

ActiveUS20180268298A1Semantic analysisGeneral purpose stored program computerDeep neural networksDecision networks

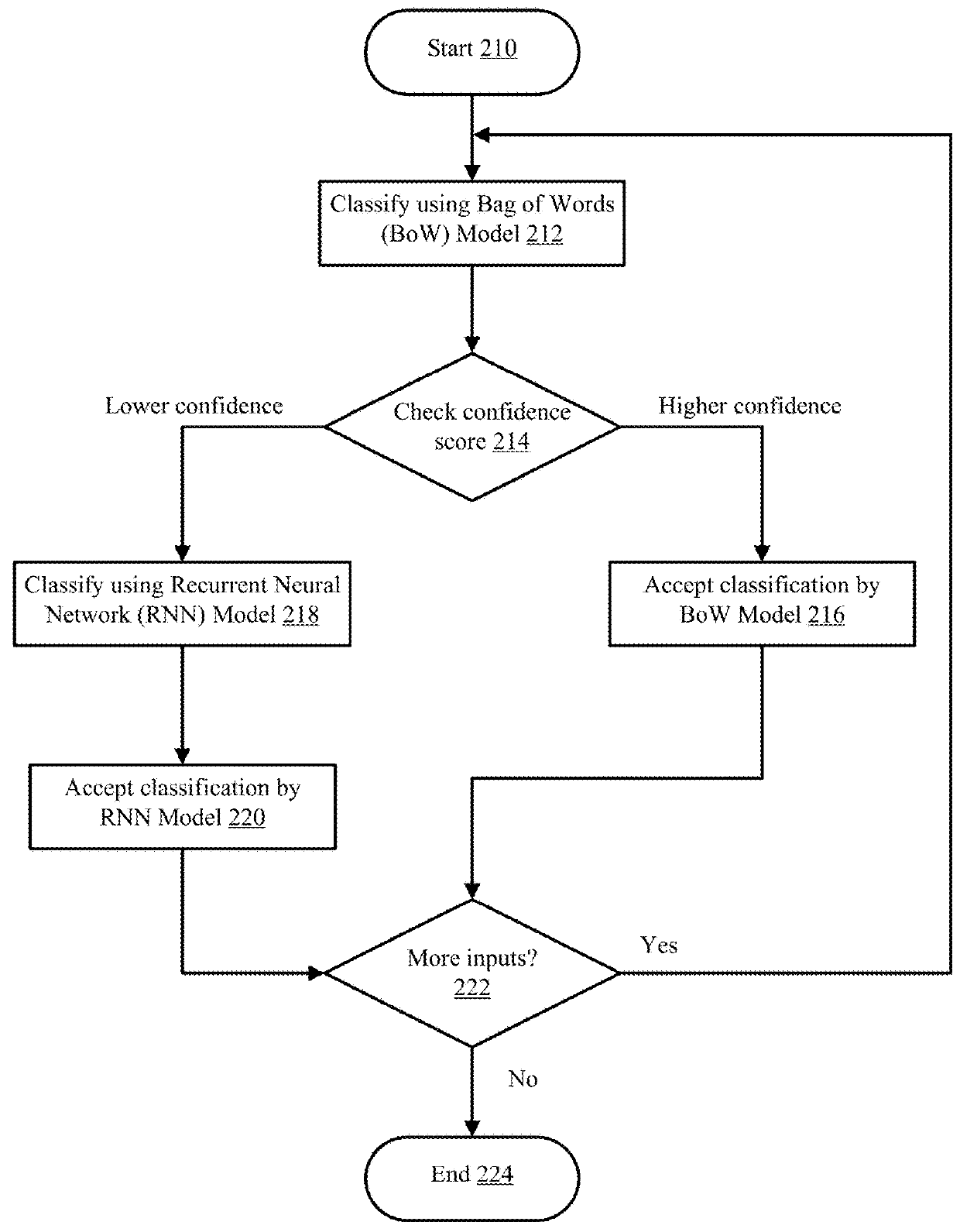

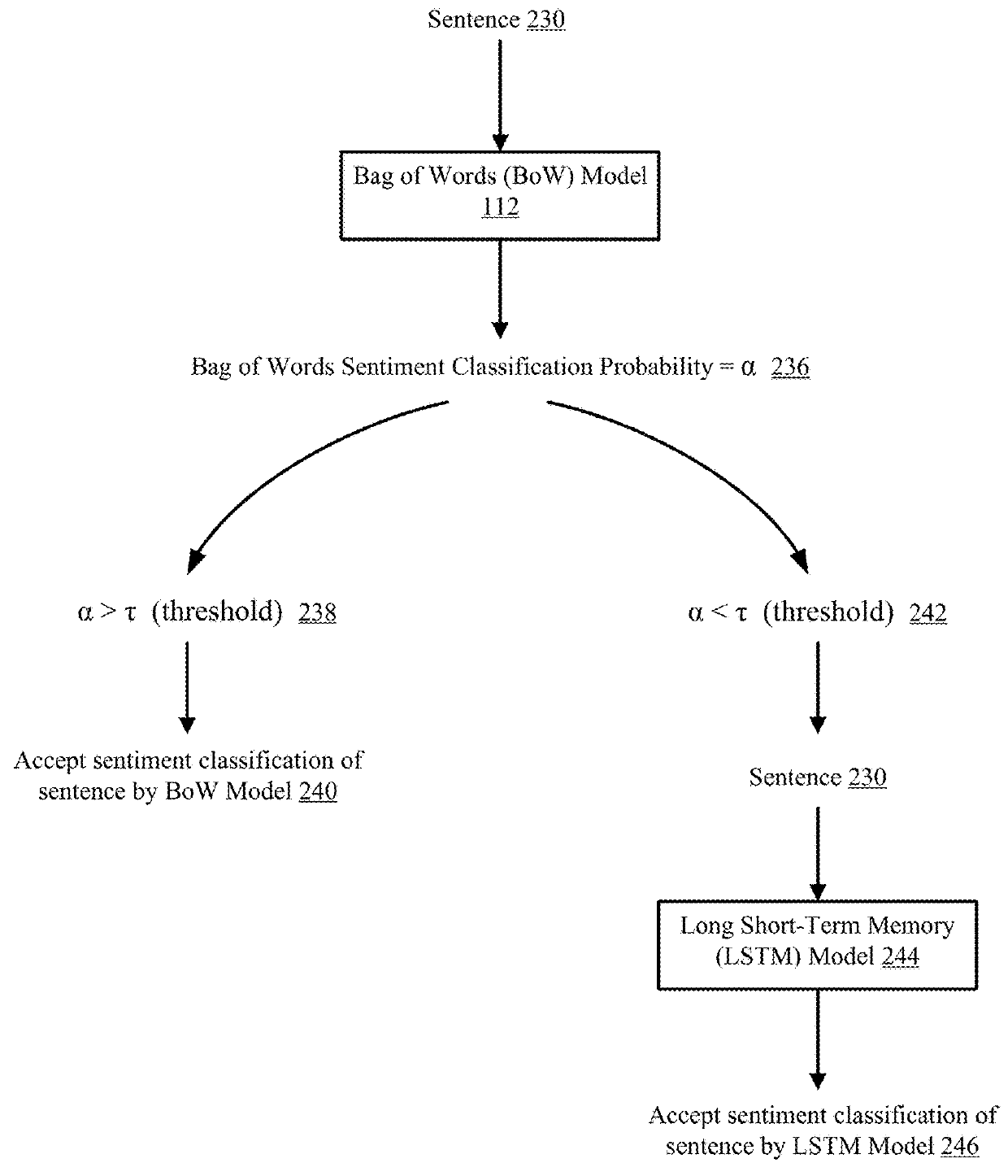

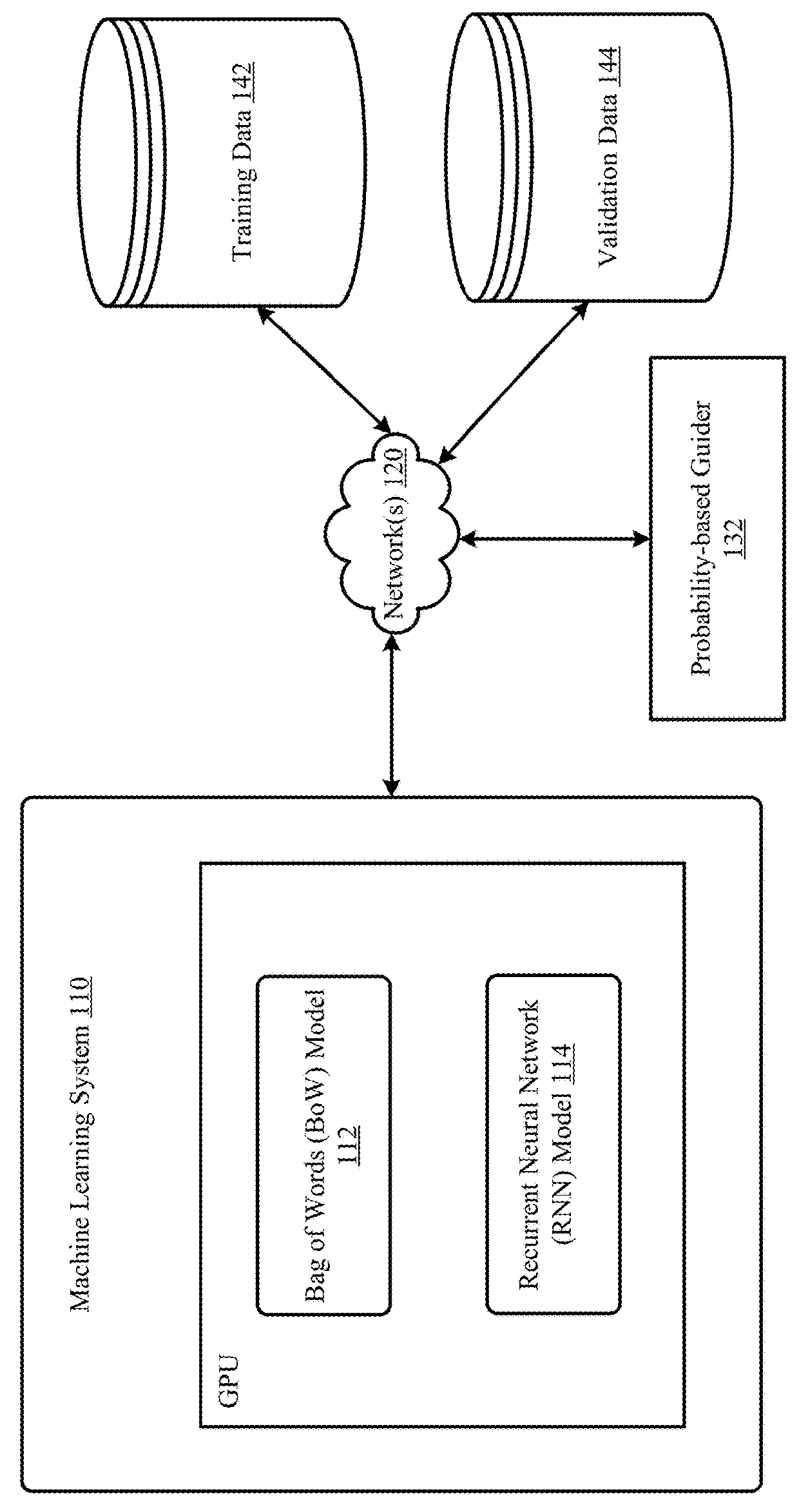

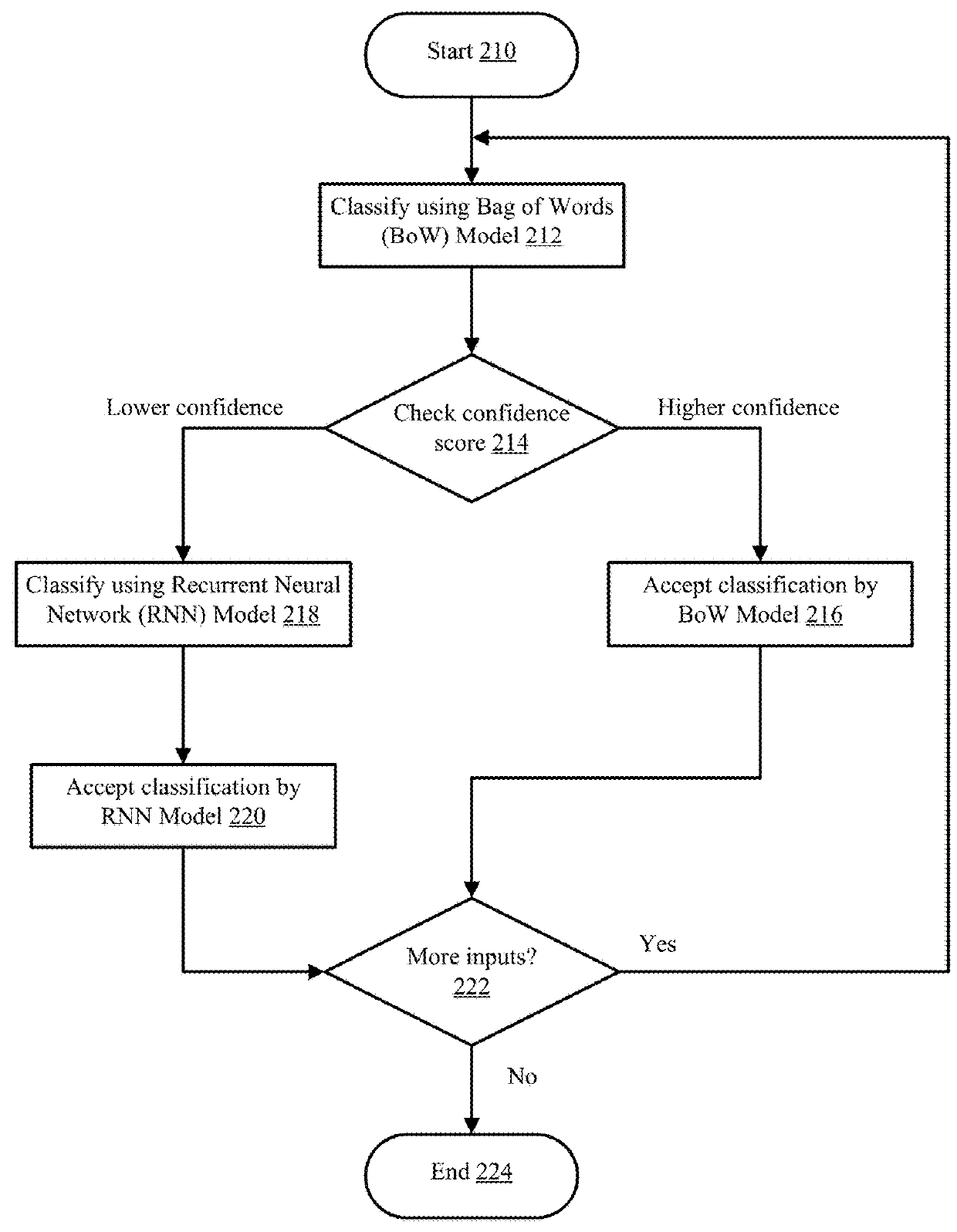

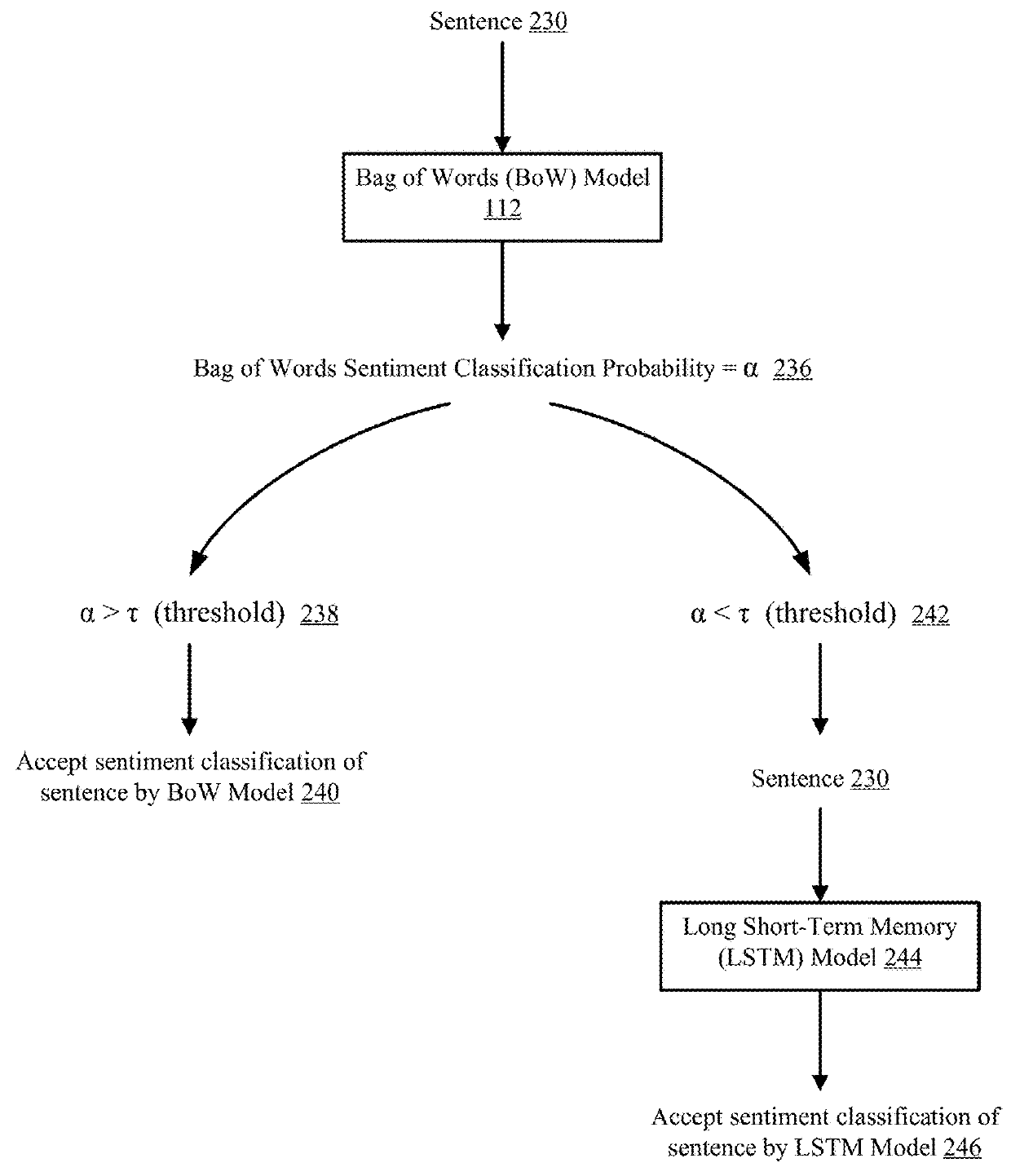

The technology disclosed proposes using a combination of computationally cheap, less-accurate bag of words (BoW) model and computationally expensive, more-accurate long short-term memory (LSTM) model to perform natural processing tasks such as sentiment analysis. The use of cheap, less-accurate BoW model is referred to herein as “skimming”. The use of expensive, more-accurate LSTM model is referred to herein as “reading”. The technology disclosed presents a probability-based guider (PBG). PBG combines the use of BoW model and the LSTM model. PBG uses a probability thresholding strategy to determine, based on the results of the BoW model, whether to invoke the LSTM model for reliably classifying a sentence as positive or negative. The technology disclosed also presents a deep neural network-based decision network (DDN) that is trained to learn the relationship between the BoW model and the LSTM model and to invoke only one of the two models.

Owner:SALESFORCE COM INC

Probability-Based Guider

ActiveUS20180268287A1Semantic analysisGeneral purpose stored program computerShort-term memoryDecision networks

The technology disclosed proposes using a combination of computationally cheap, less-accurate bag of words (BoW) model and computationally expensive, more-accurate long short-term memory (LSTM) model to perform natural processing tasks such as sentiment analysis. The use of cheap, less-accurate BoW model is referred to herein as “skimming”. The use of expensive, more-accurate LSTM model is referred to herein as “reading”. The technology disclosed presents a probability-based guider (PBG). PBG combines the use of BoW model and the LSTM model. PBG uses a probability thresholding strategy to determine, based on the results of the BoW model, whether to invoke the LSTM model for reliably classifying a sentence as positive or negative. The technology disclosed also presents a deep neural network-based decision network (DDN) that is trained to learn the relationship between the BoW model and the LSTM model and to invoke only one of the two models.

Owner:SALESFORCE COM INC

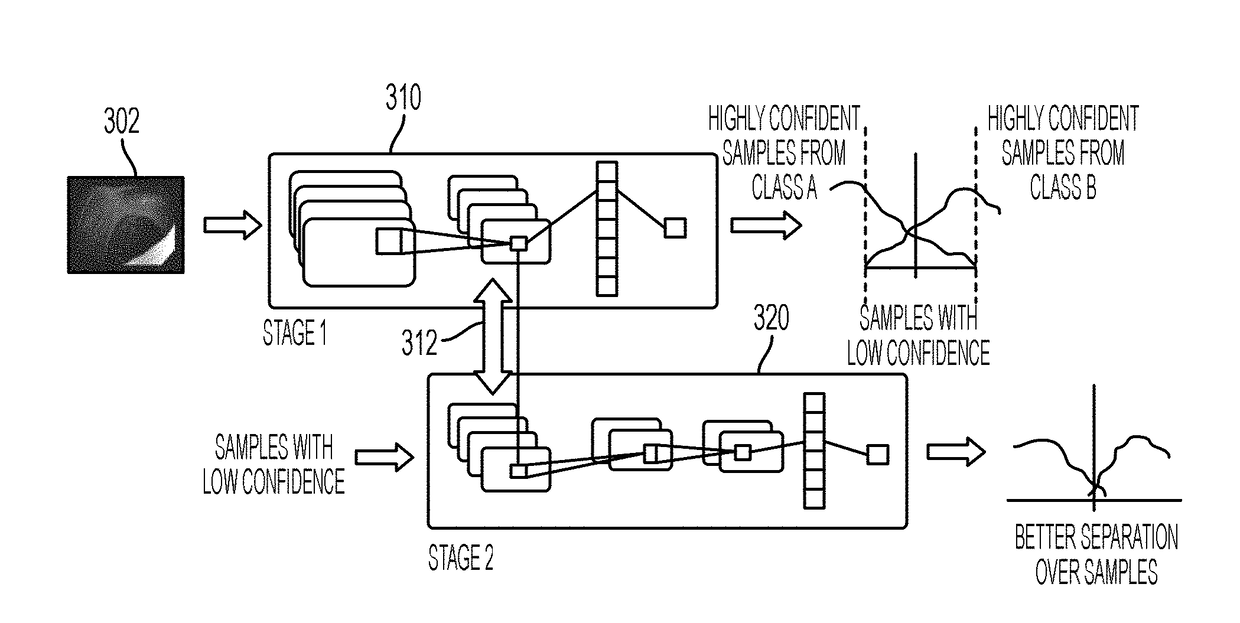

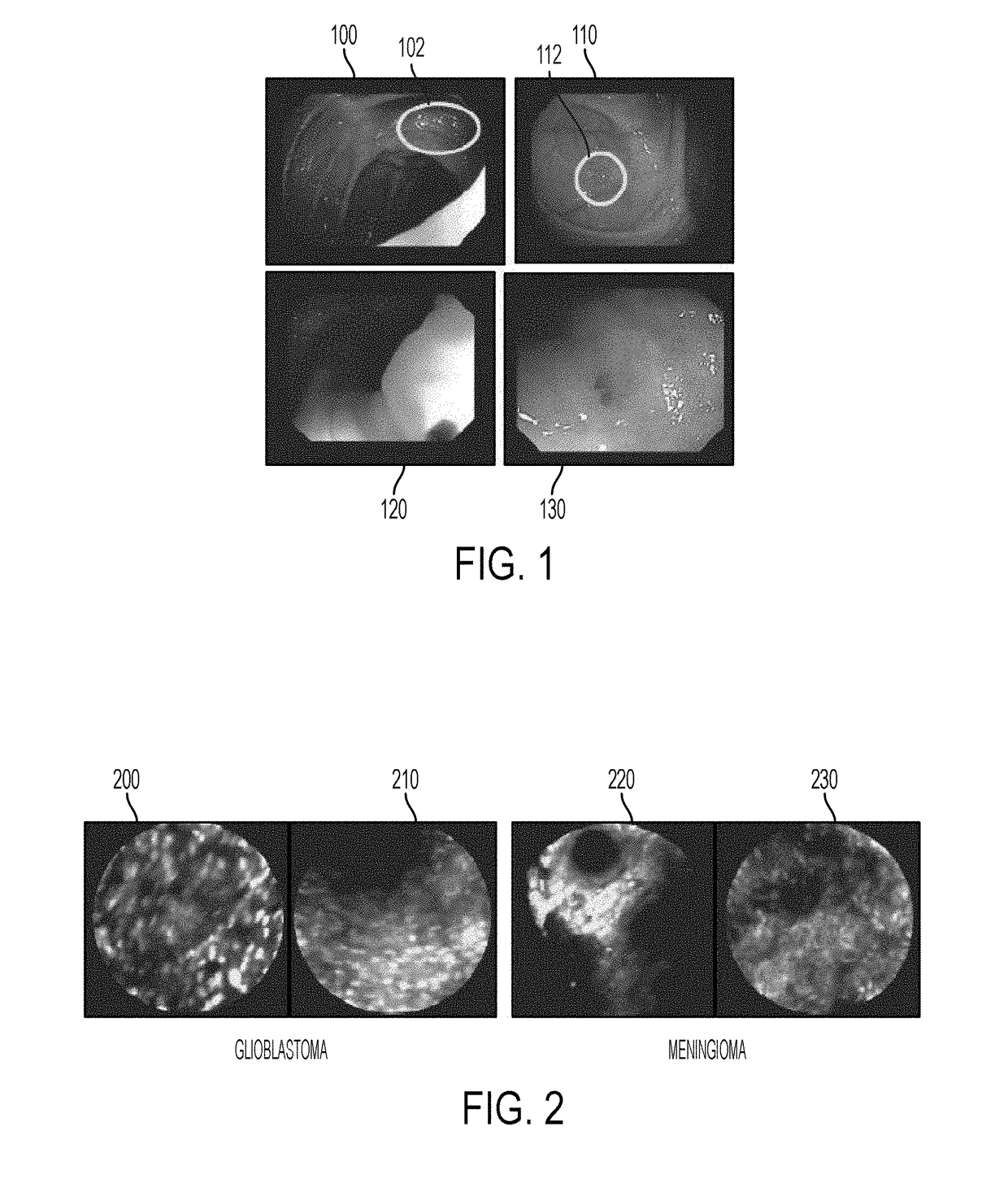

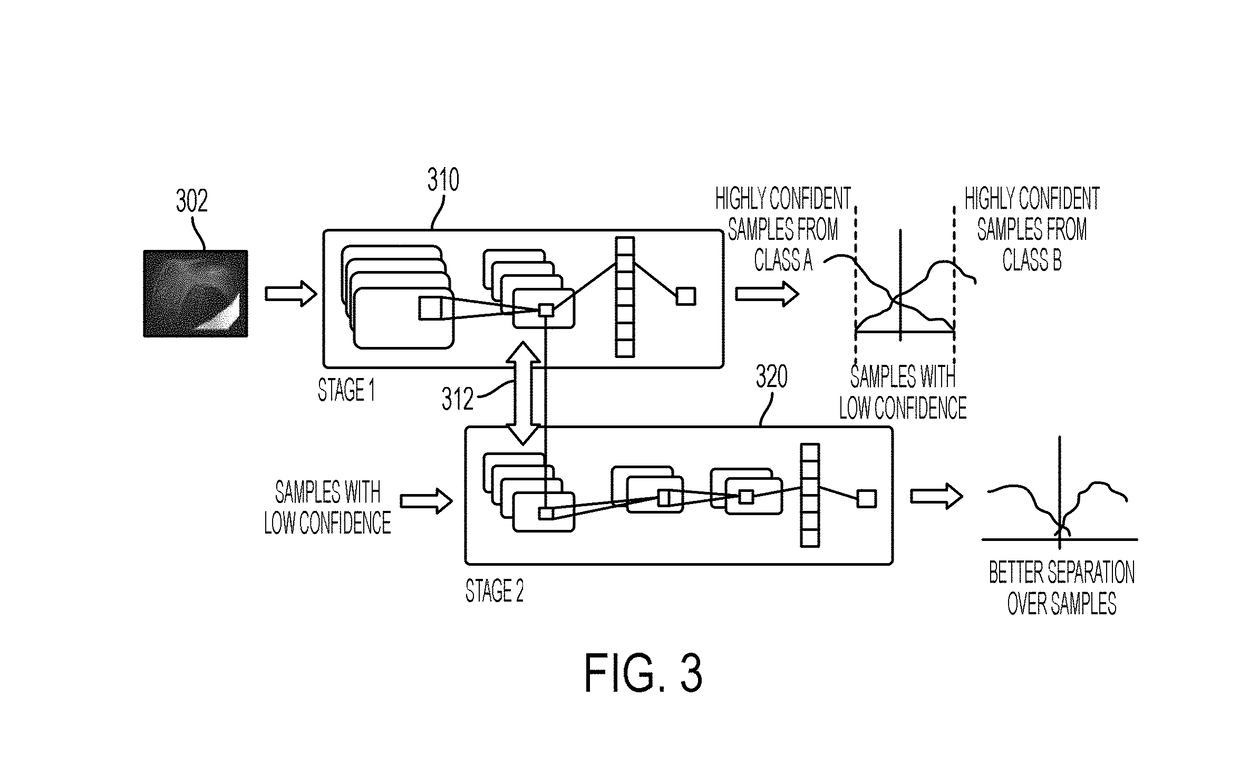

Method and system for classification of endoscopic images using deep decision networks

A method and system for classification of endoscopic images is disclosed. An initial trained deep network classifier is used to classify endoscopic images and determine confidence scores for the endoscopic images. The confidence score for each endoscopic image classified by the initial trained deep network classifier is compared to a learned confidence threshold. For endoscopic images with confidence scores higher than the learned threshold value, the classification result from the initial trained deep network classifier is output. Endoscopic images with confidence scores lower than the learned confidence threshold are classified using a first specialized network classifier built on a feature space of the initial trained deep network classifier.

Owner:SIEMENS HEALTHCARE GMBH

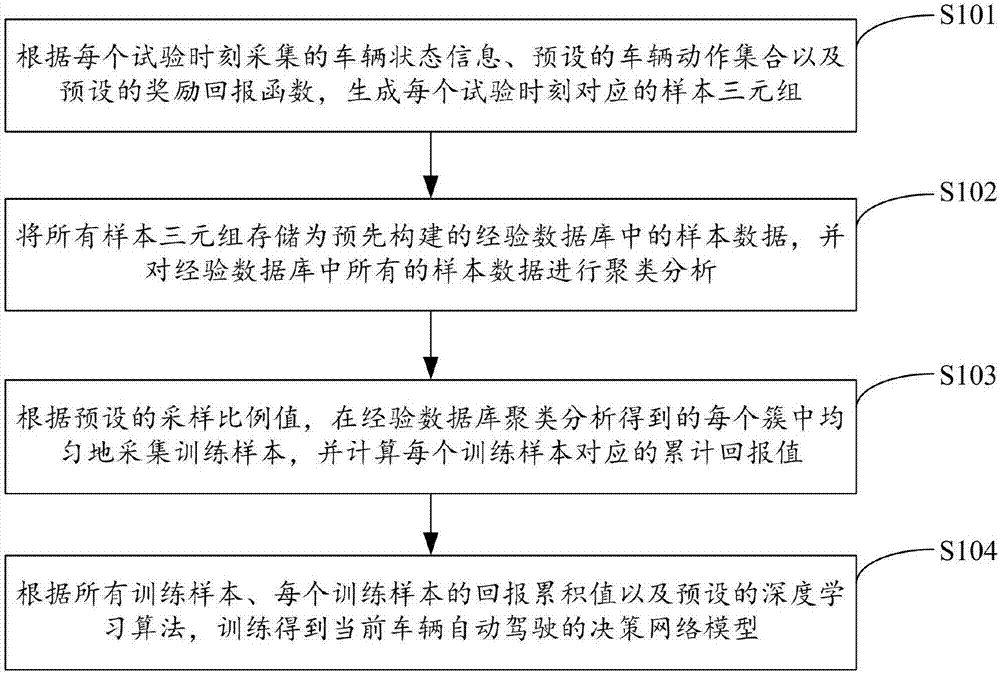

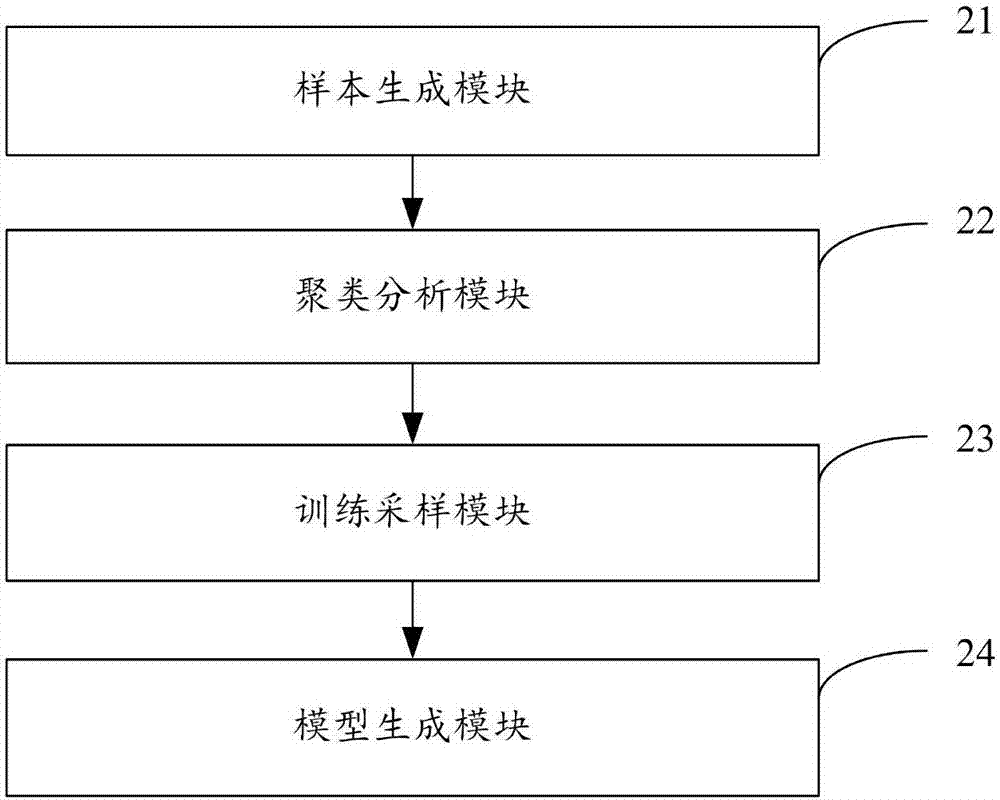

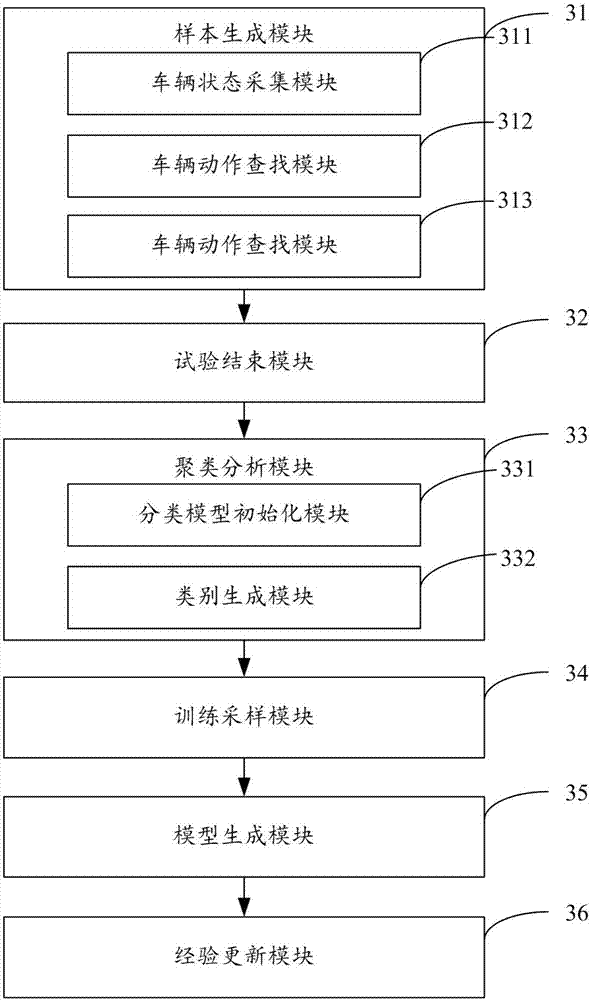

Generation method and device for decision network model of vehicle automatic driving

ActiveCN107169567AFast trainingTraining samples, and finally training fastKnowledge representationMachine learningAlgorithmDecision networks

The invention is applicable to the field of computer technology and provides a generation method and device for a decision network model of vehicle automatic driving. The method comprises the steps that a sample triad corresponding to each test moment is generated according to vehicle state information collected at each test moment, a preset vehicle movement set and a preset return function, all the sample triads are stored as sample data in a pre-established experience database, and all the sample data is subjected to clustering analysis; training samples are uniformly collected from each cluster obtained after clustering analysis of the experience database according to a preset sampling scale value, and a return accumulated value of each training sample is calculated; and according to all the training samples, the return accumulated value of each training sample and a preset deep learning algorithm, training is performed to obtain the decision network model of vehicle automatic driving. Therefore, the training efficiency of the decision network model and the generalization ability of the decision network model are effectively improved.

Owner:SHENZHEN INST OF ADVANCED TECH

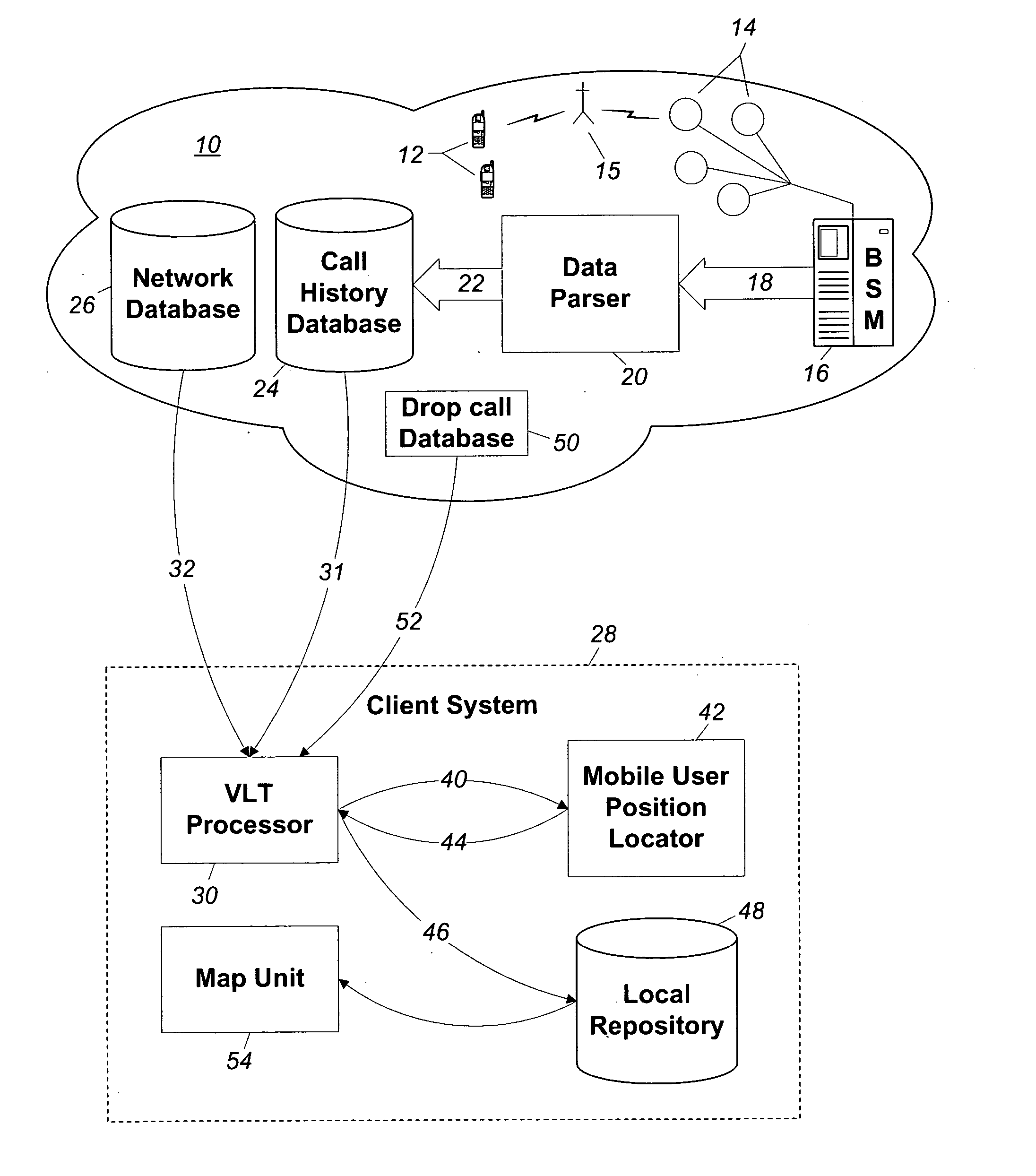

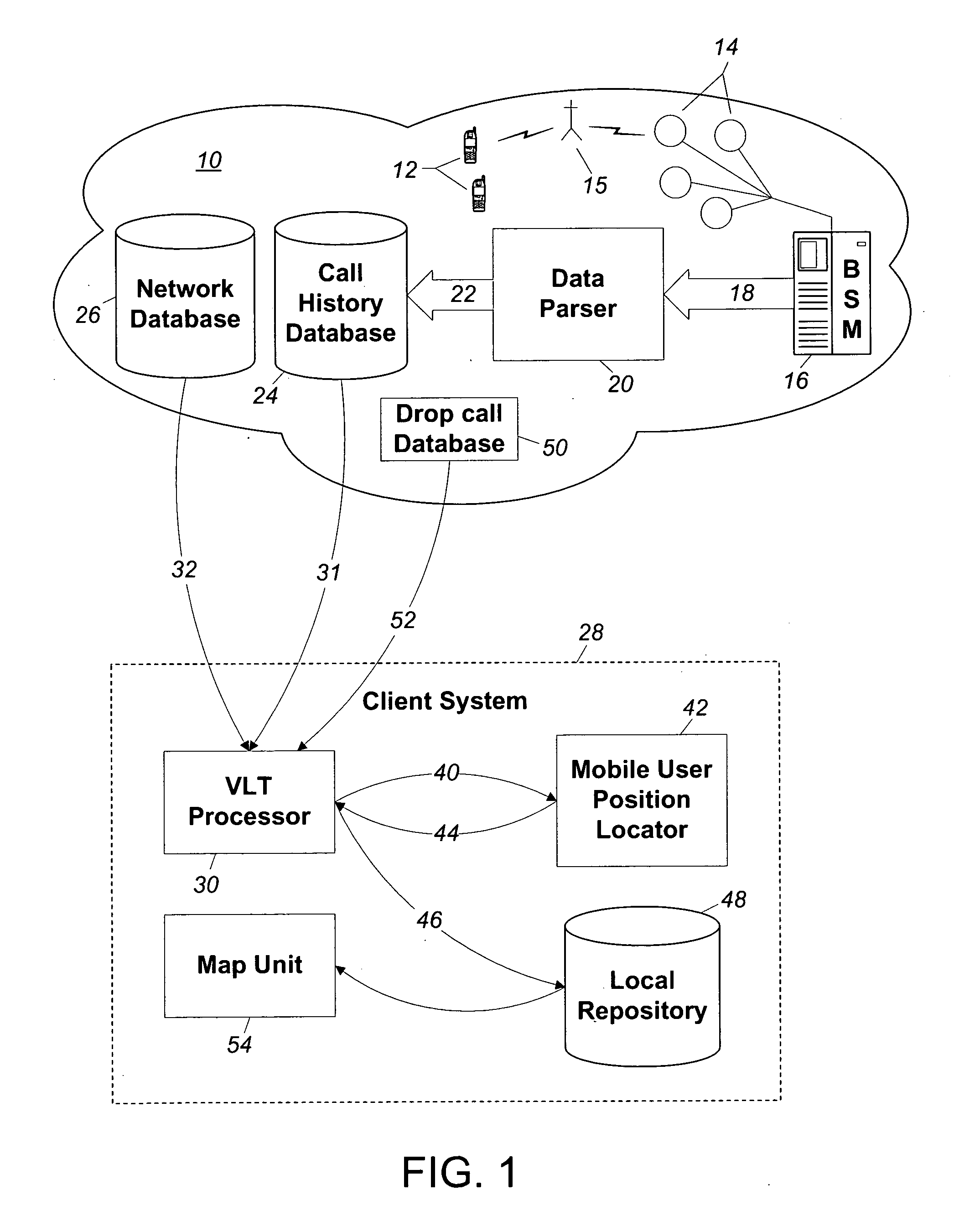

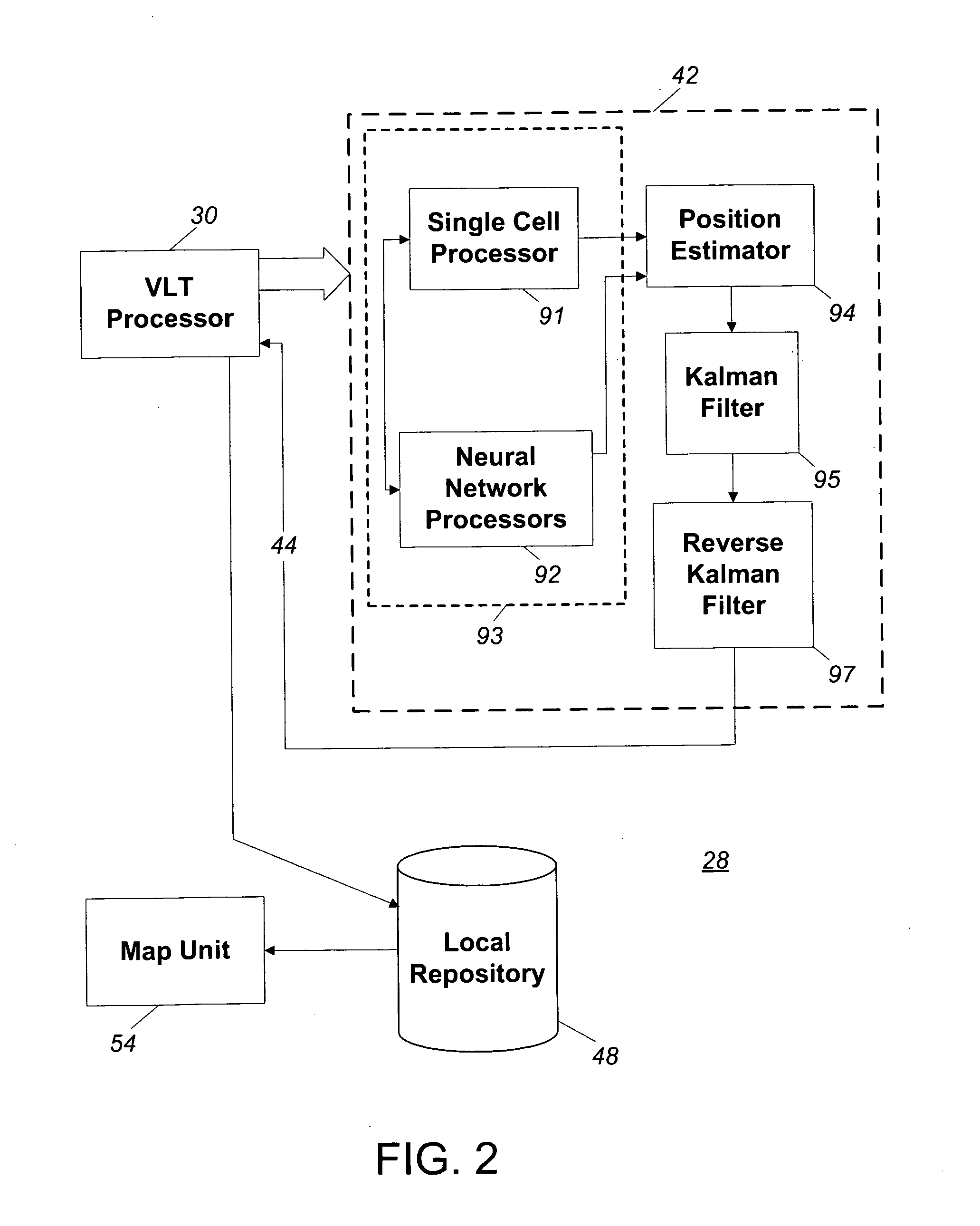

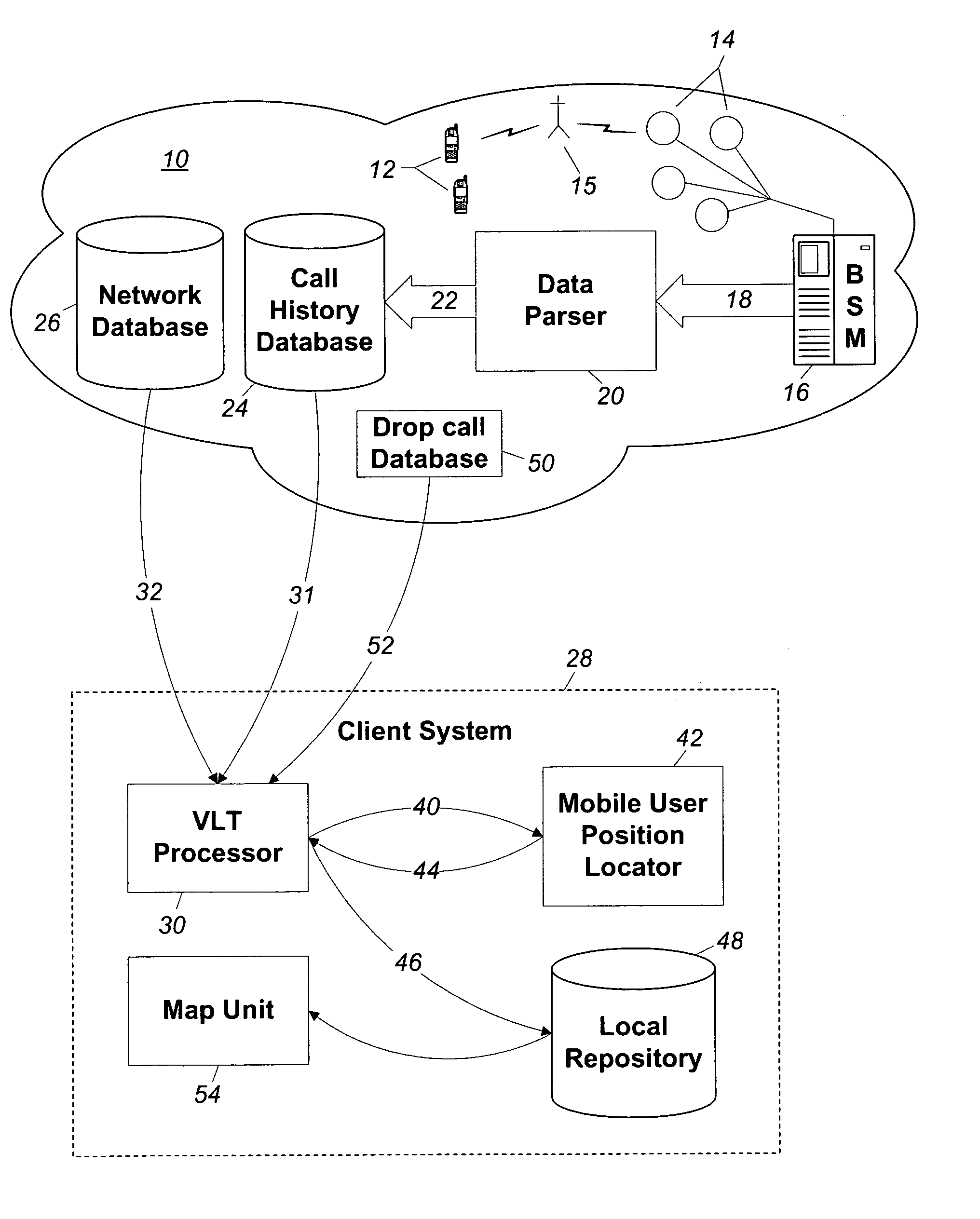

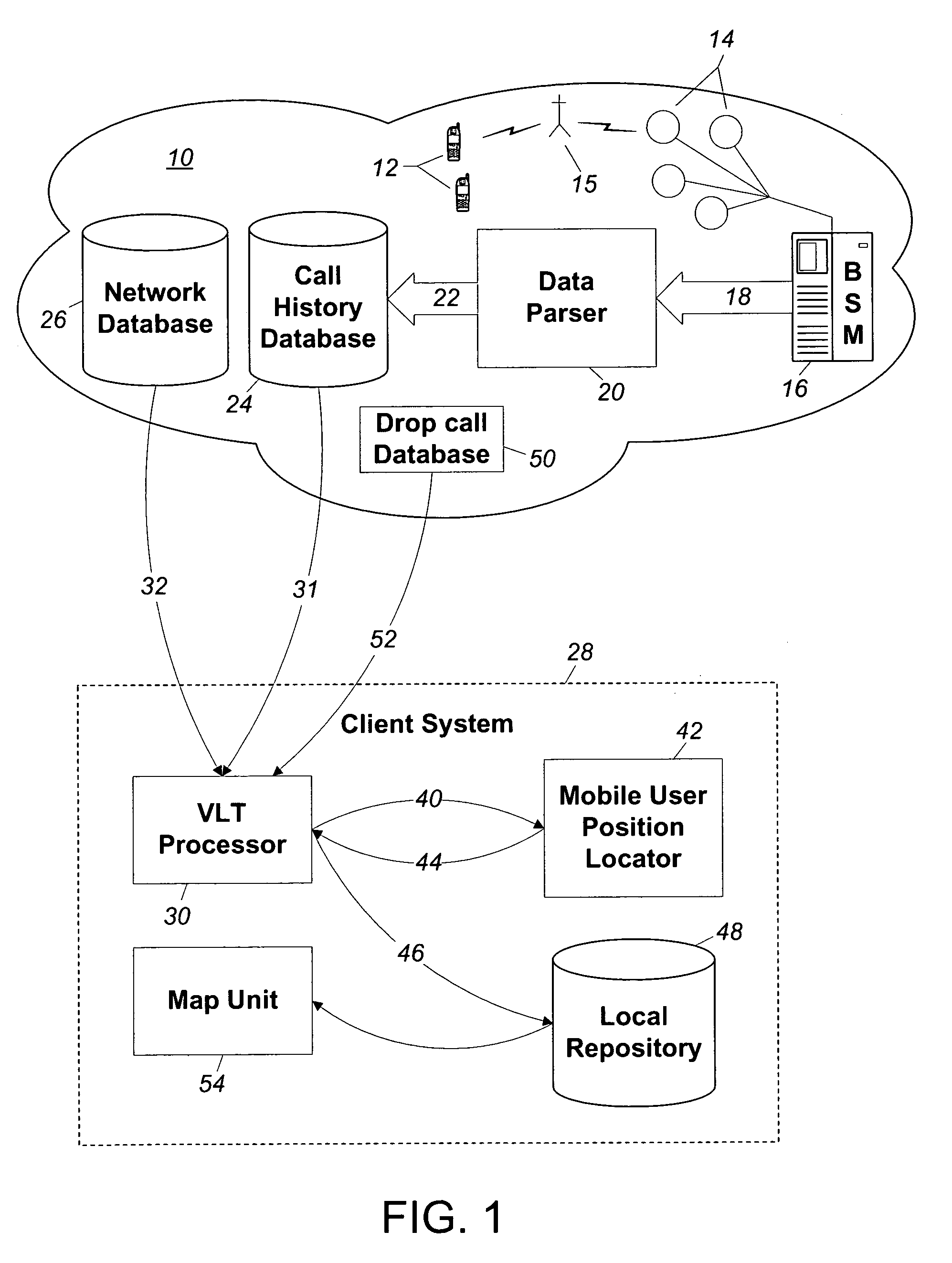

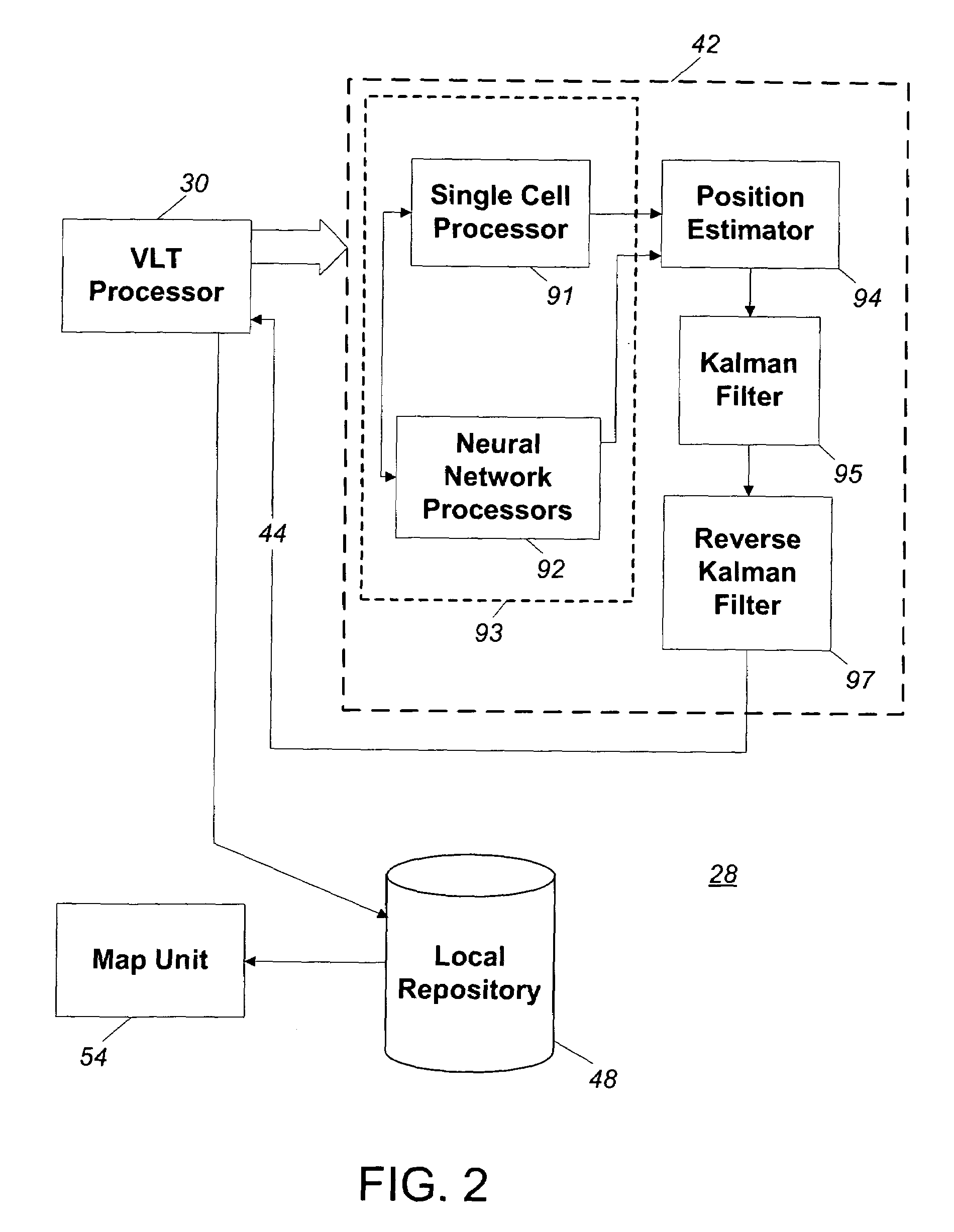

Mobile user position locating system

InactiveUS20050064879A1Improved position estimatePosition fixationRadio/inductive link selection arrangementsTelecommunications networkDecision networks

A method and system for locating a mobile user of one or more mobile users while on a call in a wireless telecommunication network utilizes a decision network that determines if the call signal is propagating through a repeater station. When the call is propagating through a repeater station, the decision network alters the signal measurement parameters to correspond with the location of the mobile user relative to the repeater station coordinates. By revising signal measurement parameters to compensate for repeater stations, the present invention provides for improved position estimates of the location of the mobile user in the network. For multiple cell soft handoff conditions, the decision network utilizes trained neural networks.

Owner:BELL MOBILITY

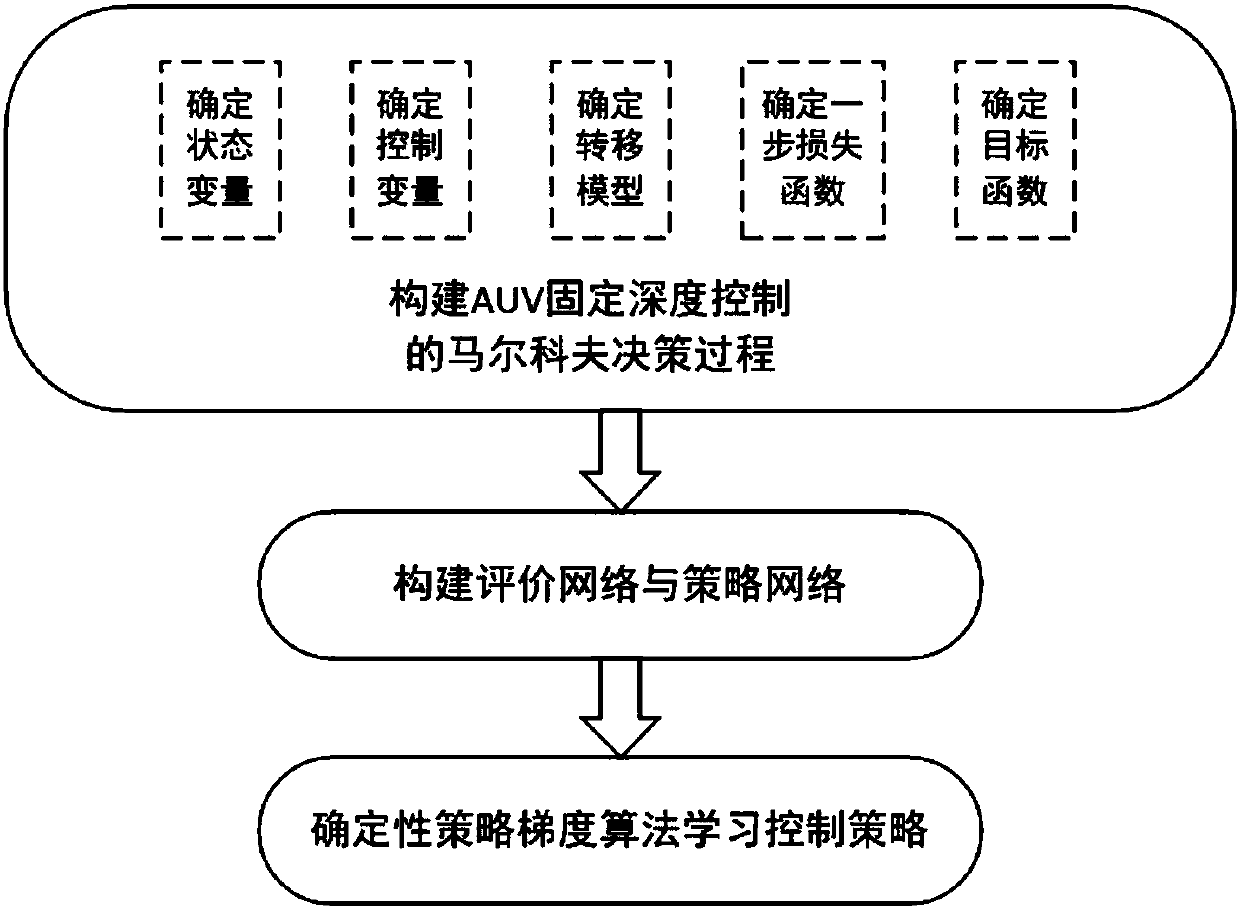

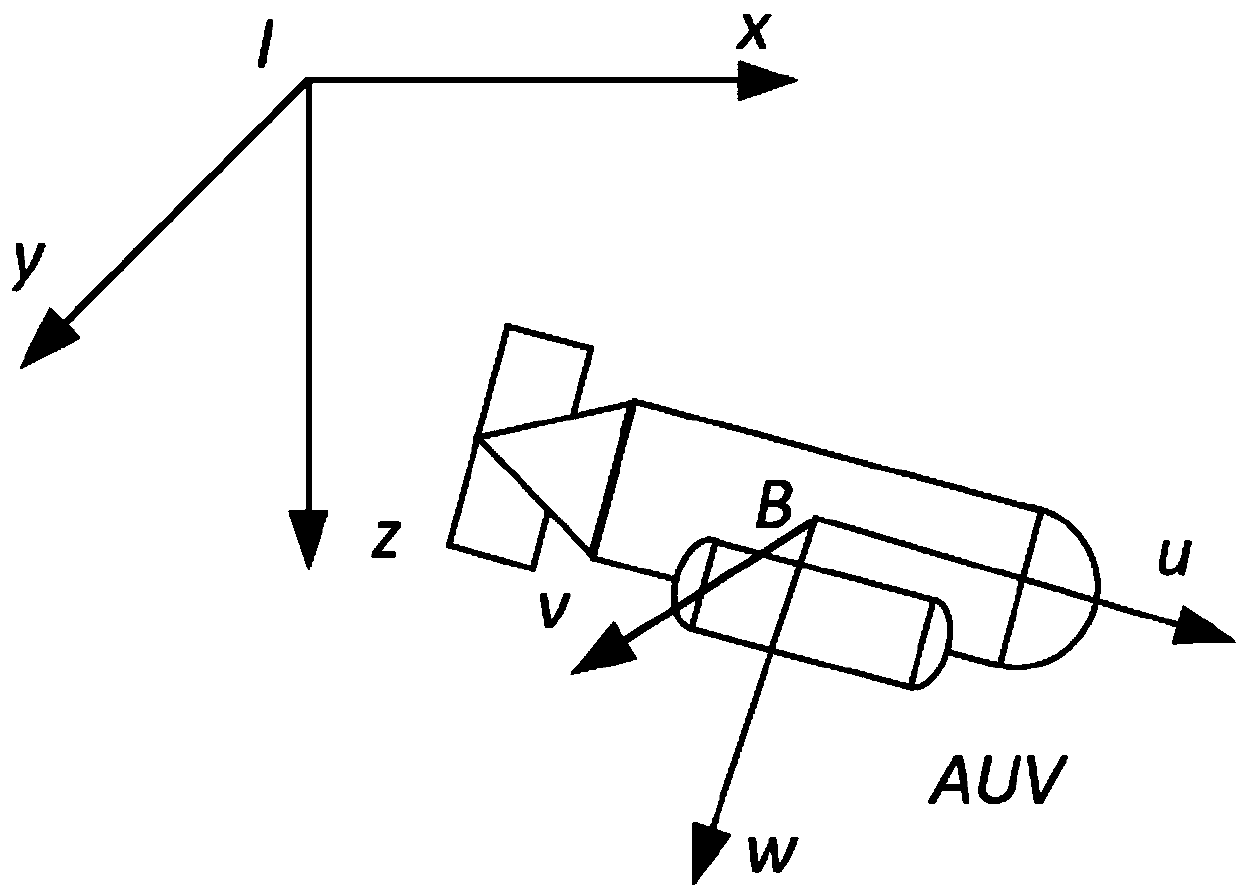

Underwater autonomous robot fixed depth control method based on reinforcement learning

ActiveCN107748566AEnhance expressive abilityIdeal control strategyAdaptive controlAltitude or depth controlTransfer modelDynamic models

The invention provides an underwater autonomous robot fixed depth control method based on reinforcement learning and belongs to the underwater robot control field. The method comprises steps that firstly, a Markoff decision process model for underwater autonomous robot fixed depth control is constructed, and expressions of a state variable, a control variable, a transfer model and a one-step lossfunction for underwater autonomous robot fixed depth control are respectively acquired; the decision network and the evaluation network are respectively established; through reinforcement learning, the decision network and the evaluation network can be continuously updated whenever an underwater autonomous robot moves forwards for each step during fixed depth control training till convergence; thefinal decision network for fixed depth control is acquired. The method is advantaged in that fixed depth control on the underwater autonomous robot under the condition of a completely-unknown underwater autonomous robot dynamic model is realized, and the practical value is high.

Owner:TSINGHUA UNIV

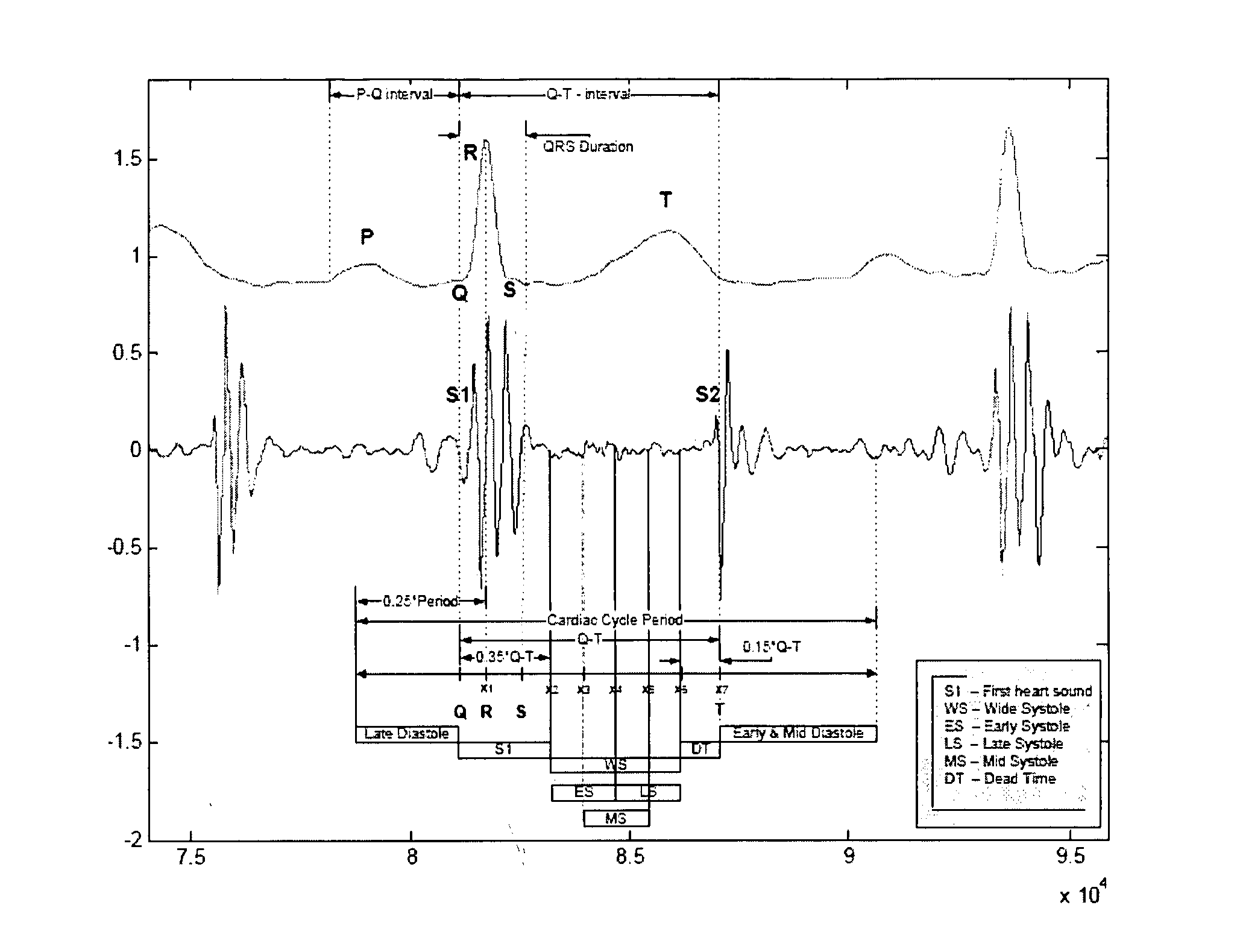

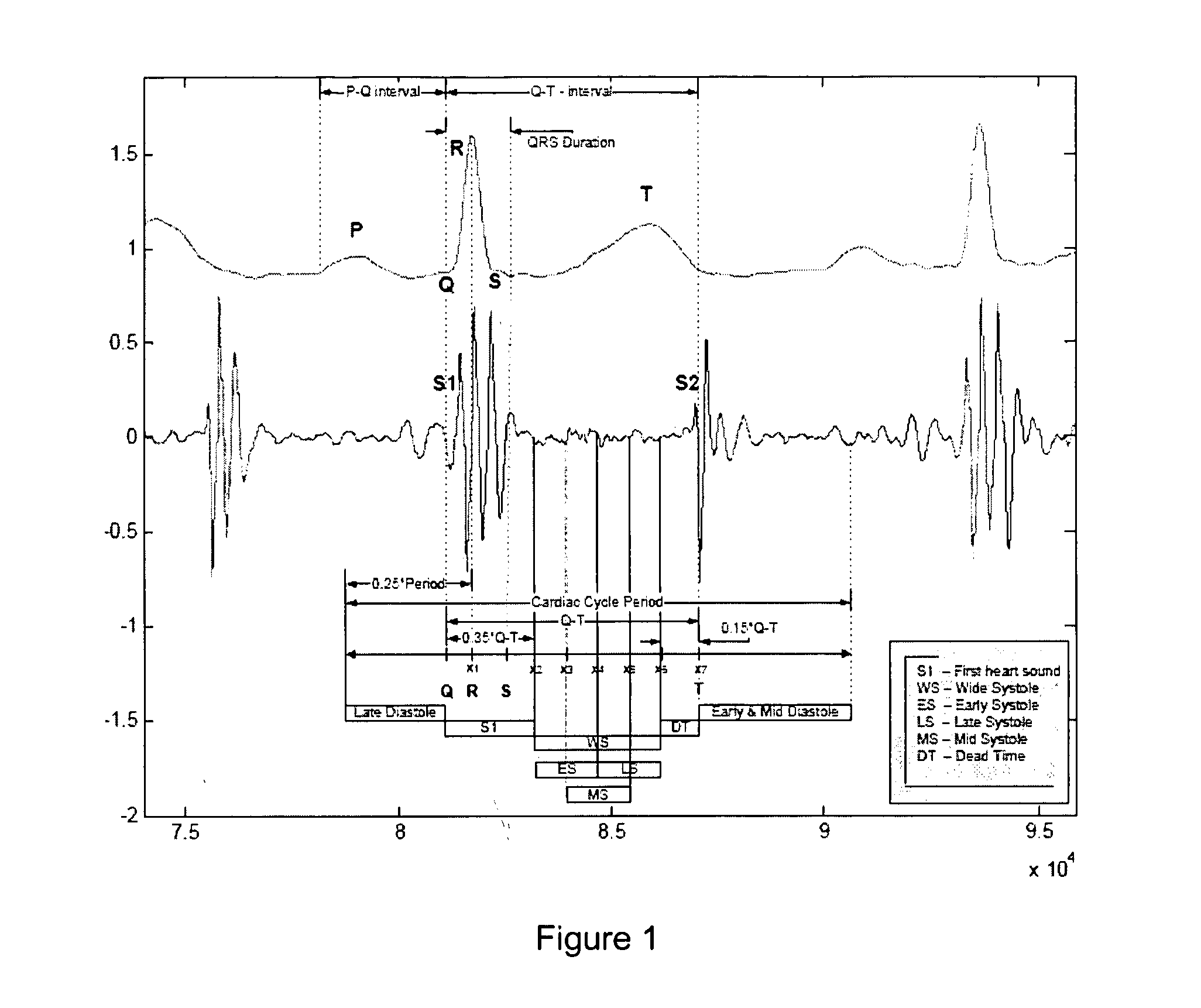

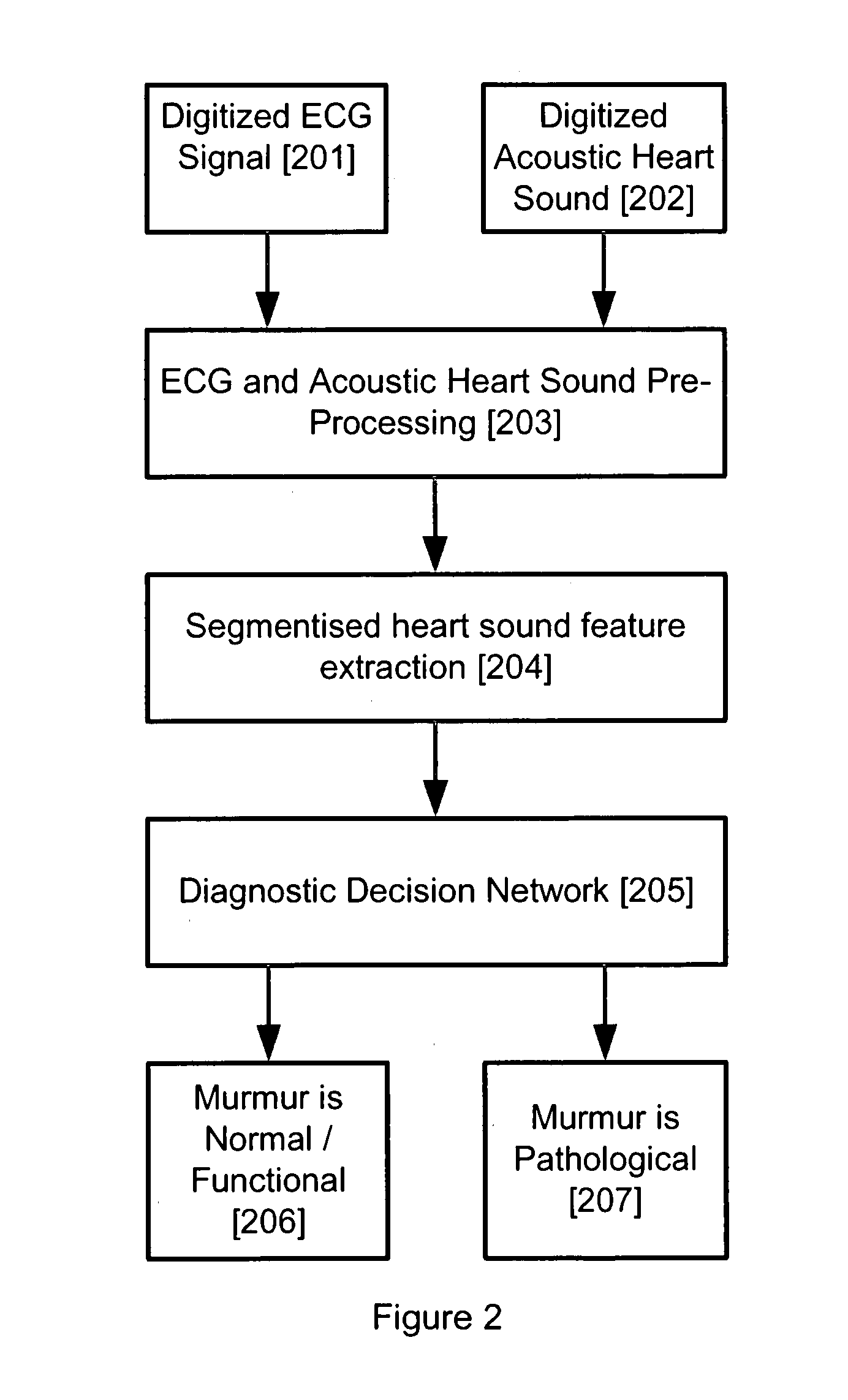

Medical decision support system

A process for pre-processing recorded and digitised heart sounds and corresponding digitised ECG signals is provided. The pre-processed signals (203) are suitable for input into an automatic decision support system implemented by means of a diagnostic decision network (205), used for diagnosing and differentiation between normal / functional (206) and pathological (207) heart murmurs, particularly in paediatric patients. The process includes identifying or predicting locations of individual heart beats within the heart sound signal, identifying the positions of the S1 and S2 heart pulses within the respective heart beats, predicting and identifying the locations and durations of the systole and diastole segments of the heart beats (302), determining if segmentation of the heart sound signal is possible based on the selected and isolated heart beats (305), and the segmentation of the respective heart beats into segments for allowing better feature extraction. The invention also provides a process for detecting the QRS complex of an ECG signal for optimizing the number of heart beat cycles detected.

Owner:DIACOUSTIC MEDICAL DEVICES

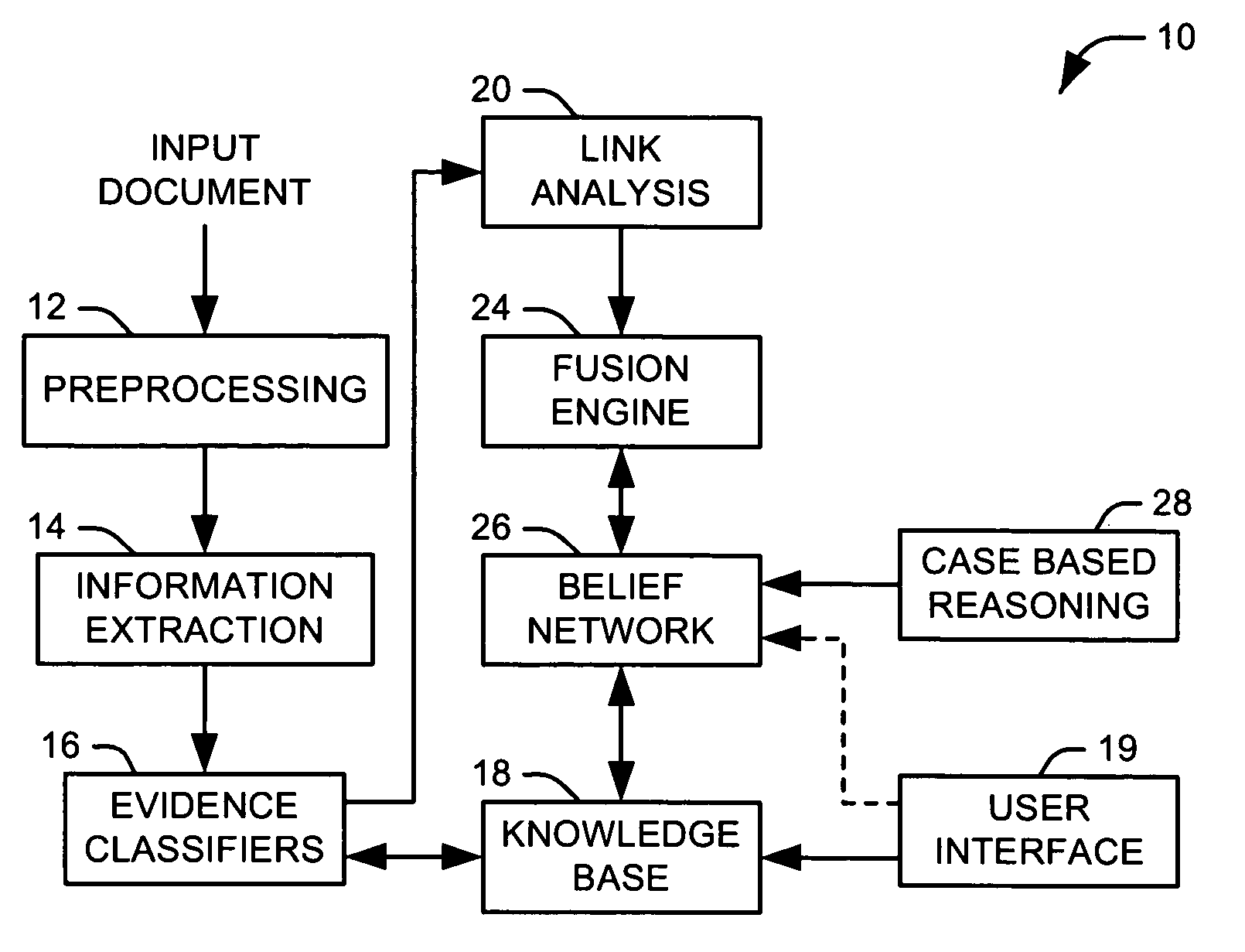

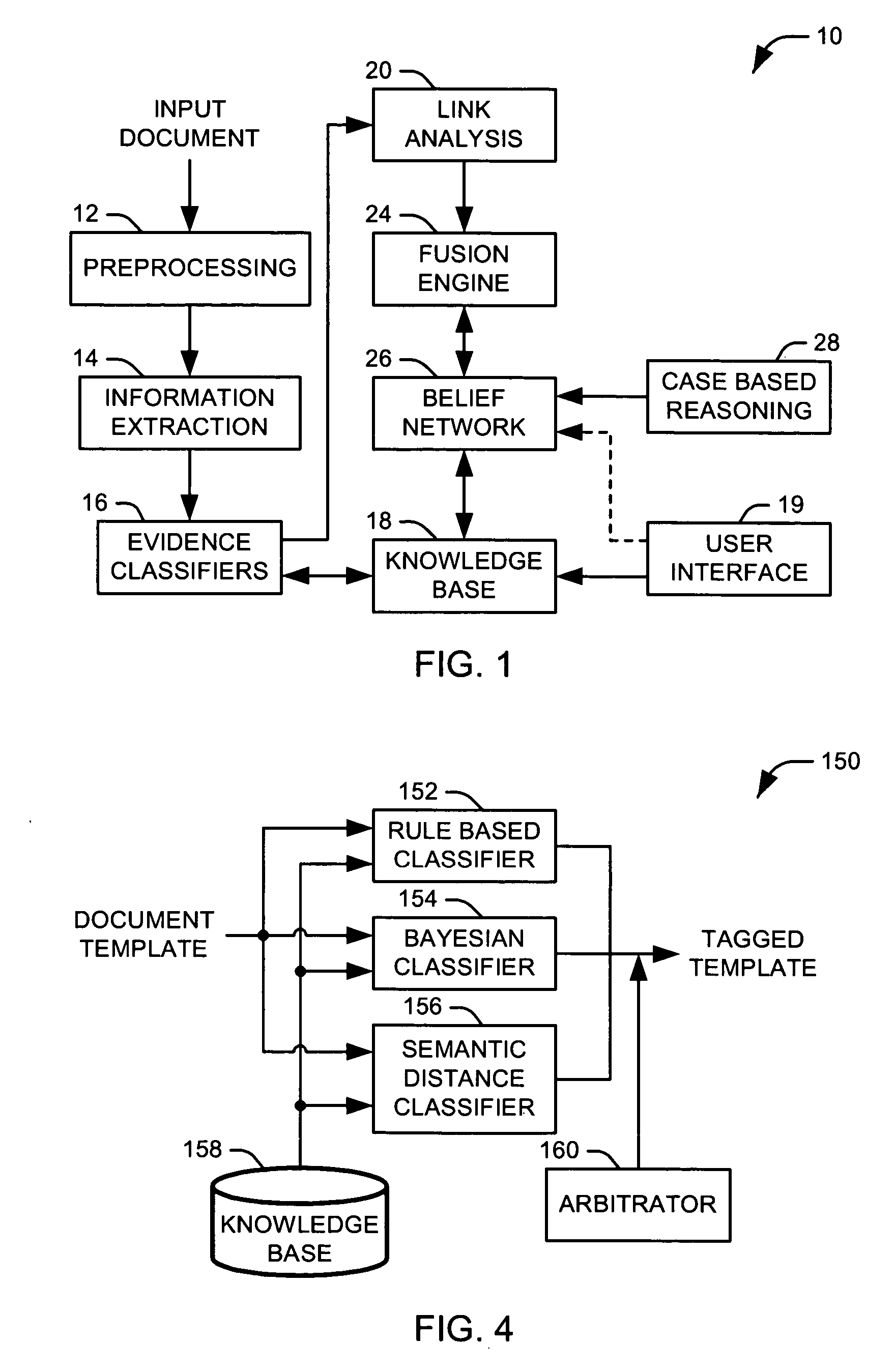

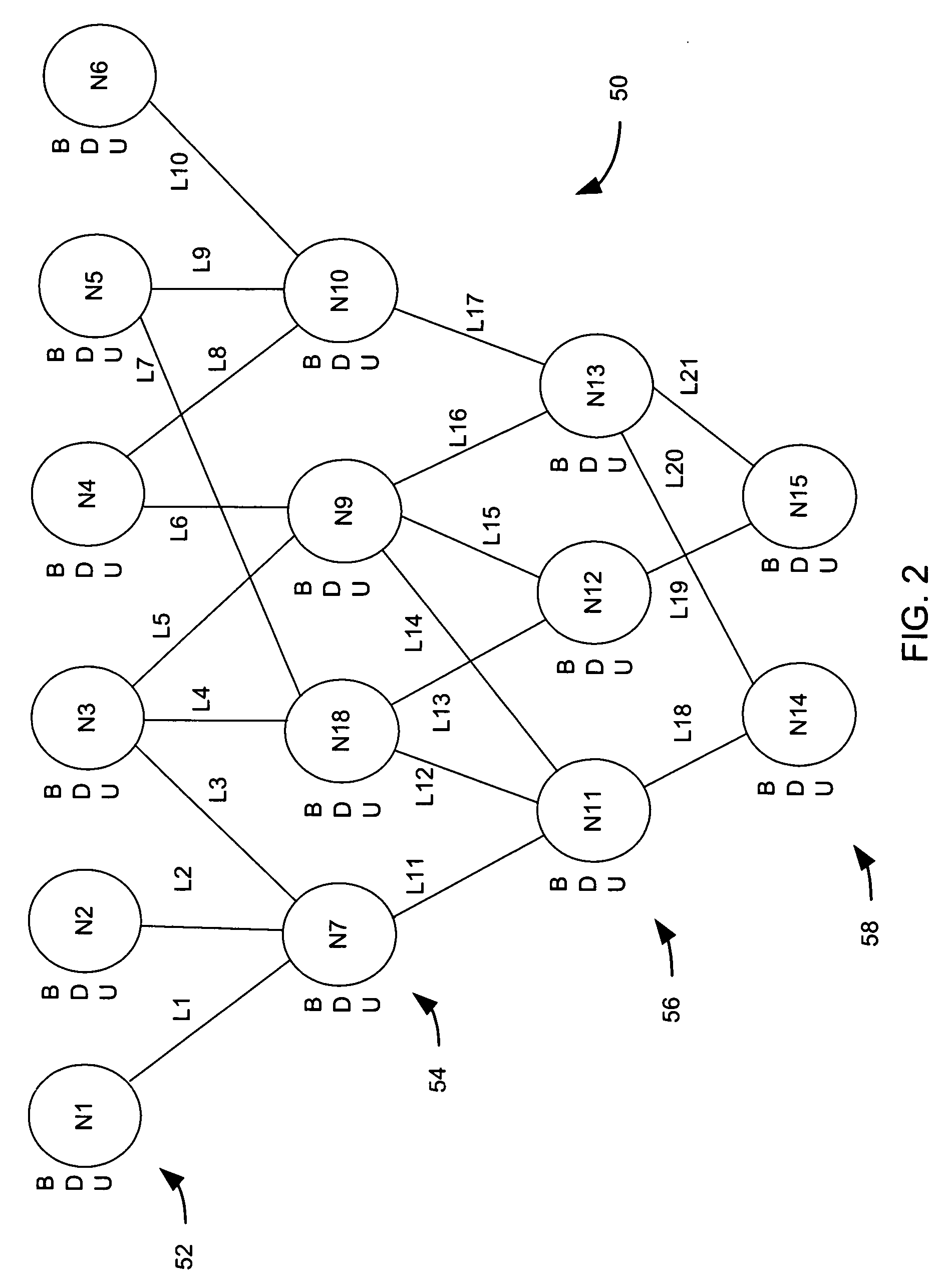

Systems and methods for generating a decision network from text

Systems and methods are provided for generating a decision network from text. An information extraction component extracts a quantum of evidence and an associated confidence value from a given text segment. An evidence classifier associates each quantum of evidence with one of a plurality of hypotheses. A fusion engine builds a decision network from the plurality of hypotheses, an associated base structure, the extracted quanta of evidence, and the confidence values.

Owner:NORTHROP GRUMMAN SYST CORP

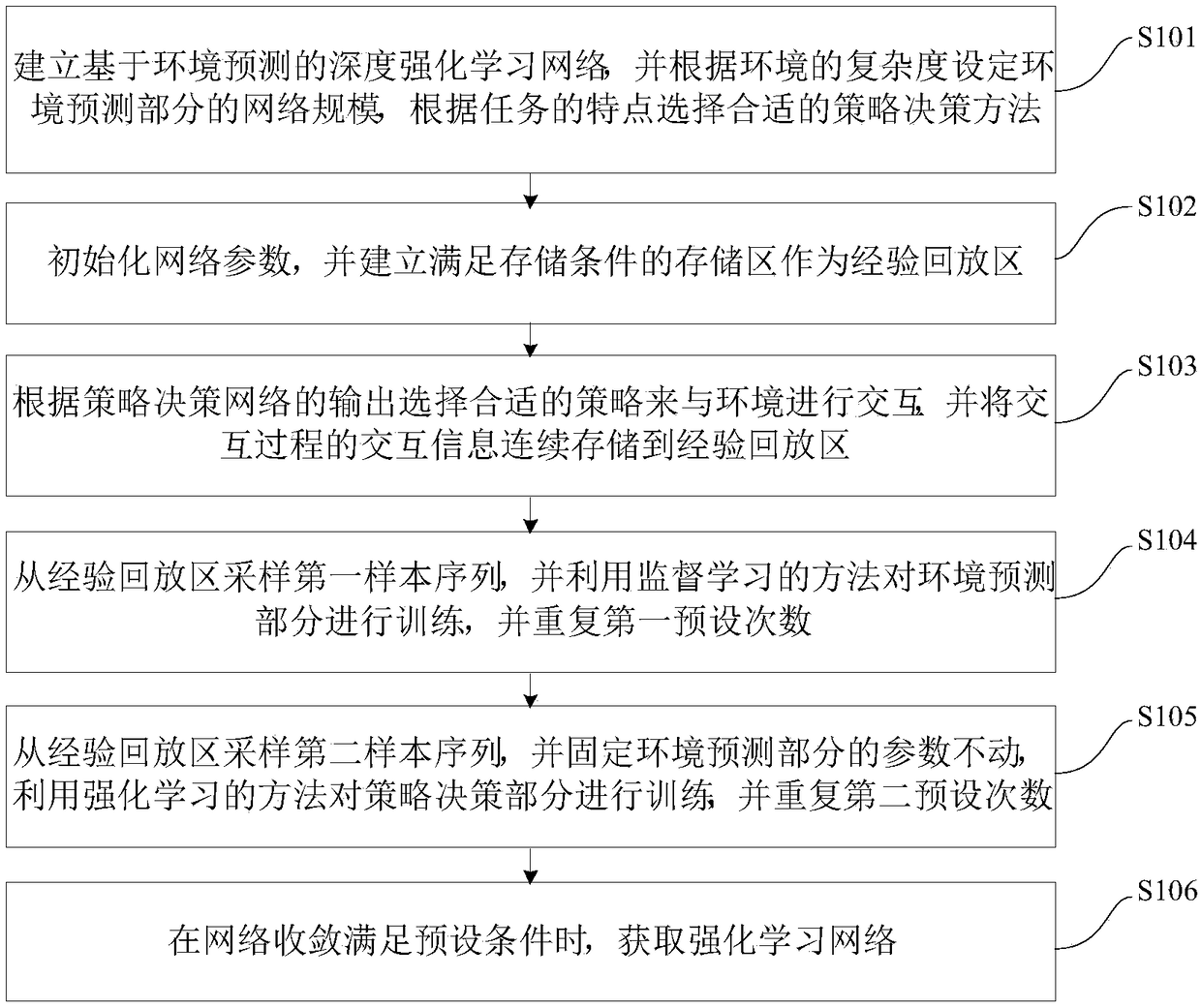

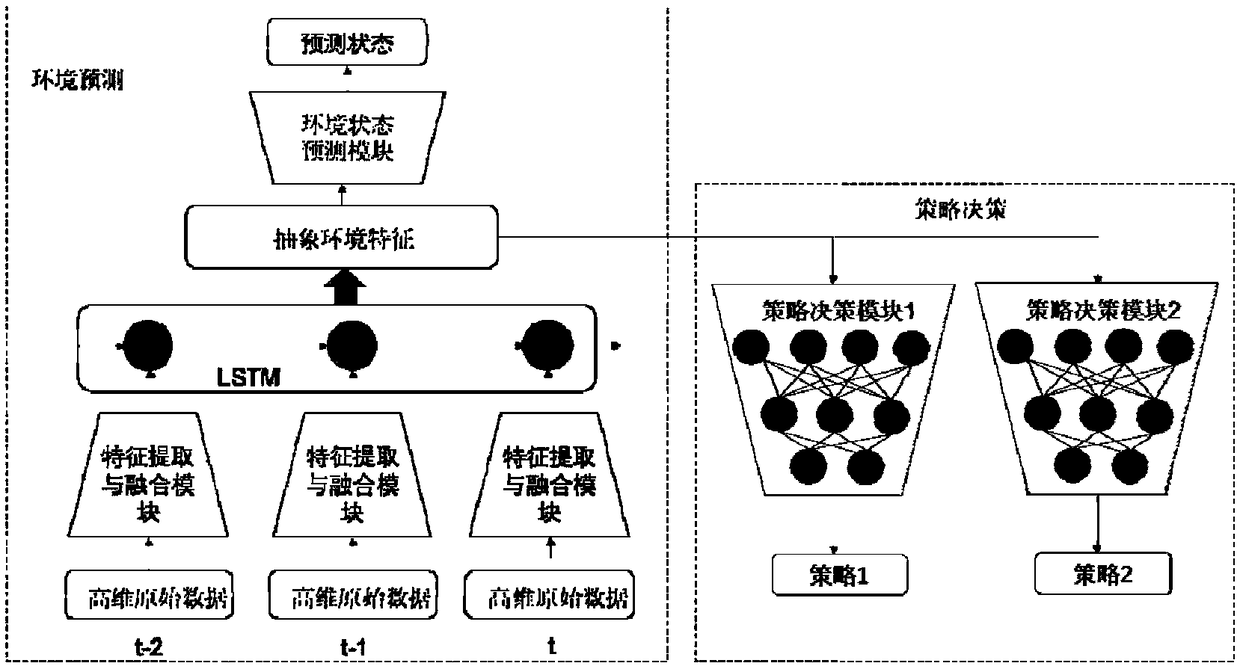

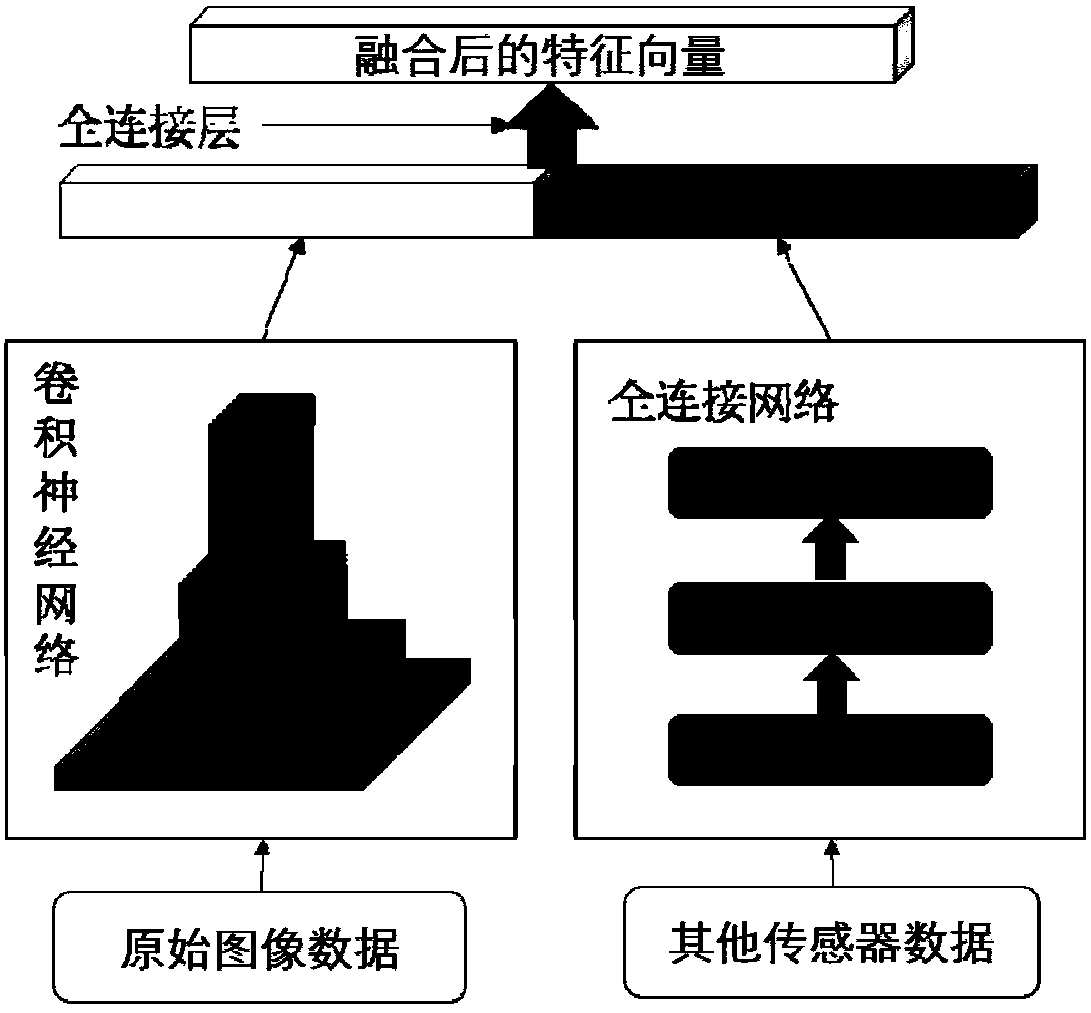

Deep reinforcement learning method and device based on environment state prediction

ActiveCN108288094ASolve the generality problemQuick migrationNeural learning methodsNetwork ConvergenceState prediction

The invention discloses a deep reinforcement learning method and device based on environment state prediction. The method comprises the following steps that: establishing a deep reinforcement learningnetwork based on the environment prediction, and selecting a proper strategy decision method according to the characteristics of tasks; initializing network parameters, and establishing a storage area which meets a storage condition as an experience replaying area; according to the output of a strategy decision network, selecting a proper strategy to interact with environment, and continuously storing the interaction information of an interaction process into the experience replaying area; sampling a first sample sequence from the experience replaying area, utilizing a supervised learning method to train an environment prediction part, and repeating a first preset frequency; sampling a second sample sequence from the experience replaying area, fixing the parameter of the environment prediction part to be constant, utilizing a reinforcement learning method to train the strategy decision part, and repeating a second preset frequency; when network convergence meets a preset condition, obtaining a reinforcement learning network. By use of the method, learning efficiency can be effectively improved.

Owner:TSINGHUA UNIV

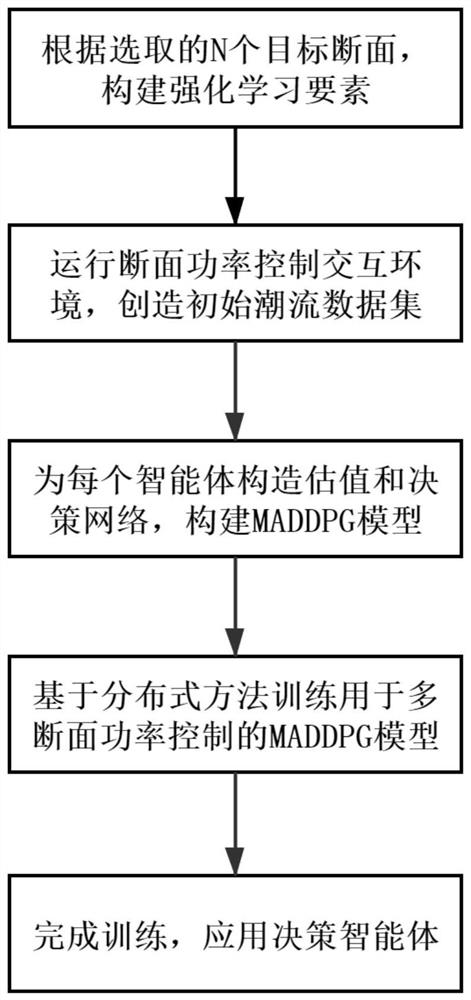

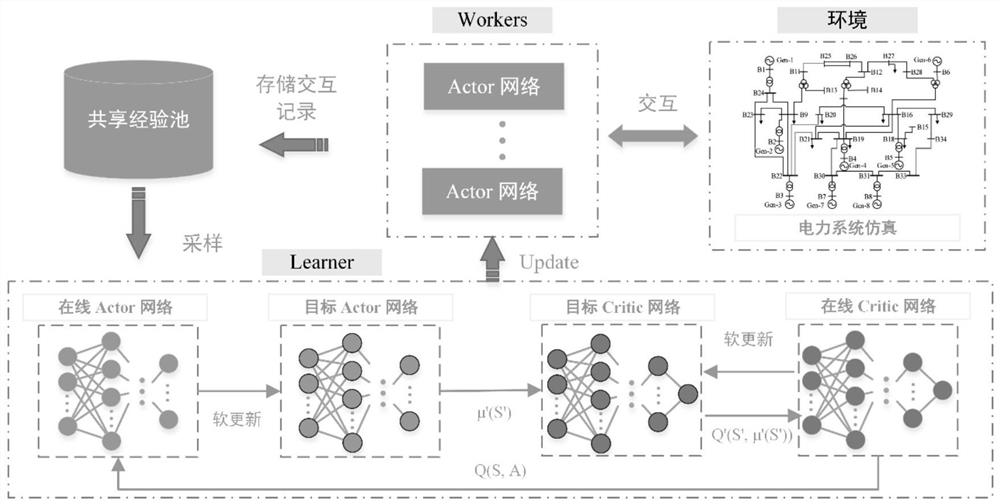

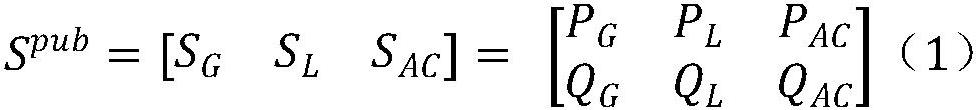

Power grid multi-section power automatic control method based on distributed multi-agent reinforcement learning

ActiveCN112615379AImprove training efficiencySolving multi-section power control problemsSingle network parallel feeding arrangementsDesign optimisation/simulationAutomatic controlData set

The invention discloses a power grid multi-section power automatic control method based on distributed multi-agent reinforcement learning. The method can achieve the autonomous learning of a proper multi-section power control strategy for a complex power grid through the interaction of multiple agents and a power simulation environment. The method comprises the following steps of firstly, N target sections are selected according to the need of power grid control, and basic elements such as an environment, an intelligent agent, an observation state, an action and a reward function of the reinforcement learning method are constructed; secondly, a multi-section power control task interaction environment is operated, and an initial power flow data set is created; then, a decision network and an estimation network based on a deep neural network are constructed for each agent, an MADDPG (multi-agent deep deterministic strategy gradient) model is constructed, and a distributed method is introduced to train an autonomous learning optimal control strategy; and finally, the trained strategy network is applied to perform automatic section control. The method is advantaged in that a complex power grid multi-section power control problem is solved through the multi-agent reinforcement learning method, the control success rate is high, expert experience is not needed, and meanwhile agent training efficiency is greatly improved by introducing the distributed method.

Owner:ZHEJIANG UNIV

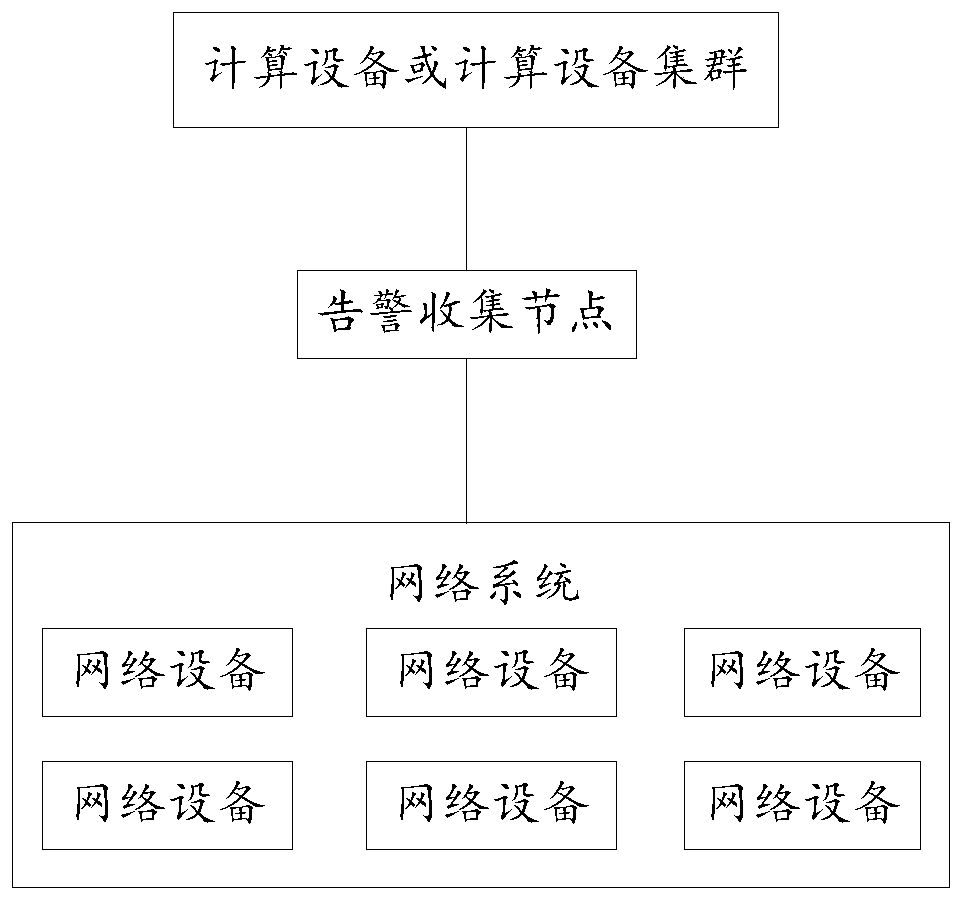

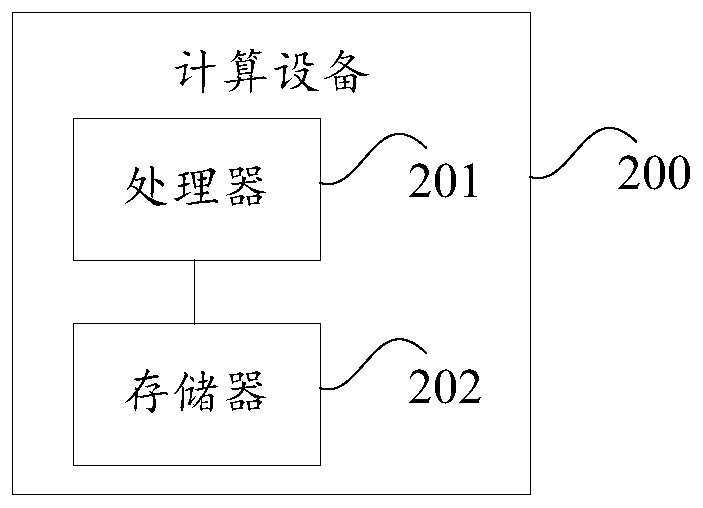

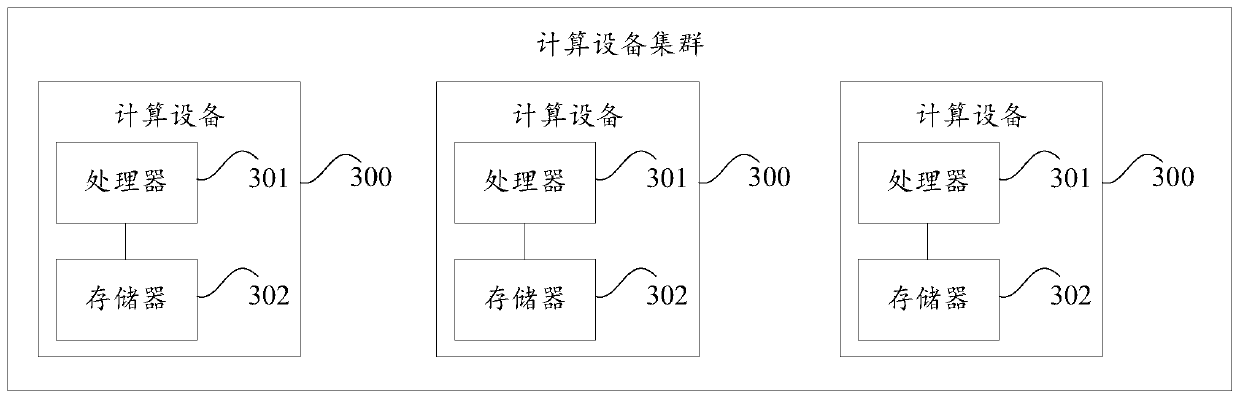

Root cause alarm decision network construction method and device, and storage medium

ActiveCN110351118AImprove build efficiencyHigh implementabilityData switching networksDecision networksStructure of Management Information

The invention provides a root cause alarm decision network construction method and a device, and a storage medium. The method comprises the steps of obtaining multiple pieces of alarm data appearing in a network in a preset time period; determining an alarm type corresponding to each piece of alarm data in the plurality of pieces of alarm data to obtain a plurality of alarm types; constructing a topological structure of a decision network by taking each alarm type in the plurality of alarm types as a node according to a time sequence relationship among the plurality of alarm data; and determining a target weight of each edge in the topological structure according to the topological structure and the occurrence frequency of each alarm type so as to generate the root cause alarm decision network. According to the root cause alarm decision network construction method and the device, and the storage medium, the efficiency of constructing the root cause alarm decision network can be improved, and when the alarm behavior form and the alarm type number in the network system are changed, the network topology does not need to be reconstructed, so that the enforceability of the root cause alarm decision network is improved.

Owner:HUAWEI TECH CO LTD

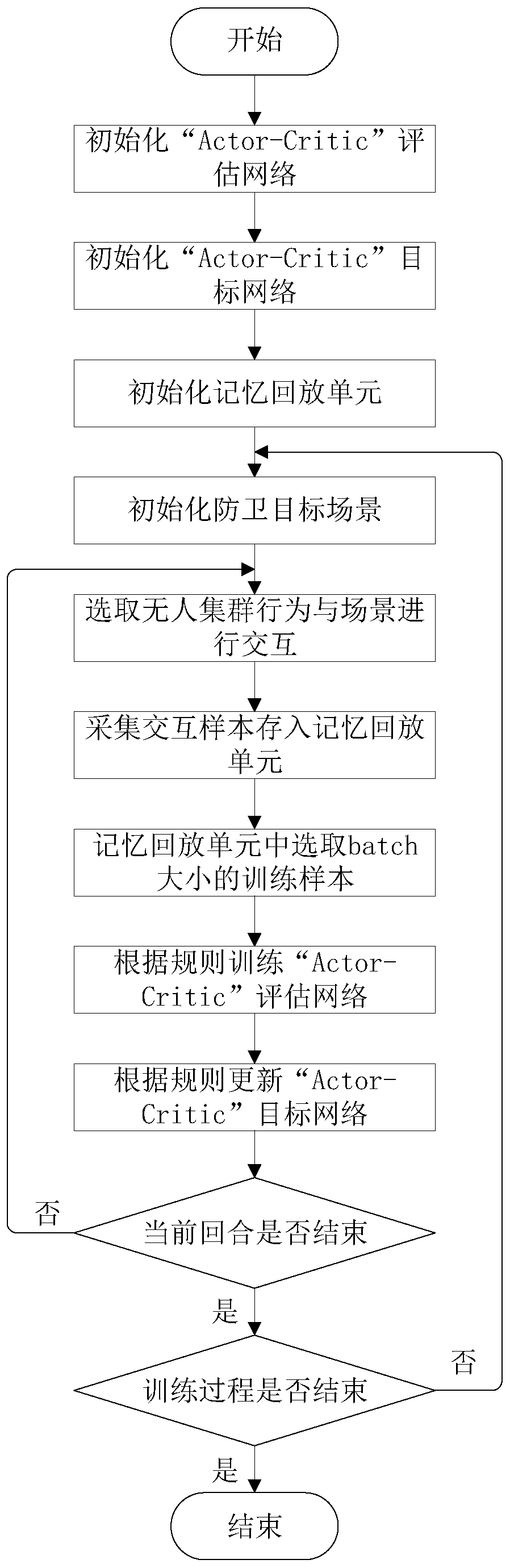

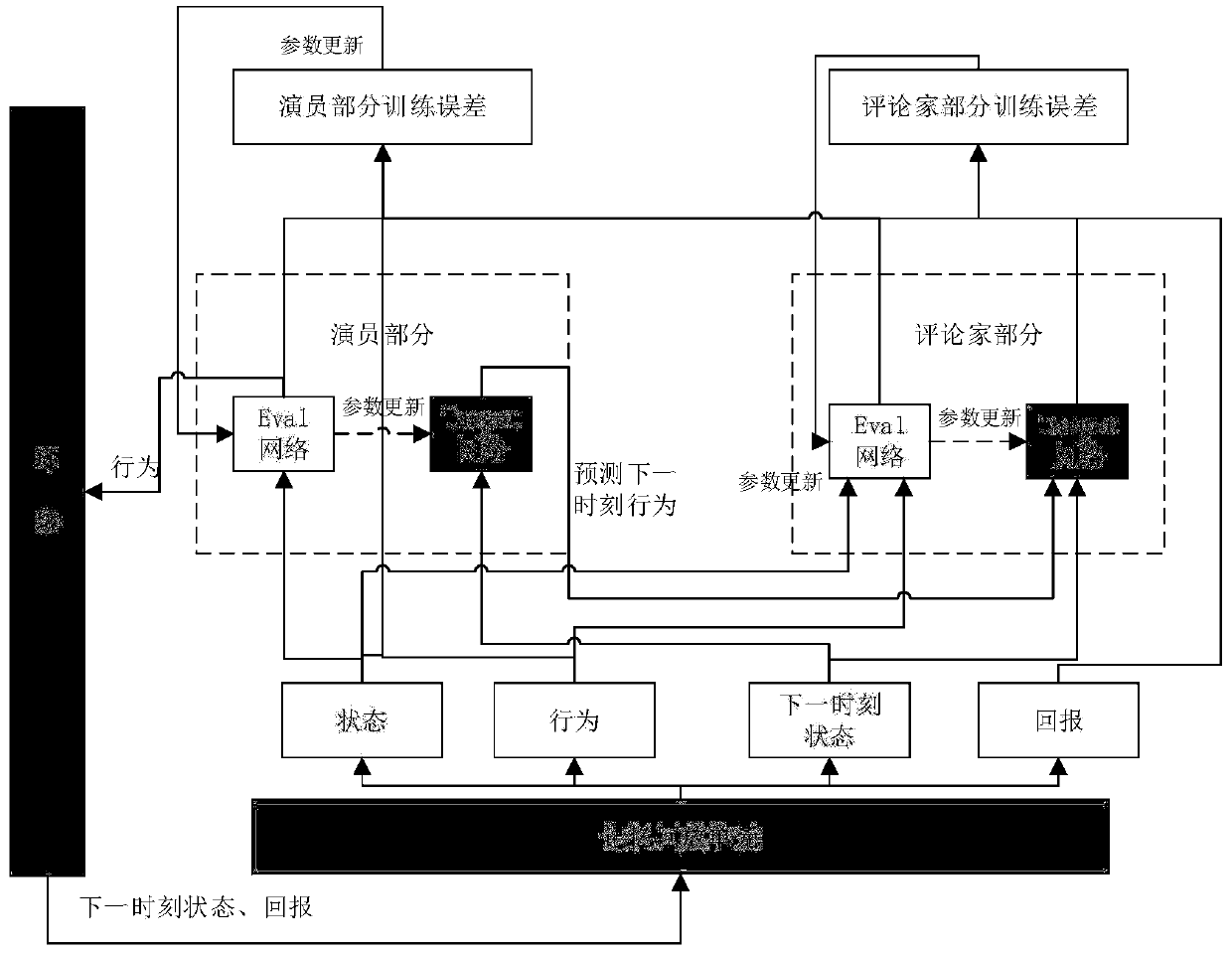

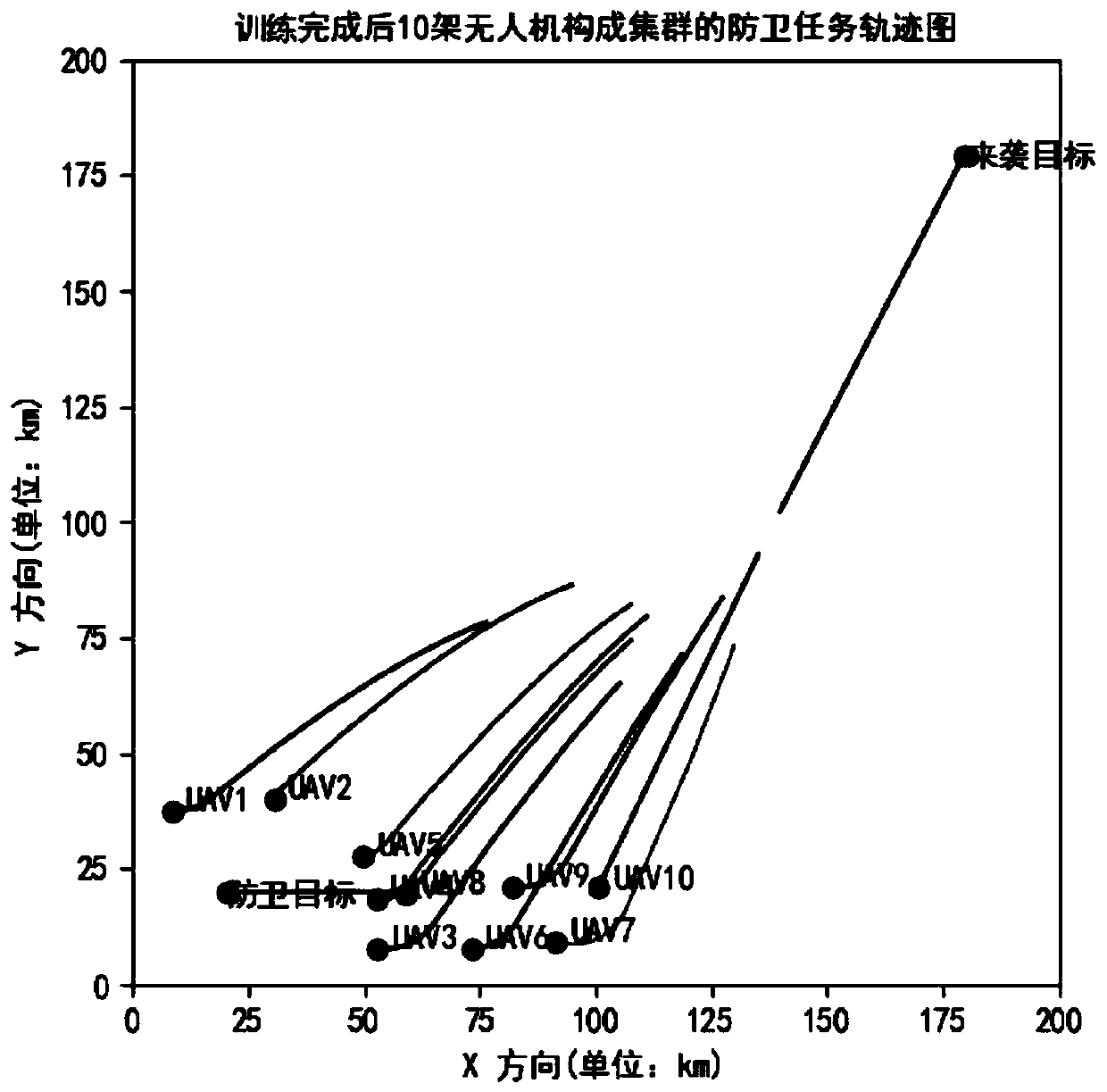

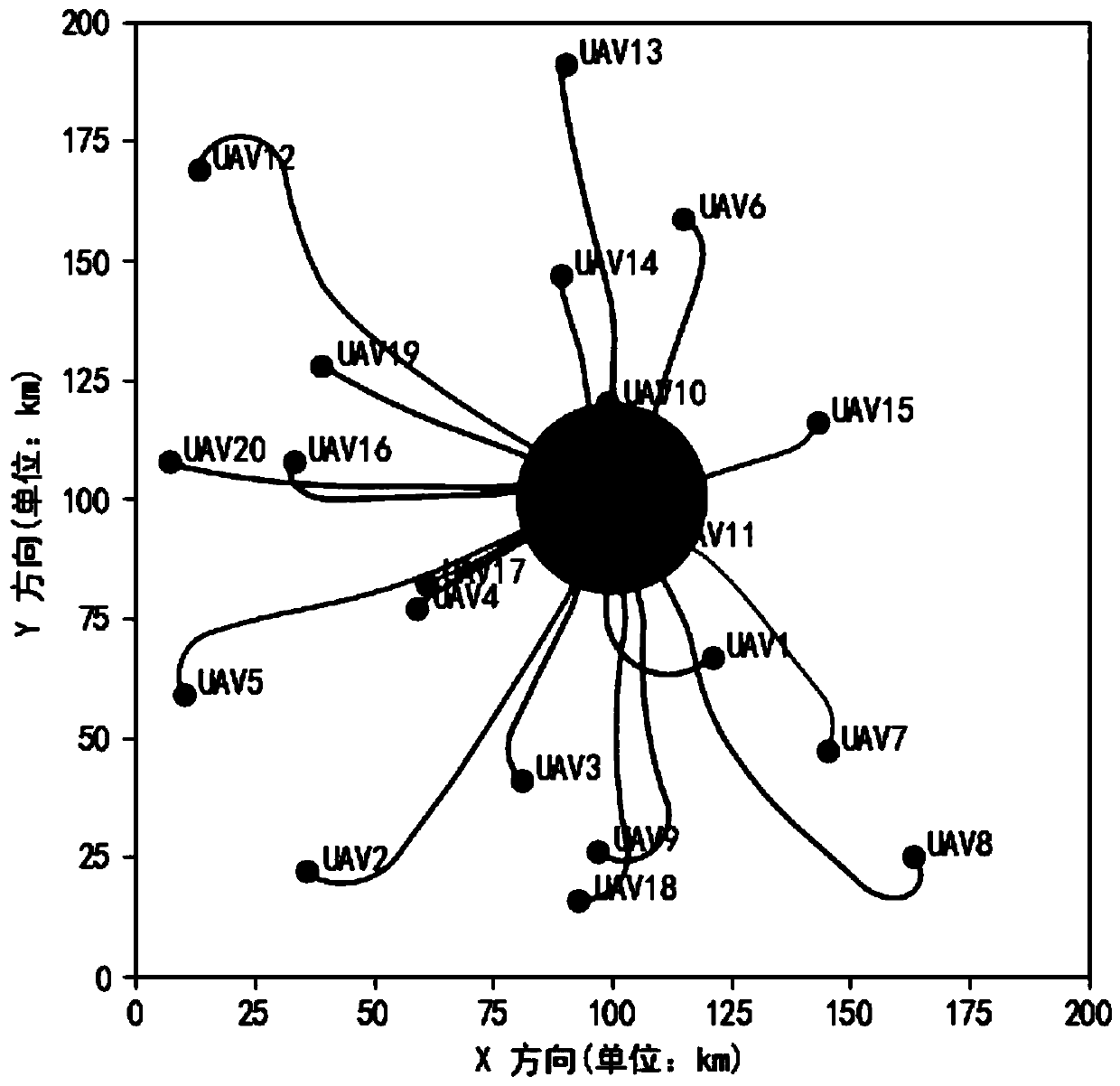

Unmanned aerial vehicle cluster target defense method based on deep reinforcement learning

ActiveCN111260031AExtended state spaceExpand behavioral spaceCosmonautic condition simulationsNeural architecturesPattern recognitionDecision networks

The invention provides an unmanned aerial vehicle cluster target defense method based on deep reinforcement learning. In the training stage, initial position information and behavior information of anattacking target are obtained, a training neural network of deep reinforcement learning is established, the neural network is trained, in the execution stage, an unmanned aerial vehicle cluster stateand a target state are obtained and input into the trained deep neural network, and an output result is judged. According to the method, the state space and the behavior space of the unmanned aerialvehicle clustering task are expanded, the task-oriented unmanned aerial vehicle clustering unified decision network is constructed, and unified command and control of the decision network on the uncertain number of unmanned aerial vehicles are realized.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

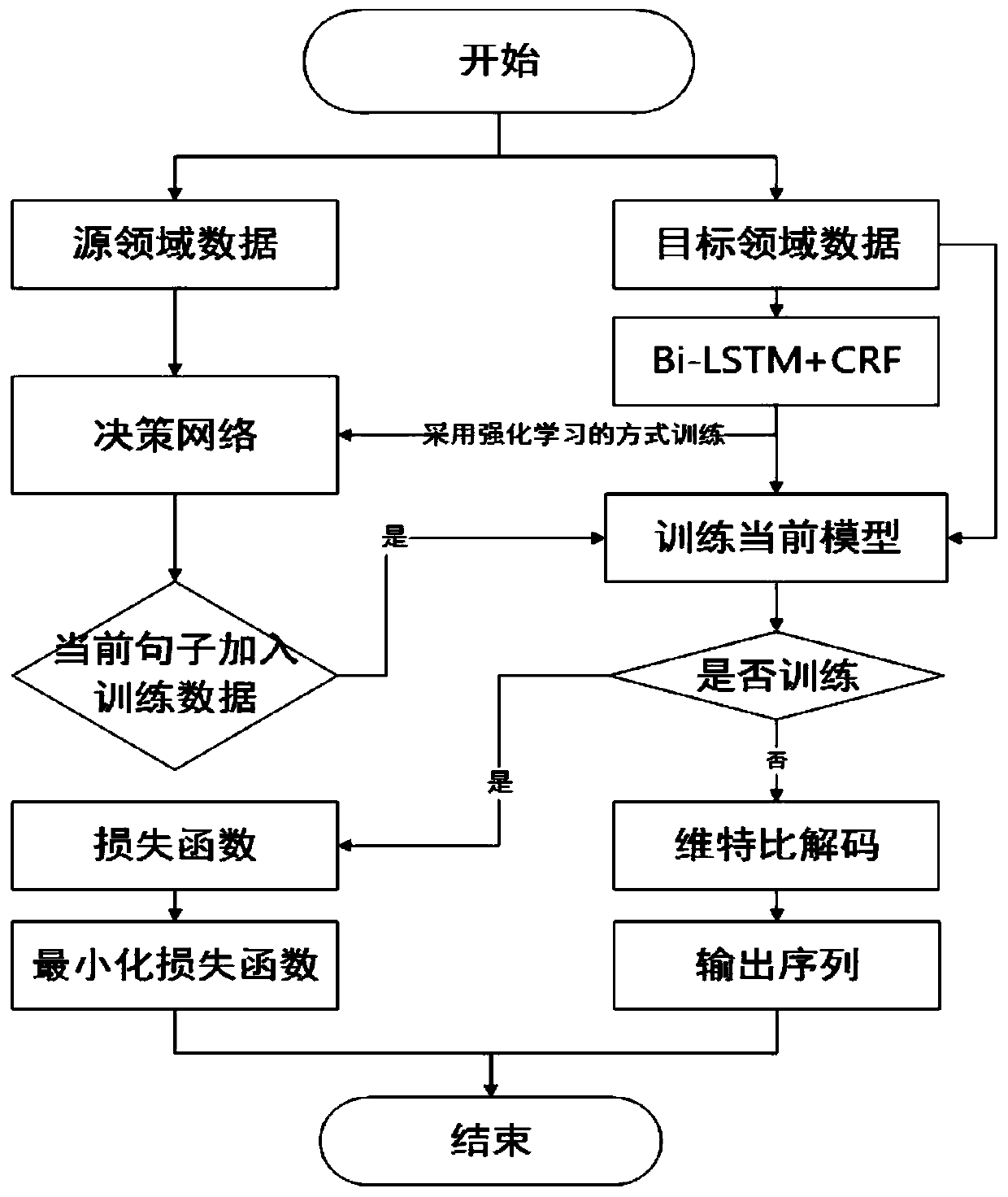

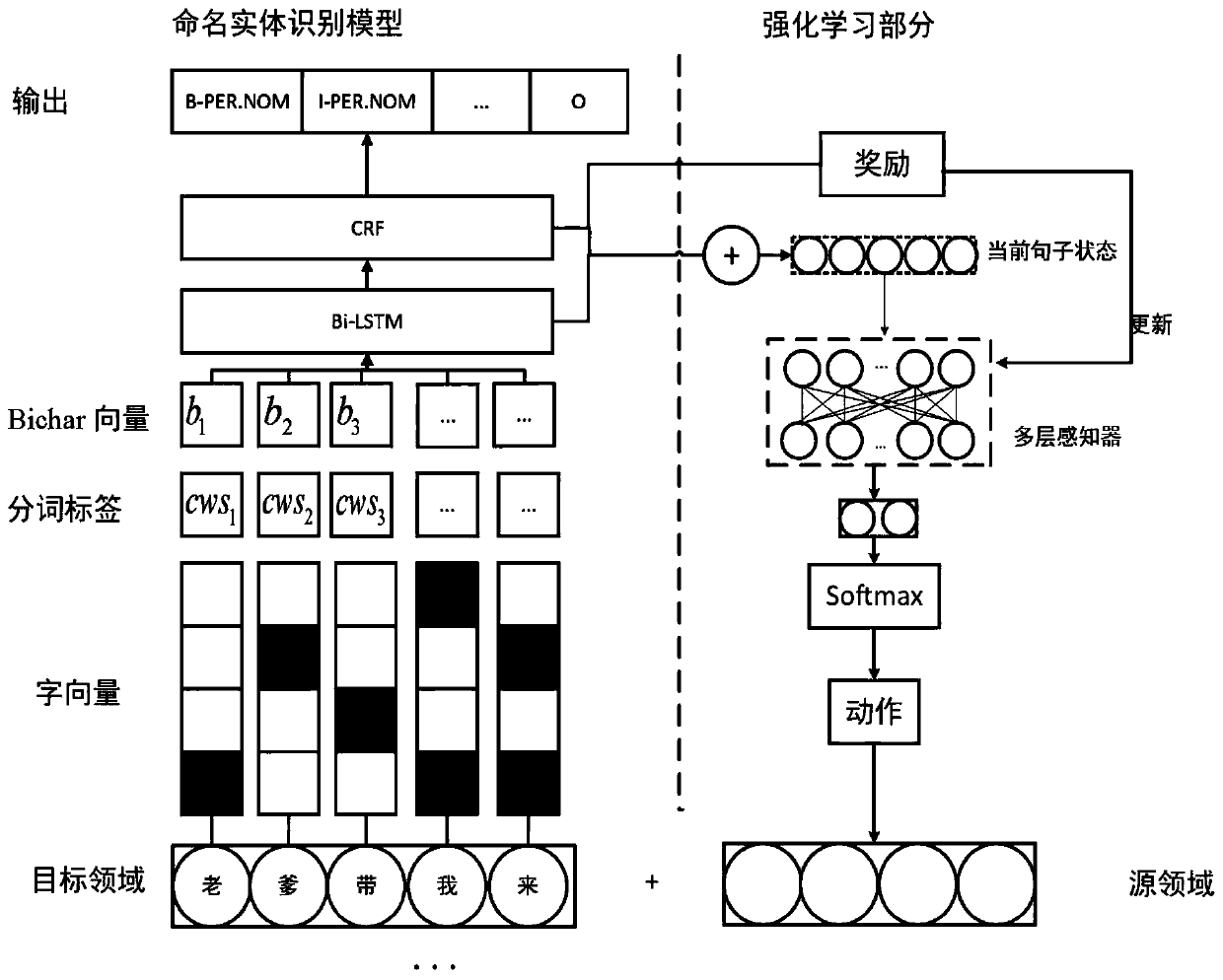

Semantic and label difference fused named entity identification field self-adaption method

ActiveCN110765775AAvoid the problem of infinite state spaceRealization of Domain MigrationSemantic analysisNeural architecturesPositive sampleNamed-entity recognition

The invention provides a method for selecting positive sample data in source domain data to extend training data of a target domain by fusing semantic difference and label difference of sentences in the source domain and the target domain, so as to achieve the purpose of enhancing named entity recognition performance of the target domain. Based on a conventional Bi-LSTM+CRF model, in order to fusesemantic differences and label differences of sentences in a source domain and a target domain, semantic difference and label difference are introduced through state representation and reward settingin reinforcement learning; therefore, the trained decision network can select sentences having positive influence on the named entity recognition performance of the target domain in the data of the source domain, expand the training data of the target domain, solve the problem of insufficient training data of the target domain, and improve the named entity recognition performance of the target domain at the same time.

Owner:BEIJING UNIV OF POSTS & TELECOMM

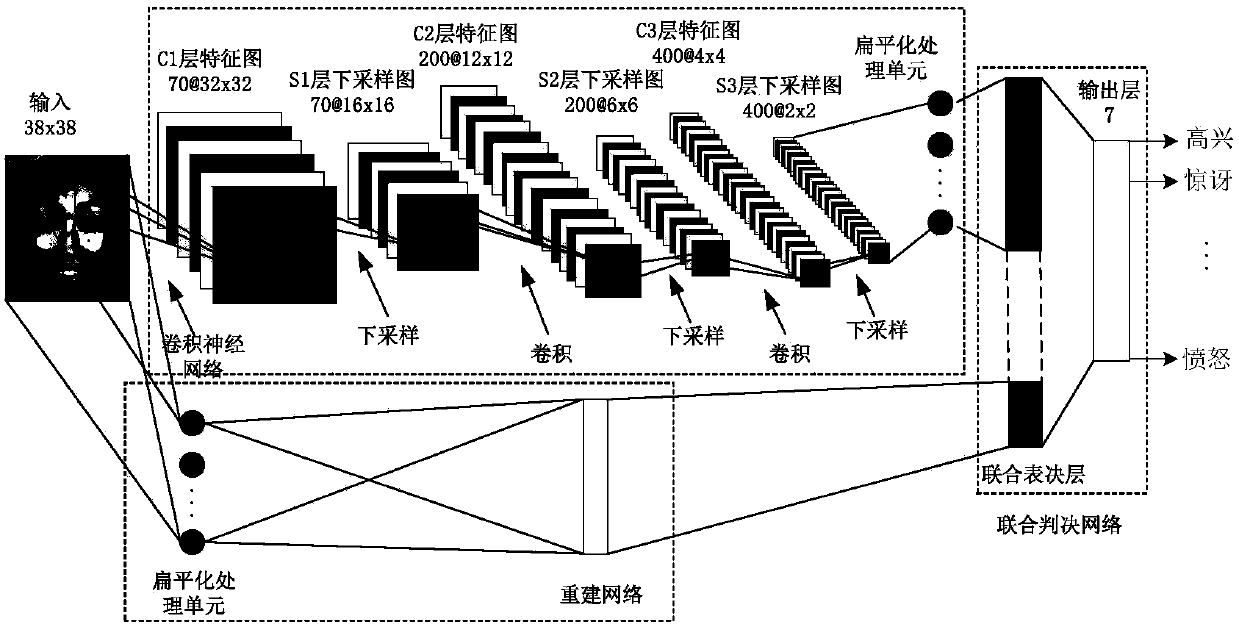

Deep network model constructing method, and facial expression identification method and system

InactiveCN107657204AImprove learning abilityImprove generalization abilityNeural architecturesAcquiring/recognising facial featuresDecision networksAlgorithm

The invention discloses a deep network model constructing method, which comprises the steps of: step S1) establishing a deep network model used for facial expression identification, and initializing parameters of the deep network model, wherein the deep network model comprises a convolutional neural network used for extracting high-level features of pictures, a reconstructed network used for extracting low-level features of the pictures and a joint decision network used for identifying facial expressions; step S2) dividing all training pictures into N groups; step S3) sequentially inputting each group of the pictures into the deep network model, and training parameters in the deep network model based on a gradient descent method; step S4) regarding the parameters of the deep network modelobtained in the step S3) as initial values of model parameters, and re-dividing all the training pictures into N groups, then jumping to the step S3), and carrying out the process repeatedly until allthe trained model parameters no longer change when compared to the initial values of the model parameters. The invention further discloses a facial expression identification method and a facial expression identification system.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

Method for analyzing and evaluating decision supporting capability of complex system

InactiveCN101819661AStandardize and facilitate assessmentInstrumentsDecision networksElectronic information

The invention discloses a method for analyzing and evaluating decision supporting capability evaluation of a complex system, comprising the following steps of: establishing a system capability requirement model, generating a decision network model, establishing a decision supporting capability evaluation model and evaluating the networking decision supporting capability. In the invention, the method breaks through the conventional evaluation method only based on an index system, and comprehensively utilizes theory methods, such as comentropy, architecture and the like, so that the analysis is more scientific and the pertinence is more obvious, thereby the method can be used for guiding the design of the system structure of military electronics information system and organization operation of the system in fighting.

Owner:PLA UNIV OF SCI & TECH

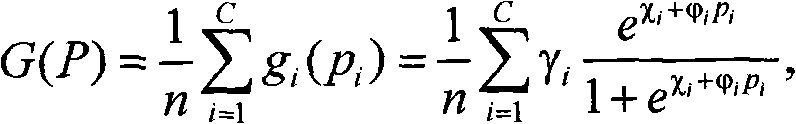

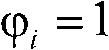

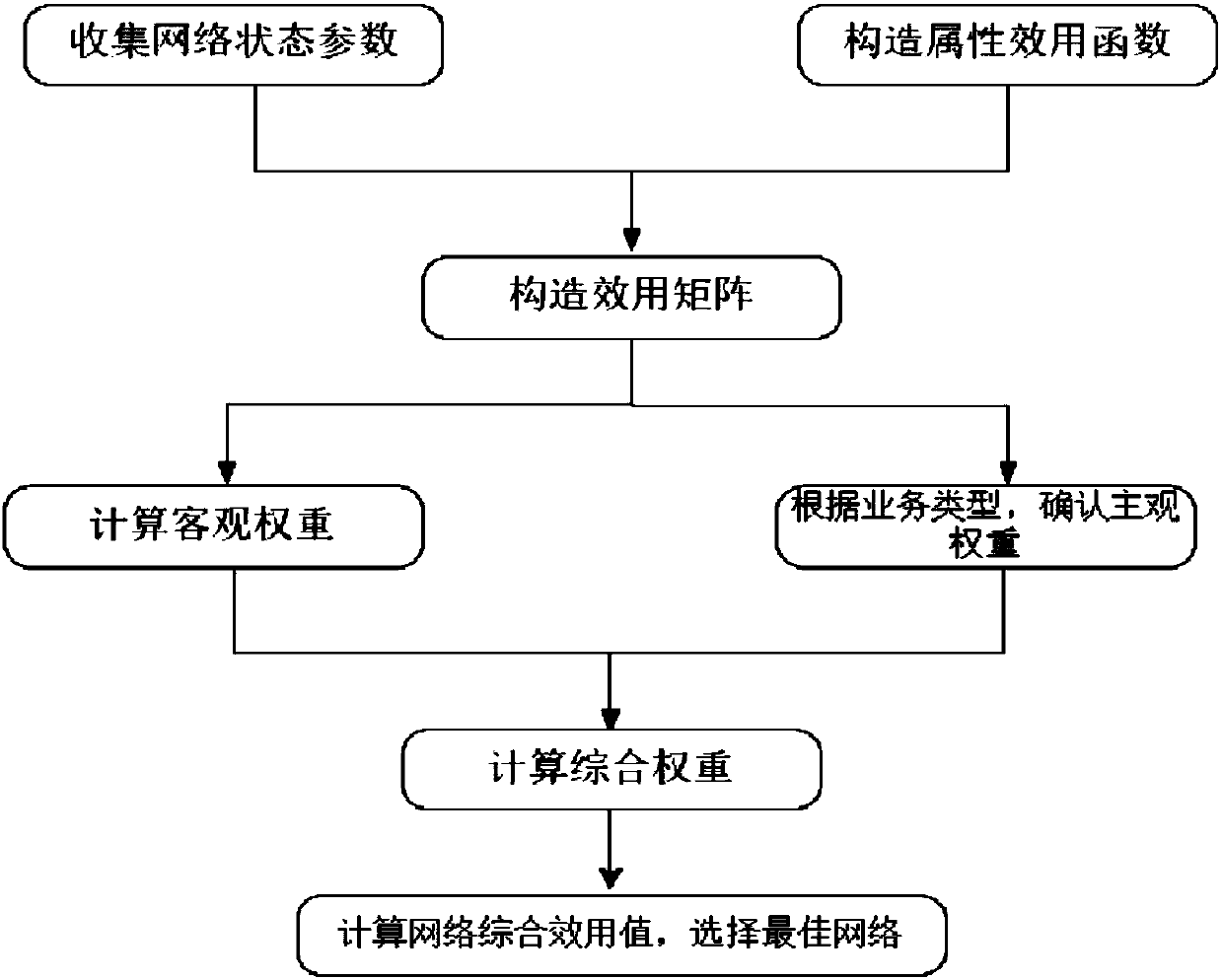

Heterogeneous wireless network selection method based on utility function

ActiveCN108419274AReasonable decision valueImprove balanceNetwork traffic/resource managementAssess restrictionDecision networksComputer science

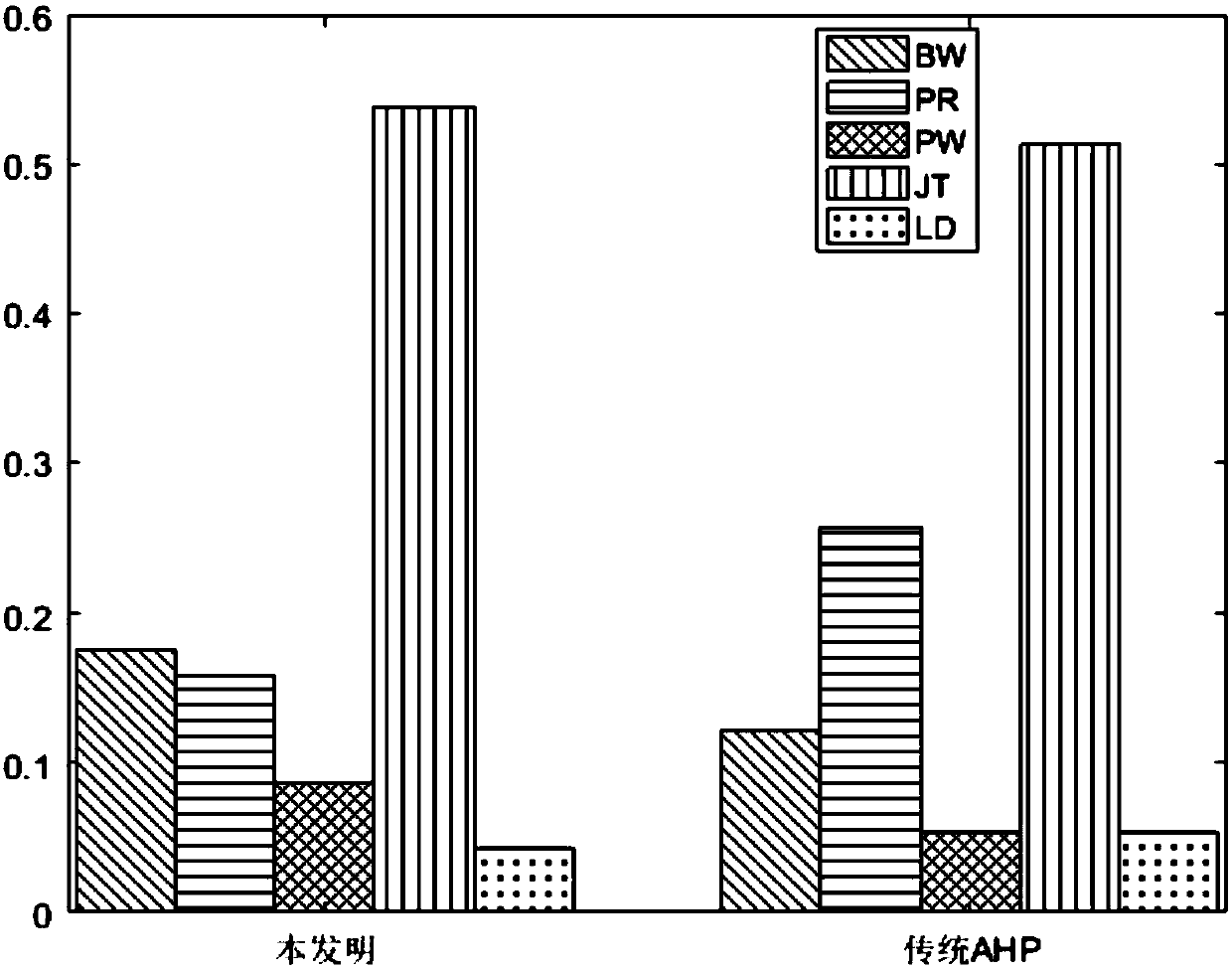

The invention discloses a heterogeneous wireless network selection method based on a utility function. The method includes the following specific steps: (1) acquiring network attribute data and a current user service type, wherein the network attribute data includes available bandwidth, network costs, energy consumption and network load, and the user service type includes voice, data and videos; (2) constructing a utility function for each decision network attribute, and solving a utility value of the corresponding attribute according to the utility function of the network attribute, wherein the relationship between the attribute value and the user satisfaction determines the utility function that is used; (3) constructing a utility matrix according to the solved utility value of the network attribute in each network; (4) constructing a comprehensive weight according to a subjective weight and an objective weight; and (5) calculating the comprehensive utility of networks, and selectingan optimal network for access. According to the scheme of the invention, the user preferences, network states, energy consumption of equipment, network costs and network load are comprehensively considered, and the selected optimal network can achieve an optimal balance between the QoS and the network load.

Owner:SOUTH CHINA UNIV OF TECH

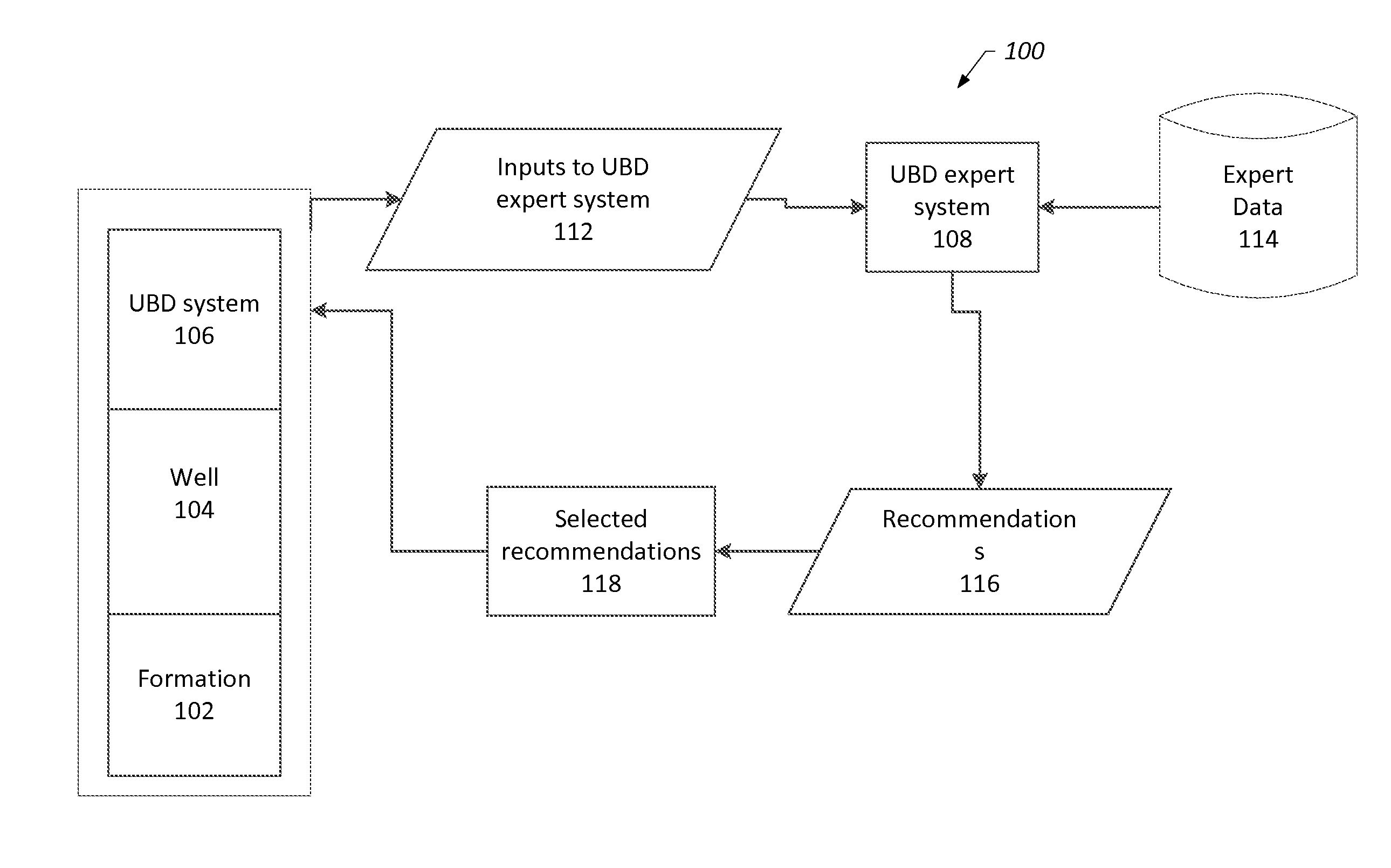

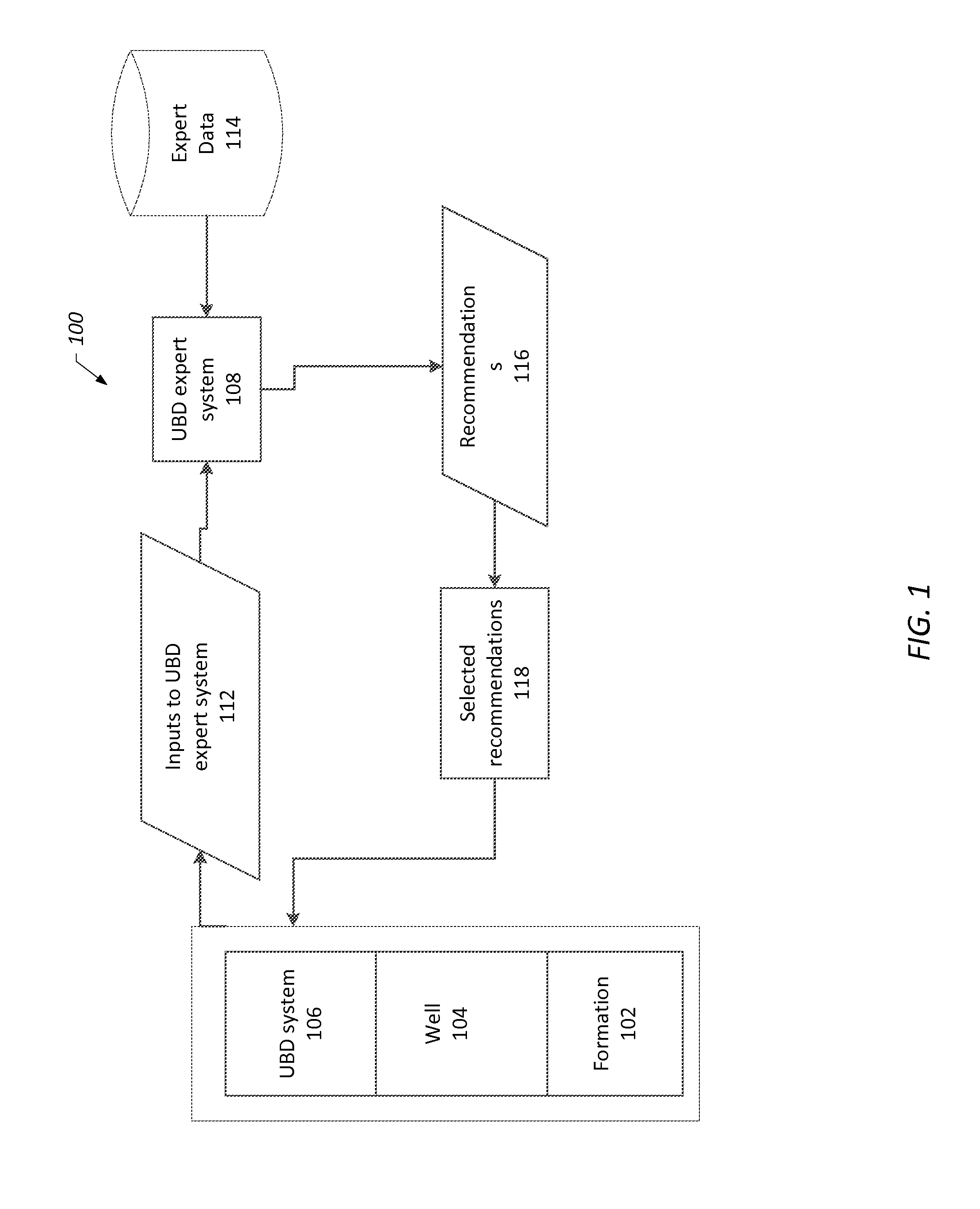

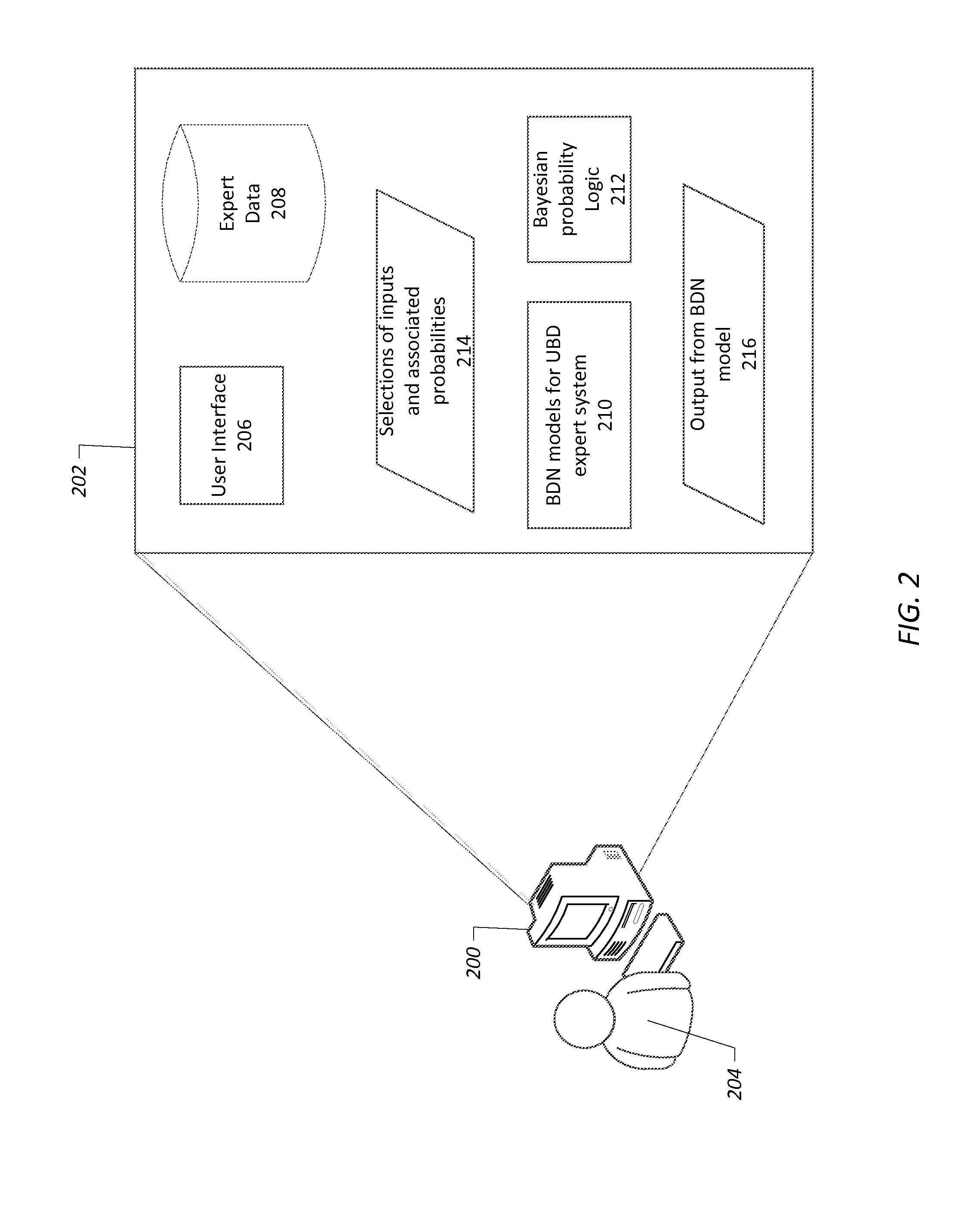

Systems and methods for expert systems for underbalanced drilling operations using bayesian decision networks

InactiveUS20140124265A1Mathematical modelsAutomatic control for drillingDecision networksGood practice

Systems and methods are provided for an underbalanced drilling (UBD) expert system that provides underbalanced drilling recommendations, such as best practices. The UBD expert system may include one or more Bayesian decision network (BDN) model that receive inputs and output recommendations based on Bayesian probability determinations. The BDN models may include: a general UBD BDN model, a flow UBD BDN model, a gaseated (i.e., aerated) UBD BDN model, a foam UBD BDN model, a gas (e.g., air or other gases) UBD BDN model, a mud cap UBD BDN model, an underbalanced liner drilling (UBLD) BDN model, an underbalanced coil tube (UBCT) BDN model, and a snubbing and stripping BDN model.

Owner:SAUDI ARABIAN OIL CO +1

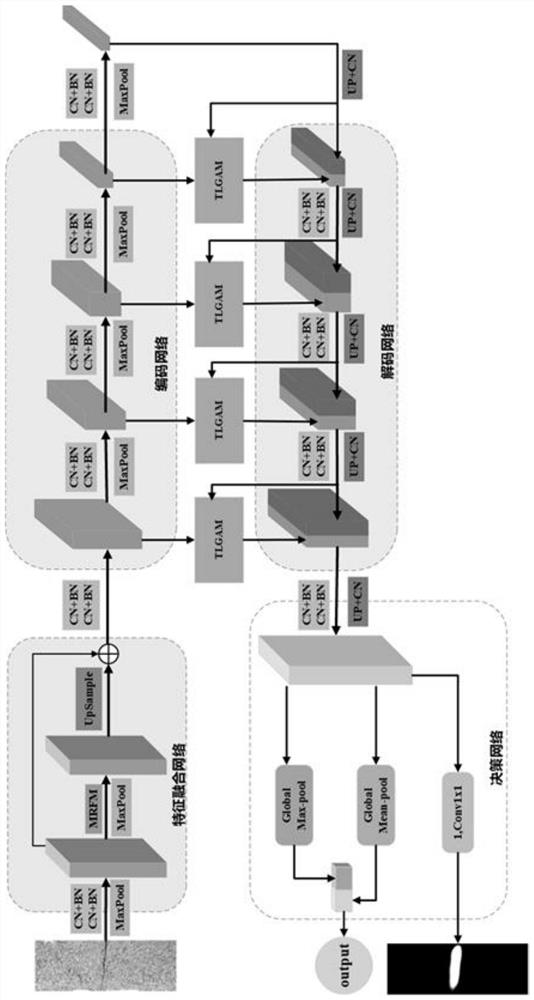

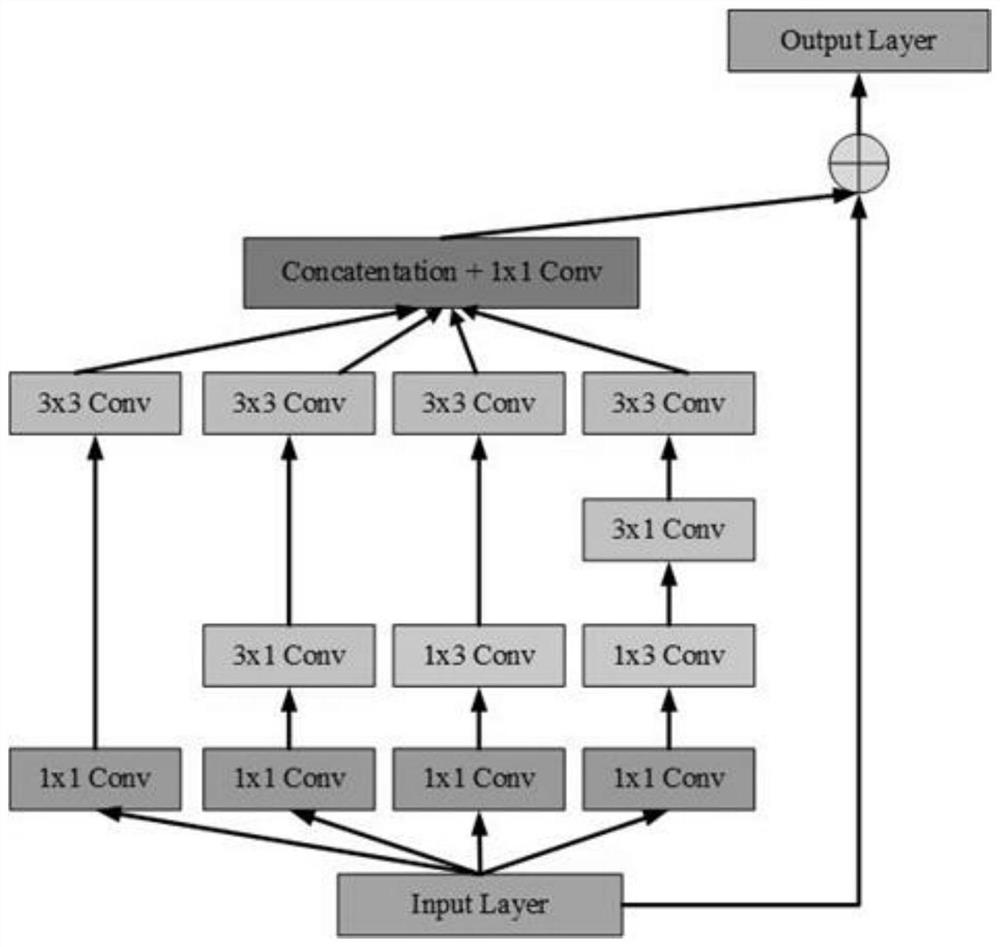

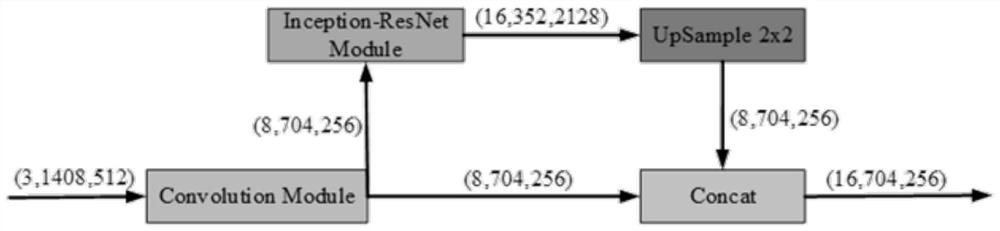

Surface defect detection method based on multi-scale convolution and trilinear global attention

PendingCN112465790AAlleviate the problem of imbalanceAnnotated data is lowImage enhancementImage analysisPattern recognitionActivation function

The invention discloses a surface defect detection method based on multi-scale convolution and trilinear global attention, and the method comprises the steps: carrying out the convolution and poolingof trunk features in an encoding module, and extracting shallow feature maps of an image under different scales; obtaining a deep feature map through up-sampling and convolution operations in a decoding module; fusing the shallow feature maps and the deep feature map together through four times of splicing operation in the middle; converting the shallow feature map into a shallow attention map bya first branch of the trilinear global attention module through linear operation, activating the deep feature map by a second branch through compression to obtain a deep feature weight of the deep feature map, and then weighting the deep feature weight to the shallow feature map; in a decision-making network module, processing an output feature map of the decoding module by using global average pooling and global maximum pooling, outputting the probability that a surface defect image has defects through an activation function, and outputting a grey-scale map of a potential position of the defect through 1*1 convolution operation for visually explaining a neural network.

Owner:TIANJIN UNIV

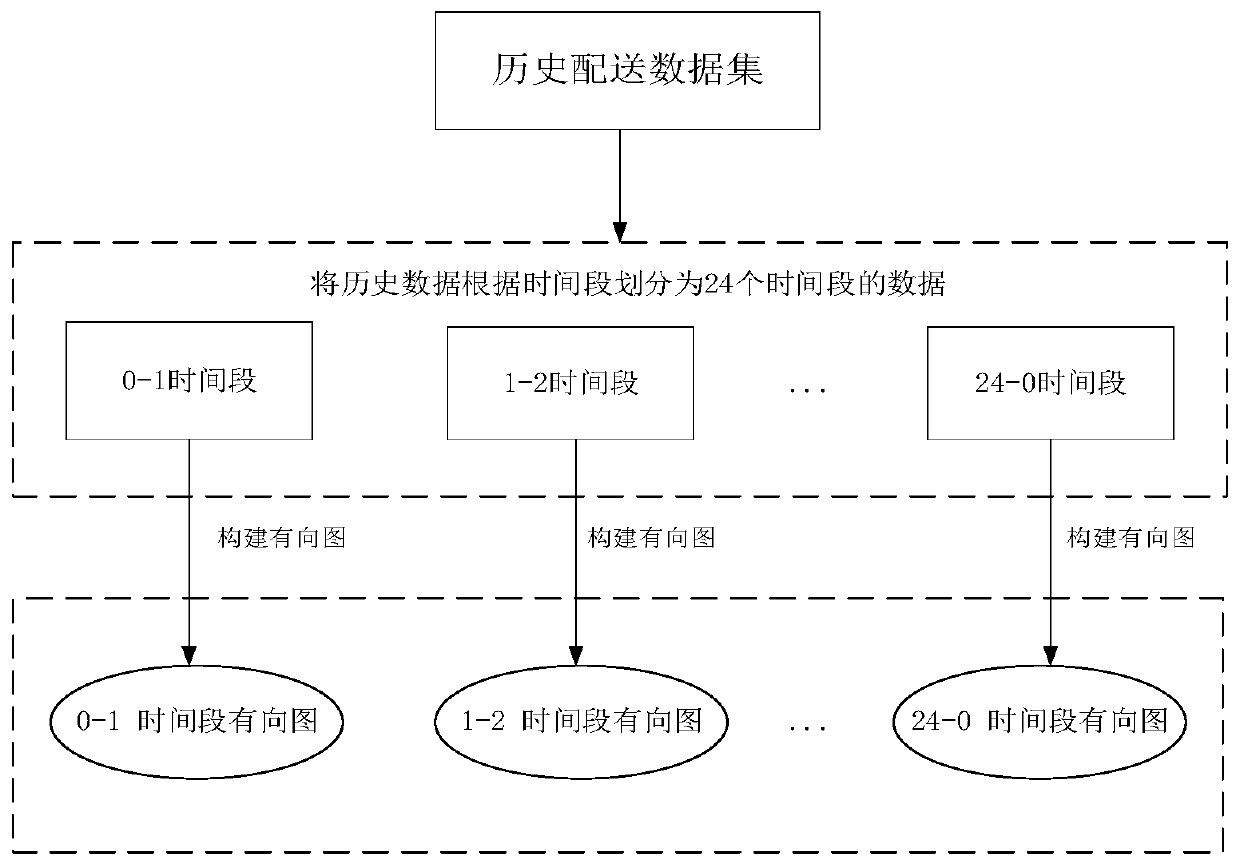

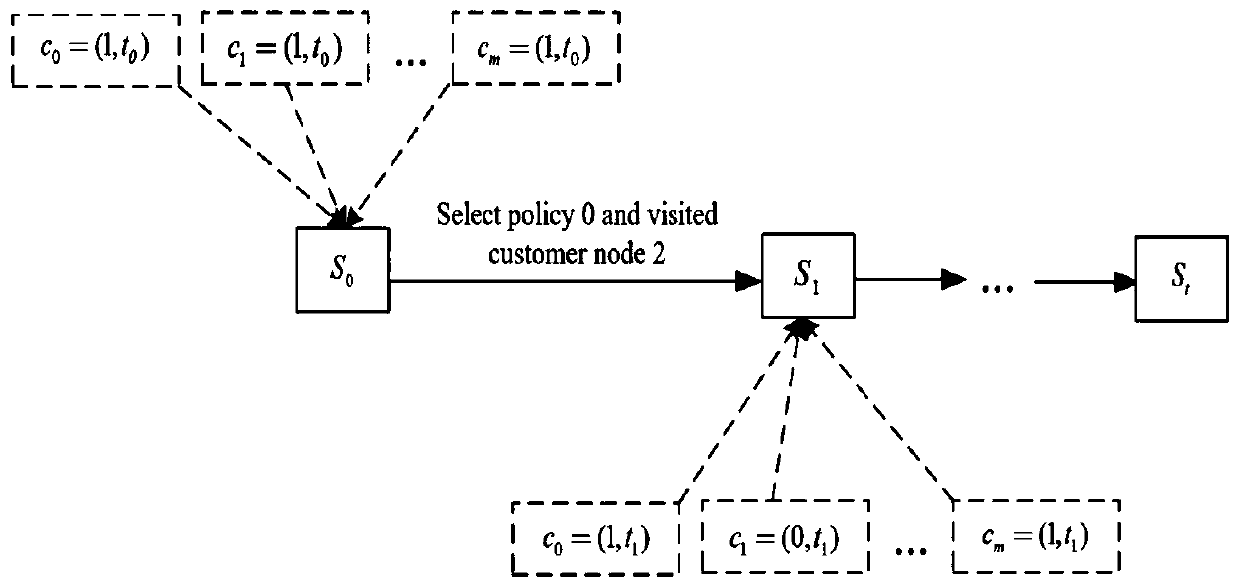

Vehicle path planning method based on reinforcement learning

PendingCN111415048AImprove transportation efficiencyLow costForecastingMachine learningDecision networksSimulation

The invention provides a vehicle path planning method based on reinforcement learning, which comprises the following steps: taking a state sequence of a client node as input information, sending the input information to a decision network, and selecting an action by the decision network according to an action value function and calculating and planning a vehicle traveling path. According to the method, the model is trained through the reinforcement learning algorithm based on historical distribution data, and therefore the purpose of dynamically planning the driving path under the condition that the road traffic condition and the number of distribution target nodes are changed is achieved. According to the method, complex and changeable road traffic conditions and delivery tasks with uncertain delivery target numbers in real life are considered, and the driving route is dynamically adjusted, so that the transportation efficiency is improved, and the cost is reduced.

Owner:DALIAN MARITIME UNIVERSITY

Mobile user position locating system

InactiveUS7177653B2Improved position estimatePosition fixationRadio/inductive link selection arrangementsTelecommunications networkDecision networks

A method and system for locating a mobile user of one or more mobile users while on a call in a wireless telecommunication network utilizes a decision network that determines if the call signal is propagating through a repeater station. When the call is propagating through a repeater station, the decision network alters the signal measurement parameters to correspond with the location of the mobile user relative to the repeater station co-ordinates. By revising signal measurement parameters to compensate for repeater stations, the present invention provides for improved position estimates of the location of the mobile user in the network. For multiple cell soft handoff conditions, the decision network utilizes trained neural networks.

Owner:BELL MOBILITY

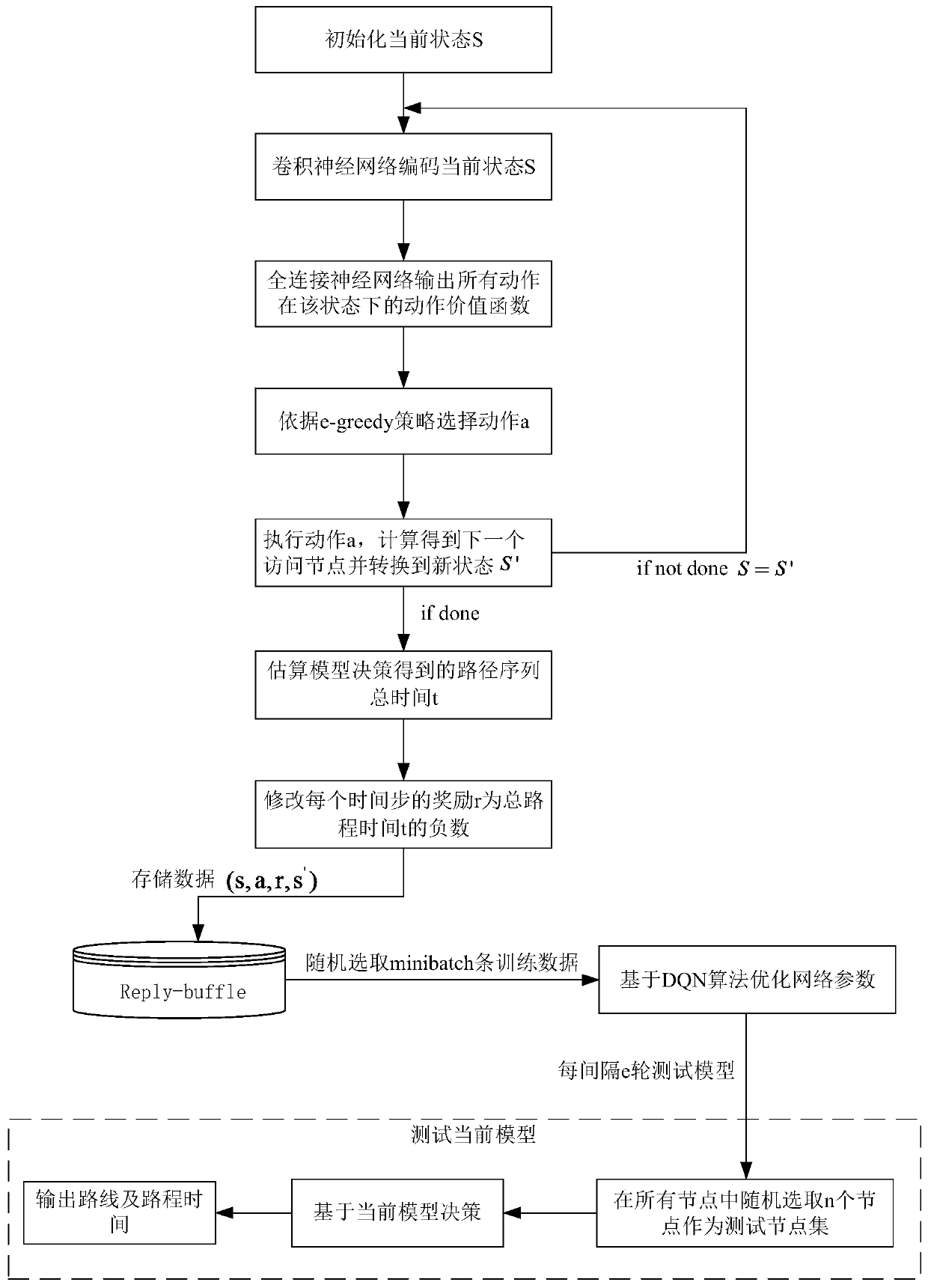

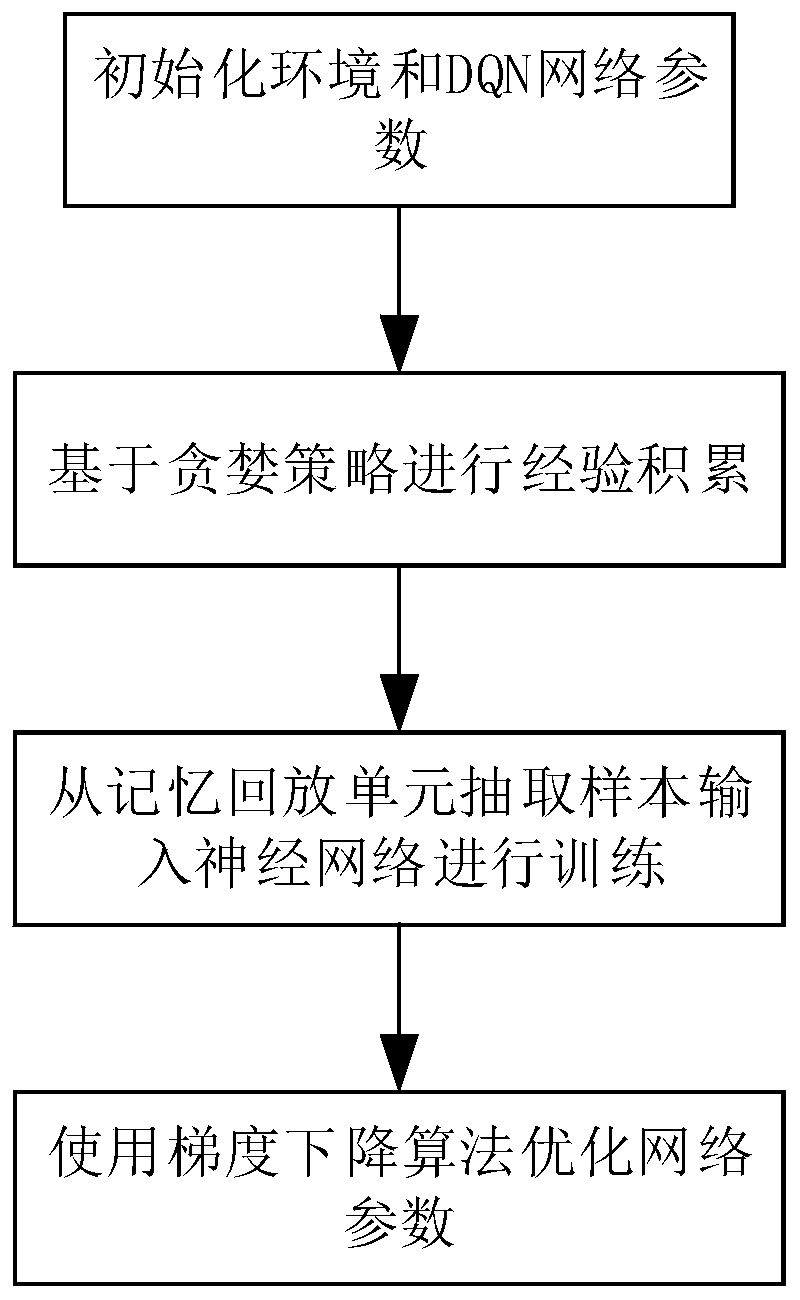

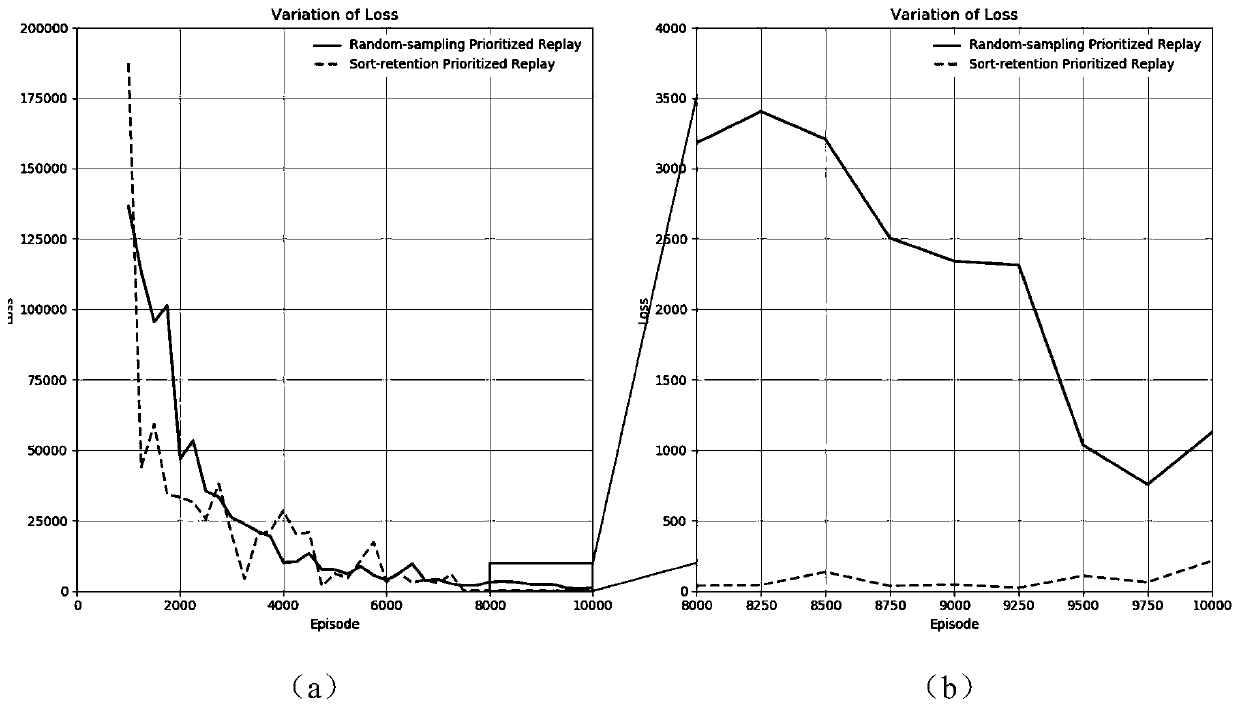

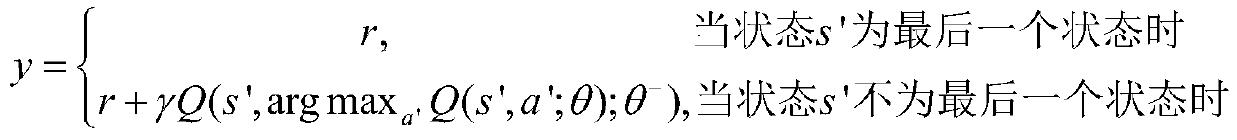

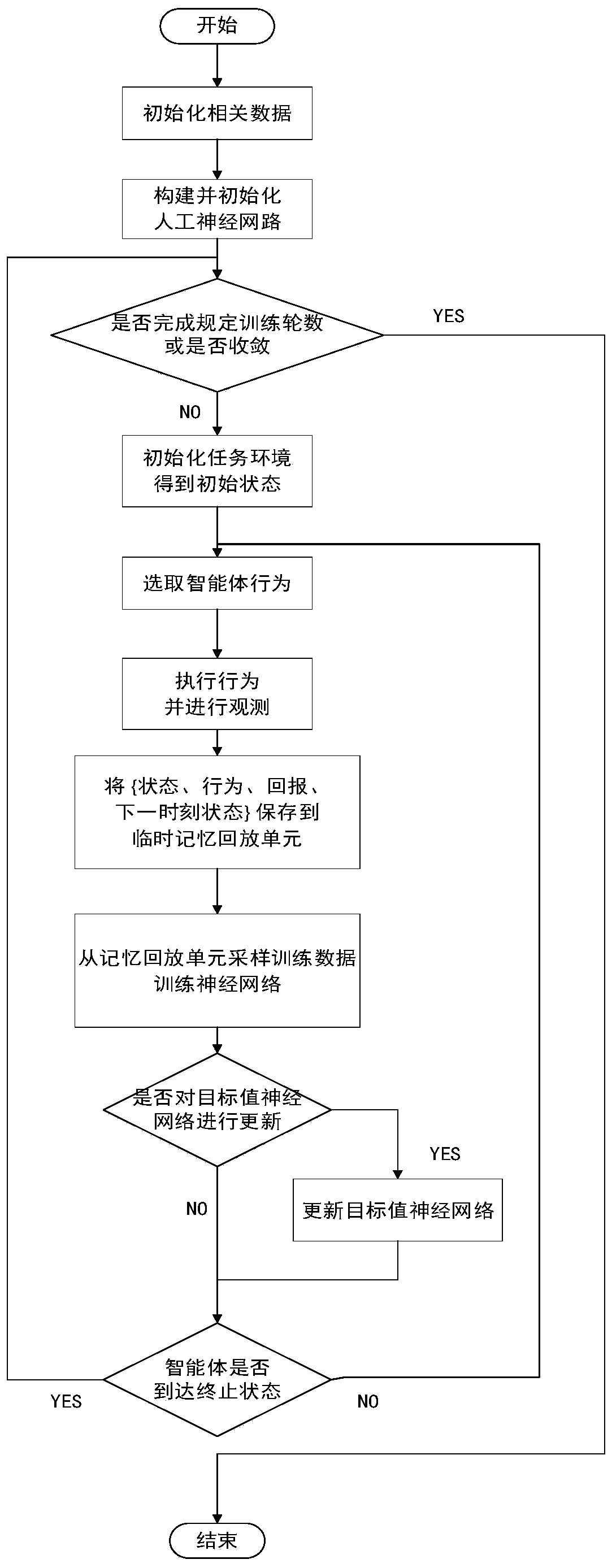

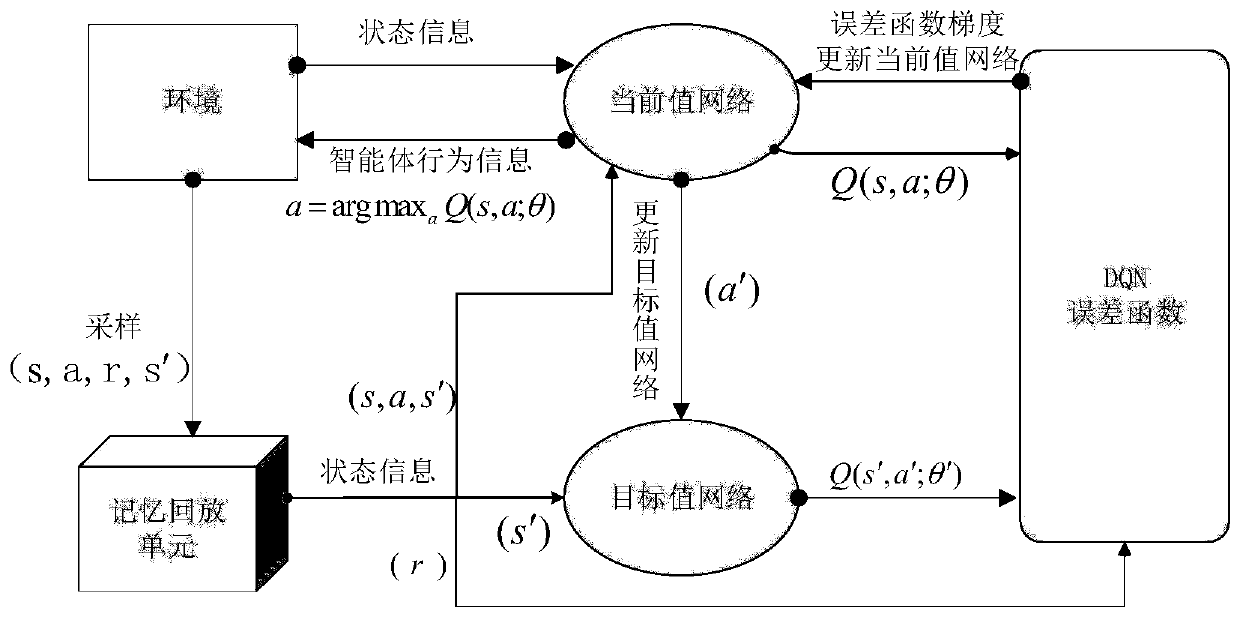

Improved deep reinforcement learning method and system based on Double DQN

InactiveCN111461321AFast convergenceHigh precisionNeural architecturesNeural learning methodsDecision networksSimulation

The invention discloses an improved deep reinforcement learning method and system based on Double DQNs, and belongs to the field of reinforcement learning, and the method comprises the following steps: initializing an environment and DQN network parameters; performing experience accumulation based on an epsilon-greedy strategy, and storing experience into a playback memory unit; and training and optimizing the DQN network by using samples in the playback memory unit to obtain a decision network. According to the method, the convergence speed of Double Q-Learning Network can be increased, the final convergence value can be optimized, the interference of noise on the effect of the DQN algorithm can be reduced, the application effect of deep reinforcement learning in actual production and life can be improved, and the application range of deep reinforcement learning can be expanded.

Owner:NANJING UNIV OF SCI & TECH

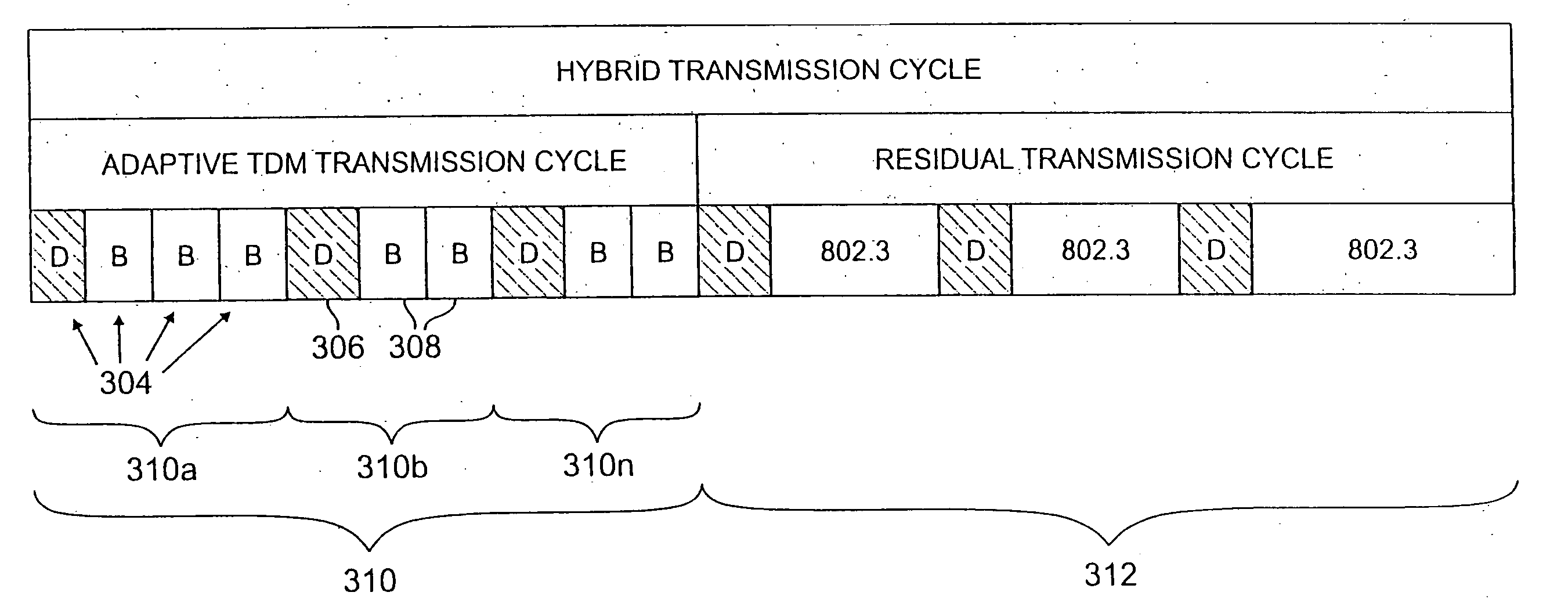

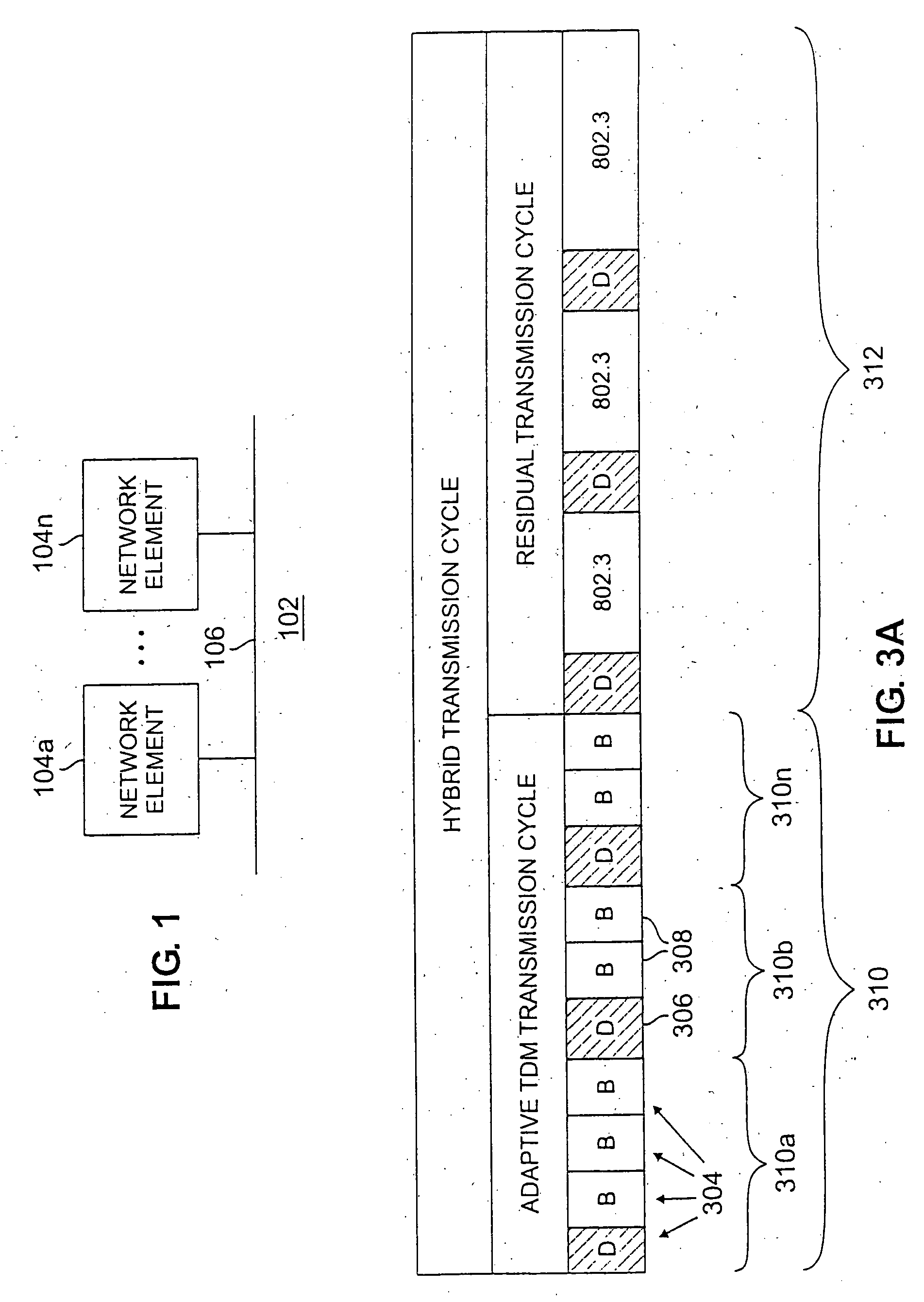

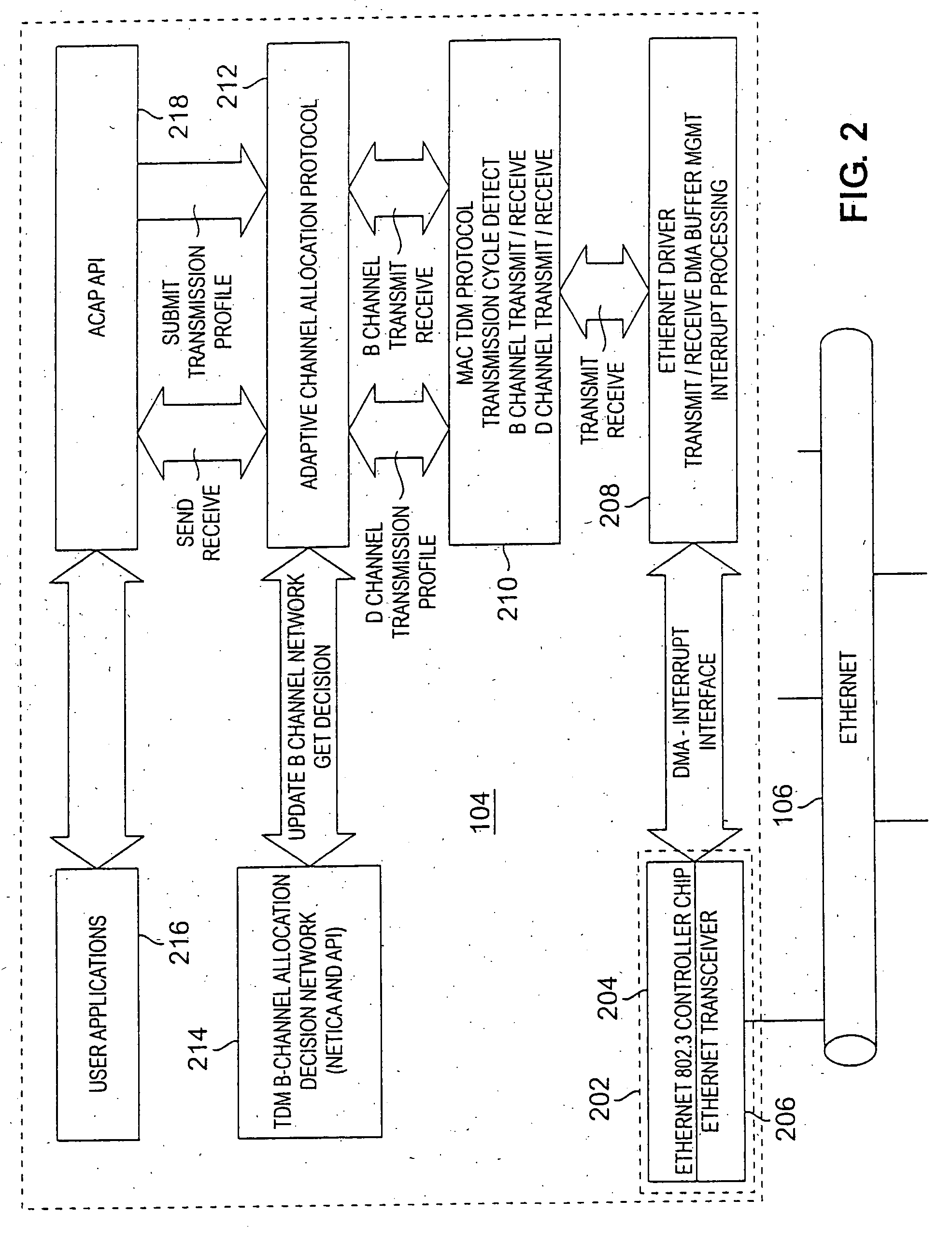

Adaptive transmission in multi-access asynchronous channels

ActiveUS20050111477A1Prevent unacceptable latencyError preventionFrequency-division multiplex detailsDecision networksTime-division multiplexing

A hybrid transmission cycle (HTC) unit of bandwidth on a shared transmission medium is defined to include an adaptive, time division multiplexing transmission cycle (ATTC), which is allocated in portions sequentially among all participating network entities, and a residual transmission cycle (RTC), which is allocated in portions, as available, to the first network entity requesting access to the shared medium during each particular portion. The ratio of logical link virtual channels, or D-Channels, to data payload virtual channels, or B-Channels, within the ATTC is adaptive depending on loading conditions. Based on transmission profiles transmitted on the D-Channels during the ATTC, each network entity determines how many B-Channels it will utilize within the current HTC. This calculation may be based on any decision network, such as a decision network modelling the transmission medium as a marketplace and employing microeconomic principles to determine utilization. The ratio of the duration of the ATTC segment to the duration of the RTC segment is also adaptive depending on loading conditions, to prevent unacceptable latency for legacy network entities employing the shared transmission medium. During the RTC, utilization of the shared medium preferably reverts to IEEE 802.3 compliant CSMA / CD transmission, including transmissions by HTC-compliant network entities.

Owner:GLOBAL COMM INVESTMENT LLC

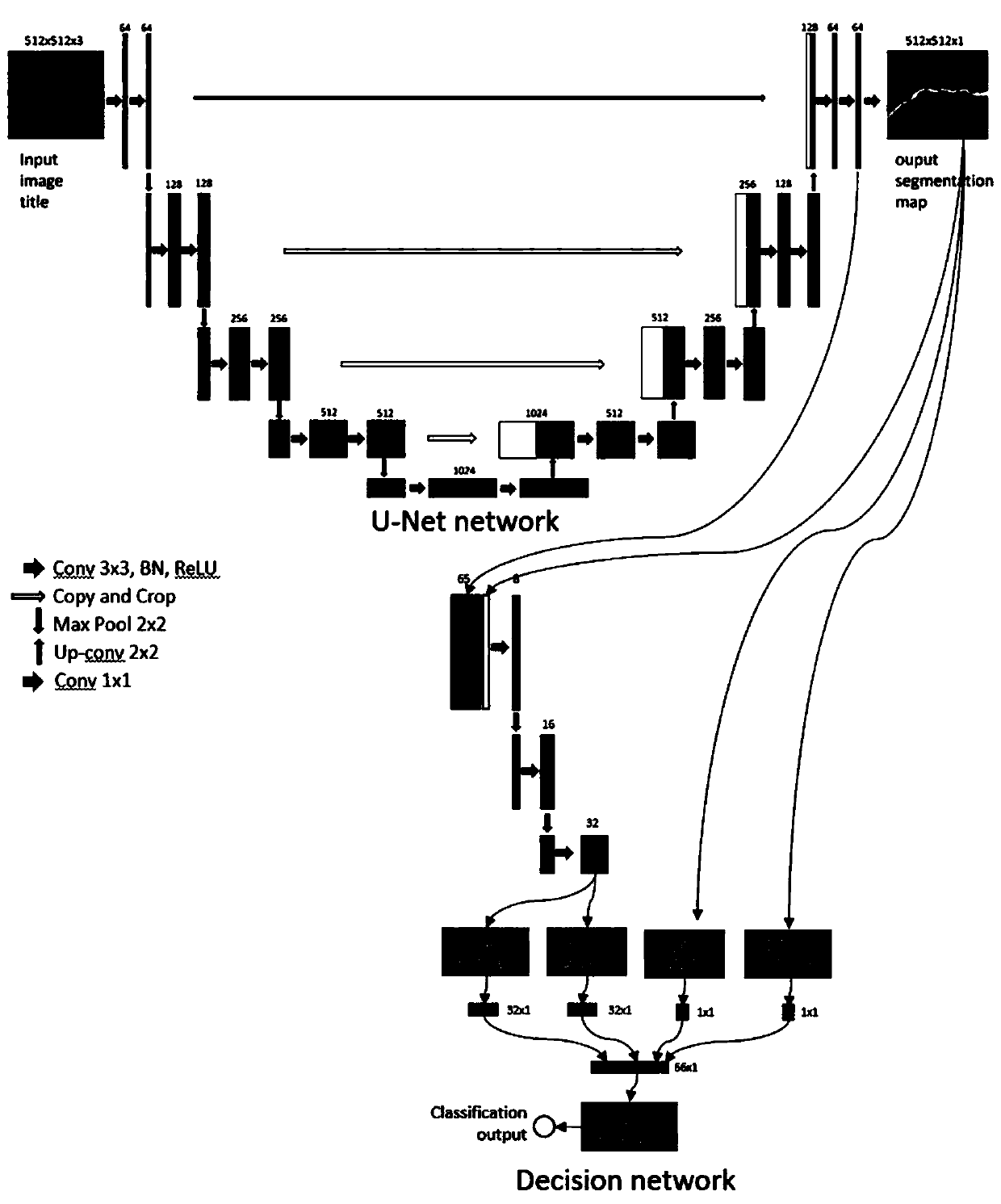

Pavement crack segmentation and recognition method based on deep learning

InactiveCN110502972AImprove generalization abilityAccurate segmentationCharacter and pattern recognitionNeural learning methodsDecision networksSlide window

The invention discloses a pavement crack segmentation and recognition method based on deep learning. The pavement crack segmentation and recognition method comprises the following steps: firstly, manually labeling an acquired color crack sample image to obtain a crack label image; performing sub-image segmentation of the same size and position on the two types of images, marking whether the sub-images contain cracks, training a U-Net neural network by using the marked sub-images, and training the decision network by using the results of the last two layers of the U-Net neural network as the input of the decision network; and finally, obtaining a trained network model, and performing non-overlapping sliding window detection and classification on the to-be-identified image so as to obtain the segmentation and identification result of the image. According to the pavement crack segmentation and recognition method, the crack image is segmented, and whether the image contains cracks can be accurately identified, so that the purposes of efficient, accurate and low-cost pavement crack segmentation and identification can be achieved.

Owner:GUANGDONG UNIV OF TECH

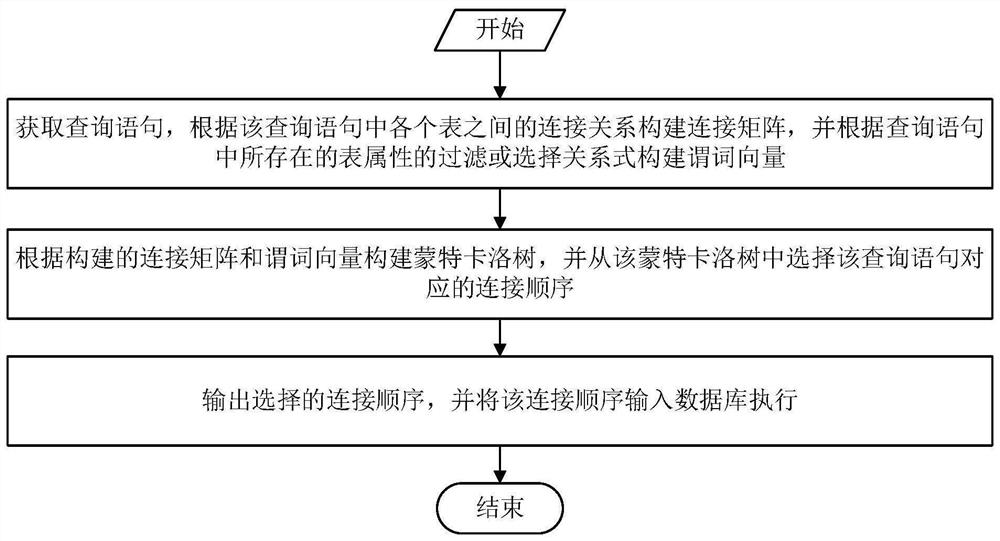

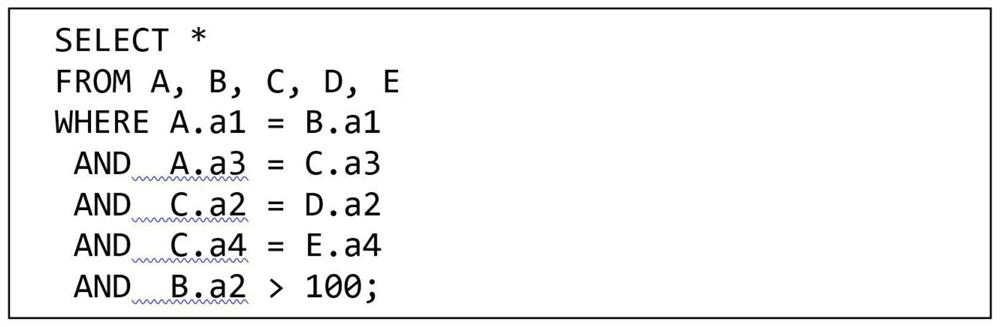

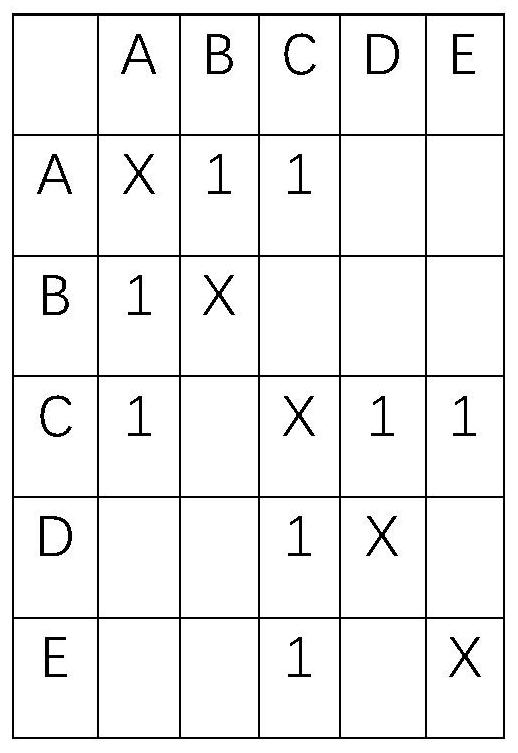

Database query optimization method and system

PendingCN111611274ASolve the tiltResolve dependenciesDigital data information retrievalNeural architecturesDatabase queryQuery plan

The invention discloses a database query optimization method. The database query optimization method comprises a connection sequence selector and a self-adaptive decision network, wherein the connection sequence selector is used for selecting an optimal connection sequence in the query plan and comprises a new database query plan coding scheme, and codes are in one-to-one correspondence with the connection sequence; a value network which is used for predicting the execution time of the query plan, is trained by the query plan and the corresponding real execution time, and is used for reward feedback in Monte Carlo tree search; a Monte Carlo tree search method which is used for simulating and generating multiple different connection sequences, evaluating the quality of the connection sequences through a connection sequence value network, and returning a recommended connection sequence after preset exploration times are reached. And the adaptive decision network is used for distinguishing whether the query statement uses the connection sequence selector or not, so that the overall performance of the optimization system is improved. According to the method and the system, the limitation of a traditional query optimizer can be effectively avoided, and the database query efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH +1

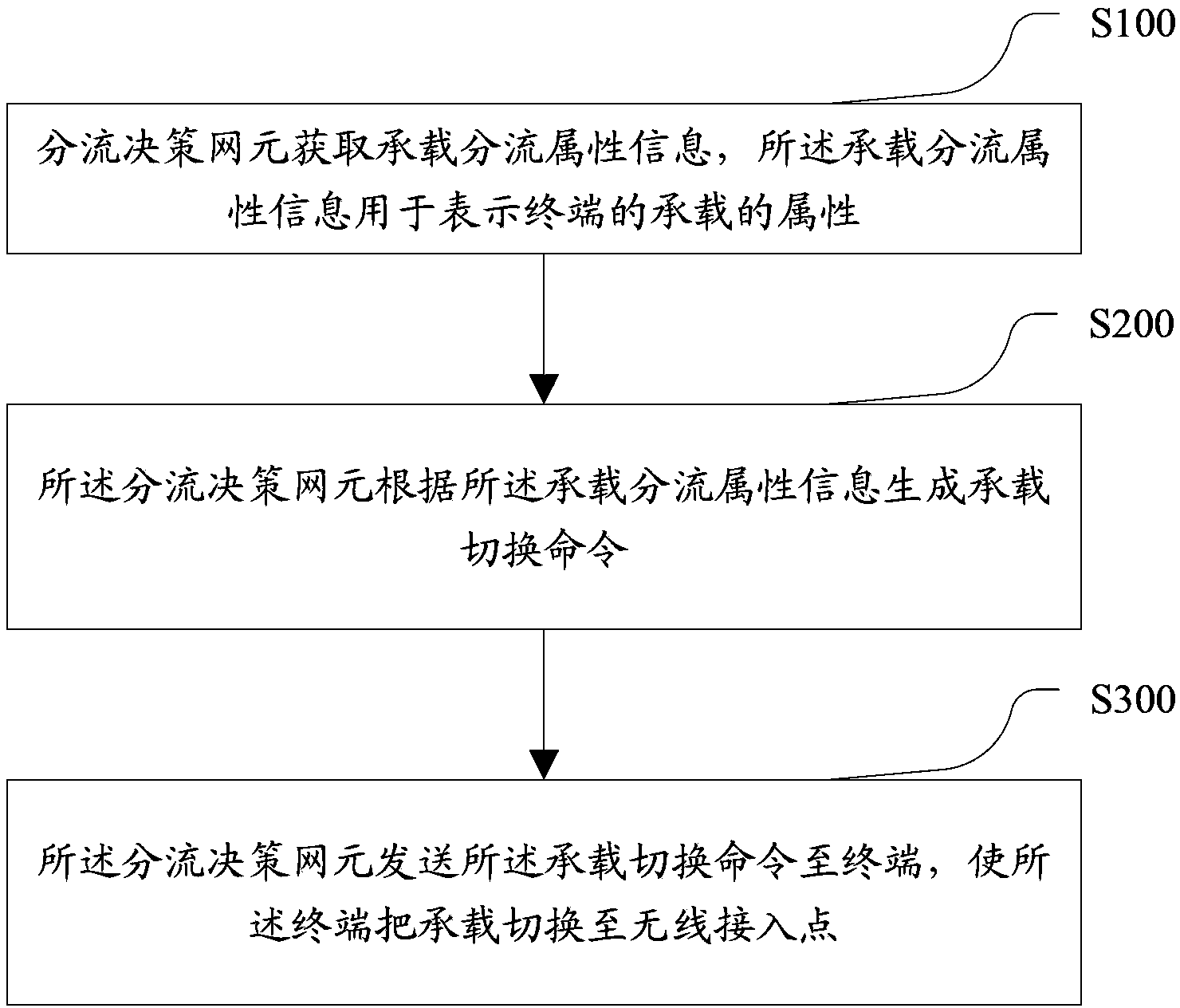

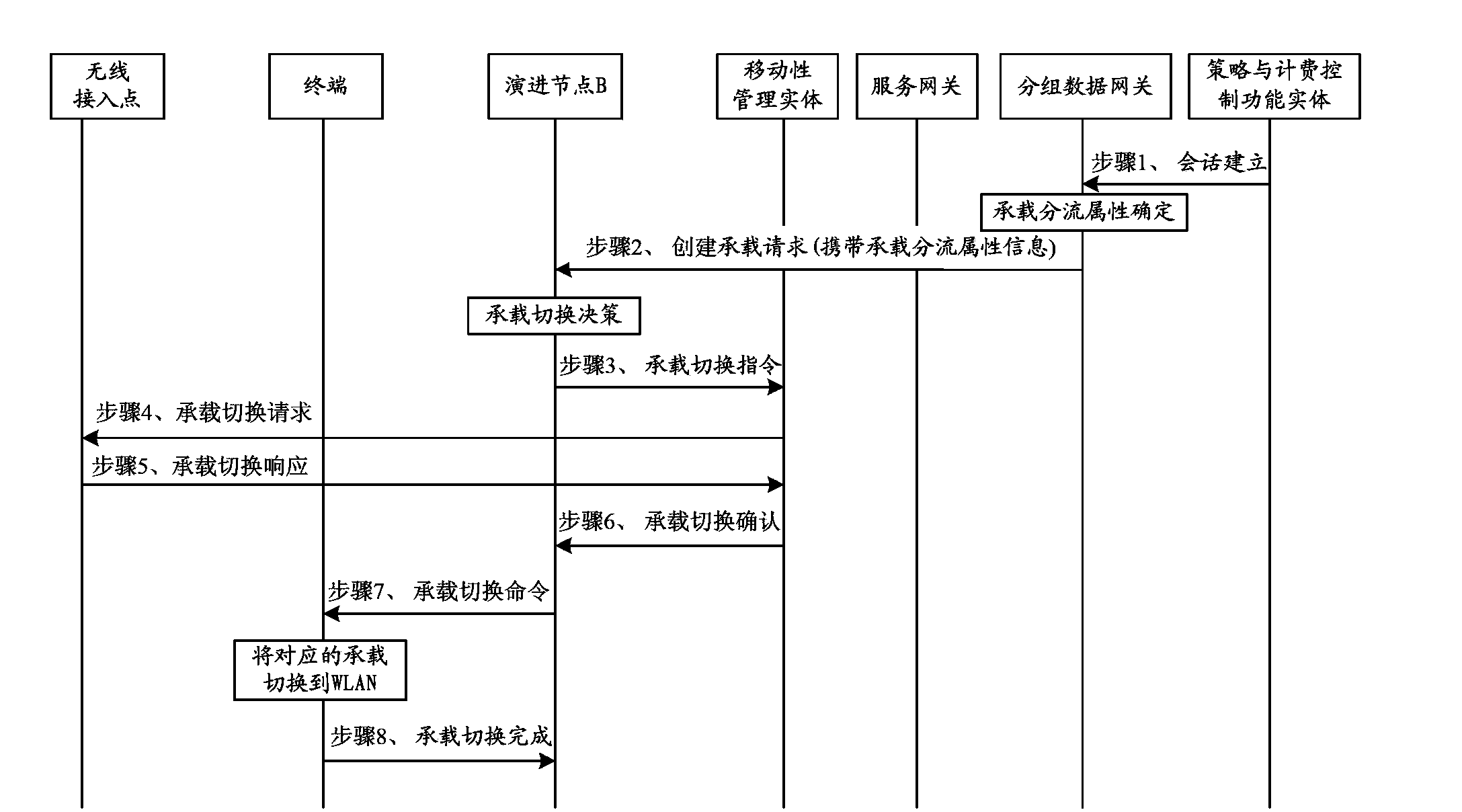

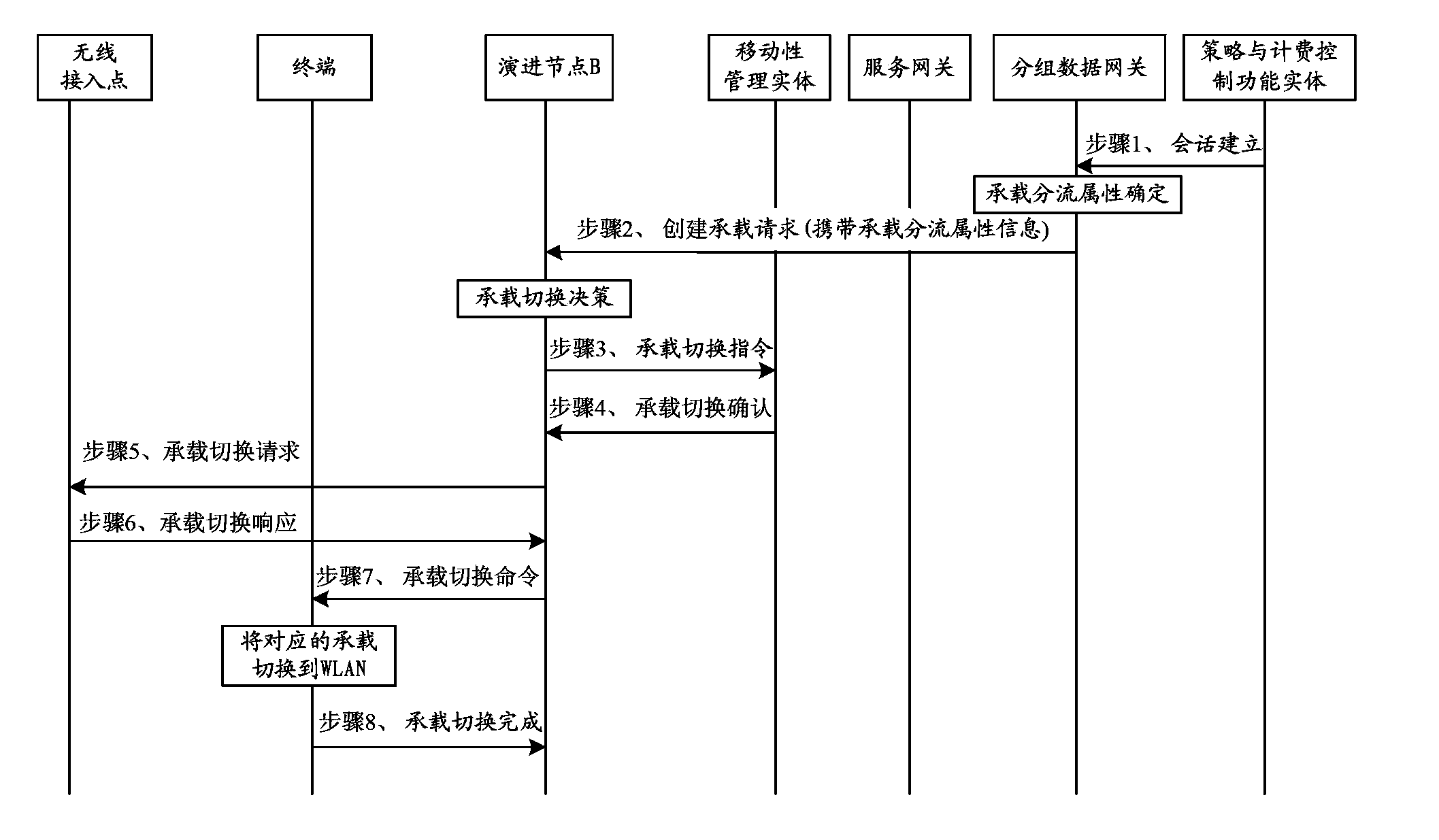

Network shunt control method and system and network equipment

ActiveCN104125608ASolve the problem of degraded experienceImprove experienceError prevention/detection by using return channelNetwork traffic/resource managementAccess networkDecision networks

Disclosed is a network-controlled shunting method, comprising: a shunting decision network element acquires the shunting attribute information of a bearer, the information being used to indicate the attributes of the bearer of a terminal; the shunting decision network element generates a bearer switching command according to the shunting attribute information of the bearer; and the shunting decision network element transmits the bearer switching command to the terminal, such that the terminal switches the bearer to a wireless access point (AP). The network-controlled shunting method, system and network device of an embodiment of the present invention make a decision according to the shunting attribute information of a bearer so as to obtain a bearer switching command, and then according to the bearer switching command, allow a terminal to switch the bearer to a wireless AP, enabling the terminal to shunt data over a wireless local area network (WLAN), thus solving the problem of user experience being affected by a possible conflict between the shunting decision of the network element in an access network and service route attributes in the prior art.

Owner:HUAWEI TECH CO LTD

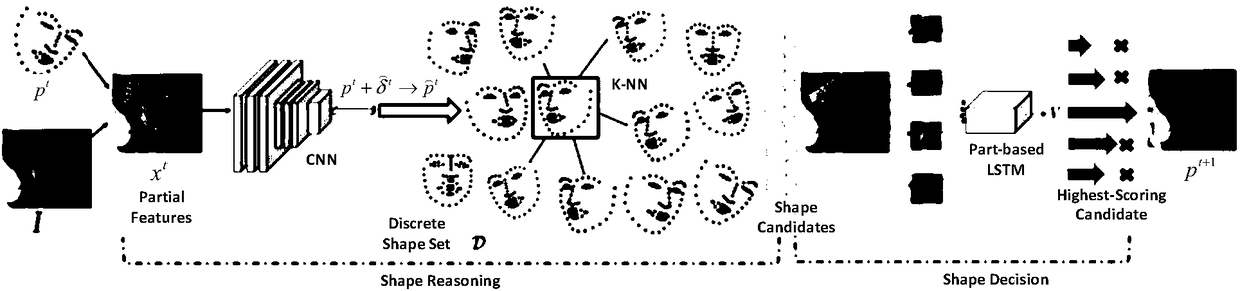

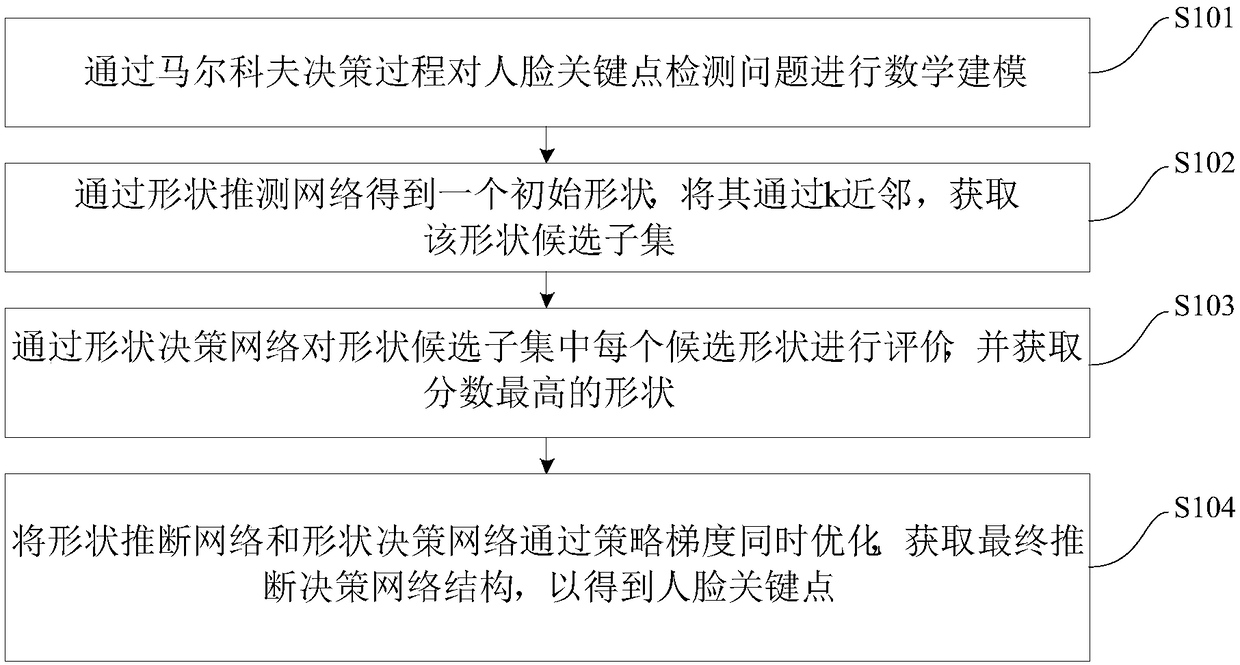

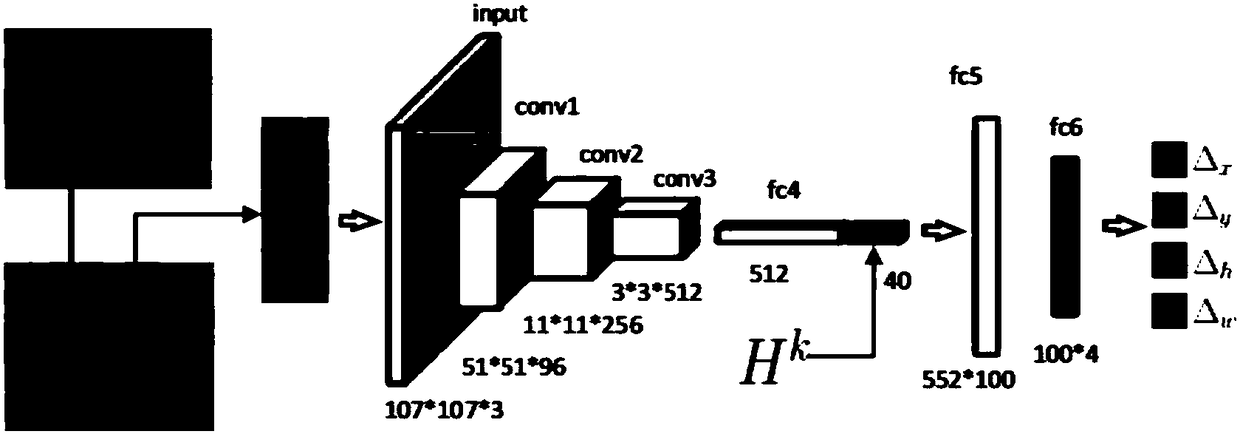

Face key point detection method and device based on deep reinforcement learning

ActiveCN108446619AImprove accuracyImprove reliabilityCharacter and pattern recognitionPattern recognitionDecision networks

The invention discloses a face key point detection method and device based on deep reinforcement learning, wherein the method comprises the following steps: mathematically modeling a face key point detection problem by a Markov decision process; obtaining an initial shape by a shape prediction network, subjecting the initial shape to the k-nearest neighbor to obtain a shape candidate subset; evaluating each candidate shape in the shape candidate subset by the shape decision network, and obtaining the shape with the highest score; simultaneously optimizing the shape prediction network and the shape decision network by a strategy gradient to obtain the final predicted decision network structure to obtain the key points of a face. By predicting the framework of decision, the method can find an optimal shape search path in the shape continuous space to maximize the shape evaluation score, thereby effectively improving the accuracy and reliability of face key point detection.

Owner:TSINGHUA UNIV

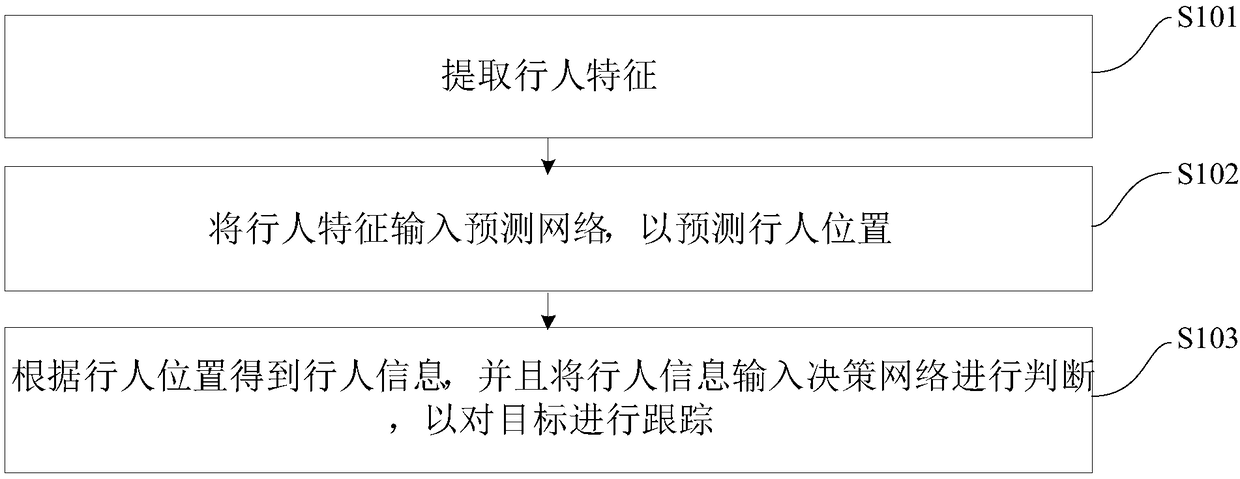

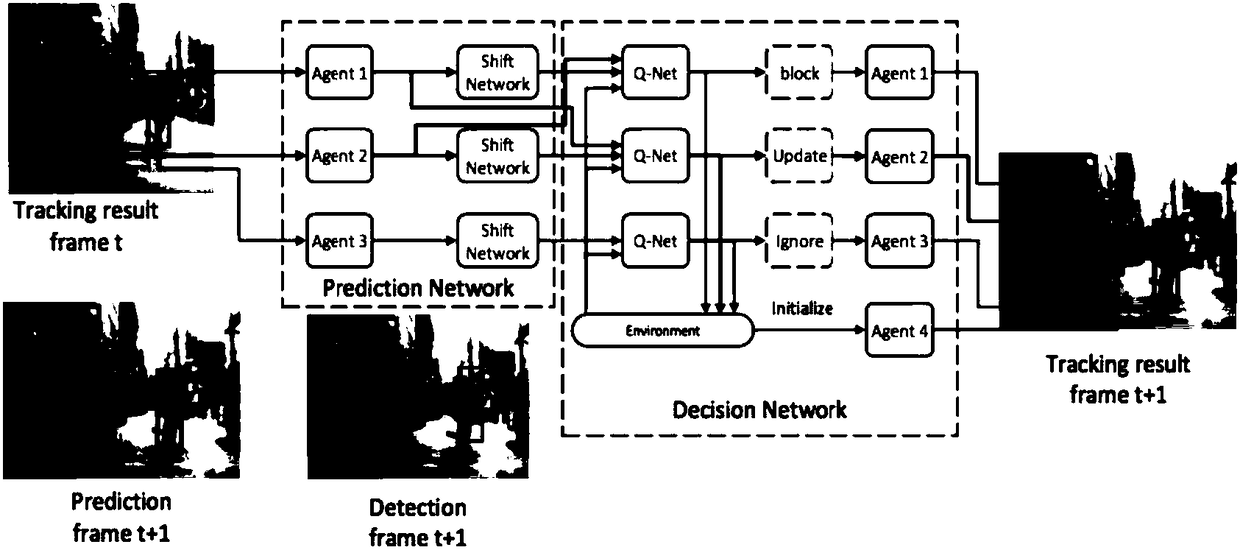

Multi-object tracking method based on deep reinforcement learning

ActiveCN108447076AImprove performanceEfficient use ofImage enhancementImage analysisDecision networksMulti target tracking

The invention discloses a multi-object tracking method and device based on deep reinforcement learning. The method comprises the following steps: extracting pedestrian characteristics; inputting the pedestrian characteristics to a prediction network to predicate pedestrian positions; and according to the pedestrian positions, obtaining pedestrian information, and inputting the pedestrian information to a decision network for judgment to track objects. The method can utilize information interaction between different objects and environment, and greatly improves tracking precision and performance.

Owner:TSINGHUA UNIV

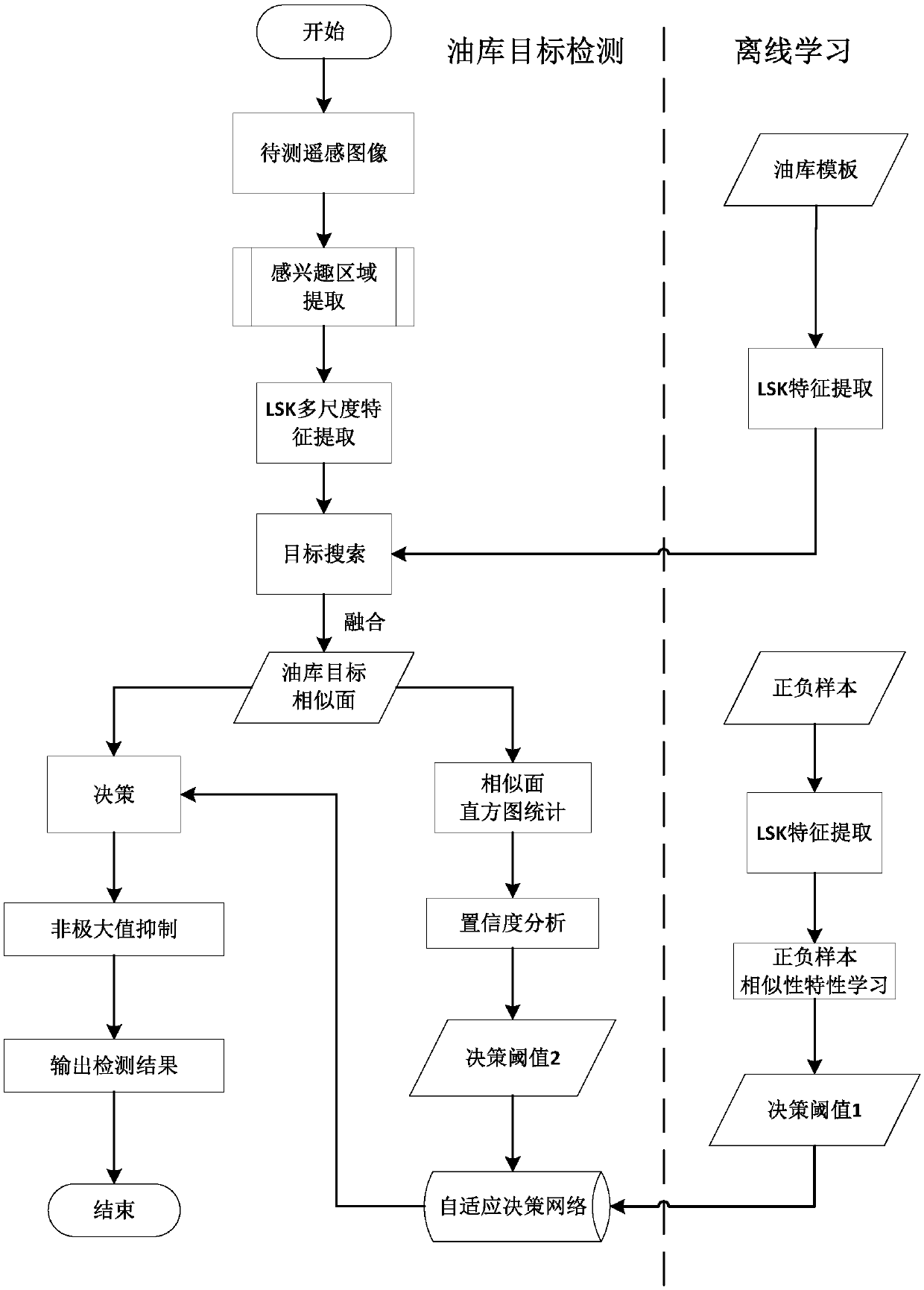

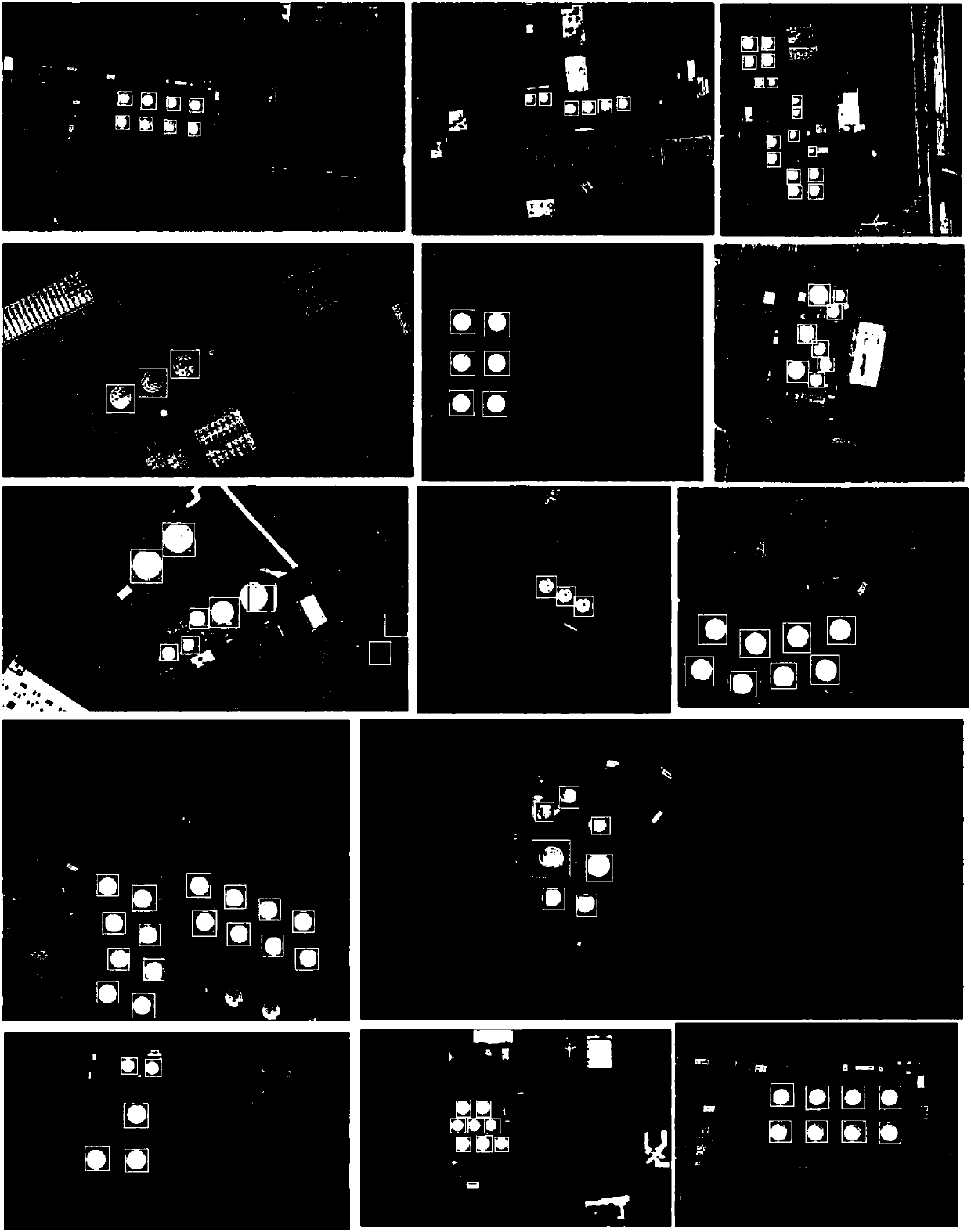

Identification method of oil depot target in remote sensing image

ActiveCN106557740AGood positive and negative samplesNarrow searchCharacter and pattern recognitionDecision networksGoal recognition

The present invention discloses an identification method of an oil depot target in a remote sensing image. The method comprises the steps of firstly calculating the phase spectrum significance of a whole scene, and extracting all interested areas possibly containing the target in the scene according to the phase spectrum significance; in the feature extraction, adopting a local regression nuclear model to calculate the local structural features of the interested areas point by point, and generating a feature descriptor capable of describing the structure of the target; at a target detection stage, carrying out the similarity measurement by the cosine similarity, calculating the similarities of the interested areas and an oil depot sample image, utilizing the positive and negative sample distinguishing ability of the feature descriptor and the features of the similarity surfaces to construct a decision network having the adaptive capability, obtaining the preliminary results of the target detection by the decision network, and removing the redundant preliminary results by a non-maxima suppression algorithm, thereby obtaining a final target detection result. A universal detection method of the oil depot target in the remote sensing image provided by the present invention is good in multi-scale and multi-view angle target identification effect.

Owner:HUAZHONG UNIV OF SCI & TECH

Unmanned aerial vehicle cluster meeting method based on deep reinforcement learning

ActiveCN111240356AAchieve decentralizationAchieve autonomyInternal combustion piston enginesPosition/course control in three dimensionsPattern recognitionDecision networks

The invention provides an unmanned aerial vehicle cluster meeting method based on deep reinforcement learning. In the training stage, a fixed area is set in the meeting task scene to serve as a meeting area of the unmanned aerial vehicle cluster, area center point position information is obtained, a deep neural network for judging movement of the unmanned aerial vehicle cluster is established, thedeep neural network is trained, and after training is completed, a final deep neural network is obtained; and in the execution stage: the input data is inputted into the trained deep neural network for judgment. According to the method, the state space and the behavior space of the unmanned aerial vehicle cluster task are expanded, the practicability is high for incomplete scene information, thetask-oriented unmanned aerial vehicle cluster unified decision network is constructed, and unified command and control of the decision network for the uncertain number of unmanned aerial vehicles arerealized.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com