Patents

Literature

233 results about "Q learning algorithm" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

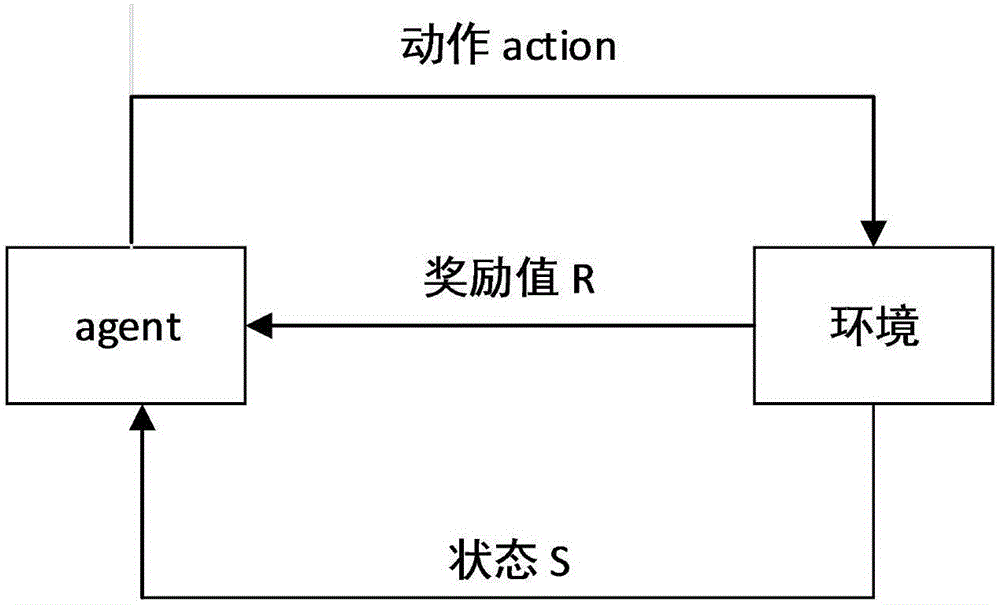

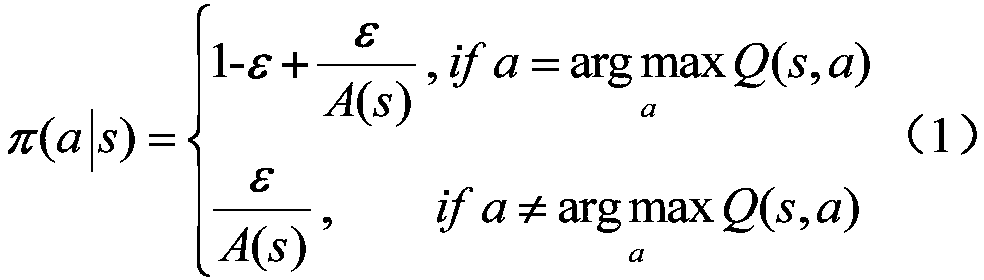

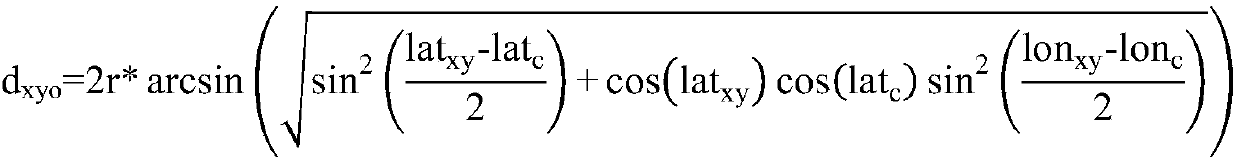

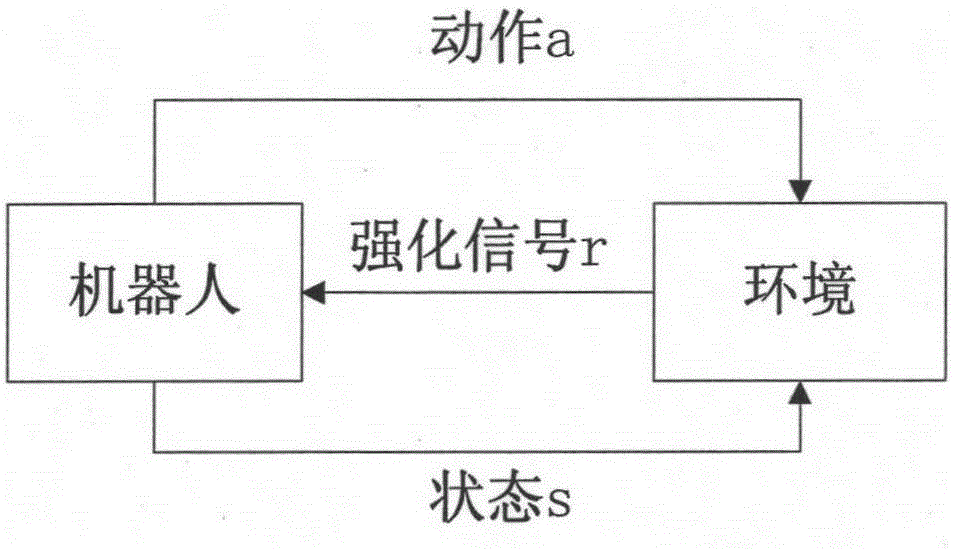

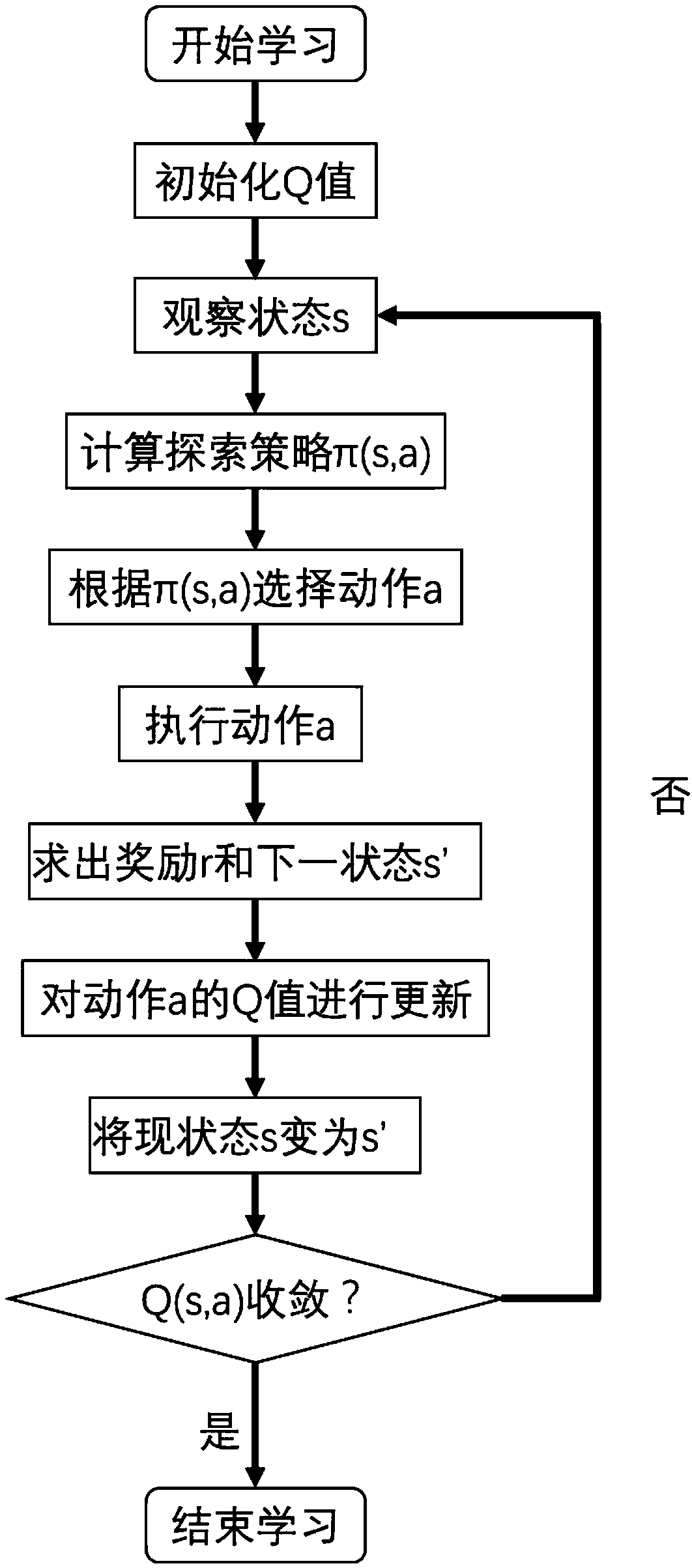

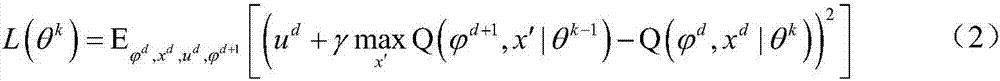

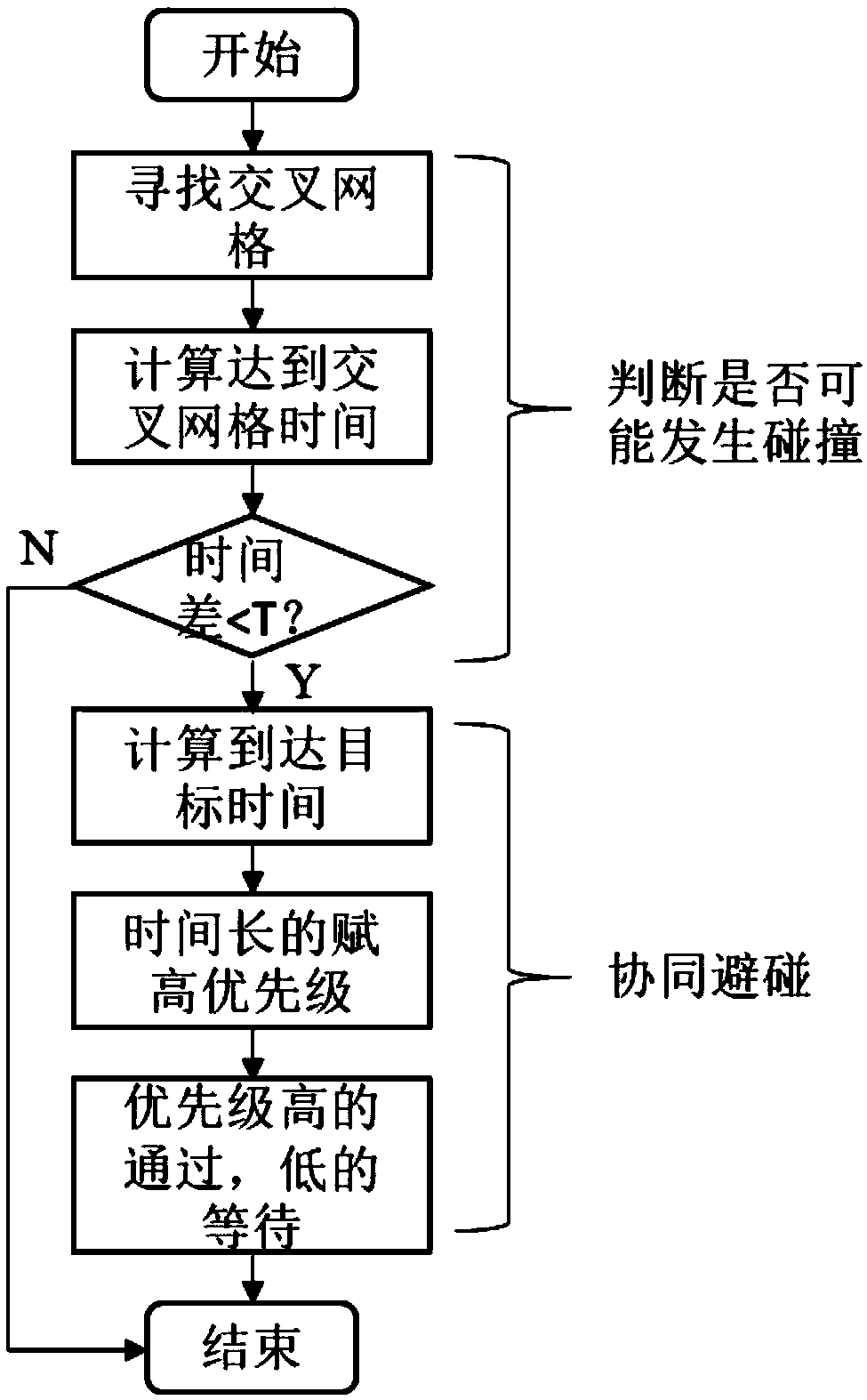

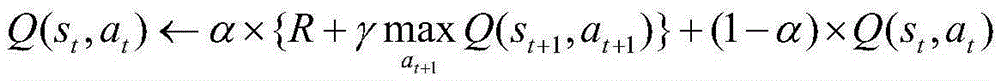

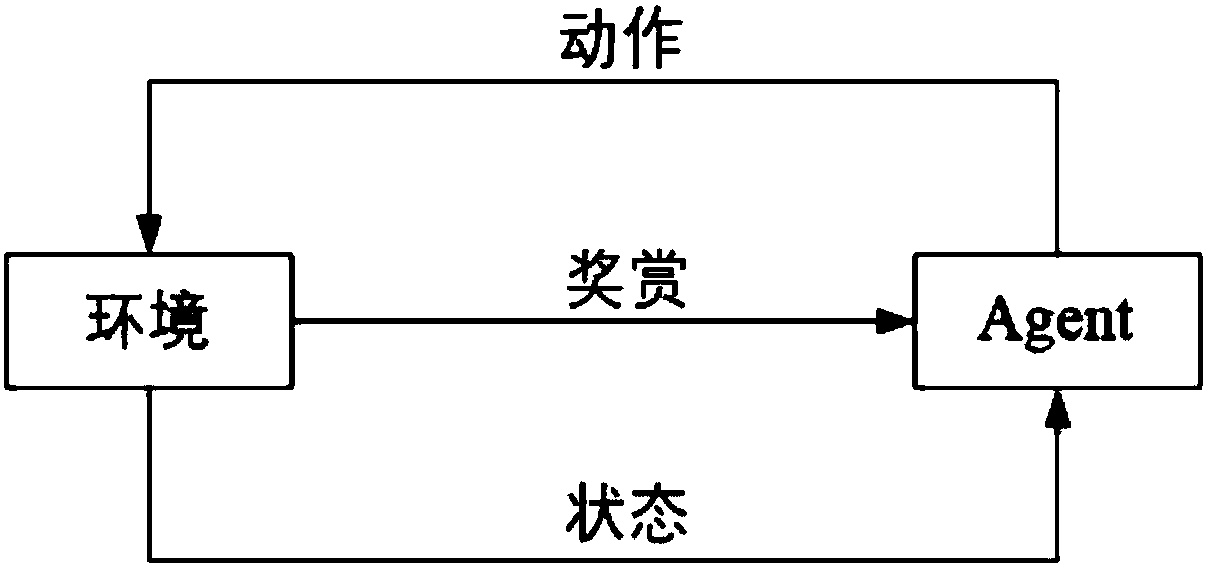

Q-learning, is a simple incremental algorithm developed from the theory of dynamic programming [Ross,1983] for delayed reinforcement learning. In Q-learning, policies and the value function are represented by a two-dimensional lookup table indexed by state-action pairs. Formally, for each state and action let:

Path planning Q-learning initial method of mobile robot

InactiveCN102819264AImprove learning effectFast convergencePosition/course control in two dimensionsPotential fieldWorking environment

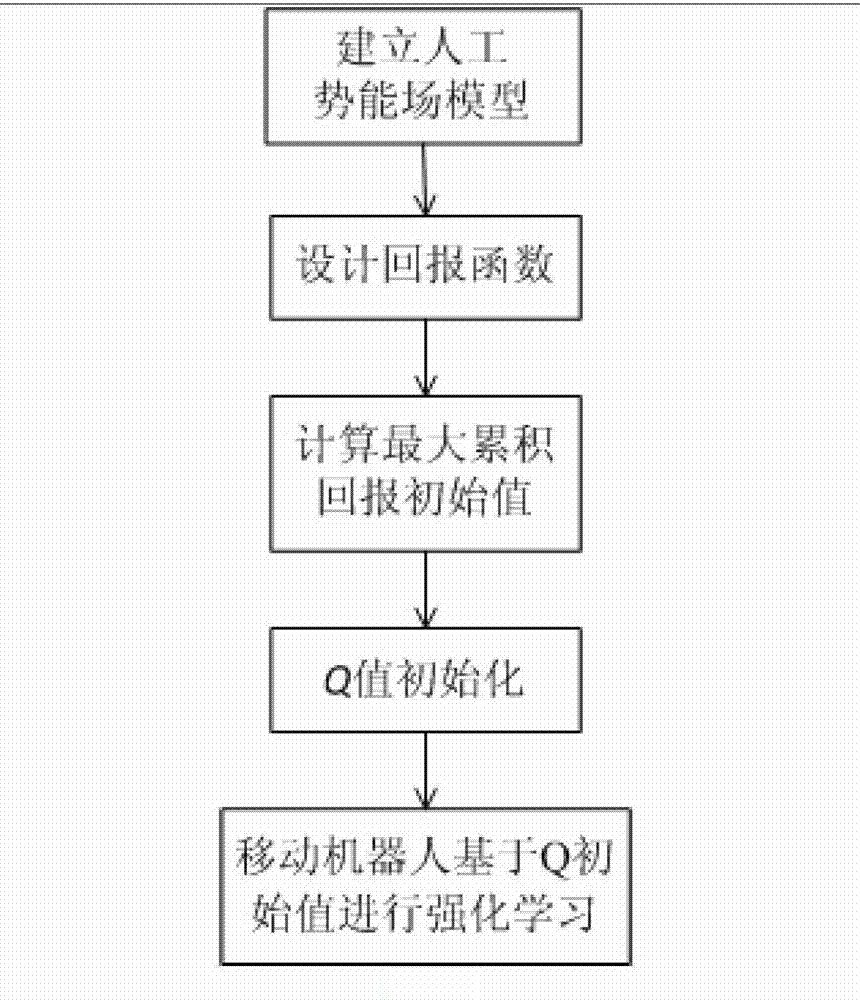

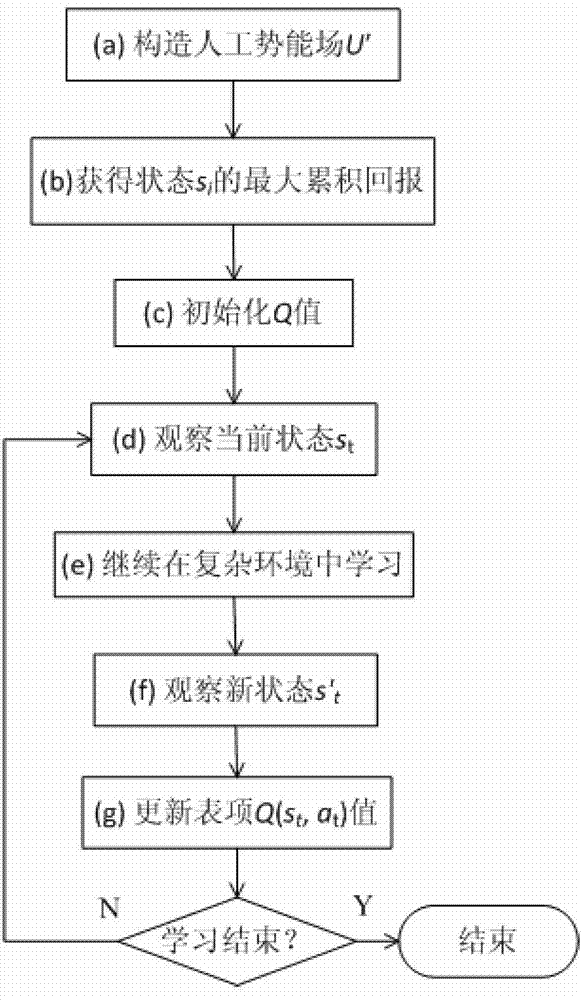

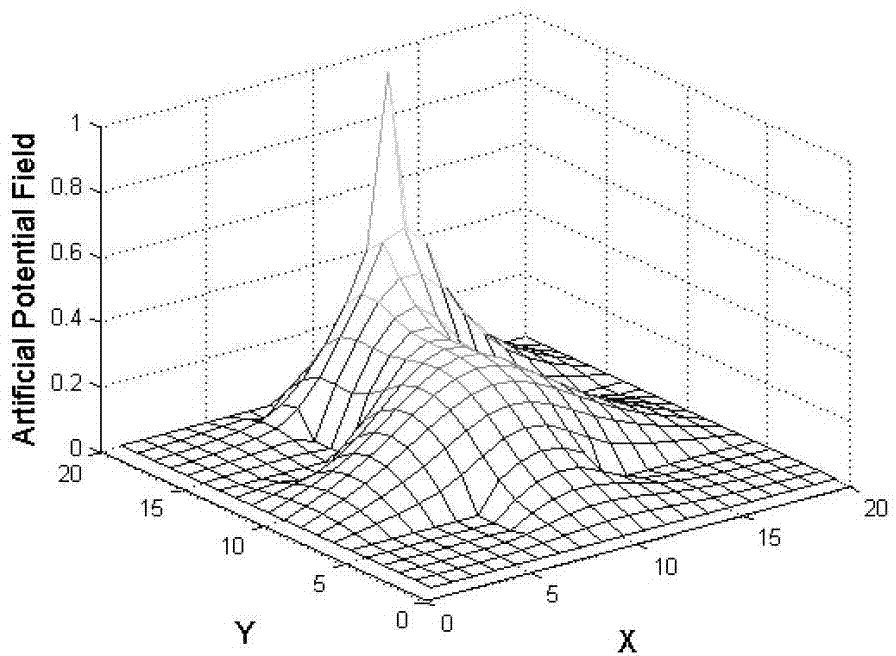

The invention discloses a reinforcing learning initial method of a mobile robot based on an artificial potential field and relates to a path planning Q-learning initial method of the mobile robot. The working environment of the robot is virtualized to an artificial potential field. The potential values of all the states are confirmed by utilizing priori knowledge, so that the potential value of an obstacle area is zero, and a target point has the biggest potential value of the whole field; and at the moment, the potential value of each state of the artificial potential field stands for the biggest cumulative return obtained by following the best strategy of the corresponding state. Then a Q initial value is defined to the sum of the instant return of the current state and the maximum equivalent cumulative return of the following state. Known environmental information is mapped to a Q function initial value by the artificial potential field so as to integrate the priori knowledge into a learning system of the robot, so that the learning ability of the robot is improved in the reinforcing learning initial stage. Compared with the traditional Q-learning algorithm, the reinforcing learning initial method can efficiently improve the learning efficiency in the initial stage and speed up the algorithm convergence speed, and the algorithm convergence process is more stable.

Owner:山东大学(威海)

Mobile robot path planning algorithm based on single-chain sequential backtracking Q-learning

InactiveCN102799179AImprove learning efficiencyShort learning timePosition/course control in two dimensionsMobile robots path planningPath plan

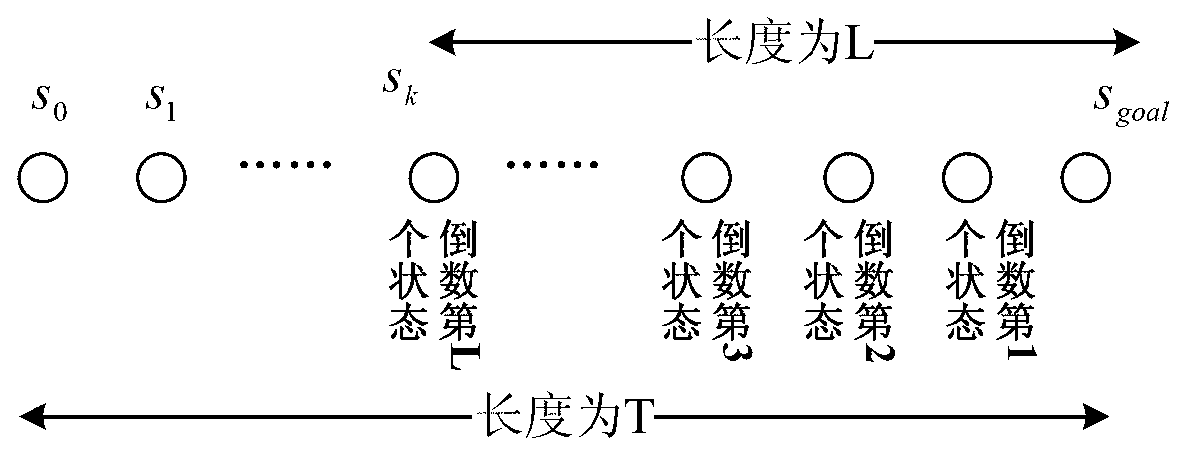

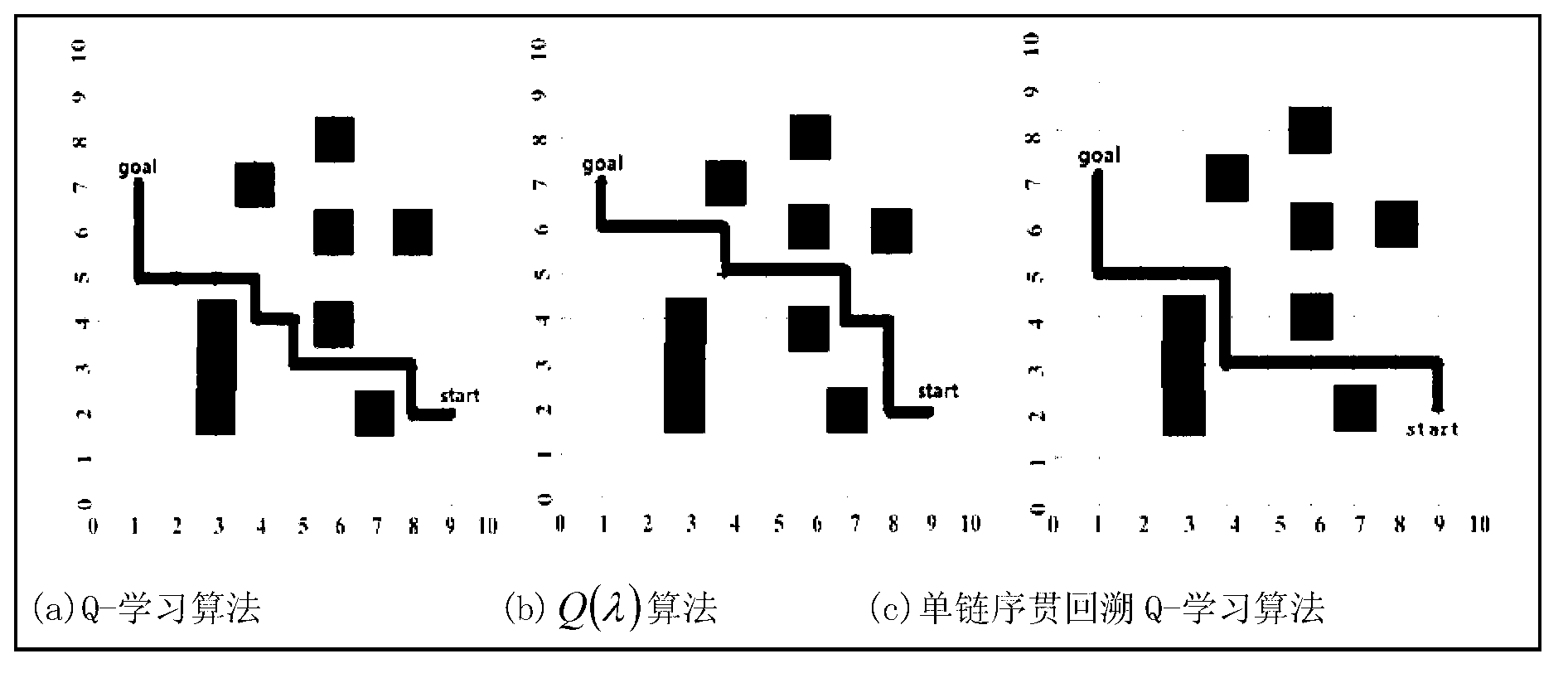

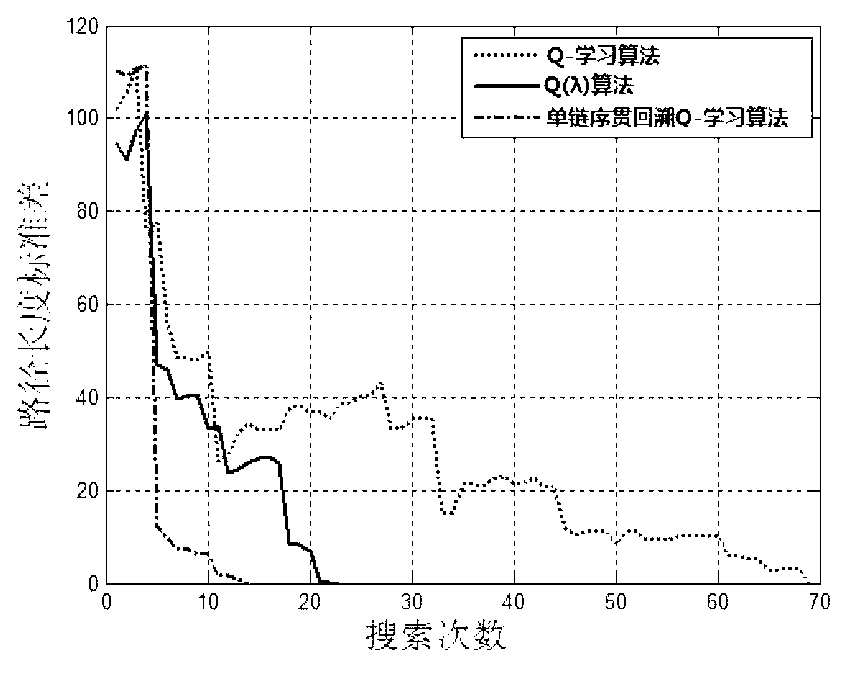

The invention provides a mobile robot path planning algorithm based on single-chain sequential backtracking Q-learning. According to the mobile robot path planning algorithm based on the single-chain sequential backtracking Q-learning, a two-dimensional environment is expressed by using a grid method, each environment area block corresponds to a discrete location, the state of a mobile robot at some moment is expressed by an environment location where the robot is located, the search of each step of the mobile robot is based on a Q-learning iterative formula of a non-deterministic Markov decision process, progressively sequential backtracking is carried out from the Q value of the tail end of a single chain, namely the current state, to the Q value of the head end of the single chain until a target state is reached, the mobile robot cyclically and repeatedly finds out paths to the target state from an original state, the search of each step is carried out according to the steps, and Q values of states are continuously iterated and optimized until the Q values are converged. The mobile robot path planning algorithm based on the single-chain sequential backtracking Q-learning has the advantages that the number of steps required for optimal path searching is far less than that of a classic Q-learning algorithm and a Q(lambda) algorithm, the learning time is shorter, and the learning efficiency is higher; and particularly for large environments, the mobile robot path planning algorithm based on the single-chain sequential backtracking Q-learning has more obvious advantages.

Owner:SHANDONG UNIV

Intensive learning based urban intersection passing method for driverless vehicle

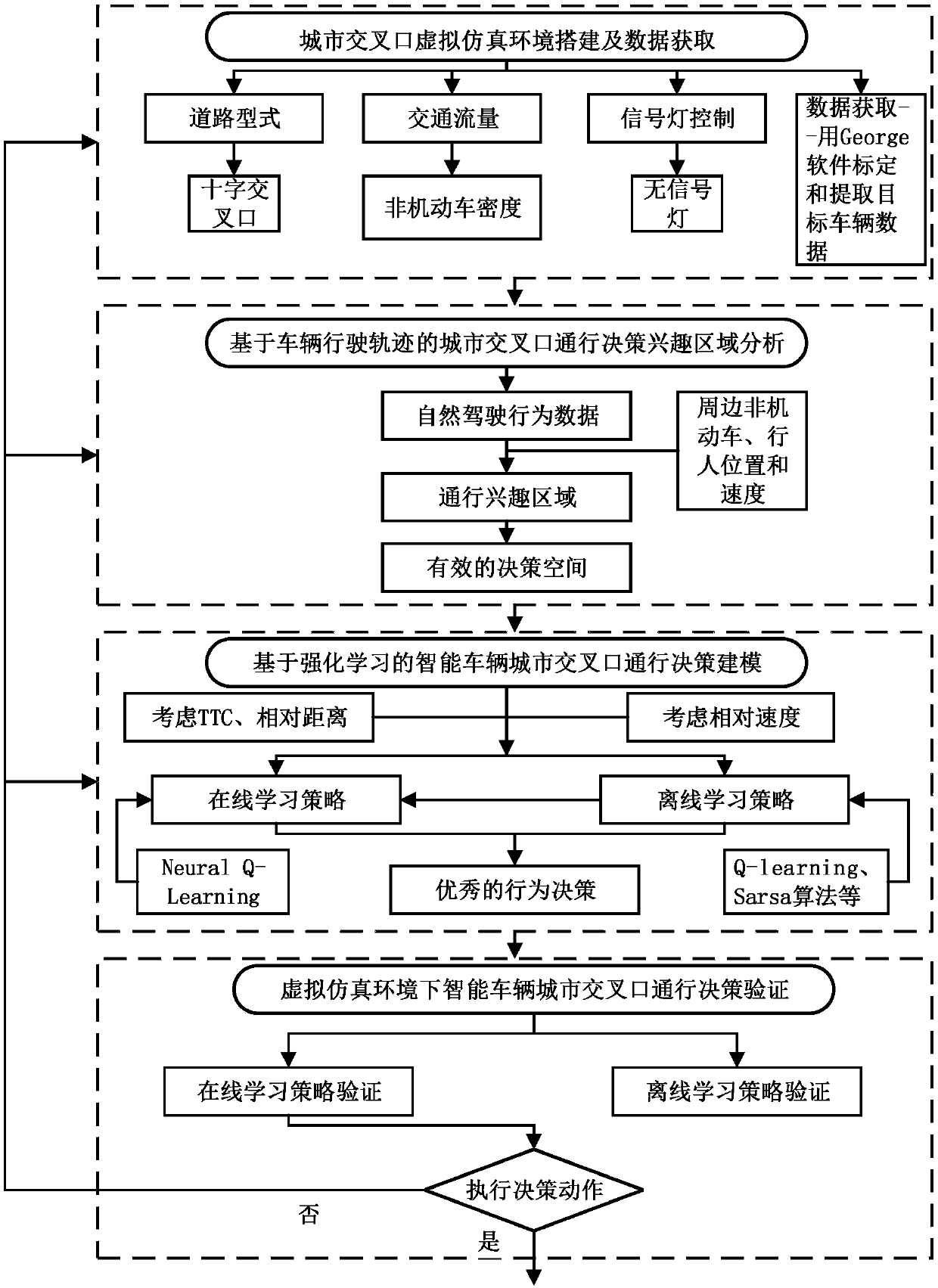

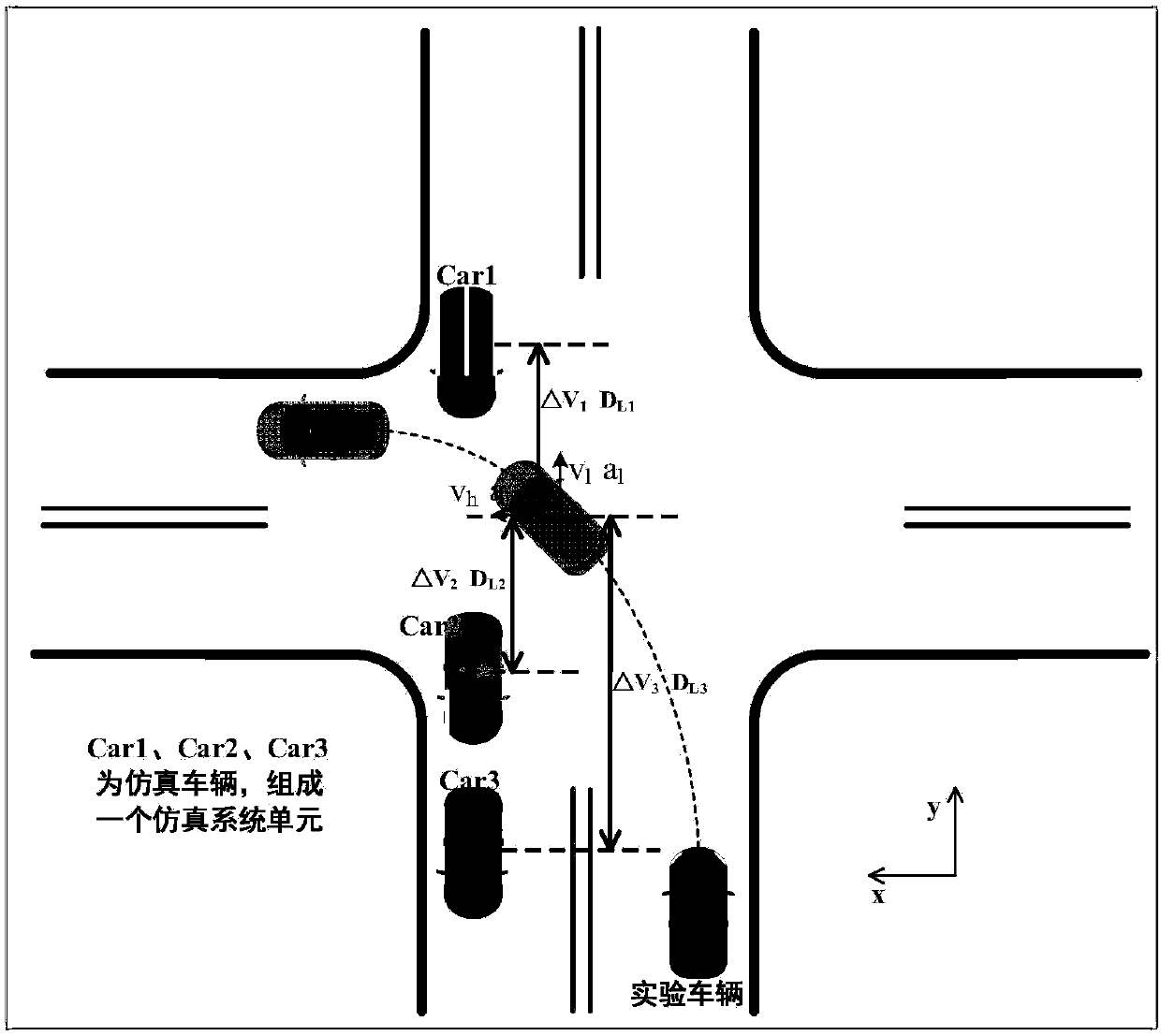

ActiveCN108932840AImprove real-time performanceReduce the dimensionality of behavioral decision-making state spaceControlling traffic signalsDetection of traffic movementMoving averageLearning based

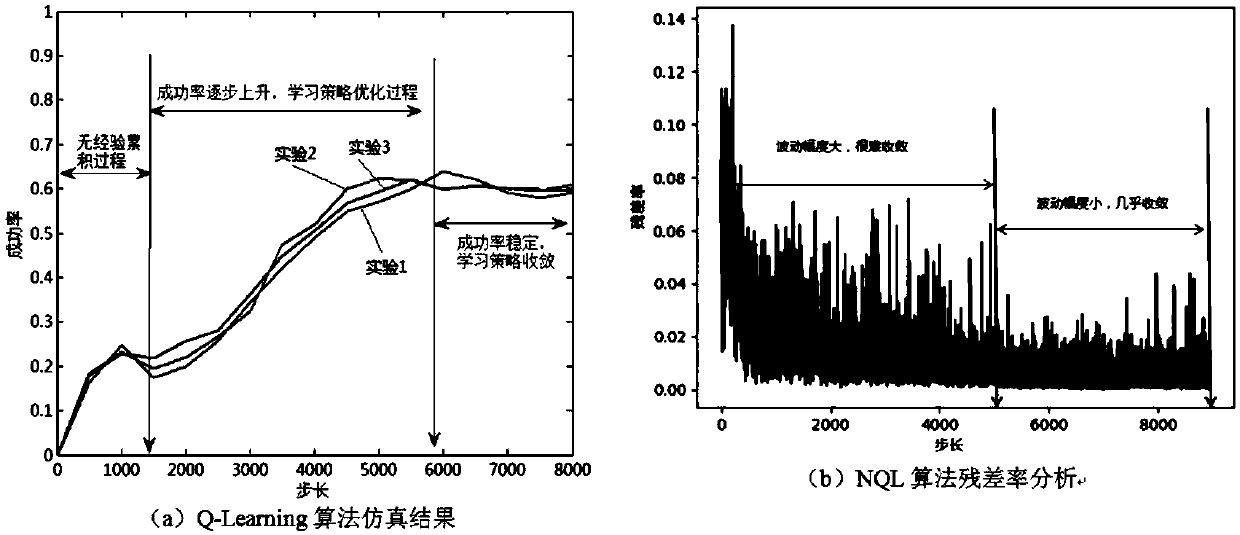

The invention discloses an intensive learning based urban intersection passing method for a driverless vehicle. The method includes a step 1 of collecting vehicle continuous running state informationand position information through a photographing method, the vehicle continuous running state information and position information including speed, lateral speed and acceleration value, longitudinal speed and acceleration value, traveling track curvature value, accelerator opening degree and brake pedal pressure; a second step of obtaining characteristic motion track and the velocity quantity of actual data through clustering; a step 3 of processing original data by an exponential weighting moving average method; a step 4 of realizing the interaction passing method by utilizing an NQL algorithm. The NQL algorithm of the invention is obviously superior to a Q learning algorithm in learning ability when handling complex intersection scenes and a better training effect can be achieved in shorter training time with less training data.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

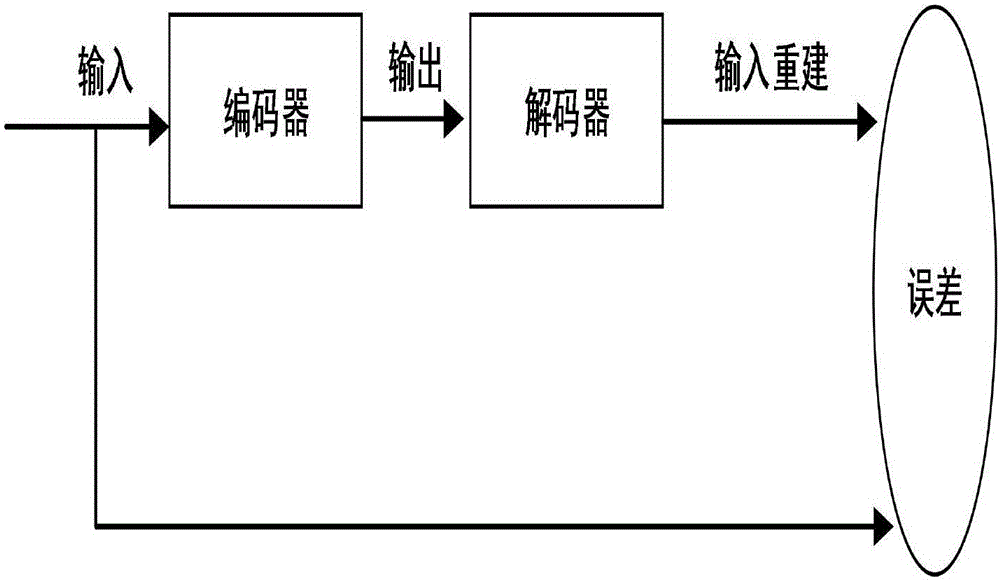

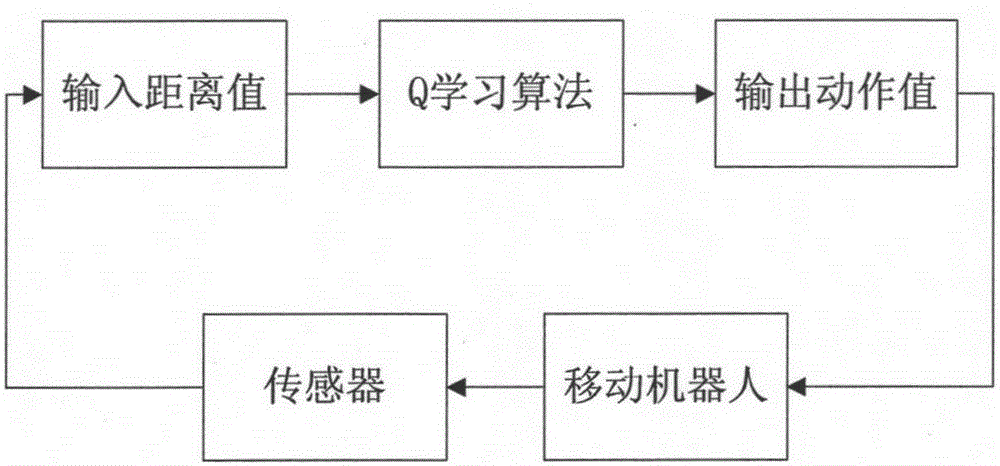

Mobile robot path planning method with combination of depth automatic encoder and Q-learning algorithm

ActiveCN105137967AAchieve cognitionImprove the ability to process imagesBiological neural network modelsPosition/course control in two dimensionsAlgorithmReward value

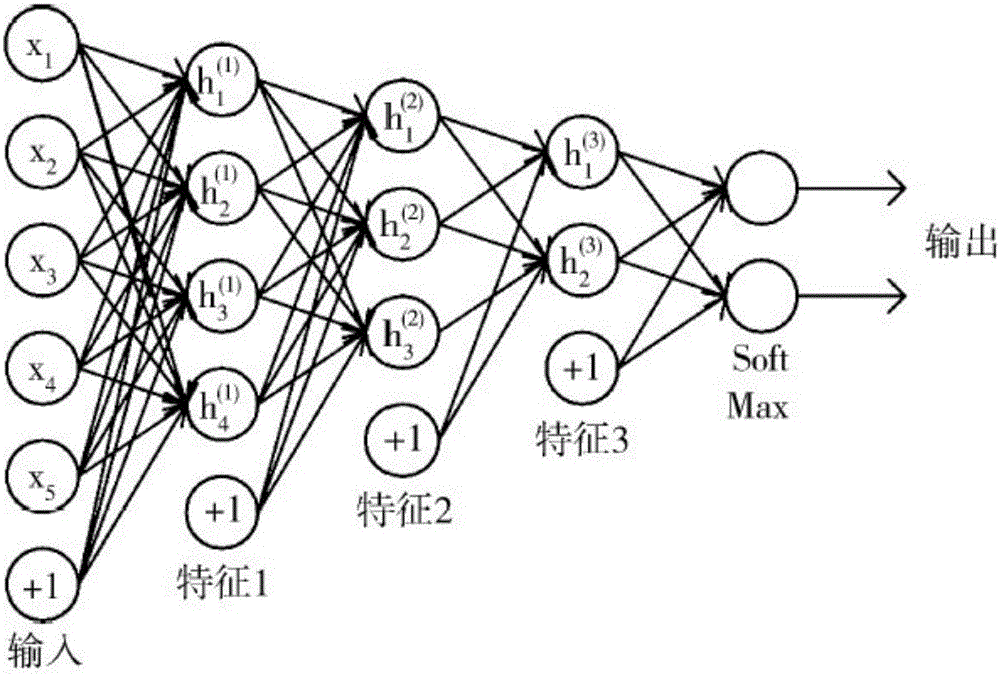

The invention provides a mobile robot path planning method with combination of a depth automatic encoder and a Q-learning algorithm. The method comprises a depth automatic encoder part, a BP neural network part and a reinforced learning part. The depth automatic encoder part mainly adopts the depth automatic encoder to process images of an environment in which a robot is positioned so that the characteristics of the image data are acquired, and a foundation is laid for subsequent environment cognition. The BP neural network part is mainly for realizing fitting of reward values and the image characteristic data so that combination of the depth automatic encoder and the reinforced learning can be realized. According to the Q-learning algorithm, knowledge is obtained in an action-evaluation environment via interactive learning with the environment, and an action scheme is improved to be suitable for the environment to achieve the desired purpose. The robot interacts with the environment to realize autonomous learning, and finally a feasible path from a start point to a terminal point can be found. System image processing capacity can be enhanced, and environment cognition can be realized via combination of the depth automatic encoder and the BP neural network.

Owner:BEIJING UNIV OF TECH

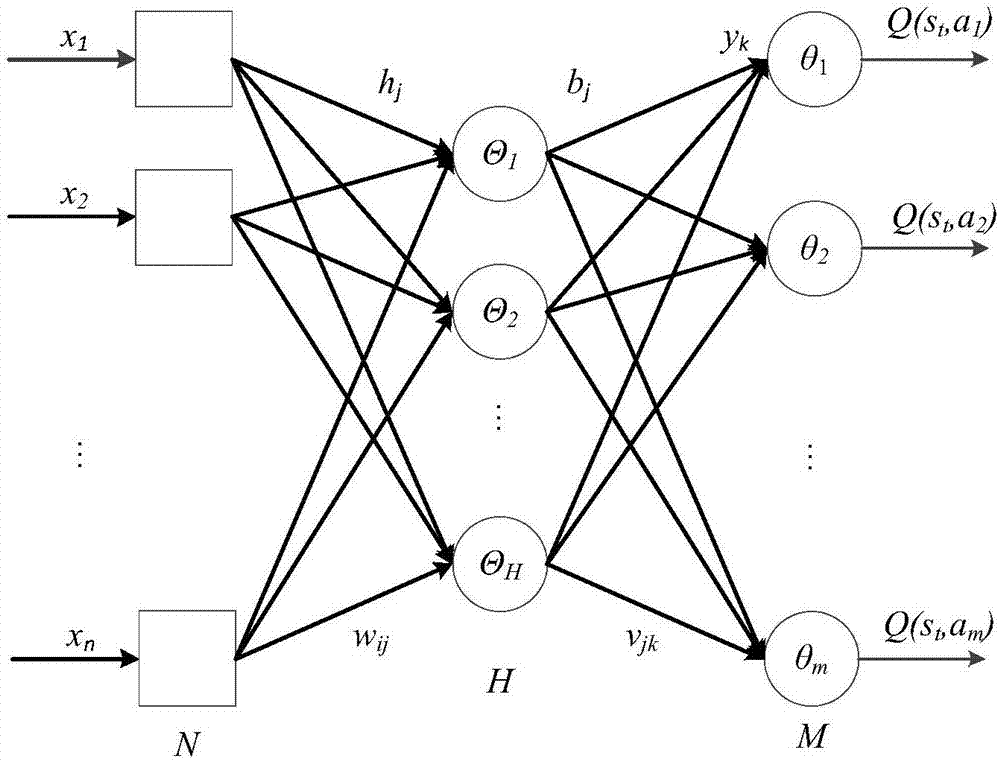

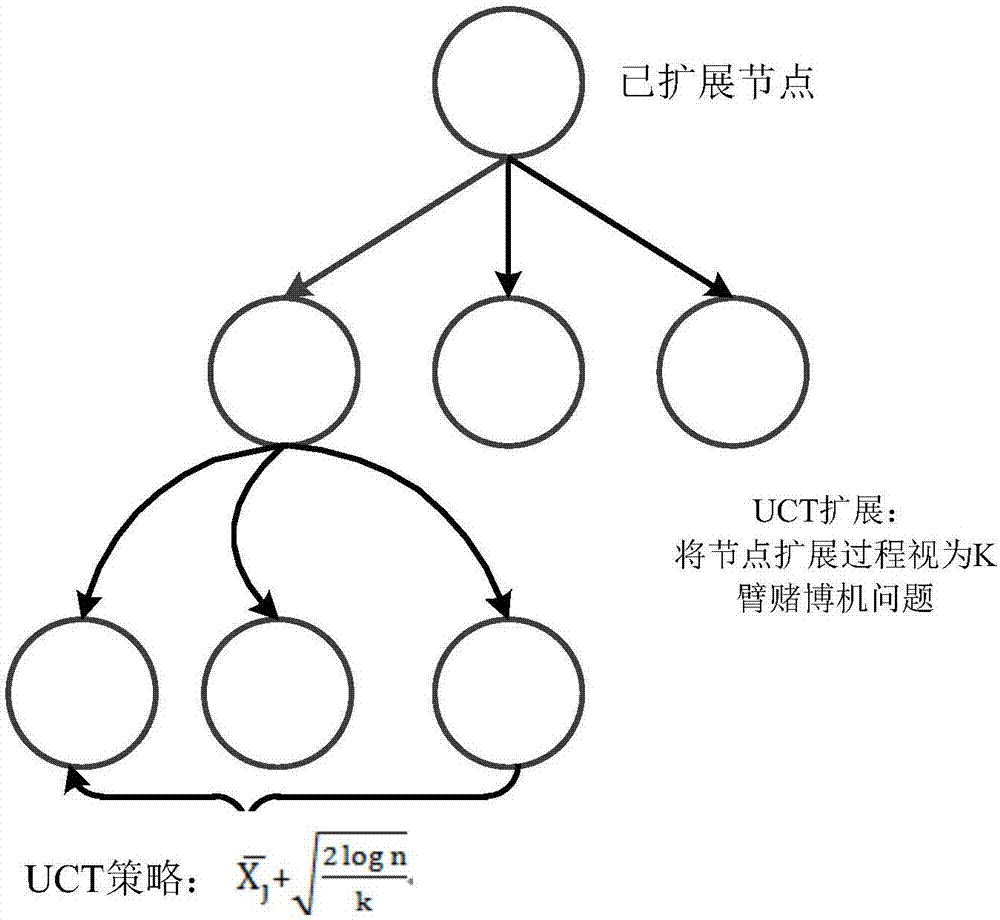

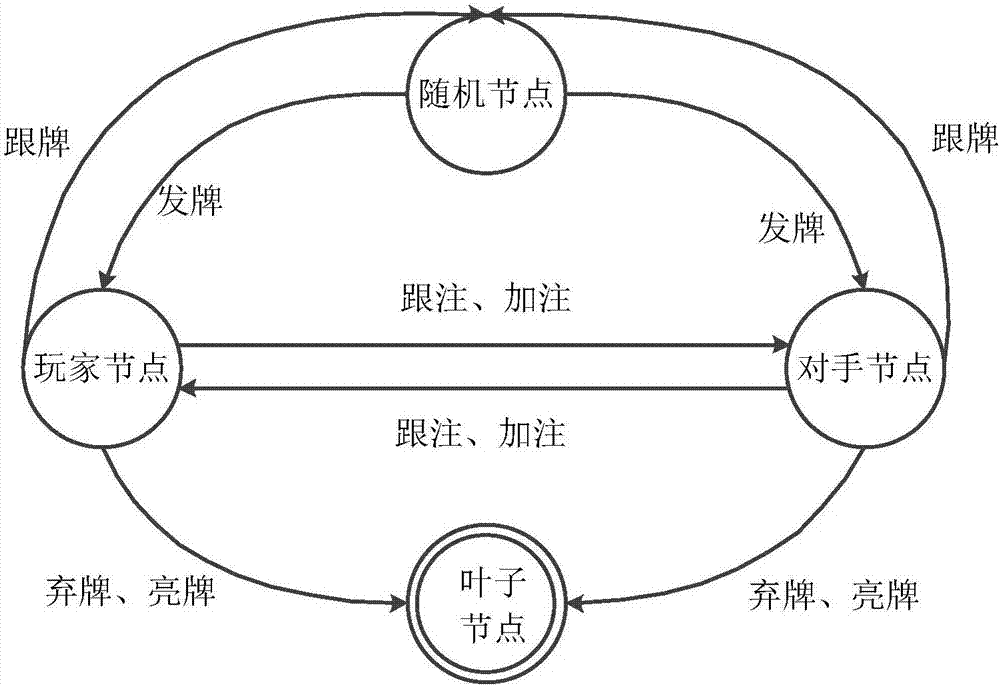

Neural network and Q learning combined estimation method under non-complete information

InactiveCN107038477AImprove the game levelReasonable strategyInference methodsNeural learning methodsDecision modelNerve network

The invention provides a neural network and Q learning combined estimation method under non-complete information, and the method comprises the steps: 1, converting the non-complete information into a partly observable Markov decision-making model; 2, converting the non-complete information gaming into complete information gaming through the Monte Carlo sampling technology; 3, calculating Q learning delay return value through a Q learning algorithm based on former n steps, an algorithm combining a neural network and the Q learning and an algorithm UCT based on an upper limit confidence interval; 4, carrying out the fusion of the Q value obtained at a former step, and obtaining a final result. According to the technical scheme of the invention, the method can be used in various types of non-complete information gaming, such as the Chinese poker and Texas Hold'em poker, and improves the gaming level of an intelligent agent. Compared with the conventional related research, the method greatly improves the precision.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

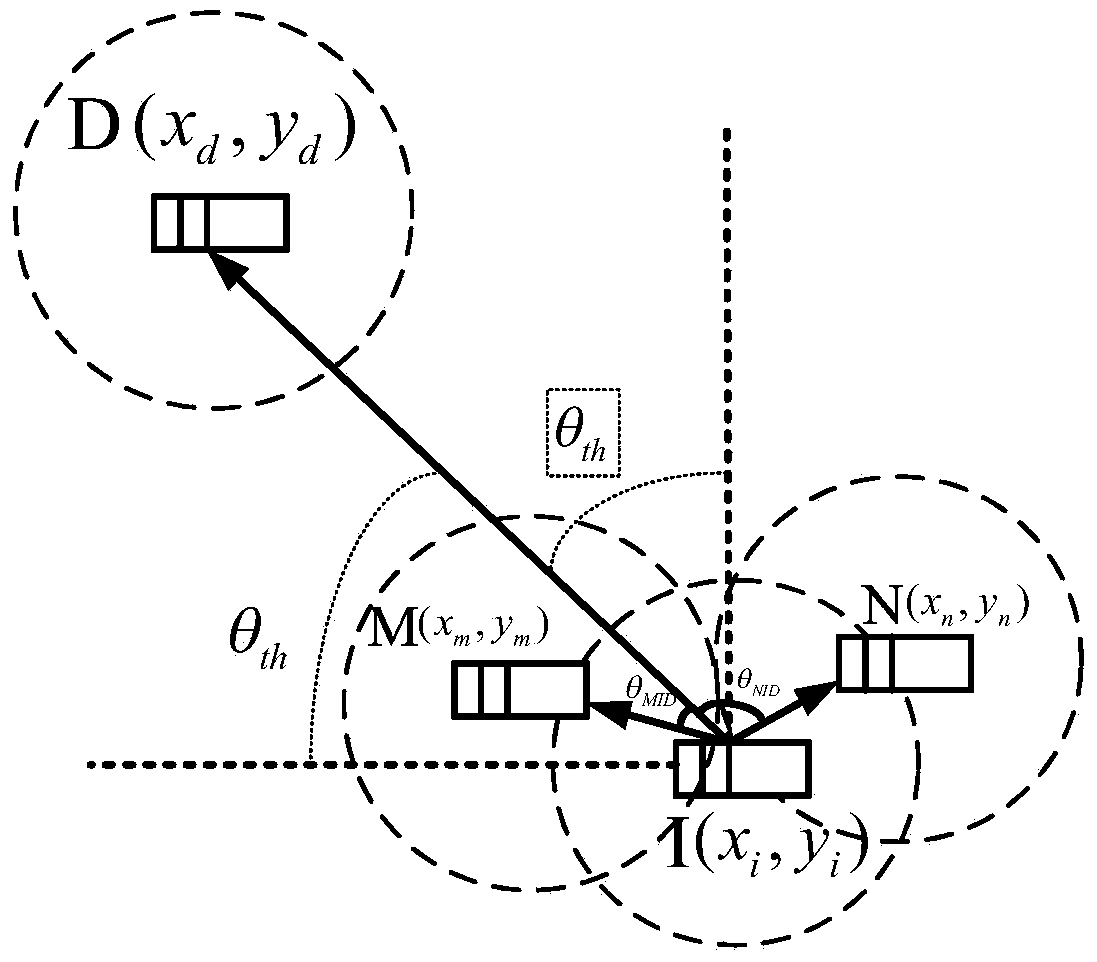

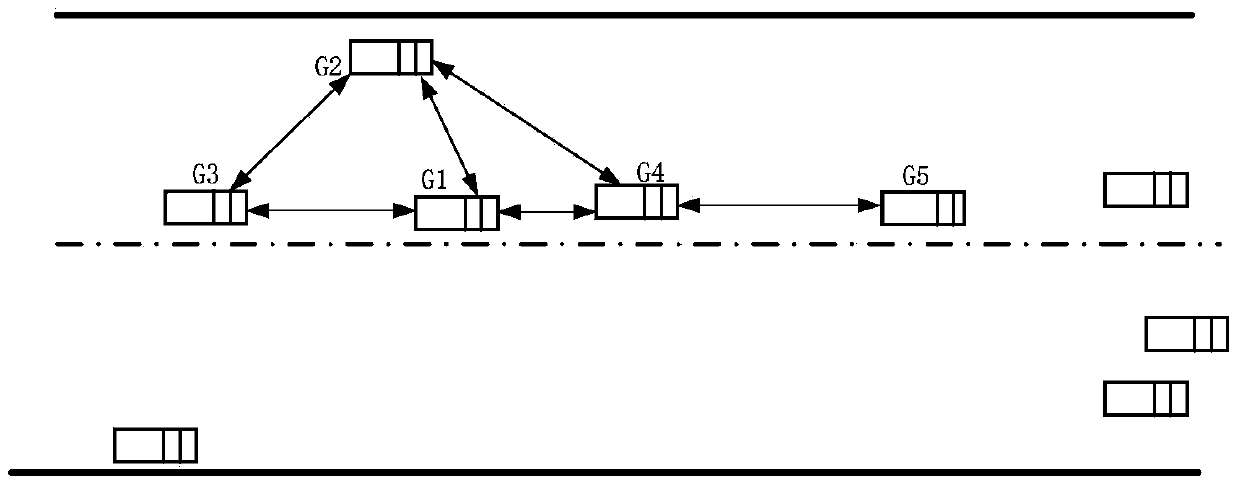

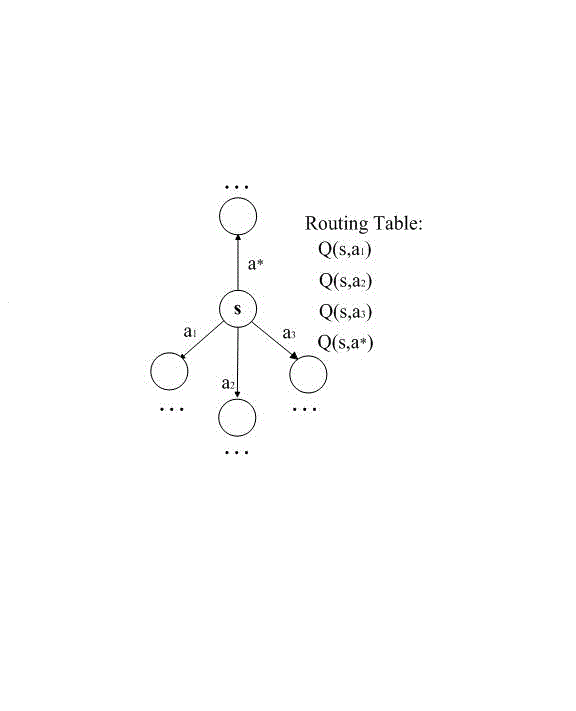

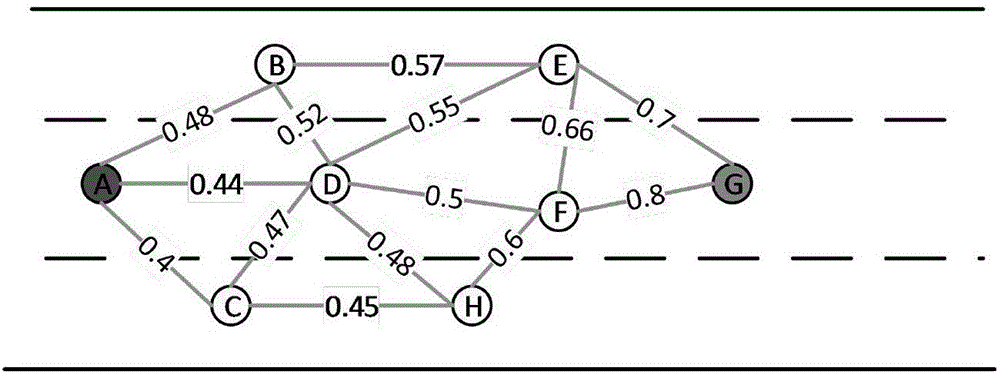

Social network-based vehicle-mounted self-organization network routing method

ActiveCN103702387AReduce the number of retweetsArrive quicklyWireless communicationNODALSocial network

The invention discloses a social network-based vehicle-mounted self-organization network routing method and belongs to the technical field of a vehicle-mounted wireless network. The method comprises the steps of (1) utilizing neighbor node information to calculate the direction angles and the effective values of nodes; (2) adopting a greedy algorithm added with a cache mechanism for the nodes on a road section, wherein intersection nodes adopt the neighbor nodes with the maximum effective values larger than those of the current nodes in an angle threshold value range as the next-hop transmission relay; (3) enabling vehicle nodes to study from the self history transmission actions by a Q learning algorithm assisted by a routing algorithm, wherein the nodes select the neighbor nods enabling a reward function to achieve the maximum convergence value as the next-hop transponder. The complexity of the routing algorithm is reduced, the system cost is reduced, and the Q learning algorithm is used for assisting the routing selecting, so the data packets are enabled to be transmitted along the path with the minimum hop number, and the time delay is reduced; the delivery rate of the data packets is improved and the end-to-end time delay and the consumption of system resources are reduced.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

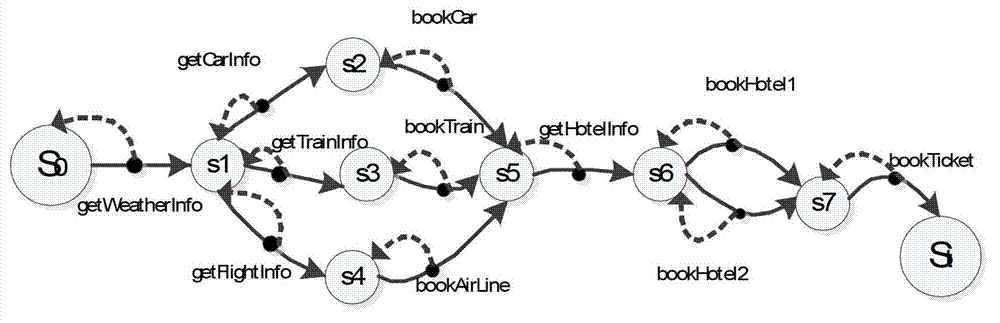

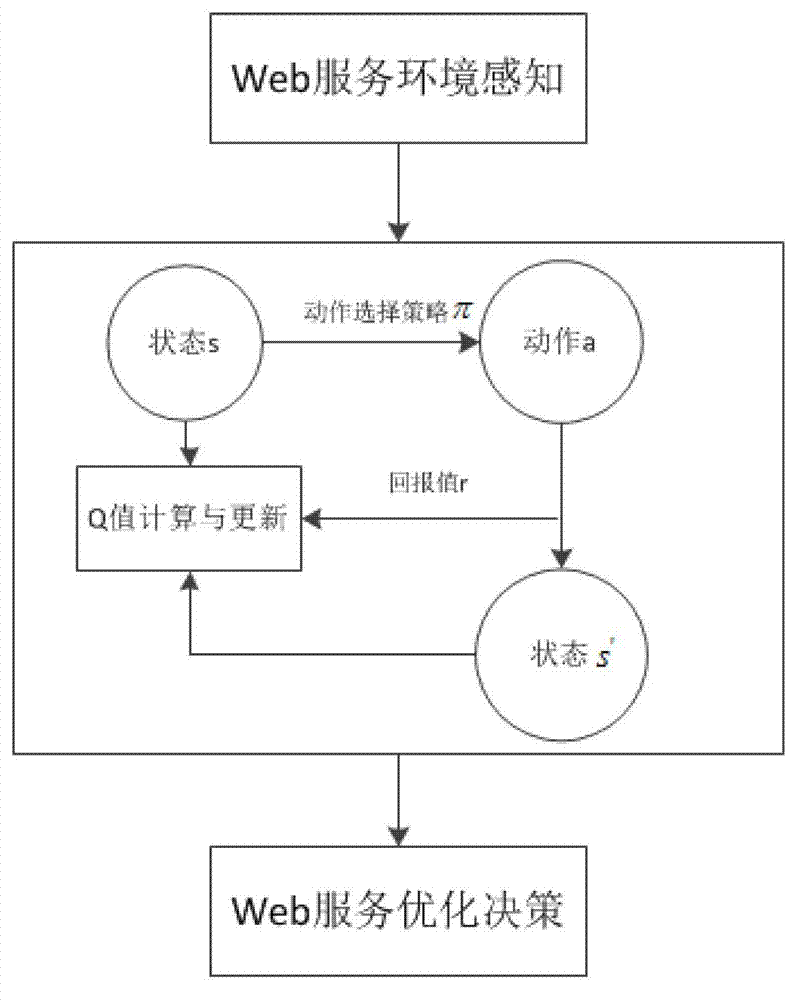

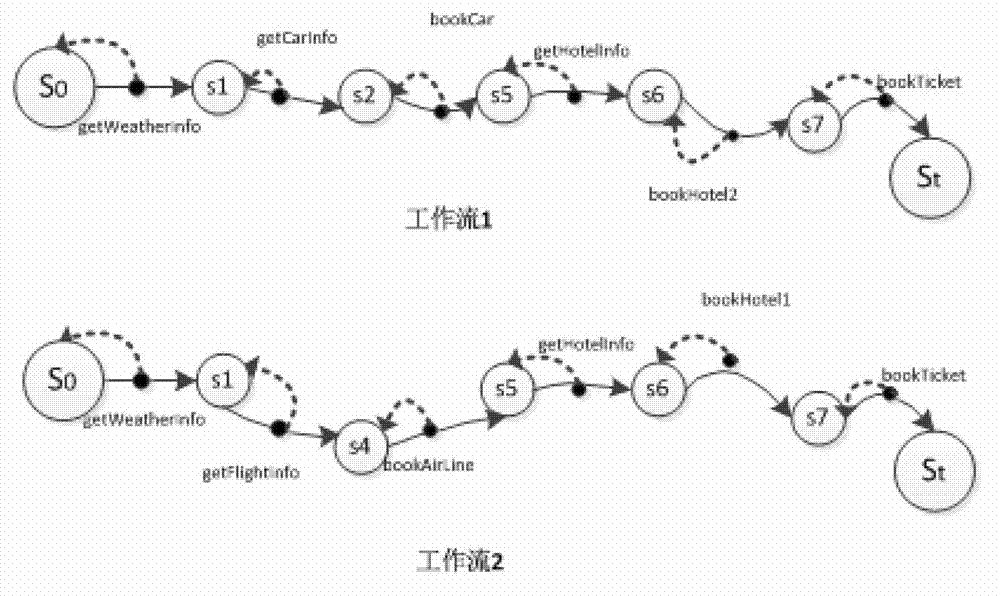

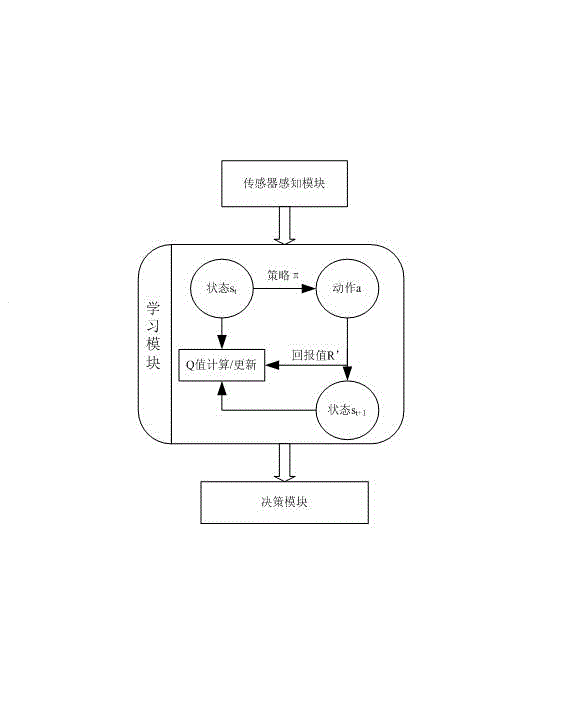

Large-scale self-adaptive composite service optimization method based on multi-agent reinforced learning

InactiveCN103248693AAdd coordinatorMeet individual needsTransmissionComposite servicesCombinatorial optimization

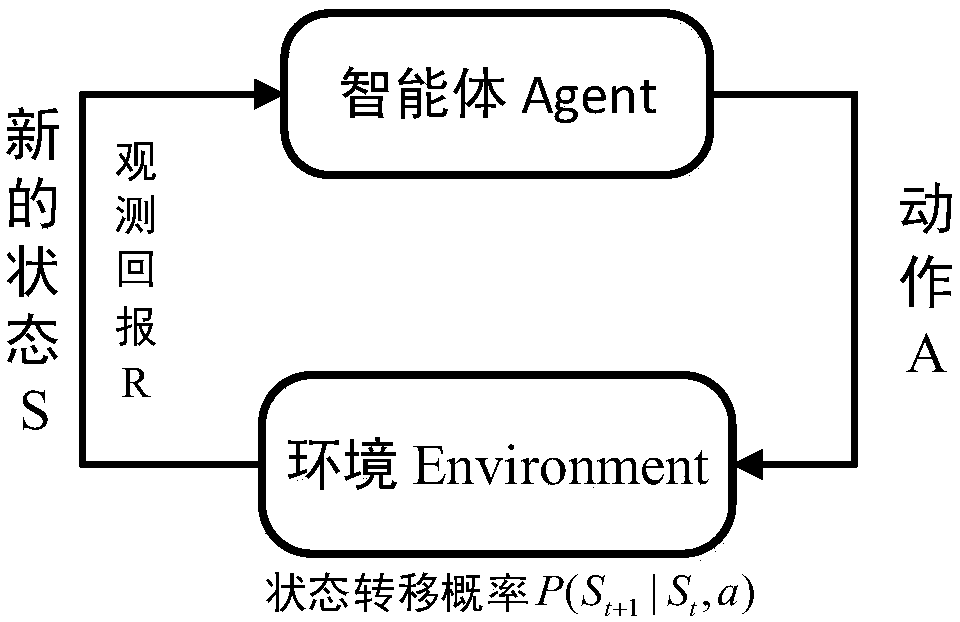

The invention discloses a self-adaptive composite service optimization method based on multi-agent reinforced learning. The method combines conceptions of the reinforced learning and agents, and defines the state set of reinforced learning to be the precondition and postcondition of the service, and the action set to be the Web service; parameters for Q learning including the learning rate, discount factors and Q value in reinforced learning are initialized; each agent is used for performing one composite optimizing task, and can perceive the current state, and select the optimal action under the current state as per the action selection strategy; the Q value is calculated and updated as per the Q learning algorithm; before the Q value is converged, the next round learning is performed after one learning round is finished, and finally the optimal strategy is obtained. According to the method, the corresponding self-adaptive action strategy is worked out on line as per the environment change at the time, so that higher flexibility, self-adaptability and practical value are realized.

Owner:SOUTHEAST UNIV

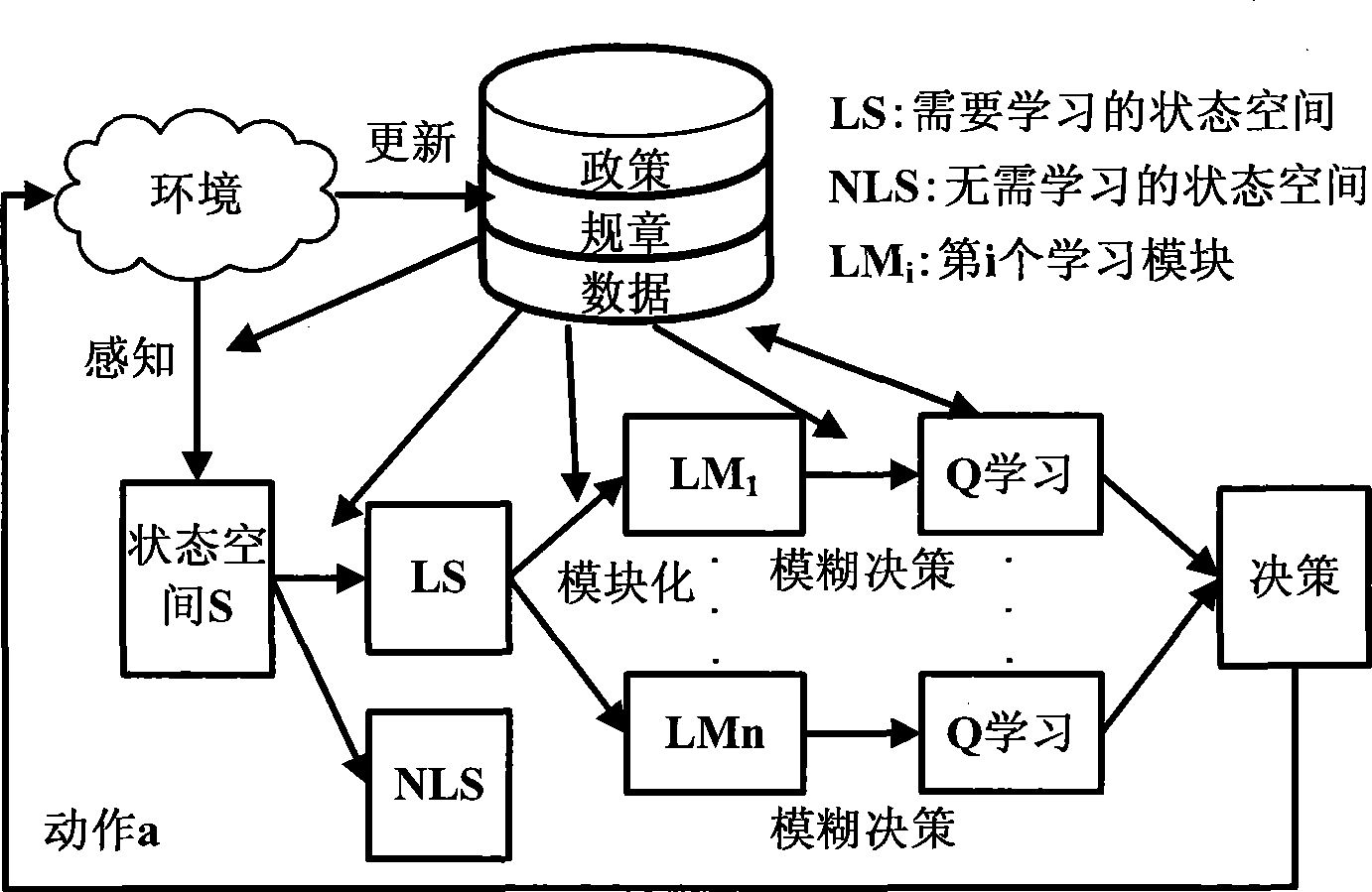

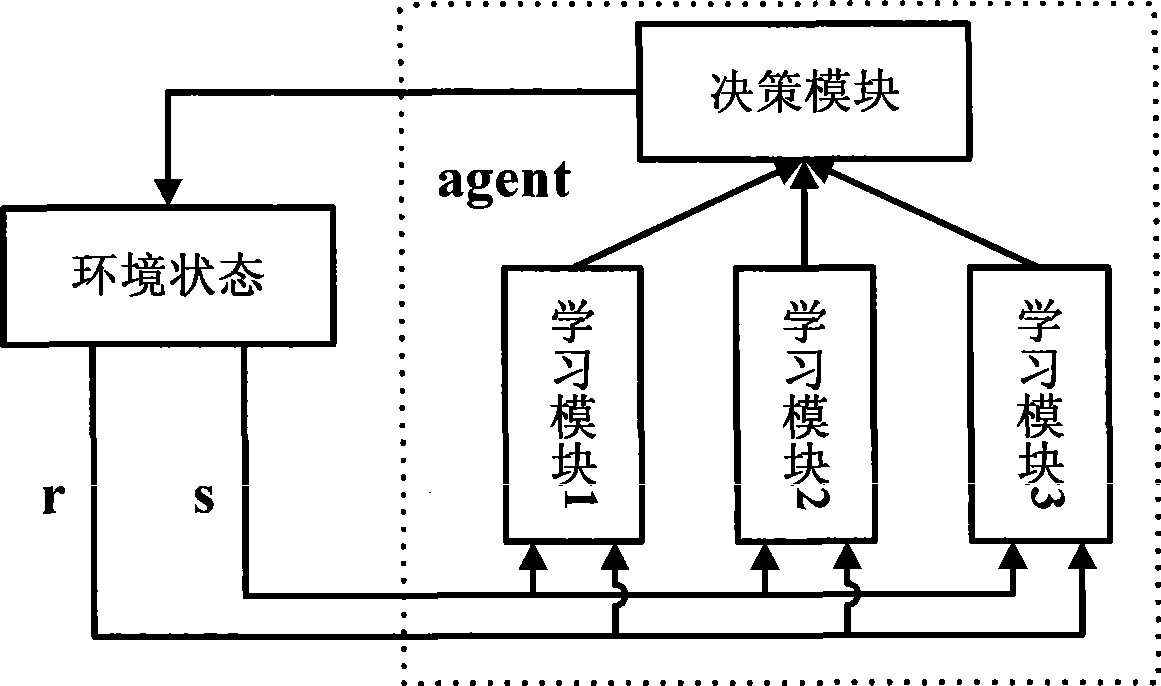

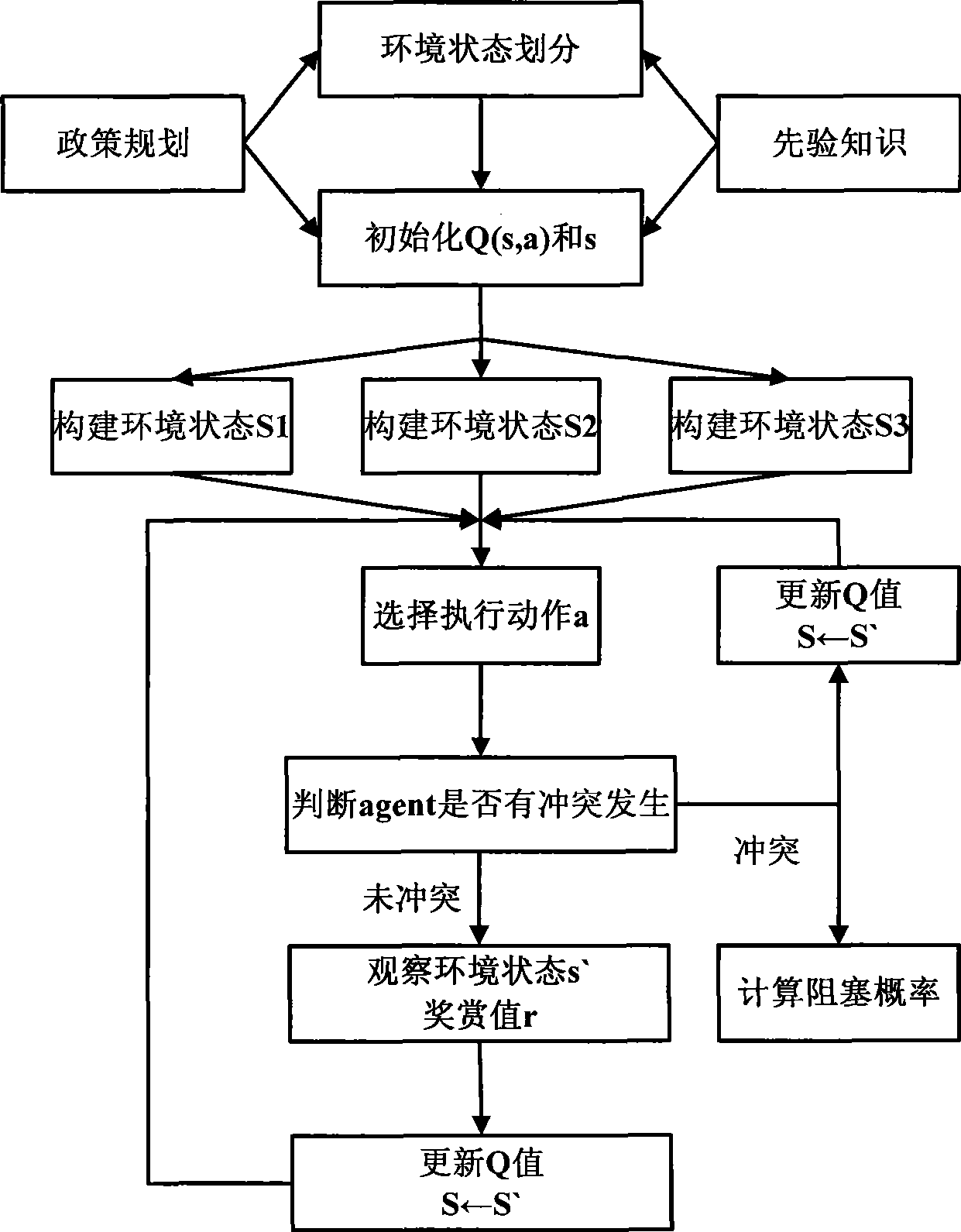

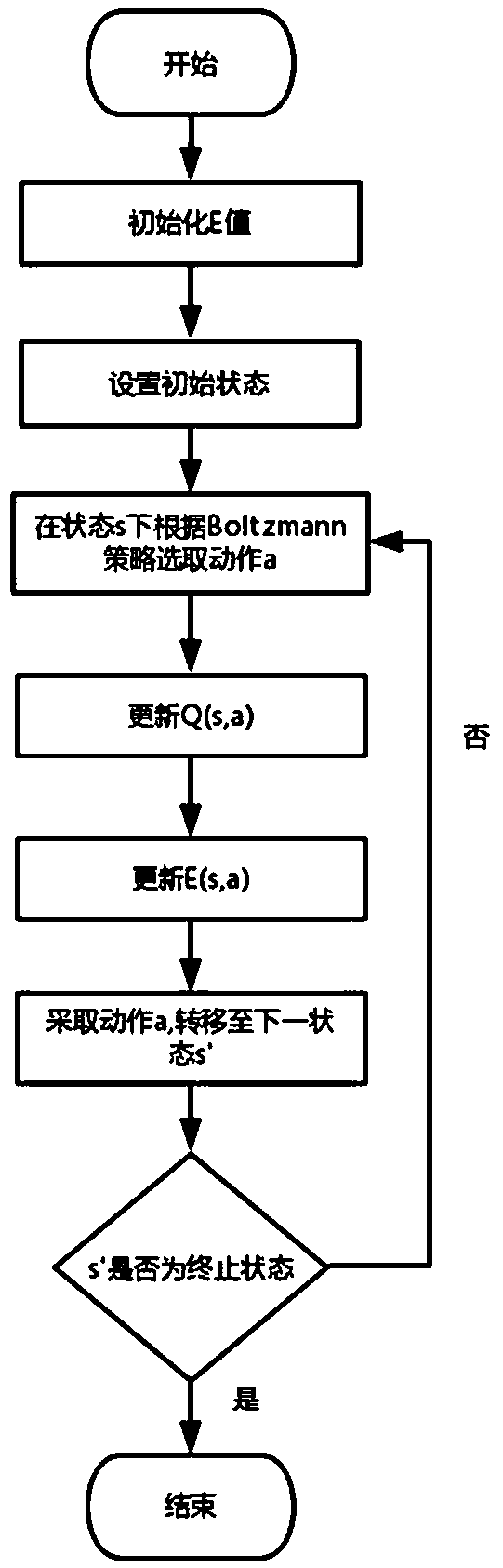

Dynamic spectrum access method based on policy planning constrain Q study

InactiveCN101466111AAvoid blindnessImprove learning efficiencyWireless communicationPropogation channels monitoringCognitive userFrequency spectrum

The invention provides a dynamic spectrum access method on the basis that the policy planning restricts Q learning, which comprises the following steps: cognitive users can divide the frequency spectrum state space, and select out the reasonable and legal state space; the state space can be ranked and modularized; each ranked module can finish the Q form initialization operation before finishing the Q learning; each module can individually execute the Q learning algorithm; the algorithm can be selected according to the learning rule and actions; the actions finally adopted by the cognitive users can be obtained by making the strategic decisions by comprehensively considering all the learning modules; whether the selected access frequency spectrum is in conflict with the authorized users is determined; if so, the collision probability is worked out; otherwise, the next step is executed; whether an environmental policy planning knowledge base is changed is determined; if so, the environmental policy planning knowledge base is updated, and the learning Q value is adjusted; the above part steps are repeatedly executed till the learning convergence. The method can improve the whole system performance, and overcome the learning blindness of the intelligent body, enhance the learning efficiency, and speed up the convergence speed.

Owner:COMM ENG COLLEGE SCI & ENGINEEIRNG UNIV PLA

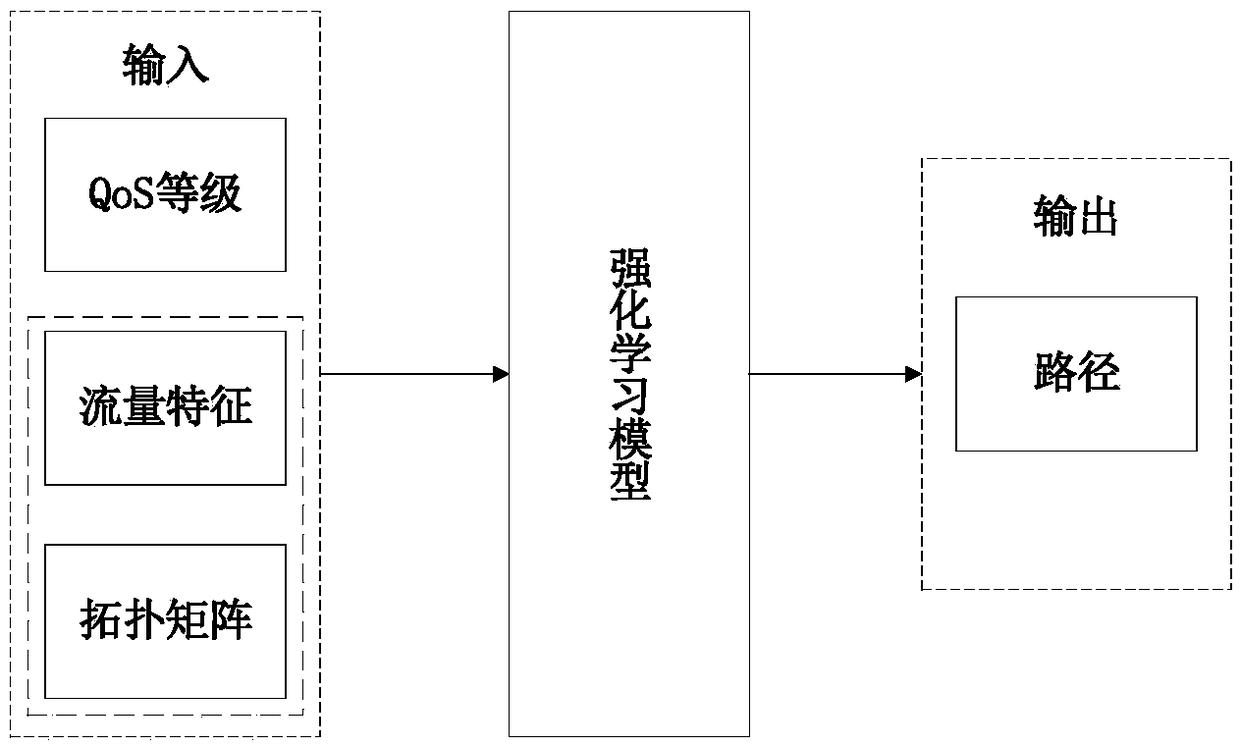

SDN route planning method based on reinforcement learning

ActiveCN109361601AIncrease profitReduce congestionData switching networksTraffic characteristicReward value

The invention discloses an SDN route planning method based on reinforcement learning. The method comprises the following steps: constructing a reinforcement learning model capable of generating a route by using Q learning in reinforcement learning on an SDN control plane, designing a reward function in the Q learning algorithm, generating different reward values according to different QoS levels of traffic; inputting the current network topology matrix, traffic characteristics, and QoS levels of traffic in the reinforcement learning model for training to implement traffic-differentiated SDN route planning, and finding the shortest forwarding path meeting the QoS requirements for each traffic. The SDN route planning method, by utilizing the characteristics of continuous learning and environment interaction and adjustment strategy, is high in link utilization rate and can effectively reduce the network congestion compared with the Dijkstra algorithm commonly used in traditional route planning.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

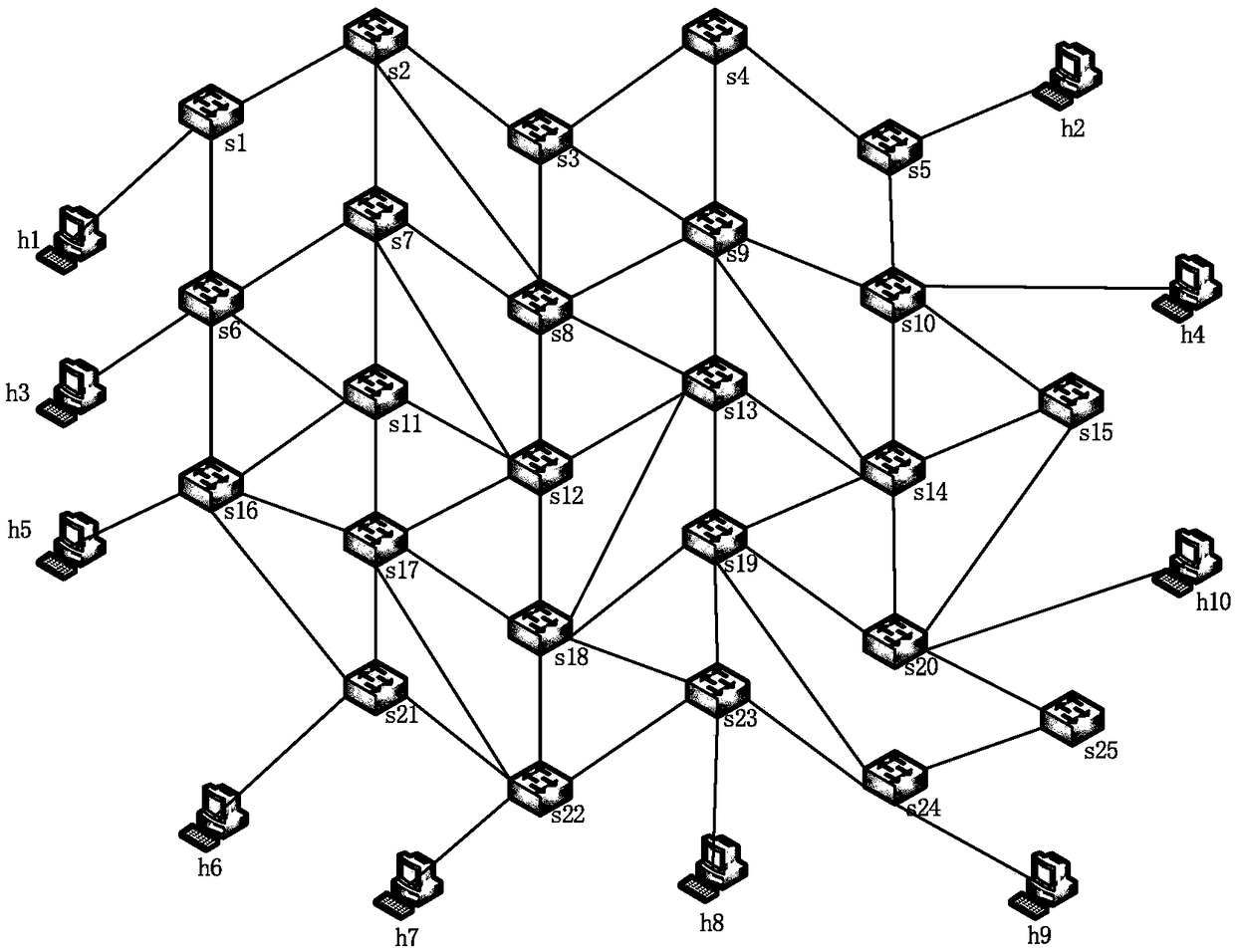

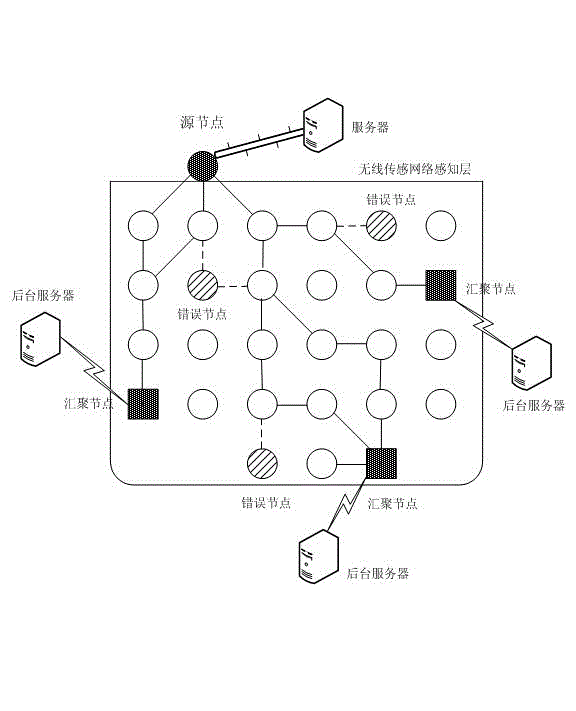

Internet of things (IoT) error sensor node location method based on improved Q learning algorithm

ActiveCN102868972AReduce distanceEven consumptionEnergy efficient ICTNetwork topologiesSensor nodeSelf adaptive

The invention discloses an internet of things (IoT) error sensor node location method based on an improved Q learning algorithm. The method is characterized in that the traditional Q learning method is improved to make calculated Q values adaptively change according to characteristic information such as residual energy of a sensor node, routing and transmission hop count, a routing path is established by means of a maximal Q value, meanwhile, a background server works out a network topological structure, when the node is attached or produces error data, an error range is set by comparison with the Q value of the node of the next period, and when the range is exceeded, the node is judged to be the error node and is located. According to the invention, extra energy of the sensor node does not need to be consumed, and when a wireless sensor network topological structure changes, higher robustness is also achieved; and the method has the advantages of intelligent property, low energy consumption, high adaptive degree and the like, can be used to not only routing, location and energy consumption performance evaluation of the sensor node but also accurate location of unknown error nodes, and has wide application values.

Owner:江苏楠睿科技有限公司

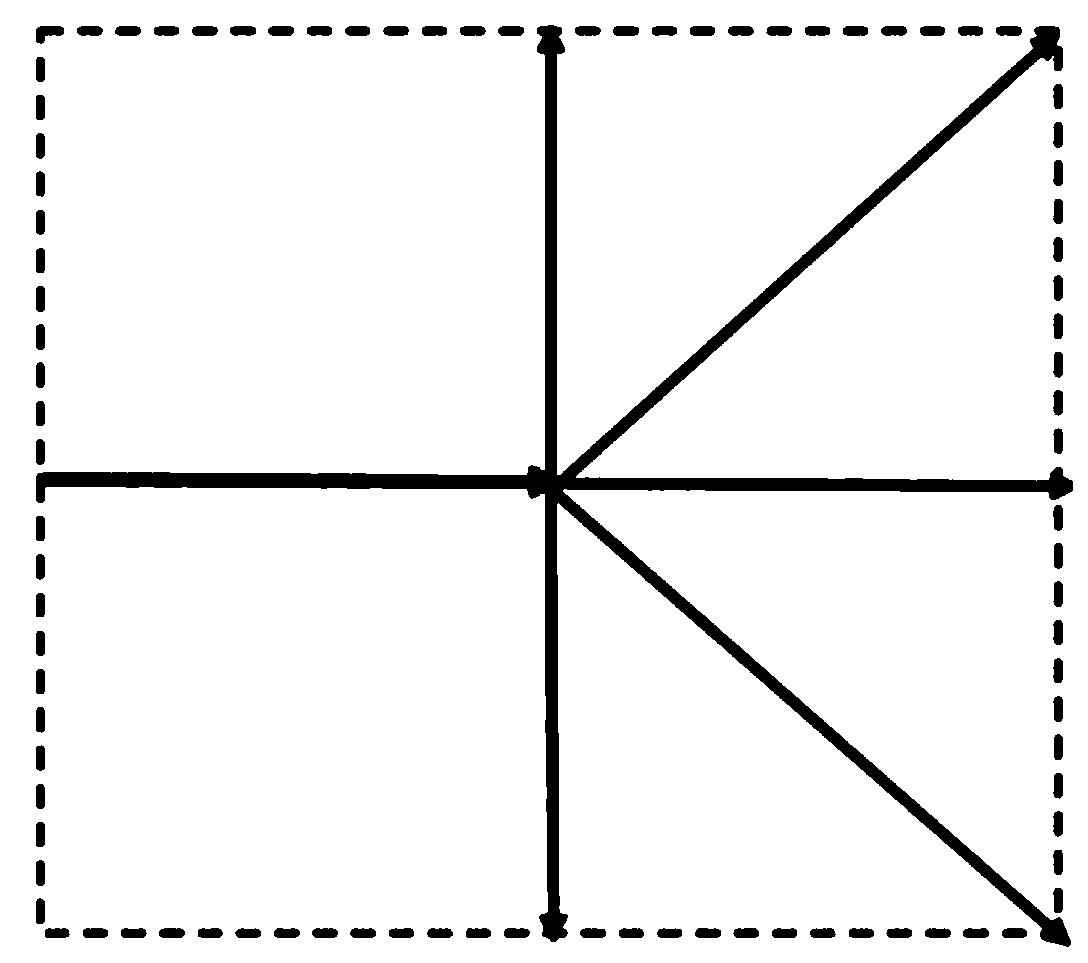

Method for planning paths of unmanned aerial vehicles on basis of Q(lambda) algorithms

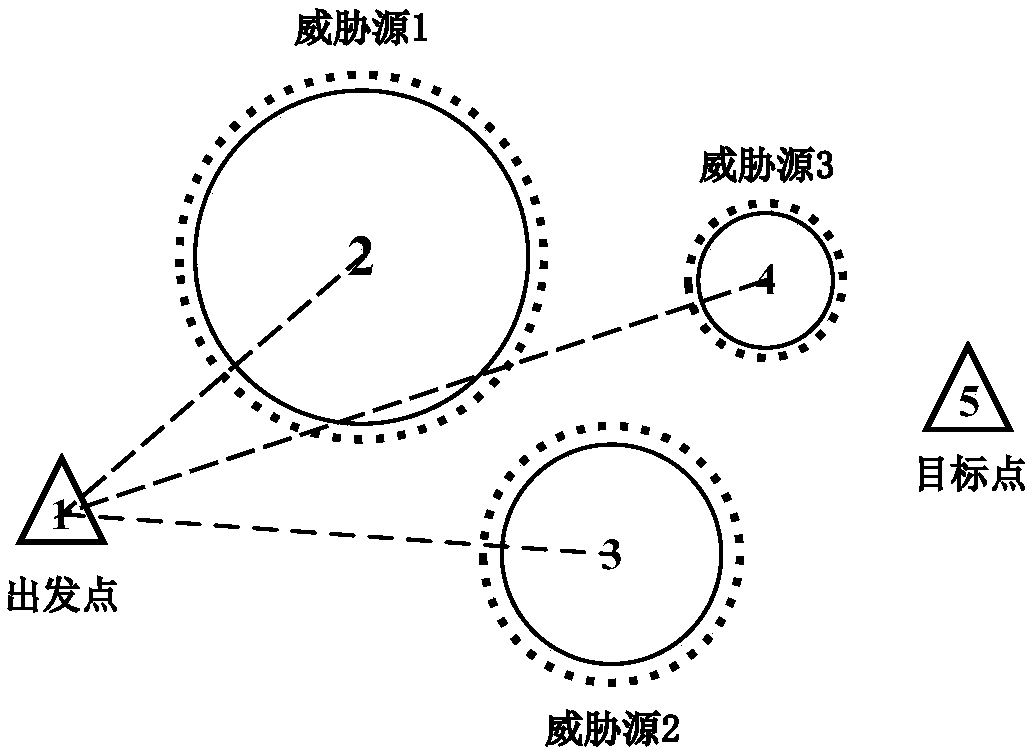

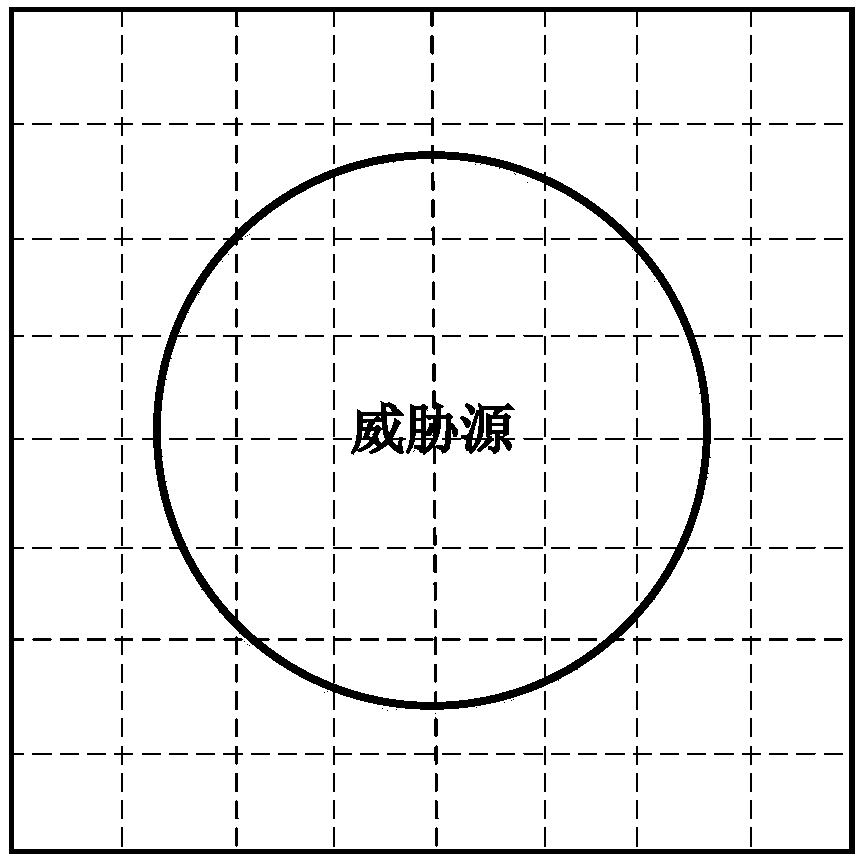

ActiveCN109655066AGive full play to the flying abilitySolve the shortcomings of the lack of basis in the discretization processNavigational calculation instrumentsPosition/course control in three dimensionsDecision modelEnvironmental modelling

The invention provides a method for planning tasks of unmanned aerial vehicles on the basis of Q(lambda) algorithms. The method includes a step of carrying out environment modeling, a step of initializing Markov decision process models, a step of carrying out Q(lambda) algorithm iterative computation and a step of computing the optimal paths according to state value functions. The method particularly includes initializing grid spaces according to the minimum flight path section lengths of the unmanned aerial vehicles, mapping coordinates of the grid spaces to obtain airway points and representing circular and polygonal threat regions; building Markov decision models, to be more specific, representing flight action spaces of the unmanned aerial vehicles, designing state transition probability and constructing reward functions; carrying out iterative computation on the basis of constructed models by the aid of the Q(lambda) algorithms; computing each optimal path of the corresponding unmanned aerial vehicle according to the ultimate convergent state value functions. The unmanned aerial vehicles can safely avoid the threat regions via the optimal paths computed according to the ultimate convergent state value functions. The method has the advantages that the traditional Q learning algorithms and effectiveness tracking are combined with one another, accordingly, the value functionconvergence speeds can be increased, the value function convergence precision can be enhanced, and the unmanned aerial vehicles can be guided to avoid the threat regions and autonomously plan paths.

Owner:NANJING UNIV OF POSTS & TELECOMM

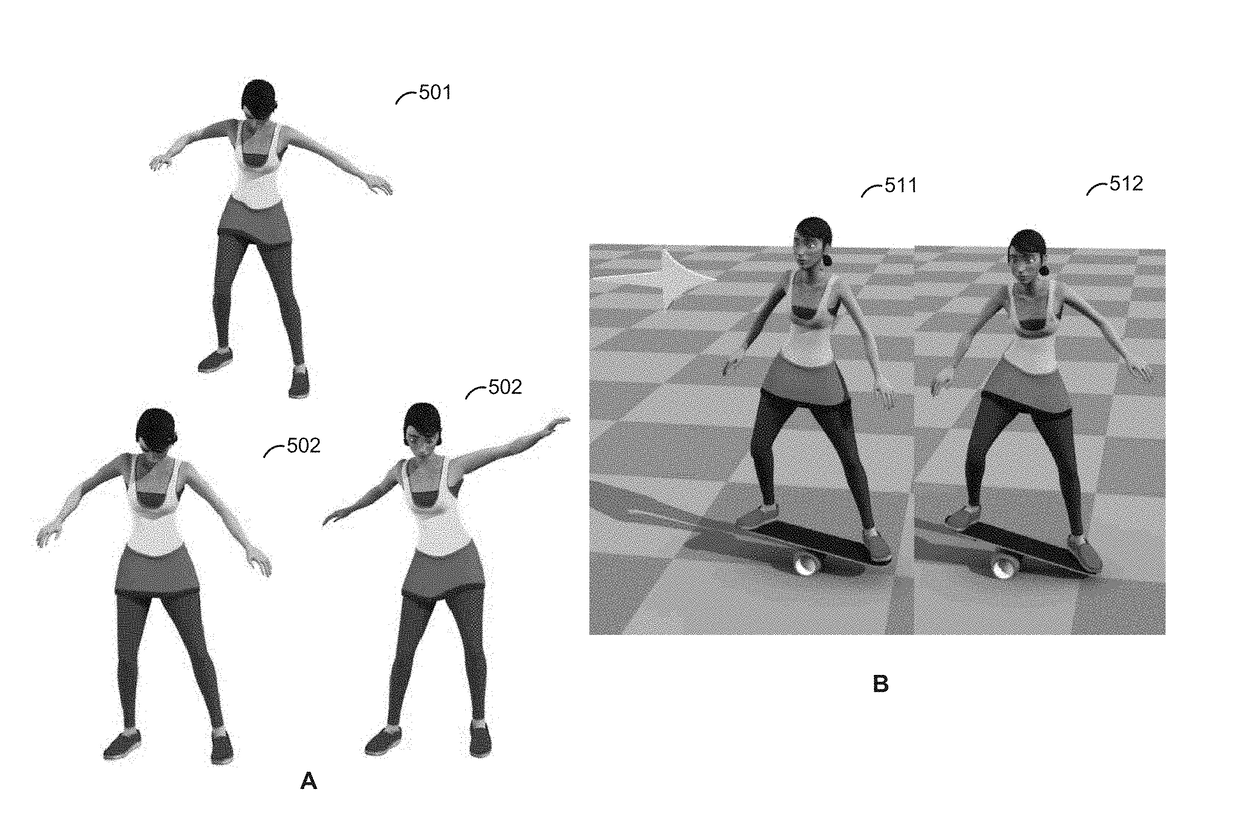

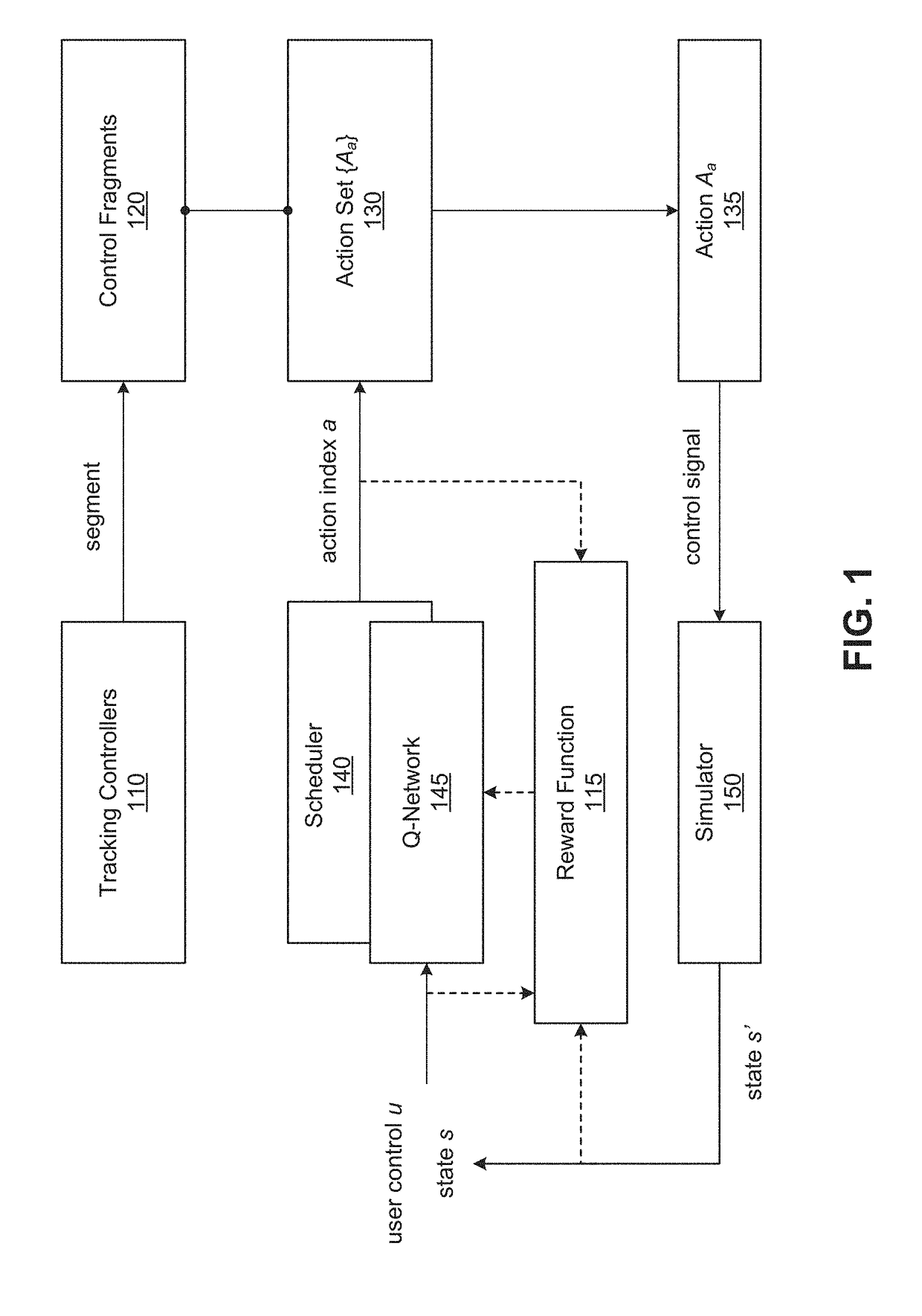

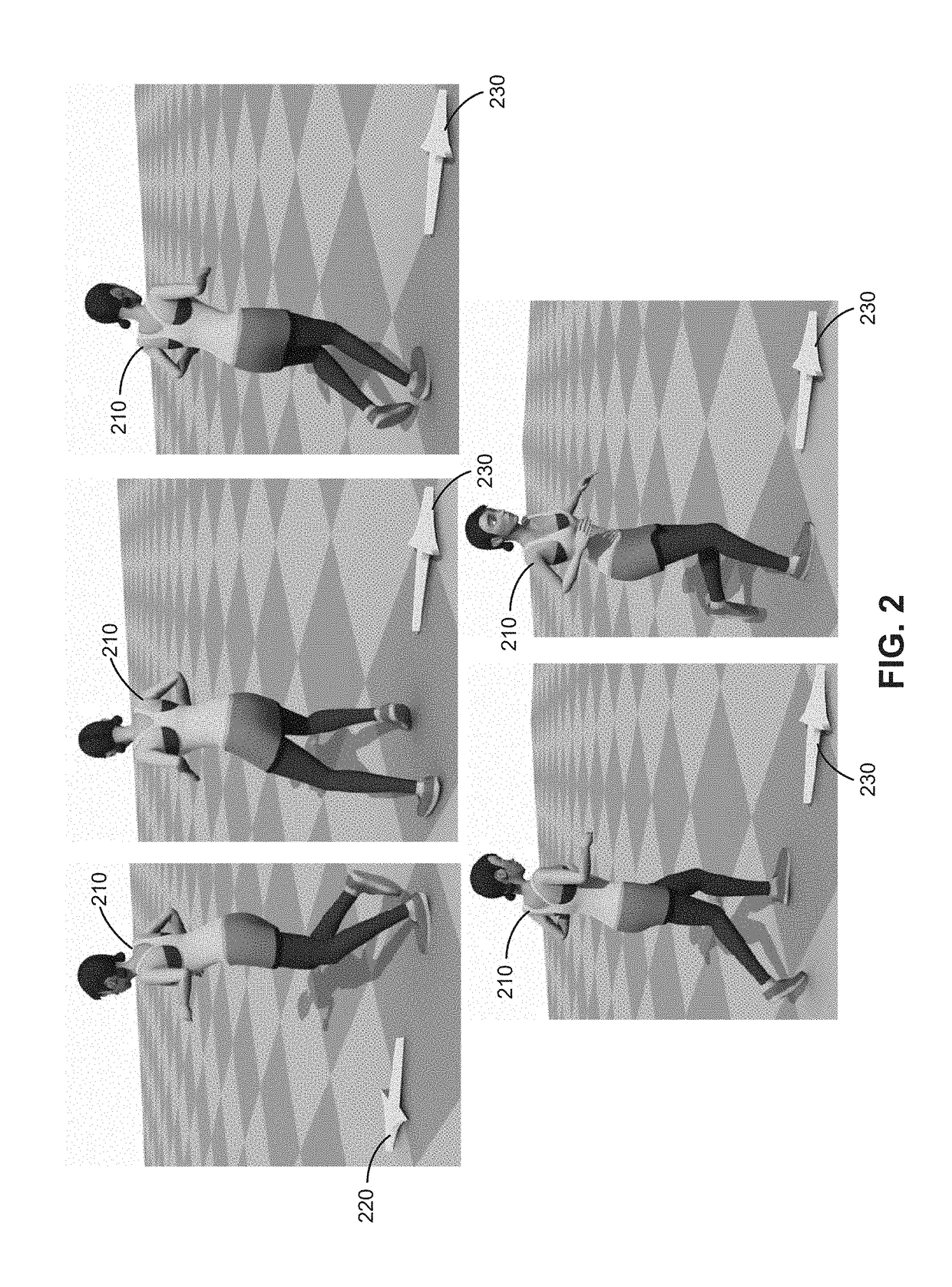

Learning to schedule control fragments for physics-based character simulation and robots using deep q-learning

The disclosure provides an approach for learning to schedule control fragments for physics-based virtual character simulations and physical robot control. Given precomputed tracking controllers, a simulation application segments the controllers into control fragments and learns a scheduler that selects control fragments at runtime to accomplish a task. In one embodiment, each scheduler may be modeled with a Q-network that maps a high-level representation of the state of the simulation to a control fragment for execution. In such a case, the deep Q-learning algorithm applied to learn the Q-network schedulers may be adapted to use a reward function that prefers the original controller sequence and an exploration strategy that gives more chance to in-sequence control fragments than to out-of-sequence control fragments. Such a modified Q-learning algorithm learns schedulers that are capable of following the original controller sequence most of the time while selecting out-of-sequence control fragments when necessary.

Owner:DISNEY ENTERPRISES INC

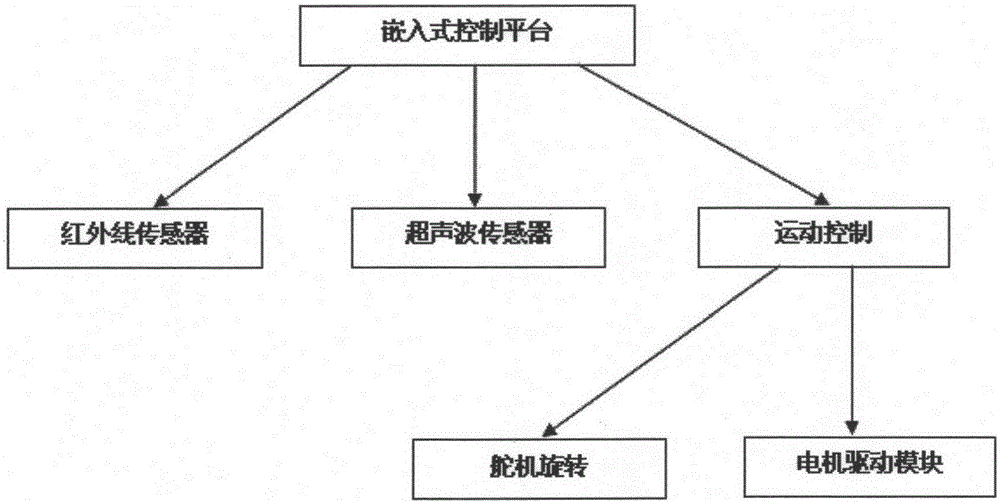

Reinforcement learning algorithm applied to non-tracking intelligent trolley barrier-avoiding system

The invention discloses a reinforcement learning algorithm, including a new Q learning algorithm. The new Q learning algorithm includes the implementation steps of: inputting collected data to a BP neural network, and calculating input and output of each unit of a hidden layer and an output layer in the state; calculating a maximum output value m in a t state, based on the output, judging whether a collision with a barrier occurs, if a collision occurs, recording each unit threshold value and each connection weight of the BP neural network, and otherwise calculating T+1 moment, collecting data and performing normalization, calculating input and output of each unit of the hidden layer and the output layer in the t+1 state, calculating an expected output value of a t state, adjusting output and the threshold value of each unit of the hidden layer, judging whether an error is smaller than a given threshold value or the number of times of learning is larger than a given value, if the condition is not satisfied, performing learning again, and otherwise recording the threshold value of each unit and each connection weight, finishing learning. The reinforcement learning algorithm provided by the invention has good real-time performance and good rapidity, and allows relearning in a later period.

Owner:DONGHUA UNIV

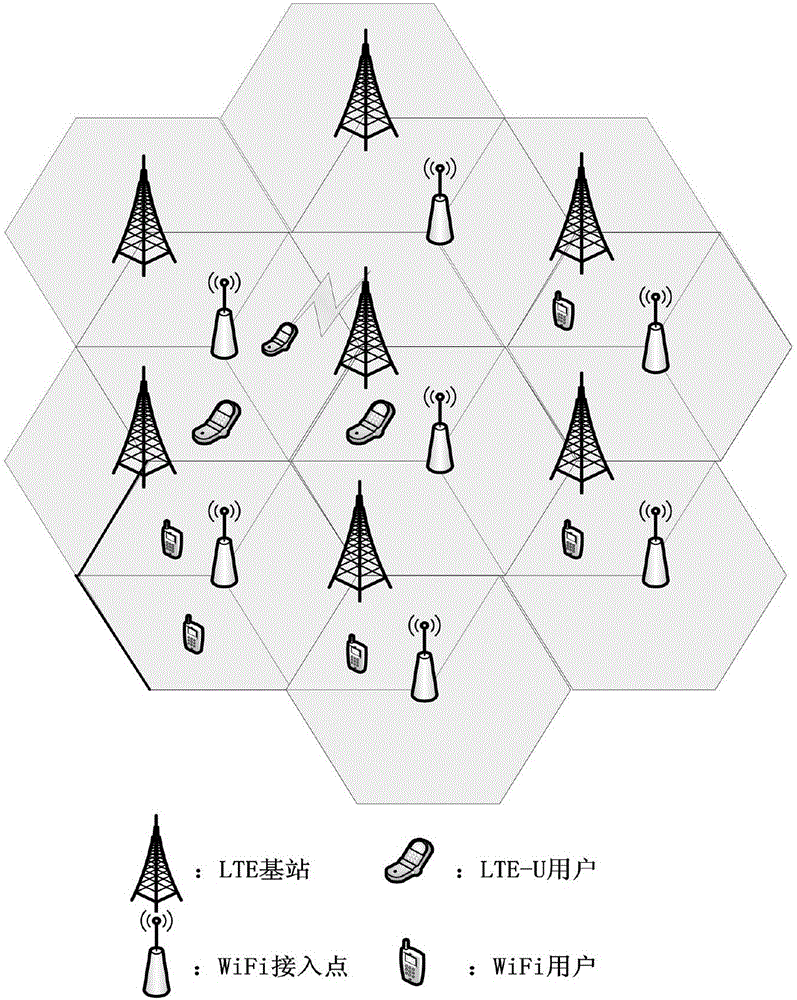

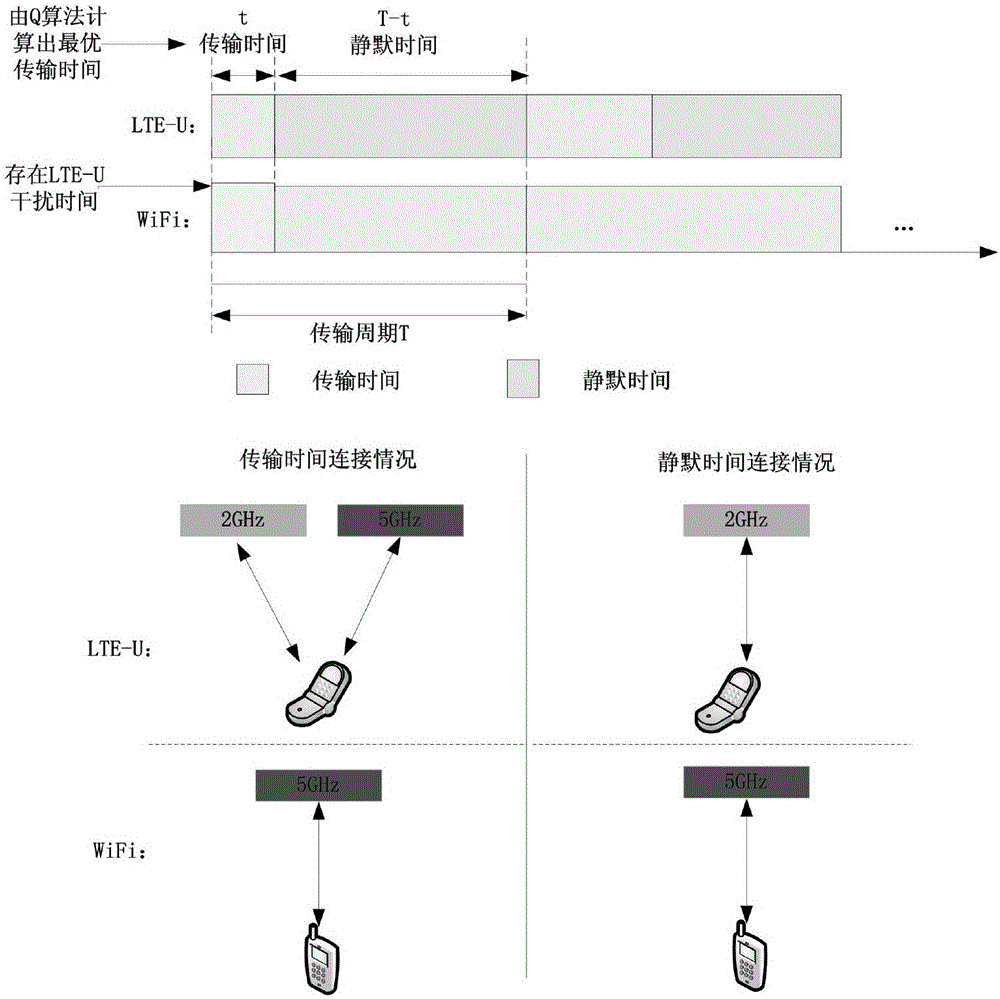

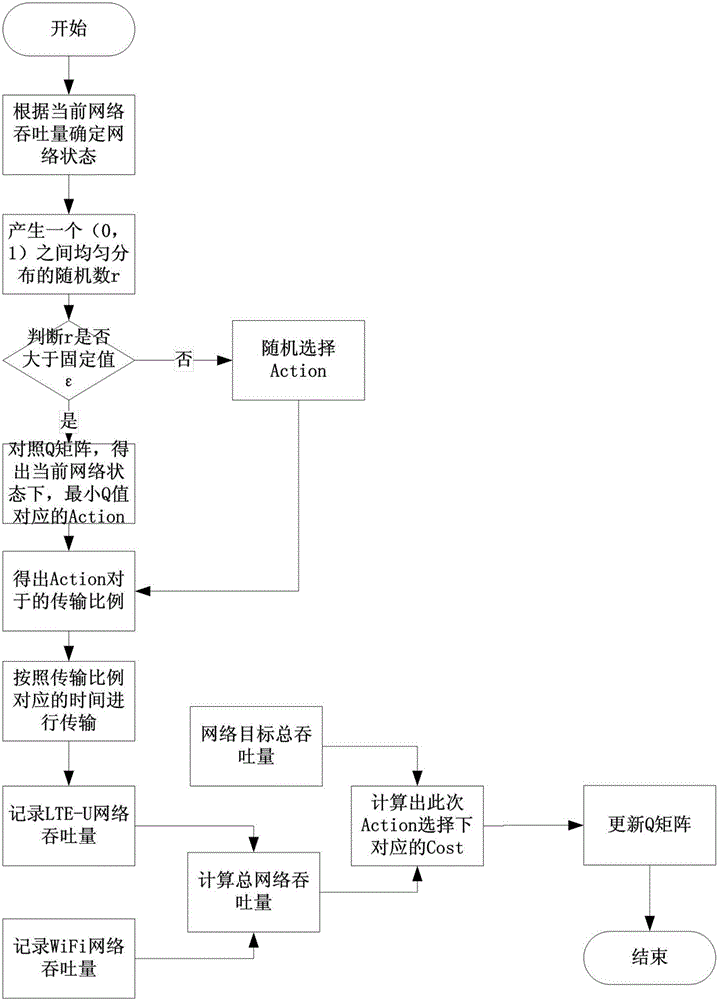

Q algorithm-based dynamic duty ratio coexistence method for LTE-U and Wi-Fi systems in unauthorized frequency band

The invention relates to a Q algorithm-based dynamic duty ratio coexistence method for LTE-U and Wi-Fi systems in an unauthorized frequency band, and belongs to the technical field of wireless communication. With the aid of a Q learning algorithm, the transmission time of an LTE-U system in a next transmission period T is obtained according to the total throughput of the LTE-U system and a Wi-Fi system in a current transmission period T, and thus, expected network throughput is achieved, and the coexistence performance of the LTE-U system and the Wi-Fi system is improved. The method starts from the perspective of improving the overall network throughput, and supposes that data is transmitted via the Wi-Fi system all the time within T. The LTE-U system determines the transmission time ratio thereof within T according to the result of the Q learning algorithm, and then records the overall network throughput in the current T under the transmission time ratio to provide a basis for the selection of transmission ratio in next Q learning algorithm. The method fully considers the situation in which the throughput of the Wi-Fi system sharply declines due to transmission interference of the LTE-U system when the LTE-U system and the Wi-Fi system coexist, and can be applied to a user moving scene under the premise of coexistence of the LTE-U system and the Wi-Fi system.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

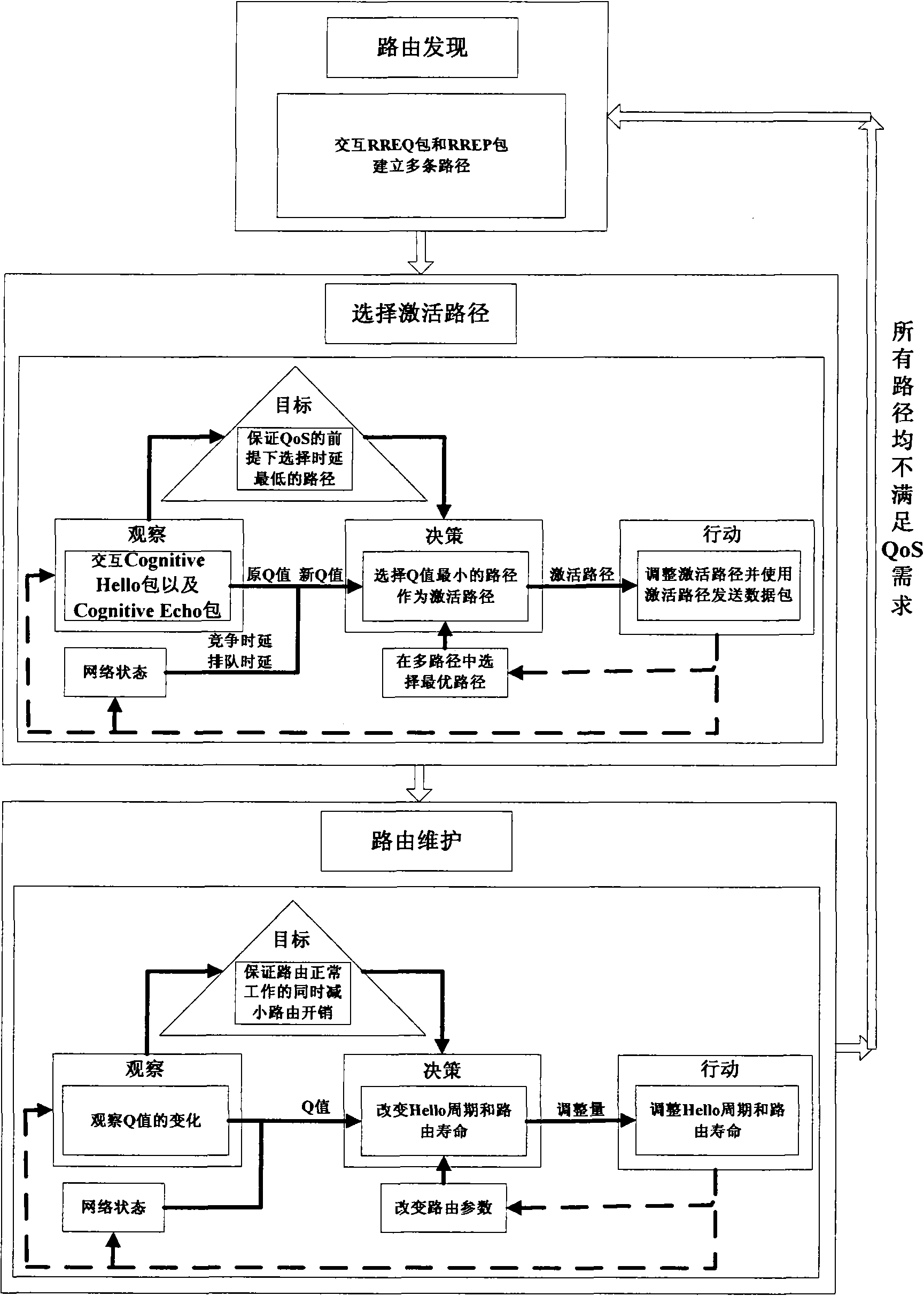

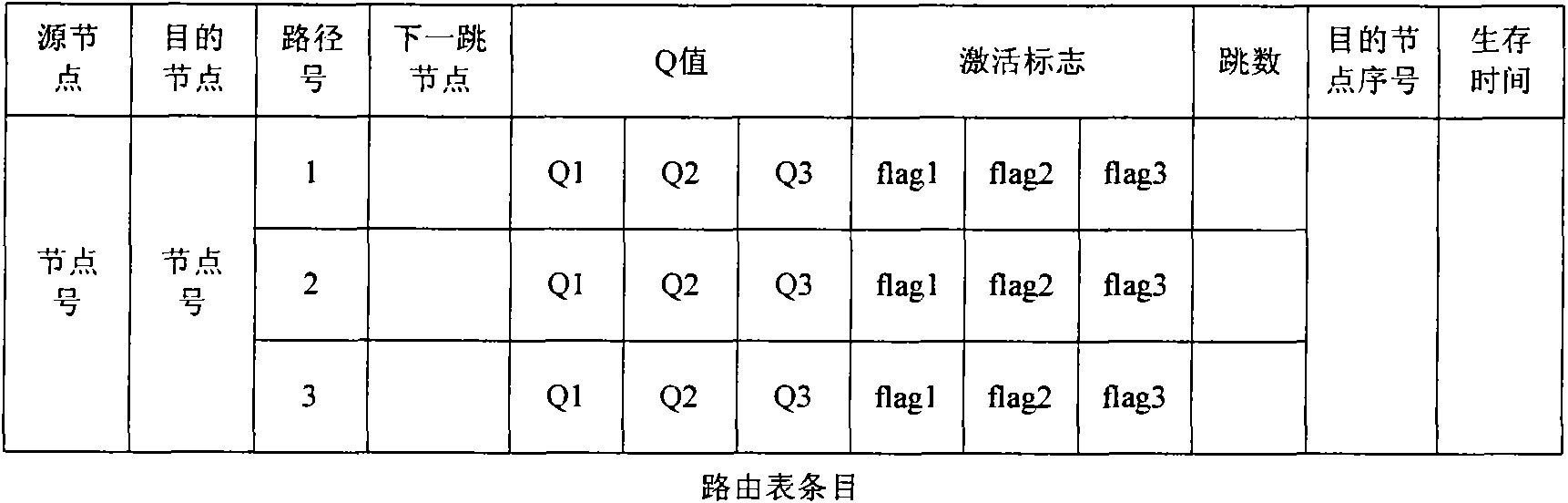

Multi-path delay sensing optimal route selecting method for cognitive network

InactiveCN101835239AReduce latencyLoad balancingHigh level techniquesWireless communicationMulti pathEnd-to-end delay

The invention discloses a multi-path delay sensing optimal route selecting method for a cognitive network, which comprises the following steps of: dividing service into different classes; establishing a plurality of paths through route discovery; adopting an end-to-end delay recorded in the route discovery process as an initial value of a Q value; updating the Q value of the path by utilizing a Q learning algorithm, and introducing the estimation of node queue delay and the estimation of channel contending delay during updating; selecting an activating path according to the Q value to send a data package; reducing route control packet overhead by utilizing the Q learning algorithm; and when the plurality of paths cannot meet the requirement of QoS of the service, beginning the process again. The method has the advantages of quick transmission of advanced service, short path delay, high routing efficiency and high network load bearing capacity, and can be used for a cognitive wireless network.

Owner:XIDIAN UNIV

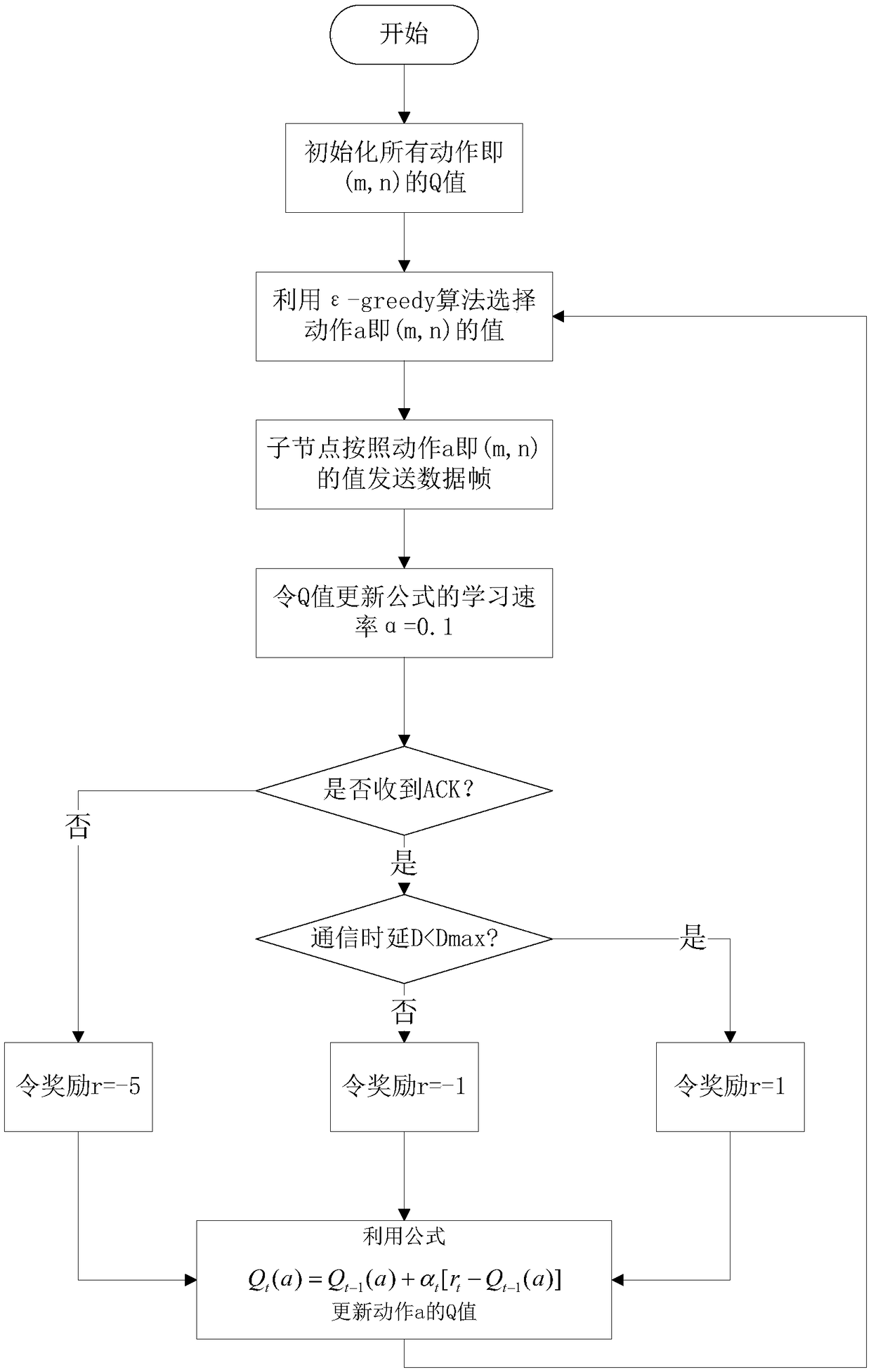

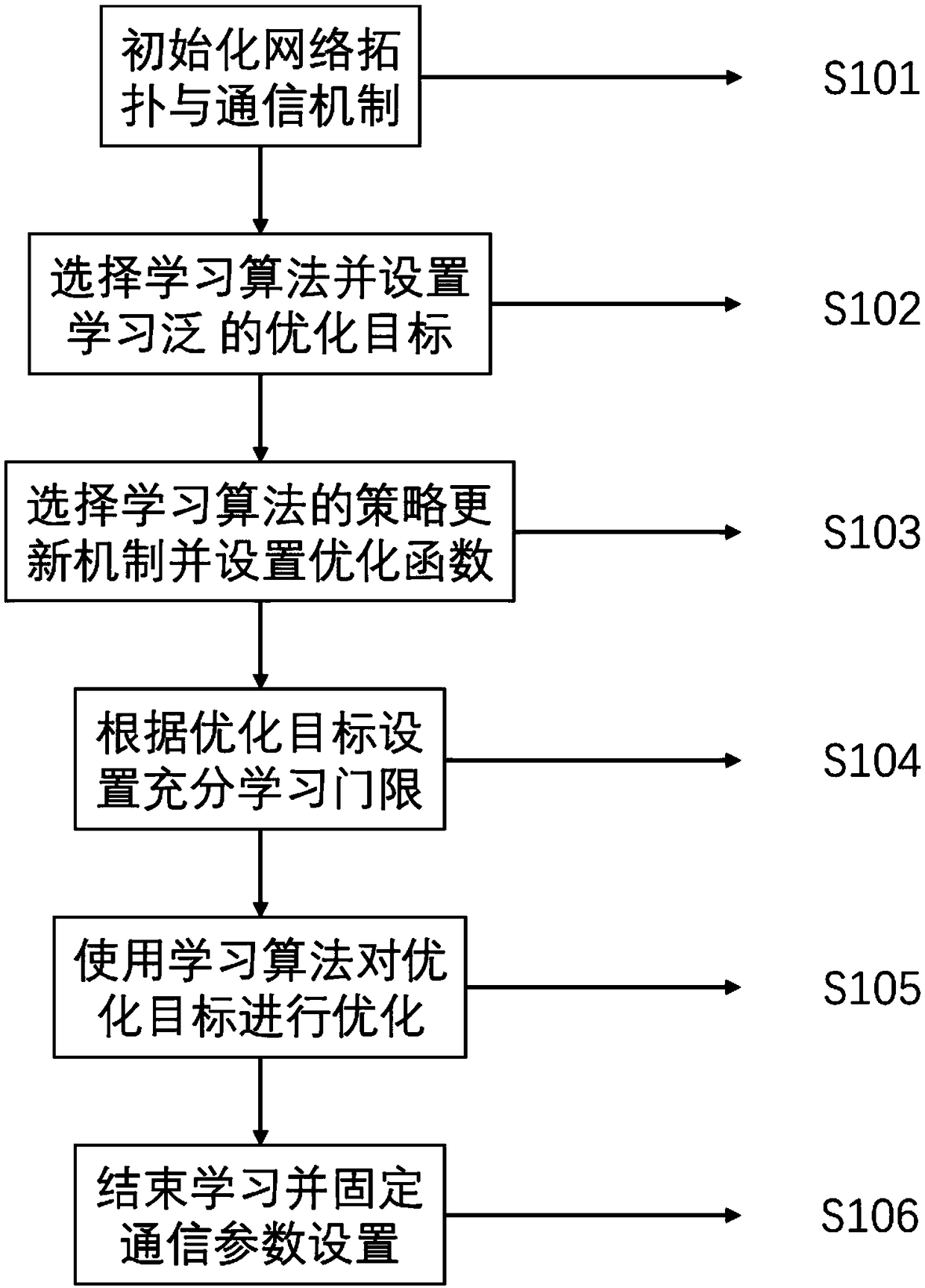

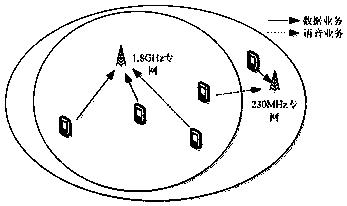

Parameter adaptive adjustment method of wireless sensor network

InactiveCN109462858ASatisfy validitySatisfy latency requirementsNetwork topologiesQuality of serviceWireless mesh network

The invention discloses a parameter adaptive adjustment method of a wireless sensor network. A star network topological model is used; the sensor network is composed of a sensor node and a coordinator; the node is used for collecting sensor data; and the coordinator is taken as a convergence device of the whole sensor network, and is used for collecting the sensor data uploaded by the node. An 802.15.4 protocol based on a time slot CSMA / CA is adopted in communication of the wireless sensor network, and a Q-learning algorithm is used to dynamically optimize parameter settings of the protocol, so that the problem of adaptability of the 802.15.4 protocol to a position network environment can be effectively solved; while the quality of network service is improved, the overhead of the network is effectively reduced; and thus, the parameter adaptive adjustment method is an optimization method of the wireless sensor network with strong adaptability, high timeliness and good quality of service.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Cognitive radio space-frequency two-dimensional anti-hostility jamming method based on deep reinforcement learning

InactiveCN106961684AImprove communication efficiencyFast learningMachine learningNeural learning methodsAnti jammingNerve network

The invention discloses a cognitive radio space-frequency two-dimensional anti-hostility jamming method based on deep reinforcement learning. A cognitive radio secondary user observes an access state of a cognitive radio primary user and a signal to jamming ratio of a wireless signal under a state of unknowing a jammer attack mode and a wireless channel environment, and decides whether to leave the located interfered region or select an appropriate frequency point to send the signal by use of a deep reinforcement learning mechanism. A deep convolutional nerve network and Q learning are combined, the Q learning is used for learning an optimal anti-jamming strategy in a wireless dynamic game, and an observation state and acquired benefit are input into the deep convolutional nerve network as a training set to accelerate the learning speed. By use of the deep reinforcement learning mechanism, the communication efficiency for competing hostility jammer by the cognitive radio under a wireless network environment scene in dynamic change is improved. A problem that the learning speed is fast reduced since an artificial nerve network needs to firstly classify the data in the training process and the Q learning algorithm is large in dimension in a state set and an action set can be overcome.

Owner:XIAMEN UNIV

Path planning method based on multi-agent enhanced learning

ActiveCN109059931AImprove survival rateImprove task completion rateNavigational calculation instrumentsTask completionGreedy algorithm

The invention discloses a path planning method based on multi-agent enhanced learning, and belongs to the technical field of aircrafts. Firstly, a global state division model of an air flight environment is established, and a global state transfer table Q-Table 1 is initialized, and the global state of a certain line is randomly selected to be used as the initial state s1; in all columns of the current sate s1, an epsilon-greedy algorithm is adopted to select a certain column to be recorded as a behavior a1; based on the selected behavior a1, the next state, a formula which is as shown in thespecification, of the current state s1 is obtained in the global state transfer table Q-Table 1; the specific element values corresponding to the current state s1 and the behavior a1 are updated in the global state transfer table Q-Table 1 according to a transfer rule of a Q-Learning algorithm; a formula which is as shown in the specification is updated to enter an inner layer cycle; and a local planning path corresponding to the updated state s1 is obtained by adopting the Q-Learning algorithm. The number of iterations of the outer-layer cycle is increased by 1 until N1, and global path planning of the aircraft in the air is completed. The aircraft can meet requirements of different environments, so that the survival rate and the task completion rate of the aircraft are improved, and theconvergence speed of the enhanced learning is improved.

Owner:BEIHANG UNIV

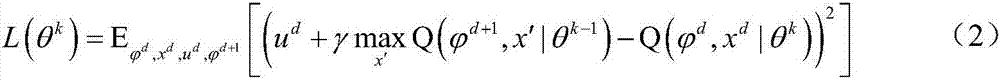

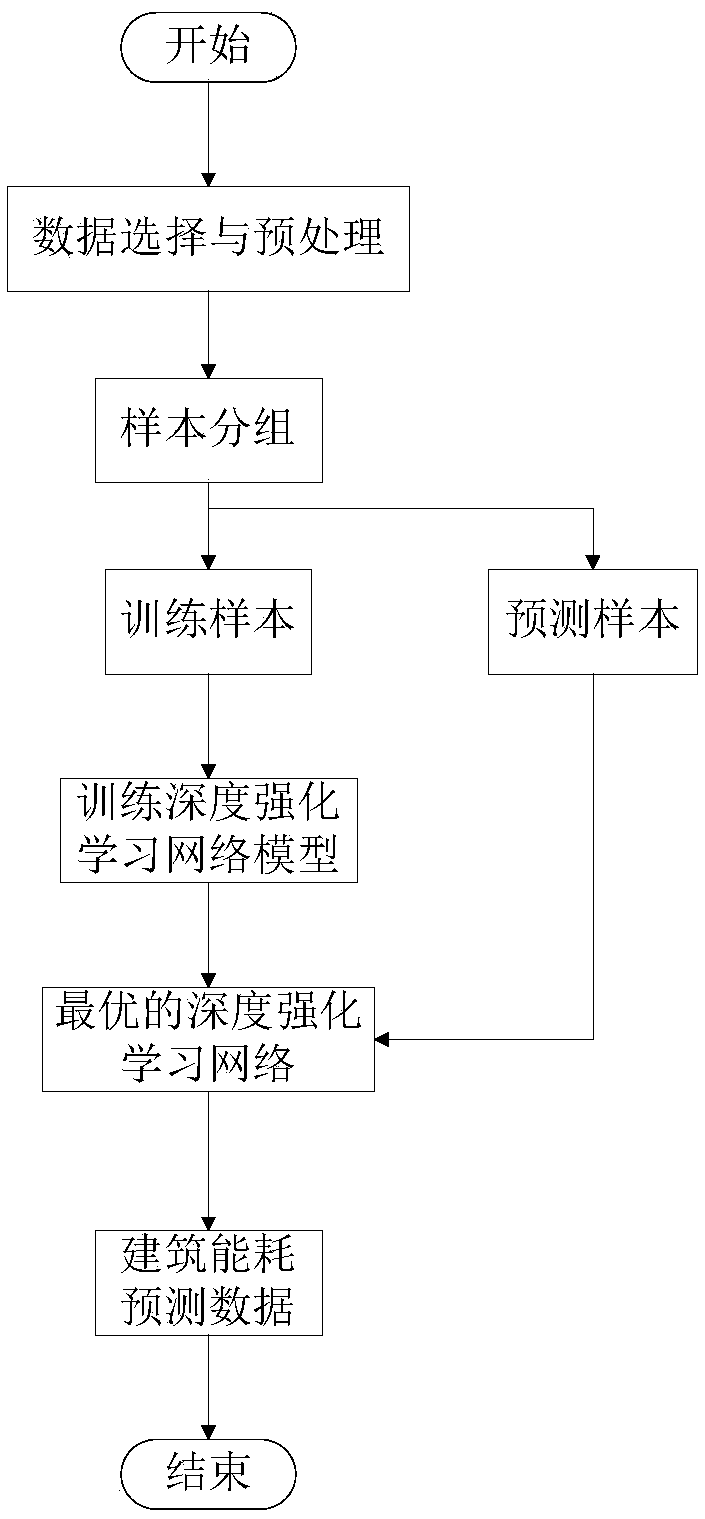

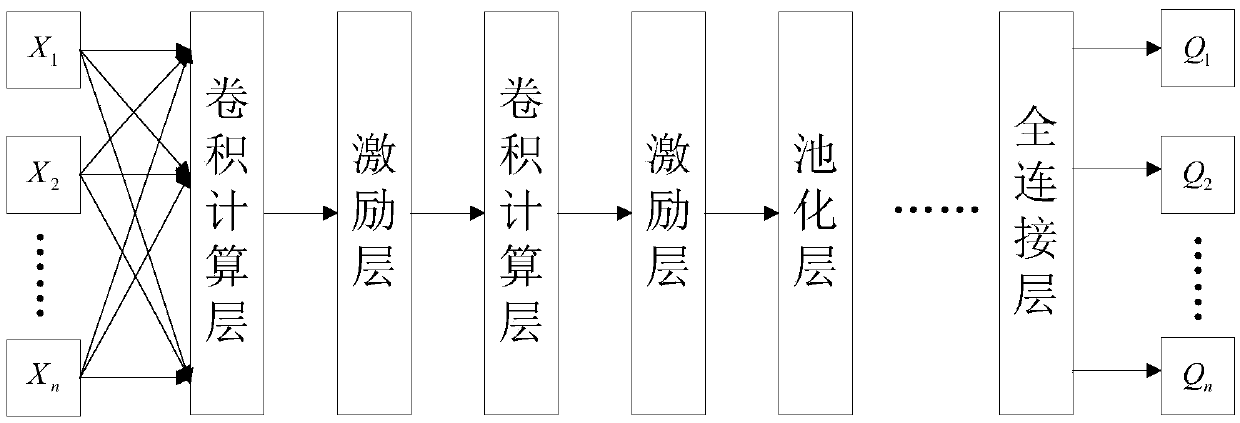

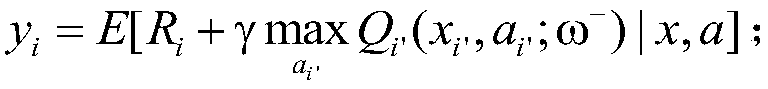

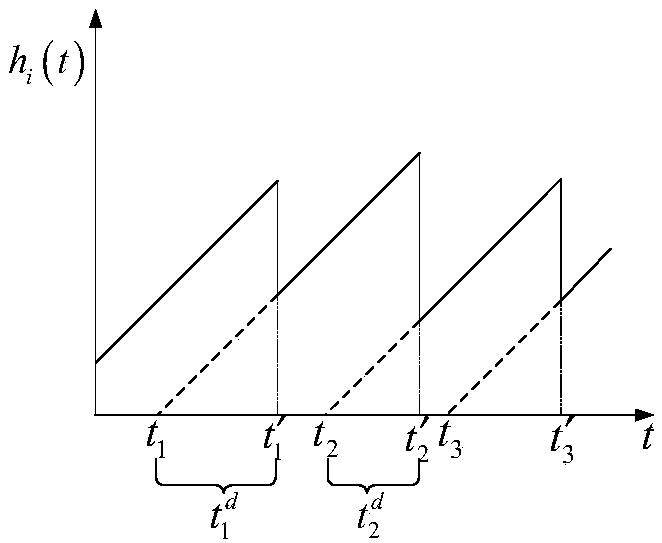

Method and system for predicting building energy consumption based on depth reinforcement learning

ActiveCN109063903AReduce storage requirementsImprove efficiencyForecastingNeural architecturesBuilding energyNetwork model

The invention discloses a building energy consumption prediction method and system based on depth reinforcement learning, which comprises: collecting building energy consumption historical data, simultaneously collecting building area, building permanent population quantity, building permanent population consumption level and weather condition data of building location. The collected data samplesare grouped and input into the deep reinforcement learning network model according to the obtained training samples to train and save the network model to optimize the state action value function. Finally, the prediction samples are inputted into the deep reinforcement learning network model to predict building energy consumption. The invention adopts the method of combining the convolution neuralnetwork in the depth learning and the Q learning in the reinforcement learning to realize the energy consumption prediction of the building, Compared with the traditional prediction method, the deepreinforcement learning network based on convolution neural network and Q learning algorithm can reduce the amount of data, reduce the storage requirements of data, improve the efficiency of data use and speed up the efficiency of data processing.

Owner:SHANDONG JIANZHU UNIV

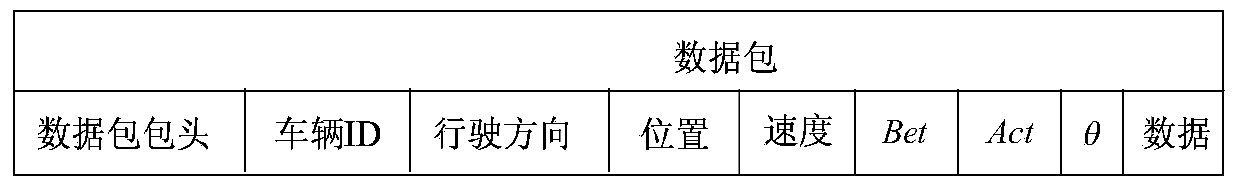

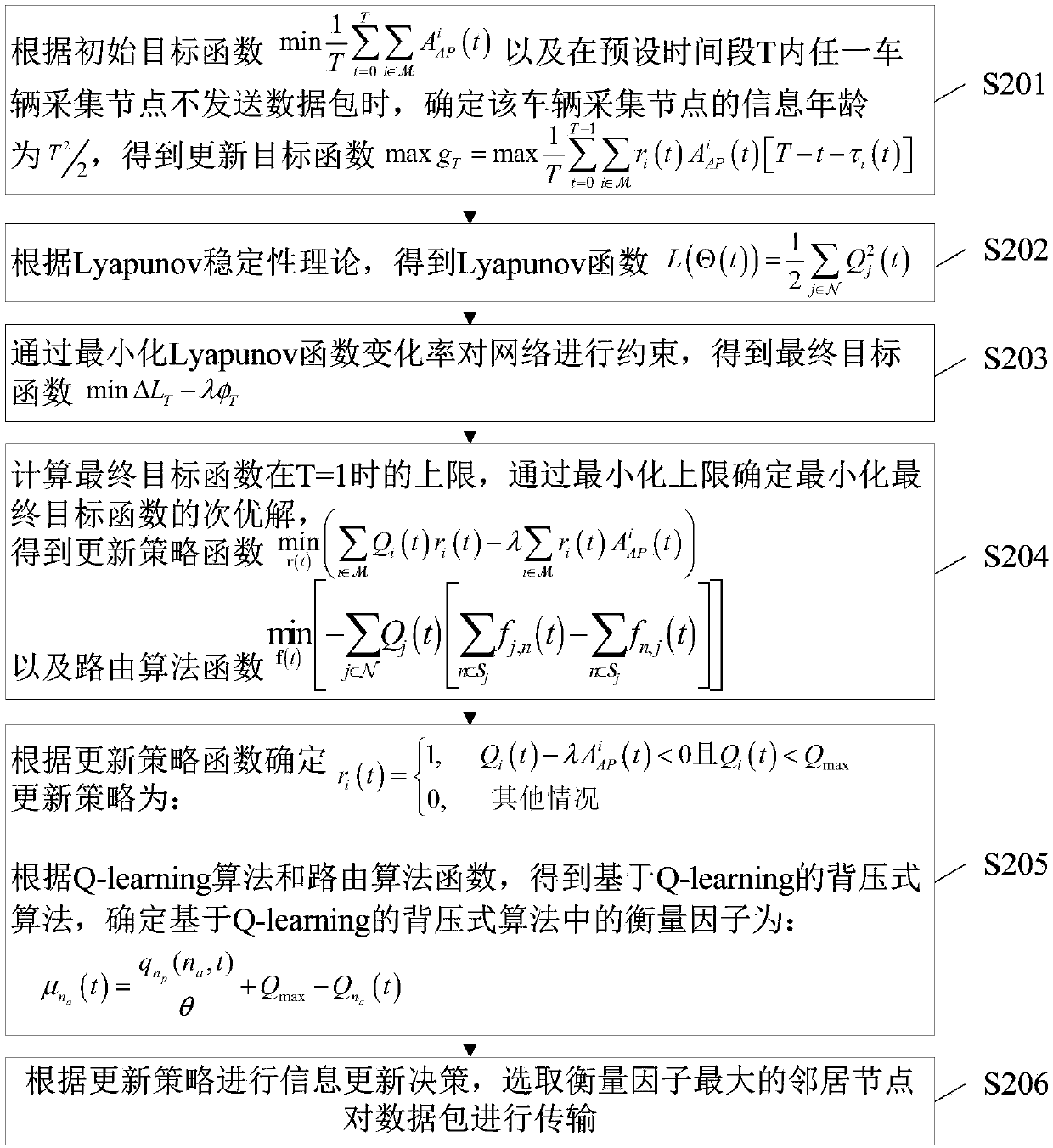

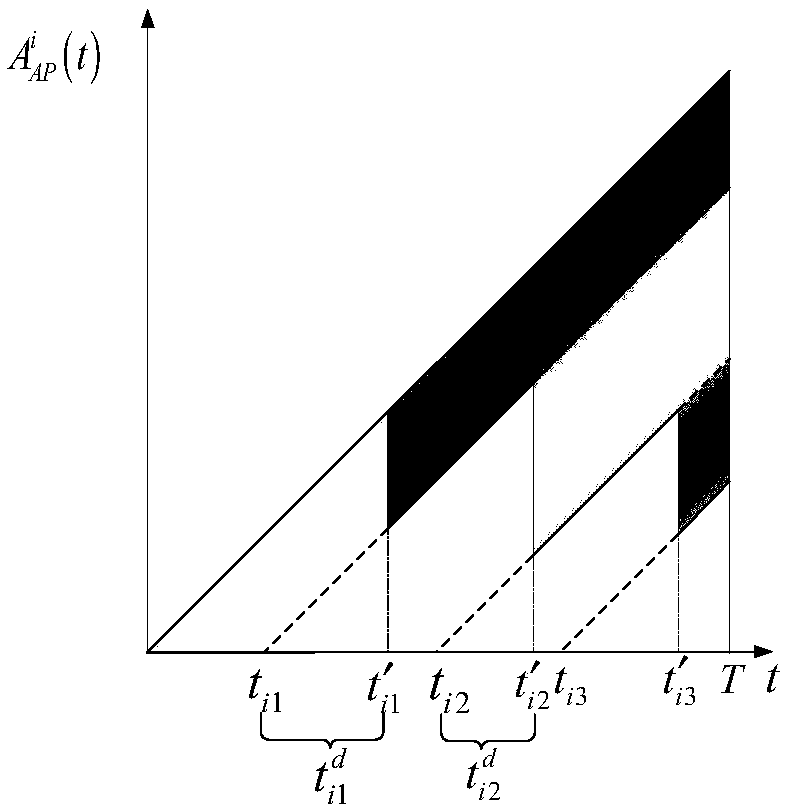

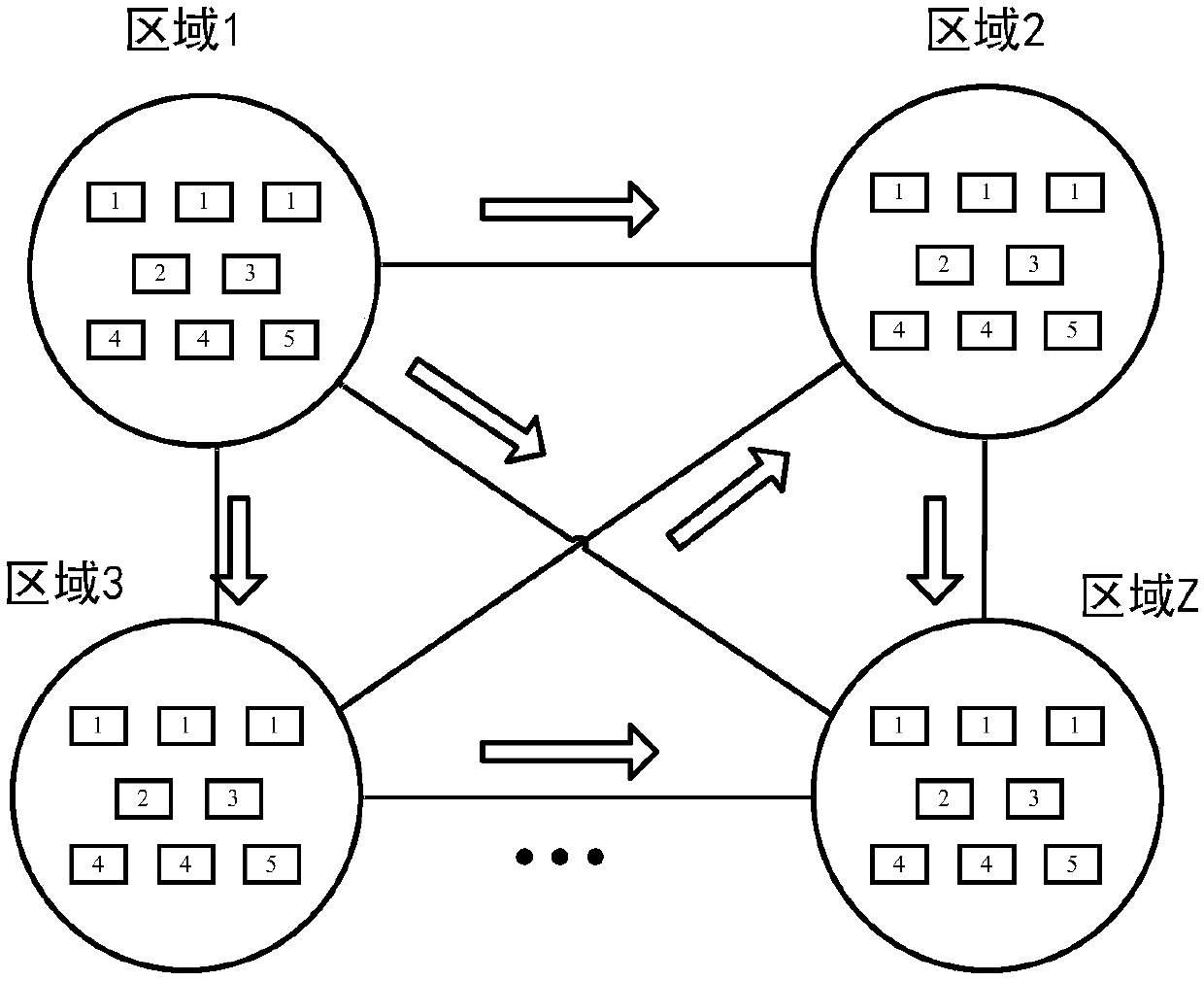

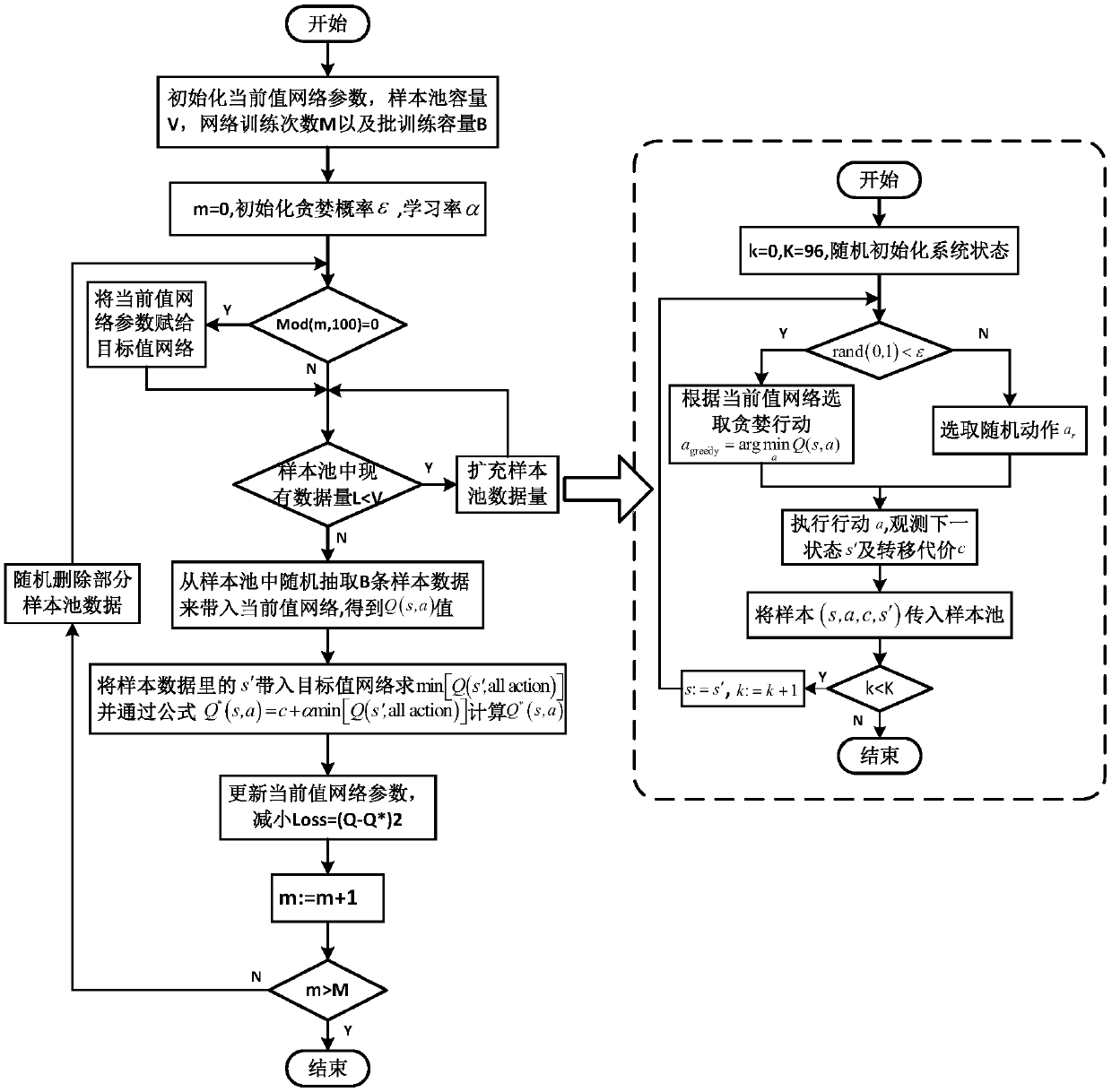

Internet of Vehicles transmission method and device based on information freshness

ActiveCN109640370ALower average age of informationReduce computational complexityParticular environment based servicesVehicle-to-vehicle communicationComputation complexityNetwork packet

The embodiment of the invention provides an Internet of Vehicles transmission method and device based on information freshness, applied to the technical field of the Internet of Vehicles. The method comprises the following steps: obtaining an update objective function according to an initial objective function and the fact that information age of the vehicle collection node is a fixed value, whenvehicle collection node does not send a data packet within a preset time period T; constraining the network by minimizing the change rate of a Lyapunov function to obtain a final objective function, calculating an upper limit of the final final objective function at T=1, determining a second-best solution of the minimum final objective function by minimizing the upper limit to obtain an update strategy function and a routing algorithm function, determining the update strategy according to the update strategy function, determining a measurement factor according to the Q-learning algorithm andthe routing algorithm function, performing, by the vehicle collection node, information update decision making according to the update strategy, and selecting a neighbor node with the maximum measurement factor to transmit the data packet. By adoption of the Internet of Vehicles transmission method and device provided by the invention, the computational complexity and the information age can be reduced.

Owner:BEIJING UNIV OF POSTS & TELECOMM

A method for dynamic dispatch optimization of generation and transmission system of cross-region interconnected power network

ActiveCN109066805AConducive to safe and economical operationPromote digestionSingle network parallel feeding arrangementsMathematical modelNew energy

A method for optimize that dynamic dispatch of power generation and transmission system include such steps as establishing a multi-area interconnected power network structure including conventional generator set, photovoltaic generator set, wind generator set, rigid load, flexible load and DC tie line, and establishing physical model of each unit. Then the dynamic dispatching problem of interconnected power network is established into the corresponding MDP mathematical model. Finally, the depth Q learning algorithm is used to solve the MDP mathematical model. Under the strategy, the dispatching mechanism can select a reasonable action plan according to the actual operation state of the power grid at the dispatching time, and realize the dynamic dispatching of the interconnected power gridgeneration and transmission system. The invention can effectively cope with the randomness of the new energy and load demand in the cross-region interconnected power network, promotes the consumptionof the new energy, is conducive to the safe and economic operation of the cross-region interconnected power network, and improves the stability of the operation of the power system.

Owner:HEFEI UNIV OF TECH

Multi-unmanned boat collaborative path planning method

InactiveCN109540136AFlexible to completeNavigational calculation instrumentsSimulationPlanning approach

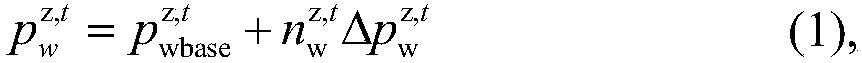

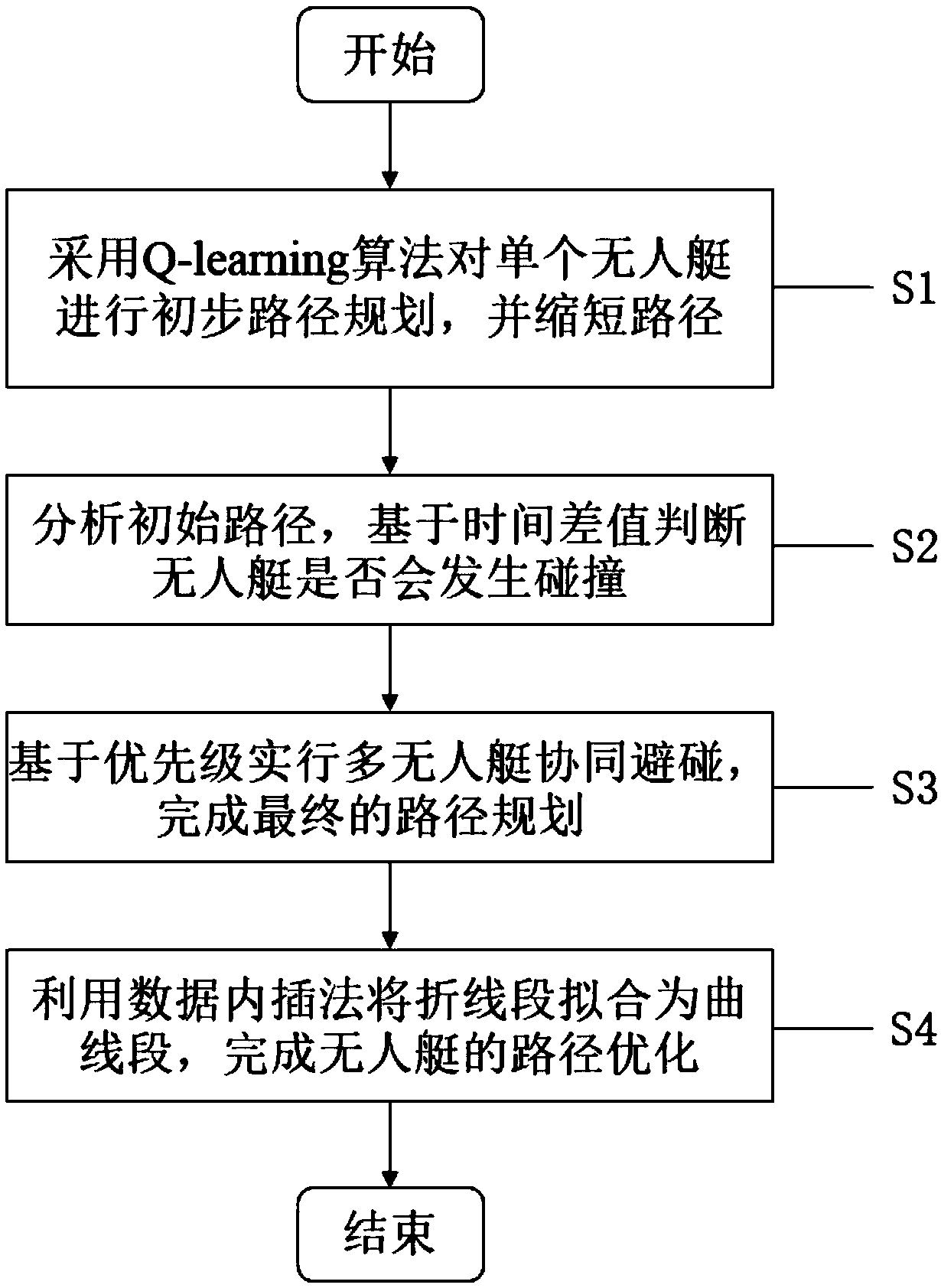

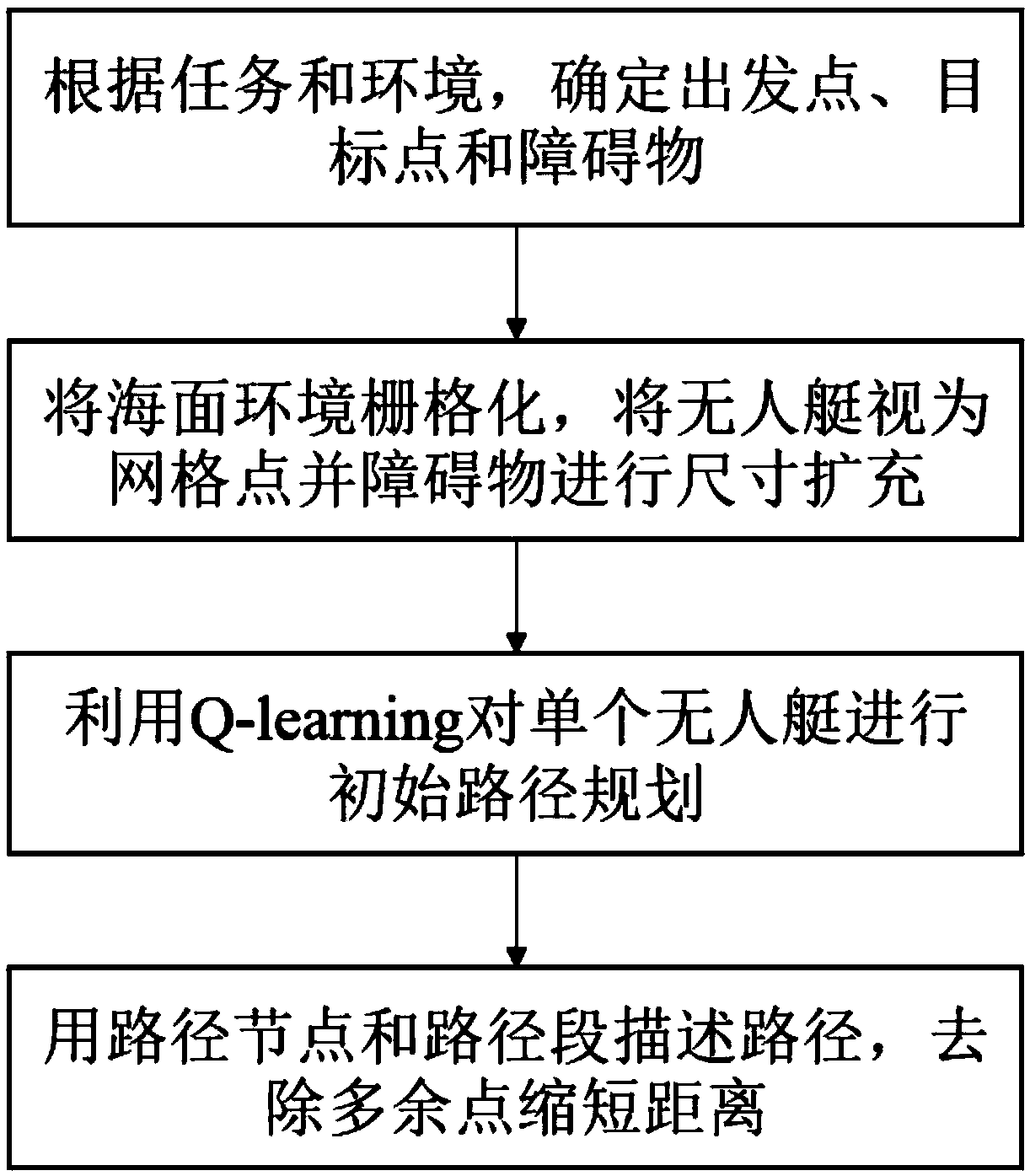

The invention discloses a multi-unmanned-boat collaborative path planning method. The method comprises the following steps: S1, according to a task requirement and a navigation environment, adopting Q-learning algorithm to carry out primary path planning on a single unmanned boat and shortening the path; S2, analyzing the initial path of each unmanned boat, and judging whether collision exists between unmanned boats or not on the basis of a time difference value; S3, generating a plurality of unmanned boats which can collide with each other, giving priority to the movement time, and completingcollaborative collision avoidance of the unmanned boats to obtain a final path; S4, fitting broken line sections into a curve section through a data interpolation method, and achieving the smooth processing of the path; ending the process. According to the invention, the obstacle avoidance, the road finding, the mutual collision avoidance and the like for multiple unmanned boats can be completedefficiently and flexibly. Meanwhile, the method is safe, stable and reliable.

Owner:GUANGDONG HUST IND TECH RES INST

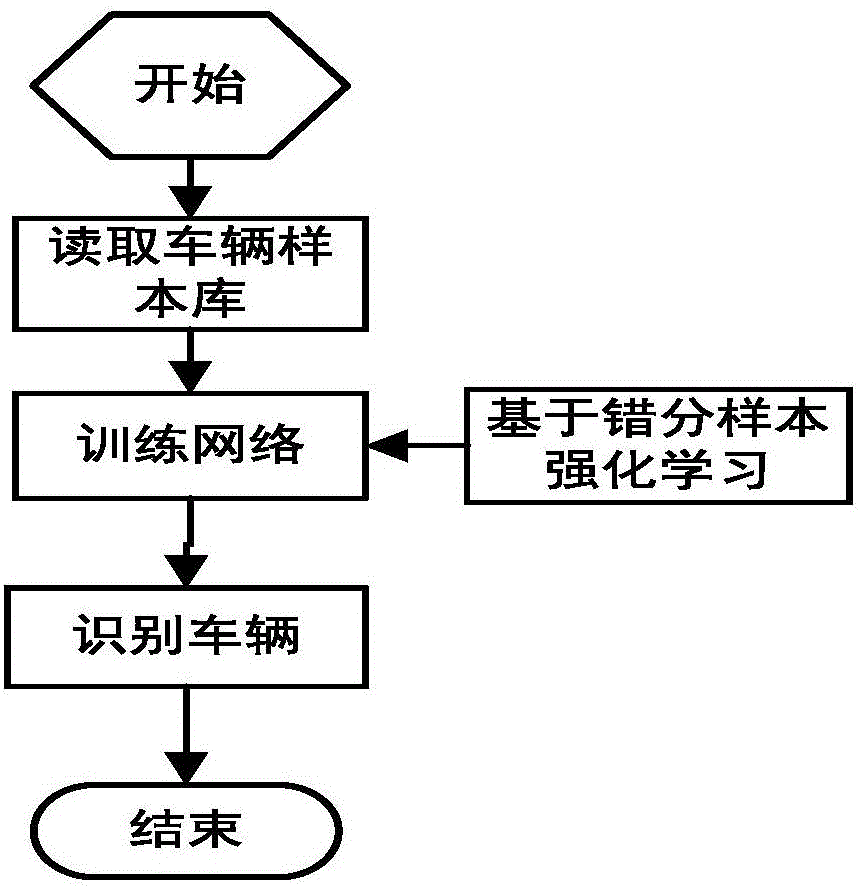

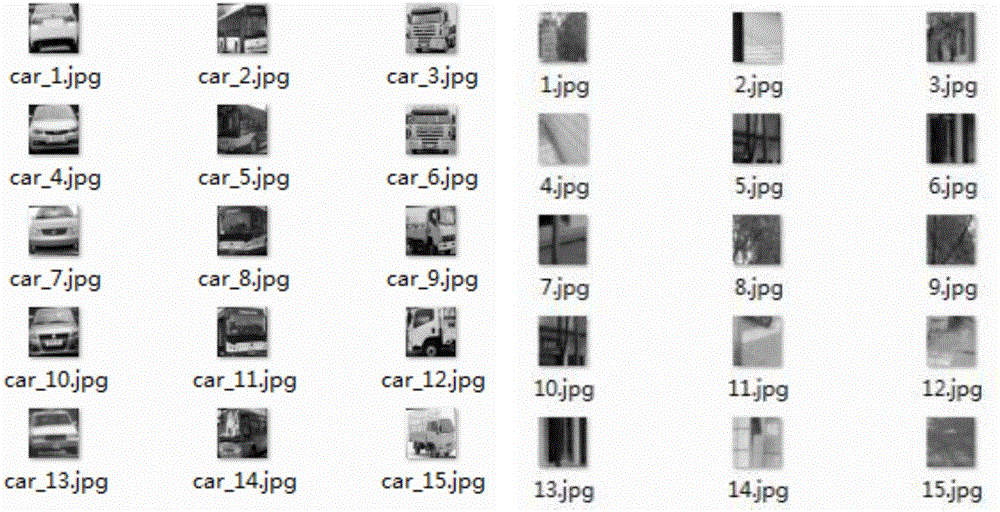

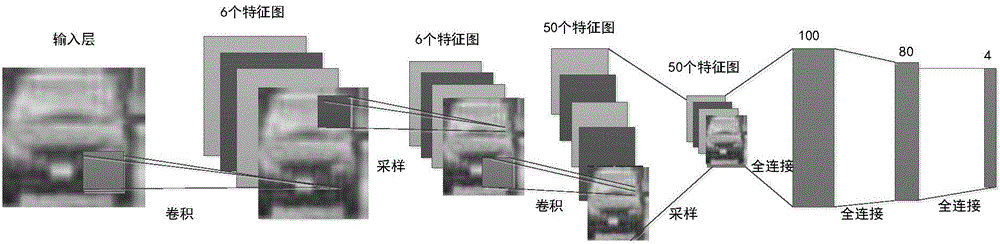

Vehicle identification method based on deep learning and reinforcement learning

ActiveCN106295637AEasy to identifyImprove training efficiencyCharacter and pattern recognitionNeural architecturesStochastic gradient descentAlgorithm

The present invention discloses a vehicle identification method based on deep learning and reinforcement learning. On the structure features of the deep network, a deep learning and reinforcement learning combination method is provided, the Q learning algorithm in the reinforcement learning is applied to the deep learning network, and the training process still uses a random gradient descent algorithm so as to improve the vehicle identification capability of the depth network; and the reinforcement learning technology based on the missed classed sample learning is added so as to overcome the current technology deficiency of the deep learning network in the vehicle identification field and improve the network training efficiency while improving the vehicle identification performance.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

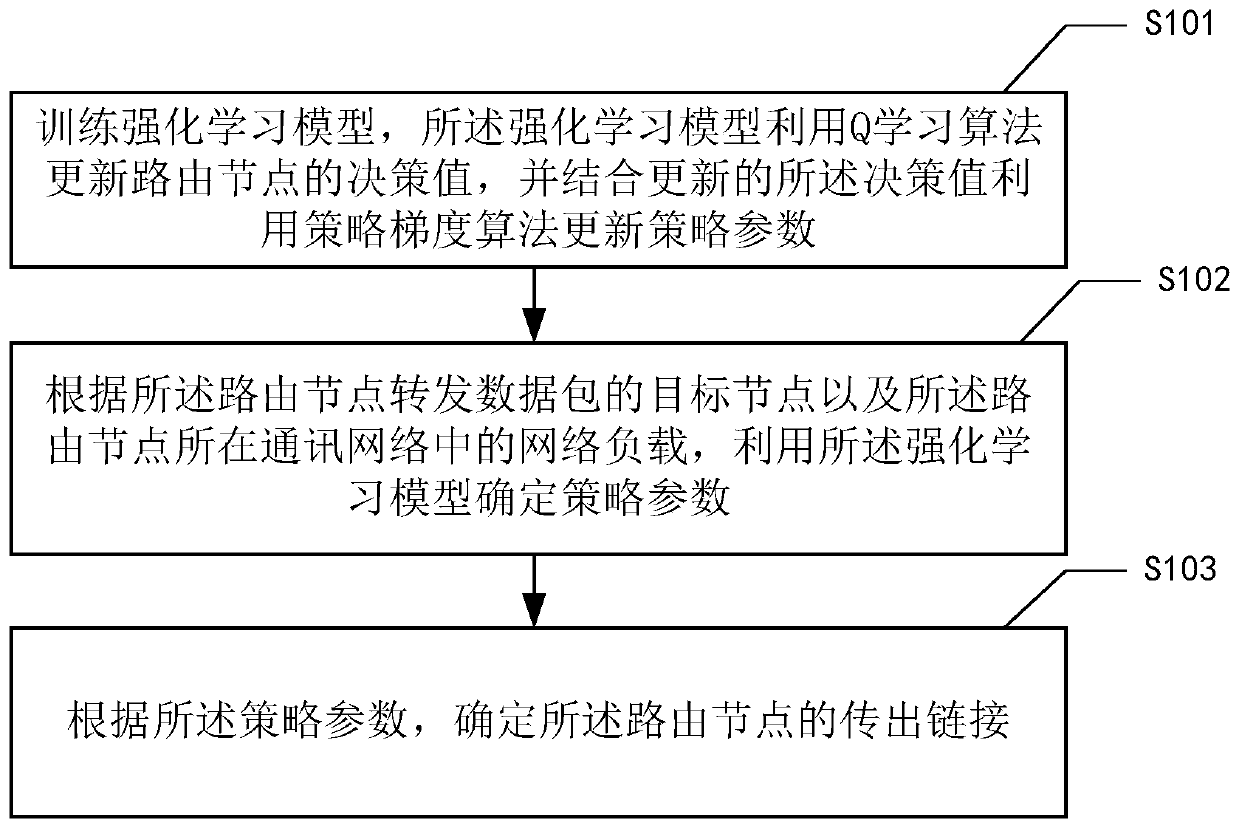

Method for controlling routing actions based on multi-agent reinforcement learning routing strategy

The invention relates to the technical field of information, and discloses a method for controlling routing actions based on a multi-agent reinforcement learning routing strategy. The method comprisesthe following steps: training a reinforcement learning model, updating decision values of routing nodes by the reinforcement learning model by using a Q learning algorithm, and updating strategy parameters by using a strategy gradient algorithm in combination with the updated decision values; determining strategy parameters by using the reinforcement learning model according to the target node towhich the routing nodes forward the data packets and the network load in the communication network where the routing nodes is located; and determining an outgoing link of the routing nodes accordingto the strategy parameter. According to the method, routing strategies can be adjusted in time for dynamically changing network connection modes, network loads and routing nodes; the appropriate shortest path is selected according to the target node of the data packet, and finally the average delivery time of the data packet is greatly shortened.

Owner:SHENZHEN RES INST OF BIG DATA +1

Adaptive reliable routing method for VANETs

InactiveCN106713143AImprove delivery rateReduce transmission delayData switching networksDependabilityBroadcasting

The invention provides an adaptive reliable routing method for the VANETs. In the design of the method, the maintenance times of links are proved to abide by logarithmic normal distribution by studying the movement characteristics of vehicles. Then, a reliability calculation model of links between nodes is established. The reliability values used for estimating the links between the nodes are used in a Q-Learning algorithm as a parameter, and a RSAR routing algorithm is proposed. Firstly, a source node sends a R_req data packet to the whole network in the form of broadcast, a path request timer is started, the R_req records IDs of all nodes passed, a R_rep data packet is generated by overturning in the R_req data packet, the R_rep data packet is sent to nodes recorded in an overturning path in the form of single-hop broadcast after waiting for a time slot, the next hop address of the data packet is modified, and a Q_Table of the node is updated. Experiments show that the method has the advantages of having a relatively high packet delivery rate and shortening the transmission delay.

Owner:TIANJIN UNIVERSITY OF TECHNOLOGY

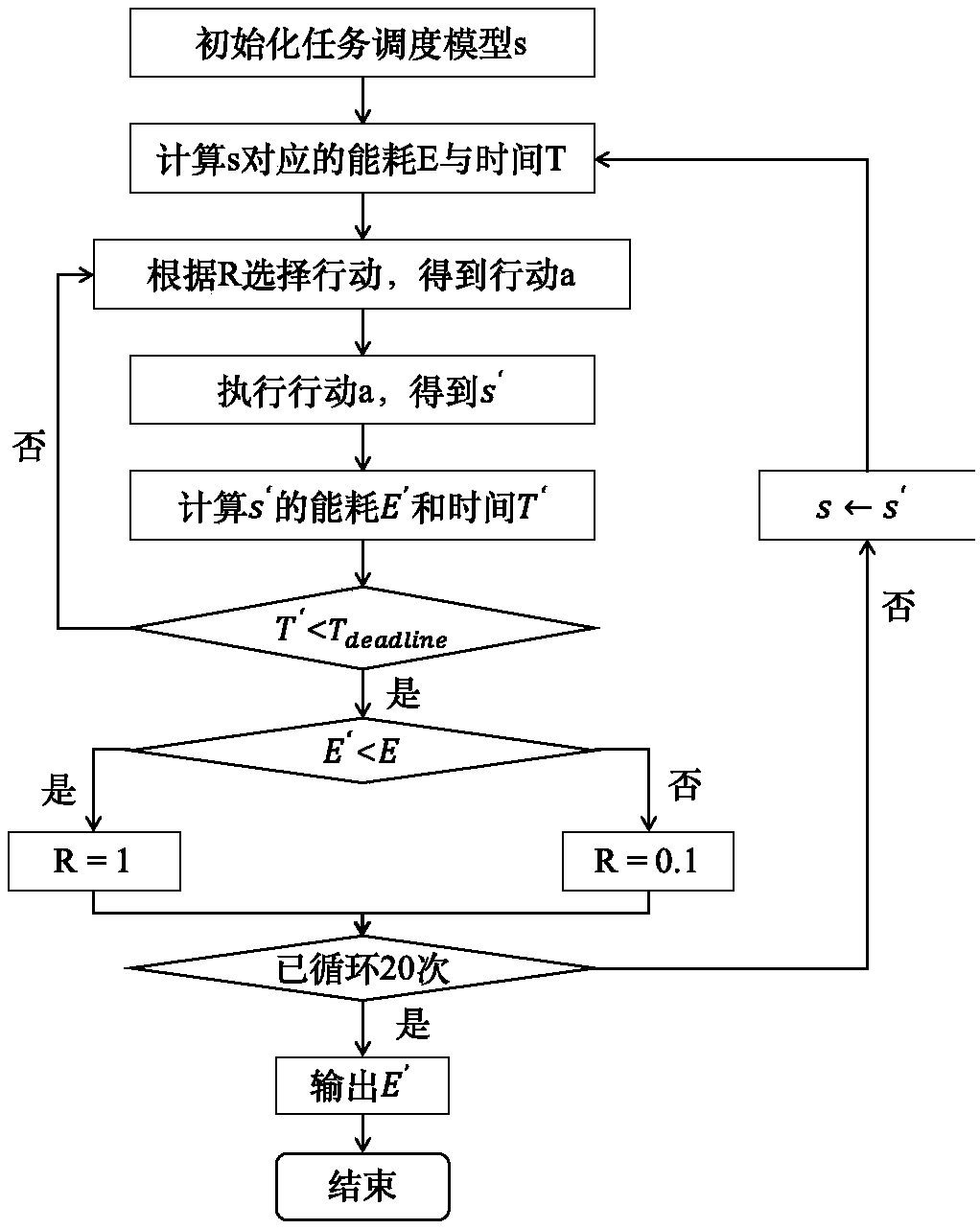

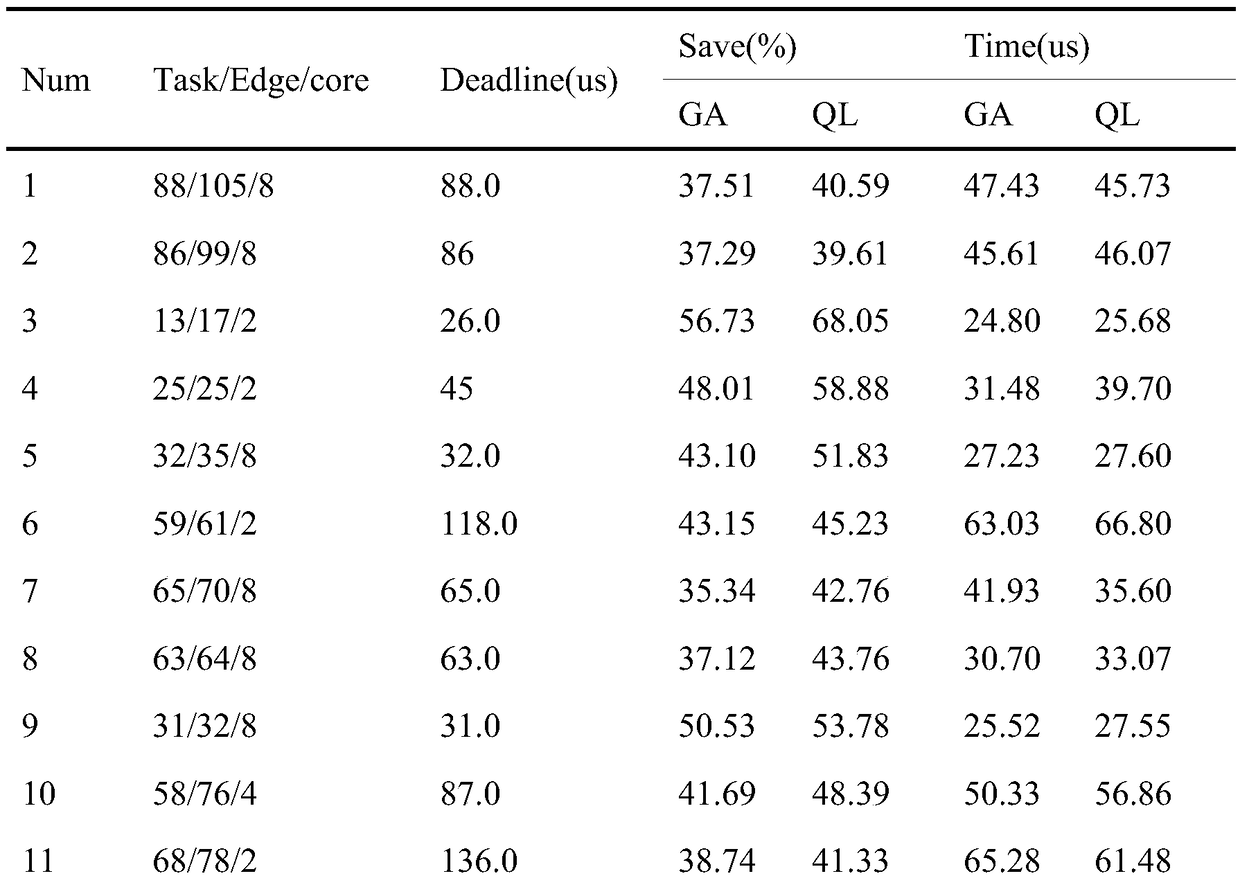

Energy consumption optimization scheduling method for heterogeneous multi-core embedded systems based on reinforcement learning

ActiveCN109117255AImprove execution efficiencyGuaranteed Execution DeadlineProgram initiation/switchingResource allocationReduction rateAlgorithm

The invention discloses a heterogeneous multi-core embedded system energy consumption optimization scheduling method based on a reinforcement learning algorithm. In the hardware aspect, a DVFS regulator is loaded on each processor, and the hardware platform matching the software characteristics is dynamically constructed by adjusting the working voltage of each processor and changing the hardwarecharacteristics of each processor. In the aspect of software, aiming at the shortcomings of traditional heuristic algorithm (genetic algorithm, annealing algorithm, etc.), such as insufficient local searching ability or weak global searching ability, this paper makes an exploratory application of Q-Learning algorithm to find the optimal scheduling solution of energy consumption. The Q-Learning algorithm can give consideration to the performance of global search and local search by trial-and-error and interactive feedback with the environment, so as to achieve better search results than the traditional heuristic algorithm. Thousands of experiments show that compared with the traditional GA algorithm, the energy consumption reduction rate of the Q-learning algorithm can reach 6%-32%.

Owner:WUHAN UNIV OF TECH

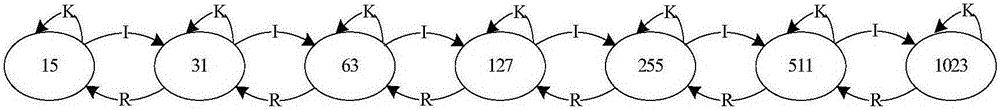

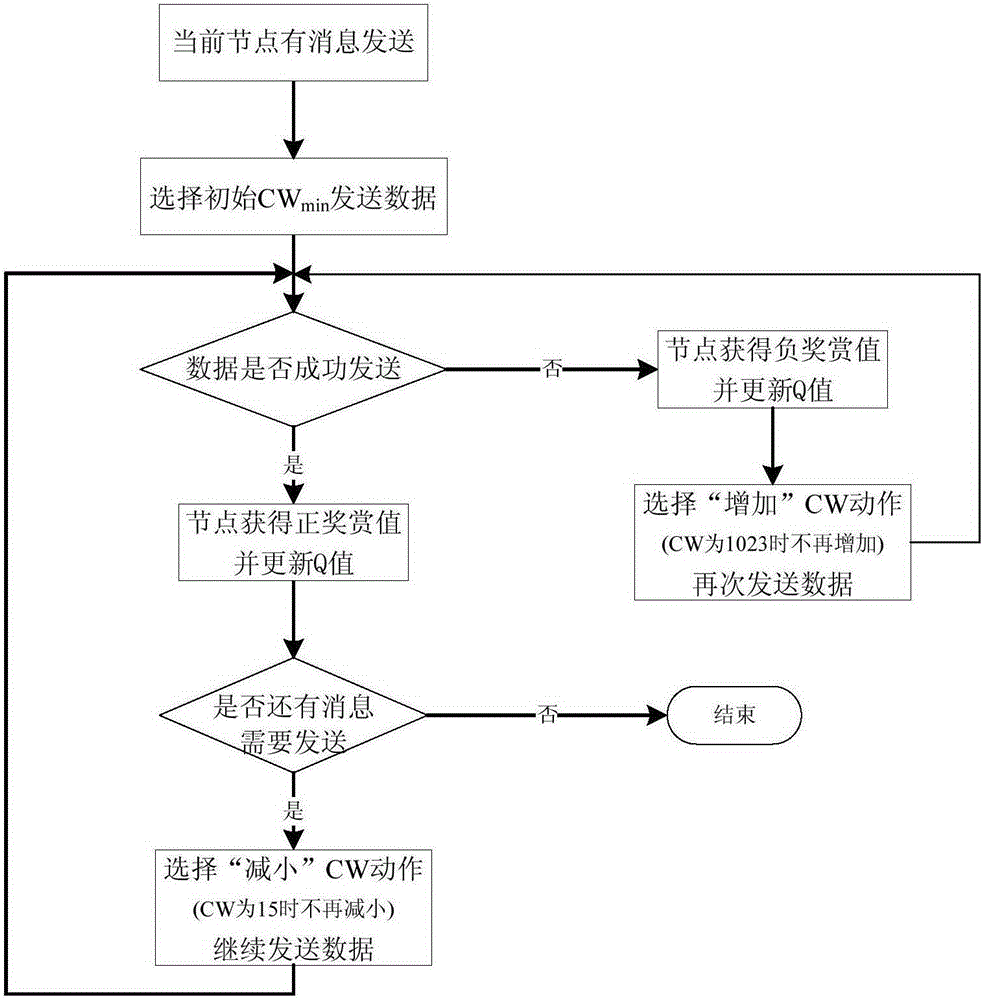

Realization method for Q learning based vehicle-mounted network media access control (MAC) protocol

ActiveCN105306176AIncrease the probability of successful deliveryReduce the number of dodgesError prevention/detection by using return channelNetwork traffic/resource managementLearning basedReward value

The invention discloses a realization method for a Q learning based vehicle-mounted network media access control (MAC) protocol. According to the method, a vehicle node uses a Q learning algorithm to constantly interact with the environment through repeated trial and error in a VANETs environment, and dynamically adjusts a competitive window (CW) according to a feedback signal (reward value) given by the VANETs environment, thereby always accessing the channel via the best CW (the best CW is selected when the reward value obtained from surrounding environment is maximum). Through adoption of the method, the data frame collision rate and transmission delay are lowered, and fairness of the node in channel accessing is improved.

Owner:NANJING NANYOU INST OF INFORMATION TECHNOVATION CO LTD

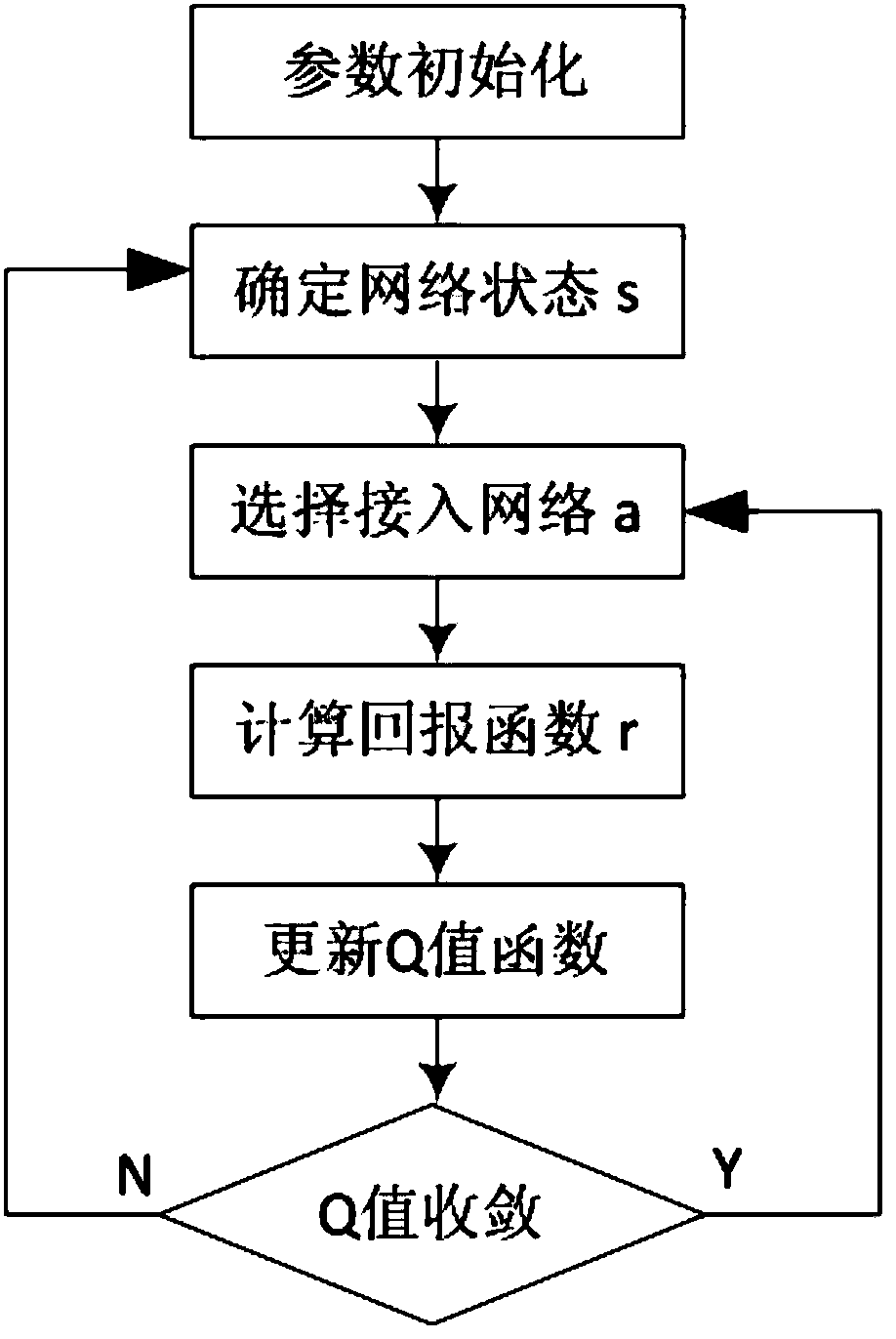

Network selection method based on Q-learning algorithm

ActiveCN107690176AAdapt to the choice problemImprove throughputAssess restrictionReturn functionPacket loss rate

The invention discloses a network selection method based on a Q-learning algorithm. The network selection method includes the steps of (1) initializing a Q value table and setting a discount factor gamma and a learning rate alpha; (2) determining a business type k and load rates of two current networks when a set time is up so as to obtain a current state sn; (3) selecting a useable action from anaction set A and recording such action as well as next network state sn+1; (4) computing an immediate return function r according to the network state after the selected action is implemented; (5), updating a Q value function Qn (s, a), and gradually decreasing the learning rate alpha to 0 according to rules of an inverse proportional function; (6), repeating the steps (2)-(5) until Q values areconverged, in other words, a difference value of the Q values before and after updating is smaller than a threshold value; (7) returning to the step (3) to select the action and accessing to an optimal network. The network selection method based on the Q-learning algorithm is capable of decreasing a voice business block rate and a data service packet loss rate and increasing average network throughout.

Owner:NANJING NARI GROUP CORP +1

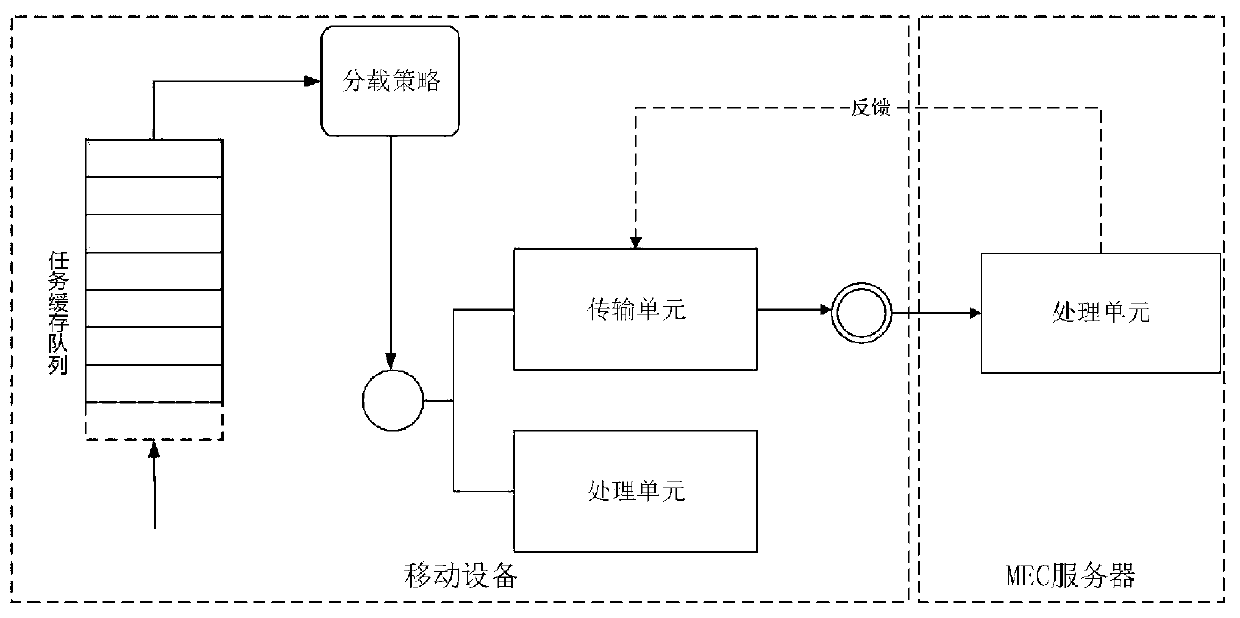

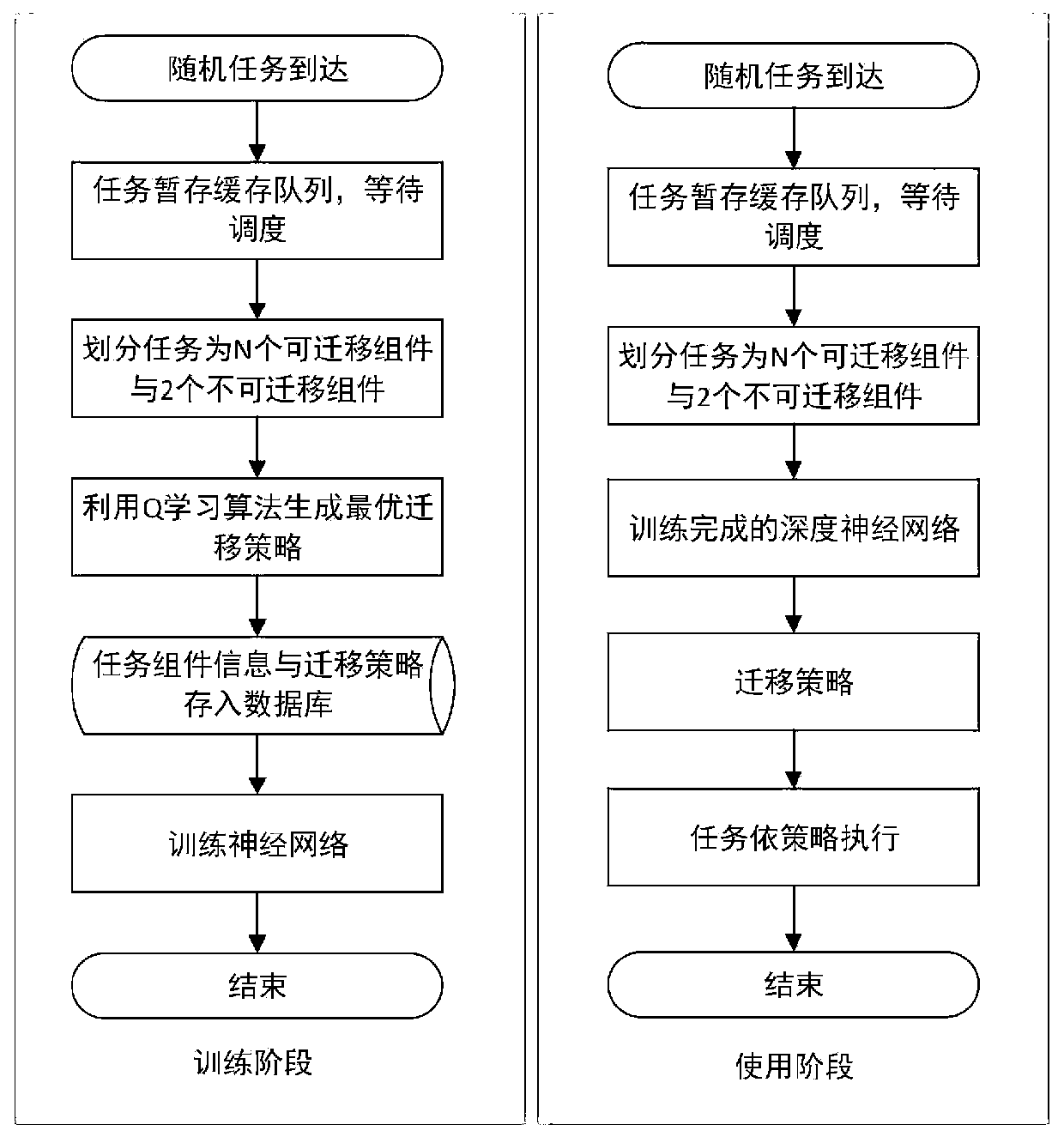

MEC random task migration method based on machine learning

ActiveCN109753751ASolve build problemsEnable online learningTransmissionEnergy efficient computingNerve networkAlgorithm

The invention discloses a random task migration method based on machine learning. A single task is divided into N migratable components irrelevant to equipment and two non-migratable components relevant to the equipment. A Markov decision process is used for modeling a system, a Q learning algorithm in reinforcement learning is used for generating an optimal migration strategy for determining taskcomponents, task component data and the optimal strategy are recorded to serve as training samples, so that the deep neural network is trained, and the learning capacity of the neural network is stronger along with continuous increase of the training samples. When the neural network accuracy reaches a certain degree, the approximate optimal migration strategy of the random task can be obtained only through one-time forward propagation. According to the method provided by the invention, the generation problem of the optimal migration strategy when the equipment-related and equipment-irrelevanttasks randomly arrive is well solved, and meanwhile, online learning can be realized.

Owner:BEIJING UNIV OF TECH

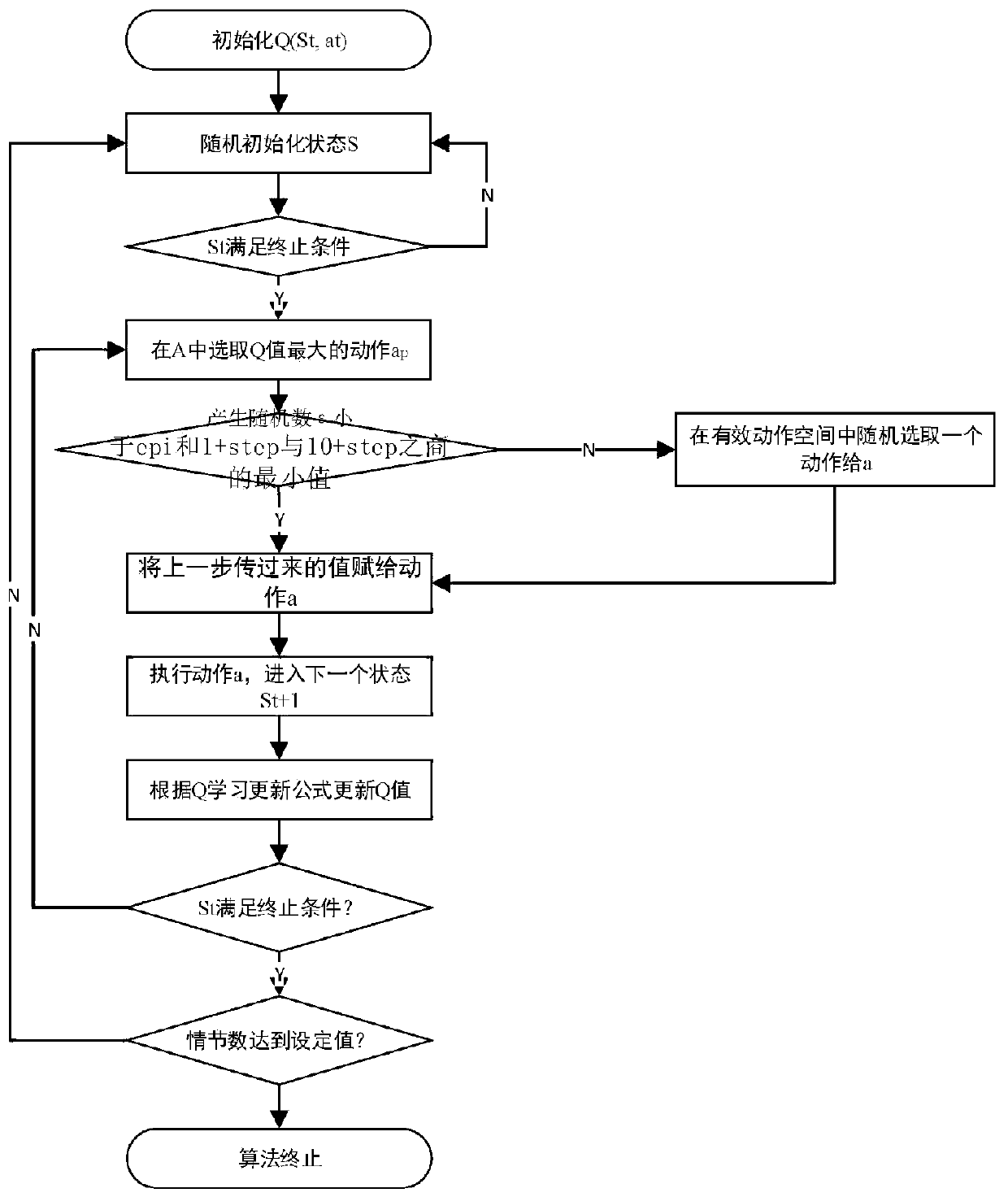

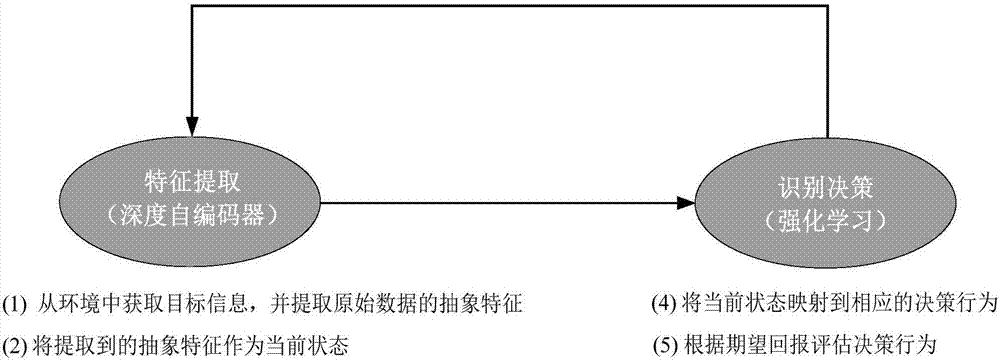

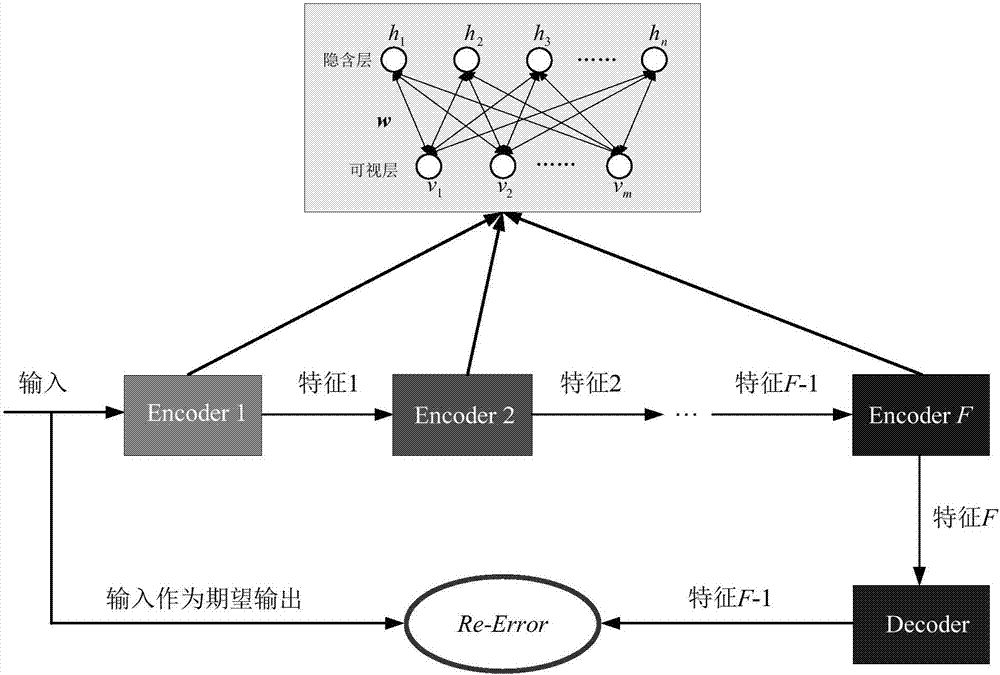

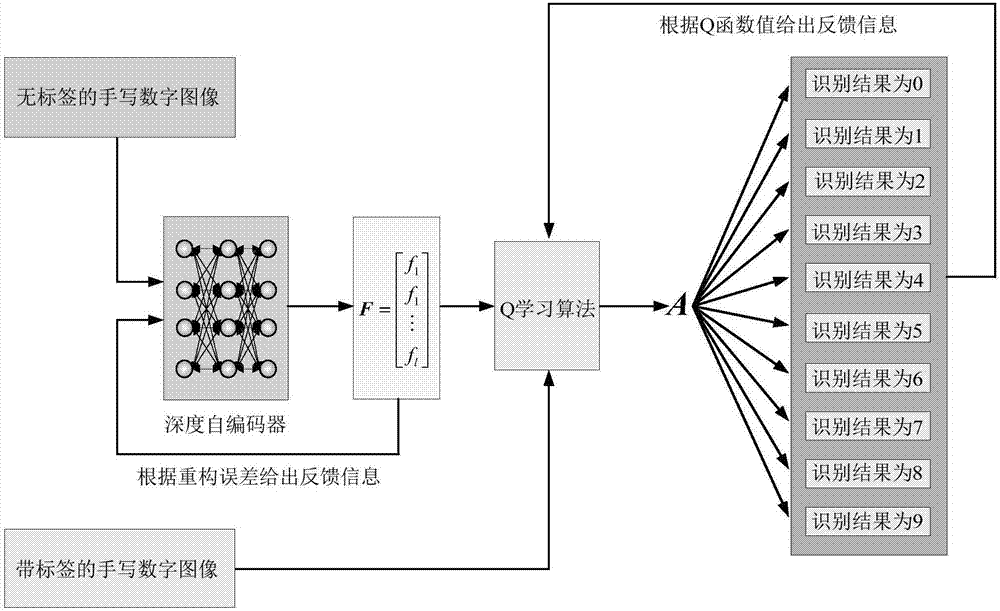

Handwritten numeral recognition method based on deep Q learning strategy

ActiveCN107229914AStrong decision-making abilityHigh precisionDigital ink recognitionDeep belief networkFeature extraction

The invention provides a handwritten numeral recognition method based on a deep Q learning strategy, belongs to the field of artificial intelligence and pattern recognition and solves the problem of low identification precision of a handwritten numeral standard object MNIST database. The method is characterized by, to begin with, carrying out abstract feature extraction on an original signal through a deep auto-encoder (DAE), Q learning algorithm using coding characteristics of the DAE for the original signal as a current state; then, carrying out classification and identification on the current state to obtain a reward value, and returning the reward value to the Q learning algorithm to carry out iterative update; and maximizing the reward value to finish high-precision identification of handwritten numerals. The method combines deep learning having perception capability and reinforcement learning having decision-making ability and forms a Q deep belief network (Q-DBN) through combination of the deep auto-encoder and the Q learning algorithm, thereby improving identification precision, and meanwhile, reducing identification time.

Owner:BEIJING UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com