Patents

Literature

125 results about "Robot learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Robot learning is a research field at the intersection of machine learning and robotics. It studies techniques allowing a robot to acquire novel skills or adapt to its environment through learning algorithms. The embodiment of the robot, situated in a physical embedding, provides at the same time specific difficulties (e.g. high-dimensionality, real time constraints for collecting data and learning) and opportunities for guiding the learning process (e.g. sensorimotor synergies, motor primitives).

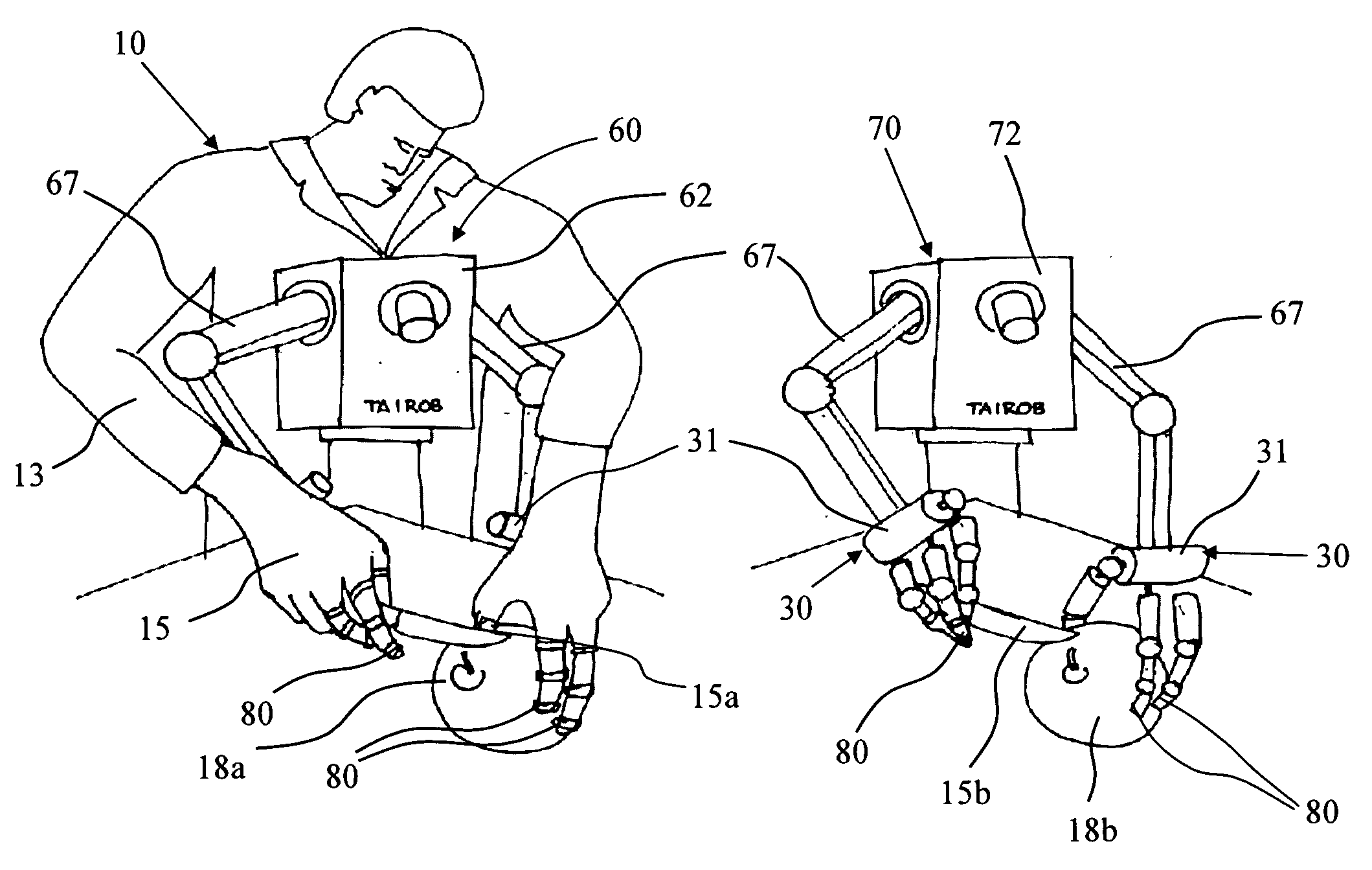

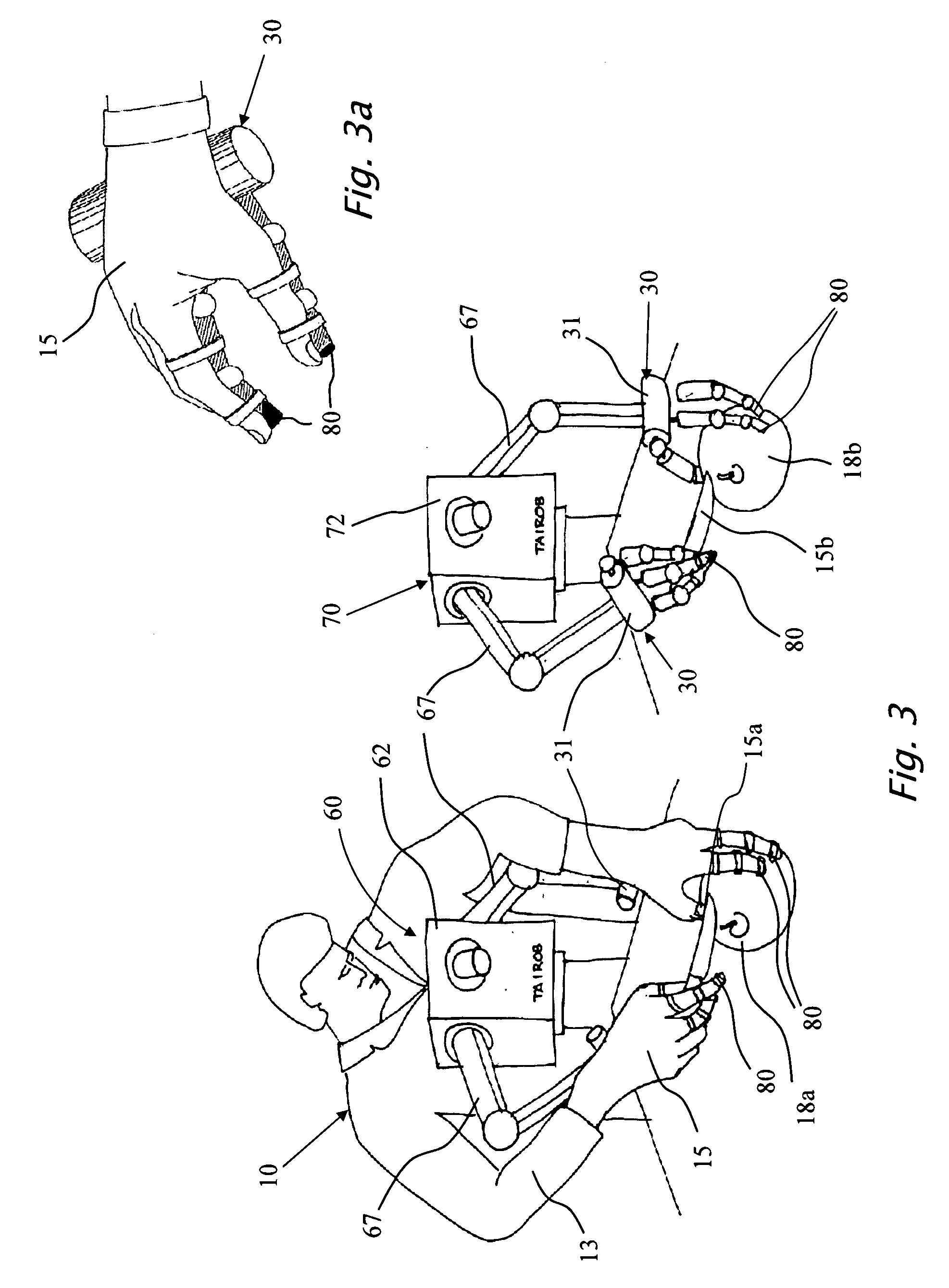

Transfer of knowledge from a human skilled worker to an expert machine - the learning process

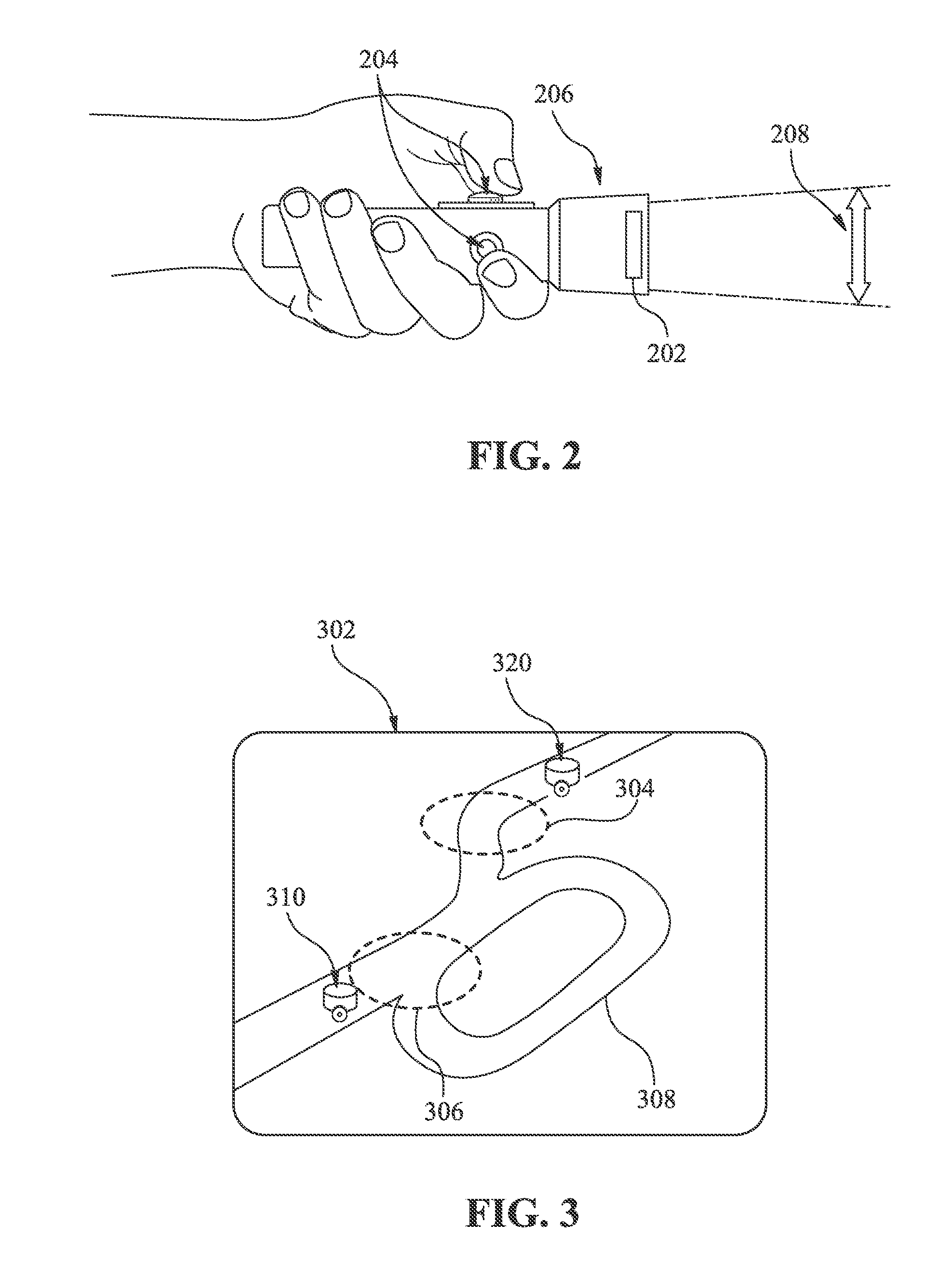

InactiveUS20090132088A1Improve operational sensitivityComputer controlSimulator controlSoftware engineeringLearning methods

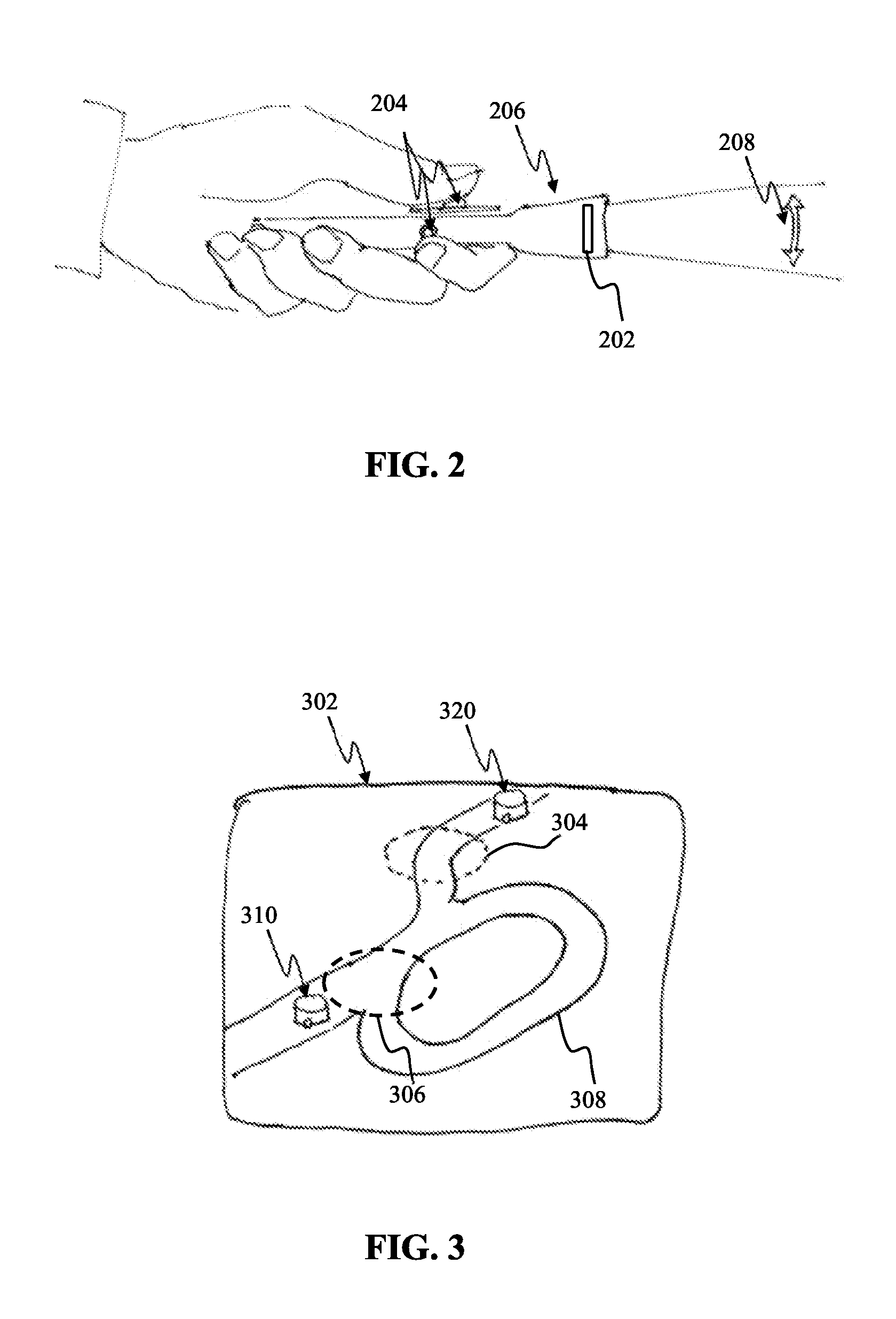

A learning environment and method which is a first milestone to an expert machine that implements the master-slave robotic concept. The present invention is of a learning environment and method for teaching the master expert machine by a skilled worker that transfers his professional knowledge to the master expert machine in the form of elementary motions and subdivided tasks. The present invention further provides a stand alone learning environment, where a human wearing one or two innovative gloves equipped with 3D feeling sensors transfers a task performing knowledge to a robot in a different learning process than the Master-Slave learning concept. The 3D force\torque, displacement, velocity\acceleration and joint forces are recorded during the knowledge transfer in the learning environment by a computerized processing unit that prepares the acquired data for mathematical transformations for transmitting commands to the motors of a robot. The objective of the new robotic learning method is a learning process that will pave the way to a robot with a “human-like” tactile sensitivity, to be applied to material handling, or man / machine interaction.

Owner:TAIROB

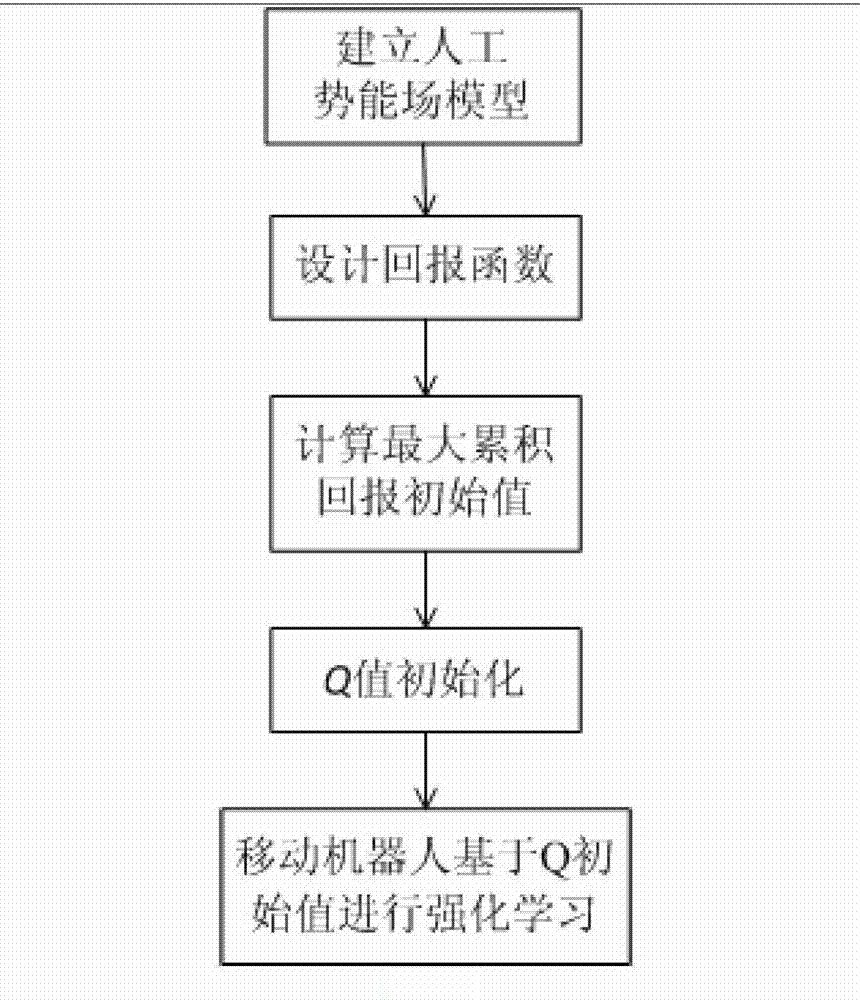

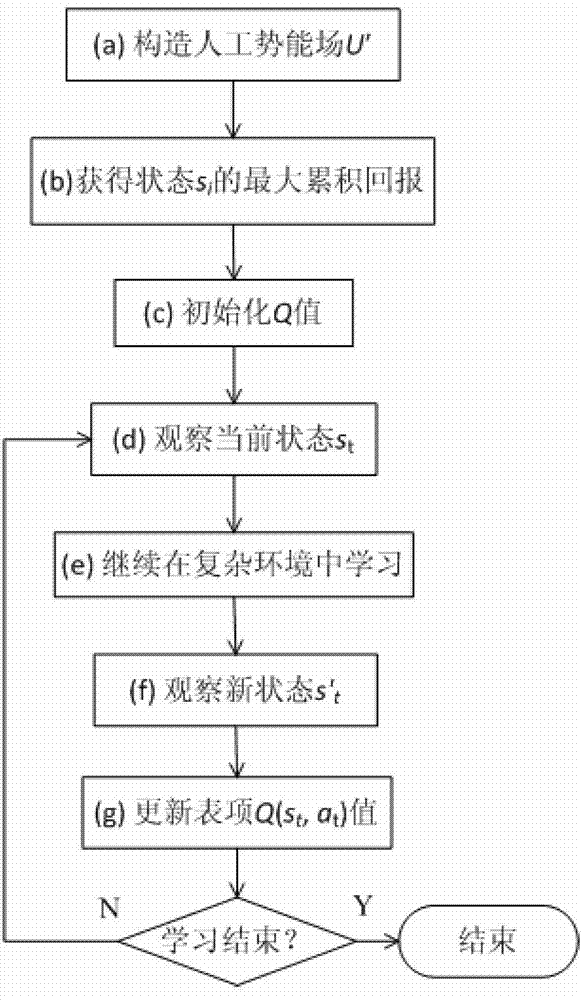

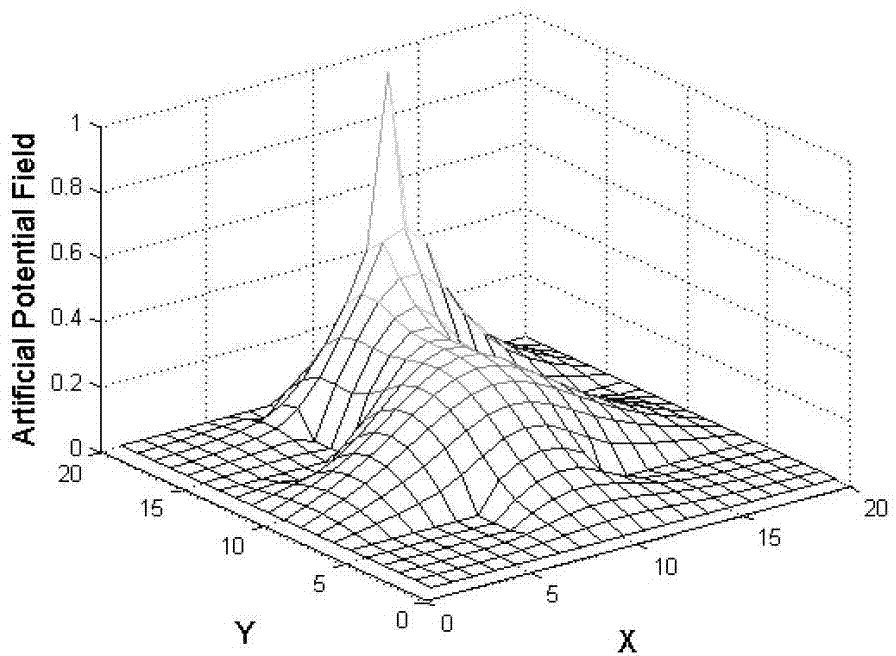

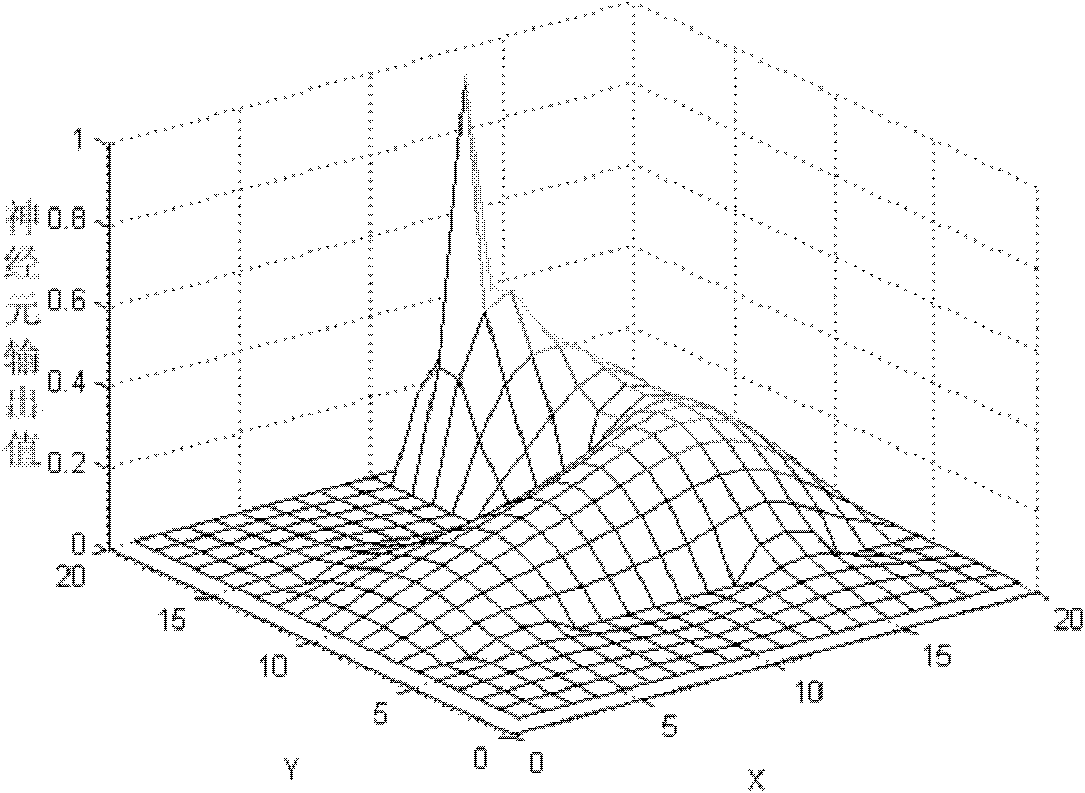

Path planning Q-learning initial method of mobile robot

InactiveCN102819264AImprove learning effectFast convergencePosition/course control in two dimensionsPotential fieldWorking environment

The invention discloses a reinforcing learning initial method of a mobile robot based on an artificial potential field and relates to a path planning Q-learning initial method of the mobile robot. The working environment of the robot is virtualized to an artificial potential field. The potential values of all the states are confirmed by utilizing priori knowledge, so that the potential value of an obstacle area is zero, and a target point has the biggest potential value of the whole field; and at the moment, the potential value of each state of the artificial potential field stands for the biggest cumulative return obtained by following the best strategy of the corresponding state. Then a Q initial value is defined to the sum of the instant return of the current state and the maximum equivalent cumulative return of the following state. Known environmental information is mapped to a Q function initial value by the artificial potential field so as to integrate the priori knowledge into a learning system of the robot, so that the learning ability of the robot is improved in the reinforcing learning initial stage. Compared with the traditional Q-learning algorithm, the reinforcing learning initial method can efficiently improve the learning efficiency in the initial stage and speed up the algorithm convergence speed, and the algorithm convergence process is more stable.

Owner:山东大学(威海)

Robotic learning and evolution apparatus

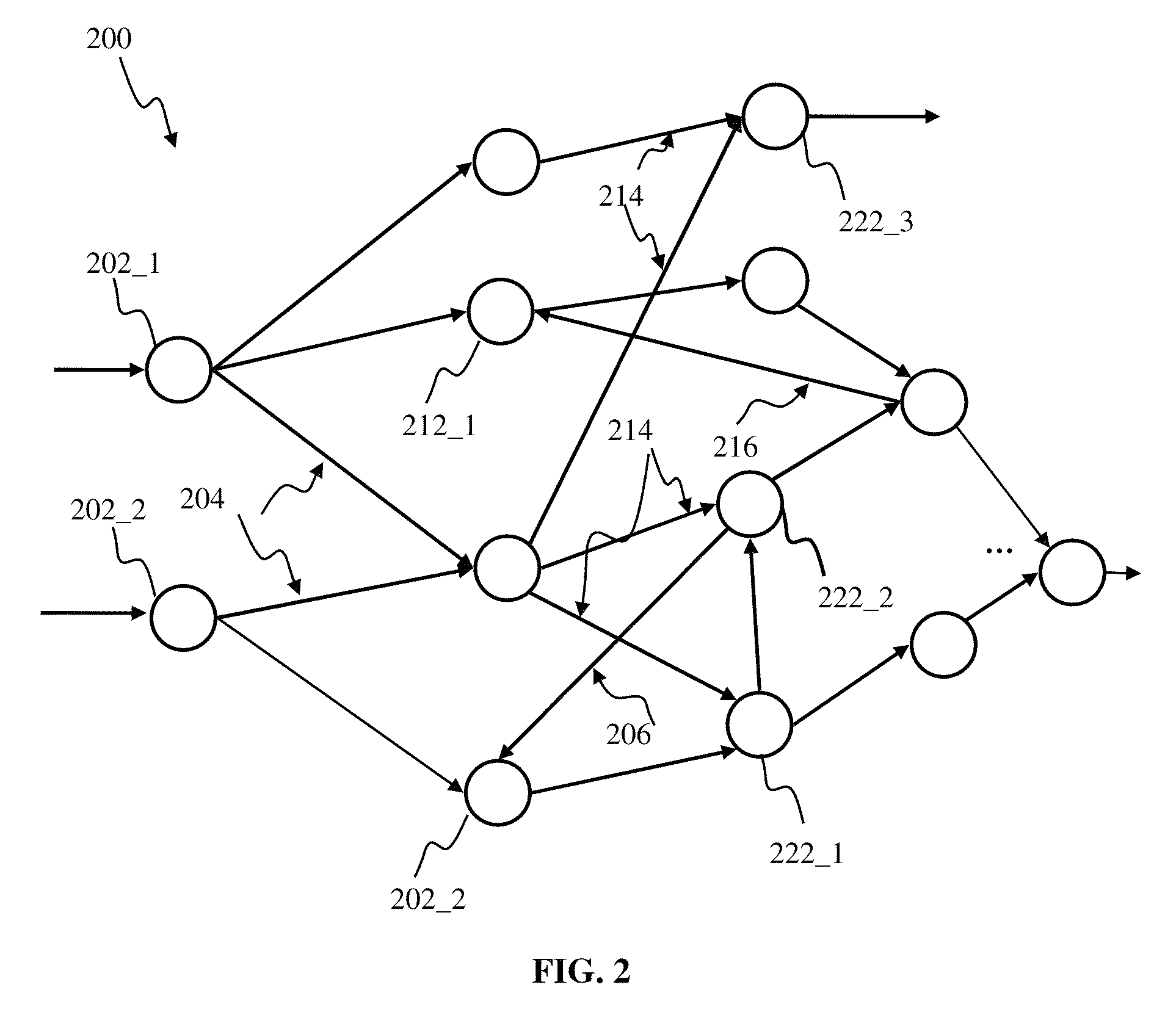

ActiveUS8793205B1Inhibition of reproductionEasy to optimizeDigital computer detailsArtificial lifeArtificial neuronHuman–computer interaction

Apparatus and methods for implementing robotic learning and evolution. An ecosystem of robots may comprise robotic devices of one or more types utilizing artificial neuron networks for implementing learning of new traits. A number of robots of one or more species may be contained in an enclosed environment. The robots may interact with objects within the environment and with one another, while being observed by the human audience. In one or more implementations, the robots may be configured to ‘reproduce’ via duplication, copy, merge, and / or modification of robotic. The replication process may employ mutations. Probability of reproduction of the individual robots may be determined based on the robot's success in whatever function trait or behavior is desired. User-driven evolution of robotic species may enable development of a wide variety of new and / or improved functionality and provide entertainment and educational value for users.

Owner:BRAIN CORP

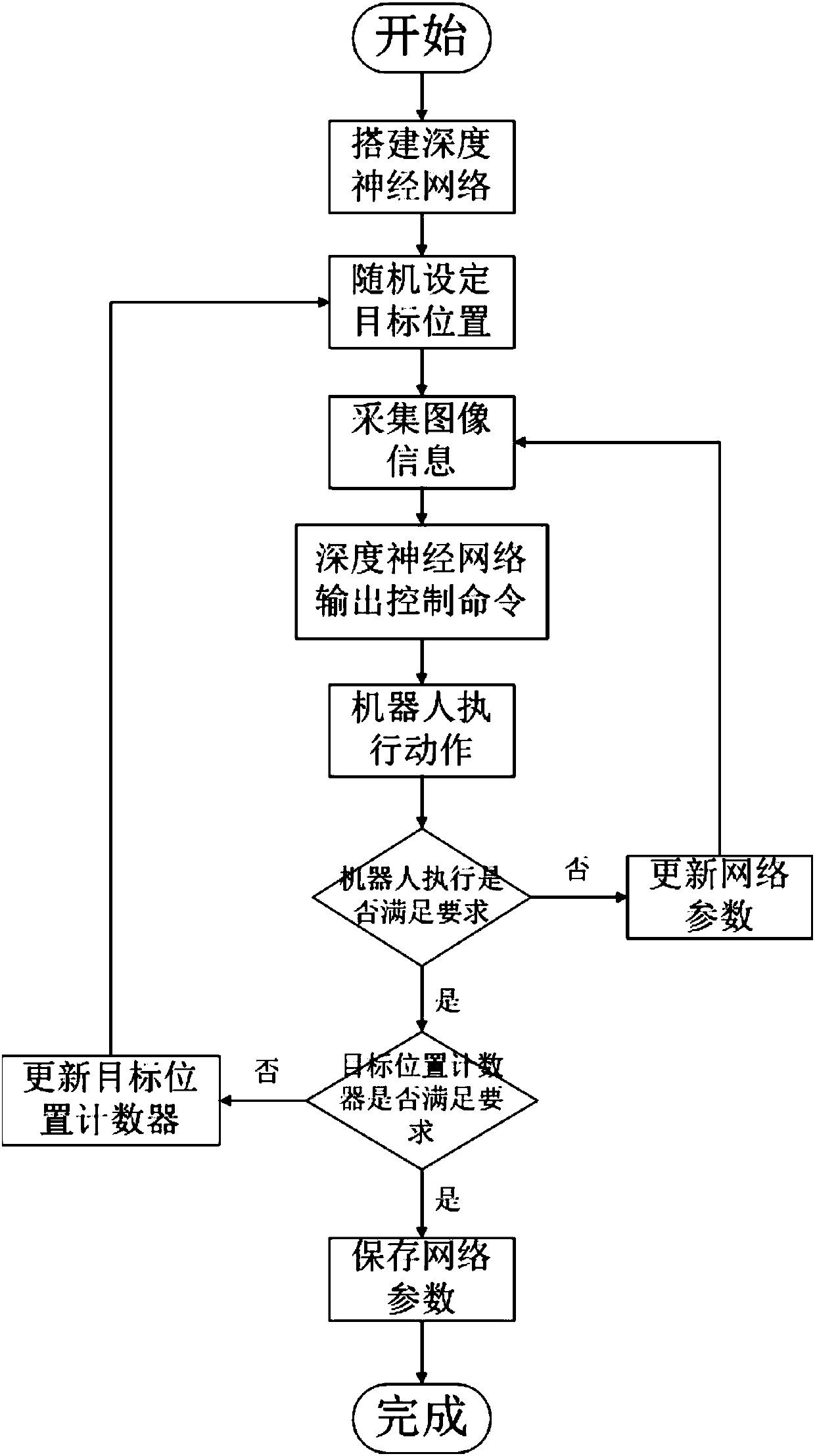

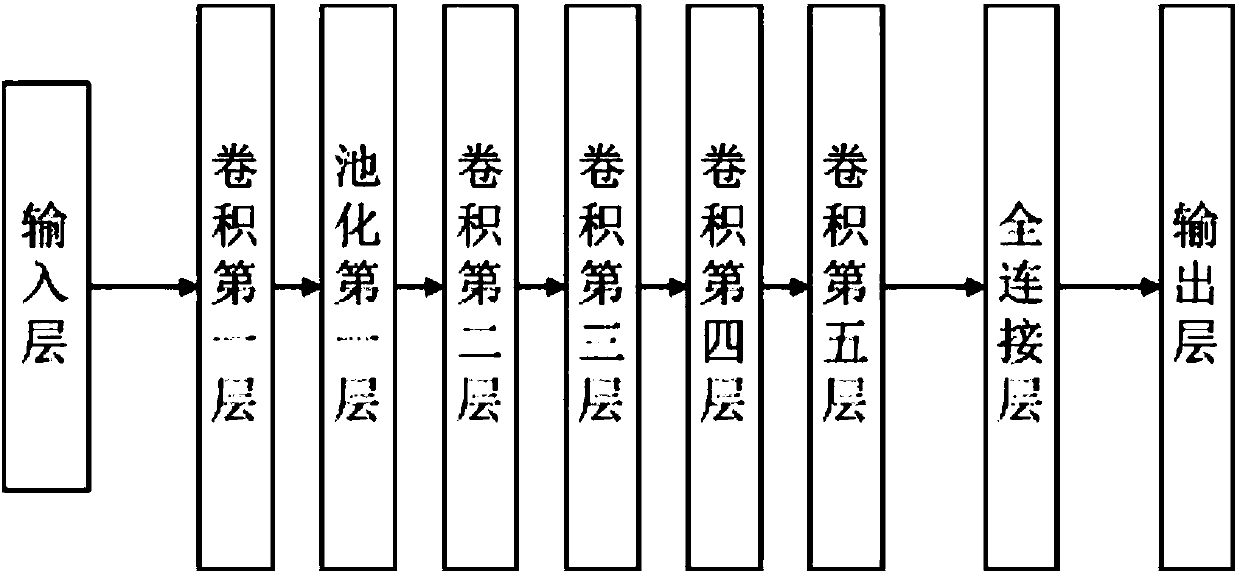

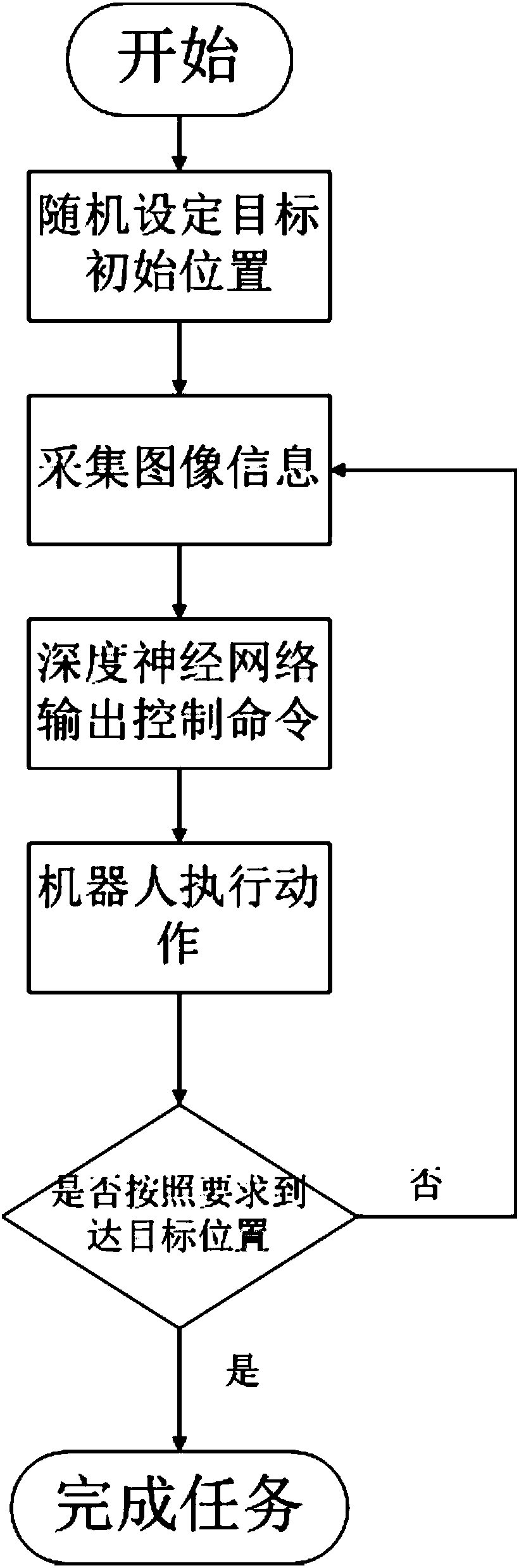

Robot global path planning method based on deeply enhanced learning

ActiveCN107065881AMake up for deficienciesStrong real-timePosition/course control in two dimensionsNerve networkView camera

The invention proposes a robot global path planning method based on deeply enhanced learning, which belongs to the robot learning and global path planning technology fields. The method comprises: in the training session: firstly, installing a top-view camera in a scenario; constructing a deep neural network; after a training path is created, outputting the executing actions by the deep neural network according to the images photographed by the camera; and according to the execution effect of the actions, optimizing the parameters of the deep neural network; then, updating the position of a target; performing different path planning trainings to obtain a final deep neural network; and in the execution session: outputting the executing actions for the robot by the final deep neural network according to the images photographed by the camera so that the robot executes the actions; and if the robot reaches the position of the target after its execution of the actions, then, completing the global path planning by the robot. The method of the invention is very practical in use, does not require manual participation, or the entrance of a scenario for constructing an environment map in advance. The method can be applied to multiple scenarios at a low cost.

Owner:TSINGHUA UNIV

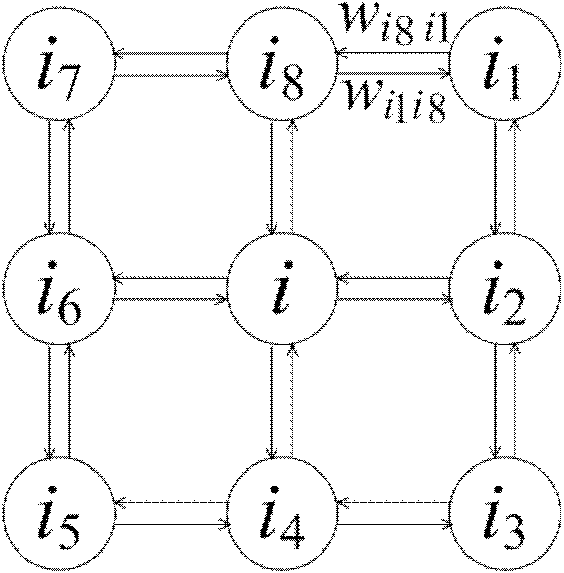

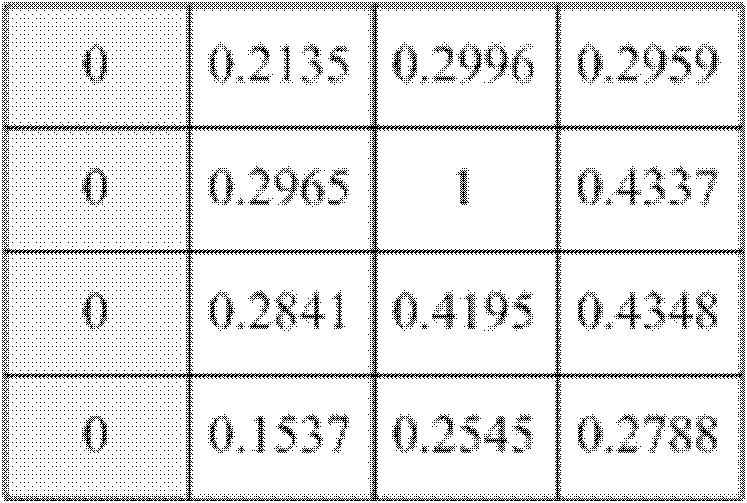

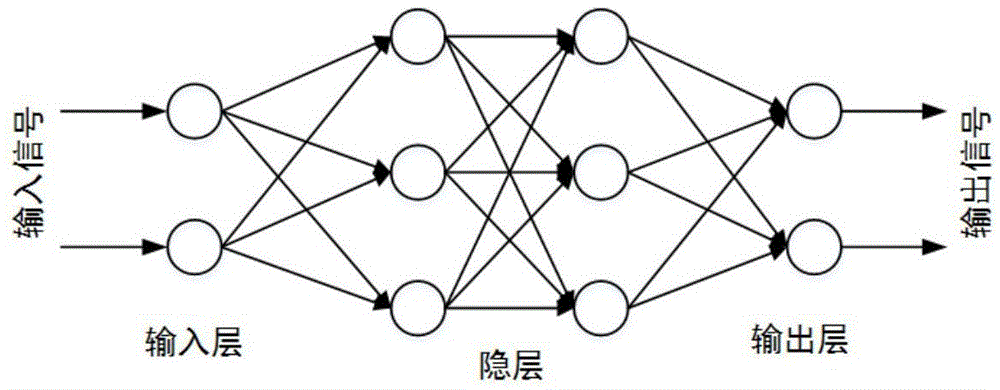

Robot reinforced learning initialization method based on neural network

InactiveCN102402712AImprove learning effectFast convergenceNeural learning methodsRobot learningLearning abilities

The invention provides a robot reinforced learning initialization method based on a neural network. The neural network has the same topological structure as a robot working space, and each neuron corresponds to a discrete state of a state space. The method comprises the following steps of: evolving the neural network according to the known partial environmental information till reaching a balance state, wherein at the moment, the output value of each neuron represents maximum cumulative return acquired when the corresponding state follows the optimal strategy; defining the initial value of a Q function as the sum of the immediate return of the current state and the maximum converted cumulative return acquired when the subsequent state follows the optimal strategy; and the mapping the known environmental information into the initial value of the Q function by the neural network. Therefore, the prior knowledge is fused into a robot learning system, and the learning capacity of the robot at the initial stage of reinforced learning is improved; and compared with the conventional Q learning algorithm, the method has the advantages of effectively improving the learning efficiency of the initial stage and increasing the algorithm convergence speed.

Owner:SHANDONG UNIV

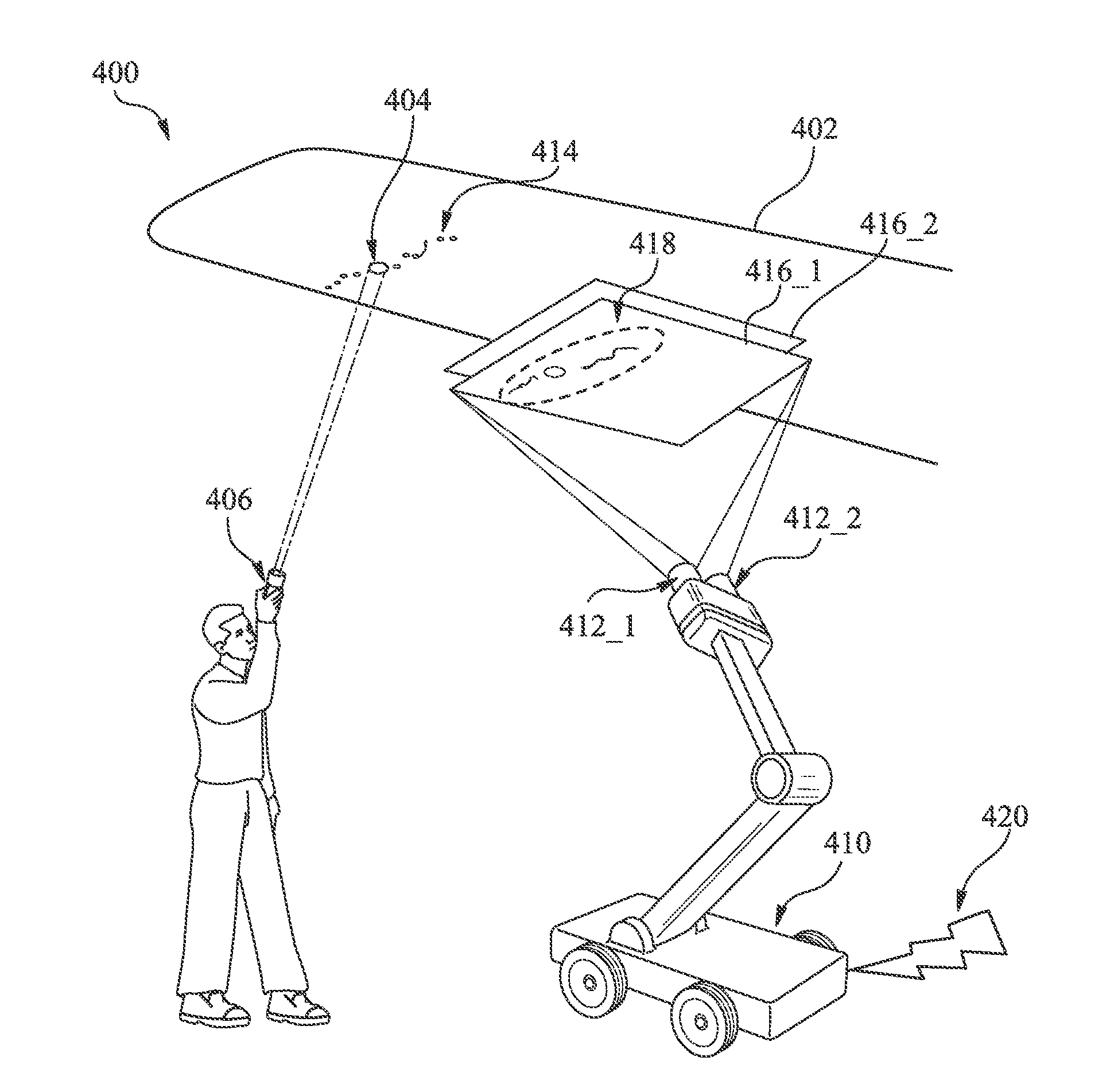

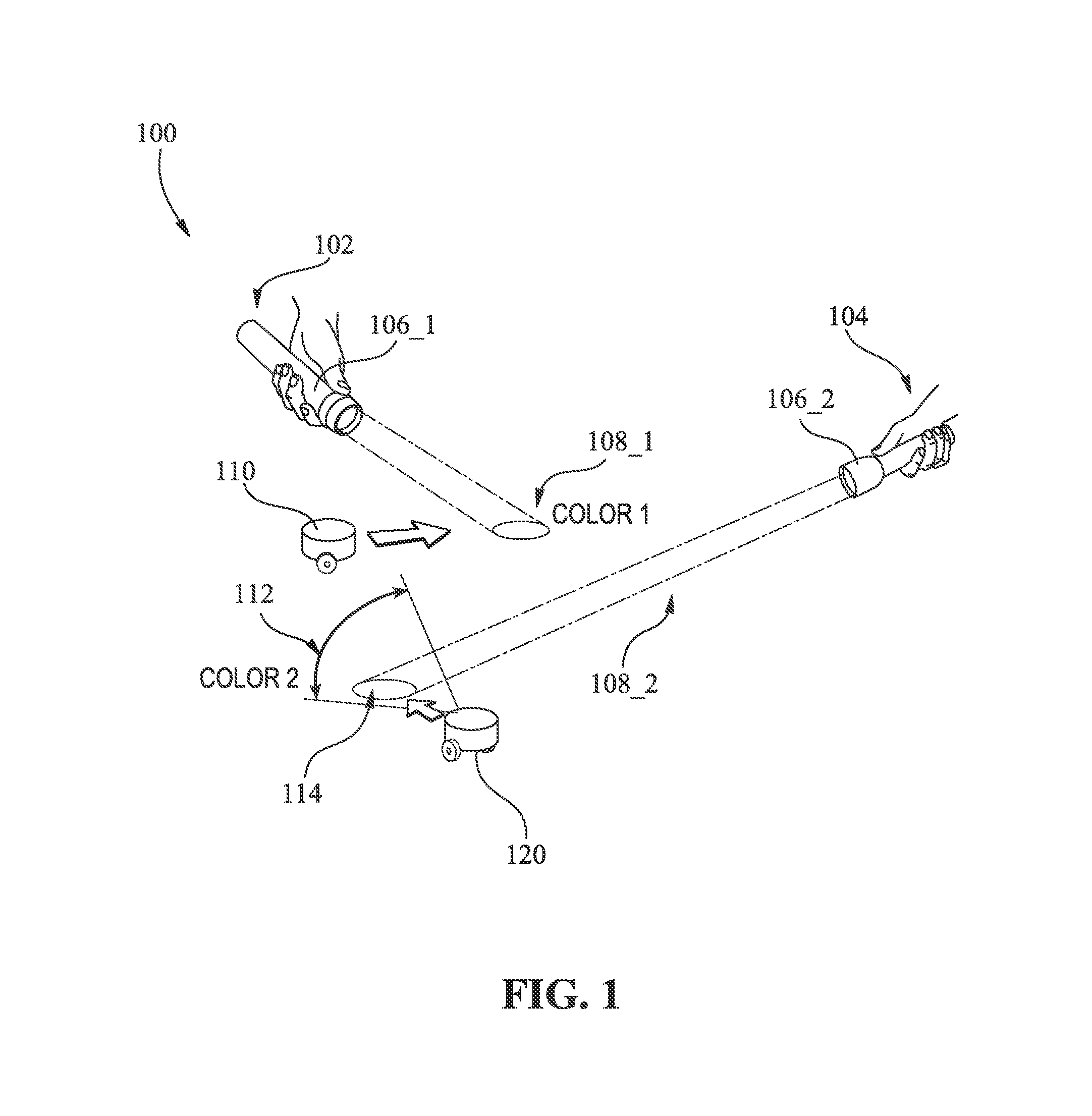

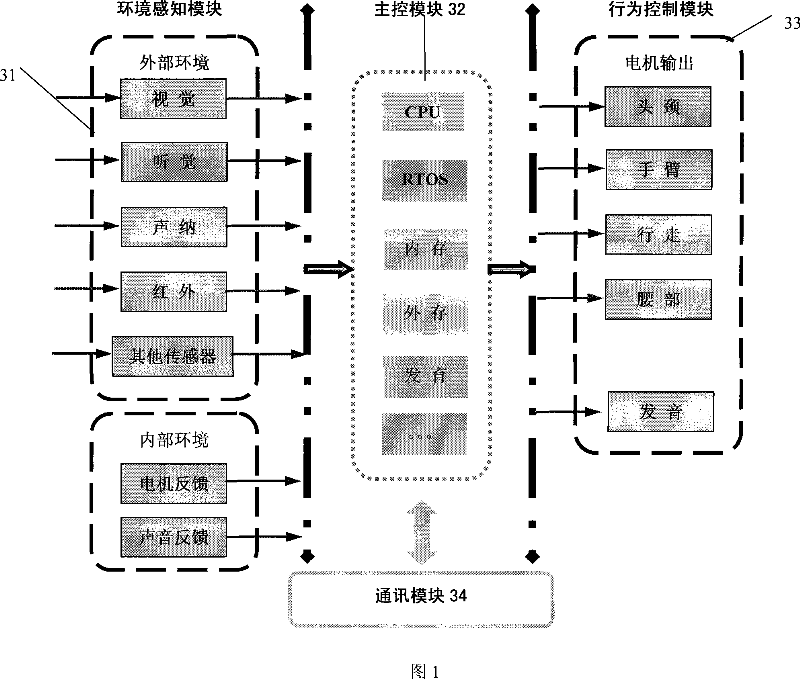

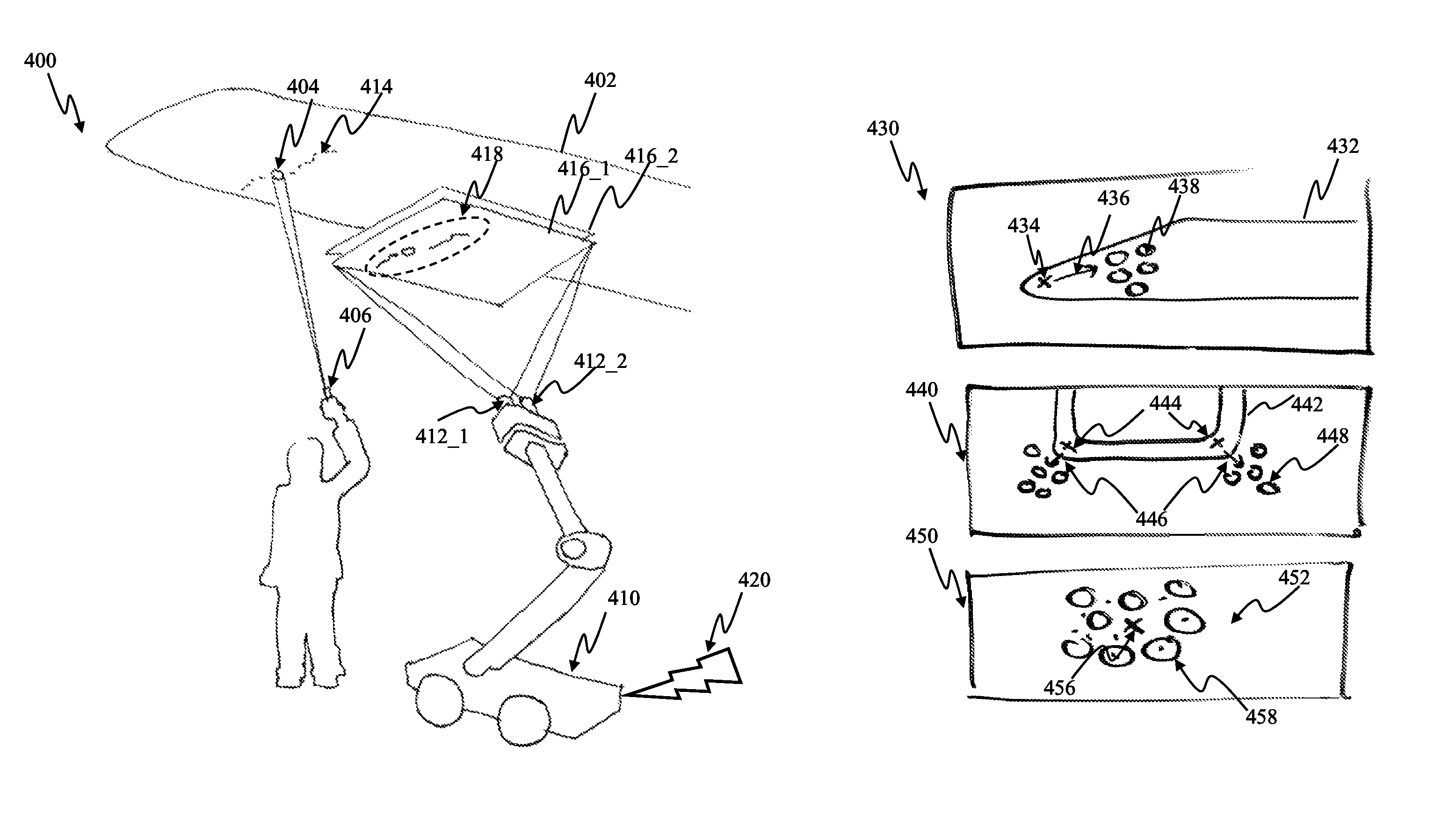

Apparatus and methods for controlling attention of a robot

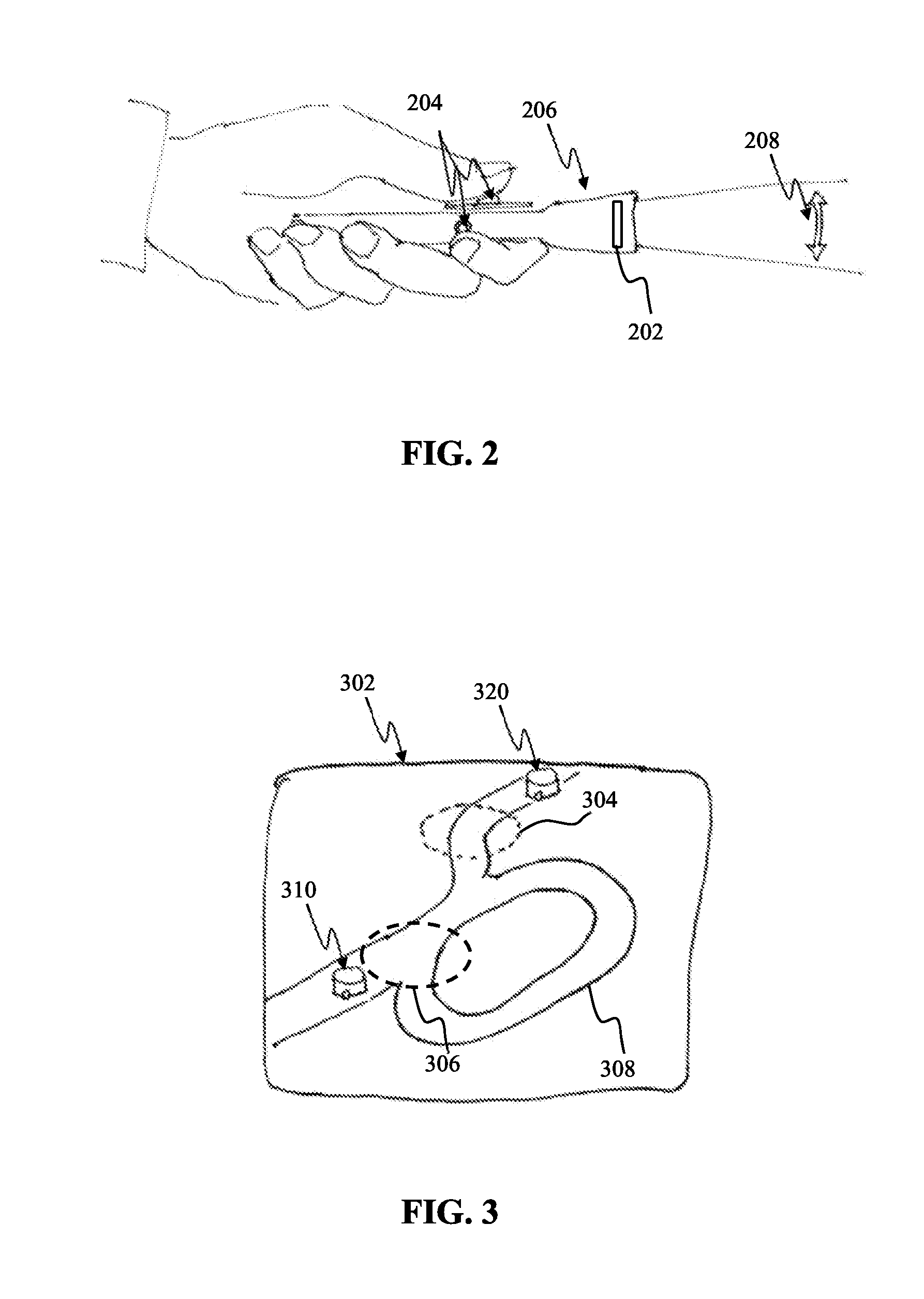

Apparatus and methods for controlling attention and training of autonomous robotic devices. In one approach, attention of the robot may be manipulated by use of a spot-light device illuminating a portion of the aircraft undergoing inspection in order to indicate to inspection robot target areas requiring more detailed inspection. The robot guidance may be aided by way of an additional signal transmitted by the agent to the robot indicating that the object has been illuminated and attention switch may be required. Responsive to receiving the additional signal, the robot may initiate a search for the signal reflected by the illuminated area requiring its attention. Responsive to detecting the illuminated object and receipt of the additional signal, the robot may develop an association between the two events and the inspection task. The light guided attention system may influence the robot learning for subsequent actions.

Owner:GOPRO

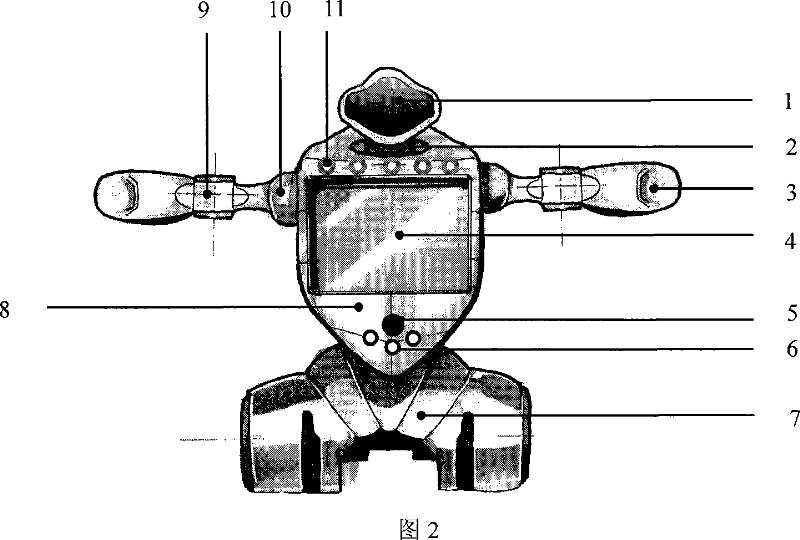

Intelligent robot friend for study and entertainment

InactiveCN101036838AGood study habitsMake progressSelf-moving toy figuresRemote-control toysVoice communicationElectric machinery

The invention belongs to the robot technology field, specifically a robot having abundant freedom of motion and in intelligent control, which is a novel study and entertainment platform tightly combined with the intelligent robot. The robot noumenon structure includes a head and neck, an arm, a body, a base etc, having nine independent freedom of motion. The system adopts the PID feed back control, accurately controls the speed pf the servo motor, thereby controlling the speed, the direction and the location of each arthrosis, which is a standard robot studying and playing operation interactive platform, and runs different application programs compatible with the standard interface of the control desk, and mainly includes the learning program about the study, the games and the robot. The invention combines the mobile anthropomorphic robot with the study and the entertainment, and the users can communicate with the robot by the information of the video and the frequency. During the studying and entertainment process, the robot can process the limb action and speech communication.

Owner:FUDAN UNIV

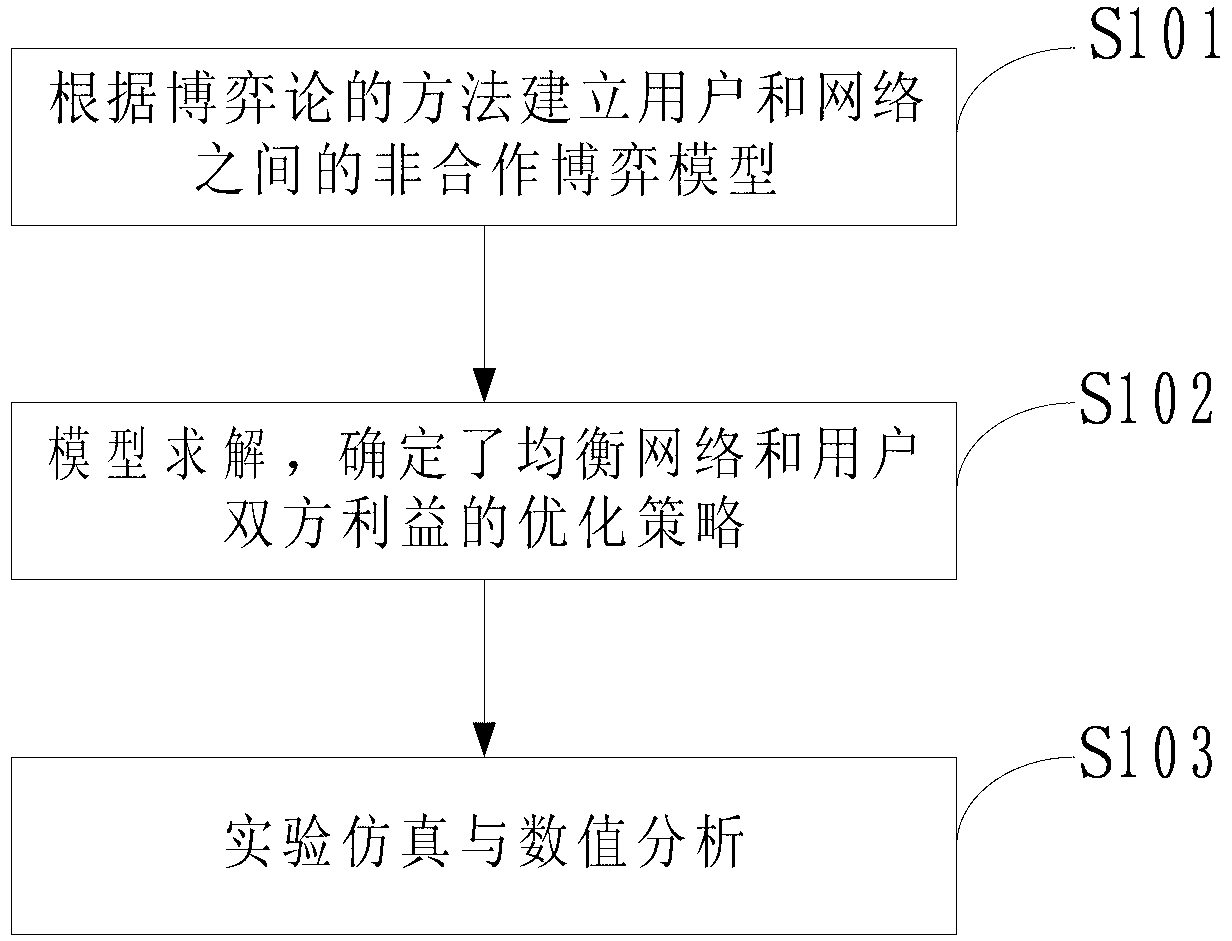

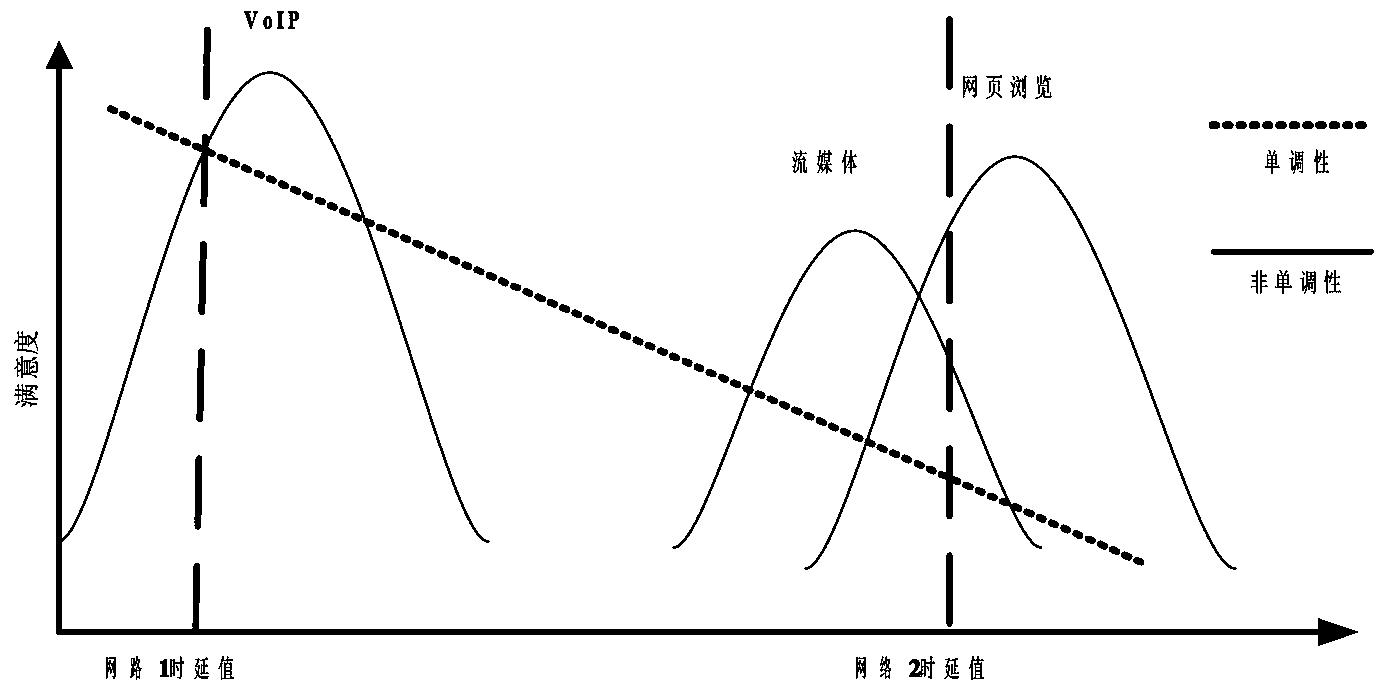

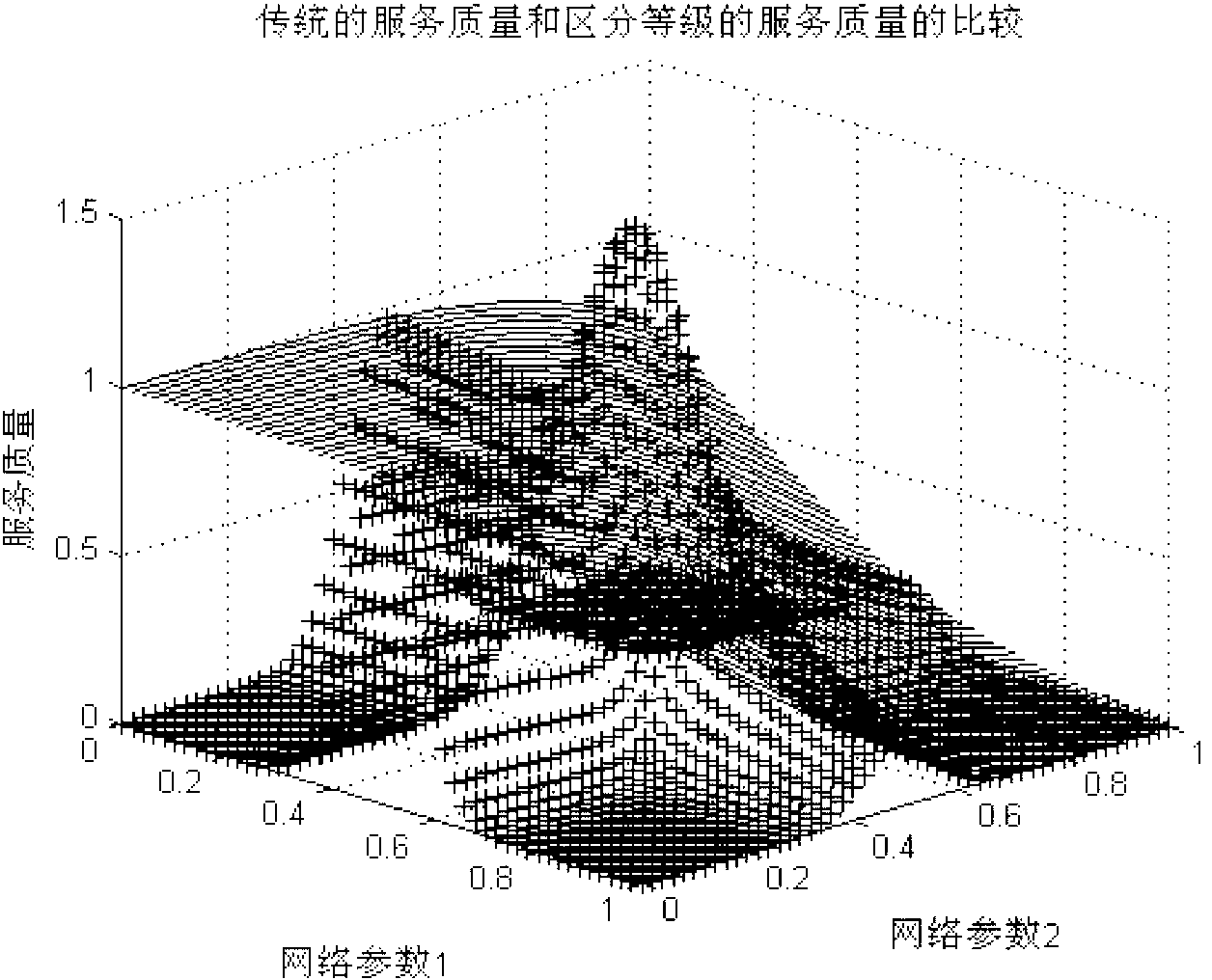

Method for selecting access network in heterogeneous network

InactiveCN103298076AMeet different levels of service needsBalance interestsAssess restrictionSpecial data processing applicationsAccess networkGray level

The invention discloses a method for selecting an access network in a heterogeneous network. The method includes the following steps: building of a non-cooperative game model between a user and a network according to a game theory method; model solution which determines an optimizing strategy for balancing mutual interest of the network and the user; and experiment simulation and numerical analysis. A non-monotone network service quality quantization model is built by combining a gray level relevance idea and a sigmoid function in a robot study mechanism. Simulation shows that the model fits an actual heterogeneous fusion network scene, according to the non-cooperative game model between the user and the network, the strategy of balancing the mutual interest of the user and the network is adopted, and a network optimization pricing strategy is determined by solving the non-cooperative game model. In addition, the method helps the user to select the most suitable access network, accordance is provided for formulating a QoS standard of the heterogeneous network fusion system, and service requirements of different levels of the user under different scenes are met.

Owner:XIAN UNIV OF POSTS & TELECOMM

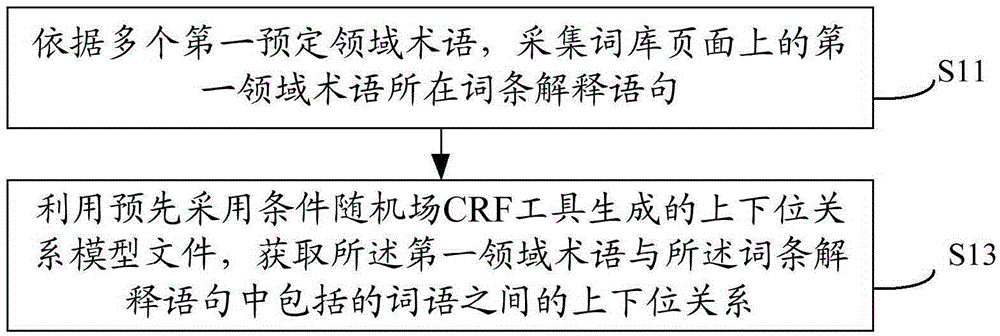

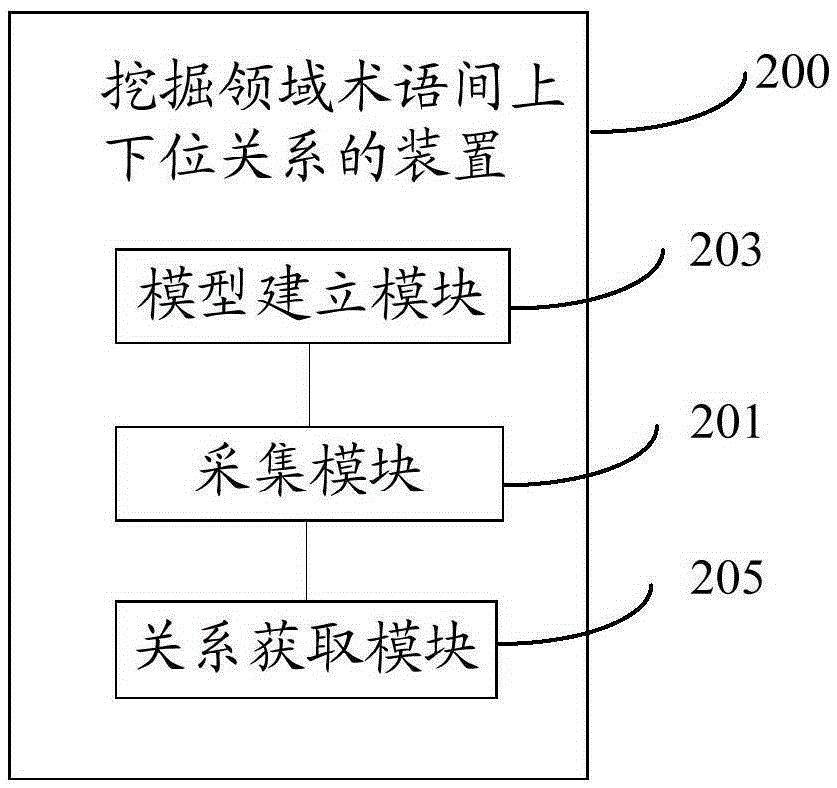

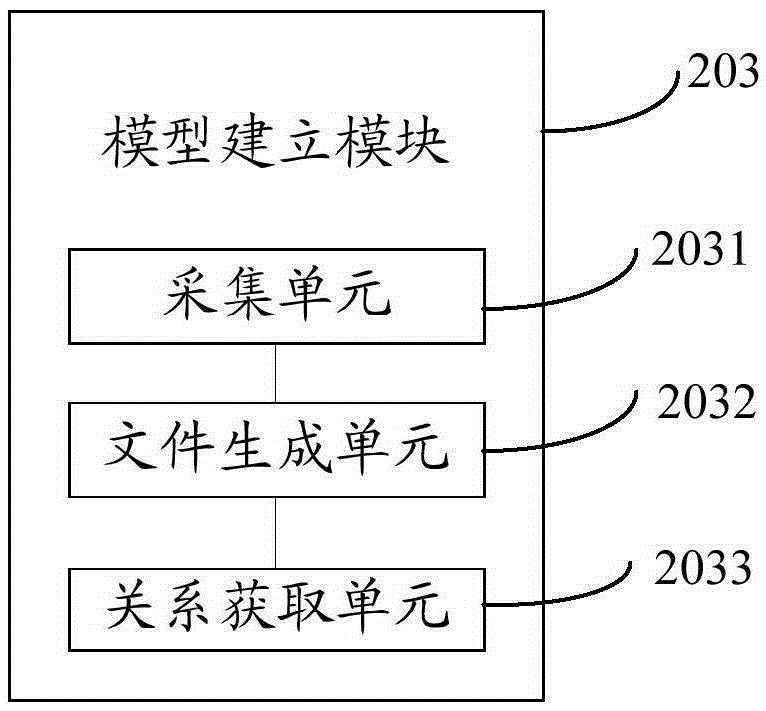

Method and device for mining hypernym-hyponym relation between domain-specific terms

The invention provides a method and device for mining a hypernym-hyponym relation between domain-specific terms. The method includes: acquiring an entry explanation statement where first domain-specific terms are located, on a word bank page, according to a plurality of first preset domain-specific terms, wherein the first preset domain-specific terms are associates with first preset domain-specific term meaning; and acquiring a hypernym-hyponym relation between the first domain-specific terms and words included in the entry explanation statement by using a hypernym-hyponym relation model file which is generated in advance by a condition random field CRF tool. According to the scheme of the invention, the entry explanation statement where first domain-specific terms are located, on a word bank page is used, training and learning is performed by using a CRF robot learning technology, the model file is established finally, the hypernym-hyponym relation between the first domain-specific terms and the words included in the entry explanation statement is acquired by using the model file, and the accuracy of acquisition of the hypernym-hyponym relation can be improved.

Owner:CHINA MOBILE COMM GRP CO LTD

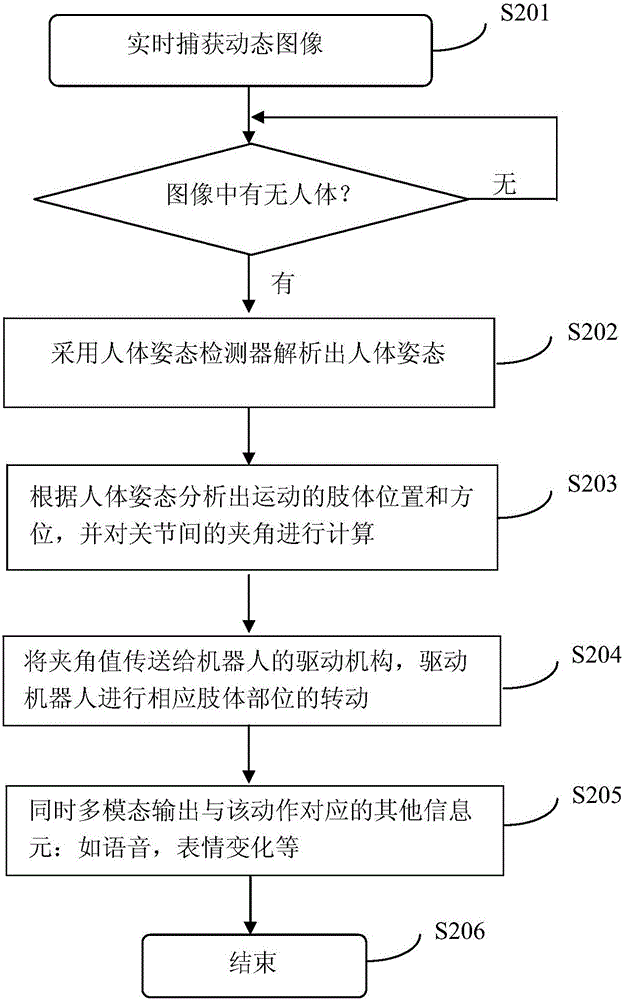

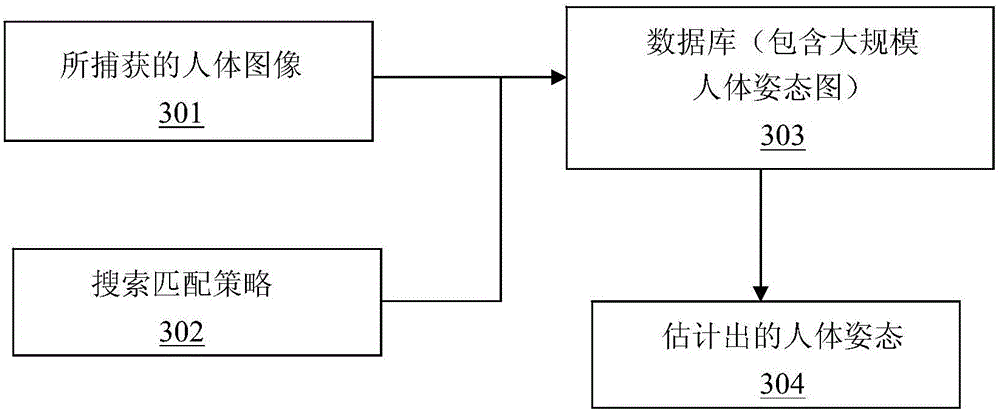

Method and system for data processing for robot action expression learning

ActiveCN105825268AInteractive natureVarious forms of communicationArtificial lifeMemory bankHuman–computer interaction

The invention provides a data processing method for robot action expression learning. The method comprises the following steps: a series of actions made by a target in a period of time is captured and recorded; information sets correlated with the series of actions are recognized and recorded synchronously, wherein the information sets are composed of information elements; the recorded actions and the correlated information sets are sorted and are stored in a memory bank of the robot according to a corresponding relationship; when the robot receives an action output instruction, an information set matched with the expressed content in the information sets stored in the memory bank is called to perform an action corresponding to the information set, and a human action expression is simulated. Action expressions are correlated with other information related to language expressions, after simulation training, the robot can perform diverse output, the communication forms are rich and more humane, and the intelligent degree is enhanced more greatly.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

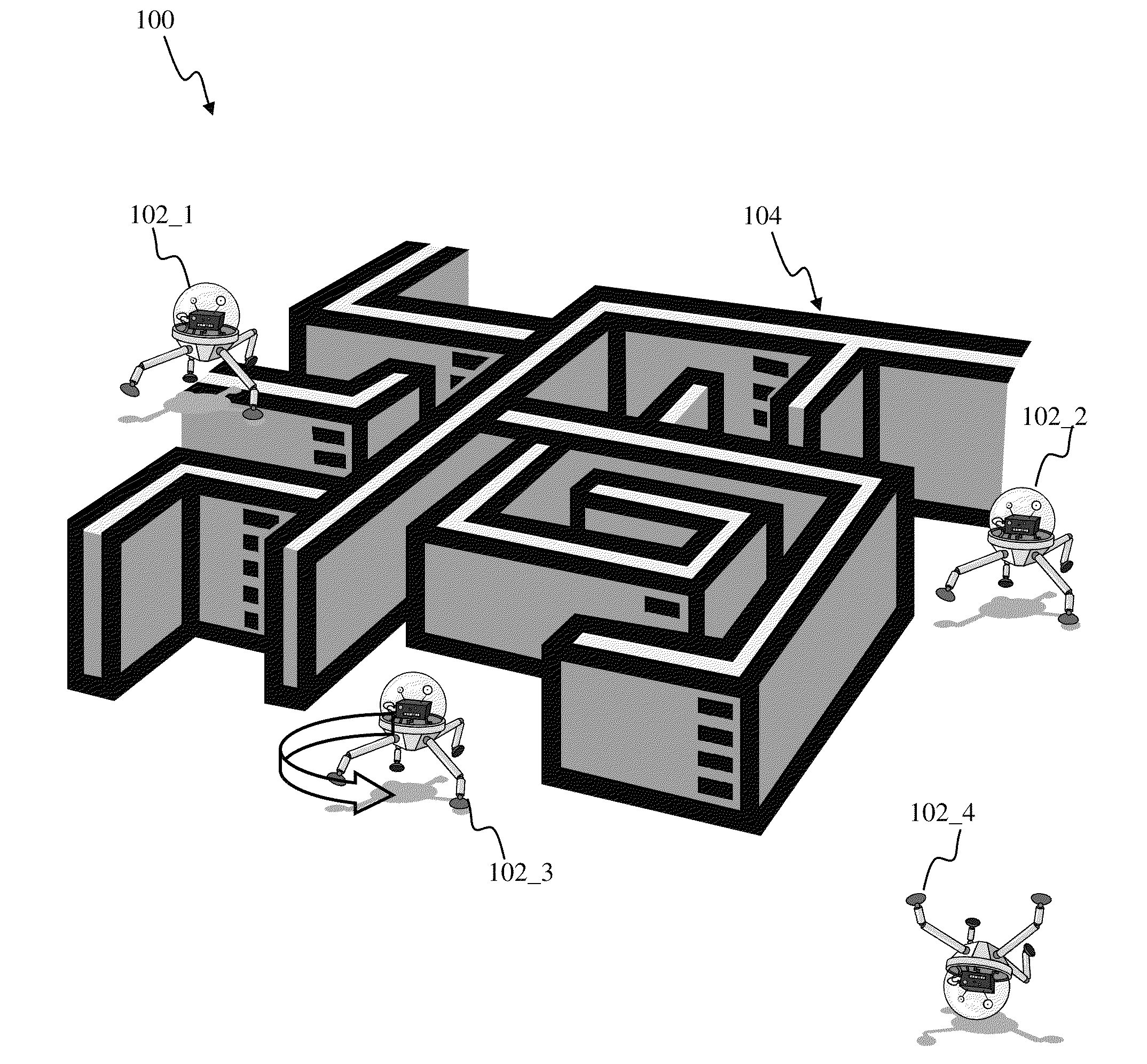

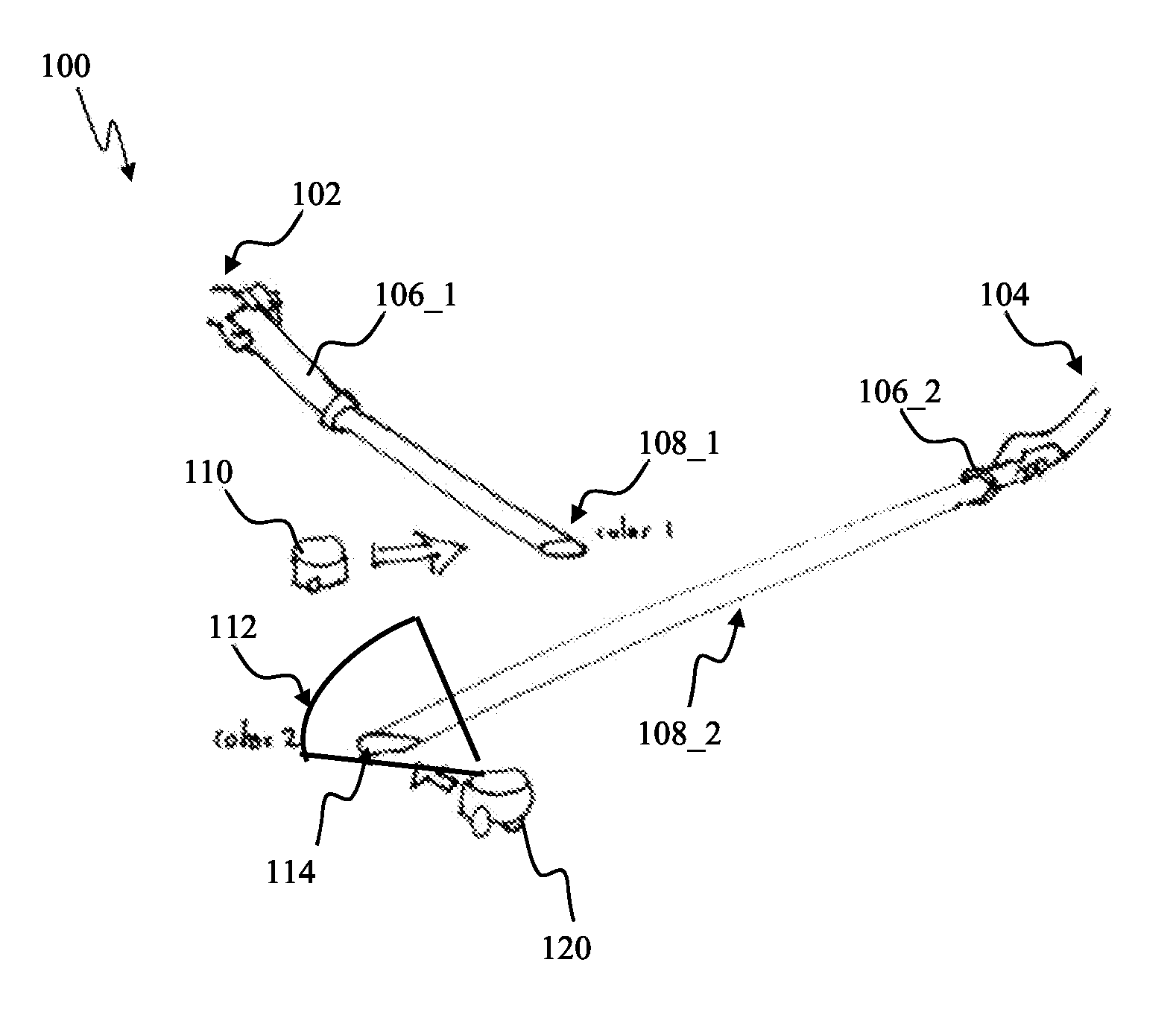

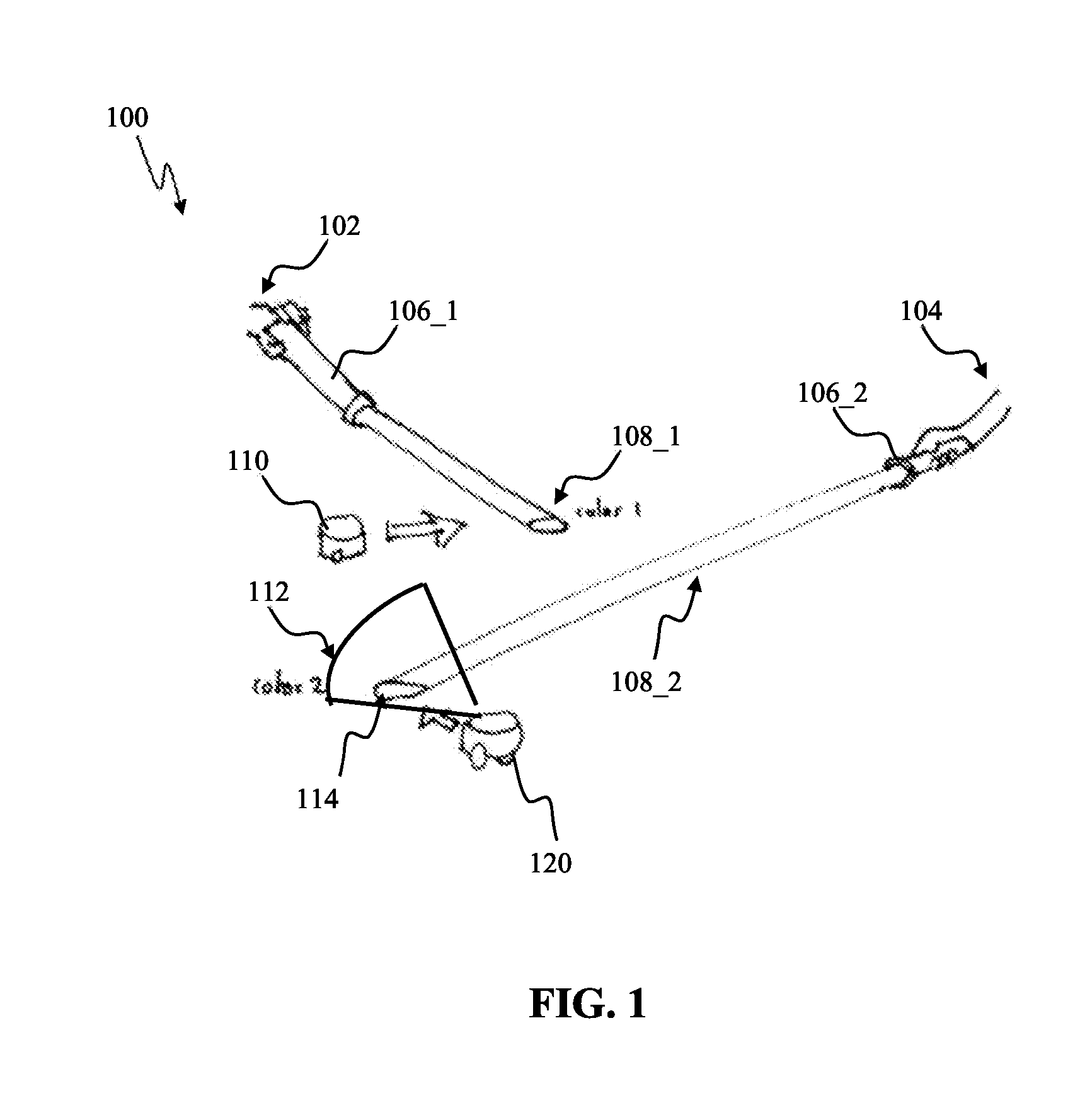

Apparatus and methods for robotic learning

Apparatus and methods for implementing learning by robotic devices. Attention of the robot may be manipulated by use of a spot-light device illuminating a portion of the aircraft undergoing inspection in order to indicate to inspection robot target areas requiring more detailed inspection. The robot guidance may be aided by way of an additional signal transmitted by the agent to the robot indicating that the object has been illuminated and attention switch may be required. The robot may initiate a search for the signal reflected by the illuminated area requiring its attention. Responsive to detecting the illuminated object and receipt of the additional signal, the robot may develop an association between the two events and the inspection task thereby storing a robotic context. The context of one robot may be shared with other devices in lieu of training so as to enable other devices to perform the task.

Owner:QUALCOMM INC

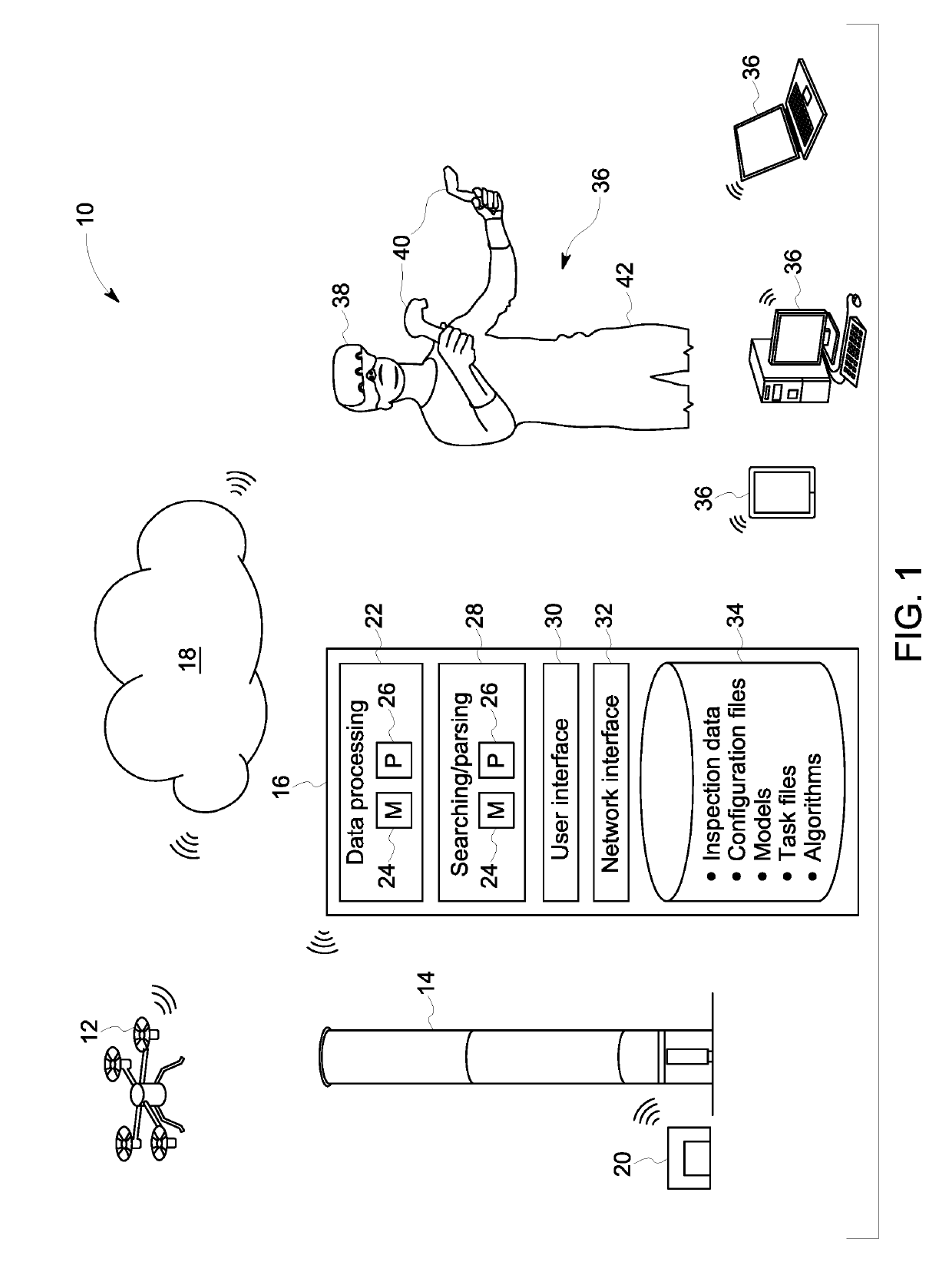

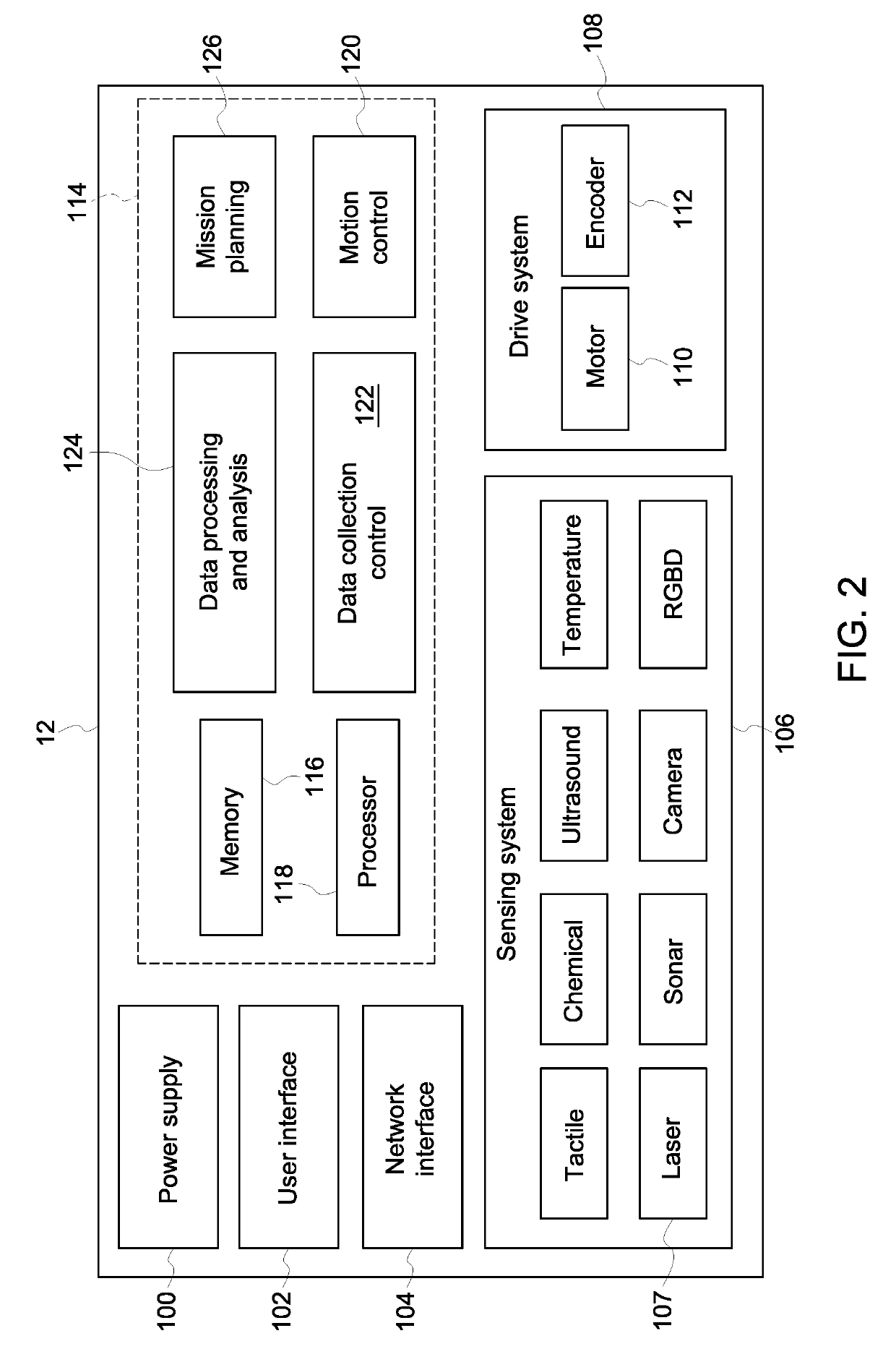

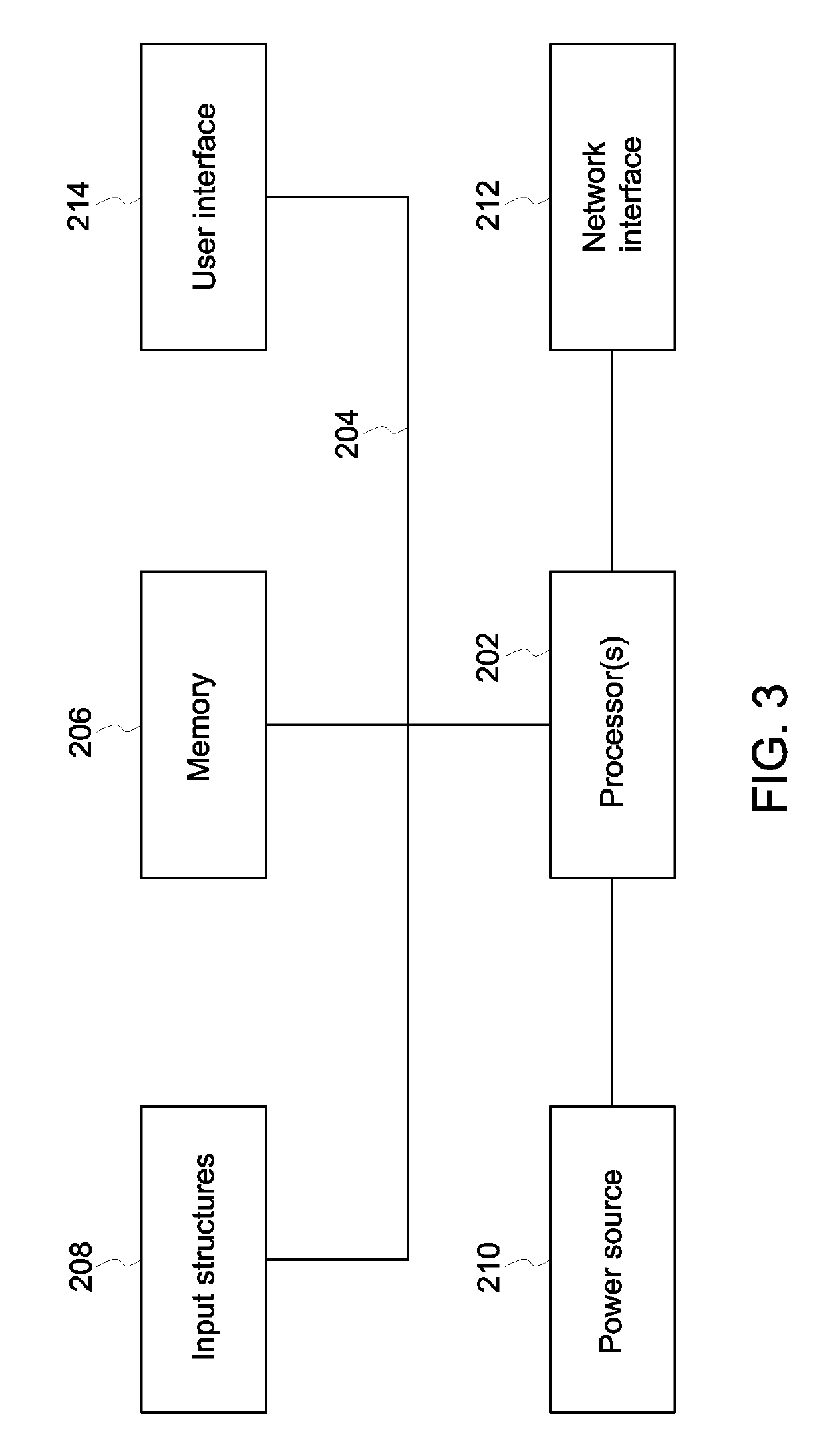

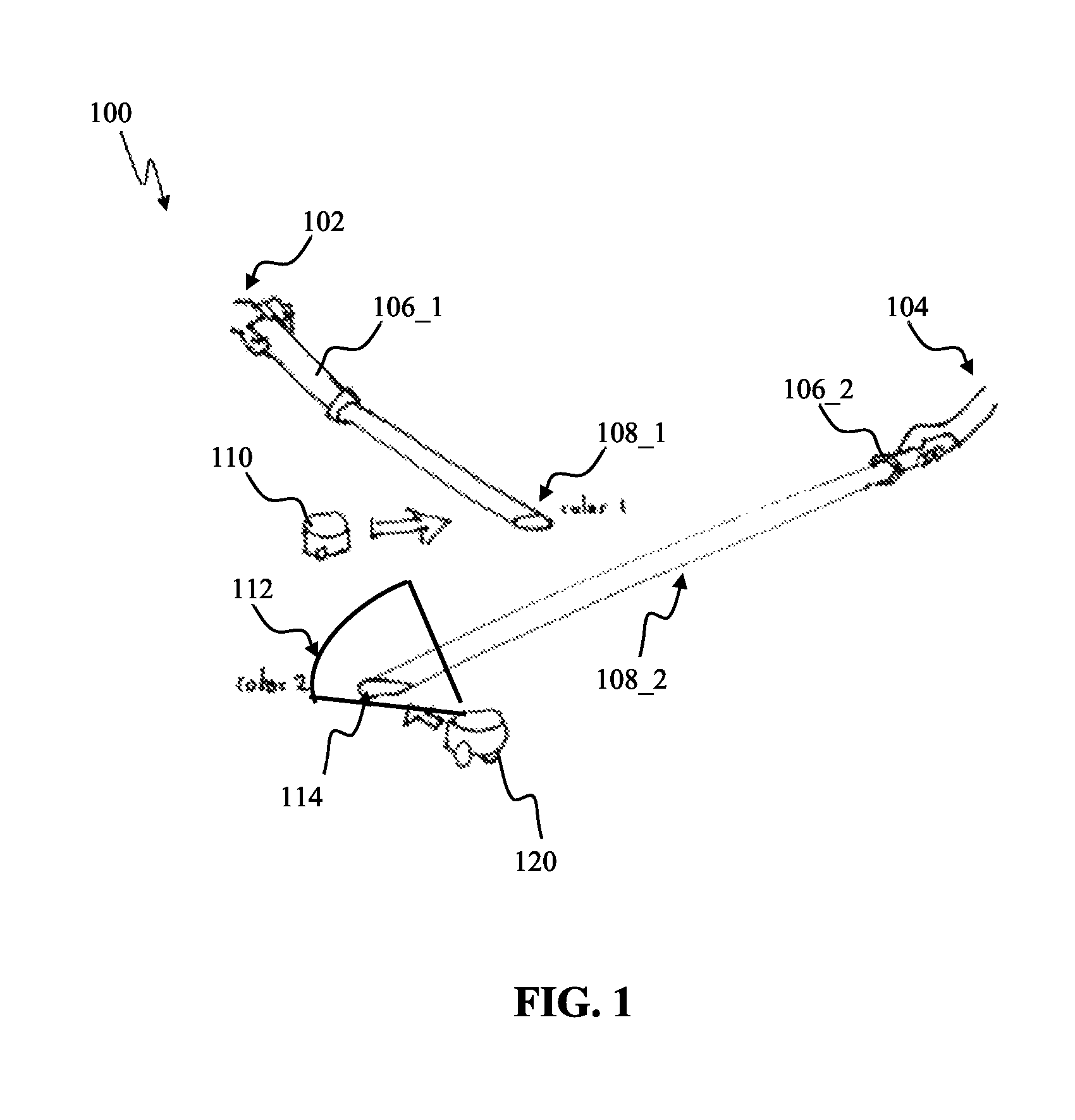

Systems and method for robotic learning of industrial tasks based on human demonstration

A system for performing industrial tasks includes a robot and a computing device. The robot includes one or more sensors that collect data corresponding to the robot and an environment surrounding the robot. The computing device includes a user interface, a processor, and a memory. The memory includes instructions that, when executed by the processor, cause the processor to receive the collected data from the robot, generate a virtual recreation of the robot and the environment surrounding the robot, receive inputs from a human operator controlling the robot to demonstrate an industrial task. The system is configured to learn how to perform the industrial task based on the human operator's demonstration of the task, and perform, via the robot, the industrial task autonomously or semi-autonomously.

Owner:GENERAL ELECTRIC CO

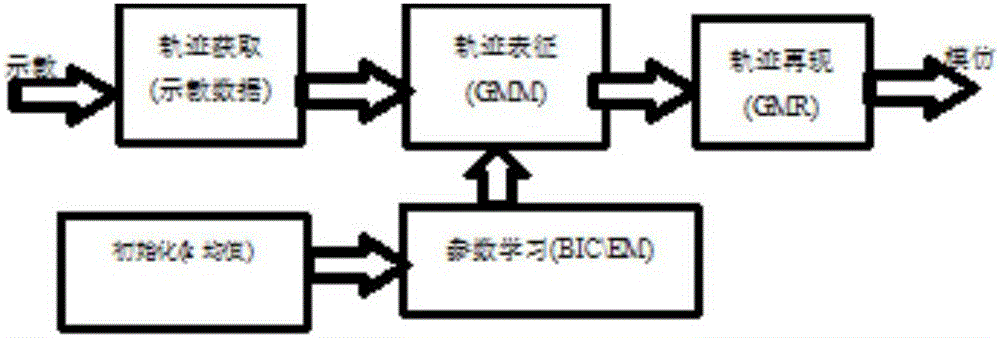

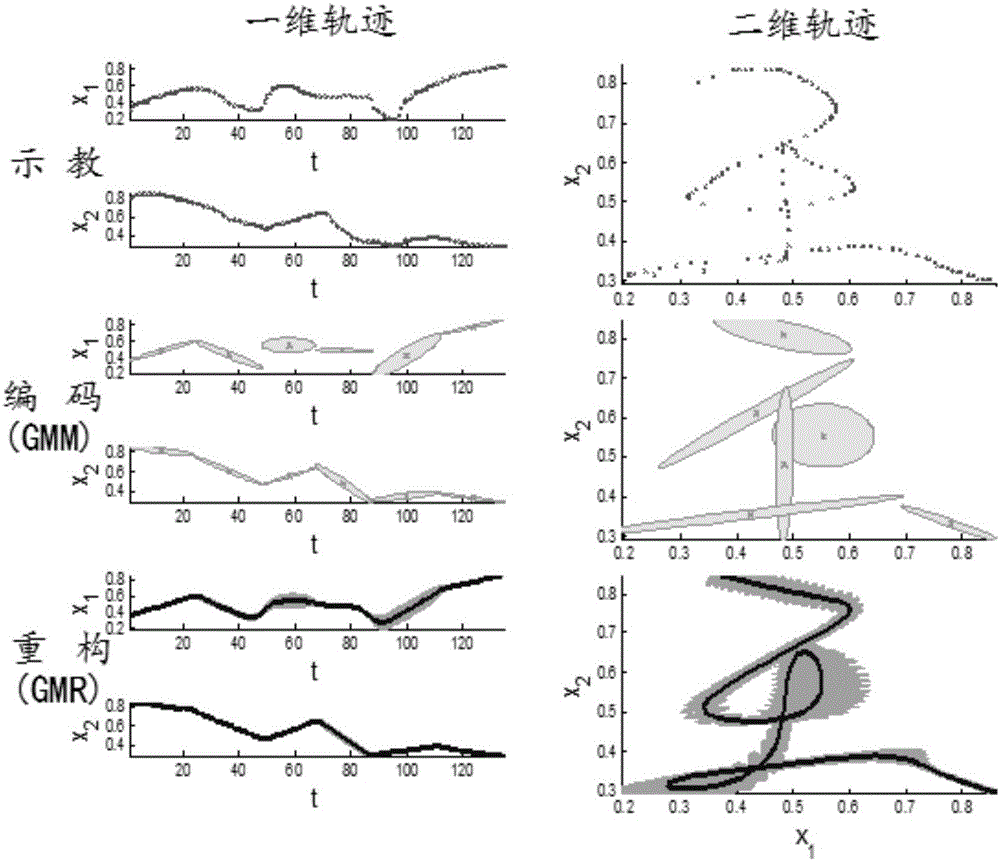

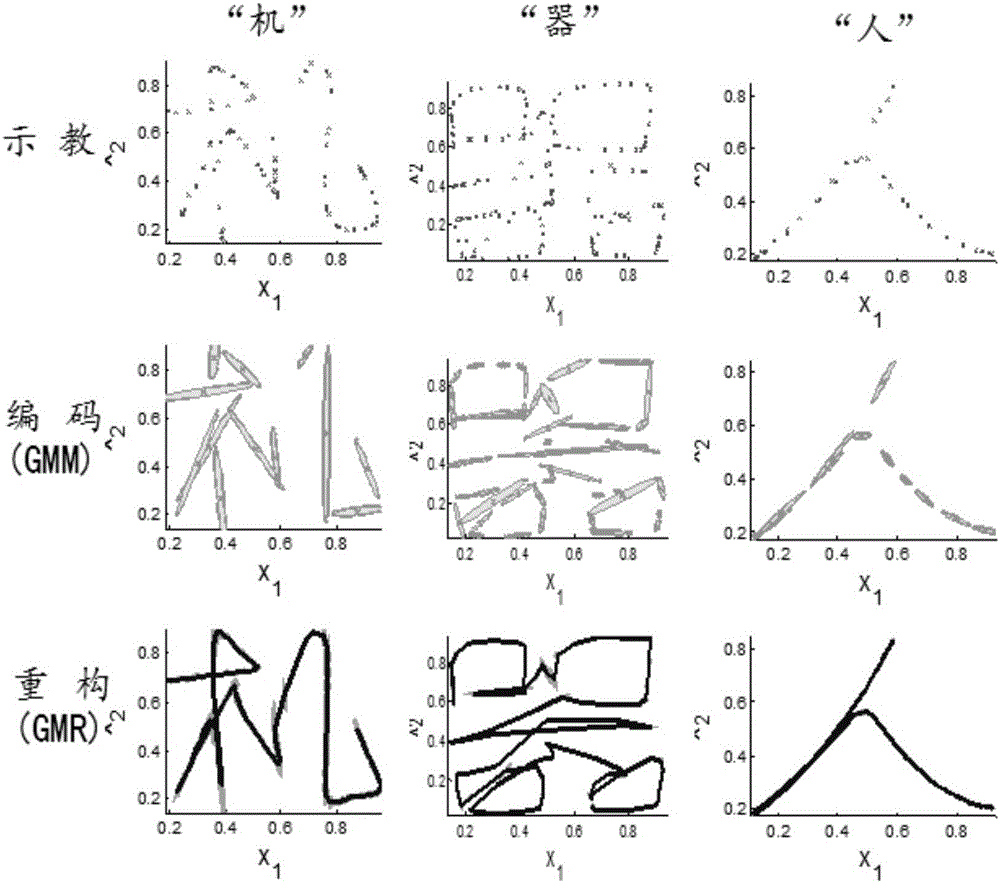

Robot Chinese character writing learning method based on track imitation

The invention relates to a Chinese character writing learning method based on track imitation, wherein the method belongs to the fields of artificial intelligent and robot learning. According to the method, imitation learning based on track matching is introduced into studying of a robot writing skill; demonstration data are coded through a Gaussian mixture model; track characteristics are extracted; data reconstruction is performed through Gaussian mixture regression; a generalized output of the track is obtained; and furthermore learning of a track-continuous Chinese character writing skill can be realized. An interference problem in the writing process is processed in a method of multiple demonstrations, and noise tolerance of the method is improved. According to the method, multitask expansion is based on the basic Gaussian mixture model; a complicated Chinese character is divided into a plurality of parts; track coding and reconstruction are performed on each divided part; and the method is applied for generating discrete tracks, thereby realizing writing of track-discontinuous Chinese characters. The Chinese character writing learning method realizes high Chinese character writing generalization effect.

Owner:BEIJING UNIV OF TECH

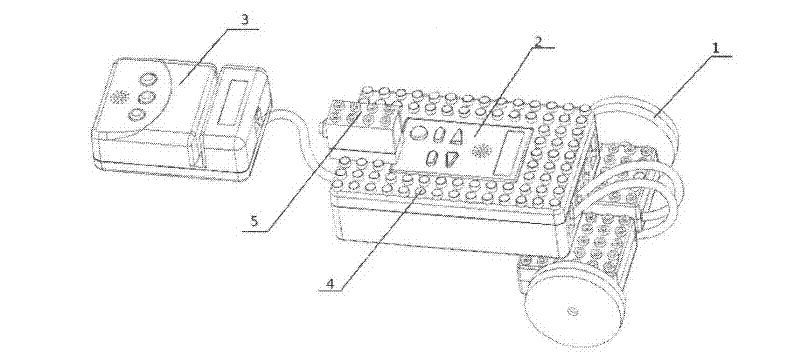

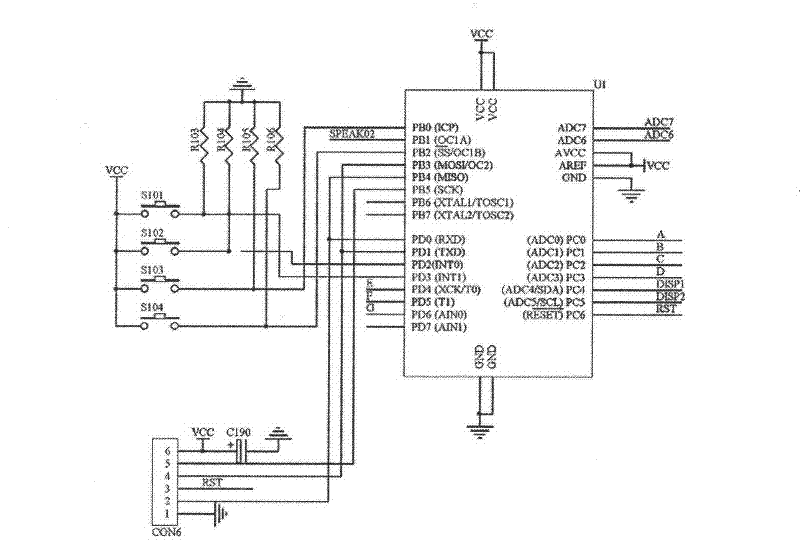

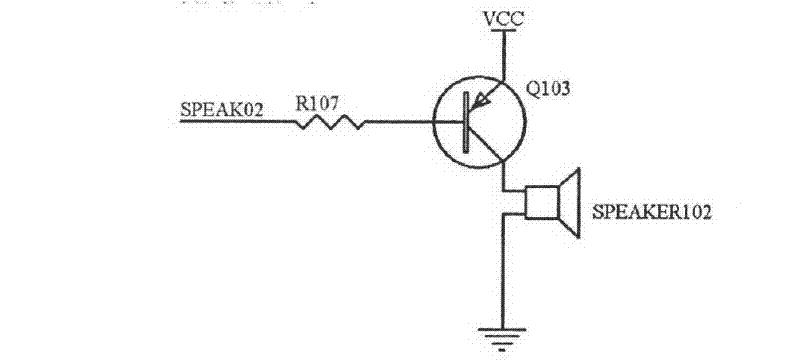

A programmable learning robot

InactiveCN102289981AMeet needsIntellectual development and growthEducational modelsToy vehiclesInfraredRemote control

The invention is a programmable learning robot, which includes a mechanical device, a card reading module, a mainboard module, a remote controller, a power supply, and an infrared sensing module, a touch module, a sound sensing module and a motor. The mechanical device consists of more than one Composed of plastic components, the mainboard assembly includes a mainboard module containing a mainboard chip and a mainboard shell, the plastic component and the mainboard shell have circular grooves or protrusions matching the circular grooves on the surface, the motor is placed on the plastic component, and It is connected with the mainboard module, the card reader module is connected with the mainboard module, and the remote controller can transmit control signals to the mainboard through infrared rays. The beneficial effect of the present invention is that it can be combined into different shapes and structures, and can be programmed by the user through card input, the robot can respond to the corresponding action according to the induction of external signals, and can realize the movement of the remote control robot Way.

Owner:朱鼎新

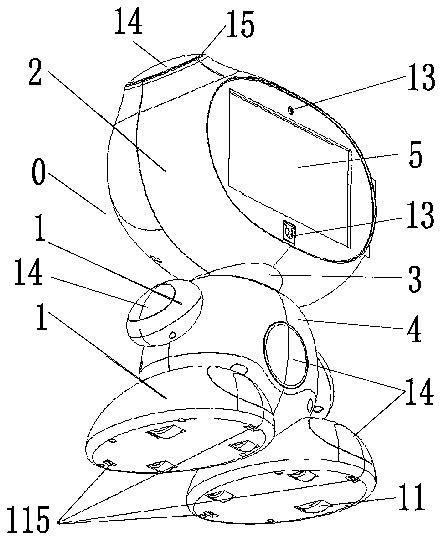

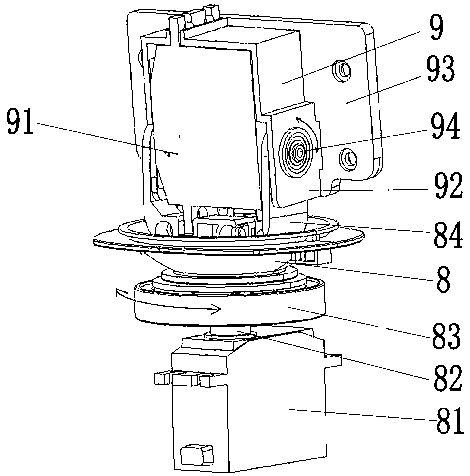

Intelligent robot learning toy

InactiveCN105498228AEasy to expandFully mobilize the funSelf-moving toy figuresTeaching apparatusEngineeringHead nodding

The invention discloses an intelligent robot learning toy. The intelligent robot learning toy is characterized in that a multi-freedom-degree concealed joint is arranged on the neck of the intelligent robot learning toy, a head rotating mechanism is sleeved with a bowl-shaped shell assembly of the concealed joint, the bowl-shaped shell assembly is connected with the connecting part for connecting the head and the body of the intelligent robot learning toy with the neck, and a head nodding mechanism is arranged in the head, connected with the upper end of the head rotating mechanism, and driven by the head rotating mechanism. The intelligent robot learning toy has the following advantages that the structure is scientific and compact, the neck of the intelligent robot learning toy is provided with the concealed joint, the effects of horizontal rotation and neck connection in the nodding process are achieved, the multi-freedom-degree joint mechanism is protected from being exposed, and by means of the limb modularized structure, different functions of the intelligent robot learning toy can be expanded conveniently; multimedia is matched with action and expression, things can be expressed vividly, good interaction with children can be achieved, learning and play can be achieved, the robot helps parents to achieve the purpose of education, and creativity of the children is motivated.

Owner:胡文杰

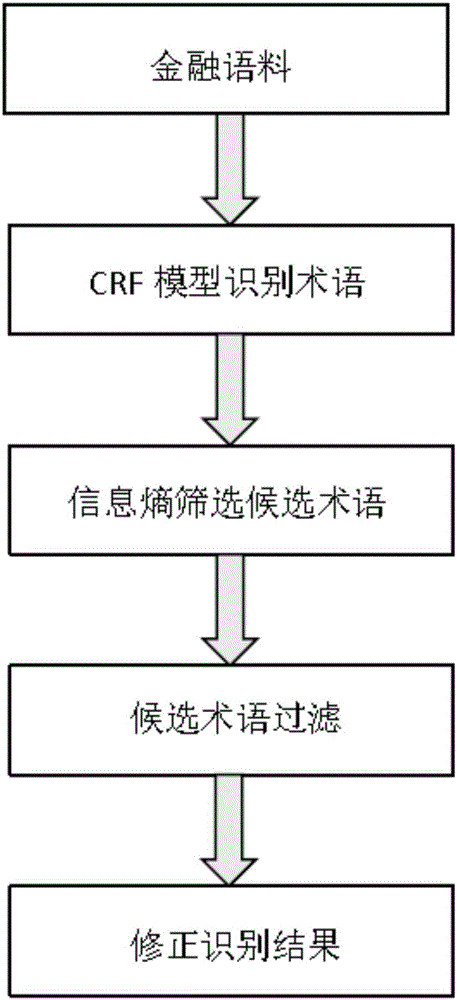

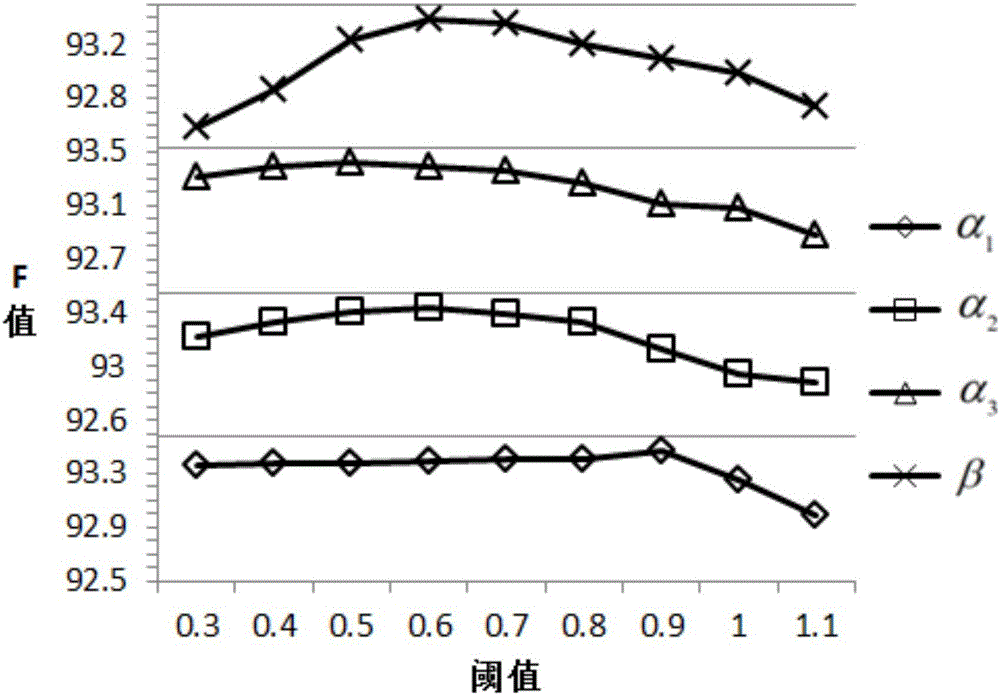

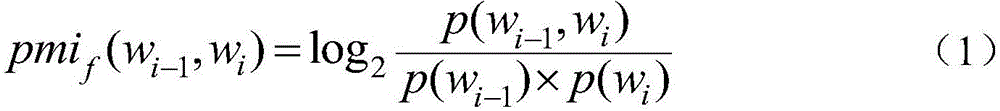

Financial field term recognizing method based on information entropy and term credibility

InactiveCN106095753AAvoid tedious feature selection processImprove integrityNatural language data processingSpecial data processing applicationsEdge basedProbit

The invention provides a financial field term recognizing method based on information entropy and term credibility. Only simple characteristics are selected, and financial terms are recognized through a CRF model; candidate terms belonging to the specific error type are screened out by setting a threshold according to an information entropy formula based on marginal probability in a recognition result, and the candidate terms are processed in a more targeted mode; words are converted into word vectors with rich semantic information when the candidate terms are filtered, and a large number of financial field terms can be obtained through filtering since a similarity calculation method and a traditional mutual information method complement each other. The too complicated characteristic selection process of an existing robot learning model can be effectively avoided, post-processing part is flexible and not limited to specific linguistic data, the recall rate can be easily increased, the term structure integrity can be improved, and the method can be used as a universal term recognizing method.

Owner:DALIAN UNIV OF TECH

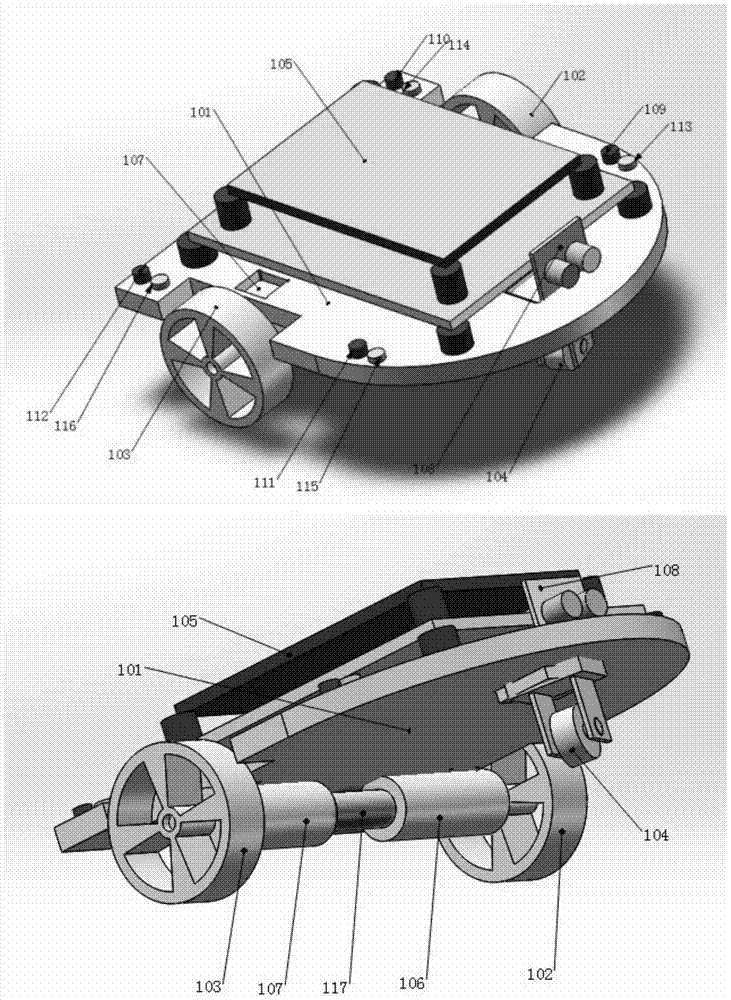

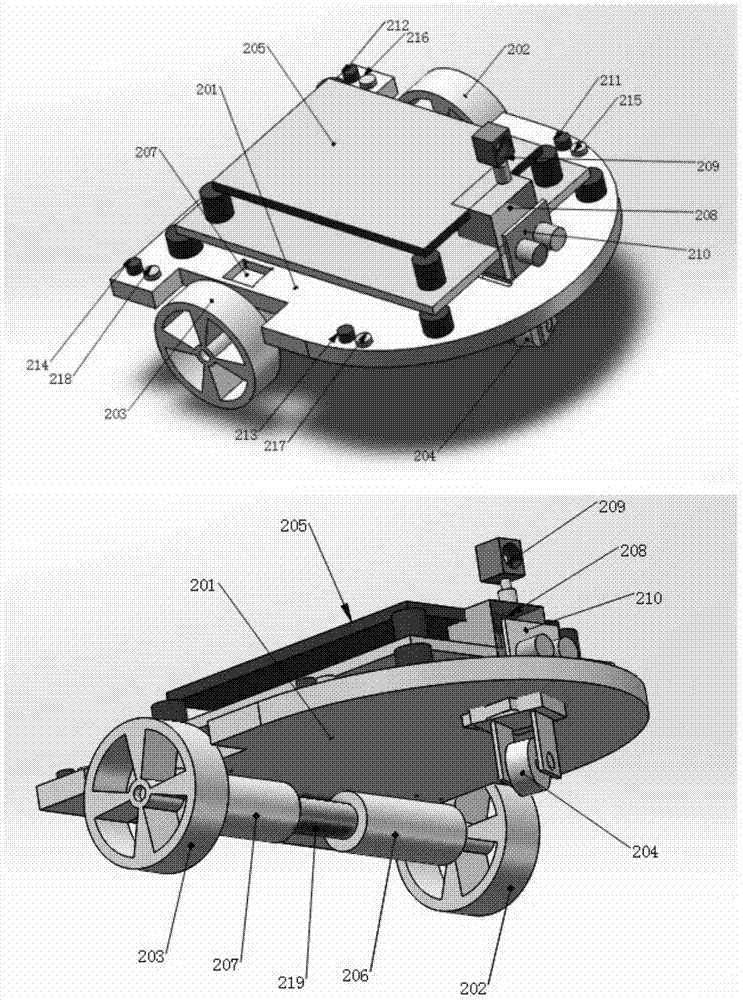

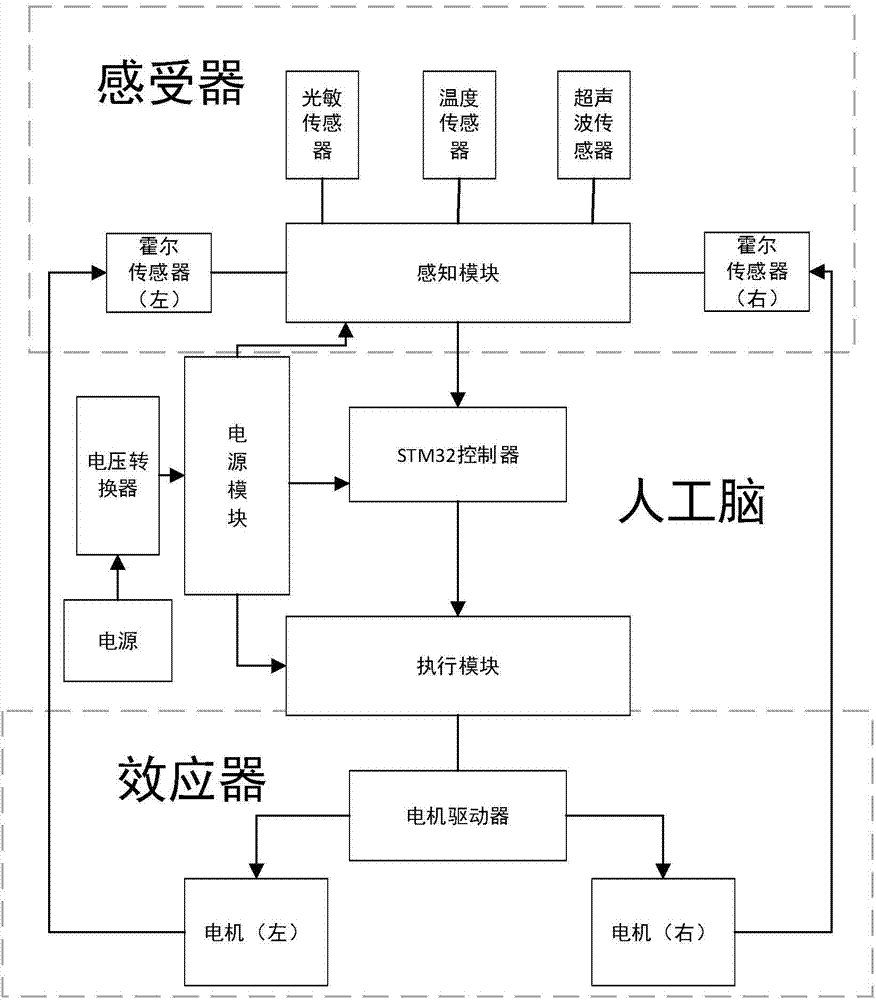

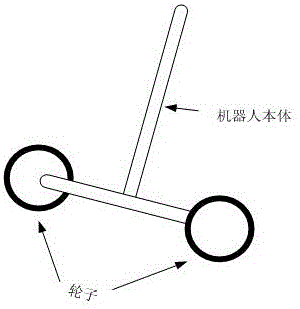

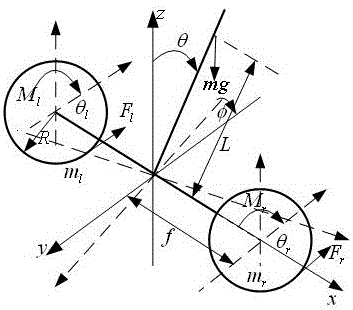

Biomorphic wheeled robot system with simulation learning mechanism and method

InactiveCN103878772ALow costImprove the efficiency of imitation learningProgramme-controlled manipulatorRobotic systemsImaging processing

The invention relates to a biomorphic wheeled robot system with a simulation learning mechanism and a method. The system comprises a teaching robot A and a simulation robot B. When the robot system works, firstly, the teaching robot A demonstrates teaching behaviors, and then the simulation robot B observes and simulates the teaching behaviors of the robot A. A rotary device formed by assembling a rudder and an infrared sensor is carried on the simulation robot, and through a behavior capturing method of rotary detection, action information of scattered teaching observation points is collected and then used for instructing the simulation robot to simulate learn the teaching behaviors through a simulation and learning algorithm. The cost of sensors is greatly reduced, the defect that in the prior art, after a shooting technology is used for collecting the teaching behaviors, the process of image processing is tedious is overcome, simulation and learning efficiency of the robot is improved, and learning time of the robot is shortened.

Owner:BEIJING UNIV OF TECH

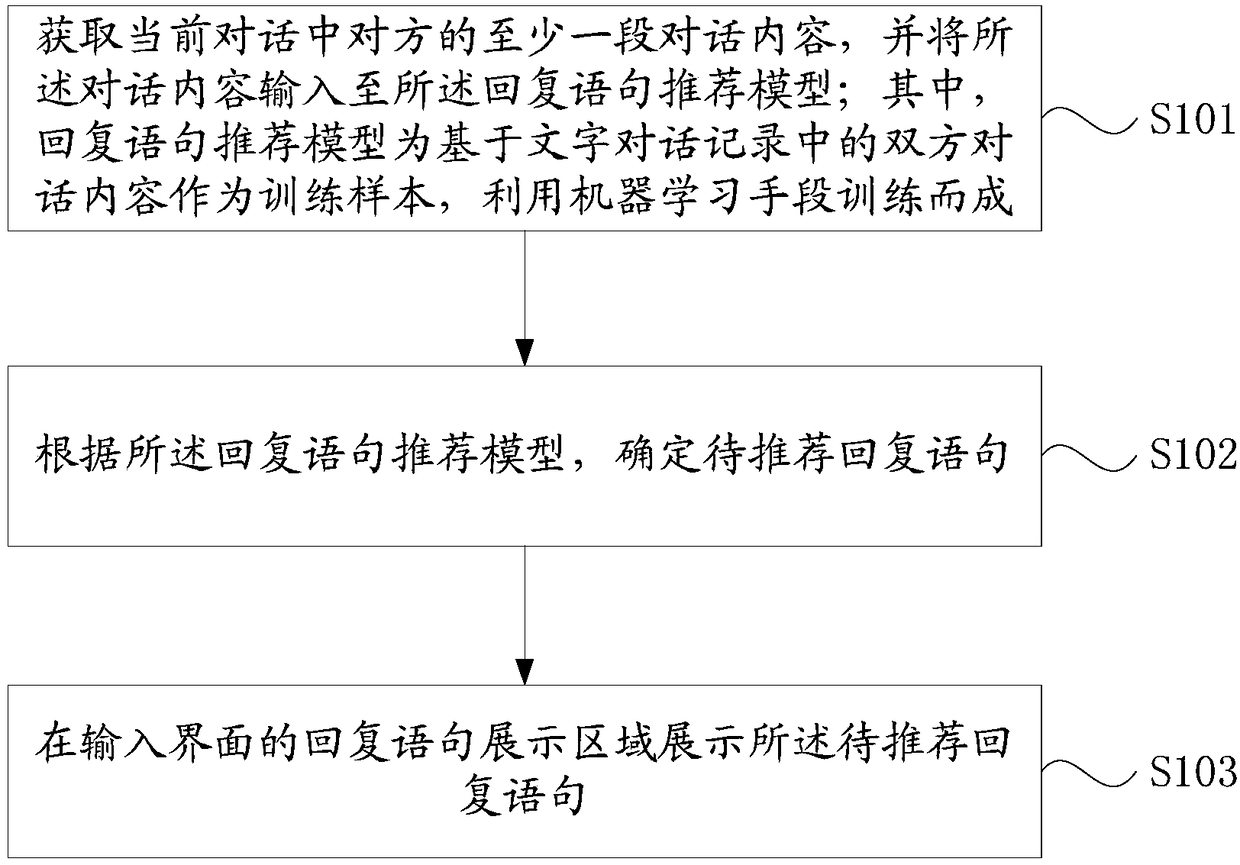

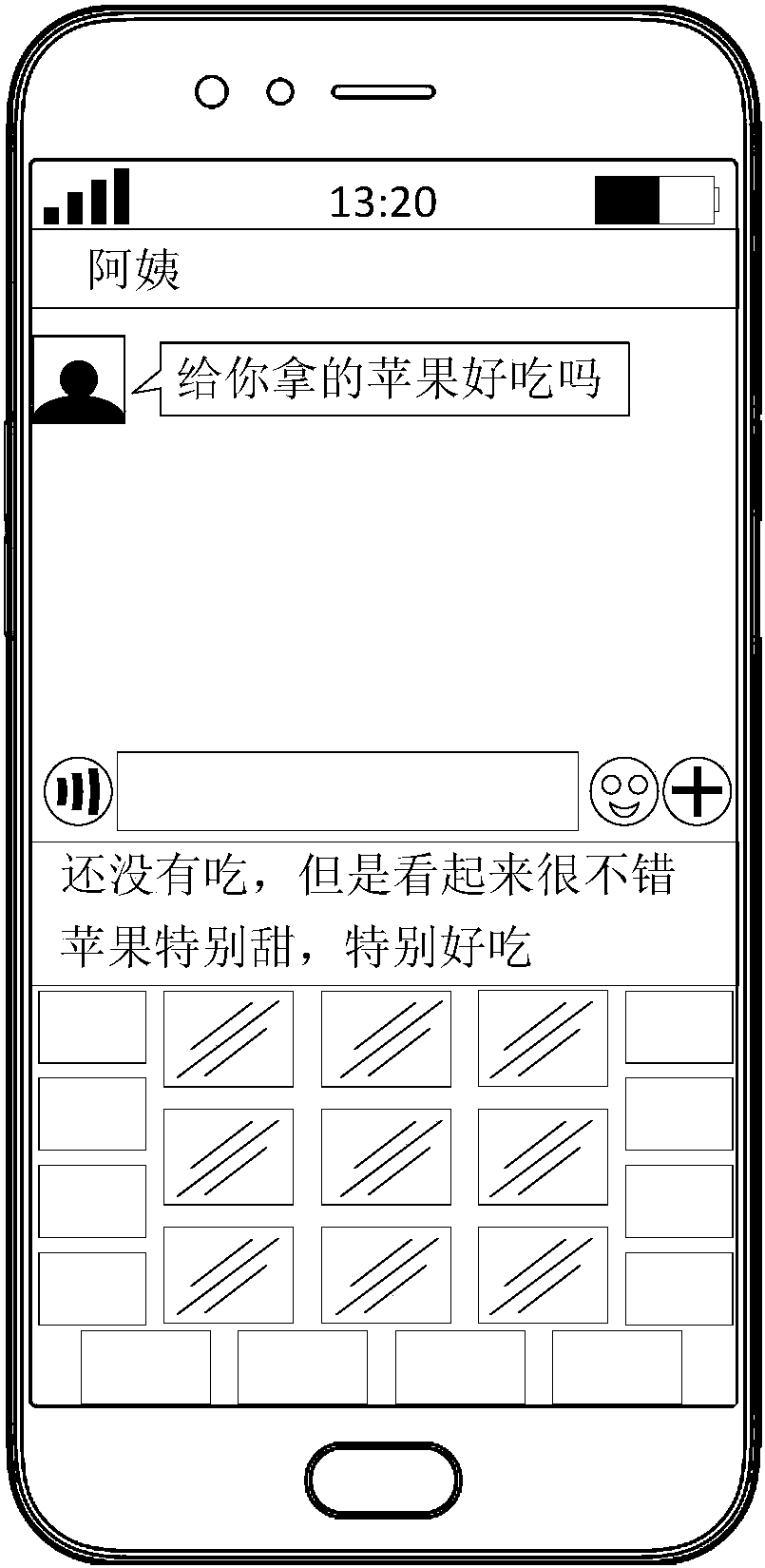

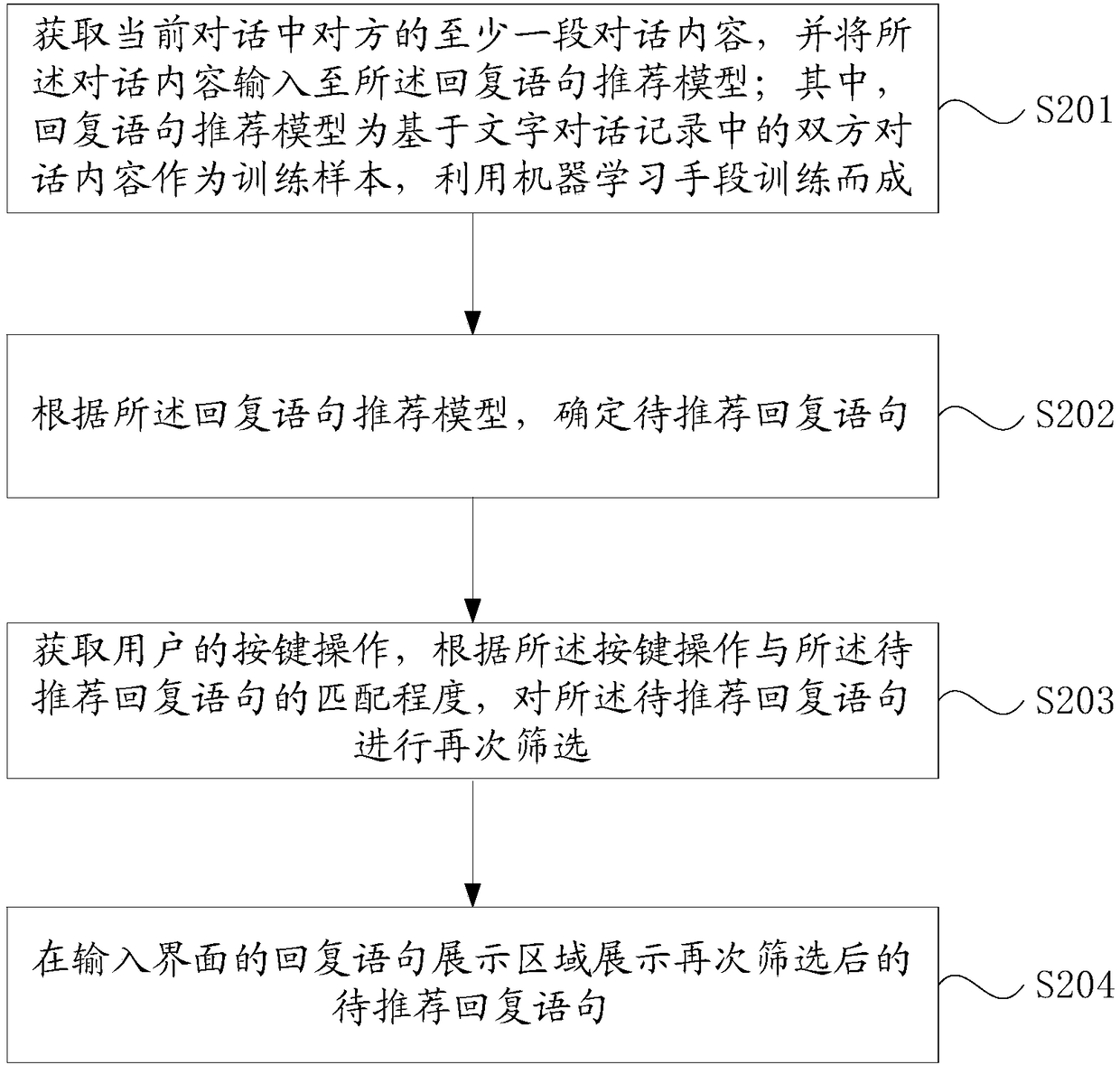

Response statement recommending method and device, storage medium and mobile terminal

An embodiment of the application discloses a response statement recommending method and device, a storage medium and a mobile terminal. The response statement recommending method comprises: acquiringat least one conversation content of an opposite party in a current conversation, and inputting the conversation contents to a response statement recommending model, wherein the response statement recommending model is formed by training by means of robot learning with two-party conversation contents in text conservation recording as a training sample; determining a response statement to be recommended according to the response statement recommending model; the displaying the response statement to be recommend in a response statement display area of an input interface. The response statement recommending method and device, the storage medium and the mobile terminal according to the technical scheme can provide optimized recommendation modes for information interaction of mobile terminals.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

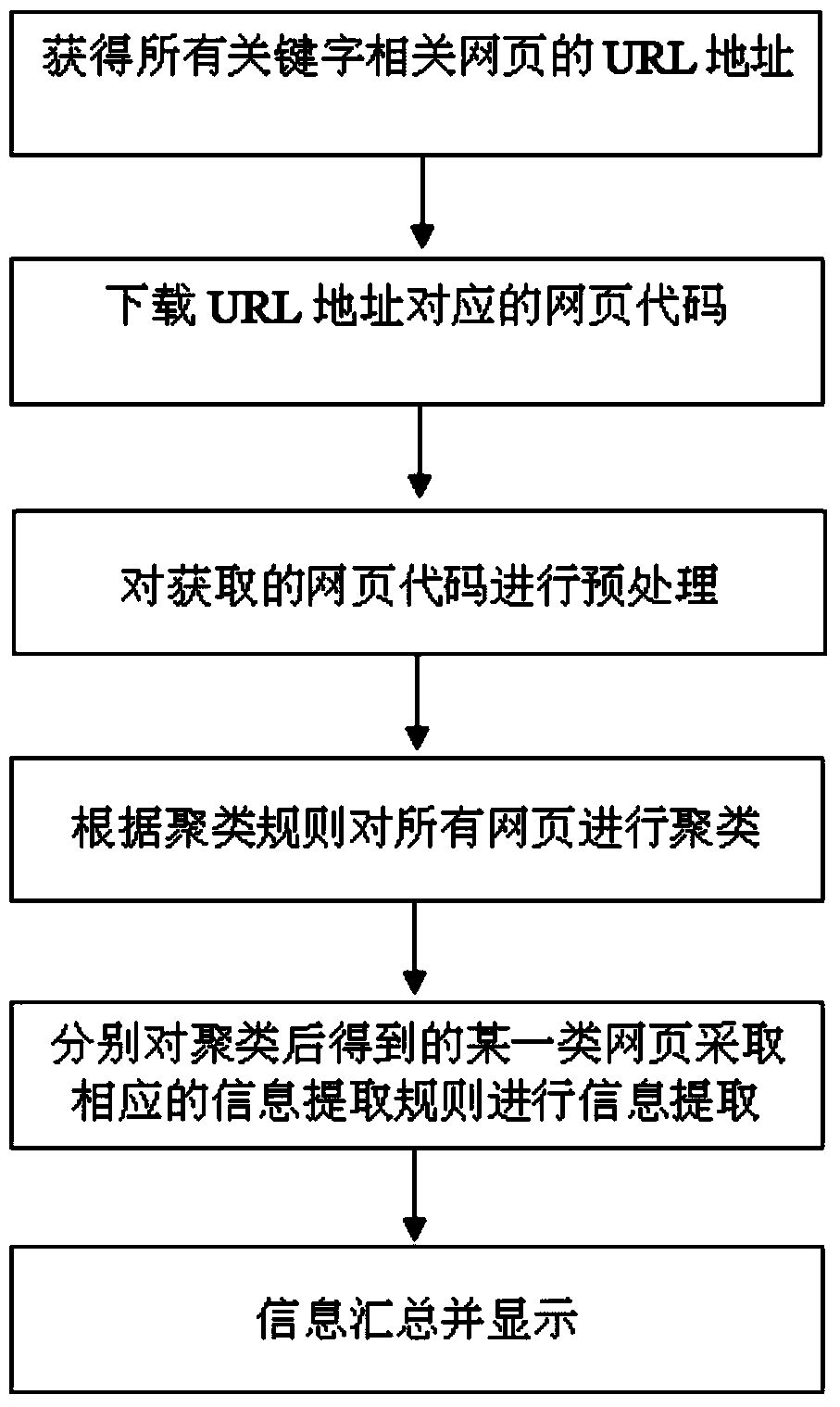

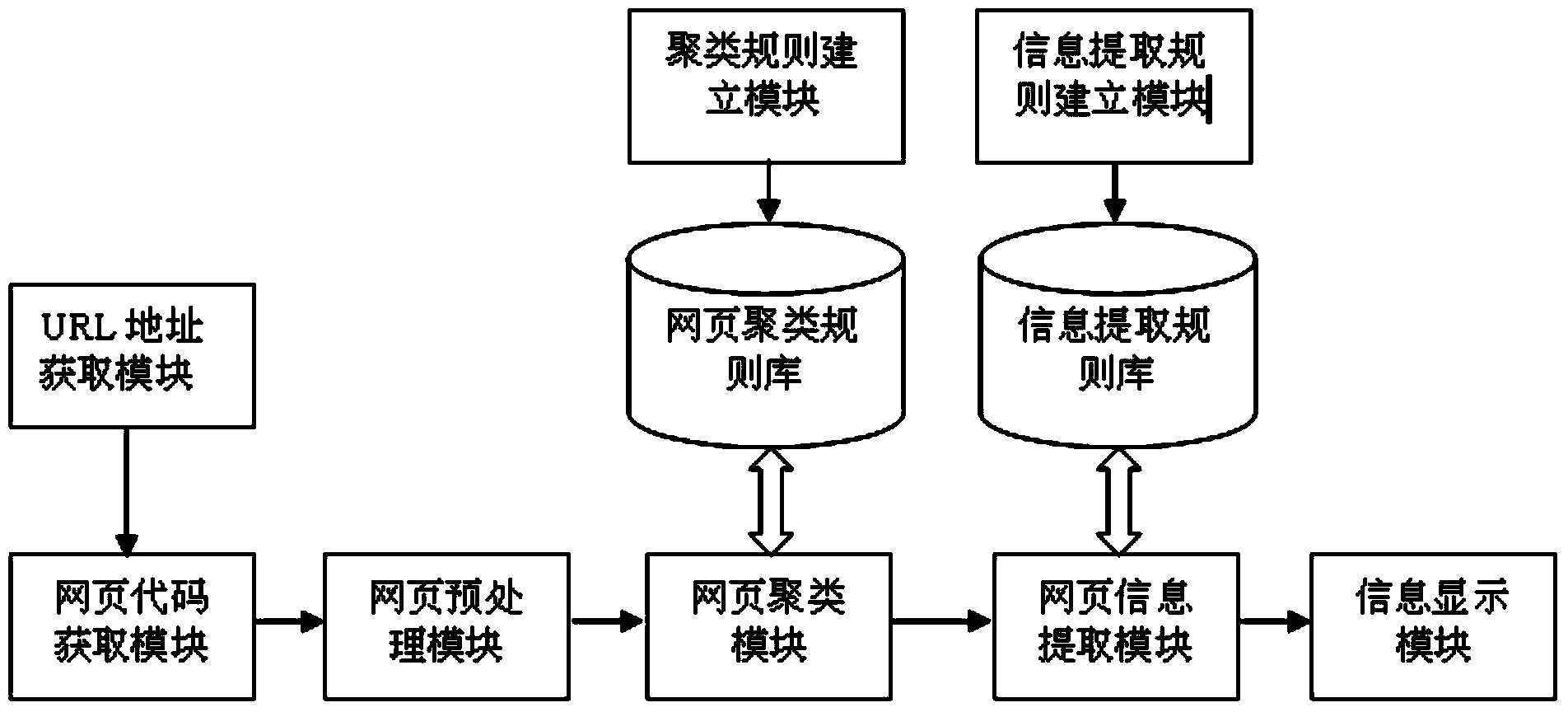

Method and device for extracting information based on multistage rule base

InactiveCN103970898AImprove recallGuaranteed recallSpecial data processing applicationsWeb page clusteringStructure chart

A method for extracting information based on a multistage rule base comprises the steps that (1) a URL address of web pages is obtained; (2) the web pages corresponding to the URL address are downloaded; (3) a web page tree-type structure chart is obtained; (4) web page clustering is conducted, web pages are selected from the web pages to be clustered to serve as a training set, and a clustering rule of the web pages is defined according to a robot learning method; (5) a searching result is extracted; (6) information is collected and displayed. After the web page tree-type structure chart is obtained in the step (3) and the web pages are clustered in the step (4), the recall ratio of the retrieved information can be effectively increased, the clustering rule is automatically generated by means of robot learning in a training set mode, manual clustering is not needed, the automation degree of searching is effectively increased, and the condition of large-area use is achieved on the premise that the recall ratio is guaranteed. According to a device for extracting the information based on the multistage rule base, a hardware foundation is provided for an information extraction process, cost is low, and the device is suitable for large-scale use.

Owner:CHONGQING UNIV

Apparatus and methods for robotic learning

Apparatus and methods for implementing learning by robotic devices. Attention of the robot may be manipulated by use of a spot-light device illuminating a portion of the aircraft undergoing inspection in order to indicate to inspection robot target areas requiring more detailed inspection. The robot guidance may be aided by way of an additional signal transmitted by the agent to the robot indicating that the object has been illuminated and attention switch may be required. The robot may initiate a search for the signal reflected by the illuminated area requiring its attention. Responsive to detecting the illuminated object and receipt of the additional signal, the robot may develop an association between the two events and the inspection task thereby storing a robotic context. The context of one robot may be shared with other devices in lieu of training so as to enable other devices to perform the task.

Owner:QUALCOMM INC

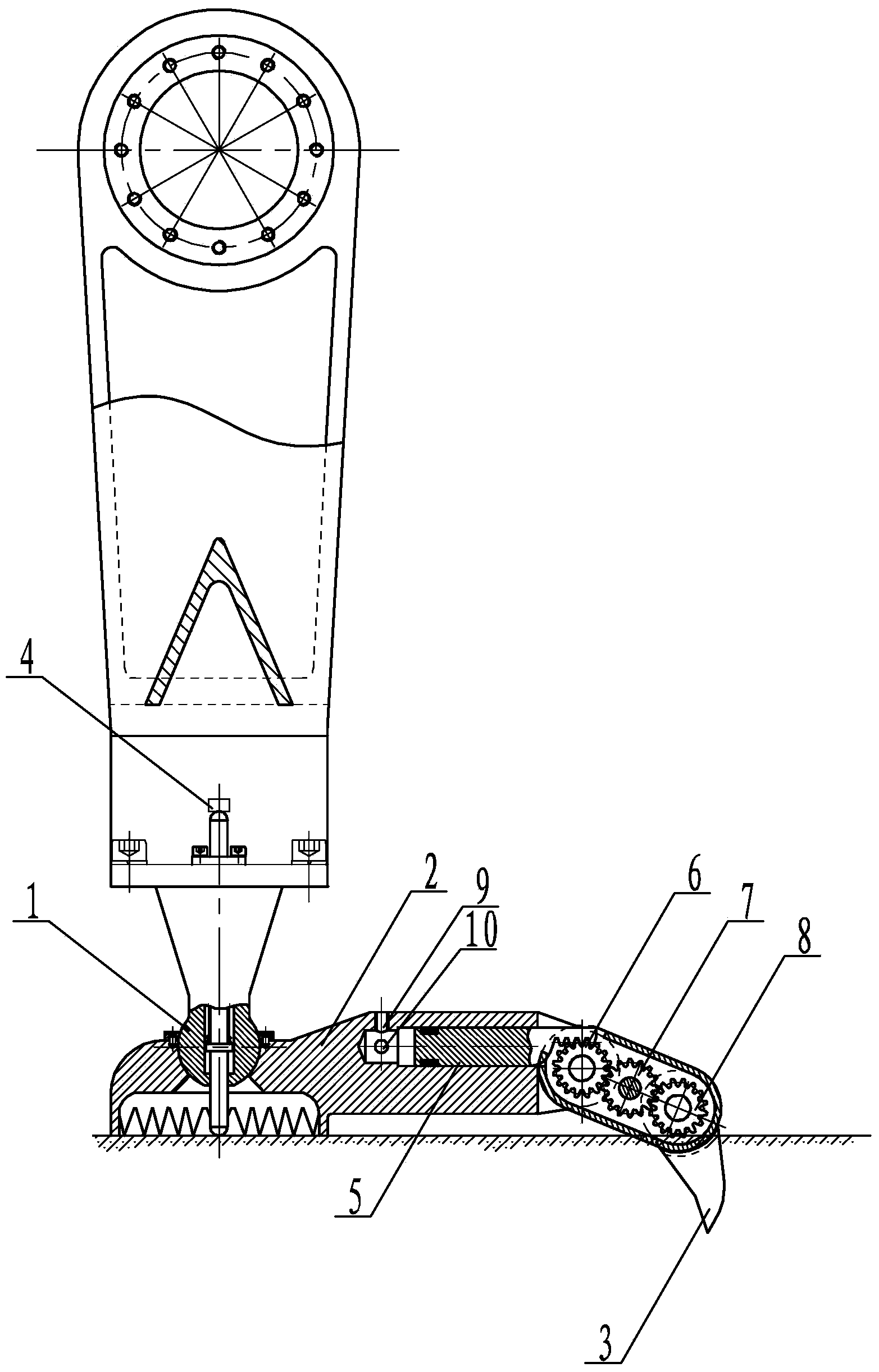

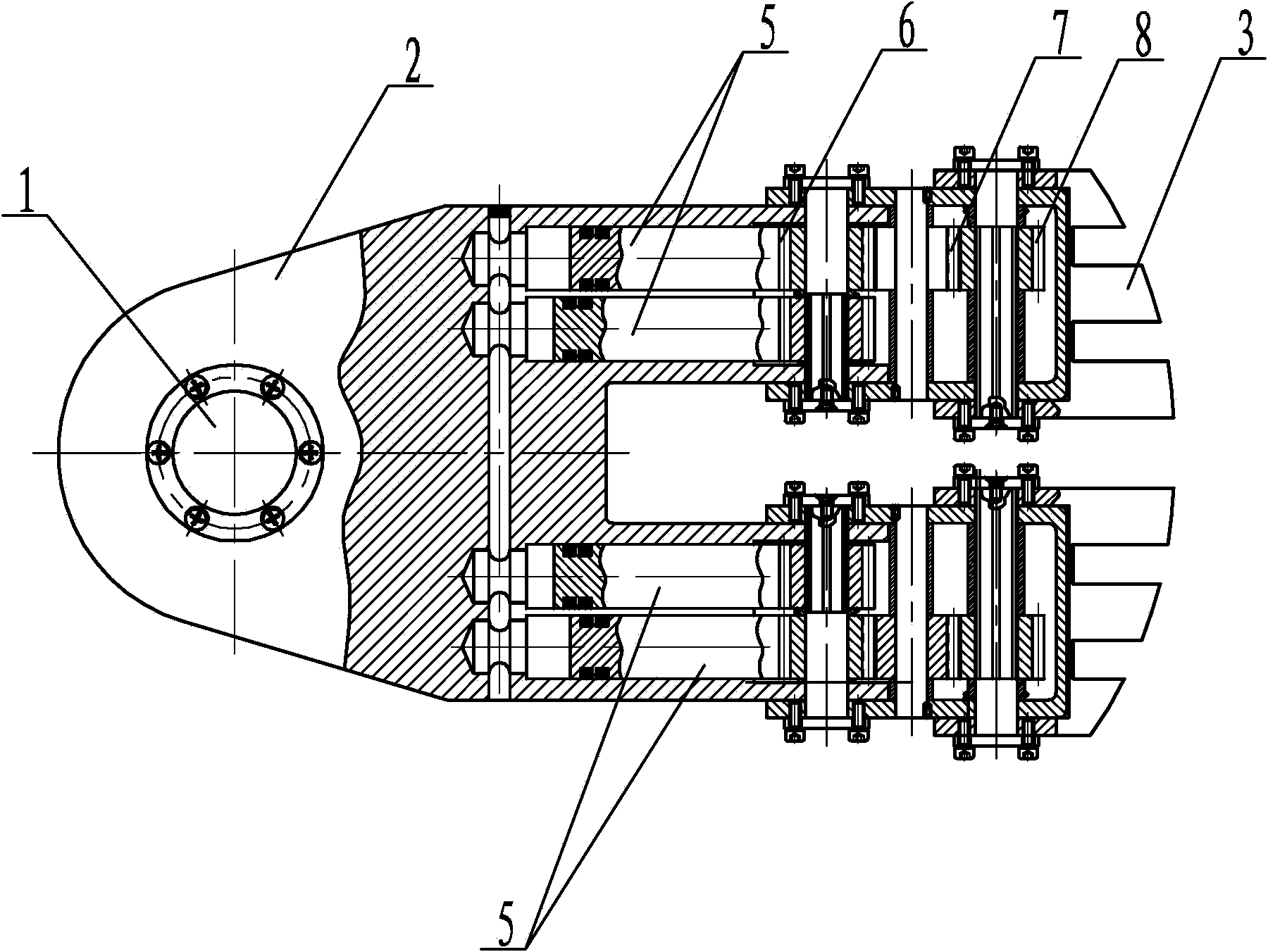

Leg robot large-gradient walking leg oriented to hard mountain environment

ActiveCN104260801AImprove exercise adaptabilityImprove the equivalent adhesion coefficientVehiclesAdhesion coefficientTerrain

A leg robot large-gradient walking leg oriented to hard mountain environment relates to robot large-gradient walking legs. The leg robot large-gradient walking leg oriented to the hard mountain environment solves the problem that the legs of existing joint type multi-legged walking robots are poor in motion performance, low in equivalent adhesion coefficient and incapable of making full use of large-gradient terrains. According to the leg robot large-gradient walking leg oriented to the hard mountain environment, a limit switch (4) is mounted on a spherical hinge ankle joint (1), the spherical hinge ankle joint (1) is mounted at the root of a sole (2), a rack group (5) is slidingly mounted on the sole (2), and a first transmission gear group (6), a second transmission gear group (7) and a third transmission gear group (8) are sequentially engaged and mounted on the right of the rack group (5) from left to right, wherein the first transmission gear group is engaged with the rack group (5), and toes (3) are fixedly connected through the third transmission gear group (8). The leg robot large-gradient walking leg oriented to the hard mountain environment is applicable to large-gradient walking.

Owner:HARBIN INST OF TECH

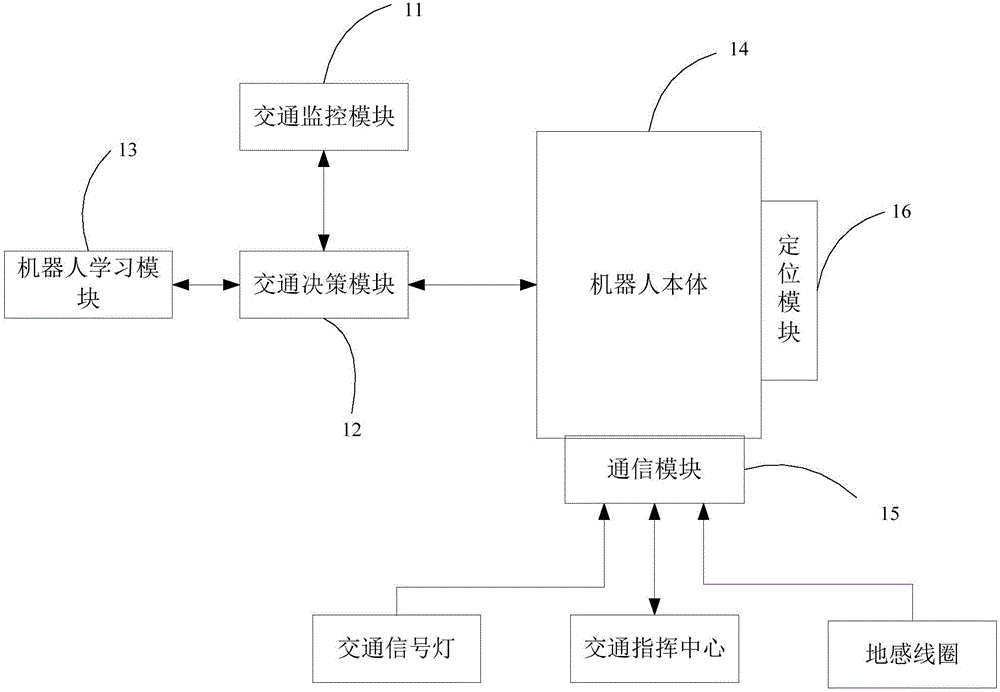

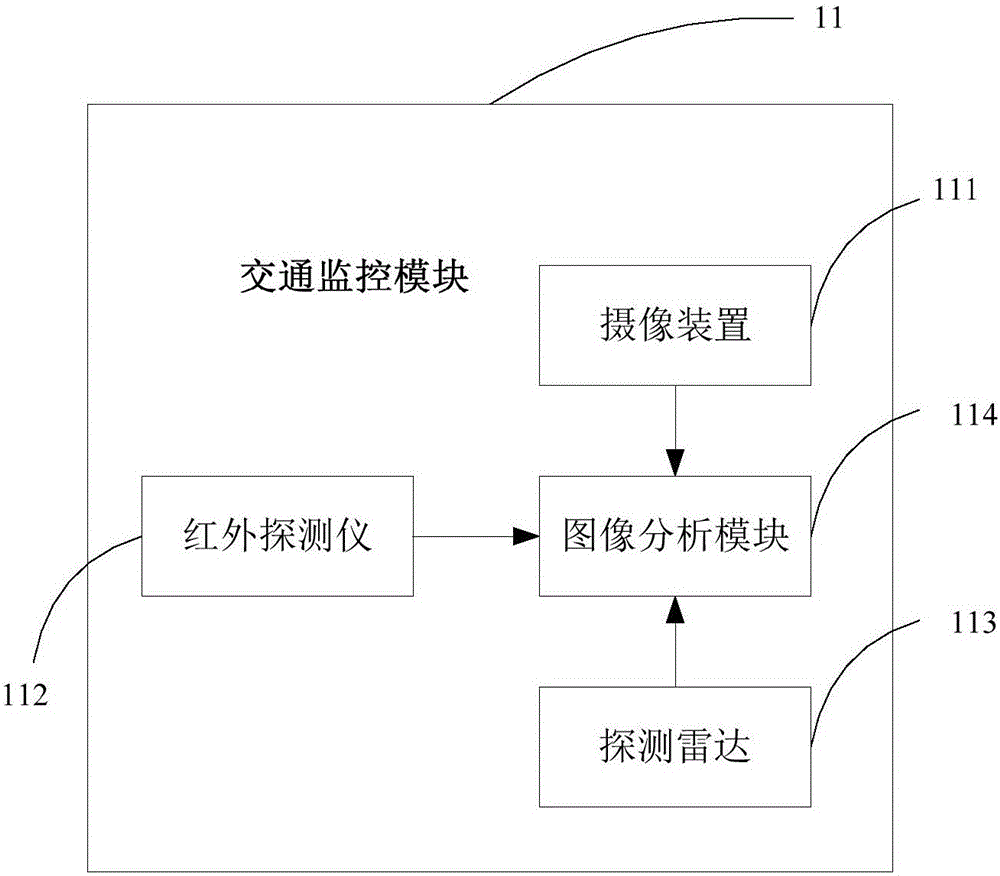

Intelligent traffic robot

InactiveCN106251663AQuick groomingArrangements for variable traffic instructionsDecision modelSelf adaptive

The invention discloses an intelligent traffic robot. The intelligent traffic robot includes a traffic monitoring module, a traffic decision-making module, a robot learning module and a robot body; the traffic monitoring module is used for monitoring the traffic condition of a traffic intersection in real time and obtaining traffic information parameters; the traffic decision-making module is used for obtaining the traffic information parameters provided by the traffic monitoring module and emitting traffic commands according to the traffic information parameters; the robot learning module is used for learning and modifying the traffic information parameters and the traffic commands in the traffic decision-making module according to a traffic command sample, or adaptively building a new traffic decision-making model; and the robot body is used for carrying out corresponding traffic command behaviors according to the traffic commands emitted by the traffic decision-making module. The intelligent traffic robot of the invention can replace traffic police to perform traffic command such as on-site duty performing tasks.

Owner:SHENZHEN XIYUE ZHIHUI DATA CO LTD

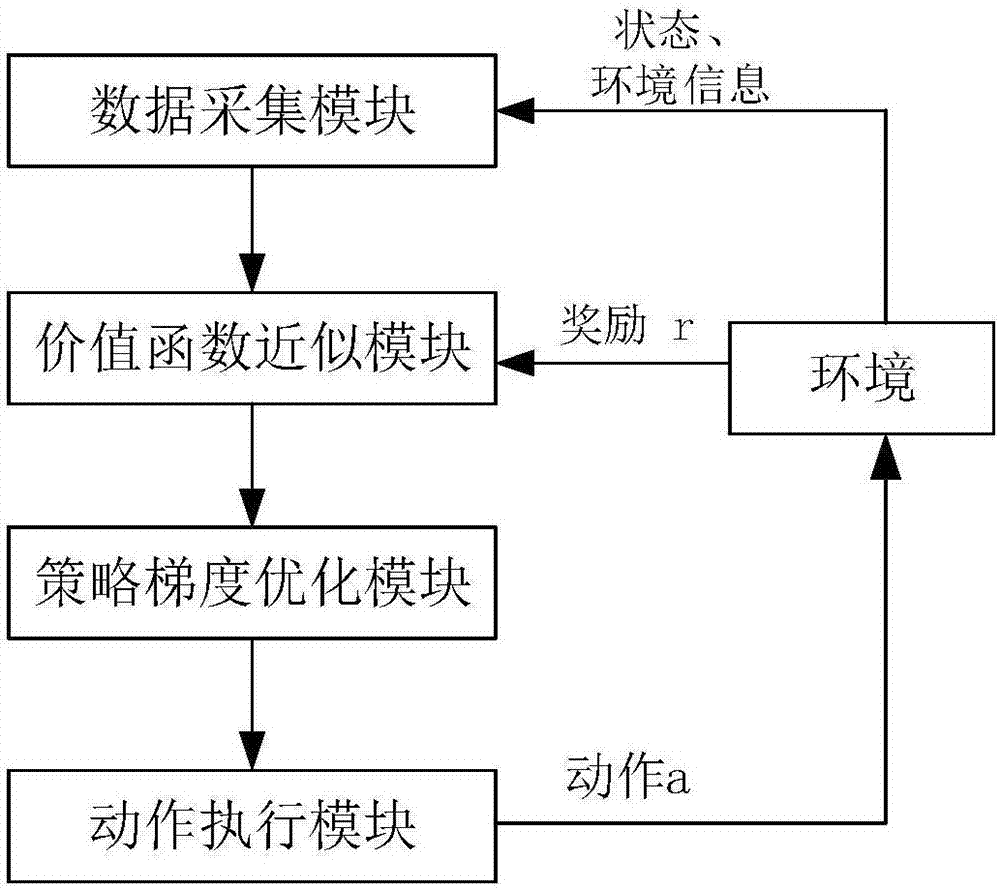

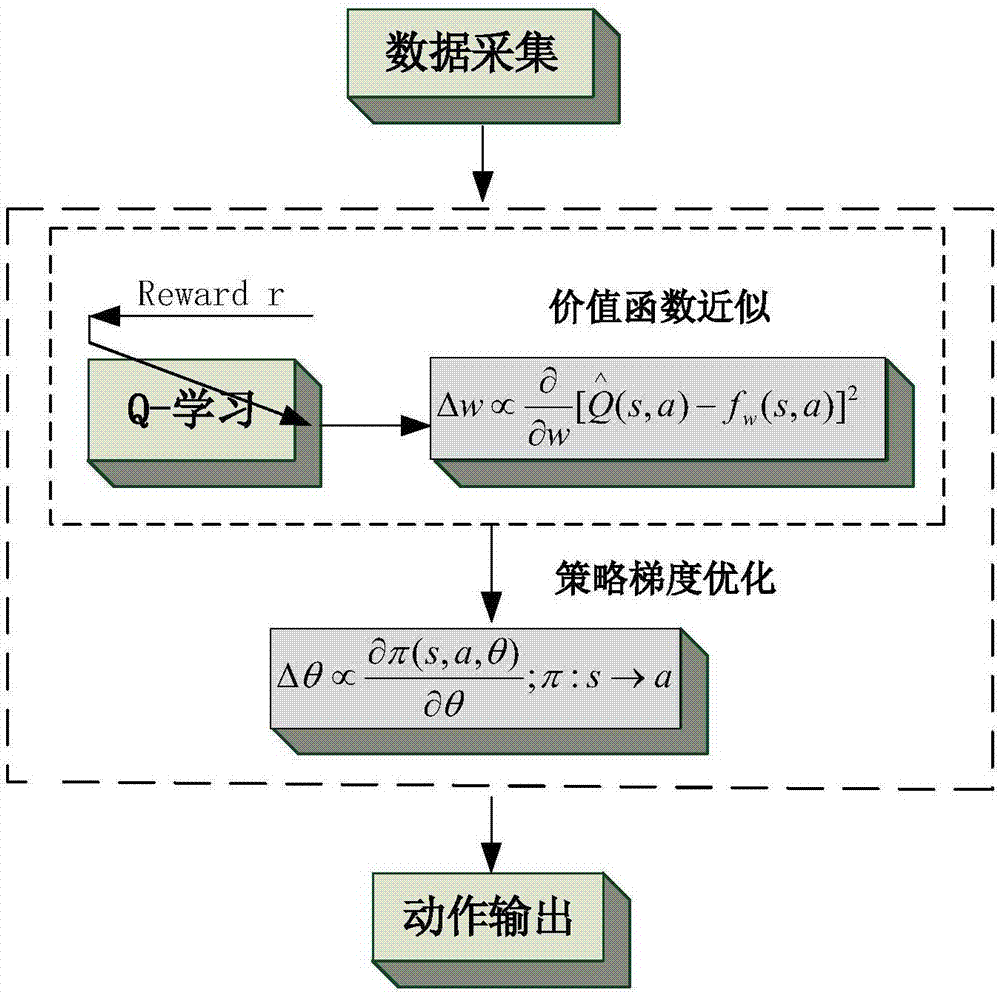

Robot learning control method based on policy gradient

InactiveCN107020636AImprove learning effectImprove intelligenceProgramme-controlled manipulatorState parameterSimulation

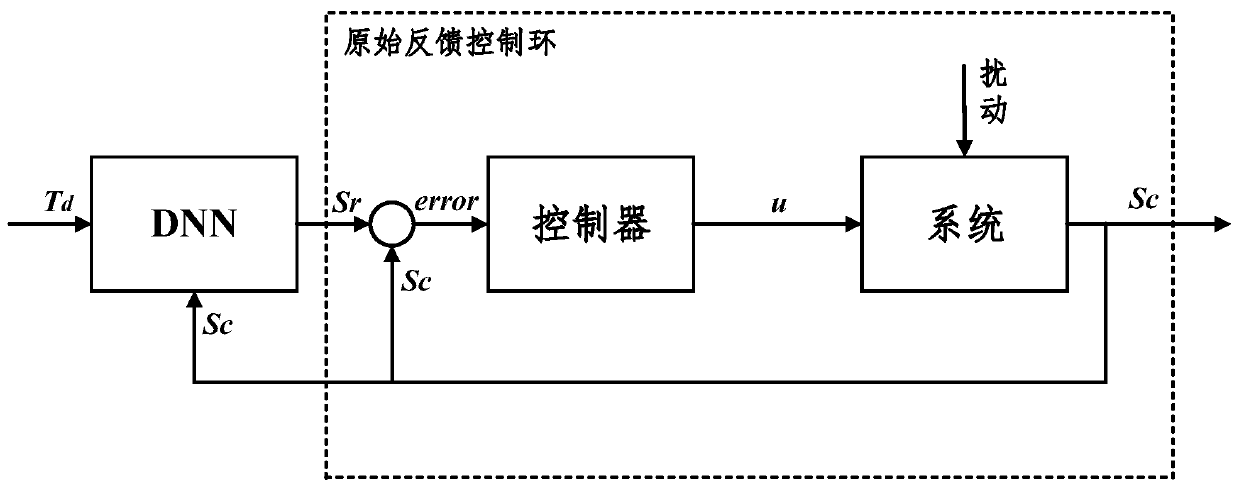

The invention discloses a strategy gradient method suitable for robot learning control, which relates to robot learning control technology, including a data acquisition module, which acquires information data during the operation of the robot; a value function approximation module, which uses observed state information and obtained The timely reward is input to obtain an approximate estimation model of the value function; the strategy gradient optimization module parameterizes the robot learning control strategy, and adjusts and optimizes the parameters to make the robot reach an ideal operating state. The action execution module maps the action output by the controller to the action command actually executed by the robot. The method proposed by the present invention can be used for different types of robots, especially multi-degree-of-freedom robots, which have the ability to learn complex actions and solve random strategies, thereby improving the intelligence of the robot, reducing the danger in the learning process, and shortening the robot life. Learning time simplifies controller design difficulty.

Owner:CHONGQING UNIV

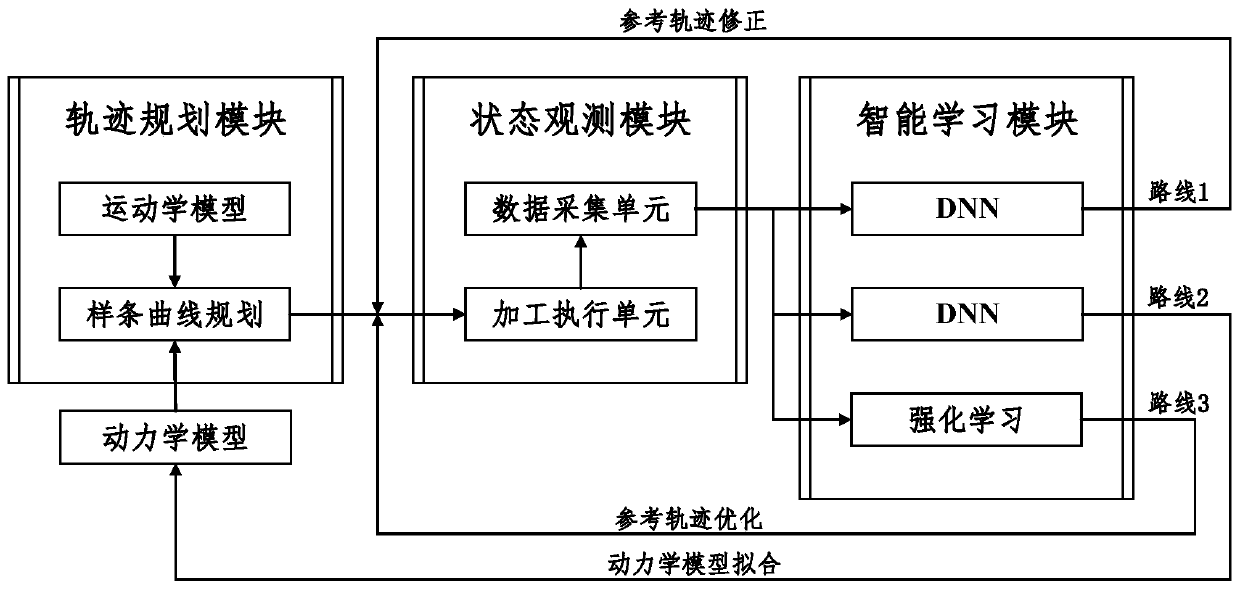

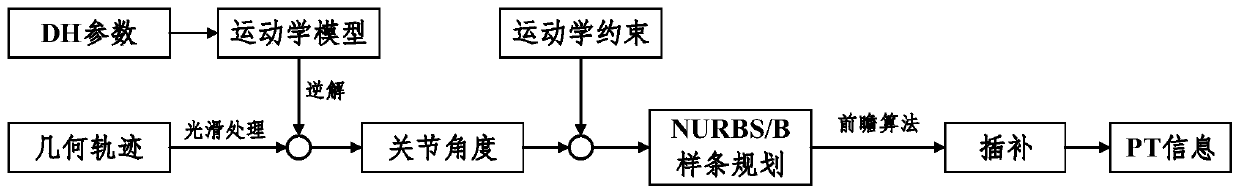

Robot trajectory planning method based on deep learning

ActiveCN110083160AHigh running precisionImprove stabilityPosition/course control in two dimensionsTime informationDynamic models

The invention discloses a robot trajectory planning method based on deep learning, which comprises the following steps: firstly, establishing a kinematics model of a robot, providing a basic planningtrajectory for the robot, moving the robot, acquiring real-time information of the robot, including information such as position and moment, establishing a dynamics model of the robot, and then obtaining an optimal planning trajectory by utilizing Q-learning reinforcement learning; and the modeling and learning are carried out based on actual acquired data, and modeling under an ideal environmentis avoided. The robot trajectory planning method based on deep learning can be applied to industrial robots in various complex environments because the industrial robots have the capabilities of parameter self-learning and self-adjusting. Under the condition that the consistency of the robots is good, the models learned by the robots can be shared with the robot platforms of the same type. The robot trajectory planning method based on deep learning has wide application prospect in industrial production.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

Abnormal sound detection method and abnormal sand detection system for automobile seat slide rail

ActiveCN109946055ARemove noise signalAchieving identifiabilityMachine part testingSubsonic/sonic/ultrasonic wave measurementTime domainSound detection

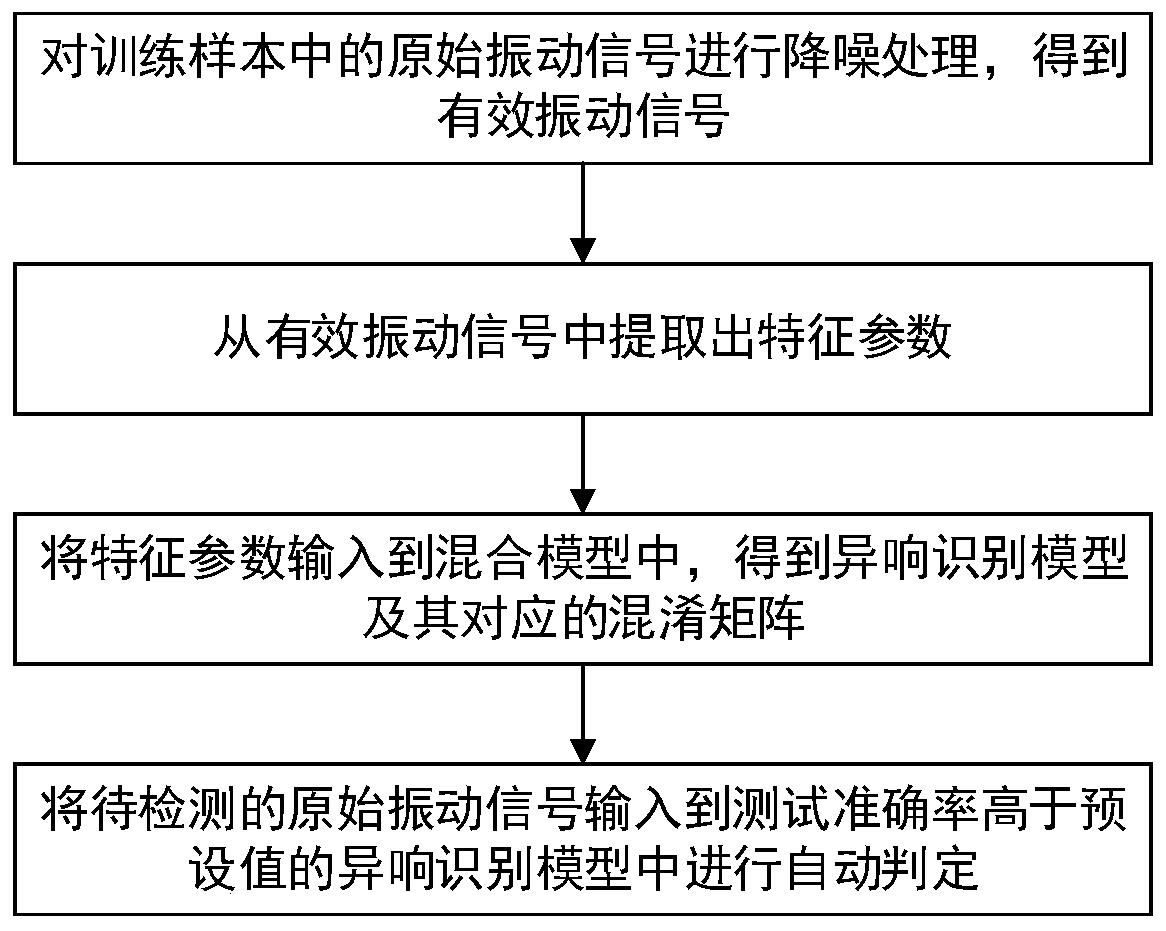

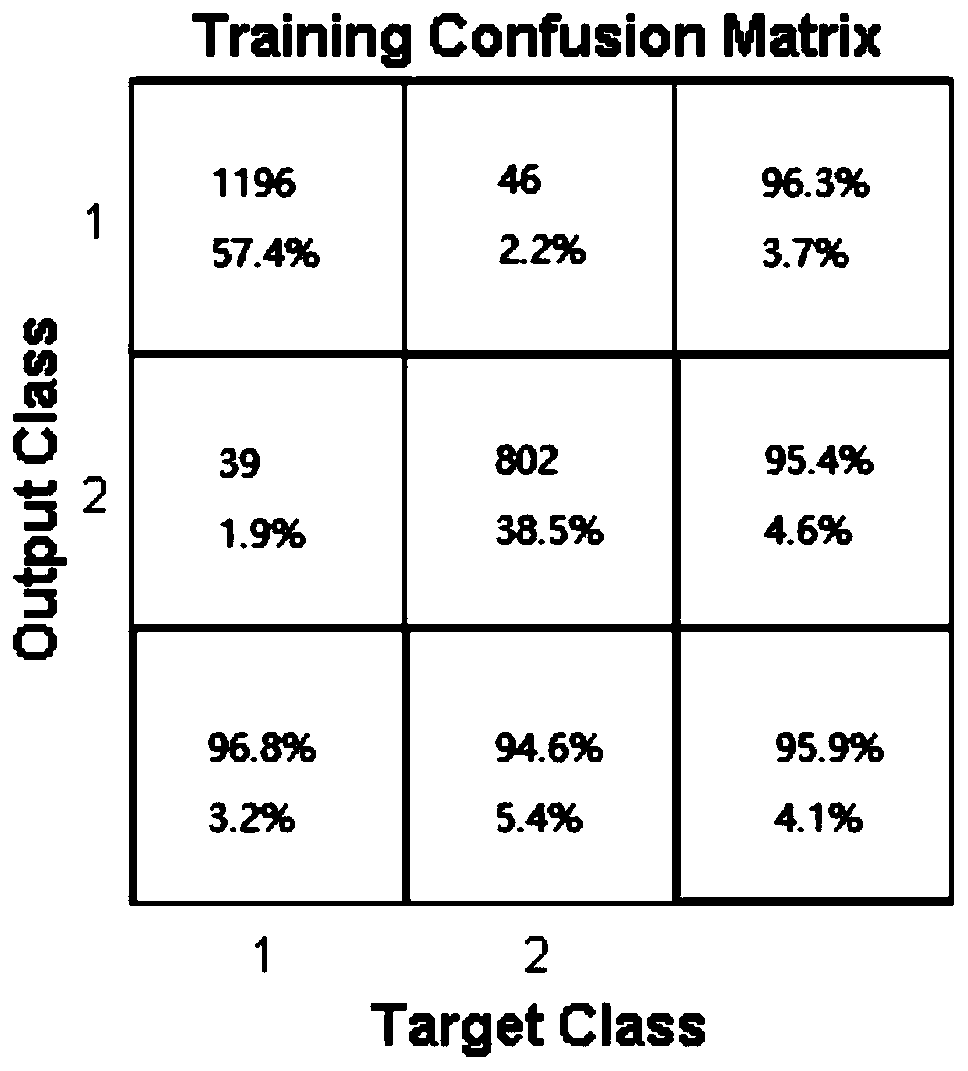

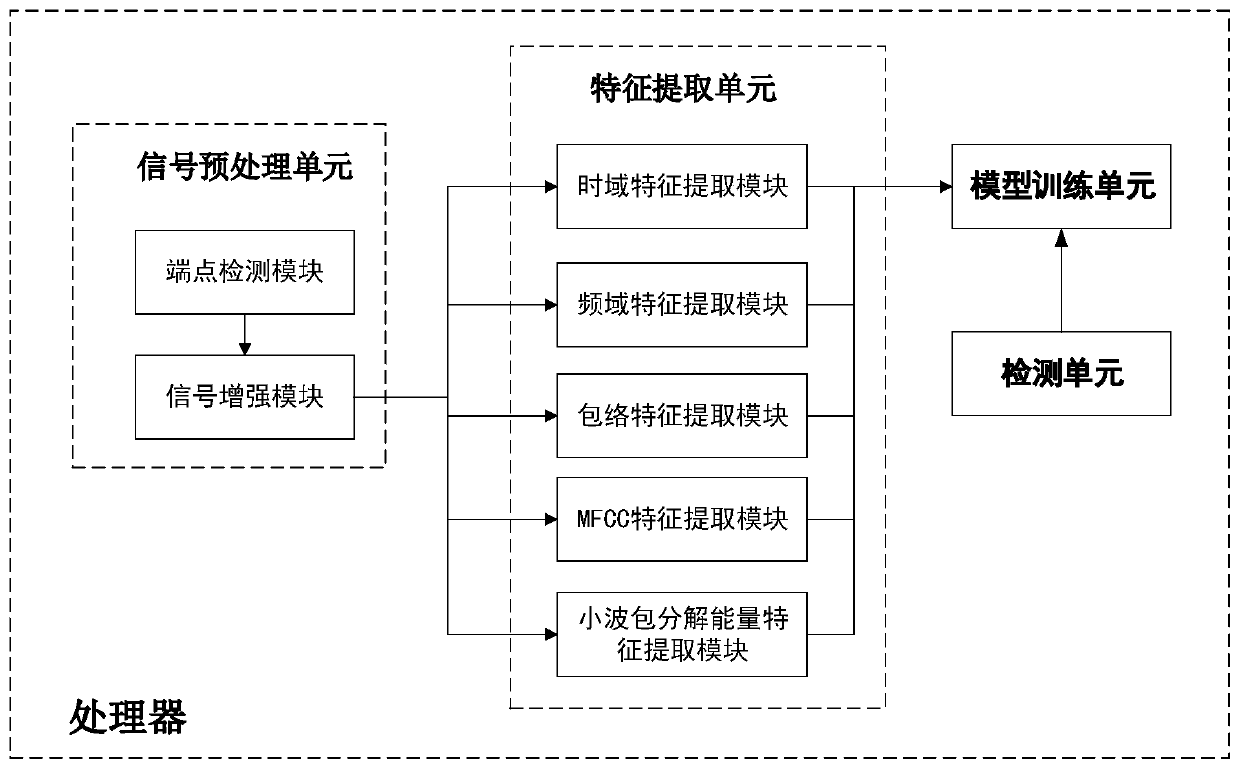

The invention discloses an abnormal sound detection method and an abnormal sand detection system for an automobile seat slide rail. The method comprises the following steps: S1, acquiring an originalvibrating signal as a training sample and denoising the original vibrating signal to obtain an effective vibrating signal; S2, extracting characteristic parameters such as a time domain characteristic, a frequency domain characteristic and an envelope characteristic in the effective vibrating signal; S3, inputting the characteristic parameters to a mixed model to train the model to obtain an abnormal sound recognizing module and a mixing matrix corresponding to the abnormal sound recognizing module, wherein the mixing matrix comprises a test accuracy of an abnormal recognizing module; and S4,inputting the to-be-detected original vibrating signal to the corresponding abnormal sound recognizing model, the testing accuracy of which is higher than a preset value, to judge the to-be-detected original vibrating signal automatically. By means of a detection means based on industrial big data and robot learning model, automatic recognition can be achieved, and the method is high in detectionefficiency, excludes manual unstable factors and improves the detection accuracy.

Owner:宁波慧声智创科技有限公司

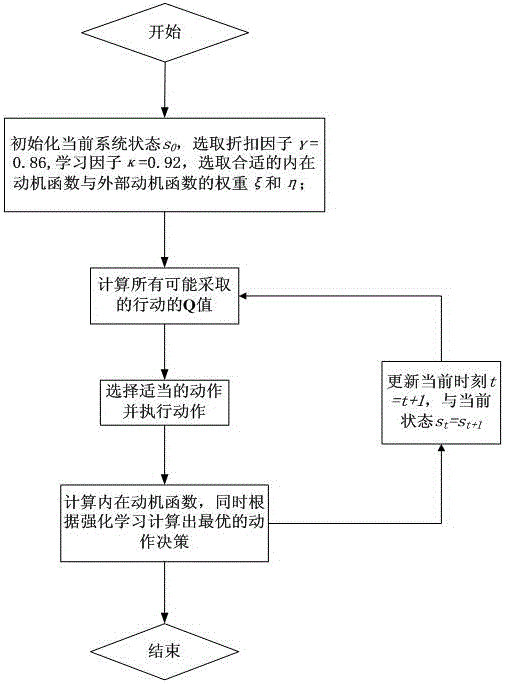

Intrinsically motivated extreme learning machine autonomous development system and operating method thereof

InactiveCN106598058AImprove learning initiativeImprove the speed of adaptation to the environmentNeural architecturesAttitude controlLearning machineOrientation function

The invention belongs to the technical field of intelligent robots, and concretely relates to an intrinsically motivated extreme learning machine autonomous development system and an operating method thereof. The autonomous development system comprises an inner state set, a motion set, a state transition function, an intrinsic motivation orientation function, a reward signal, a reinforced learning update iteration formula, an evaluation function and a motion selection probability. According to the invention, an intrinsic motivation signal is utilized to simulate an orientation cognitive mechanism of the interest of people in things so that a robot can finish relevant tasks voluntarily, thereby solving a problem that the robot is poor in self-learning. Furthermore, an extreme learning machine network is utilized to practice learning and store knowledge and experience so that the robot, if an experience fails, can use the stored knowledge and experience to keep exploring instead of learning from the beginning. In this way, the learning speed of the robot is increased, and a problem of low efficiency of reinforced learning for single-step learning is solved.

Owner:NORTH CHINA UNIVERSITY OF SCIENCE AND TECHNOLOGY

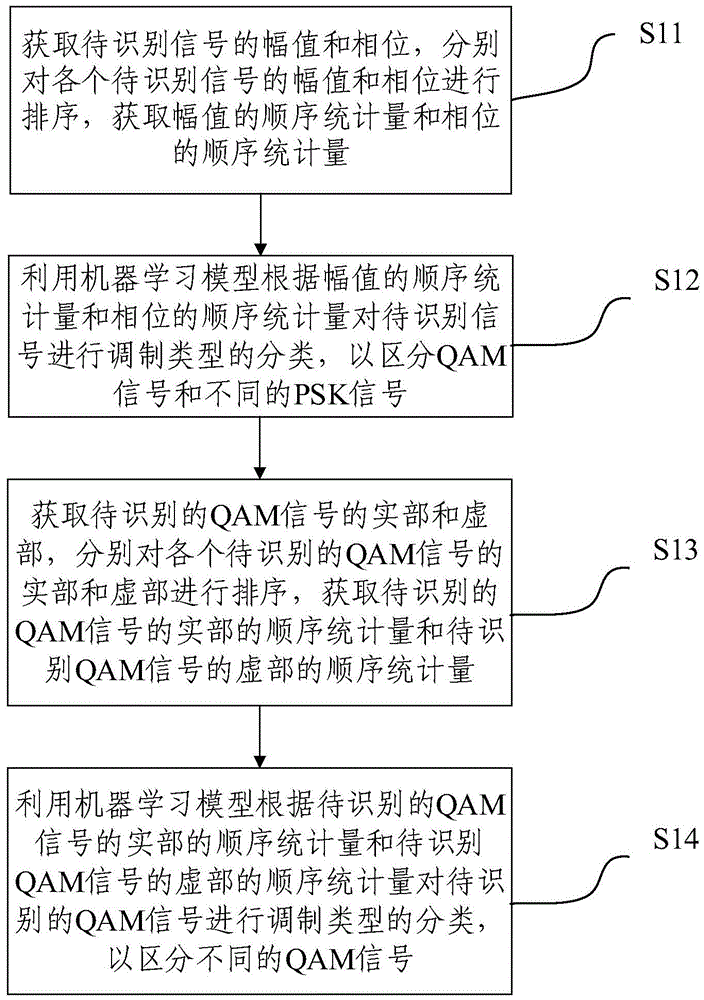

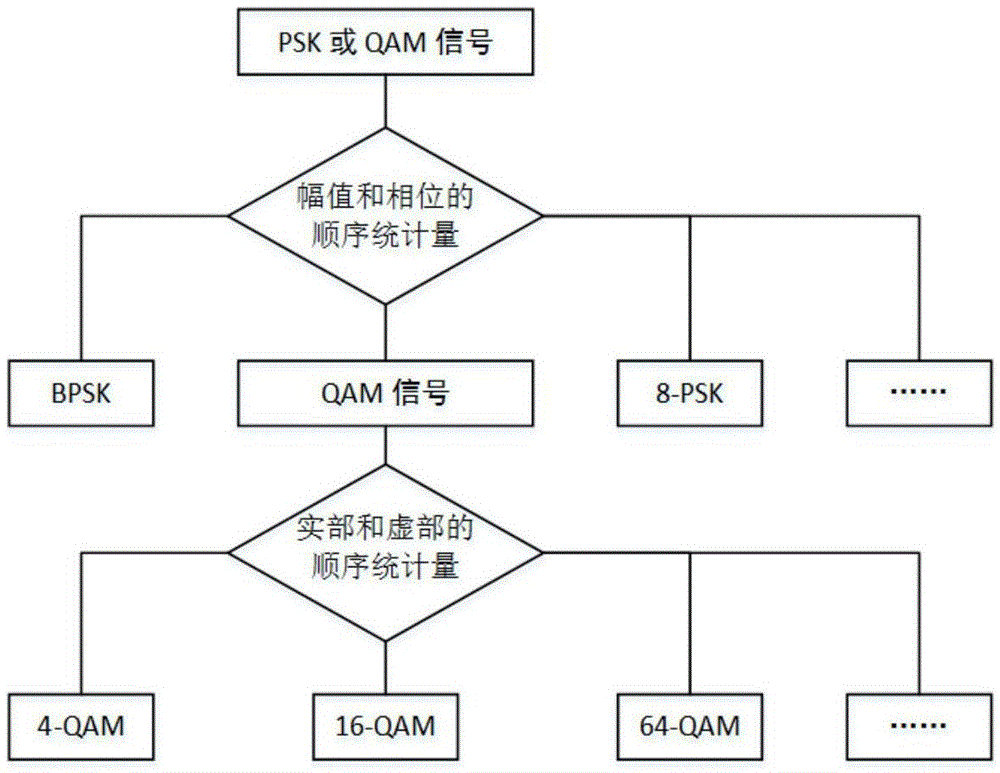

Modulation recognizing method and system based on order statistics and machine learning

ActiveCN105656826AEasy to classifyModulation type identificationPattern recognitionComputation complexity

The invention relates to a modulation recognizing method and system based on order statistics and machine learning. The method includes the steps of obtaining the amplitude and phase of a to-be-recognized signal, obtaining the order statistics of the amplitude and the order statistics of the phase, classifying modulation types of the to-be-recognized signal through a robot learning model according to the order statistics of the amplitude and the order statistics of the phase to distinguish a QAM signal and different PSK signals, obtaining the real part and the visual part of the to-be-recognized QAM signal, obtaining the order statistics of the real part of the to-be-recognized QAM signal and the order statistics of the visual part of the to-be-recognized QAM signal, and classifying the modulation types of the to-be-recognized QAM signal through the robot learning model according to the order statistics of the real part of the to-be-recognized QAM signal and the order statistics of the visual part of the to-be-recognized QAM signal to distinguish different QAM signals. Good signal classifying performance is kept when the computation complexity is low.

Owner:TSINGHUA UNIV +1

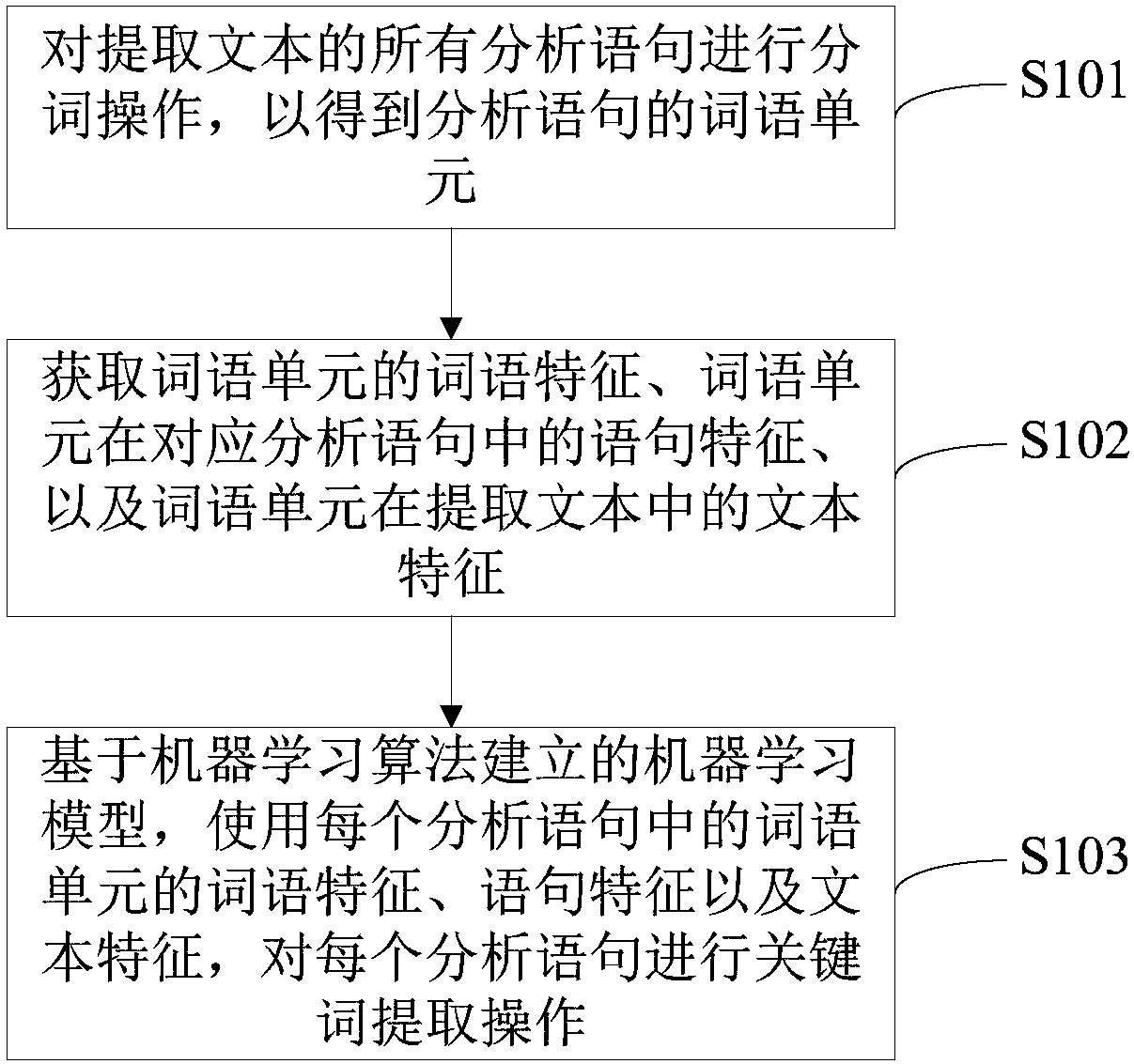

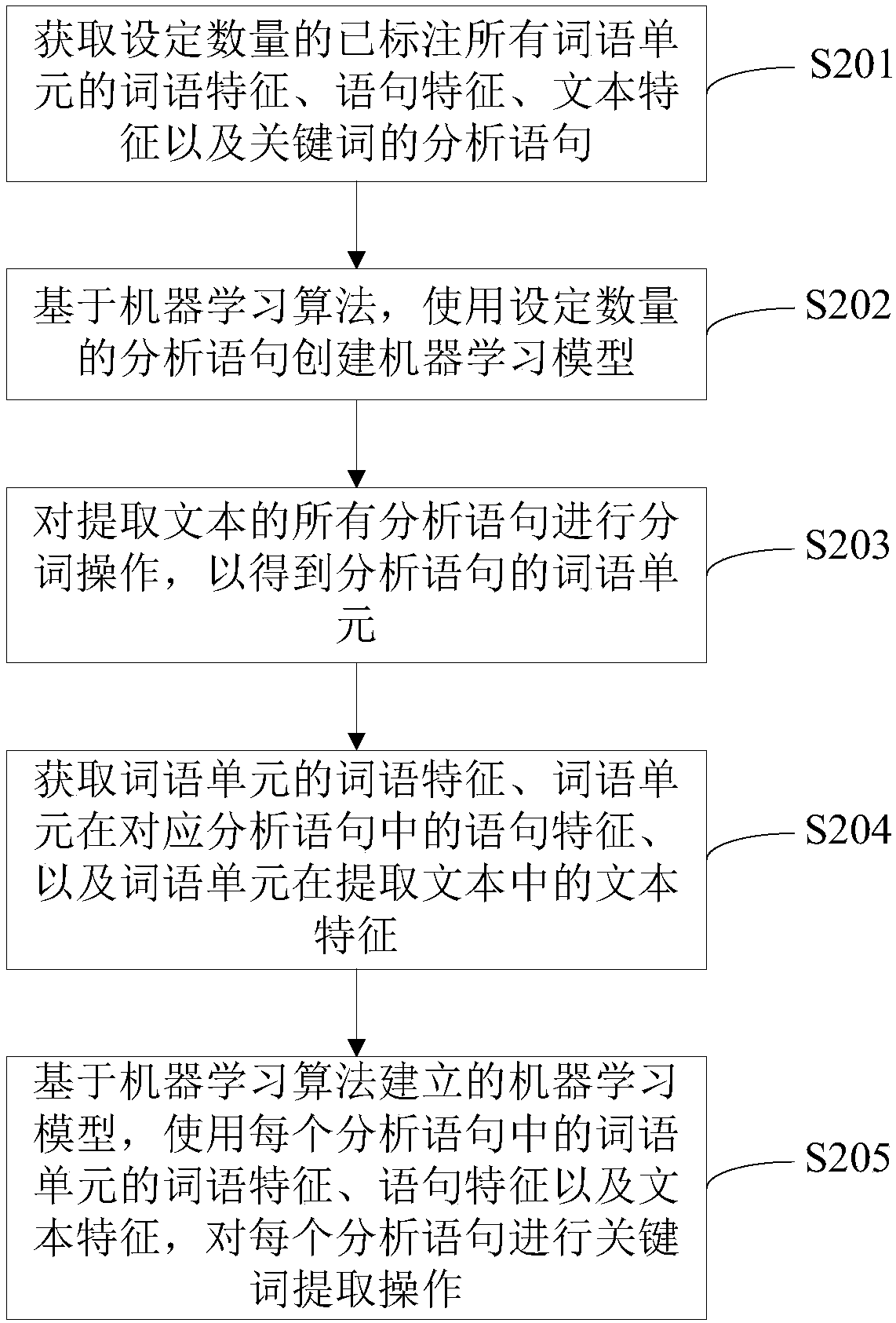

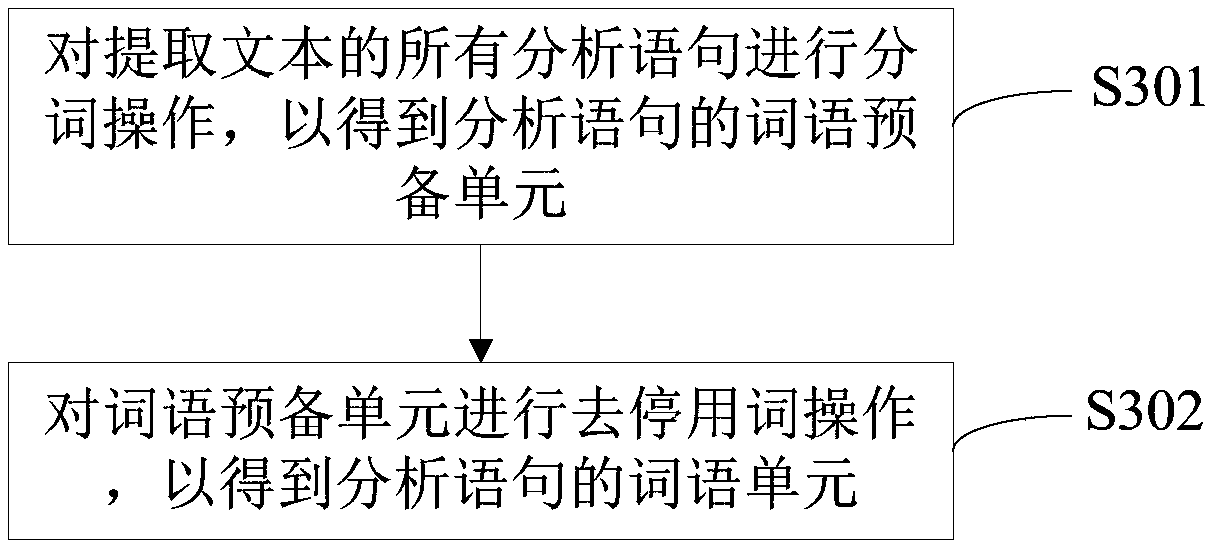

Method and device for extracting keywords

ActiveCN108334490AImprove accuracySolve the technical problem of low extraction accuracyNatural language data processingSpecial data processing applicationsPattern recognitionCorrespondence analysis

The invention provides a method for extracting keywords. The method includes the steps that word segmentation operation is performed on all analysis sentences of an extracted text so as to obtain wordunits of the analysis sentences; word features of the word units, sentence features of the word units in the corresponding analysis sentences and text features of the word units in the extracted textare obtained; based on a robot learning model established through a robot learning algorithm, by the utilization of the word features of the word units in all the analysis sentences, the sentence features and the text features, keyword extraction operation is performed on each analysis sentence. The invention further provides a device for extracting the keywords. According to the method and device for extracting the keywords, the word features of the word units, the sentence features and the text features are used for establishing the robot learning model, then the keyword extraction operation is performed on each analysis sentence, and then the accuracy of keyword extraction is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

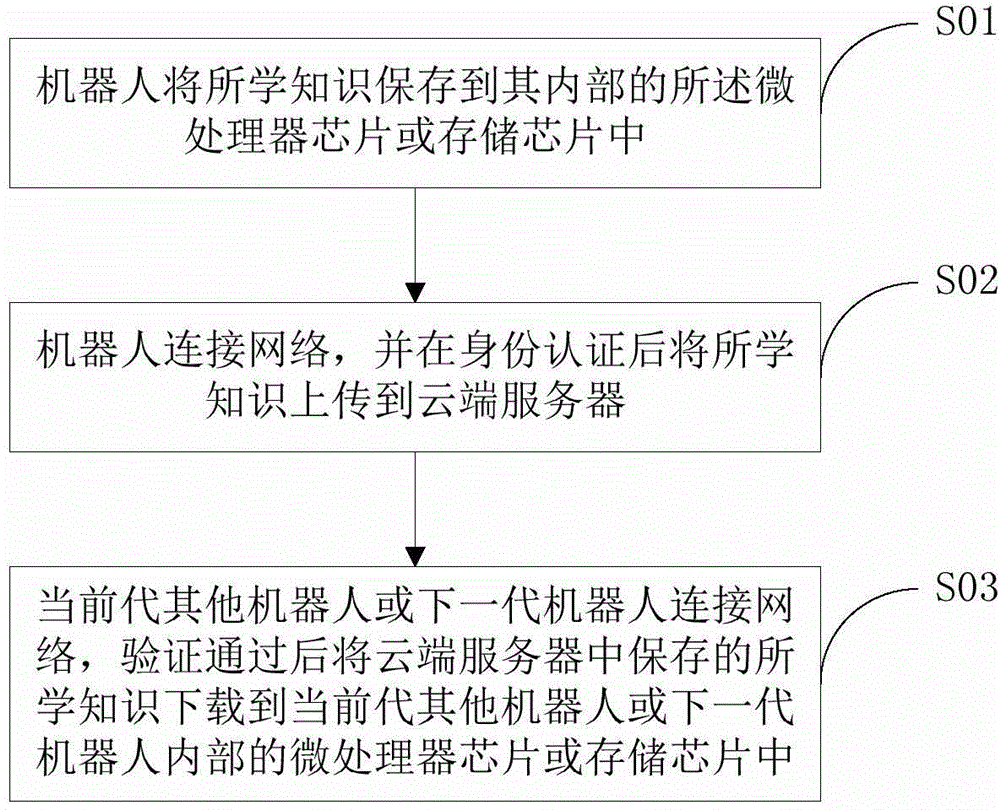

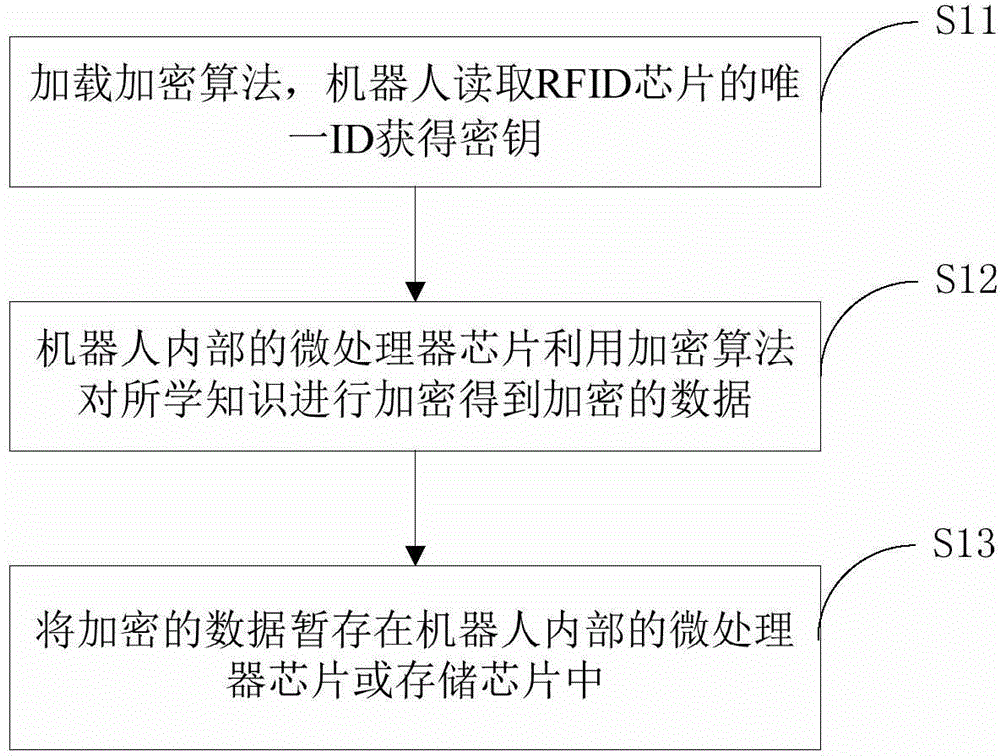

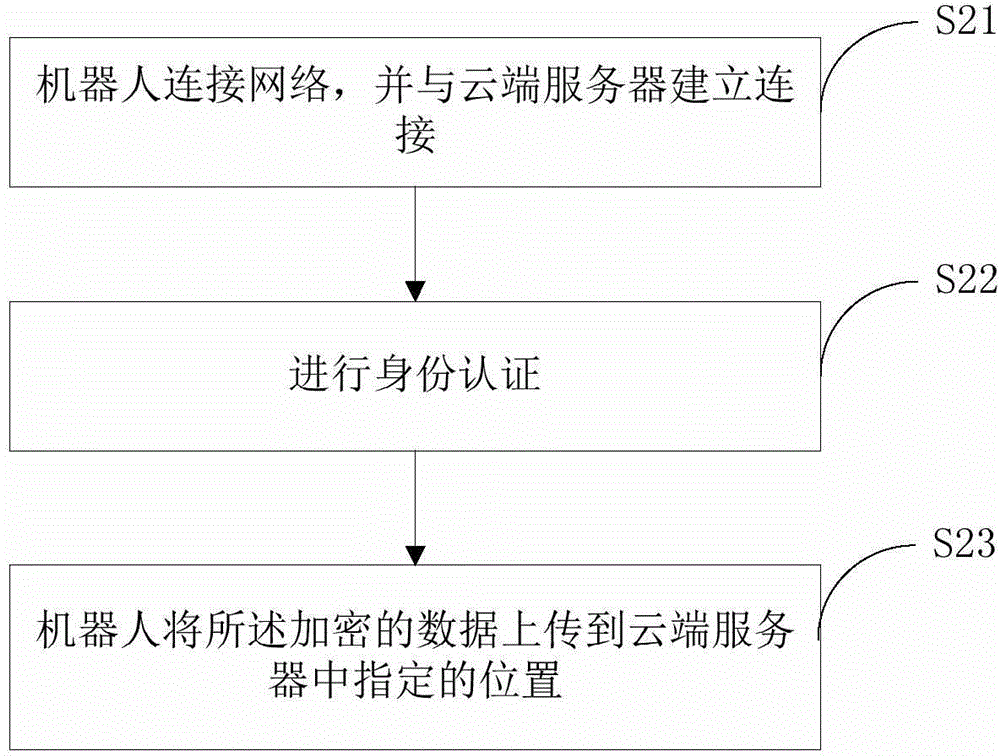

Method for backing up and learning knowledge learned by robot

ActiveCN104408519AEasy to operateShorten the timeSensing record carriersKnowledge based modelsTime efficientMachine learning

The invention brings forward a method for backing up and learning knowledge learned by a robot, wherein the method is applied to a robot learning system. The robot learning system is composed of a robot, a next-generation robot, and a cloud end server; microprocessor chips or and storage chips are arranged inside the robot and the next-generation robot; and the robot and the next-generation robot carry out communication with the cloud end server by a network. The method comprises the following steps that: the robot stores the learned knowledge to the internal microprocessor chip or storage chip; the robot is connected with the network and uploads the learned knowledge to the cloud end server after identity authentication; and when another previous-generation robot or the next-generation robot is connected to the network, the learned knowledge stored by the cloud end server is downloaded to the microprocessor chip or and storage chip of the another previous-generation robot or the next-generation robot after successful verification. The implemented method has the following beneficial effects: the usage and operation flows become simple; and time is saved.

Owner:广州市小罗机器人有限公司

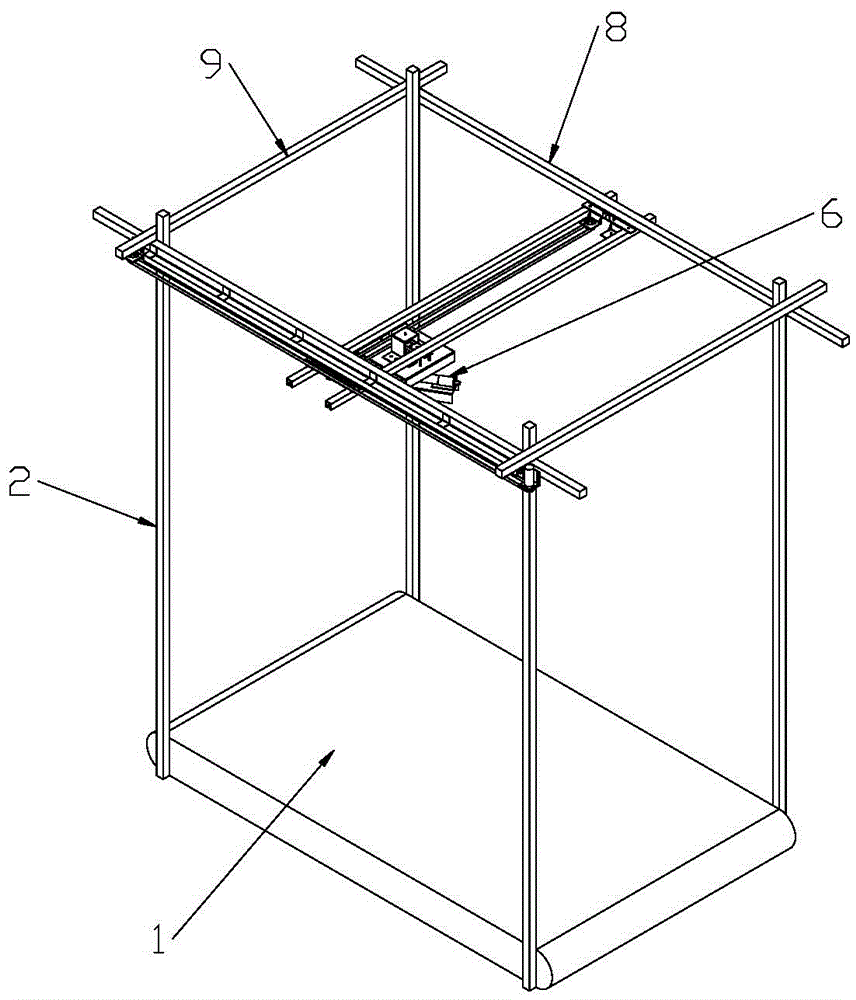

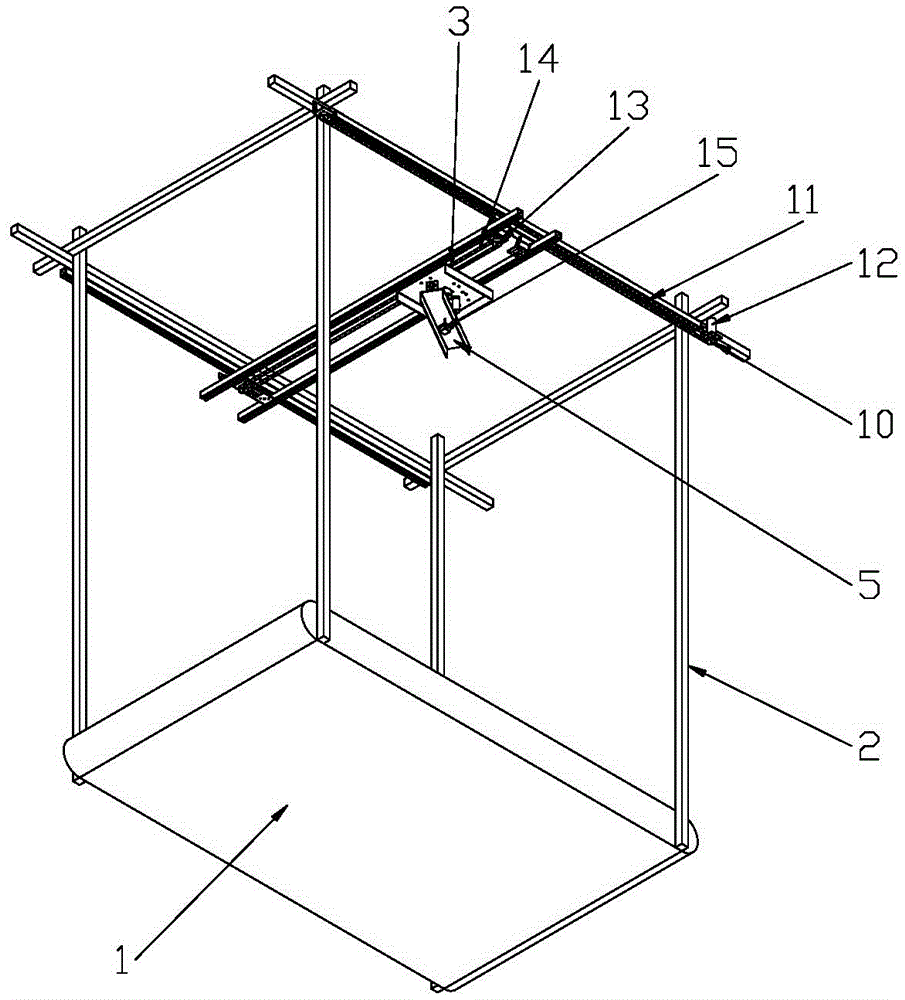

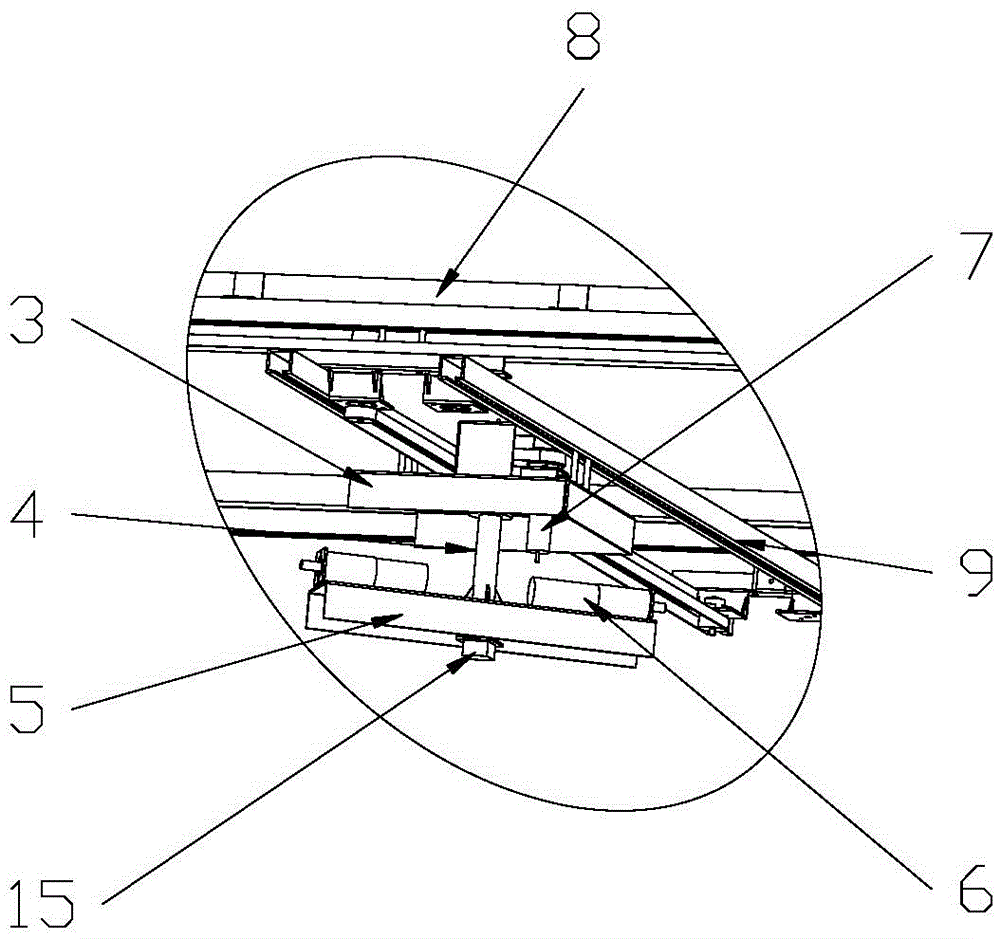

Walking robot learning platform

The invention discloses a walking robot learning platform which comprises a treadmill and a support frame, wherein a robot follow-up lifting mechanism is arranged on the support frame; the robot follow-up lifting mechanism comprises a robot lifting bracket and a follow-up driving mechanism for driving the robot lifting bracket to move in two mutually perpendicular directions; the robot lifting bracket comprises a lifting plate; a rotation shaft rotatably matched with the lifting plate is arranged on the lifting plate; a rotating part synchronously rotating with the rotating shaft is arranged at a lower end of the rotating shaft; at least one traction mechanism is mounted on the rotating part; the traction mechanism comprises a traction rope tied on a walking robot to prevent the walking robot from falling down and a traction motor for taking up and paying off the traction rope; a rotation motor for driving the rotating part to rotate is arranged on the lifting plate. The walking robot learning platform provided by the invention can not only meet technical purpose of walking robot on walking learning, but also effectively prevent the walking robot from being damaged in walking learning process, and further prevent a threat to personal safety of research and development and testing personnel.

Owner:深圳市行者机器人技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com