Suicide emotion perception method based on multi-modal fusion of voice and micro-expressions

A technology of emotion perception and micro-expression, applied in the field of emotion perception, can solve the problems of insufficient reliability of research results and achieve the effect of convenient operation and high performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

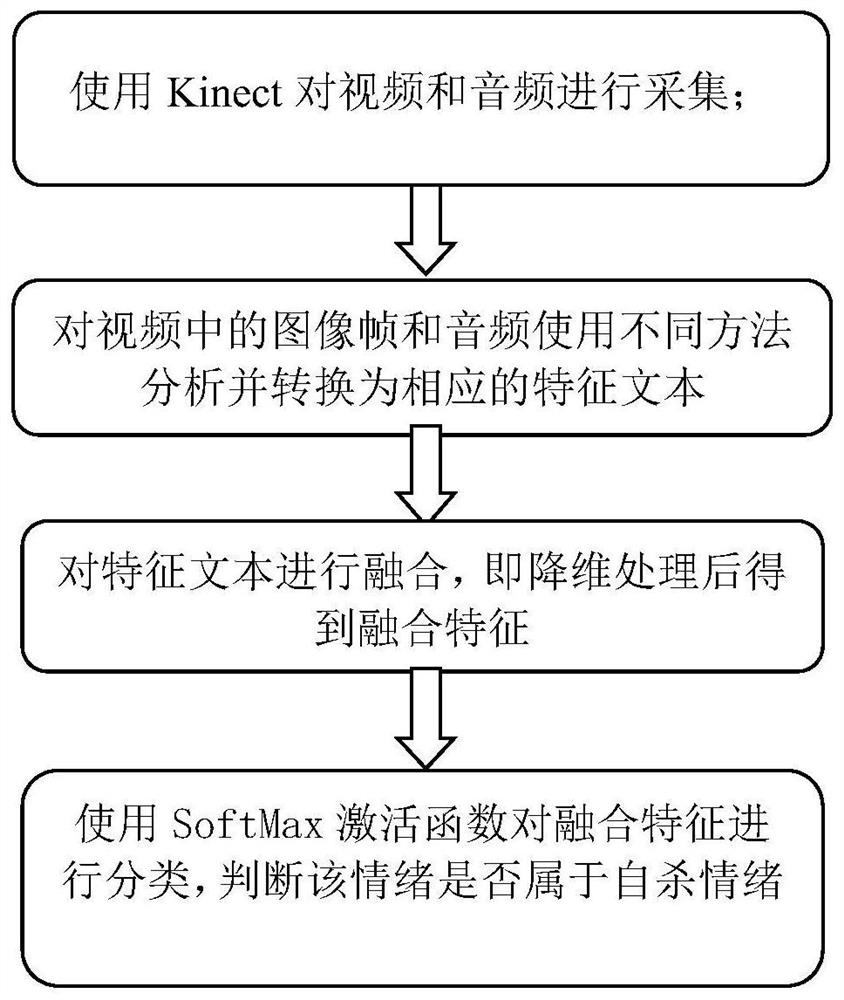

[0042] A suicide emotion perception method based on the multi-modal fusion of speech and micro-expressions, such as figure 1 shown, including the following steps:

[0043] S1, use Kinect with infrared camera to collect video and audio;

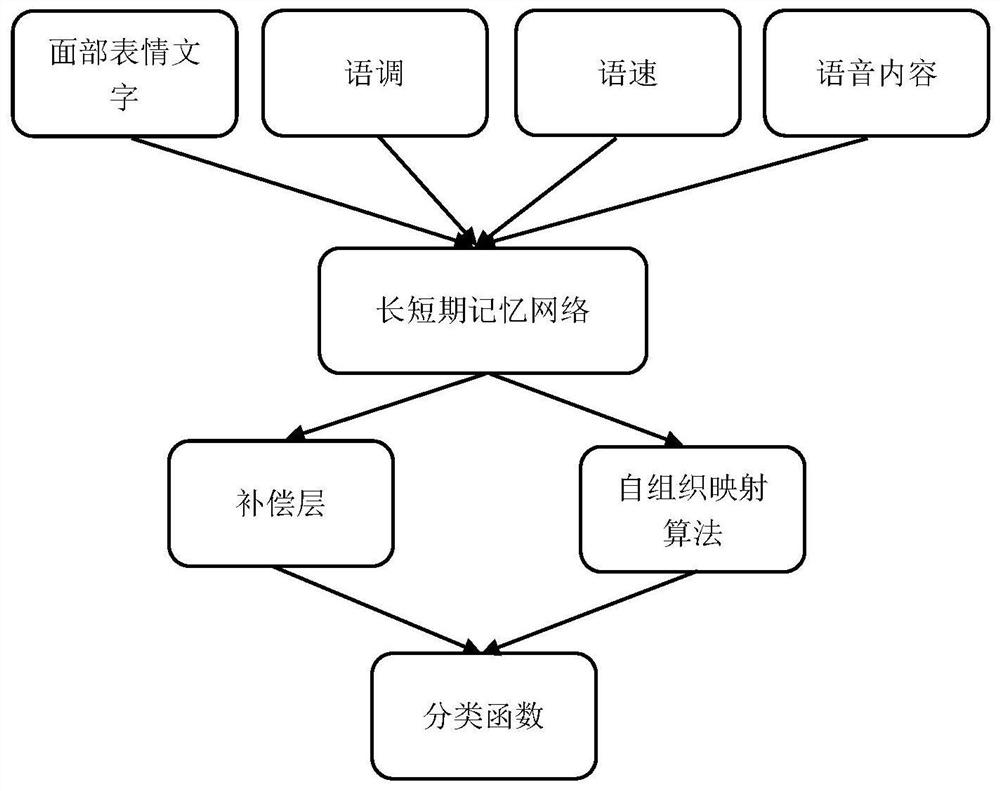

[0044] S2. Using different methods to analyze and convert image frames and audio in the video into corresponding feature texts;

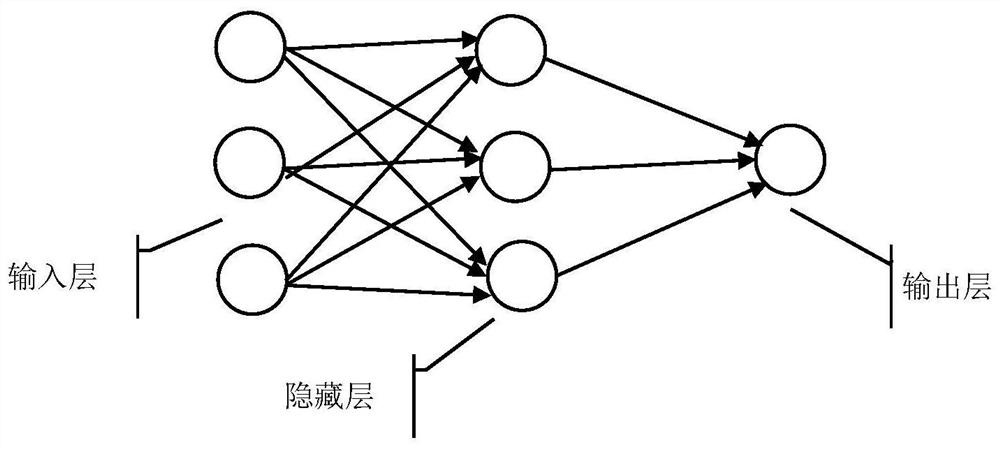

[0045] For the acquired audio, different feature extractions are performed from the three dimensions of voice content, intonation and speech rate, and converted into three sets of corresponding feature texts; for the acquired image frames, after capturing facial expressions, feature extraction and Dimensionality reduction, and classification by neural network into corresponding emoticon text descriptions.

[0046] Step S2 specifically includes the following steps:

[0047] S2.1. After the audio signal is denoised, the speech is converted into three corresponding feature text descriptions in turn according to the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com