Patents

Literature

306 results about "Speech rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

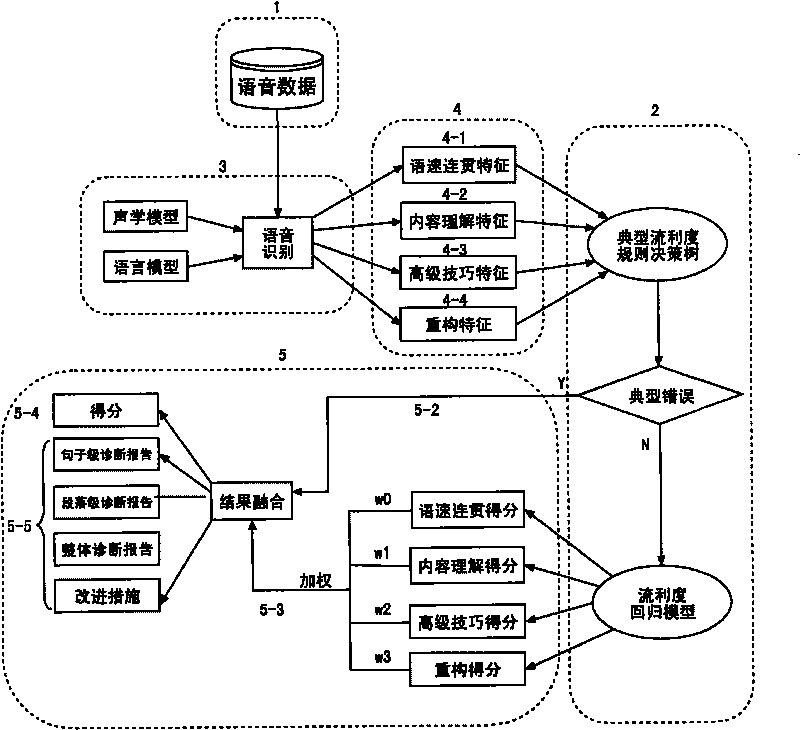

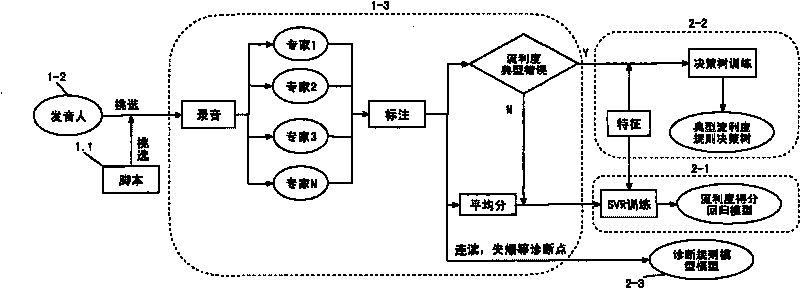

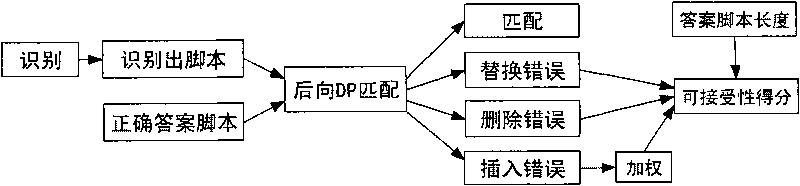

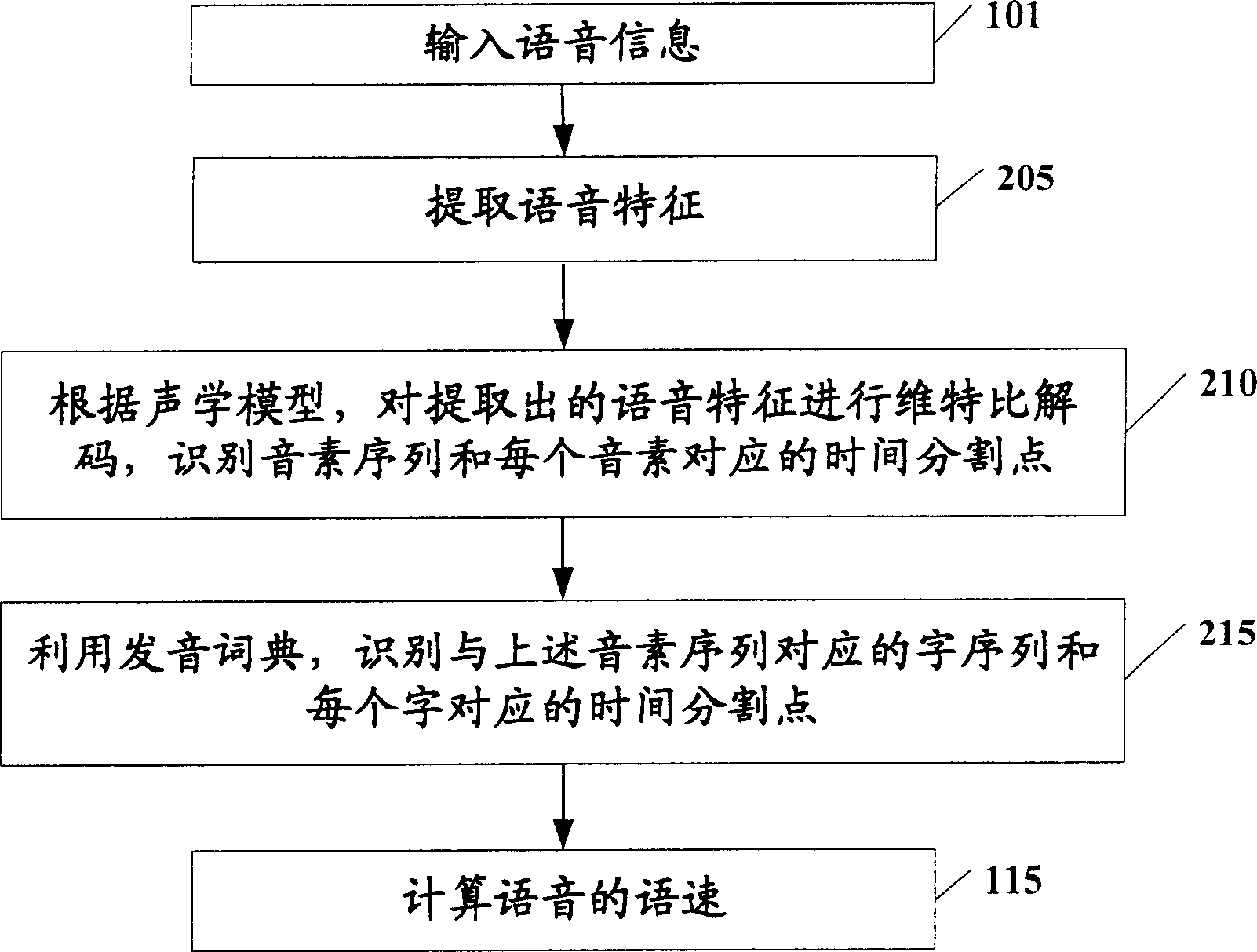

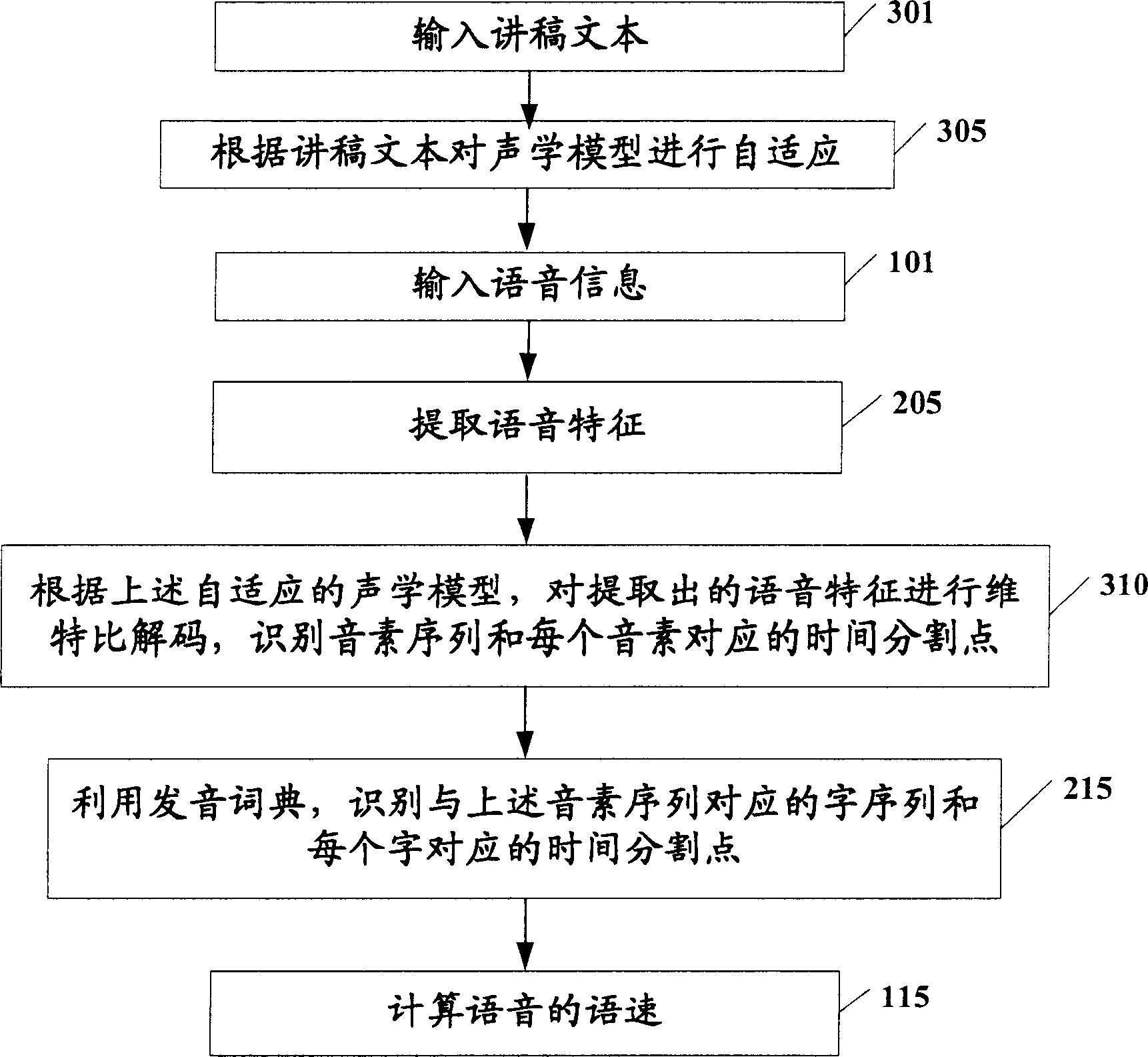

Method for automatic evaluation based on generalized fluent spoken language fluency

ActiveCN101740024ATroubleshoot automated assessment issuesFast scoringSpeech recognitionData dredgingSpoken language

The invention relates to a method for automatic evaluation based on generalized fluent spoken language fluency, which comprises the following steps of: acquiring speech data according to different ages and spoken language levels by using a speech input device; adopting an evaluating model based on characteristics of the generalized fluency and the machine learning training fluency; configuring a speech recognition system with corresponding parameters according to scripts of different subjects and genders of enunciators in the speech data; performing quantification on speech speed coherence, content understanding, advanced skills and reconstruction standard characteristics in the speech data to comprehensively extract the characteristics of the fluency from the speech data from the angle of expert assessment and evaluation; and adopting a decision tree method in regression fitting analysis and data mining to detect faults of abnormal fluency and grade and diagnose the fluency. The acquired score of the machine fluency can reach the level close to that of grading experts, and the relativity index exceeds that of 2 to 3 of general 5 experts; besides, the method has a high speed, and can be embedded into a spoken language automatic evaluation system to serve as an important module to evaluate fluency indexes in pronunciation quality.

Owner:IFLYTEK CO LTD

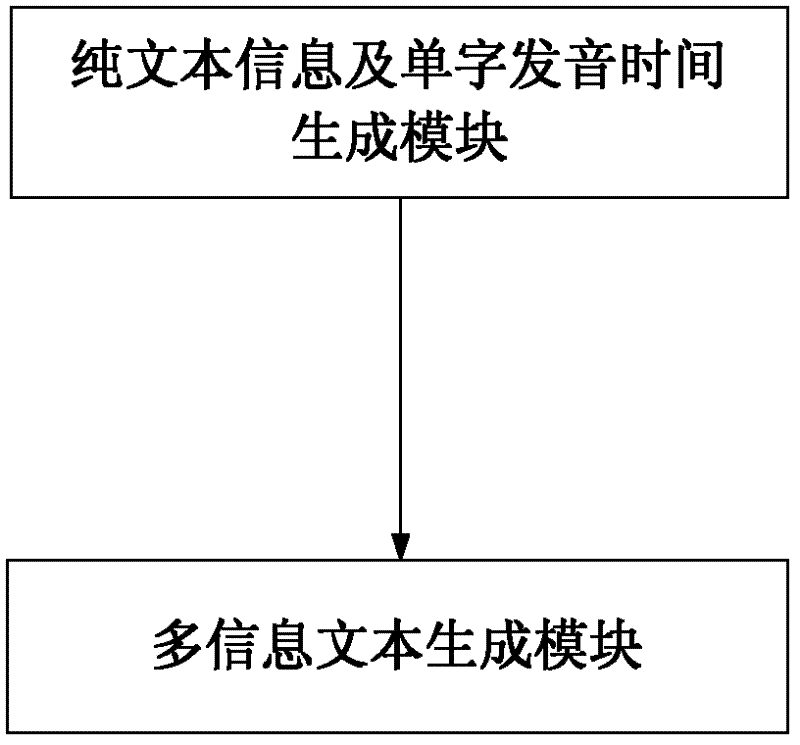

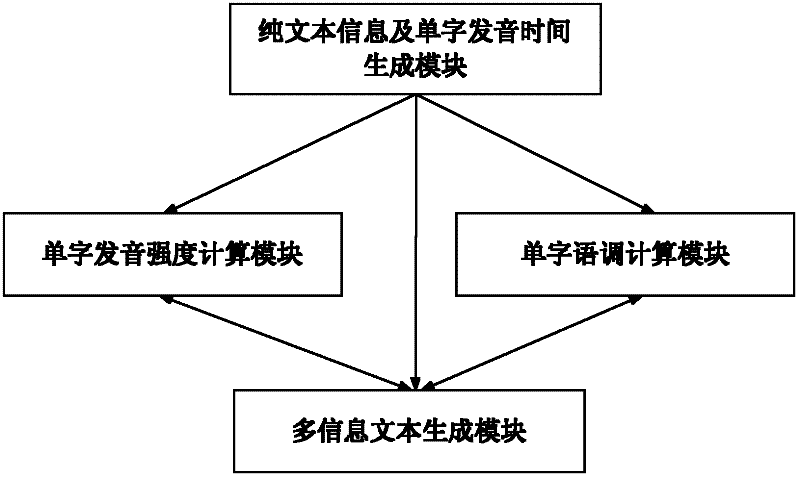

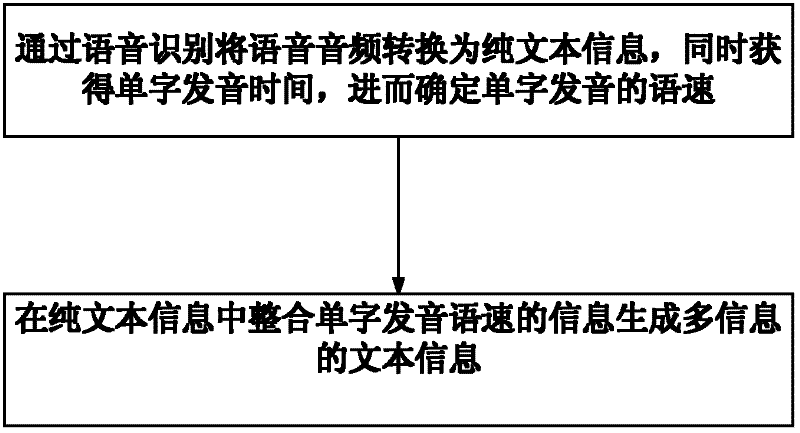

Device and method for acquiring speech recognition multi-information text

The invention provides a device and a method for acquiring a speech recognition multi-information text. After a speech audio frequency is converted into pure text information by speech recognition, individual character pronunciation speed, individual character pronunciation strength and individual character pronunciation intonation in the speech audio frequency are integrated into the initially-generated pure text information in a certain expression way to generate multi-information text information. The device and the method for acquiring the speech recognition multi-information text can be widely used for information release platforms such as micro blogs, short messages, signature files and the like.

Owner:SHANGHAI GUOKE ELECTRONICS

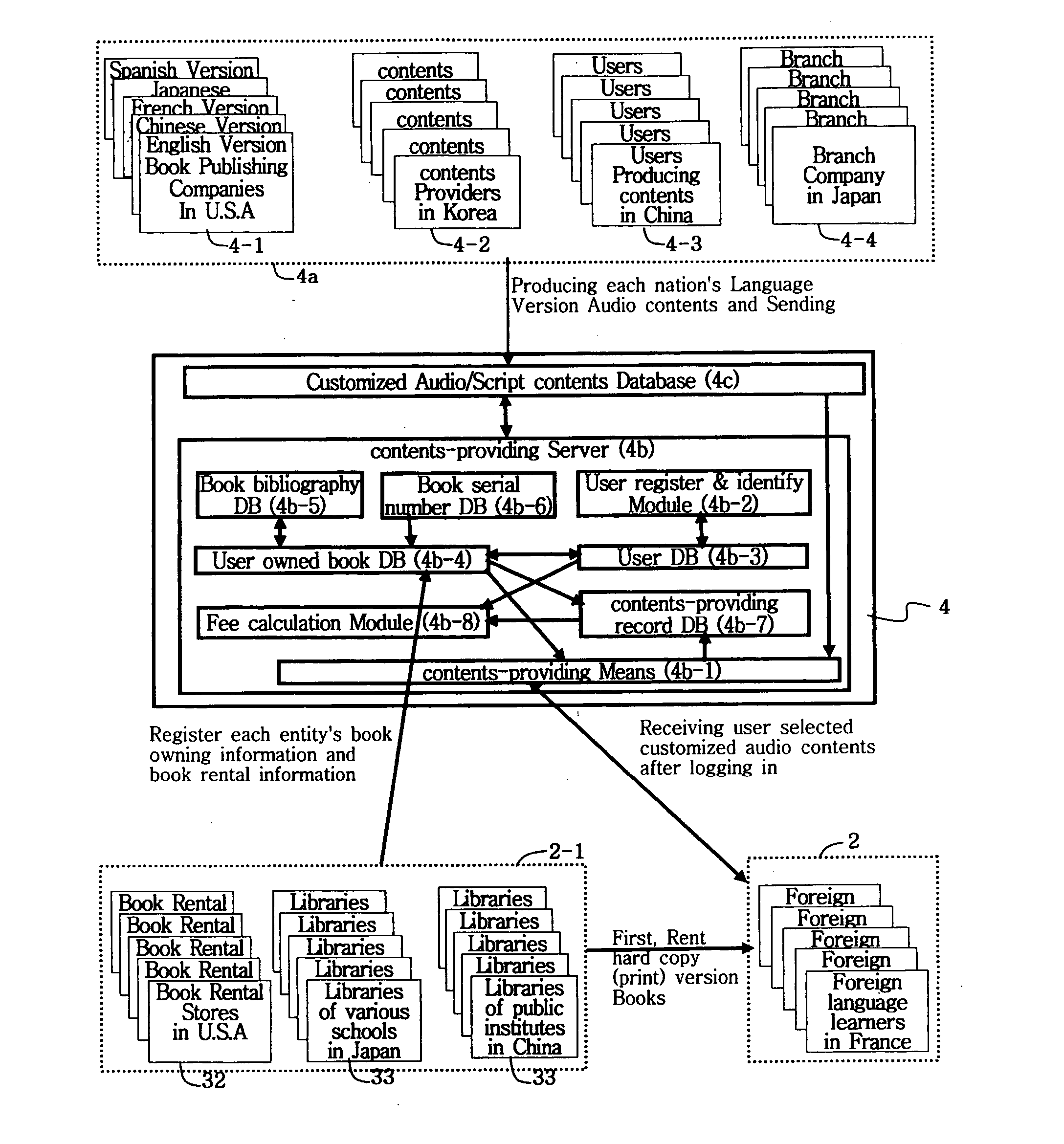

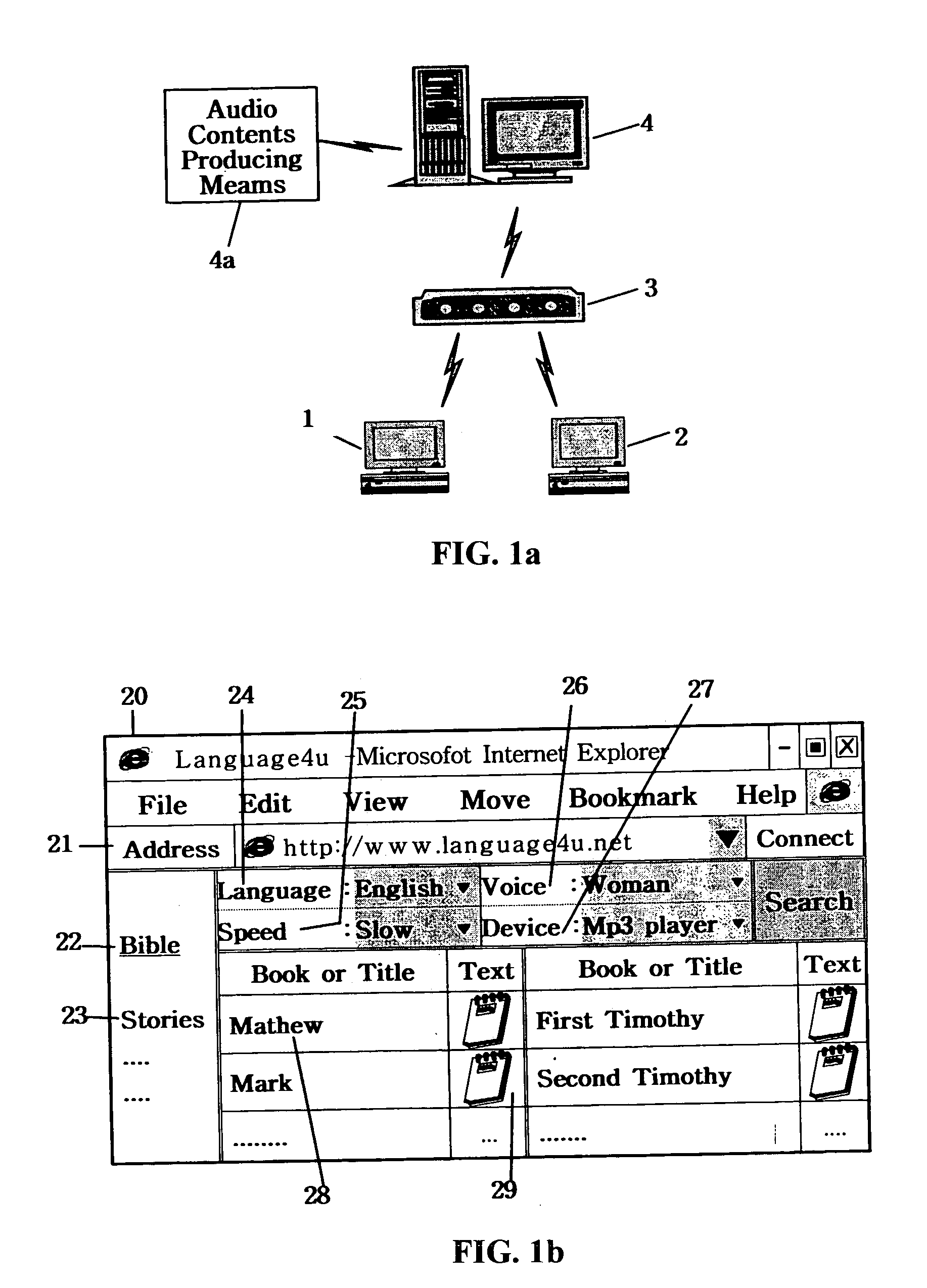

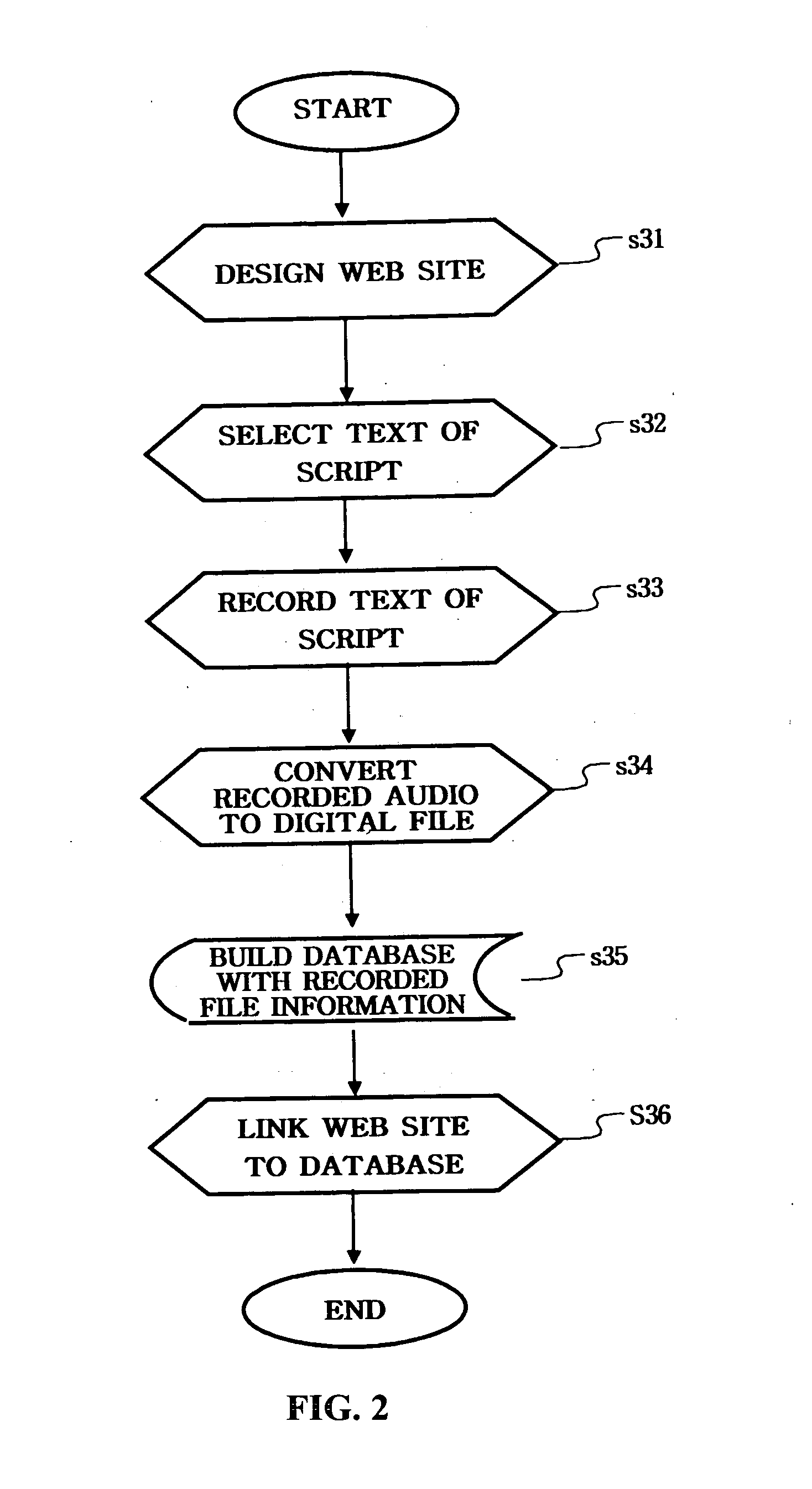

System and method for providing customized contents

The present invention provides a digital voice book library system which through Internet provides an customized digital audio version book that can be customized according to a learner's learning language, learning level, learning condition, learning taste or the like by a learner's selecting / varying audio attributes of the audio version book(digital audio file) associated with the equivalent printed script (hardcopy) version book that is owned by the learner or is borrowed from a conventional library or a book rental store. Wherein the audio attribute of said customized digital audio version book can be varied and customized in language, voice, speech speed or playback device at every repetition time according to user's learning language, learning level, learning condition or the like, corresponding to the equivalent script book despite different audio attribute. Using the present invention a learner can repeatedly listen to a same content audio version book without boring, therefore can acquire a good language ability in short time.

Owner:LEEM YOUNG HIE

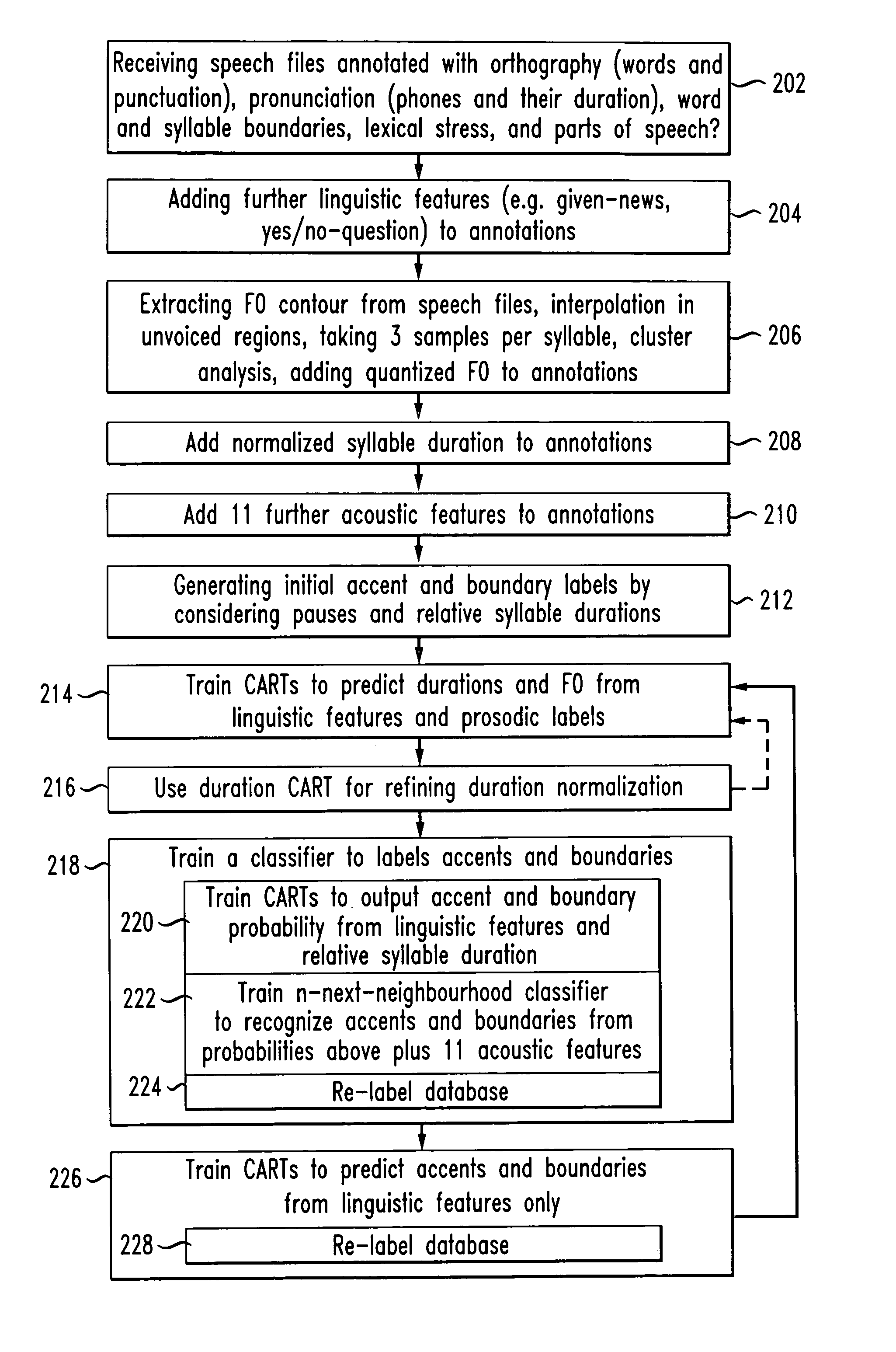

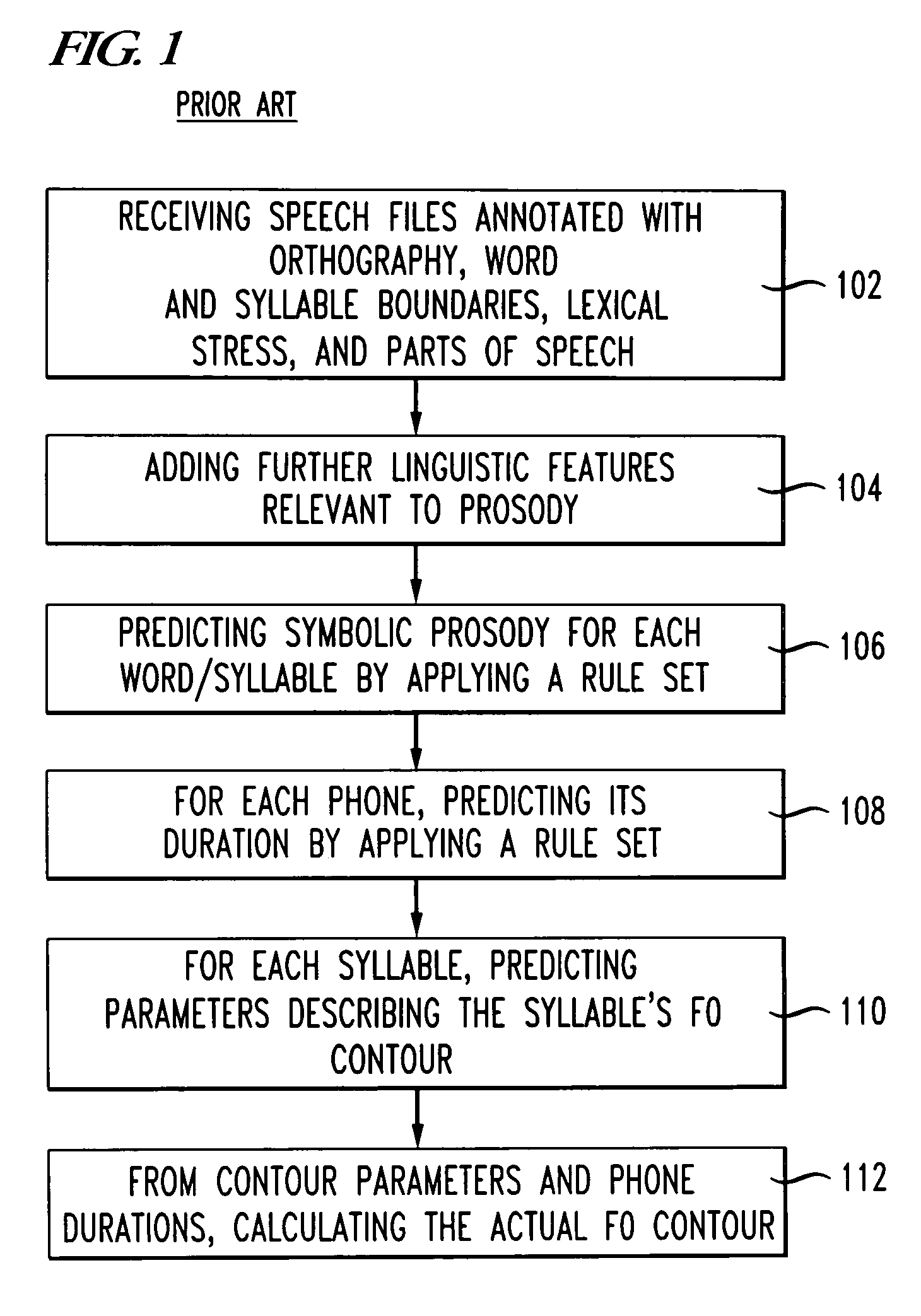

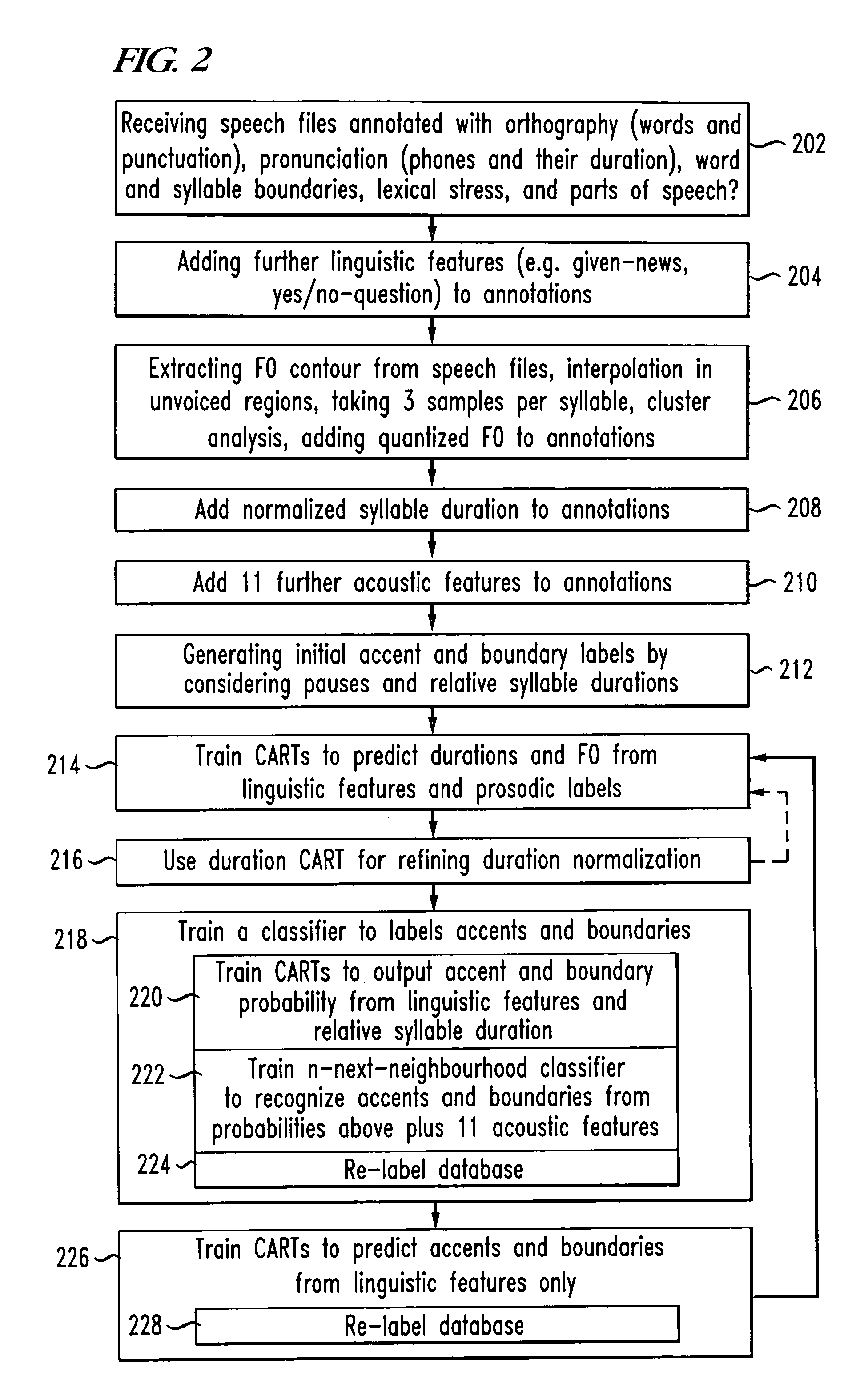

System and method for predicting prosodic parameters

A method for generating a prosody model that predicts prosodic parameters is disclosed. Upon receiving text annotated with acoustic features, the method comprises generating first classification and regression trees (CARTs) that predict durations and F0 from text by generating initial boundary labels by considering pauses, generating initial accent labels by applying a simple rule on text-derived features only, adding the predicted accent and boundary labels to feature vectors, and using the feature vectors to generate the first CARTs. The first CARTs are used to predict accent and boundary labels. Next, the first CARTs are used to generate second CARTs that predict durations and F0 from text and acoustic features by using lengthened accented syllables and phrase-final syllables, refining accent and boundary models simultaneously, comparing actual and predicted duration of a whole prosodic phrase to normalize speaking rate, and generating the second CARTs that predict the normalized speaking rate.

Owner:CERENCE OPERATING CO

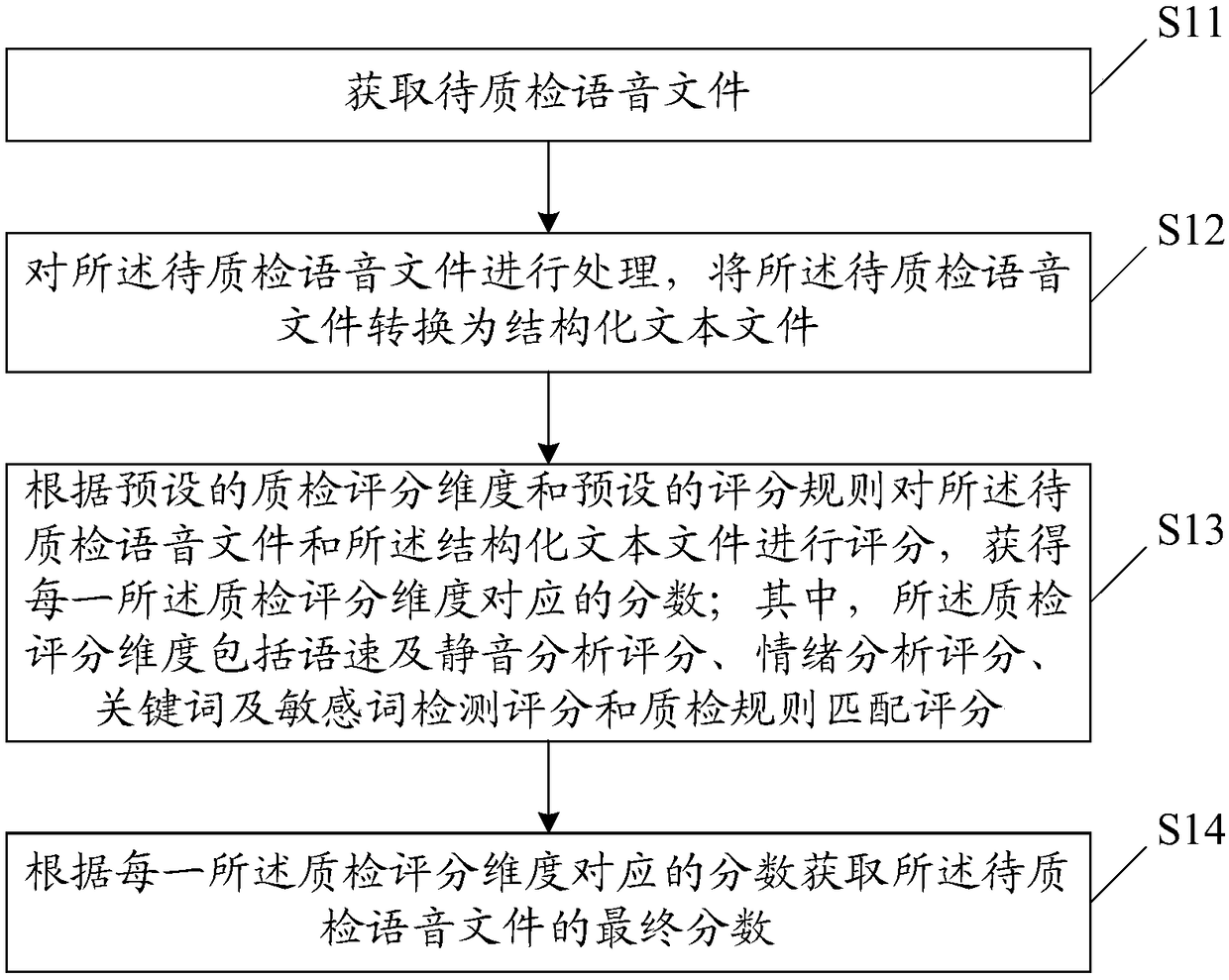

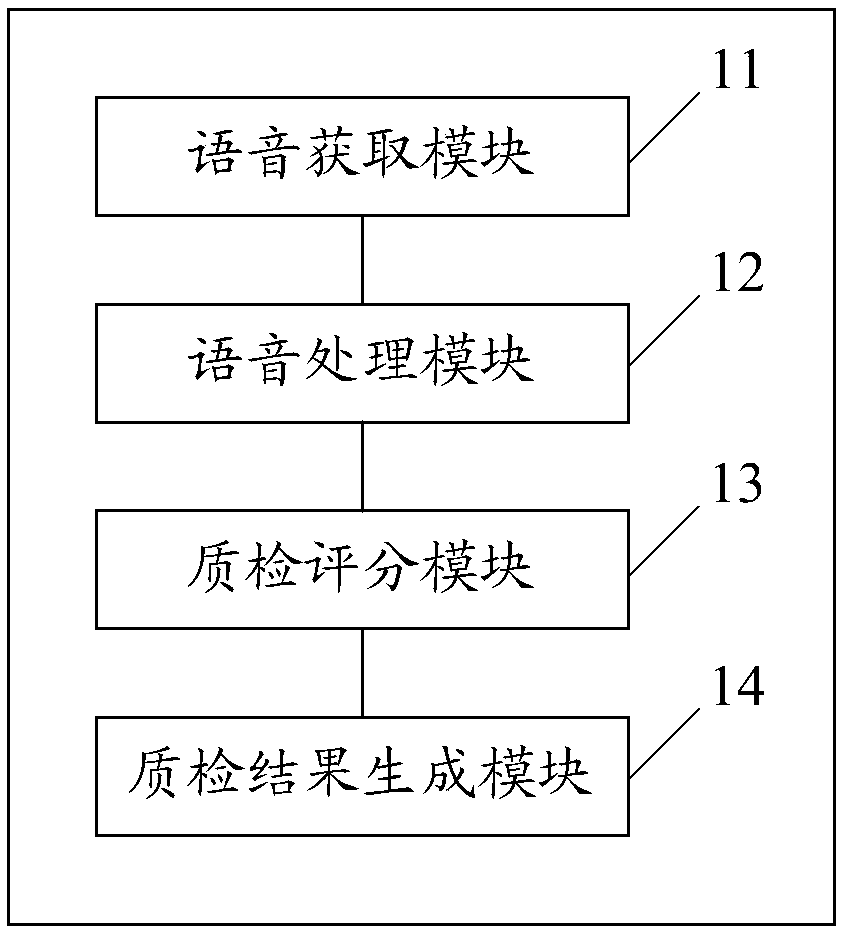

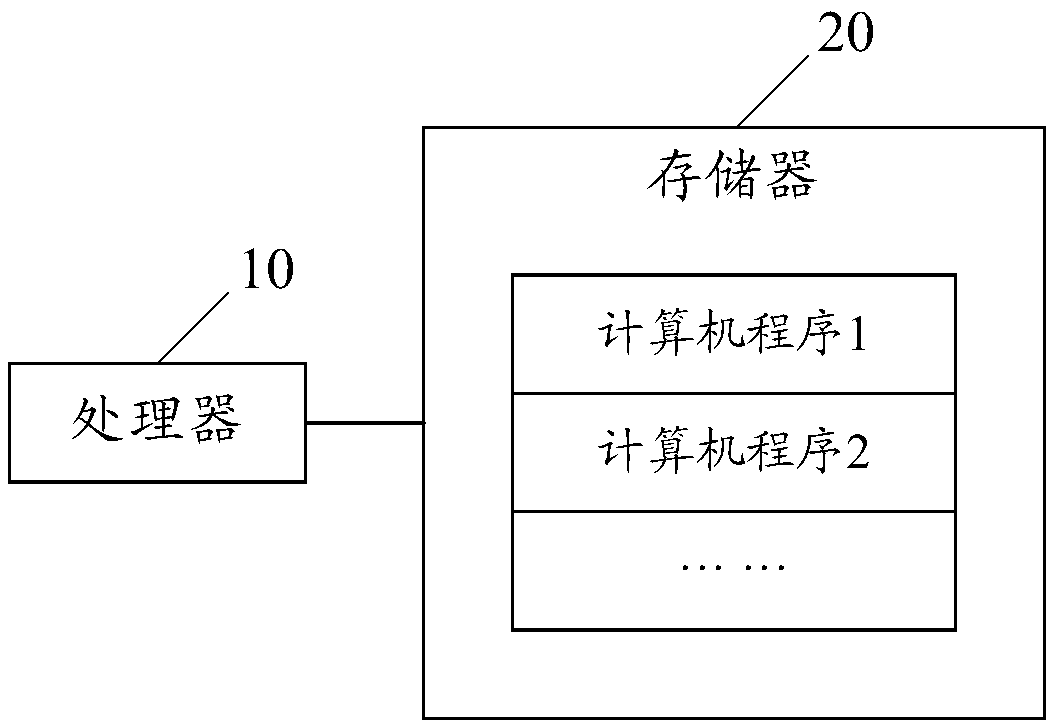

Automatic voice quality inspection method, system thereof, device and storage medium

InactiveCN109448730AObjectively reflect service qualityRealization of automatic voice quality inspectionSpeech recognitionSpeech rateSpeech sound

The invention discloses an automatic voice quality inspection method, a system thereof, a device and a storage medium. The method includes the following steps: obtaining a voice file to be inspected;processing the voice file to be inspected, and converting the voice file to be inspected into a structured text file; scoring the voice file to be inspected and the structured text file according to apreset quality inspection scoring dimension and a preset score rule, and obtaining the score corresponding to each quality inspection scoring dimension; wherein, the quality inspection scoring dimension includes speech rate and silent analysis scoring, emotional analysis scoring, keyword and sensitive word detection scoring, and quality inspection rule matching scoring; and obtaining the final score of the voice file to be inspected according to the score corresponding to each quality inspection scoring dimension. The method can realize automatic voice quality inspection, and improves qualityinspection efficiency and accuracy.

Owner:GRG BAKING EQUIP CO LTD

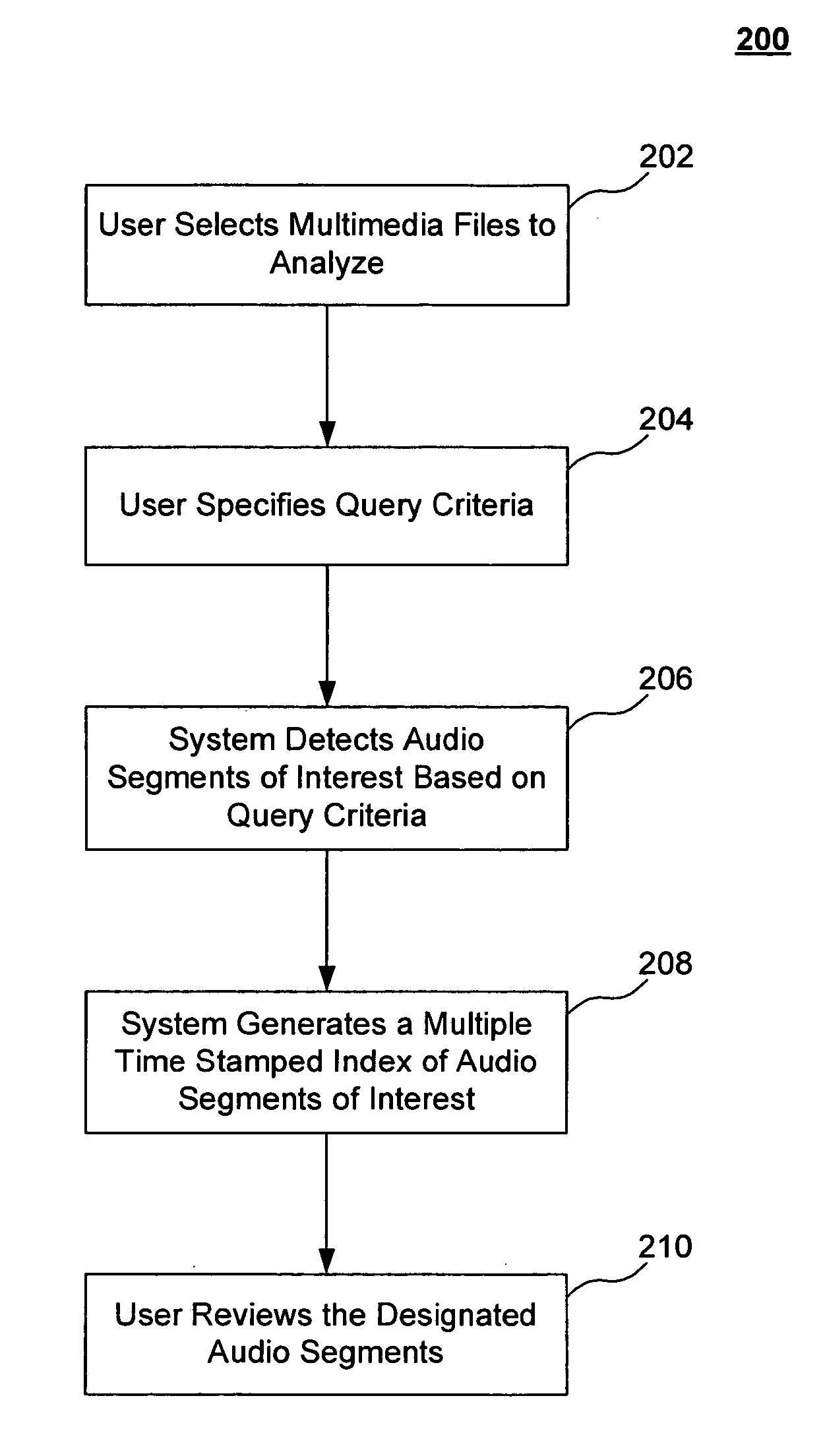

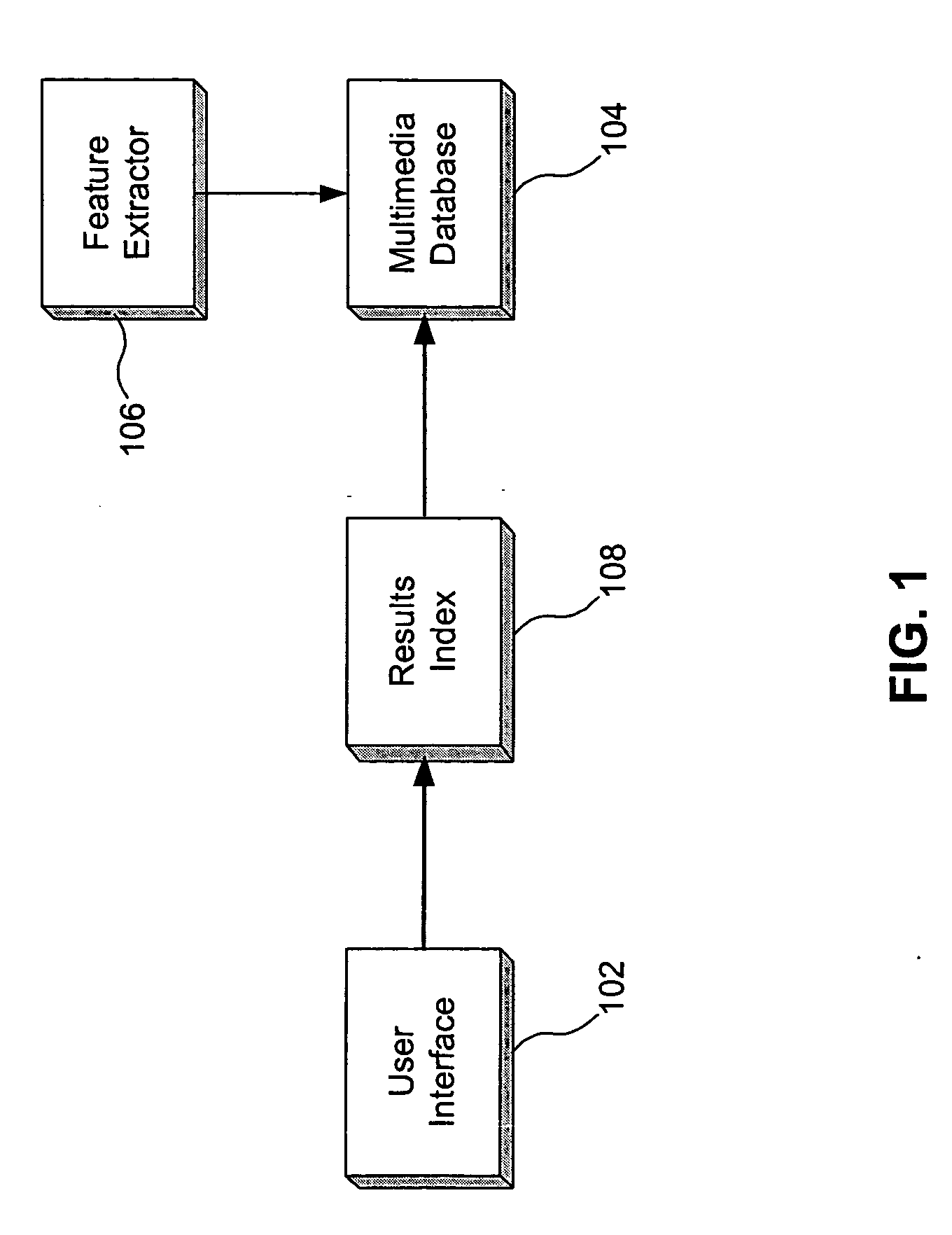

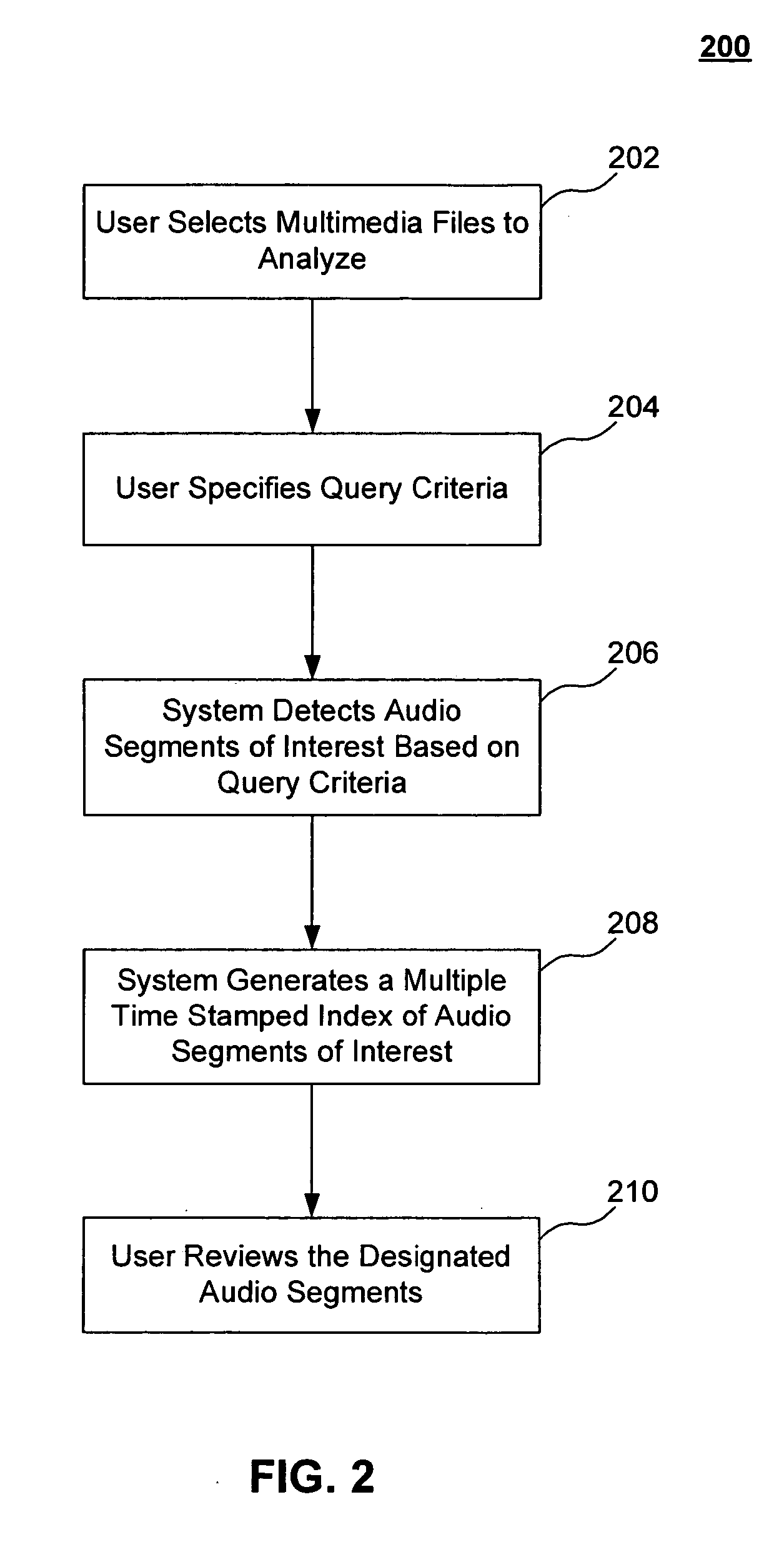

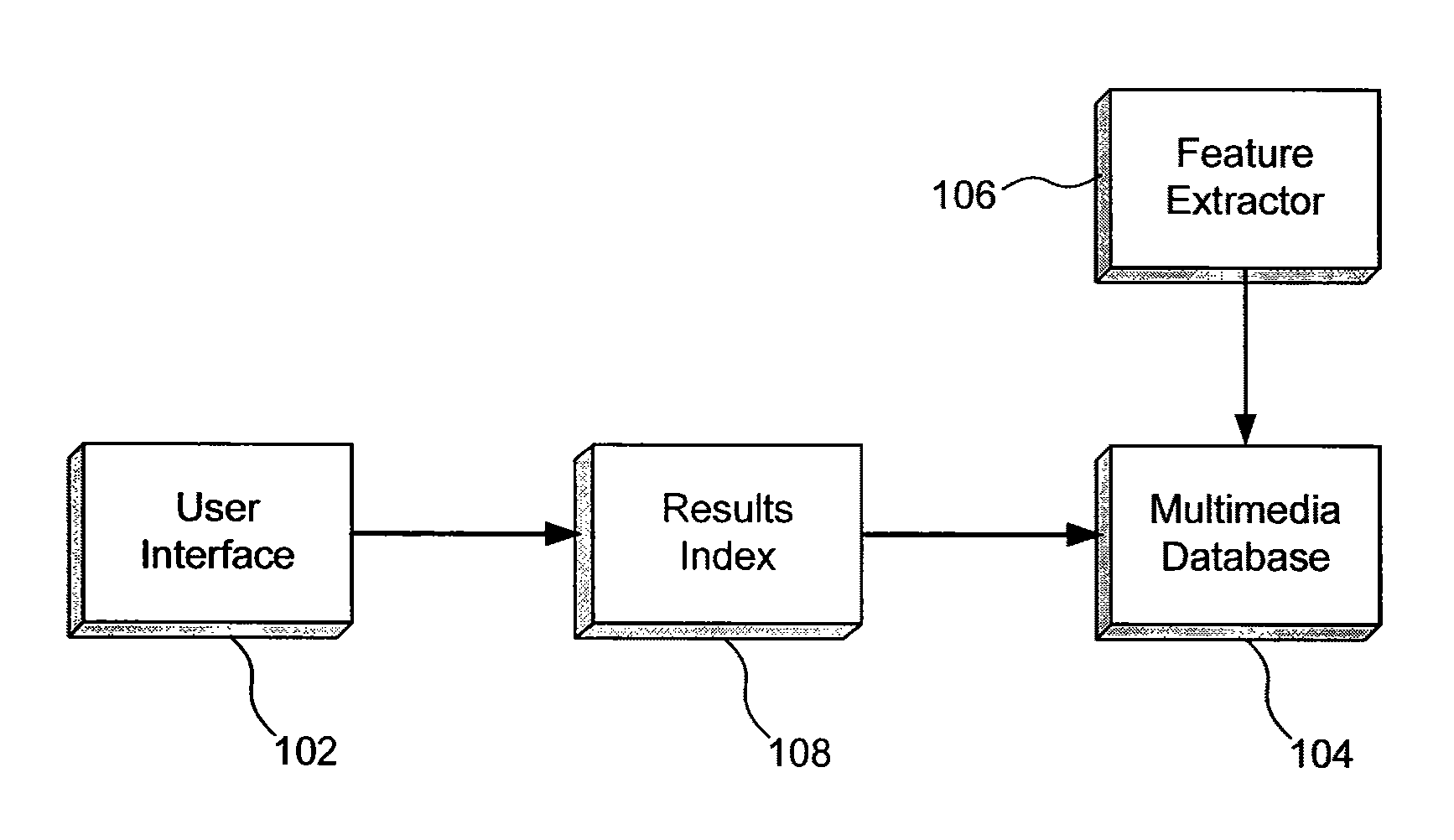

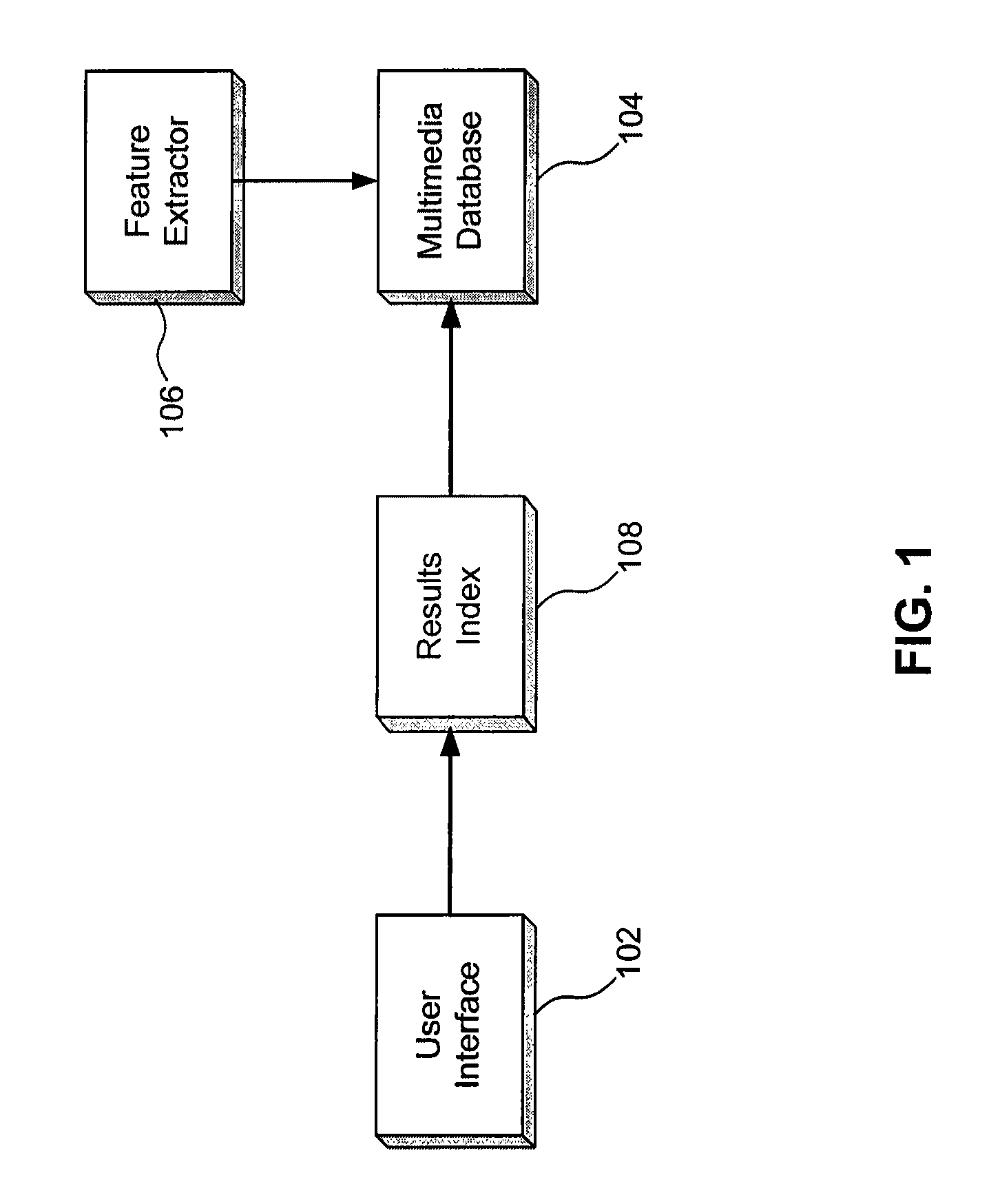

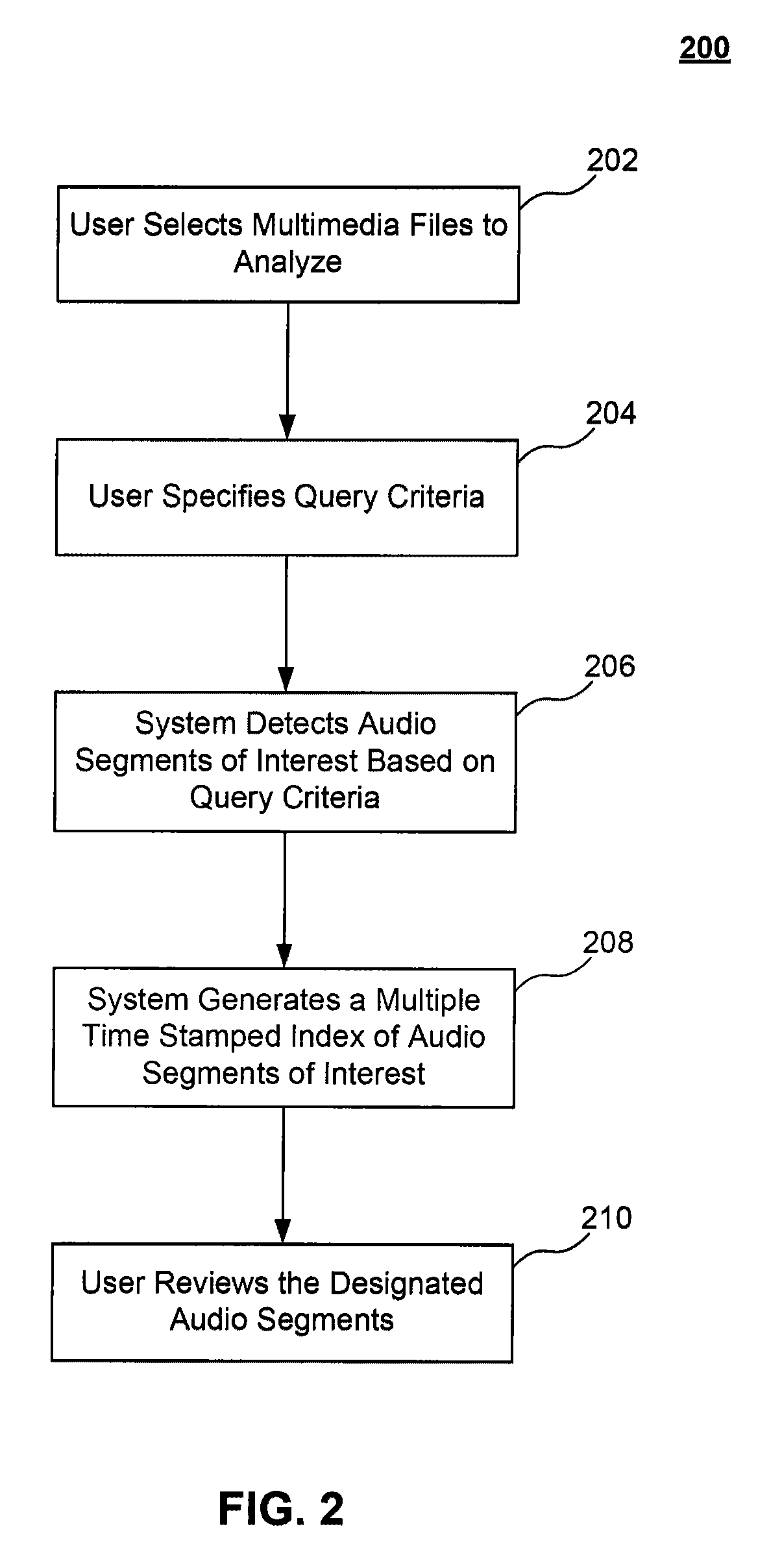

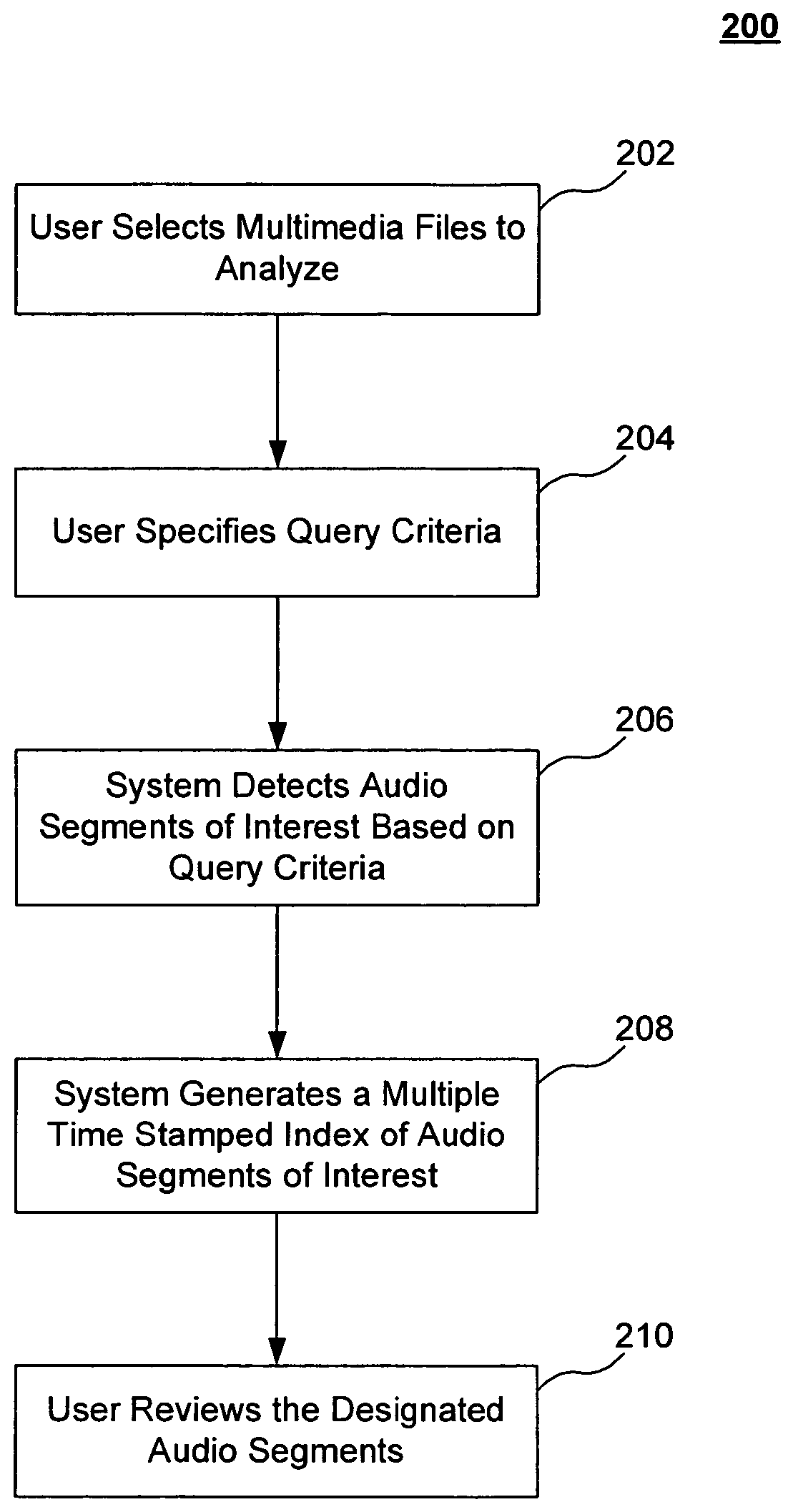

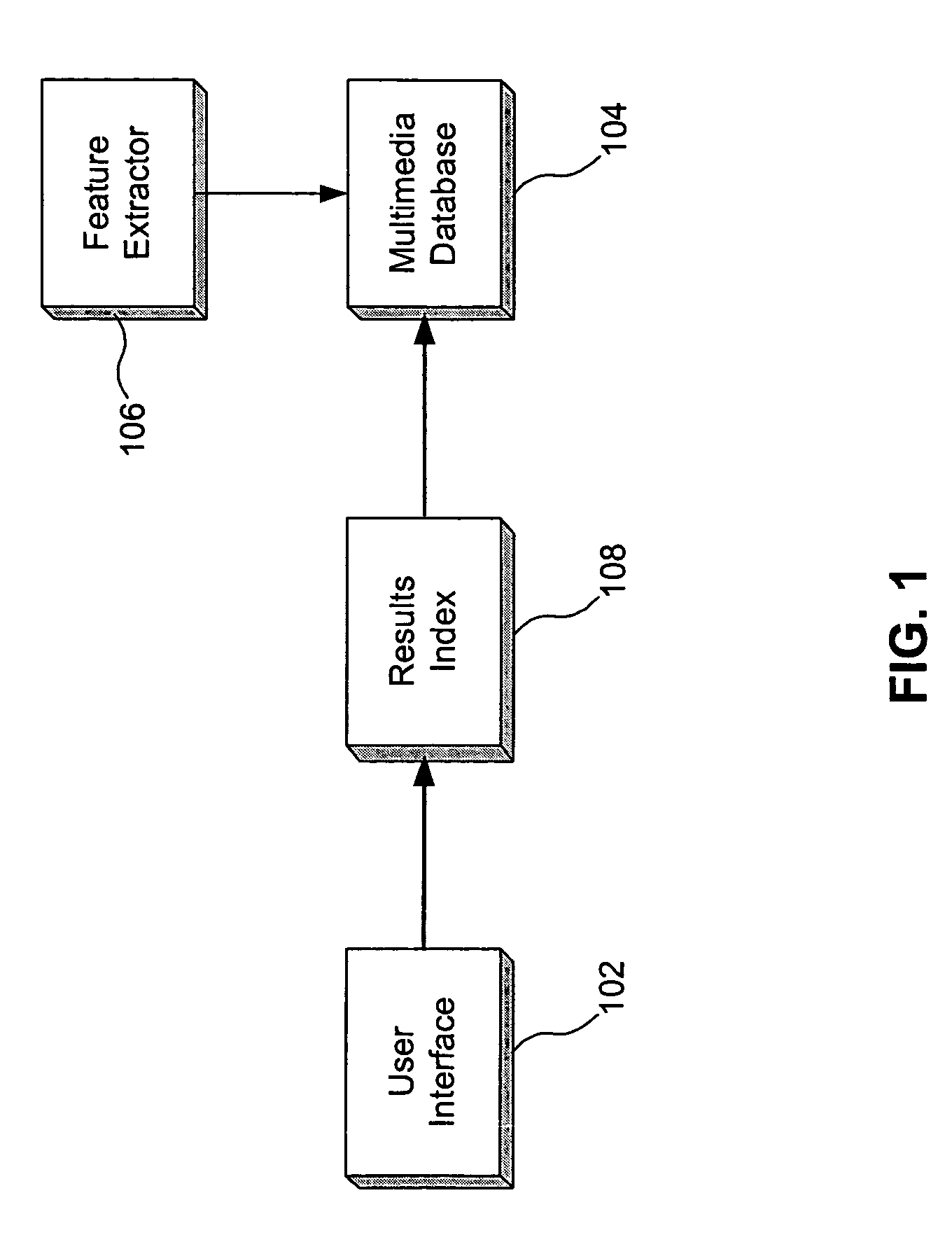

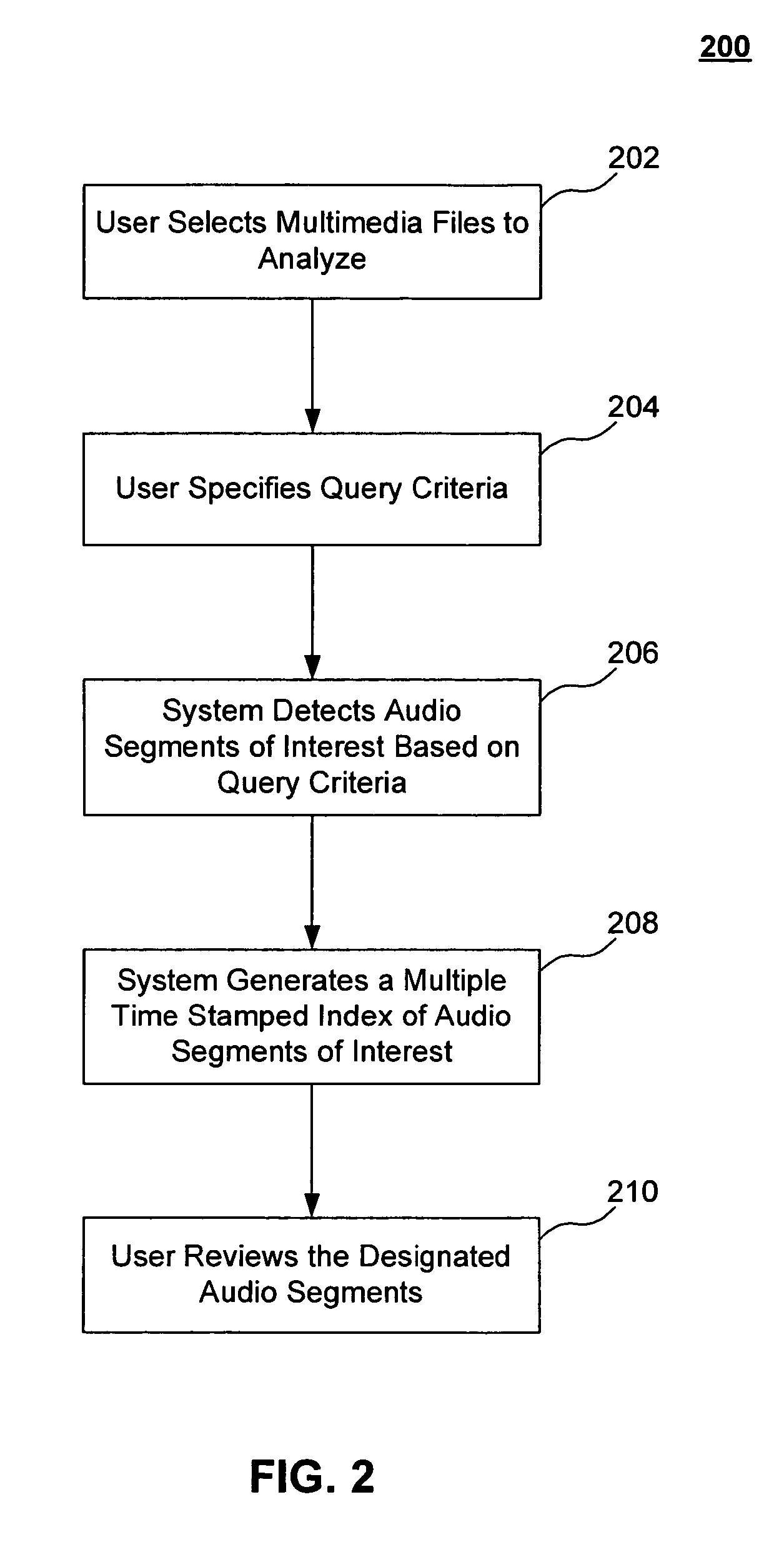

System and method for audio hot spotting

ActiveUS20060217966A1Eliminate disadvantagesData processing applicationsDigital data information retrievalSpeech rateVocal effort

Audio hot spotting is accomplished by specifying query criterion to include a non-lexical audio cue. The non-lexical audio cue can be, e.g., speech rate, laughter, applause, vocal effort, speaker change or any combination thereof. The query criterion is retrieved from an audio portion of a file. A segment of the file containing the query criterion can be provided to a user. The duration of the provided segment can be specified by the user along with the files to be searched. A list of detections of the query criterion within the file can also be provided to the user. Searches can be refined by the query criterion additionally including a lexical audio-cue. A keyword index of topic terms contained in the file can also be provided to the user.

Owner:MITRE SPORTS INT LTD

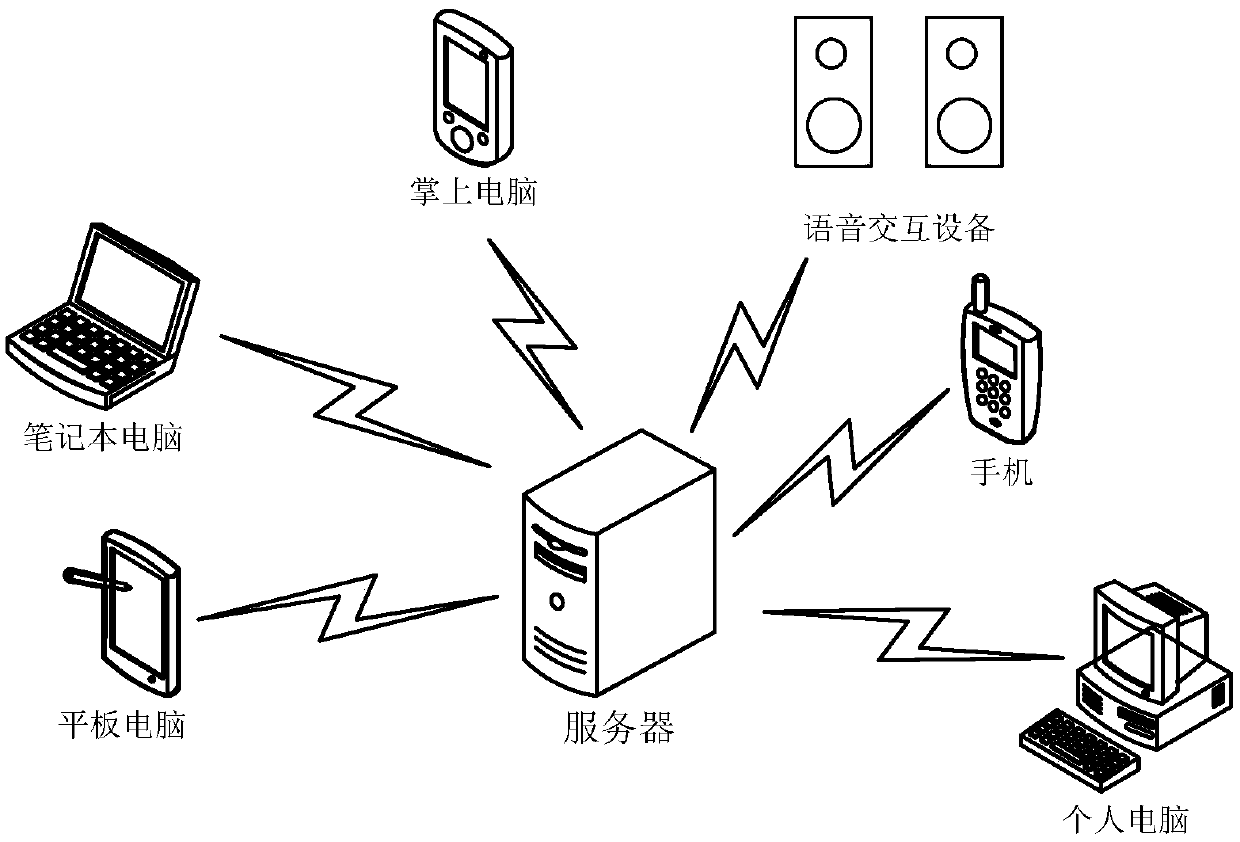

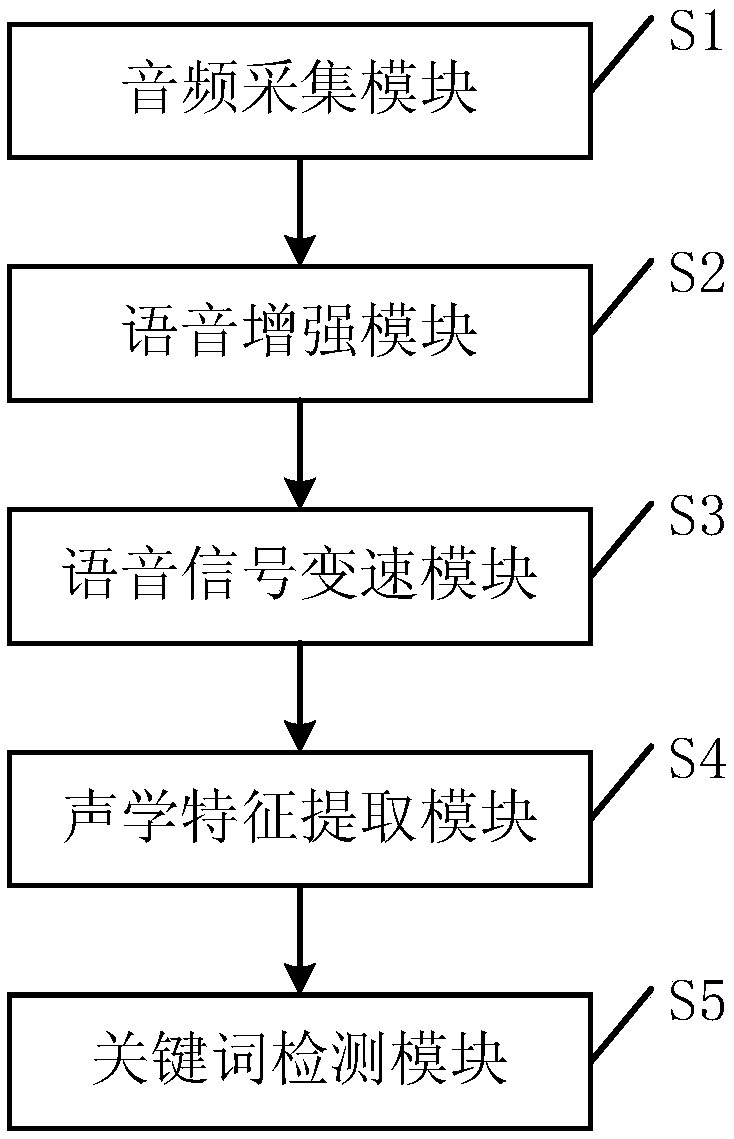

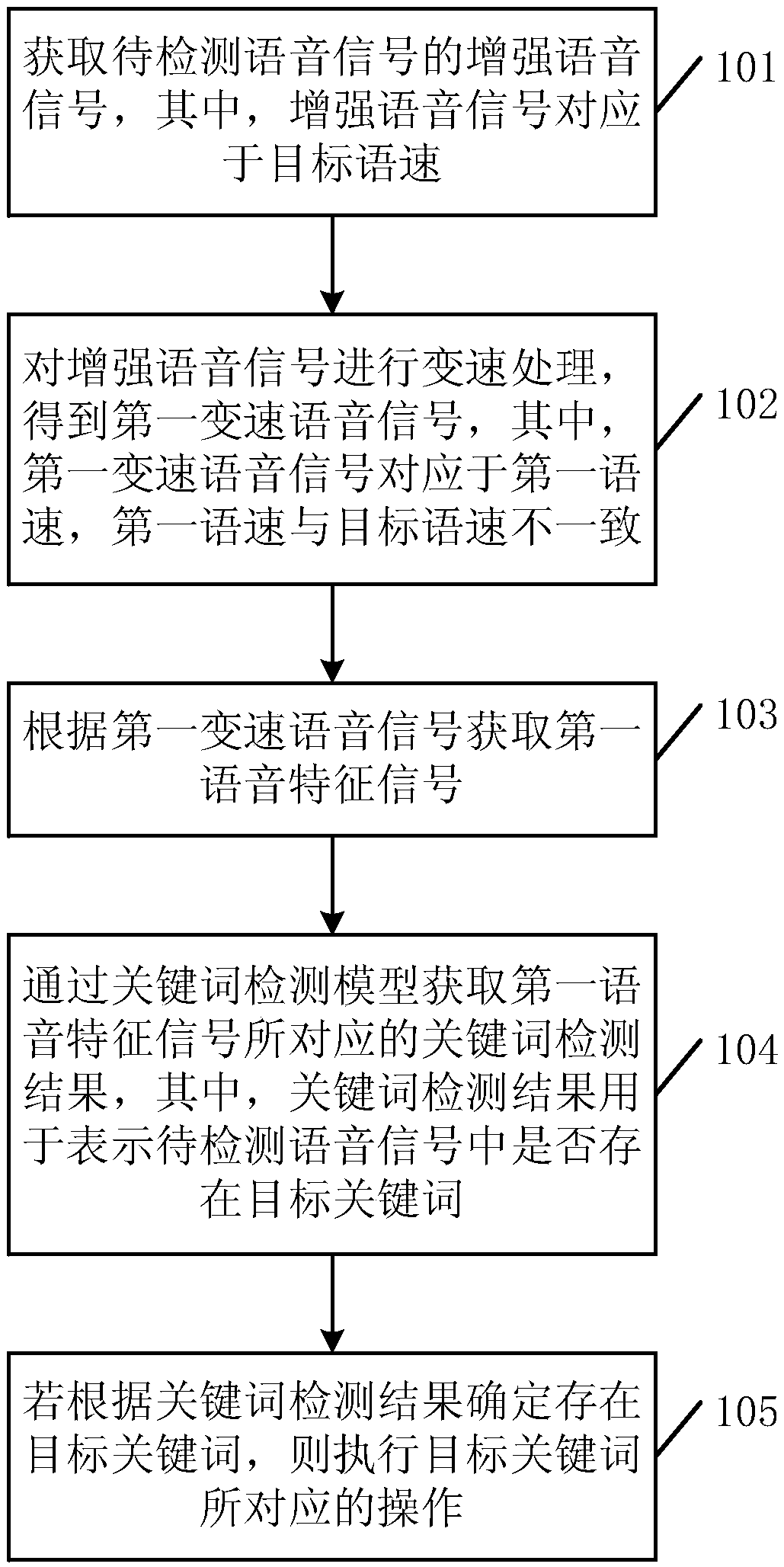

Keyword detection method and related device

ActiveCN109671433AImprove the detection rateImprove speech recognition qualitySignal processingMicrophones signal combinationSpeech rateSpeech sound

The invention discloses a keyword detection method. The method comprises the steps that a reinforcement voice signal of a to-be-detected voice signal is acquired, wherein the reinforcement voice signal corresponds to a target voice speed; the reinforcement voice signal is subjected to speed changing to obtain a first speed-changed voice signal, wherein the first speed-changed voice signal corresponds to a first voice speed; according to the first speed-changed voice signal, a first voice characteristic signal is acquired; through a keyword detection model, a keyword detection result corresponding to the first voice characteristic signal is acquired, wherein the keyword detection result is used for expressing whether or not a target keyword exists in the to-be-detected voice signal; if it is determined through the keyword detection result that the target keyword exists, an operation corresponding to the target keyword is executed. The invention further discloses a keyword detection device. According to the keyword detection method, the reinforced signal can be subjected to speed changing again, and the detection rate for the keyword in high-speed voice or low-speed voice can be increased.

Owner:TENCENT TECH (SHENZHEN) CO LTD

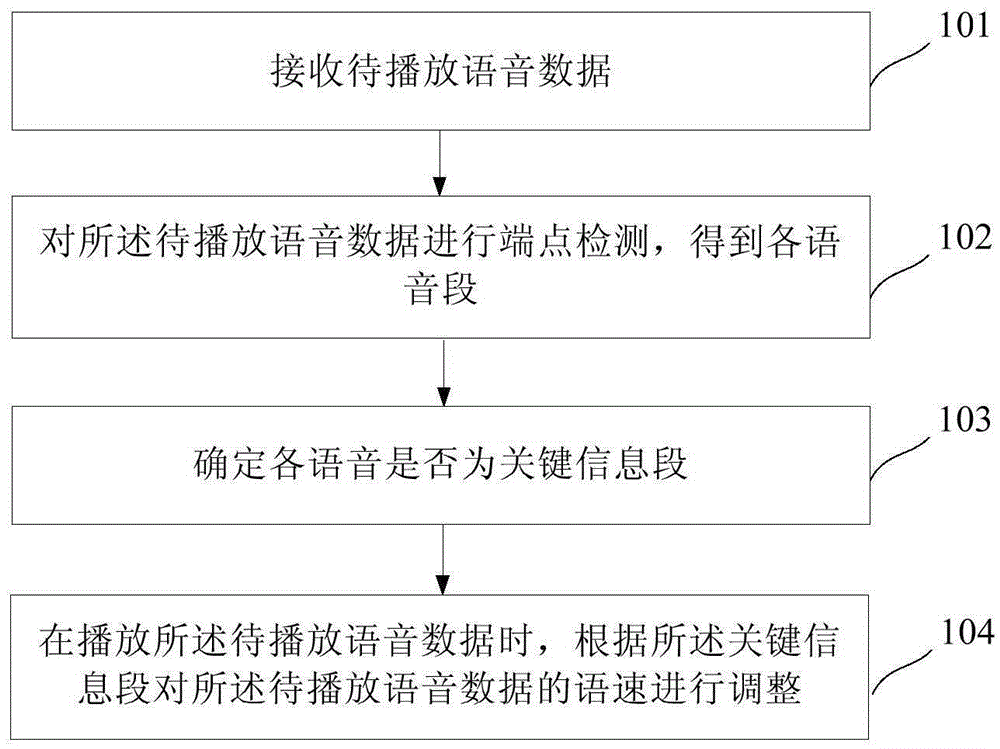

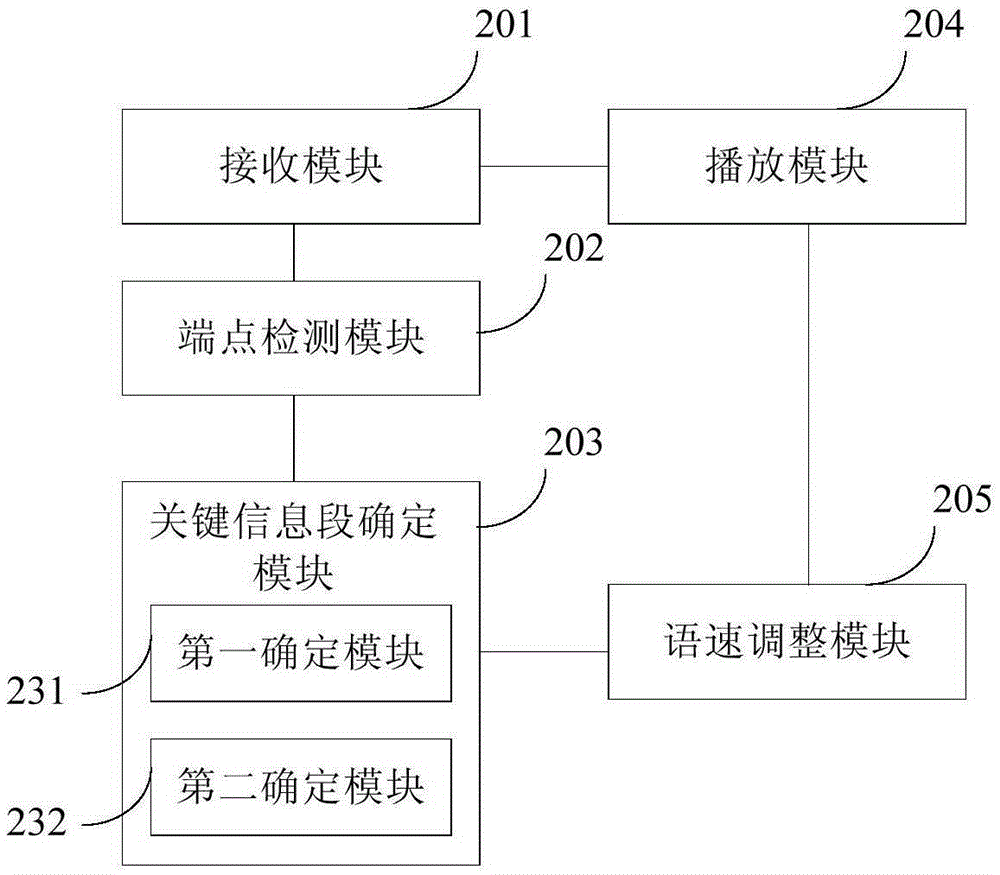

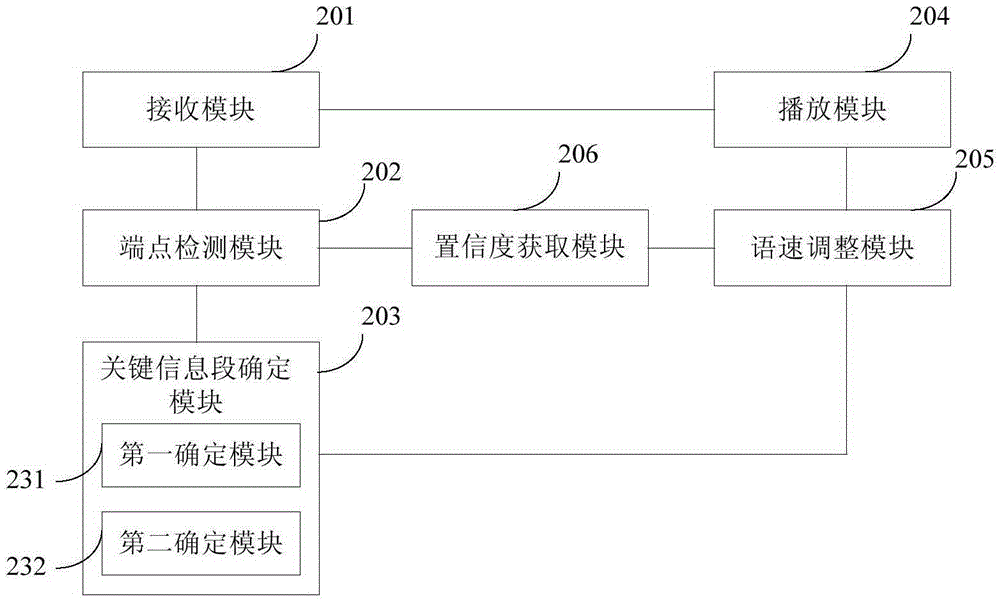

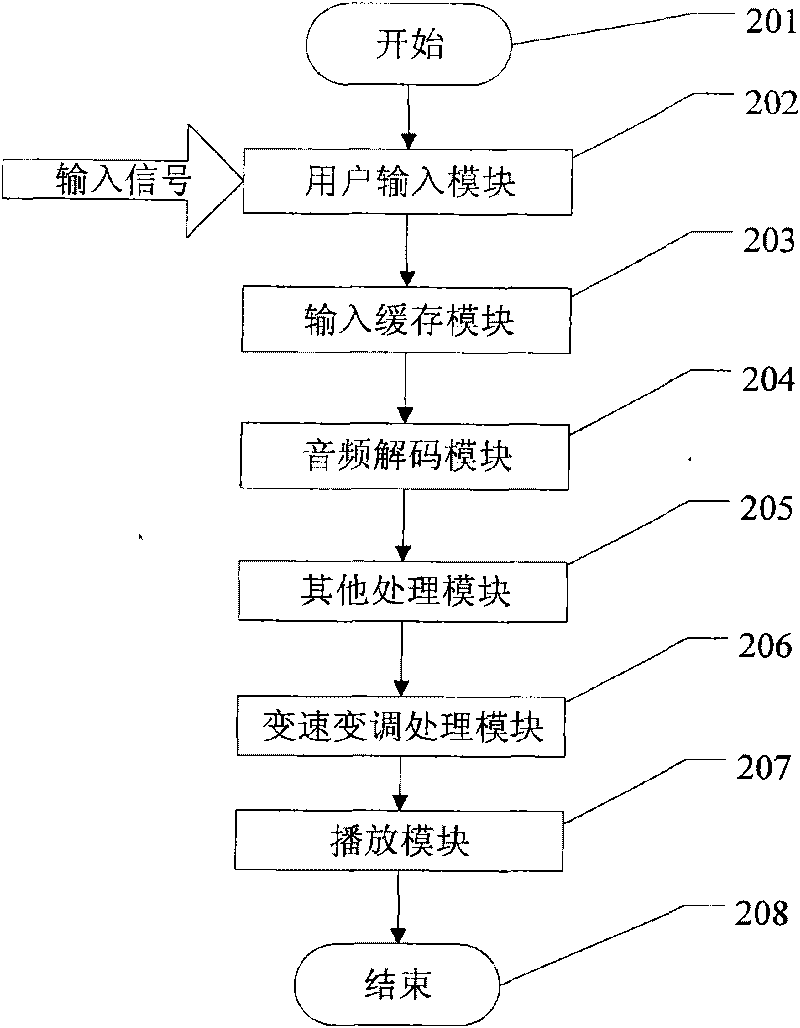

Voice playing method and device

The invention discloses a voice playing method and device. The method comprises the steps that voice data to be played are received; endpoint detection is performed on the voice data to be played so that all voice segments are obtained; whether all the voice is key information segments is determined; and voice speed of the voice data to be played is adjusted according to the key information segments when the voice data to be played are played. With application of the voice playing method and device, users can be assisted to rapidly and accurately find the focused voice segments.

Owner:IFLYTEK CO LTD

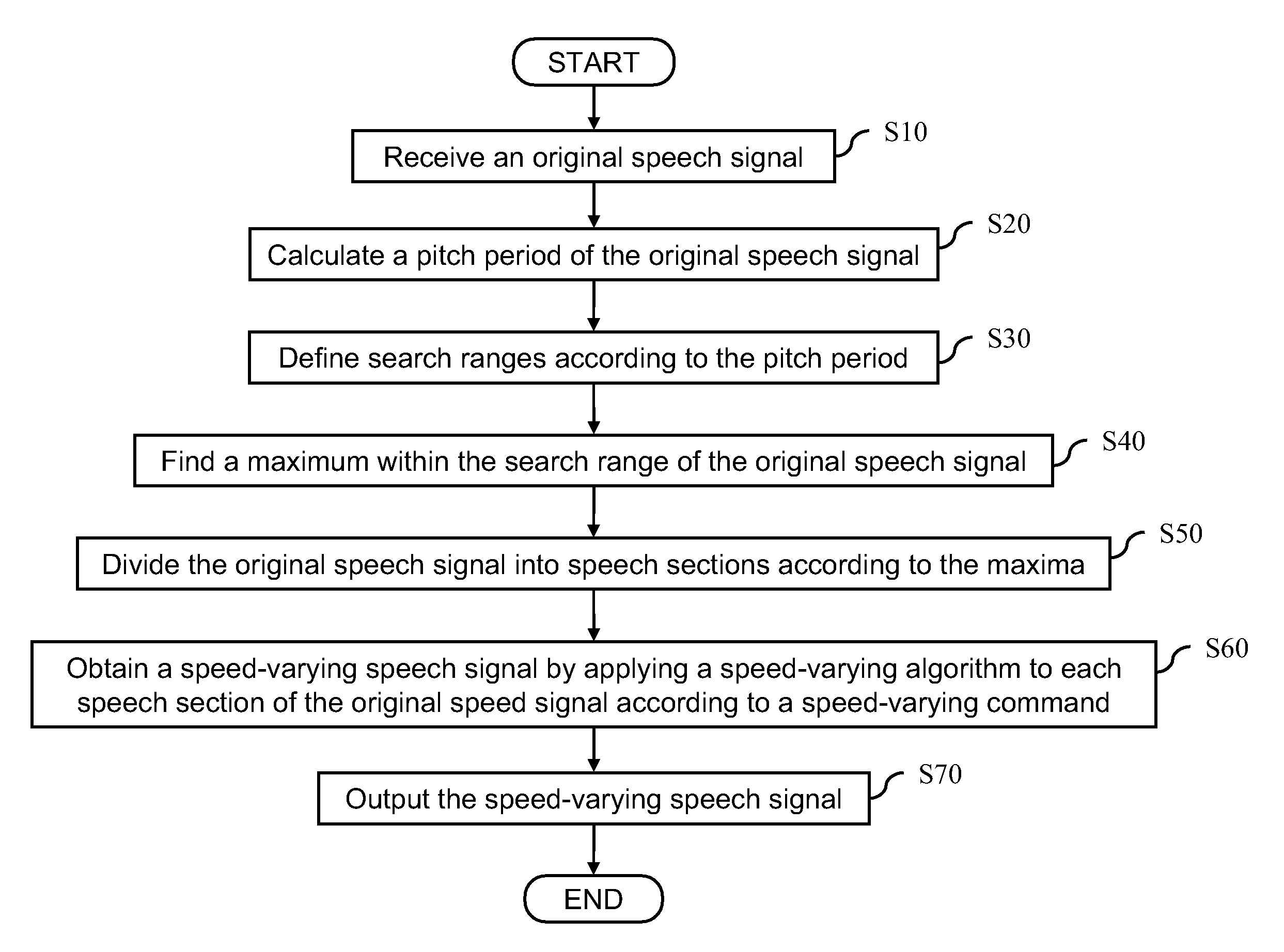

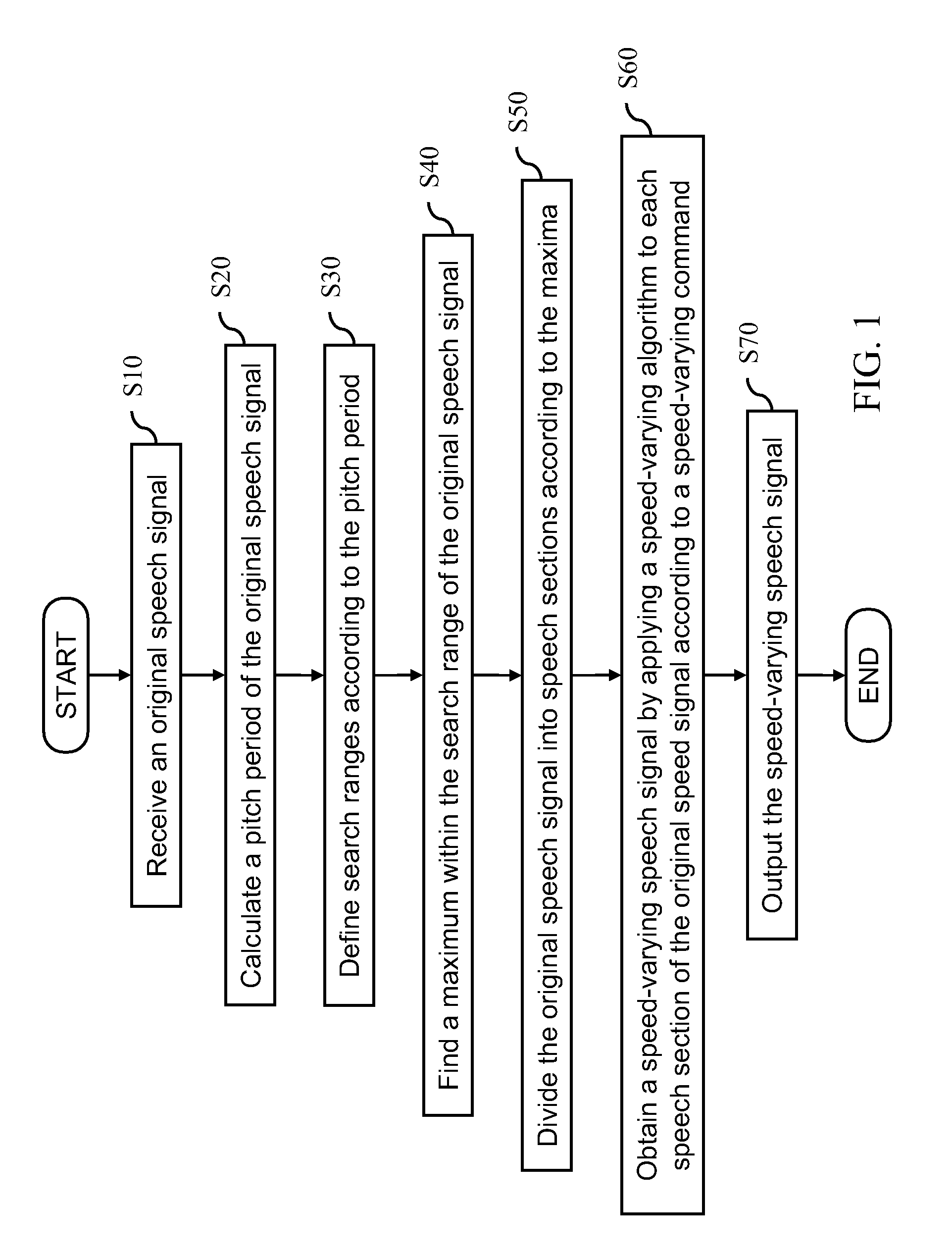

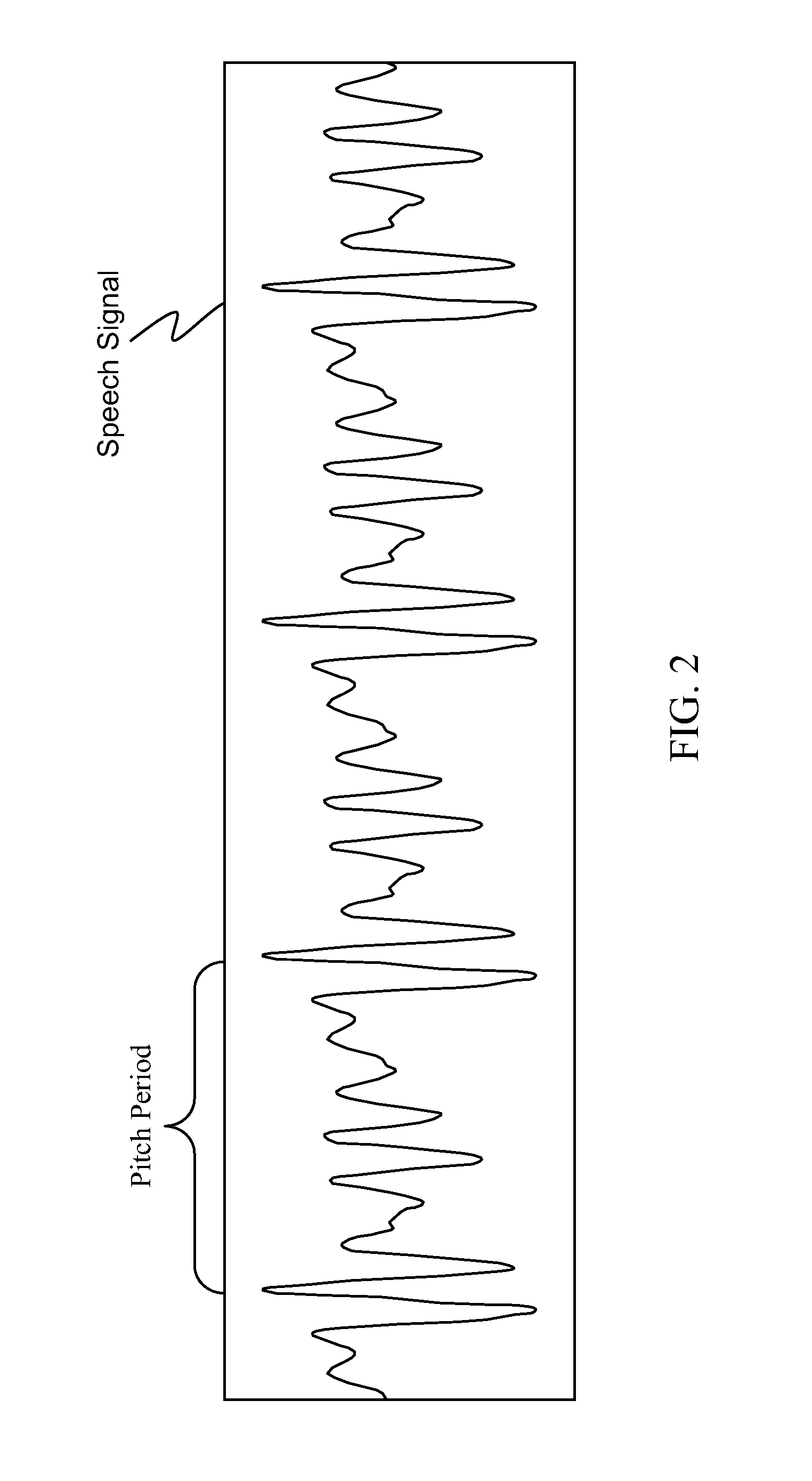

Method for Varying Speech Speed

ActiveUS20080140391A1Facilitate decelerationFacilitate accelerationEar treatmentDigital computer detailsSpeech rateSpeech sound

A method for varying speech speed is provided. The method includes the following steps: receive an original speech signal; calculate a pitch period of the original speech signal; define search ranges according to the pitch period; find a maximum within each of the search ranges of the original speech signal; divide the original speech signal into speech sections according to the maxima; obtain a speed-varied speech signal by applying a speed-varying algorithm to each speech section of the original speed signal according to a speed-varying command; and eventually, output the speed-varied speech signal.

Owner:MICRO-STAR INTERNATIONAL

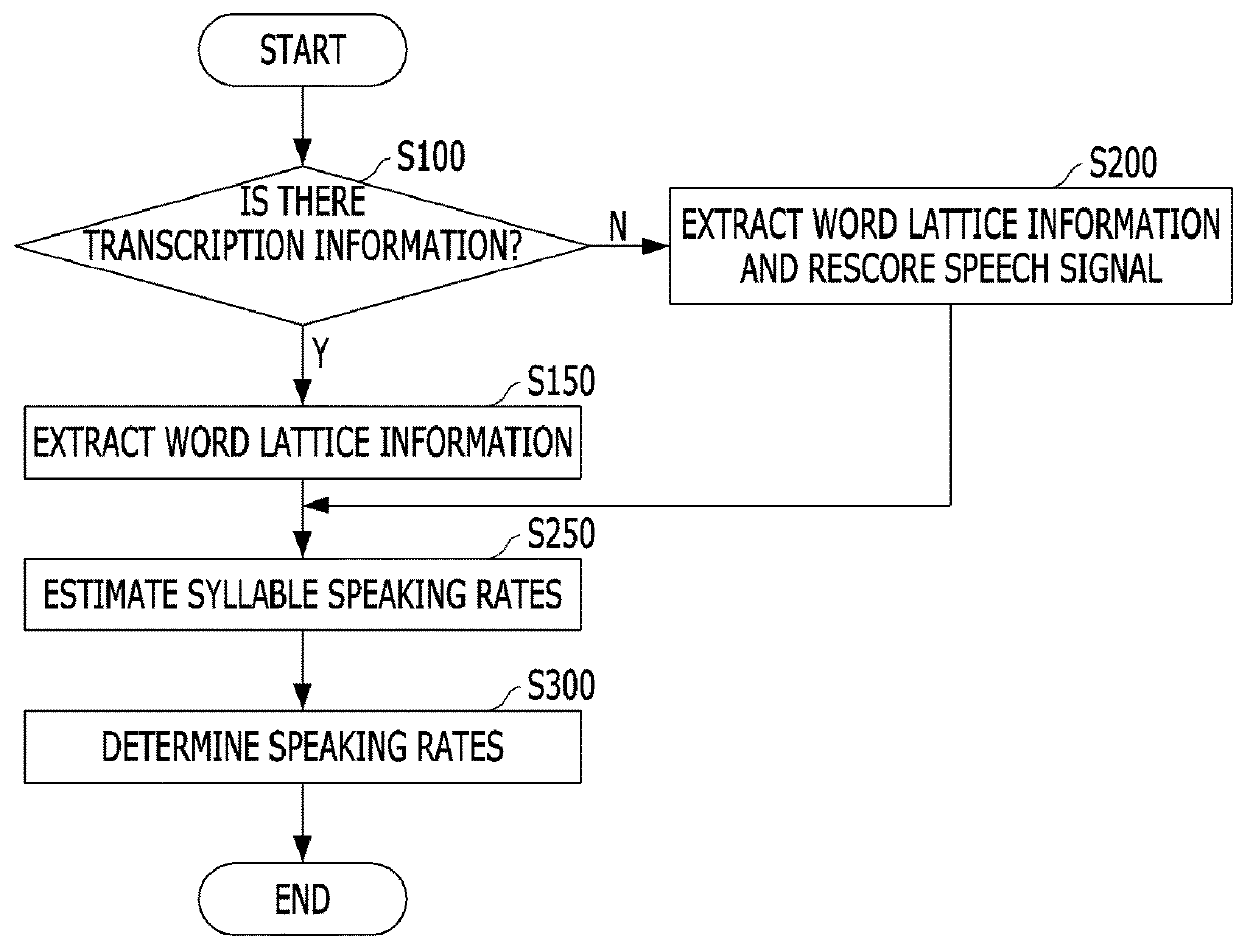

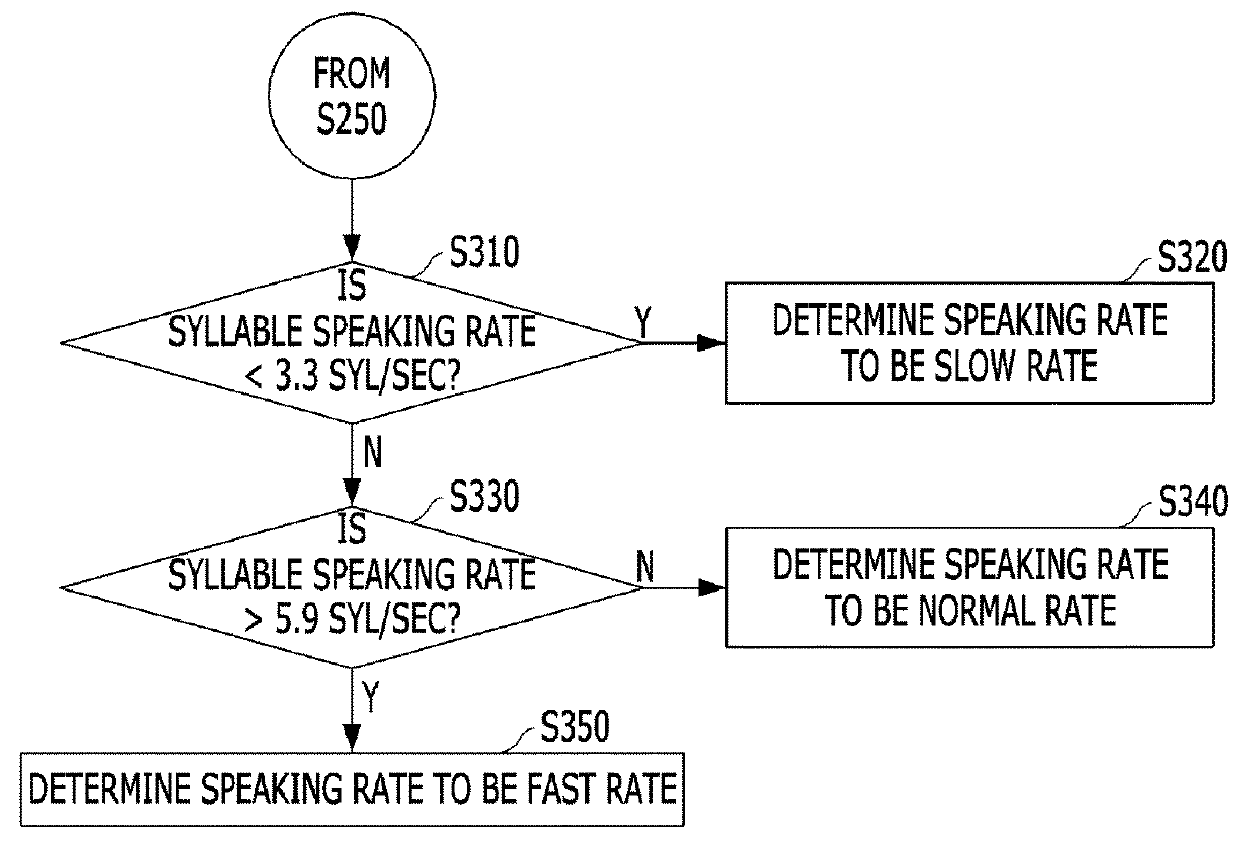

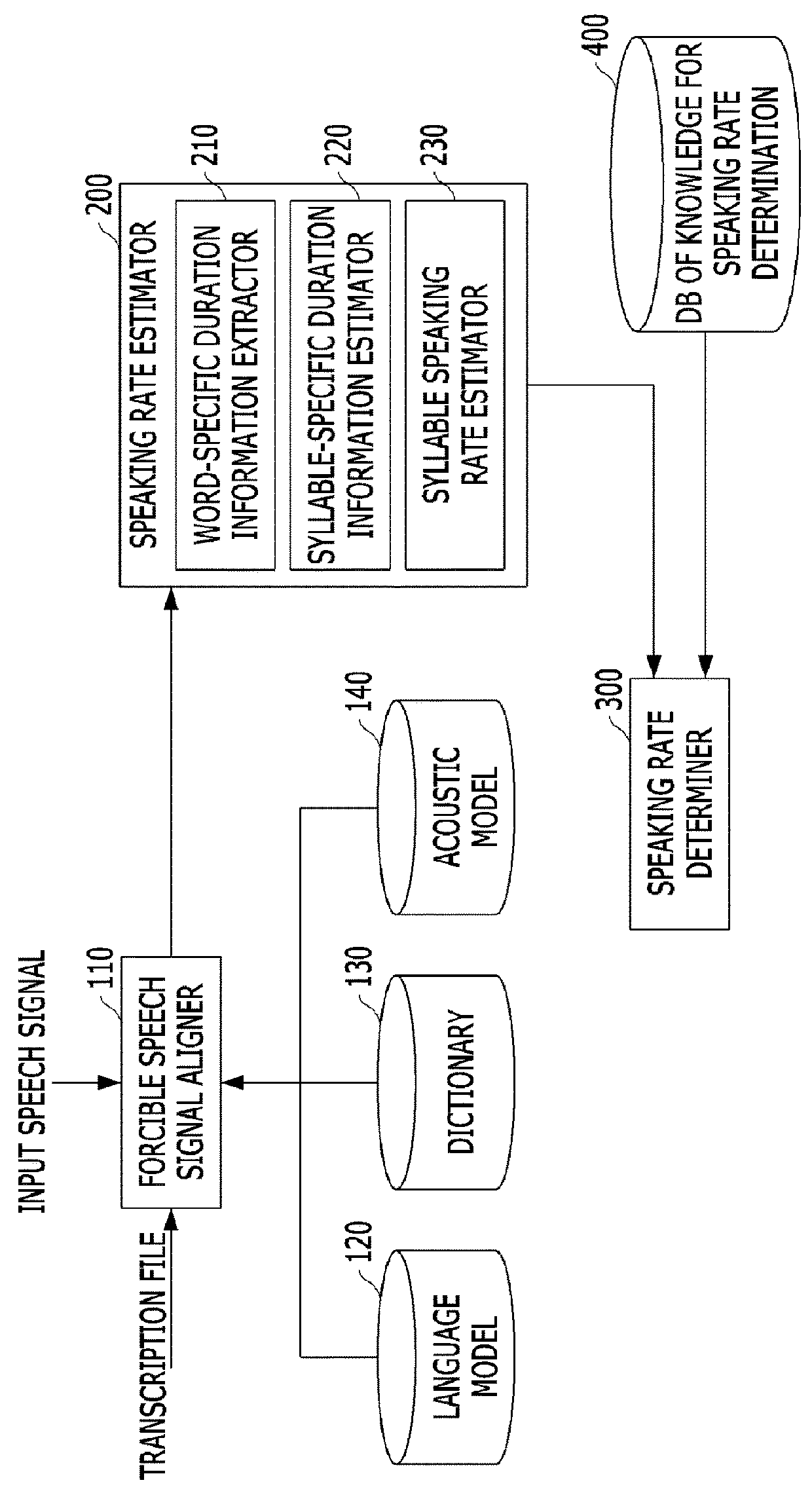

Method of automatically classifying speaking rate and speech recognition system using the same

InactiveUS20180166071A1Improve speech recognition performanceSpeech recognitionSpeech rateSpeech identification

Provided are a method of automatically classifying a speaking rate and a speech recognition system using the method. The speech recognition system using automatic speaking rate classification includes a speech recognizer configured to extract word lattice information by performing speech recognition on an input speech signal, a speaking rate estimator configured to estimate word-specific speaking rates using the word lattice information, a speaking rate normalizer configured to normalize a word-specific speaking rate into a normal speaking rate when the word-specific speaking rate deviates from a preset range, and a rescoring section configured to rescore the speech signal whose speaking rate has been normalized.

Owner:ELECTRONICS & TELECOMM RES INST

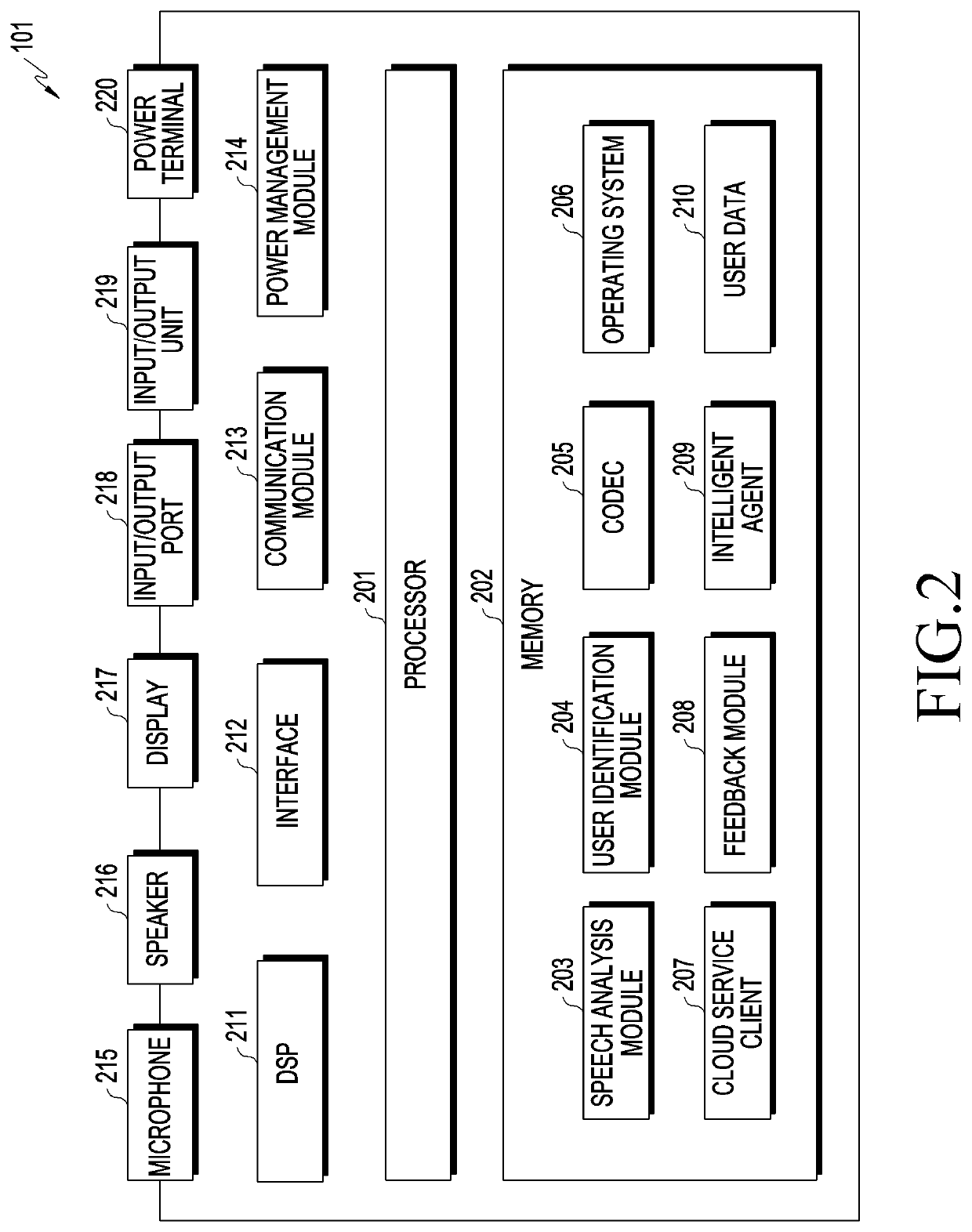

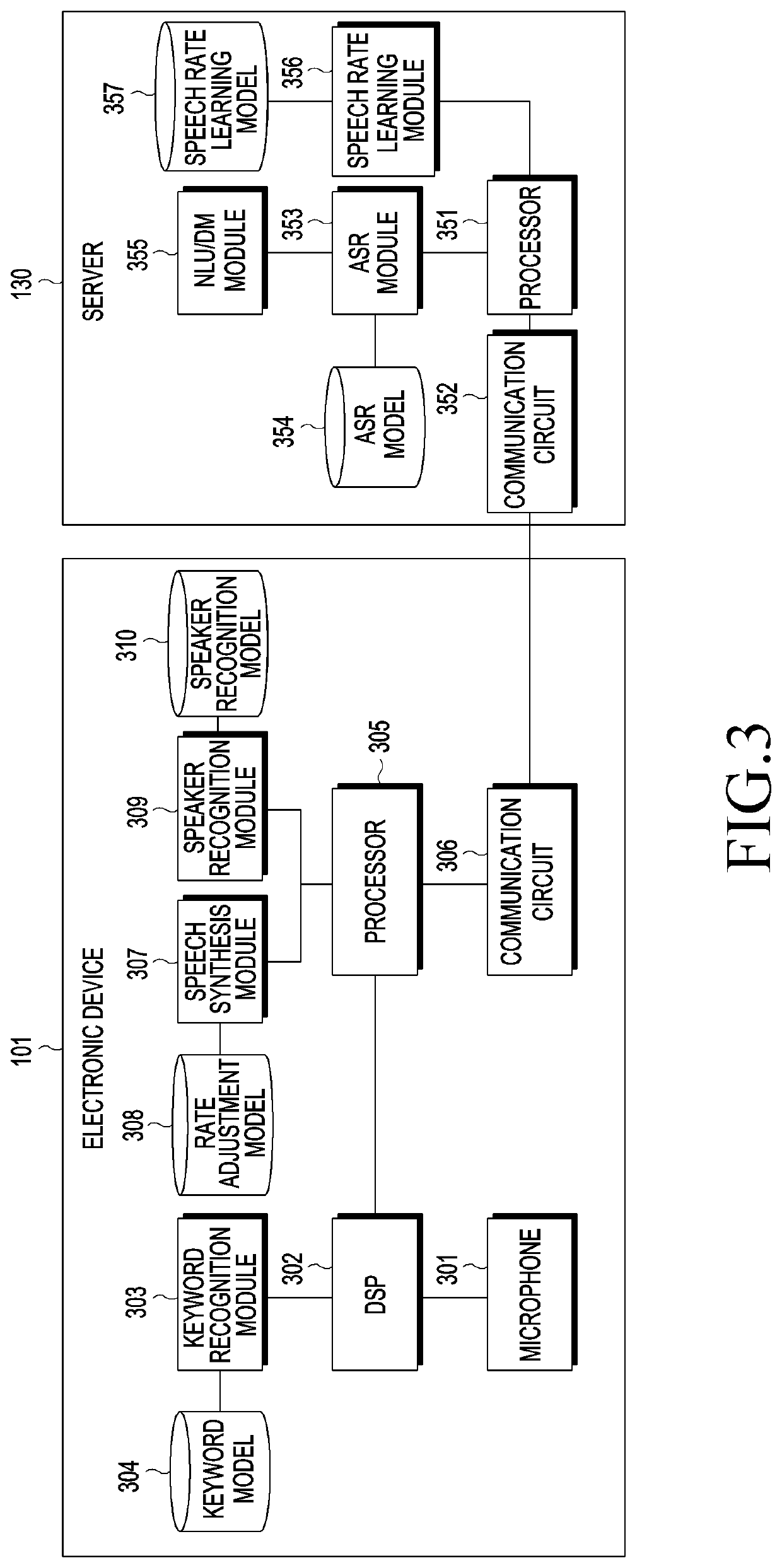

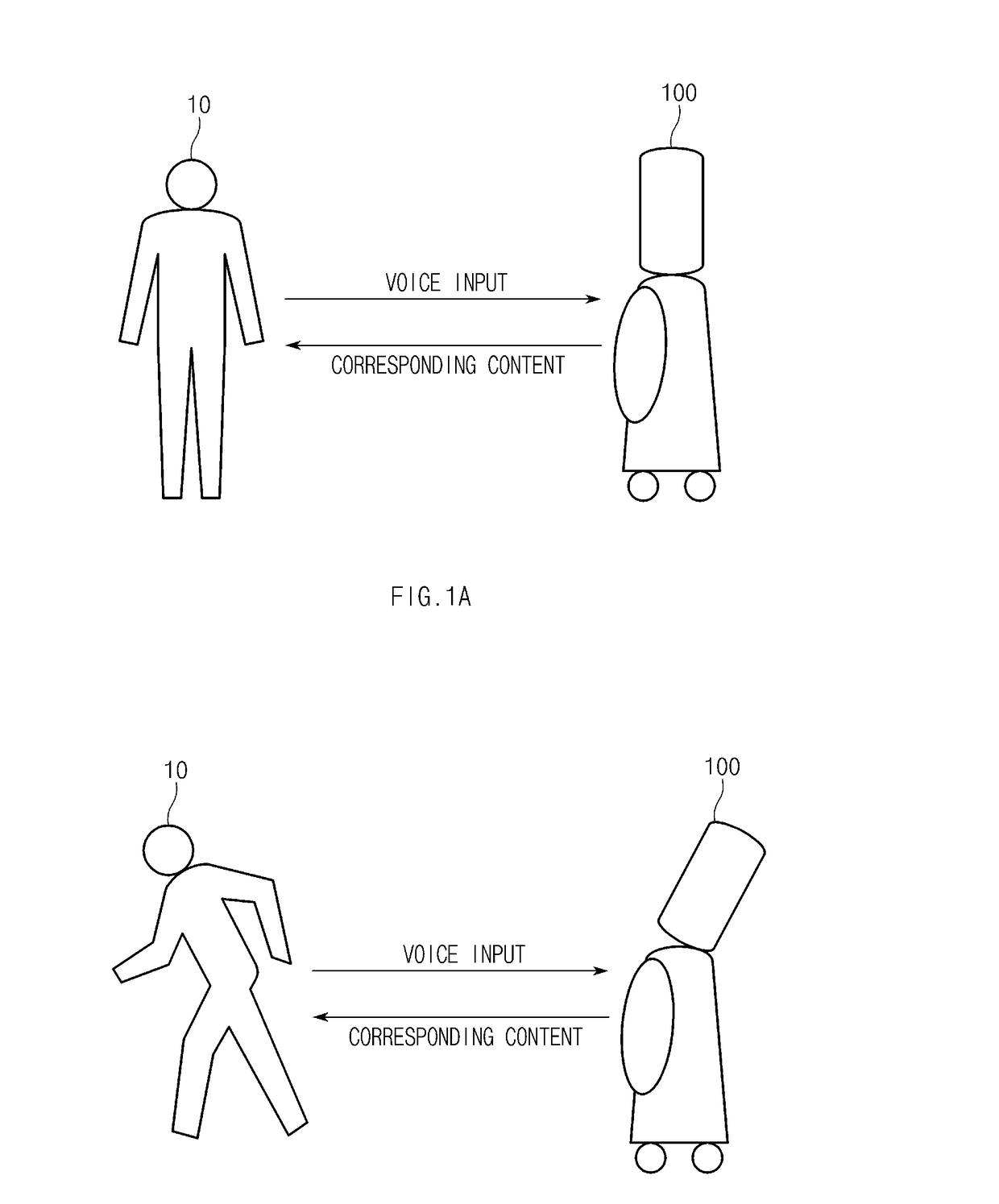

Electronic device and method of controlling speech recognition by electronic device

ActiveUS20200302913A1Easy to understandEasy to rememberNatural language translationSpeech recognitionSpeech rateAcoustics

Owner:SAMSUNG ELECTRONICS CO LTD

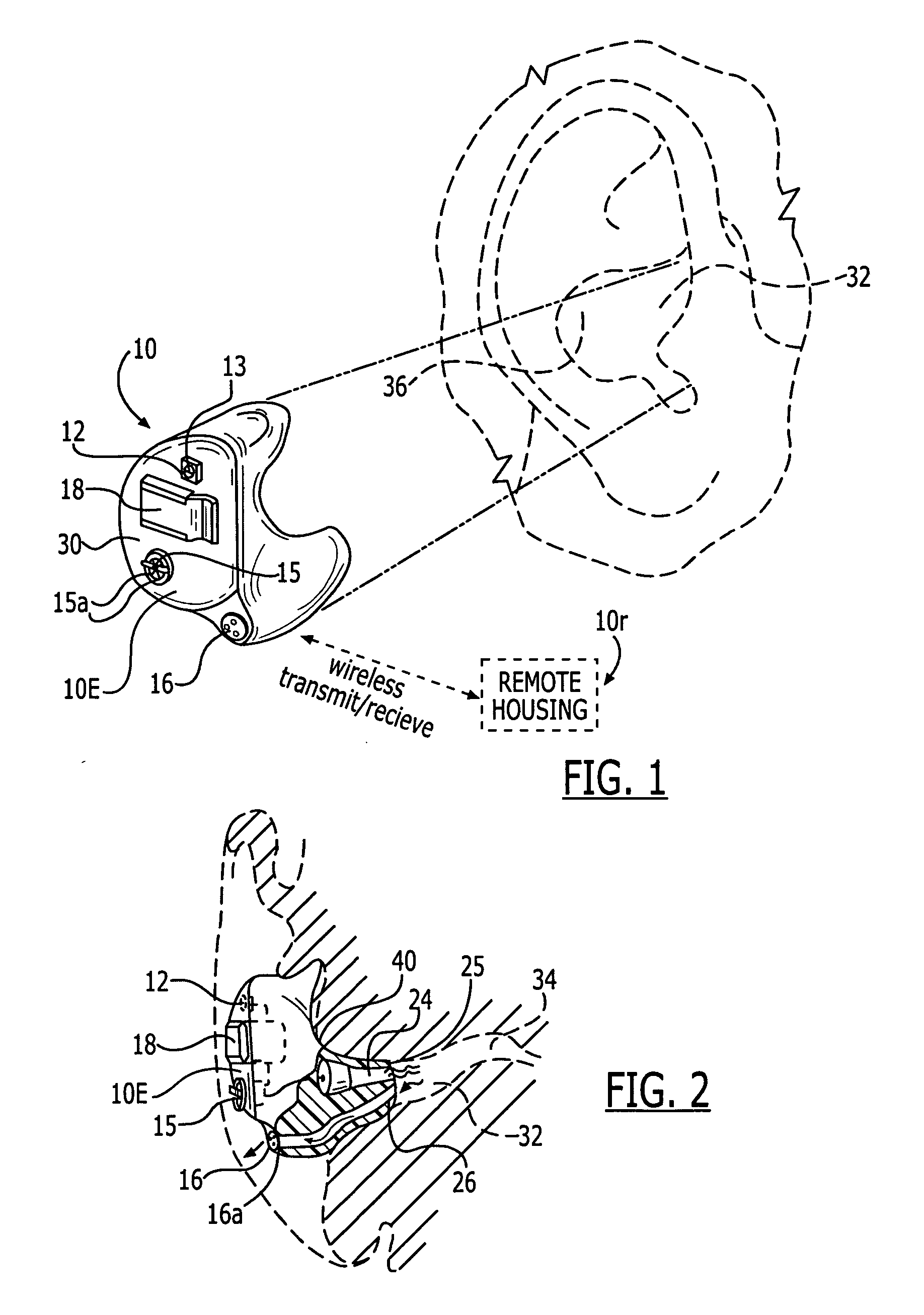

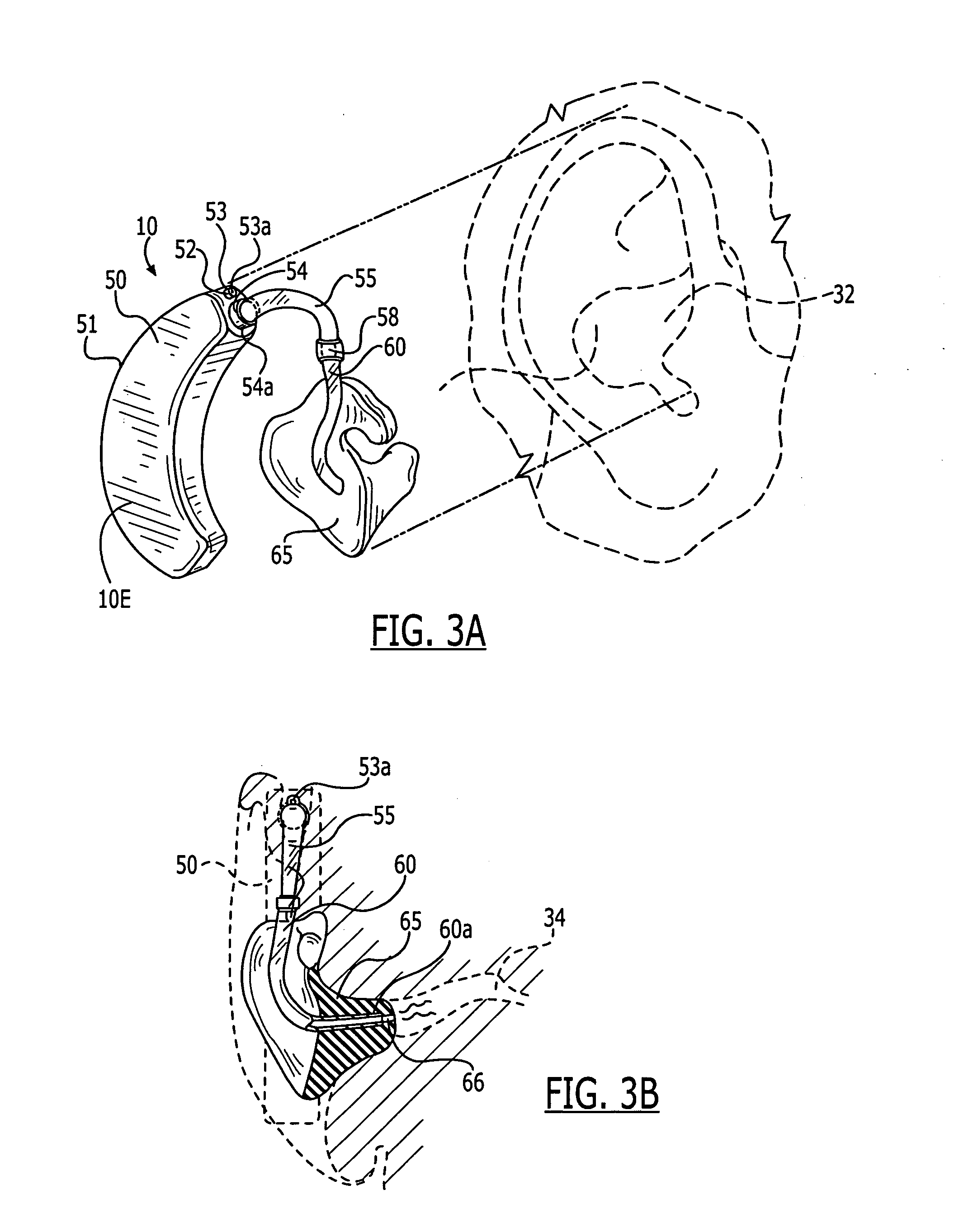

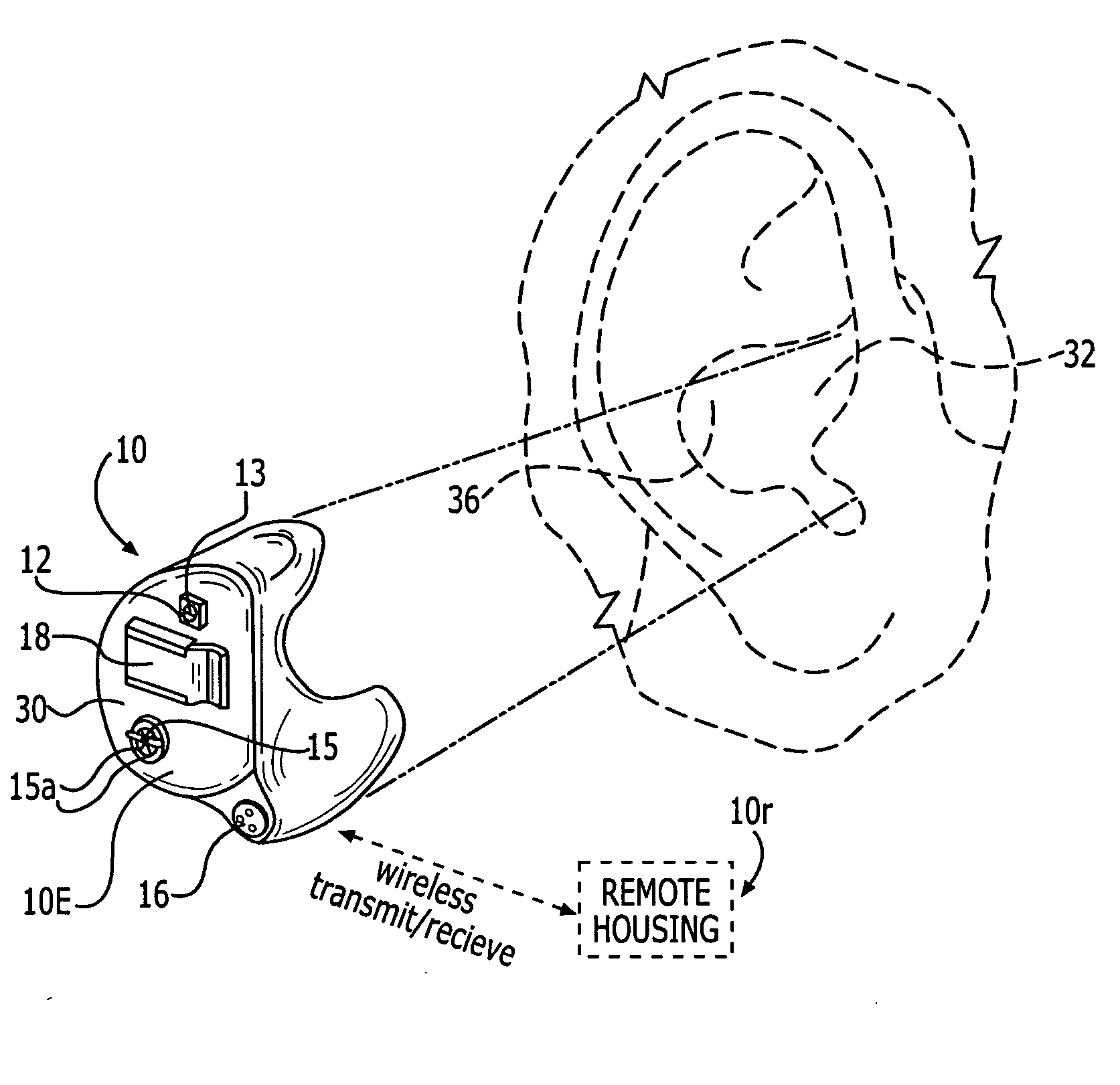

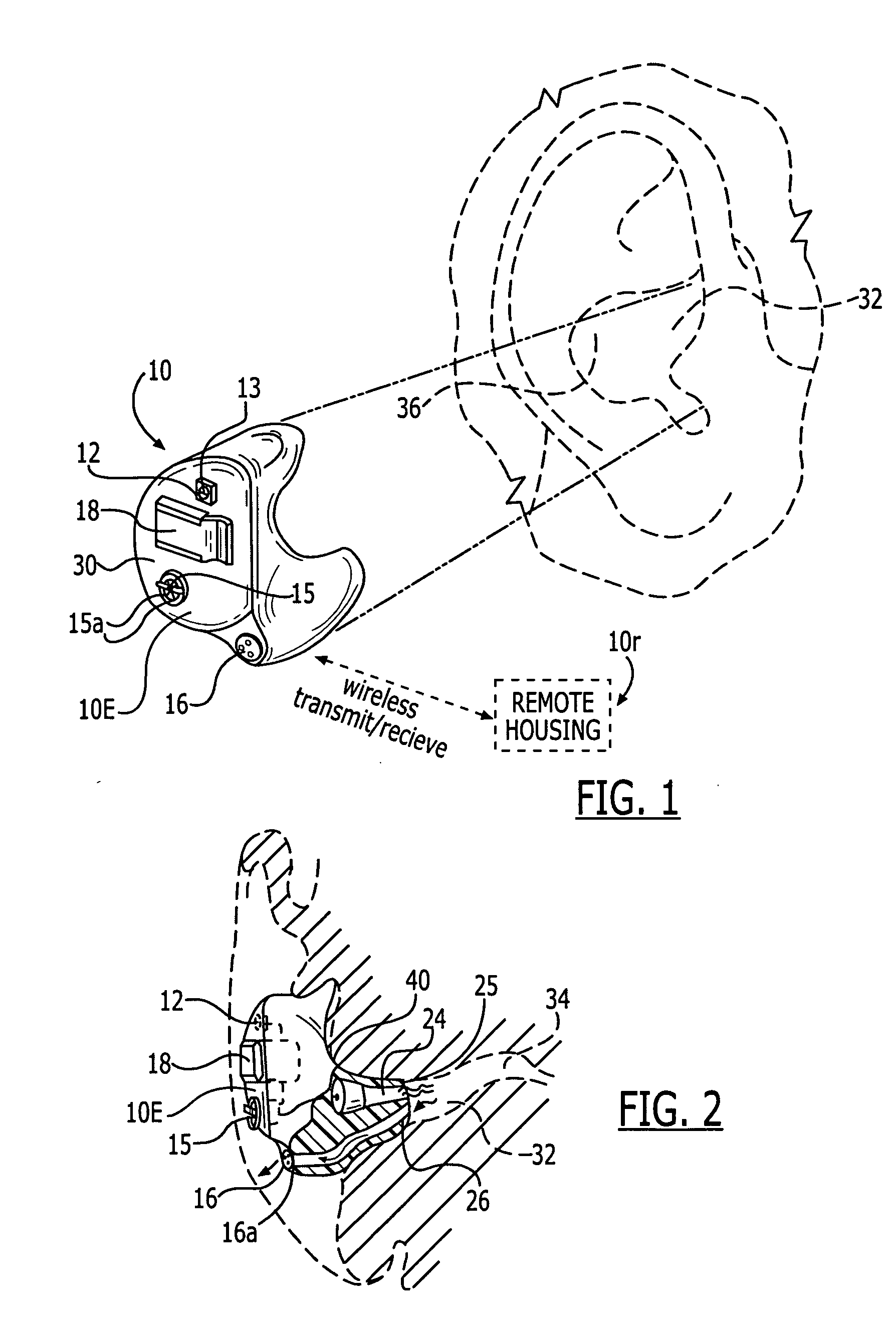

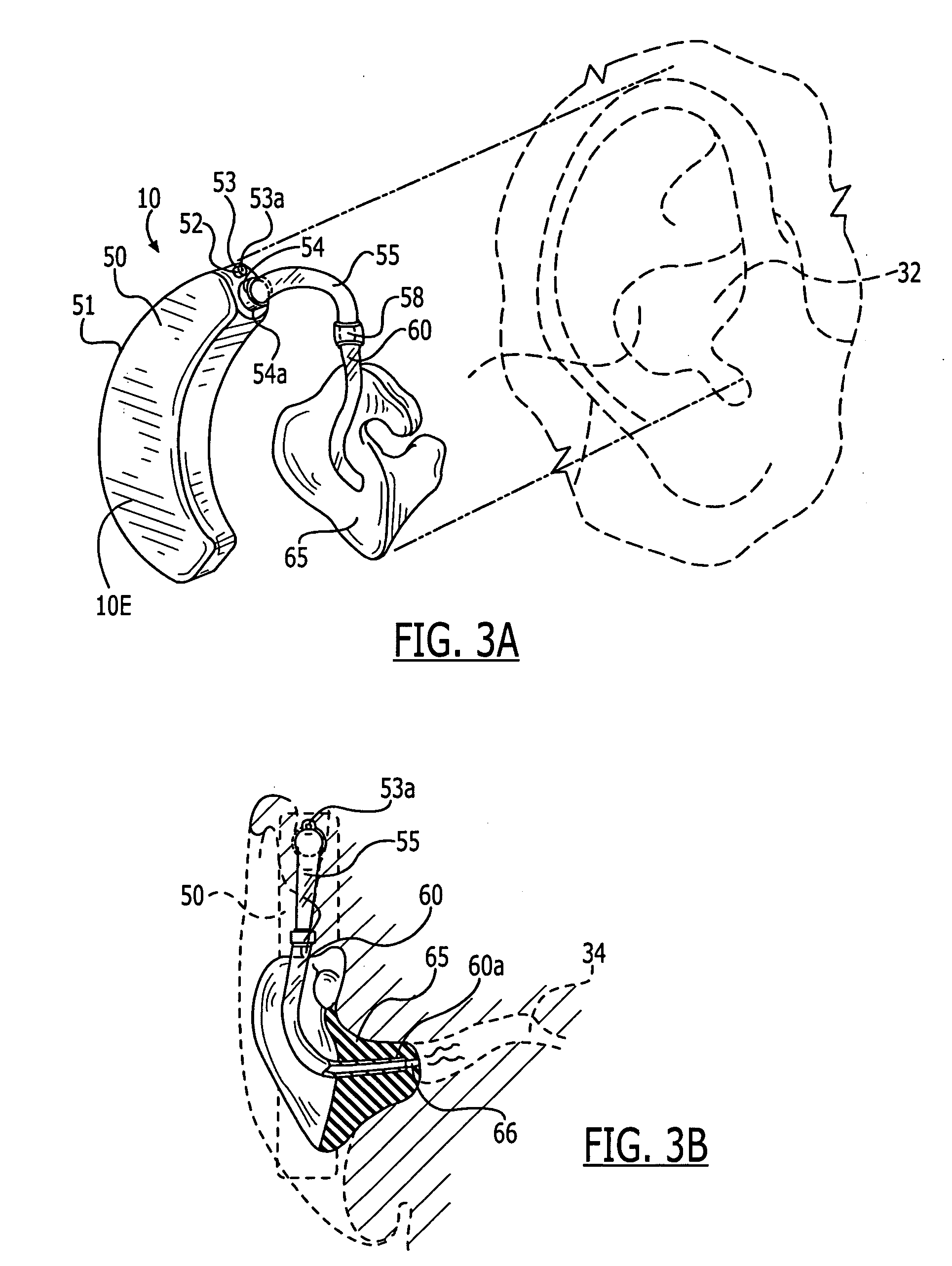

Methods and devices for treating non-stuttering speech-language disorders using delayed auditory feedback

InactiveUS20050095564A1Facilitate communicationUseful for promotionStammering correctionEar treatmentSpeech rateSpeech language disorders

Methods, devices and systems treat non-stuttering speech and / or language related disorders by administering a delayed auditory feedback signal having a delay of under about 200 ms via a portable device. The DAF treatment may be delivered on a chronic basis. For certain disorders, such as Parkinson's disease, the delay is set to be under about 100 ms, and may be set to be even shorter such as about 50 ms or less. Certain methods treat cluttering (an abnormally fast speech rate) by exposing the individual to a DAF signal having a sufficient delay that automatically causes the individual to slow his or her speech rate.

Owner:EAST CAROLINA UNIVERISTY

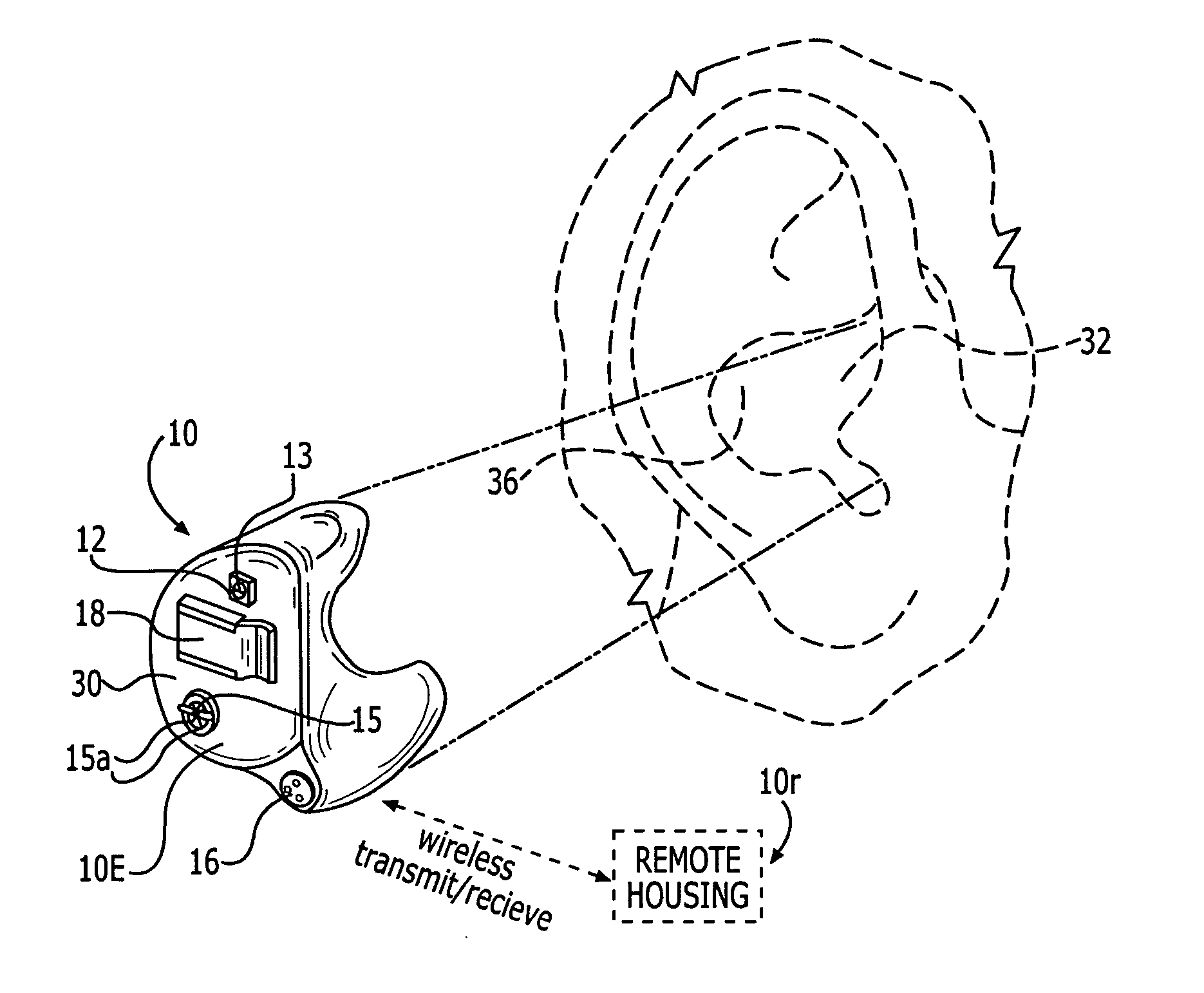

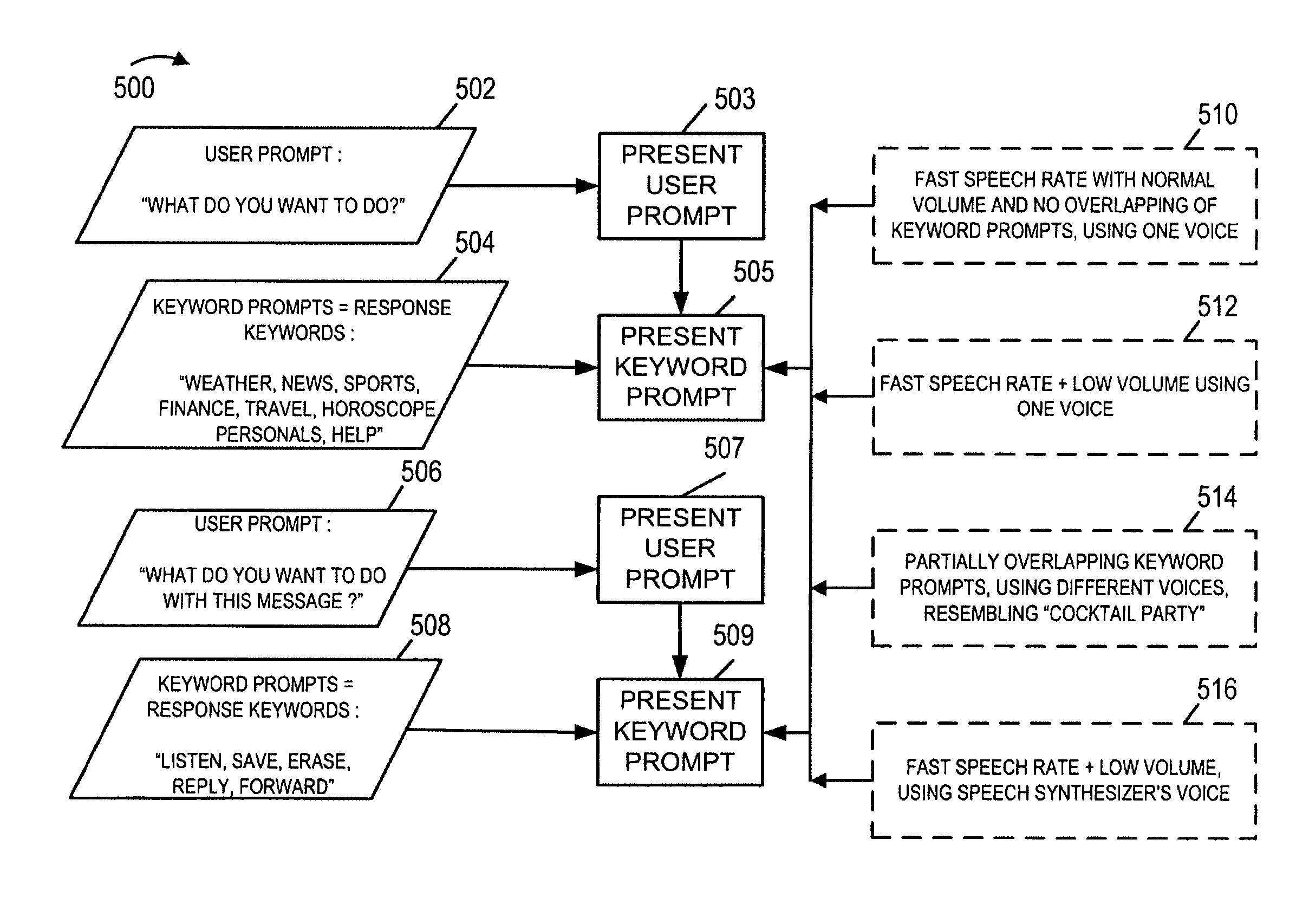

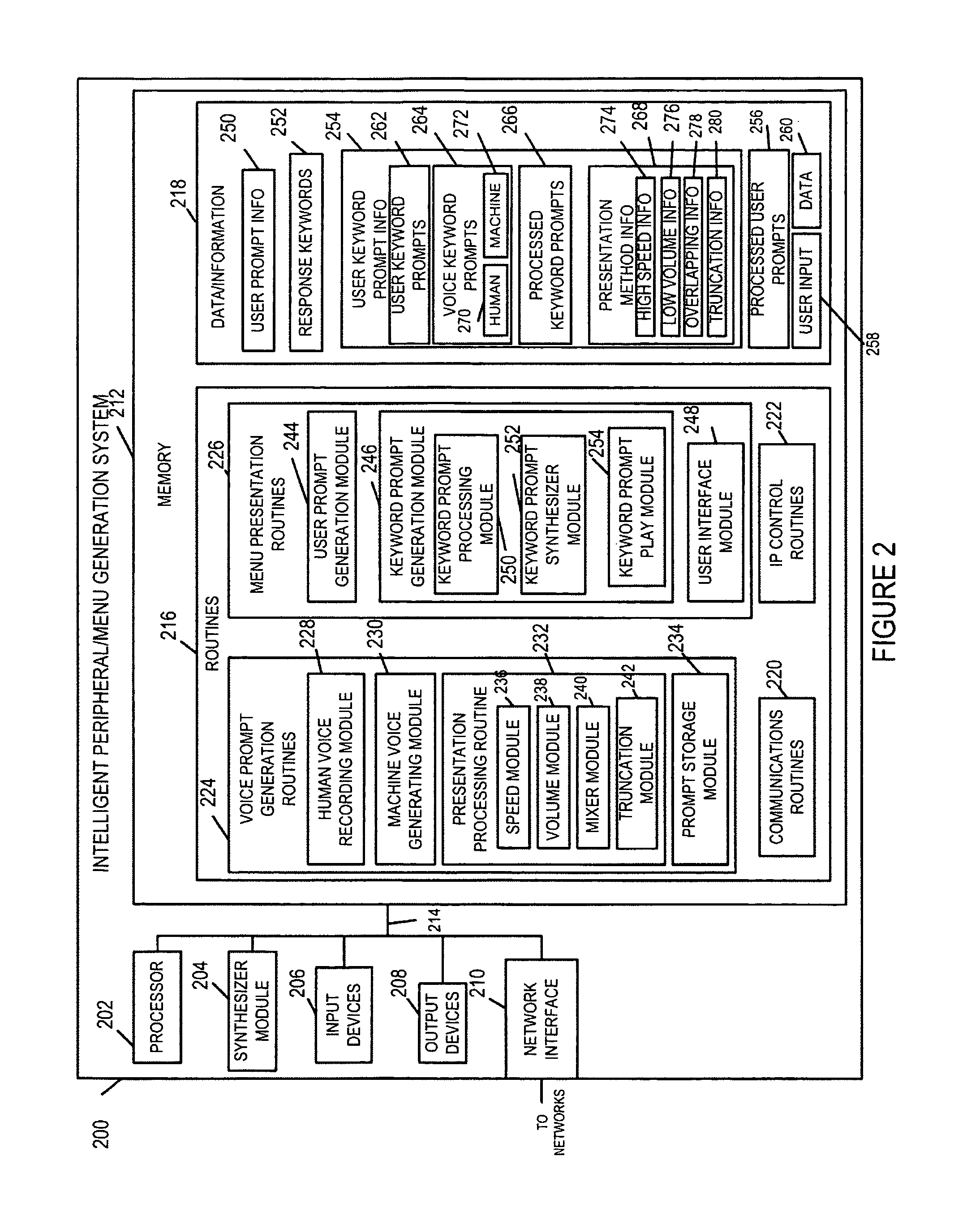

Enhanced interface for use with speech recognition

ActiveUS8583439B1Improve usabilityGood user interfaceAutomatic call-answering/message-recording/conversation-recordingDigital computer detailsSpeech rateSpeech identification

Improved methods of presenting speech prompts to a user as part of an automated system that employs speech recognition or other voice input are described. The invention improves the user interface by providing in combination with at least one user prompt seeking a voice response, an enhanced user keyword prompt intended to facilitate the user selecting a keyword to speak in response to the user prompt. The enhanced keyword prompts may be the same words as those a user can speak as a reply to the user prompt but presented using a different audio presentation method, e.g., speech rate, audio level, or speaker voice, than used for the user prompt. In some cases, the user keyword prompts are different words from the expected user response keywords, or portions of words, e.g., truncated versions of keywords.

Owner:VERIZON PATENT & LICENSING INC

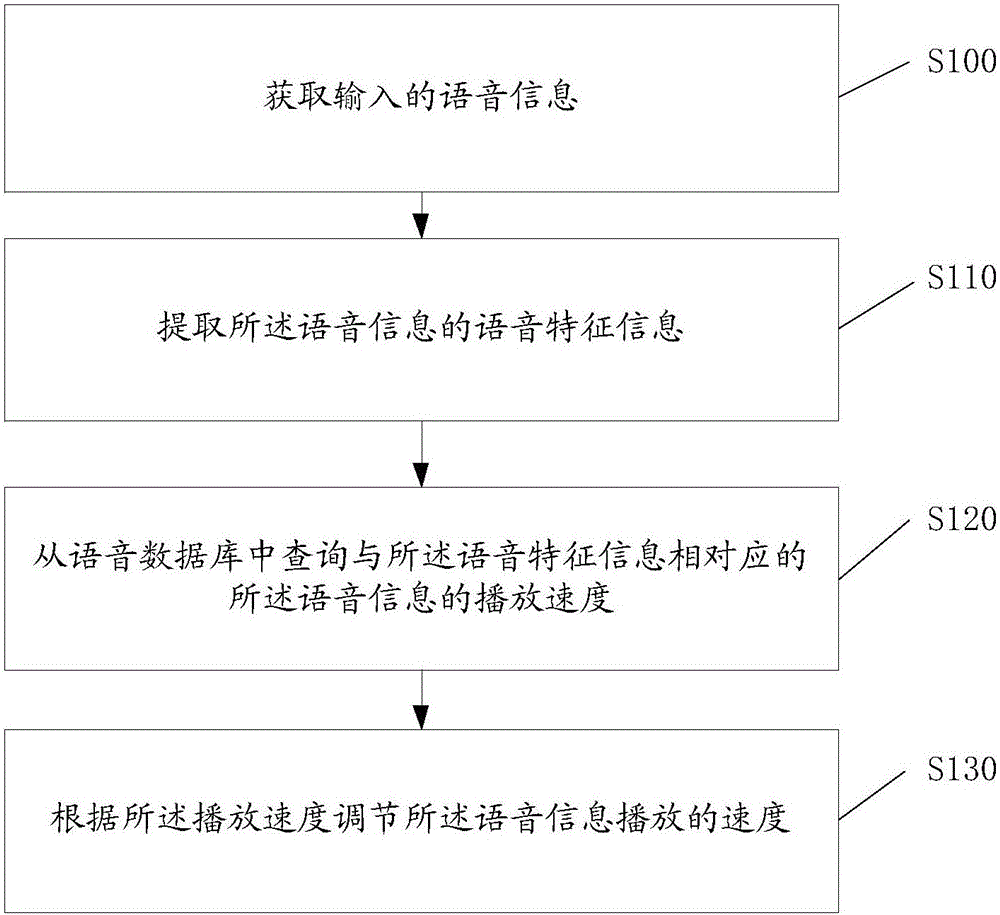

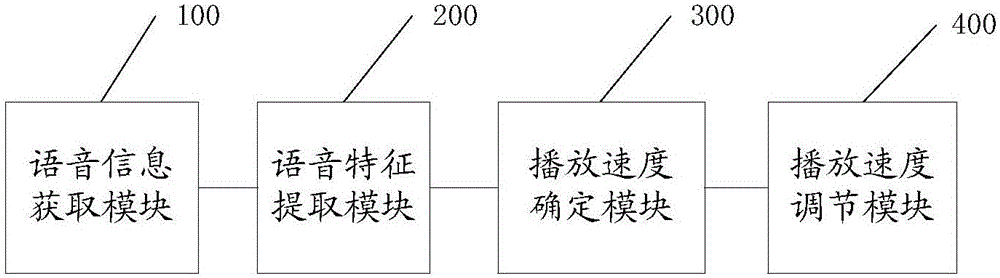

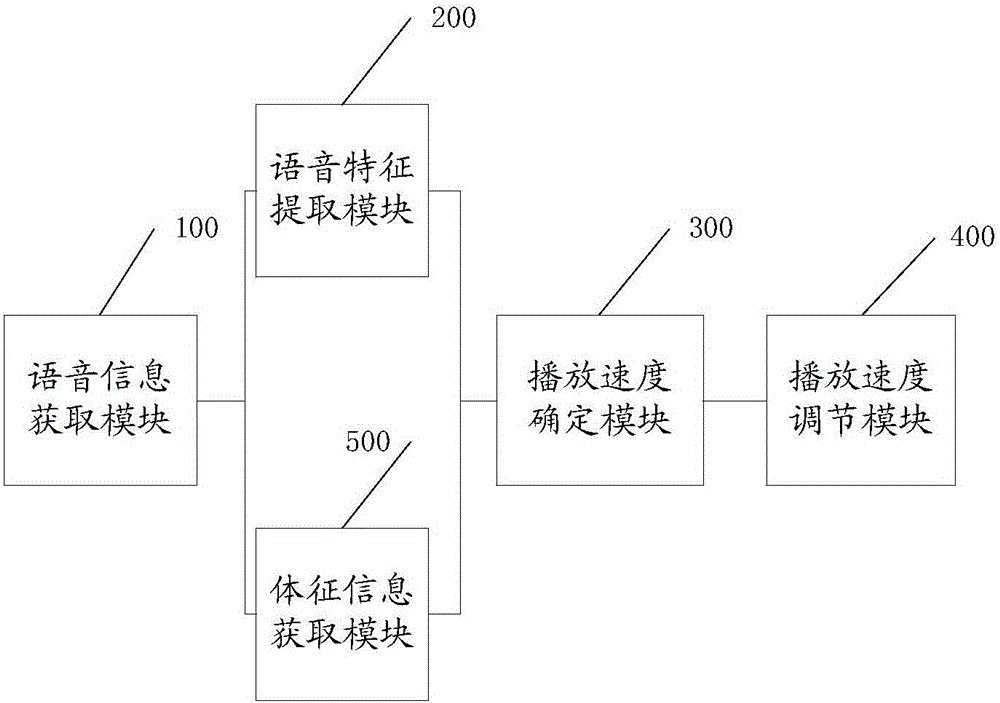

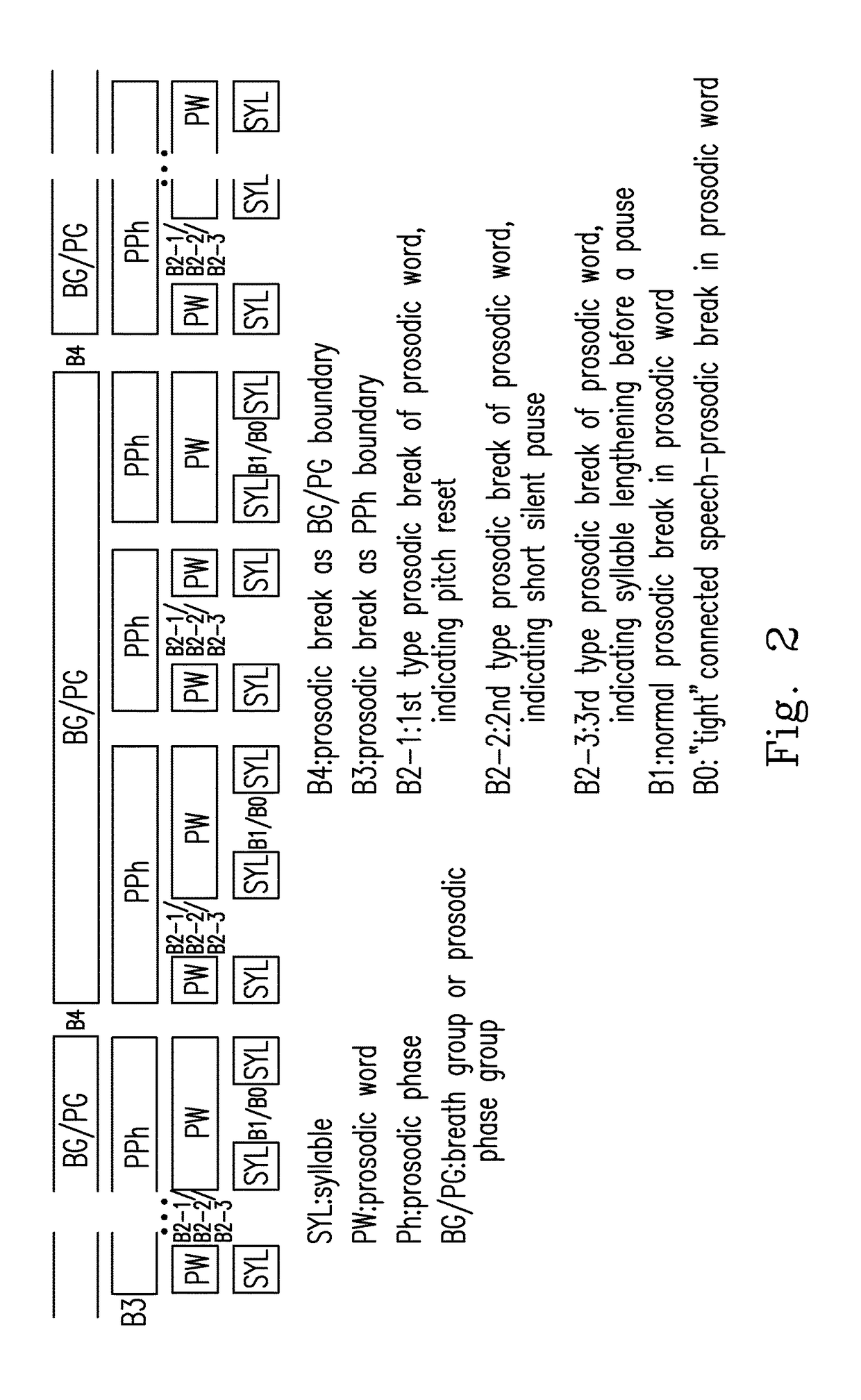

Automatic speech rate adjusting method and terminal

ActiveCN105869626AImprove adaptabilitySubstation equipmentAudio data retrievalSpeech rateComputer terminal

The invention discloses an automatic speech rate adjusting method. The method comprises the steps that input speech information is acquired; speech feature information of the speech information is extracted; the speech information playing rate corresponding to the speech feature information is inquired from a speech database; the speech information playing rate is adjusted according to the playing rate. According to the method, the preset playing rate corresponding to the speech feature information can be determined according to the speech feature information of the speech information input in real time, and the speech rate of the input speech information is adjusted according to the playing rate to meet the requirements various users; not only is the purpose of self-adaptively adjusting the playing rate according to the content of the speech information achieved, but also the adaptability is high when the method is applied to the occasions of communication, program playing and the like. The invention further discloses a terminal which can achieve the purpose of self-adaptively adjusting the playing rate according to the content of the speech information.

Owner:YULONG COMPUTER TELECOMM SCI (SHENZHEN) CO LTD

Methods and devices for treating non-stuttering speech-language disorders using delayed auditory feedback

InactiveUS20060177799A9Useful for promotionReduce speech rateStammering correctionEar treatmentSpeech rateSpeech language disorders

Methods, devices and systems treat non-stuttering speech and / or language related disorders by administering a delayed auditory feedback signal having a delay of under about 200 ms via a portable device. The DAF treatment may be delivered on a chronic basis. For certain disorders, such as Parkinson's disease, the delay is set to be under about 100 ms, and may be set to be even shorter such as about 50 ms or less. Certain methods treat cluttering (an abnormally fast speech rate) by exposing the individual to a DAF signal having a sufficient delay that automatically causes the individual to slow his or her speech rate.

Owner:EAST CAROLINA UNIVERISTY

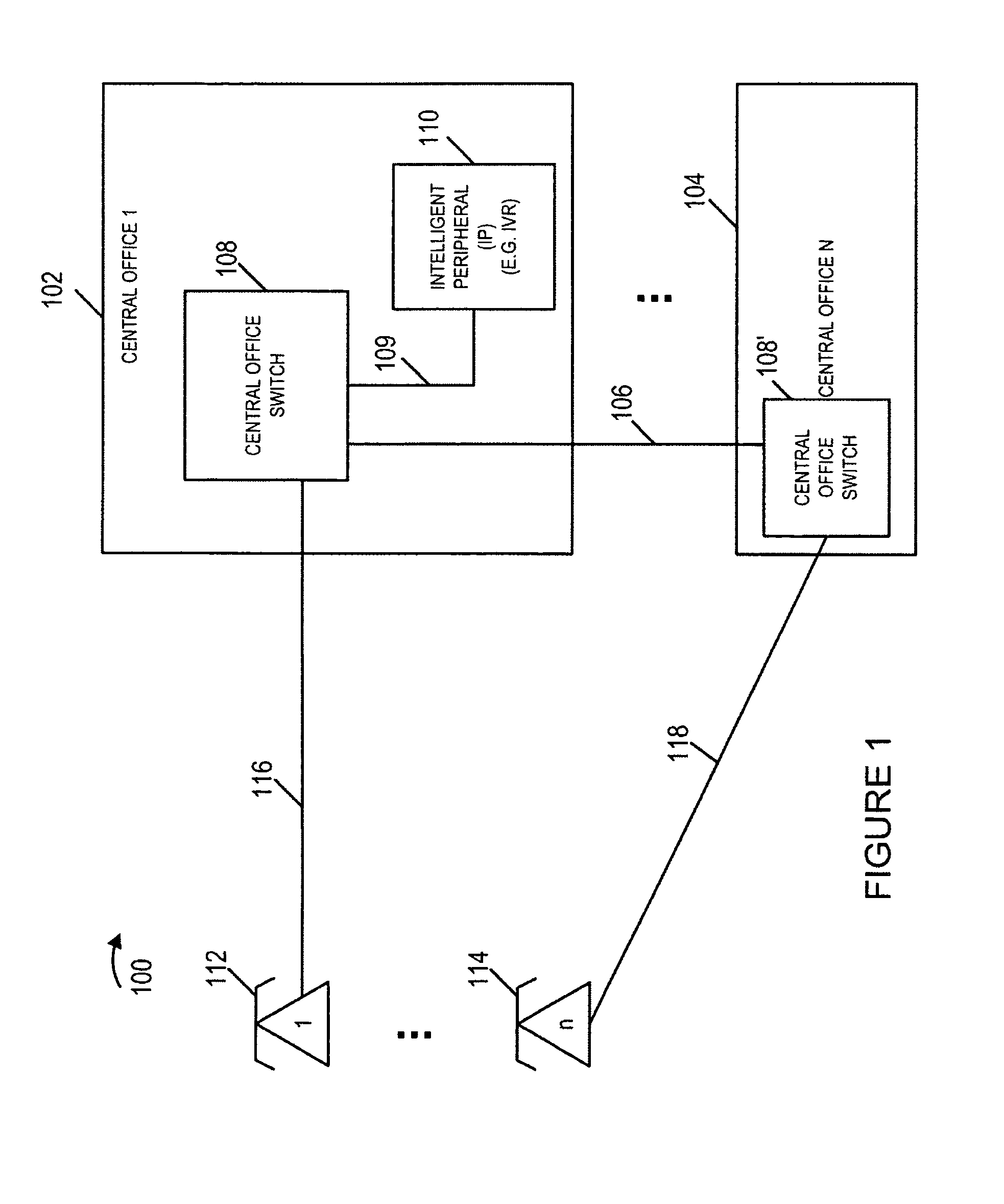

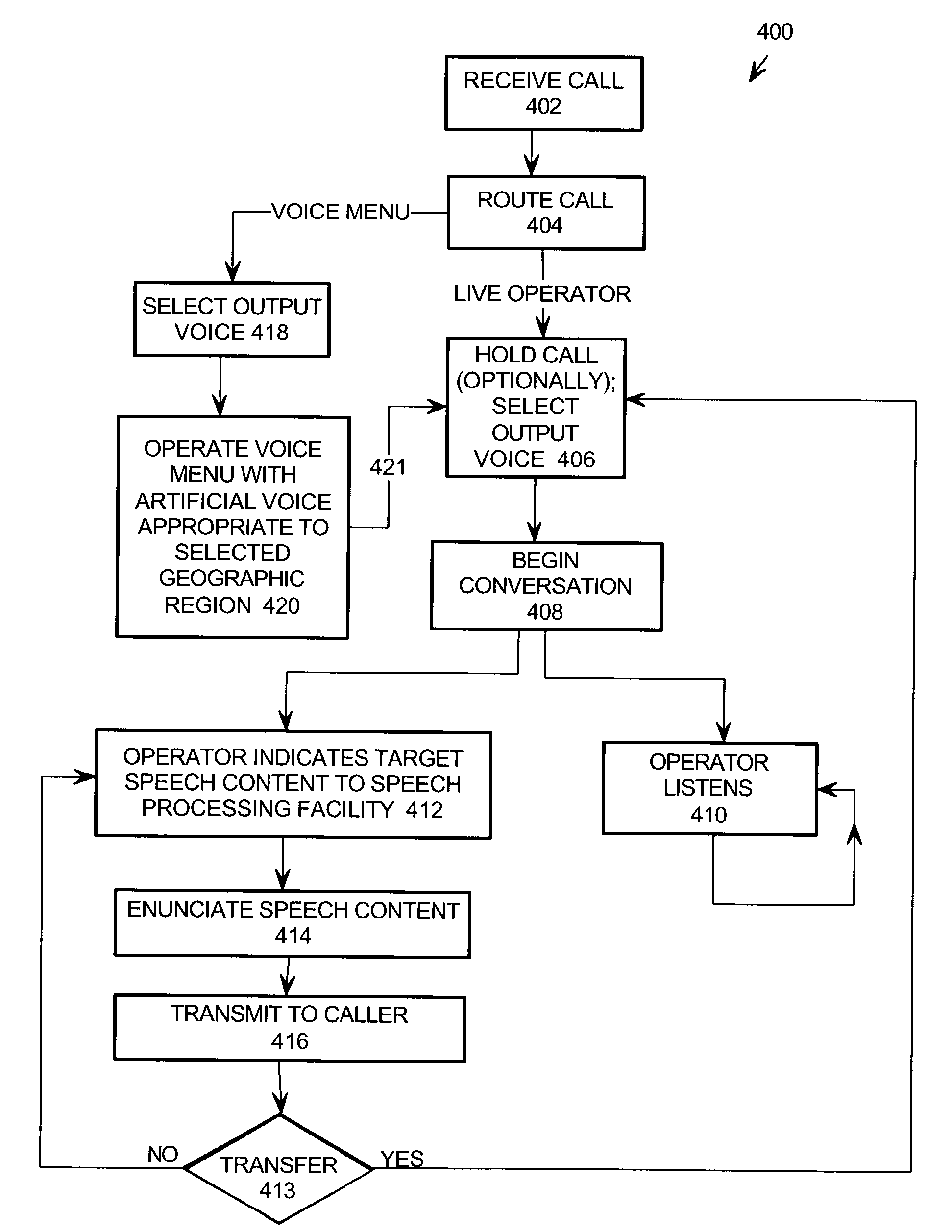

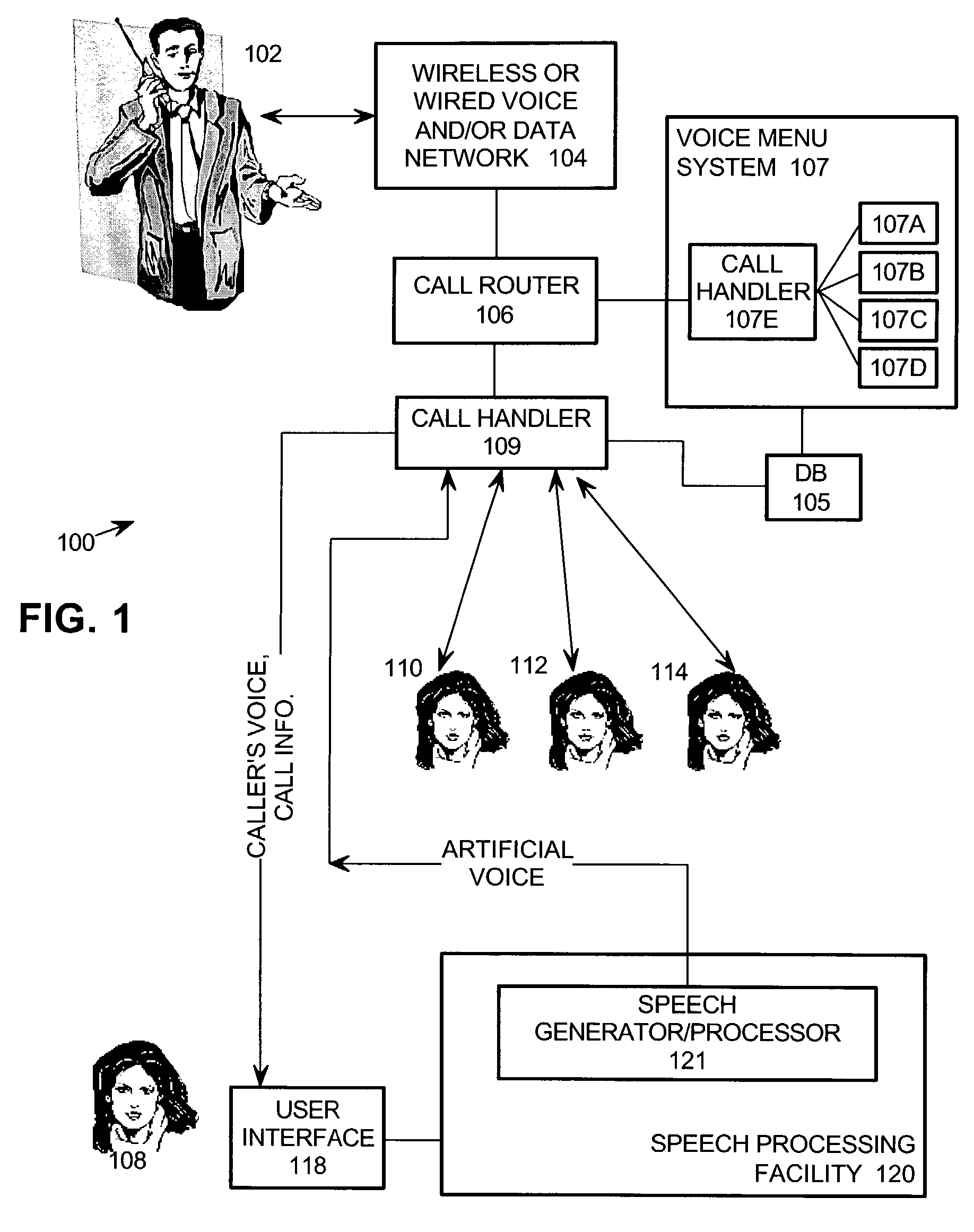

Telephone call handling center where operators utilize synthesized voices generated or modified to exhibit or omit prescribed speech characteristics

InactiveUS7275032B2Automatic call-answering/message-recording/conversation-recordingSpeech recognitionSpeech rateGeographic regions

A human operator's voice is artificially varied prior to transmission to a remote caller. In one example, the operator indicates target speech content (e.g., actual speech, pre-prepared text, manually entered text) to a speech processing facility, which enunciates the target speech content with an output voice that exhibits prescribed speech characteristics (e.g., accent, dialect, speed, vocabulary, word choice, male / female, timbre, speaker age, fictional character, speech particular to people of a particular geographic region or socioeconomic status or other grouping). Another example is an automated call processing system, where an output voice is selected for each incoming call and information is interactively presented to callers using the output voice selected for their respective calls. In another example, a speech processing facility intercepts the operator's speech, manipulates the waveform representing the operator's intercepted speech to add or remove prescribed characteristics, and transmits an enunciated output of the manipulated waveform to the caller.

Owner:ABACUS COMM LC

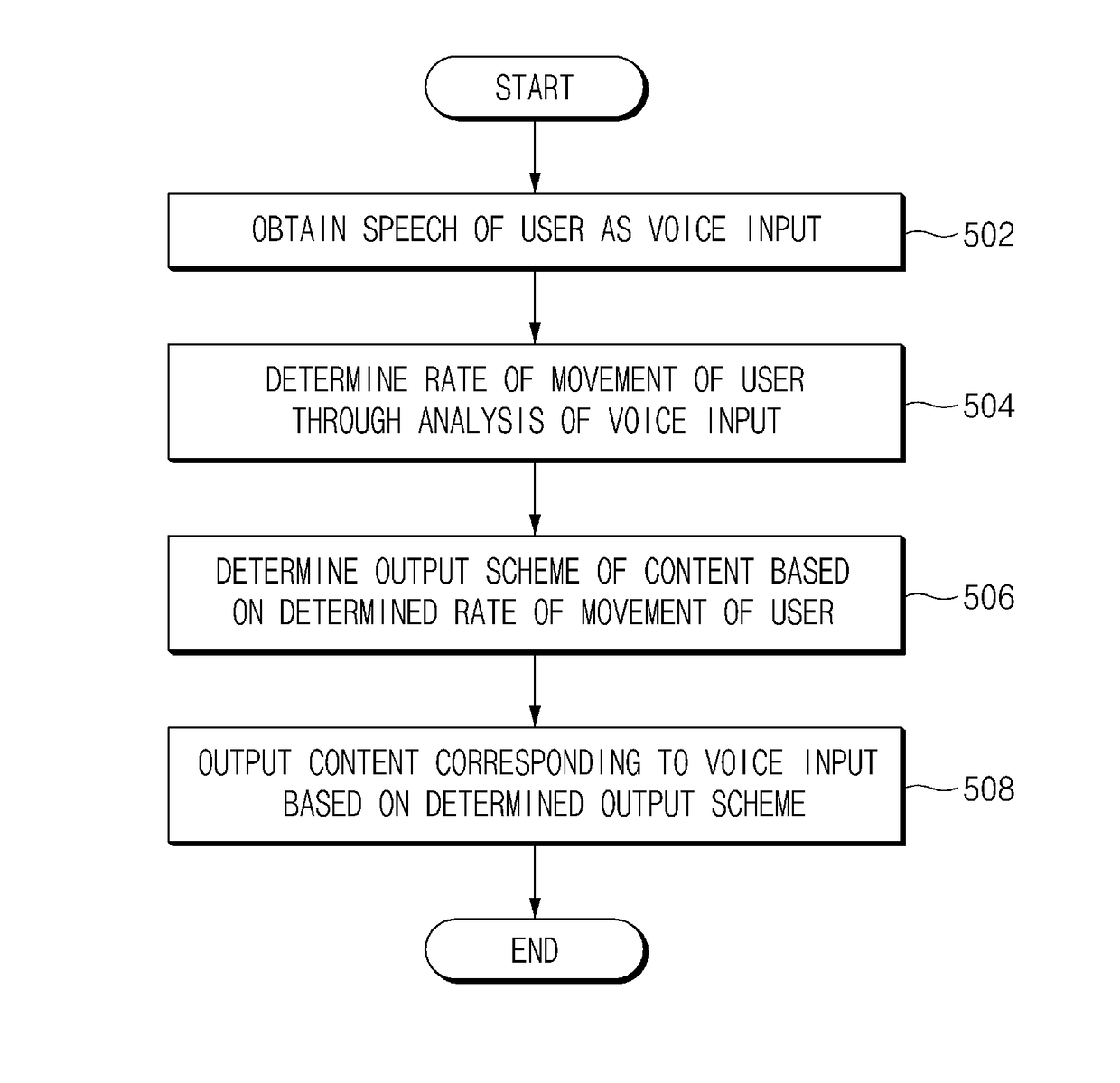

Method and electronic device for providing content

An electronic device and a method are provided. The electronic device includes an audio input module configured to receive a speech of a user as a voice input, an audio output module configured to output content corresponding to the voice input, and a processor configured to determine an output scheme of the content based on at least one of a speech rate of the speech, a volume of the speech, and a keyword included in the speech, which is obtained from an analysis of the voice input.

Owner:SAMSUNG ELECTRONICS CO LTD

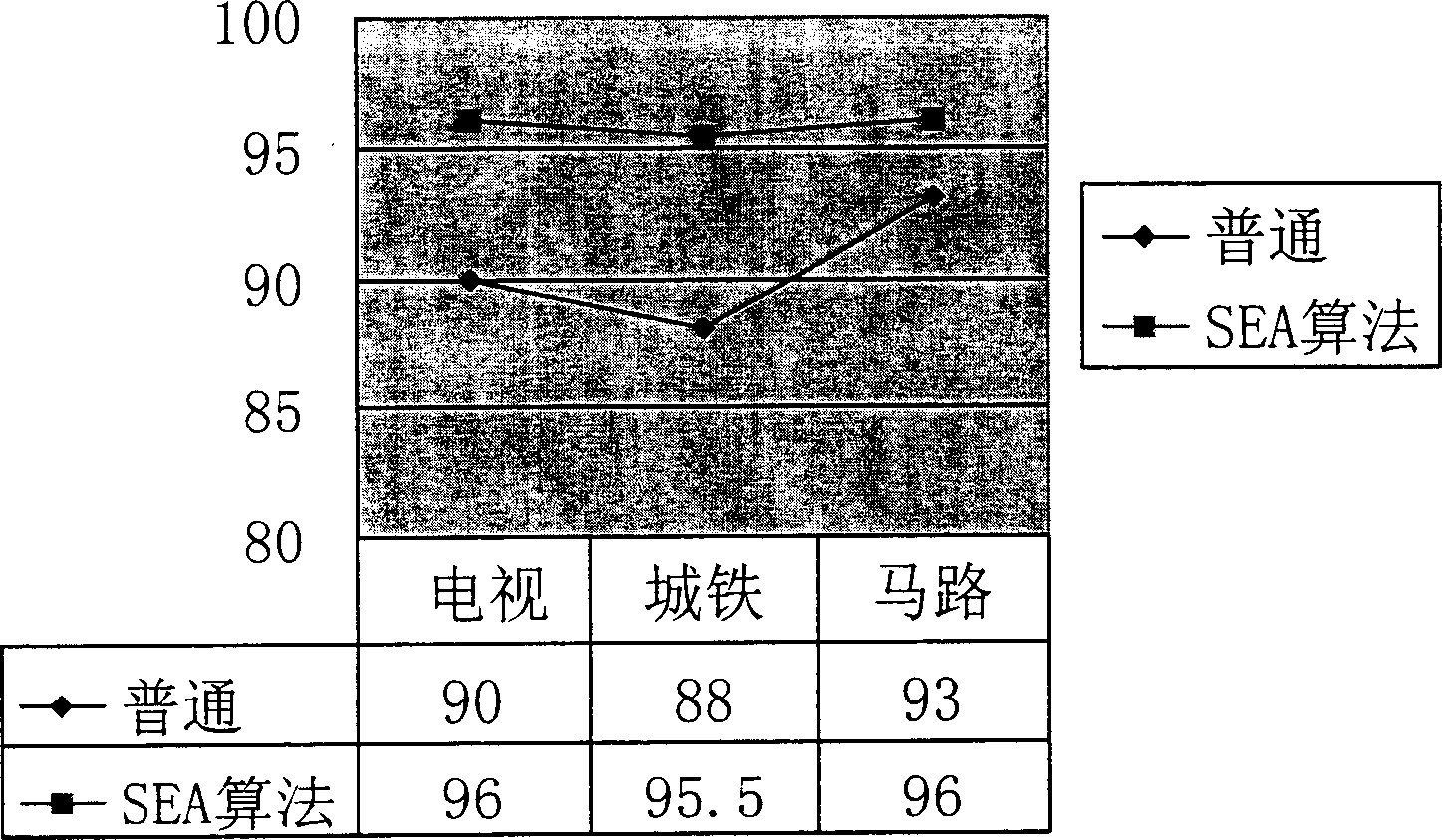

Method for adaptively improving speech recognition rate by means of gain

ActiveCN1801326ASolve a series of problems encountered using speech recognitionEasy to identifyTwo-way loud-speaking telephone systemsSpeech recognitionSpeech rateSpeech identification

The invention relates to a method for improving speech recognition rate with gain self-adapting. The invention is characterized in that: by evaluating noise, adjusting recording gain and adjusting port detection parameters to improve speech rate. The inventive method comprises steps of: 1, evaluating background noise; 2, adjusting recording gain according to the evaluated background noise in step 1; taking port detection and speech recognition on the basis of step 1 and step 2.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

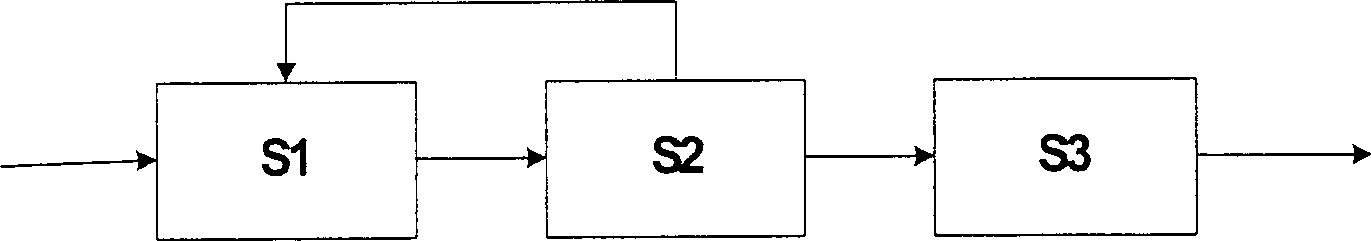

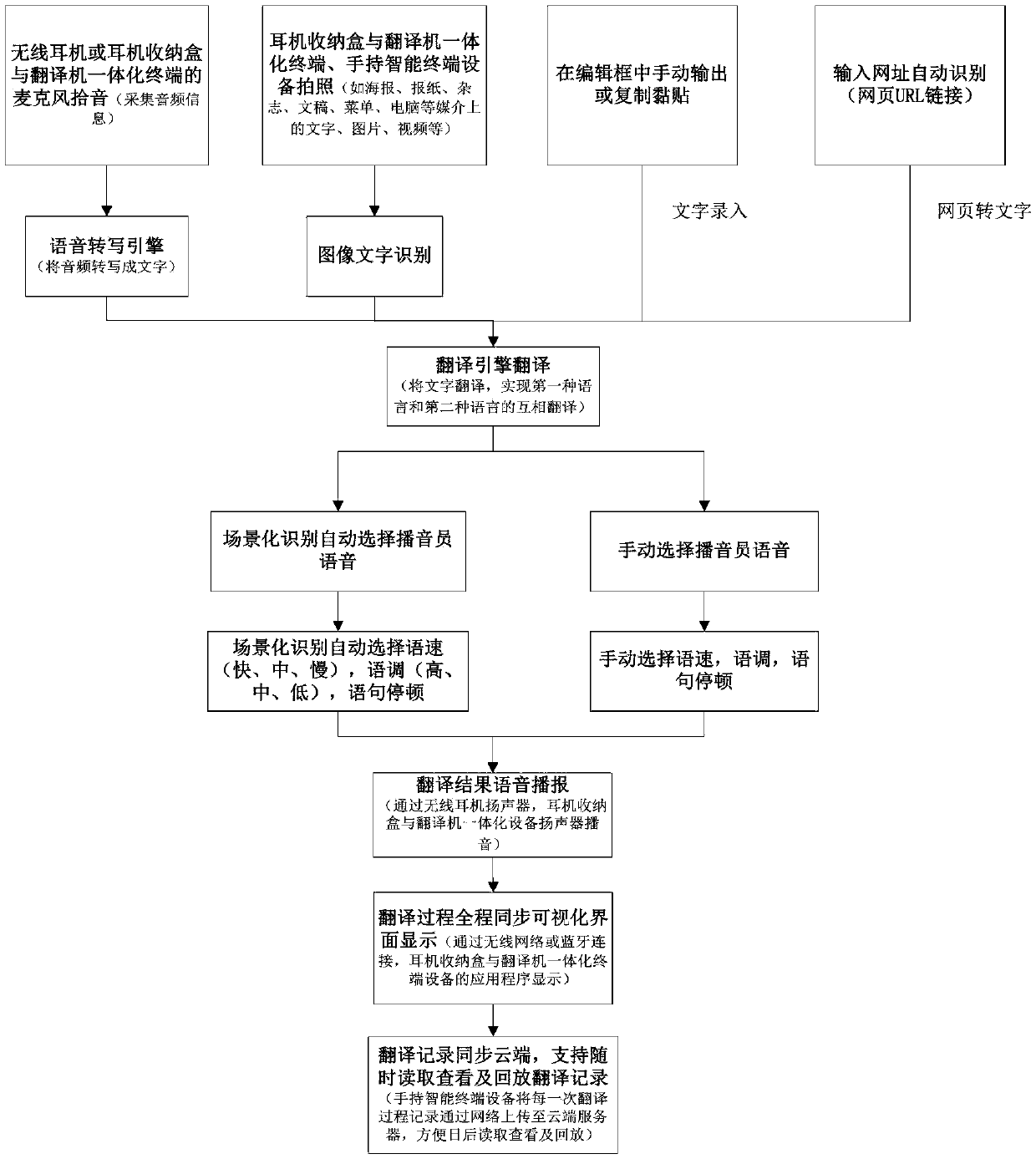

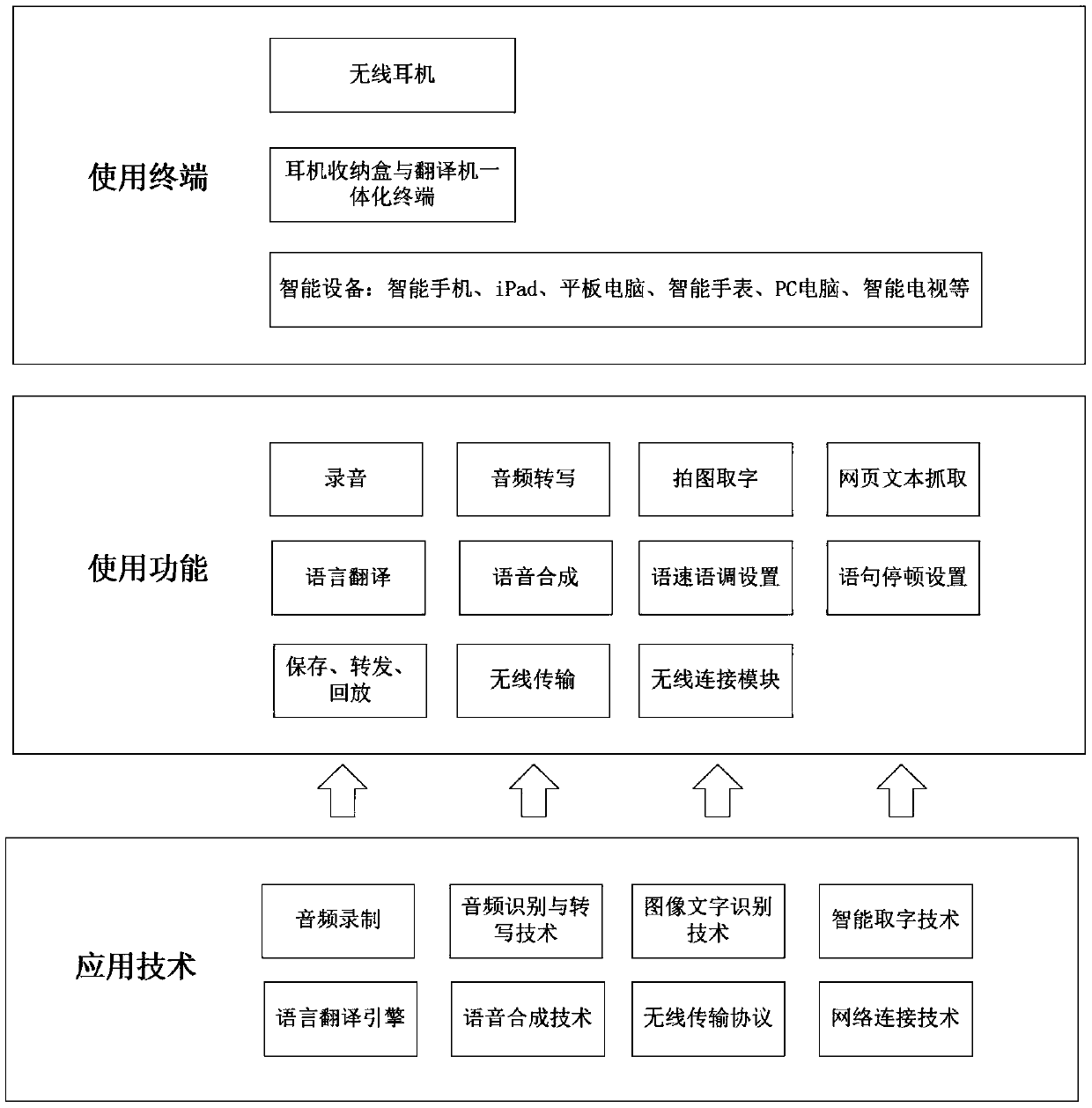

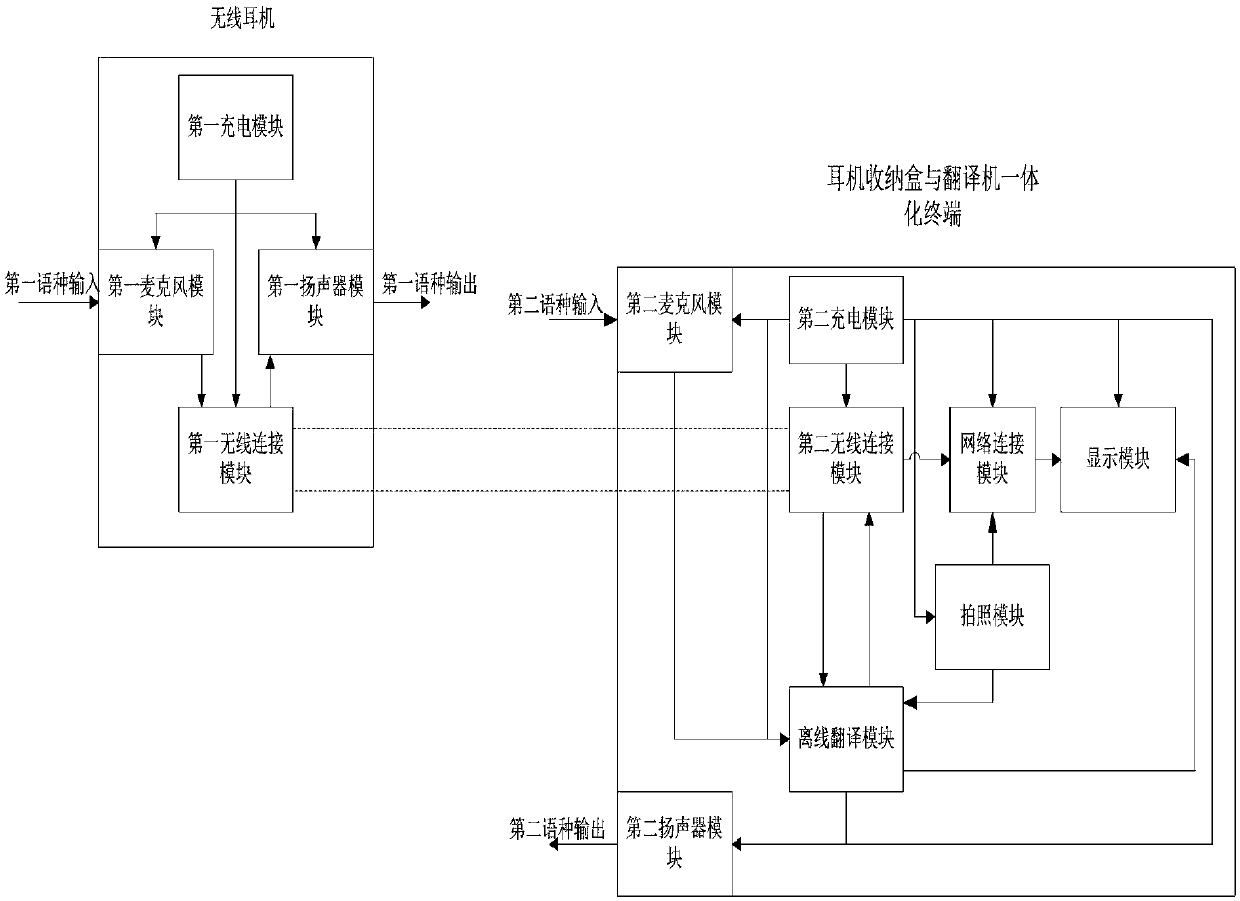

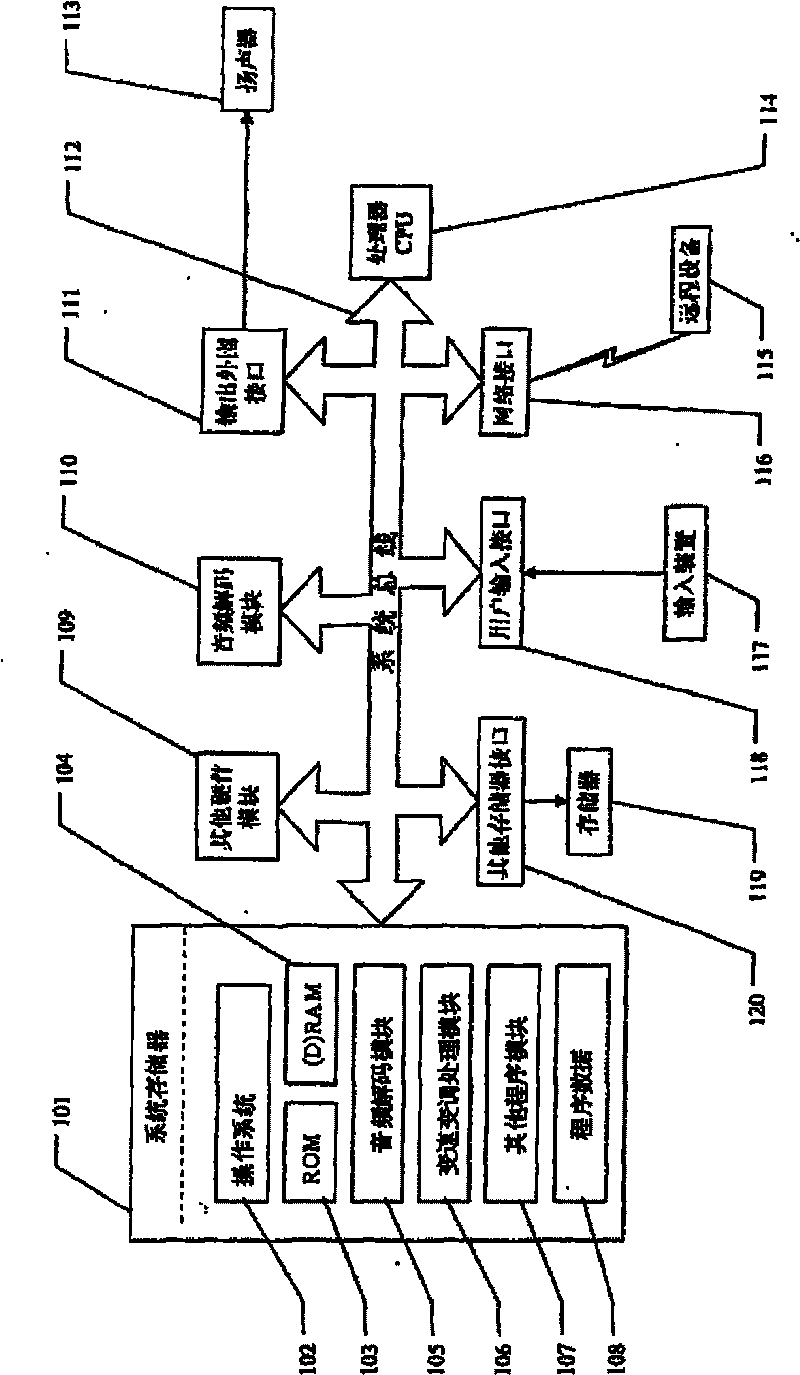

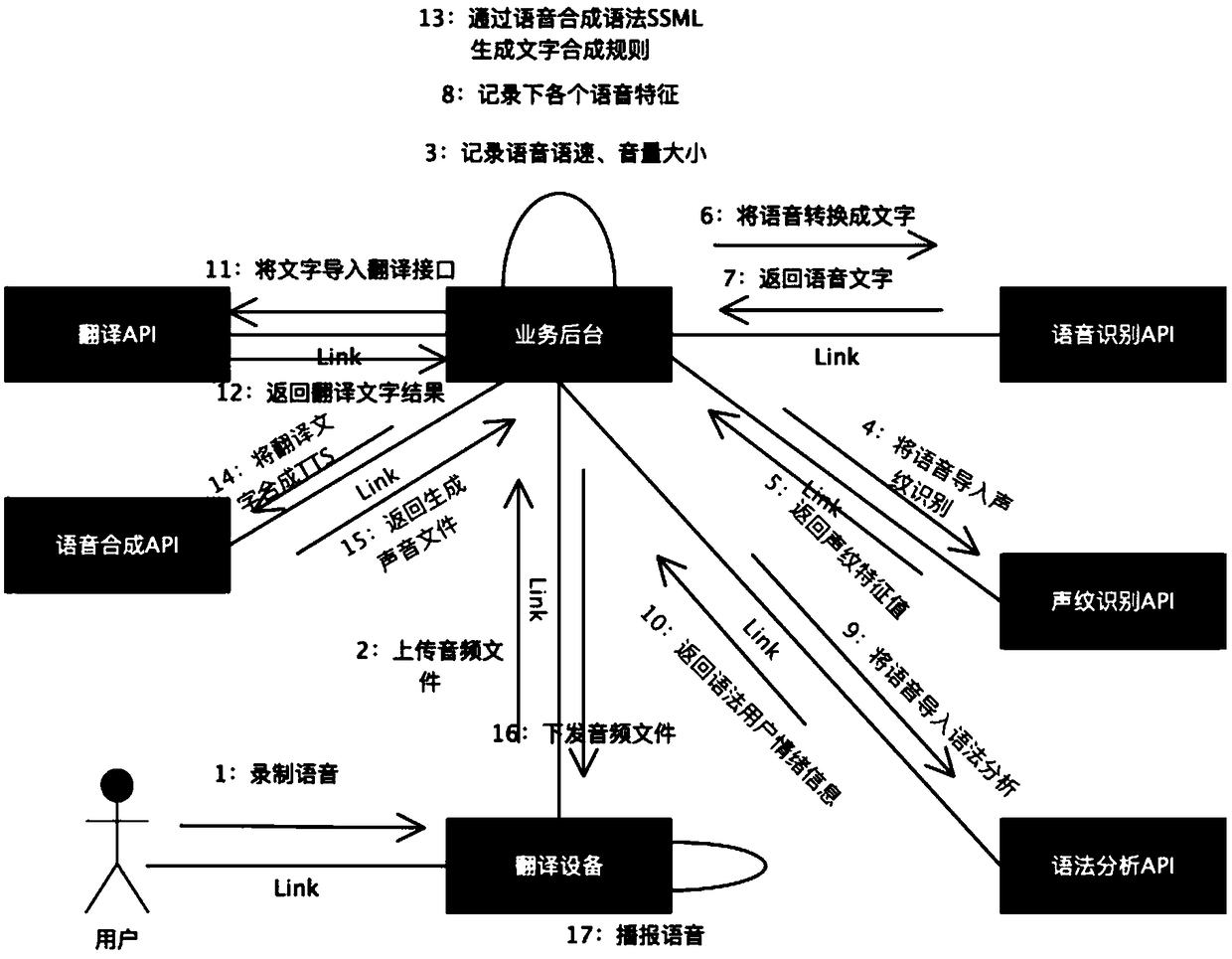

The invention discloses a tTranslation method and translation system based on intelligent hardware

PendingCN109614628ALower barriers to useEasy translation processNatural language translationSemantic analysisSpeech rateWireless transmission

The invention discloses a translation method based on intelligent hardware, and the method comprises the following steps: sS1, obtaining audio, image, video or text information, and translating the audio, image and video to obtain text contents; S; s2, translating the obtained character information or character content into second language characters through an online or offline translation engine; S; s3, carrying out knowledge base knowledge point automatic identification is carried out on text information or keywords or semantics of text content before and after translation, and a use sceneis intelligently prejudged; S; s4, automatically or manually selecting the tone of the phonetic bank and adjusting the speed tone through a pre-judged use scene; S; s5, translating result voice broadcast. Information is transmitted by using a wireless transmission technology, translation is completed by combining applications of new technologies such as a voice transfer technology, an image recognition character technology, a translation engine and the like, meanwhile, storage, playback and sharing functions are provided for a user, and scene extension of the user and continuous optimization of a product are also realized.

Owner:广州市讯飞樽鸿信息技术有限公司

Method for realizing sound speed-variation without tone variation and system for realizing speed variation and tone variation

InactiveCN101740034ARealize functionImprove sound qualitySpeech analysisRecord information storageState variationSound quality

The invention discloses a system for realizing sound speed variation and tone variation, which comprises an input cache module, a tone variation processing module, a speed-variation no-tone-variation processing module and a data output module, wherein the input cache module is used for reading the sound signal data to be processed into the cache; the tone variation processing module is used for carrying out the tone variation processing on the sound signal to change the sound tone; the speed-variation no-tone-variation processing module is used for carrying out the speed-variation no-tone-variation processing on the sound signal, thereby changing the sound speed without changing the tone; and the data output module is used for outputting the speed-variation tone-variation signal. The speed-variation no-tone-variation processing module comprises a segmentation data module and a connection data module, wherein the speed-variation no-tone-variation processing module extracts a string of signal subfamilies (namely small sections of sound) from the original speech signal according to the coefficient of variation in speed by using a window function; and the connection data module connects the signal subfamilies according to the time sequence, thereby obtaining the speed-variation no-tone-variation signal. The invention realizes the speed-variation no-tone-variation function and the speed-variation tone-variation function of the audio frequency by using very low algorithm complexity, and does not introduce noise, thereby enhancing the quality of the processed sound.

Owner:刘盛举 +1

System and method for audio hot spotting

ActiveUS20100076996A1Data processing applicationsDigital data information retrievalSpeech rateVocal effort

Owner:THE MITRE CORPORATION

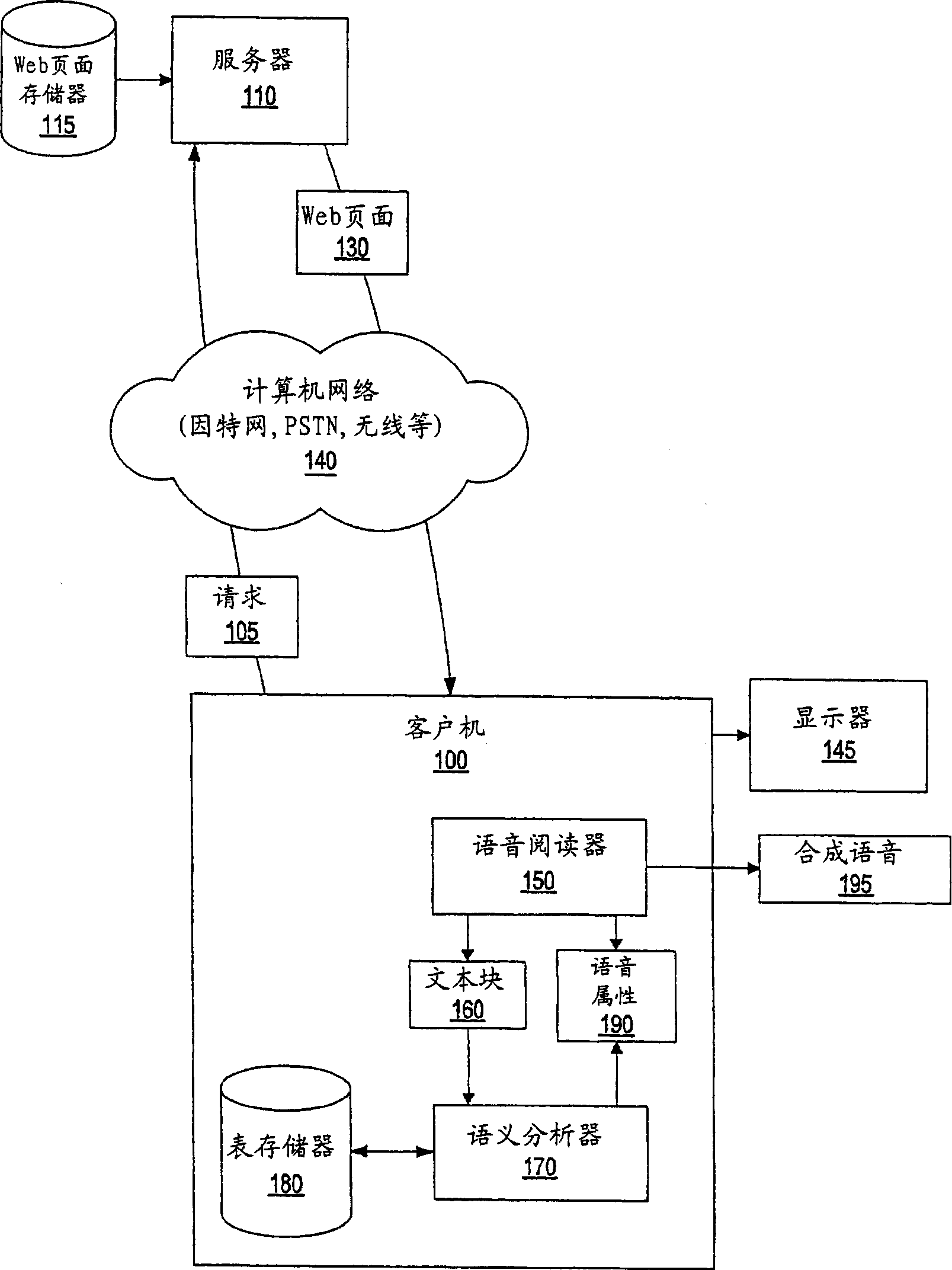

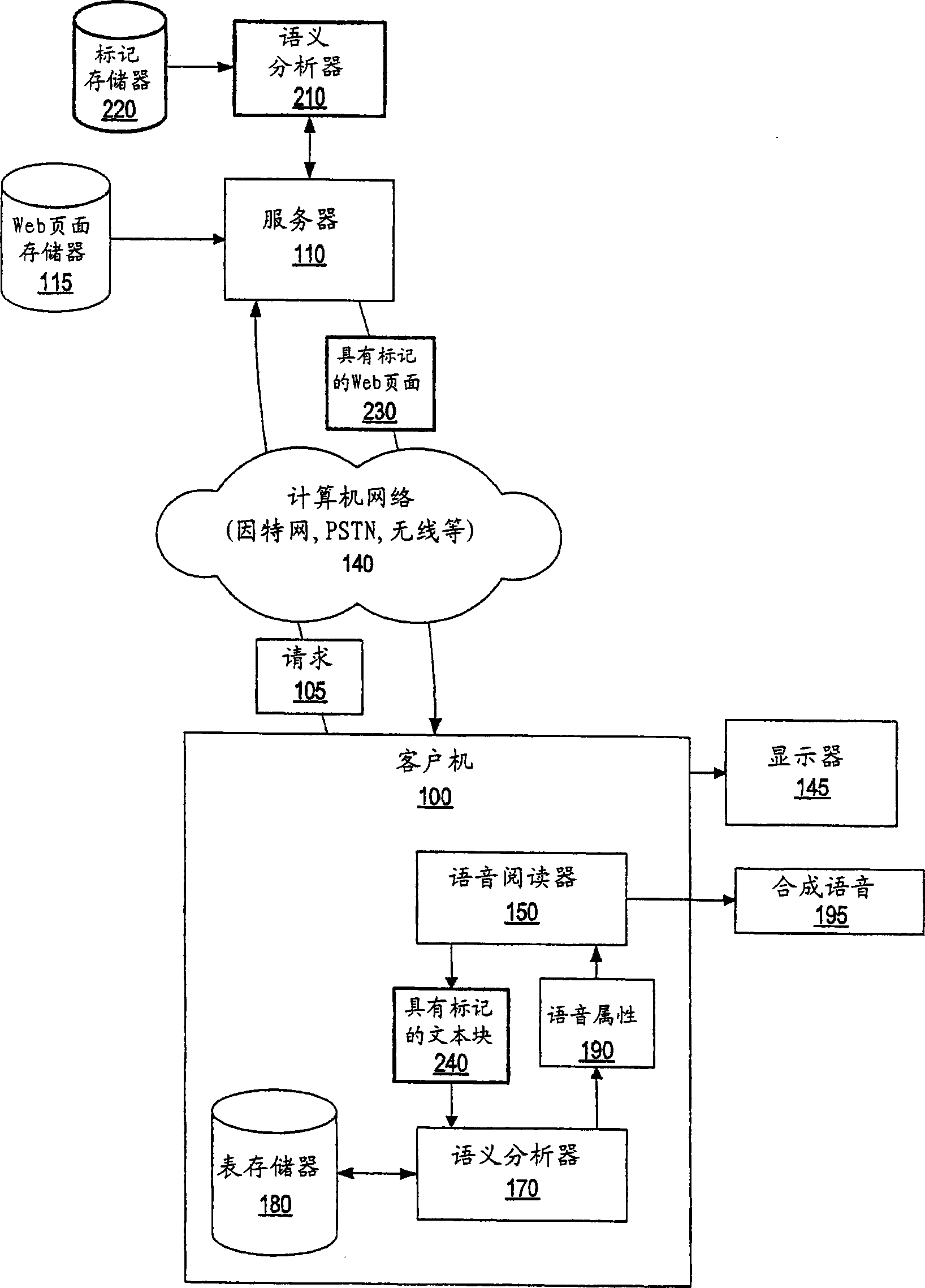

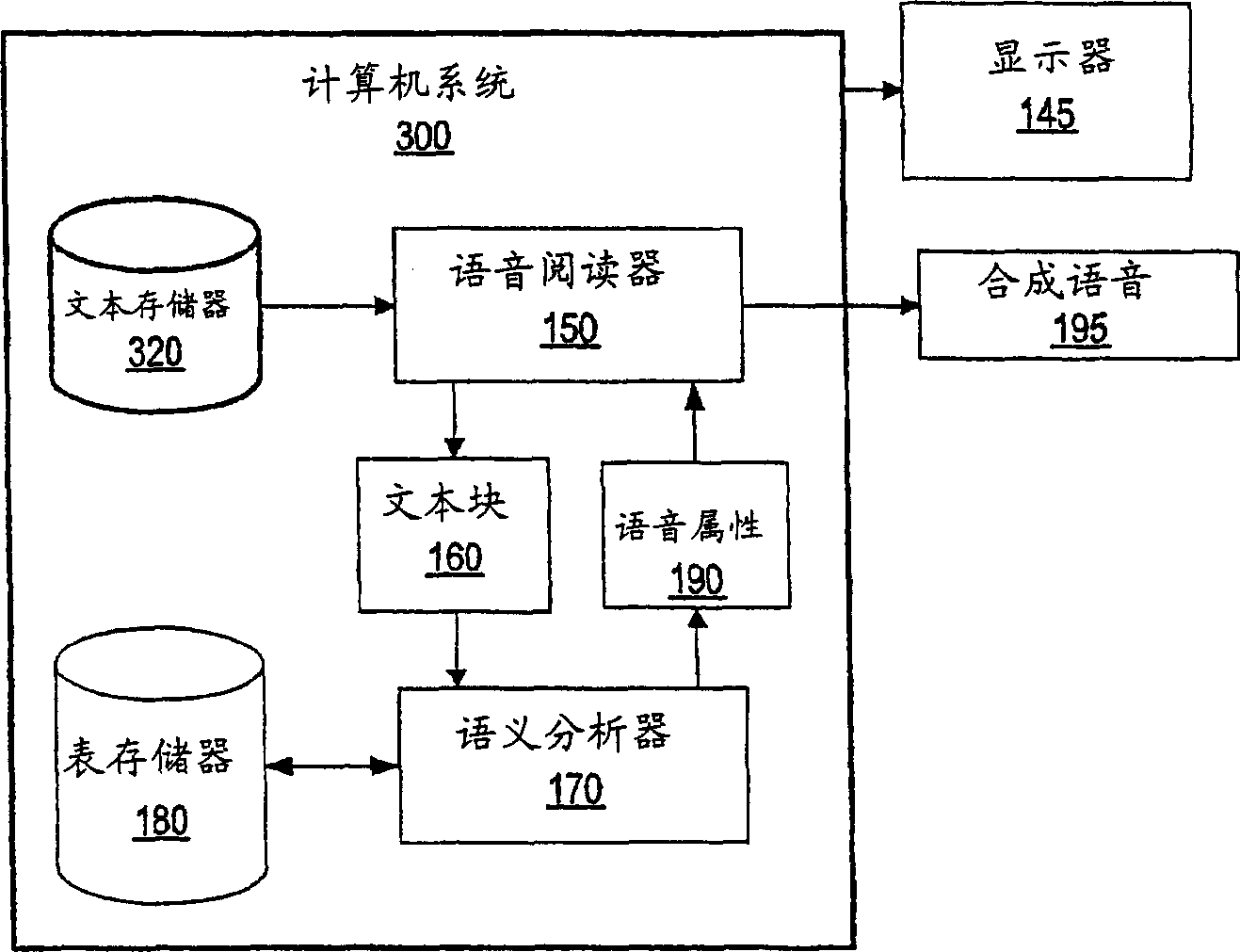

System and method for configuring voice readers using semantic analysis

A system and method for using semantic analysis to configure a voice reader is presented. A text file includes a plurality of text blocks, such as paragraphs. Processing performs semantic analysis on each text block in order to match the text block's semantic content with a semantic identifier. Once processing matches a semantic identifier with the text block, processing retrieves voice attributes that correspond to the semantic identifier (i.e. pitch value, loudness value, and pace value) and provides the voice attributes to a voice reader. The voice reader uses the text block to produce a synthesized voice signal with properties that correspond to the voice attributes. The text block may include semantic tags whereby processing performs latent semantic indexing on the semantic tags in order to match semantic identifiers to the semantic tags.

Owner:INT BUSINESS MASCH CORP

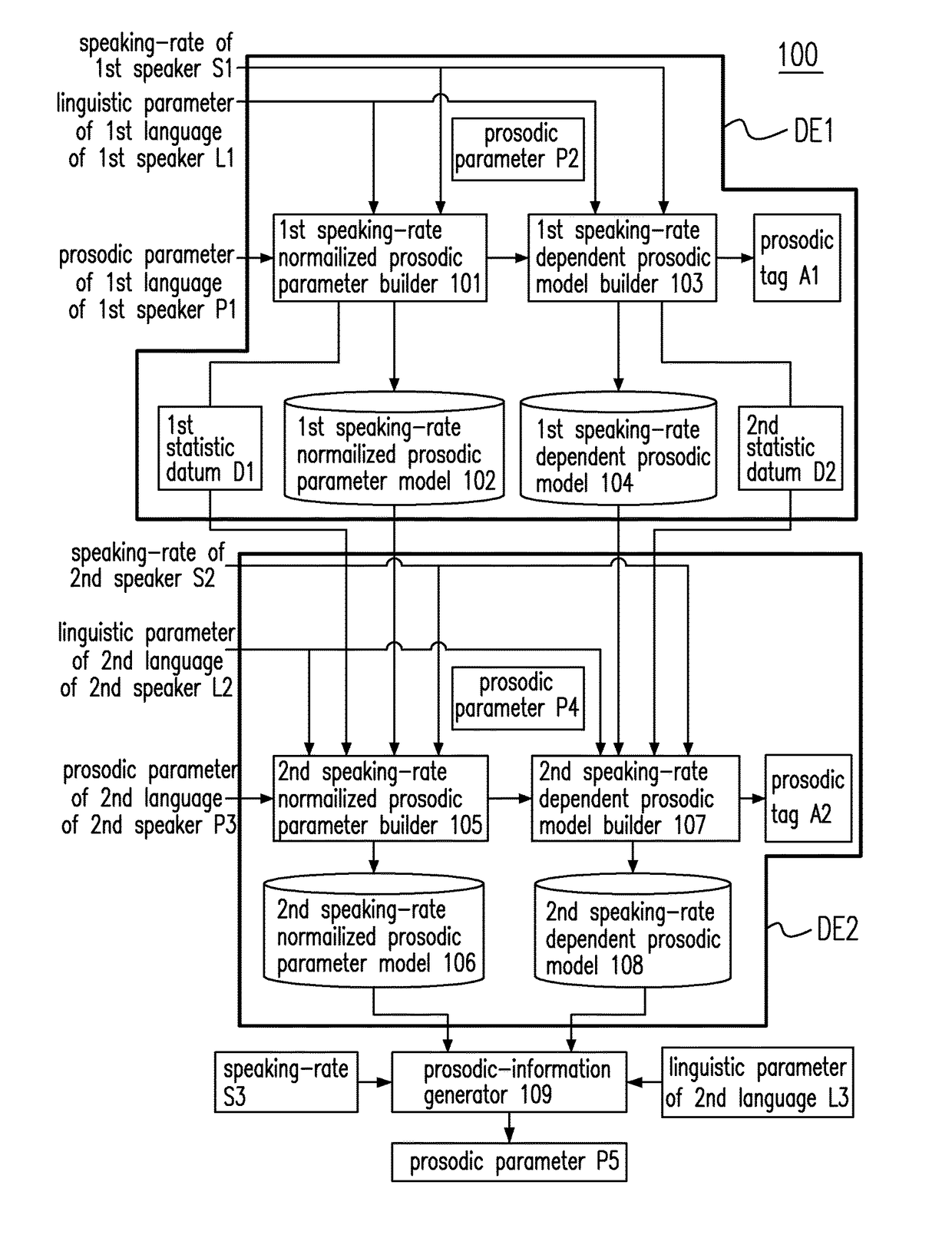

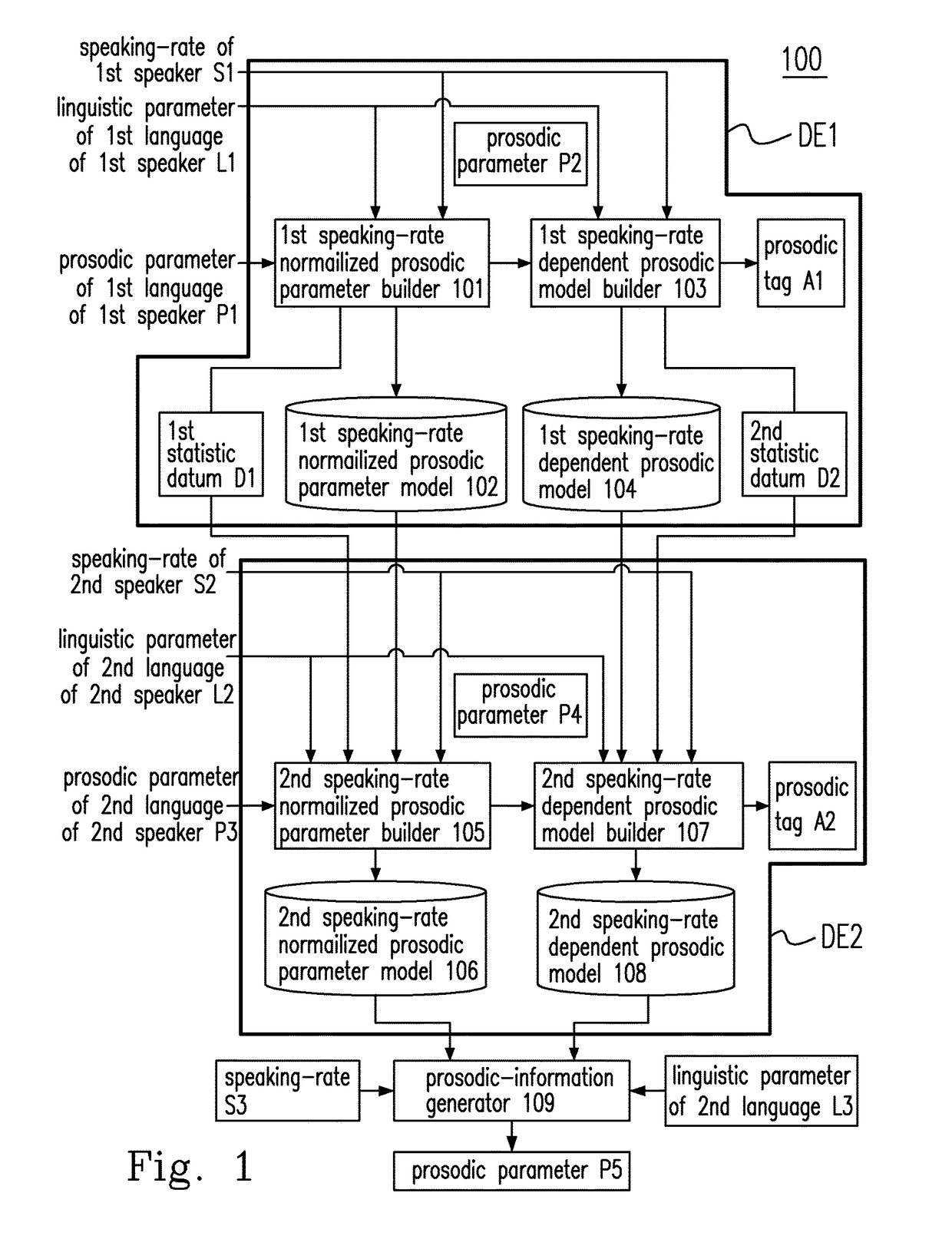

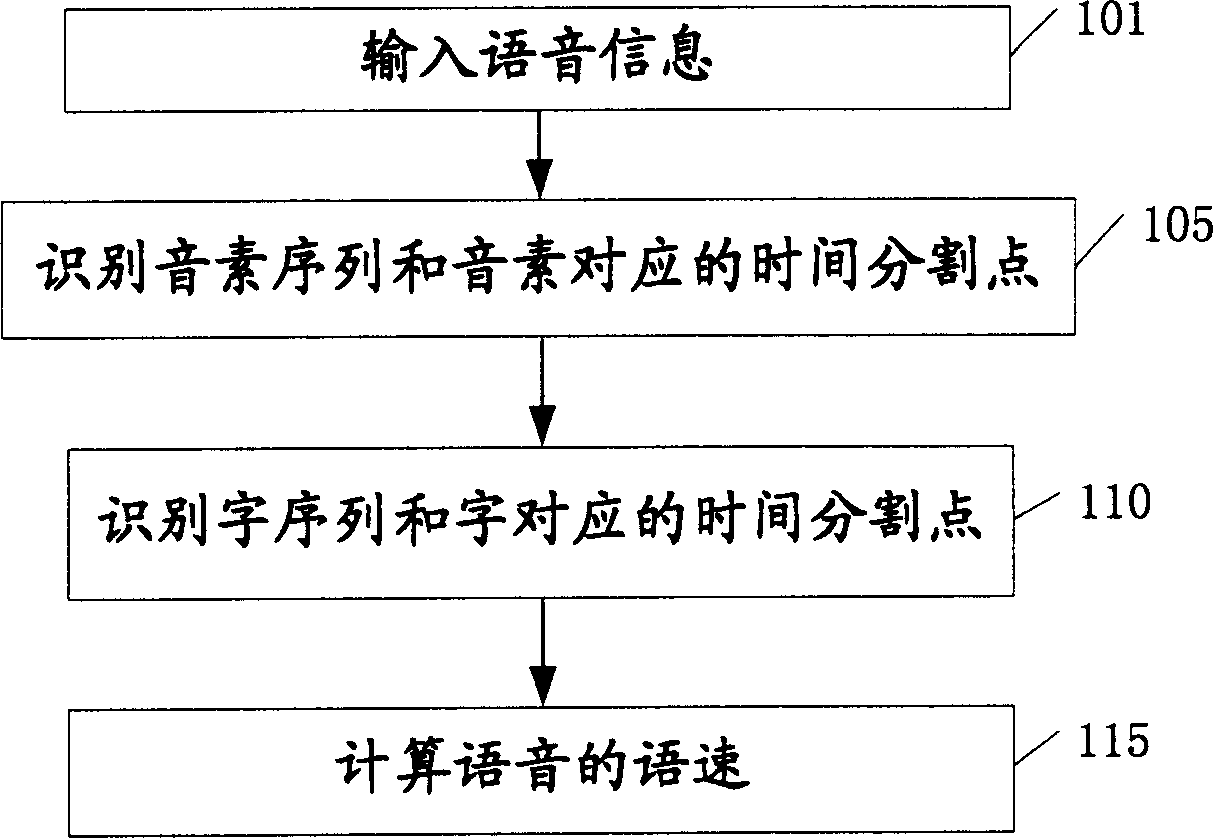

Speaking-rate normalized prosodic parameter builder, speaking-rate dependent prosodic model builder, speaking-rate controlled prosodic-information generation device and prosodic-information generation method able to learn different languages and mimic various speakers' speaking styles

A speaking-rate dependent prosodic model builder and a related method are disclosed. The proposed builder includes a first input terminal for receiving a first information of a first language spoken by a first speaker, a second input terminal for receiving a second information of a second language spoken by a second speaker and a functional information unit having a function, wherein the function includes a first plurality of parameters simultaneously relevant to the first language and the second language or a plurality of sub-parameters in a second plurality of parameters relevant to the second language alone, and the functional information unit under a maximum a posteriori condition and based on the first information, the second information and the first plurality of parameters or the plurality of sub-parameters produces speaking-rate dependent reference information and constructs a speaking-rate dependent prosodic model of the second language.

Owner:NATIONAL TAIPEI UNIVERSITY

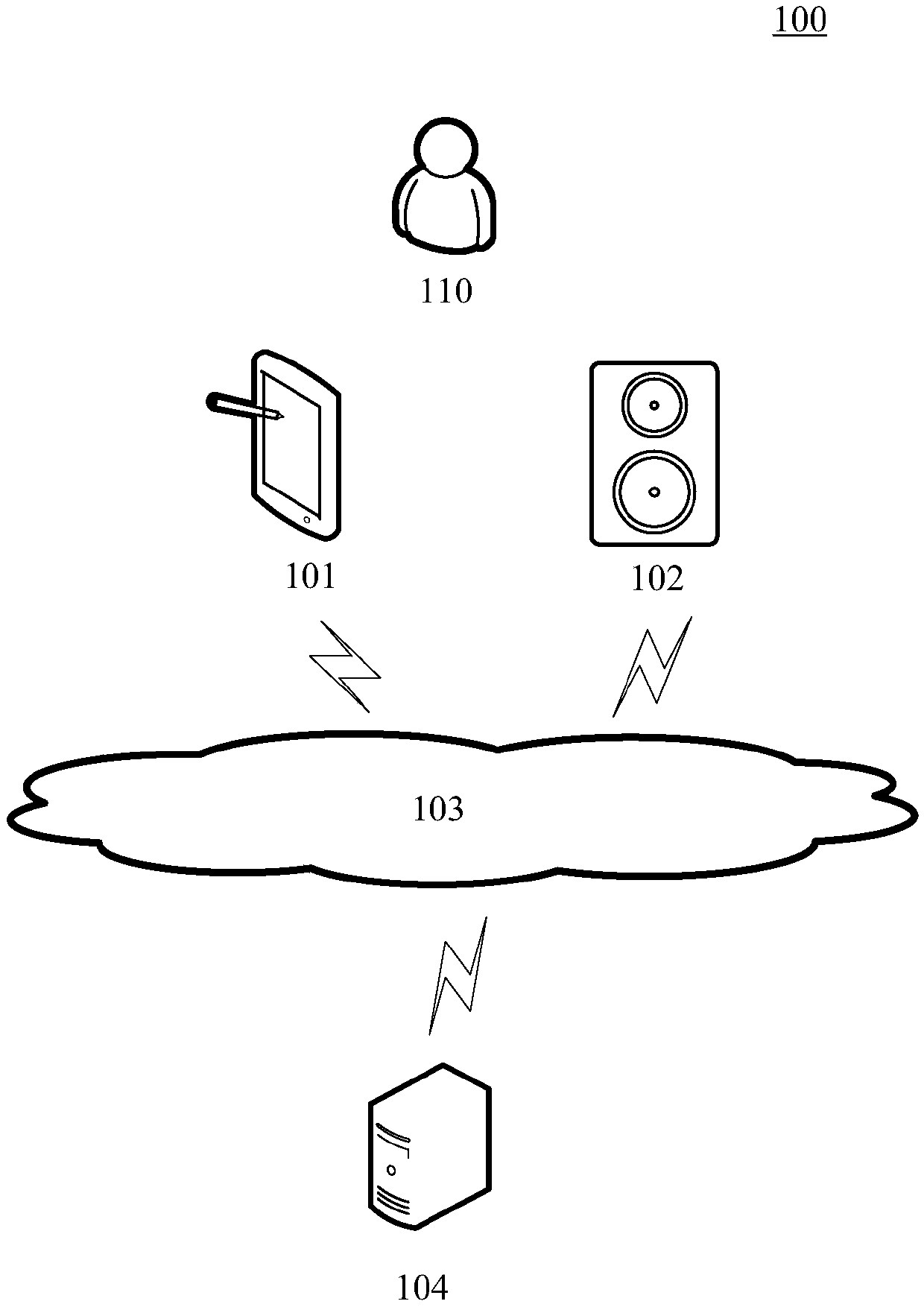

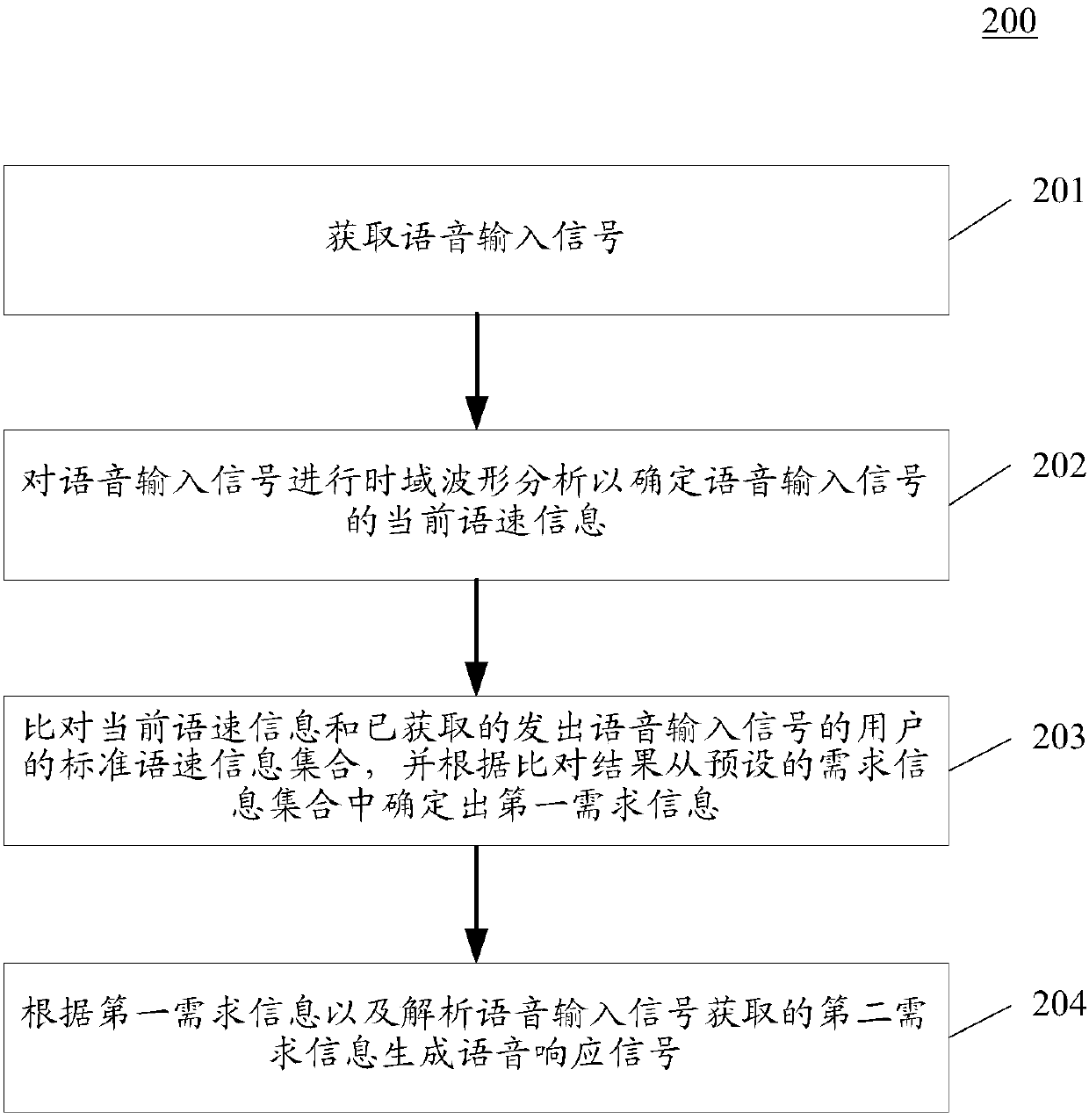

Method and device for providing voice service

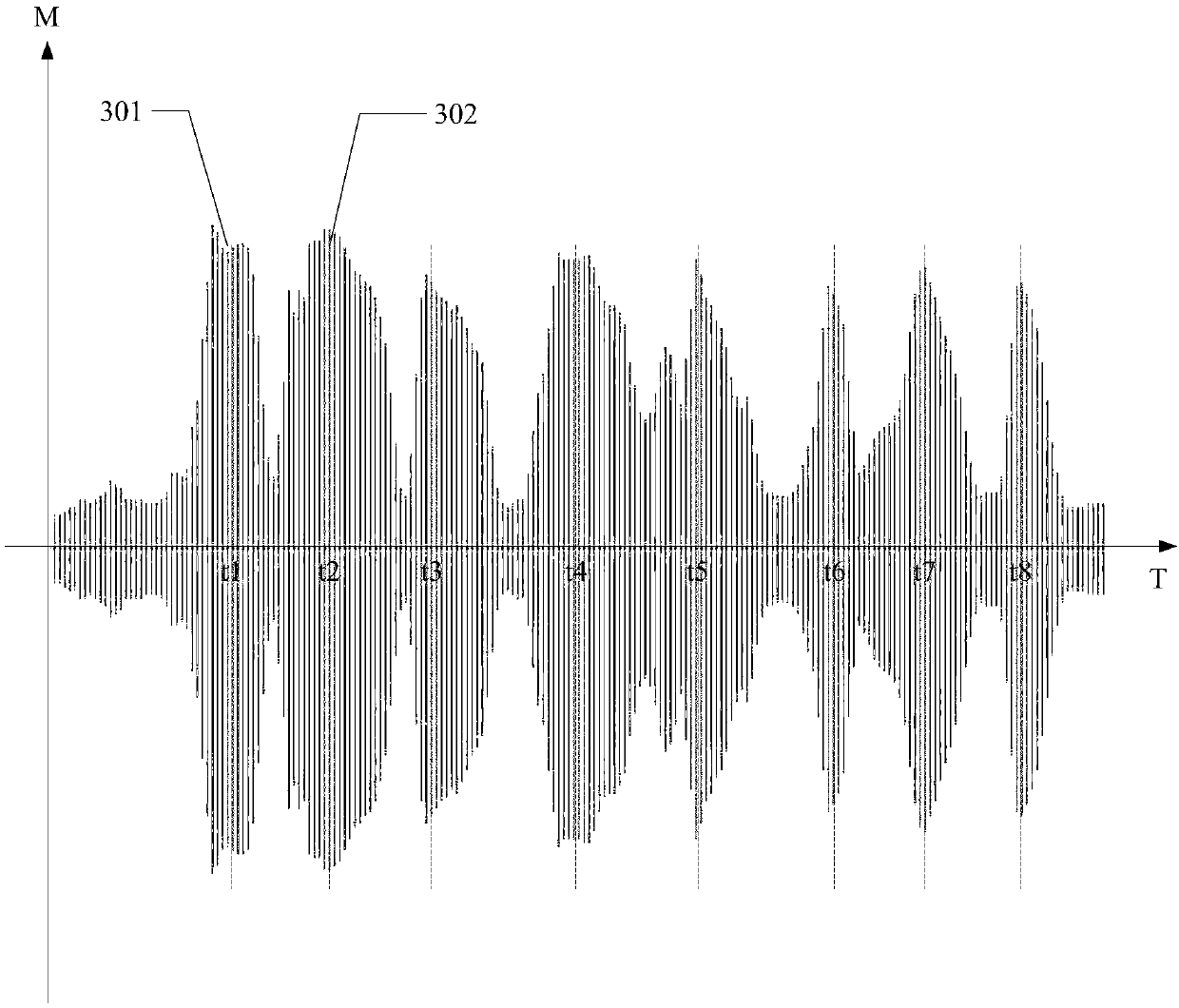

ActiveCN107767869AImprove matchFlexible voice serviceSpeech recognitionSpeech rateTime domain waveforms

The application discloses a method and device for providing a voice service. The method for providing the voice service in a specific implementation mode includes the steps of acquiring a voice inputsignal; analyzing a time domain waveform of the voice input signal to determine current speech rate information of the voice input signal; comparing the current speech rate information with an obtained standard speech rate information set of a user that sends out the voice input signal, and determining first demand information from a preset demand information set according to the comparison result, the standard speech rate information set including at least one piece of standard speech rate information, and the preset demand information set including demand information corresponding to each piece of standard speech rate information in the standard speech rate information set; and generating a voice response signal according to the first demand information and second demand information obtained by analyzing the voice input signal. The embodiment can improve the matching degree between the voice service and the user's potential demand, and achieve a more flexible and accurate voice service.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

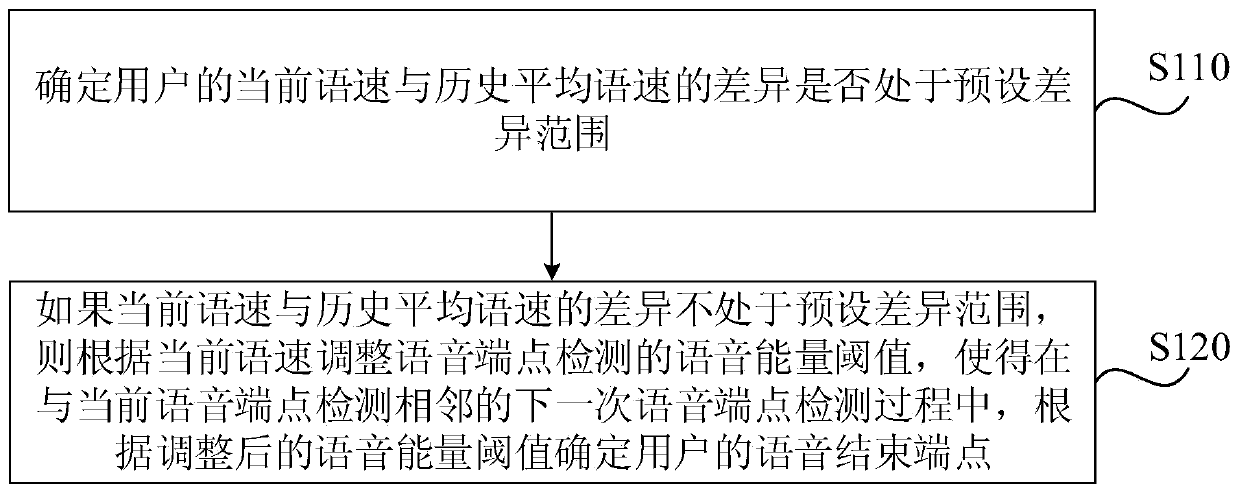

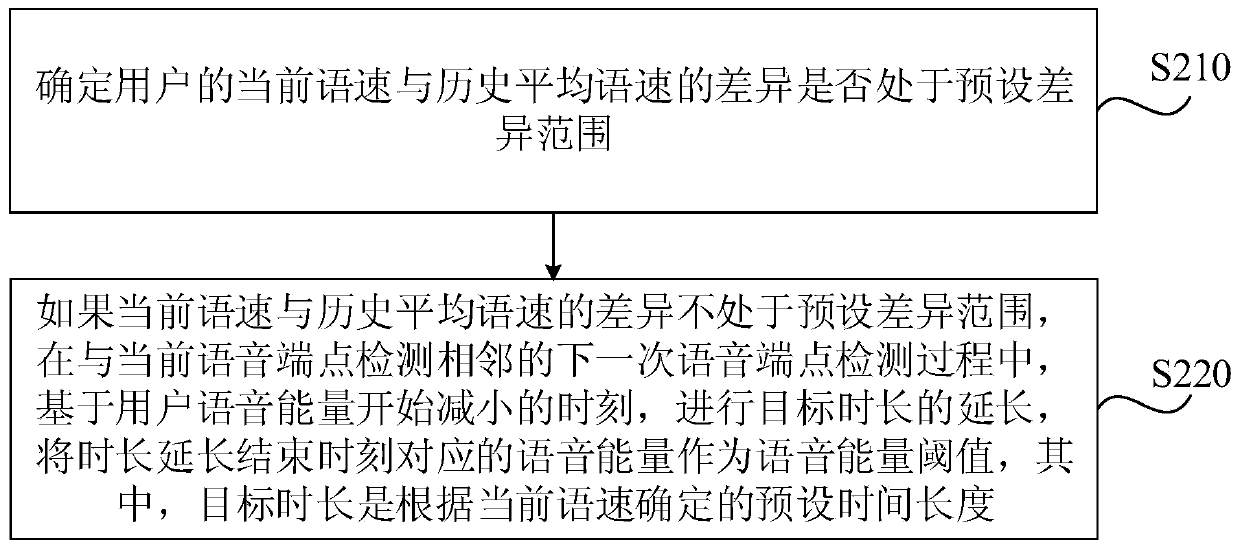

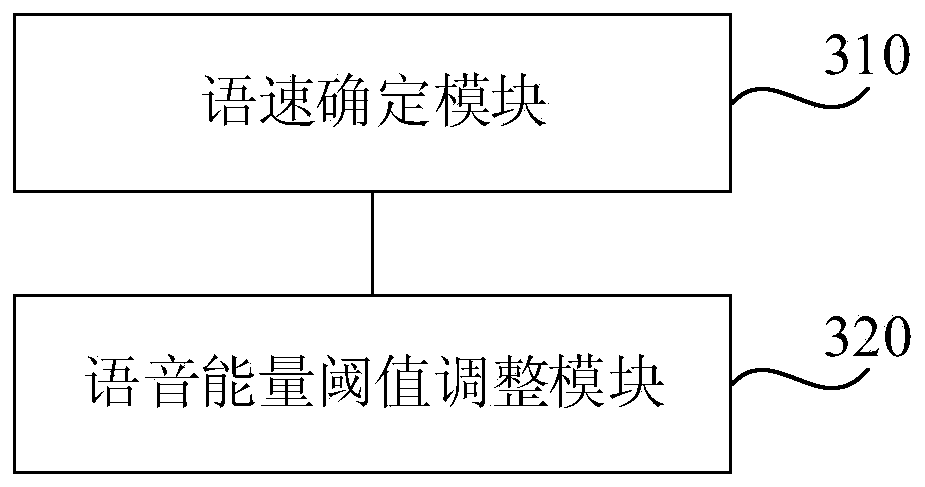

Voice end detection method, device, terminal and storage medium

ActiveCN109767792AImprove accuracyRealize adaptive dynamic adjustmentSpeech analysisPersonalizationSpeech rate

Owner:APOLLO INTELLIGENT CONNECTIVITY (BEIJING) TECH CO LTD

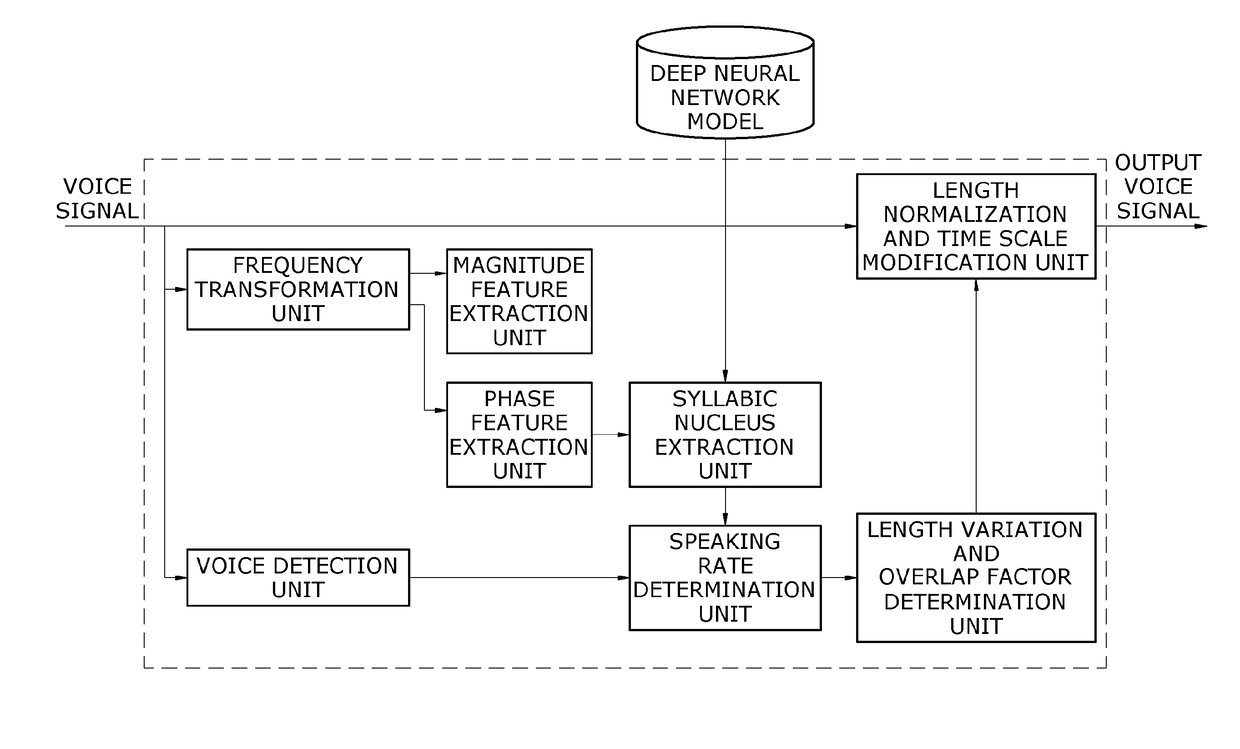

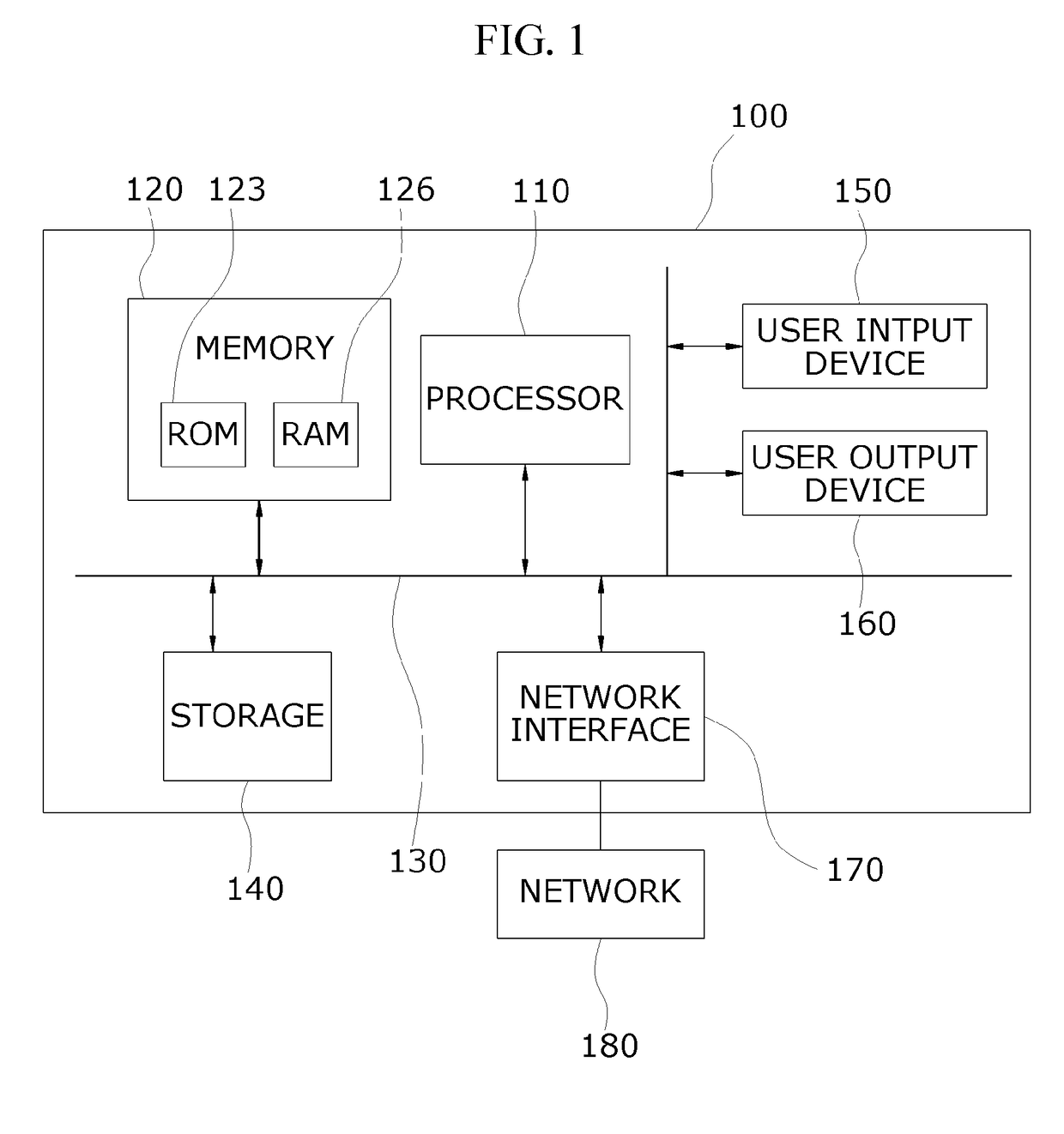

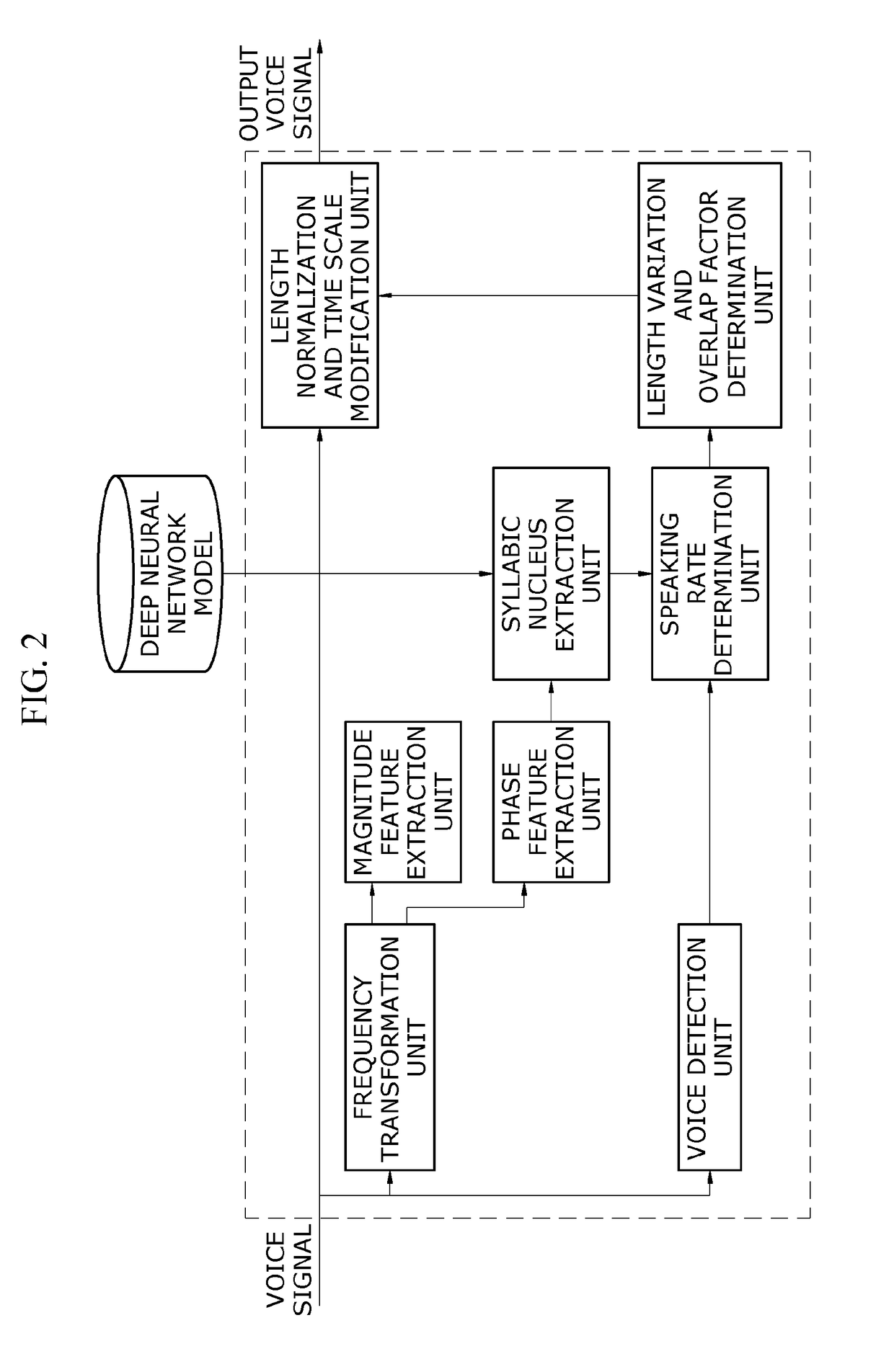

Method and apparatus for improving spontaneous speech recognition performance

ActiveUS20180247642A1Improving spontaneous speech recognition performanceImprove speech recognition performanceSpeech recognitionNeural learning methodsSpeech rateLength variation

The present invention relates to a method and apparatus for improving spontaneous speech recognition performance. The present invention is directed to providing a method and apparatus for improving spontaneous speech recognition performance by extracting a phase feature as well as a magnitude feature of a voice signal transformed to the frequency domain, detecting a syllabic nucleus on the basis of a deep neural network using a multi-frame output, determining a speaking rate by dividing the number of syllabic nuclei by a voice section interval detected by a voice detector, calculating a length variation or an overlap factor according to the speaking rate, and performing cepstrum length normalization or time scale modification with a voice length appropriate for an acoustic model.

Owner:ELECTRONICS & TELECOMM RES INST

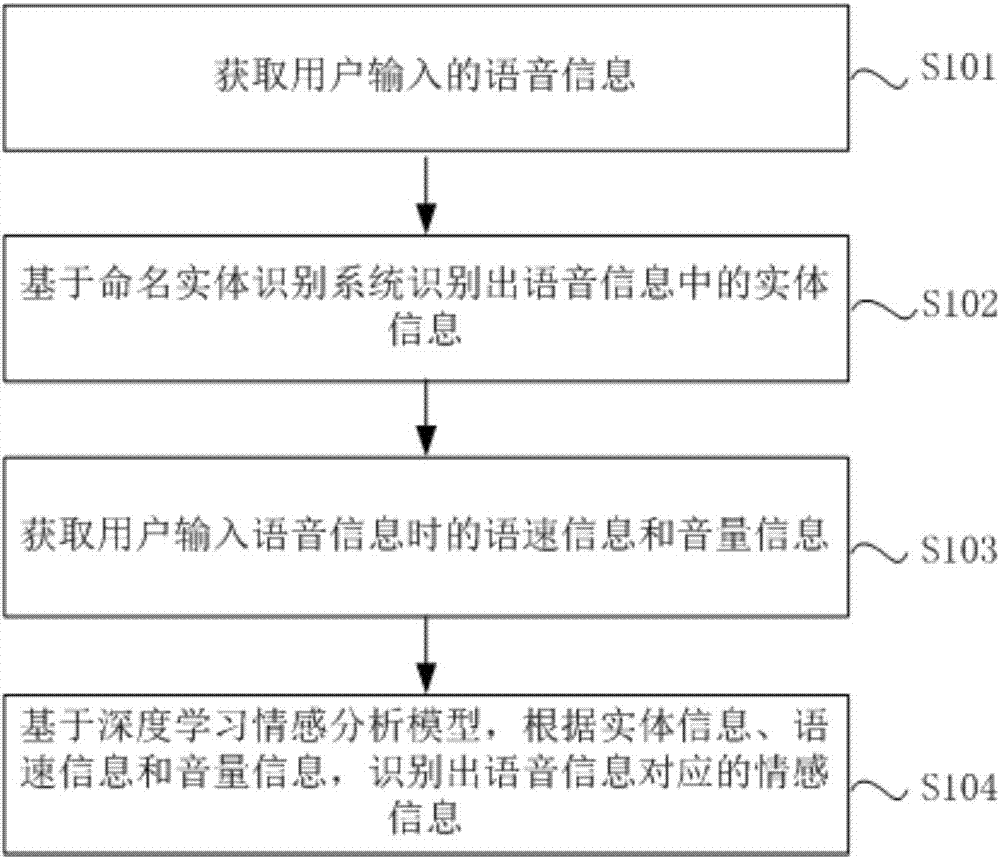

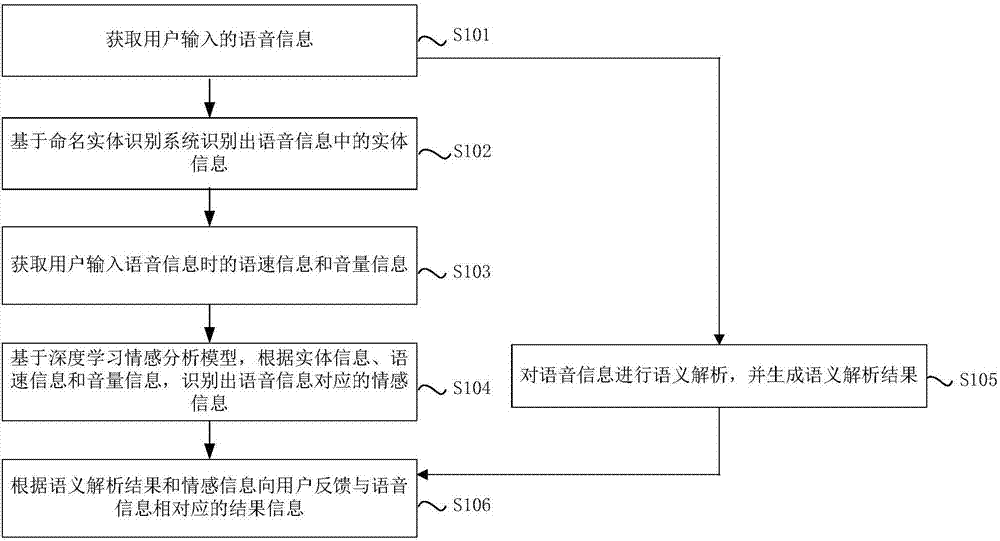

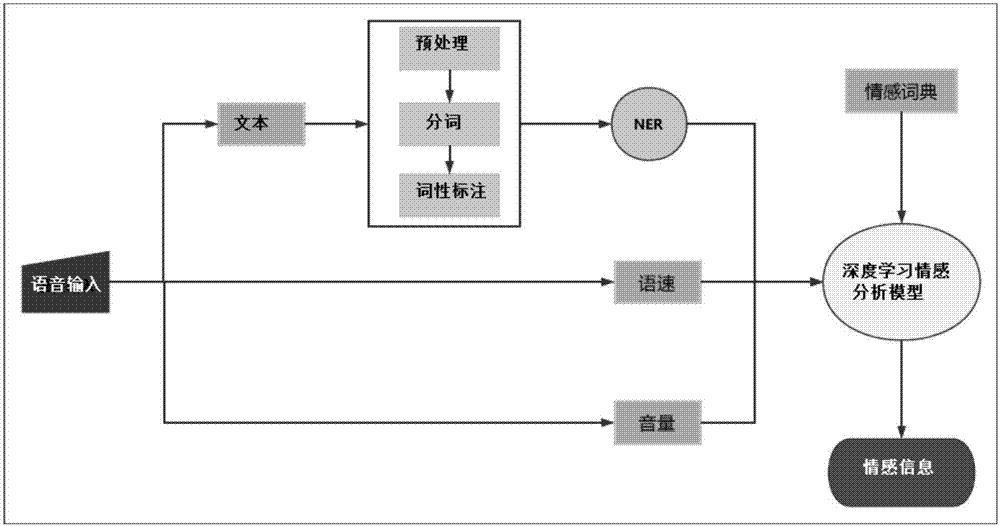

Voice recognition method and device

InactiveCN107464566AMeet real needsImprove accuracySpeech recognitionSpeech rateNamed-entity recognition

The invention discloses a voice recognition method and device. The method comprises the following steps: acquiring voice information input by a user; recognizing entity information in the voice information based on a named entity recognition system; acquiring speed information and volume information of the user when the voice information is input; and recognizing emotive information corresponding to the voice information according to the entity information, the speed information and the volume information based on a deep learning emotion analysis model. According to the voice recognition method in the embodiment of the invention, the voice information input by the user is acquired, the entity information in the voice information is recognized based on the named entity recognition system, the speed information and the volume information of the user when the voice information is input are acquired, and the emotive information corresponding to the voice information is recognized according to the entity information, the speed information and the volume information based on the deep learning emotion analysis model and is taken as an important factor for voice recognition, so that the accuracy rate of the voice recognition is increased, and the real demands of a user can be well met.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

System and method for audio hot spotting

ActiveUS7617188B2Data processing applicationsDigital data information retrievalSpeech rateVocal effort

Audio hot spotting is accomplished by specifying query criterion to include a non-lexical audio cue. The non-lexical audio cue can be, e.g., speech rate, laughter, applause, vocal effort, speaker change or any combination thereof. The query criterion is retrieved from an audio portion of a file. A segment of the file containing the query criterion can be provided to a user. The duration of the provided segment can be specified by the user along with the files to be searched. A list of detections of the query criterion within the file can also be provided to the user. Searches can be refined by the query criterion additionally including a lexical audio-cue. A keyword index of topic terms contained in the file can also be provided to the user.

Owner:MITRE SPORTS INT LTD

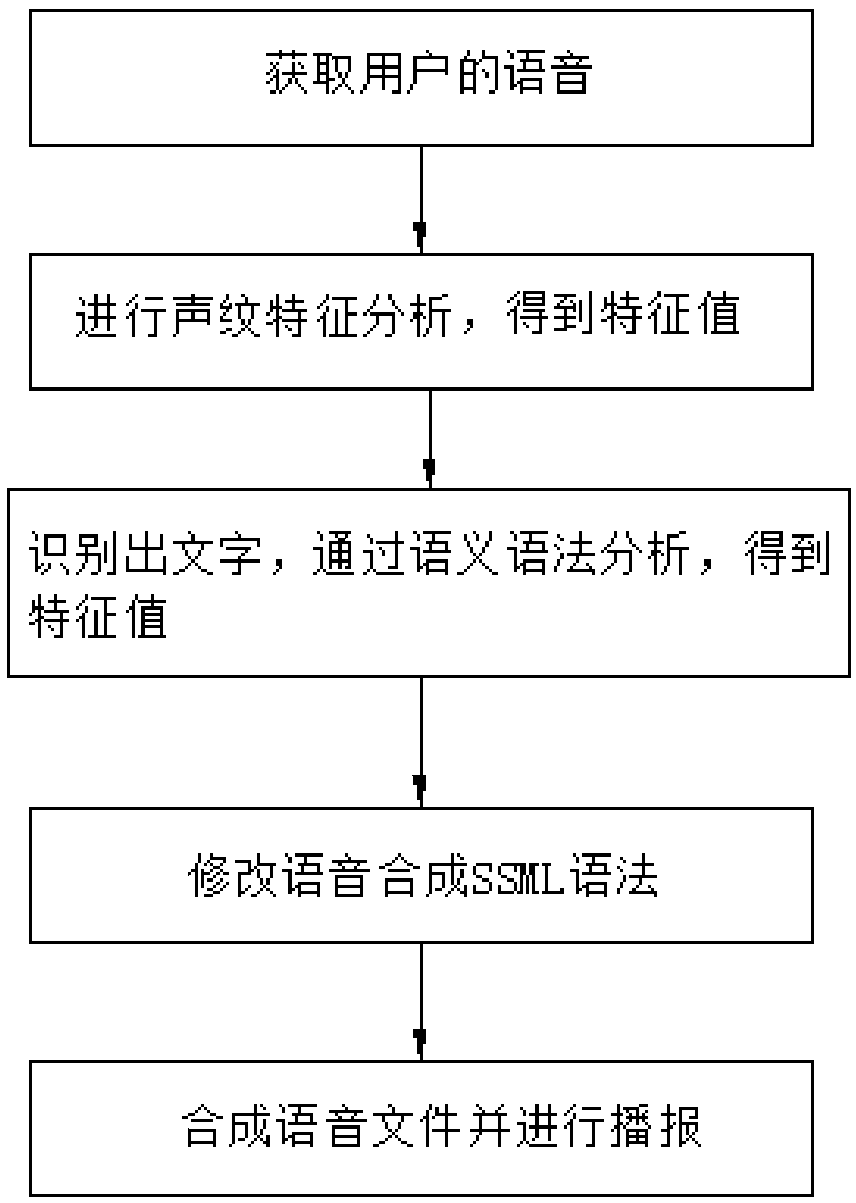

Text speech synthesis method after speaker emotion simulated optimization translation

The invention discloses a text speech synthesis method after speaker emotion simulated optimization translation. Voice information of a user is obtained; The background analyzes an audio file to obtain frequency and speech speed parameters; the background is introduced to a voiceprint identification system to obtain gender and age parameters; speech is recognized to obtain text information; an emotion parameter is obtained from the text via analysis on grammar, vocabulary and sentences of the text; frequency, speech speed, gender, age and emotion features are combined, and a characteristic value is set for each feature; and the characteristic values are combined with a speech synthesis SSML grammar to set the broadcast speed, volume and word pause in the speech synthesis SSML grammar. Thus, synthesized speech broadcast of another language can reflect the emotion feature of a native language of a speaker. The mood, tone, vocabulary and grammar features of the speaker are identified, sothat the speech translated synthesis broadcast reflect the emotion of the speaker at present.

Owner:LANGOGO TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com