Patents

Literature

71 results about "Microexpression" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

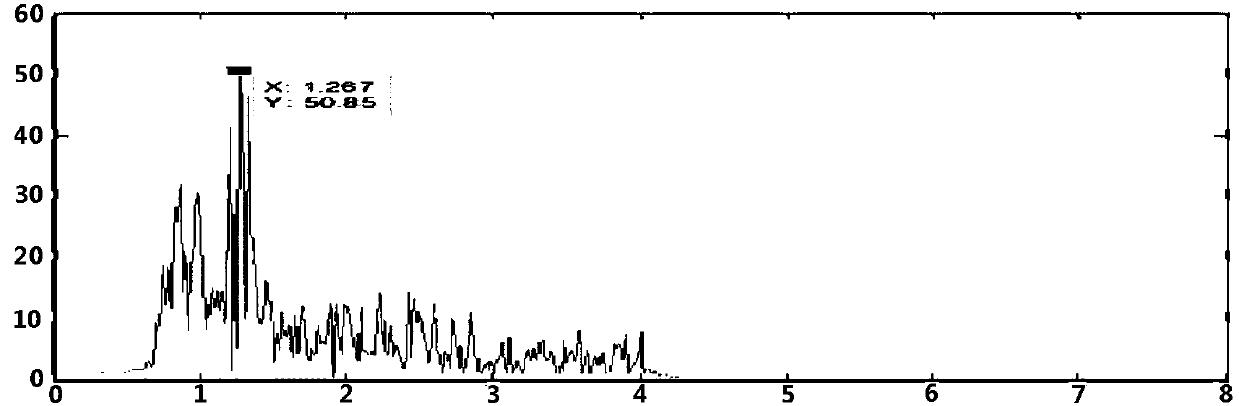

A microexpressionis the innate result of a voluntary and an involuntary emotional response occurring simultaneously and conflicting with one another. This occurs when the amygdala (the emotion center of the brain) responds appropriately to the stimuli that the individual experiences and the individual wishes to conceal this specific emotion. This results in the individual very briefly displaying their true emotions followed by a false emotional reaction. Human emotions are an unconscious bio-psycho-social reaction that derives from the amygdala and they typically last 0.5–4.0 seconds, although a microexpression will typically last less than 1/2 of a second. Unlike regular facial expressions it is either very difficult or virtually impossible to hide microexpression reactions. Microexpressions cannot be controlled as they happen in a fraction of a second, but it is possible to capture someone's expressions with a high speed camera and replay them at much slower speeds. Microexpressions express the seven universal emotions: disgust, anger, fear, sadness, happiness, contempt, and surprise. Nevertheless, in the 1990s, Paul Ekman expanded his list of emotions, including a range of positive and negative emotions not all of which are encoded in facial muscles. These emotions are amusement, embarrassment, anxiety, guilt, pride, relief, contentment, pleasure, and shame.

Method of micro facial expression detection based on facial action coding system (FACS)

InactiveCN107194347AImprove recognition rateAccurate identificationCharacter and pattern recognitionNetwork architectureBase function

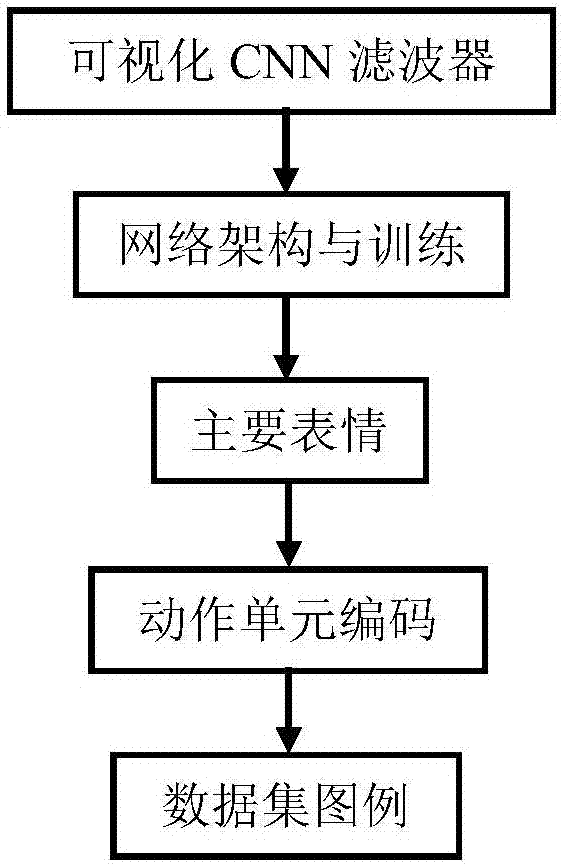

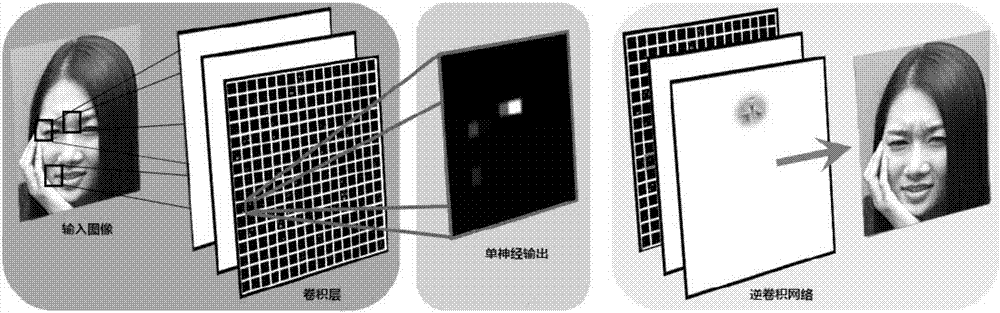

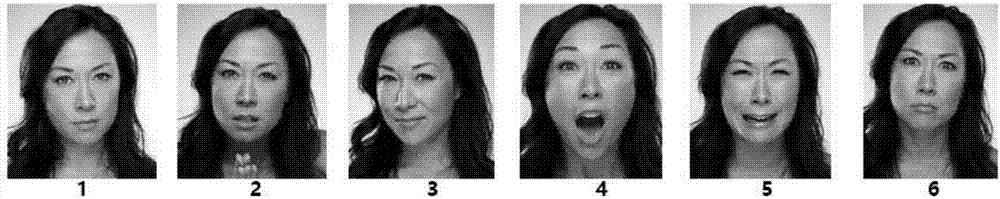

The invention provides a method of micro facial expression detection based on a facial action coding system (FACS). The method has a main content of a visual CNN filter, network architecture and training, migration learning and micro facial expression detection. The method comprises steps: firstly, a robust emotion classification framework is built; the provided network learning model is analyzed; the provided network training filter is visualized in different emotion classification tasks; and the model is applied to micro facial expression detection. The recognition rate of the existing method in micro facial expression detection is improved, the strong correlation between features generated by an unsupervised learning process and action units for a facial expression analysis method is presented, the FACS-based function generalization ability in aspects of providing high-precision score cross data and cross mission is verified, the micro facial expression detection recognition rate is improved, the facial expression can be recognized more accurately, the emotion state is deduced, the effectiveness and the accuracy of application in various fields are improved, and development of artificial intelligence is pushed.

Owner:SHENZHEN WEITESHI TECH

Face microexpression recognition method

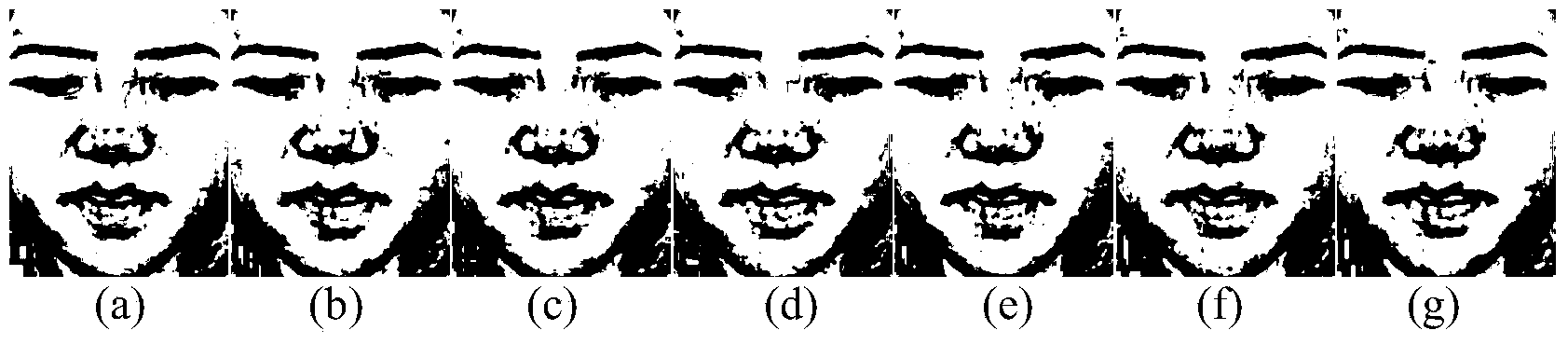

InactiveCN104298981AOutstanding FeaturesHighlight significant progressAcquiring/recognising facial featuresGraphicsPattern recognition

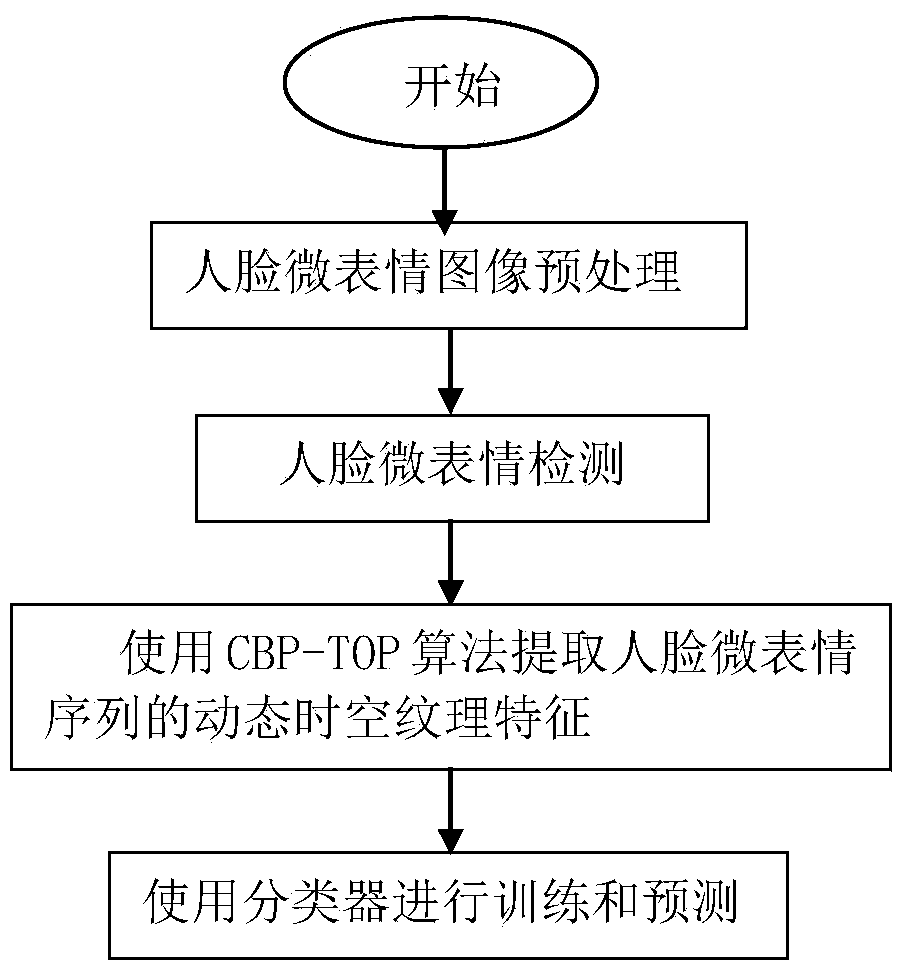

The invention discloses a face microexpression recognition method and relates to a method for recognizing graphs with electronic equipment. The method comprises the steps that after a face microexpression image sequence is preprocessed, the Birnbaum-Saunders distribution curve is used for building a regression model, then the CBP-TOP algorithm is used for extracting dynamic space-time texture features of the face microexpression sequence, and finally a classier is used for training and prediction. The defect that an existing face microexpression recognition method is sensitive to small features such as bright points, edges and white noise of a face microexpression image, thereby being low in recognition performance is overcome.

Owner:HEBEI UNIV OF TECH

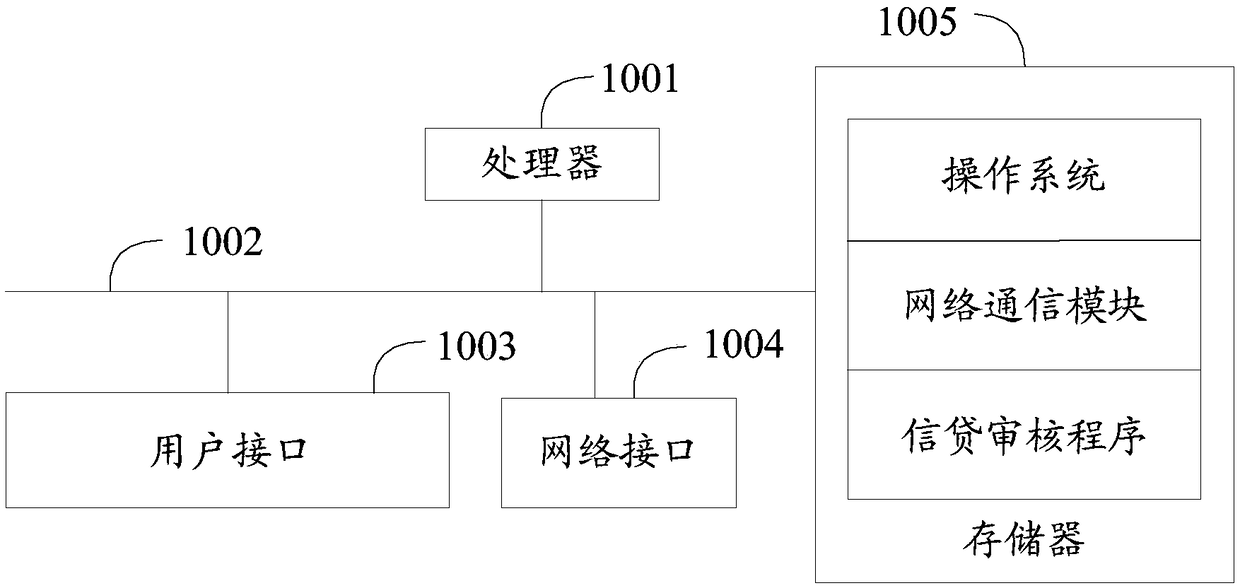

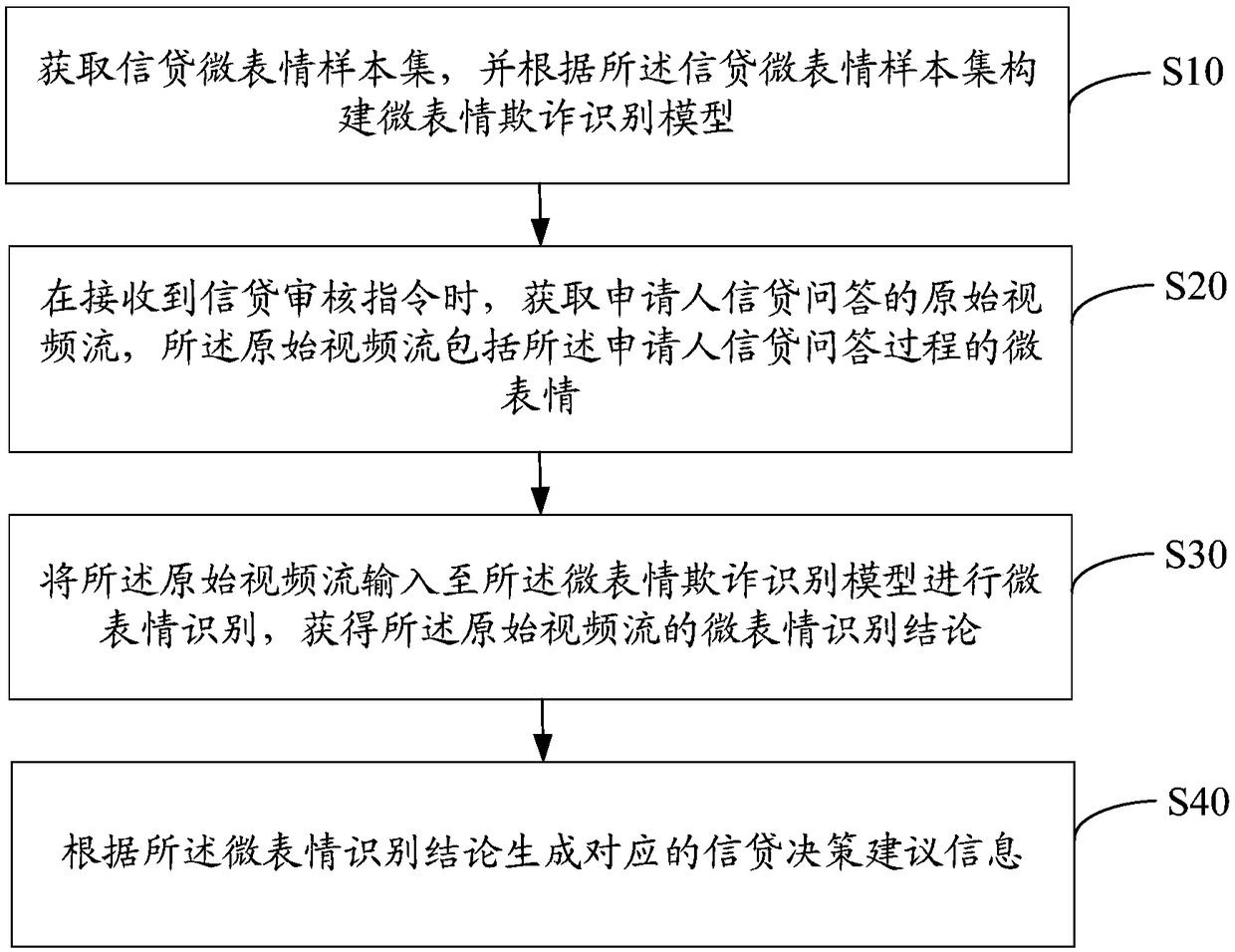

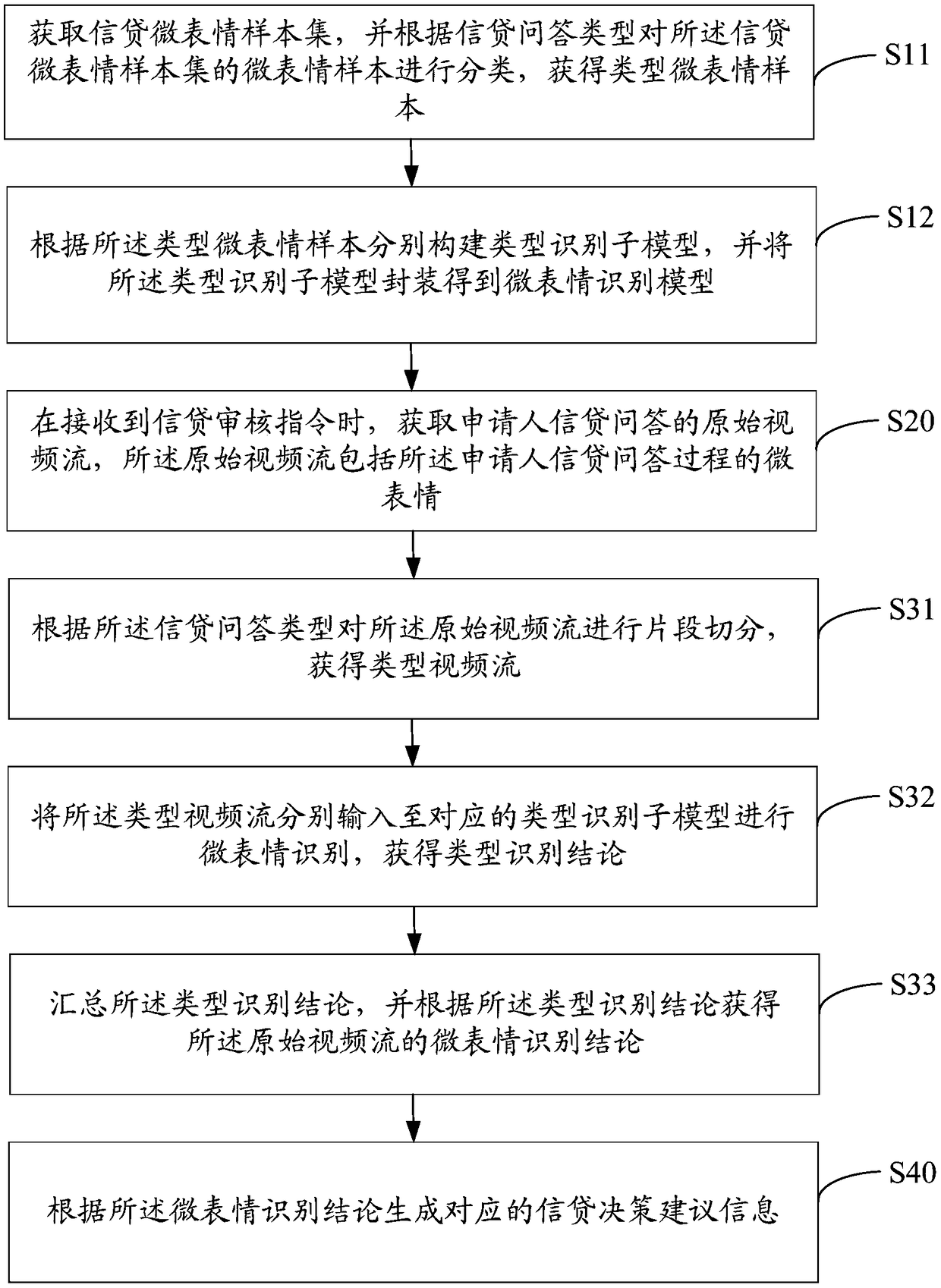

Microexpression-based credit authorization method and device, terminal and readable storage medium

ActiveCN108765131AImprove efficiencyImprove accuracyFinanceCharacter and pattern recognitionMicroexpressionQuestions and answers

The invention provides a microexpression-based credit authorization method. The microexpression-based credit authorization method includes the steps: acquiring a credit microexpression sample set, andconstructing a microexpression fraud identification model according to the credit microexpression sample set; when receiving a credit authorization instruction, acquiring an original video stream ofapplicant credit Q&A (Questions and Answers), wherein the original video stream includes microexpressions of the applicant credit Q&A process; inputting the original video stream into the microexpression fraud identification model to perform microexpression identification, and obtaining a microexpression identification result; and according to the microexpression identification result, generatingcorresponding credit decision-making suggestion information. The invention also provides a microexpression-based credit authorization device, equipment and a readable storage medium. The microexpression-based credit authorization method uses the microexpression fraud identification model to analyze the microexpressions of a credit applicant to determine the real feeling of the applicant to determine whether the applicant tell lies so as to detect fraud, thus reducing the workload of artificial authorization, and being conductive to improving efficiency and accuracy of credit authorization.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

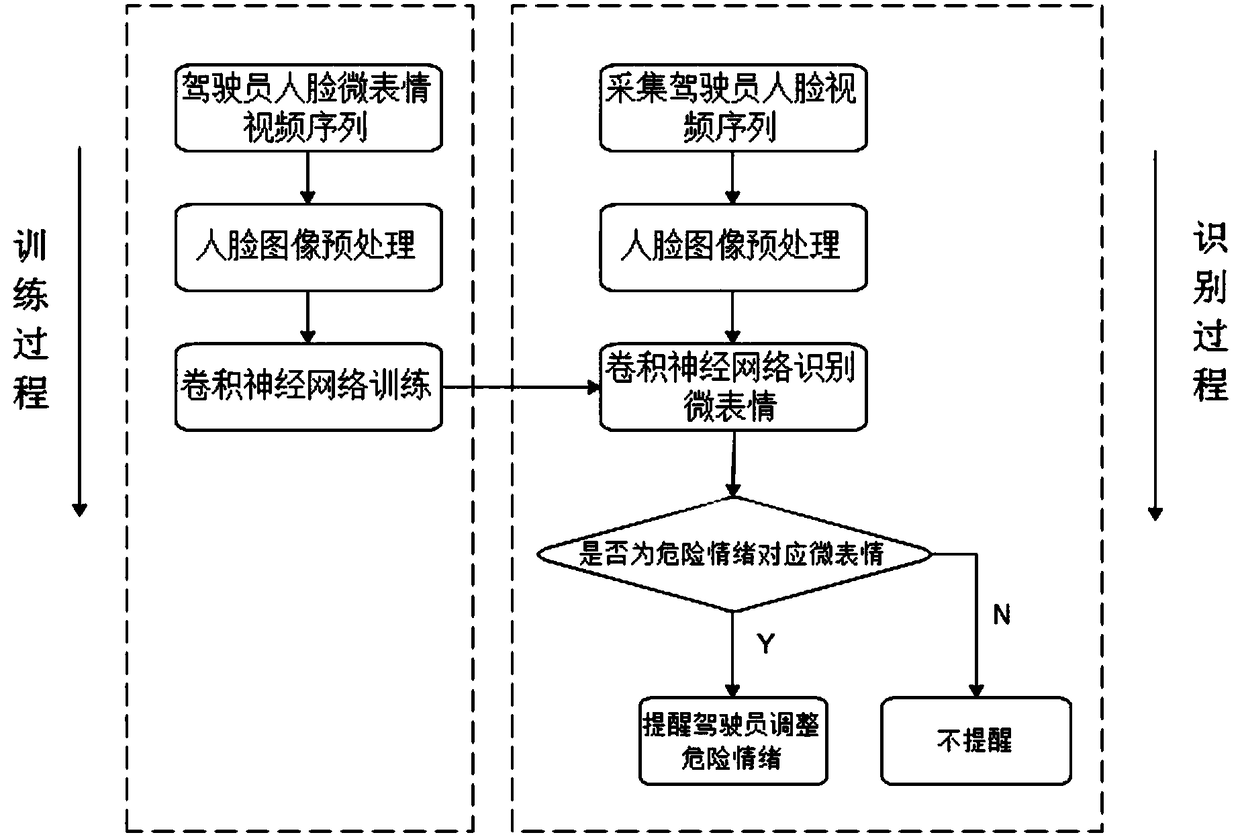

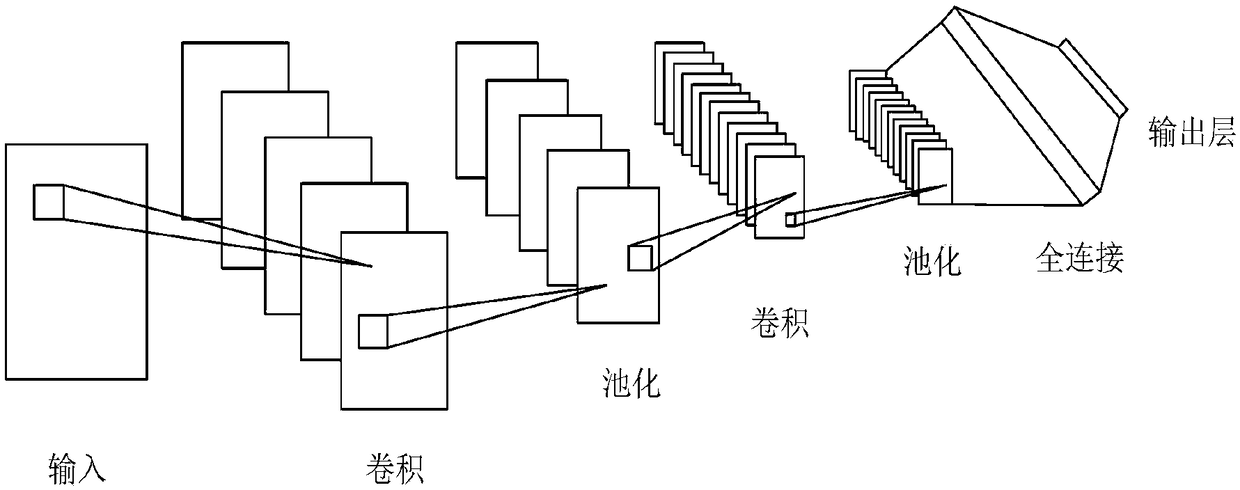

DRIVING DANGEROUS MOOD alert METHOD, TERMINAL APPARATUS, AND STORAGE MEDIUM

ActiveCN109426765ADriving safetyReduce accident rateCharacter and pattern recognitionNeural architecturesMicroexpressionTerminal equipment

Disclosed is a driving dangerous mood alert method including that follow steps, S1: acquiring a training sample set, S2, preprocess that micro-expression image, S3, using the improved convolution neural network to train the preprocessed microexpression images in the microexpression training sample set, S4: Microexpression image recognition: A driver's face video sequence is captured in real time,S5, judging whether the micro-expression type of the micro-expression image in S4 is the micro-expression corresponding to the driving dangerous emotion or not, if so, proceeding to S6, wherein the micro-expression type is the micro-expression corresponding to the driving dangerous emotion; if not, proceeding to step S7; S6, voice prompt that driver of the mood correspond to the current micro expression, prompting the driver to adjust the mood and steadily drive; S7: No reminders.

Owner:XIAMEN YAXON NETWORKS CO LTD

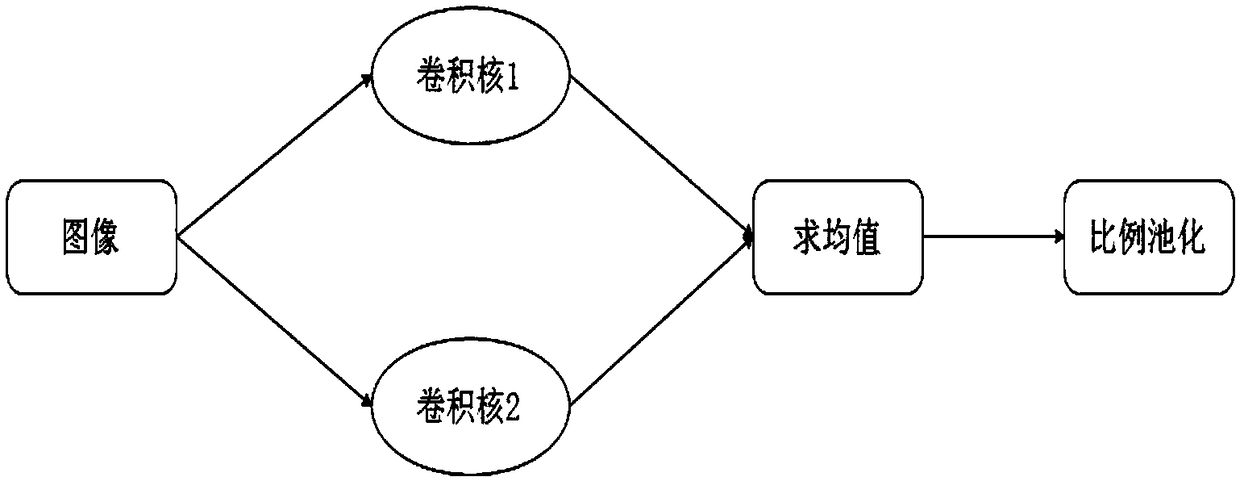

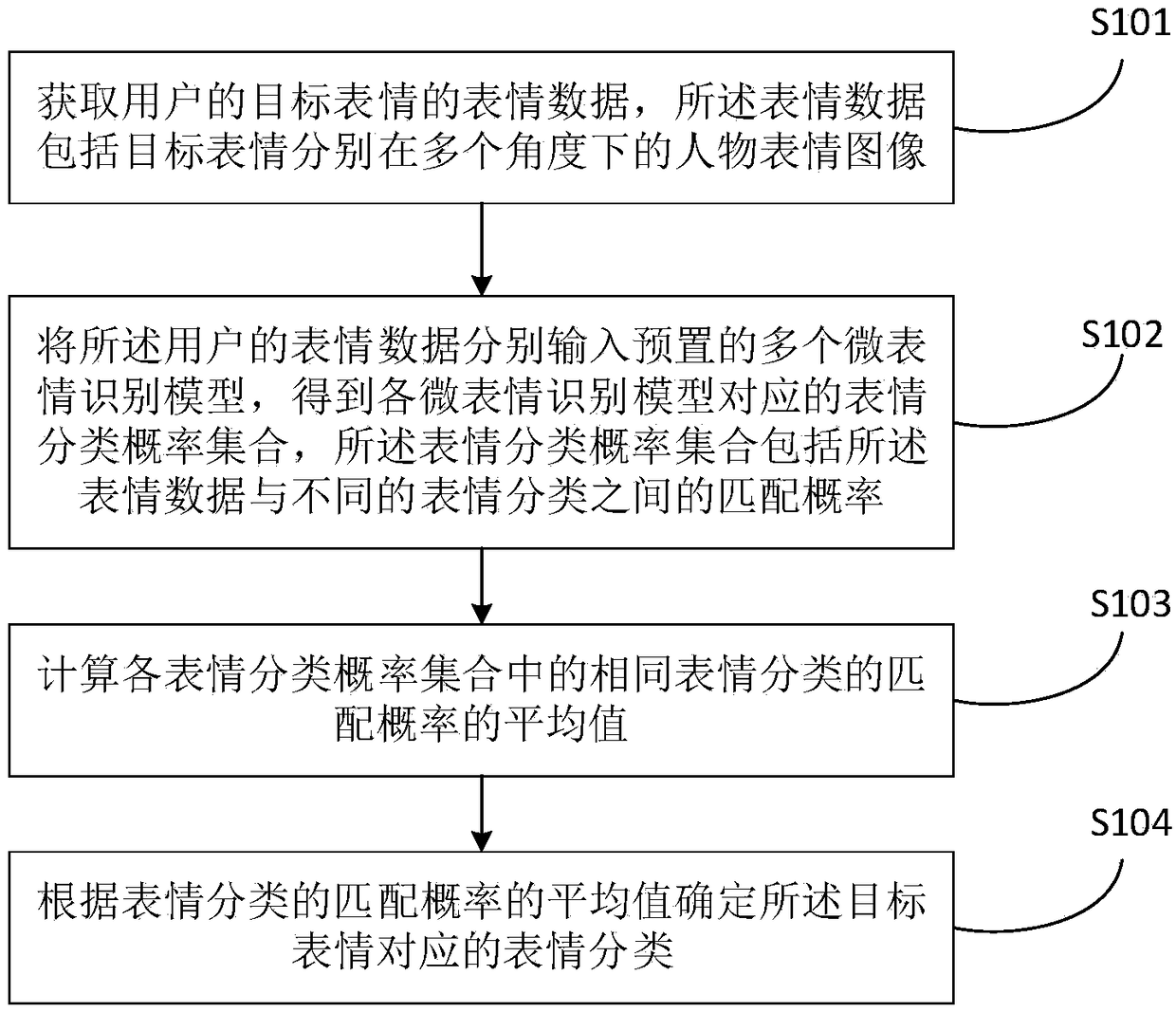

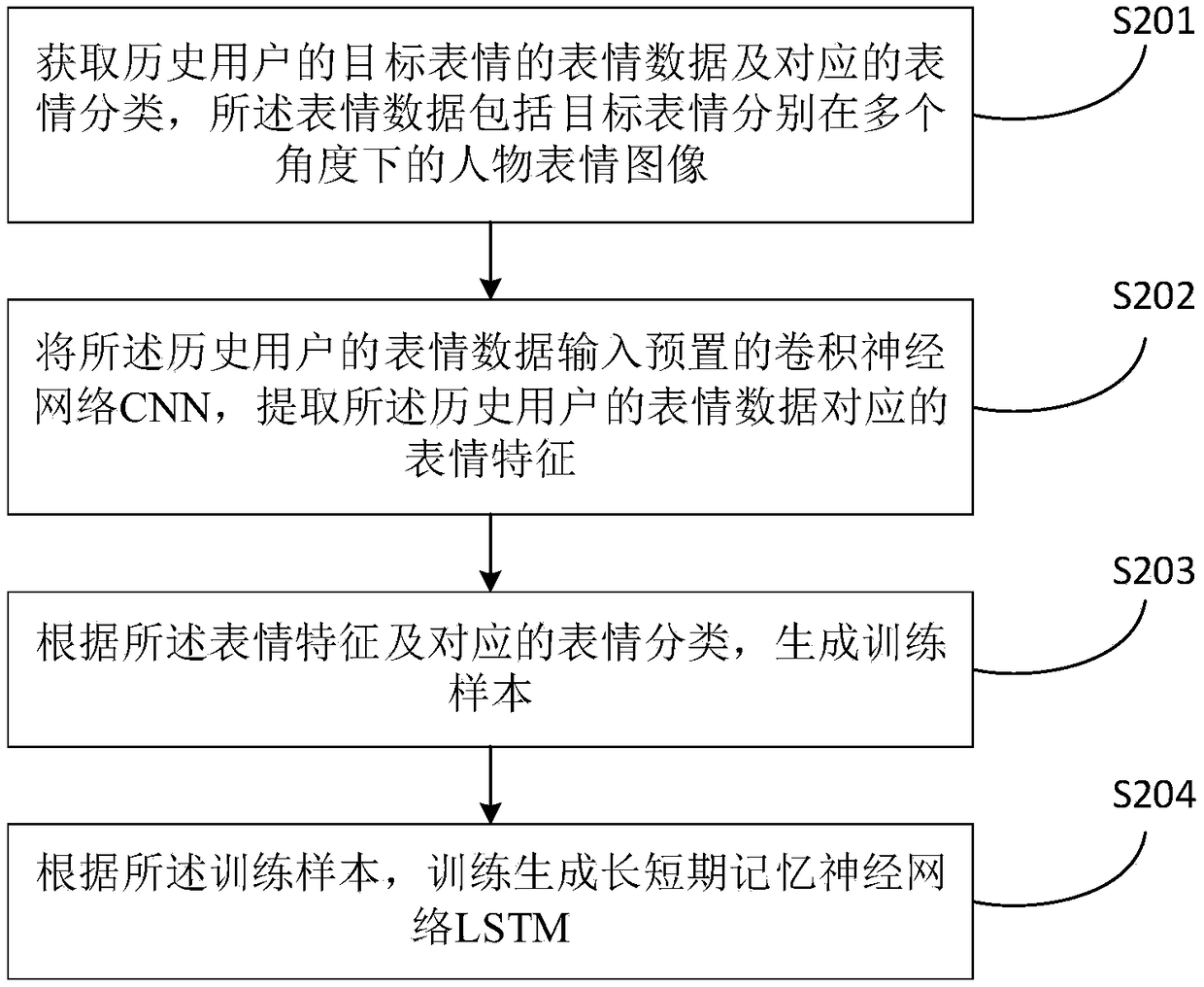

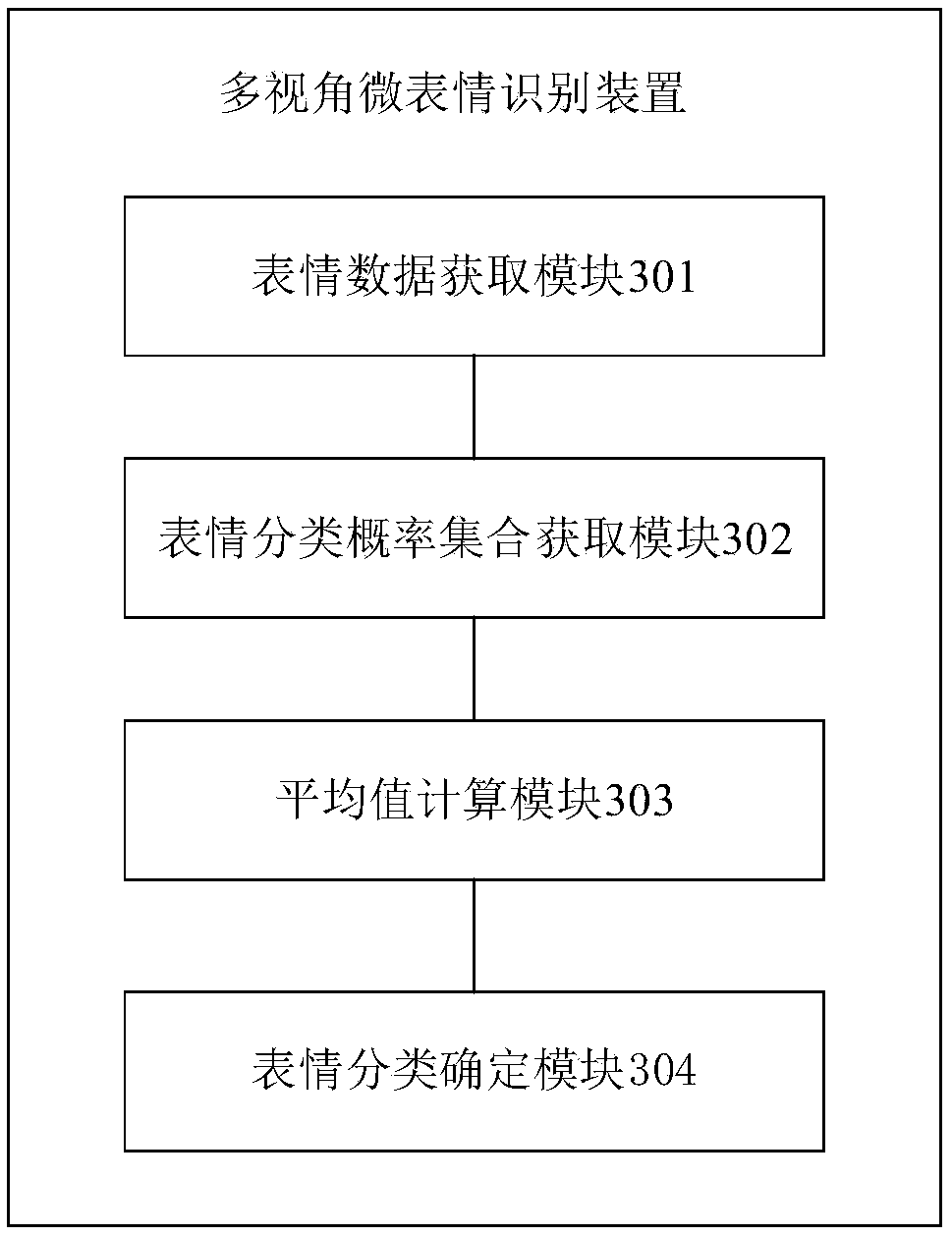

Multi-view microexpression recognition method and device, storage medium and computer device

PendingCN109165608ARealize multi-pose micro-expression recognitionFast Multi-Pose Micro-expression RecognitionAcquiring/recognising facial featuresComputer deviceMicroexpression

The invention provides a multi-view micro-expression recognition method and device, a storage medium and a computer device. The method comprises the following steps: obtaining facial expression data of a target expression of a user, wherein the facial expression data includes figure expression images of a target expression at multiple angles; inputting the expression data of the user into a plurality of preset microexpression recognition models to obtain a set of expression classification probabilities corresponding to each microexpression recognition model, wherein the set of expression classification probabilities comprises a matching probability between the expression data and different expression classifications; calculating an average value of matching probabilities of the same expression classification in each expression classification probability set; determining the expression classification corresponding to the target expression according to the average value of the matching probability of the expression classification. This method can quickly and accurately realize multi-angle multi-pose micro-expression recognition, and meet the needs of multi-angle multi-pose micro-expression recognition.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

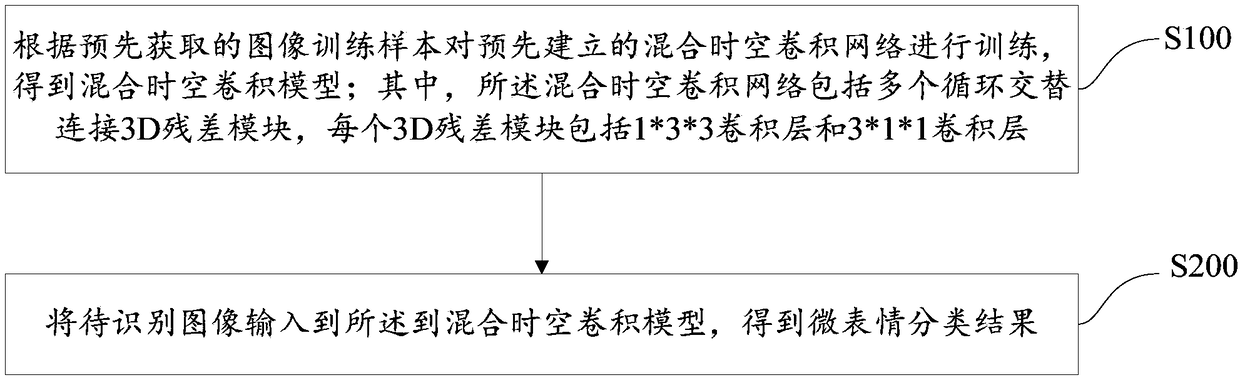

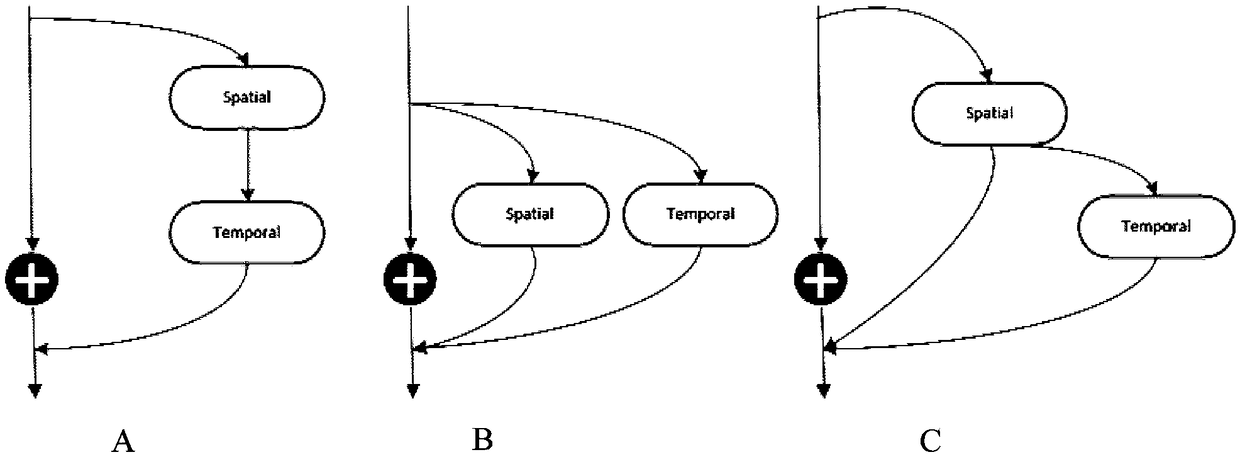

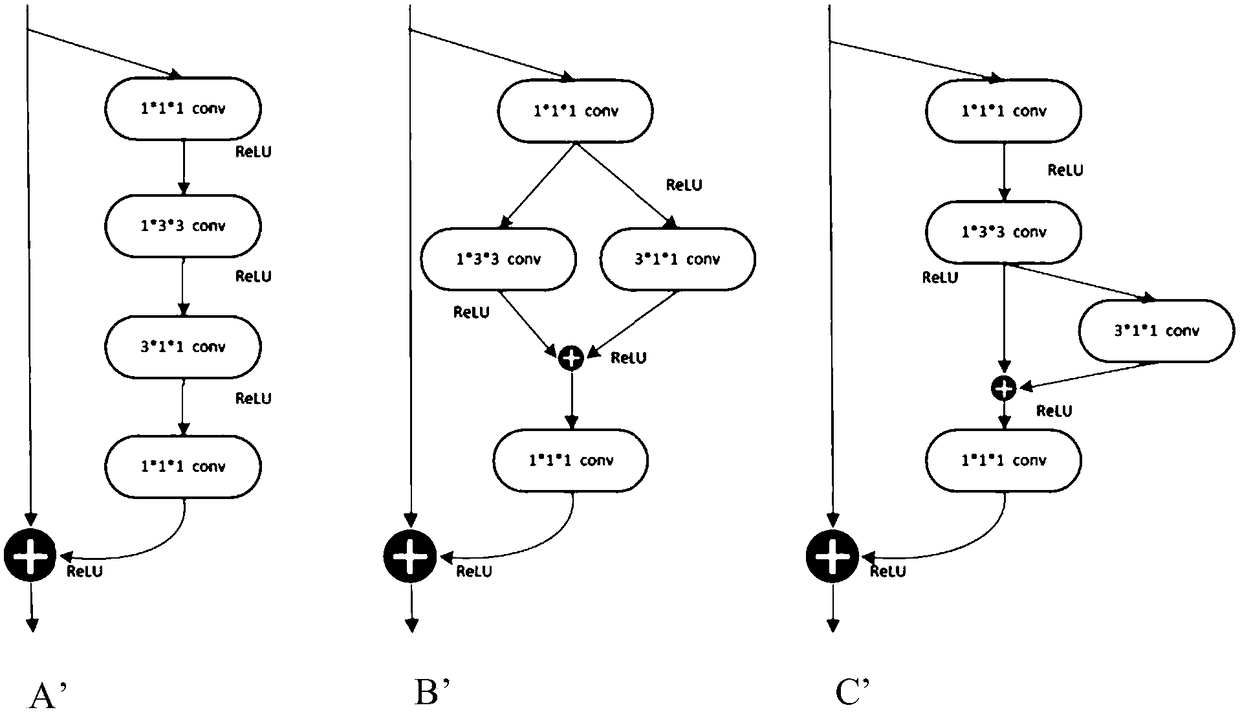

Method and apparatus for micro-expression recognition based on hybrid spatio-temporal convolution model

ActiveCN109389045ARich and authentic inner emotionsAdd categoryAcquiring/recognising facial featuresComputation complexityMicroexpression

The invention provides a micro-expression recognition method and a device based on a mixed spatio-temporal convolution model. The method comprises the following steps: training a mixed spatio-temporalconvolution network established in advance according to a pre-acquired image training sample to obtain a mixed spatio-temporal convolution model; Wherein the hybrid spatio-temporal convolution network comprises a plurality of cyclically alternately connected 3D residual modules, each 3D residual module comprising a 1*3*3 convolution layer and a 3*1*1 convolution layer; An image to be recognized is input to the mixed spatio-temporal convolution model to obtain a microexpression classification result. The mixed 1*3*3 convolution (2D) +3*1*1 convolution (1D) is used for convolution calculation,on the one hand, the invention guarantees the precision requirement of 3D CNN in micro expression recognition; on the other hand, the invention adopts the mixed 1*3*3 convolution (2D) +3*1*1 convolution (1D) to perform convolution calculation. On the other hand, it greatly reduces the computational complexity, and reduces the requirement of computer hardware, which is more conducive to the production.

Owner:GCI SCI & TECH +1

Micro-expression recognition method based on active migration learning

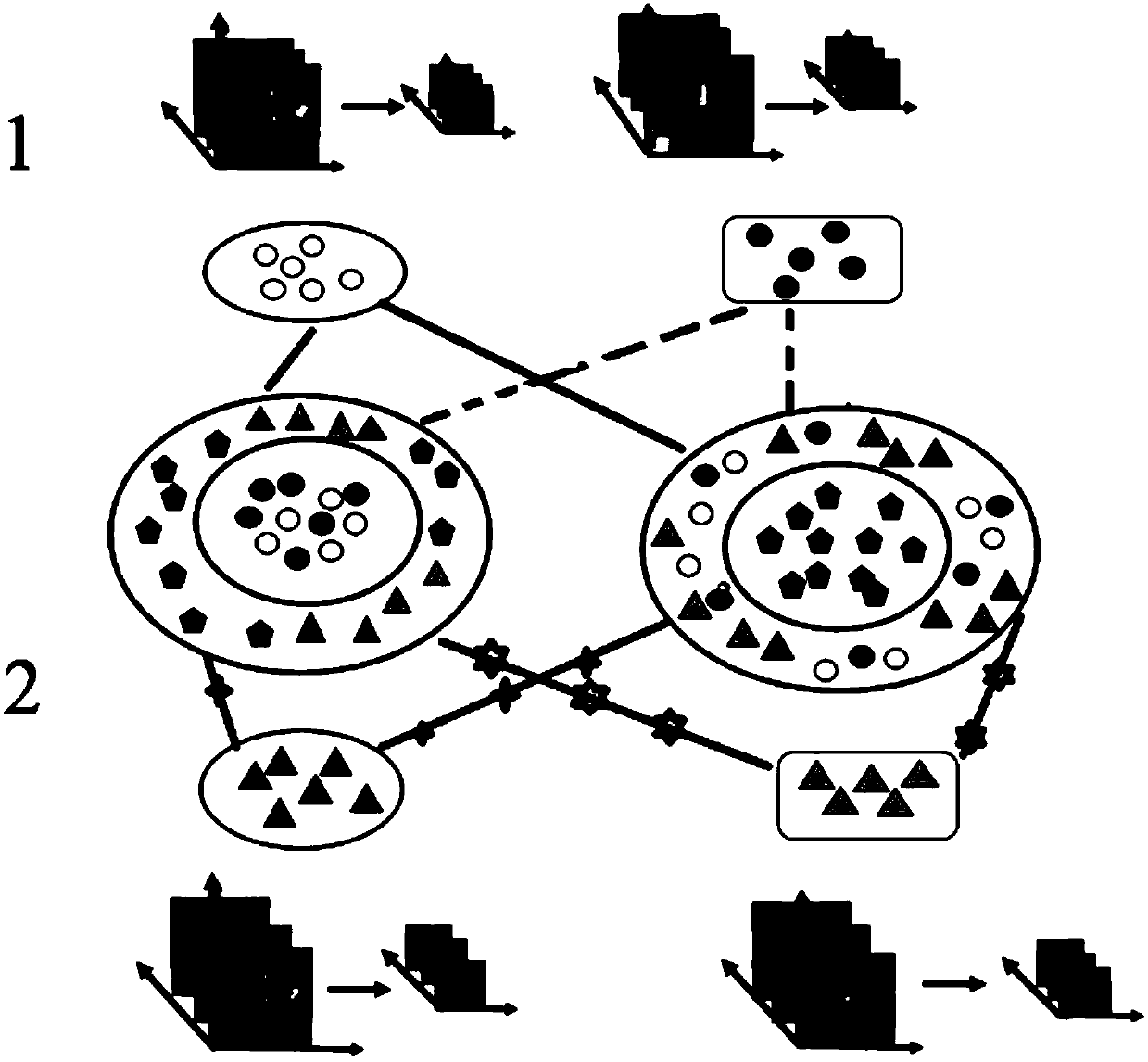

ActiveCN108629314AEffective trainingEffective classifierCharacter and pattern recognitionMicroexpressionProactive learning

The invention relates to a micro-expression recognition method based on active migration learning. The method comprises the following steps: (1) extracting micro-expression and micro-expression features; (2) establishing and solving a micro-expression active migration learning problem; (3) recognizing a microexpression. Based on the internal relation between macro expressions and micro expressions, a bridge between macro expressions and micro expressions is constructedthrough an asymmetric linear translator. Less labeled samples are adopted during the initial stage of the active learning. By means of the above translator, the supervision information of the macro-expression domain can be utilized by micro expressions in thetransformation domain. As a result, a high-quality sample can be selected for the active learning in the micro-expression domain. After the sample is manually marked, the sample is added to an existing training set. Therefore, a more effective classifier is obtained through training.

Owner:SHANDONG UNIV

A micro-expression recognition method based on macro-expression knowledge transfer

ActiveCN109543603AImprove relevanceNearbyAcquiring/recognising facial featuresCrucial pointMicroexpression

The invention relates to a micro-expression recognition method based on macro-expression knowledge transfer, comprising the following steps: (1) partitioning the expression and the micro-expression; (2) performing feature extraction of facial expression and microexpression, performing LBP feature extraction and optical flow feature extraction; (3) constructing a micro-expression recognition modelof macro-expression knowledge transfer, that is, mapping a specific class of expression and micro-expression learning, and projecting the expression and micro-expression onto multiple common discriminant subspaces; (4) classifying and recognizing the microexpression by a nearest neighbor classifier based on Euclidean distance. On the one hand, multi-feature learning can combine the characteristicsof different features to achieve the best recognition results. On the other hand, multi-task learning is to take the feature points as the center to reduce the influence of other irrelevant regions of the face on the experimental results. Feature points here mainly refer to the key points related to facial expression and micro-expression recognition.

Owner:SHANDONG UNIV

Real-time video emotion analysis method and system based on deep learning

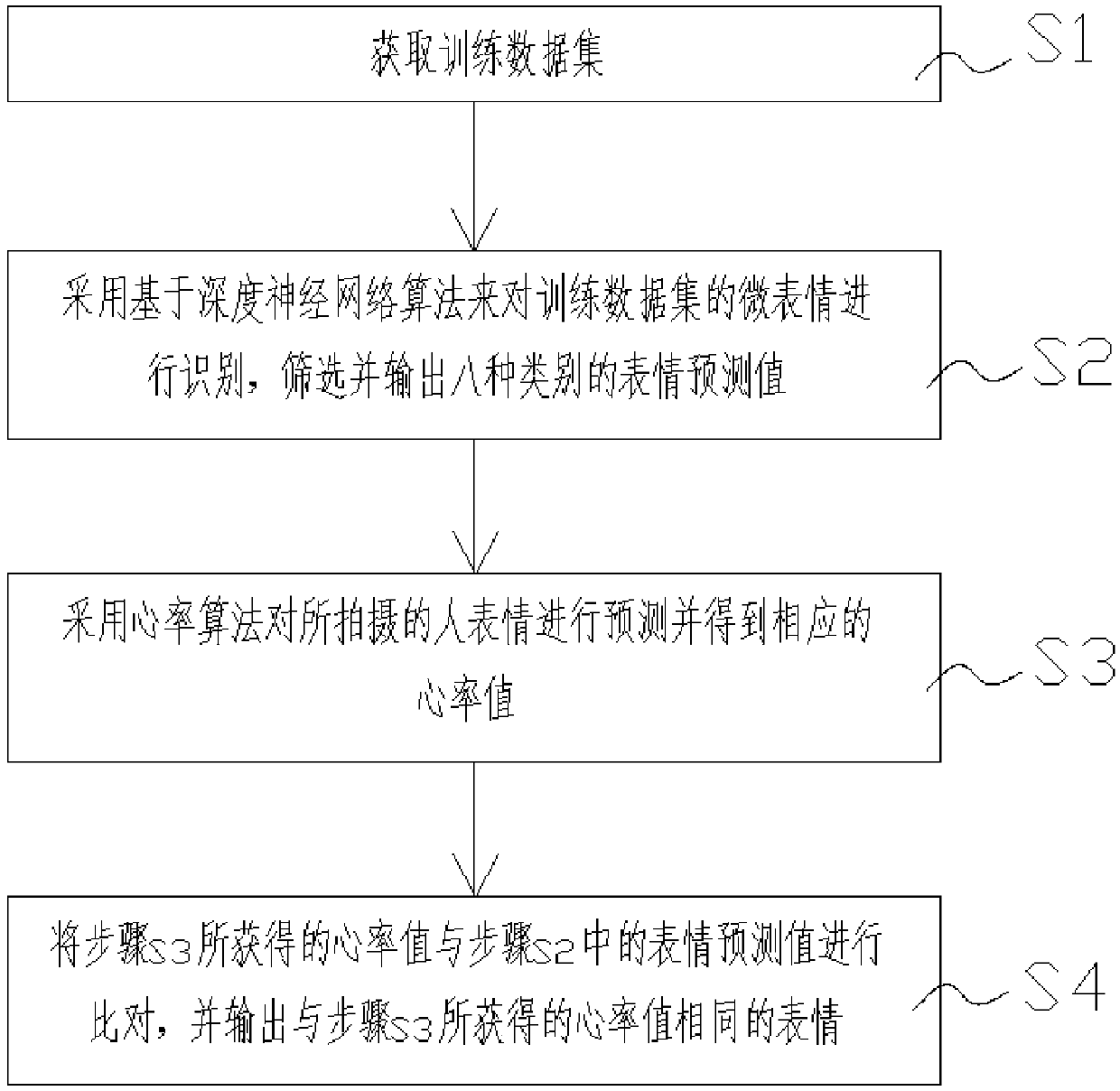

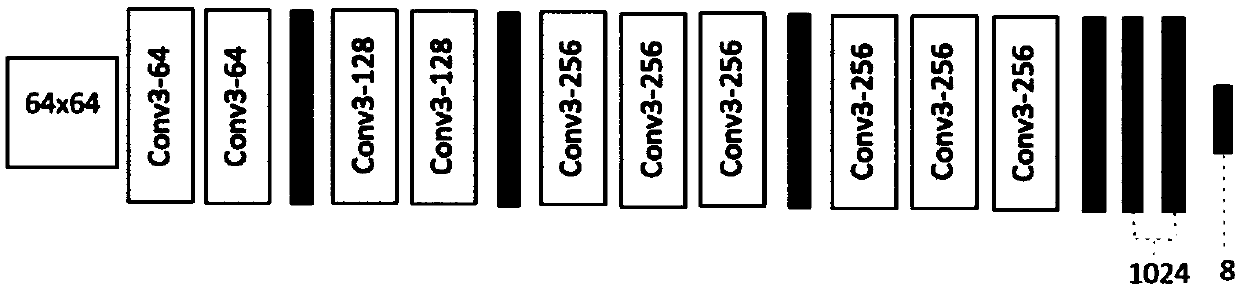

InactiveCN109549624ARealize identificationSensorsPsychotechnic devicesPattern recognitionMachine vision

The invention discloses a real-time video emotion analysis method and system based on deep learning. The analysis method comprises the following steps of S1, obtaining a training data set; S2, recognizing microexpressions of the training data set through an algorithm based on a deep neural network, performing screening, and outputting 8 kinds of expression predicating values, wherein 8 kinds of expressions comprise a gentle expression, a happy expression, an amazed expression, a sad expression, an angry expression, a disgusted expression, a fear expression and a despised expression; S3, predicating shot human expressions through a heart rate algorithm, and obtaining corresponding heart rate values; and S4, comparing the heart rate values obtained in the step S3 with the expression predicating values obtained in the step S2, and outputting the expressions the same as the heart rate values obtained in the step S3. According to the real-time video emotion analysis method and system basedon deep learning disclosed by the invention, human face recognition in machine vision and an image classification algorithm are applied to detection of microexpressions and the heart rate, recognitionof the microexpressions is realized through the deep learning algorithm, and the real-time video emotion analysis method and system based on deep learning can be applied to the clinical field, the juridical field and the security field.

Owner:南京云思创智信息科技有限公司

Mcroexpression recognition method based on the principal direction of optical flow

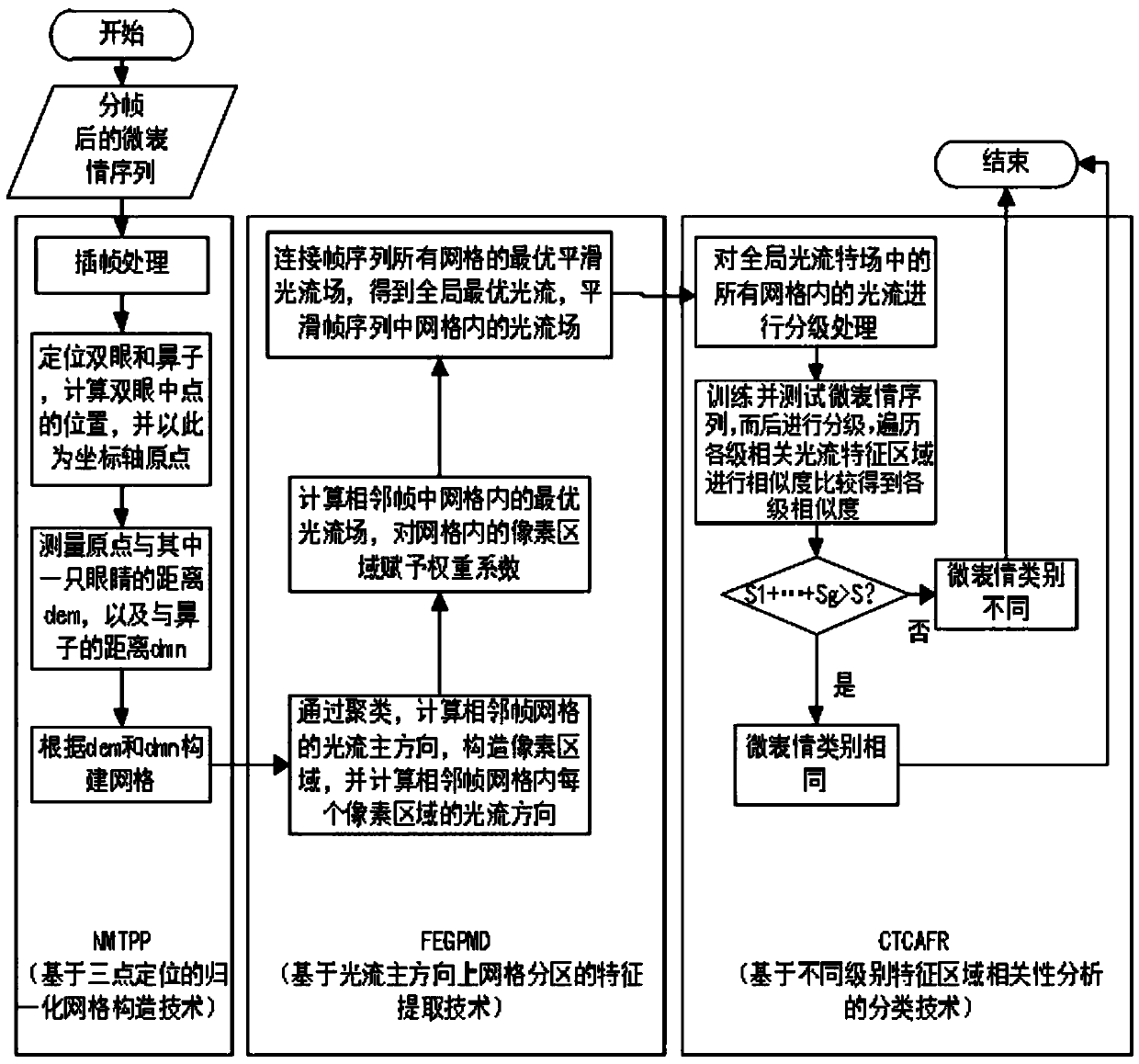

ActiveCN109034126AGuaranteed recognition efficiencyReduce the number of traversalsAcquiring/recognising facial featuresMicroexpressionNose

The invention provides a micro-expression recognition method based on the main direction of optical flow, which comprises the following steps: interpolating a micro-expression sequence formed by framing a micro-expression video; positioning the eyes and nose of each frame, and calculating the position of the middle point of the eyes as the origin of the coordinate axis; calculating the distance dem and dmn from the origin of the coordinate axis of each frame to the center of each eye and the nose; constructing normalized mesh according to dem and dmn; calculating the optical flow direction ofeach pixel region in all adjacent frame grids and clustering to obtain the optical flow main direction; a rectangular characteristic region being formed in the main direction of the optical flow, andthe pixel region in the main direction of the optical flow being assigned with weight coefficients to obtain the optical flow field optimized by adjacent frame grids, and the curves formed by connecting the related data drawing points being smoothed to obtain the global optical flow field; the pixel region in the main direction of the optical flow being assigned with weight coefficients. A training classifier inputs each optical flow characteristic region and compares the sum of similarities between the traversed-level microexpression sequence and the trained microexpression sequence with S toobtain a classification result.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

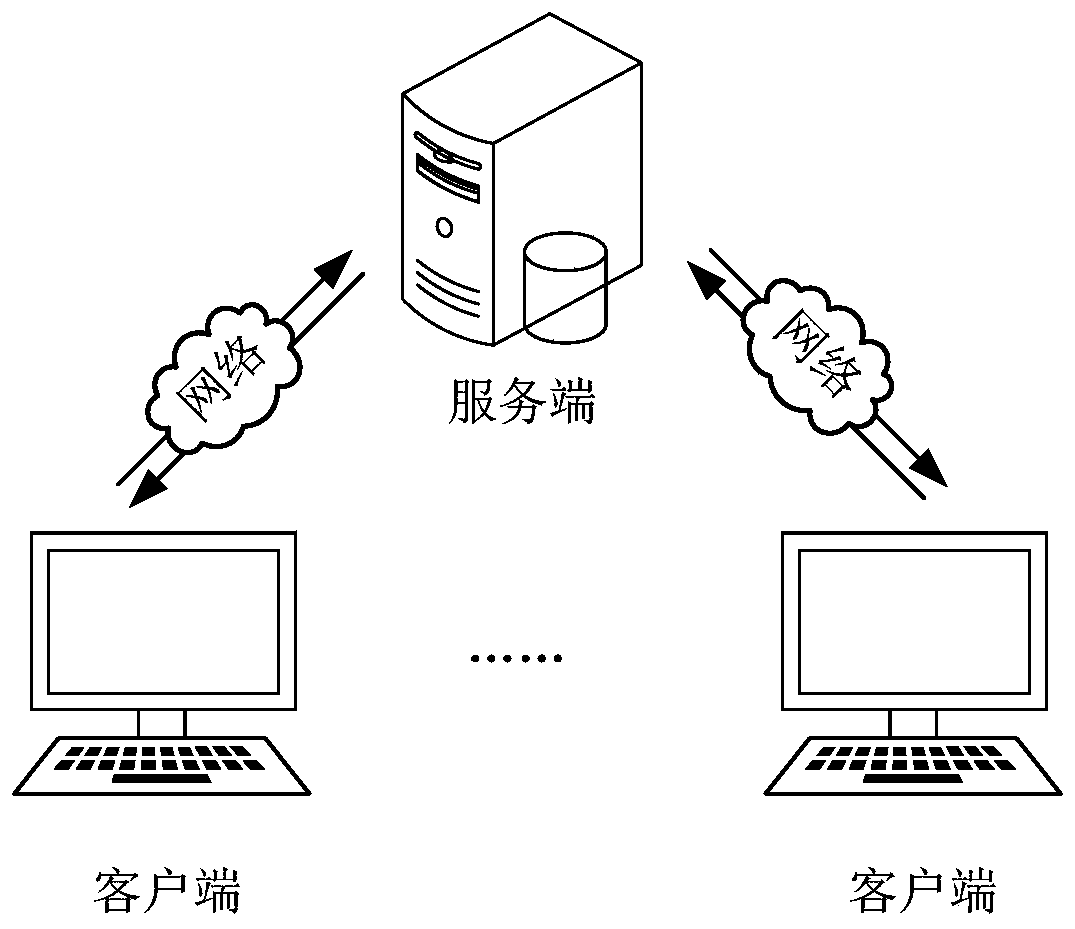

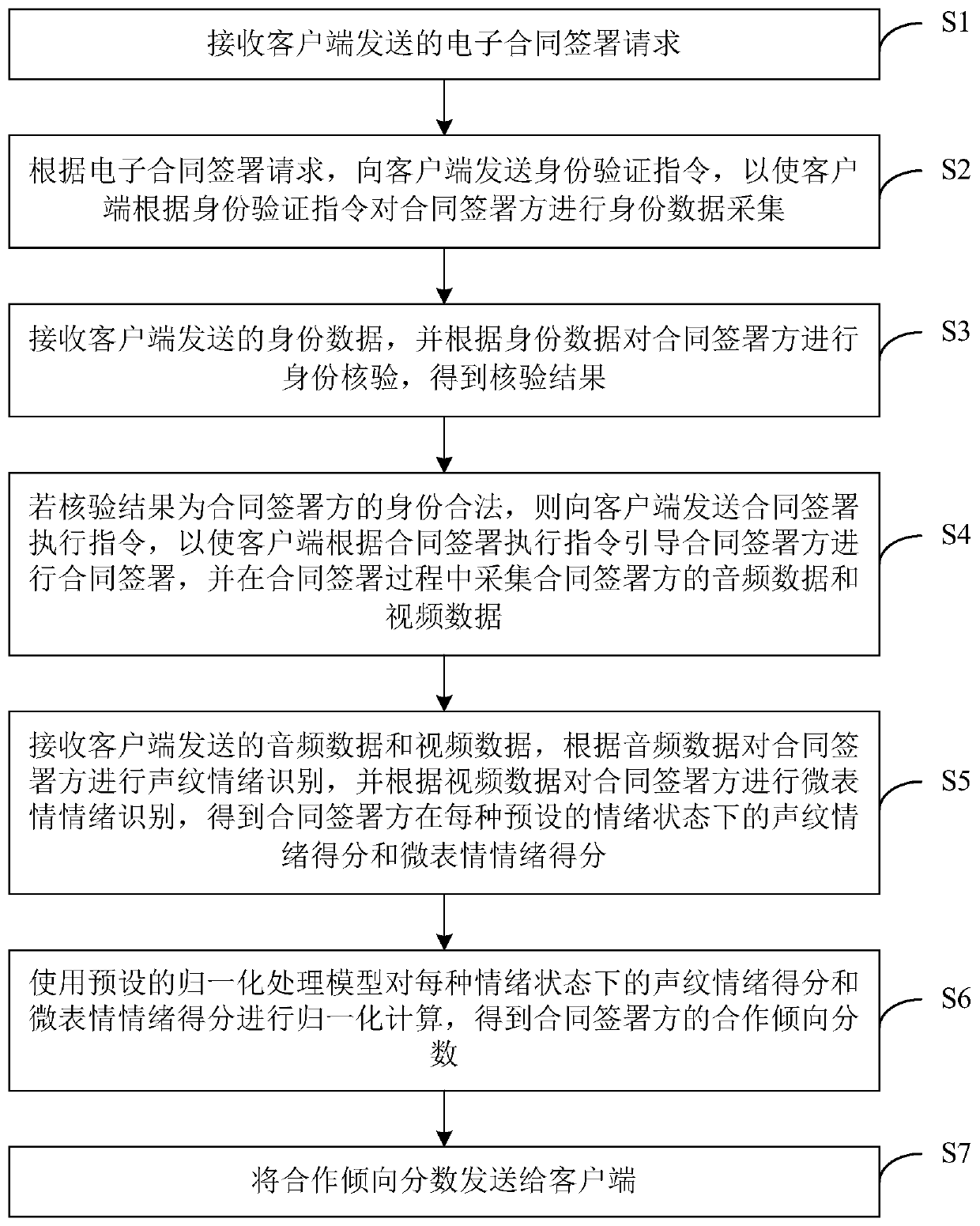

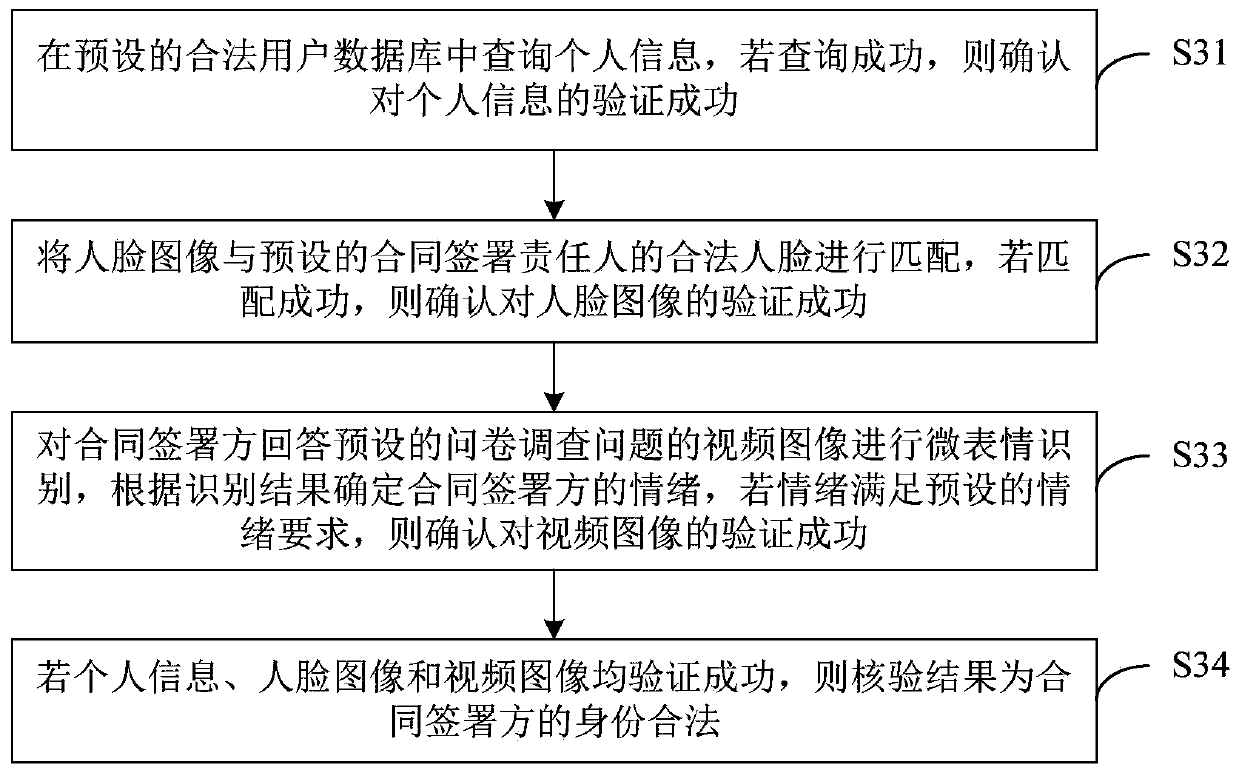

Double-recording method and device for electronic contract signing, computer equipment and storage medium

PendingCN111401826AImprove the level of intelligenceEasy to adjustMultimedia data indexingDatabase queryingMicroexpressionElectronic contracts

The invention discloses a double-recording method and device for electronic contract signing, computer equipment and a storage medium. The method comprises the following steps: receiving an electroniccontract signing request sent by a client; performing identity verification on the contract signer; if the identity of the contract signer is legal, sending a contract signing execution instruction to the client; receiving audio data and video data sent by the client, performing voiceprint emotion recognition on the contract signer according to the audio data, and performing micro-expression emotion recognition on the contract signer according to the video data to obtain the voiceprint emotion score and the micro-expression emotion score of the contract signer in each preset emotion state; performing normalization calculation on the voiceprint emotion score and the micro-expression emotion score in each emotion state by using a preset normalization processing model to obtain a cooperationtendency score; and sending the cooperation tendency score to the client. According to the technical scheme, efficient contract signing is guaranteed, and the intelligent level of double recording iseffectively improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

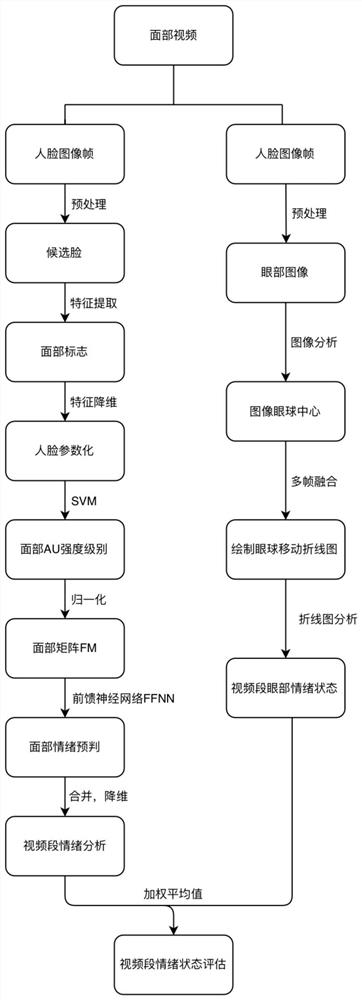

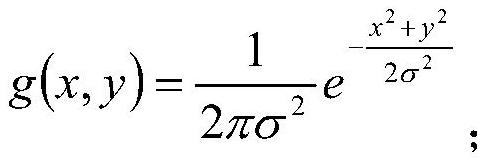

Emotion prediction method based on micro-expression recognition and eye movement tracking

ActiveCN111967363AAvoid psychological problemsNeural architecturesNeural learning methodsMicroexpressionMedicine

The invention discloses an emotion prediction method based on micro-expression recognition and eye movement tracking. The method comprises the following steps: (1) inputting a face video of an observed person after the observed person is stimulated by a certain signal, and carrying out the micro-expression recognition; (2) inputting a face video of an observed person after receiving certain signalstimulation, and carrying out eye movement tracking; and (3) fusing the micro-expression recognition result in the step (1) with the eye movement tracking result in the step (2), and judging the depression, anxiety and stress emotion states of the current observed person. By combining the emotional state ratio recognized by the micro-expression and the emotional state ratio tracked by the eye movement, the negative emotional states of depression, anxiety and stress of an observed person after facing a certain psychological stimulation signal can be predicted more accurately.

Owner:HOHAI UNIV

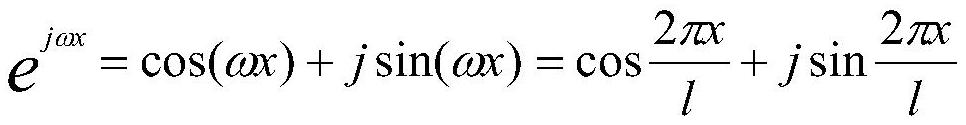

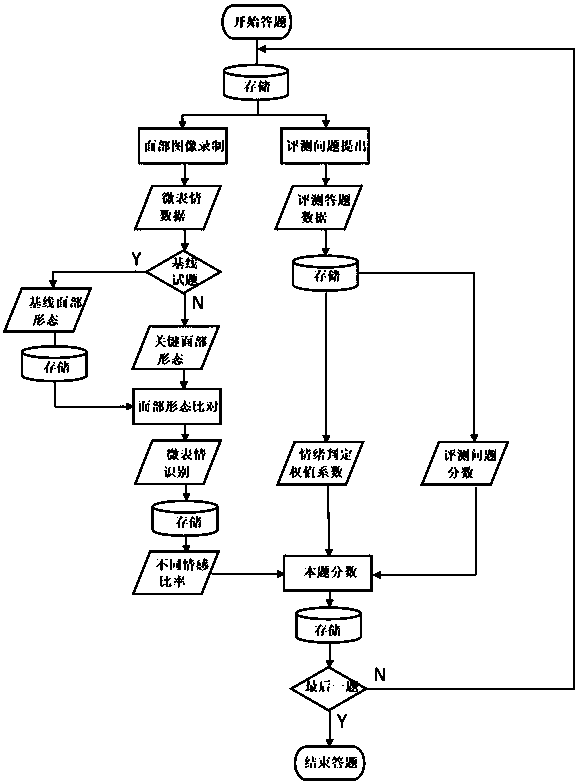

Subtle expression recognition based intelligent evaluation system and method

InactiveCN109805943AAccurately Target Overt BehaviorEliminate the effects ofCharacter and pattern recognitionPsychotechnic devicesFeature extractionComputer module

The invention discloses a subtle expression recognition based intelligent evaluation system and a method. The subtle expression recognition based intelligent evaluation system comprises a facial imageacquisition module, a feature extraction module, a user interaction module, an evaluating question bank and a grading module. The system is capable of providing a reliable evaluation data by catchingand analyzing subtle expression of a tested person while answering different evaluation questions, evaluating emotions of the tested person in the case of different questions and correcting an evaluation result according to the emotion state of the tested person during the test process. The evaluation scope of the next question can be judged according to the acquired emotion state of the tested person during the answering process, and the evaluation questions can be adjusted through the evaluating question bank, so as to supply a specific evaluation content.

Owner:徐熠

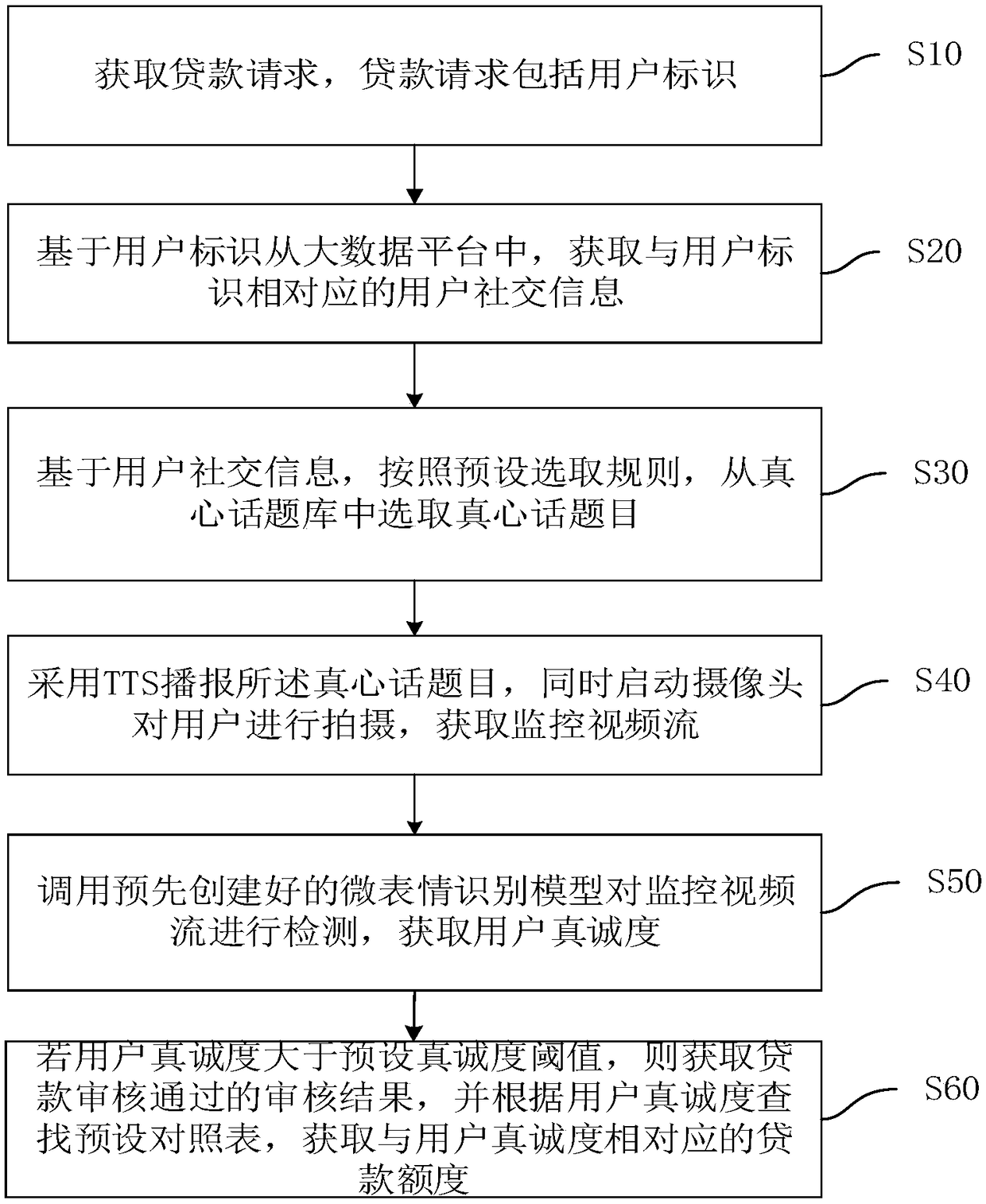

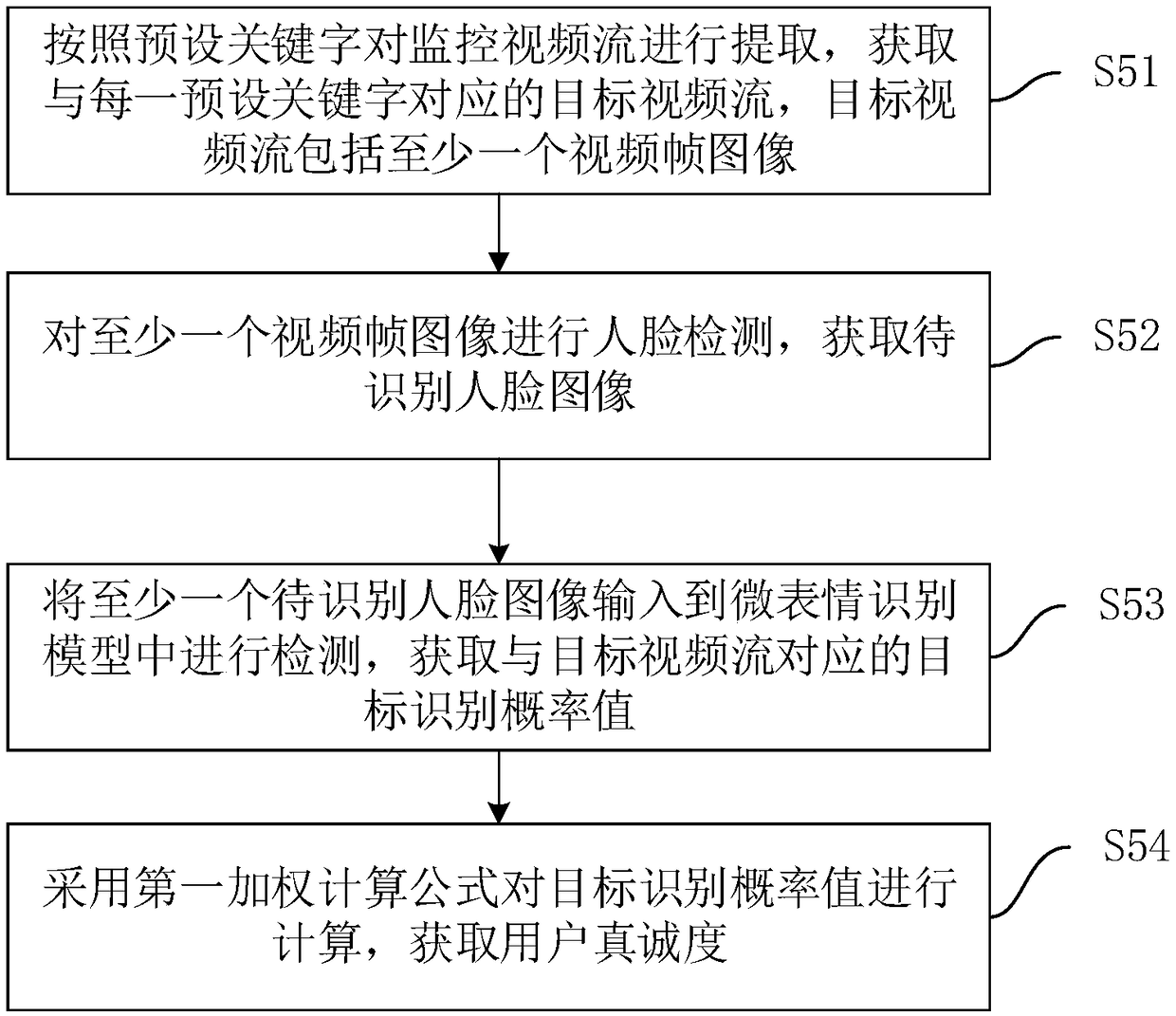

Loan verification method, apparatus, device and medium based on microexpression recognition

PendingCN109509088AIntelligent acquisition of sincerityImprove reliabilityFinanceAcquiring/recognising facial featuresMicroexpressionData platform

The invention discloses a loan verification method, apparatus, device and a medium based on micro-expression recognition, comprising the following steps: obtaining a loan request, wherein the loan request comprises a user identification; Acquiring user social information corresponding to the user identification from a big data platform based on the user identification; Based on the social information of the user, according to a preset selection rule ,selecting a true-hearted topic from a true-hearted topic bank; At that same time, starting a camera to shoot the user to obtain the monitor videostream; Calling a pre-created micro-expression recognition model to detect the surveillance video stream and obtain the user sincerity; If the user sincerity degree is greater than a preset sinceritydegree threshold, obtaining the approval result of the loan approval, searching a preset comparison table according to the user sincerity degree, and obtaining a loan amount corresponding to the usersincerity degree. This method does not need manual intervention, can achieve the purpose of intelligent audit and improve the efficiency of loan audit.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

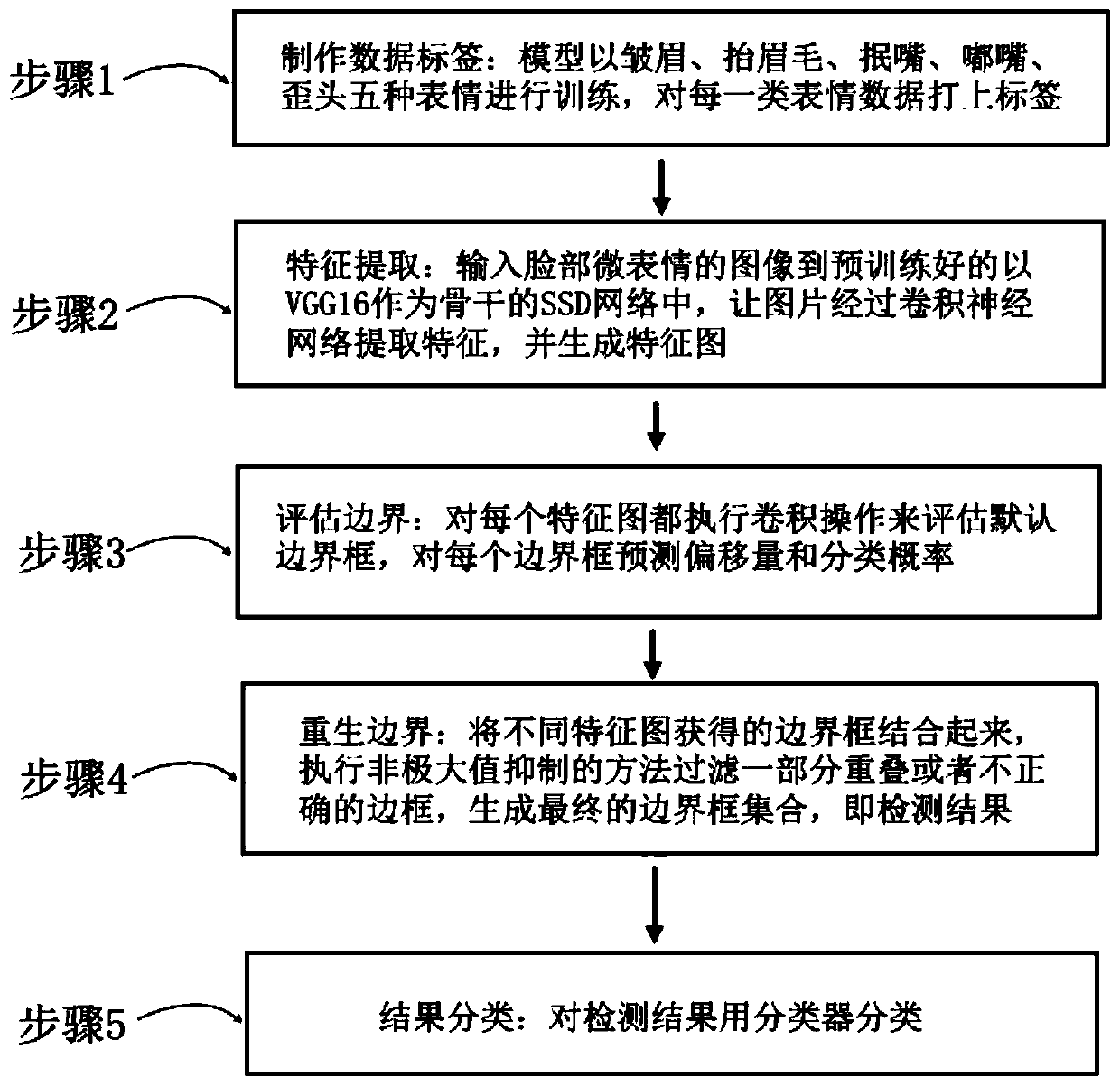

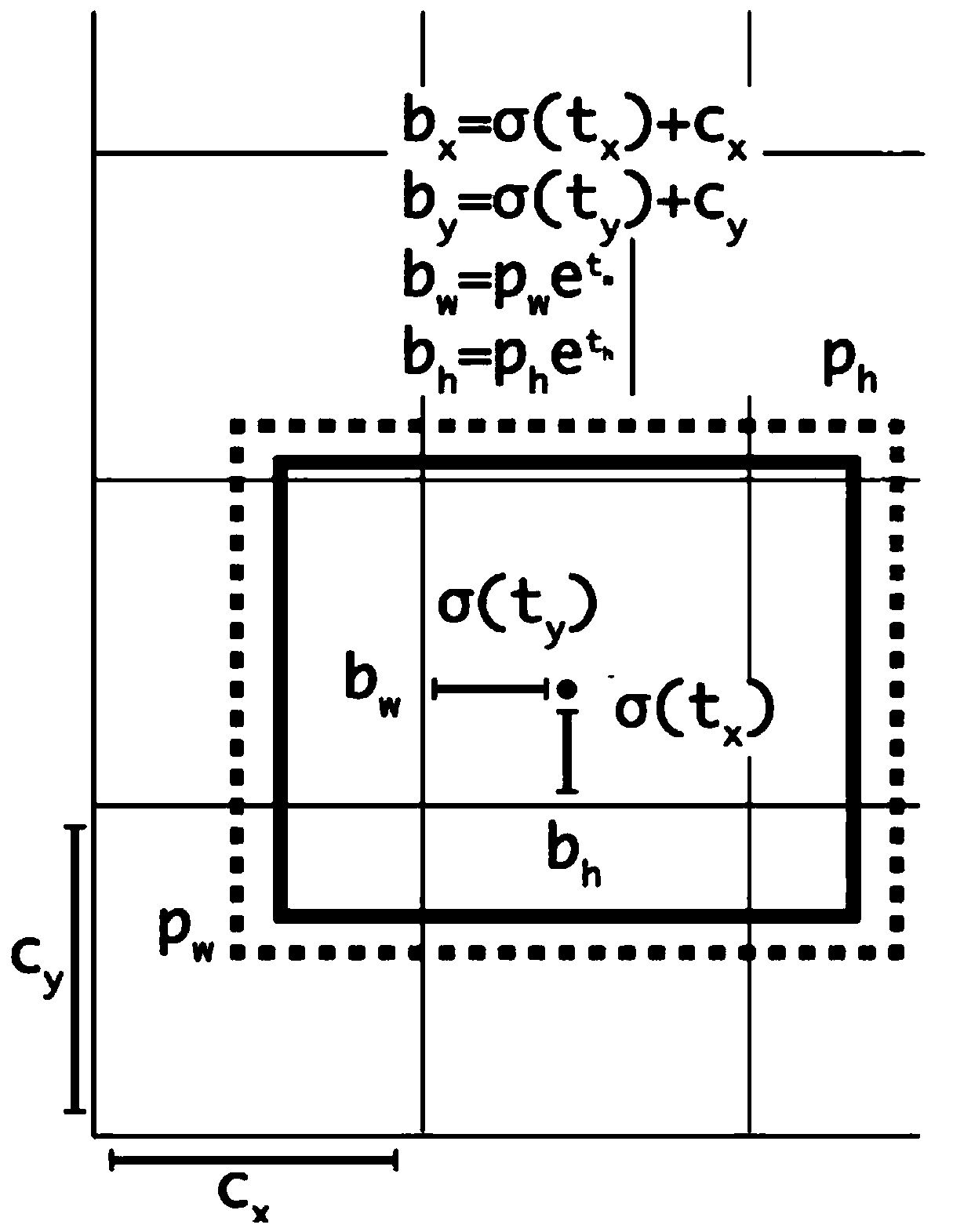

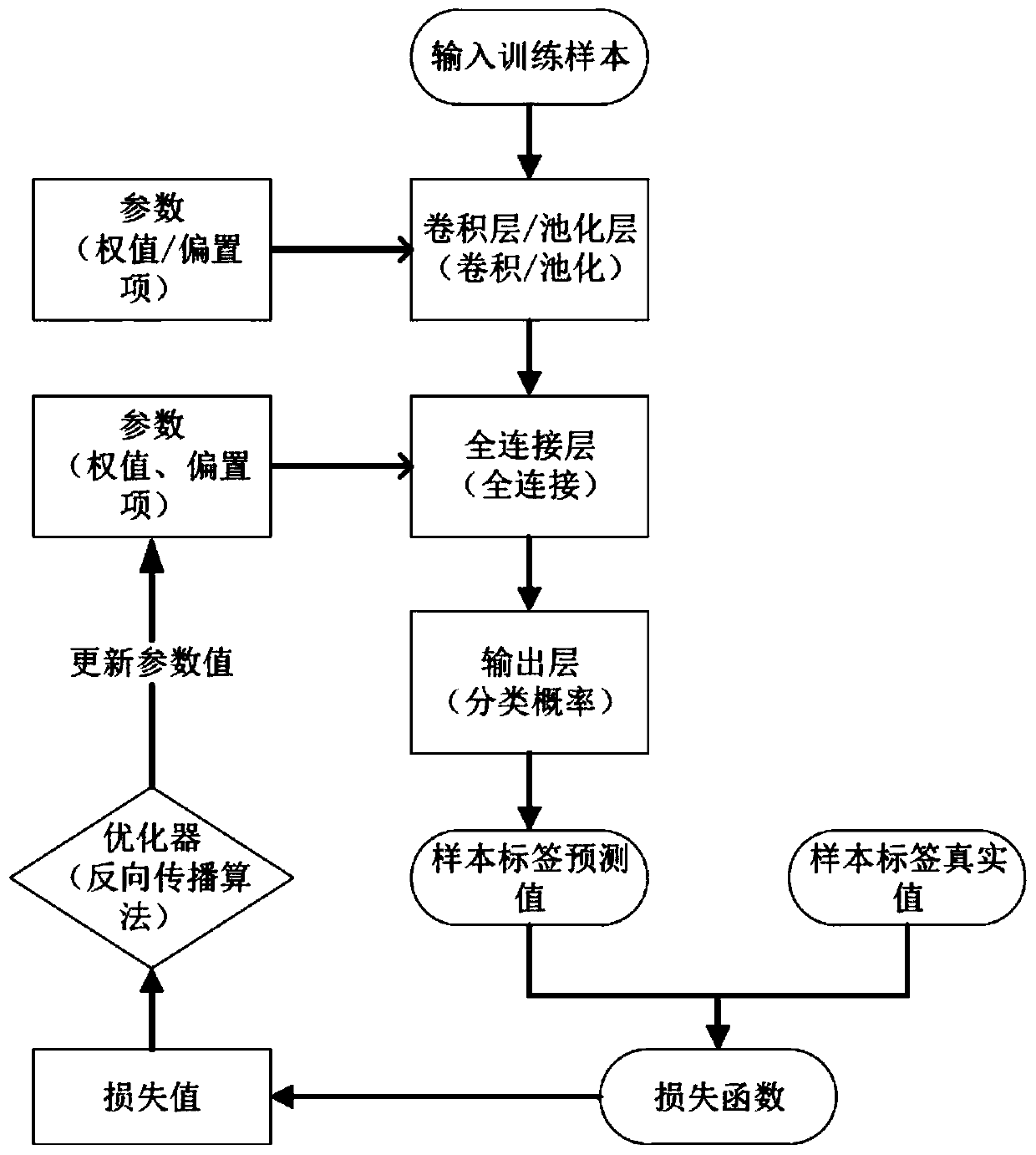

Lie-telling detection method based on micro-expressions in interview

PendingCN110889332AHigh technical precisionPrediction cheating goodOffice automationNeural architecturesMicroexpressionAlgorithm

The invention relates to a lie-telling detection method based on micro-expressions in an interview, which comprises the following steps: firstly, training five expressions, namely eyebrow wrinkling, eyebrow lifting, mouth closing, pouting and head tilting, by a model, and labeling each type of expression data; secondly, inputting an image of the facial micro-expression into a pre-trained SSD network taking VGG16 as a backbone, enabling the image to pass through a convolutional neural network to extract features, and generating a feature map; performing convolution operation on each feature mapto evaluate a default bounding box, and predicting an offset and a classification probability for each bounding box; combining bounding boxes obtained by different feature maps, executing a non-maximum suppression method to filter a part of overlapped or incorrect borders, and generating a final bounding box set; and finally, classifying detection results by using a classifier. According to the method, high-level and low-level visual features are used at the same time, and compared with human beings, the method is obviously better in cheating prediction; and compared with naked eye judgment of human beings, the speed is higher, and the technical accuracy is higher.

Owner:中科南京人工智能创新研究院 +1

Patient monitoring method, apparatus, computer device, and storage medium

PendingCN109460749AEnables real-time monitoringImprove quality of careMedical communicationAcquiring/recognising facial featuresMicroexpressionComputer engineering

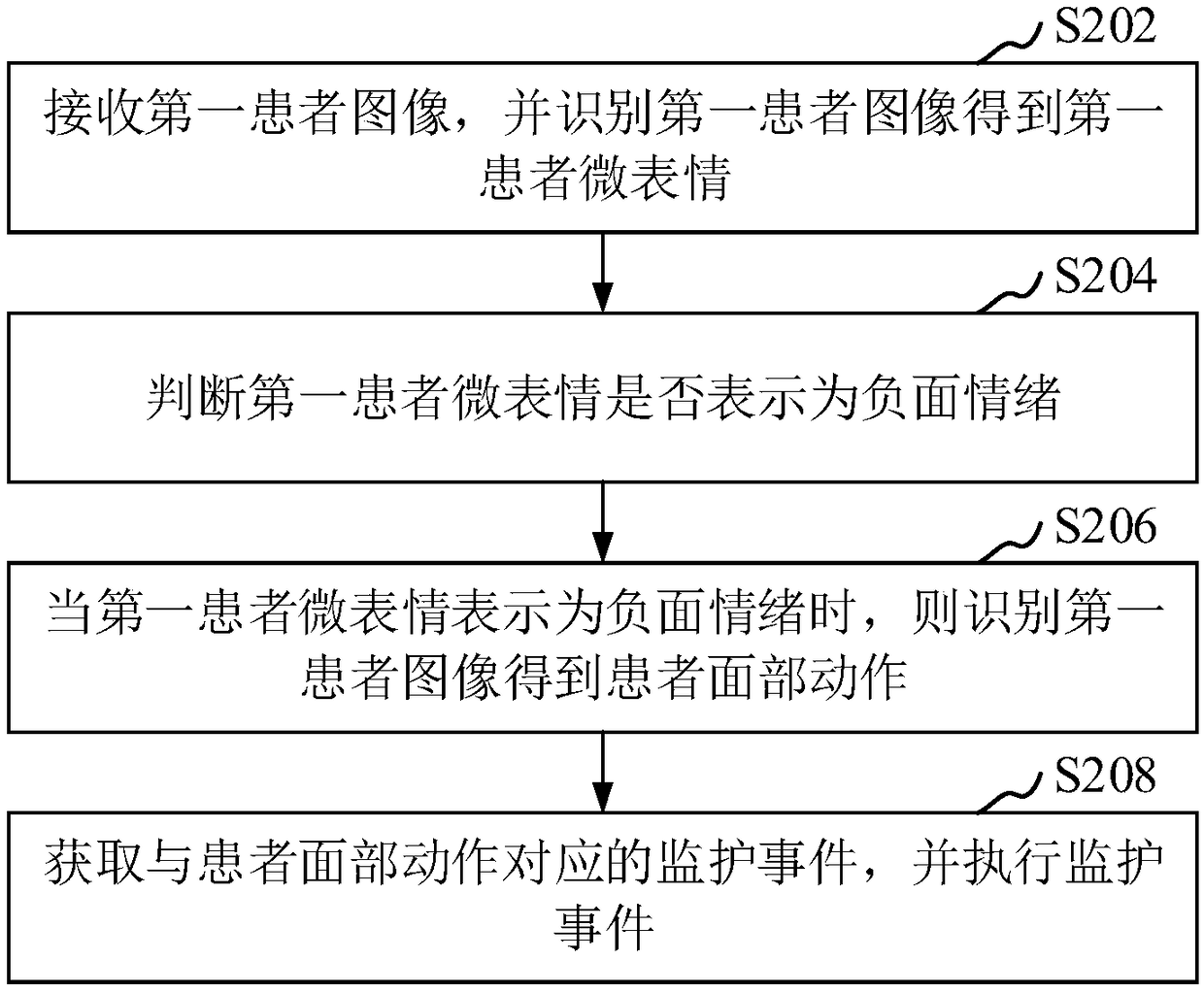

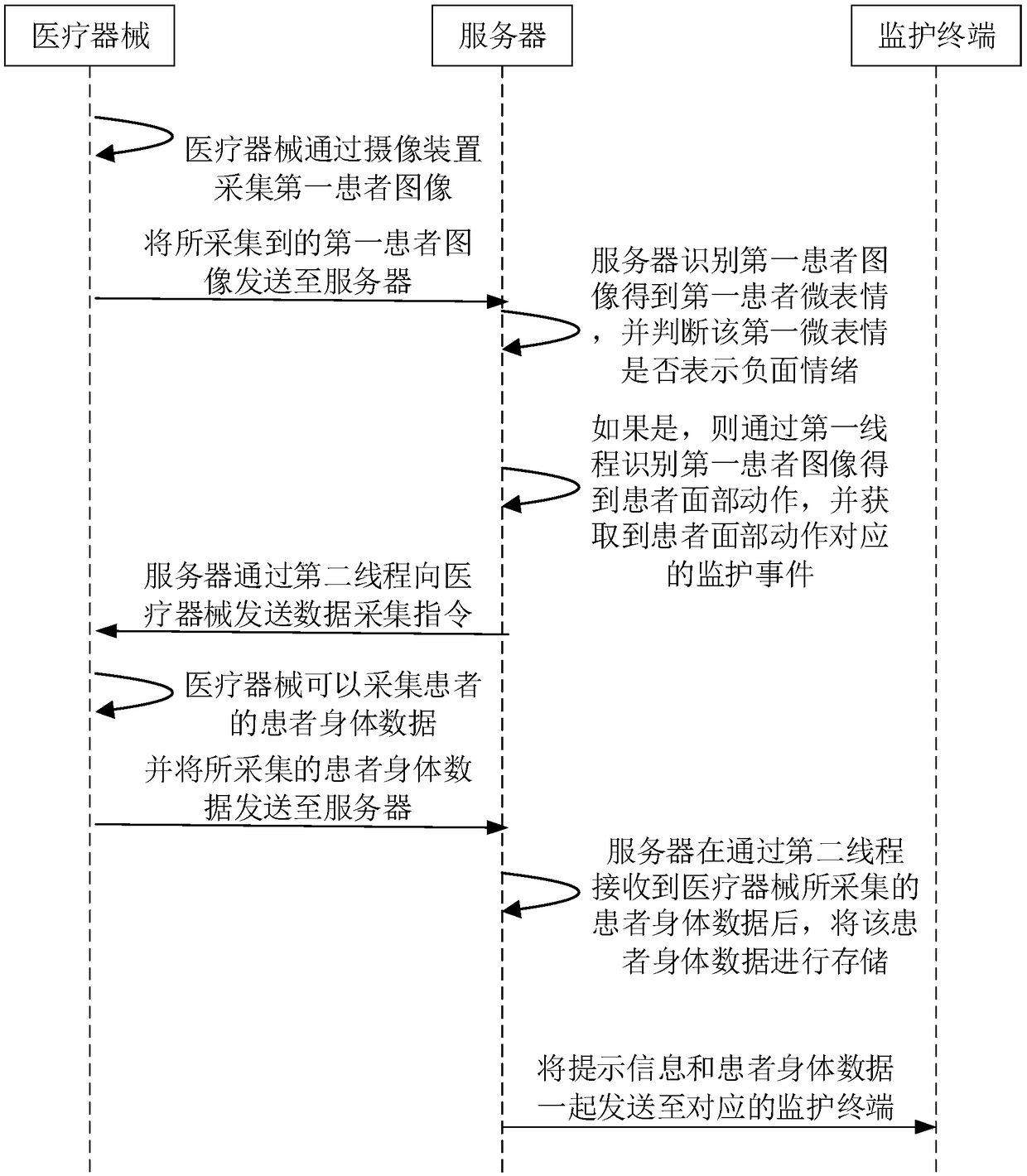

The present application relates to the field of artificial intelligence, in particular to a patient monitoring method, an apparatus, a computer device and a storage medium. The method comprises: receiving a first patient image and identifying the first patient image to obtain a first patient microexpression; Judging whether the first patient microexpression is expressed as a negative emotion; Identifying the first patient image to obtain a patient facial action when the first patient microexpression is expressed as a negative emotion; Acquiring a monitoring event corresponding to the patient'sfacial action and executing the monitoring event. The method can improve the monitoring quality.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

Minimum -error-based feature extraction method for face microexpression sequence

InactiveCN105608440AAvoid the requirement of uniform frame rateFast extractionAcquiring/recognising facial featuresMicroexpressionFeature extraction

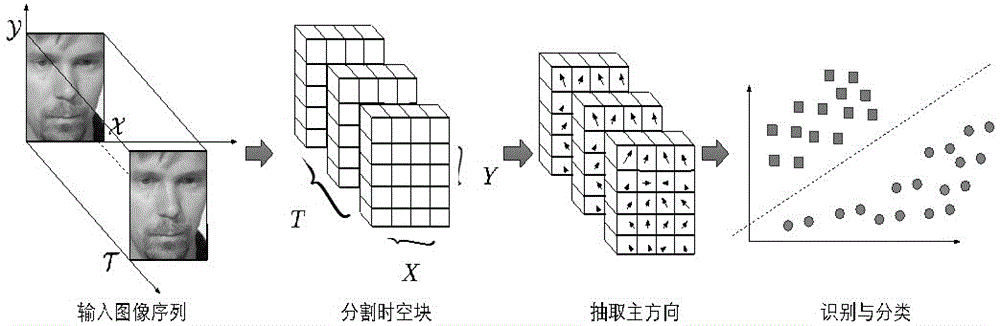

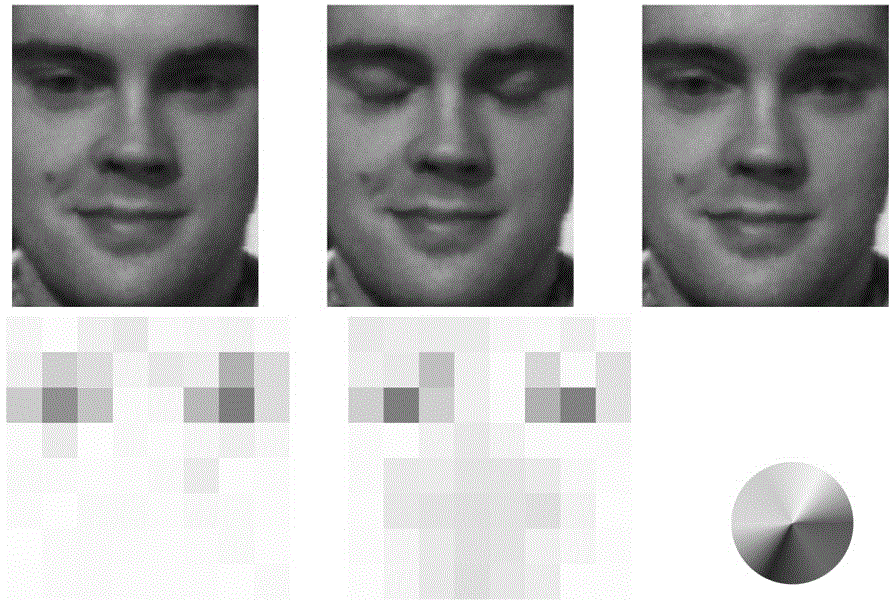

The invention, which belongs to the technical field of computer vision, particularly relates to a minimum-error-based feature extraction method for face microexpression sequence. A microexpression sequence is segmented into small space-time blocks and a two-dimensional principal direction vector is searched in each space-time block according to a minimum error rule; and principal directions of all blocks are spliced to obtain vectors twice as large as the number dimension of the blocks, thereby representing the overall microexpression sequence. According to the method, the requirement of the uniform frame number in the traditional algorithm is avoided and thus introduction of the interpolation algorithm is not required. Meanwhile, the extraction speed is fast and the possibility of high-precision microexpression detection is provided.

Owner:FUDAN UNIV

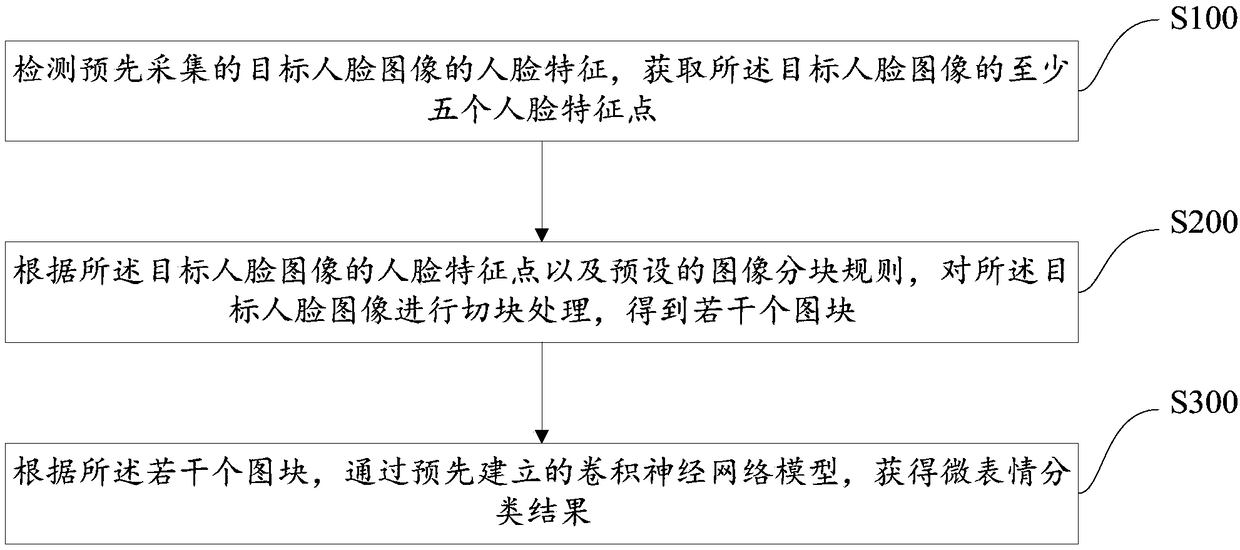

Micro-expression recognition method, device, and storage medium

ActiveCN109271930AHigh speedHigh precisionCharacter and pattern recognitionMicroexpressionNetwork model

The invention provides a micro-expression recognition method, a device and a storage medium. The method comprises the following steps: detecting the facial features of a target face image collected inadvance, and obtaining at least five facial feature points of the target face image; according to the facial feature points of the target face image and the preset image partitioning rules, cutting the target face image into blocks to obtain a plurality of blocks, according to the plurality of image blocks, obtaining the microexpression classification result through a convolution neural network model established in advance. According to the obtained facial feature points and preset image partitioning rules, the target face image is processed by slicing, and a plurality of image blocks obtained after slicing are recognized and classified by adopting a convolution neural network, so that the speed and the precision of the micro expression recognition can be effectively improved, and the working efficiency of the micro expression recognition can be greatly improved.

Owner:GCI SCI & TECH +1

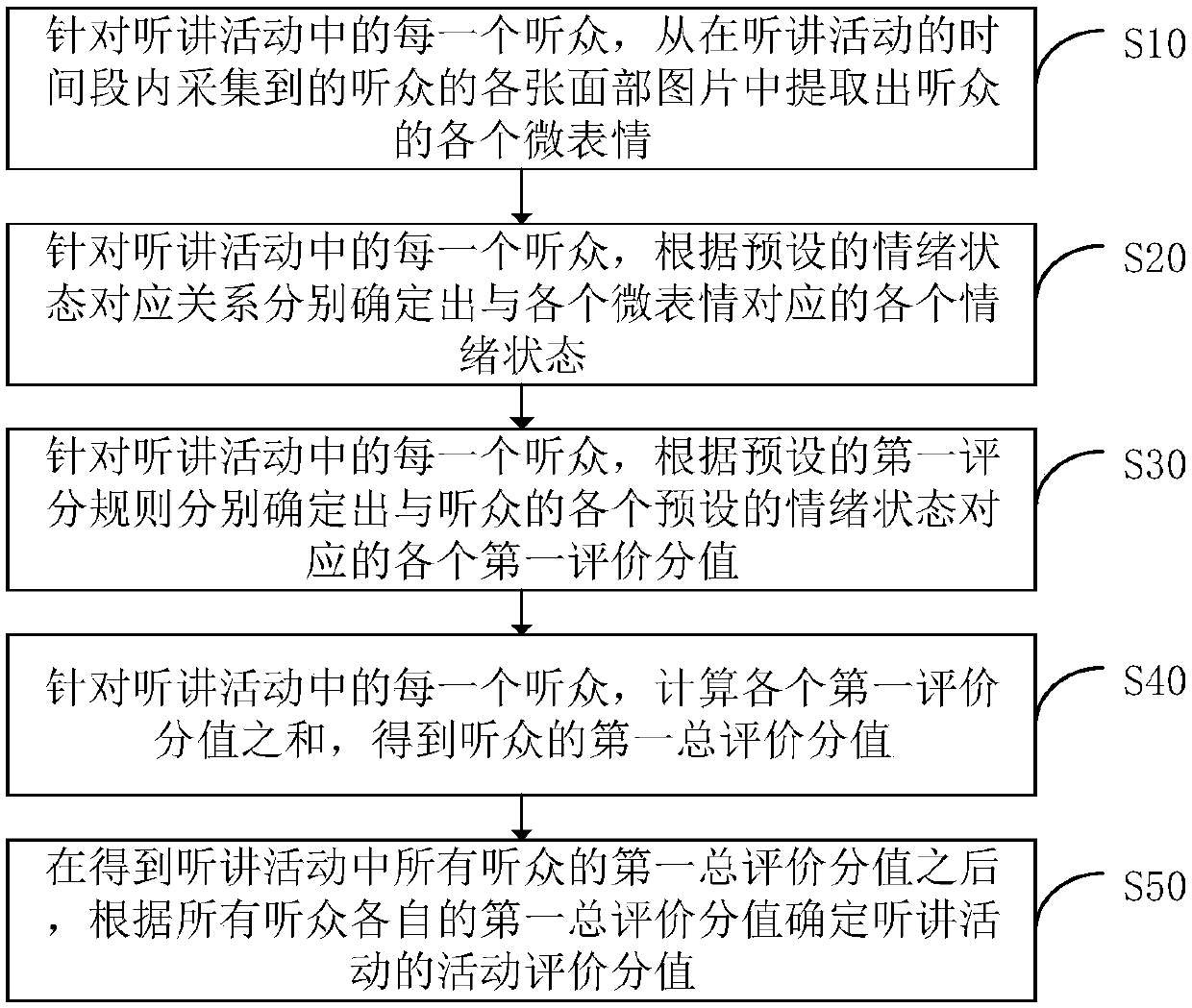

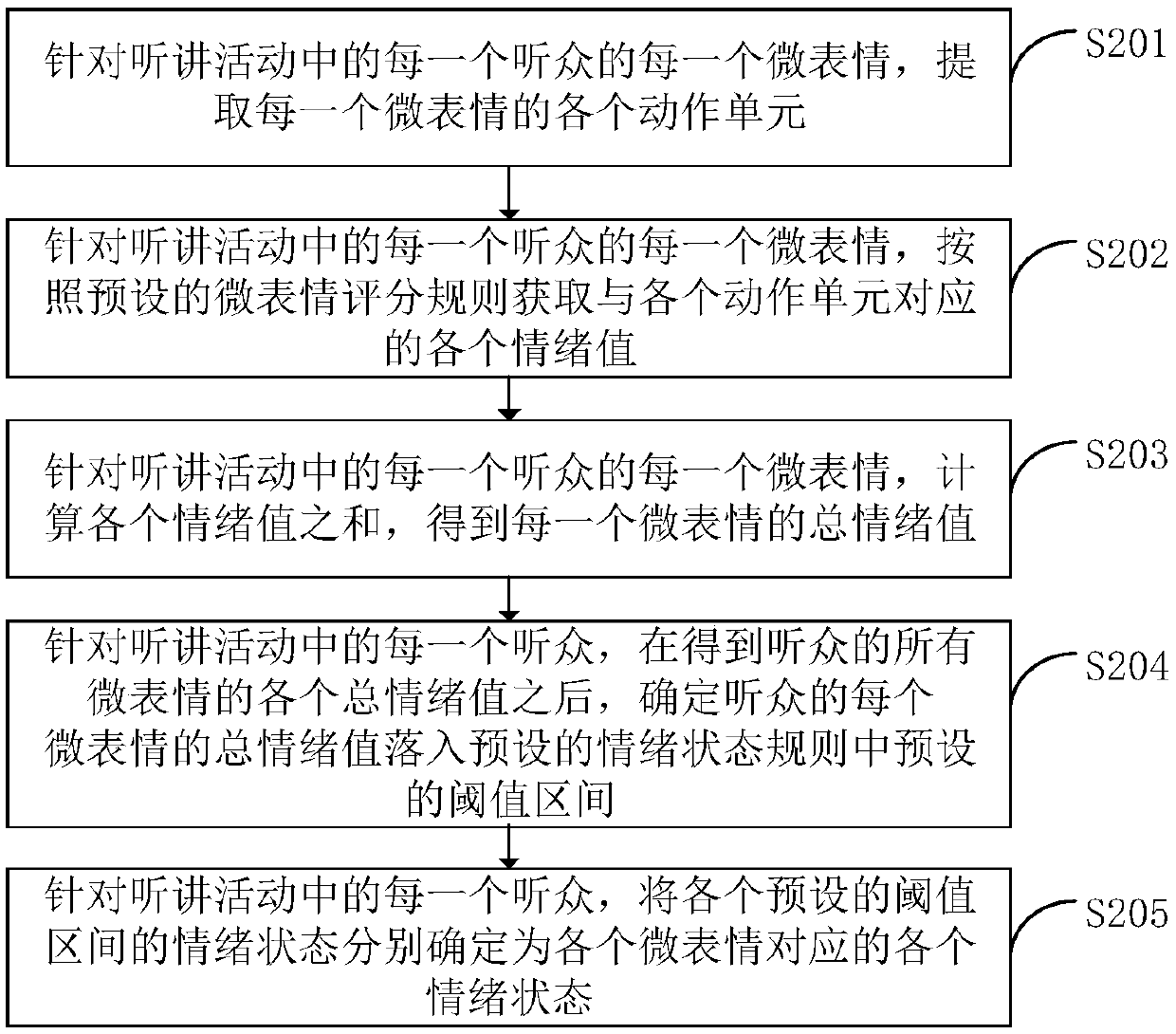

listening evaluation method based on listener micro-expression, DEVICE, COMPUTER DEVICE AND STORAGE MEDIUM

PendingCN109523290AGood effectImprove accuracyAcquiring/recognising facial featuresMarketingMicroexpressionRating score

The invention discloses a listening evaluation method based on listener micro-expression, DEVICE, COMPUTER DEVICE AND STORAGE MEDIUM, The listening evaluation method based on the listener's micro-expression comprises the following steps: because for every listener in the lecture, the microexpressions extracted from the collected facial images of the listener belong to the true state of the listener 's heart, it is determined that the preset emotional state corresponding to the micro-expression also belongs to the true emotional state of the listener 's heart, Next, each first evaluation scorecorresponding to the emotional state of the listener is determined, the first overall rating score of the listener is then calculated, Finally, the activity evaluation score of listening activities isdetermined according to the first total evaluation score of all the listeners, Therefore, this kind of scoring method is more reflective of the audience's true evaluation of listening activities, will not be affected by the audience's subjectivity and other reasons, a better understanding of the effect of listening activities, undoubtedly improve the accuracy of the statistics of the audience's evaluation of listening activities.

Owner:PING AN TECH (SHENZHEN) CO LTD

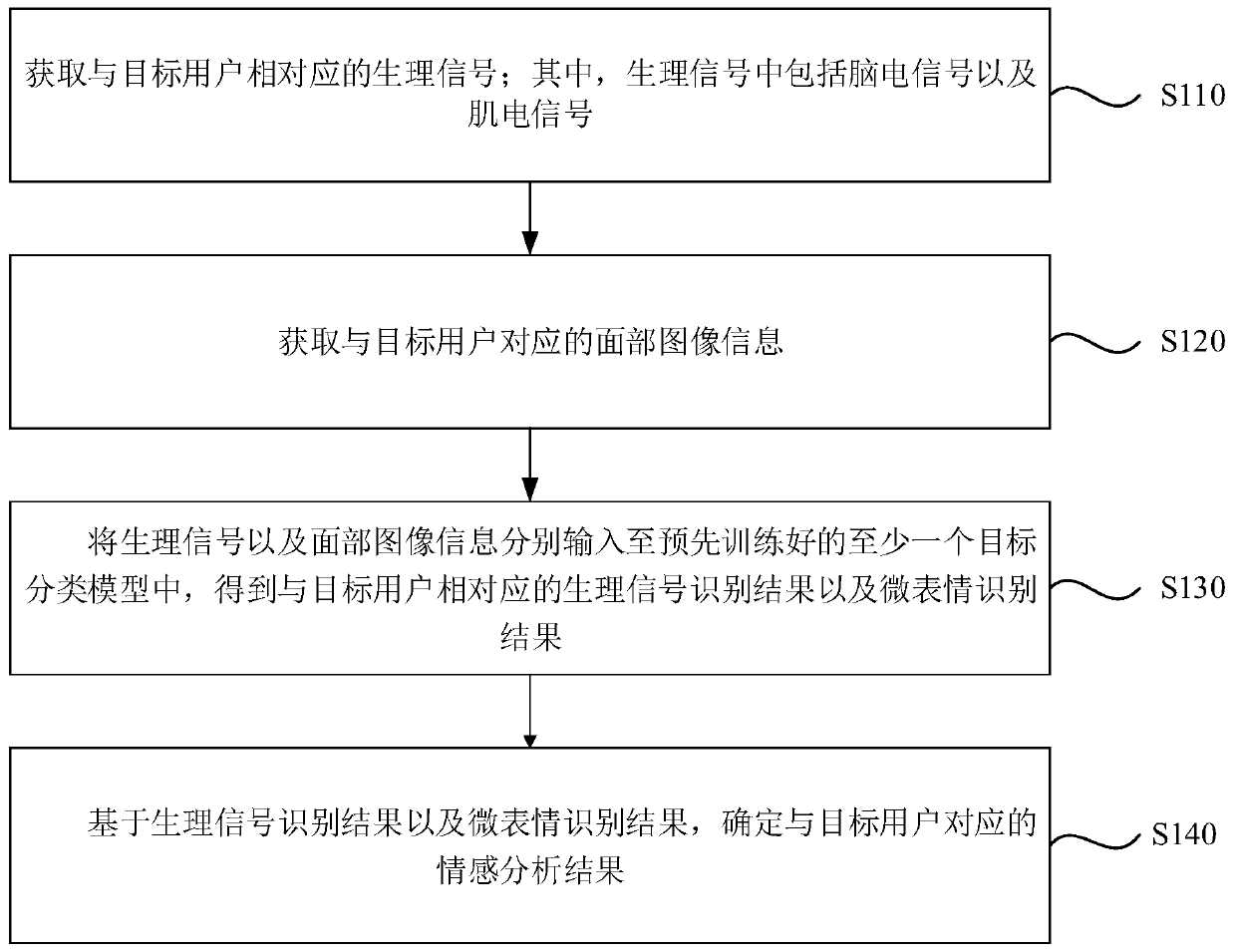

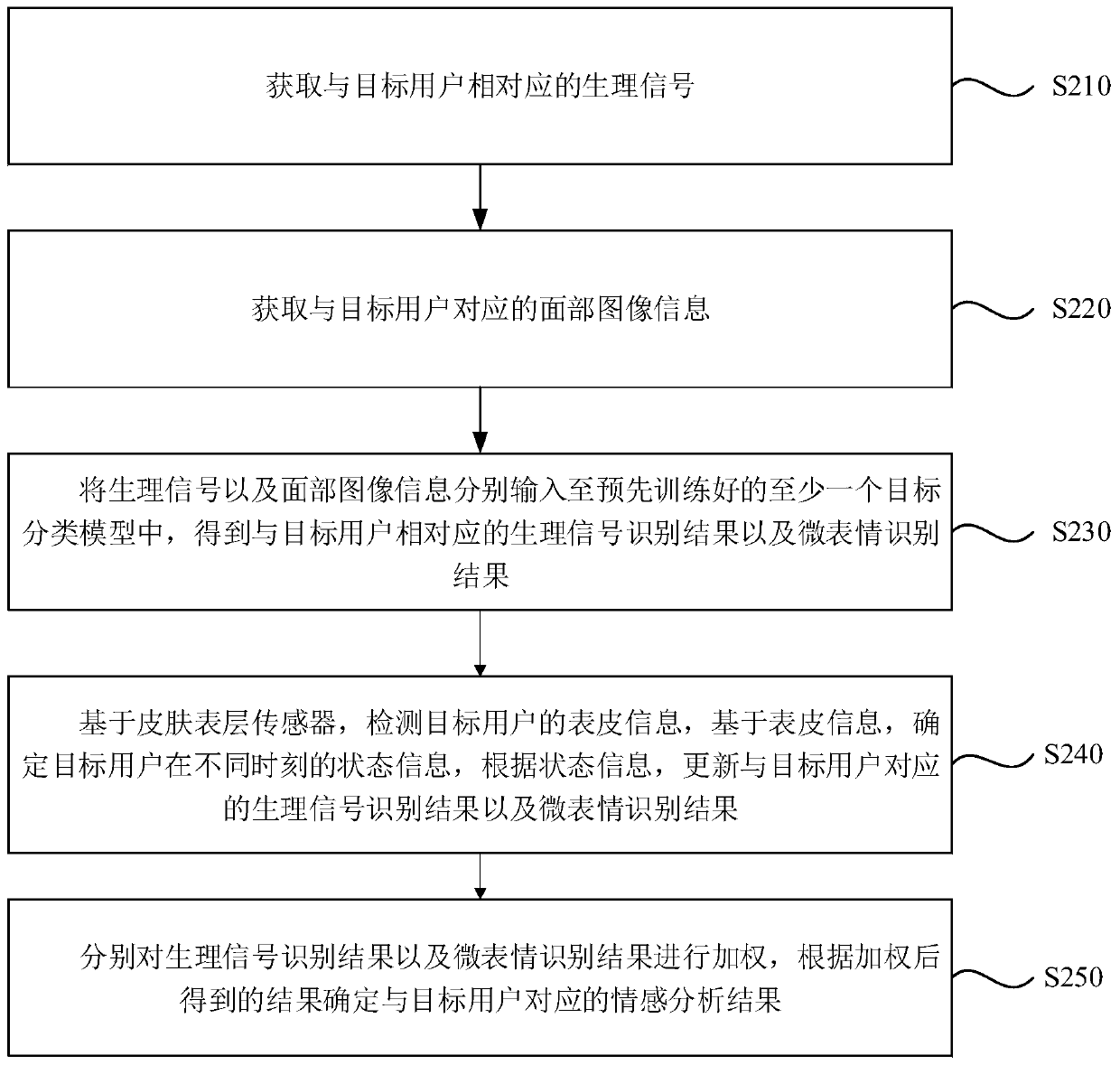

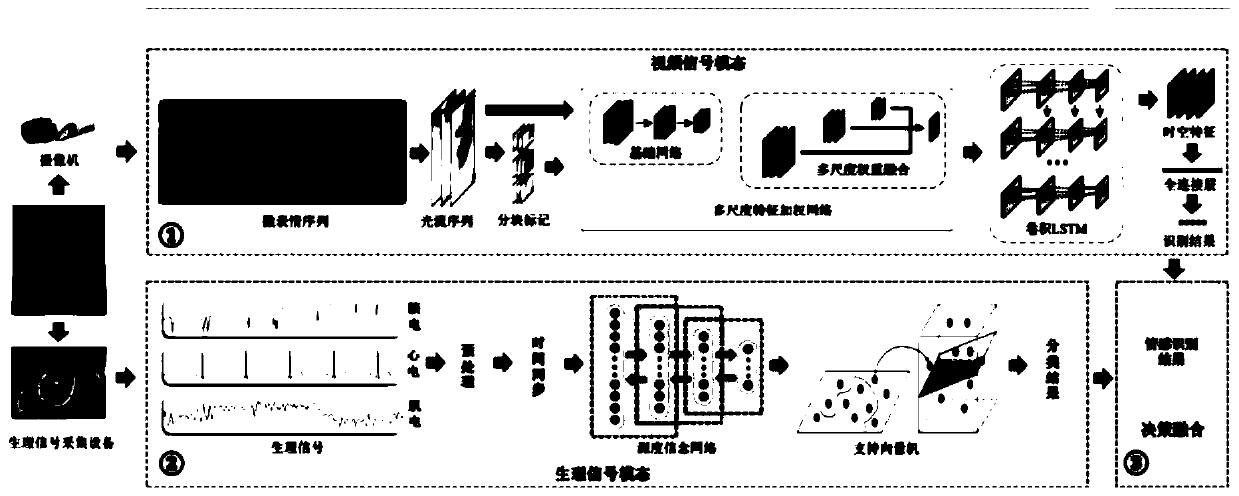

Emotion analysis method and system

PendingCN111222464AImprove accuracyImprove convenienceNeural architecturesAcquiring/recognising facial featuresPattern recognitionMicroexpression

The invention discloses an emotion analysis method and system. The method comprises the steps of obtaining a physiological signal corresponding to a target user; wherein the physiological signals comprise electroencephalogram signals and electromyogram signals; acquiring facial image information corresponding to the target user; respectively inputting the physiological signal and the facial imageinformation into at least one pre-trained target classification model to obtain a physiological signal recognition result and a micro-expression recognition result corresponding to the target user, and determining an emotion analysis result corresponding to the target user based on the physiological signal recognition result and the micro-expression recognition result. According to the technical scheme provided by the embodiment of the invention, the technical problems of certain errors and relatively high labor cost caused by manually determining the current state information of the target user in the prior art are solved, and the technical effects of quickly and accurately determining the current state of the user and reducing the labor cost are achieved.

Owner:INST OF BIOMEDICAL ENG CHINESE ACAD OF MEDICAL SCI

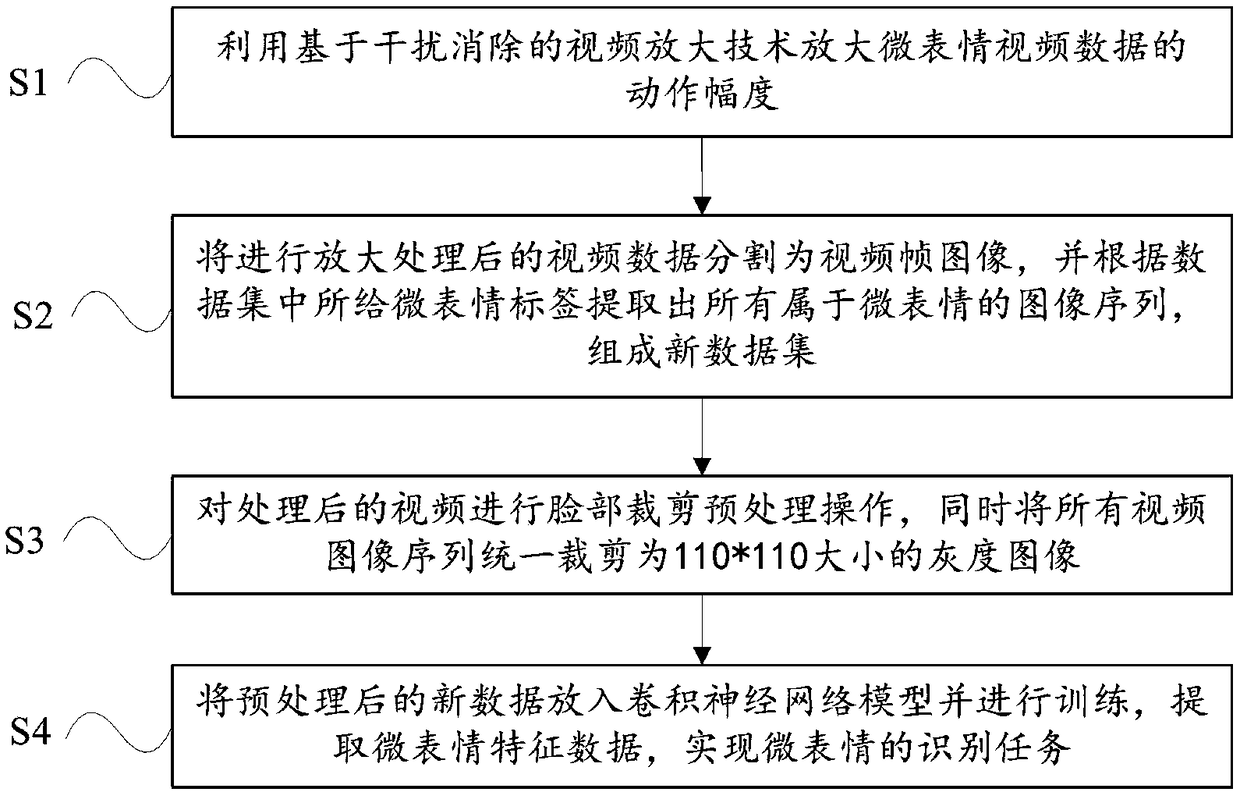

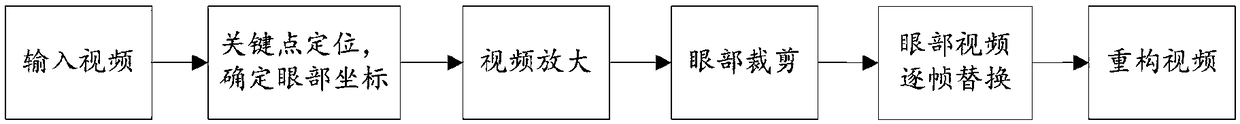

Face microexpression recognition method based on video magnification and depth learning

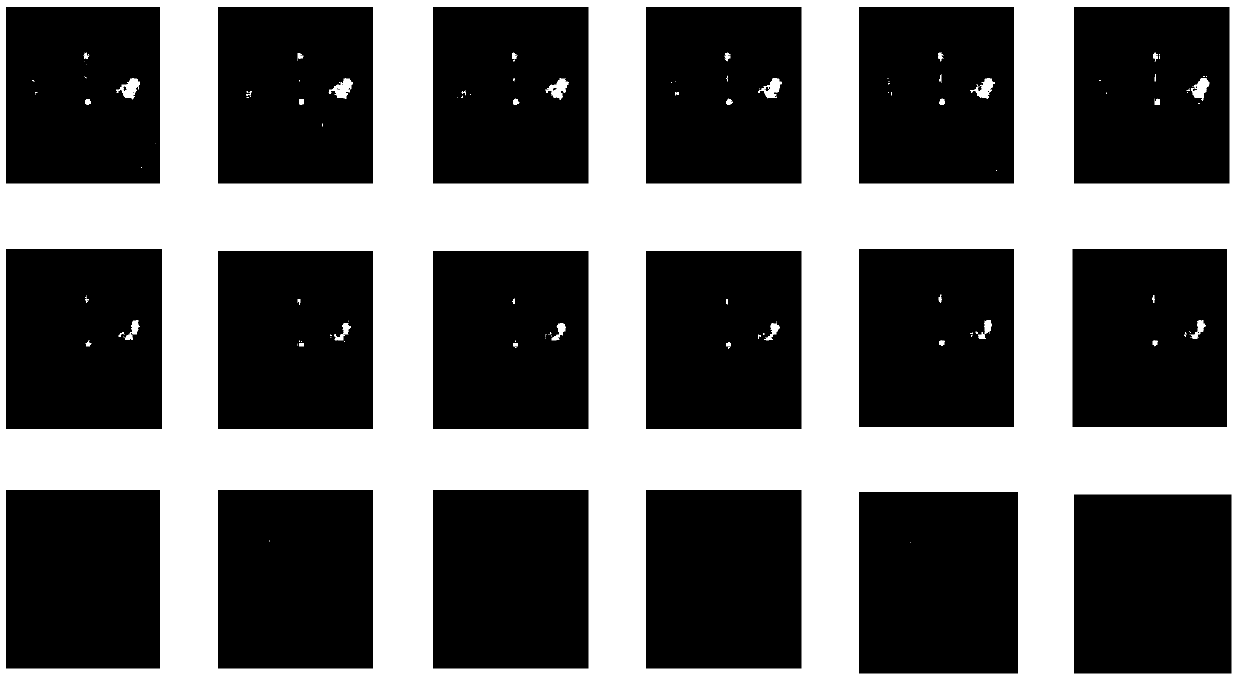

InactiveCN109034143AImprove accuracyIncrease the range of facial expressionsAcquiring/recognising eyesNeural architecturesData setMicroexpression

The invention provides a method for recognizing facial micro-expression based on video amplification and depth learning. The method comprises the following steps of: using a video amplification technique based on interference cancellation to amplify the motion amplitude of the micro-expression video data; the enlarged video data being divided into video frame images, and all image sequences belonging to micro-expression are extracted according to the micro-expression tags in the data set to form a new data set; facial clipping preprocessing being carried out on the processed video, and all video image sequences being uniformly clipped into 110*110 size gray-scale images; the new data after preprocessing being put into the convolution neural network model and trained to extract the micro-expression feature data to achieve the task of micro-expression recognition. The technical proposal provided by the invention enlarges the amplitude of the expression action through the video amplification operation of eliminating interference to the complete data set, and simultaneously introduces a neural network model for training, thereby effectively improving the accuracy rate of the micro expression recognition on the basis of the full classification of the emotion label.

Owner:YUNNAN UNIV

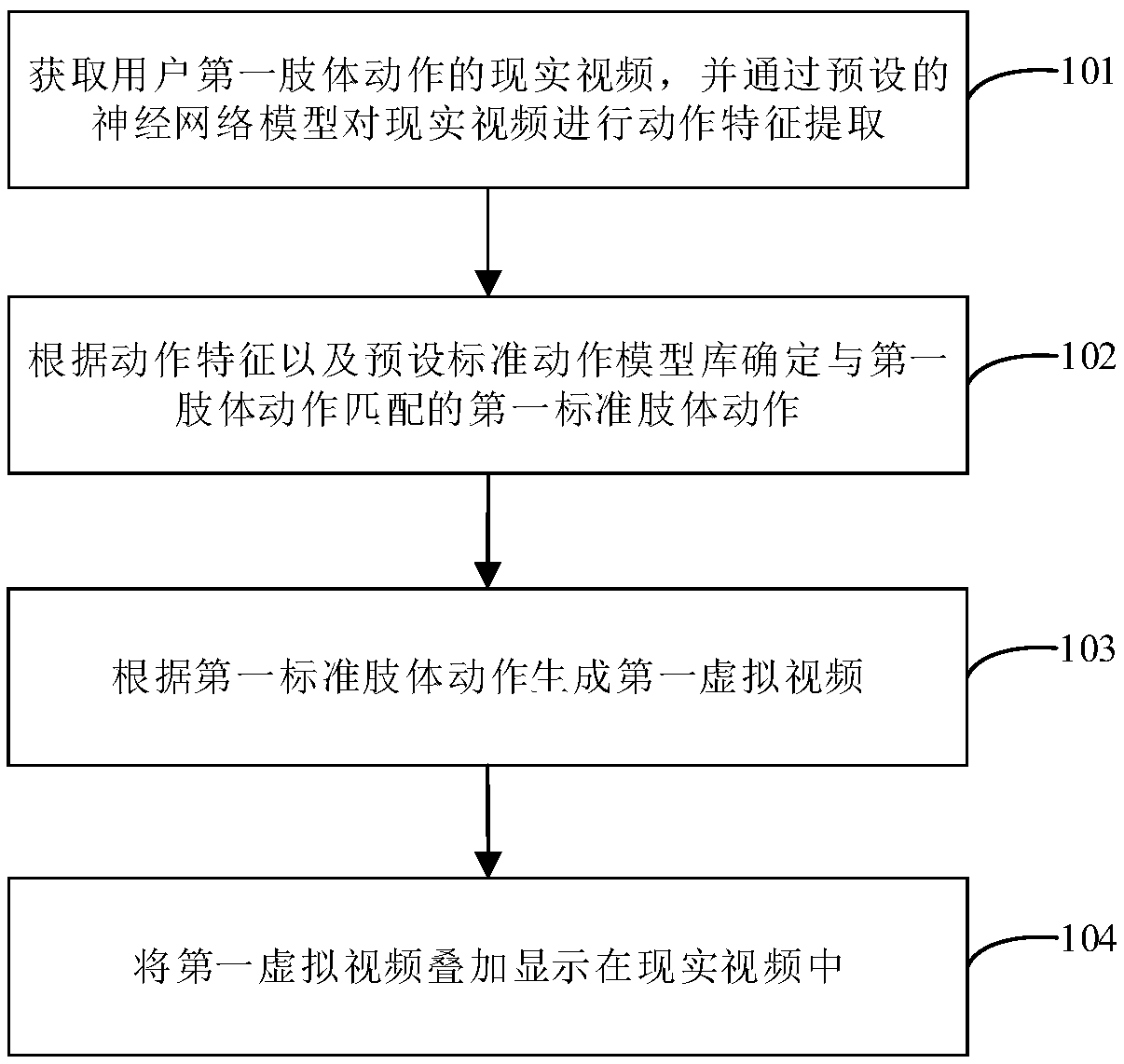

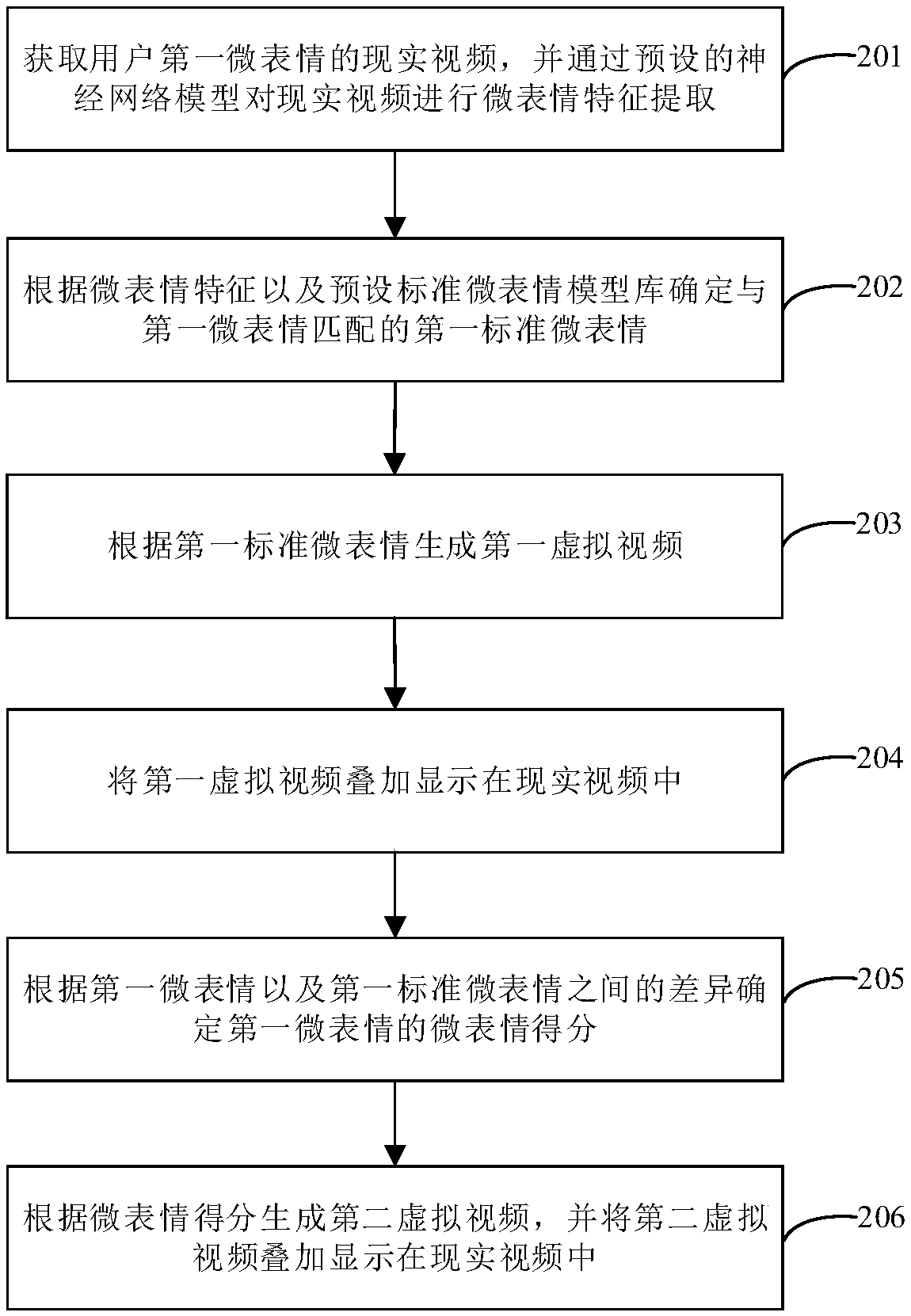

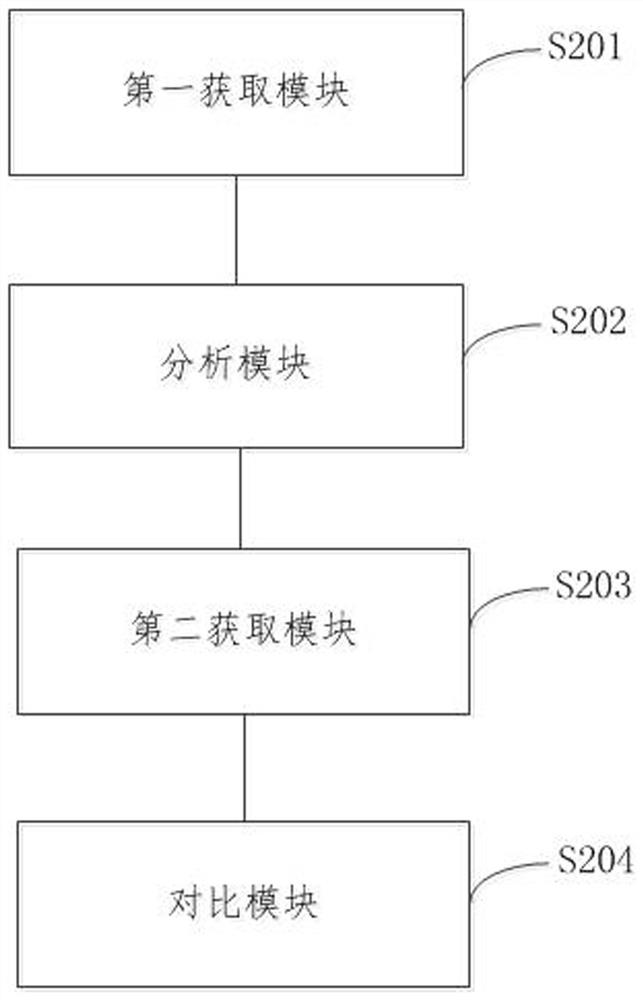

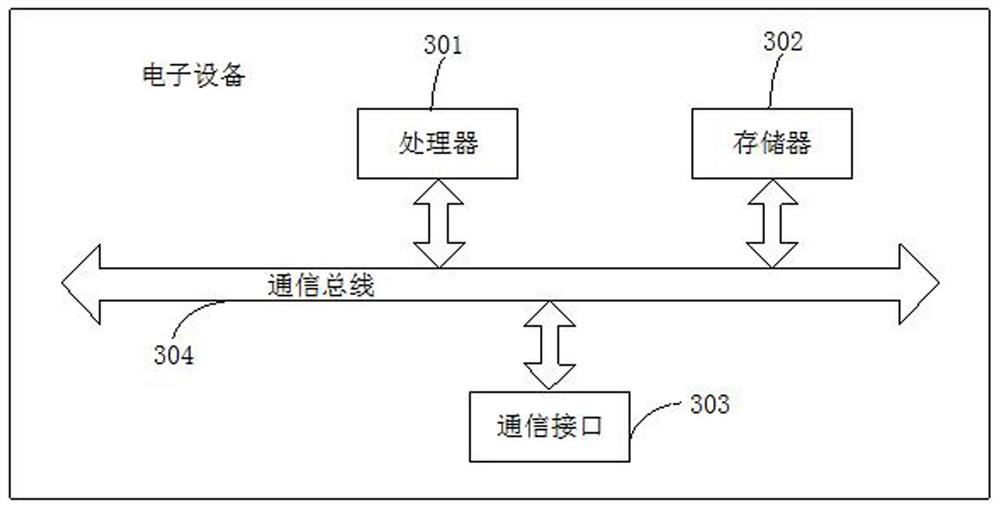

Microexpression training method, device, storage medium and electronic device

ActiveCN109409199AImprove the ability to manage micro-expressionsNeural architecturesAcquiring/recognising facial featuresMicroexpressionNetwork model

The invention provides a microexpression training method, a device, a storage medium and an electronic device. The microexpression training method provided by the invention comprises first obtaining arealistic video of a user's first micro-expression, and extracting the micro-expression features from the real video through the preset neural network model to obtain a microexpression feature corresponding to the first microexpression; then a first standard microexpression matching with the first microexpression is determined according to the microexpression characteristics and a preset standardmicroexpression model library, and a first virtual video is generated according to the first standard microexpression, and finally, the first virtual video is superimposed and displayed in a real video. The microexpression training method provided by the invention visually displays the microexpression of the user and the corresponding standard microexpression through an augmented reality mode, sothat the user can adjust the microexpression according to the difference between the microexpression and the standard microexpression, thereby improving the management ability of the microexpressionof the user.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

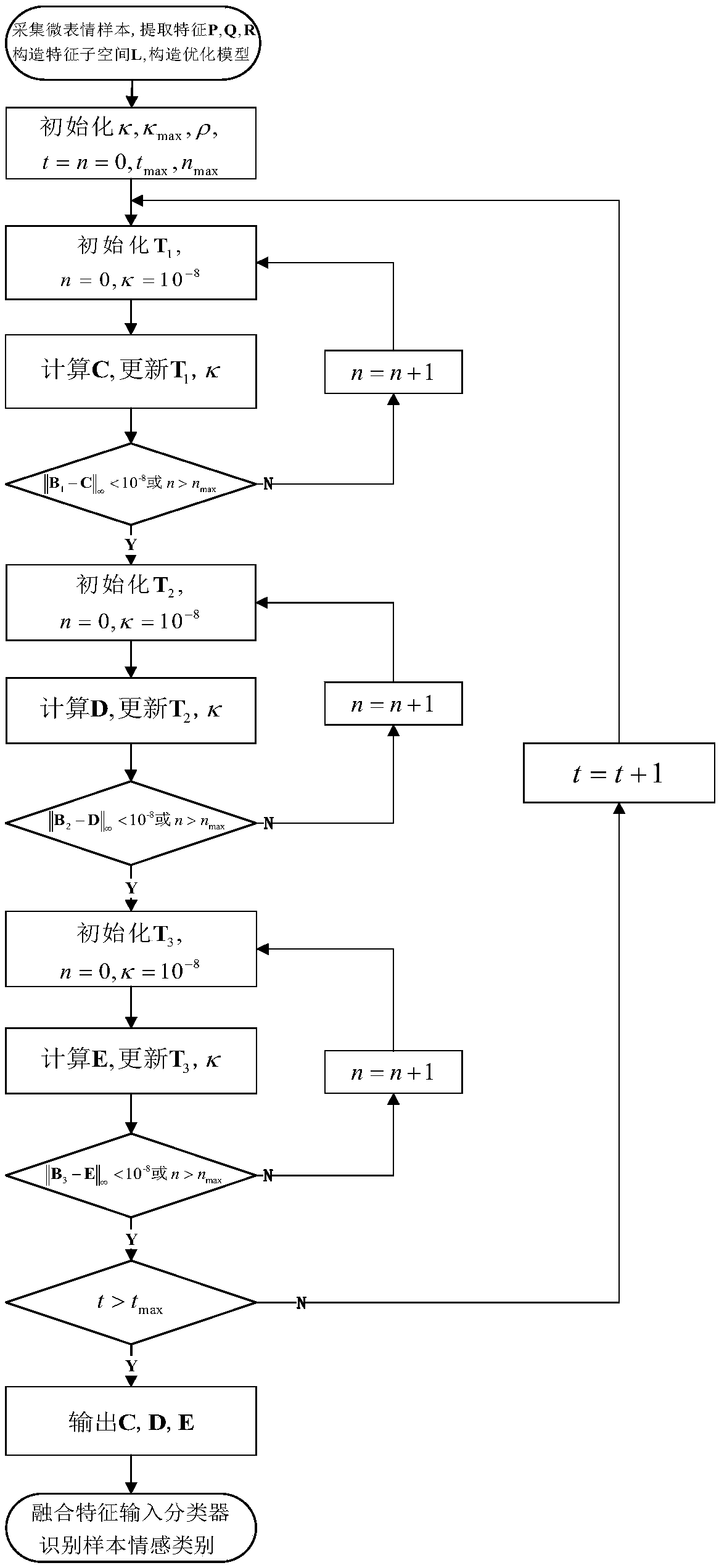

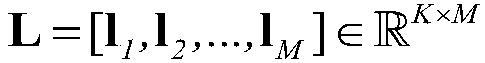

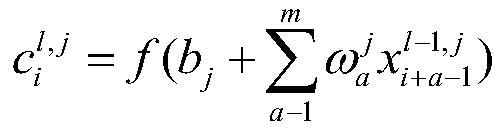

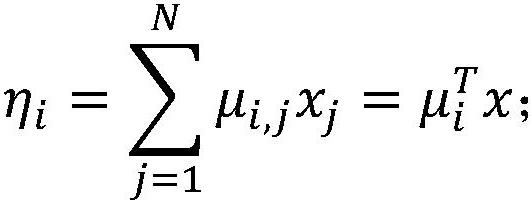

A microexpression recognition method based on sparse projection learning

ActiveCN109033941AEasy to implementReduce computational complexityAcquiring/recognising facial featuresPattern recognitionMicroexpression

The invention discloses a micro expression recognition method based on sparse projection learning. The method comprises the steps of: Step 1, collecting a micro expression sample, extracting LBP features P, Q, R of three orthogonal planes of the micro expression, and defining C, D, E as feature optimization variables of three orthogonal planes of XY, XT, YT respectively; constructing an optimization model; 2, setting the initial value and maximum value of iterative counting variable t and n and initializing the regularization parameter kappa, kappa max, and the scale parameter ruho; step 3, initializing the expression (shown in the description), calculating C, and updating T1 and Kappa; If the expression (shown in the description) converges or n> nmax, proceeding to step 4; step 4, initializing the expression (shown in the description), calculating D, and updating T2 and Kappa; and if the expression (shown in the description) converges or n>nmax, proceeding to step 5; step 5, initializing the expression (shown in the description), calculating E, and updating T3 and Kappa; and if the expression (shown in the description) converges or n> nmax, proceeding to step 6; step 6, makingt=t+1, if t <= tmax, returning to step 3, otherwise, outputting C, D, E; step 7, optimizing the LBP features of the three orthogonal plane by optimizing the variables C, D and E to obtain a new fusionfeature Ftest, and predicting the emotion category of the test sample through the trained SVM classifier for the fusion feature Ftest.

Owner:JIANGSU UNIV

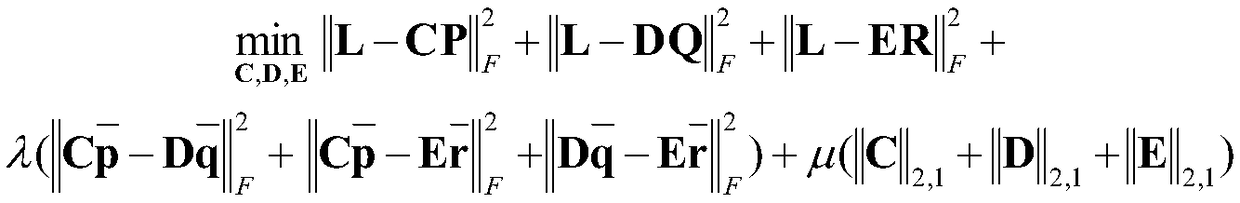

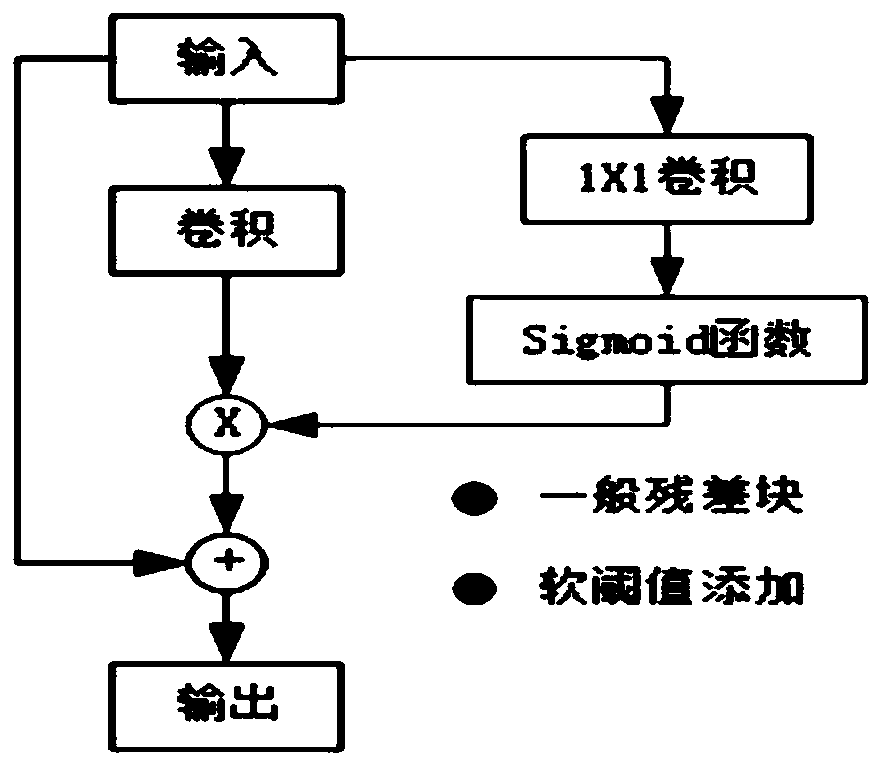

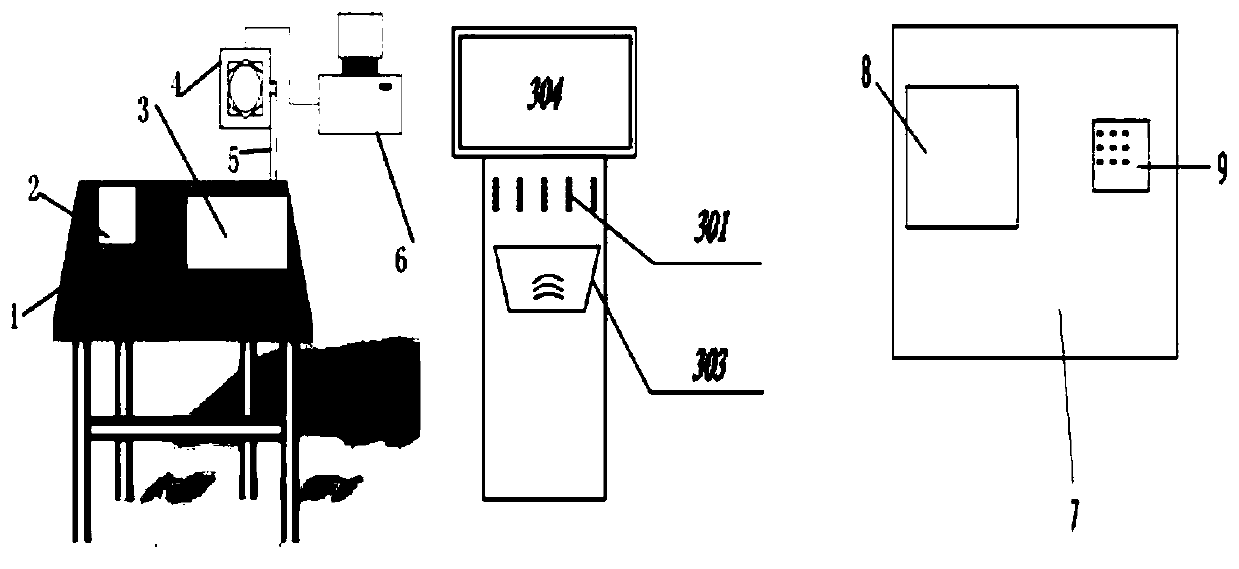

Examination behavior detection method based on improved Openpose model and facial micro-expressions

ActiveCN111523445AAchieve innovationLess distracting factorsBiometric pattern recognitionResourcesMachine visionMicroexpression

The invention discloses an examination behavior detection method based on an improved Openpose model and facial micro-expressions, and the method comprises the steps: arranging a camera in front of adesk, and detecting the examination behaviors of students in real time; recognizing facial information and upper body skeleton information through an artificial intelligence model, serving whether keypoints can be recognized or not and the distance between the key points as main judgment conditions, serving changes of micro-expressions as auxiliary judgment conditions, and if a certain student does not meet the condition for a period of time, judging that the examination behavior of the student is abnormal. Besides, through the video stream of one class, the possible stage of abnormal behaviors of students is found out and analyzed, and innovation and reform of teaching are realized. Interference factors are reduced by means of machine vision recognition, equipment is simplified, and a network model is further optimized by means of a residual network, weight trimming and the like. Compared with a traditional mode, self-service examination behavior detection and feedback are achieved,the test efficiency is high, the accuracy can reach 95%, and the method can be applied to general examination detection.

Owner:NANTONG UNIVERSITY

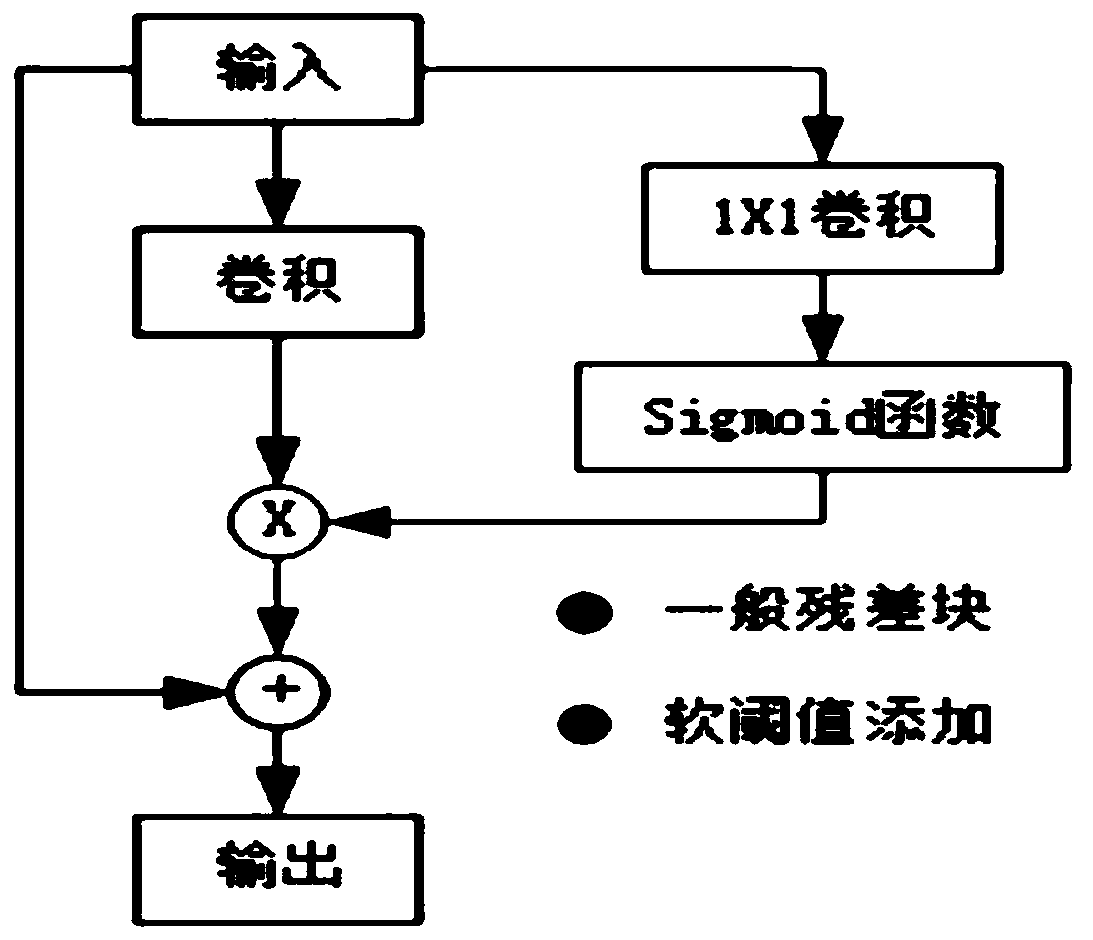

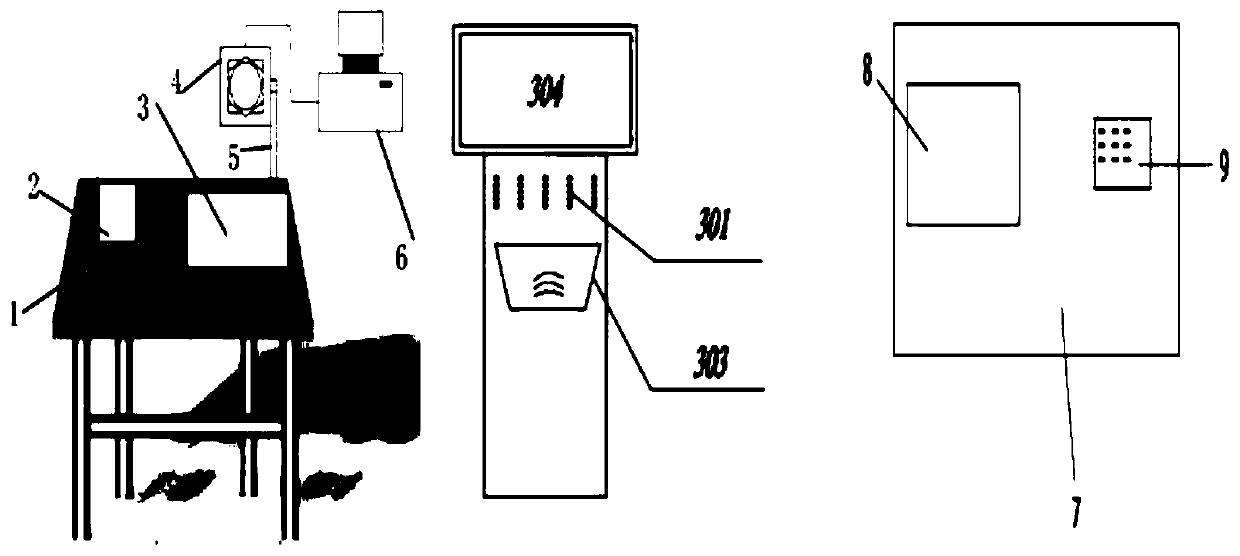

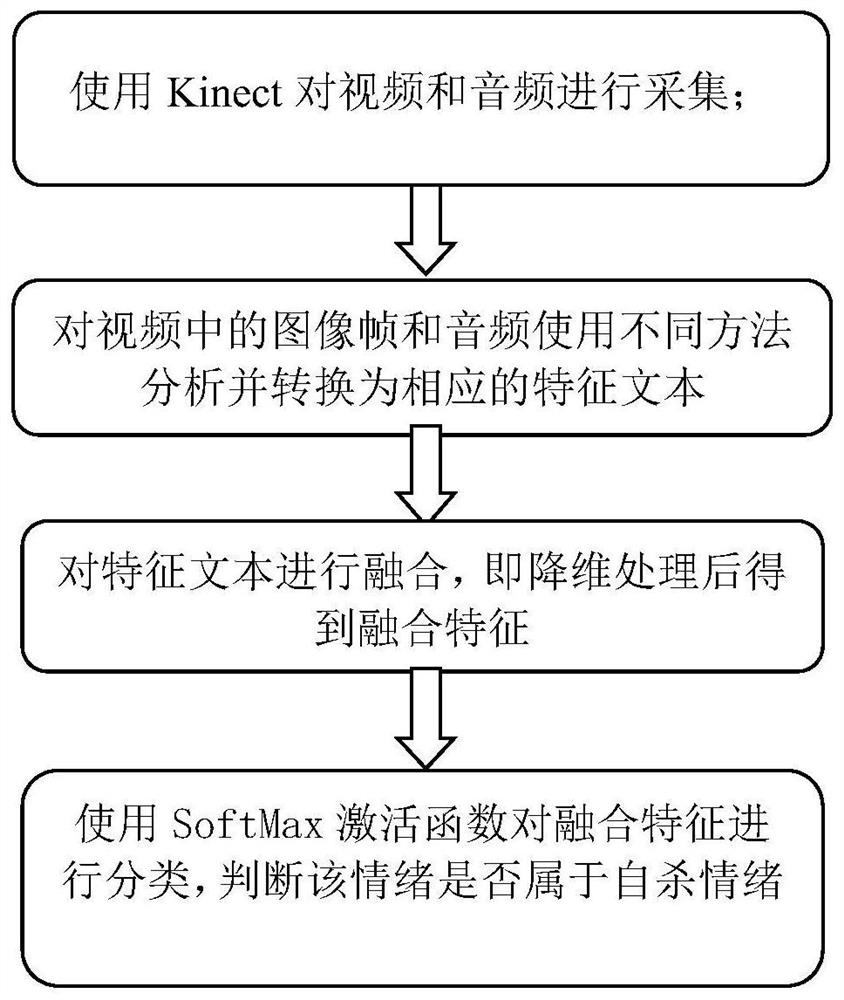

Suicide emotion perception method based on multi-modal fusion of voice and micro-expressions

PendingCN112101096AReduce dimensionalityImprove performanceNeural architecturesAcquiring/recognising facial featuresEmotion perceptionMicroexpression

The invention discloses a suicide emotion perception method based on multi-mode fusion of voice and micro-expression. The method comprises the following steps: collecting videos and audios by using Kinect with an infrared camera; analyzing image frames and audios in the video by using different methods and converting the image frames and audios into corresponding feature texts; fusing the featuretexts, namely performing dimension reduction processing to obtain fused features; and classifying the fusion features by using a SoftMax activation function, and judging whether the emotion belongs tosuicide emotions or not. According to the invention, the multi-modal data is aligned with the text layer. The text intermediate representation and the proposed fusion method form a frame fusing speech and facial expressions. According to the invention, the dimensionality of voice and facial expressions is reduced, and two pieces of information are unified into one component. The Kinect is used for data acquisition, and the invention has the advantages of being non-invasive, high in performance and convenient to operate.

Owner:SOUTH CHINA UNIV OF TECH

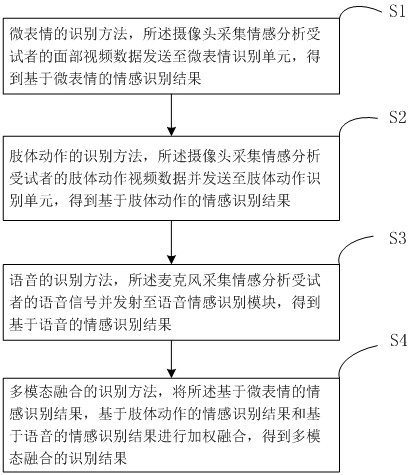

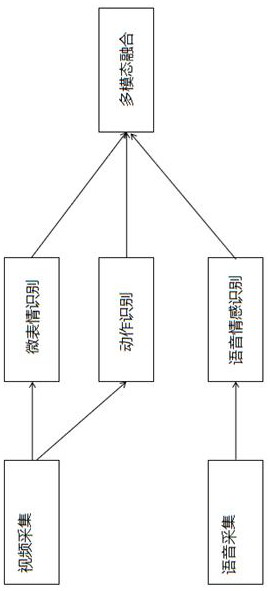

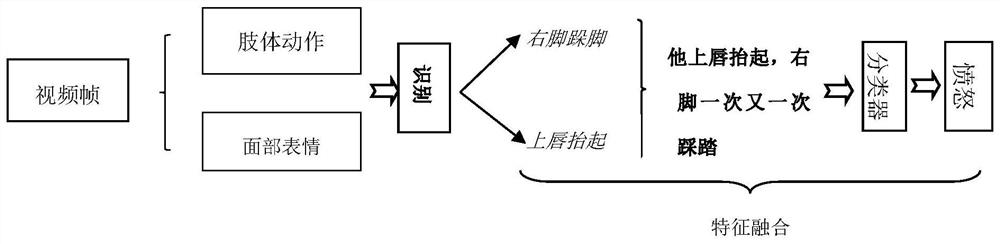

Multi-modal emotion recognition method based on micro-expressions, body movements and voices

ActiveCN113469153AEasy to identifyEfficient identificationSpeech analysisCharacter and pattern recognitionMicroexpressionBody movement

The invention provides a multi-modal emotion recognition method based on micro-expressions, limb actions and voice, which comprises the following steps: step 1, inputting a facial video of a subject receiving stimulation of a certain signal, and recognizing the micro-expressions; 2, inputting a body video of a subject receiving stimulation of a certain signal, and identifying limb actions; and 3, inputting an audio signal stimulated by a certain signal received by the subject, and recognizing the voice emotion. The micro-expression recognition result in the first step, the limb action recognition result in the second step and the voice emotion recognition result in the third step are fused, and the continuous emotion state of the current subject is judged. According to the method, the emotion recognized by the micro-expression is combined with the emotion recognized by the limb action and the speech emotion, so that the emotional state of the subject can be predicted more accurately. Compared with the prior art, the method has the beneficial effects that the real emotion of a person can be identified more accurately.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

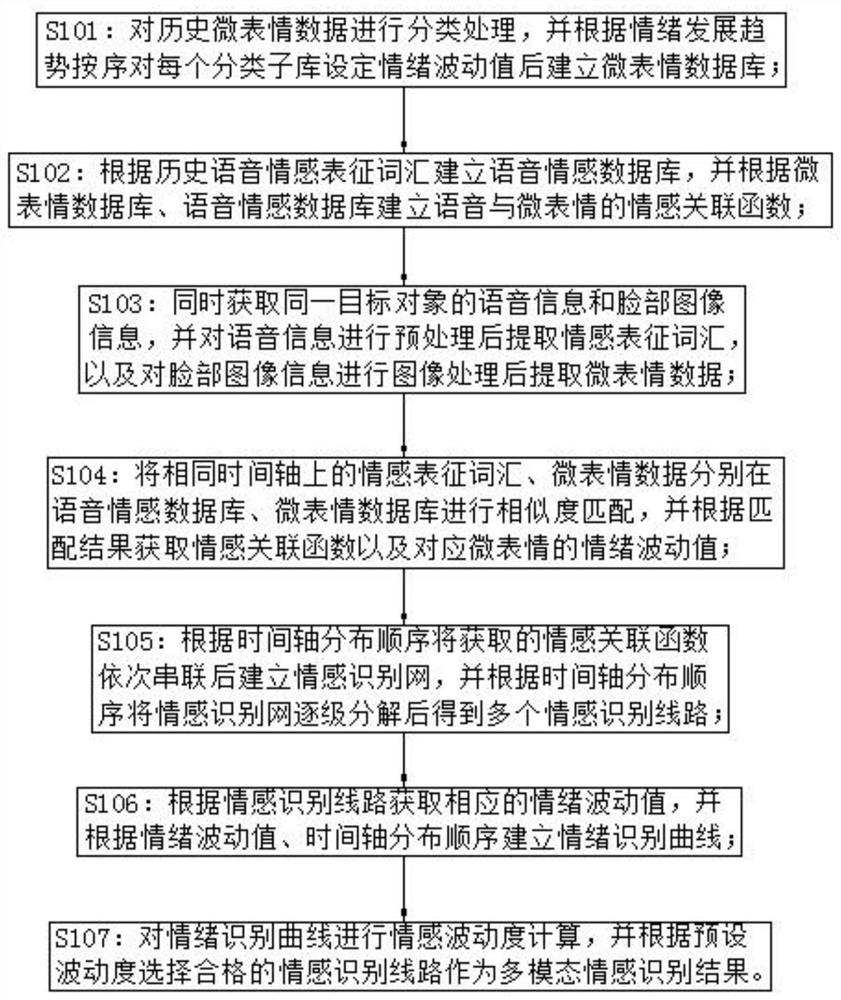

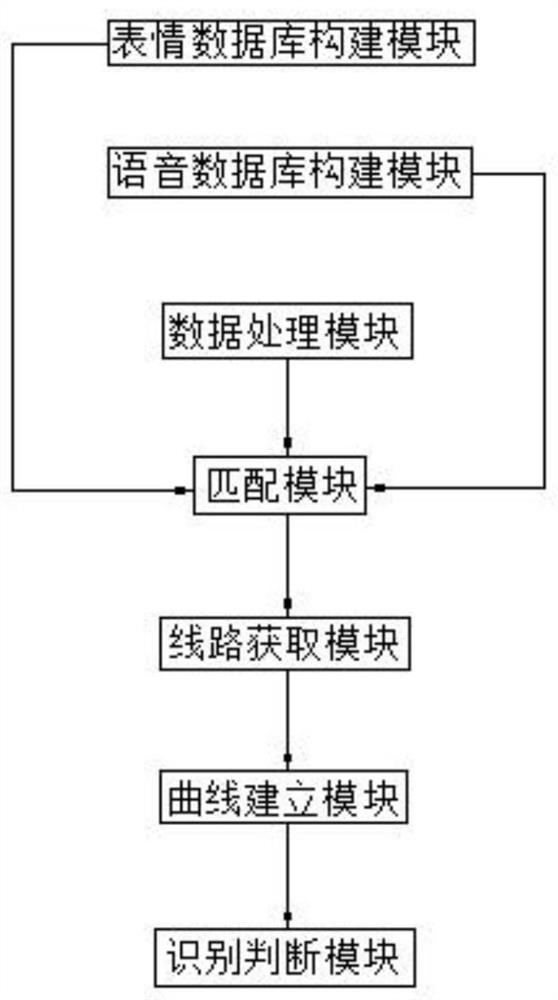

Multi-modal emotion recognition method and system fusing voice and micro-expressions

PendingCN112307975AReduce mistakesImprove accuracySemantic analysisSpeech analysisPattern recognitionMicroexpression

The invention discloses a multi-modal emotion recognition method and system fusing voice and micro-expressions, and relates to the technical field of situation recognition. The method comprises the steps of: establishing a voice emotion database and an emotion association function; acquiring voice information and face image information of the same target object at the same time, and extracting emotion representation vocabularies and micro-expression data; obtaining an emotion correlation function and an emotion fluctuation value corresponding to the micro-expression according to a matching result; establishing an emotion recognition network, and decomposing step by step to obtain a plurality of emotion recognition lines; obtaining a corresponding emotion fluctuation value, and establishingan emotion recognition curve; and selecting a qualified emotion recognition line according to a preset fluctuation degree after the emotion fluctuation degree is calculated. According to the invention, the authenticity of the real-time emotion of the target object represented by the voice information and the face image information is enhanced, the probability that the same situation reflects different situations is reduced, the accuracy of the emotion recognition result is improved, and the error of the emotion recognition result is reduced.

Owner:JIANGXI UNIV OF SCI & TECH

Classroom behavior detection method based on improved Openpose model and facial micro-expressions

ActiveCN111523444AImprove analysis efficiencyAvoid distractionsResourcesNeural architecturesMicroexpressionMachine vision

The invention discloses a classroom behavior detection method based on an improved Openpose model and facial micro-expressions, and the method comprises the steps: arranging a camera in front of a desk, and detecting the classroom behaviors of students in real time; and recognizing facial information and upper body skeleton information through an artificial intelligence model, taking whether key points can be recognized or not and the distance between the key points as main judgment conditions, serving changes of micro-expressions as auxiliary judgment conditions, if a certain student does notmeet the condition for a period of time, judging that the examination behavior of the student is abnormal. Besides, through the video stream of one class, the possible stage of abnormal behaviors ofstudents is found out and analyzed, and innovation and reform of teaching are realized. Interference factors are reduced through machine vision recognition, equipment is simplified, and meanwhile theinvention further provides a corresponding data analyzing and processing system. According to the invention, the network model is further optimized through adoption of methods such as residual network, weight trimming and the like. According to the invention, self-service classroom behavior detection and feedback are realized, the test efficiency is high, and the accuracy can reach 95%.

Owner:NANTONG UNIVERSITY

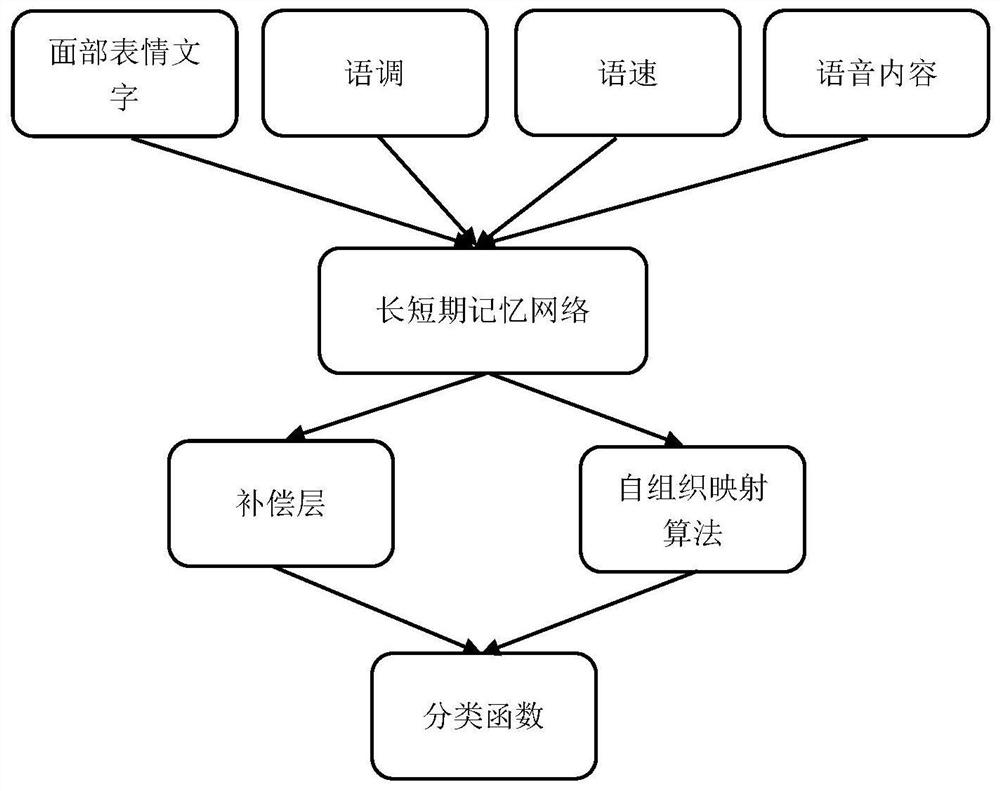

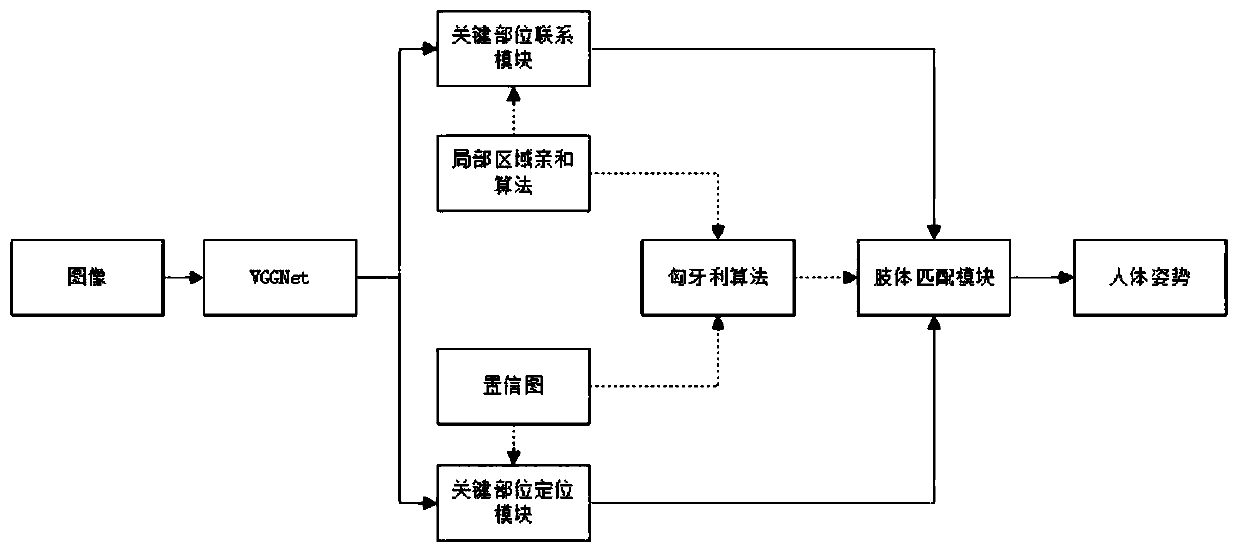

Depression tendency recognition method based on multi-modal characteristics of limbs and micro-expressions

ActiveCN111967354AReduce dimensionalityImprove robustnessNeural architecturesAcquiring/recognising facial featuresHuman bodyNerve network

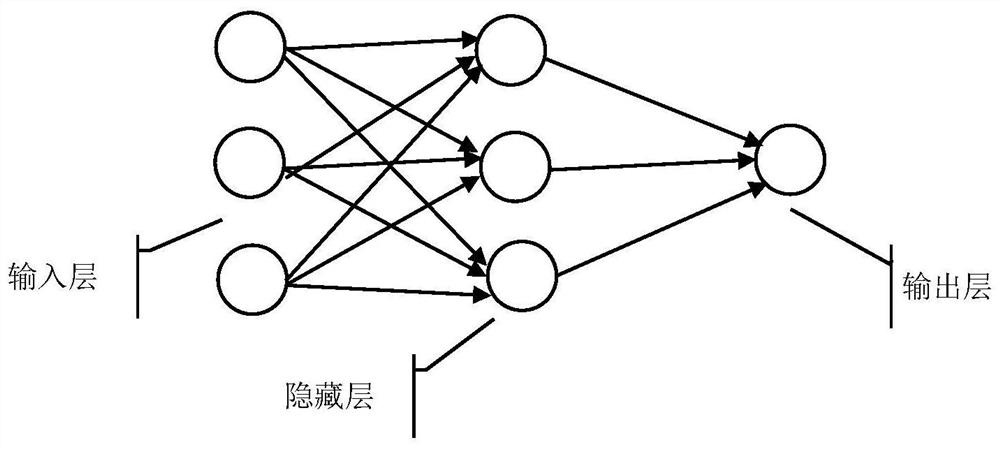

The invention discloses a depression tendency recognition method based on multi-modal characteristics of limbs and micro-expressions. The method comprises the following steps: detecting human motion by means of a non-contact measurement sensor Kinect, and generating motion text description; capturing a face image frame by adopting a non-contact measurement sensor Kinect, performing Gabor wavelet and linear discriminant analysis on a face region of interest, performing feature extraction and dimensionality reduction, and then realizing face expression classification by adopting a three-layer neural network to generate expression text description; performing fusion through text description extracted by a fusion neural network with a self-organizing mapping layer and generating information with emotion features; and S4, using a Softmax classifier to classify the feature information generated in the S3 in emotion categories, wherein a classification result is used for evaluating whether the patient has a depression tendency or not. Static body movement and dynamic body movement are considered, and higher efficiency is achieved. Body movement is helpful for identifying the emotion of adepression patient.

Owner:SOUTH CHINA UNIV OF TECH

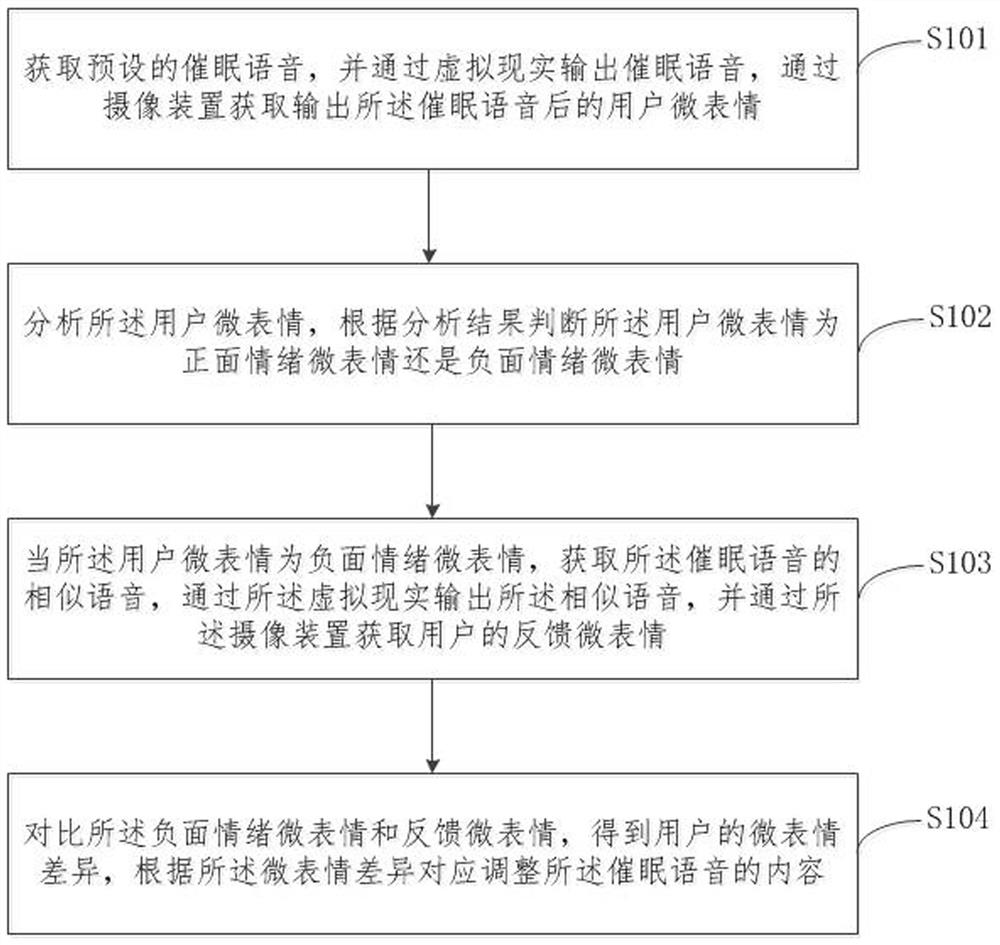

Virtual reality sleep promoting method and device

PendingCN113545781ADoes not affect sleep experienceInput/output for user-computer interactionMedical devicesPhysical medicine and rehabilitationMicroexpression

The embodiment of the invention provides a virtual reality sleep promoting method and device. The method comprises the steps of: obtaining a preset hypnotic voice, outputting the hypnotic voice through virtual reality, and obtaining a micro-expression of a user after the hypnotic voice is output through a camera device; analyzing the micro-expression of the user, and judging whether the micro-expression of the user is a positive emotion micro-expression or a negative emotion micro-expression according to an analysis result; when the micro-expression of the user is a negative emotion micro-expression, obtaining a similar voice of the hypnosis voice, outputting the similar voice through virtual reality, and obtaining a feedback micro-expression of the user through the camera device; and comparing the negative emotion micro-expression with the feedback micro-expression to obtain a micro-expression difference of the user, and correspondingly adjusting the content of the hypnosis voice according to the micro-expression difference. By adopting the method, targeted adjustment of the hypnotic voice can be performed for different users; and when the user falls asleep, the hypnotic voice is adjusted through the micro-expression change of the user, so that the falling asleep experience of the user is not affected.

Owner:ZHEJIANG BUSINESS TECH INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com