Patents

Literature

64 results about "Expression - action" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

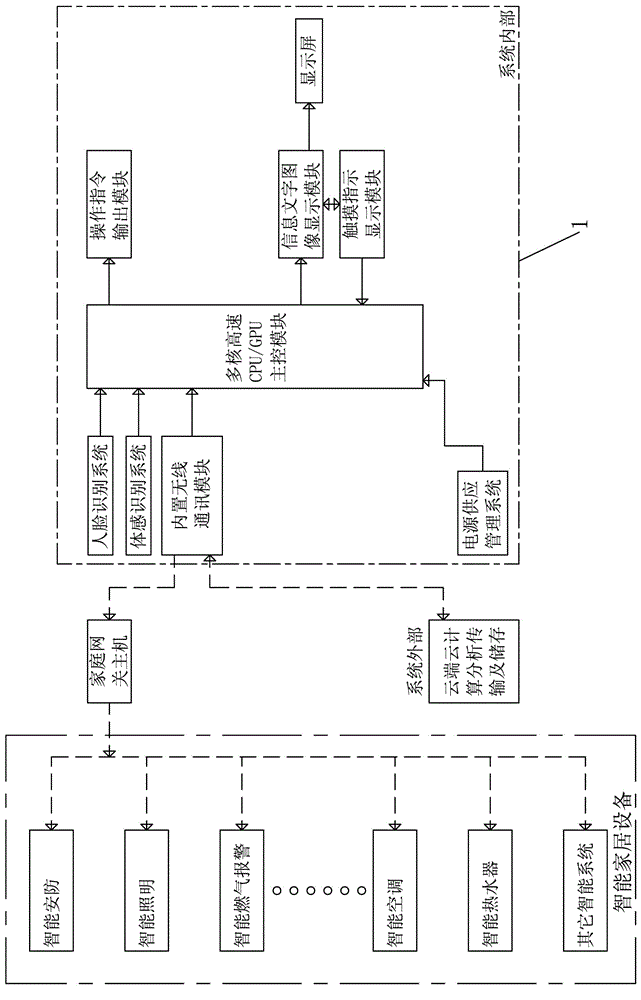

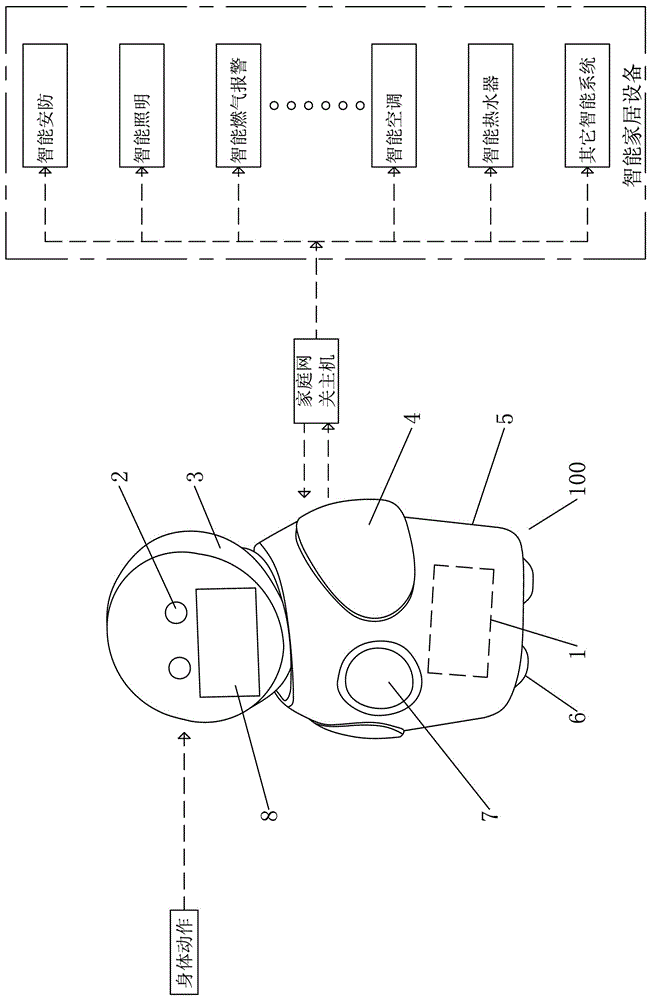

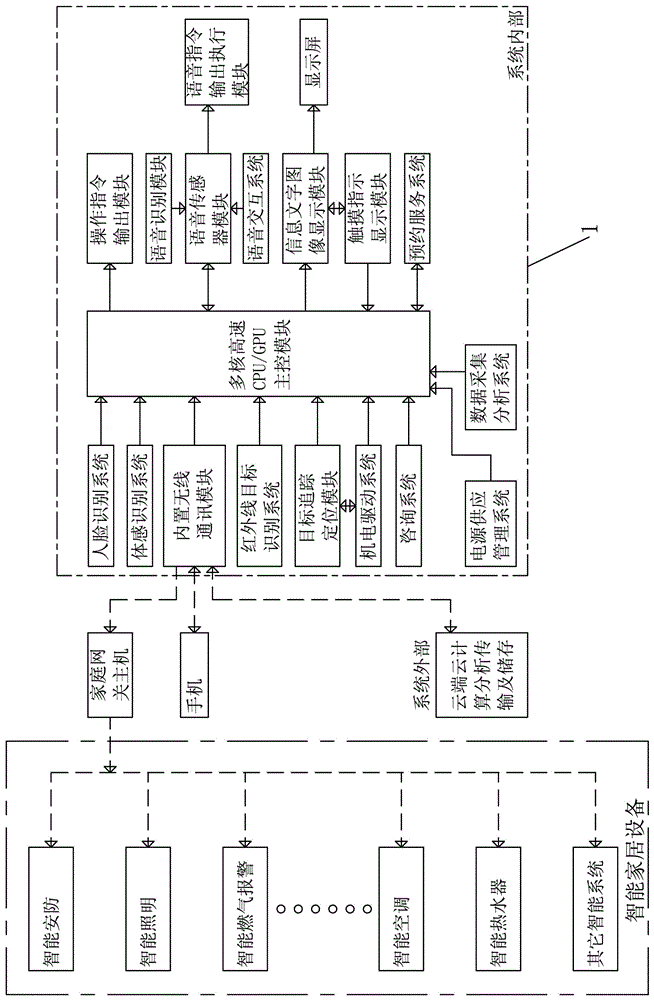

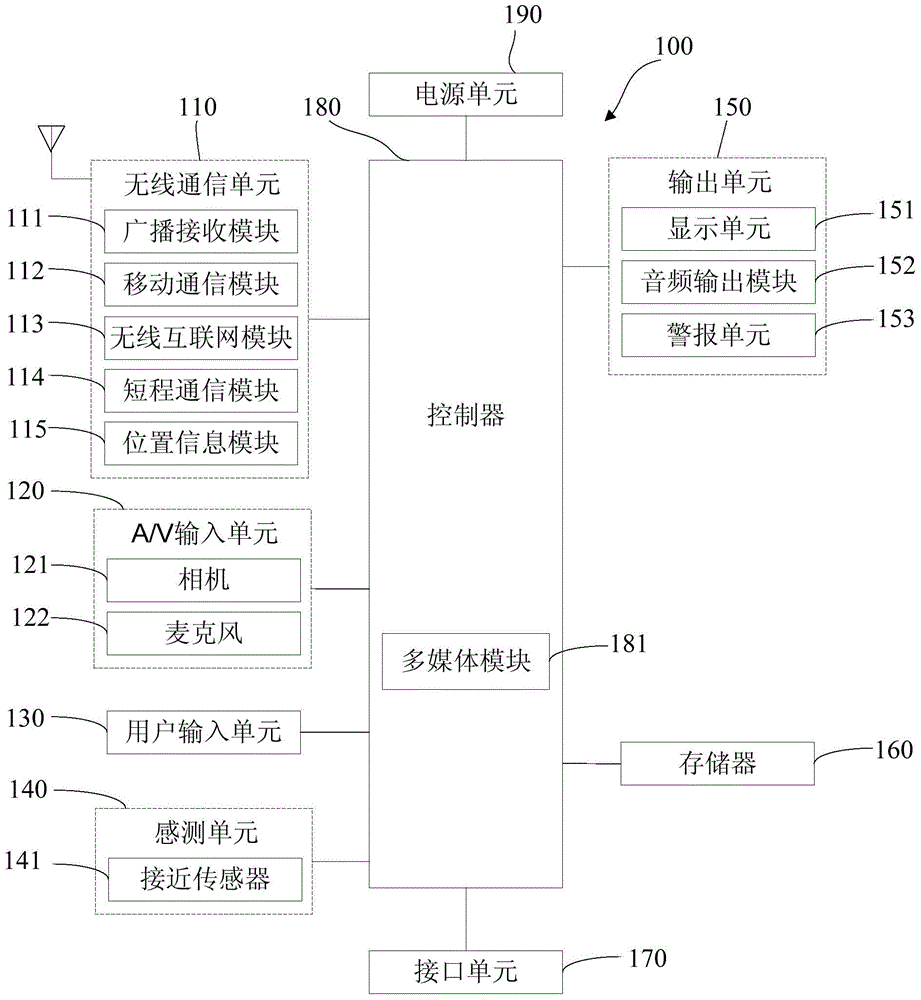

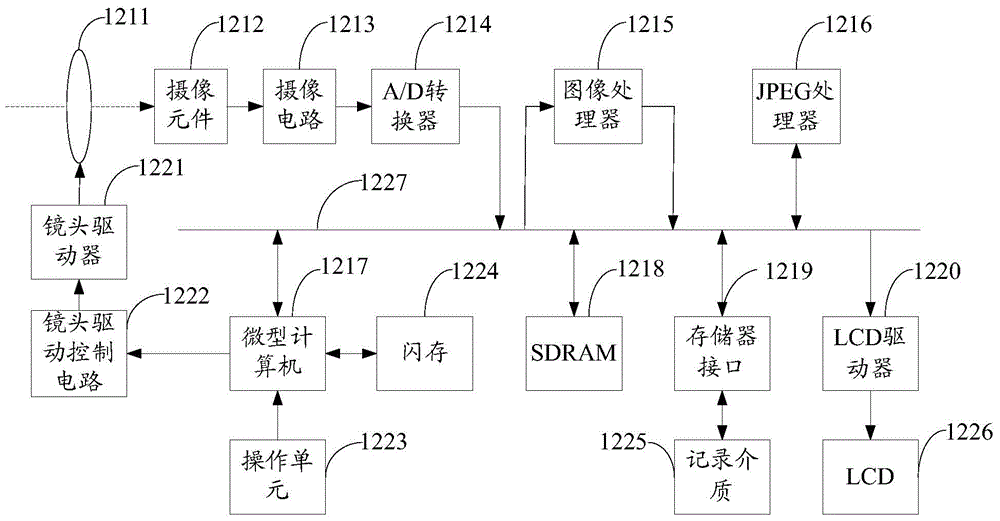

Intelligent home steward central control system with somatosensory function and control method of intelligent home steward central control system

PendingCN106790628AEfficient operating experienceImprove operating experienceComputer controlTransmissionHuman bodySupply management

The invention relates to an intelligent home steward central control system with a somatosensory function and a control method of the intelligent home steward central control system. The intelligent home steward central control system comprises a wireless communication module, a human face identification system and a somatosensory identification system, wherein the human face identification system is used for acquiring human face information, and the somatosensory identification system is used for acquiring human body action information; the somatosensory identification system, a power supply management system, an operation instruction output module and a human-machine exchange interface are respectively electrically connected with a master control module, the master control module is further in remote network connection with an external cloud terminal through the wireless communication module, and the operation instruction output module is used for carrying out wireless network control on intelligent home equipment through the wireless communication module. The intelligent home steward central control system can be used for replacing a manner of manually starting intelligent home equipment software to operate and carrying out corresponding control after receiving limb / expression action of a set figure, so that tedious operations as in the prior art are omitted, a ''human-similar'' intelligent home steward function is realized, and efficient, convenient and comfortable operating experiences can be brought to a user.

Owner:ELITE ARCHITECTURAL CO LTD

Interactive edit and extended expression method of network video link information

InactiveCN101141622AIncrease expressionIncrease contentTwo-way working systemsTransmissionVideo playerClient-side scripting

The present invention relates to an expression method for the interactive edition and expansion of network video linkage information. The expression method comprises that the network video is collected, and a system network video storehouse is established; a system network video player is established, and a system edition platform and a playing system are established; the interactive linkage of the video in the system network video storehouse and the correlated information through the edition platform, and the edited correlated information is collected and stored into the correlated information database of the system network video storehouse; the content of the played video is collected and detected in real time according to the correlated information obtained by a client-side script program, and when information corresponding to the correlated domain is detected, the system network video player informs the client-side script program to express the correlated information correspondingly. The present invention solves the technical problem that the network video can not be correlated with the content of the existing information or the user-defined information in the network in the background technology. The way of the correlation between the network video and the information of the present invention supports all the information expression actions and the information expression ways which can appear on network pages.

Owner:张伟华 +2

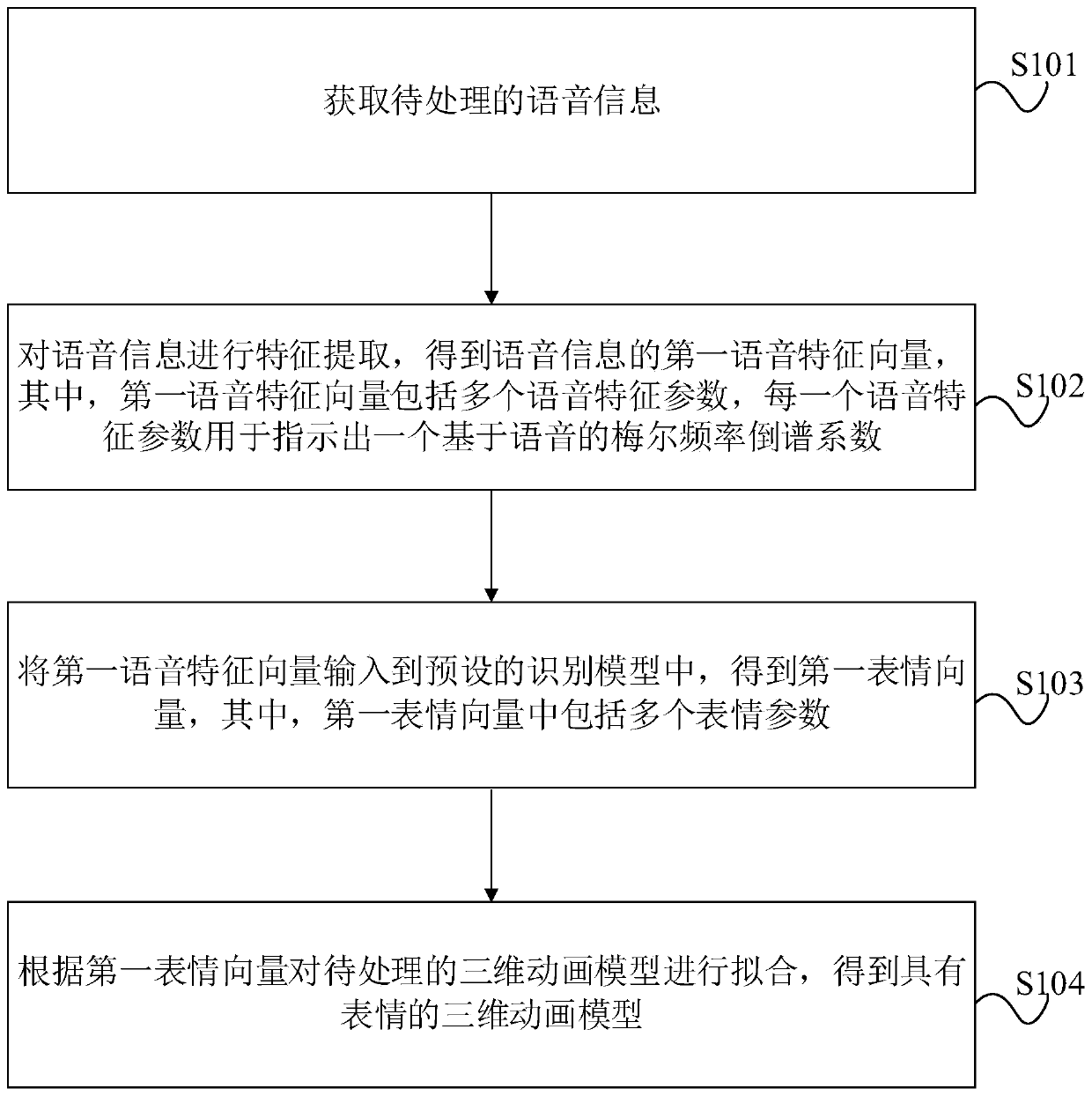

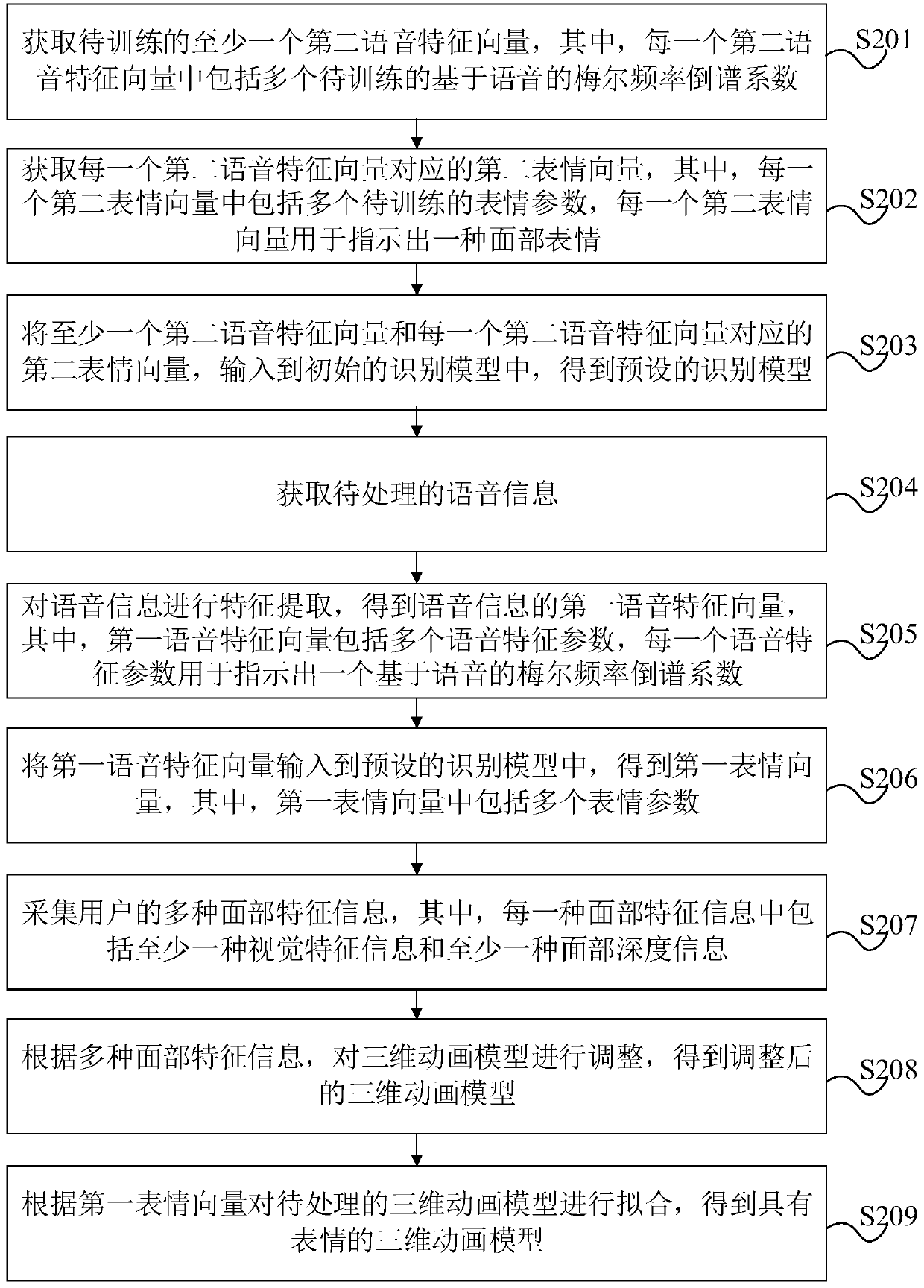

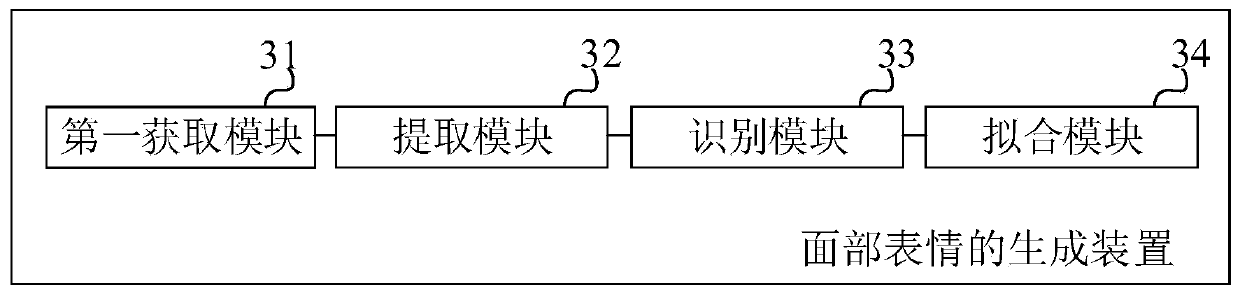

Facial expression generation method and device, electronic device and storage medium

PendingCN110009716APitch guaranteeGuaranteed soundAnimationSpeech recognitionMel-frequency cepstrumFeature vector

The invention provides a facial expression generation method and device, an electronic device and a storage medium. The method comprises: performing feature extraction on obtained voice information, obtaining a first voice feature vector, the first voice feature vector comprising a plurality of voice feature parameters, and each voice feature parameter indicating a Mel frequency cepstrum coefficient based on voice; inputting the first voice feature vector into a preset recognition model to obtain a first expression vector, wherein the first expression vector comprises a plurality of expressionparameters; and fitting the three-dimensional animation model according to the first expression vector to obtain a three-dimensional animation model with expressions. The Mel frequency cepstrum coefficient based on the voice can retain the content information, pitch, tone and other voice characteristics of the voice, and can better restore the expression action. Therefore, the facial expressionsof the three-dimensional animation model are more correct, the facial expressions can be better simulated, and the obtained expression actions conform to the voice information and the voice data.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

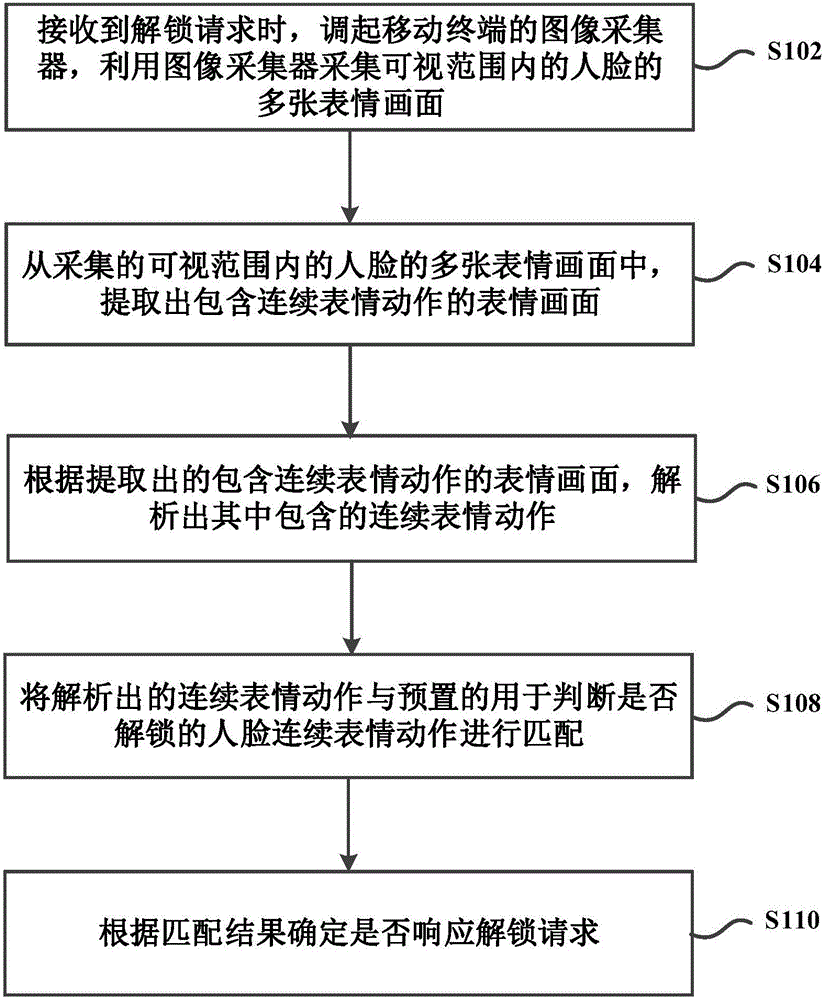

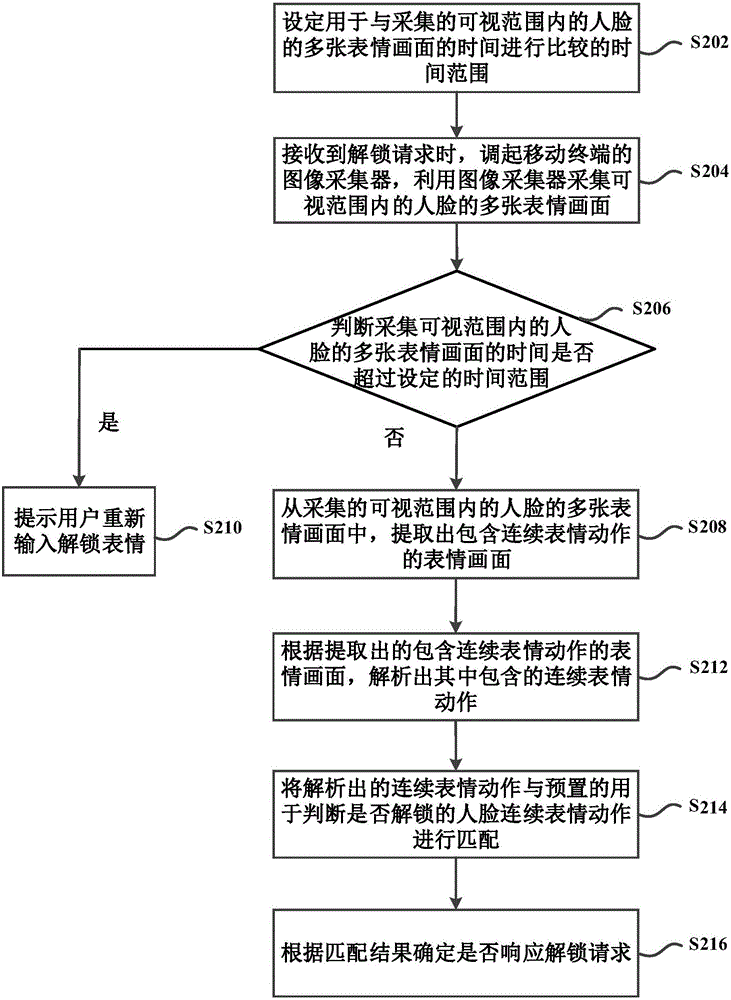

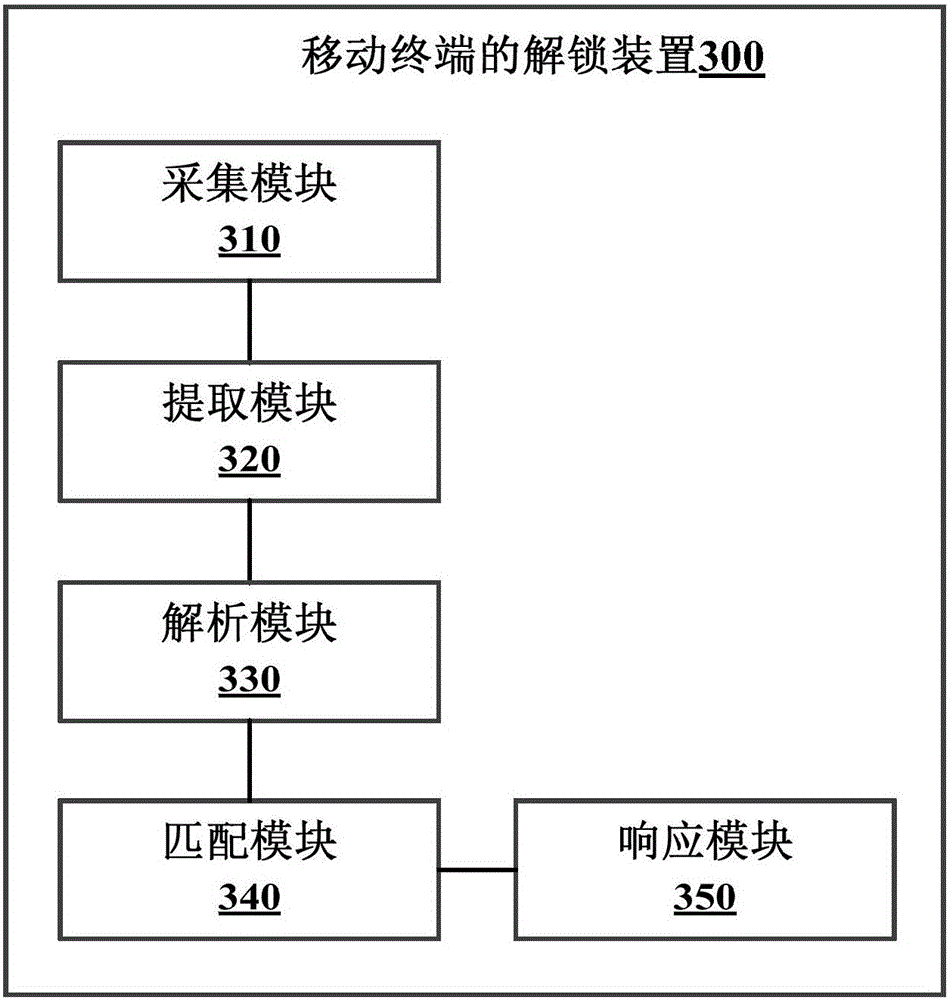

Mobile terminal unlocking method and device

InactiveCN105825112AImprove securityUnlock flexibleDigital data authenticationExpression - actionComputer terminal

The invention provides a mobile terminal unlocking method and device, wherein the method comprises the following steps: when an unlocking request is received, calling an image collector of a mobile terminal; using the image collector to collect a plurality of expression images of a human face in a visual range; extracting expression images containing continuous expression actions from the plurality of collected expression images of the human face in the visual range; analyzing out the continuous expression actions included in the expression images according to the extracted expression images containing the continuous expression actions; matching the analyzed continuous expression actions and preset human face continuous expression actions used for judging whether to unlock or not; determining whether to respond to the unlocking request or not according to the matching result. According to the unlocking mode provided by the invention, a plurality of continuous human face expression actions are used for executing the unlocking, and the mode is a dynamic unlocking mode. Compared with a static unlocking mode in the prior art, the unlocking security can be improved.

Owner:BEIJING QIHOO TECH CO LTD +1

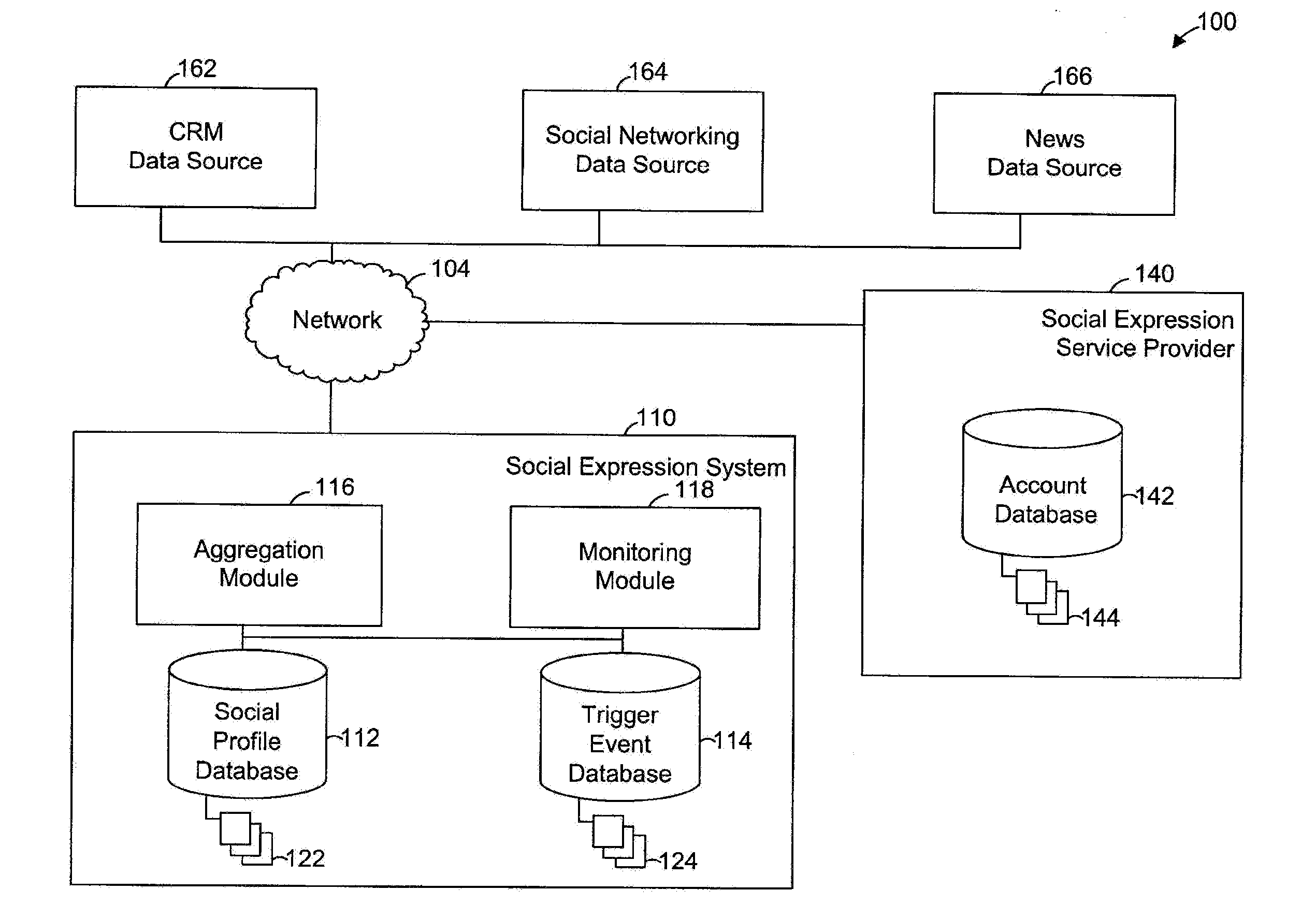

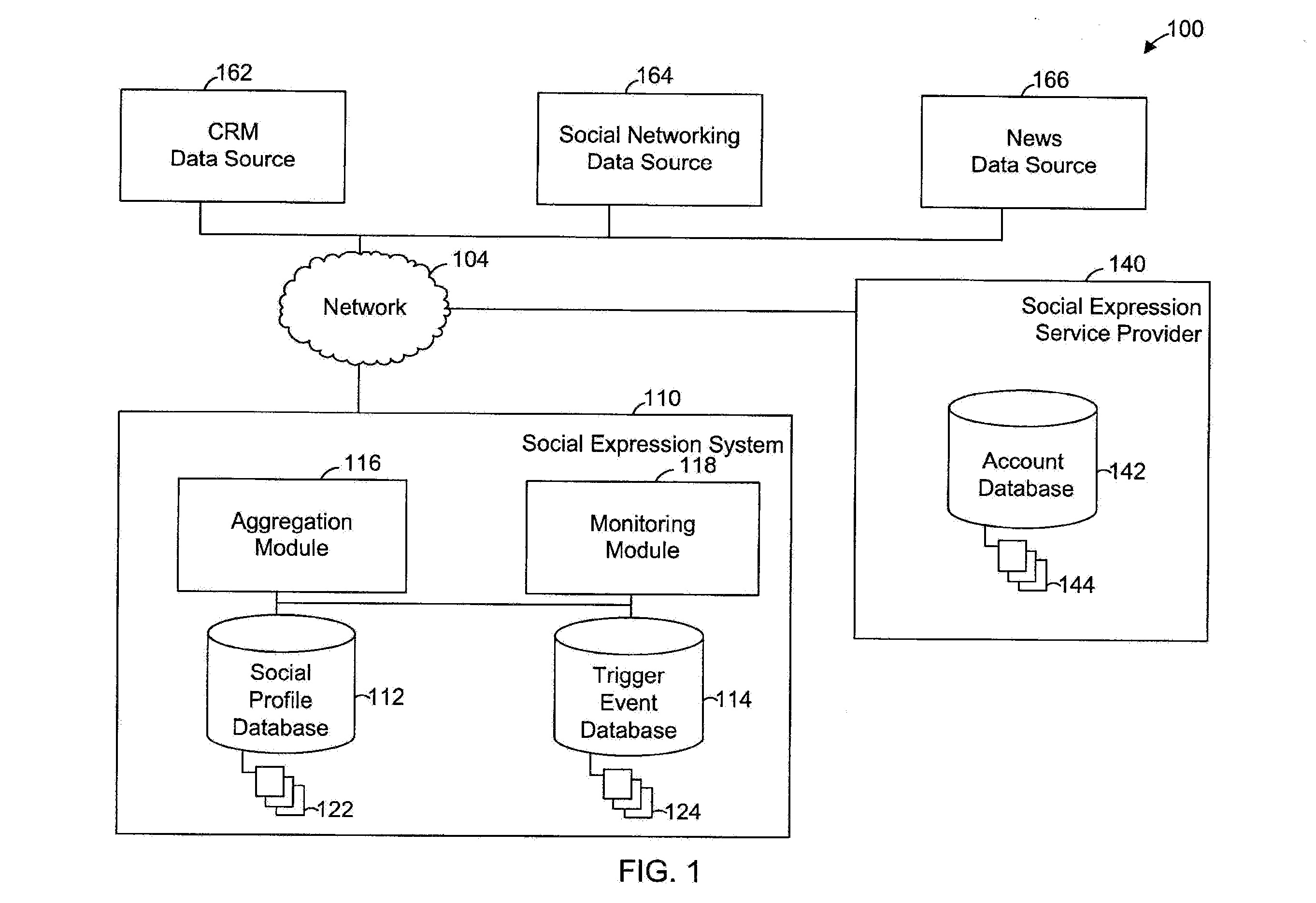

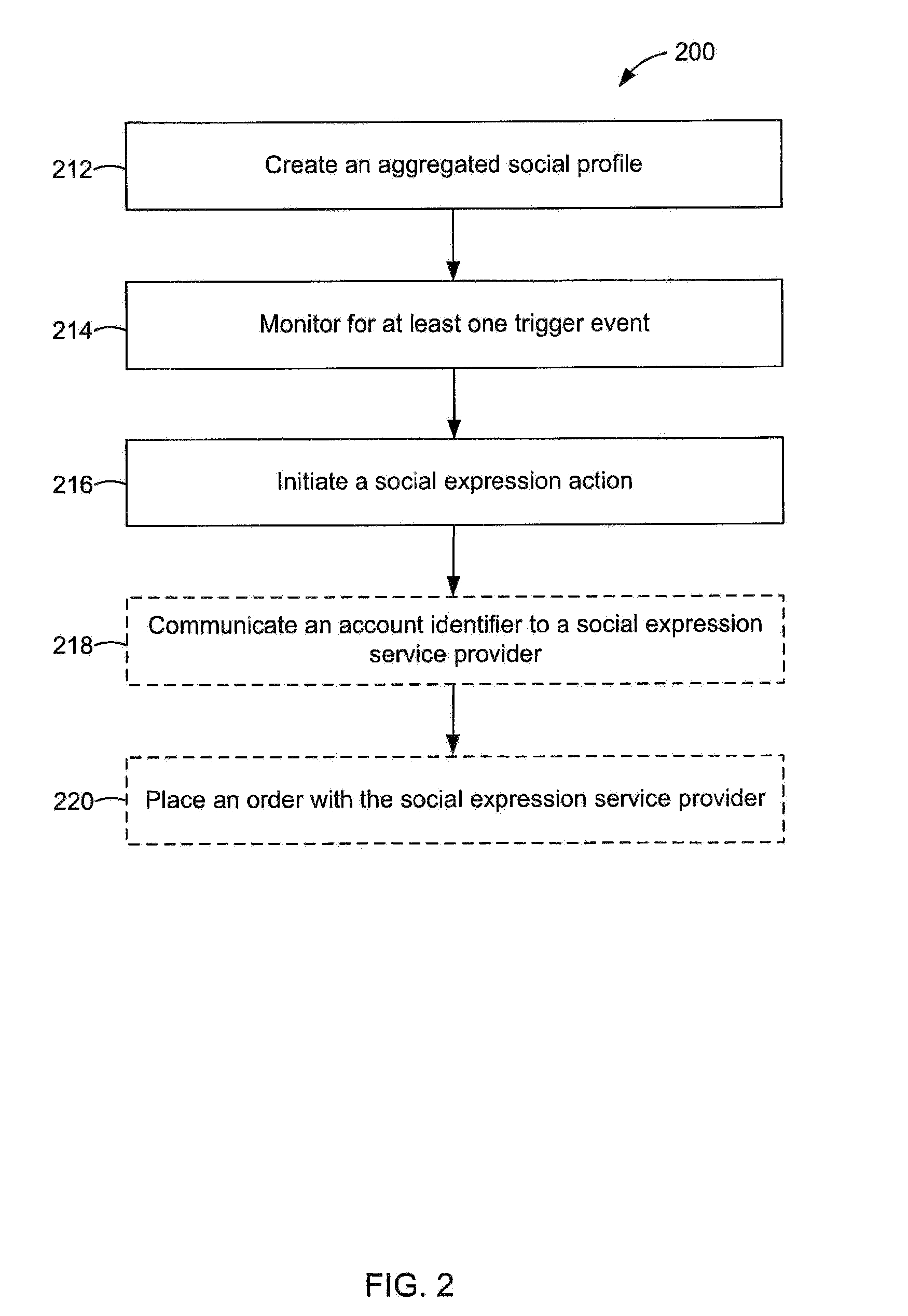

Methods and Systems for Communicating Social Expression

InactiveUS20120179573A1Multiple digital computer combinationsBuying/selling/leasing transactionsExpression - actionOrder form

In one aspect, a method for communicating social expression to a contact, the method comprising: creating an aggregated social profile, the aggregated social profile comprising a plurality of informational items about the contact collected from a first plurality of data sources; monitoring a second plurality of data sources for at least one trigger event related to at least one informational item of the plurality of informational items; and initiating a social expression action in response to the at least one trigger event. The embodiments may also be directed at generating at least one keyword from the at least one informational item; and scanning for the at least one keyword in the second plurality of data sources. The method may also provide in further aspects, analyzing the at least one trigger event to determine the appropriate social expression action and / or placing an order with a social expression service provider.

Owner:INFLUITIVE CORP

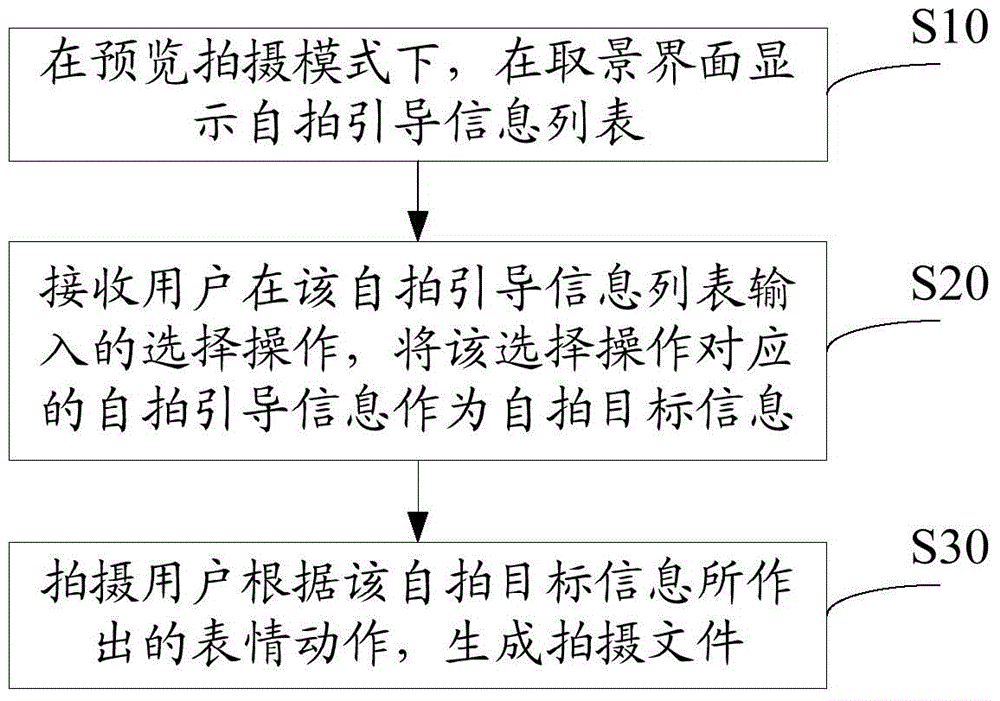

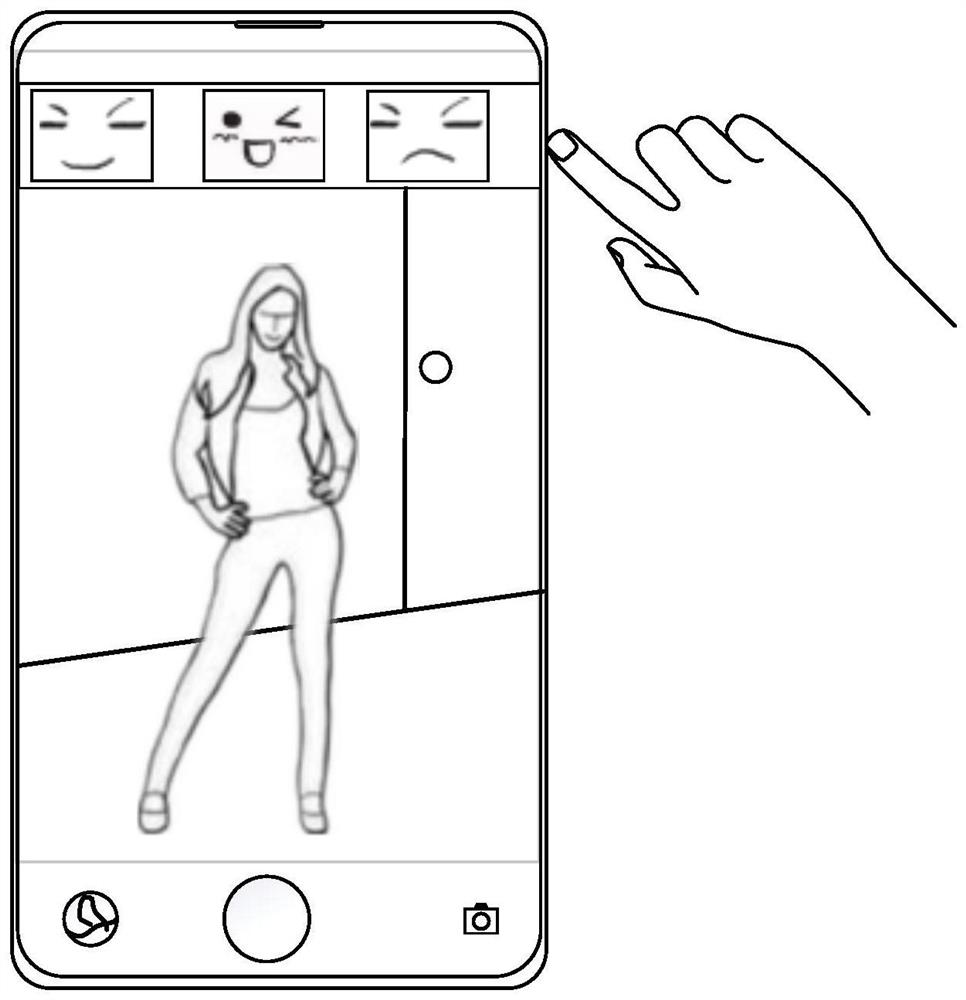

Shooting method and shooting device

ActiveCN104902185ATelevision system detailsColor television detailsExpression - actionShooting method

The invention discloses a shooting method. The method comprises the following steps of displaying an autodyne guidance information list on a framing interface under a preview shooting mode; receiving a selecting operation, which is input on the autodyne guidance information list by a user, and taking autodyne guidance information corresponding to the selecting operation as autodyne target information; and shooting an expression action, which is made by the user according to the autodyne target information, and generating a shooting file. The invention also discloses a shooting device. By adopting the shooting method and the shooting device, the user is guided to carry out autodyne, so that a satisfied picture / video can be easily shot.

Owner:NUBIA TECHNOLOGY CO LTD

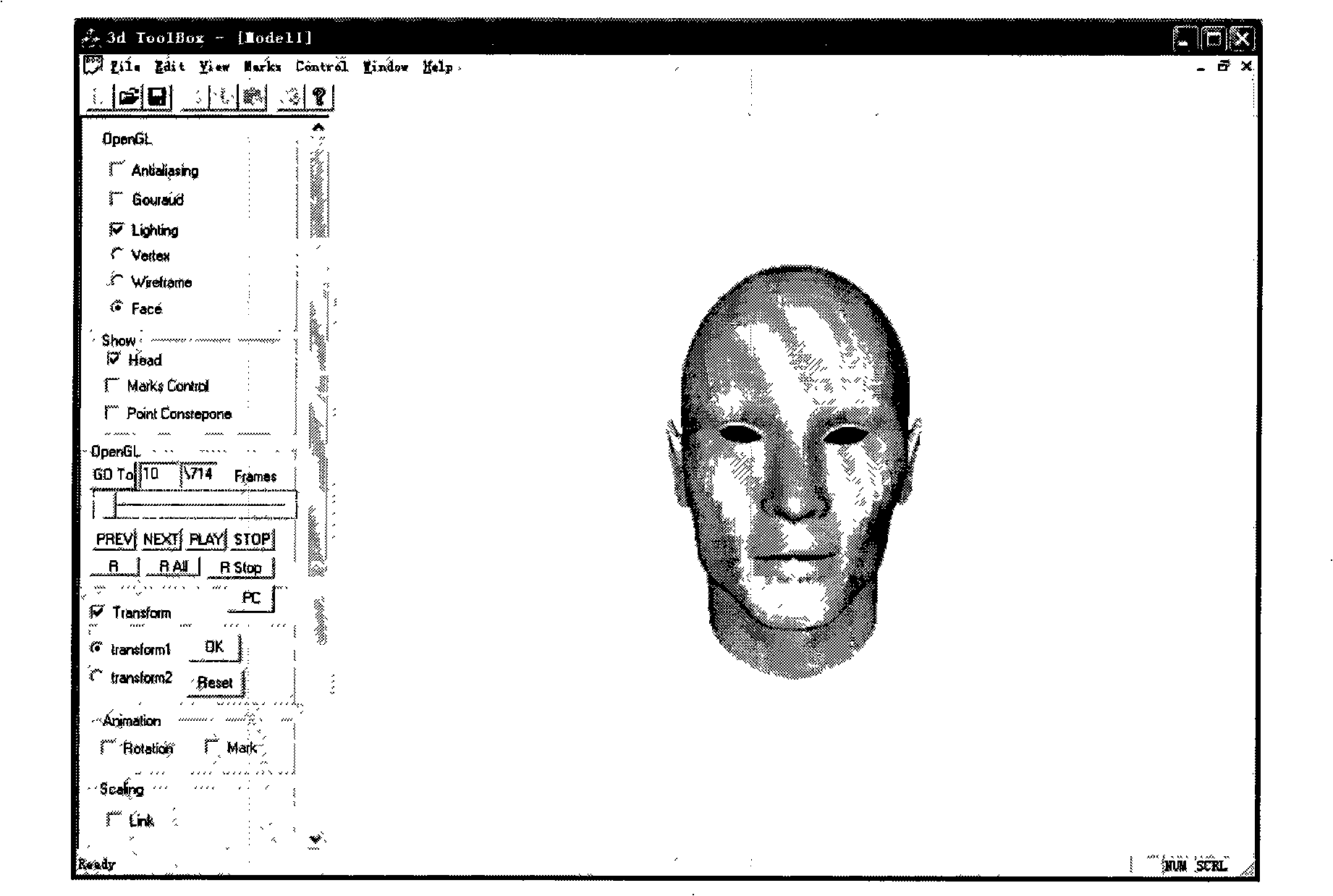

Three-dimensional human face animations editing and synthesis a based on operation transmission and Isomap analysis

InactiveCN101311966AEasy to editImprove production efficiencyAnimationModifying/creating image using manual inputHuman anatomySynthesis methods

The invention discloses a method for compiling and compounding 3D face animation based on sport communication and Isomap analysis. Firstly, the method requires a user to choose a control point in the 3D face model and designate the restriction conditions of expression action in a two-dimension plane; the system trains a prior probability model according to the data of face animation, spreads less user restriction to the other parts of face grids so as to generate completed lively face expression; then modeling to 3D face animation knowledge is carried out by Isomap learning algorithm, key frames designated by the user are combined, smooth geodesic on the higher order surfaces is fitted, and new face animation serial is generated automatically. The method of the invention can lead the compiling of face expression to be carried out only in the two-dimension projection space and the compiling of face expression is easier; owing to the restriction of the prior probability model, 3D face expression satisfying the principle of anthropotomy can be obtained, the new face animation serial can be obtained by combining the key frames designated by the user, and the production of face expression animation is simplified.

Owner:ZHEJIANG UNIV

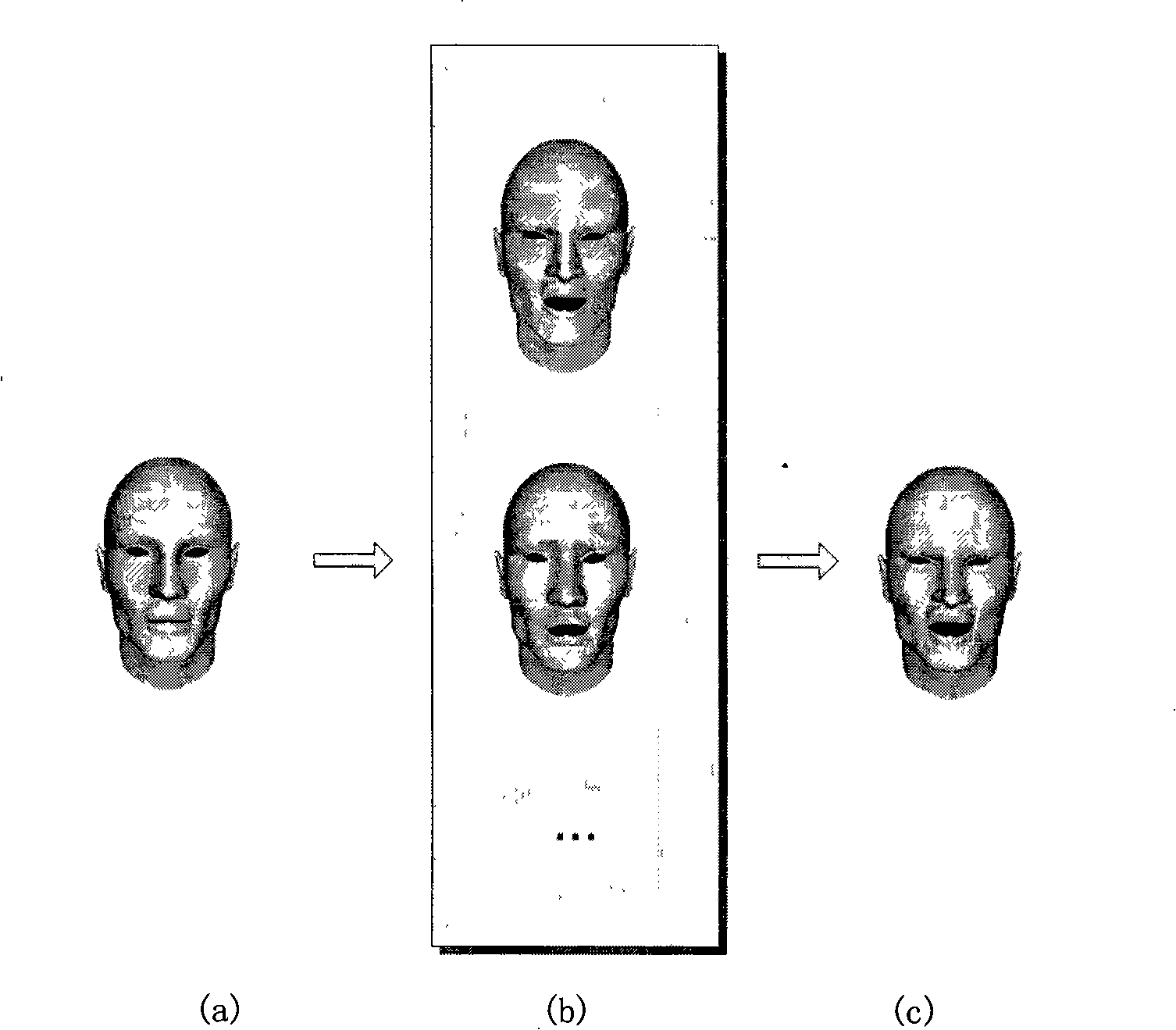

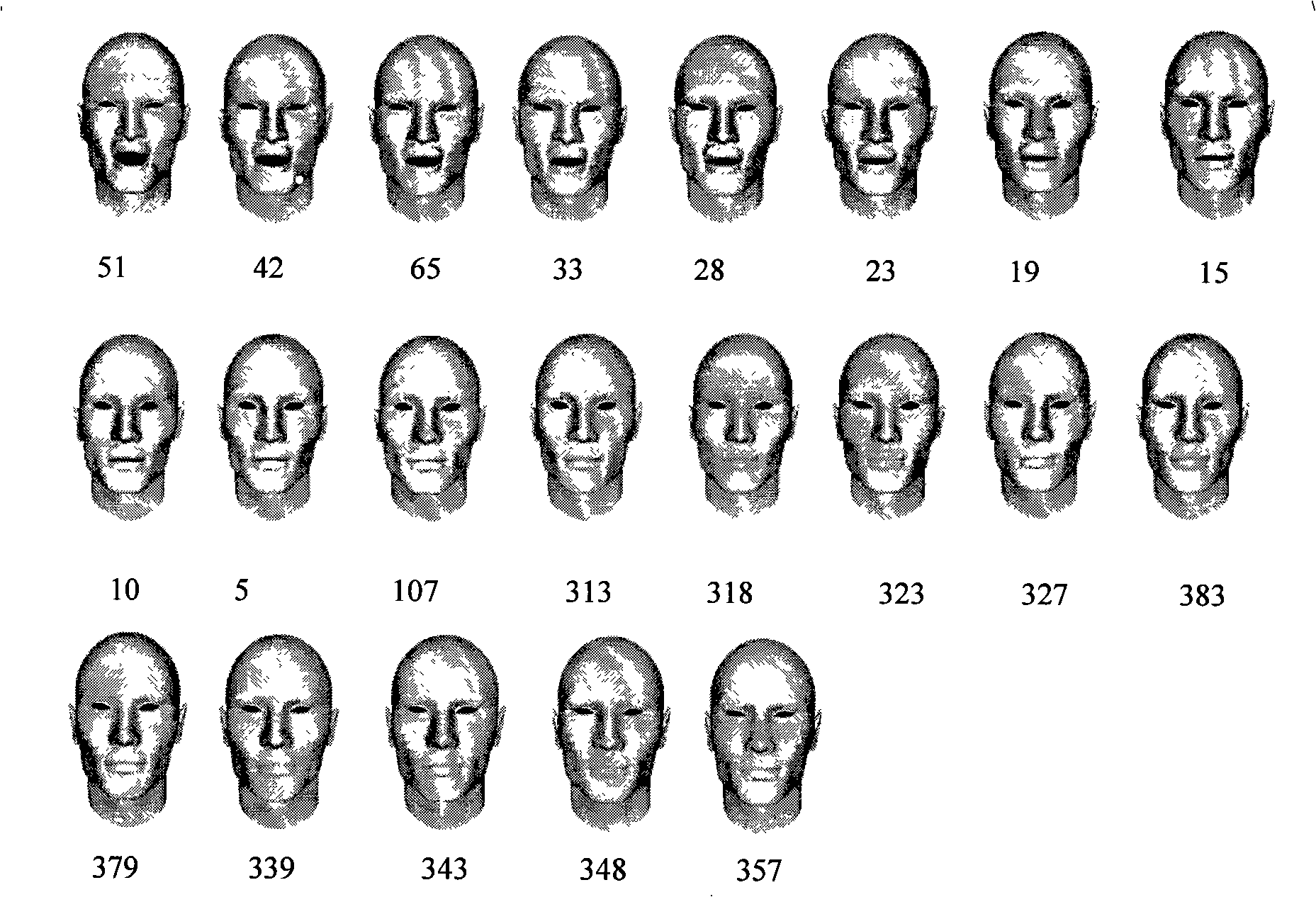

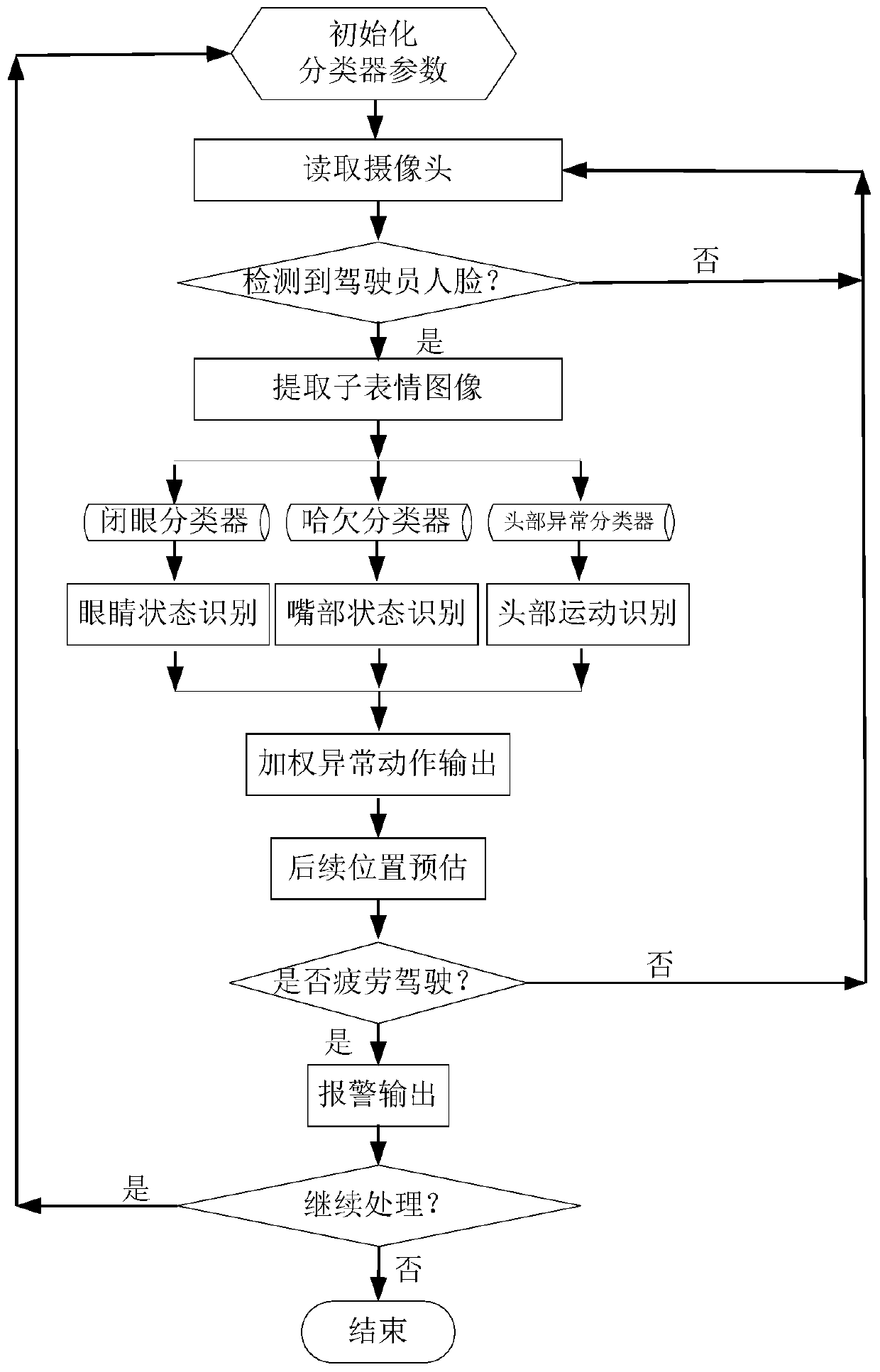

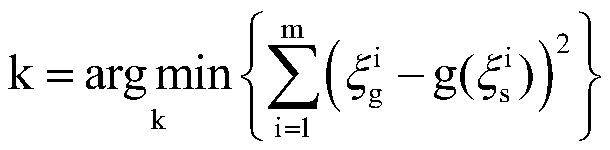

Multi-feature fusion driver abnormal expression recognition method

InactiveCN110334600AImprove detection efficiencyHigh precisionCharacter and pattern recognitionAbnormal expressionHead movements

The invention discloses a multi-feature fusion driver abnormal expression recognition method. The multi-feature fusion driver abnormal expression recognition method comprises the steps: S1, tracking and monitoring expression actions of a driver in real time through a camera installed on the driver side; S2, precisely identifying expression details in the real-time driver video; S3, detecting the positions of the eyes, and judging whether the eyes are tired or not; S4, positioning the edge contour of the mouth, and judging whether yawn action occurs or not; S5, detecting a head action, and judging whether fatigue occurs or not; S6, weighting the detection results of the eye state, the mouth state and the head motion state, finally judging whether fatigue occurs or not, and outputting the detection results; and S7, combining the identification result of the current frame as an estimated position of subsequent frame identification, and respectively detecting actions in subsequent frames to realize continuous detection and identification of abnormal behaviors of the driver. According to the invention, real-time monitoring and alarm triggering can be carried out, a driver is warned andreminded, and traffic accidents are prevented, and the safety in the driving process is ensured.

Owner:WUHAN INSTITUTE OF TECHNOLOGY

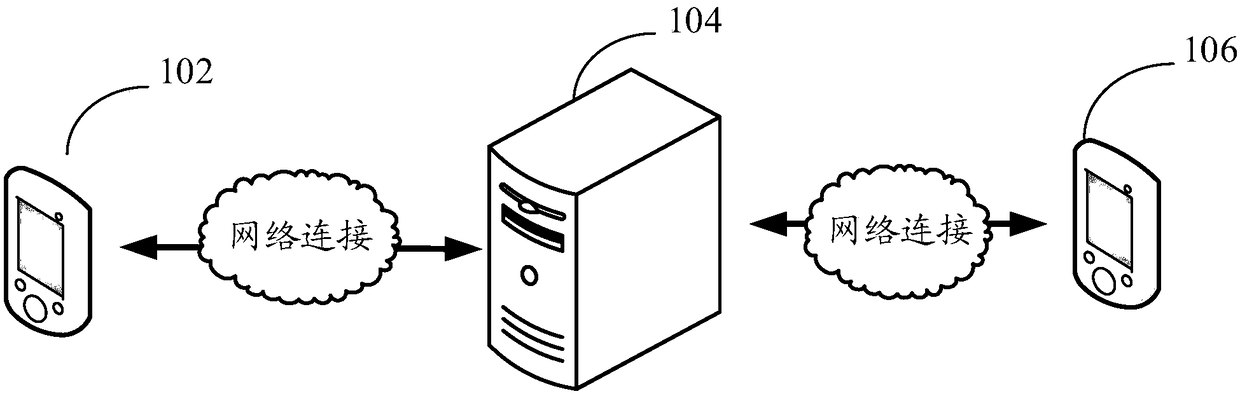

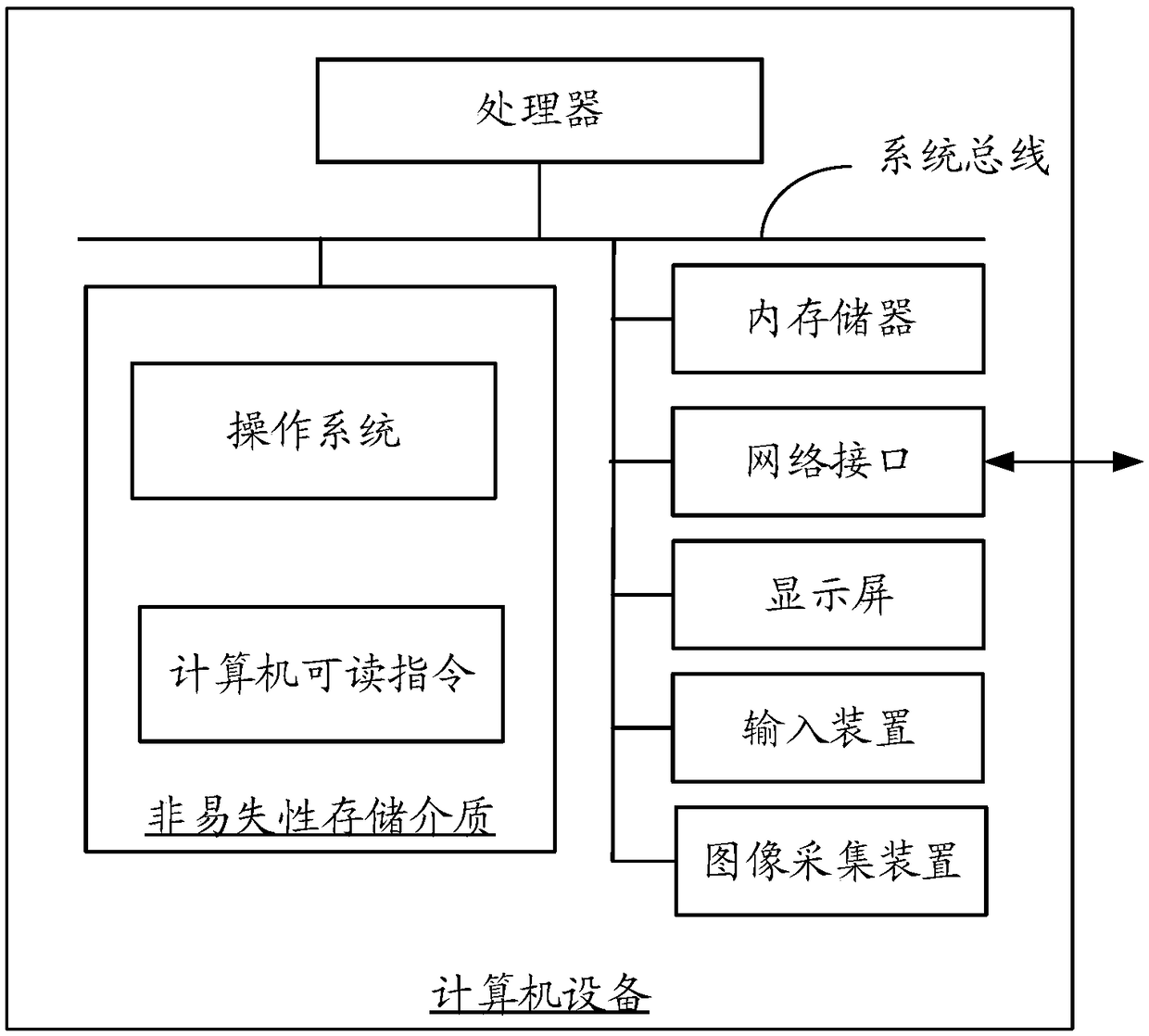

Method and device for realizing emotion expression of virtual object, computer equipment and storage medium

ActiveCN108874114AAdd funInput/output for user-computer interactionAnimationPattern recognitionExpression - action

The invention provides a method for realizing emotion expression of a virtual object. The method comprises the following steps: receiving expression data, extracting a user identifier corresponding tothe expression data, wherein the expression data is obtained by identifying a face image corresponding to the user identifier; determining an expression type corresponding to the expression data; searching an action driving file corresponding to the expression type; according to the expression data and the action driving file, controlling the corresponding three-dimensional virtual object corresponding to the user identifier to execute the corresponding expression action. The method can visually express the real emotion of the user. In addition, a device, computer equipment and a storage medium for realizing emotion expression of the virtual object are also provided.

Owner:TENCENT TECH (SHENZHEN) CO LTD

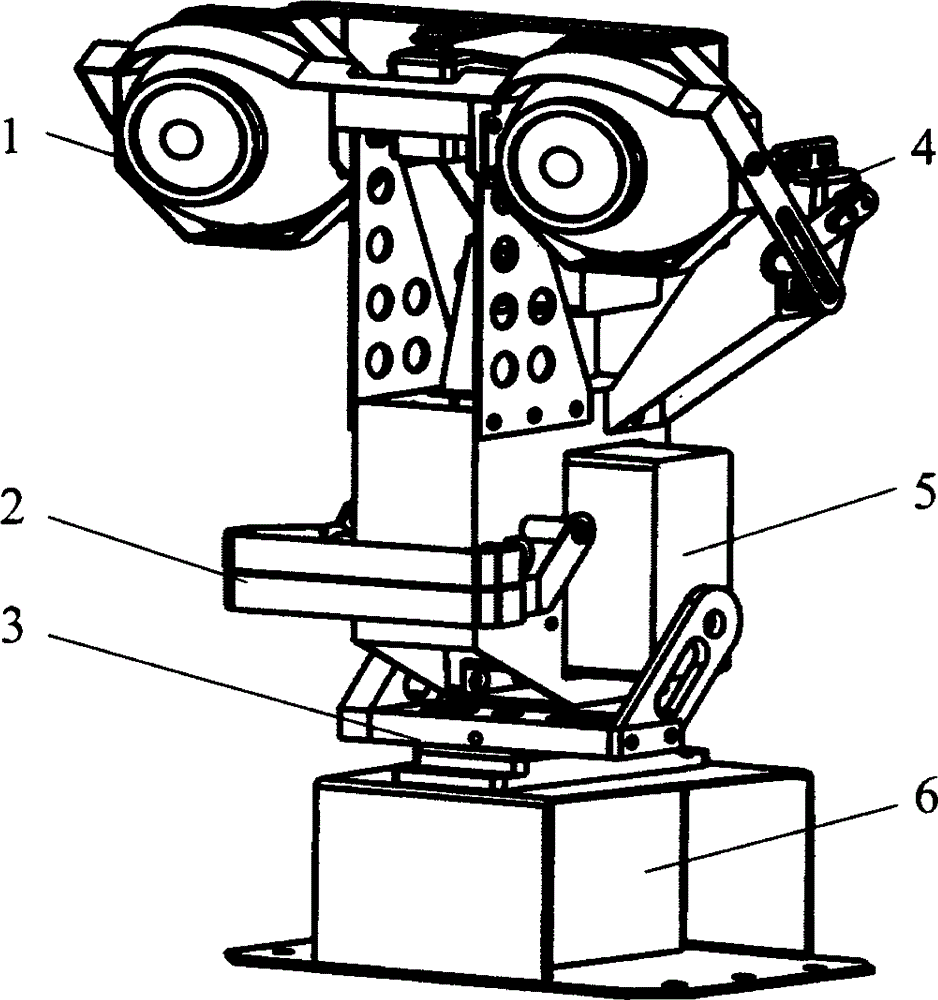

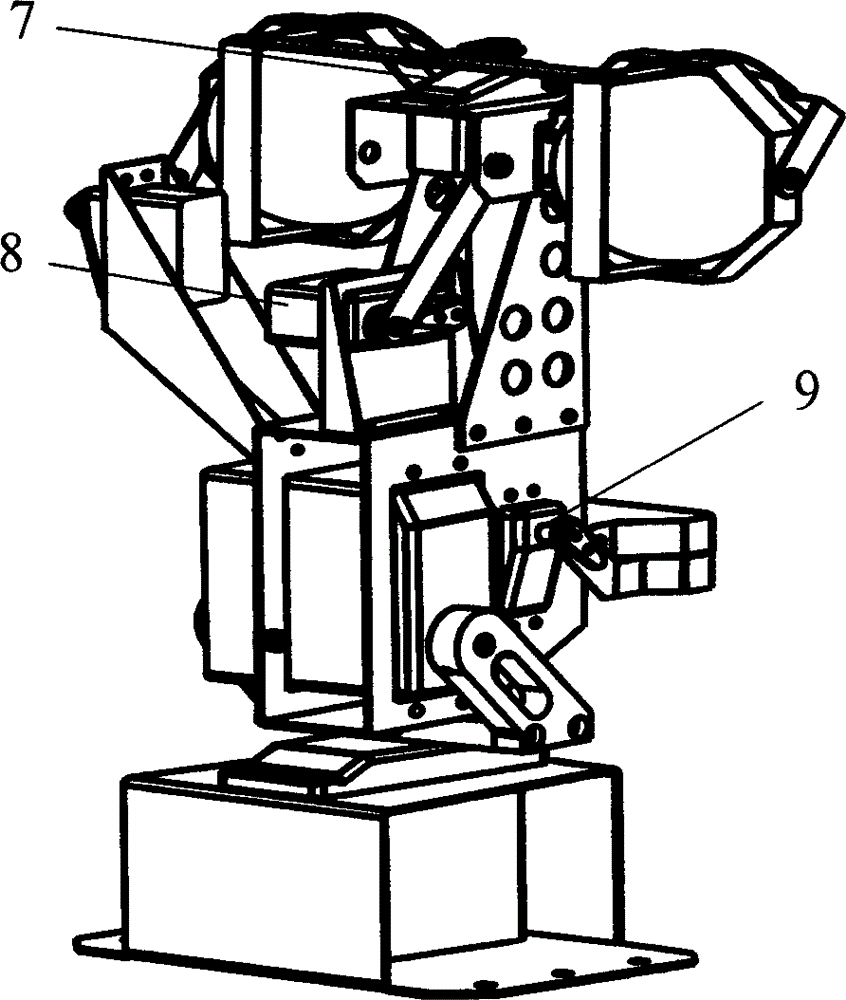

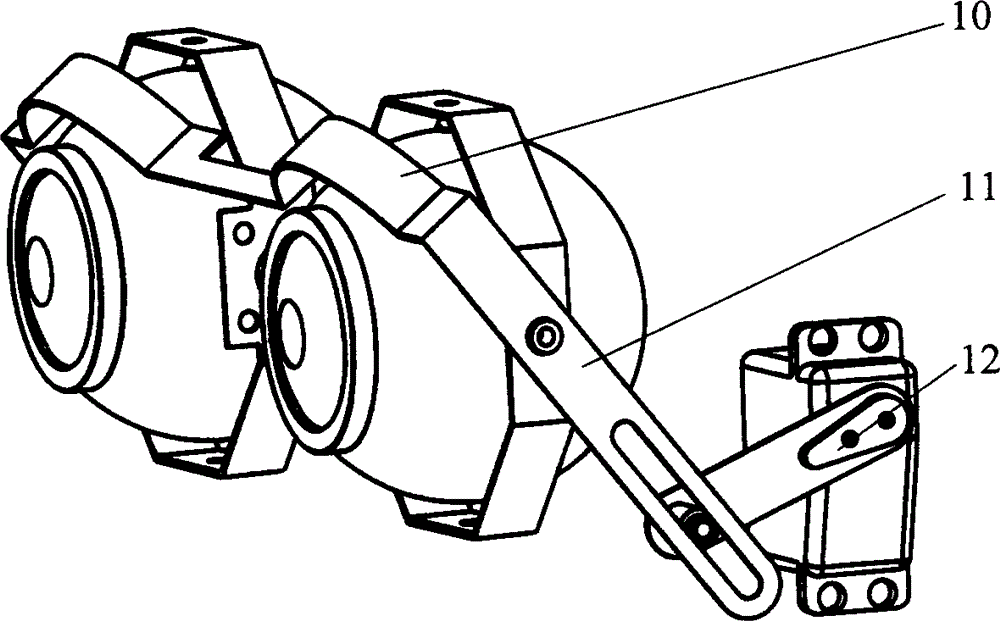

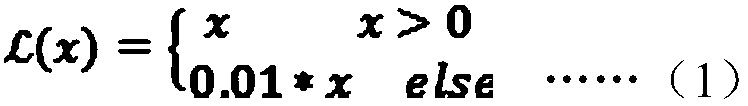

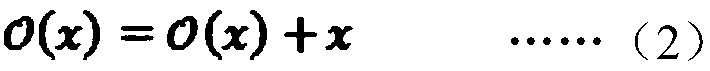

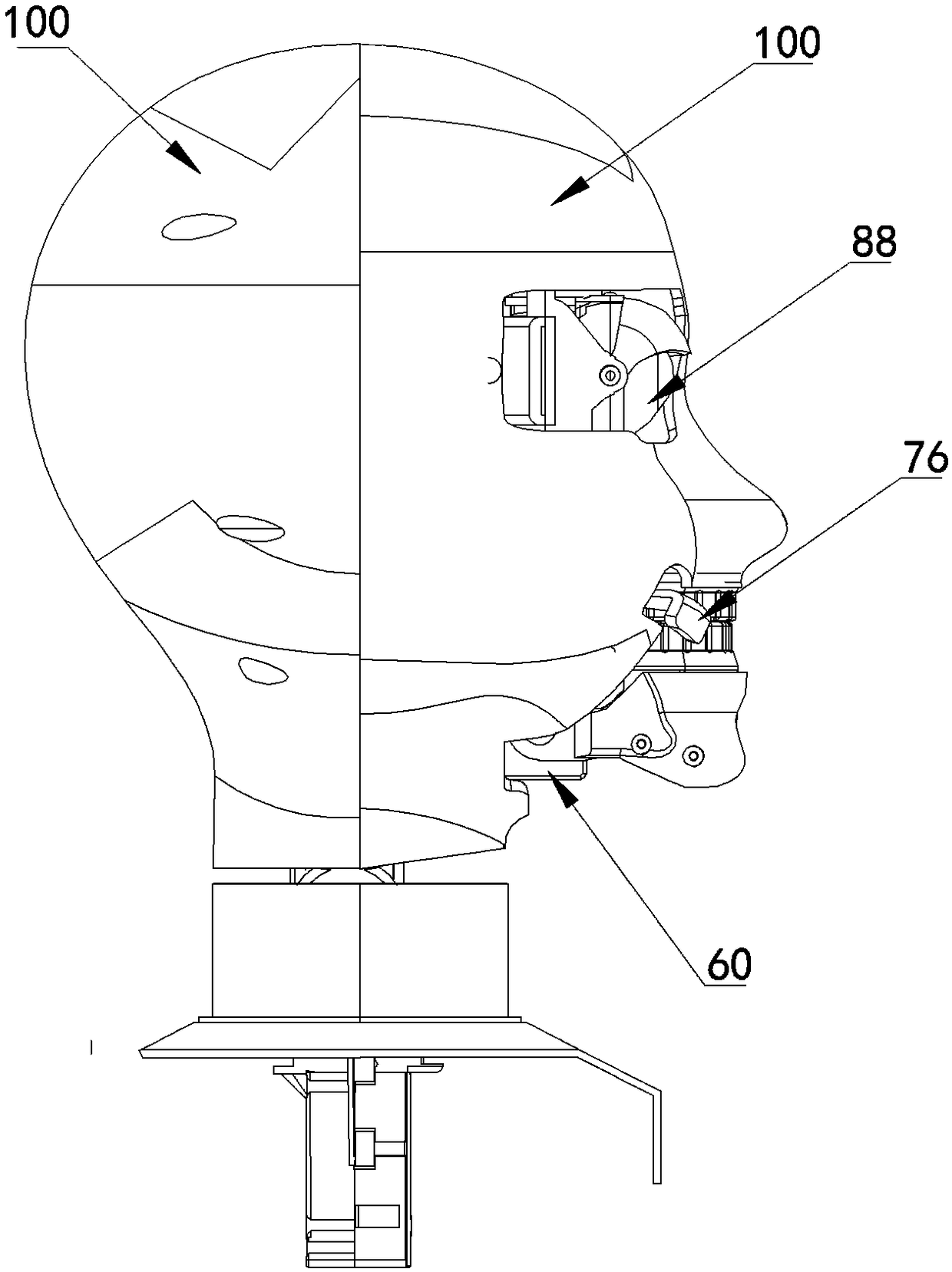

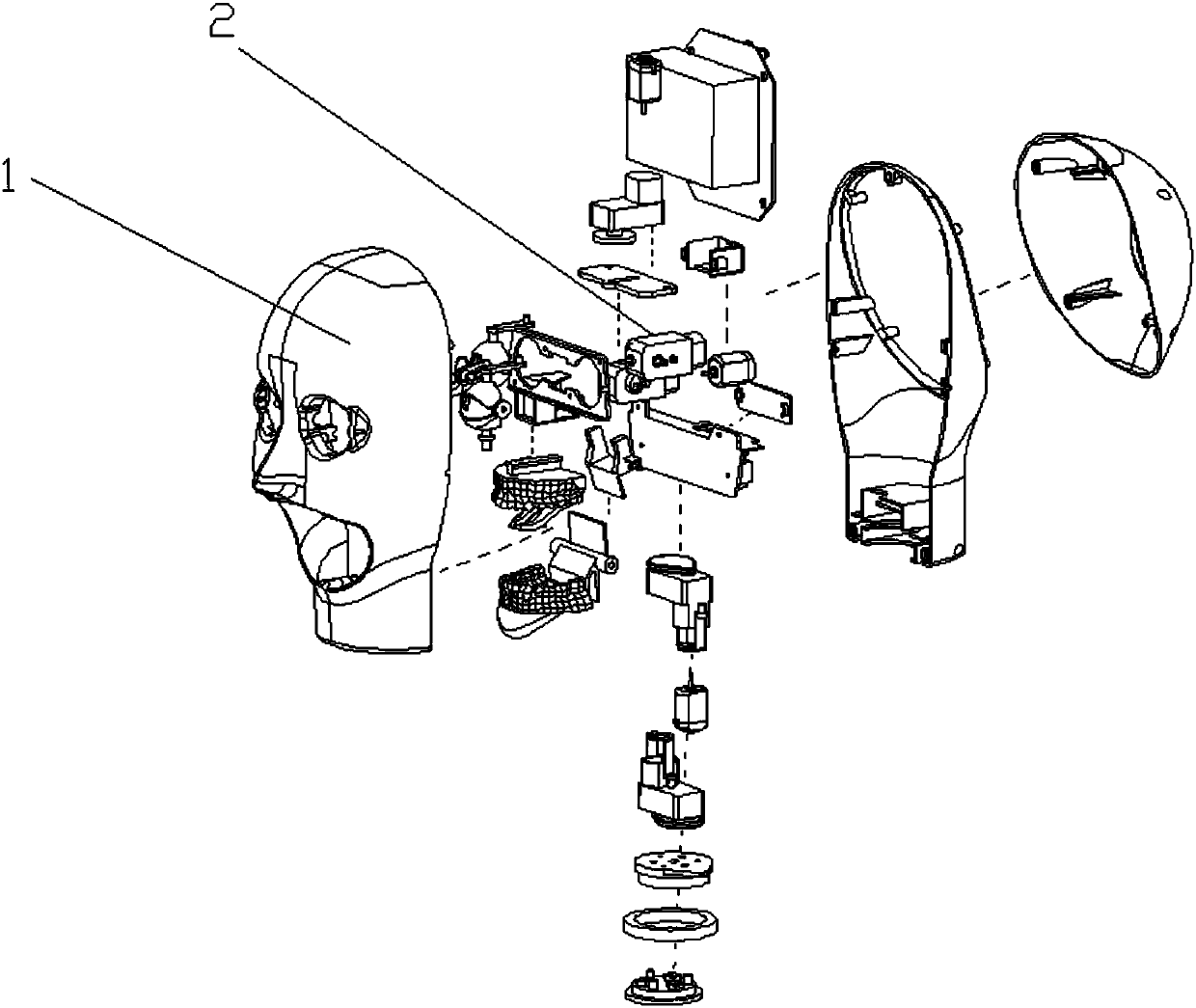

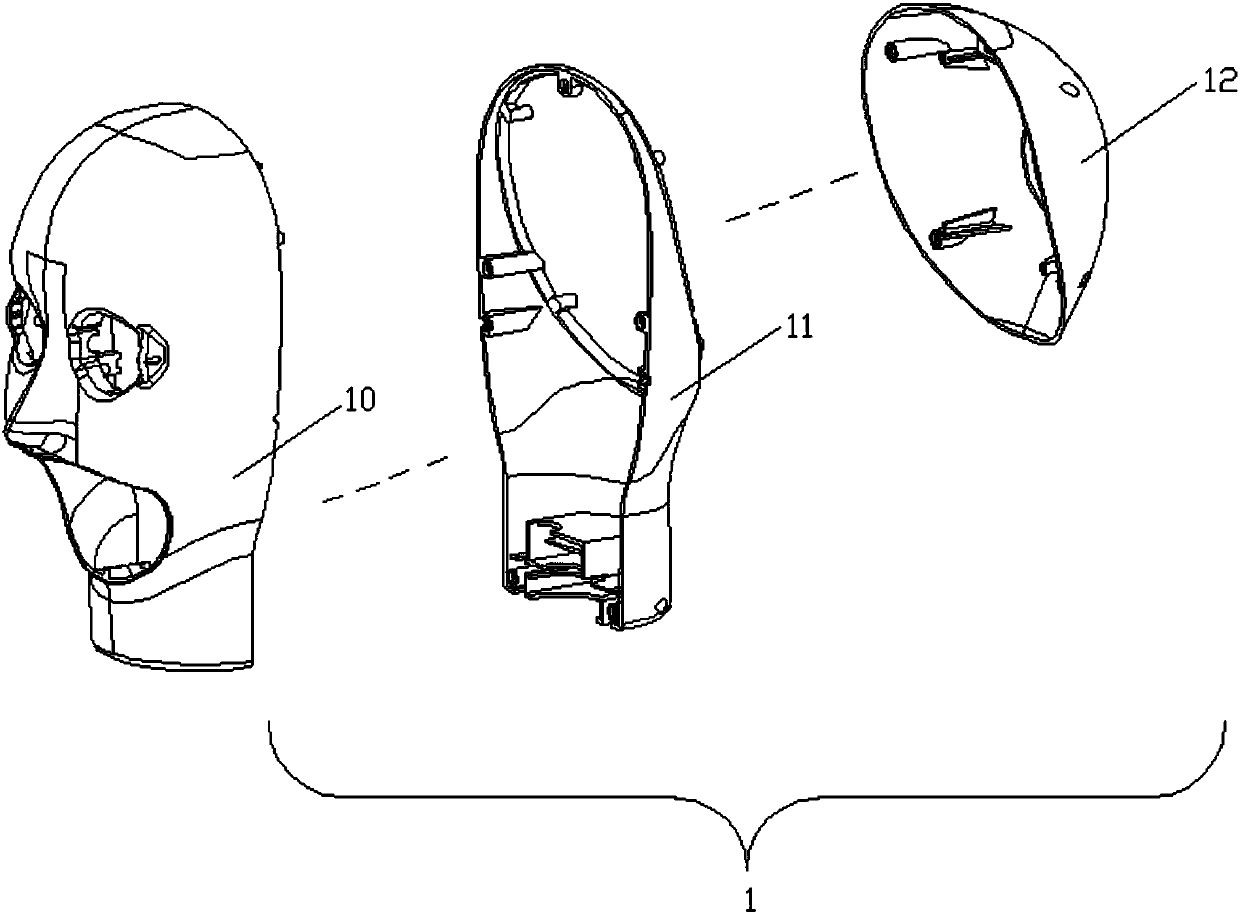

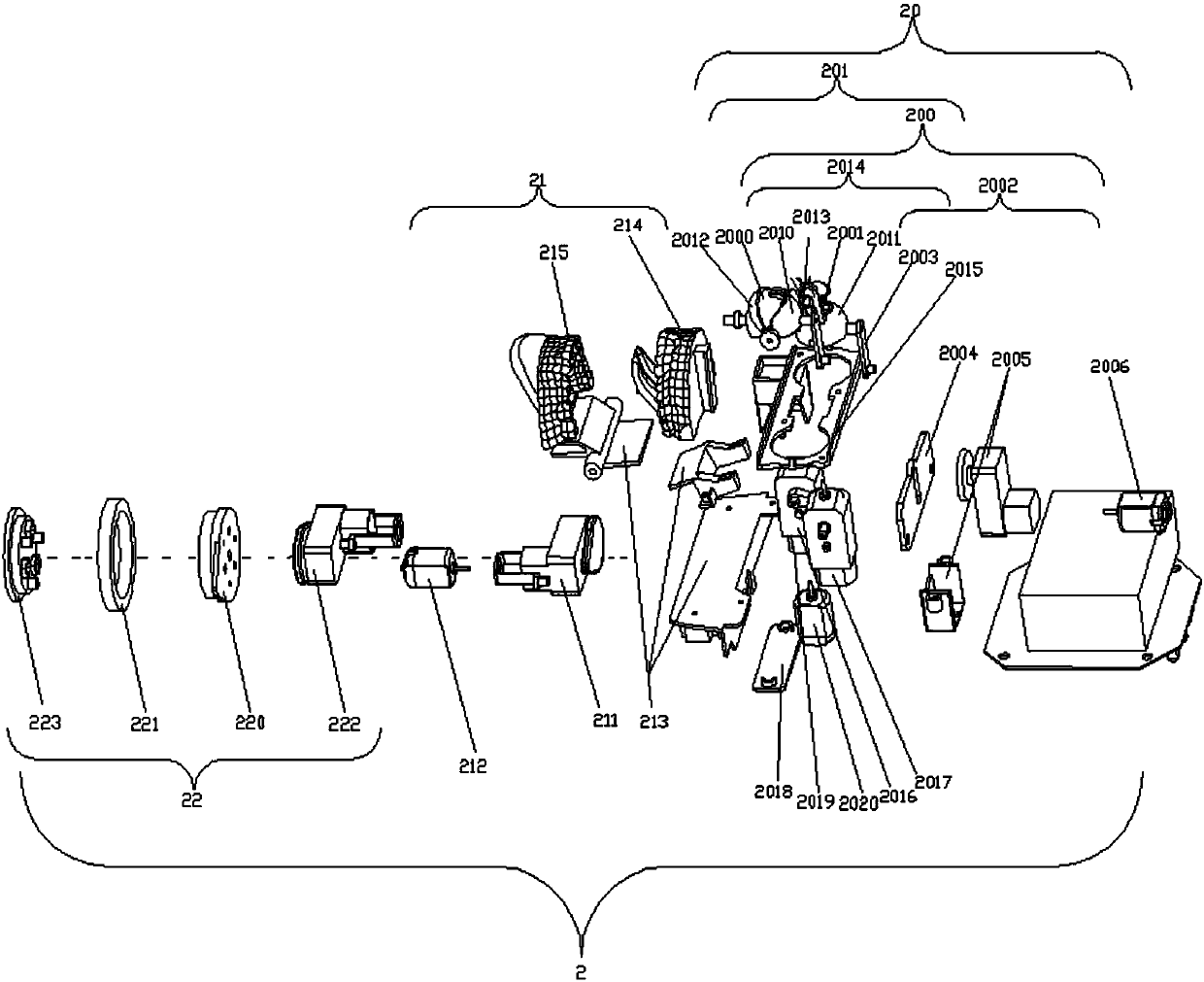

Head structure of humanoid service robot

The invention provides a head structure of a humanoid service robot. By means of the head structure, blinking of eyelids, up-down and left-right movement of eyeballs, opening and closing of the mouth and nodding and left-right head shaking actions can be achieved. The structure mainly comprises eye parts 1, a mouth part 2 and a neck part 3. Actions of the eye parts are achieved through a parallel connecting rod mechanism 4, a crank and guide rod mechanism 5 and a crank and rocker mechanism correspondingly. Opening and closing of the mouth are achieved through direct drive of a motor. The nodding and head shaking actions of the neck are achieved through direct drive of motors (6 and 7). By means of the rod mechanisms, blinking of the eyelids of the two eyes and the up-down movement and the left-right movement of the eyeballs can be synchronously achieved through drive of the motors correspondingly. The head structure is simple and compact in structure, and the basic face expression actions can be achieved.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

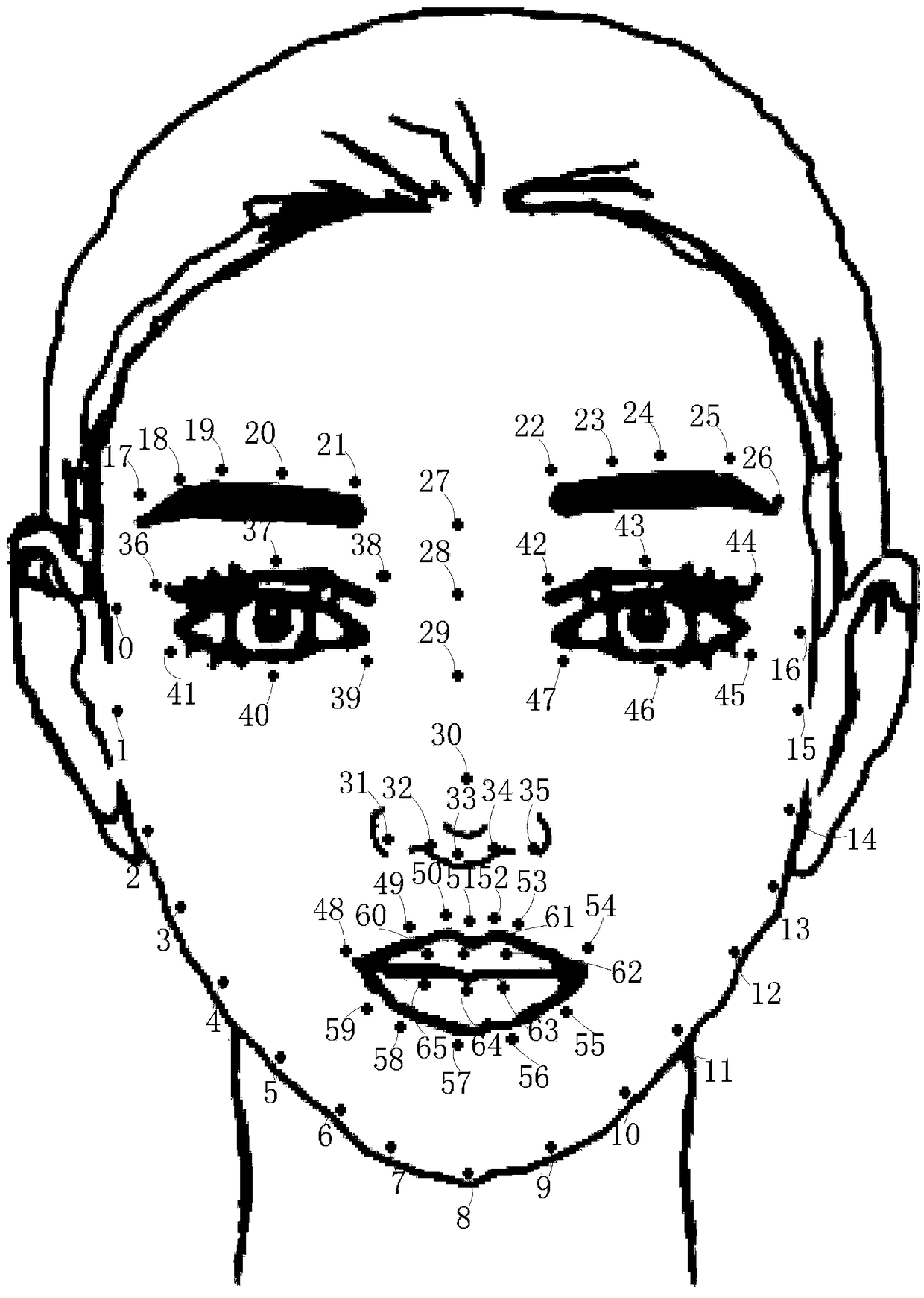

Method for detecting a facial micro-expression action unit based on a depth convolution neural network

ActiveCN109344744AAvoid missingImprove detection accuracyCharacter and pattern recognitionNerve networkData set

The invention discloses a method for detecting a facial micro-expression action unit based on a depth convolution neural network, which comprises the following steps: step 1, designing a depth convolution neural network structure; 1.1, marking that face and the rectangular shape area of different action units therein; 1.2, designing and realizing a depth convolution neural network, wherein that neural network comprise a convolution layer, a shortcut layer and an action unit detection layer, so as to learn the information of the face and the action unit area of different expression of the faceand the face, and obtain forward propagation parameters of the network; Step 1.3: taking the sample data in the face sample data set as the input data of the neural network; 2, detecting a facial expression action unit according to the network parameters learned in the step 1; Step 3: Visualize the output according to the face action unit detected in step 2. The detection method of the invention relies on a deep-layer convolution neural network to detect and recognize an action unit in a face image, and the detection accuracy and the detection speed can be improved.

Owner:BEIJING NORMAL UNIVERSITY

Robot head structure with tongue expression

PendingCN108081245AExpressiveCompact structureProgramme-controlled manipulatorEyelidExpression - action

The invention discloses a robot head structure with a tongue expression. The robot head structure comprises a head formed by a head shell, a scalp and a head fixing base. The head is provided with a tongue assembly, a mouth assembly and an eye assembly. Each assembly is provided with a corresponding driving steering engine and an action driving component thereof. The tongue assembly comprises a tongue extensible assembly, a tongue vertical-tilting assembly and a tongue left-right-swing assembly. The mouth assembly is used for completing a mouth opening-closing action expression and / or a mouthcorner smile action expression. The eye assembly is used for completing eyeball left-right-moving action expression and / or an eyelid blinking action expression. The tongue assembly is used for completing extending-retracting, vertical tilting and left-right swing action expressions of a tongue. Rich expression actions including rich tongue expressions can be provided for a humanoid robot, and on the basis of providing using functions for people, rich expression experience and joyful mood feelings for communication are added.

Owner:欢乐飞(上海)机器人股份有限公司

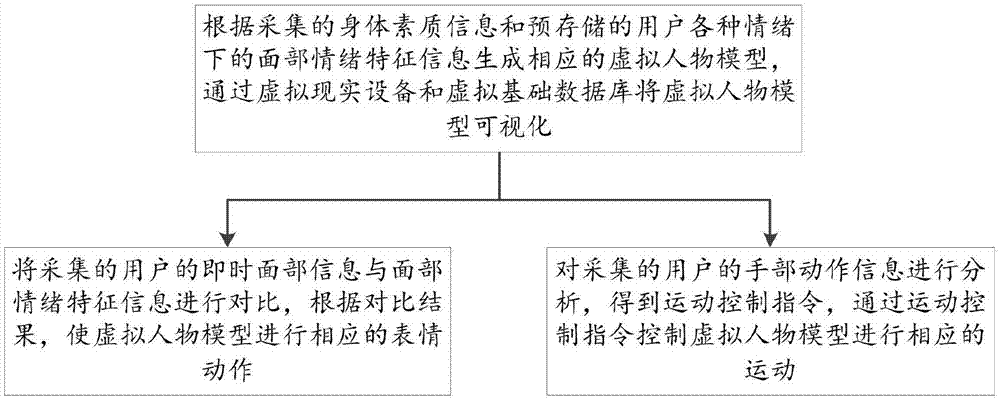

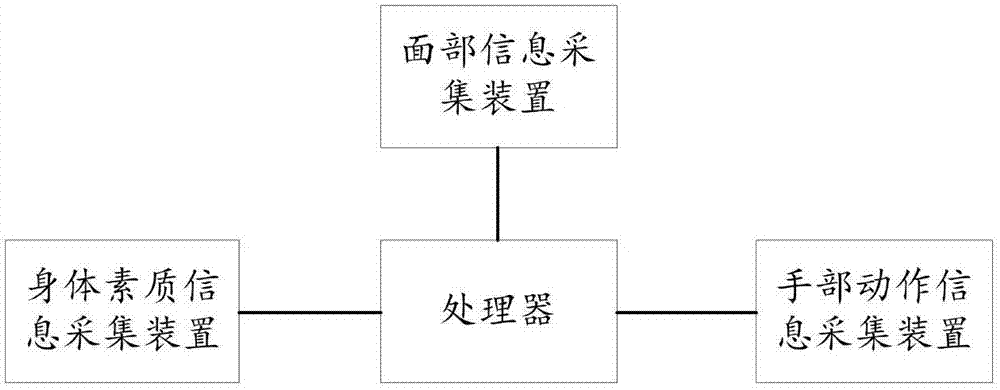

Control method based on virtual reality technology and control system thereof

InactiveCN107272884AAchieve interactionLess Design WorkInput/output for user-computer interactionGraph readingExpression - actionMovement control

The invention relates to a control method based on a virtual reality technology and a control system thereof. The control system includes a processor, a collection device of physical quality information, a collection device of instant facial information and a collection device of hand action information. The processor is used for comparing the collected instant facial information of a user with facial emotion characteristic information, and enabling a virtual character model to perform a corresponding expression action according to a comparison result; and analyzing the collected hand action information of the user to obtain a motion control instruction, and controlling the virtual character model to perform a corresponding motion through the motion control instruction. According to the method and the system, the control instruction of the virtual character model is obtained through collecting the instant facial information and the hand action information of the user and performing comparison and analysis, the virtual character model is controlled to perform the corresponding action, and interaction between the user and a virtual character is realized.

Owner:聂懋远

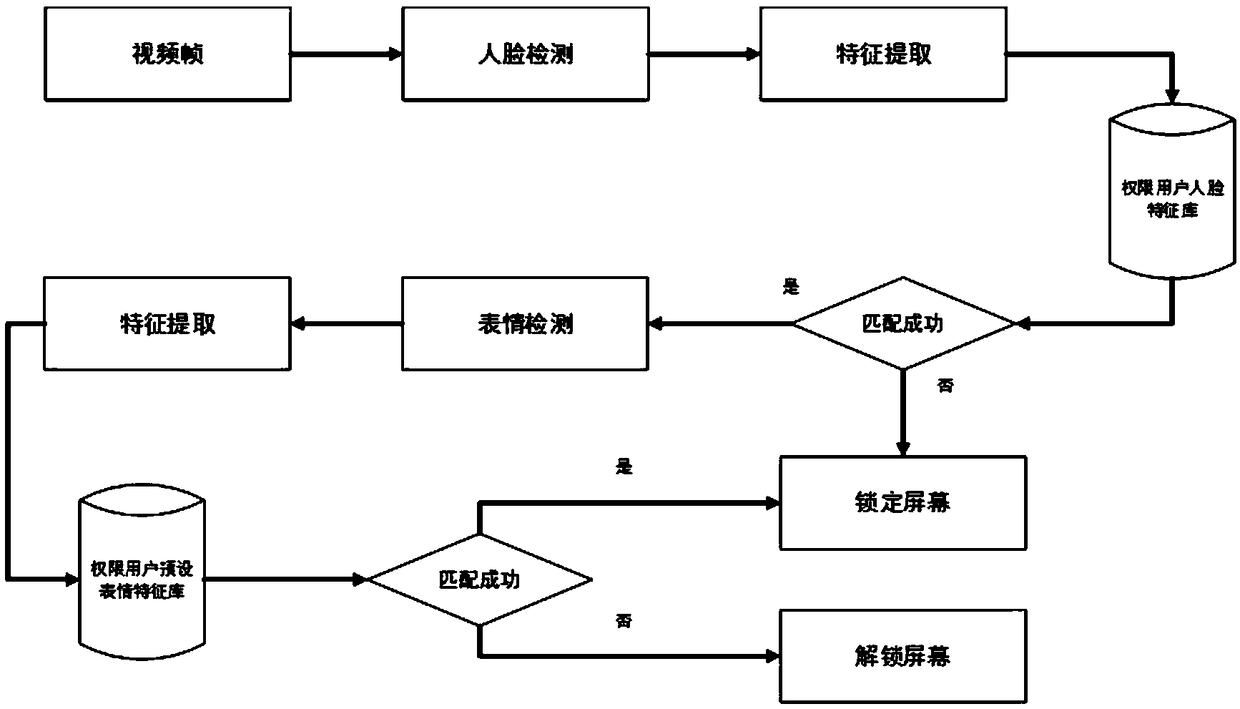

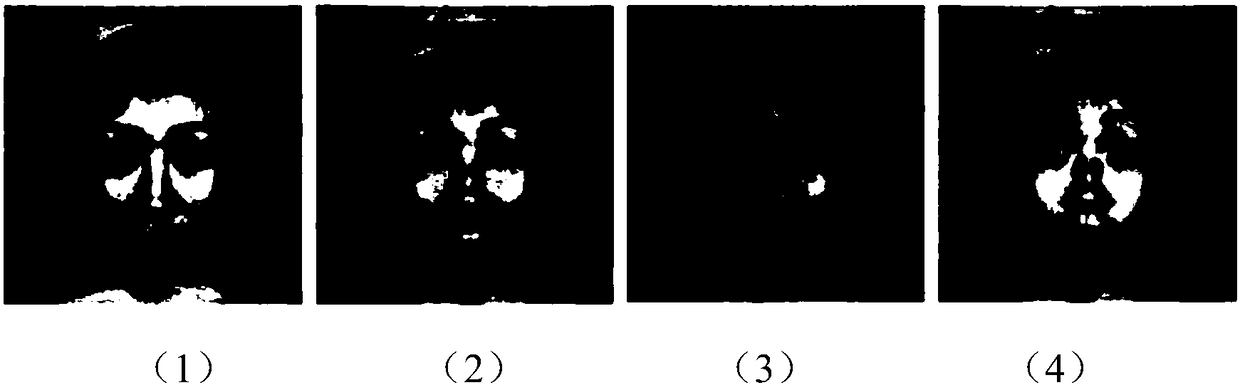

An intelligent terminal face unlocking method combined with gesture recognition is disclosed

InactiveCN109086589AIncreased complexityLower success rateInput/output for user-computer interactionCharacter and pattern recognitionFeature DimensionDimensionality reduction

The invention comprises an intelligent terminal face unlocking method combined with gesture recognition. Firstly, the unlocked video image is collected and preprocessed. Then the image is processed byan Adaboost algorithm based on Haar features. After filtering, feature extraction and feature dimension reduction, the feature is extracted by a support vector machine SVM for face recognition. Aftersuccessful face recognition, the salient map of gesture region is established, and the Hu moment feature and HOG feature are extracted and fused to carry out preset gesture recognition. If the recognition is successful, the screen is unlocked, and the recognition failure is counted to reach the set number of screen locks. The invention integrates the visual recognition advanced technology such asface and gesture, improves the complexity of face unlocking, greatly reduces the success rate of cracking the terminal by using the methods such as video recording or dynamic picture recording without knowing the specific expression action, and also can not unlock the screen.

Owner:NORTHEASTERN UNIV

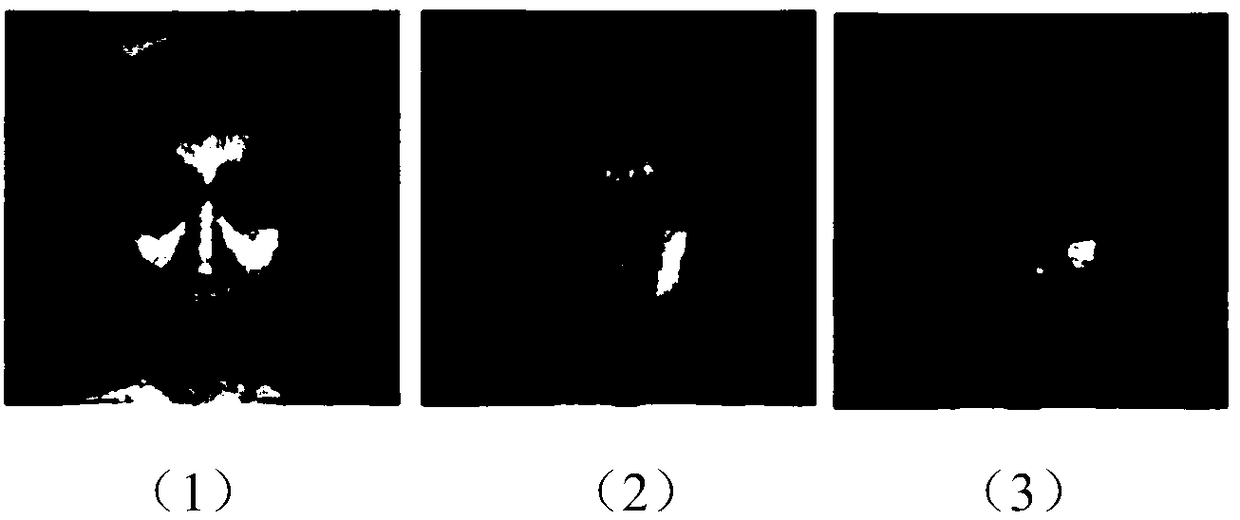

An intelligent terminal face unlocking method combined with expression recognition

InactiveCN109145559AIncreased complexityLower success rateDigital data authenticationAcquiring/recognising facial featuresFeature DimensionDimensionality reduction

The invention comprises an intelligent terminal face unlocking method combined with expression recognition. Firstly, the face image is captured and preprocessed, then the image is processed by an Adaboost algorithm based on Haar feature; after filtering, feature extraction and feature dimension reduction, the feature is classified and recognized by a support vector machine SVM, and face recognition is carried out; the expression key frame is extracted and the expression recognition is carried out; if the recognition screen is unlocked successfully, the recognition failure is counted to reach the set number and the screen is locked. The invention integrates the visual recognition advanced technology such as face and expression, improves the complexity of face unlocking, greatly reduces thesuccess rate of cracking the terminal by using the methods such as video recording or dynamic picture recording without knowing the specific expression action, and is unable to unlock the screen.

Owner:NORTHEASTERN UNIV

High-simulation robot head structure and motion control method thereof

PendingCN107718014AReal and vivid service experienceNatural movementProgramme-controlled manipulatorJointsSimulationControl engineering

The invention discloses a high-simulation robot head structure and a motion control method thereof. The high-simulation robot head structure comprises a shell assembly, a face motion assembly and an internal control assembly. The face motion assembly comprises an eye assembly, a mouth assembly and a neck assembly. An eyeball assembly is connected with the internal control assembly through a firstmotor. An eyelid assembly is connected with the internal control assembly through a second motor and a third motor. The mouth assembly is connected with the internal control assembly through a fourthmotor. An expression control and management module, independently researched and developed by our company, for realizing expression actions can be added to the internal control assembly. The expression control and management module works in cooperation with the face assembly under the external silica gel skin to realize the changes of expressions. With the high-simulation robot head structure andthe motion control method thereof, it is solved that in the prior art, when a robot for the service industry simulates the facial expressions of a real human being, the facial expressions are stiff because a traditional actuator is adopted for providing kinetic energy, the robot is in lack of the function of interacting with real human beings, and the robot cannot provide new and interesting consumption experience for consumers in the service industry.

Owner:深圳市小村机器人智能科技有限公司

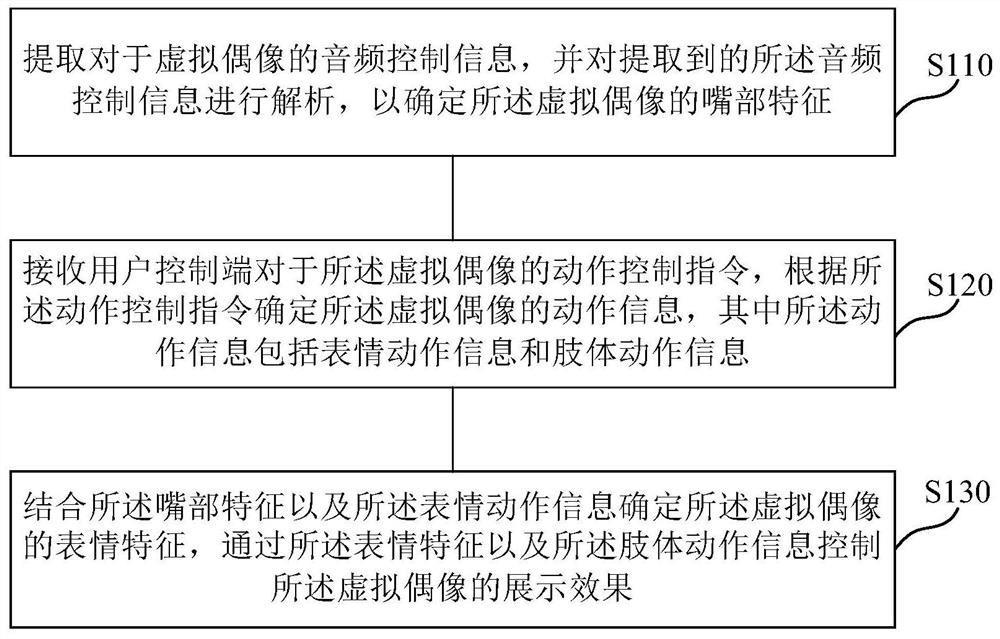

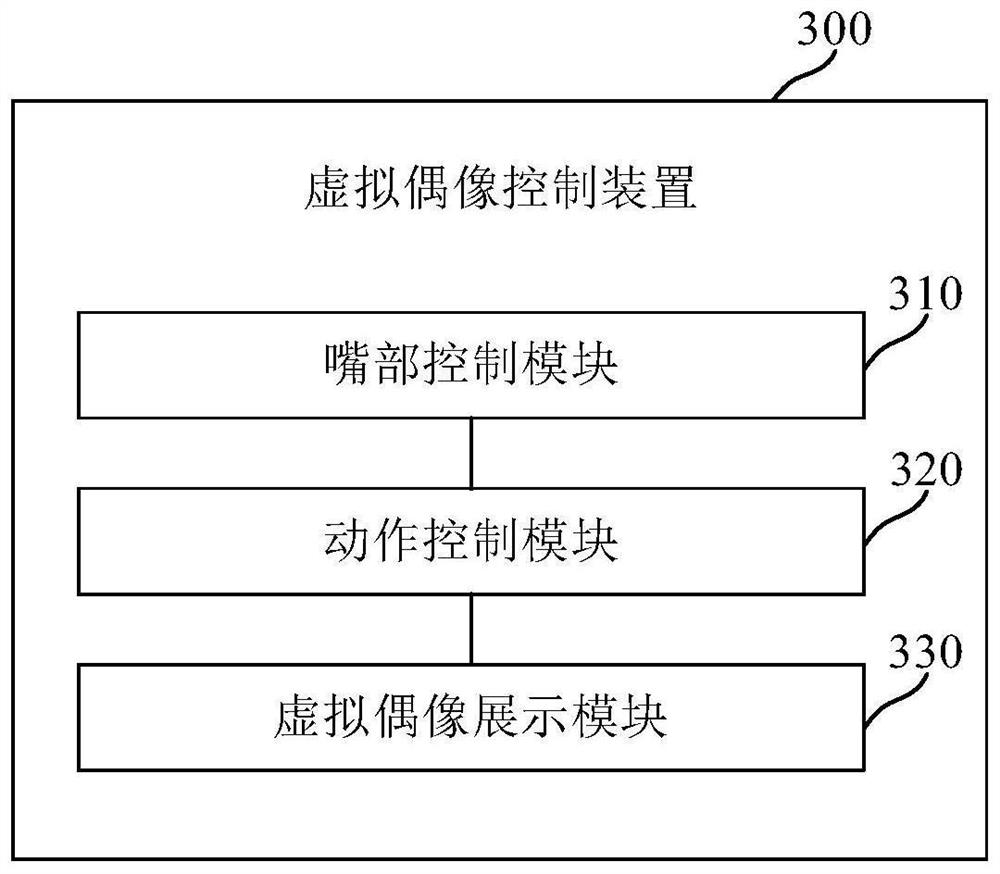

Virtual character control method and system, medium and electronic equipment

PendingCN111862280AEasy to controlIncrease authenticitySpeech analysisAnimationInformation controlControl system

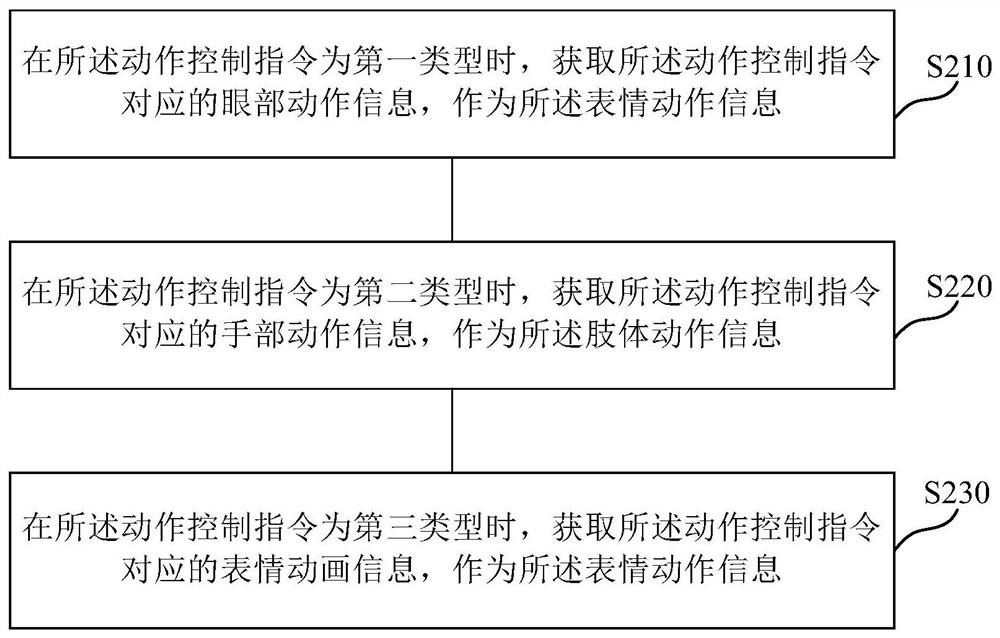

The embodiment of the invention provides a virtual character control method, a virtual character control system, a computer readable medium and electronic equipment, and relates to the technical fieldof virtual reality and augmented reality. The virtual character control method comprises the following steps: extracting audio control information for a virtual character, and analyzing the extractedaudio control information to determine mouth characteristics of the virtual character; receiving an action control instruction of a user control terminal for the virtual character, and determining action information of the virtual character according to the type of the action control instruction, the action information including expression action information and limb action information; determining expression features of the virtual character in combination with the mouth features and the expression action information, and controlling a display effect of the virtual character through the expression features and the limb action information. According to the technical scheme of the embodiment of the invention, the control of the virtual character is finer, and the sense of reality of the virtual character can be improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

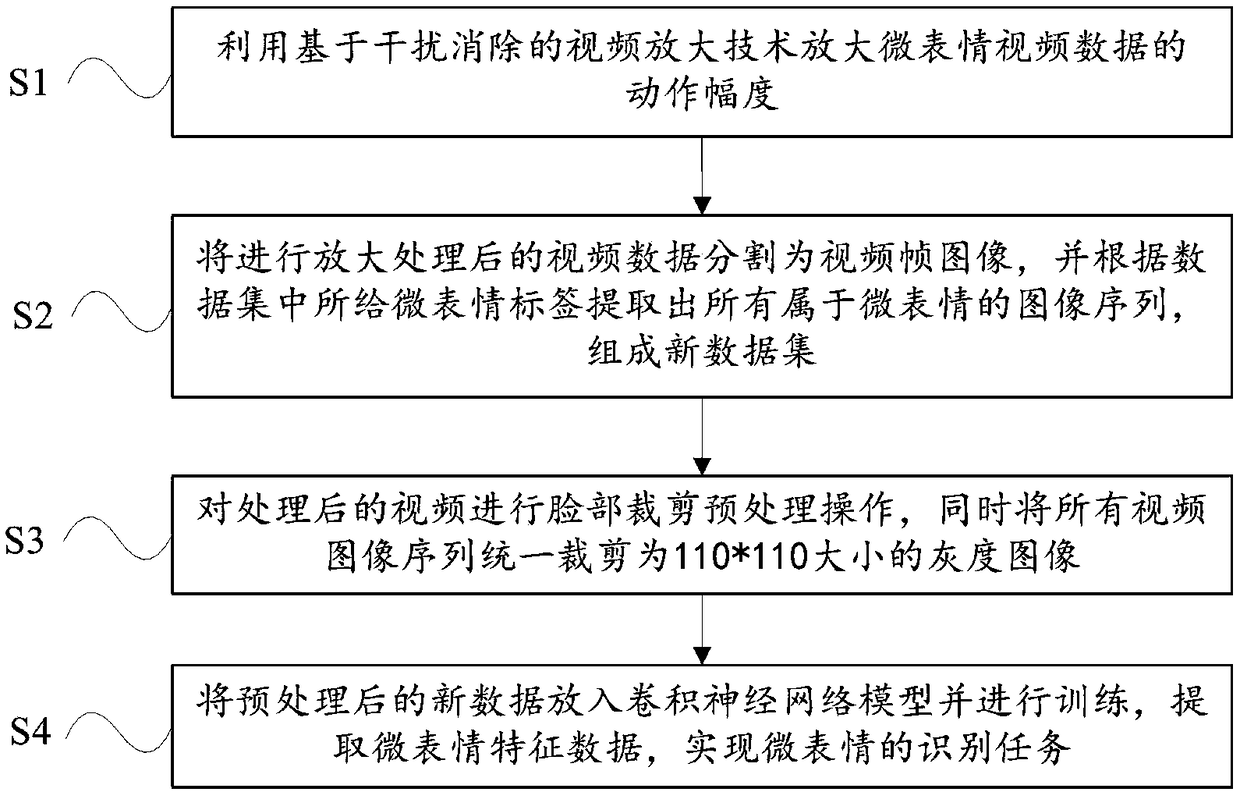

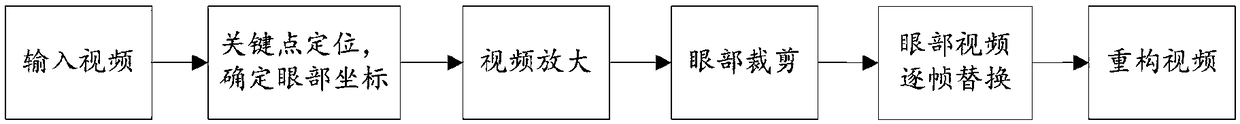

Face microexpression recognition method based on video magnification and depth learning

InactiveCN109034143AImprove accuracyIncrease the range of facial expressionsAcquiring/recognising eyesNeural architecturesData setMicroexpression

The invention provides a method for recognizing facial micro-expression based on video amplification and depth learning. The method comprises the following steps of: using a video amplification technique based on interference cancellation to amplify the motion amplitude of the micro-expression video data; the enlarged video data being divided into video frame images, and all image sequences belonging to micro-expression are extracted according to the micro-expression tags in the data set to form a new data set; facial clipping preprocessing being carried out on the processed video, and all video image sequences being uniformly clipped into 110*110 size gray-scale images; the new data after preprocessing being put into the convolution neural network model and trained to extract the micro-expression feature data to achieve the task of micro-expression recognition. The technical proposal provided by the invention enlarges the amplitude of the expression action through the video amplification operation of eliminating interference to the complete data set, and simultaneously introduces a neural network model for training, thereby effectively improving the accuracy rate of the micro expression recognition on the basis of the full classification of the emotion label.

Owner:YUNNAN UNIV

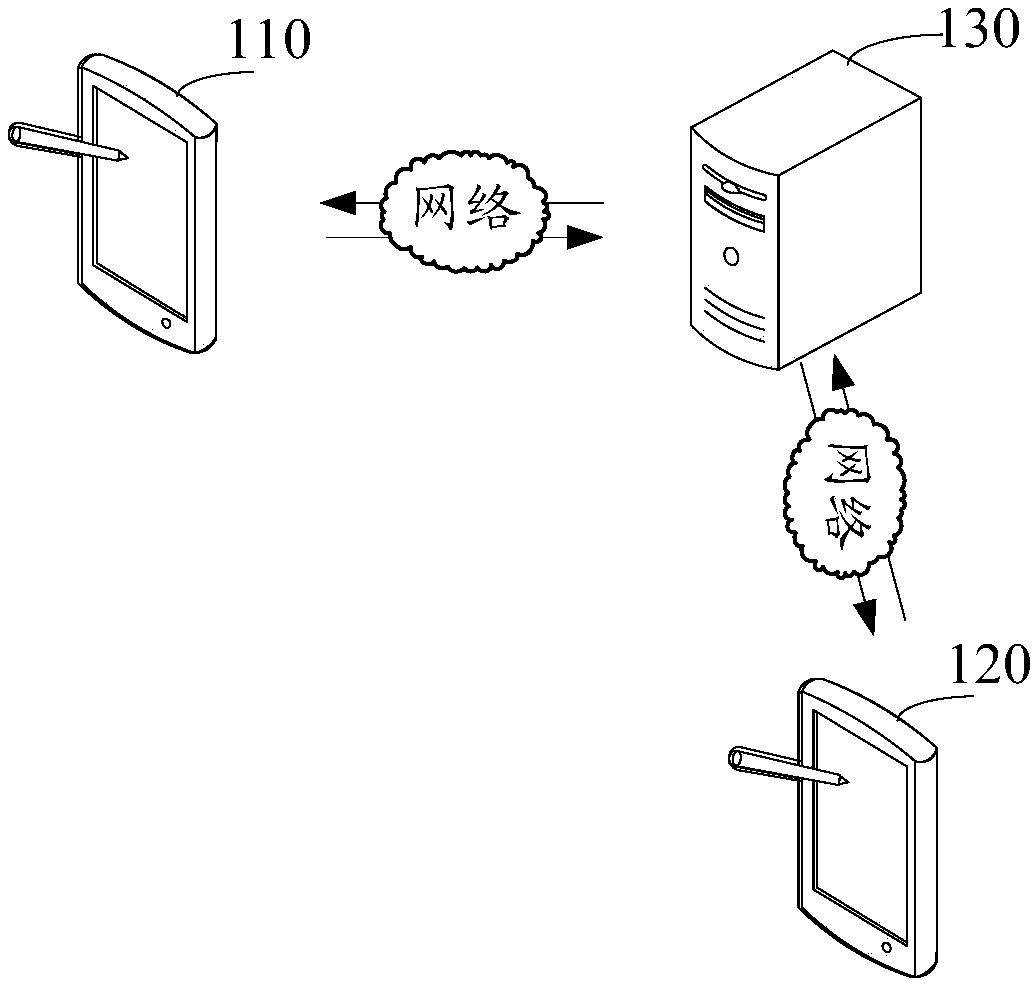

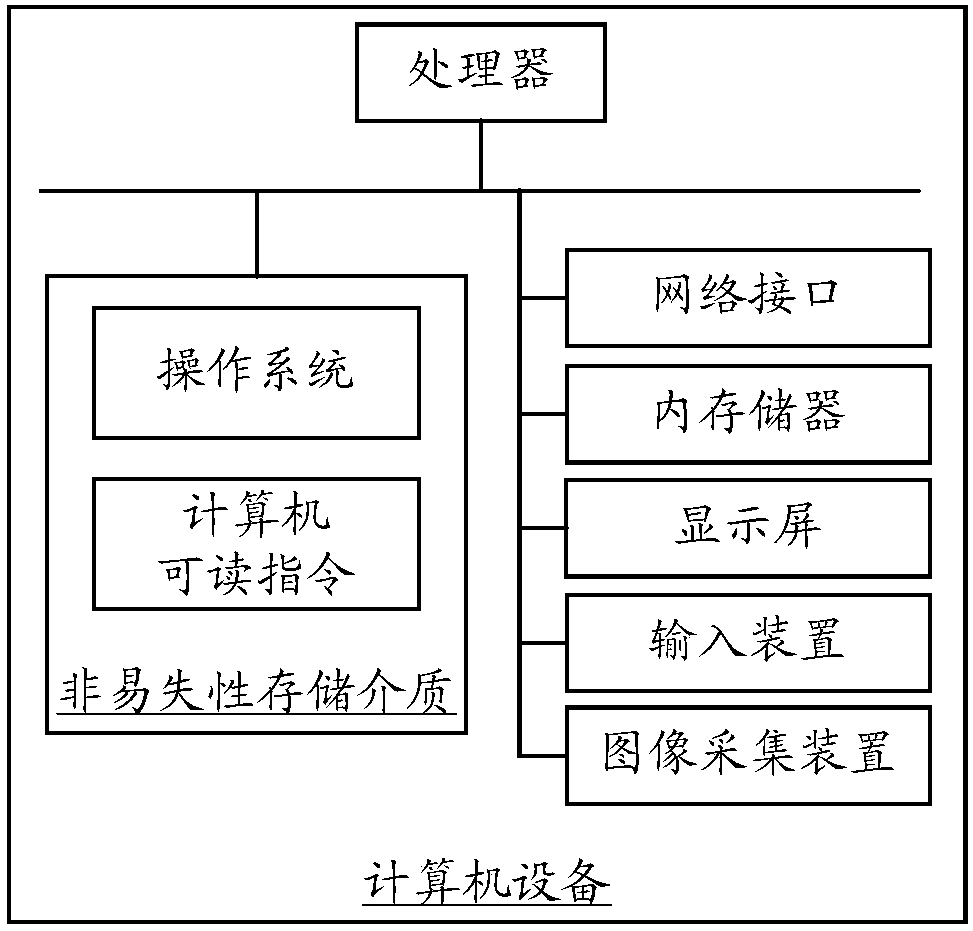

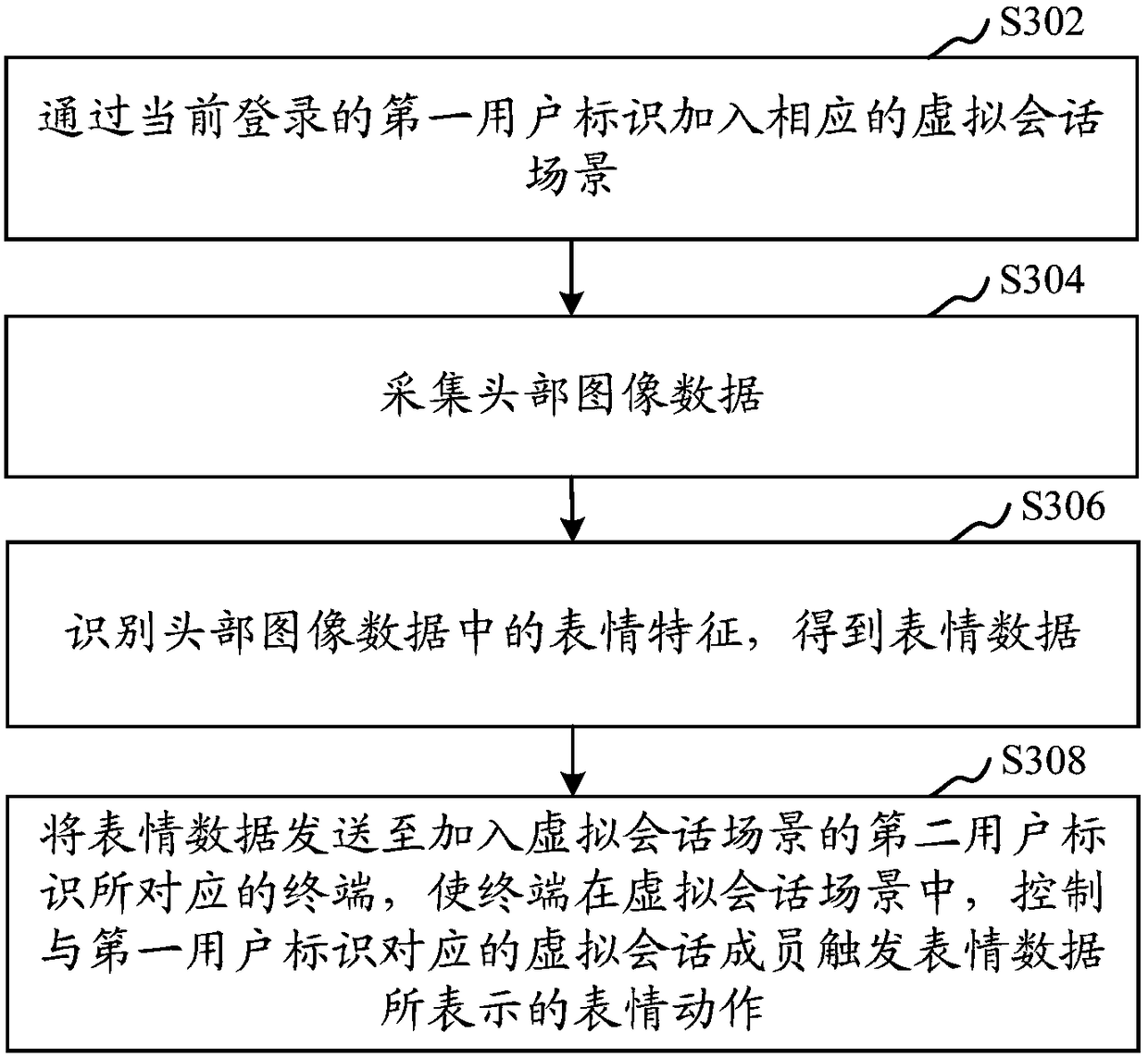

Interactive data processing method, device, computer device and storage medium

ActiveCN109150690AImprove privacy and securityImage data processingData switching networksExpression - actionComputer terminal

The present invention relates to an interactive data processing method, an interactive data processing apparatus, a computer device, and a storage medium. The method includes the following steps that:a currently logged-in first user identifier joints a corresponding virtual session scenario; head image data are acquired; expression features in the head image data are identified, so that expression data are obtained; and the expression data are sent to a terminal corresponding to a second user identifier which joints the virtual session scenario, so that the terminal controls a virtual sessionmember corresponding to the first user identifier in the virtual session scenario to trigger an expression action represented by the expression data. With the method adopted, the virtual session member is controlled to trigger the expression action represented by the expression data, so that interactive communication can be realized. The method can improve privacy security in an interactive communication process to some extent compared with a user's real image-based interactive communication method.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Multi-channel information emotional expression mapping method for facial expression robot

The invention discloses a multi-channel information emotional expression mapping method for a facial expression robot. The method comprises the following steps of S1: pre-establishing an expression library, a voice library, a gesture library, an expression output library, a voice output library and a gesture output library; S2: acquiring a voice of an interlocutor and identifying a sound expression by comparing the voice with the voice library; acquiring an expression of the interlocutor and identifying an emotional expression by comparing the expression with the expression library; acquiring a gesture of the interlocutor and identifying a gesture expression by comparing the gesture with the gesture library; fusing the sound expression, the emotional expression and the gesture expression to obtain a combined expression instruction; and S3: selecting voice stream data from the voice output library by the facial expression robot according to the combined expression instruction to perform output, and selecting an expression action instruction from the expression output library by the facial expression robot according to the combined expression instruction to perform facial expression. According to the method, multi-channel information emotional expression of the facial expression robot can be realized; and the method is simple, convenient to use and low in cost.

Owner:ANHUI SEMXUM INFORMATION TECH CO LTD

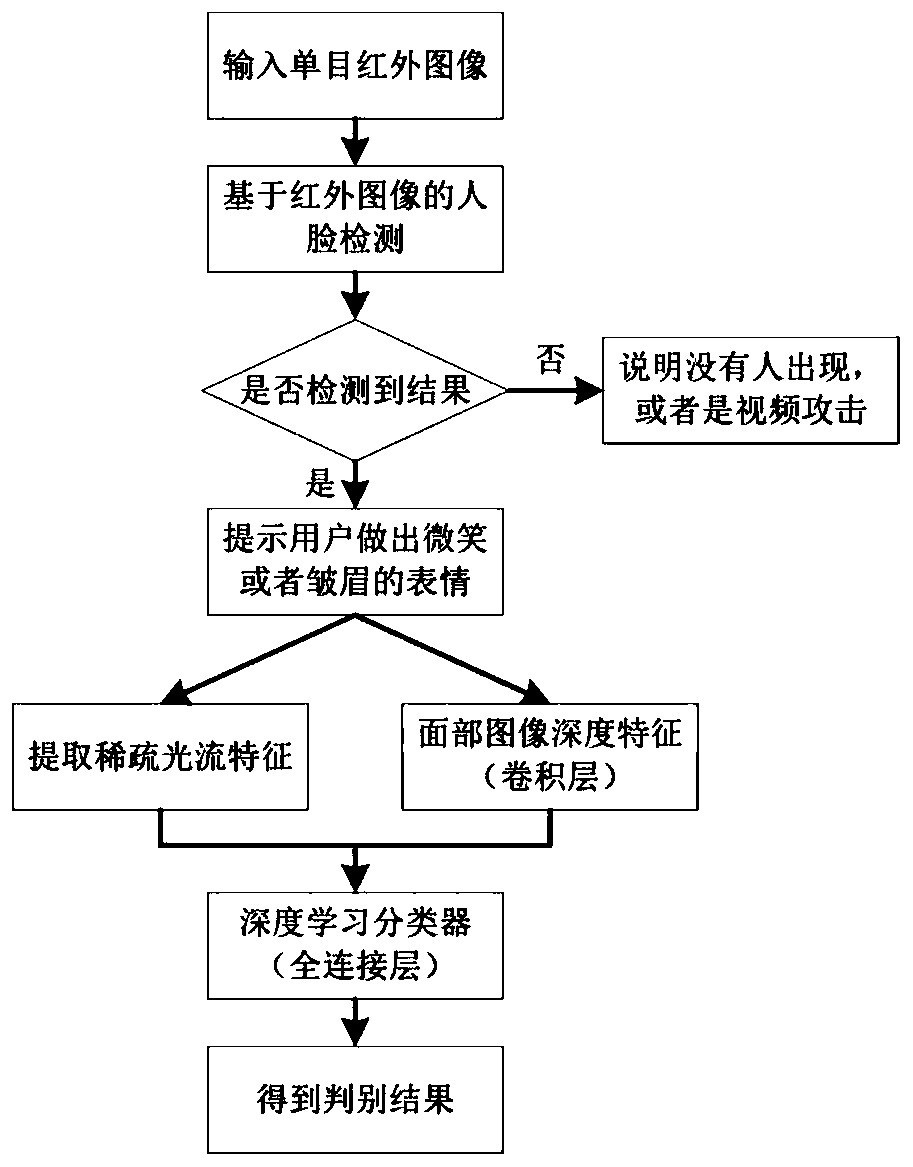

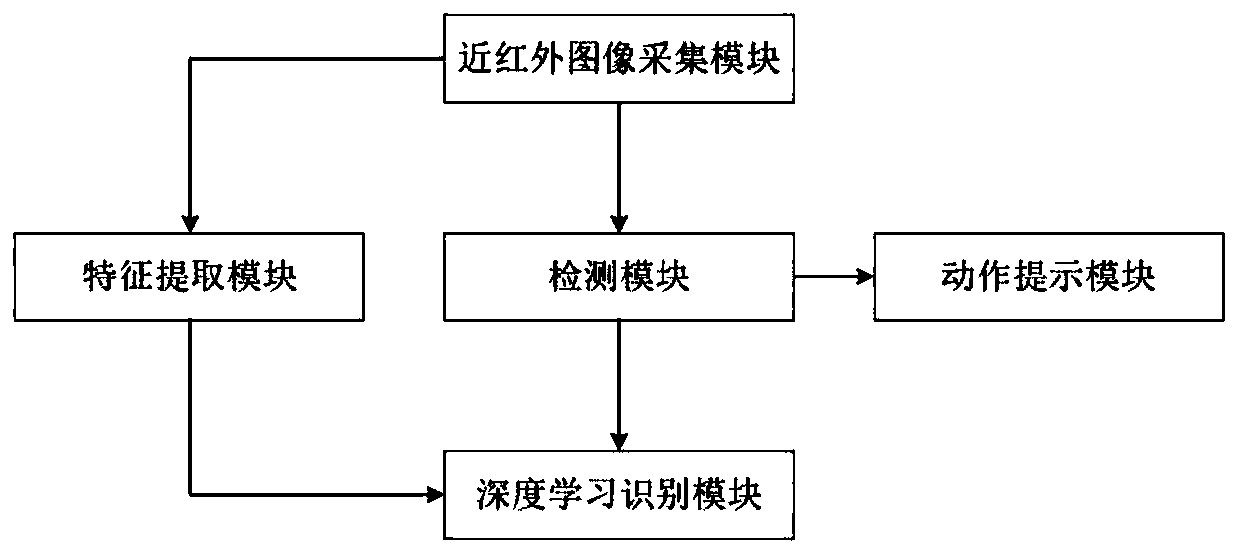

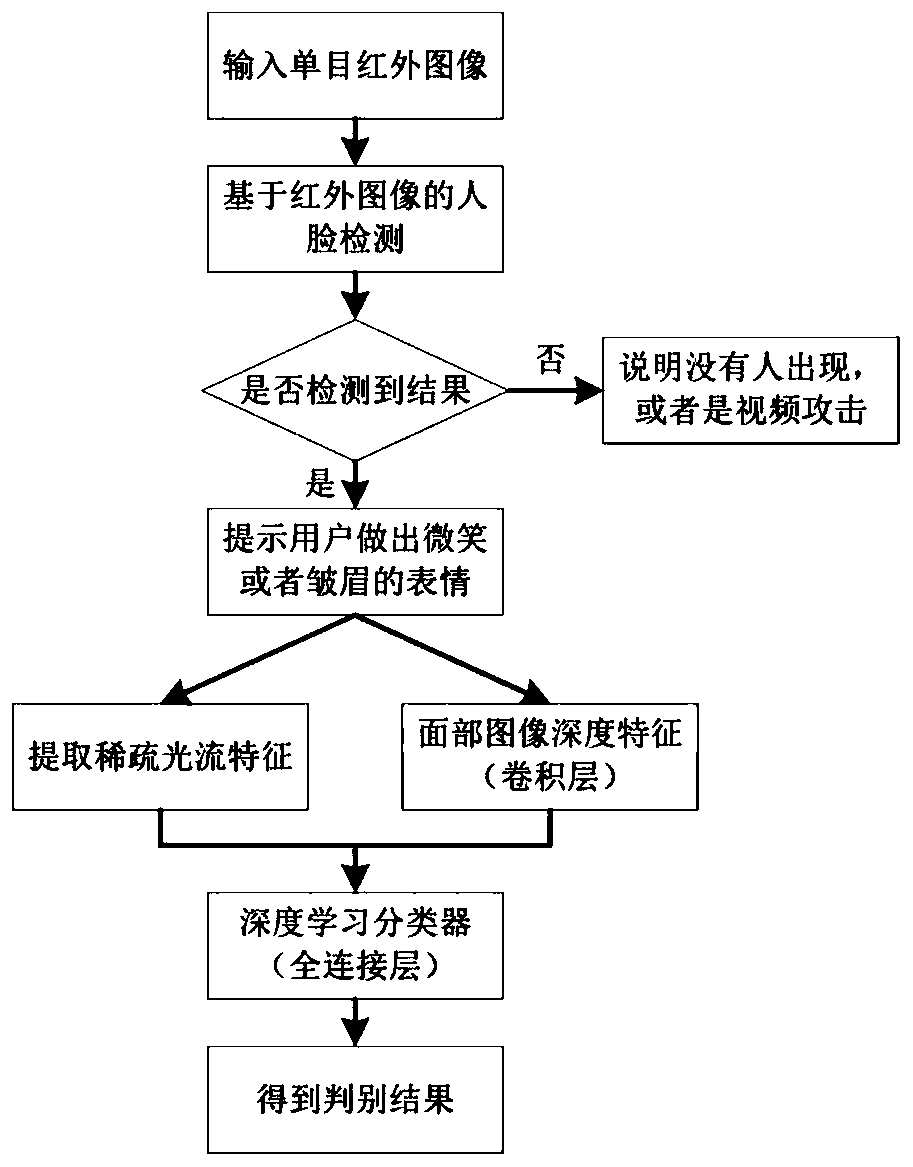

Living body detection method and system based on near-infrared monocular camera shooting

ActiveCN109977846AEffectively filter out interferenceFilter out noiseAcquiring/recognising facial featuresSpoof detectionPattern recognitionExpression - action

The invention provides a living body detection method based on near-infrared monocular camera shooting. The living body detection method comprises the following steps: acquiring near-infrared image information; detecting whether the near-infrared image contains a human face or not, and if the human face is not detected, judging that a recognition object is a non-real person; if the human face is detected, prompting a user to make an appointed expression action; extracting optical flow characteristics of the expression actions, and extracting facial image depth characteristics of the near-infrared image at the same time; inputting the optical flow characteristics and the deep characteristics of the face image into a deep learning classifier; obtaining a face recognition result; according tothe method, video and three-dimensional mask attacks can be effectively prevented, and the living body detection accuracy is improved.

Owner:CHONGQING INST OF GREEN & INTELLIGENT TECH CHINESE ACADEMY OF SCI +1

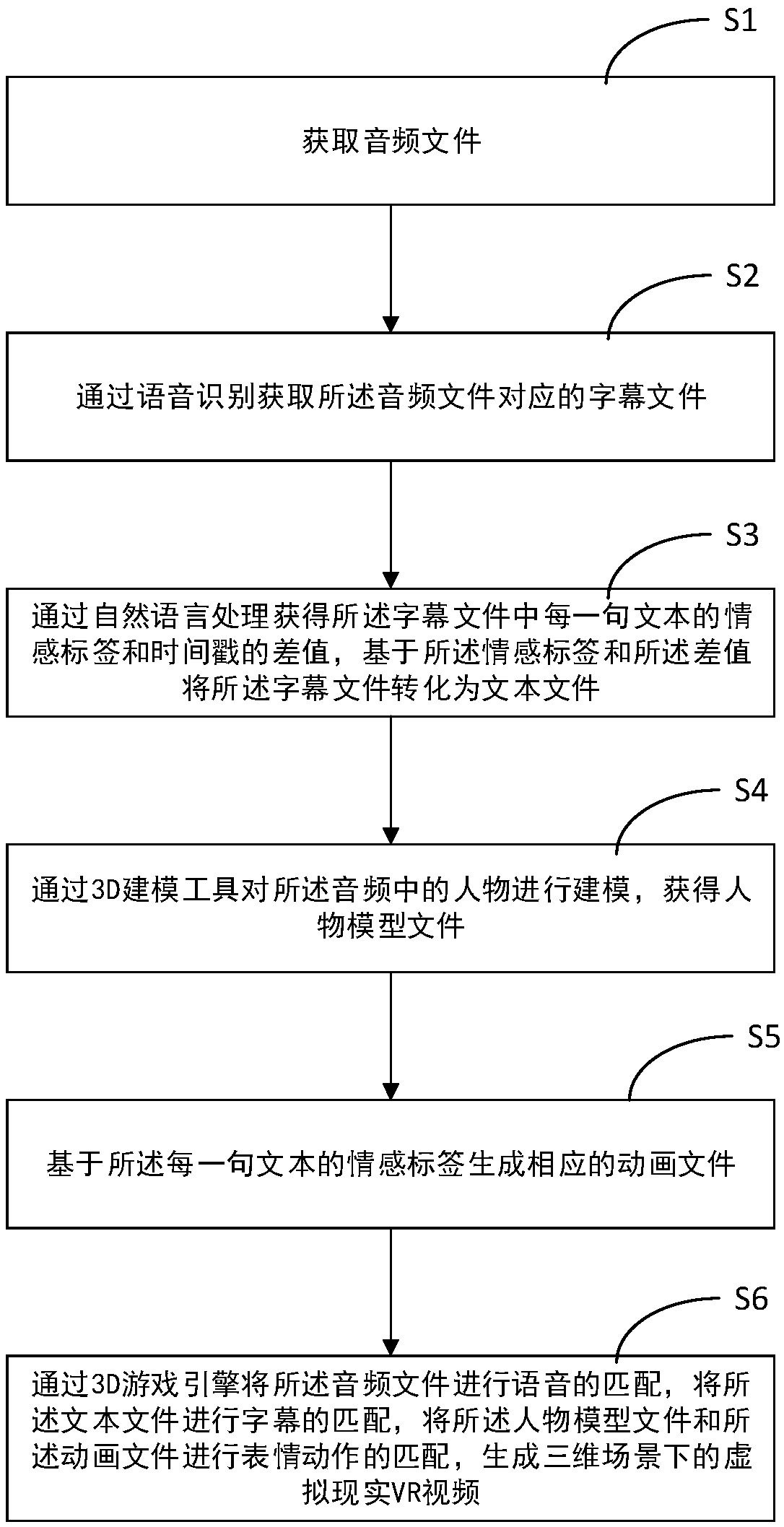

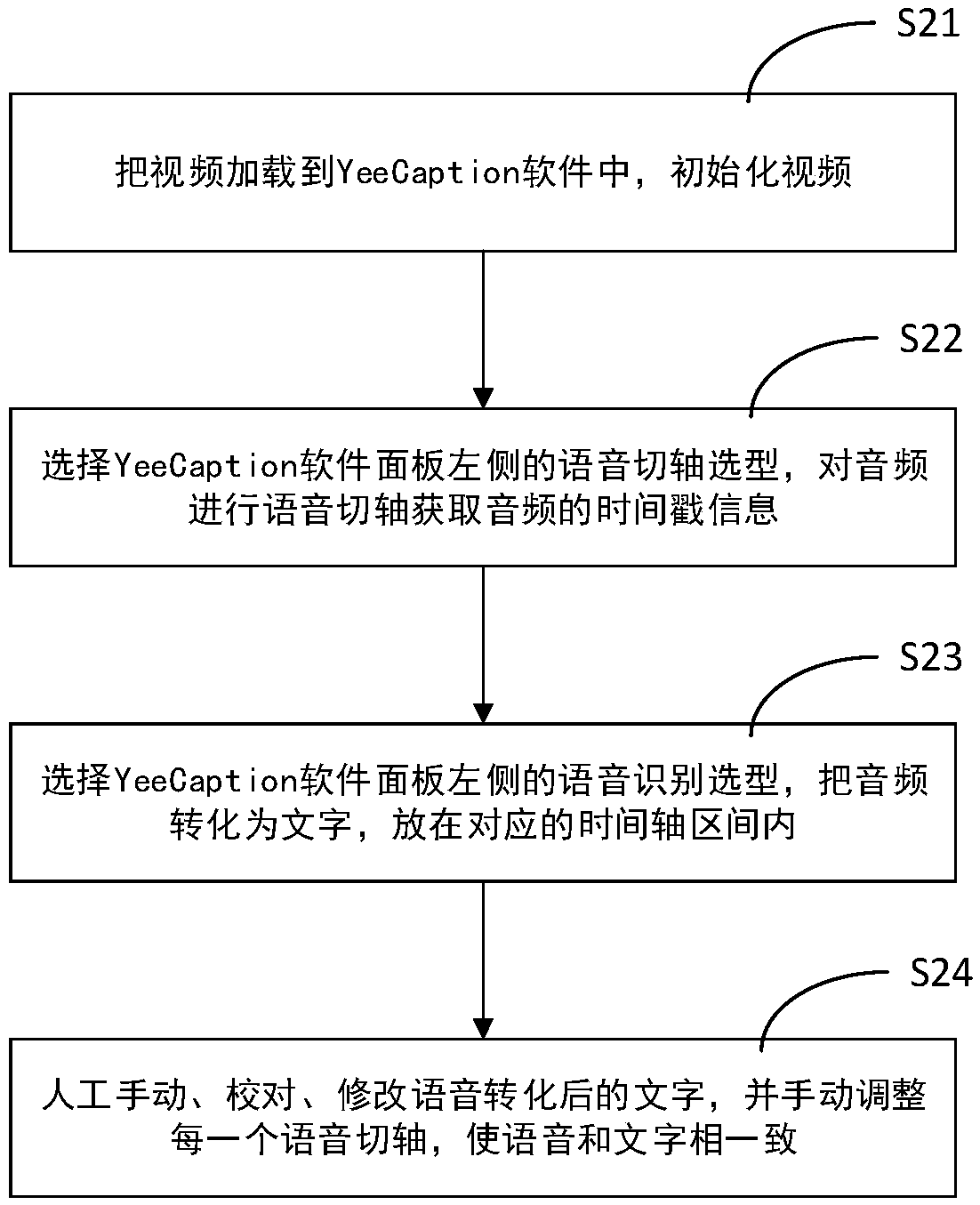

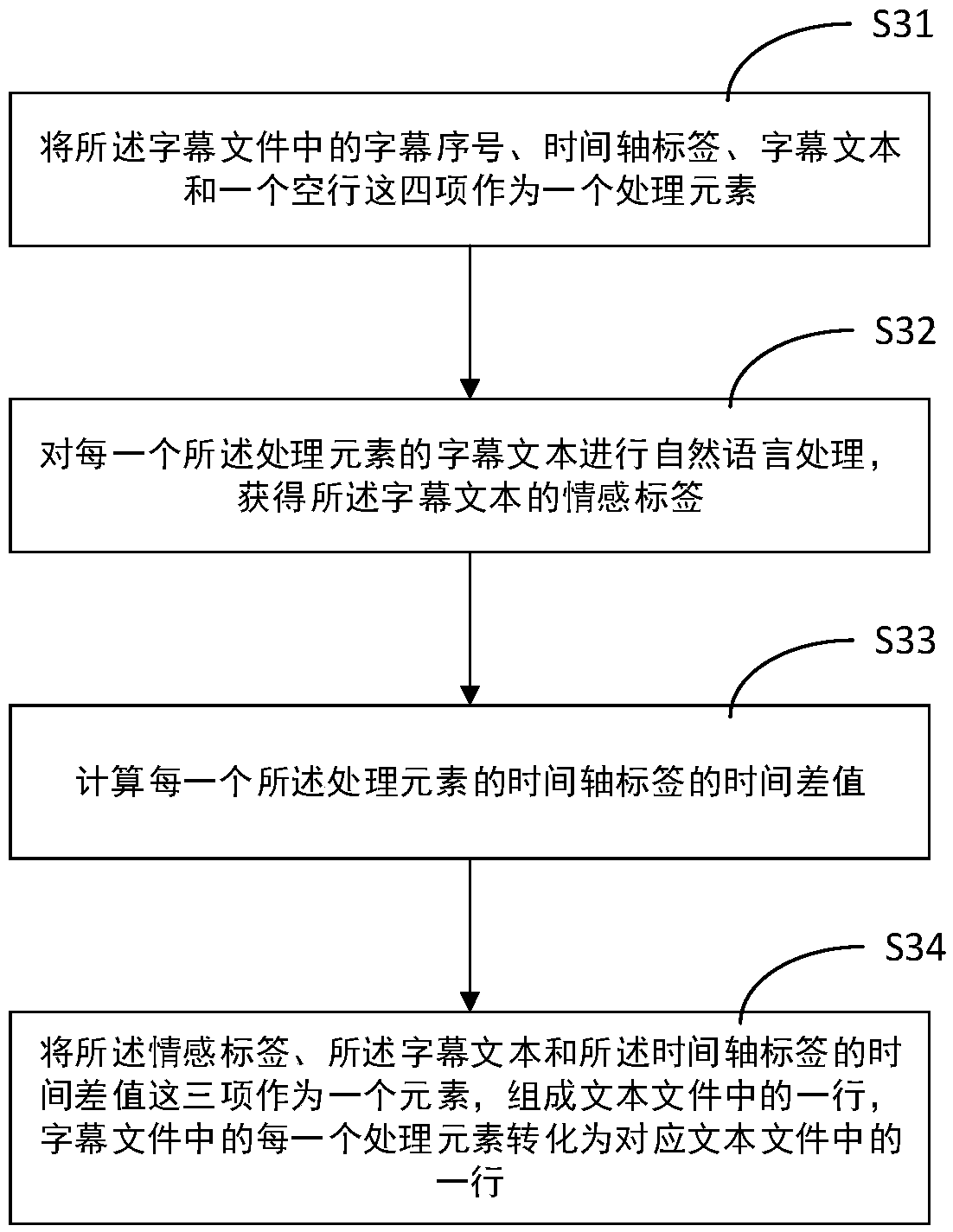

Generation method and device for VR video

The invention relates to a method for converting a video or audio into a VR video. The method comprises the steps that an audio file is acquired, or an audio file in a video is extracted; a subtitle file corresponding to the audio file is acquired through voice recognition; emotion labels of all text sentences in the subtitle file and difference values of timestamps are obtained by means of natural language processing, and the subtitle file is converted into a text file; figures in the video or audio are modeled through a 3D modeling tool to obtain a figure model file; a corresponding animation file is recorded for the emotion label of each text sentence through recording equipment, or a corresponding animation file is made for the emotion label of each text sentence through the 3D modeling tool; and by means of a 3D game engine, voice matching is conducted on the audio file, subtitle matching is conducted on the text file, expression action matching is conducted on the figure model file and the animation files, and a VR video in a three-dimensional scene is generated.

Owner:CAPITAL NORMAL UNIVERSITY

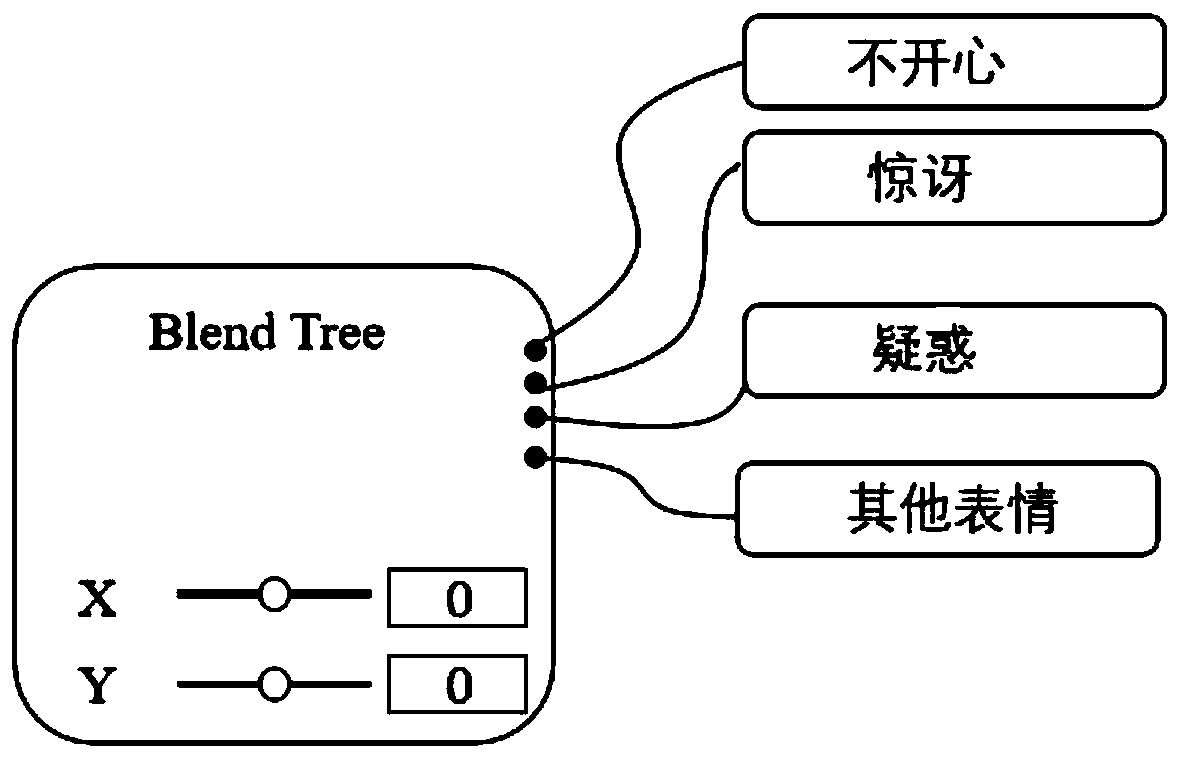

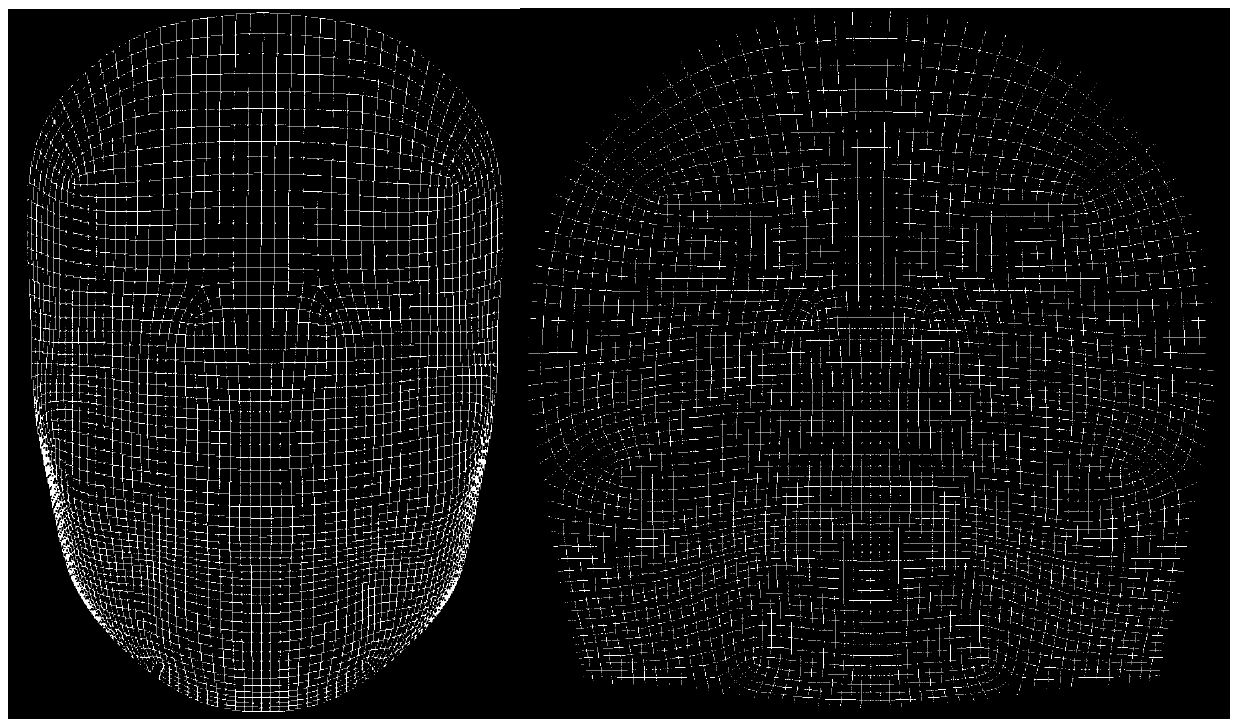

Virtual face modeling method based on real face image

ActiveCN110189404AHigh degree of acquaintanceModeling takes less timeAcquiring/recognising facial features3D modellingAnimationExpression - action

The invention discloses a virtual face modeling method based on a real face image. The method comprises the following steps: 1) manufacturing a head model; 2) making a facial expression action chartlet set; 3) in the Unity 3D, using a frame animation machine built in the Unity 3D to make various obtained expression action chartlet sets into corresponding expression animations; 4) manufacturing anexpression animation controller; 5) decorating the hair accessory on the 3D head model to modify the face; 6) writing a script to control the displacement of the 3D head model except the face, so thatthe 3D head model does corresponding movement along with the face displacement. According to the method, the face of the established 3D virtual head model is modified by adopting the facial expression image of the real person, so that the recognition degree of the face of the established virtual character head model and the face of the real person is extremely high; and the modeling process doesnot need to carry out complex space design like existing 3D software modeling, the modeling time is shorter, and the cost is lower.

Owner:CHONGQING UNIV

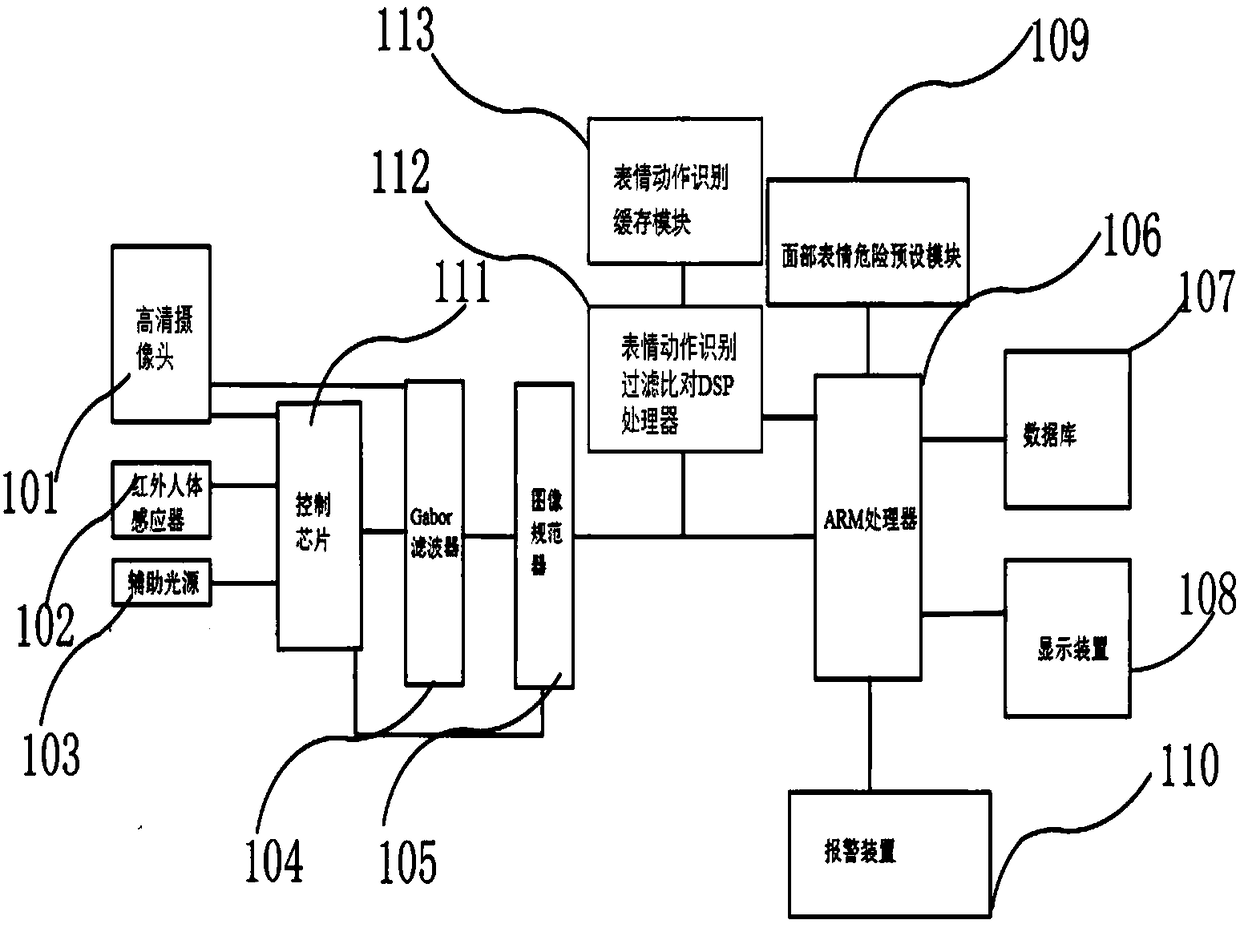

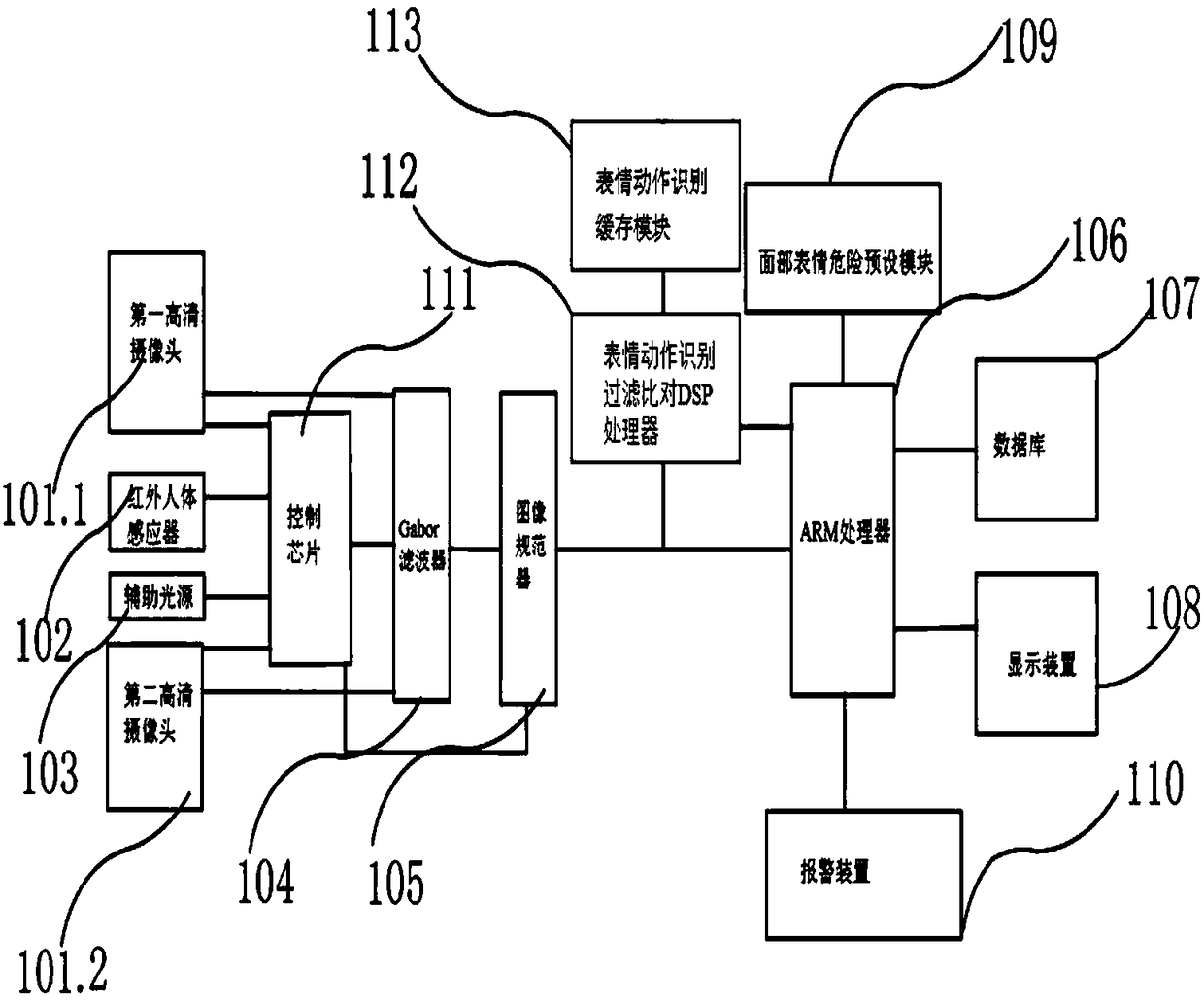

Precise face recognition system

PendingCN108197609APrevent theftEasily solve facial recognition problemsCharacter and pattern recognitionProtocol authorisationPaymentDisplay device

The invention belongs to the technical field of face recognition and especially relates to a novel precise face recognition system. The system includes a high definition camera, an infrared human bodysensor, an auxiliary light source, a control chip, a Gabor filter, an image normalizer, an ARM processor, a database and a display device. An expression action recognition filtering and comparison DSP processor is also connected with an expression action recognition buffer module. Through setting of the expression action recognition filtering and comparison DSP processor and the expression actionrecognition buffer module, a series of expressions or actions for identity verification of a person can be taken as identify verification information, so that credit card fraud when the precision face recognition system is applied to payment can be prevented and a problem of face recognition of twins can be solved easily.

Owner:梁纳星

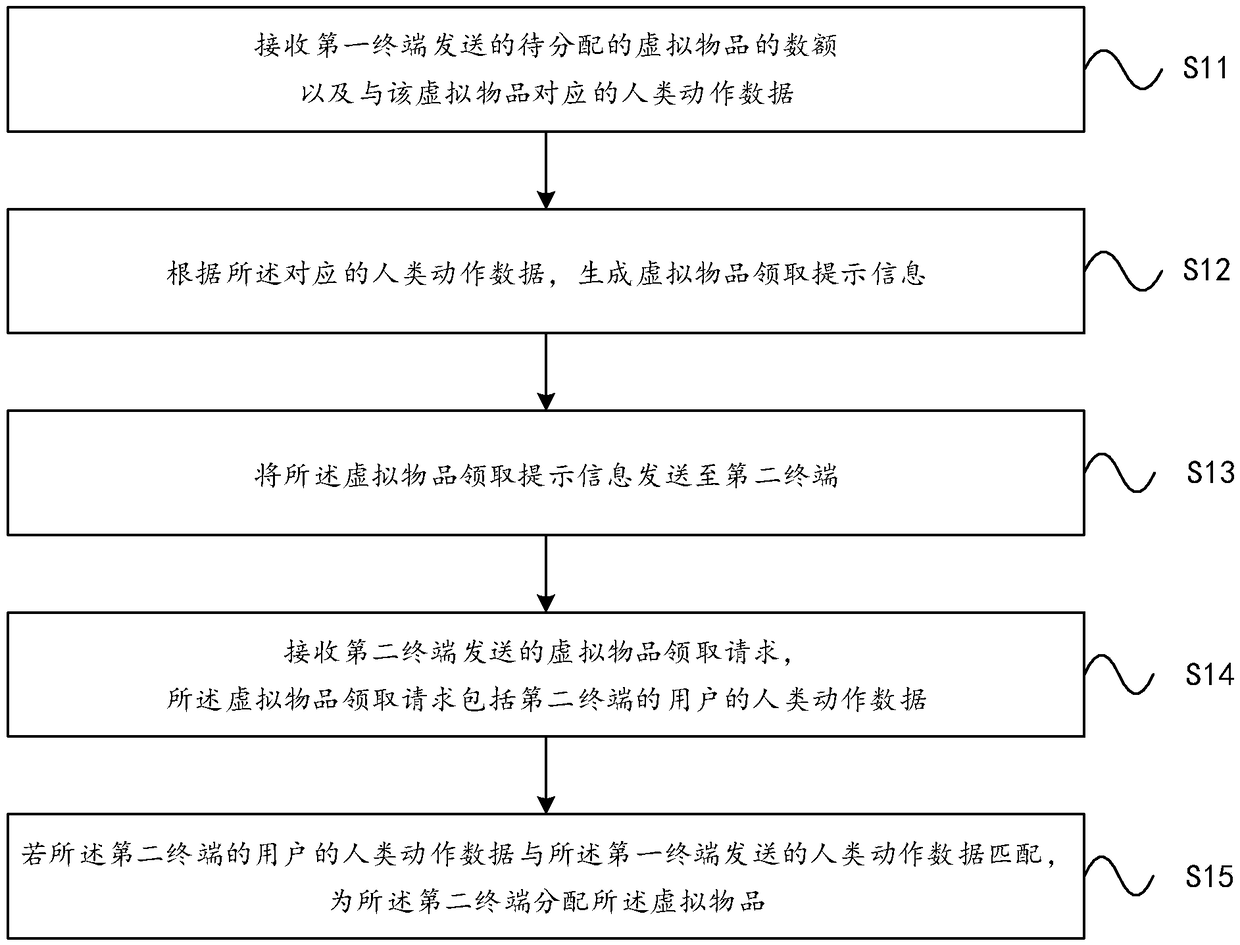

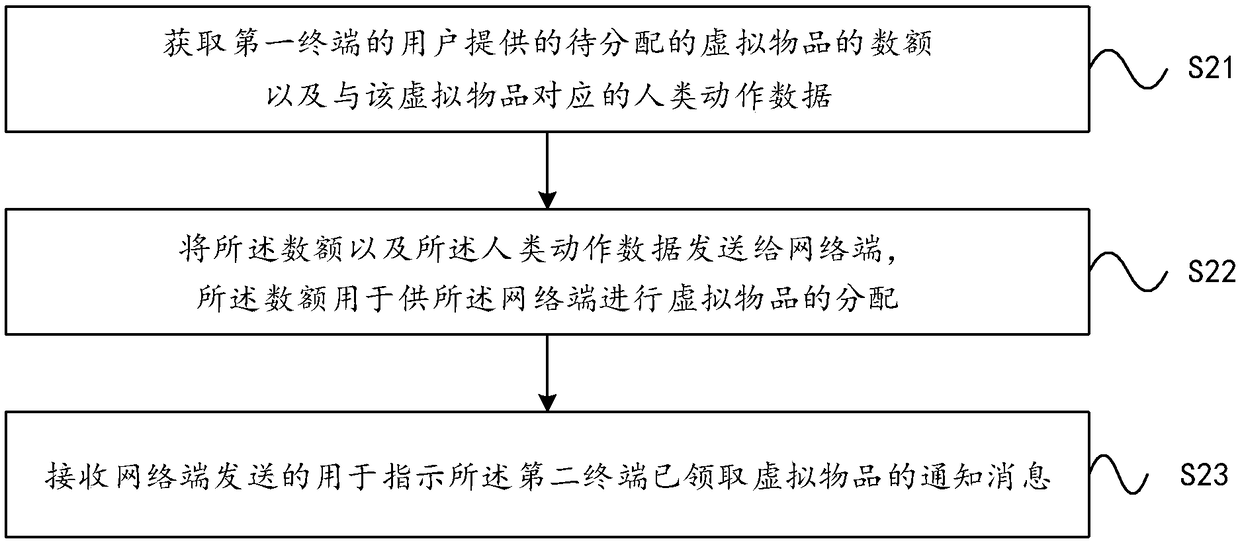

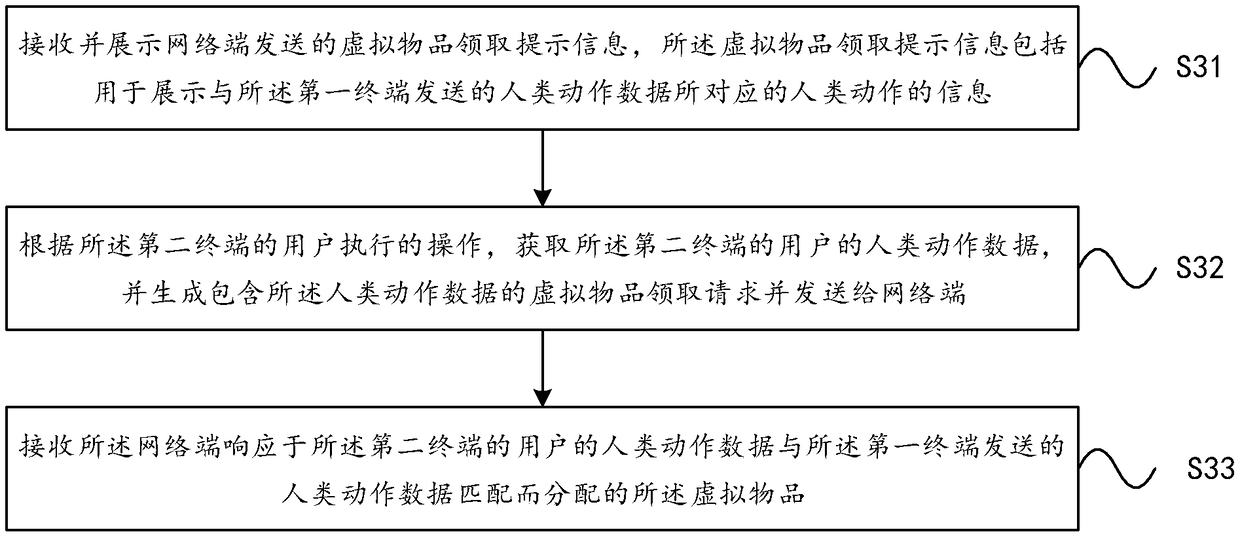

A method of distributing, dispensing, and collecting virtual items

PendingCN109284714AHigh precisionPayment circuitsAcquiring/recognising facial featuresDistribution methodExpression - action

The present application provides a method of distributing, dispensing, and collecting virtual items. The virtual article distribution method includes: receiving the amount of the virtual article to bedistributed sent by the first terminal and human action data corresponding to the virtual article, the human action data including at least one of limb action data and expression action data; receiving the amount of the virtual article to be distributed and the human action data corresponding to the virtual article. Generating virtual article retrieval prompt information according to the corresponding human action data; Sending the virtual article collection prompt information to the second terminal; receiving a virtual article retrieval request sent by the second terminal, the virtual article retrieval request including human action data of a user of the second terminal; assigning the virtual item to the second terminal if the human action data of the user of the second terminal matchesthe human action data sent by the first terminal. The invention improves entertainment of the distribution, dispensing and collection of virtual goods.

Owner:SHANGHAI ZHANGMEN TECH

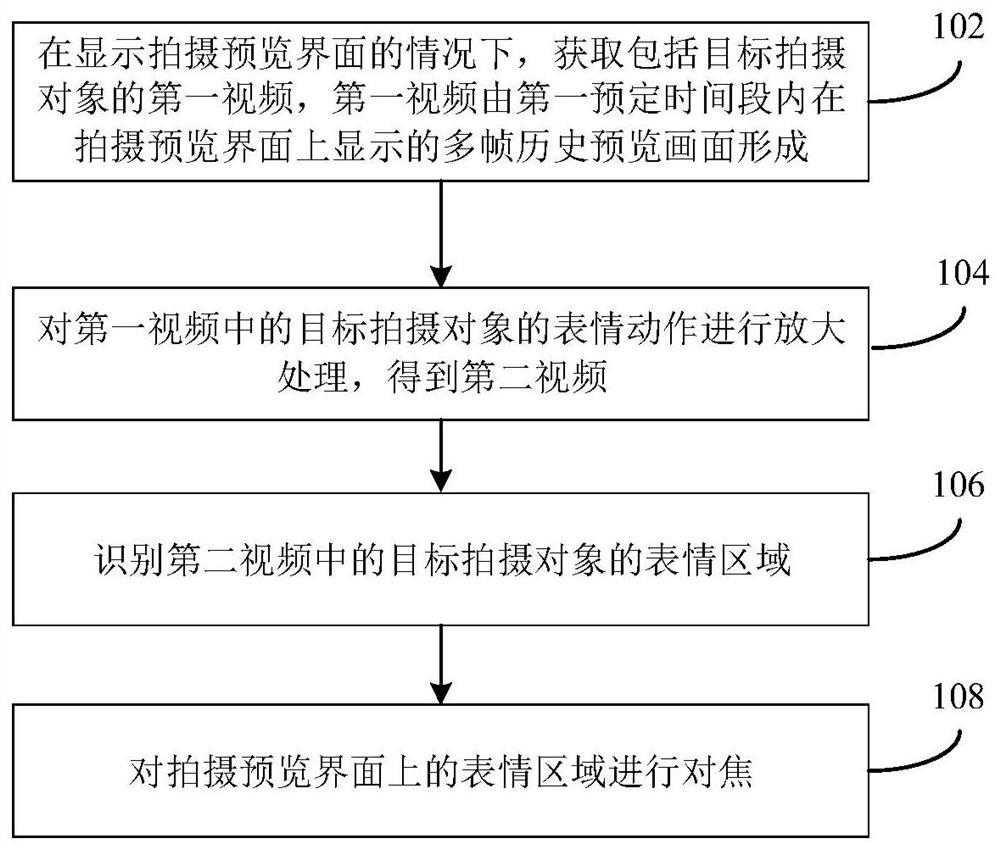

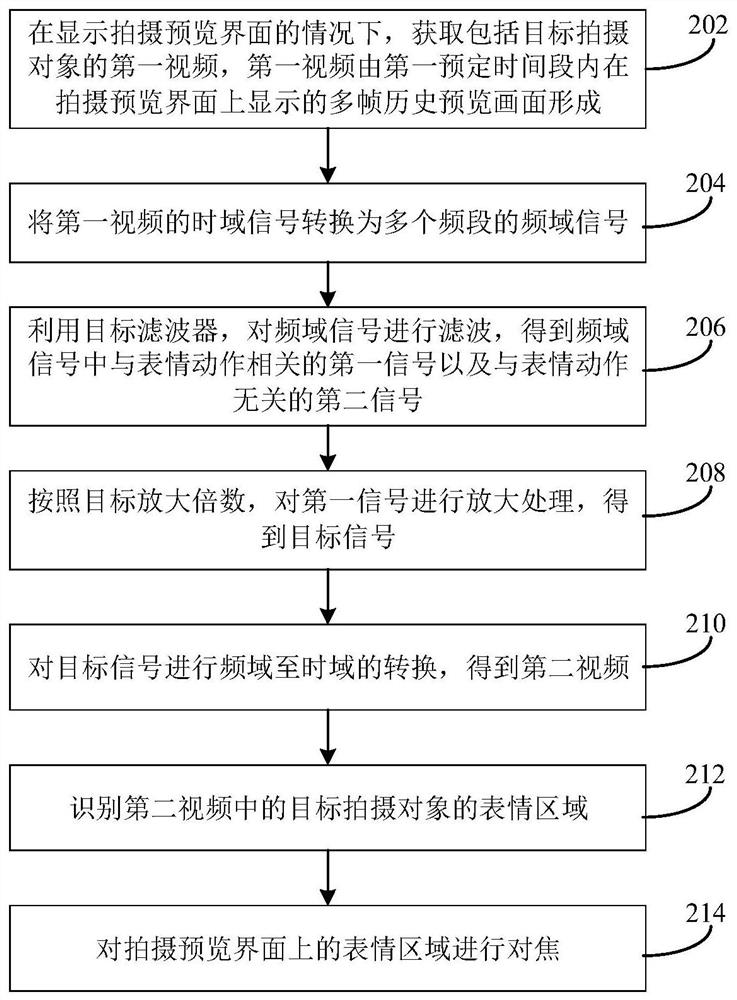

Shooting focusing method and device, electronic equipment and storage medium

ActiveCN111654622AAccurate identificationGuaranteed accuracyTelevision system detailsColor television detailsComputer graphics (images)Multiple frame

The invention discloses a shooting focusing method and device, electronic equipment and a storage medium, and belongs to the field of electronic equipment. The method comprises the steps: under the condition that a shooting preview interface is displayed, acquiring a first video including a target shooting object wherein the first video is formed by multiple frames of historical preview images displayed on the shooting preview interface within a first preset time period; amplifying the expression action of the target shooting object in the first video to obtain a second video; identifying an expression area of a target shooting object in the second video; and focusing the expression area on the shooting preview interface. By utilizing the embodiment of the invention, the accuracy of the focusing area can be ensured, and a user does not need to manually operate focusing, so that the user can conveniently shoot.

Owner:VIVO MOBILE COMM CO LTD

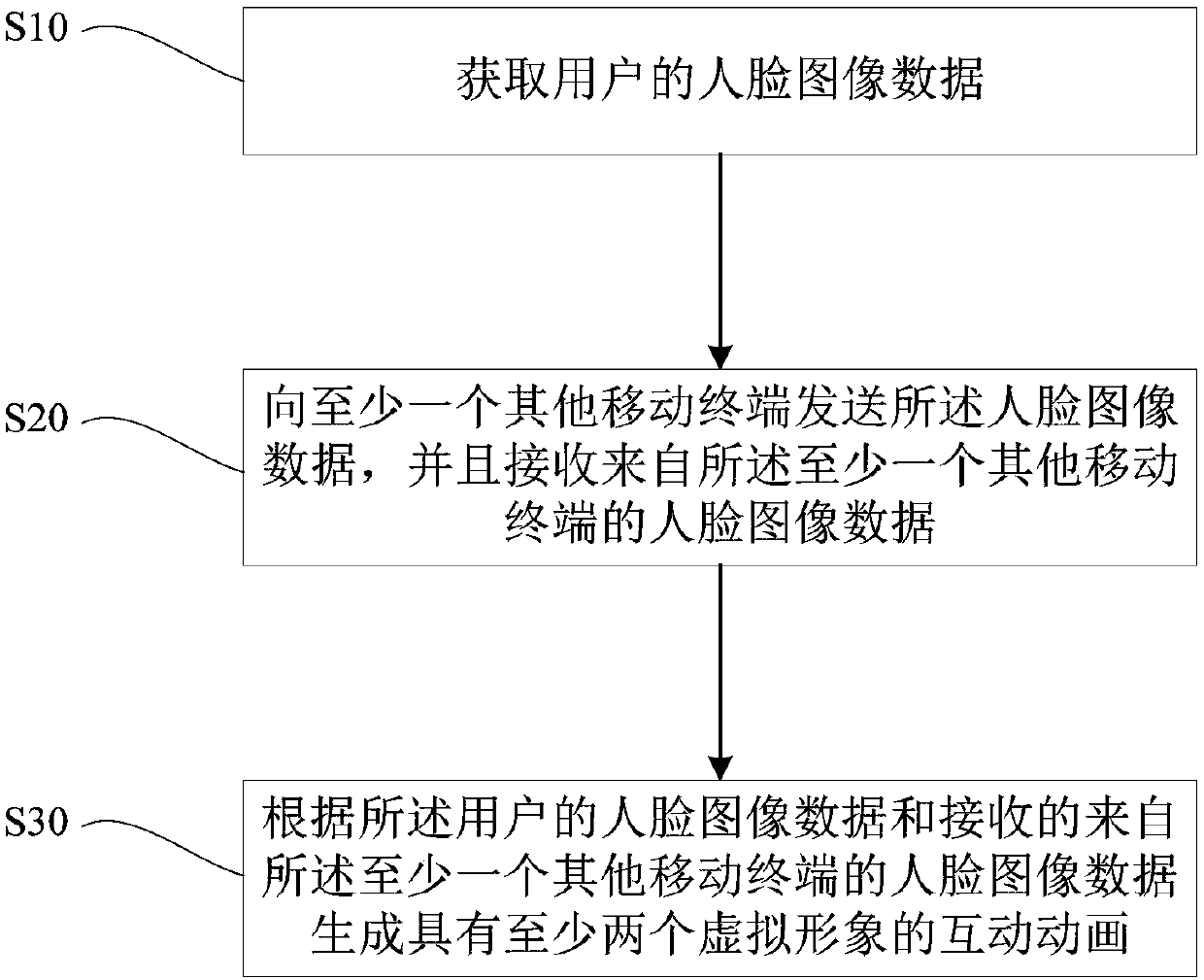

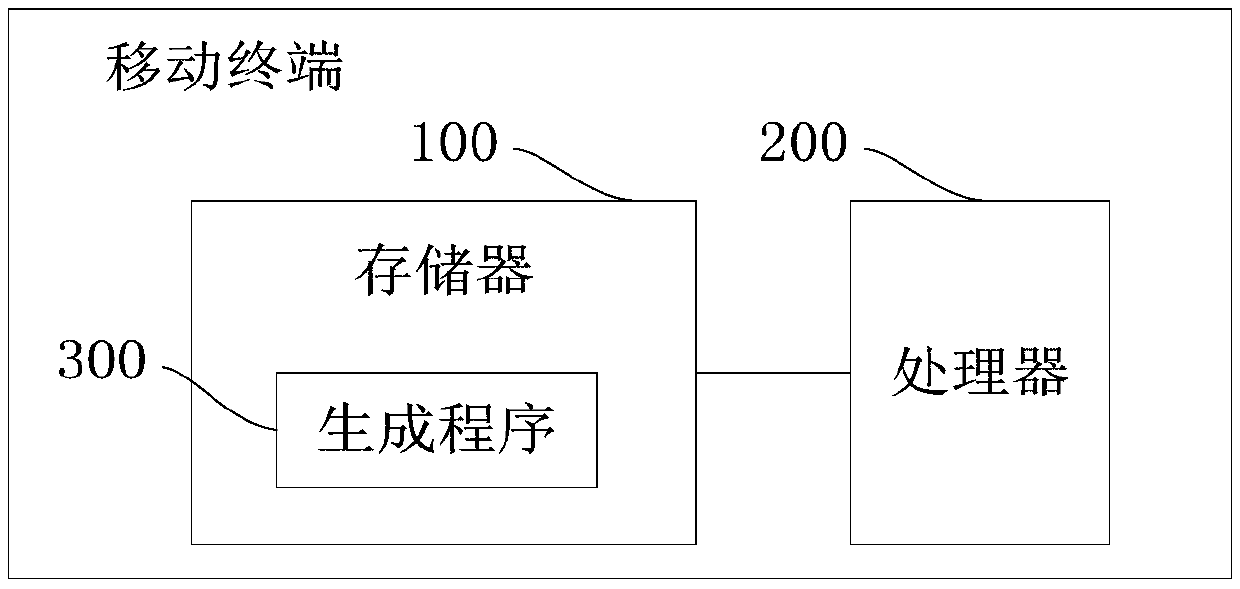

Mobile terminal, generation method of interactive animation thereof and computer readable storage medium

InactiveCN109525483AImprove interactive experienceCharacter and pattern recognitionAnimationAnimationExpression - action

The invention discloses a mobile terminal, a generation method of an interactive animation thereof, and a computer readable storage medium. The method comprises the following steps of: acquiring faceimage data of a user; sending the face image data to at least one other mobile terminals, and receiving face image data from the at least one other mobile terminals; and generating an interactive animation with at least two virtual images according to the face image data of the user and the received face image data from the at least one other mobile terminals. According to the mobile terminal, thegeneration method of the interactive animation thereof, and the computer readable storage medium, the interactive animation can be generated on each mobile terminal, and the interactive animation hasan avatar matched with each user expression actions by acquiring face image data of each user through each mobile terminal and transmitting the face image data to other mobile terminals, thereby increasing the interactive experience of multiple parties.

Owner:HUIZHOU TCL MOBILE COMM CO LTD

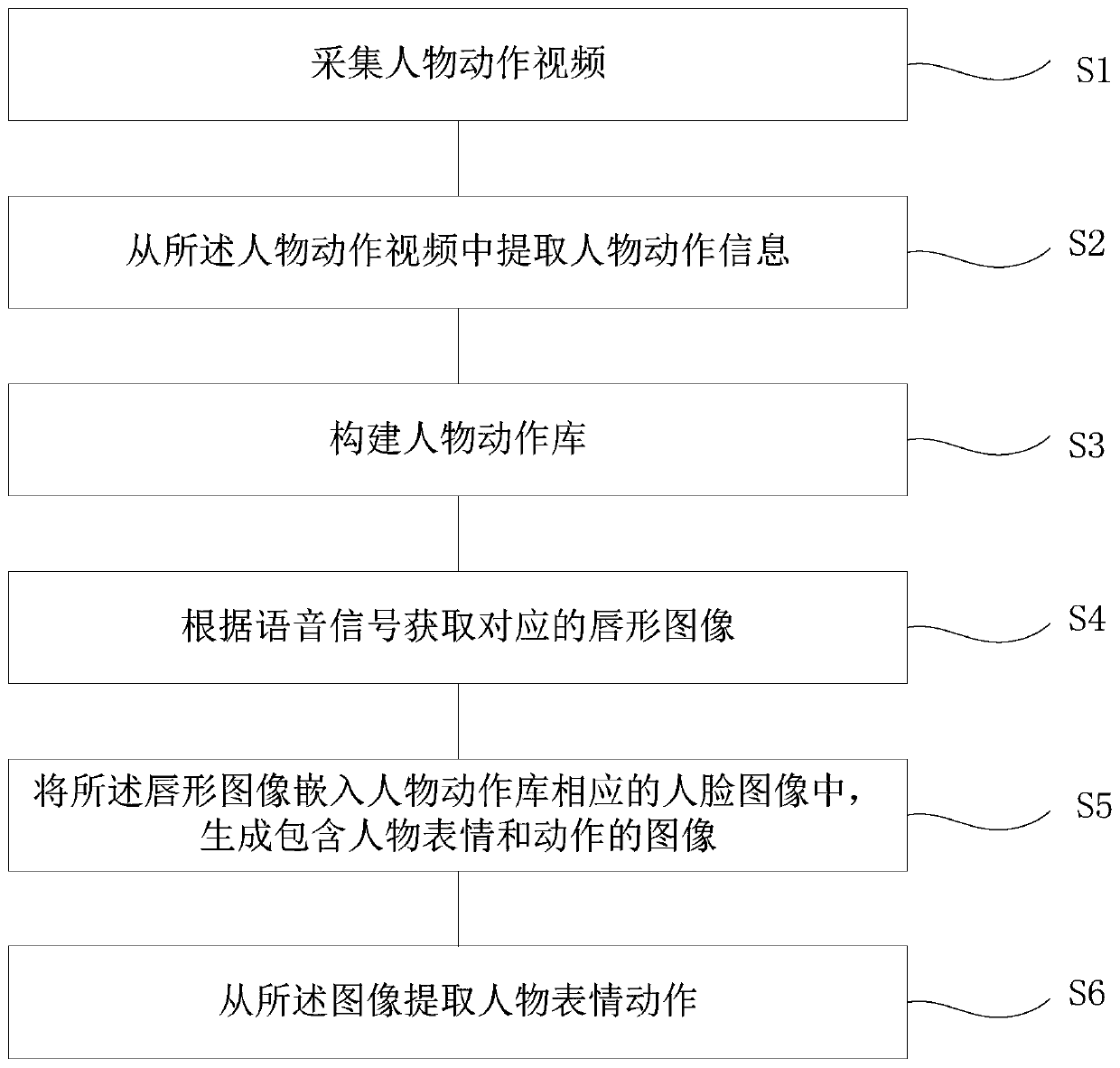

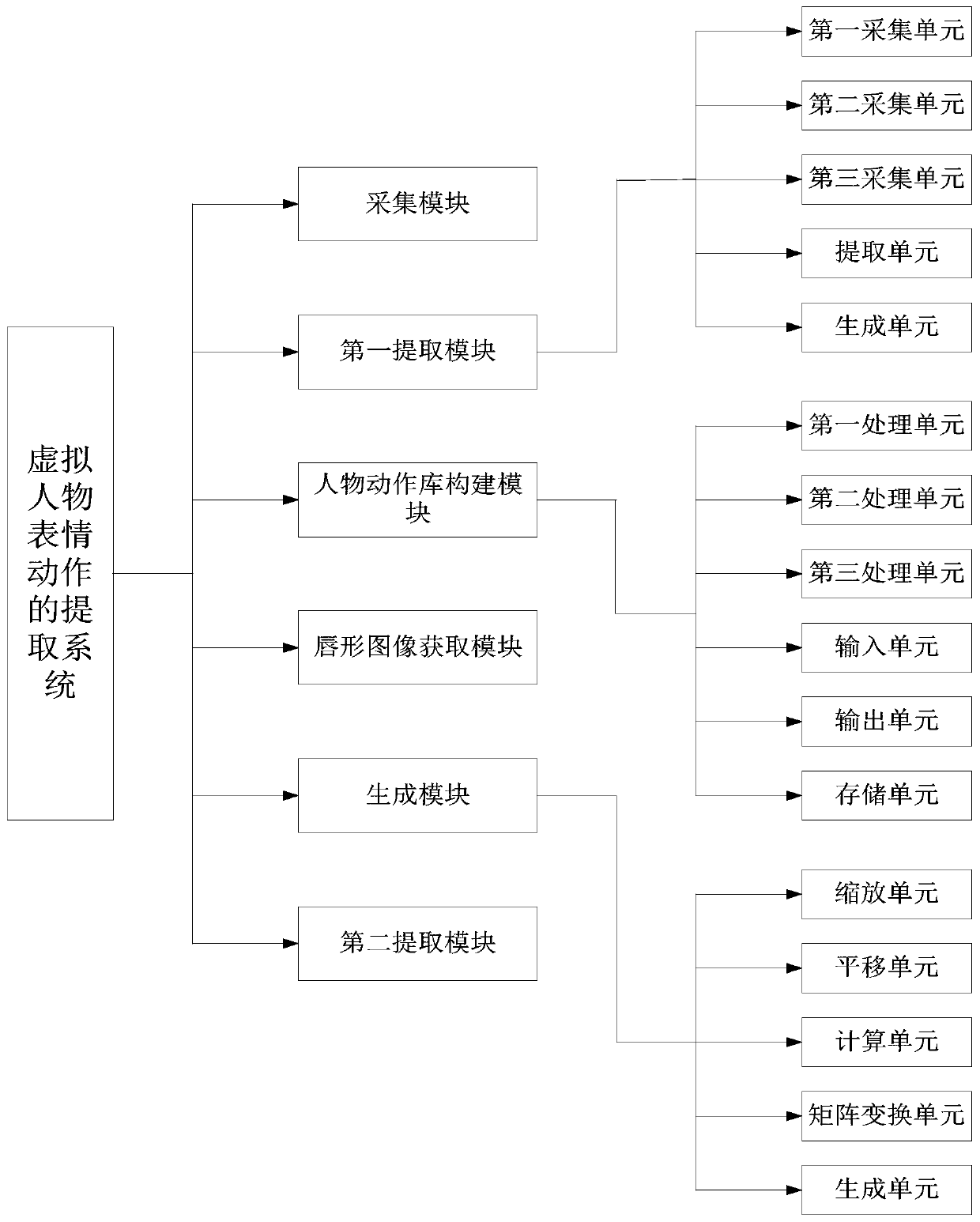

Extracting method, system and device for virtual character expressions and actions and medium

ActiveCN111291674AIncrease contentEasy to joinNeural architecturesAcquiring/recognising facial featuresImage extractionImaging processing

The invention discloses a virtual character expression action extraction method, device and apparatus, and a storage medium. The method comprises the following steps: acquiring a character action video; and extracting character action information from the character action video, constructing a character action library, obtaining a corresponding lip image according to a voice signal, embedding thelip image into a face image corresponding to the character action library; generating an image containing character expressions and actions; and extracting character expression actions from the image.Different second label information can be generated by constructing a character action library and simply modifying two-dimensional point coordinates or the shape of a two-dimensional mask, so that the content of the character action library can be enriched; according to the method, different expression actions can be extracted at any time while the character expression action extraction operation is simplified, rich character action libraries can be provided, new actions can be conveniently added into the character action libraries, and the working efficiency is improved. The method is widely applied to the technical field of image processing.

Owner:RES INST OF TSINGHUA PEARL RIVER DELTA +1

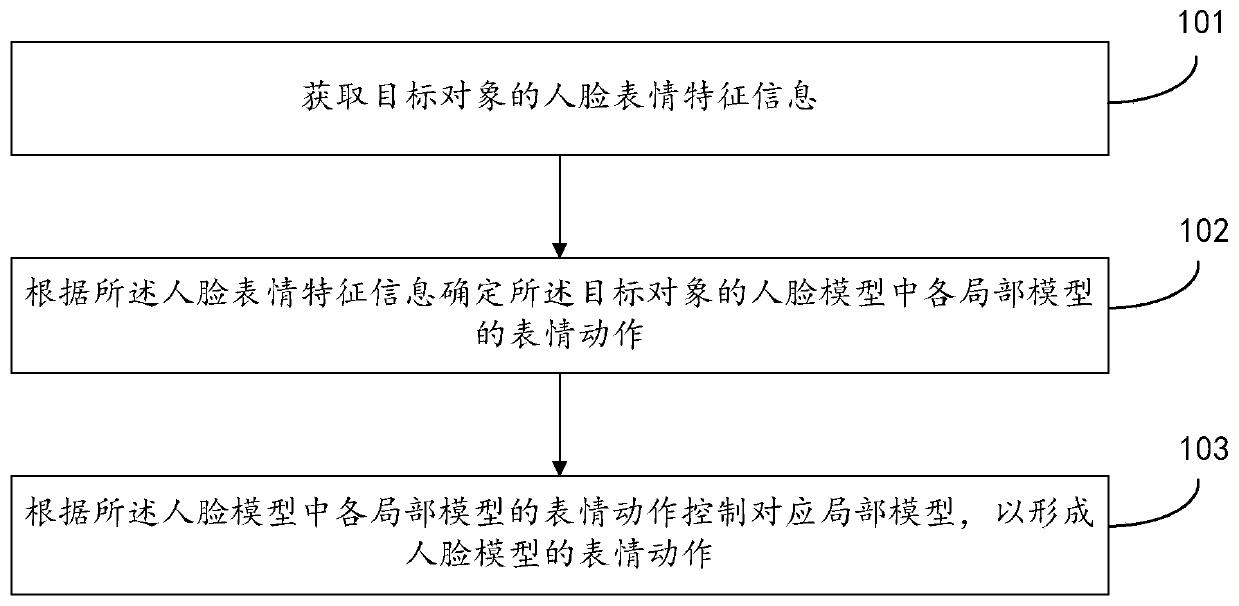

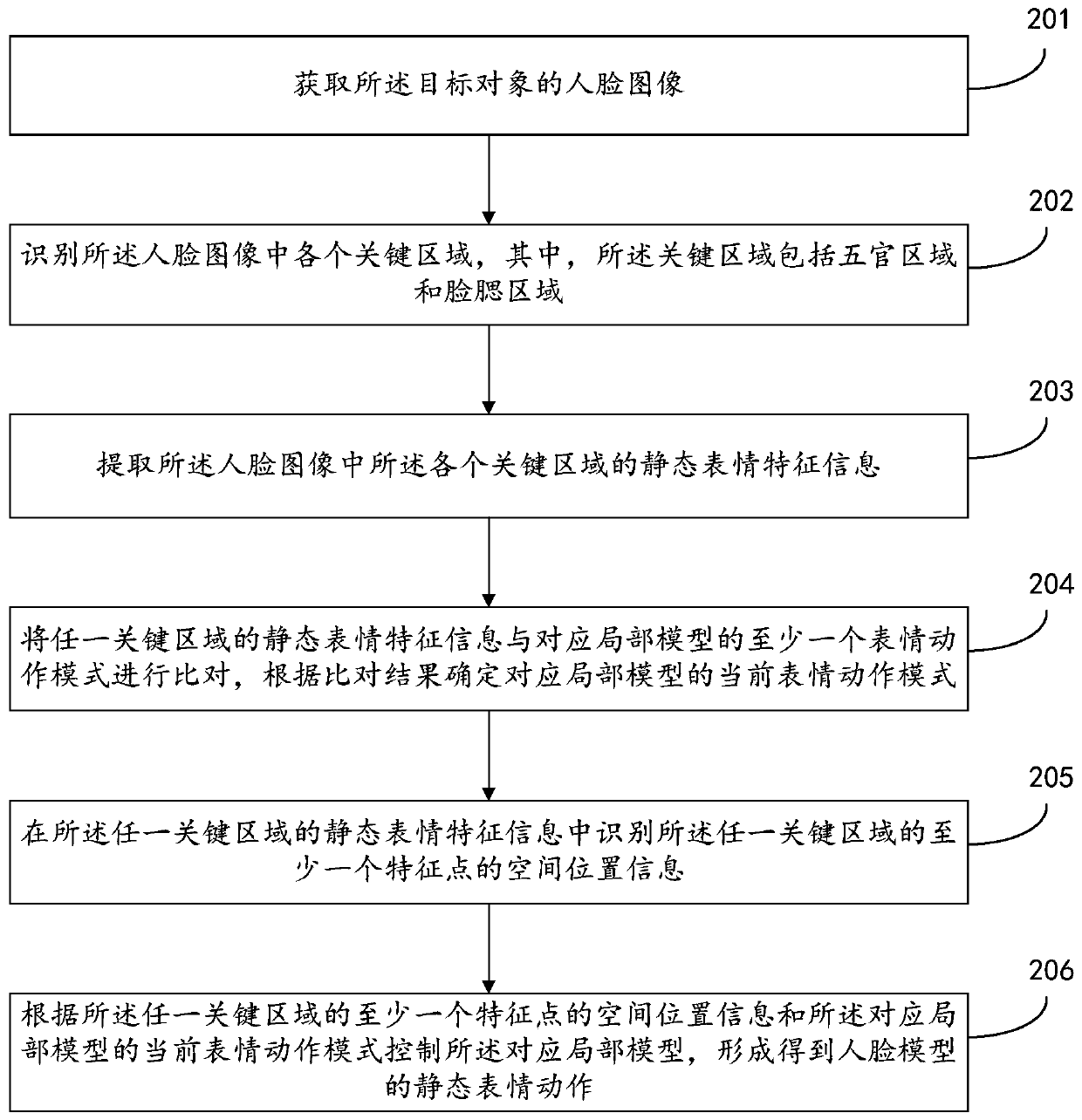

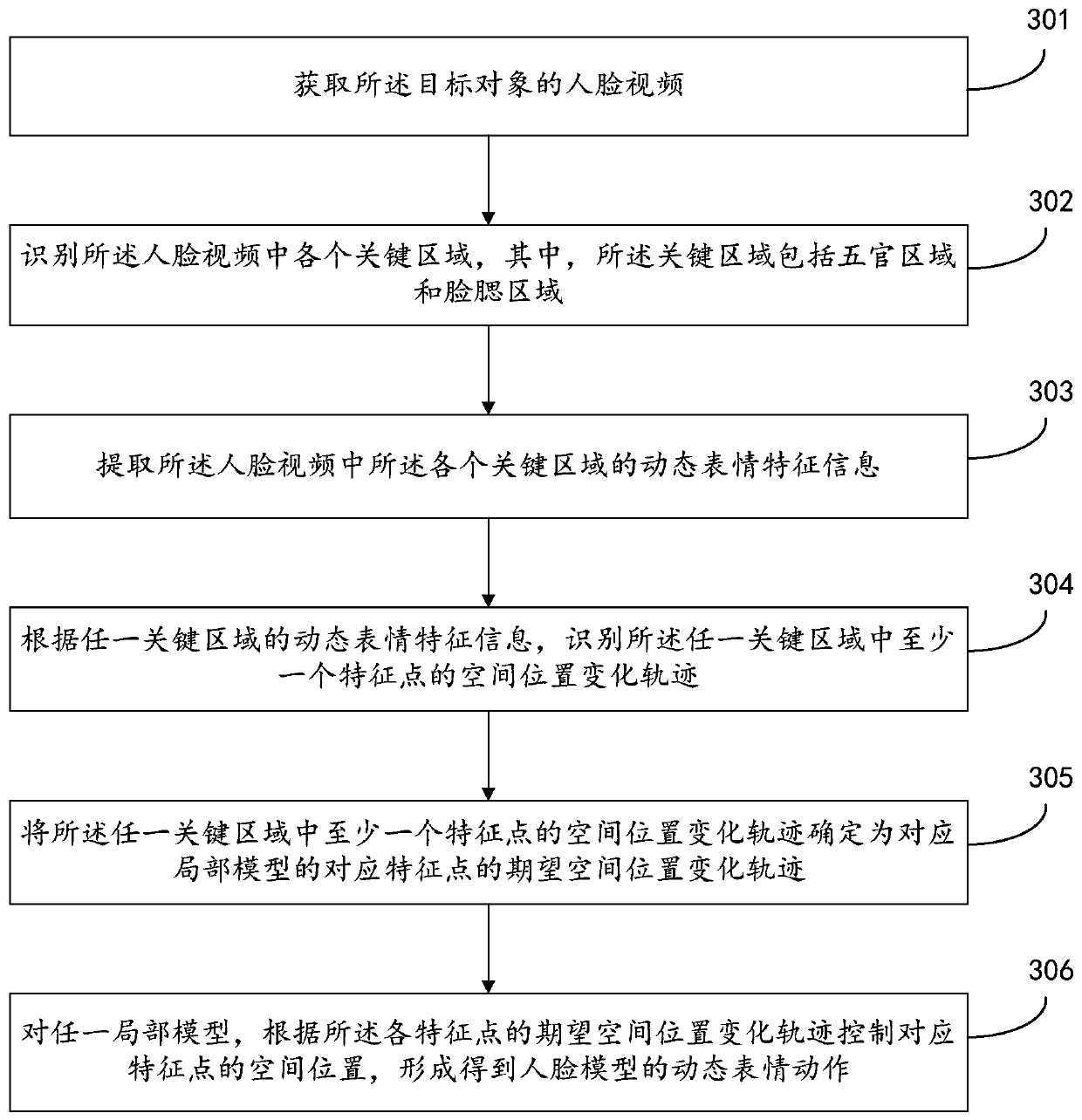

Action processing method and device of face model, storage medium and electronic equipment

ActiveCN109829965AExpressiveImprove personalizationCharacter and pattern recognition3D-image renderingPersonalizationPattern recognition

The embodiment of the invention discloses an action processing method and device of a face model, storage equipment and an electronic device. The method comprises the steps of obtaining facial expression feature information of a target object; Determining an expression action of each local model in the face model of the target object according to the face expression feature information; And controlling a corresponding local model according to the expression action of each local model in the face model to form the expression action of the face model. By the adoption of the technical scheme, thefacial expression feature information of the target object is collected, the expression actions of all the local models in the face model are controlled, the expression actions the same as the expression actions of the target object are formed, the expression of the face model is enriched, and individuation and difference of the expression of the face model are improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

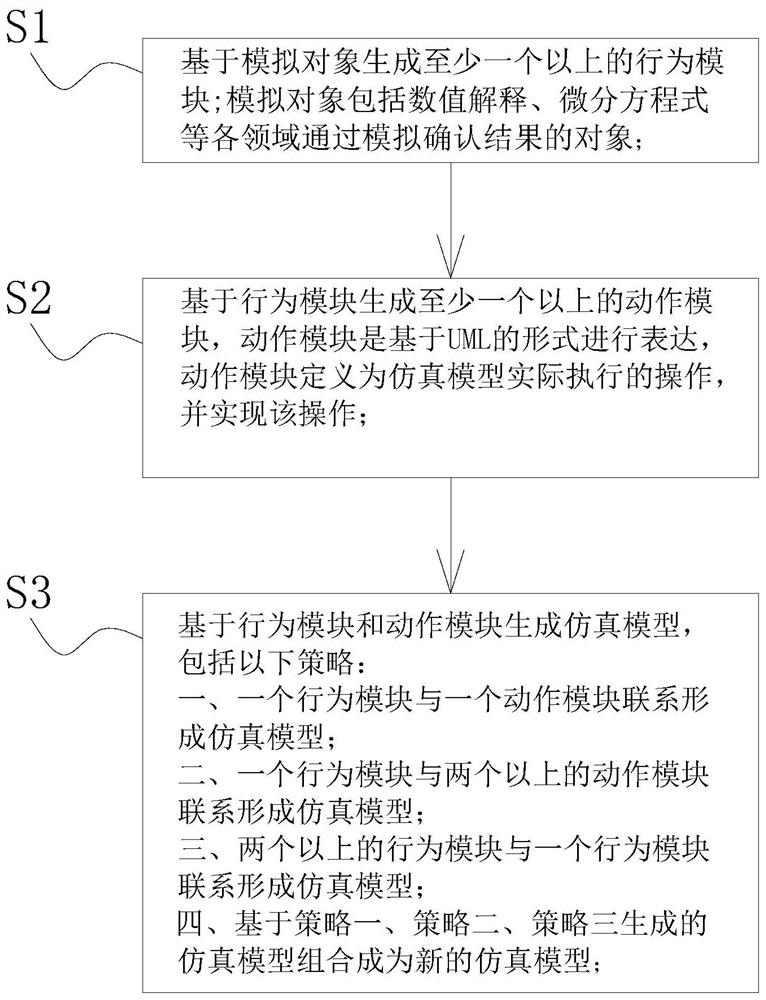

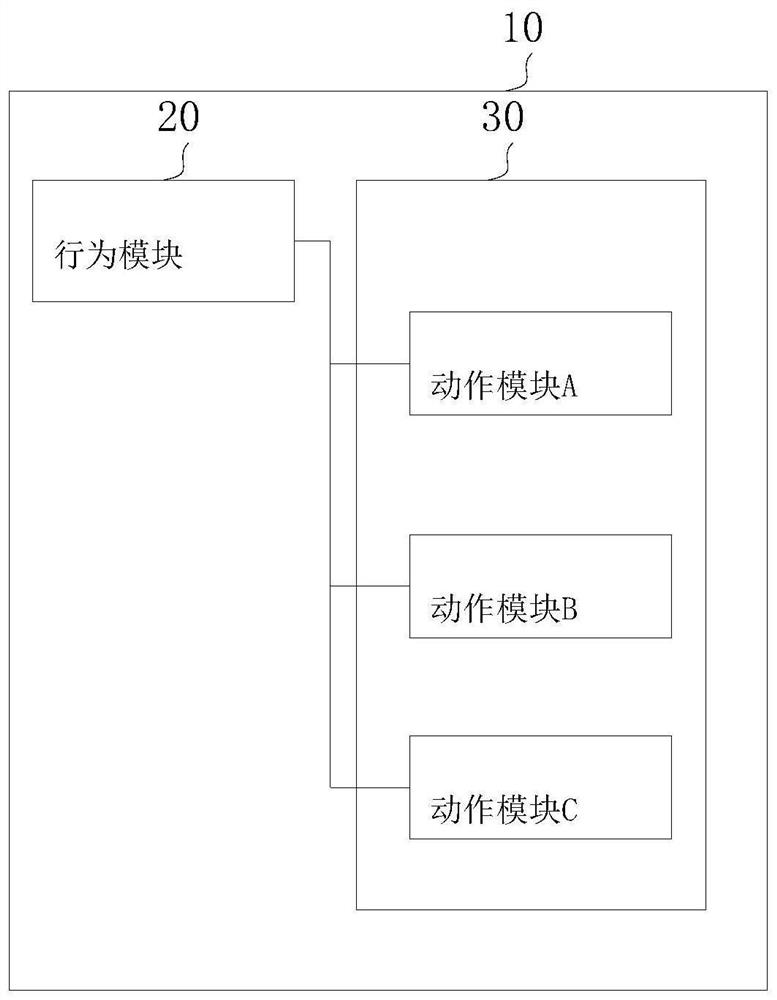

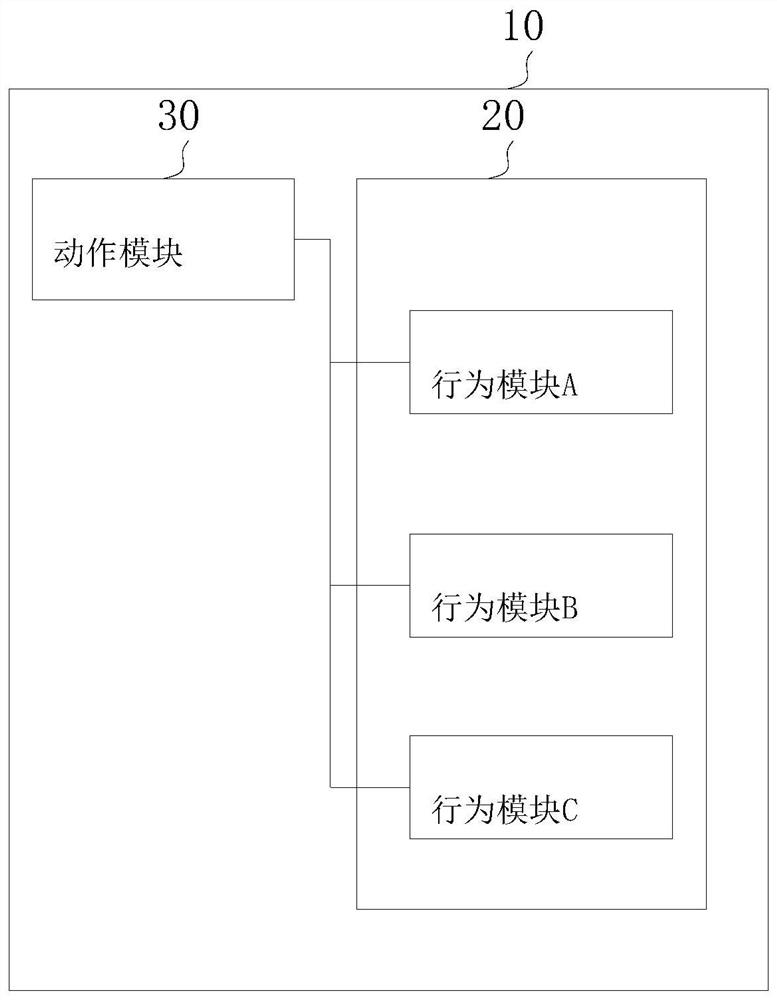

Cross-platform simulation model development method

PendingCN112988147AImprove reuse rateProgram code adaptionDesign optimisation/simulationSoftware engineeringSimulation based

The invention discloses a cross-platform simulation model development method. The method comprises the following steps: S1, generating at least more than one behavior module based on a simulation object; S2, generating at least more than one action module based on the behavior module, expressing the action module based on an XML language form, defining the action module as an operation actually executed by the simulation model, and realizing the operation; and S3, generating a simulation model based on the behavior module and the action module. According to the method, the simulation model is developed on the system based on the predetermined unified strategy, the simulation systems among the platforms can be compatible with each other, the simulation models can be connected with each other, the reuse rate of the simulation model is effectively improved, and the method is suitable for development of cross-platform simulation models.

Owner:南京仁谷系统集成有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com