Virtual face modeling method based on real face image

A technology of real people and faces, applied in the field of virtual face modeling based on real face images, which can solve the problems of high facial difference and long modeling time, and achieve the effect of low cost and short modeling time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0033] The present embodiment is based on the virtual face modeling method of real human face image, comprises the following steps:

[0034] One) making head model, it comprises the following steps again:

[0035] a) Collect frontal photos of real people's faces, and use Insight 3D to convert the frontal photos of real people's faces into 3D head models;

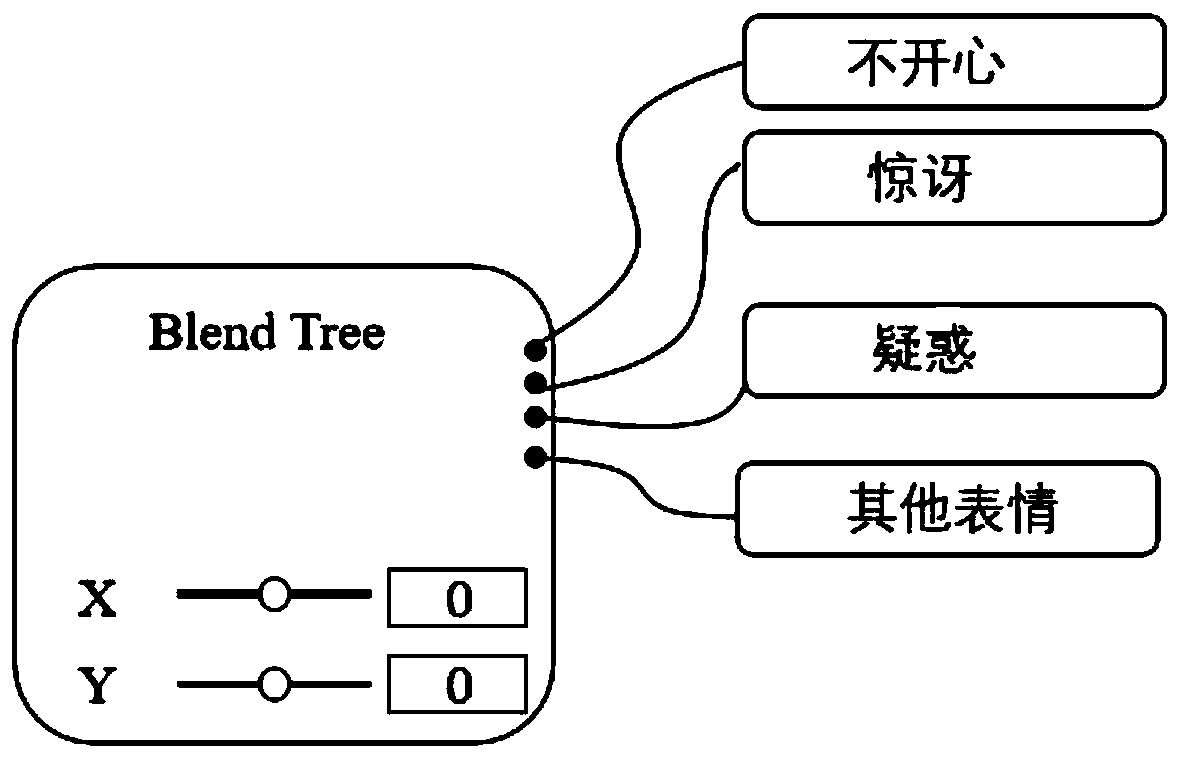

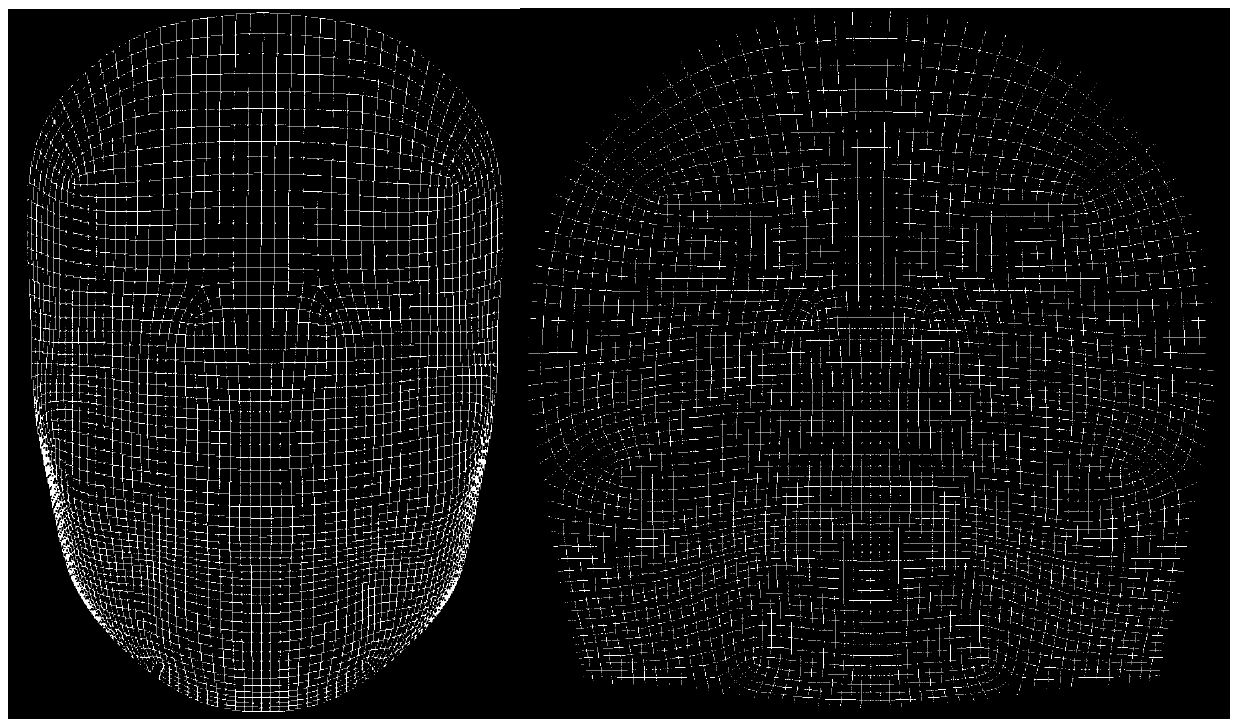

[0036] b) In Insight 3D, separate the human face part of the 3D head model from the rest of the 3D head model, and smooth the separated human face part to make an ellipsoid; the specific details of this step in Insight 3D The operation process is as follows: first select the head model, convert the model into an editable polygon, then select the face part of the head model, and select the separation option in the option of editing geometry, so that the face can be easily separated from the rest of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com