Patents

Literature

239 results about "Context vector" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

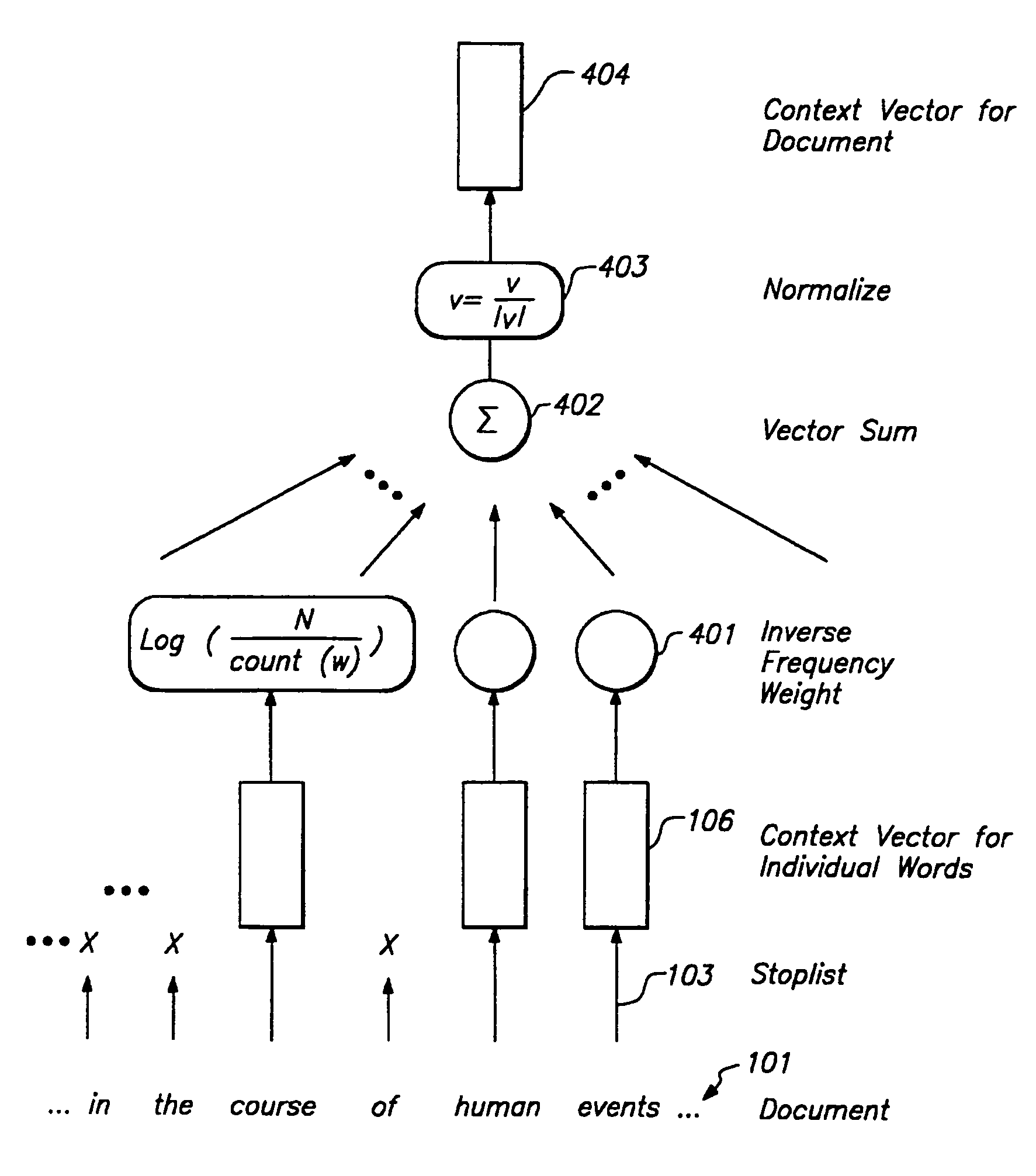

Context Vectors are created for the context of the target word and also for the glosses of each sense of the target word. Each gloss is considered as a bag of words, where each word has a corresponding Word Vector. These vectors for the words in a gloss are averaged to get a Context Vector corre- sponding to the gloss.

Context vector generation and retrieval

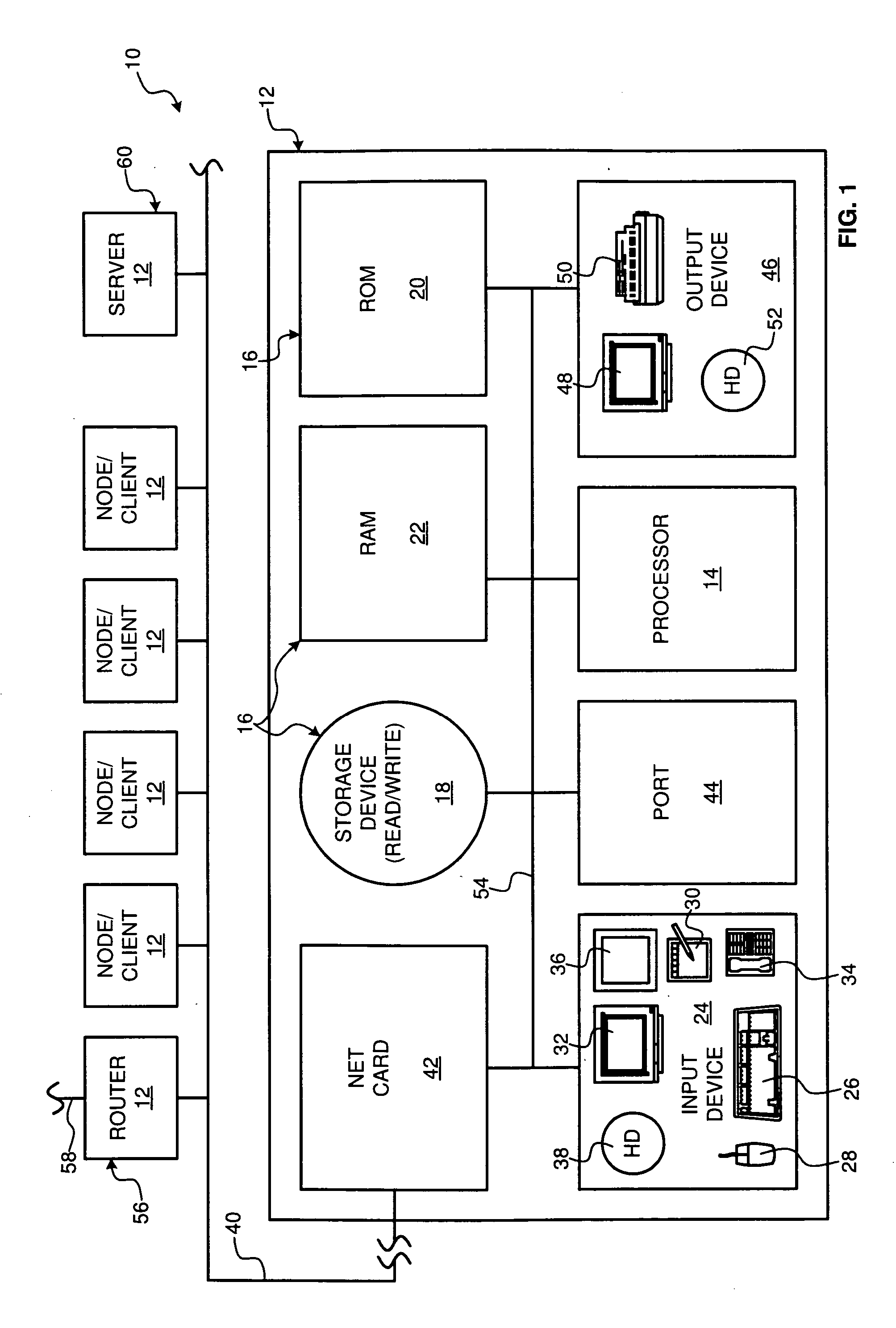

InactiveUS7251637B1Reduce search timeRapid positioningDigital computer detailsBiological neural network modelsCo-occurrenceDocument preparation

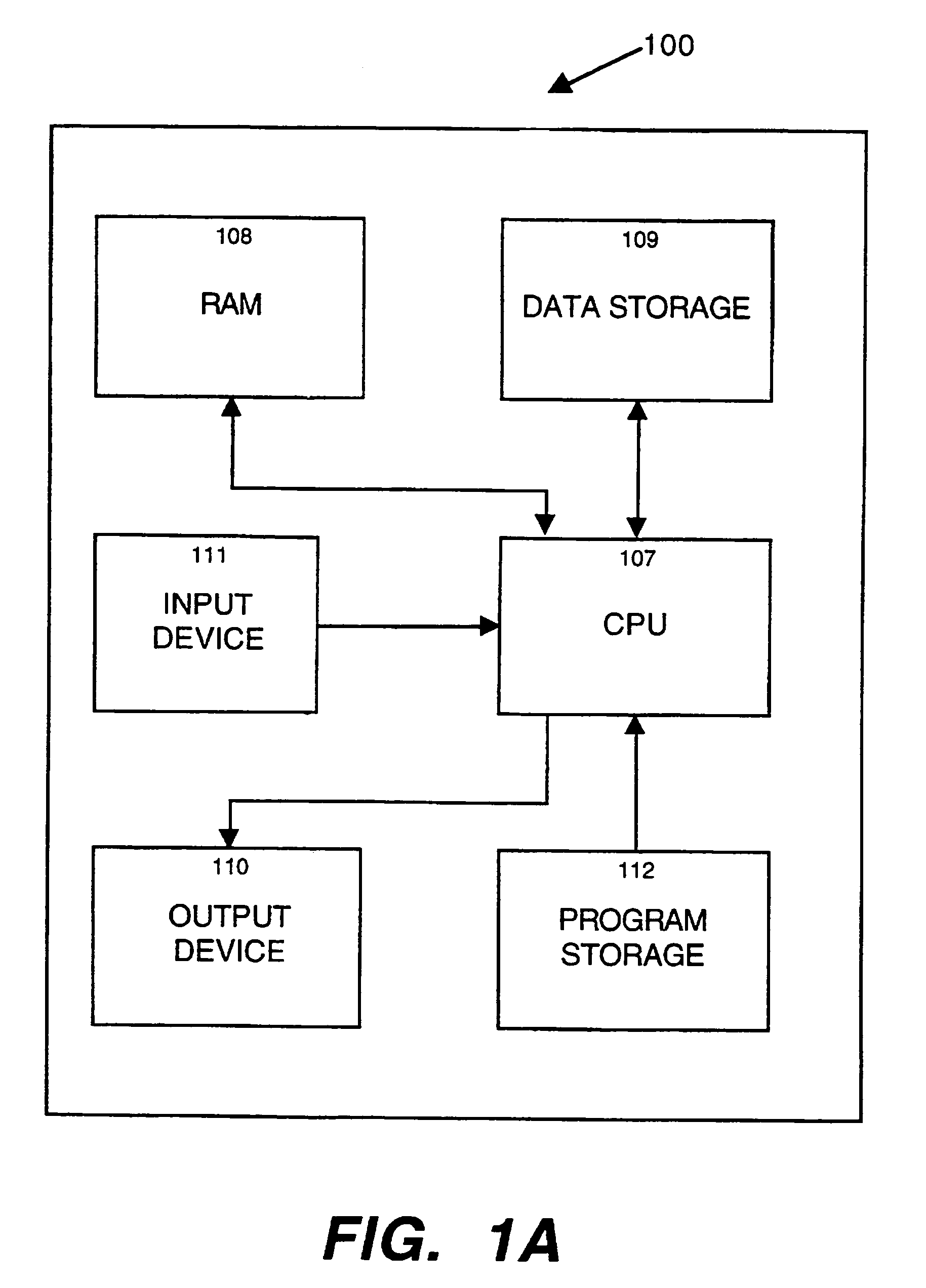

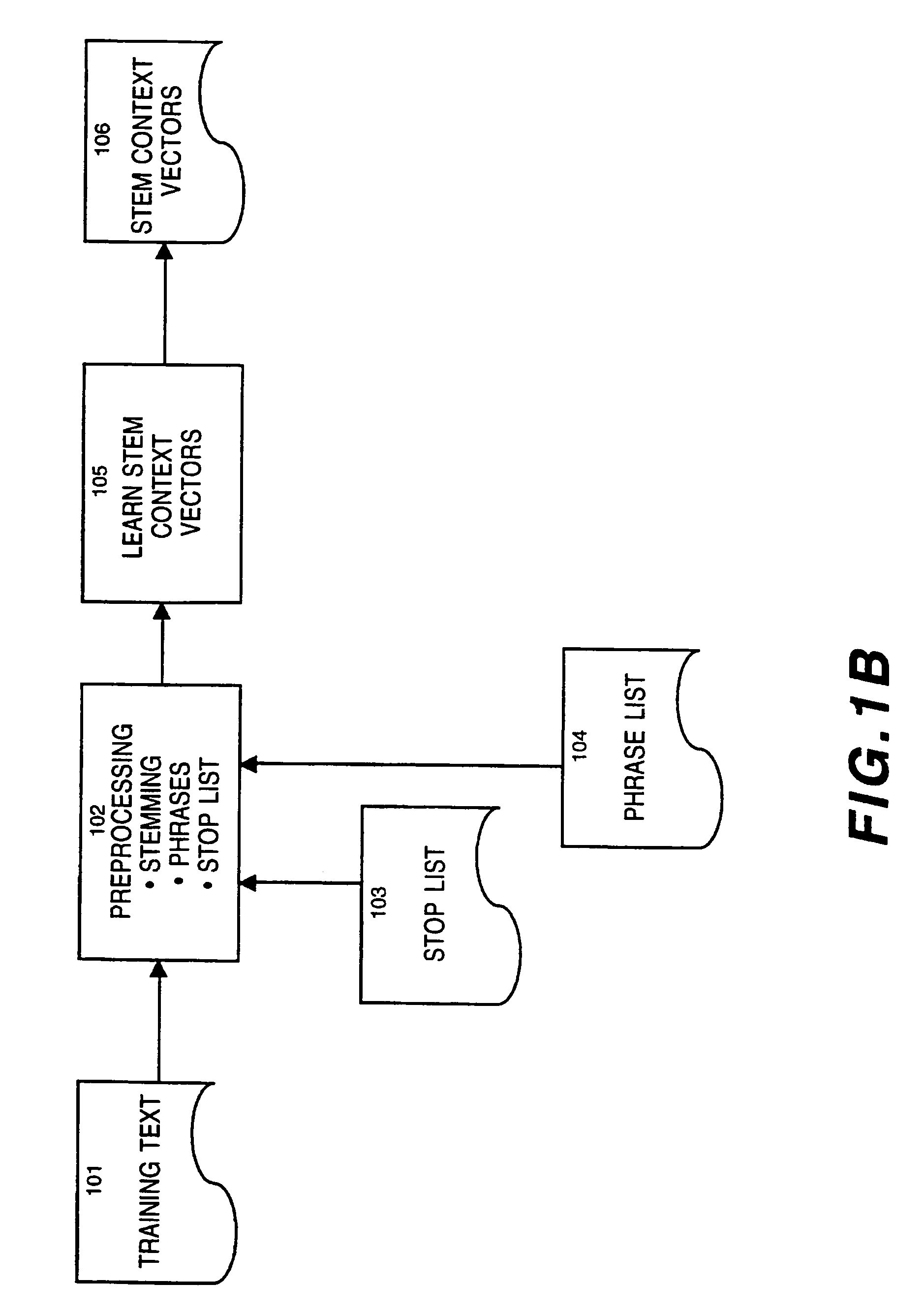

A system and method for generating context vectors for use in storage and retrieval of documents and other information items. Context vectors represent conceptual relationships among information items by quantitative means. A neural network operates on a training corpus of records to develop relationship-based context vectors based on word proximity and co-importance using a technique of “windowed co-occurrence”. Relationships among context vectors are deterministic, so that a context vector set has one logical solution, although it may have a plurality of physical solutions. No human knowledge, thesaurus, synonym list, knowledge base, or conceptual hierarchy, is required. Summary vectors of records may be clustered to reduce searching time, by forming a tree of clustered nodes. Once the context vectors are determined, records may be retrieved using a query interface that allows a user to specify content terms, Boolean terms, and / or document feedback. The present invention further facilitates visualization of textual information by translating context vectors into visual and graphical representations. Thus, a user can explore visual representations of meaning, and can apply human visual pattern recognition skills to document searches.

Owner:FAIR ISAAC & CO INC

Systems and methods for contextual transaction proposals

ActiveUS20060167857A1Digital data information retrievalDigital data processing detailsContext specificWeb page

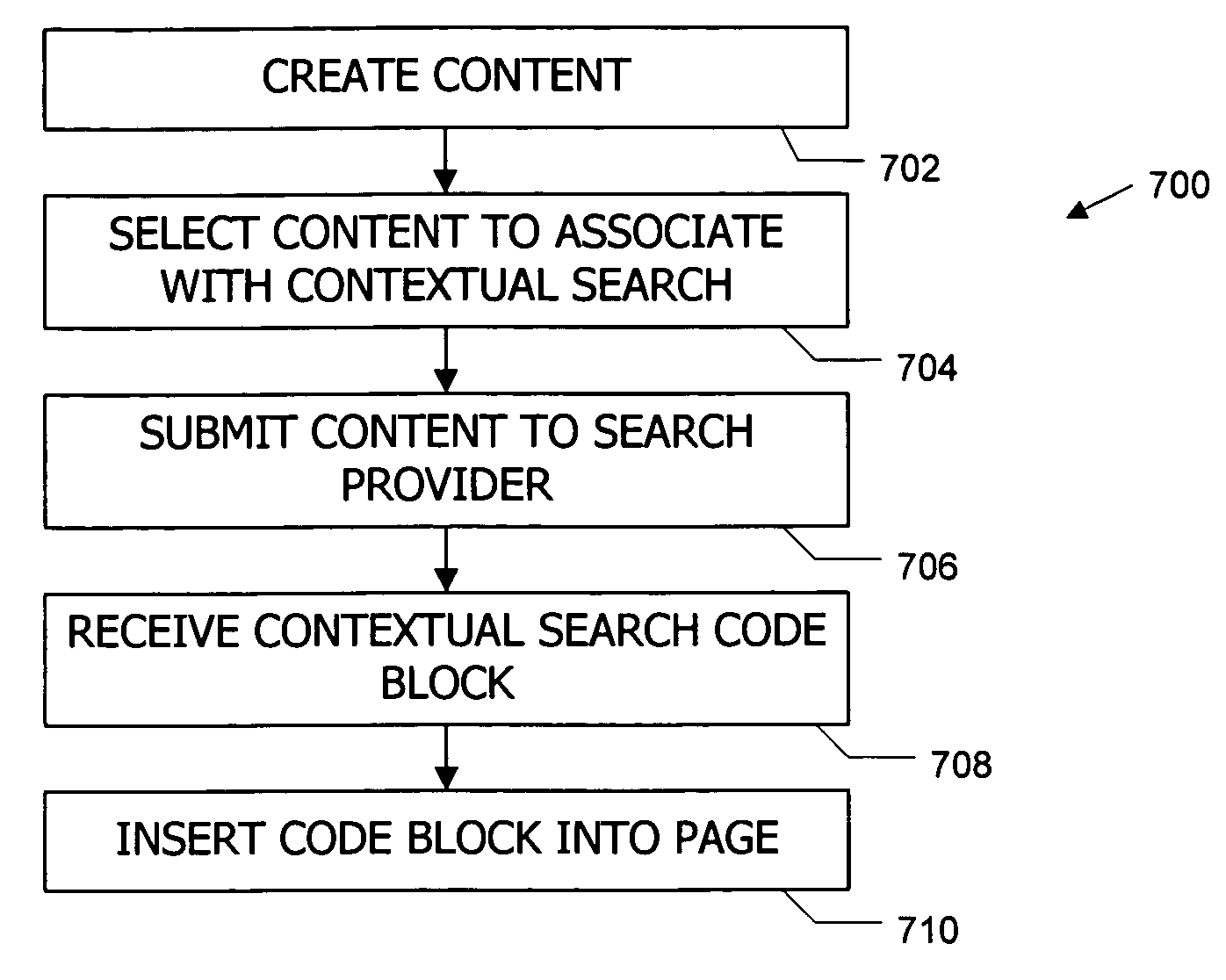

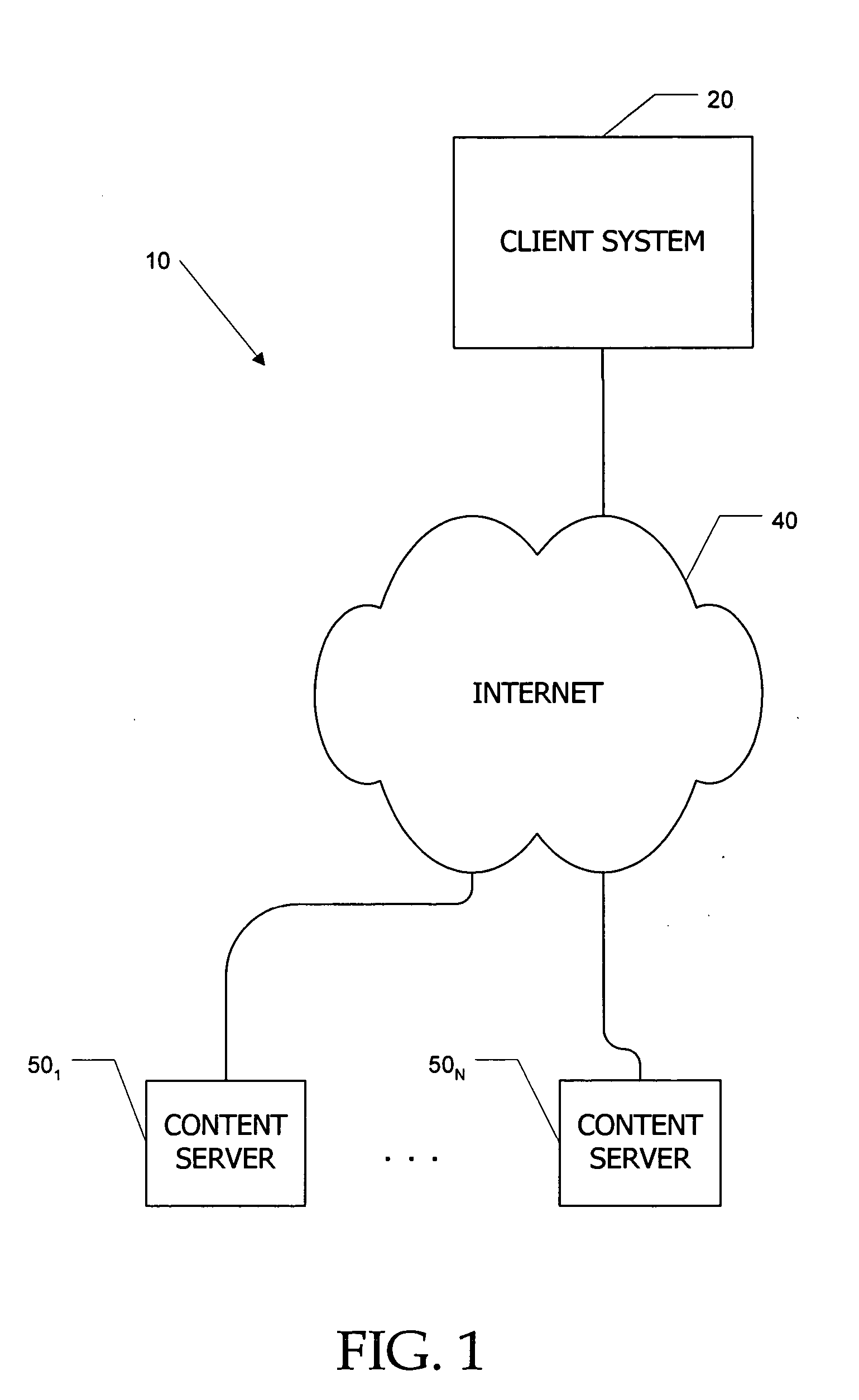

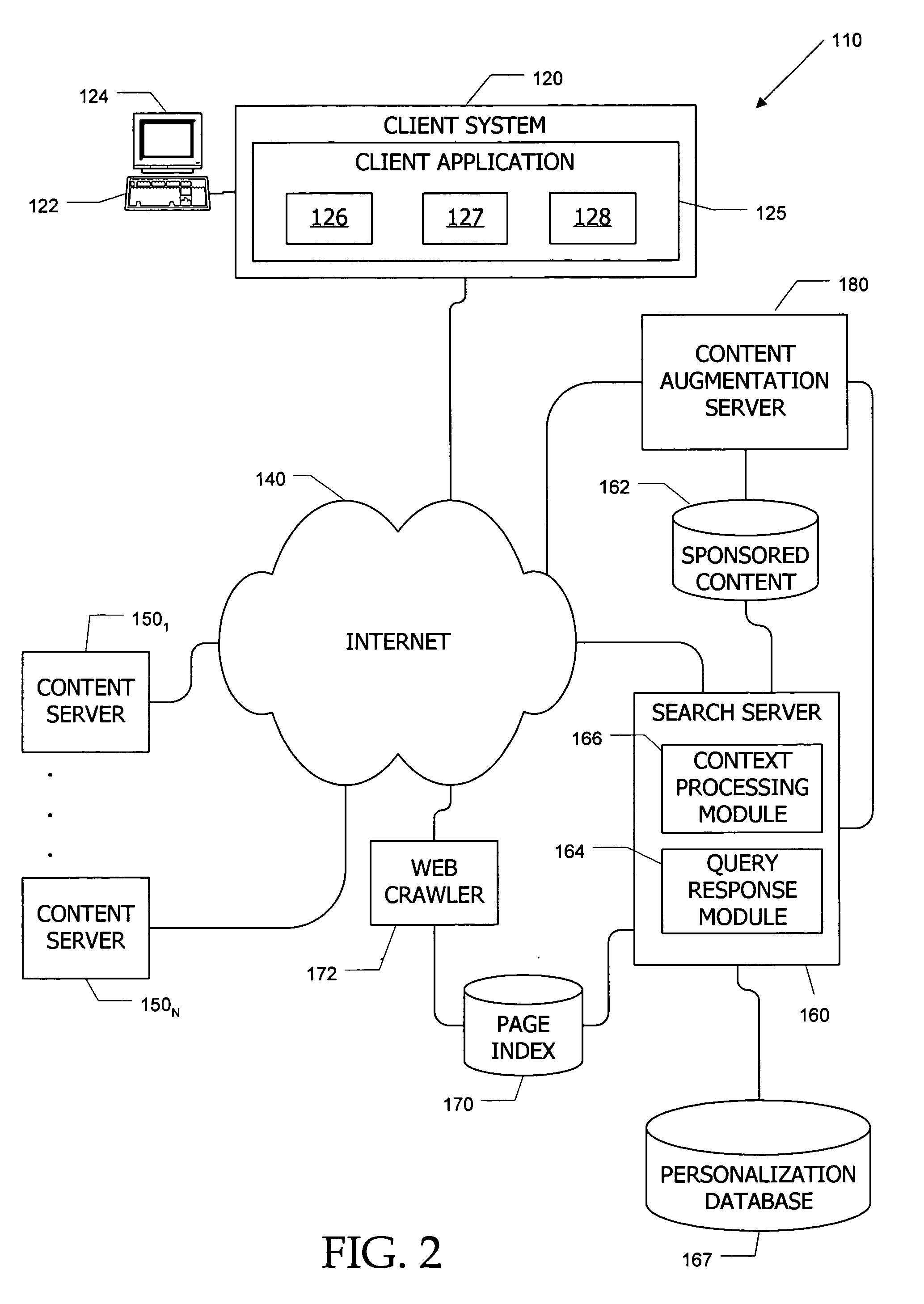

Context-specific transaction proposals are automatically generated and presented to a user who expresses interest in a particular topic. A user viewing a World Wide Web page or other content item activates an interface to indicate that he or she is interested in additional information related to the subject of the page. A context vector or other representation of the content of the page being viewed is transmitted to an information server, which identifies possible transactions related to the content and proposes one or more of these transactions to the user. Transaction proposals can be presented together with a contextual search interface that allows the user to submit zero or more search terms together with the context vector as a search query.

Owner:R2 SOLUTIONS

Term synonym acquisition method and term synonym acquisition apparatus

InactiveUS20150006157A1Reduce the impactImprove accuracyNatural language translationDigital data information retrievalSynonymAuxiliary system

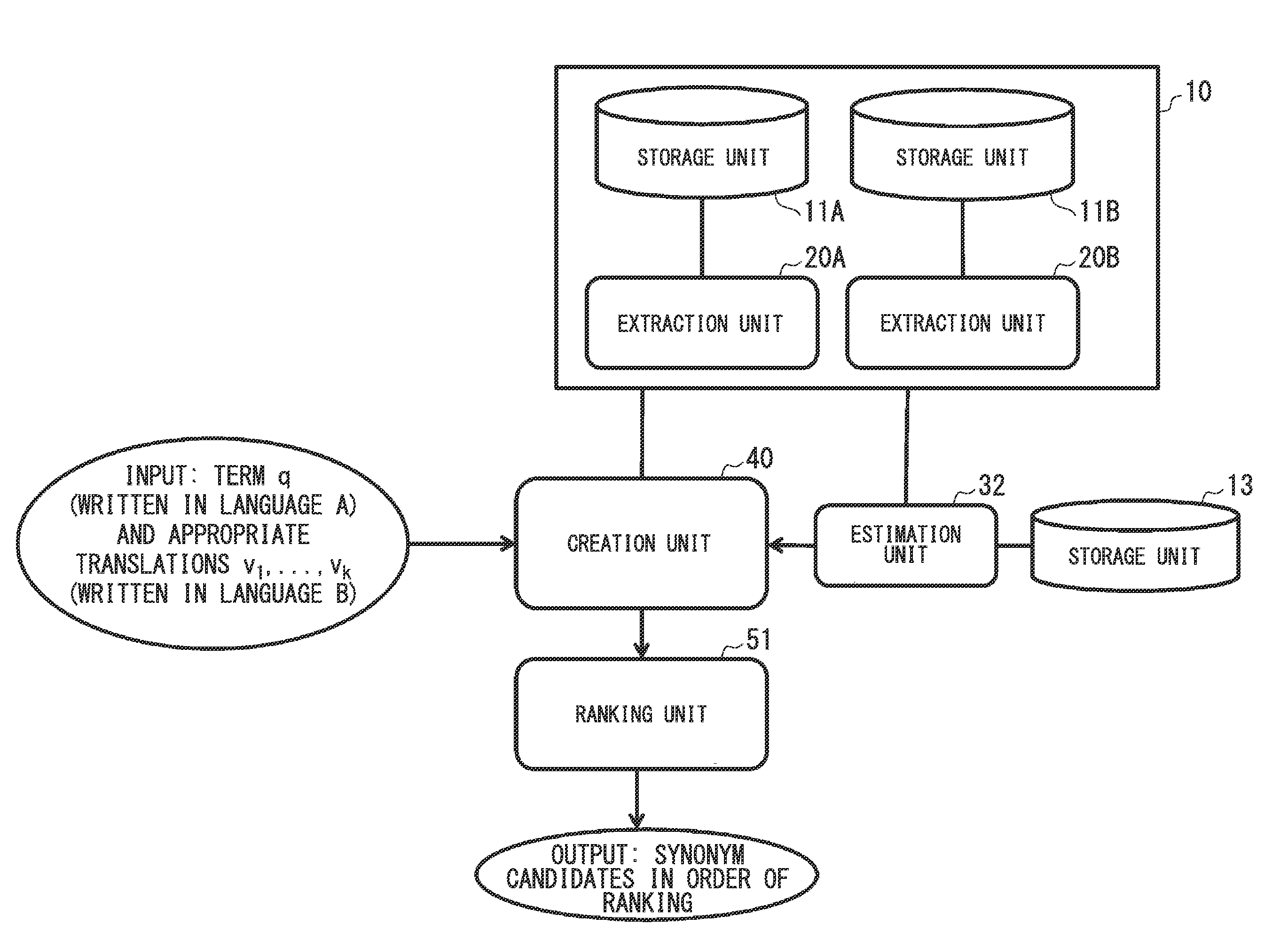

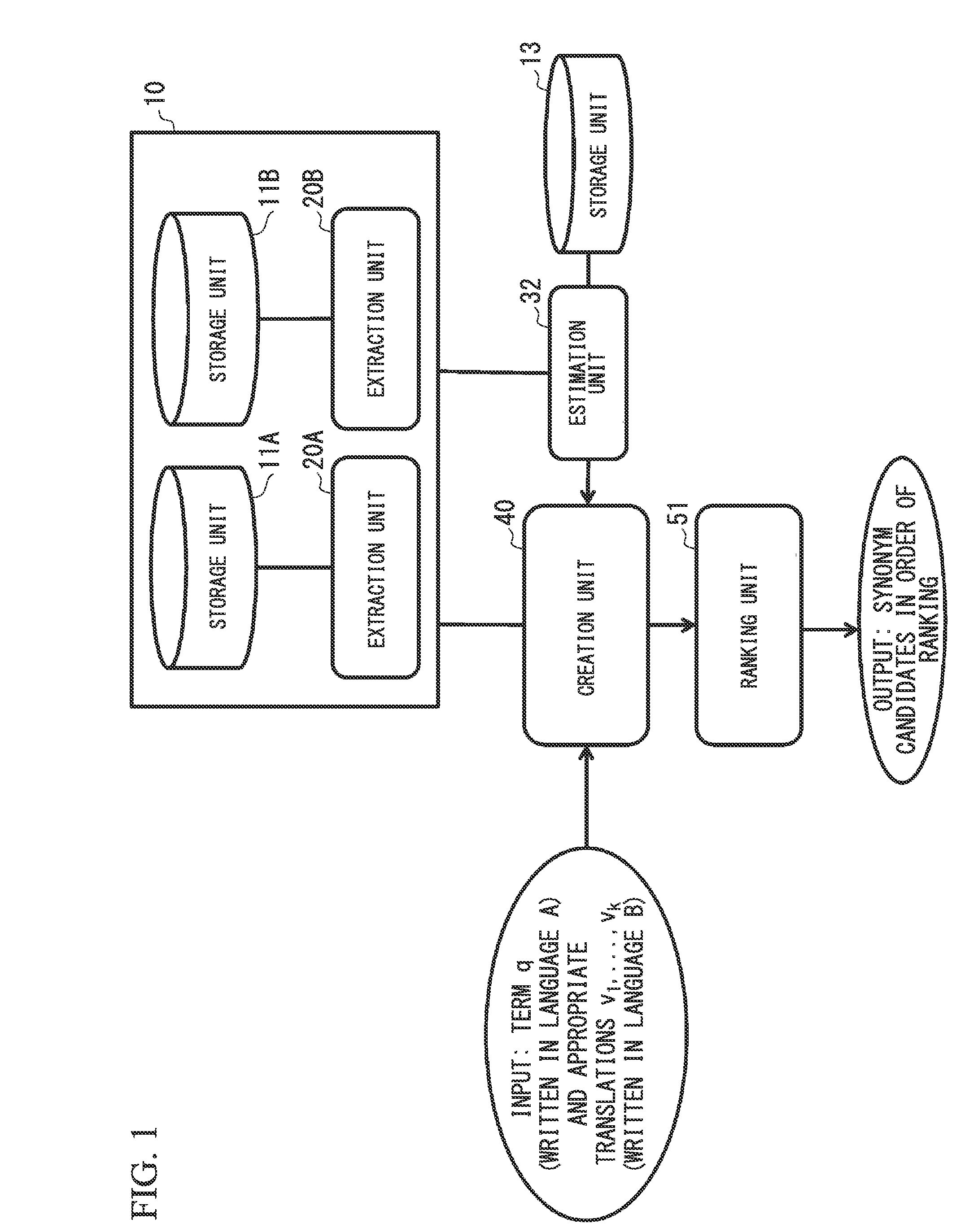

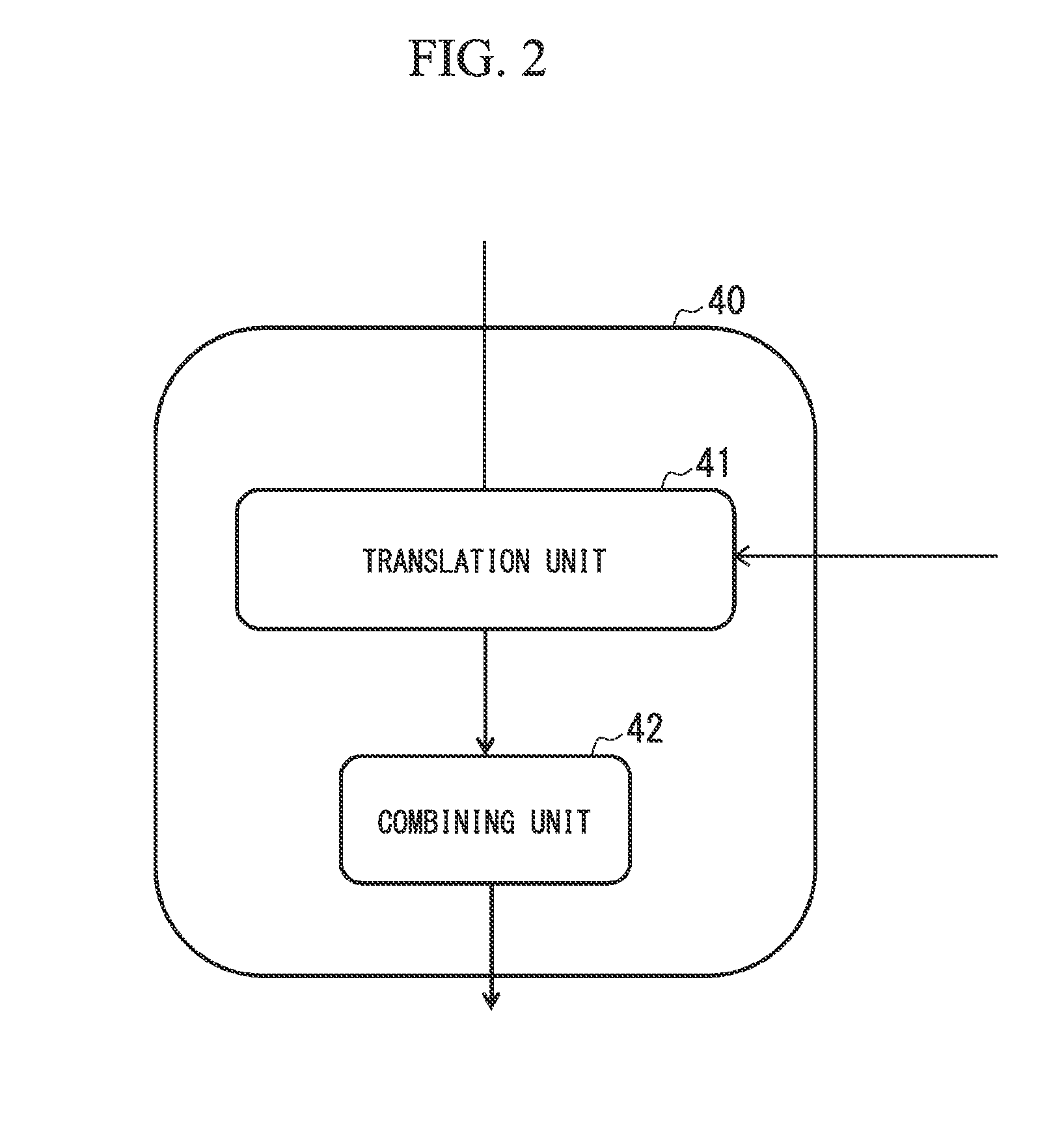

A term synonym acquisition apparatus includes: a first generating unit which generates a context vector of an input term in an original language and a context vector of each synonym candidate in the original language; a second generating unit which generates a context vector of an auxiliary term in an auxiliary language that is different from the original language, where the auxiliary term specifies a sense of the input term; a combining unit which generates a combined context vector based on the context vector of the input term and the context vector of the auxiliary term; and a ranking unit which compares the combined context vector with the context vector of each synonym candidate to generate ranked synonym candidates in the original language.

Owner:NEC CORP

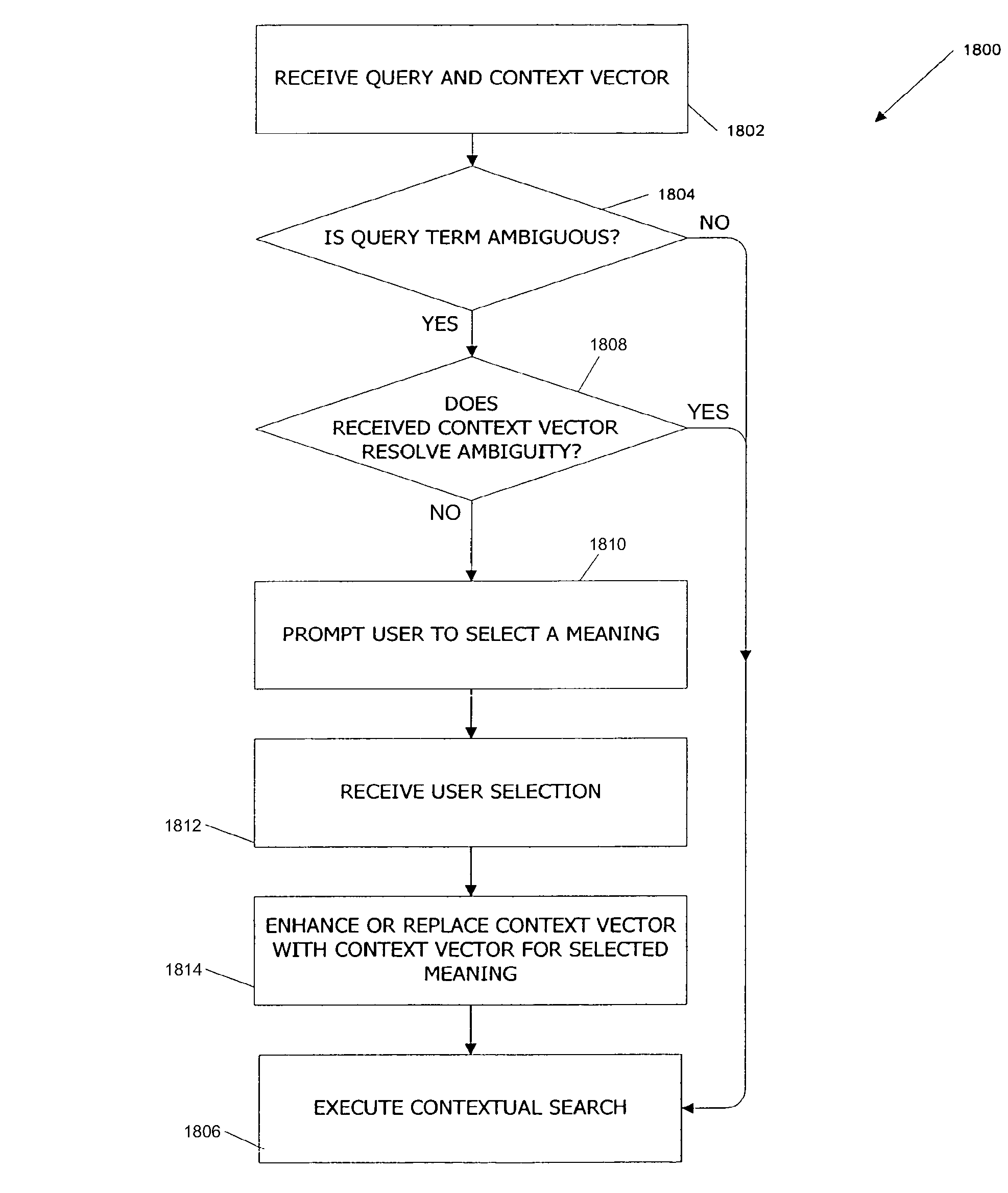

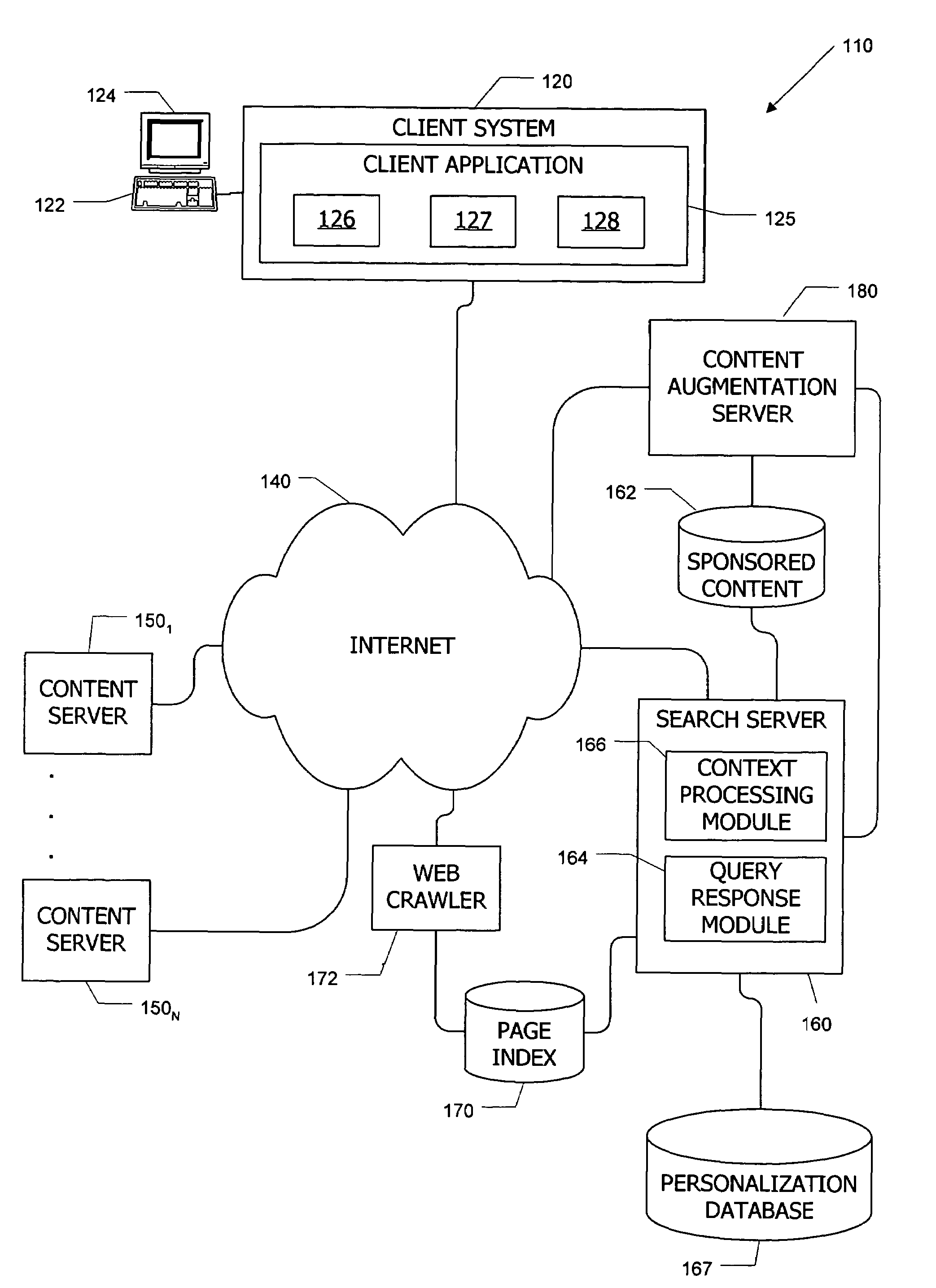

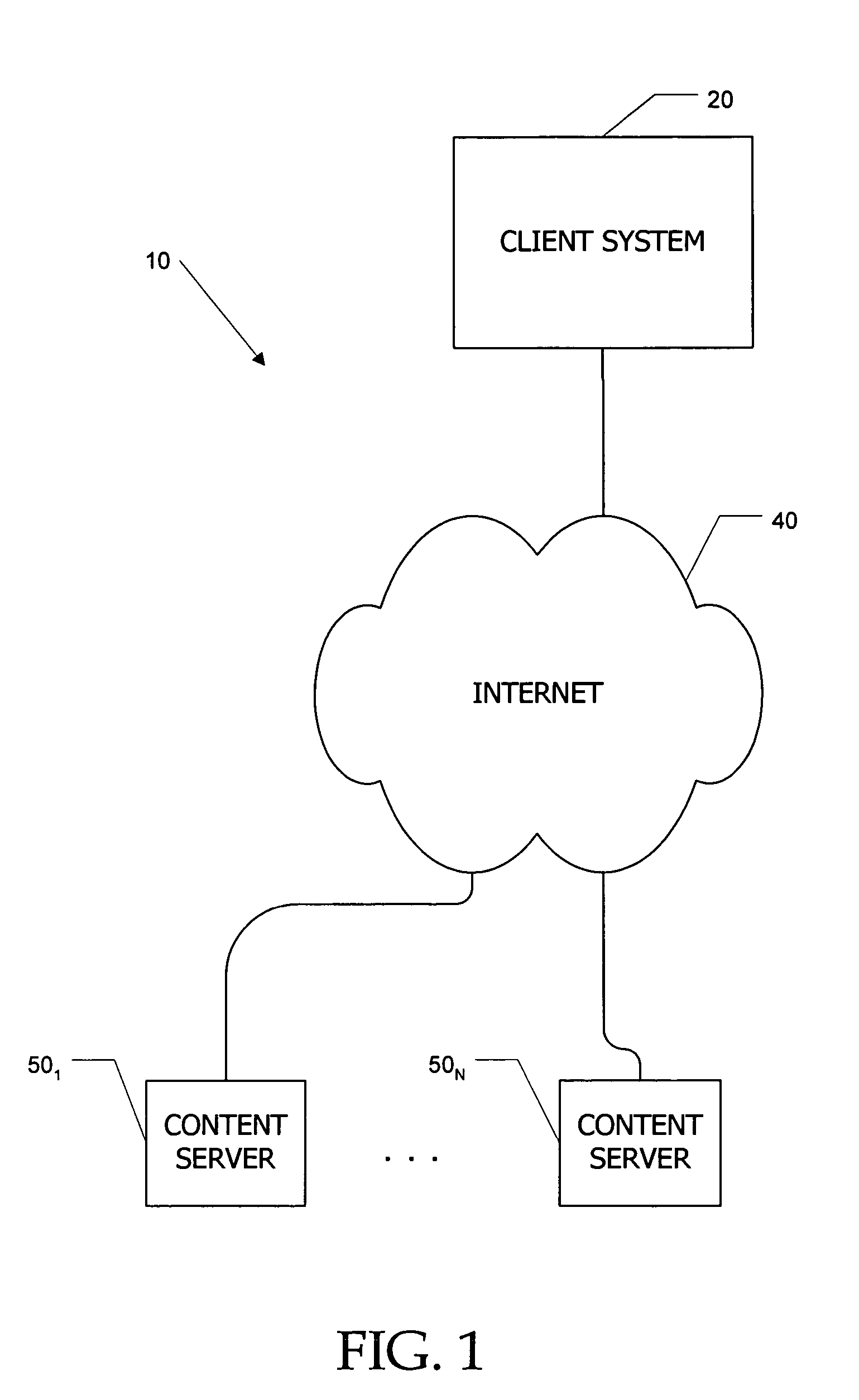

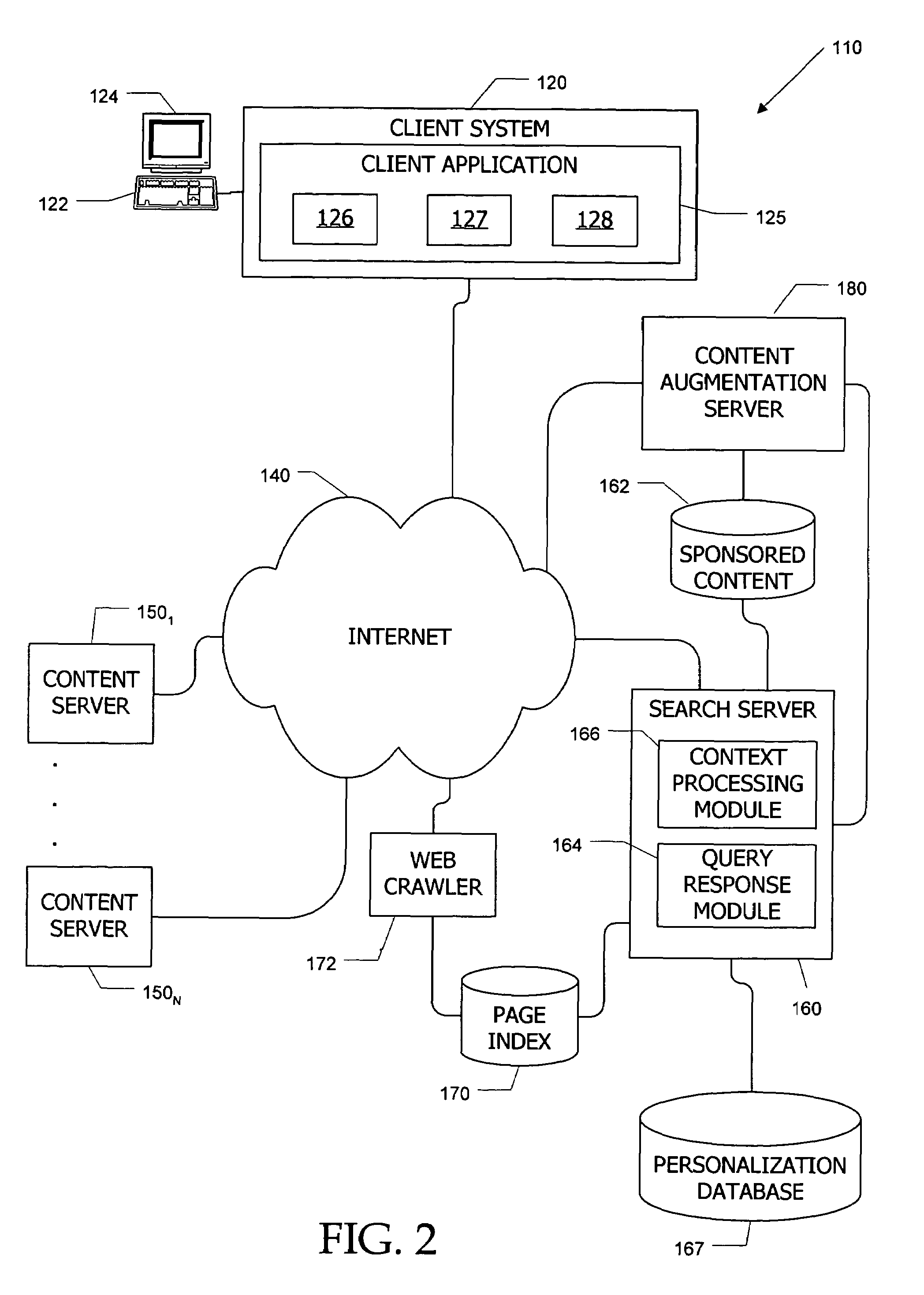

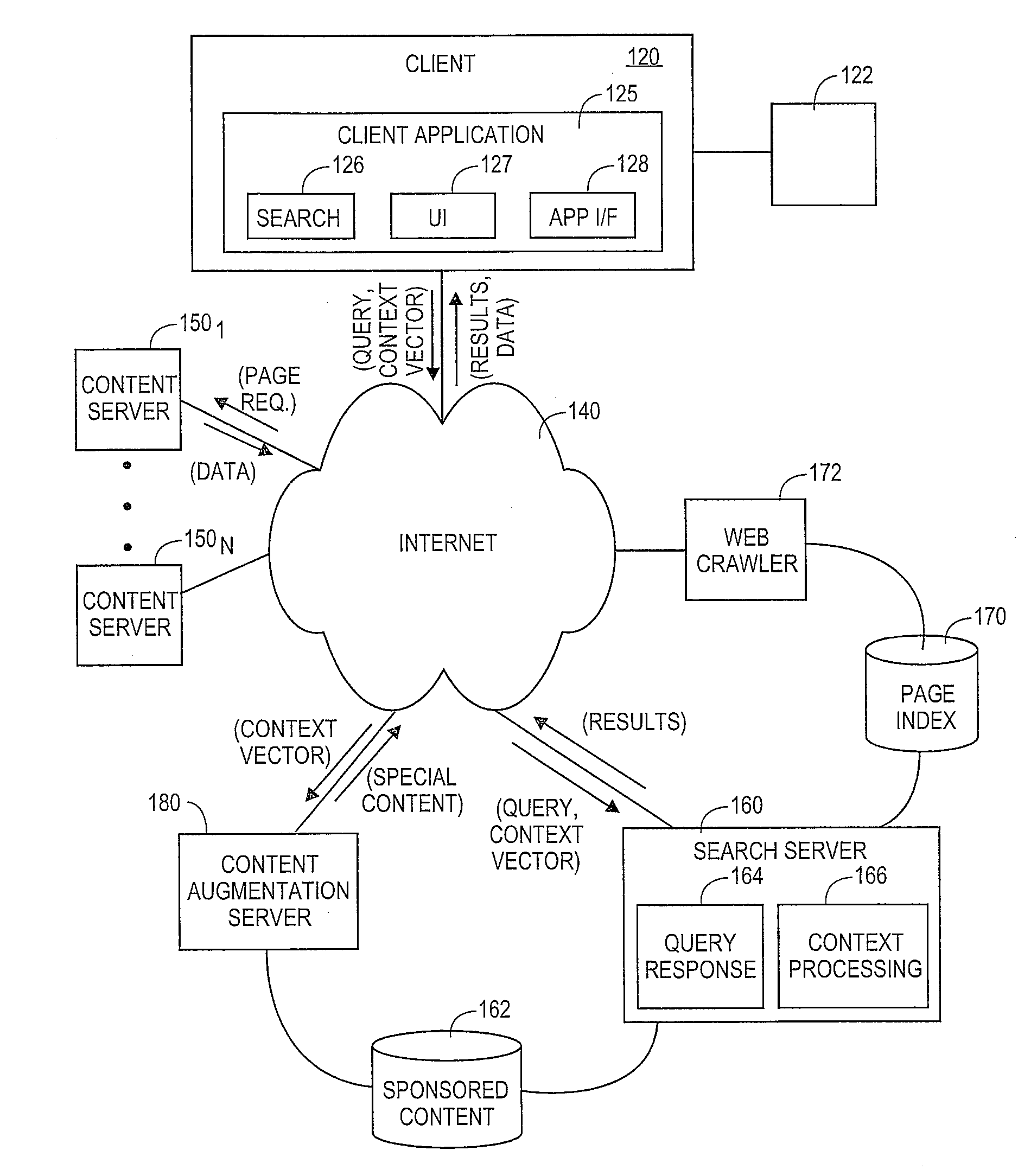

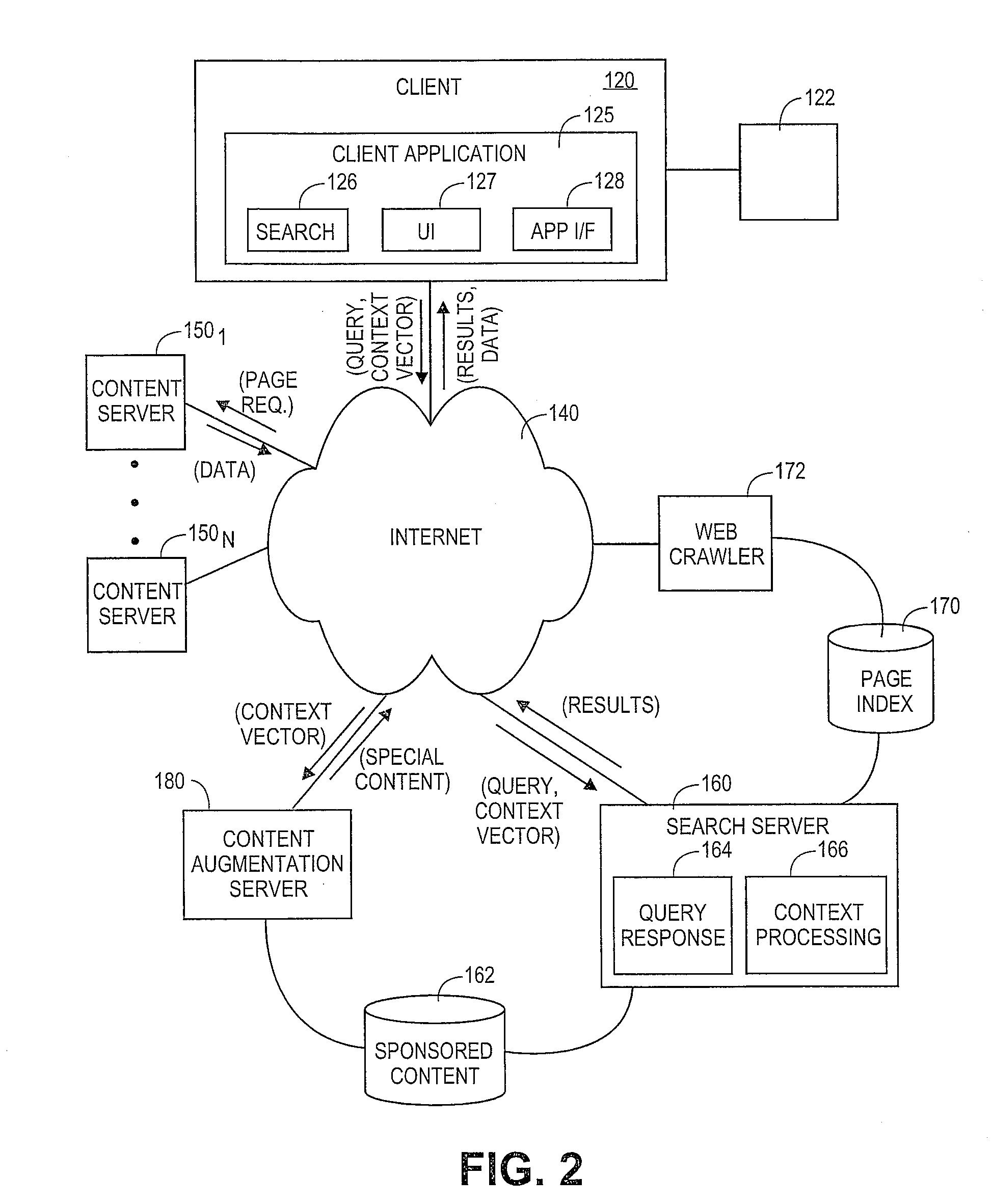

Search systems and methods using enhanced contextual queries

ActiveUS7856441B1Web data indexingDigital data processing detailsContextual inquiryDocument preparation

Systems and methods are provided for implementing searches using contextual information associated with a Web page (or other document) that a user is viewing when a query is entered. The page includes a contextual search interface that has an associated context vector representing content of the page. When the user submits a search query via the contextual search interface, the query and the context vector are both provided to the query processor and used in responding to the query. The context vector can be enhanced by the addition of terms related to one or more terms appearing in the context vector or the query.

Owner:R2 SOLUTIONS

Systems and methods for contextual transaction proposals

Owner:R2 SOLUTIONS

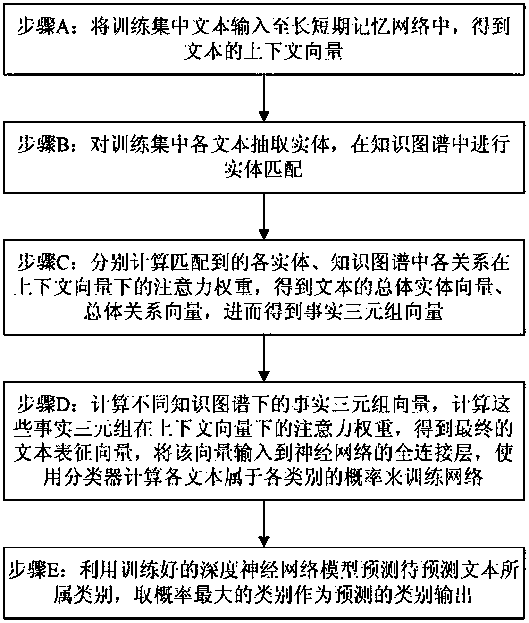

A neural network text classification method based on a multi-knowledge map

ActiveCN108984745AImprove understandingReliable classificationCharacter and pattern recognitionSpecial data processing applicationsNerve networkGraph spectra

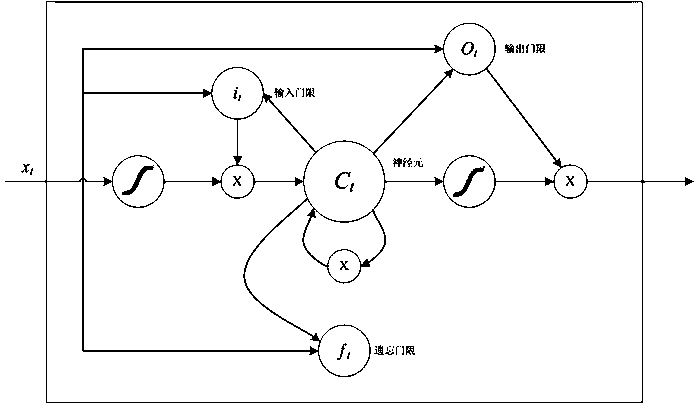

The invention relates to a neural network text classification method based on a multi-knowledge map. The method comprises the following steps: inputting a text in a training set into a long-term and short-term memory network to obtain a context vector of the text; extracting entities from each text in the training set, and performing entity matching in the knowledge map; calculating the attentionweights of matched entities and relationships in the knowledge map under context vector to obtain the overall entity vector, the overall relationship vector and the fact triple vector of the text; computing the fact triple vectors under different knowledge maps, calculating the attention weights of these fact triples, obtaining the text representation vectors and inputting them to the full connection layer of the neural network, and using a classifier to calculate the probability of each text belonging to different categories to train the network; using the trained deep neural network model to predict the category of the text to be predicted. This method improves the model's understanding of text semantics and can classify text content more reliably, accurately and robustly.

Owner:FUZHOU UNIV

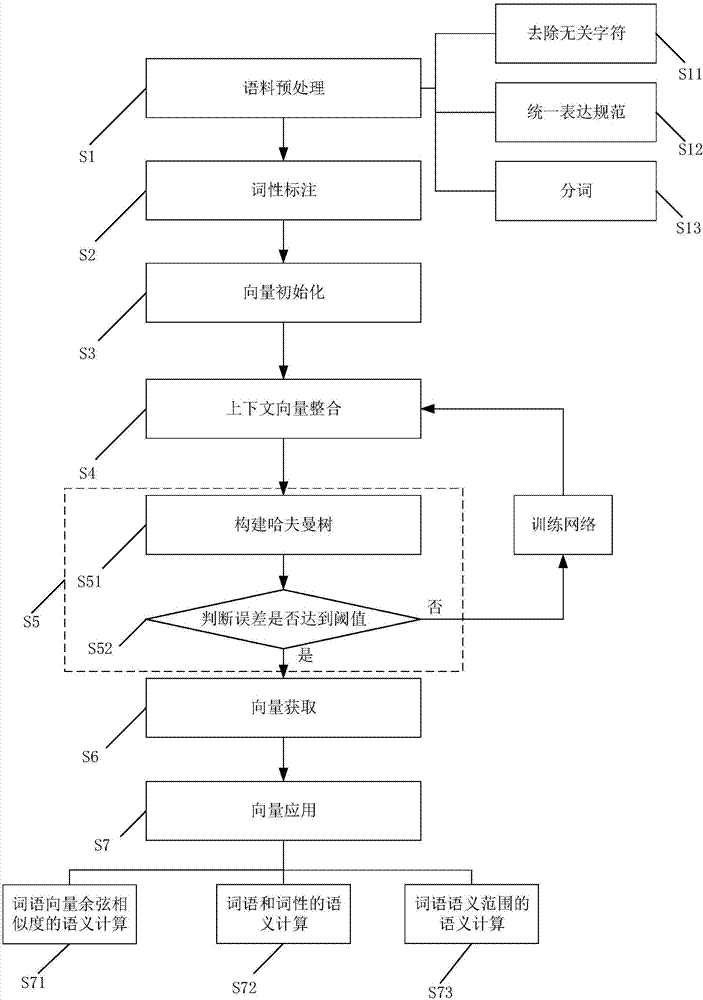

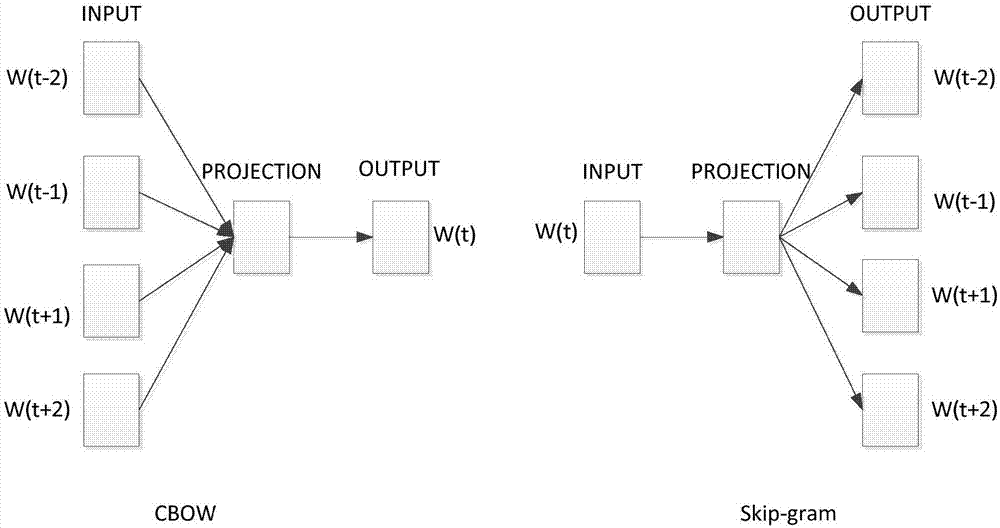

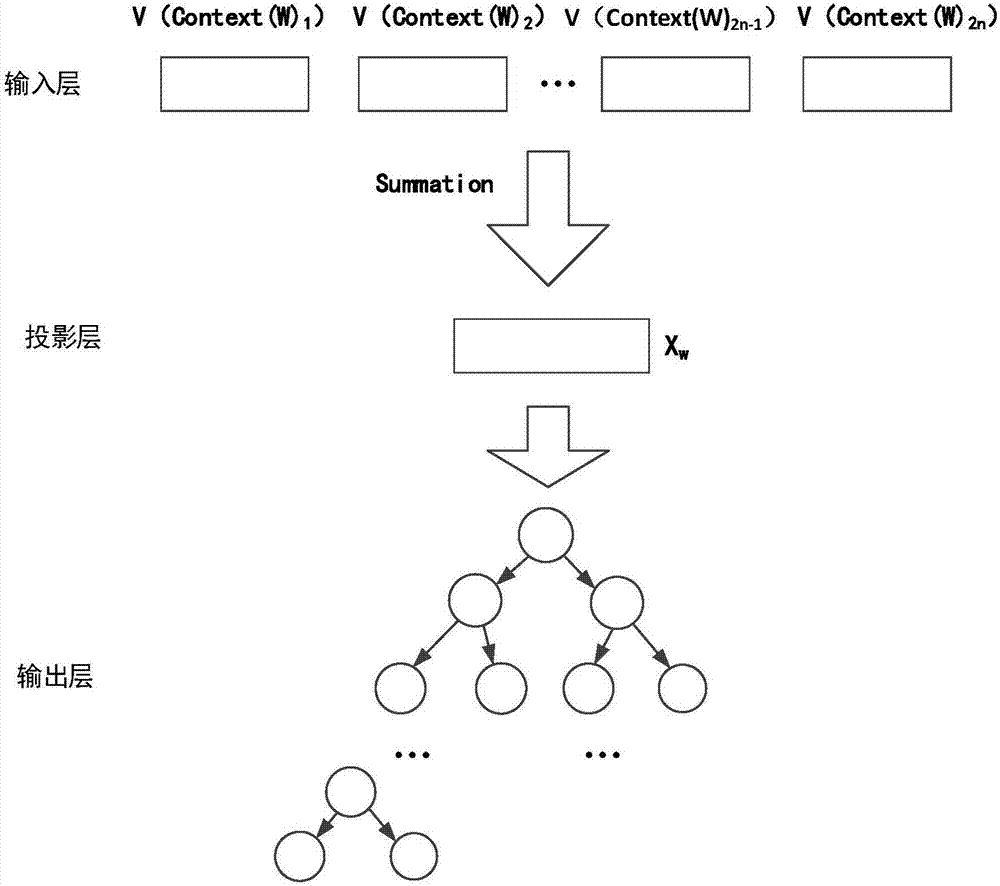

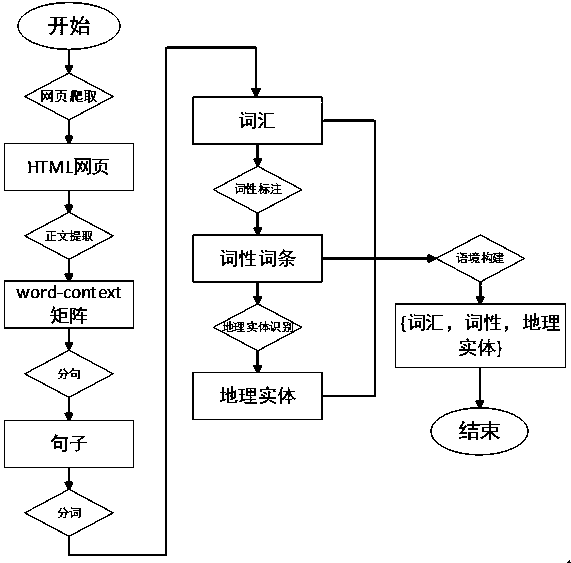

Semantic computing method for improving word vector model

ActiveCN107291693AExtending the capabilities of semantic computingSemantic analysisUnstructured textual data retrievalPart of speechObject function

The invention provides a semantic computing method for improving a word vector model. The method comprises the following steps of S1, preprocessing a corpus; S2, tagging parts of speech and tagging the parts of speech of words obtained through preprocessing of the corpus; S3, initializing vectors and vectoring the words obtained through tagging of the parts of speech and the parts of speech; S4, integrating context vectors and computing and integrating context word vectors and part of speed vectors of the words; S5, establishing a Huffman tree, training a network, optimizing a target function and judging whether an error reaches a threshold value or not; S6, obtaining the vectors and obtaining the word vectors and the part of speed vectors; and S7, applying the vectors and carrying out semantic computing through application of the word vectors and the part of speed vectors. Compared with the prior art, the method has the advantages that a part of speech factor is added to the vectors, an existing Word2vec model is improved, moreover, innovative application is carried out according to the improved model, and a function of carrying out semantic computing through the Word2vec is expanded.

Owner:GUANGZHOU HEYAN BIG DATA TECH CO LTD

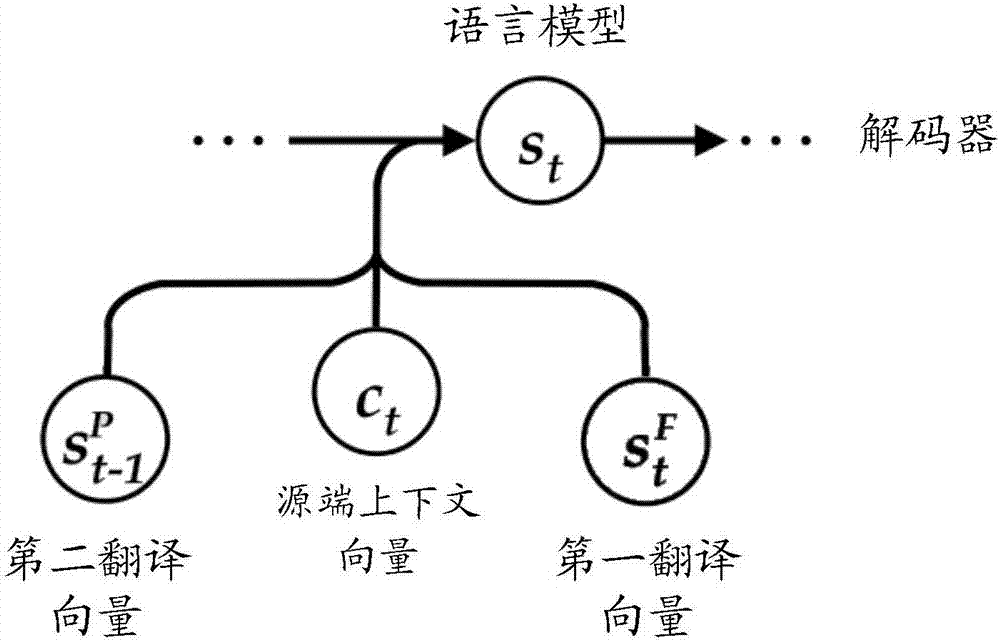

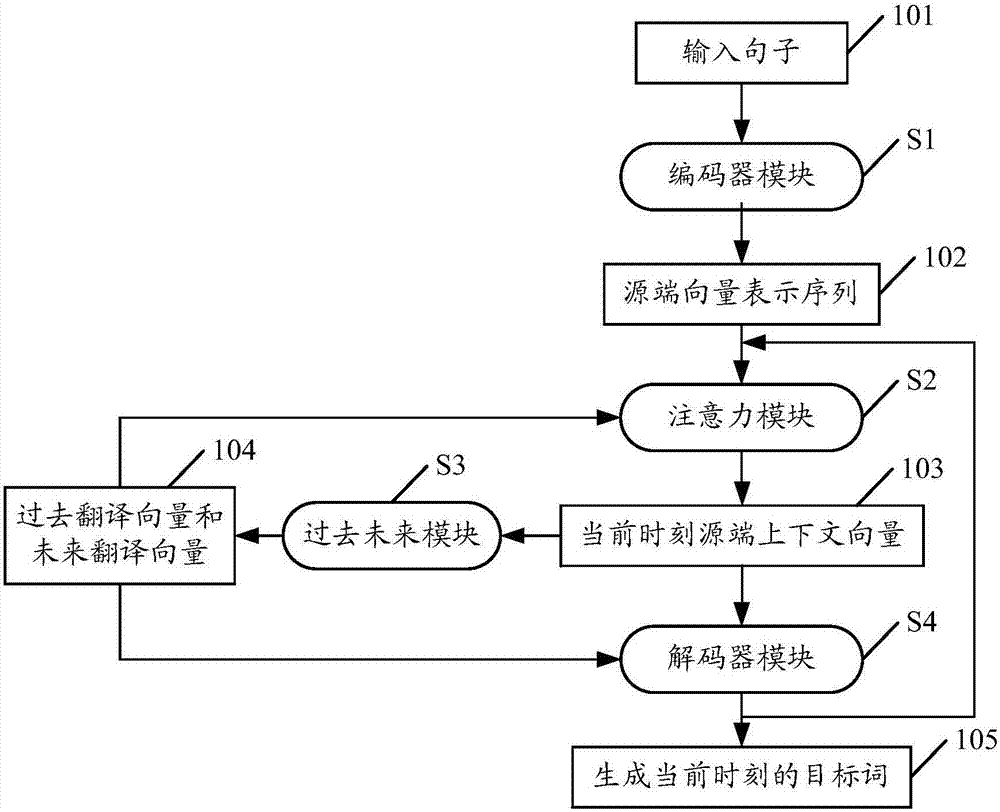

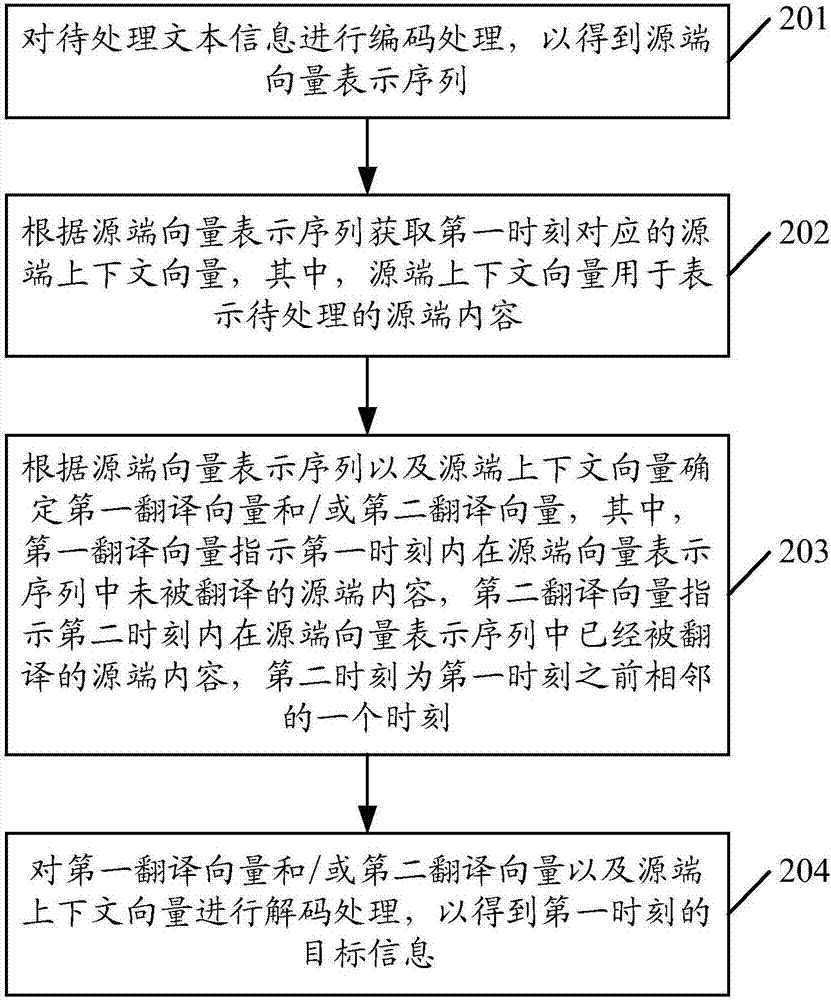

Method of translation, method for determining target information and related devices

ActiveCN107368476AReduce training difficultyImprove translation performanceNatural language translationNeural architecturesComputer scienceTranslation system

The invention discloses a method for determining target information. The method includes the following steps that encoding is conducted on to-be-processed text information to obtain a source-end vector expression sequence; according to the source-end vector expression sequence, a source-end context vector corresponding to the first moment is obtained, wherein the source-end context vector is used for expressing to-be-processed source-end content; according to the source-end vector expression sequence and the source-end context vector, a first translation vector and / or a second translation vector are / is determined, wherein the first translation vector indicates the source-end content which is not translated in the source-end vector expression sequence within the first moment, and the second translation vector indicates the source-end content which is translated in the source-end vector expression sequence within the second moment; decoding is conducted on the first translation vector and / or a second translation vector and the source-end context vector so as to obtain the target information of the first moment. The invention further provides a method of translation and a device for determining the target information. According to the method for determining the target information, the model training difficulty of a decoder can be reduced, and the translation effect of a translation system is improved.

Owner:SHENZHEN TENCENT COMP SYST CO LTD

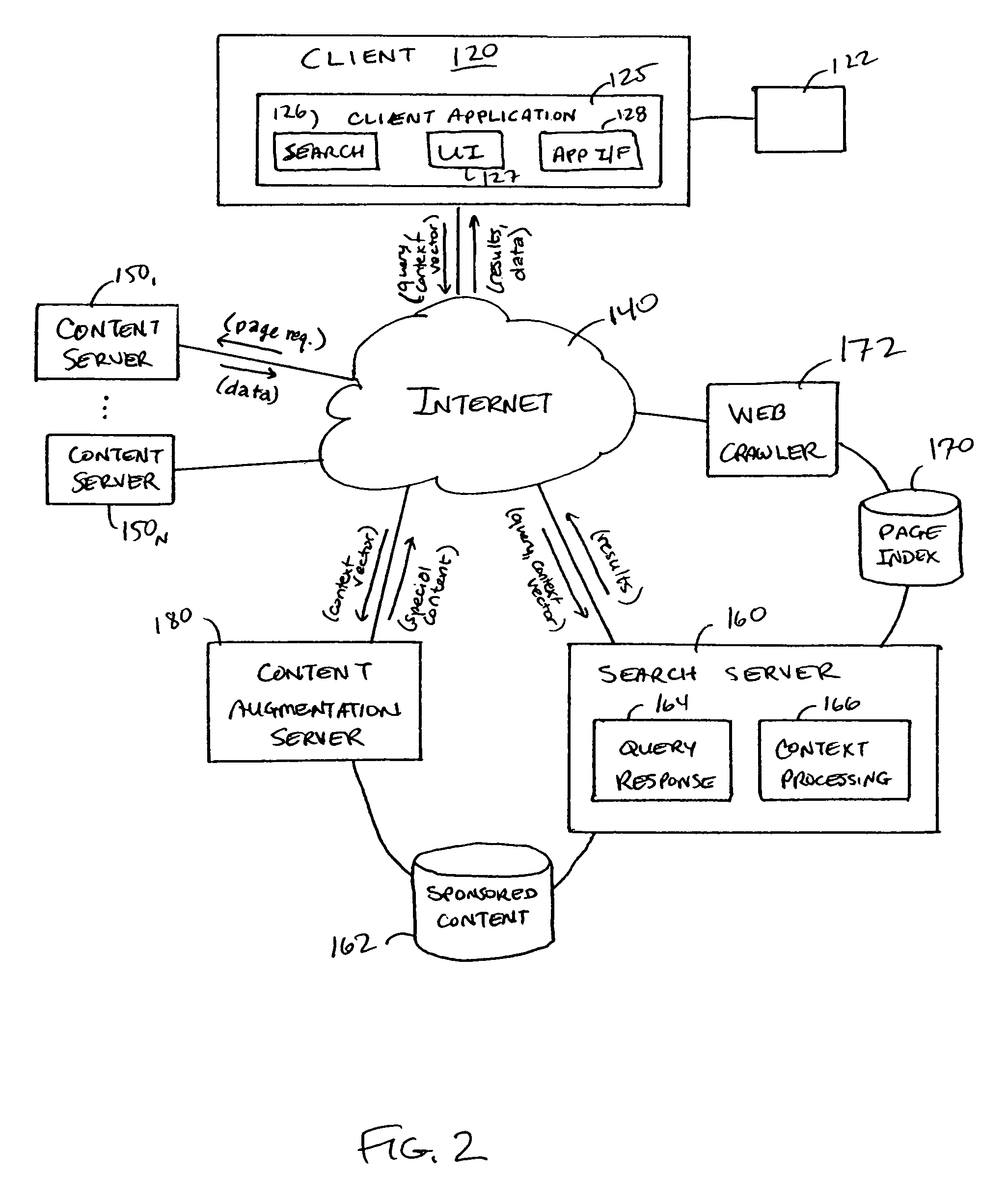

Search systems and methods using in-line contextual queries

ActiveUS20090070326A1Digital data information retrievalOffice automationContextual inquiryDocument preparation

Systems and methods are provided for implementing searches using contextual information associated with a Web page (or other document) that a user is viewing when a query is entered. The page includes a contextual search interface that has an associated context vector representing content of the page. When the user submits a search query via the contextual search interface, the query and the context vector are both provided to the query processor and used in responding to the query.

Owner:R2 SOLUTIONS

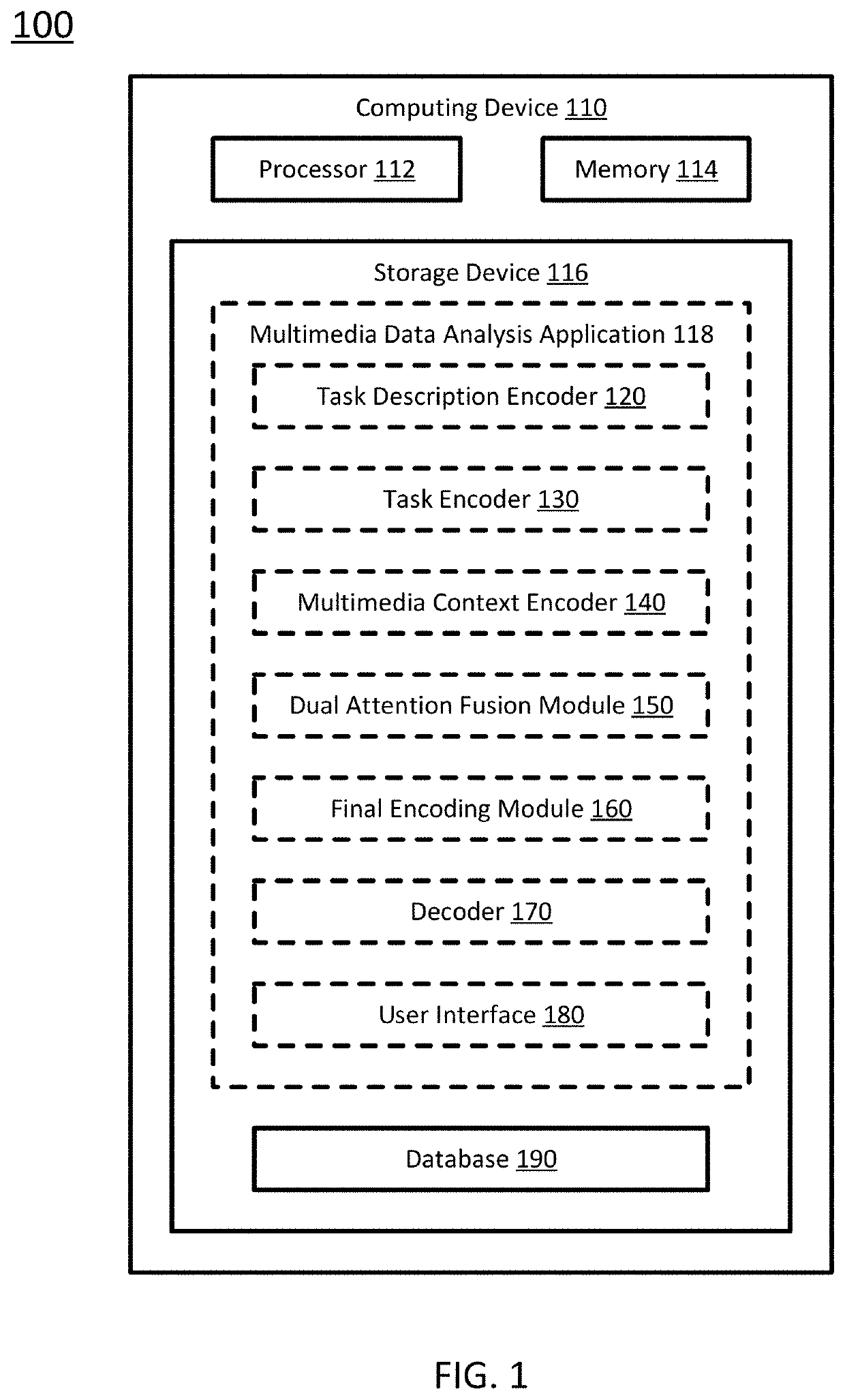

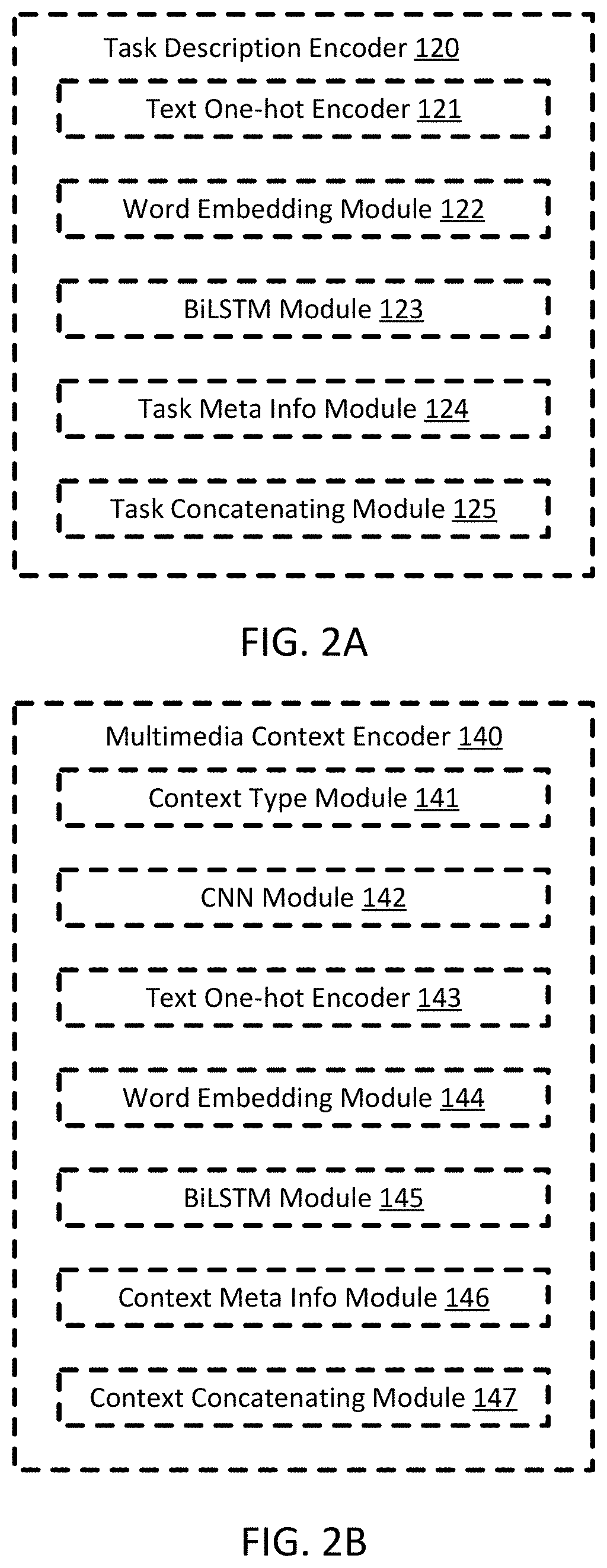

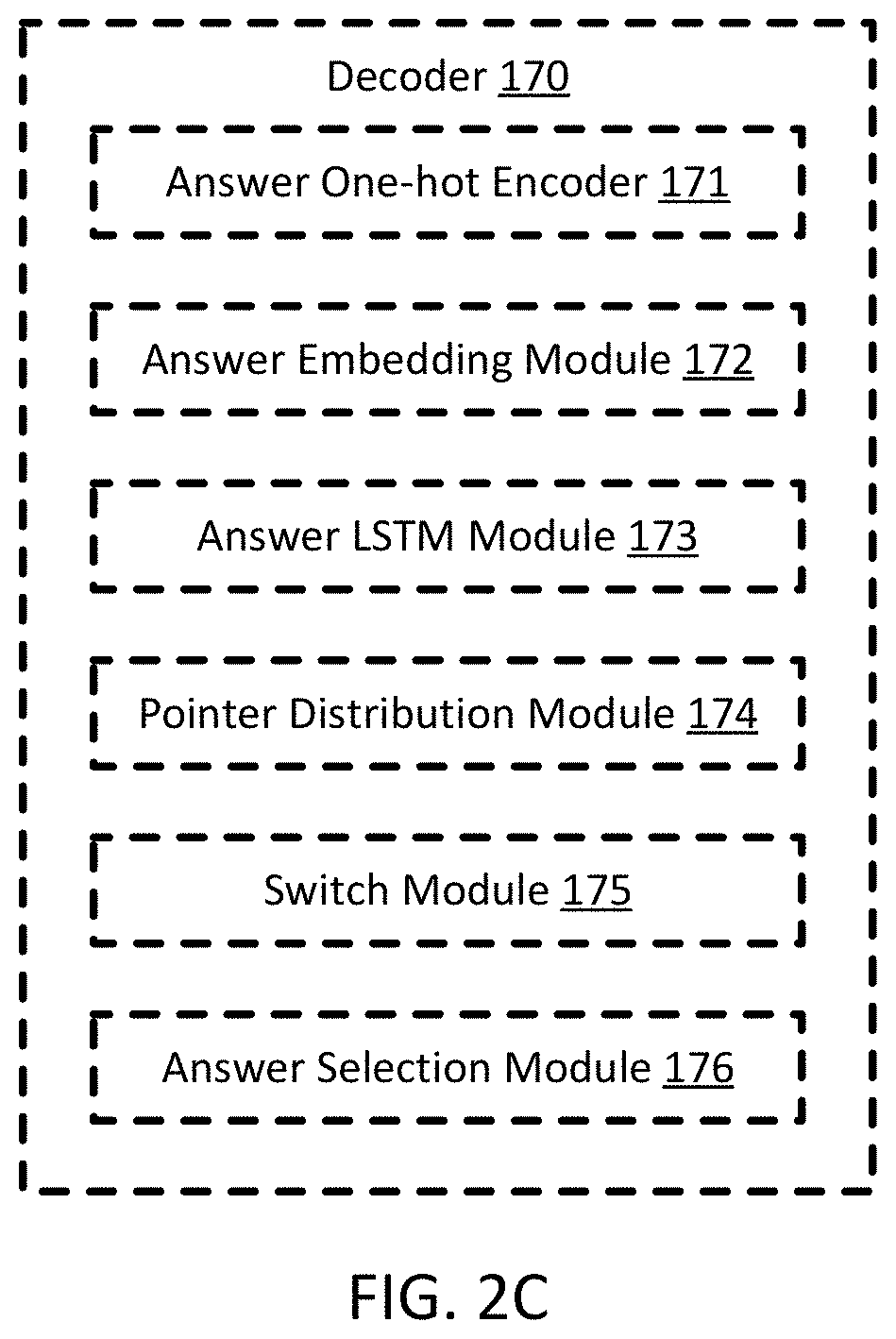

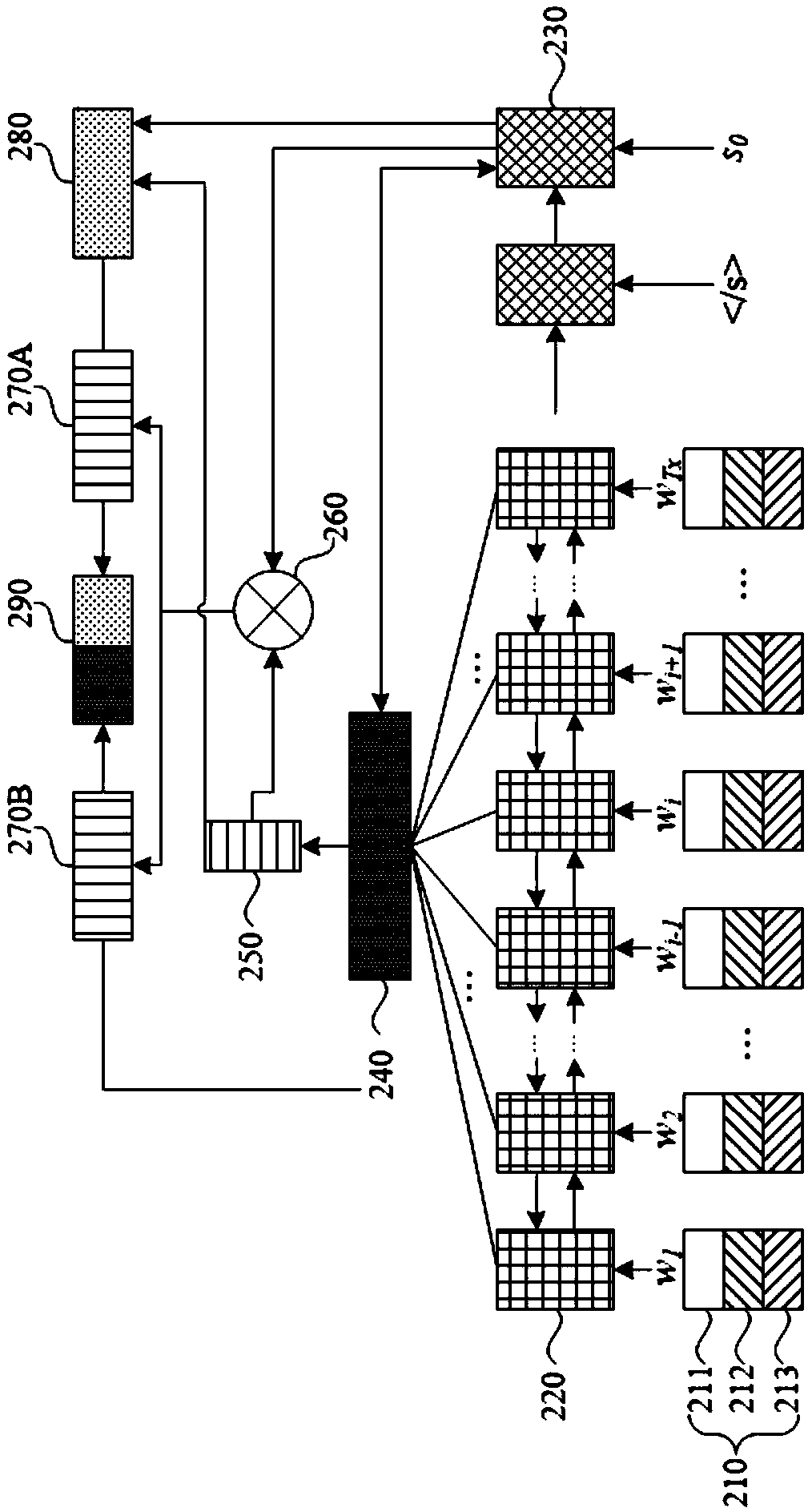

System and method for semantic analysis of multimedia data using attention-based fusion network

ActiveUS20210216862A1Character and pattern recognitionNeural architecturesTheoretical computer scienceEquipment computers

A system and a method for multimedia data analysis. The system includes a computing device. The computer device includes a processor and a storage device storing computer executable code. The computer executable code, when executed at the processor, is configured to: encode task description and task meta info into concatenated task vectors; encode context text and context meta info into concatenated context text vectors; encode context image and the context meta info into concatenated context image vectors; perform dual coattention on the concatenated task vectors and the concatenated context text and image vectors to obtain attended task vectors and attended context vectors; perform BiLSTM on the attended task vectors and the attended context vectors to obtain task encoding and context encoding; and decode the task encoding and the context encoding to obtain an answer to the task.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

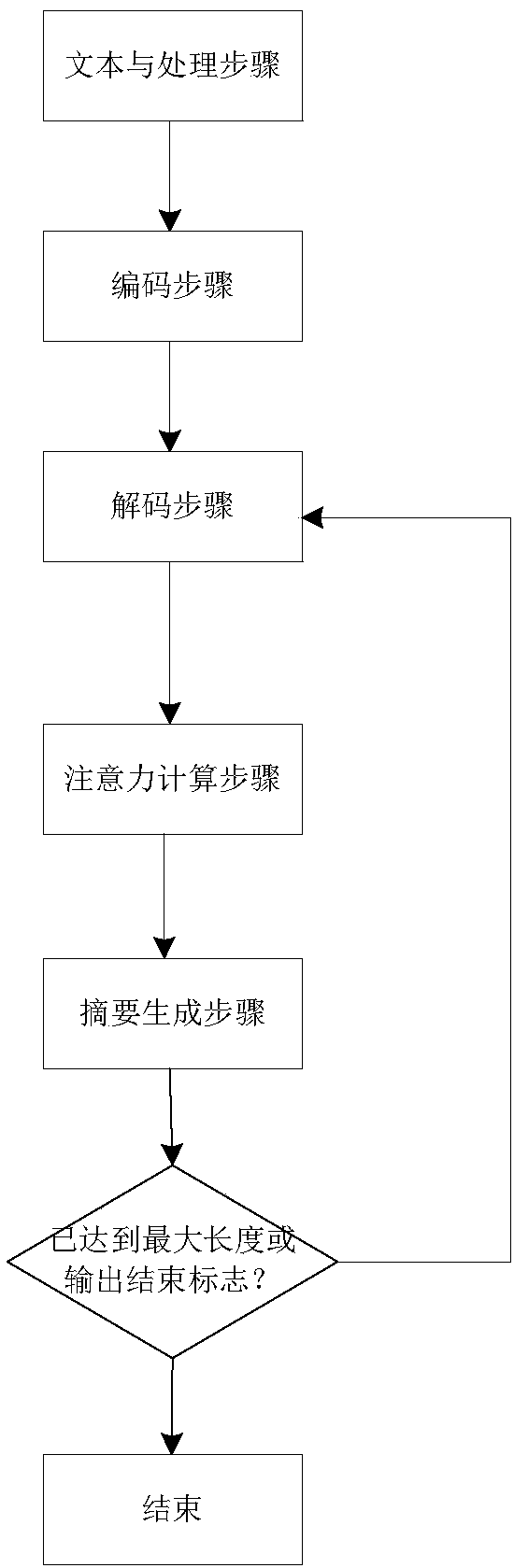

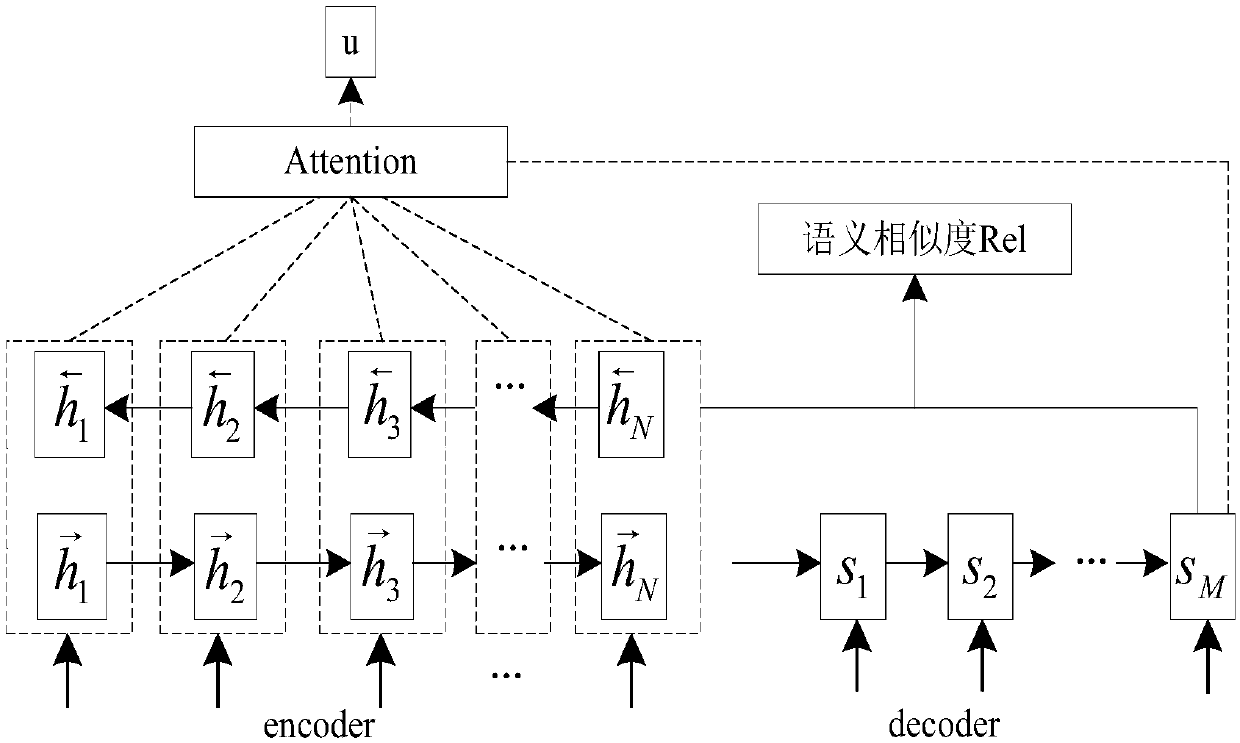

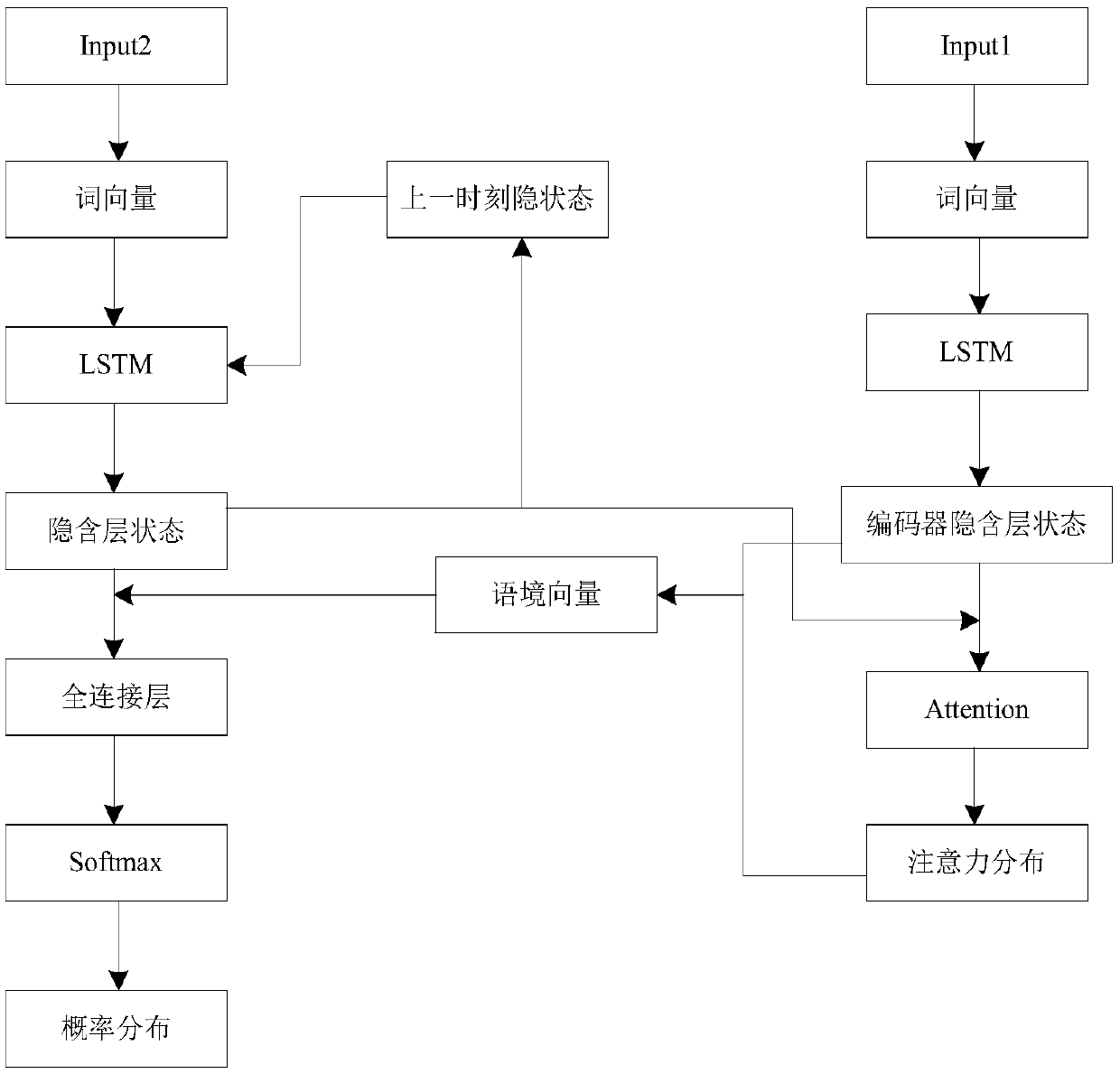

Automatic text summarization method based on enhanced semantics

InactiveCN108804495APrevent deviationQuality improvementNatural language data processingSpecial data processing applicationsSemantic vectorWord list

The invention discloses an automatic text summarization method based on enhanced semantics. The method comprises the following steps of: preprocessing a text, arranging words from high to low according to the word frequency information, and converting the words to id; using a single-layer bi-directional LSTM to encode the input sequence and extracting text information features; using a single-layer unidirectional LSTM to decode the encoded text semantic vector to obtain the hidden layer state; calculating a context vector to extract the information, most useful the current output, from the input sequence; after decoding, obtaining the probability distribution of the size of a word list, and adopting a strategy to select summarization words; in the training phase, fusing the semantic similarity between the generated summarization and the source text to calculate the loss, so as to improve the semantic similarity between the summarization and the source text. The invention utilizes the LSTM depth learning model to characterize the text, integrates the semantic relation of the context, enhances the semantic relation between the summarization and the source text, and generates the summarization which is more suitable for the subject idea of the text, and has a wide application prospect.

Owner:SOUTH CHINA UNIV OF TECH

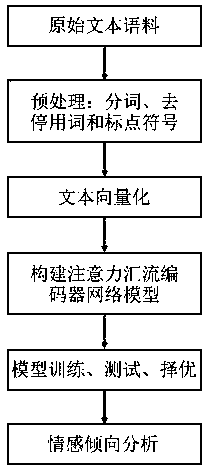

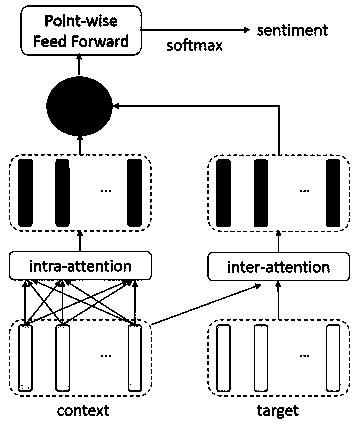

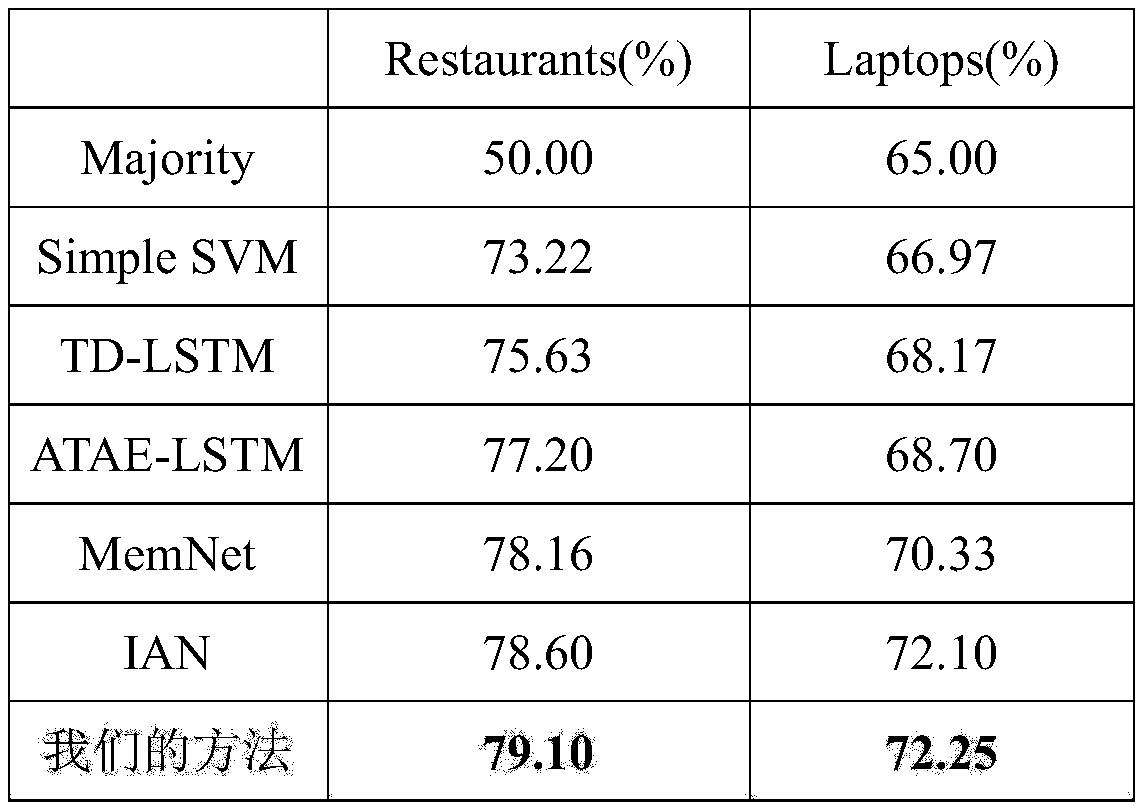

A text emotion analysis method based on attention mechanism

ActiveCN109543180AReduce model parametersFast trainingSemantic analysisSpecial data processing applicationsAlgorithmModel parameters

The invention discloses a text emotion analysis method based on attention mechanism, which comprises the following steps: 1, preprocessing text data; 2, constructing a vocabulary and constructing a word vector by using a GloVe model; Thirdly, the sentence vector is encoded by intrinsic attention, and the target word vector is encoded by interactive attention. The two encoded vectors are fused by GRU, and the fused representation is obtained after average pooling. Fourthly, according to the fusion representation, the abstract feature of context vector is obtained by point-by-point feed-forwardnetwork (FFN), and then the probability distribution of affective classification labels is calculated by full connection and Softmax function, and the classification result is obtained. Fifthly, the preprocessed corpus is divided into training set and test set, the parameters of the model are trained many times, and the model with the highest classification accuracy is selected to classify the affective tendencies. The method of the invention only uses the attention mechanism to model the text, and strengthens the understanding of the target word, so that the user can understand the emotionaltendency held by the specific target word in the text.

Owner:SUN YAT SEN UNIV

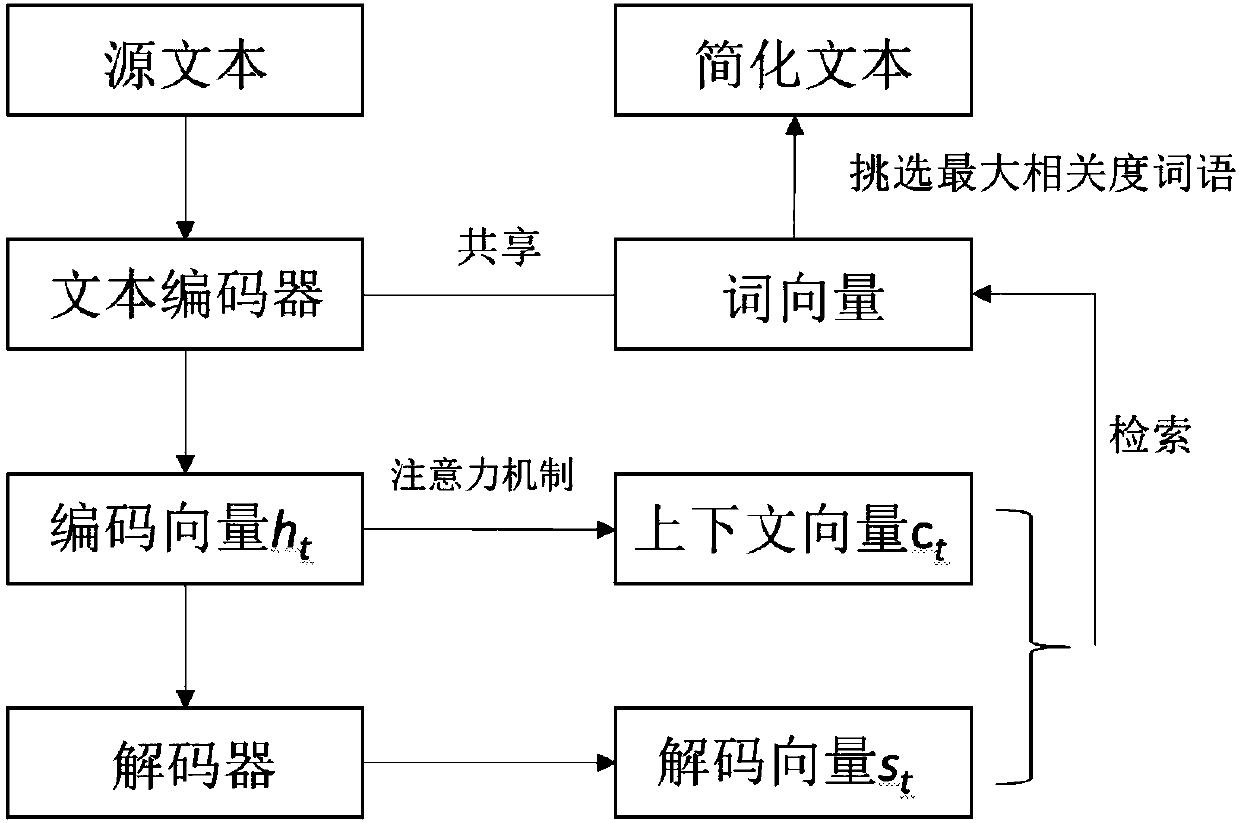

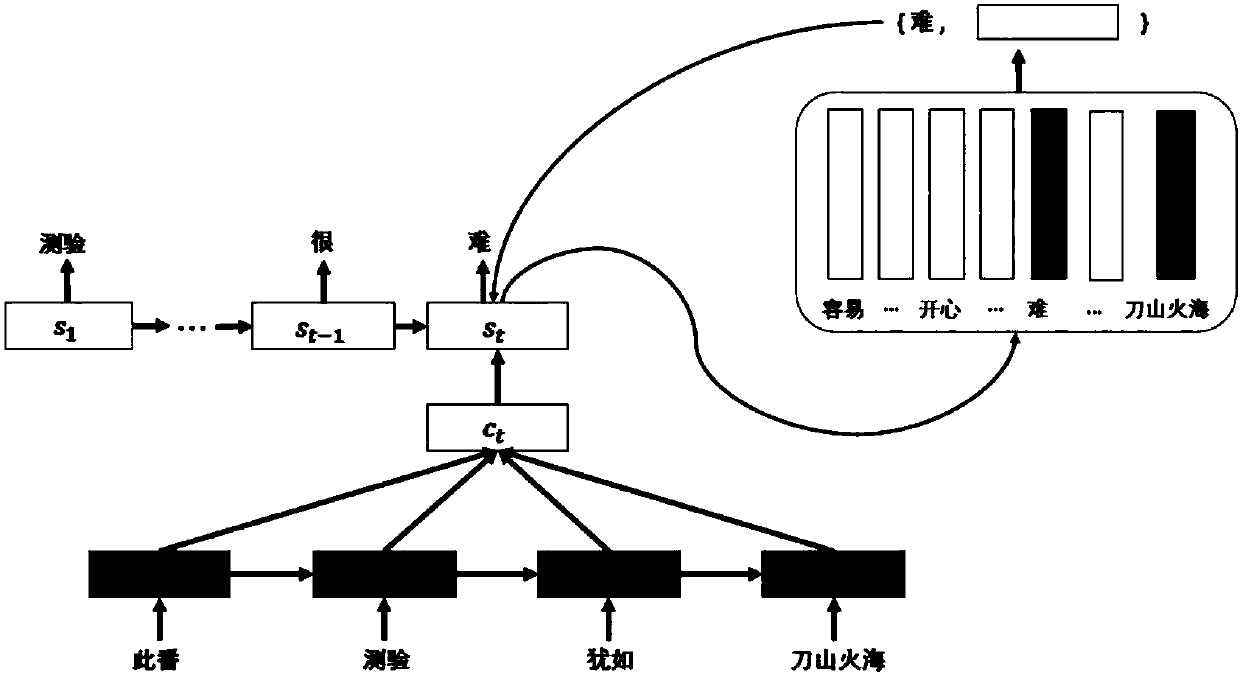

Text simplification method based on word vector query model

ActiveCN107844469AQuality improvementImprove accuracyNatural language data processingNeural architecturesHidden layerWord list

The invention provides a text simplification method based on a word vector query model. Based on a sequence and a sequence model, when decoding is conducted, the correlation between the hidden state of a decoder and the word vectors of all vocabularies is obtained through the reference of an attention mechanism to serve as a measurement of the possibility of the words to be generated in the next step. The method includes the following steps that a text encoder is designed, and an original text is compressed; a text simplification decoding generator is designed, and the current hidden layer vector and the context vector at every moment are calculated circularly; the retrieval correlation of each word in a word list is obtained, the predicted words at the current moment are output, and a complete simplified text is obtained; a model for generating the simplified text is trained, and the log likelihood of the predicted words and actual target words is minimized; after training, the complete simplified text is generated. The method can improve the quality and accuracy of the generated text, greatly reduce the number of parameters of the existing method, and reduce the training time andthe memory usage.

Owner:PEKING UNIV

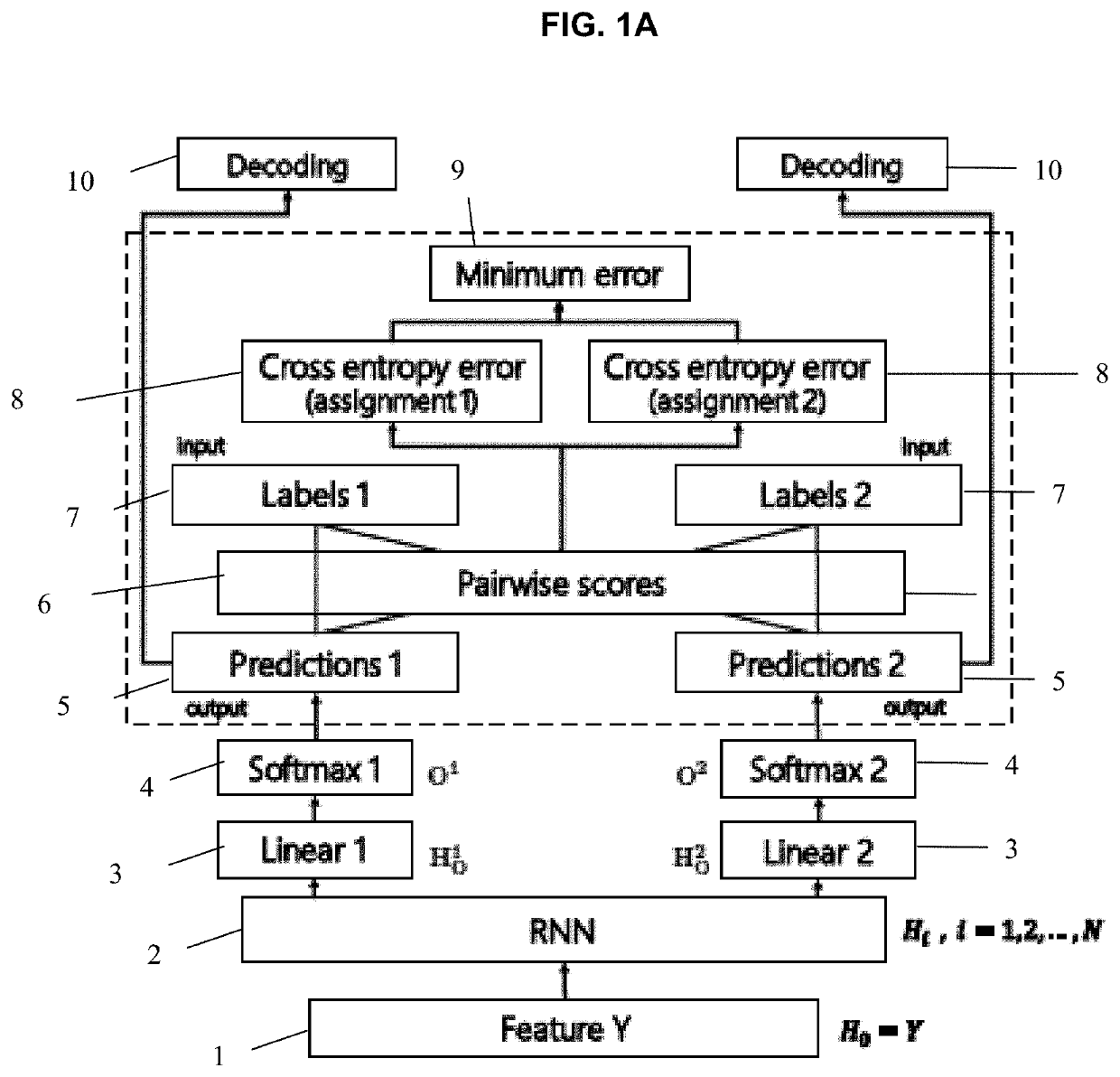

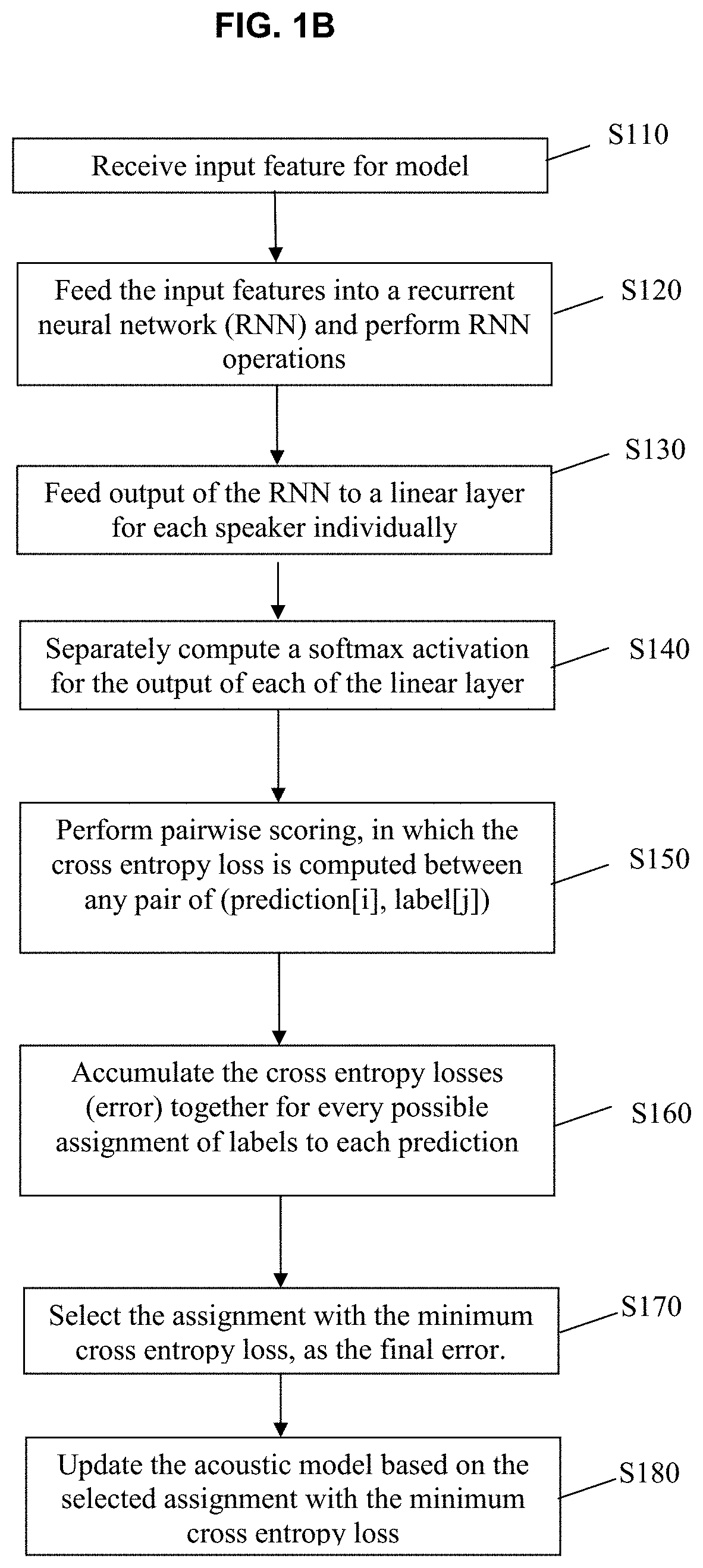

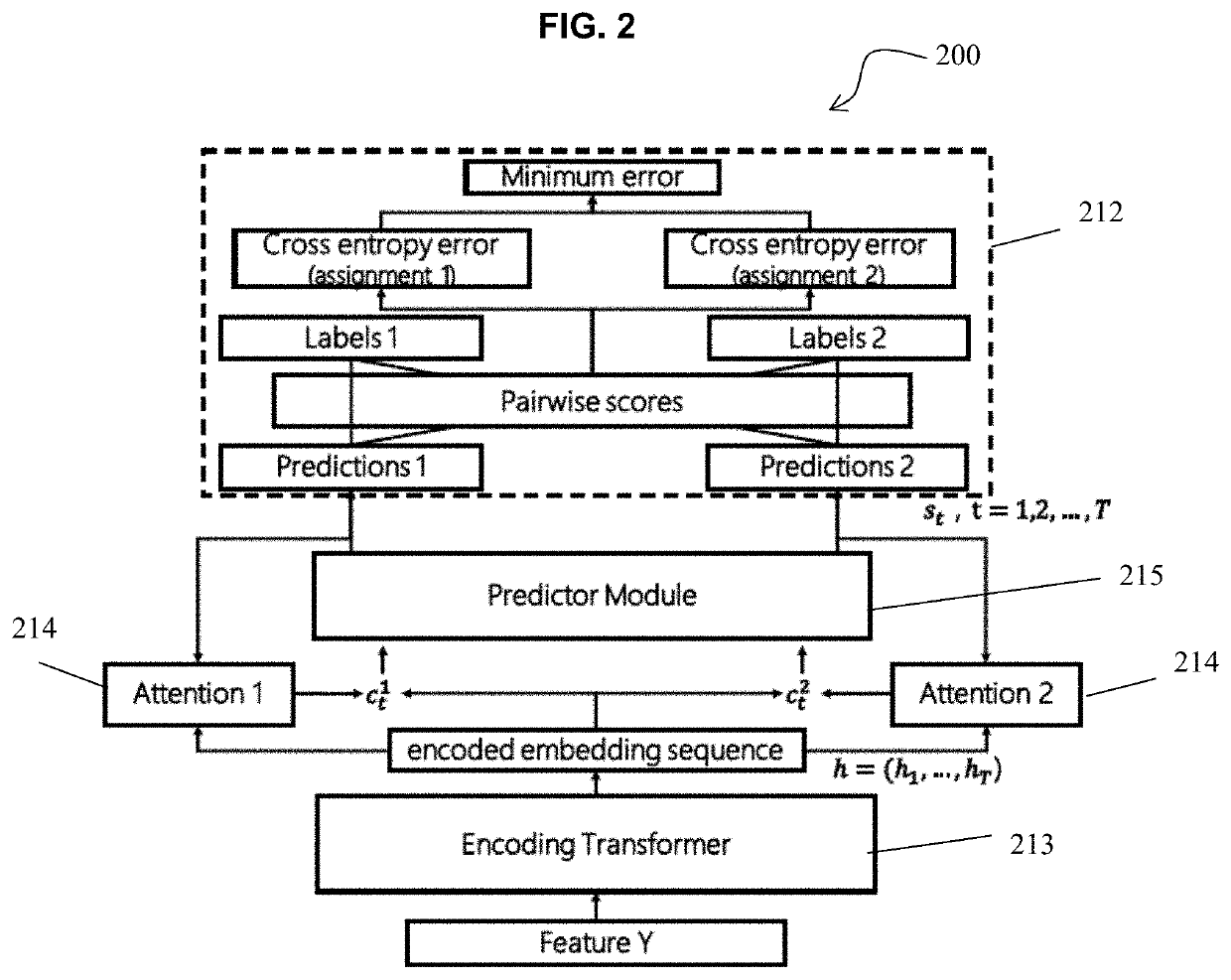

Monaural multi-talker speech recognition with attention mechanism and gated convolutional networks

Provided are a speech recognition training processing method and an apparatus including the same. The speech recognition training processing method includes acquiring multi-talker mixed speech sequence data corresponding to a plurality of speakers, encoding the multi-speaker mixed speech sequence data into an embedded sequence data, generating speaker specific context vectors at each frame based on the embedded sequence, generating senone posteriors for each of the speaker based on the speaker specific context vectors and updating an acoustic model by performing permutation invariant training (PIT) model training based on the senone posteriors.

Owner:TENCENT TECH (SHENZHEN) CO LTD

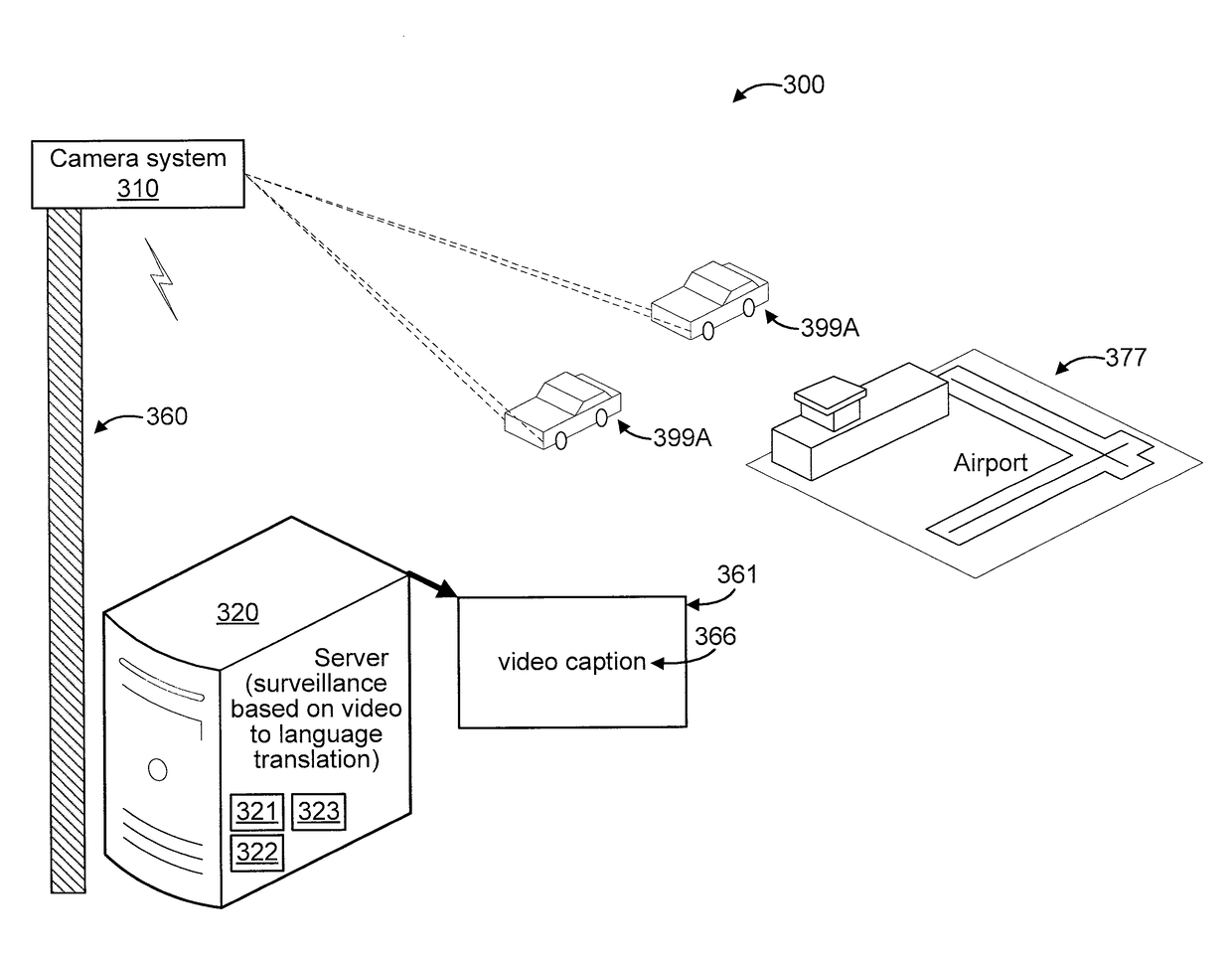

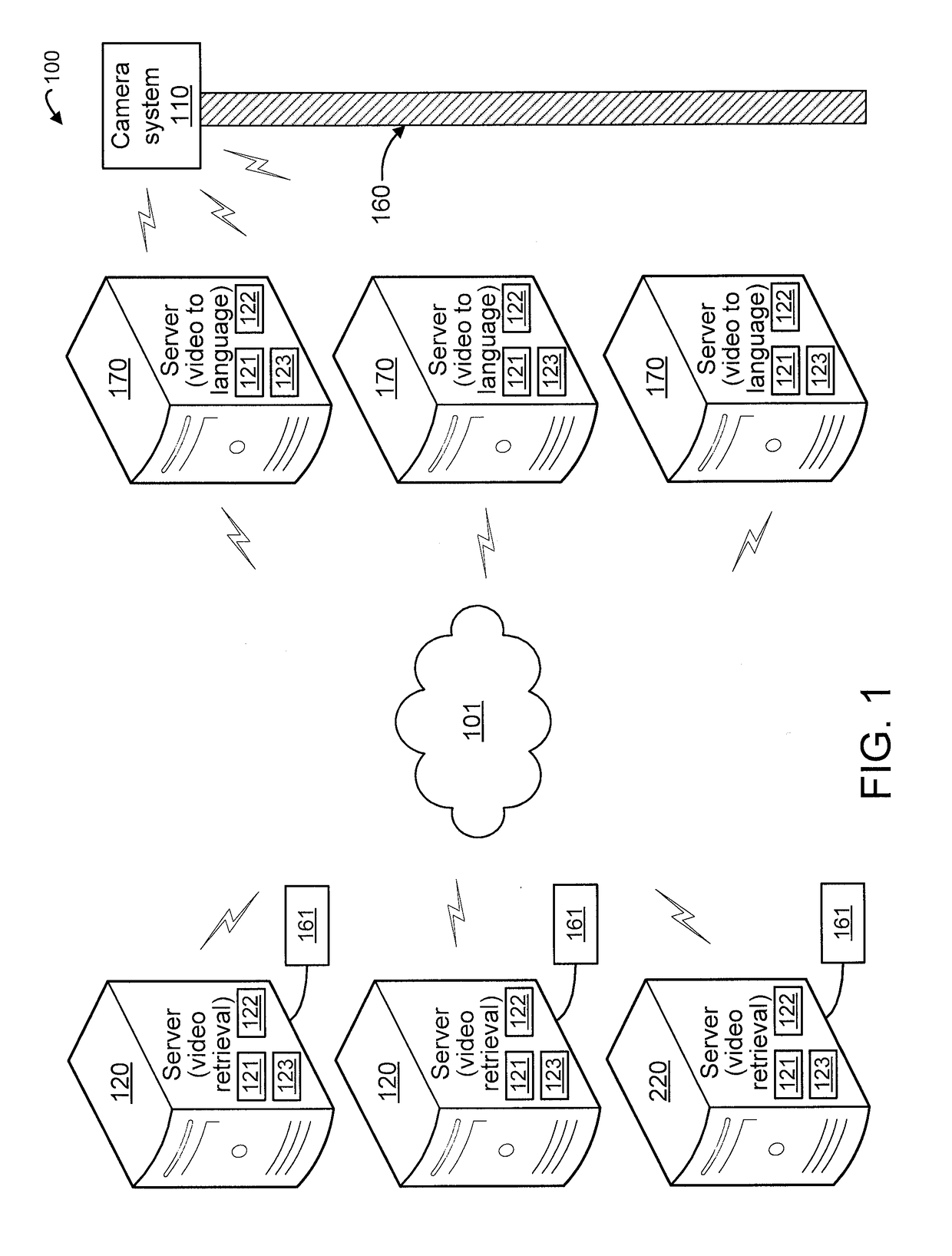

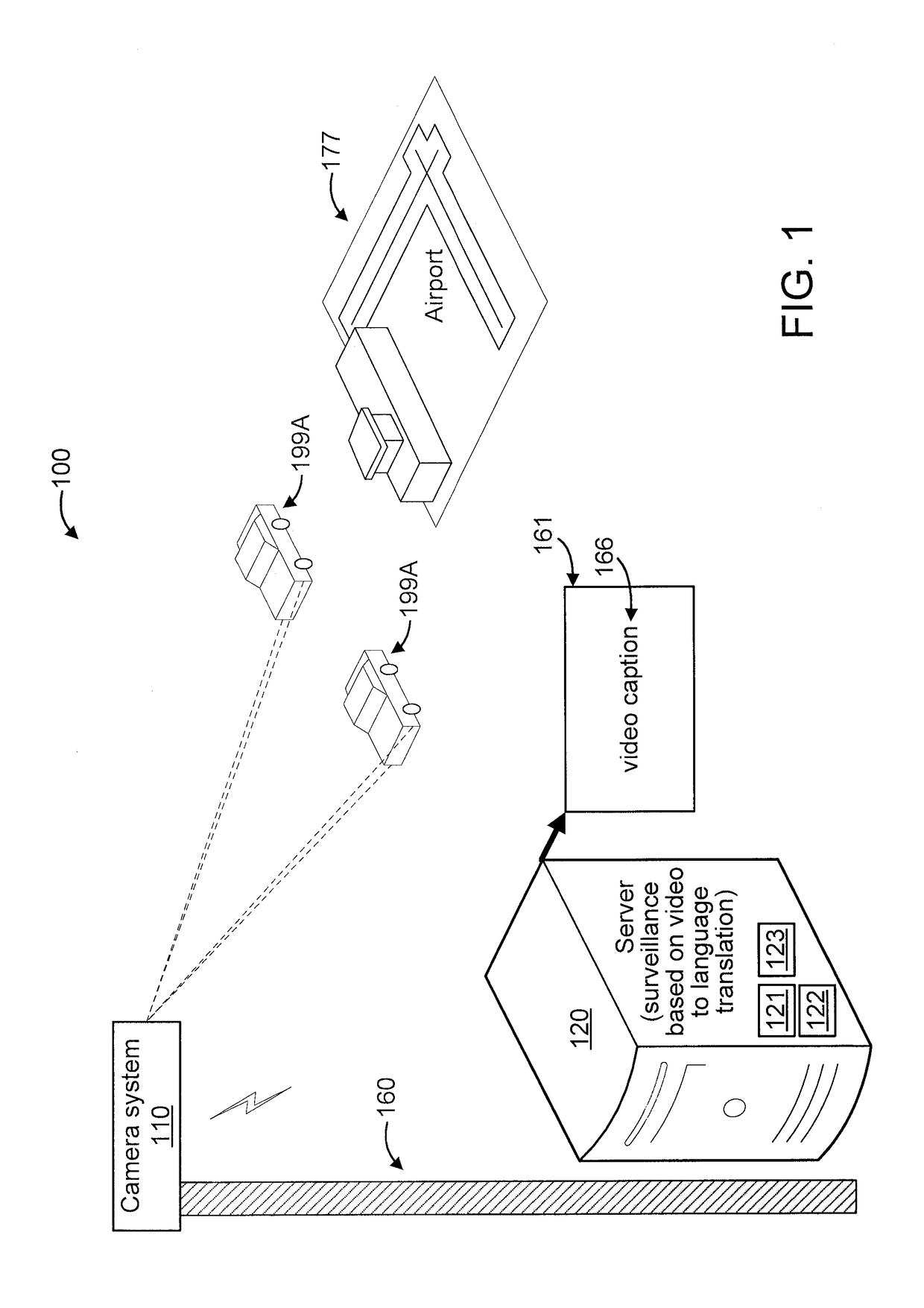

Video retrieval system using adaptive spatiotemporal convolution feature representation with dynamic abstraction for video to language translation

ActiveUS20180124331A1Television system detailsCharacter and pattern recognitionPattern recognitionVideo retrieval

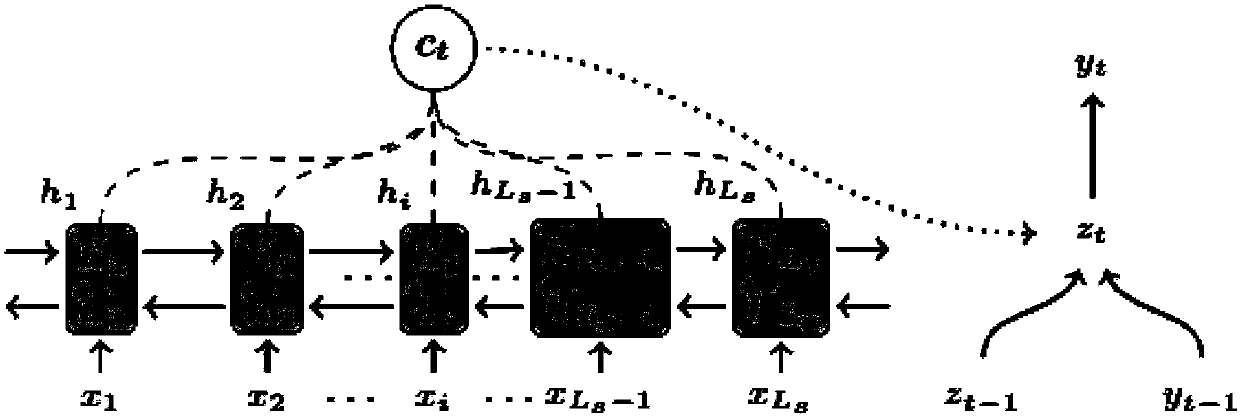

A video retrieval system is provided, that includes a set of servers, configured to retrieve a video sequence from a database and forward it to a requesting device responsive to a match between an input text and a caption for the video sequence. The servers are further configured to translate the video sequence into the caption by (A) applying a C3D to image frames of the video sequence to obtain therefor (i) intermediate feature representations across L convolutional layers and (ii) top-layer features, (B) producing a first word of the caption for the video sequence by applying the top-layer features to a LSTM, and (C) producing subsequent words of the caption by (i) dynamically performing spatiotemporal attention and layer attention using the representations to form a context vector, and (ii) applying the LSTM to the context vector, a previous word of the caption, and a hidden state of the LSTM.

Owner:NEC CORP

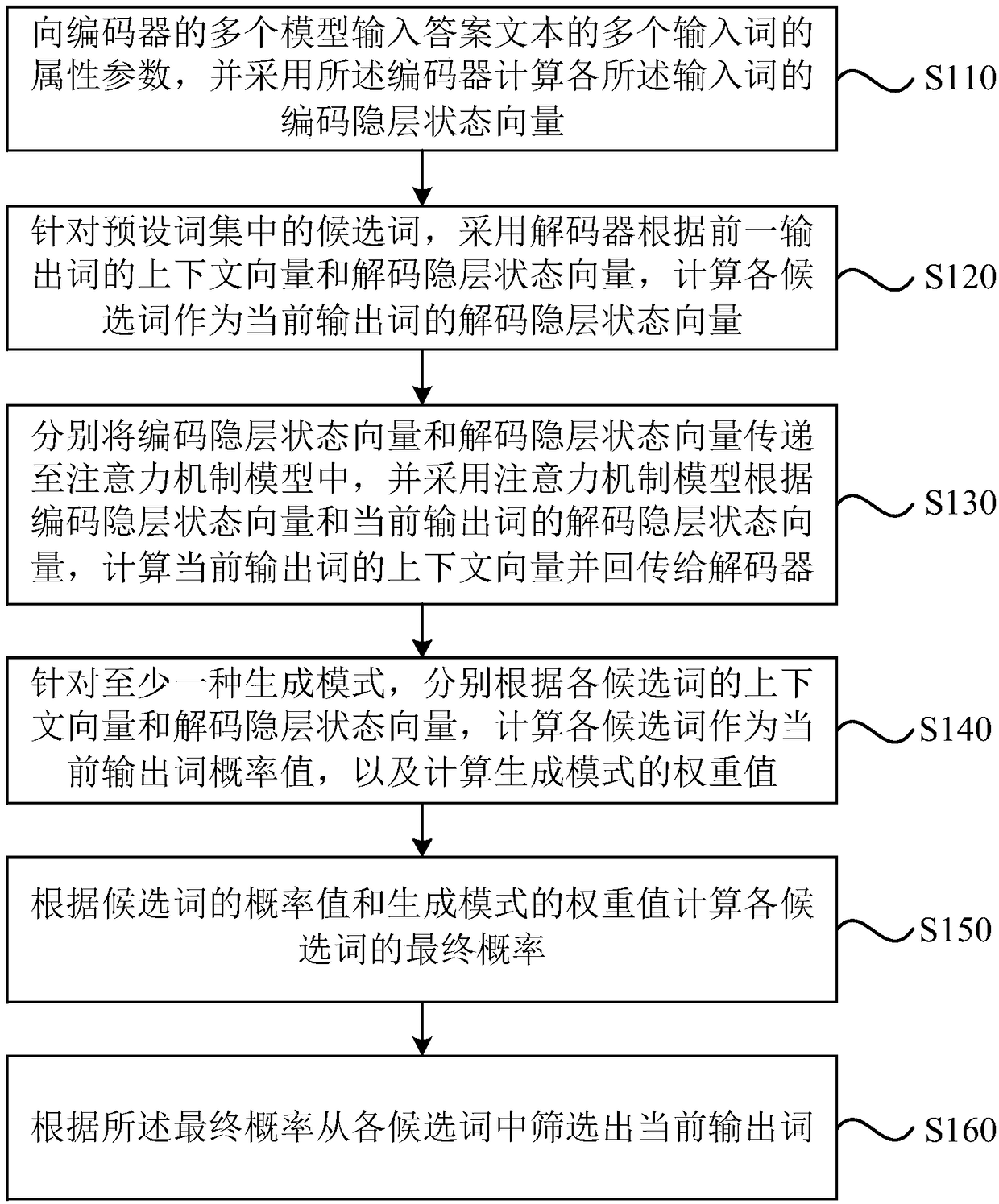

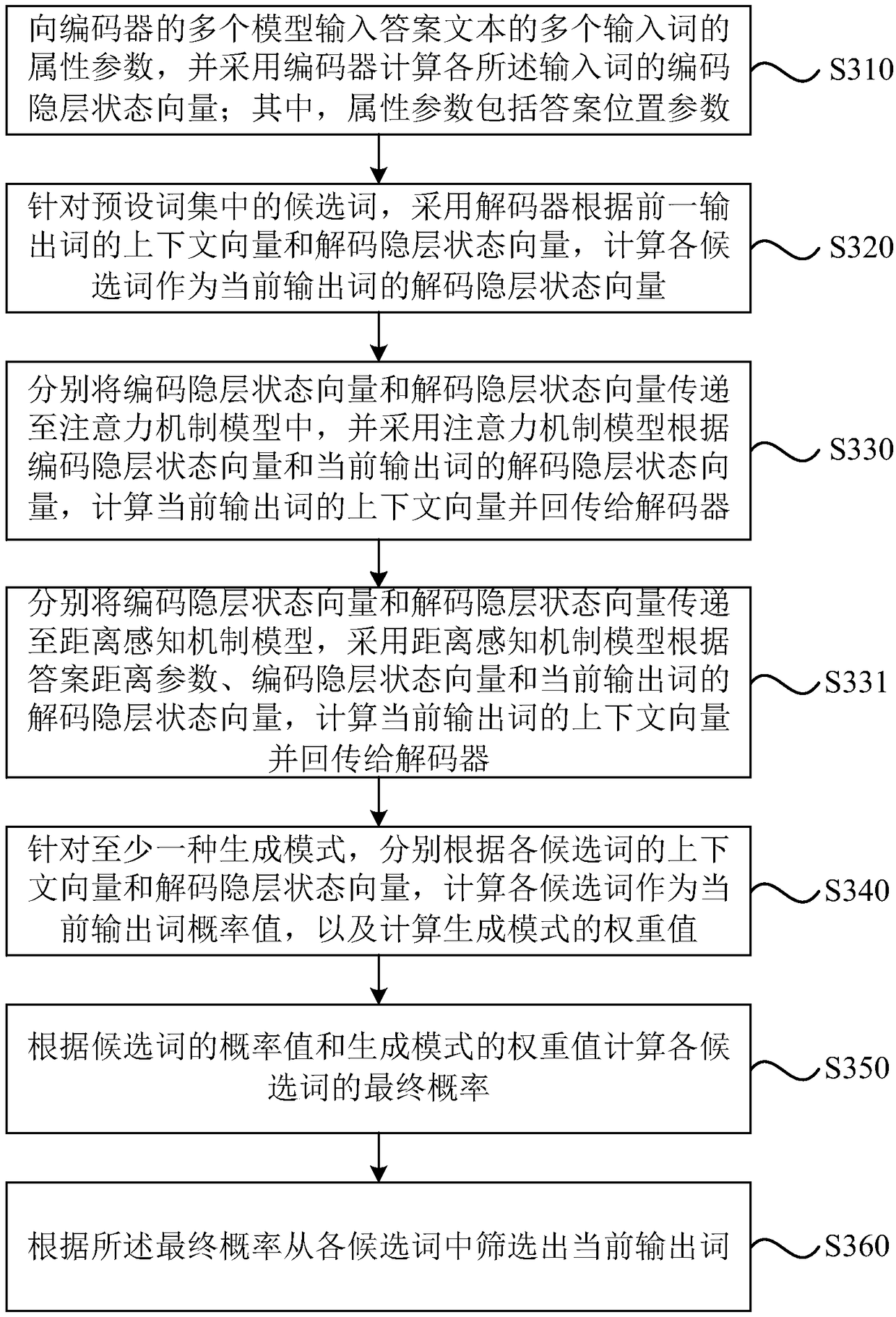

Problem text generation method and device, equipment and medium

ActiveCN108846130AImprove accuracyIncrease diversityNatural language data processingNeural architecturesHidden layerAlgorithm

An embodiment of the invention discloses a problem text generation method and device, equipment and a medium. The method comprises the following steps: the embodiment of the invention determines the coded hidden layer state vector of each input word by adopting an encoder based on the attribute parameters of each input word; for each candidate word, the decoder and an attention mechanism model areused to determine the context vector and decoded hidden layer state vector of each candidate word as the current output word; and for at least one generation mode, the probability value of each candidate word as the current output word and the weight value of the generation mode are respectively calculated according to the context vector and the decoded hidden layer state vector, and the final probability is further determined to screen the current output word among the candidate words based on the final probability. The technical scheme improves the accuracy and diversity of generating the question text based on the answer text.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

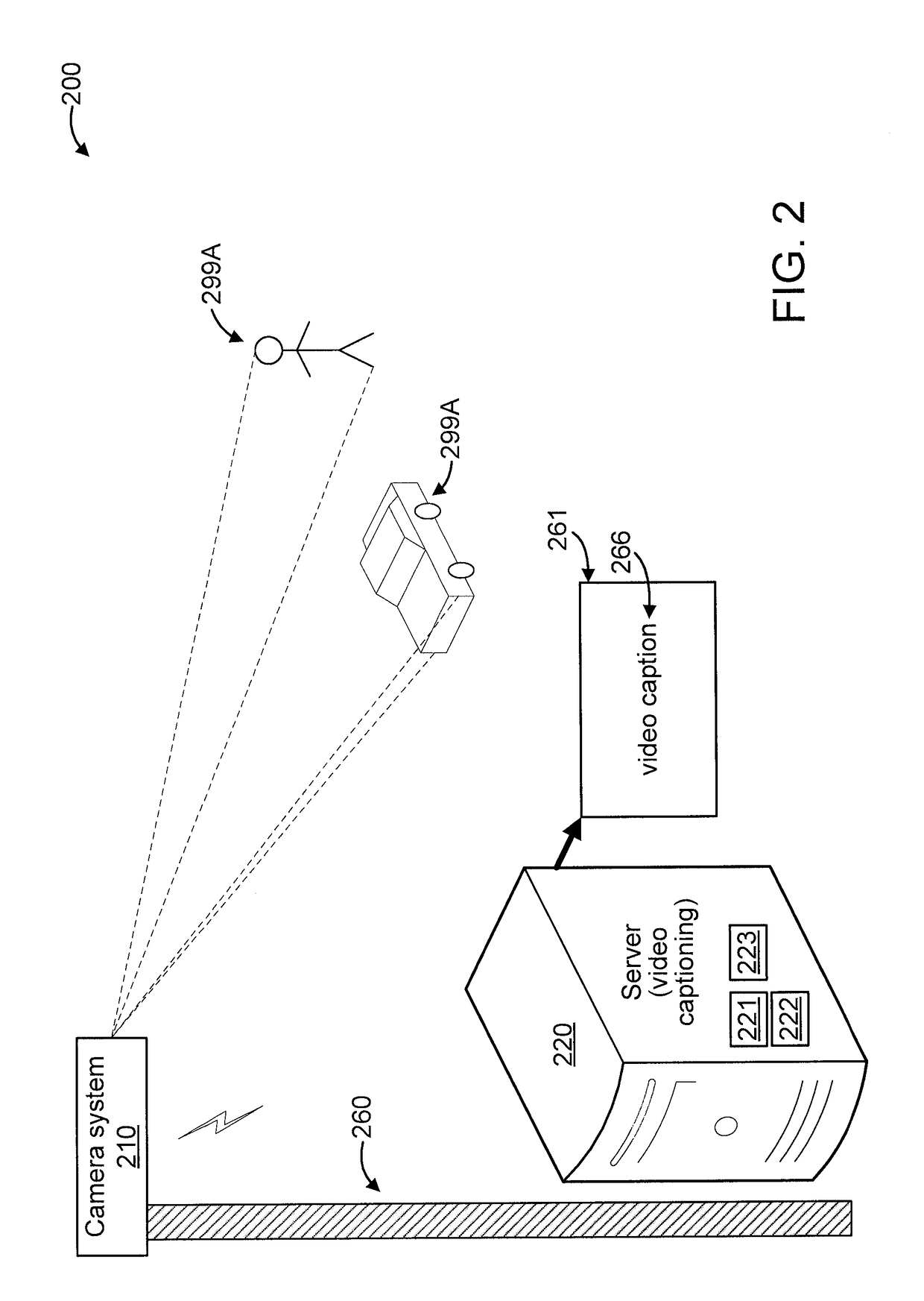

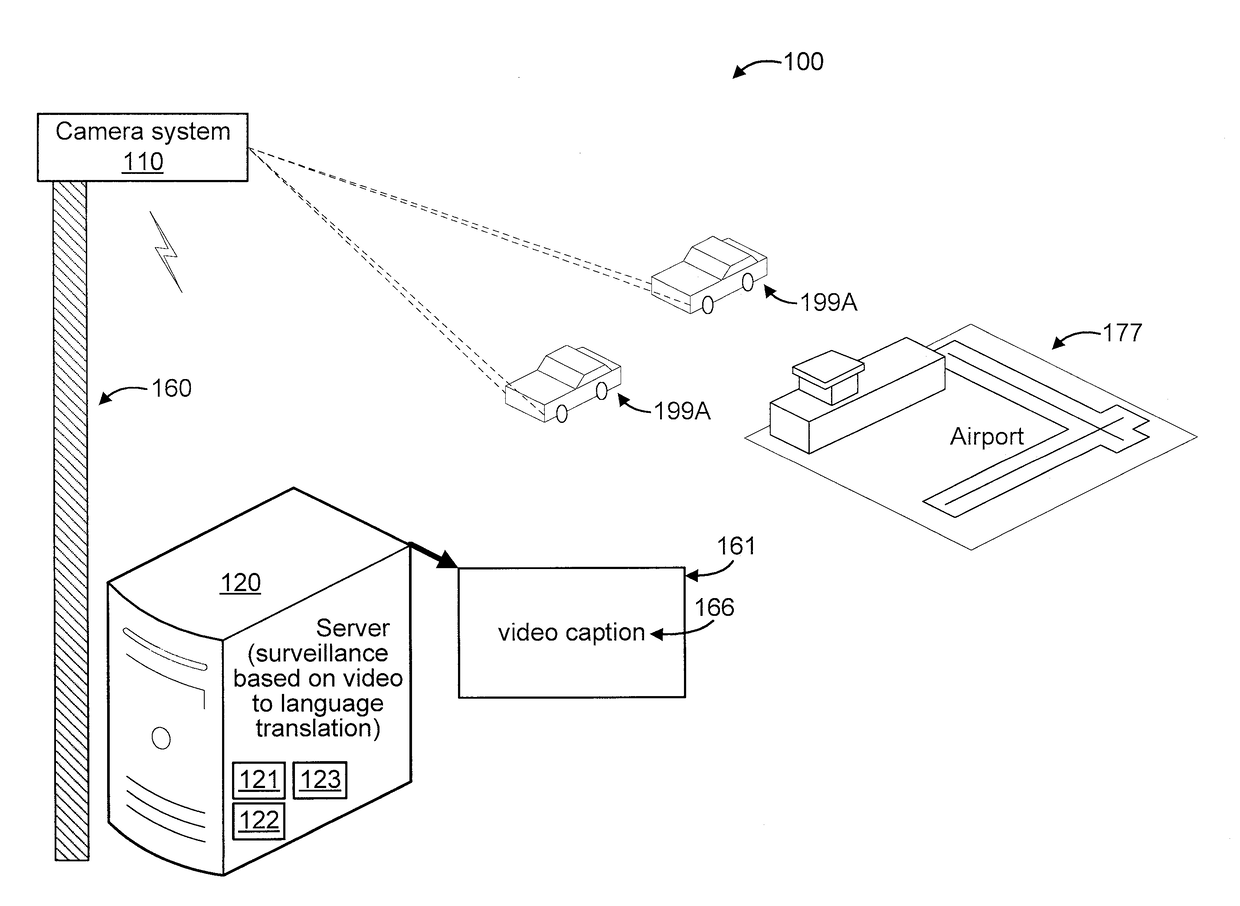

Surveillance system using adaptive spatiotemporal convolution feature representation with dynamic abstraction for video to language translation

ActiveUS20180121731A1Television system detailsCharacter and pattern recognitionPattern recognitionDisplay device

A surveillance system is provided that includes an image capture device configured to capture a video sequence of a target area that includes objects and is formed from a set of image frames. The system further includes a processor configured to apply a C3D to the image frames to obtain therefor (i) intermediate feature representations across L convolutional layers and (ii) top-layer features. The processor is further configured to produce a first word of a caption for the sequence by applying the top-layer features to a LSTM. The processor is further configured to produce subsequent words of the caption by (i) dynamically performing spatiotemporal attention and layer attention using the intermediate feature representations to form a context vector, and (ii) applying the LSTM to the context vector, a previous word of the caption, and a hidden state of the LSTM. The system includes a display device for displaying the caption.

Owner:NEC CORP

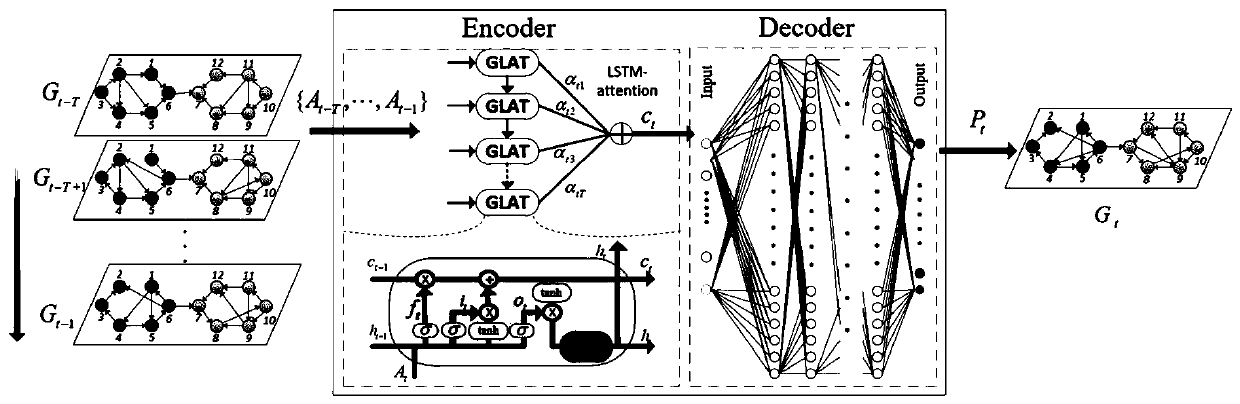

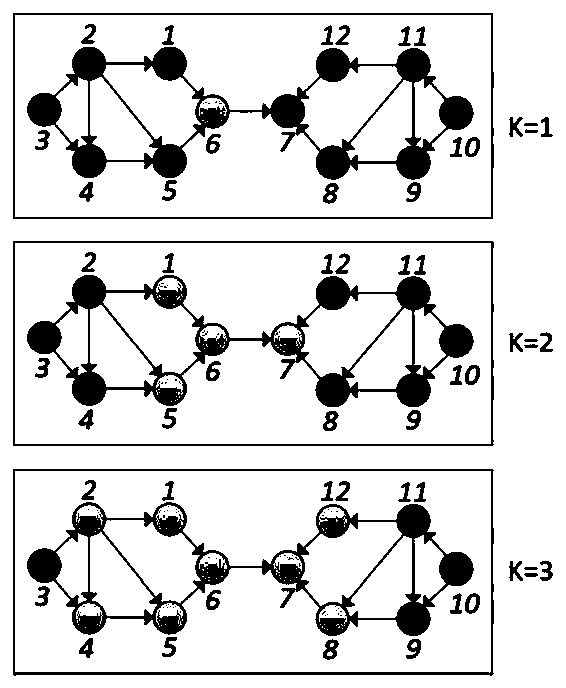

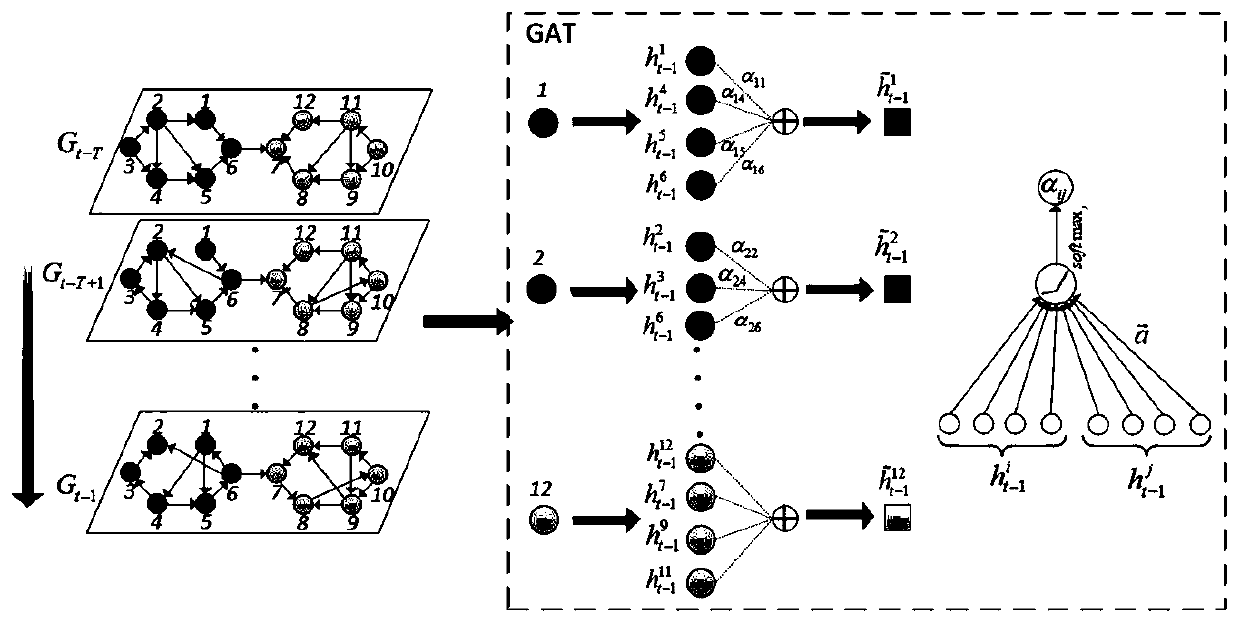

Dynamic link prediction method based on space-time attention deep model

The invention discloses a dynamic link prediction method for a space-time attention deep model, and the method comprises the following steps: taking an adjacent matrix A corresponding to a dynamic network as an input, and the dynamic network comprises a social network, a communication network, a scientific cooperation network or a social security network; extracting a hidden layer vector {ht-T,..., ht-1} from the hidden layer vectors {ht-T,..., ht-1} by means of an LSTM-attention model, calculating a context vector at according to the hidden layer vectors {ht-T,..., ht-1} at T moments, and inputting the context vector at the T moments into a decoder as a space-time feature vector; and decoding the input time feature vector at by adopting a decoder, and outputting a probability matrix whichis obtained by decoding and is used for representing whether a link exists between the nodes or not, thereby realizing the prediction of the dynamic link. According to the dynamic link prediction method, link prediction of the end-to-end dynamic network is realized by extracting the spatial and temporal characteristics of the dynamic network.

Owner:ZHEJIANG UNIV OF TECH

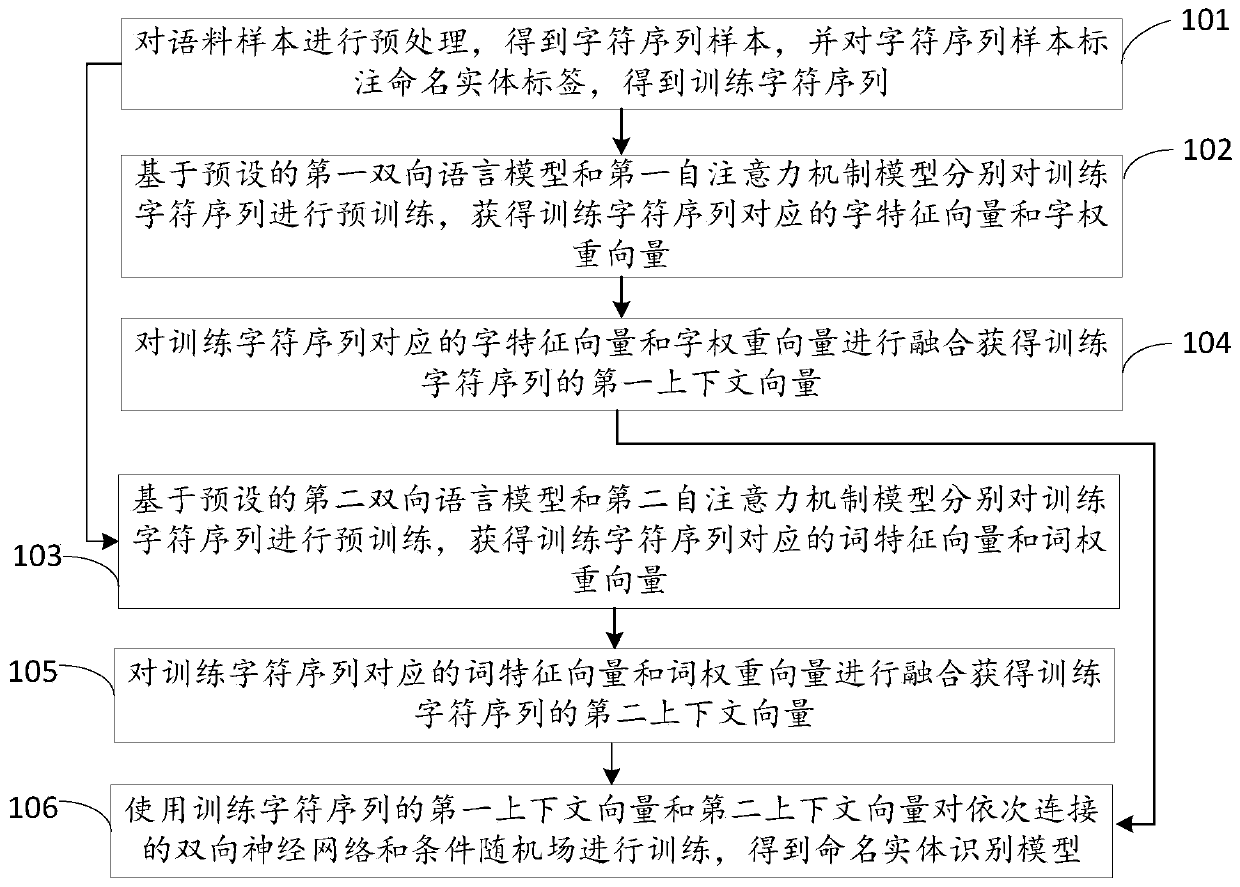

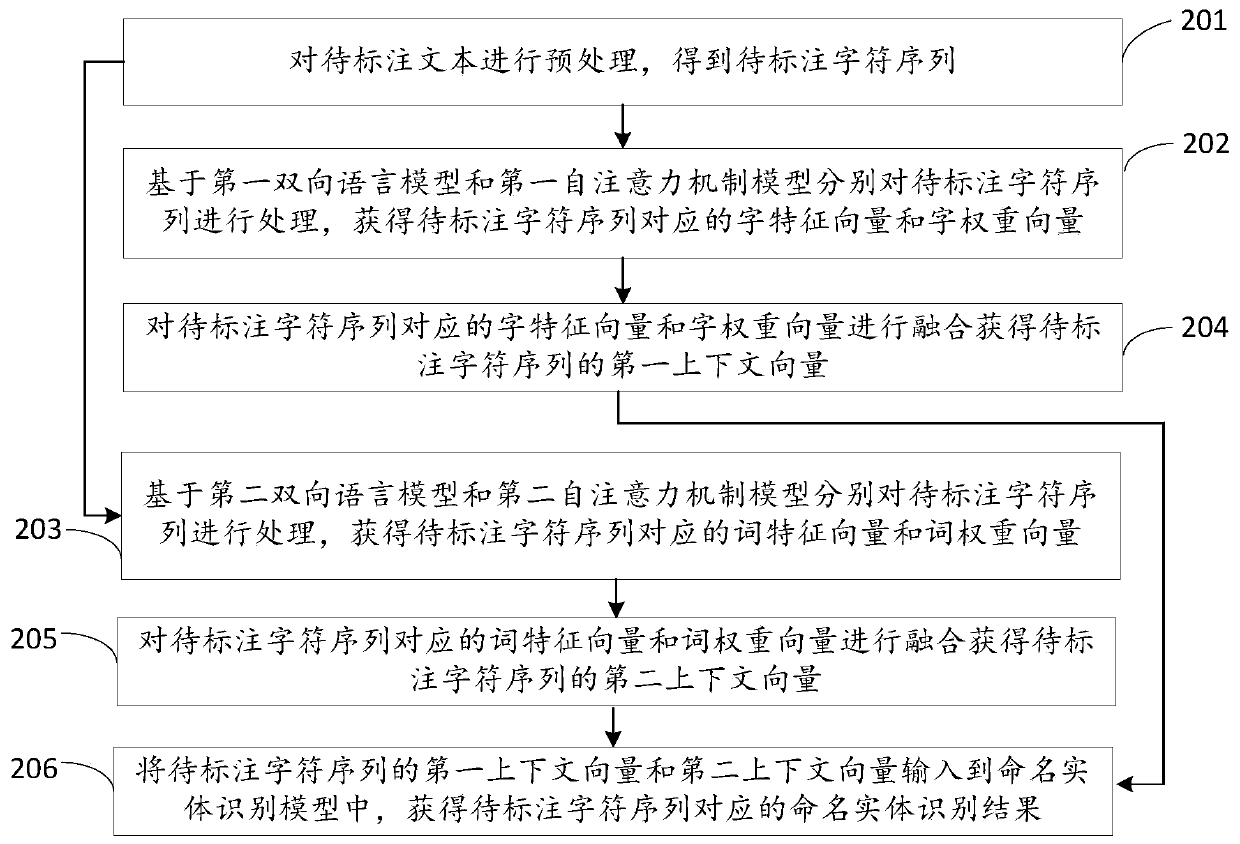

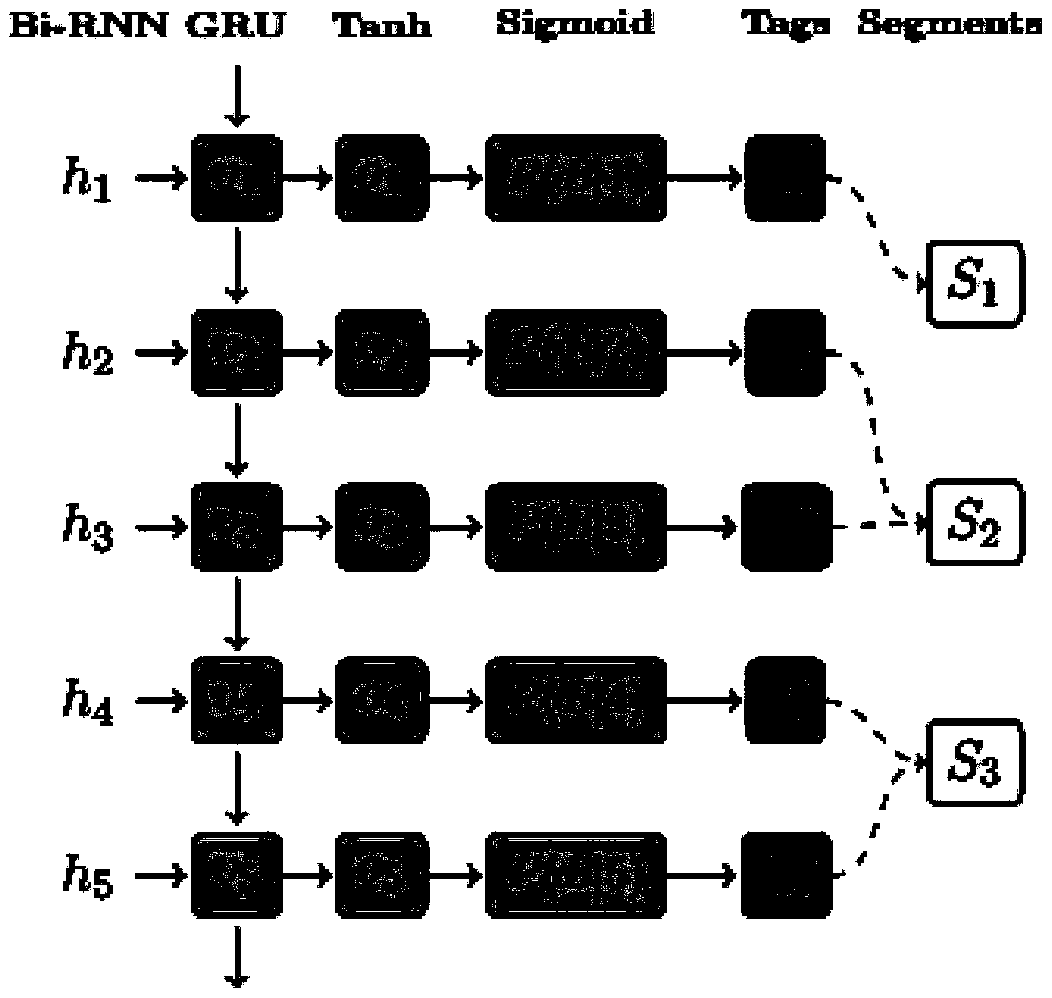

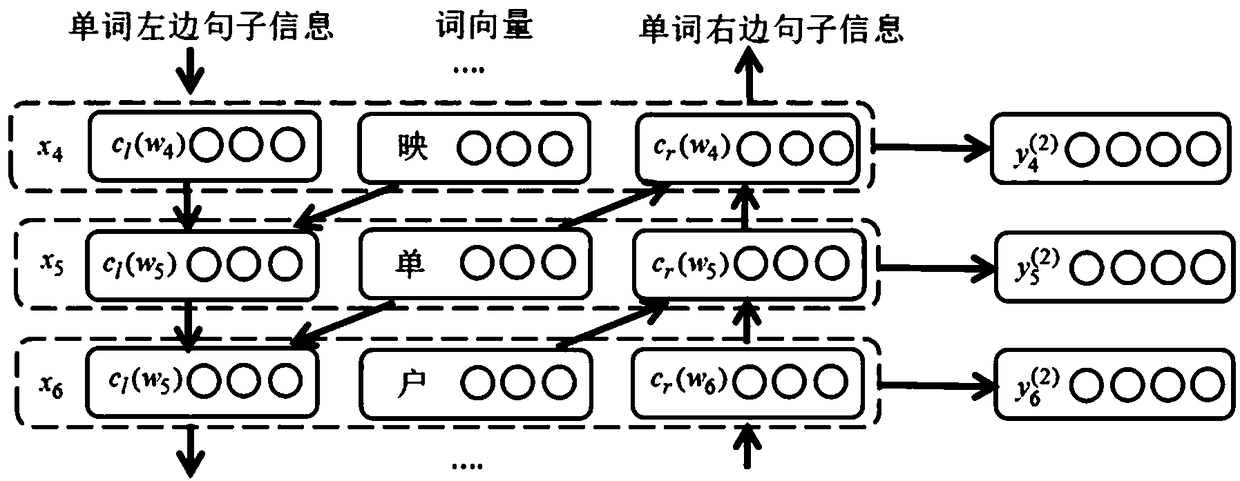

Named entity recognition model training method and named entity recognition method and device

ActiveCN110705294AAccelerated trainingImprove applicabilityInternal combustion piston enginesNatural language data processingConditional random fieldFeature vector

The invention discloses a named entity recognition model training method, a named entity recognition method and a named entity recognition device. The training method comprises the following steps: preprocessing a corpus sample to obtain a character sequence sample, and labeling a named entity label on the character sequence sample to obtain a training character sequence; pre-training the trainingcharacter sequence based on a first bidirectional language model and a first self-attention mechanism model to obtain a character feature vector and a character weight vector, and fusing the character feature vector and the character weight vector to obtain a second context vector; pre-training the training character sequence based on a second bidirectional language model and a second self-attention mechanism model to obtain a word feature vector and a word weight vector, and fusing the word feature vector and the word weight vector to obtain a second context vector; and training the bidirectional neural network and the conditional random field which are connected in sequence by using the first context vector and the second context vector to obtain a named entity recognition model. According to the method, the training effect of the named entity recognition model is effectively improved, and the named entity recognition accuracy is improved.

Owner:SUNING CLOUD COMPUTING CO LTD

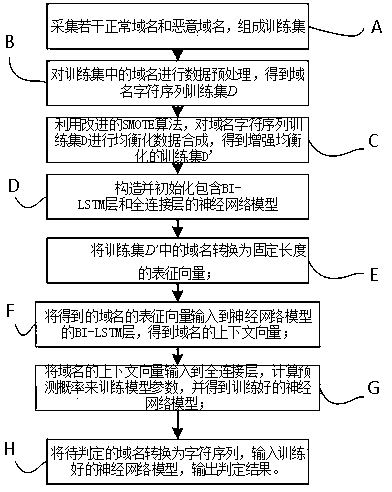

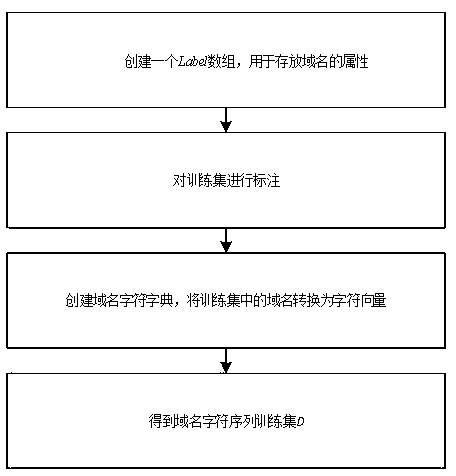

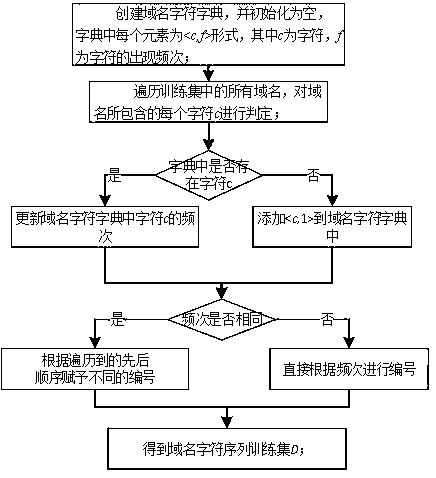

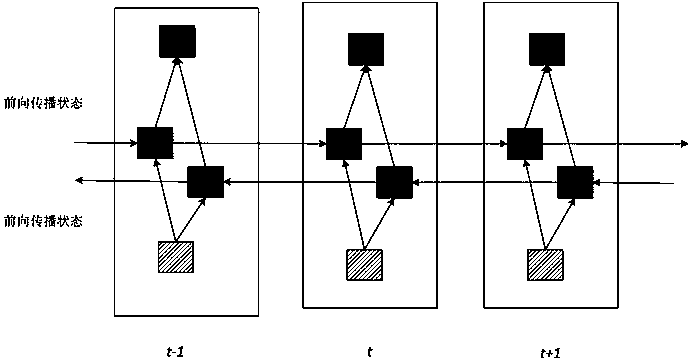

Malicious domain name detection method based on SMOTE and BI-LSTM network

InactiveCN109617909AIncrease the number of samplesAvoid easily circumvented deficienciesCharacter and pattern recognitionTransmissionDomain nameNetwork model

The invention relates to a malicious domain name detection method based on SMOTE and BI-LSTM. The method comprises the following steps of carrying out data preprocessing on domain names in a trainingset in order to obtain a domain name character sequence training set D; carrying out equalized data synthesis on the domain name character sequence training set D by utilizing an improved SMOTE algorithm in order to obtain an enhanced and equalized training set D'; constructing and initializing a neural network model comprising a BI-LSTM layer and a full-connection layer; converting the domain names in the training set D' into representation vectors with the fixed length; inputting the representation vectors of the domain names obtained in the step D into the BI-LSTM layer of the neural network model in order to obtain context vectors of the domain names; inputting he context vectors of the domain names into the full-connection layer of the neural network model in order to obtain a trainedneural network model; and converting the domain names to be judged into a character sequence, inputting the trained neural network model, and outputting a judgment result.

Owner:FUZHOU UNIV

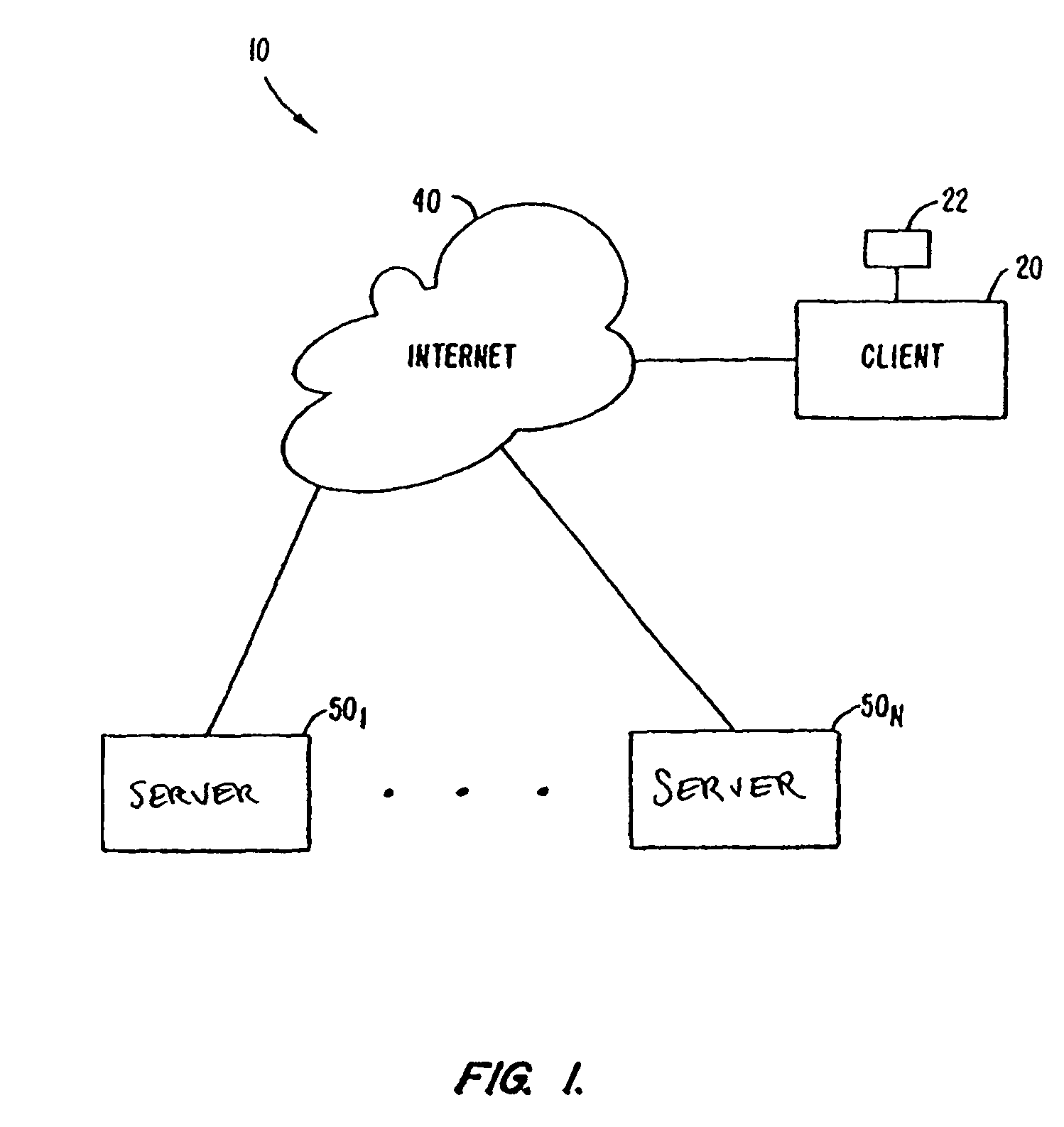

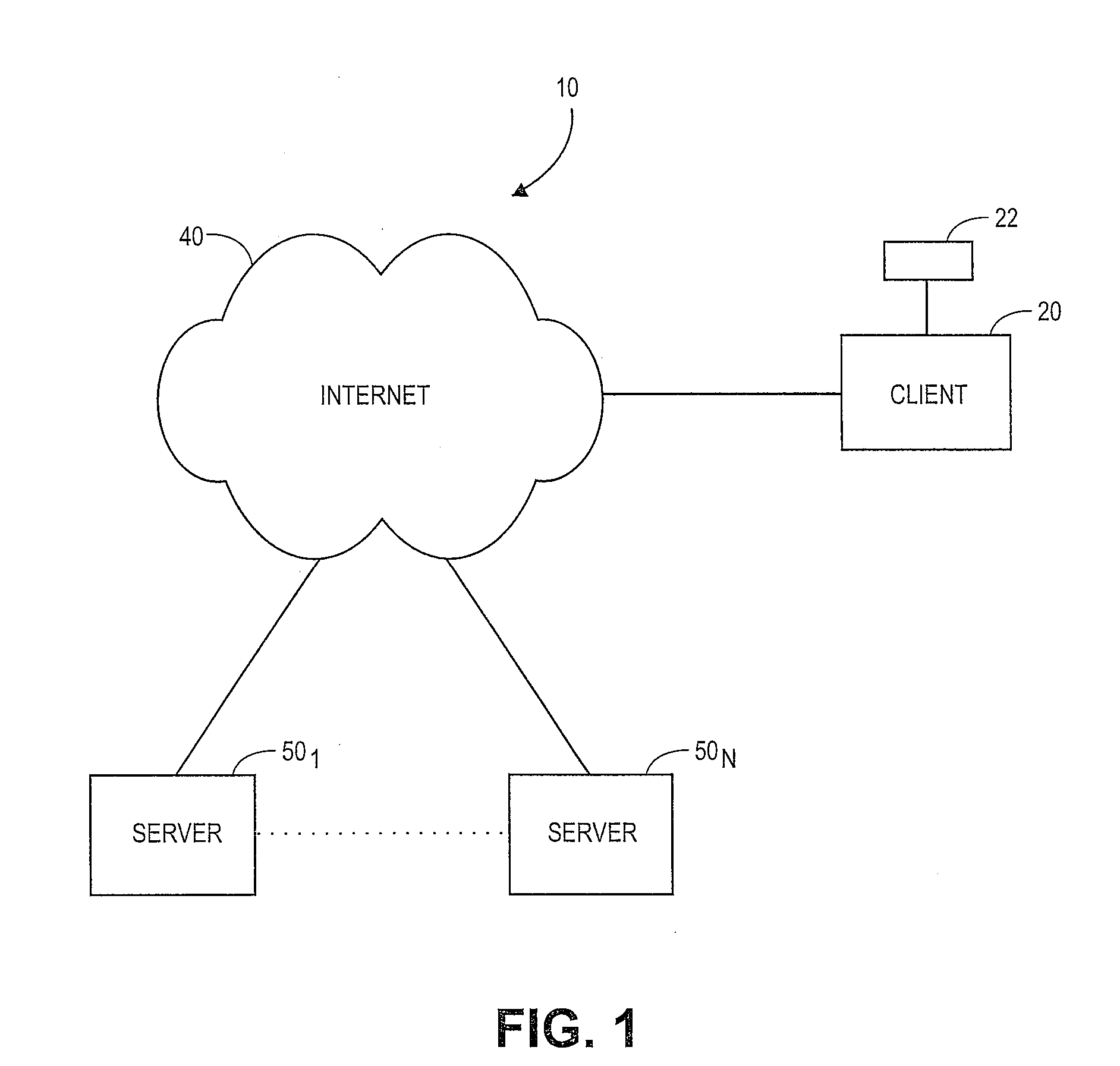

User-context-based search engine

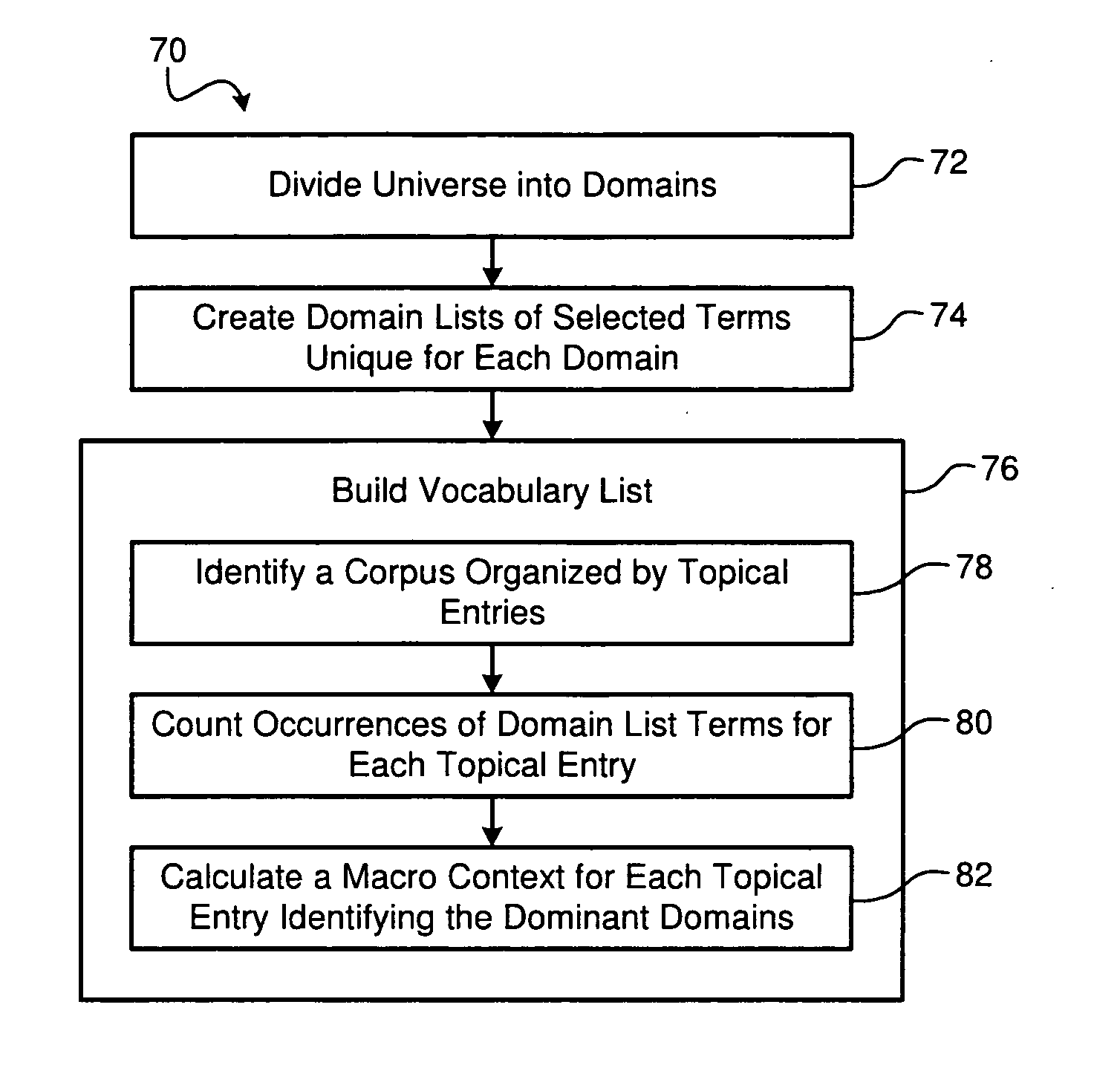

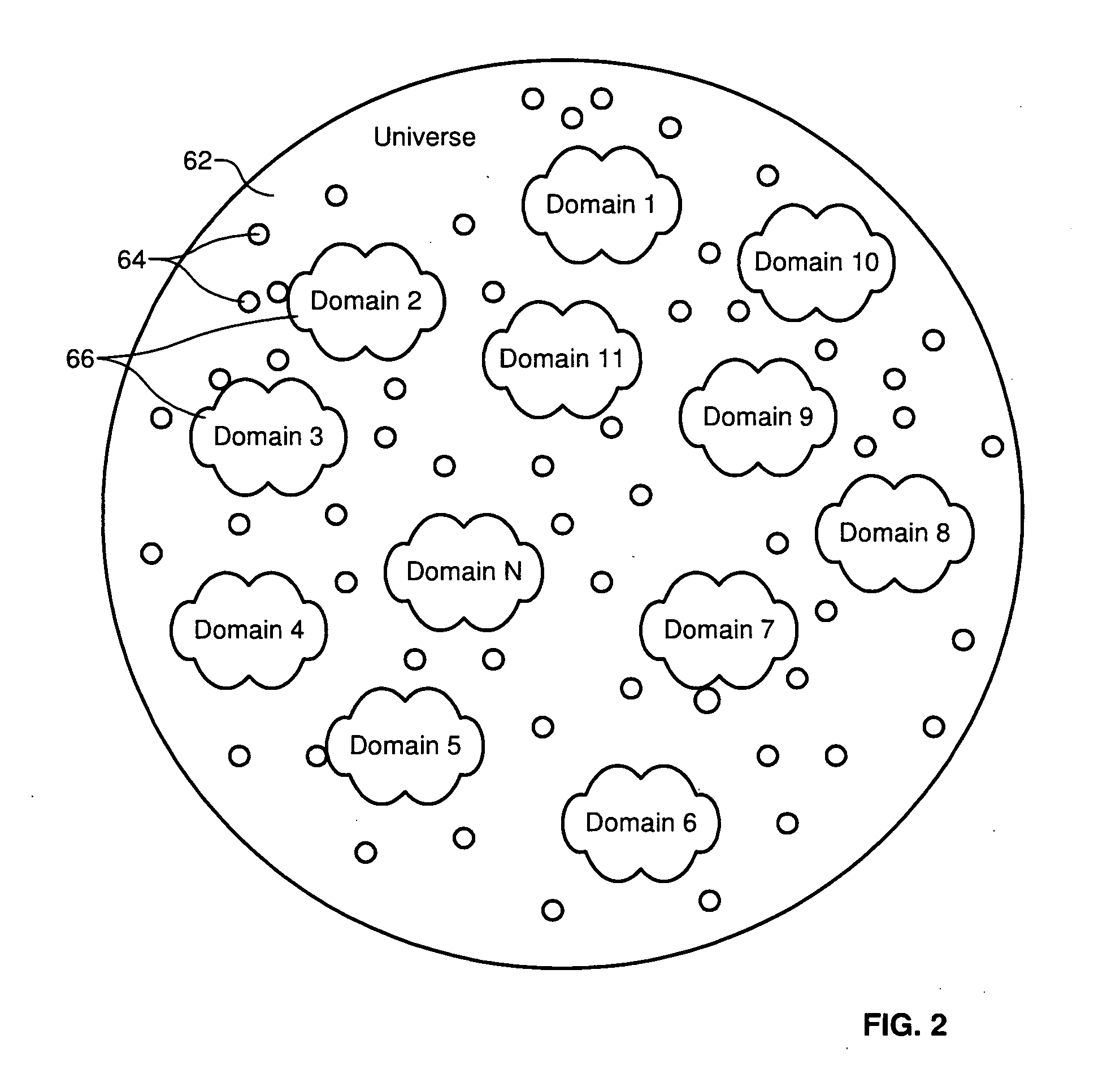

A method and apparatus for determining contexts of information analyzed. Contexts may be determined for words, expressions, and other combinations of words in bodies of knowledge such as encyclopedias. Analysis of use provides a division of the universe of communication or information into domains, and selects words or expressions unique to those domains of subject matter as an aid in classifying information. A vocabulary list is created with a macro-context (context vector) for each, dependent upon the number of occurrences of unique terms from a domain, over each of the domains. This system may be used to find information or classify information by subsequent inputs of text, in calculation of macro-contexts, with ultimate determination of lists of micro-contests including terms closely aligned with the subject matter.

Owner:GOOGLE LLC

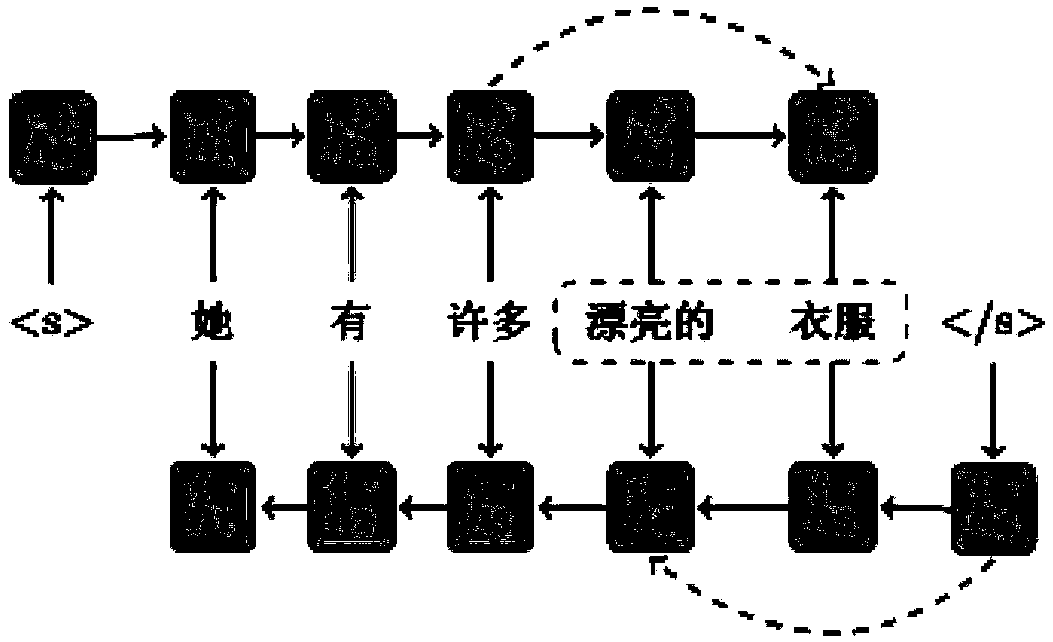

Neural machine translation method by introducing source language block information to encode

The present invention relates to a neural machine translation method for introducing source language block information to encode. The method comprises: inputting bilingual sentence-level parallel data, and carrying out word segmentation on the source language and the target language respectively to obtain bilingual parallel sentence pairs after being subject to word segmentation; encoding the source sentence in the bilingual parallel sentence pairs after being subject to word segmentation according to the time sequence, obtaining the state of each time sequence on the hidden layer of the lastlayer, and segmenting the input source sentence by blocks; according to the state of each time sequence of the source sentence and the segmentation information of the source sentence, obtaining the block encoding information of the source sentence; combing the time sequence encoding information with the block encoding information to obtain final source sentence memory information; and by dynamically querying the source sentence memory information, using attention mechanism to generate a context vector at each moment through a decoder network, and extracting feature vectors for word prediction.According to the method provided by the present invention, block segmentation is automatically carried out on the source sentence without the need of any pre-divided sentence to participate in the training, and the method can capture the latest and the best block segmentation manner of the source sentence.

Owner:沈阳雅译网络技术有限公司

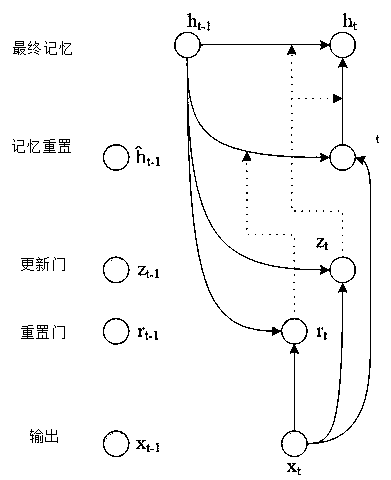

Long text sentiment analysis method fusing Attention mechanism

InactiveCN108595601AIgnore or reduce impactFully consider the impactNatural language translationCharacter and pattern recognitionAttention modelSemantics

The invention relates to a long text sentiment analysis method fusing an Attention mechanism. A text sentiment classification model (Bi-Attention model) is built by adopting a bidirectional thresholdrecurrent neural network in combination with the Attention mechanism. Attention can enable a neural network to pay attention to important information in a text and ignore or reduce the influence of secondary information in the text, thereby lowering the complexity of long text processing. On the other hand, a context vector is generated through a bidirectional threshold recurrent unit, and a memory state is updated, thereby fully considering the influence of historical information and future information on semantics.

Owner:FUZHOU UNIV

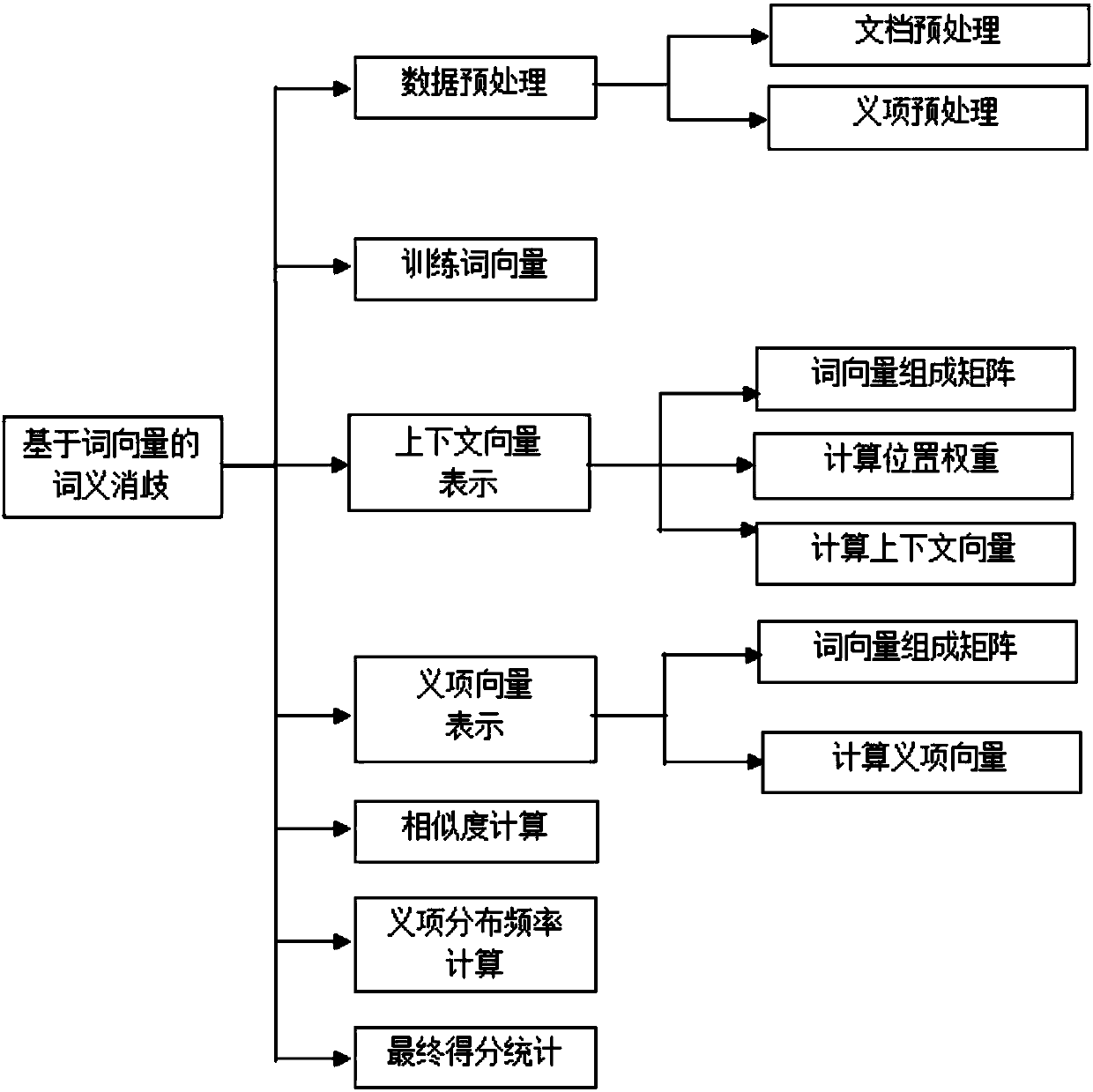

Word sense disambiguation method and device based on word vector

ActiveCN108446269AEfficient use ofNatural language translationSpecial data processing applicationsCosine similarityWord-sense disambiguation

The invention relates to a word sense disambiguation method and device based on a word vector. The method comprises the following steps that: data preprocessing: carrying out processing, including punctuation removal, word segmentation and the like, on a document and a semantic item; training the word vector: using a word vector training tool to train the word vector; carrying out context vector representation: obtaining the word vector, and adopting a local weighting method to calculate the context vector; carrying out semantic item vector representation: obtaining the word vector of each word of the semantic item, and carrying out calculation to obtain a semantic item vector; carrying out similarity calculation: calculating a cosine similarity between the context vector and each semanticitem vector; carrying out semantic item distribution frequency calculation: carrying out statistics on the distribution frequency of each semantic item of an ambiguous term in a dataset; and carryingout final score statistics: calculating the cosine similarity between the context and each semantic item and the comprehensive score of each piece of semantic item frequency, wherein the semantic item with a highest score is an optimal word meaning.

Owner:KUNMING UNIV OF SCI & TECH

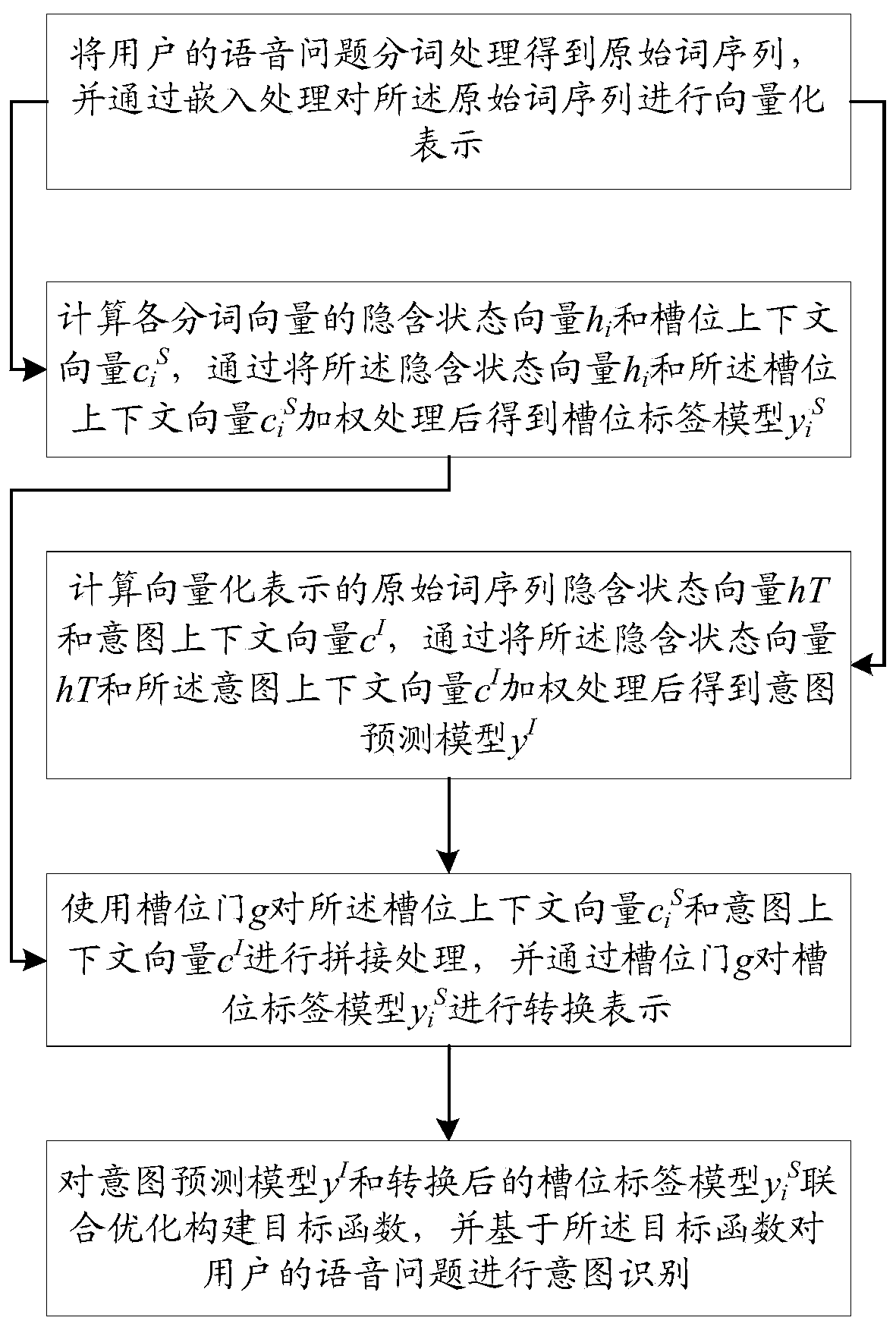

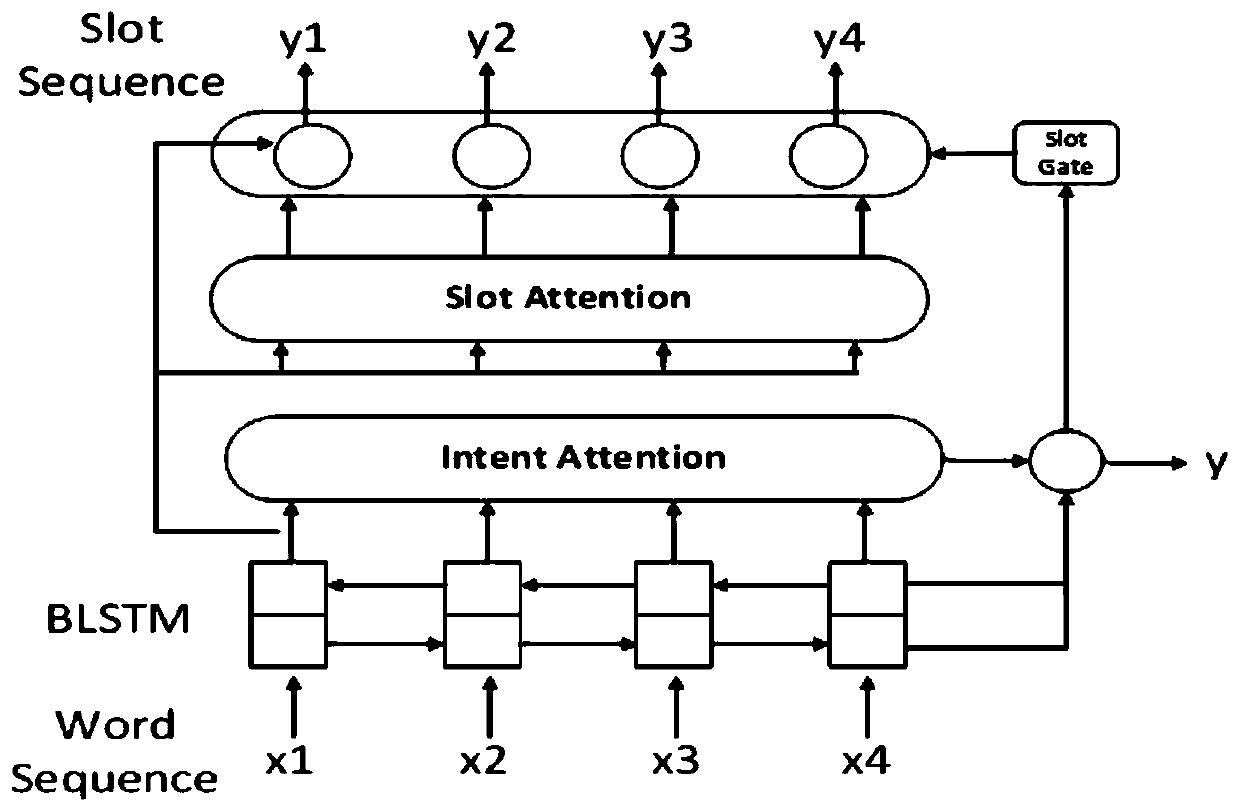

Human-computer interactive speech recognition method and system used for intelligent equipment

InactiveCN109785833AGuaranteed accuracySpeech recognitionSpeech identificationHuman–robot interaction

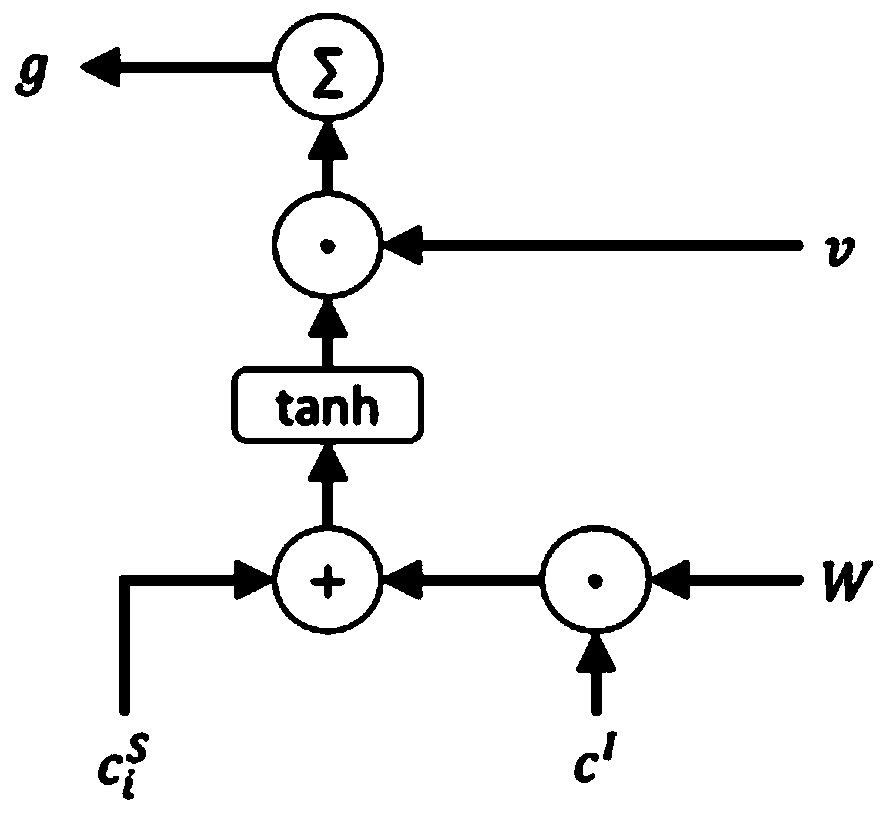

The invention discloses a human-computer interactive speech recognition method and system used for intelligent equipment, and belongs to the technical field of speech recognition. The accuracy of speech recognition is improved through joint optimization training of intention recognition and slot filling. The method includes the following steps that user's voice problem word segmentation is processed to obtain an original word sequence, and the original word sequence is vectorized through embedding processing; a slot label model yiS is obtained by weighting an implicit state vector hi and a slot context vector ciS; an intention anticipation model yI is obtained by weighting an implicit state vector hT and an intent context vector ciS; a slot door g is used for splicing the slot context vector ciS and the intent context vector ciS, and a slot gate g is used for converting the slot label model yiS; a target function is constructed through joint optimization of the intention anticipation model yI and the slot label model yiS obtained after conversion, and the user's voice problem is subjected to intention recognition based on the target function.

Owner:SUNING COM CO LTD

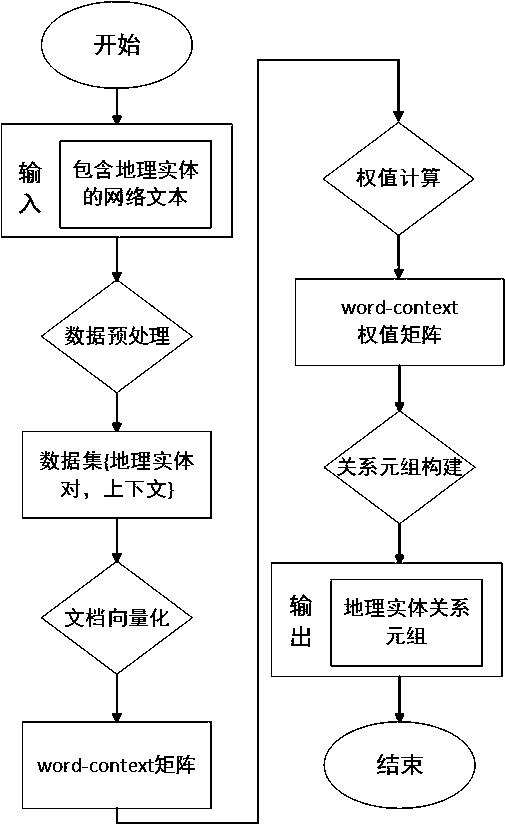

Method for extracting relationship among geographic entities contained in internet text

ActiveCN107180045ARich search methodsQuick extractionRelational databasesSpecial data processing applicationsThe InternetDocument preparation

The invention discloses a method for extracting a relationship among geographic entities contained in an internet text. The method comprises the following steps of data preprocessing, document vectorization, weight calculation, keyword extraction and relational tuple construction. The method specifically comprises the steps of inputting the network text containing the geometric entities, extracting the spatial relationship or the semantic relationship among the geometric entities through data preprocessing, and obtaining a webpage pure text and candidate keywords; performing vectorization on the text by adopting a word-level vector space model, and establishing a word-context matrix; designing a novel weight calculation method for performing weight calculation on the geometric entities; and selecting the word with the maximum weight as a keyword from a context vector, constructing a relational tuple, and finally finishing geometric entity extraction. According to the method, a semantic-based retrieval mode is provided, so that a conventional search technology depending on the keyword is changed; and on the premise of lack of large-scale tagged corpora and a geometric knowledge library, geometric relationship description words can be quickly extracted, so that the operation efficiency is improved and the labor cost is greatly reduced.

Owner:INST OF GEOGRAPHICAL SCI & NATURAL RESOURCE RES CAS

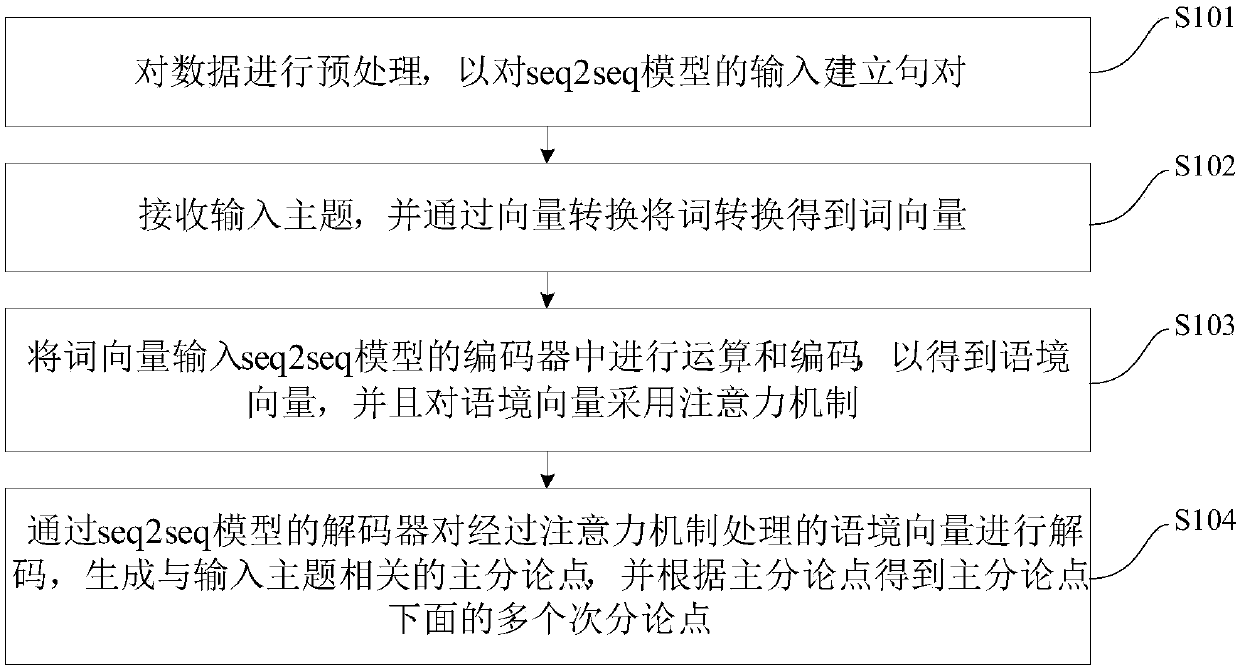

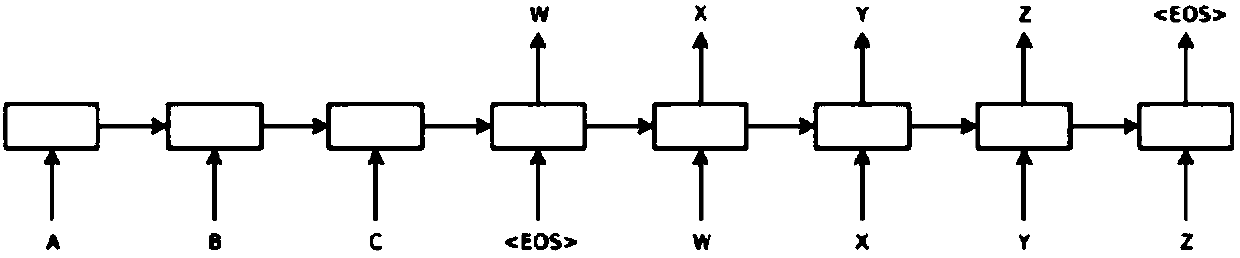

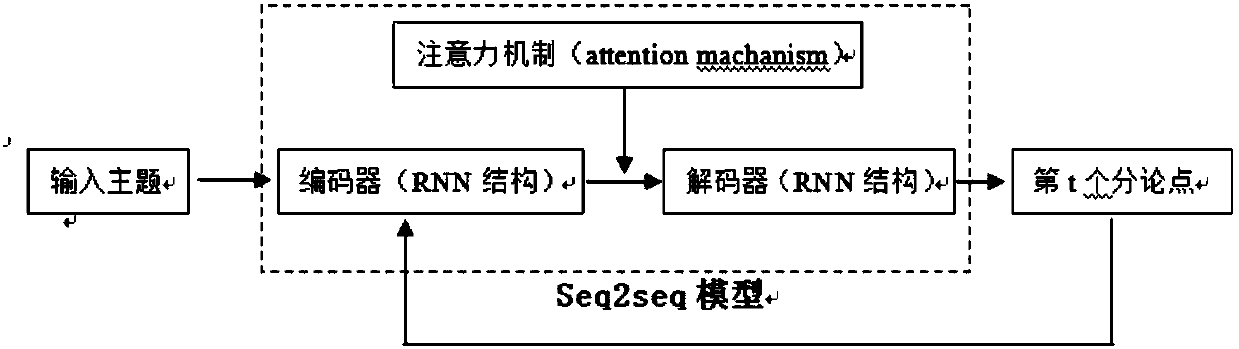

Seq2seq model-based structured argument generation method and system

InactiveCN107832310AImprove reliabilityImprove accuracyNatural language translationSpecial data processing applicationsSentence pairAlgorithm

The invention discloses a seq2seq model-based structured argument generation method and system. The method comprises the following steps of preprocessing data to establish sentence pairs for an inputof a seq2seq model; receiving an input topic and converting words through vector conversion to obtain word vectors; inputting the word vectors to an encoder of the seq2seq model to perform calculationand encoding to obtain a context vector, and adopting an attention mechanism for the context vector; and through the encoder of the seq2seq model, decoding the context vector processed through the attention mechanism through a decoder of the seq2seq model to generate main and sub arguments related to the input topic, and according to the main and sub arguments, obtaining multiple sub-sub arguments in the main and sub arguments. According to the method, randomly initialized word vectors of an original model can be replaced with pre-trained word vectors; and the attention mechanism is added tothe seq2seq model, so that the model reliability is improved and the model accuracy and consistency are effectively ensured.

Owner:CAPITAL NORMAL UNIVERSITY

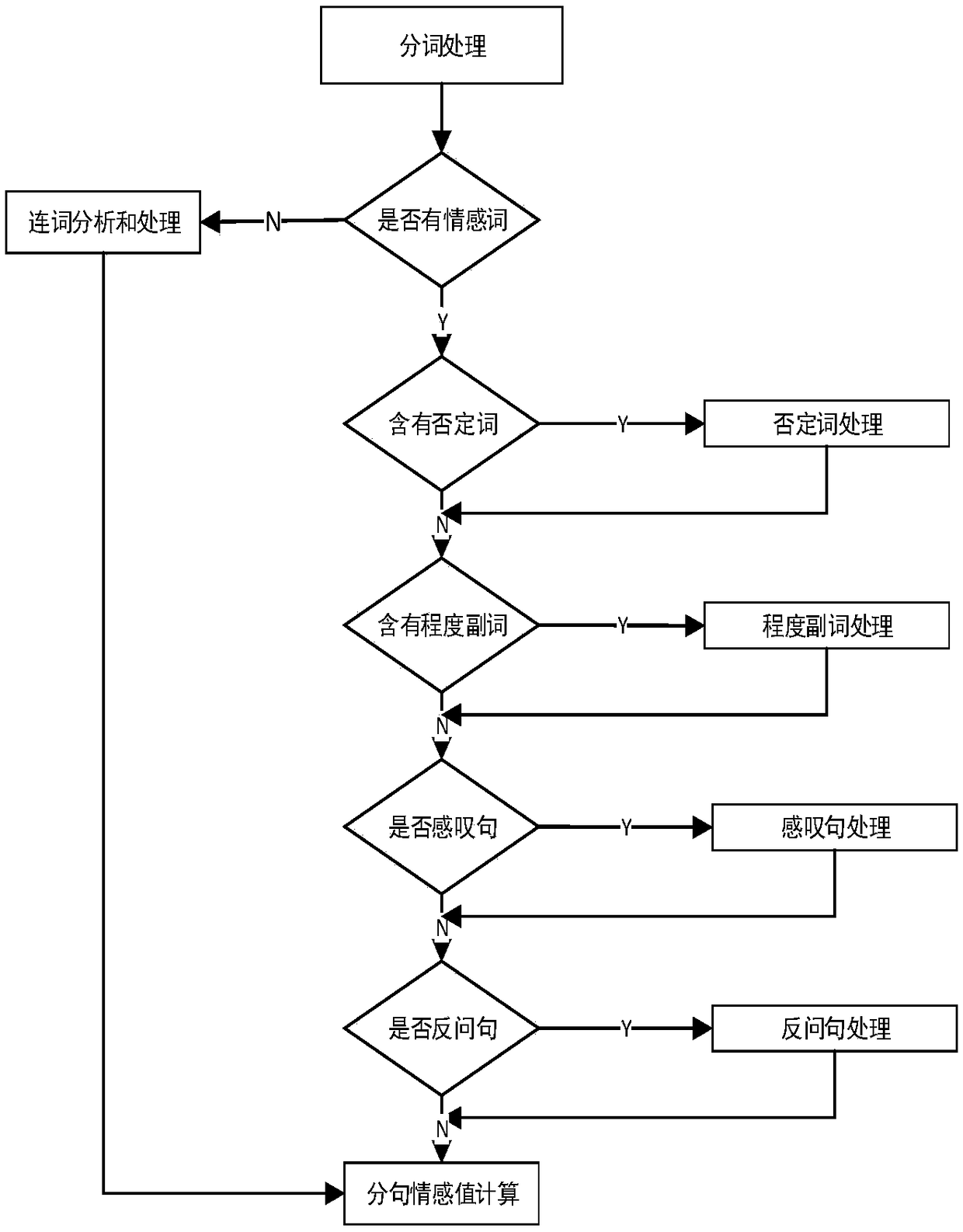

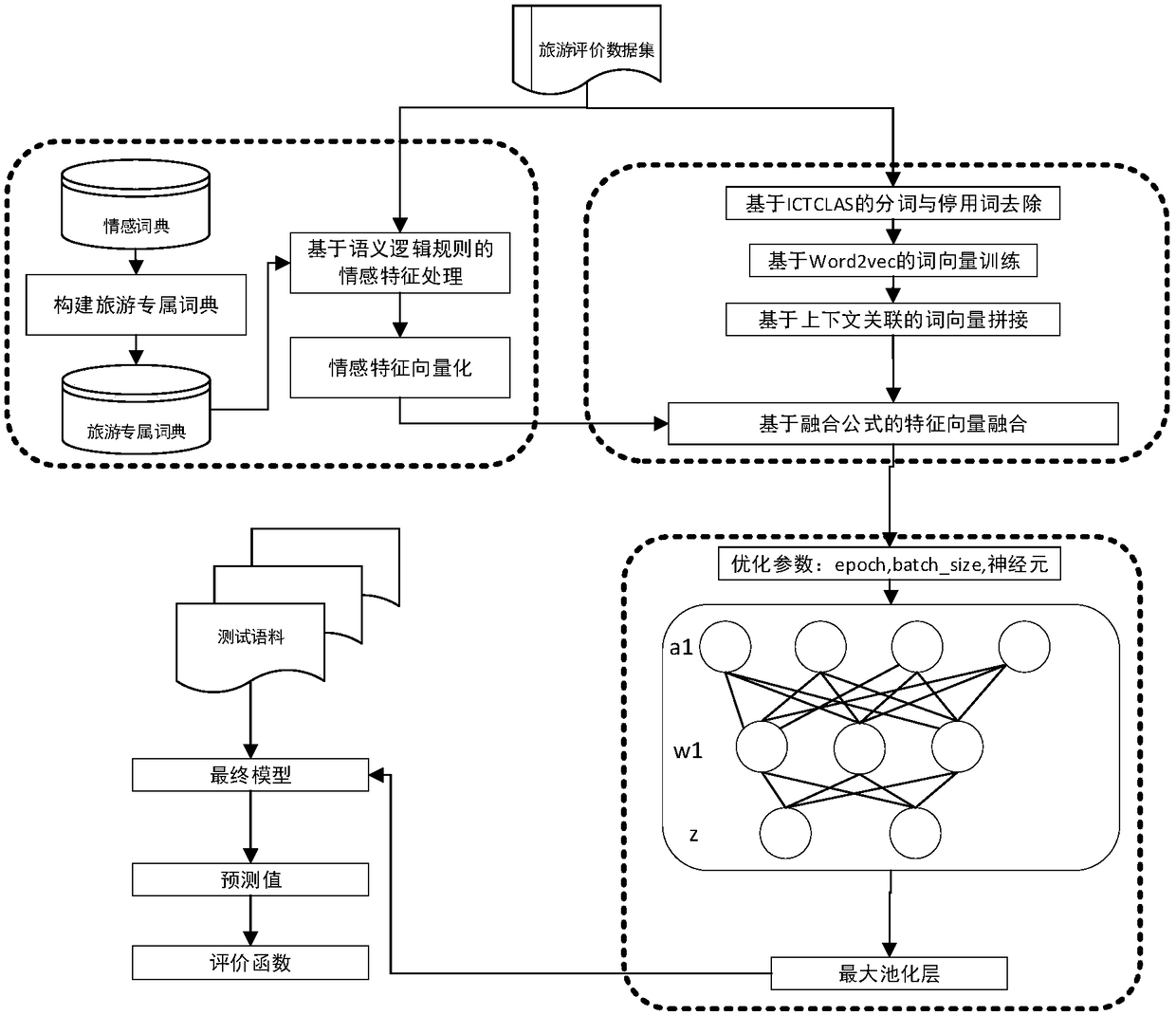

Combining At_GRU neural network and emotion dictionary, the emotion classification method of tourism evaluation

ActiveCN109213861AImprove classification accuracyAccurateBiological neural network modelsText database clustering/classificationClassification methodsEmotion classification

The invention relates to a tourism evaluation emotion classification method combining At_GRU neural network and emotion dictionary, the present invention is used for realizing the semantic classification of tourism users' evaluation on the whole tourism according to the evaluation text of the whole journey of the tour, and comprises the following steps: 1) processing the emotion characteristics: vectorizing the emotion characteristics in the tourism review by constructing a compound tourism-specific emotion dictionary; 2) data pre-processing stage: training the word vector of the original comment text and splicing the context vector, and fusing the spliced vector and the vectorized emotion feature as the input of the bi-directional GRU neural network; 3) two-way GRU text semantic classification model stage: training two-way GRU neural network and classifying tourism evaluation emotion. Compared with that prior art, the invention has the advantage of high accuracy, considering the accuracy of emotion dictionary, robustness of machine learning and the like.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER

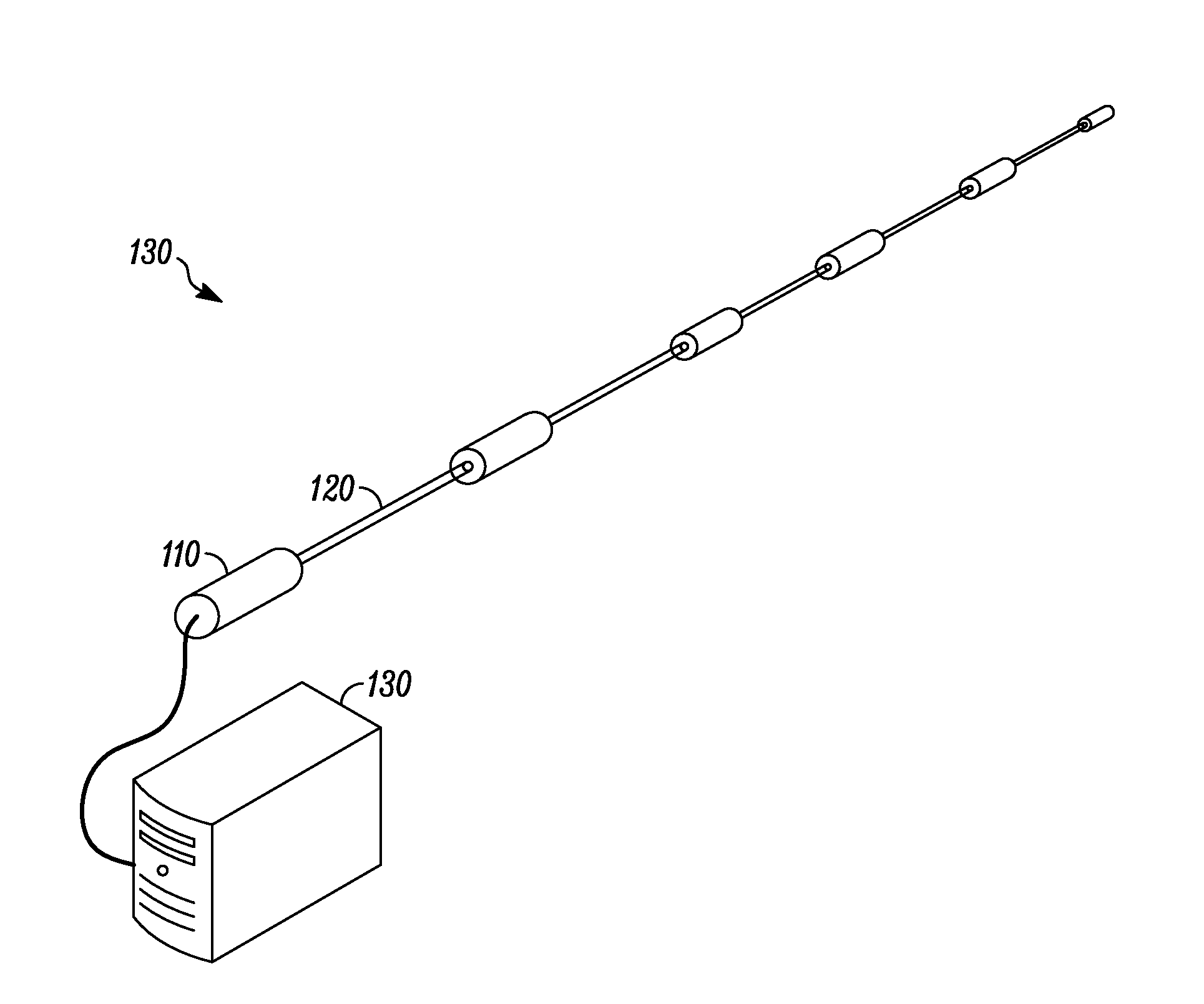

Context aware multiple-input and multiple-output antenna systems and methods

A method, a system, and a server provide context aware multiple-input-multiple-output MIMO antenna systems and methods. Specifically, the systems and methods provide, in a multiple MIMO antenna or node system, techniques of antenna / beam selection, calibration, and periodic refresh, based on environmental and mission context. The systems and methods can define a context vector as built by cooperative use of the nodes on the backhaul to direct antennas for the best user experience as well as mechanisms using the context vector in a 3D employment to point the antennas in a cooperative basis therebetween. The systems and methods utilize sensors in the nodes to provide tailored context sensing versus motion sensing, in conjunction with BER (Bit Error Rate) measurements on test signals to position an antenna beam from a selection of several “independent” antenna subsystems operating within a single node, as well as, that of its optically connected neighbor.

Owner:EXTREME NETWORKS INC

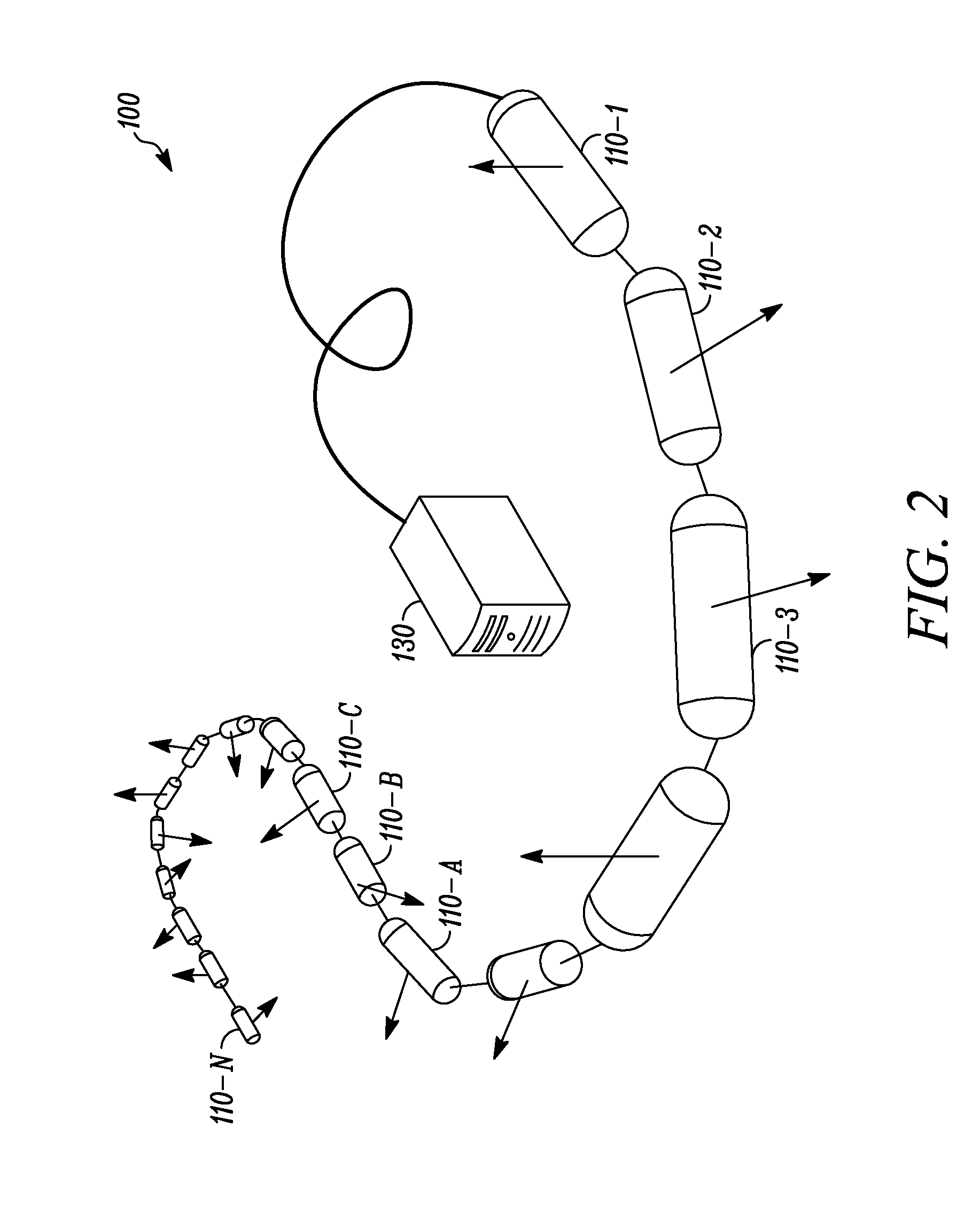

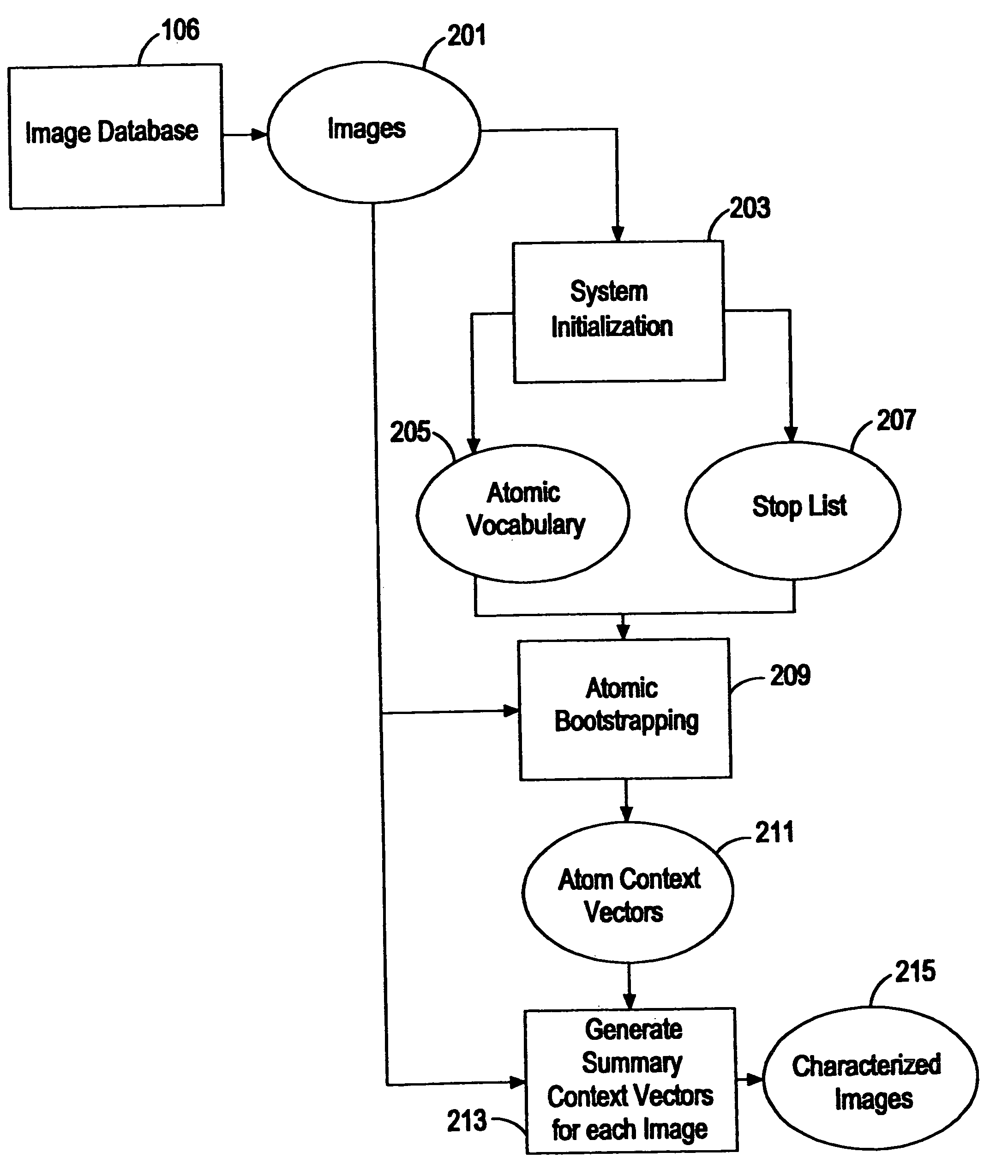

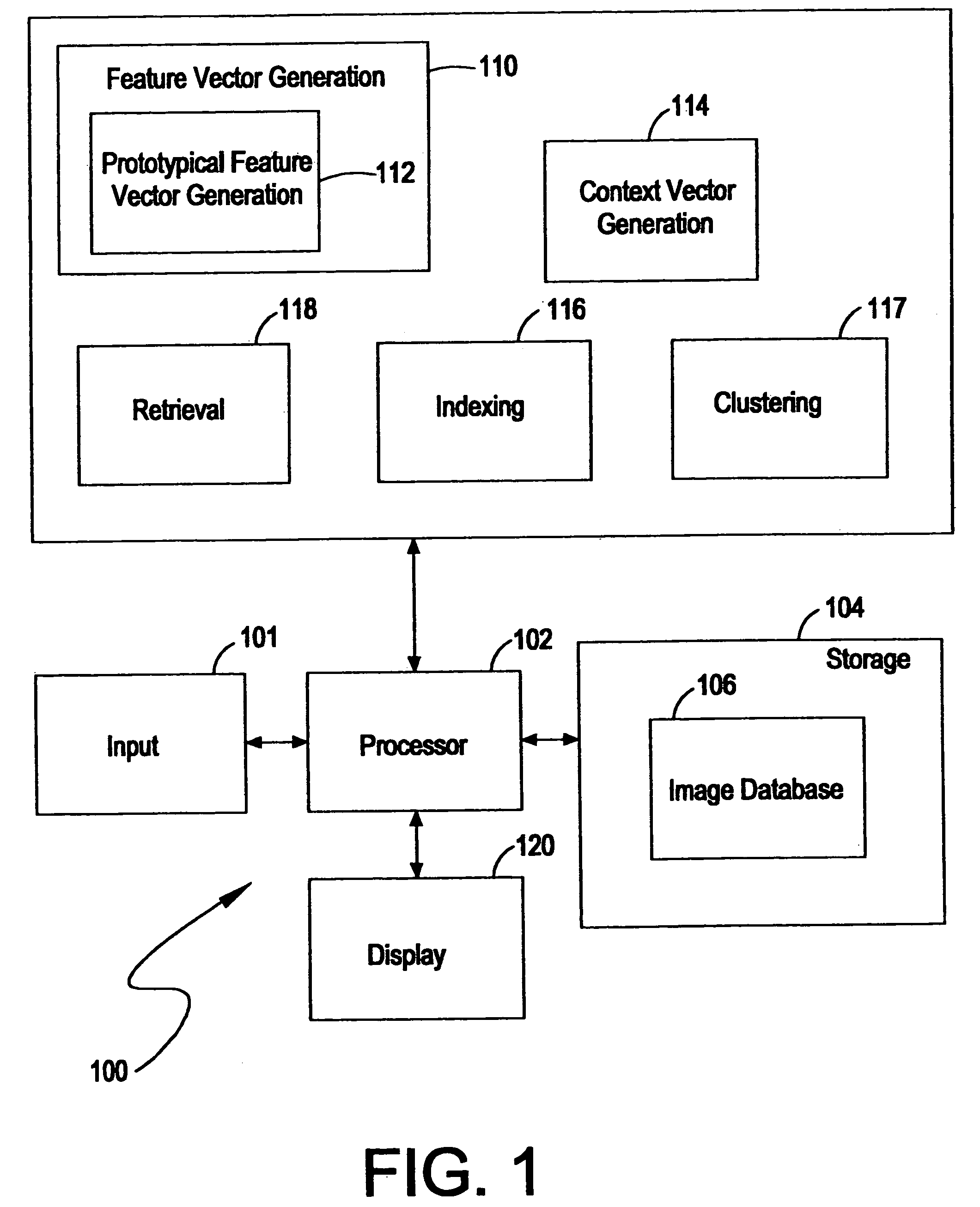

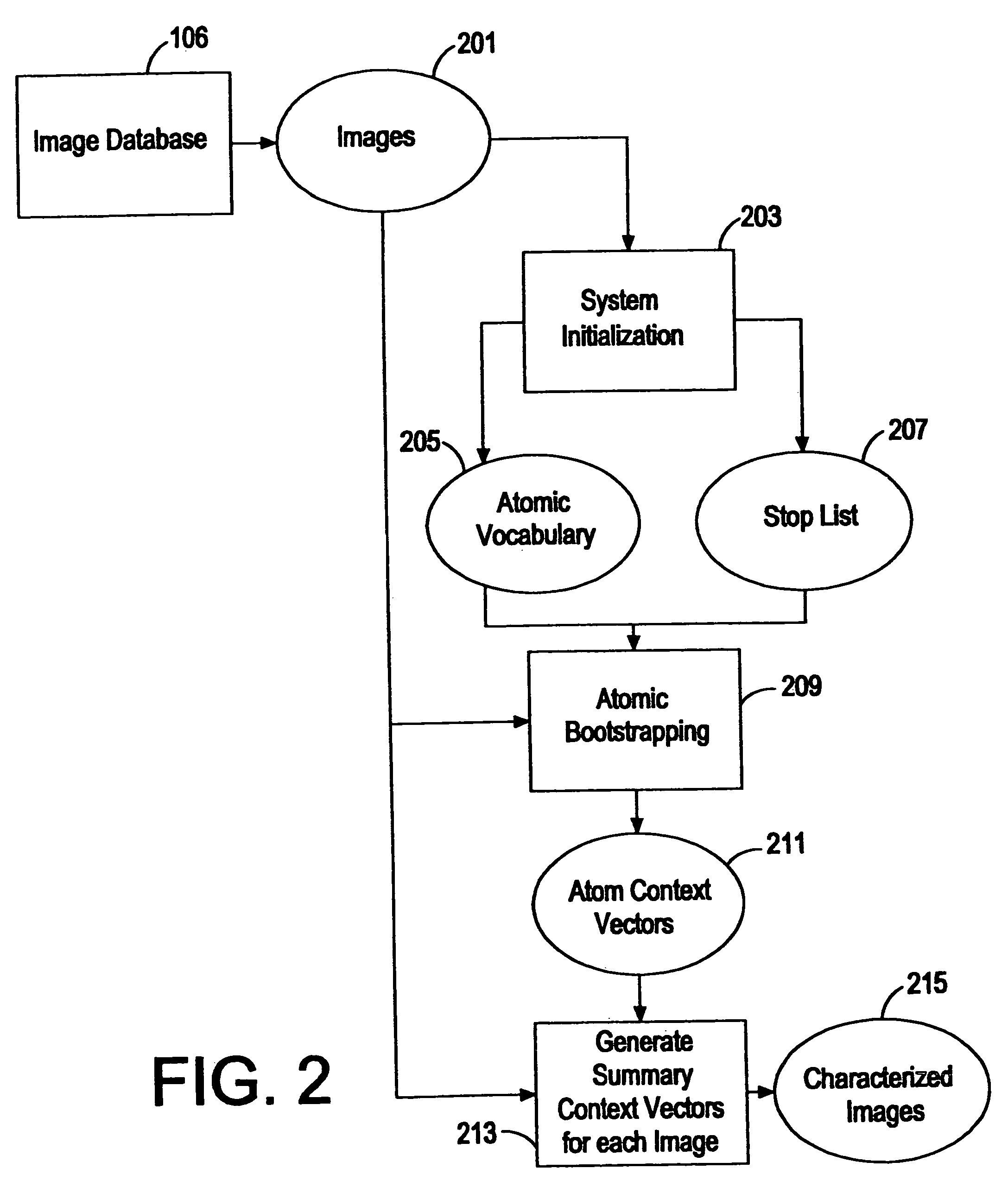

Representation and retrieval of images using context vectors derived from image information elements

InactiveUS7072872B2Reduce search timeDigital computer detailsUnstructured textual data retrievalFeature vectorCo-occurrence

Image features are generated by performing wavelet transformations at sample points on images stored in electronic form. Multiple wavelet transformations at a point are combined to form an image feature vector. A prototypical set of feature vectors, or atoms, is derived from the set of feature vectors to form an “atomic vocabulary.” The prototypical feature vectors are derived using a vector quantization method, e.g., using neural network self-organization techniques, in which a vector quantization network is also generated. The atomic vocabulary is used to define new images. Meaning is established between atoms in the atomic vocabulary. High-dimensional context vectors are assigned to each atom. The context vectors are then trained as a function of the proximity and co-occurrence of each atom to other atoms in the image. After training, the context vectors associated with the atoms that comprise an image are combined to form a summary vector for the image. Images are retrieved using a number of query methods, e.g., images, image portions, vocabulary atoms, index terms. The user's query is converted into a query context vector. A dot product is calculated between the query vector and the summary vectors to locate images having the closest meaning. The invention is also applicable to video or temporally related images, and can also be used in conjunction with other context vector data domains such as text or audio, thereby linking images to such data domains.

Owner:FAIR ISAAC & CO INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com