Patents

Literature

400results about How to "Reduce training difficulty" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

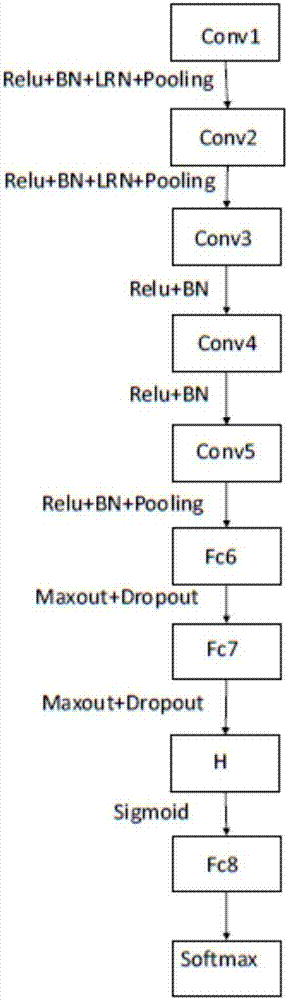

Human body gesture identification method based on depth convolution neural network

InactiveCN105069413AOvercome limitationsEasy to trainBiometric pattern recognitionHuman bodyInformation processing

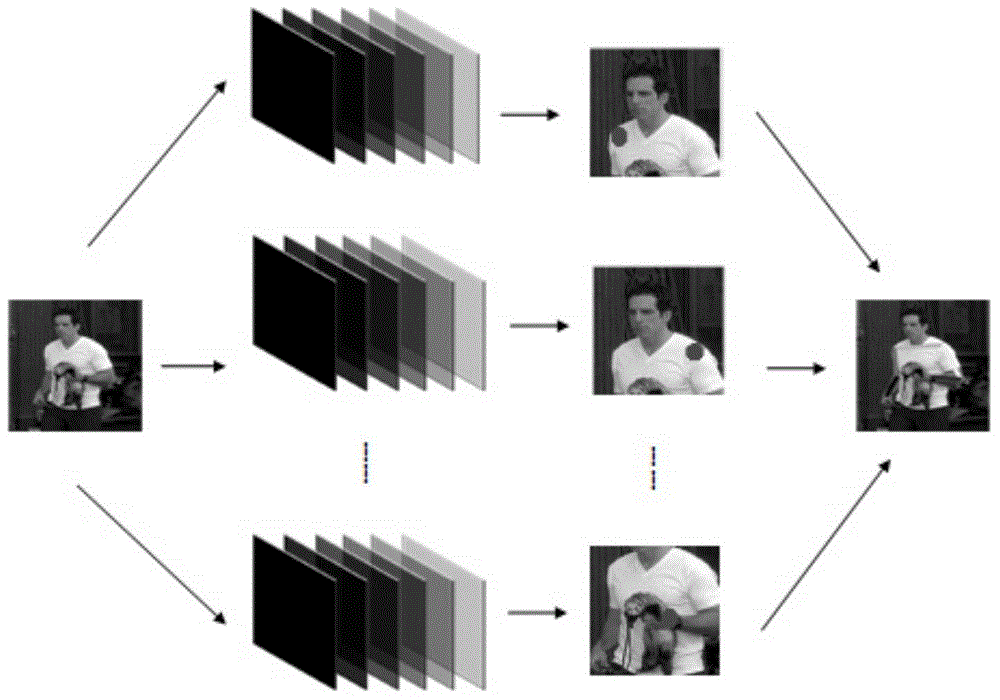

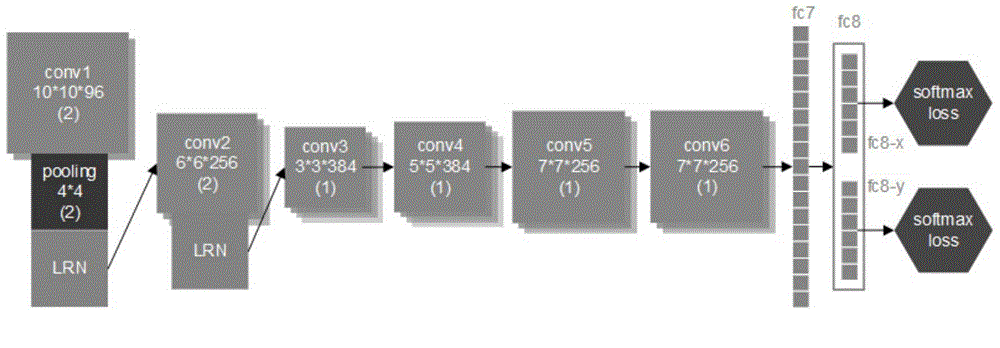

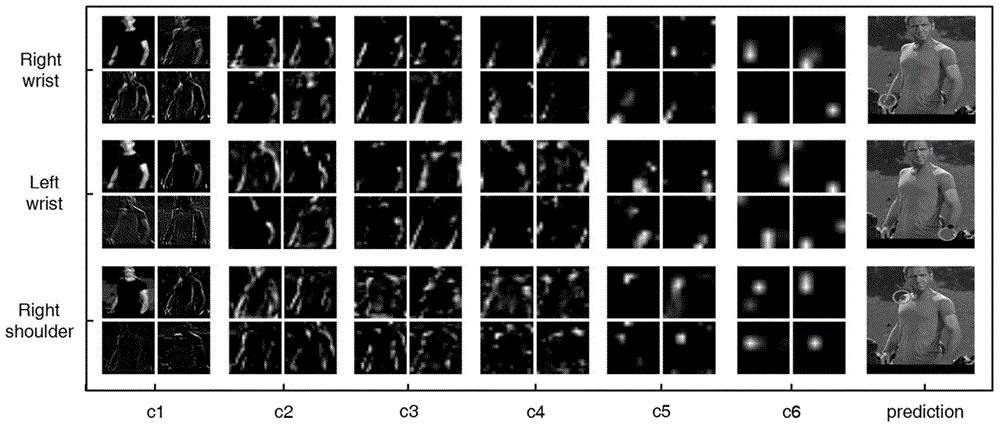

The invention discloses a human body gesture identification method based on a depth convolution neural network, belongs to the technical filed of mode identification and information processing, relates to behavior identification tasks in the aspect of computer vision, and in particular relates to a human body gesture estimation system research and implementation scheme based on the depth convolution neural network. The neural network comprises independent output layers and independent loss functions, wherein the independent output layers and the independent loss functions are designed for positioning human body joints. ILPN consists of an input layer, seven hidden layers and two independent output layers. The hidden layers from the first to the sixth are convolution layers, and are used for feature extraction. The seventh hidden layer (fc7) is a full connection layer. The output layers consist of two independent parts of fc8-x and fc8-y. The fc8-x is used for predicting the x coordinate of a joint. The fc8-y is used for predicting the y coordinate of the joint. When model training is carried out, each output is provided with an independent softmax loss function to guide the learning of a model. The human body gesture identification method has the advantages of simple and fast training, small computation amount and high accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Quick image classification method based on deep learning

ActiveCN107292333AImprove network convergence speedReduce the difficulty of network trainingCharacter and pattern recognitionNetwork modelNetwork layer

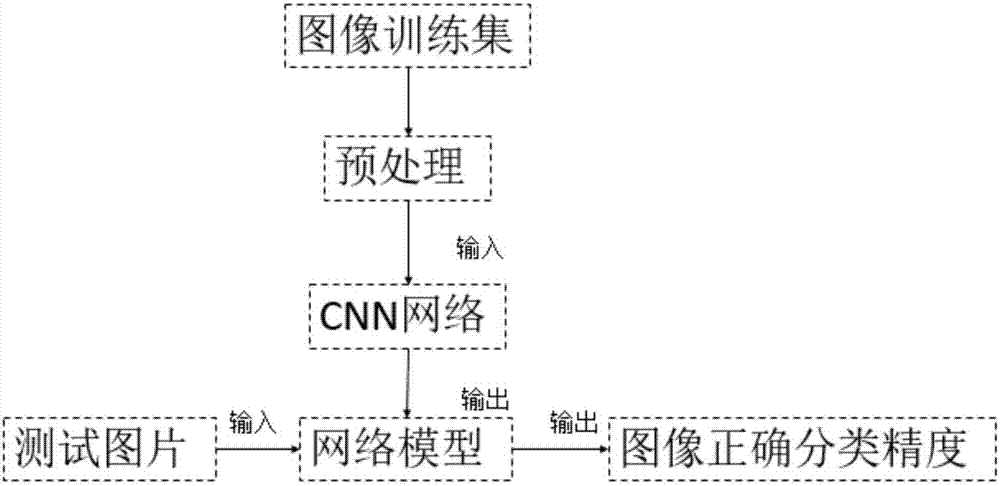

The invention discloses a quick image classification method based on deep learning, and the method comprises the following steps: 1, network building; 2, data set preprocessing; 3, network training; 4, image classification, wherein the image classification comprises the following substeps: 4.1, inputting a testing data set into the trained network model after preprocessing, and extracting the multi-scale features of a testing image; 4.2, inputting the extracted multi-scale features into a Softmax classifier, and outputting the probability that the testing image belongs to one class; 4.3, inputting probability that the testing image belongs to one class and a label corresponding to the image into an Accuracy network layer, and outputting the probability that the image is classified correctly. Through the above steps, the method can achieve the quick classification of the testing image. The method is short in training time, is convenient in training, and is high in classification precision.

Owner:ZHEJIANG UNIV OF TECH

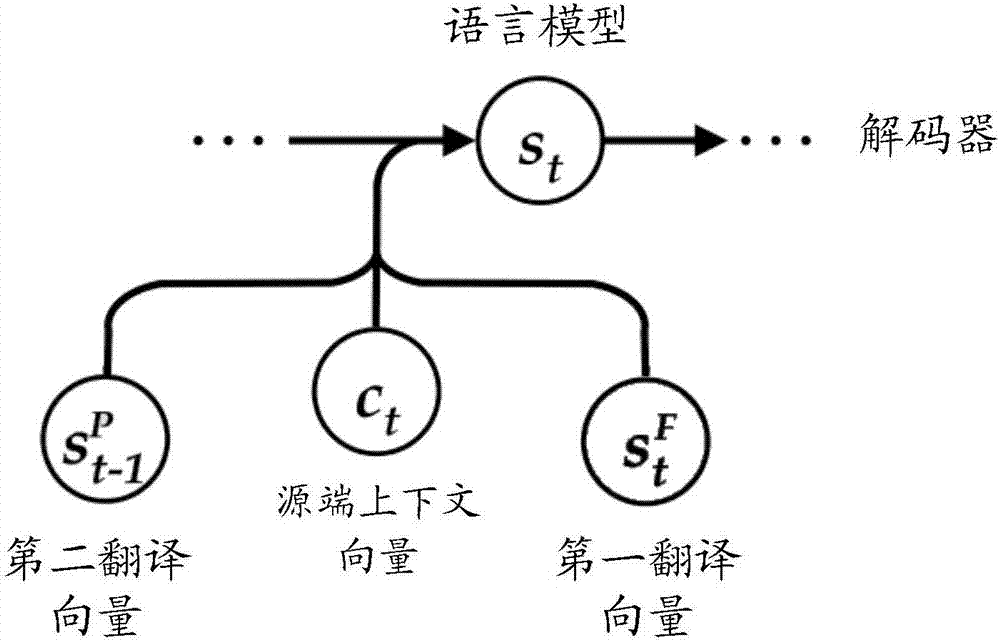

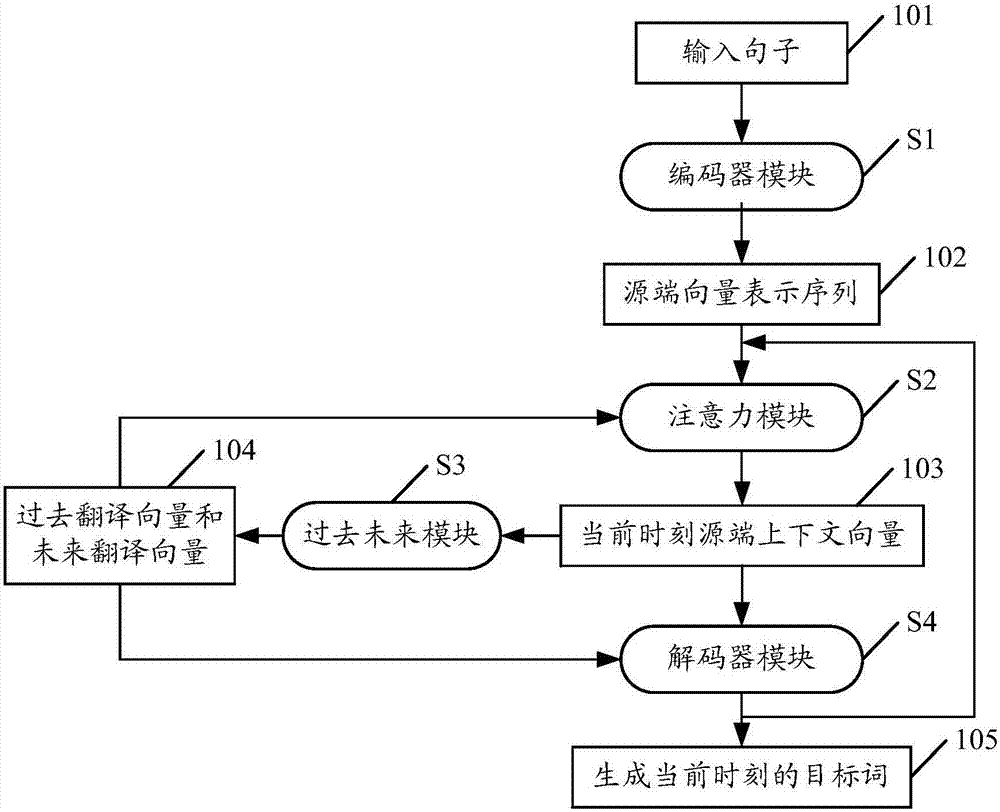

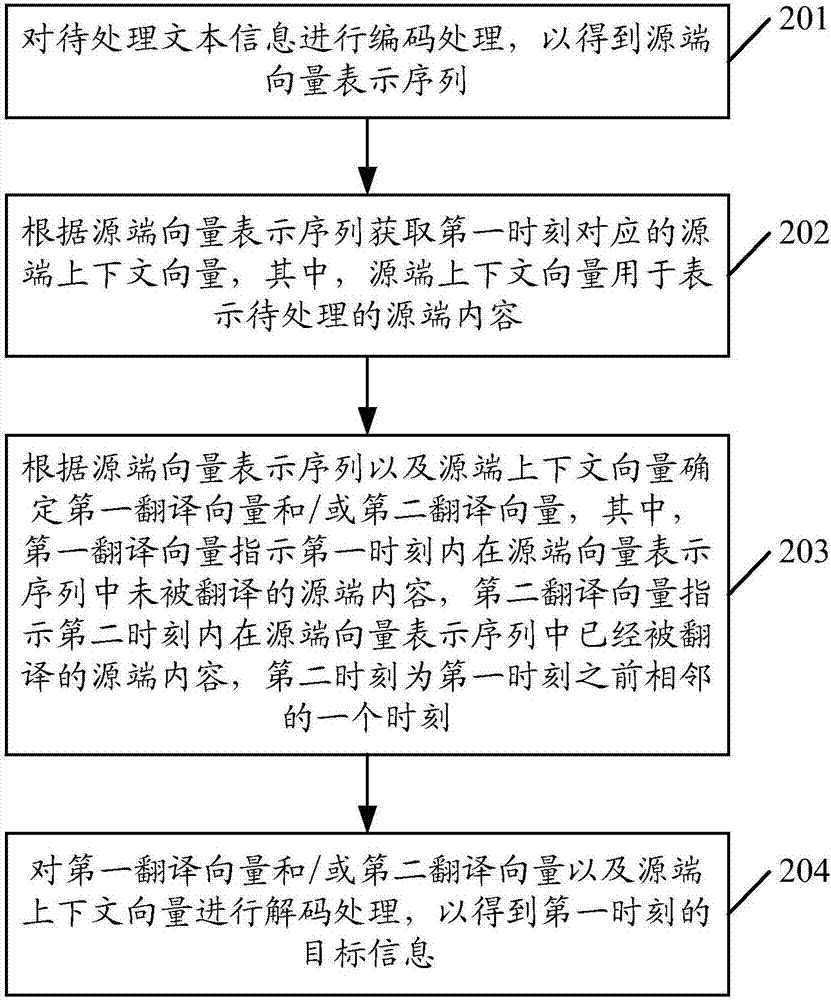

Method of translation, method for determining target information and related devices

ActiveCN107368476AReduce training difficultyImprove translation performanceNatural language translationNeural architecturesComputer scienceTranslation system

The invention discloses a method for determining target information. The method includes the following steps that encoding is conducted on to-be-processed text information to obtain a source-end vector expression sequence; according to the source-end vector expression sequence, a source-end context vector corresponding to the first moment is obtained, wherein the source-end context vector is used for expressing to-be-processed source-end content; according to the source-end vector expression sequence and the source-end context vector, a first translation vector and / or a second translation vector are / is determined, wherein the first translation vector indicates the source-end content which is not translated in the source-end vector expression sequence within the first moment, and the second translation vector indicates the source-end content which is translated in the source-end vector expression sequence within the second moment; decoding is conducted on the first translation vector and / or a second translation vector and the source-end context vector so as to obtain the target information of the first moment. The invention further provides a method of translation and a device for determining the target information. According to the method for determining the target information, the model training difficulty of a decoder can be reduced, and the translation effect of a translation system is improved.

Owner:SHENZHEN TENCENT COMP SYST CO LTD

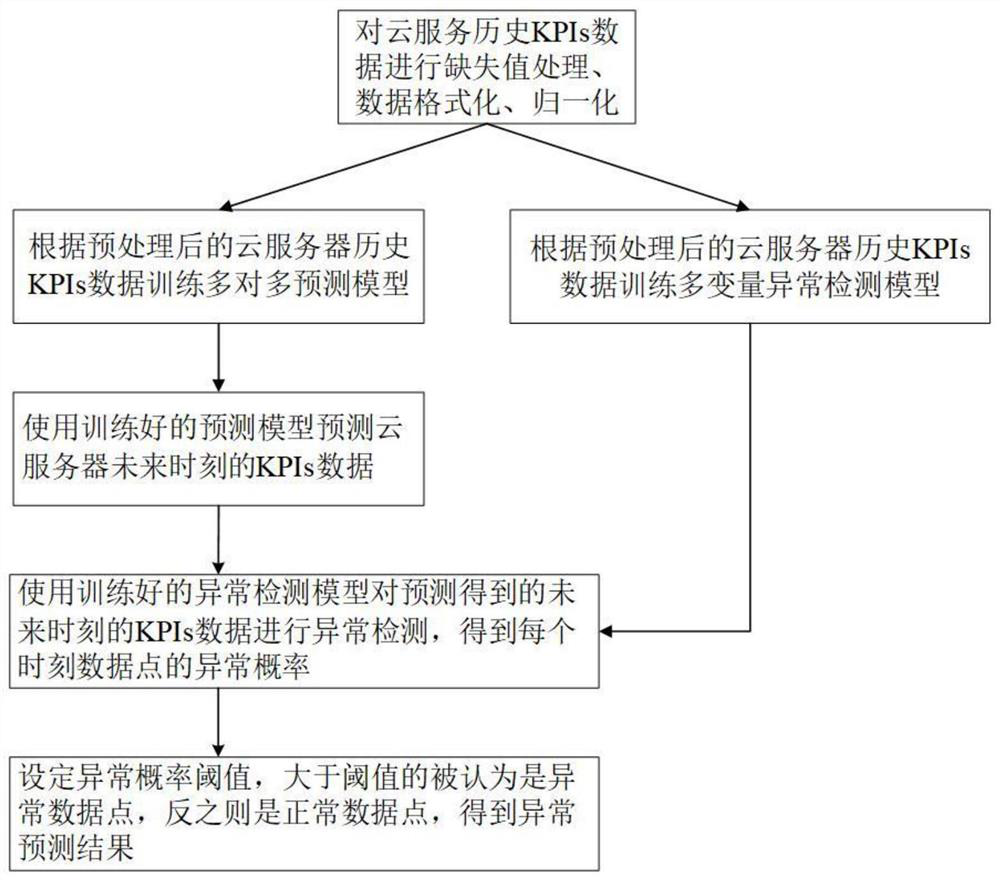

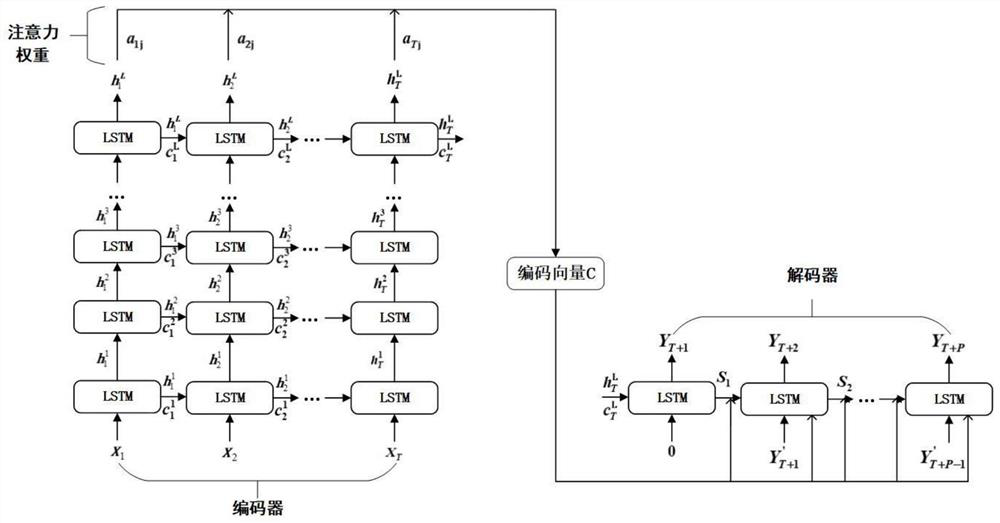

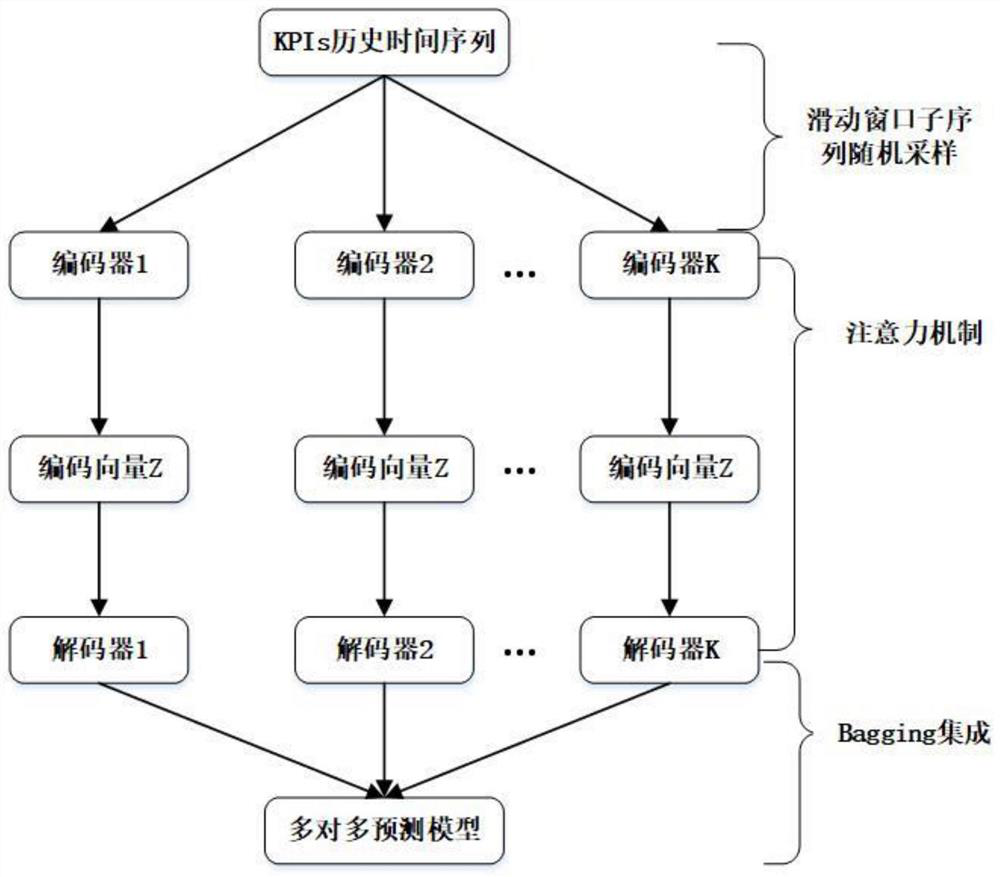

Unsupervised anomaly prediction method for two-stage cloud server

PendingCN111914873AHigh precisionImprove detection accuracyCharacter and pattern recognitionNeural architecturesAnomaly detectionLabeled data

The invention discloses an unsupervised anomaly prediction method for two-stage cloud server, which is used for solving the anomaly prediction problem of a cloud server environment. The method comprises a prediction stage and an anomaly detection stage, the prediction stage is used for training a many-to-many time sequence prediction model according to preprocessed historical cloud server key performance index data, and the model is used for predicting cloud server KPIs data at the future moment; in the anomaly detection stage, a multivariable anomaly detection model is trained according to preprocessed historical cloud server KPIs data, anomaly detection is conducted on the predicted KPIs data at the future moment through the model, the anomaly probability of data points at the future moment is obtained, finally, an anomaly probability threshold value is set, and the data points with the anomaly probability threshold value larger than the threshold value are regarded as abnormal datapoints. Otherwise, the data points are normal data points, and an anomaly prediction result is obtained. The invention has the advantages of being independent of label data, wider in applicability andexcellent in performance.

Owner:SOUTH CHINA UNIV OF TECH

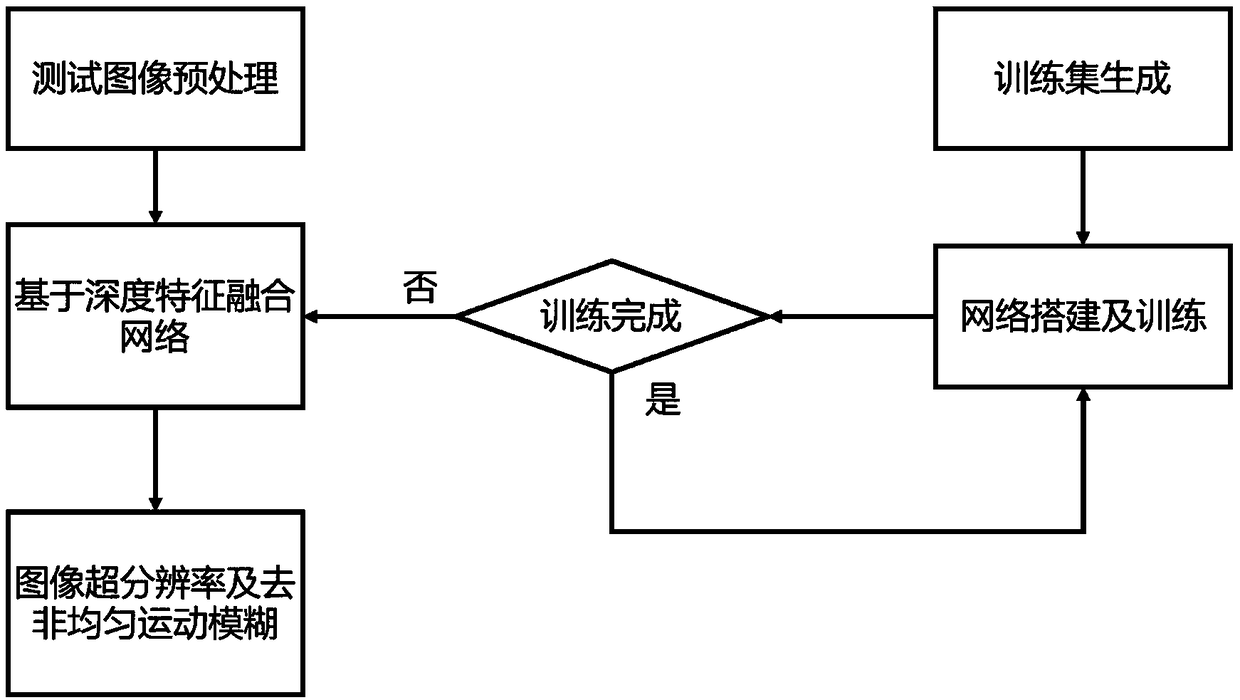

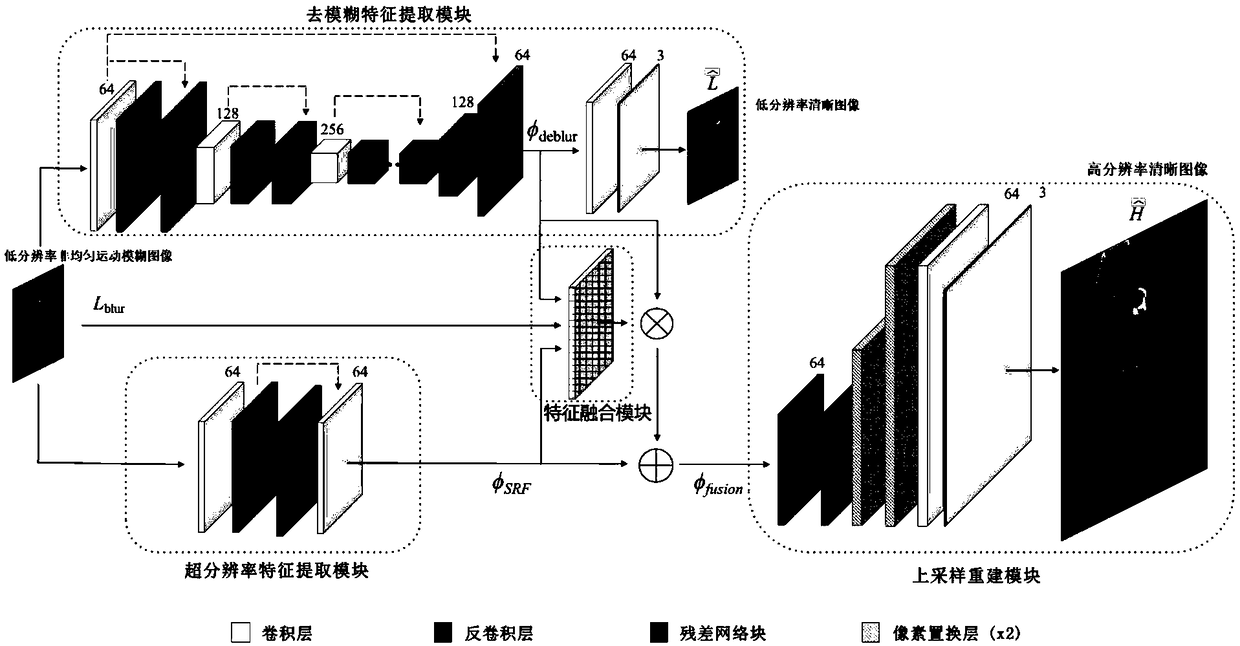

An image super-resolution and non-uniform blur removal method based on fusion network

ActiveCN109345449AReduce training difficultyGood effectImage enhancementImage analysisData setImage resolution

The invention discloses a natural image super-resolution and non-uniform motion blur removing method based on a depth feature fusion network, which firstly realizes the restoration of a low-resolutionnon-uniform motion blur image based on a depth neural network. The network adopts two branch modules to extract features for image super-resolution and non-uniform blur removal respectively, and achieves adaptive fusion of the outputs of the two feature extraction branches through a feature fusion module that can be trained. Ultimately, the upsampling reconstruction module realizes the task of removing non-uniform motion blur and super-resolution. This method utilizes the self-generated training data set to train the network offline, thus realizing the restoration of low-resolution non-uniform motion blurred images of any input size. This method has low training difficulty, good effect and high computational efficiency, is very suitable for image restoration and enhancement of mobile devices and monitoring equipment.

Owner:XI AN JIAOTONG UNIV

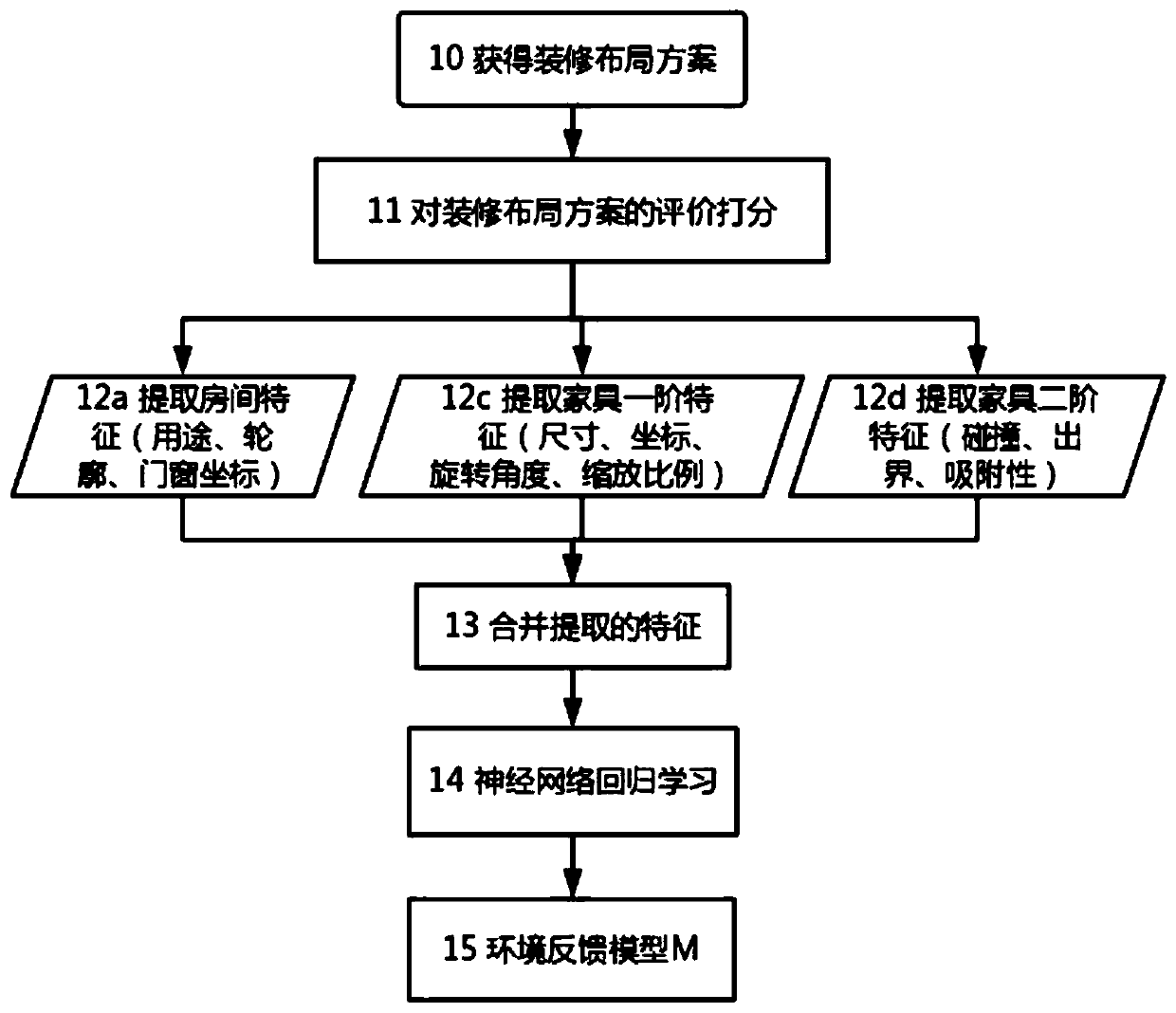

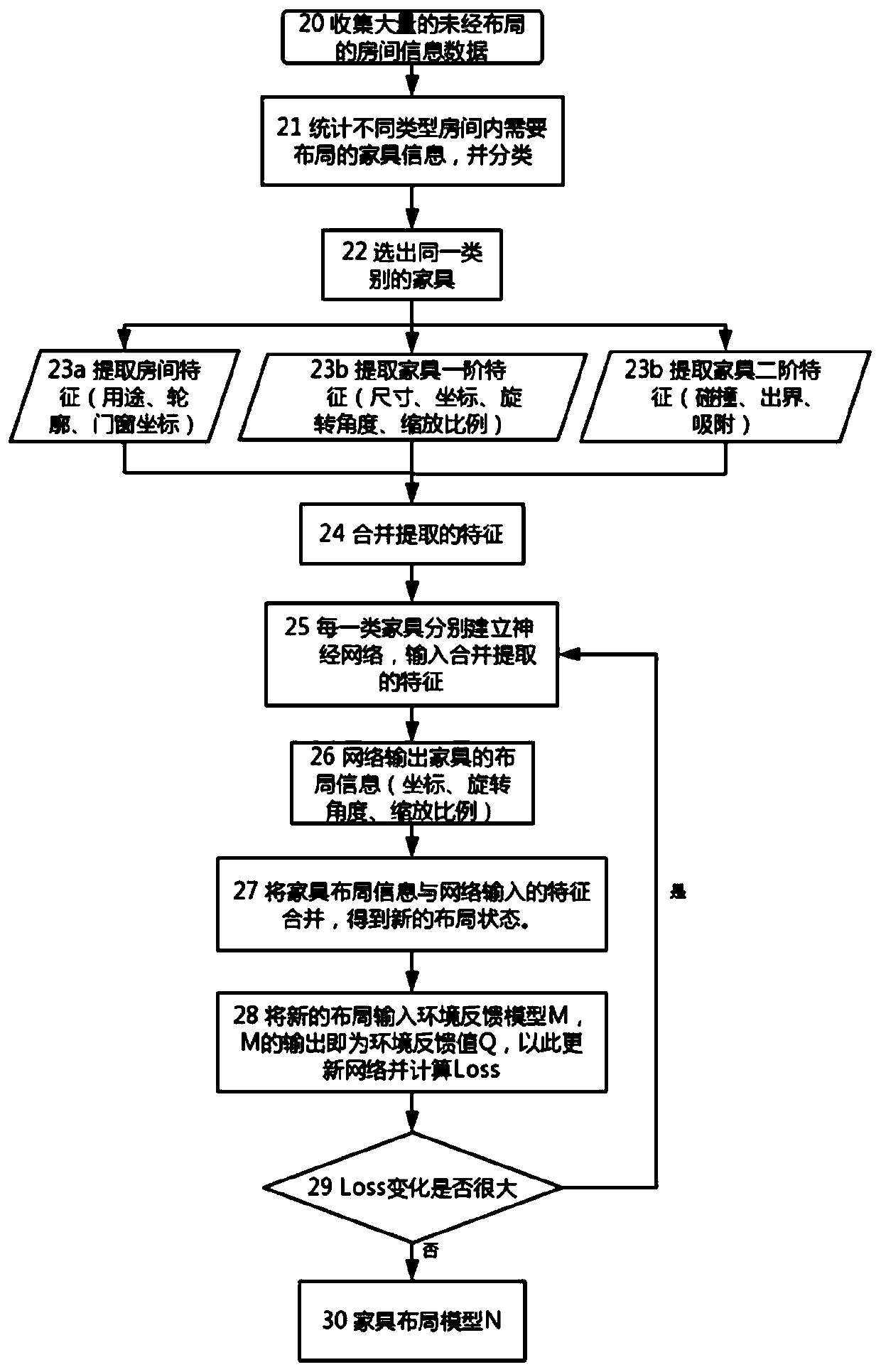

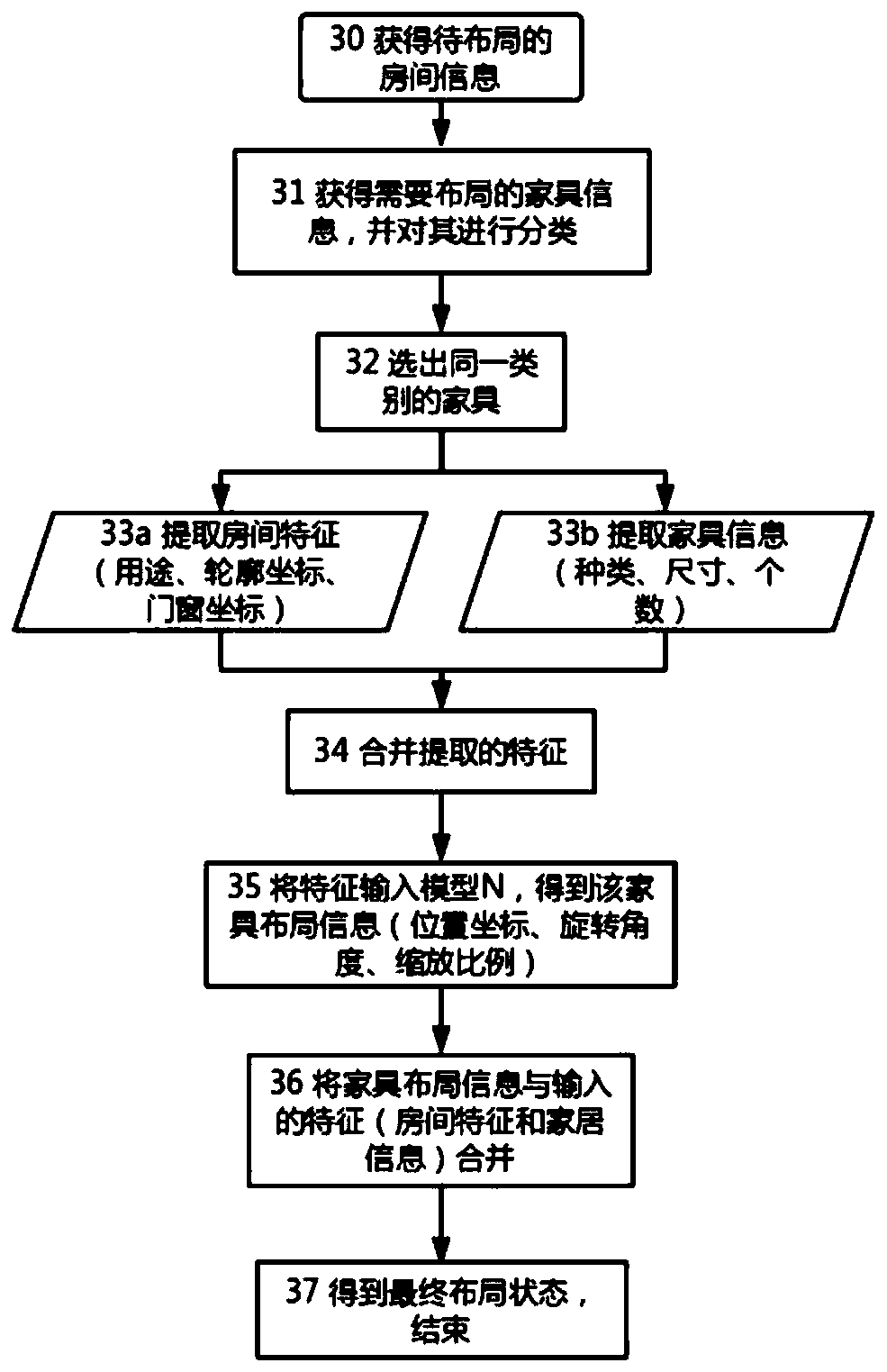

A furniture layout method and system based on a part-by-part reinforcement learning technology

ActiveCN109740243AFully automatedConform to the layoutNeural architecturesNeural learning methodsFeature extractionState space

The invention discloses a furniture layout method based on a part-by-part reinforcement learning technology. The furniture layout method comprises a furniture layout environment building step, a furniture layout reinforcement learning training step and a furniture layout generation step by using reinforcement learning. Firstly, evaluation and scoring are conducted on a specific furniture layout scheme through the manual technology, and feature extraction processing is conducted on the data; secondly, regression learning is conducted through a neural network algorithm, and the neural network obtained through training is used for simulating designers to score; secondly, learning specific state space and behavior action space by using a reinforcement learning technology according to environment feedback as guidance; and finally, in actual use, the trained reinforcement learning model is used for carrying out layout on specific furniture. The method is high in applicability in the implementation process, automation of furniture layout is achieved, the design cost is reduced, and the design efficiency is extremely high.

Owner:江苏艾佳家居用品有限公司

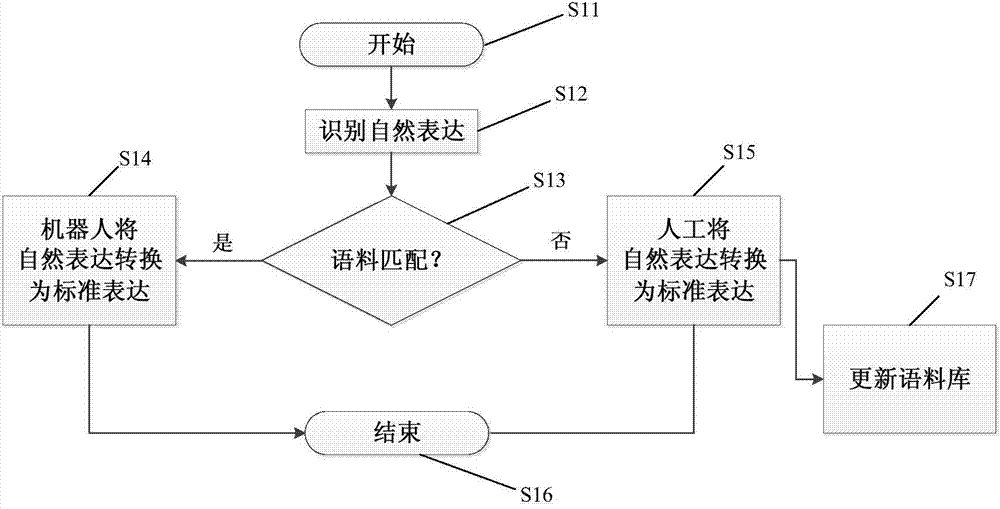

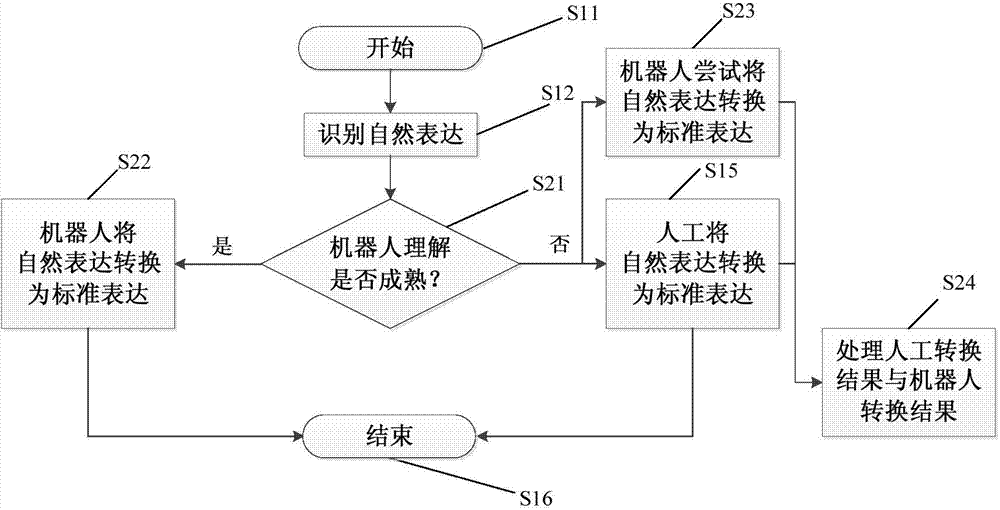

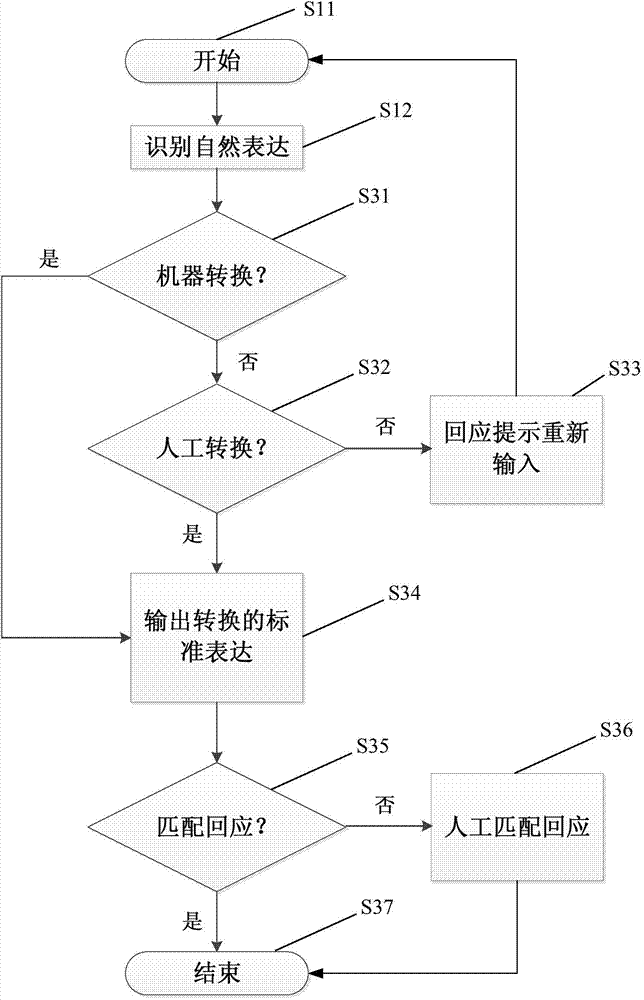

Natural expression information processing method, natural expression information processing and responding method, equipment and system

InactiveCN103593340AReduced precision requirementsReduce complexityNatural language translationMathematical modelsInformation processingSemantic translation

The invention provides a natural expression information processing method. The natural expression information processing method includes identifying natural expression information from users to acquire natural expression in the form of characters, and transforming the identified natural expression into standard expression in the form of codes. According to the natural expression information processing method, ruleless natural expression information can be transformed into encoded standard expression; transformation of the standard expression refers to transformation from semanteme of the natural expression to the codes, and no accurate language translation is needed, so that accuracy requirements of machine translation can be reduced, complexity of databases used for expression transformation (machine translation) is lowered, data inquiry and update speeds are increased, and performance of intelligent processing is improved; in addition, by simple encoded expression, workload for human-assisted intervention can be reduced.

Owner:ICONTEK CORP

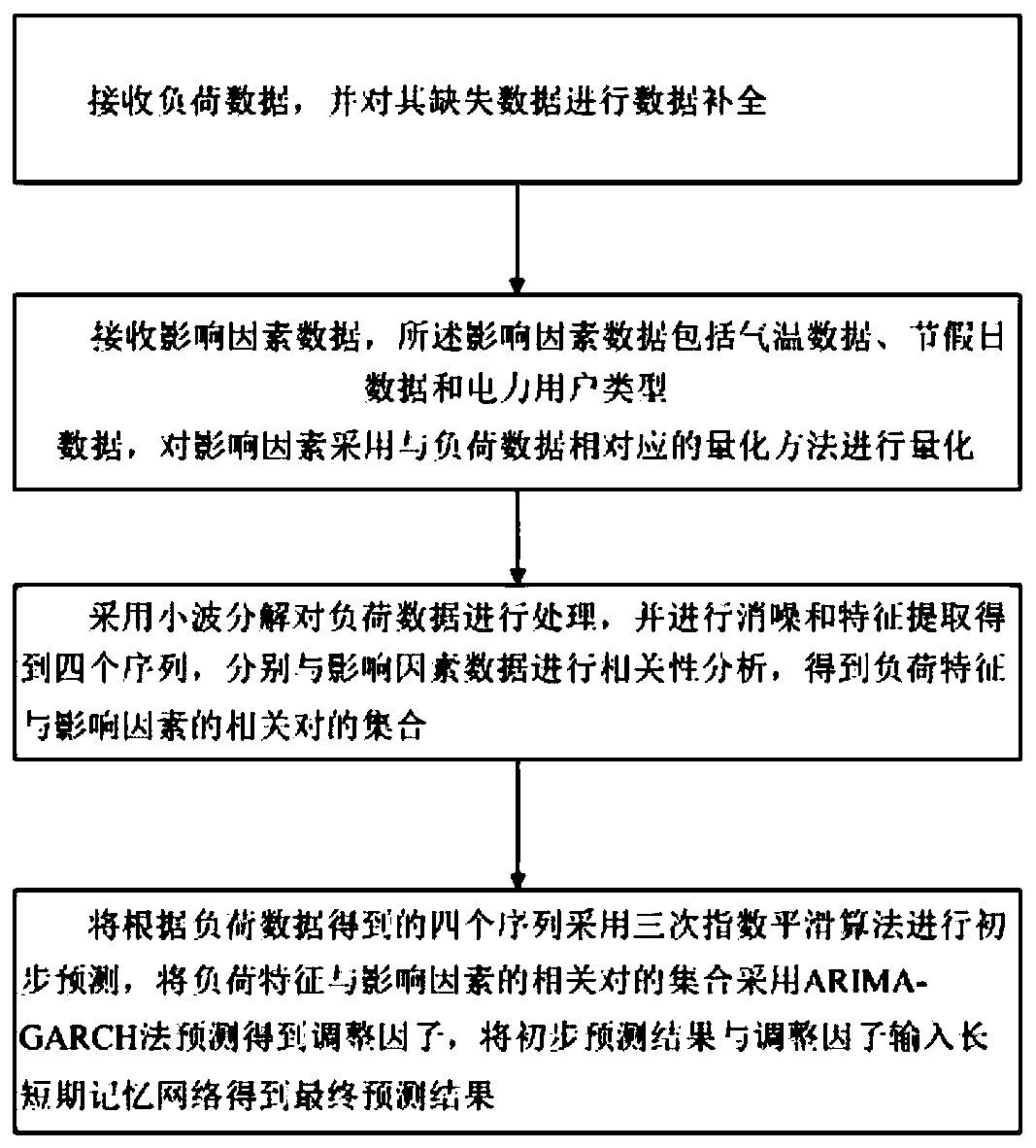

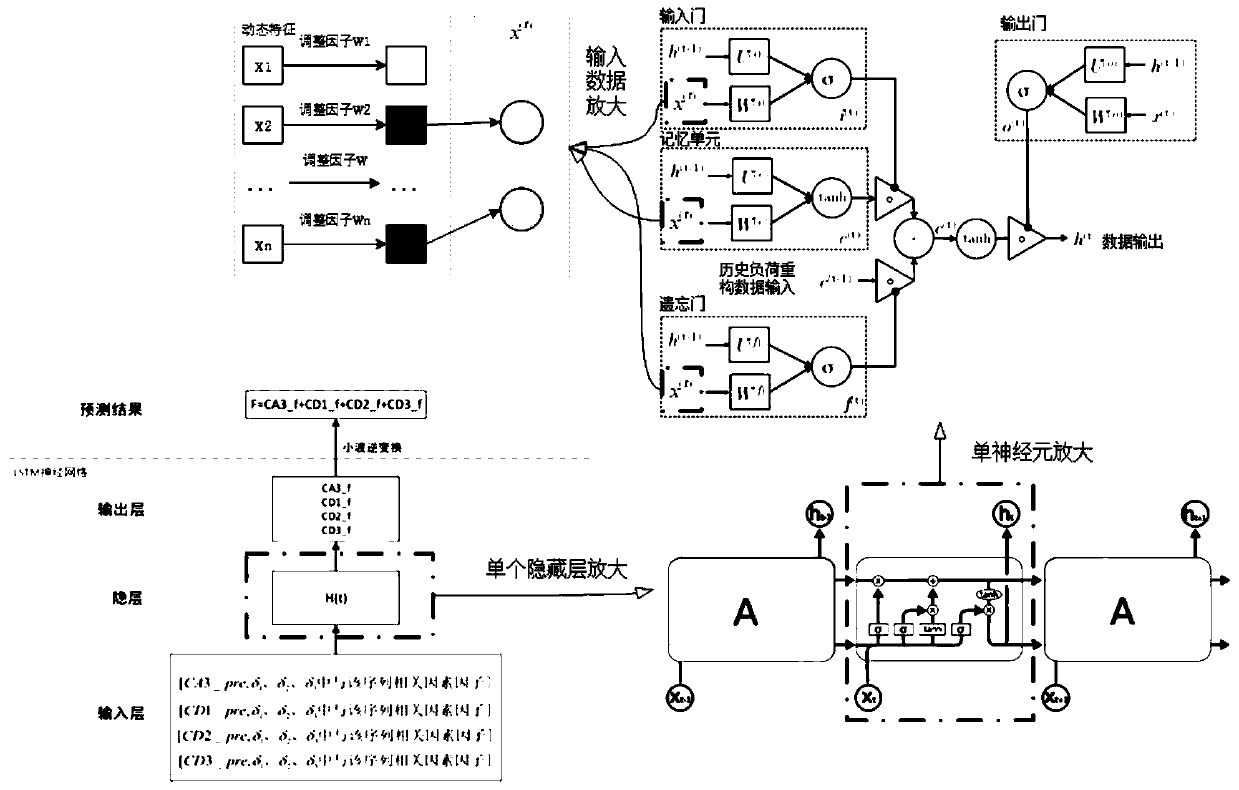

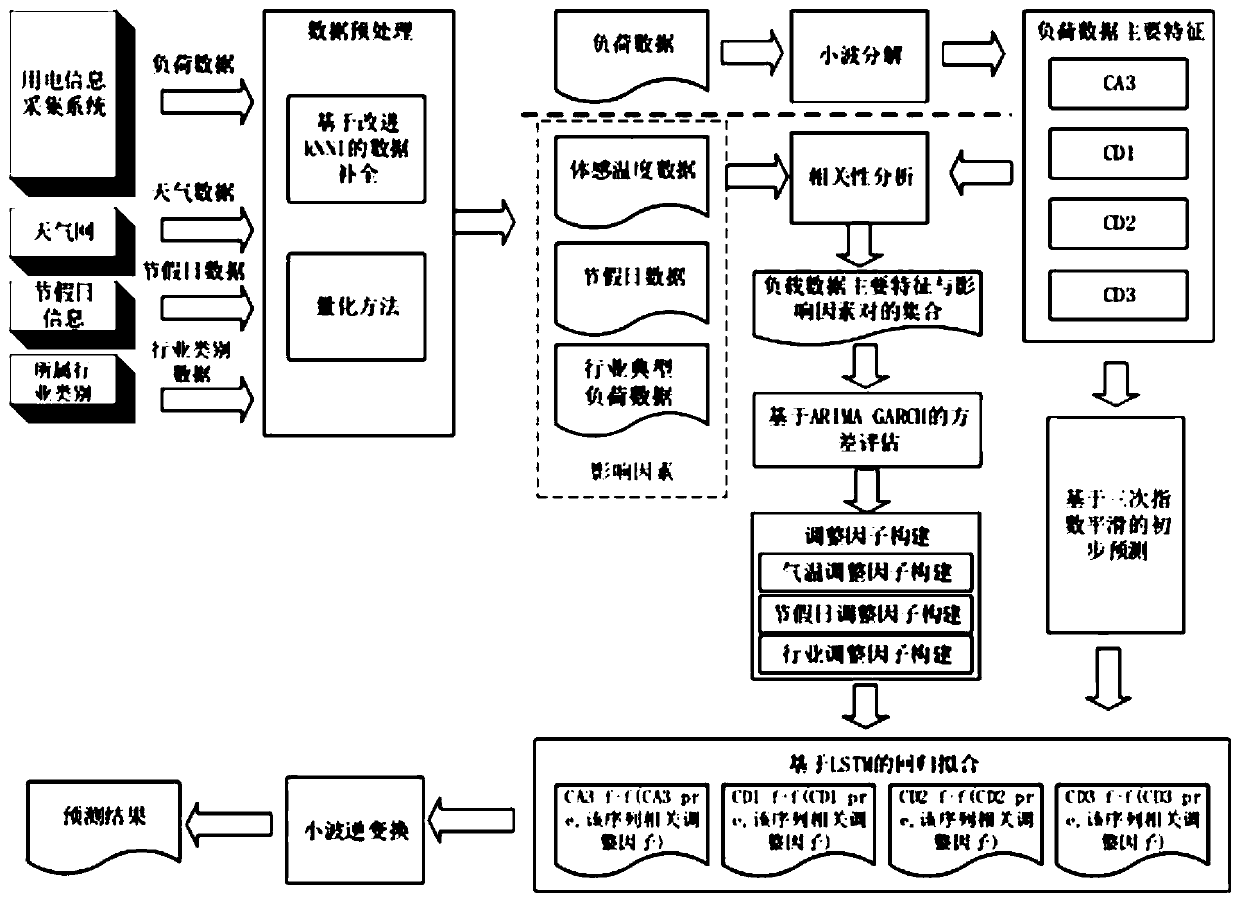

Power load short-term prediction method, model, device and system

ActiveCN110610280AImprove accuracyImprove usabilityForecastingCharacter and pattern recognitionMultiscale decompositionMissing data

The invention discloses a power load short-term prediction method, model, device and system, and the method comprises the steps of receiving the load data, and complementing the missing data of the load data; receiving the influence factor data which comprises the air temperature data, the holiday data and the industry category data to which the power consumers belong, and quantifying the influence factors by adopting a quantification method corresponding to the load data; processing the historical load data by adopting the wavelet decomposition, carrying out the multi-scale decomposition to obtain four historical load reconstruction data sequences, and respectively carrying out correlation measurement on the four historical load reconstruction data sequences and the influence factor datato obtain a correlation characteristic data set of each reconstruction load characteristic and the influence factor; and carrying out preliminary prediction on the four sequences obtained according tothe load data by adopting a cubic exponential smoothing algorithm, further optimizing a preliminary prediction result, and finally obtaining a power short-term load prediction value as the power loadscheduling reference data.

Owner:SHANDONG UNIV

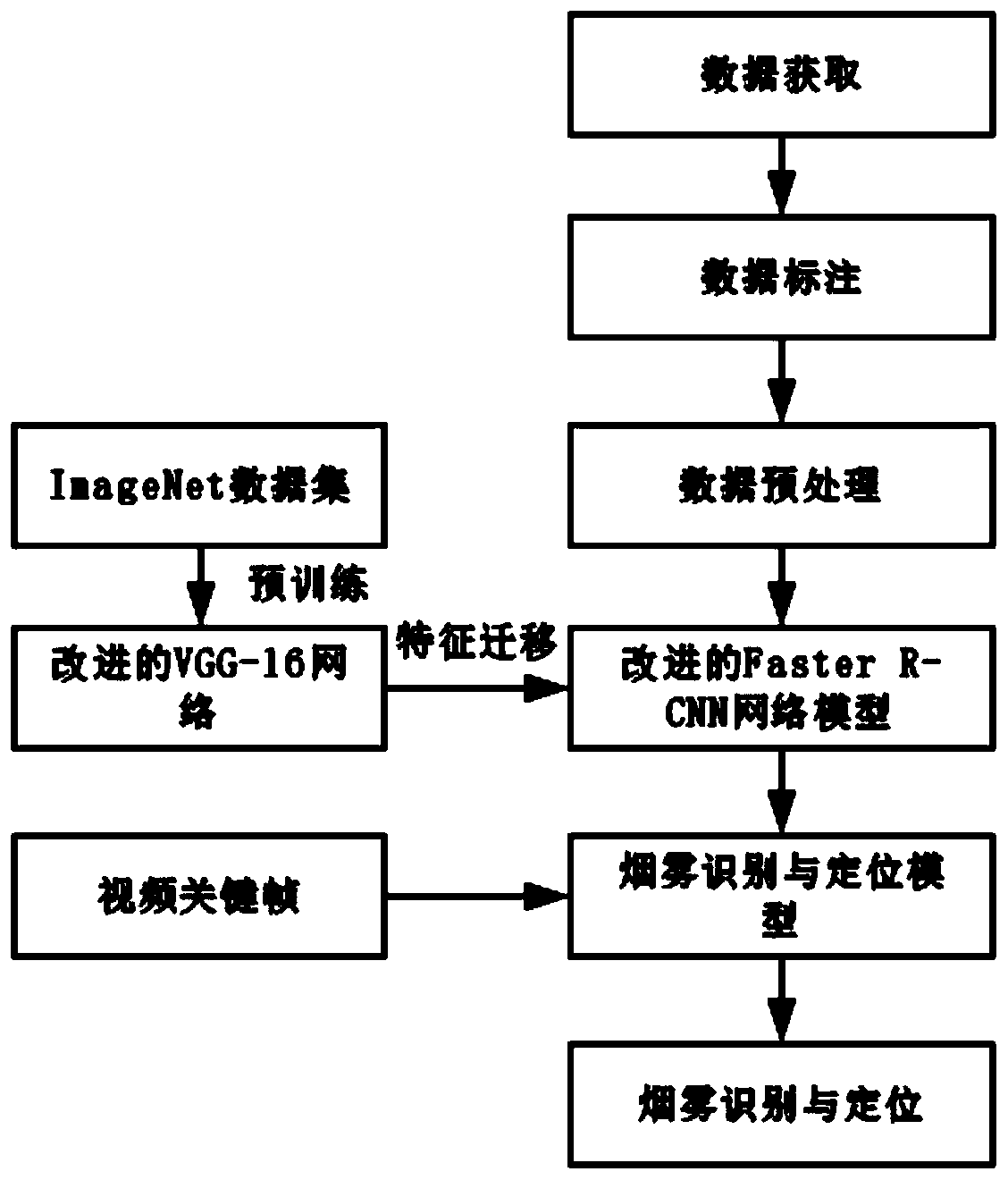

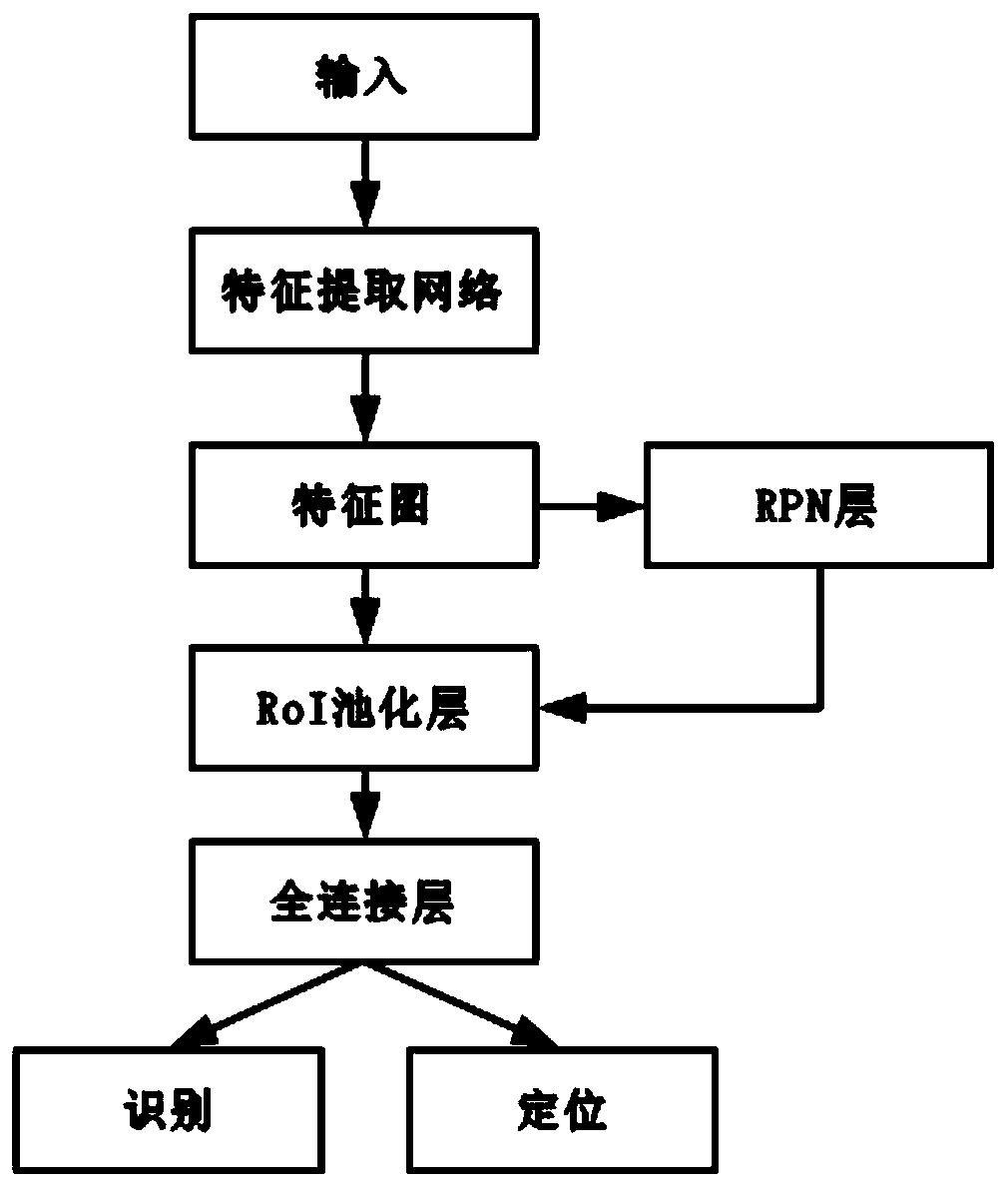

Video smoke detection and recognition method based on transfer learning

InactiveCN109977790AReduce complexityImprove recognition efficiencyCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention discloses a video smoke identification detection method based on transfer learning. The method comprises the steps of generating analog data to enlarge the number of smoke image samples;preprocessing the image data set; constructing a target detection network; using an ImageNet image dataset to pre-training an improved VGG-16 network; training a target detection network on the labeled smoke data set by adopting a transfer learning mode, wherein the feature extraction network part uses improved VGG-16 network pre-training weights to perform feature initialization; and extractinga key frame from the video, inputting the key frame into the model for identification and detection, and if smoke is found, returning coordinate information and positioning an area thereof in the video image. Model performance under the condition of limited smoke data is improved by using a transfer learning technology, and a smoke area in a video image can be automatically identified and positioned.

Owner:ZHEJIANG UNIV OF TECH

Structure adaptive CNN (Convolutional Neural Network)-based face recognition method

ActiveCN104778448AReduce distractionsReduce human interventionCharacter and pattern recognitionIdentity recognitionNetwork structure

The invention discloses a structure adaptive CNN (Convolutional Neural Network)-based face recognition method, and belongs to the field of identity recognition. According to the method, the advantages of a conventional CNN directly extracting characteristics from a two-dimensional image for recognition are maintained, and in addition, a network structure is adaptively constructed to overcome the shortcoming of excessive dependence of the conventional CNN on human experiences. According to the structure adaptive CNN-based face recognition method, the network is extended according to network requirements, so that the controllability and adjustability of the network structure are achieved, ineffective training is also avoided, difficulty in training for face recognition is lowered, and in addition, an optimal face recognition network structure is obtained; by adaptive network extension advantages, a newly added face sample can be relearned on the basis of maintaining early recognition results, retraining overhead is reduced, and incremental learning is implemented; the automatic intelligent face recognition method is low in training difficulty and high in accuracy under a big data condition.

Owner:孙建德

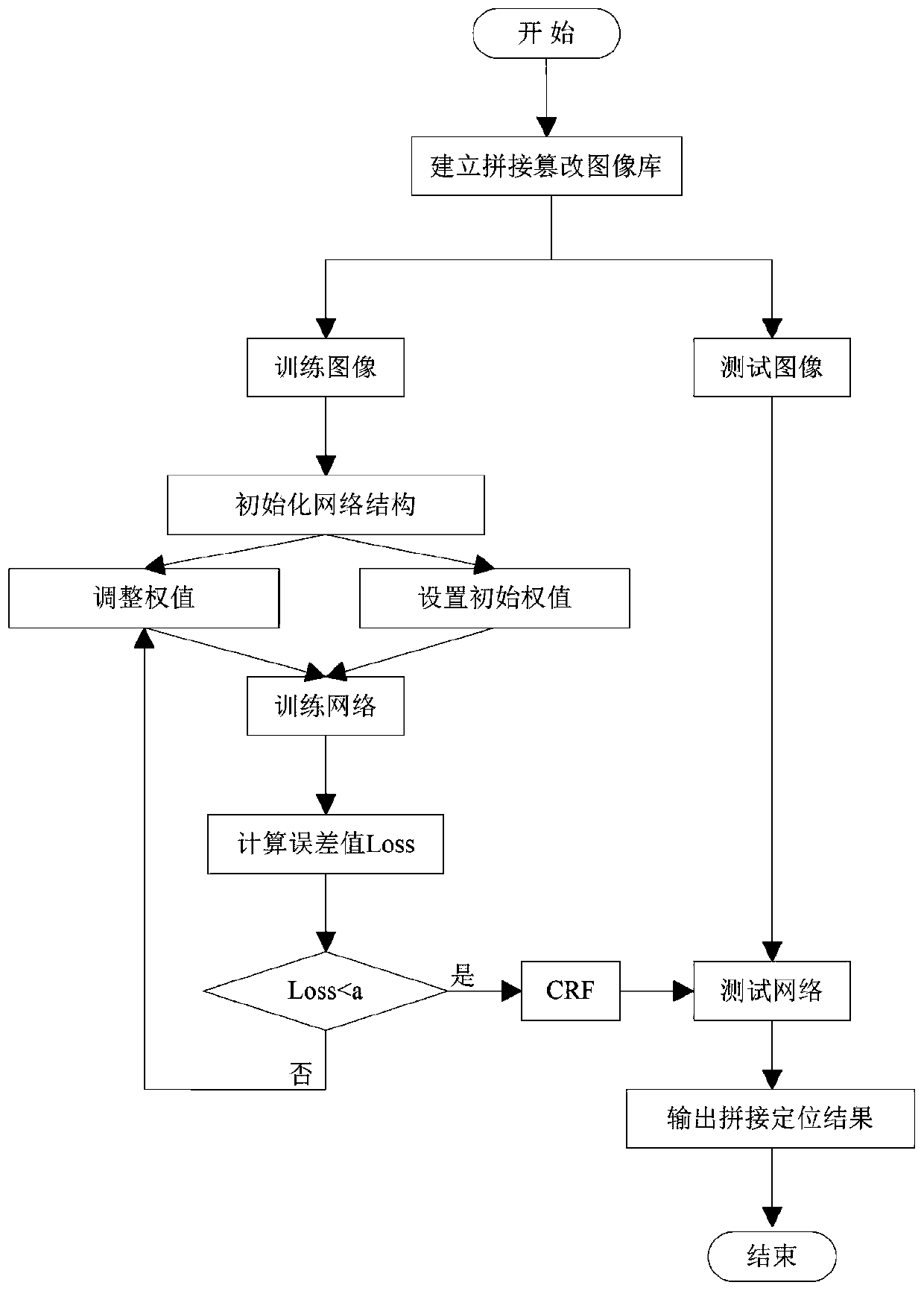

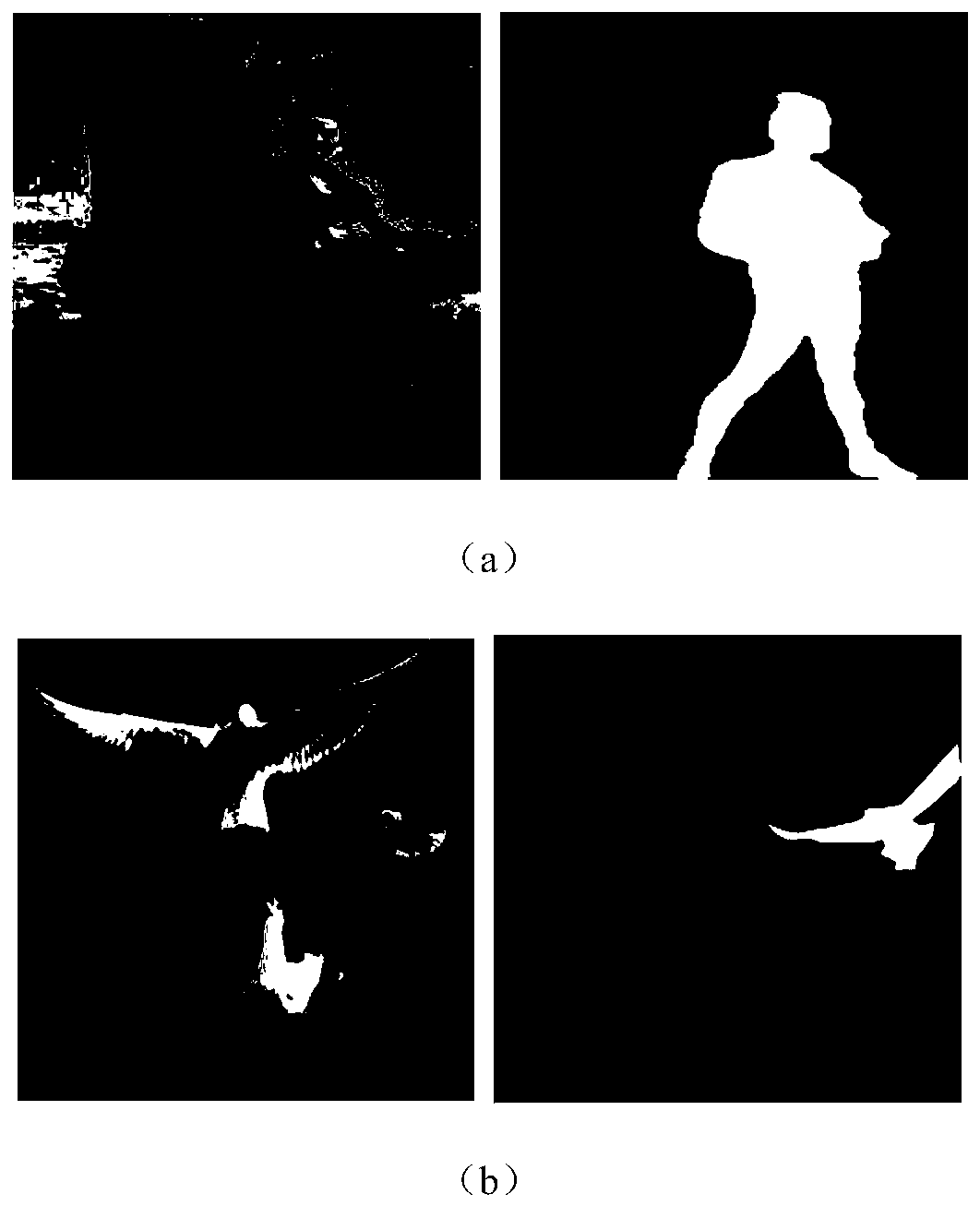

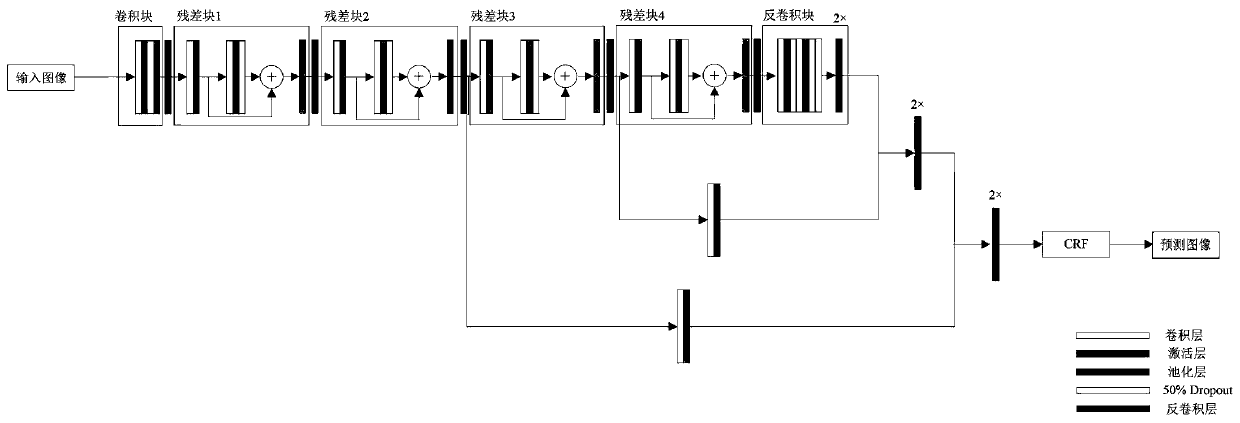

Image splicing tampering positioning method based on full convolutional neural network

ActiveCN110414670AHigh positioning accuracyFast convergenceImage analysisNeural architecturesConditional random fieldNetwork model

The invention discloses an image splicing tampering positioning method based on a full convolutional neural network. The method includes: establishing a splicing tampered image library; initializing an image splicing tampering positioning network based on a full convolutional neural network, and setting a training process of the network; initializing network parameters; reading a training image, performing training operation on the training image, and outputting a splicing positioning prediction result of the training image; calculating an error value between a training image splicing positioning prediction result and a real label, and adjusting network parameters until the error value meets a precision requirement; performing post-processing on the prediction result of which the precisionmeets the requirement by using a conditional random field, adjusting network parameters, and outputting a final prediction result of the training image; and reading the test image, predicting the test image by adopting the trained network, carrying out post-processing on a prediction result through a conditional random field, and outputting a final prediction result of the test image. The methodhas high splicing tampering positioning precision, the network training difficulty is low, and the network model is easy to converge.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

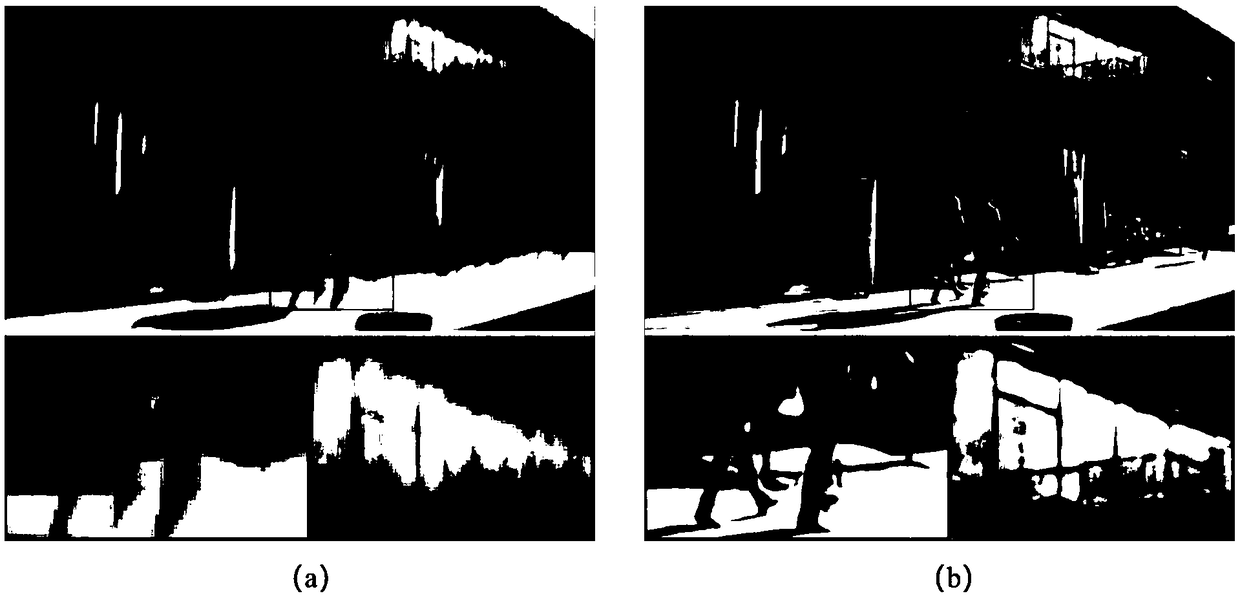

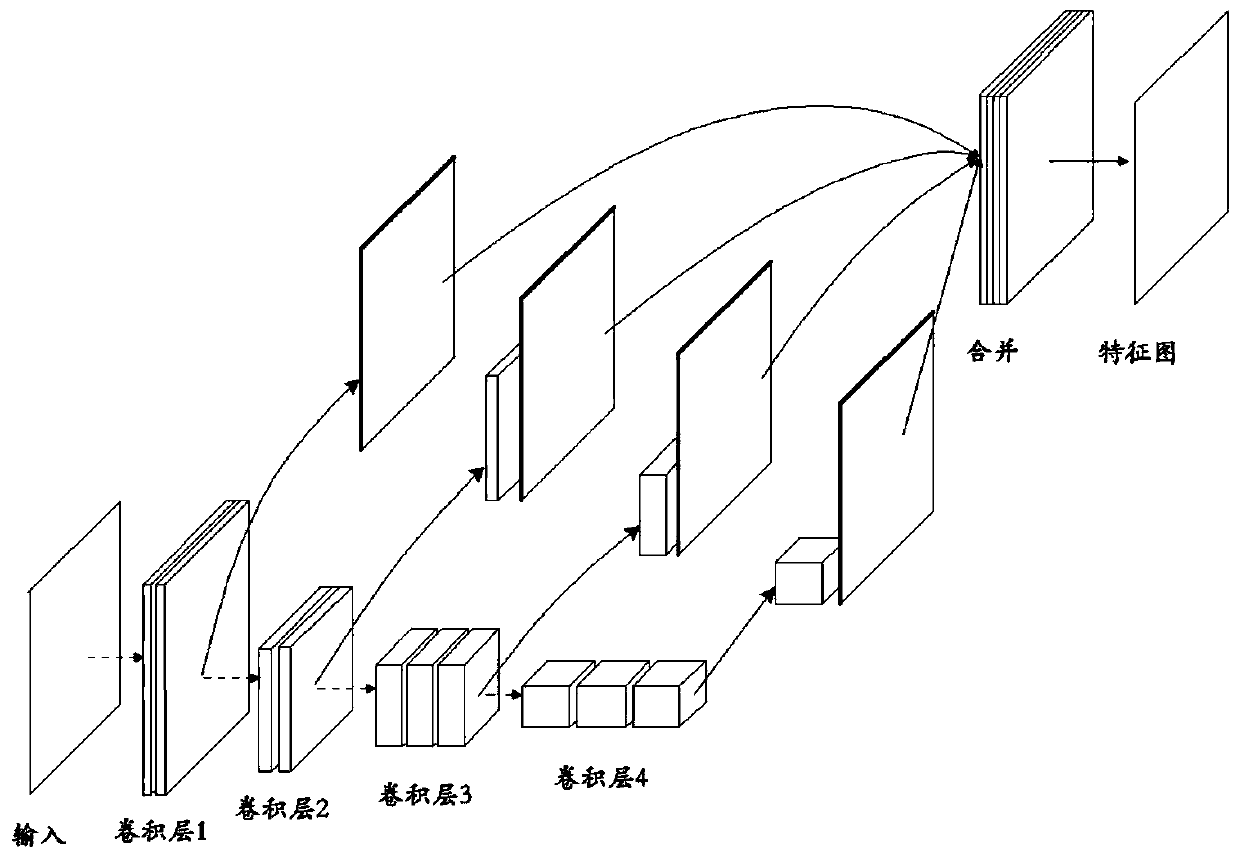

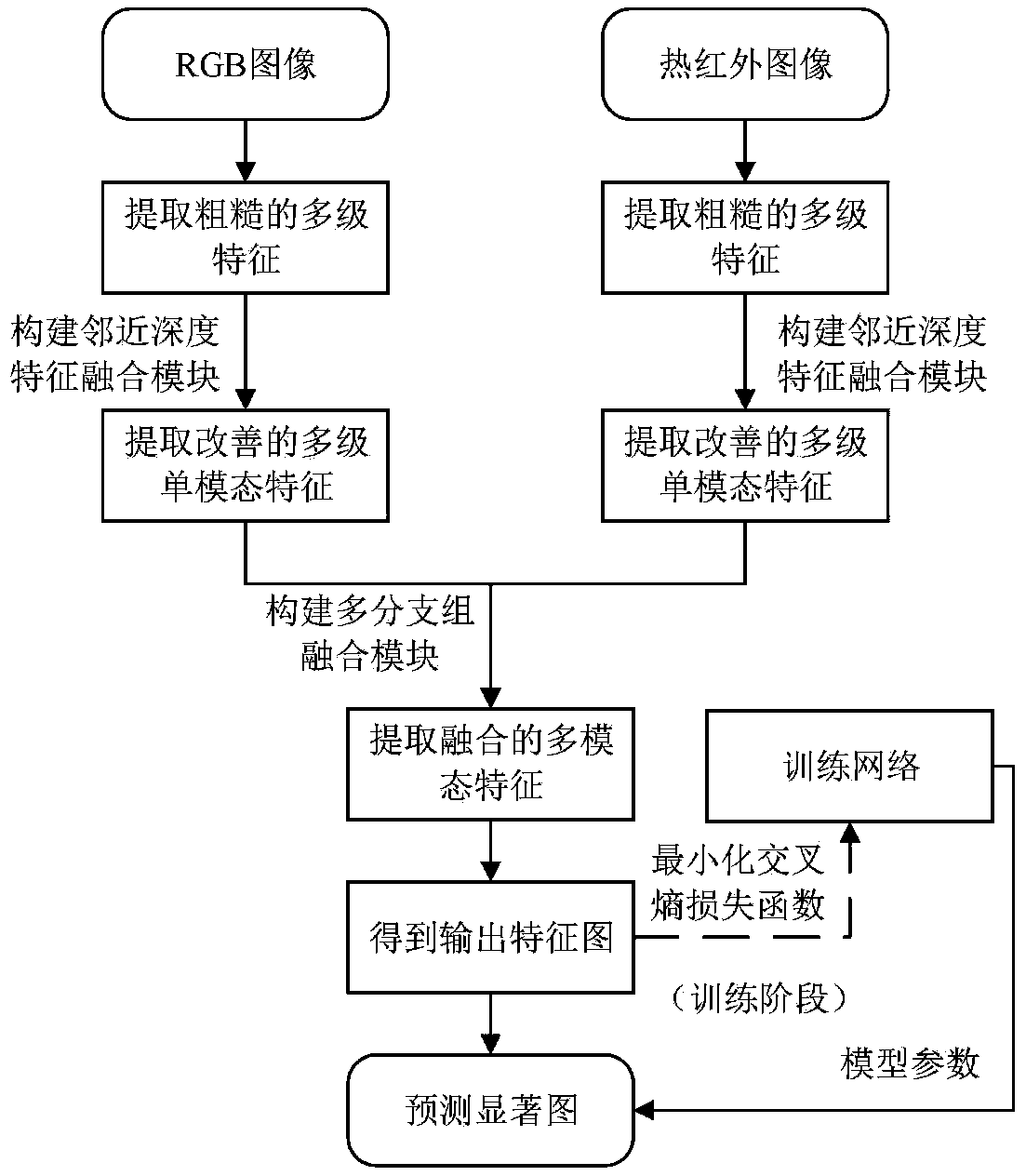

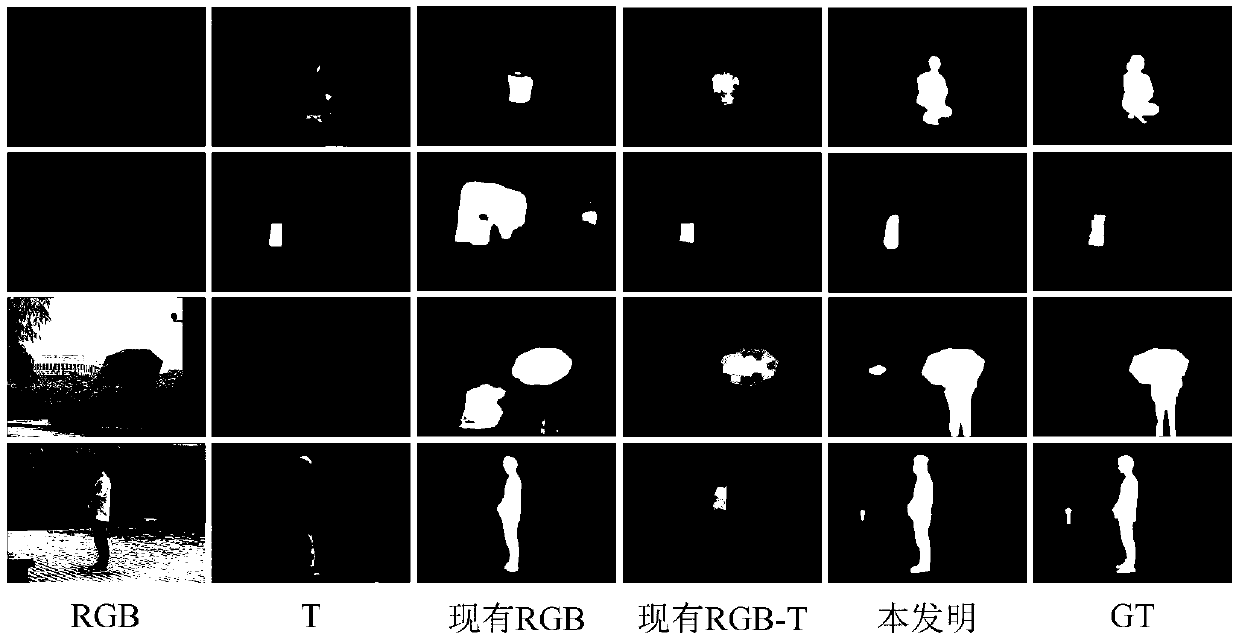

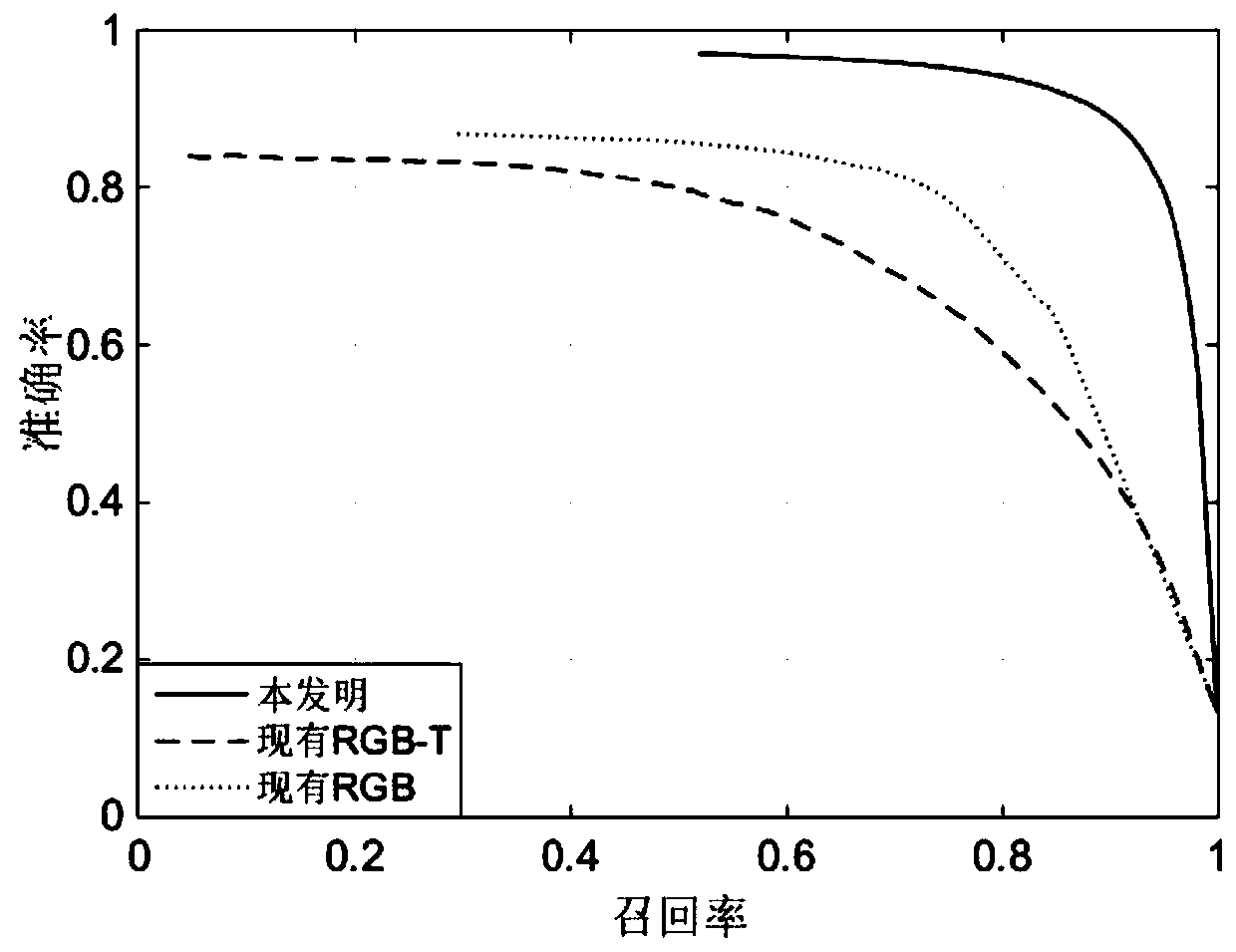

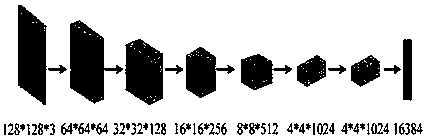

RGB-T image significance target detection method based on multi-level depth feature fusion

ActiveCN110210539AAchieving pixel-level detectionHas a full consistency effectCharacter and pattern recognitionNeural architecturesPattern recognitionImage extraction

The invention discloses an RGB-T image significance target detection method based on multi-level depth feature fusion, which mainly solves the problem that in the prior art, a saliency target cannot be completely and consistently detected in a complex and changeable scene. The implementation scheme comprises the following steps: 1, extracting rough multi-level features from an input image; 2, constructing an adjacent depth feature fusion module, and improving single-mode features; 3, constructing a multi-branch-group fusion module, and fusing the multi-mode characteristics; 4, obtaining a fusion output feature map; 5, training an algorithm network; 6, predicting a pixel-level saliency map of the RGB-T image. Supplementary information from different modal images can be effectively fused, image salient targets can be completely and consistently detected in a complex and changeable scene, and the method can be used for an image preprocessing process in computer vision.

Owner:XIDIAN UNIV

Bridge crack image barrier detection and removal method based on generative adversarial network

InactiveCN108492281AReduce training difficultyAccurate detection and removalImage enhancementImage analysisSignal-to-noise ratio (imaging)Generative adversarial network

The invention relates to a bridge crack image barrier detection and removal method based on a generative adversarial network. The method comprises the steps that first, multiple barrier pictures are collected, then tags are added, and the pictures with the tags are input into a Faster-RCNN for training; multiple barrier-containing crack pictures are collected, and barrier position calibration is performed through the Faster-RCNN; second, multiple barrier-free crack pictures are collected, and the pictures are turned over to amplify a dataset; third, the amplified dataset is input into the generative adversarial network to train a crack generation model; fourth, information erasure is performed on the positions of barriers in the barrier-containing crack pictures to obtain damaged images; and fifth, the damaged images are input into a cyclic discrimination restoration model for iteration, and then restored crack images are obtained. Through the method, barrier information in the crack pictures can be accurately detected and removed, the peak signal-to-noise ratio of the restored crack images is increased by 0.6-0.9dB compared with before, and therefore a large quantity of crack images with a high restoration degree are generated under a finite crack dataset condition.

Owner:SHAANXI NORMAL UNIV

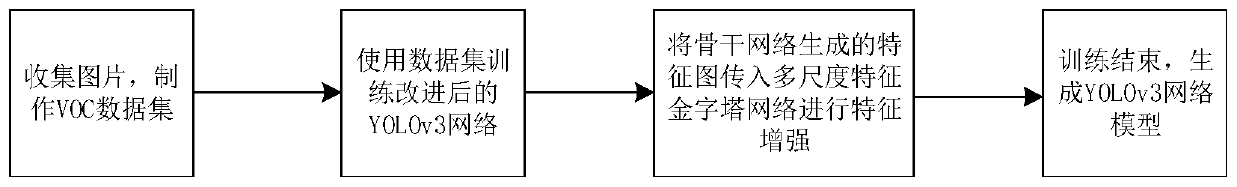

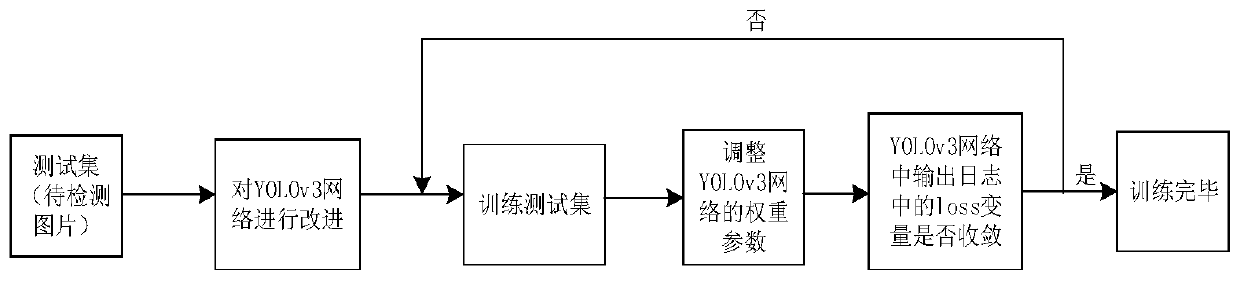

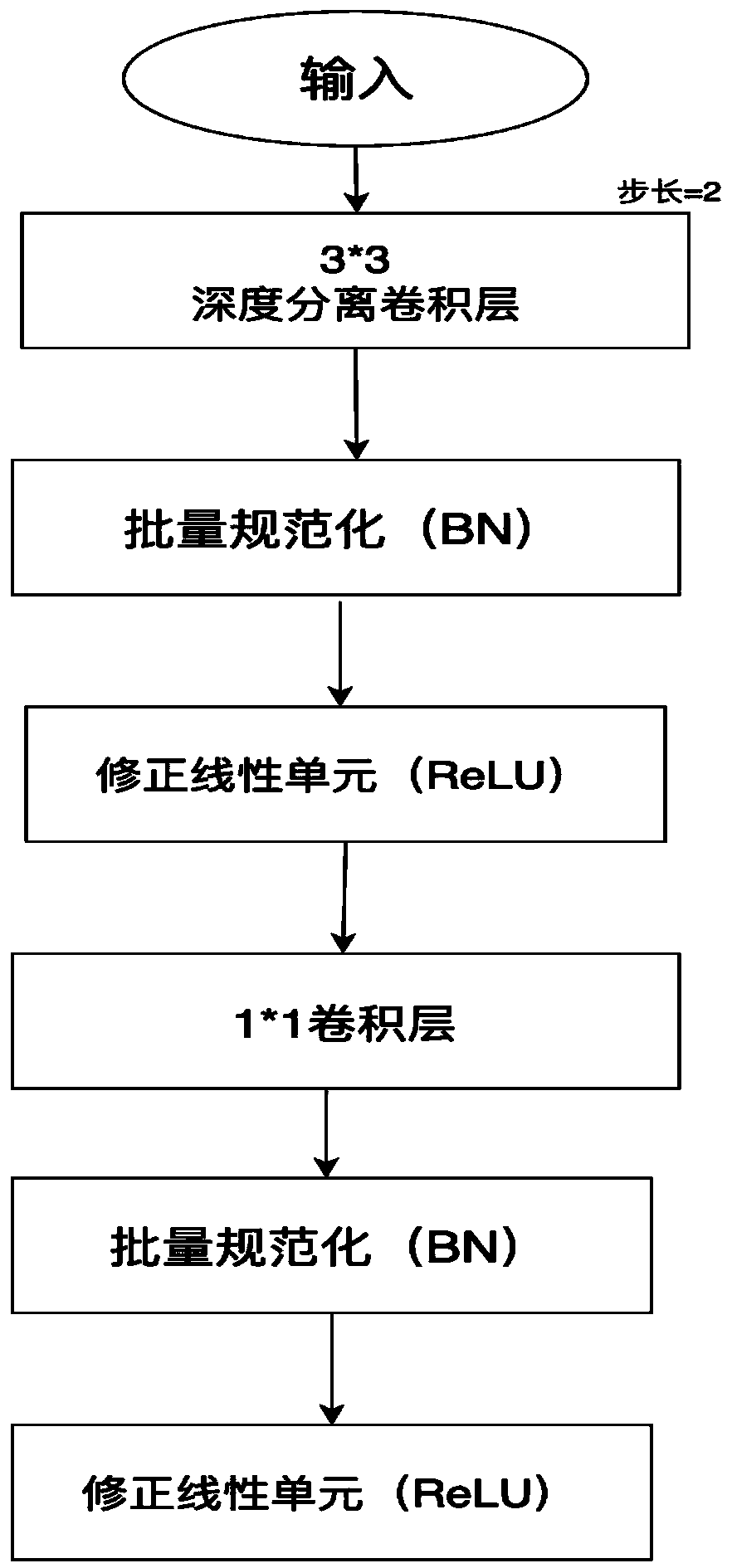

License plate detection model based on improved YOLOv3 network and construction method

PendingCN111209921AGuaranteed accuracyReduce calculationCharacter and pattern recognitionNeural architecturesEngineeringFeature aggregation

The invention discloses a license plate detection model based on an improved YOLOv3 network and a construction method. The improved YOLOv3 network is used for inputting a license plate image and extracting three feature maps of different scales; carrying out up-sampling on the obtained three feature maps with different scales, scaling depth features to the same proportion, then carrying out down-sampling, and carrying out decoding through a constructed convolution layer to generate a feature map after feature enhancement; performing feature aggregation on the generated feature maps of the three different scales after feature enhancement and the feature maps of the three different scales extracted from the YOLOv3 feature extraction network to generate a feature pyramid, and obtaining an improved license plate detection model of the YOLOv3 network; and training the license plate detection model to obtain a final model. According to the method, the detection speed is greatly improved, thepyramid multi-scale feature network is introduced to enhance the features of the backbone network and generate a more effective multi-scale feature pyramid, and the features are better extracted fromthe input image.

Owner:NANJING UNIV OF POSTS & TELECOMM

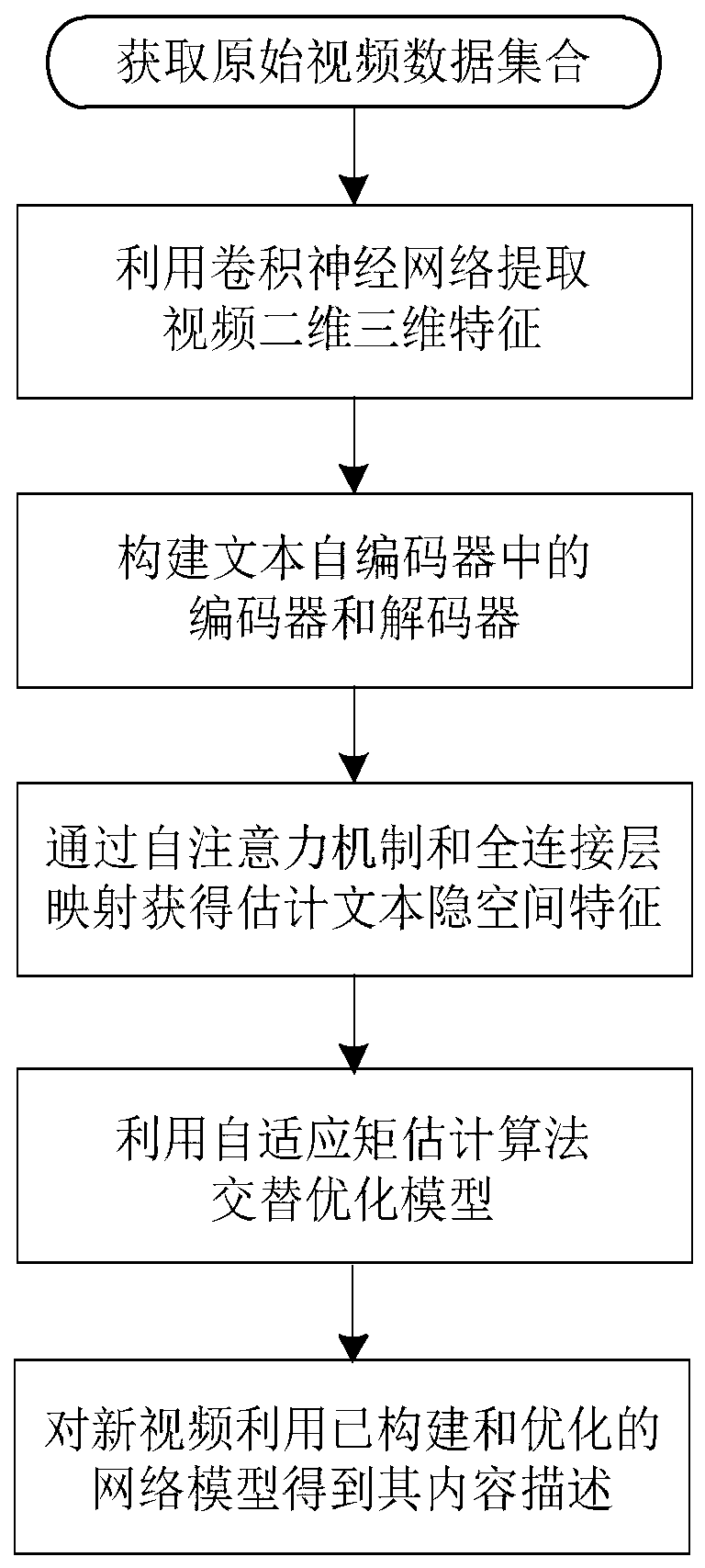

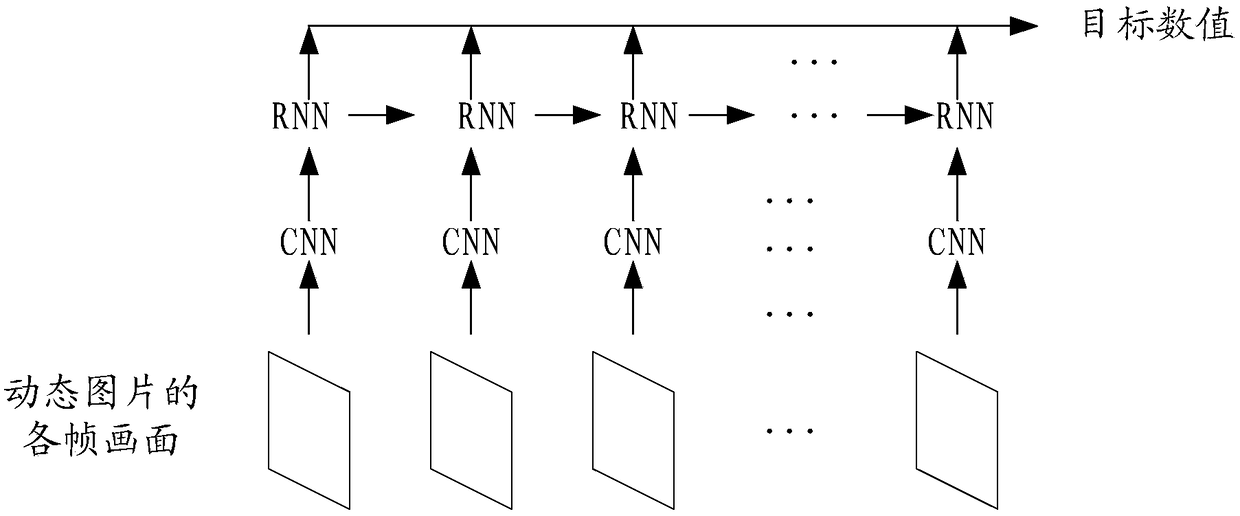

Video content description method based on text auto-encoder

ActiveCN111079532AImprove content description qualityImprove computing efficiencyCharacter and pattern recognitionNeural architecturesSelf adaptiveAutoencoder

The invention discloses a video content description method based on a text auto-encoder. The method comprises the following steps: firstly, constructing a convolutional neural network to extract two-dimensional and three-dimensional features of a video; secondly, constructing a text auto-encoder, namely extracting text hidden space features and a decoder-multi-head attention residual error networkreconstruction text by using an encoder-text convolution network; thirdly, obtaining estimated text hidden space features through a self-attention mechanism and full-connection mapping; and finally,alternately optimizing the model through an adaptive moment estimation algorithm, and obtaining corresponding video content description for the new video by using the constructed text auto-encoder andthe convolutional neural network. According to the method, a potential relationship between video content semantics and video text description can be fully mined through the training of the text auto-encoder, and the action time sequence information of the video in a long time span is captured through the self-attention mechanism, so that the calculation efficiency of the model is improved, and the text description more conforming to the real content of the video is generated.

Owner:HANGZHOU DIANZI UNIV

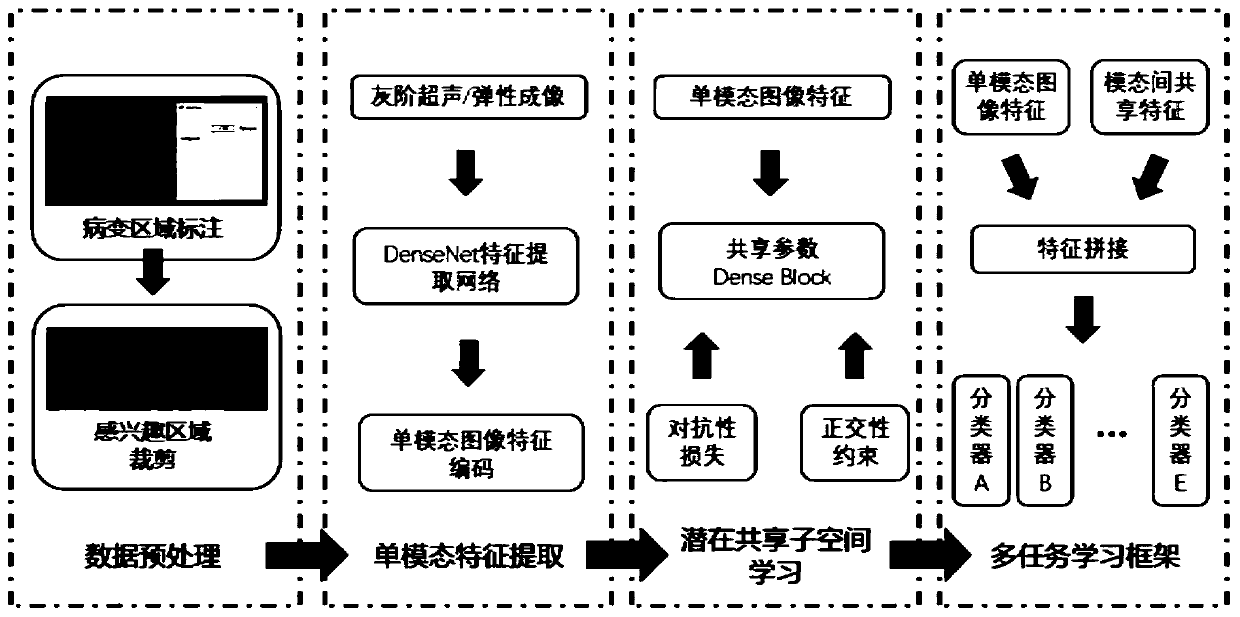

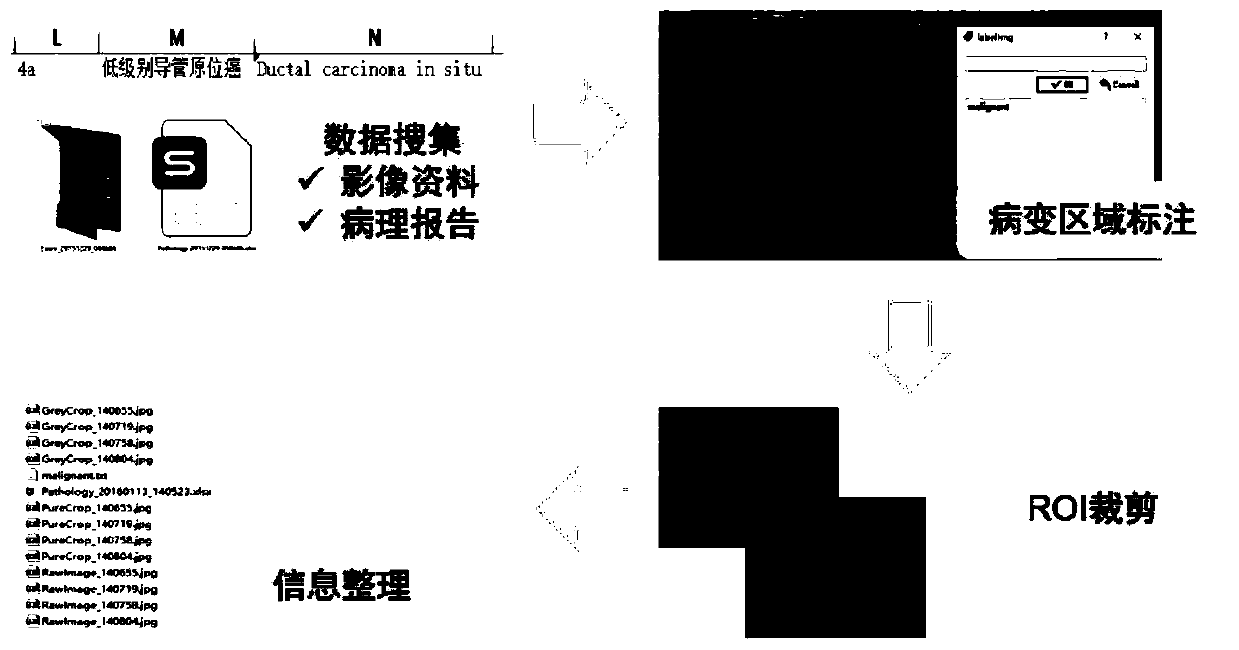

Multi-mode ultrasonic image classification method and breast cancer diagnosis device

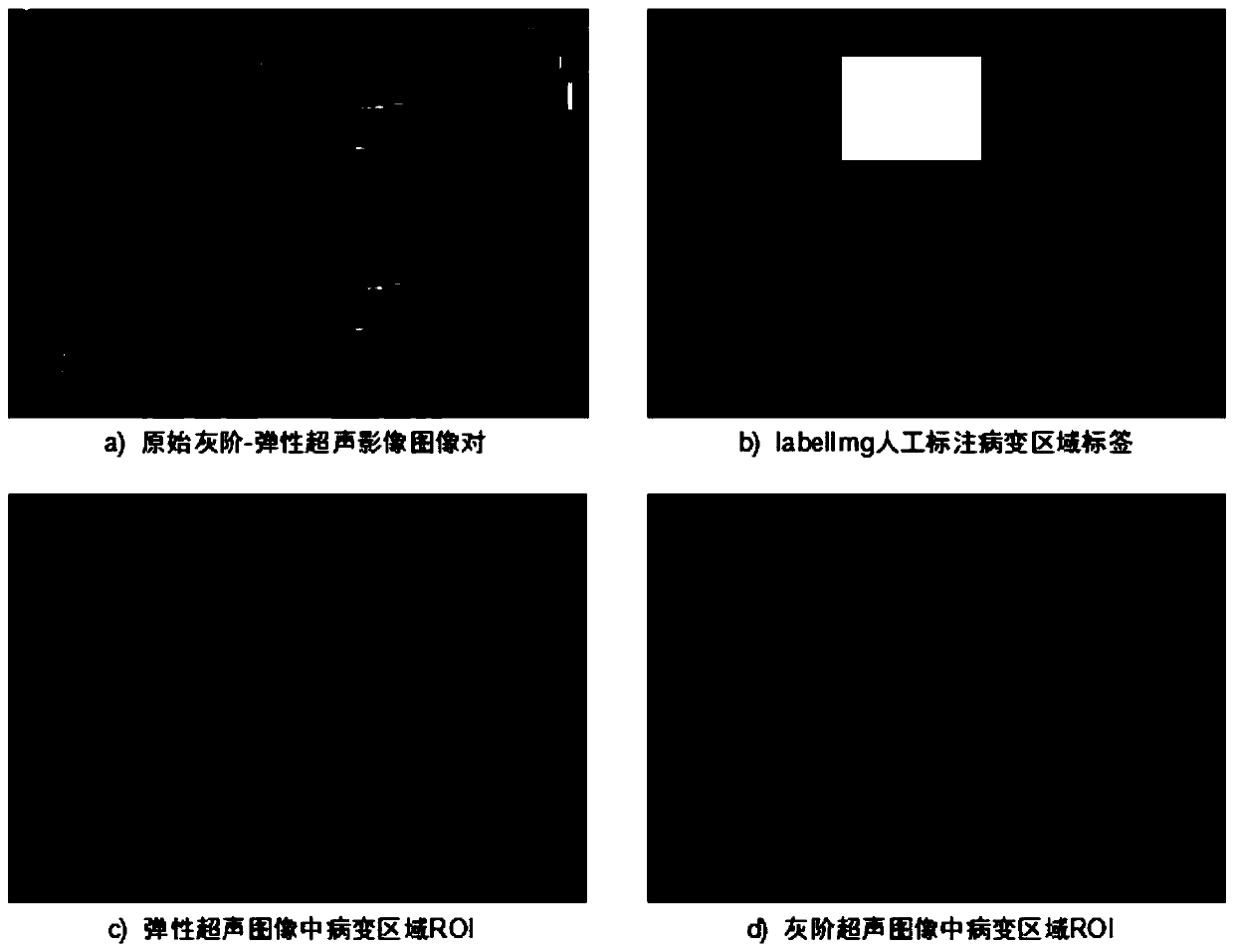

ActiveCN110930367AAdd depthReduce training difficultyImage enhancementImage analysisGrey levelImaging Feature

The invention provides a multi-mode ultrasonic image classification method and a breast cancer diagnosis device, and the method comprises the steps: S1, segmenting a region-of-interest image from an original gray-scale ultrasonic-elastic imaging image pair, and obtaining a pure elastic imaging image according to the segmented region-of-interest image; s2, extracting single-mode image features of the gray-scale ultrasonic image and the elastic imaging image by using a DenseNet network; s3, constructing a resistance loss function and an orthogonality constraint function, and extracting shared features between the gray-scale ultrasonic image and the elastic imaging image; and S4, constructing a multi-task learning framework, splicing the inter-modal shared features obtained in the S3 and thesingle-modal features obtained in the S2, inputting the inter-modal shared features and the single-modal features into a plurality of classifiers together, and performing benign and malignant classification respectively. According to the method, benign and malignant classification can be carried out on the gray-scale ultrasonic image, the elastic imaging image and the two modal images at the sametime, and the method has excellent performance of high accuracy and wide application range.

Owner:SHANGHAI JIAO TONG UNIV

Single convolutional neural network-based facial multi-feature point locating method

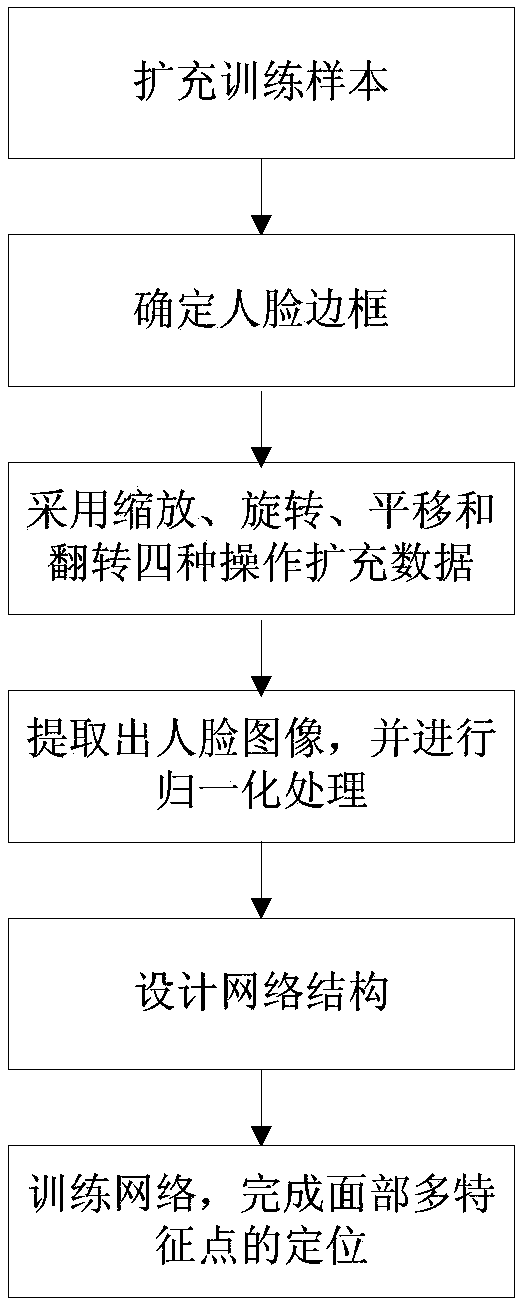

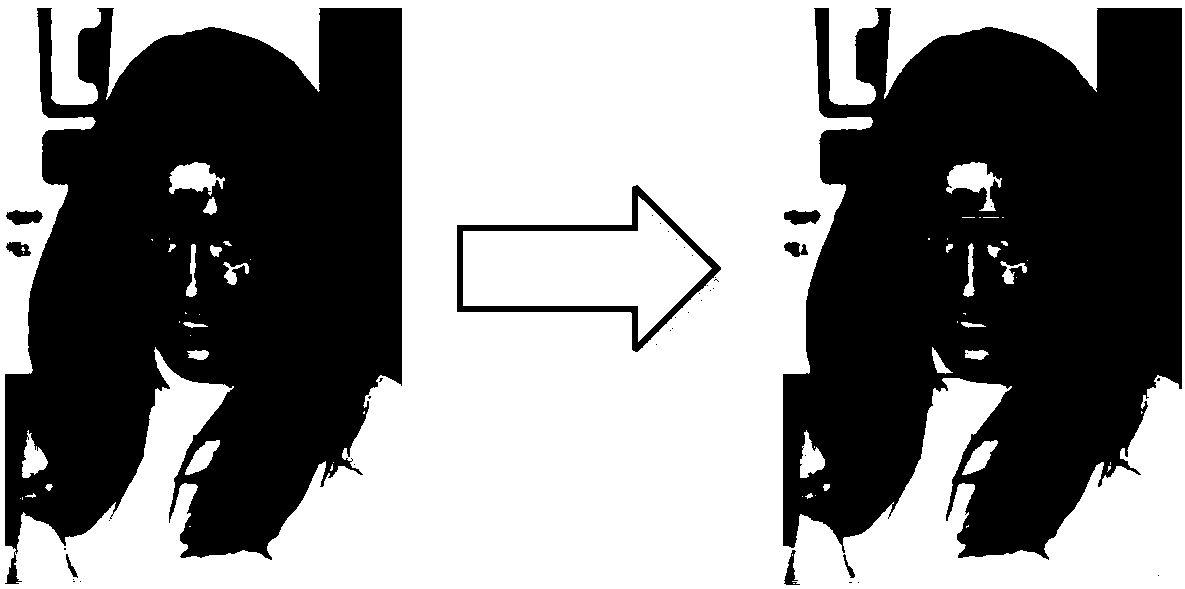

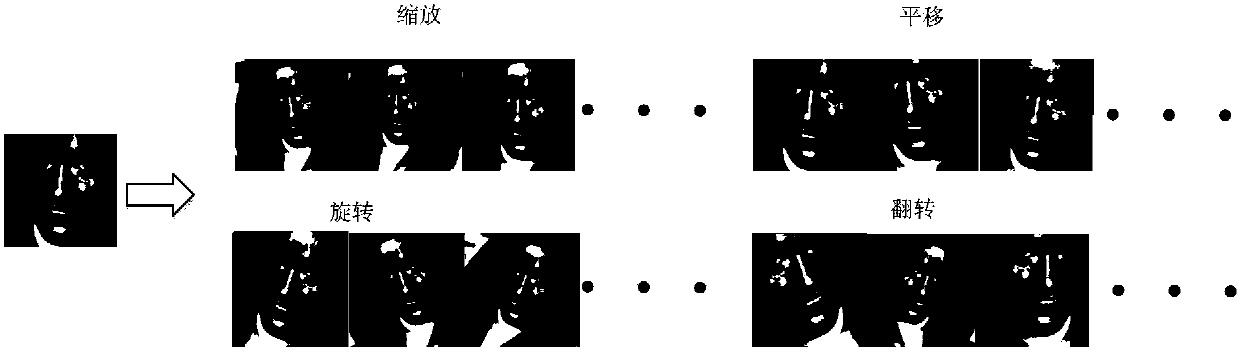

ActiveCN107808129ASimple structureAccurate extractionCharacter and pattern recognitionNeural architecturesData processingData set

The invention discloses a single convolutional neural network-based facial multi-feature point locating method. The method comprises the steps of expanding training samples; according to facial feature point coordinates corresponding to the samples and provided by a data set, determining a human face boundary frame; expanding data by adopting four operations of zooming, rotation, translation and overturning to made up for the deficiency of feature point tagging of training images; according to the human face boundary frame, extracting a human face image, and performing normalization processing; and finally designing a network structure, training a network, setting a learning rate of the network and a data processing quantity each time, and finishing facial multi-feature point locating. According to the method, the network structure is simplified; the training difficulty is lowered; the network structure can extract more global advanced features and express facial feature points more accurately; the locating effect on the facial feature points under variable complex conditions is good; and the facial multi-feature point locating can be realized.

Owner:NANJING UNIV OF SCI & TECH

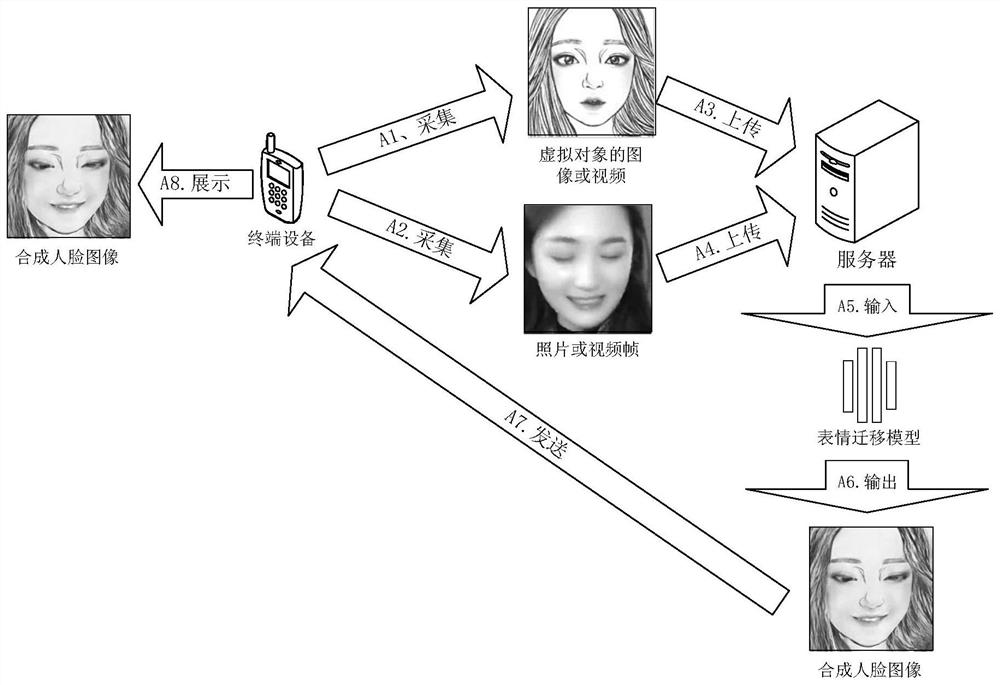

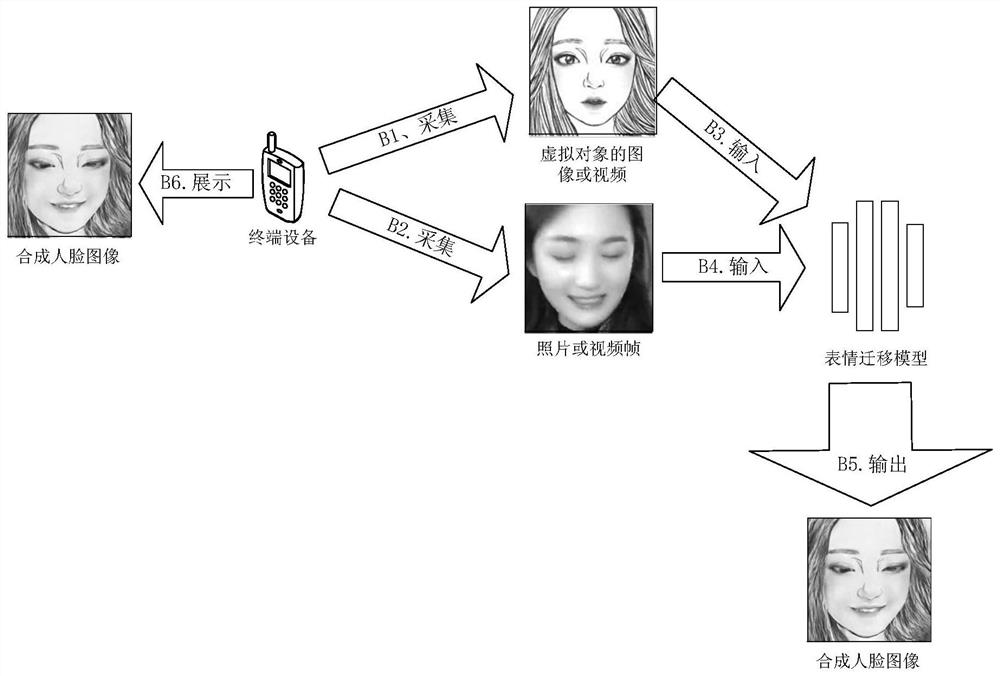

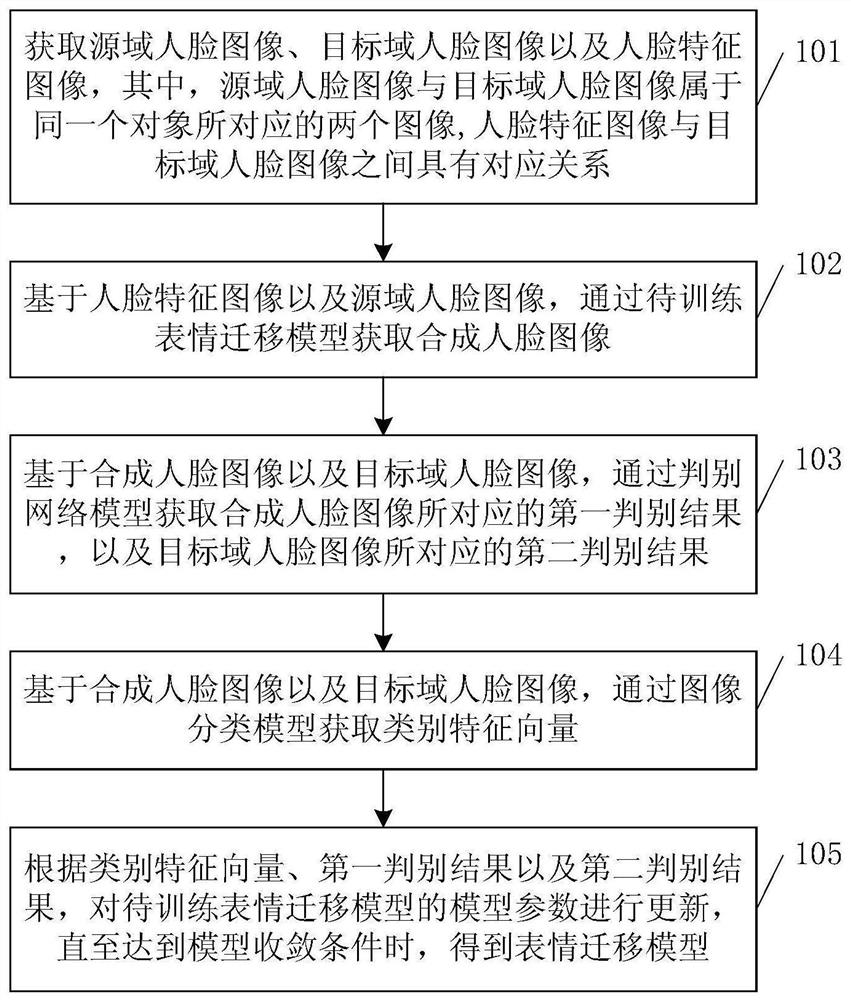

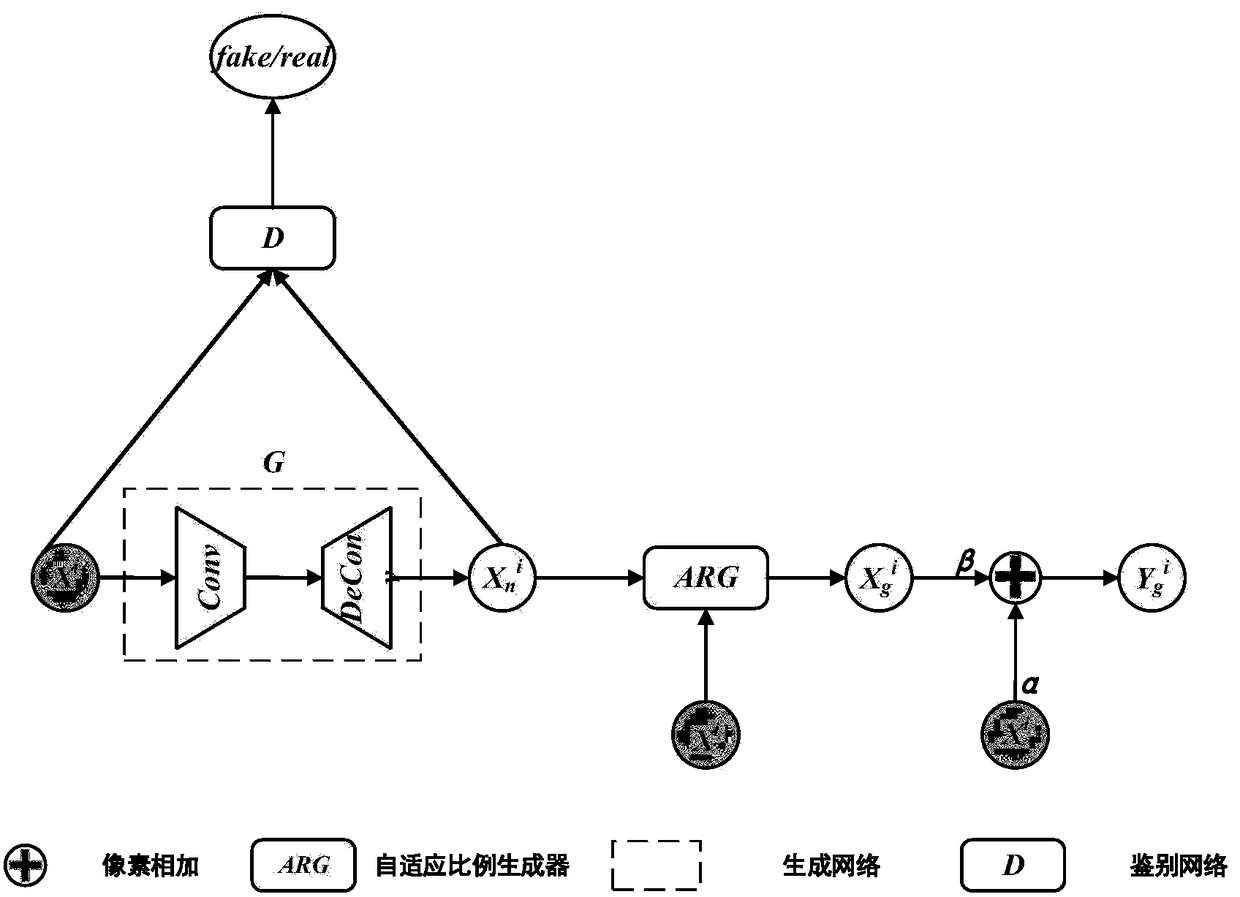

Expression migration model training method, expression migration method and device

PendingCN111652121AImprove performanceHigh outputTexturing/coloringAcquiring/recognising facial featuresFeature vectorImaging processing

The invention discloses a training method of an expression migration model applied to the field of artificial intelligence. The method comprises the steps of obtaining a source domain face image, a target domain face image and a face feature image; obtaining a synthetic face image through a to-be-trained expression migration model; obtaining a first discrimination result and a second discrimination result through the discrimination network model; obtaining a category feature vector through an image classification model; and updating model parameters of the to-be-trained expression migration model according to the category feature vector, the first discrimination result and the second discrimination result until a model convergence condition is met, thereby obtaining the expression migration model. The invention further discloses an expression migration method and device. According to the invention, complex image processing does not need to be carried out on the face image, the trainingdifficulty and the training cost are reduced, and the expression migration model can output a more real face image.

Owner:TENCENT TECH (SHENZHEN) CO LTD

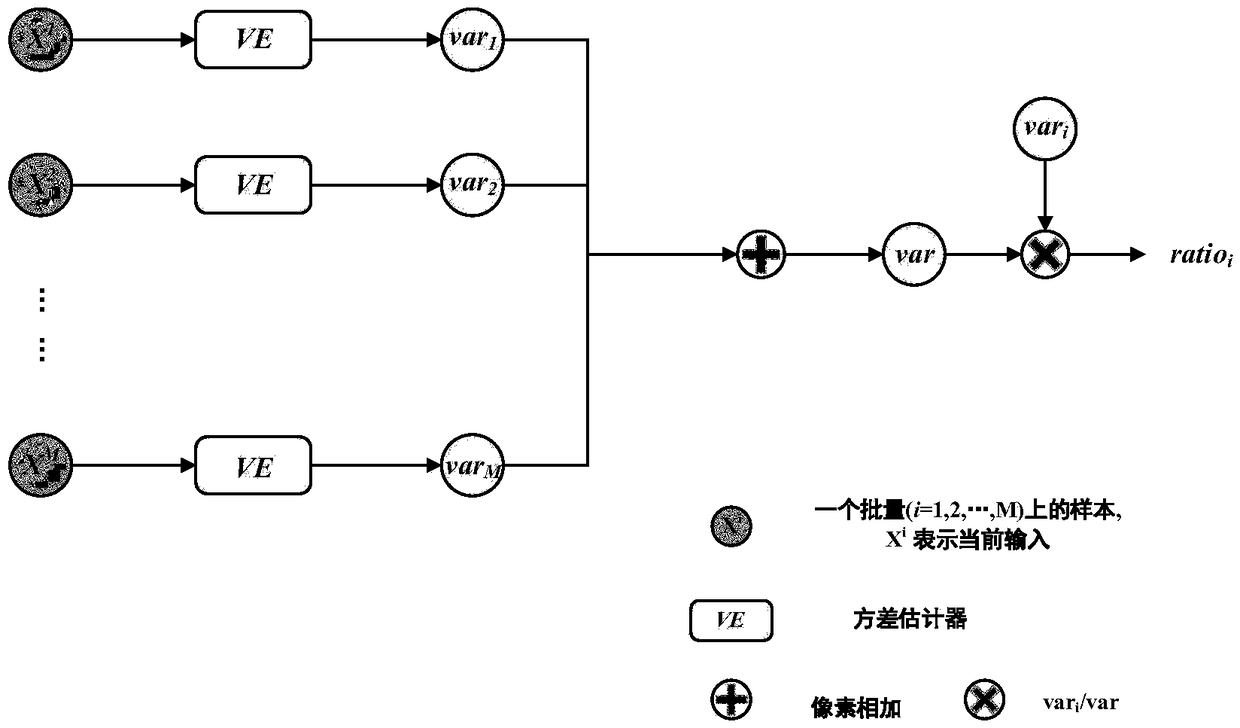

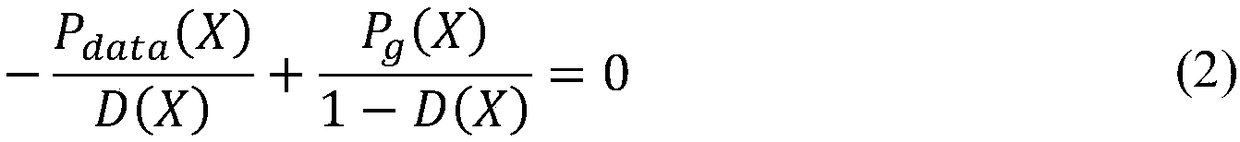

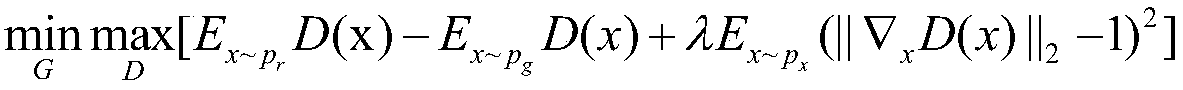

A method for generating a new sample base on a generative adversarial network and an adaptive proportion

ActiveCN109165735AFast convergenceImprove accuracyNeural learning methodsAlgorithmGenerative adversarial network

The invention discloses a method for generating a new sample based on a generative adversarial network and an adaptive proportion. The method comprises the following steps: S1, adding noise generatedby the generative adversarial network to the input sample directly and close to the distribution of the input sample; S2, constructing an adaptive proportion according to that variance of the sample,fusing the input sample and the noise generated by the generative adversarial network to generate a new sample, and adjusting the proportion of the noise and the input sample accord to the adaptive proportion of the new sample; S3 supplementing the original sample information for the new sample through the pixel addition operation, and generating a final sample which is beneficial to improving theDNN detection rate. The method improves the accuracy of DNN, and the cost is relatively small, and the complexity is lower.

Owner:HANGZHOU DIANZI UNIV

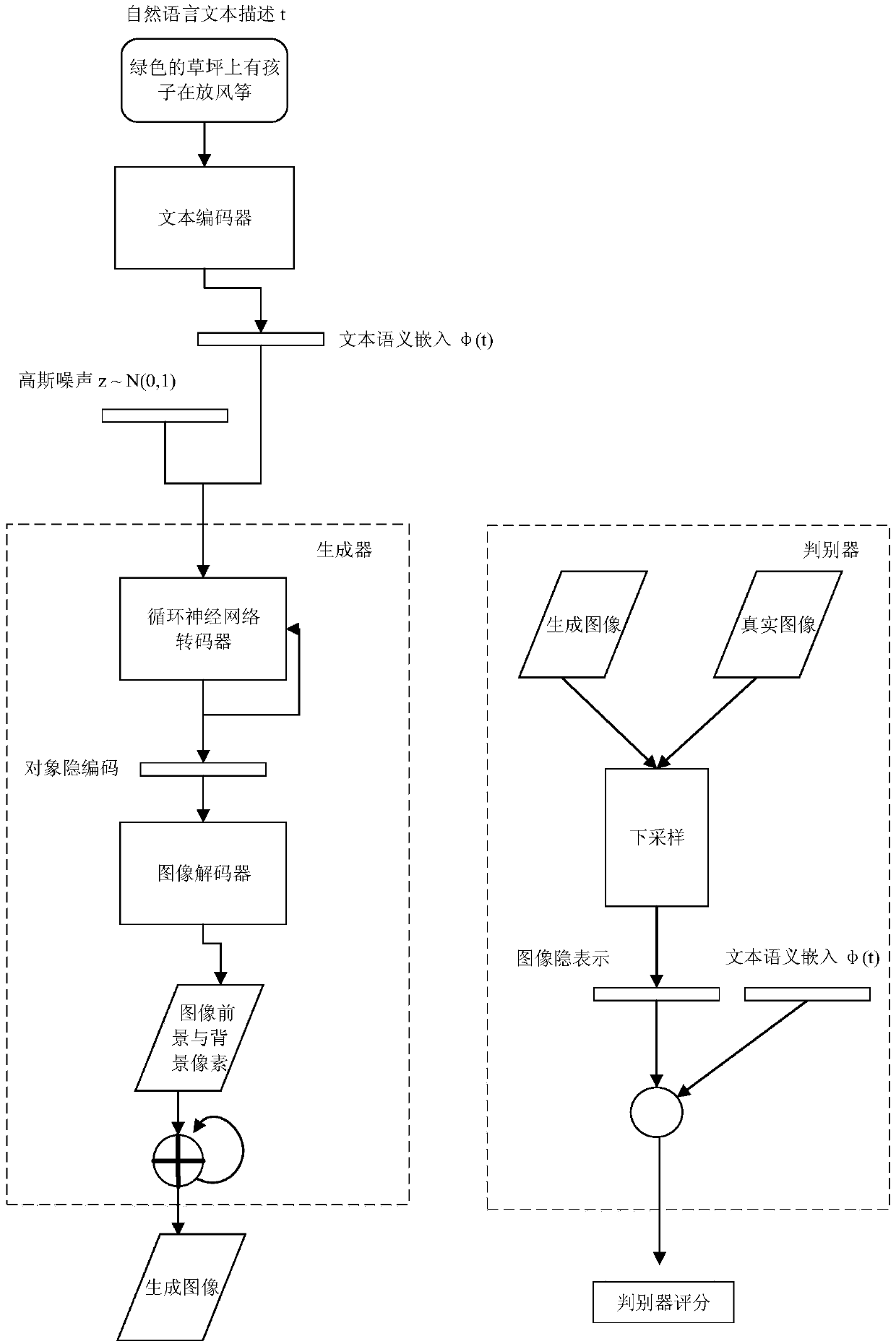

A text generation image method and device

ActiveCN109543159AReduce training difficultyComplete contentImage codingNatural language data processingNatural languageTranscoding

The invention discloses a text generation image method and device. The text generation image method comprises the following steps: step 1, coding a natural language text describing the image to obtaina text semantic embedded representation; 2, mixing the text semantic embedding representation obtained in the step 1 with random noise, reading the text semantic embedding representation by a cyclicneural network transcoder, outputting the object hidden coding of each step by the random noise and the hidden state of the cyclic neural network transcoder; 3, decoding the hidden coding of each stepobject output from the step 2 to generate step images, and finally fusing all the step images to obtain the generated images; 4, carrying out confrontation training on that generate image and the real image. The generator of the invention decodes and generates the foreground and background pixel set of an image according to the object hidden coding through multi-step transcoding, and fuses the foreground and background pixel set of the image to generate a high-quality image, thereby reducing the training difficulty of directly generating the image.

Owner:南京德磐信息科技有限公司

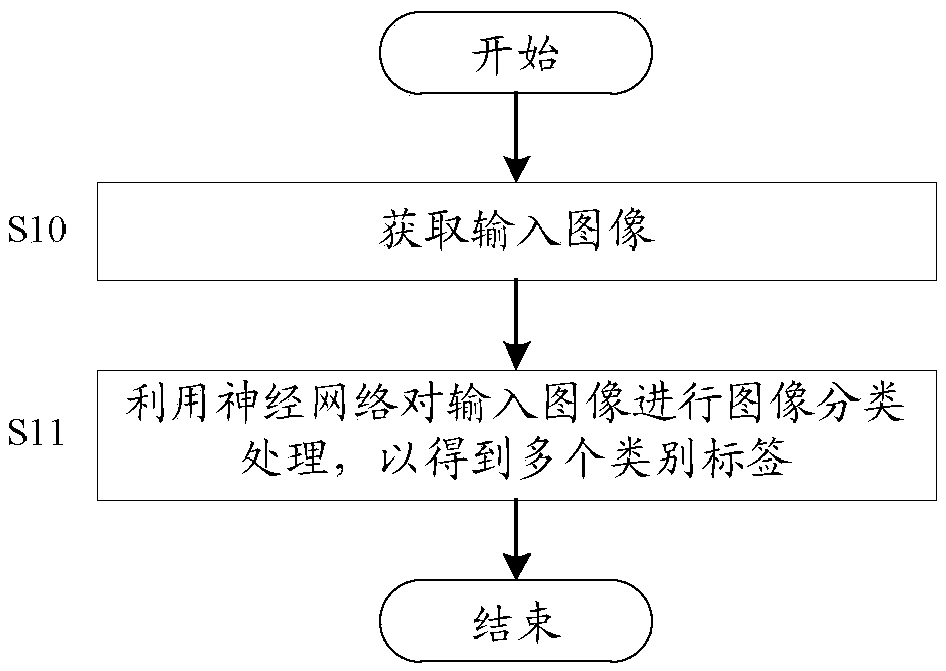

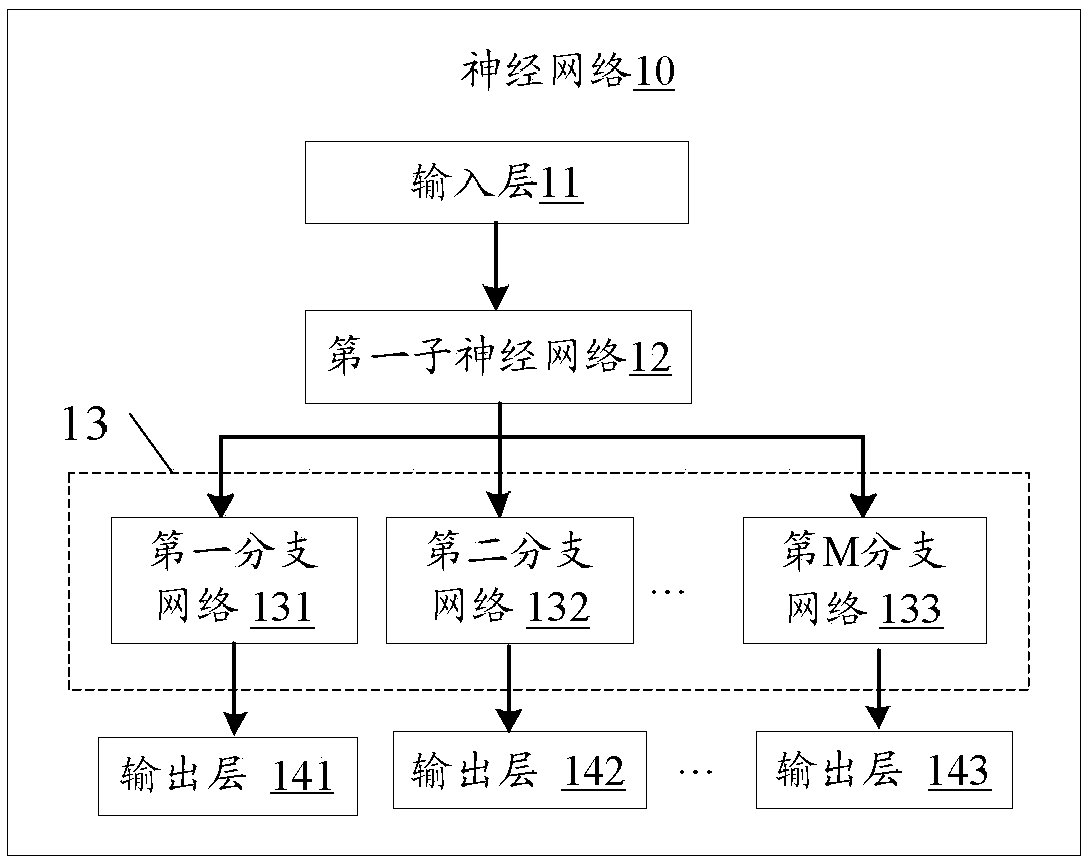

Image processing method, image processing apparatus, computer readable storage medium

ActiveCN109241880AReduce training difficultyImprovement effectCharacter and pattern recognitionNeural architecturesEngineeringMultiple category

An image processing method based on a neural network, an image processing apparatus, and a computer-readable storage medium are provided. The image processing method comprises the following steps of:obtaining an input image; classifying the input image by using neural network to obtain multiple category labels, wherein the neural network comprises a first sub-neural network and a second sub-neural network, The second sub-neural network comprises a plurality of branch networks, at least two branch networks of the plurality of branch networks are arranged in parallel, and the step of classifying the input image by using neural network to obtain multiple category labels comprises the following steps: extracting basic feature information of the input images by the first sub-neural network toobtain a plurality of first feature images; Dividing a plurality of first feature maps to obtain a plurality of first feature map groups corresponding to the plurality of branch networks one by one;respectively processing a plurality of first feature map groups by a plurality of branch networks to obtain a plurality of class tags.

Owner:BEIJING KUANGSHI TECH

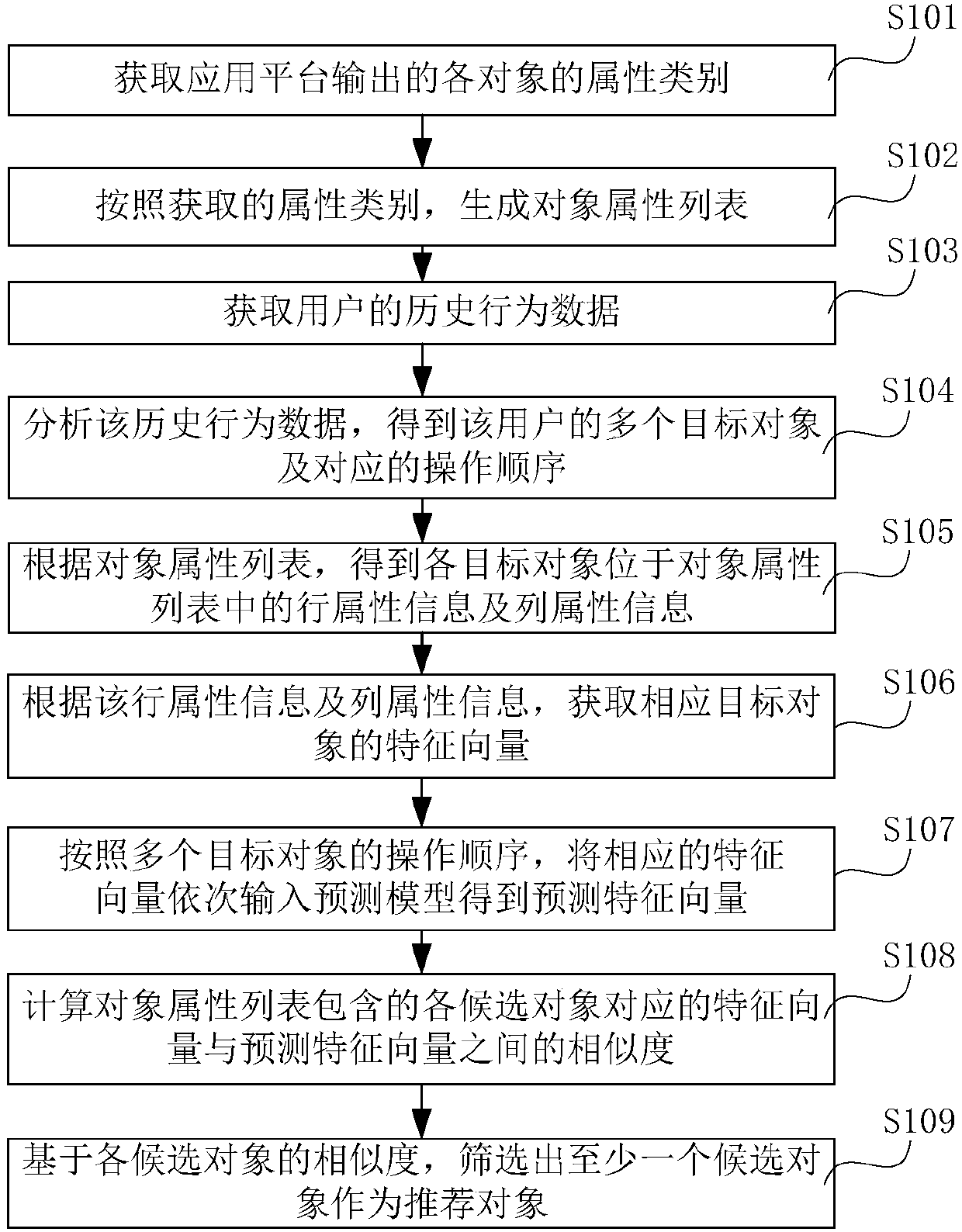

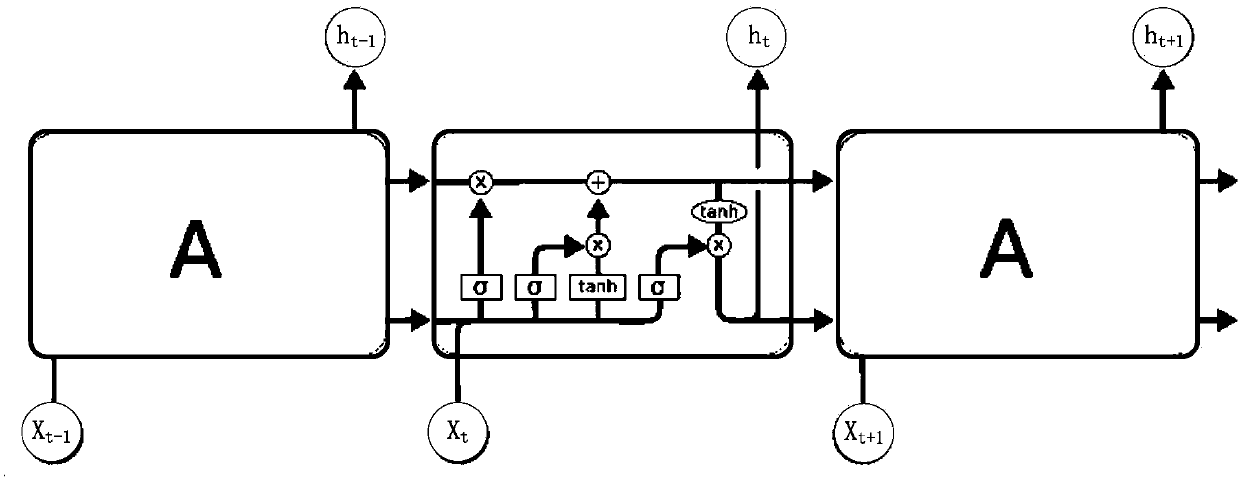

Object recommendation method and device, storage medium and computer equipment

ActiveCN110209922AReduce training difficultyImprove accuracyDigital data information retrievalSpecial data processing applicationsFeature vectorComputer module

Embodiments of the invention provide an object recommendation method and device, a storage medium and computer equipment, and the method comprises the steps: obtaining various attribute information ofa target object, constructing a feature vector of the target object, and inputting the feature vector into a prediction module according to an operation sequence of each target object to obtain a prediction feature vector.Thus, the expression content of the feature vectors is enriched, the operation sequence of all the target objects is considered in the model calculation process, and the accuracy of the prediction result is improved; moreover, since the plurality of objects output by the application platform share one piece of attribute information, the number of parameters for training theprediction model is greatly reduced, the model training difficulty is reduced, and the method can be suitable for a large number of application scenarios.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

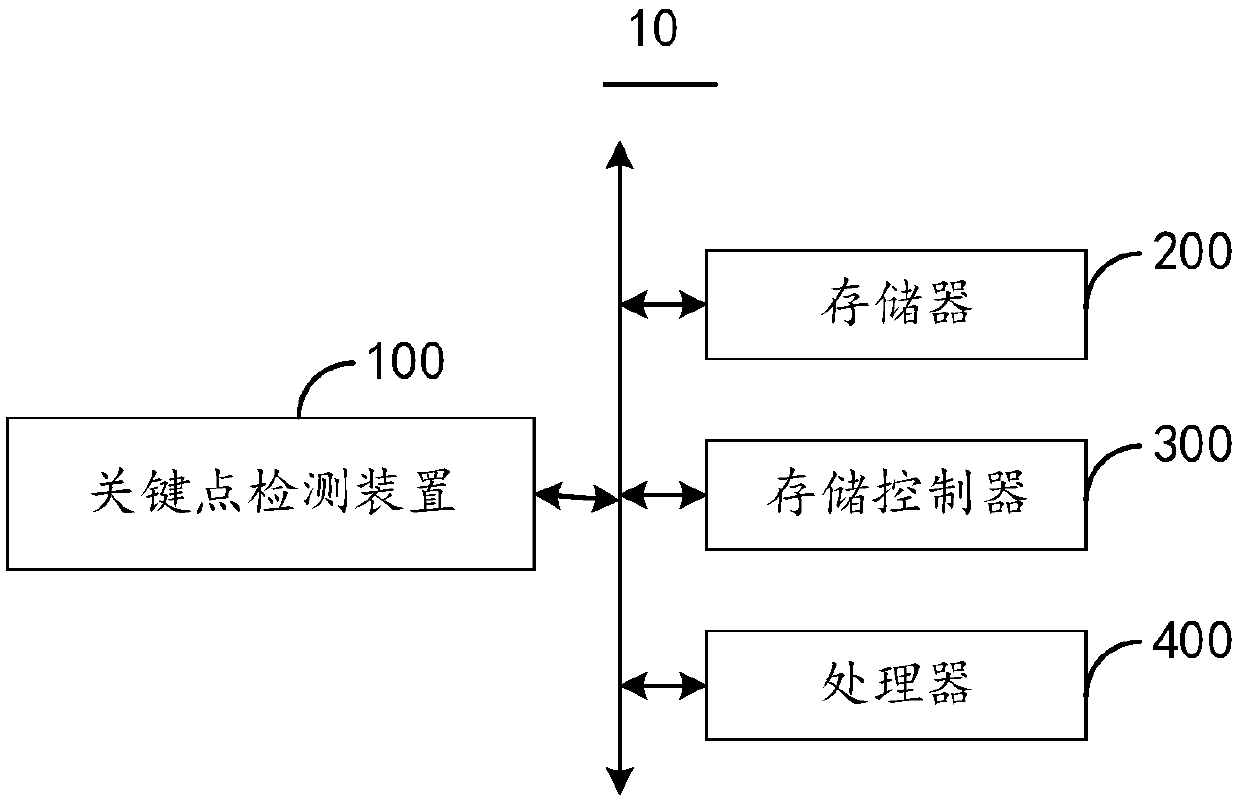

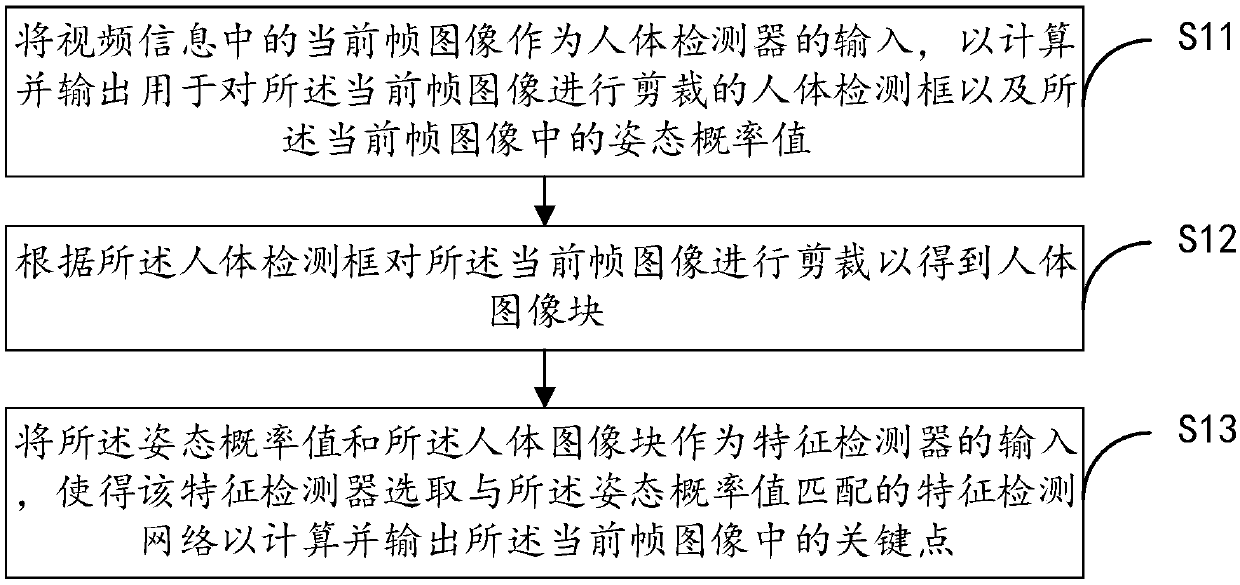

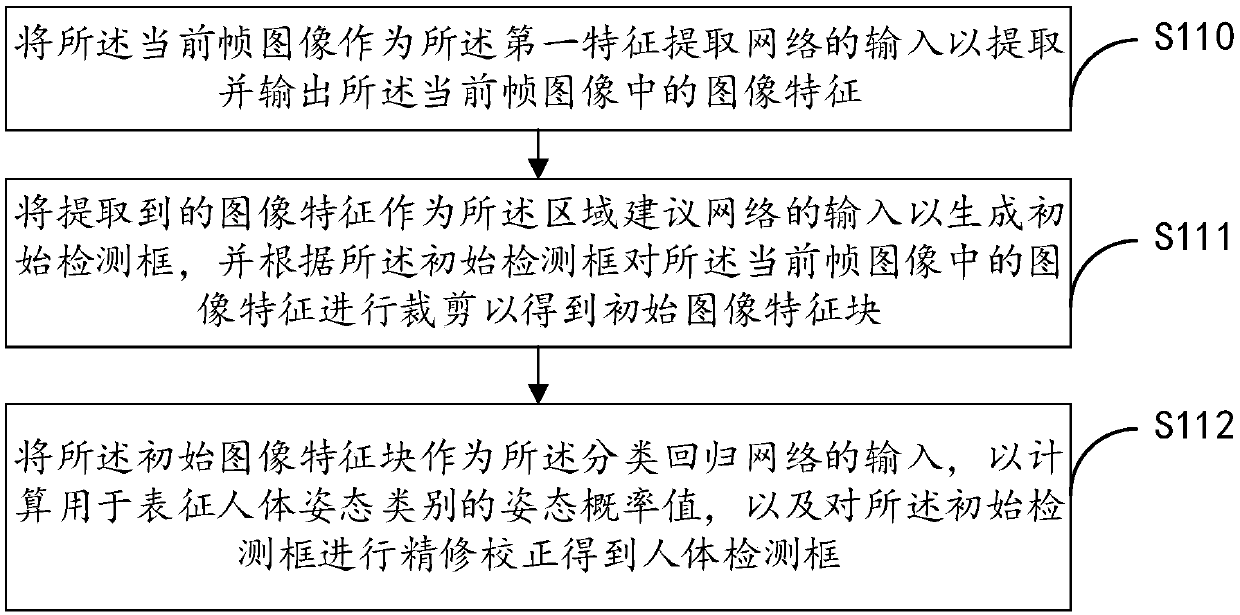

Key point detection method and device

ActiveCN109598234AReduce the difficulty of network trainingFast data processingCharacter and pattern recognitionNetwork complexityFeature detection

The embodiment of the invention provides a key point detection method and device. The method comprises the steps: enabling a current frame image in video information to serve as the input of a human body detector, so as to calculate and output a human body detection frame vector which is used for cutting the current frame image, and an attitude probability value in the current frame image; Clipping the current frame image according to the human body detection frame to obtain a human body image block; And taking the attitude probability value and the human body image block as input of a featuredetector to calculate and output key points in the current frame of image. The method can effectively solve the problem that human body feature detection is difficult to execute in real time in the mobile terminal, reduces the network complexity in the key point detection process, and provides the detection precision.

Owner:深圳美图创新科技有限公司

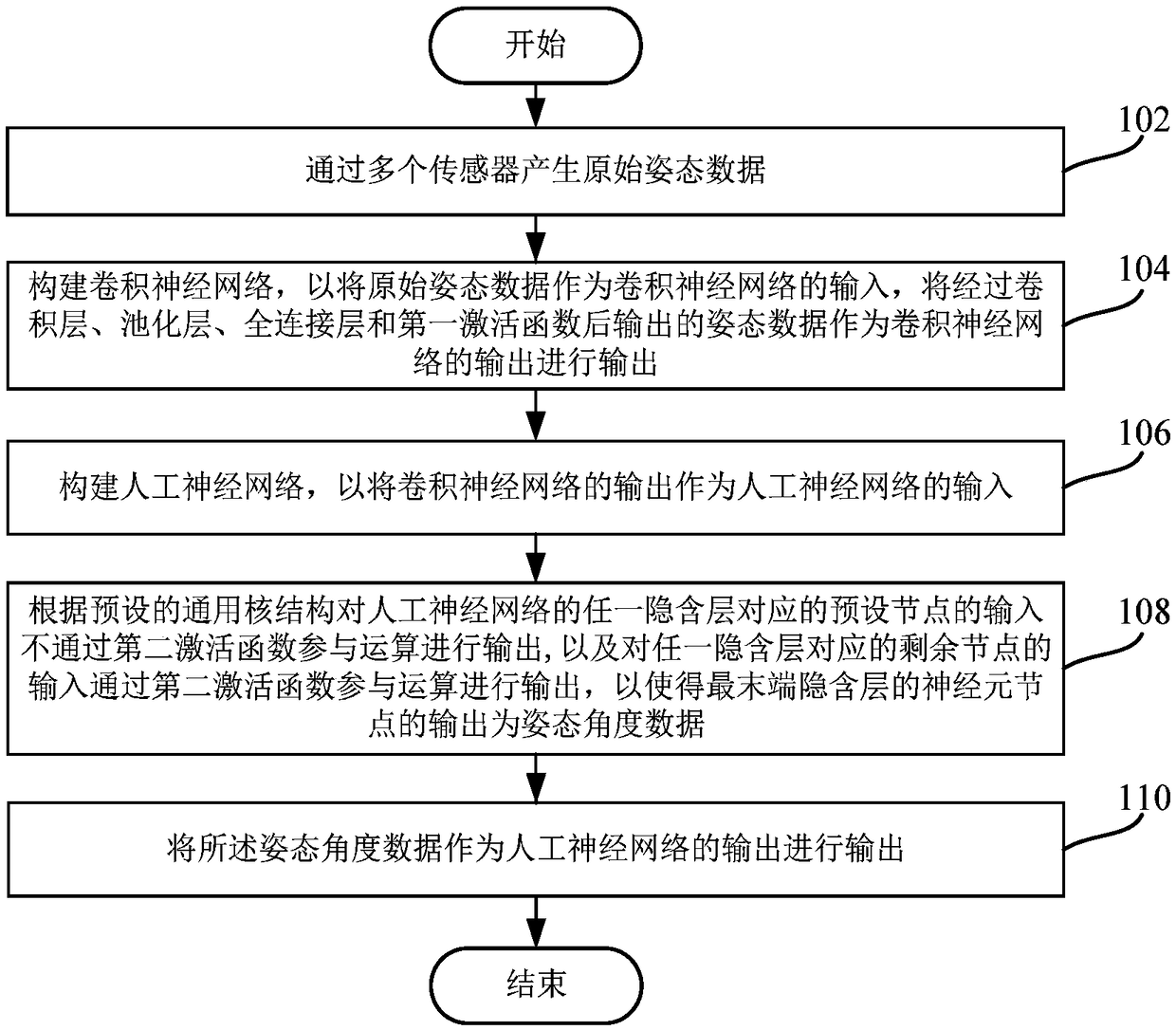

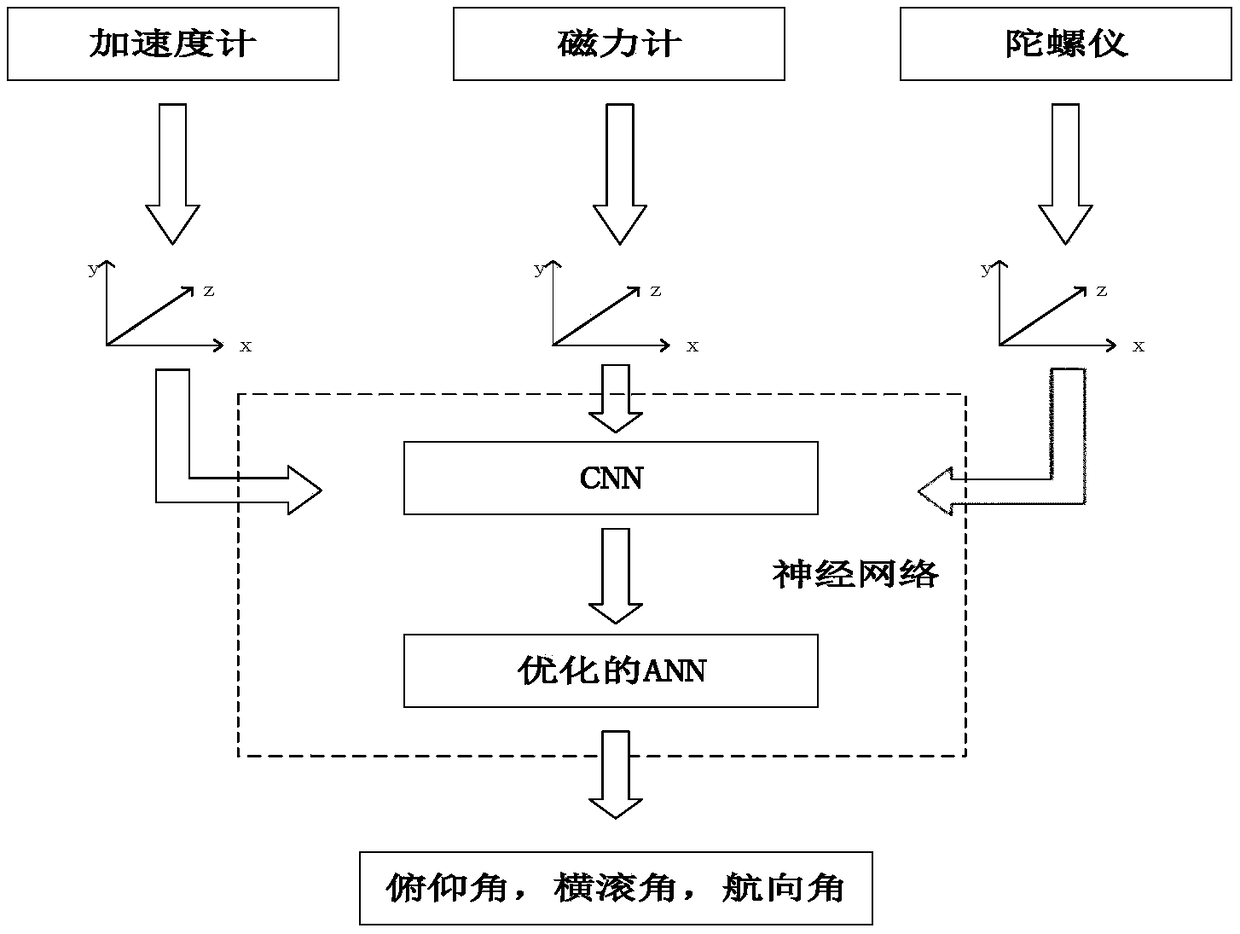

A multi-sensor attitude data fusion method and system based on a neural network

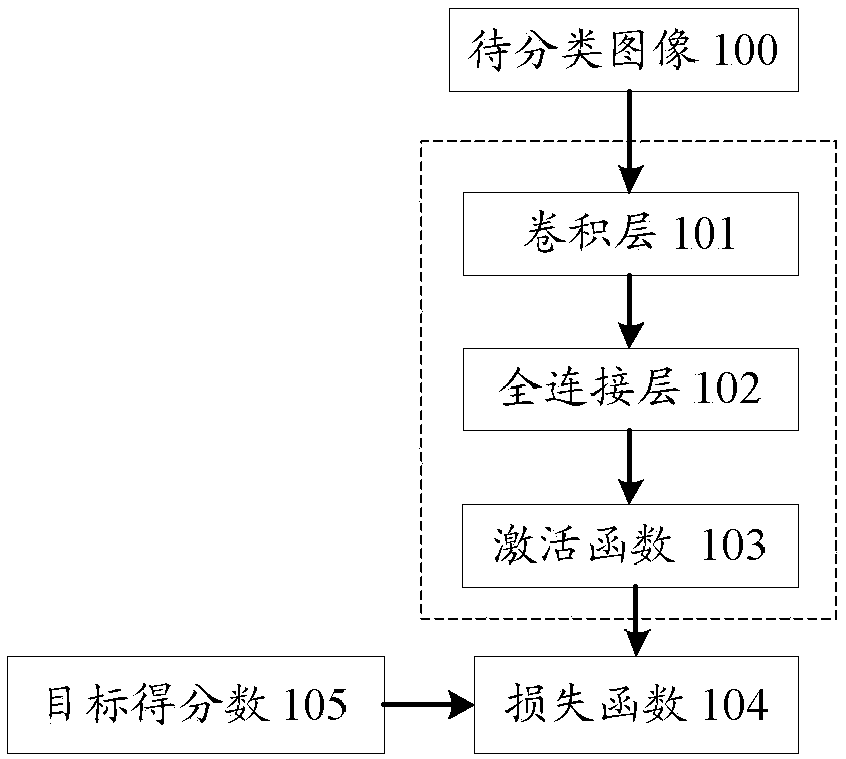

ActiveCN109409431AHigh measurement accuracyAchieve integrationCharacter and pattern recognitionNeural architecturesHidden layerActivation function

The invention discloses a multi-sensor attitude data fusion method and system based on a neural network. The method comprises the following steps: generating original attitude data through a pluralityof sensors; Taking the original attitude data as the input of the convolutional neural network, and taking the attitude data output after the convolution layer, the pooling layer, the full connectionlayer and the first activation function as the output of the convolutional neural network for output; Taking the output of the convolutional neural network as the input of the artificial neural network; and according to a preset general kernel structure, not outputting input of a preset node corresponding to any hidden layer of the artificial neural network through a second activation function, and outputting the input of the remaining nodes corresponding to any hidden layer through a second activation function, and outputting the attitude angle data output by the neuron node of the hidden layer at the tail end as the output of the artificial neural network. According to the fusion method, the convolutional neural network and the optimized artificial neural network are effectively combined, so that the measurement precision of the attitude angle data is improved.

Owner:JILIN UNIV

Biological ink and preparation method thereof

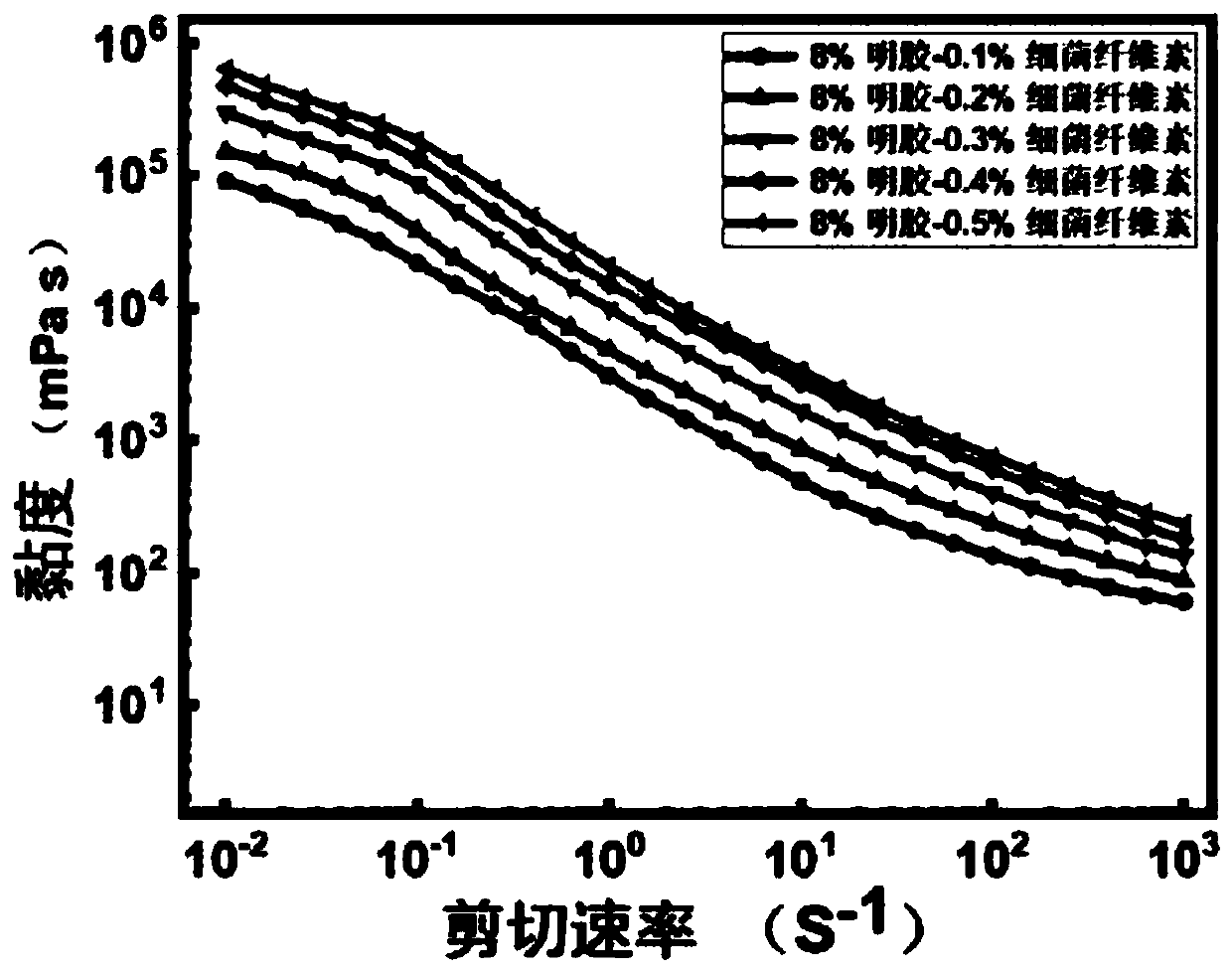

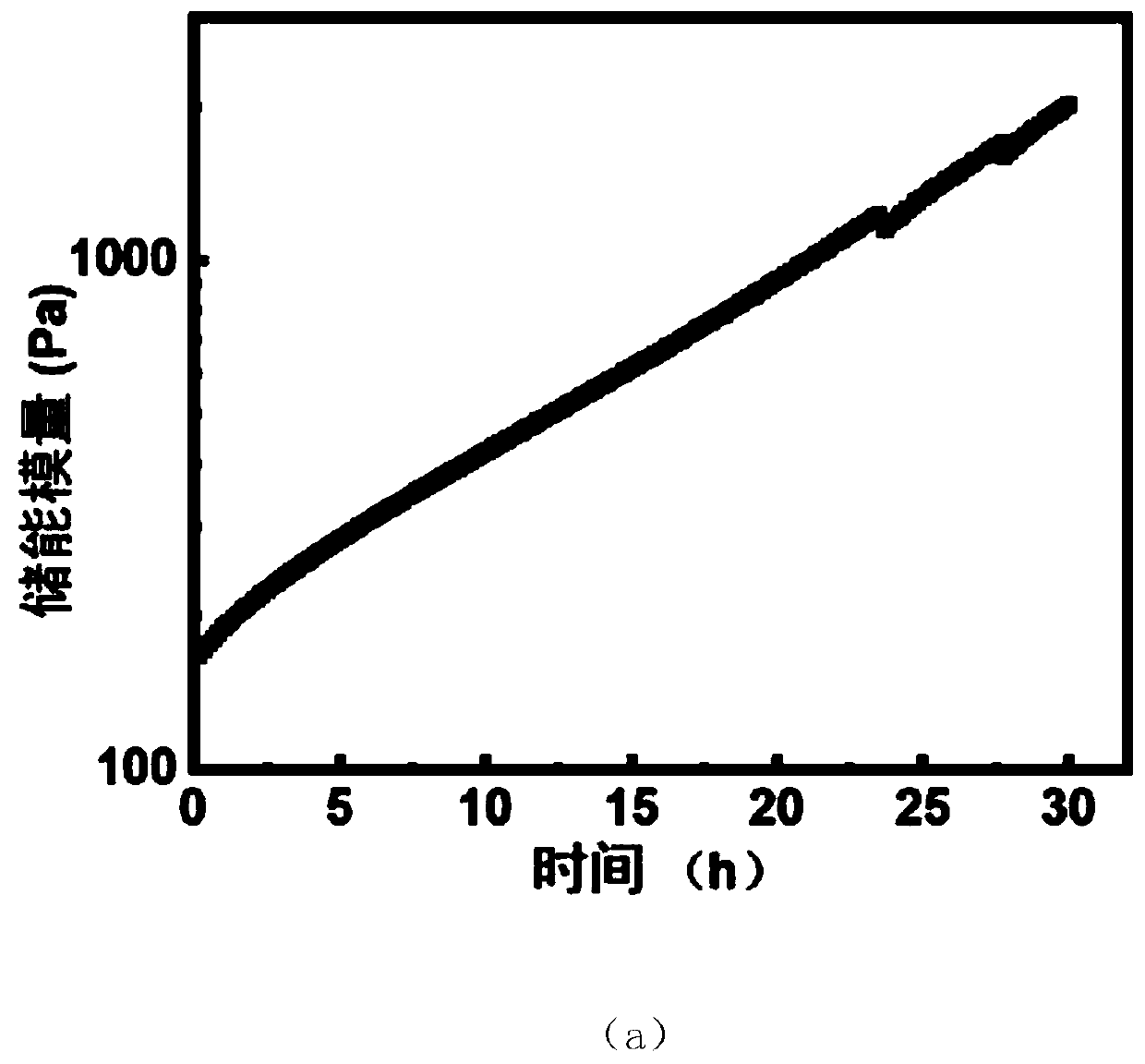

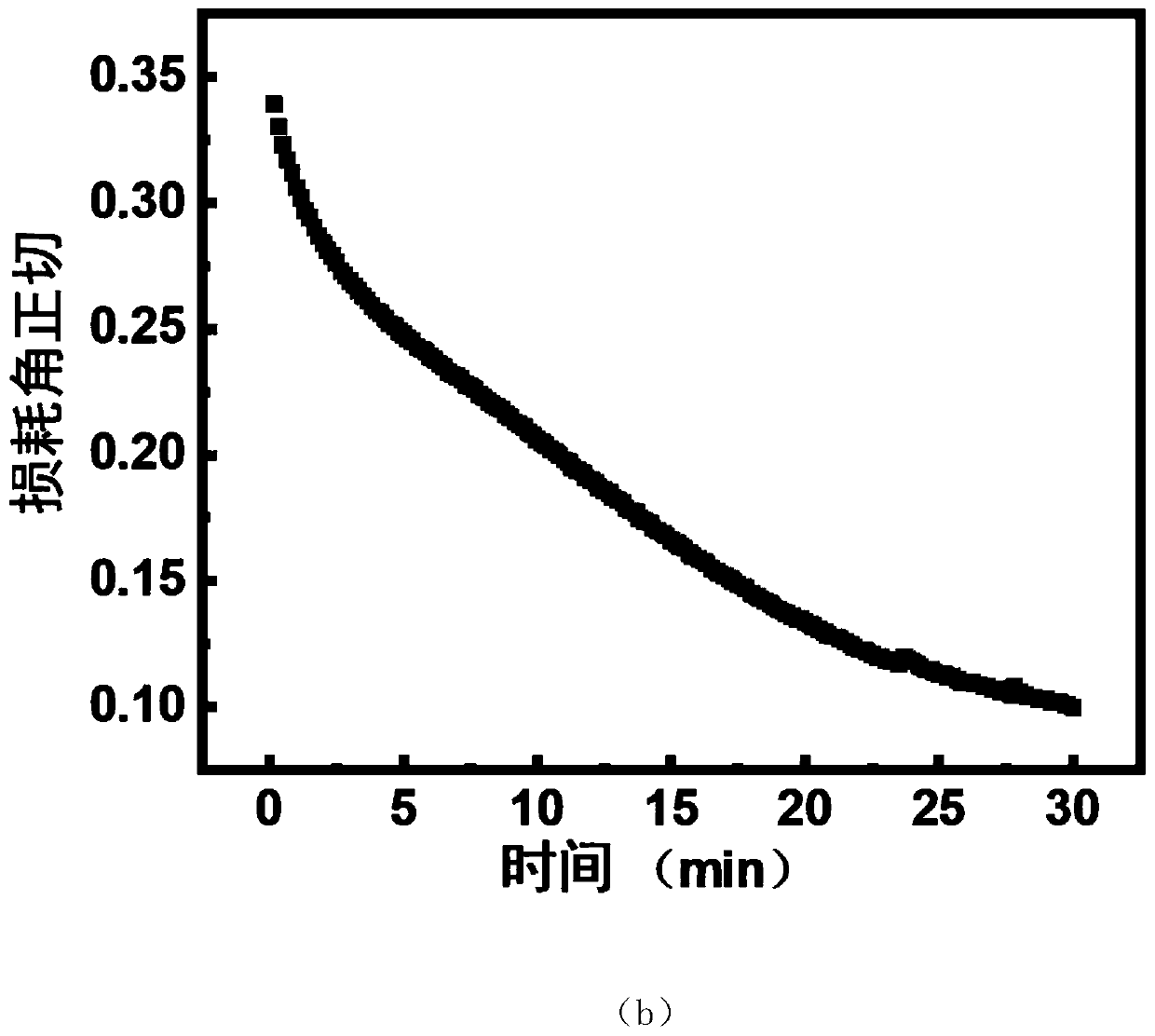

InactiveCN110801532AAvoid harmMild reaction conditionsAdditive manufacturing apparatusProsthesisCross linkerHydrogel scaffold

The invention discloses a biological ink used for 3D biological printing and a preparation method thereof. The biological ink comprises the following components in percentage by mass: 8%-20% of a hydrophilic polymer with the crosslinking function, 10-20% of a water-soluble enzyme with the crosslinking function, 0.1%-1% of bacterial cellulose, and the balance a sterile PBS solution. The biologicalink can be used for 3D printing after mixed with cells, and the shear thinning properties of the used materials make the biological ink have the characteristics of self-supporting after extrusion. Theused materials have good cell compatibility, the enzyme crosslinking agent forms a hydrogel at physiological temperature (about 37 DEG C), the reaction conditions are mild, the cells are not damaged,a hydrogel support has relatively good stability in a culture medium, and thereby cell proliferation can be supported.

Owner:RENMIN UNIVERSITY OF CHINA

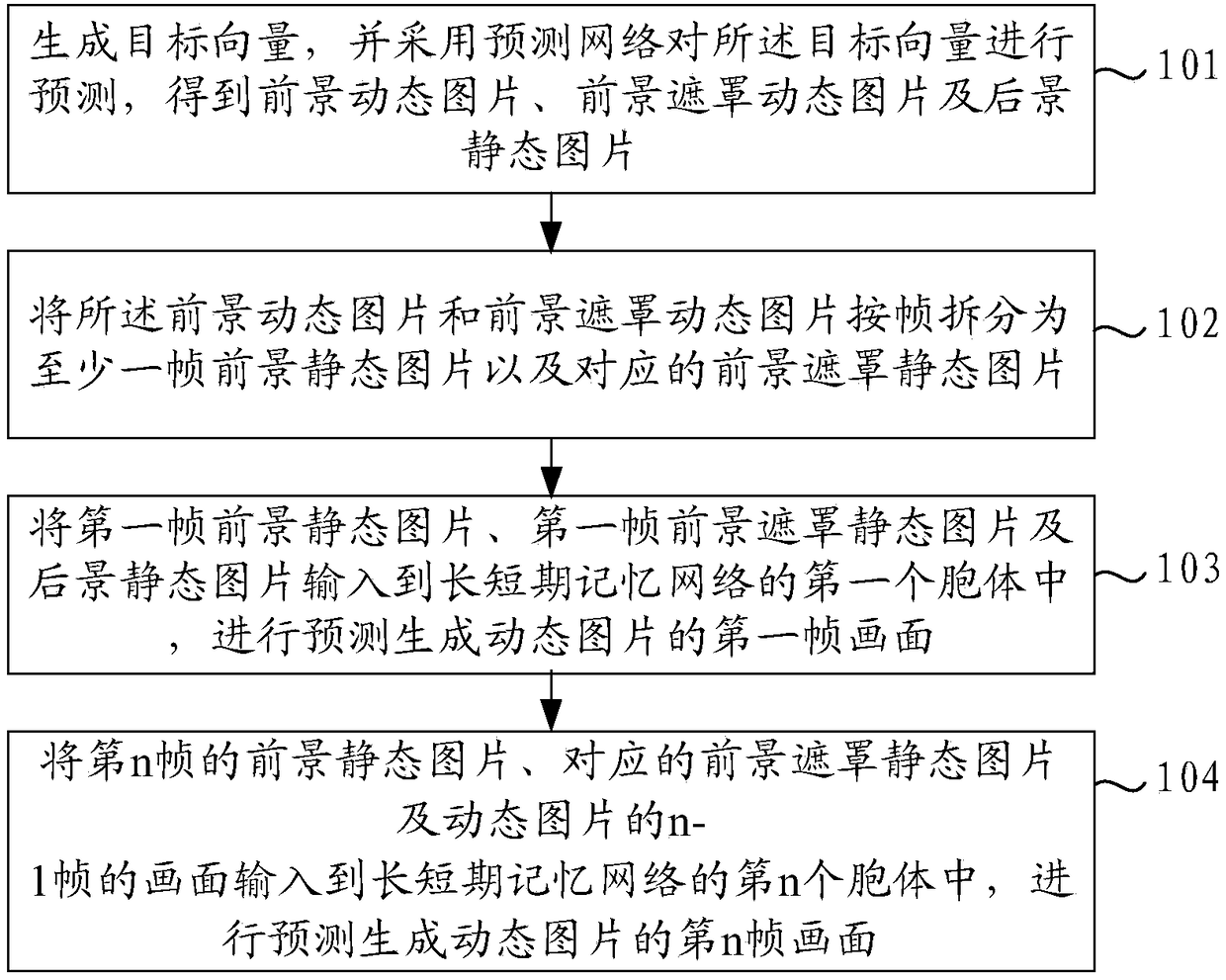

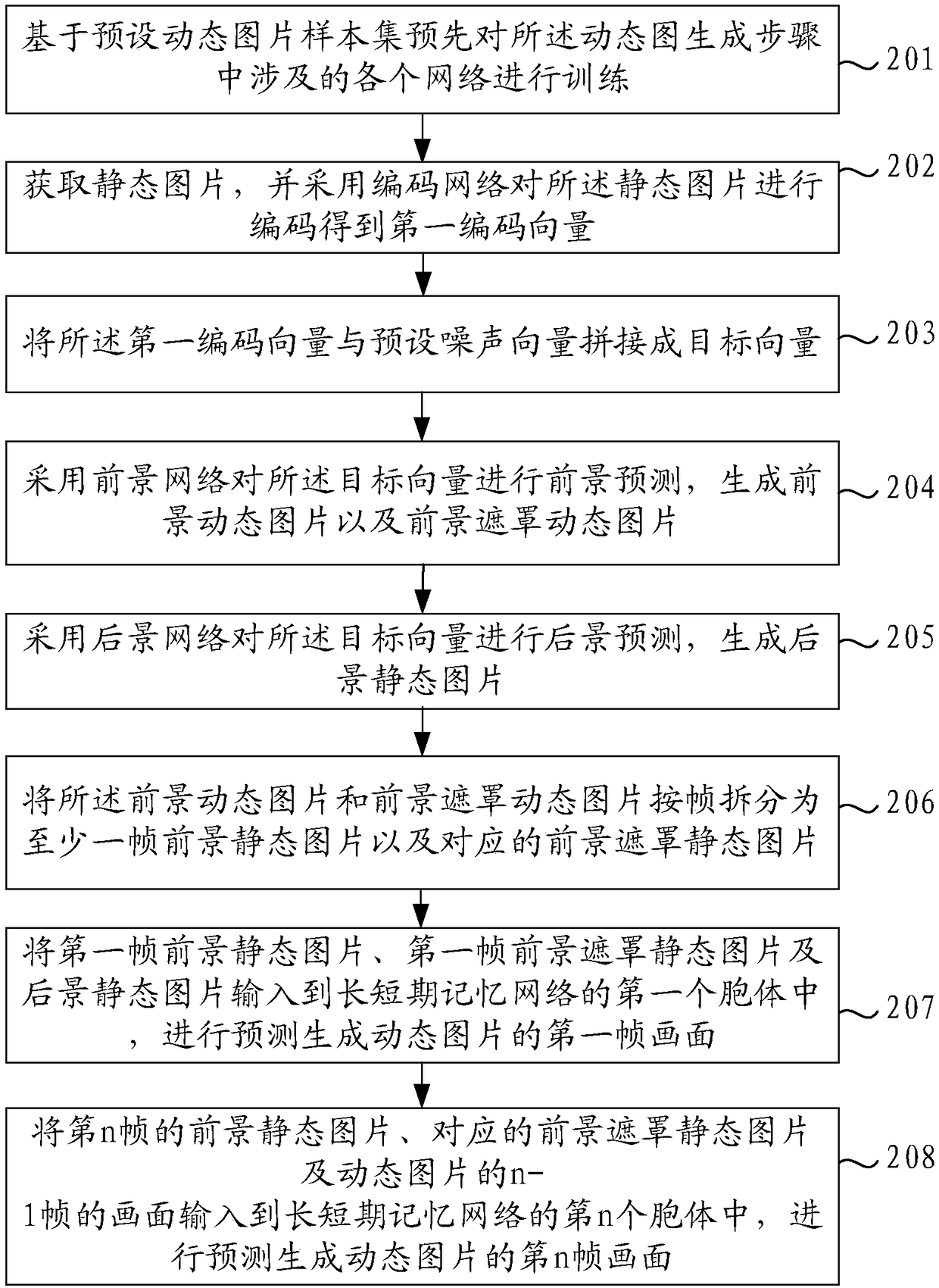

Generation method and device for dynamic picture

ActiveCN108648253AImprove accuracyReduce training difficultyImage enhancementImage analysisPattern recognitionComputer graphics (images)

The embodiment of the invention provides a generation method and device for a dynamic picture. The method comprises the steps that a target vector is generated, and the target vector is predicted by adopting a prediction network to obtain a foreground dynamic picture, a foreground masked dynamic picture and a background static picture; the foreground dynamic picture and the foreground masked dynamic picture are split into at least one foreground static picture frame and corresponding foreground masked static picture frames; the first foreground static picture frame, the first foreground maskedstatic picture frame and the background static picture are input to a first cell of a long short-term memory network, and a first picture frame of the dynamic picture is generated predictively; and the n foreground static picture frame, the corresponding foreground masked static picture frame and an n-1 picture frame of the dynamic picture are input to an n cell of the long short-termmemory network, and an n picture frame of the dynamic picture is generated predictively. Accordingly, the problem that the generation accuracy rate of the dynamic picture is low is solved, and the generation accuracy rate of the dynamic picture can be increased.

Owner:BEIJING SANKUAI ONLINE TECH CO LTD

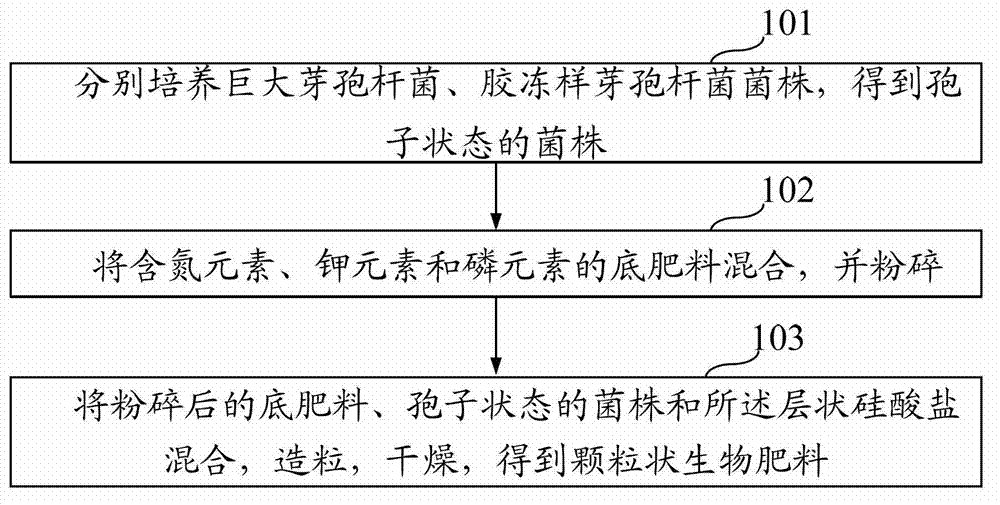

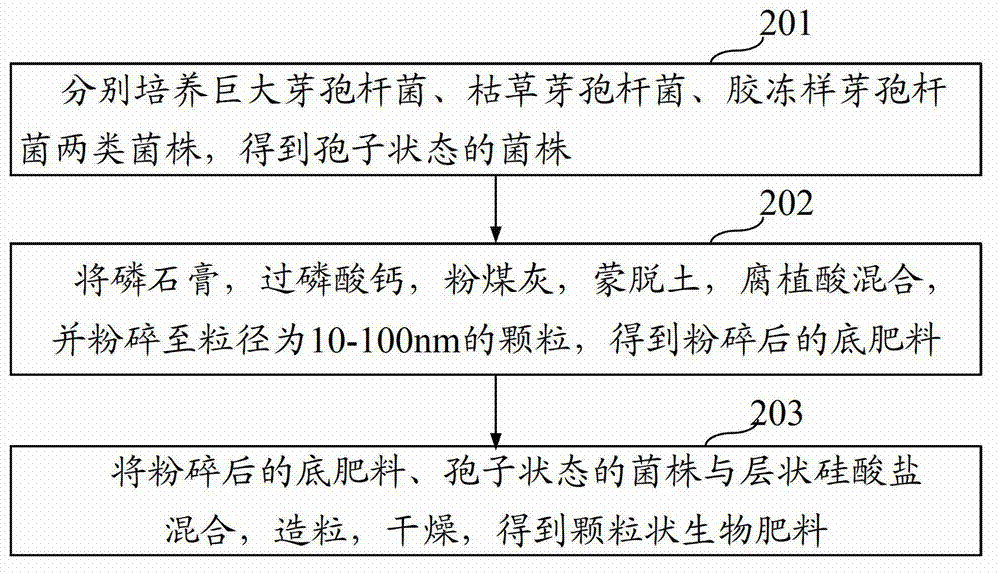

Nano microorganism organic and inorganic compound fertilizer and production method thereof

ActiveCN103030471APromote growthTo achieve the purpose of disease resistanceFertilizer mixturesBacillus megateriumPotassium

The invention relates to the agricultural field and the environment-friendly field, in particular to nano microorganism organic and inorganic compound fertilizer and a production method thereof. The fertilizer comprises the following components in parts by weight: 5 to 40 parts of layered silicate, 0.1 to 0.25 part of bacillus megaterium, 0.1 to 0.25 part of bacillus mucilaginosus, 0.1 to 0.25 part of bacillus subtilis, and 0.1 to 0.25 part of base fertilizer containing nitrogen element, potassium element and phosphor element, wherein the nitrogen element, the potassium element and the phosphor element are 6-18% of total weight of the base fertilizer. The production method comprises the following steps: strains of the bacillus megaterium, the bacillus mucilaginosus and the bacillus subtilis are cultivated respectively to obtain strains in a spore state; the base fertilizer is mixed and smashed; and the smashed base fertilizer, the strains in the spore state and the layered silicate are mixed, pelletized and dried. The fertilizer can provide the nutrition needed by crops, and can decompose nitrogen fertilizer, potassium fertilizer and phosphor fertilizer adsorbed and fixed in the soil, so that soil damage is avoided.

Owner:ZIGONG HUAQI ZHENGGUANG ECOLOGY AGICULTURE FORESTRY +1

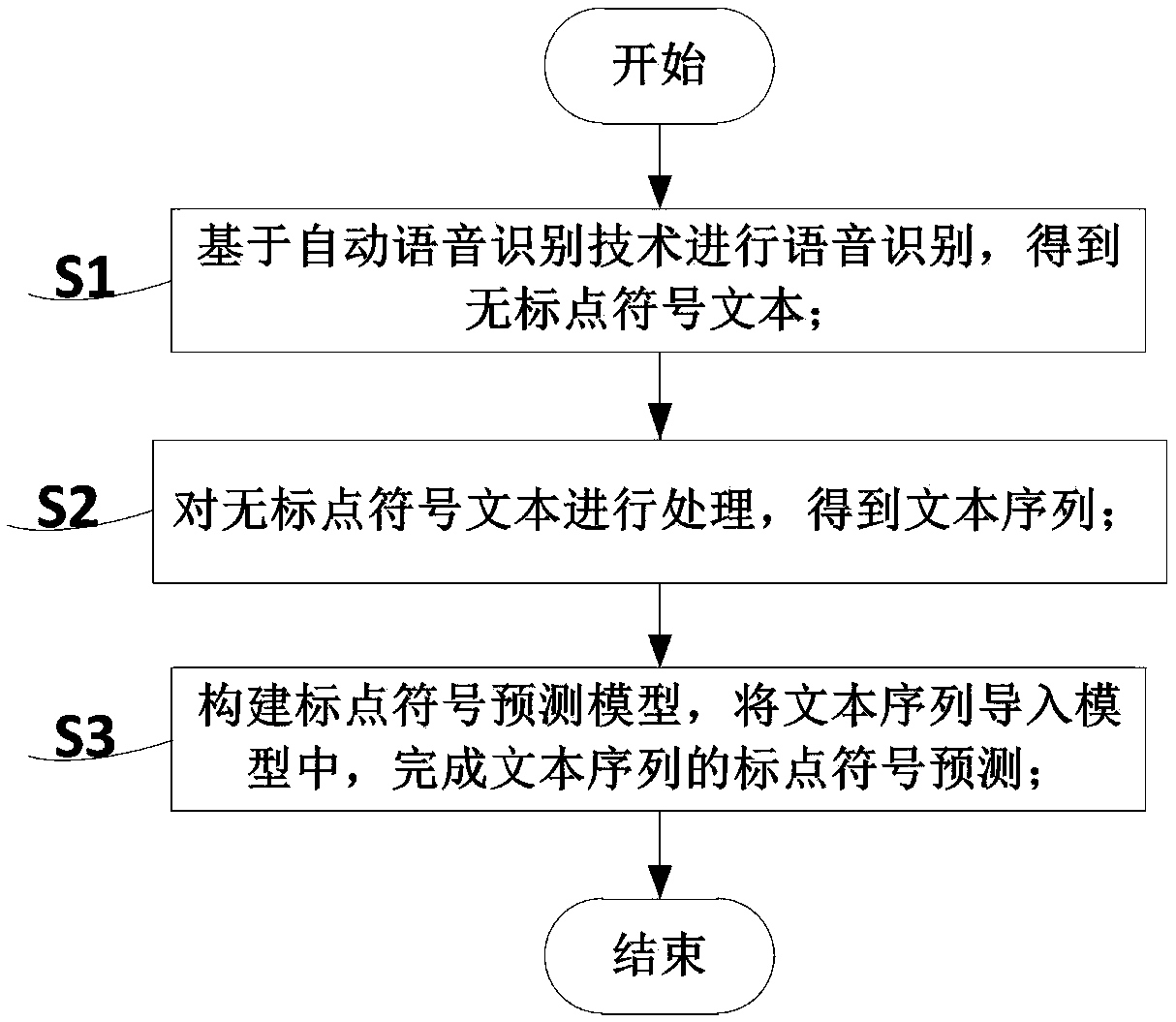

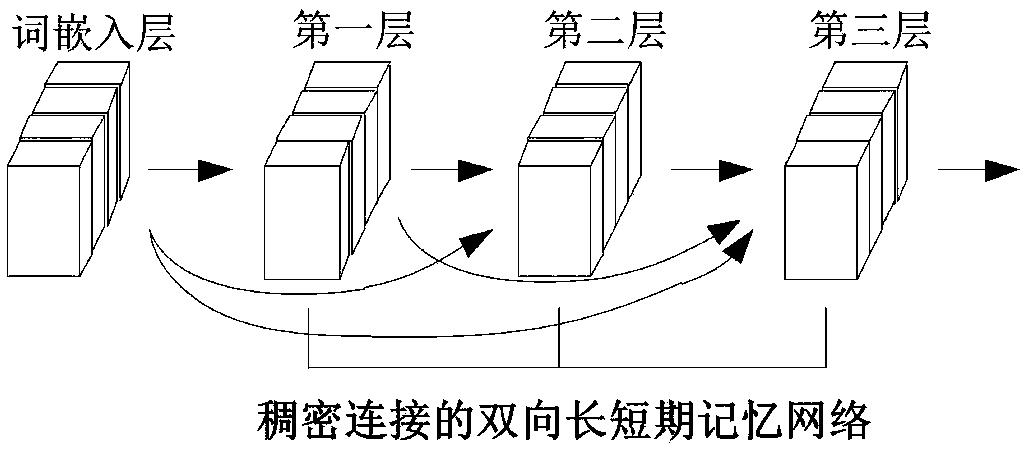

A punctuation mark prediction method based on a self-attention mechanism

ActiveCN109558576ABuild long-term dependent relationshipsReduce training difficultyNatural language data processingSpeech recognitionSelf attentionAlgorithm

The invention provides a punctuation mark prediction method based on a self-attention mechanism, which comprises the following steps of performing voice recognition based on an automatic voice recognition technology to obtain a punctuation mark-free text; processing the punctuation-free text to obtain a text sequence; and constructing a punctuation mark prediction model, and importing the text sequence into the model to complete punctuation mark prediction of the text sequence. According to the punctuation prediction method based on the self-attention mechanism provided by the invention, the punctuation prediction of the speech recognition text is realized by constructing the punctuation prediction model, the problem of gradient disappearance is effectively alleviated, the feature transferis enhanced, and the long-term dependency relationship of the text is effectively established. Meanwhile, compared with a previous model, the additional parameters are not needed, the transmitted data size is effectively reduced, and the training difficulty of the parameters is reduced.

Owner:SUN YAT SEN UNIV

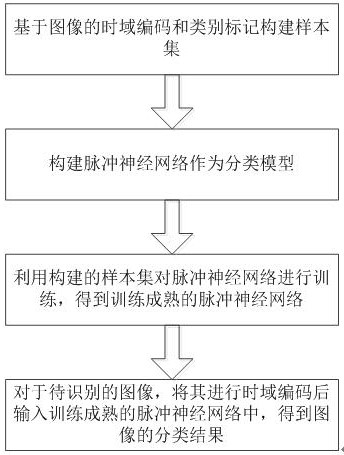

Image classification method based on time domain coding and pulse neural network

PendingCN112906828AReduce training difficultyReduce power consumptionCharacter and pattern recognitionNeural architecturesTime domainClassification methods

The invention discloses an image classification method based on time domain coding and a pulse neural network. The method comprises the following steps: S1, constructing a sample set based on time domain coding and category marking of an image; s2, constructing a pulse neural network as a classification model; s3, training the spiking neural network by using the constructed sample set to obtain a maturely trained spiking neural network; and S4, carrying out time domain coding on an image to be identified, and inputting the image to the maturely trained pulse neural network to obtain a classification result of the image. According to the invention, through a direct training framework which does not need to calculate neuron membrane potential, the training difficulty of the spiking neural network is reduced, and then real-time low-power-consumption image recognition and classification are effectively realized.

Owner:周士博

Low-oxygen culture method for akkermansia muciniphila

InactiveCN110551658AReduce incubation timeBreak thinkingBacteriaMicroorganism based processesPromotion effectBiology

The invention discloses a low-oxygen culture method for akkermansia muciniphila. In the low-oxygen culture method, a low-oxygen gas environment is mainly created; a volume ratio of various componentsof mixed gas is as follows: 70-94 percent of N2, 1-5 percent of O2 and 5-25 percent of CO2; and the culture time is 24-36h. The invention provides a brand-new low-oxygen mixed gas atmosphere which isrelatively high in oxygen concentration and more suitable for growth of Akk bacteria. Compared with a traditional absolute oxygen-free environment and an existing micro-oxygen environment, by adoptingthe method disclosed by the invention, the time of Akk bacterium culture, entering a stabilization period, is greatly shortened, and the low-oxygen environment can also show a similar promotion effect for different culture media.

Owner:君维安(武汉)生命科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com