RGB-T image significance target detection method based on multi-level depth feature fusion

A technology of RGB-T and depth features, applied in the field of image processing, can solve the problem of not being able to detect salient objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] Specific embodiments of the present invention will be described in detail below.

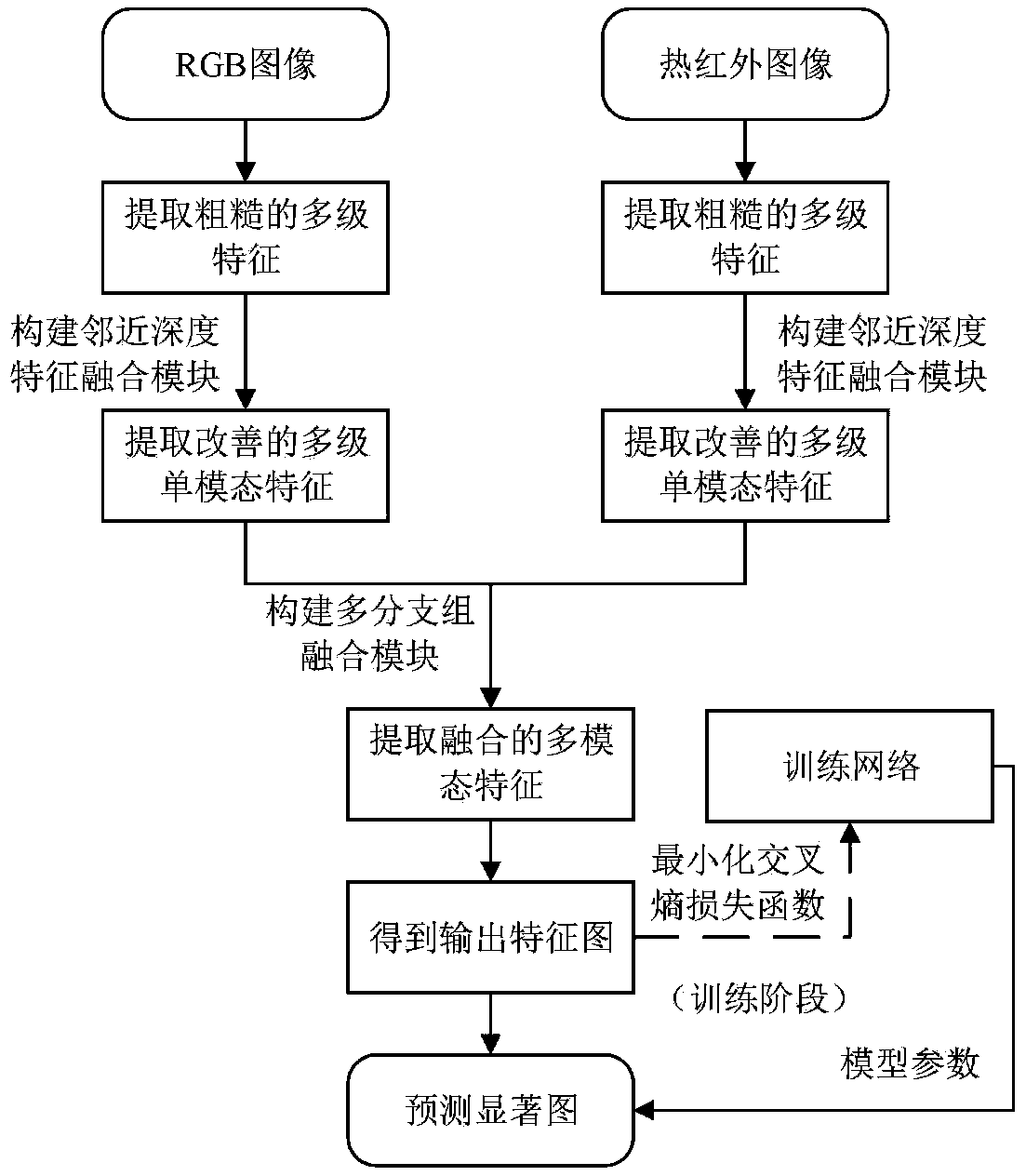

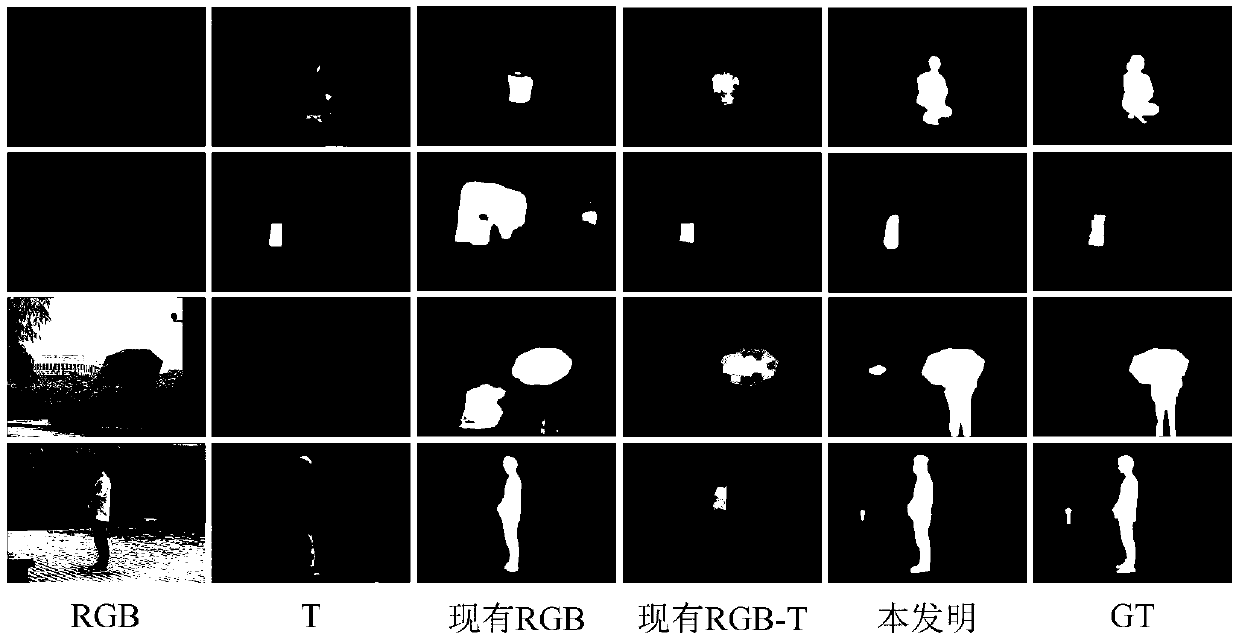

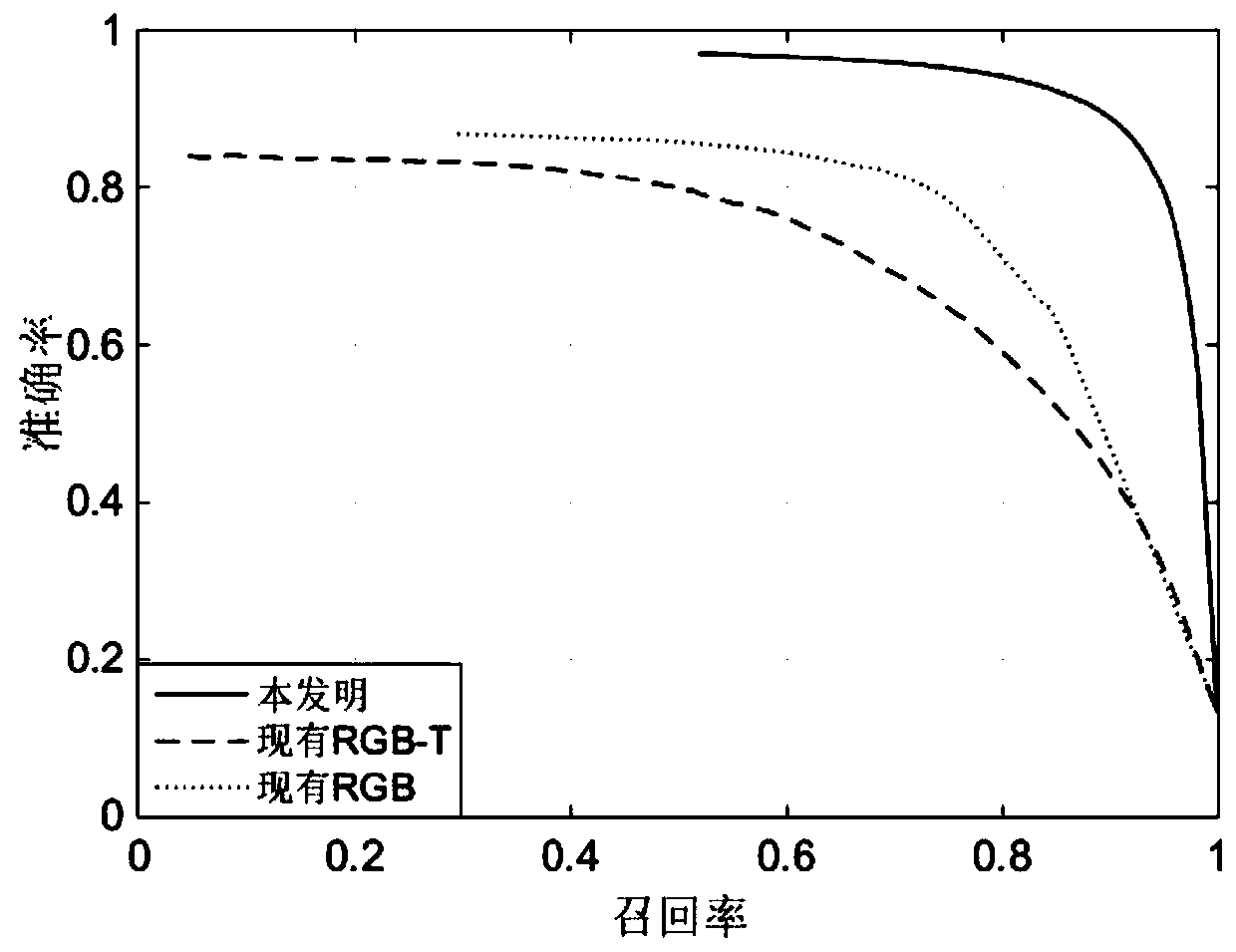

[0064] refer to figure 1 , a multi-level deep feature fusion RGB-T image salient target detection method, including the following steps:

[0065] Step 1) Extract rough multi-level features from the input image:

[0066] For RGB images or thermal infrared images, the 5-level features at different depths in the VGG16 network are extracted as rough single-modal features, respectively:

[0067] Conv1-2 (with the symbol Indicates that it contains 64 feature maps of size 256×256);

[0068] Conv2-2 (with the symbol Indicates that it contains 128 feature maps with a size of 128×128);

[0069] conv3-3 (with the symbol representation, containing 256 feature maps of size 64×64);

[0070] Conv4-3 (with the symbol representation, containing 512 feature maps of size 32×32);

[0071] Conv5-3 (with the symbol representation, containing 512 feature maps of size 16×16);

[0072] Wherein: n=...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com