Patents

Literature

137 results about "Salient object detection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Salient object detection is a task based on a visual attention mechanism, in which algorithms aim to explore objects or regions more attentive than the surrounding areas on the scene or images.

Deep learning-based weakly supervised salient object detection method and system

ActiveCN108399406AMining and Correcting AmbiguityCharacter and pattern recognitionNeural architecturesConditional random fieldData set

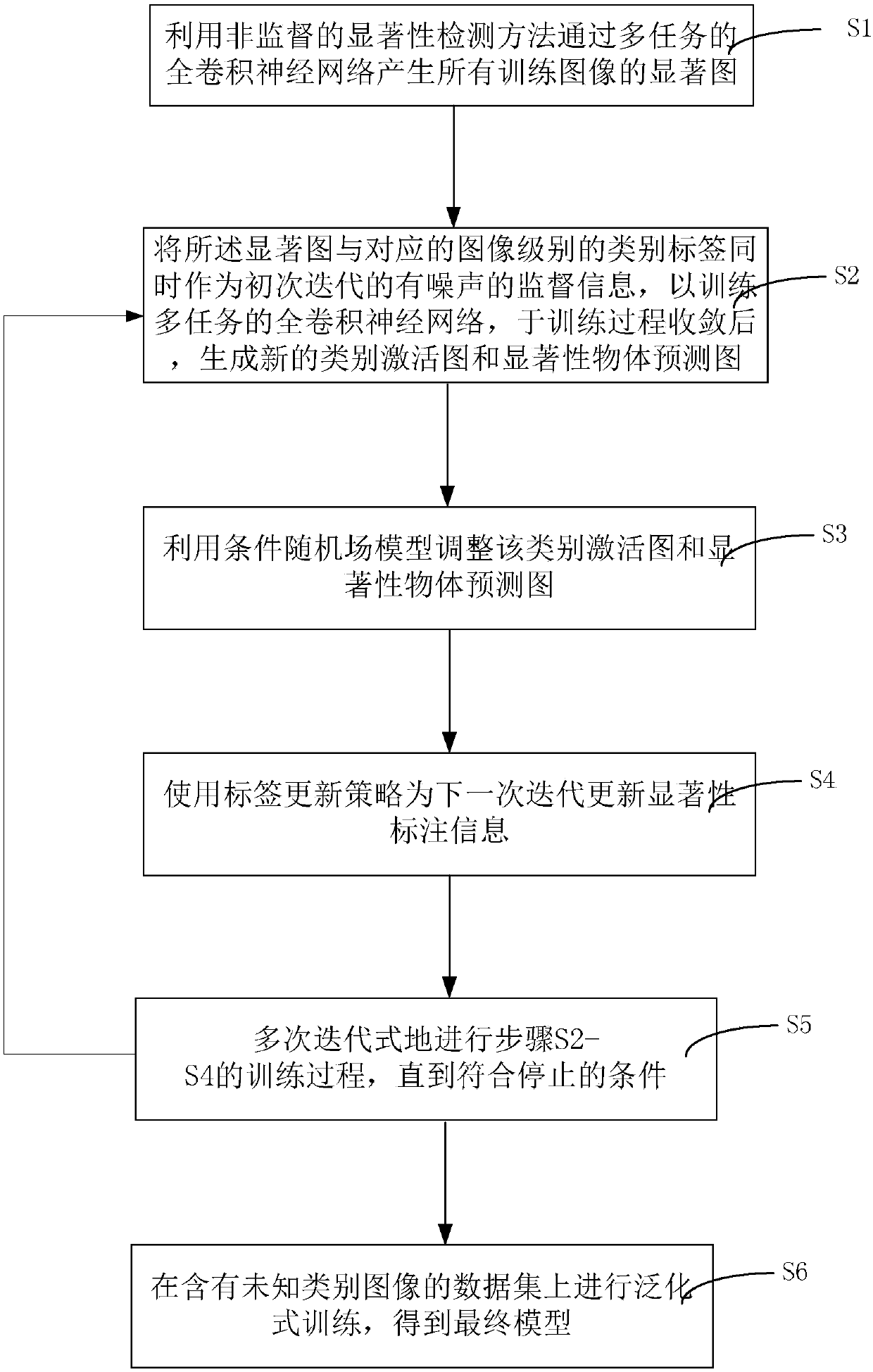

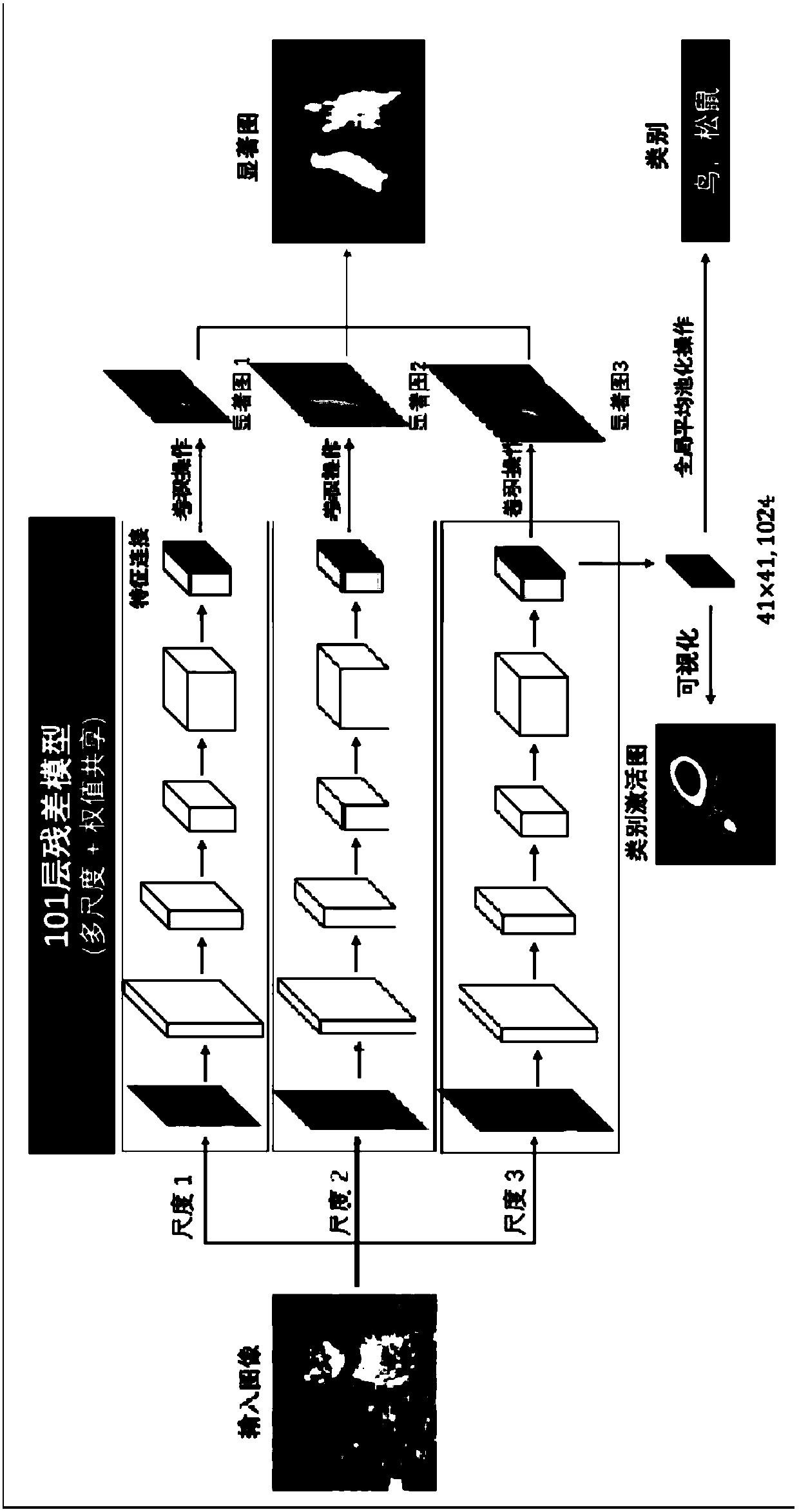

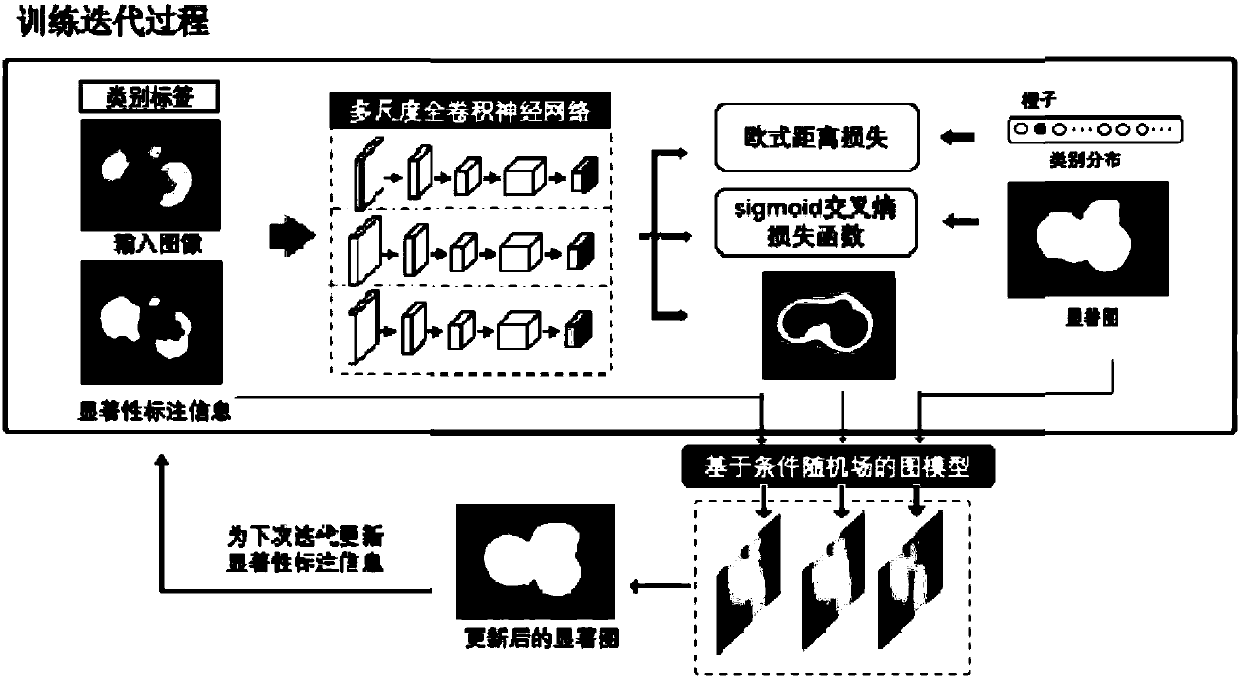

The invention discloses a deep learning-based weakly supervised salient object detection method and system. The method comprises the steps of generating salient images of all training images by utilizing an unsupervised saliency detection method; by taking the salient images and corresponding image-level type labels as noisy supervision information of initial iteration, training a multi-task fullconvolutional neural network, and after the training process is converged, generating a new type activation image and a salient object prediction image; adjusting the type activation image and the salient object prediction image by utilizing a conditional random field model; updating saliency labeling information for next iteration by utilizing a label updating policy; performing the training process by multi-time iteration until a stop condition is met; and performing general training on a data set comprising unknown types of images to obtain a final model. According to the detection method and system, noise information is automatically eliminated in an optimization process, and a good prediction effect can be achieved by only using image-level labeling information, so that a complex andlong-time pixel-level manual labeling process is avoided.

Owner:SUN YAT SEN UNIV

RGB-D salient object detection method based on foreground and background optimization

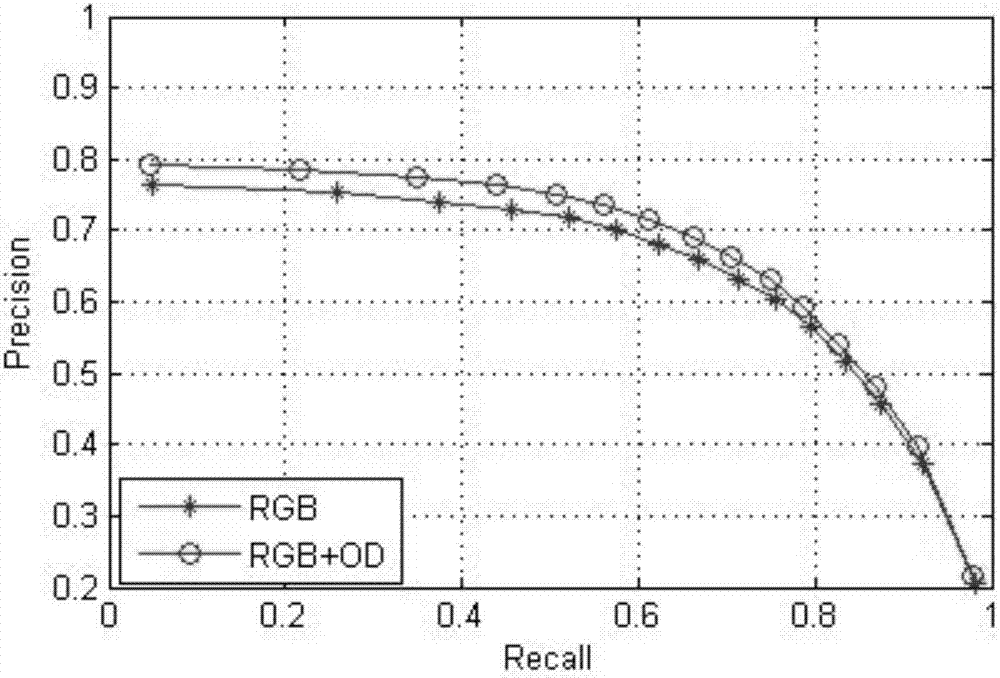

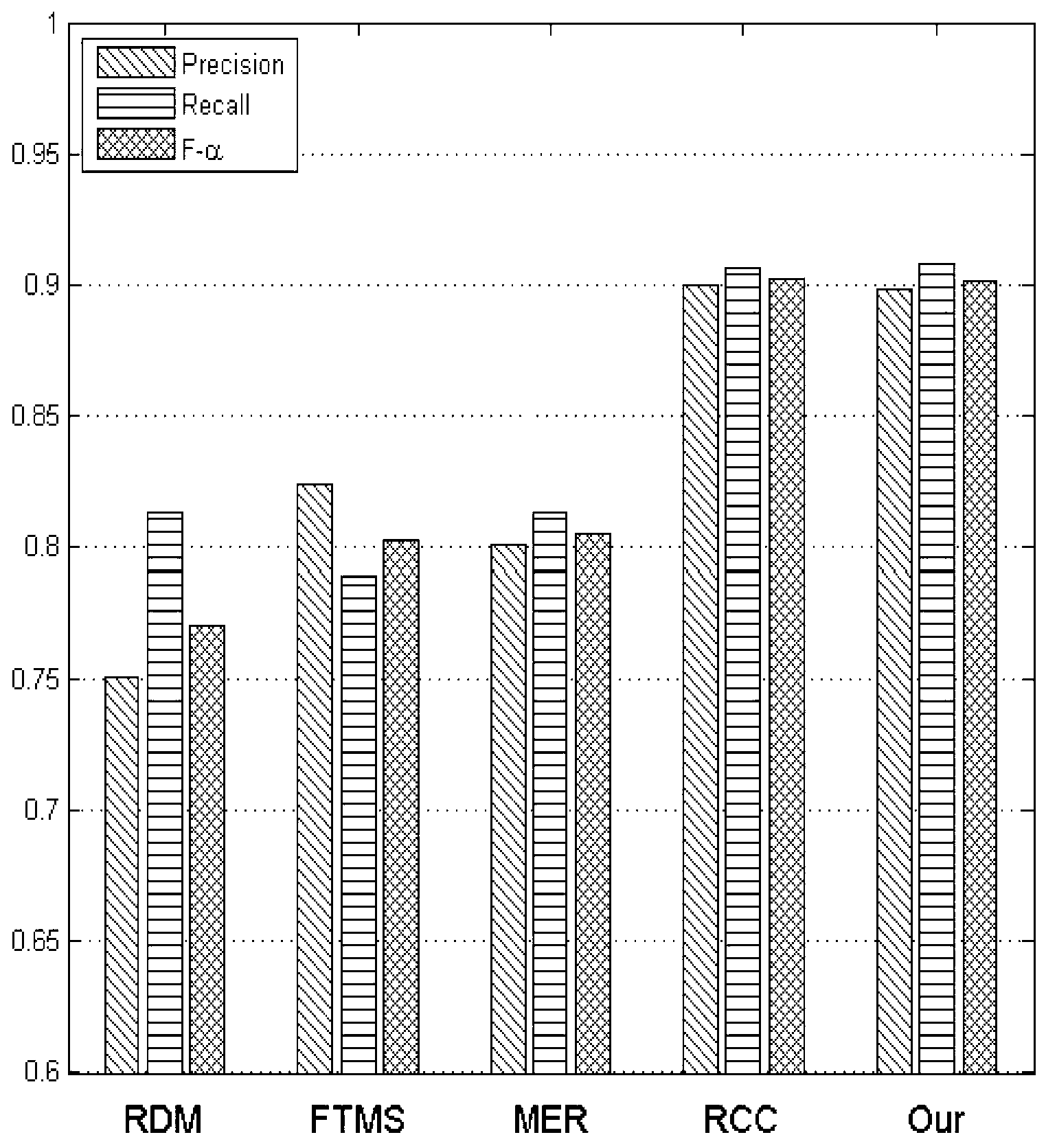

InactiveCN105513070APrecise positioningImprove recallImage analysisPattern recognitionSalient objects

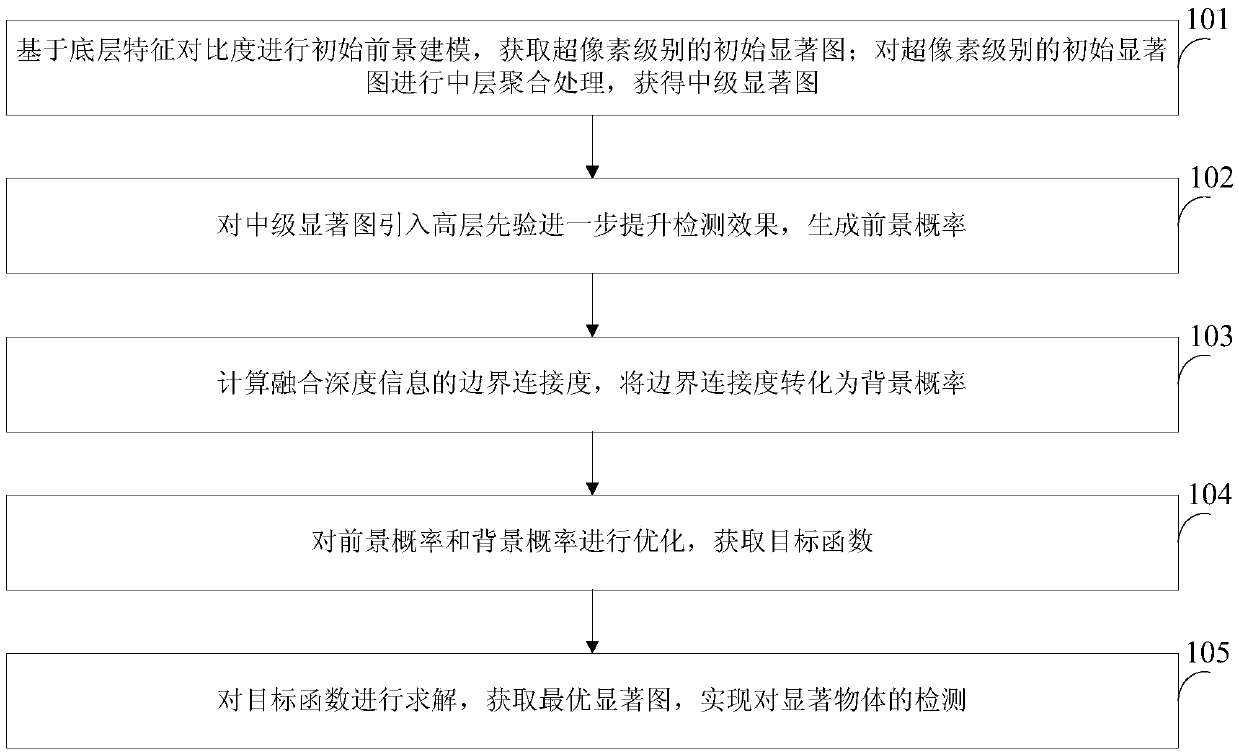

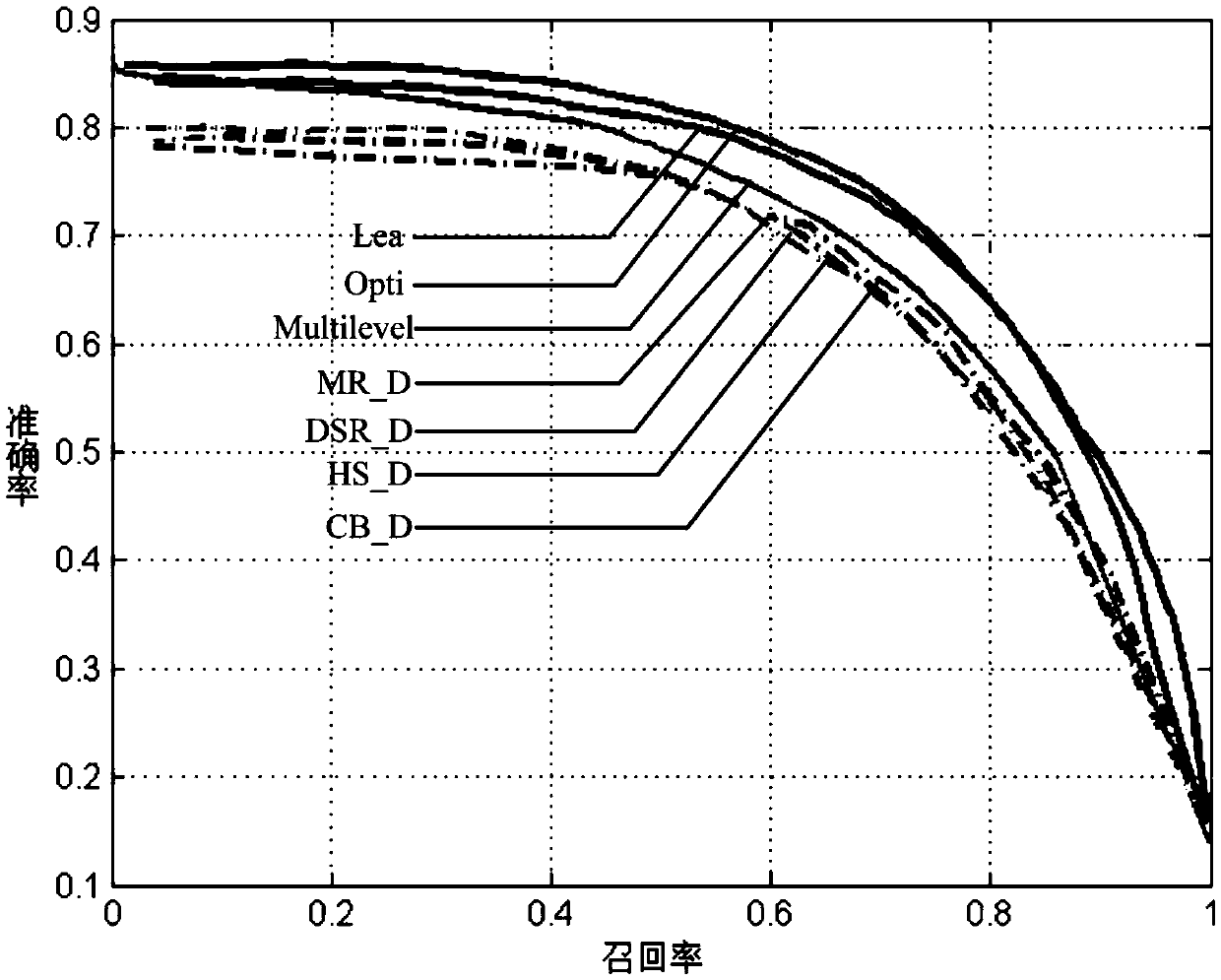

The invention discloses an RGB-D salient object detection method based on foreground and background optimization. The method comprises the following steps: initial foreground modeling is performed based on low-level feature contrast, and a superpixel-level initial salient figure is obtained; a middle-level aggregation processing is performed on the superpixel-level initial salient figure, and a middle-level salient figure is obtained; a high-level prior is introduced in the middle-level salient figure to improve the detection effect, and a foreground probability is generated; edge connectivity mixing depth information is calculated, and the edge connectivity is converted into a background probability; the foreground probability and the background probability are optimized, and a objective function is obtained; the objective function is solved, a optimal salient figure is obtained, and the detection of a salient object is realized. According to the invention, a optimization framework based on foreground and background measurement and the depth information of a scene is fully utilized by the invention, a high recall rate can be obtained, and the accuracy is high; the method can accurately position the salient object in different scenes and different sizes of objects and can also obtain nearly equal salience values in the target object.

Owner:TIANJIN UNIV

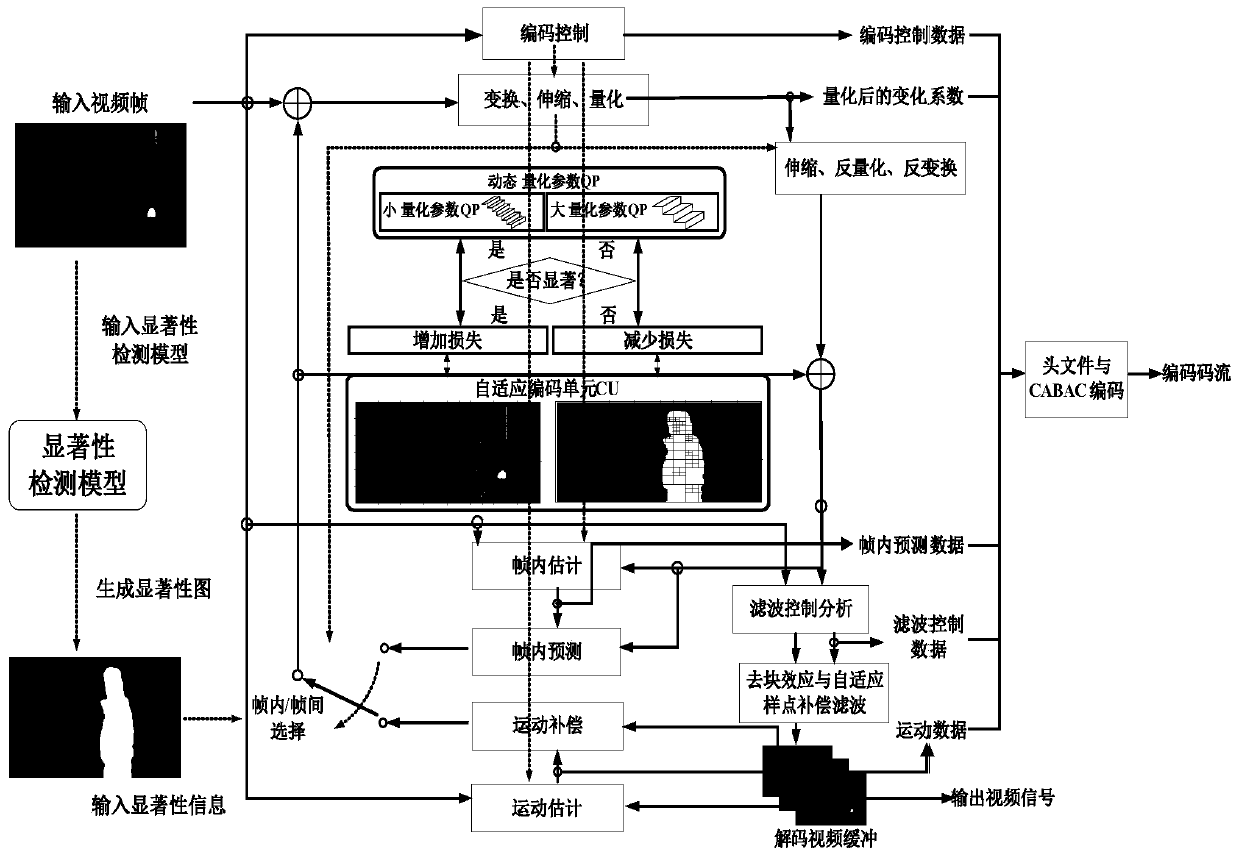

Perceptual high-definition video coding method based on salient target detection and saliency guidance

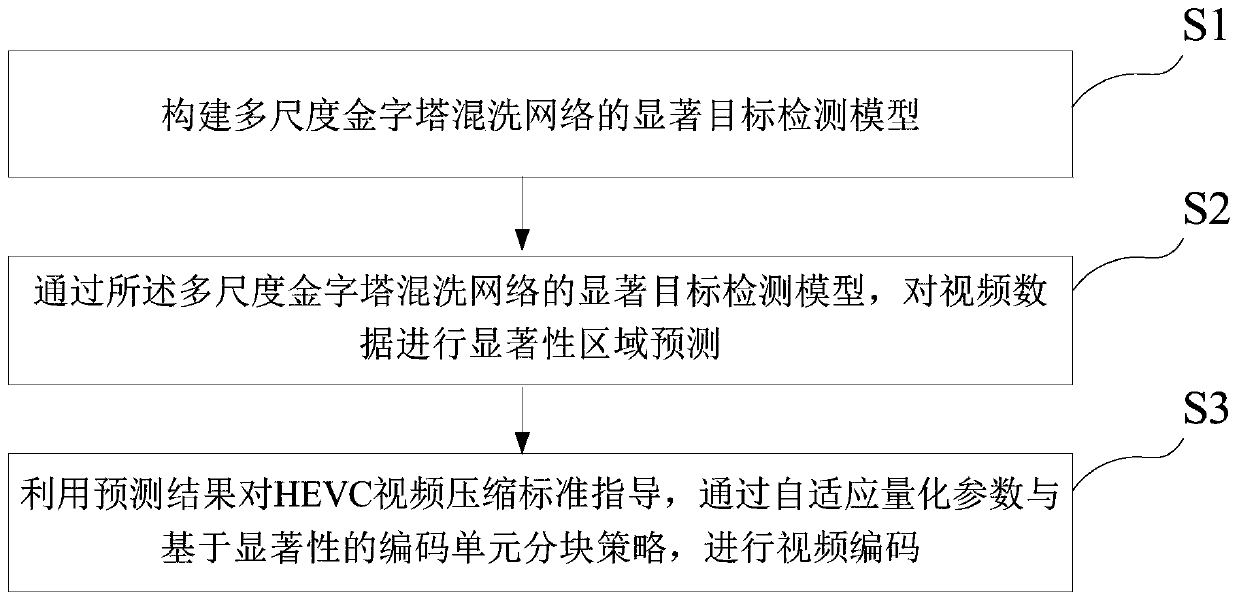

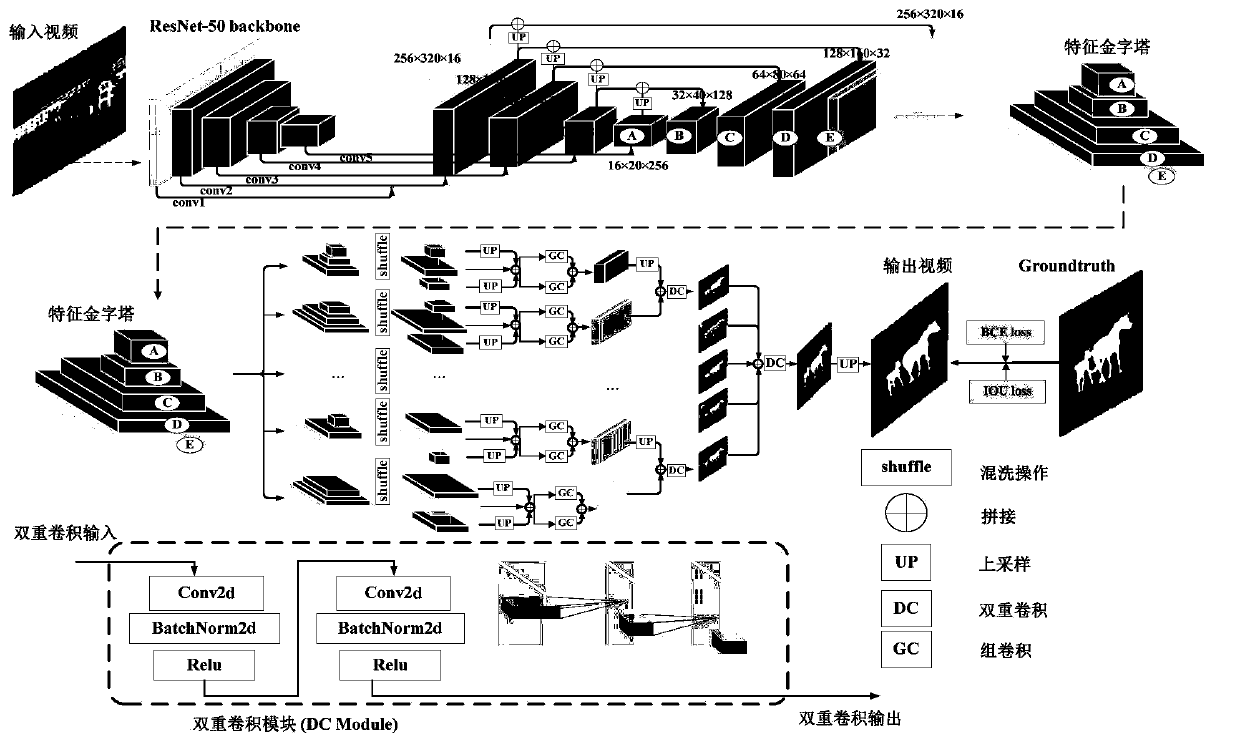

ActiveCN111432207AImprove accuracyReduce bit rateDigital video signal modificationPattern recognitionVideo encoding

The invention discloses a perceptual high-definition video coding method based on salient target detection and saliency guidance. The method comprises the following steps: constructing a salient target detection model of a multi-scale pyramid shuffling network; carrying out salient region prediction on video data through the salient target detection model of the multi-scale pyramid shuffling network; and guiding an HEVC video compression standard by utilizing a prediction result, and performing video coding through an adaptive quantization parameter and a significance-based coding unit partitioning strategy. The significant target detection model of the multi-scale pyramid shuffling network is stronger in generalization, and can output a prediction result image of significant target segmentation with higher accuracy; the HEVC video compression standard is guided on the basis of the prediction result image, the video image is divided into a salient region and a non-salient region, dynamic optimization is carried out on rate distortion optimization and quantization parameter selection, finally, a video coding result with better indexes is obtained, the video code stream is smaller, and the image quality is better.

Owner:深圳市北辰星途科技有限公司

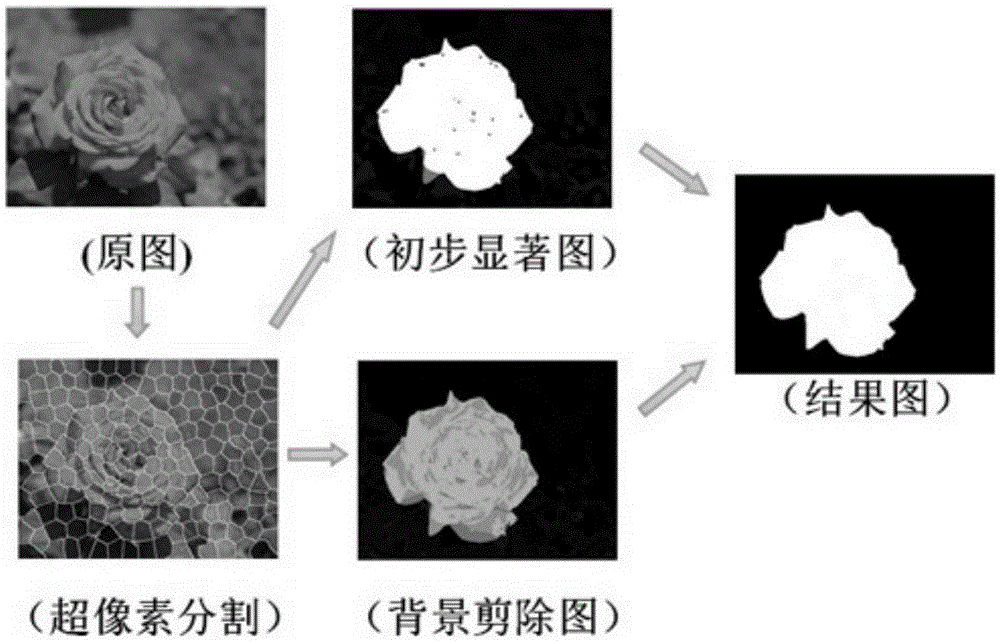

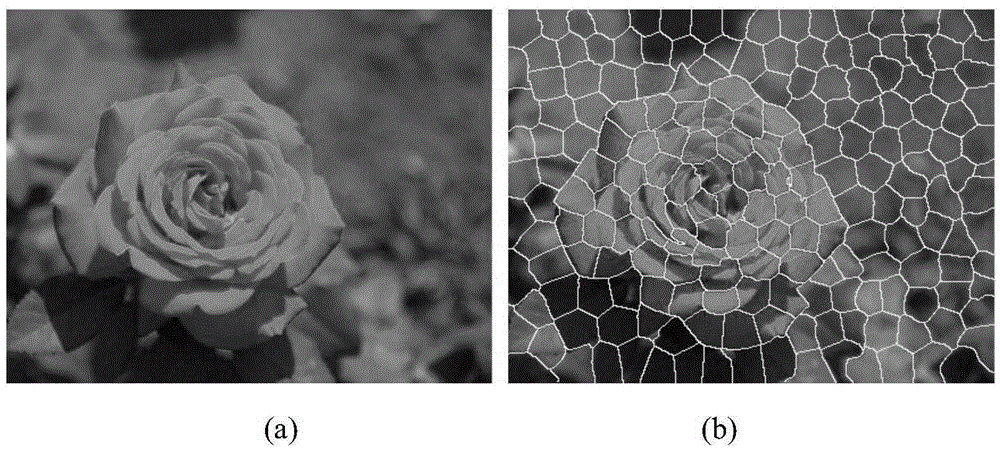

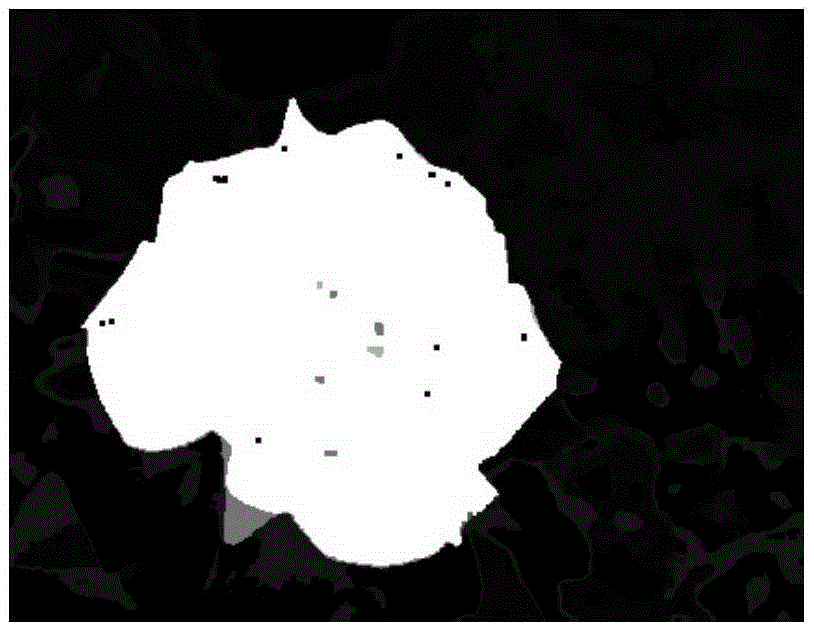

Salient object detection method based on video

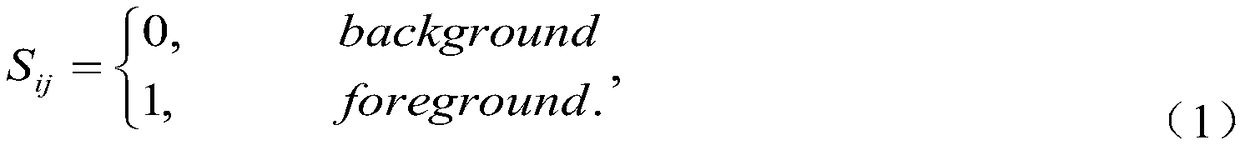

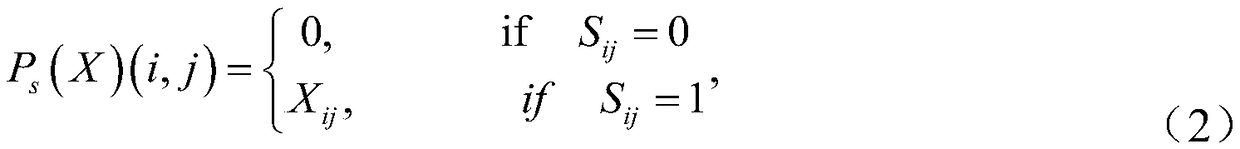

InactiveCN105427292ATo achieve the purpose of detecting salient targetsHigh speedImage enhancementImage analysisSalient objectsBackground information

The invention discloses a salient object detection method based on video. The method comprises the following steps: performing super-pixel segmentation on a picture, and utilizing optical flow information and color information to obtain a preliminary salient object detection area; acquiring picture background information by using gradient information; fusing the preliminary object detection area and pruning the background to obtain a final salient object zone. According to the method, the similarity of pixels is detected by combining statistical motion information (i.e. the optical flow information) and static information (i.e. the color and gradient information) on the pixel level, foreground and background cues are fused in the result, and the purpose of detecting a salient object in video is finally fulfilled.

Owner:NANJING UNIV OF POSTS & TELECOMM

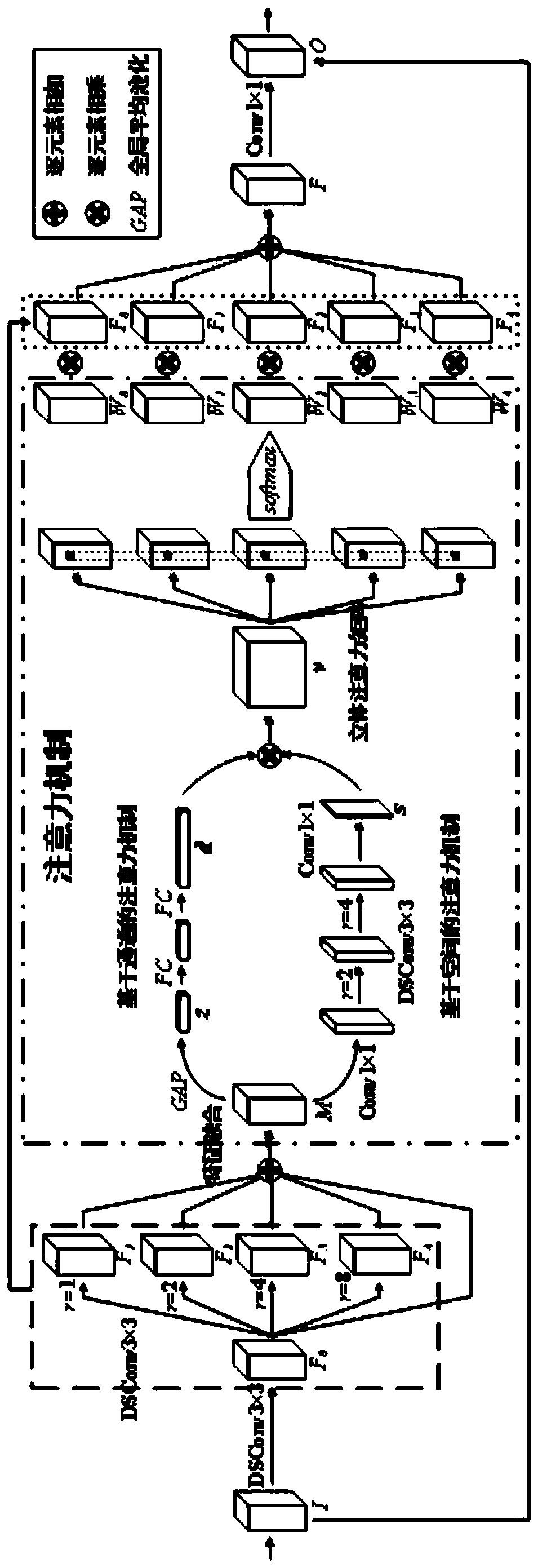

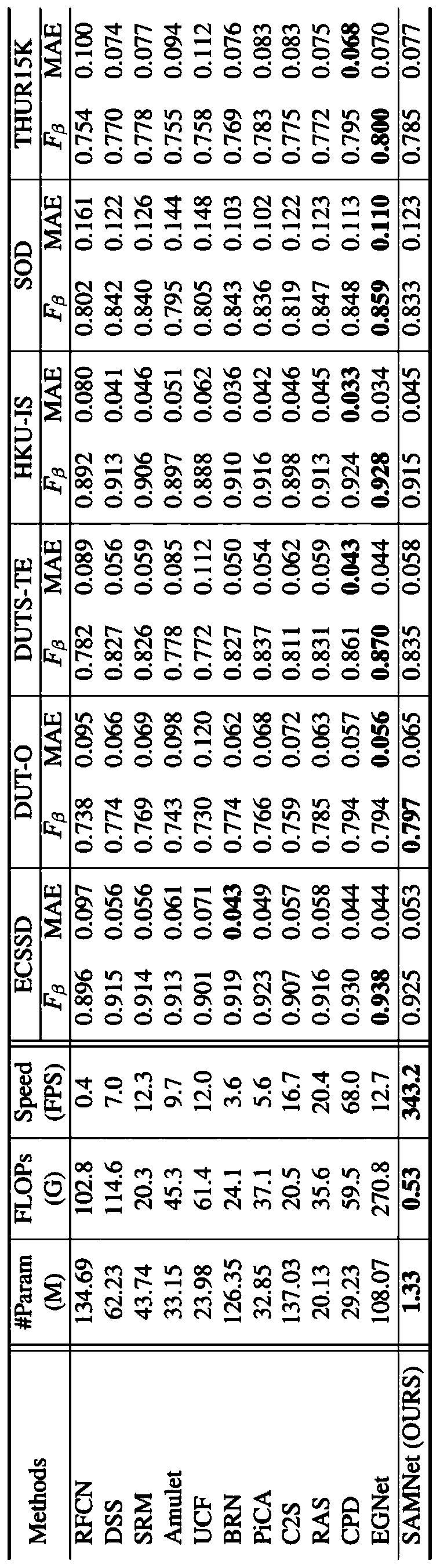

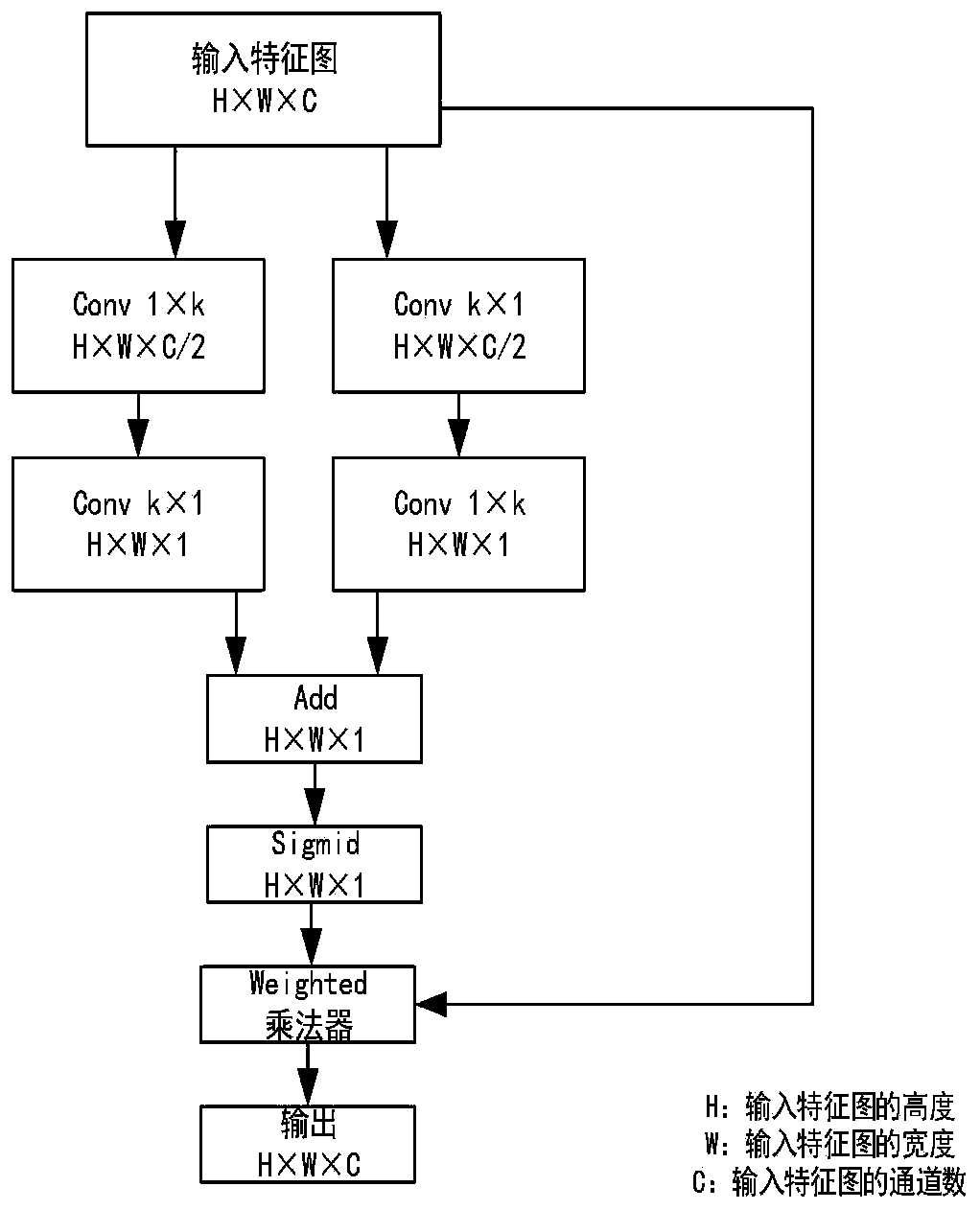

Rapid saliency object detection method of multi-scale neural network based on stereo attention control

InactiveCN111598108AAccurate detectionEfficient learning processCharacter and pattern recognitionNeural architecturesNeural network nnSalient object detection

The invention discloses a rapid saliency object detection method of a multi-scale neural network based on stereo attention control. The objective of the method is to design a lightweight convolutionalneural network for salient object detection. The method includes: extracting multi-scale convolution features through a multi-branch structure, wherein each branch is a depth separable convolution with different expansion rates; adding the convolution features of all the branches, and calculating an attention graph for each branch by using a three-dimensional attention unit; multiplying the attention map obtained by calculation by the features of each branch, adding the multiplied results of each branch, and adding residual connection to form a multi-scale convolution module controlled by three-dimensional attention; and finally, stacking the multi-scale modules to form a deep convolutional neural network, and performing saliency object detection on a natural image. Experiments show thatcompared with an existing method, the method is higher in speed, fewer in parameters, less in calculated amount and similar in precision.

Owner:NANKAI UNIV

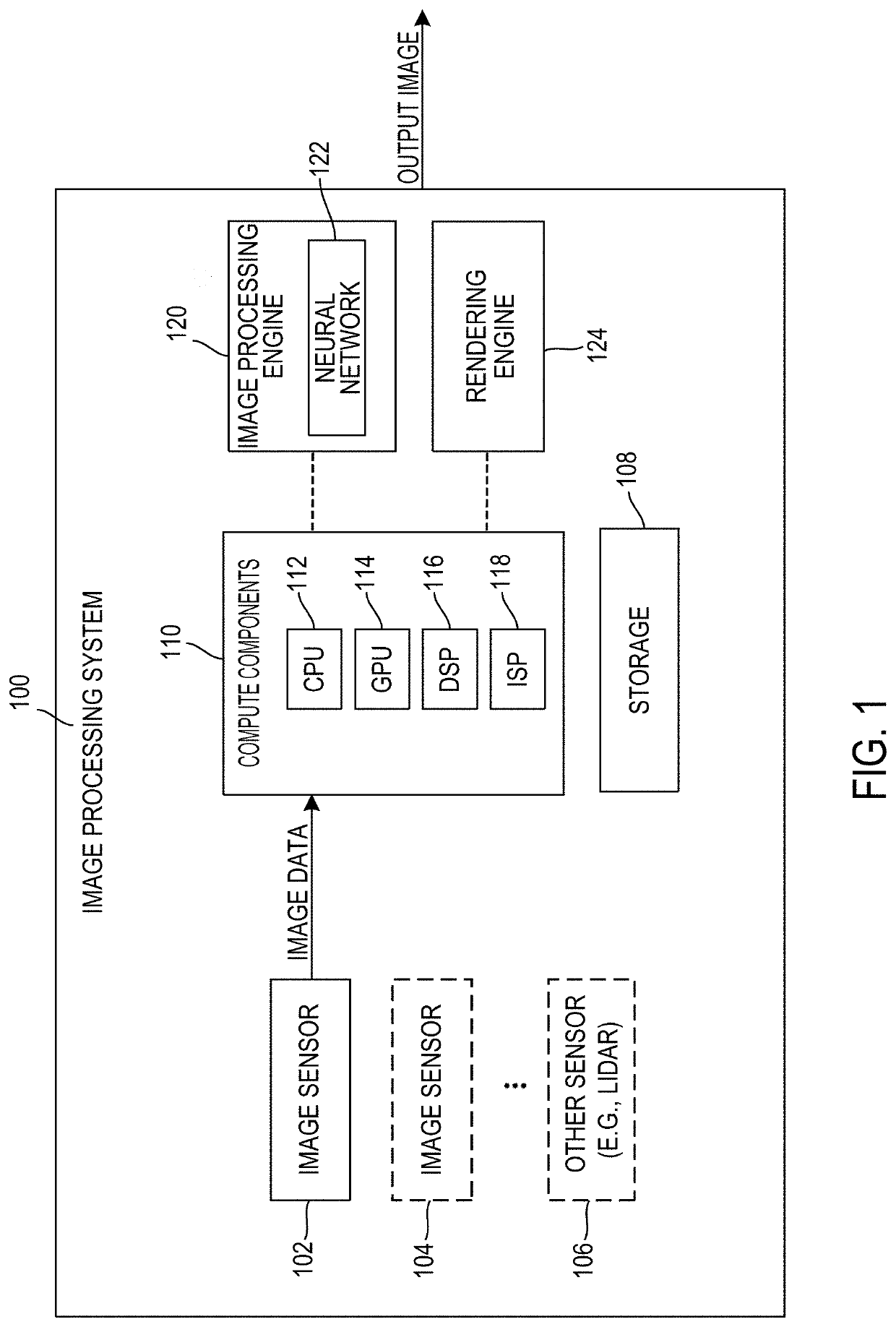

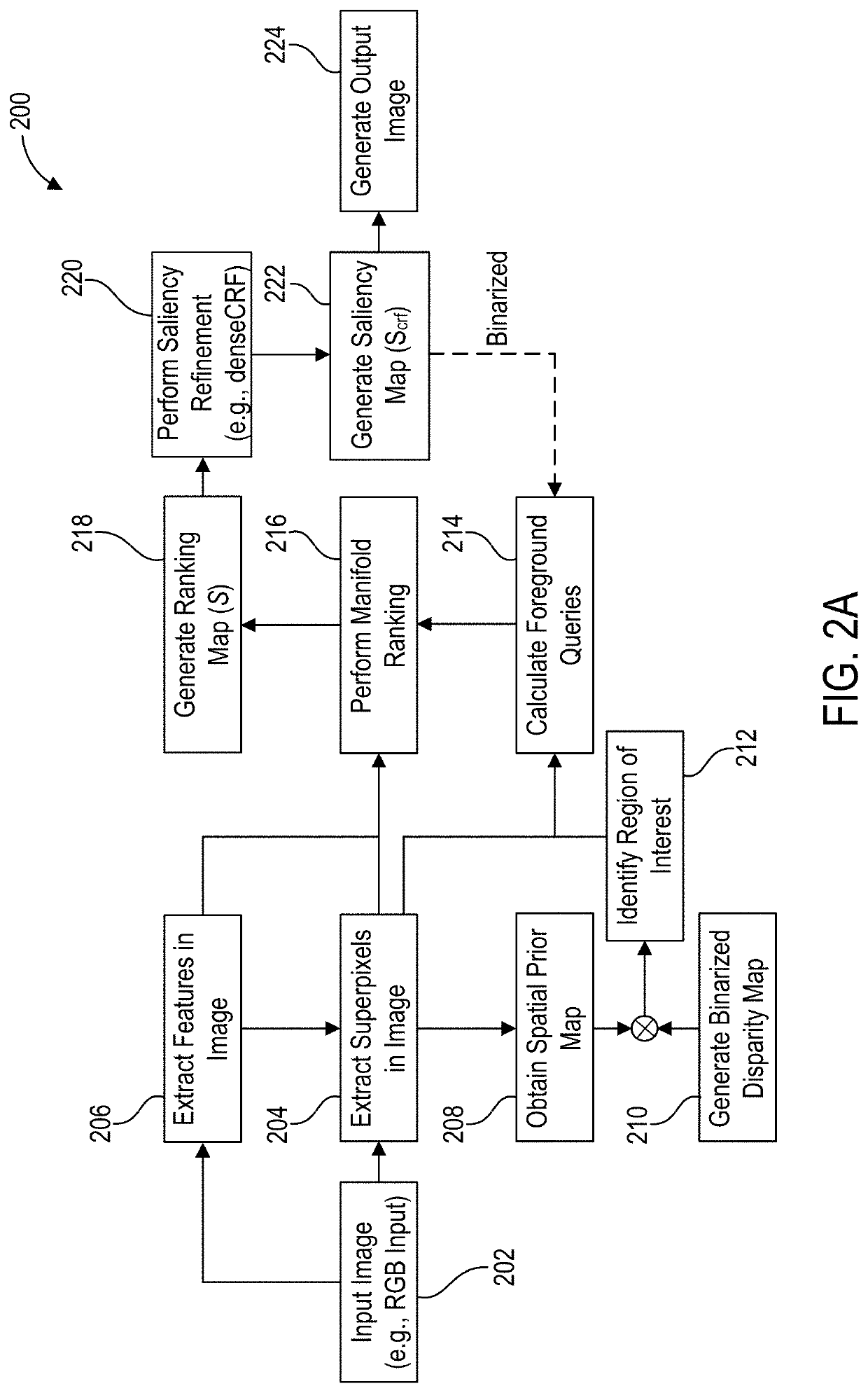

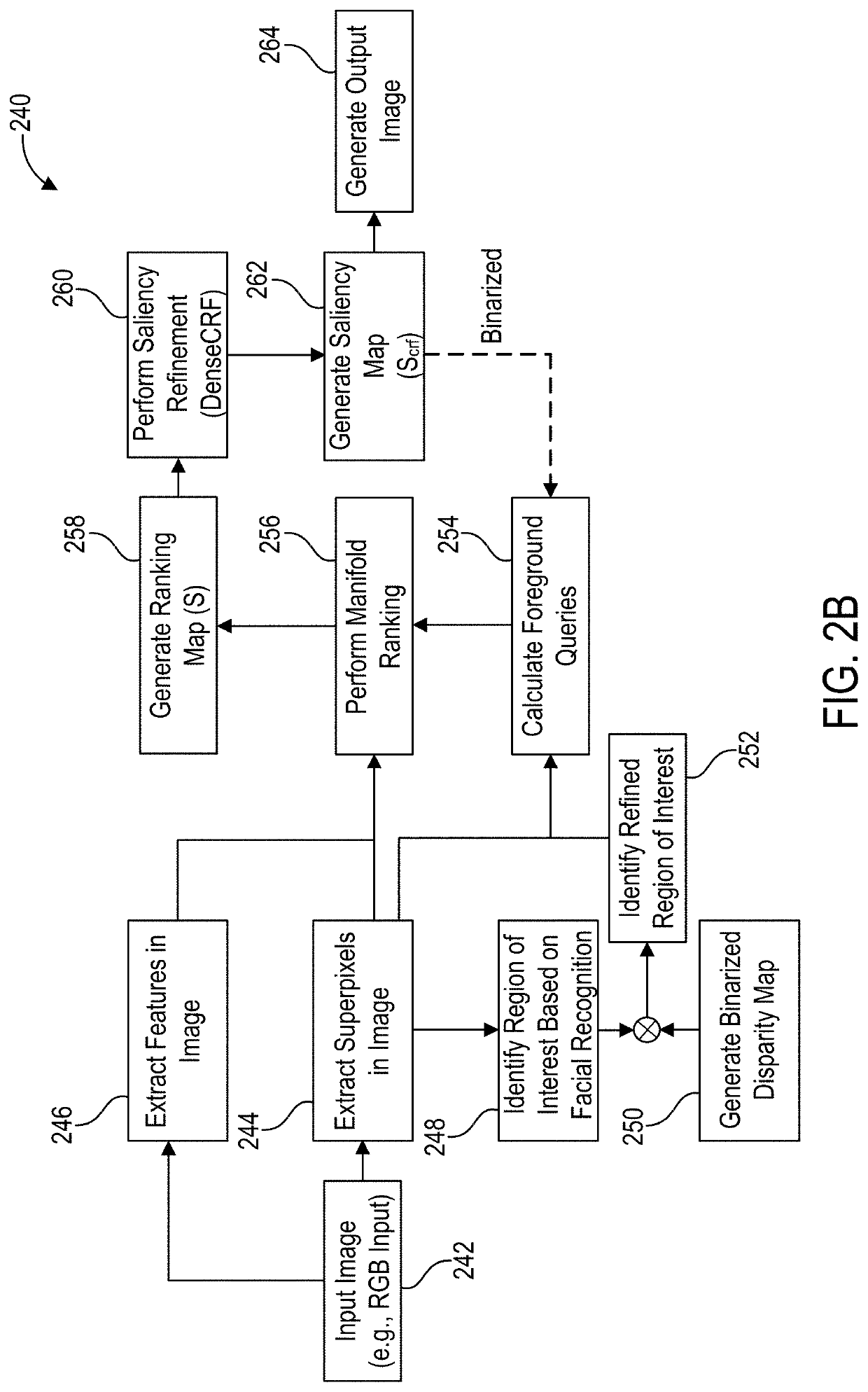

Generating effects on images using disparity guided salient object detection

Systems, methods, and computer-readable media are provided for generating an image processing effect via disparity-guided salient object detection. In some examples, a system can detect a set of superpixels in an image; identify, based on a disparity map generated for the image, an image region containing at least a portion of a foreground of the image; calculate foreground queries identifying superpixels in the image region having higher saliency values than other superpixels in the image region; rank a relevance between each superpixel and one or more foreground queries; generate a saliency map for the image based on the ranking of the relevance between each superpixel and the one or more foreground queries; and generate, based on the saliency map, an output image having an effect applied to a portion of the output image.

Owner:QUALCOMM INC

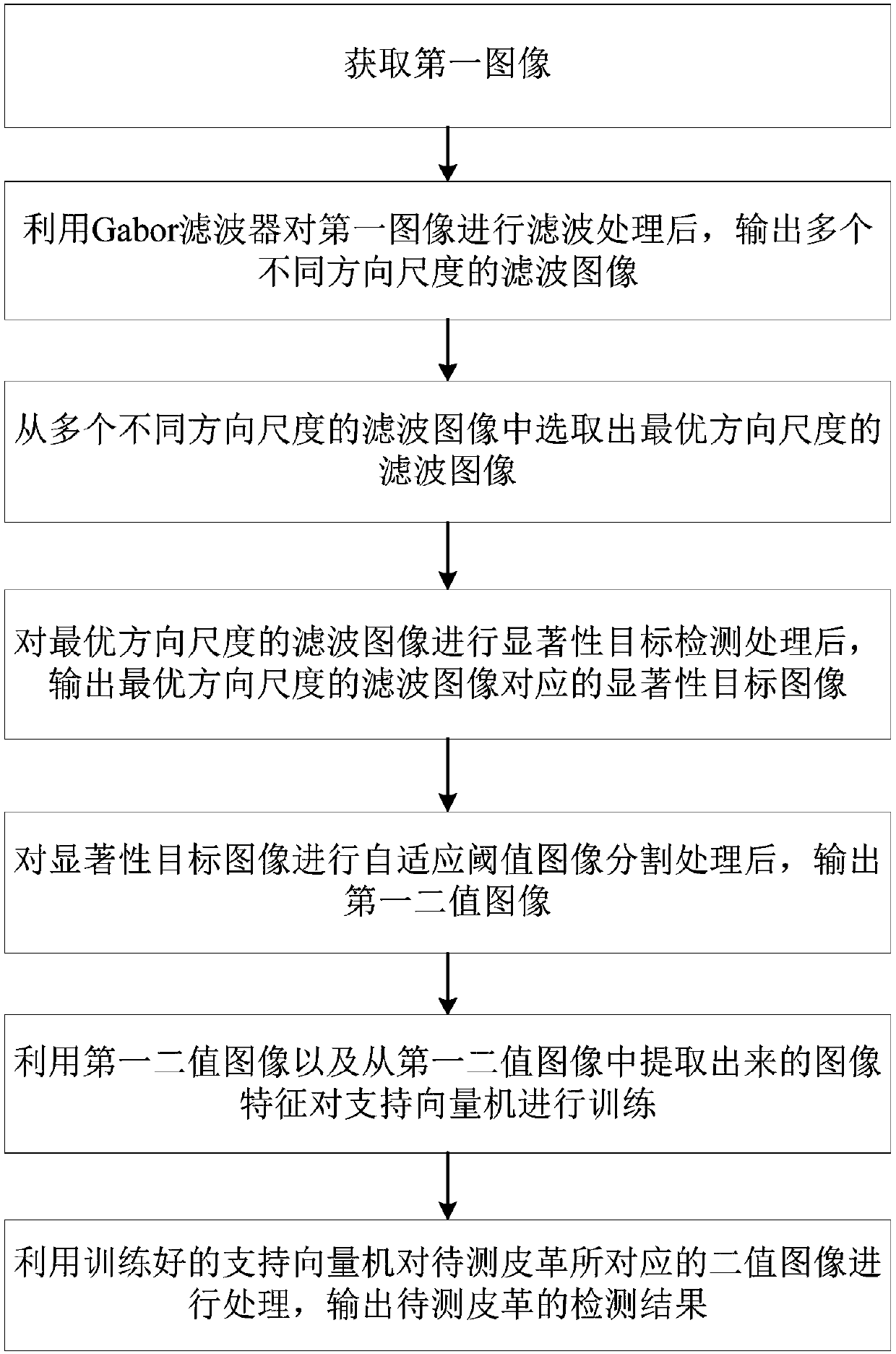

Leather surface salient defect detection method, system and apparatus

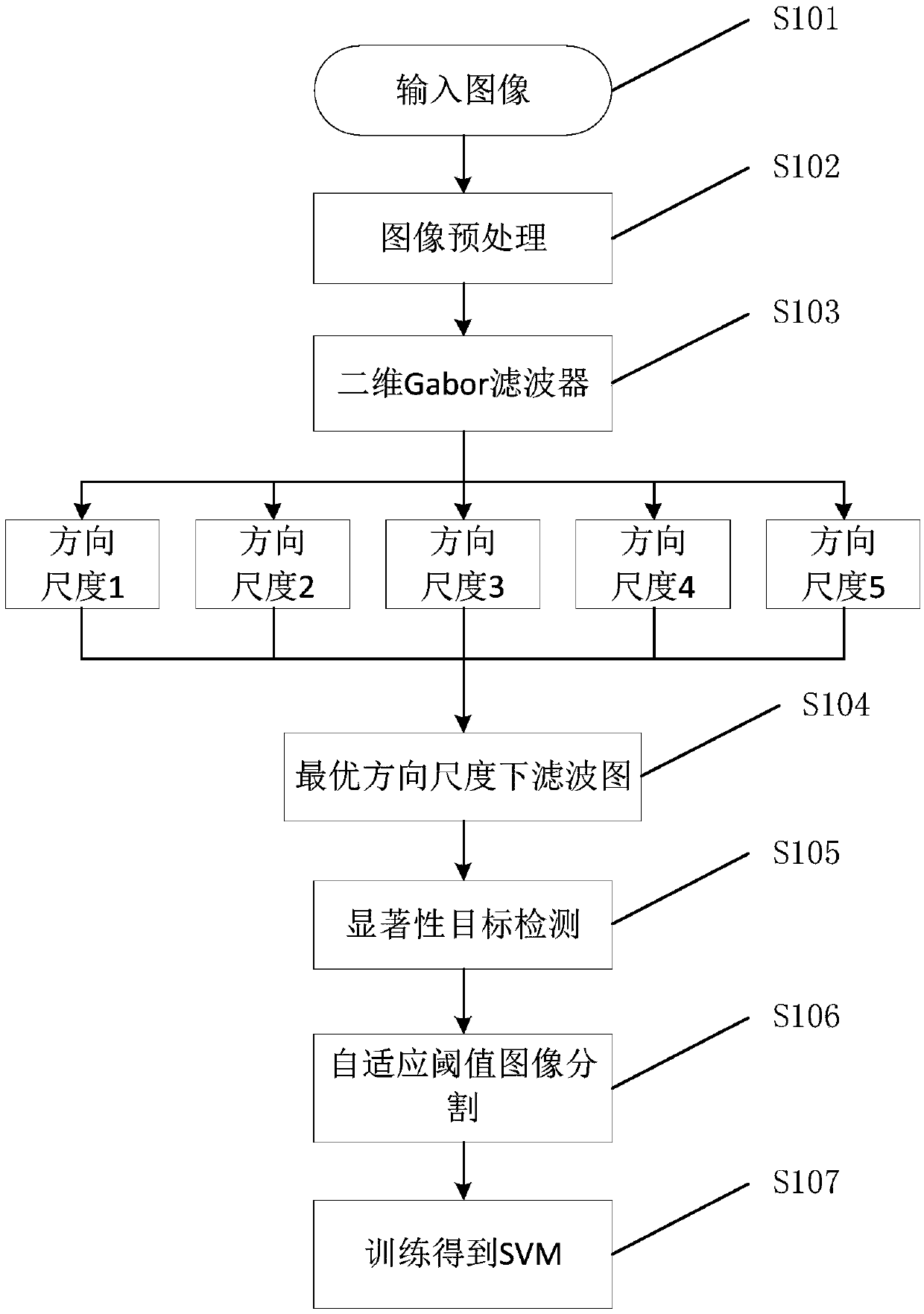

ActiveCN107845086AReduce running timeImprove processing efficiencyImage enhancementImage analysisProcess moduleImage segmentation

The invention discloses a leather surface salient defect detection method, system and apparatus. The system comprises a first obtaining module, a first processing module, a second processing module, athird processing module, a fourth processing module, a fifth processing module and a sixth processing module. The method comprises the steps of selecting out a filtered image with an optimal direction scale from filtered images output by a Gabor filter; performing salient object detection processing and adaptive threshold image segmentation processing on the filtered image with the optimal direction scale in sequence to obtain a first binary image; performing training on an SVM by utilizing the first binary image; and processing the binary image corresponding to to-be-detected leather by utilizing the SVM. The apparatus comprises a memory and a processor. By using the leather surface salient defect detection method, system and apparatus, the universality and practicality of the leather defect detection method can be improved while the processing efficiency is improved. The leather surface salient defect detection method, system and apparatus can be widely applied to the field of leather detection.

Owner:佛山缔乐视觉科技有限公司

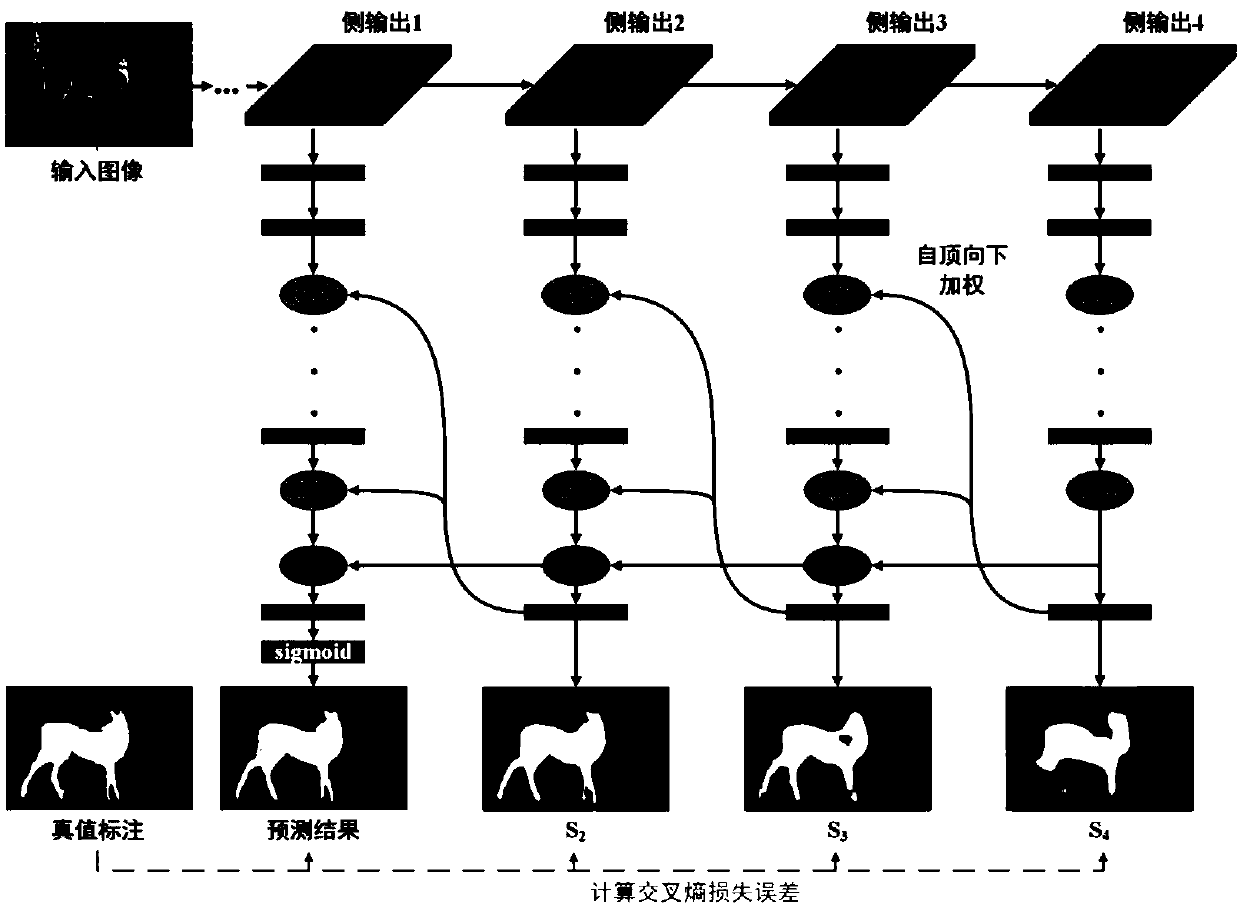

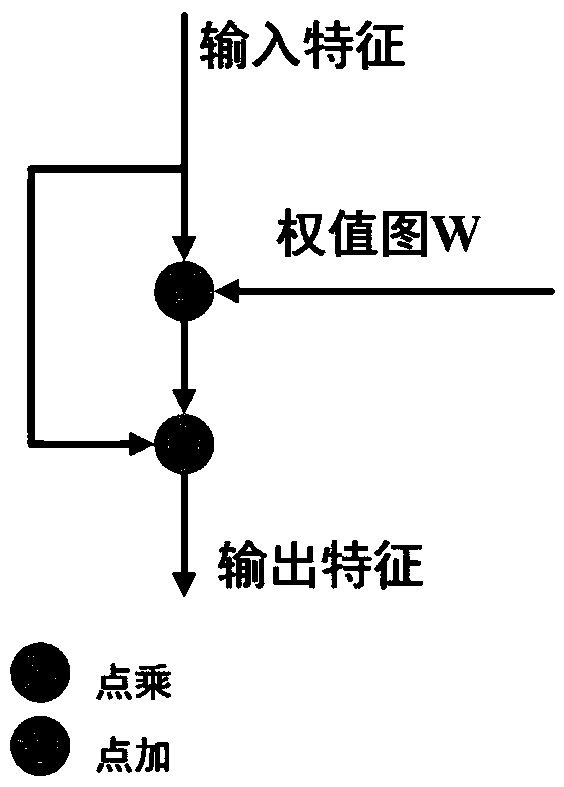

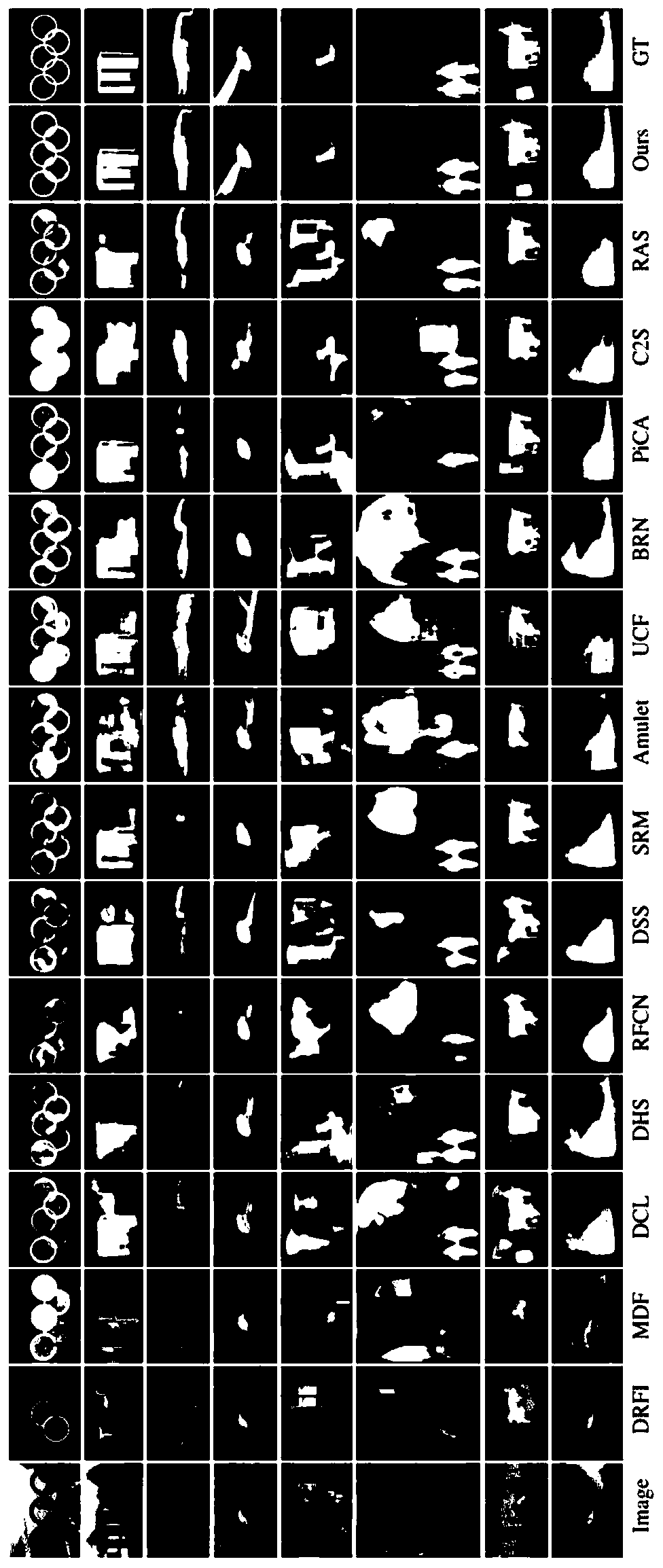

Attention mechanism based salient object detection method

ActiveCN108960261AImprove accuracyEffectively filter out interferenceCharacter and pattern recognitionColor imagePattern recognition

An attention mechanism based salient object detection method relates to the fields of computer vision and digital image processing. The method comprises that a top down attention network is designed to purify different layers of convolution characteristics, a fusion network with characteristics of a residual error of second order term design is used to maintain characteristics of the residual error better, a saliency map in which any static color image serves as input and the size of output is consistent with that of the input image, and in the saliency map, white represents areas of salient objects, and black represents background areas. Thus, the saliency map of high resolution can be obtained, and small salient objects can be detected more effectively.

Owner:YANGZHOU WANFANG ELECTRONICS TECH

Apparent sight line estimation method based on human body posture analysis

PendingCN110795982AImprove accuracyImprove robustnessBiometric pattern recognitionNeural architecturesHuman bodyFixation point

The invention relates to a method for estimating a sight line by combining human body posture information. A deep convolutional neural network comprising a salient target detection branch, a head posture estimation branch and a human body posture estimation branch is designed to estimate a sight line direction. And finally, prediction of a fixation point position is given through point multiplication according to the feature maps of the three branches, wherein a connecting line of the fixation point position and a head center position is used as thesight line direction. Through the method forestimating a sight line by combining human body posture information, the accuracy and robustness of the sight line estimation algorithm can be improved.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

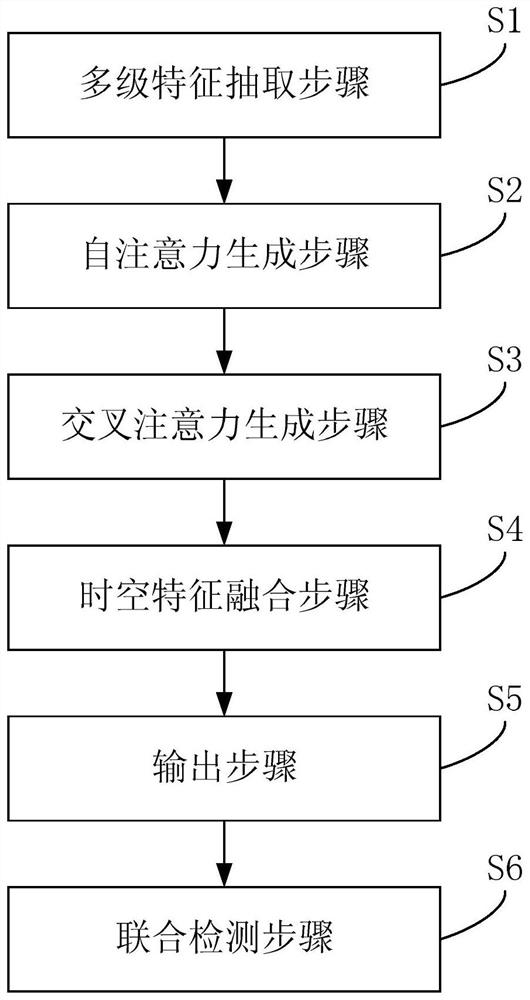

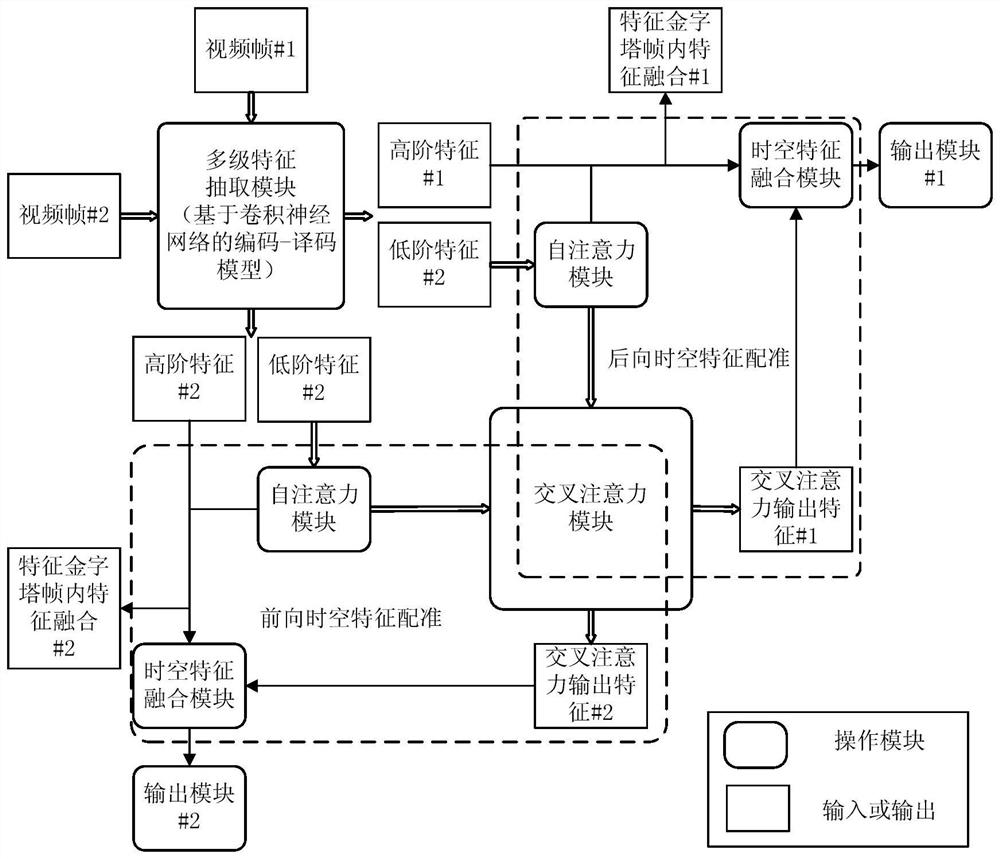

Video saliency object detection model and system based on cross attention mechanism

ActiveCN112149459AHigh-speed training and testing processInternal combustion piston enginesCharacter and pattern recognitionSalient objectsFeature Dimension

The invention relates to a video saliency object detection method and system based on a cross attention mechanism. The method comprises the following steps: A, inputting an input adjacent frame imageinto a similar network structure sharing parameters, and extracting high-level and low-level features; b, performing feature re-registration and alignment on the saliency features in the single-frameimage by using a self-attention module; c, utilizing an inter-frame cross attention mechanism to obtain the relationship dependence on the position of the salient object on the inter-frame space-timerelationship, acting on the advanced feature as a weight, and capturing the consistency of salient object detection on the space-time relationship; d, fusing the extracted intra-frame advanced features and low-level features of the adjacent frames and the space-time features with the inter-frame dependency relationship; e, performing feature dimension reduction on the input features, and outputting a pixel-level classification result by using a classifier; and F, establishing a depth video saliency object detection model based on a cross attention mechanism, and accelerating the training of the model by using GPU parallel computing.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

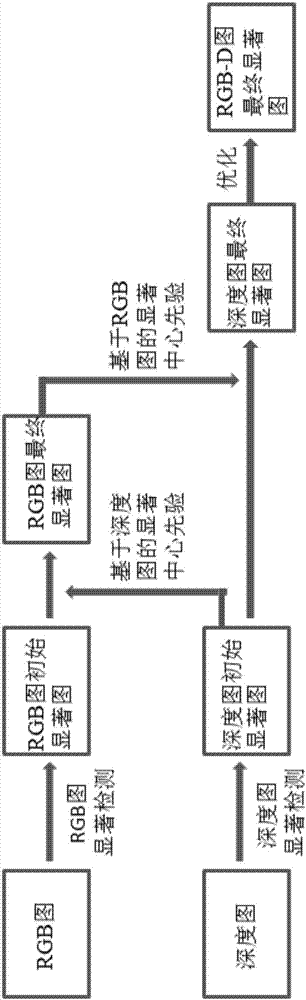

RGB-D image salient object detection method based on salient center prior

ActiveCN106997478AEnhance significant detection resultsSignificant improvement in test resultsCharacter and pattern recognitionSalient objectsRgb image

The invention discloses an RGB-D image salient object detection method based on salient center prior. The RGB-D image salient object detection method comprises steps that salient center prior based on a depth map and salient center prior based on an RGB image are provided; according to the salient center prior based on the depth map, Euclidean distances between other super pixels in the RGB image and the depth characteristics of the center super pixels of the depth map salient object are calculated, and are used as the salient detection results of the salient weight strengthened RGB image, and therefore the RGB image salient detection is effectively guided by the depth characteristics, and the RGB image salient detection results are improved; according to the salient center prior based on the RGB image, the Euclidean distances between the other super pixels of the depth map and the CIELab color characteristics of the center super pixels of the RGB image salient object are calculated, and are used as the salient detection results of the salient weight strengthened depth map, and therefore the depth map salient detection is effectively guided by the RGB characteristics, and the depth map salient detection results are improved.

Owner:ANHUI UNIVERSITY

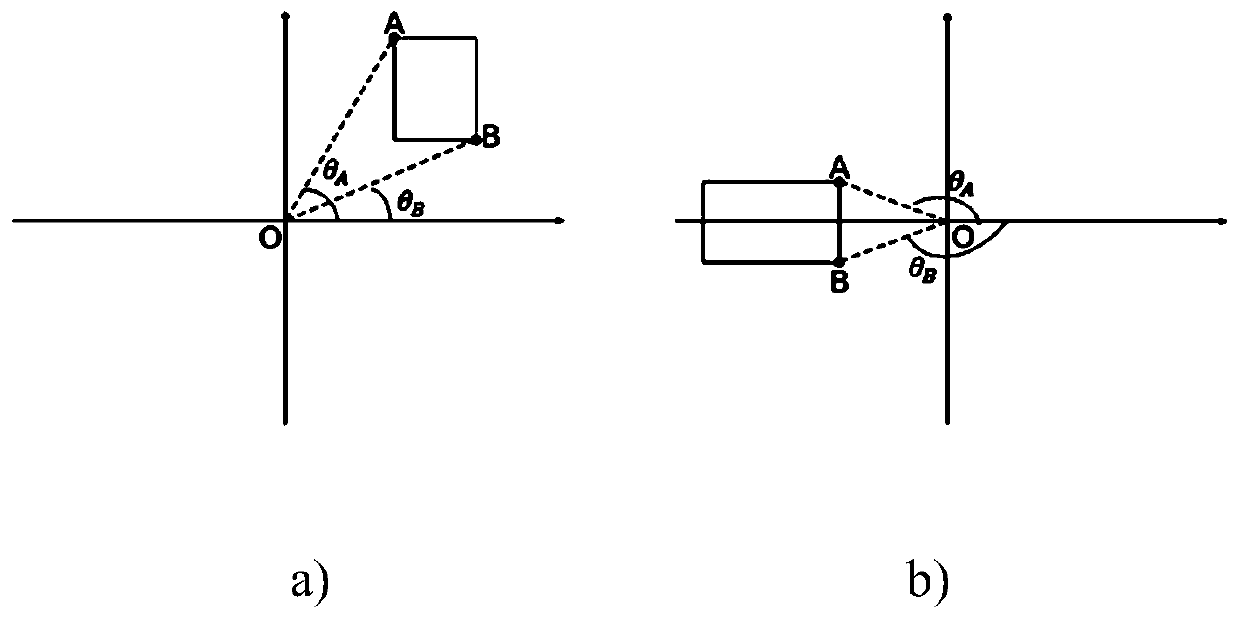

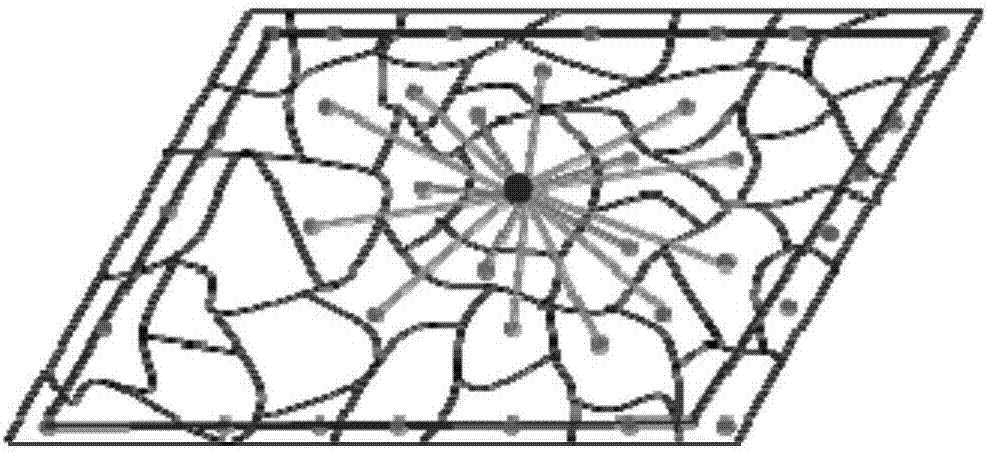

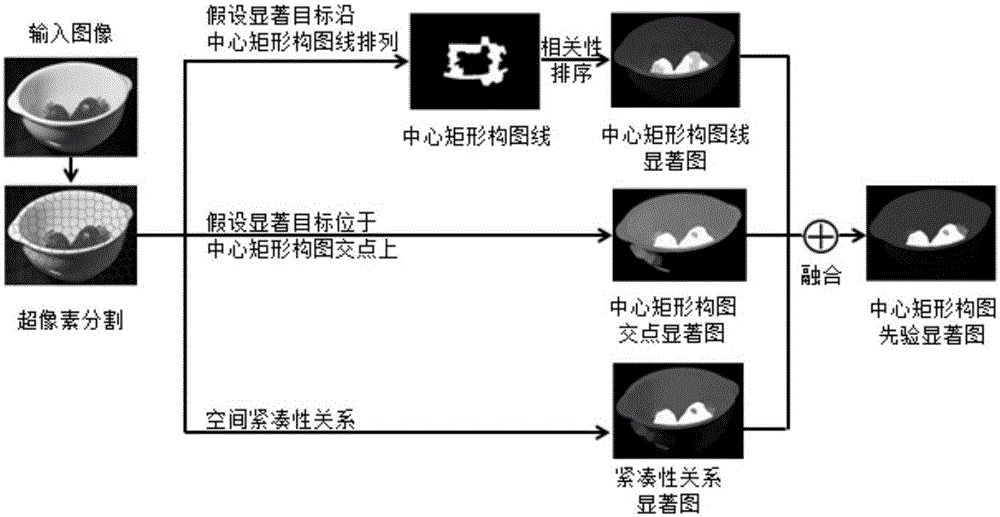

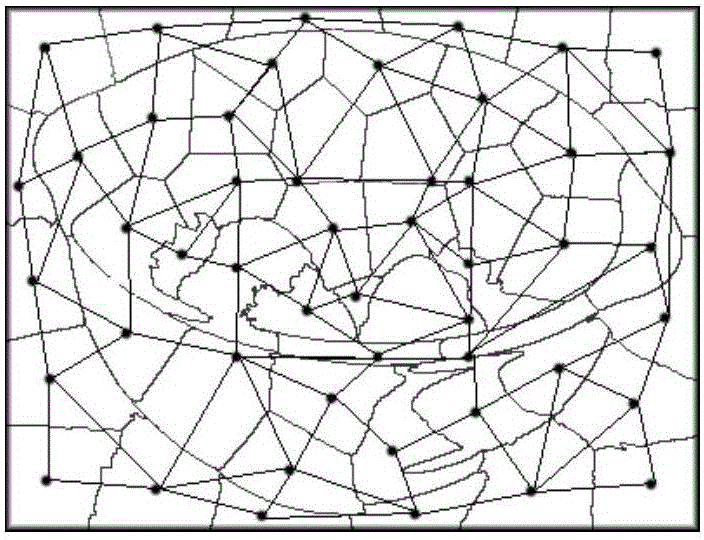

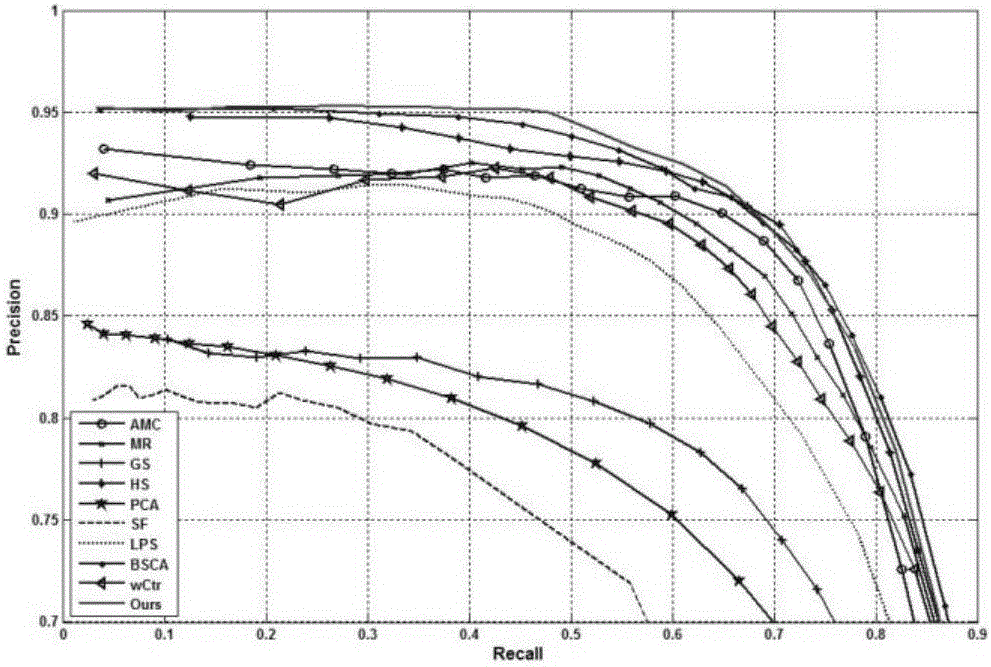

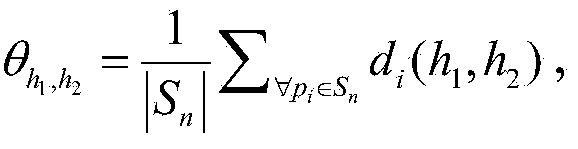

Salient object detection method based on central rectangular composition prior

ActiveCN106204615AMechanism of visual attentionConforms to human visual attention mechanismImage enhancementImage analysisPattern recognitionSalient objects

The invention provides a salient object detection method based on a central rectangular composition prior. The central rectangle refers to a rectangle surrounded by four composition intersections of thirds of the composition line. The method comprises steps of: supposing that a salient object is arranged along the composition line of the central rectangle, performing correlation ordering on the super-pixels on the four sides of the central rectangle to obtain a central rectangle composition line salient map; supposing that the salient target is located at the intersection of the center rectangle composition, removing composition intersections unlikely to be the salient object according to the central rectangle composition line salient map, and then computing the spatial distance between all the super-pixel nodes and a center node in an image by using the rest composition intersections as the center node to form a corresponding salient map, and finally adding and fusing the salient maps to form a central rectangle composition intersection salient map; then acquiring a compactness relation salient map by using a compactness relation; and finally, fusing the three maps to obtain a final salient map. The method conforms to the principle of photographic composition and conforms to the human visual attention mechanism.

Owner:ANHUI UNIVERSITY

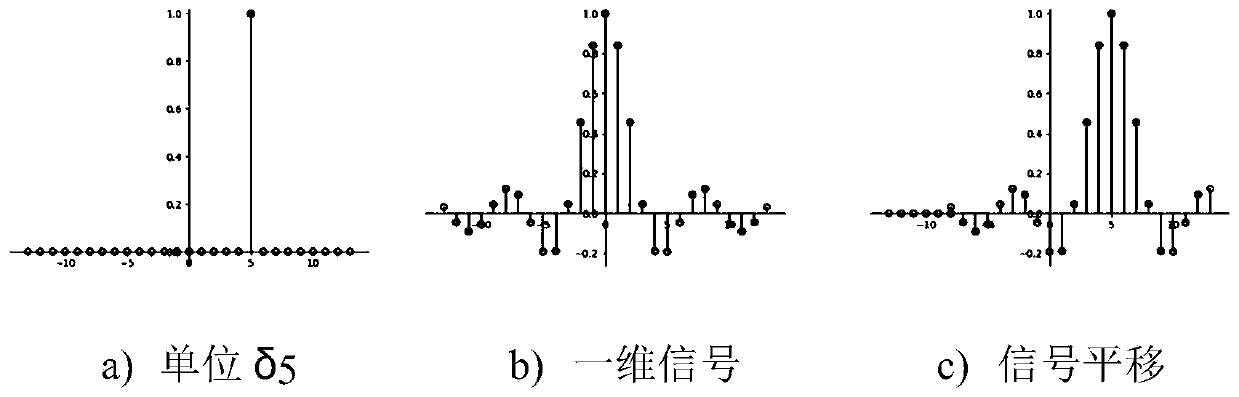

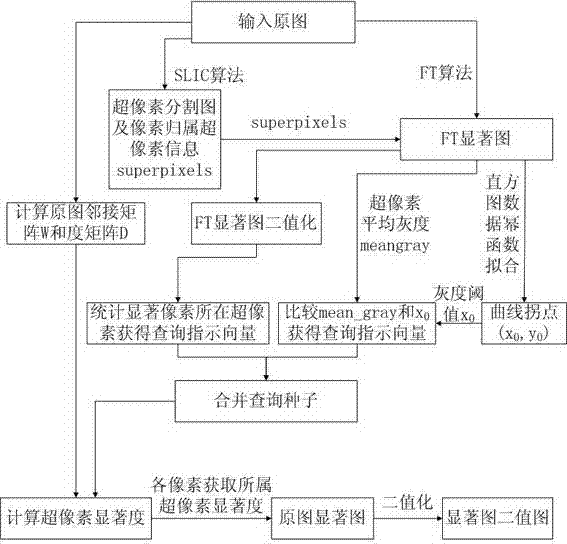

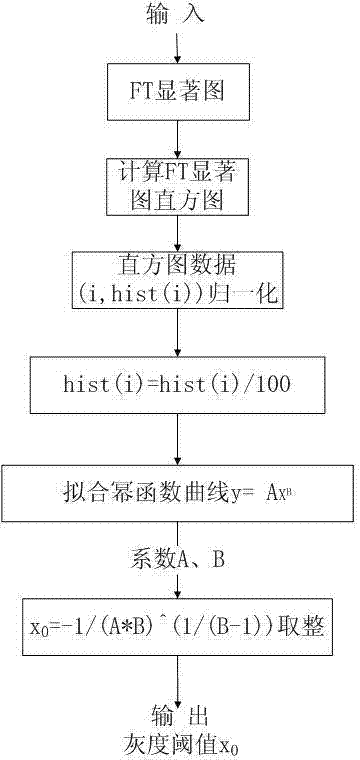

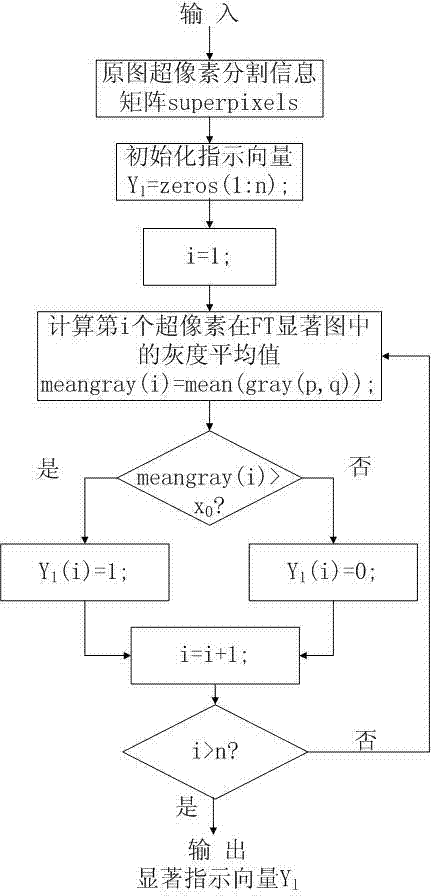

Salient object detection method based on histogram power function fitting

A salient object detection method based on histogram power function fitting comprises the four steps of the histogram power function fitting, a superpixel classification, a salient region problem and salient object detection. The salient object detection method based on the histogram power function fitting has the advantages that gray threshold is obtained through using an FT saliency map, a figure manifold sorting method, an SLIC method superpixel classification and the histogram power function fitting, under the situations of multiple images, multiple targets and complex scenes, the detection efficiency is high, the performance is good, the precision is good, the problem in salient object detection fields is solved, by means of the salient object detection method based on the histogram power function fitting, the execution speed is high, and the complexity of algorithm is low; meanwhile, higher detection precision can be guaranteed.

Owner:郑州新马科技有限公司

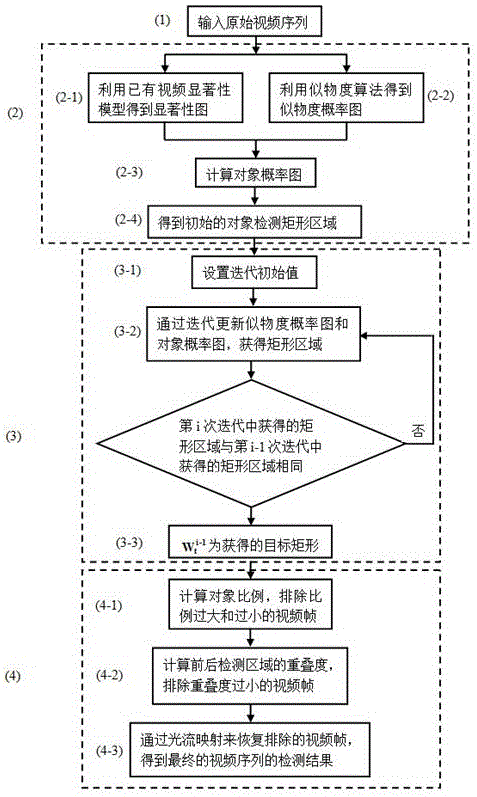

Unconstrained in-video salient object detection method combined with objectness degree

ActiveCN106407978AImprove accuracyAccurate detectionCharacter and pattern recognitionSalient objectsVideo sequence

The invention discloses an unconstrained in-video salient object detection method combined with an objectness degree. The unconstrained in-video salient object detection method specifically comprises the steps of: (1) inputting an original video sequence F={F<1>, F<2>, ..., F<M>}, wherein a t-th frame in the sequence is referred to as F<t>; (2) adopting a video saliency model and an objectiveness object detection algorithm for the video frame F<t>, so as to obtain an initial rectangular region for salient object detection; (3) updating an objectness degree probability graph and an object probability graph through iteration for the video frame F<t>, and adjusting the size of the rectangular region for salient object detection continuously, so as to obtain a single-frame salient object detection result; (4) and utilizing a dense optical flow method algorithm to obtain a motion vector field of pixel points of the video frame F<t>, and calculating the overlapping degree of the rectangular regions for salient object detection of the adjacent frames, so as to obtain a final salient object detection result. The unconstrained in-video salient object detection method updates the objectness degree probability graph and the object probability graph through iteration, enhances the precision of spatial domain salient object detection results, improves time consistency through sequence-level refining, and can detect salient objects in a video more accurately and completely.

Owner:SHANGHAI UNIV

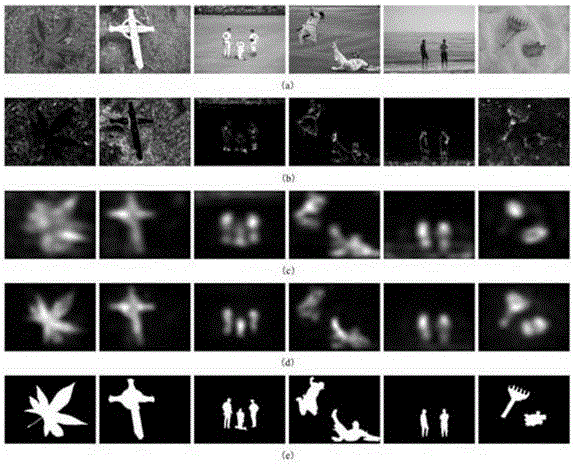

Image saliency target detection method

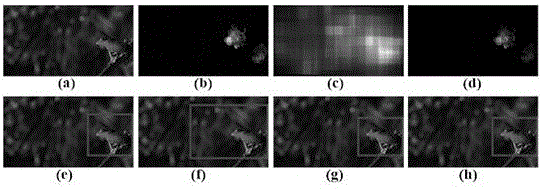

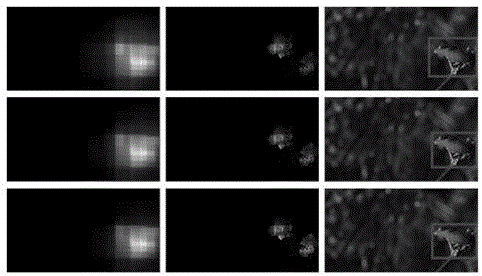

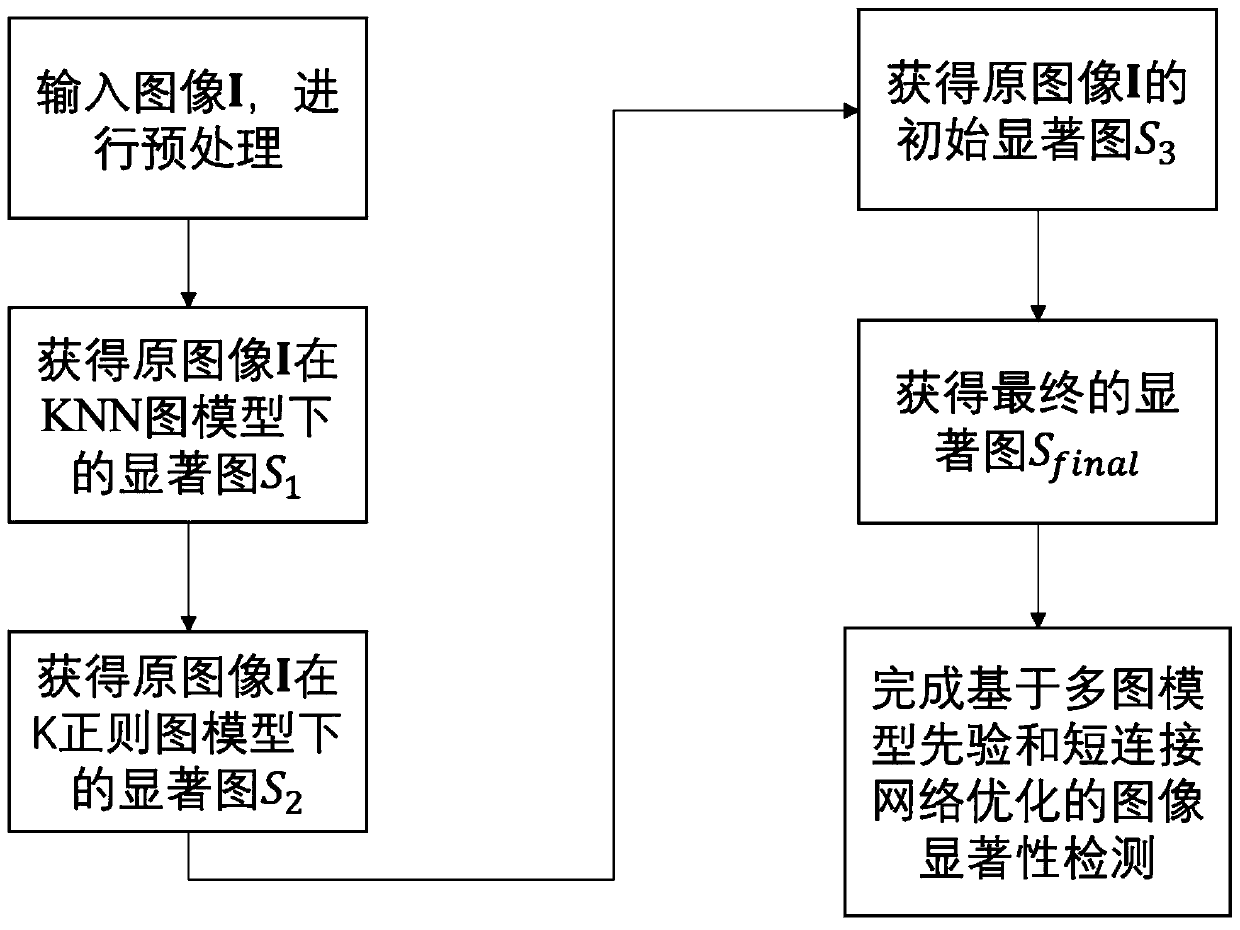

ActiveCN111209918AConsistent highlighting of salient targetsRich semantic informationGeometric image transformationCharacter and pattern recognitionPattern recognitionSaliency map

The invention relates to an image saliency target detection method. Region segmentation of image analysis is involved. The method is an image saliency detection method based on multi-graph model priorand short connection network optimization. The method comprises the following steps of: utilizing color and position information for each input image; calculating a KNN graph model and a K regular graph model; obtaining a saliency map S1 under the KNN graph model and a saliency map S2 under the K regular graph model; carrying out pixel-level fusion on the KNN graph model and the K regular graph model; S3, obtaining an initial saliency map of the original image; and S3, optimizing the initial saliency map by using a short connection network to obtain a final saliency map Sfinal of the originalimage, and completing image saliency target detection, thereby overcoming the defects of incomplete saliency target detection and inaccurate algorithm detection when foreground and background colorsare similar in the prior art of image saliency target detection.

Owner:HEBEI UNIV OF TECH

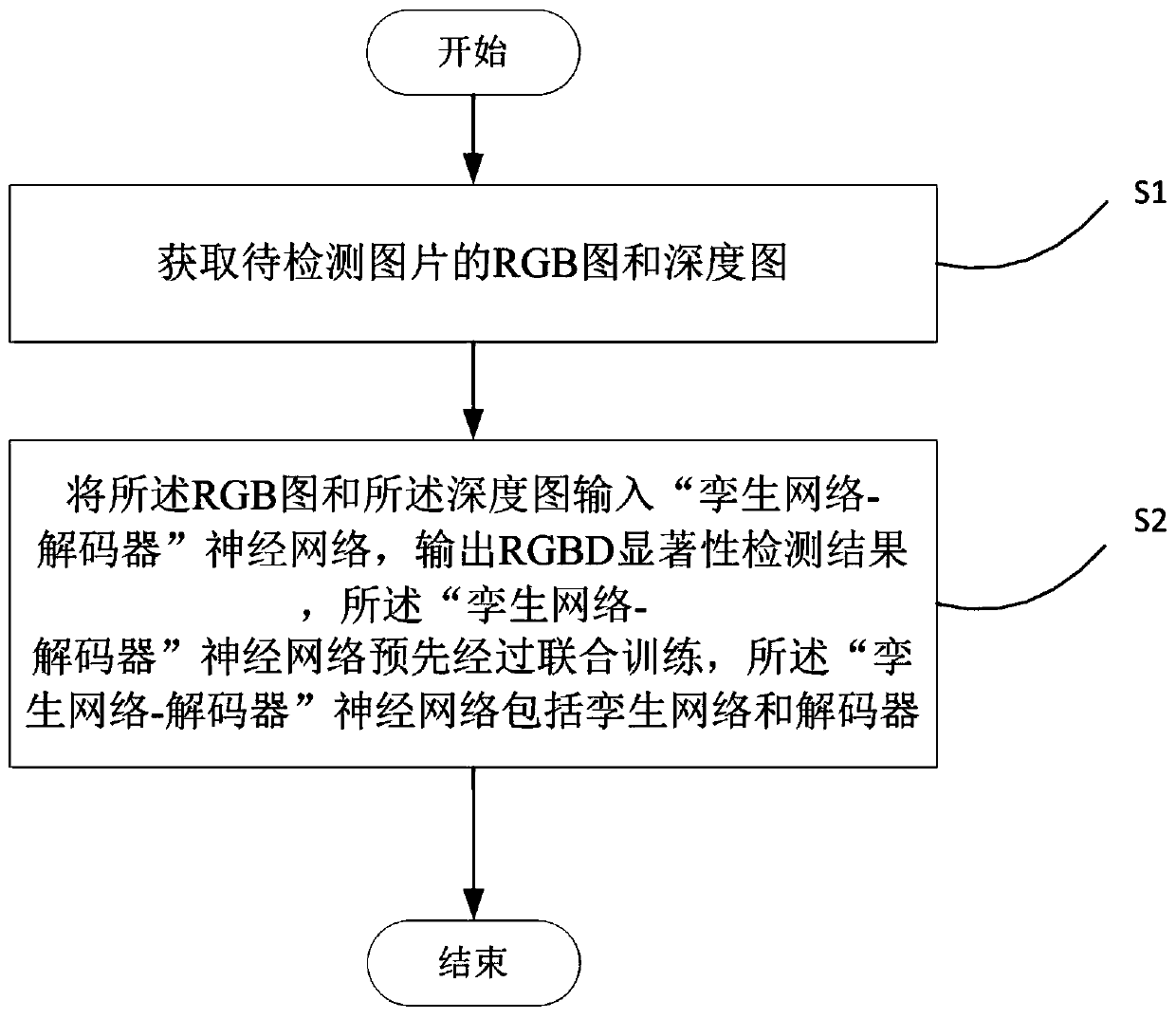

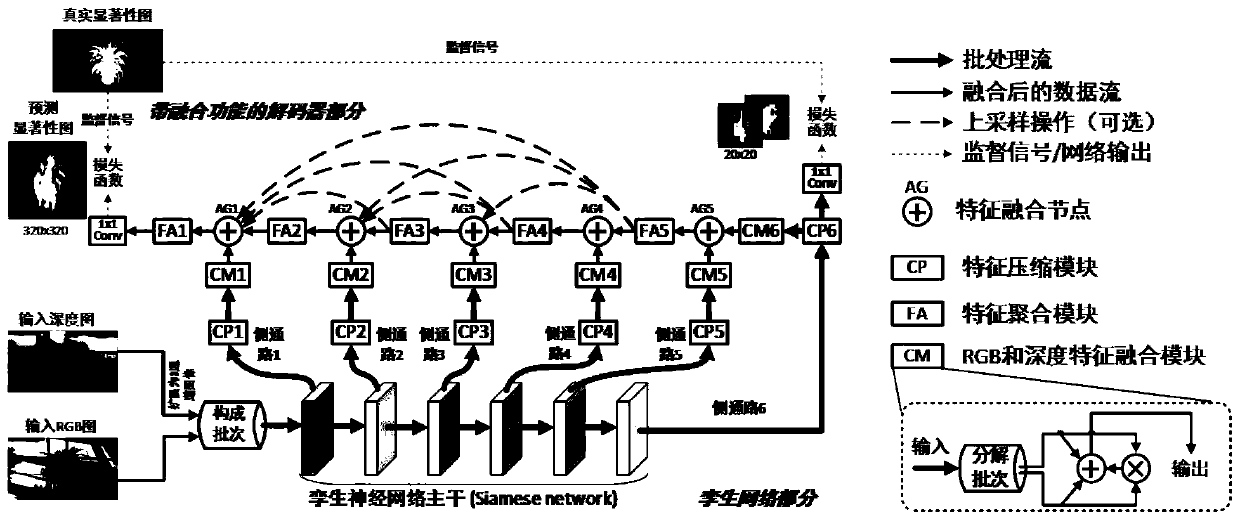

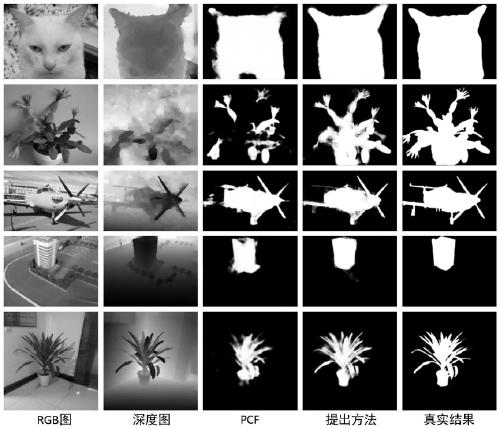

RGBD salient object detection method based on twin network

ActiveCN111242173AThe test result is fineEasy to detectCharacter and pattern recognitionNeural architecturesPattern recognitionImaging processing

The invention discloses an RGBD salient object detection method based on a twin network in the technical field of image processing and computer vision. The RGBD salient object detection method comprises the steps of 1, obtaining an RGB image and a depth image of a to-be-detected picture; 2, inputting the RGB image and the depth image into a 'twin network-decoder' neural network, outputting an RGBDsaliency detection result, and performing joint training on the 'twin network-decoder' neural network in advance, including the twin network and the decoder; s2, inputting the RGB image and the depthimage into a twin network, and outputting RGB and depth hierarchical features of a twin network side path; and inputting the RGB and depth hierarchical features into a decoder, and outputting an RGBDsaliency detection result. According to the method, a twin network is combined with a decoder network structure with a fusion function, and hierarchical features are subjected to feature fusion and then decoded, so that RGB information and depth information supplement each other, the detection performance is improved, and a refined RGBD detection result is obtained.

Owner:SICHUAN UNIV

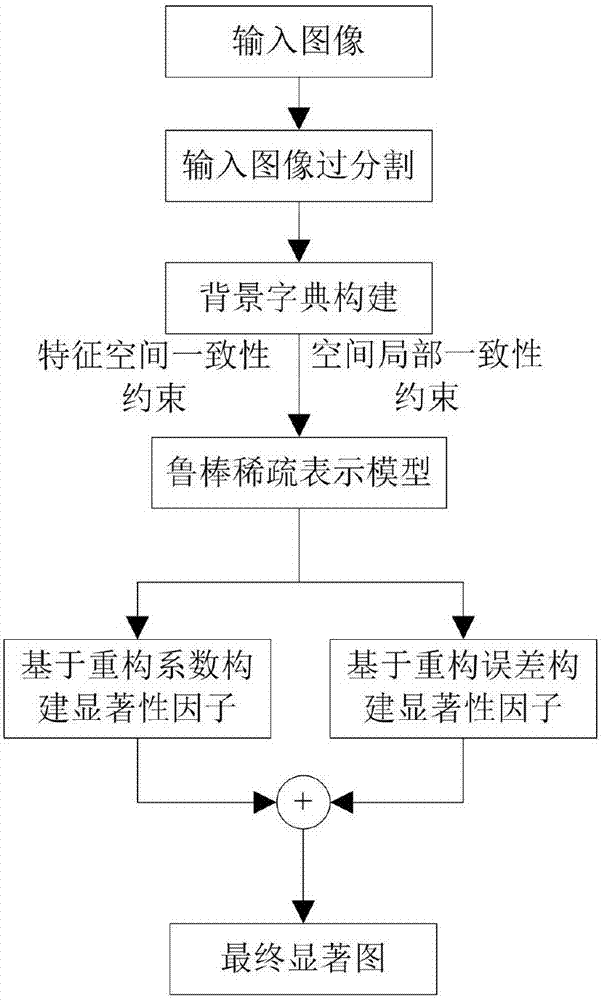

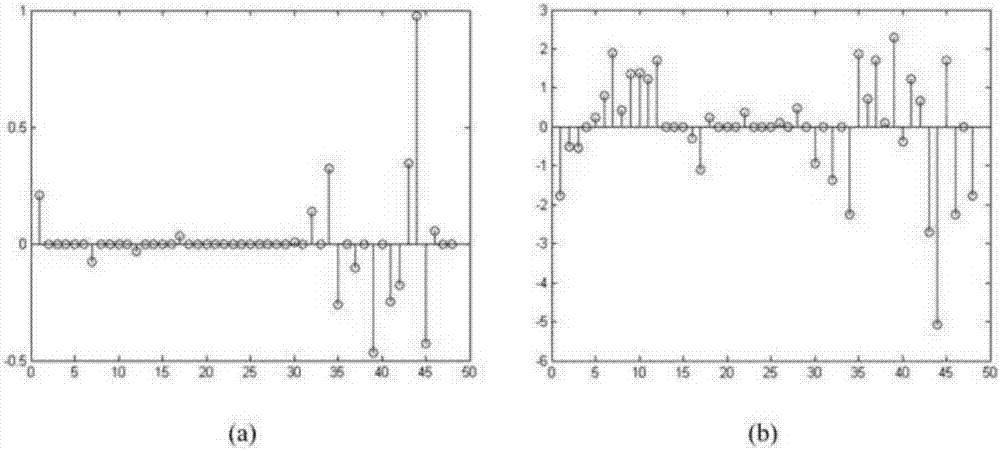

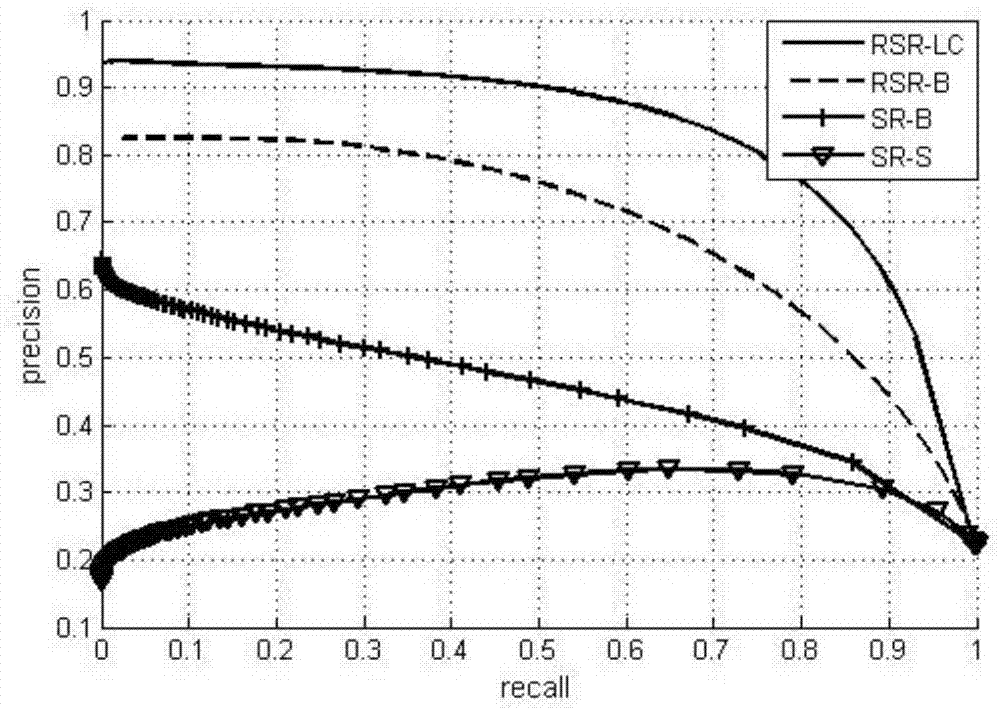

Robust sparse representation and Laplace regular term-based salient object detection method

InactiveCN107301643AEnhanced inhibitory effectComplete detectionImage analysisCharacter and pattern recognitionSalient objectsSaliency map

The invention discloses a robust sparse representation and Laplace regular term-based salient object detection method, and mainly aims at solving the problem that the existing method cannot completely and consistently detect the salient objects in complicated images. The method comprises the following steps of: 1, segmenting an input image to obtain a superpixel set; 2, constructing a background dictionary by adoption of superpixels at a boundary region; 3, respectively restraining the consistency between representation coefficients and reconstruction errors in a robust sparse representation model by adoption of two Laplace regular terms, and obtaining a representation coefficient matrix and a reconstruction error matrix by utilizing a background dictionary solution model; 5, constructing salient factors by combining the representation coefficient matrix and the reconstruction error matrix, so as to obtain a superpixel-level saliency map; and 6, mapping the superpixel-level saliency map to obtain a pixel-level saliency map. Experiments indicate that the method has relatively good background suppression effect, is capable of completely detecting salient objects of images, and can be used for the salient object detection of complicated scene images.

Owner:重庆江雪科技有限公司

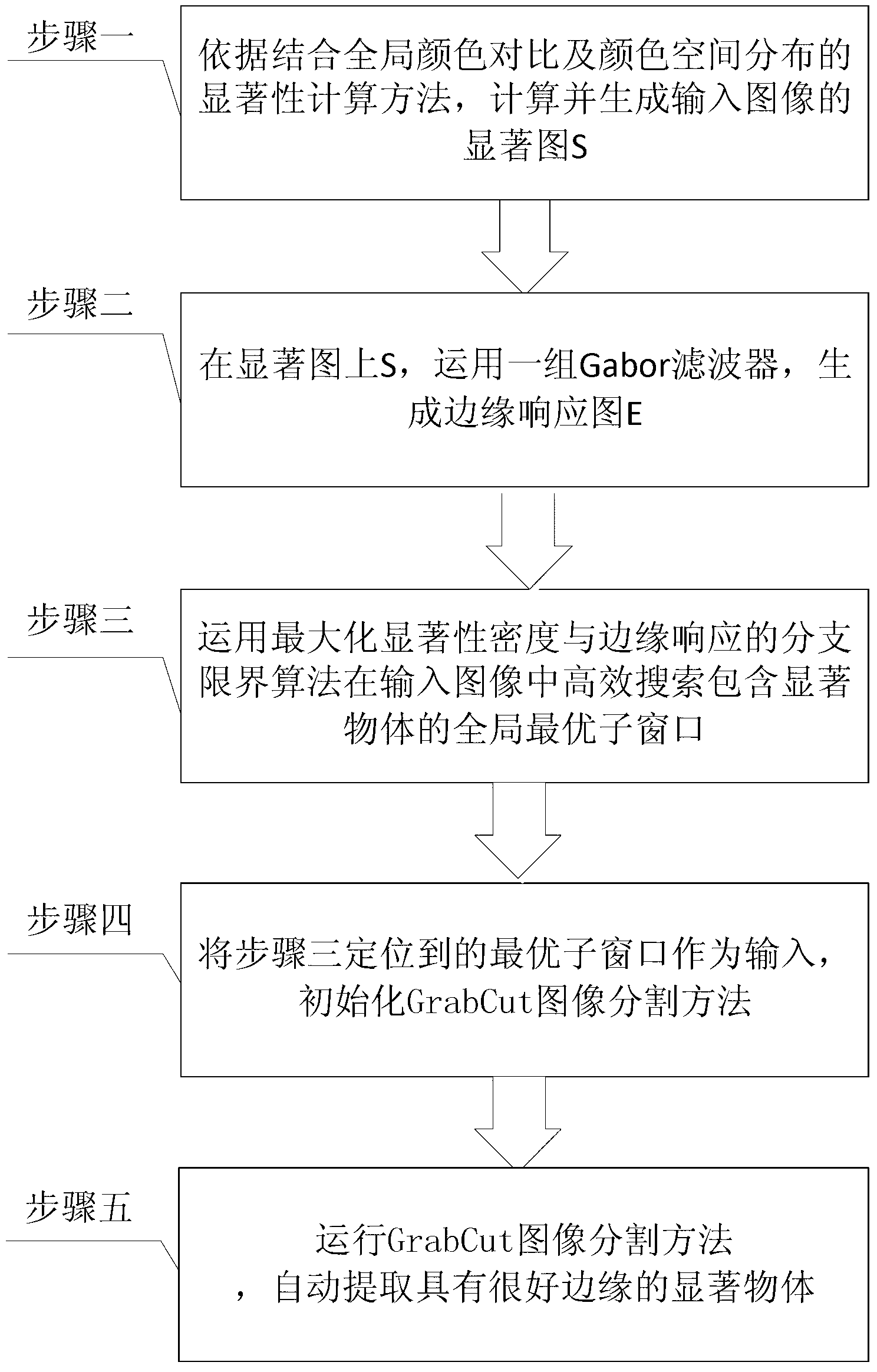

Automatic detection method of salient object based on salience density and edge response

ActiveCN103198489AImplement automatic detectionImprove accuracyImage analysisImaging processingSalient objects

The invention provides an automatic detection method of a salient object based on salience density and edge response, and relates to a method for automatically detecting the salient object, solving the problems of the convectional salient object detection method that only one attribute that is the salience is utilized, but the edge attribute of the salient object is not taken into account, therefore, the detection accuracy of the salient object is relatively low. The automatic detection method of the salient object based on the salience density and the edge response comprises the following steps of: calculating and generating a salient map S of an input map according to the regional salience calculation method in combination of the global color comparison and the color space distribution; generating an edge response map E on the salient map S by utilizing a group of Gabor filters; efficiently searching a global optimal sub-window containing the salient object in the input map by utilizing the maximized branch-and-bound algorithm of the salience density and the edge response; adopting the obtained optimal sub-window as the input; initializing the GrabCut graphic cutting method; carrying out the GrabCut graphic cutting method; and automatically extracting the salient object with a good edge. The automatic detection method is applicable to the image processing field.

Owner:中数(深圳)时代科技有限公司

Light field saliency target detection method based on generative adversarial convolutional neural network

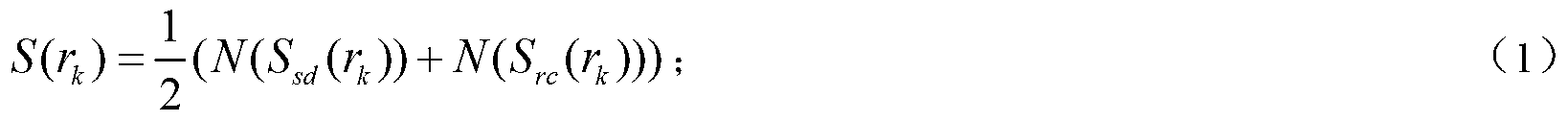

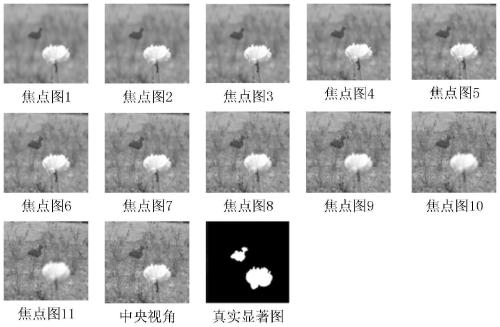

ActiveCN111369522ASolve the defect that high-level semantic features cannot be extractedHigh precisionImage analysisNeural architecturesData setNetwork structure

The invention discloses a light field saliency target detection method based on a generative adversarial convolutional neural network. The method comprises the following steps: 1, converting light field data into a refocusing sequence; 2, performing data enhancement on the refocusing sequence; 3, constructing a generative adversarial convolutional neural network on the basis of a U-Net network anda GAN network structure, taking a refocusing sequence as network input, and performing training by using the light field data set; and 4, using the trained generative adversarial convolutional neuralnetwork to carry out significance target detection on the to-be-processed light field data. According to the method, a deep learning method and light field refocusing information can be fully utilized, so that the accuracy of salient target detection of a complex scene image can be effectively improved.

Owner:HEFEI UNIV OF TECH

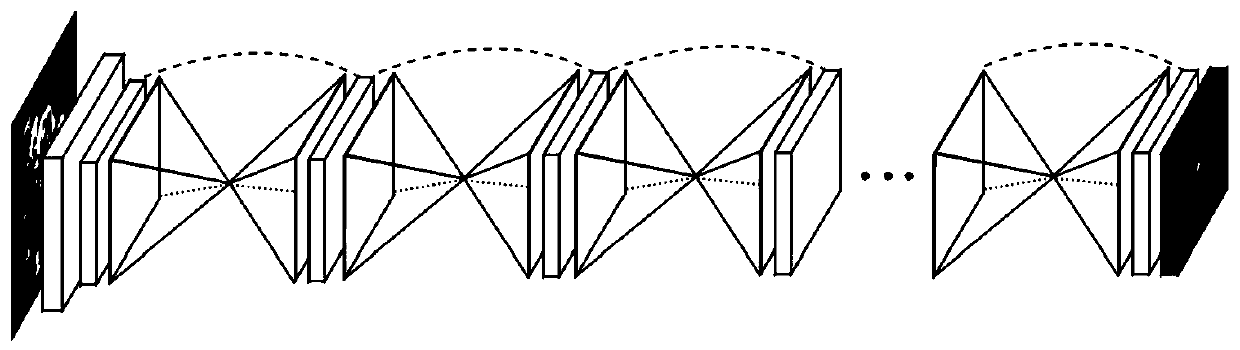

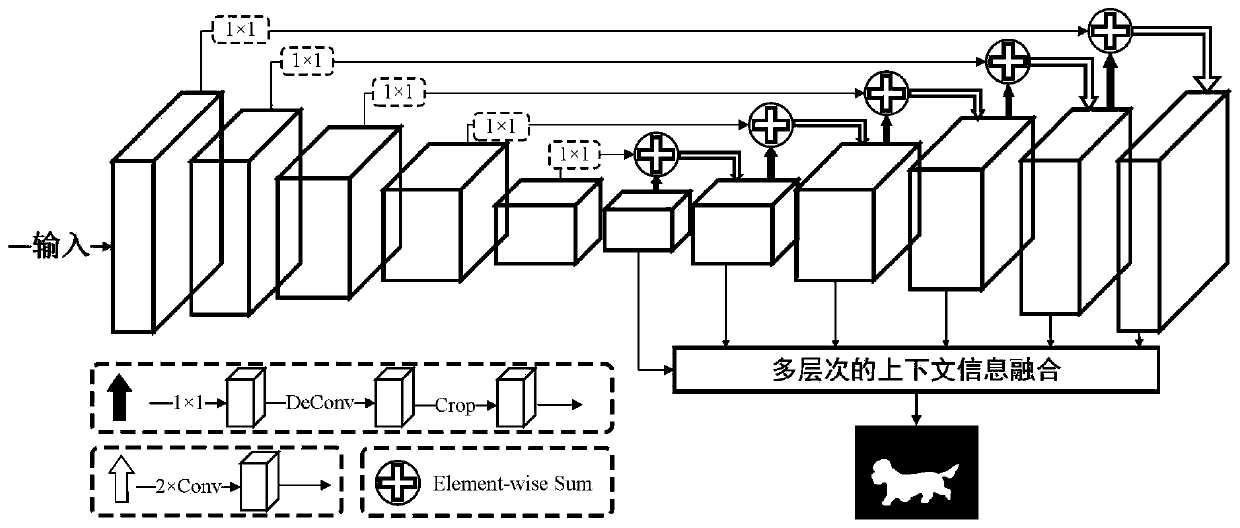

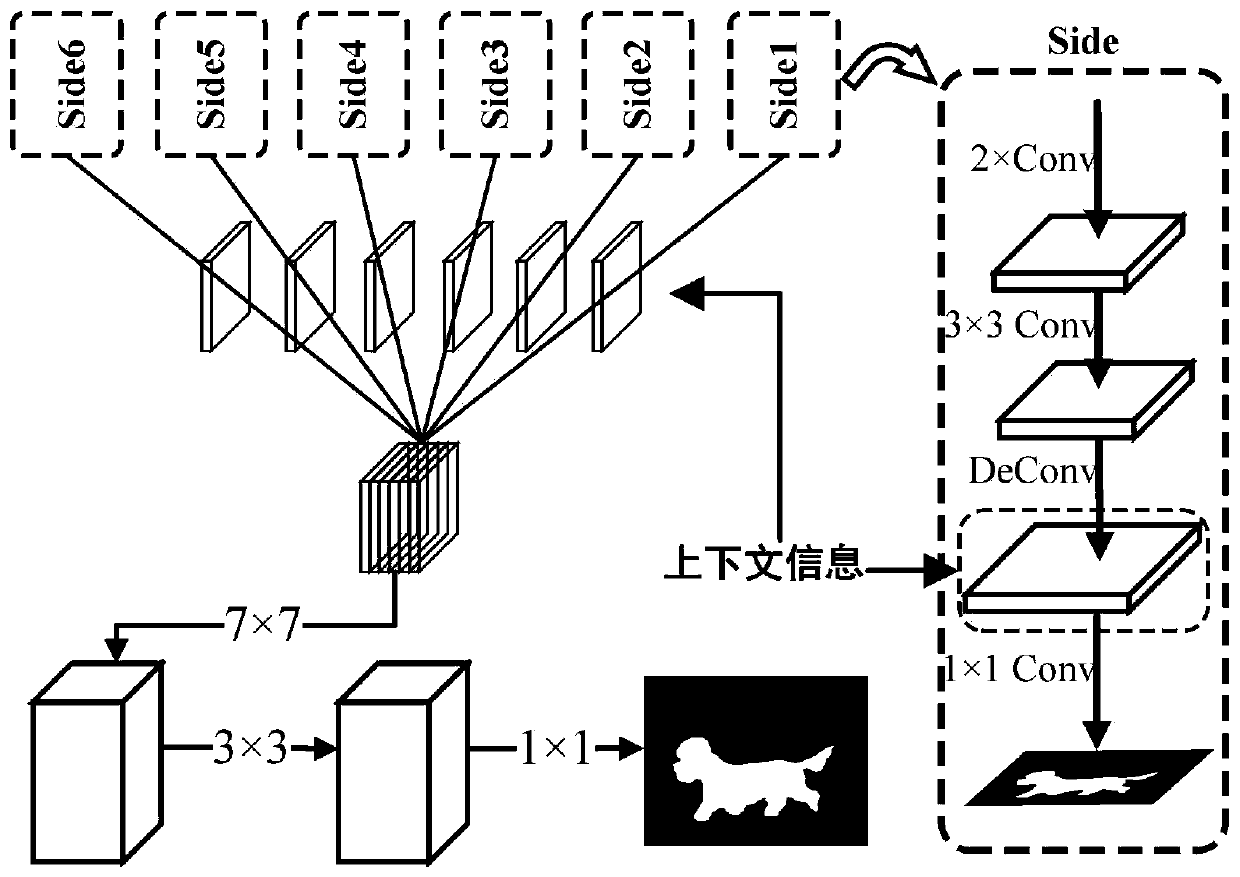

A salient object detection method based on multi-level context information fusion

ActiveCN109766918AAccurate Salient Object DetectionCharacter and pattern recognitionNeural architecturesSaliency mapVisual perception

The invention discloses a salient object detection method based on multi-level context information fusion. The object of the method is to construct and utilize multi-level context features to performimage saliency detection. According to the method, a new convolutional neural network architecture is designed, and the new convolutional neural network architecture is optimized in a manner of convolution from a high layer to a bottom layer, so that the context information on different scales is extracted for an image, and the context information is fused to obtain a high-quality image saliency map. The salient region detected by using the method can be used for assisting other visual tasks.

Owner:NANKAI UNIV

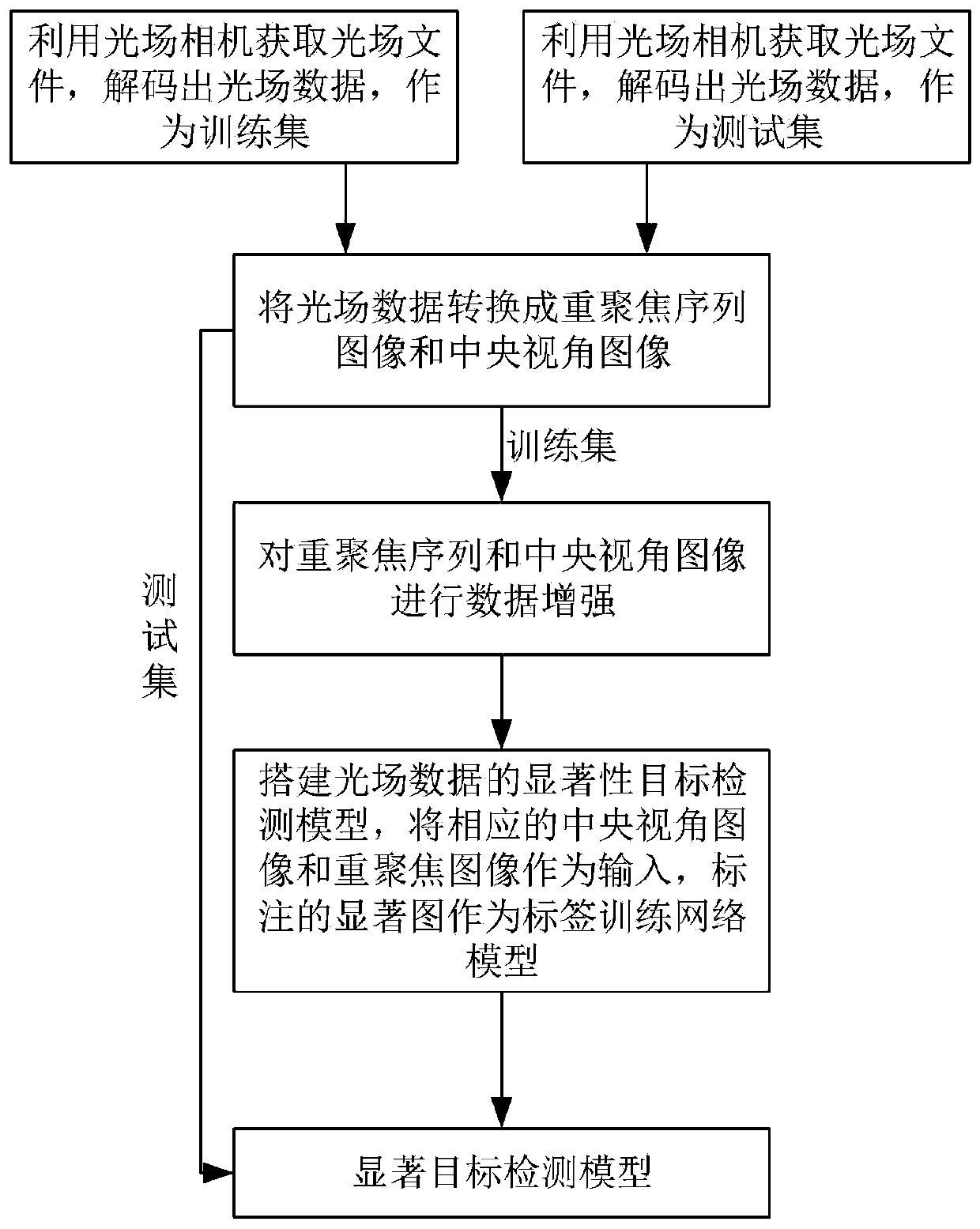

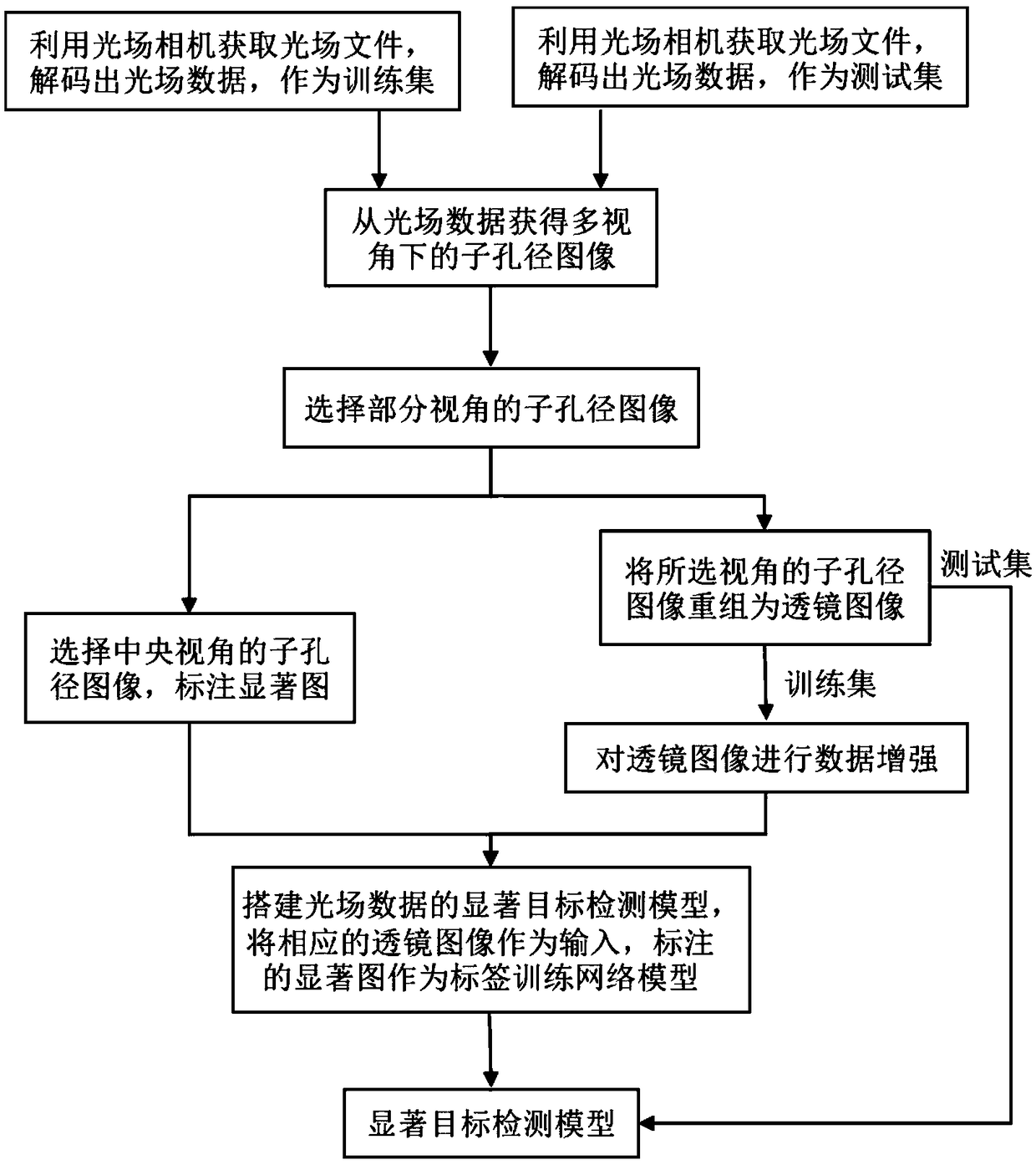

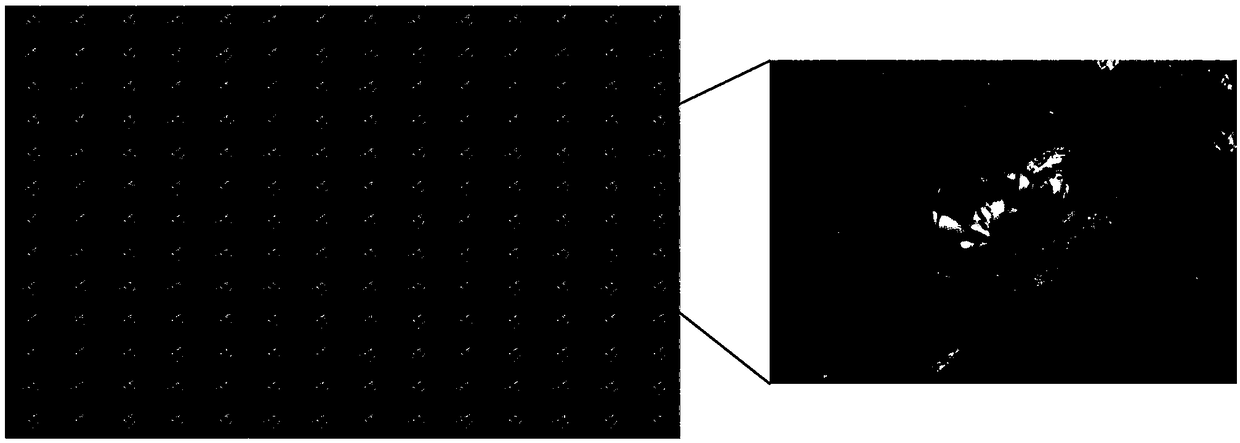

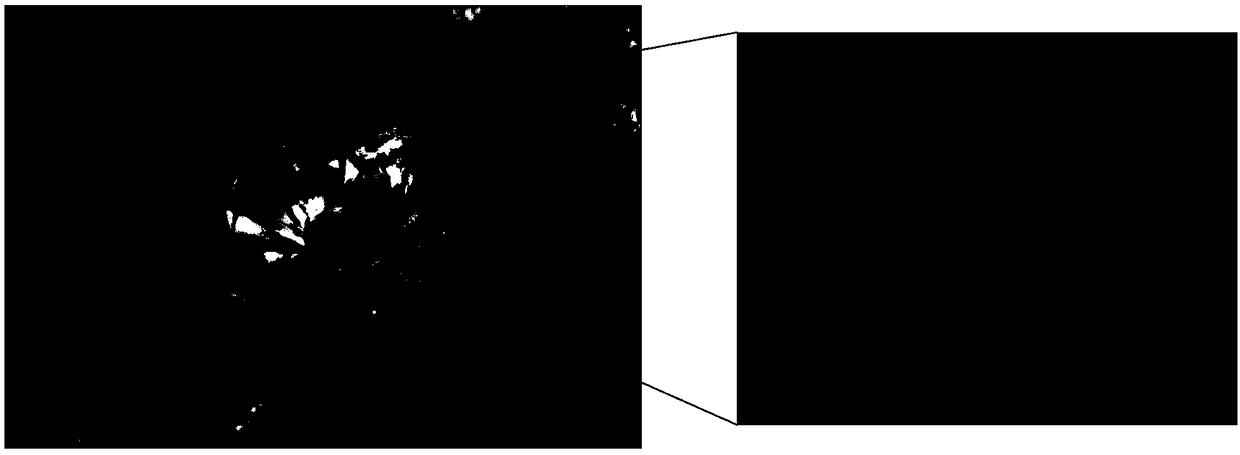

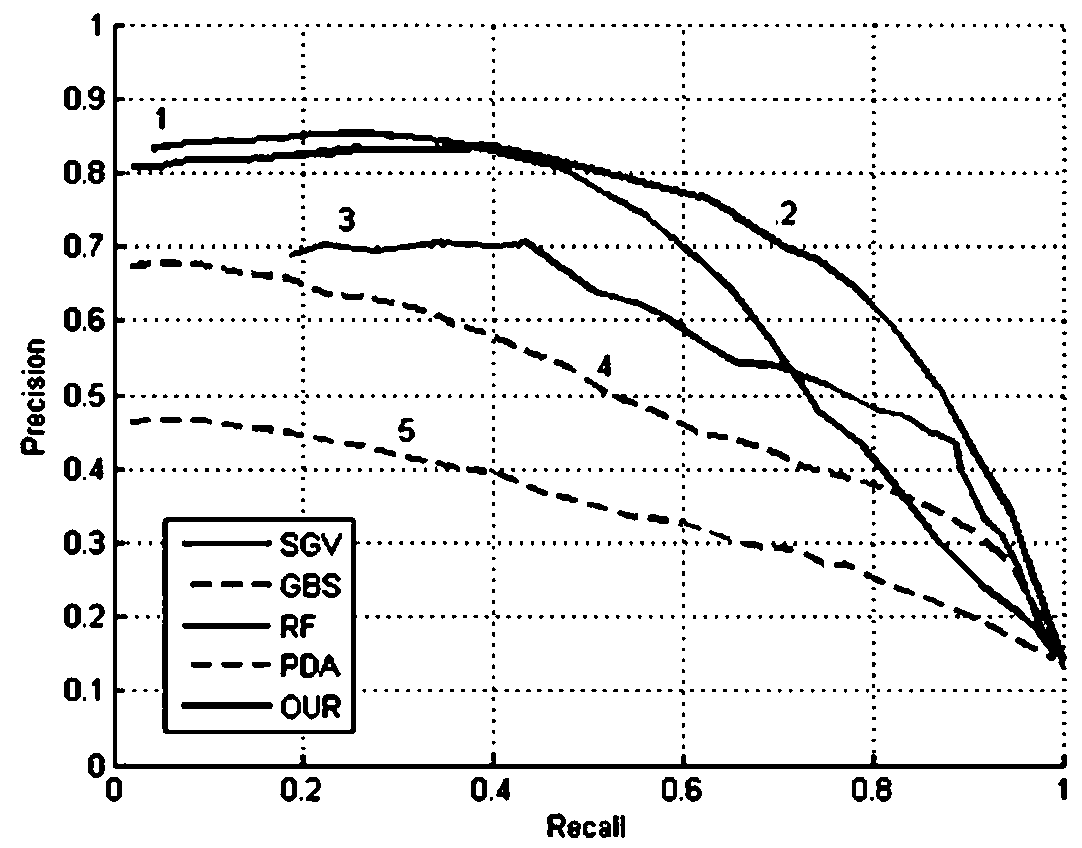

A salient object detection method in optical field based on depth convolution network is proposed

ActiveCN109344818AQuality improvementSolve the bug that the angle of view information cannot be usedCharacter and pattern recognitionNeural architecturesData setSalient objects

The invention discloses an optical field salient target detection method based on a depth convolution network. The method comprises the following steps: 1. Converting a sub-aperture image of all viewing angles from optical field data obtained by using an optical field acquisition device; 2. Reconstructing the sub-aperture images into microlens images from different angles of view; 3, perform dataenhancement on that microlens image; With Deeplab- Based on the pre-training weights of V2 network, a salient object detection model combined with microlens image is constructed and trained by data set. 5. Using the trained salient object detection model to detect the salient object. The method of the invention can effectively improve the accuracy of the detection of the salient object of the complex scene image.

Owner:HEFEI UNIV OF TECH

A method for salient object detection in dynamic scene

ActiveCN109146925ASolving the salient object detection problemMeeting the needs of detecting saliency targetsImage analysisCharacter and pattern recognitionFeature vectorFixation point

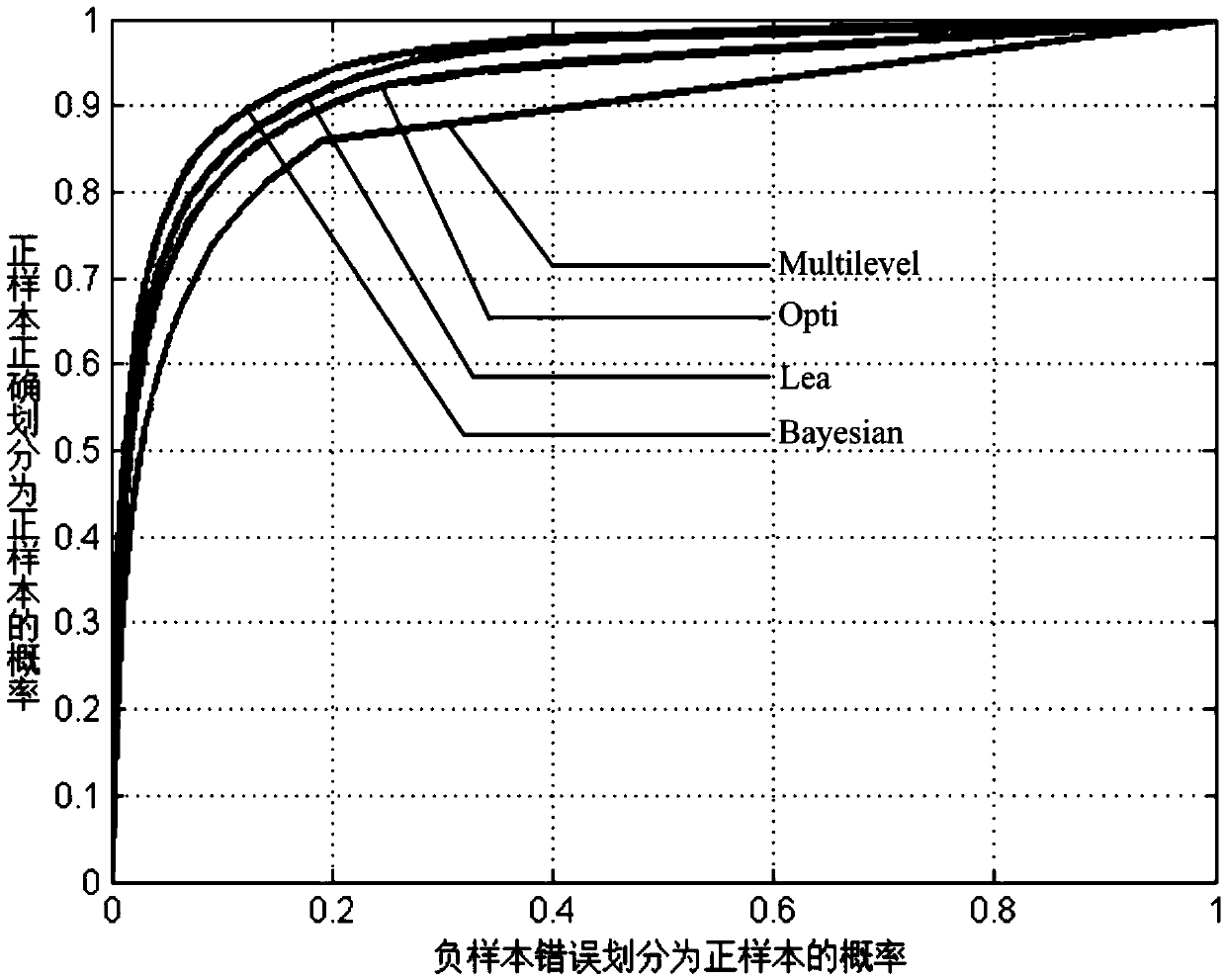

A method for detecting a salient object in dynamic scene is disclosed. That salient object detection in dynamic scene is a salient detection process by analyzing the moving state of the object in moving video. The method mainly includes three steps: firstly, moving object detection is carried out in the dynamic scene, and all moving object regions in the scene are obtained; then, image features are extracted from dynamic scene and fused at feature level to get image fusion feature vector, which is used to detect visual fixation point based on Bayesian inference; finally, combined with fixationdetection, the saliency of the detected moving object is estimated, and a dynamic saliency map based on the moving object is generated. The invention solves the problem of salient object detection ofthe camera under the moving condition, can meet the requirement of the machine vision system for detecting the salient object in the dynamic scene, and the method has strong adaptability to the environment change.

Owner:ZHENGZHOU UNIVERSITY OF AERONAUTICS

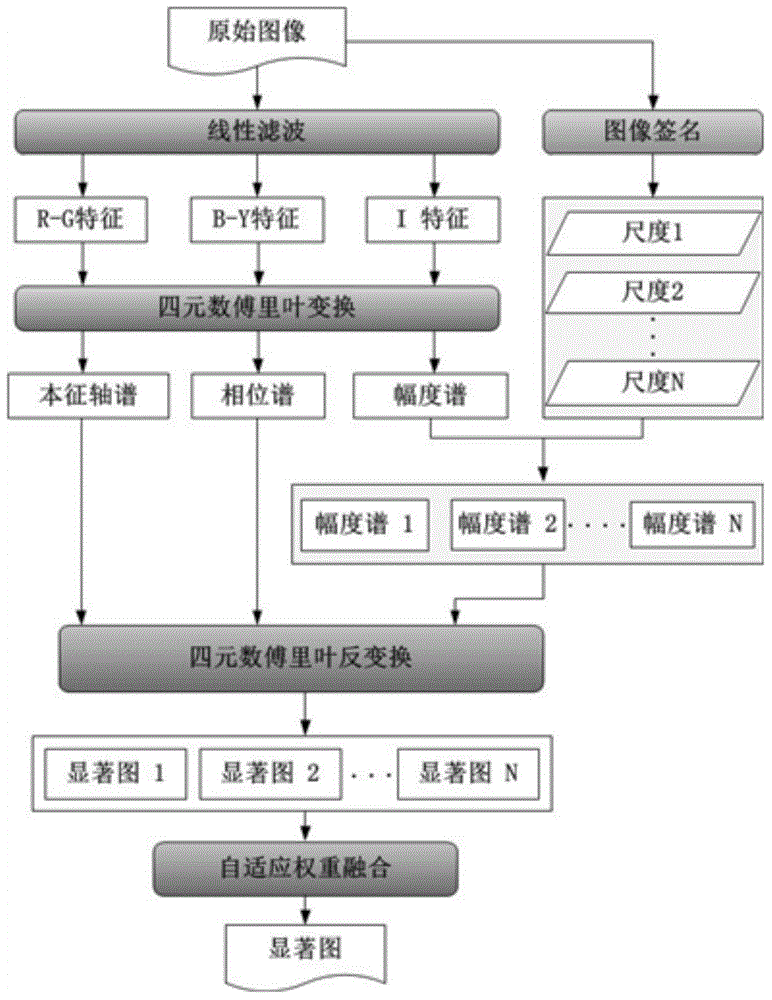

Amplitude spectrum analysis based salient object detection method

ActiveCN106296632AEven detectionImage enhancementImage analysisPattern recognitionQuaternion fourier transform

The invention discloses an amplitude spectrum analysis based salient object detection method, which comprises the steps of extracting a brightness feature I, an antagonistic feature RG and an antagonistic feature BY from an acquired image; the extracted features are transformed into a frequency domain through quaternion Fourier transform so as to acquire an amplitude spectrum, a phase spectrum and an intrinsic axis spectrum of the image; detecting the size of each salient object and a central position of each salient object in the image by using an image signature operator; acquiring an optimal filtering scale corresponding to each of the different salient object through utilizing a specific relation between the optimal filtering scale of the amplitude spectrum and the size of the salient objects, and respectively carrying out different scales of Gaussian filtering on the amplitude spectrum of the image; determining a weight value of an optimal saliency map corresponding to each salient object according to central bias Gaussian distribution and salient object locations, carrying out adaptive Gaussian weight fusion on the acquired different saliency maps, and calculating a fused saliency map; carrying out Gaussian filtering on the fused saliency map; and performing normalization on saliency values to acquire a final saliency map. The method disclosed by the invention can suppress the background quickly and effectively, the salient objects are highlighted uniformly, and more salient information of the image is reserved.

Owner:OCEAN UNIV OF CHINA

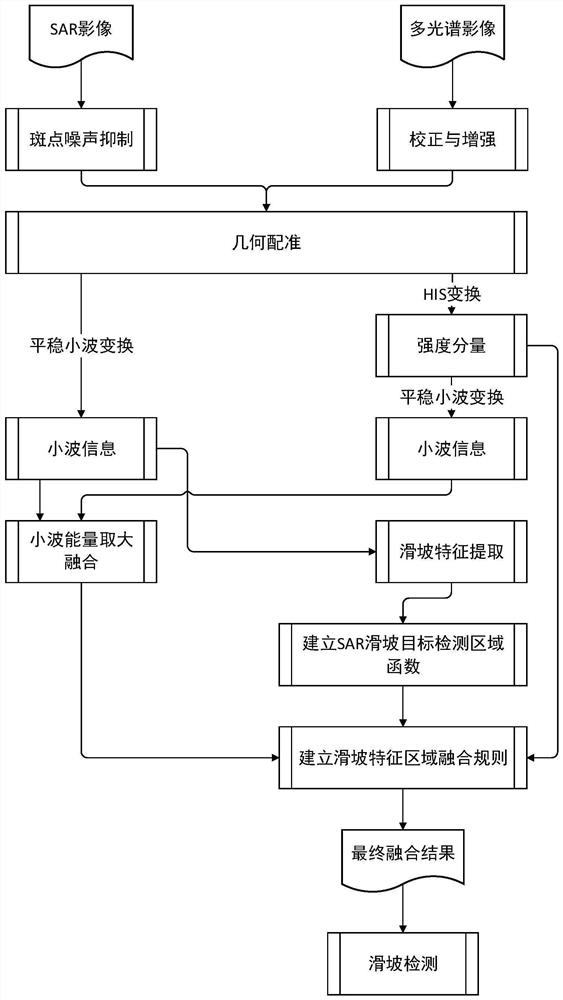

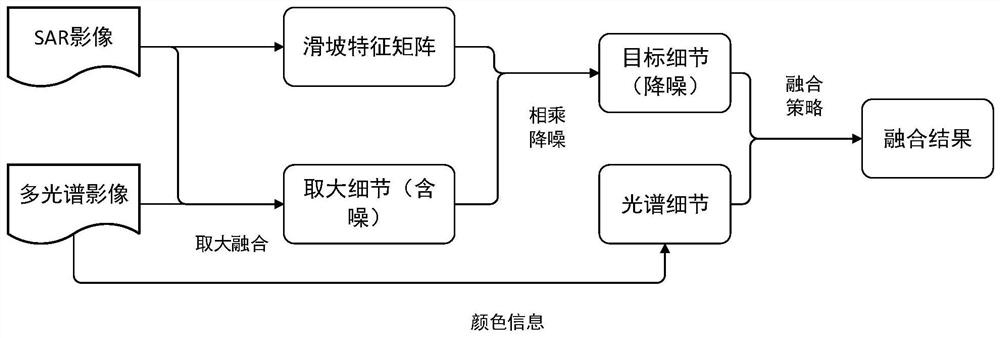

SAR and optical image fusion method and system for landslide detection

PendingCN112307901AAccurate judgmentAccurate analysisScene recognitionNoise removalLandslide detection

The invention discloses an SAR and optical image fusion method for landslide detection. The method provided by the invention comprises the following steps: step 1, preprocessing an SAR and an opticalimage; step 2, performing HIS conversion on the optical image to obtain three components I, H and S; step 3, performing stationary wavelet transform and high-frequency component energy maximization fusion on I components of the SAR image and the optical image; step 4, performing saliency detection on the low-frequency and high-frequency components of the SAR image and the gray scale information ofthe image, establishing an SAR salient target detection area guidance function, and partitioning the SAR image; step 5, establishing a salient region fusion rule, and realizing image fusion accordingto a regional fusion strategy; and step 6, identifying and extracting landslide disaster information based on the fused image. The method has good adaptability to SAR and optical image fusion for landslide detection, adopts related processing measures in structure maintenance, noise removal and spectrum reservation, and obtains an excellent effect.

Owner:ELECTRIC POWER RES INST OF STATE GRID ZHEJIANG ELECTRIC POWER COMAPNY +3

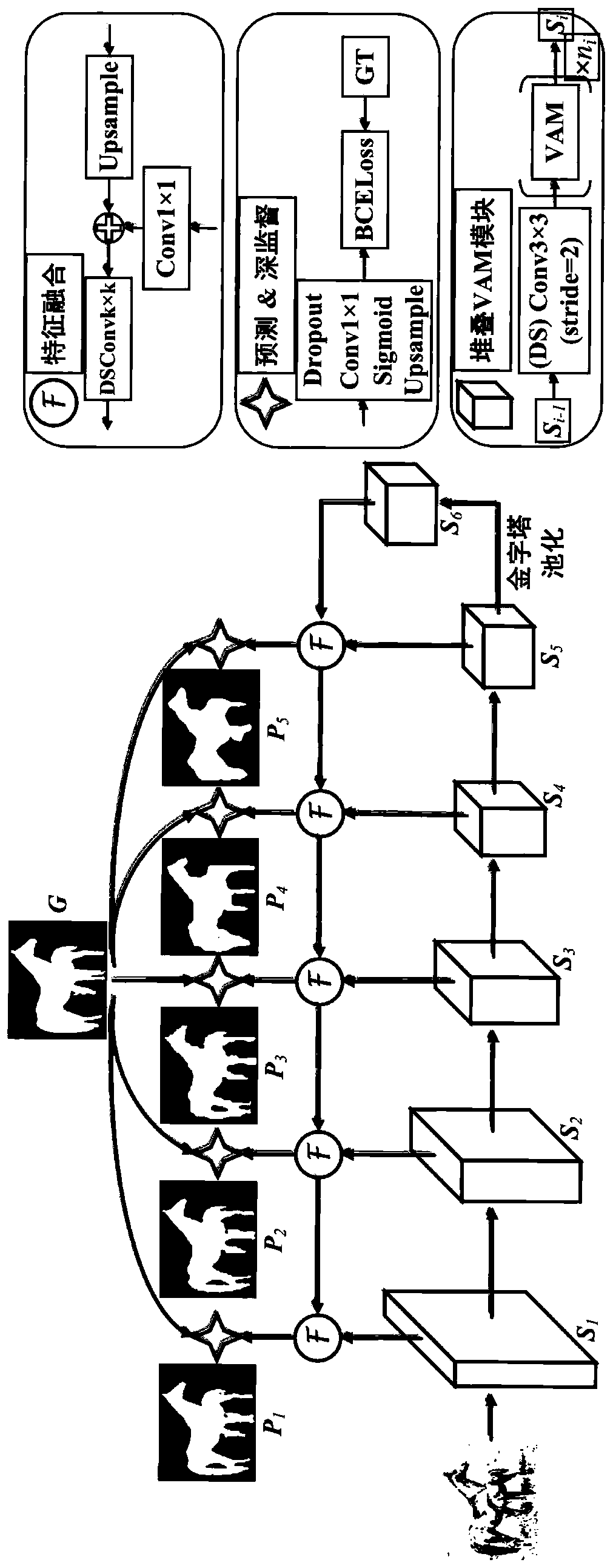

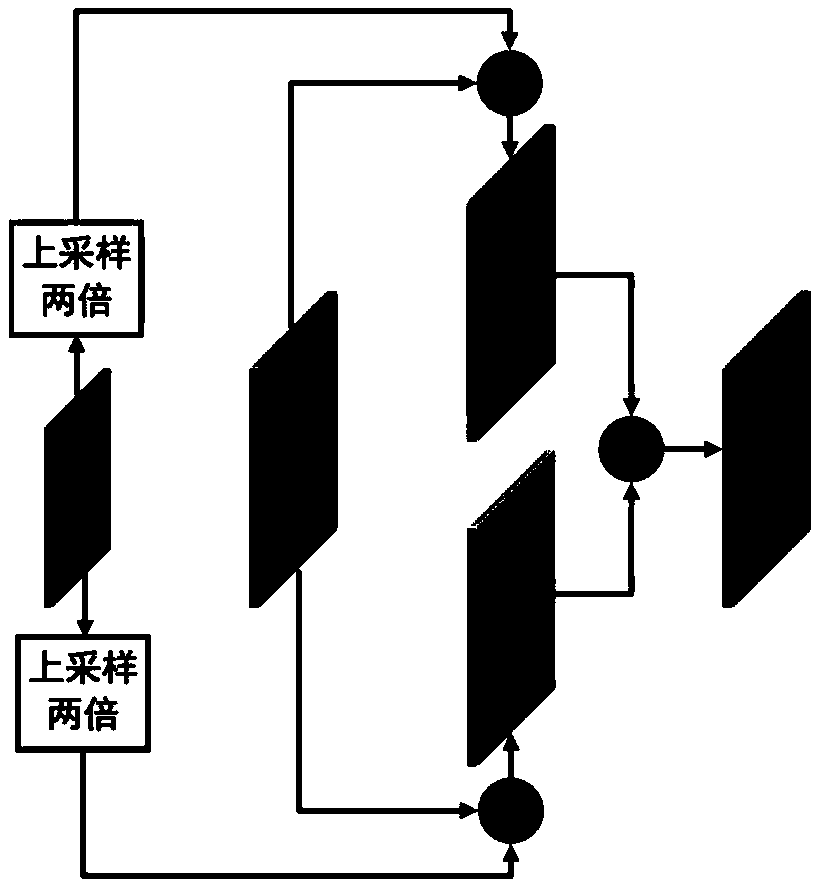

Salient object detection method based on cascade improved network

The invention discloses an RGB-D saliency object detection method based on a cascade improved network, and belongs to the technical field of image processing. Most existing RGB-D models directly aggregate the features from the CNN networks of different levels, and the noise and interference information contained in low-level features are easily introduced. The invention creatively provides a cascade improved structure, a saliency map generated by the features of a high-level part is used as a mask to improve the features of a low-level part, and then a final saliency map is generated by aggregating the improved low-level features. In addition, in order to eliminate the interference information of the depth map, the invention provides a depth enhancement module for preprocessing before thedepth features and the RGB features are mixed. According to the method, four evaluation indexes are used for carrying out experiments on seven data sets, and the results show that the method surpassesall current most advanced RGB-D saliency object detection methods.

Owner:NANKAI UNIV

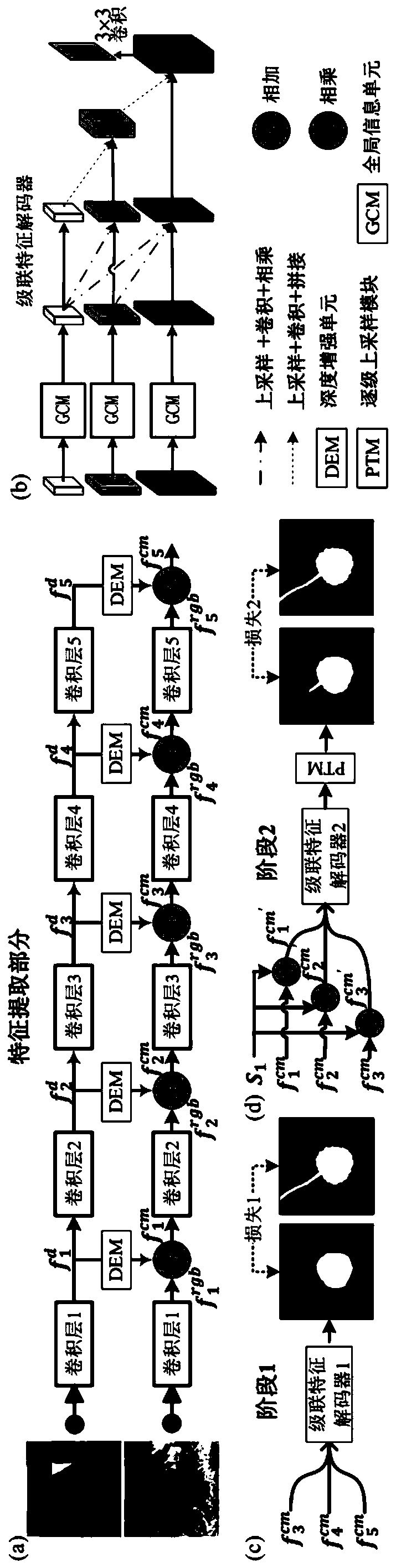

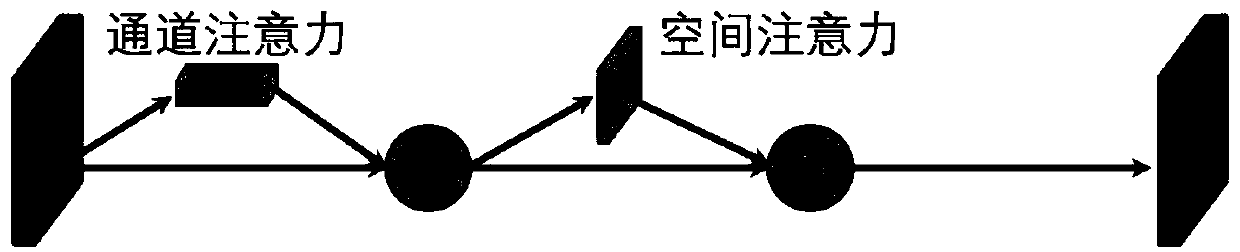

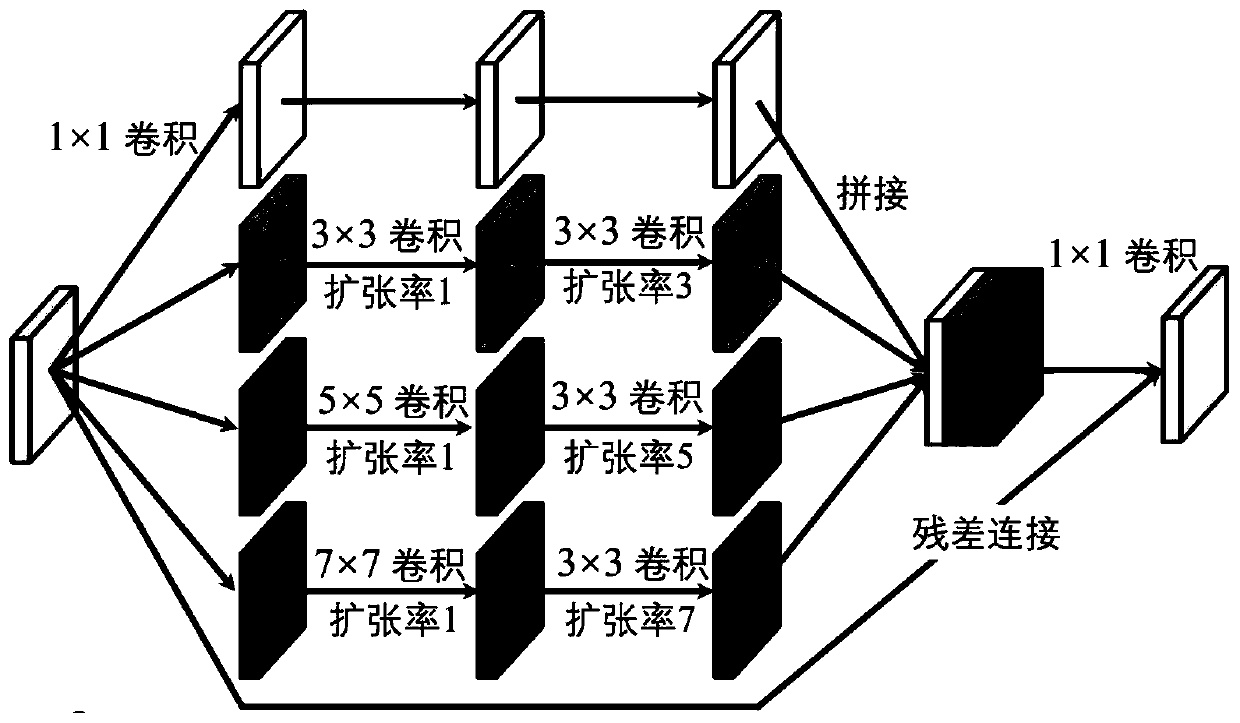

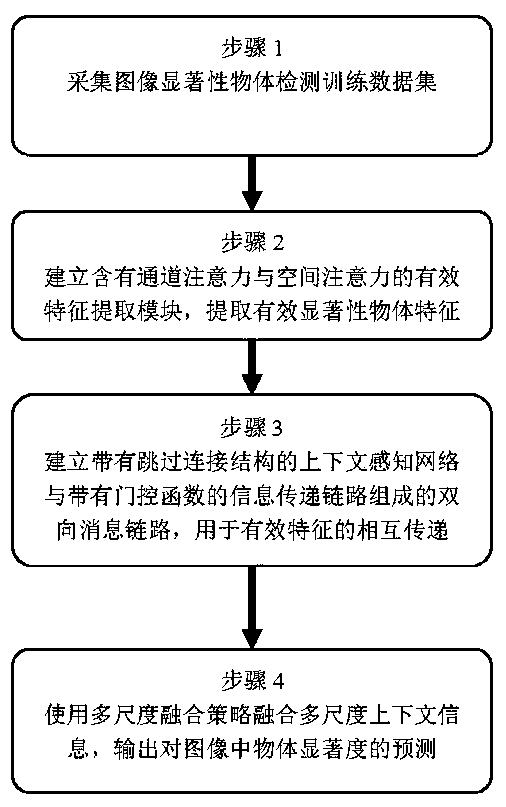

Salient object detection method based on bidirectional message link convolutional network

InactiveCN110490189AReduce the impact of forecastsControl impactCharacter and pattern recognitionNeural architecturesSaliency mapFeature extraction

The invention provides a salient object detection method based on a bidirectional message link convolutional network. Firstly, an attention mechanism is used for guiding a feature extraction module toextract entity effective features, and contextual information between multiple levels is selected and integrated in a progressive mode; and fusing the high-level semantic information with the shallowcontour information by using a bidirectional information link consisting of a network with a skipping connection structure and a message transmission link with a gating function. Finally, a multi-scale fusion strategy is used to encode the multi-layer effective convolution features so as to generate a final saliency map. Qualitative and quantitative experiments of six data sets show that the method provided by the invention can obtain better performance under different indexes.

Owner:SHANGHAI MARITIME UNIVERSITY

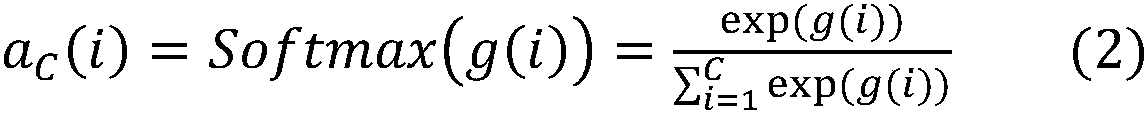

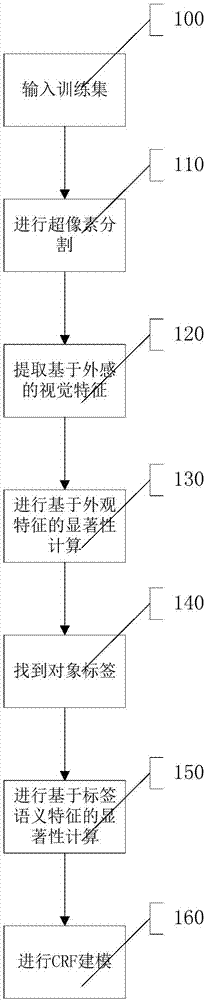

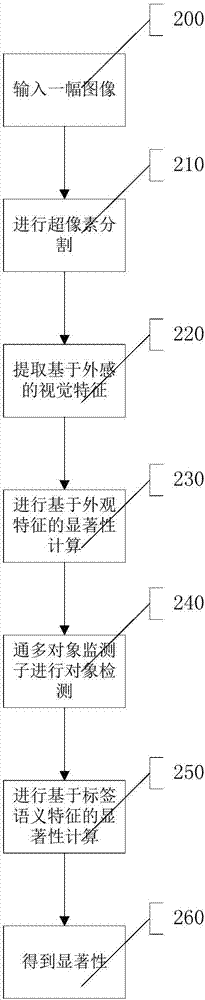

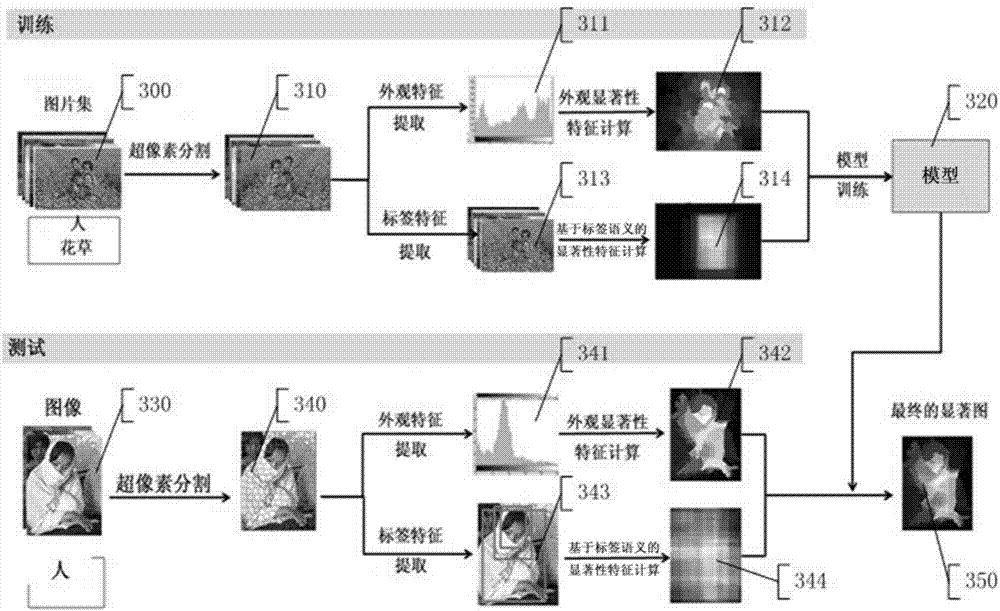

Salient object extraction method based on label semantic meaning

The invention provides a salient object extraction method based on label semantic meaning, which comprises the steps of training, testing, and obtaining a final salient map. The step of training comprises the sub-steps of inputting a training set, and performing super pixel segmentation on an image I. According to the invention, firstly an object label in labels is selected, detection is performedthrough an object detector corresponding to the object label, salient features based on semantic meaning of the label are obtained, the label semantic meaning and the appearance based salient features are integrated to perform detection on a salient object. The label semantic information is advanced semantic information, so that the traditional salient object detection method can be better improved.

Owner:BEIJING UNION UNIVERSITY

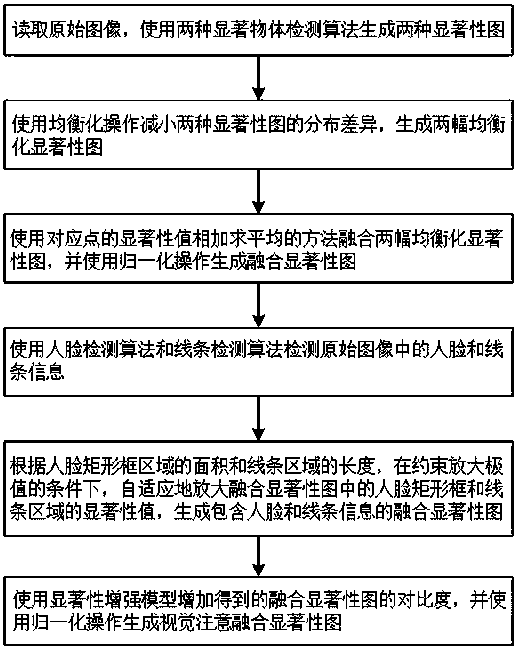

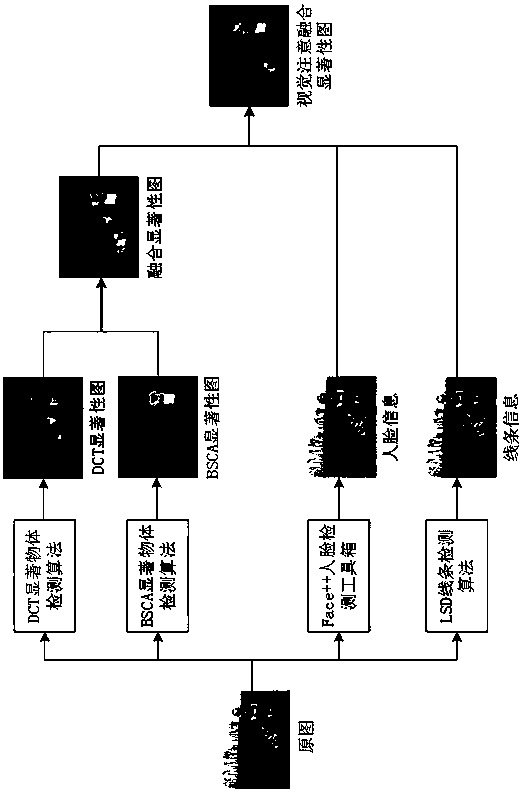

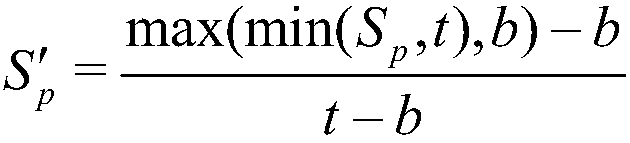

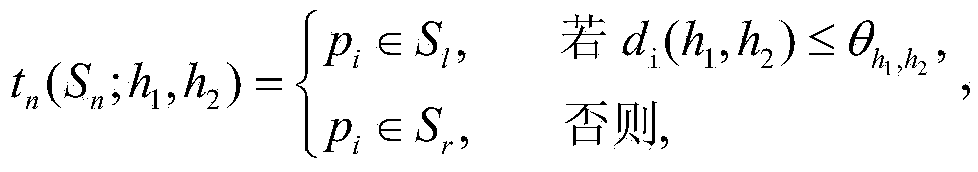

Visual attention fusion method for redirected image quality evaluation

ActiveCN108549872AIncrease contrastReduce limitationsCharacter and pattern recognitionPattern recognitionObjective quality

The invention relates to a visual attention fusion method for redirected image quality evaluation. The method comprise: step one, an original image is read and two kinds of saliency maps are generatedby using two kinds of salient object detection algorithms; step two, equalization is carried out to reduce the distribution difference between the two saliency maps and two equalization saliency mapsare generated; step three, the equalization saliency maps are fused by using a method of adding saliency values of corresponding points and calculating an average value and then a normalization operation is performed to generate a fused saliency map; step four, face and line information in the original image is detected; step five, saliency values of a face rectangular frame and a line region inthe fused saliency map are magnified adaptively under the condition of constraining a magnification extreme value and a fused saliency map containing facial and line information is generated; and stepsix, the contrast of the fused saliency map is enhanced b using a saliency enhancement model and then normalization is performed to generate a visual attention fusion saliency map. Therefore, the consistency between objective quality assessment results and subjective perception is enhanced.

Owner:FUZHOU UNIV

Image salient object detection method and device

ActiveCN103793710ARealize complete and accurate detectionImage analysisCharacter and pattern recognitionPattern recognitionSalient objects

The invention discloses an image salient object detection method and device, and belongs to the computer vision field. The method comprises the following steps that: a random forest is built according to an image; based on the random forest, a global image block rarity is used to capture an approximate shape of a salient object; the image is divided into an inner shape part and an outer shape part; through measurement of a contrast ratio between an inner image block and an outer image block, the inner image block similar to the outer shape part is suppressed, so as to highlight the outer image block similar to the inner shape part; and finally, image segmentation method based on image segmenting is used to modify a local image. The image salient object detection method provided by the invention can detect the object of any size in the image; the complete and accurate detection of the object can be achieved; at the same time, the image salient object detection method provided by the invention can detect a plurality of salient objects in one single image.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

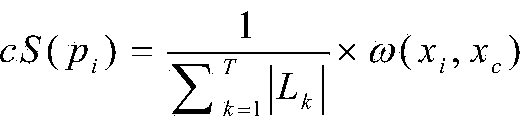

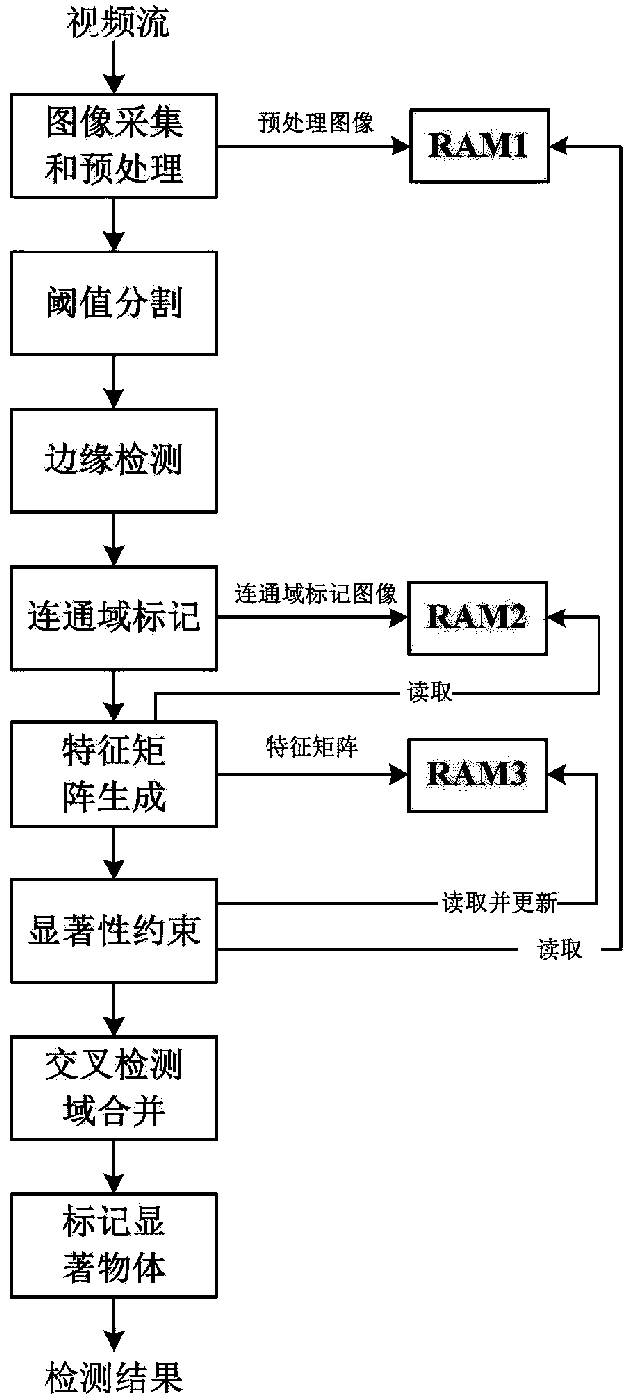

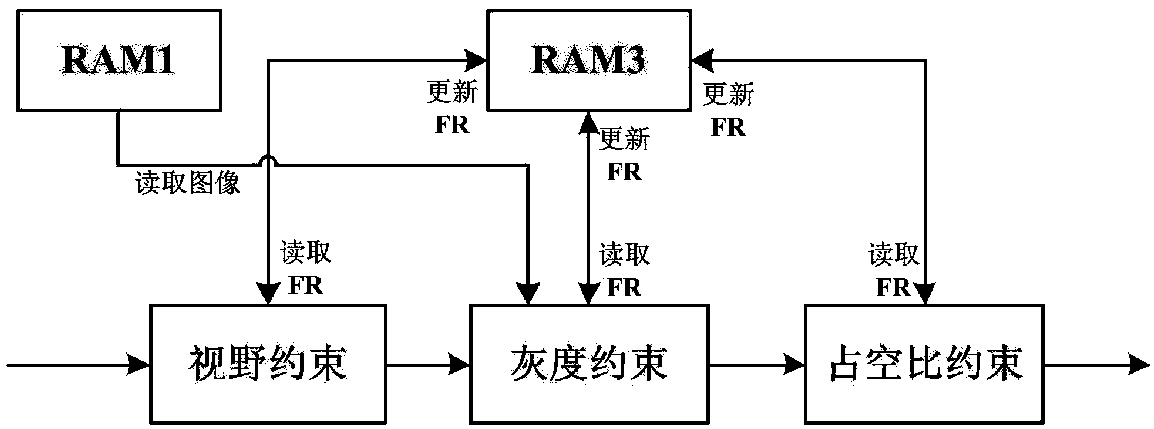

FPGA-based infrared salient object detection method

ActiveCN107194946AImprove portabilityReduce volumeImage enhancementImage analysisPattern recognitionSalient objects

The invention provides an FPGA-based infrared salient object detection method. The method comprises the following steps of: 1, acquiring an image A1; 2, carrying out threshold value segmentation on the acquired image to obtain an image A2; 3, carrying out edge detection on A2 to obtain an image A3; 4, marking a connected domain on A3 to obtain an image A4; 4, for the connected domain in A4, obtaining a feature matrix which takes borders of the connected domain as features; 6, judging whether the feature matrix corresponding to the connected domain is a salient object or not through a restriction manner, the restriction manner comprises field of view restriction, grayscale restriction and duty cycle restriction; 7 carrying cross merging on the connected domain which passes through the restriction to obtain a new connected domain feature matrix; and 8, outputting images in a border value corresponding to the cross merged connected domain feature matrix. The method provided by the invention is capable of detecting salient objects in complicated scenes, so as to improve the detection precision while ensuring the timeliness and stability.

Owner:KUNMING INST OF PHYSICS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com