Salient object extraction method based on label semantic meaning

An object extraction and notable technology, applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve the problems of not considering the label context, dependence, and not easy to generalize

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

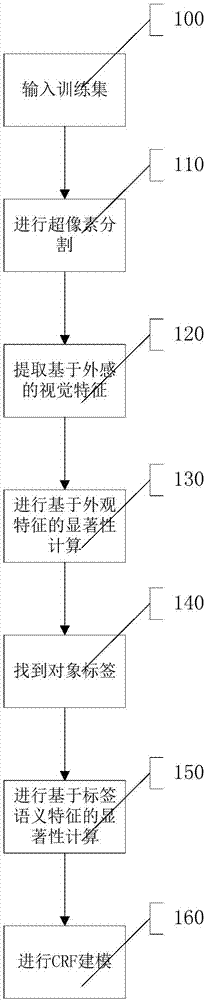

[0068] like figure 1 As shown, the training process is as follows:

[0069] Execute step 100, input the training set, and perform the following operations on each image in the training set.

[0070] Execute step 110, carry out superpixel segmentation to image I;

[0071] Image I is segmented into M superpixels, and each superpixel is denoted as R i , 1≤i≤M.

[0072] Execute step 120 to extract visual features based on appearance of the image;

[0073] The appearance visual feature of the ith superpixel is v i , the feature on the feature channel of the kth dimension can be expressed as v i k .

[0074] Execute step 130 to perform saliency calculation based on image appearance features;

[0075] The saliency of the i-th superpixel on the feature channel of the k-th dimension is calculated as follows:

[0076]

[0077] Among them, D(v i k ,v j k ) represents the superpixel R i and superpixel R j The difference over the feature channels of the k-th dimension. w...

Embodiment 2

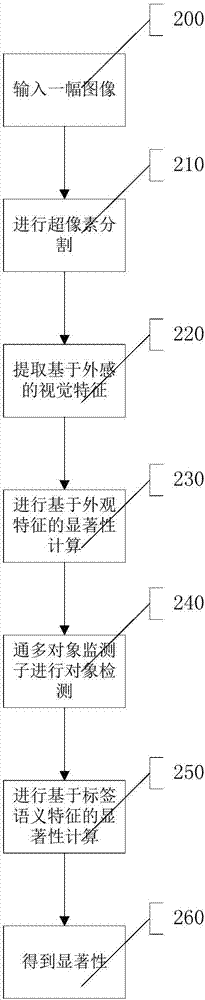

[0098] like figure 2 As shown, the test process is as follows:

[0099] Execute step 200, input an image I;

[0100] Execute step 210, carry out superpixel segmentation to image I;

[0101] Image I is segmented into M superpixels, and each superpixel is denoted as R i , 1≤i≤M.

[0102] Execute step 220 to extract the appearance-based features of the image;

[0103] The appearance visual feature of the ith superpixel is v i , the feature on the feature channel of the kth dimension can be expressed as v i k .

[0104] Execute step 230 to perform saliency calculation based on image appearance features;

[0105] The saliency of the i-th superpixel on the feature channel of the k-th dimension is calculated as follows:

[0106]

[0107] Among them, D(v i k ,v j k ) represents the superpixel R i and superpixel R j The difference over the feature channels of the k-th dimension. w ij Represents the spatial distance weight, calculated as

[0108]

[0109] p i s...

Embodiment 3

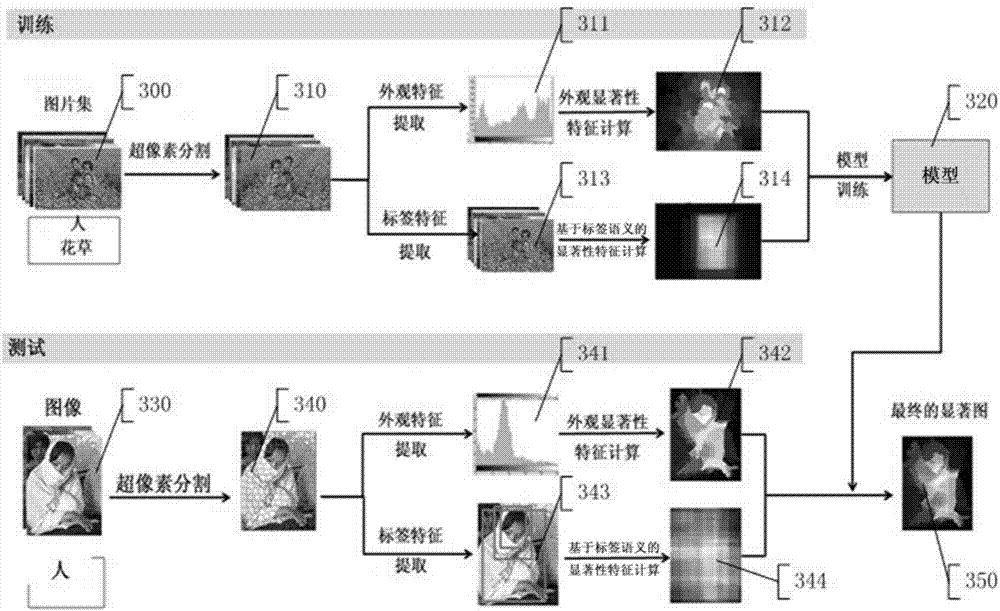

[0116] like image 3 As shown, it can be clearly seen how to get the final saliency map.

[0117] In the first step, the training process is performed first, and the images 300 of people and flowers in the picture collection are subjected to superpixel segmentation to obtain an image 310 . Image 310 is subjected to appearance feature extraction to obtain image 311 , and then image 311 is subjected to appearance saliency feature calculation to obtain image 312 . The image 310 is subjected to tag feature extraction to obtain an image 313 , and then the image 313 is subjected to salient feature calculation based on tag semantics to obtain an image 314 . The image 312 and the image 314 are simulated and trained together to obtain a weight vector 320 .

[0118] The second step is to carry out the testing process. Perform superpixel segmentation on the person image 330 to obtain an image 340 . Image 340 is subjected to appearance feature extraction to obtain image 341 , and then...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com