Patents

Literature

876 results about "Superpixel segmentation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

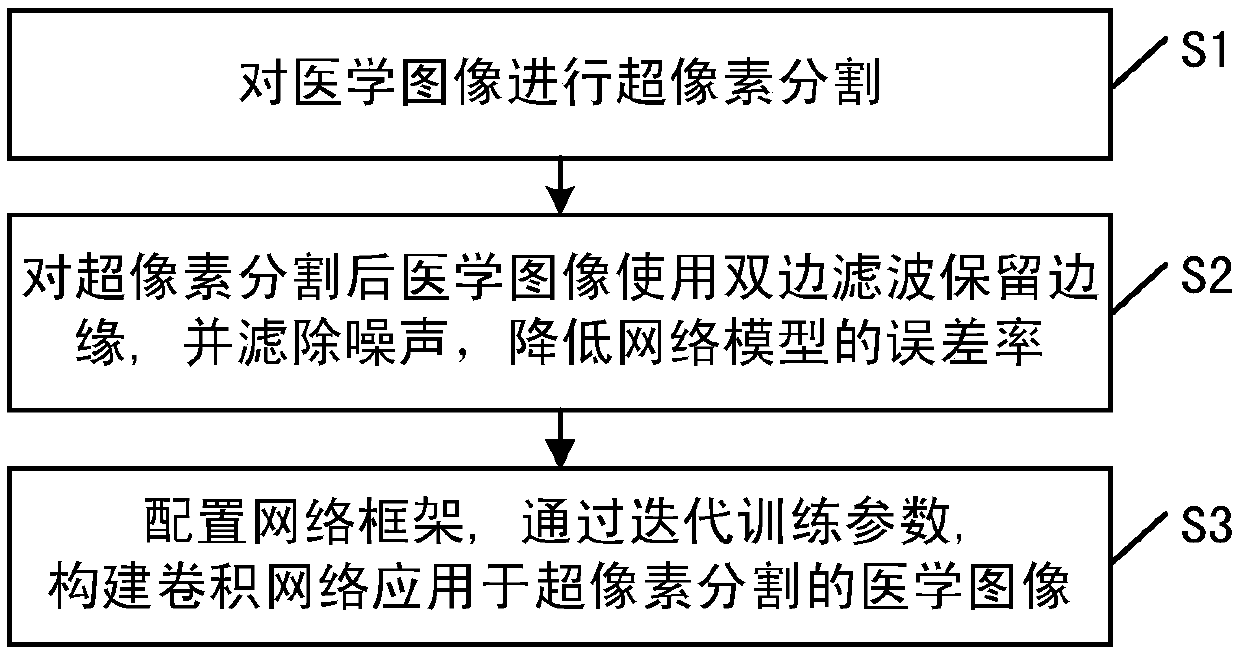

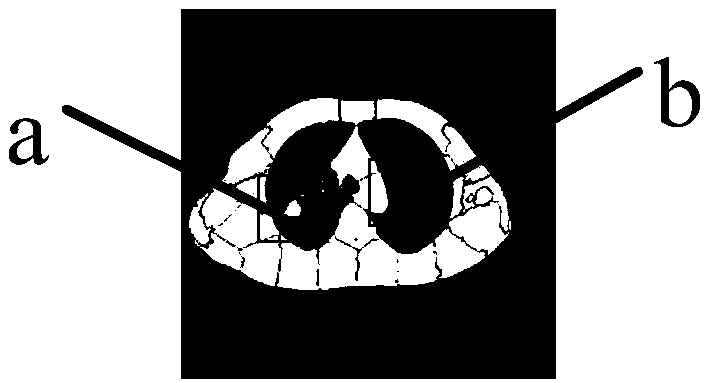

A superpixel method for medical image segmentation

ActiveCN109035252AMake up for the defect of inaccurate edge segmentationSolve the fuzzy classification problemImage analysisAnatomical structuresPattern recognition

The invention provides a superpixel method for medical image segmentation. The method comprises the steps of: processing a medical image into a superpixel; for the medical images obtained after superpixel segmentation, using bilateral filtering to preserve the edge and filter the noise to reduce the error rate of the network model; configuring a network framework, and constructing a convolution network for the medical images obtained after superpixel segmentation by iterative training parameters. Based on the linear iterative clustering segmentation method, this method applies the thought of the U-Net network to the post-optimization of super-pixels, which makes up the defect of inaccurate segmentation of inner edge of super-pixel, increases the standard layer to improve the weight sensitivity of each network layer, improves the convergence performance of the network, and makes the segmentation result closer to the actual value. Because the anatomical structure and pathological tissueof medical images are very clear, the medical images segmented by SLIC algorithm can obtain more comprehensive super-pixel, and the edge accuracy of super-pixel can be further improved by convolutionnetwork.

Owner:SHANDONG UNIV OF FINANCE & ECONOMICS

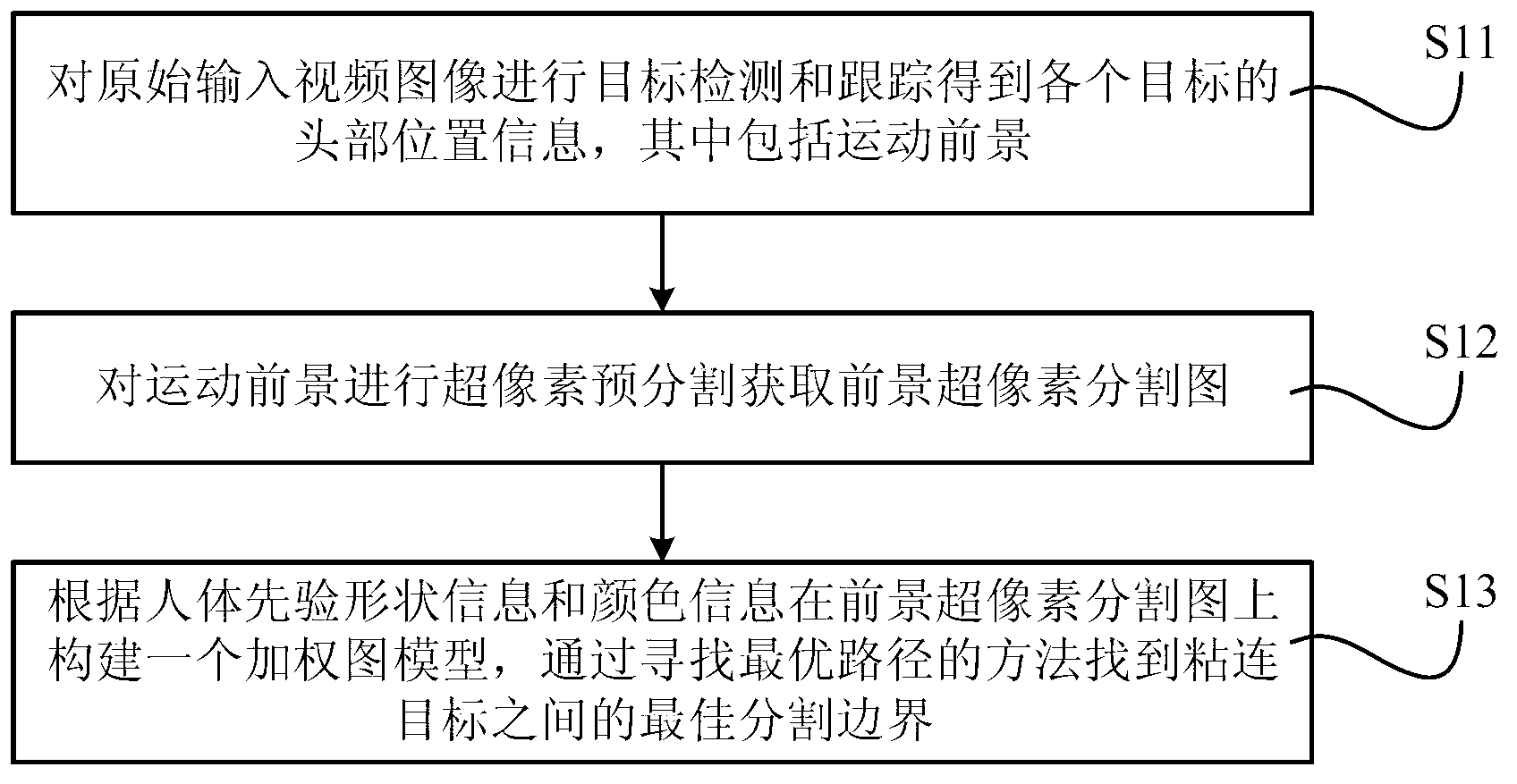

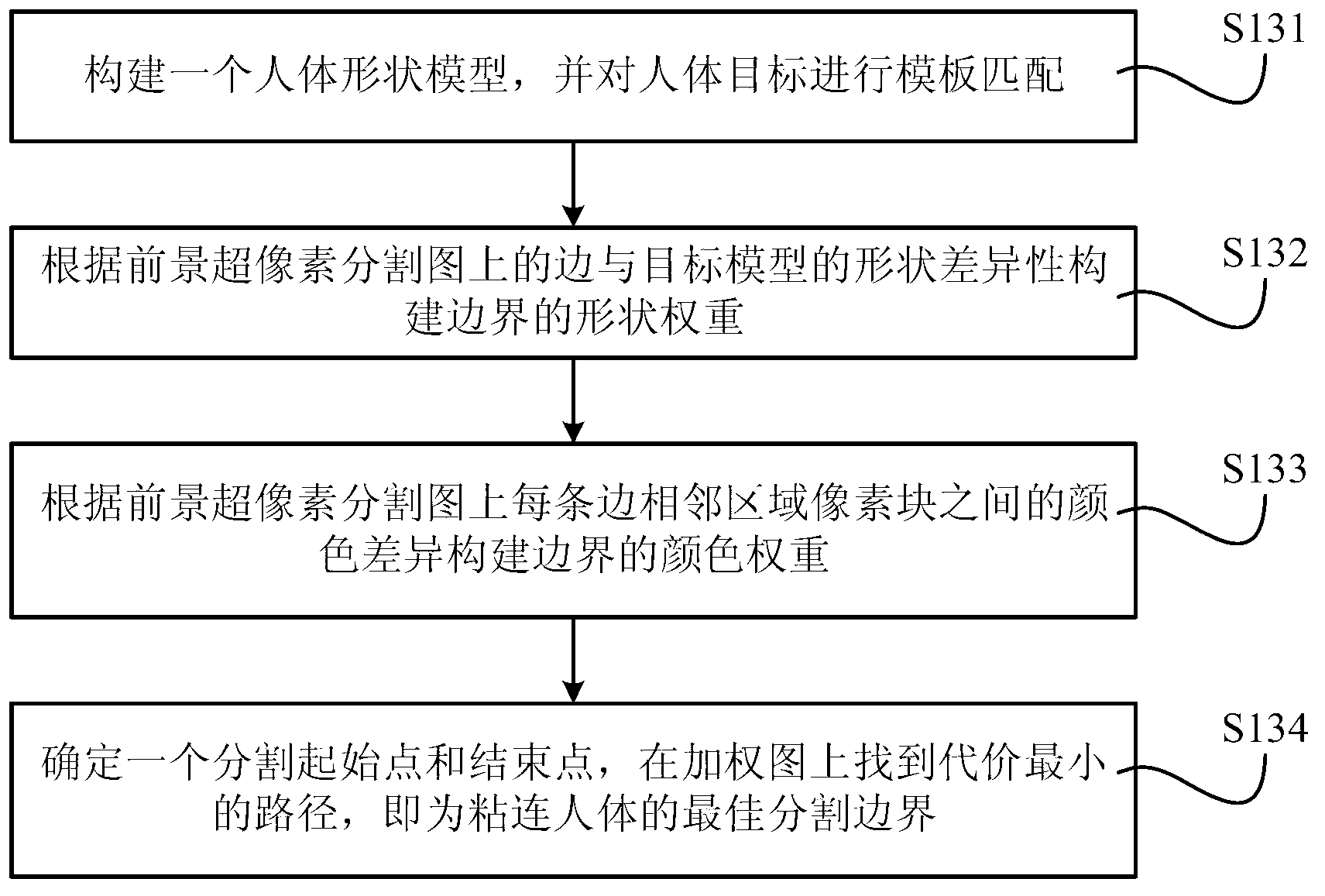

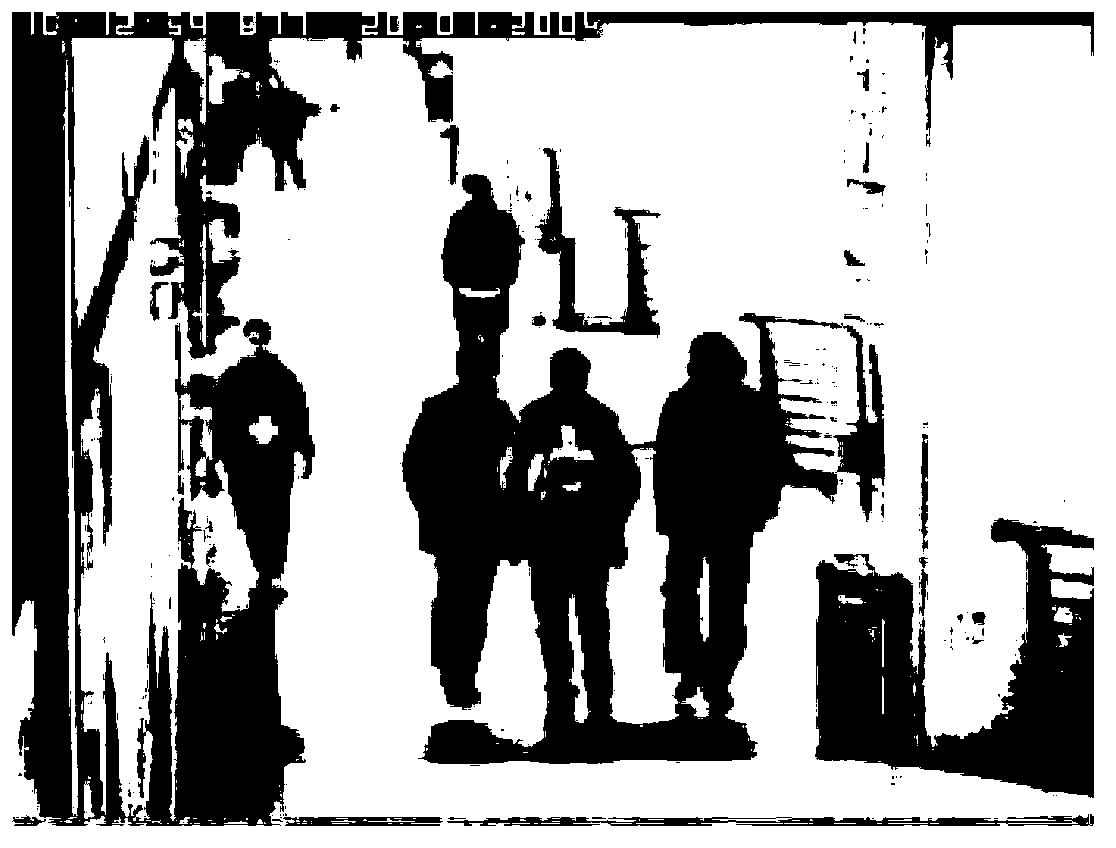

Adhered crowd segmenting and tracking methods based on superpixel and graph model

InactiveCN103164858AImprove accuracyAchieve precise positioningImage analysisHuman bodyPattern recognition

The embodiment of the invention discloses adhered crowd segmenting and tracking methods based on superpixel and a graphical model. The methods are used for segmenting and tracking target crowded people and have high robustness and adaptability, the outline of each target can be accurately extracted, and clear data can be provided for subsequent data processing. The methods comprise the following steps of: performing target detection and tracking on an initially input video image to obtain head position information, such as a motion foreground, of each target; performing superpixel pre-segmentation on the motion foreground to acquire a foreground superpixel segmentation image; and constructing the weighted graph model on the foreground superpixel segmentation image according to prior shape information and color information of human bodies, and finding out optimal segmentation borders among the adhered targets by finding the optimal path.

Owner:ZHEJIANG UNIV

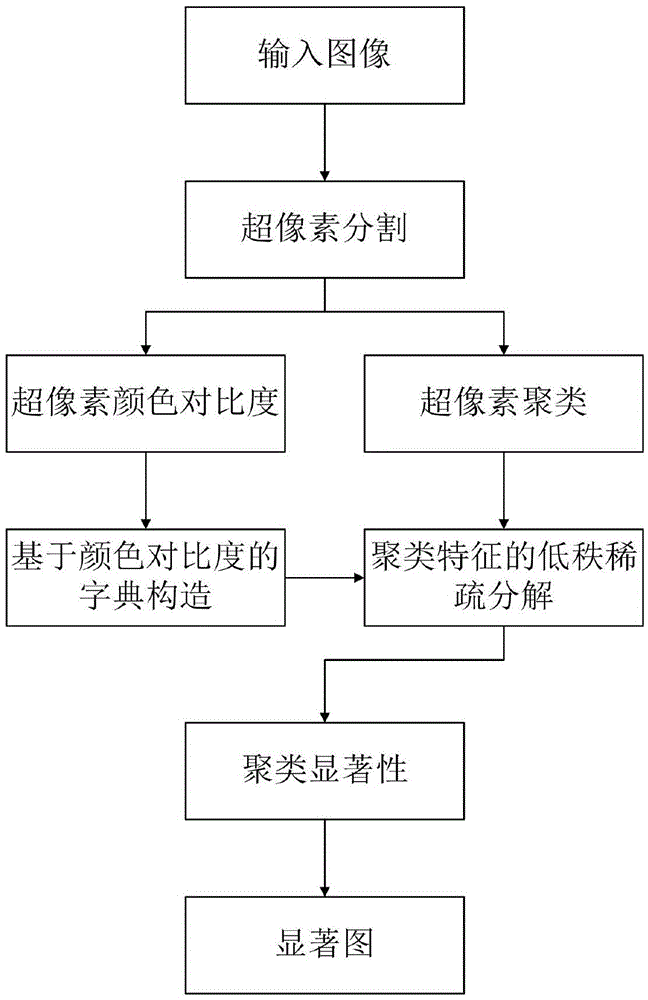

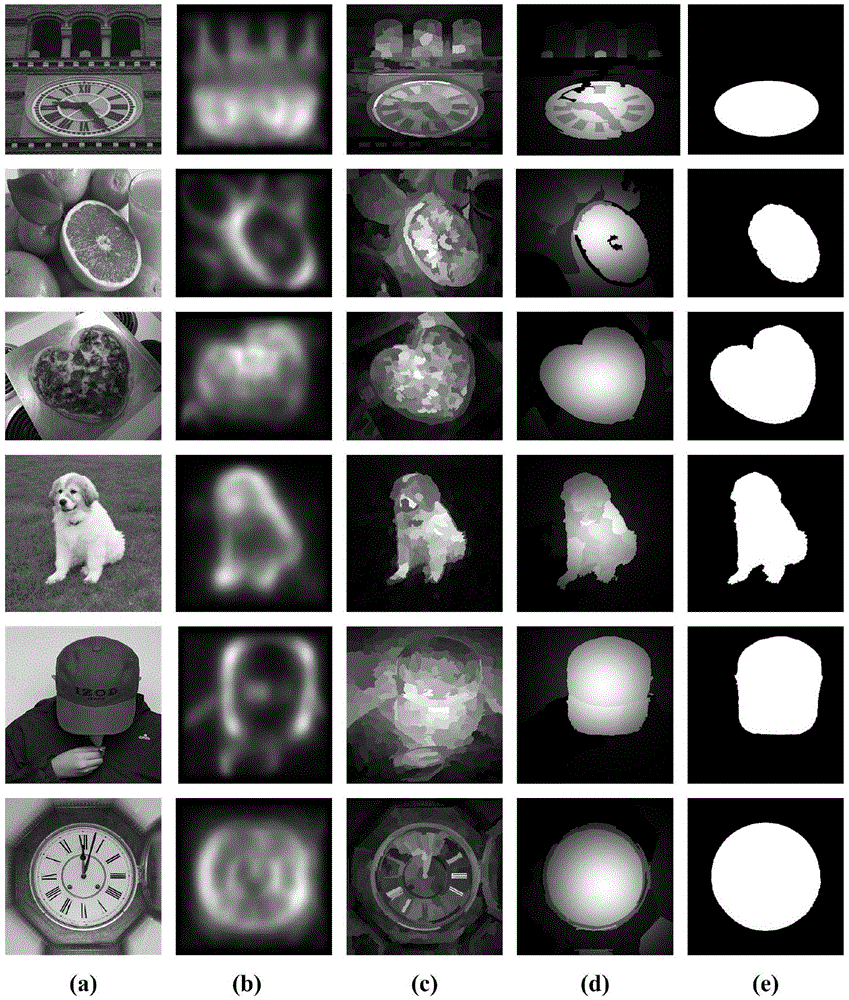

Significant object detection method based on sparse subspace clustering and low-order expression

ActiveCN105574534ASolve the problem that it is difficult to detect large-scale salient objectsOvercome the difficulty of detecting large-scale saliency objects completely and consistentlyImage enhancementImage analysisGoal recognitionImage compression

The invention discloses a significant object detection method based on sparse subspace clustering and low-order expression. The method comprises the steps of: 1, carrying out super pixel segmentation and clustering on an input image; 2, extracting the color, texture and edge characteristics of each super pixel in clusters, and constructing cluster characteristic matrixes; 3, ranking all super pixel characteristics according to the magnitude of color contrast, and constructing a dictionary; according to the dictionary, constructing a combined low-order expression model, solving the model and decomposing the characteristic matrixes of the clusters so as to obtain low-order expression coefficients, and calculating significant factors of the clusters; and 5, mapping the significant value of each cluster into the input image according the spatial position, and obtaining a significant map of the input image. According to the invention, the significant objects relatively large in size in the image can be completely and consistently detected, the noise in a background is inhibited, and the robustness of significant object detection of the image with the complex background is improved. The significant object detection method is applicable to image segmentation, object identification, image restoration and self-adaptive image compression.

Owner:XIDIAN UNIV

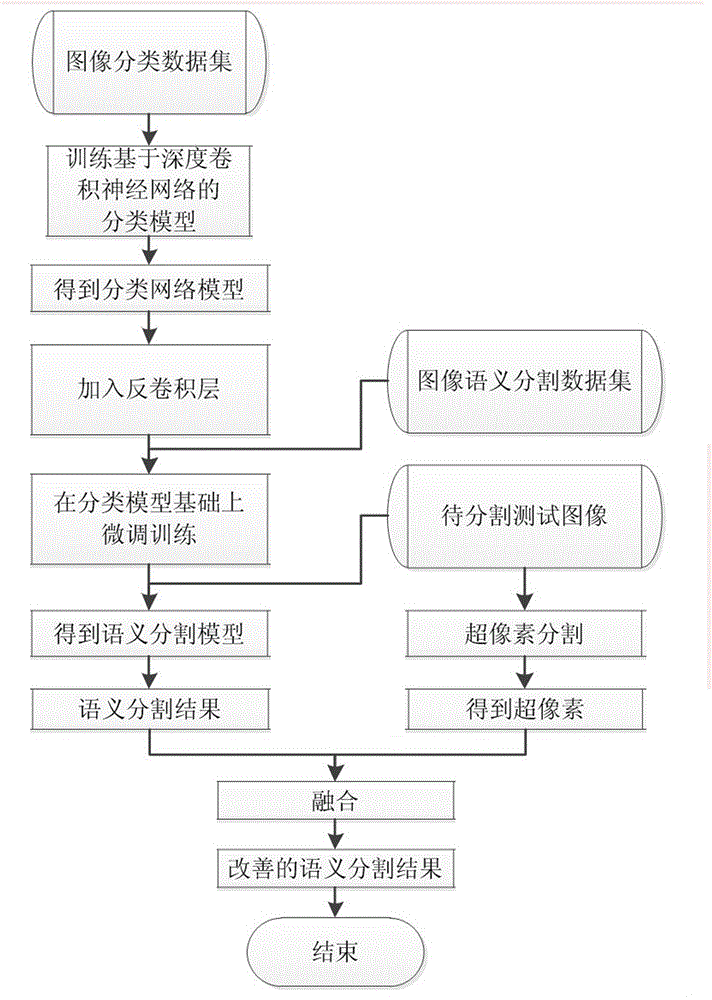

Deep convolutional neutral network and superpixel-based image semantic segmentation method

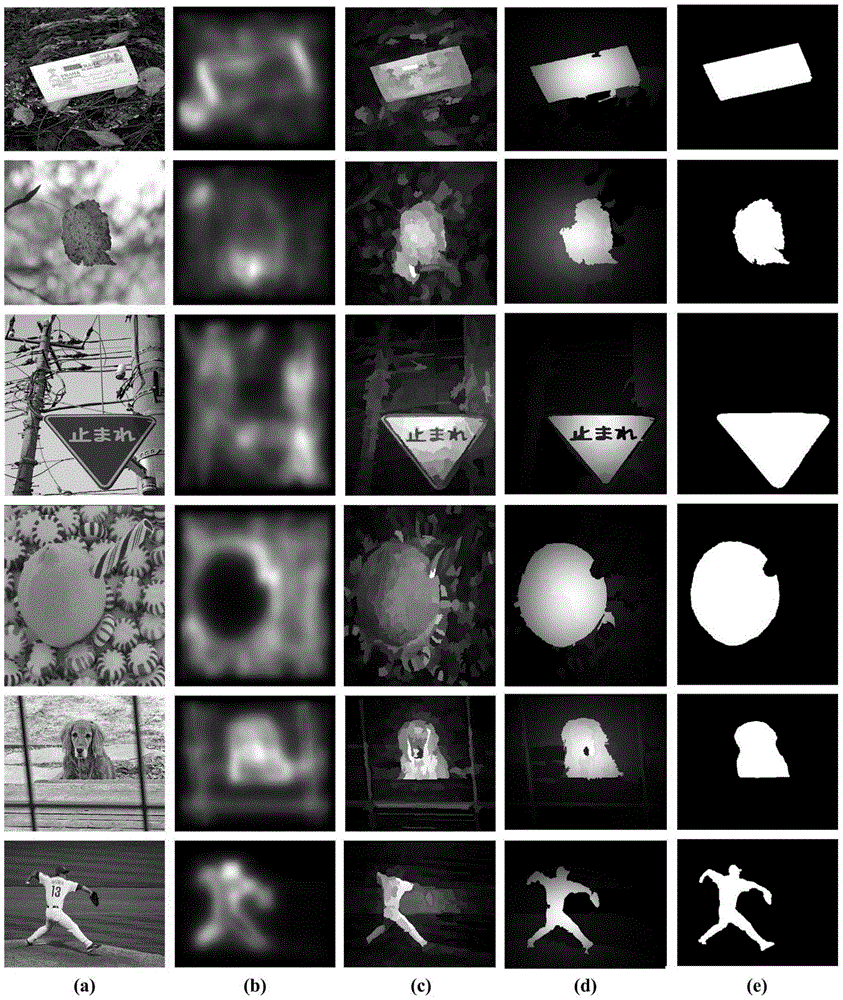

ActiveCN106709924AGood fit to target edgeHigh precisionImage analysisCharacter and pattern recognitionData setNetwork classification

The invention discloses a deep convolutional neutral network and superpixel-based image semantic segmentation method, which overcomes the problem that the precision of an existing semantic segmentation method still needs to be improved in the prior art. The method comprises the following steps of 1, training a deep convolutional neutral network classification model from images to category labels on an image classification data set; 2, adding a deconvolutional layer to the deep convolutional neutral network classification model, performing fine adjustment training on an image semantic segmentation data set, and realizing mapping from images to image semantic segmentation results; 3, inputting test images to a deep convolutional neutral network semantic segmentation model to obtain semantic labels of pixels, and inputting the test images to a superpixel segmentation algorithm to obtain a plurality of superpixel regions; and 4, fusing superpixels and the semantic labels to obtain a final improved semantic segmentation result. The method improves the precision of the existing semantic segmentation method and is of important significance in image identification and application.

Owner:THE PLA INFORMATION ENG UNIV

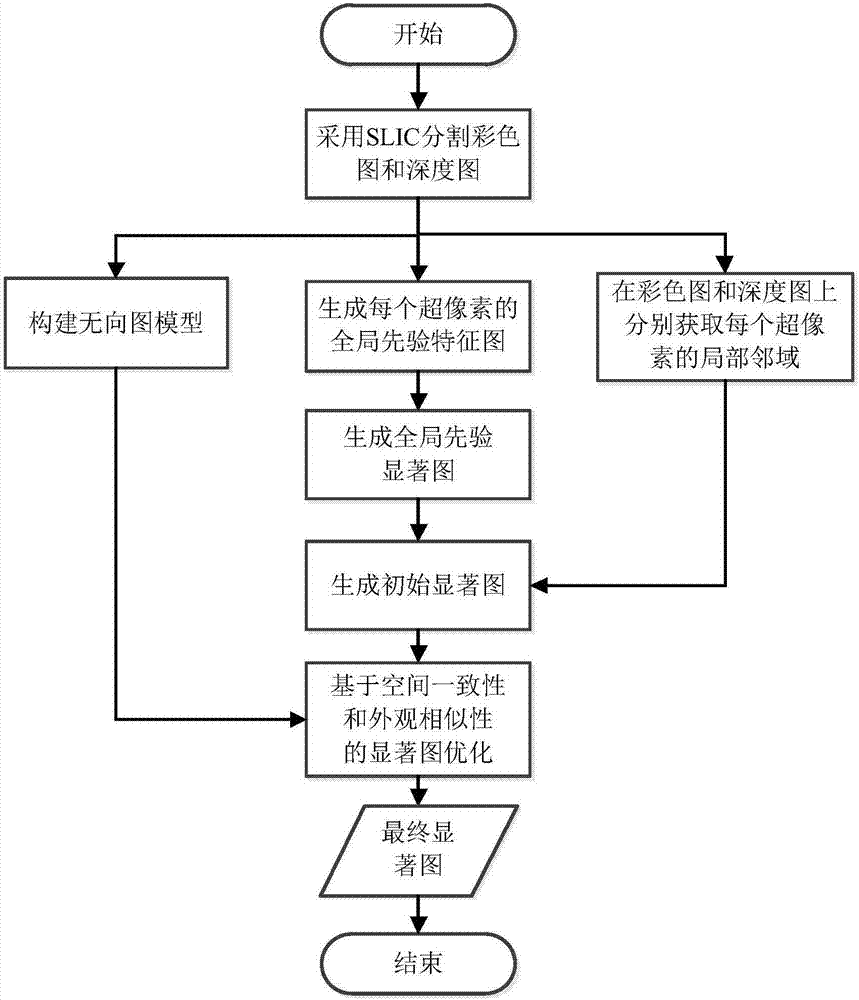

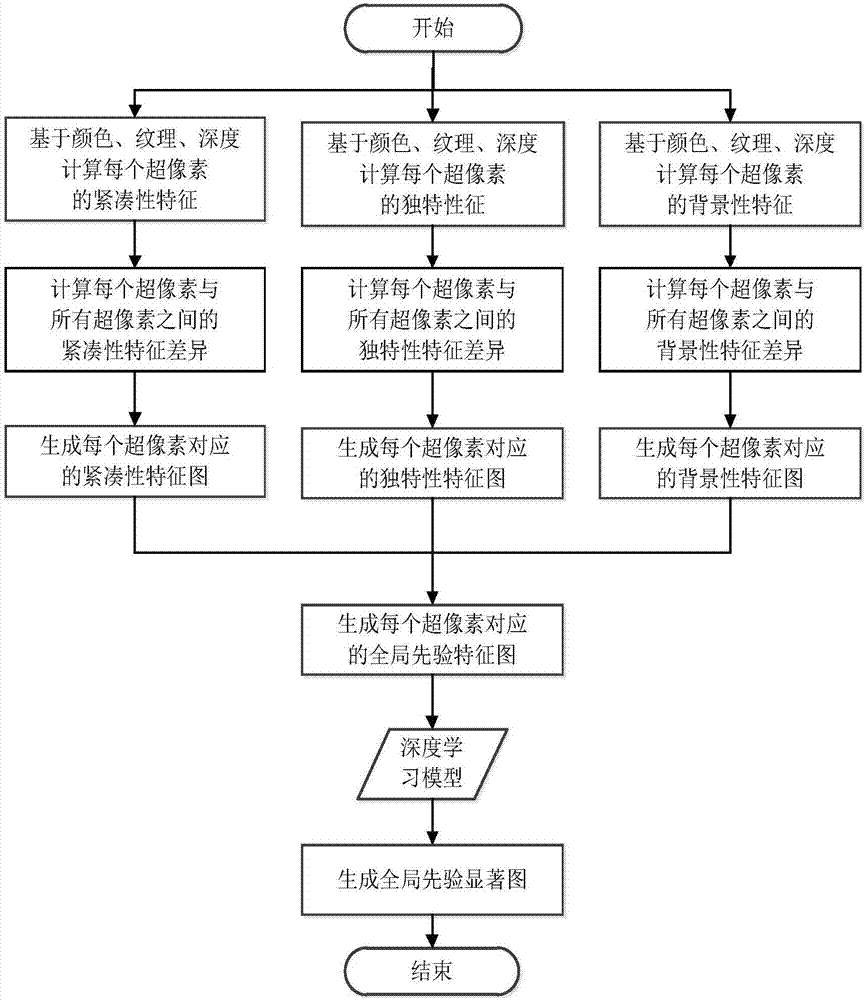

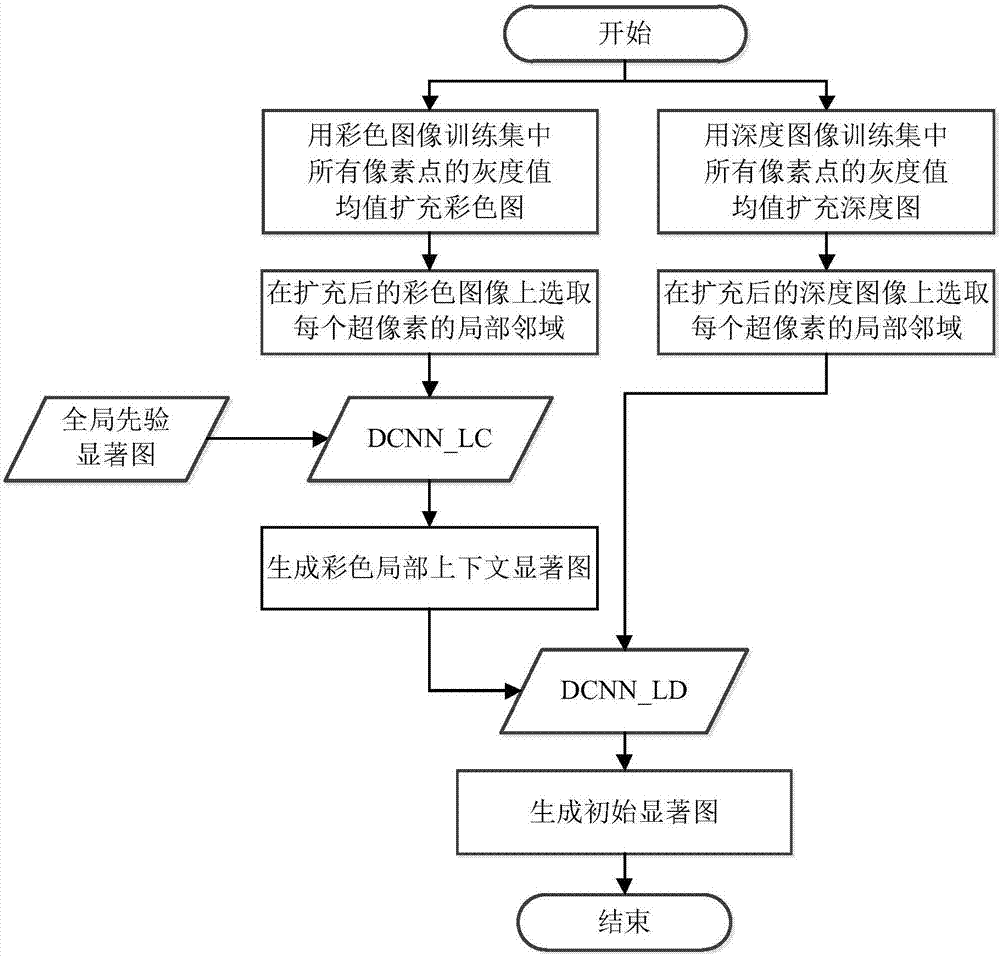

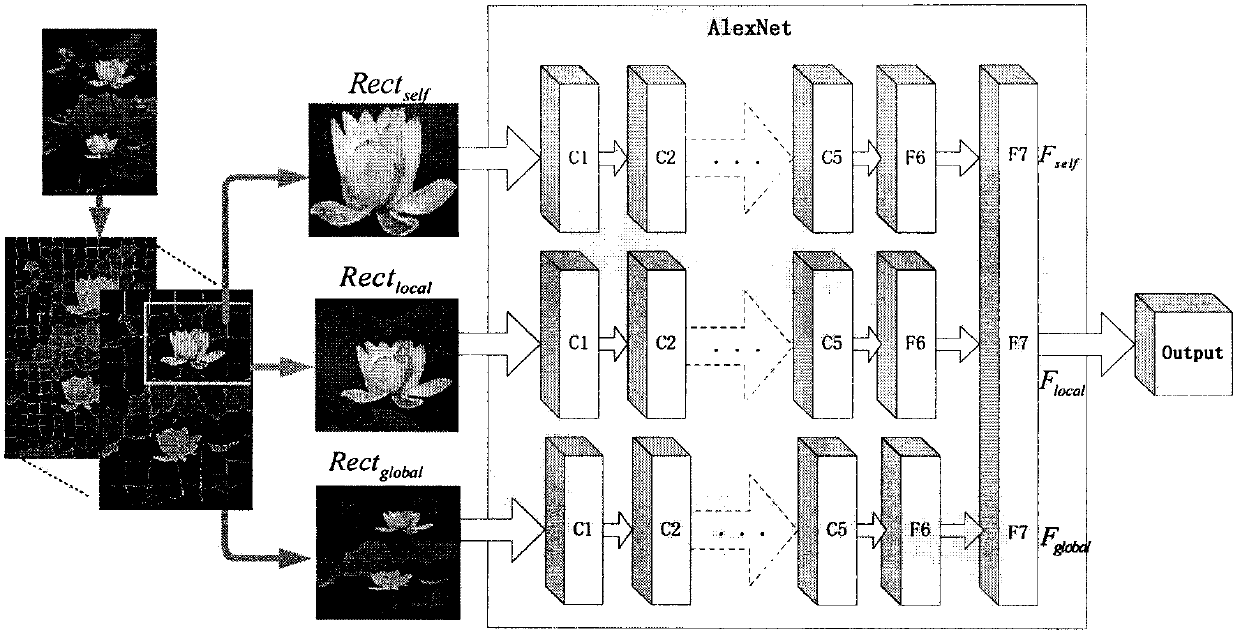

Deep learning saliency detection method based on global a priori and local context

ActiveCN107274419ASolve the problem of false detectionImprove robustnessImage enhancementImage analysisColor imageSaliency map

The invention discloses a deep learning saliency detection method based on the global a priori and local context. The method includes the steps of firstly, performing superpixel segmentation for a color image and a depth image, obtaining a global a priori feature map of each superpixel based on middle-level features such as compactness, uniqueness and background of each superpixel, and further obtaining a global a priori saliency map through a deep learning model; then, combining the global a priori saliency map and the local context information in the color image and the depth image, and obtaining an initial saliency map through the deep learning model; and finally, optimizing the initial saliency map based on spatial consistency and appearance similarity to obtain a final saliency map. The method of the invention can be used for solving the problem that a traditional saliency detection method cannot effectively detect a salient object in a complex background image and also for solving the problem that a conventional saliency detection method based on deep learning leads to false detection due to the presence of noise in the extracted high-level features.

Owner:BEIJING UNIV OF TECH

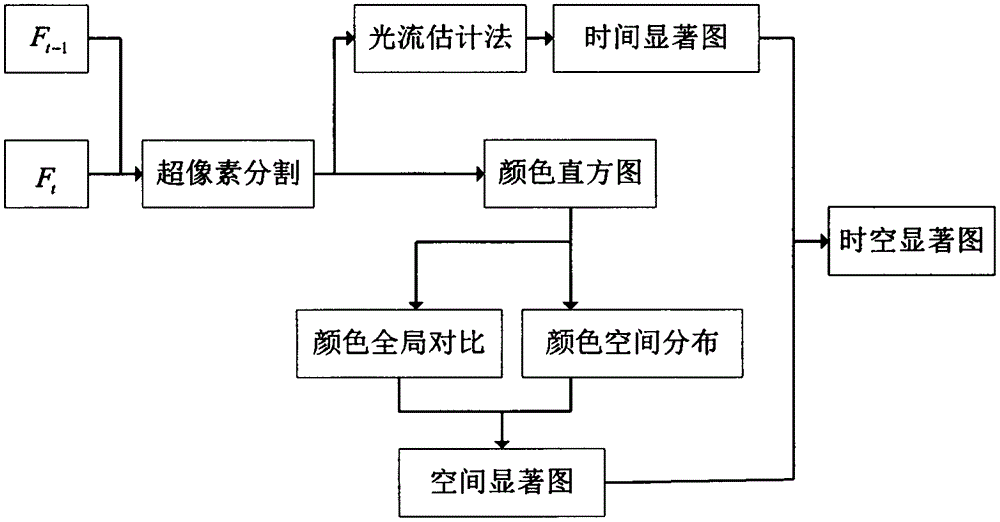

Motion-feature-fused space-time significance detection method

The invention belongs to the field of image and video processing, in particular to a space-time significance detection method which fuses space-time significance and motion features. The space-time significance detection method comprises the following steps: firstly, utilizing a superpixel partitioning algorithm to express each frame of image as one series of superpixels, and extracting a superpixel-level color histogram as features; then, obtaining a spatial salient map through the calculation of the global comparison and the spatial distribution of colors; thirdly, through optical flow estimation and block matching methods, obtaining a temporal salient map; and finally, using a dynamic fusion strategy to fuse the spatial salient map and the temporal salient map to obtain a final space-time salient map. The method fuses the space significance and the motion features to carry out significance detection, and the algorithm can be simultaneously applied to the significance detection in dynamic and static scenes.

Owner:JIANGNAN UNIV

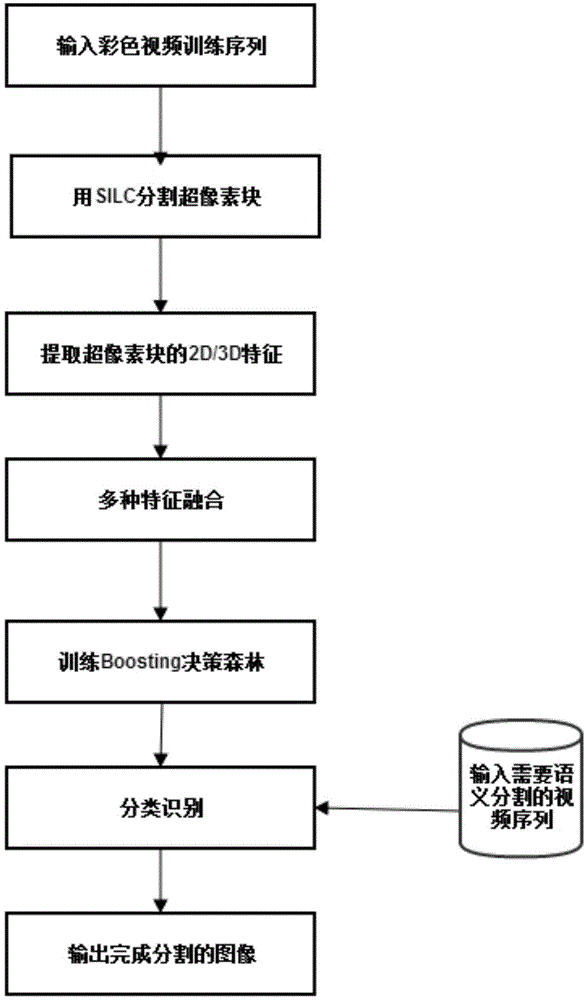

Cityscape image semantic segmentation method based on multi-feature fusion and Boosting decision forest

InactiveCN103984953AImprove recognition rateImprove recognition efficiencyCharacter and pattern recognitionForest classificationSuperpixel segmentation

A cityscape image semantic segmentation method based on multi-feature fusion and Boosting decision forest includes the following steps of carrying out super-pixel segmentation on images, carrying out multi-feature extraction, carrying out feature fusion and carrying out training learning and classification recognition. The method effectively integrates 2D features and 3D features and remarkably improves recognition rates of targets. Compared with the prior art, segmentation results are consistent, connectivity is good, edge positioning is accurate, a Boosting decision forest classification mechanism is introduced, and stability of target classification is guaranteed.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

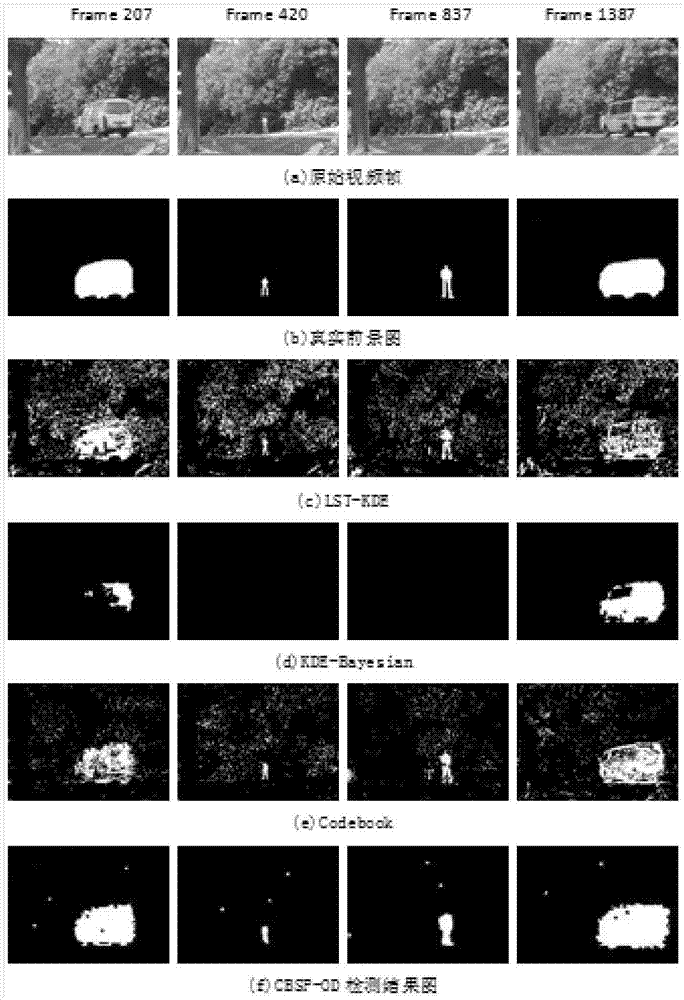

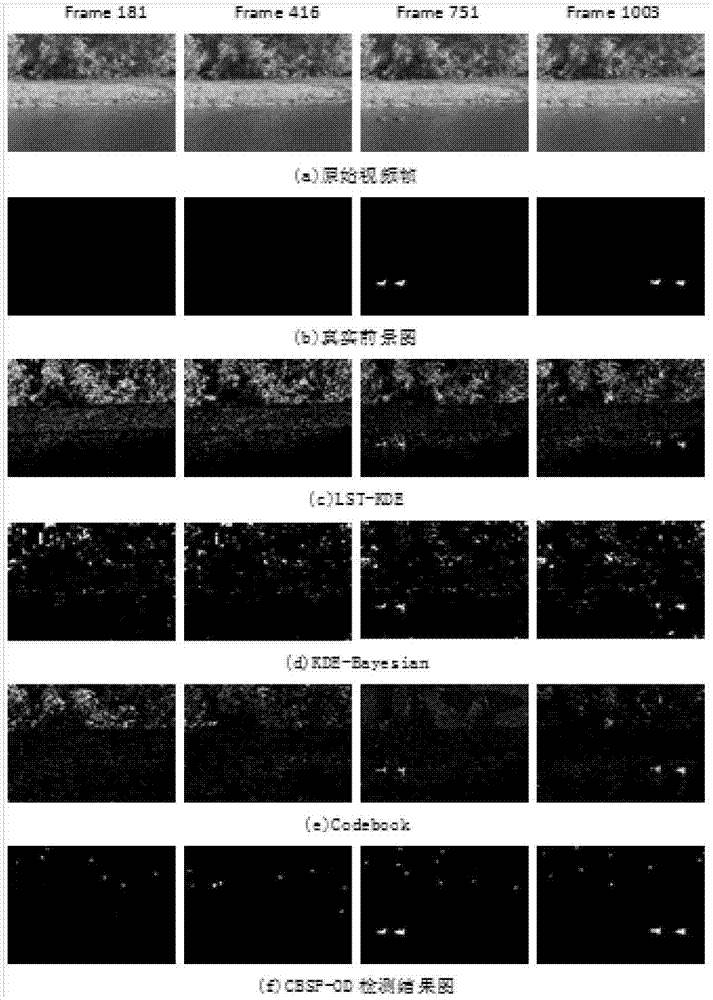

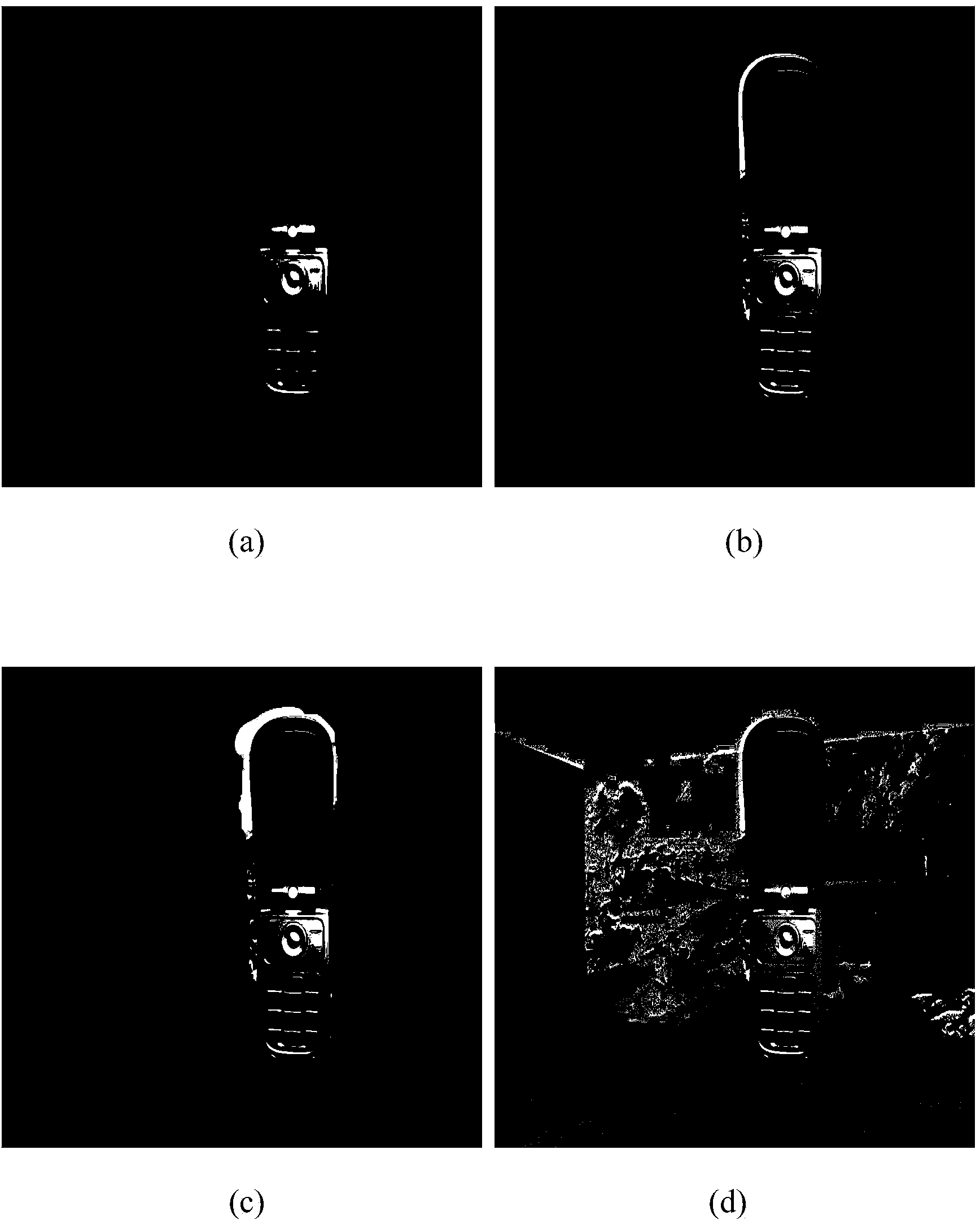

Target detection method in Codebook dynamic scene based on superpixels

ActiveCN103578119AReduced stabilityReduce false detection rateImage analysisSuperpixel segmentationIn real life

The invention discloses a target detection method in a Codebook dynamic scene based on superpixels. The method is characterized by comprising the following steps that (1) a superpixel partition method is used for partitioning video frames, K superpixels are obtained by partitioning; (2) a Codebook background modeling method is used, a Codebook is established for each superpixel partitioned in the step (1), each Codebook comprises one or more Codewords, each Codeword has the maximin threshold values during learning, the maximin threshold values are detected, background modeling is completed; (3) after background modeling is completed, currently-entering video frames are subjected to target detection, if a certain pixel value of the current frames accords with distribution of the background pixel values, the certain pixel value is marked as the background, otherwise, the certain pixel value is marked as the foreground; finally the current video frames are used for updating the background model. The method solves the problems that a traditional Codebook background modeling algorithm is large in calculated amount and high in memory requirement, and established Codewords are not accurate are solved, target detecting accuracy and speed are improved, the requirement for real-time accuracy is met, and accordingly the requirement for intelligent monitoring in real life is met.

Owner:苏州华创智城科技有限公司

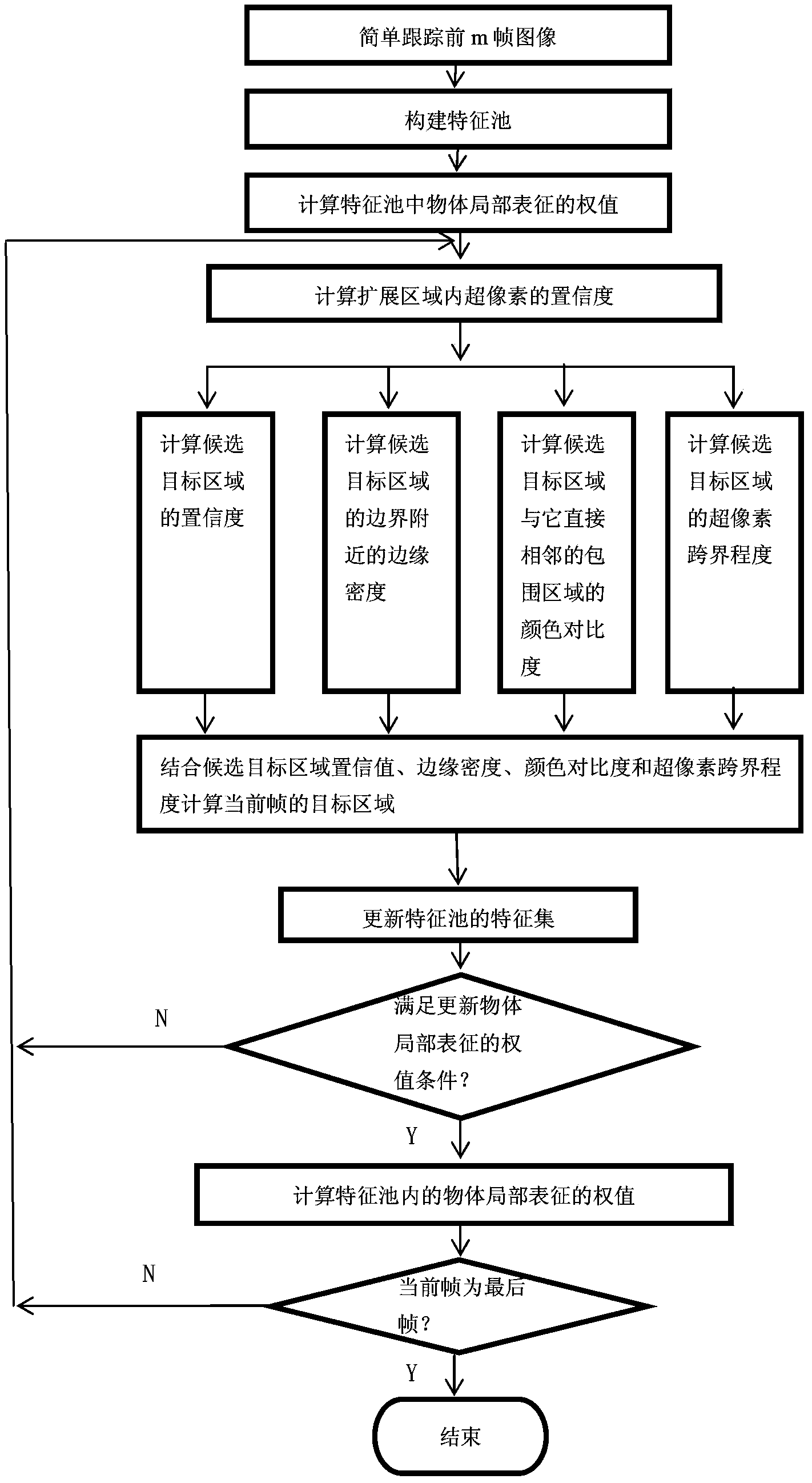

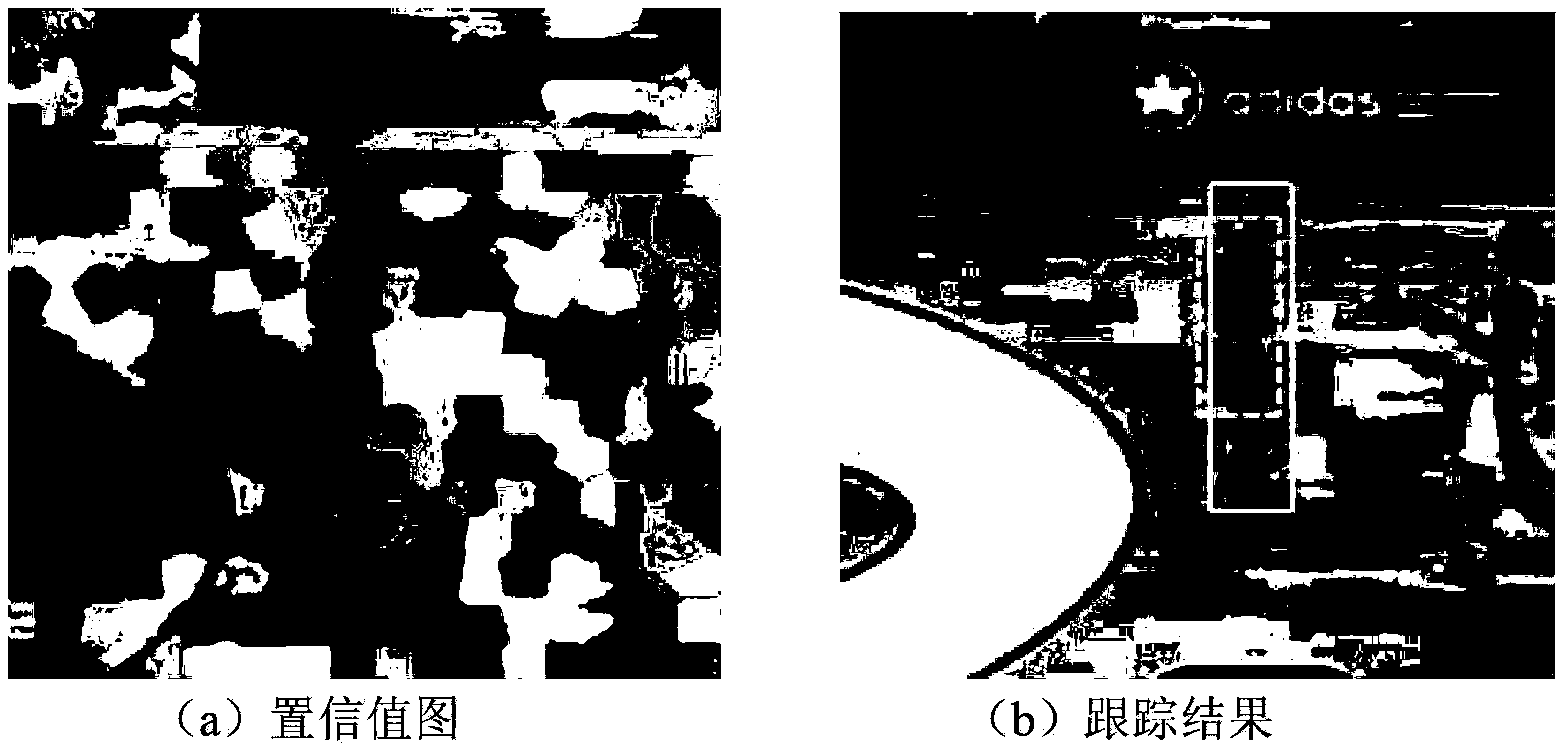

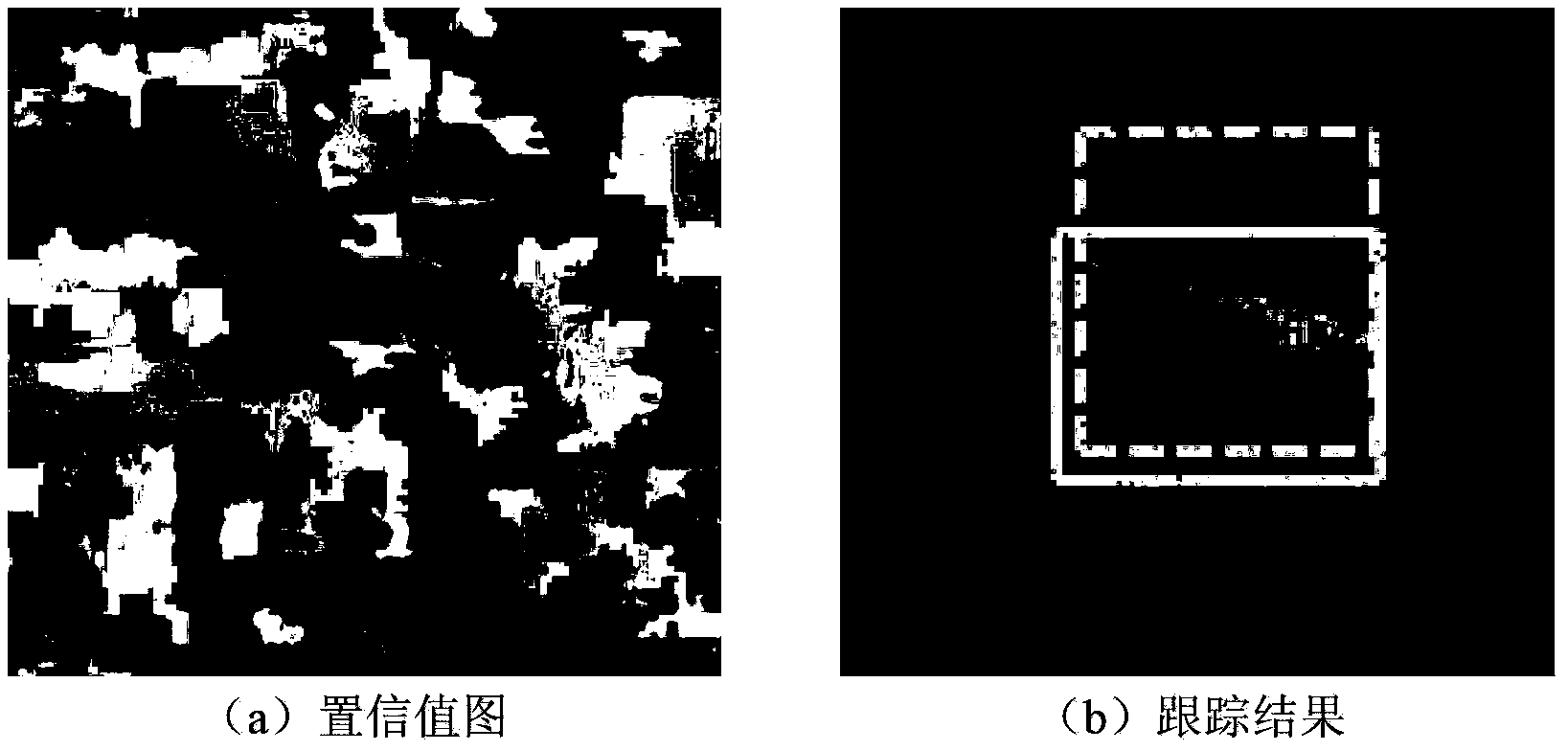

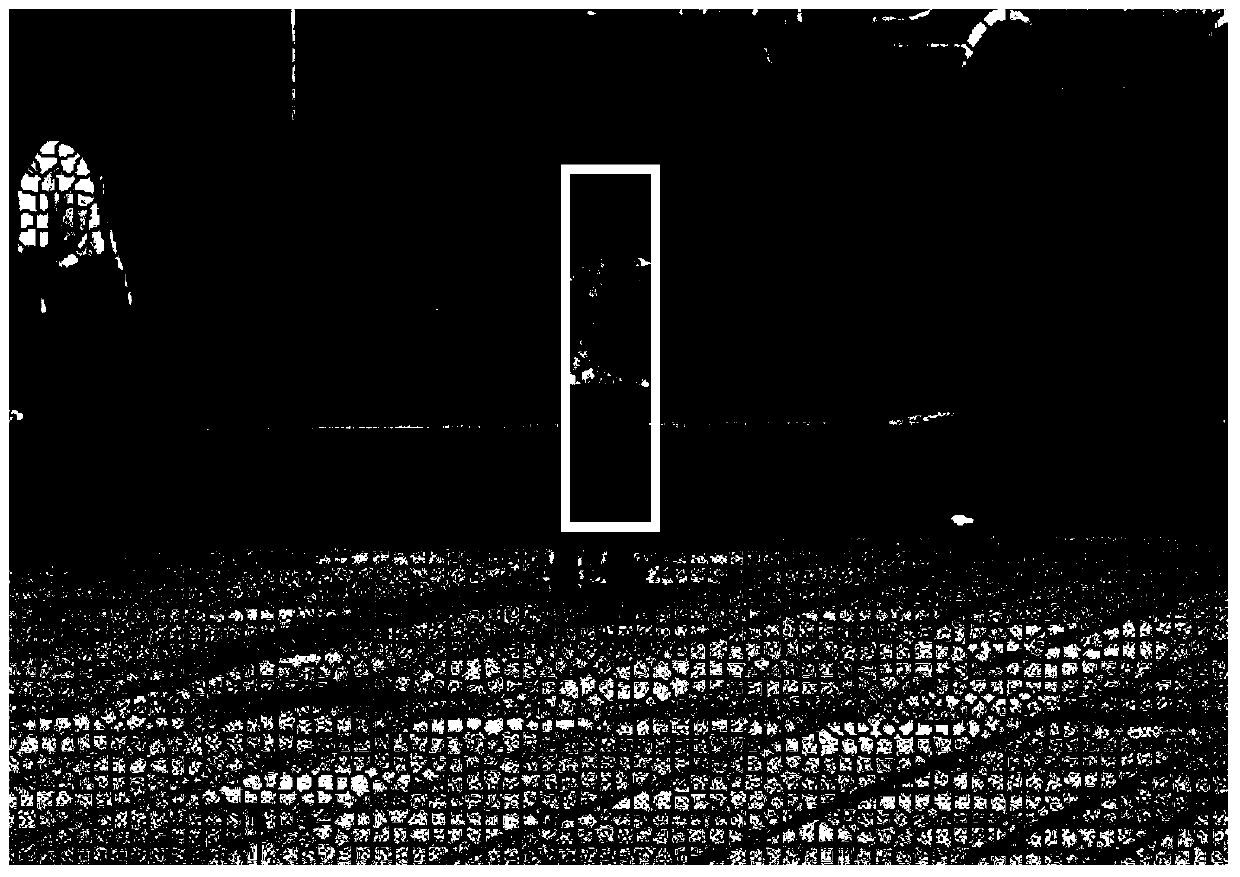

Tracking method based on integral and partial recognition of object

ActiveCN103413120AValid representationFlexible representationCharacter and pattern recognitionPartial representationSuperpixel segmentation

The invention discloses a tracking method based on integral and partial recognition of an object. As for recognition based on partial information, superpixel division is conducted on all candidate areas, different weight values are given to superpixels according to characteristics of partial representation of the object, weighting similarity measurement is put forward, and the confidence coefficients of all the candidate target areas are calculated. As for recognition based on integrality, object property measurement is introduced into a target object detection portion of a current frame, colors, edges and the superpixels are chosen to serve as three types of clues of object property measurement, marking rules of the clues are provided respectively, the confidence coefficients are calculated by combining the three types of clues and recognition based on the partial information so as to mark all the candidate target areas in an extension area, and a target area is determined according to marks. According to the tracing method, the target object in a tracking scene dynamically changing can be described preferably, the target area is converged in the target object better by combing object property measurement, the probability of a background existing in the target are is reduced, and the tracking accuracy rate and tracking stability are improved.

Owner:SOUTH CHINA AGRI UNIV

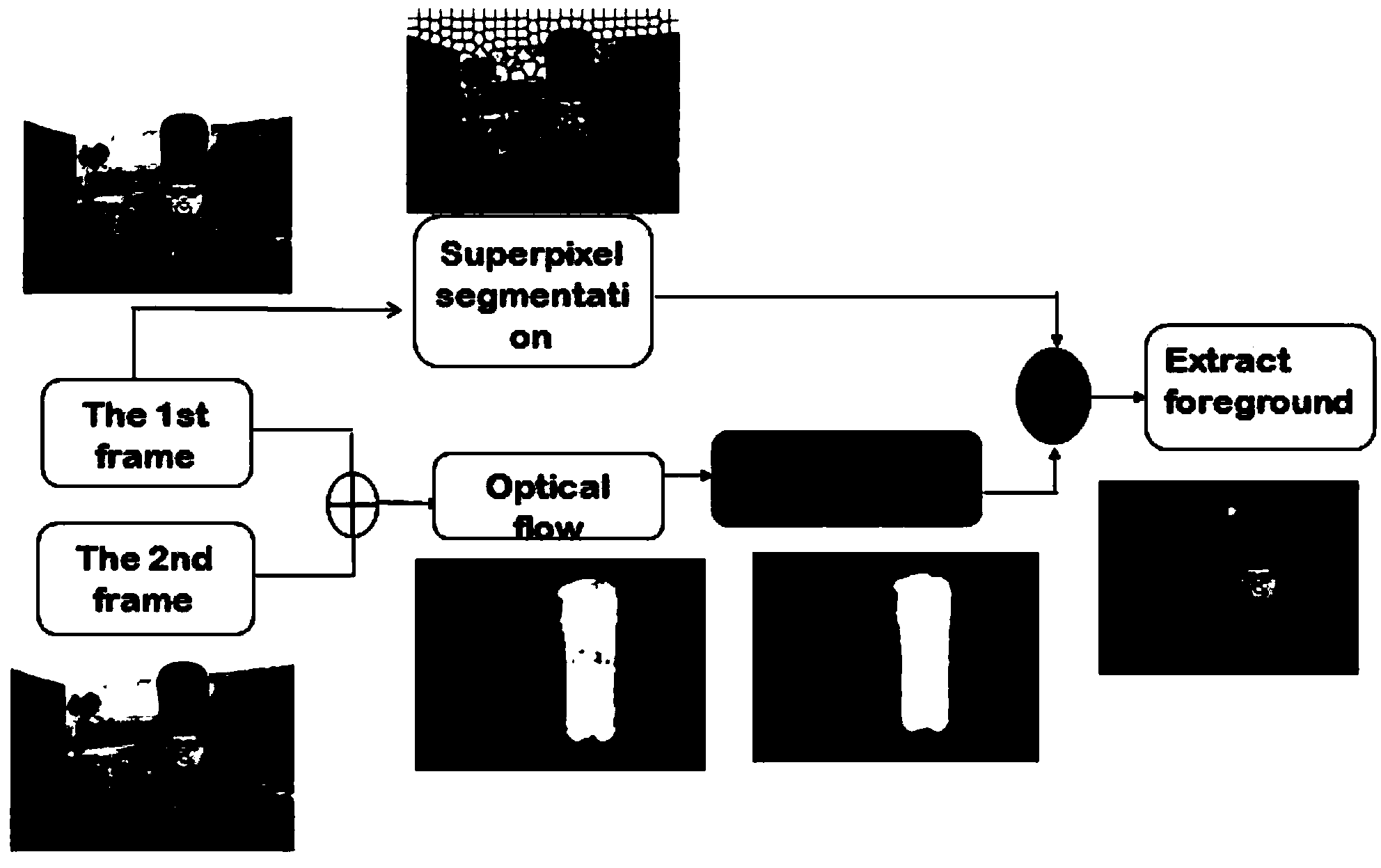

Moving object extraction method based on optical flow method and superpixel division

The invention discloses a moving object extraction method based on superpixel division and an optical flow method, and mainly solves the problems of more noises, high-frequency information loss, inaccurate boundary and the like of the existing moving object extraction method. The implementation steps of the method are as follows: (1), inputting an image, and pre-dividing the image into a superpixel set S to obtain a mark sheet I 2; (2), taking images of two adjacent frames in a video sequence and determining a rough position of a moving object by a Horn-Schunck optical flow method; (3), using the optical flow method to obtain the speed u in the horizontal direction and the speed v in the vertical direction, wherein V is speed amplitude of the optical flow method; (4) performing median filtering, Gauss filtering, binarization operation and morphology opening and closing operation on the optical flow result V to obtain V4; (5) using a superpixel division result to further correct the optical flow result, and extracting to obtain the accurate moving object. Superpixels belonging to a moving area are extracted accurately. Simulation experiments show that compared with the prior art, the moving object extraction method has the advantages of simple operation, small noise, clear boundary and the like, and can be used for extracting the moving object in the video sequence.

Owner:XIDIAN UNIV

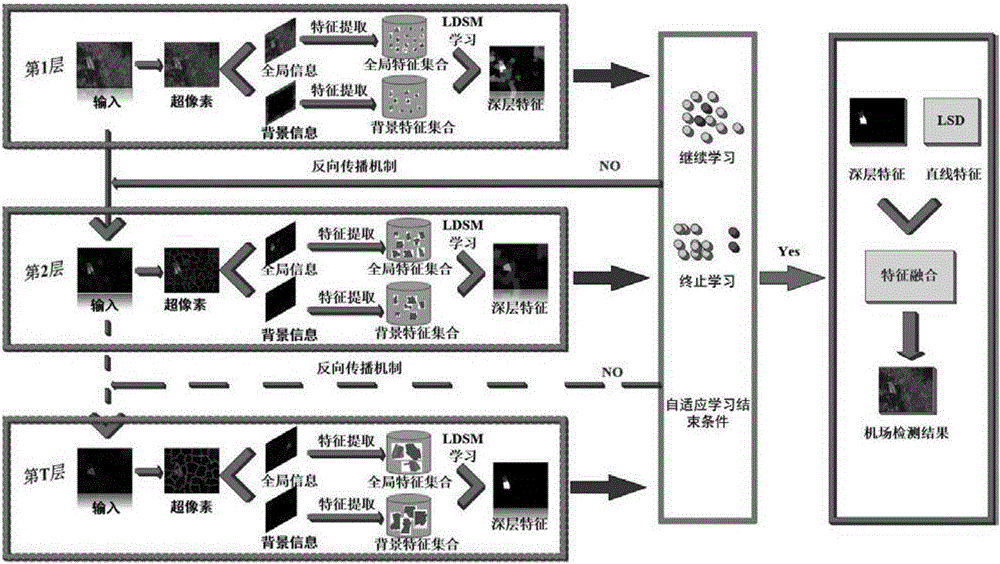

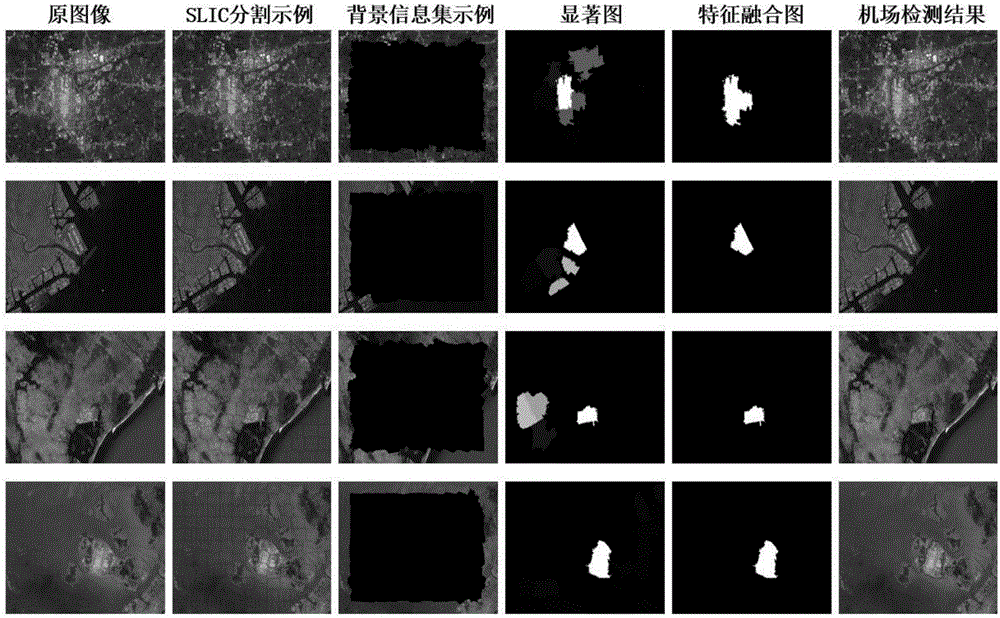

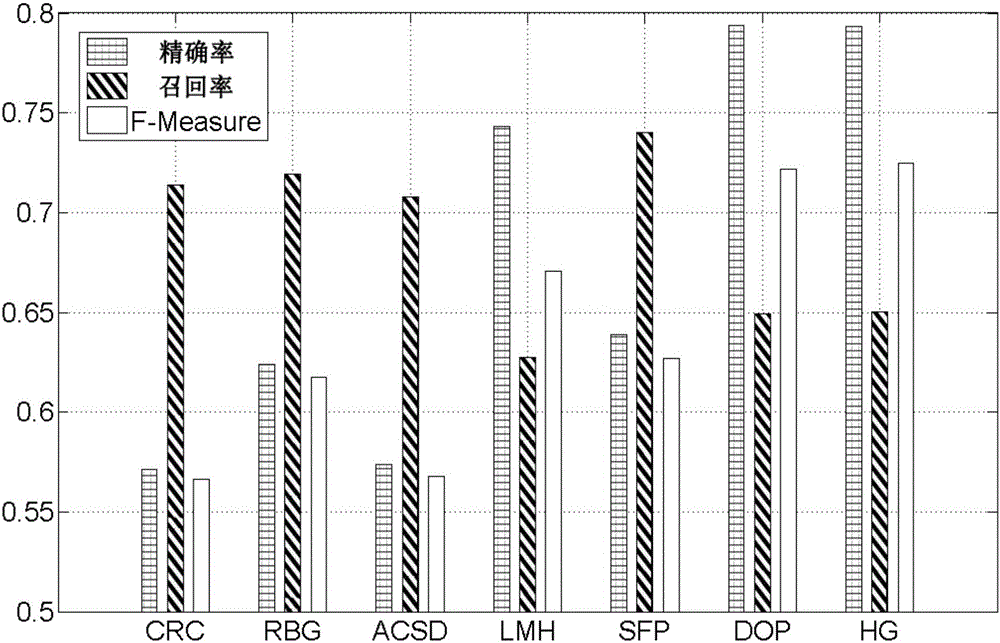

Low-resolution airport target detection method based on hierarchical reinforcement learning

ActiveCN105930868AReduce manual interventionImprove adaptabilityImage analysisCharacter and pattern recognitionPattern recognitionImage resolution

The invention provides a low-resolution airport target detection method based on hierarchical reinforcement learning. The method comprises the steps of (1) carrying out super pixel division on an inputted remote sensing image, (2) extracting the boundary super pixel of the input image to construct a background information set, (3) learning the characteristic similarity between each super pixel and a background information set through a minimum distance similarity measurement operator and extracting a deep layer characteristic, (4) defining the ending condition of a learning process, judging whether the step (3) satisfies an ending condition or not, executing a step (6) if so, otherwise, executing a step (5), (5) using the back-propagation theory to act the deep layer characteristic in the step (3) as an reinforcement factor to a local layer input image, and taking the image which is subjected to reinforcement processing as the input image of a next layer learning process, executing the step (1), and continuing a next layer learning, (6) stopping learning, taking the deep layer characteristic learned in the local layer in the step (3) as the salient characteristic of a super pixel, and obtaining a final salient map, and (7) generating the linear feature map of an original image, fusing the linear feature map and the salient map, through salient area positioning and area combination, an airport target area is determined, and the target detection is completed.

Owner:BEIHANG UNIV

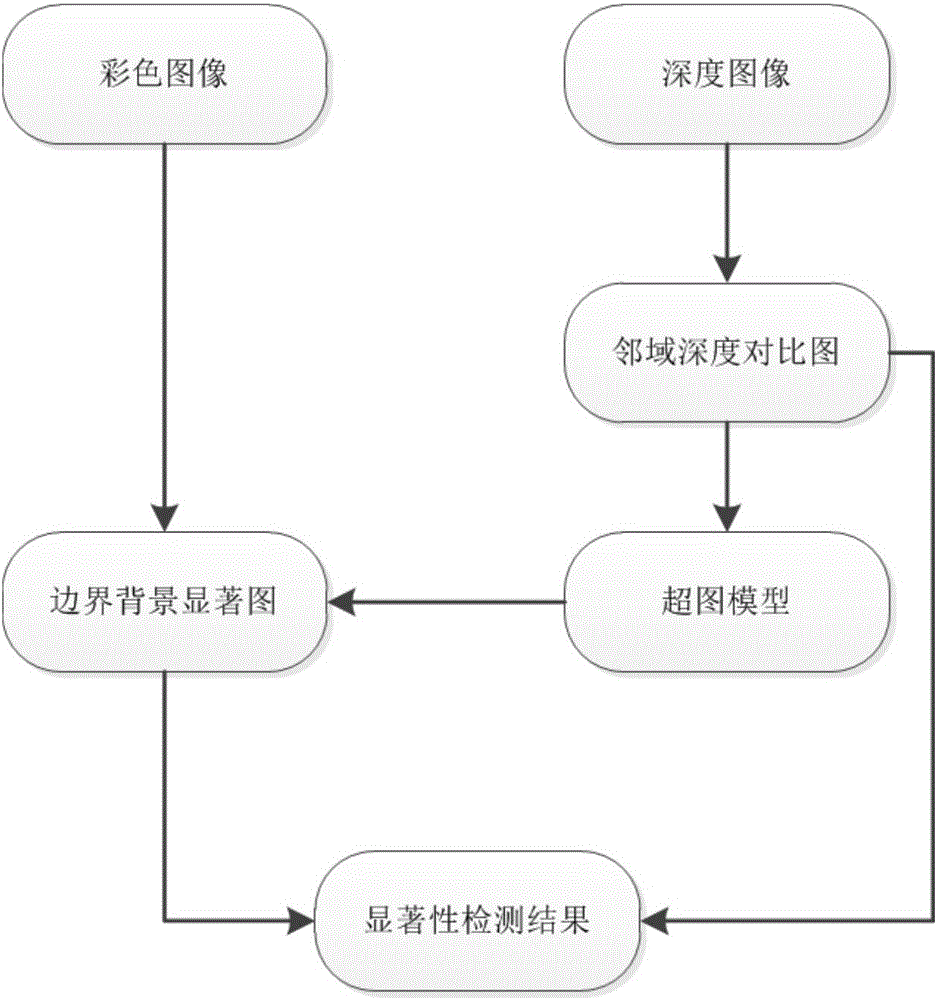

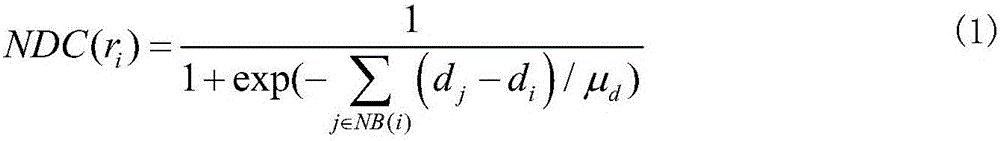

RGBD image salience detection method based on hypergraph model

The invention discloses an RGBD image salience detection method based on a hypergraph model. The method includes conducting hyperpixel segmentation for a color image to be detected and a depth image, calculating neighborhood depth contrast graphs for each hyperpixel area of the depth image, constructing a depth background hyperedge according to neighborhood depth contrast ratio, extracting hyper pixel areas on the boundary of the image to construct a boundary background hyperedge, calculating the weight of the two hyperedges, expanding hyperedges according to a hypergraph learning algorithm, building an induce graph, prior calculating boundary background salient map by using the boundary connectivity based on the spatial adjacent relation of the induce graph and the edge weight, and obtaining a final salient detection map based on salience degree updating algorithm of cellular automaton and a fusion algorithm in combination with depth prior. Deficiency of conventional 2D boundary background prior is overcome. The improvement is made based on depth information and a hypergraph model, and better effects are achieved than a conventional image salience detection method that combines color and depth information.

Owner:ZHEJIANG UNIV

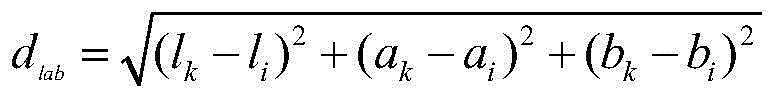

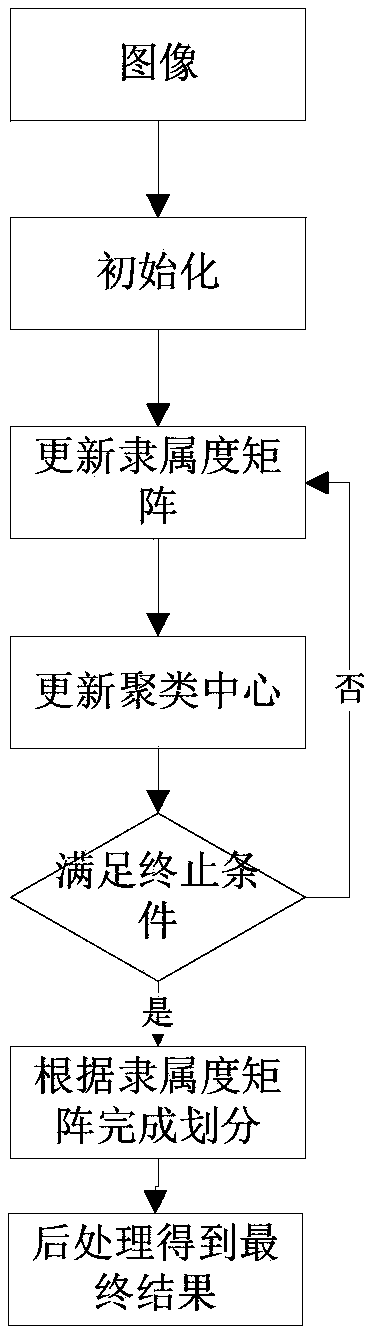

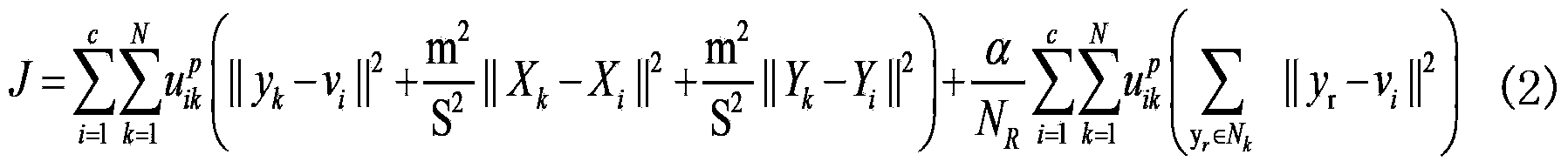

Superpixel segmentation method based on fuzzy theory

ActiveCN103353987AGood welt performanceImprove robustnessImage analysisCluster algorithmPattern recognition

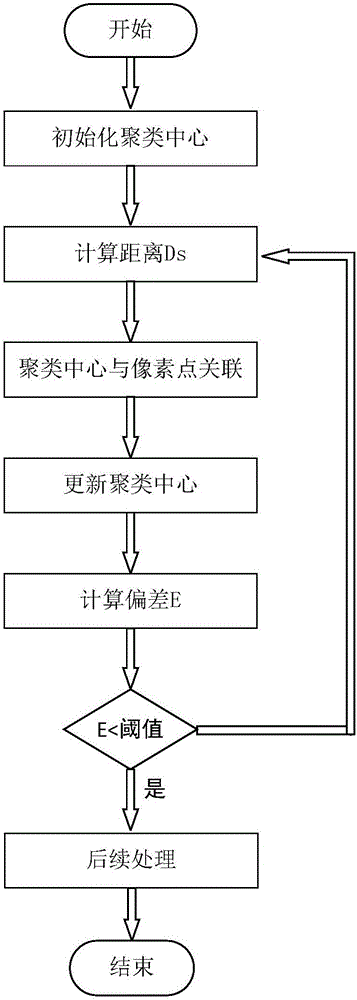

The invention relates to a superpixel segmentation method based on the fuzzy theory. The superpixel segmentation method improves fitness of superpixel edges and original image edges, and segmented superpixels have an undiversified internal structure and uniform gray scale. The superpixel segmentation method comprises steps of 1) processing images and initializing cluster centers; 2) adding coordinate distances of pixel points into the fuzzy C-means clustering algorithm and establishing an objective function; 3) updating a membership matrix; 4) updating cluster centers with gray scale values and coordinates included; 5) repeating steps 3) and 4) until a termination condition that the change amount of the cluster center gray scale values is smaller than an artificially set threshold value or iteration times are larger than some artificially set value; 6) completing initial superpixel division based on the finally obtained membership matrix; and 7) performing post-processing to complete the final superpixel division by combining sets of points ,which are isolated and unavoidably exist in superpixels generated after the above six steps, with adjacent superpixels which have the maximum similarity.

Owner:山东幻科信息科技股份有限公司

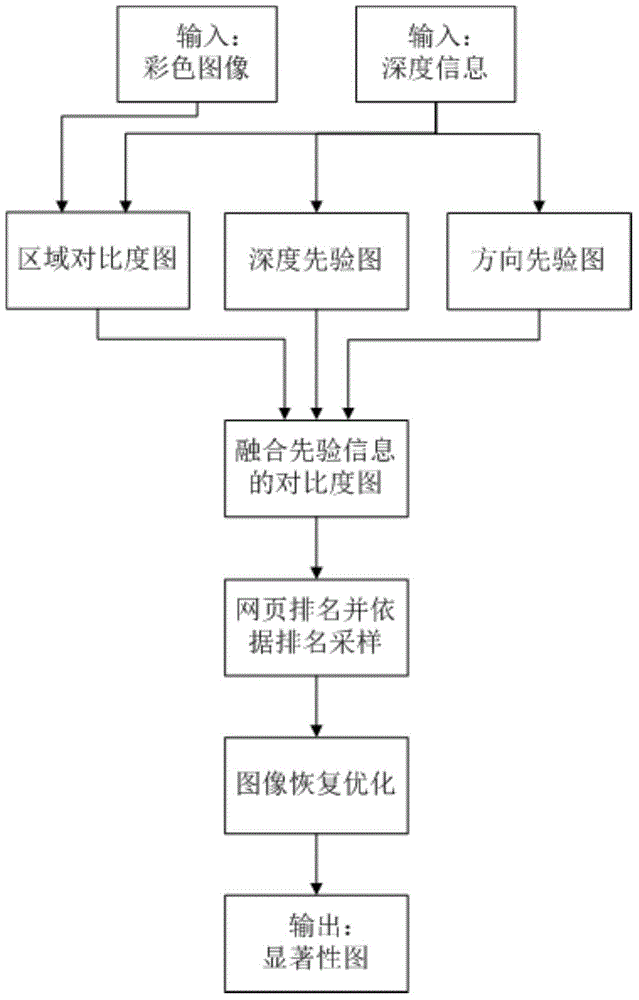

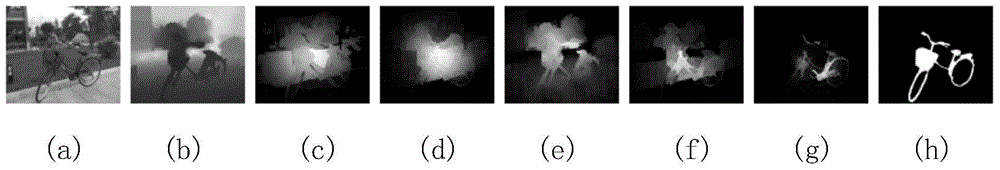

Image significance detection method combining color and depth information

The invention discloses an image significance detection method combining color and depth information. The method comprises the following steps: performing superpixel segmentation on a to-be-detected color image, calculating a region contrast image in each segmented area through combining depth and color features, and obtaining a depth prior image and a direction prior image by utilizing depth information; integrating the region contrast image, the depth prior image and the direction prior image, and obtaining a contrast image integrated with prior information through calculation; performing overall optimization on the contrast image integrated with prior information: executing the normal inner product weighted webpage ranking algorithm, selecting an area with high confidence coefficient as a sampling area, designing an image restoration problem based on a Markov random field model, and solving to obtain a final significance detection image. According to the invention, the influence of the depth and direction information on significance is explored, and compared with the existing image significance detection method combining color and depth information, the method provided by the invention achieves a better effect.

Owner:ZHEJIANG UNIV

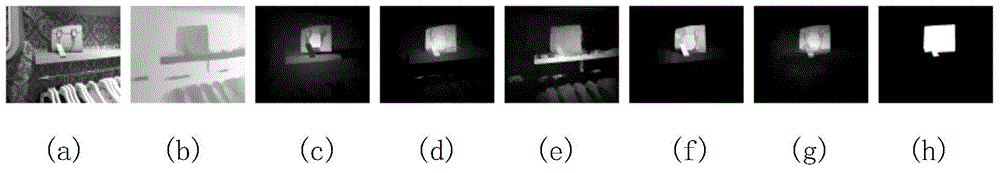

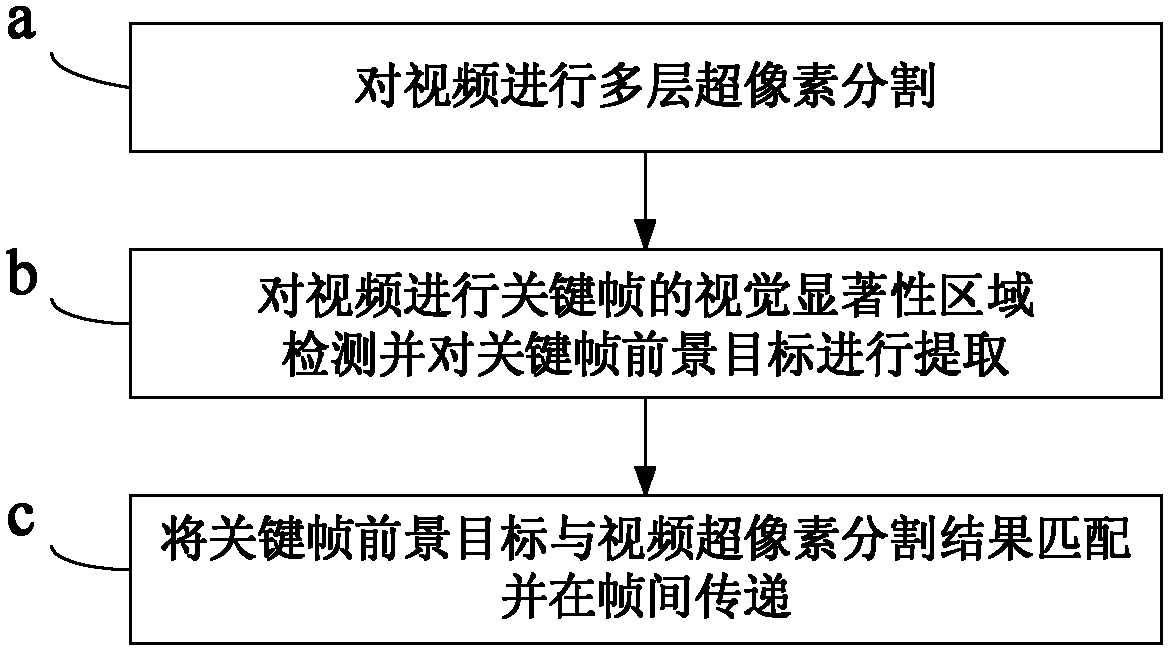

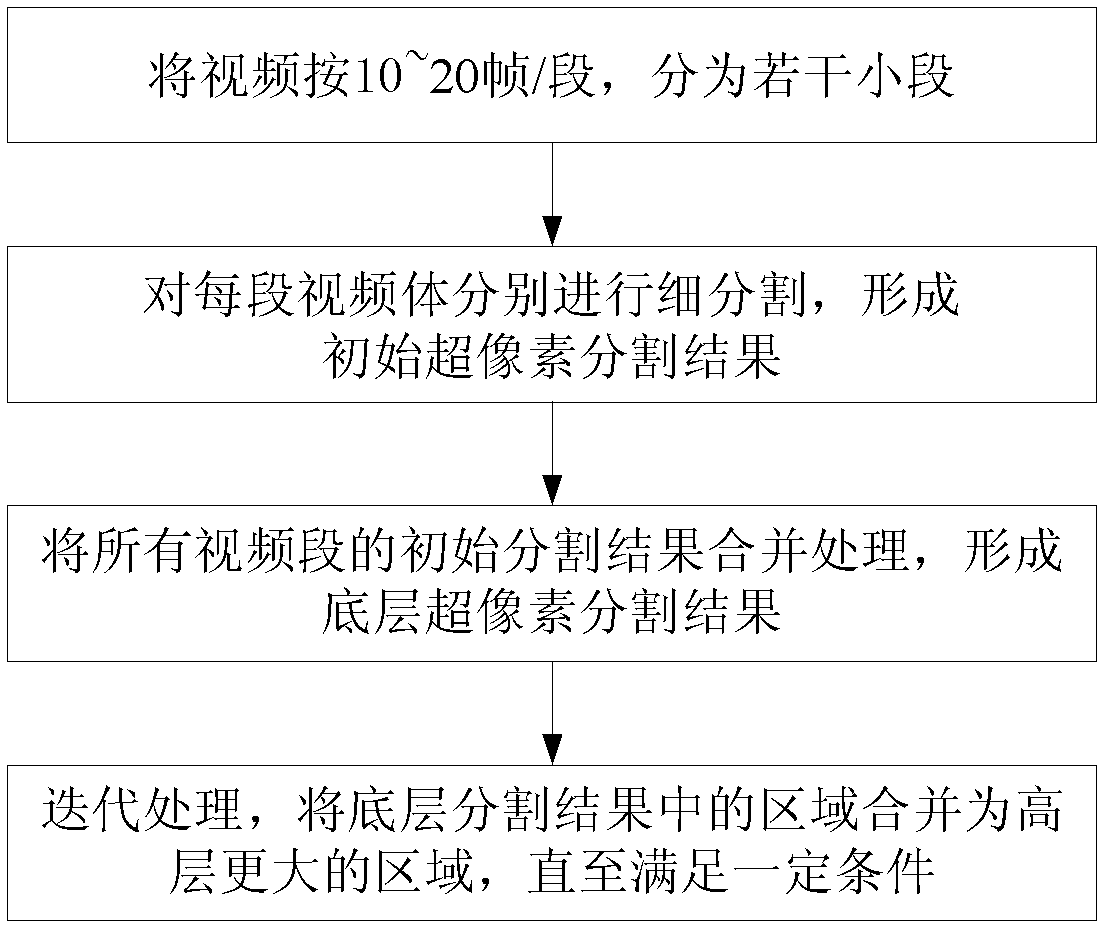

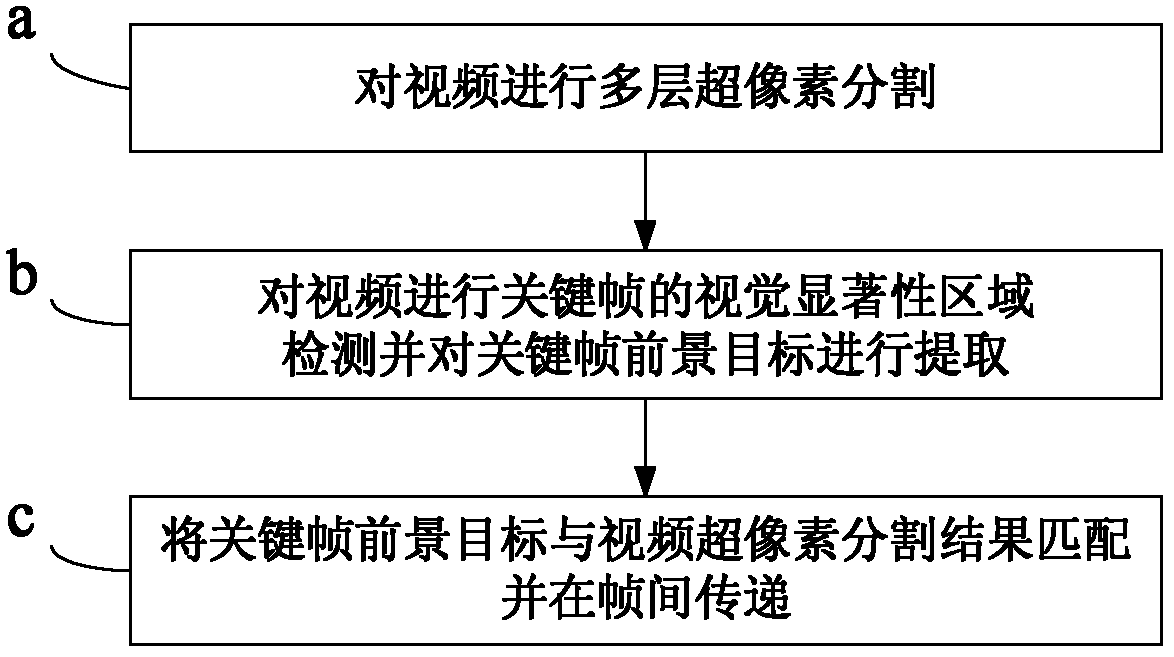

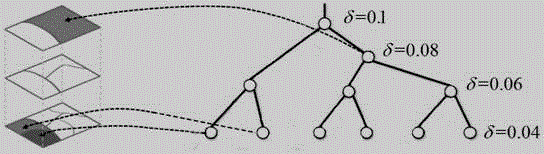

Video foreground object extracting method based on visual saliency and superpixel division

ActiveCN102637253AImprove efficiencyThe result is accurateCharacter and pattern recognitionPattern recognitionVisual saliency

The invention discloses a video foreground object extracting method based on visual saliency and superpixel division. The video foreground object extracting method includes steps: a, dividing multiple layers of superpixels of video: dividing the superpixels of the video used as a three-dimensional video body, and grouping elements of the video body into body areas; b, detecting visual saliency areas of key frames of the video and extracting foreground objects of the key frames: analyzing the visual saliency areas in images of the key frames of the video by a visual saliency detecting method, then using the visual saliency areas as initial values and obtaining the foreground objects of the key frames by an image foreground extracting method; and c, matching the foreground objects of the key frames with a dividing result of the superpixels of the video and transmitting foreground object extracting results of the key frames among the frames: diffusing areas, covered by the foreground objects of the key frames, of the video body, and further continuously transmitting the foreground object extracting results among the frames. The video foreground object extracting method is high in efficiency, accurate in result and little in manual intervention and is robust.

Owner:TSINGHUA UNIV +1

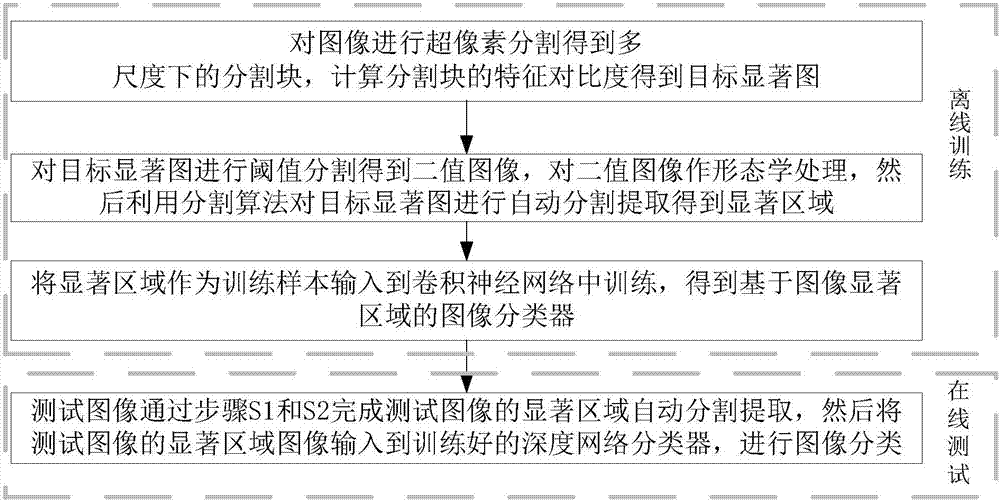

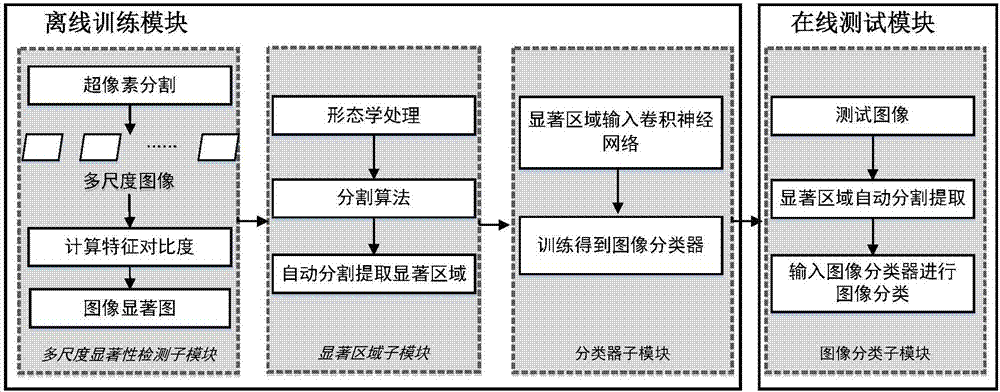

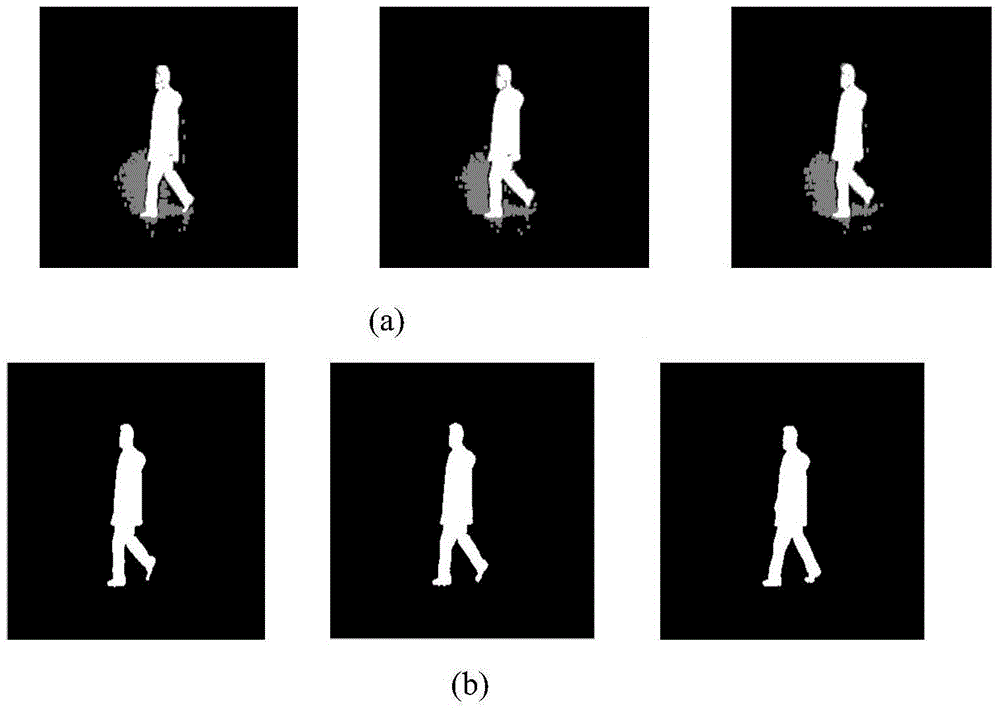

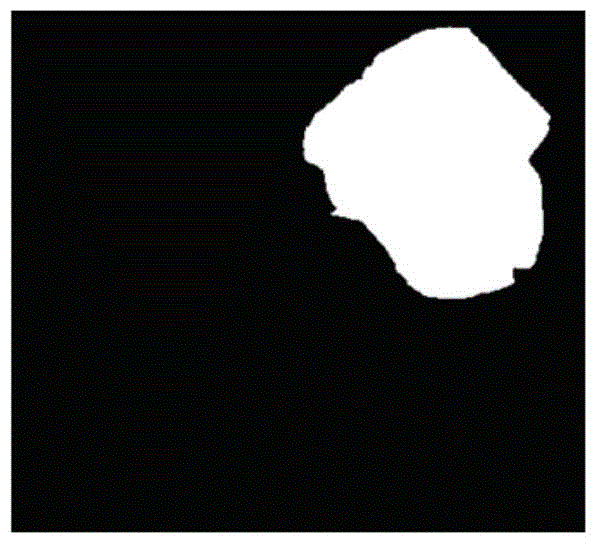

Image classification method and system based on image salient region

InactiveCN107016409AReduce workloadImprove accuracyImage analysisCharacter and pattern recognitionMargin classifierClassification methods

The invention discloses an image classification method and system based on an image salient region. The method includes offline training and online test. The offline training comprises: performing ultra-pixel segmentation on an image to obtain multidimensional segmentation blocks, and calculating the characteristic contrast of the segmentation blocks to obtain a target salient map; performing threshold segmentation on the target salient map to obtain a binary image, performing morphological processing on the binary image, and performing automatic segmentation extraction on the target salient map by employing a segmentation algorithm to obtain the salient region; and inputting the salient region to a convolutional neural network for training to obtain an image classifier based on the image salient region. The online test includes: performing automatic segmentation extraction of the salient region on a test image, inputting a salient region image of the test image to the trained image classifier, and performing image classification to obtain an image class mark. According to the method and system, the segmentation result is guaranteed, the workload of artificial interaction is reduced, and the accuracy of image classification is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

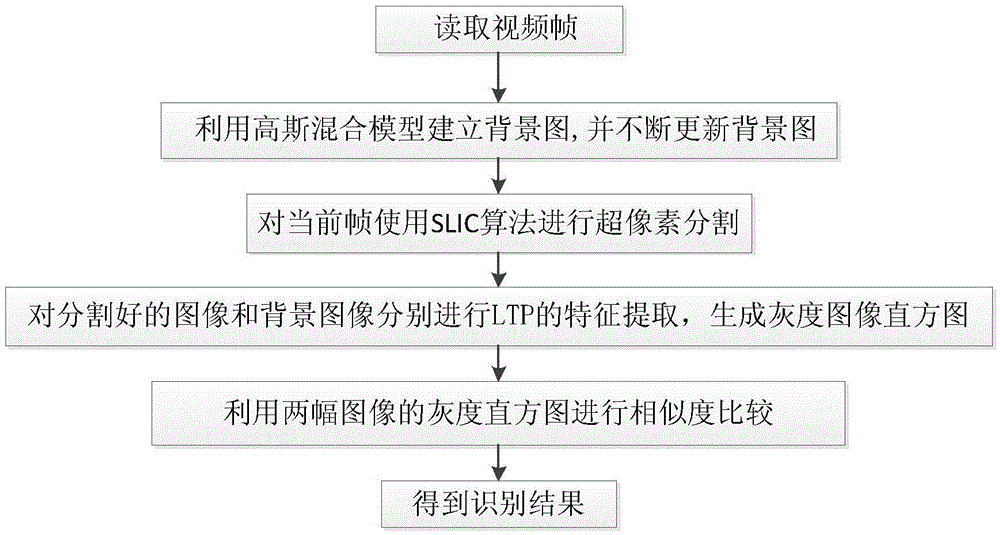

Moving object detection method based on Gaussian mixture model and superpixel segmentation

InactiveCN105528794AEliminate distractionsThe detection method is accurateImage enhancementImage analysisObject-class detectionBackground image

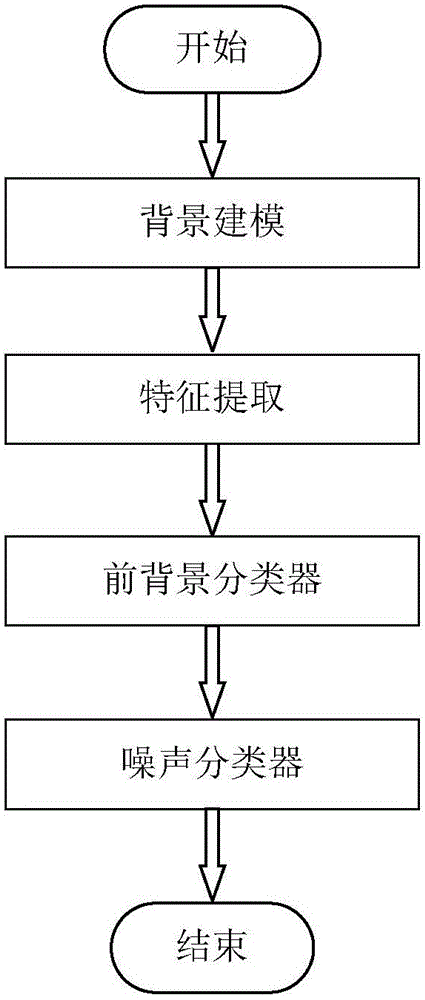

The invention discloses a moving object detection method based on a Gaussian mixture model and superpixel segmentation. The task of moving object detection is extracting objects of interest as many as possible from a complex scene and presenting the results in the form of binary images. The method is characterized by, to begin with, carrying out background modeling by utilizing the Gaussian mixture model and obtaining a background image of the current frame; then, carrying out superpixel segmentation on the current frame through an SLIC(simple linear iterative clustering) algorithm; and finally, carrying out LTP (local ternary pattern) texture feature extraction on the segmented images and background images, and then, carrying out comparison to obtain a moving object. The beneficial effects of the method are that the method can detect the moving object in real time according to the video images captured by cameras; and the detection method is efficient and accurate.

Owner:SHANGHAI INST OF TECH

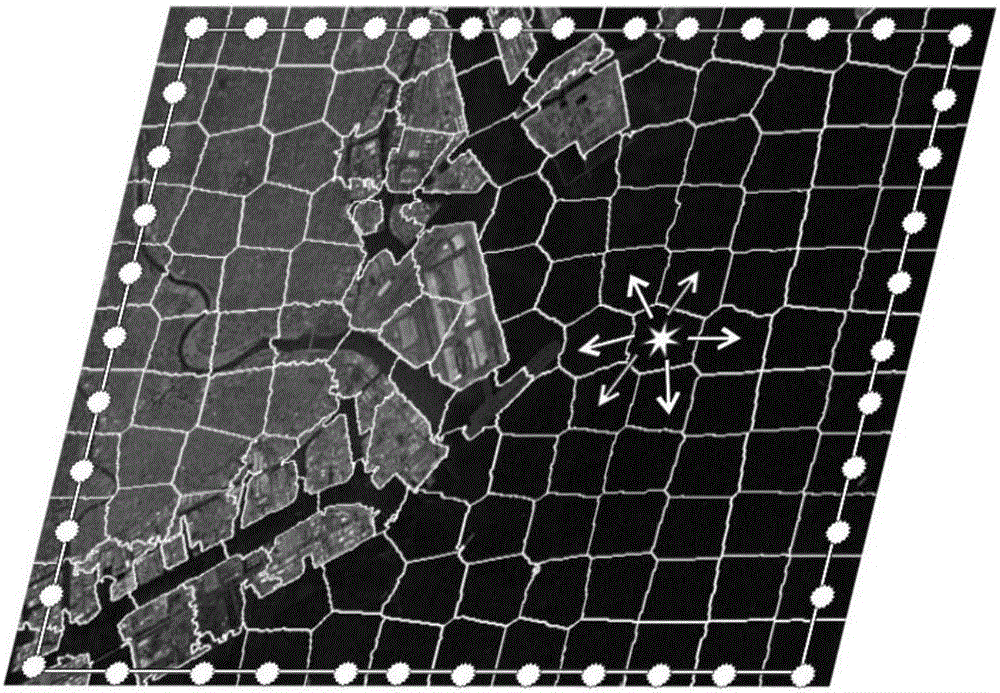

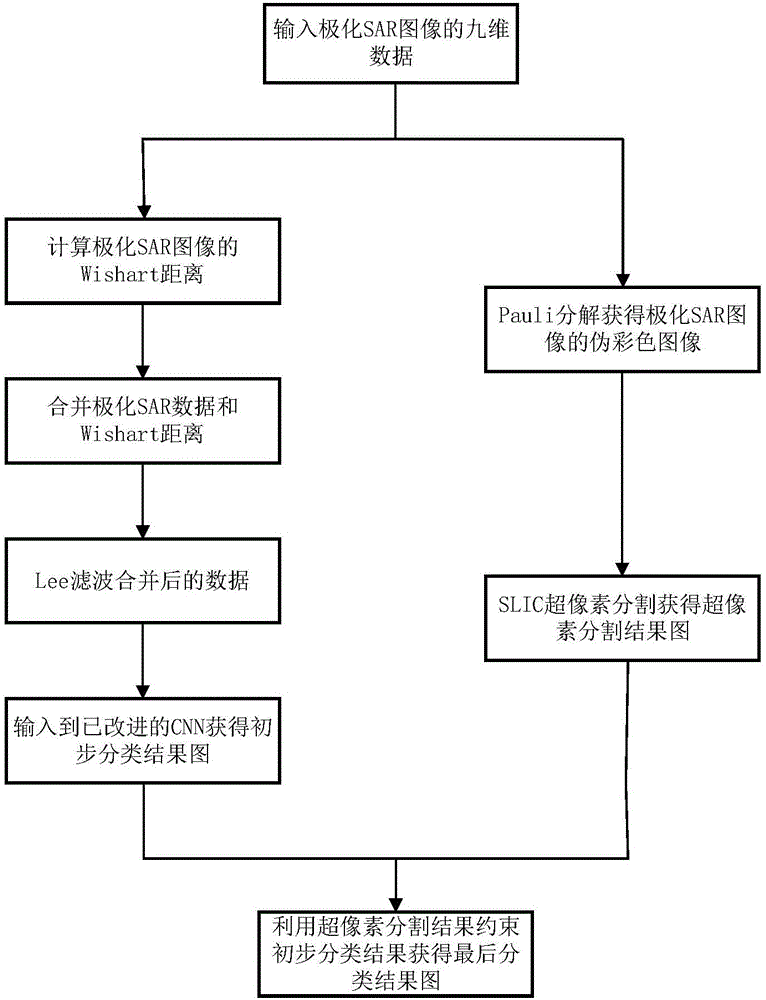

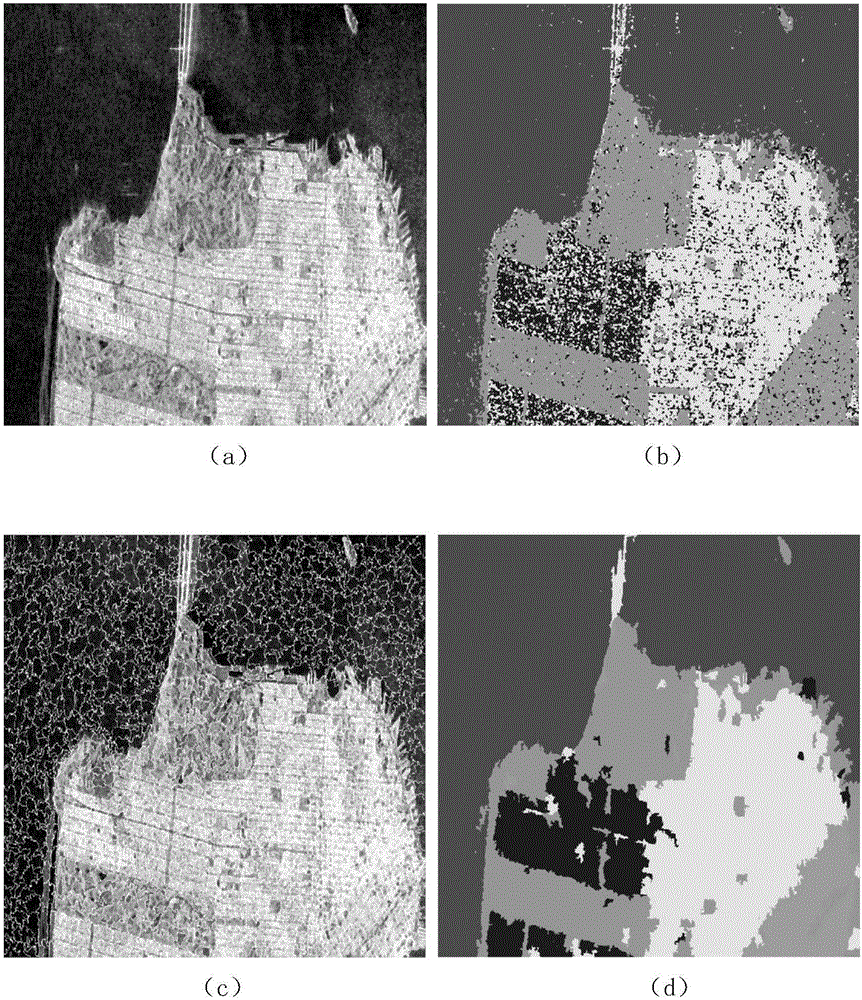

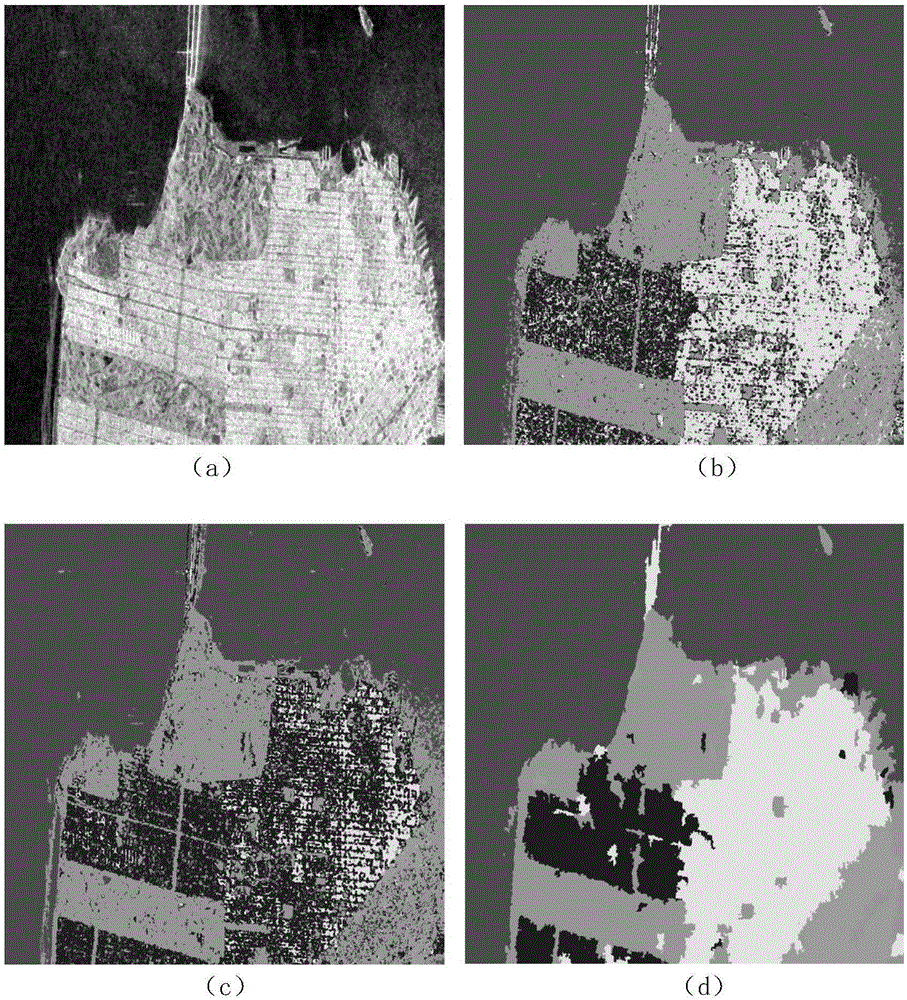

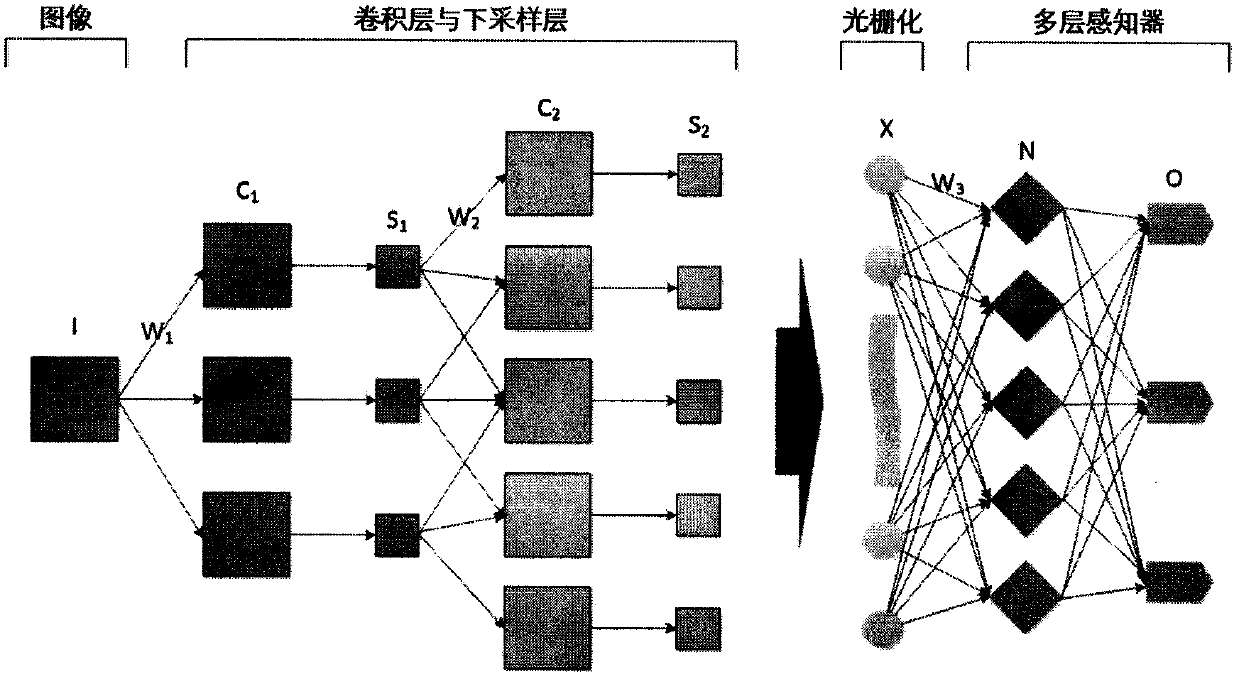

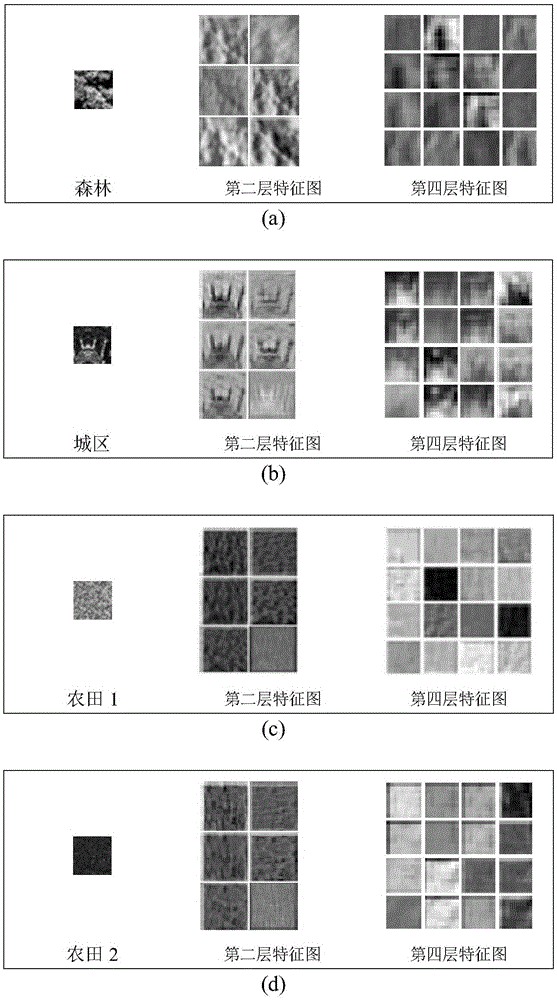

Polarized SAR (Synthetic Aperture Radar) image classification method based on SLIC (Software Licensing Internal Code) and improved CNN (Convolutional Neural Network)

ActiveCN106778821AAvoid repeated operationsFast operationCharacter and pattern recognitionColor imageSynthetic aperture radar

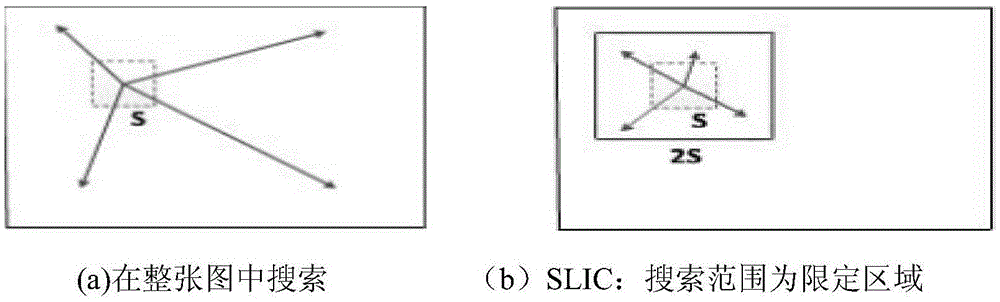

The invention puts forward a polarized SAR (Synthetic Aperture Radar) image classification method based on an SLIC (Software Licensing Internal Code) and an improved CNN (Convolutional Neural Network), and is used for solving the technical problem of low classification speed and low classification accuracy in an existing supervised polarized SAR image classification method. The method comprises the following steps that: firstly, taking the Wishart distance and the polarization feature of a polarized SAR image as new data, carrying out Lee filtering on the new data, and inputting the new data into an improved CNN to be classified so as to obtain a preliminary classification result; then, carrying out SLIC superpixel segmentation on the pseudo-color image of the polarized SAR image to obtain a superpixel segmentation result; and finally, utilizing the superpixel segmentation result to carry out constraint post-processing on the preliminary classification result to obtain a final classification result. The method is high in classification speed and accuracy, and can be used for fields including polarized SAR terrain classification and the like.

Owner:XIDIAN UNIV

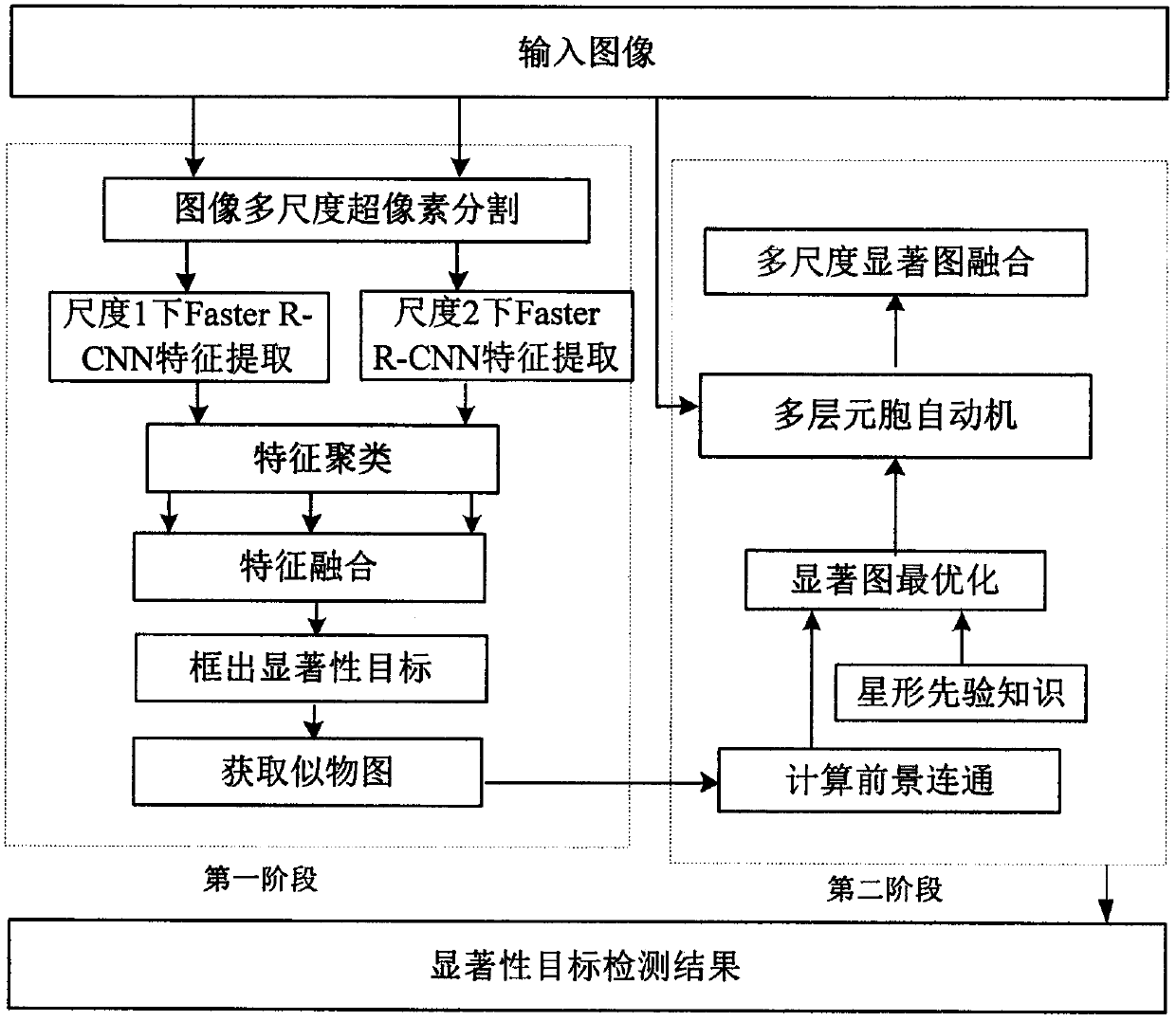

Saliency object detection method based on Faster R-CNN

InactiveCN107680106ASolve the problem that the effect of saliency detection is not idealImage enhancementImage analysisSaliency mapRound complexity

The invention discloses a saliency object detection method based on Faster R-CNN. The method comprises the steps of first performing multi-scale segmentation on an image, then outlining possible saliency objects using the Faster R-CNN, establishing an object analogue map, thereafter distributing a foreground specific gravity to a superpixel via foreground connectivity, then obtaining round and smooth saliency maps in combination with specific gravities of a foreground and a background using a saliency optimization technology, and at last performing fusion using an MCA (Multi-layer Cellular Automata) to obtain a final saliency map. The segmentation is performed on an input image on three scales using a superpixel segmentation algorithm, and the superpixel segmentation algorithm is to aggregate adjacent and similar pixel points into different sizes of image areas according to low-level characteristics such as a color, a texture and a brightness, such that the complexity of saliency detection can be effectively reduced; and by taking different scales of segmented images as a layer of cells and performing fusion on the different scales of superpixel segmented images using the MCA, theconsistency of an image saliency detection result is guaranteed.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

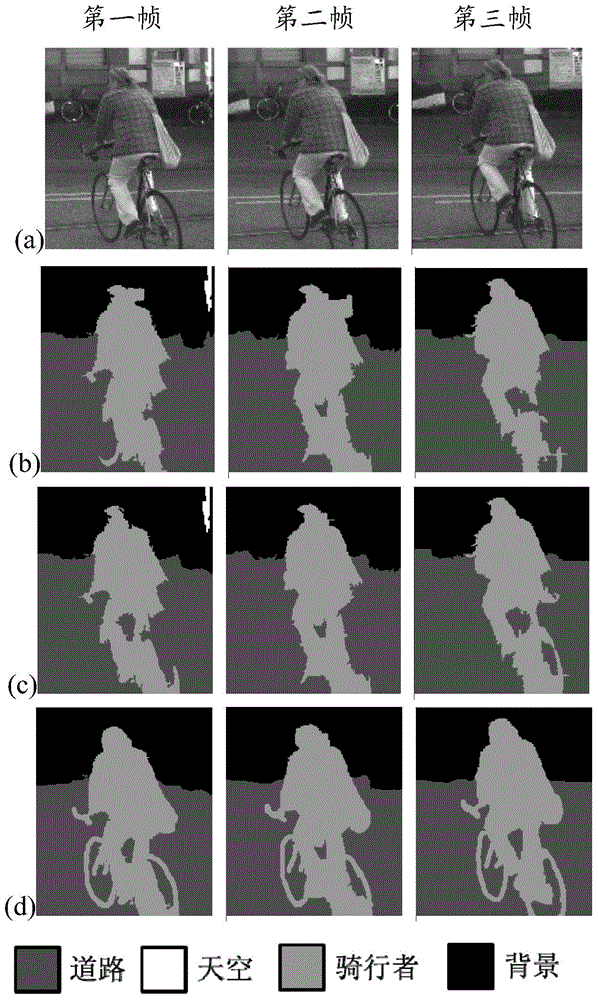

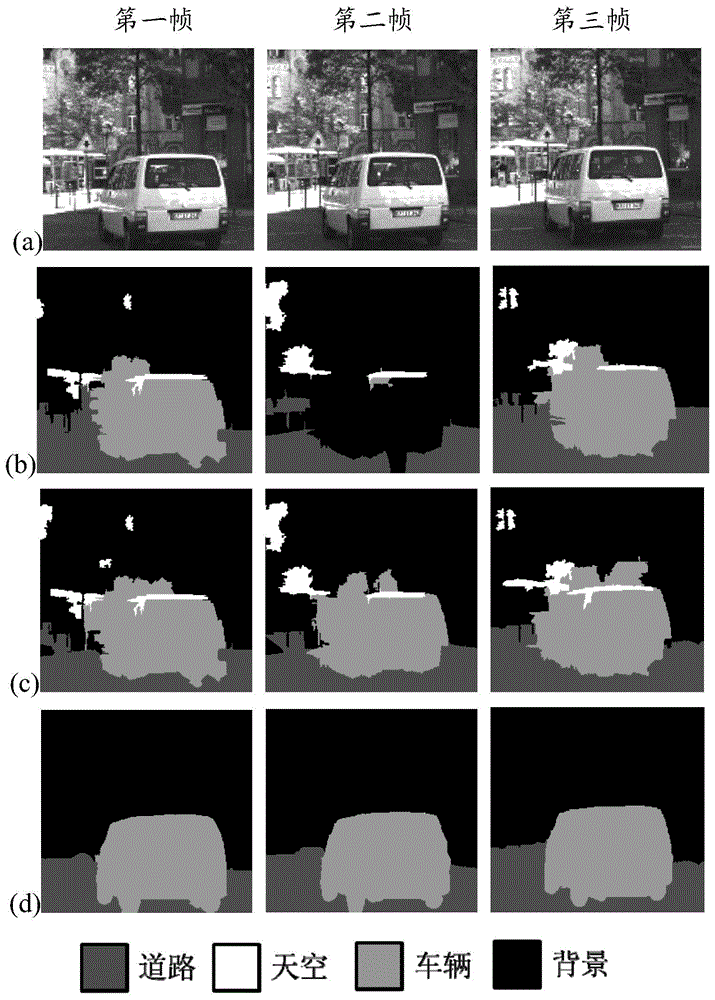

Image sequence category labeling method based on mixed graph model

InactiveCN104881681AImprove accuracyImprove consistencyCharacter and pattern recognitionNon symmetricNear neighbor

The invention discloses an image sequence category labeling method based on a mixed graph model. The method comprises a step of performing superpixel segmentation of an image sequence and characteristic description of superpixels; a step of performing nearest neighbor matching of inter-frame superpixels of a two-continuous-frame image; a step of using the mixed graph model to carry out global optimization modeling of the image sequence category labeling based on the spatial domain adjacency relation among superpixels of a single frame image and the time domain matching relation among superpiexels of a multi-frame image; and a step of using a linear method to solve a global optimization problem to obtain category labels of superpixles of a continuous multi-frame image. Compared with previous graph models, the mixed graph model created by the invention can describe the first-order and symmetric relation among superpixels in a single frame image and also the high-order and non-symmetric relation among superpixels in a two-continuous-frame image; the linear method is used for solution; and a category label which is better in consistency of time domain and higher in accuracy is effectively provided for each superpixel of an image sequence.

Owner:ZHEJIANG UNIV

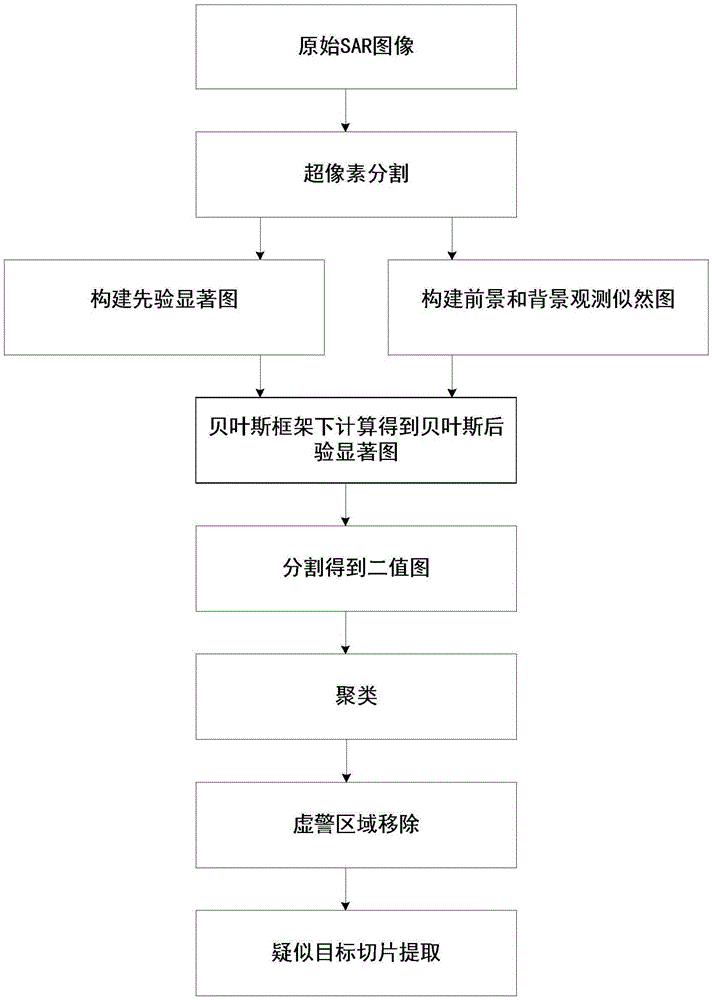

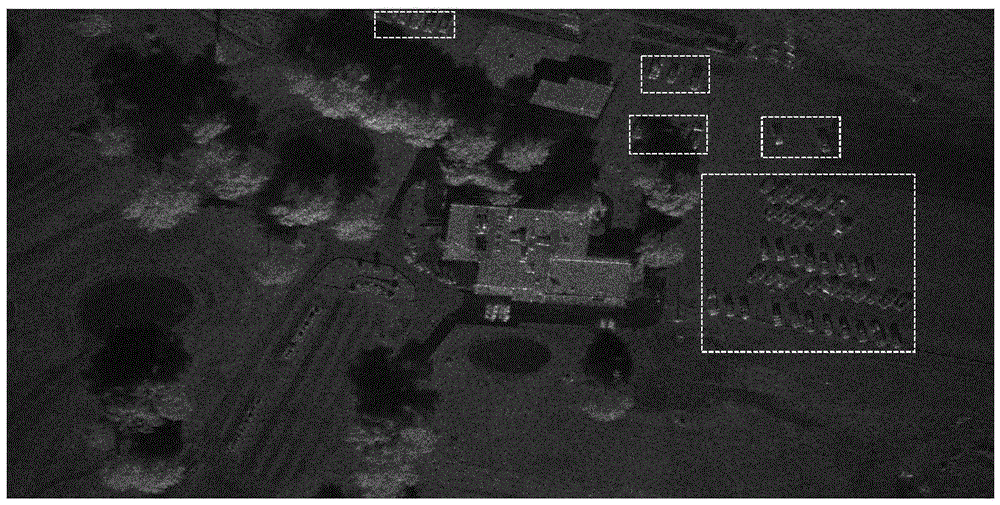

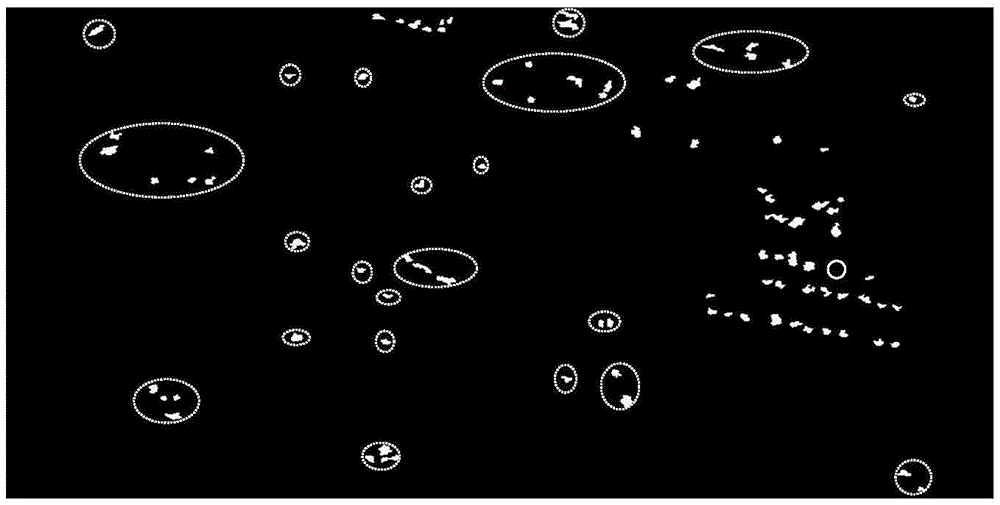

Bayesian saliency based SAR image target detection method

ActiveCN105427314AImprove detection accuracyAvoid missing detectionImage enhancementImage analysisPattern recognitionSaliency map

The invention discloses a Bayesian saliency based SAR image target detection method and mainly solves the problems of low detection accuracy and incomplete structure of a detected target on a binary image in an existing SAR image target detection technology. The method is implemented by the steps of performing superpixel segmentation on an original SAR image; constructing a prior saliency map, a foreground likelihood graph and a background likelihood graph by utilizing a superpixel segmentation result; fusing results of the prior saliency map, the foreground likelihood graph and the background likelihood graph in a Bayesian framework to obtain a Bayesian posterior saliency map; segmenting the Bayesian posterior saliency map to obtain a binary image with a suspected target region; and performing clustering and false alarm region removal on the binary image and extracting a suspected target slice on an original SAR image, thereby finishing the SAR image target detection. Compared with dual-parameter CFAR detection, the method has the advantages of high detection accuracy and relatively complete structure of the detected target on the binary image, thereby being suitable for SAR image target detection in complicated scenes.

Owner:XIDIAN UNIV

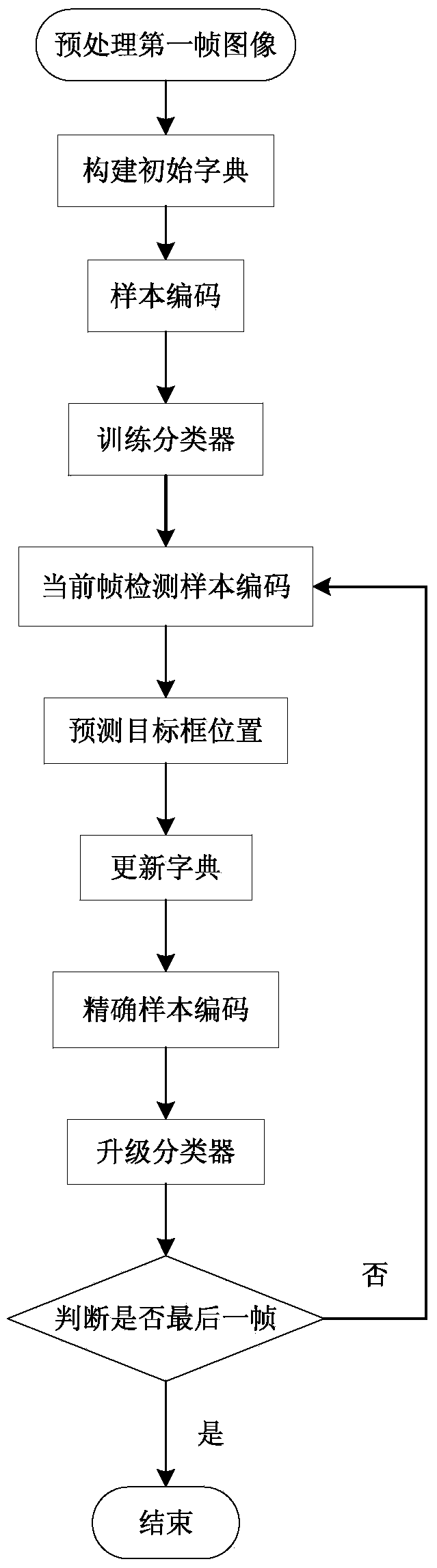

Target tracking method based on inter-frame constraint super-pixel encoding

InactiveCN103810723AReduce redundancyReduce complexityImage analysisCharacter and pattern recognitionPattern recognitionImaging processing

The invention discloses a target tracking method based on inter-frame constraint super-pixel encoding, mainly aiming to solve the tracking problem of failure in tracking due to shielding of a target, rapid motion and deformation in the appearance. The method comprises the following implementation steps: (1) preprocessing a first frame image; (2) constructing an initial dictionary; (3) encoding a sample; (4) training a classifier; (5) encoding a current frame detection sample; (6) predicting a target frame location; (7) updating the dictionary; (8) accurately encoding the sample; (9) upgrading the classifier; (10) judging whether an image is a last frame image, if so, ending, otherwise, returning to the step (5) to process a next frame image. By adopting a super-pixel segmentation and constraint encoding method, the complexity of a subsequent image processing task is lowered, the consistency principle of image spatial information is ensured, the consistency between the local boundary information and texture information of the image is kept, and a target can be tracked stably and accurately.

Owner:XIDIAN UNIV

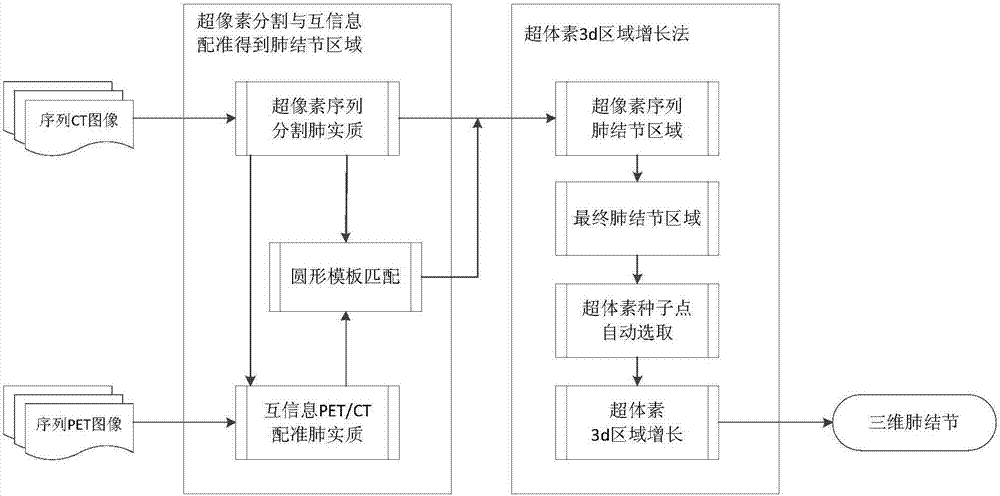

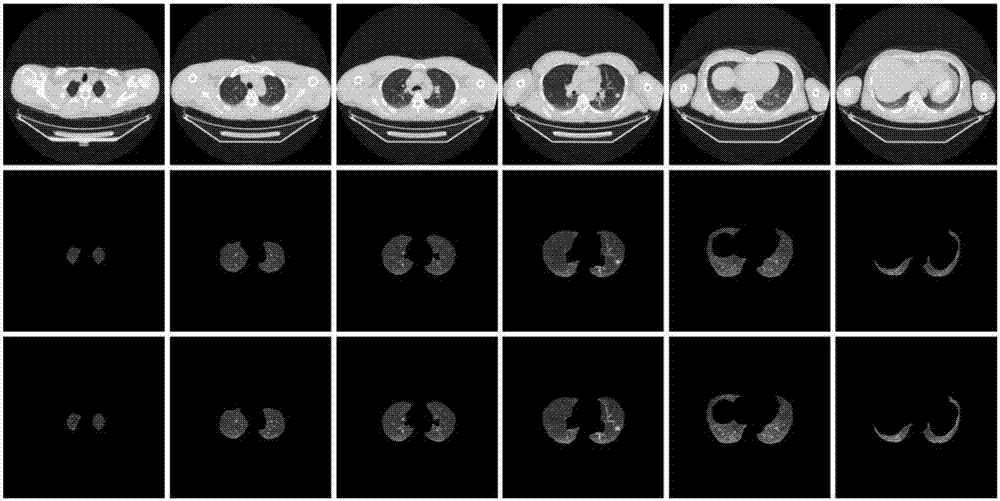

Supervoxel sequence lung image 3D pulmonary nodule segmentation method based on multimodal data

ActiveCN107230206AUnderstand intuitiveImprove the quality of surgeryImage enhancementImage analysisPulmonary noduleDiagnostic Radiology Modality

The invention discloses a supervoxel sequence lung image 3D pulmonary nodule segmentation method based on multimodal data. The method comprises the following steps: A) extracting sequence lung parenchyma images through superpixel segmentation and self-generating neuronal forest clustering; B) registering the sequence lung parenchyma images through PET / CT multimodal data based on mutual information; C) marking and extracting an accurate sequence pulmonary nodule region through a multi-scale variable circular template matching algorithm; and D) carrying out three-dimensional reconstruction on the sequence pulmonary nodule images through a supervoxel 3D region growth algorithm to obtain a final three-dimensional shape of pulmonary nodules. The method forms a 3D reconstruction area of the pulmonary nodules through the supervoxel 3D region growth algorithm, and can reflect dynamic relation between pulmonary lesions and surrounding tissues, so that features of shape, size and appearance of the pulmonary nodules as well as adhesion conditions of the pulmonary nodules with surrounding pleura or blood vessels can be known visually.

Owner:TAIYUAN UNIV OF TECH

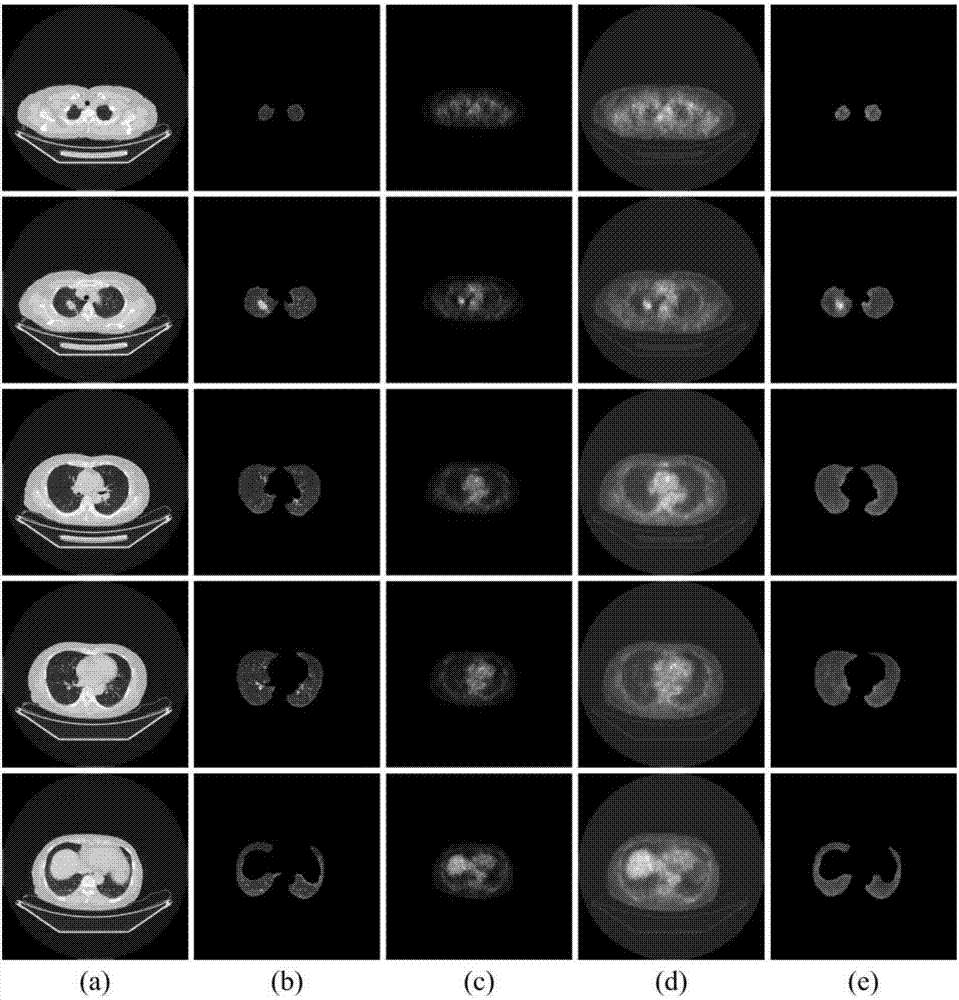

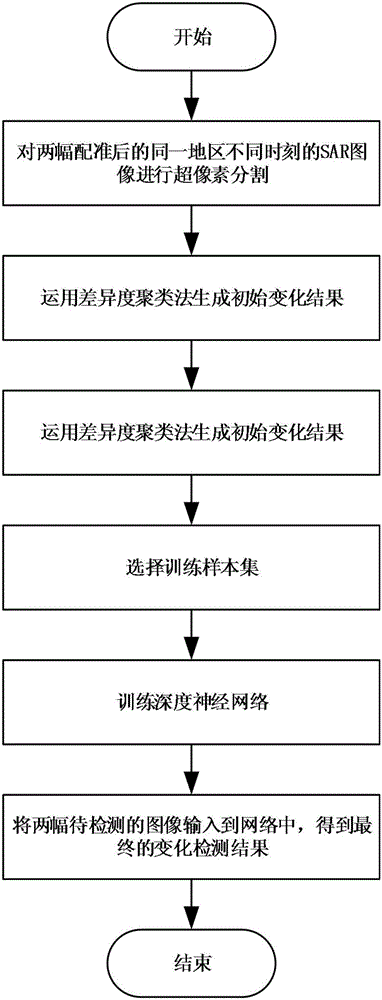

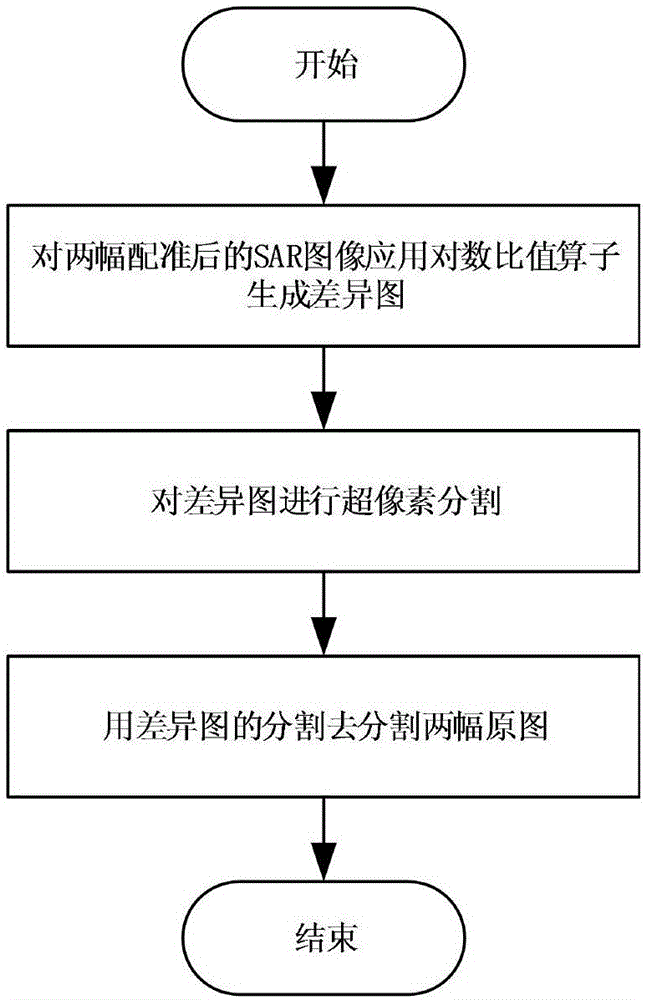

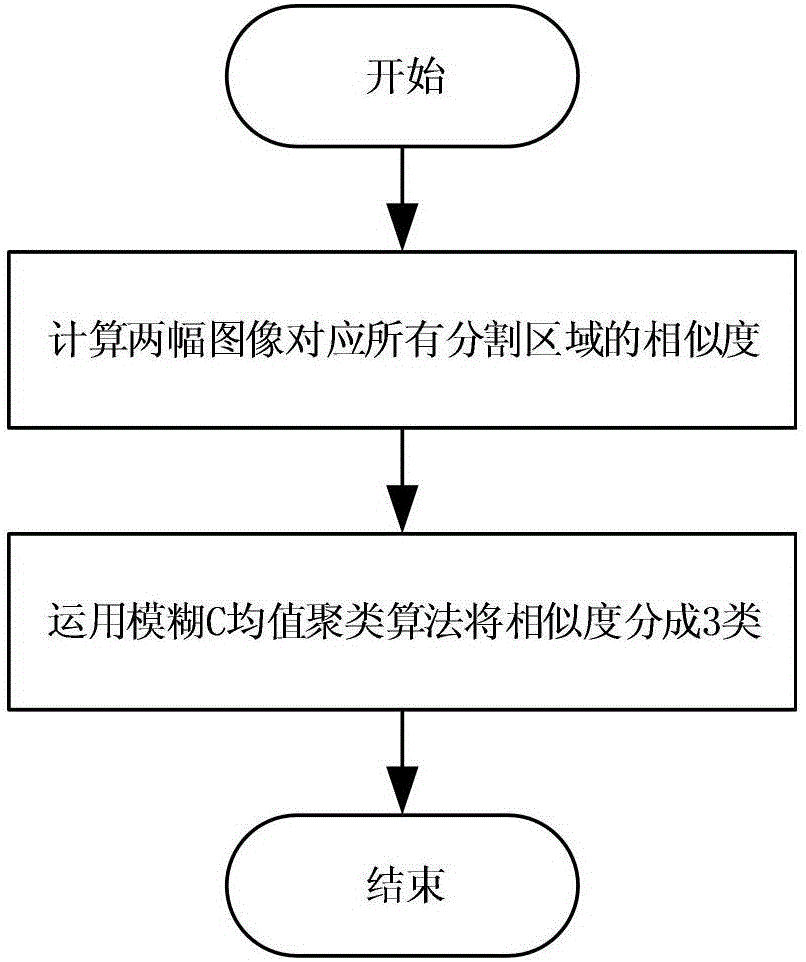

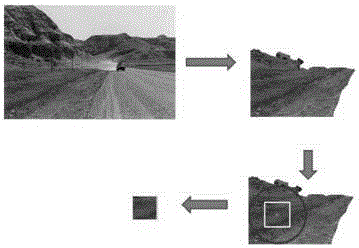

SAR image change detecting method based on superpixel segmentation and characteristic learning

ActiveCN106780485AAvoid lossImprove robustnessImage enhancementImage analysisPattern recognitionProcessing element

The invention discloses an SAR image change detecting algorithm based on superpixel segmentation and characteristic learning. The algorithm comprises the first step of starting a SAR image change detecting method based on the superpixel segmentation and the characteristic learning; the second step of conducting the superpixel segmentation on two SAR images at the same area and different time phases after rectification; the third step of utilizing a difference degree clustering method to generate an initiation change result; the fourth step of selecting samples with the same quantity as training samples from a changed category and an unchanged category according to the initiation change result; the fifth step of inputting samples to be trained into a designed deep neural network to be subjected to training; the sixth step of inputting the two images to be detected into the trained deep neural network to obtain a final change detecting result; the seventh step of finishing. According to the SAR image change detecting algorithm based on the superpixel segmentation and the characteristic learning, a superpixel block is adopted as a basic processing unit, the time spent in processing data can be shortened to some degree, sensitive problems of noise are improved to a large extent, and the detecting result and the detecting accuracy are obviously improved.

Owner:XIDIAN UNIV

Image salient region detection method

InactiveCN104463870AImprove accuracySolve the problem that some colors appear in large numbers in the backgroundImage analysisSaliency mapBackground noise

The invention provides an image salient region detection method. The method includes the steps that background estimation is conducted on an image generated after super pixel partition is conducted; the contrast ratio of all super pixels and the background is obtained according to the color difference of all the super pixels in the image and the super pixels in the background estimation; a super pixel saliency map is obtained according to the contrast ratio of all the super pixels and the background. By means of the image salient region detection method, robustness can be conducted on background noise of the image and the like, and calculation is easy and fast to conduct.

Owner:UNIVERSITY OF CHINESE ACADEMY OF SCIENCES

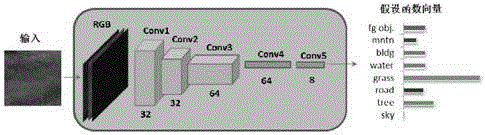

Image semantic annotation method based on super pixel segmentation

ActiveCN106022353ASimplify complexityImprove computing efficiencyCharacter and pattern recognitionConditional random fieldFeature vector

The invention provides an image semantic annotation method based on super pixel segmentation. The method comprises the steps that a feature block extracted based on image super-pixel segmentation is input into a convolution neural network; a feature vector trained by the convolution neural network is expanded and weighted; and finally, a conditional random field model is constructed for semantic class annotation prediction. According to the technical scheme of the invention, the method takes a super-pixel block as a research object, which simplifies the complexity of the feature block extracted based on image super-pixel segmentation, and improves the calculation efficiency of semantic annotation; in addition, the multi-layer super-pixel block is used for semantic analysis, and the annotation results are integrated; and the accuracy and the robustness of semantic annotation are improved.

Owner:ZHEJIANG UNIV

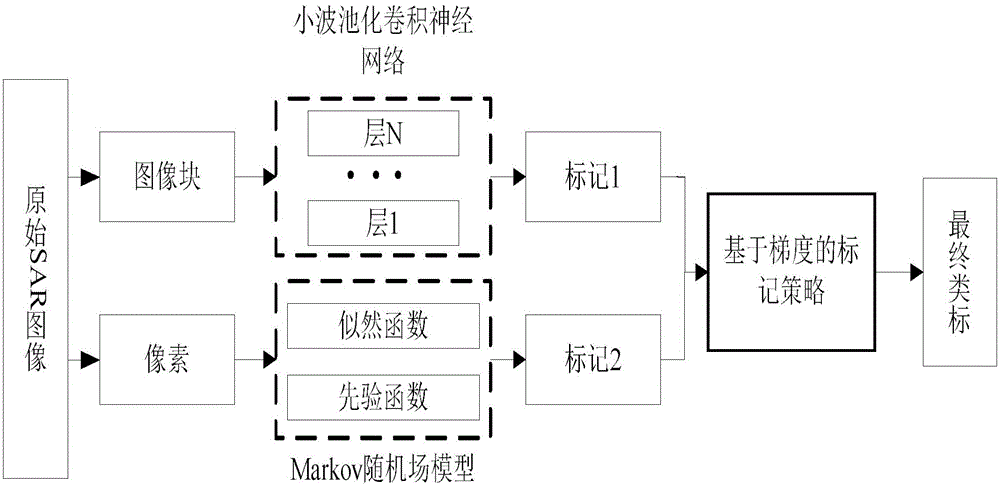

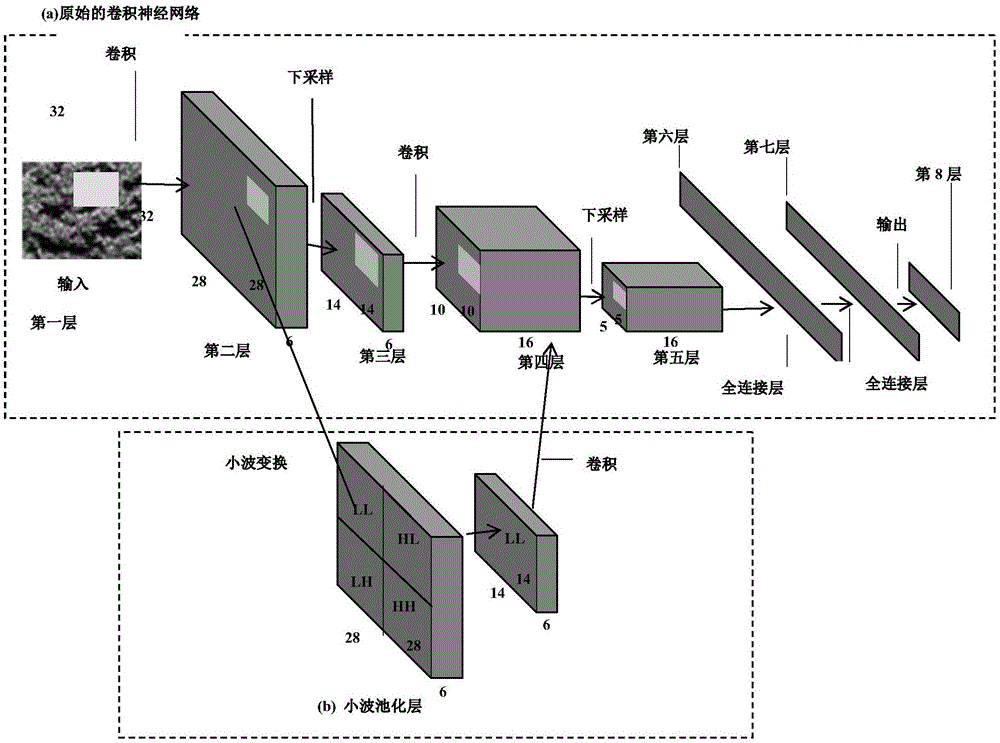

SAR image segmentation method based on wavelet pooling convolutional neural networks

ActiveCN105139395AWith noise reduction functionMaintain structural featuresImage analysisSar image segmentationImage gradient

The invention discloses an SAR image segmentation method based on wavelet pooling convolutional neural networks. The SAR image segmentation method comprises 1. constructing a wavelet pooling layer and forming wavelet pooling convolutional neural networks; 2. selecting image blocks and inputting the image blocks into the wavelet pooling convolutional neural networks, and training the image blocks; 3. inputting all the image blocks into the trained networks, and testing the image blocks to obtain a first class mark of an SAR image; 4. performing superpixel segmentation of the SAR image, and blending the superpixel segmentation result with the first class mark of the SAR image to obtain a second class mark of the SAR image; 5. obtaining a third class mark of the SAR image according to a Markov random field model, and blending the third class mark of the SAR image with the superpixel segmentation result to obtain a fourth class mark of the SAR image; and 6. blending the second class mark of the SAR image with the fourth class mark of the SAR image according to an SAR image gradient map to obtain the eventual segmentation result. The SAR image segmentation method based on wavelet pooling convolutional neural networks improves the segmentation effect of the SAR image and can be used for target detection and identification.

Owner:XIDIAN UNIV

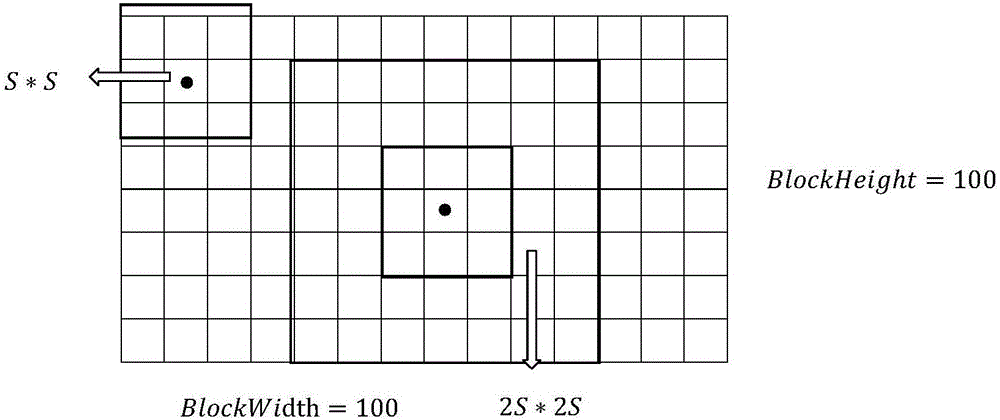

Quick binocular stereo matching method based on superpixel segmentation

InactiveCN106709948AReduce the numberImprove time and efficiencyImage enhancementImage analysisCluster algorithmParallax

The invention discloses a quick binocular stereo matching method based on superpixel segmentation. The method comprises the following steps that: (1) adopting a SLIC (Simple Linear Iterative Clustering) superpixel segmentation method to carry out regional division on an original reference diagram and a target diagram; (2) on the basis of a local matching algorithm of adaptive weighting, calcuating an original parallax spatial diagram; (3) carrying out regional parallax plane fitting based on a confidence point; (4) applying a clustering algorithm to combine adjacent regional parallax planes; and (5) on the basis of a superpixel region, constructing an energy cost function to carry out stereo matching. A stereo matching unit is the superpixel region combined on the basis of the edge information of an image, so that the algorithm is guaranteed to be suitable for a large no-texture region, a depth boundary can be accurately positioned, a matching cost better than the matching cost of a traditional stereo matching method is obtained and is combined with a parallax postprocessing method to effectively obtain a high-accuracy parallax diagram, and the method exhibits good instantaneity.

Owner:ZHEJIANG UNIV

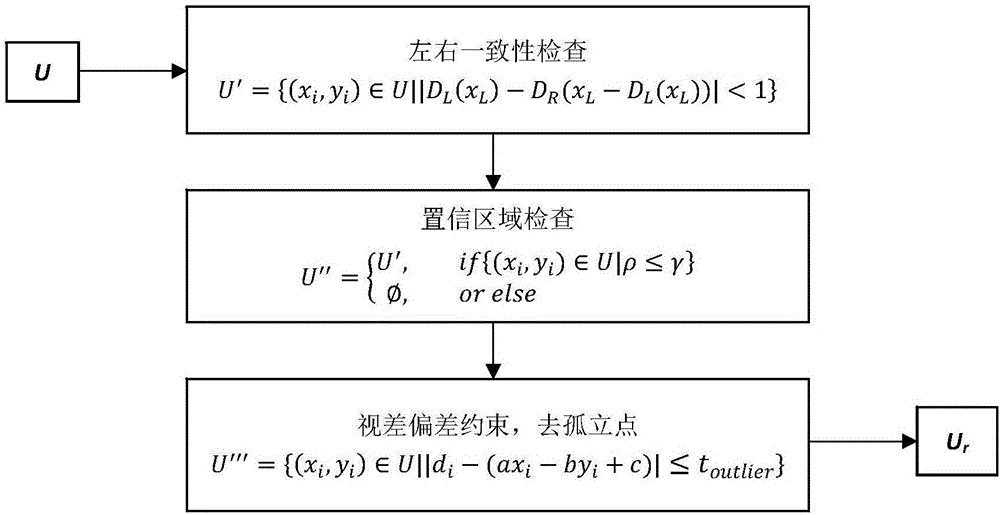

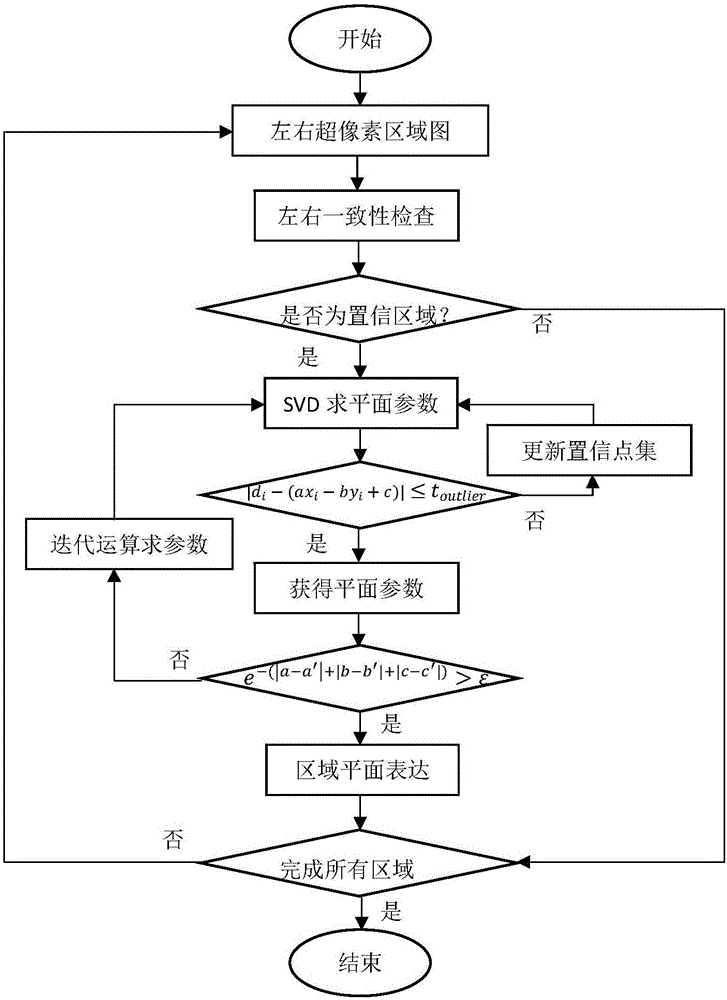

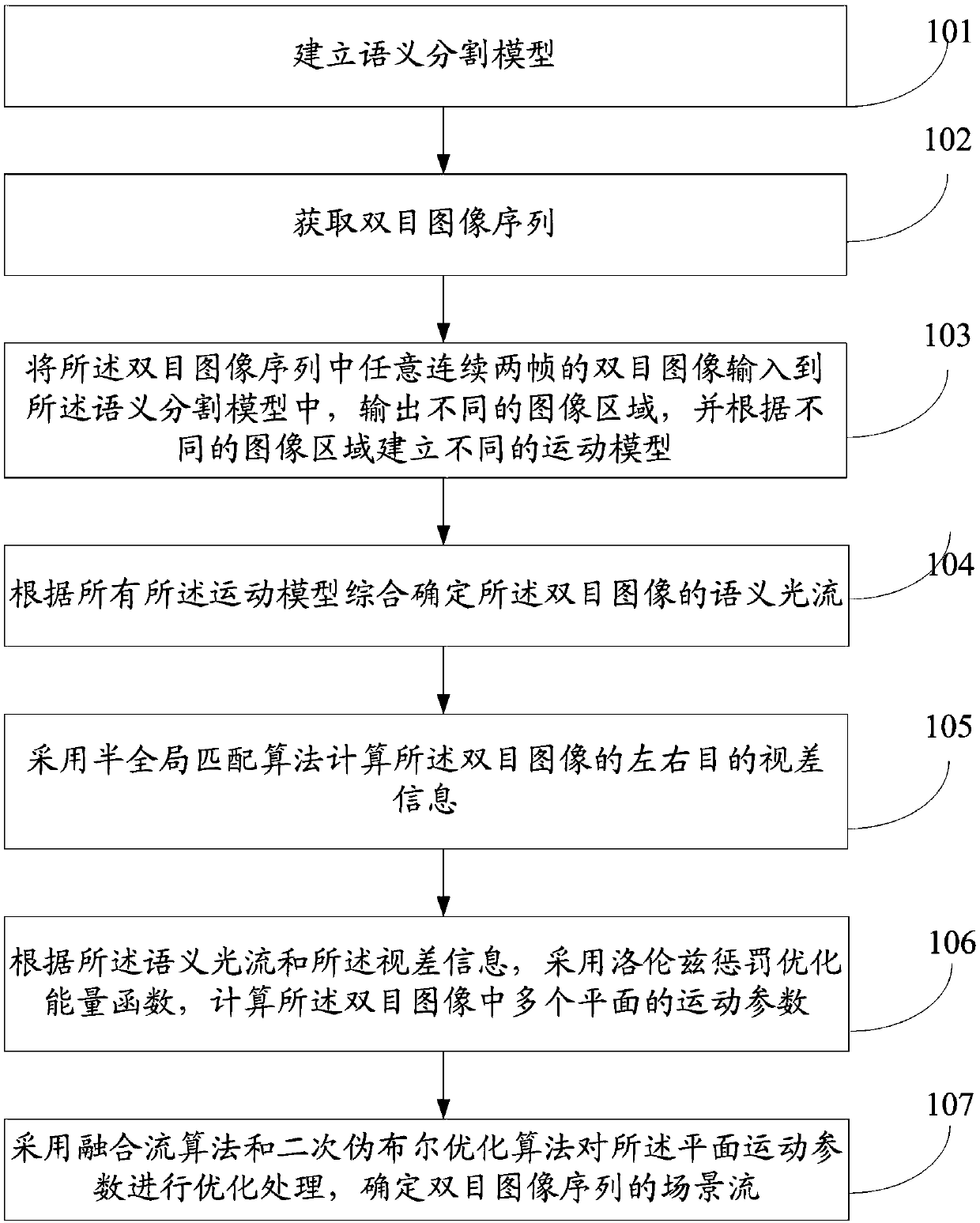

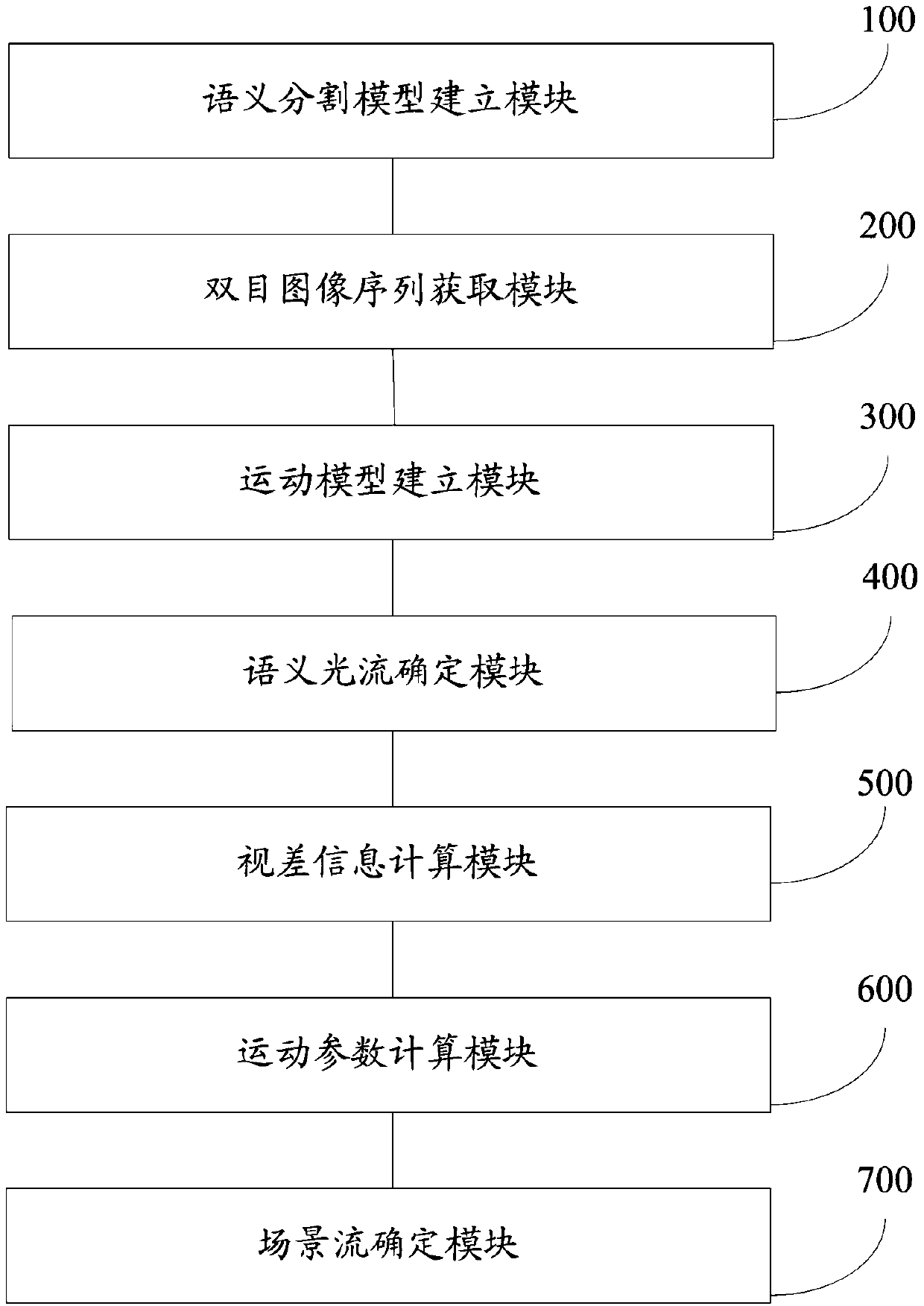

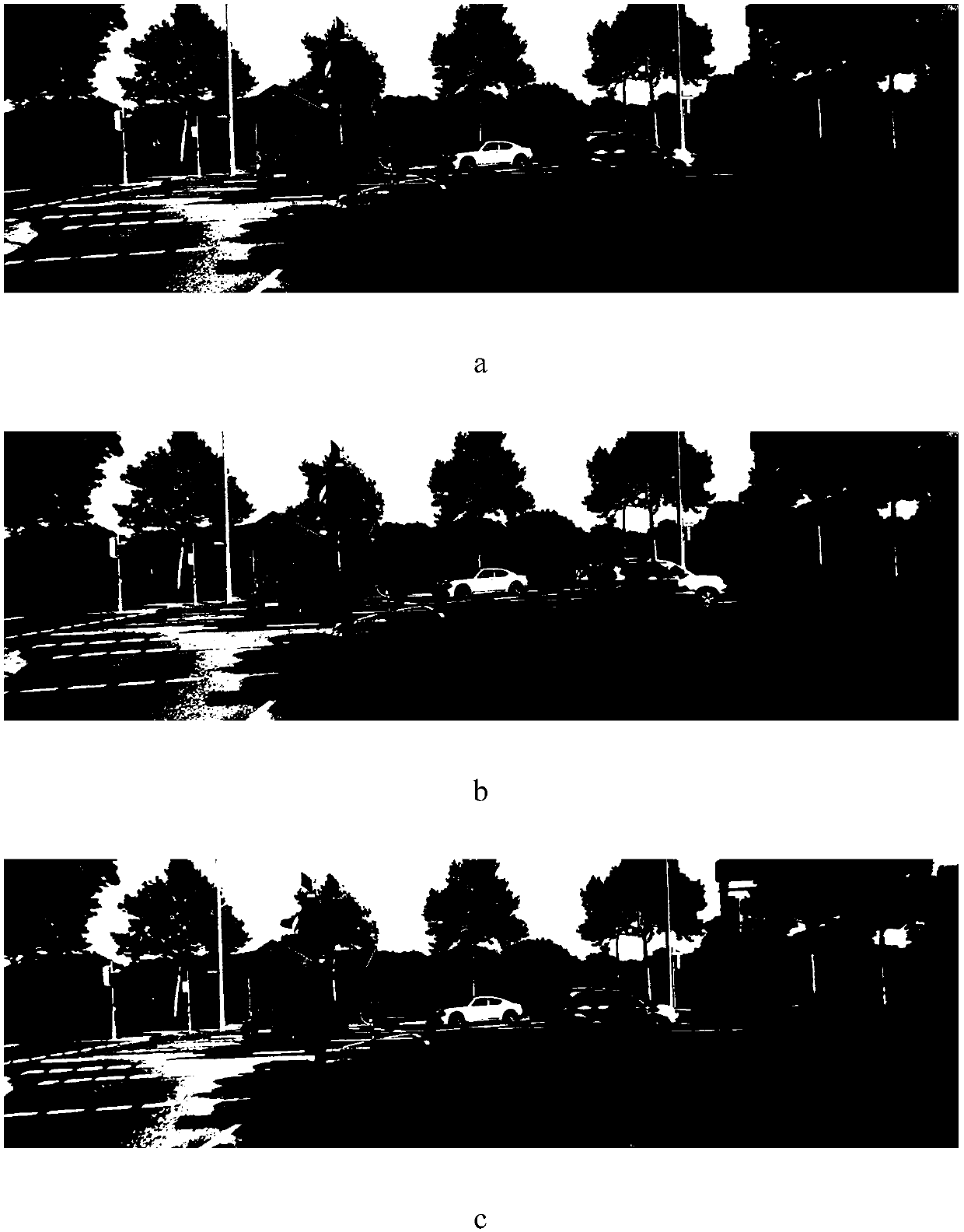

A method and a system for determining binocular scene flow based on semantic segmentation

ActiveCN108986136AAccurate estimateImprove accuracyImage enhancementImage analysisParallaxMotion parameter

The invention discloses a method and a system for determining binocular scene flow based on semantic segmentation. Firstly, the scene in binocular image is semantically segmented, and the semantic optical flow is calculated by adding semantic segmentation label information, then the disparity information is calculated by semi-global matching algorithm, and then the motion parameters of countless facet regions are calculated and optimized by combining the semantic optical flow and disparity information fitting. In the process of optimization, the initial scene flow is obtained by superpixel segmentation, and then the motion of the superpixel blocks in the semantic tag is optimized, so the motion of the superpixel blocks in the semantic tag is consistent and the edge information of the moving object is protected well. Semantic information is added into the optical flow information, so that the edge of the object is protected, and the reasoning process of the occlusion problem is greatlysimplified. In addition, the motion inference at the semantic tag level makes the scene flow of pixels on the surface of the same moving object approximately consistent, and ultimately the goal of optimizing the scene flow is achieved.

Owner:NANCHANG HANGKONG UNIVERSITY

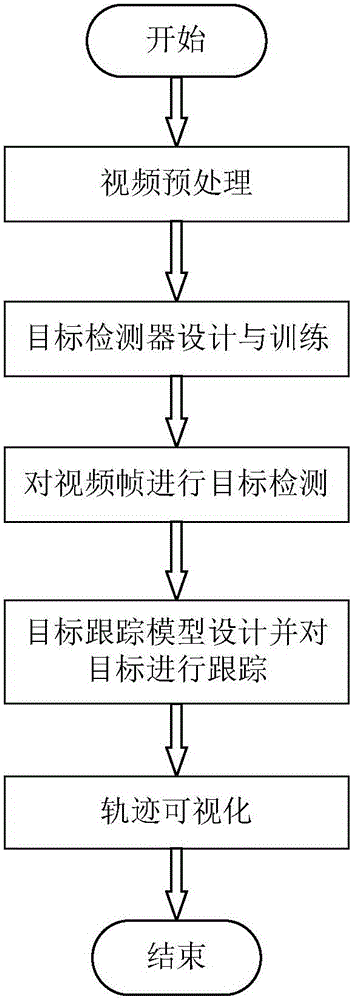

Real-time multi-class and multi-target tracking method of video

InactiveCN106127807AReduce complexityNarrow the matchImage enhancementImage analysisGraphicsImaging processing

The invention belongs to the field of computer graphics and image processing, and specifically discloses a real-time multi-class and multi-target tracking method of a video. The tracking method comprises the following steps: s1: carrying out video frame preprocessing, such as superpixel segmentation; s2: on the basis of a superpixel block, designing a target detector, carrying out off-line training, and fully utilizing movement features so as to detect all moving targets in the video; s3: utilizing the trained detector to carry out target detection on a given video; s4: designing a target tracking model, and tracking the targets in the video; and s5: carrying out trajectory visualization. The tracking method has the following beneficial effects: 1: the complexity and the time consumption of an algorithm are greatly lowered since the video is subjected to the target detection on the basis of the superpixel block; and 2: all multiple classes of moving targets in the video can be detected, so that the tracking of all moving objects in the video can be realized under a situation of one camera, and therefore, hardware cost is greatly lowered.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com