Problem text generation method and device, equipment and medium

A text and problem technology, applied in the field of computer data processing, which can solve problems such as limited types, cumbersome, time-consuming, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

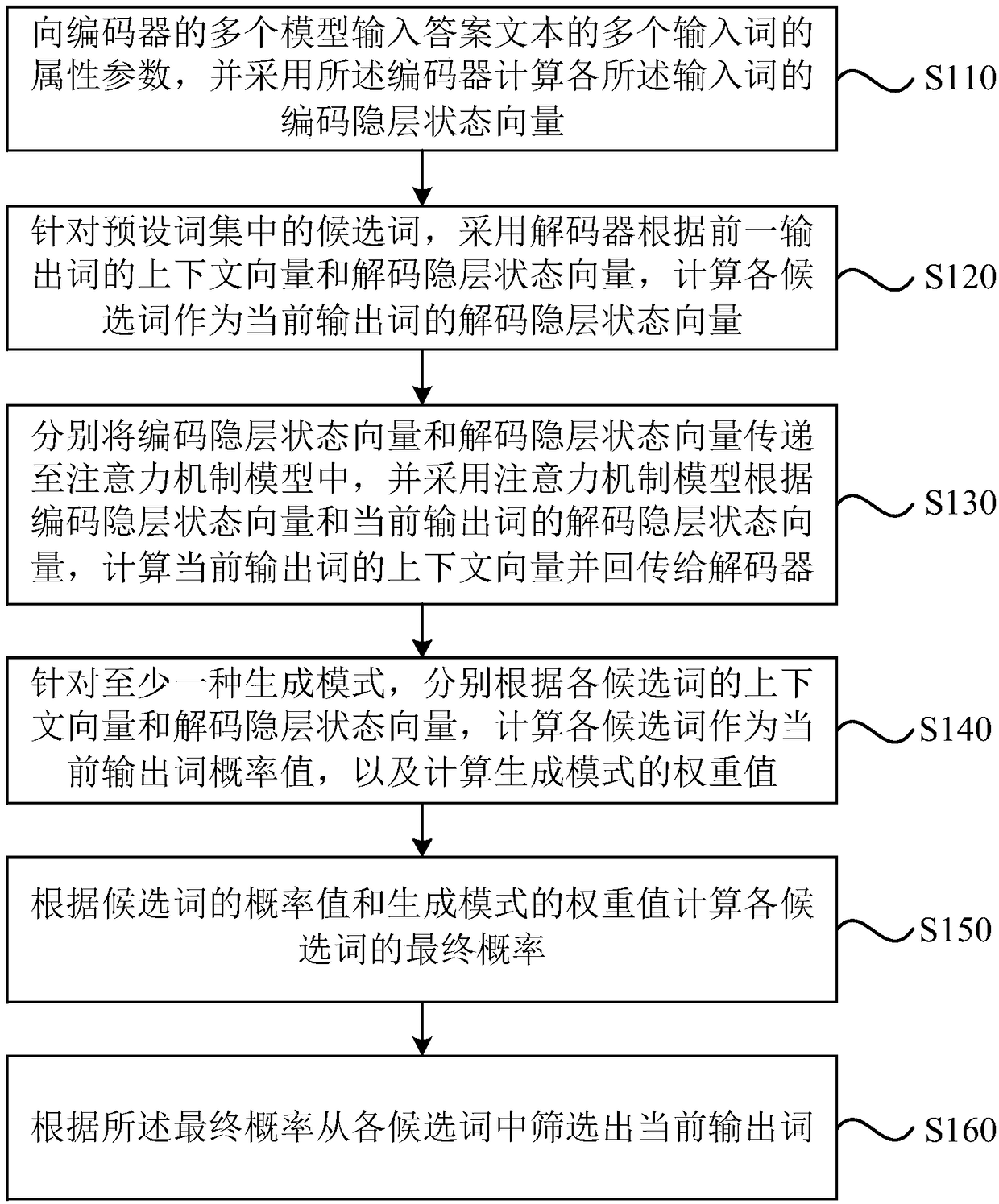

[0041] figure 1 It is a schematic flowchart of a method for generating question text provided in Embodiment 1 of the present invention. The embodiment of the present invention is applicable to the situation that a neural network is used to generate a corresponding question text based on an answer text. The method is executed by a question text generating device, which is implemented by software and / or hardware and is specifically configured to The electronic device of the question text may be a server or a terminal device. The question text generation method includes:

[0042] S110. Input the attribute parameters of multiple input words of the answer text to multiple models of the encoder, and use the encoder to calculate the encoding hidden layer state vector of each input word.

[0043] Wherein, the input word is a vocabulary in the answer text of the sentence, which may be a single character or a word composed of multiple characters, that is, a vocabulary with independent...

Embodiment 2

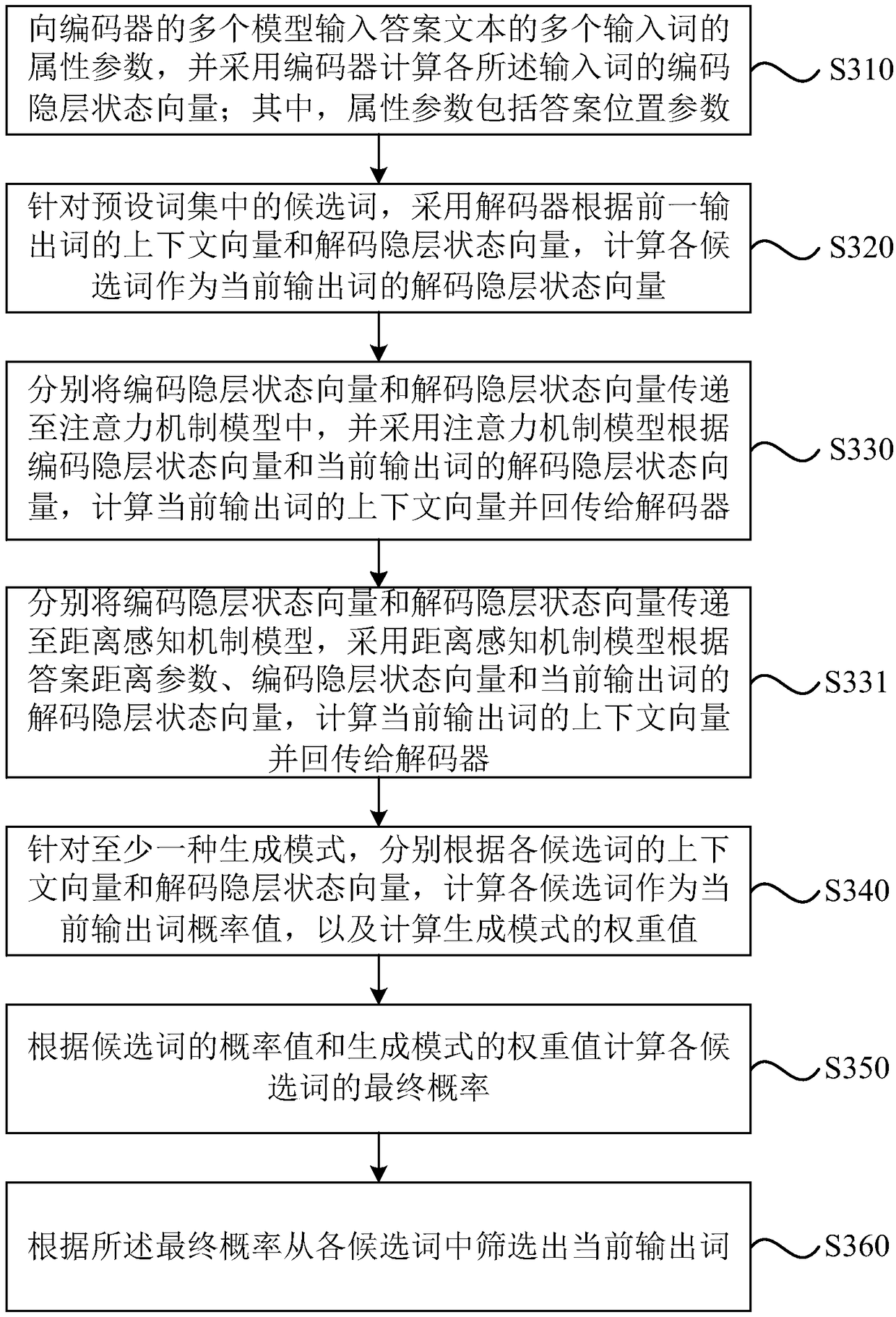

[0074] image 3 It is a schematic flowchart of a method for generating question text provided by Embodiment 2 of the present invention. The embodiment of the present invention is further optimized on the basis of the technical solutions of the foregoing embodiments.

[0075] Further, before the operation "using the attention mechanism model, calculate the context vector of the current output word according to the answer position parameter, the encoding hidden layer state vector and the decoding hidden layer state vector of the current output word", add "according to The answer position parameters of the answer input word and the non-answer input word determine the distance between the non-answer input word and the answer input word as the answer distance parameter" operation; According to the context vector and the decoding hidden layer state vector of the previous output word, the device calculates each candidate word as the decoding hidden layer state vector of the current o...

Embodiment 3

[0102] Figure 5 It is a schematic flowchart of a method for generating question text provided by Embodiment 3 of the present invention. The embodiment of the present invention is further optimized on the basis of the technical solutions of the foregoing embodiments.

[0103] Further, the operation "input the attribute parameters of multiple input words of the answer text to multiple models of the encoder, and use the encoder to calculate the encoding hidden layer state vector of each of the input words" is refined into "to encode Each two-way LSTM model in the device, input the attribute parameter of each input word in the answer text respectively, and adopt each described two-way LSTM model to calculate the encoding hidden layer state vector of each described input word ", to adopt two-way LSTM model Build an encoder, distinguish between forward word order and reverse word order, so that the encoder can avoid long-order dependency problems in complex scenarios, better captur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com