Text simplification method based on word vector query model

A text simplification and word vector technology, applied in biological neural network models, instruments, computing, etc., can solve the problems of long training time, low text simplification efficiency, slow model training and convergence speed, etc., to improve quality and accuracy. degree, reduce training time and memory usage, and reduce the effect of the number of parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way.

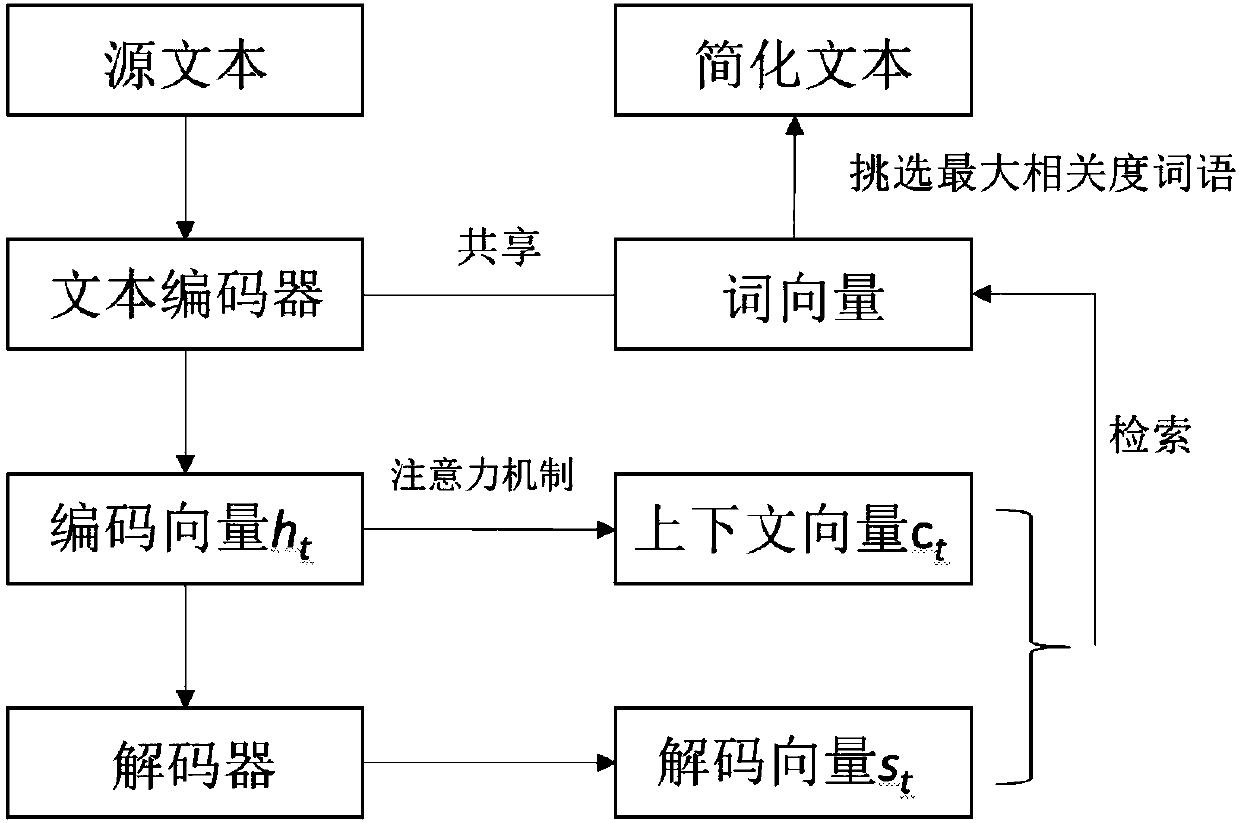

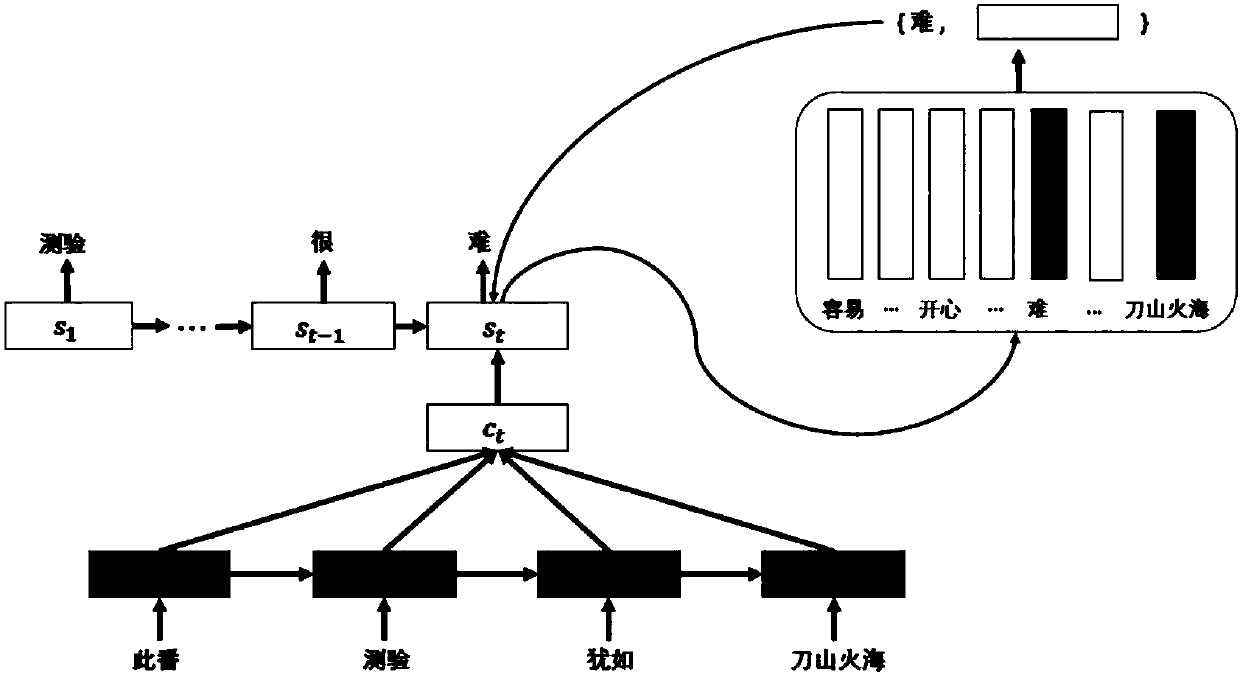

[0034] The invention provides a method for generating simplified text based on a word vector retrieval model, figure 1 It is a block flow diagram of the method provided by the present invention, figure 2 It is a schematic diagram of the specific implementation of the present invention. By improving the generation algorithm in the classic sequence-to-sequence algorithm, the target output is generated in the form of a search word vector; and then the negative log likelihood of the standard answer and the predicted word is maximized through training, thereby generating a complete The simplified text of .

[0035]The following embodiments take simplified text in Wikipedia as an example, the original text is as follows:

[0036] “Depending on the context, another closely-related meaning of constituent ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com