Recognition method for multi-mode fused song emotion based on deep study

A deep learning and emotion recognition technology, applied in speech recognition, speech analysis, instruments, etc., can solve the problems of difficult extraction, difficult training and prediction, low accuracy, etc., to achieve the effect of good emotional discrimination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

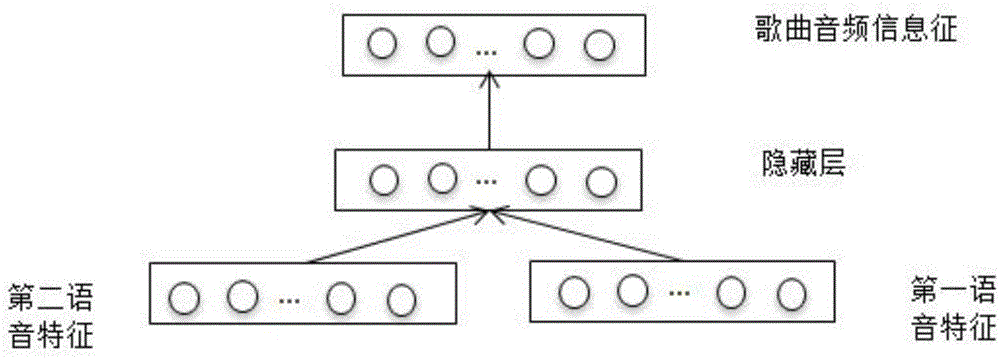

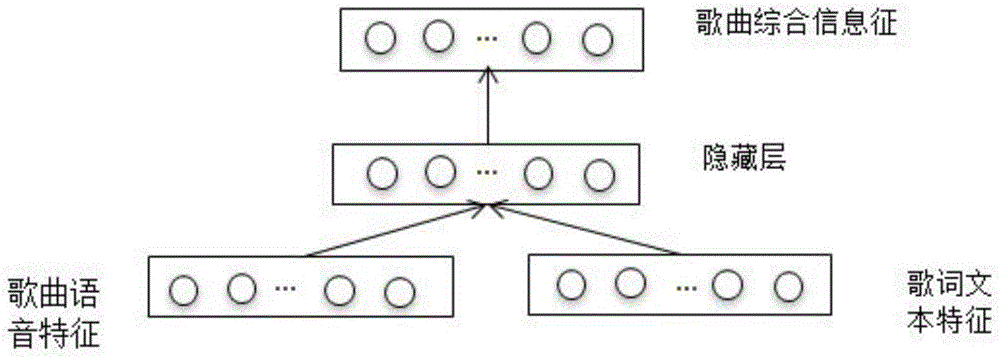

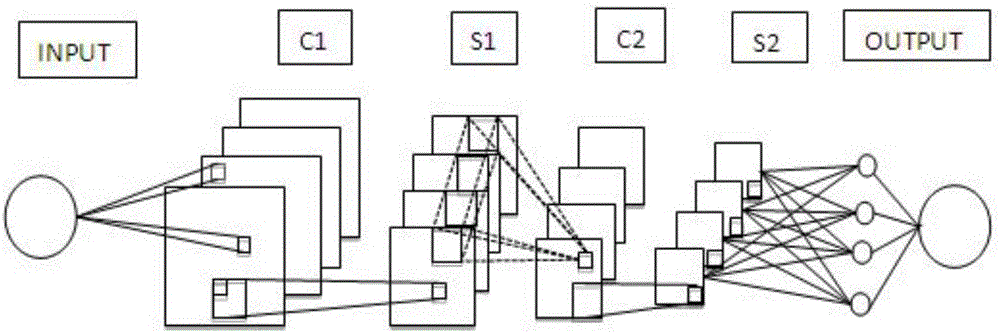

Method used

Image

Examples

Embodiment Construction

[0029] In this embodiment, a deep learning-based multimodal fusion song emotion category recognition method includes the following steps:

[0030] Step 1, collect the lyric text database and the song audio database of the song, the lyric text of each song corresponds to the number of the song audio; the collected songs are classified into emotions; specifically, they are divided into miss and abreact , happy (happy) and sad (sad) these four kinds of emotions, and use 1, 2, 3, 4 to express respectively. The comprehensive emotional features of each video can be represented by a quaternion Y.

[0031] Y = ( E , V T , V V 1 , V V 2 ) - - - ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com