Patents

Literature

301 results about "Lyrics" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Lyrics are words that make up a song usually consisting of verses and choruses. The writer of lyrics is a lyricist. The words to an extended musical composition such as an opera are, however, usually known as a "libretto" and their writer, as a "librettist". The meaning of lyrics can either be explicit or implicit. Some lyrics are abstract, almost unintelligible, and, in such cases, their explication emphasizes form, articulation, meter, and symmetry of expression. Rappers can also create lyrics (often with a variation of rhyming words) that are meant to be spoken rhythmically rather than sung.

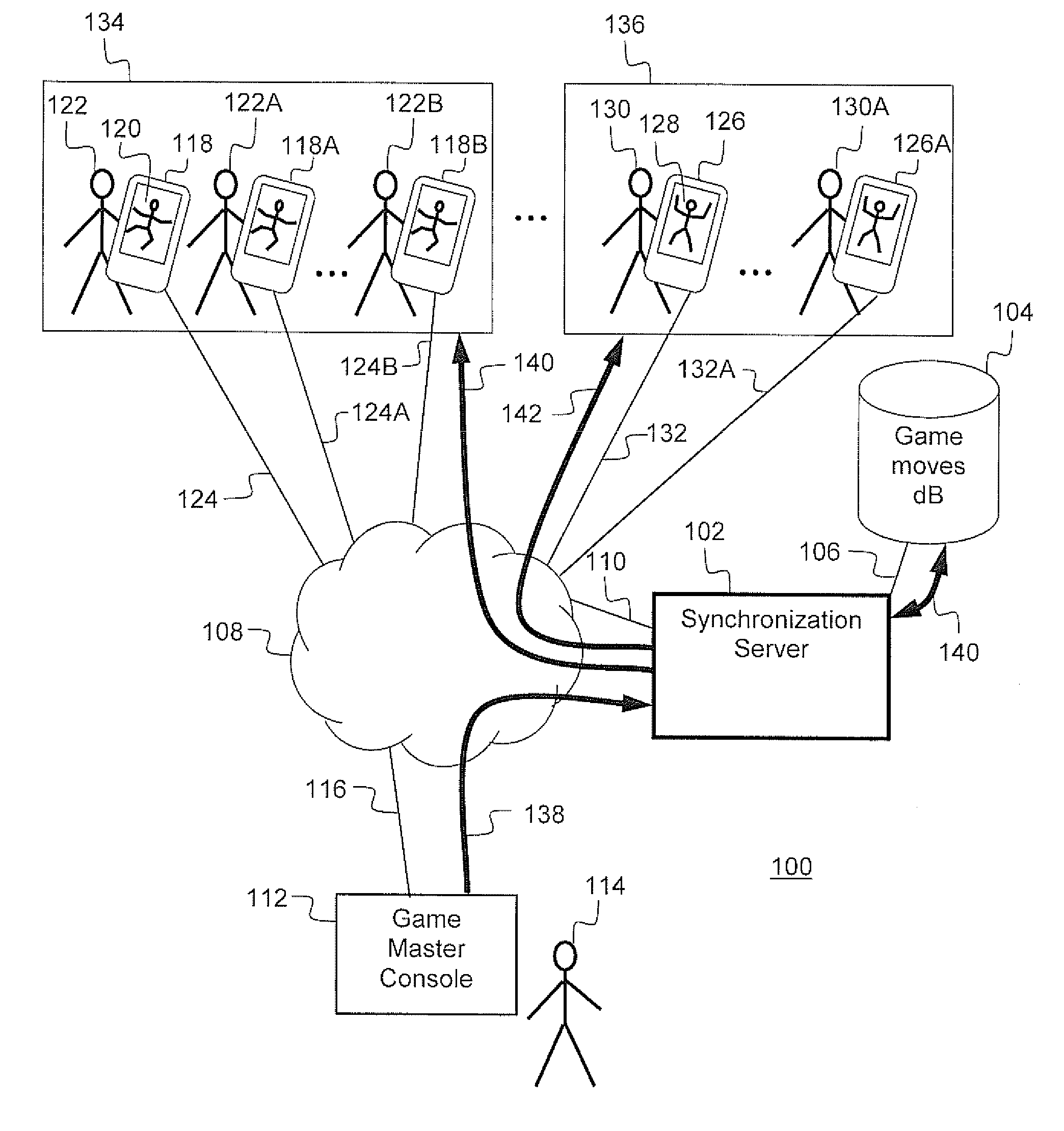

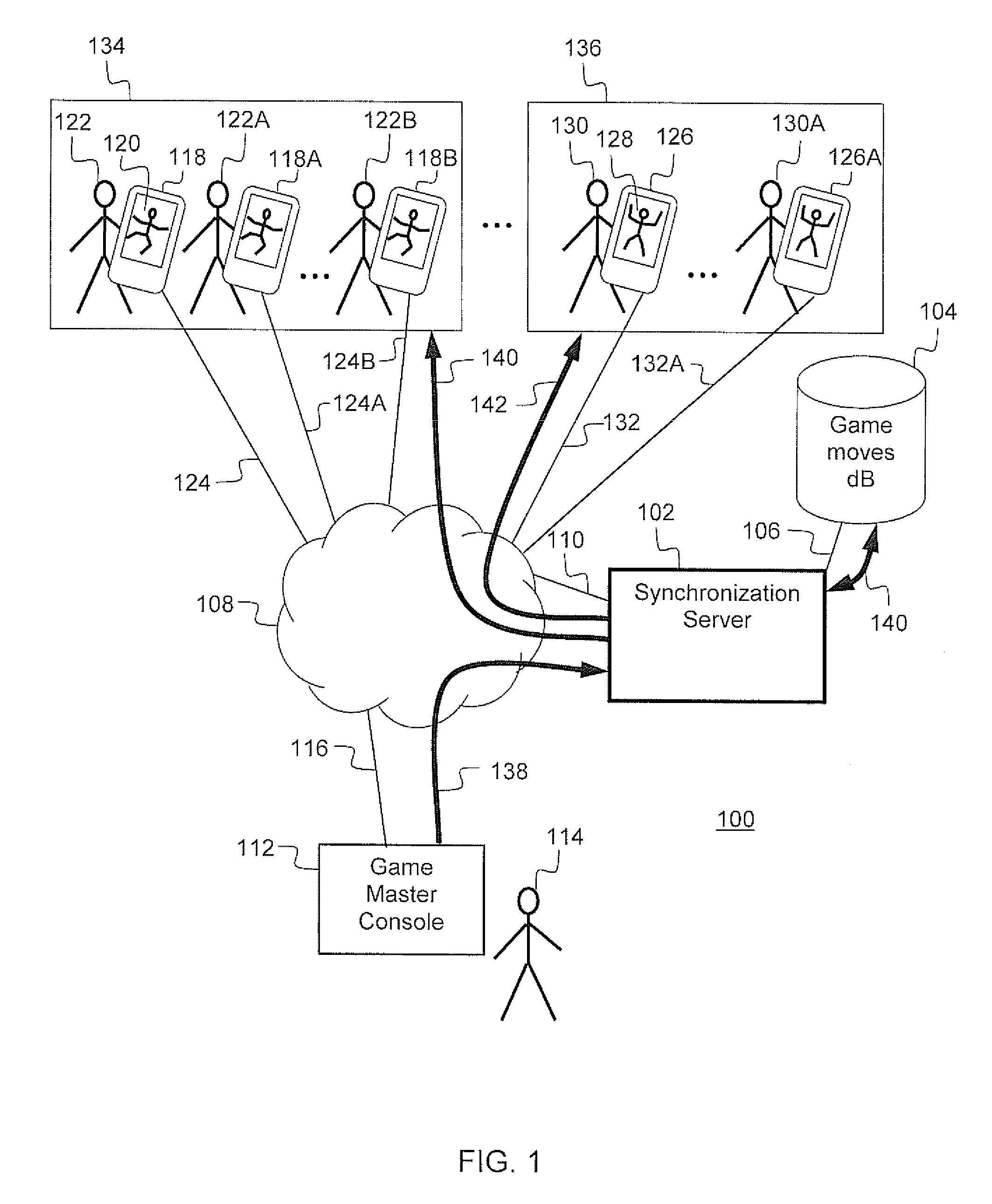

Crowd mobile synchronization

The invention is directed to a system and method for providing a synchronized game display to a plurality of mobile communications devices which can be grouped according to one or more attributes. Game display messages are specific to each grouping and comprise dance moves, song lyrics or other instructions to users of the mobile communication devices. A synchronization mechanism is provided to synchronize the displays the groups of mobile devices.

Owner:ALCATEL LUCENT SAS

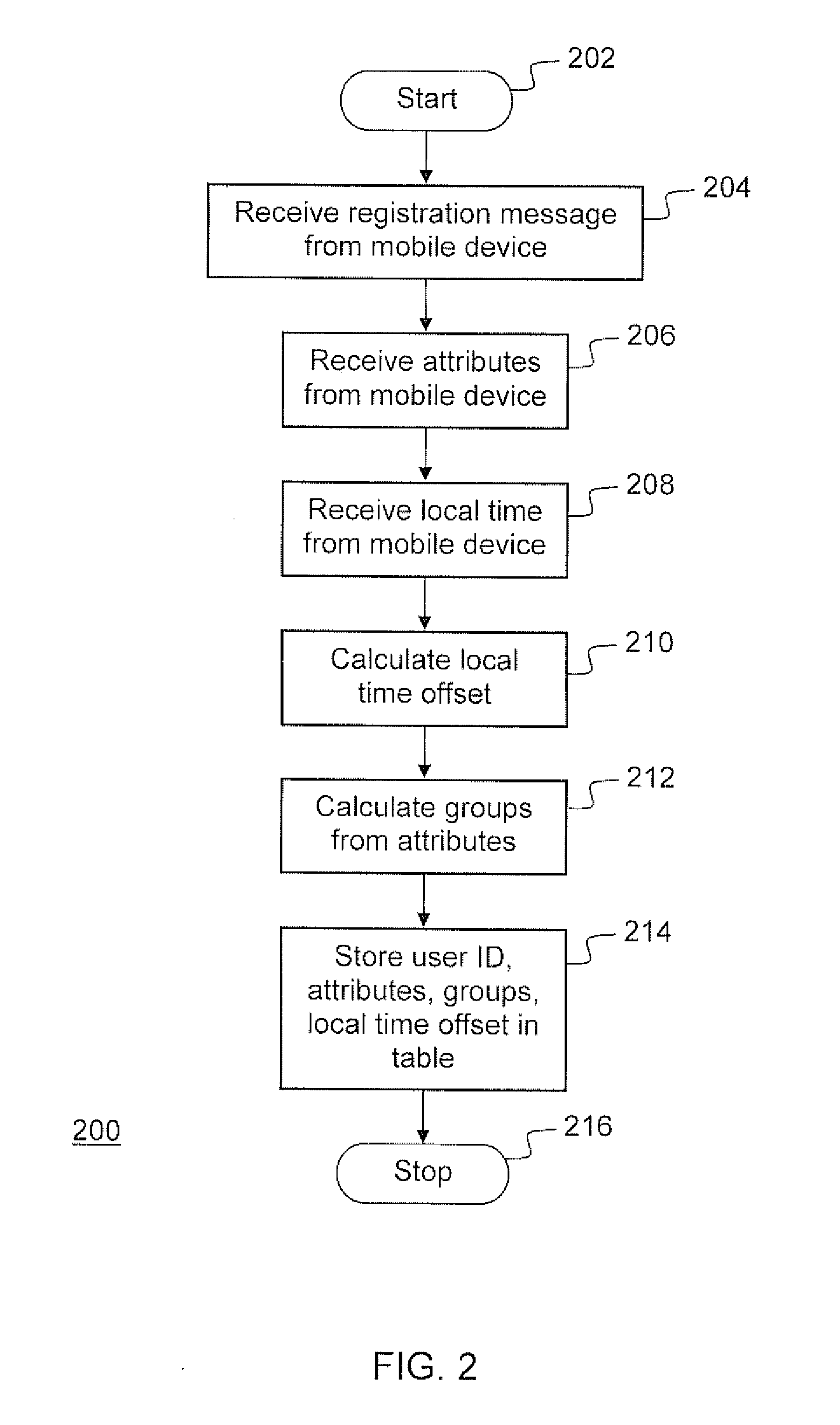

Using speech recognition to determine advertisements relevant to audio content and/or audio content relevant to advertisements

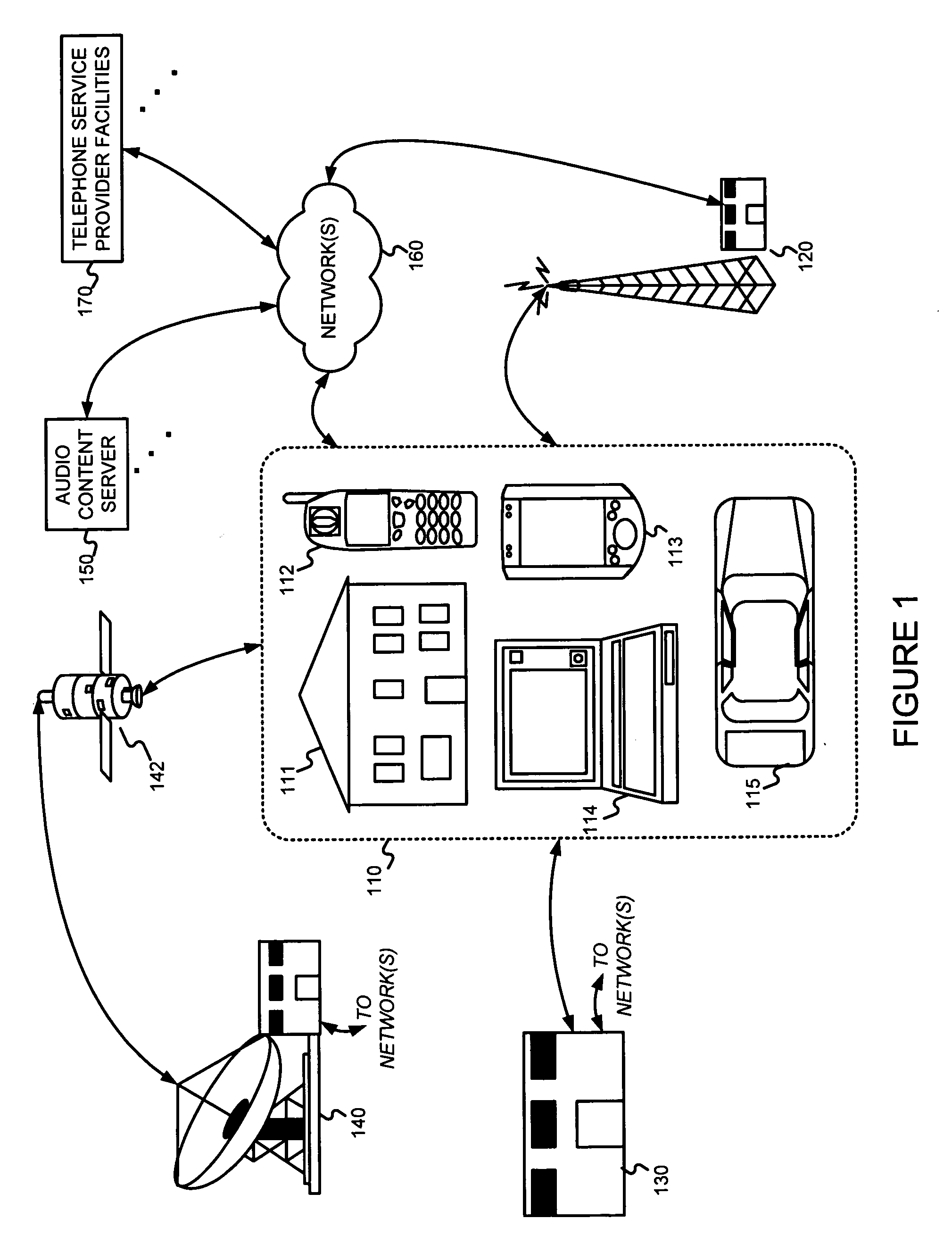

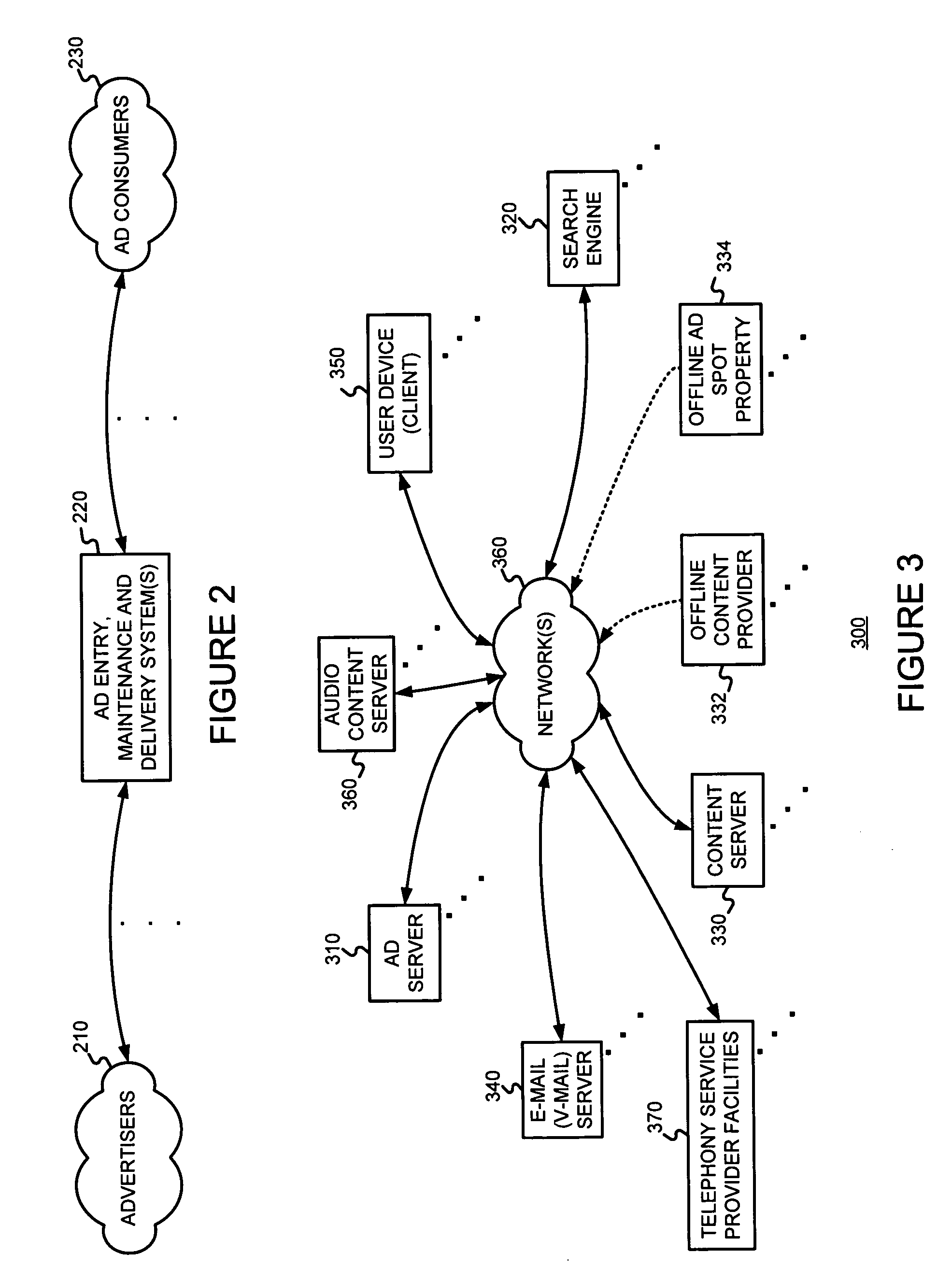

Serving advertisements with (e.g., in) audio documents may be improved by (a) accepting at least a portion of a document including audio content, (b) analyzing the audio content to determine relevancy information for the document, and (c) determining at least one advertisement relevant to the document using at least the relevancy information and serving constraints associated with advertisements. The advertisements may be scored if more than one advertisement was determined to be relevant to the document. Then, at least one of the advertisements to be served with an ad spot for the document may be determined using at least the scores. Examples of documents include radio programs, live or recorded musical works with lyrics, live or recorded dramatic works with dialog or a monolog, live or recorded talk shows, voice mail, segments of an audio conversation, etc. The audio content may be analyzed to determine relevancy information for the document by converting the audio content to textual information using speech recognition. Then, relevancy information may be determined from the textual information.

Owner:GOOGLE LLC

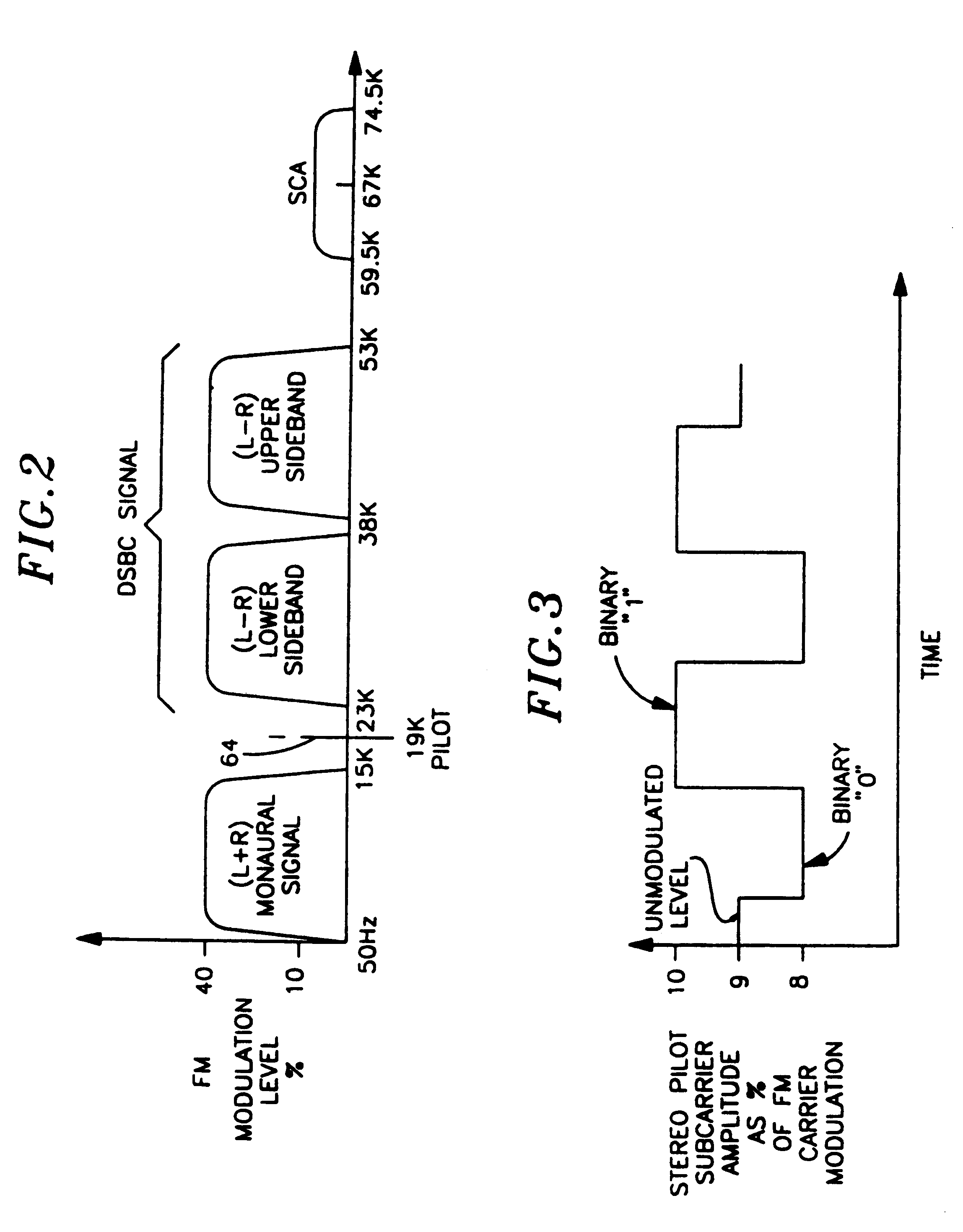

Apparatus and methods for music and lyrics broadcasting

InactiveUSRE37131E1Television system detailsBroadcast information characterisationRadio broadcastingSubcarrier

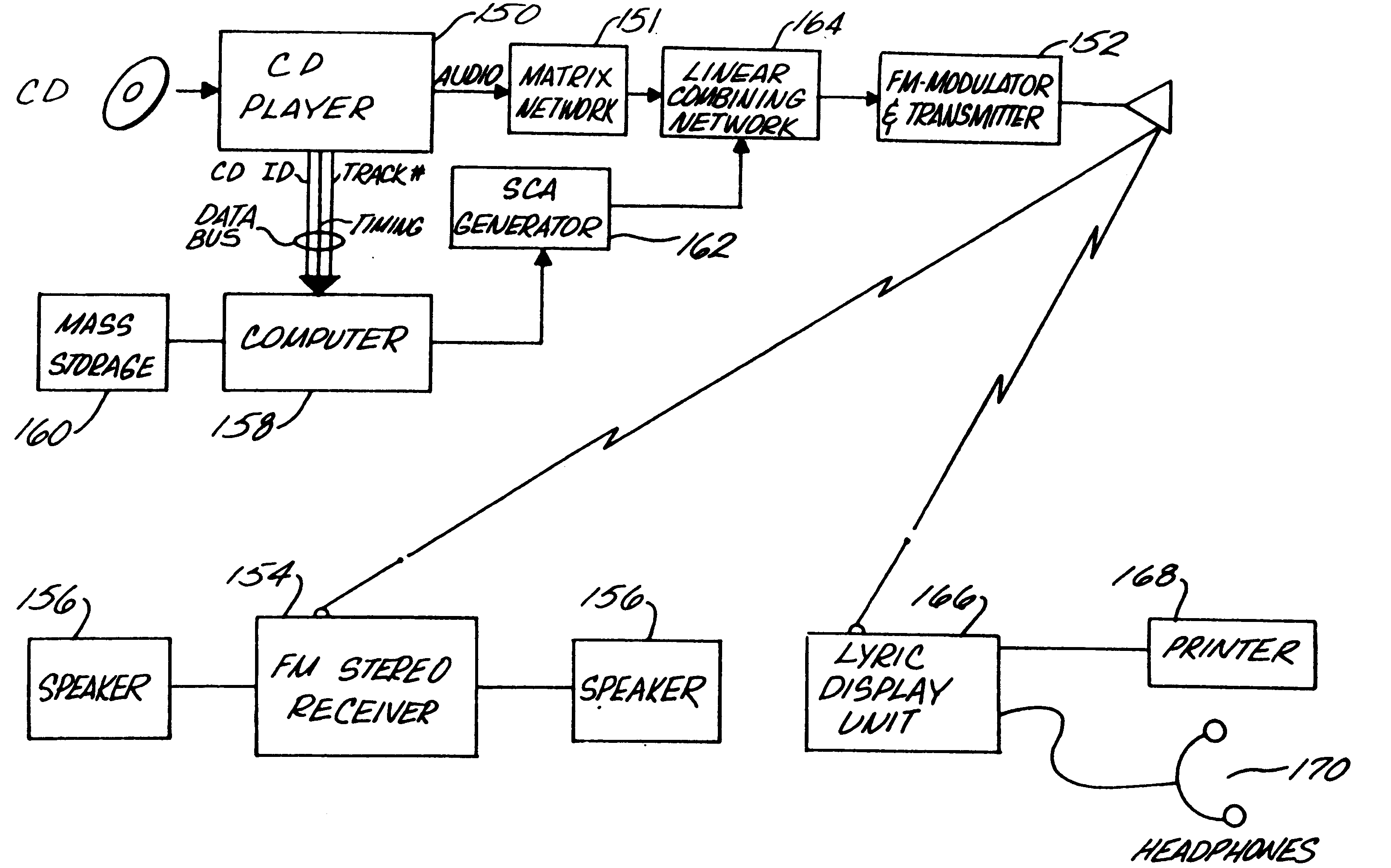

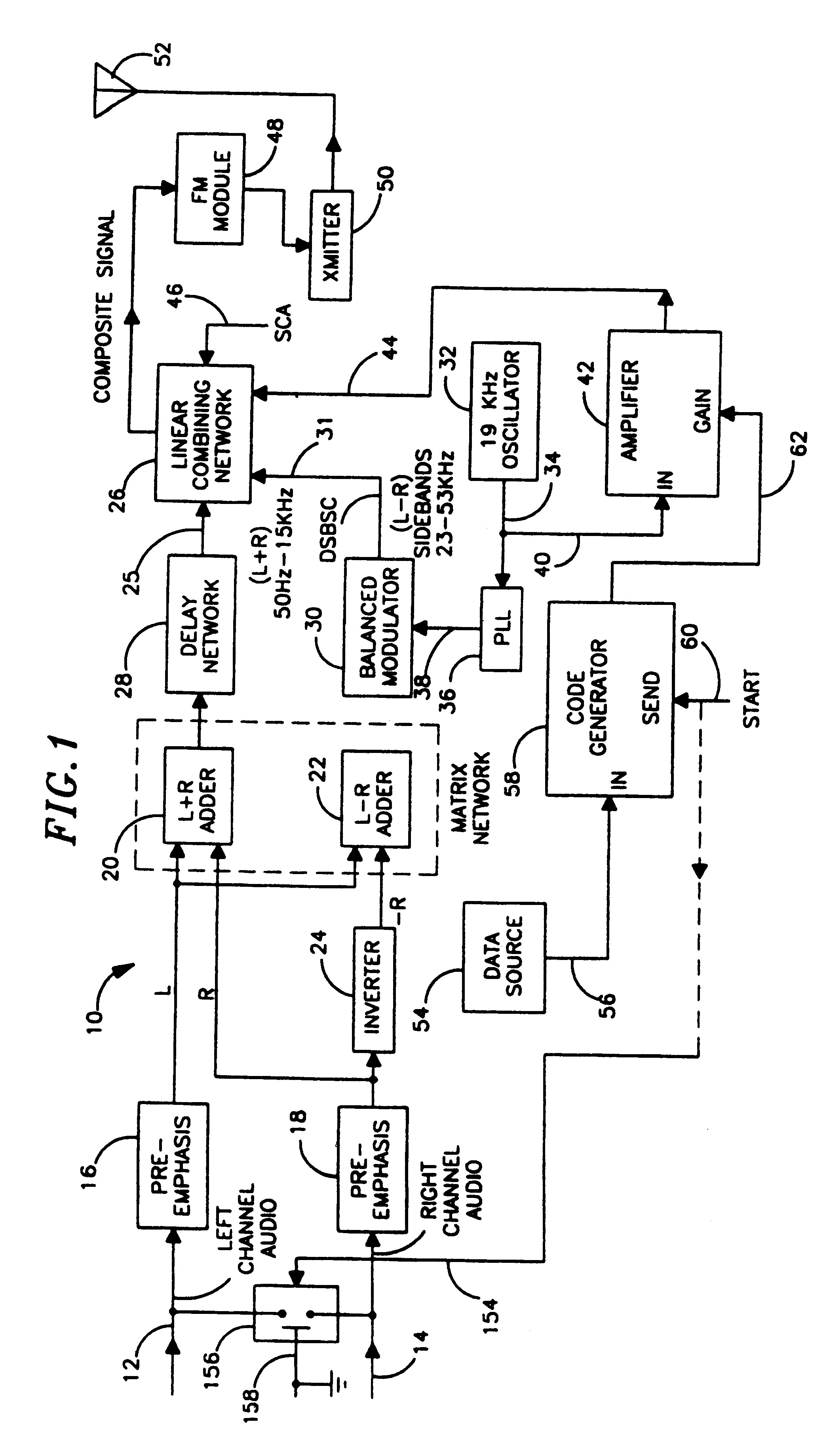

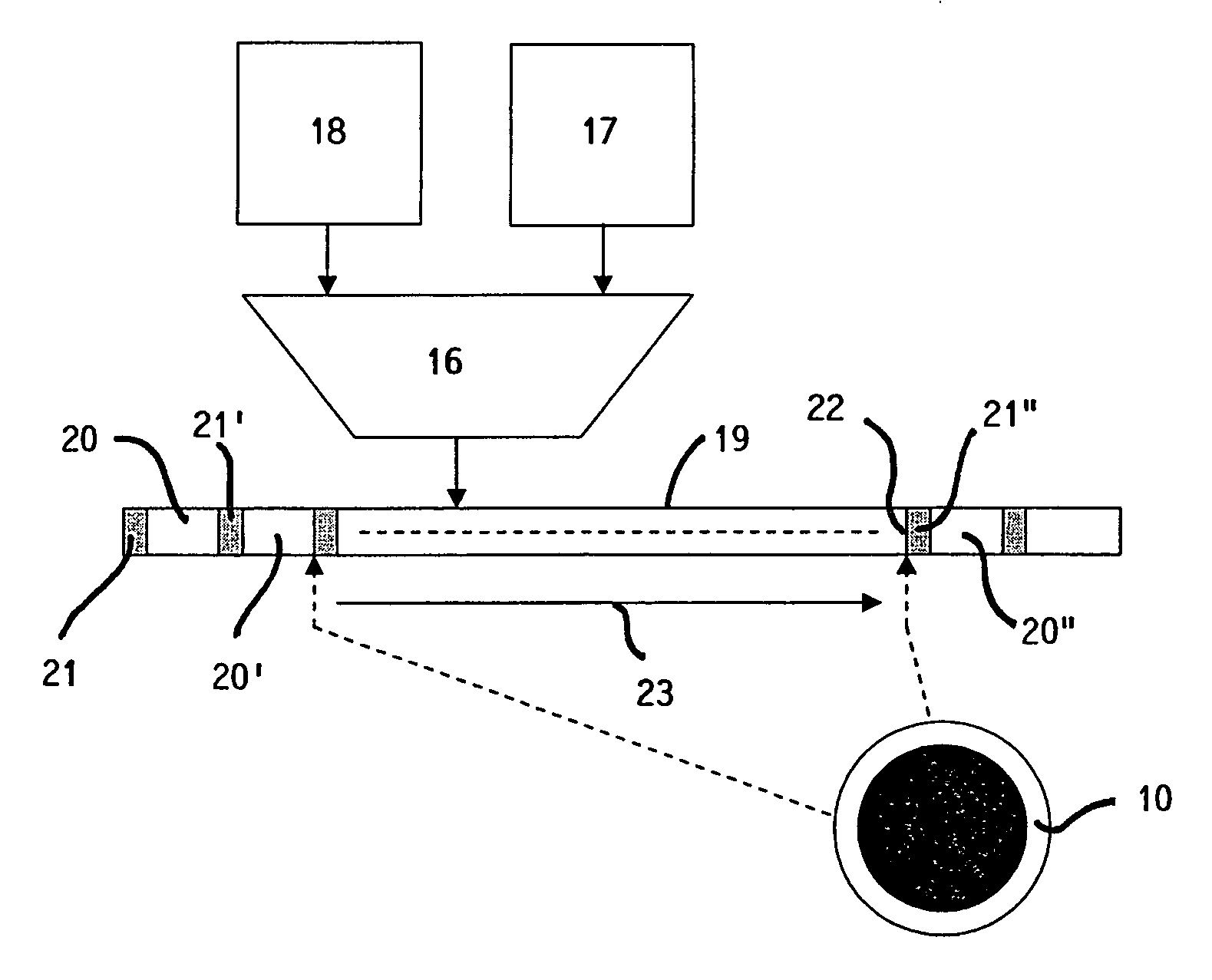

A system for broadcasting audio music and broadcasting lyrics for display and highlighting substantially simultaneously with the occurrence of the lyrics in the accompanying audio music is provided. The system includes <DEL-S DATE="20010410" ID="DEL-S-00001">a<DEL-E ID="DEL-S-00001"> <INS-S DATE="20010410" ID="INS-S-00001">an <INS-E ID="INS-S-00001">audio music source that provides a data output and <DEL-S DATE="20010410" ID="DEL-S-00002">a<DEL-E ID="DEL-S-00002"> <INS-S DATE="20010410" ID="INS-S-00002">an <INS-E ID="INS-S-00002">analog audio signal output. A computer receives the data output by the music source and generates lyric text data and lyric timing commands. A subcarrier generator generates a subcarrier signal carrying the lyric text data and lyric timing commands. An FM transmitter broadcasts a composite signal that combines the analog output of the music source with the subcarrier signal. A lyric display unit receives the composite signal, separates and decodes the subcarrier signal and displays and highlights lyrics according <INS-S DATE="20010410" ID="INS-S-00003">to <INS-E ID="INS-S-00003">the lyric text data and lyric timing commands decoded from the subcarrier signal.

Owner:DIGIMEDIA HLDG

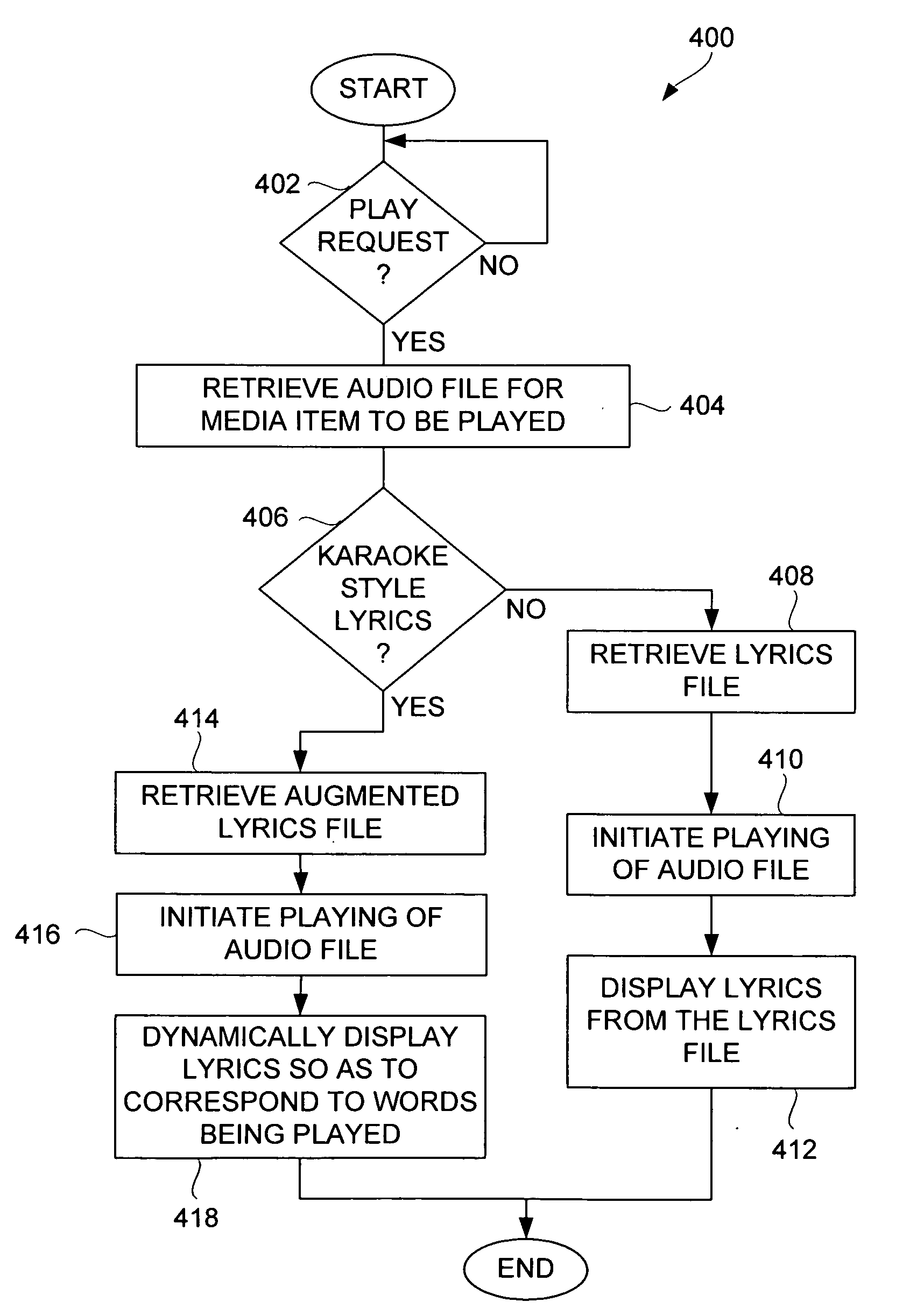

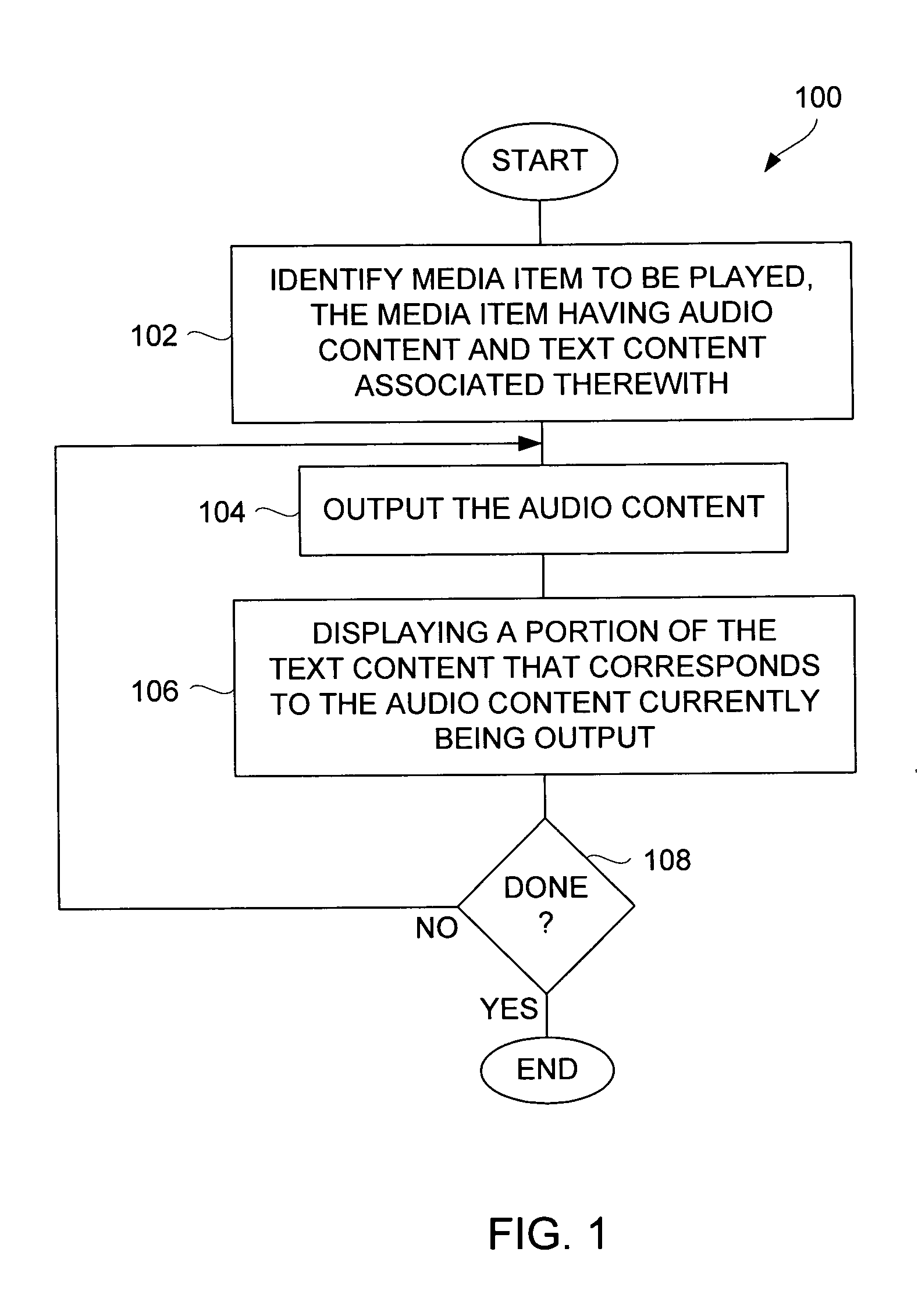

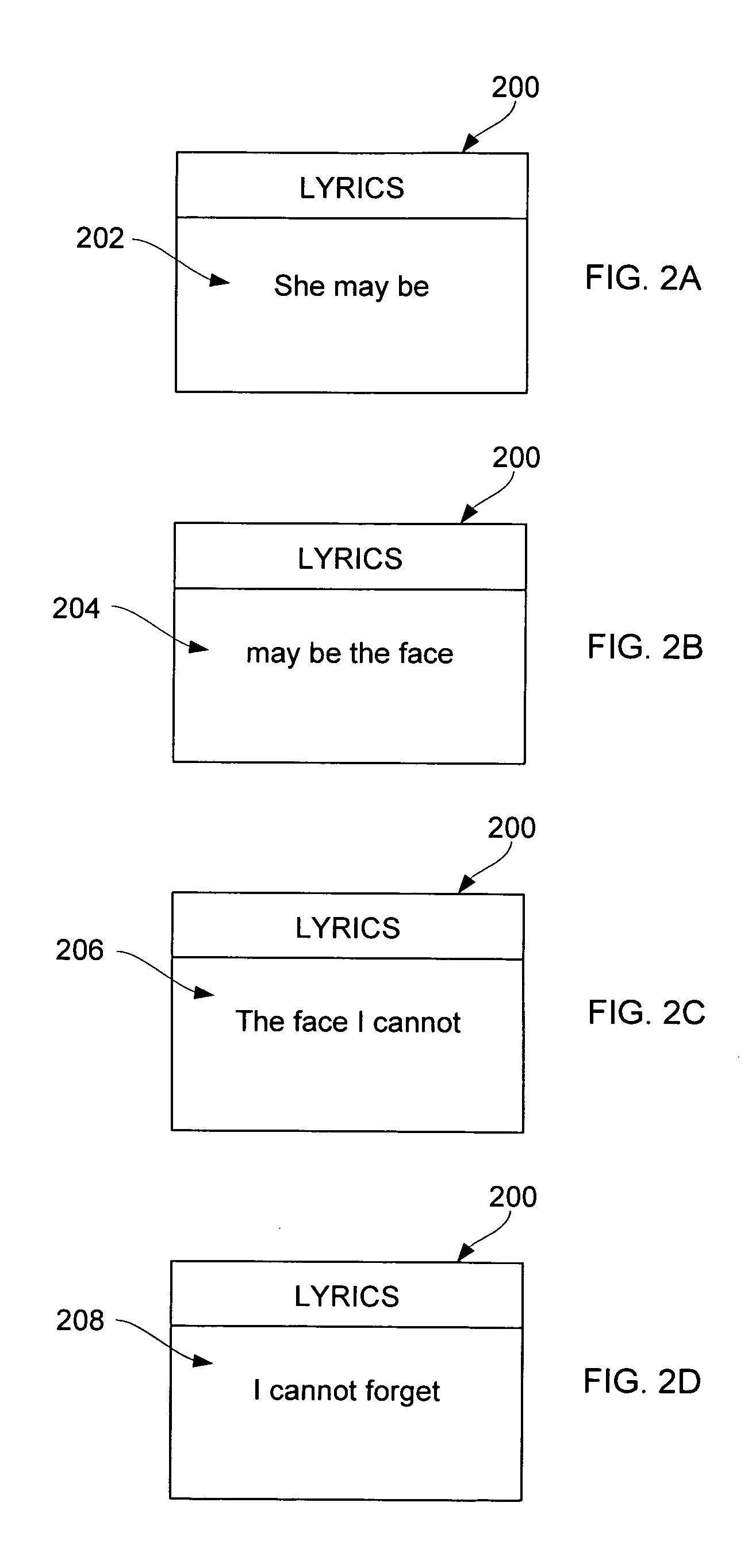

Dynamic lyrics display for portable media devices

InactiveUS20070166683A1Electrophonic musical instrumentsElectrical appliancesDynamic displayMedia content

Improved techniques for dynamically displaying text on a display screen of a portable media device while presenting media content are disclosed. The text being displayed is associated with and synchronized to the media content being presented. In one implementation, the dynamic nature of the text can scroll across the display screen of the portable media device. In another implementation, a part of the text being displayed can be distinguishably displayed from other parts. In one embodiment, the media content is music and the text is lyrics, whereby the portable media device can not only play music but also output synchronized lyrics.

Owner:APPLE INC

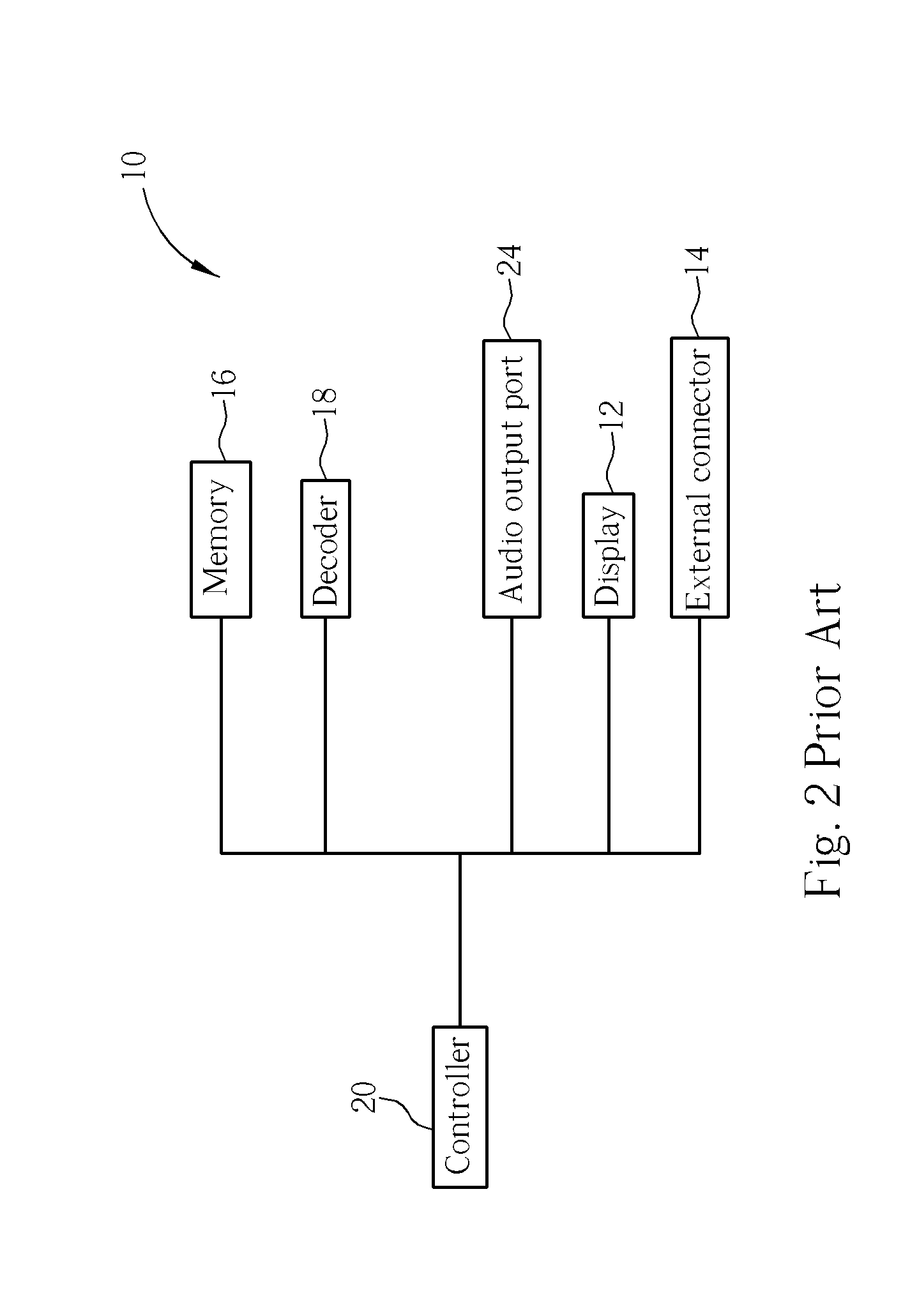

Personal audio player

InactiveUS20070016314A1Improved display and user interface schemeImprove user experienceRecord information storageSpecial data processing applicationsOutput deviceOperation mode

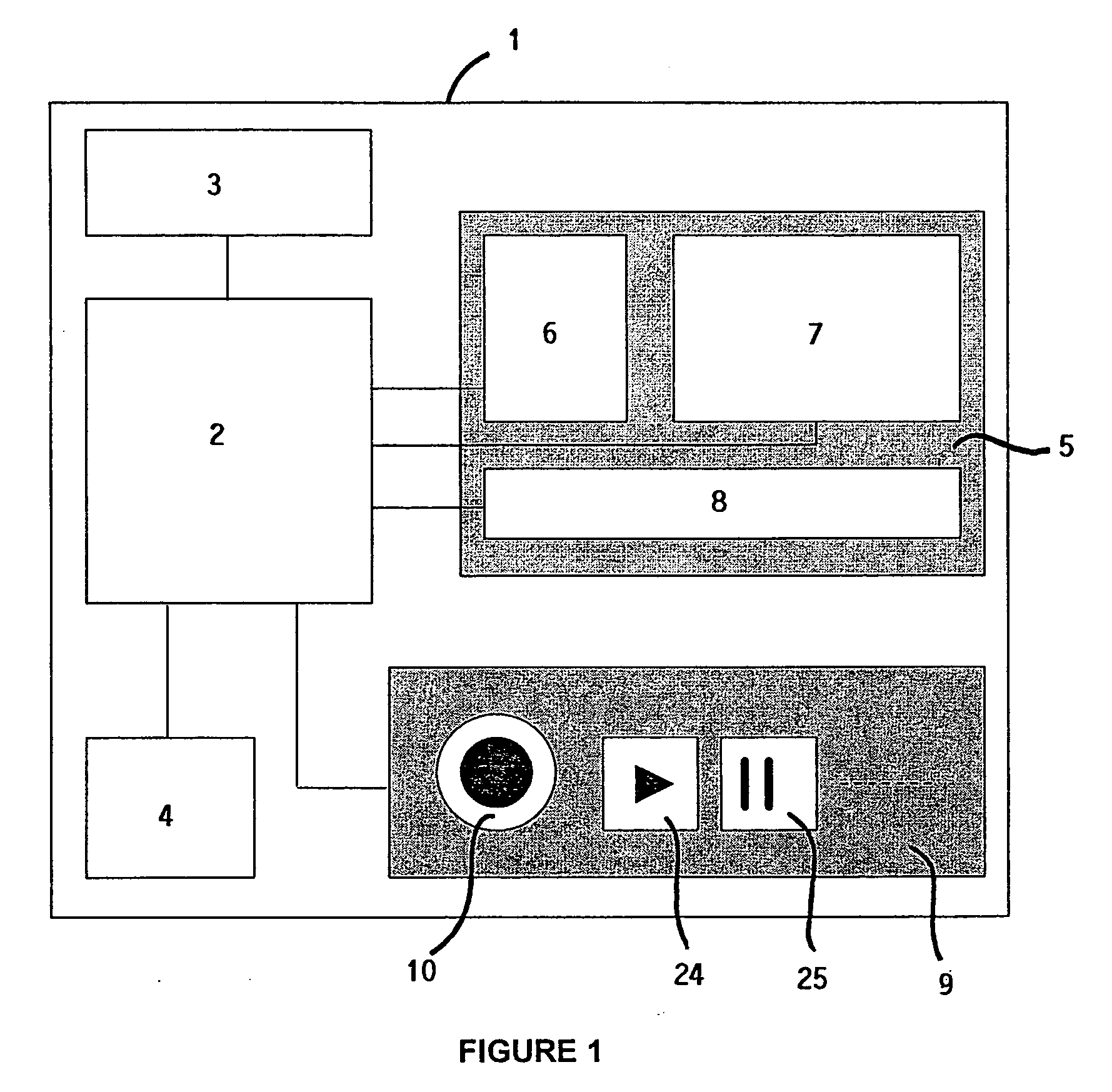

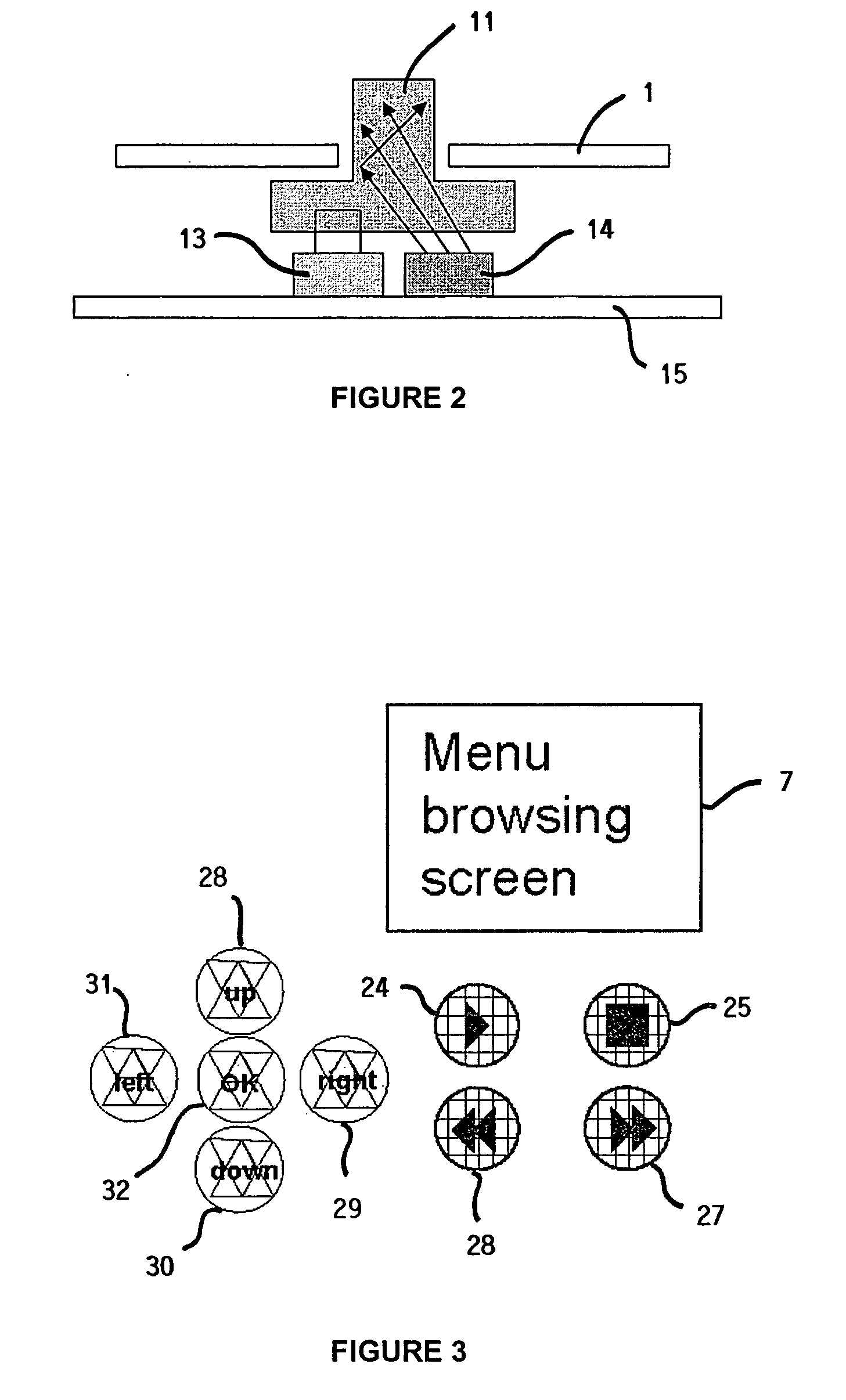

A personal audio player has a housing, an audio output device in the housing and an audio player in the housing and coupled with the output device for storing and playing audio files. A plurality of different display panels are located on the housing. Each display panel is controlled to have a unique presentation format for presenting different information to a user. A user interface on the housing has a plurality of different illumination colors corresponding to different operation modes of the audio player. A lyrics processor outputs lyric text to one of the display panels in synchronisation with playing of a corresponding audio file.

Owner:PERCEPTIION DIGITAL

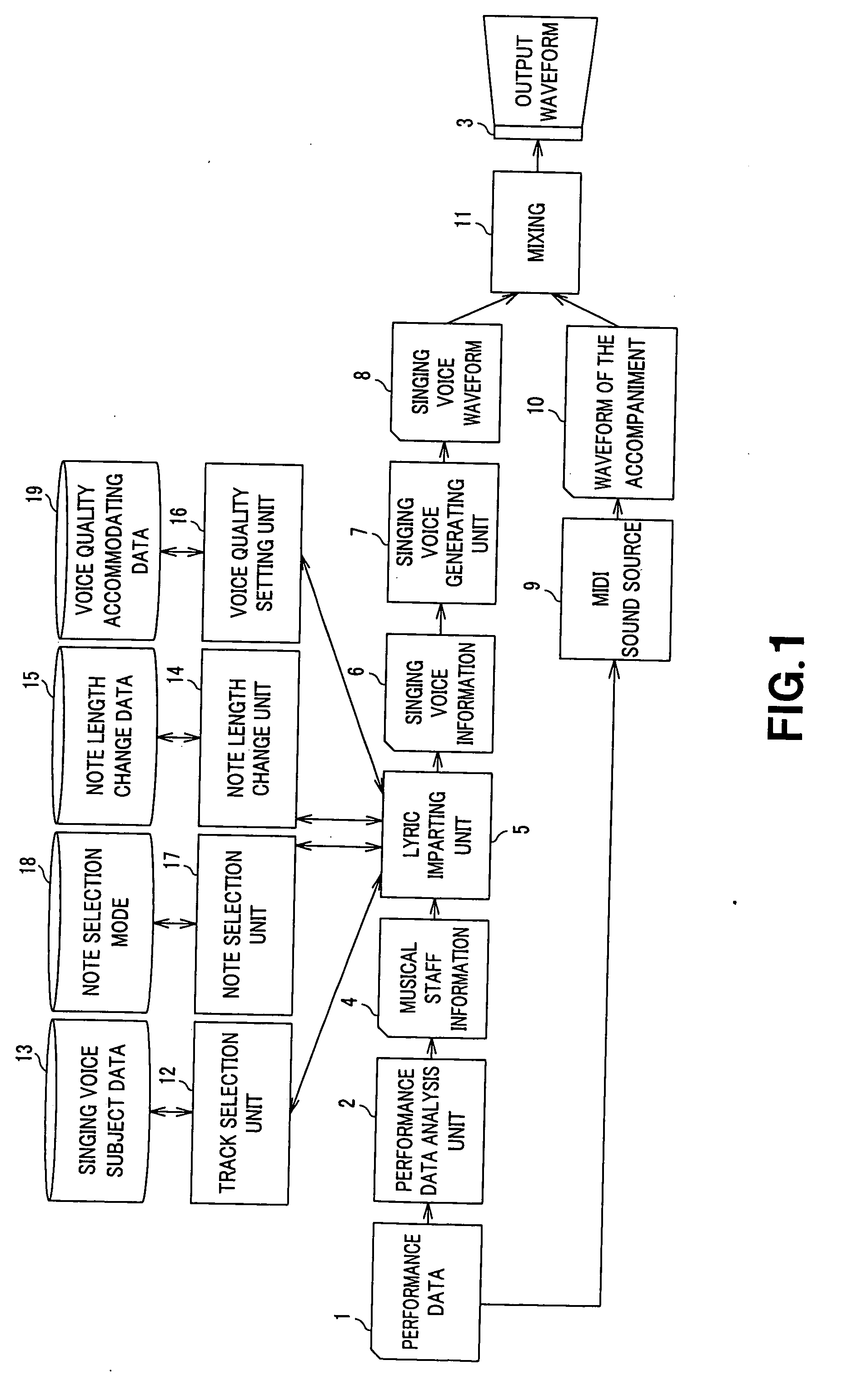

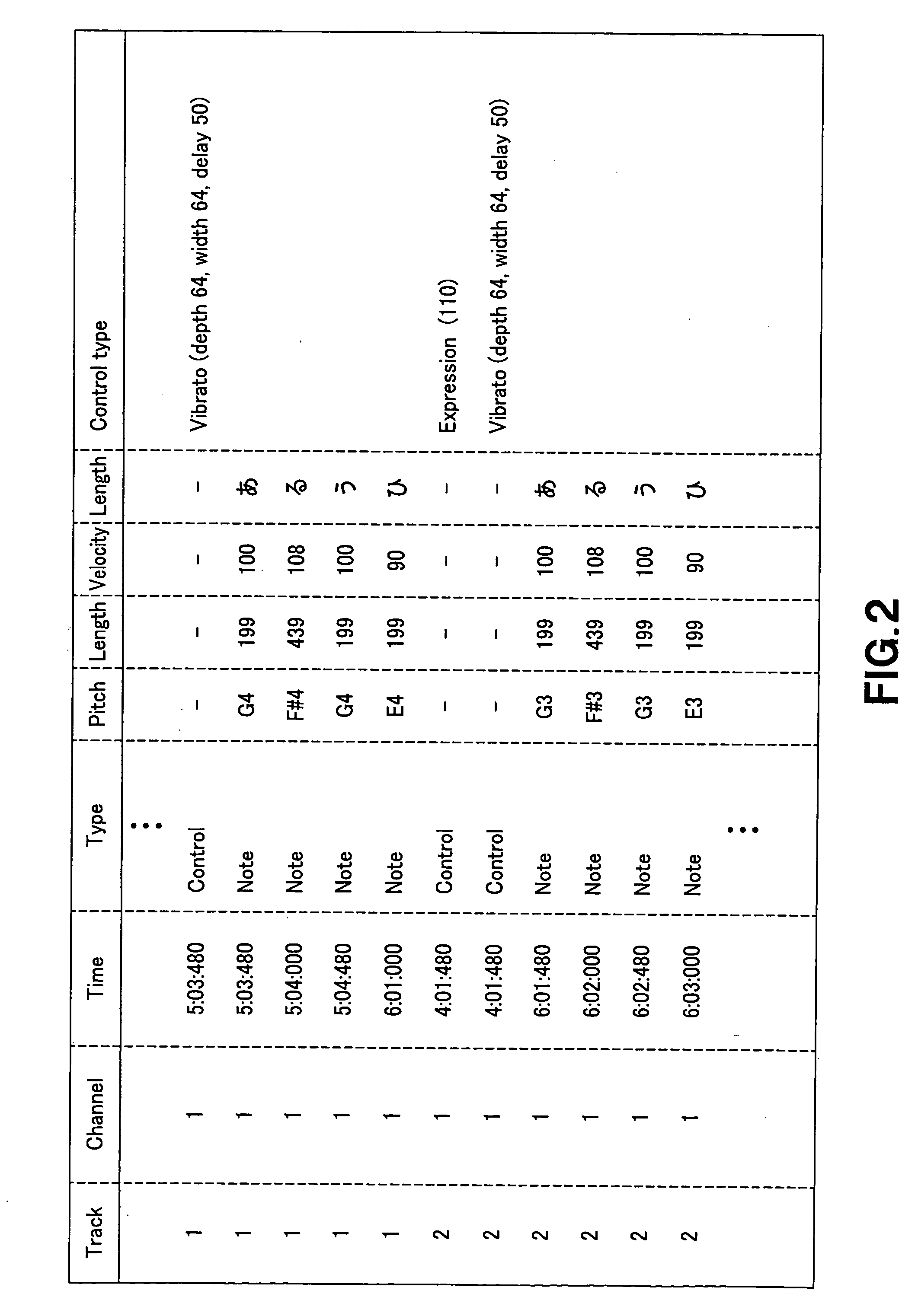

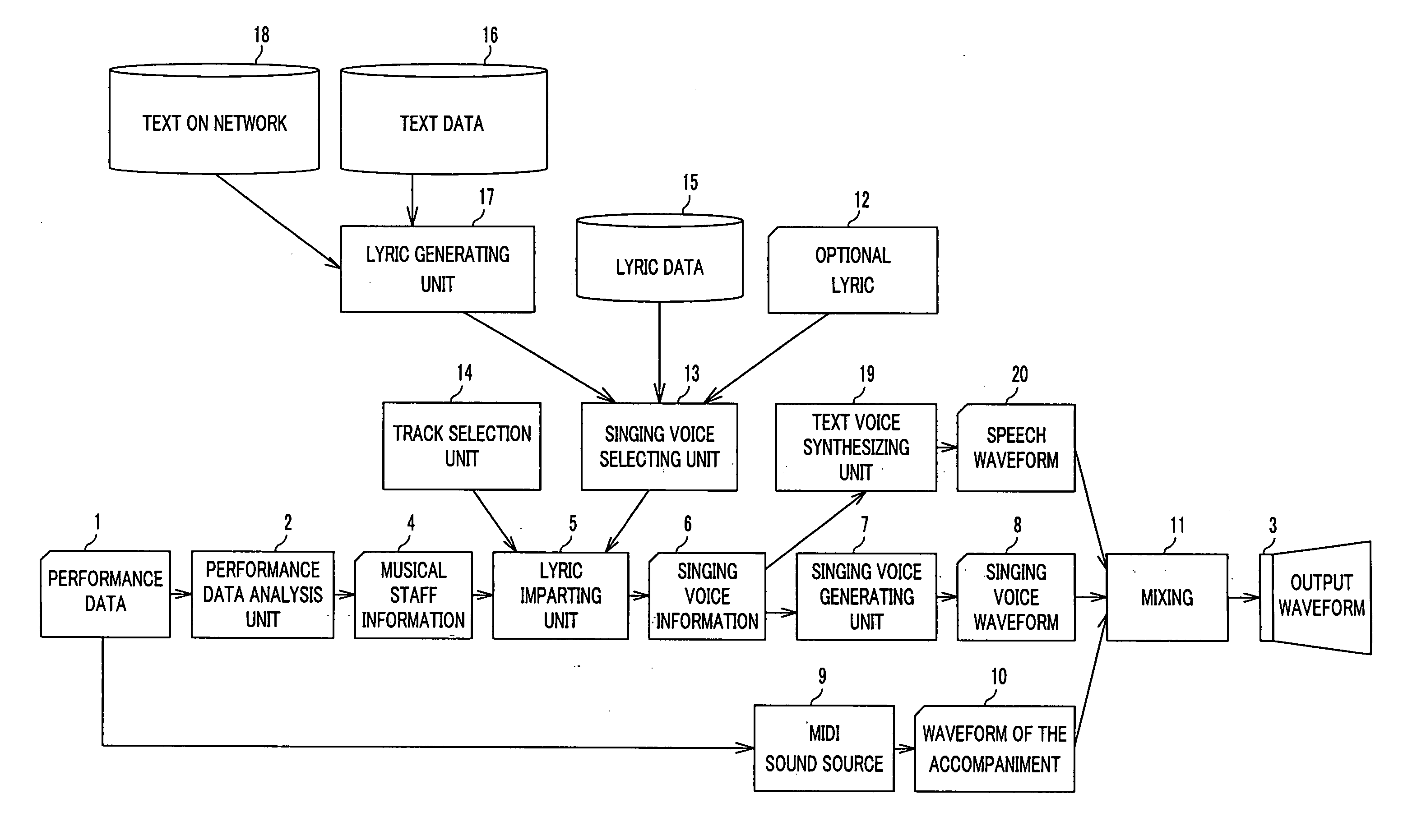

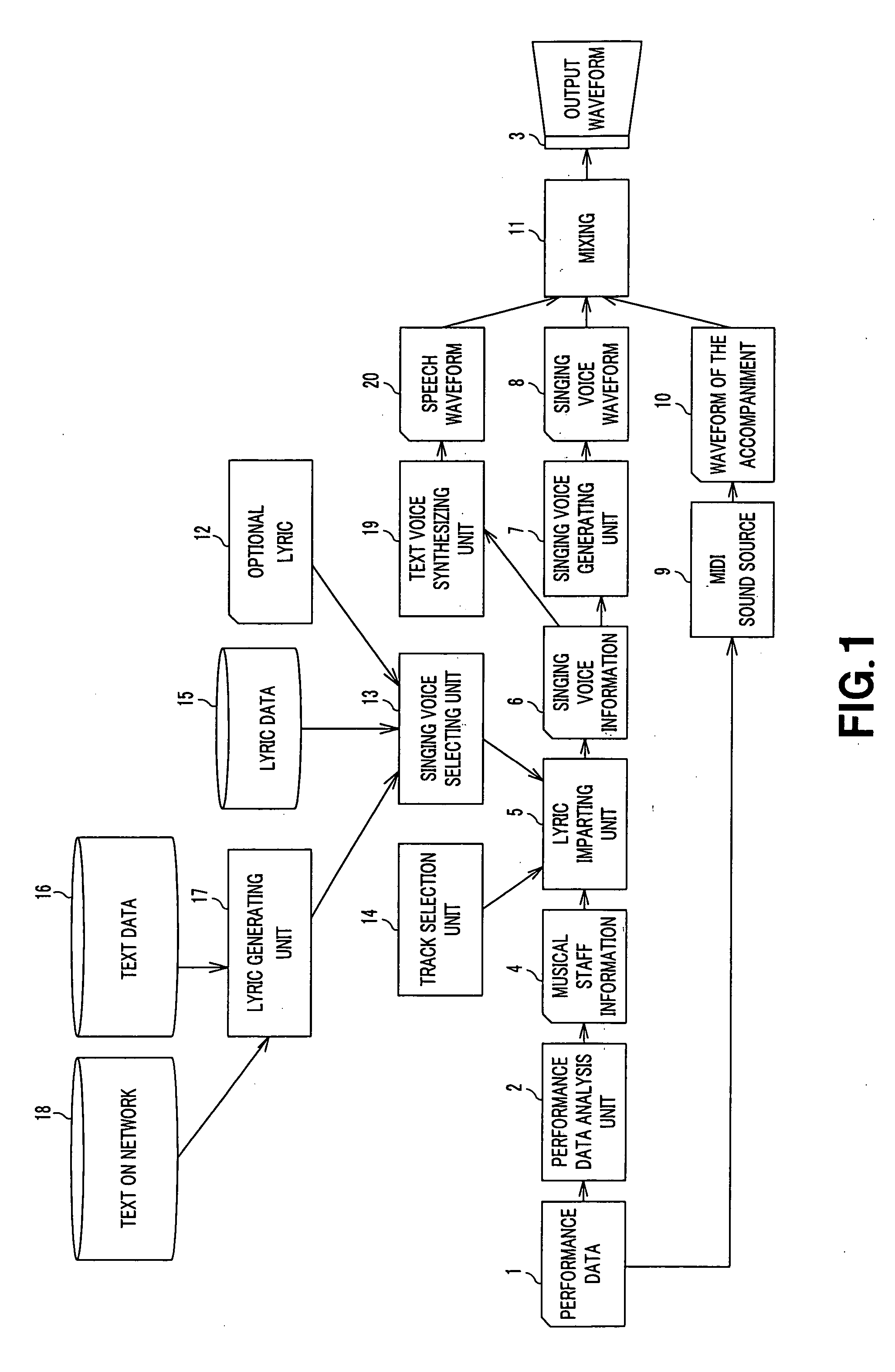

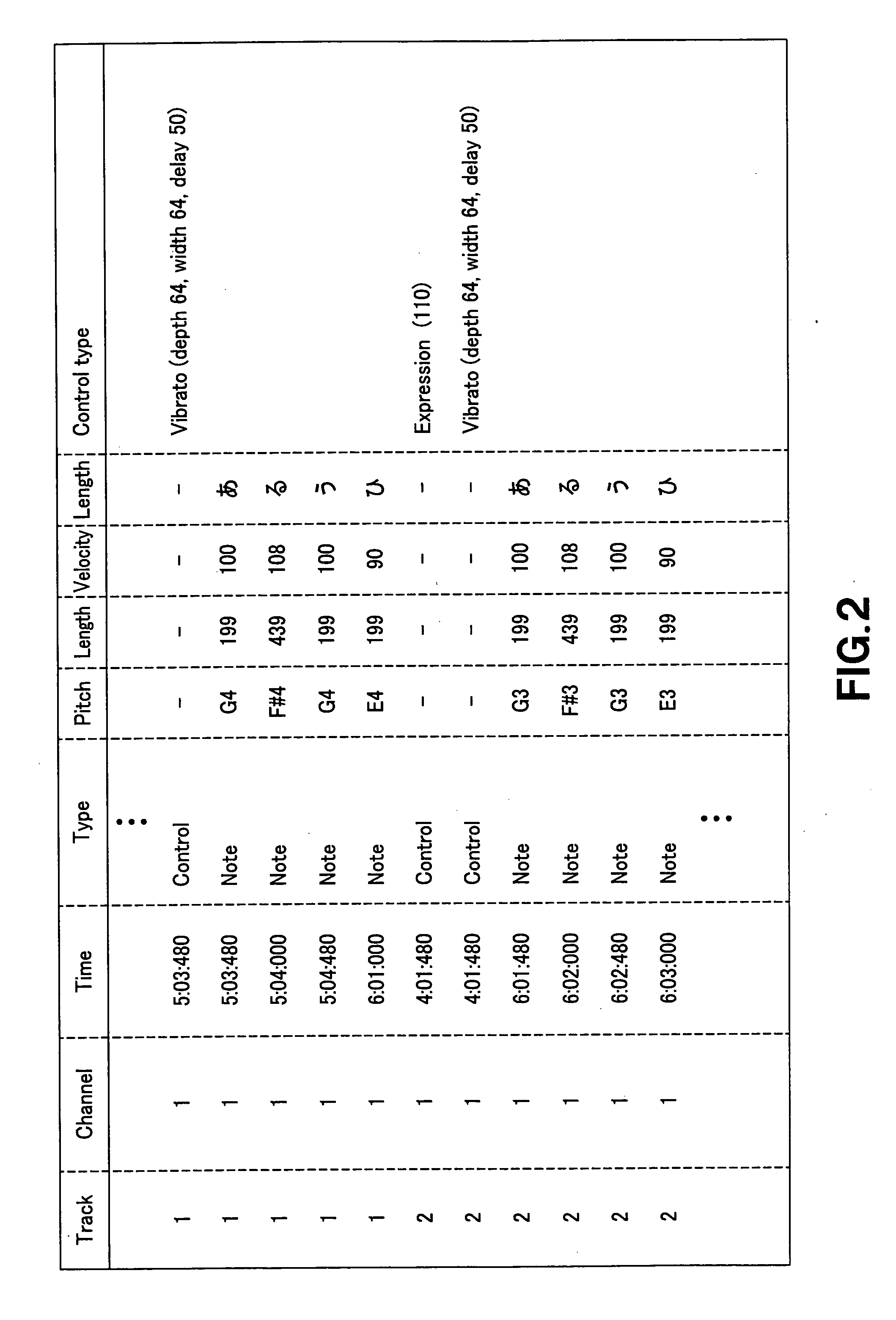

Singing voice synthesizing method, singing voice synthesizing device, program, recording medium, and robot

ActiveUS20060185504A1Improve propertiesOvercome problemsMusical toysElectrophonic musical instrumentsSynthesis methodsSound quality

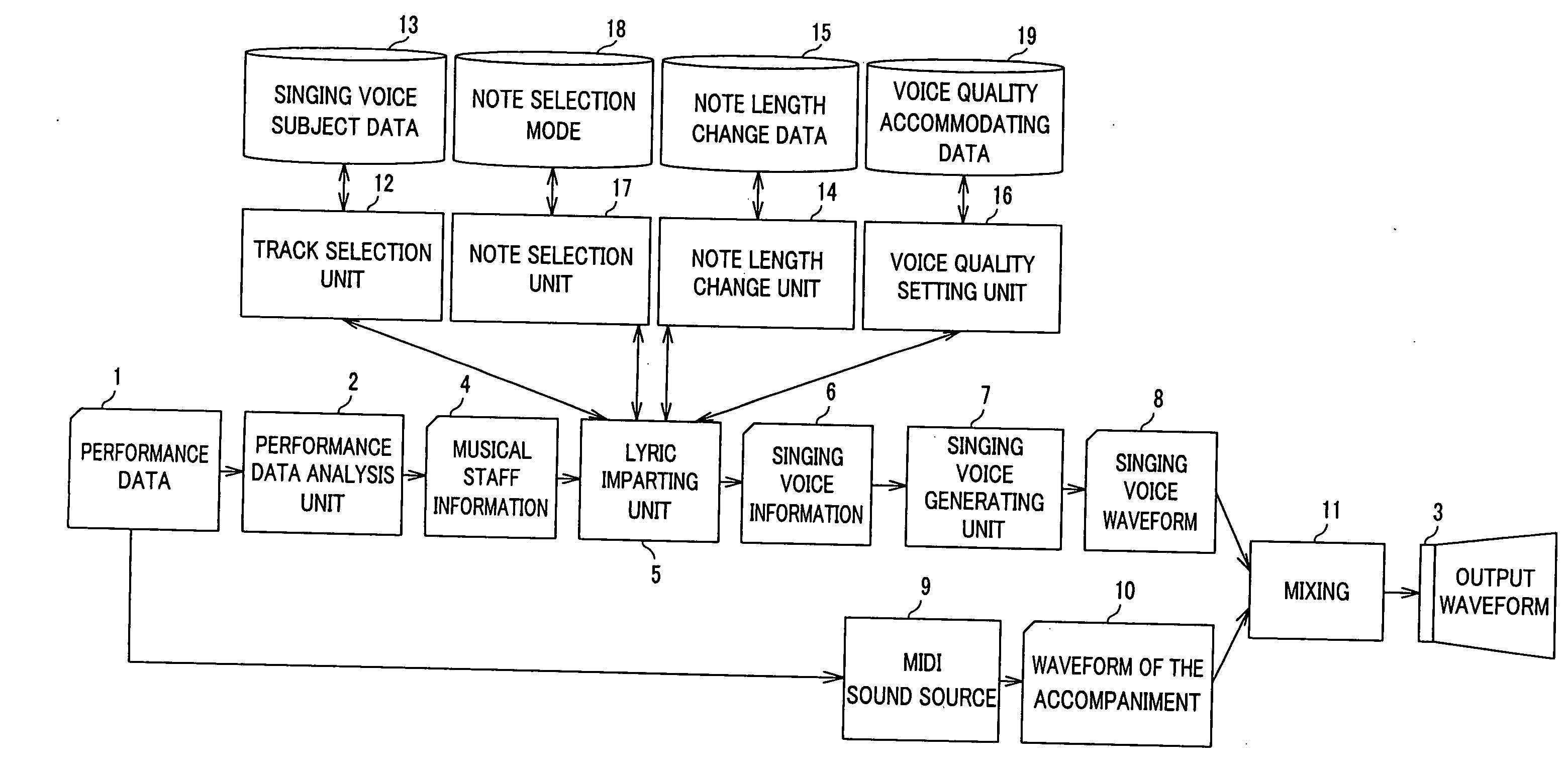

A singing voice synthesizing method for synthesizing the singing voice from performance data is disclosed. The input performance data are analyzed as the musical information of the pitch and the length of sounds and the lyric (S2 and S3). A track as the subject of the lyric is selected from the analyzed musical information (S5). A note the singing voice is allocated to is selected from the track (S6). The length of the note is changed to suit to a song being sung (S7). The voice quality suited to the singing is selected based on e.g. the track name / sequence name (S8) and singing voice data is prepared (S9). The singing voice is generated based on the singing voice data (S10).

Owner:SONY CORP

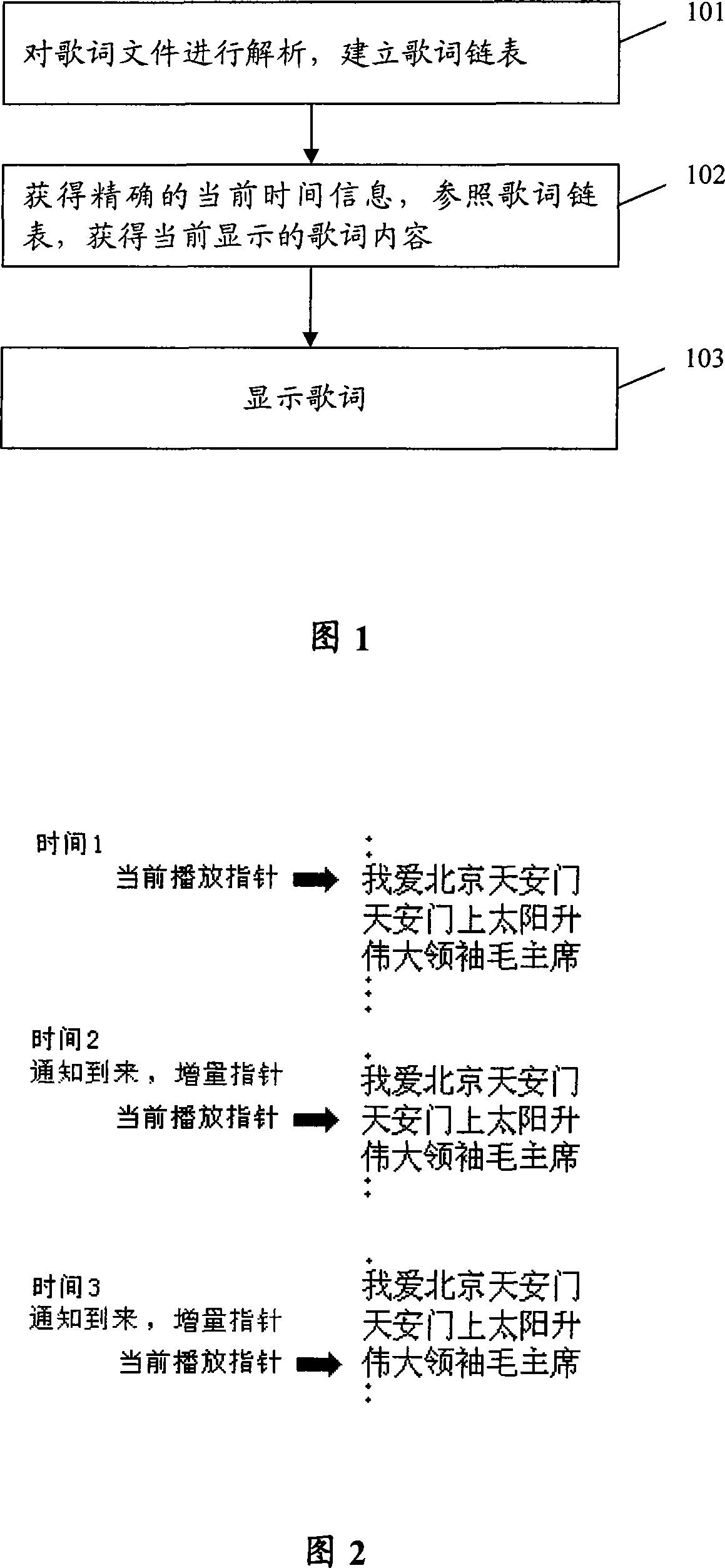

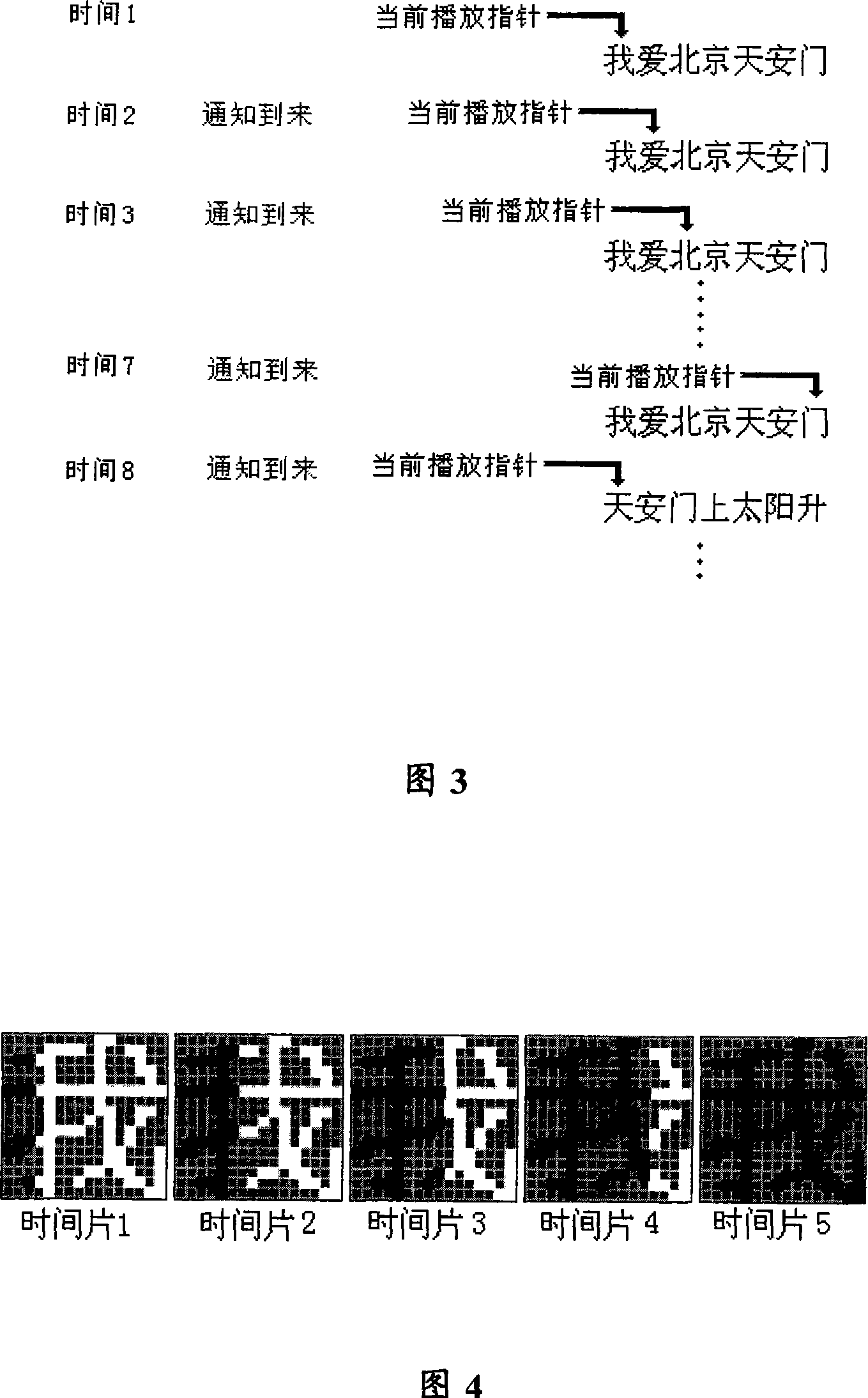

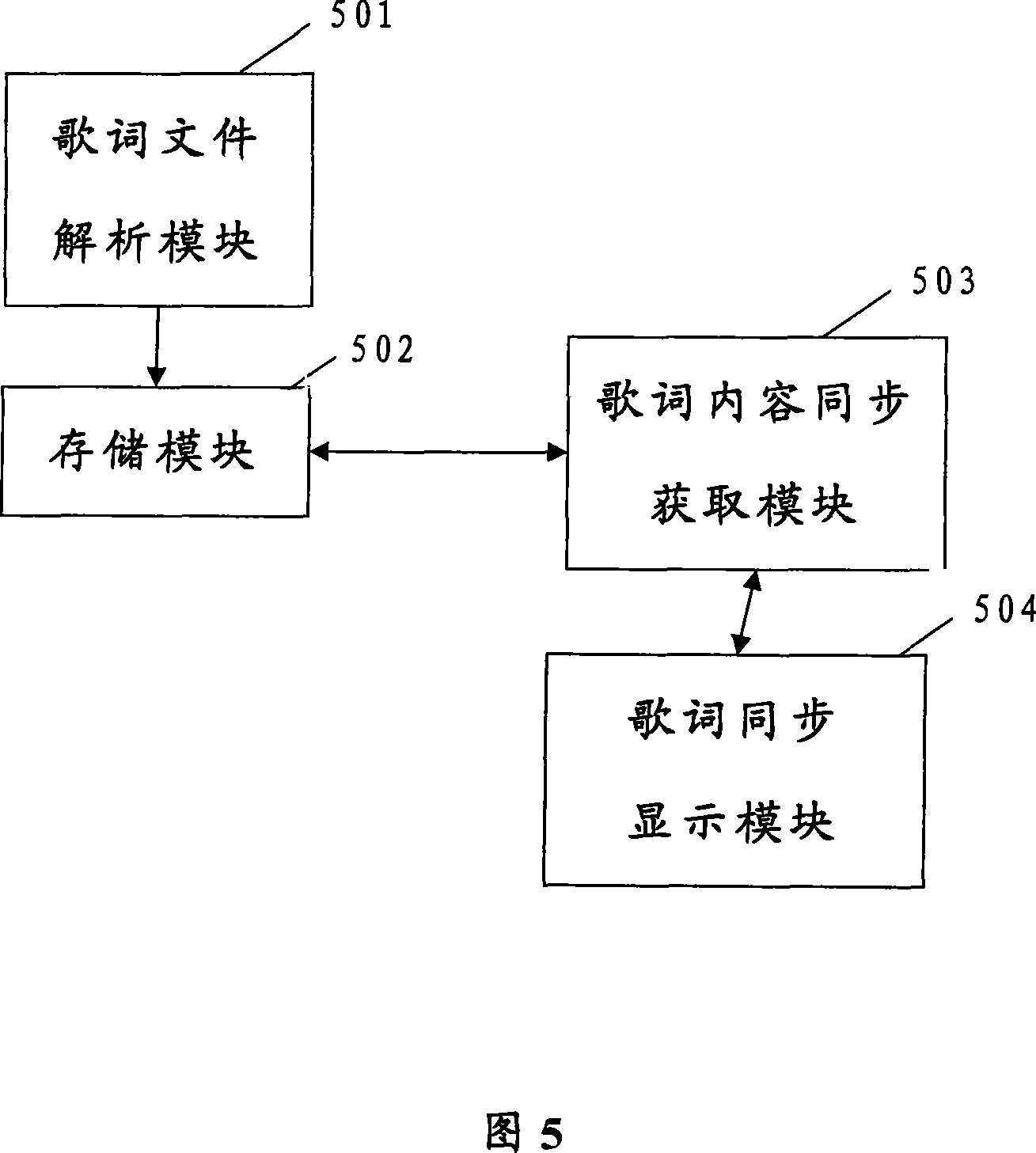

Method and device for implementing lyric synchronization when broadcasting song

InactiveCN101127210AAchieve synchronizationOvercome the defect that the lyrics cannot be displayed synchronouslyElectrophonic musical instrumentsSpeech analysisEngineeringLyrics

The utility model discloses a method with a device for synchronous lyrics in the songs playing process, which is characterized in that: a lyrics file is analyzed to acquire time information and text contents of the lyrics which are correspondently stored in a lyrics list; in the songs playing process, the current playing time is attained, and the correspondence relationship between the passed time information of the lyrics chain list and the lyrics text is determined, then the lyrics text matched with the current time is ascertained; the ascertained lyrics text is displayed. The utility model has the advantages of not depending on the support of high-end decoder chips, and realizing synchronous lyrics in ordinary digital terminal during the songs playing process.

Owner:UTSTARCOM TELECOM CO LTD

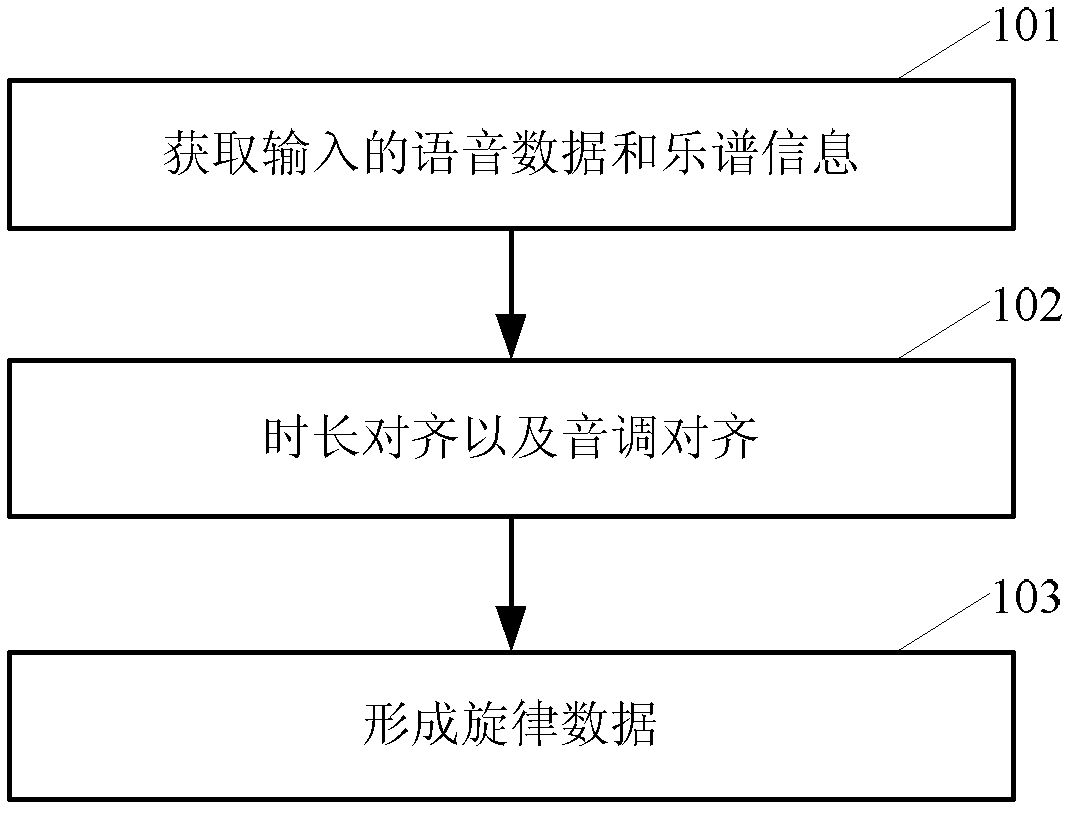

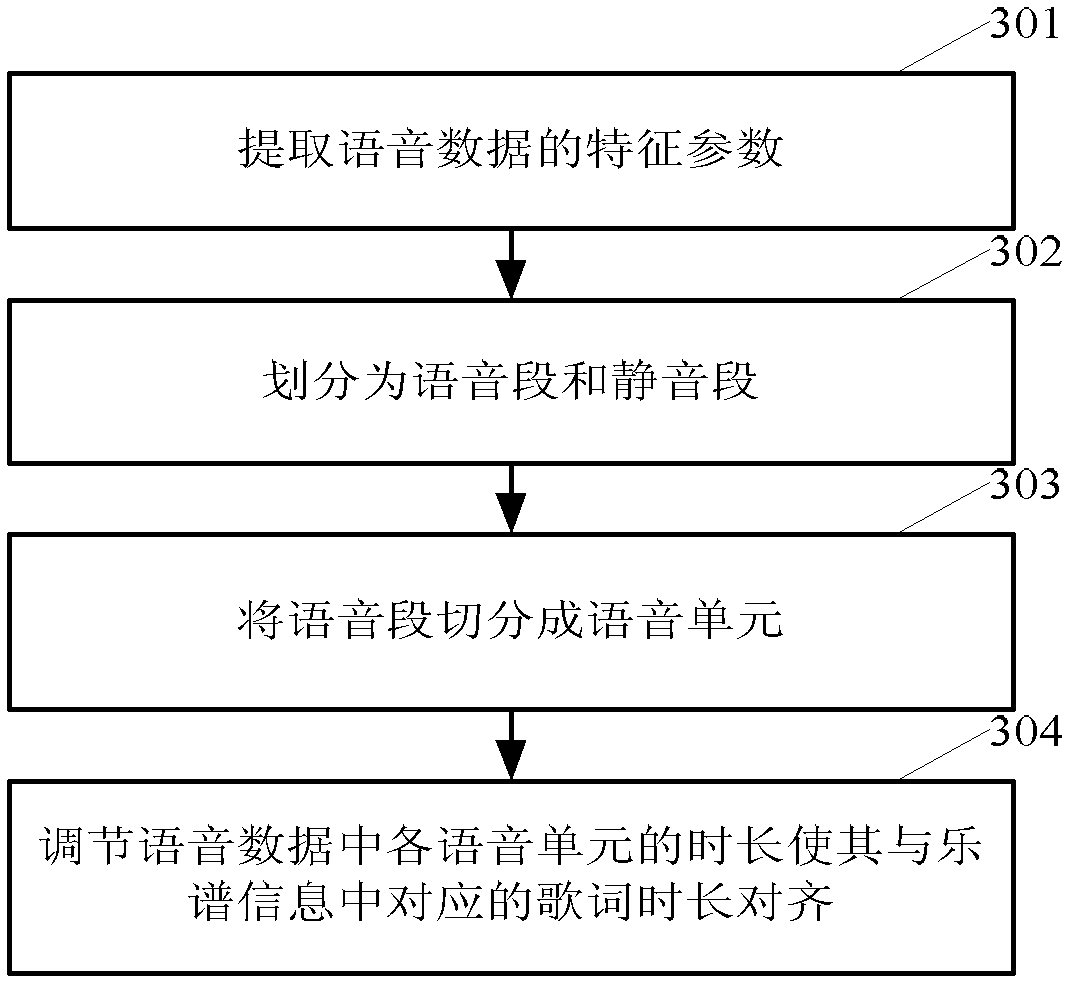

Method and device for transforming voice into melody

The invention provides a method and a device for transforming voice into melody. The method includes acquiring input voice data and music information, adjusting duration of each syllable in the voice data to enable the duration of each syllable to be aligned with duration of corresponding lyric in the music information, adjusting voice base frequency of the voice data according to tone of each note in the music information to enable each voice base frequency point to be aligned with the tone of corresponding note in the music information and combining the voice base frequency points subjected to tone adjustment and notes subjected duration adjustment to form melody data.

Owner:SIEMENS AG

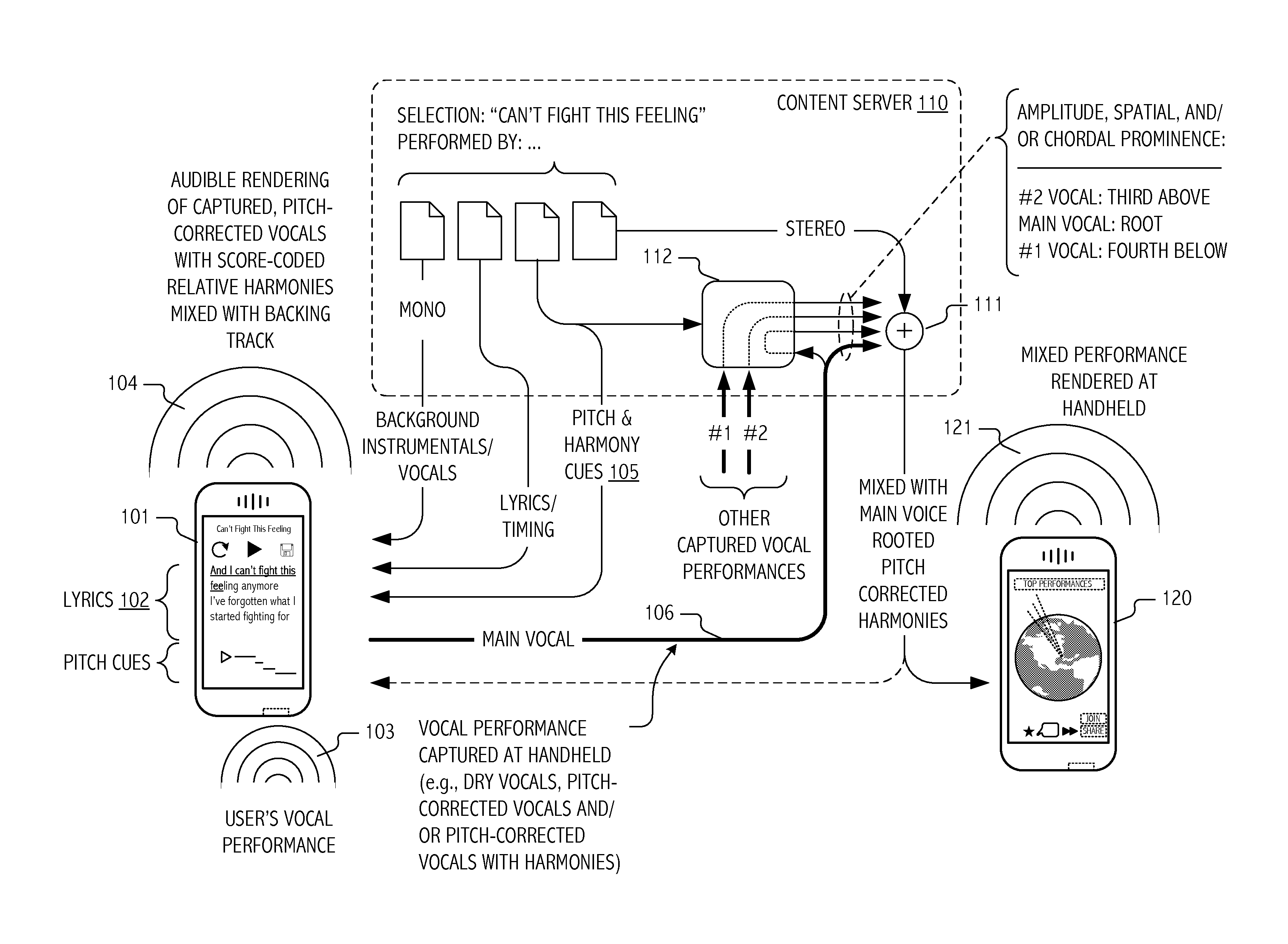

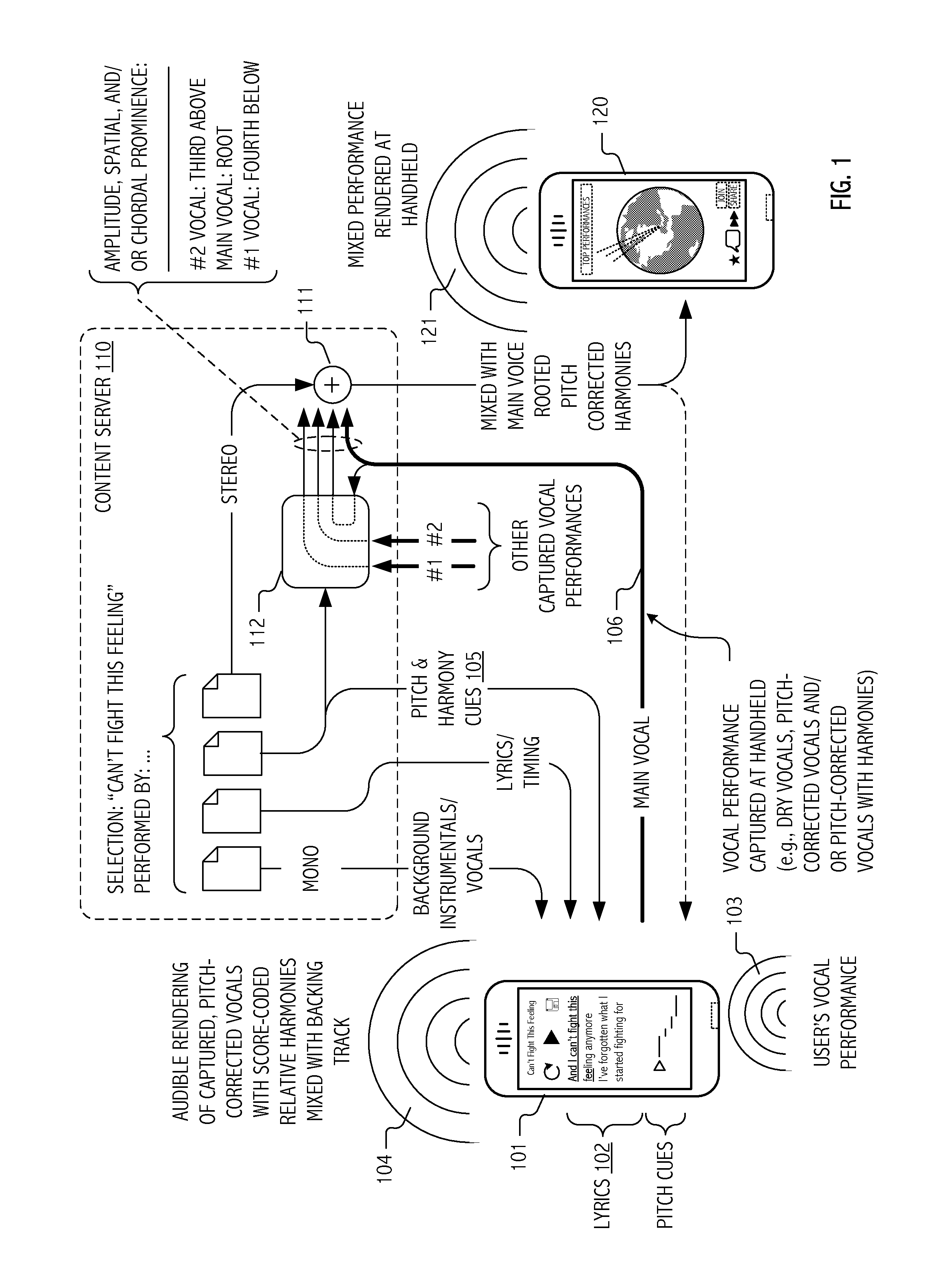

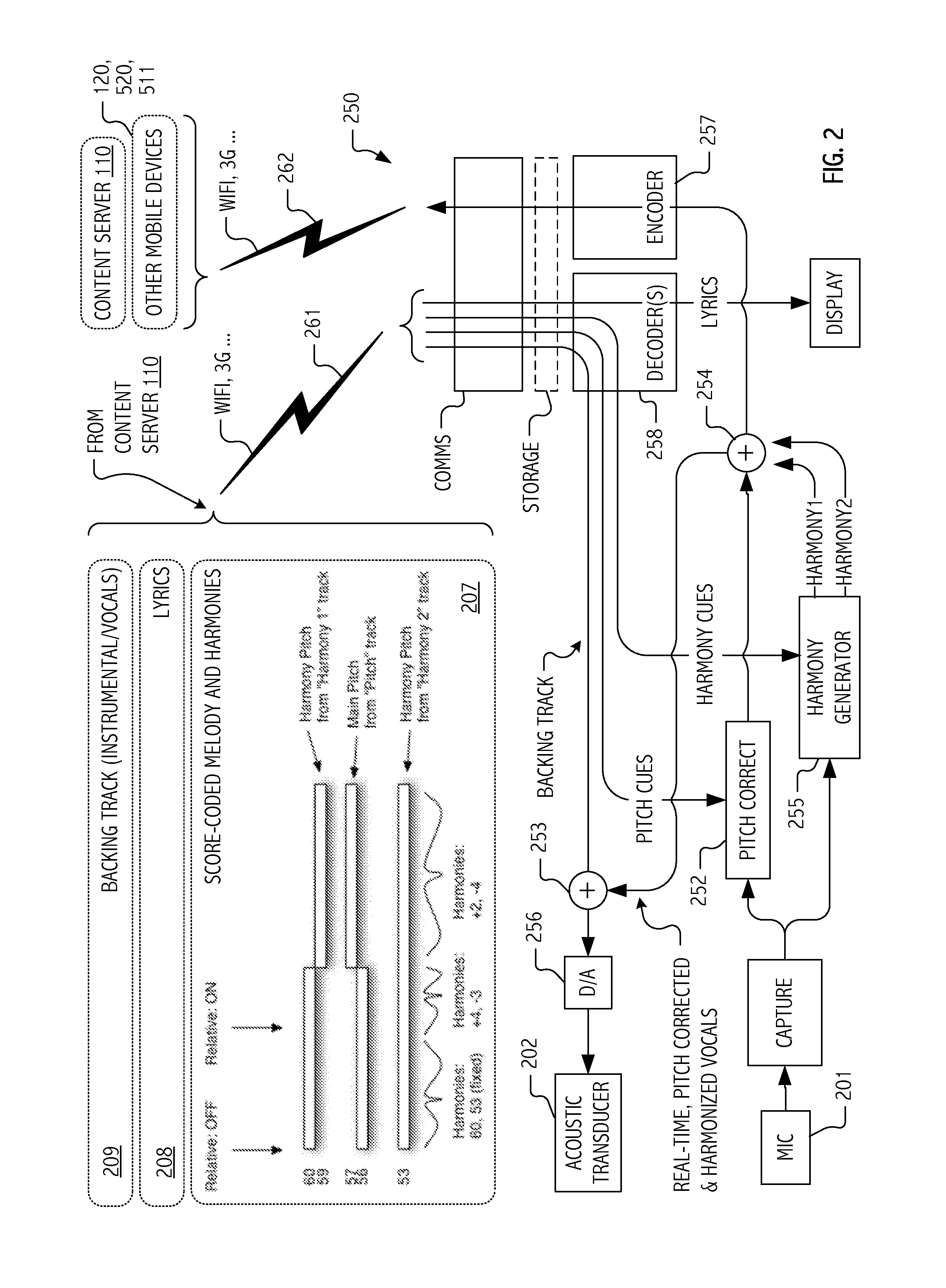

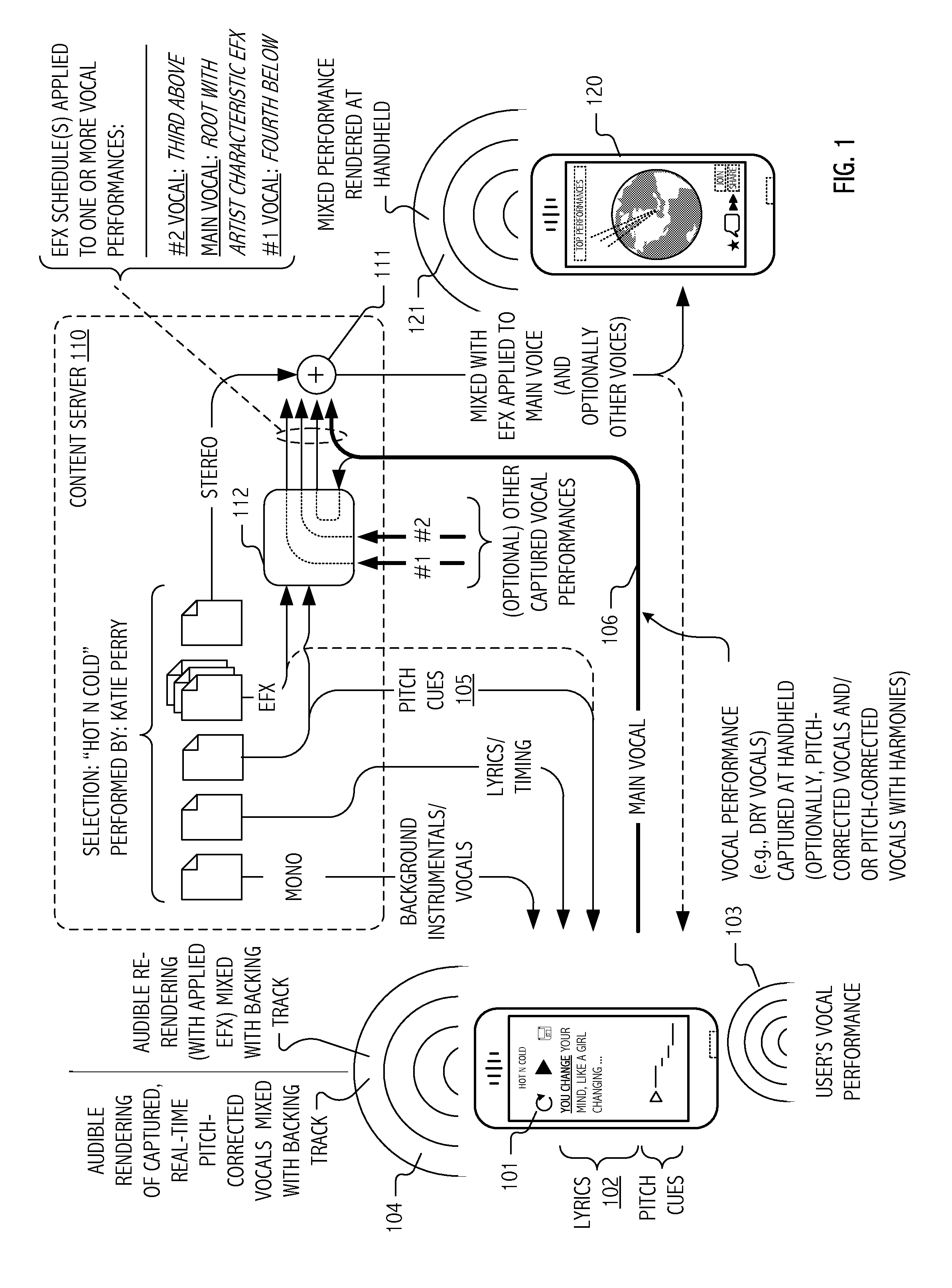

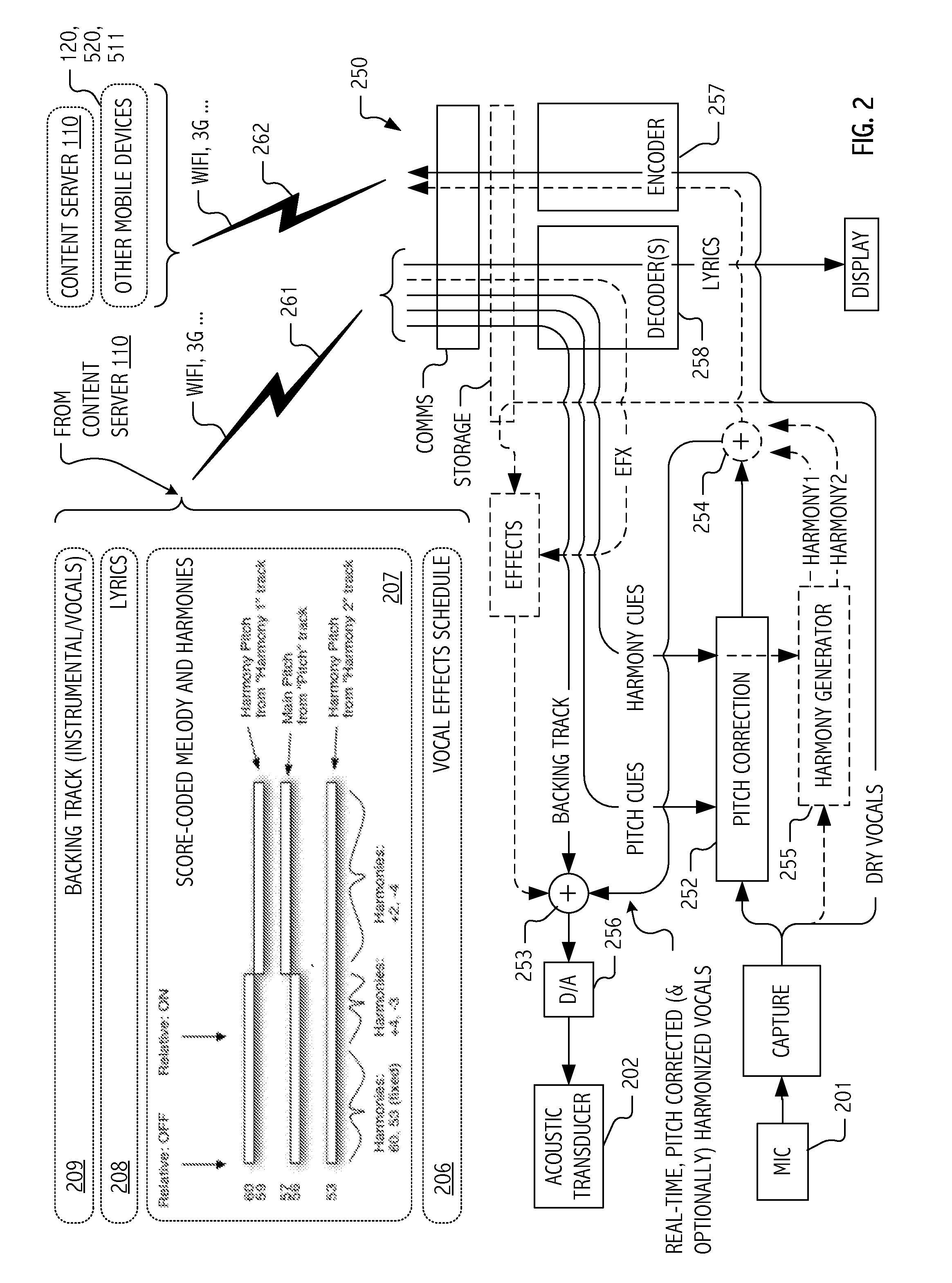

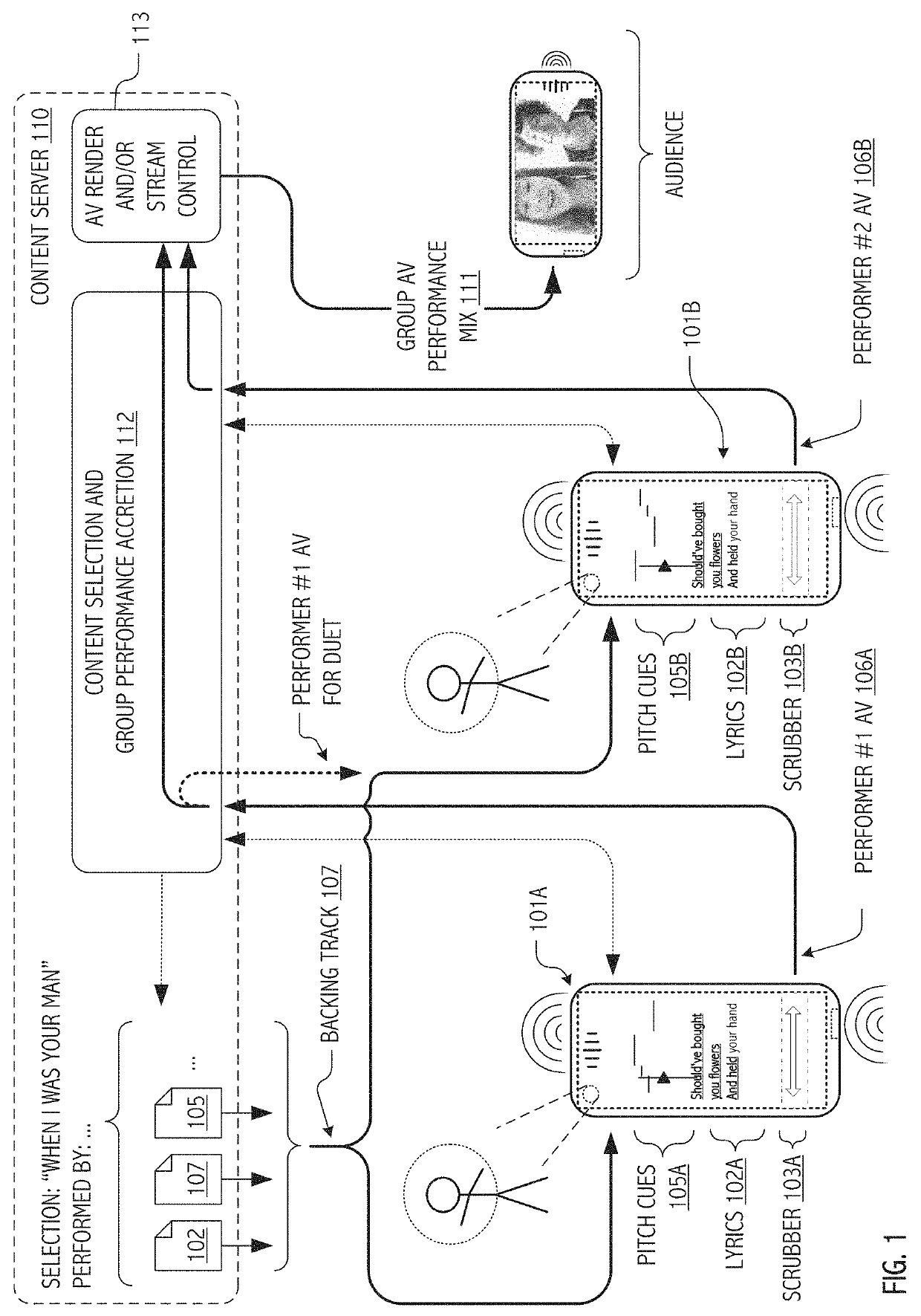

Pitch-correction of vocal performance in accord with score-coded harmonies

ActiveUS8868411B2Improve sound qualityFacilitate ad-libbingElectrophonic musical instrumentsStereophonic systemsChord (music)Engineering

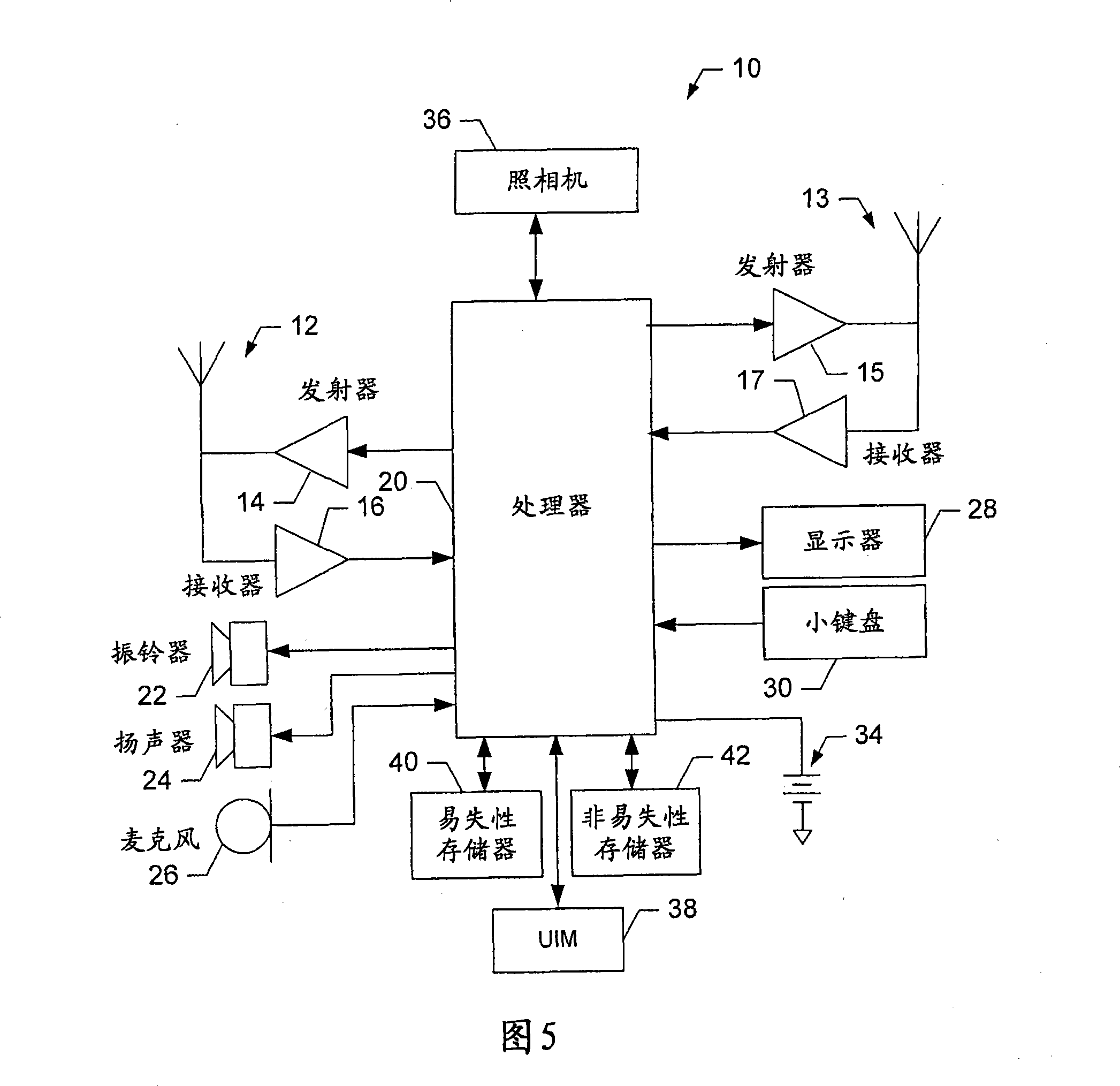

Despite many practical limitations imposed by mobile device platforms and application execution environments, vocal musical performances may be captured and continuously pitch-corrected for mixing and rendering with backing tracks in ways that create compelling user experiences. In some cases, the vocal performances of individual users are captured on mobile devices in the context of a karaoke-style presentation of lyrics in correspondence with audible renderings of a backing track. Such performances can be pitch-corrected in real-time at a portable computing device (such as a mobile phone, personal digital assistant, laptop computer, notebook computer, pad-type computer or netbook) in accord with pitch correction settings. In some cases, pitch correction settings include a score-coded melody and / or harmonies supplied with, or for association with, the lyrics and backing tracks. Harmonies notes or chords may be coded as explicit targets or relative to the score coded melody or even actual pitches sounded by a vocalist, if desired.

Owner:SMULE

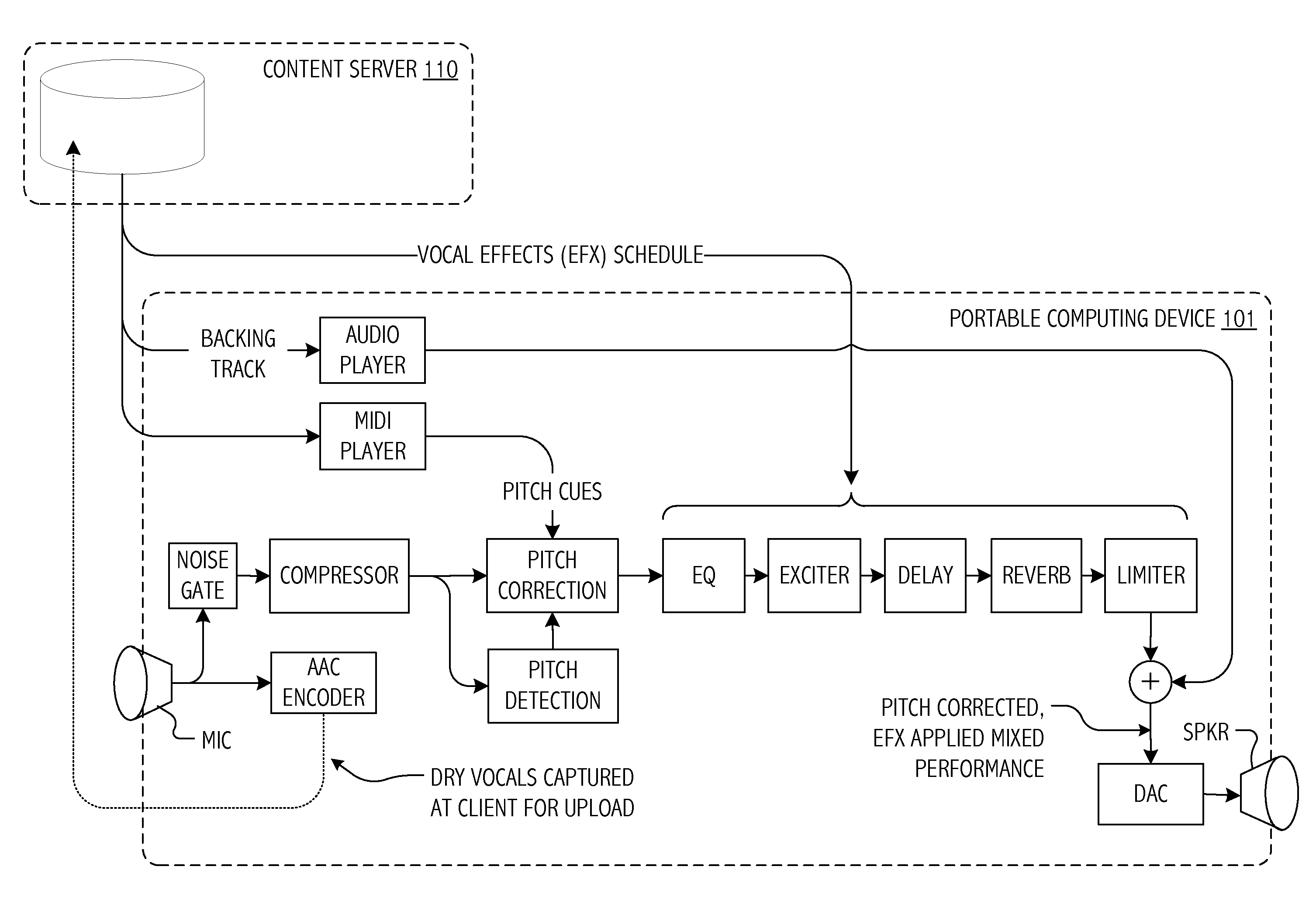

Social music system and method with continuous, real-time pitch correction of vocal performance and dry vocal capture for subsequent re-rendering based on selectively applicable vocal effect(s) schedule(s)

ActiveUS20140039883A1Compelling user experienceFacilitates musical collaborationElectrophonic musical instrumentsSpeech analysisEngineeringSocial network

Vocal musical performances may be captured and, in some cases or embodiments, pitch-corrected and / or processed in accord with a user selectable vocal effects schedule for mixing and rendering with backing tracks in ways that create compelling user experiences. In some cases, the vocal performances of individual users are captured on mobile devices in the context of a karaoke-style presentation of lyrics in correspondence with audible renderings of a backing track. Such performances can be pitch-corrected in real-time at the mobile device in accord with pitch correction settings. Vocal effects schedules may also be selectively applied to such performances. In these ways, even amateur user / performers with imperfect pitch are encouraged to take a shot at “stardom” and / or take part in a game play, social network or vocal achievement application architecture that facilitates musical collaboration on a global scale and / or, in some cases or embodiments, to initiate revenue generating in-application transactions.

Owner:SMULE

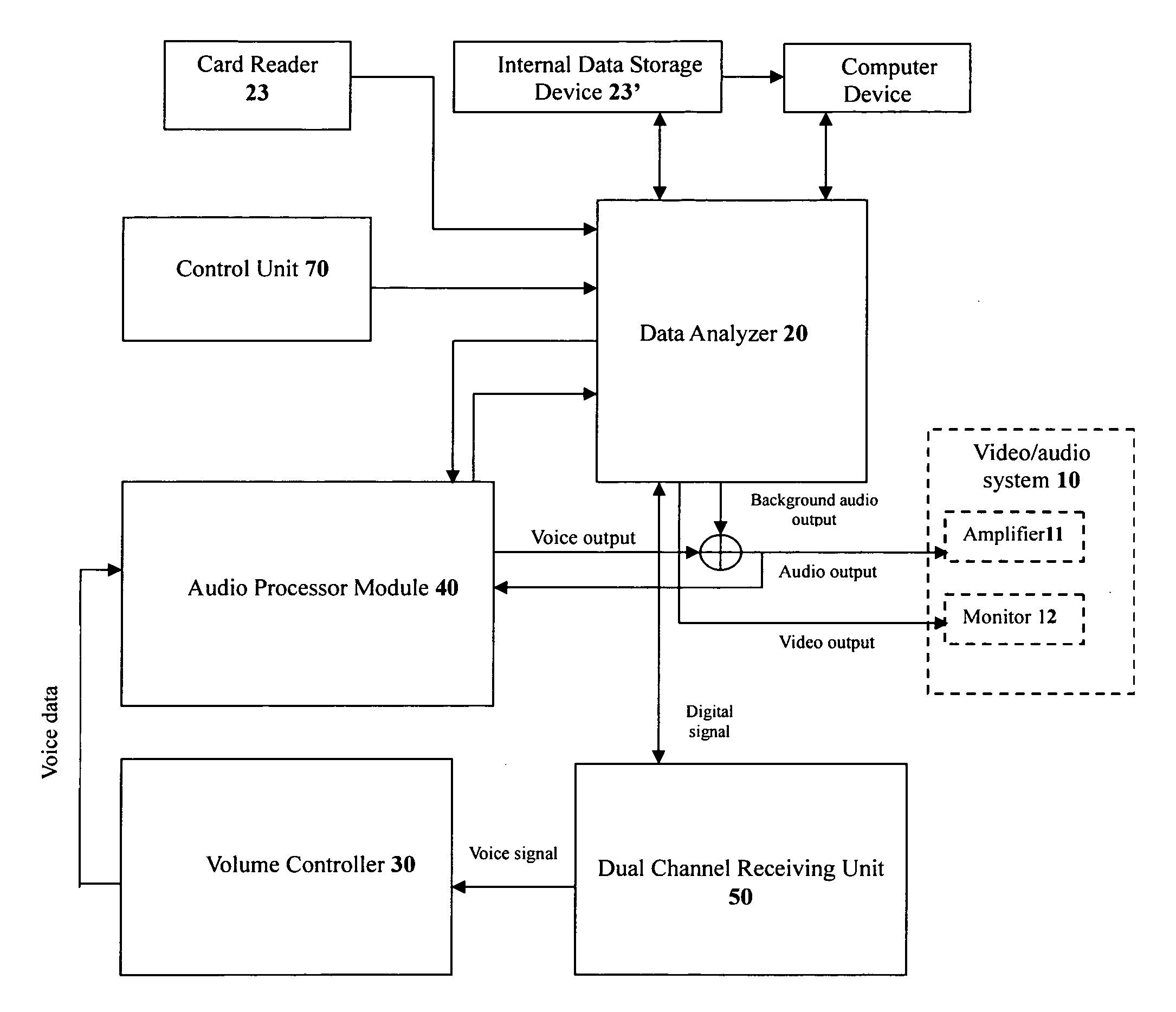

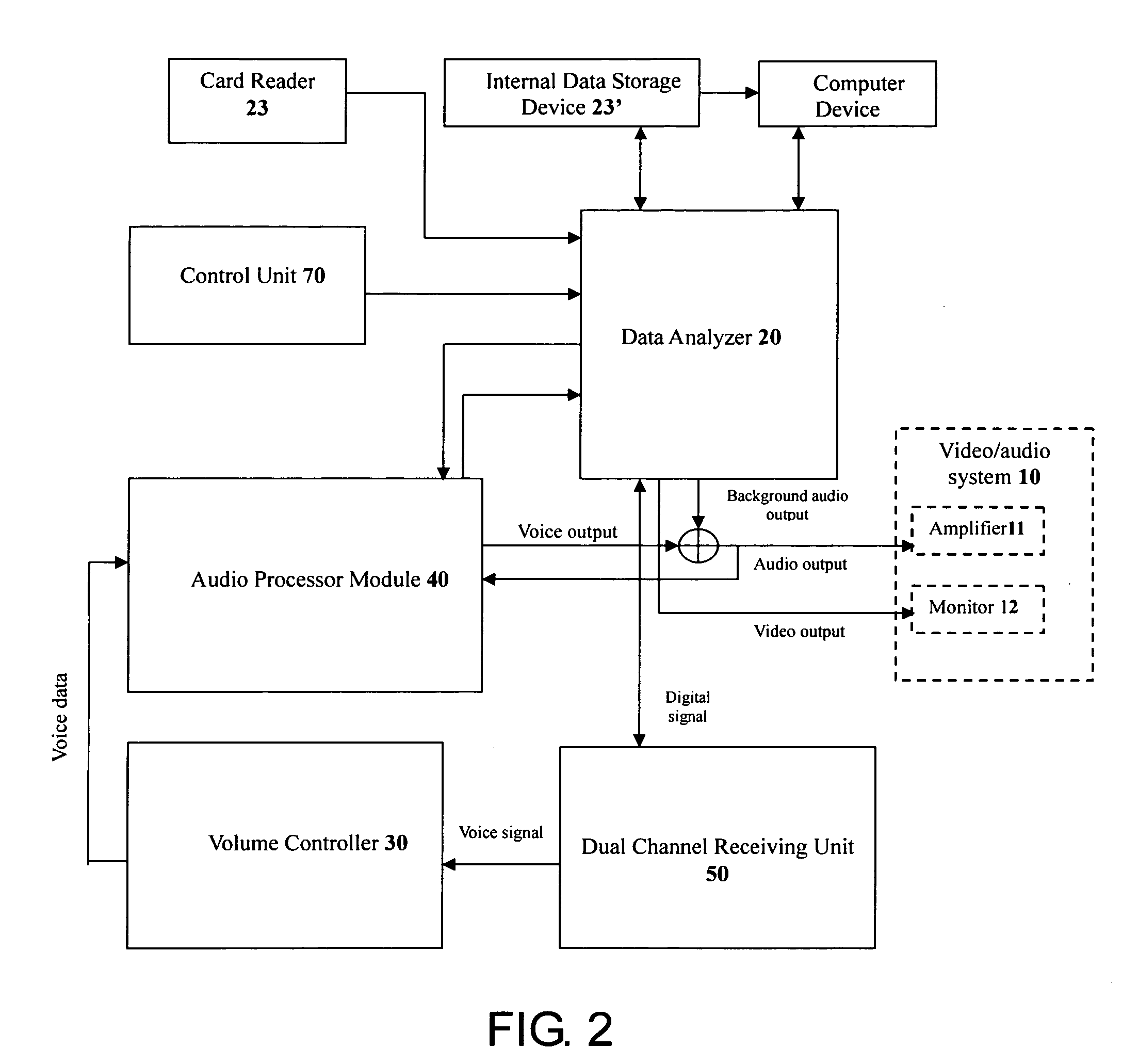

Receiver device for karaoke

InactiveUS20060246408A1Eliminates connecting cableSmall sizeElectrophonic musical instrumentsElectrical appliancesWireless microphoneVoice data

The present invention provides a karaoke receiving device which is capable of simultaneously supporting the voice and data signals entering in a wireless manner with two sets of wireless microphones. The singer's voice data are being processed and mixed together with the background music and then transferred to an amplifier or a television or other similar audio devices. It is also possible to control the song selecting, playback, recording and similar karaoke feature by the wireless microphone keypad control. The images and lyrics signals are transferred to a display monitor from the video encoder after the data encoding. In addition, the present invention eliminates the connecting cable between the karaoke microphone and the television and also reduces the size of the microphone. Therefore, the present invention is more convenient and provides a more enjoyable experience for the users.

Owner:SHANGHAI MULTAK TECH DEV

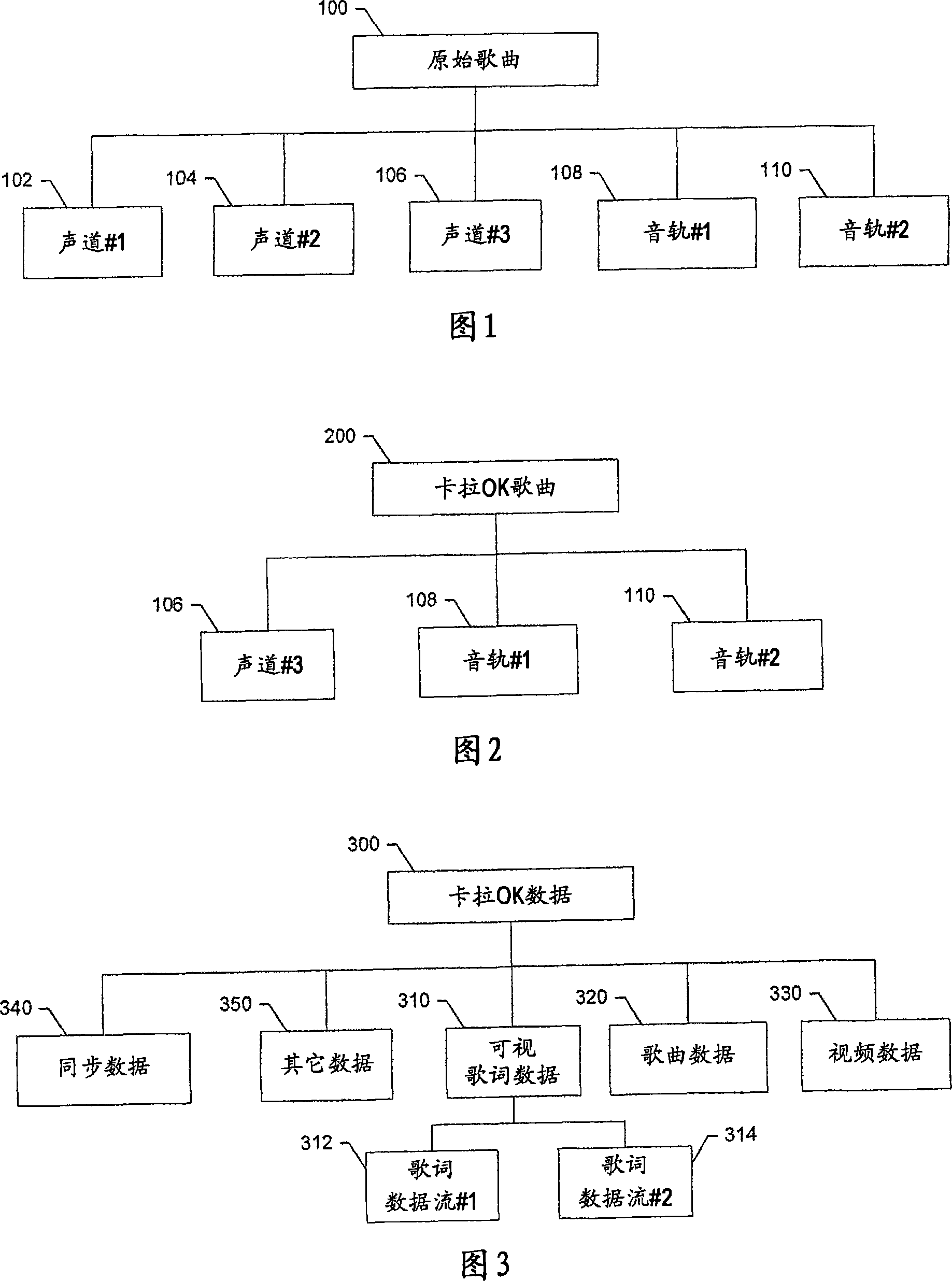

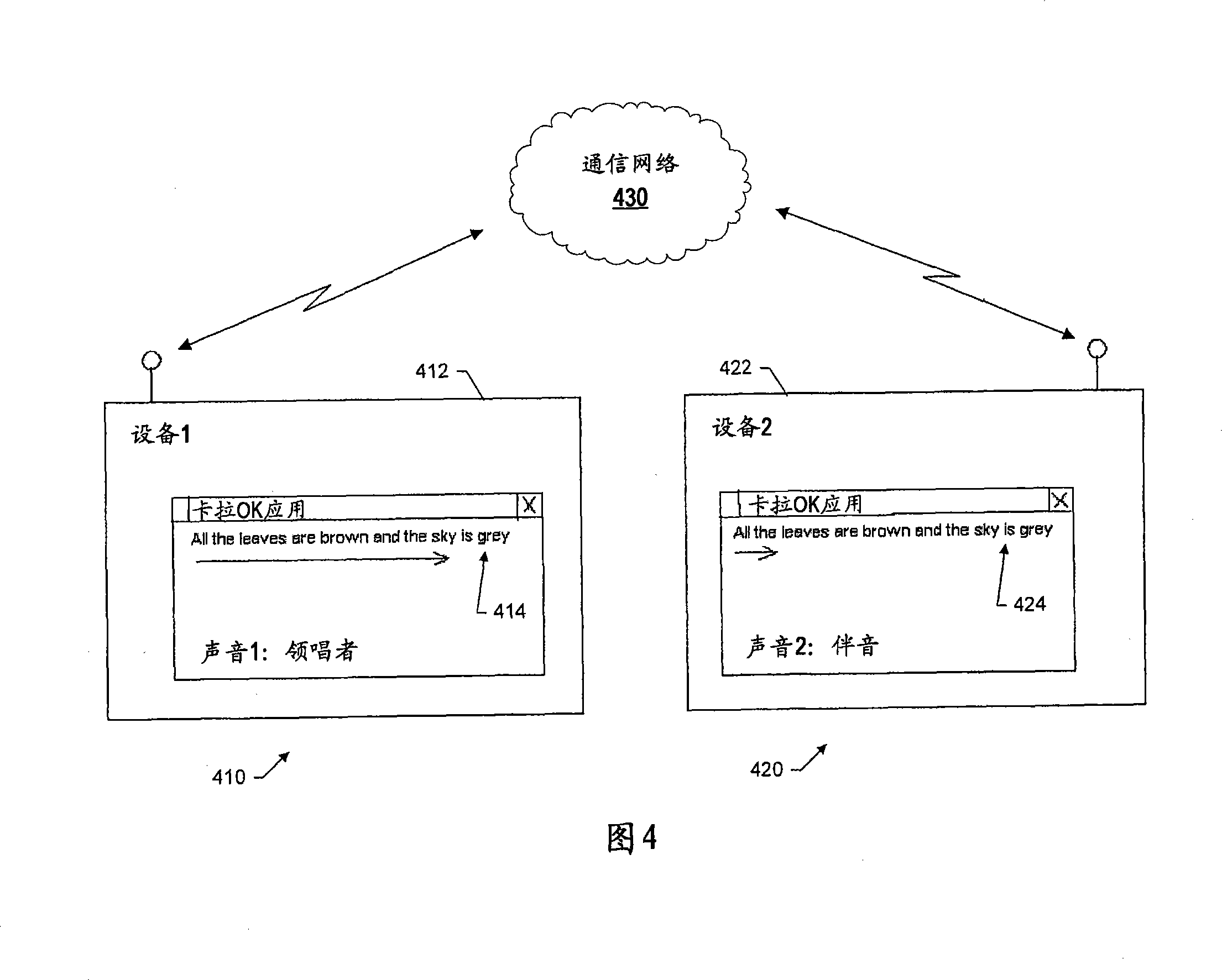

System, method and device for providing multiple lyric kala OK system

The invention provides a system, method, device and computer program product for promoting the Karaoke performance of a group. In a normal case, the system includes at least two devices, each of which includes a memory and a monitor which can be coupled to a processor through operation. The memory of each device is configured to at least temporarily store the Karaoke data of a visible song lyric data stream which is corresponding to one of the voice parts in the song. The processor for each device is configured to display the visible song lyric information on the monitor according to the visible song lyric data stream stored in the memory. At least two of the devices are made synchronous so as to display the visible song lyric information almost at the same time. One of the devices displays the visible song lyric information of one voice part of the song, and the other device displays the visible song lyric information of a different voice part of the song.

Owner:NOKIA CORP

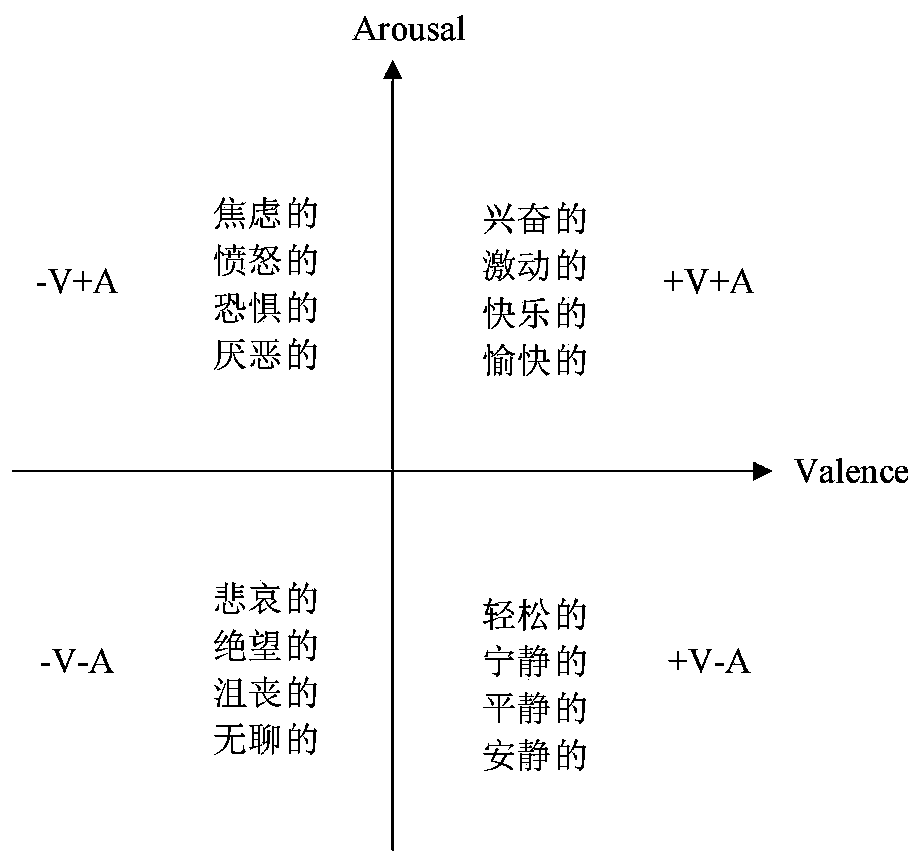

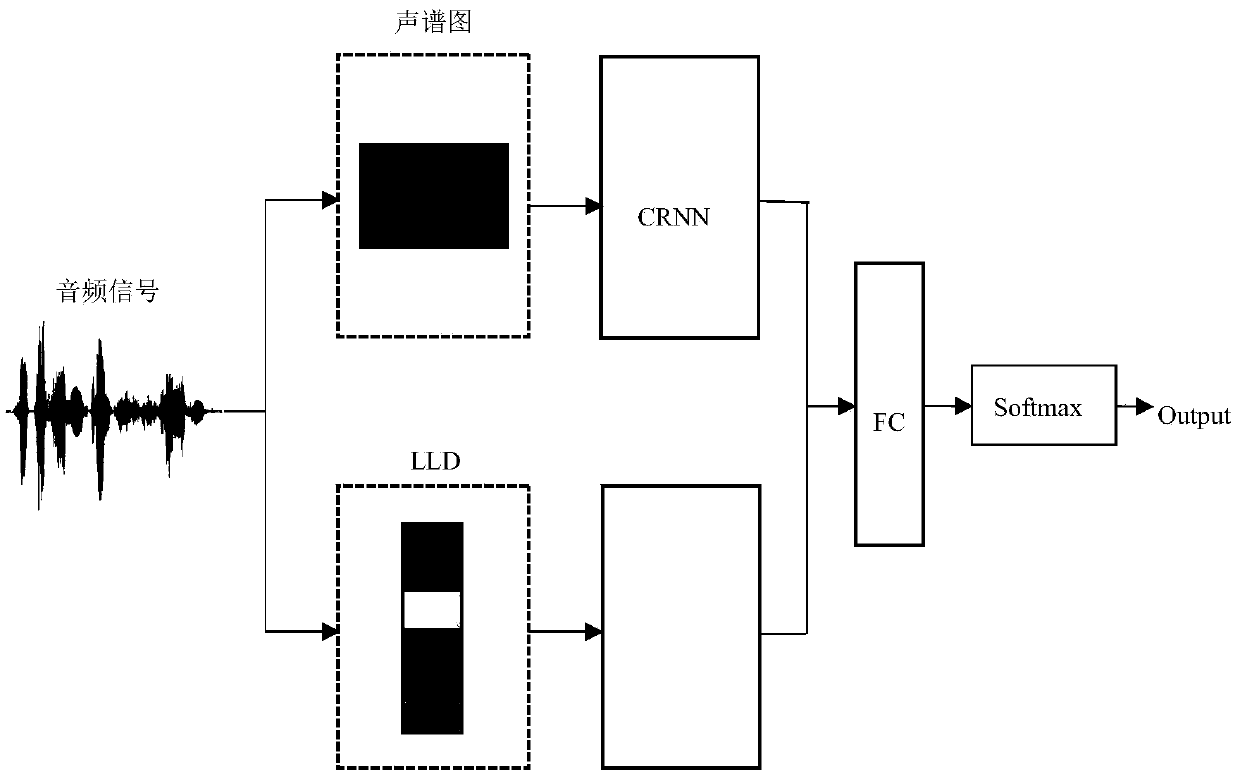

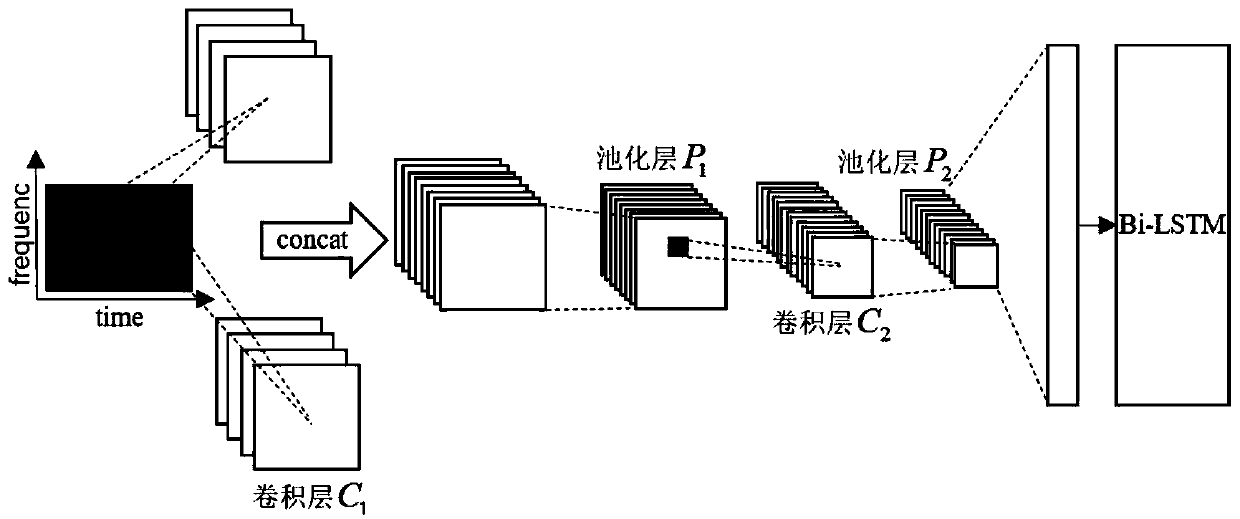

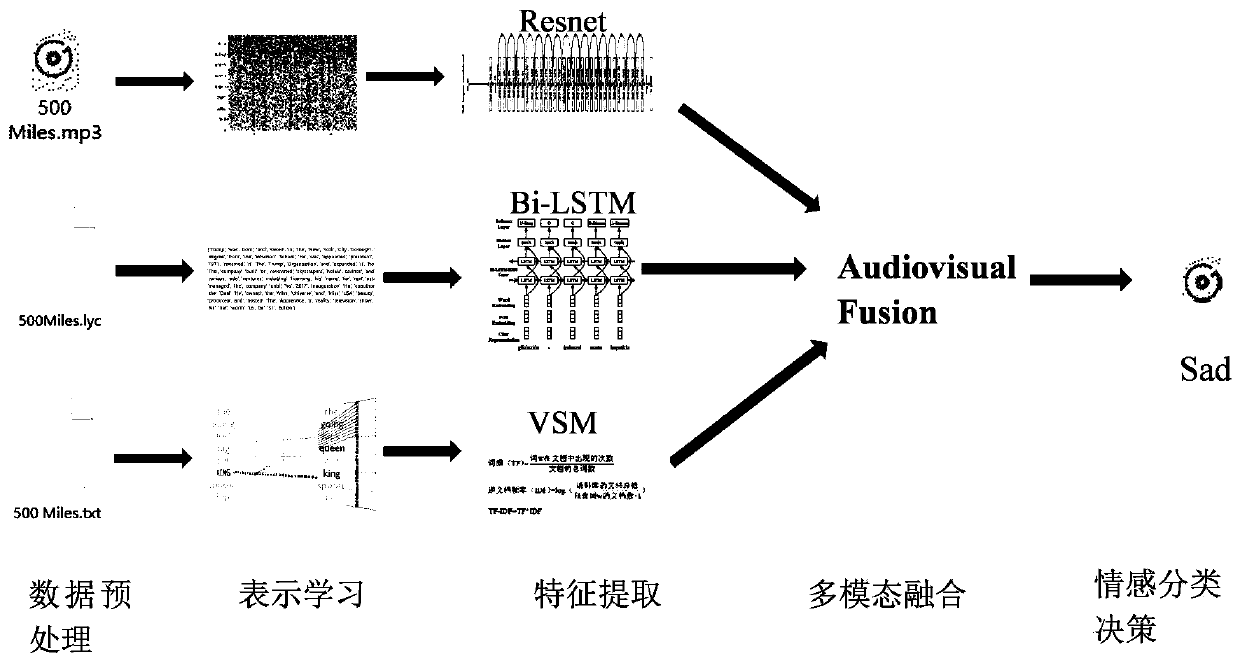

Chinese song emotion classification method based on multi-modal fusion

ActiveCN110674339AStrong complementarityDig richNeural architecturesSpecial data processing applicationsTime domainEmotion classification

The invention discloses a Chinese song emotion classification method based on multi-modal fusion. The Chinese song emotion classification method comprises the steps: firstly obtaining a spectrogram from an audio signal, extracting audio low-level features, and then carrying out the audio feature learning based on an LLD-CRNN model, thereby obtaining the audio features of a Chinese song; for lyricsand comment information, firstly constructing a music emotion dictionary, then constructing emotion vectors based on emotion intensity and part-of-speech on the basis of the dictionary, so that textfeatures of Chinese songs are obtained; and finally, performing multi-modal fusion by using a decision fusion method and a feature fusion method to obtain emotion categories of the Chinese songs. TheChinese song emotion classification method is based on an LLD-CRNN music emotion classification model, and the model uses a spectrogram and audio low-level features as an input sequence. The LLD is concentrated in a time domain or a frequency domain, and for the audio signal with associated change of time and frequency characteristics, the spectrogram is a two-dimensional representation of the audio signal in frequency, and loss of information amount is less, so that information complementation of the LLD and the spectrogram can be realized.

Owner:BEIJING UNIV OF TECH

Non-linear media segment capture and edit platform

ActiveUS20190355337A1Facilitate animationFacilitate display artifactElectrophonic musical instrumentsElectronic editing digitised analogue information signalsRecording durationScrolling

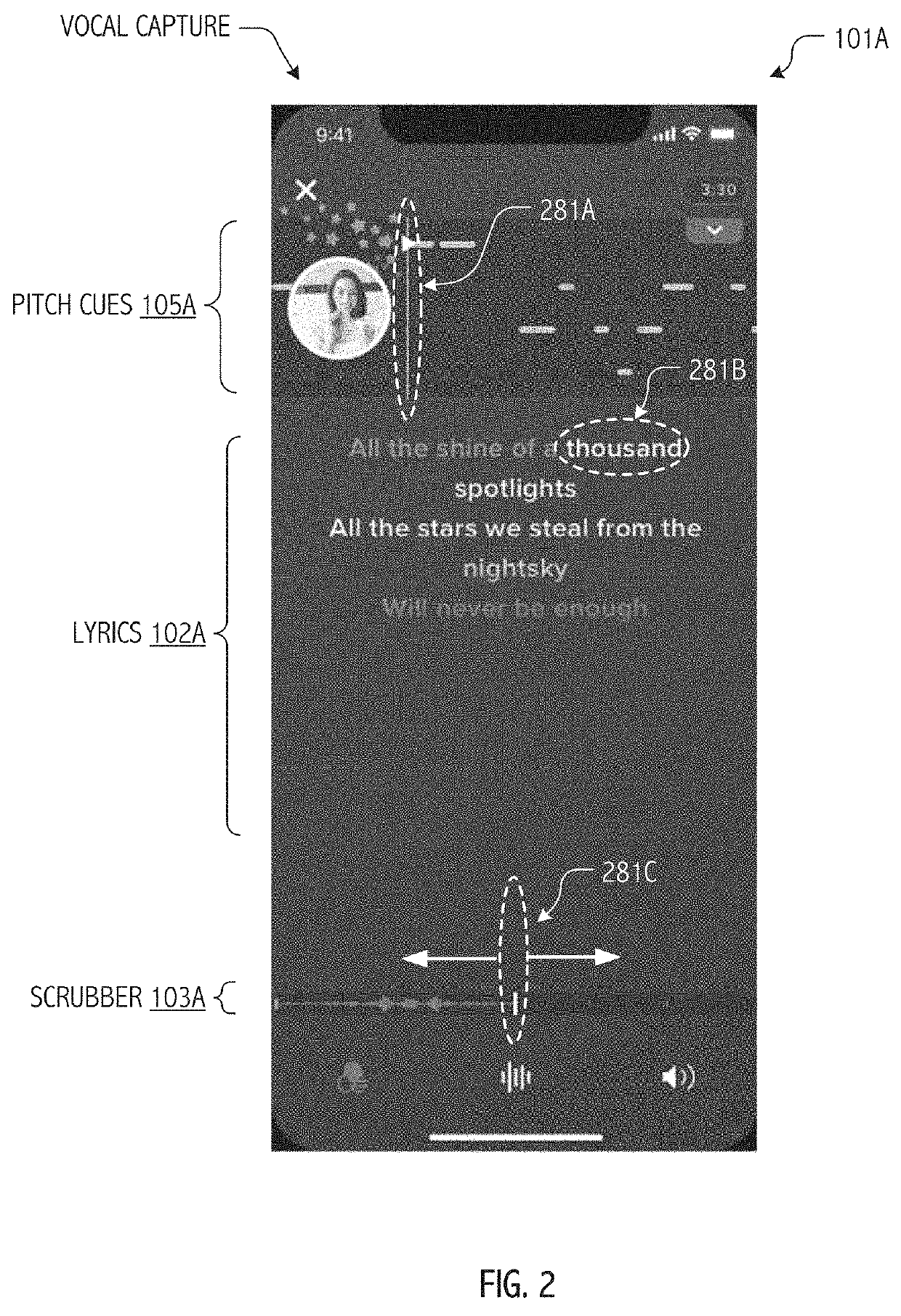

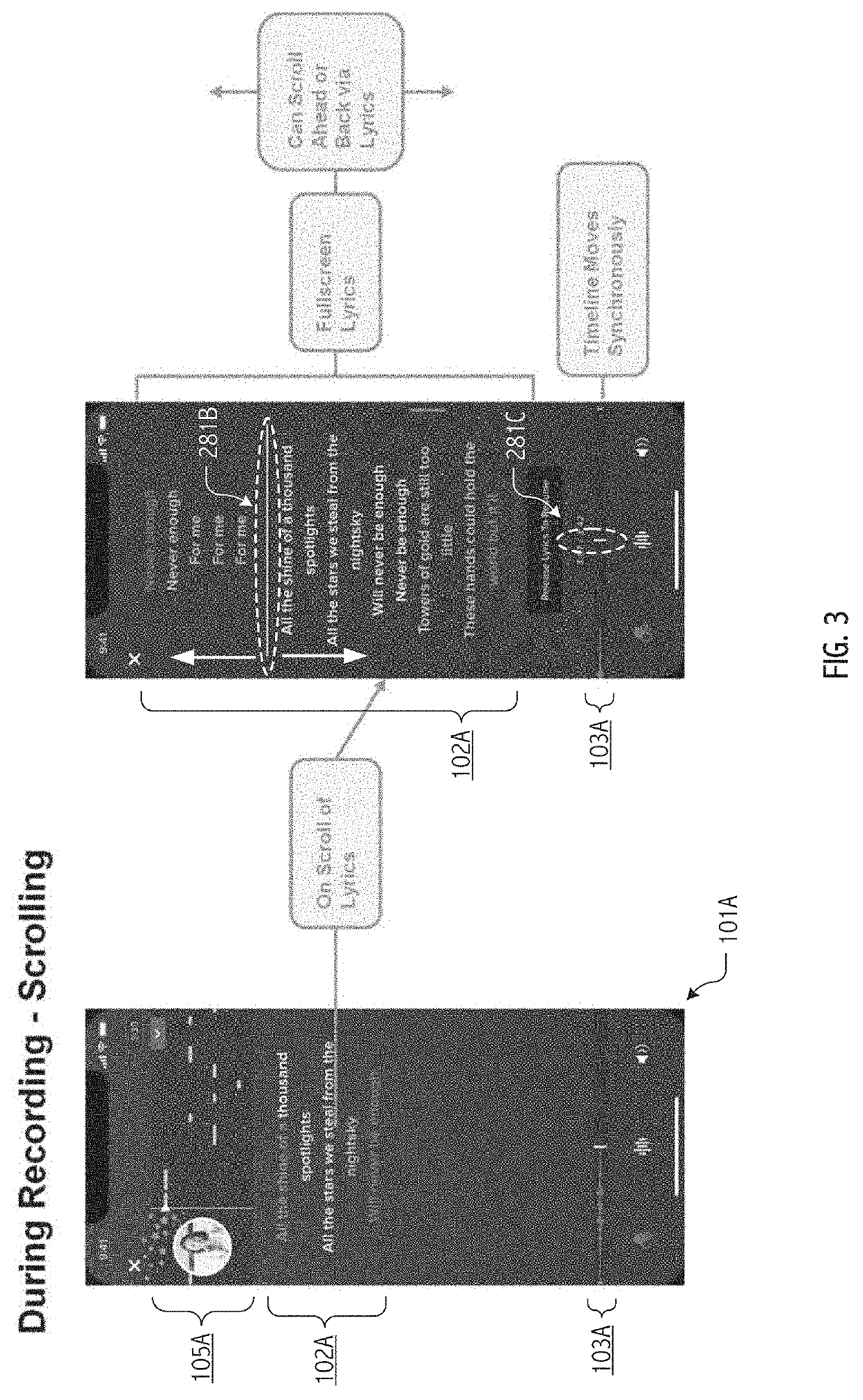

User interface techniques provide user vocalists with mechanisms for forward and backward traversal of audiovisual content, including pitch cues, waveform- or envelope-type performance timelines, lyrics and / or other temporally-synchronized content at record-time, during edits, and / or in playback. Recapture of selected performance portions, coordination of group parts, and overdubbing may all be facilitated. Direct scrolling to arbitrary points in the performance timeline, lyrics, pitch cues and other temporally-synchronized content allows user to conveniently move through a capture or audiovisual edit session. In some cases, a user vocalist may be guided through the performance timeline, lyrics, pitch cues and other temporally-synchronized content in correspondence with group part information such as in a guided short-form capture for a duet. A scrubber allows user vocalists to conveniently move forward and backward through the temporally-synchronized content.

Owner:SMULE

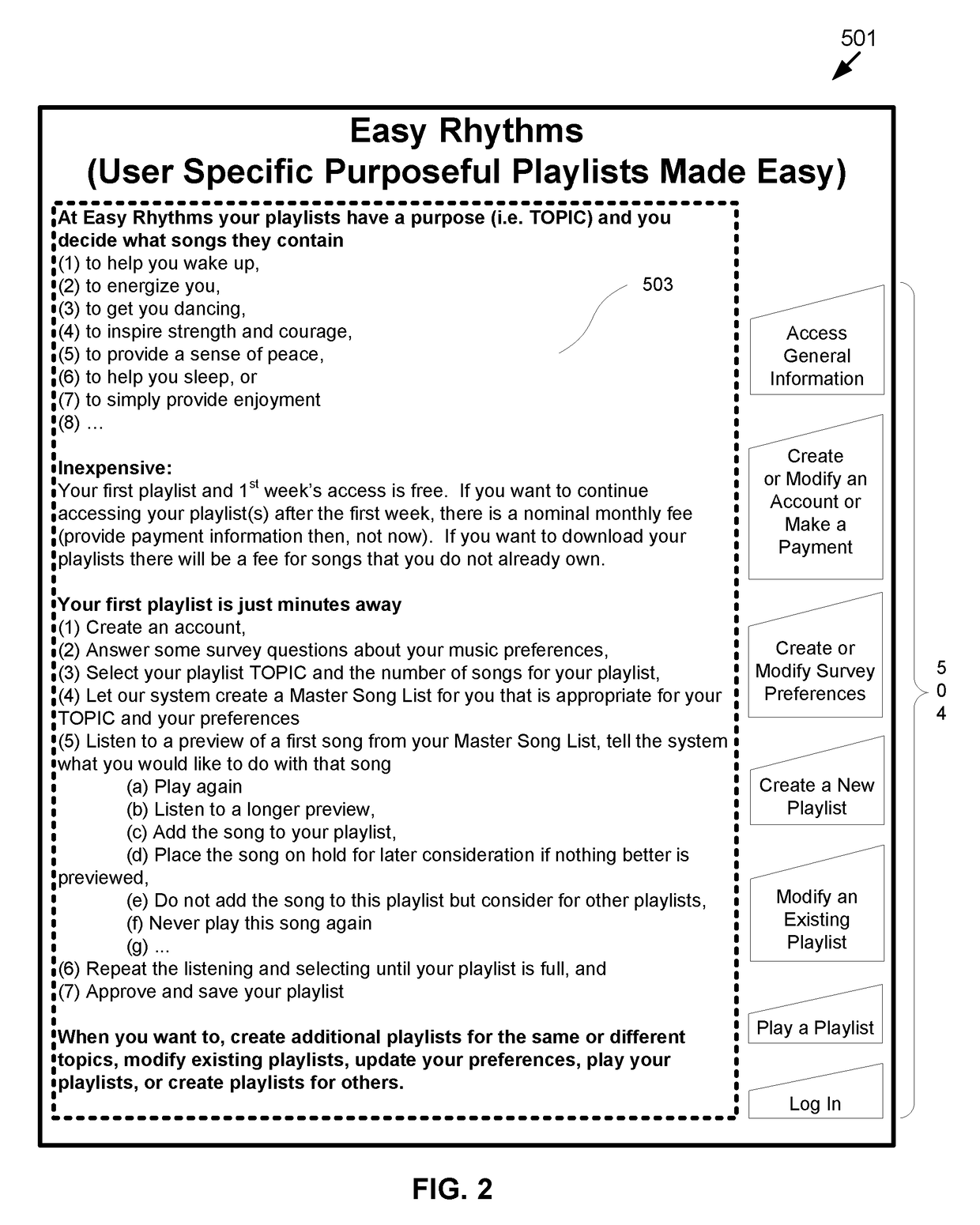

Methods and Systems for Purposeful Playlist Music Selection or Purposeful Purchase List Music Selection

InactiveUS20180197158A1Simple methodPayment architectureBuying/selling/leasing transactionsPersonalizationDatabase

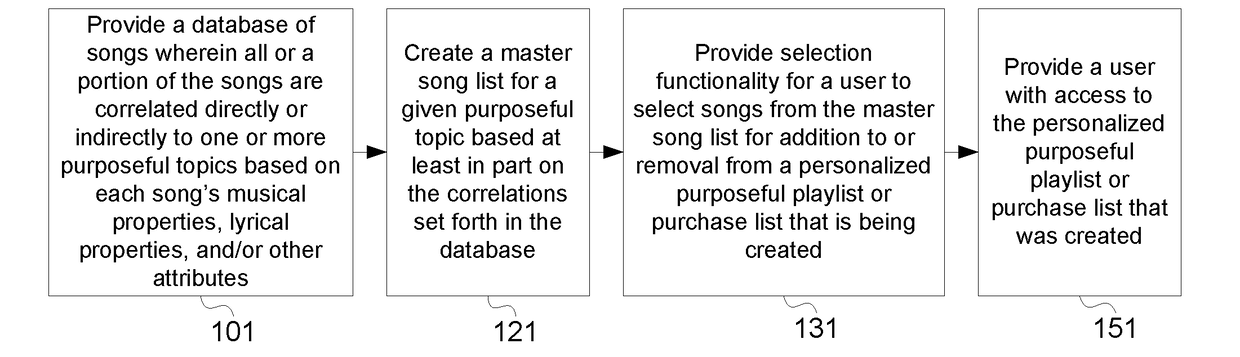

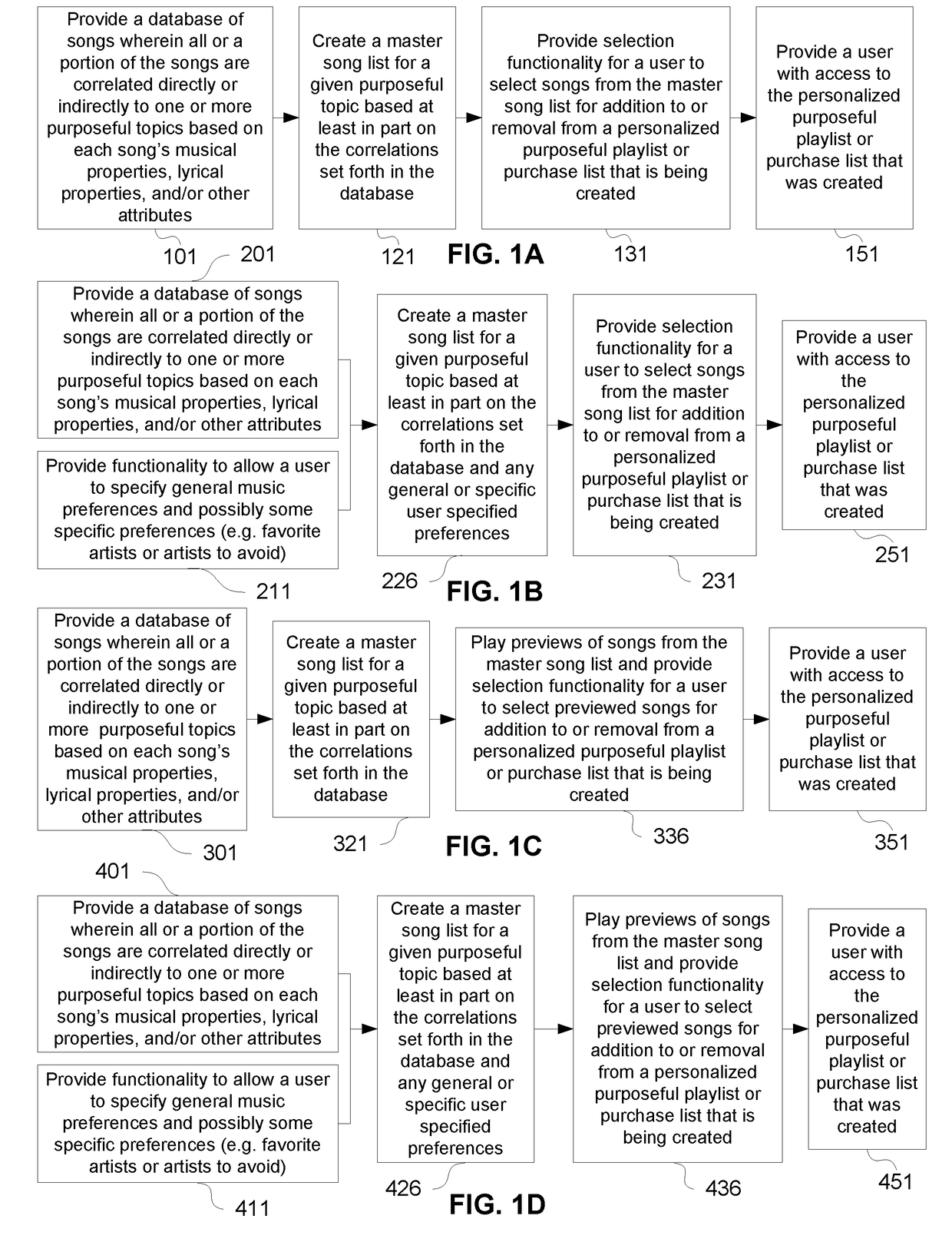

“Embodiments provide purposeful playlists or purchase lists of music from master song or preview lists that are created using filtering, intersecting, or weighting of musical and / or lyrical features of database songs against the anticipated usefulness or inappropriateness for the given topic. Creation of master song or preview lists might also involve filtering, intersecting, weighting of database songs against a user's general musical preferences. Songs in the master song list may go directly into a purposeful playlist while songs in a master preview list may be previewed and selected for inclusion or rejected for inclusion by a user while creating a playlist or purchase list such that the list is both fully personalized and purposeful.”

Owner:SMALLEY DENNIS R

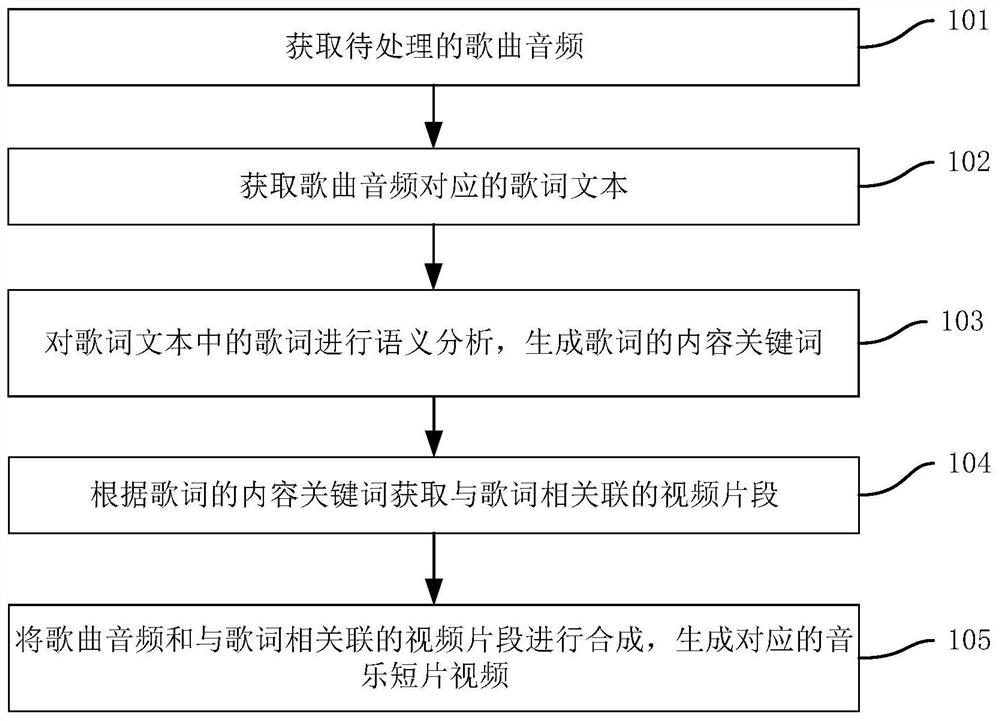

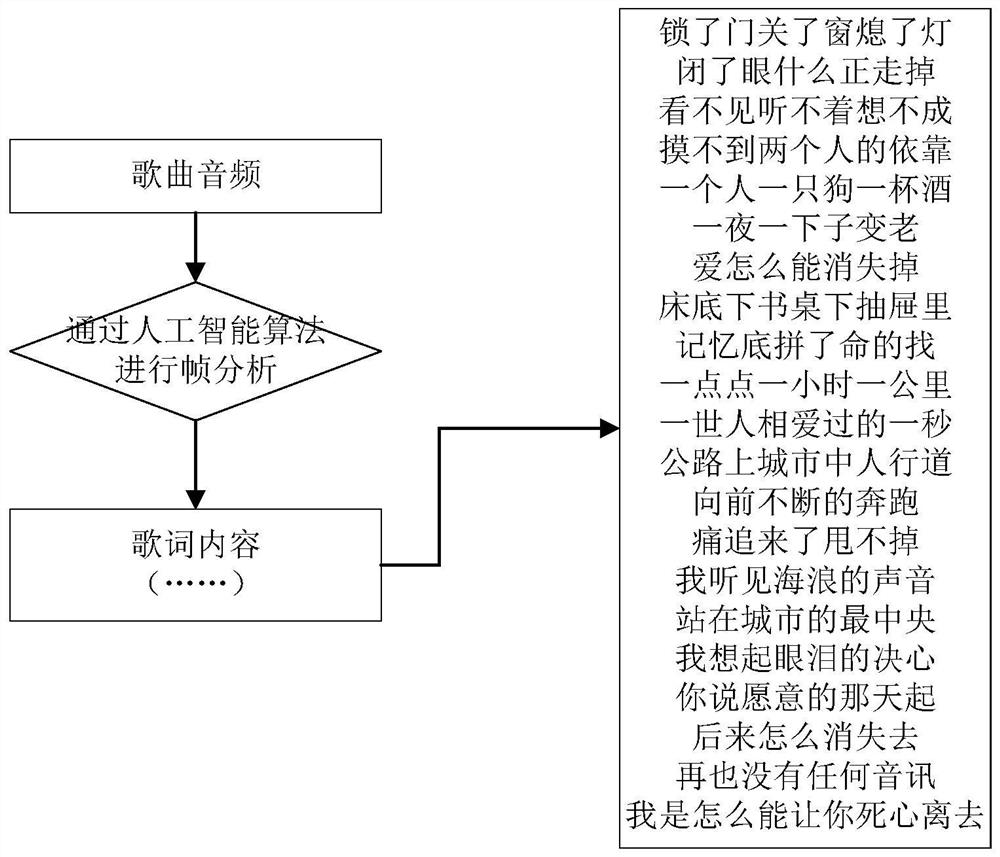

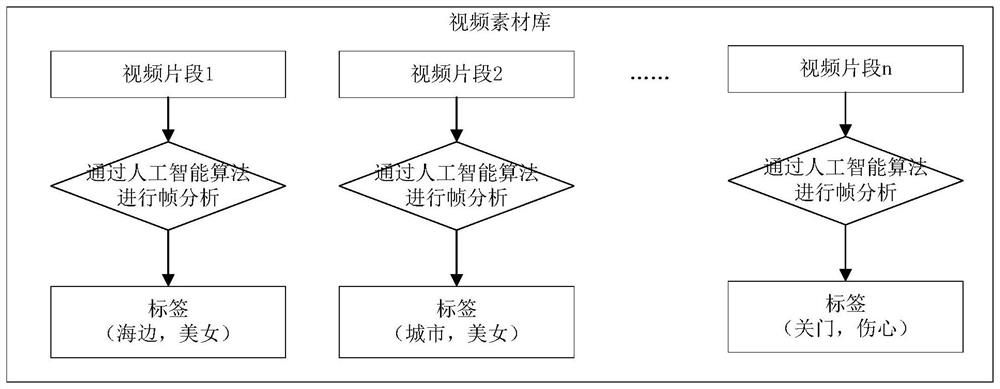

Music short video generation method and device, electronic equipment and storage medium

The invention discloses a music short video generation method and device, electronic equipment and a storage medium, and relates to the field of artificial intelligence and the field of video processing. According to the specific implementation scheme, the method comprises the steps of obtaining to-be-processed song audios; obtaining a lyric text corresponding to the song audio; performing semantic analysis on lyrics in the lyric text to generate content keywords of the lyrics; obtaining video clips associated with the lyrics according to the content keywords of the lyrics; and synthesizing the song audio and the video clips associated with the lyrics to generate a corresponding music short video. According to the invention, the video material and the song audio content are analyzed, and the label information is established for association, so that the video material associated with the song is automatically found according to the label, the song audio and the associated video materialare automatically synthesized, the making difficulty of the music short video can be reduced, and the automation and intelligence of making the MV music short video are realized.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Singing voice synthesizing method, singing voice synthesizing device, program, recording medium, and robot

InactiveUS20060156909A1Improve propertiesOvercome problemsMusical toysElectrophonic musical instrumentsRecording mediaMIDI

A singing voice synthesizing method synthesizes the singing voice by exploiting performance data, such as MIDI data. The input performance data are analyzed as the musical information including the pitch and the length of the sounds and the lyric (S2 and S3). If the musical information analyzed lacks in the lyric information, an arbitrary lyric is donated to an arbitrary string of notes (S9, S11, S12 and S15). The singing voice is generated based on the so donated lyric (S17).

Owner:SONY CORP

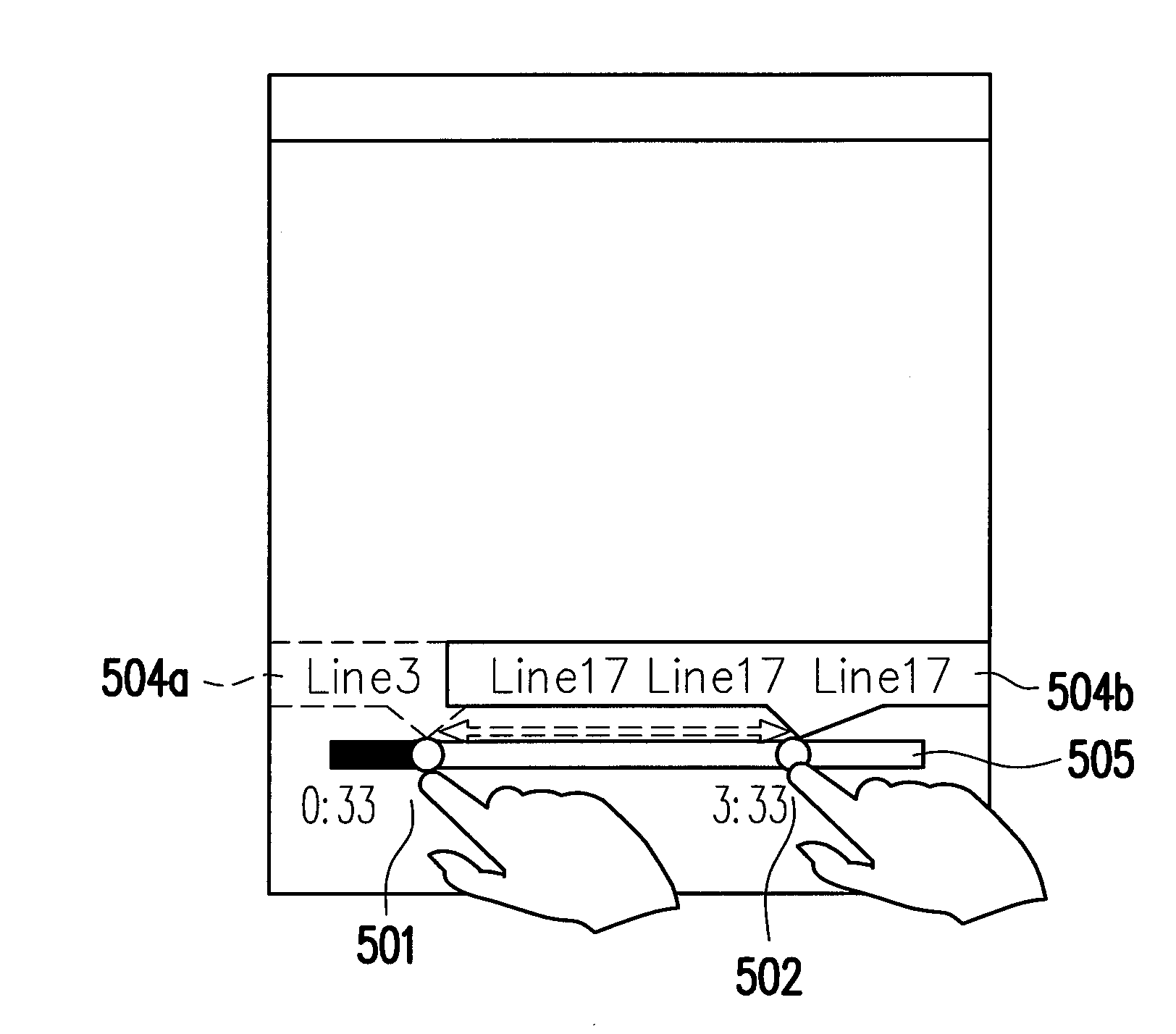

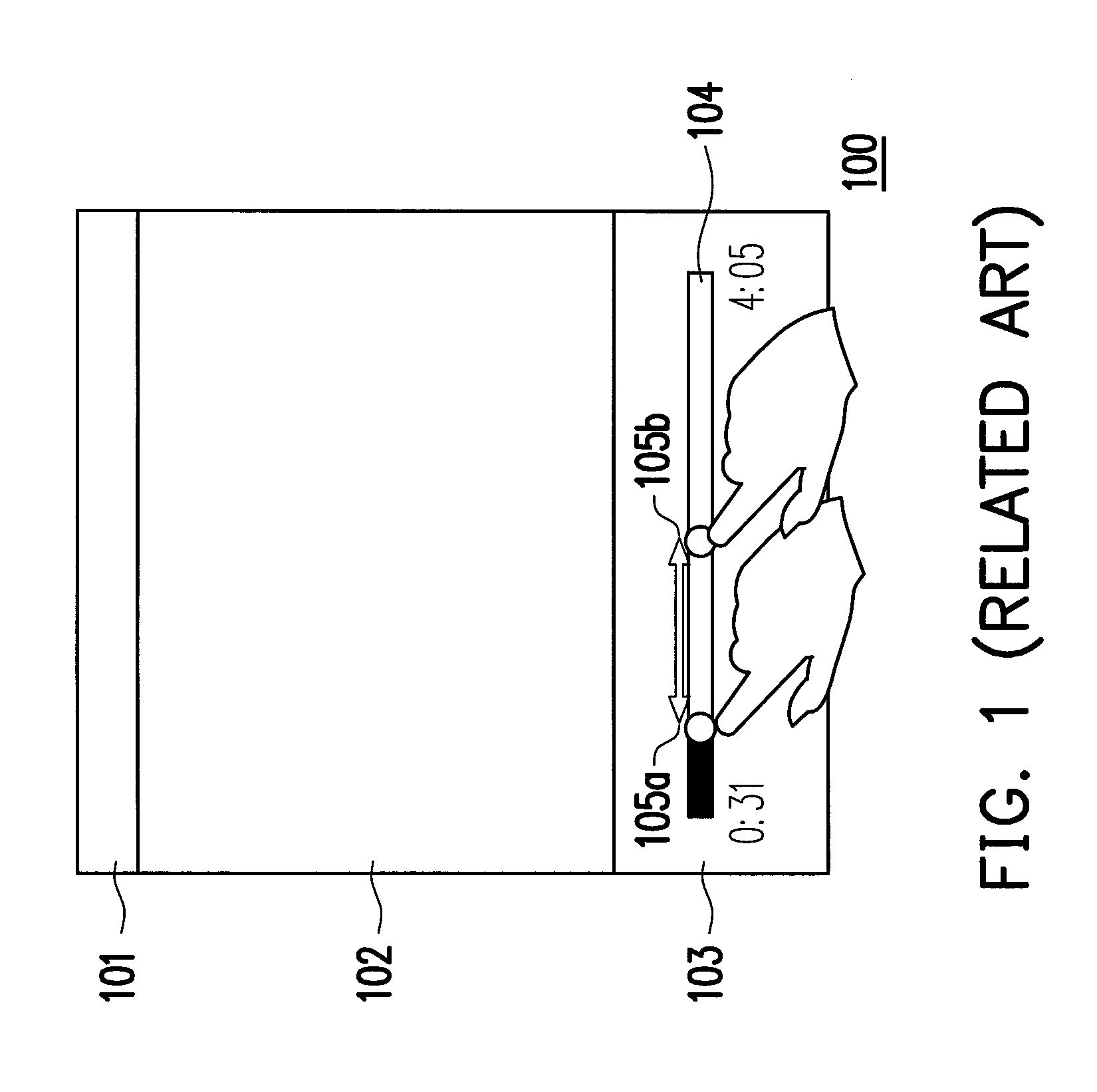

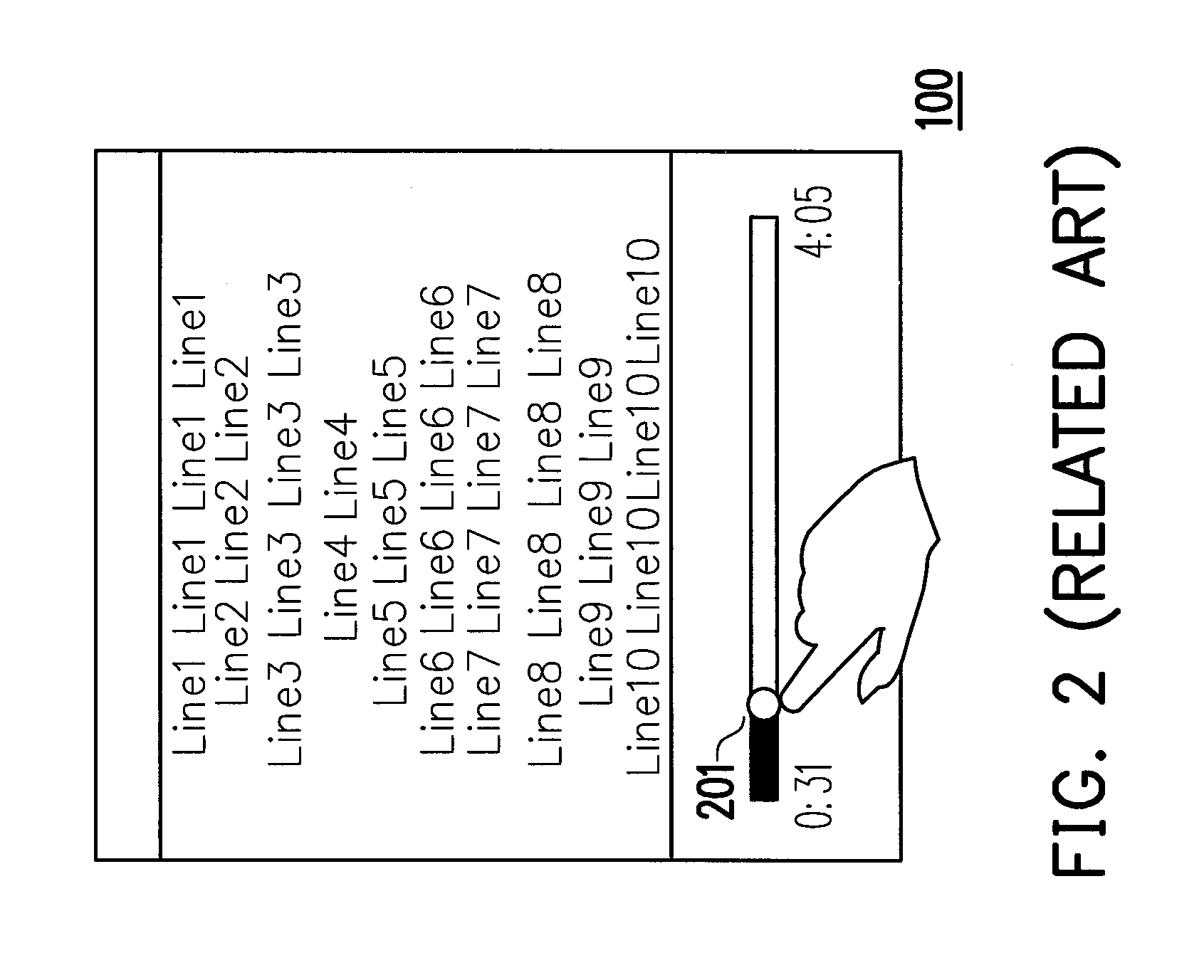

Method of displaying music lyrics and device using the same

InactiveUS20140149861A1Locate musicPrecise positioningInput/output processes for data processingSignal onTouchscreen

The present disclosure the proposes a method of displaying and seeking lyrics and an portable handheld electronic device using the same. The method includes the steps of selecting an audio recording and displaying a progress indicator of the audio recording. After receiving a touch signal on the progress indicator, the device would locate the segment of lyrics of the audio recording which is based on the location of the signal on the progress indicator. Next a pop up window on the touch screen would emerge from the progress indicator and be displayed, and the lyrics would be displayed within the frame of the pop up window. The lyrics could be one line or two lines only. The audio recording and / or the lyrics could be played from the beginning of the line corresponding to the location of the touch signal on the progress indicator.

Owner:HTC CORP

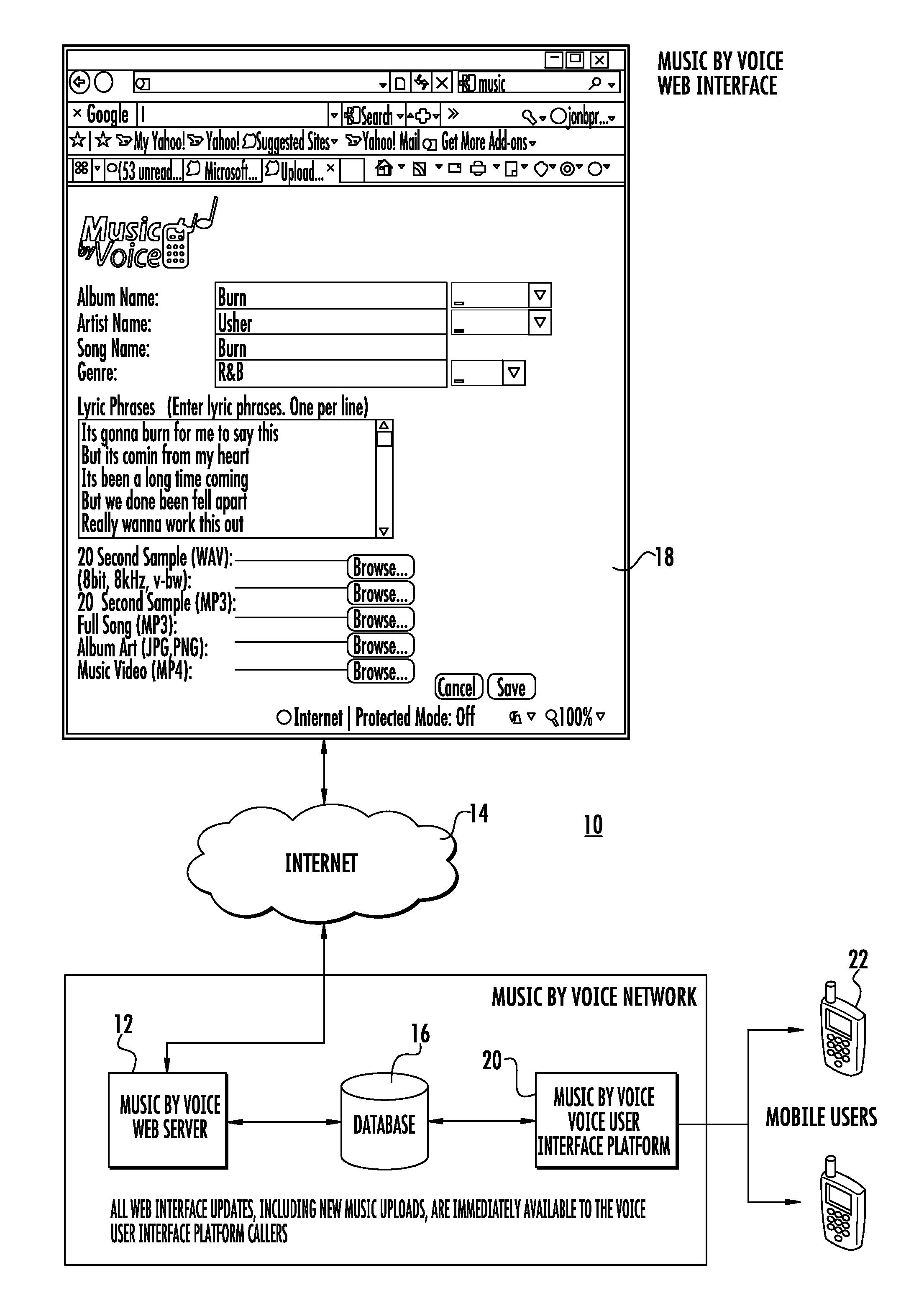

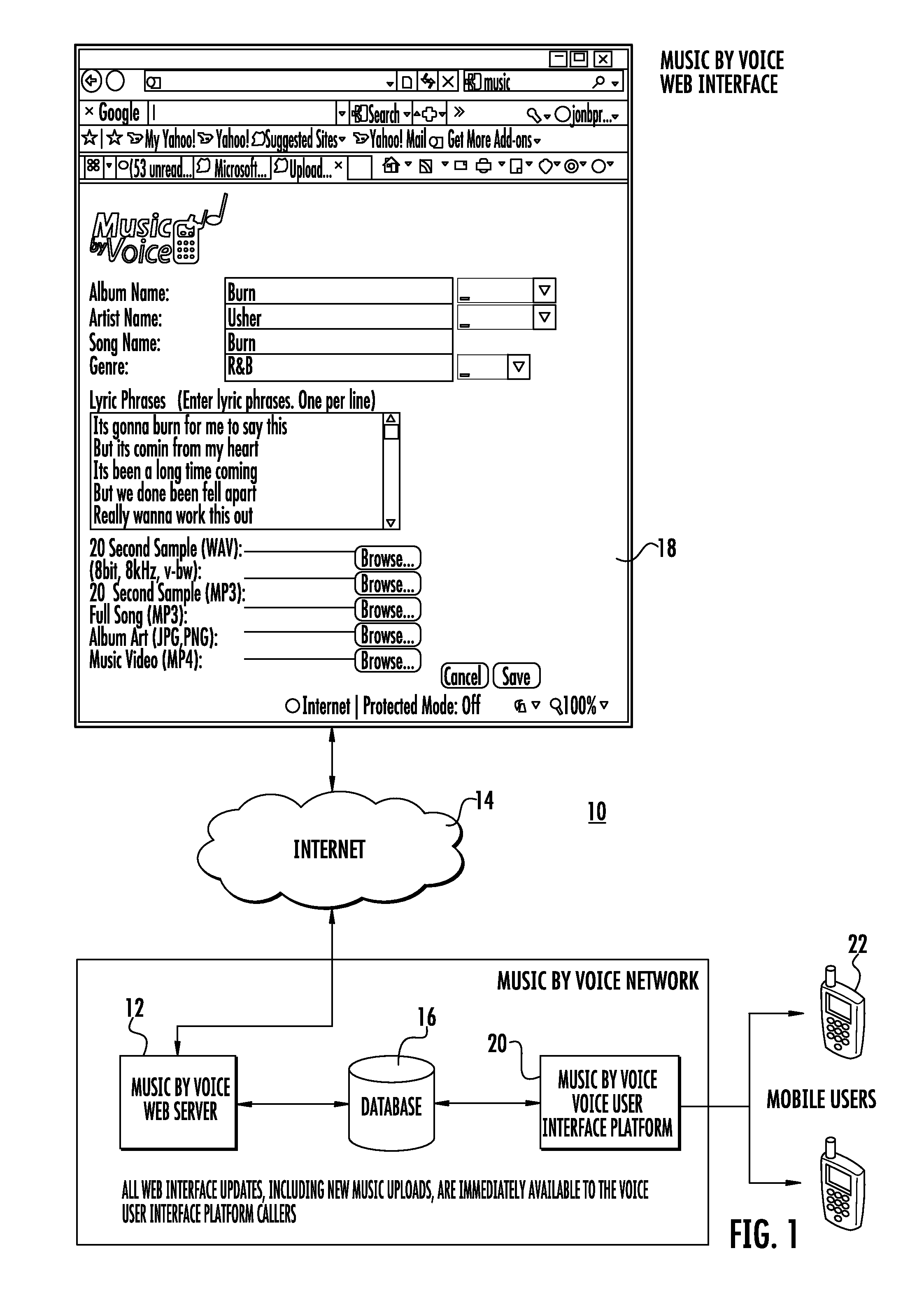

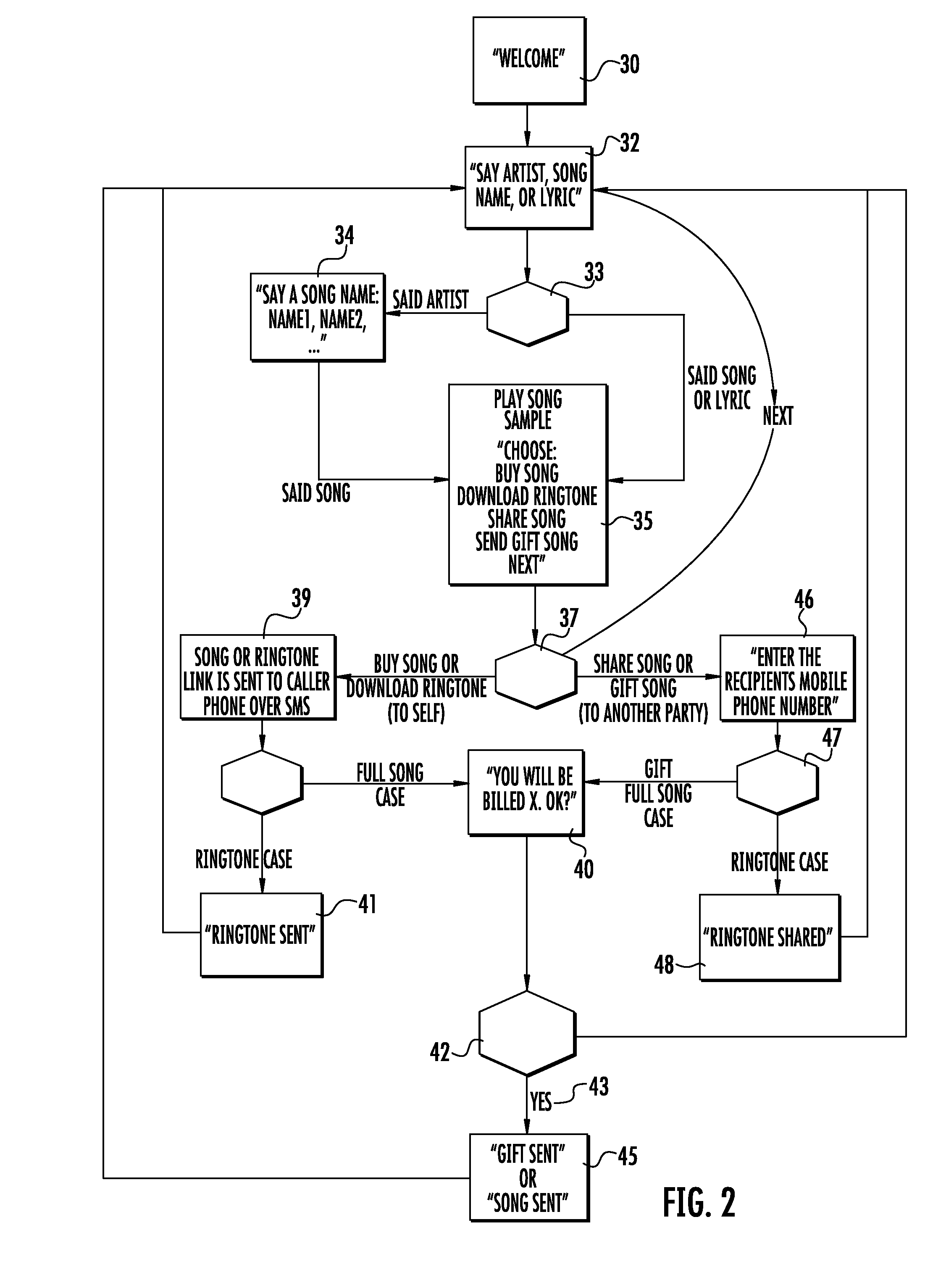

Method and system for selecting music

InactiveUS20120096018A1Digital data information retrievalDigital data processing detailsService provisionSpeech sound

A method for selecting music and directing disposal thereof includes a step of providing a service with a database having music information stored therein and a user interface platform coupled to the database and accessible through a telecommunication device. A communication is received including a request for music from an external telecommunication device. An instruction is provided to the external telecommunication device for a song designator including one of a title, artist and / or lyric by one of voiced information, typed information, and a music source. The song designator is received from the external telecommunication device and the song designator is compared to music information stored in the database to determine the music requested. The telecommunication device is queried to determine a desired disposal of the requested music. The method can be performed with videos—search, upload, share, watch / listen.

Owner:METCALF MICHAEL D

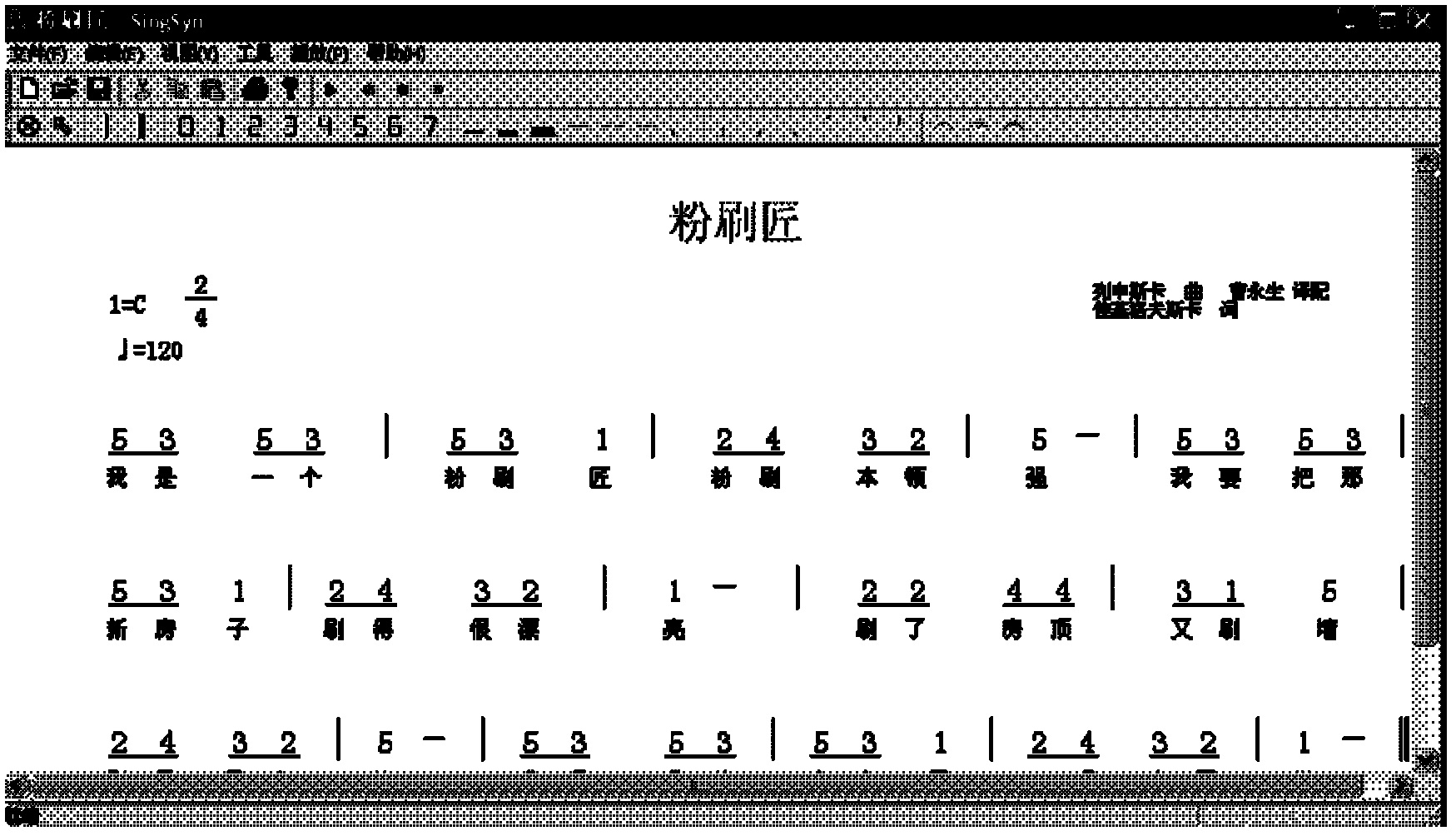

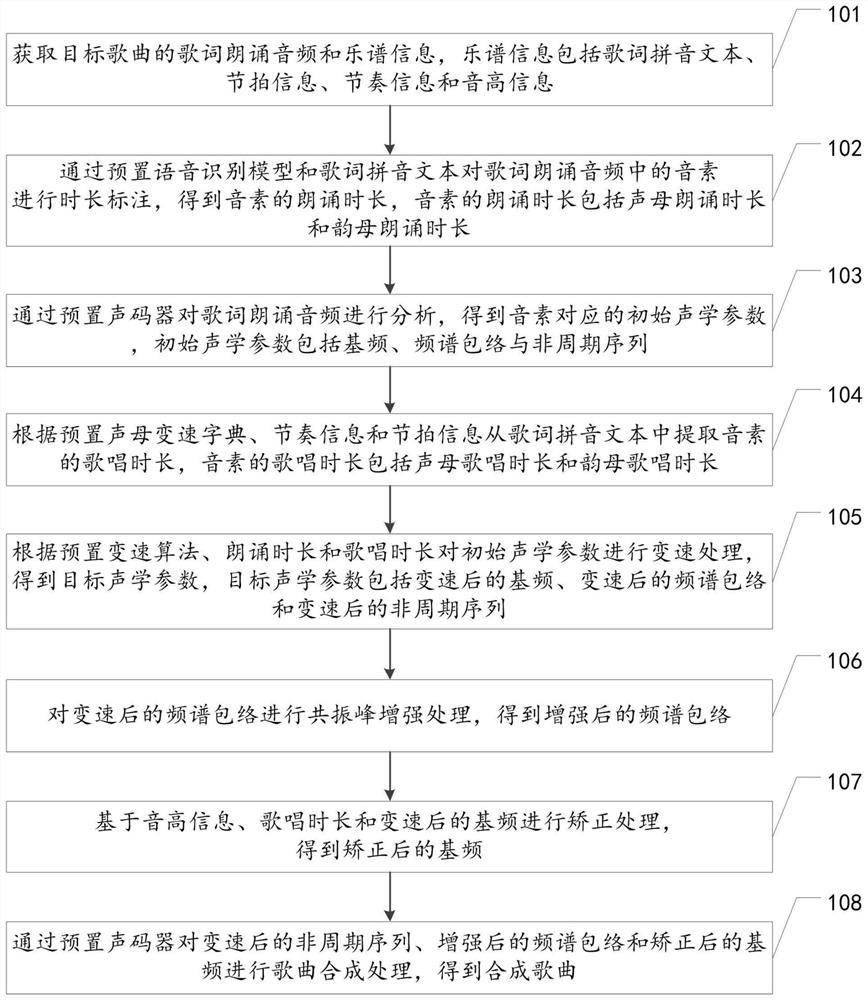

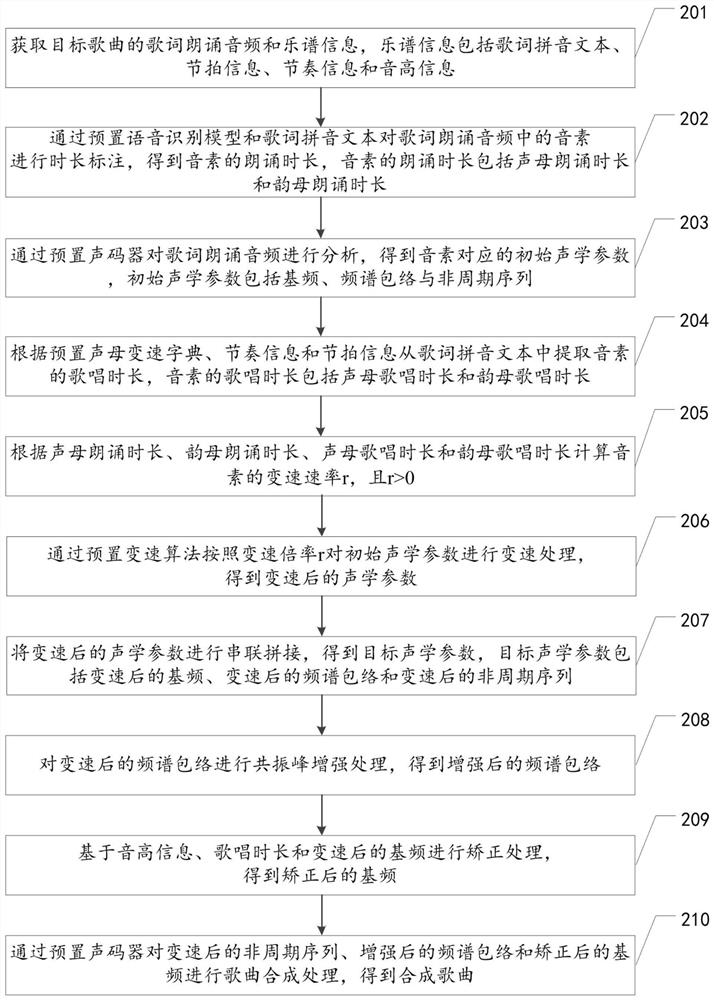

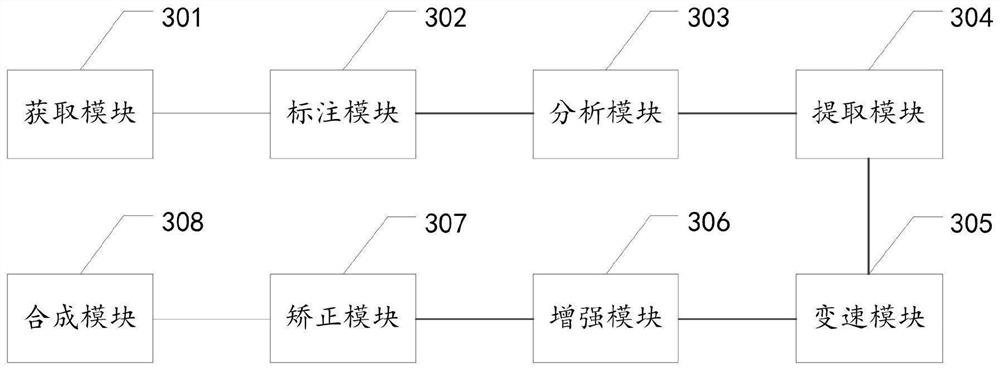

Song synthesis method, device and equipment and storage medium

PendingCN111681637AImprove naturalnessReduce data collection costsSpeech synthesisFrequency spectrumSynthesis methods

The invention relates to artificial intelligence, and discloses a song synthesis method which comprises the steps: obtaining lyric recitation audio and music score information; performing duration labeling on the lyric recitation audio through a preset voice recognition model and a lyric pinyin text to obtain recitation duration; analyzing initial acoustic parameters from the lyric recitation audio through a preset vocoder; extracting singing duration from the lyric pinyin text according to a preset initial consonant variable speed dictionary, the rhythm information and the beat information; performing speed change processing on the initial acoustic parameters according to a preset speed change algorithm, the recitation duration and the singing duration; performing formant enhancement processing on the frequency spectrum envelope after speed change to obtain an enhanced frequency spectrum envelope; performing correction processing based on the pitch information, the singing duration and the fundamental frequency after speed change to obtain a corrected fundamental frequency; and performing song synthesis processing on the processed acoustic parameters through the preset vocoder. The invention also relates to a blockchain, and the synthesized song is stored in the blockchain.

Owner:PING AN TECH (SHENZHEN) CO LTD

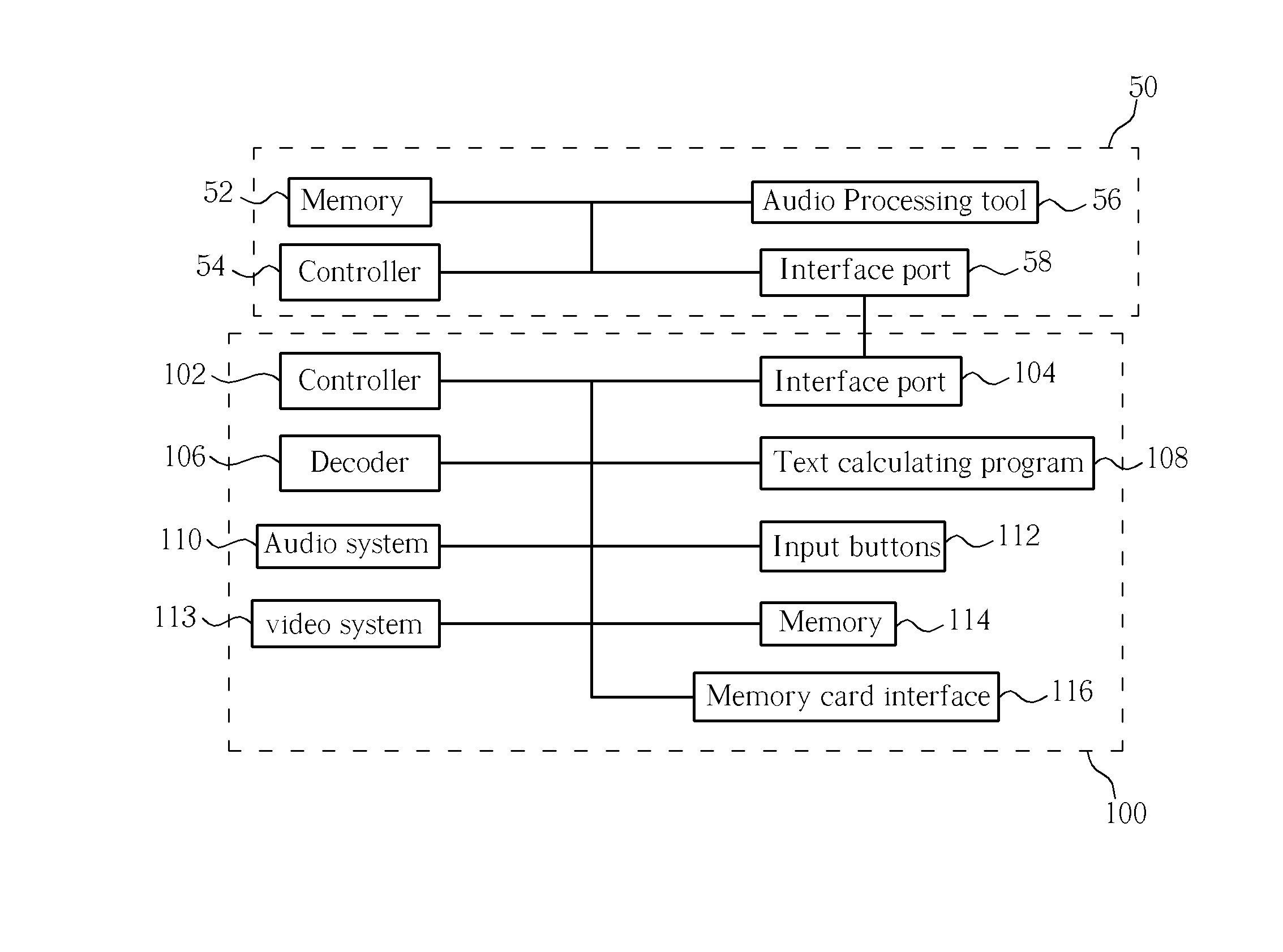

Audio Player with Lyrics Display

InactiveUS20080022207A1Easy to readSave users the troubleDigital computer detailsCathode-ray tube indicatorsDisplay deviceText file

A method of displaying text corresponding to an audio file on an audio player includes selecting a first audio file and loading a first text file corresponding to the first audio file. A character set file is generated and contains a list only those characters included in all text files stored in the audio player. The method further includes calculating a rate at which text is displayed on a display device electrically coupled to the audio player according to a predetermined relationship between a duration of the first audio file and a size of the first text file. Next, texts stored in the first text file are displayed on the display device at the calculated rate while simultaneously outputting audio signals decoded from the first audio file.

Owner:BENQ CORP

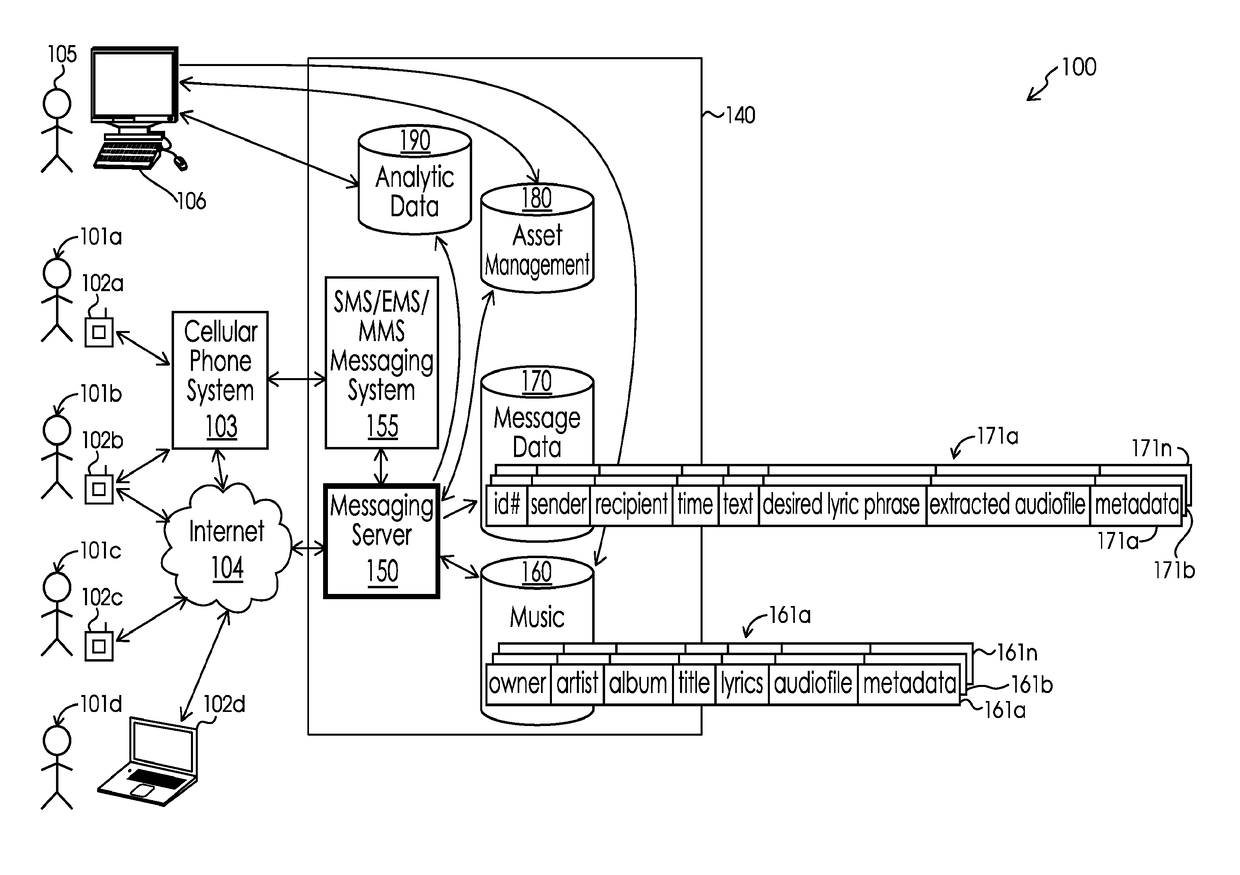

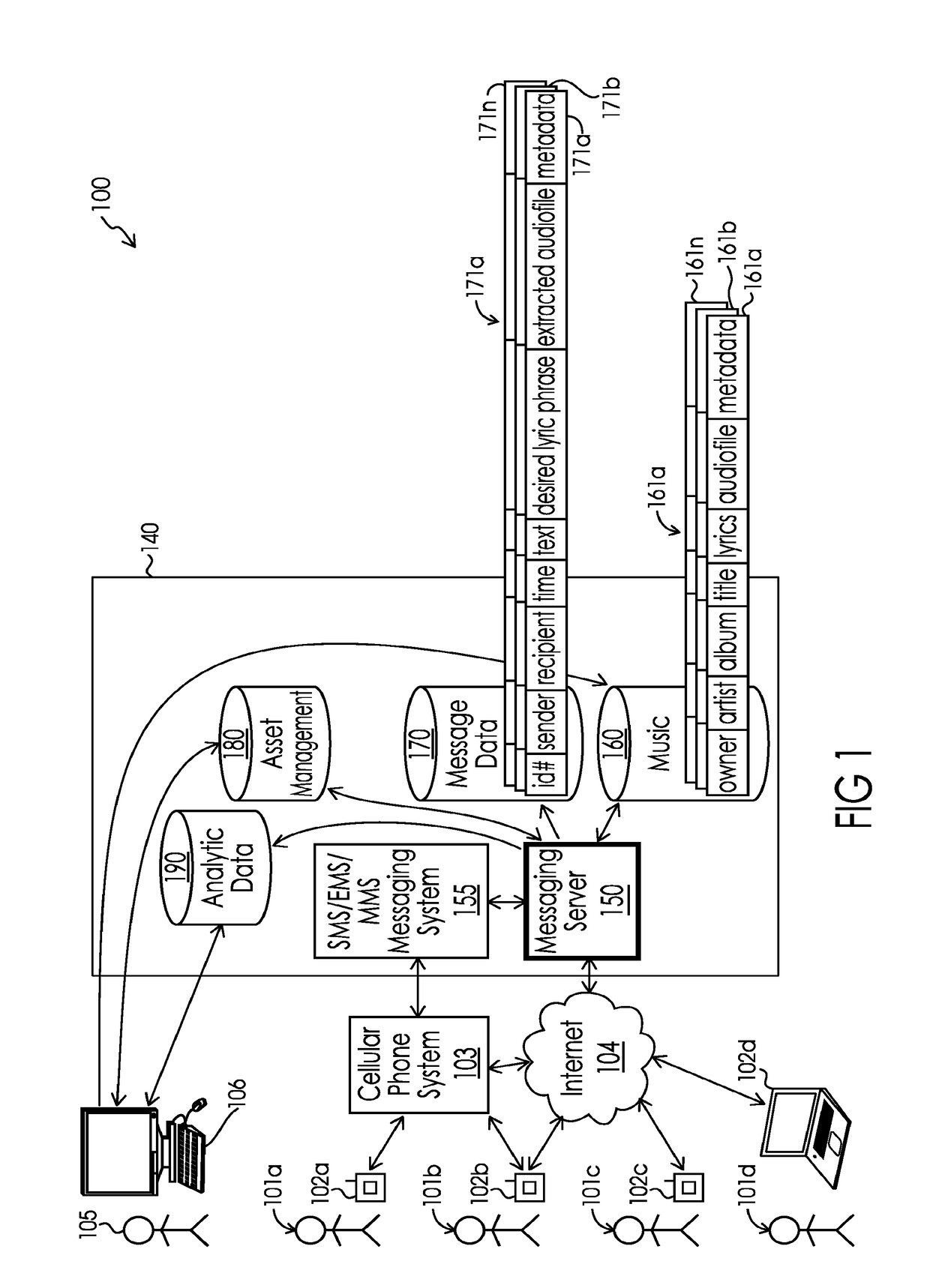

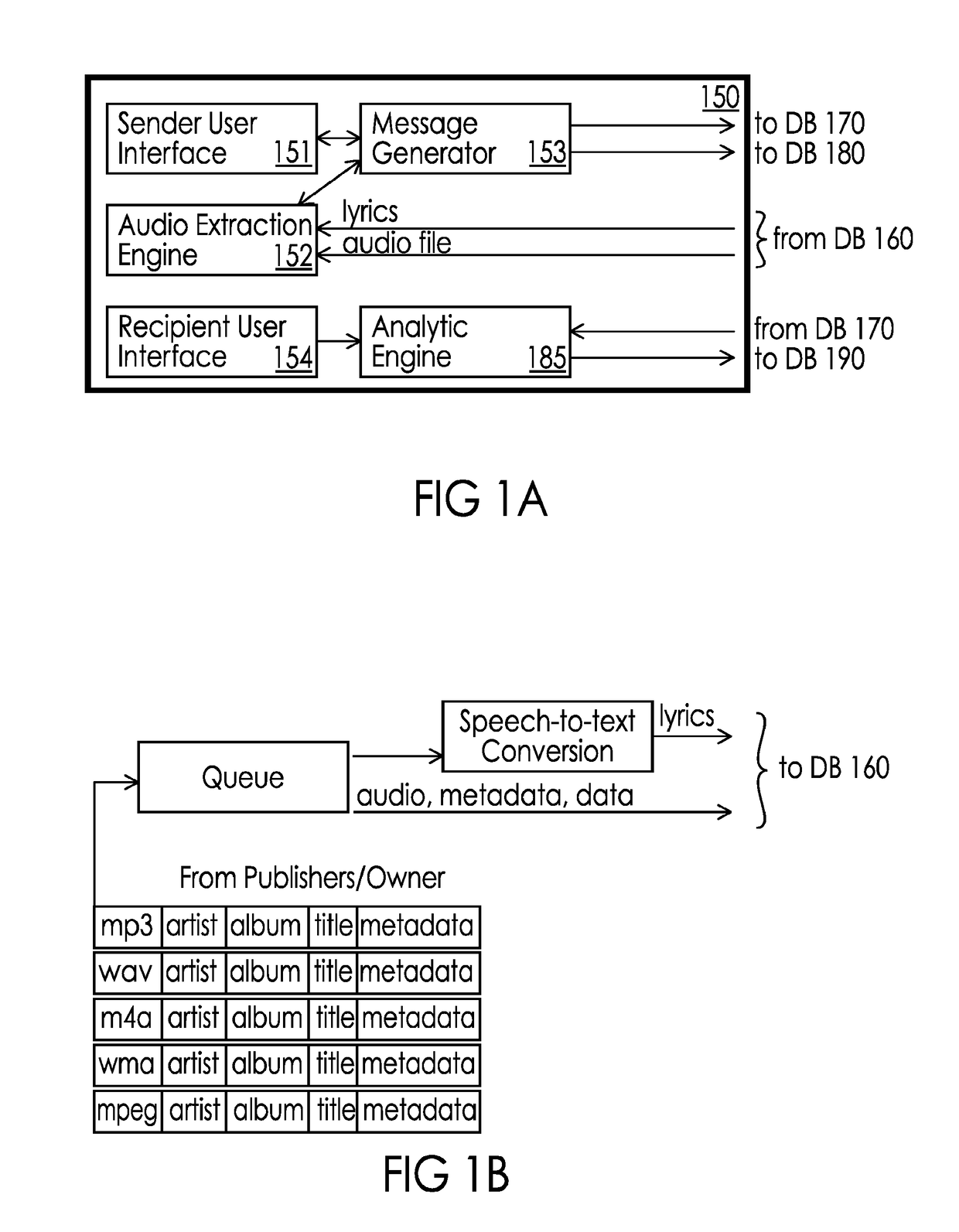

Method and System for Communicating Between a Sender and a Recipient Via a Personalized Message Including an Audio Clip Extracted from a Pre-Existing Recording

A method of communicating between a sender and a recipient via a personalized message, including steps of: (a) identifying text, via the user interface of a communication device, of a desired lyric phrase from within a pre-existing audio recording; (b) selecting visual data, such as an image or video, to be paired with the desired lyric phrase; (c) extracting audio substantially associated with the desired lyric phrase from the pre-existing recording into a desired audio clip; (d) inputting personalized text via the user interface; (e) creating the personalized message with the sender identification, the personalized text and access to the desired audio clip; and (e) sending an electronic message to the electronic address of the recipient. Clips may be generated automatically based on a relevance score. The electronic message may be a text message, instant message, or email message; this message may alternatively have a link to the personalized message.

Owner:AUDIOBYTE LLC

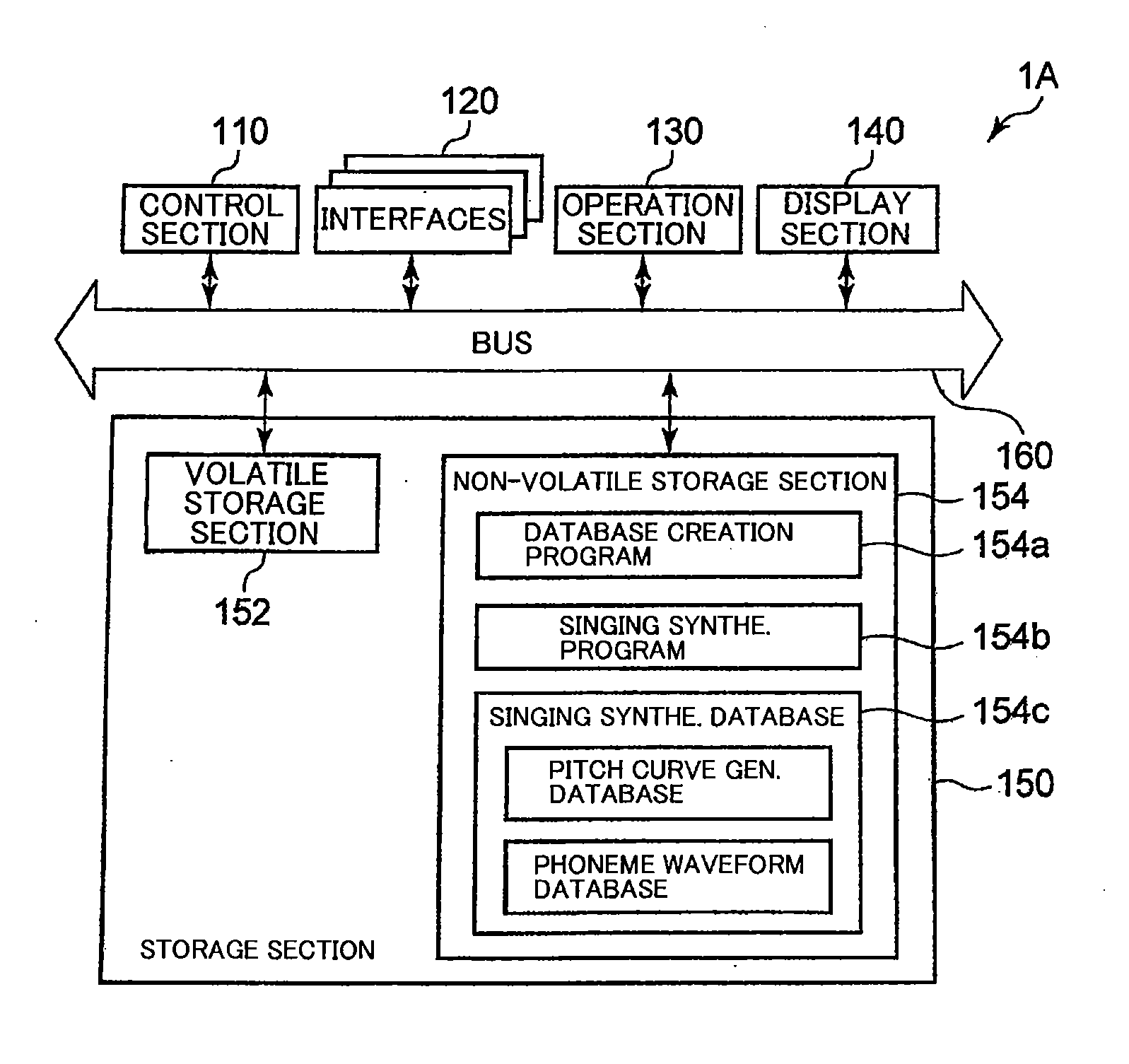

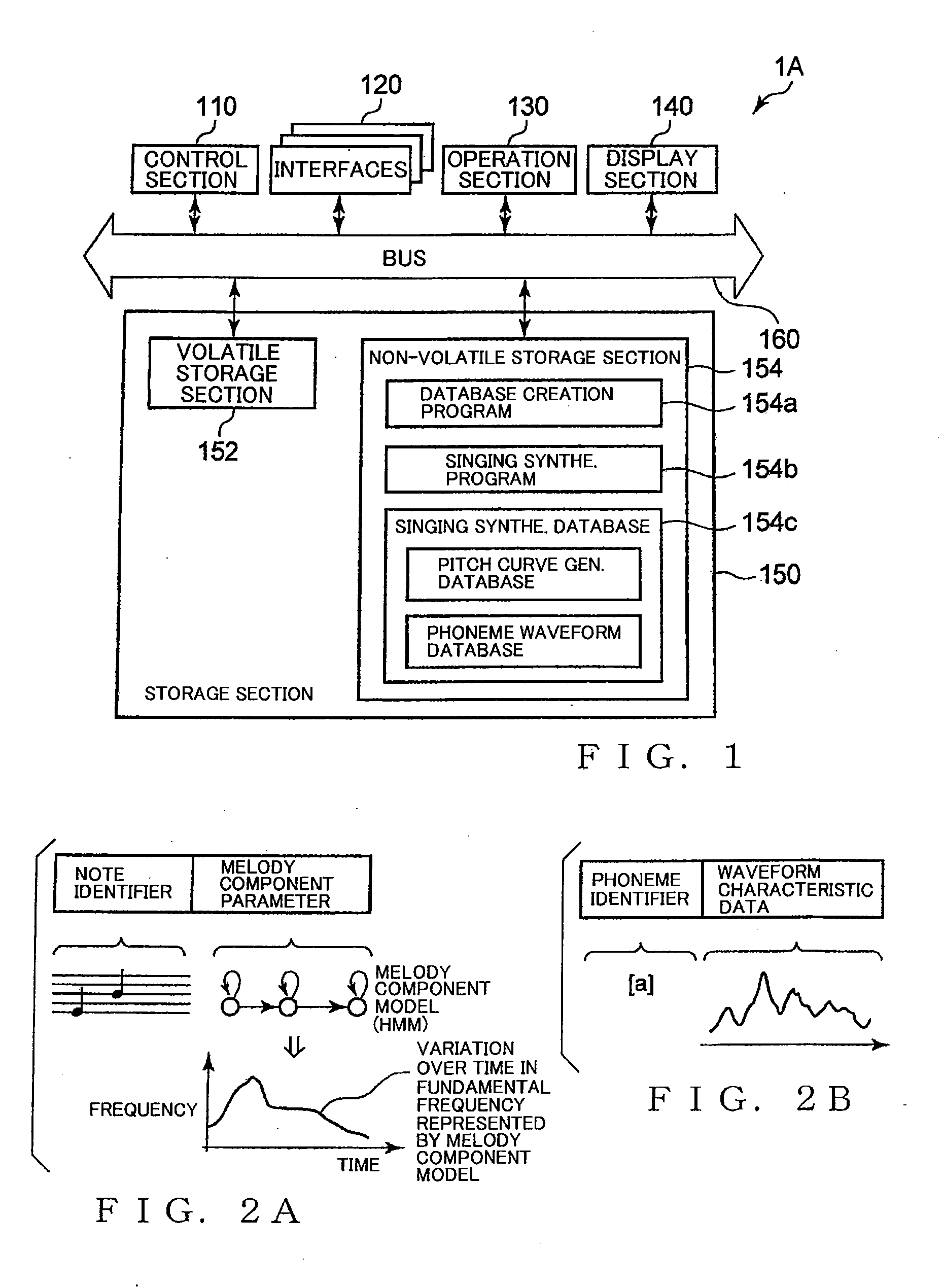

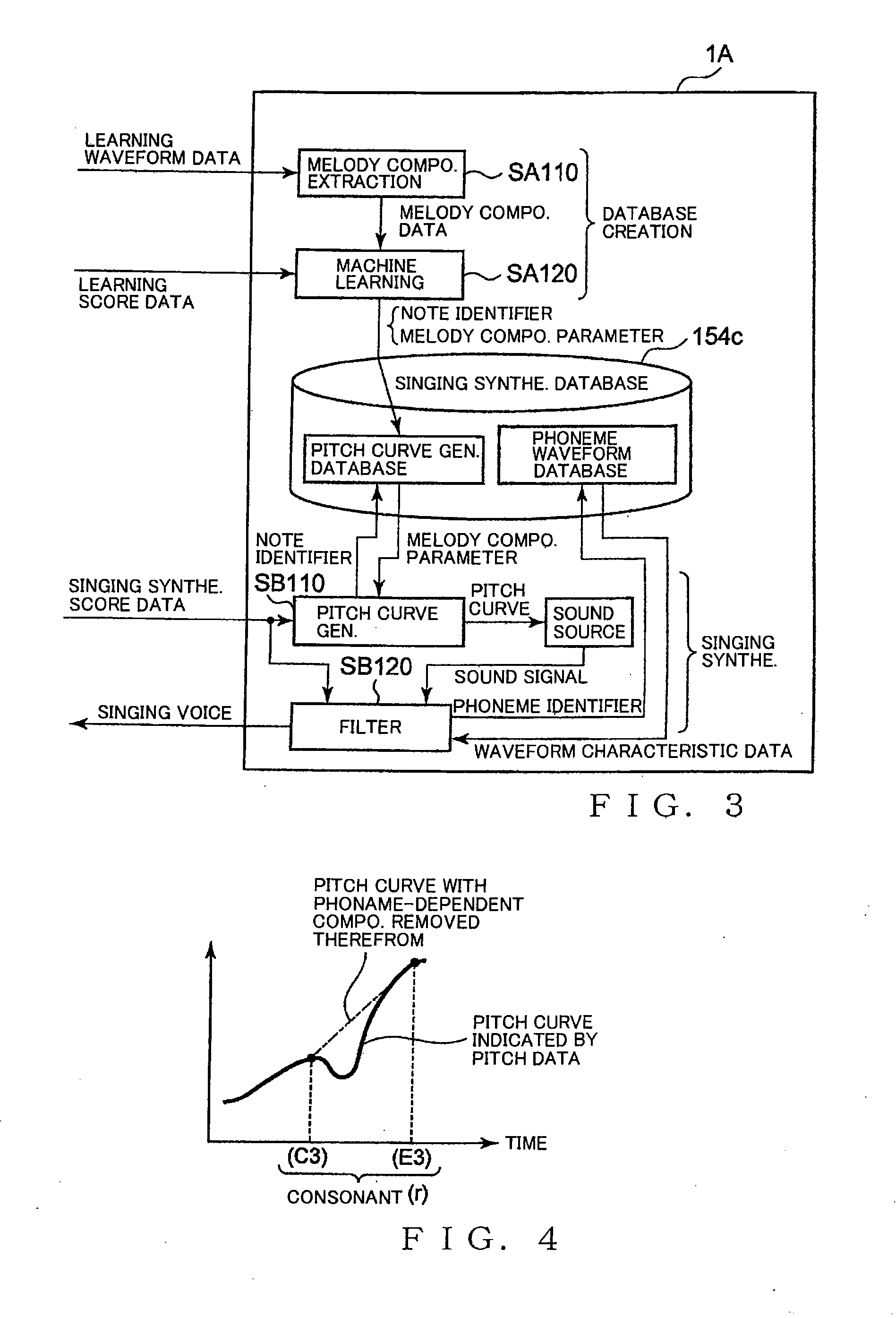

Apparatus and Method for Creating Singing Synthesizing Database, and Pitch Curve Generation Apparatus and Method

ActiveUS20110004476A1Accurately model singing expressionNatural soundElectrophonic musical instrumentsSpeech synthesisPitch variationFundamental frequency

Variation over time in fundamental frequency in singing voices is separated into a melody-dependent component and a phoneme-dependent component, modeled for each of the components and stored into a singing synthesizing database. In execution of singing synthesis, a pitch curve indicative of variation over time in fundamental frequency of the melody is synthesized in accordance with an arrangement of notes represented by a singing synthesizing score and the melody-dependent component, and the pitch curve is corrected, for each of pitch curve sections corresponding to phonemes constituting lyrics, using a phoneme-dependent component model corresponding to the phoneme. Such arrangements can accurately model a singing expression, unique to a singing person and appearing in a melody singing style of the person, while taking into account phoneme-dependent pitch variation, and thereby permits synthesis of singing voices that sound more natural.

Owner:YAMAHA CORP

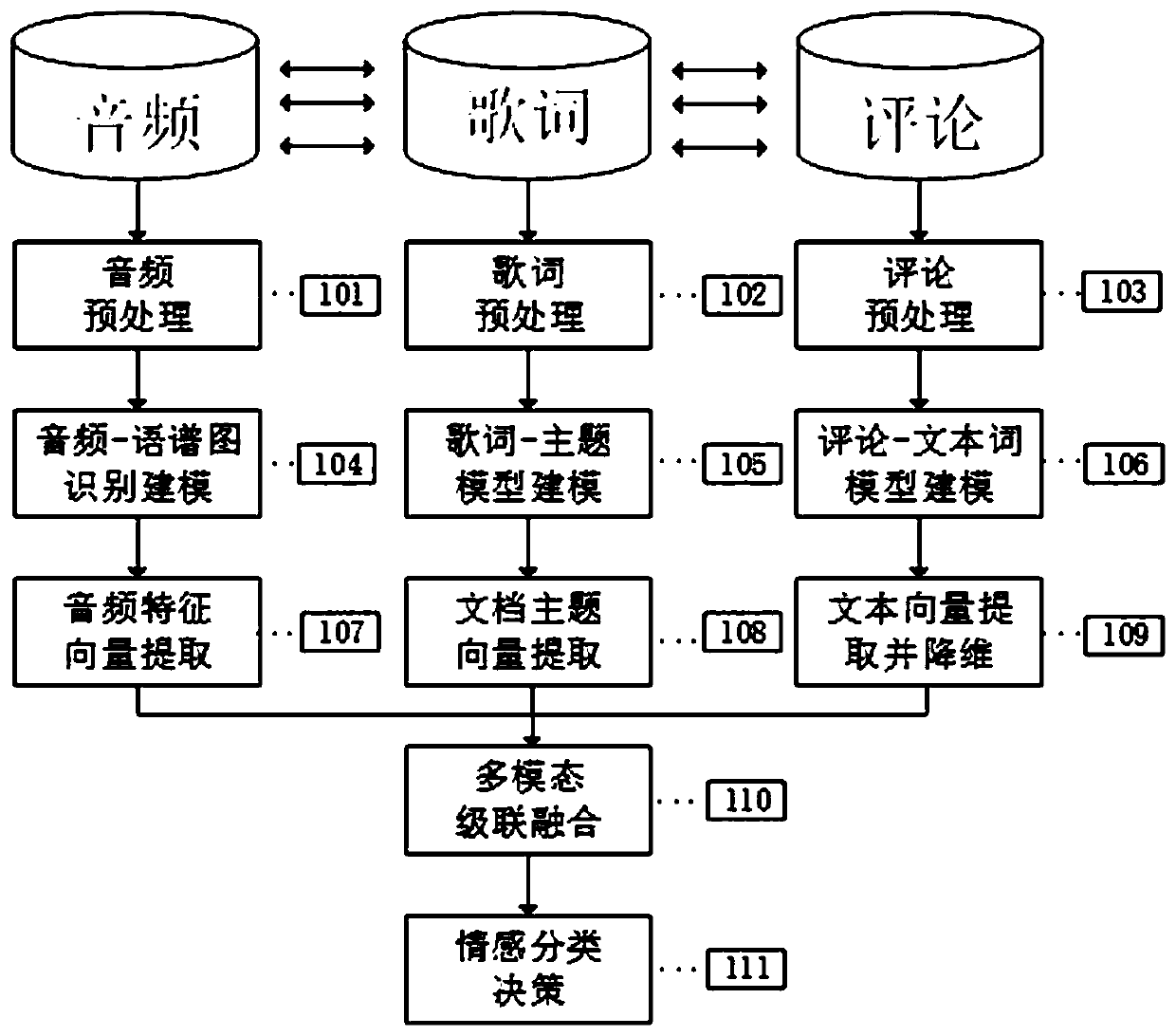

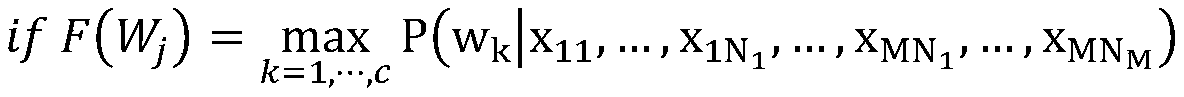

Music emotion classification method based on multi-modal learning

ActiveCN111460213AUnified classification standardsIncrease opennessSpeech analysisCharacter and pattern recognitionFeature vectorFeature extraction

The invention discloses a music emotion classification method based on multi-modal learning, and the method comprises the following steps: data preprocessing: carrying out the preprocessing of the audio, lyrics and comments of music according to the needed modal information, so as to obtain the effective input of a model; representation learning: mapping each modal to a respective representation space by using different modeling modes; feature extraction: extracting feature vectors of different modals after model mapping, and reducing dimensions to the same dimension; multi-modal fusion:, carrying out cascade early fusion on the features of the three different modals, and establishing more comprehensive feature representation; and emotion classification decision making: performing supervised emotion classification on the music by using the fused features. According to the music sentiment classification method, a method based on multi-modal joint learning is provided, the defect that noise or data loss exists in a current mainstream single-modal model method can be effectively reduced, and the accuracy and stability of music sentiment classification are improved.

Owner:HOHAI UNIV

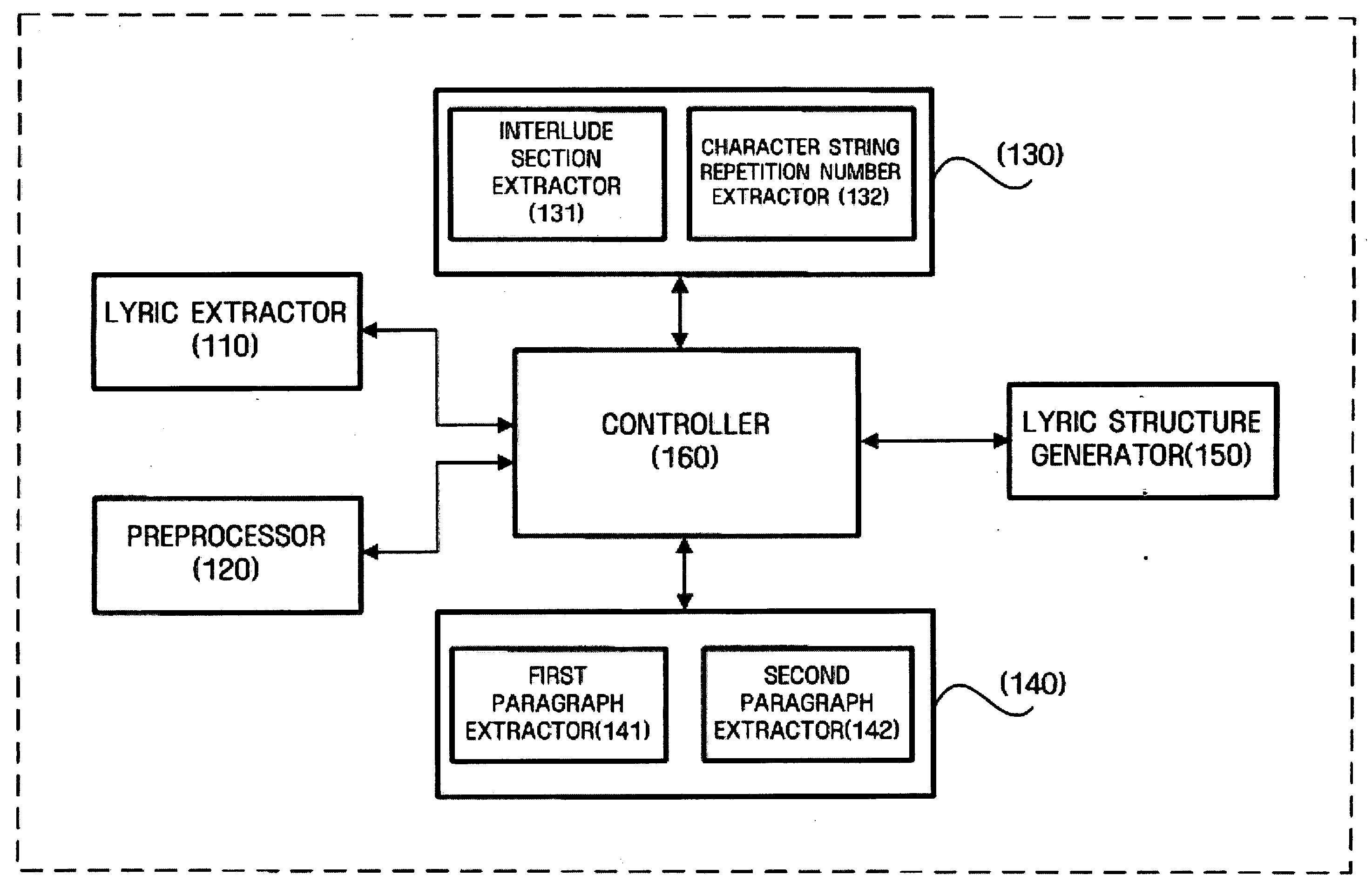

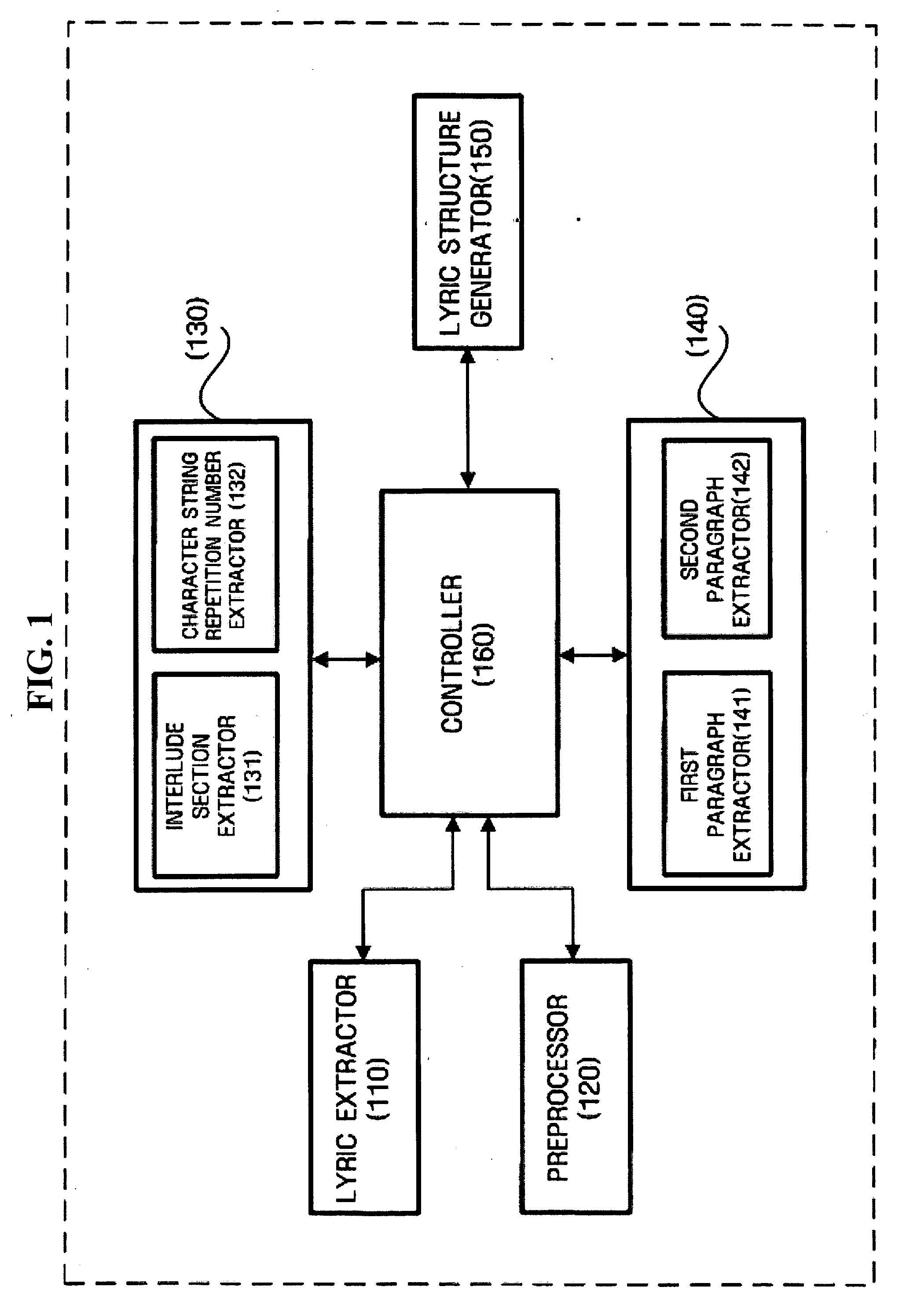

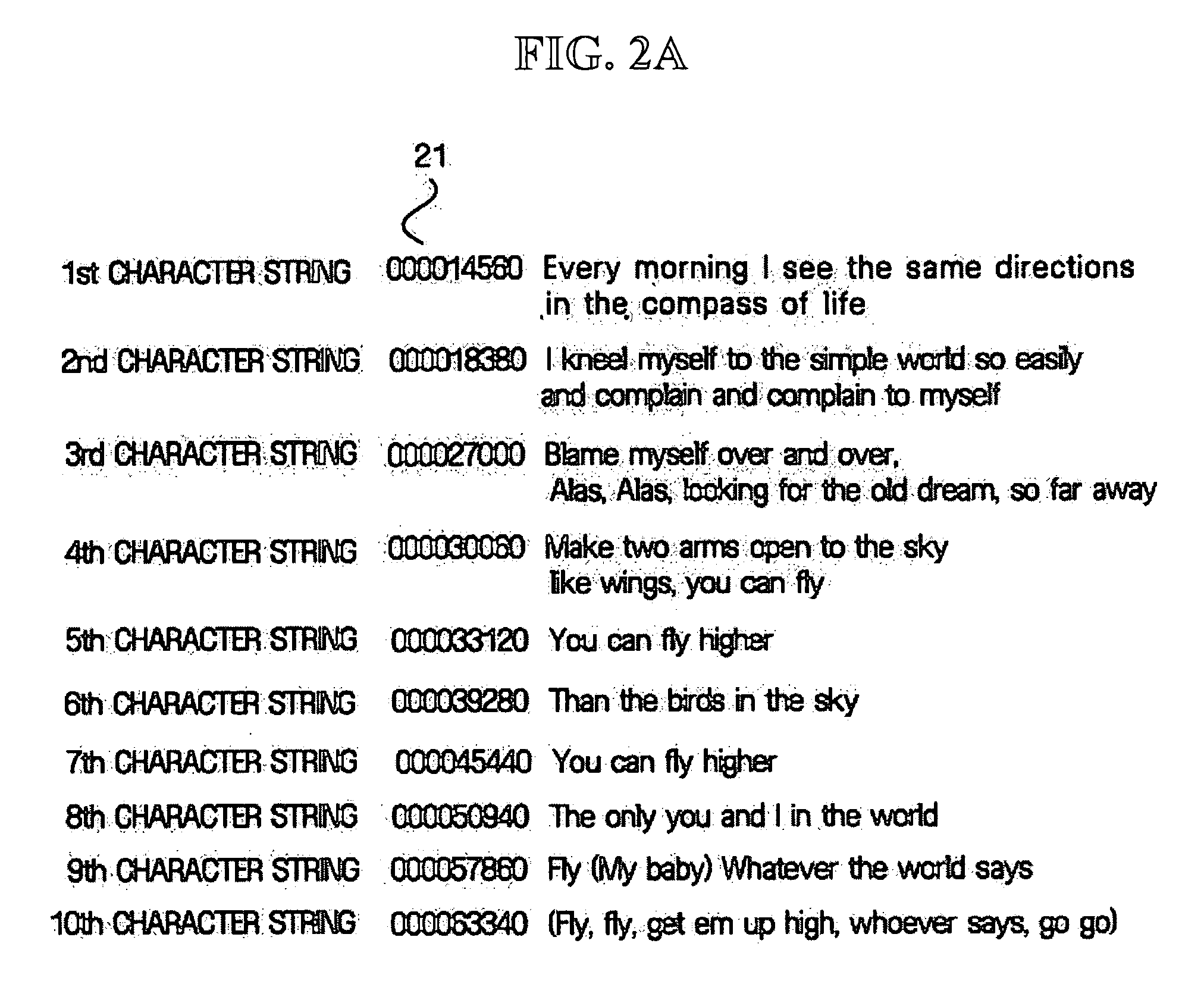

Apparatus, system and method for extracting structure of song lyrics using repeated pattern thereof

ActiveUS20070186754A1Reduce the amount requiredElectrophonic musical instrumentsData processing applicationsPattern recognitionRepeat pattern

An apparatus, system, and method for extracting the structure of song lyrics using a repeated pattern thereof are provided. The apparatus includes a lyric extractor extracting lyric information from metadata related to an audio file, a character string information extractor extracting an interlude section and a repeated character string based on the extracted lyric information, a paragraph extractor extracting a paragraph based on the repeated character string and then a set of paragraphs having the same repeated pattern among the extracted paragraphs, and a lyric structure generator arranging an interlude section, a character string, and a paragraph related to the audio file in a tree structure.

Owner:SAMSUNG ELECTRONICS CO LTD

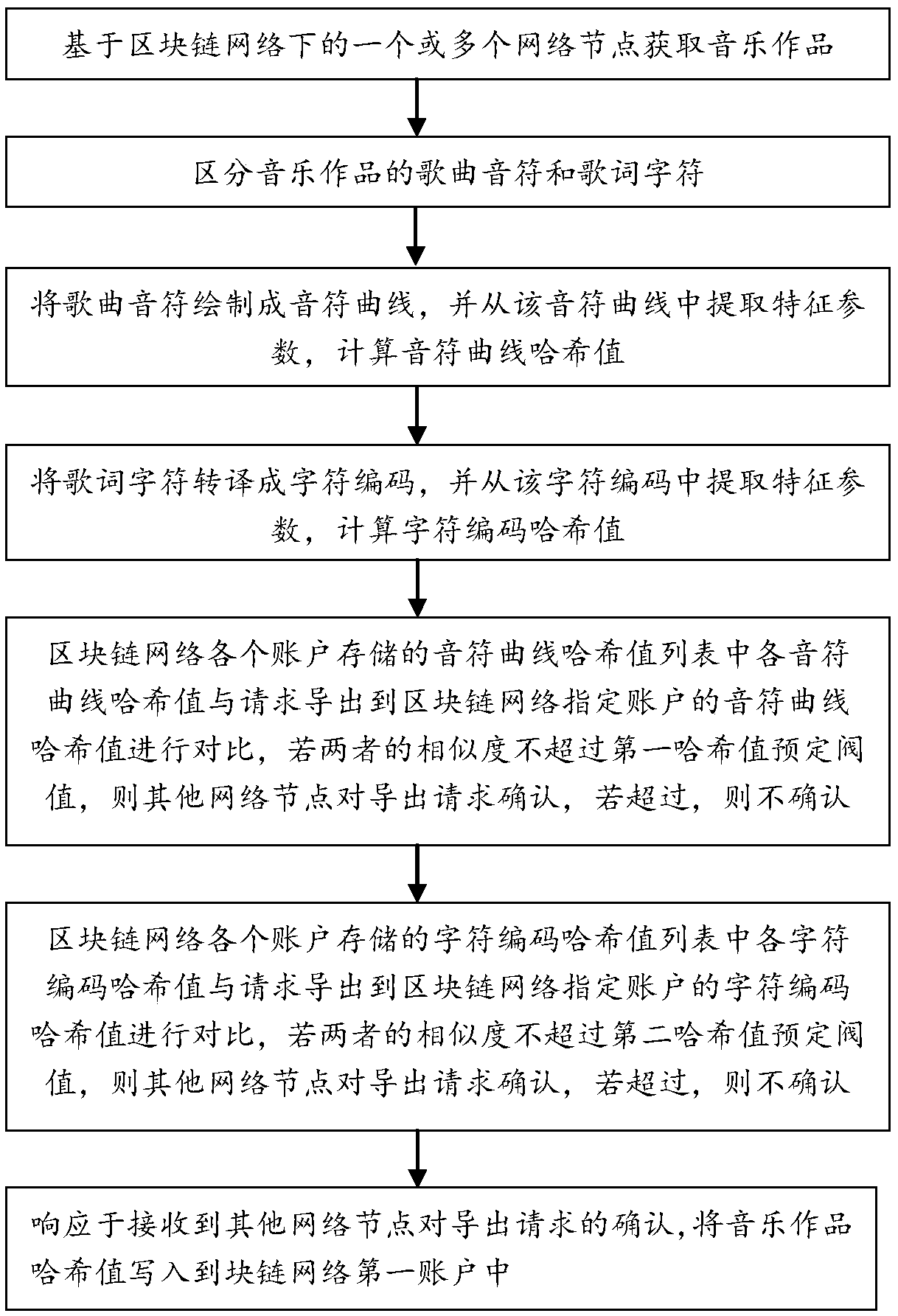

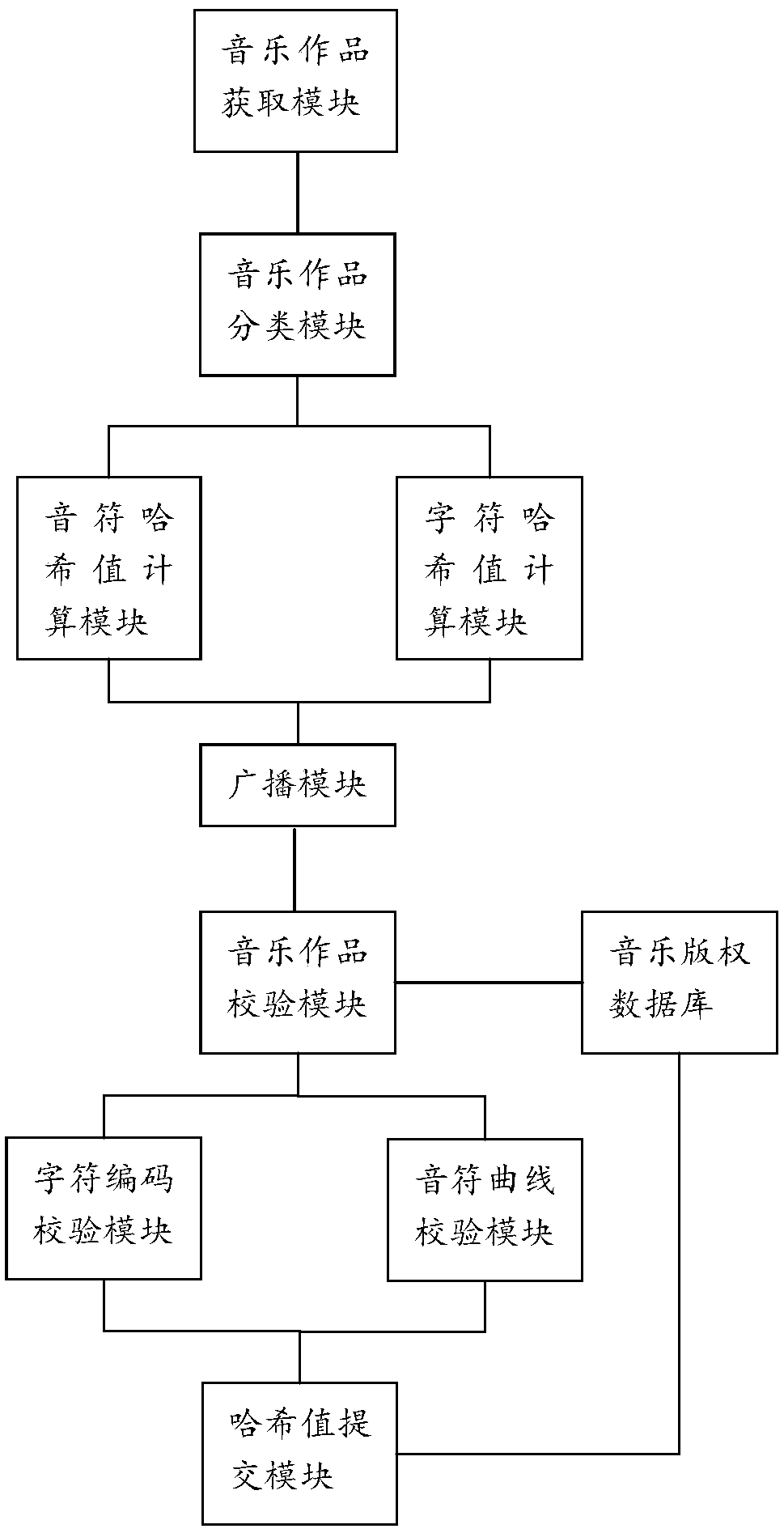

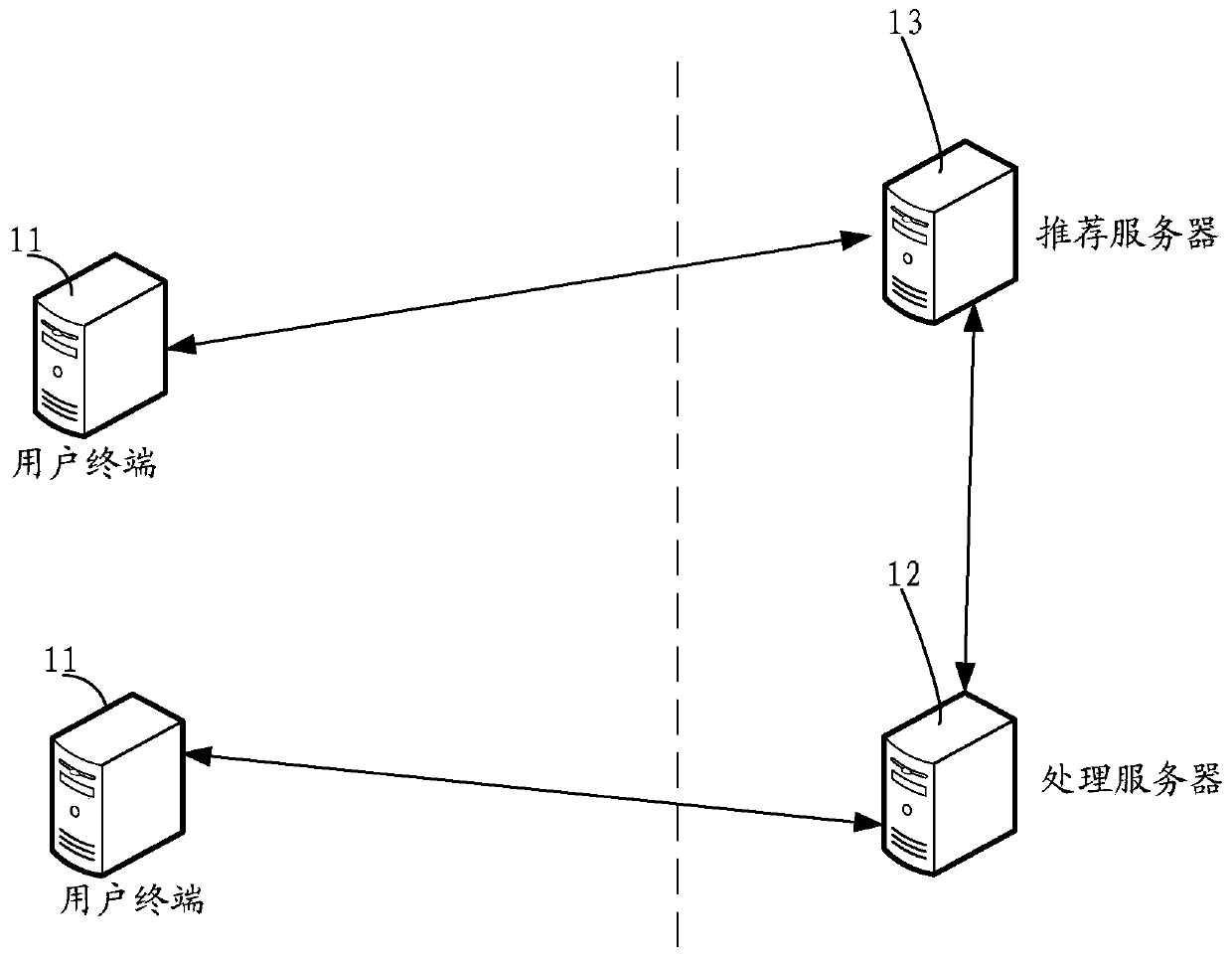

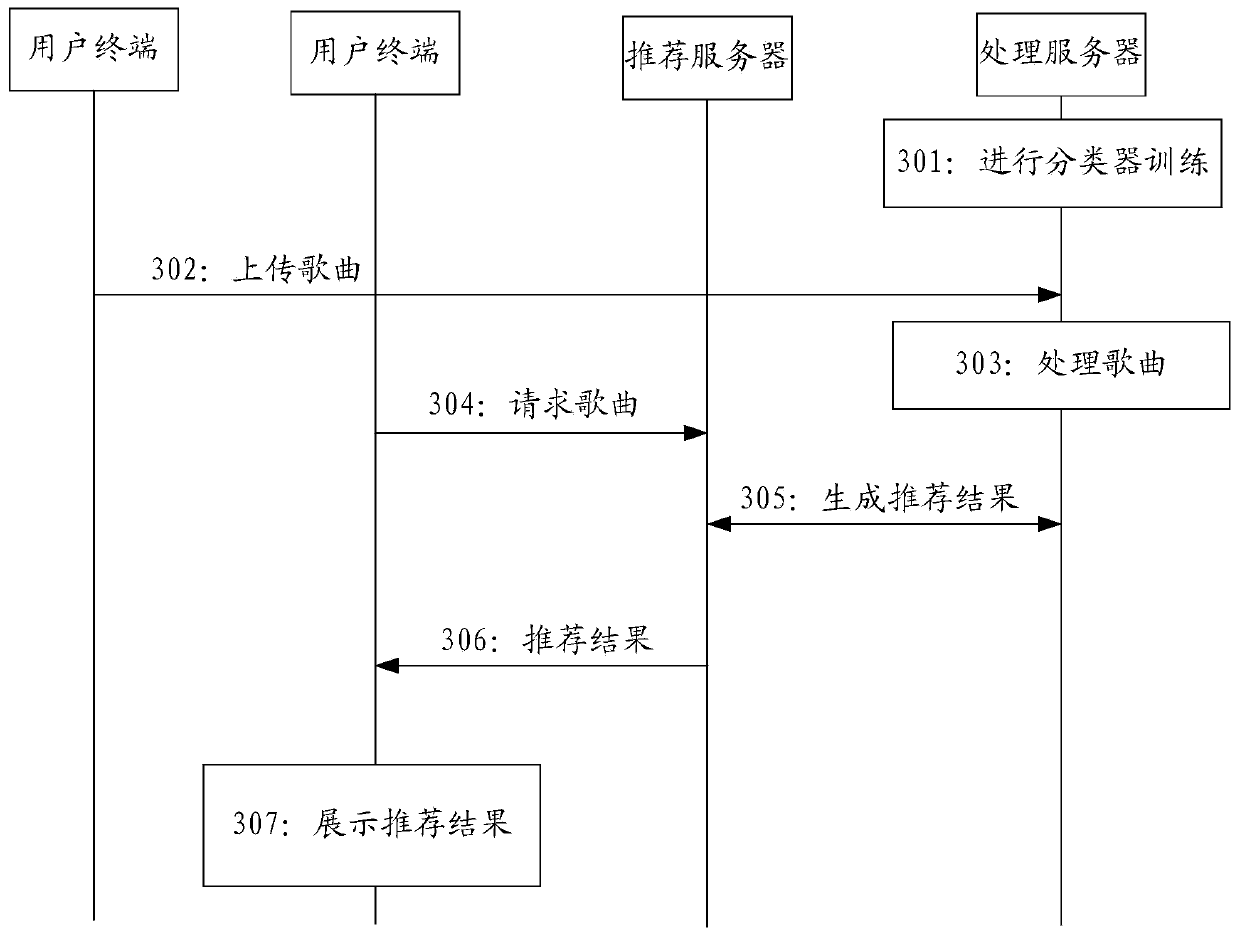

Music copyright recognition authentication method and authentication system based on block chain

InactiveCN109033760ARealize immutableQuickly determine copyright ownershipDigital data protectionProgram/content distribution protectionChain networkAuthentication system

The invention relates to a music copyright identification authentication method and a music copyright authentication system based on a block chain. The authentication method comprises the following steps: obtaining music works based on one or more network nodes under the block chain network; obtaining music works based on one or more network nodes under the block chain network; distinguishing musical compositions by song notes and lyric character; drawing a song note into a note curve, extracting characteristic parameters from the note curve, and calculating a hash value of the note curve; a lyric character being translated into a character code, and characteristic parameters being extracted from the character code to calculate a character coding hash value; export request for exporting the note curve hash value or character encoded hash value to the block chain network specified account being broadcast among other network nodes; in response to receiving acknowledgement of the export request from other network nodes, a note curve or character encoded hash value being written to a block chain network designated account. The invention broadcasts and submits the hash value of a musical work to a network node of a block chain network, thereby protecting the copyright of the musical work at the time of submission.

Owner:北京创声者文化传媒有限公司

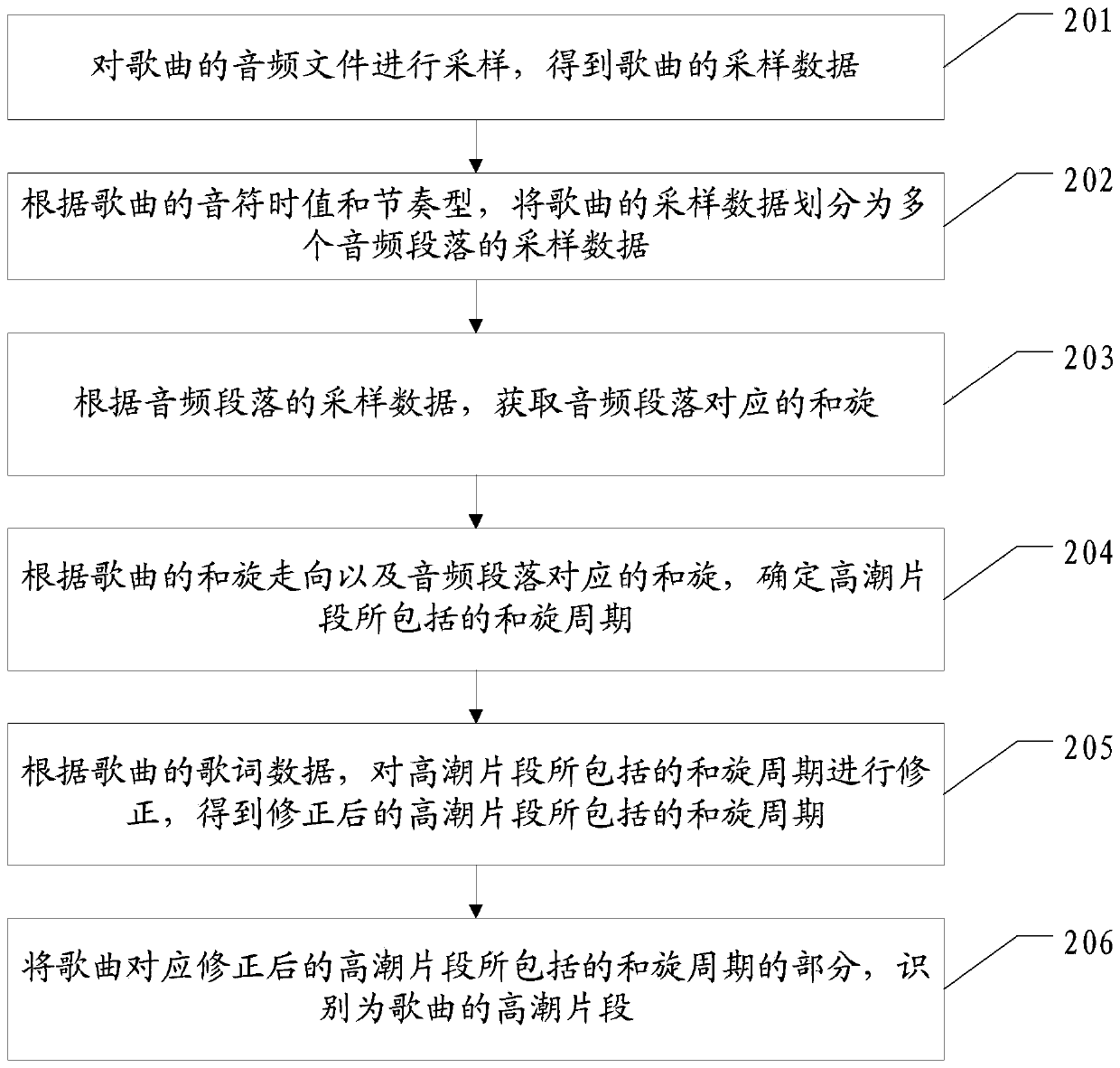

Song climax fragment identification method and device

PendingCN111081272AImprove recognition accuracyImprove user experienceElectrophonic musical instrumentsSpeech analysisNote valueAudio frequency

The embodiment of the invention discloses a song climax fragment identification method and device. The method comprises the following steps: sampling an audio file of a song, according to the note time value and the rhythm type of the song, dividing the sampling data of the song into sampling data of a plurality of audio paragraphs, obtaining a chord corresponding to the audio paragraph accordingto the sampling data of the audio paragraphs, determining a chord period included in the climax fragment according to the chord trend of the song and the chord corresponding to the audio paragraph, correcting the chord period included in the climax fragment according to the lyric data of the song to obtain a chord period included in the climax fragment, and finally identifying a part, corresponding to the chord period included in the climax fragment after correction, of the song as the climax fragment of the song. According to the method and the device, the climax fragments of the song are identified based on essential factors of the song, such as note time values, rhythm types and chord trends, so that the identification accuracy of the climax fragments of the song is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

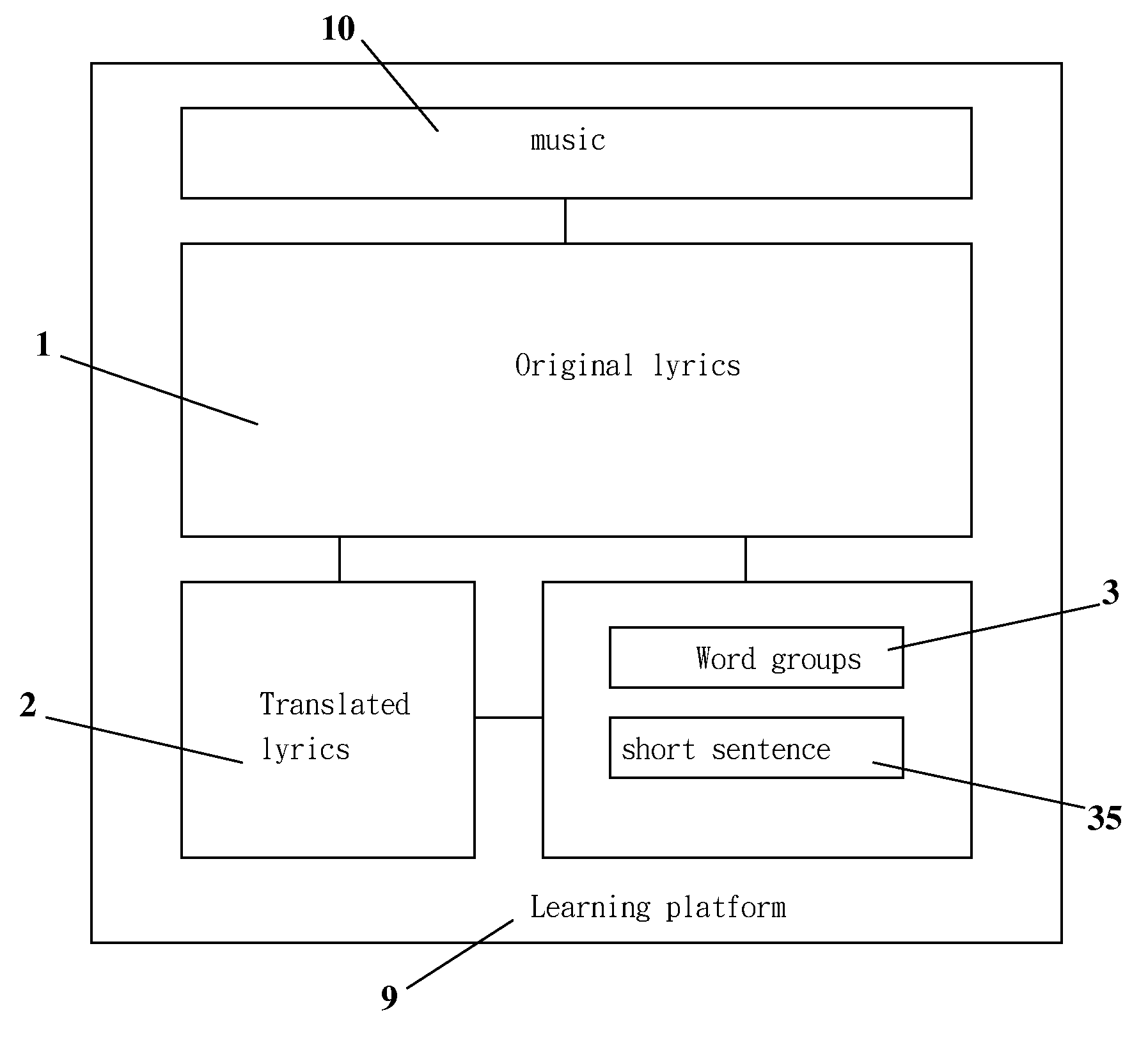

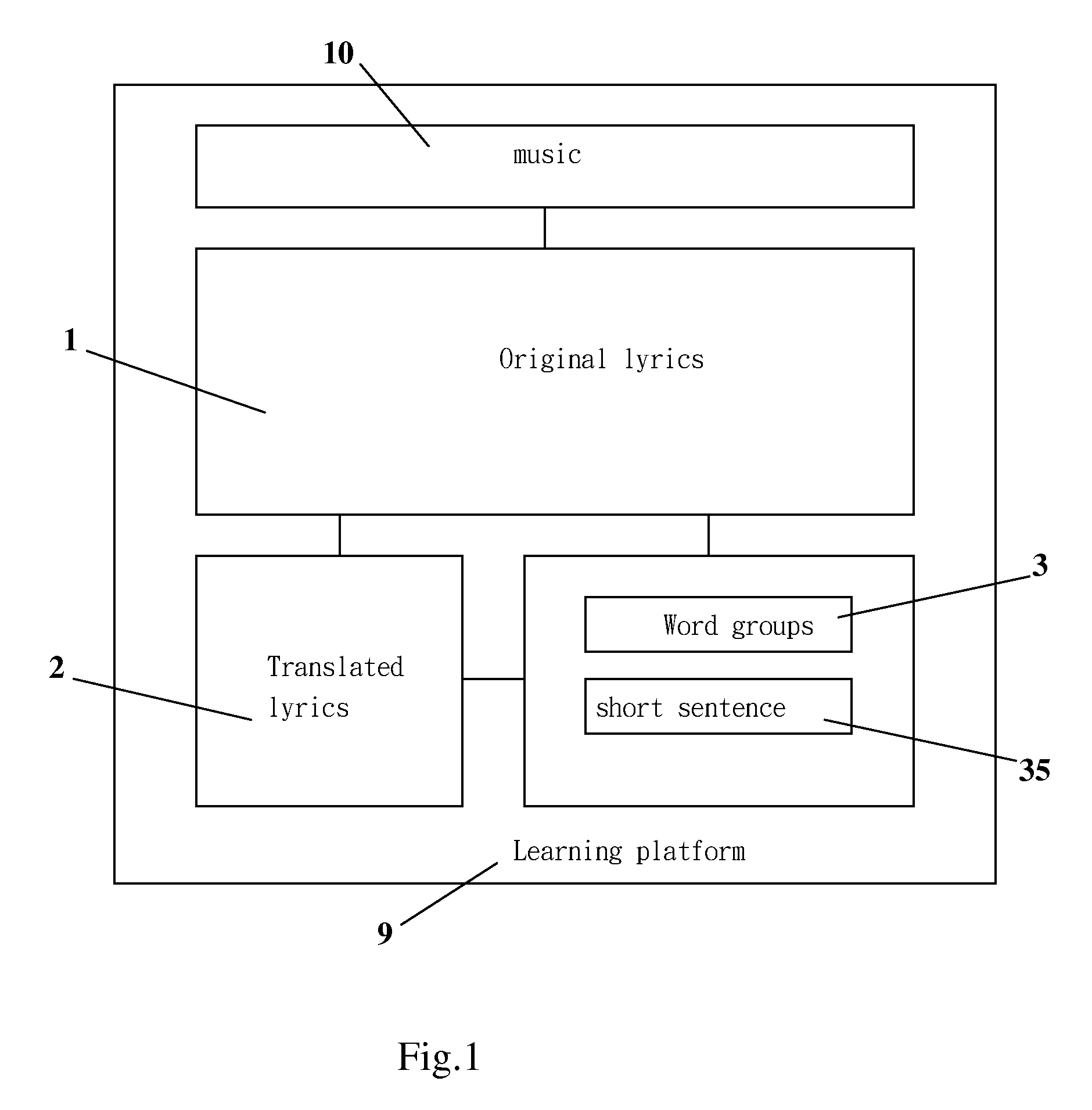

Language learning device for expanding vocaburary with lyrics

InactiveUS20120290285A1Electrical appliancesSpecial data processing applicationsInformation networksPersonal computer

A language learning device for expanding vocabulary with lyrics have a learning platform selected from information network, a personal computer, an electronic dictionary apparatus, a multimedia apparatus, a personal digital assistant (PDA), a cell phone, a paper sheet, a book, a memory card, and an optical disk. The learning platform further comprises an original lyric data base, a translated lyric data base, a word group expansion data base, and a correlated short sentence data base. The words of the original lyrics appear in order to match short sentences formed with the original word groups and the translated word meanings in the second language for learning a batch of expanded words. The language learning device is capable of being applied to assisting trilingual and even multilingual learning in addition to the bilingual learning.

Owner:WANG GAO PENG +1

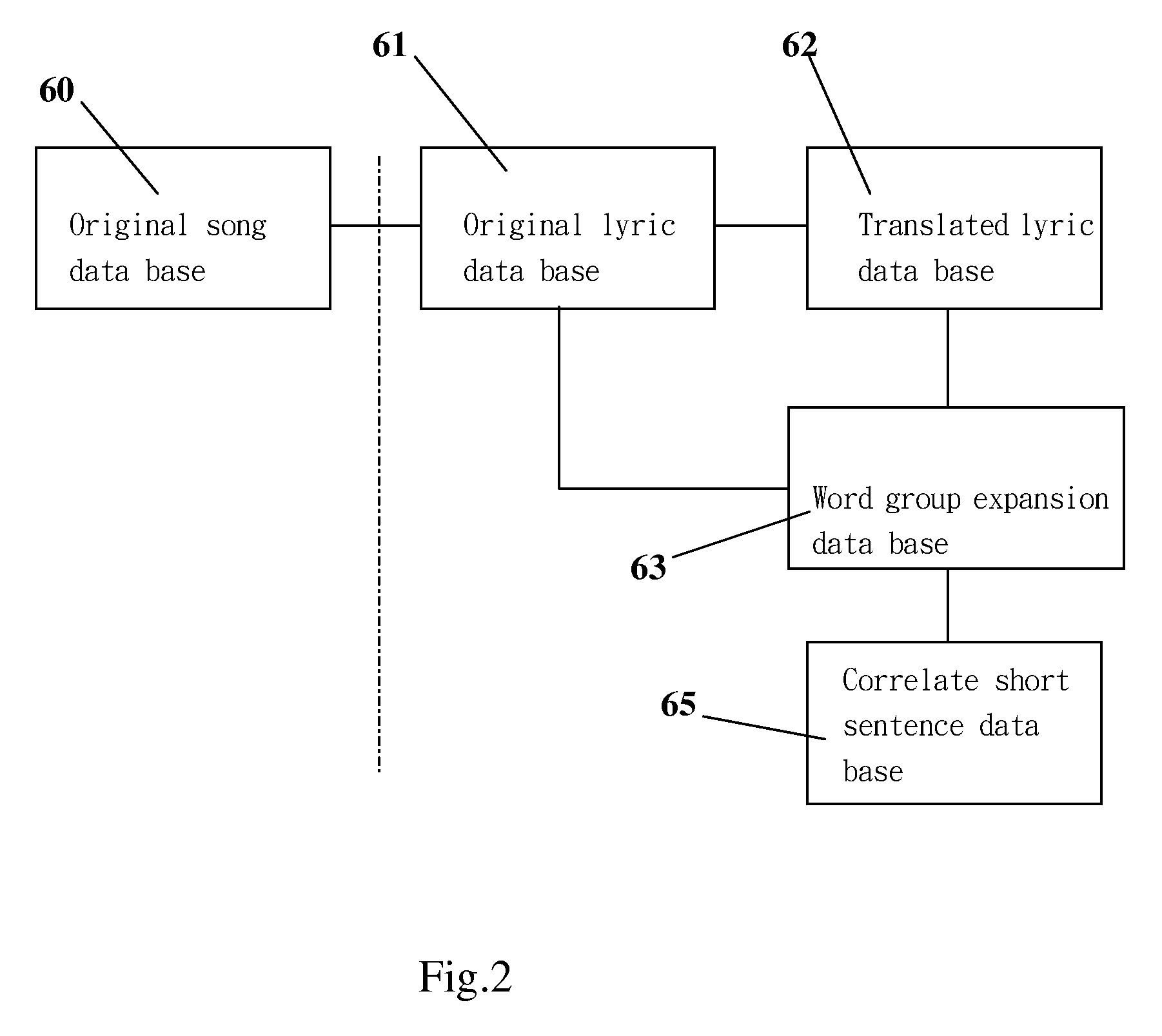

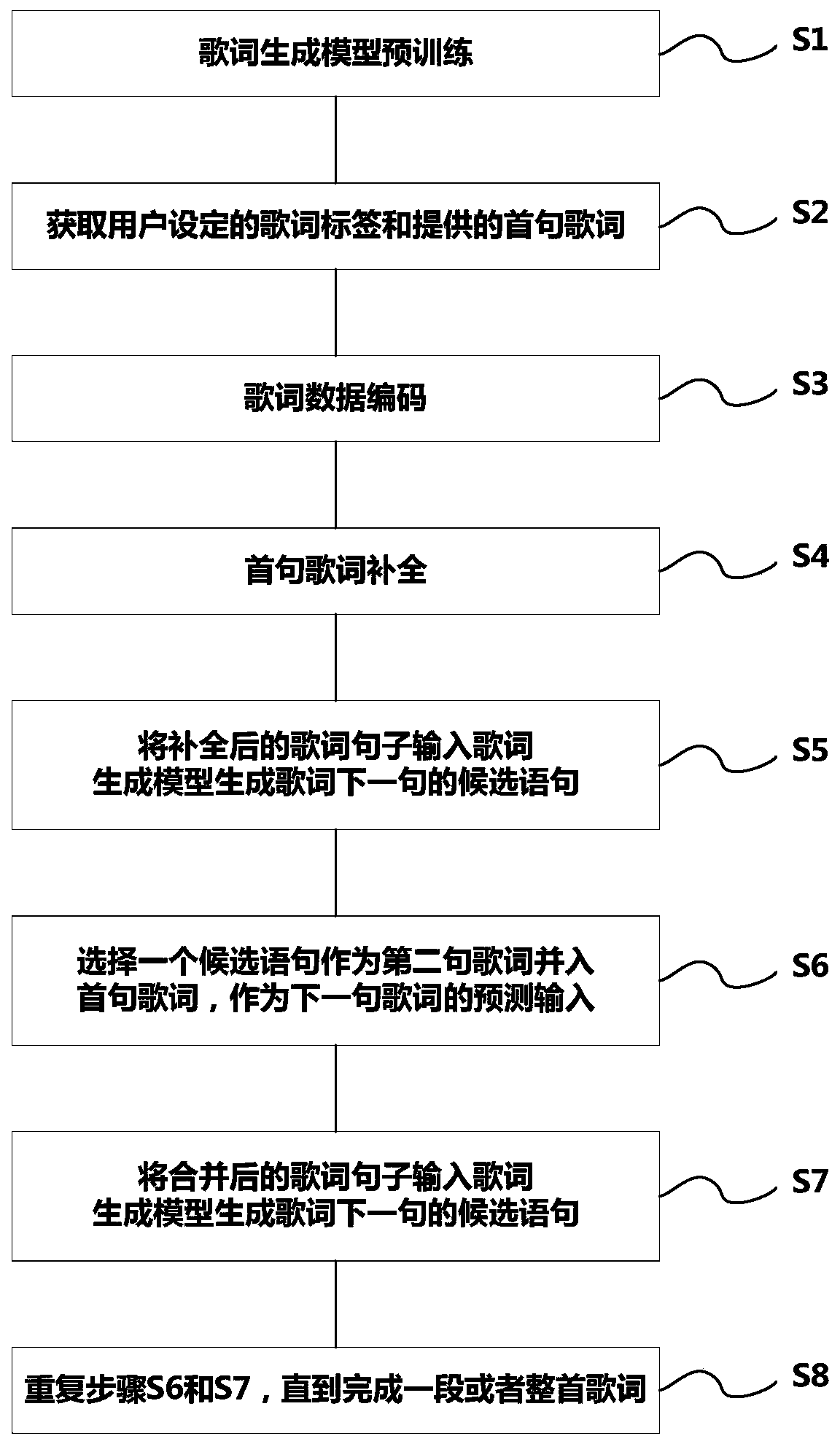

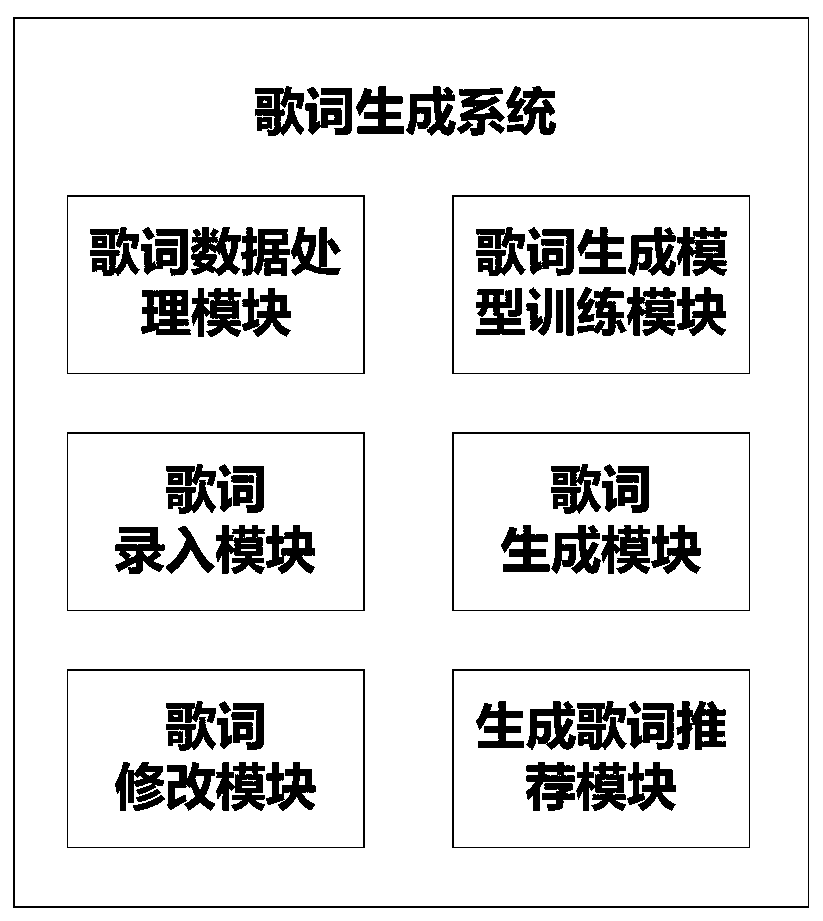

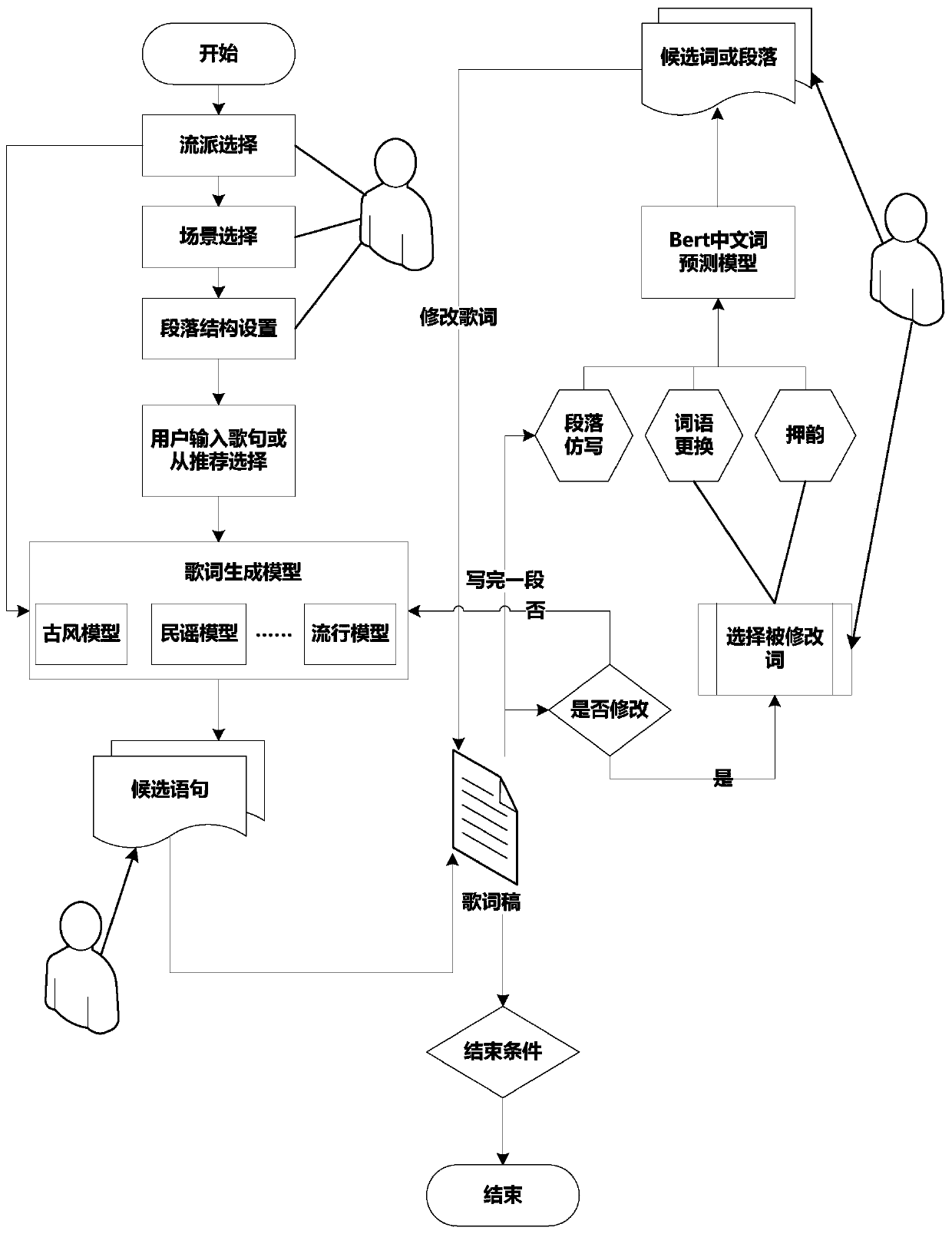

Interactive lyric generation method and system based on neural network

ActiveCN111259665AImprove interactivityAchieving Thematic ConsistencyDigital data information retrievalNatural language data processingGeneration processAlgorithm

The invention discloses an interactive lyric generation method and system based on a neural network, and the system is based on the method, and the method comprises the steps: carrying out the pre-training of a lyric generation model, inputting preprocessed lyric training data into a basic training model for training, and obtaining a lyric generation model; acquiring a lyric label set by a user and a provided first sentence of lyrics; encoding lyric data; completing the first sentence of lyrics, and inputting data codes of the lyrics provided by the user into a lyrics generation model to automatically complete lyrics sentences; inputting the completed lyric sentence into a lyric generation model to generate a candidate sentence of a next lyric sentence; selecting a candidate statement as asecond sentence of lyrics and merging the second sentence of lyrics into the first sentence of lyrics to serve as prediction input of the next sentence of lyrics; inputting the merged lyric sentencesinto a lyric generation model to generate candidate sentences of the next lyric sentence; and repeating the above steps until one segment or the whole lyric is completed. In the lyric generation process, a plurality of candidate sentences are generated for a user to select, so that the interactivity in the lyric generation process is improved.

Owner:成都潜在人工智能科技有限公司

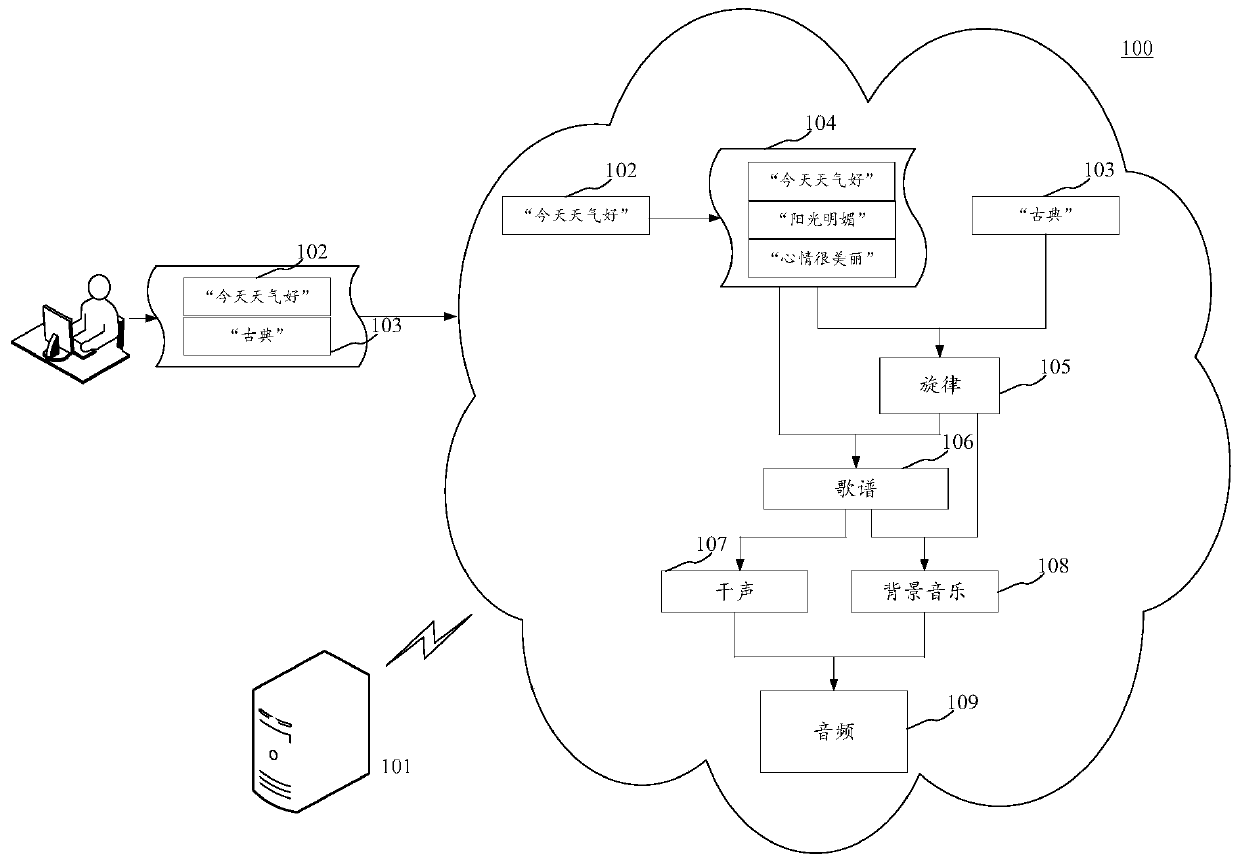

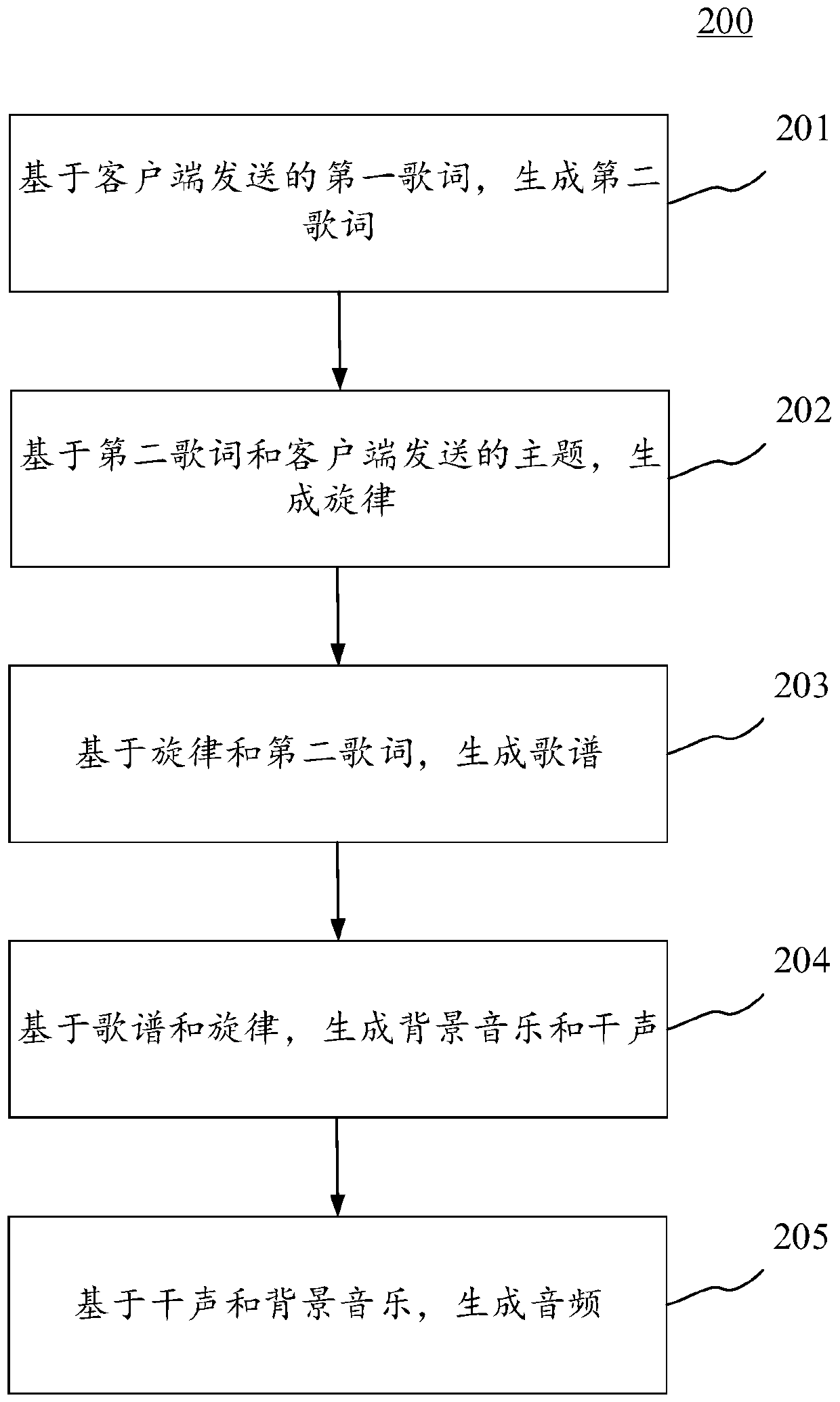

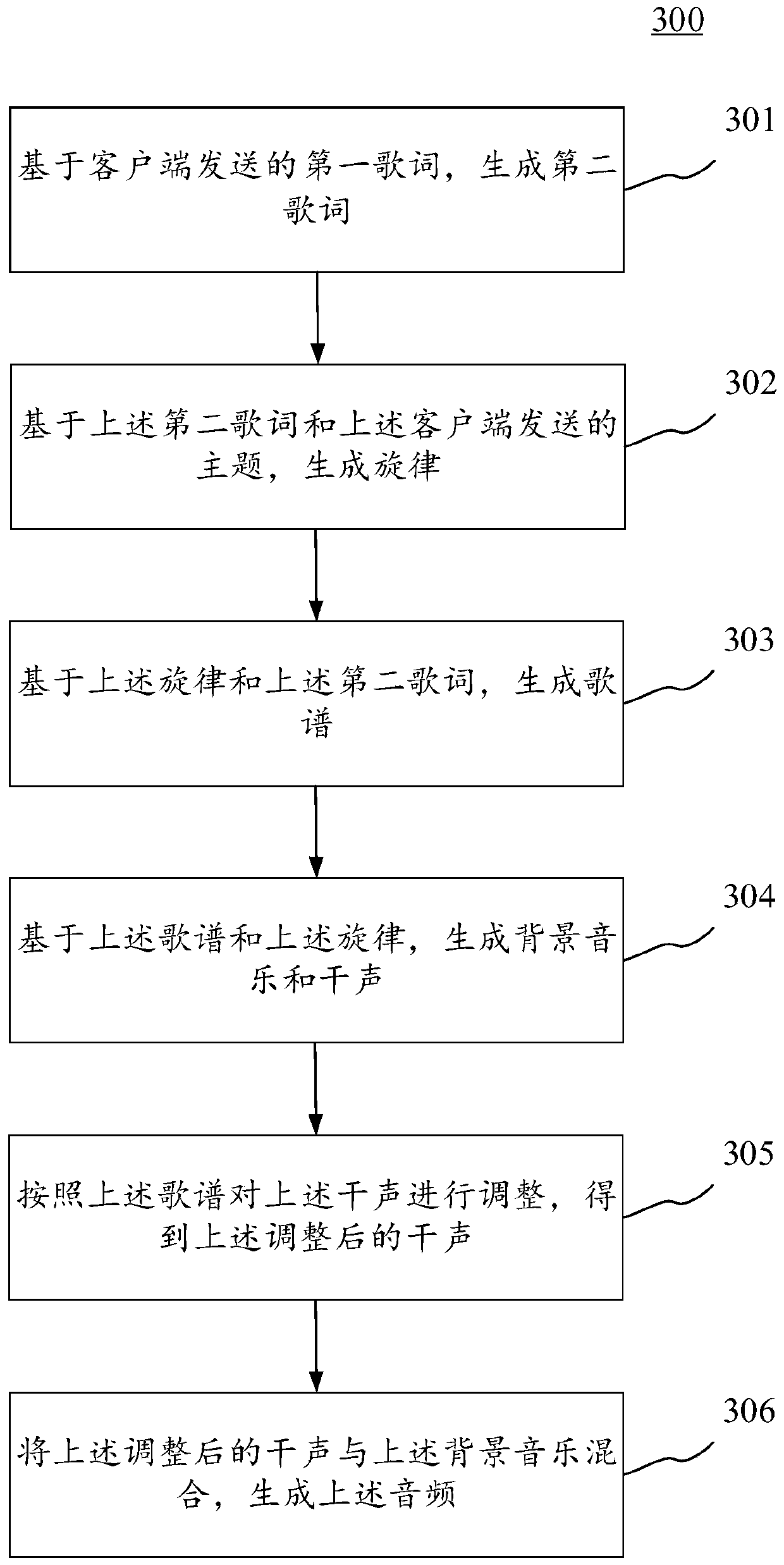

Audio synthesis method and device, electronic equipment and computer readable medium

PendingCN111554267AImprove experienceImprove creation efficiencyElectrophonic musical instrumentsSpeech synthesisAudio synthesisEngineering

The embodiment of the invention discloses an audio synthesis method and device, electronic equipment and a computer readable medium. The specific embodiment of the method comprises the steps of generating a second lyric based on a first lyric sent by a client; generating melody based on the second lyrics and the theme sent by the client; generating a lyric score based on the melody and the secondlyric; generating background music and dry sound based on the music score and the melody; and generating an audio based on the dry sound and the background music. According to the embodiment, efficient song synthesis is realized, and the user of the client can participate in the singing synthesis process, so that the participation interestingness of the user is increased, and the user experience is improved.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com