Patents

Literature

257 results about "Audio synthesis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Digital wavetable audio synthesizer with delay-based effects processing

InactiveUS6047073AElectrophonic musical instrumentsCounting chain pulse countersAudio synthesisNoise

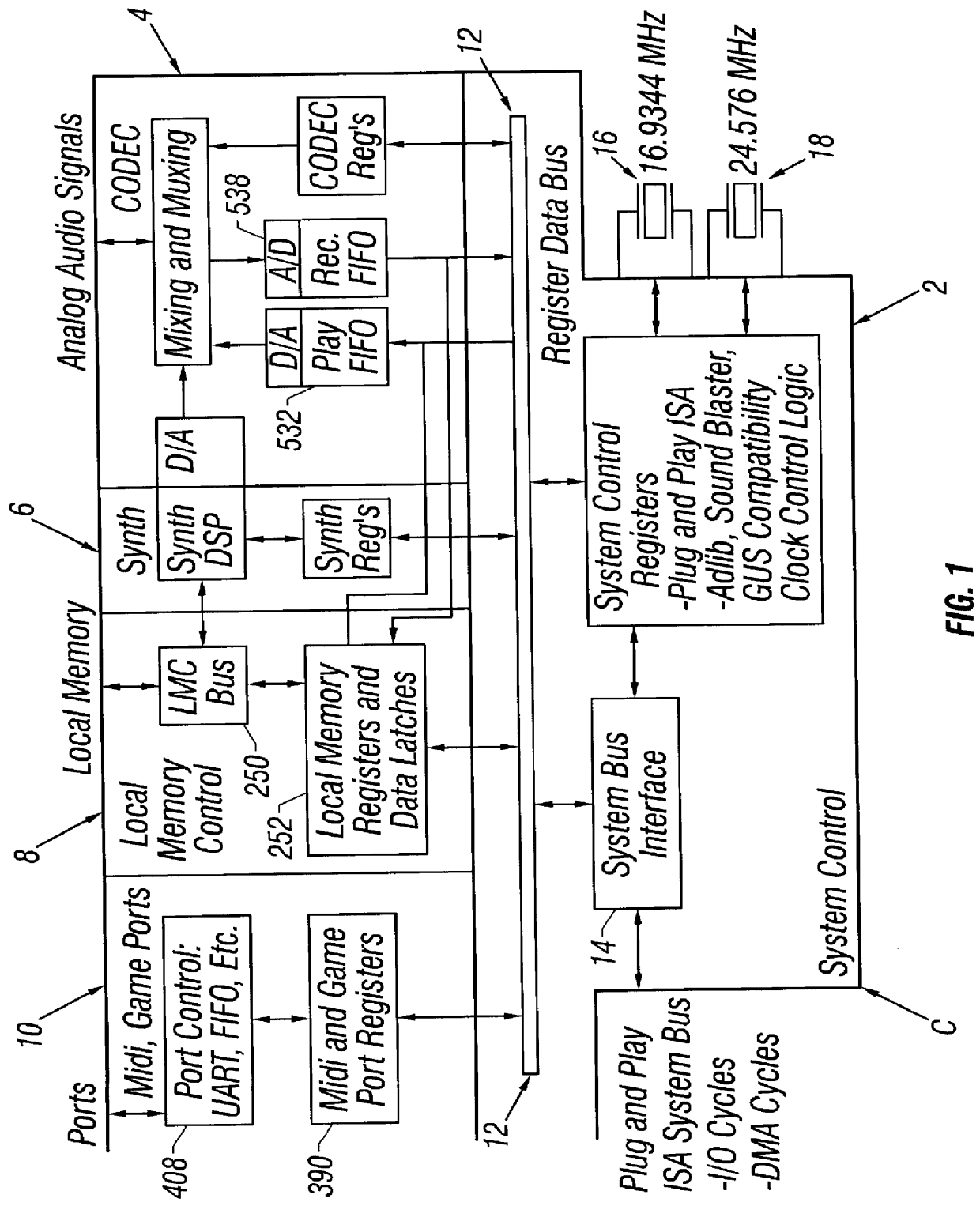

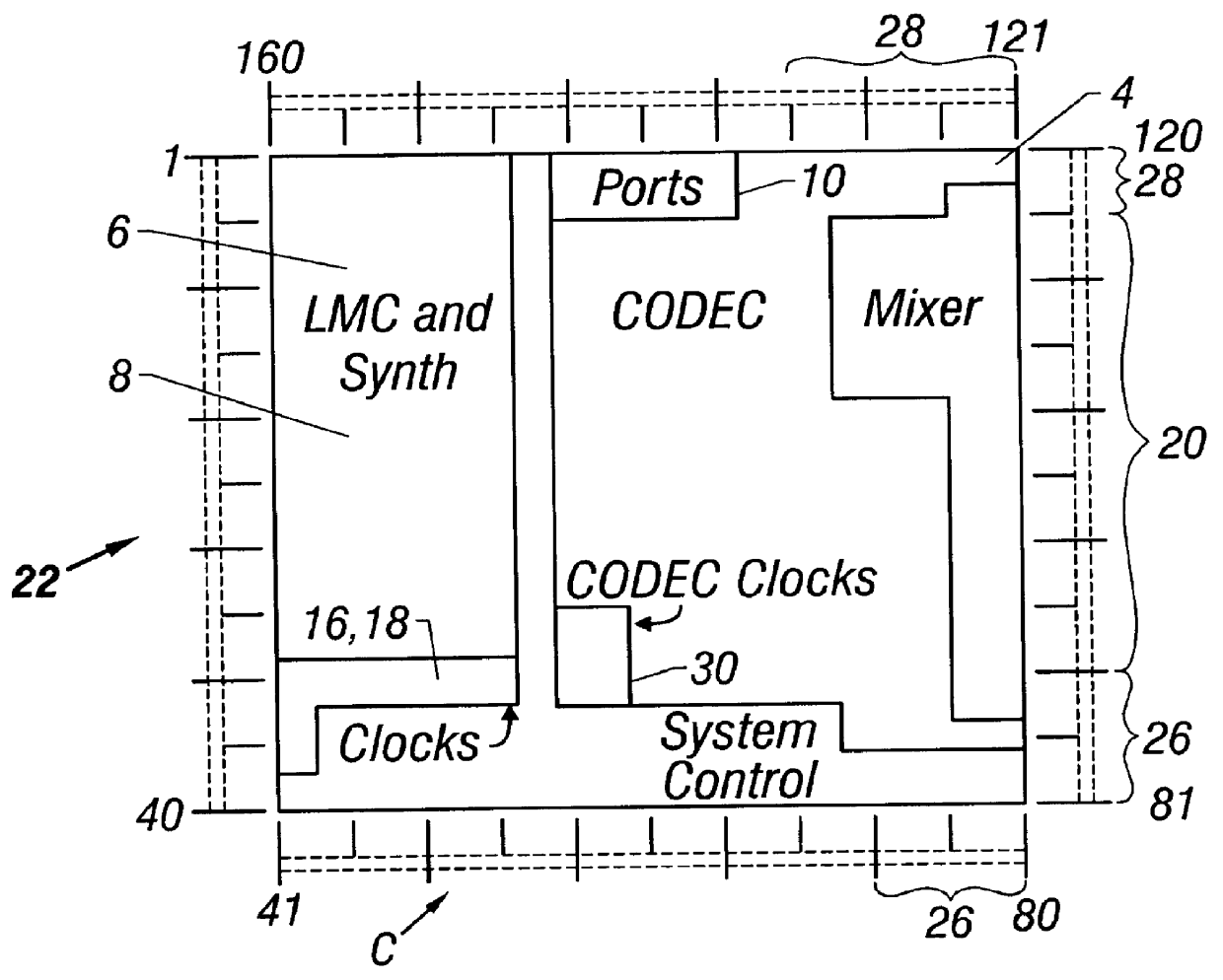

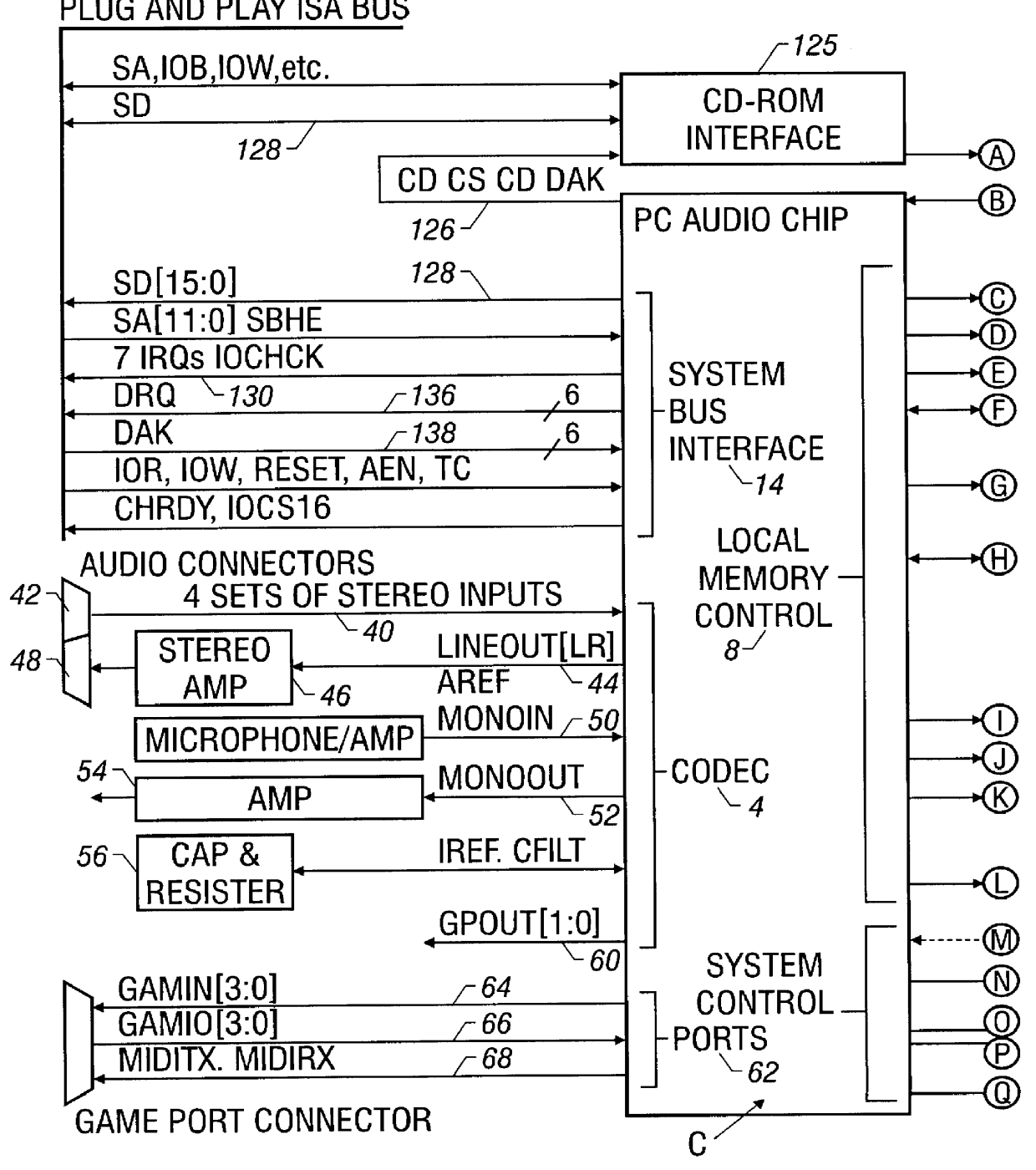

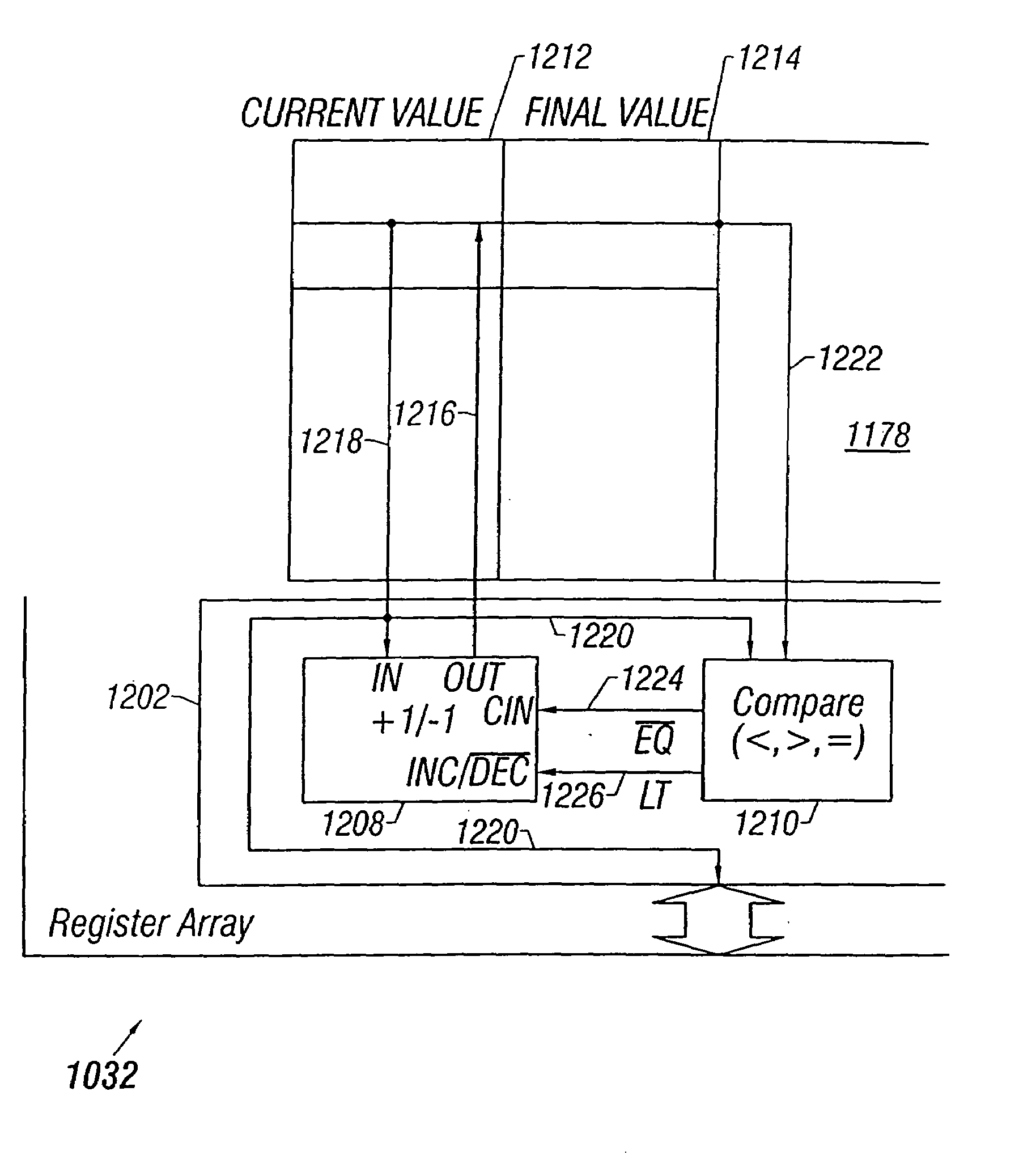

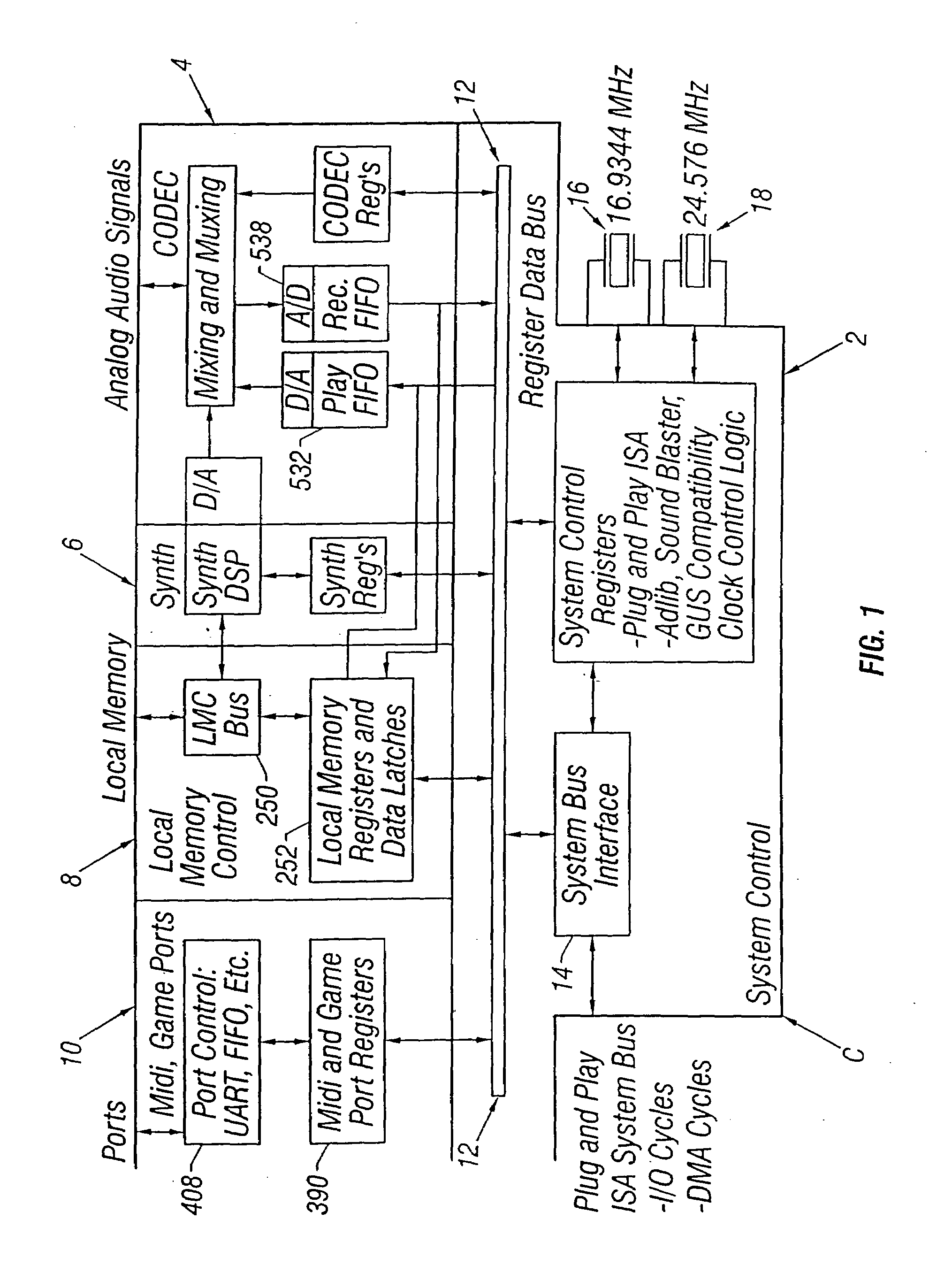

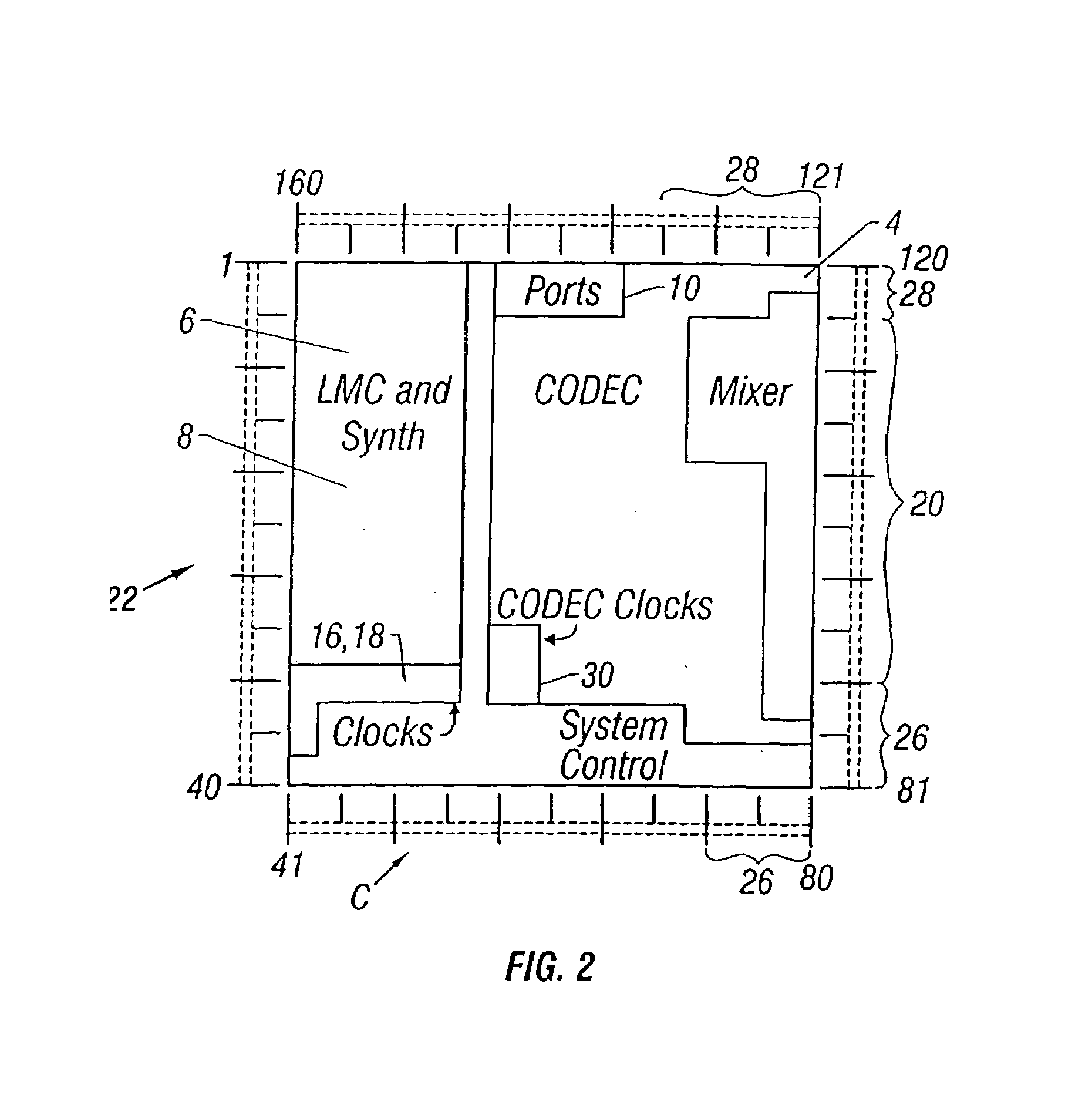

A digital wavetable audio synthesizer is described. The synthesizer can generate up to 32 high-quality audio digital signals or voices, including delay-based effects, at either a 44.1 KHz sample rate or at sample rates compatible with a prior art wavetable synthesizer. The synthesizer includes an address generator which has several modes of addressing wavetable data. The address generator's addressing rate controls the pitch of the synthesizer's output signal. The synthesizer performs a 10-bit interpolation, using the wavetable data addressed by the address generator, to interpolate additional data samples. When the address generator loops through a block of data, the signal path interpolates between the data at the end and start addresses of the block of data to prevent discontinuities in the generated signal. A synthesizer volume generator, which has several modes of controlling the volume, adds envelope, right offset, left offset, and effects volume to the data. The data can be placed in one of sixteen fixed stereo pan positions, or left and right offsets can be programmed to place the data anywhere in the stereo field. The left and right offset values can also be programmed to control the overall volume. Zipper noise is prevented by controlling the volume increment. A synthesizer LFO generator can add LFO variation to: (i) the wavetable data addressing rate, for creating a vibrato effect; and (ii) a voice's volume, for creating a tremolo effect. Generated data to be output from the synthesizer is stored in left and right accumulators. However, when creating delay-based effects, data is stored in one of several effects accumulators. This data is then written to a wavetable. The difference between the wavetable write and read addresses for this data provides a delay for echo and reverb effects. LFO variations added to the read address create chorus and flange effects. The volume of the delay-based effects data can be attenuated to provide volume decay for an echo effect. After the delay-based effects processing, the data can be provided with left and right offset volume components which determine how much of the effect is heard and its stereo position. The data is then stored in the left and right accumulators.

Owner:MICROSEMI SEMICON U S

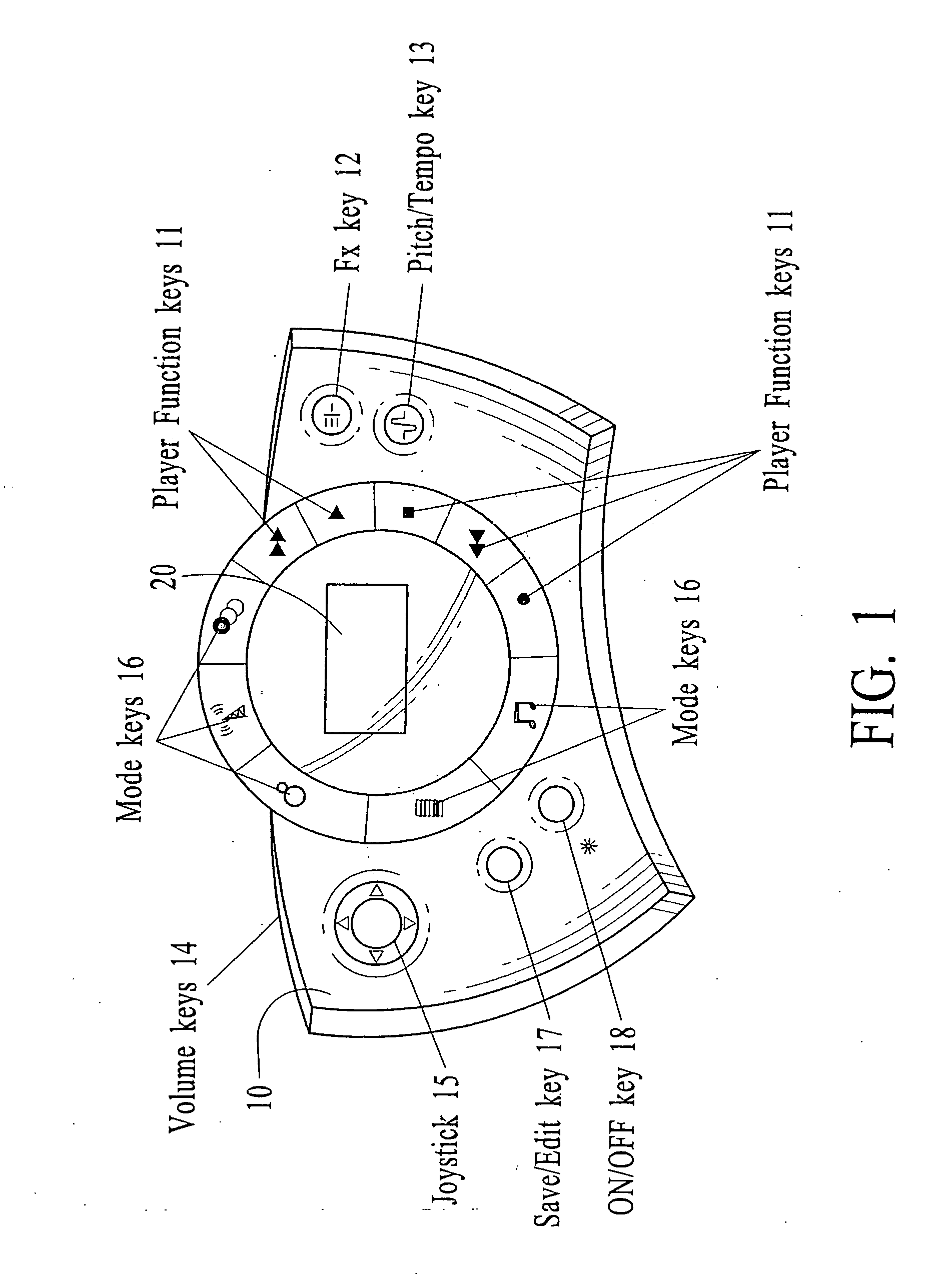

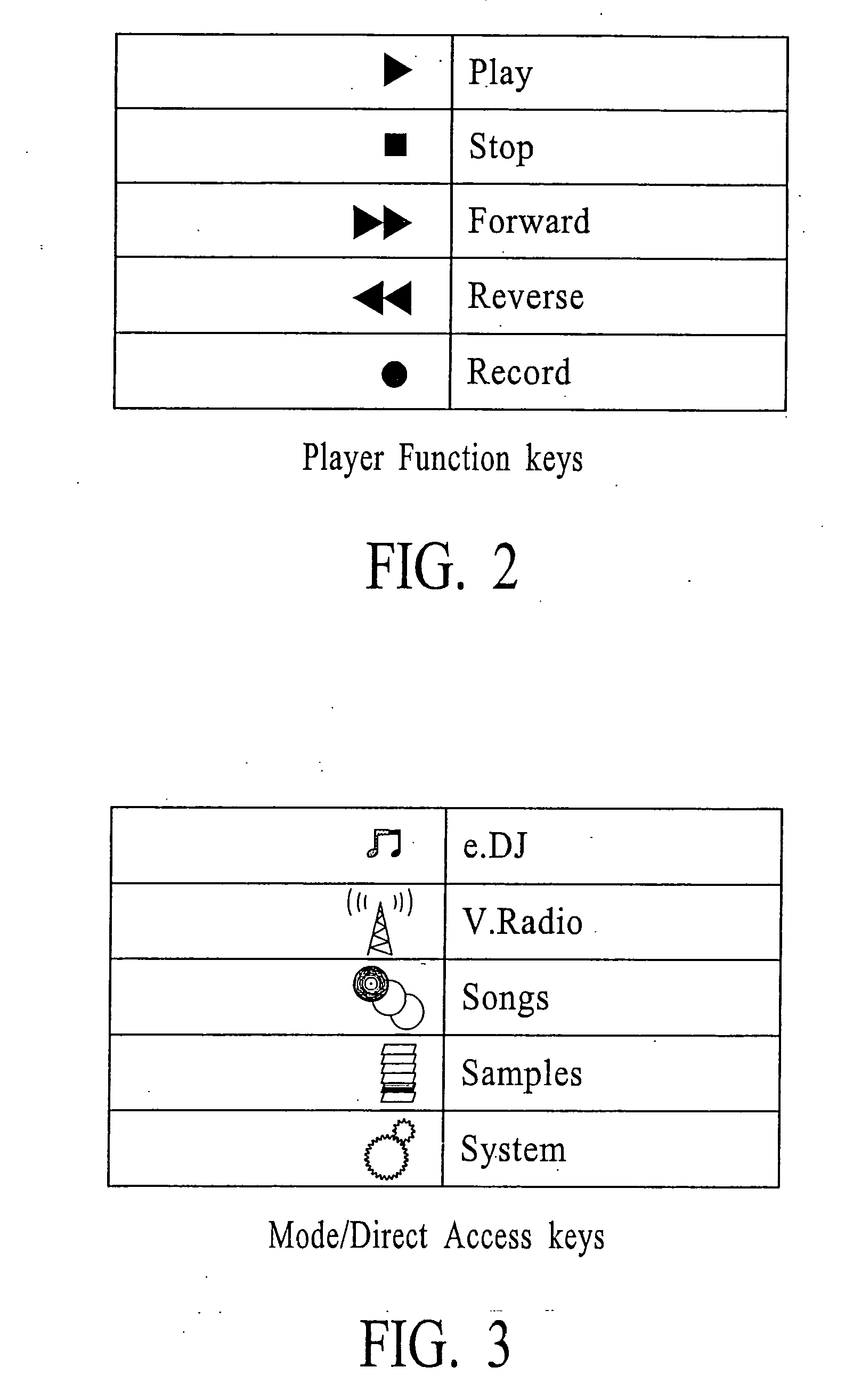

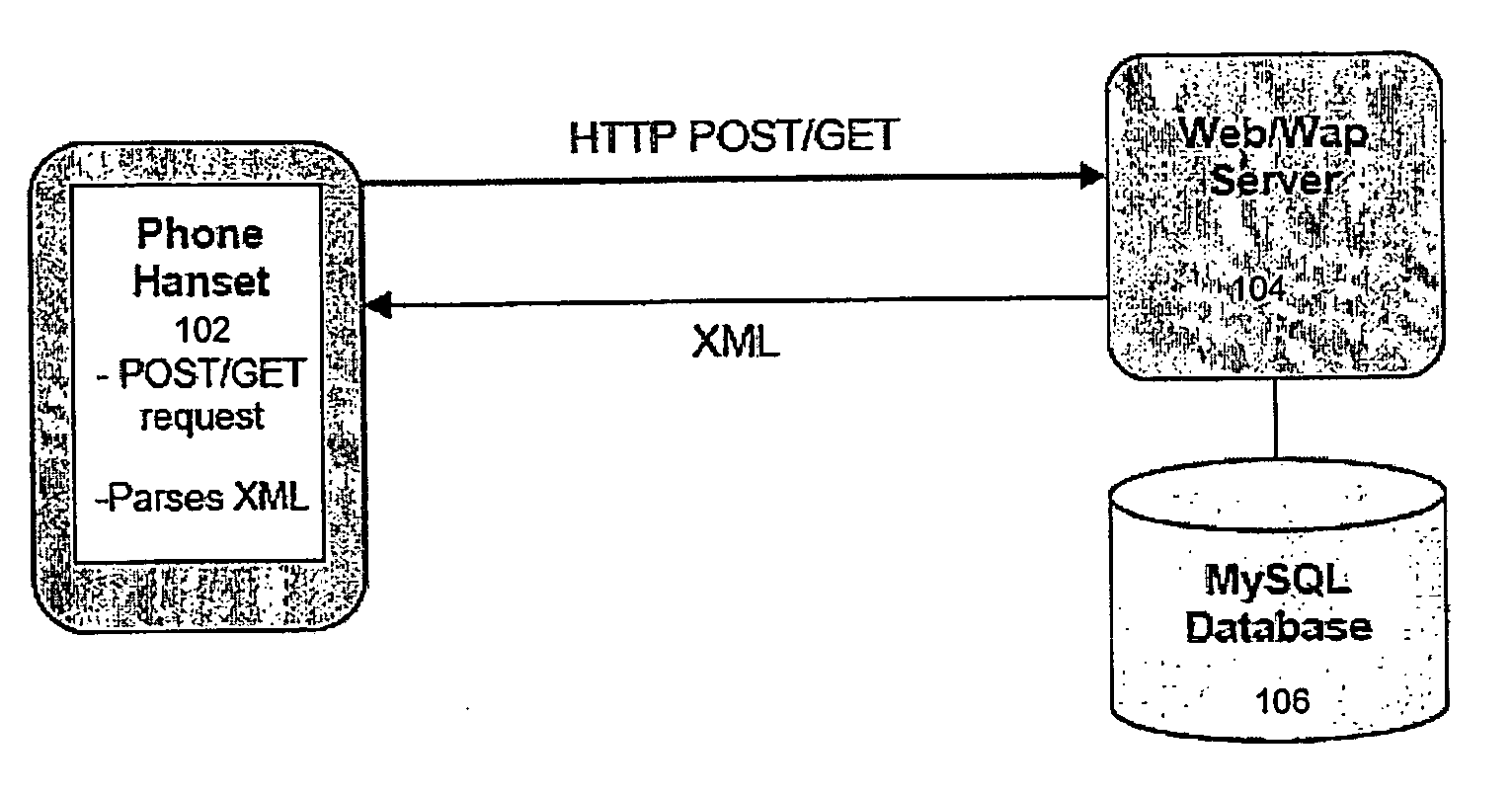

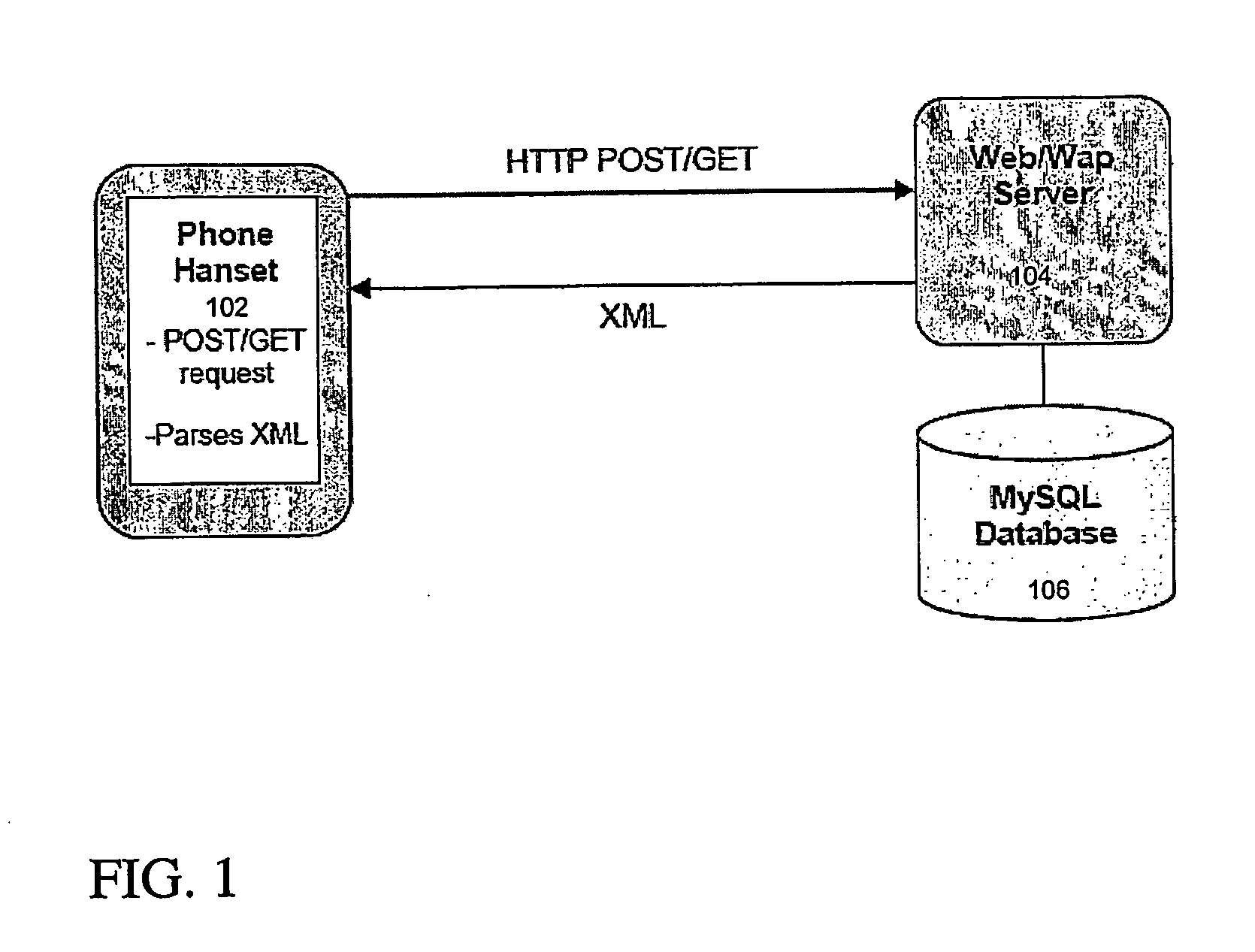

Systems and Methods for Portable Audio Synthesis

InactiveUS20080156178A1Create efficientlyEfficiently stored and/processedGearworksMusical toysAudio synthesisDisplay device

Systems and methods for creating, modifying, interacting with and playing music are provided, particularly systems and methods employing a top-down process, where the user is provided with a musical composition that may be modified and interacted with and played and / or stored (for later play). The system preferably is provided in a handheld form factor, and a graphical display is provided to display status information, graphical representations of musical lanes or components which preferably vary in shape as musical parameters and the like are changed for particular instruments or musical components such as a microphone input or audio samples. An interactive auto-composition process preferably is utilized that employs musical rules and preferably a pseudo random number generator, which may also incorporate randomness introduced by timing of user input or the like, the user may then quickly begin creating desirable music in accordance with one or a variety of musical styles, with the user modifying the auto-composed (or previously created) musical composition, either for a real time performance and / or for storing and subsequent playback. In addition, an analysis process flow is described for using pre-existing music as input(s) to an algorithm to derive music rules that may be used as part of a music style in a subsequent auto-composition process. In addition, the present invention makes use of node-based music generation as part of a system and method to broadcast and receive music data files, which are then used to generate and play music. By incorporating the music generation process into a node-subscriber unit, the bandwidth-intensive systems of conventional techniques can be avoided. Consequently, the bandwidth can preferably be also used of additional features such as node-to-node and node to base music data transmission. The present invention is characterized by the broadcast of relatively small data files that contain various parameters sufficient to describe the music to the node / subscriber music generator. In addition, problems associated with audio synthesis in a portable environment are addressed in the present invention by providing systems and methods for performing audio synthesis in a manner that simplifies design requirements and / or minimizes cost, while still providing quality audio synthesis features targeted for a portable system (e.g., portable telephone). In addition, problems associated with the tradeoff between overall sound quality and memory requirements in a MIDI sound bank are addressed in the present invention by providing systems and methods for a reduced memory size footprint MIDI sound bank.

Owner:MEDIALAB SOLUTIONS

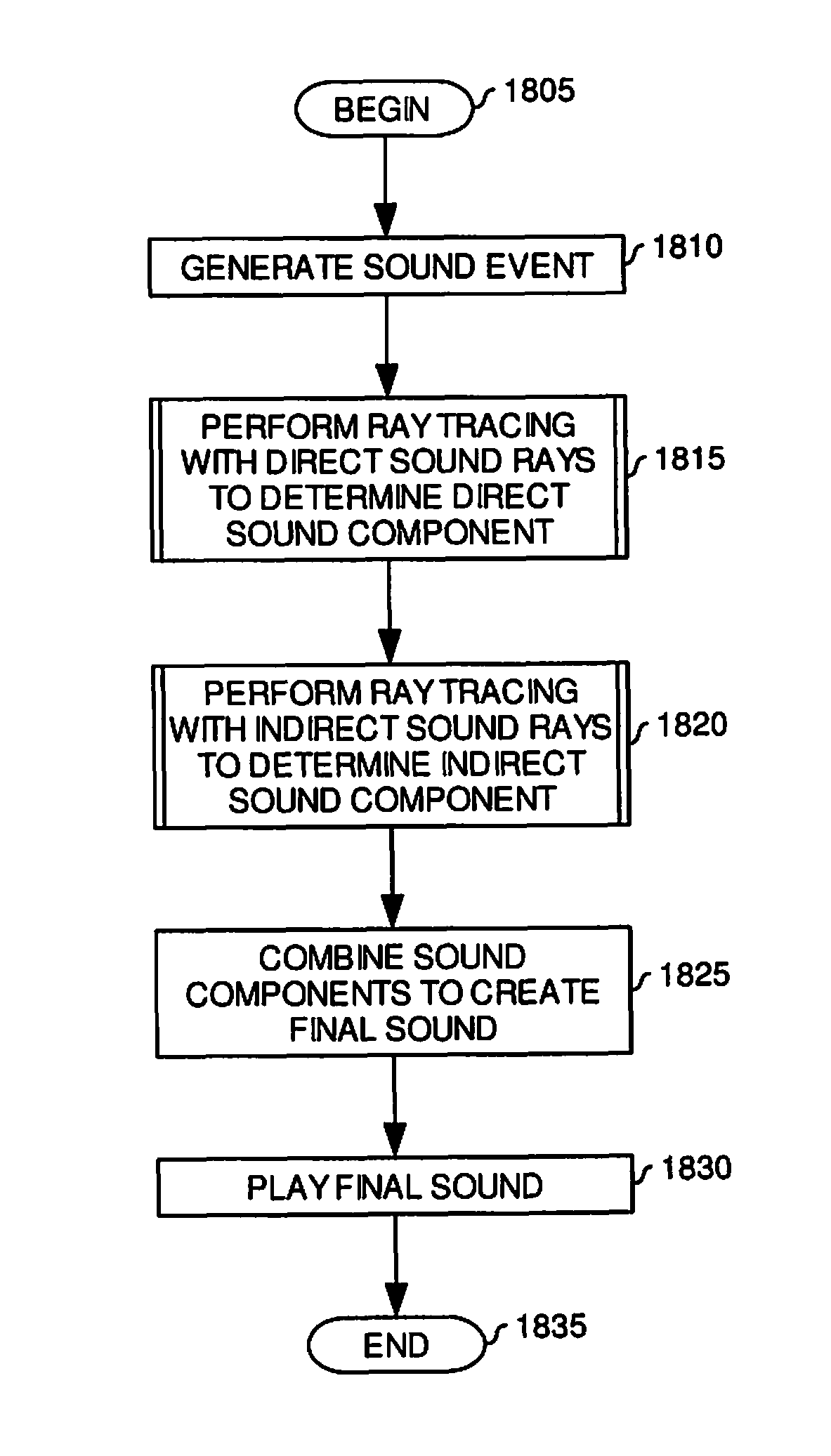

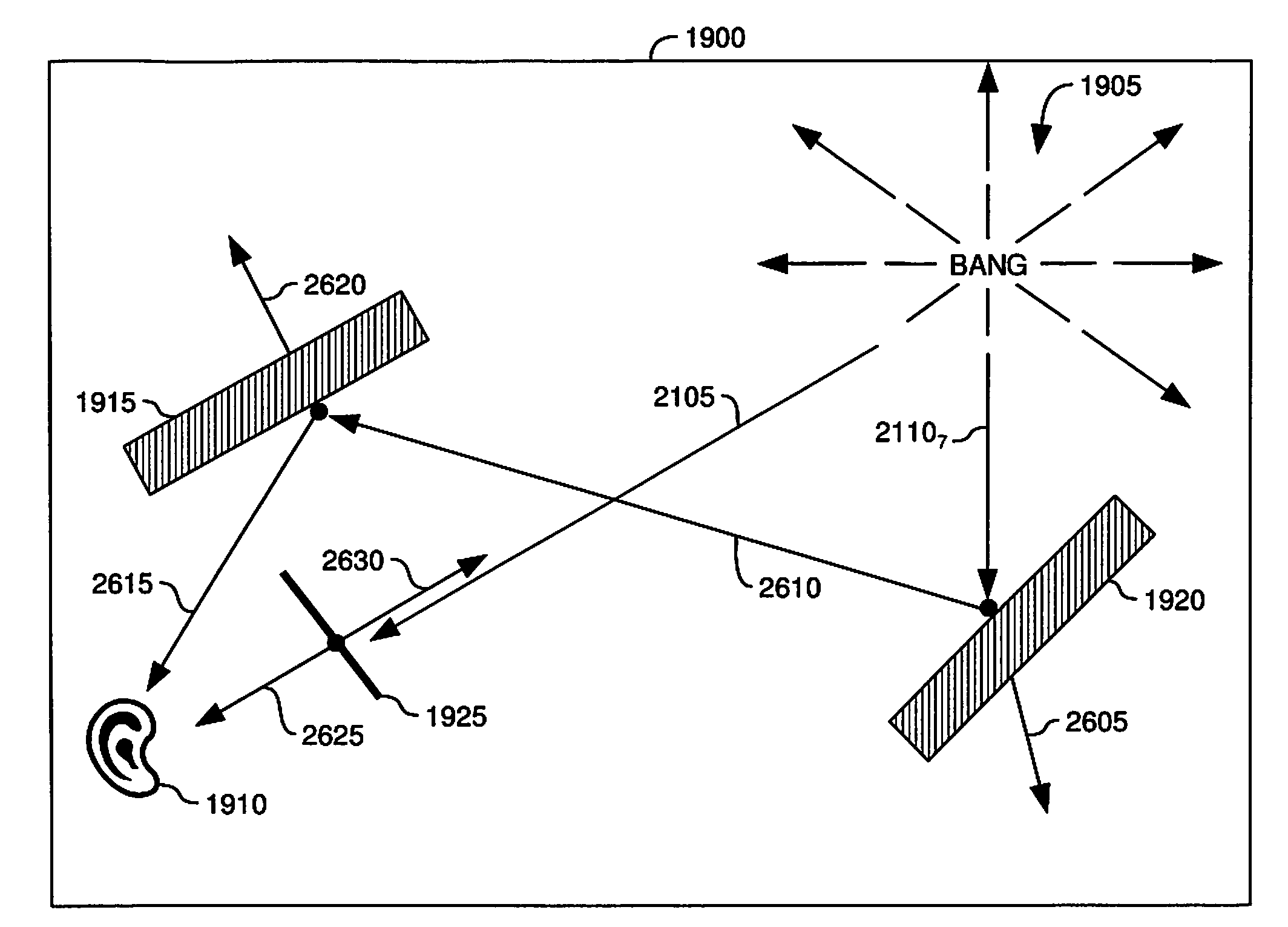

Using ray tracing for real time audio synthesis

According to embodiments of the invention, a sound engine may determine a final sound at a listener location by emulating sound waves within a three-dimensional scene. The sound engine may emulate sound waves by issuing rays from a location of a sound event and tracing the rays through the three-dimensional scene. The rays may intersect objects within the three-dimensional scene which have sound modification factors. The sound modification factors and other factors (e.g., distance traveled by the ray, angle of intersection with the object, etc.) may be applied to the sound event to determine a final sound which is heard by the listener.

Owner:ACTIVISION PUBLISHING

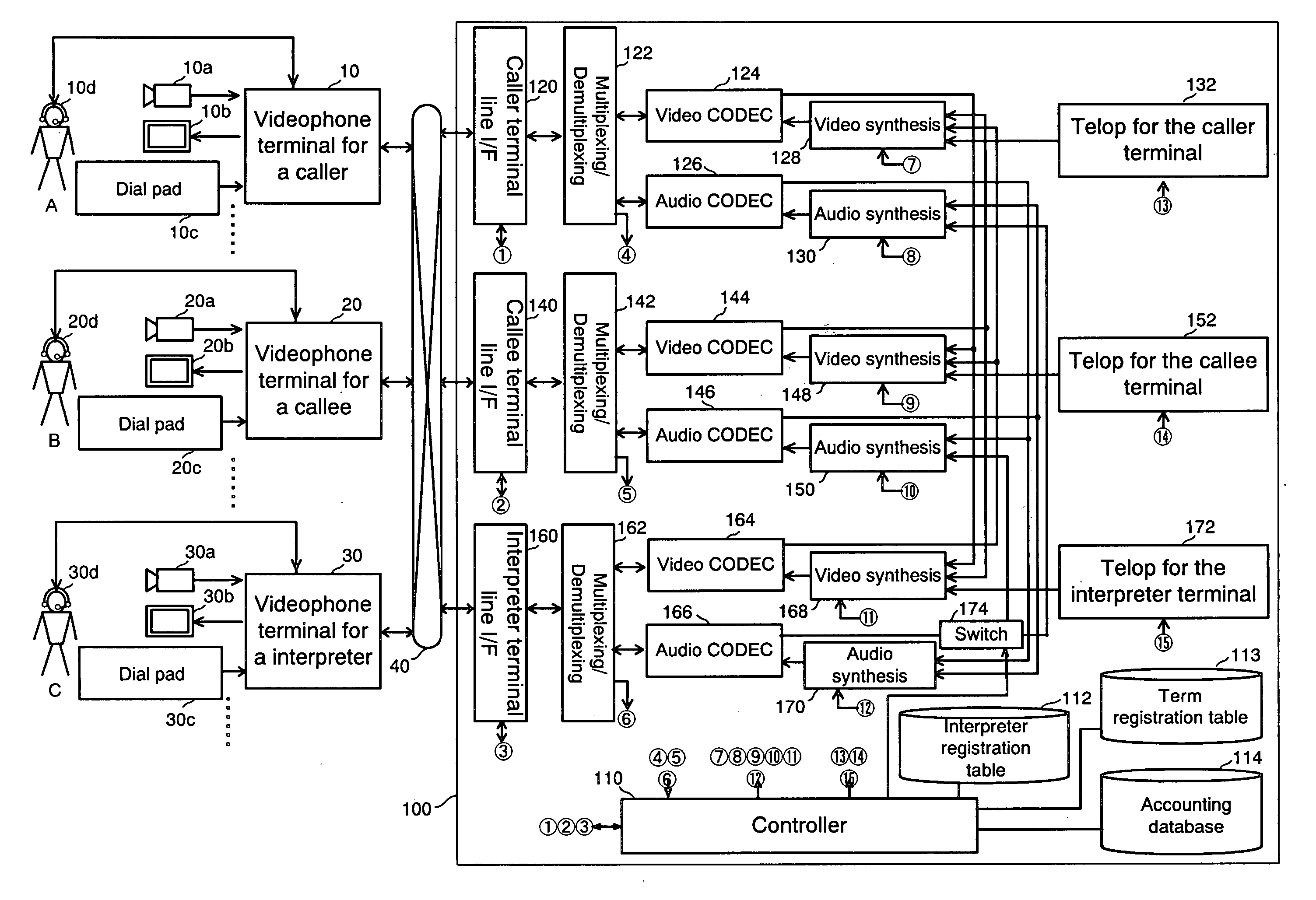

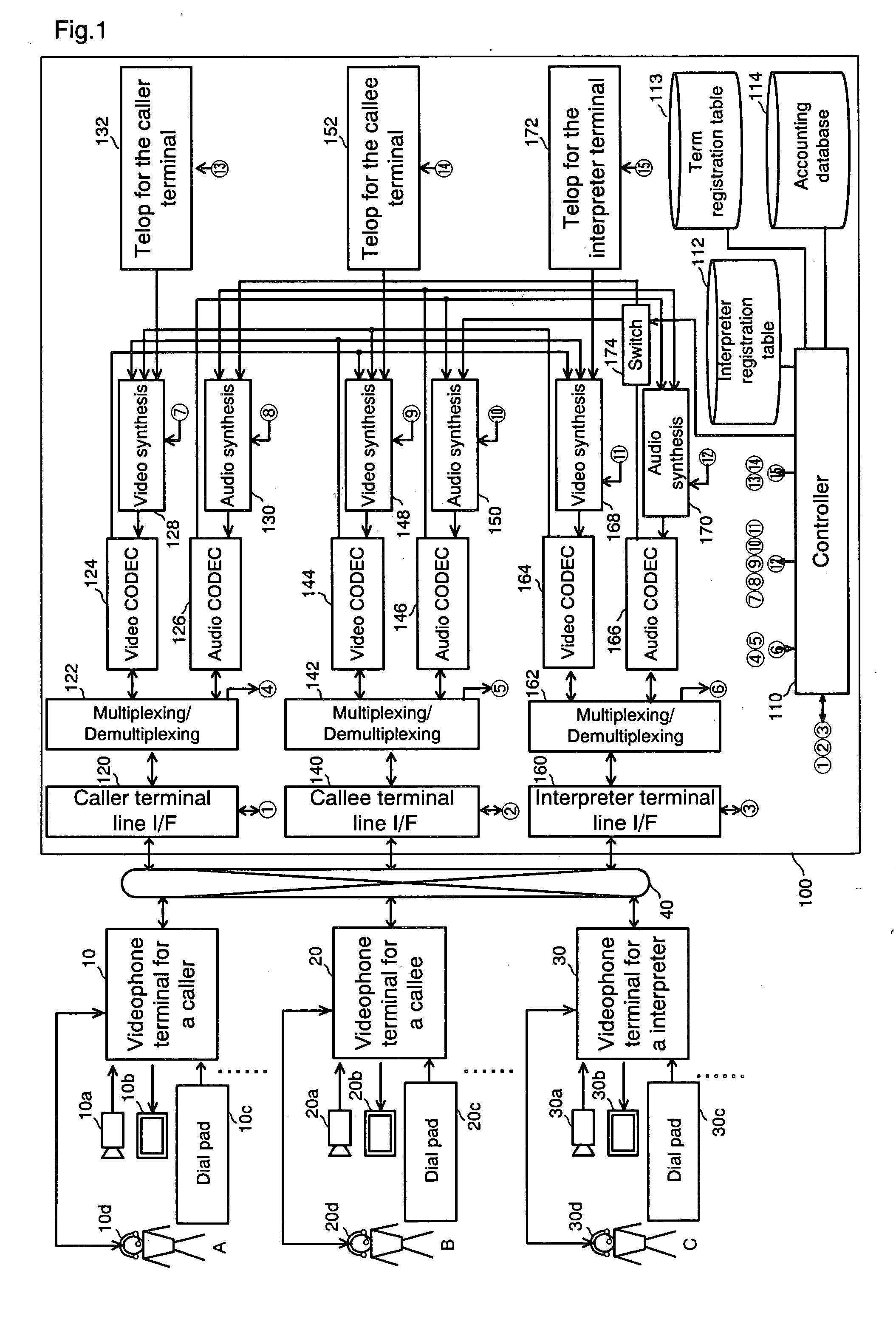

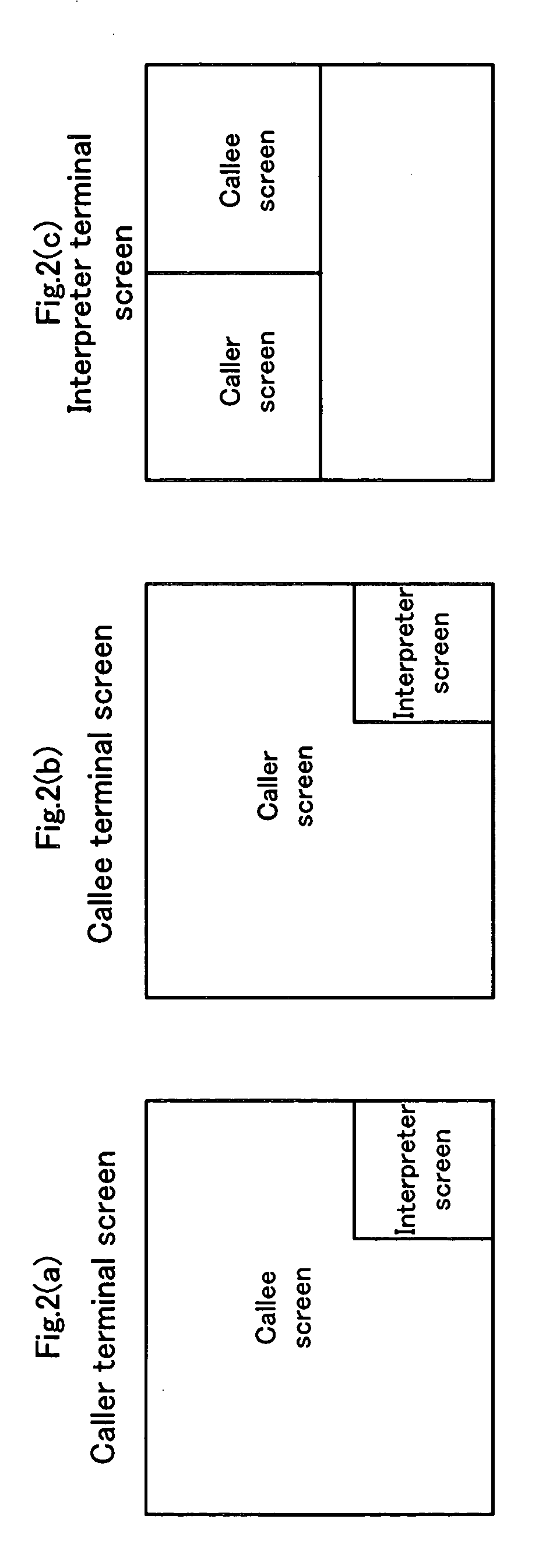

Video telephone interpretation system and a video telephone interpretation method

InactiveUS20060120307A1Minimize timeLow costMultiplex system selection arrangementsSpecial service provision for substationAudio synthesisHuman language

A videophone interpretation system accepts a call from a caller terminal and refers an interpreter registration table to extract the terminal number of an interpreter capable of interpreting between the language of a caller and the language of a callee and connects the caller terminal, a callee terminal and an interpreter terminal. The videophone interpretation system includes a function to communicate video and audio necessary for interpretation between the terminals. The audio of an interpreter is transmitted either to the caller or callee, which is specified by the interpreter terminal. The audio of the conversation partner is suppressed or interrupted when the audio of the interpreter is detected by an audio synthesizer, thereby providing a quick and precise interpretation service.

Owner:GINGANET CORP

Audio signal synthesizing

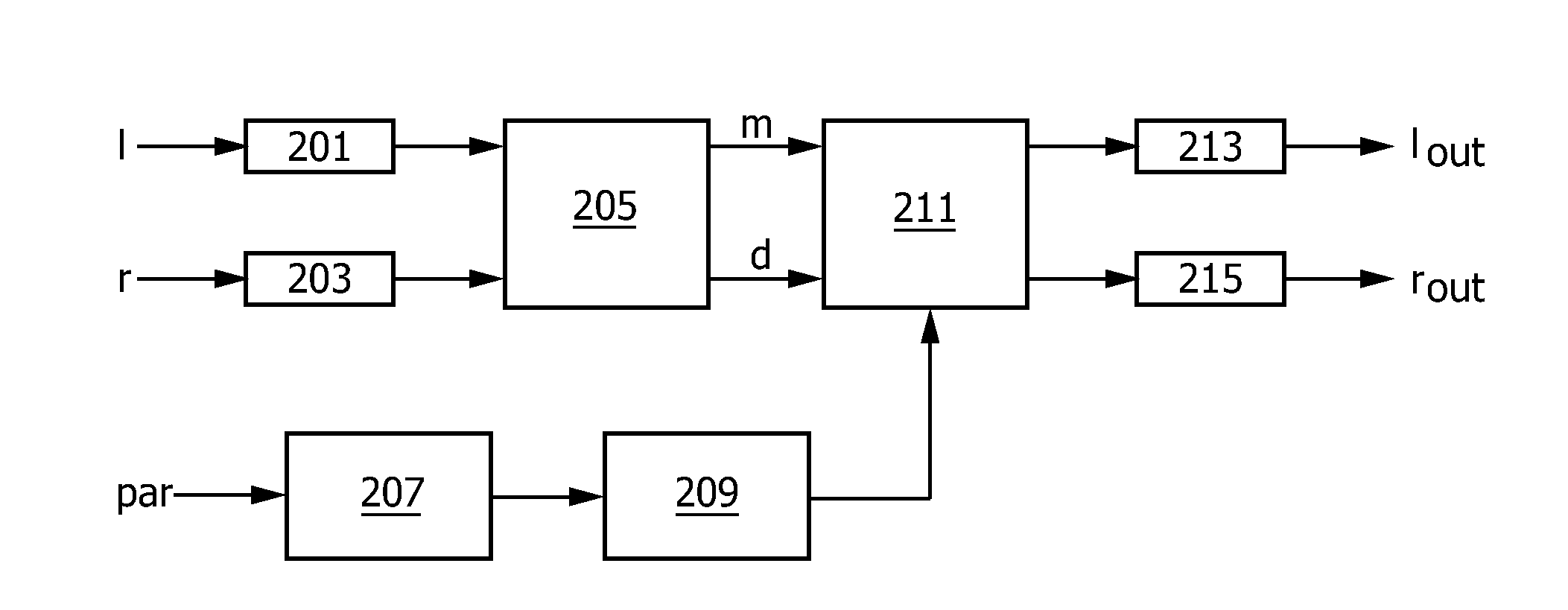

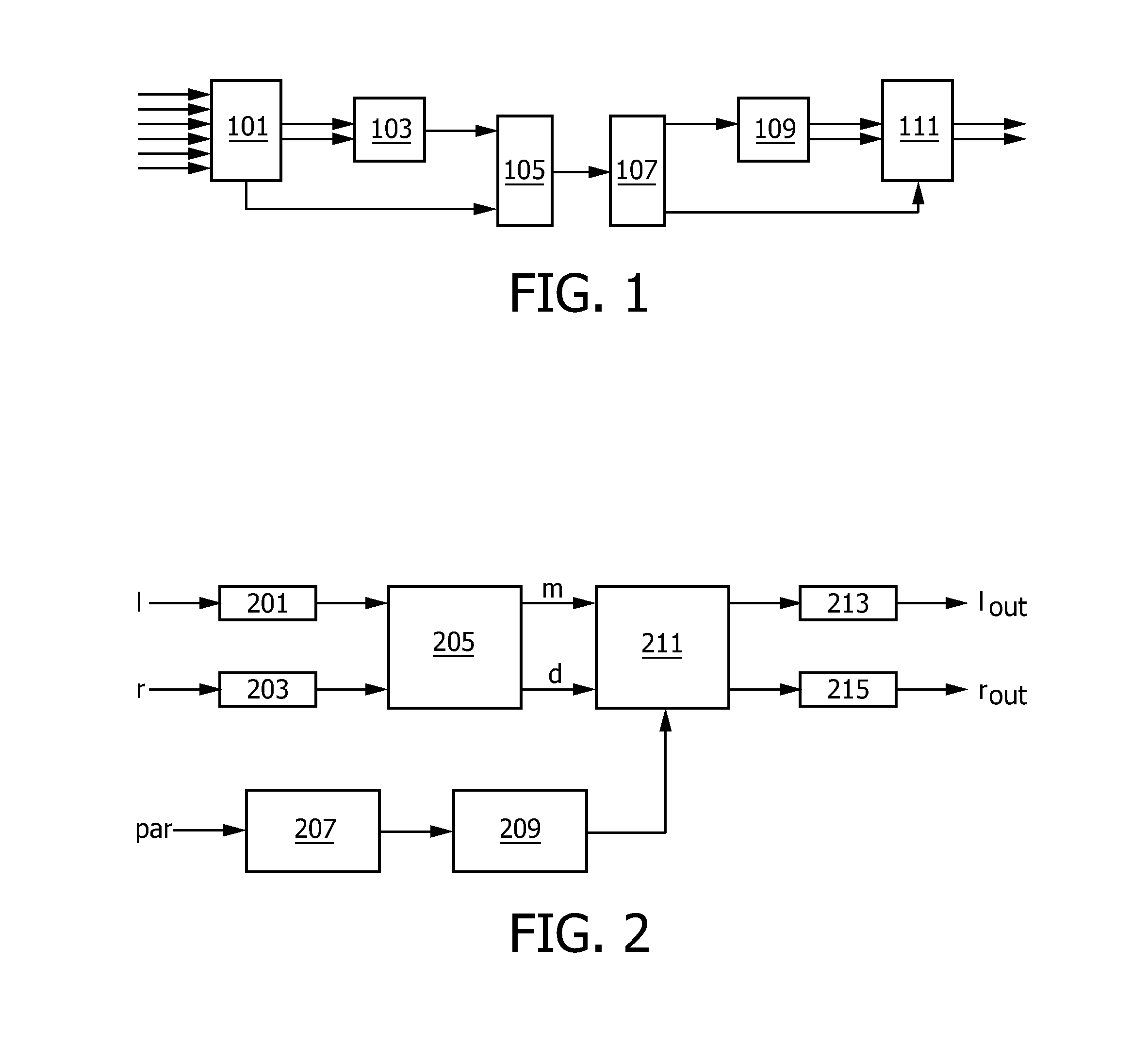

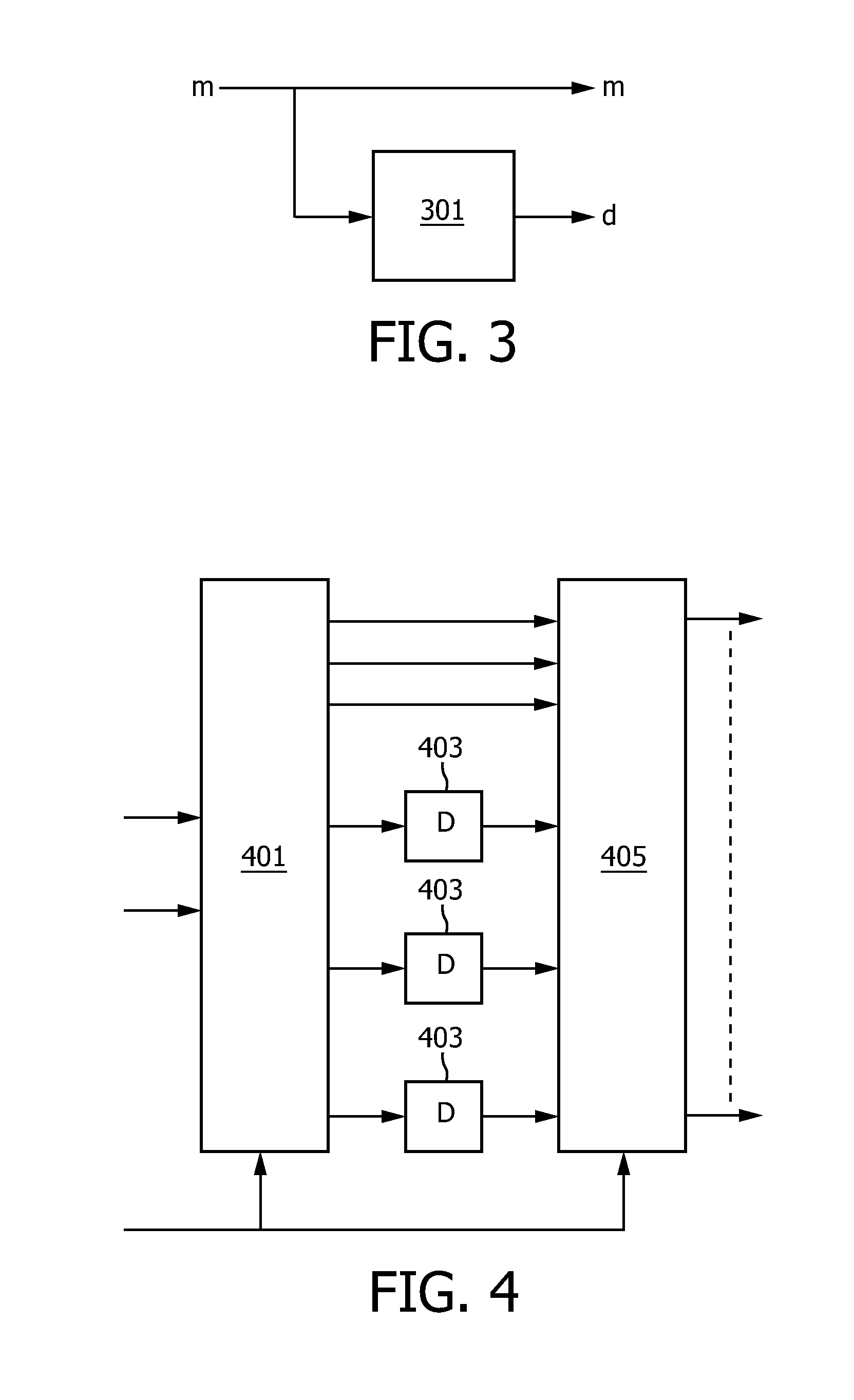

InactiveUS20120039477A1Improve audio performanceEasy to operateHeadphones for stereophonic communicationSpeech analysisAudio synthesisSound sources

An audio synthesizing apparatus receives an encoded signal comprising a downmix signal and parametric extension data for expanding the downmix signal to a multi-sound source signal. A decomposition processor (205) performs a signal decomposition of the downmix signal to generate at least a first signal component and a second signal component, where the second signal component is at least partially decorrelated with the first signal component. A position processor (207) determines a first spatial position indication for the first signal component in response to the parametric extension data and a binaural processor (211) synthesizes the first signal component based on the first spatial position indication and the second signal component to originate from a different direction. The invention may provide improved spatial experience from e.g. headphones by using a direct synthesis of a main directional signal from the appropriate position rather than as a combination of signals from virtual loudspeaker positions.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Wavetable audio synthesizer with left offset, right offset and effects volume control

InactiveUS7088835B1Avoid noiseLower the volumeElectrophonic musical instrumentsGain controlAudio synthesisData placement

A digital wavetable audio synthesizer is described. A synthesizer volume generator, which has several modes of controlling the volume, adds envelope, right offset, left offset, and effects volume to the data. The data can be placed in one of sixteen fixed stereo pan positions, or left and right offsets can be programmed to place the data anywhere in the stereo field. The left and right offset values can also be programmed to control the overall volume. Zipper noise is prevented by controlling the volume increment. A synthesizer LFO generator can ad LFO variation to: (i) the wavetable data addressing rate, for creating a vibrato effect; and (ii) a voice's volume, for creating a tremolo effect. Generated data to be output from the synthesizer is stored in left and right accumulators. However, when creating delay-based effects, data is stored in one of several effects accumulators. This data is then written to a wavetable. The difference between the wavetable write and read addresses for this data provides a delay for echo and reverb effects. LFO variations added to the read address create a chorus and flange effects. The volume of the delay-based effects data can be attenuated to provide volume decay for an echo effect. After the delay-based effects processing, the data can be provided with left and right offset volume components which determine how much of the effect is heard and its stereo position. The data is then stored in the left and right accumulators.

Owner:MICROSEMI SEMICON U S

Sound scene manipulation

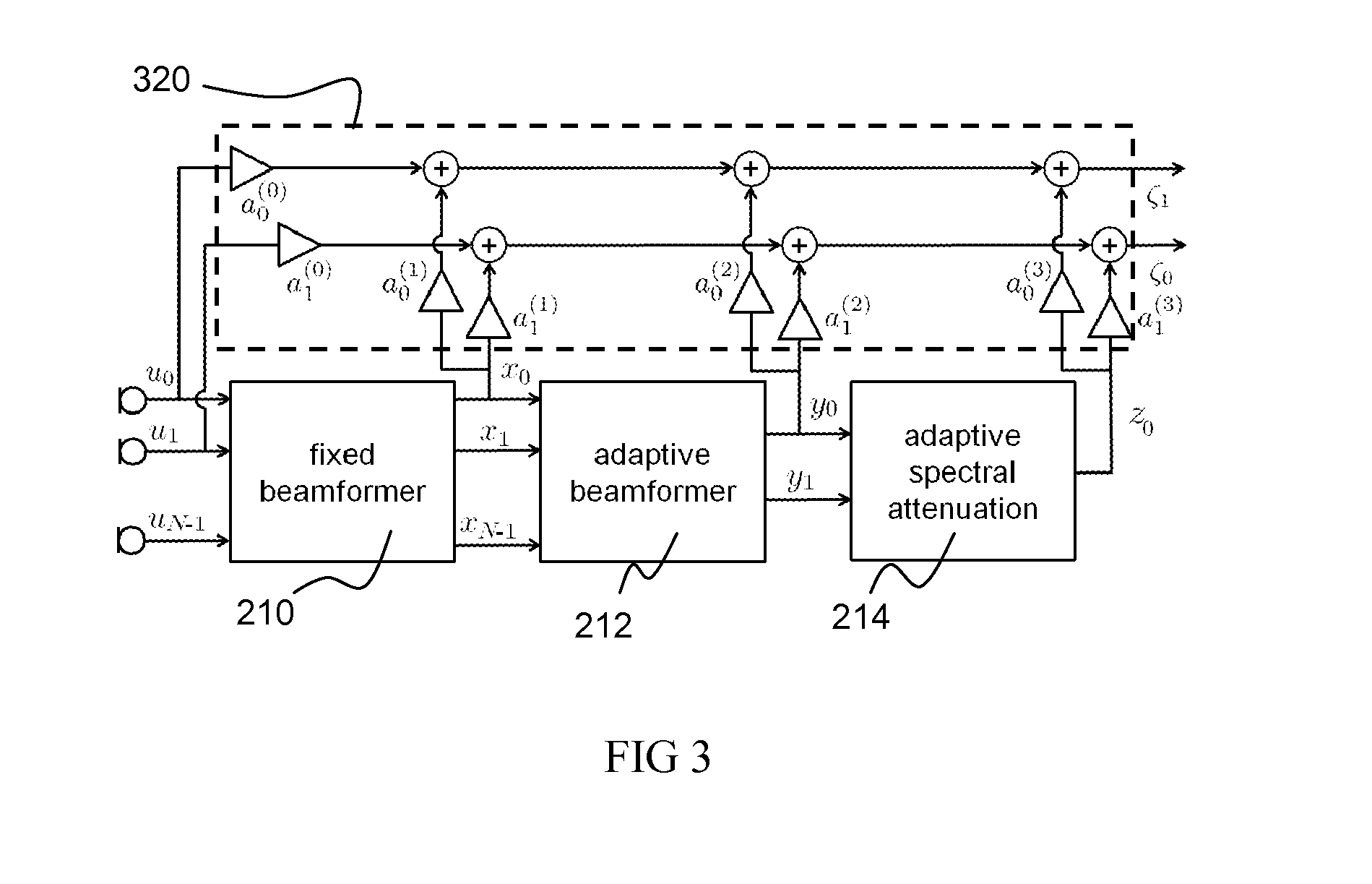

InactiveUS20120082322A1Light demandReduce power consumptionGain controlSpeech analysisAudio synthesisSound sources

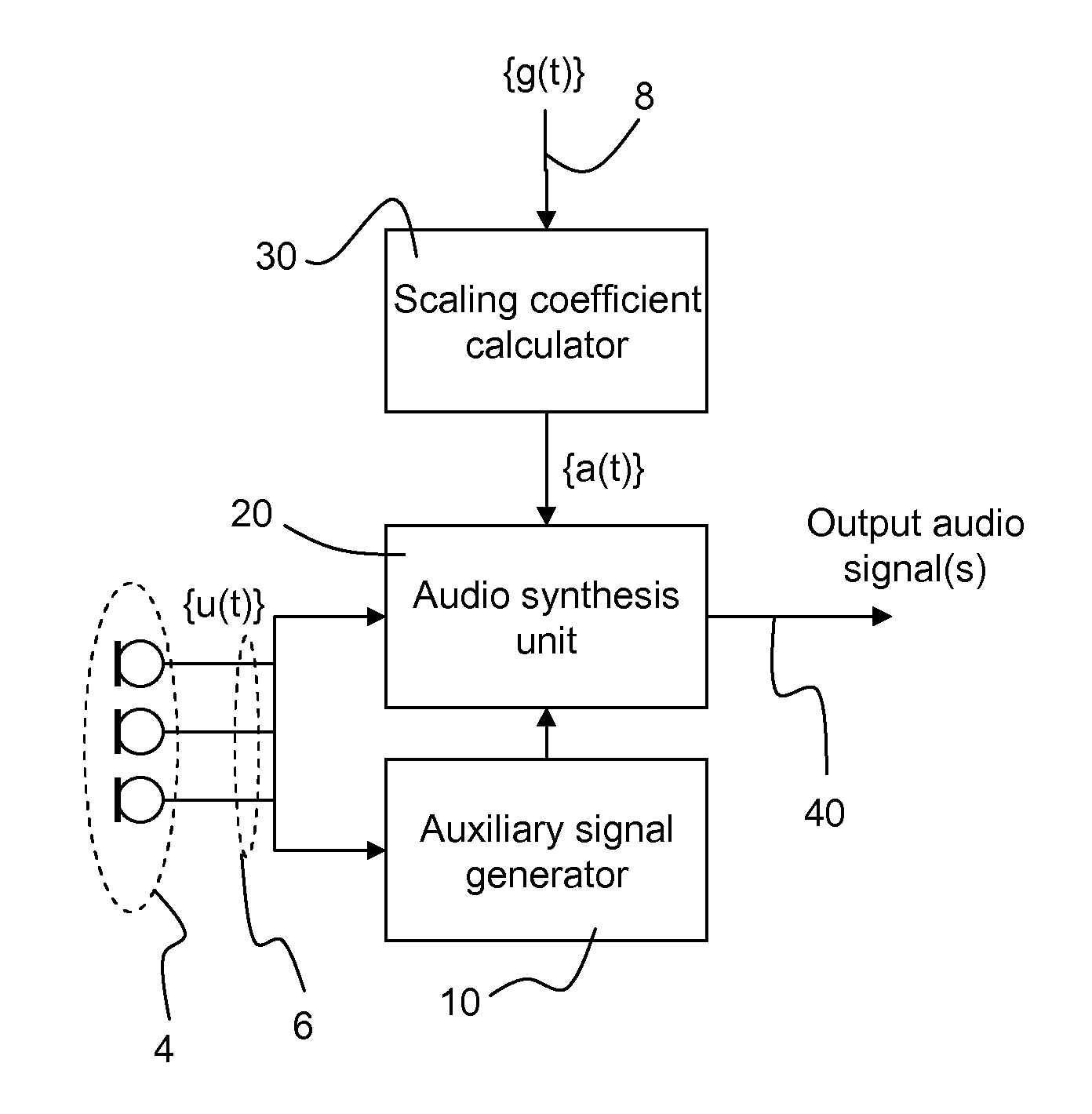

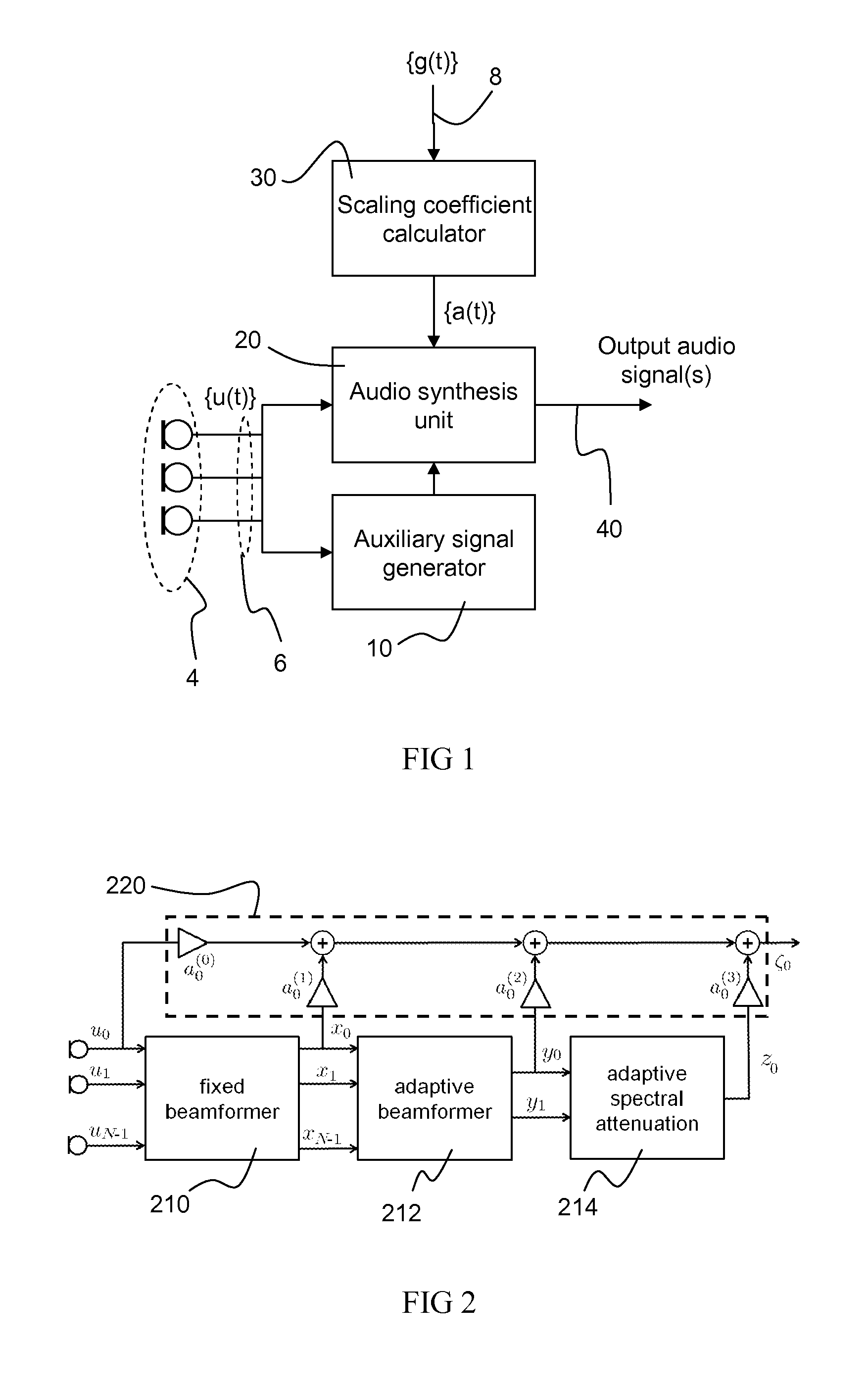

An audio-processing device having an audio input, for receiving audio signals, each audio signal having a mixture of components, each corresponding to a sound source, and a control input, for receiving, for each sound source, a desired gain factor associated with the source, by which it is desired to amplify the corresponding component, and an auxiliary signal generator, for generating at least one auxiliary signal from the audio signals, and with a different mixture of components as compared with a reference audio signal; and a scaling coefficient calculator, for calculating scaling coefficients based upon the desired gain factors and upon parameters of the different mixture, each scaling coefficient associated with one of the auxiliary signal and optionally the reference audio signal, and an audio synthesis unit, for synthesizing an output audio signal by applying scaling coefficients to the auxiliary signal and optionally the reference audio signal and combining the results.

Owner:NXP BV

Systems and methods for portable audio synthesis

Owner:MEDIALAB SOLUTIONS

Using Ray Tracing for Real Time Audio Synthesis

According to embodiments of the invention, a sound engine may determine a final sound at a listener location by emulating sound waves within a three-dimensional scene. The sound engine may emulate sound waves by issuing rays from a location of a sound event and tracing the rays through the three-dimensional scene. The rays may intersect objects within the three-dimensional scene which have sound modification factors. The sound modification factors and other factors (e.g., distance traveled by the ray, angle of intersection with the object, etc.) may be applied to the sound event to determine a final sound which is heard by the listener.

Owner:ACTIVISION PUBLISHING

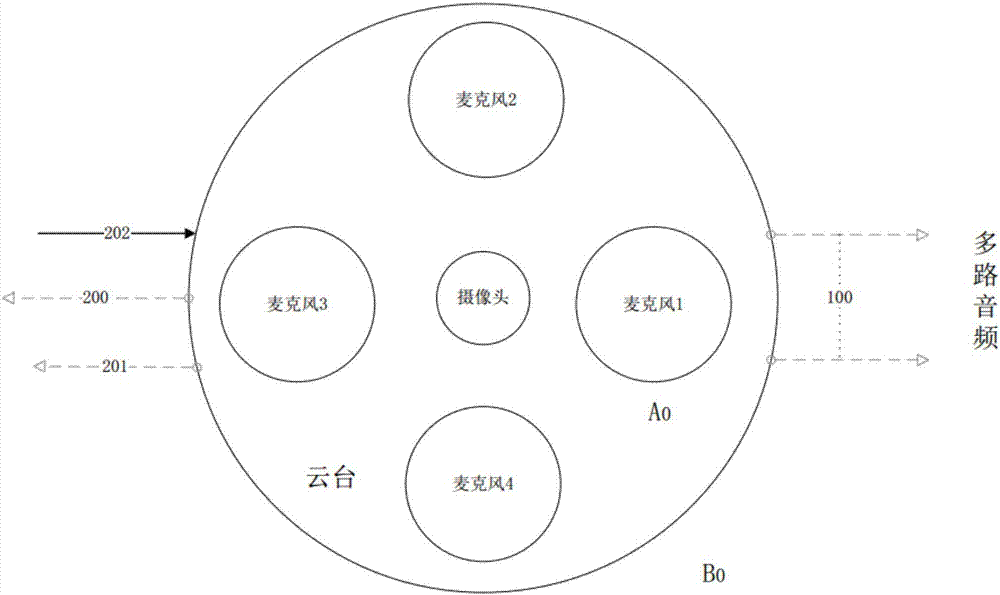

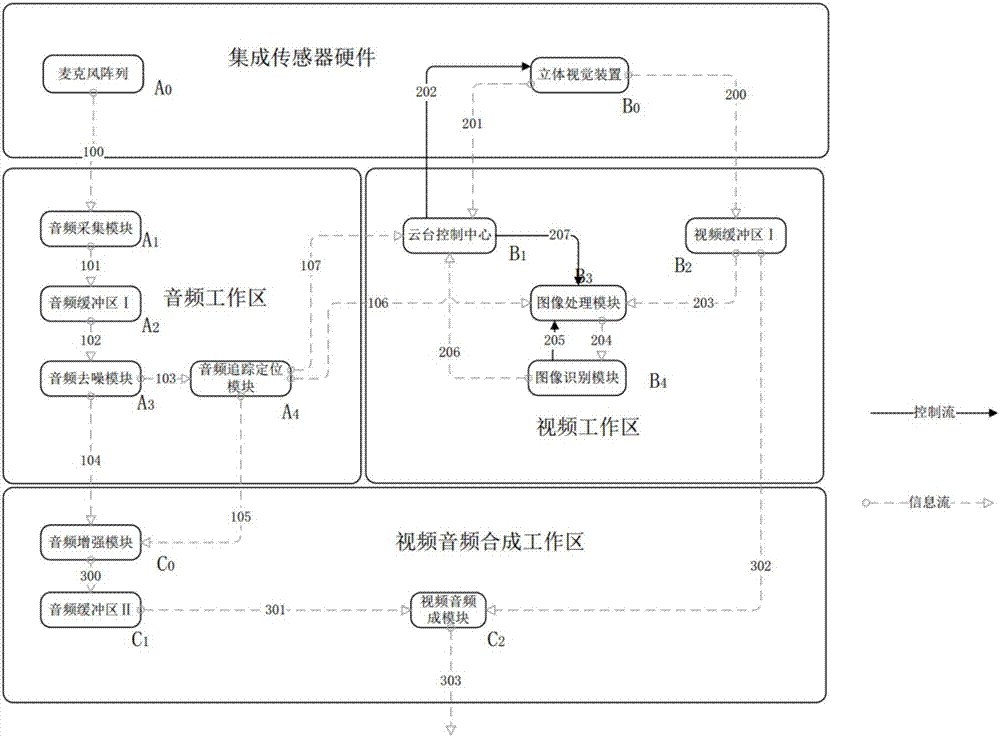

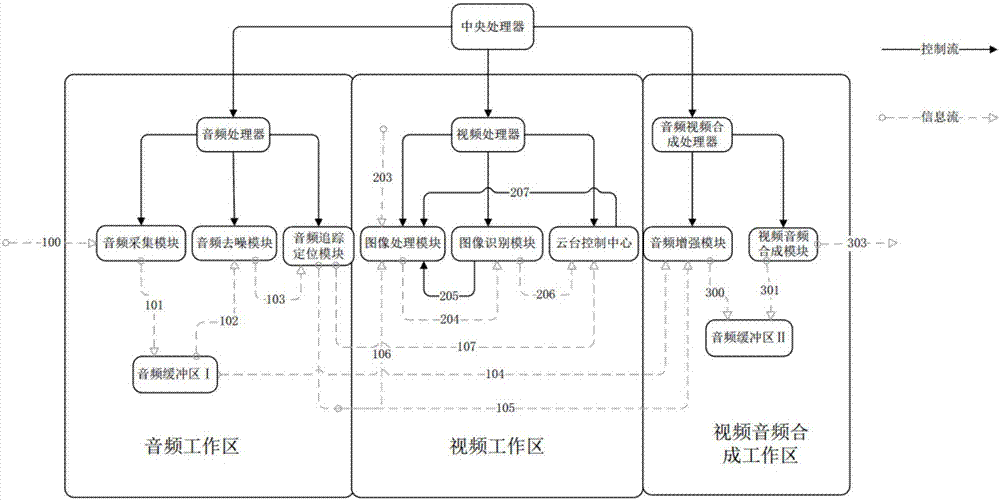

Integrated sensor based on microphone array and stereoscopic vision

ActiveCN107333120AReduce the range of estimatesSmall amount of calculationTelevision system detailsTelevision conference systemsAudio synthesisVisual perception

The invention discloses an integrated sensor based on a microphone array and stereoscopic vision. The integrated sensor comprises a hardware system and a control system. The hardware system comprises the microphone array and a stereoscopic vision device. The microphone array is responsible for receiving audio information in an environment and generating multichannel analog audios. The stereoscopic vision device is responsible for collecting environment images. The control system comprises an audio working area, a video working area and a video / audio synthesis working area. The audio working area is responsible for denoising audios, positioning audio sources, and tracking the audio sources (through audio processing). The video working area is responsible for positioning and tracking target audio sources, namely calibrating, positioning and precisely tracking the target audio sources through image processing and image identification. The video / audio synthesis working area is responsible for enhancing audio signals (improving a signal-to-noise ratio of the audio signals) and obtaining and outputting an integrated signal of the video / audio signals.

Owner:JILIN UNIV

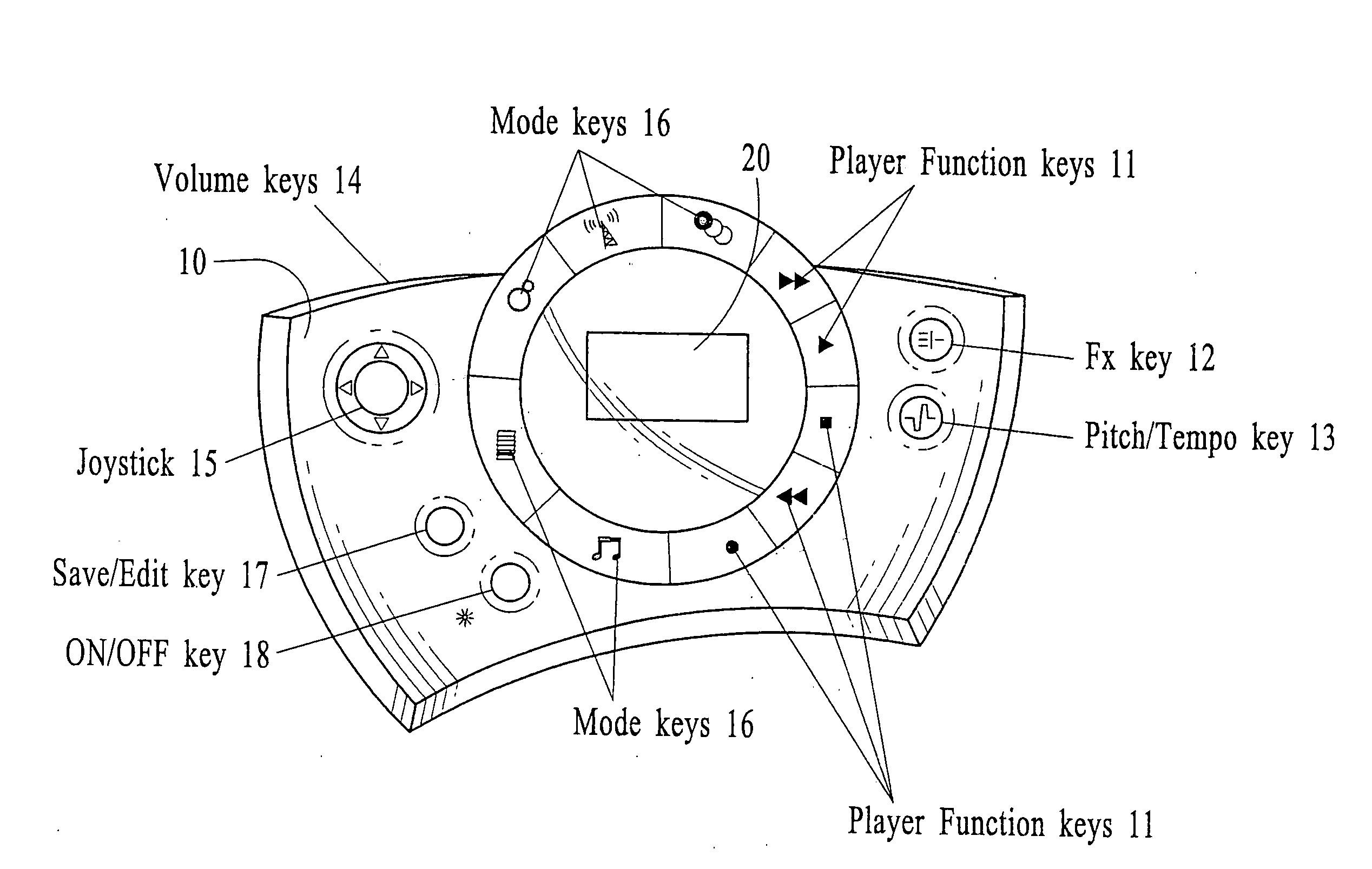

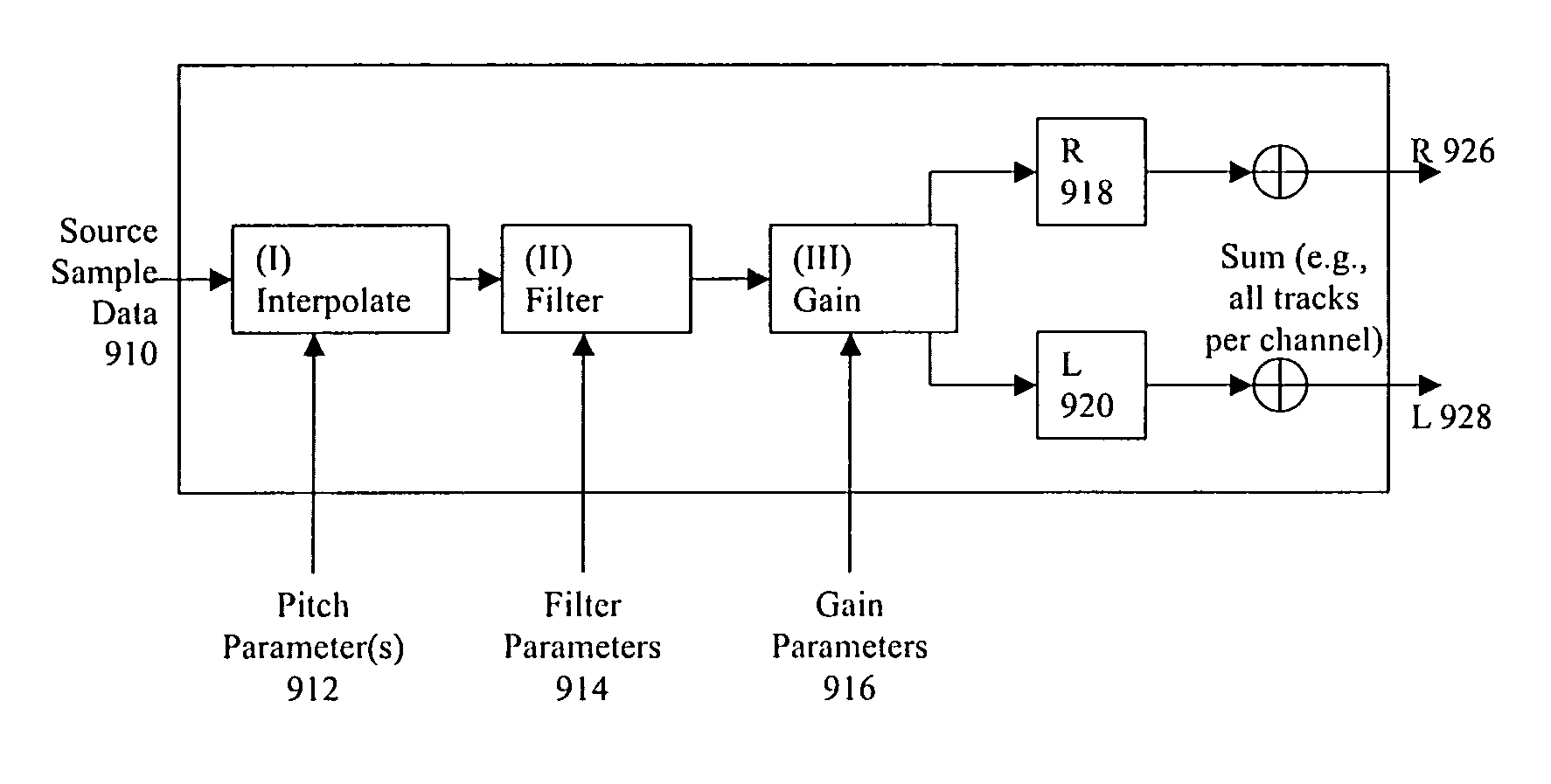

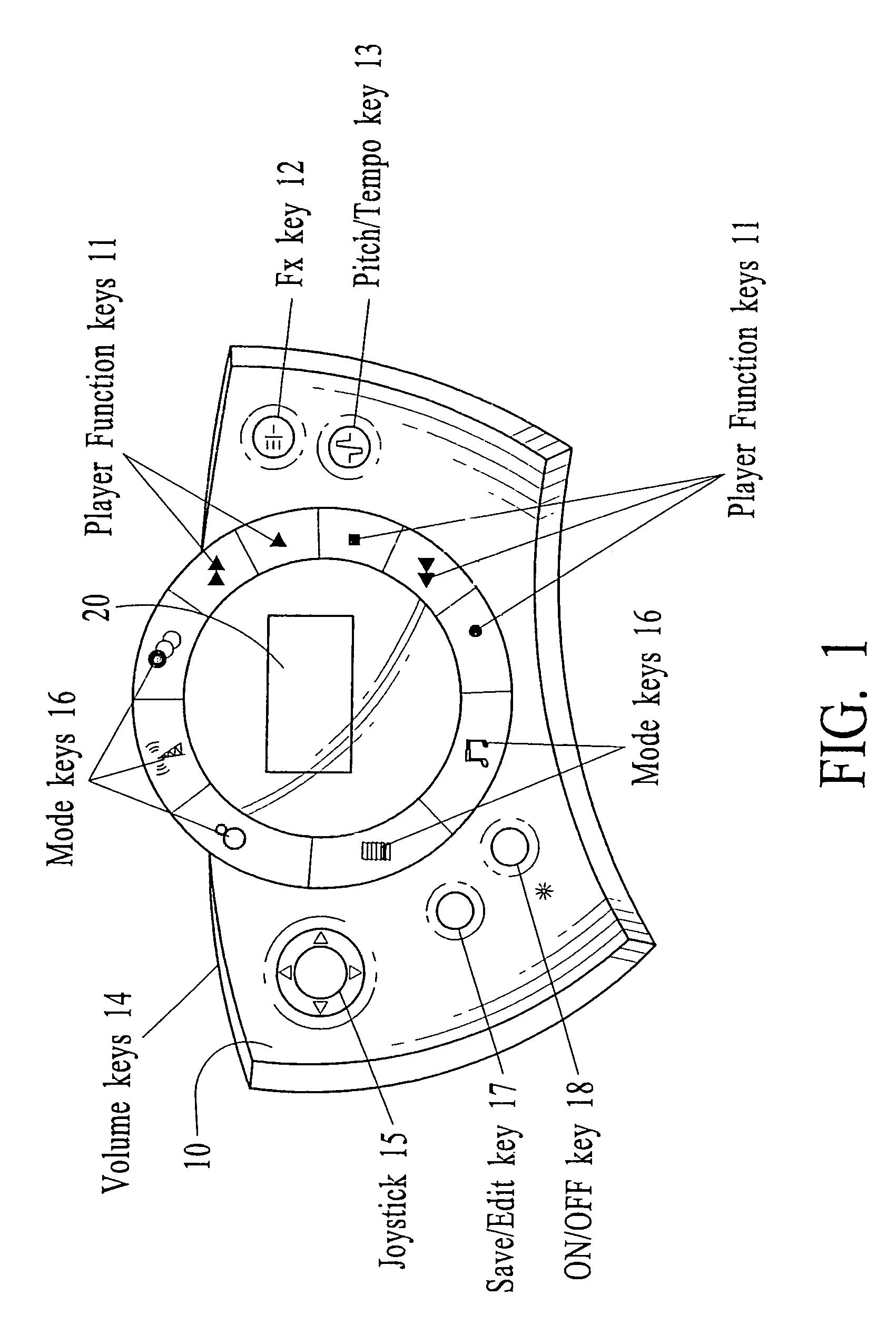

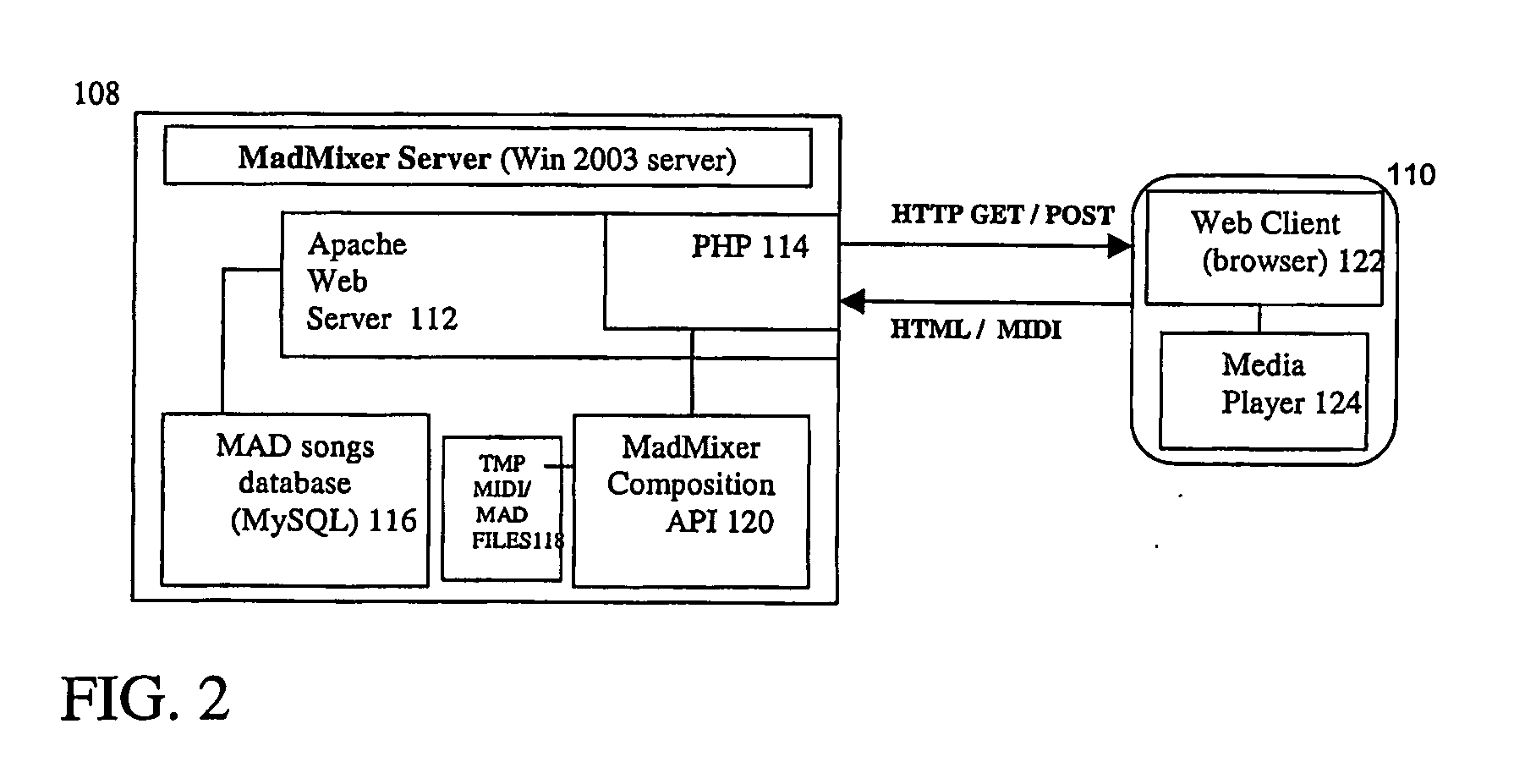

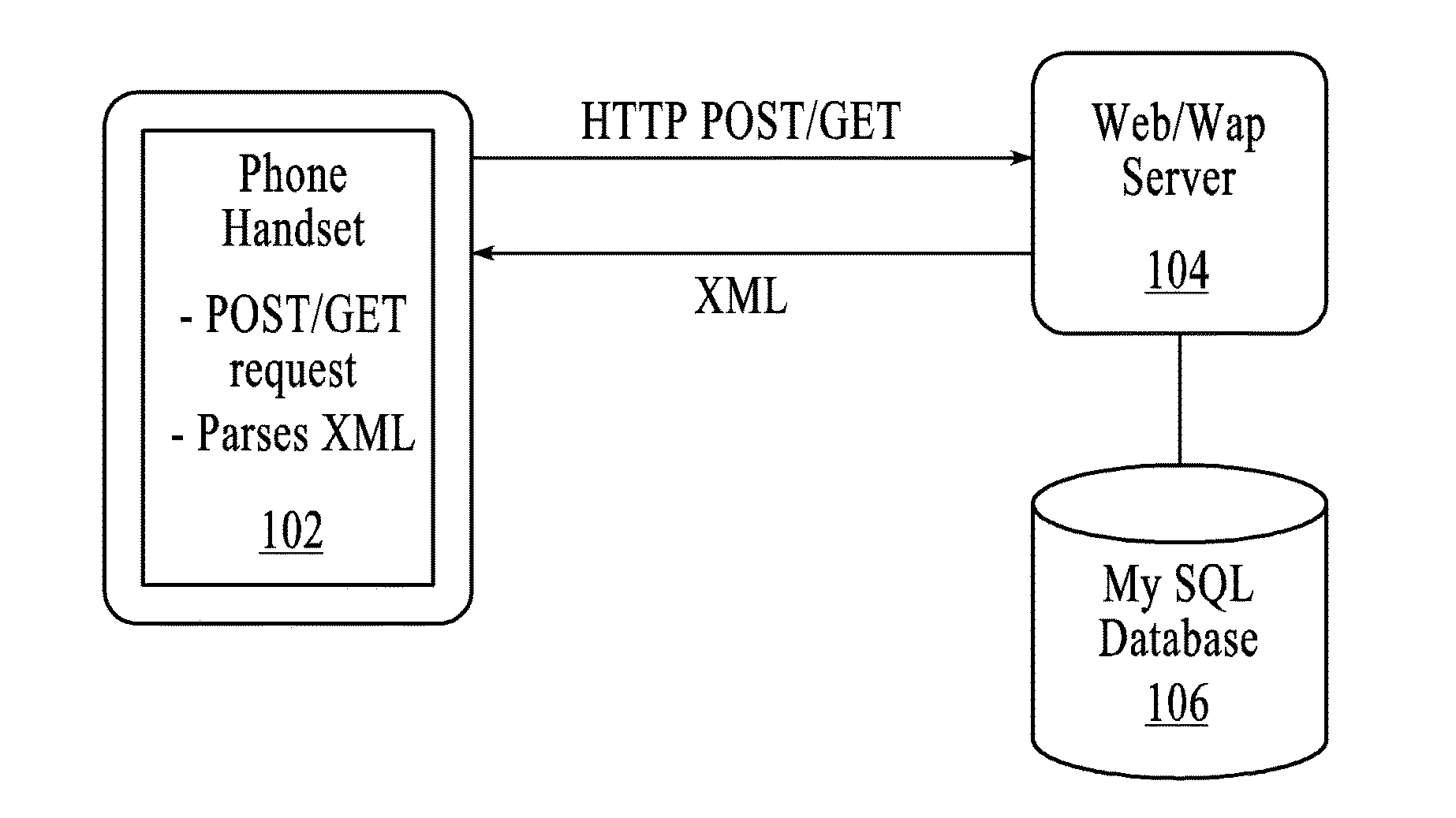

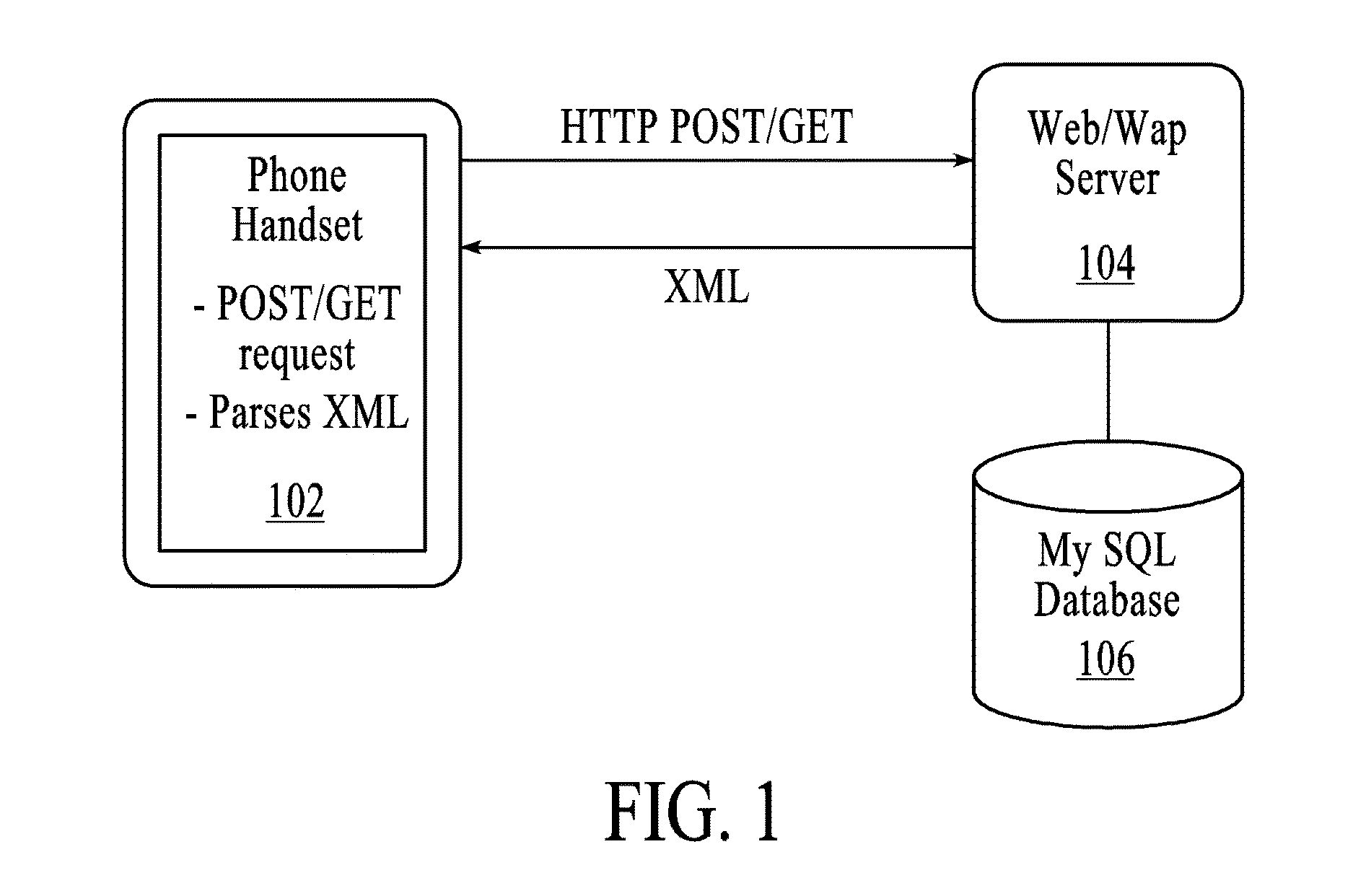

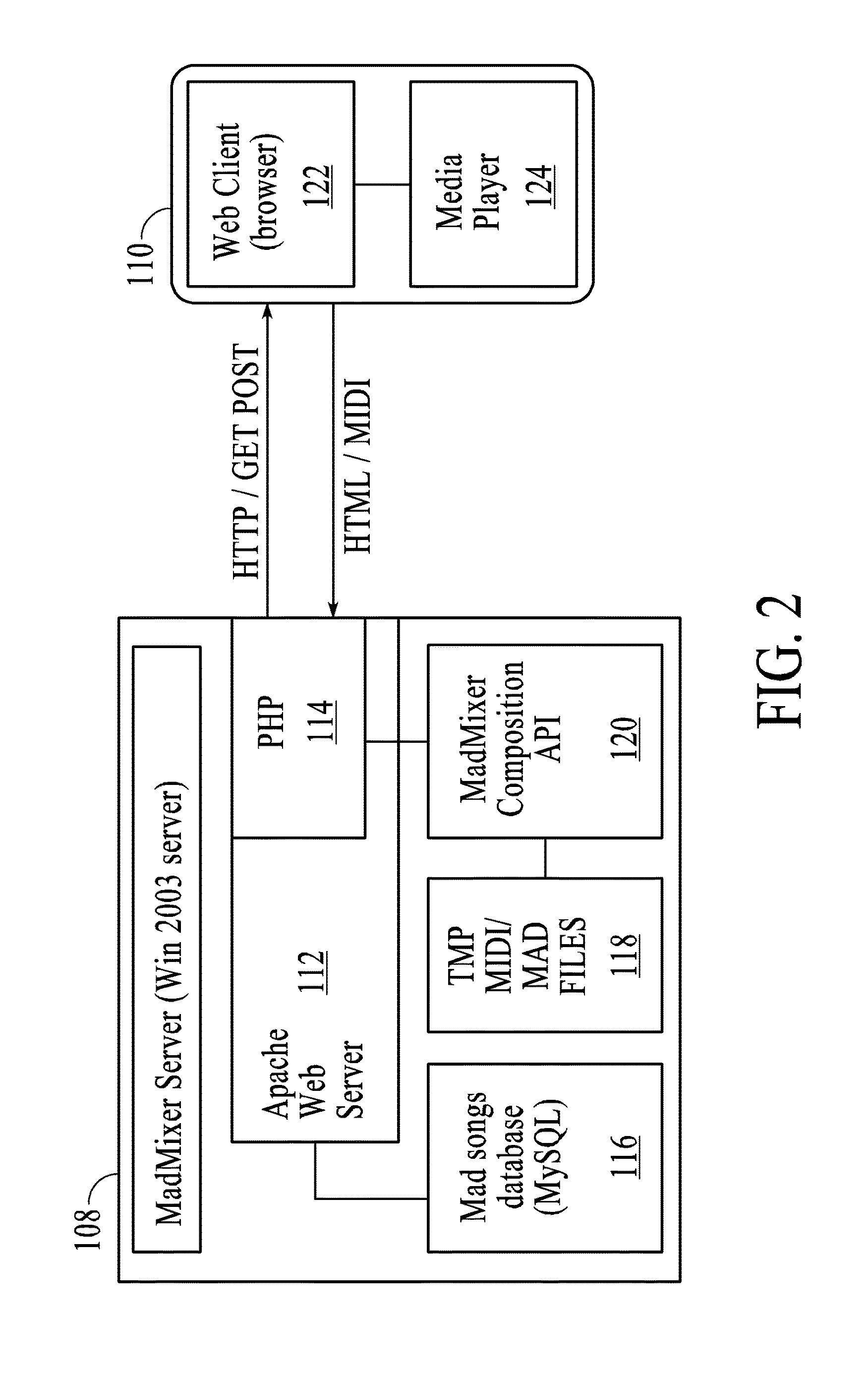

Systems and methods for portable audio synthesis

InactiveUS20090272251A1Create quicklyEasy to createGearworksMusical toysAudio synthesisComposition process

Systems and methods for creating, modifying, interacting with and playing music are provided, preferably employing a top-down process, where the user is provided with a musical composition that may be modified and interacted with and played and / or stored (for later play). The system preferably is provided in a handheld form factor, and a graphical display is provided to display status information, graphical representations of musical lanes or components which preferably vary in shape as musical parameters and the like are changed for particular instruments or musical components such as a microphone input or audio samples. An interactive auto-composition process preferably employs musical rules and a pseudo random number generator, which may also incorporate randomness introduced by timing of user input or the like. The user may quickly begin creating desirable music in accordance with one or a variety of musical styles, with the user modifying the auto-composed (or previously created) musical composition, either for a real time performance and / or for storing and subsequent playback. An analysis process flow also is disclosed for using pre-existing music as input(s) to an algorithm to derive music rules that may be used as part of a music style in a subsequent auto-composition process. In addition, the present invention makes use of node-based music generation as part of a system and method to broadcast and receive music data files, which are then used to generate and play music. By incorporating the music generation process into a node / subscriber unit, bandwidth requirements are lowered, and consequently the bandwidth can preferably be used for additional features such as node-to-node and node-to-base music data transmission. The present invention is characterized by the broadcast of relatively small data files that contain various parameters sufficient to describe the music to the node / subscriber music generator. In addition, improved audio synthesis in a portable environment is provided with the present invention by performing audio synthesis in a manner that simplifies design requirements and / or minimizes cost, while still providing quality audio synthesis features targeted for a portable system (e.g., portable telephone). In addition, problems associated with the tradeoff between overall sound quality and memory requirements in a MIDI sound bank are addressed in the present invention by providing systems and methods for a reduced memory size footprint MIDI sound bank. In addition, music ringtone alert tone remixing, navigation, and purchasing capabilities are disclosed that are particularly advantageous in the context of a portable communications device, such as a cellular telephone, in connection with a communications network.

Owner:MEDIALAB SOLUTIONS

Systems and Method for Music Remixing

InactiveUS20160379611A1Create quicklyEasy to createElectrophonic musical instrumentsCurrent supply arrangementsAudio synthesisComposition process

Systems and methods for creating, modifying, interacting with and playing music are provided, preferably employing a top-down process, where the user is provided with a musical composition that may be modified and interacted with and played and / or stored (for later play). The system preferably is provided in a handheld form factor, and a graphical display is provided to display status information, graphical representations of musical lanes or components which preferably vary in shape as musical parameters and the like are changed for particular instruments or musical components such as a microphone input or audio samples. An interactive auto-composition process preferably employs musical rules and a pseudo random number generator, which may also incorporate randomness introduced by timing of user input or the like. The user may quickly begin creating desirable music in accordance with one or a variety of musical styles, with the user modifying the auto-composed (or previously created) musical composition, either for a real time performance and / or for storing and subsequent playback. An analysis process flow also is disclosed for using pre-existing music as input(s) to an algorithm to derive music rules that may be used as part of a music style in a subsequent auto-composition process. In addition, the present invention makes use of node-based music generation as part of a system and method to broadcast and receive music data files, which are then used to generate and play music. By incorporating the music generation process into a node / subscriber unit, bandwidth requirements are lowered, and consequently the bandwidth can preferably be used for additional features such as node-to-node and node-to-base music data transmission. The present invention is characterized by the broadcast of relatively small data files that contain various parameters sufficient to describe the music to the node / subscriber music generator. In addition, improved audio synthesis in a portable environment is provided with the present invention by performing audio synthesis in a manner that simplifies design requirements and / or minimizes cost, while still providing quality audio synthesis features targeted for a portable system (e.g., portable telephone). In addition, problems associated with the tradeoff between overall sound quality and memory requirements in a MIDI sound bank are addressed in the present invention by providing systems and methods for a reduced memory size footprint MIDI sound bank. In addition, music ringtone alert tone remixing, navigation, and purchasing capabilities are disclosed that are particularly advantageous in the context of a portable communications device, such as a cellular telephone, in connection with a communications network.

Owner:MEDIALAB SOLUTIONS

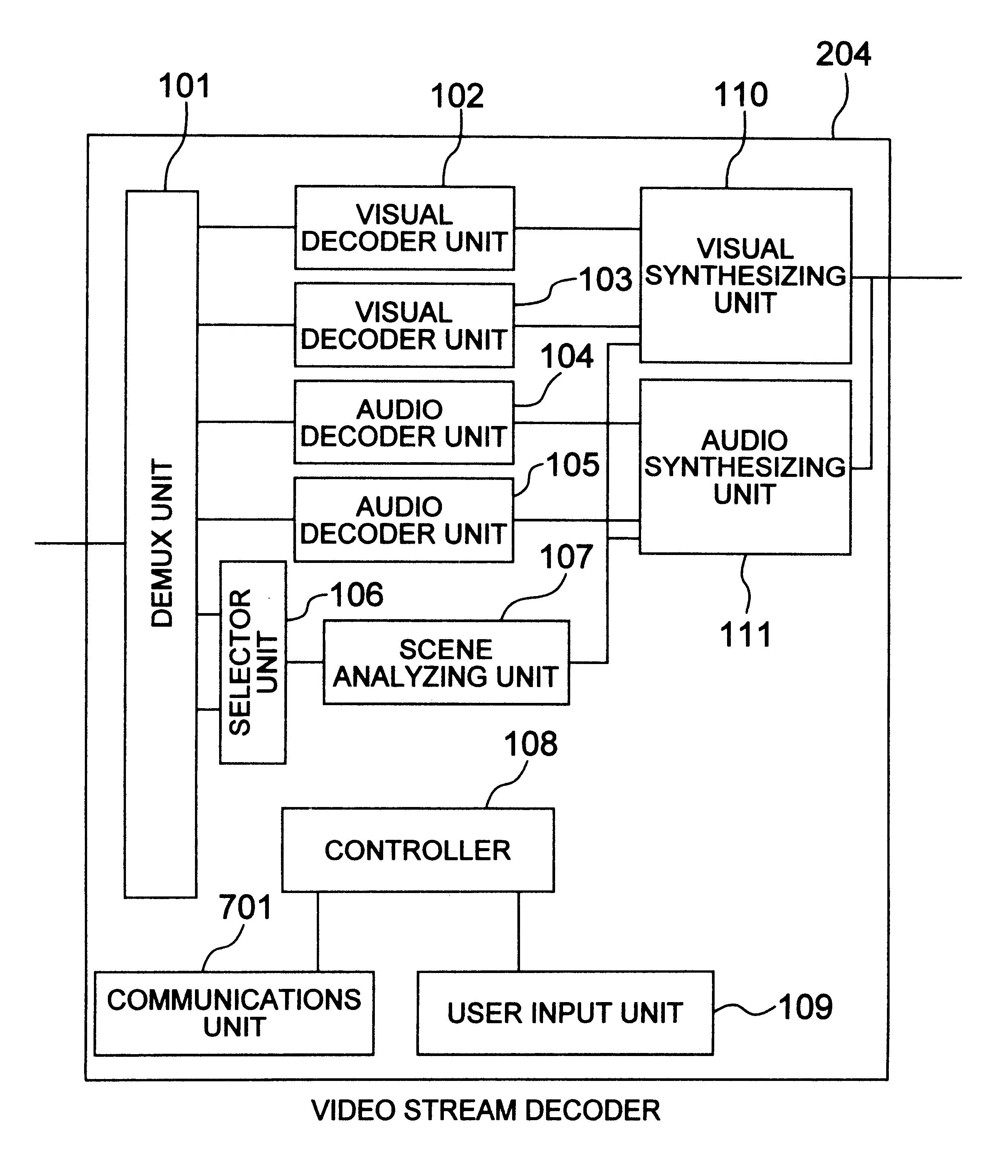

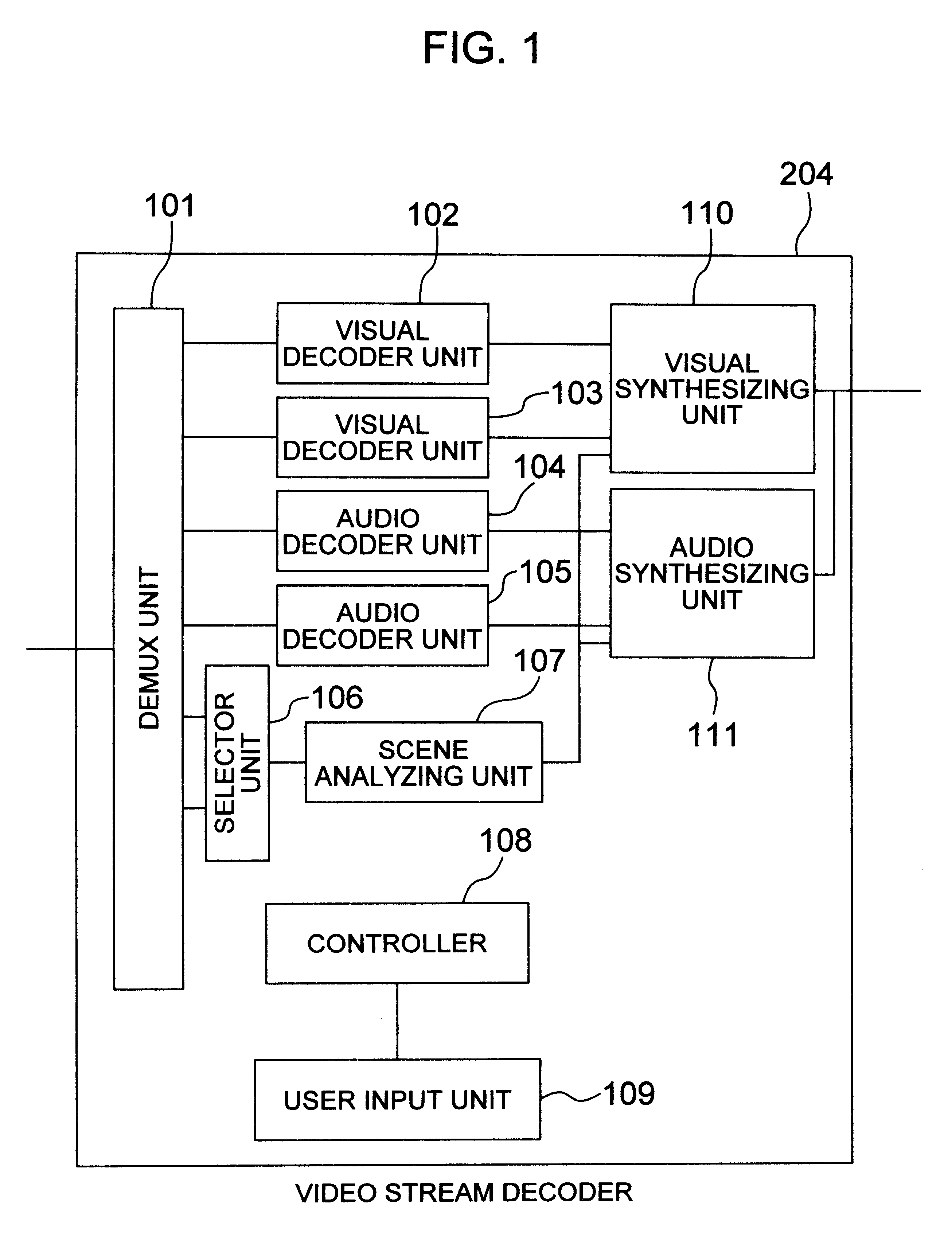

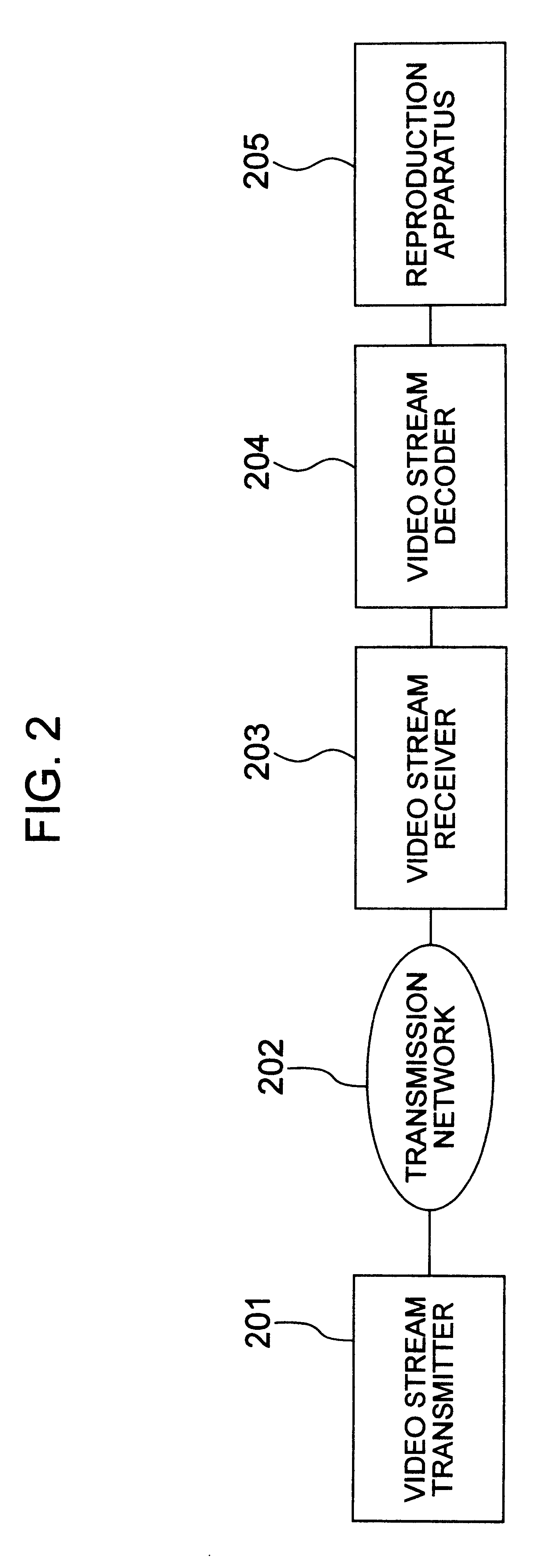

Stream decoder

InactiveUS6665318B1Easy to controlTelevision system detailsTime-division multiplexPattern recognitionAudio synthesis

A video stream decoder include: a demultiplexing unit for demultiplexing a video stream containing at least one or more object encoded visual or audio data and one or more scene descriptions which express scene contents by object encoded data; a decoder unit for decoding the object encoded visual data; a decoder unit for decoding the object encoded audio data; a visual synthesizing unit for synthesizing images corresponding to the object encoded visual data; an audio synthesizing unit for synthesizing sounds corresponding to the object encoded audio data; an analyzing unit for analyzing each scene description; a selector for selecting one of at least two or more scene descriptions contained in the video stream.

Owner:HITACHI LTD

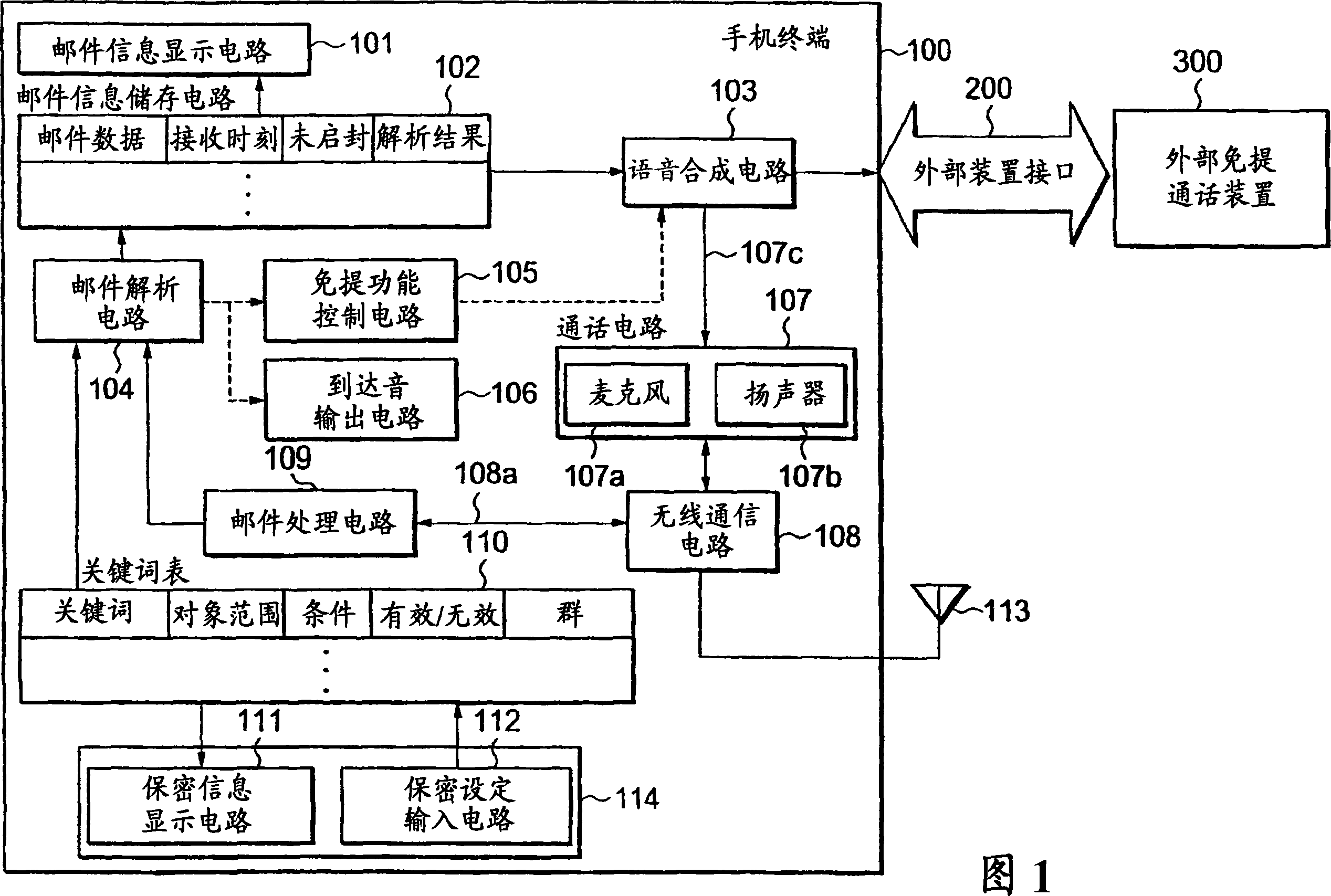

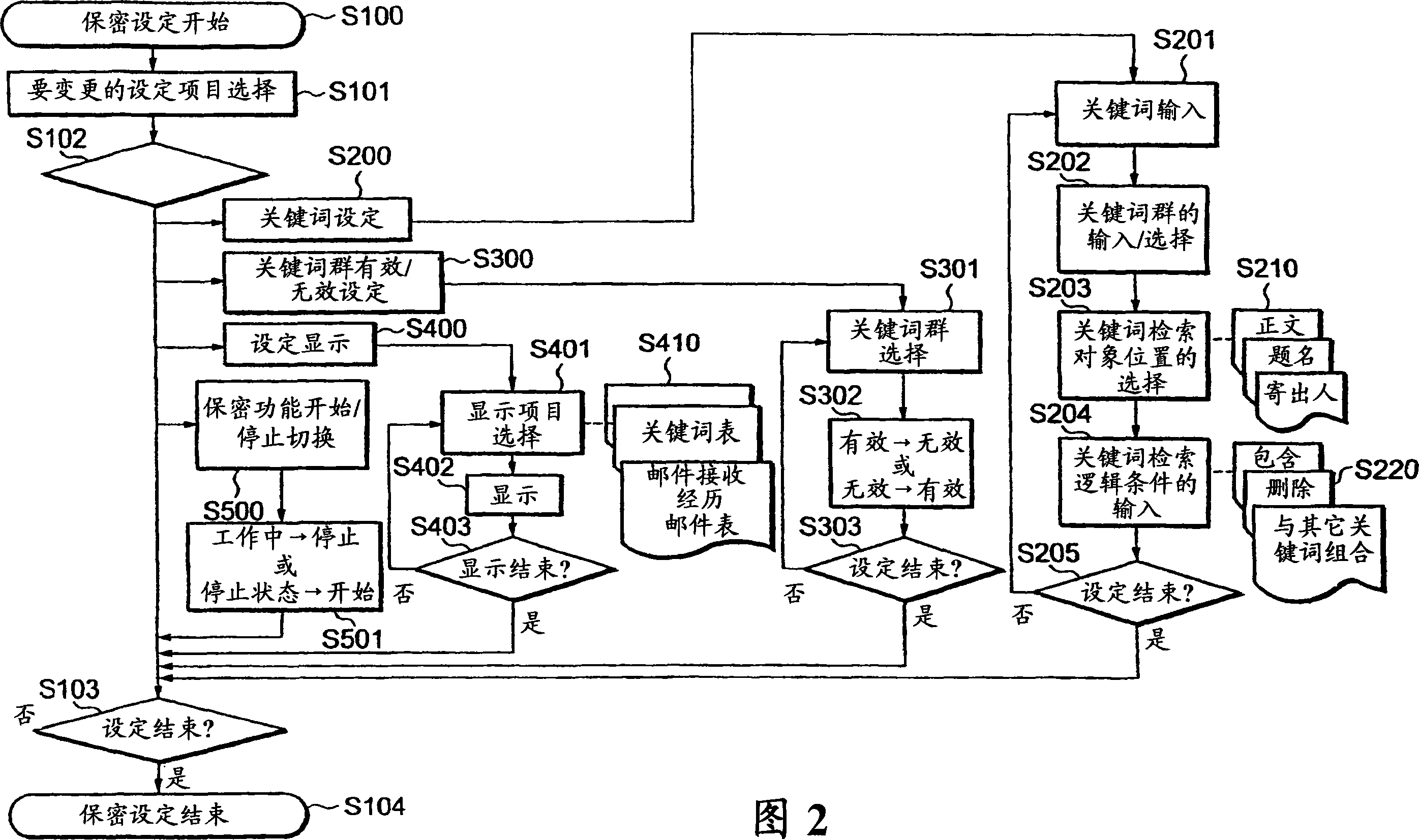

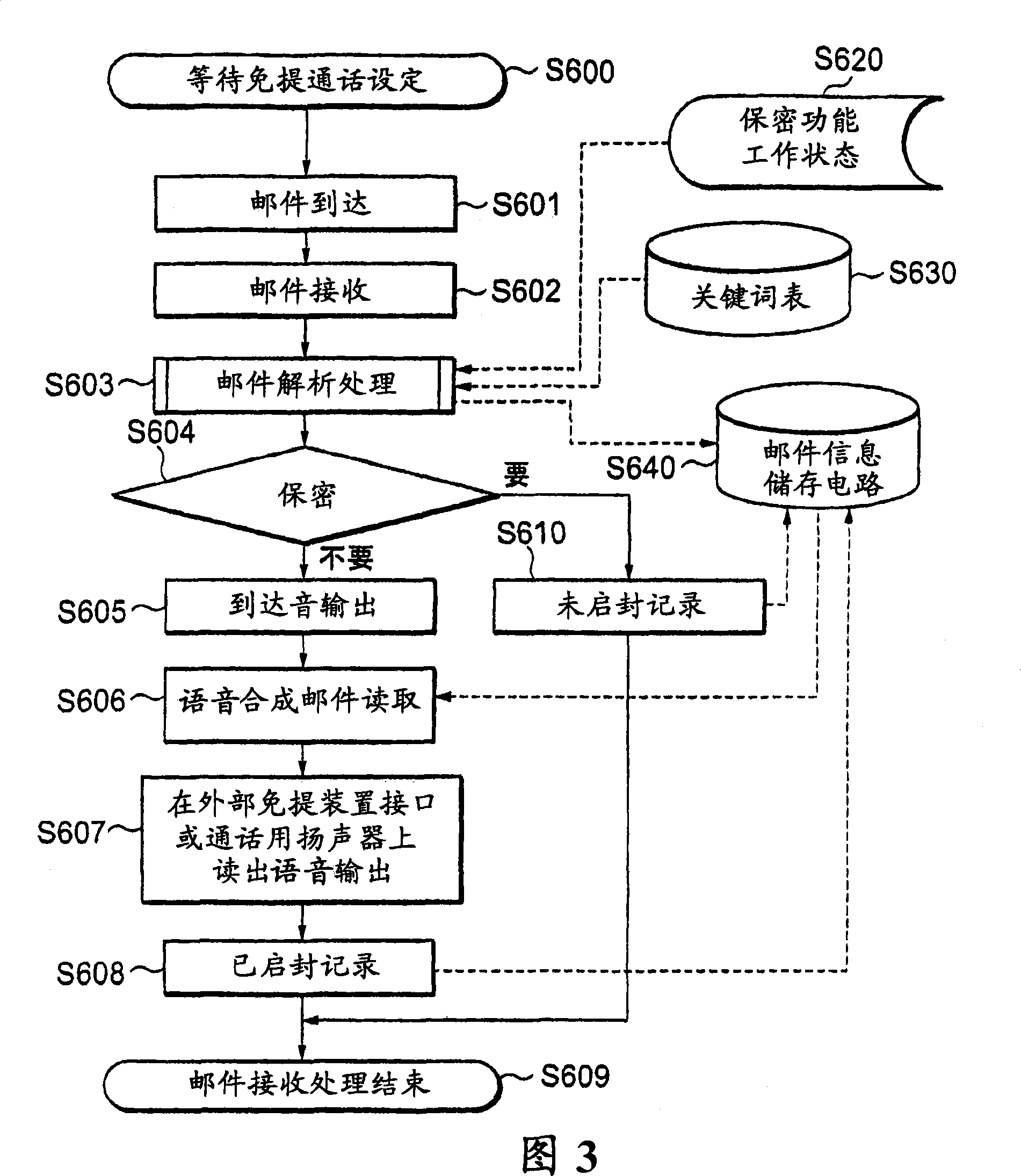

Privacy protection device for hands-free function

InactiveCN101112072AUnauthorised/fraudulent call preventionSubstation speech amplifiersAudio synthesisPrivacy protection

Owner:LENOVO INNOVATIONS LTD HONG KONG

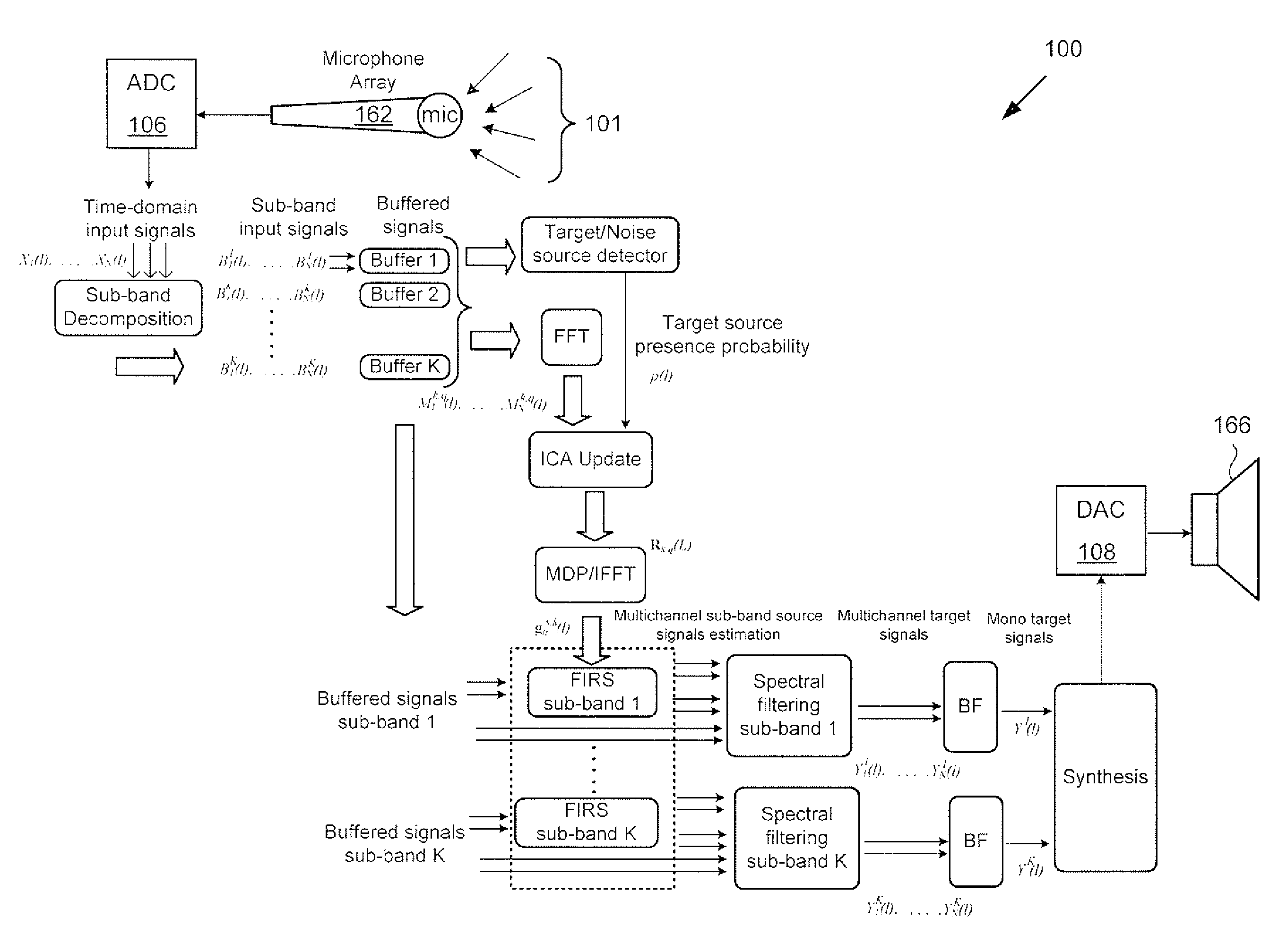

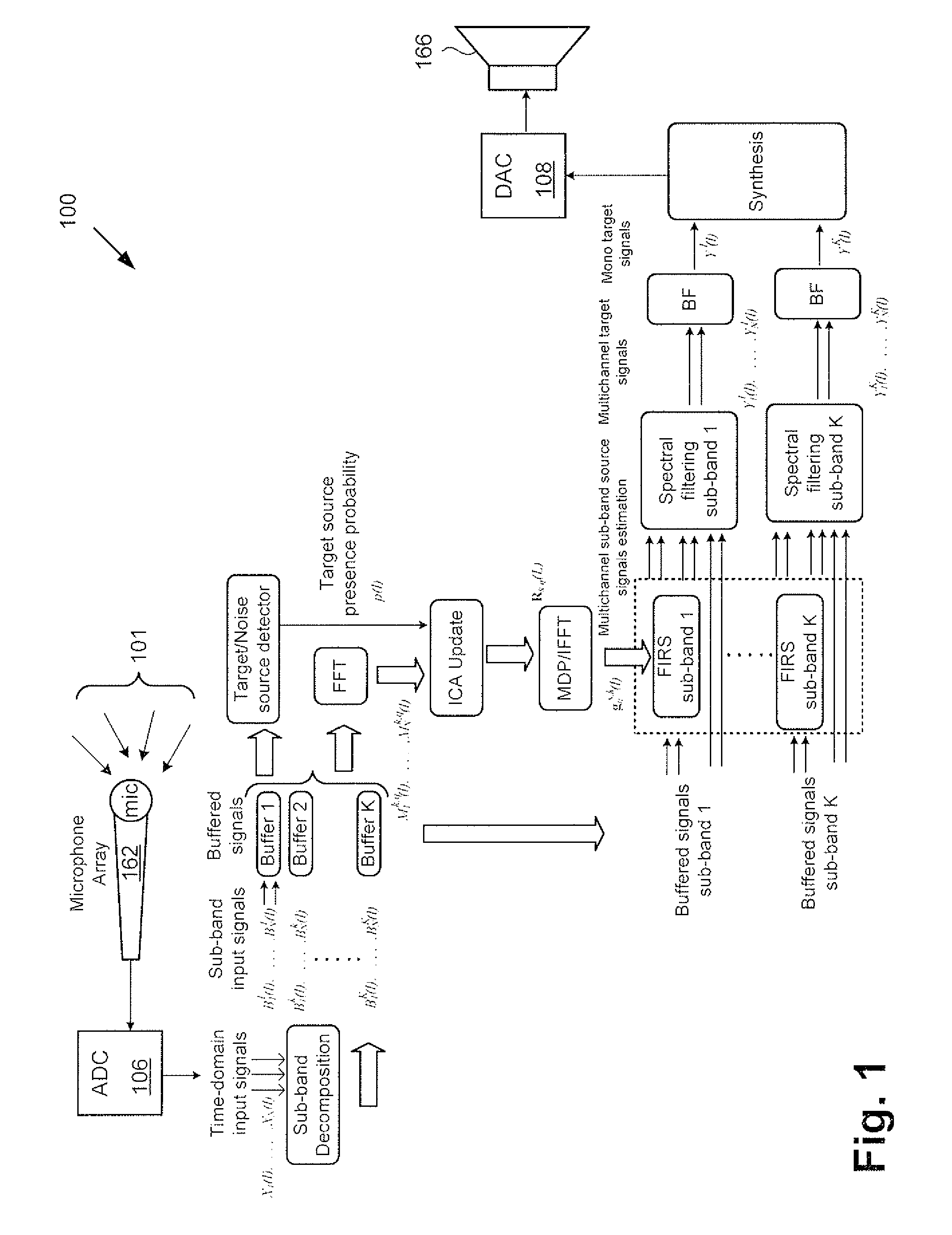

Selective Audio Source Enhancement

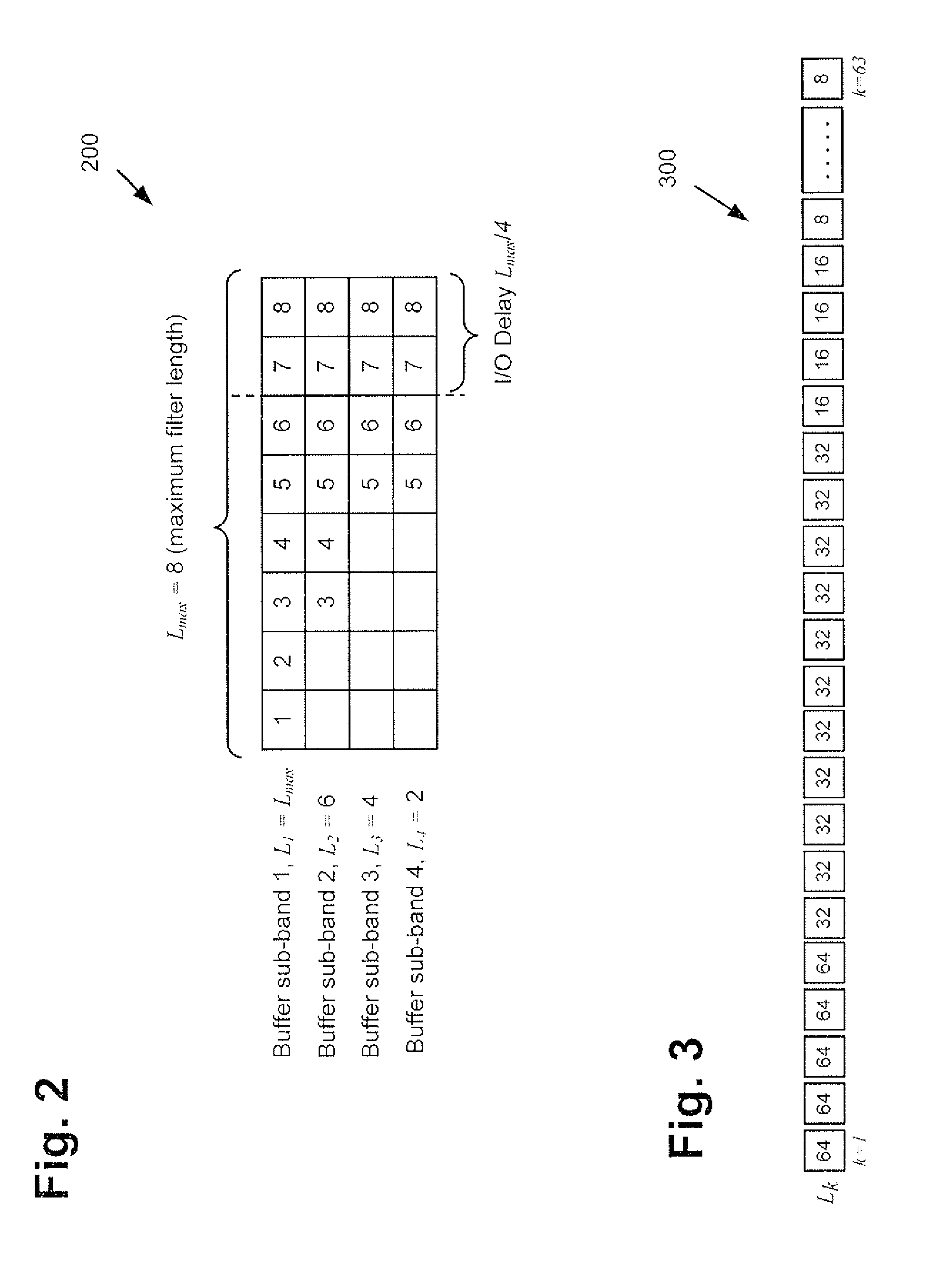

ActiveUS20150117649A1Increase choiceSignal processingStereophonic circuit arrangementsAudio synthesisDecomposition

A selective audio source enhancement system includes a processor and a memory, and a pre-processing unit configured to receive audio data including a target audio signal, and to perform sub-band domain decomposition of the audio data to generate buffered outputs. In addition, the system includes a target source detection unit configured to receive the buffered outputs, and to generate a target presence probability corresponding to the target audio signal, as well as a spatial filter estimation unit configured to receive the target presence probability, and to transform frames buffered in each sub-band into a higher resolution frequency-domain. The system also includes a spectral filtering unit configured to retrieve a multichannel image of the target audio signal and noise signals associated with the target audio signal, and an audio synthesis unit configured to extract an enhanced mono signal corresponding to the target audio signal from the multichannel image.

Owner:SYNAPTICS INC

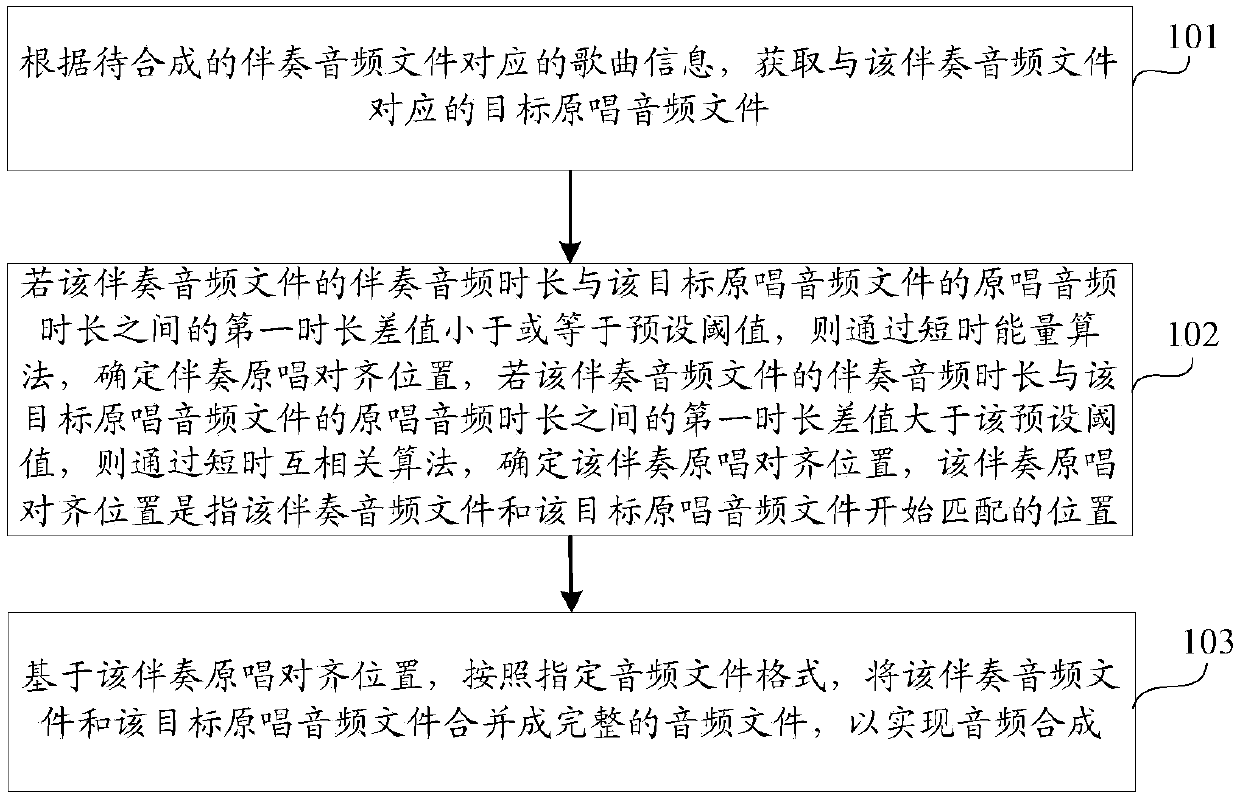

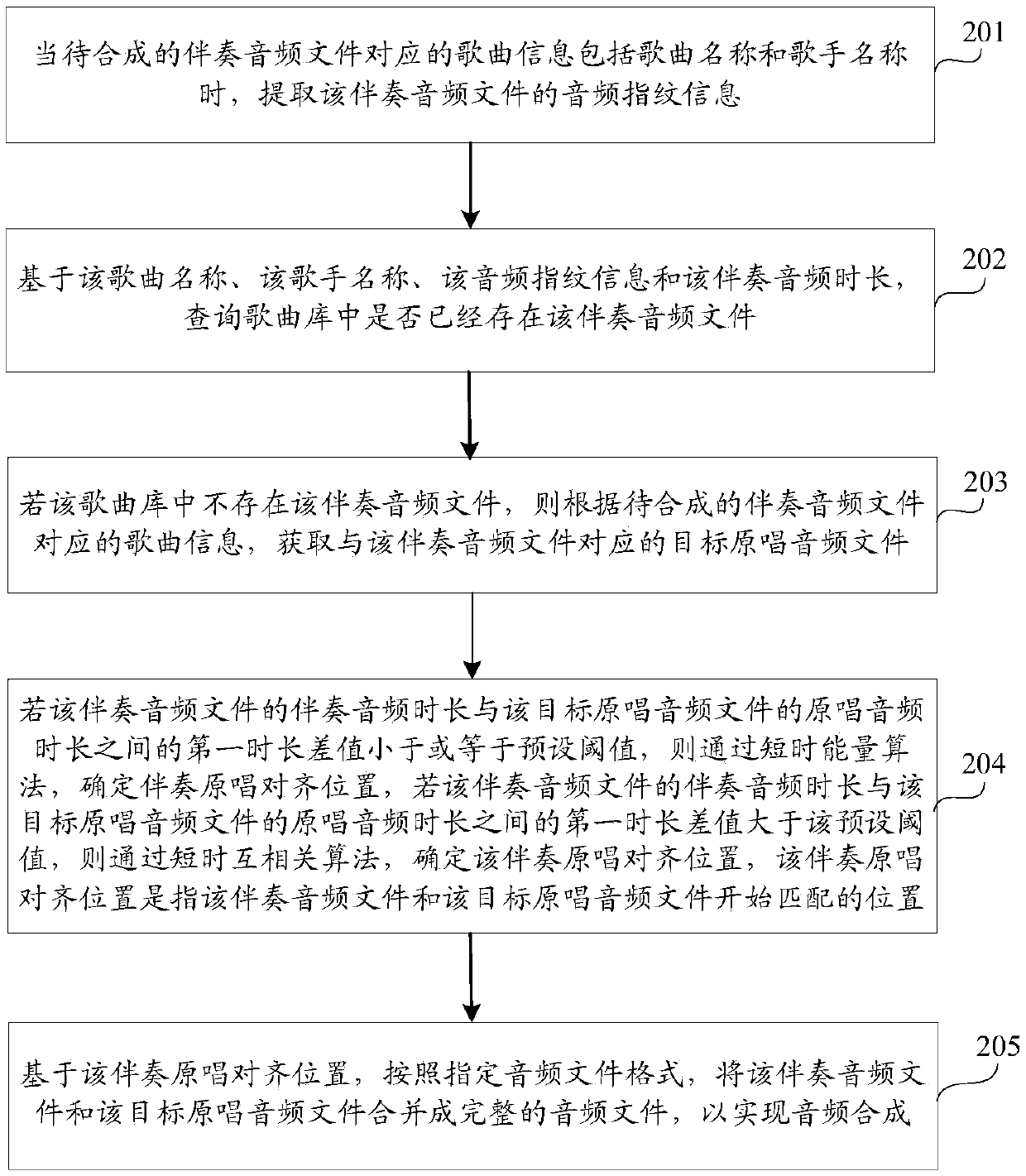

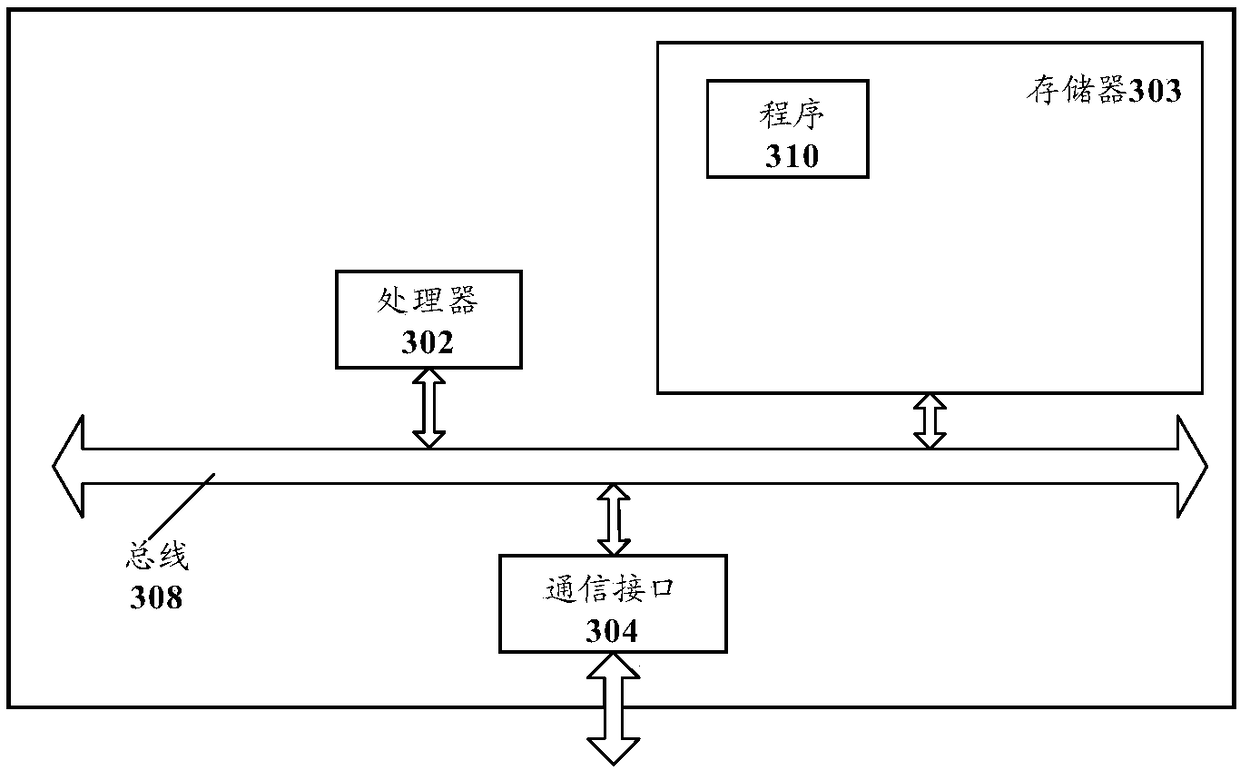

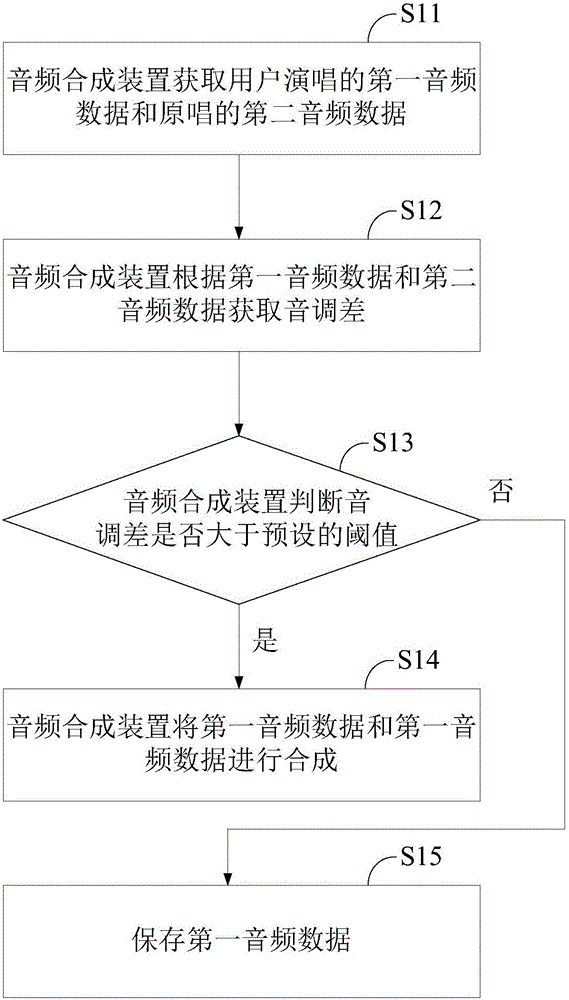

Audio synthesis method and device, and storage medium

ActiveCN107591149AAchieve synthesisSynthesis is automatically implementedElectrophonic musical instrumentsSpeech synthesisComputer hardwareAudio synthesis

The present invention discloses an audio synthesis method and device, and a storage medium, belonging to the multimedia technology field. The method comprises: acquiring a target original audio file corresponding to an accompaniment audio file according to song information corresponding to the accompaniment audio file to be synthesized; if a first duration difference between an accompaniment audioduration of the accompaniment audio file and an original audio duration of the target original audio file is smaller than or equal to a preset threshold, determining an accompaniment original alignment position through a short-time energy algorithm, and if the first duration difference between the accompaniment audio duration of the accompaniment audio file and the original audio duration of thetarget original audio file is larger than the preset threshold, determining an accompaniment original alignment position through the short-time energy algorithm; and based on the accompaniment original alignment position, combining the accompaniment audio file and the target original audio file into a complete audio file according to an assigned audio file format. Therefore, the audio synthesis method and device, and the storage medium can realize audio synthesis and do not need manual operation so as to improve audio synthesis efficiency.

Owner:TENCENT MUSIC ENTERTAINMENT TECH SHENZHEN CO LTD

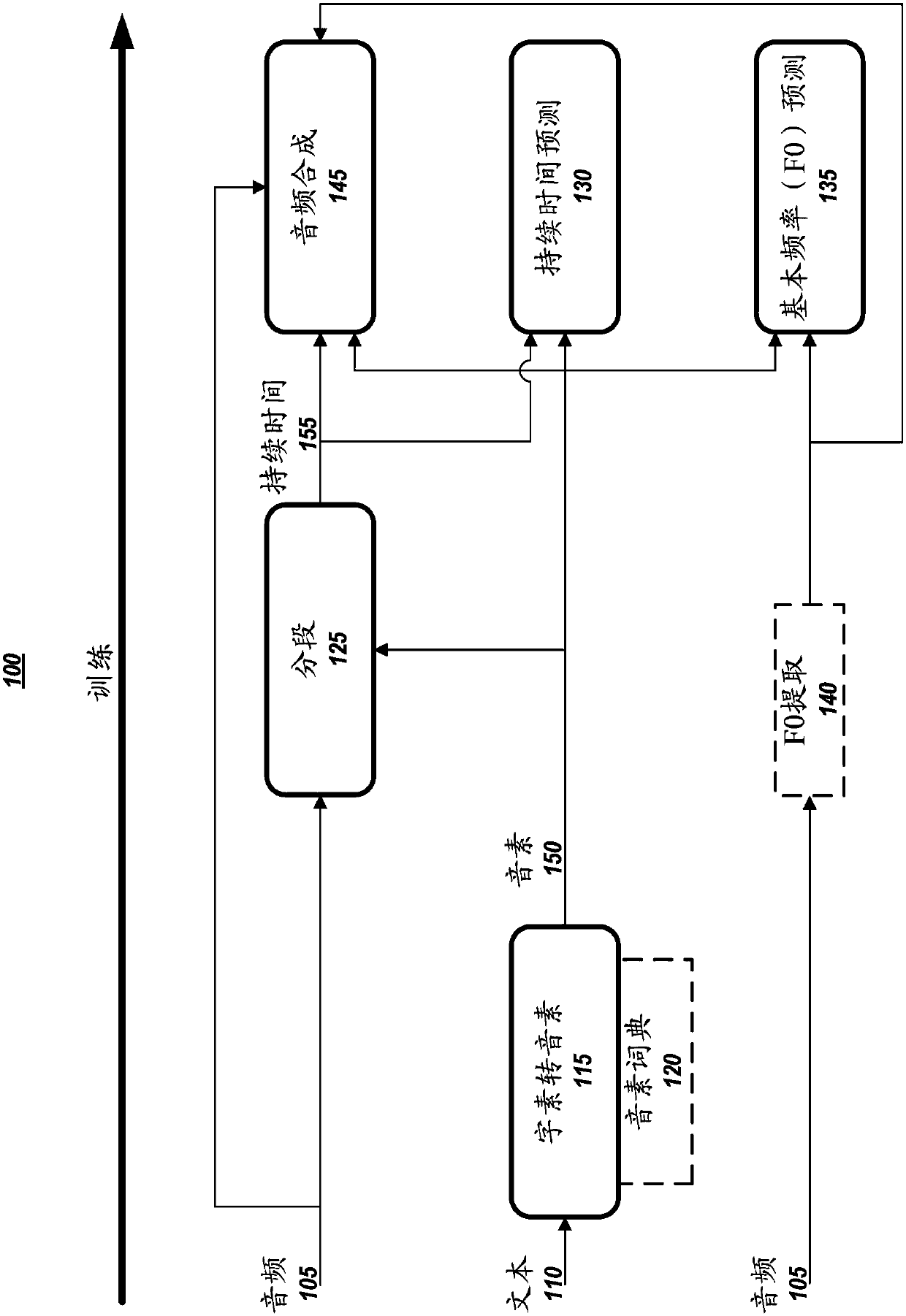

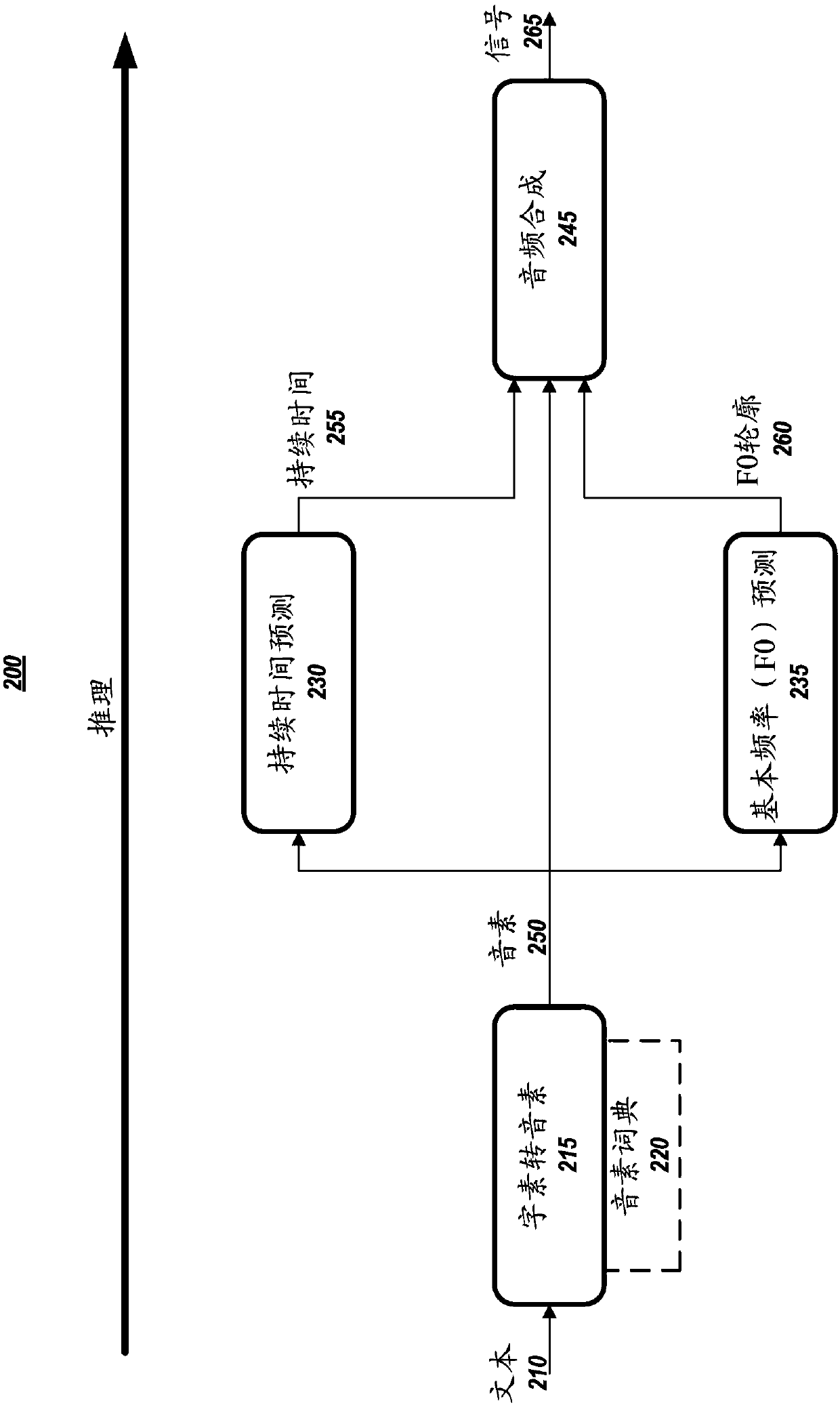

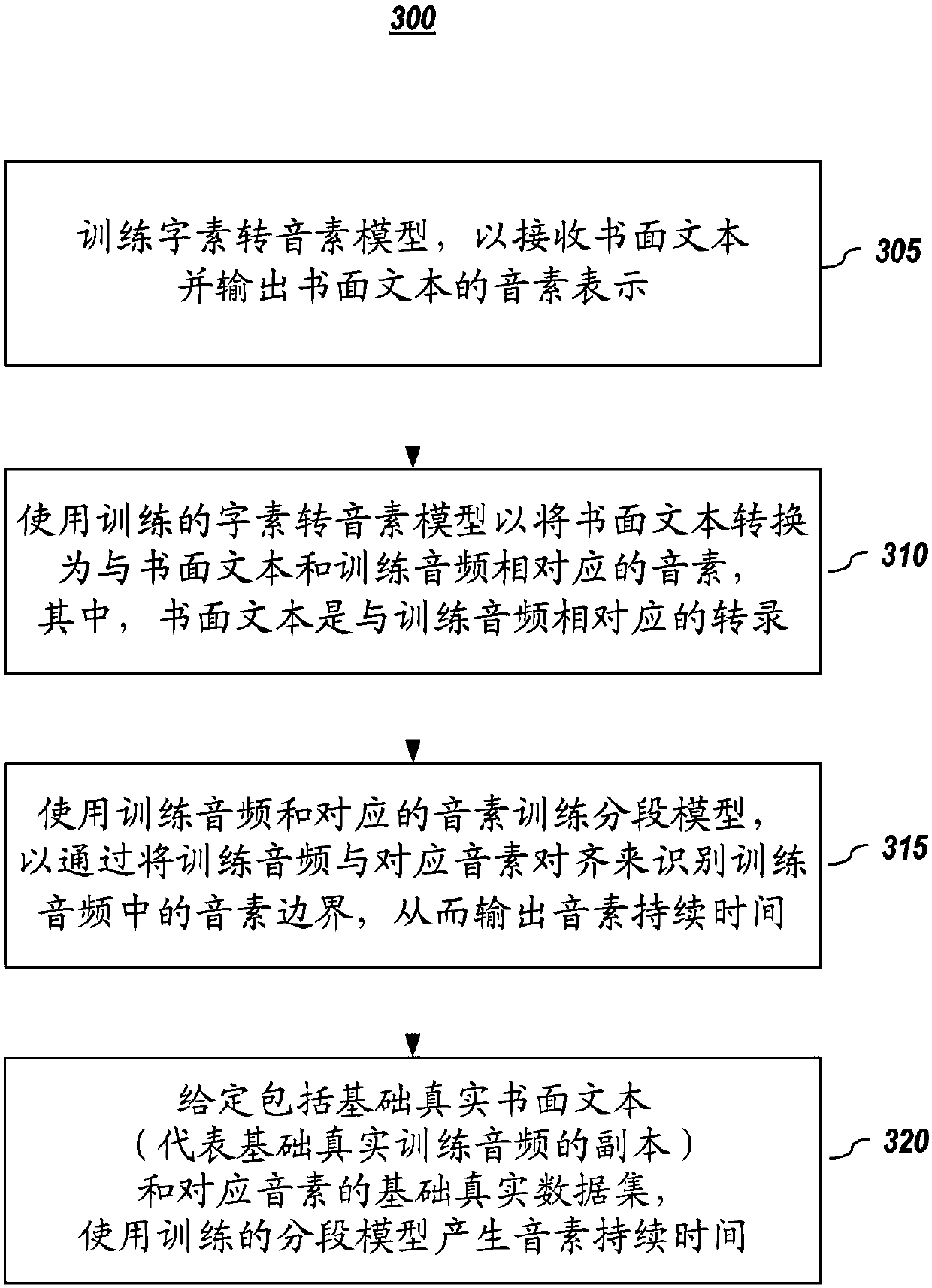

Systems and methods for real-time neural text-to-speech

Embodiments of a production-quality text-to-speech (TTS) system constructed from deep neural networks are described. System embodiments comprise five major building blocks: a segmentation model for locating phoneme boundaries, a grapheme-to-phoneme conversion model, a phoneme duration prediction model, a fundamental frequency prediction model, and an audio synthesis model. For embodiments of the segmentation model, phoneme boundary detection was performed with deep neural networks using Connectionist Temporal Classification (CTC) loss. For embodiments of the audio synthesis model, a variant ofWaveNet was created that requires fewer parameters and trains faster than the original. By using a neural network for each component, system embodiments are simpler and more flexible than traditionalTTS systems, wherein each component requires laborious feature engineering and extensive domain expertise. Inference with system embodiments may be performed faster than real time.

Owner:BAIDU USA LLC

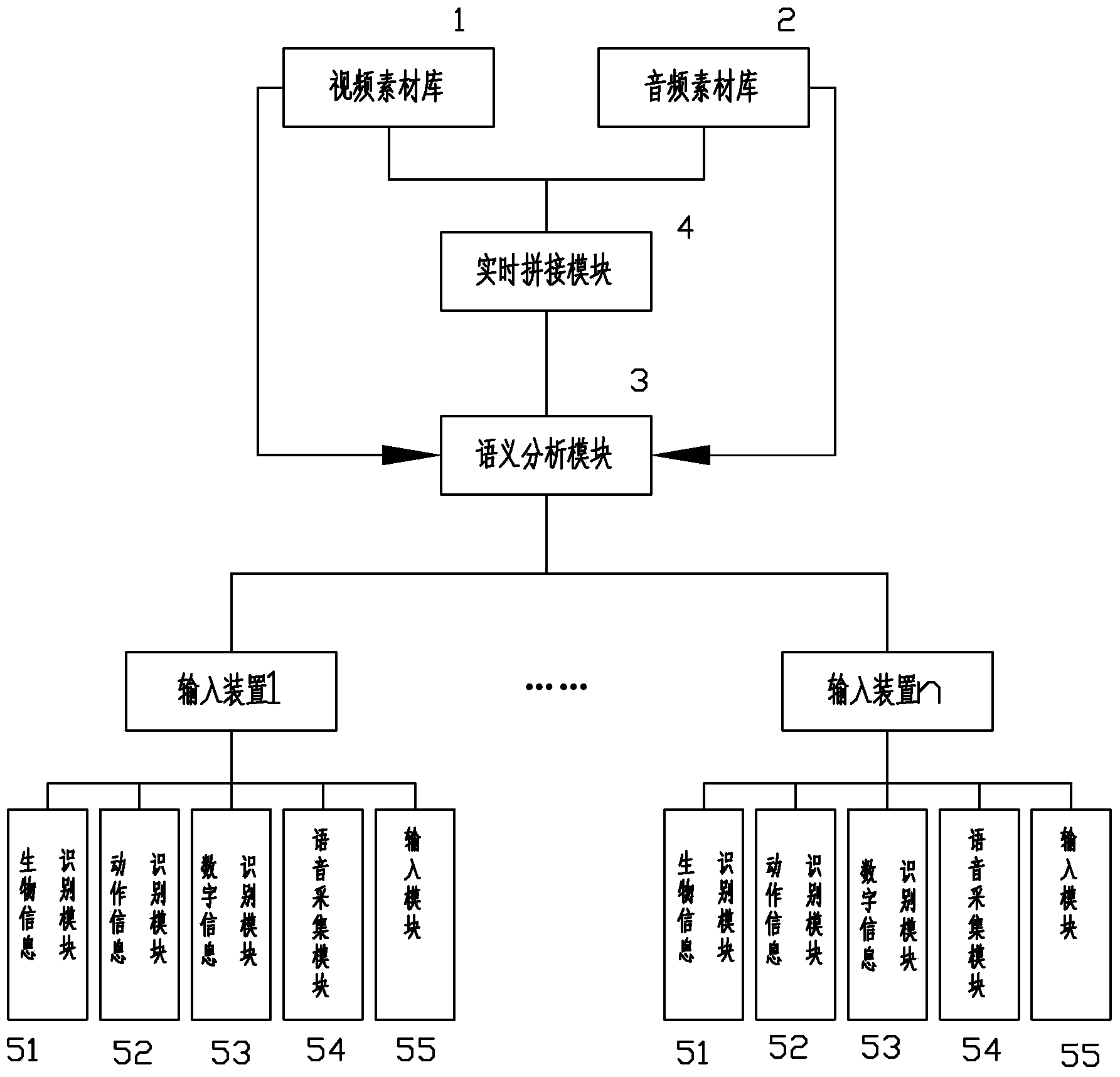

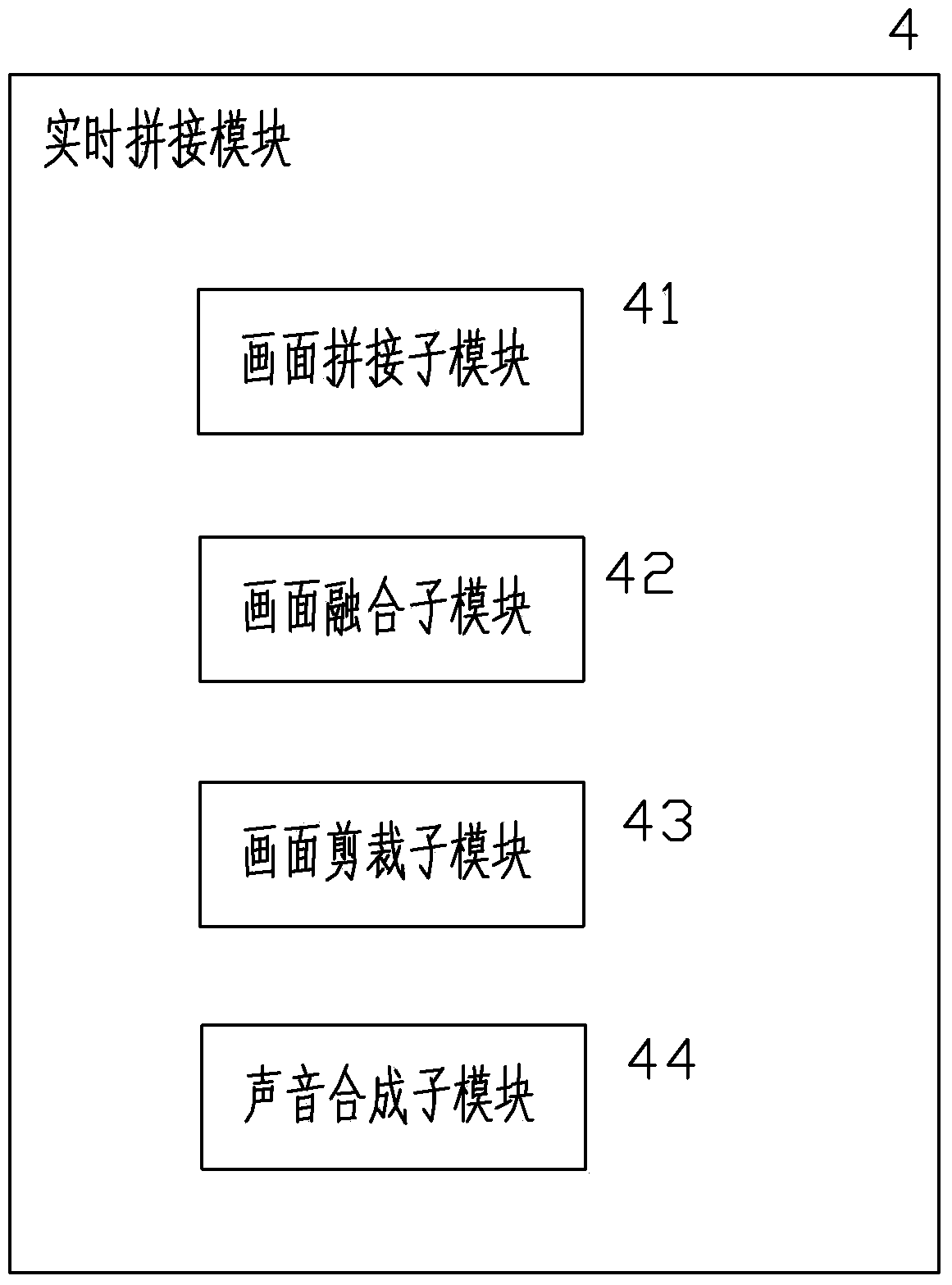

Video real-time splicing device and method based on real-time conversation semantic analysis

InactiveCN104244086AEnable real-time interactionImprove publicitySelective content distributionAudio synthesisSemantics

The invention discloses a video real-time splicing device and method based on real-time conversation semantic analysis. The method includes the steps that a video material library and an audio material library which are related to standard semantics are established, a semantic analysis module conducts semantic analysis on input, a video unit and an audio unit which need to be selected for use are calculated according to the semantic analysis, and corresponding commands are generated and sent to a real-time splicing module for corresponding processing; the real-time splicing module calls the corresponding video unit and the corresponding audio unit from the video material library and the audio material library according to the commands of the semantic analysis module, picture splicing and audio synthesis are conducted according to the requirements of the commands, and complete audio and video fragments are formed and provided for a player so as to be played. By means of the device and method, videos and audio are spliced in real time according to the semantics, real-time interaction with visitors on the scene can be achieved, the content needing to be known by the visitors on the scene can be shown in the played audio and videos duly, and therefore the advertising effect is greatly improved.

Owner:陈飞 +1

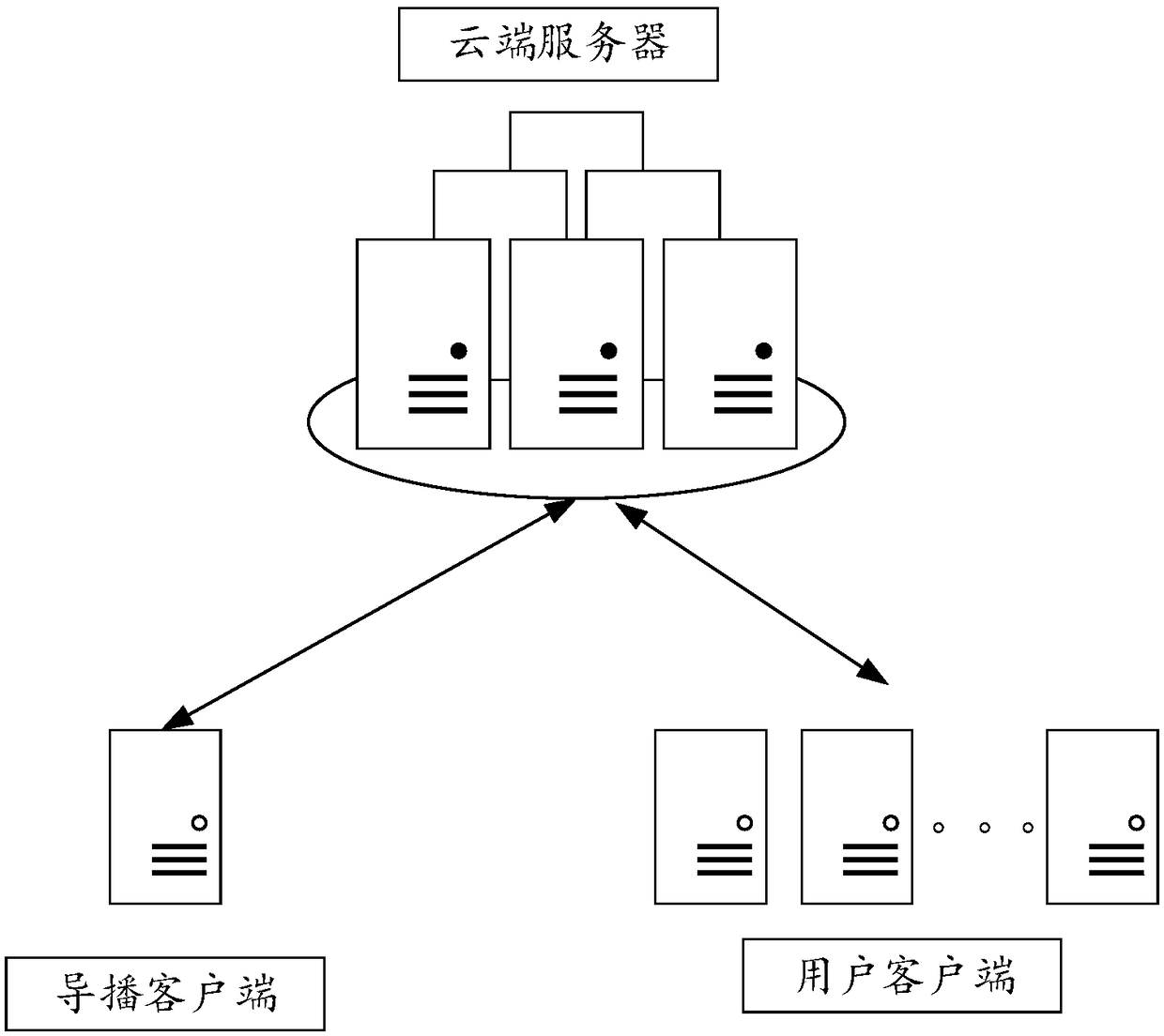

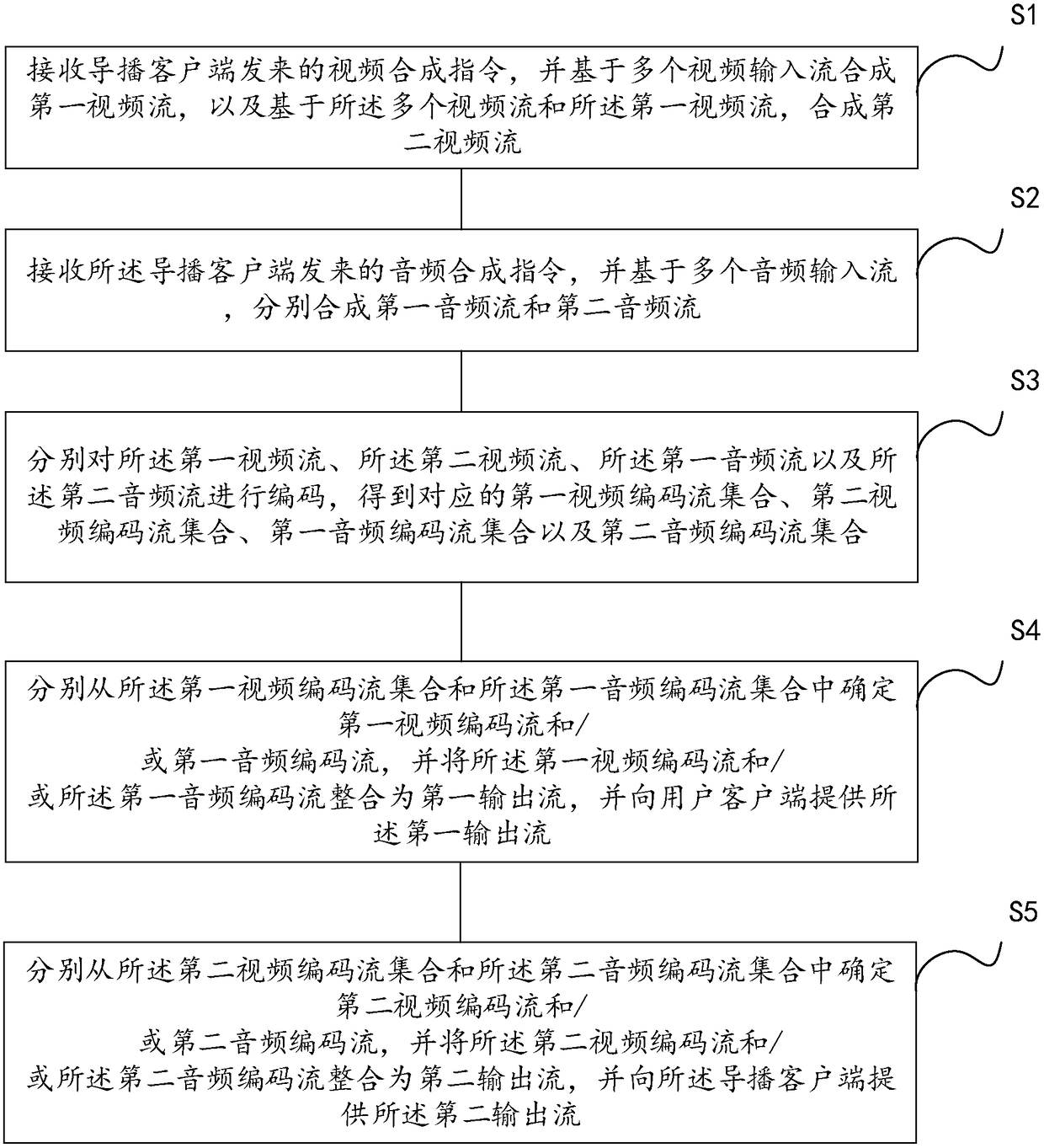

Audio and video synthesis method and system

ActiveCN108495141AEasy to observe in real timeReduce waiting timeTelevision system detailsDigital video signal modificationAudio synthesisSynthesis methods

The invention discloses an audio and video synthesis method and an audio and video synthesis system. The method comprises the steps of receiving a video synthesis command sent by a director client, synthesizing a first video stream based on a plurality of video input streams, and synthesizing a second video stream based on the plurality of video streams and the first video stream; receiving an audio synthesis command sent by the director client, and separately synthesizing a first audio stream and a second audio stream based on a plurality of audio input streams; separately coding the audio and video streams to acquire a first corresponding video coded stream set, a second corresponding video coded stream set, a first corresponding audio coded stream set and a second corresponding audio coded stream set; and acquiring a first output stream and a second output stream based on integration of the coded stream sets, and separately providing the first output stream and the second output stream to a user client and the director client. According to the technical scheme provided by the invention, the cost during an audio and video synthesis process can be reduced.

Owner:CHINANETCENT TECH

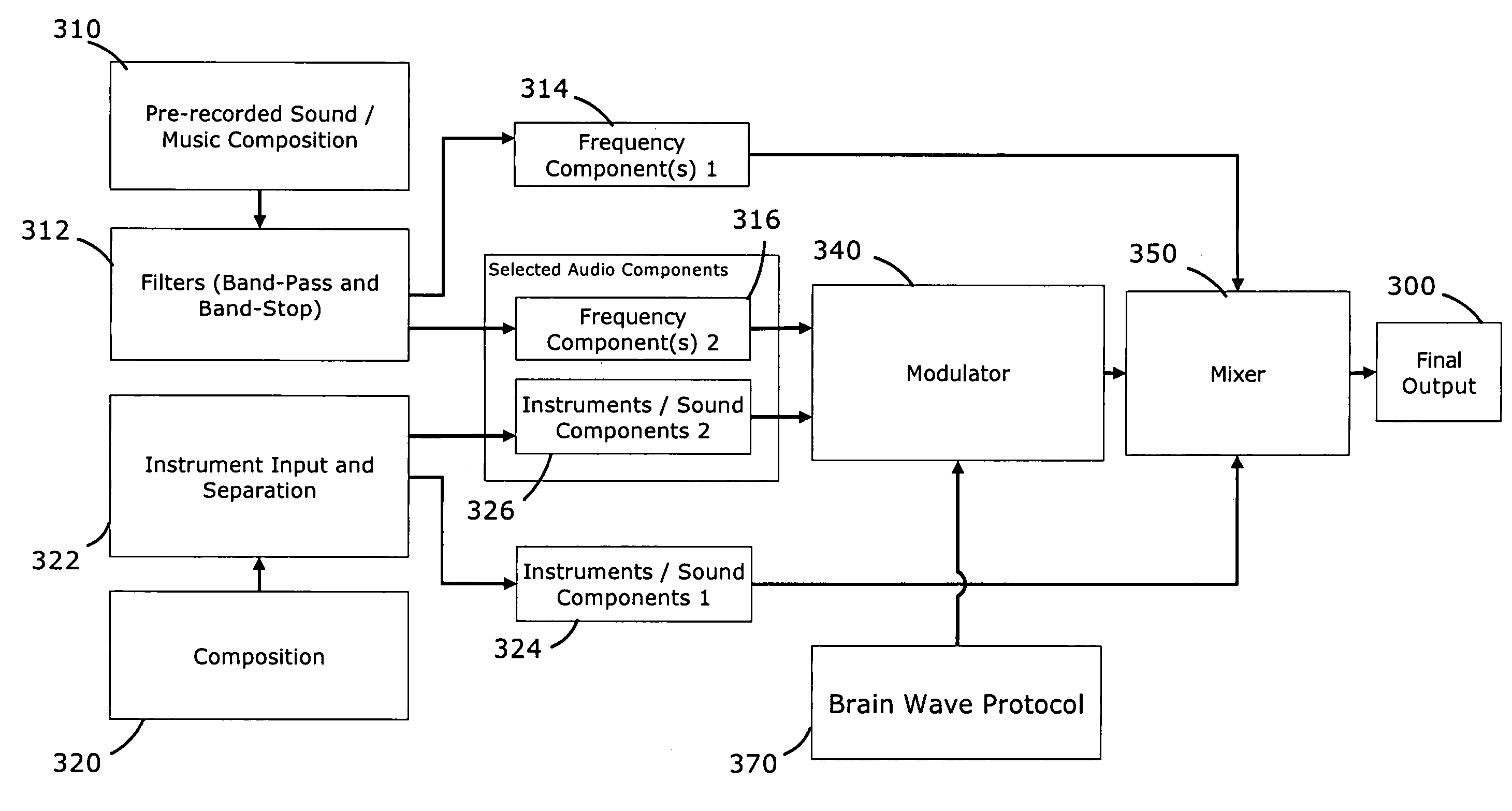

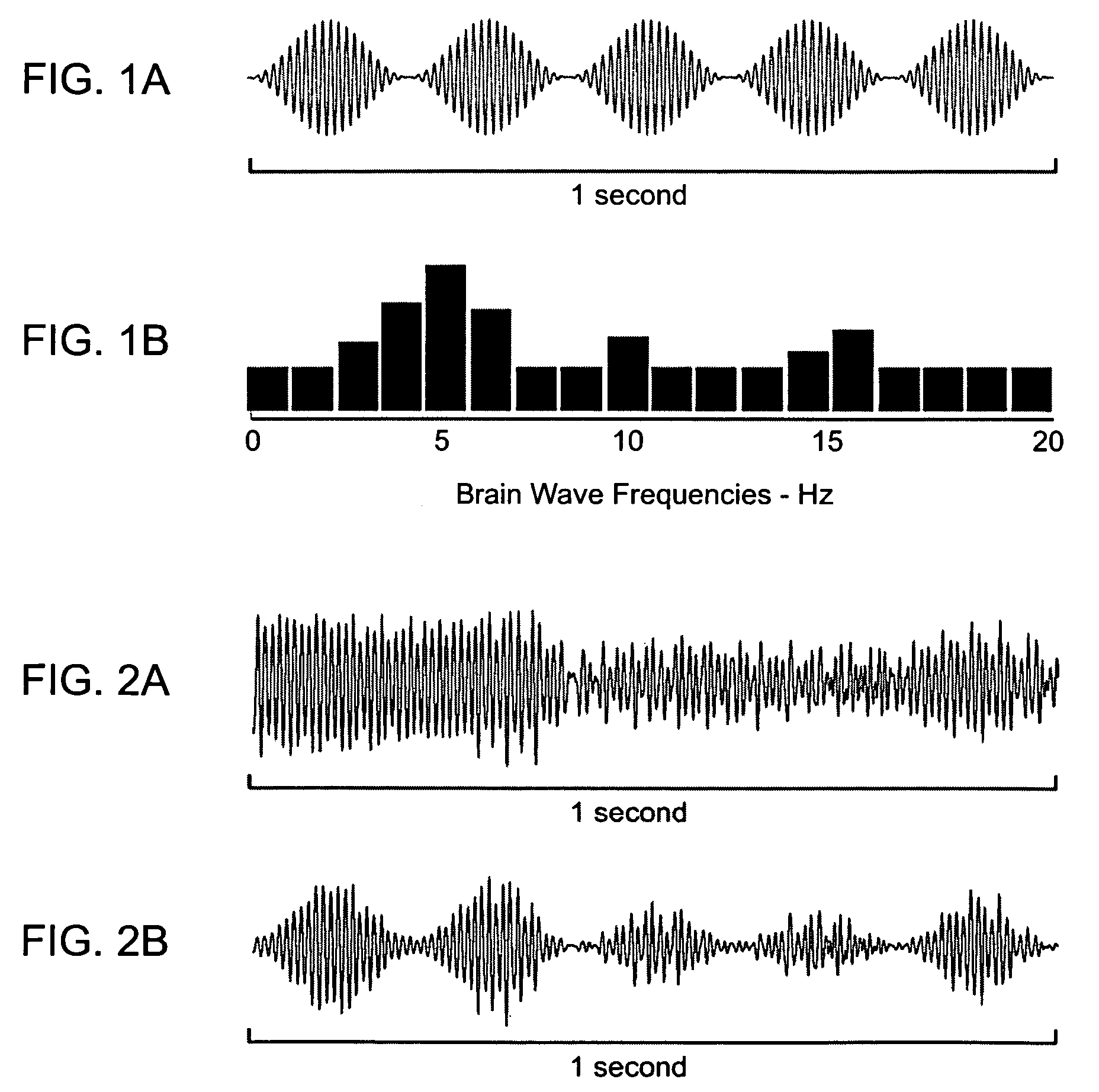

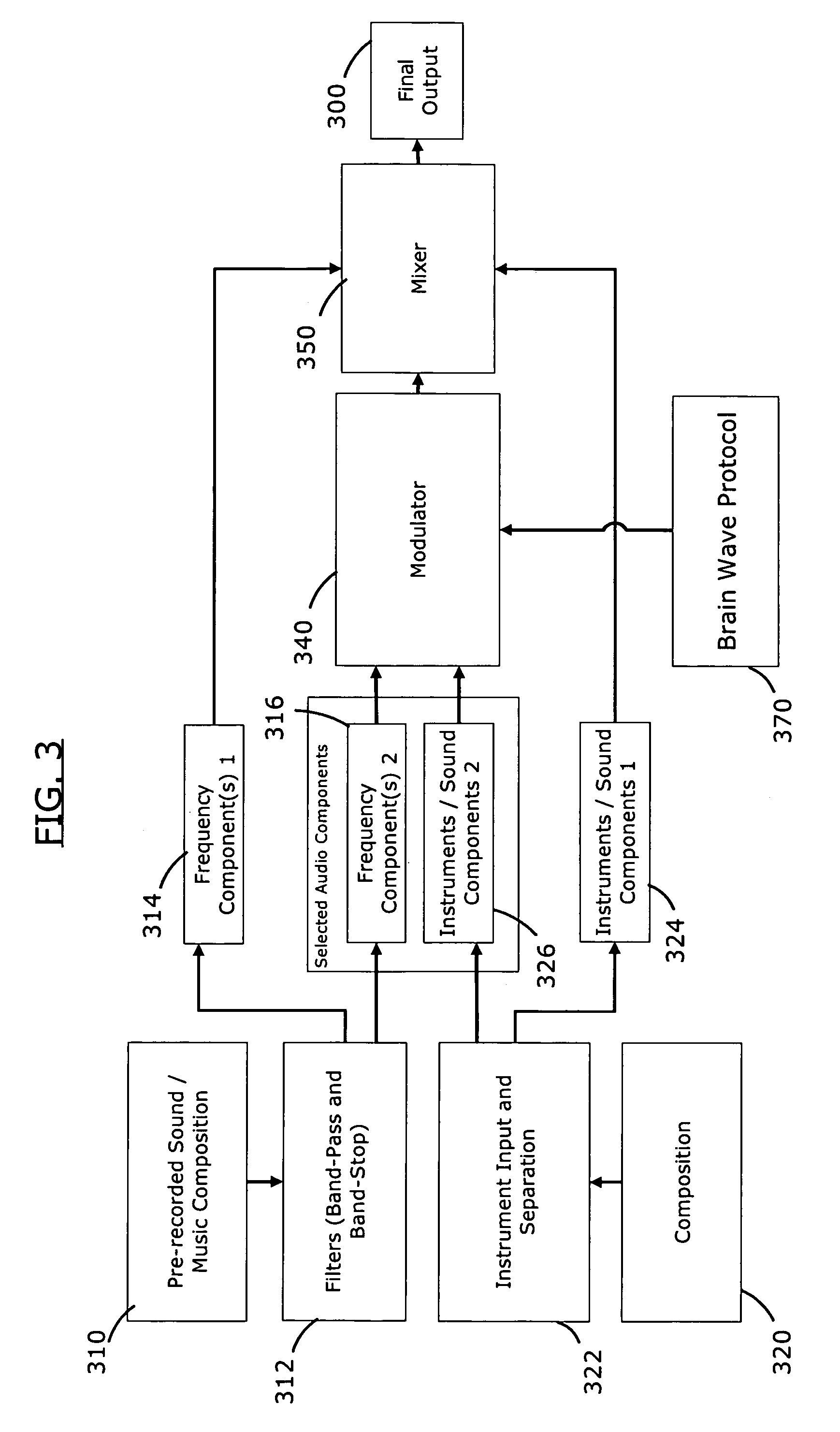

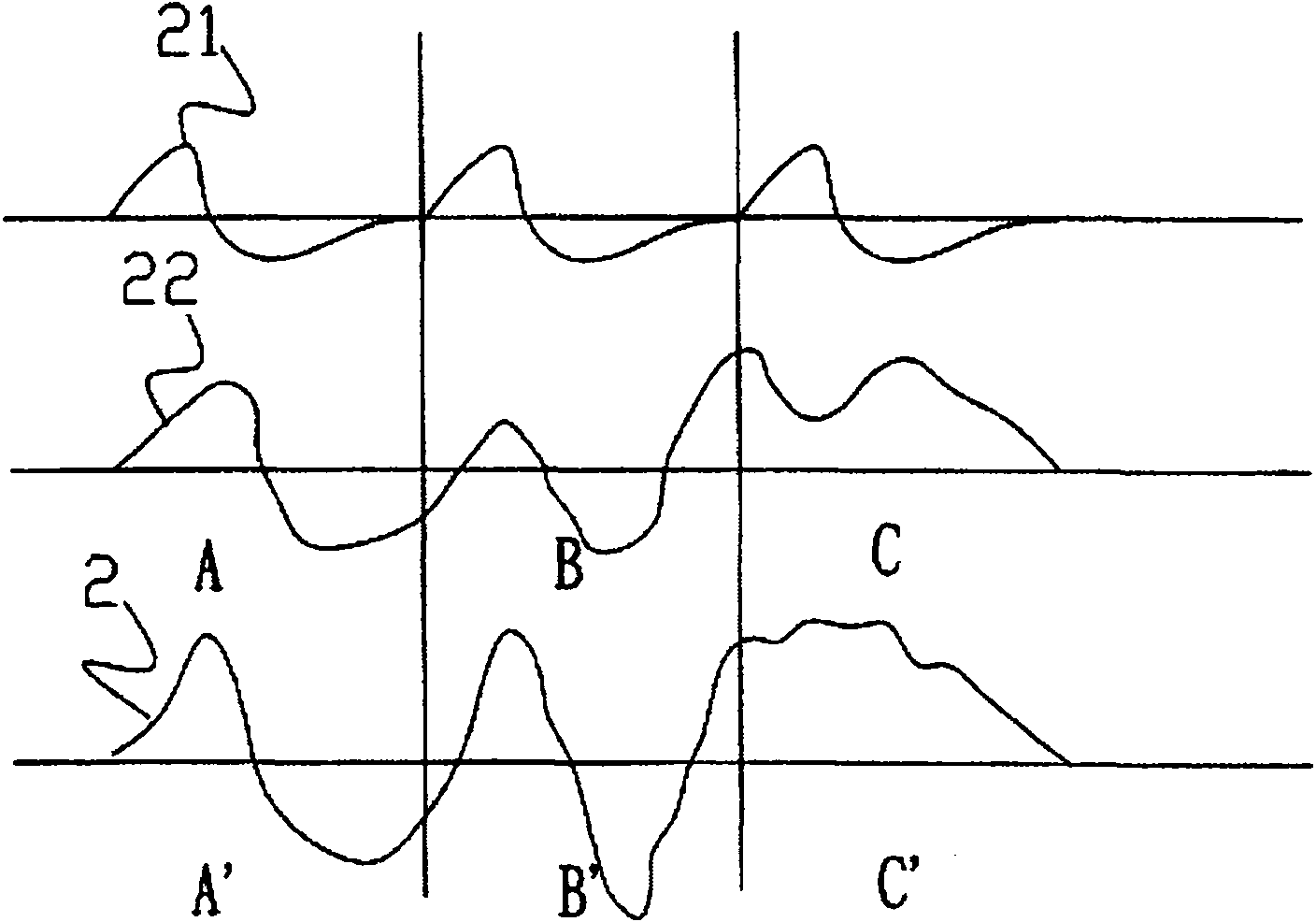

Method for incorporating brain wave entrainment into sound production

ActiveUS7674224B2Efficient productionSurgeryDiagnostic recording/measuringAudio synthesisEngineering

A method for incorporating brain wave entrainment into an audio composition by selectively modulating musical elements within the composition. The invention provides a way to specify and modulate individual frequency components in an audio composition, according to the desired brain wave state, allowing brain wave entrainment to be easily and subtly incorporated into an audio composition by disguising the modulations as natural instrumental qualities such as vibrato or reverberation.

Owner:BRAINFM INC

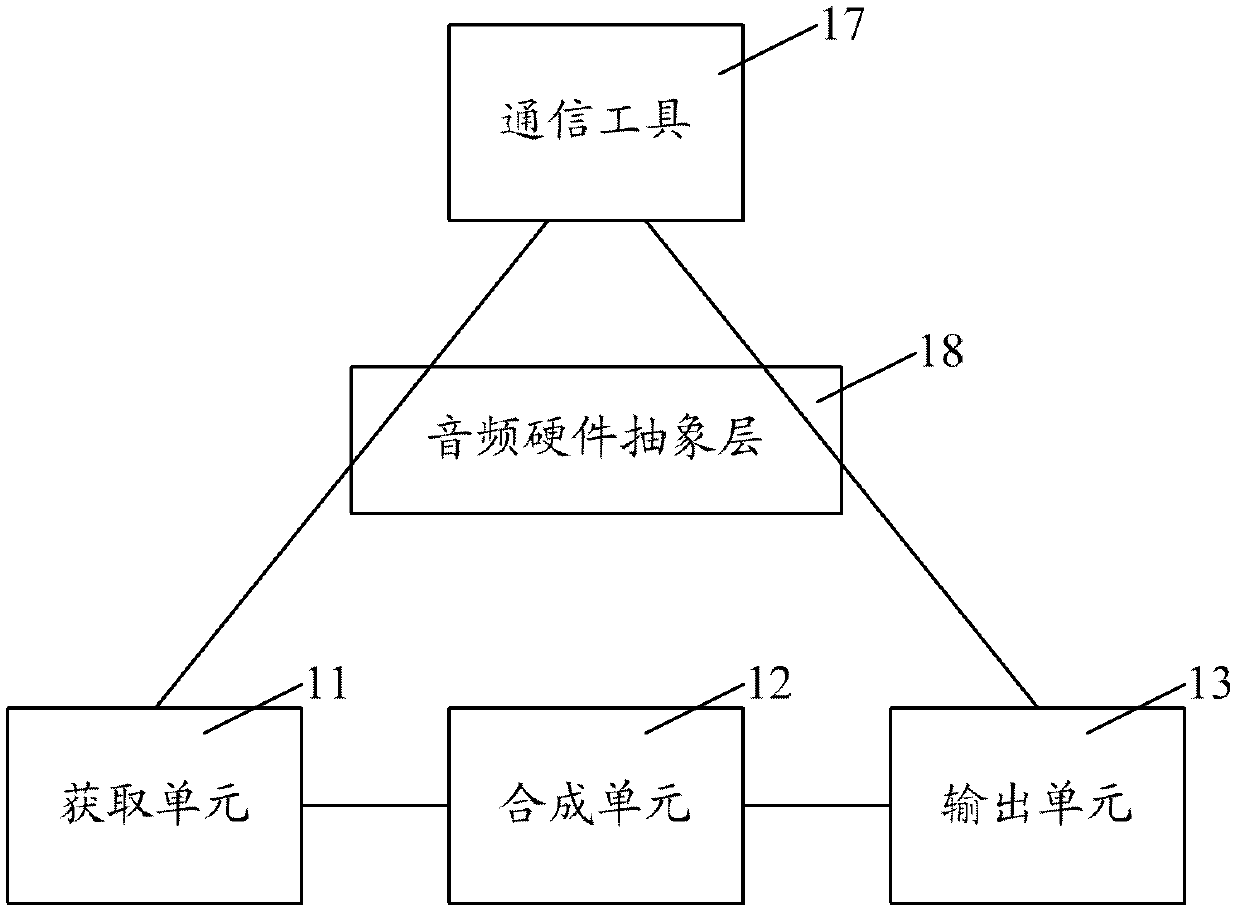

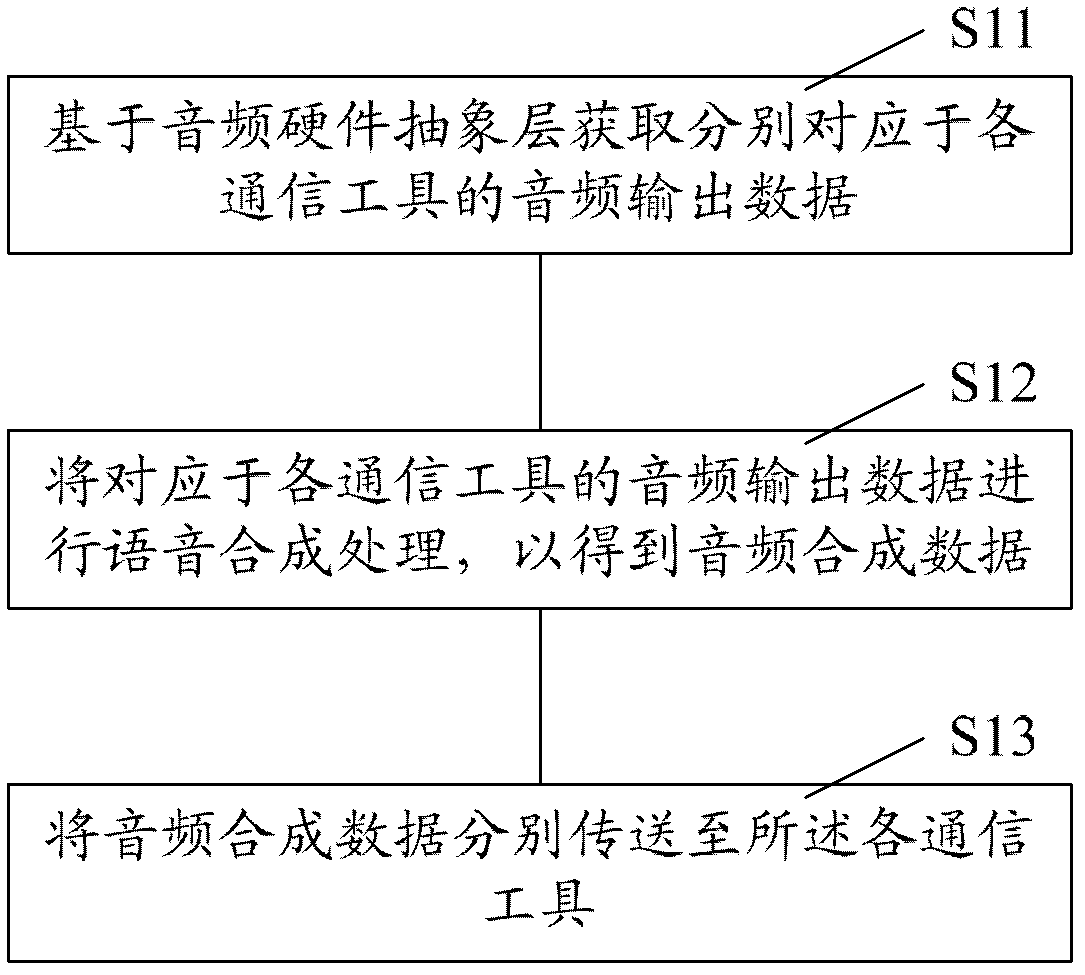

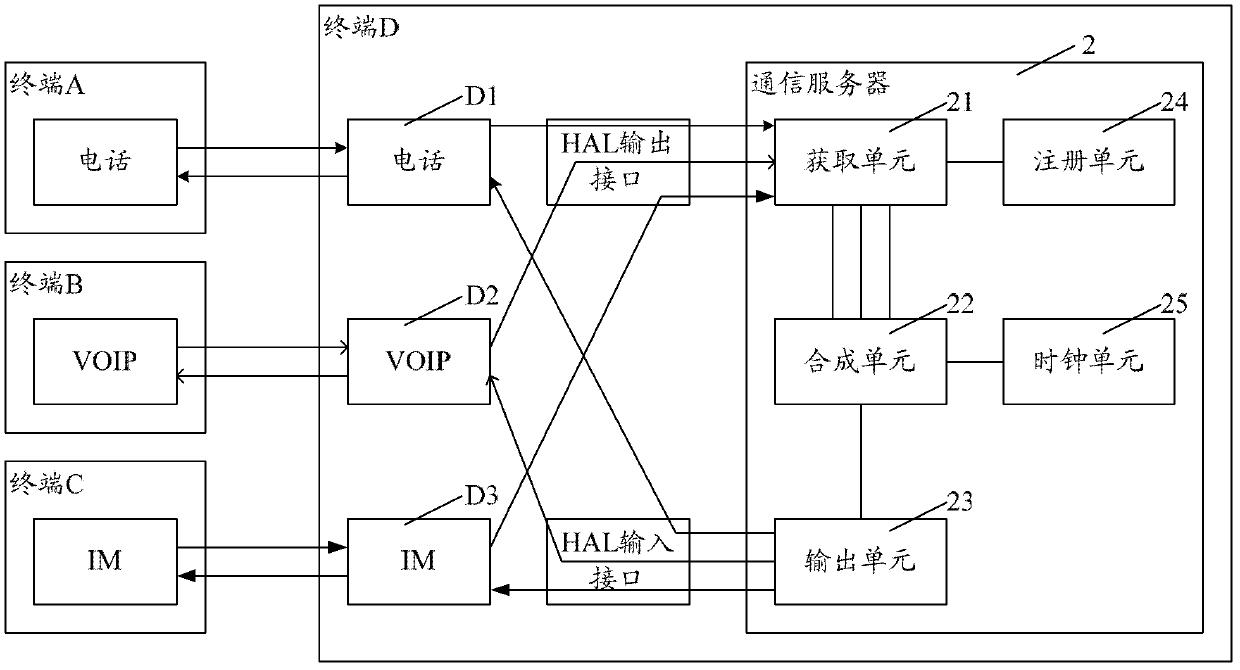

Communication server, communication terminal and voice communication method

ActiveCN103379232ARealize interconnectionPracticalInterconnection arrangementsSpeech synthesisAudio synthesisVoice communication

Disclosed are a communication server, a communication terminal and a voice communication method. The communication server includes an acquisition unit adapted to acquire audio output data respectively corresponding to different communication tools based on an audio hardware abstraction layer; a synthesis unit adapted to audio synthesis processing of the audio output data corresponding to the different communication tools so as to acquire audio synthesis data; and an output unit adapted to send the synthesis audio data respectively to the different communication tools. Multi-voice data combined and forwarded by use of the communication server and therefore interconnection and internetworking between communication terminals with different communication tools are achieved as well as multi-party calls.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

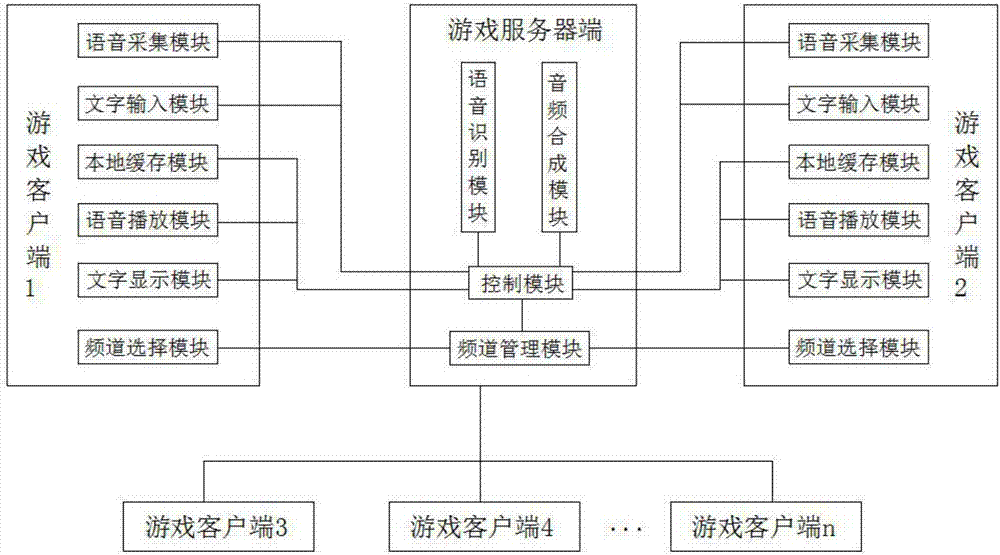

Voice recognition-based network game online interaction system

InactiveCN107115668AFacilitate communicationHumanized designSpeech recognitionVideo gamesInteraction systemsText display

The invention discloses a voice recognition-based network game online interaction system, which comprises a game server side and a plurality of game clients connected with the game server side, wherein the game server side comprises a voice acquisition module, a text input module, a channel selection module, a voice playing module, a text display module and a local cache module; and each game client comprises a channel management module, a control module, a voice recognition module and an audio synthesis module. According to the network game online interaction system, a player inputs voice or text through the game clients, transcribes the voice or the text into the text or the voice through the game server side, and outputs the text or the voice to the game clients of other players in the same chat channel, the other players in the same chat channel can receive the voice or text information at the same time no matter that the voice or the text is adopted for inputting, and each player can select the voice or the text to exchange according to the requirements, so that the voice recognition-based network game online interaction system is convenient to communicate and more humanized in design.

Owner:南京丹鹏信息科技有限公司

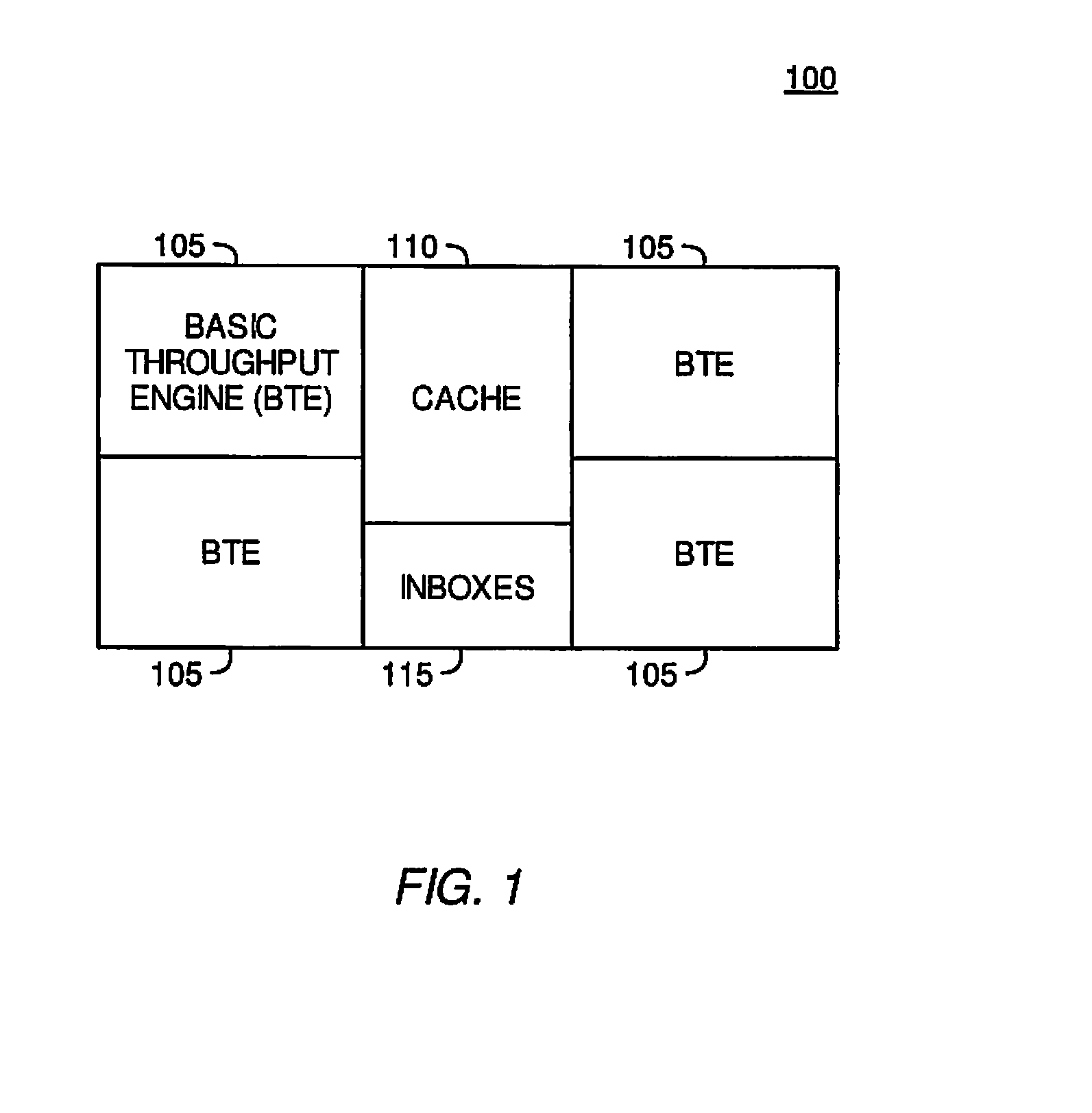

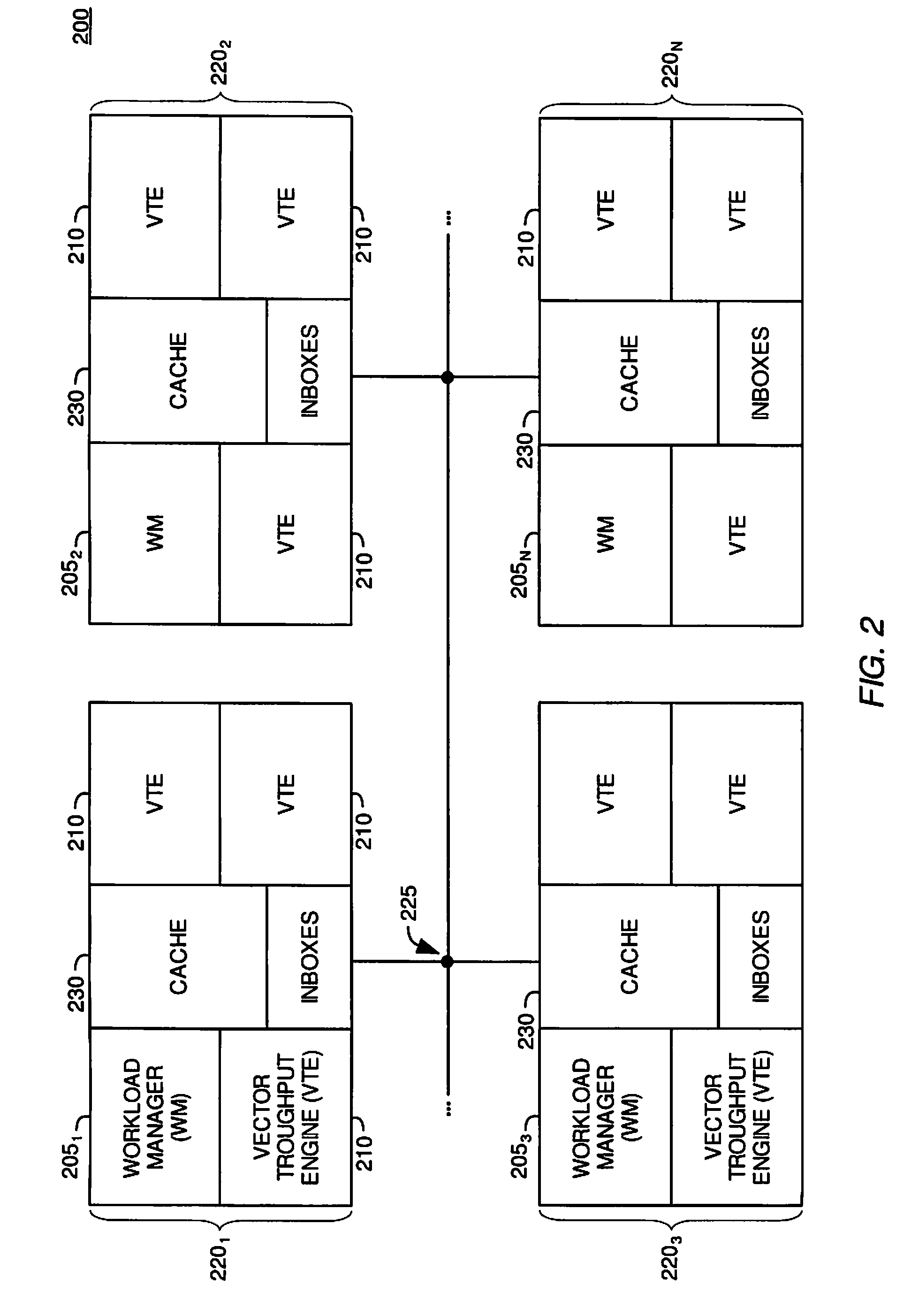

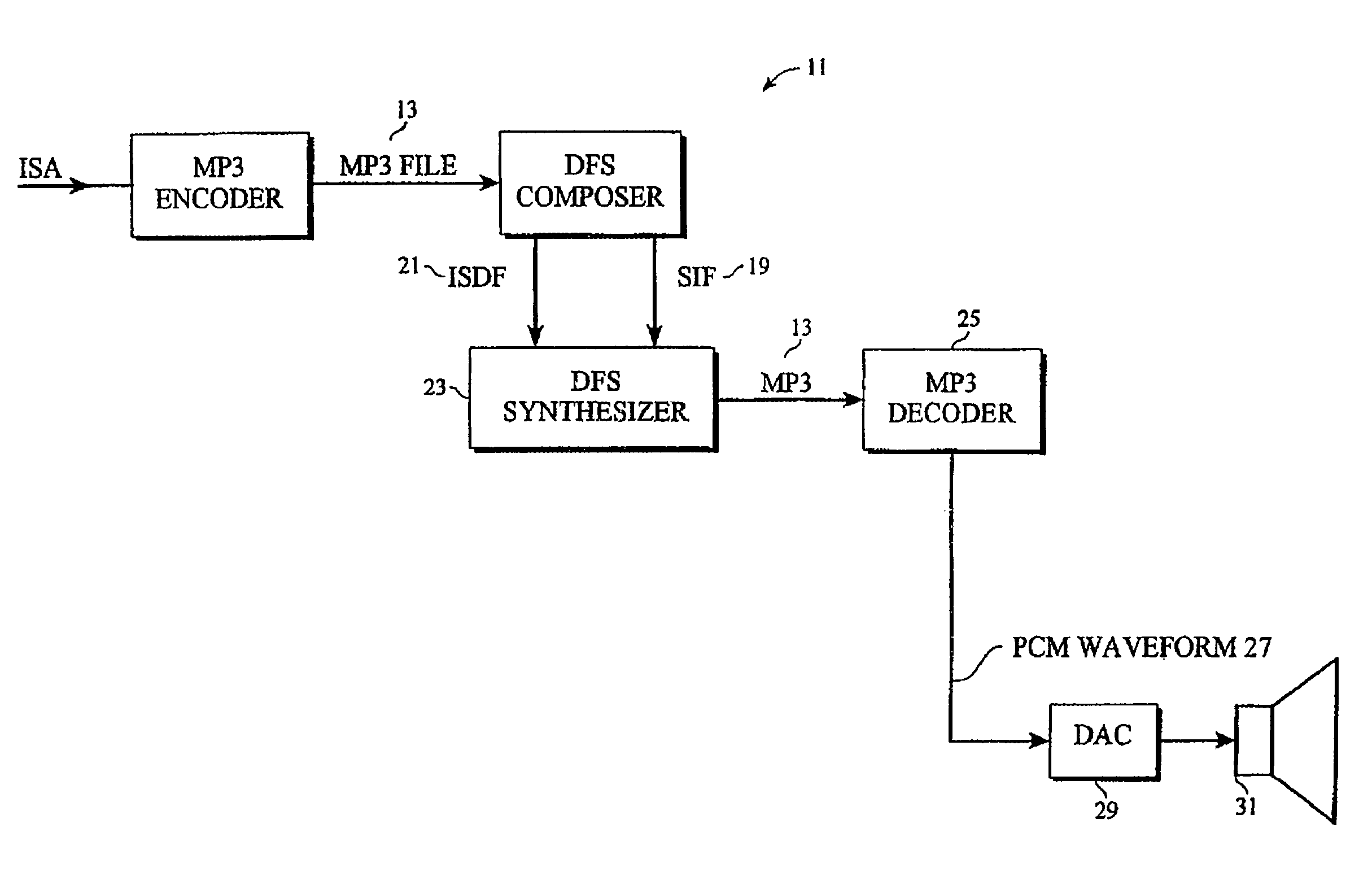

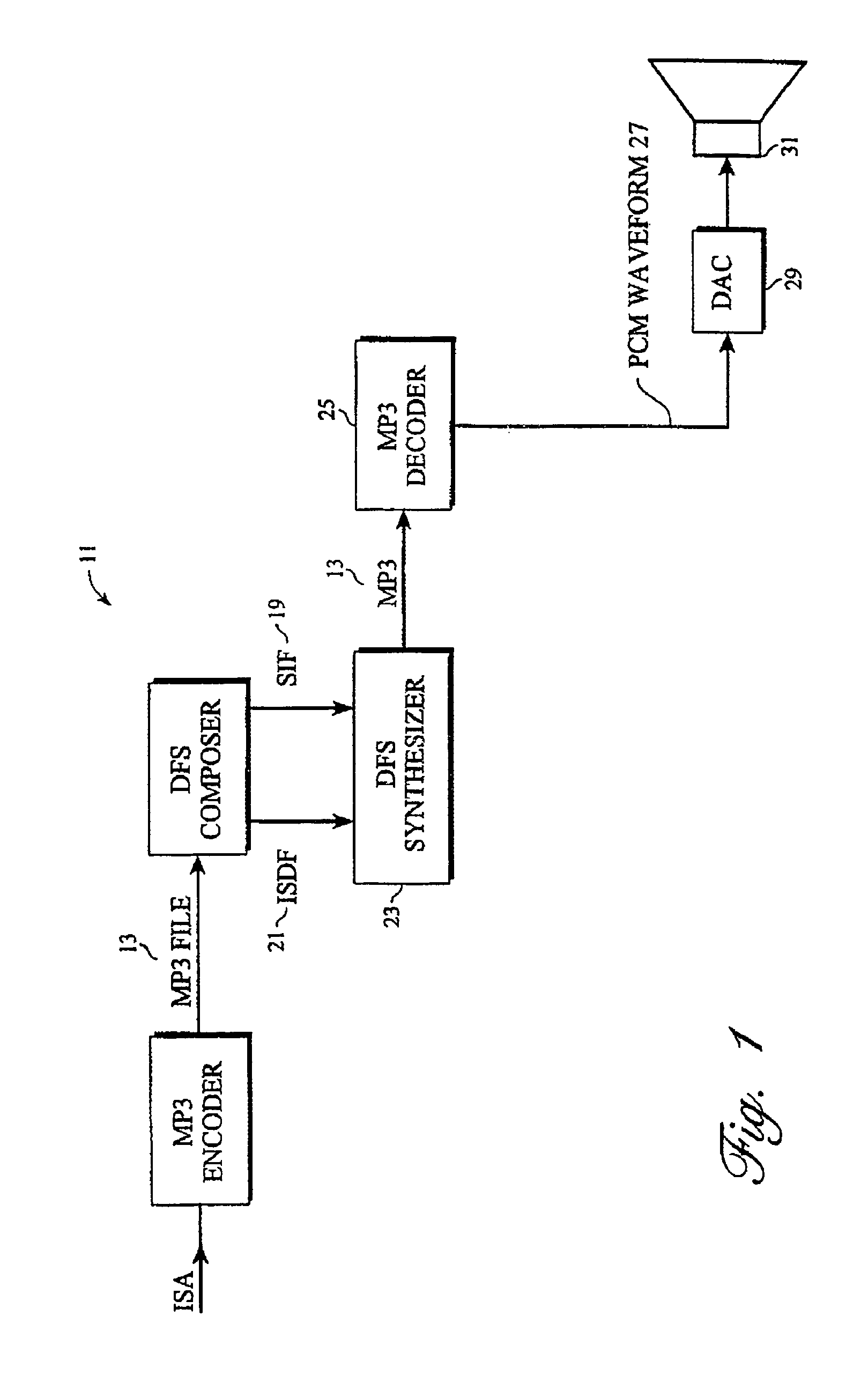

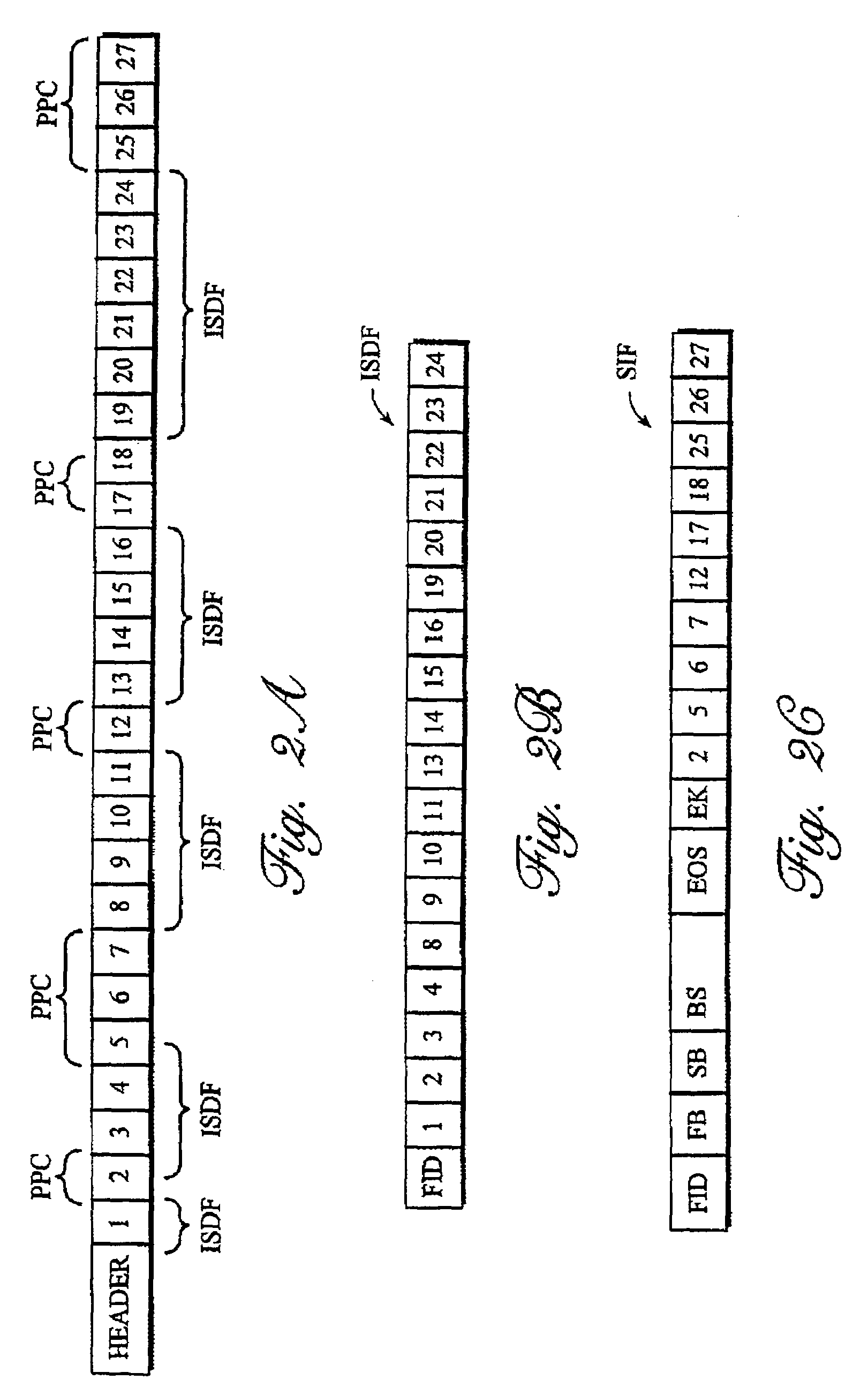

Audio synthesis using digital sampling of coded waveforms

InactiveUS7099848B1Exact reproductionTelevision system detailsElectrophonic musical instrumentsDigital dataAudio synthesis

Method and system for audio synthesis of a digital data file representing an assembly of information-bearing sounds in digital form. One or more spaced apart data segments are designated as key blocks and are removed from the original data file. The remainder of the data file is encrypted or otherwise encoded and communicated to a selected recipient on a first channel. Locations, sizes and separation distances of key blocks from each other within the original data file and a selected portion of the encoding or encryption key are placed in a data supplement. The removed segments and data supplement (optional) are communicated to the selected recipient on a second channel and / or at another time. The original data file is recovered by using the data supplement information, or using already available information, decoding or decrypting the encoded or encrypted data file and replacing the removed segments within the data file remainder. Neither the remainder data file nor the removed segments plus data supplement is sufficient, by itself, to allow reproduction of the original data file. Each of the remainder data file and the removed segments plus data supplement can be distributed separately and subsequently combined when authorization or license to reproduce the sounds has been obtained.

Owner:INTEL CORP

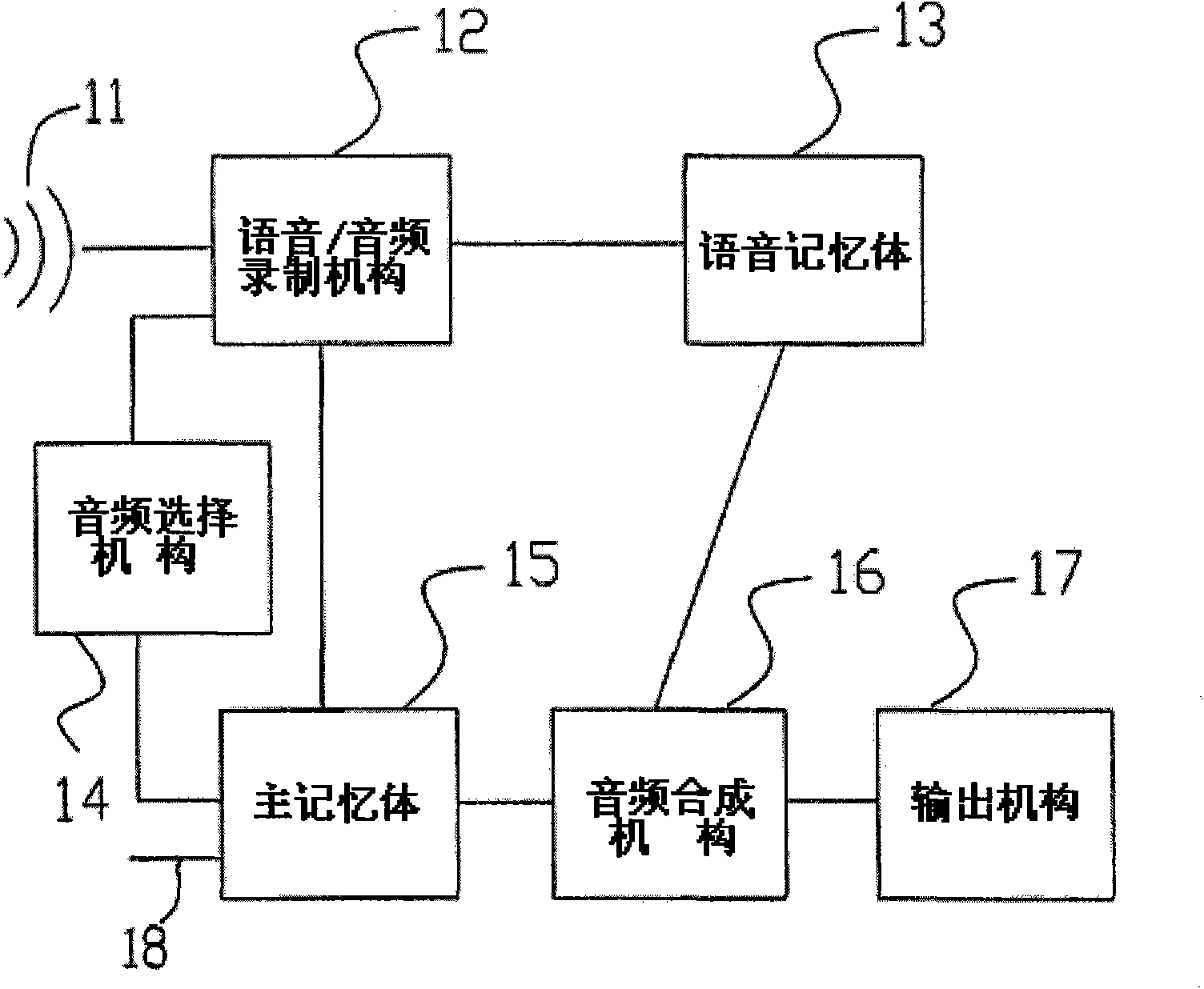

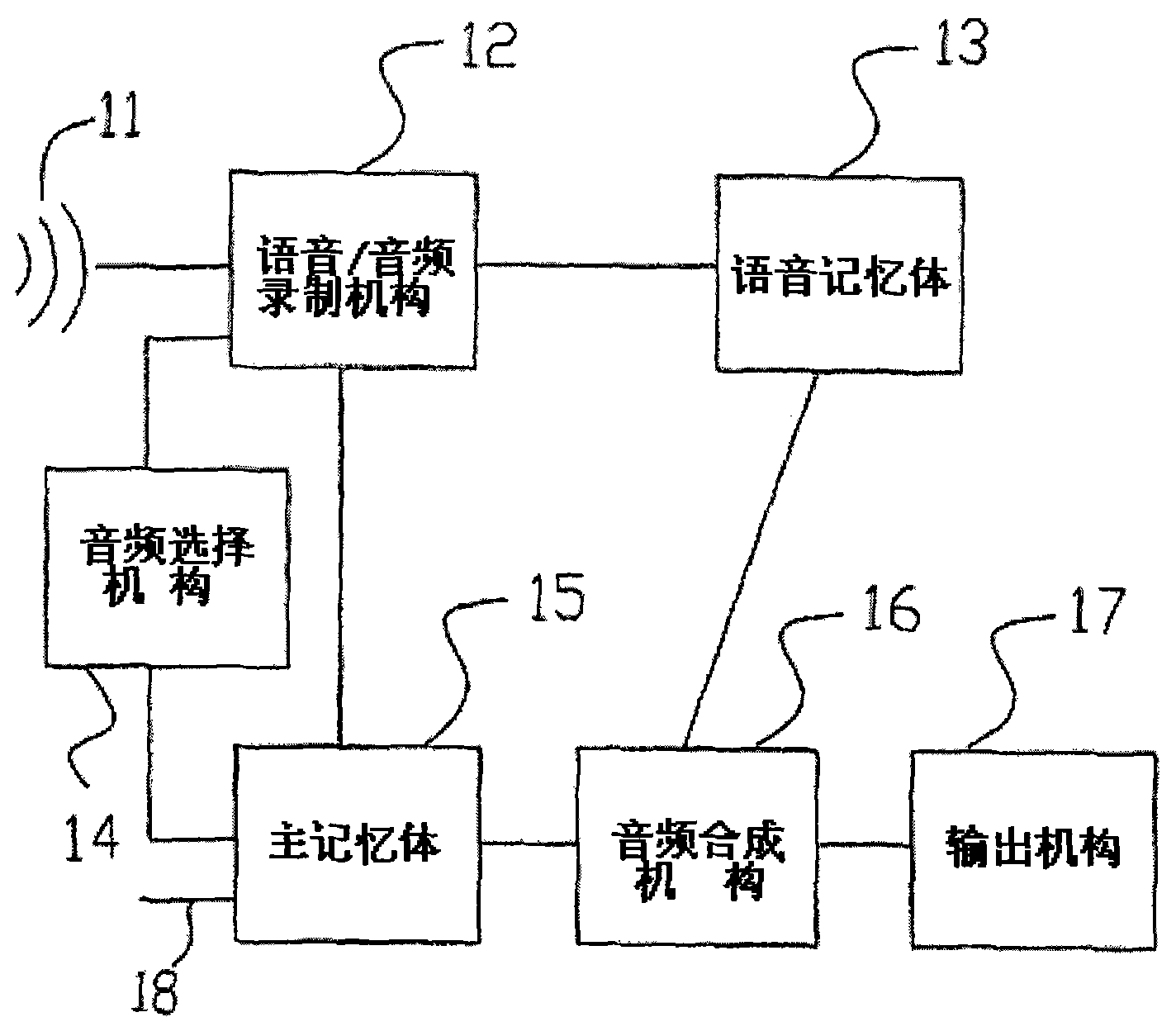

Audio synthesizer capable of converting voices to songs

The invention provides an audio synthesizer capable of converting voices to songs, which comprises a voice / audio recording mechanism for recording voices input by a user, digitally converting a preset voice, a downloaded voice or the input voices and storing as a voice sample; an audio selection mechanism for selecting audio frequencies from preset audio frequencies, audio frequencies recorded from outside and audio frequencies in downloaded audio frequencies, digitally converting and storing as an audio object; an audio synthesis mechanism for cutting the audio object into a plurality of syllables by taking the time length of the voice sample as one syllable and synthesizing the syllables of the voice sample and the audio object into synthesized audio frequencies; and an output mechanism for outputting a plurality of the synthesized audio frequencies.

Owner:傅可庭

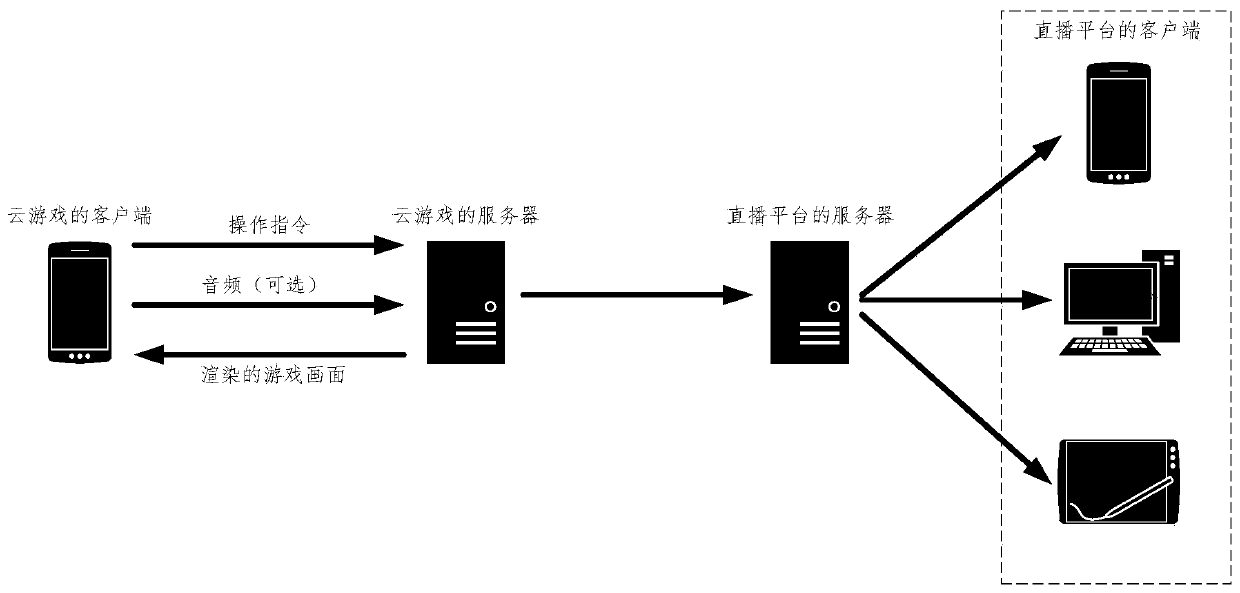

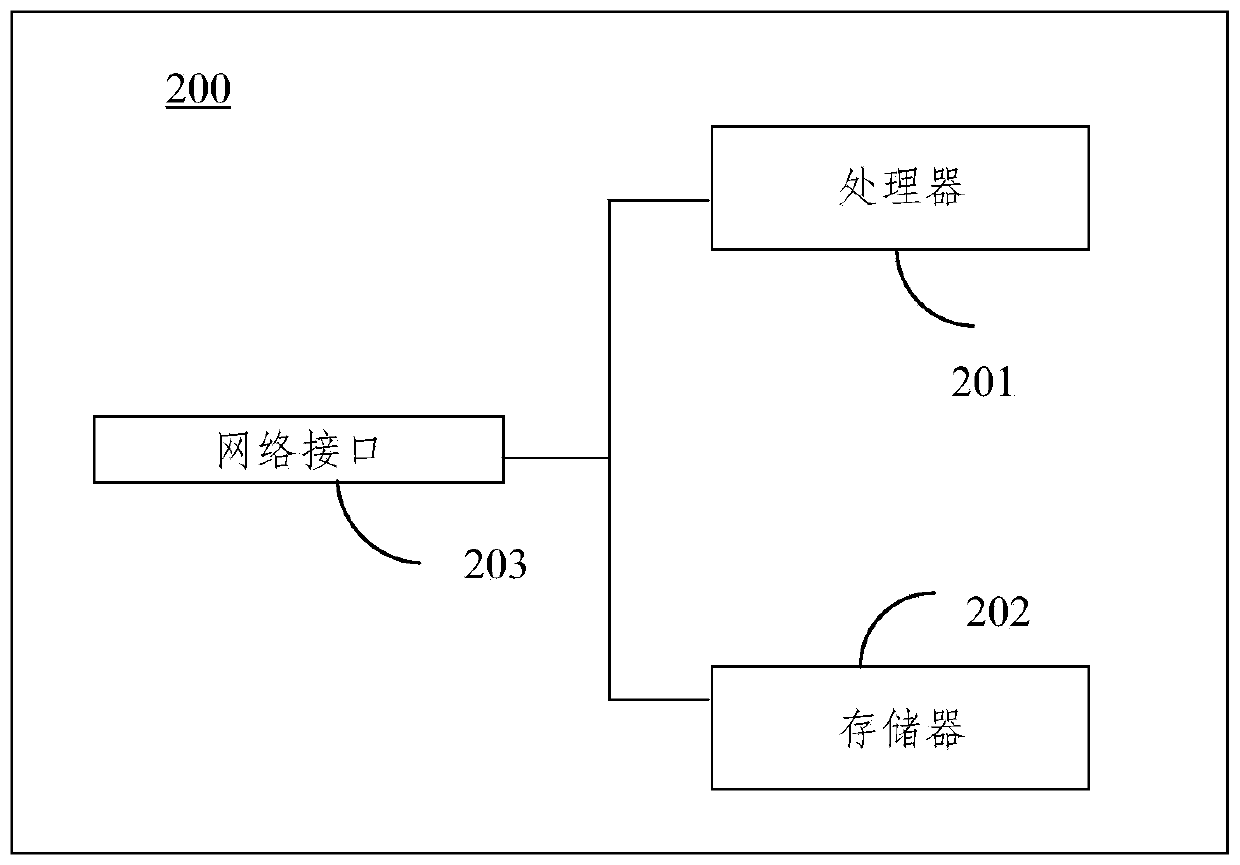

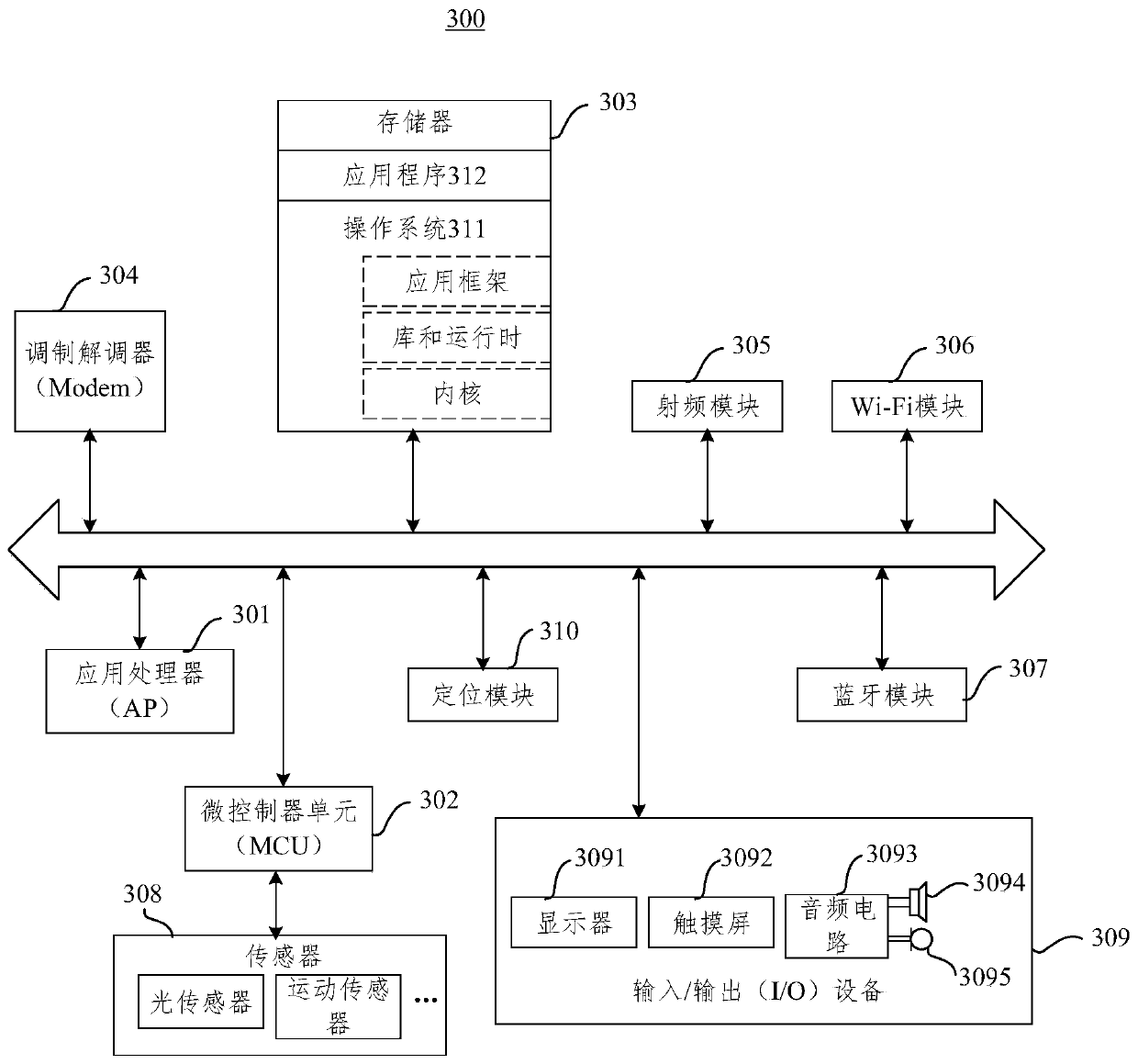

Cloud game live broadcast method and device

ActiveCN111314724AReduce upload operationsReduce power consumptionVideo gamesSelective content distributionNoise (video)Audio synthesis

The invention provides a cloud game live broadcast method and device. The cloud game live broadcast method comprises the following steps: generating a video for recording the operation process of a cloud game application program APP; receiving audio from terminal equipment, wherein the audio is used for recording voice information when a user operates the cloud game APP; obtaining an audio and video synthesized by the video and the audio; and encoding the audio and video and sending the encoded audio and video to a server of a live broadcast platform. According to the invention, clear game picture live broadcast and noise-free sound reproduction can be realized, the power consumption of the terminal equipment can be reduced, and a lag-free game service is provided.

Owner:HUAWEI TECH CO LTD

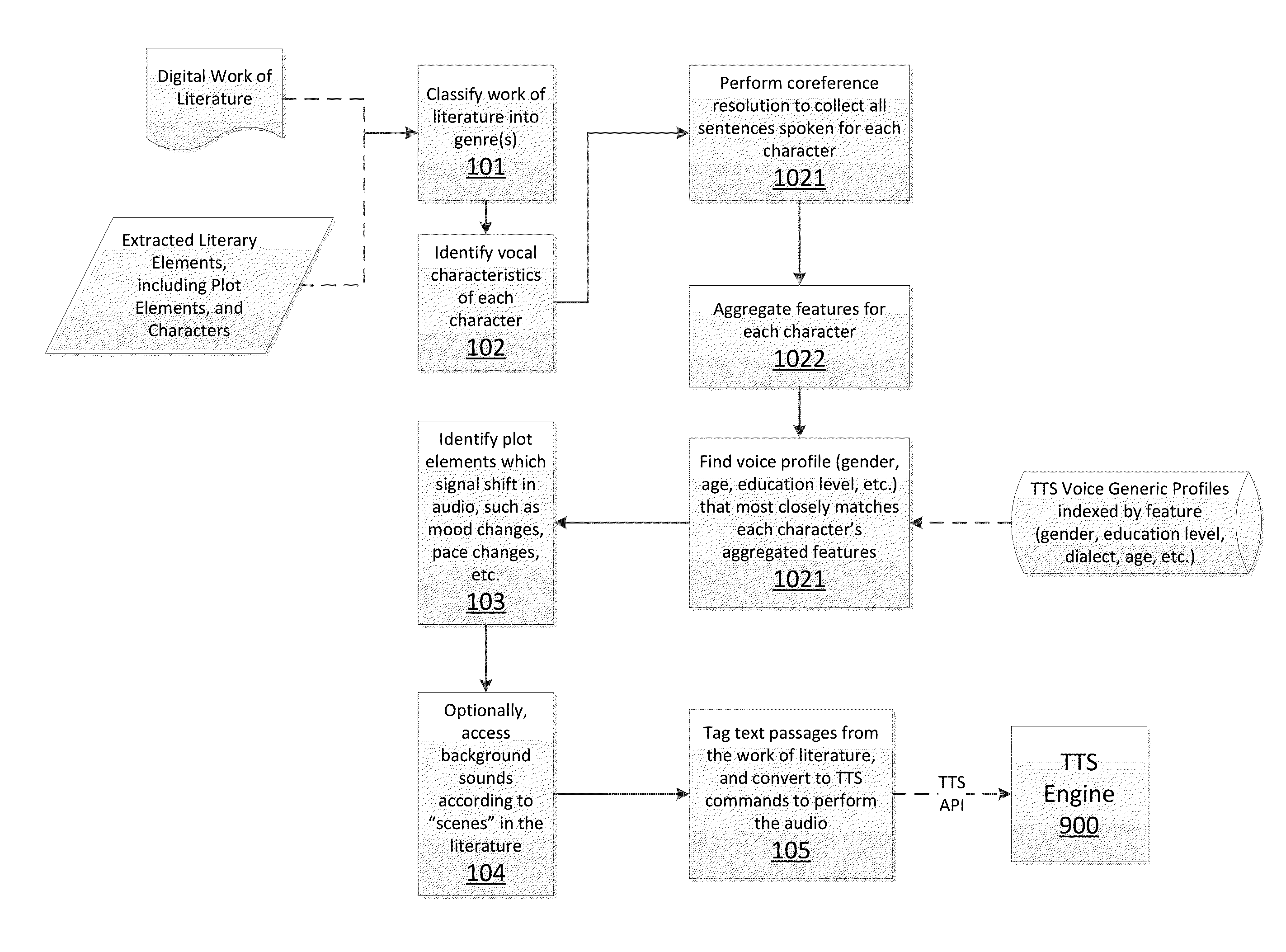

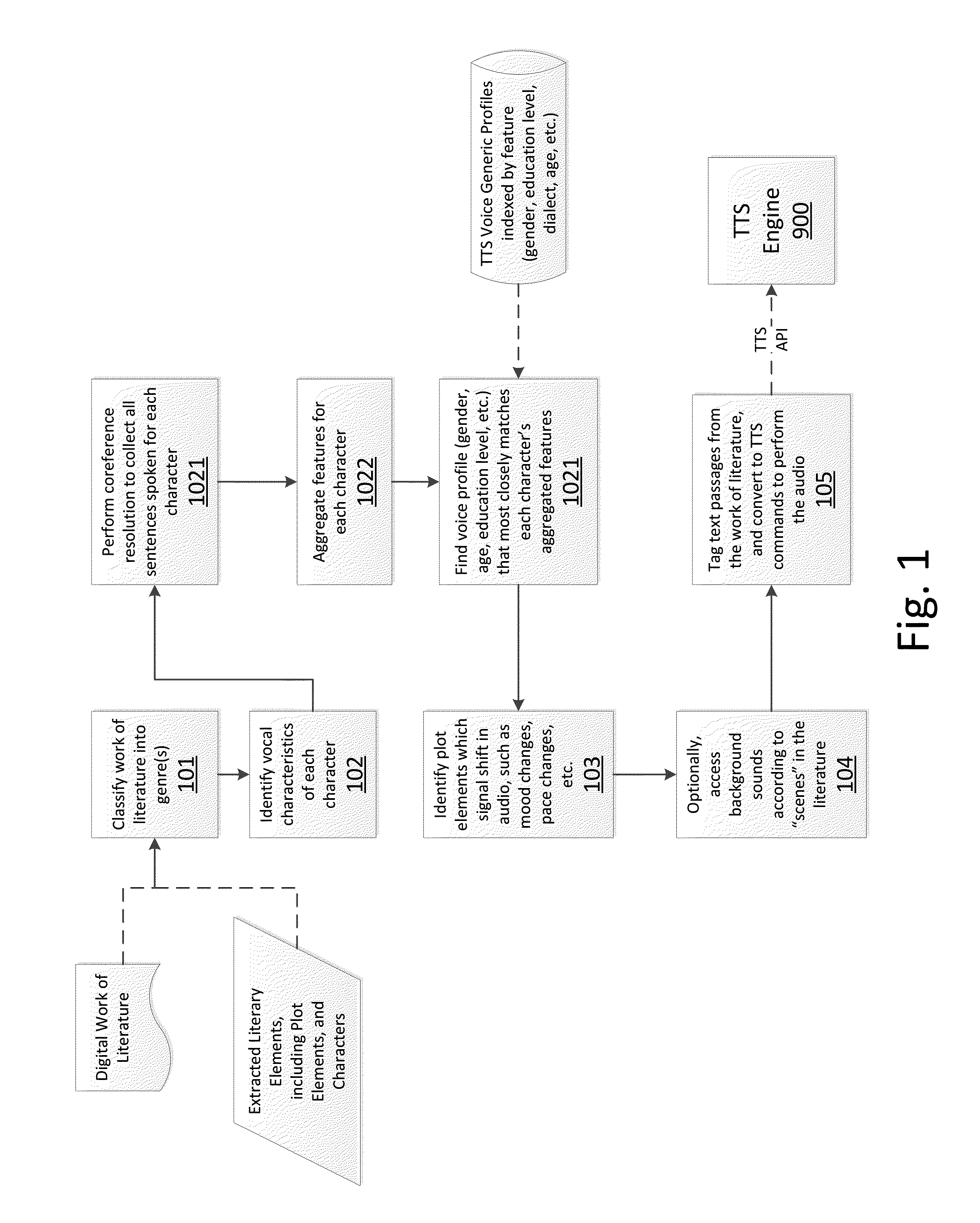

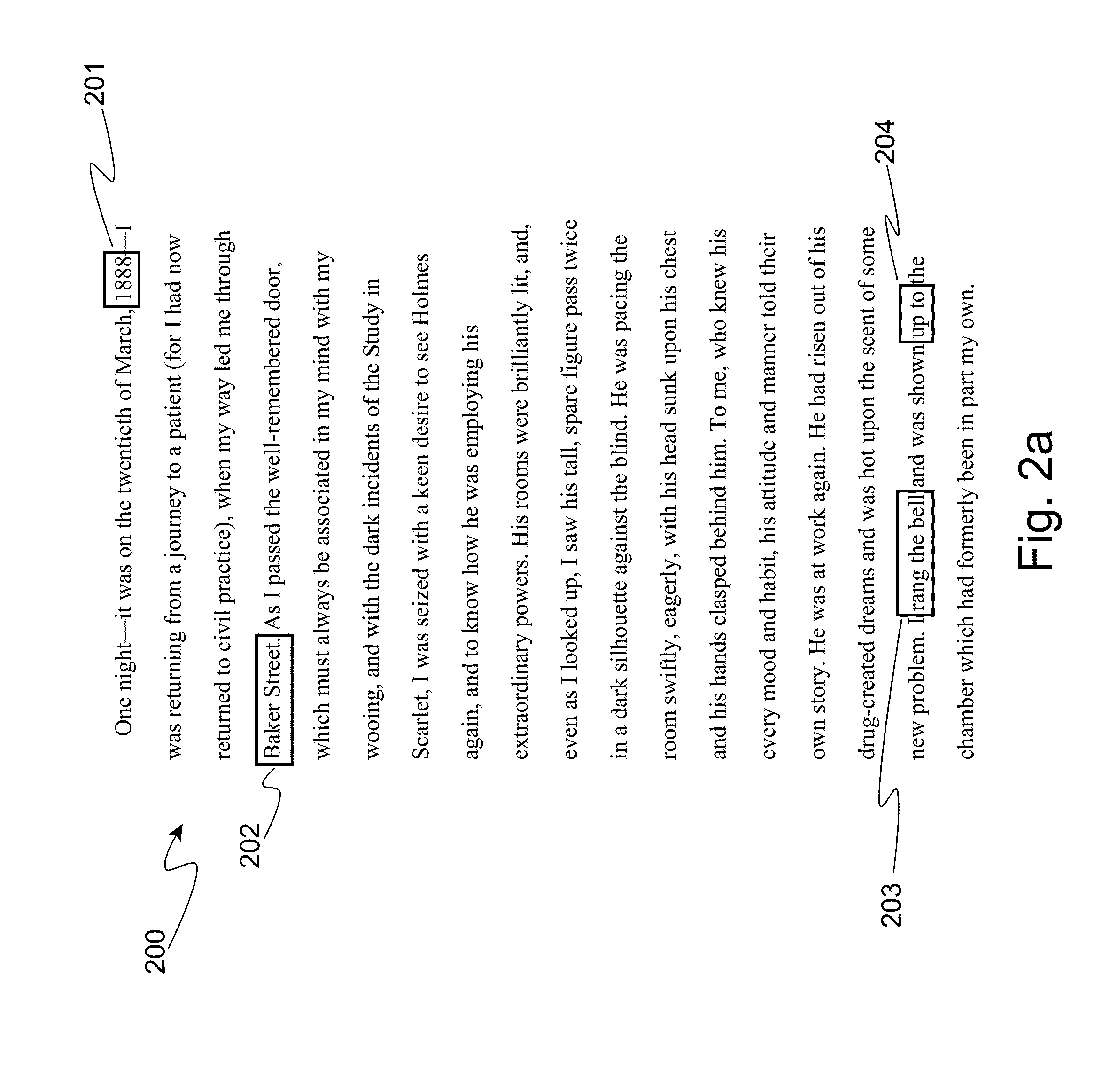

Text-to-Speech for Digital Literature

A digital work of literature is vocalized using enhanced text-to-speech (TTS) controls by analyzing a digital work of literature using natural language processing to identify speaking character voice characteristics associated with context of each quote as extracted from the first work of literature; converting the character voice characteristics to audio metadata to control text-to-speech audio synthesis for each quote; transforming the audio metadata into text-to-speech engine commands, each quote being associated with audio synthesis control parameters for the TTS in the context of each the quotes in the work of literature;and inputting the commands to a text-to-speech engine to cause vocalization of the work of literature according to the words of each quote, character voice characteristics of corresponding to each quote, and context corresponding to each quote.

Owner:IBM CORP

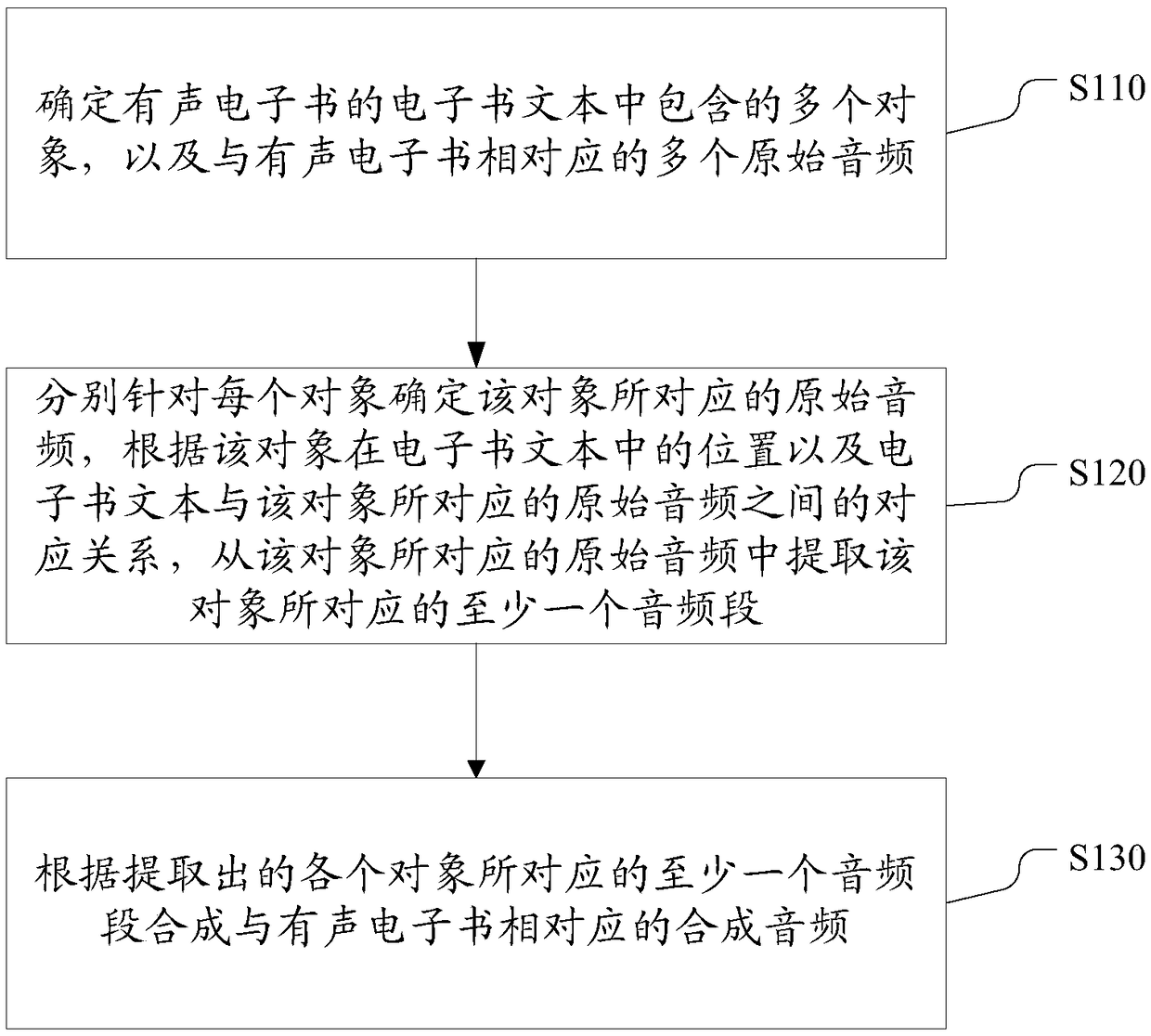

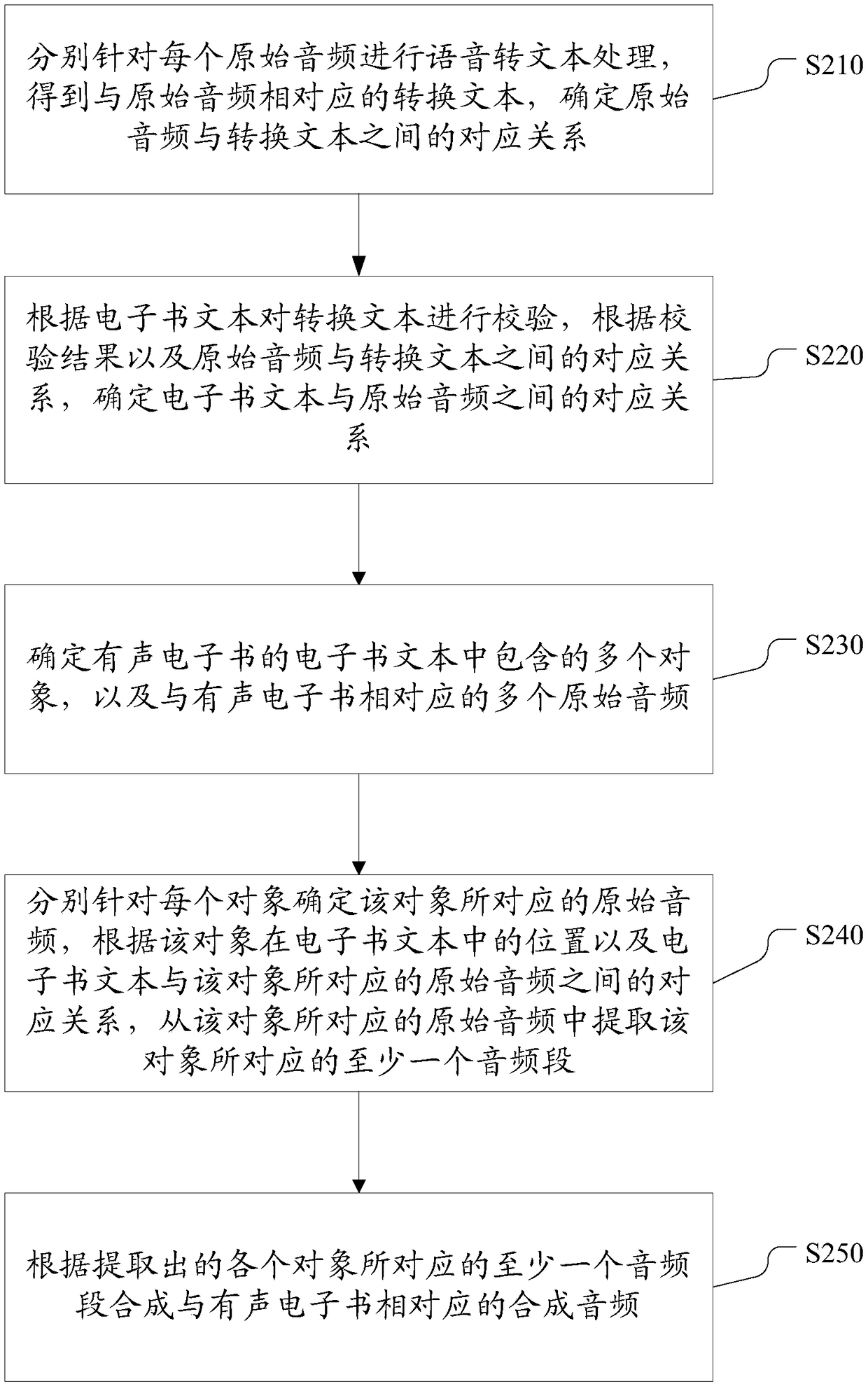

Audio synthesis method for audio electronic book, electronic device and computer storage medium

ActiveCN108877764AImprove experienceImprove participationDigital computer detailsElectric digital data processingAudio synthesisElectronic book

The invention discloses an audio synthesis method for an audio electronic book, an electronic device and a computer storage medium. The method comprises determining a plurality of objects included inan electronic book text of the audio electronic book, and a plurality of original audio files corresponding to the audio electronic book; for each object, determining the original audio file corresponding to the object, and extracting at least one audio segment corresponding to the object from the original audio file corresponding to the object according to the position of the object in the electronic book text and a corresponding relationship between the electronic book text and the original audio file corresponding to the object; and synthesizing a synthesized audio file corresponding to theaudio electronic book according to the extracted at least one audio segment corresponding to each object. According to the method, a user can select different people to read the same book according to his or her preference during a process of listening to the electronic book, thereby improving user experience.

Owner:ZHANGYUE TECH CO LTD

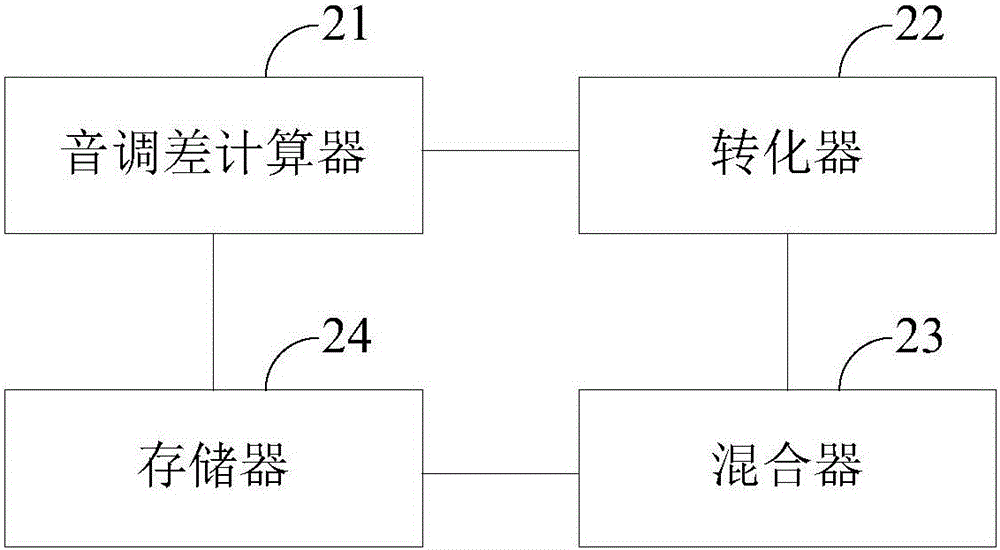

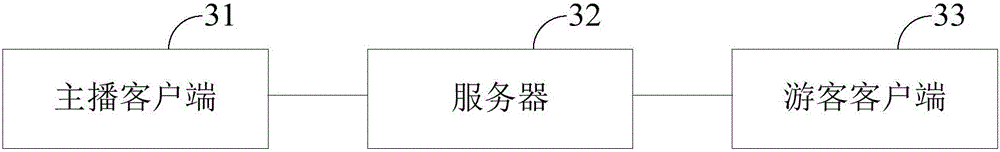

Audio synthesizing device and audio synthesizing method applied to same

Owner:GUANGZHOU HUADUO NETWORK TECH

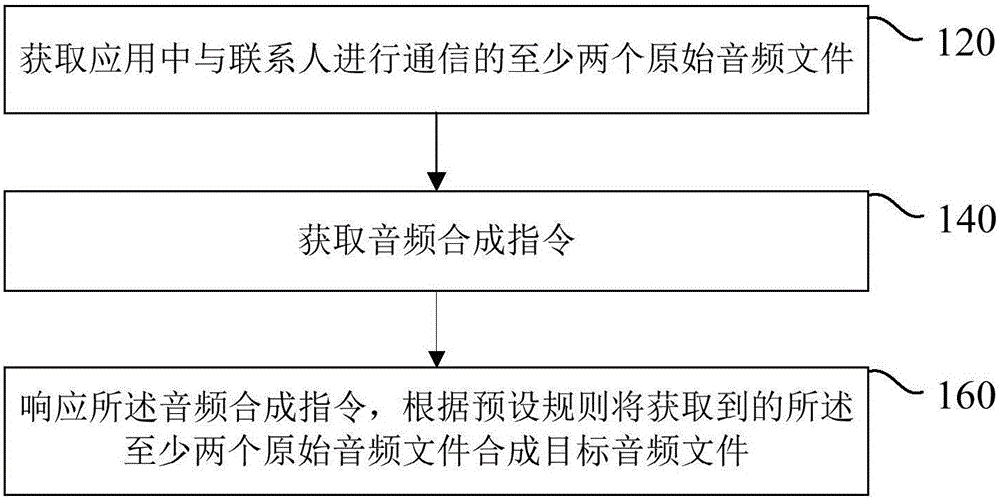

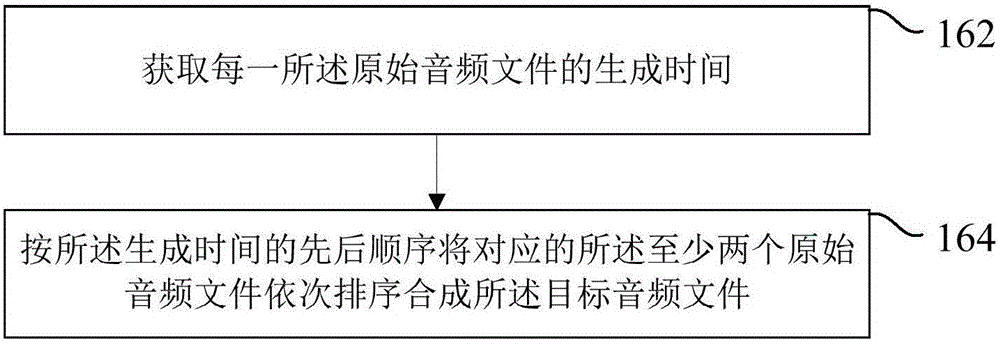

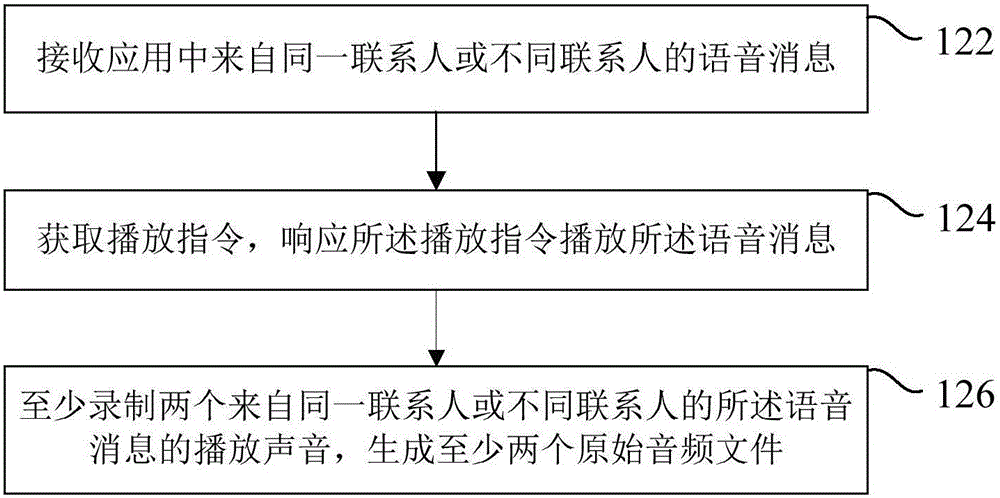

Audio processing method and apparatus

InactiveCN105939250AEasy to manageEasy to storeData switching networksSpeech synthesisAudio synthesisAudio frequency

The invention relates to an audio processing method and apparatus. The audio processing method comprises the following steps: acquiring at least two original audio files for communicating with contacts in an application; acquiring an audio synthesis instruction; and responding to the audio synthesis instruction, and synthesizing the acquired at least two original audio files into a target audio file according to a preset rule. A plurality of audio files in an instant messaging application are acquired, these audio files are synthesized into the target audio file, and after the target audio file is synthesized, effective voice messages are more concentrated, thereby being convenient for the management and storage of a user.

Owner:MEIZU TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com