Music emotion classification method based on multi-modal learning

An emotion classification, multimodal technology, applied in the field of emotion recognition, can solve the problems of information overload, lack of openness of music labels, ignoring music features, etc., to avoid noise or sparseness, eliminate ambiguity and uncertainty, and unify classification standards Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] Below in conjunction with accompanying drawing and specific embodiment, further illustrate the present invention, should be understood that these embodiments are only for illustrating the present invention and are not intended to limit the scope of the present invention, after having read the present invention, those skilled in the art will understand various aspects of the present invention Modifications in equivalent forms all fall within the scope defined by the appended claims of this application.

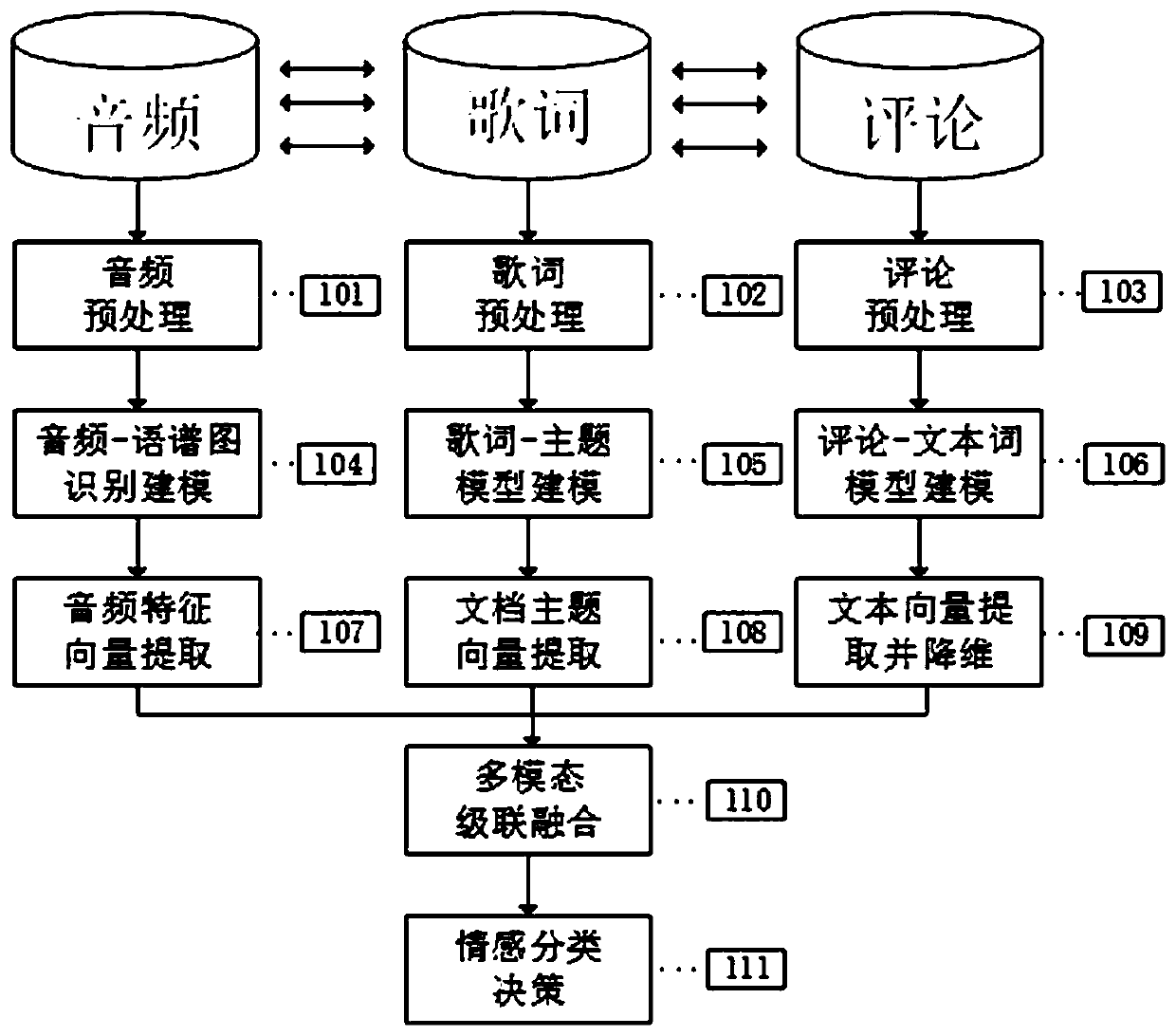

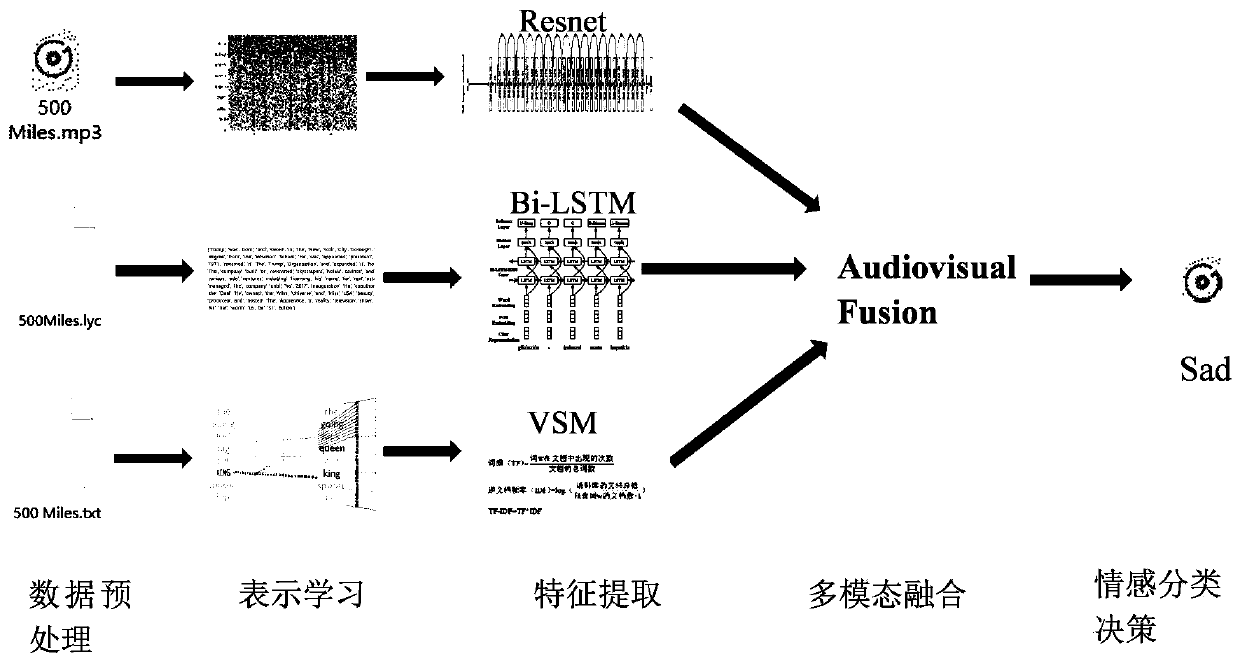

[0035] A kind of music emotion classification method based on multimodal learning of the present invention, see figure 1 , the figure shows the algorithm flow of the embodiment of the present invention, figure 2 It is a schematic diagram of the present invention, specifically comprising the following steps:

[0036] S101, audio preprocessing: the audio data is converted from MP3 format to WAV format, and each song is divided into 5 seconds of audio with a sampling freq...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com