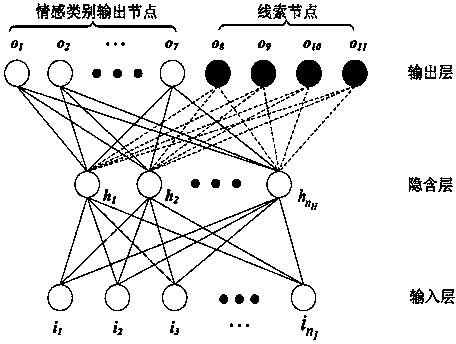

Voice-vision fusion emotion recognition method based on hint nerve networks

A neural network and emotion recognition technology, applied in biological neural network models, character and pattern recognition, instruments, etc., can solve the problem of low recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

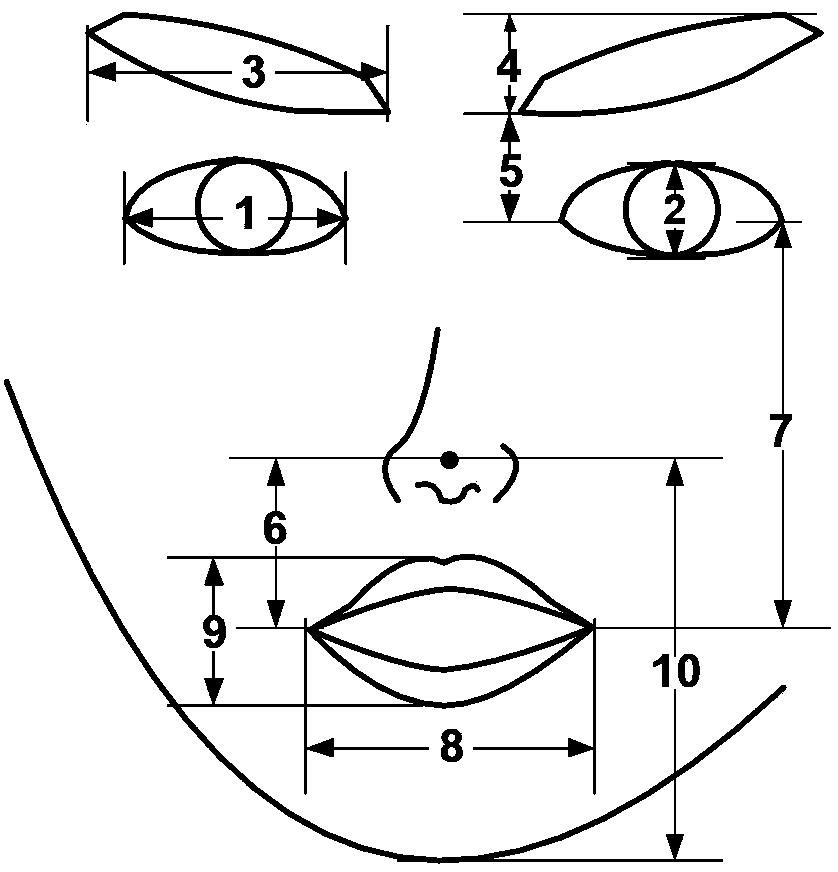

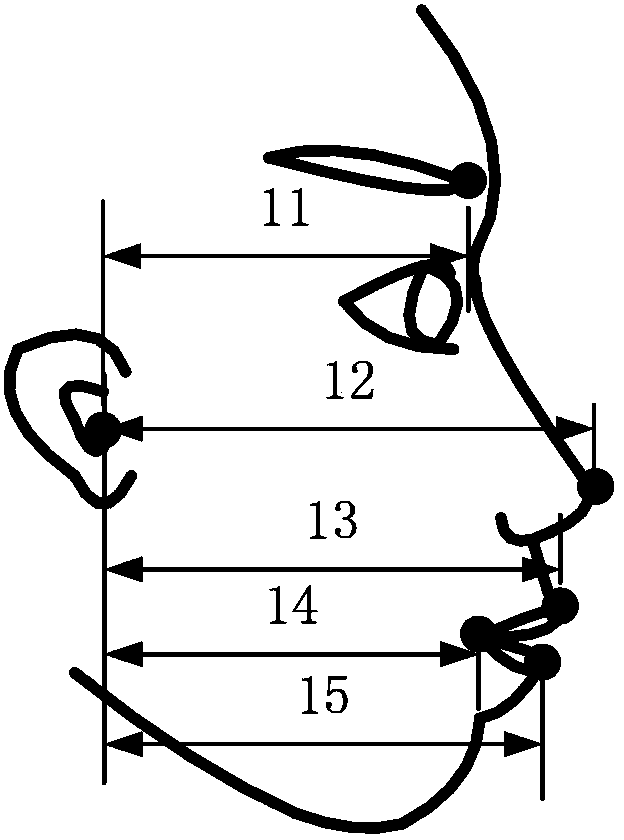

Method used

Image

Examples

Embodiment Construction

[0092] The implementation of the method of the present invention will be described in detail below in conjunction with the accompanying drawings and specific examples.

[0093] In this example, 6 experimenters (3 males and 3 females) read aloud with 7 discrete basic emotions (happy, sad, angry, disgusted, fearful, surprised and neutral) in a guided (Wizard of Oz) scenario , the two cameras simultaneously capture the face video of the front view, the face video of the side view and voice data. In the scenario script, there are 3 different sentences for each emotion, and each person will repeat each sentence 5 times.

[0094]. The emotion data of three people are randomly selected as the first training data set, which is used to train three neural networks using a single-channel feature data stream. Then randomly select the emotional data of two people as the second training data set for training the multimodal fusion neural network. Using the emotion data of the remaining pe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com