View-angle-invariant local region constraint-based slanted image linear feature matching method

A technology of straight line features and local areas, which is applied to computer parts, instruments, characters and pattern recognition, etc., and can solve problems such as difficult matching of straight line feature intersections

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0069] The present invention will be described in detail below in conjunction with the accompanying drawings.

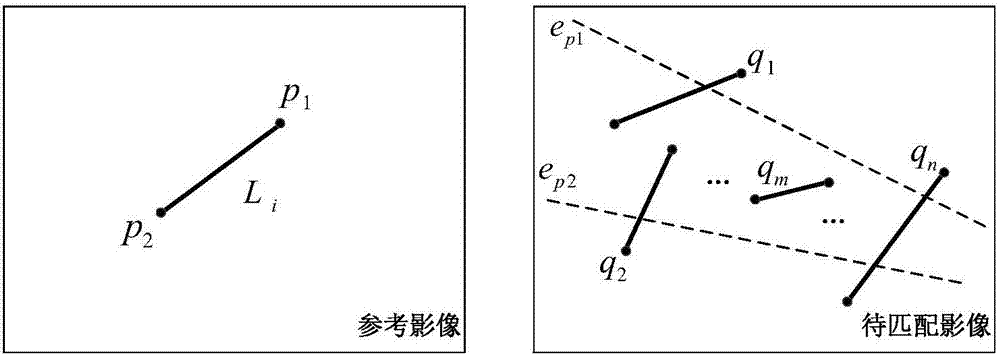

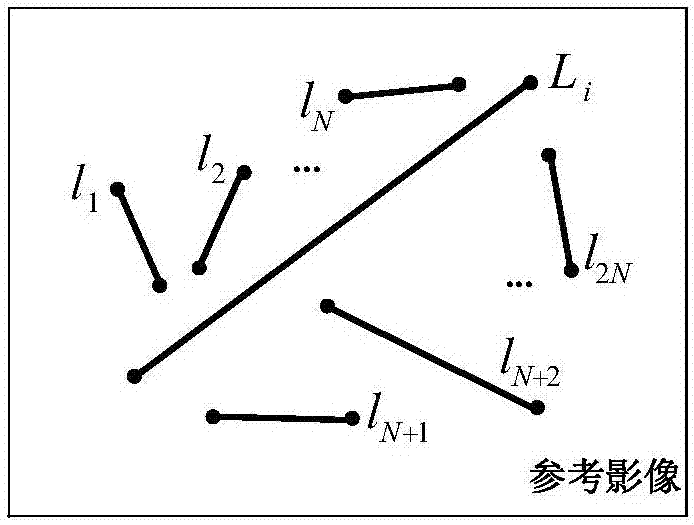

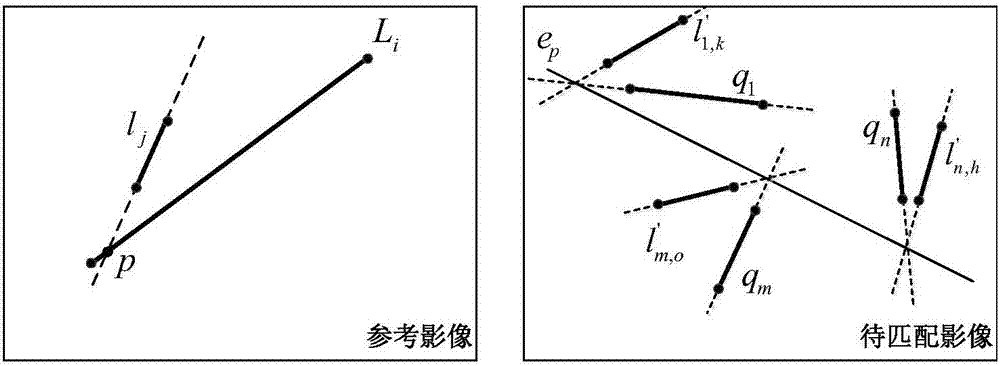

[0070] Such as Figure 7 As shown in , a linear feature matching method of oblique images constrained by a view-invariant local area, the method includes the following steps in turn:

[0071] Step 1: Extract straight line features from the reference image and the image to be matched, and calculate the feature salience of each straight line feature according to formula (1):

[0072]

[0073] In formula (1), saliency represents the saliency value of the straight line feature, l represents the length of the straight line feature, Indicates the mean value of the gradient magnitudes of all pixels on the line feature, and a and b represent weight coefficients, which are used to control the relative importance of the line feature length and gradient amplitude mean to the calculation of feature salience. During specific implementation, parameters a and b may take empir...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com